Data Mining Concepts and Techniques Mining Frequent Patterns

- Slides: 59

Data Mining: Concepts and Techniques Mining Frequent Patterns & Association Rules Dr. Maher Abuhamdeh

What Is Frequent Pattern Analysis? • Frequent pattern: a pattern (a set of items, subsequences, substructures, etc. ) that occurs frequently in a data set • First proposed by Agrawal, Imielinski, and Swami [AIS 93] in the context of frequent itemsets and association rule mining • Motivation: Finding inherent regularities in data – What products were often purchased together? — Tea and diapers? ! – What are the subsequent purchases after buying a PC? – What kinds of DNA are sensitive to this new drug? – Can we automatically classify web documents? • Applications – Basket data analysis, cross-marketing, catalog design, sale campaign analysis, Web log (click stream) analysis, and DNA sequence analysis.

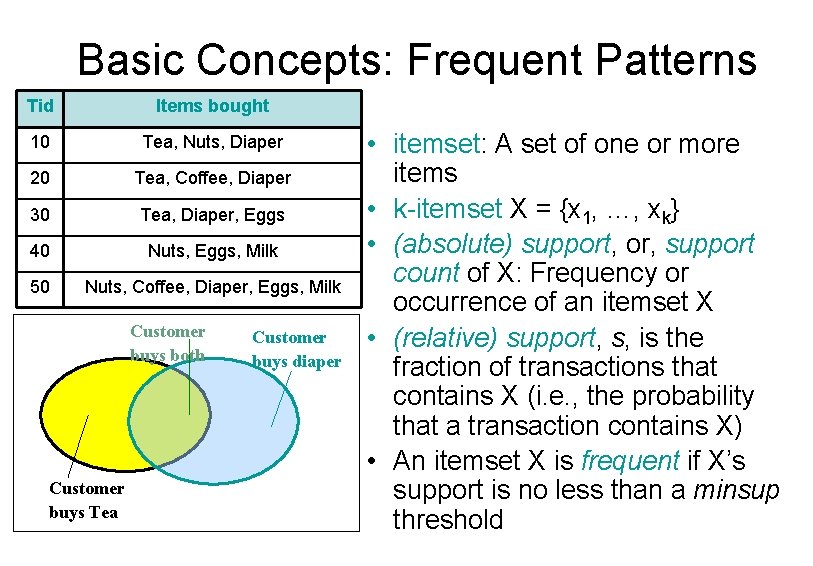

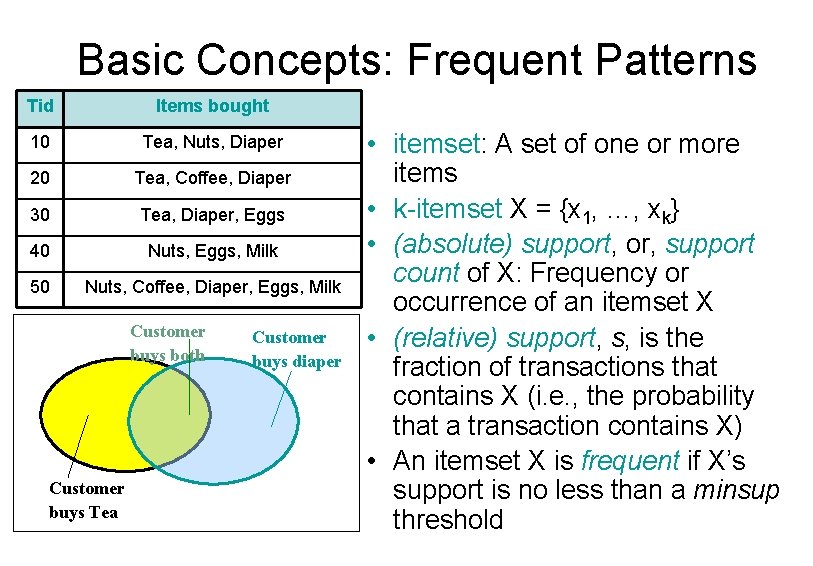

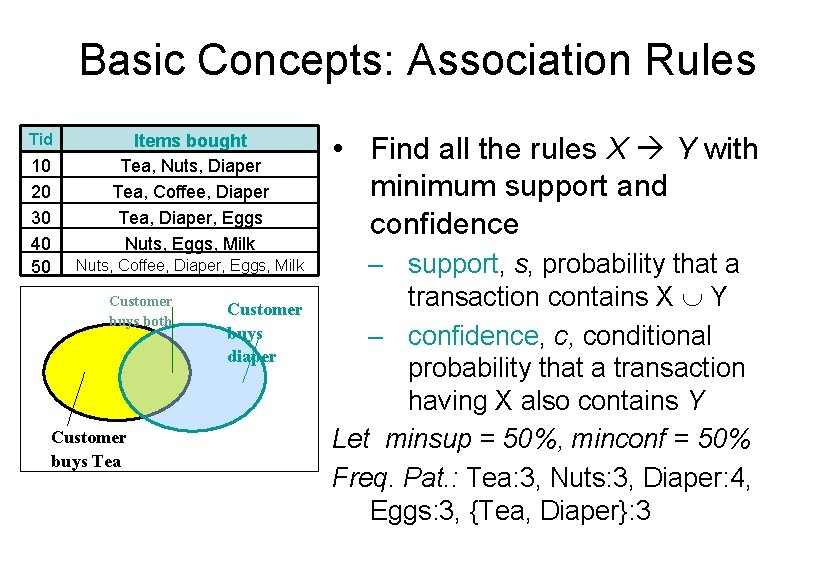

Basic Concepts: Frequent Patterns Tid Items bought 10 Tea, Nuts, Diaper 20 Tea, Coffee, Diaper 30 Tea, Diaper, Eggs 40 Nuts, Eggs, Milk 50 Nuts, Coffee, Diaper, Eggs, Milk Customer buys both Customer buys Tea Customer buys diaper • itemset: A set of one or more items • k-itemset X = {x 1, …, xk} • (absolute) support, or, support count of X: Frequency or occurrence of an itemset X • (relative) support, s, is the fraction of transactions that contains X (i. e. , the probability that a transaction contains X) • An itemset X is frequent if X’s support is no less than a minsup threshold

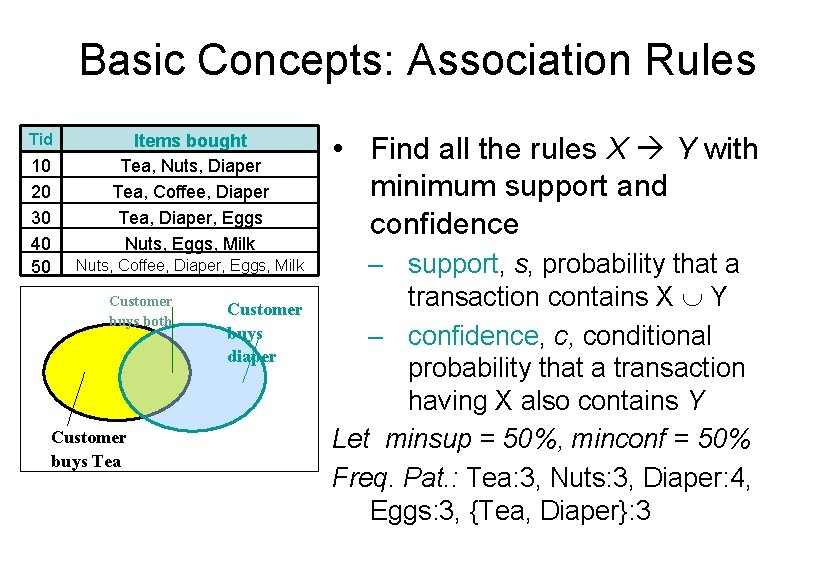

Basic Concepts: Association Rules Tid 10 20 30 40 50 Items bought Tea, Nuts, Diaper Tea, Coffee, Diaper Tea, Diaper, Eggs Nuts, Eggs, Milk Nuts, Coffee, Diaper, Eggs, Milk Customer buys both Customer buys Tea Customer buys diaper • Find all the rules X Y with minimum support and confidence – support, s, probability that a transaction contains X Y – confidence, c, conditional probability that a transaction having X also contains Y Let minsup = 50%, minconf = 50% Freq. Pat. : Tea: 3, Nuts: 3, Diaper: 4, Eggs: 3, {Tea, Diaper}: 3

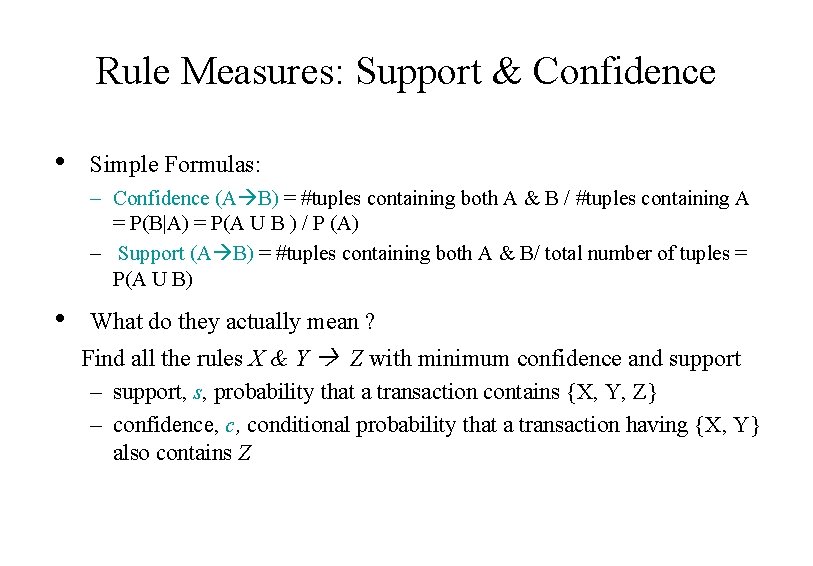

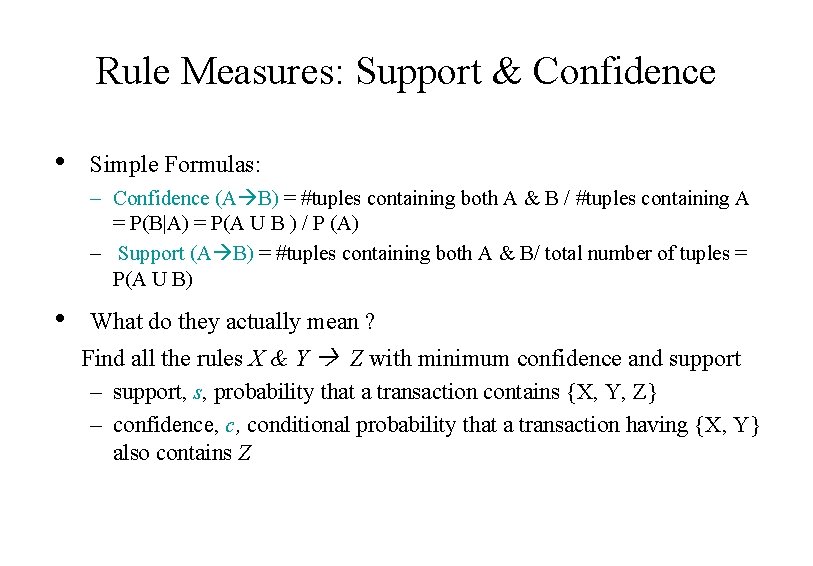

Rule Measures: Support & Confidence • Simple Formulas: – Confidence (A B) = #tuples containing both A & B / #tuples containing A = P(B|A) = P(A U B ) / P (A) – Support (A B) = #tuples containing both A & B/ total number of tuples = P(A U B) • What do they actually mean ? Find all the rules X & Y Z with minimum confidence and support – support, s, probability that a transaction contains {X, Y, Z} – confidence, c, conditional probability that a transaction having {X, Y} also contains Z

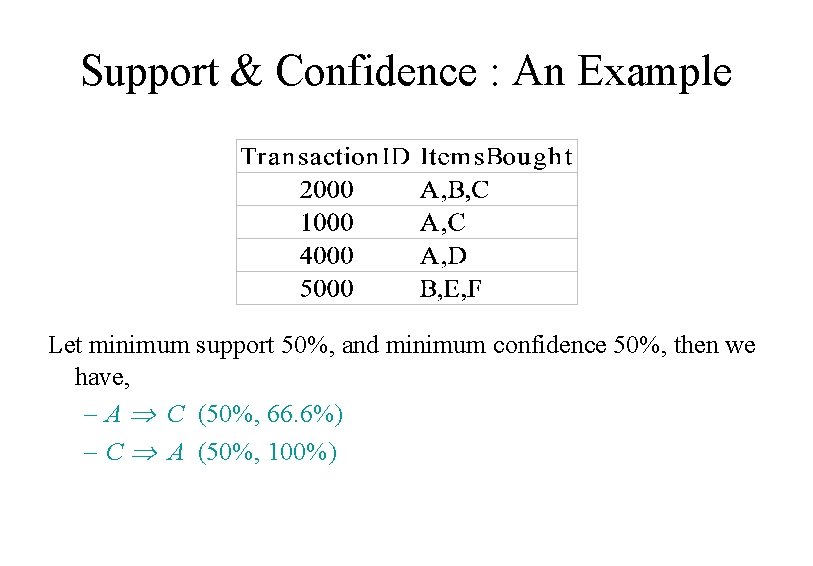

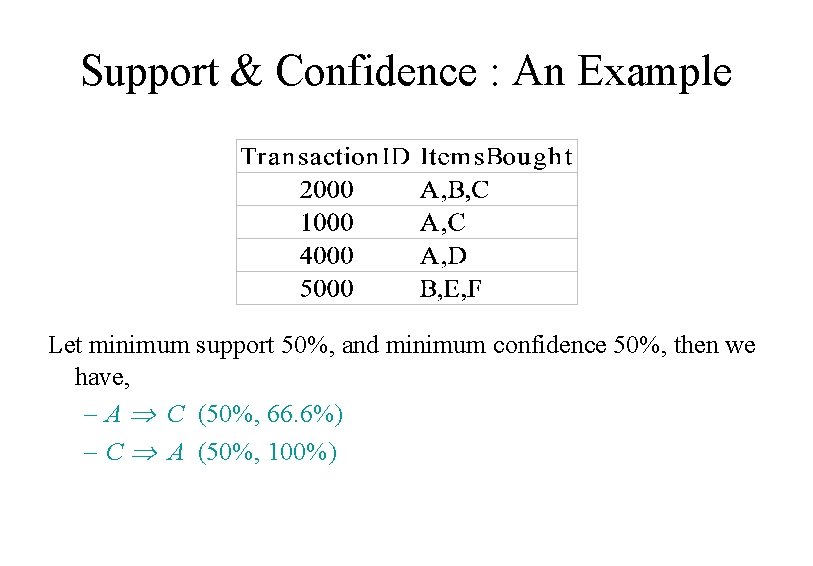

Support & Confidence : An Example Let minimum support 50%, and minimum confidence 50%, then we have, – A C (50%, 66. 6%) – C A (50%, 100%)

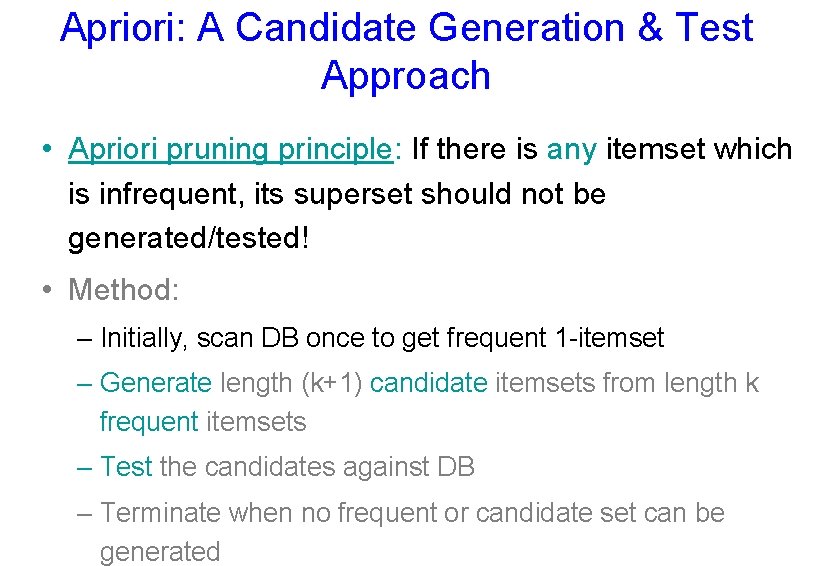

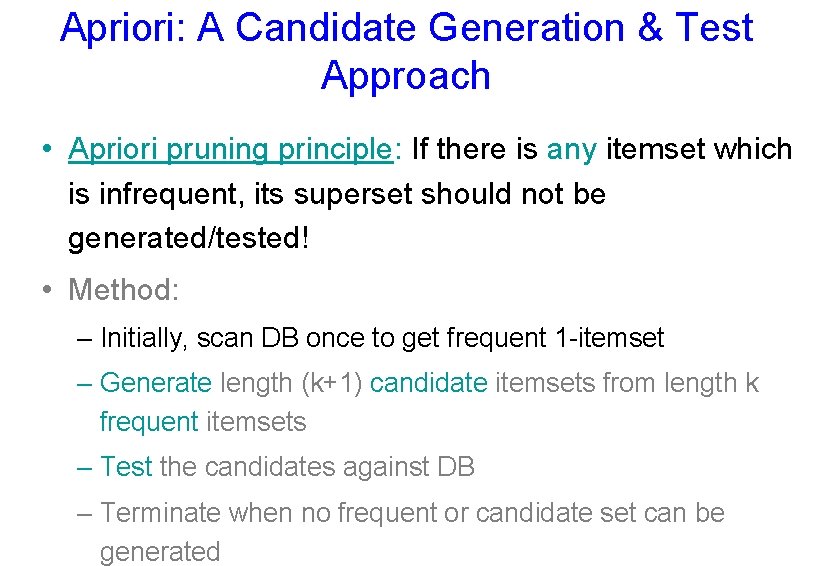

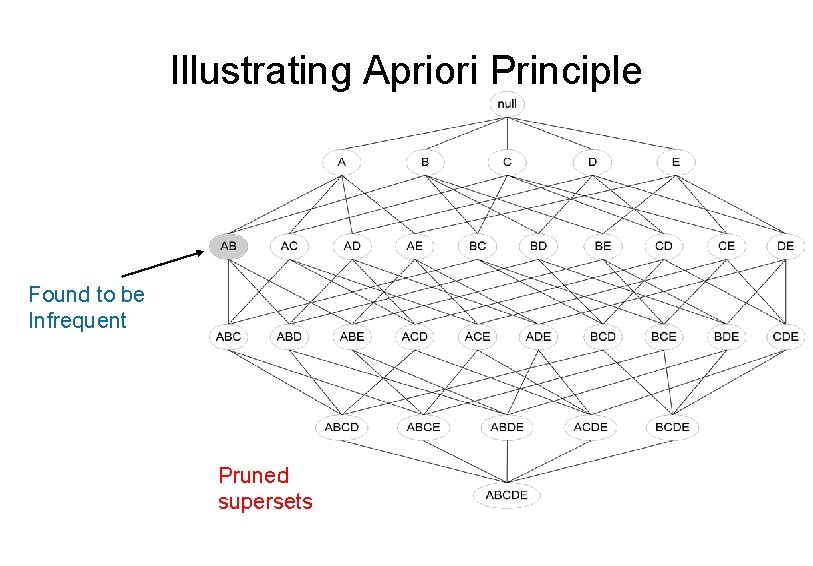

Apriori: A Candidate Generation & Test Approach • Apriori pruning principle: If there is any itemset which is infrequent, its superset should not be generated/tested! • Method: – Initially, scan DB once to get frequent 1 -itemset – Generate length (k+1) candidate itemsets from length k frequent itemsets – Test the candidates against DB – Terminate when no frequent or candidate set can be generated

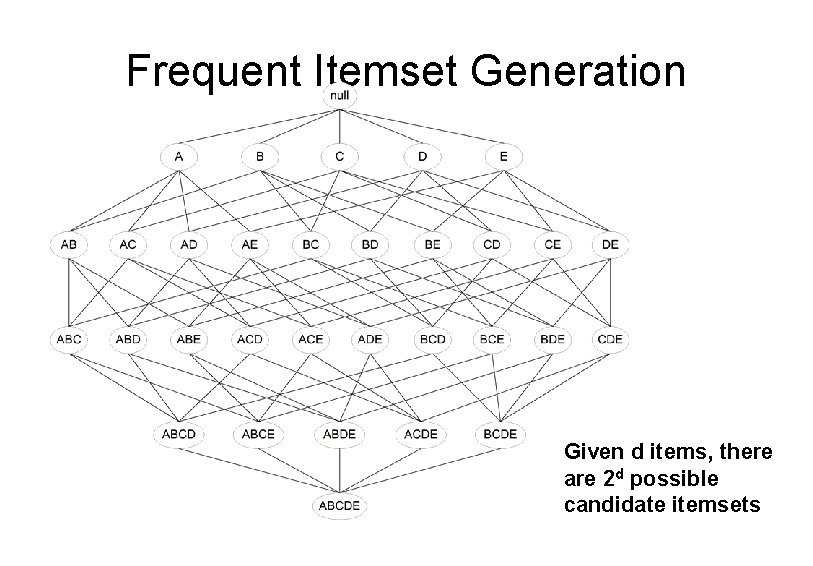

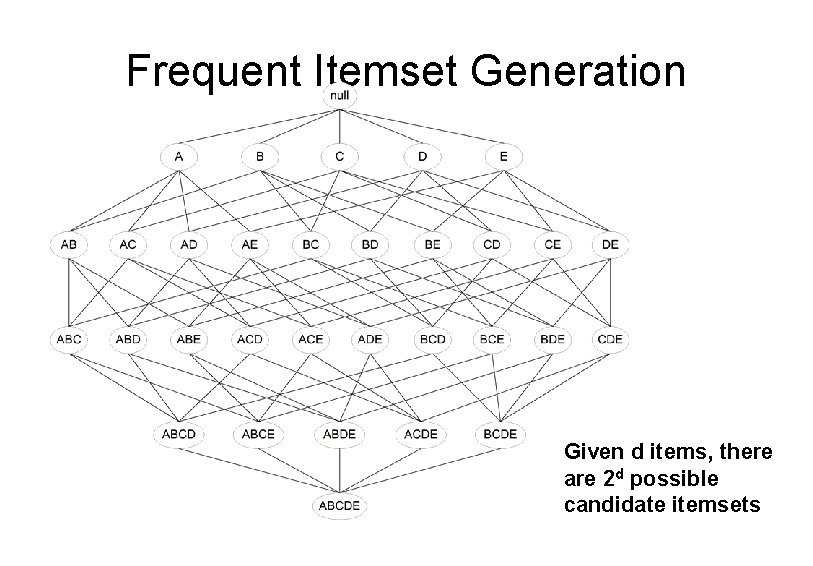

Frequent Itemset Generation Given d items, there are 2 d possible candidate itemsets

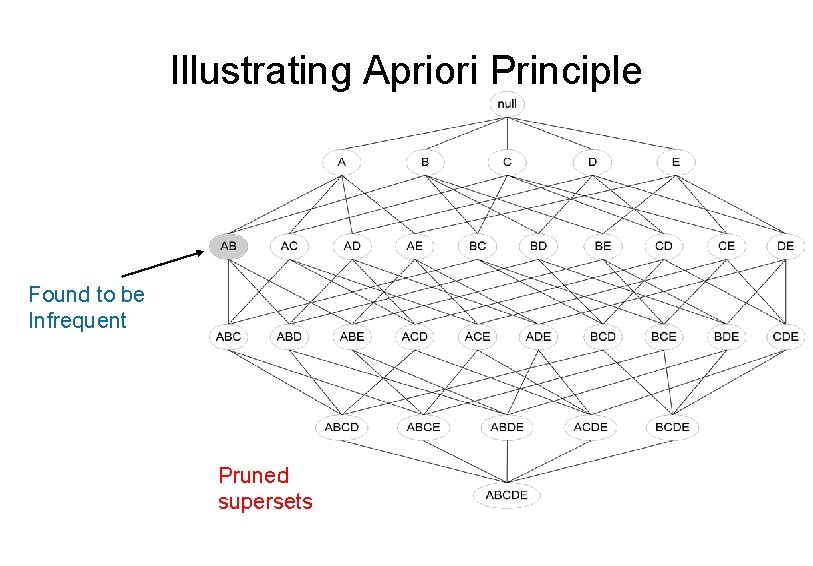

Illustrating Apriori Principle Found to be Infrequent Pruned supersets

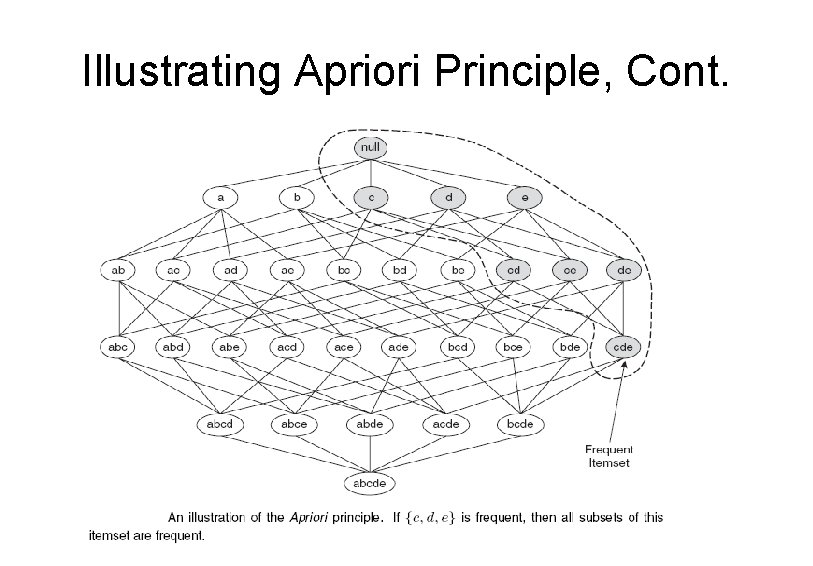

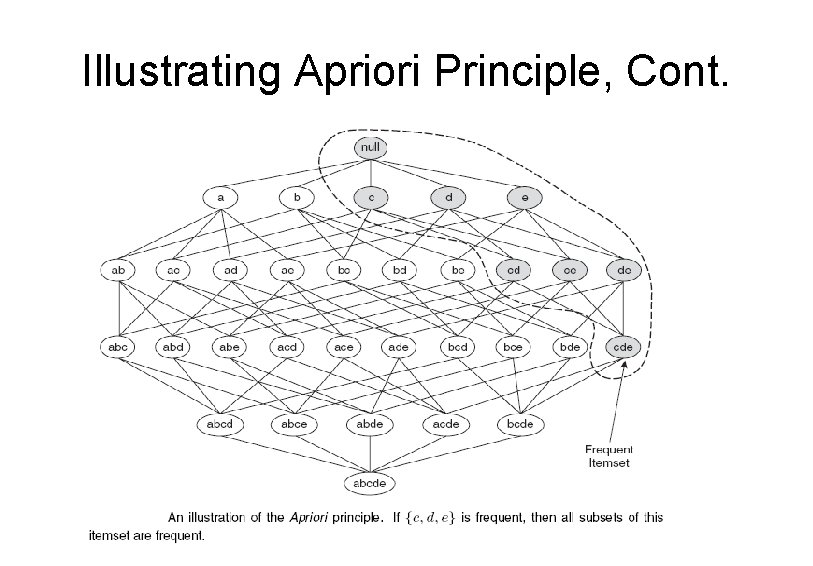

Illustrating Apriori Principle, Cont.

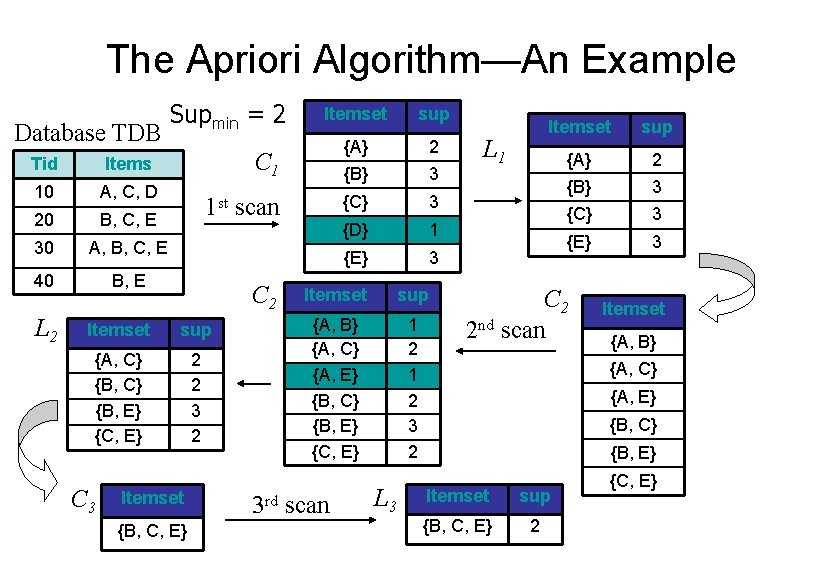

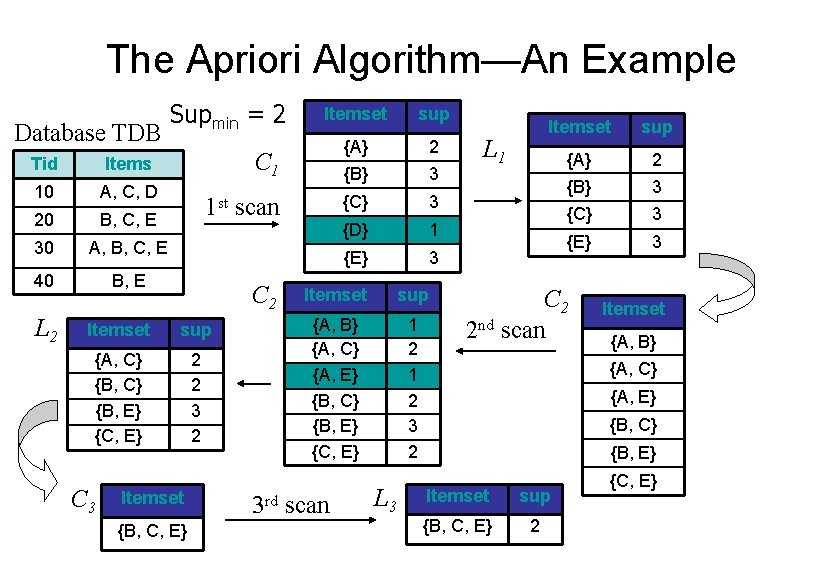

The Apriori Algorithm—An Example Database TDB Tid Items 10 A, C, D 20 B, C, E 30 A, B, C, E 40 B, E L 2 Supmin = 2 sup {A} 2 {B} 3 {C} 3 {D} 1 {E} 3 C 1 1 st scan C 2 Itemset sup {A, C} {B, E} {C, E} 2 2 3 2 C 3 Itemset {B, C, E} Itemset sup {A, B} {A, C} {A, E} {B, C} {B, E} {C, E} 1 2 3 2 3 rd scan L 3 L 1 Itemset sup {A} 2 {B} 3 {C} 3 {E} 3 C 2 2 nd scan Itemset {A, B} {A, C} {A, E} {B, C} {B, E} Itemset sup {B, C, E} 2 {C, E}

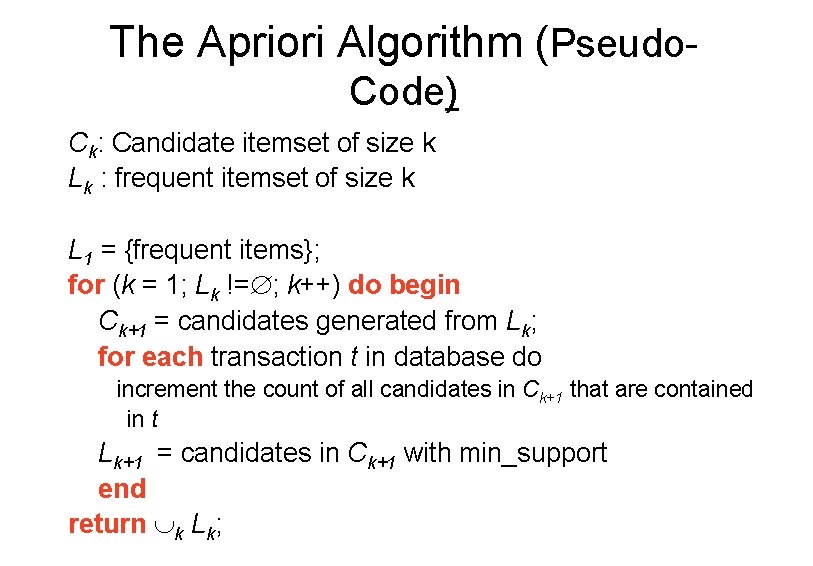

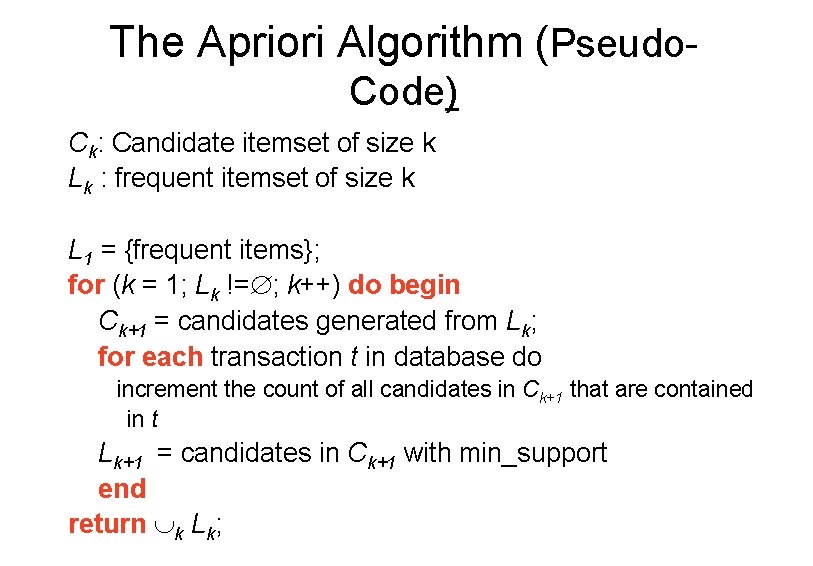

The Apriori Algorithm (Pseudo. Code) Ck: Candidate itemset of size k Lk : frequent itemset of size k L 1 = {frequent items}; for (k = 1; Lk != ; k++) do begin Ck+1 = candidates generated from Lk; for each transaction t in database do increment the count of all candidates in Ck+1 that are contained in t Lk+1 = candidates in Ck+1 with min_support end return k Lk;

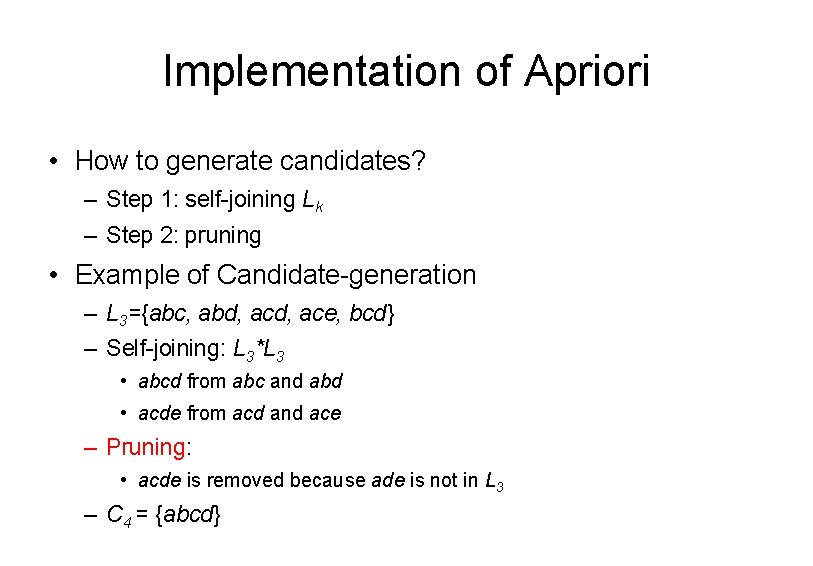

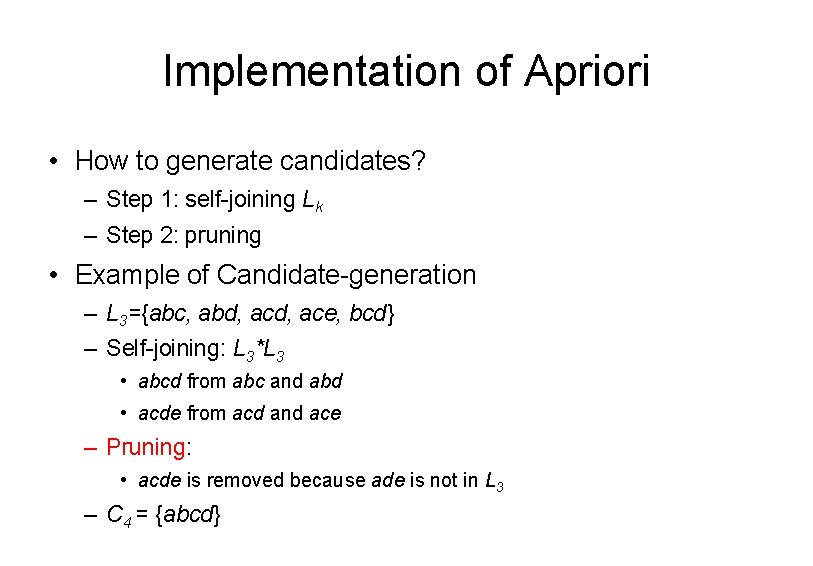

Implementation of Apriori • How to generate candidates? – Step 1: self-joining Lk – Step 2: pruning • Example of Candidate-generation – L 3={abc, abd, ace, bcd} – Self-joining: L 3*L 3 • abcd from abc and abd • acde from acd and ace – Pruning: • acde is removed because ade is not in L 3 – C 4 = {abcd}

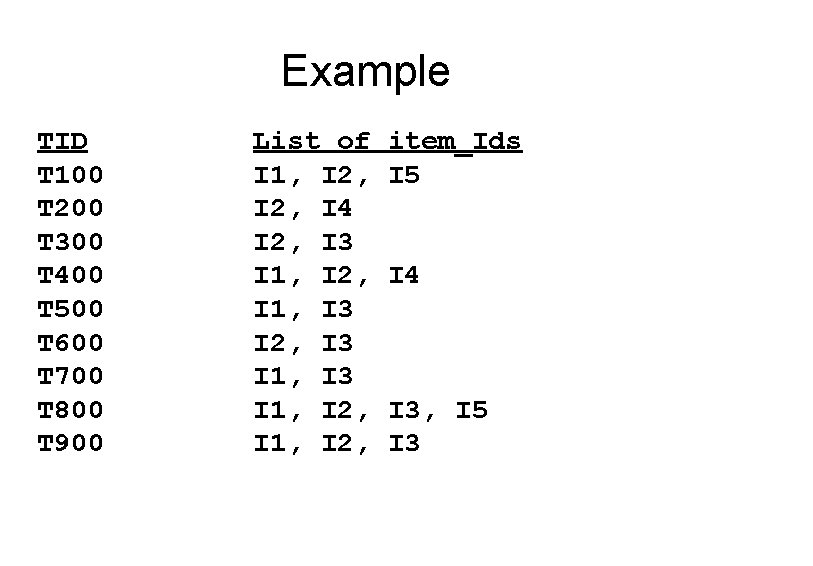

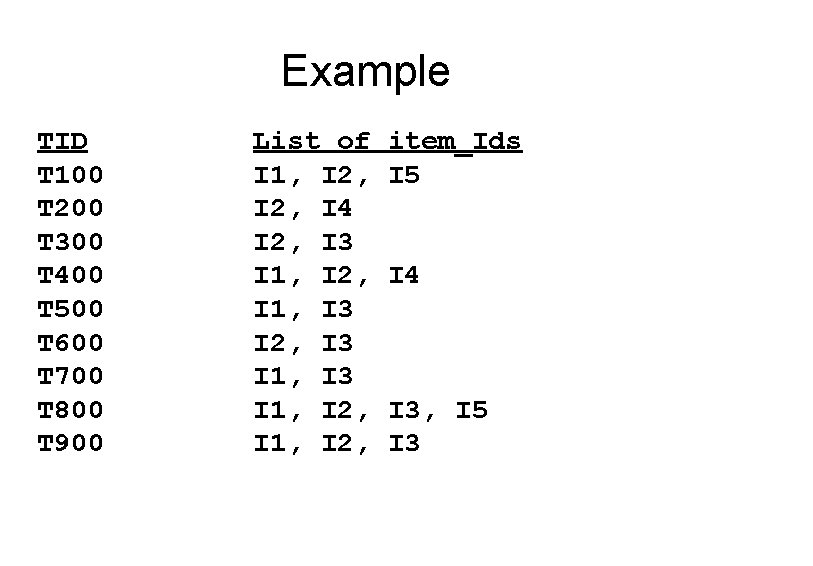

Example TID T 100 T 200 T 300 T 400 T 500 T 600 T 700 T 800 T 900 List of I 1, I 2, I 4 I 2, I 3 I 1, I 2, I 1, I 3 I 2, I 3 I 1, I 2, item_Ids I 5 I 4 I 3, I 5 I 3

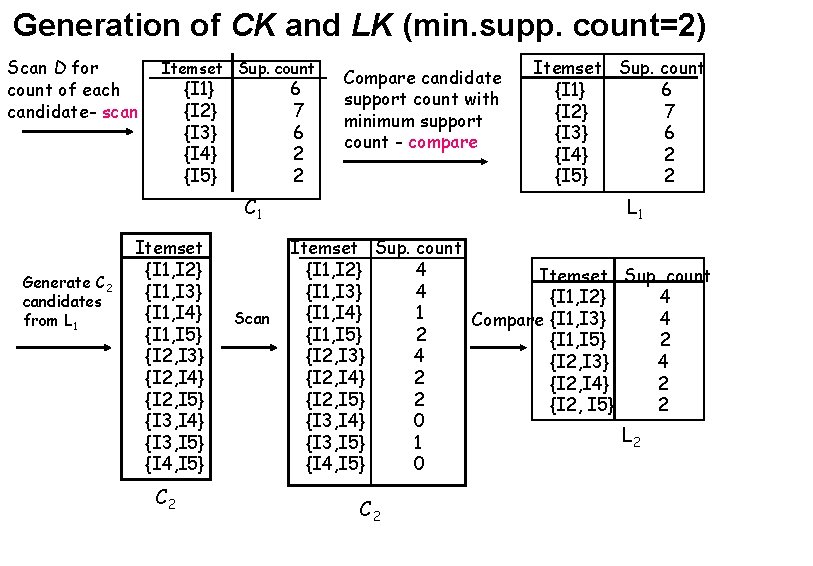

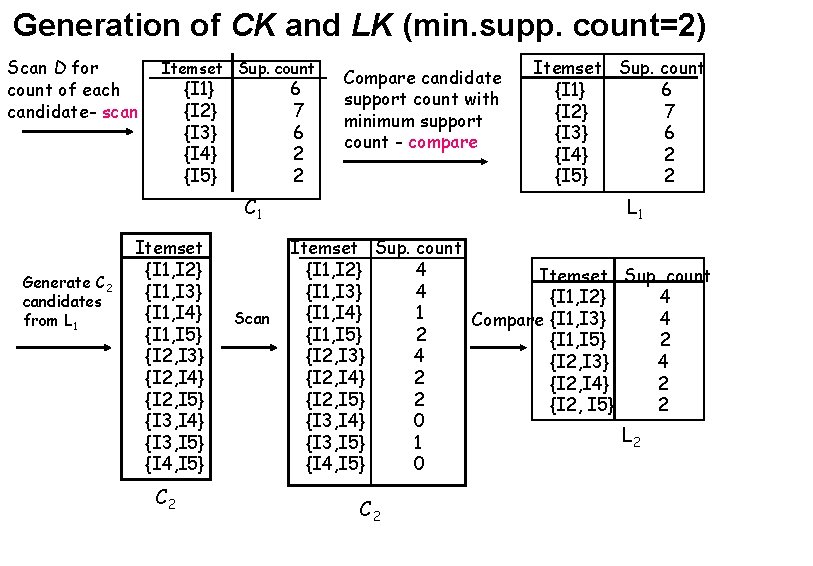

Generation of CK and LK (min. supp. count=2) Scan D for count of each candidate- scan Itemset Sup. count {I 1} {I 2} {I 3} {I 4} {I 5} 6 7 6 2 2 Compare candidate support count with minimum support count - compare C 1 Generate C 2 candidates from L 1 Itemset {I 1, I 2} {I 1, I 3} {I 1, I 4} {I 1, I 5} {I 2, I 3} {I 2, I 4} {I 2, I 5} {I 3, I 4} {I 3, I 5} {I 4, I 5} C 2 Scan Itemset Sup. count {I 1} 6 {I 2} 7 {I 3} 6 {I 4} 2 {I 5} 2 L 1 Itemset Sup. count {I 1, I 2} 4 Itemset Sup. count {I 1, I 3} 4 {I 1, I 2} 4 {I 1, I 4} 1 4 Compare {I 1, I 3} {I 1, I 5} 2 {I 2, I 3} 4 {I 2, I 4} 2 {I 2, I 5} 2 {I 3, I 4} 0 L 2 {I 3, I 5} 1 {I 4, I 5} 0 C 2

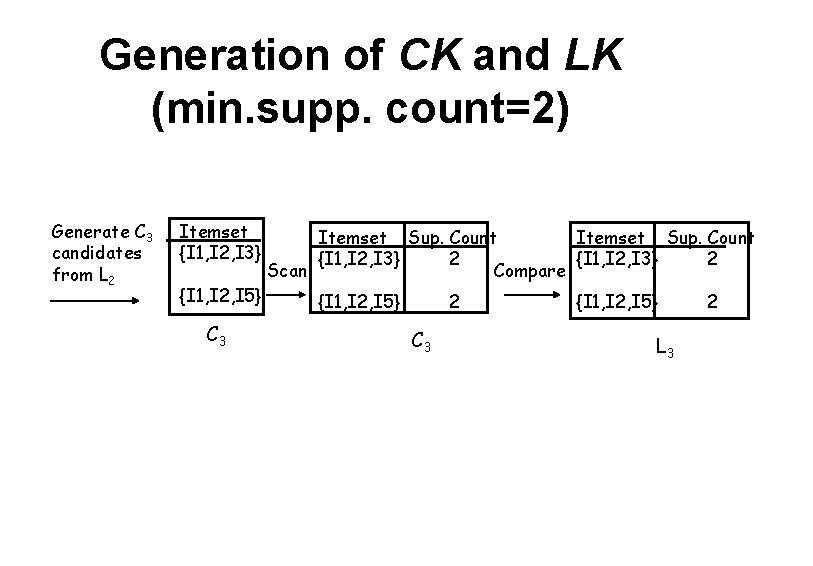

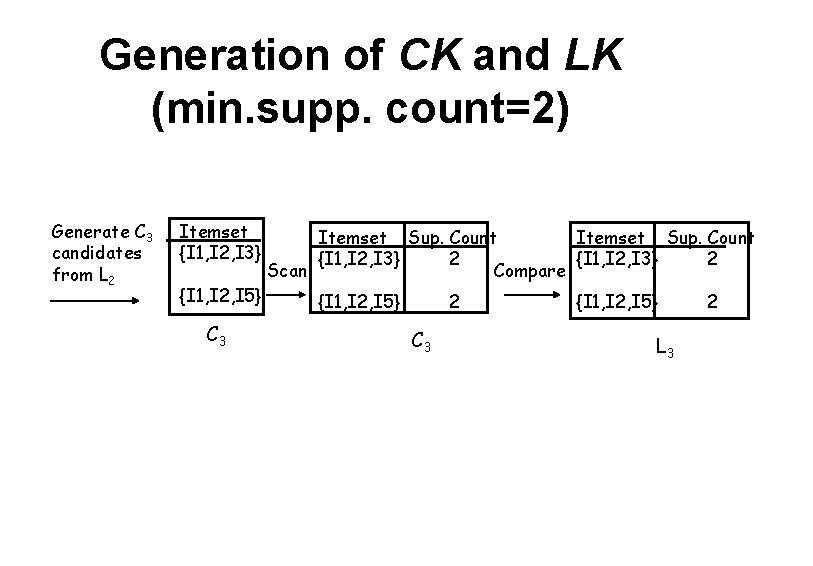

Generation of CK and LK (min. supp. count=2) Generate C 3 candidates from L 2 Itemset {I 1, I 2, I 3} {I 1, I 2, I 5} C 3 Itemset Sup. Count {I 1, I 2, I 3} 2 Scan Compare {I 1, I 2, I 5} 2 C 3 {I 1, I 2, I 5} L 3 2

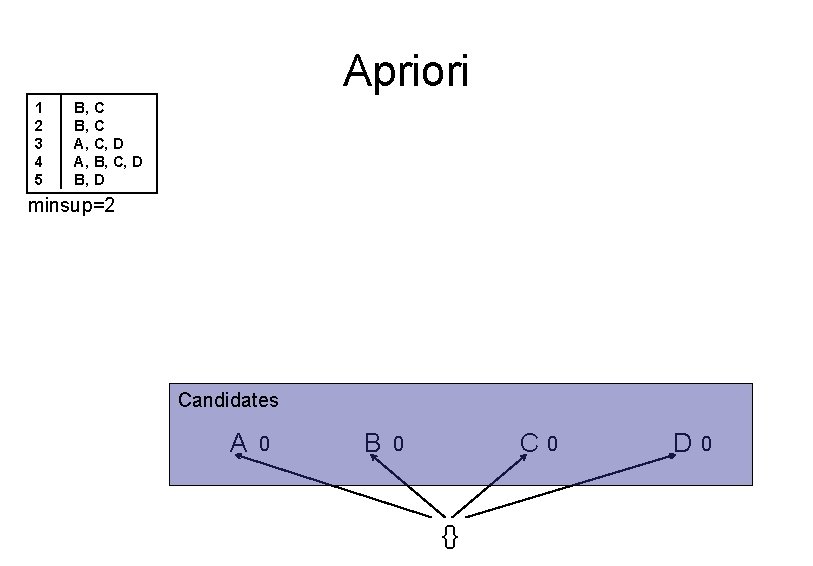

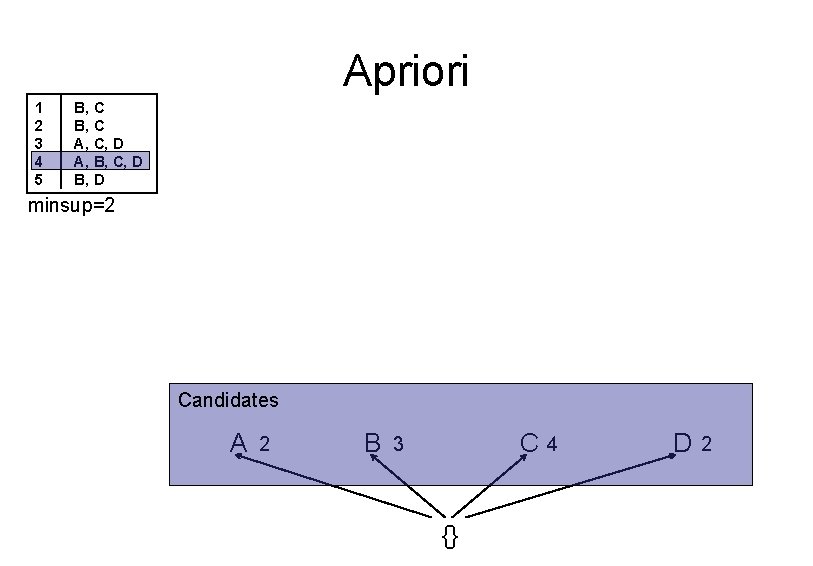

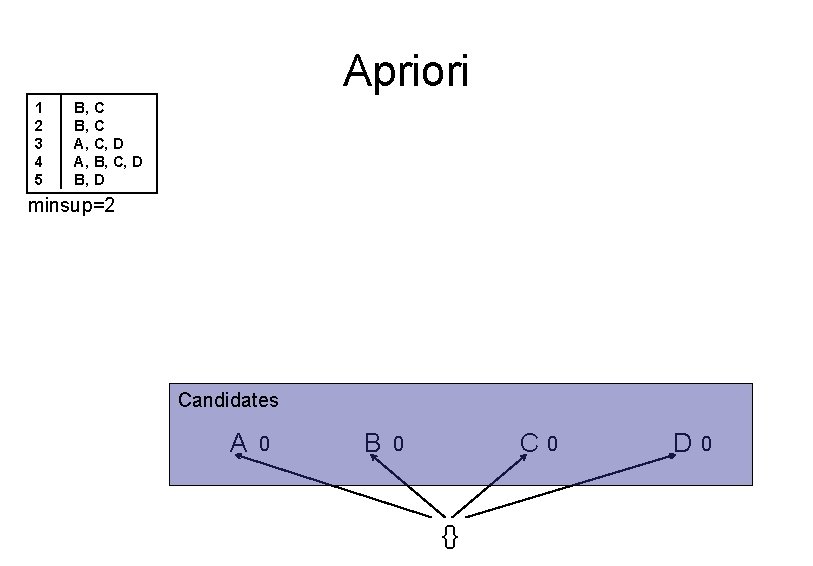

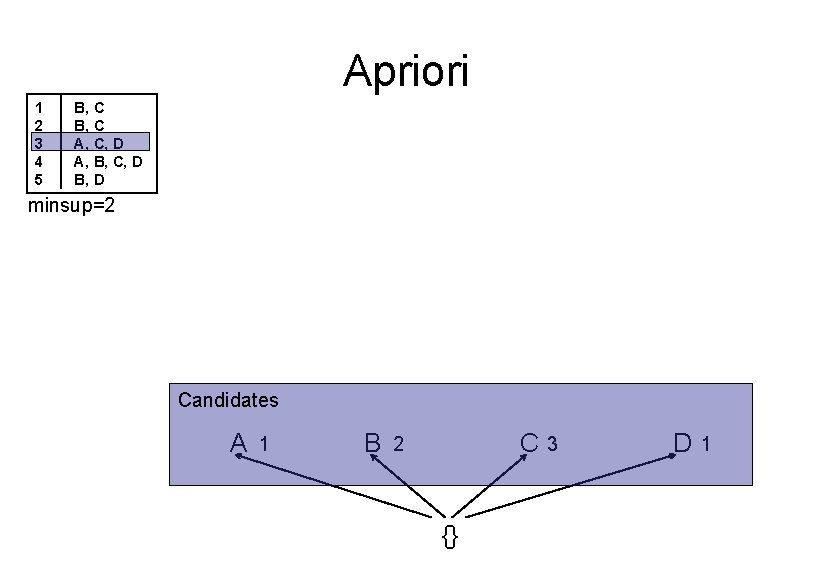

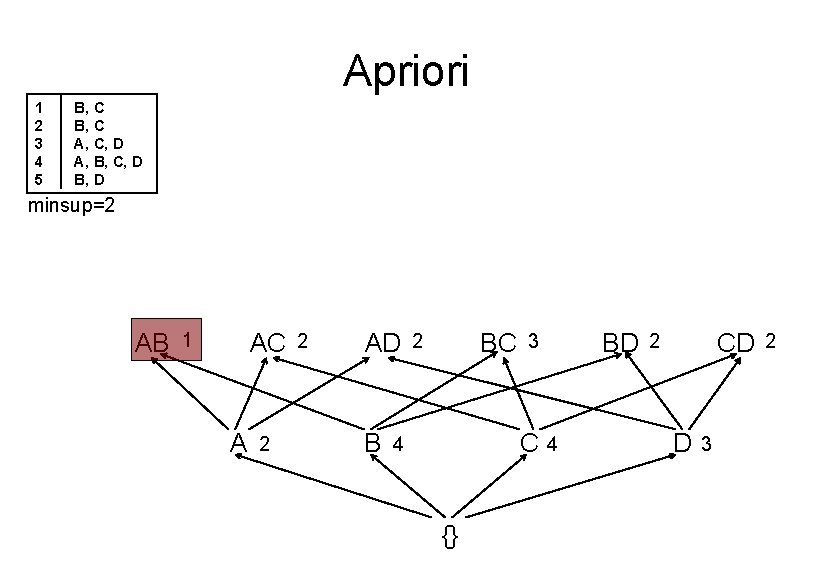

Apriori 1 2 3 4 5 B, C A, C, D A, B, C, D B, D minsup=2 Candidates A 0 B C 0 {} 0 D 0

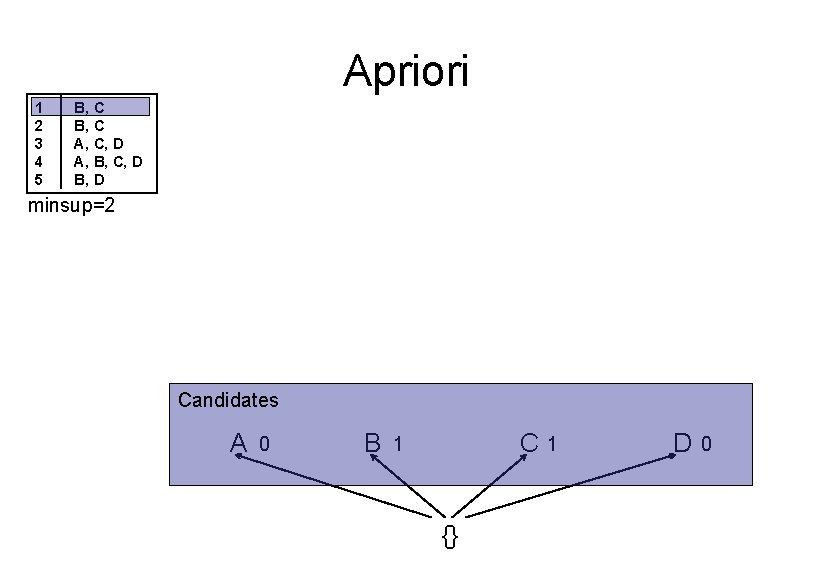

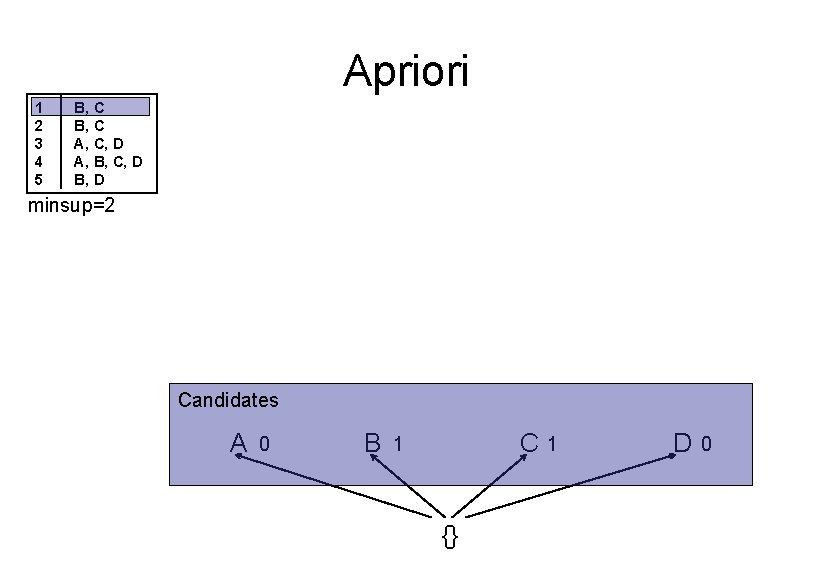

Apriori 1 2 3 4 5 B, C A, C, D A, B, C, D B, D minsup=2 Candidates A 0 B C 1 {} 1 D 0

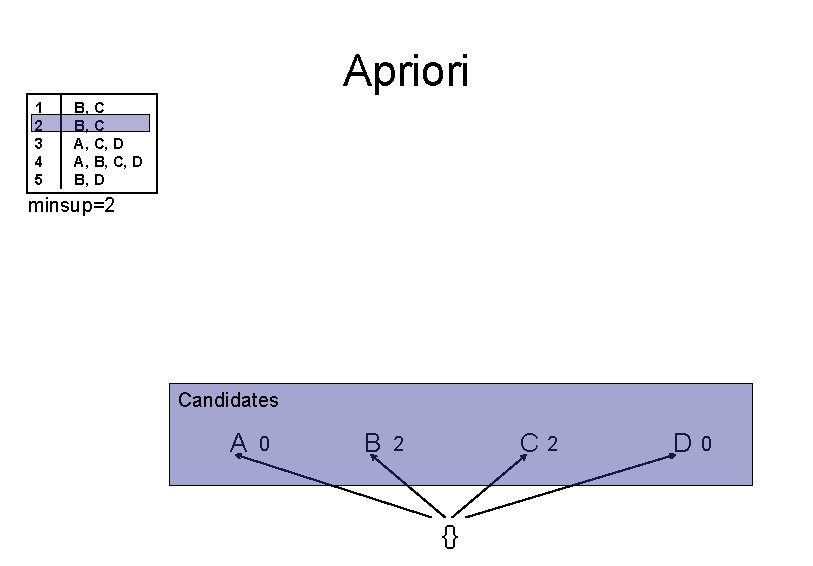

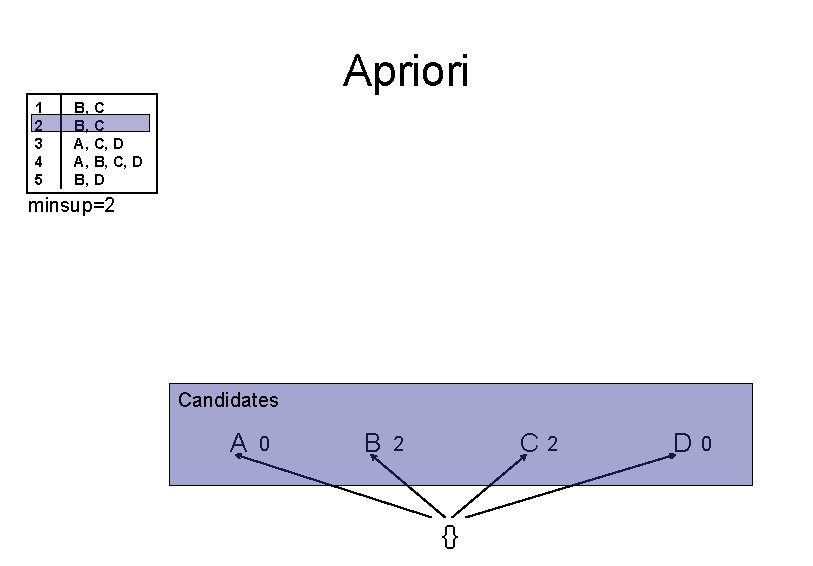

Apriori 1 2 3 4 5 B, C A, C, D A, B, C, D B, D minsup=2 Candidates A 0 B C 2 {} 2 D 0

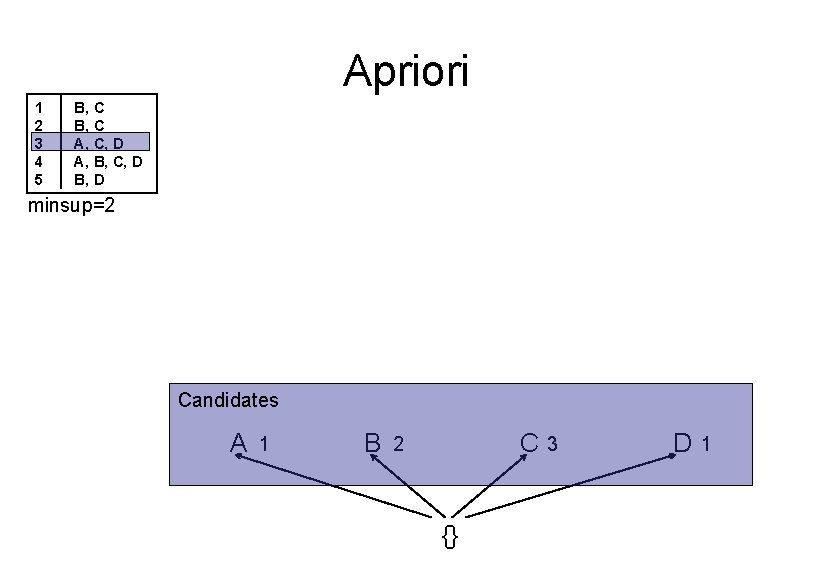

Apriori 1 2 3 4 5 B, C A, C, D A, B, C, D B, D minsup=2 Candidates A 1 B C 2 {} 3 D 1

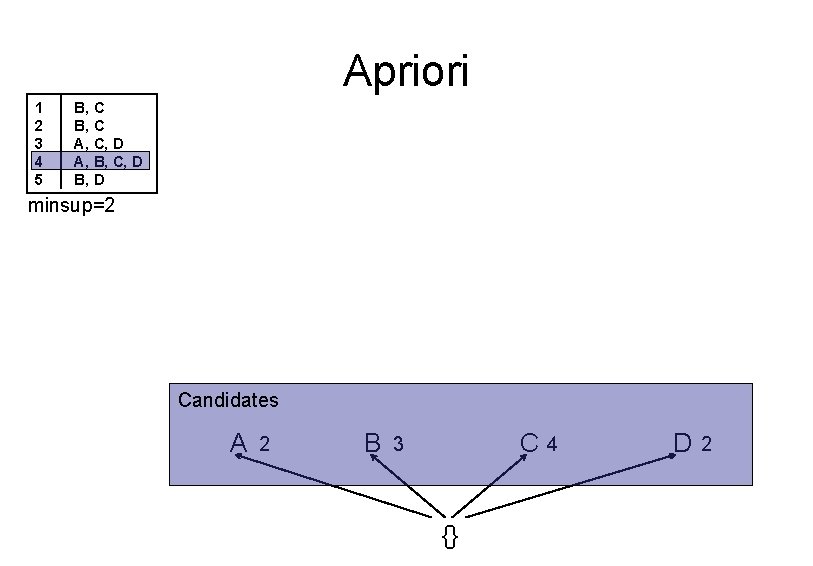

Apriori 1 2 3 4 5 B, C A, C, D A, B, C, D B, D minsup=2 Candidates A 2 B C 3 {} 4 D 2

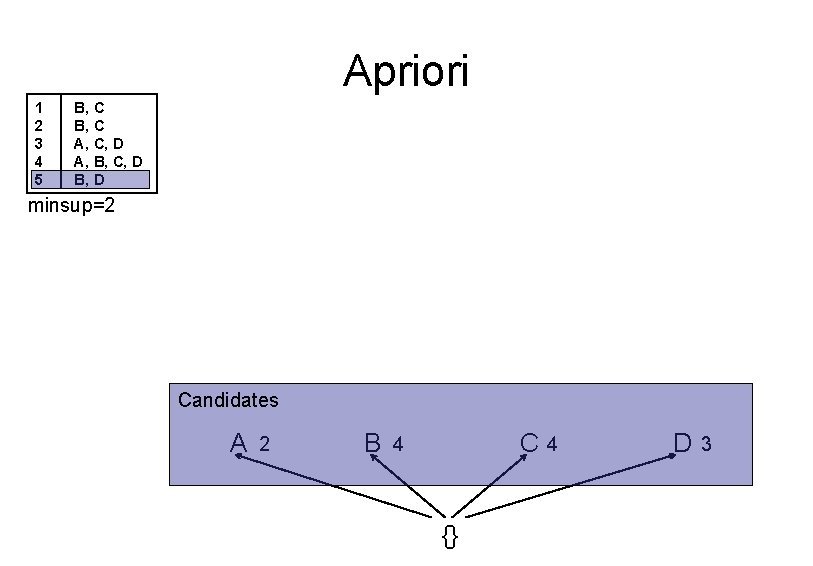

Apriori 1 2 3 4 5 B, C A, C, D A, B, C, D B, D minsup=2 Candidates A 2 B C 4 {} 4 D 3

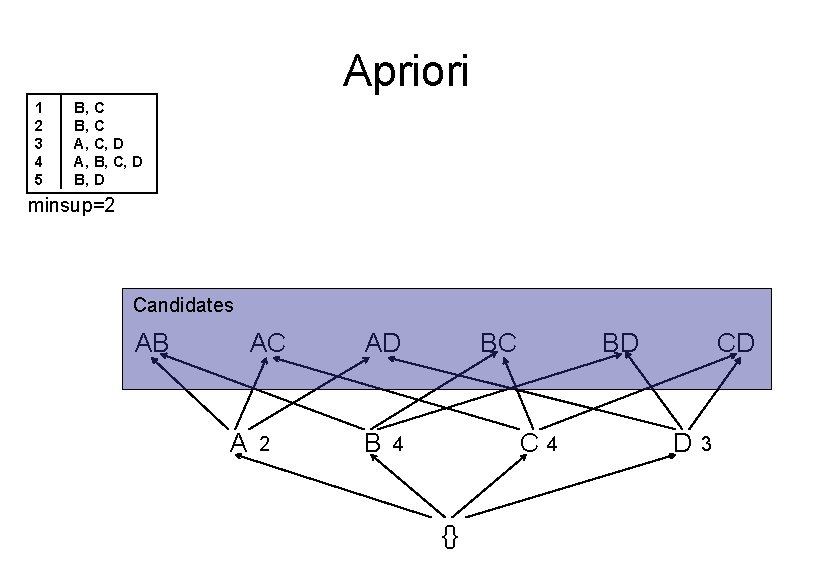

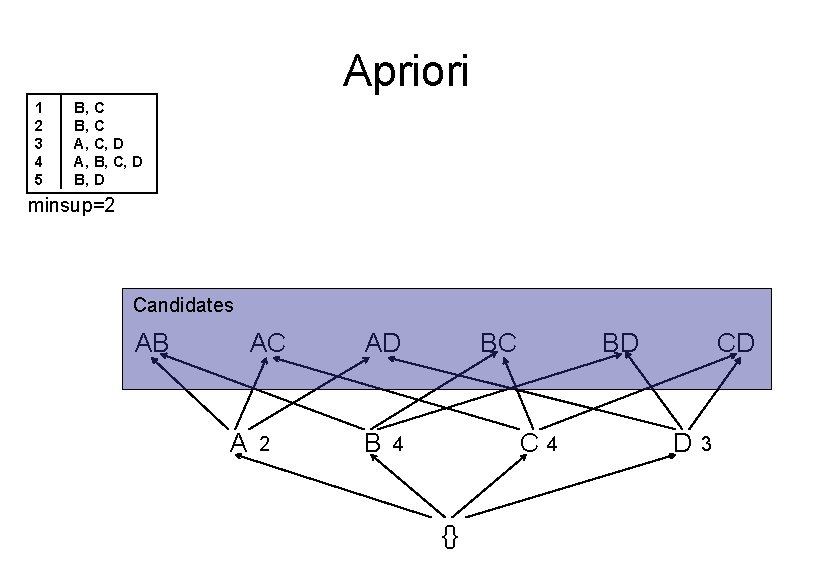

Apriori 1 2 3 4 5 B, C A, C, D A, B, C, D B, D minsup=2 Candidates AB AC A 2 AD B BC BD C 4 {} 4 CD D 3

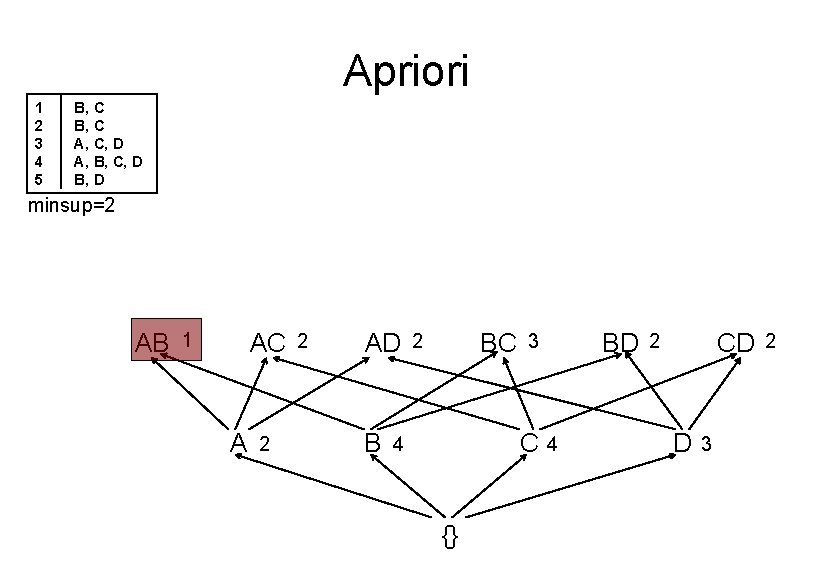

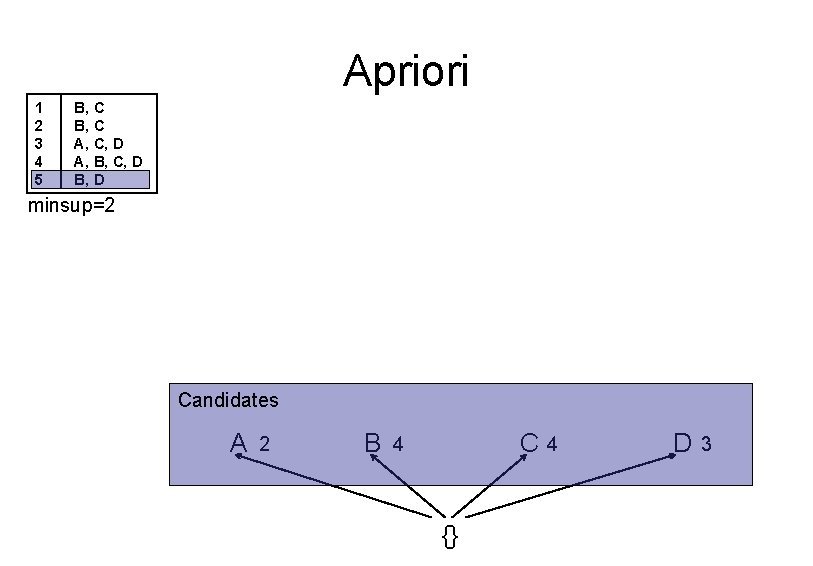

Apriori 1 2 3 4 5 B, C A, C, D A, B, C, D B, D minsup=2 AB AC 1 A 2 2 AD B BC 2 C 4 {} BD 3 4 CD 2 D 3 2

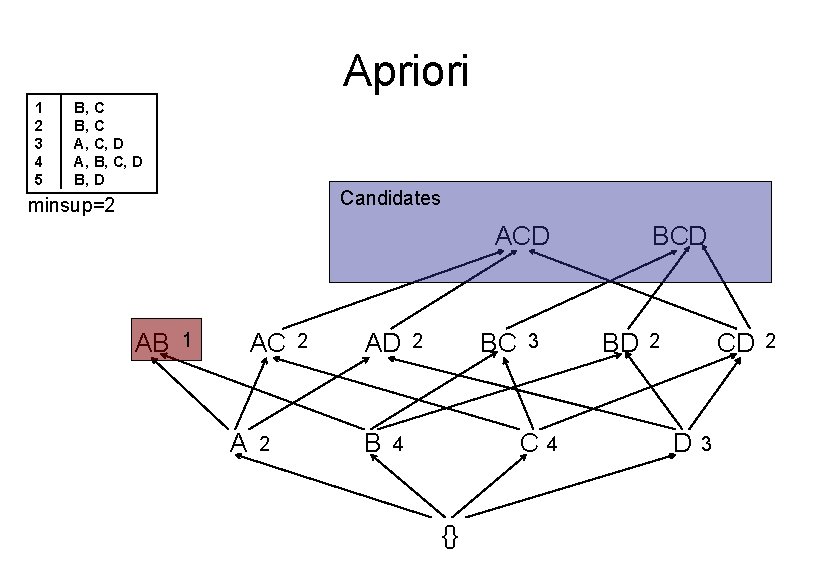

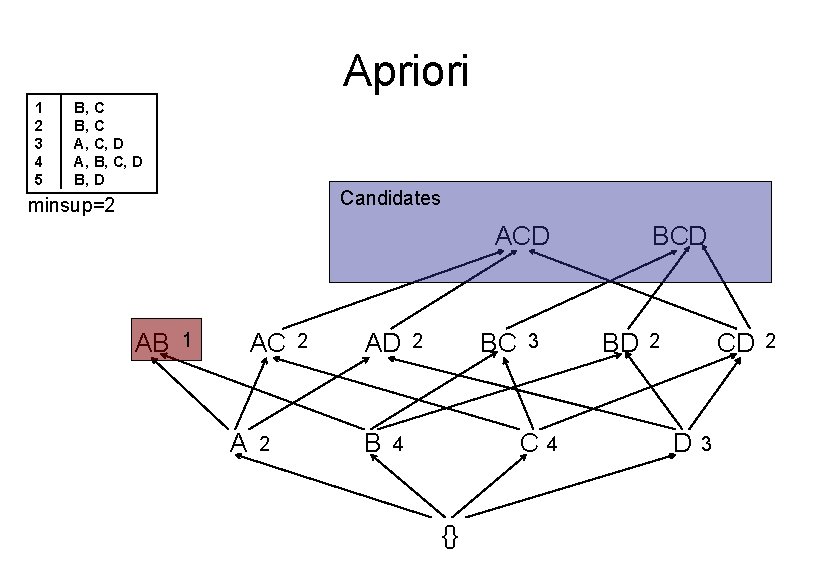

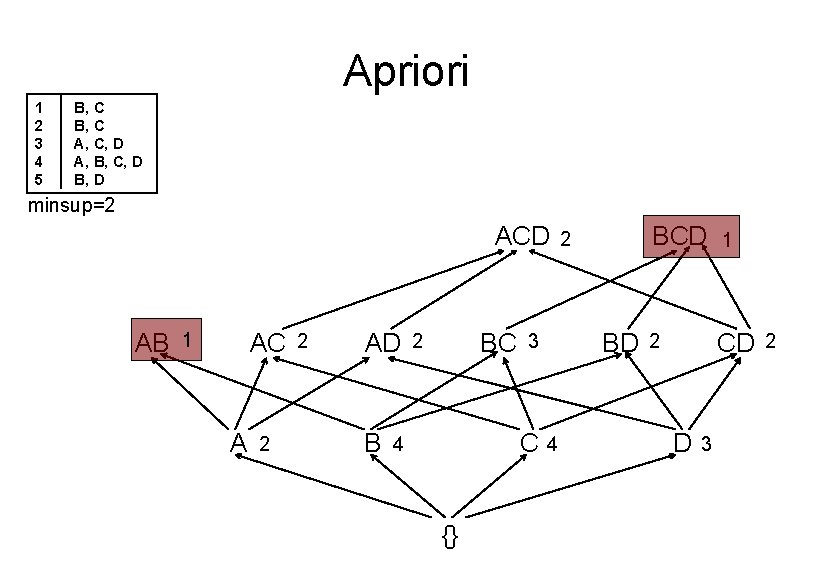

Apriori 1 2 3 4 5 B, C A, C, D A, B, C, D B, D Candidates minsup=2 ACD AB AC 1 A 2 2 AD B BC 2 {} BD 3 C 4 BCD 4 CD 2 D 3 2

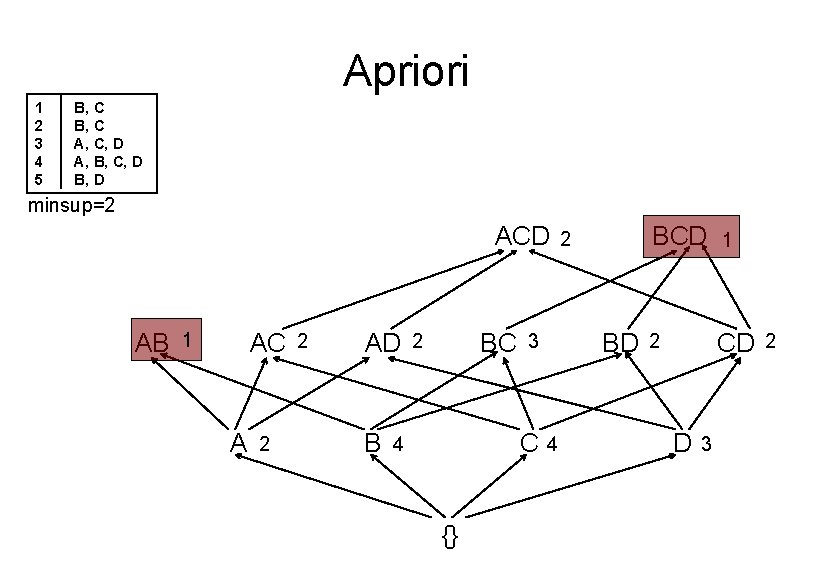

Apriori 1 2 3 4 5 B, C A, C, D A, B, C, D B, D minsup=2 ACD AB AC 1 A 2 2 AD B BC 2 {} BD 3 C 4 2 4 BCD 1 2 CD D 3 2

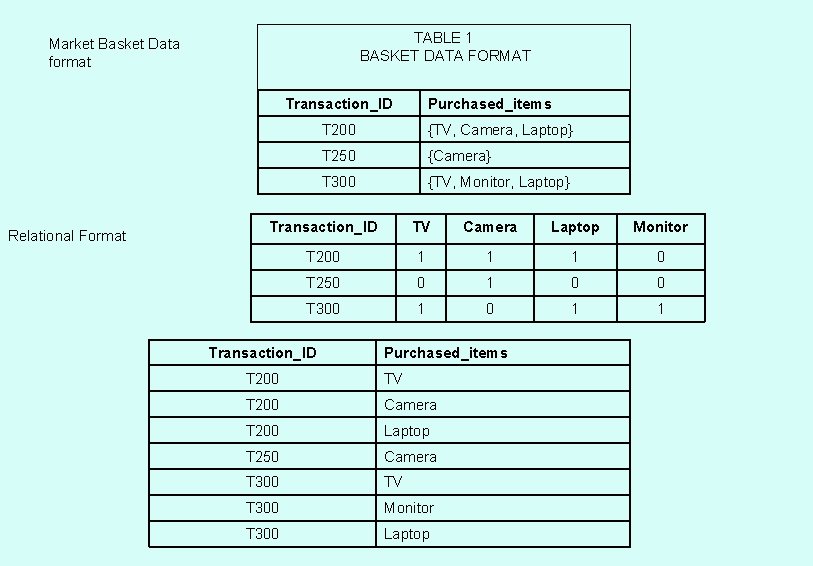

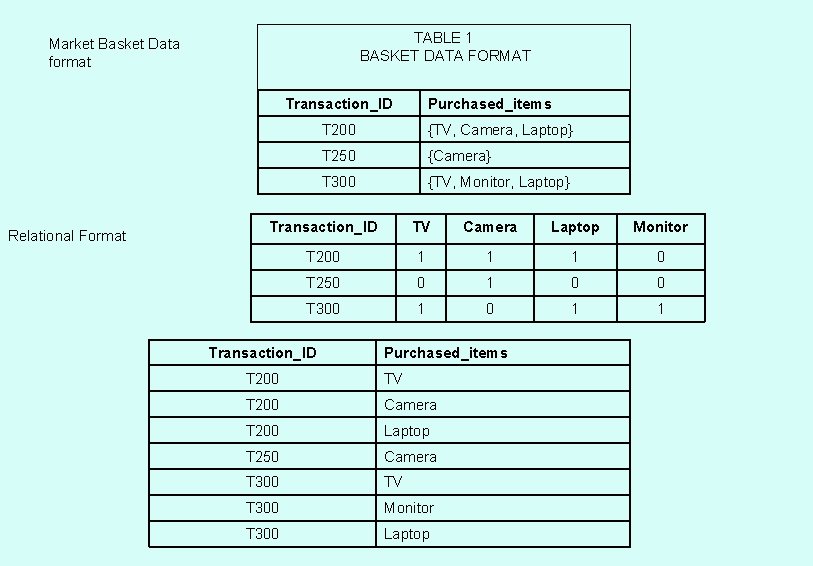

TABLE 1 BASKET DATA FORMAT Market Basket Data format Transaction_ID Relational Format Purchased_items T 200 {TV, Camera, Laptop} T 250 {Camera} T 300 {TV, Monitor, Laptop} Transaction_ID TV Camera Laptop Monitor T 200 1 1 1 0 T 250 0 1 0 0 T 300 1 1 Transaction_ID Purchased_items T 200 TV T 200 Camera T 200 Laptop T 250 Camera T 300 TV T 300 Monitor T 300 Laptop

FP Growth (Han, Pei, Yin 2000) • One problematic aspect of the Apriori is the candidate generation – Source of exponential growth • Another approach is to use a divide and conquer strategy • Idea: Compress the database into a frequent pattern tree representing frequent items

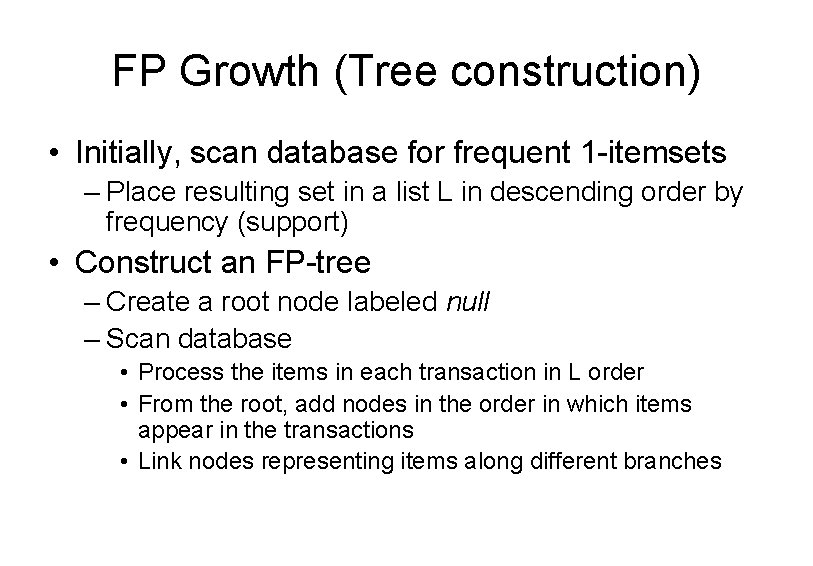

FP Growth (Tree construction) • Initially, scan database for frequent 1 -itemsets – Place resulting set in a list L in descending order by frequency (support) • Construct an FP-tree – Create a root node labeled null – Scan database • Process the items in each transaction in L order • From the root, add nodes in the order in which items appear in the transactions • Link nodes representing items along different branches

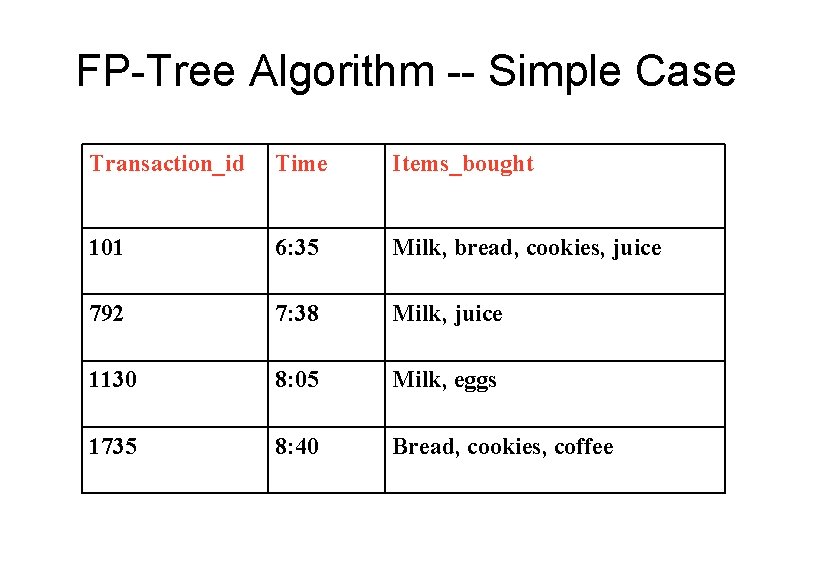

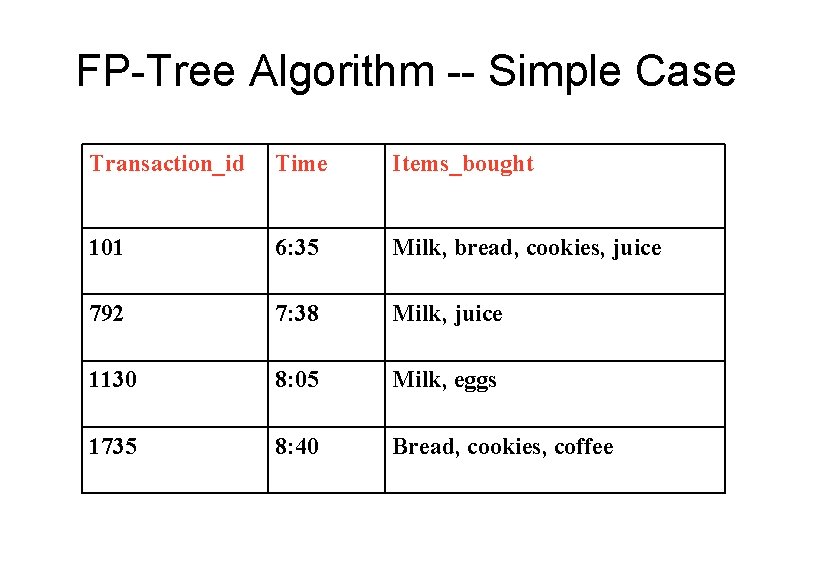

FP-Tree Algorithm -- Simple Case Transaction_id Time Items_bought 101 6: 35 Milk, bread, cookies, juice 792 7: 38 Milk, juice 1130 8: 05 Milk, eggs 1735 8: 40 Bread, cookies, coffee

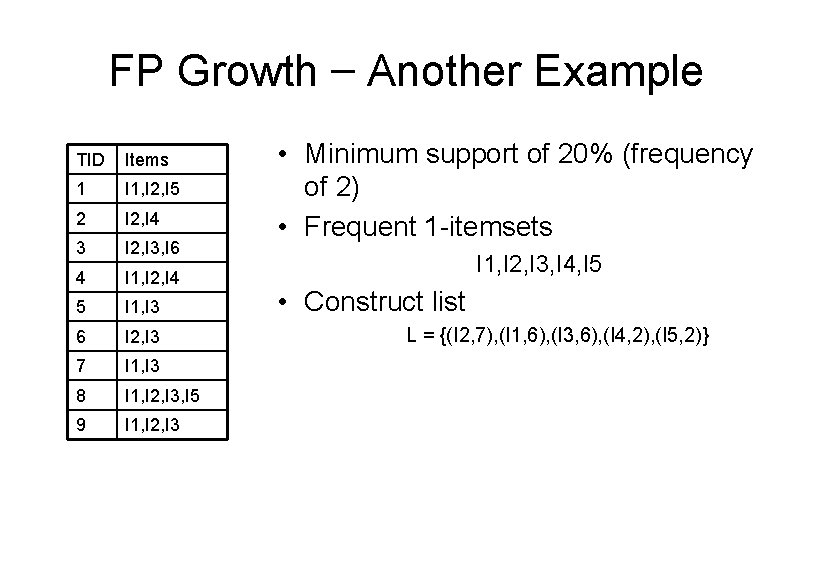

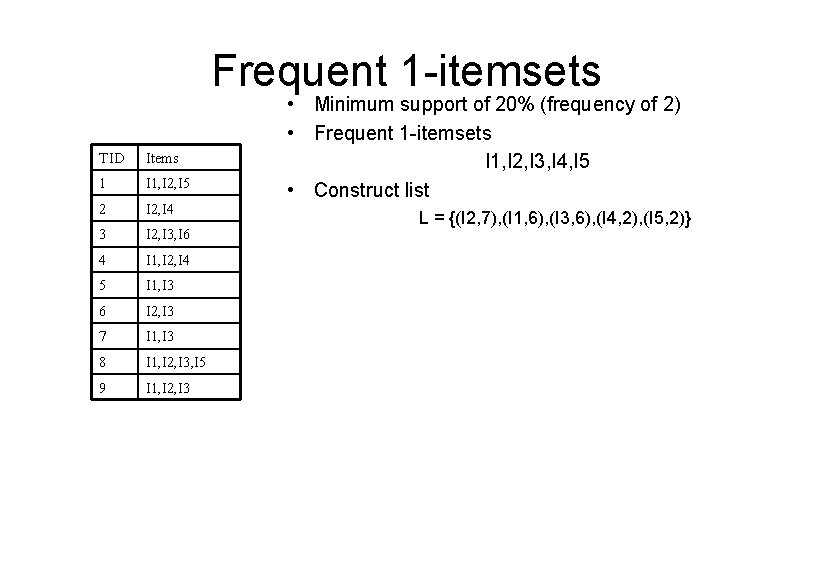

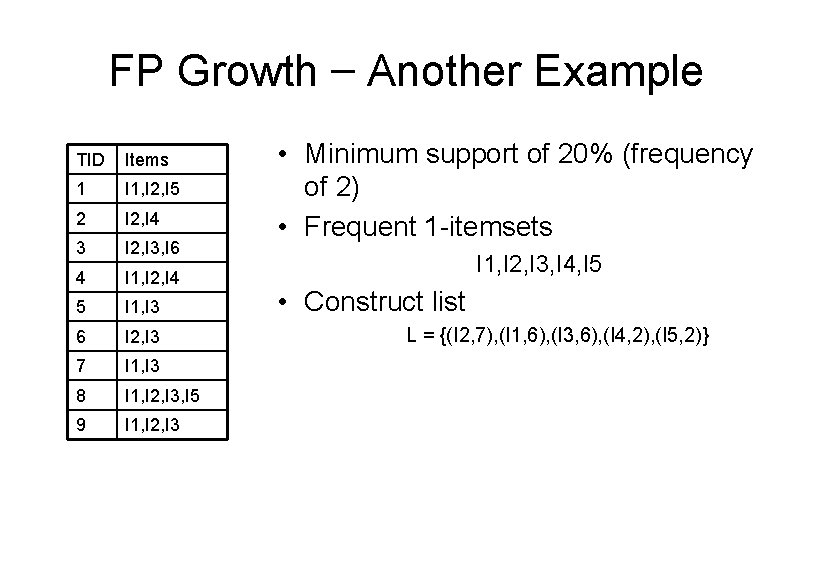

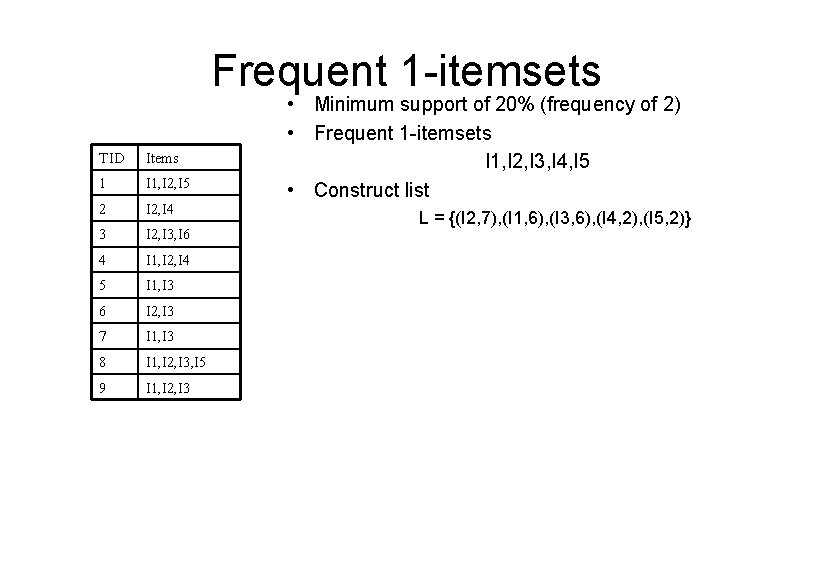

FP Growth – Another Example TID Items 1 I 1, I 2, I 5 2 I 2, I 4 3 I 2, I 3, I 6 4 I 1, I 2, I 4 5 I 1, I 3 6 I 2, I 3 7 I 1, I 3 8 I 1, I 2, I 3, I 5 9 I 1, I 2, I 3 • Minimum support of 20% (frequency of 2) • Frequent 1 -itemsets I 1, I 2, I 3, I 4, I 5 • Construct list L = {(I 2, 7), (I 1, 6), (I 3, 6), (I 4, 2), (I 5, 2)}

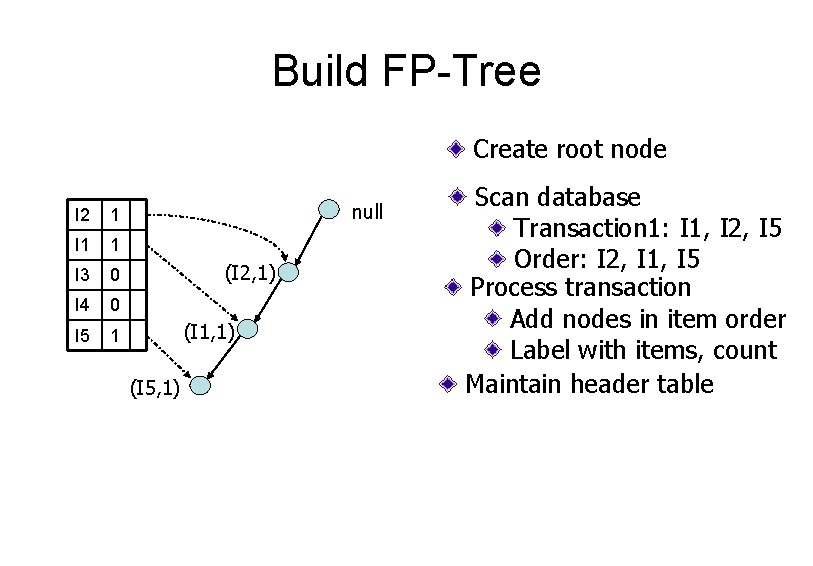

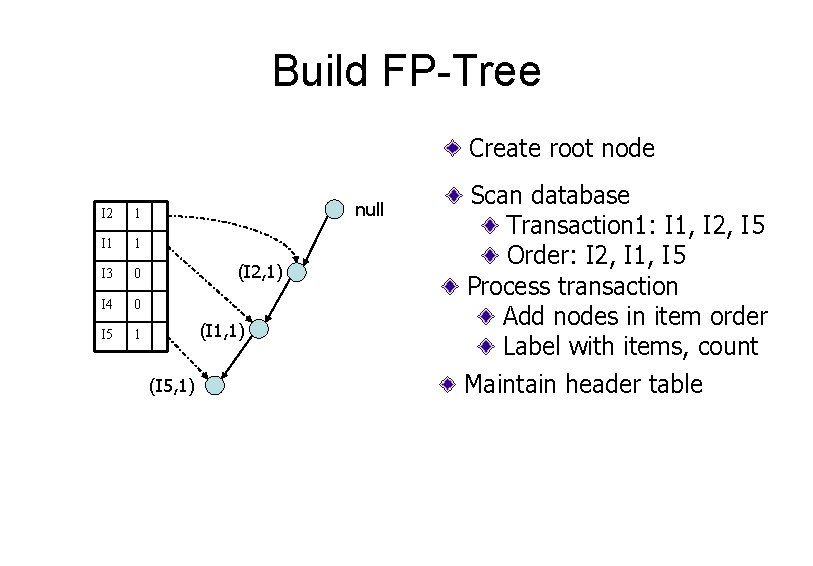

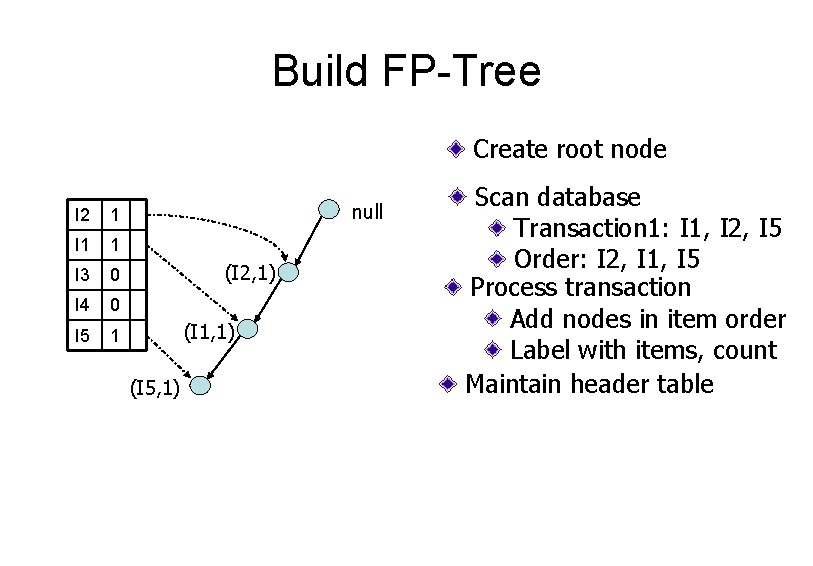

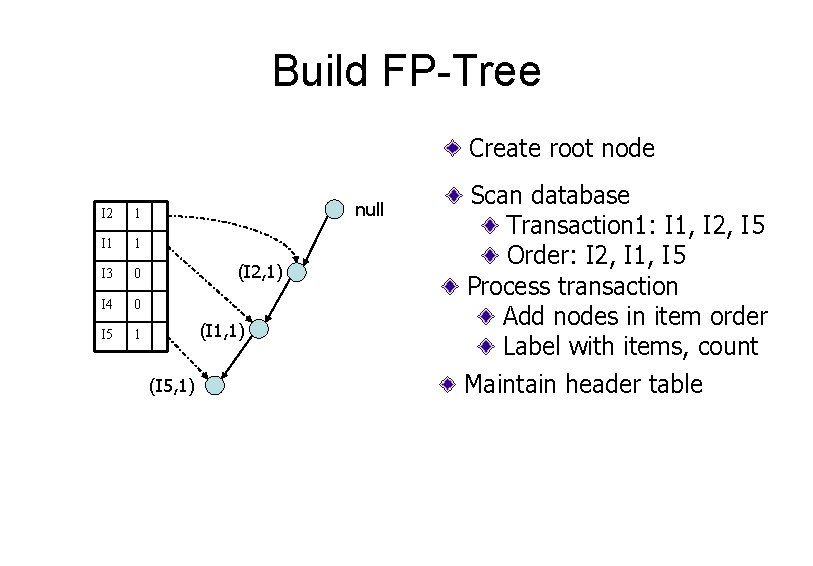

Build FP-Tree Create root node I 2 1 I 1 1 I 3 0 I 4 0 I 5 1 null (I 2, 1) (I 1, 1) (I 5, 1) Scan database Transaction 1: I 1, I 2, I 5 Order: I 2, I 1, I 5 Process transaction Add nodes in item order Label with items, count Maintain header table

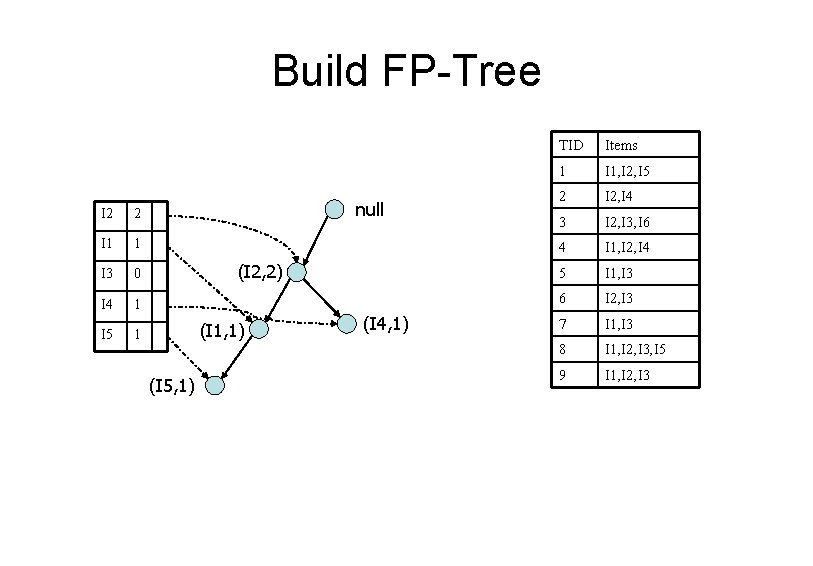

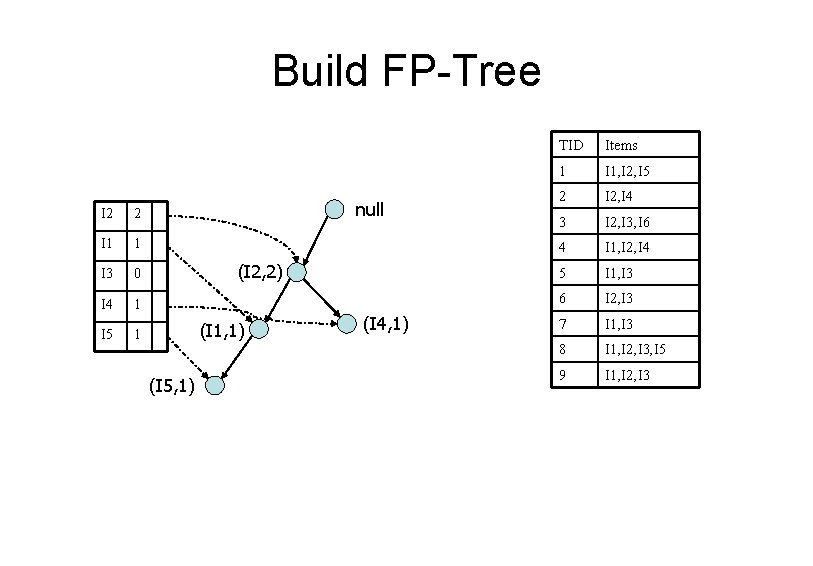

Build FP-Tree I 2 2 I 1 1 I 3 0 I 4 1 I 5 1 null (I 2, 2) (I 1, 1) (I 5, 1) (I 4, 1) TID Items 1 I 1, I 2, I 5 2 I 2, I 4 3 I 2, I 3, I 6 4 I 1, I 2, I 4 5 I 1, I 3 6 I 2, I 3 7 I 1, I 3 8 I 1, I 2, I 3, I 5 9 I 1, I 2, I 3

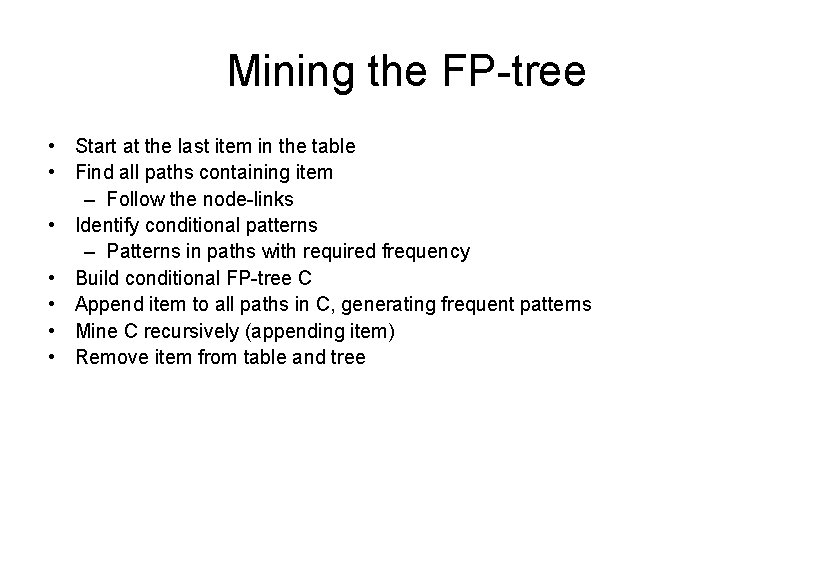

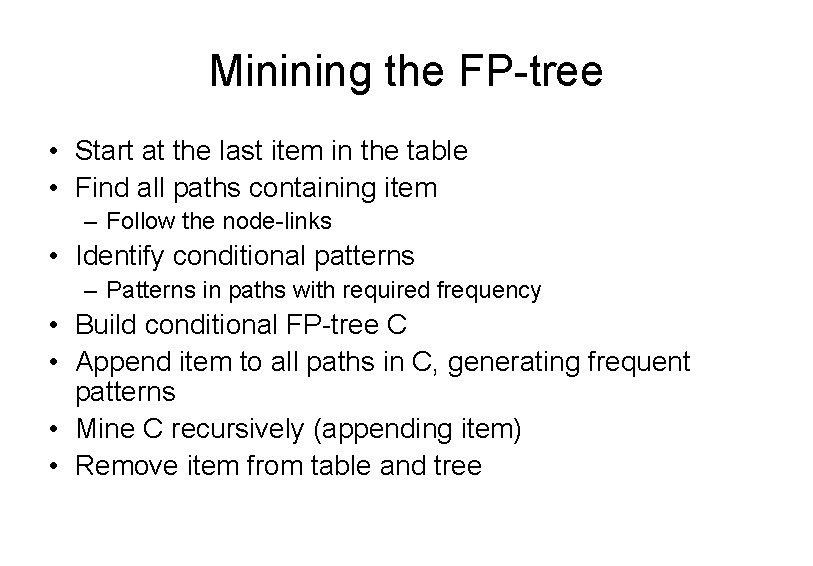

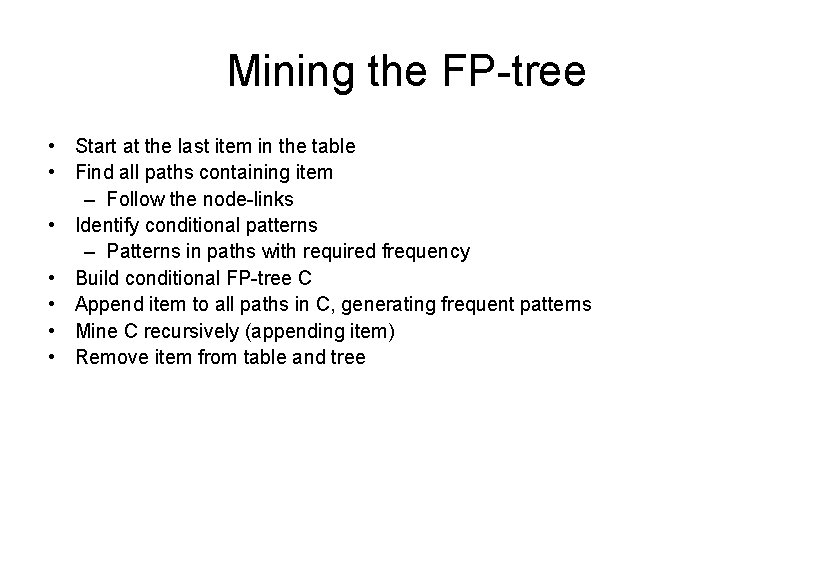

Minining the FP-tree • Start at the last item in the table • Find all paths containing item – Follow the node-links • Identify conditional patterns – Patterns in paths with required frequency • Build conditional FP-tree C • Append item to all paths in C, generating frequent patterns • Mine C recursively (appending item) • Remove item from table and tree

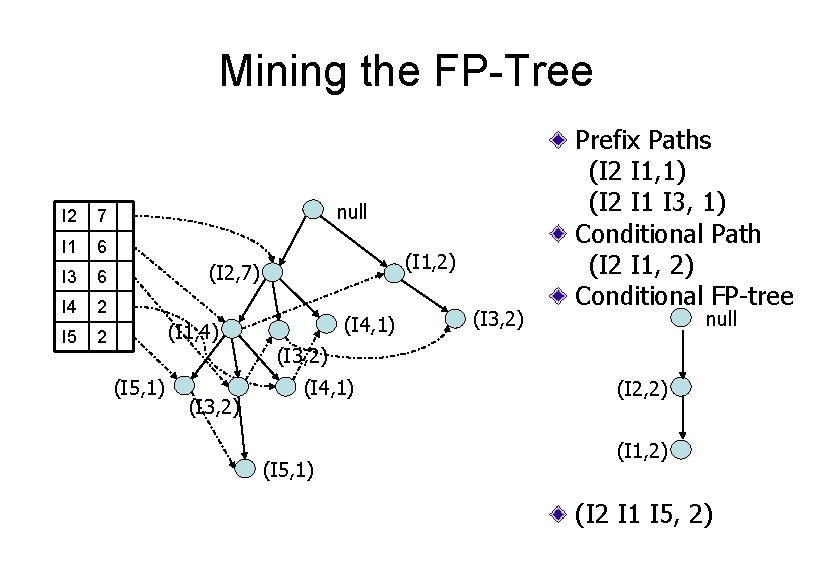

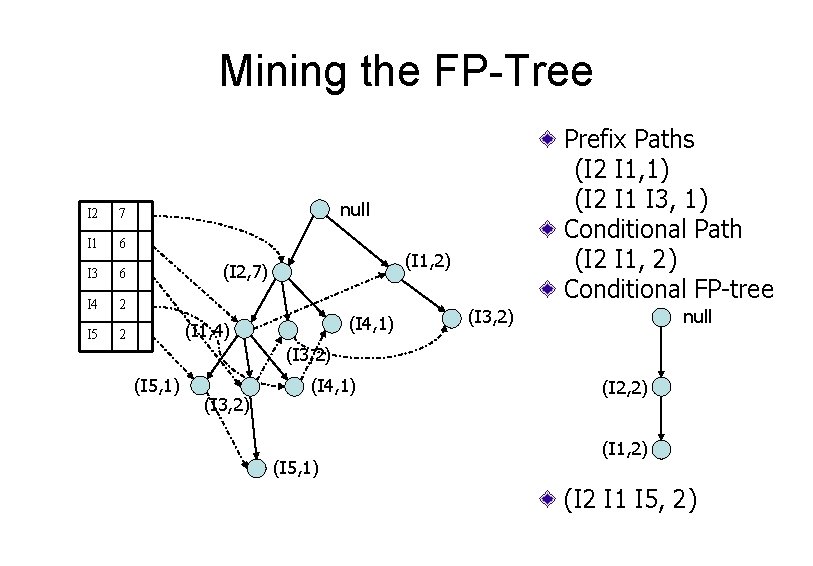

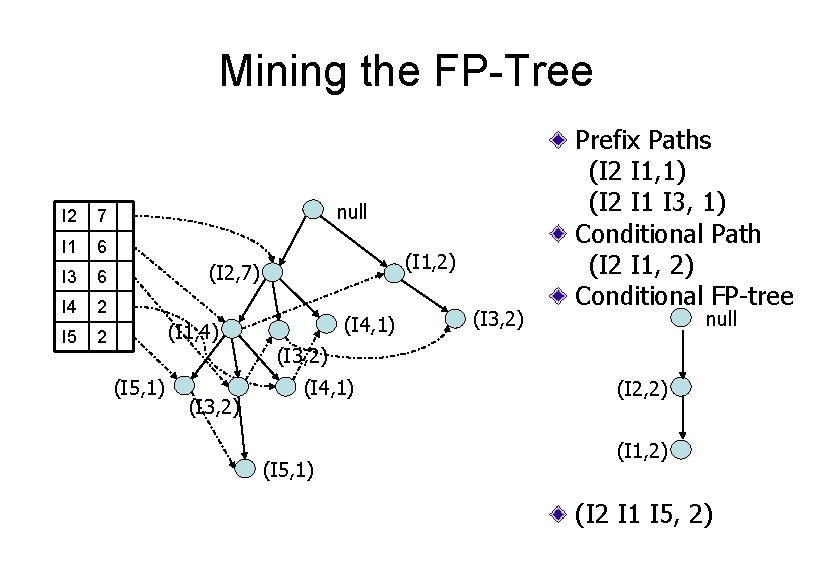

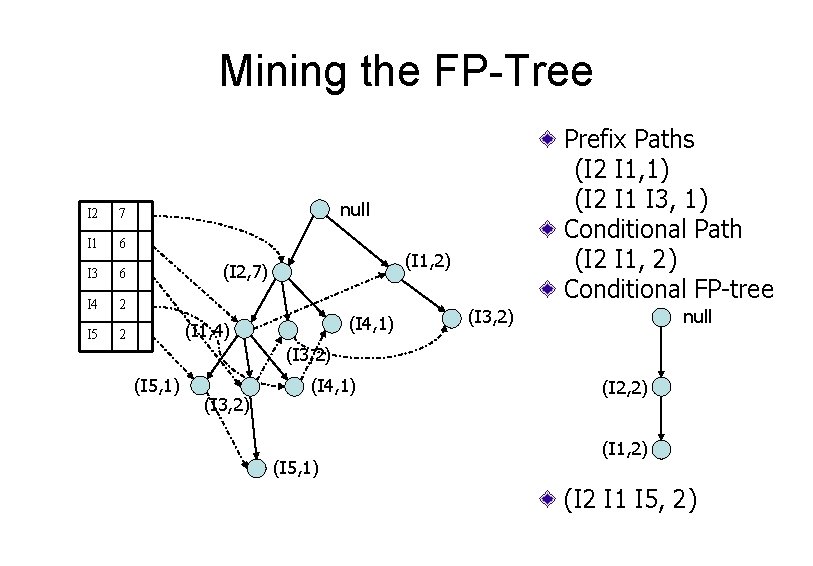

Mining the FP-Tree I 2 7 I 1 6 I 3 6 I 4 2 I 5 null (I 1, 2) (I 2, 7) (I 1, 4) 2 (I 5, 1) (I 3, 2) (I 4, 1) (I 3, 2) Prefix Paths (I 2 I 1, 1) (I 2 I 1 I 3, 1) Conditional Path (I 2 I 1, 2) Conditional FP-tree null (I 3, 2) (I 4, 1) (I 5, 1) (I 2, 2) (I 1, 2) (I 2 I 1 I 5, 2)

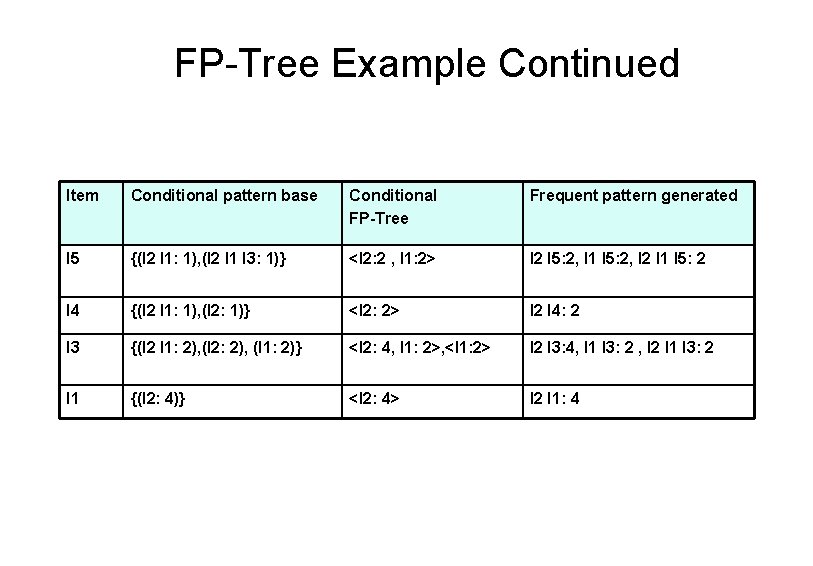

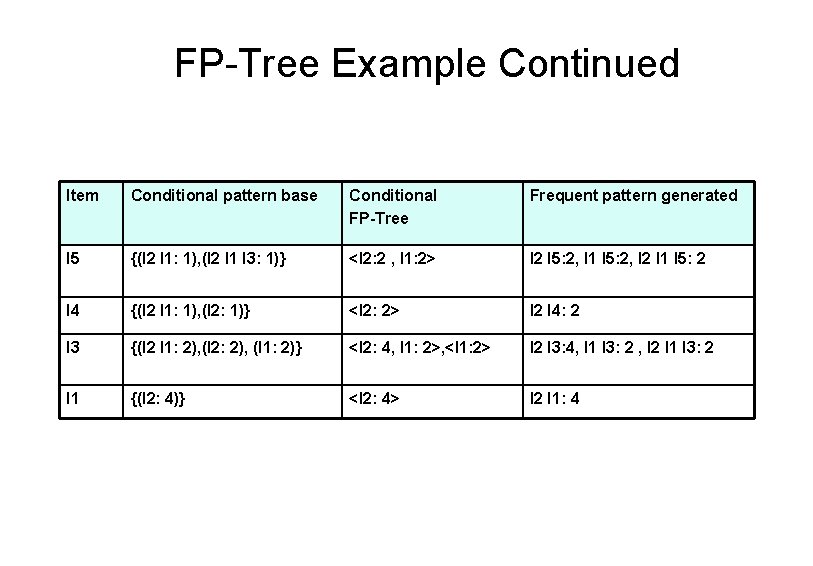

FP-Tree Example Continued Item Conditional pattern base Conditional FP-Tree Frequent pattern generated I 5 {(I 2 I 1: 1), (I 2 I 1 I 3: 1)} <I 2: 2 , I 1: 2> I 2 I 5: 2, I 1 I 5: 2, I 2 I 1 I 5: 2 I 4 {(I 2 I 1: 1), (I 2: 1)} <I 2: 2> I 2 I 4: 2 I 3 {(I 2 I 1: 2), (I 2: 2), (I 1: 2)} <I 2: 4, I 1: 2>, <I 1: 2> I 2 I 3: 4, I 1 I 3: 2 , I 2 I 1 I 3: 2 I 1 {(I 2: 4)} <I 2: 4> I 2 I 1: 4

Frequent 1 -itemsets TID Items 1 I 1, I 2, I 5 2 I 2, I 4 3 I 2, I 3, I 6 4 I 1, I 2, I 4 5 I 1, I 3 6 I 2, I 3 7 I 1, I 3 8 I 1, I 2, I 3, I 5 9 I 1, I 2, I 3 • Minimum support of 20% (frequency of 2) • Frequent 1 -itemsets I 1, I 2, I 3, I 4, I 5 • Construct list L = {(I 2, 7), (I 1, 6), (I 3, 6), (I 4, 2), (I 5, 2)}

Build FP-Tree Create root node I 2 1 I 1 1 I 3 0 I 4 0 I 5 1 null (I 2, 1) (I 1, 1) (I 5, 1) Scan database Transaction 1: I 1, I 2, I 5 Order: I 2, I 1, I 5 Process transaction Add nodes in item order Label with items, count Maintain header table

Build FP-Tree I 2 2 I 1 1 I 3 0 I 4 1 I 5 1 null (I 2, 2) (I 1, 1) (I 5, 1) (I 4, 1) TID Items 1 I 1, I 2, I 5 2 I 2, I 4 3 I 2, I 3, I 6 4 I 1, I 2, I 4 5 I 1, I 3 6 I 2, I 3 7 I 1, I 3 8 I 1, I 2, I 3, I 5 9 I 1, I 2, I 3

Mining the FP-tree • Start at the last item in the table • Find all paths containing item – Follow the node-links • Identify conditional patterns – Patterns in paths with required frequency • Build conditional FP-tree C • Append item to all paths in C, generating frequent patterns • Mine C recursively (appending item) • Remove item from table and tree

Mining the FP-Tree I 2 7 I 1 6 I 3 6 I 4 2 I 5 2 Prefix Paths (I 2 I 1, 1) (I 2 I 1 I 3, 1) Conditional Path (I 2 I 1, 2) Conditional FP-tree null (I 1, 2) (I 2, 7) (I 4, 1) (I 1, 4) (I 3, 2) null (I 3, 2) (I 5, 1) (I 3, 2) (I 4, 1) (I 5, 1) (I 2, 2) (I 1, 2) (I 2 I 1 I 5, 2)

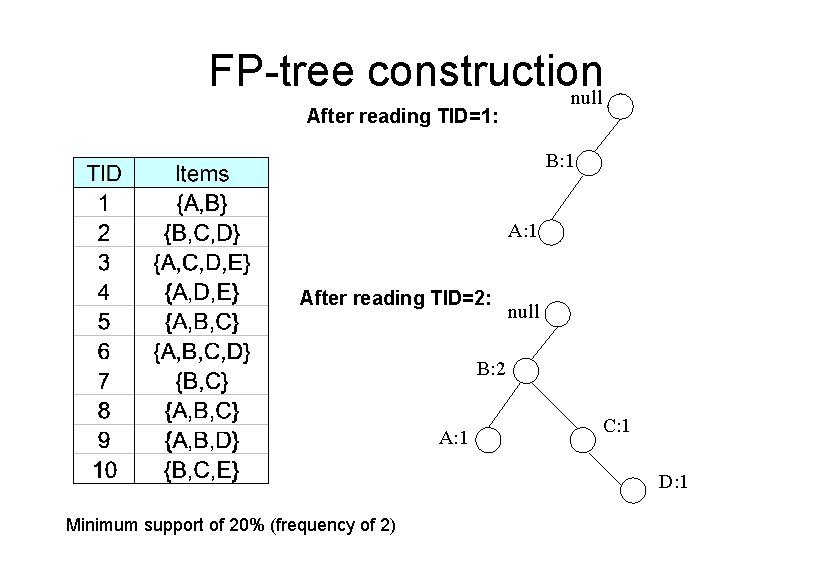

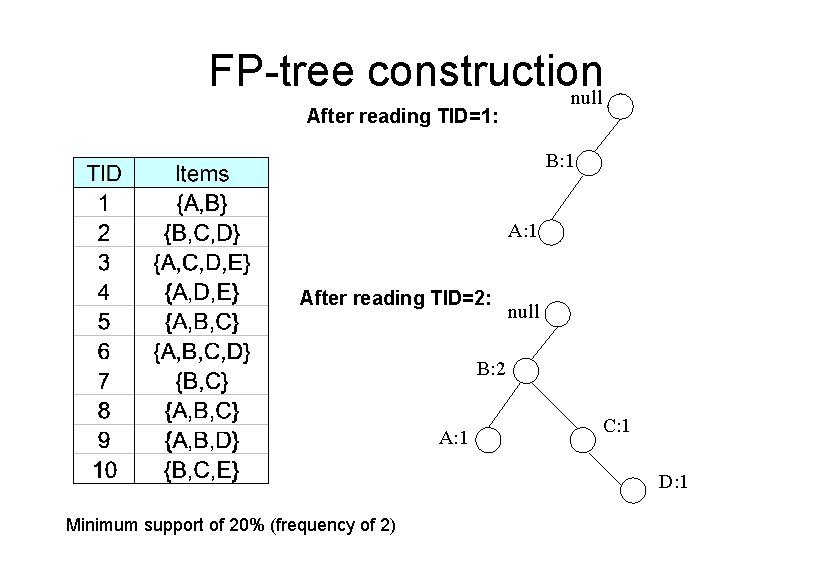

FP-tree construction null After reading TID=1: B: 1 After reading TID=2: null B: 2 A: 1 C: 1 D: 1 Minimum support of 20% (frequency of 2)

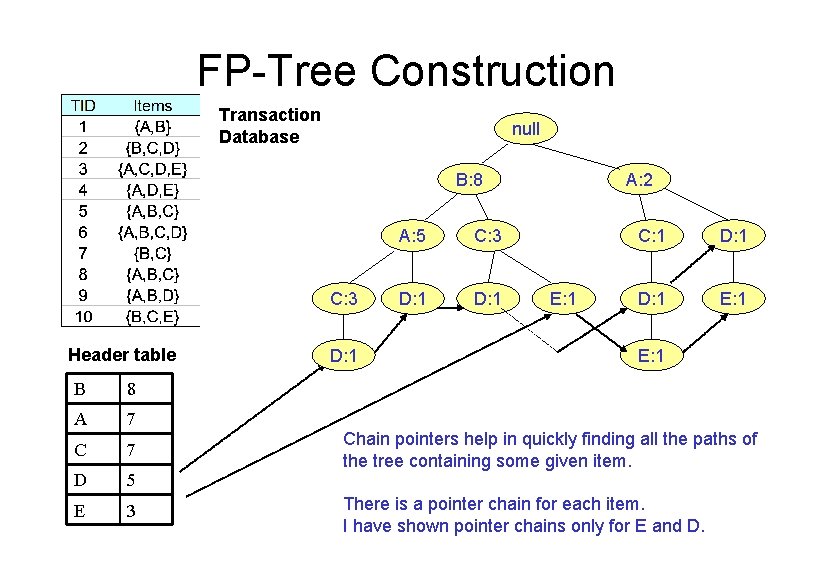

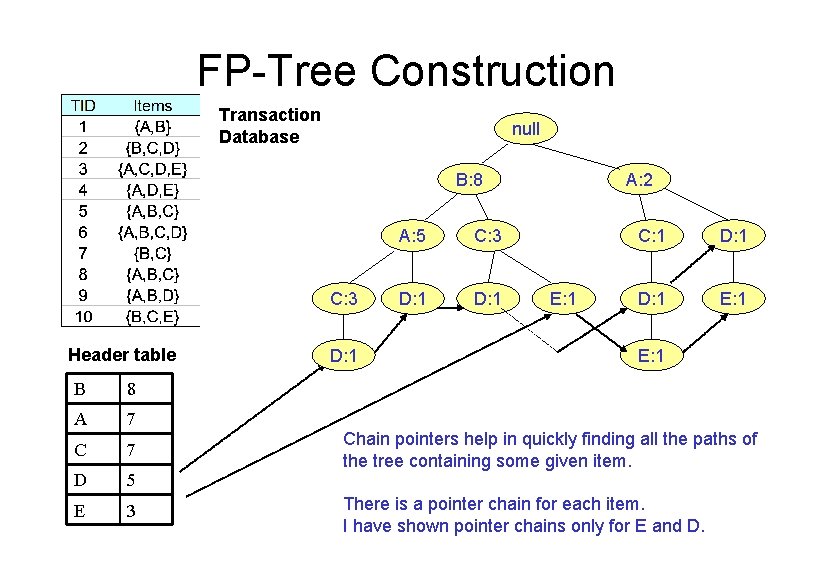

FP-Tree Construction Transaction Database null B: 8 C: 3 Header table B 8 A 7 C 7 D 5 E 3 D: 1 A: 5 C: 3 D: 1 A: 2 E: 1 C: 1 D: 1 E: 1 Chain pointers help in quickly finding all the paths of the tree containing some given item. There is a pointer chain for each item. I have shown pointer chains only for E and D.

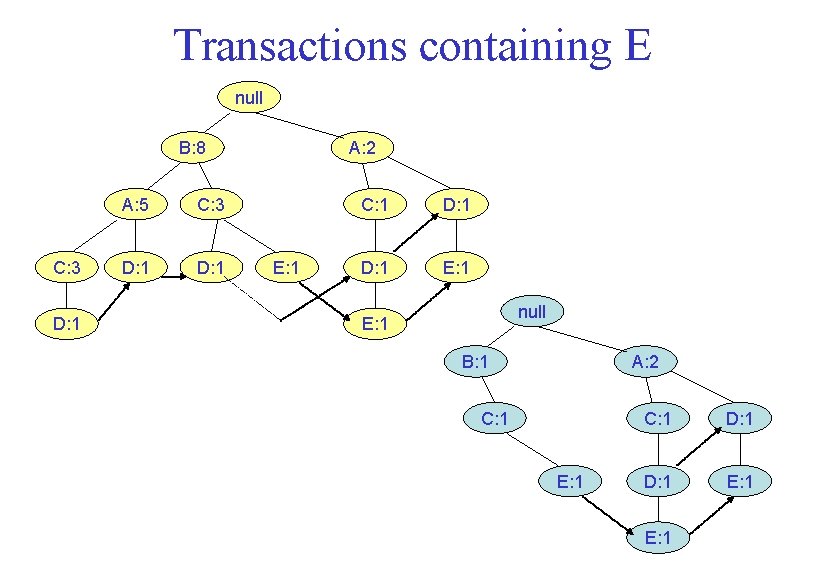

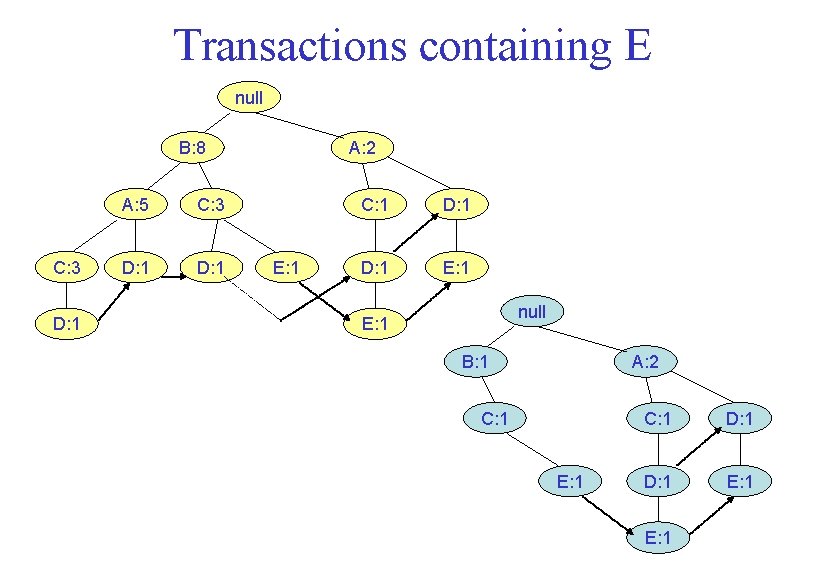

Transactions containing E null B: 8 C: 3 D: 1 A: 5 C: 3 D: 1 A: 2 E: 1 C: 1 D: 1 E: 1 null E: 1 B: 1 A: 2 C: 1 E: 1 C: 1 D: 1 E: 1

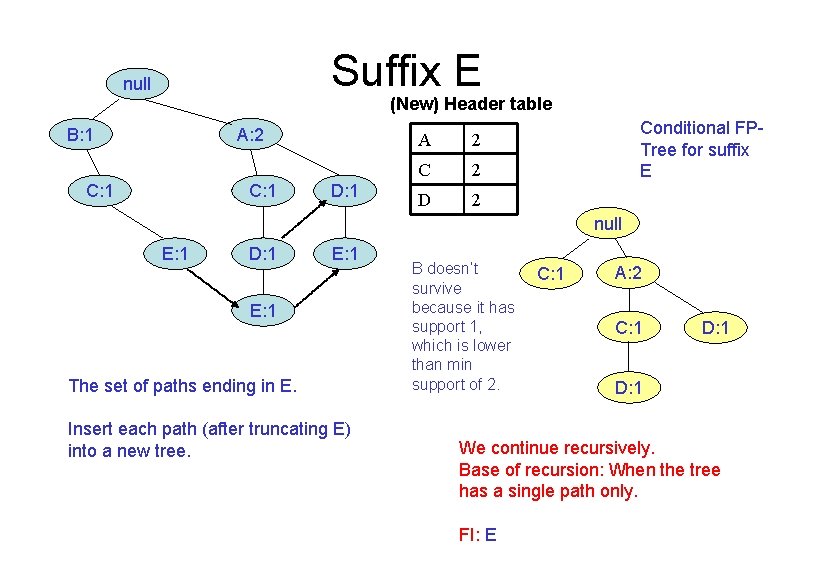

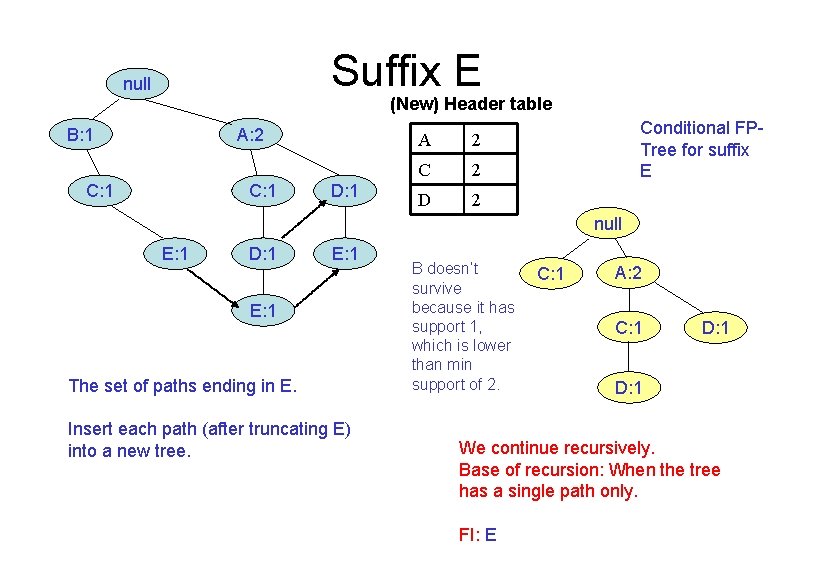

Suffix E null (New) Header table B: 1 A: 2 C: 1 D: 1 A 2 C 2 D 2 Conditional FPTree for suffix E null E: 1 D: 1 E: 1 The set of paths ending in E. Insert each path (after truncating E) into a new tree. B doesn’t survive because it has support 1, which is lower than min support of 2. C: 1 A: 2 C: 1 D: 1 We continue recursively. Base of recursion: When the tree has a single path only. FI: E

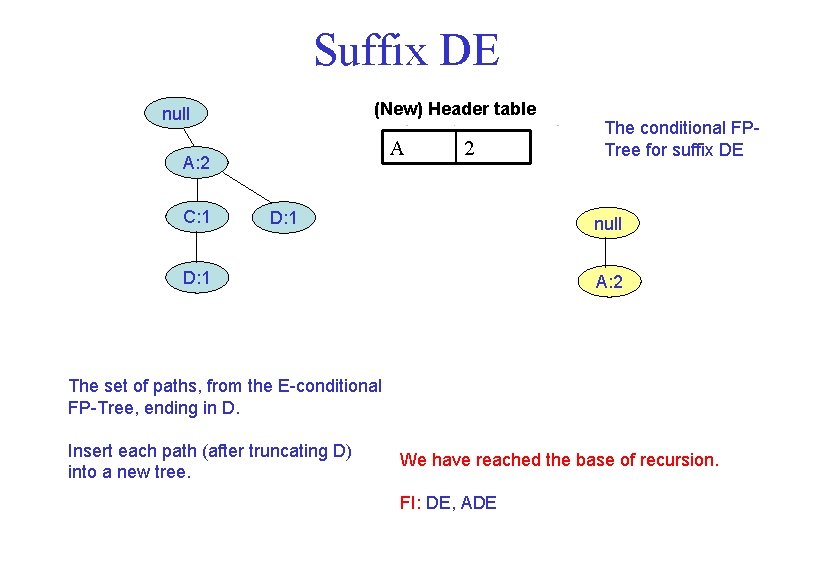

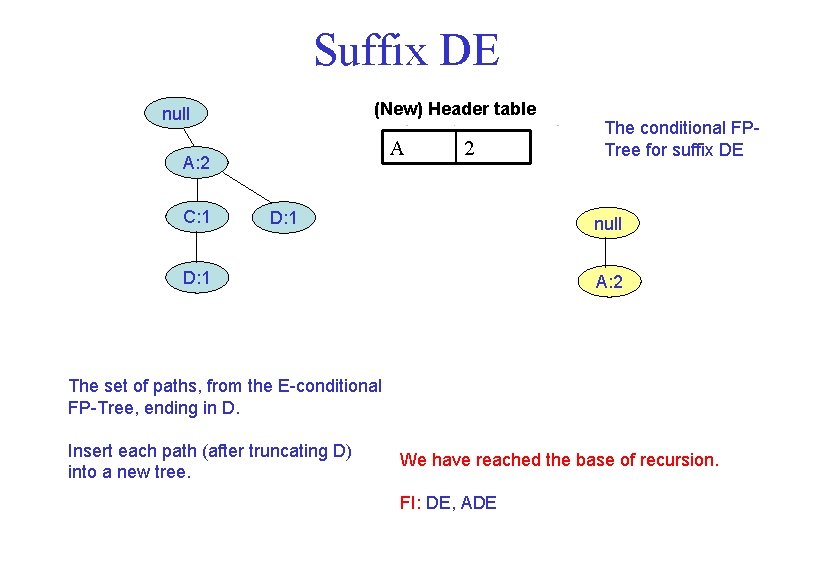

Suffix DE (New) Header table null A A: 2 C: 1 2 D: 1 The conditional FPTree for suffix DE null D: 1 A: 2 The set of paths, from the E-conditional FP-Tree, ending in D. Insert each path (after truncating D) into a new tree. We have reached the base of recursion. FI: DE, ADE

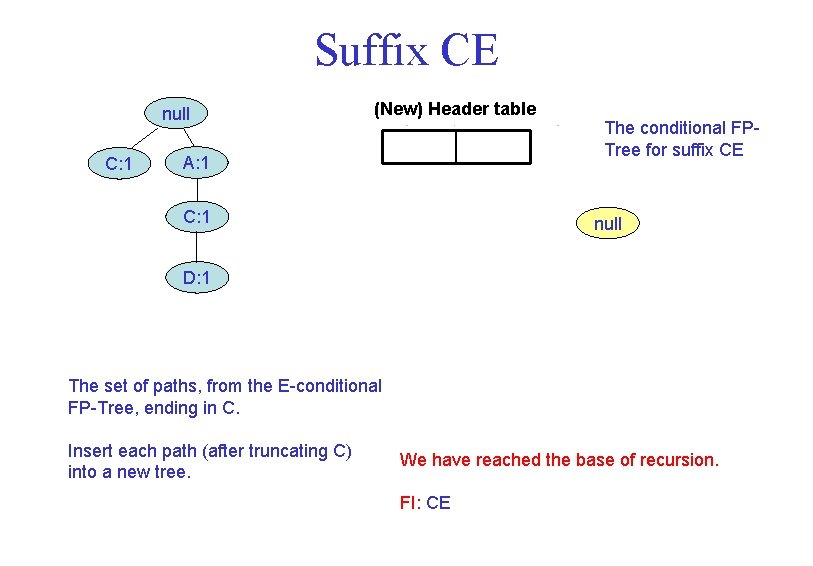

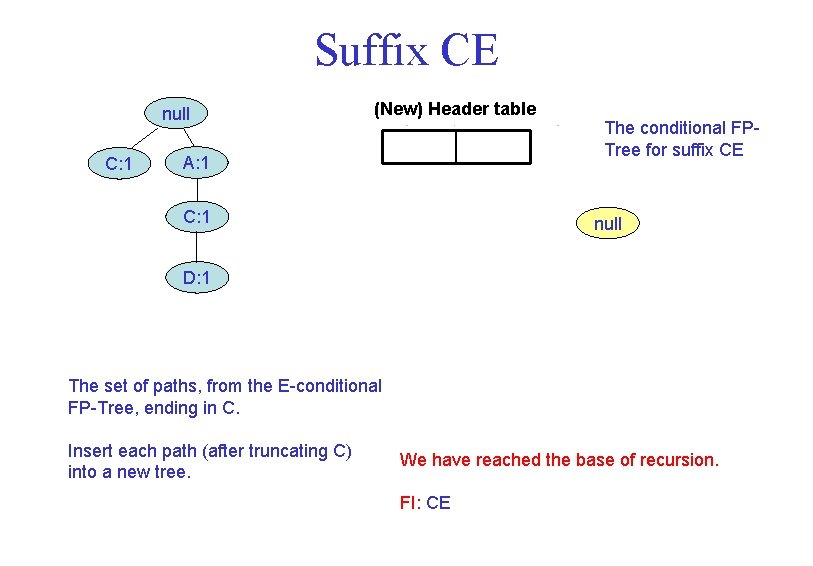

Suffix CE null C: 1 (New) Header table A: 1 C: 1 The conditional FPTree for suffix CE null D: 1 The set of paths, from the E-conditional FP-Tree, ending in C. Insert each path (after truncating C) into a new tree. We have reached the base of recursion. FI: CE

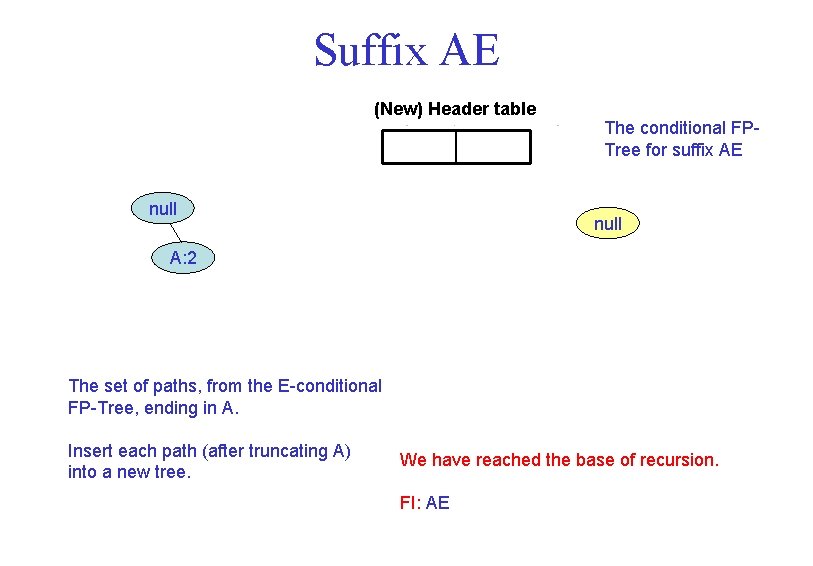

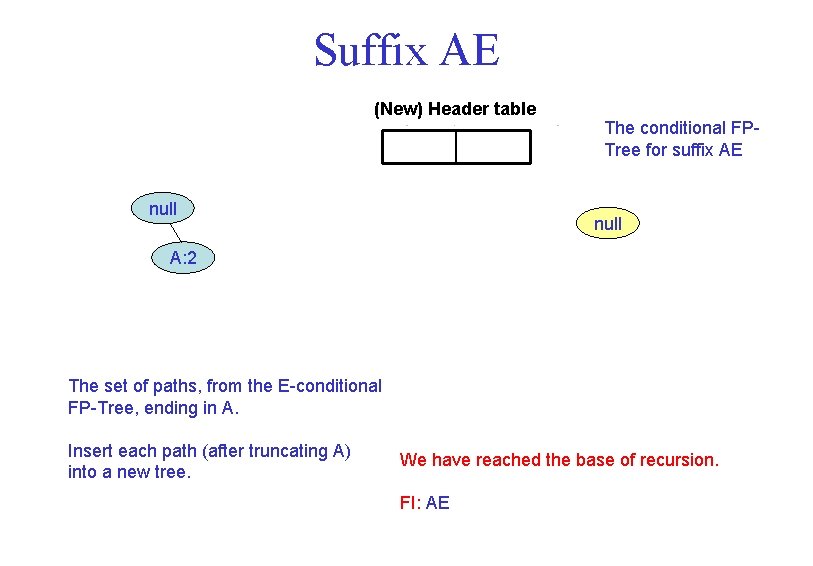

Suffix AE (New) Header table null The conditional FPTree for suffix AE null A: 2 The set of paths, from the E-conditional FP-Tree, ending in A. Insert each path (after truncating A) into a new tree. We have reached the base of recursion. FI: AE

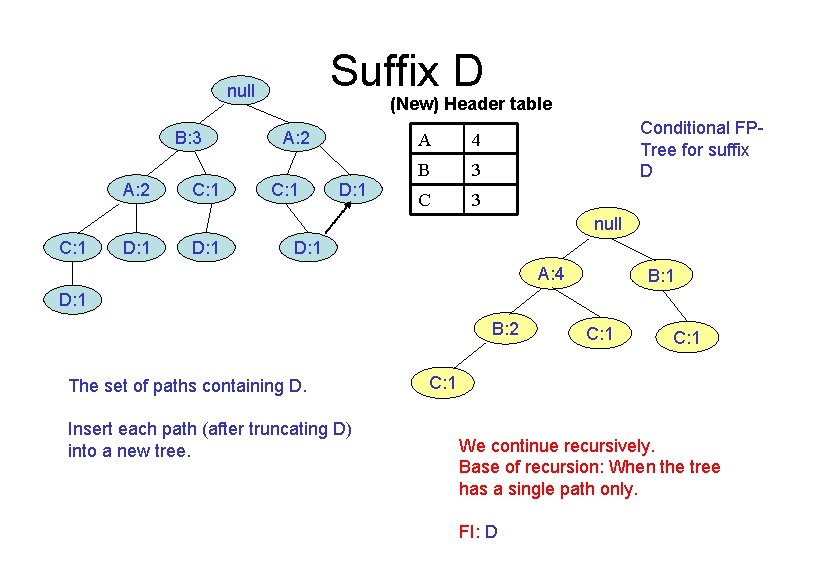

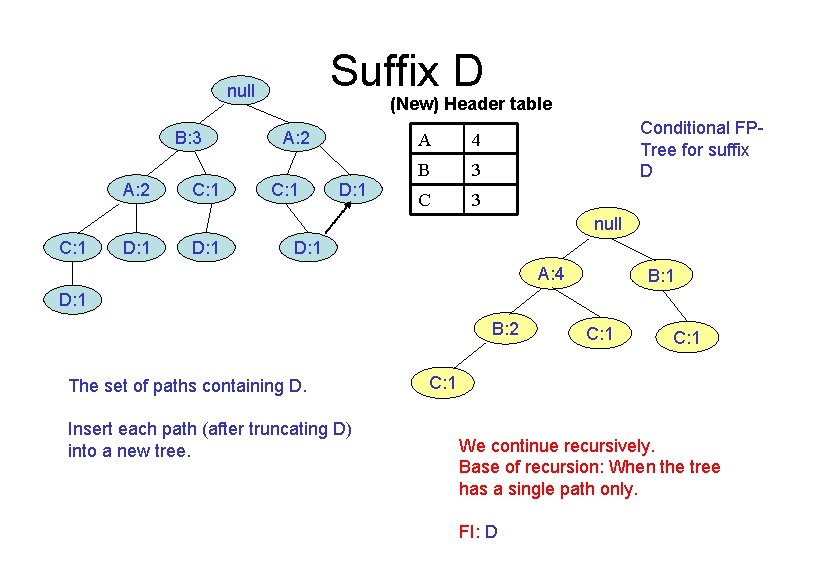

Suffix D null B: 3 A: 2 C: 1 (New) Header table A: 2 C: 1 D: 1 A 4 B 3 Conditional FPTree for suffix D null C: 1 D: 1 A: 4 B: 1 D: 1 B: 2 The set of paths containing D. Insert each path (after truncating D) into a new tree. C: 1 We continue recursively. Base of recursion: When the tree has a single path only. FI: D

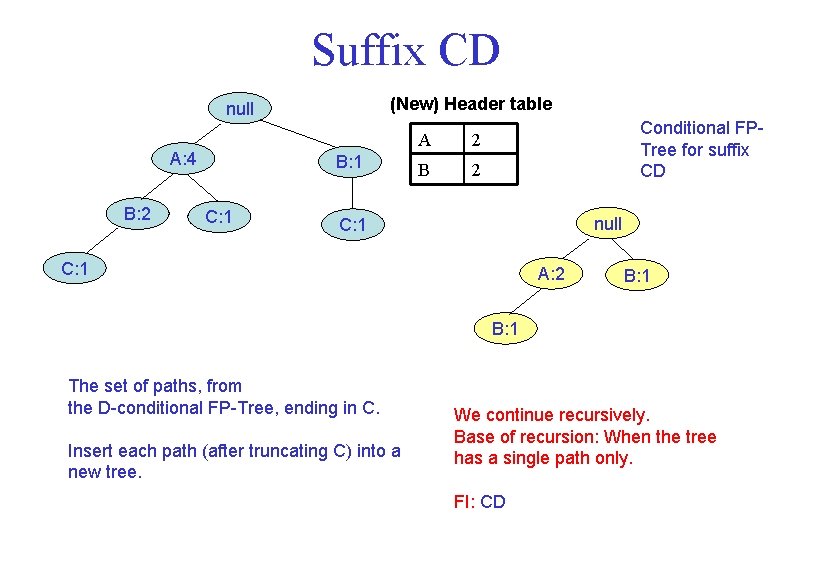

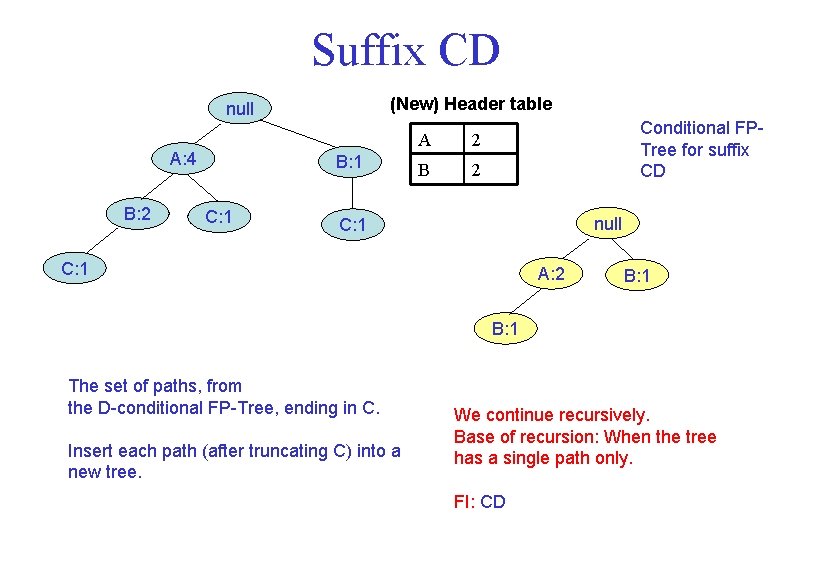

Suffix CD (New) Header table null A: 4 B: 2 B: 1 C: 1 A 2 B 2 Conditional FPTree for suffix CD null C: 1 A: 2 B: 1 The set of paths, from the D-conditional FP-Tree, ending in C. Insert each path (after truncating C) into a new tree. We continue recursively. Base of recursion: When the tree has a single path only. FI: CD

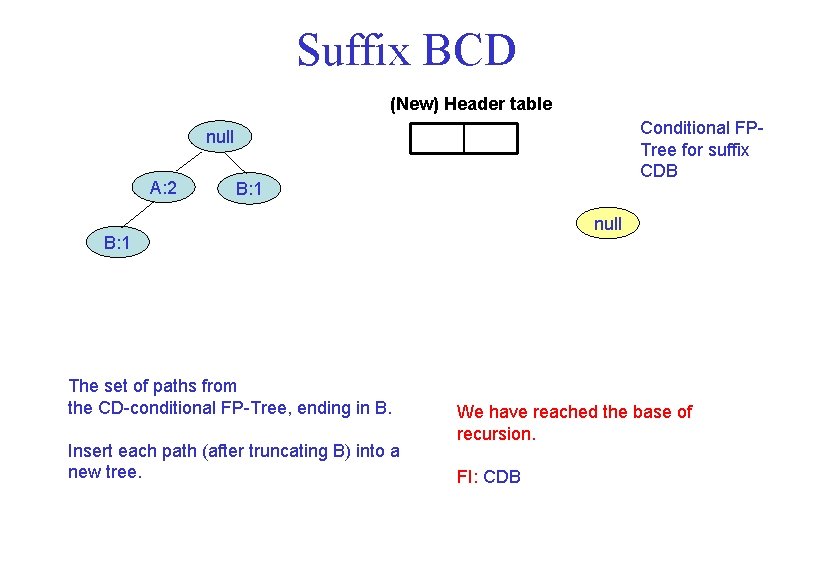

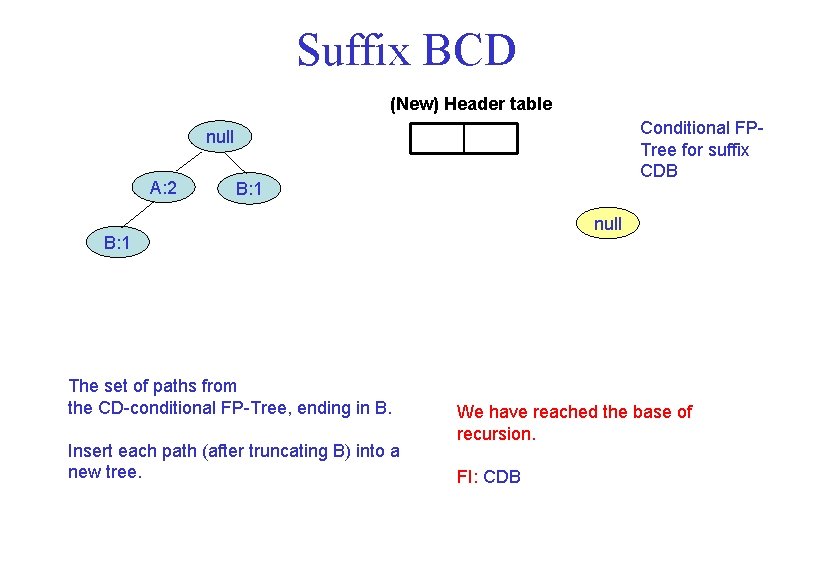

Suffix BCD (New) Header table Conditional FPTree for suffix CDB null A: 2 B: 1 null B: 1 The set of paths from the CD-conditional FP-Tree, ending in B. Insert each path (after truncating B) into a new tree. We have reached the base of recursion. FI: CDB

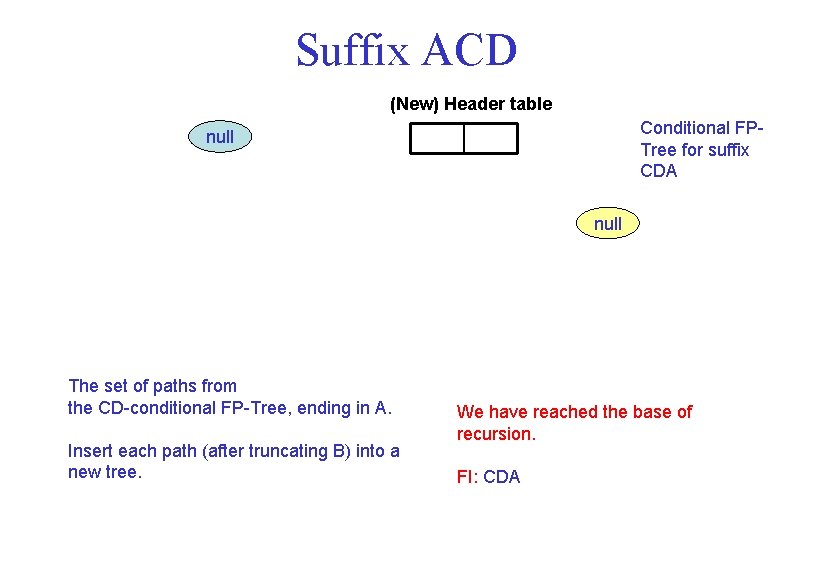

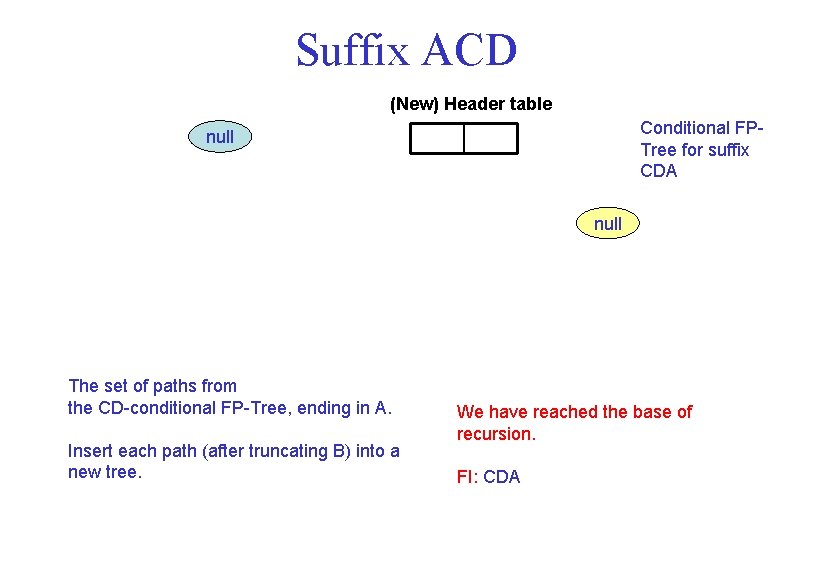

Suffix ACD (New) Header table Conditional FPTree for suffix CDA null The set of paths from the CD-conditional FP-Tree, ending in A. Insert each path (after truncating B) into a new tree. We have reached the base of recursion. FI: CDA

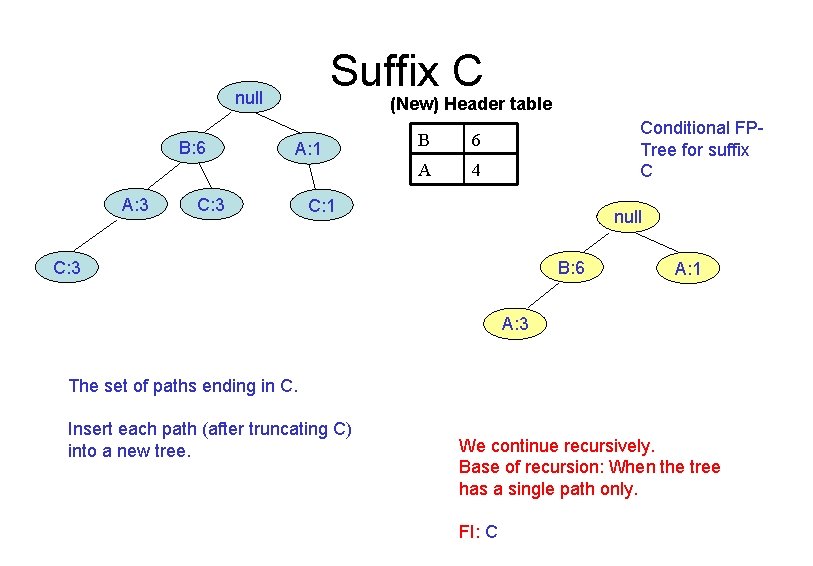

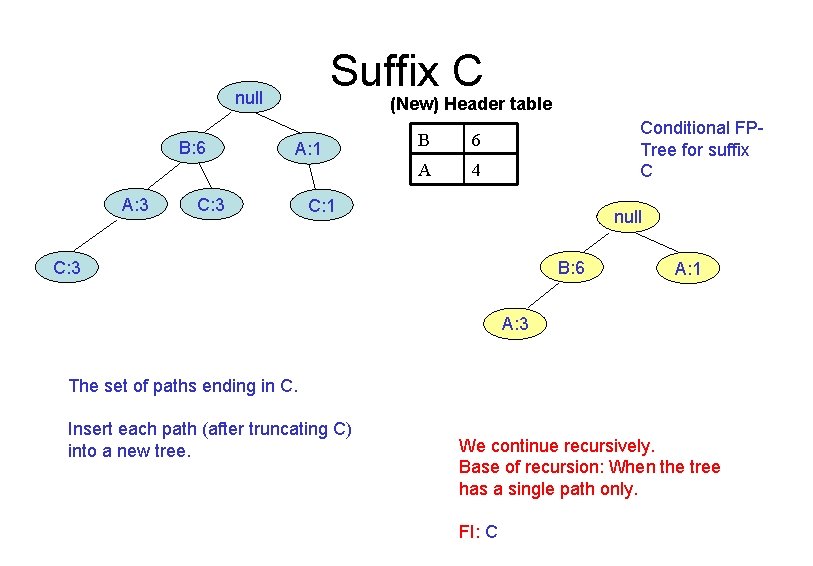

Suffix C null B: 6 A: 3 (New) Header table A: 1 C: 3 B 6 A 4 Conditional FPTree for suffix C C: 1 null C: 3 B: 6 A: 1 A: 3 The set of paths ending in C. Insert each path (after truncating C) into a new tree. We continue recursively. Base of recursion: When the tree has a single path only. FI: C

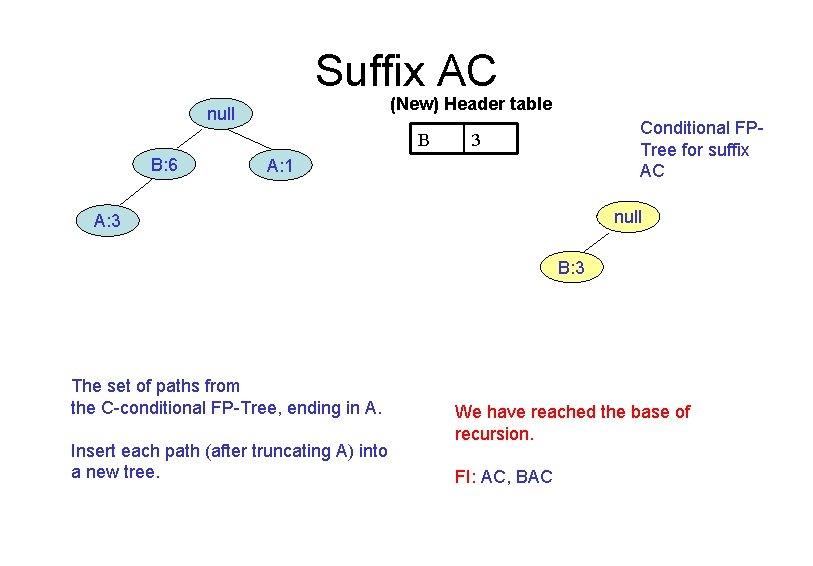

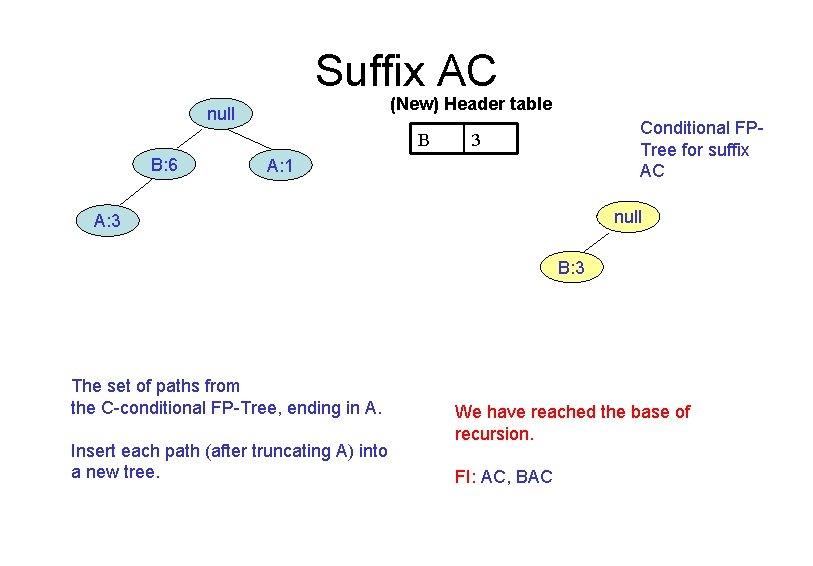

Suffix AC (New) Header table null B B: 6 Conditional FPTree for suffix AC 3 A: 1 null A: 3 B: 3 The set of paths from the C-conditional FP-Tree, ending in A. Insert each path (after truncating A) into a new tree. We have reached the base of recursion. FI: AC, BAC

Suffix BC null (New) Header table B 6 B: 6 Conditional FPTree for suffix BC null The set of paths from the C-conditional FP-Tree, ending in B. Insert each path (after truncating B) into a new tree. We have reached the base of recursion. FI: BC

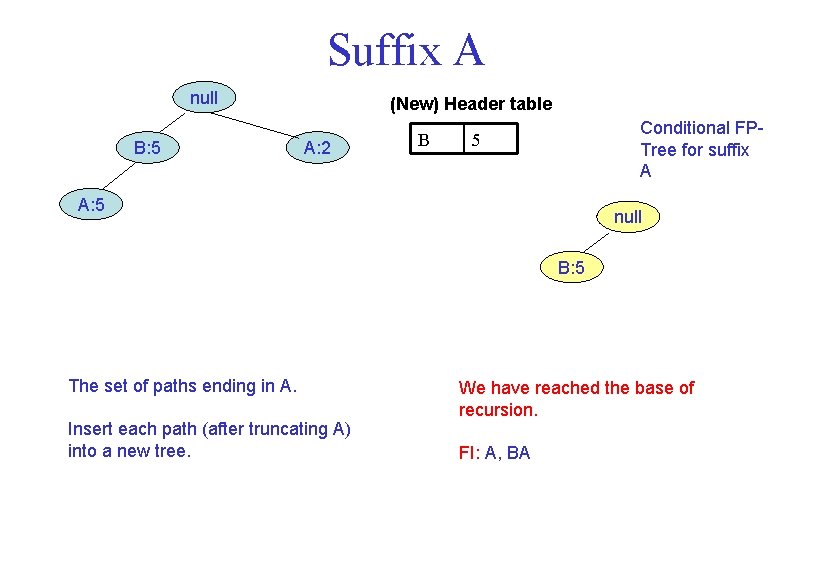

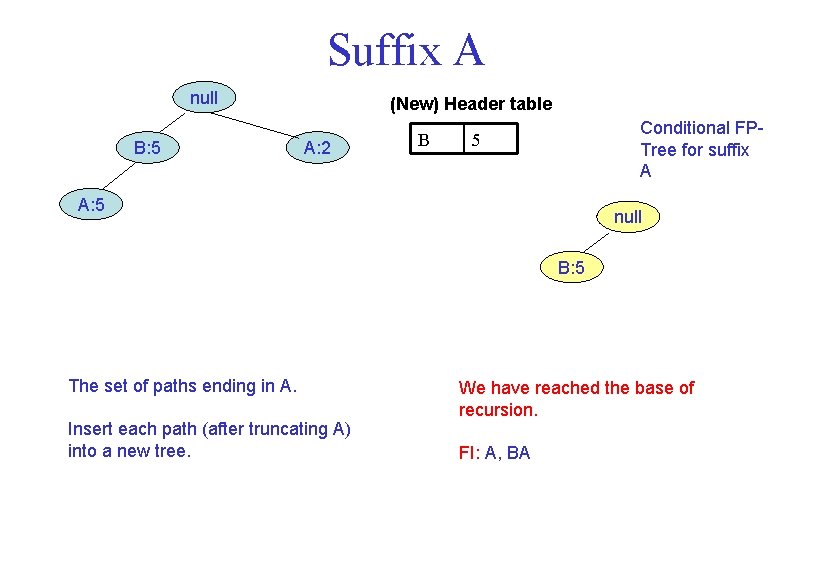

Suffix A null B: 5 (New) Header table A: 2 B Conditional FPTree for suffix A 5 A: 5 null B: 5 The set of paths ending in A. Insert each path (after truncating A) into a new tree. We have reached the base of recursion. FI: A, BA

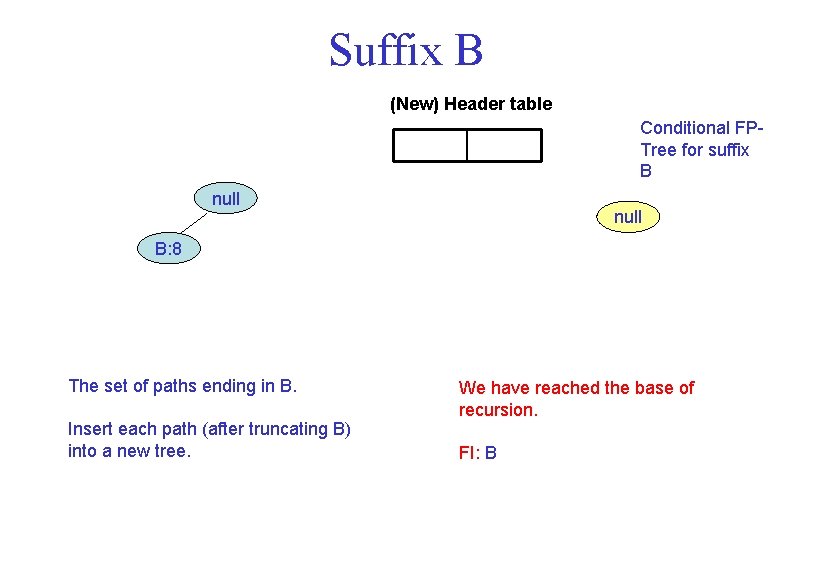

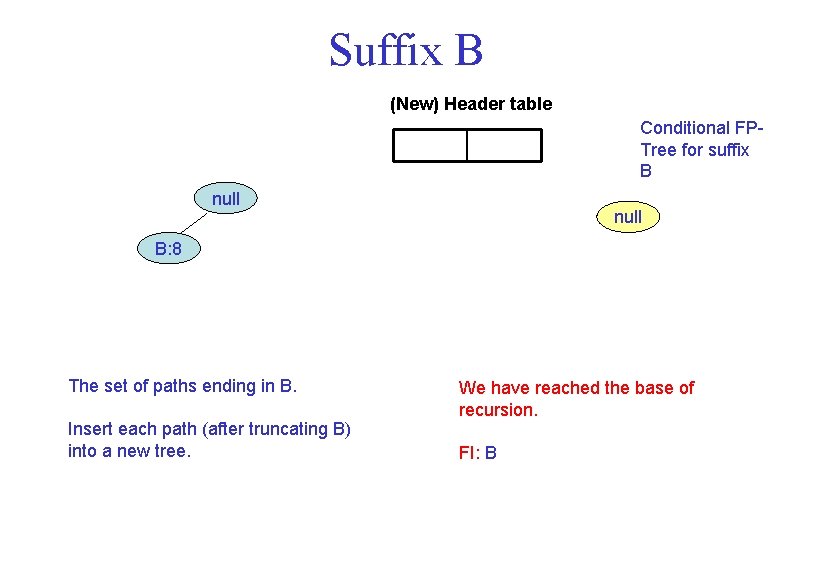

Suffix B (New) Header table Conditional FPTree for suffix B null B: 8 The set of paths ending in B. Insert each path (after truncating B) into a new tree. We have reached the base of recursion. FI: B

Apriori Advantages and disadvantages • Generate all candidates item set and test them with minimum support are expensive in both space and time. • Apriori is so slow comparing with FP growth • Apriori is easy to implement

FP Growth Advantages and disadvantages • No candidate generation, no candidate test • No repeated scan of entire database • Basic ops: counting local frequent items and building sub FP-tree, no pattern search and matching • Faster than Apriori • FP tree is expensive to build • FP tree not fit in memory