Oneway ANOVA Analysis of Variance Week 8 9

- Slides: 41

One-way ANOVA Analysis of Variance Week 8 & 9

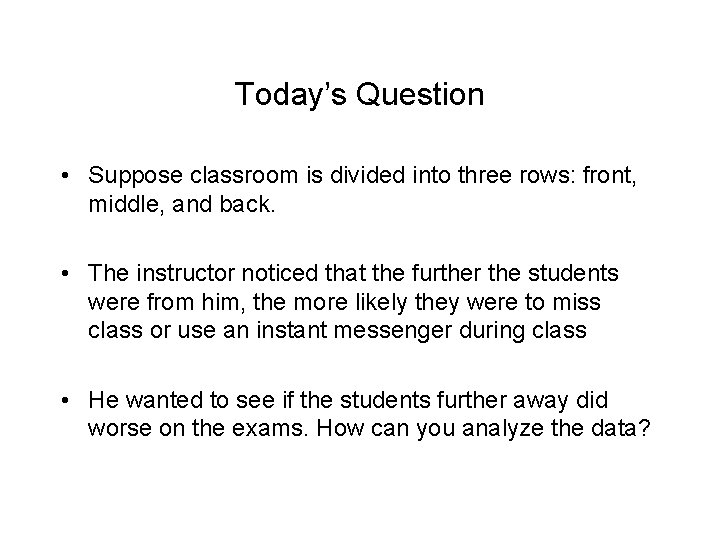

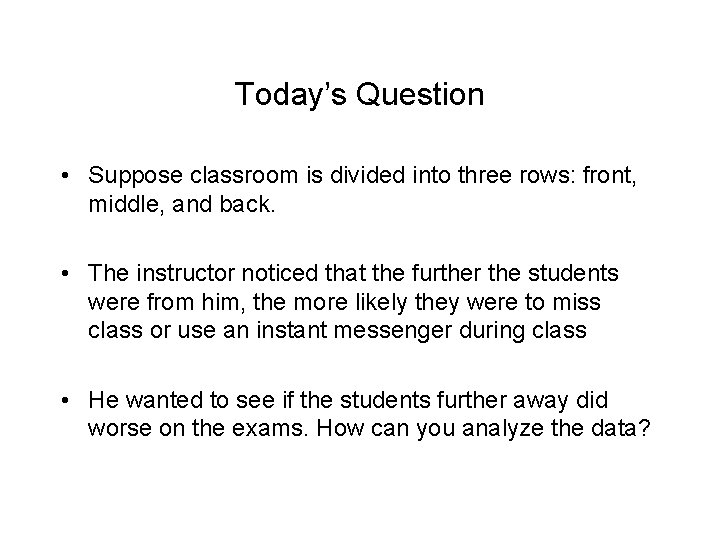

Today’s Question • Suppose classroom is divided into three rows: front, middle, and back. • The instructor noticed that the further the students were from him, the more likely they were to miss class or use an instant messenger during class • He wanted to see if the students further away did worse on the exams. How can you analyze the data?

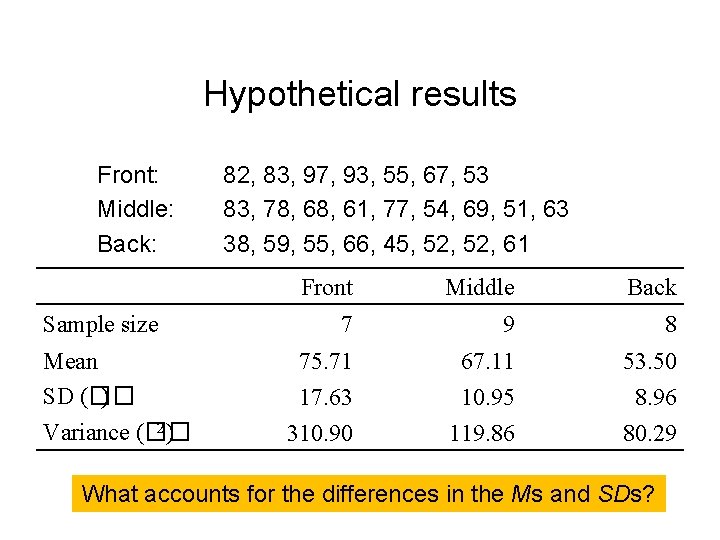

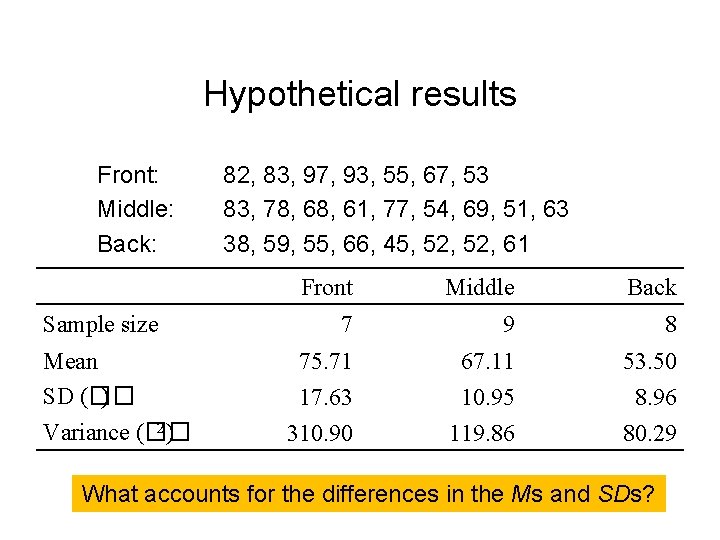

Hypothetical results Front: Middle: Back: Sample size Mean SD (�� ) 2) Variance (�� 82, 83, 97, 93, 55, 67, 53 83, 78, 61, 77, 54, 69, 51, 63 38, 59, 55, 66, 45, 52, 61 Front Middle Back 7 9 8 75. 71 17. 63 67. 11 10. 95 53. 50 8. 96 310. 90 119. 86 80. 29 What accounts for the differences in the Ms and SDs?

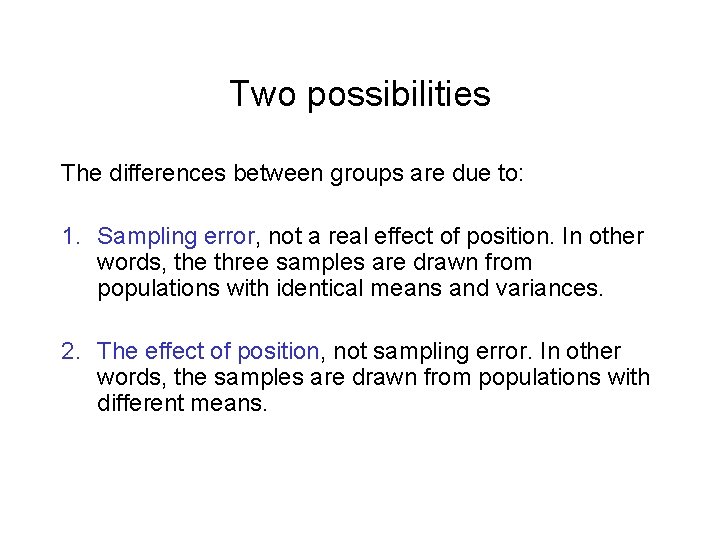

Two possibilities The differences between groups are due to: 1. Sampling error, not a real effect of position. In other words, the three samples are drawn from populations with identical means and variances. 2. The effect of position, not sampling error. In other words, the samples are drawn from populations with different means.

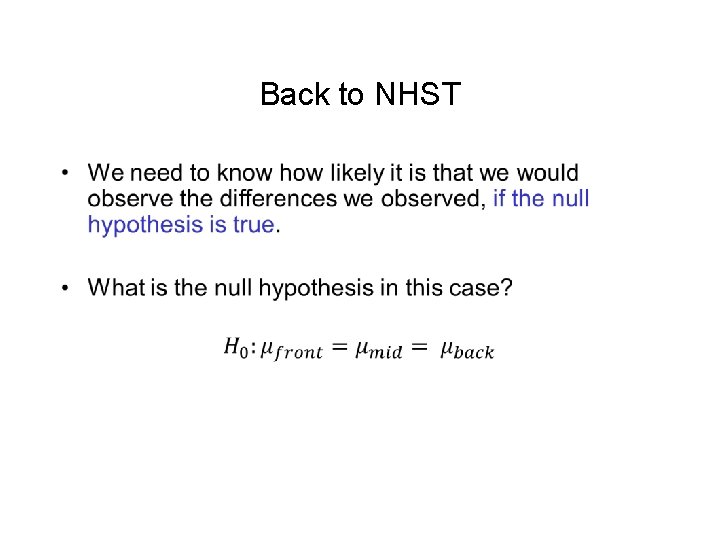

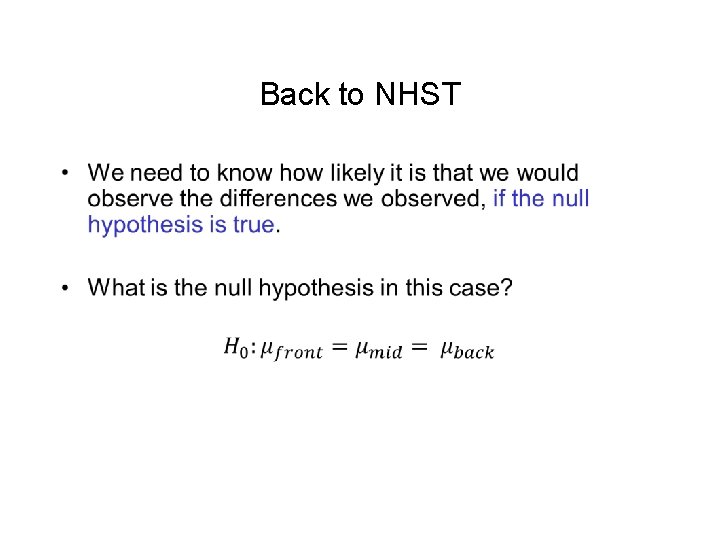

Back to NHST •

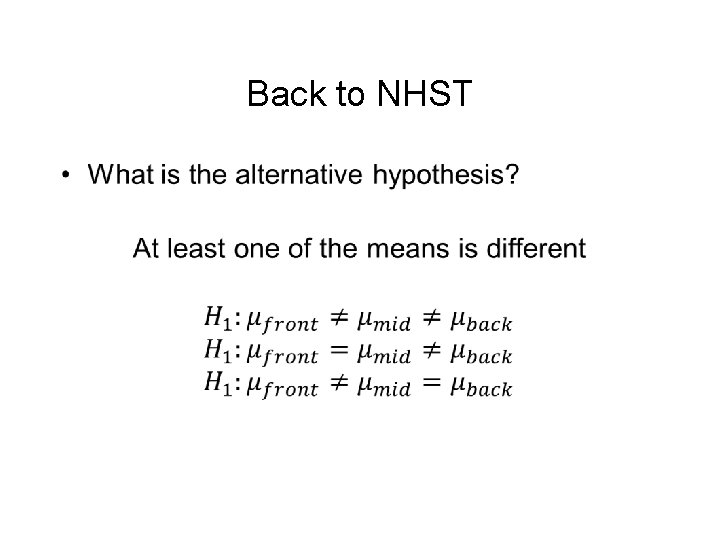

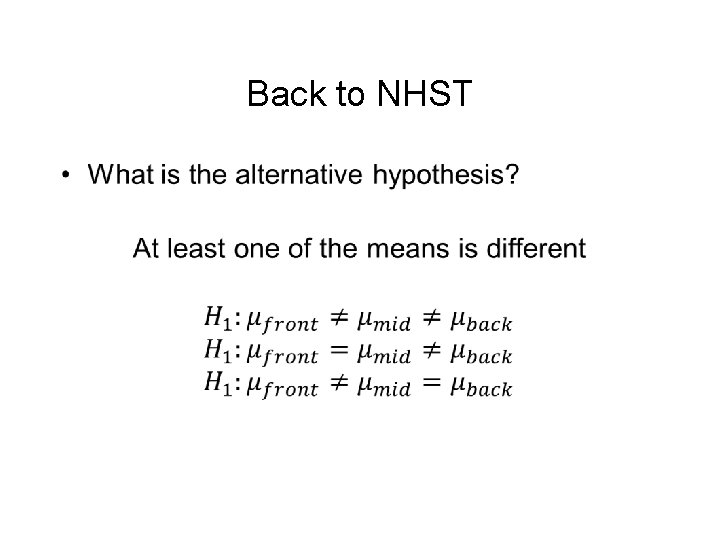

Back to NHST •

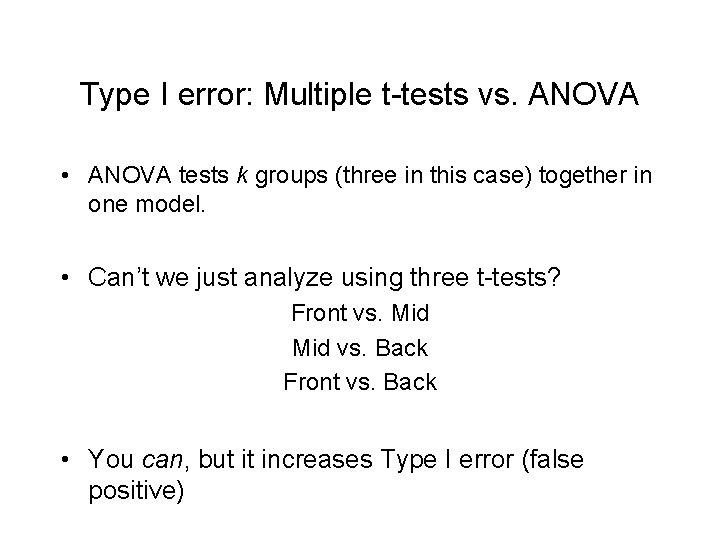

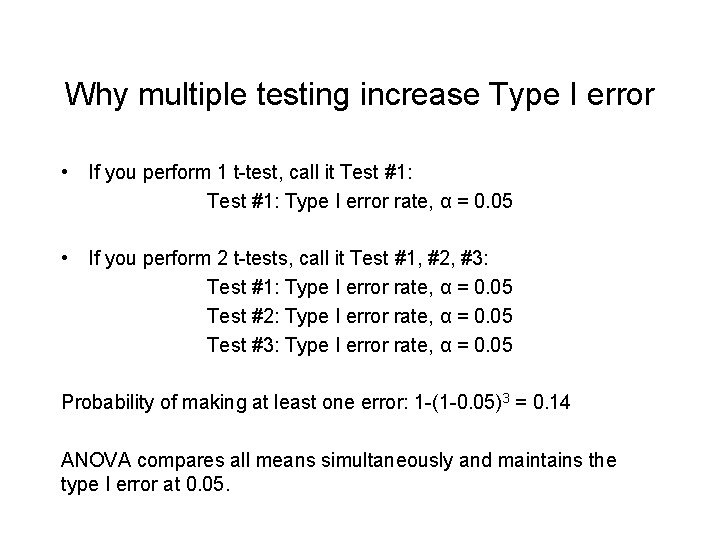

Type I error: Multiple t-tests vs. ANOVA • ANOVA tests k groups (three in this case) together in one model. • Can’t we just analyze using three t-tests? Front vs. Mid vs. Back Front vs. Back • You can, but it increases Type I error (false positive)

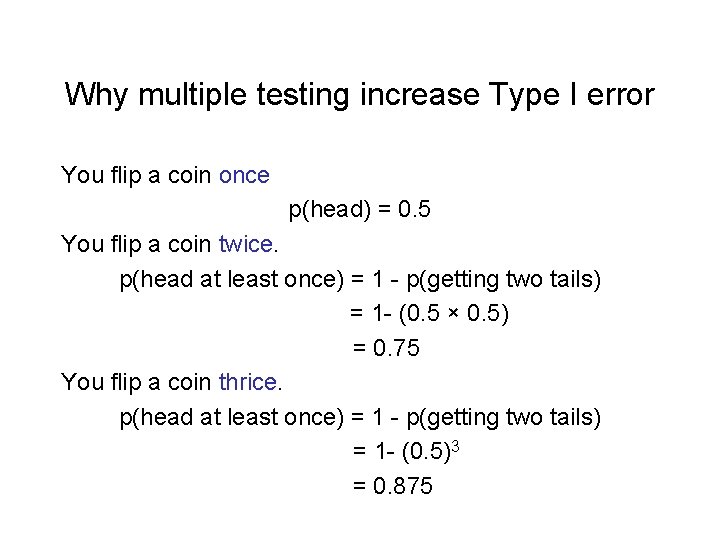

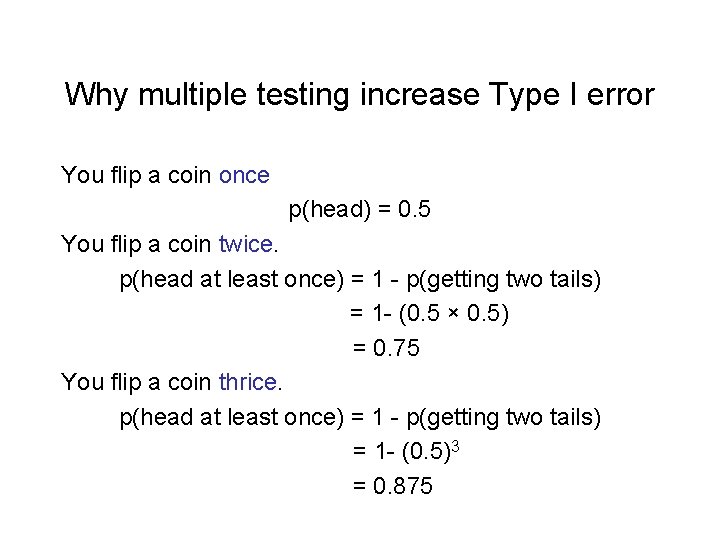

Why multiple testing increase Type I error You flip a coin once p(head) = 0. 5 You flip a coin twice. p(head at least once) = 1 - p(getting two tails) = 1 - (0. 5 × 0. 5) = 0. 75 You flip a coin thrice. p(head at least once) = 1 - p(getting two tails) = 1 - (0. 5)3 = 0. 875

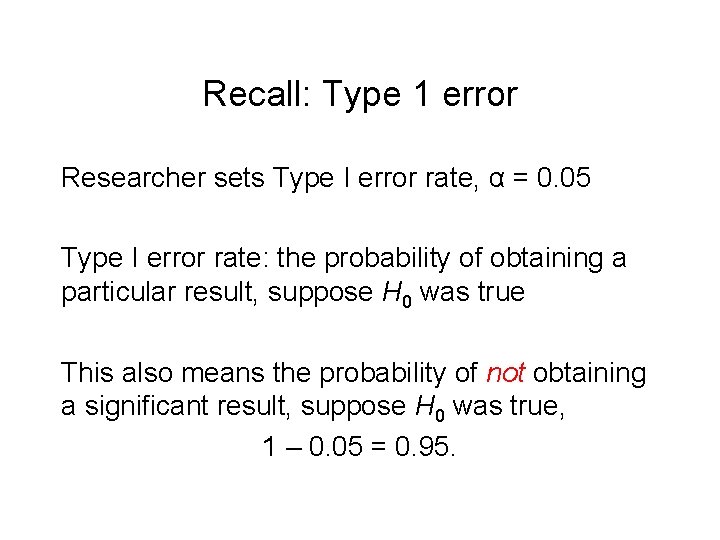

Recall: Type 1 error Researcher sets Type I error rate, α = 0. 05 Type I error rate: the probability of obtaining a particular result, suppose H 0 was true This also means the probability of not obtaining a significant result, suppose H 0 was true, 1 – 0. 05 = 0. 95.

Why multiple testing increase Type I error • If you perform 1 t-test, call it Test #1: Type I error rate, α = 0. 05 • If you perform 2 t-tests, call it Test #1, #2, #3: Test #1: Type I error rate, α = 0. 05 Test #2: Type I error rate, α = 0. 05 Test #3: Type I error rate, α = 0. 05 Probability of making at least one error: 1 -(1 -0. 05)3 = 0. 14 ANOVA compares all means simultaneously and maintains the type I error at 0. 05.

The real problem: Randomness • What sort of random variations are there? • Three sources: 1. Total (SStotal) 2. Between (SSwithin) 3. Within (SSwithin)

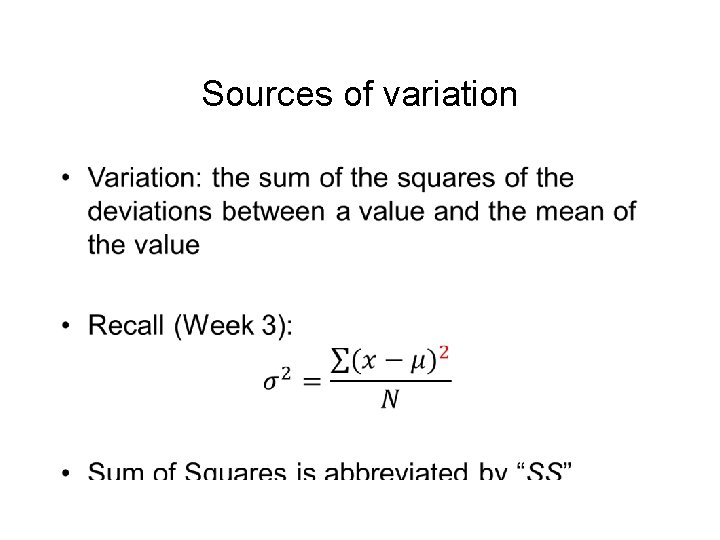

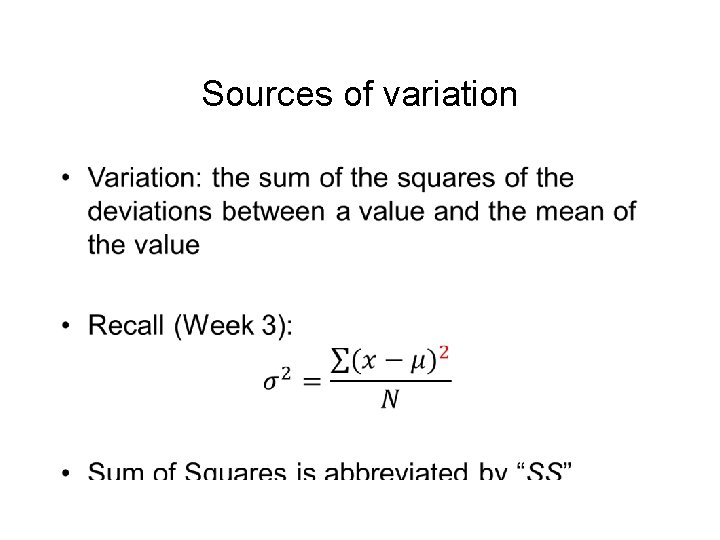

Sources of variation •

Sources of variation • SStotal: Are all values identical? – Obviously not; there is some variation in the data – This is called the total variation

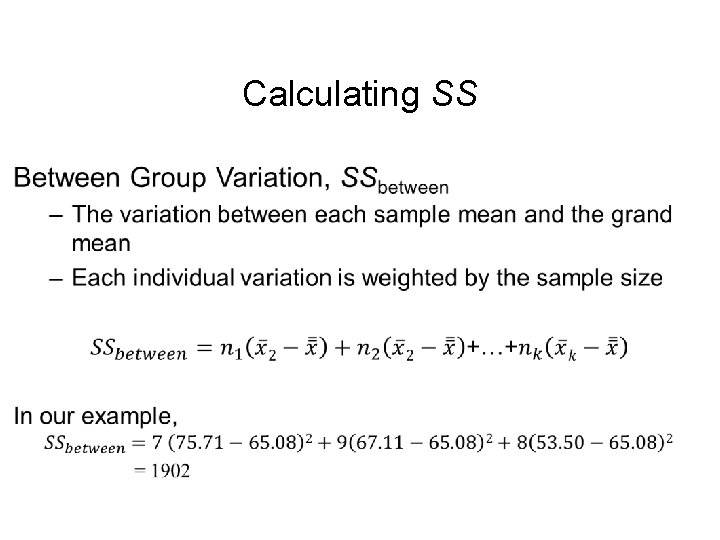

Sources of variation • Are all sample means identical? – No, there is some variation between the groups – This is called the between group variation – Sometimes called the variation due to the factor – Denoted SSbetween: variation between the groups

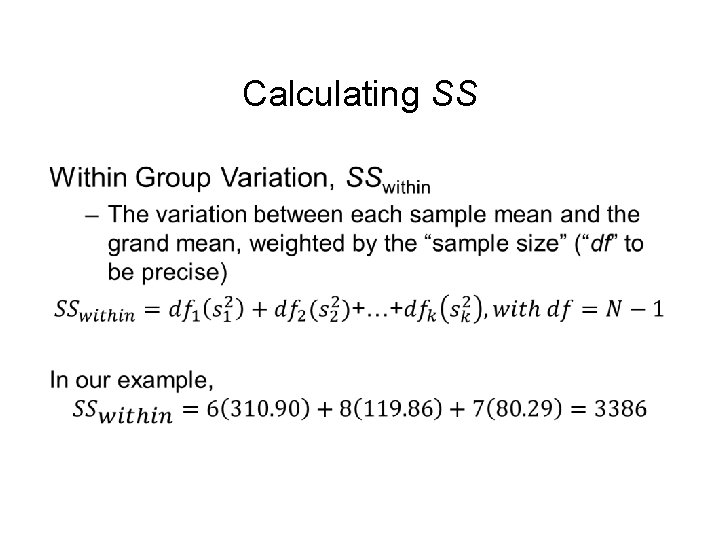

Sources of variation • Is each value within each group identical? – No, there is some variation within the groups – This is called the within group variation – Sometimes called the error variation – Denoted SSwithin: variation within the groups

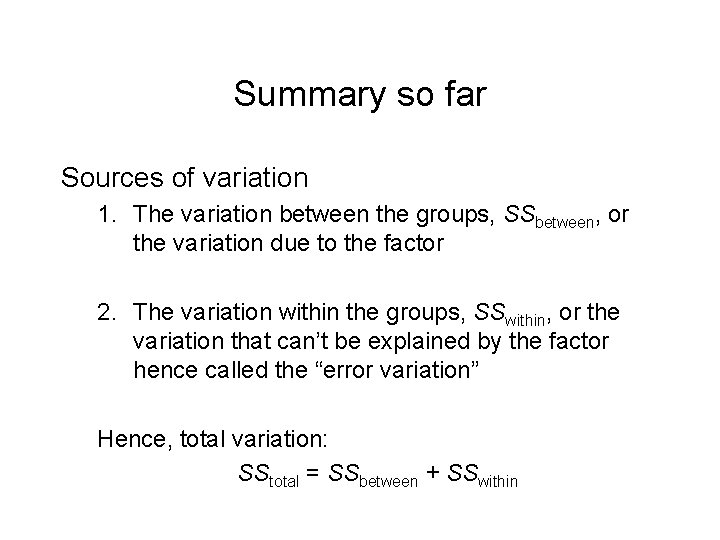

Summary so far Sources of variation 1. The variation between the groups, SSbetween, or the variation due to the factor 2. The variation within the groups, SSwithin, or the variation that can’t be explained by the factor hence called the “error variation” Hence, total variation: SStotal = SSbetween + SSwithin

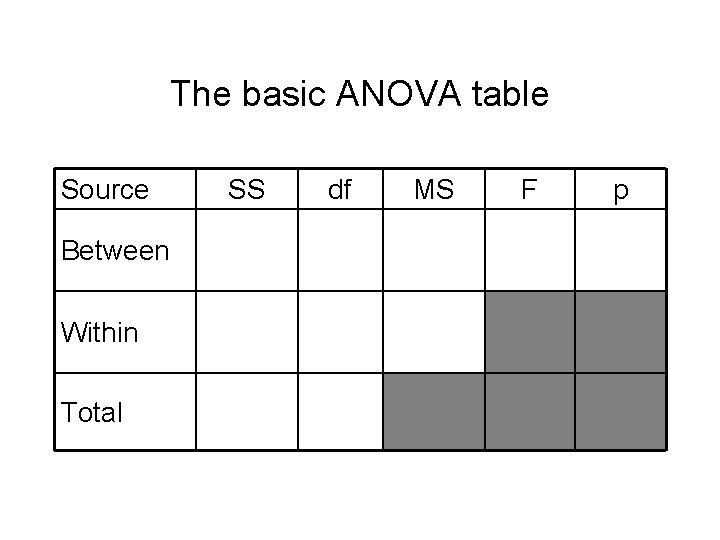

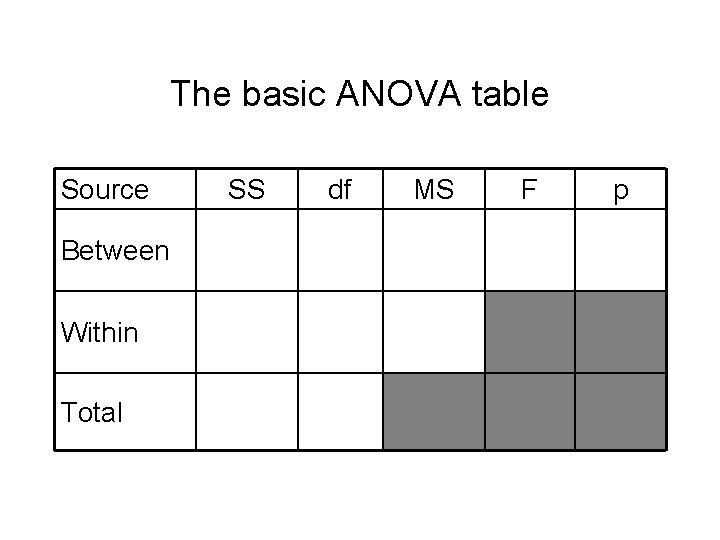

The basic ANOVA table Source Between Within Total SS df MS F p

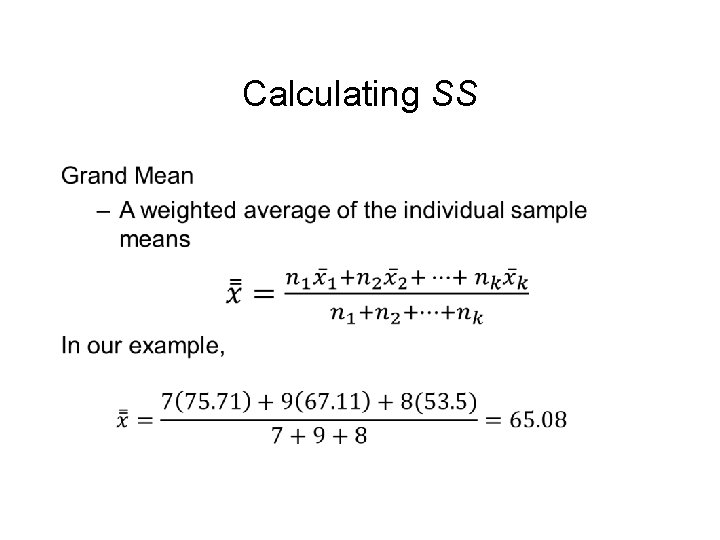

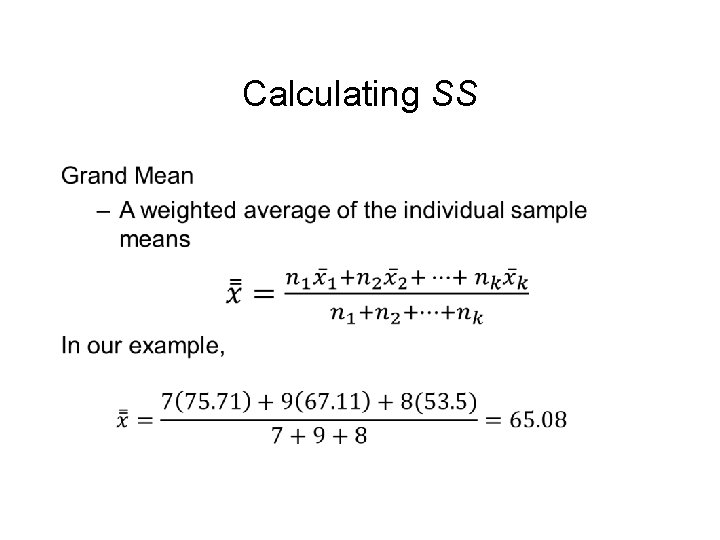

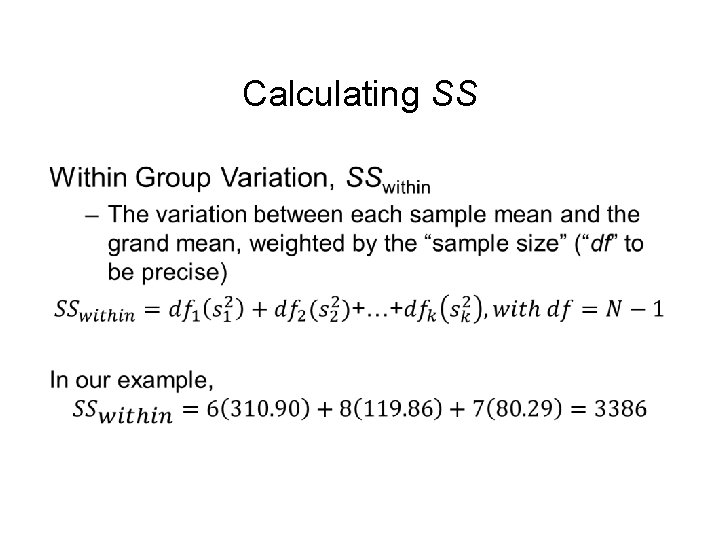

Calculating SS •

Calculating SS •

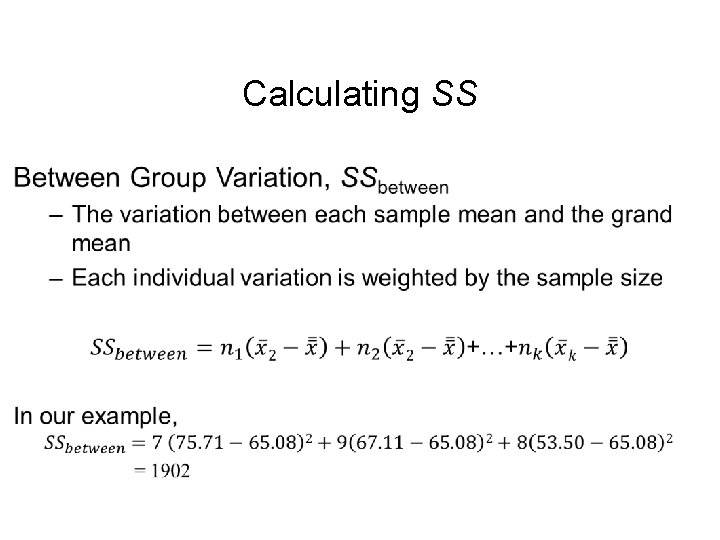

Calculating SS •

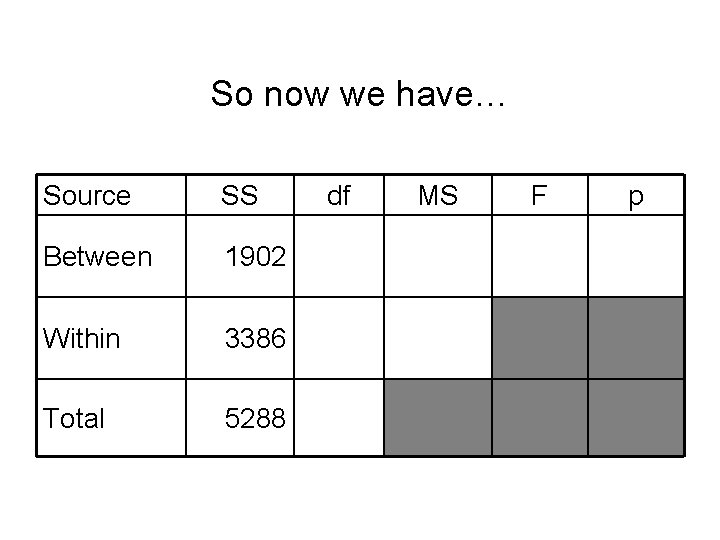

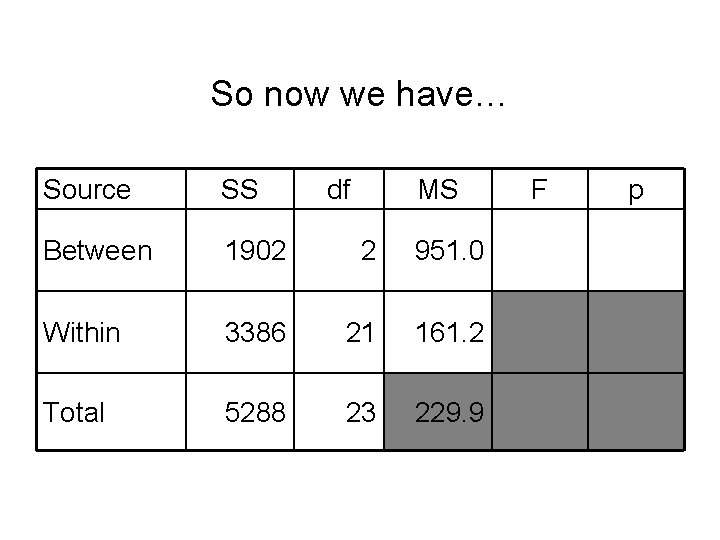

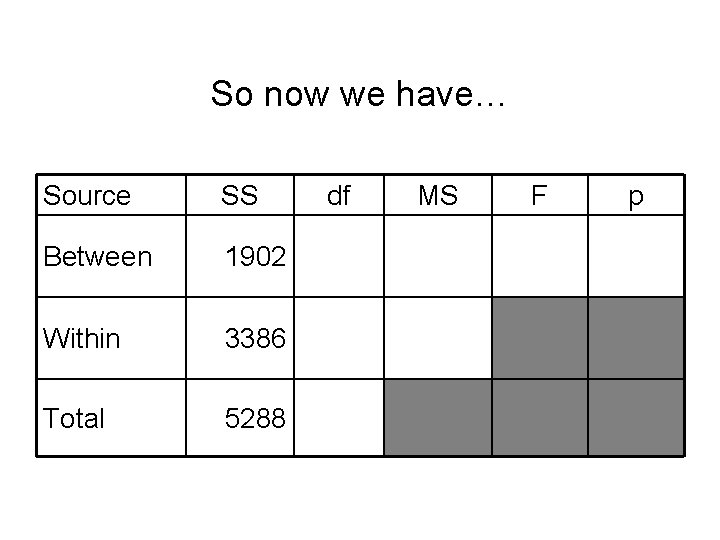

So now we have… Source SS Between 1902 Within 3386 Total 5288 df MS F p

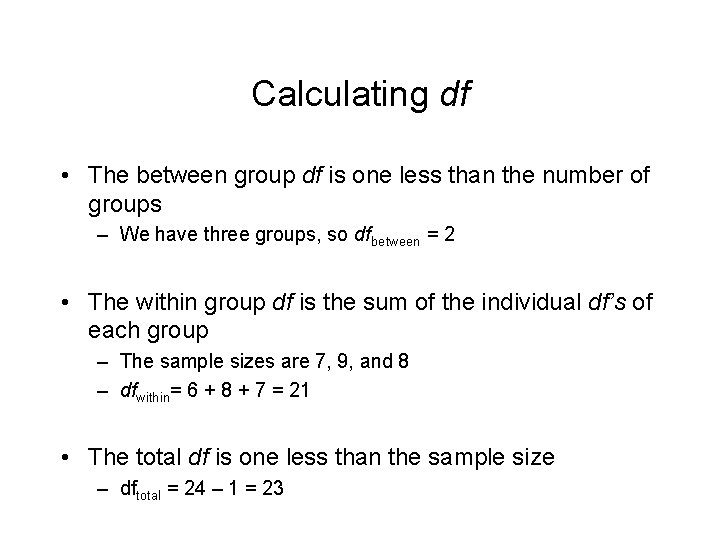

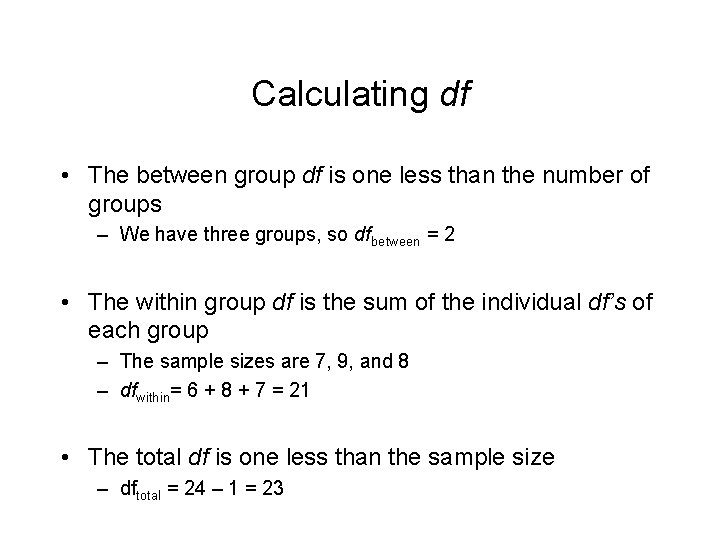

Calculating df • The between group df is one less than the number of groups – We have three groups, so dfbetween = 2 • The within group df is the sum of the individual df’s of each group – The sample sizes are 7, 9, and 8 – dfwithin= 6 + 8 + 7 = 21 • The total df is one less than the sample size – dftotal = 24 – 1 = 23

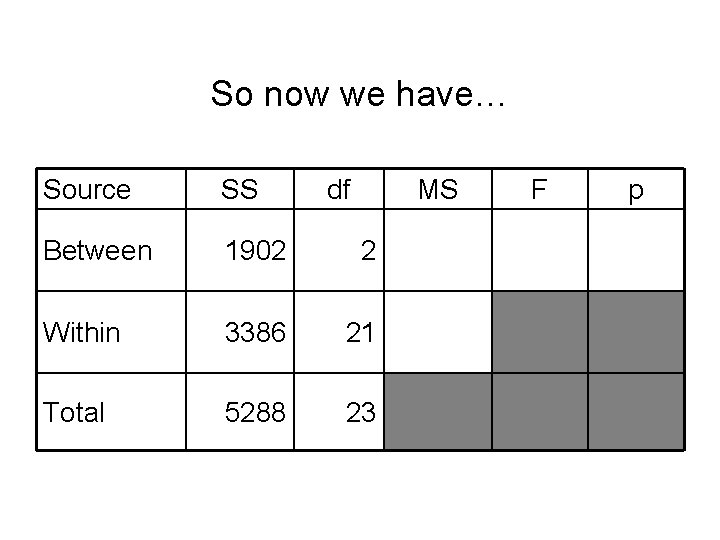

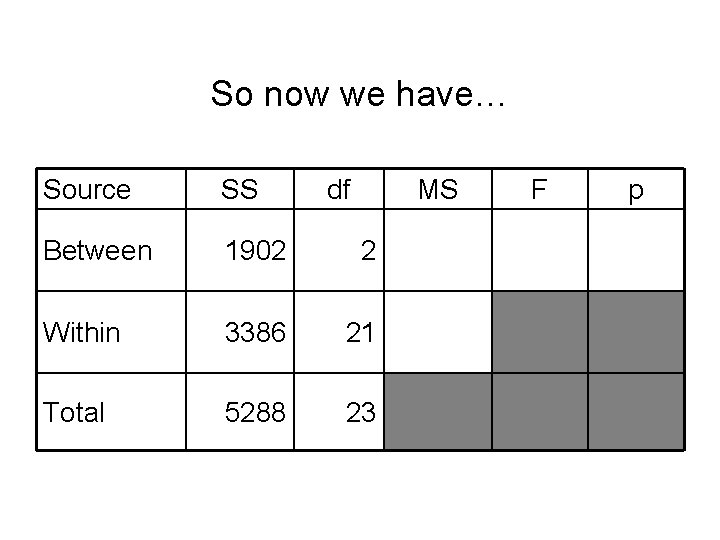

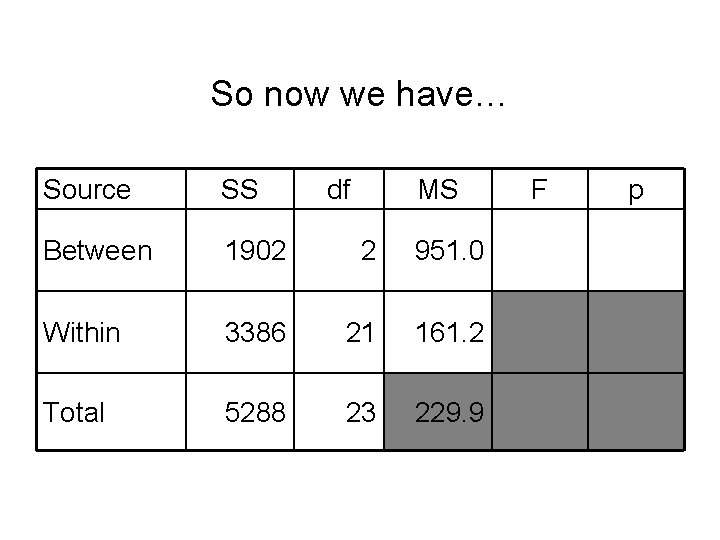

So now we have… Source SS df MS Between 1902 2 Within 3386 21 Total 5288 23 F p

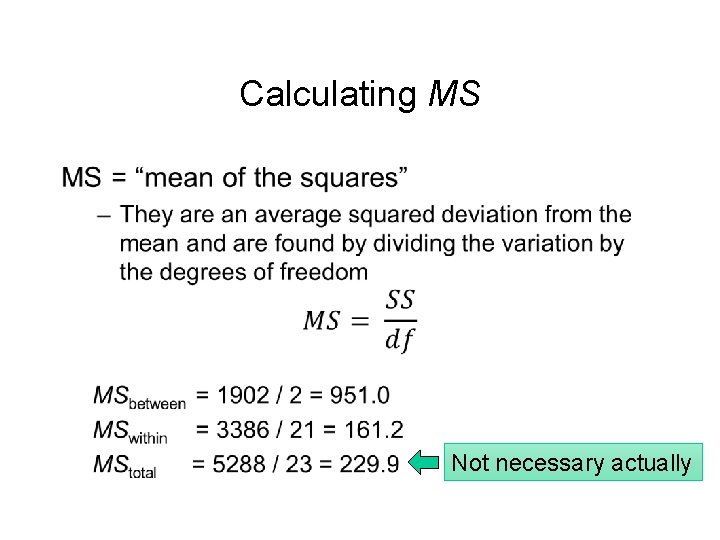

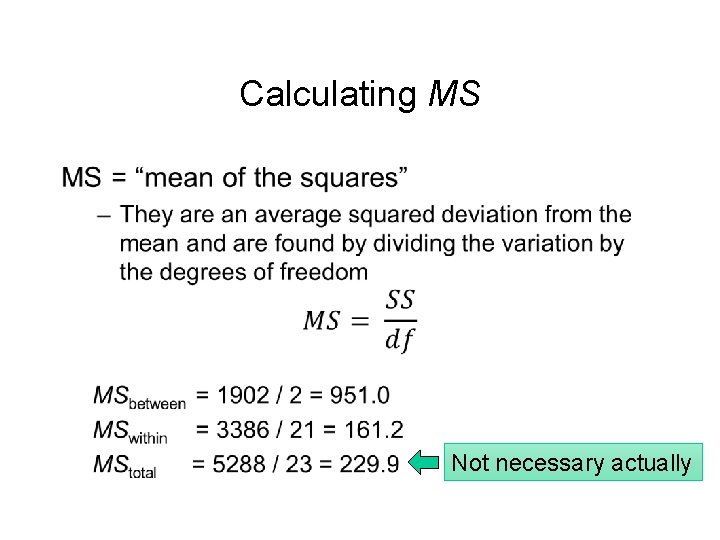

Calculating MS • Not necessary actually

So now we have… Source SS df MS Between 1902 2 951. 0 Within 3386 21 161. 2 Total 5288 23 229. 9 F p

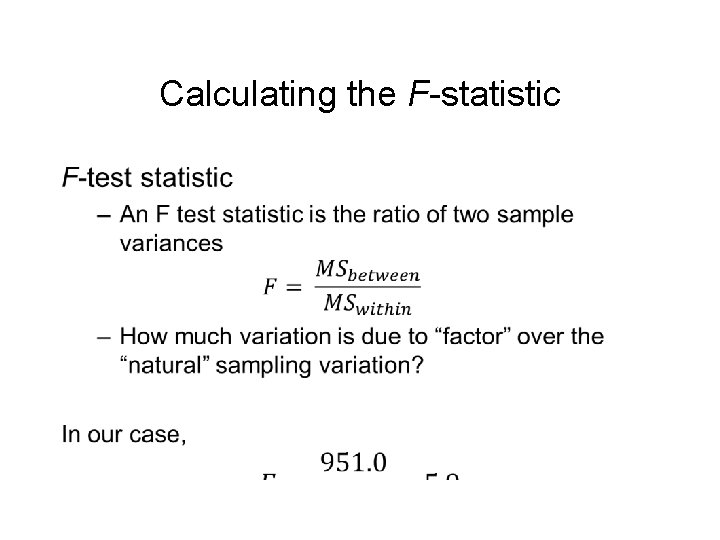

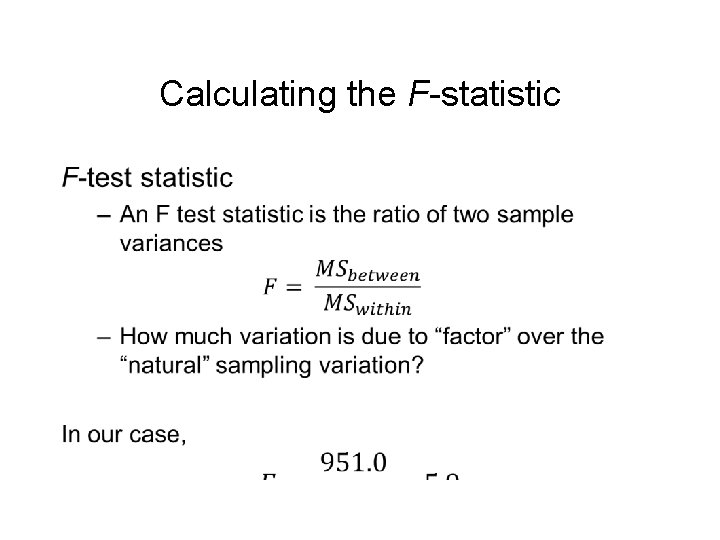

Calculating the F-statistic •

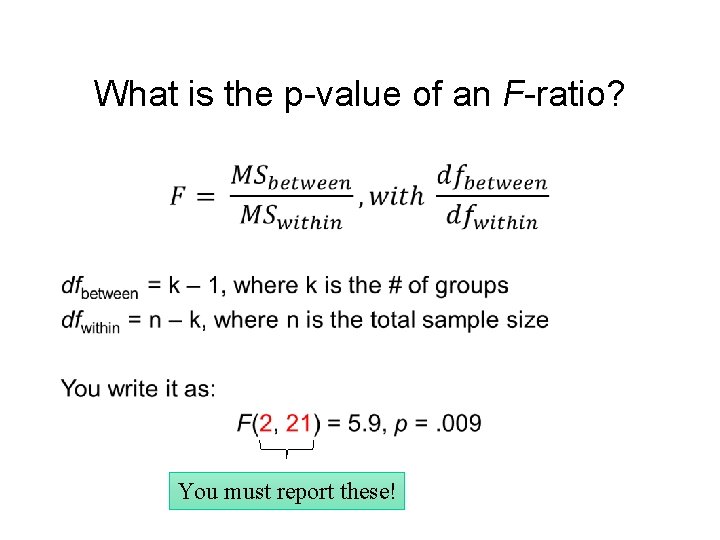

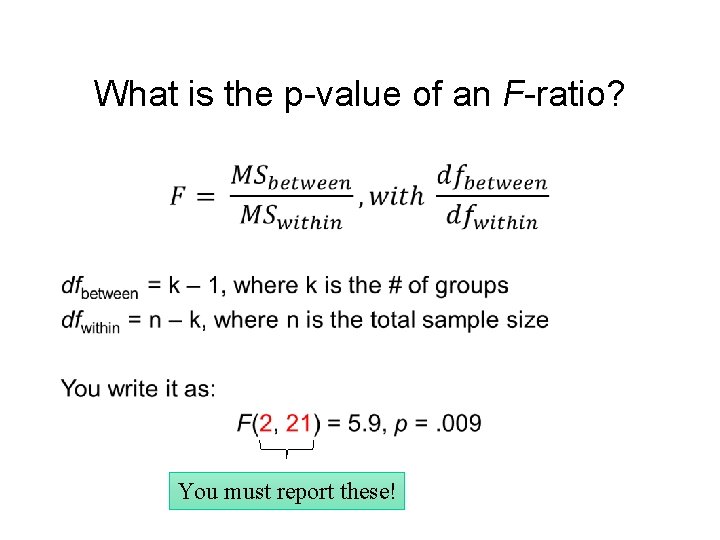

What is the p-value of an F-ratio? • You must report these!

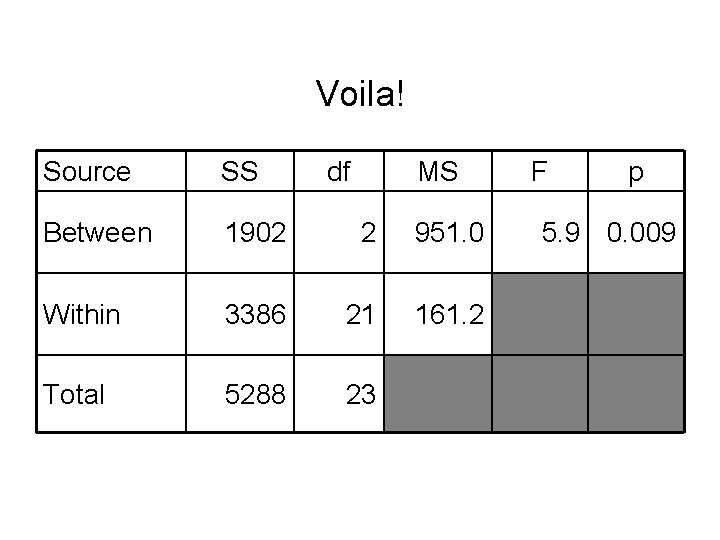

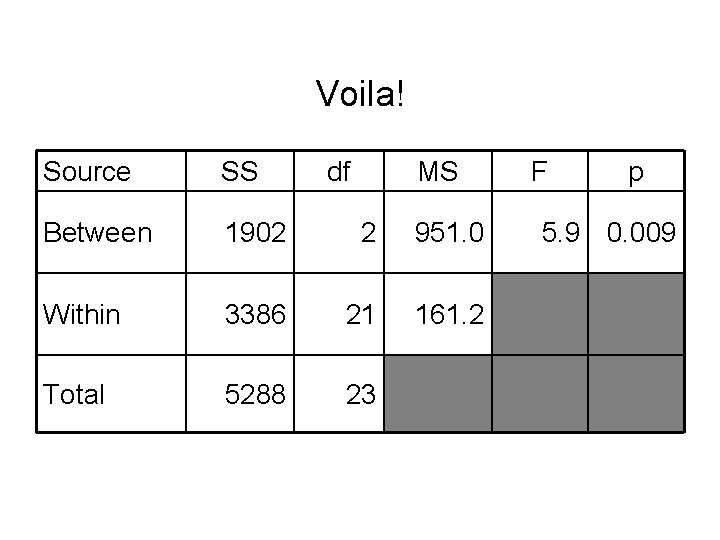

Voila! Source SS df MS Between 1902 2 951. 0 Within 3386 21 161. 2 Total 5288 23 F p 5. 9 0. 009

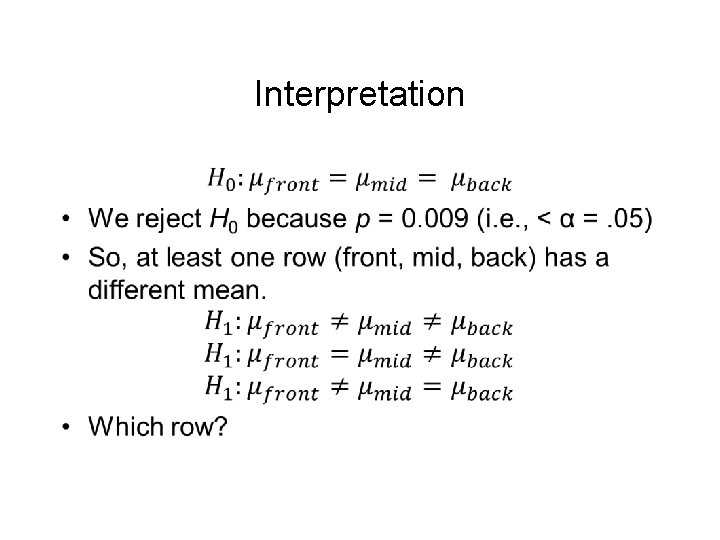

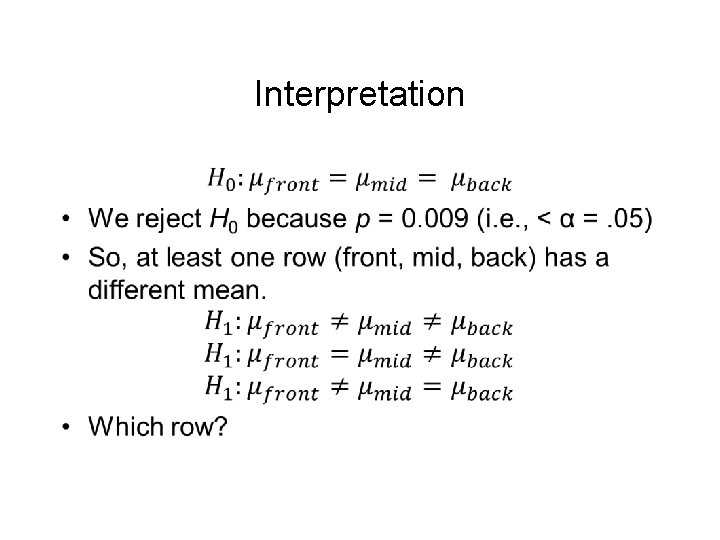

Interpretation •

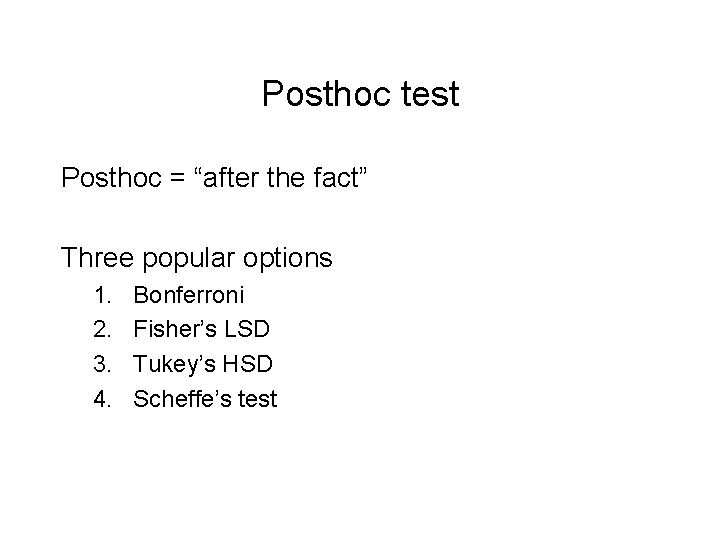

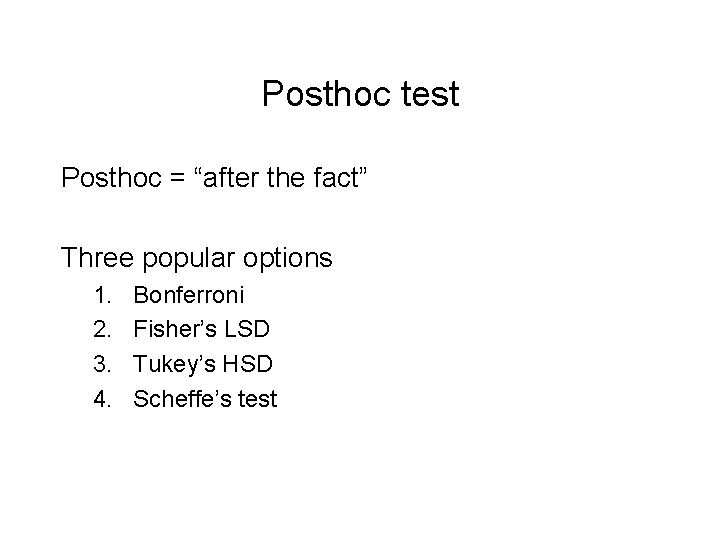

Posthoc test Posthoc = “after the fact” Three popular options 1. 2. 3. 4. Bonferroni Fisher’s LSD Tukey’s HSD Scheffe’s test

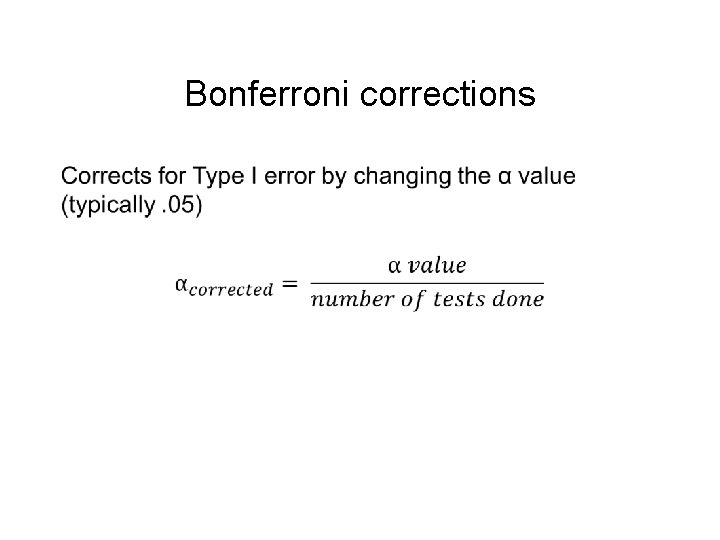

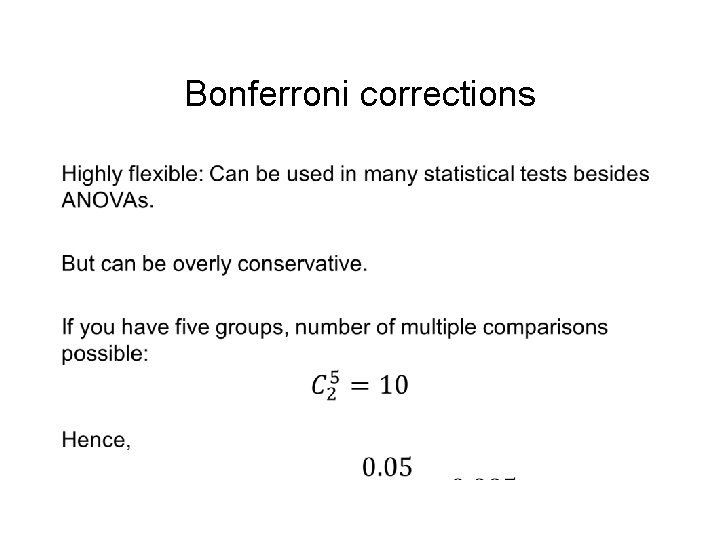

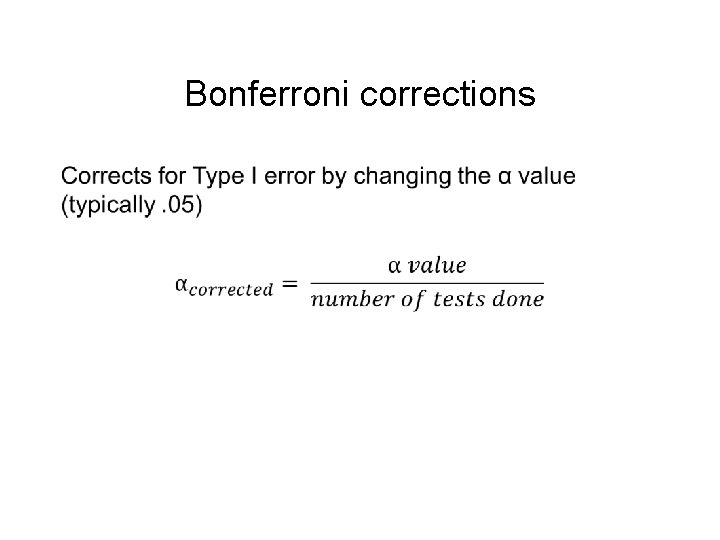

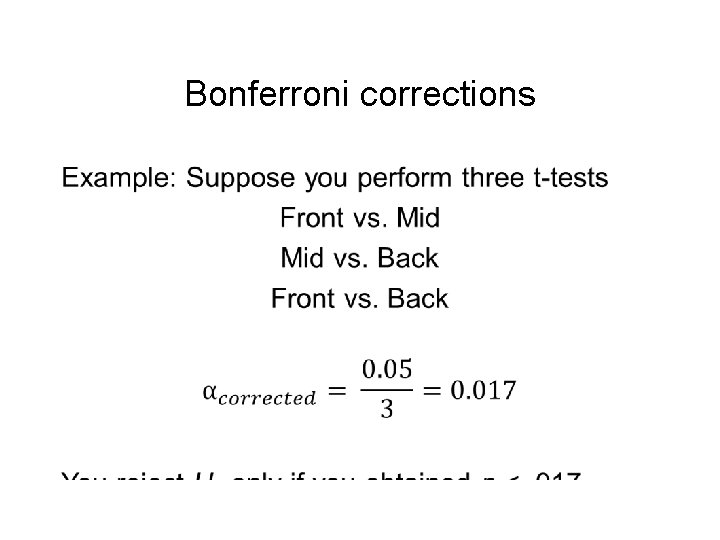

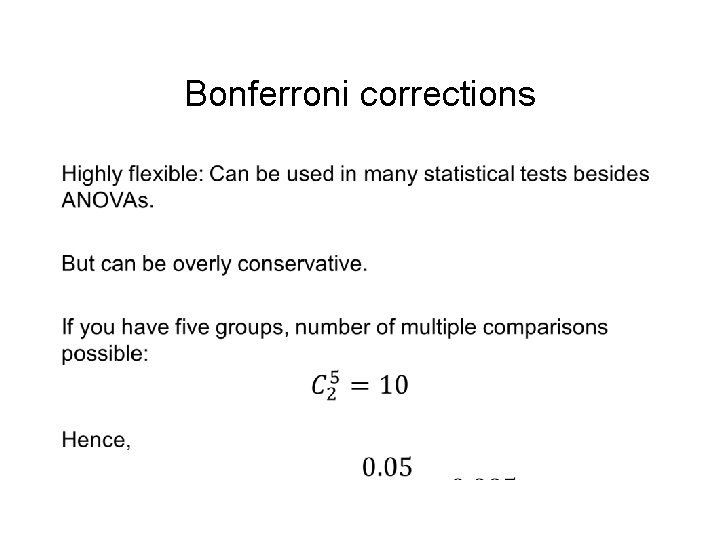

Bonferroni corrections •

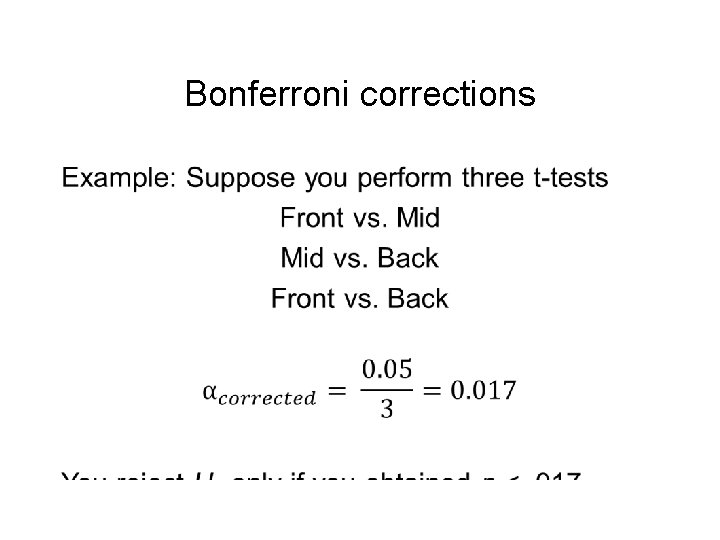

Bonferroni corrections •

Bonferroni corrections •

Others 1. Fisher’s LSD – least conservative 2. Tukey’s HSD – somewhere in between 3. Scheffe’s test – most conservative Choice depends on how conservative you want to be We will cover these more in the coming tutorial.

Three assumptions of one-way ANOVA 1. Assumptions of normality ANOVA is robust against deviations in normality. Nevertheless, this is an assumption.

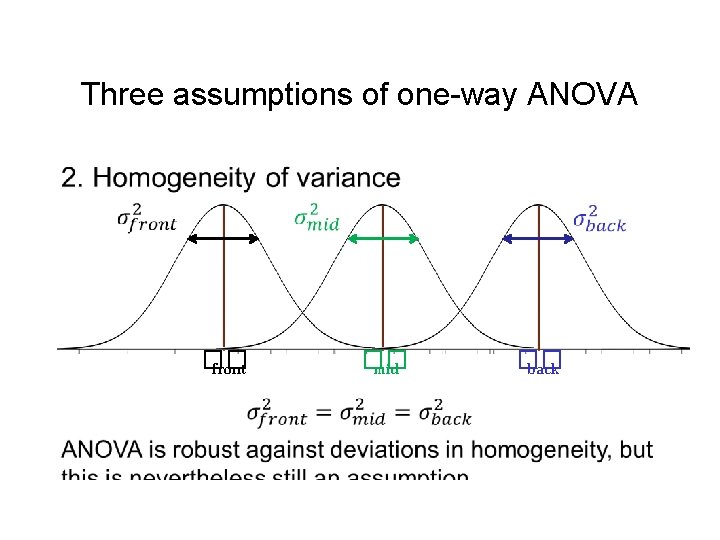

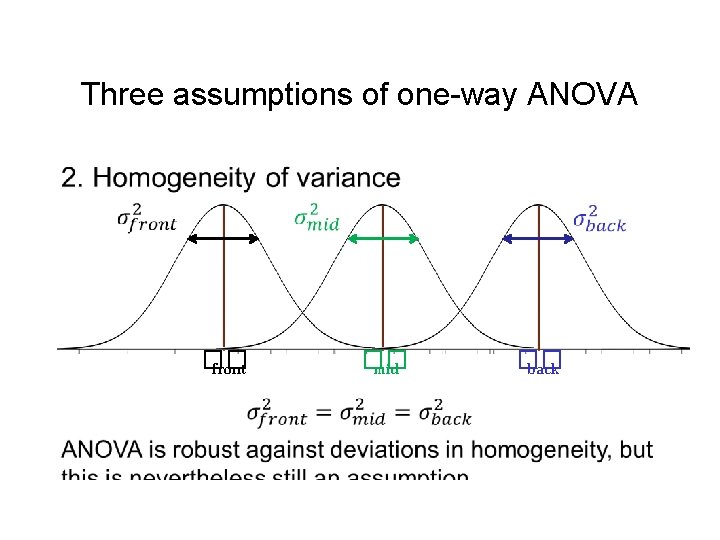

Three assumptions of one-way ANOVA • �� front �� mid �� back

Three assumptions of one-way ANOVA 3. Independence of observations Achieved through random assignments Dependent observations are dealt in SRM II (repeated measures ANOVA) But the idea is similar to dependent samples t-test

Family of ANOVAs 1. Repeated Measures ANOVA 2. Mixed ANOVA 3. Multi-way ANOVAs They share the same core principles: “treatment” variation relative to some “natural” variation

An alternative to ANOVAs in general Some people (like me) find ANOVA sometimes too rigid. Another method is contrast analysis (not in SRM I or II). Briefly, in contrast analysis, you assign weights to a priori predicted patterns of data, and test your predicted pattern directly. Rosenthal, Rosnow, & Rubin (2000). Contrasts and effect sizes in behavioral research: A correlational approach. New York: Cambridge Univ. Press

Summary • One-way ANOVA is used when there are two or more conditions and a continuous outcome variable. • The independent variable must be categorical • It is a ratio of between-group variation relative to within-group variation.

Last important note • The t-statistic is a special case of the Fstatistic, with one degree of freedom • Try this: Analyze a dataset with a t-test and ANOVA. • You will get: t 2 = F