Chapter16 Analysis of Variance and Covariance Analysis of

- Slides: 18

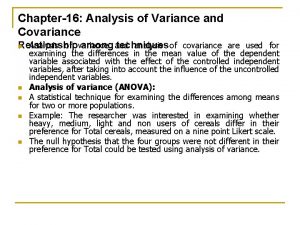

Chapter-16: Analysis of Variance and Covariance Analysis of variance analysis of covariance are used for Relationship among and techniques n n n examining the differences in the mean value of the dependent variable associated with the effect of the controlled independent variables, after taking into account the influence of the uncontrolled independent variables. Analysis of variance (ANOVA): A statistical technique for examining the differences among means for two or more populations. Example: The researcher was interested in examining whether heavy, medium, light and non users of cereals differ in their preference for Total cereals, measured on a nine point Likert scale. The null hypothesis that the four groups were not different in their preference for Total could be tested using analysis of variance.

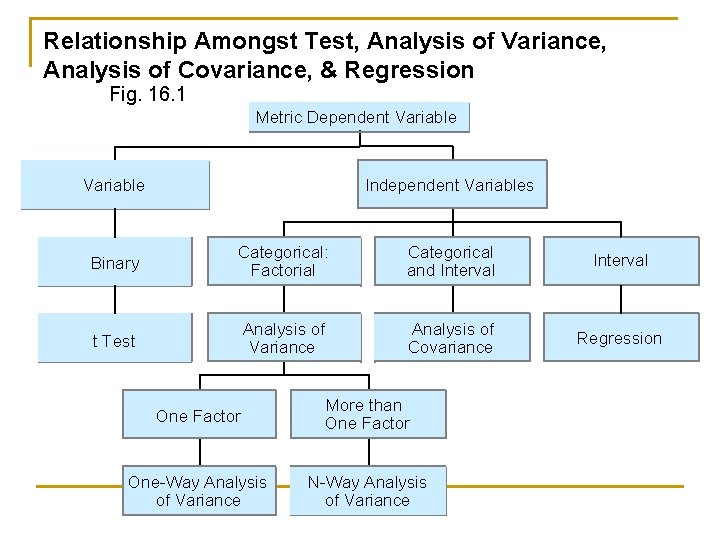

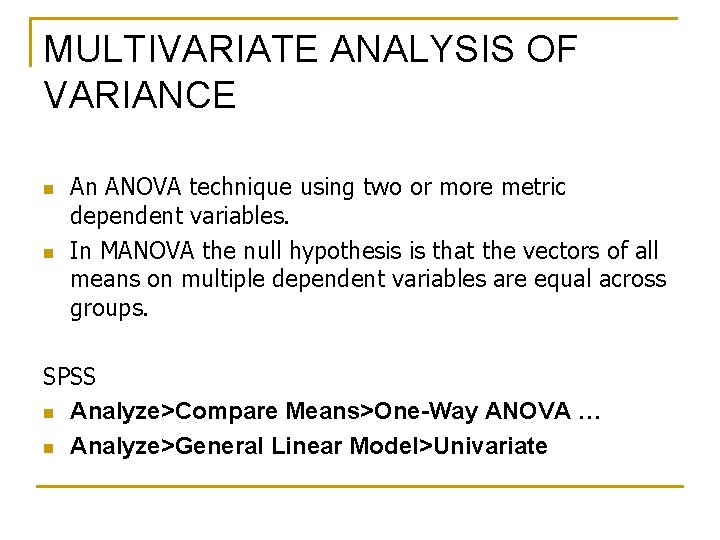

Relationship Amongst Test, Analysis of Variance, Analysis of Covariance, & Regression Fig. 16. 1 Metric Dependent Variable One Independent Variable One or More Independent Variables Binary Categorical: Factorial Categorical and Interval t Test Analysis of Variance Analysis of Covariance Regression One Factor More than One Factor One-Way Analysis of Variance N-Way Analysis of Variance

Relationship among techniques n n n n FACTORS: Categorical independent variables are called factors. The independent variables must be all categorical to use ANOVA. TREATMENT: In ANOVA a particular combination of factor levels or categories is called treatment. One way analysis of variance involves any one categorical variable or a single factor. N way analysis of variance is the one which involves two or more factors. Analysis of covariance (ANCOVA): An advanced analysis of variance procedure in which the effects of one or more metric scaled extraneous variables are removed from dependent variable before conducting the ANOVA.

One way analysis of Variance n n n Marketing researchers are often interested in examining the differences in the mean values of the dependent variable for several categories of a single independent variable or factor. Example: Do the various segments differ in terms of their volume of product consumption? Do the brand evaluations of groups exposed to different commercials vary? The answer to these and similar questions can be determined by conducting one way analysis of variance.

Statistics associated with one way analysis of variance: n n n eta² (η²): The strength of the effects of X on Y is measured by eta² (η²). The value of eta² (η²) varies between 0 and 1. F statistic: The null hypothesis that the category means are equal in the population is tested by an F Statistic based on the ratio of the mean square related to X and mean square related to error. Mean Square: The mean square is the sum of squares divided by approximate degrees of freedom. SS between: Also denoted by SSx , this is the variation in Y related to the variation in means of the categories of X. SS within: Also referred to as SSerror, this is the variation due to the variation within each of the categories of X. SSy: The total variation in Y is SSy.

CONDUCTING ONE WAY ANALYSIS OF VARIANCE: n Step-1: Identify the dependent and independent variables. Step- 2: Decompose the total variation. Step-3: Measure the effects. Step-4: Test the significance. n Step-5: Interpret the results. n n n

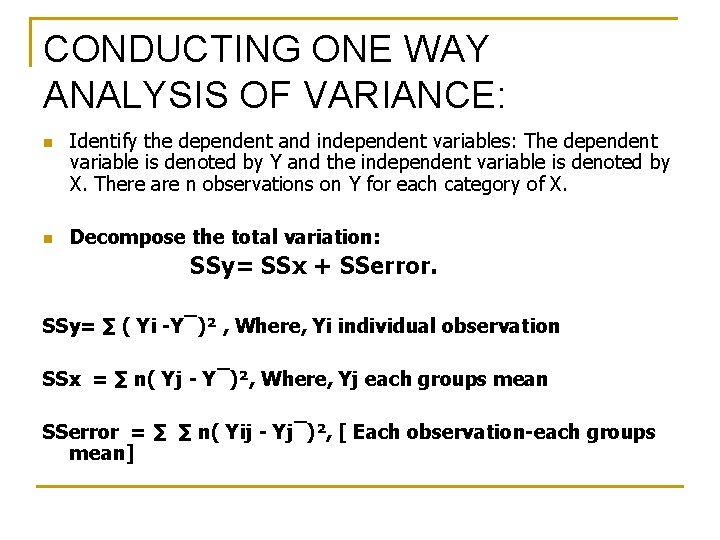

CONDUCTING ONE WAY ANALYSIS OF VARIANCE: n n Identify the dependent and independent variables: The dependent variable is denoted by Y and the independent variable is denoted by X. There are n observations on Y for each category of X. Decompose the total variation: SSy= SSx + SSerror. SSy= ∑ ( Yi -Y¯)² , Where, Yi individual observation SSx = ∑ n( Yj - Y¯)², Where, Yj each groups mean SSerror = ∑ ∑ n( Yij - Yj¯)², [ Each observation-each groups mean]

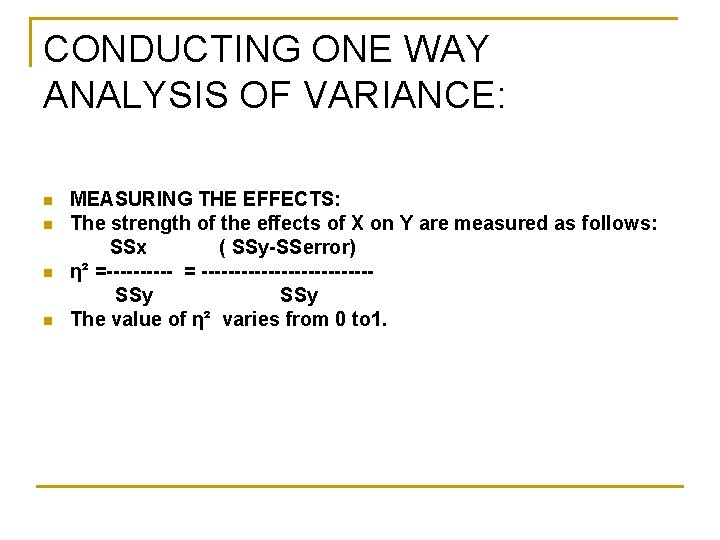

CONDUCTING ONE WAY ANALYSIS OF VARIANCE: n n MEASURING THE EFFECTS: The strength of the effects of X on Y are measured as follows: SSx ( SSy-SSerror) η² =----- = -------------SSy The value of η² varies from 0 to 1.

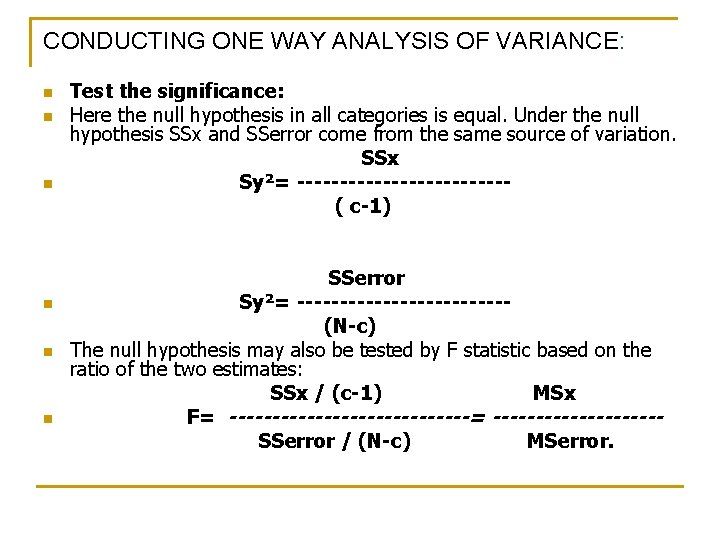

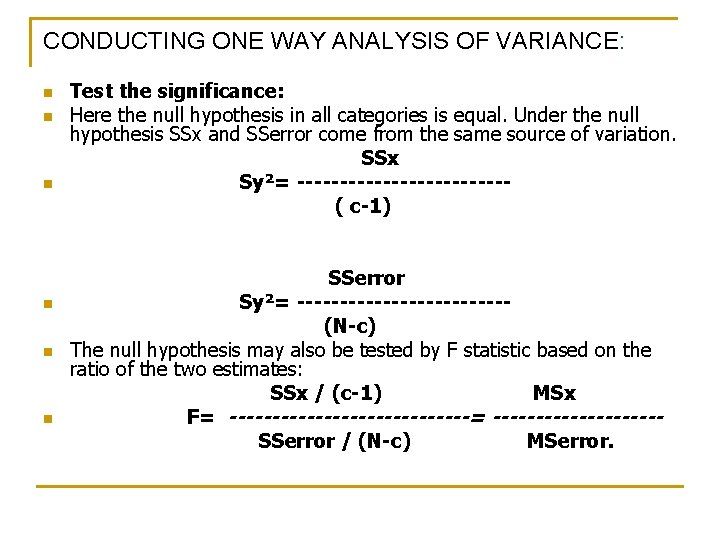

CONDUCTING ONE WAY ANALYSIS OF VARIANCE: n n n Test the significance: Here the null hypothesis in all categories is equal. Under the null hypothesis SSx and SSerror come from the same source of variation. SSx Sy²= ------------( c-1) SSerror Sy²= ------------(N-c) The null hypothesis may also be tested by F statistic based on the ratio of the two estimates: SSx / (c-1) MSx F= --------------= ----------SSerror / (N-c) MSerror.

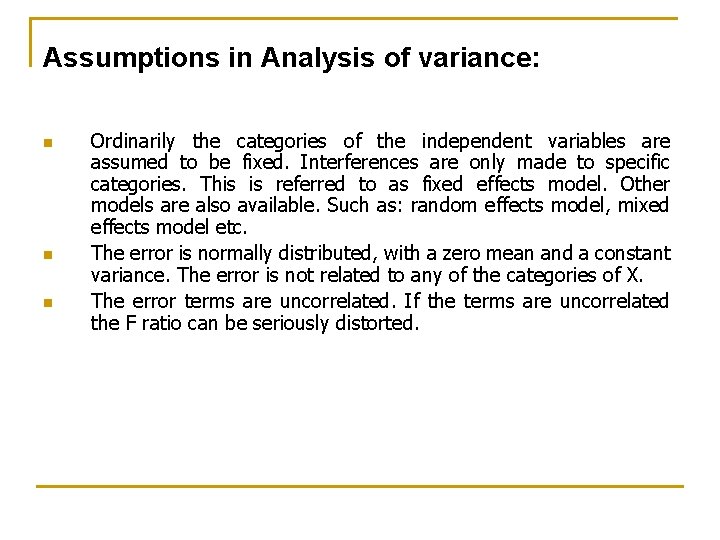

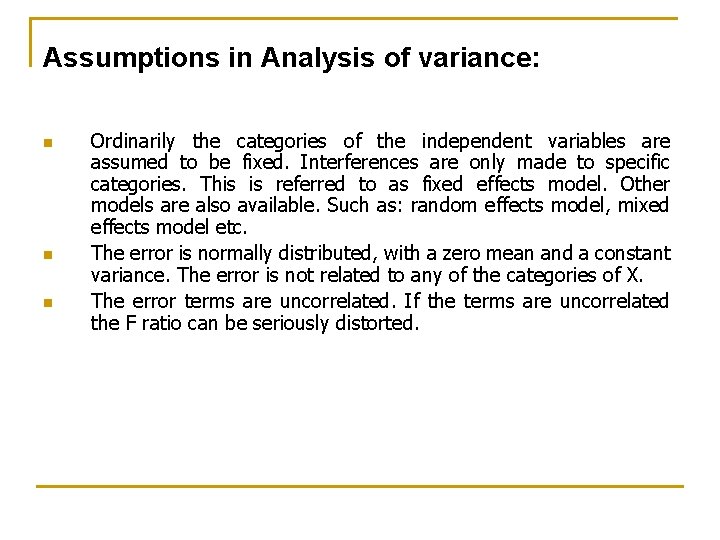

Assumptions in Analysis of variance: n n n Ordinarily the categories of the independent variables are assumed to be fixed. Interferences are only made to specific categories. This is referred to as fixed effects model. Other models are also available. Such as: random effects model, mixed effects model etc. The error is normally distributed, with a zero mean and a constant variance. The error is not related to any of the categories of X. The error terms are uncorrelated. If the terms are uncorrelated the F ratio can be seriously distorted.

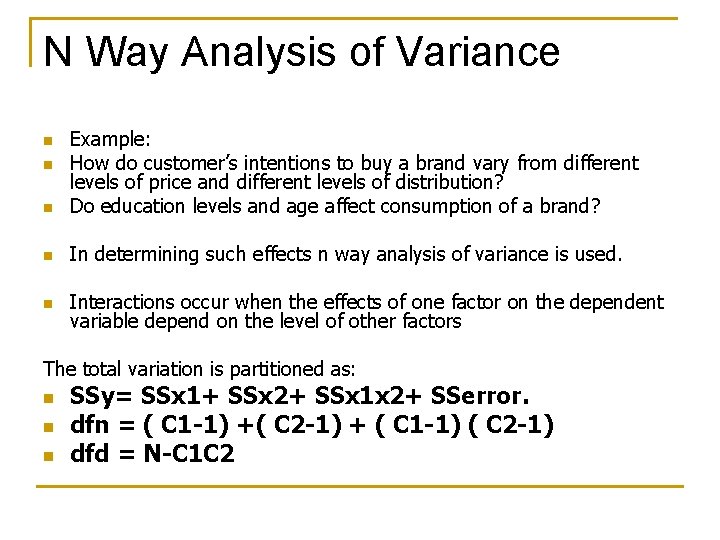

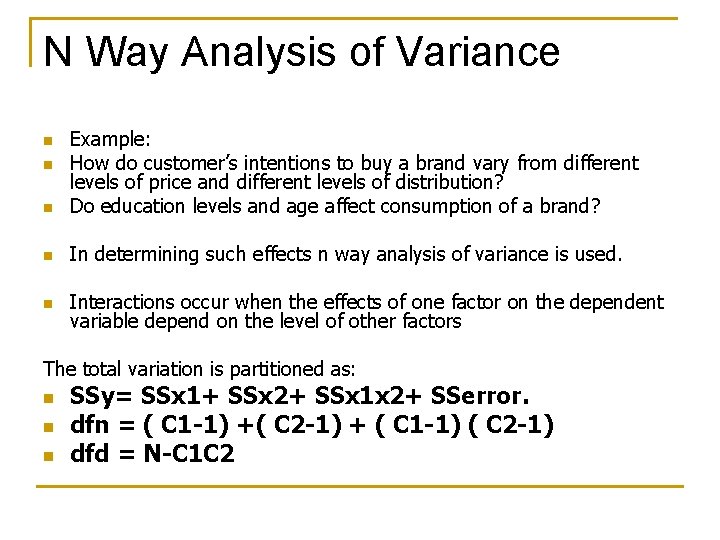

N Way Analysis of Variance n Example: How do customer’s intentions to buy a brand vary from different levels of price and different levels of distribution? Do education levels and age affect consumption of a brand? n In determining such effects n way analysis of variance is used. n Interactions occur when the effects of one factor on the dependent variable depend on the level of other factors n n The total variation is partitioned as: n n n SSy= SSx 1+ SSx 2+ SSx 1 x 2+ SSerror. dfn = ( C 1 -1) +( C 2 -1) + ( C 1 -1) ( C 2 -1) dfd = N-C 1 C 2

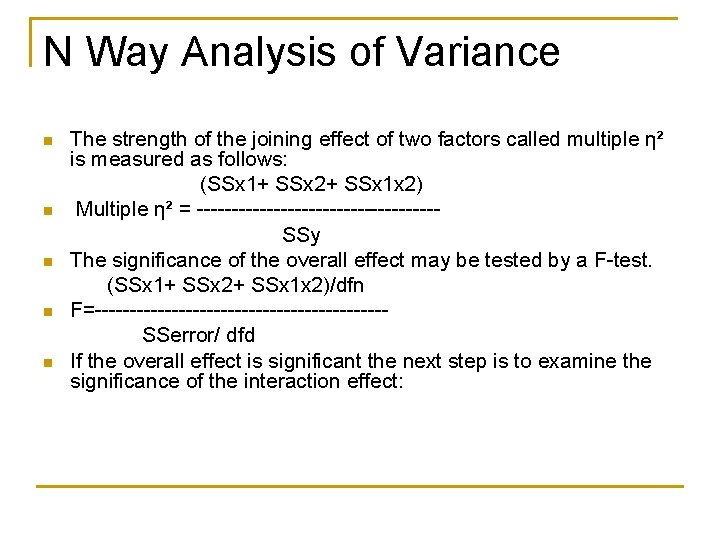

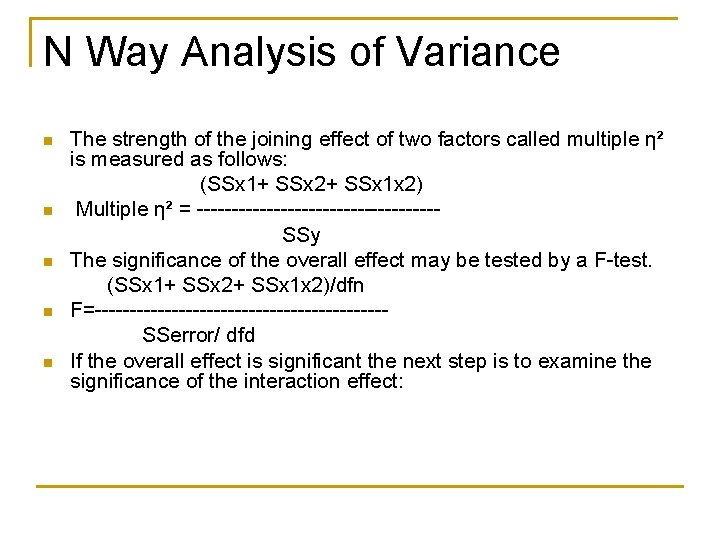

N Way Analysis of Variance n n n The strength of the joining effect of two factors called multiple η² is measured as follows: (SSx 1+ SSx 2+ SSx 1 x 2) Multiple η² = -----------------SSy The significance of the overall effect may be tested by a F-test. (SSx 1+ SSx 2+ SSx 1 x 2)/dfn F=---------------------SSerror/ dfd If the overall effect is significant the next step is to examine the significance of the interaction effect:

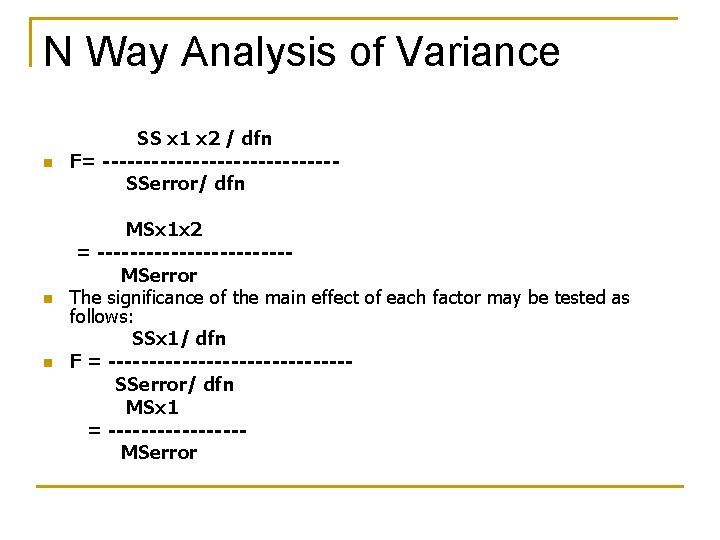

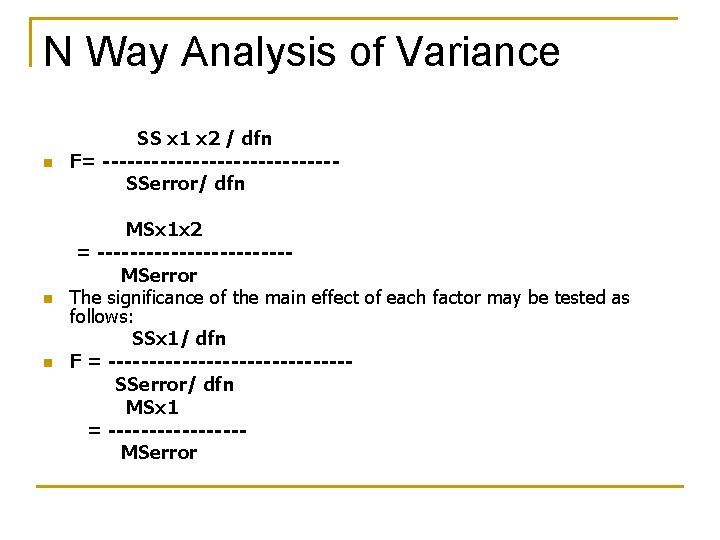

N Way Analysis of Variance n n n SS x 1 x 2 / dfn F= --------------SSerror/ dfn MSx 1 x 2 = ------------MSerror The significance of the main effect of each factor may be tested as follows: SSx 1/ dfn F = ---------------SSerror/ dfn MSx 1 = --------MSerror

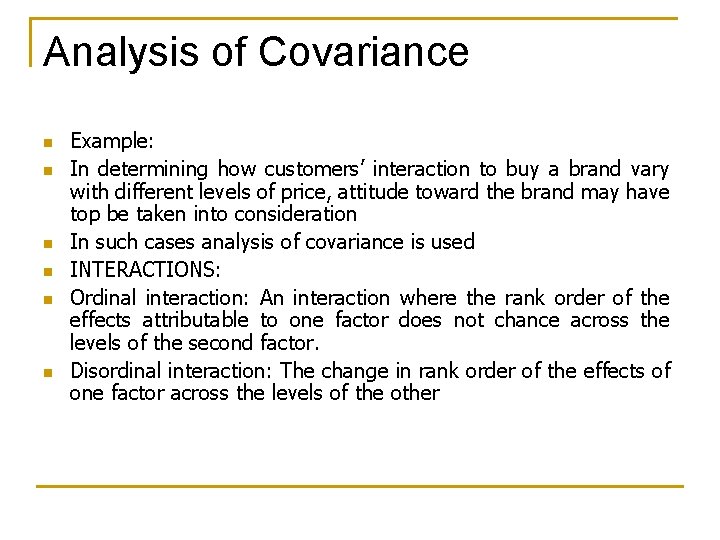

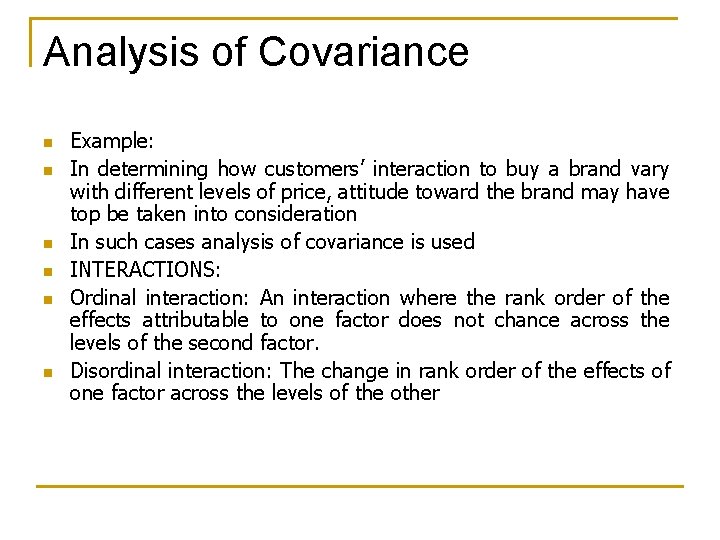

Analysis of Covariance n n n Example: In determining how customers’ interaction to buy a brand vary with different levels of price, attitude toward the brand may have top be taken into consideration In such cases analysis of covariance is used INTERACTIONS: Ordinal interaction: An interaction where the rank order of the effects attributable to one factor does not chance across the levels of the second factor. Disordinal interaction: The change in rank order of the effects of one factor across the levels of the other

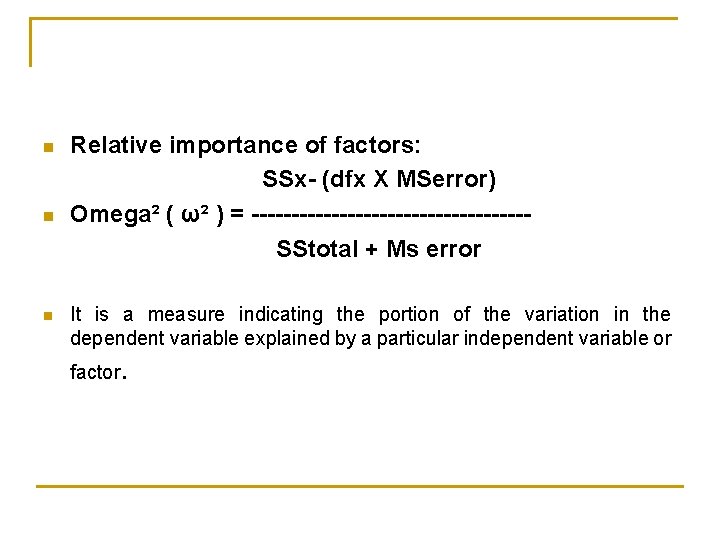

n n n Relative importance of factors: SSx- (dfx X MSerror) Omega² ( ω² ) = -----------------SStotal + Ms error It is a measure indicating the portion of the variation in the dependent variable explained by a particular independent variable or factor.

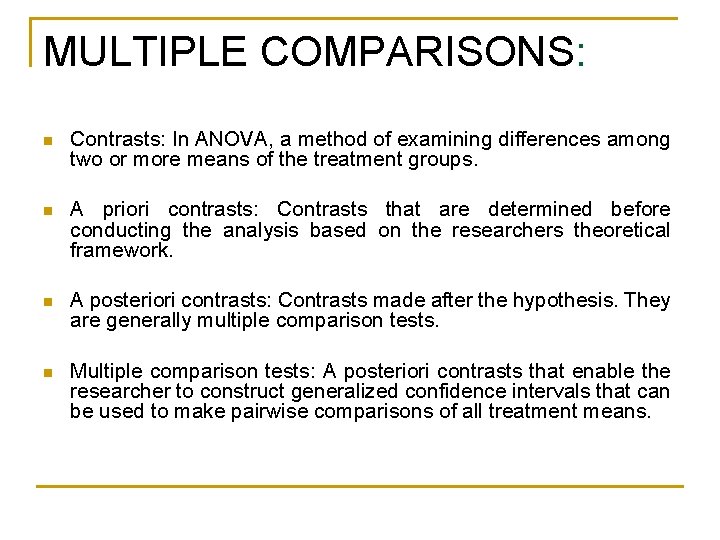

MULTIPLE COMPARISONS: n Contrasts: In ANOVA, a method of examining differences among two or more means of the treatment groups. n A priori contrasts: Contrasts that are determined before conducting the analysis based on the researchers theoretical framework. n A posteriori contrasts: Contrasts made after the hypothesis. They are generally multiple comparison tests. n Multiple comparison tests: A posteriori contrasts that enable the researcher to construct generalized confidence intervals that can be used to make pairwise comparisons of all treatment means.

REPEATED MEASURES ANOVA: An ANOVA technique used when respondents are exposed to more then one treatment condition and repeated measurements as obtained. Here, n SStotal= SSbetween people+ SS within people n SSwithin people= SSx + SSerror. NON METRIC ANALYSIS OF VARIANCE: n Non- metric ANOVA: An ANOVA technique for examining the difference in the central tendencies for more than two groups when the dependent variable is measured on an ordinal scale. n k- sample median test: Non parametric test that is used to examine differences among groups when the dependent variable is measured on ordinal scale. n Kruskal Wallis one way analysis of variance: A non metric ANOVA test that uses the rank value of each case not merely its location relative to the median. n

MULTIVARIATE ANALYSIS OF VARIANCE n n An ANOVA technique using two or more metric dependent variables. In MANOVA the null hypothesis is that the vectors of all means on multiple dependent variables are equal across groups. SPSS n Analyze>Compare Means>One-Way ANOVA … n Analyze>General Linear Model>Univariate

Multivariate analysis of variance and covariance

Multivariate analysis of variance and covariance Analysis of variance and covariance

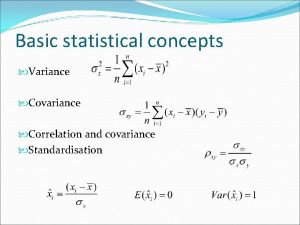

Analysis of variance and covariance Correlation variance

Correlation variance Usage variance formula

Usage variance formula Covariance and standard deviation

Covariance and standard deviation Kovariansi

Kovariansi Covariance between two random variables

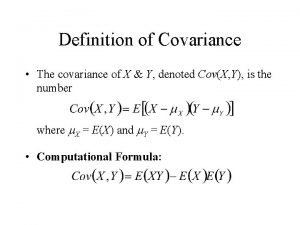

Covariance between two random variables Definition of covariance

Definition of covariance Covariance formula

Covariance formula Formule covariance

Formule covariance Joint probability density function calculator

Joint probability density function calculator Joint random variables

Joint random variables Covariance

Covariance Julia covariance matrix

Julia covariance matrix Correlation covariance

Correlation covariance Covariance of two time series

Covariance of two time series Covariance of joint distribution

Covariance of joint distribution Covariance matrix

Covariance matrix Covariance

Covariance