Integrao de Dados com Apache Nifi Marco Garcia

- Slides: 35

Integração de Dados com Apache Nifi Marco Garcia CTO, Founder – Cetax, Tutor. Pro mgarcia@cetax. com. br https: //www. linkedin. com/in/mgarciacetax/ 1

Apresentação Com mais de 20 anos de experiência em TI, sendo 18 exclusivamente com Business Intelligence , Data Warehouse e Big Data, Marco Garcia é certificado pelo Kimball University, nos EUA, onde obteve aula pessoalmente com Ralph Kimball – um dos principais gurus do Data Warehouse. 1º Instrutor Certificado Hortonworks LATAM Arquiteto de Dados e Instrutor na Cetax Consultoria. 2 02

Fluxos de Dados 3

Where do we find Data Flow? • Remote sensor delivery (Internet of Things - Io. T) • Intra-site / Inter-site / global distribution (Enterprise) • Ingest for feeding analytics (Big Data) • Data Processing (Simple Event Processing) 4

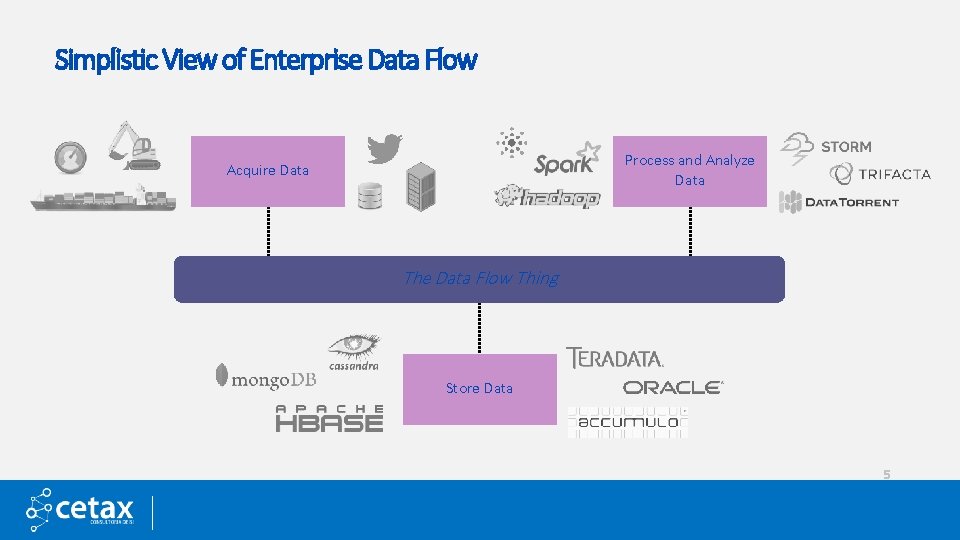

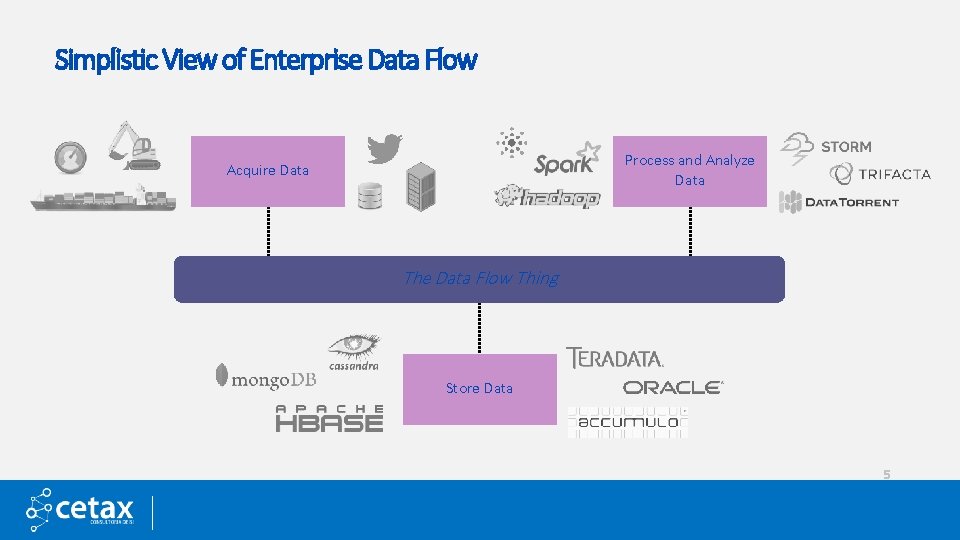

Simplistic View of Enterprise Data Flow Process and Analyze Data Acquire Data The Data Flow Thing Store Data 5

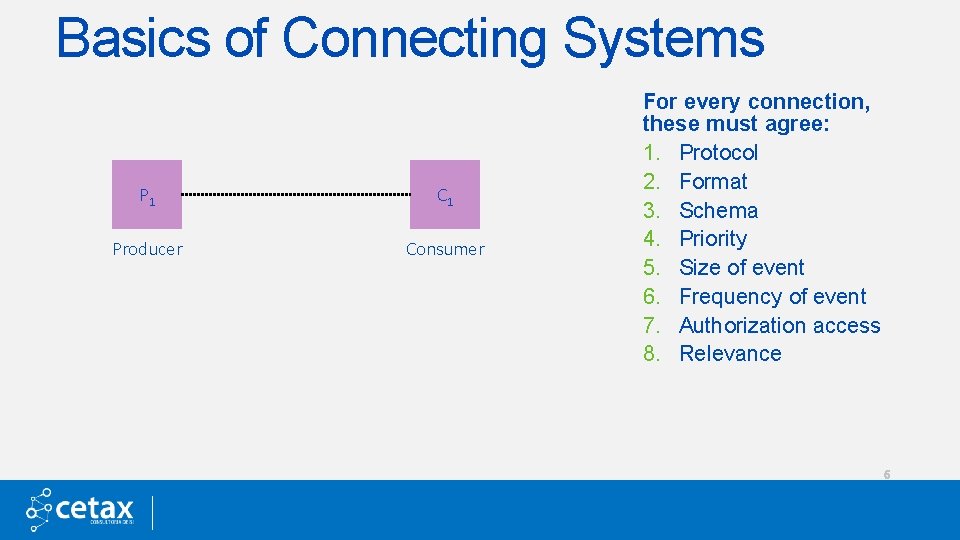

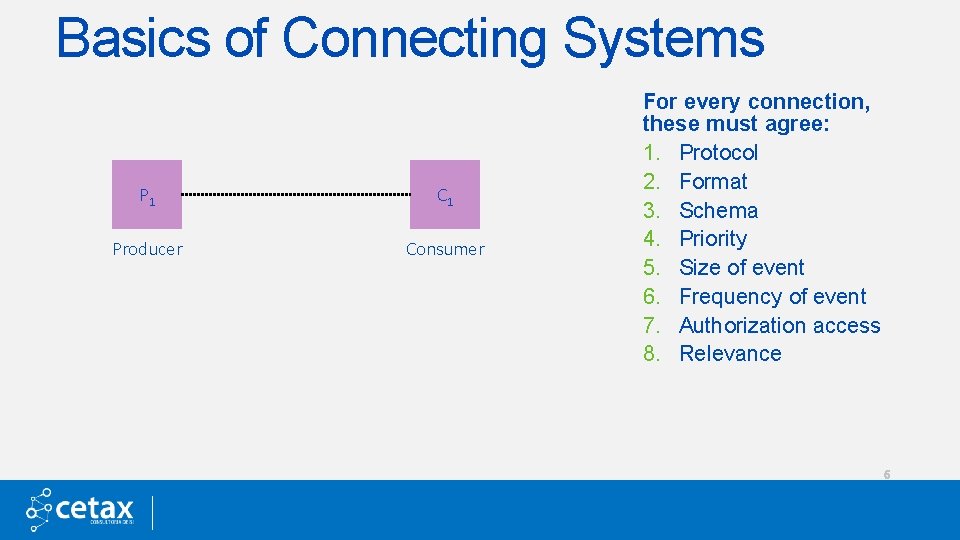

Basics of Connecting Systems P 1 C 1 Producer Consumer For every connection, these must agree: 1. Protocol 2. Format 3. Schema 4. Priority 5. Size of event 6. Frequency of event 7. Authorization access 8. Relevance 6

Io. T is Driving New Requirements 7

Io. AT Data Grows Faster Than We Consume It Internet of Anything Sensors and machines Geolocation Server logs The Opportunity Unlock transformational business value from a full fidelity of data and analytics for all data. NEW Clickstream Web & social Ability Much of the new data exists in-flight between systems and devices as part of the Internet of Anything ata t ume d s n o c o Files & emails Traditional Data Sources ERP, CRM, SCM TRADITIONAL 8

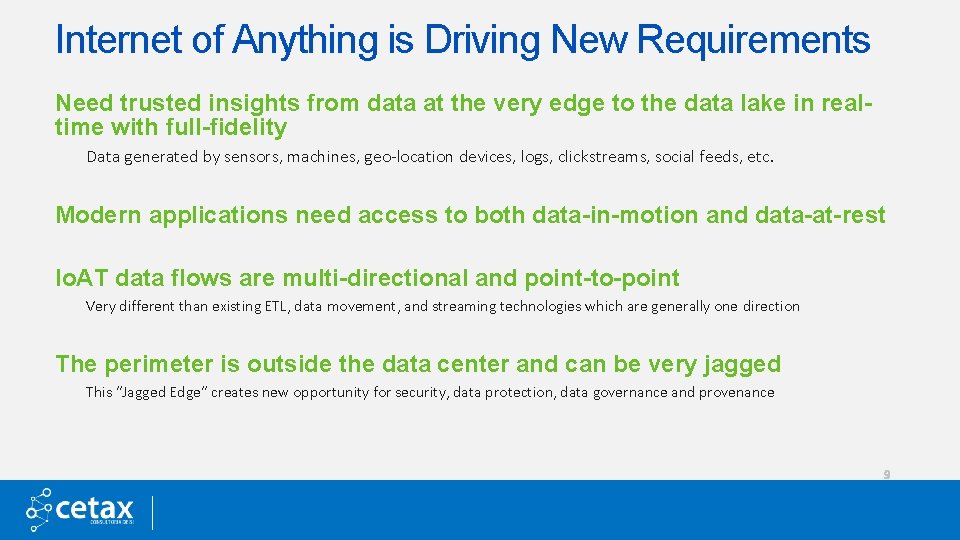

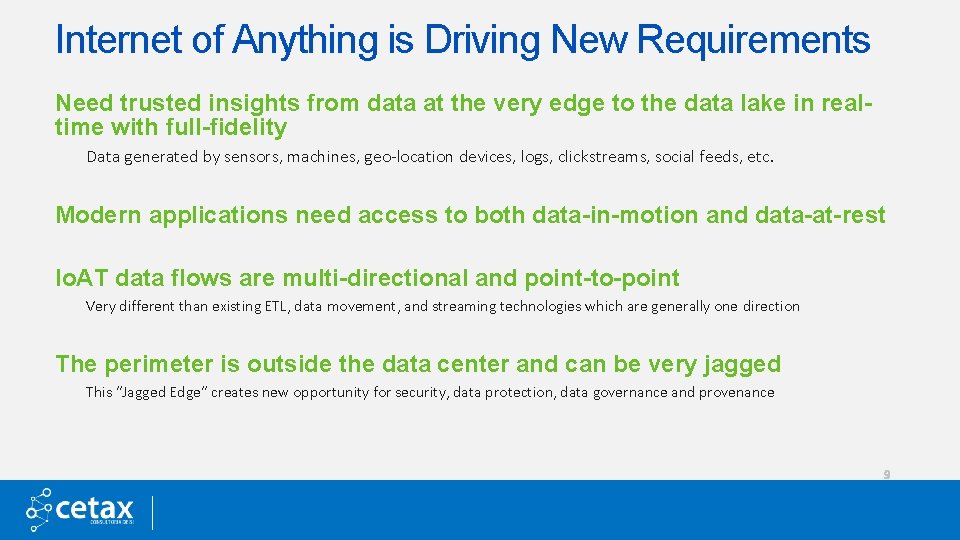

Internet of Anything is Driving New Requirements Need trusted insights from data at the very edge to the data lake in realtime with full-fidelity Data generated by sensors, machines, geo-location devices, logs, clickstreams, social feeds, etc. Modern applications need access to both data-in-motion and data-at-rest Io. AT data flows are multi-directional and point-to-point Very different than existing ETL, data movement, and streaming technologies which are generally one direction The perimeter is outside the data center and can be very jagged This “Jagged Edge” creates new opportunity for security, data protection, data governance and provenance 9

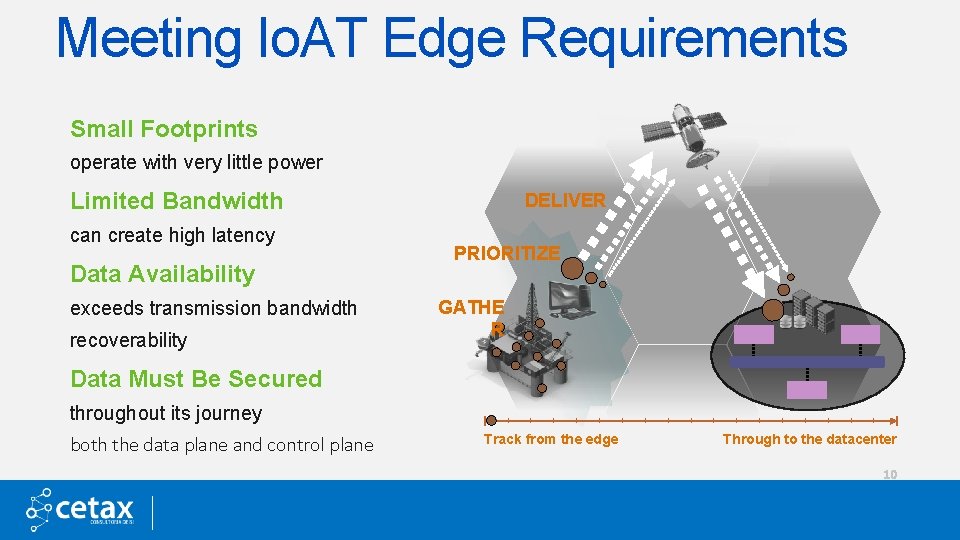

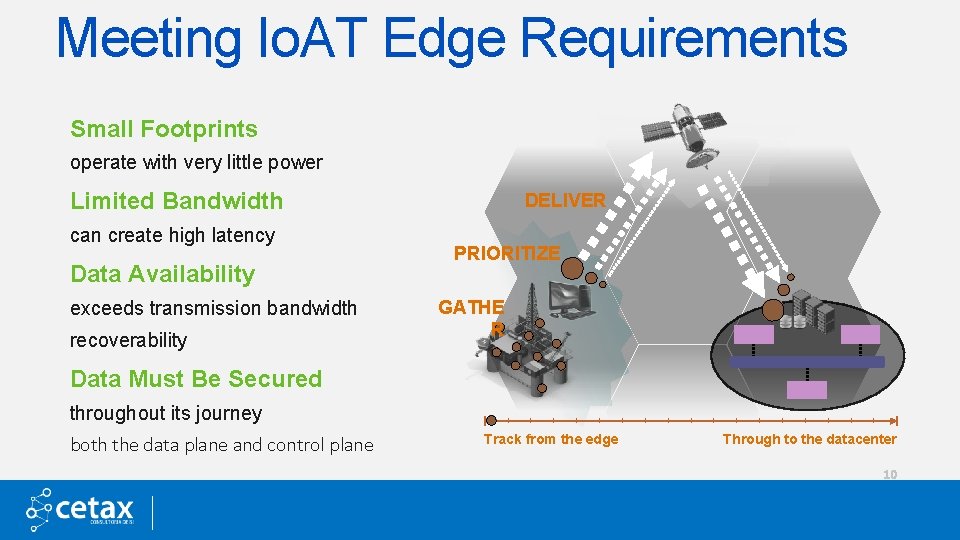

Meeting Io. AT Edge Requirements Small Footprints operate with very little power Limited Bandwidth can create high latency Data Availability exceeds transmission bandwidth recoverability DELIVER PRIORITIZE GATHE R Data Must Be Secured throughout its journey both the data plane and control plane Track from the edge Through to the datacenter 10

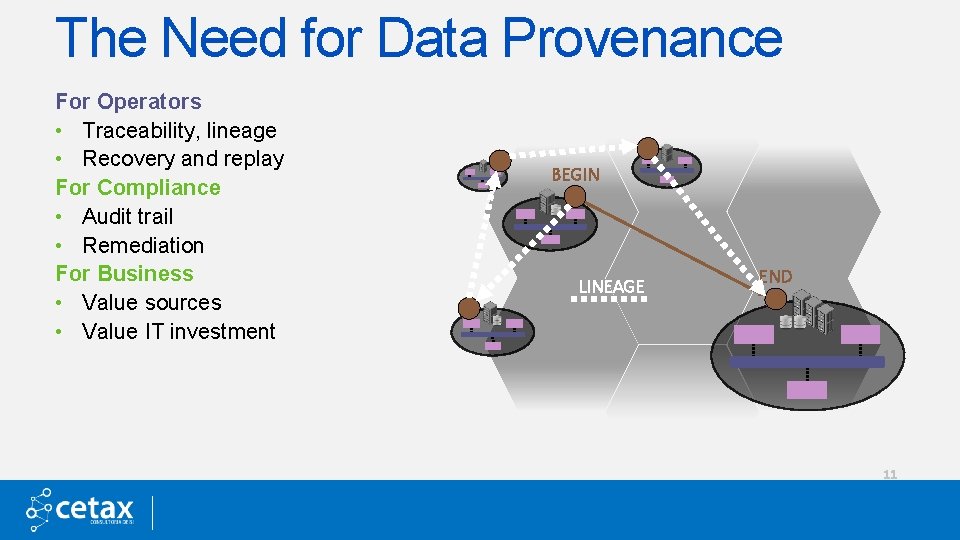

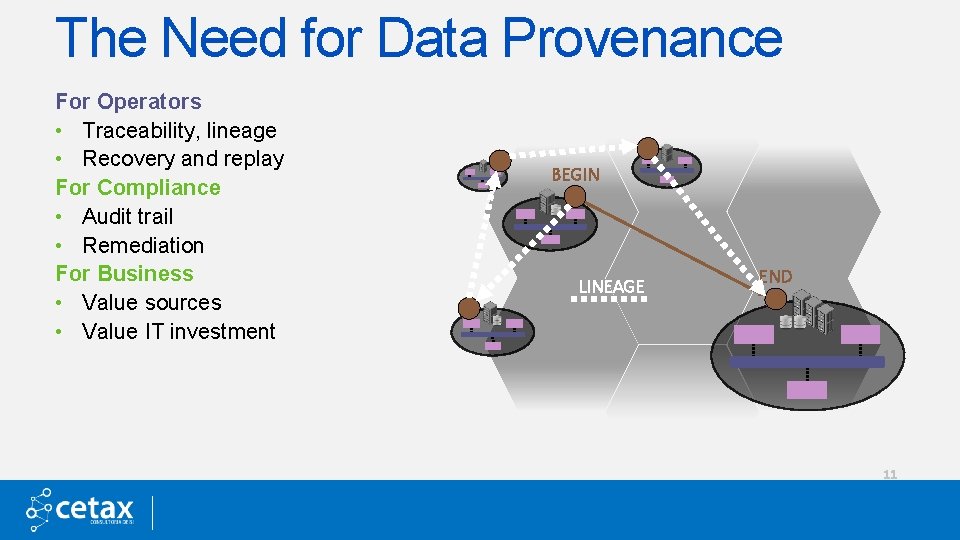

The Need for Data Provenance For Operators • Traceability, lineage • Recovery and replay For Compliance • Audit trail • Remediation For Business • Value sources • Value IT investment BEGIN LINEAGE END 11

The Need for Fine-grained Security and Compliance It’s not enough to say you have encrypted communications • Enterprise authorization services –entitlements change often • People and systems with different roles require difference access levels • Tagged/classified data 12

Real-time Data Flow It’s not just how quickly you move data – it’s about how quickly you can change behavior and seize new opportunities 13

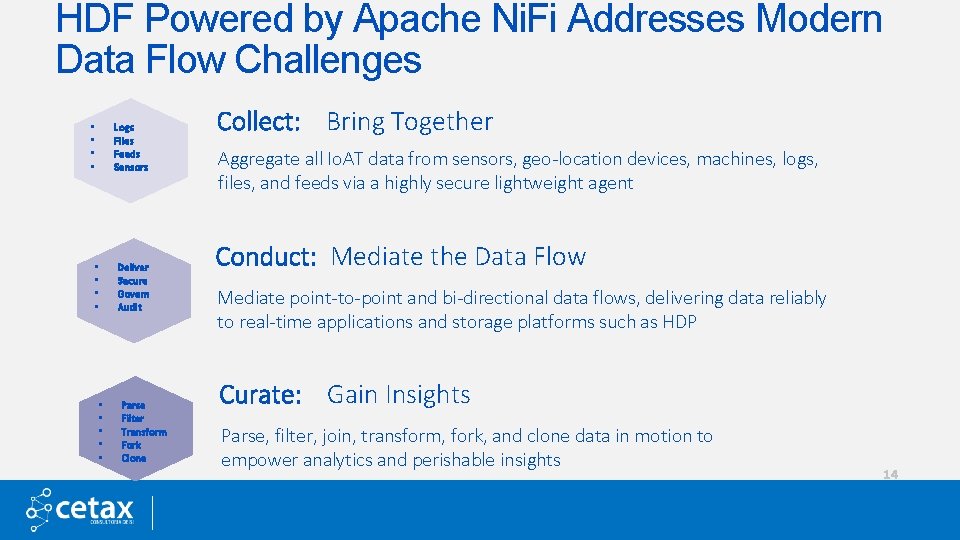

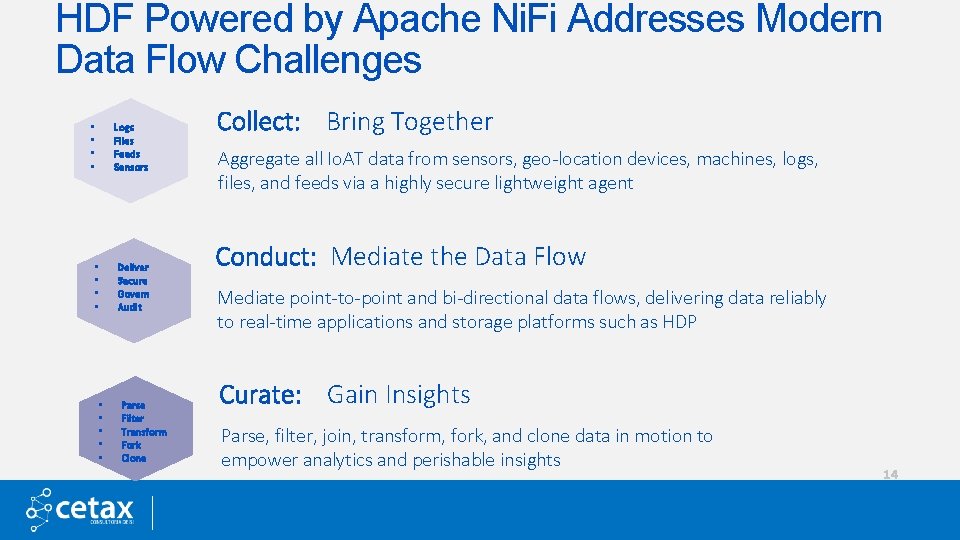

HDF Powered by Apache Ni. Fi Addresses Modern Data Flow Challenges Logs Files Feeds Sensors • • • • Deliver Secure Govern Audit Parse Filter Transform Fork Clone Collect: Bring Together Aggregate all Io. AT data from sensors, geo-location devices, machines, logs, files, and feeds via a highly secure lightweight agent Conduct: Mediate the Data Flow Mediate point-to-point and bi-directional data flows, delivering data reliably to real-time applications and storage platforms such as HDP Curate: Gain Insights Parse, filter, join, transform, fork, and clone data in motion to empower analytics and perishable insights 14

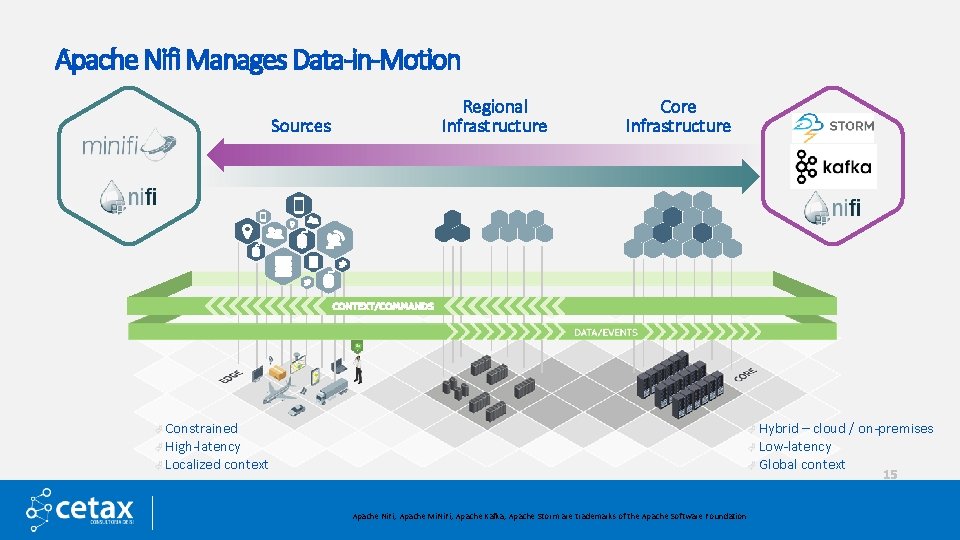

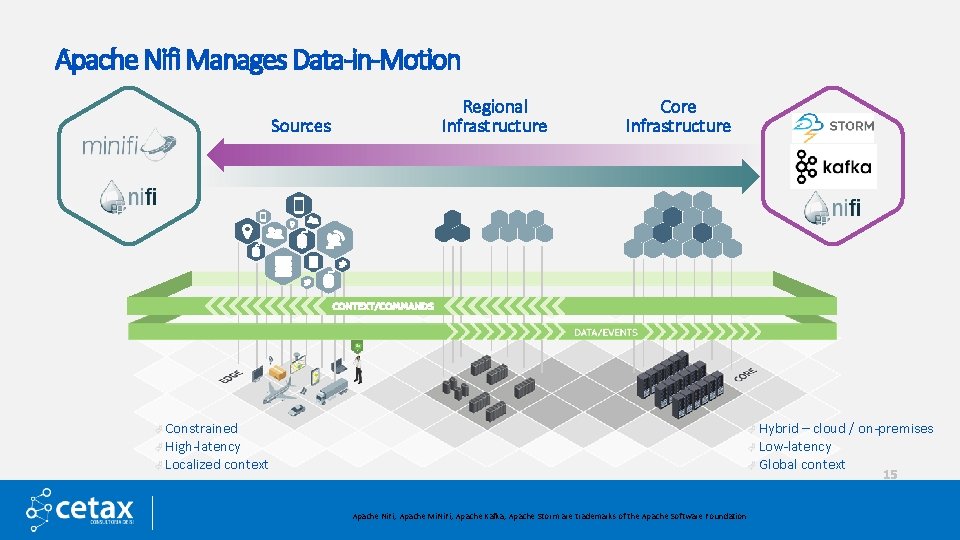

Apache Nifi Manages Data-in-Motion Sources Regional Infrastructure Core Infrastructure à Constrained à Hybrid – cloud / on-premises à Low-latency à Global context à High-latency à Localized context 15 Apache Ni. Fi, Apache Mi. Ni. Fi, Apache Kafka, Apache Storm are trademarks of the Apache Software Foundation

Ni. Fi Developed by the National Security Agency Developed by the NSA over the last 8 years. Declassified "NSA's innovators work on some of the most challenging national security problems imaginable, " "Commercial enterprises could use it to quickly control, manage, and analyze the flow of information from geographically dispersed sites – creating comprehensive situational awareness" -- Linda L. Burger, Director of the NSA 16

A Brief History 2006 Niagara. Files (Ni. Fi) was first incepted at the National Security Agency (NSA) November 2014 Ni. Fi is donated to the Apache Software Foundation (ASF) through NSA’s Technology Transfer Program and enters ASF’s incubator. July 2015 Ni. Fi reaches ASF top-level project status 17

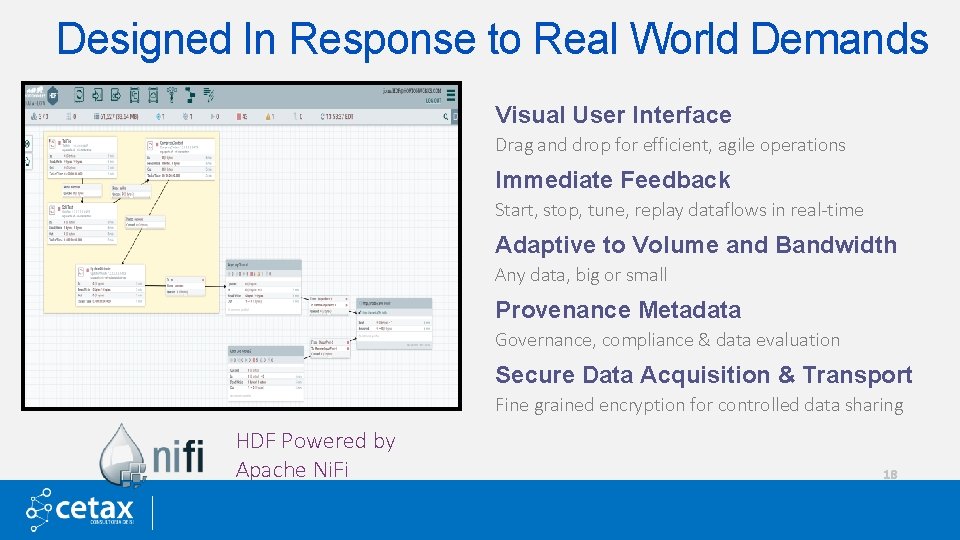

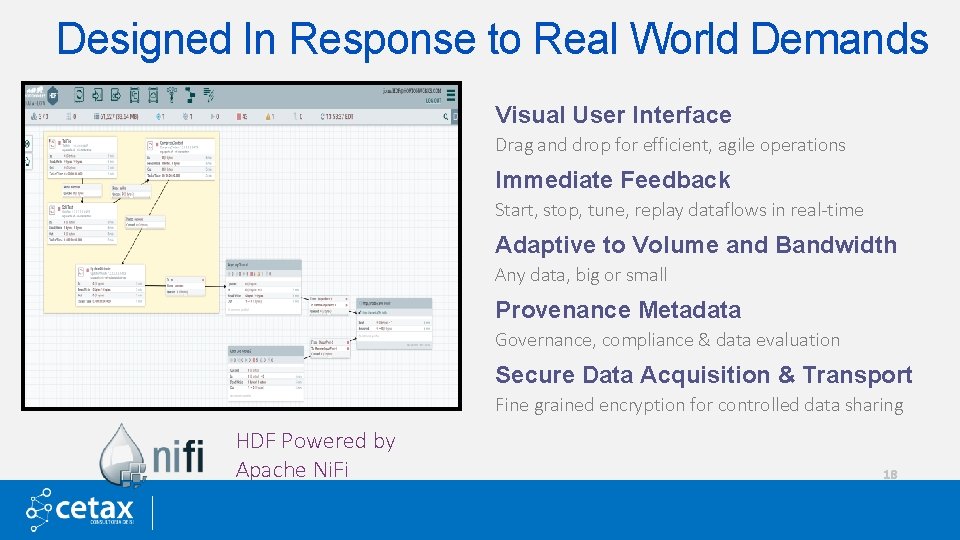

Designed In Response to Real World Demands Visual User Interface Drag and drop for efficient, agile operations Immediate Feedback Start, stop, tune, replay dataflows in real-time Adaptive to Volume and Bandwidth Any data, big or small Provenance Metadata Governance, compliance & data evaluation Secure Data Acquisition & Transport Fine grained encryption for controlled data sharing HDF Powered by Apache Ni. Fi 18

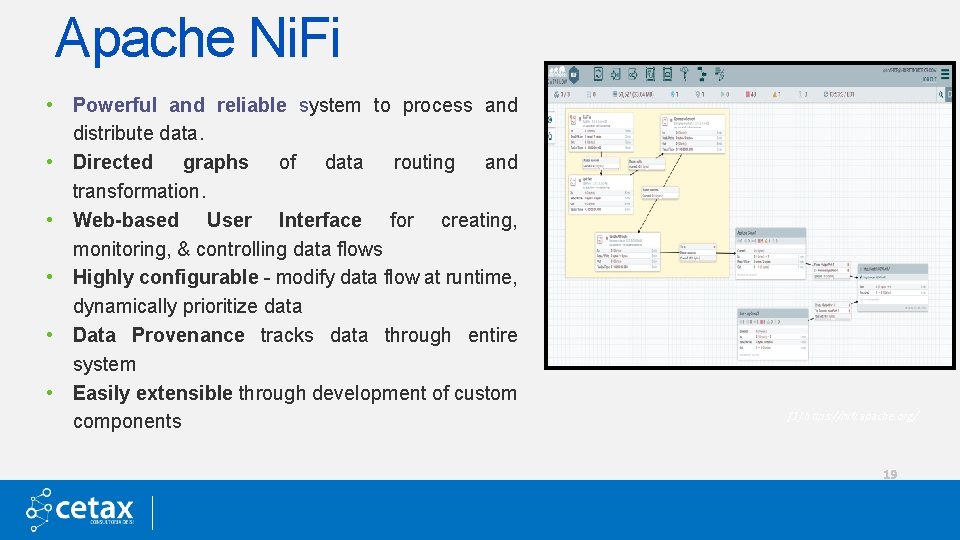

Apache Ni. Fi • Powerful and reliable system to process and distribute data. • Directed graphs of data routing and transformation. • Web-based User Interface for creating, monitoring, & controlling data flows • Highly configurable - modify data flow at runtime, dynamically prioritize data • Data Provenance tracks data through entire system • Easily extensible through development of custom components [1] https: //nifi. apache. org/ 19

Nifi Use Cases Ingest Logs for Cyber Security: Integrated and secure log collection for real-time data analytics and threat detection Feed Data to Streaming Analytics: Accelerate big data ROI by streaming data into analytics systems such as Apache Storm or Apache Spark Streaming Data Warehouse Offload: Convert source data to streaming data and use HDF for data movement before delivering it for ETL processing. Enable ETL processing to be offloaded to Hadoop without having to change source systems. Move Data Internally: Optimize resource utilization by moving data between data centers or between on-premises infrastructure and cloud infrastructure Capture Io. T Data: Transport disparate and often remote Io. T data in real time, despite any limitations in device footprint, power or connectivity—avoiding data loss Big Data Ingest Easily and efficiently ingest data into Hadoop 20

Arquitetura do NIFI 21

Apache Ni. Fi: The three key concepts • Manage the flow of information • Data Provenance • Secure the control plane and data plane 22

Apache Ni. Fi – Key Features • Guaranteed delivery • Data buffering - Backpressure - Pressure release • Prioritized queuing • Flow specific Qo. S - Latency vs. throughput - Loss tolerance • Data provenance • Recovery/recording a rolling log of finegrained history • Visual command control • Flow templates • Multi-tenant Authorization • Designed for extension • Clustering 23

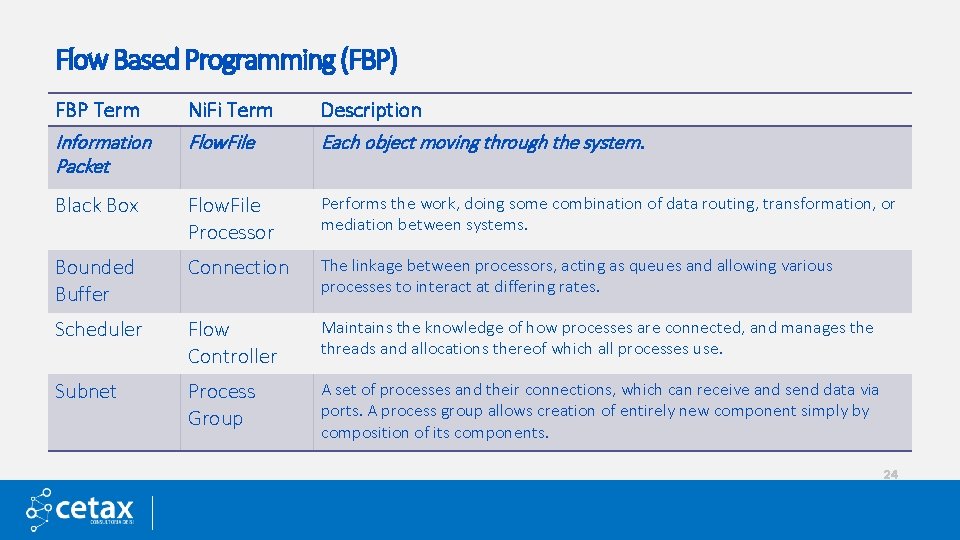

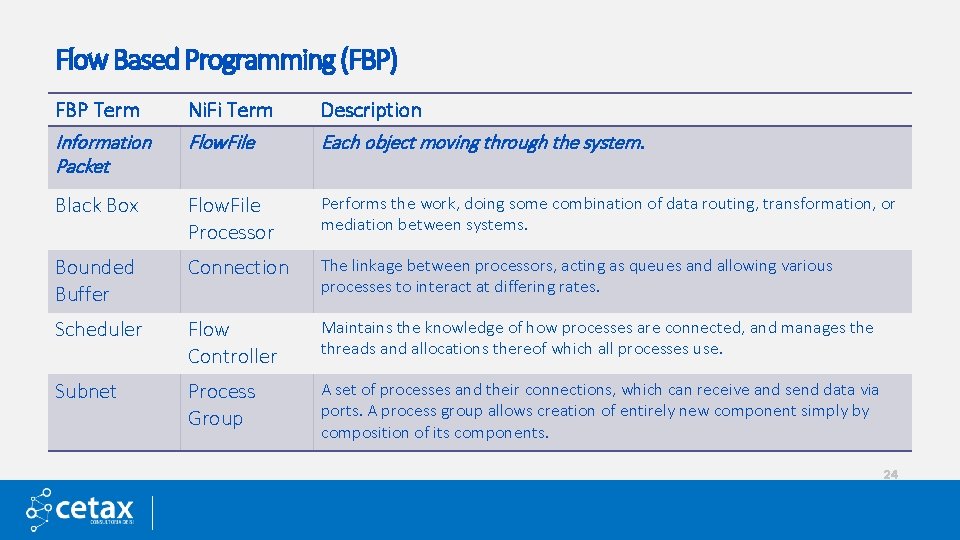

Flow Based Programming (FBP) FBP Term Information Packet Ni. Fi Term Flow. File Description Each object moving through the system. Black Box Flow. File Processor Performs the work, doing some combination of data routing, transformation, or mediation between systems. Bounded Buffer Connection The linkage between processors, acting as queues and allowing various processes to interact at differing rates. Scheduler Flow Controller Maintains the knowledge of how processes are connected, and manages the threads and allocations thereof which all processes use. Subnet Process Group A set of processes and their connections, which can receive and send data via ports. A process group allows creation of entirely new component simply by composition of its components. 24

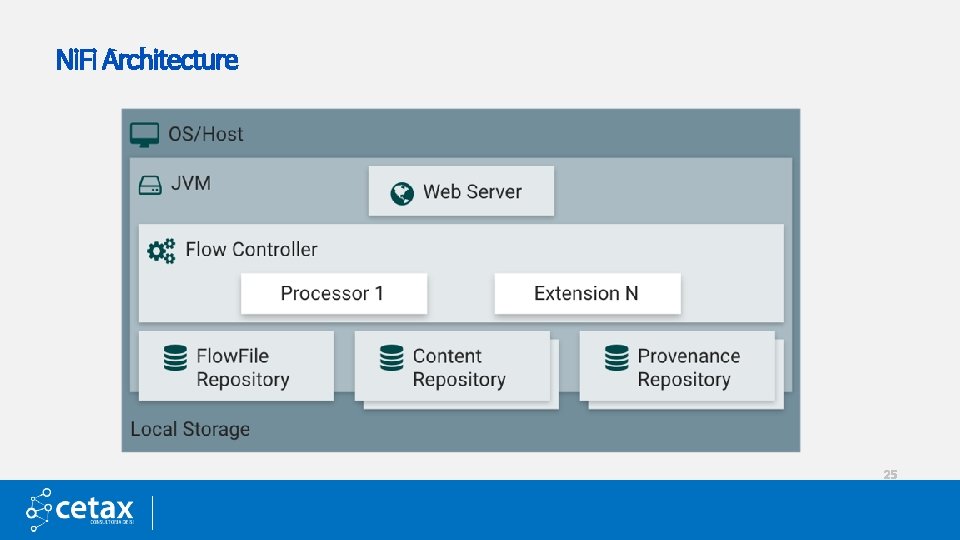

Ni. Fi Architecture 25

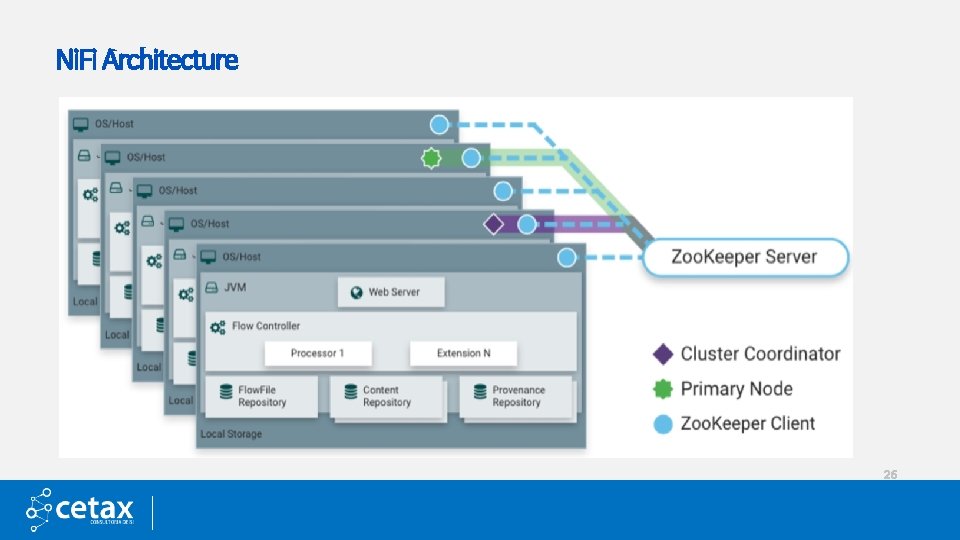

Ni. Fi Architecture 26

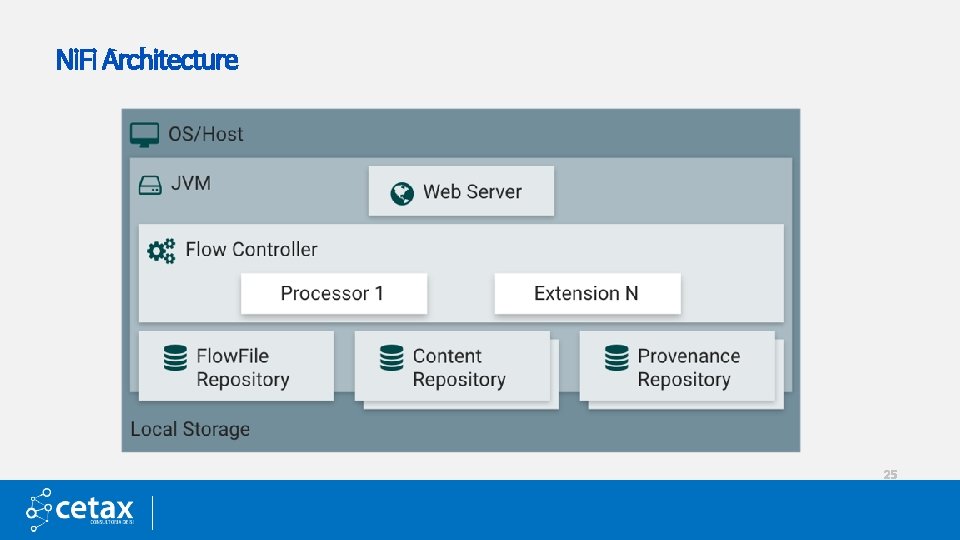

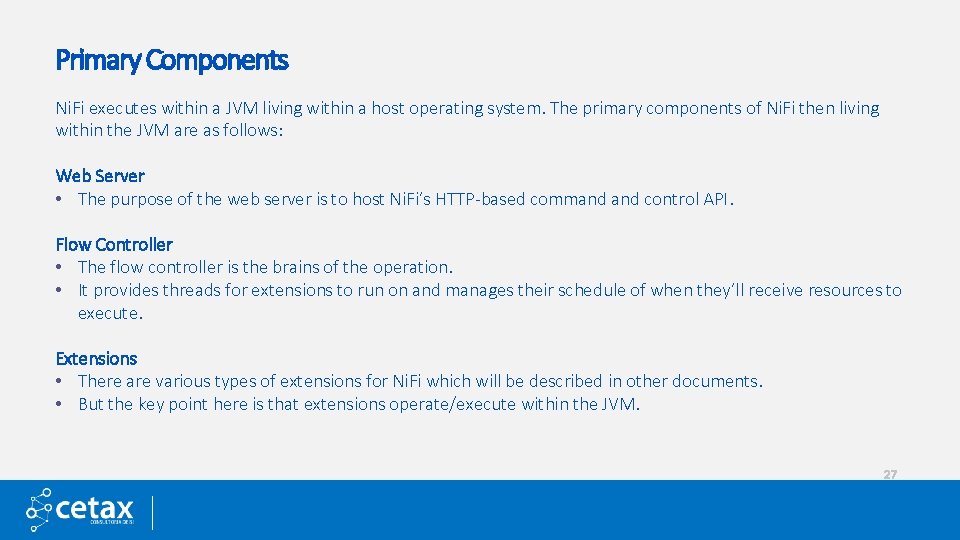

Primary Components Ni. Fi executes within a JVM living within a host operating system. The primary components of Ni. Fi then living within the JVM are as follows: Web Server • The purpose of the web server is to host Ni. Fi’s HTTP-based command control API. Flow Controller • The flow controller is the brains of the operation. • It provides threads for extensions to run on and manages their schedule of when they’ll receive resources to execute. Extensions • There are various types of extensions for Ni. Fi which will be described in other documents. • But the key point here is that extensions operate/execute within the JVM. 27

Primary Components(Cont. . ) Flow. File Repository • The Flow. File Repository is where Ni. Fi keeps track of the state of what it knows about a given Flow. File that is presently active in the flow. • The default approach is a persistent Write-Ahead Log that lives on a specified disk partition. Content Repository • The Content Repository is where the actual content bytes of a given Flow. File live. • The default approach stores blocks of data in the file system. • More than one file system storage location can be specified so as to get different physical partitions engaged to reduce contention on any single volume. Provenance Repository • The Provenance Repository is where all provenance event data is stored. • The repository construct is pluggable with the default implementation being to use one or more physical disk volumes. • Within each location event data is indexed and searchable. 28

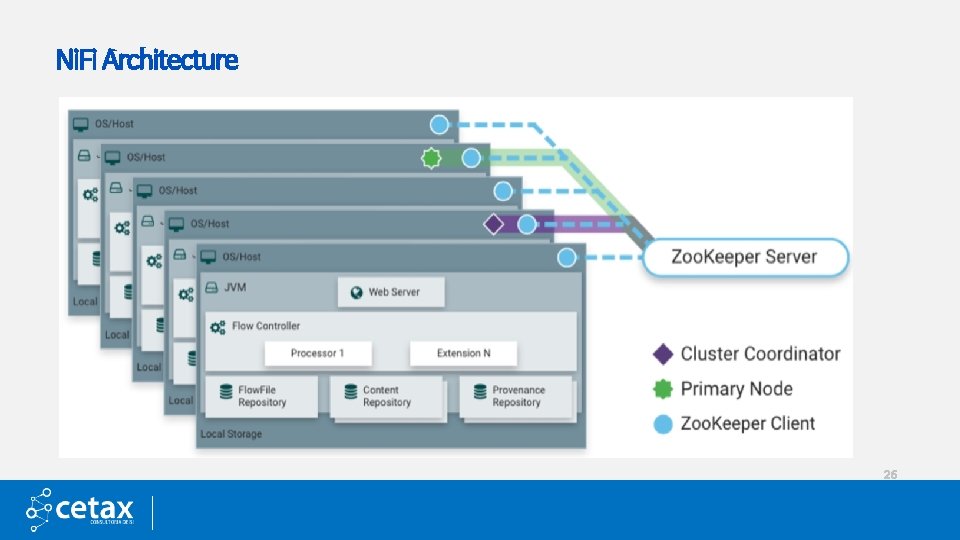

Ni. Fi Cluster Starting with the Ni. Fi 1. x/HDF-2. x release, a Zero-Master Clustering paradigm is employed. Ni. Fi Cluster Coordinator: • A Cluster Coordinator is the node in a Ni. FI cluster that is responsible managing the nodes in a cluster. • Determines which nodes are allowed in the cluster. • Providing the most up-to-date flow to newly joining nodes. Nodes: • Each cluster is made up of one or more nodes. The nodes do the actual data processing. Primary Node: • Every cluster has one Primary Node. On this node, it is possible to run "Isolated Processors" (see below). Zoo. Keeper Server: • It is used to automatically elect a Primary Node and cluster co-ordinator. We will learn in detail about Ni. Fi Cluster in following Lessons. . 29

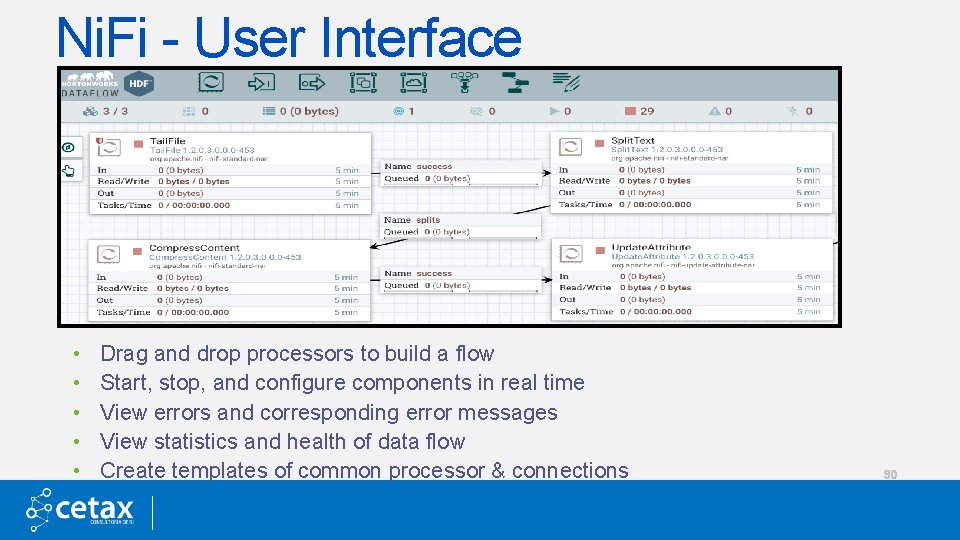

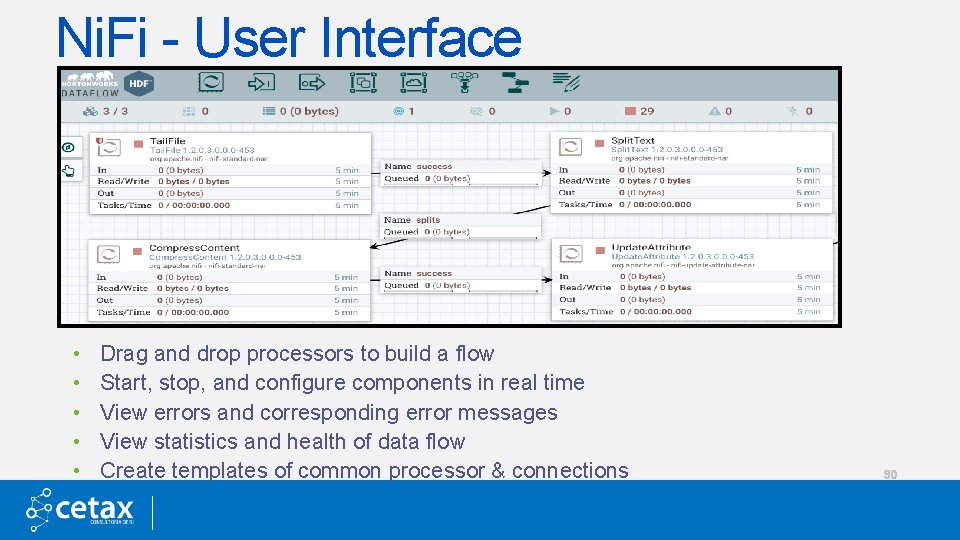

Ni. Fi - User Interface • • • Drag and drop processors to build a flow Start, stop, and configure components in real time View errors and corresponding error messages View statistics and health of data flow Create templates of common processor & connections 30

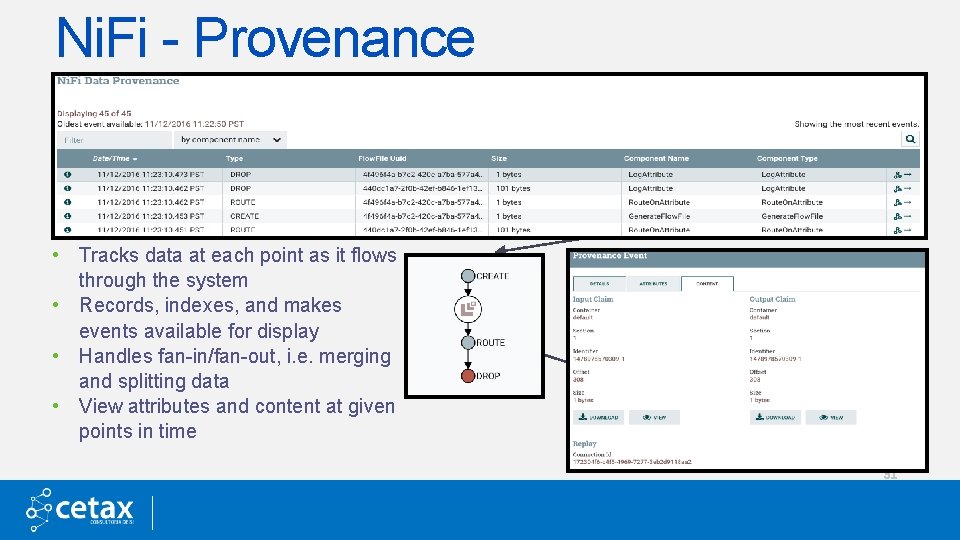

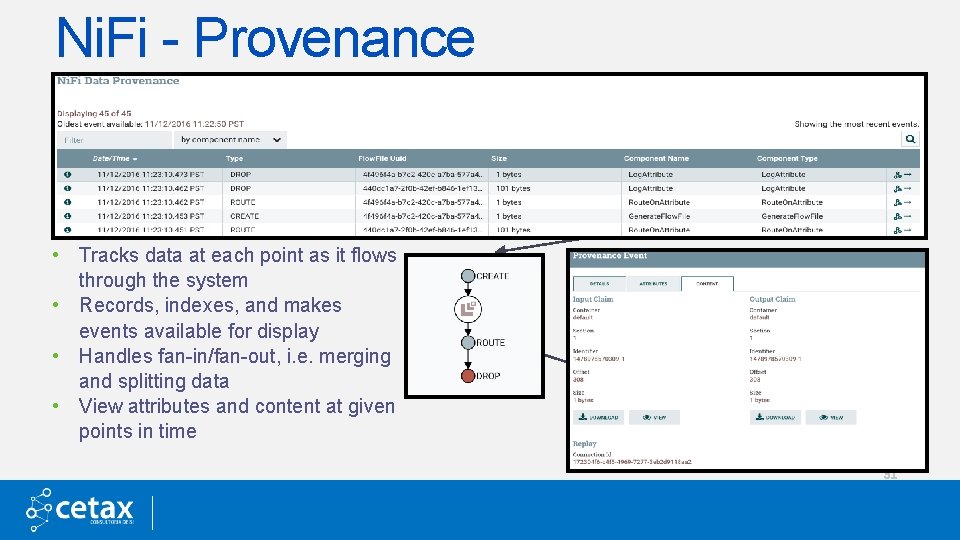

Ni. Fi - Provenance • Tracks data at each point as it flows through the system • Records, indexes, and makes events available for display • Handles fan-in/fan-out, i. e. merging and splitting data • View attributes and content at given points in time 31

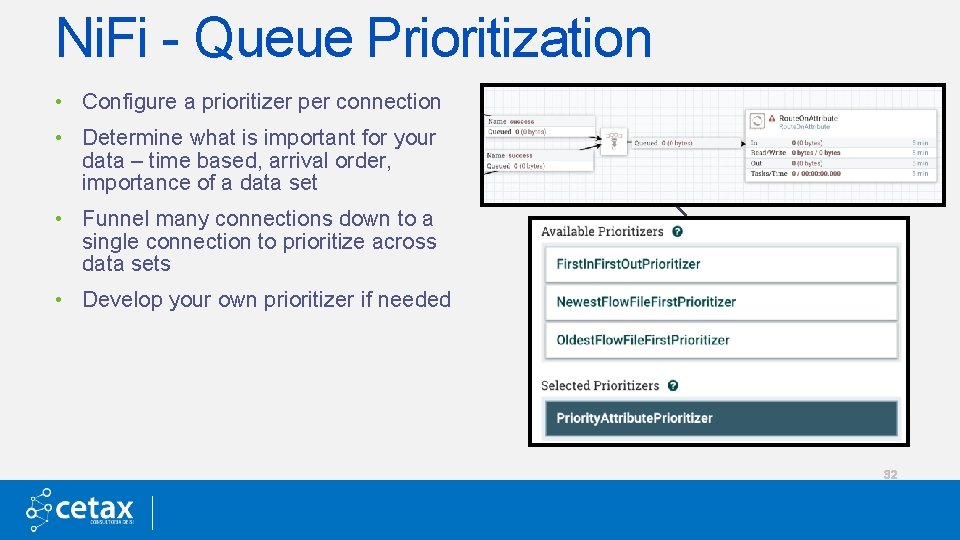

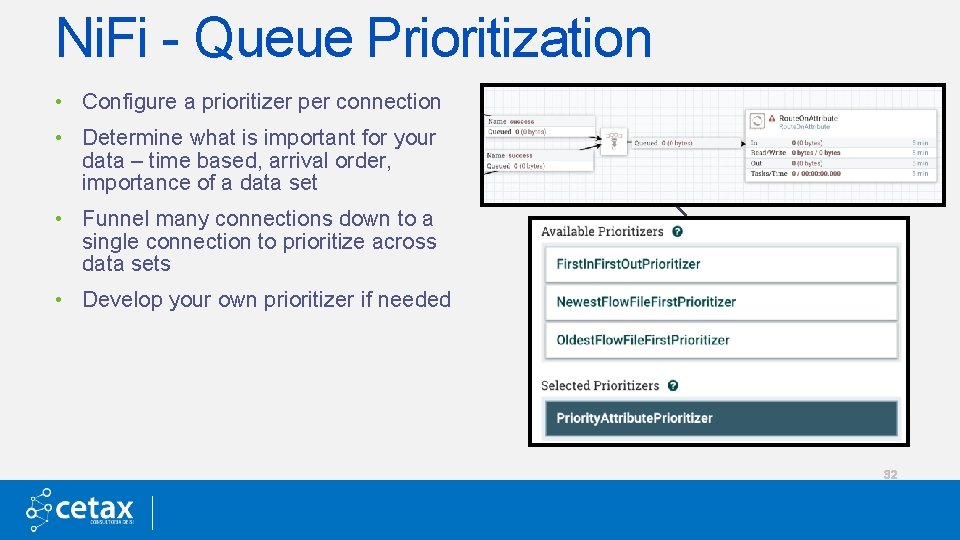

Ni. Fi - Queue Prioritization • Configure a prioritizer per connection • Determine what is important for your data – time based, arrival order, importance of a data set • Funnel many connections down to a single connection to prioritize across data sets • Develop your own prioritizer if needed 32

Ni. Fi - Extensibility Built from the ground up with extensions in mind Service-loader pattern for… • • Processors Controller Services Reporting Tasks Prioritizers Extensions packaged as Ni. Fi Archives (NARs) • Deploy Ni. Fi lib directory and restart • Provides Class. Loader isolation • Same model as standard components 33

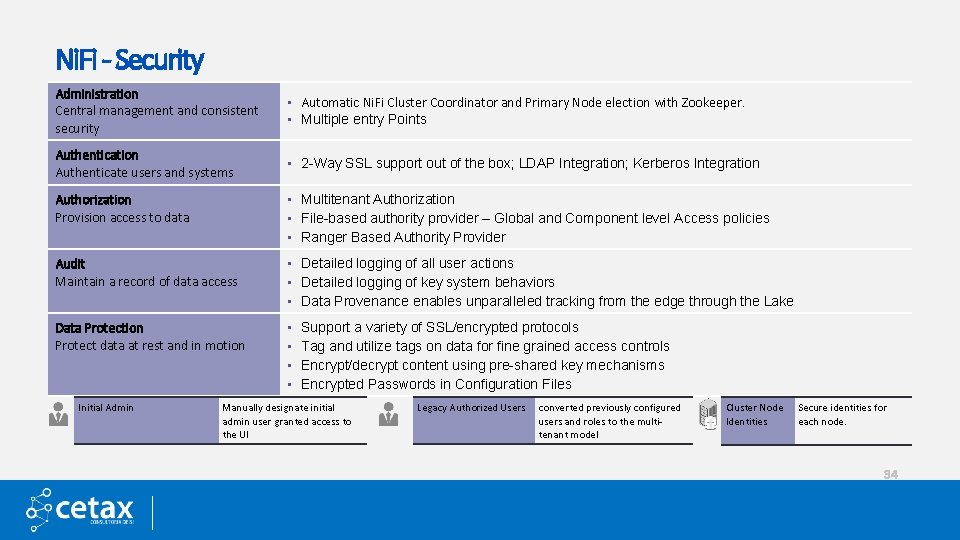

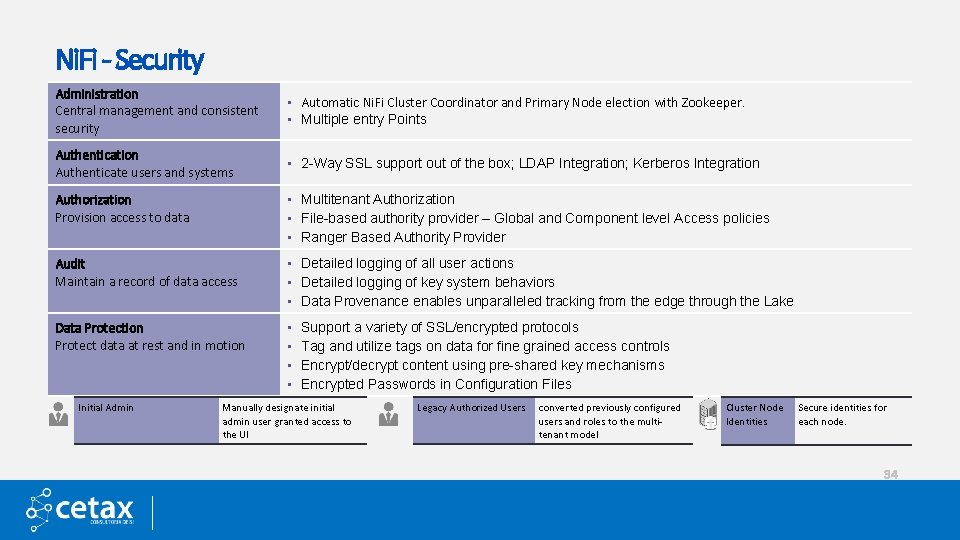

Ni. Fi - Security Administration Central management and consistent security • Automatic Ni. Fi Cluster Coordinator and Primary Node election with Zookeeper. • Multiple entry Points Authentication Authenticate users and systems • 2 -Way SSL support out of the box; LDAP Integration; Kerberos Integration Authorization Provision access to data • Multitenant Authorization • File-based authority provider – Global and Component level Access policies • Ranger Based Authority Provider Audit Maintain a record of data access • Detailed logging of all user actions • Detailed logging of key system behaviors • Data Provenance enables unparalleled tracking from the edge through the Lake Data Protection Protect data at rest and in motion • • Initial Admin Support a variety of SSL/encrypted protocols Tag and utilize tags on data for fine grained access controls Encrypt/decrypt content using pre-shared key mechanisms Encrypted Passwords in Configuration Files Manually designate initial admin user granted access to the UI Legacy Authorized Users converted previously configured users and roles to the multitenant model Cluster Node Identities Secure identities for each node. 34

Obrigado ! Visite nos : www. cetax. com. br Estamos contratando ! 35