External Sorting Chapter 13 Sec 13 1 13

- Slides: 28

External Sorting Chapter 13 (Sec. 13 -1 -13. 5): Ramakrishnan & Gehrke and Chapter 11 (Sec. 11. 4 -11. 5): G-M et al. (R 2) OR Chapter 2 (Sec. 2. 4 -2. 5): Garcia-et Molina al. (R 1) NOTE: sorting using B-trees – to be assigned for reading after we cover B-trees. CPSC 404, Laks V. S. Lakshmanan 1

What you will learn from this set of lectures How to efficiently sort a file so large it won’t all fit in memory? v What knobs are available for me to tune the performance of such a sort algorithm & how do I tune them? v CPSC 404, Laks V. S. Lakshmanan 2

Motivation v v A classic problem in computer science! Data requested in sorted order – v e. g. , arrange movie records in increase order of ratings. Why is it important? – is first step in bulk loading (i. e. , creating) B+ tree index. – useful also for eliminating duplicate copies in a collection of records & for aggregation (Why? ) [can you think of any other strategy for these tasks? ] – (later) for efficient joins of relations, i. e. , Sort-merge algo. v Problem: sort 1 GB of data with 50 MB of RAM. CPSC 404, Laks V. S. Lakshmanan 3

I/O Computation Model disk I/O (read/write a block) very expensive compared to processing it once it’s in memory. v random block accesses very common. v reasonable model for computation using secondary storage count only disk I/O’s. v CPSC 404, Laks V. S. Lakshmanan 4

Desiderata for good DBMS algorithms overlap I/O and cpu processing as much as possible. v use as much data from each block read as possible; depends on record clustering. v cluster records that are accessed together in consecutive blocks/pages. v buffer frequently accessed blocks in memory. v CPSC 404, Laks V. S. Lakshmanan 5

Merge Sort Overview Ø v Idea: given B buffer pages of main memory (Sort phase) – read in B pages of data each time and sort internally – suppose entire data stored in m * B pages – sort phase produces m sorted runs (sublists) of B pages each CPSC 404, Laks V. S. Lakshmanan 6

Merge Sort Overview v (Merge phase) – repeatedly merge two sorted runs § § Ø (Pass 0: the sort phase) Pass 1 produces m/2 sorted runs of 2 B pages each Pass 2 produces m/4 sorted runs of 4 B pages each continue until one sorted run of m * B pages produced 2 -way merge can be optimized to k-way merge, where k can be as large as B-1 (see Ch. 13. 2 and (R 1 OR R 2) chapters). CPSC 404, Laks V. S. Lakshmanan 7

Sorting Example Setup: v 10 M records of 100 bytes = 1 GB file. – Stored on Megatron 747 disk, with 4 KB blocks, each holding 40 records + header information. – Suppose each cylinder = 1 MB. entire file takes up 1000 cylinders 250 x 1000 blocks. 50 M available main memory = 50 x 2^20/(4 x 2^10) = 12, 800 blocks = 1/20 th of file. v Sort by primary key field. => ==> v CPSC 404, Laks V. S. Lakshmanan 8

Merge Sort v v Common main memory sorting algorithms don't optimize disk I/O's. Variants of Merge Sort do better. Merge = take two sorted lists and repeatedly choose the smaller of the ``heads'' of the lists (head = first among the unchosen). Example: merge 1, 3, 4, 8 with 2, 5, 7, 9 = 1, 2, 3, 4, 5, 7, 8, 9. Merge Sort based on recursive algorithm: divide list of records into two parts; recursively mergesort the parts, and merge the resulting lists. CPSC 404, Laks V. S. Lakshmanan 9

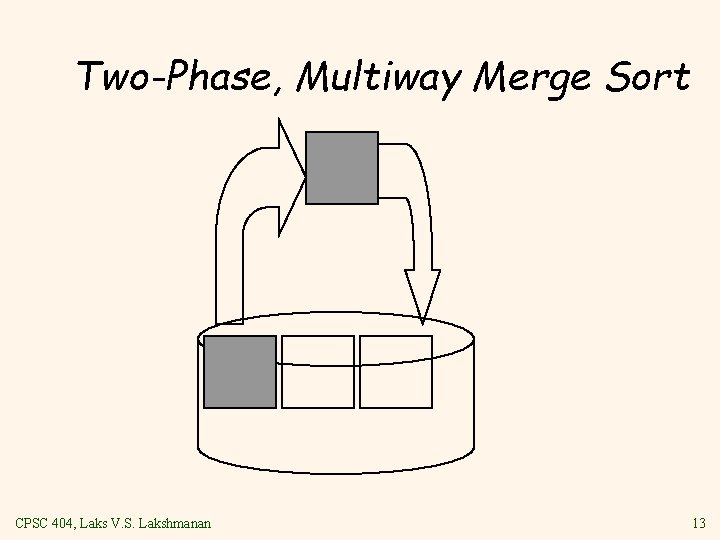

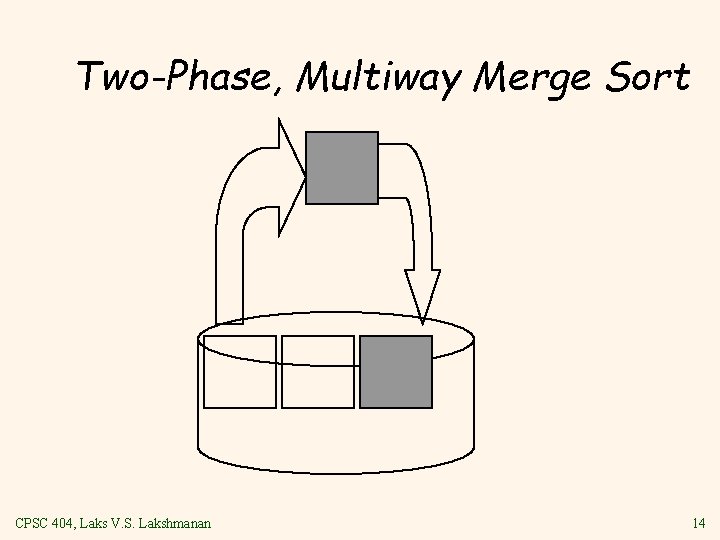

Two Phase, Multiway Merge Sort “Vanilla” Merge Sort still not very good in disk I/O model. v log 2 n passes, so each record is read/written from disk log 2 n times. v 2 PMMS: 2 reads + 2 writes per block. Phase 1 v 1. Fill buffer with records. v 2. Sort using favorite main memory sort. v 3. Write sorted sublist to disk. v 4. Repeat until all records have been put into one of the sorted sublists. v Sorted sublist = SSL = sorted run. v CPSC 404, Laks V. S. Lakshmanan 10

Two Phase, Multiway Merge Sort CPSC 404, Laks V. S. Lakshmanan 11

Two Phase, Multiway Merge Sort CPSC 404, Laks V. S. Lakshmanan 12

Two Phase, Multiway Merge Sort CPSC 404, Laks V. S. Lakshmanan 13

Two Phase, Multiway Merge Sort CPSC 404, Laks V. S. Lakshmanan 14

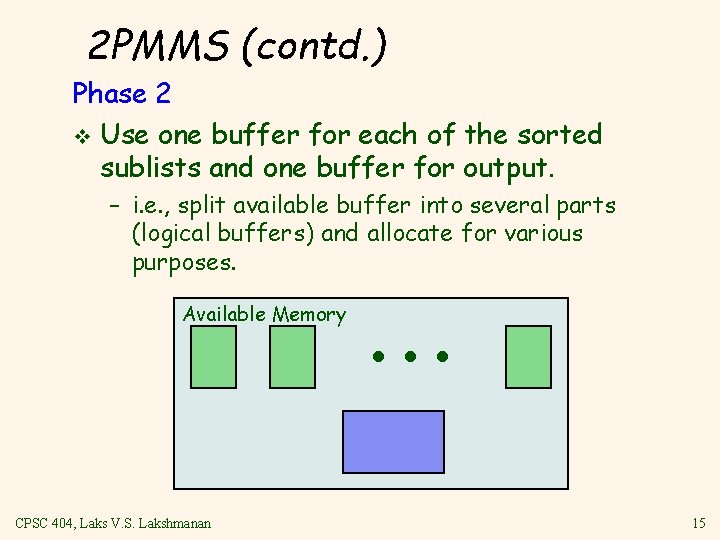

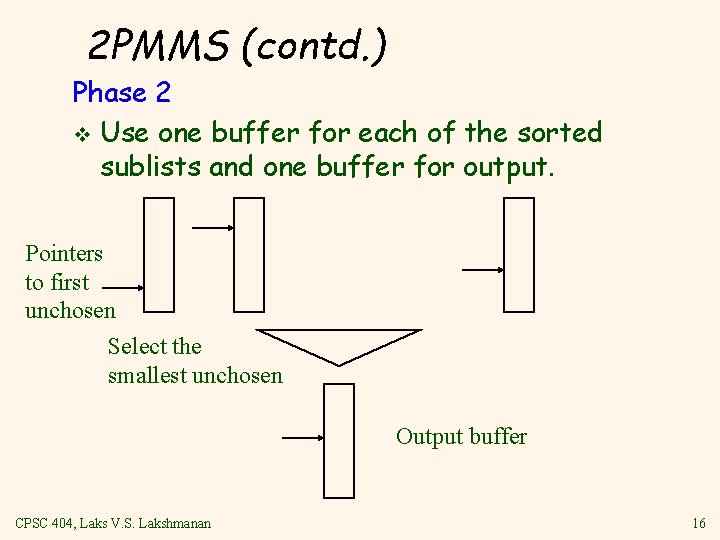

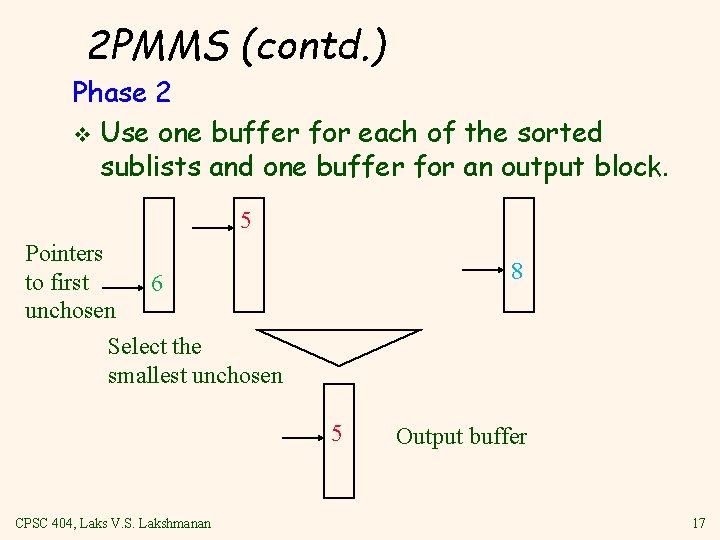

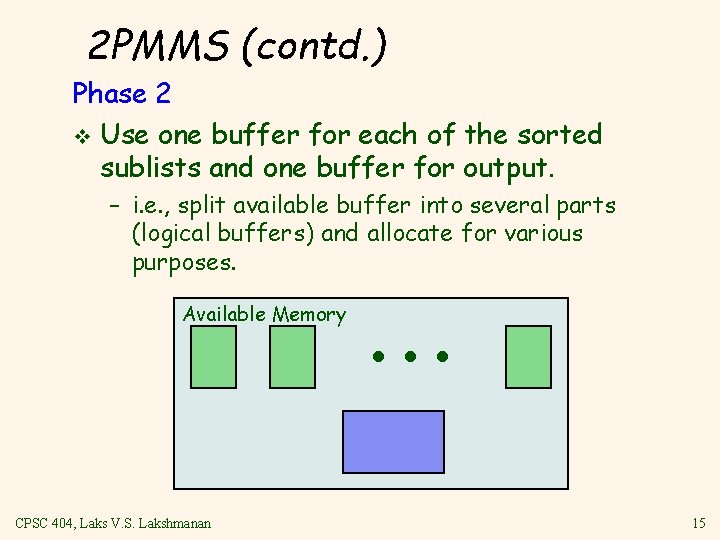

2 PMMS (contd. ) Phase 2 v Use one buffer for each of the sorted sublists and one buffer for output. – i. e. , split available buffer into several parts (logical buffers) and allocate for various purposes. Available Memory CPSC 404, Laks V. S. Lakshmanan … 15

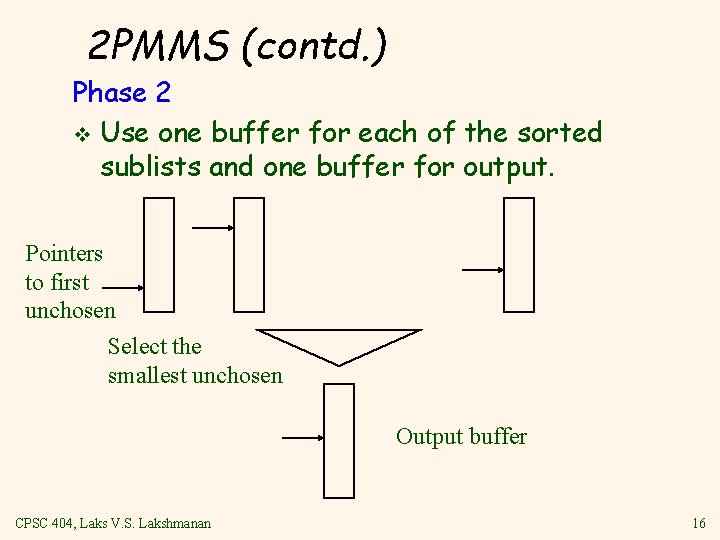

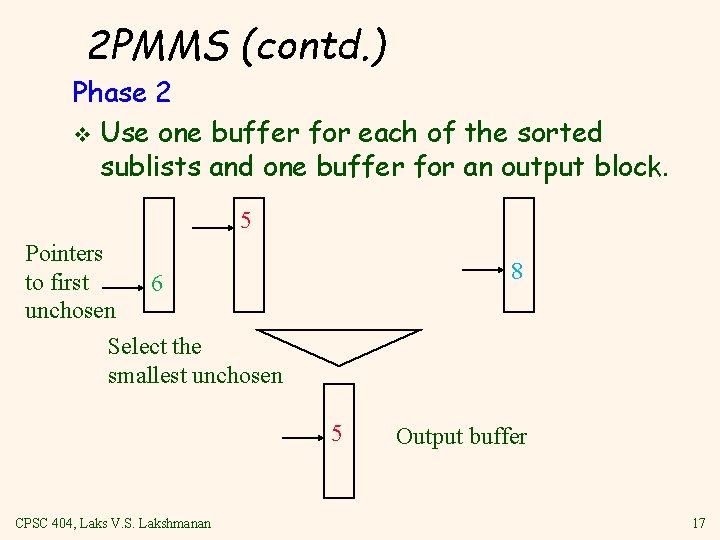

2 PMMS (contd. ) Phase 2 v Use one buffer for each of the sorted sublists and one buffer for output. Pointers to first unchosen Select the smallest unchosen Output buffer CPSC 404, Laks V. S. Lakshmanan 16

2 PMMS (contd. ) Phase 2 v Use one buffer for each of the sorted sublists and one buffer for an output block. 5 Pointers to first 6 unchosen Select the smallest unchosen 8 5 CPSC 404, Laks V. S. Lakshmanan Output buffer 17

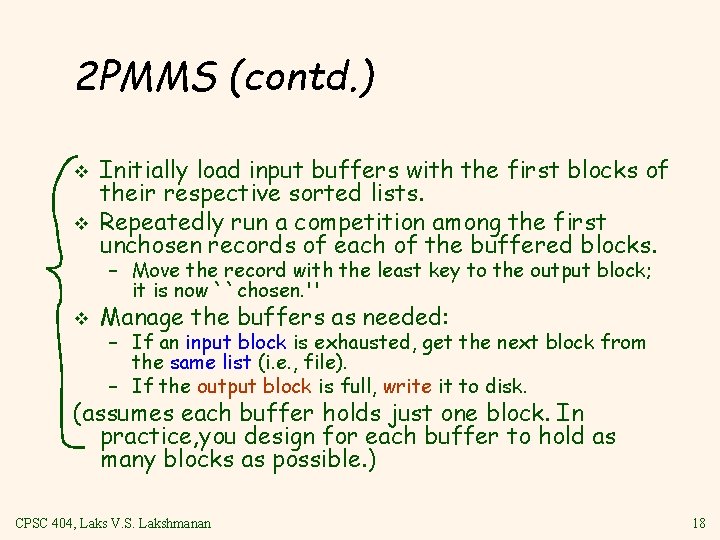

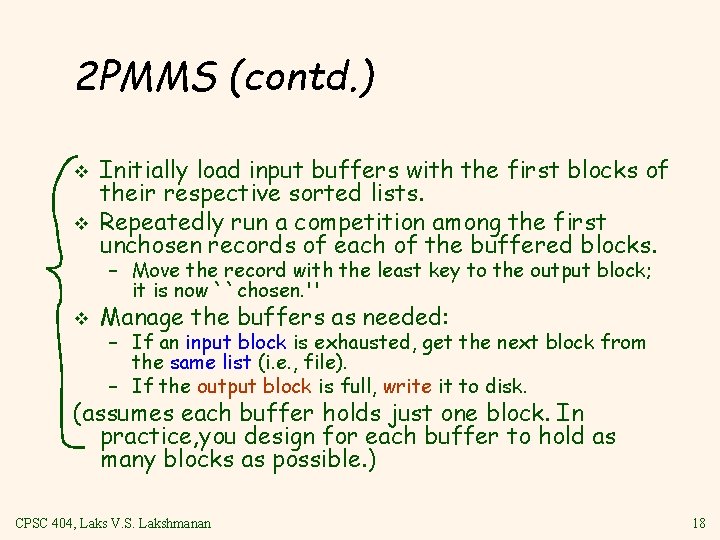

2 PMMS (contd. ) v v Initially load input buffers with the first blocks of their respective sorted lists. Repeatedly run a competition among the first unchosen records of each of the buffered blocks. – Move the record with the least key to the output block; it is now ``chosen. '' v Manage the buffers as needed: – If an input block is exhausted, get the next block from the same list (i. e. , file). – If the output block is full, write it to disk. (assumes each buffer holds just one block. In practice, you design for each buffer to hold as many blocks as possible. ) CPSC 404, Laks V. S. Lakshmanan 18

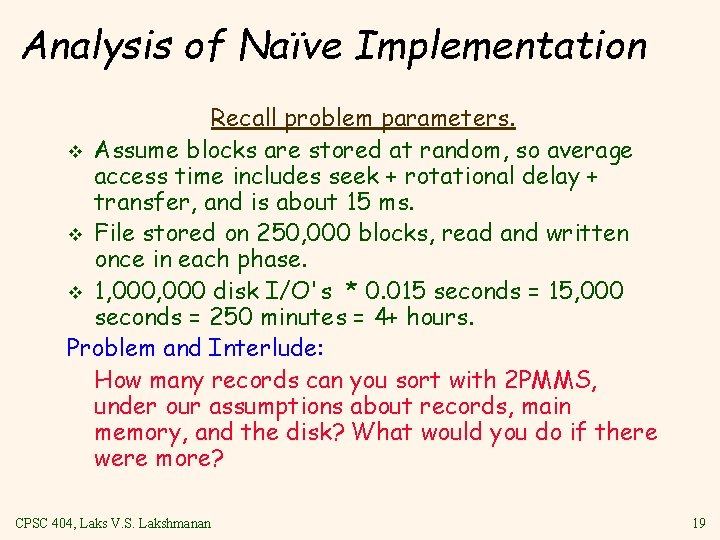

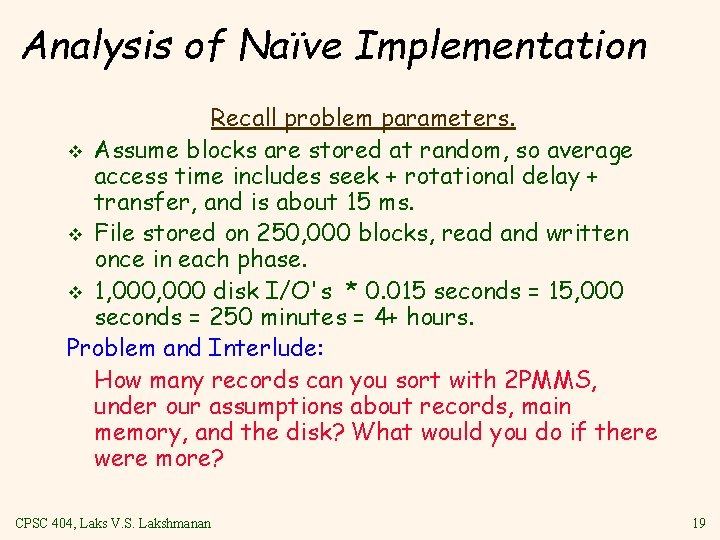

Analysis of Naïve Implementation Recall problem parameters. v Assume blocks are stored at random, so average access time includes seek + rotational delay + transfer, and is about 15 ms. v File stored on 250, 000 blocks, read and written once in each phase. v 1, 000 disk I/O's * 0. 015 seconds = 15, 000 seconds = 250 minutes = 4+ hours. Problem and Interlude: How many records can you sort with 2 PMMS, under our assumptions about records, main memory, and the disk? What would you do if there were more? CPSC 404, Laks V. S. Lakshmanan 19

Improving the Running Time of 2 PMMS Here are some techniques that sometimes make secondary memory algorithms more efficient: 1. Group blocks by cylinder (“cylindrification”). 2. One big disk several smaller disks. 3. Mirror disks = multiple copies of the same data. 4. ``Prefetching'' or ``double buffering. '' 5. Disk scheduling; the ``elevator'' algorithm. CPSC 404, Laks V. S. Lakshmanan 20

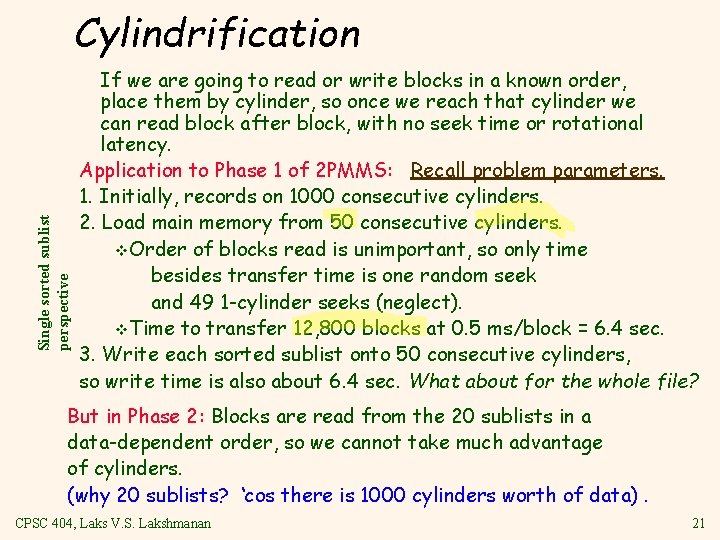

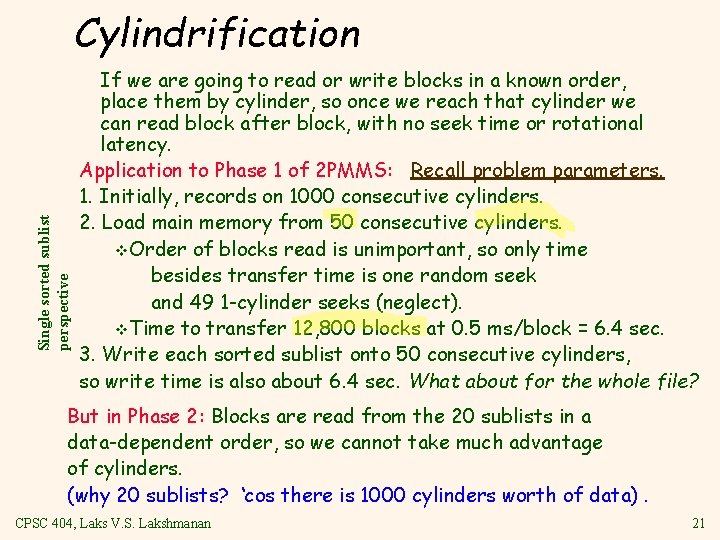

Single sorted sublist perspective Cylindrification If we are going to read or write blocks in a known order, place them by cylinder, so once we reach that cylinder we can read block after block, with no seek time or rotational latency. Application to Phase 1 of 2 PMMS: Recall problem parameters. 1. Initially, records on 1000 consecutive cylinders. 2. Load main memory from 50 consecutive cylinders. v. Order of blocks read is unimportant, so only time besides transfer time is one random seek and 49 1 cylinder seeks (neglect). v. Time to transfer 12, 800 blocks at 0. 5 ms/block = 6. 4 sec. 3. Write each sorted sublist onto 50 consecutive cylinders, so write time is also about 6. 4 sec. What about for the whole file? But in Phase 2: Blocks are read from the 20 sublists in a data dependent order, so we cannot take much advantage of cylinders. (why 20 sublists? ‘cos there is 1000 cylinders worth of data). CPSC 404, Laks V. S. Lakshmanan 21

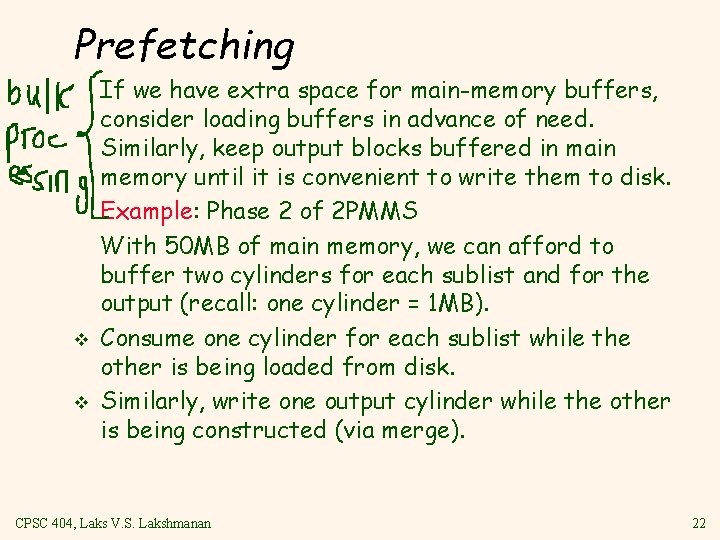

Prefetching v v If we have extra space for main memory buffers, consider loading buffers in advance of need. Similarly, keep output blocks buffered in main memory until it is convenient to write them to disk. Example: Phase 2 of 2 PMMS With 50 MB of main memory, we can afford to buffer two cylinders for each sublist and for the output (recall: one cylinder = 1 MB). Consume one cylinder for each sublist while the other is being loaded from disk. Similarly, write one output cylinder while the other is being constructed (via merge). CPSC 404, Laks V. S. Lakshmanan 22

Prefetching Thus, seek and rotational latency are made negligible for Phase I (thanks to cylindrification) and are minimized for phase II (thanks to prefetching). v So, the total time for phase 2 is approx. one read plus one write of whole file, but one cylinderful at a time. v CPSC 404, Laks V. S. Lakshmanan 23

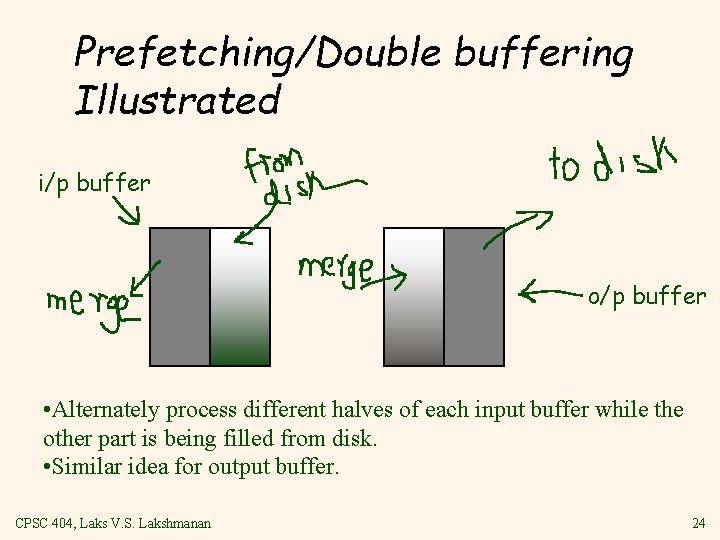

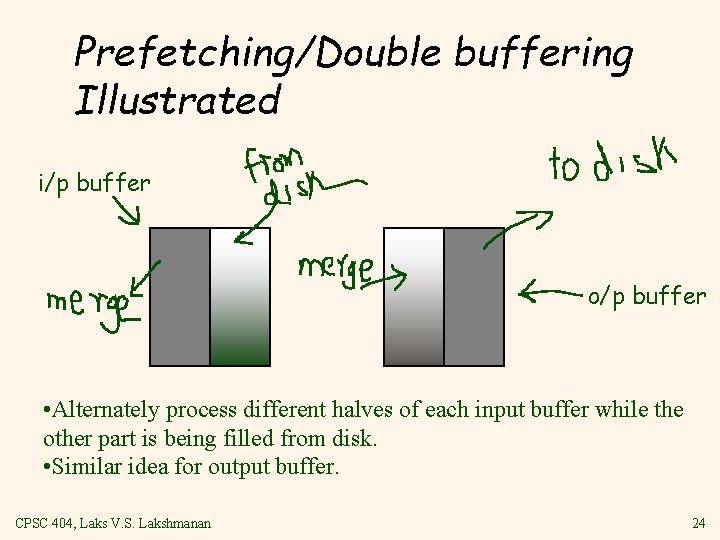

Prefetching/Double buffering Illustrated i/p buffer o/p buffer • Alternately process different halves of each input buffer while the other part is being filled from disk. • Similar idea for output buffer. CPSC 404, Laks V. S. Lakshmanan 24

Using B+ Trees for Sorting Scenario: Table to be sorted has B+ tree index on sorting column(s). v Idea: Can retrieve records in order by traversing leaf pages. v Is this a good idea? v Cases to consider: v – – B+ tree is clustered B+ tree is not clustered CPSC 404, Laks V. S. Lakshmanan Good idea! Could be a very bad idea! 25

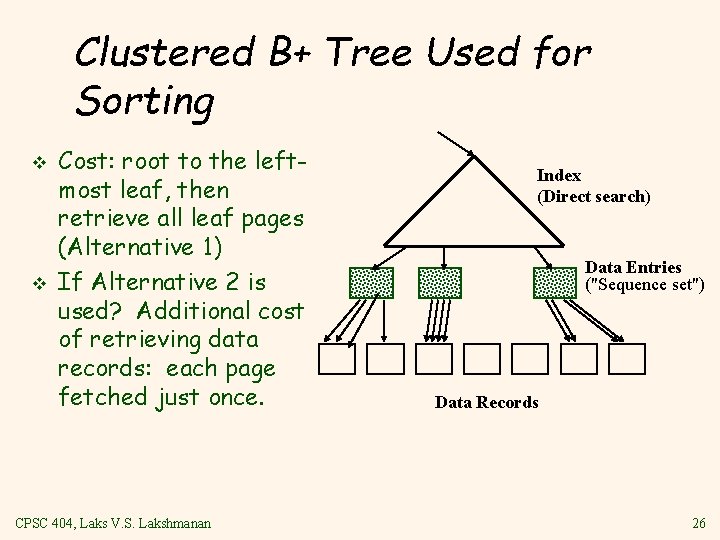

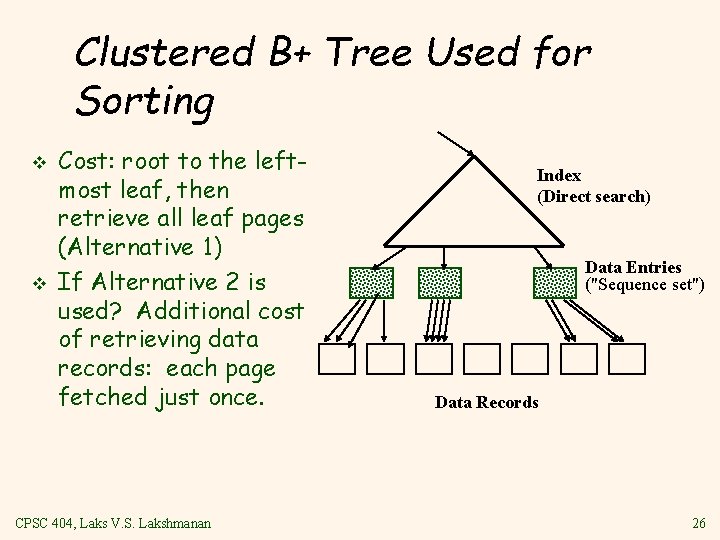

Clustered B+ Tree Used for Sorting v v Cost: root to the leftmost leaf, then retrieve all leaf pages (Alternative 1) If Alternative 2 is used? Additional cost of retrieving data records: each page fetched just once. CPSC 404, Laks V. S. Lakshmanan Index (Direct search) Data Entries ("Sequence set") Data Records 26

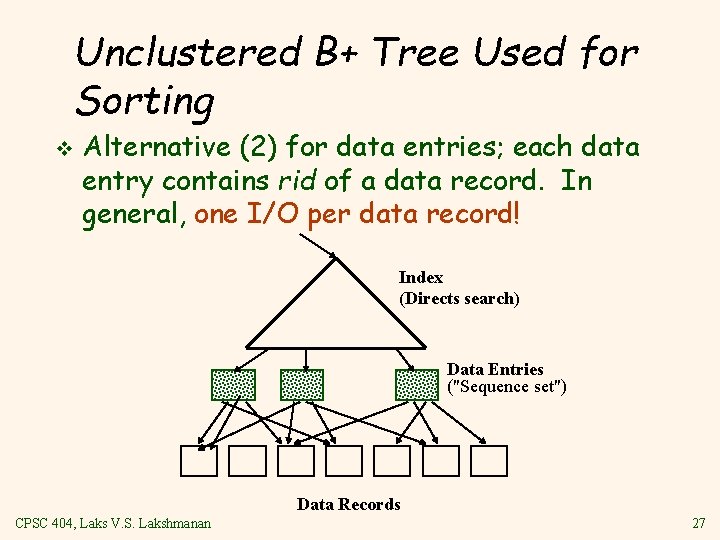

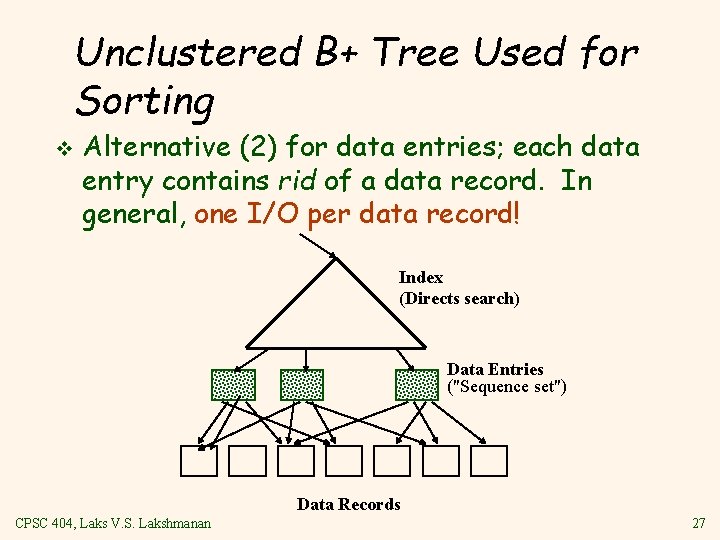

Unclustered B+ Tree Used for Sorting v Alternative (2) for data entries; each data entry contains rid of a data record. In general, one I/O per data record! Index (Directs search) Data Entries ("Sequence set") Data Records CPSC 404, Laks V. S. Lakshmanan 27

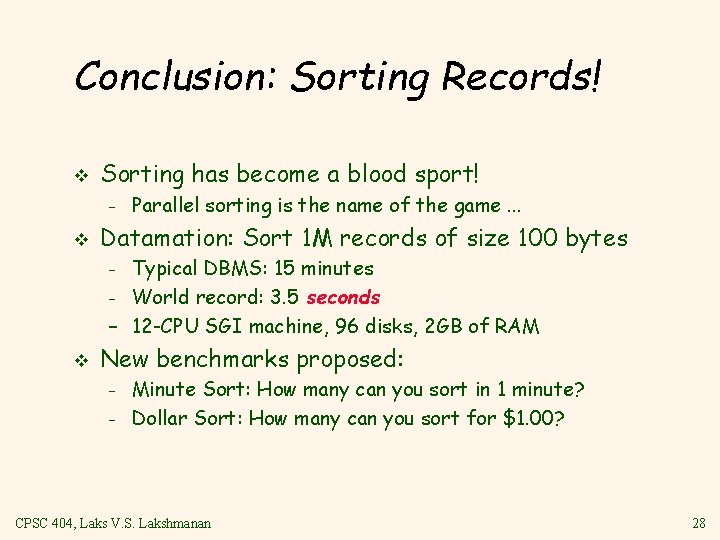

Conclusion: Sorting Records! v Sorting has become a blood sport! – v Parallel sorting is the name of the game. . . Datamation: Sort 1 M records of size 100 bytes Typical DBMS: 15 minutes – World record: 3. 5 seconds – 12 -CPU SGI machine, 96 disks, 2 GB of RAM – v New benchmarks proposed: – – Minute Sort: How many can you sort in 1 minute? Dollar Sort: How many can you sort for $1. 00? CPSC 404, Laks V. S. Lakshmanan 28