Detecting Misunderstandings in the CMU Communicator Spoken Dialog

- Slides: 38

Detecting Misunderstandings in the CMU Communicator Spoken Dialog System Presented by: Dan Bohus Joint work with: Paul Carpenter, Chun Jin, Daniel Wilson, Rong Zhang, Alex Rudnicky Carnegie Mellon University – 2002

What’s a Spoken Dialog System ? § § 03 -08 -2002 Human talking to a computer Taking turns in a goal-oriented dialog Detecting Misunderstandings in the CMU Communicator… 2

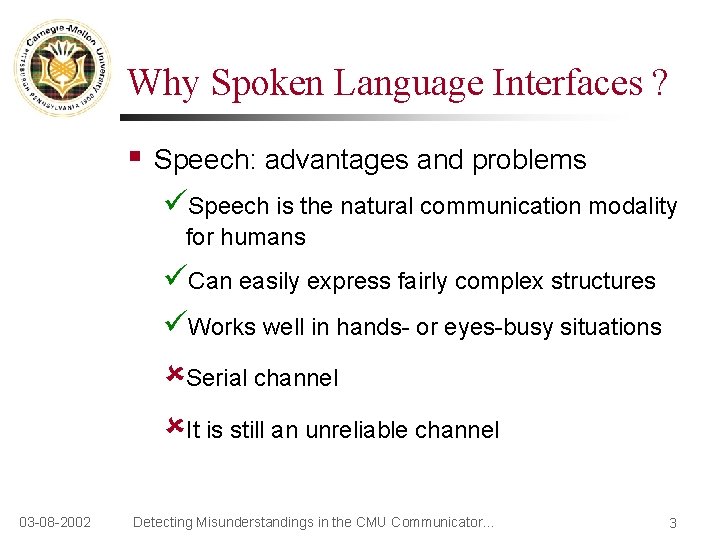

Why Spoken Language Interfaces ? § Speech: advantages and problems Speech is the natural communication modality for humans Can easily express fairly complex structures Works well in hands- or eyes-busy situations Serial channel It is still an unreliable channel 03 -08 -2002 Detecting Misunderstandings in the CMU Communicator… 3

Sample Spoken Dialog Systems § § Interactive Voice Response systems (IVR) Information Access Systems § § § 03 -08 -2002 Air-travel planning (Communicator) Weather info over the phone (Jupiter) E-mail access over the phone (ELVIS) UA Baggage claims (Simon) Other Systems: guidance, personal assistants, taskable agents, etc. Detecting Misunderstandings in the CMU Communicator… 4

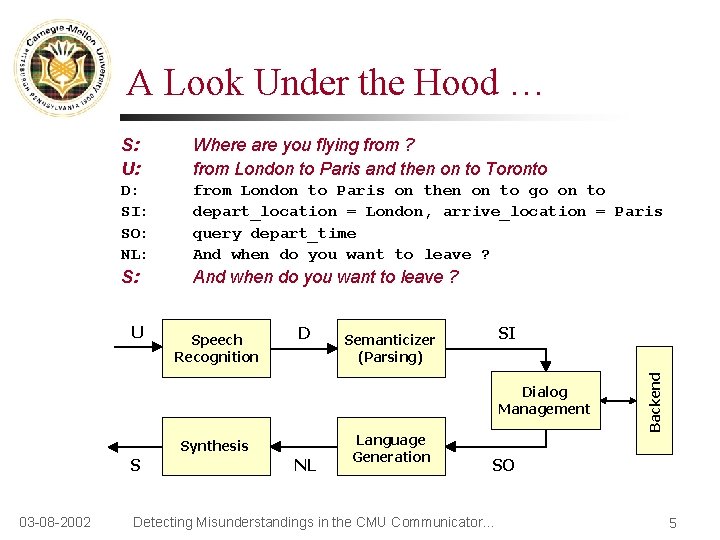

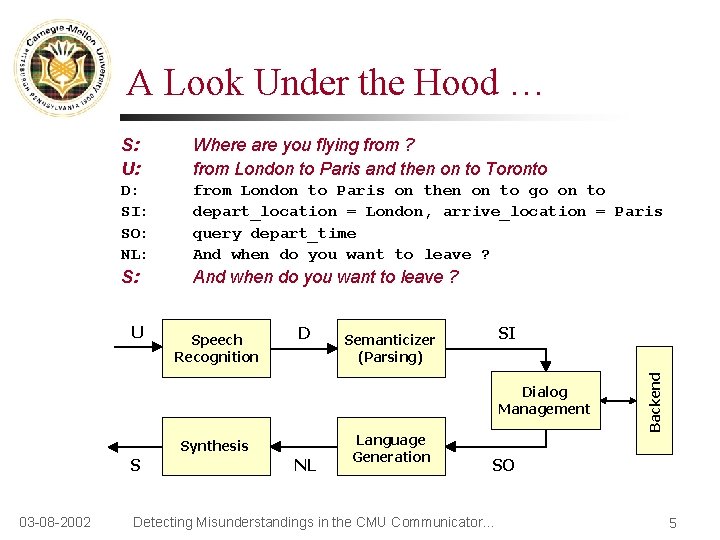

A Look Under the Hood … S: U: Where are you flying from ? from London to Paris and then on to Toronto D: SI: SO: NL: from London to Paris on then on to go on to depart_location = London, arrive_location = Paris query depart_time And when do you want to leave ? S: And when do you want to leave ? Speech Recognition D SI Semanticizer (Parsing) Dialog Management Synthesis S 03 -08 -2002 NL Language Generation Backend U SO Detecting Misunderstandings in the CMU Communicator… 5

Roadmap § Intro to Spoken Dialog Systems The Problem: Misunderstandings § A Learning Solution § Experiments and Results § Conclusion 03 -08 -2002 Detecting Misunderstandings in the CMU Communicator… 6

Speech Recognition § § Speech Recognition is the main drive behind the development of SDS. But it is problematic: § § 03 -08 -2002 Input signal quality Accents, Non-native speakers Spoken language disfluencies: stutters, falsestarts, /mm/, /um/ Typical Word Error Rates: 20 -30% Detecting Misunderstandings in the CMU Communicator… 7

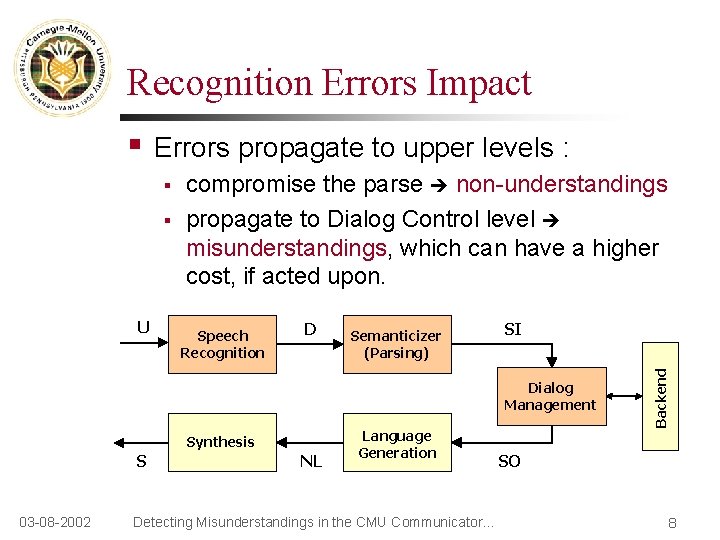

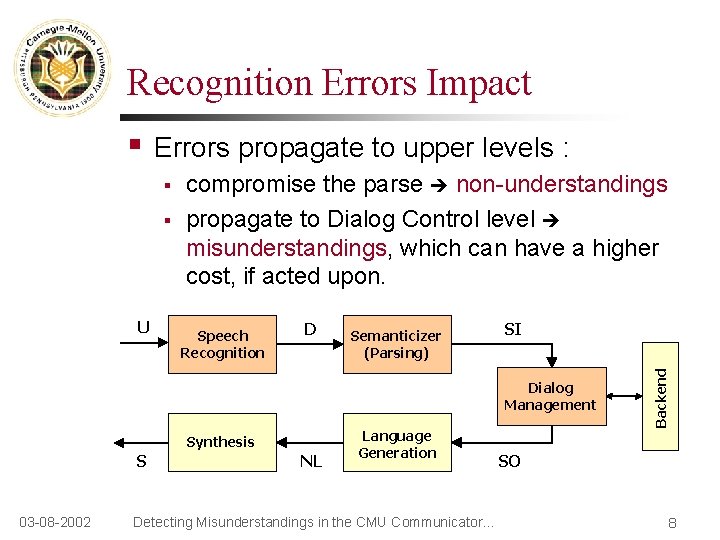

Recognition Errors Impact Errors propagate to upper levels : § § U compromise the parse non-understandings propagate to Dialog Control level misunderstandings, which can have a higher cost, if acted upon. Speech Recognition D Semanticizer (Parsing) SI Dialog Management Synthesis S 03 -08 -2002 NL Language Generation Detecting Misunderstandings in the CMU Communicator… Backend § SO 8

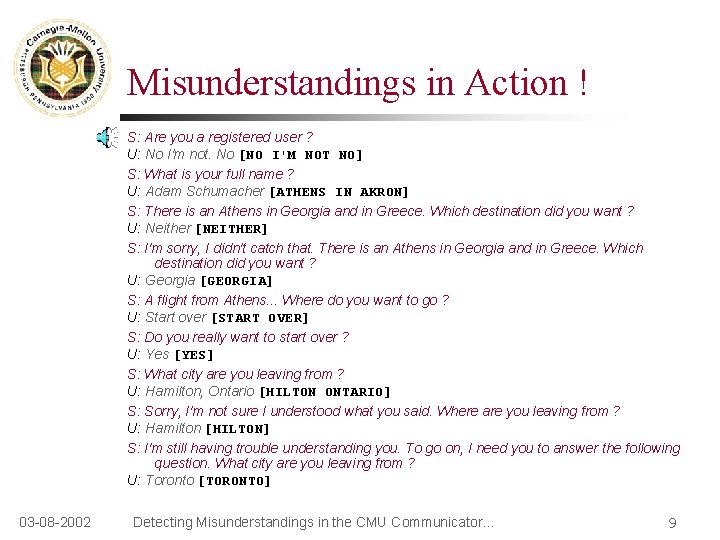

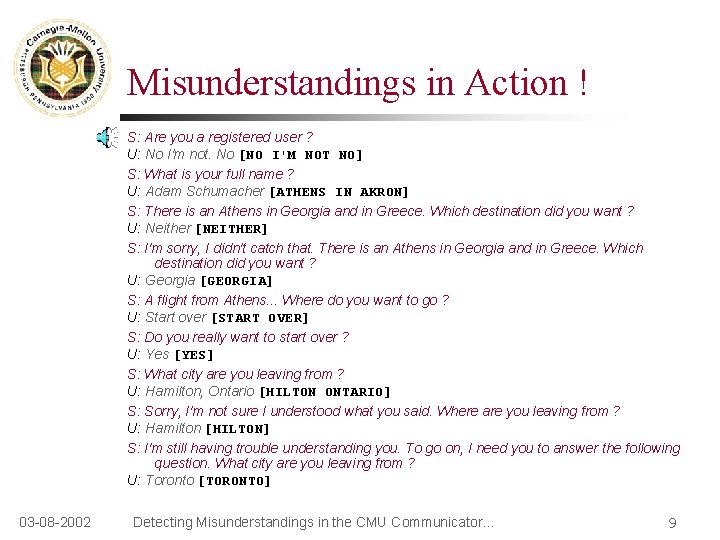

Misunderstandings in Action ! S: Are you a registered user ? U: No I'm not. No [NO I'M NOT NO] S: What is your full name ? U: Adam Schumacher [ATHENS IN AKRON] S: There is an Athens in Georgia and in Greece. Which destination did you want ? U: Neither [NEITHER] S: I'm sorry, I didn't catch that. There is an Athens in Georgia and in Greece. Which destination did you want ? U: Georgia [GEORGIA] S: A flight from Athens. . . Where do you want to go ? U: Start over [START OVER] S: Do you really want to start over ? U: Yes [YES] S: What city are you leaving from ? U: Hamilton, Ontario [HILTON ONTARIO] S: Sorry, I'm not sure I understood what you said. Where are you leaving from ? U: Hamilton [HILTON] S: I'm still having trouble understanding you. To go on, I need you to answer the following question. What city are you leaving from ? U: Toronto [TORONTO] 03 -08 -2002 Detecting Misunderstandings in the CMU Communicator… 9

Addressing the Problem Wait for SR technology to reach better performance. Increase the robustness of systems when faced with poor recognition: § § 03 -08 -2002 Detect Misunderstandings Use Recovery Techniques Detecting Misunderstandings in the CMU Communicator… 10

Problem Formulation § 03 -08 -2002 Given an input utterance, and the current state of the system, detect whether it was correctly perceived by the system or not. (confidence annotation problem) Detecting Misunderstandings in the CMU Communicator… 11

Roadmap § § Intro to Spoken Dialog Systems The Problem: Detecting Misunderstandings A Learning Solution § Experiments and Results § Conclusion 03 -08 -2002 Detecting Misunderstandings in the CMU Communicator… 12

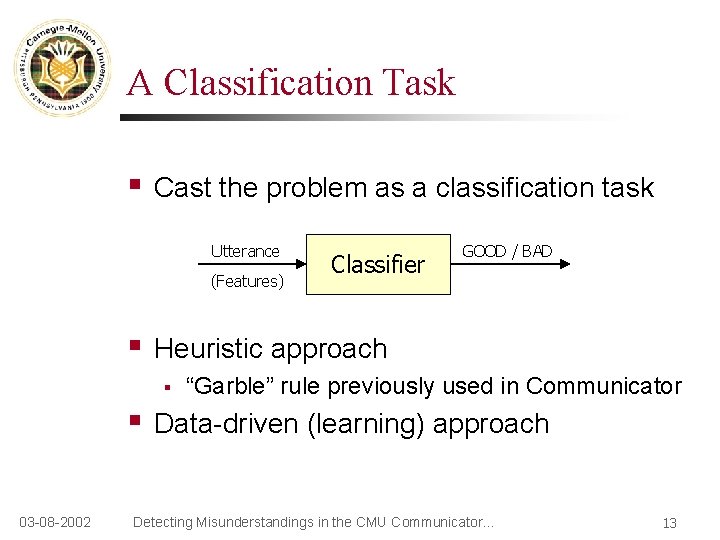

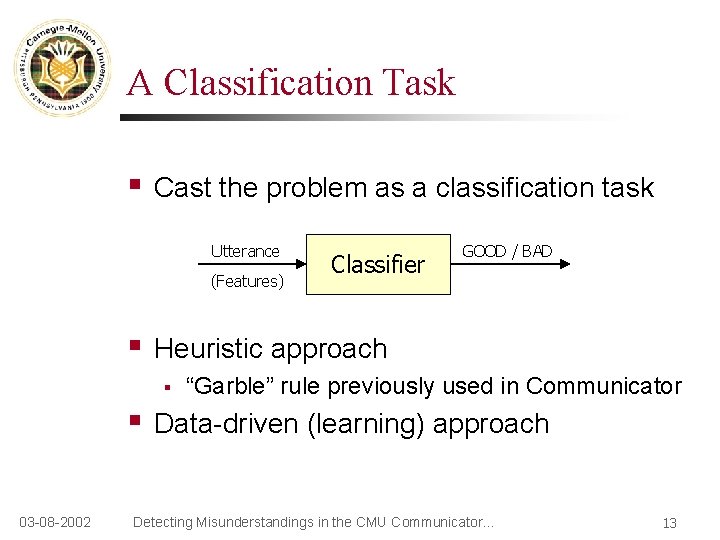

A Classification Task § Cast the problem as a classification task Utterance (Features) § Heuristic approach § § 03 -08 -2002 Classifier GOOD / BAD “Garble” rule previously used in Communicator Data-driven (learning) approach Detecting Misunderstandings in the CMU Communicator… 13

A Data-Driven Approach § Machine learning approach § Learn to classify from a labeled training corpus Features GOOD/BAD § Use it to classify new instances Features 03 -08 -2002 Classifier (Learn Mode) Classifier GOOD/BAD Detecting Misunderstandings in the CMU Communicator… 14

Ingredients § Three ingredients needed for a machine learning approach: § § § 03 -08 -2002 Corpus of labeled data to use for training Identify a set of relevant features Choose a classification technique Detecting Misunderstandings in the CMU Communicator… 15

Roadmap § § § Intro to Spoken Dialog Systems The Problem: Misunderstandings A Learning Solution § § 03 -08 -2002 Training corpus Features Classification techniques Experiments and Results Conclusion Detecting Misunderstandings in the CMU Communicator… 16

Corpus – Sources § Collected 2 months of sessions § § Eliminated conversations with < 5 turns § § 03 -08 -2002 October and November 1999 About 300 sessions Both developer and outsider calls Developers calling to check if system is on-line Wrong number calls Detecting Misunderstandings in the CMU Communicator… 17

Corpus – Structure § The Logs § § § The Transcripts (the actual utterances) § § 03 -08 -2002 Generated automatically by various system modules Serve as a source of features for classification (also contain the decoded utterances) Performed and double-checked by a human annotator Provide a basis for labeling Detecting Misunderstandings in the CMU Communicator… 18

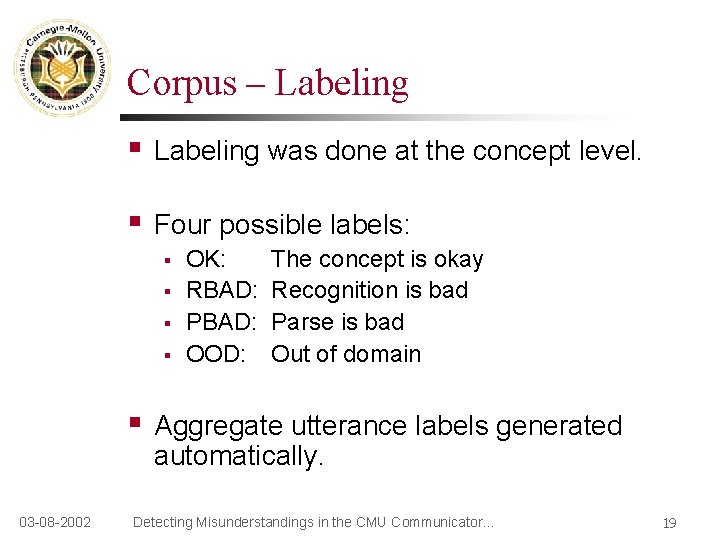

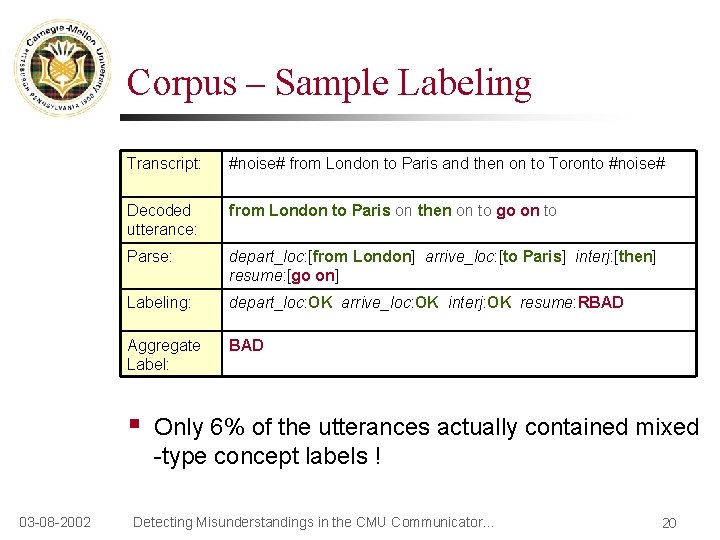

Corpus – Labeling § Labeling was done at the concept level. § Four possible labels: § § § 03 -08 -2002 OK: RBAD: PBAD: OOD: The concept is okay Recognition is bad Parse is bad Out of domain Aggregate utterance labels generated automatically. Detecting Misunderstandings in the CMU Communicator… 19

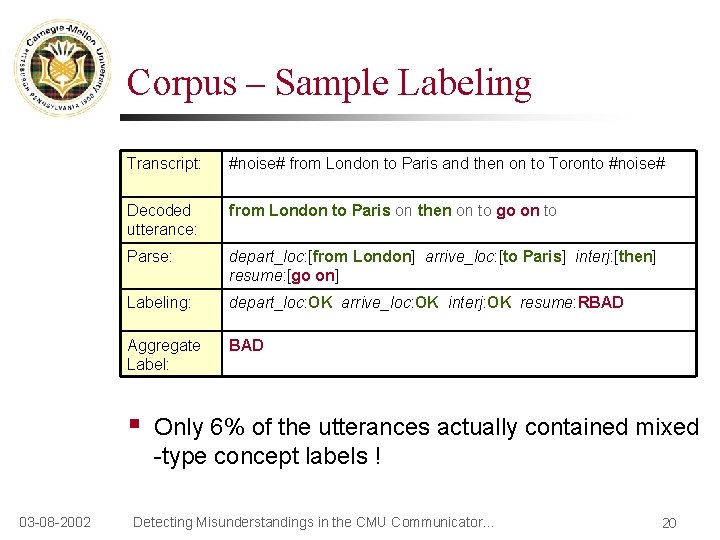

Corpus – Sample Labeling Transcript: #noise# from London to Paris and then on to Toronto #noise# Decoded utterance: from London to Paris on then on to go on to Parse: depart_loc: [from London] arrive_loc: [to Paris] interj: [then] resume: [go on] Labeling: depart_loc: OK arrive_loc: OK interj: OK resume: RBAD Aggregate Label: BAD § 03 -08 -2002 Only 6% of the utterances actually contained mixed -type concept labels ! Detecting Misunderstandings in the CMU Communicator… 20

Corpus – Summary 03 -08 -2002 § § § Started with 2 months of dialog sessions Eliminated short, ill-formed sessions Transcribed the corpus Labeled it at the concept level Discarded mixed-label utterances § § 4550 binary labeled utterances 311 dialogs Detecting Misunderstandings in the CMU Communicator… 21

Features – Sources § § Traditionally, features are extracted from the Speech Recognition layer [Chase]. In a SDS, there at least 2 other orthogonal knowledge sources: § § The Parser The Dialog Manager Speech Parsing Features Dialog 03 -08 -2002 Detecting Misunderstandings in the CMU Communicator… 22

Speech Features – Speech Recog. Dialog Transcript: #noise# from London to Paris and then on to Toronto #noise# Decoded: from London to Paris on then on to ? go? on to Parse: depart_loc: [from London] arrive_loc: [to Paris] interj: [then] resume: [? go? on] § § Word. Number (11) Unconfident. Perc = % of unconfident words (9%) § 03 -08 -2002 Parsing this feature already captures other decoder level features Detecting Misunderstandings in the CMU Communicator… 23

Speech Features – Parser Level Dialog Transcript: #noise# from London to Paris and then on to Toronto #noise# Decoded: from London to Paris on then on to ? go? on to Parse: depart_loc: [from London] arrive_loc: [to Paris] interj: [then] resume: [? go? on] § § 03 -08 -2002 Parsing Uncovered. Perc = % of words uncovered by the parse (36%) Gap. Number = # of unparsed fragments (3) Fragmentation. Score = # of transitions between parsed and unparsed fragments (5) Garble = flag computed by a heuristic rule based on parse coverage and fragmentation Detecting Misunderstandings in the CMU Communicator… 24

Speech Features – Parser Level (2) Dialog Transcript: #noise# from London to Paris and then on to Toronto #noise# Decoded: from London to Paris on then on to ? go? on to Parse: depart_loc: [from London] arrive_loc: [to Paris] interj: [then] resume: [? go? on] § Concept. Bigram = bigram concept model score: § § § 03 -08 -2002 Parsing P(c 1… cn) P(cn| cn-1) P(cn-1| cn-2)… P(c 2| c 1)P(c 1) Probabilities trained from a corpus Concept. Number (4) Detecting Misunderstandings in the CMU Communicator… 25

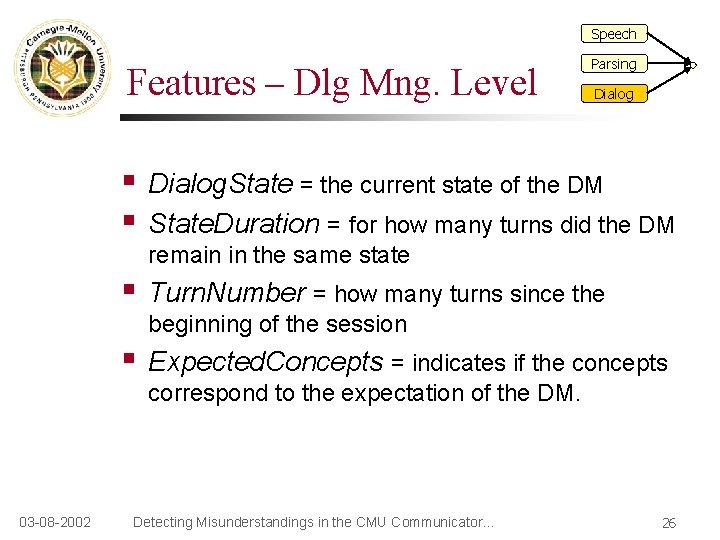

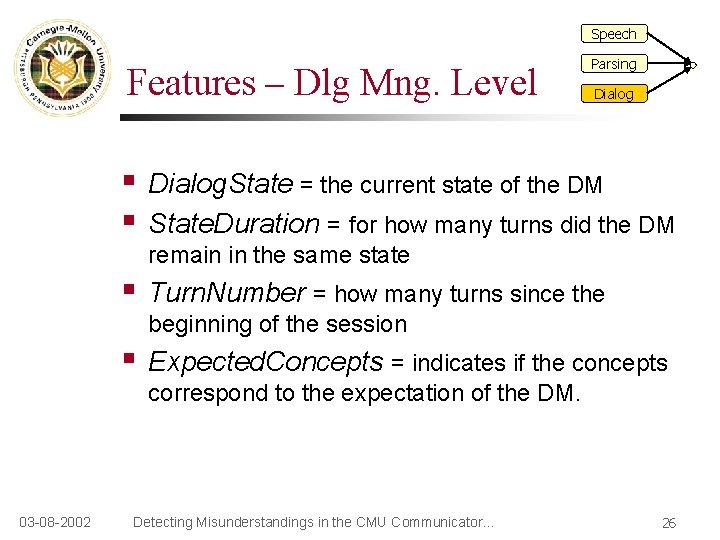

Speech Features – Dlg Mng. Level § § Parsing Dialog. State = the current state of the DM State. Duration = for how many turns did the DM remain in the same state § Turn. Number = how many turns since the beginning of the session § Expected. Concepts = indicates if the concepts correspond to the expectation of the DM. 03 -08 -2002 Detecting Misunderstandings in the CMU Communicator… 26

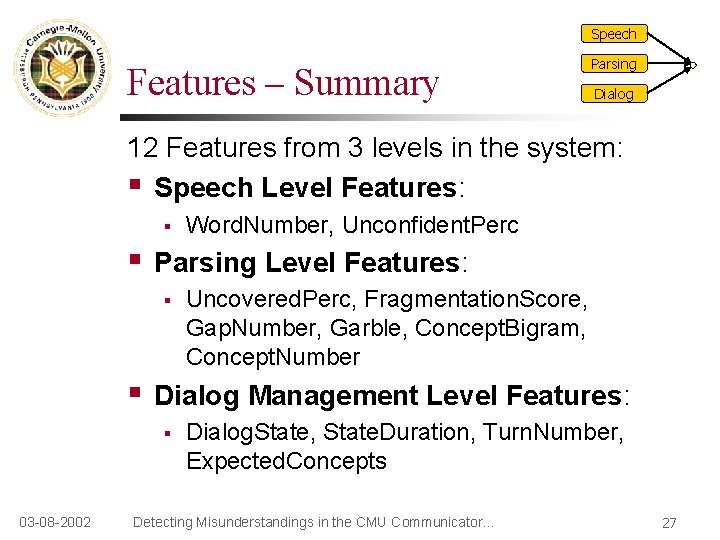

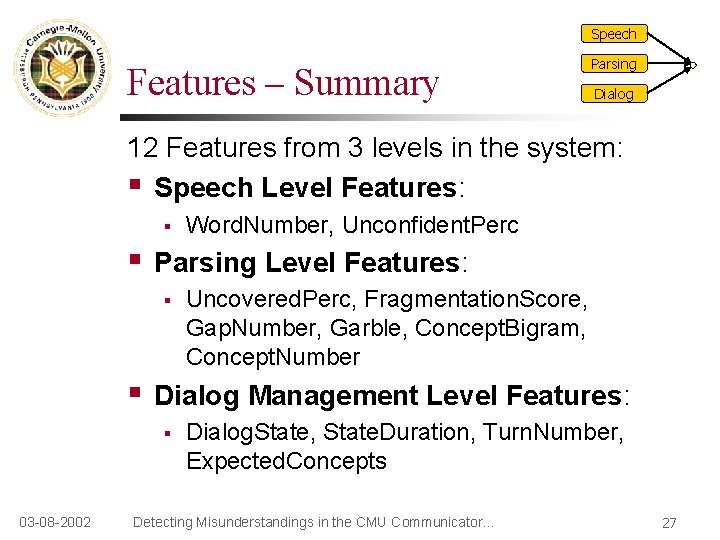

Speech Features – Summary Parsing Dialog 12 Features from 3 levels in the system: § Speech Level Features: § § Parsing Level Features: § § Uncovered. Perc, Fragmentation. Score, Gap. Number, Garble, Concept. Bigram, Concept. Number Dialog Management Level Features: § 03 -08 -2002 Word. Number, Unconfident. Perc Dialog. State, State. Duration, Turn. Number, Expected. Concepts Detecting Misunderstandings in the CMU Communicator… 27

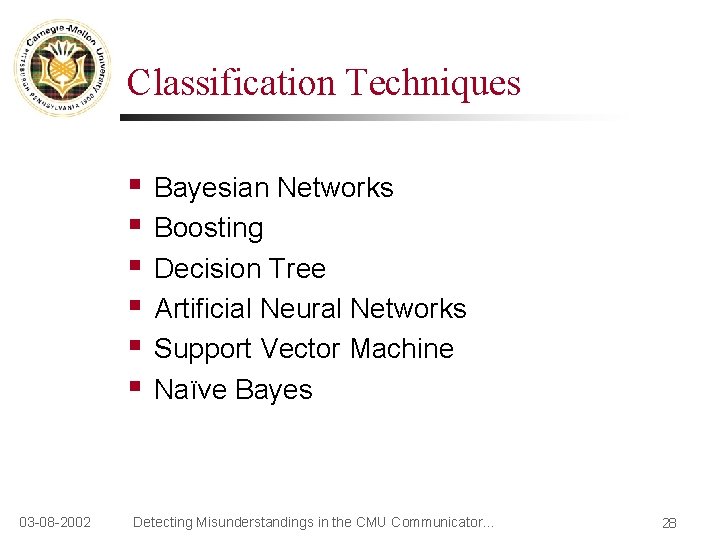

Classification Techniques § § § 03 -08 -2002 Bayesian Networks Boosting Decision Tree Artificial Neural Networks Support Vector Machine Naïve Bayes Detecting Misunderstandings in the CMU Communicator… 28

Roadmap § § § Intro to Spoken Dialog Systems The Problem: Detecting Misunderstandings A Learning Approach § § § Training corpus Features Classification techniques Experiments and Results § 03 -08 -2002 Conclusion Detecting Misunderstandings in the CMU Communicator… 29

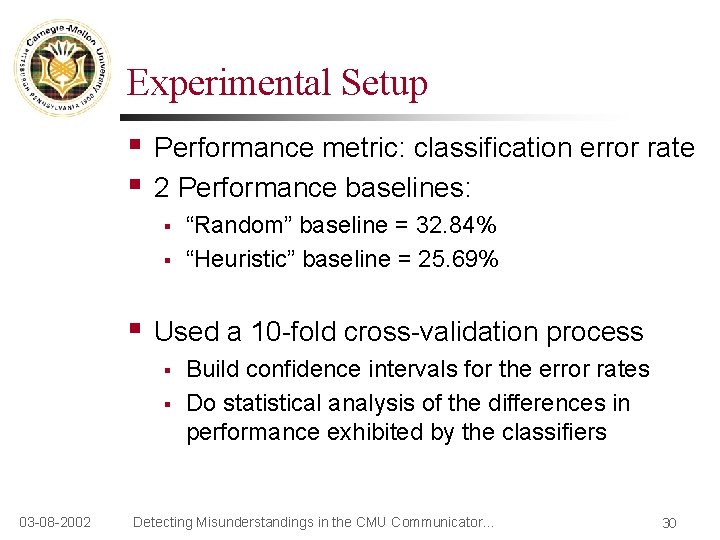

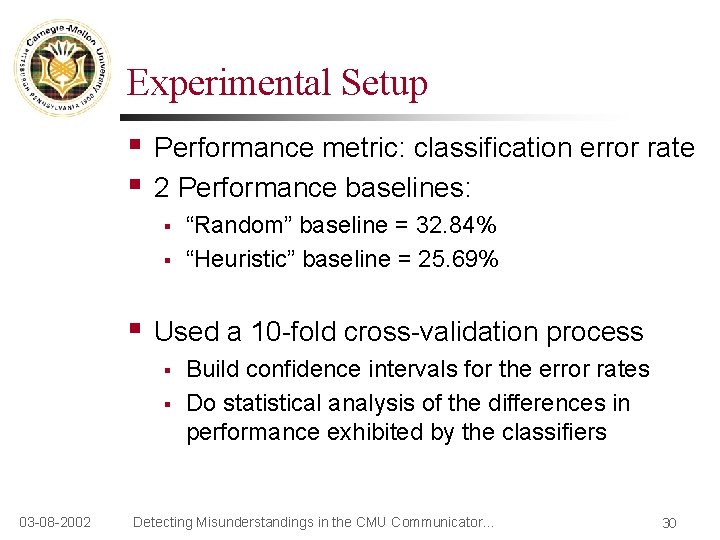

Experimental Setup § § Performance metric: classification error rate 2 Performance baselines: § § § Used a 10 -fold cross-validation process § § 03 -08 -2002 “Random” baseline = 32. 84% “Heuristic” baseline = 25. 69% Build confidence intervals for the error rates Do statistical analysis of the differences in performance exhibited by the classifiers Detecting Misunderstandings in the CMU Communicator… 30

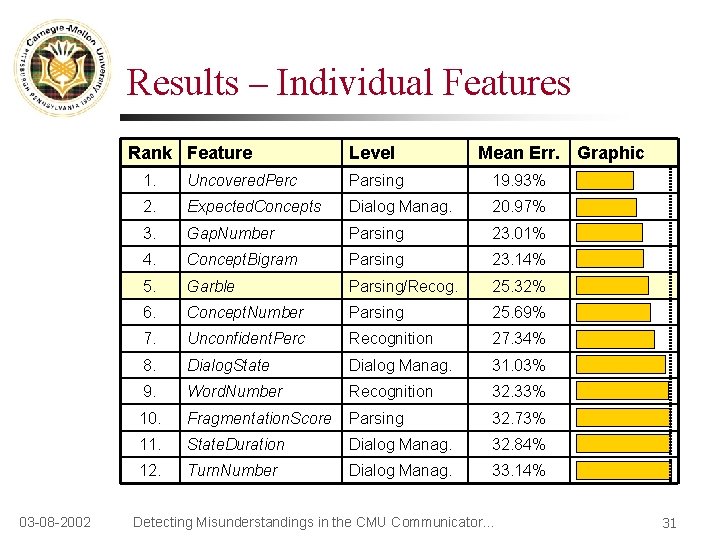

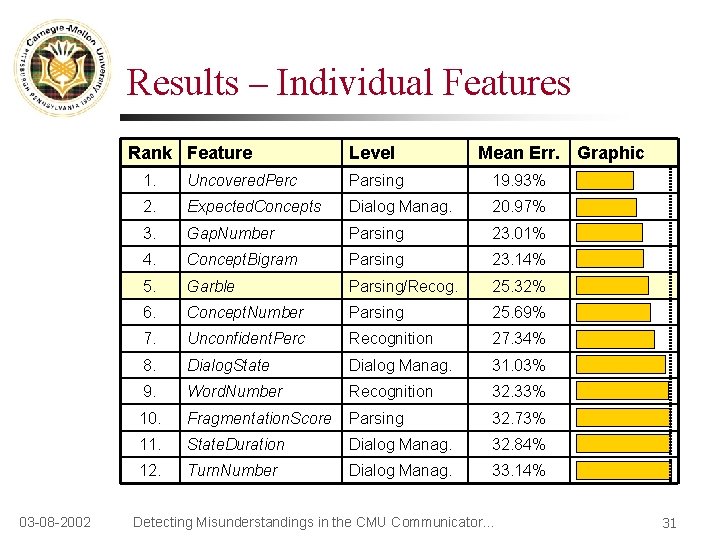

Results – Individual Features Rank Feature 03 -08 -2002 Level Mean Err. Graphic 1. Uncovered. Perc Parsing 19. 93% 2. Expected. Concepts Dialog Manag. 20. 97% 3. Gap. Number Parsing 23. 01% 4. Concept. Bigram Parsing 23. 14% 5. Garble Parsing/Recog. 25. 32% 6. Concept. Number Parsing 25. 69% 7. Unconfident. Perc Recognition 27. 34% 8. Dialog. State Dialog Manag. 31. 03% 9. Word. Number Recognition 32. 33% 10. Fragmentation. Score Parsing 32. 73% 11. State. Duration Dialog Manag. 32. 84% 12. Turn. Number Dialog Manag. 33. 14% Detecting Misunderstandings in the CMU Communicator… 31

Results – Classifiers Classifier 03 -08 -2002 Mean Error Graphic Random Baseline 32. 84% “Heuristic” Baseline 25. 69% Ada. Boost 16. 59% Decision Tree 17. 32% Bayesian Network 17. 82% SVM 18. 40% Neural Network 18. 90% Naïve Bayes 21. 65% Detecting Misunderstandings in the CMU Communicator… 32

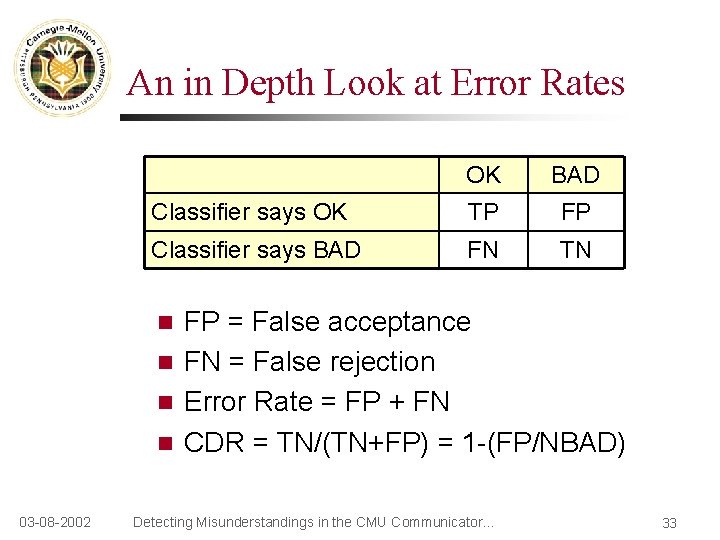

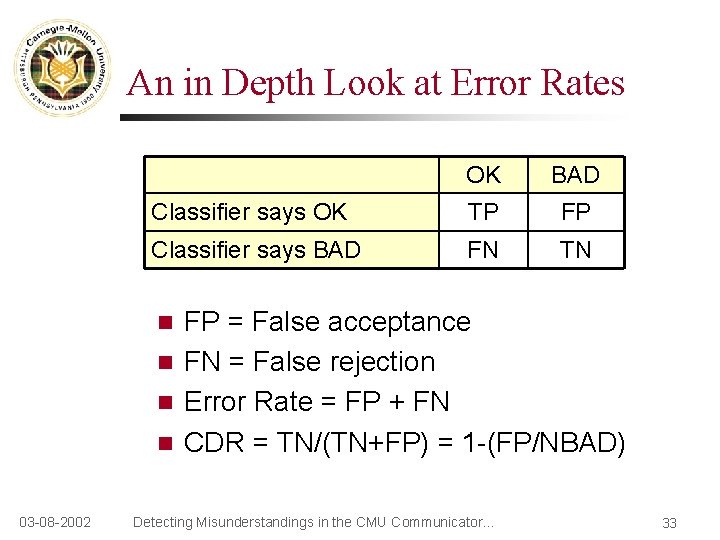

An in Depth Look at Error Rates OK BAD Classifier says OK TP FP Classifier says BAD FN TN FP = False acceptance n FN = False rejection n Error Rate = FP + FN n CDR = TN/(TN+FP) = 1 -(FP/NBAD) n 03 -08 -2002 Detecting Misunderstandings in the CMU Communicator… 33

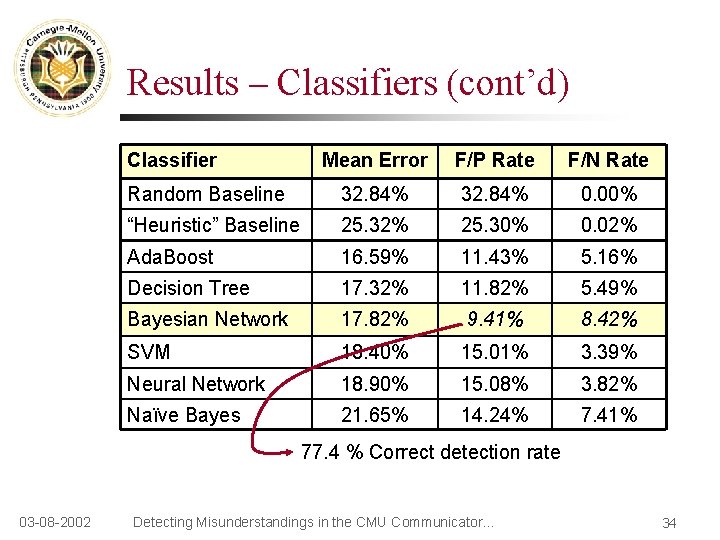

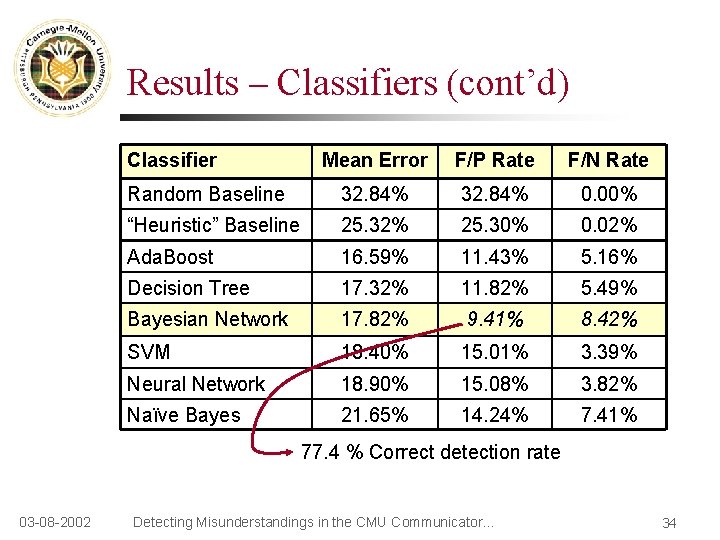

Results – Classifiers (cont’d) Classifier Mean Error F/P Rate F/N Rate Random Baseline 32. 84% 0. 00% “Heuristic” Baseline 25. 32% 25. 30% 0. 02% Ada. Boost 16. 59% 11. 43% 5. 16% Decision Tree 17. 32% 11. 82% 5. 49% Bayesian Network 17. 82% 9. 41% 8. 42% SVM 18. 40% 15. 01% 3. 39% Neural Network 18. 90% 15. 08% 3. 82% Naïve Bayes 21. 65% 14. 24% 7. 41% 77. 4 % Correct detection rate 03 -08 -2002 Detecting Misunderstandings in the CMU Communicator… 34

Conclusion § Spoken Dialog System performance is strongly impaired by misunderstandings § Increase the robustness of systems when faced with poor recognition: § § 03 -08 -2002 Detect Misunderstandings Use Recovery Techniques Detecting Misunderstandings in the CMU Communicator… 35

Conclusion (cont’d) § Data-driven classification task § § Data-Driven Misunderstanding Detector § § 03 -08 -2002 Corpus 12 Features from 3 levels in the system Empirically compared 6 classification techniques Significant improvement over previous heuristic classifier Correctly detect 74% of the misunderstandings Detecting Misunderstandings in the CMU Communicator… 36

Future Work § Detect Misunderstandings § § § Use Recovery Techniques § 03 -08 -2002 Improve performance by adding new features Identify the source of the error Incorporate the confidence score into the Dialog Management process Detecting Misunderstandings in the CMU Communicator… 37

Pointers § “Is This Conversation On Track? ”, P. Carpenter, C. Jin, D. Wilson, R. Zhang, D. Bohus, A. Rudnicky, Eurospeech 2001, Aalborg, Denmark § CMU Communicator § § 03 -08 -2002 1 -412 -268 -1084 www. cs. cmu. edu/~dbohus/SDS Detecting Misunderstandings in the CMU Communicator… 38

Ragam dialog (dialog style)

Ragam dialog (dialog style) Jendela tty

Jendela tty Detecting evolutionary forces in language change

Detecting evolutionary forces in language change Kerberos silver ticket

Kerberos silver ticket How do fraud symptoms help in detecting fraud

How do fraud symptoms help in detecting fraud Sniffer for detecting lost mobiles

Sniffer for detecting lost mobiles Detecting havex

Detecting havex Boston scientific latitude setup

Boston scientific latitude setup Netscape communicator

Netscape communicator Features of technical communication

Features of technical communication Communicator sage crm integration

Communicator sage crm integration Thrive by athena health

Thrive by athena health Characteristics of a competent communicator

Characteristics of a competent communicator The health communicator's social media toolkit

The health communicator's social media toolkit Step by step communicator

Step by step communicator Bpos software

Bpos software Positive violation valence

Positive violation valence Which communicator is motivated by mistrust of others?

Which communicator is motivated by mistrust of others? Violation valence

Violation valence What makes a competent communicator?

What makes a competent communicator? Jesus the master communicator

Jesus the master communicator Controlled situation communicator

Controlled situation communicator Athena communicator

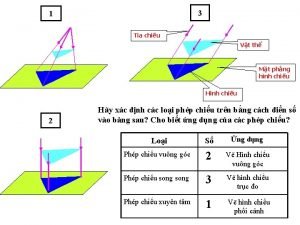

Athena communicator Vẽ hình chiếu đứng bằng cạnh của vật thể

Vẽ hình chiếu đứng bằng cạnh của vật thể Biện pháp chống mỏi cơ

Biện pháp chống mỏi cơ độ dài liên kết

độ dài liên kết Gấu đi như thế nào

Gấu đi như thế nào Các môn thể thao bắt đầu bằng tiếng chạy

Các môn thể thao bắt đầu bằng tiếng chạy Khi nào hổ mẹ dạy hổ con săn mồi

Khi nào hổ mẹ dạy hổ con săn mồi Thiếu nhi thế giới liên hoan

Thiếu nhi thế giới liên hoan điện thế nghỉ

điện thế nghỉ Một số thể thơ truyền thống

Một số thể thơ truyền thống Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Ng-html

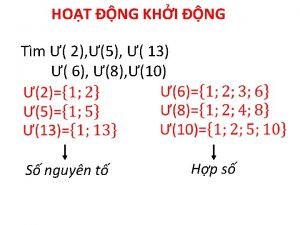

Ng-html Số nguyên tố là

Số nguyên tố là Fecboak

Fecboak Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Chụp phim tư thế worms-breton

Chụp phim tư thế worms-breton Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất