CHAPTER 3 Chapter 11 Artificial Intelligence J Glenn

- Slides: 85

CHAPTER 3 Chapter 11 Artificial Intelligence J. Glenn Brookshear J. 蔡文能 2020/10/27 Copyright © 2009 Pearson Education, Inc. 1 Slide 11 -1

Chapter 11: Artificial Intelligence • • 11. 1 Intelligence and Machines 11. 2 Perception 11. 3 Reasoning 11. 4 Additional Areas of Research 11. 5 Artificial Neural Networks 11. 6 Robotics 11. 7 Considering the Consequences 2020/10/27 Copyright © 2009 Pearson Education, Inc. 2 Slide 11 -2

2020/10/27 Copyright © 2009 Pearson Education, Inc. 3 Slide 11 -3

2020/10/27 Copyright © 2009 Pearson Education, Inc. 4 Slide 11 -4

Home Wrecker 2020/10/27 Copyright © 2009 Pearson Education, Inc. 5 Slide 11 -5

2020/10/27 Copyright © 2009 Pearson Education, Inc. 6 Slide 11 -6

The Matrix 2020/10/27 Copyright © 2009 Pearson Education, Inc. 7 Slide 11 -7

Screamers 2020/10/27 Copyright © 2009 Pearson Education, Inc. 9 Slide 11 -9

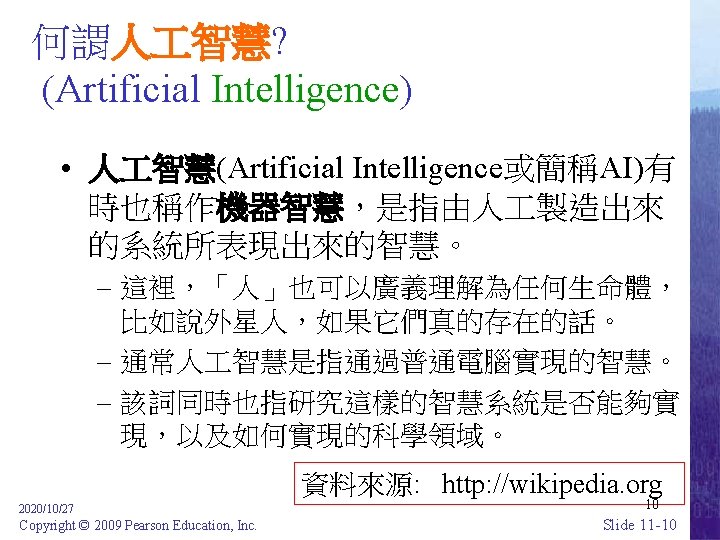

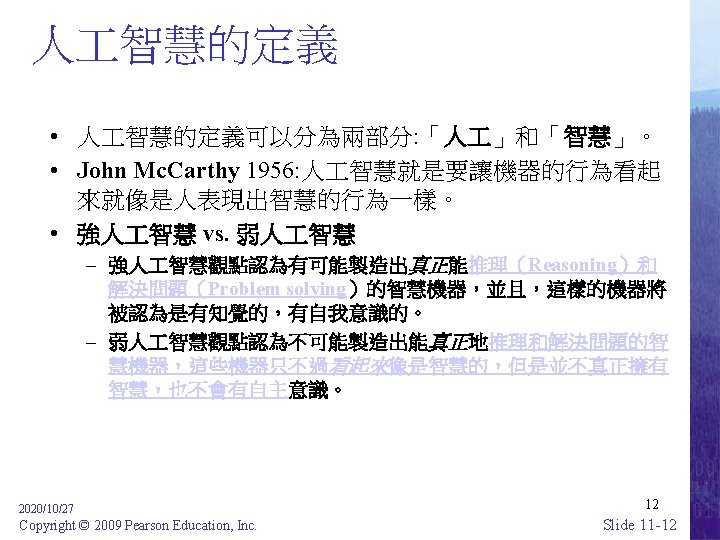

2020/10/27 Copyright © 2009 Pearson Education, Inc. 11 Slide 11 -11

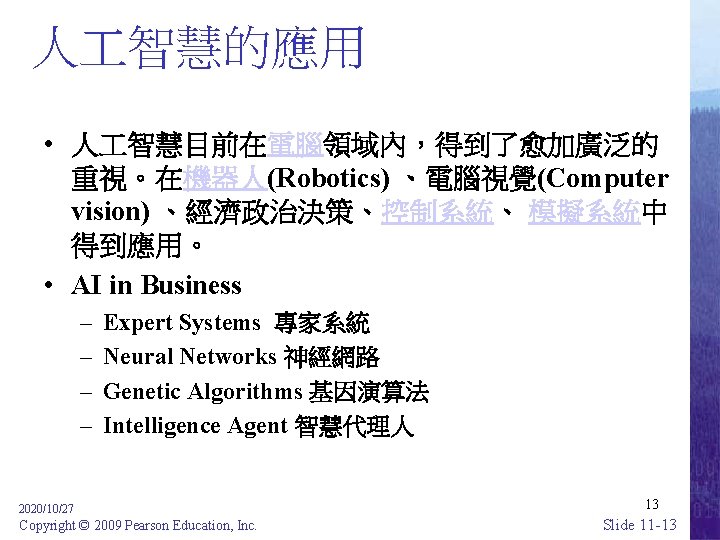

人 智慧的應用 • 人 智慧目前在電腦領域內,得到了愈加廣泛的 重視。在機器人(Robotics) 、電腦視覺(Computer vision) 、經濟政治決策、控制系統、 模擬系統中 得到應用。 • AI in Business – – Expert Systems 專家系統 Neural Networks 神經網路 Genetic Algorithms 基因演算法 Intelligence Agent 智慧代理人 2020/10/27 Copyright © 2009 Pearson Education, Inc. 13 Slide 11 -13

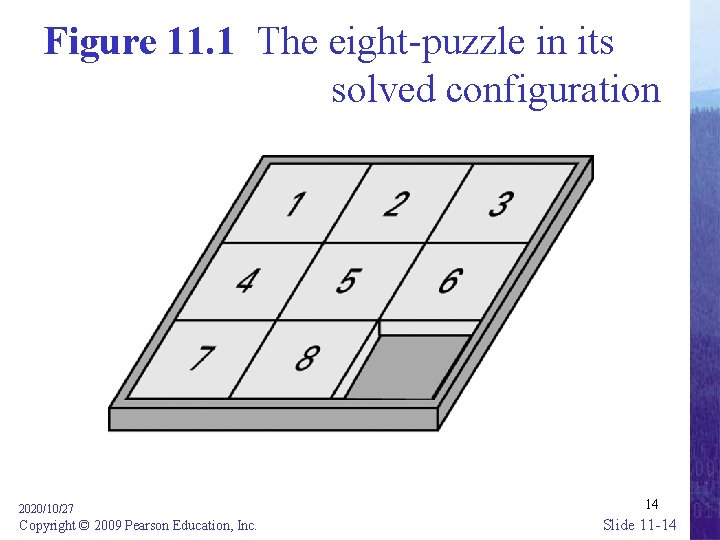

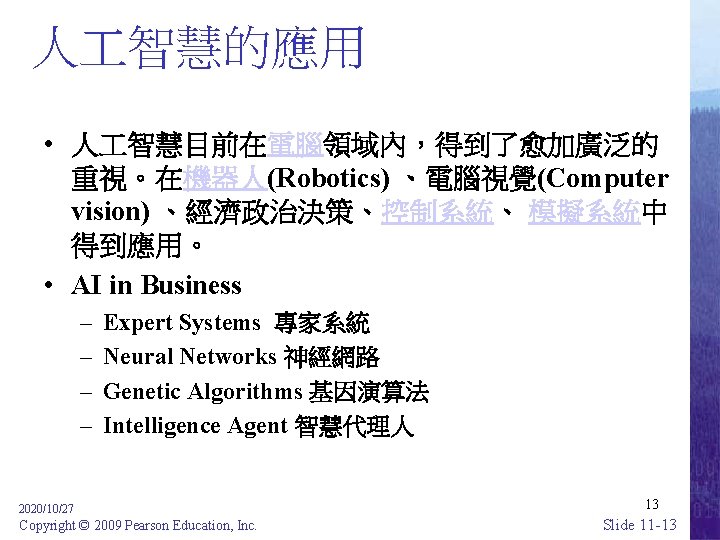

Figure 11. 1 The eight-puzzle in its solved configuration 2020/10/27 Copyright © 2009 Pearson Education, Inc. 14 Slide 11 -14

Figure 11. 2 Our puzzle-solving machine 2020/10/27 Copyright © 2009 Pearson Education, Inc. 15 Slide 11 -15

8 - puzzle Chess 2020/10/27 Copyright © 2009 Pearson Education, Inc. 16 Slide 11 -16

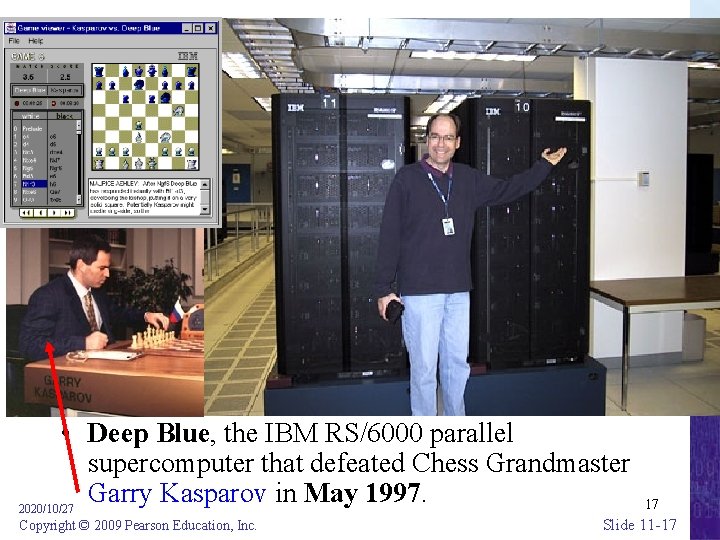

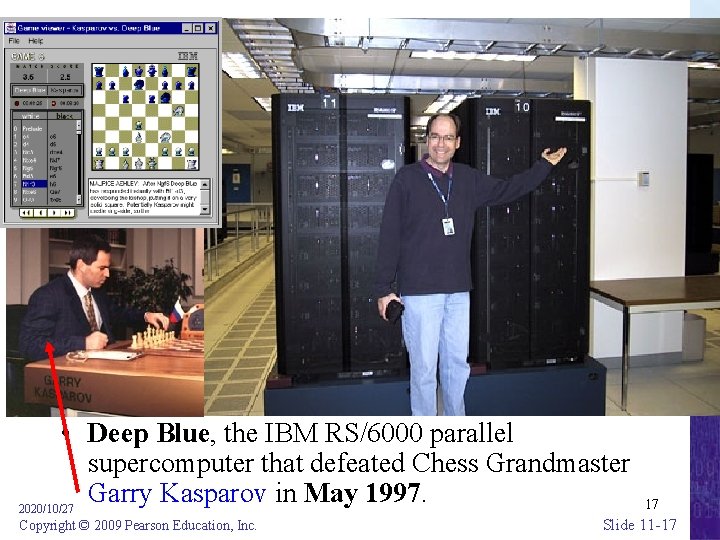

• Deep Blue, the IBM RS/6000 parallel supercomputer that defeated Chess Grandmaster Garry Kasparov in May 1997. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 17 Slide 11 -17

人 智慧的研究 (1/3) • • • 自然語言處理 (Natural language processing ) 智慧搜索 (AI search) 圖形識別 (Pattern Recognition ; 樣式識別) 機器學習 (Machine Learning) 知識庫系統(Knowledge-based systems ) 推理 (Reasoning) ,邏輯程式設計 (Logic programming) 專家系統 (Expert system) 類神經網路 (Neural network ) 基因演算法(Genetic algorithm ) 模糊理論 (Fuzzy theory) , … 2020/10/27 Copyright © 2009 Pearson Education, Inc. 18 Slide 11 -18

人 智慧的研究 (2/3) • Artificial intelligence began as an experimental field in the 1950 s with such pioneers as Allen Newell and Herbert Simon, who founded the first artificial intelligence laboratory at Carnegie-Mellon University, and John Mc. Carthy and Marvin Minsky, who founded the MIT AI Lab in 1959. (目前稱 MIT CSAIL ) 2020/10/27 Copyright © 2009 Pearson Education, Inc. 19 Slide 11 -19

人 智慧的研究 (3/3) • Seminal papers advancing the concept of machine intelligence include A Logical Calculus of the Ideas Immanent in Nervous Activity (1943), by Warren Mc. Culloch and Walter Pitts, and On Computing Machinery and Intelligence (1950), by Alan Turing, and Man-Computer Symbiosis by J. C. R. Licklider. See cybernetics and Turing test for further discussion in wikipedia. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 20 Slide 11 -20

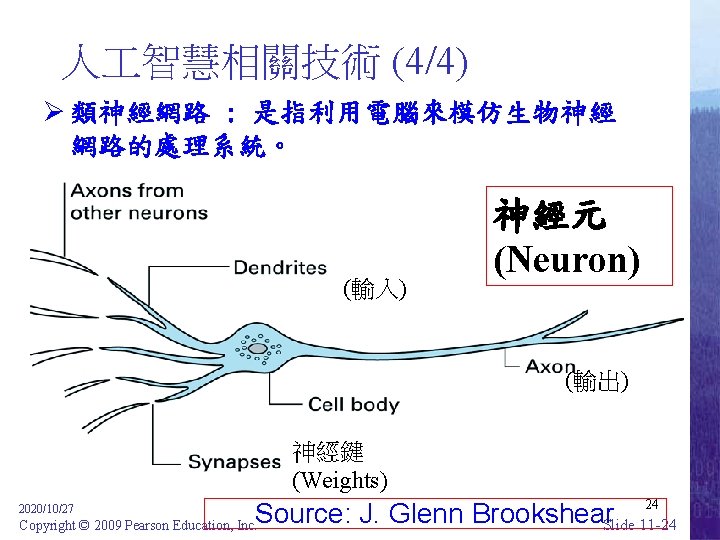

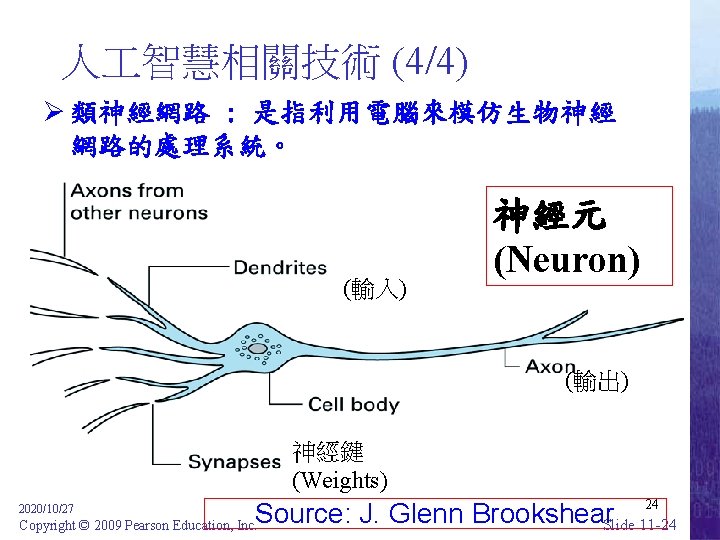

人 智慧相關技術 (4/4) Ø 類神經網路 : 是指利用電腦來模仿生物神經 網路的處理系統。 (輸入) 神經元 (Neuron) (輸出) 細胞核 神經鍵 (Weights) 24 Copyright © 2009 Pearson Education, Inc. Source: J. Glenn Brookshear Slide 11 -24 2020/10/27

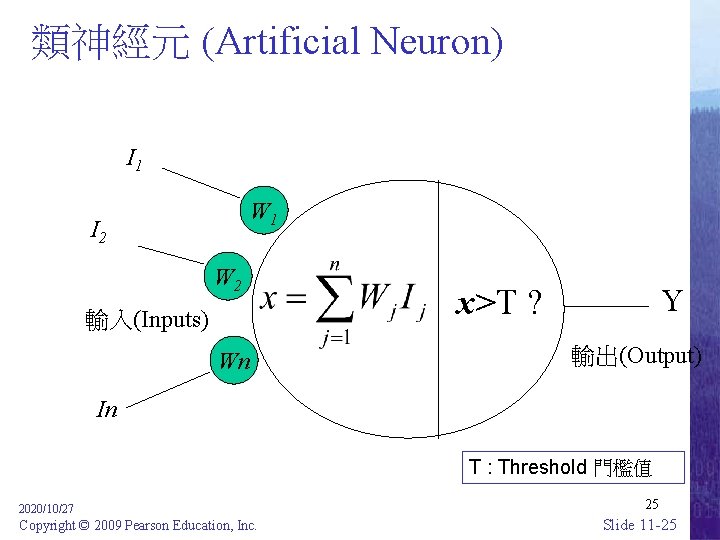

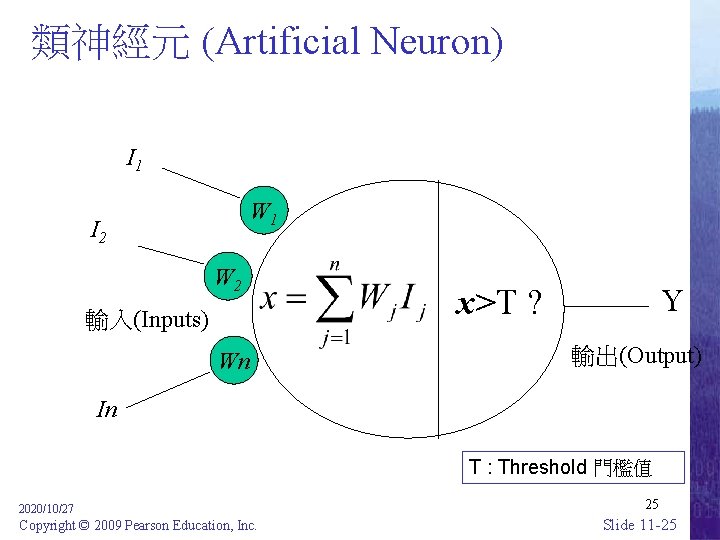

類神經元 (Artificial Neuron) I 1 W 1 I 2 W 2 輸入(Inputs) Wn Y x>T ? 輸出(Output) In T : Threshold 門檻值 2020/10/27 Copyright © 2009 Pearson Education, Inc. 25 Slide 11 -25

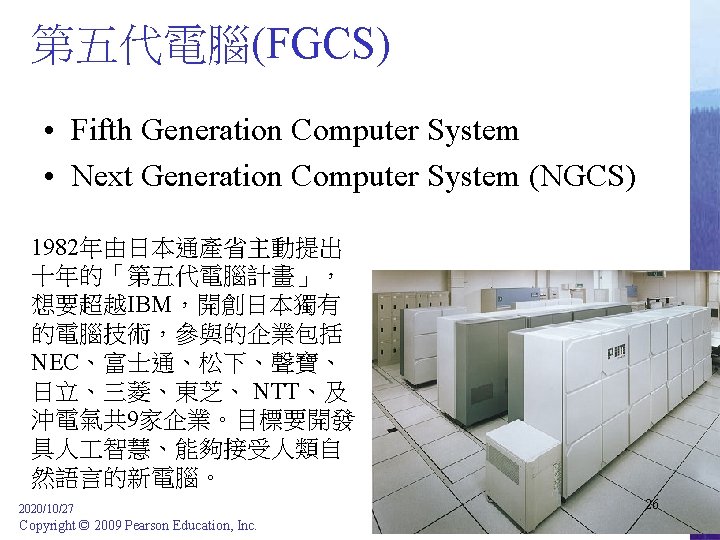

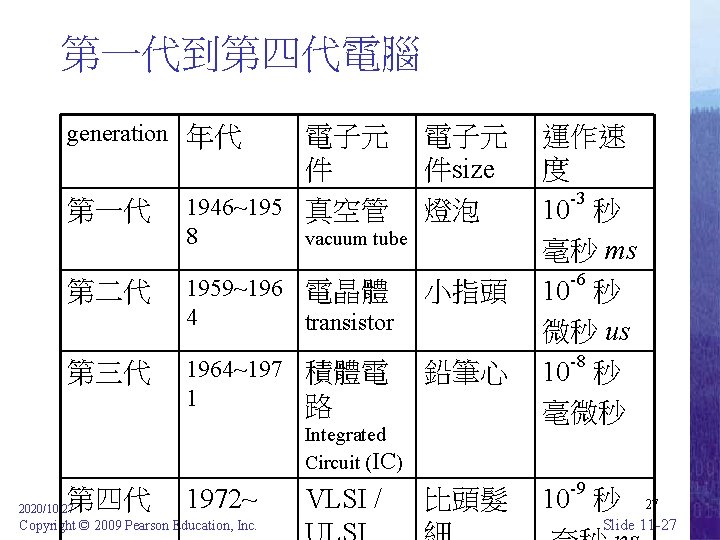

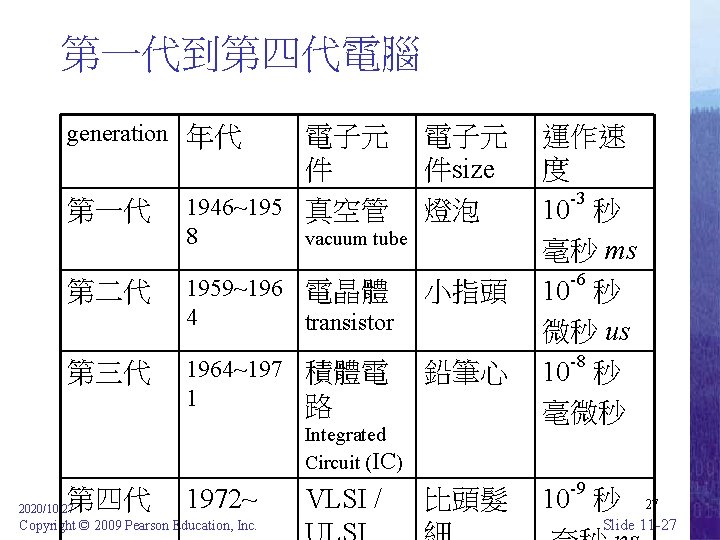

第一代到第四代電腦 generation 年代 第一代 電子元 件 1946~195 真空管 8 電子元 件size 燈泡 vacuum tube 第二代 1959~196 電晶體 4 transistor 小指頭 第三代 1964~197 積體電 1 路 鉛筆心 Integrated Circuit (IC) 第四代 2020/10/27 1972~ Copyright © 2009 Pearson Education, Inc. VLSI / 比頭髮 運作速 度 -3 10 秒 毫秒 ms -6 10 秒 微秒 us -8 10 秒 毫微秒 -9 10 秒 27 Slide 11 -27

Intelligent Agents • Agent: A “device” that responds to stimuli from its environment – Sensors – Actuators • Much of the research in artificial intelligence can be viewed in the context of building agents that behave intelligently 2020/10/27 Copyright © 2009 Pearson Education, Inc. 28 Slide 11 -28

Levels of Intelligent Behavior • Reflex: actions are predetermined responses to the input data • More intelligent behavior requires knowledge of the environment and involves such activities as: – Goal seeking – Learning 2020/10/27 Copyright © 2009 Pearson Education, Inc. 29 Slide 11 -29

Approaches to Research in Artificial Intelligence • Engineering track – Performance oriented • Theoretical track – Simulation oriented 2020/10/27 Copyright © 2009 Pearson Education, Inc. 30 Slide 11 -30

Turing Test • Test setup: Human interrogator communicates with test subject by typewriter. • Test: Can the human interrogator distinguish whether the test subject is human or machine? 2020/10/27 Copyright © 2009 Pearson Education, Inc. 31 Slide 11 -31

Techniques for Understanding Images • Template matching • Image processing – edge enhancement – region finding – smoothing • Image analysis 2020/10/27 Copyright © 2009 Pearson Education, Inc. 32 Slide 11 -32

Language Processing • Syntactic Analysis (語法) • Semantic Analysis (語意) • Contextual Analysis 2020/10/27 Copyright © 2009 Pearson Education, Inc. 33 Slide 11 -33

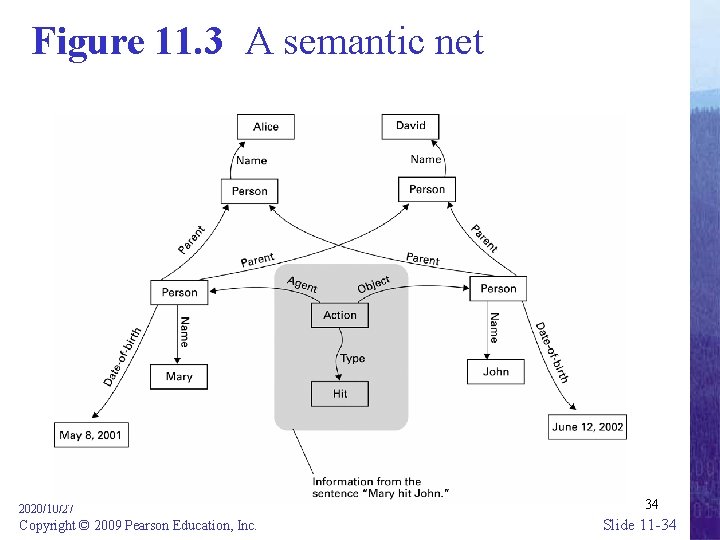

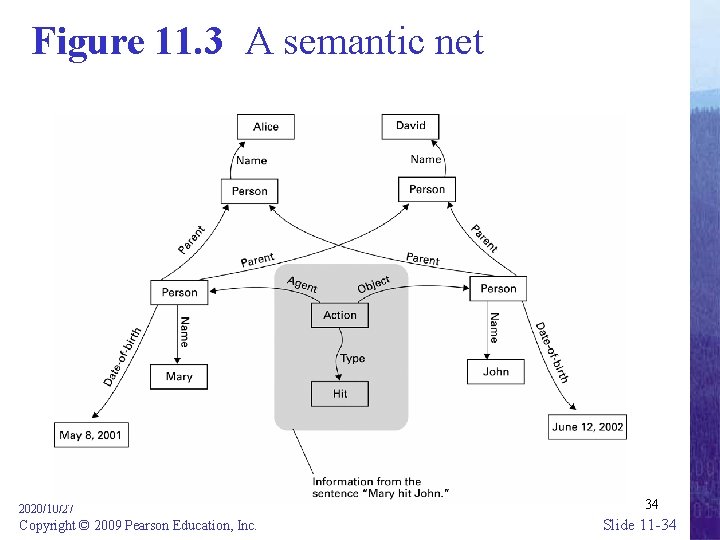

Figure 11. 3 A semantic net 2020/10/27 Copyright © 2009 Pearson Education, Inc. 34 Slide 11 -34

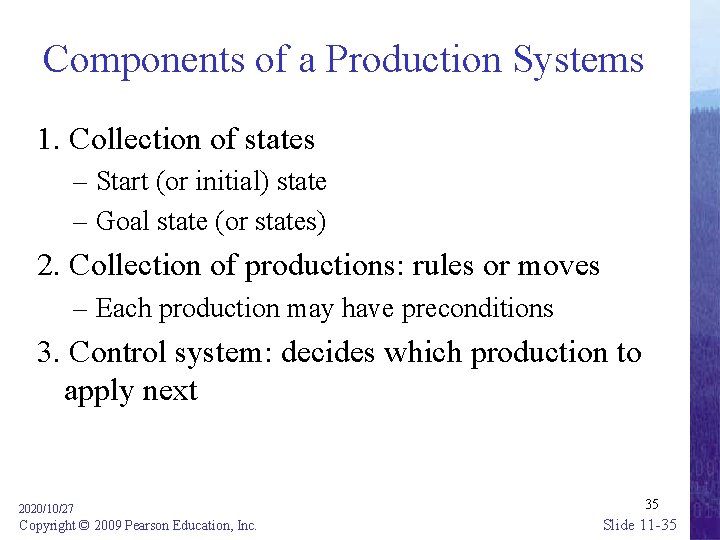

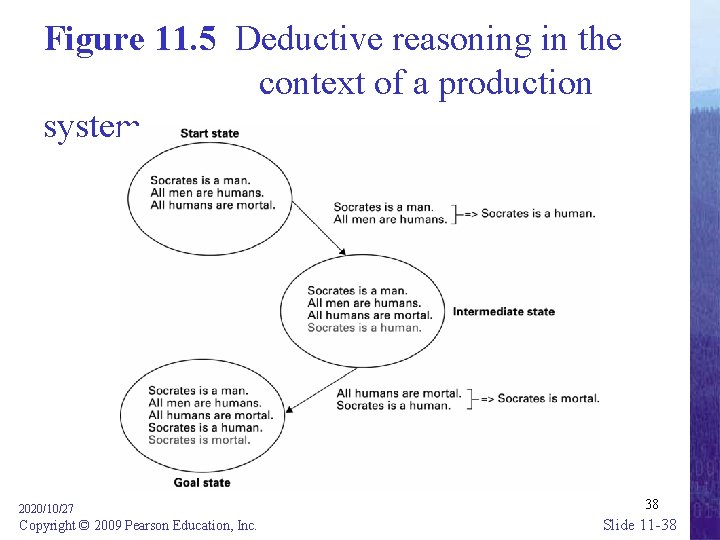

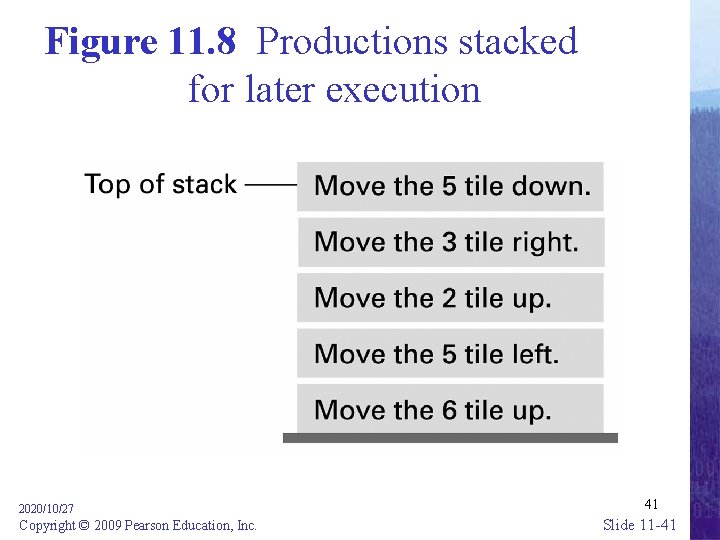

Components of a Production Systems 1. Collection of states – Start (or initial) state – Goal state (or states) 2. Collection of productions: rules or moves – Each production may have preconditions 3. Control system: decides which production to apply next 2020/10/27 Copyright © 2009 Pearson Education, Inc. 35 Slide 11 -35

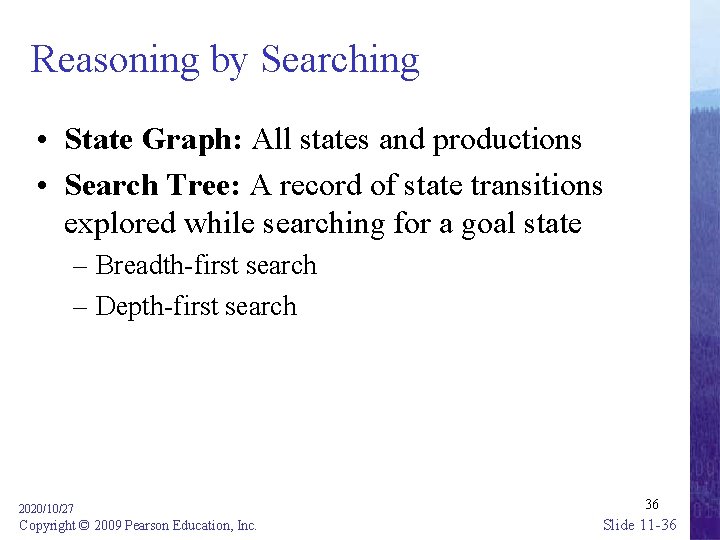

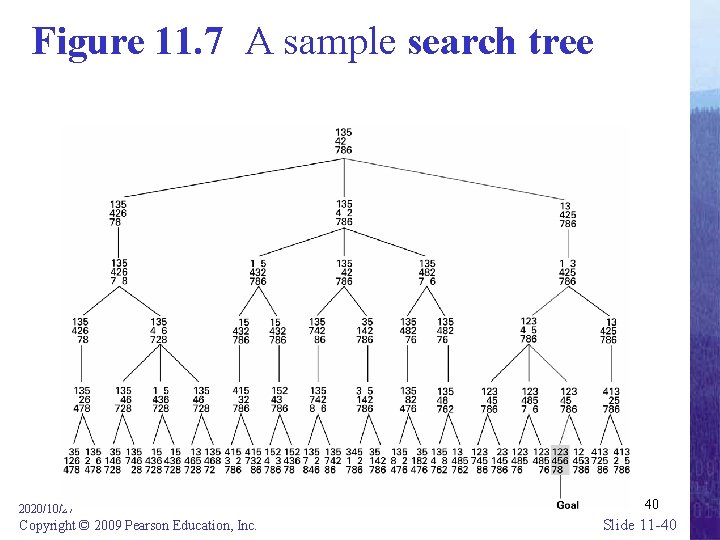

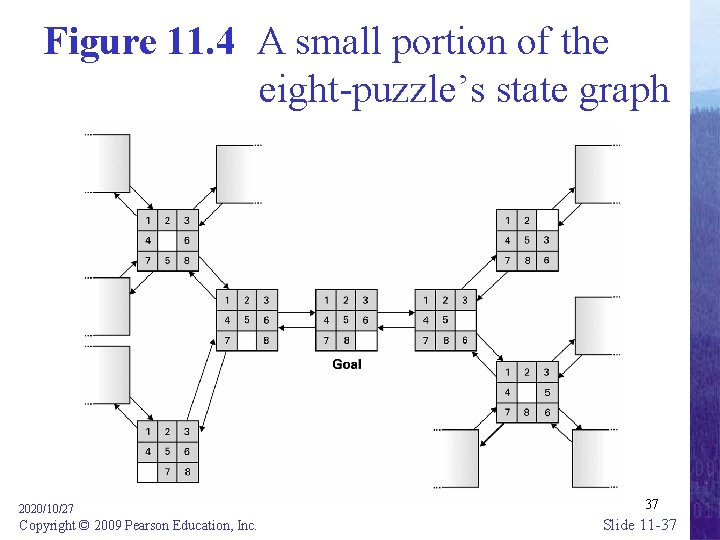

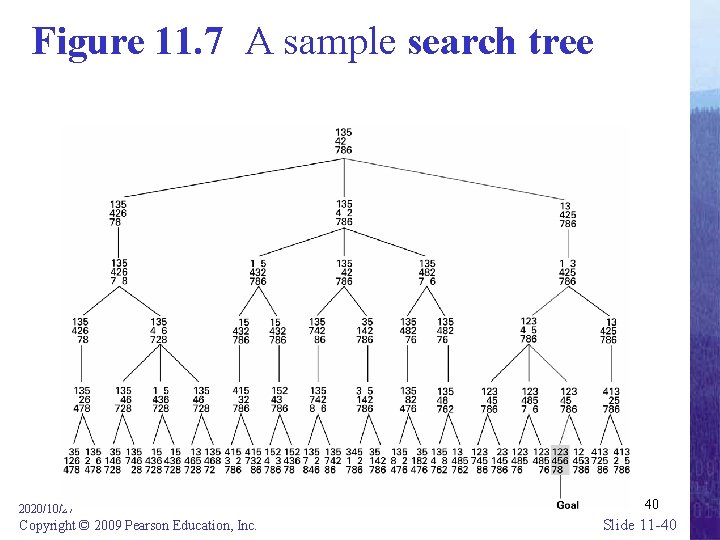

Reasoning by Searching • State Graph: All states and productions • Search Tree: A record of state transitions explored while searching for a goal state – Breadth-first search – Depth-first search 2020/10/27 Copyright © 2009 Pearson Education, Inc. 36 Slide 11 -36

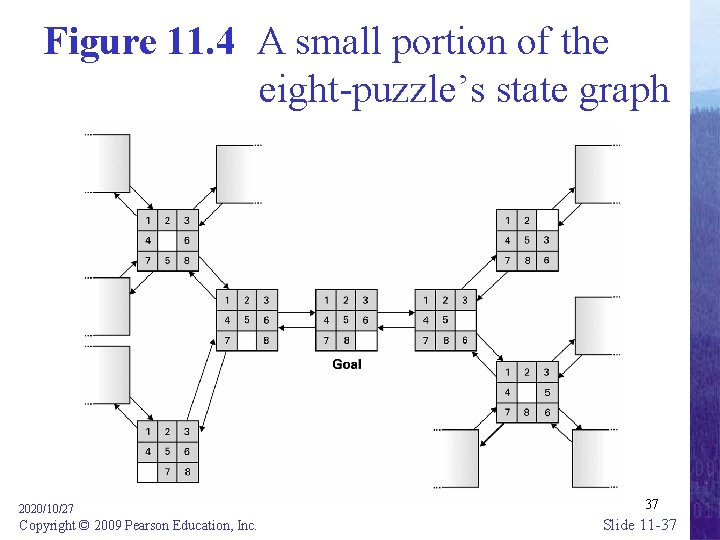

Figure 11. 4 A small portion of the eight-puzzle’s state graph 2020/10/27 Copyright © 2009 Pearson Education, Inc. 37 Slide 11 -37

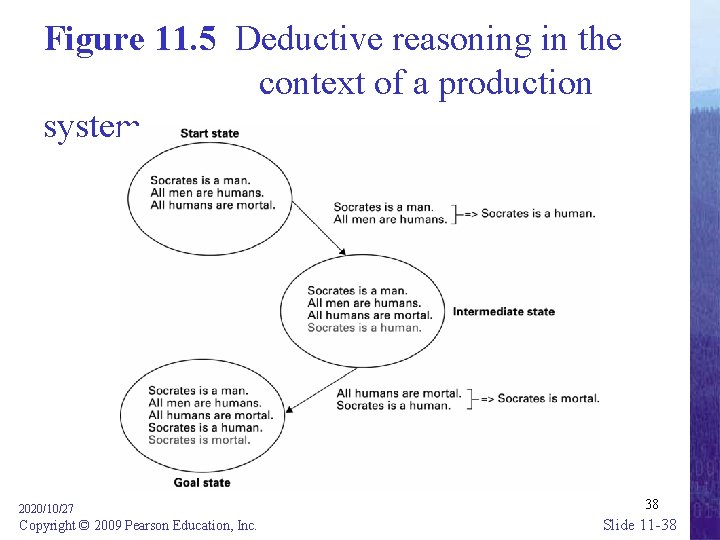

Figure 11. 5 Deductive reasoning in the context of a production system 2020/10/27 Copyright © 2009 Pearson Education, Inc. 38 Slide 11 -38

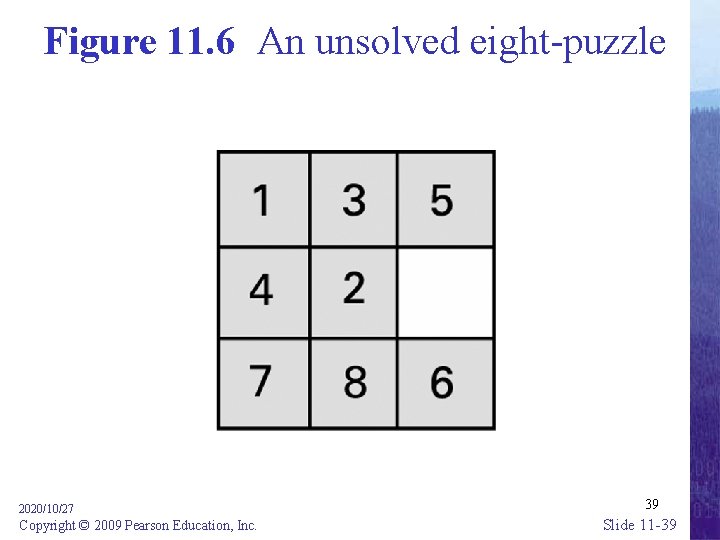

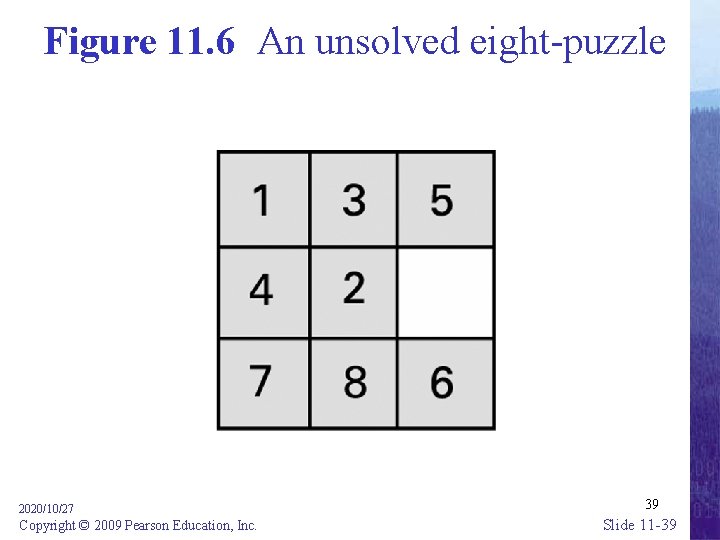

Figure 11. 6 An unsolved eight-puzzle 2020/10/27 Copyright © 2009 Pearson Education, Inc. 39 Slide 11 -39

Figure 11. 7 A sample search tree 2020/10/27 Copyright © 2009 Pearson Education, Inc. 40 Slide 11 -40

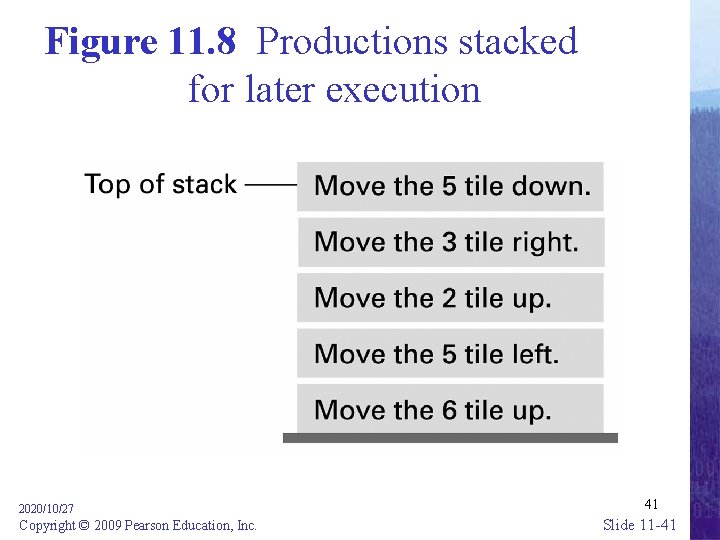

Figure 11. 8 Productions stacked for later execution 2020/10/27 Copyright © 2009 Pearson Education, Inc. 41 Slide 11 -41

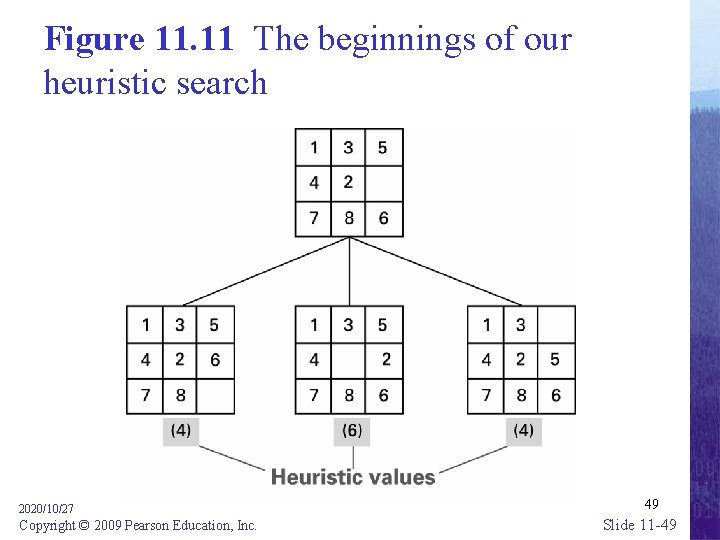

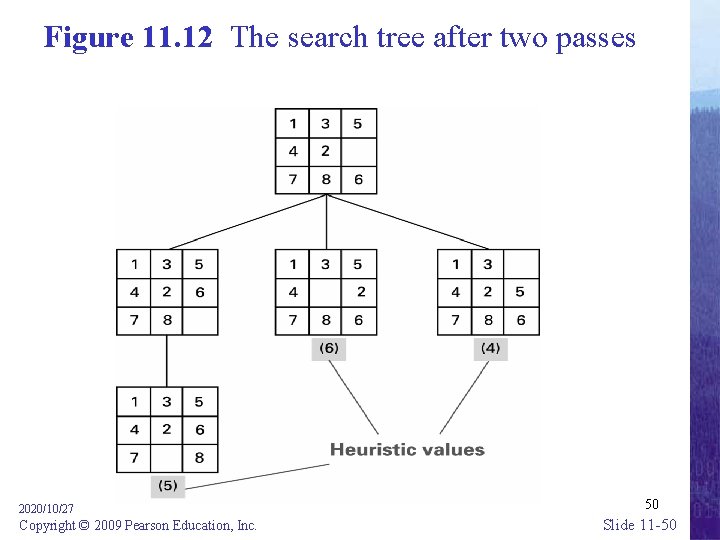

Heuristic Strategies • Heuristic: A “rule of thumb” for making decisions • Requirements for good heuristics – Must be easier to compute than a complete solution – Must provide a reasonable estimate of proximity to a goal 2020/10/27 Copyright © 2009 Pearson Education, Inc. 42 Slide 11 -42

Othello 黑白棋 Heuristic search 金角銀邊 2020/10/27 Copyright © 2009 Pearson Education, Inc. 43 Slide 11 -43

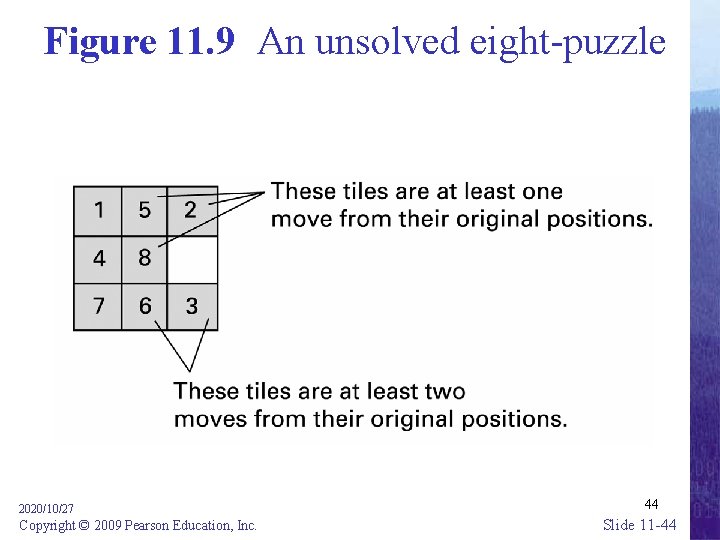

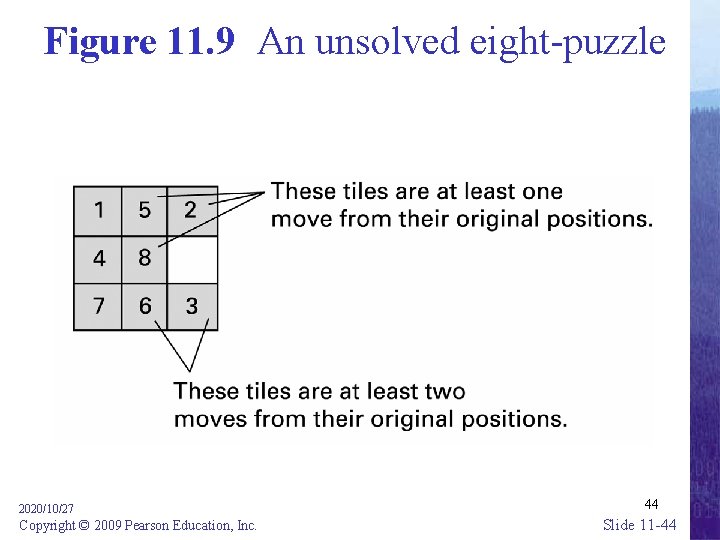

Figure 11. 9 An unsolved eight-puzzle 2020/10/27 Copyright © 2009 Pearson Education, Inc. 44 Slide 11 -44

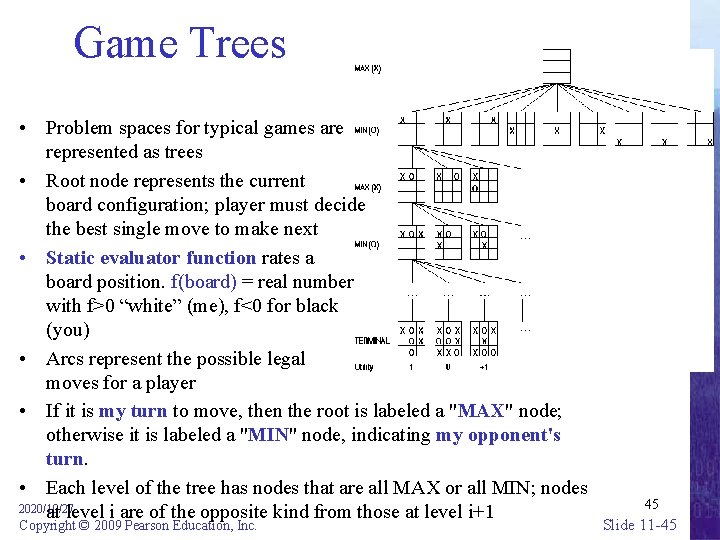

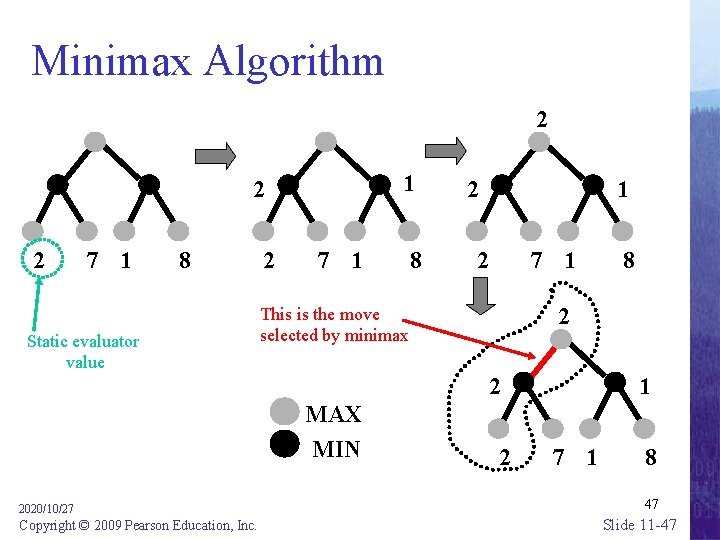

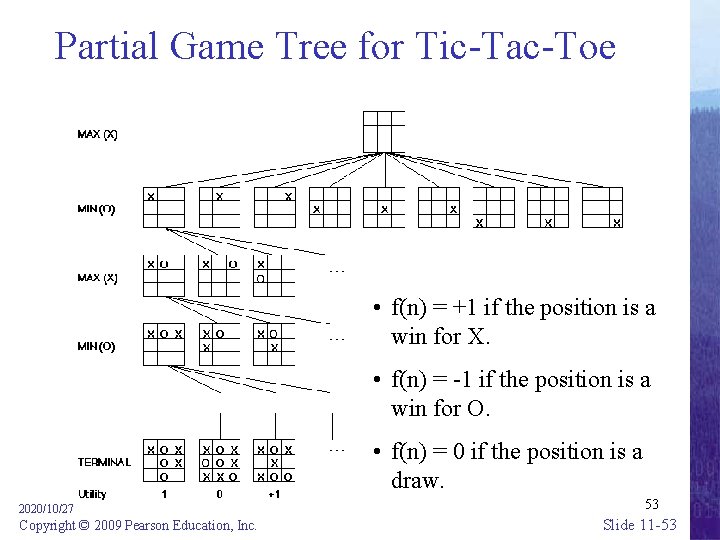

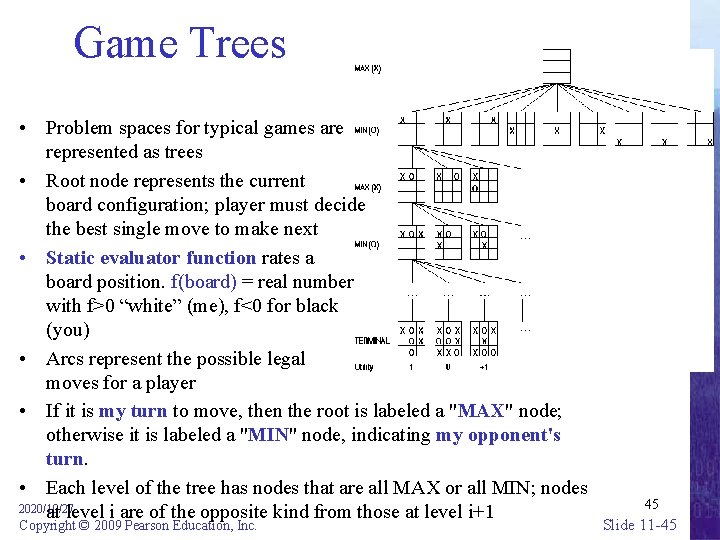

Game Trees • Problem spaces for typical games are represented as trees • Root node represents the current board configuration; player must decide the best single move to make next • Static evaluator function rates a board position. f(board) = real number with f>0 “white” (me), f<0 for black (you) • Arcs represent the possible legal moves for a player • If it is my turn to move, then the root is labeled a "MAX" node; otherwise it is labeled a "MIN" node, indicating my opponent's turn. • Each level of the tree has nodes that are all MAX or all MIN; nodes 2020/10/27 at level i are of the opposite kind from those at level i+1 Copyright © 2009 Pearson Education, Inc. 45 Slide 11 -45

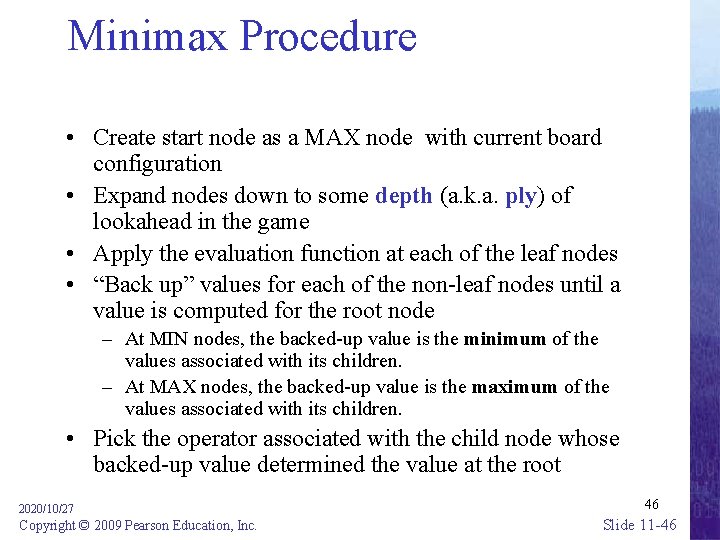

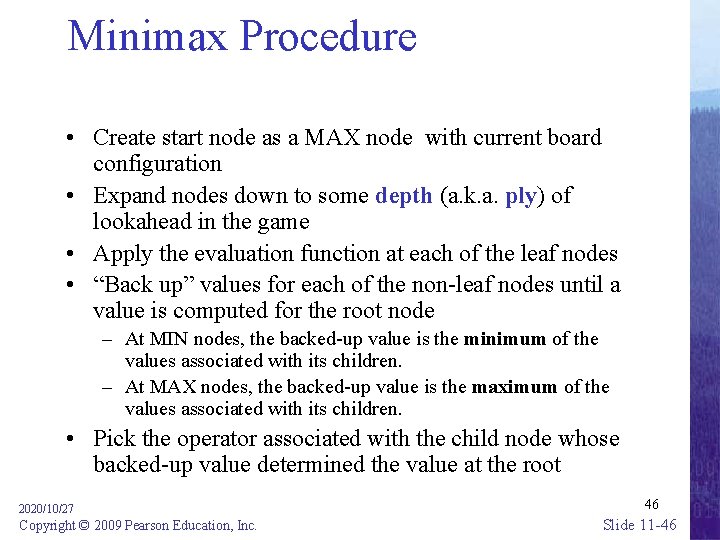

Minimax Procedure • Create start node as a MAX node with current board configuration • Expand nodes down to some depth (a. k. a. ply) of lookahead in the game • Apply the evaluation function at each of the leaf nodes • “Back up” values for each of the non-leaf nodes until a value is computed for the root node – At MIN nodes, the backed-up value is the minimum of the values associated with its children. – At MAX nodes, the backed-up value is the maximum of the values associated with its children. • Pick the operator associated with the child node whose backed-up value determined the value at the root 2020/10/27 Copyright © 2009 Pearson Education, Inc. 46 Slide 11 -46

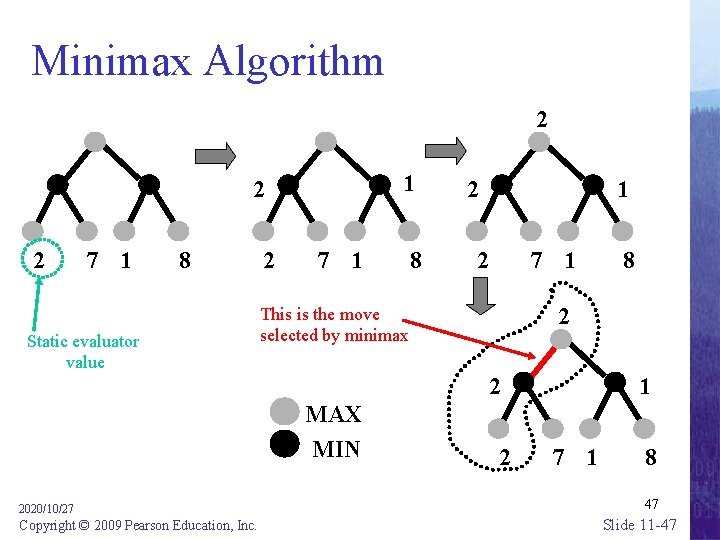

Minimax Algorithm 2 1 2 2 7 1 8 Static evaluator value 2 7 1 8 2 1 2 7 1 2 This is the move selected by minimax 2 MAX MIN 2020/10/27 Copyright © 2009 Pearson Education, Inc. 8 2 1 7 1 8 47 Slide 11 -47

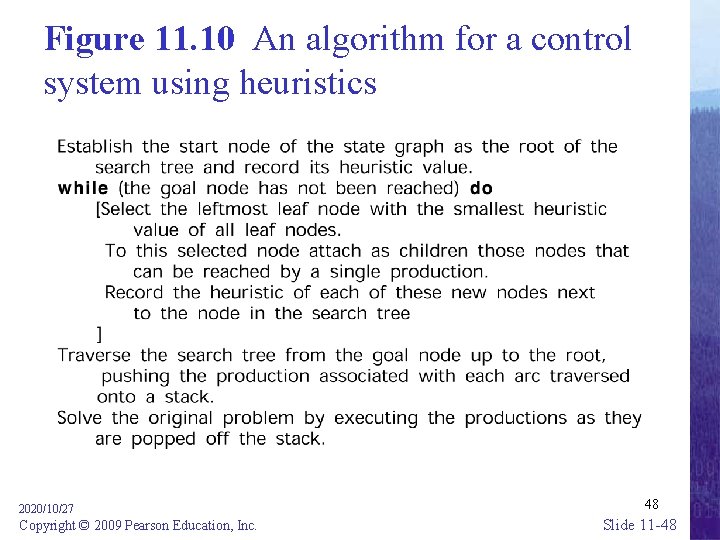

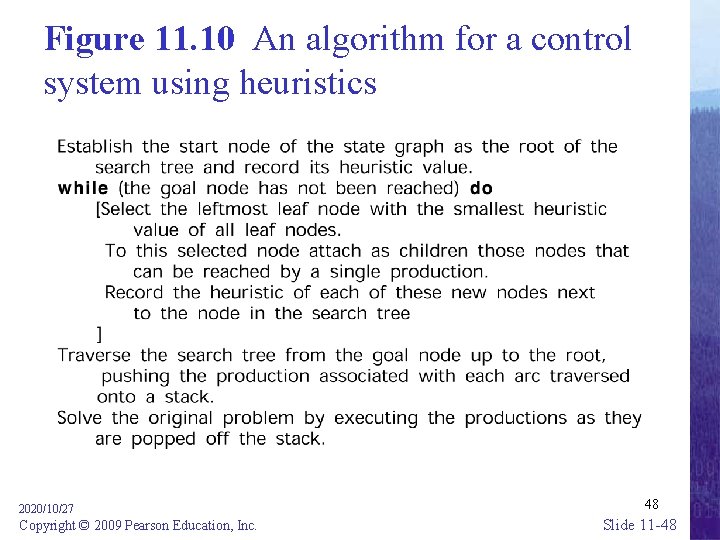

Figure 11. 10 An algorithm for a control system using heuristics 2020/10/27 Copyright © 2009 Pearson Education, Inc. 48 Slide 11 -48

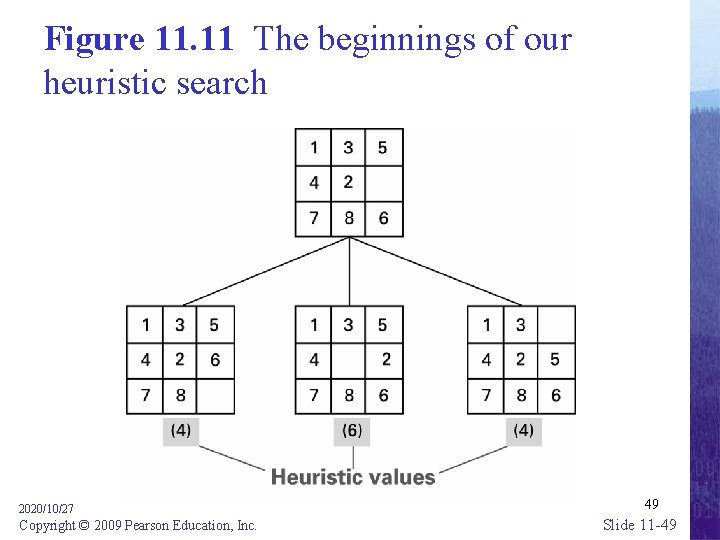

Figure 11. 11 The beginnings of our heuristic search 2020/10/27 Copyright © 2009 Pearson Education, Inc. 49 Slide 11 -49

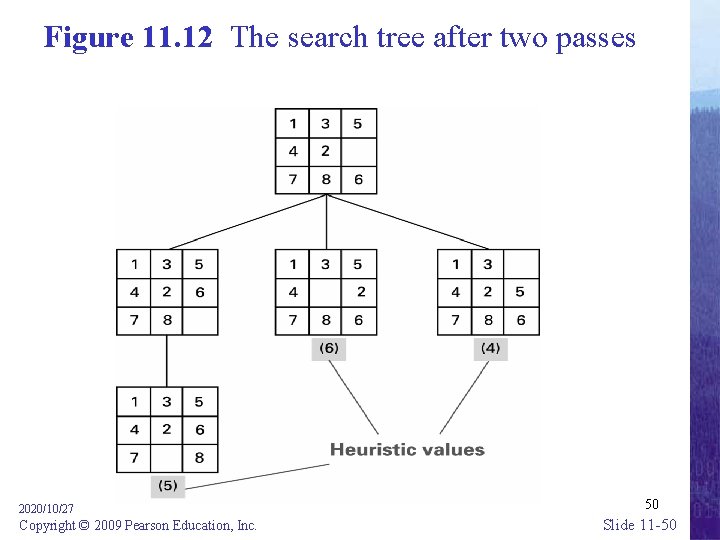

Figure 11. 12 The search tree after two passes 2020/10/27 Copyright © 2009 Pearson Education, Inc. 50 Slide 11 -50

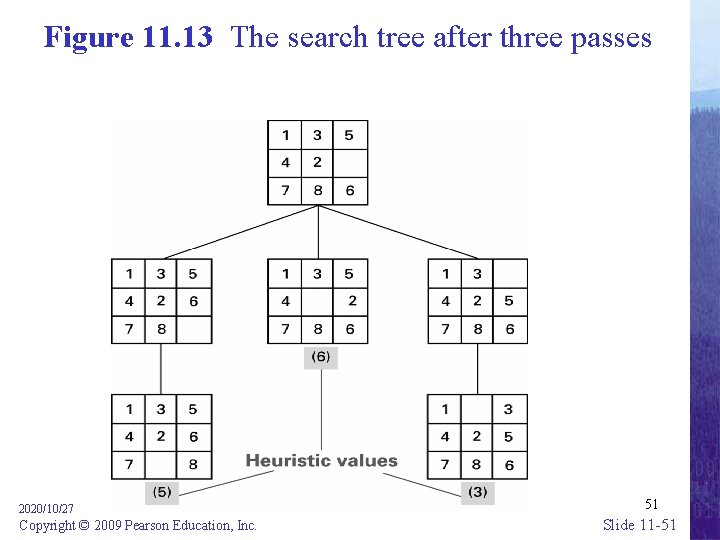

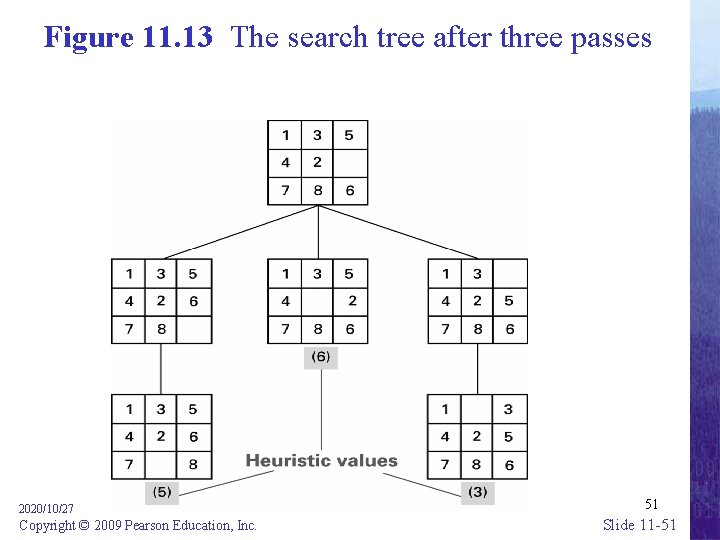

Figure 11. 13 The search tree after three passes 2020/10/27 Copyright © 2009 Pearson Education, Inc. 51 Slide 11 -51

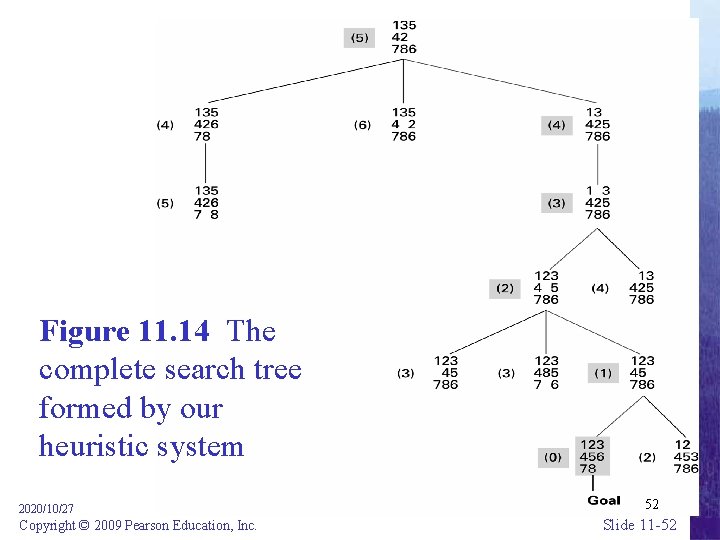

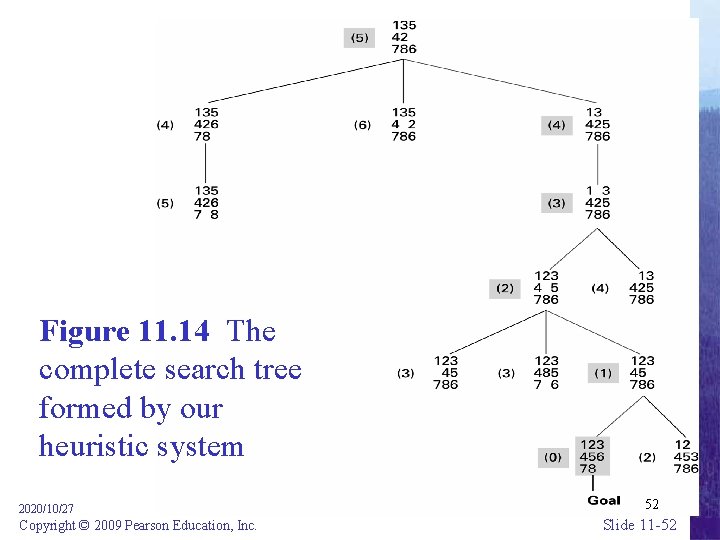

Figure 11. 14 The complete search tree formed by our heuristic system 2020/10/27 Copyright © 2009 Pearson Education, Inc. 52 Slide 11 -52

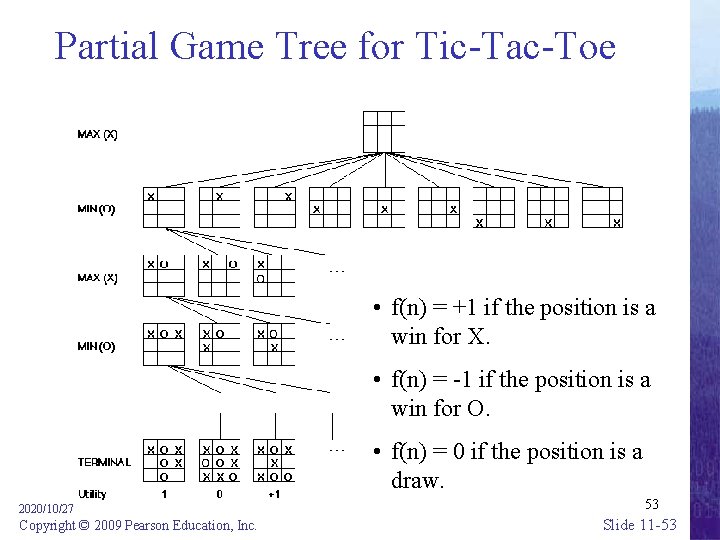

Partial Game Tree for Tic-Tac-Toe • f(n) = +1 if the position is a win for X. • f(n) = -1 if the position is a win for O. • f(n) = 0 if the position is a draw. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 53 Slide 11 -53

Handling Real-World Knowledge • Representation and storage • Accessing relevant information – Meta-Reasoning – Closed-World Assumption • Frame problem 2020/10/27 Copyright © 2009 Pearson Education, Inc. 54 Slide 11 -54

Learning • • Imitation Supervised Training Reinforcement Evolutionary Techniques 2020/10/27 Copyright © 2009 Pearson Education, Inc. 55 Slide 11 -55

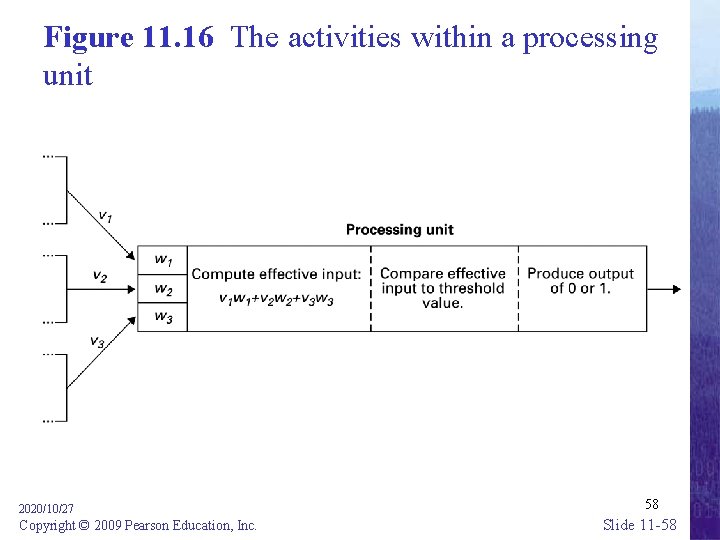

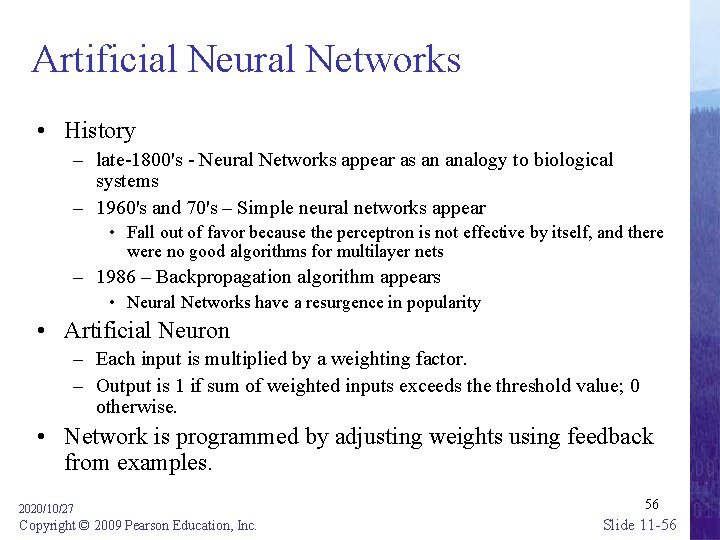

Artificial Neural Networks • History – late-1800's - Neural Networks appear as an analogy to biological systems – 1960's and 70's – Simple neural networks appear • Fall out of favor because the perceptron is not effective by itself, and there were no good algorithms for multilayer nets – 1986 – Backpropagation algorithm appears • Neural Networks have a resurgence in popularity • Artificial Neuron – Each input is multiplied by a weighting factor. – Output is 1 if sum of weighted inputs exceeds the threshold value; 0 otherwise. • Network is programmed by adjusting weights using feedback from examples. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 56 Slide 11 -56

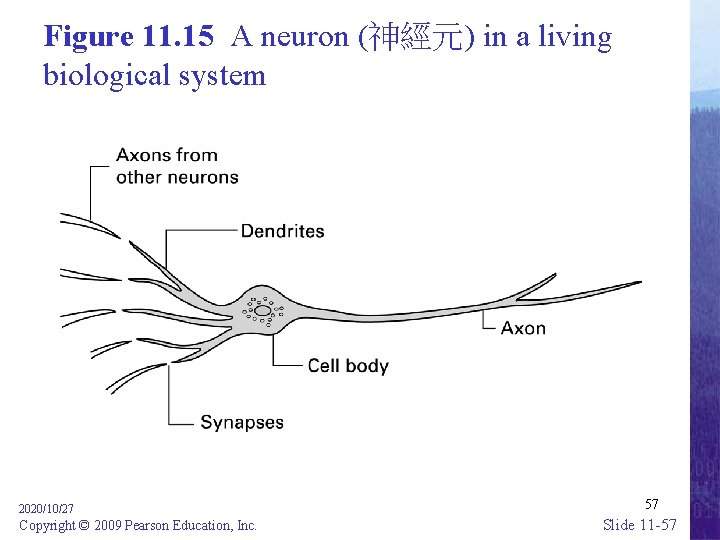

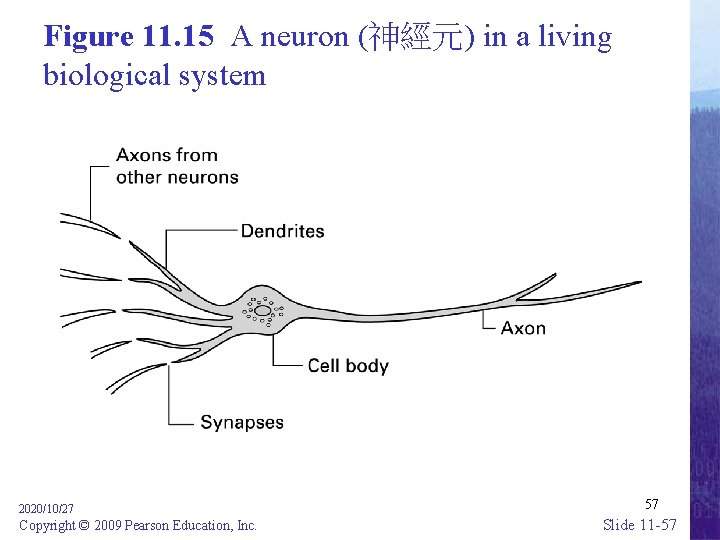

Figure 11. 15 A neuron (神經元) in a living biological system 2020/10/27 Copyright © 2009 Pearson Education, Inc. 57 Slide 11 -57

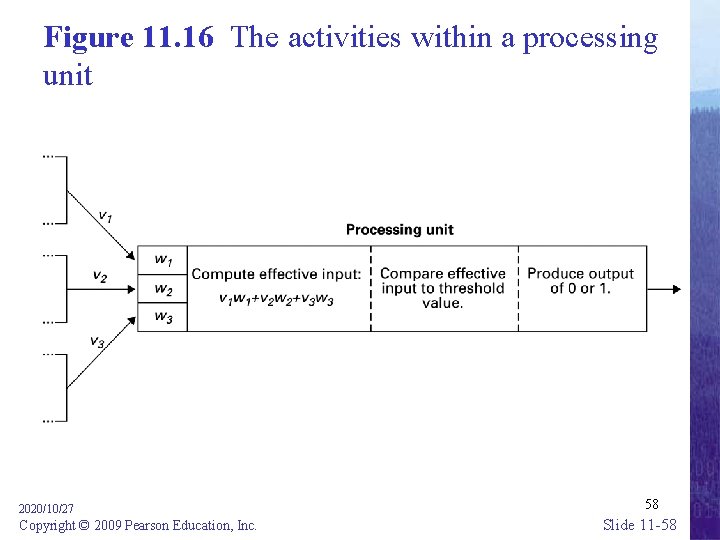

Figure 11. 16 The activities within a processing unit 2020/10/27 Copyright © 2009 Pearson Education, Inc. 58 Slide 11 -58

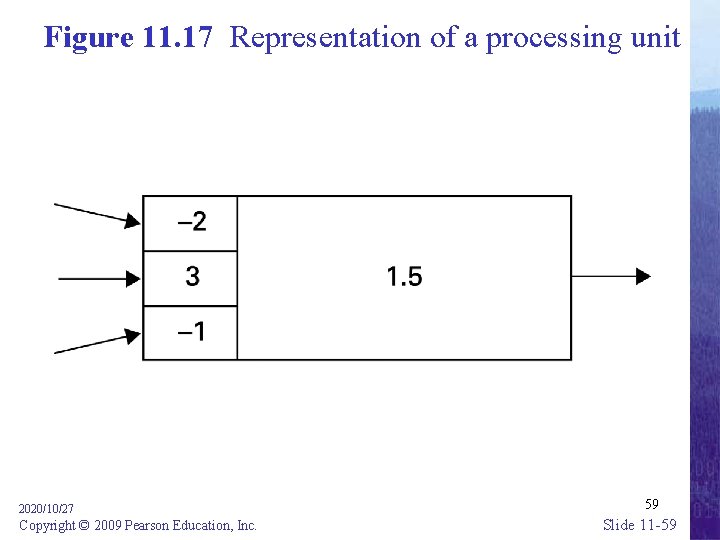

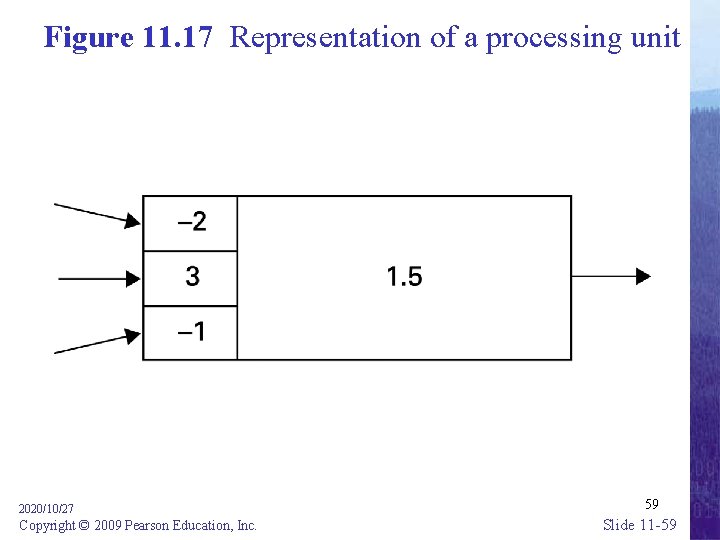

Figure 11. 17 Representation of a processing unit 2020/10/27 Copyright © 2009 Pearson Education, Inc. 59 Slide 11 -59

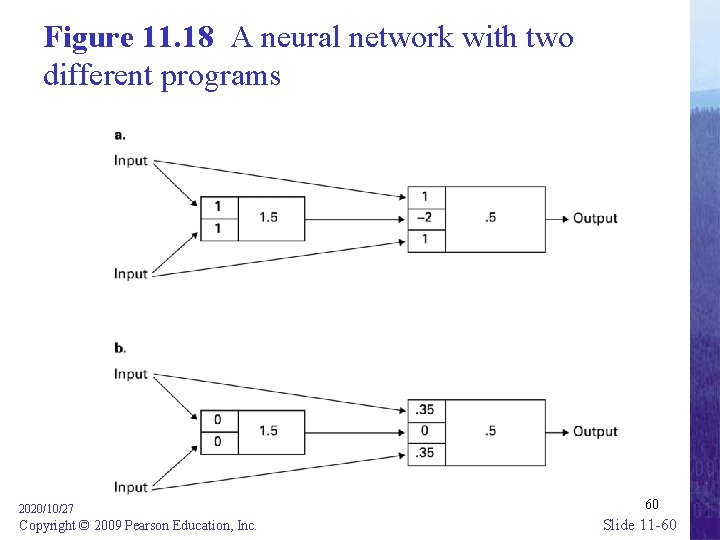

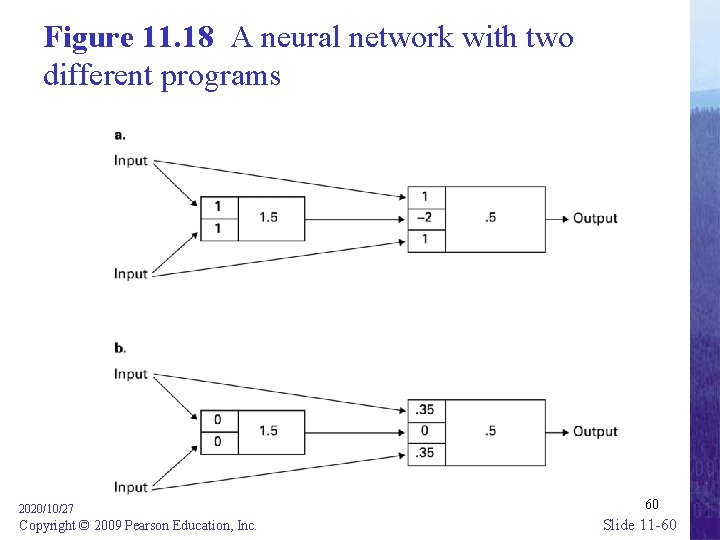

Figure 11. 18 A neural network with two different programs 2020/10/27 Copyright © 2009 Pearson Education, Inc. 60 Slide 11 -60

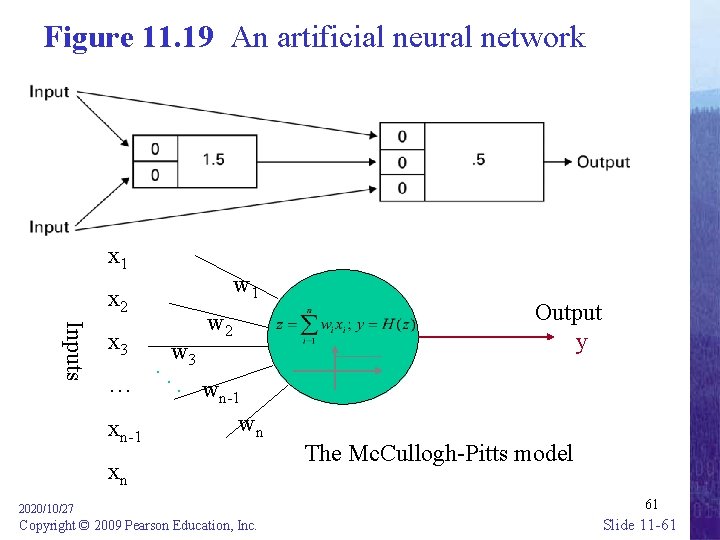

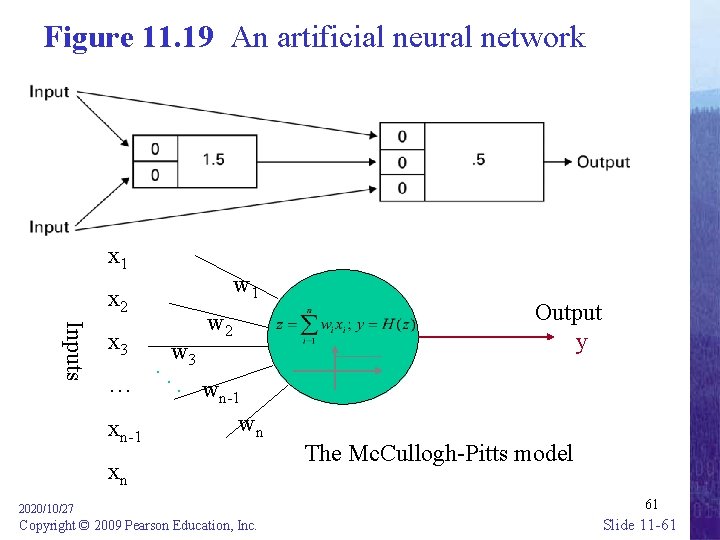

Figure 11. 19 An artificial neural network x 1 x 2 Inputs x 3 w 1 w 2 w 3. . …. wn-1 xn-1 wn xn 2020/10/27 Copyright © 2009 Pearson Education, Inc. Output y The Mc. Cullogh-Pitts model 61 Slide 11 -61

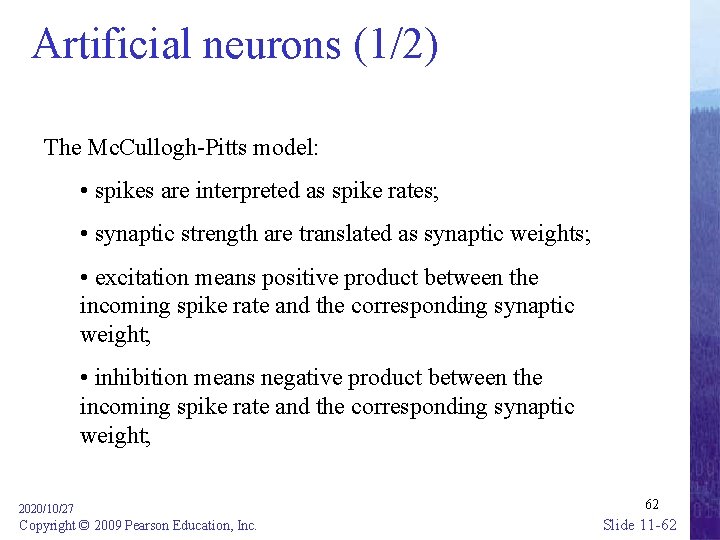

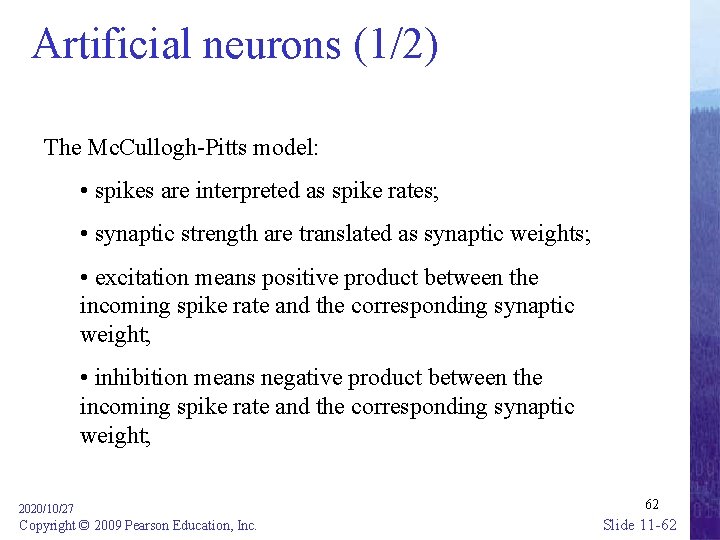

Artificial neurons (1/2) The Mc. Cullogh-Pitts model: • spikes are interpreted as spike rates; • synaptic strength are translated as synaptic weights; • excitation means positive product between the incoming spike rate and the corresponding synaptic weight; • inhibition means negative product between the incoming spike rate and the corresponding synaptic weight; 2020/10/27 Copyright © 2009 Pearson Education, Inc. 62 Slide 11 -62

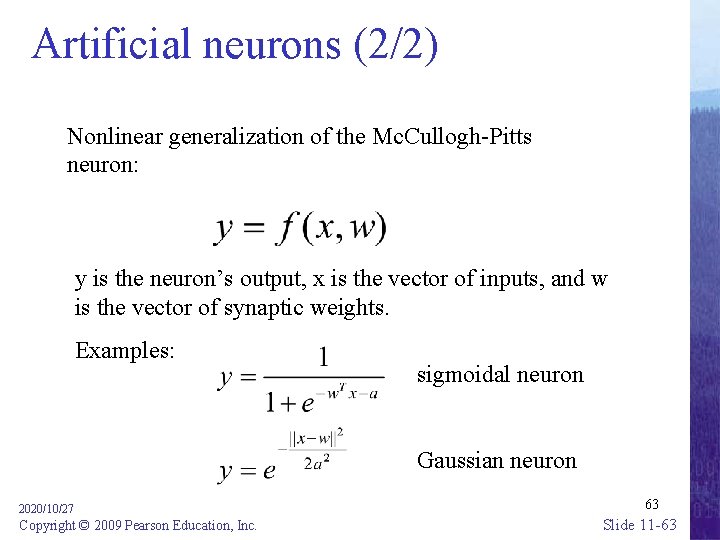

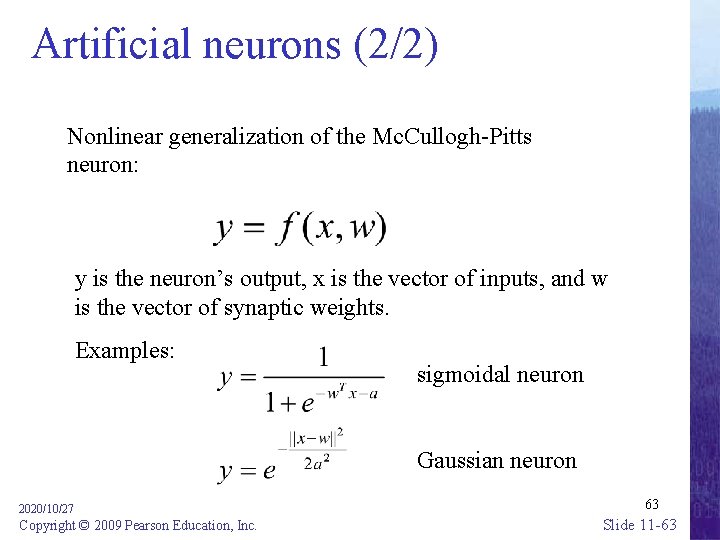

Artificial neurons (2/2) Nonlinear generalization of the Mc. Cullogh-Pitts neuron: y is the neuron’s output, x is the vector of inputs, and w is the vector of synaptic weights. Examples: sigmoidal neuron Gaussian neuron 2020/10/27 Copyright © 2009 Pearson Education, Inc. 63 Slide 11 -63

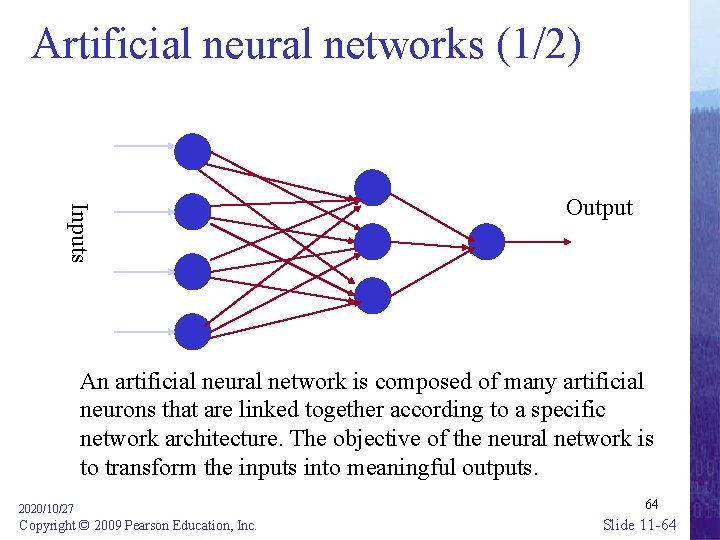

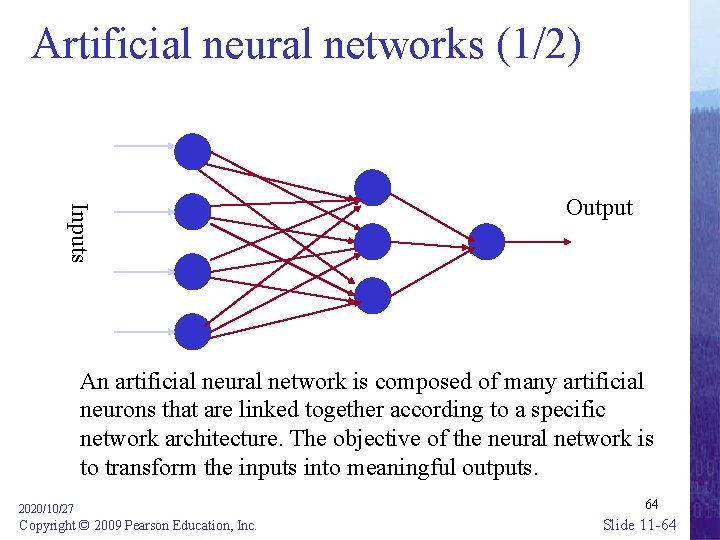

Artificial neural networks (1/2) Inputs Output An artificial neural network is composed of many artificial neurons that are linked together according to a specific network architecture. The objective of the neural network is to transform the inputs into meaningful outputs. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 64 Slide 11 -64

Artificial neural networks (2/2) üTasks to be solved by artificial neural networks: • controlling the movements of a robot based on selfperception and other information (e. g. , visual information); • deciding the category of potential food items (e. g. , edible or non-edible) in an artificial world; • recognizing a visual object (e. g. , a familiar face); • predicting where a moving object goes, when a robot wants to catch it. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 65 Slide 11 -65

Learning in biological systems Learning = learning by adaptation The young animal learns that the green fruits are sour, while the yellowish/reddish ones are sweet. The learning happens by adapting the fruit picking behavior. At the neural level the learning happens by changing of the synaptic strengths, eliminating some synapses, and building new ones. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 66 Slide 11 -66

Learning as optimisation Ø The objective of adapting the responses on the basis of the information received from the environment is to achieve a better state. E. g. , the animal likes to eat many energy rich, juicy fruits that make its stomach full, and makes it feel happy. Ø In other words, the objective of learning in biological organisms is to optimise the amount of available resources, happiness, or in general to achieve a closer to optimal state. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 67 Slide 11 -67

Learning in biological neural networks üThe learning rules of Hebb: • synchronous activation increases the synaptic strength; • asynchronous activation decreases the synaptic strength. üThese rules fit with energy minimization principles. Ø Maintaining synaptic strength needs energy, it should be maintained at those places where it is needed, and it shouldn’t be maintained at places where it’s not needed. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 68 Slide 11 -68

Learning principle for artificial neural networks ENERGY MINIMIZATION We need an appropriate definition of energy for artificial neural networks, and having that we can use mathematical optimisation techniques to find how to change the weights of the synaptic connections between neurons. ENERGY = measure of task performance error 2020/10/27 Copyright © 2009 Pearson Education, Inc. 69 Slide 11 -69

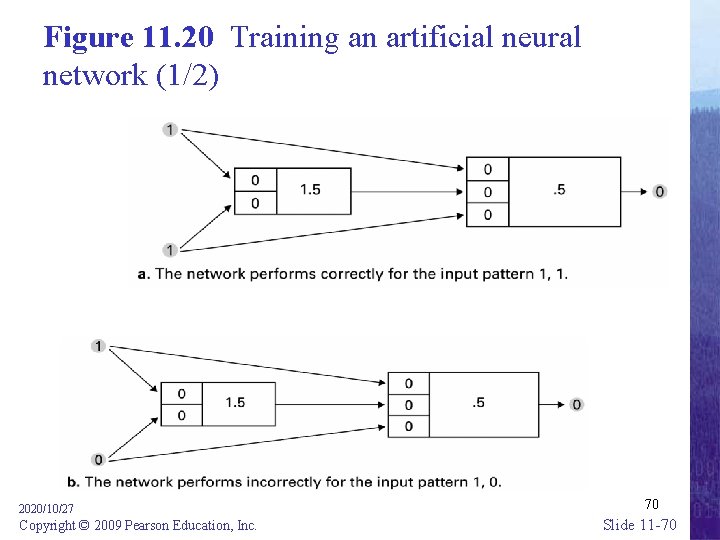

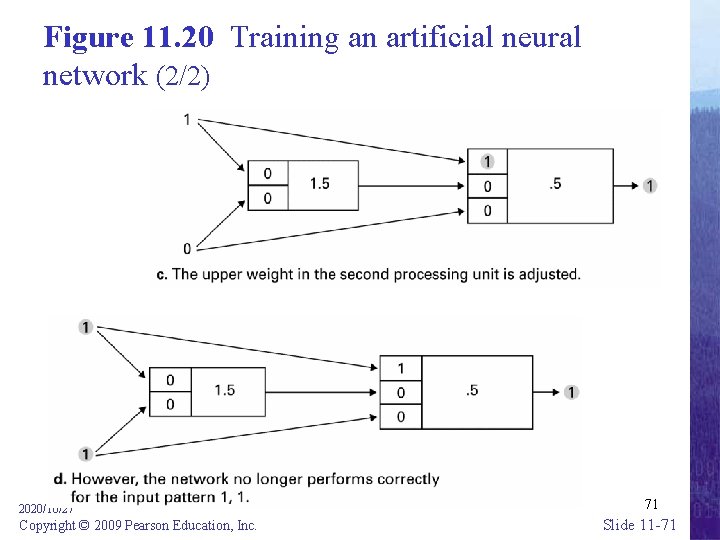

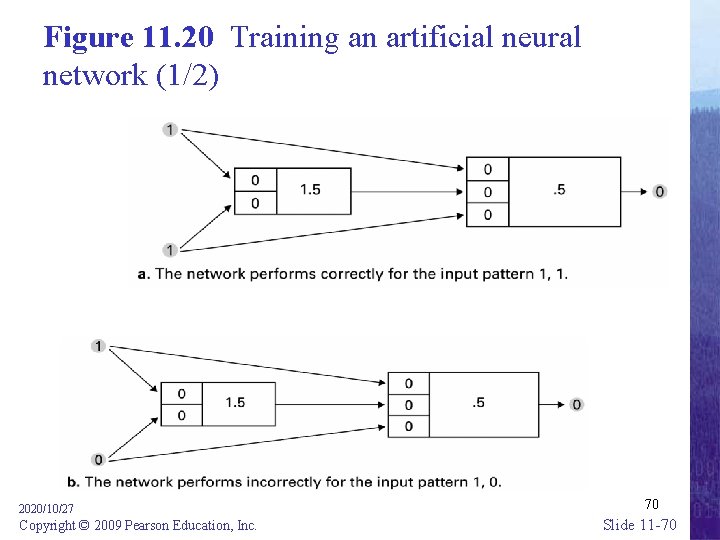

Figure 11. 20 Training an artificial neural network (1/2) 2020/10/27 Copyright © 2009 Pearson Education, Inc. 70 Slide 11 -70

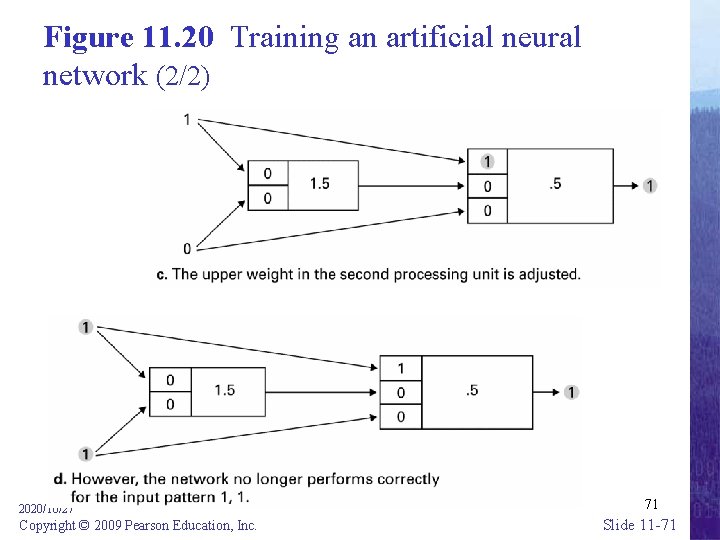

Figure 11. 20 Training an artificial neural network (2/2) 2020/10/27 Copyright © 2009 Pearson Education, Inc. 71 Slide 11 -71

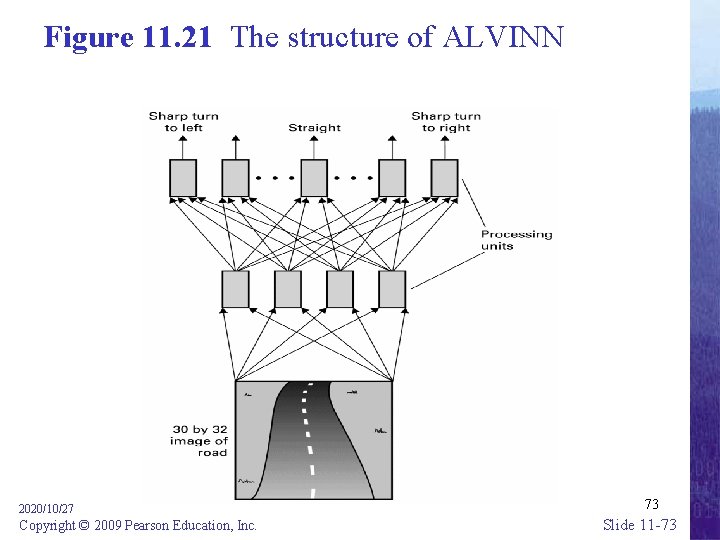

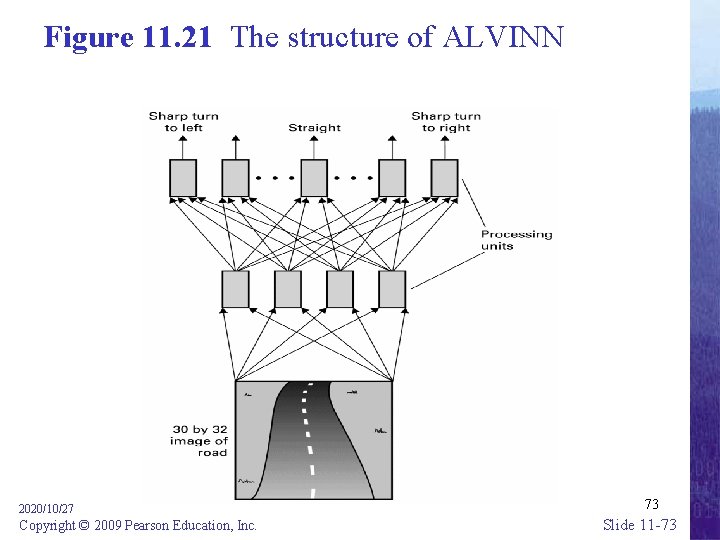

ALVINN • • Autonomous Land Vehicle In a Neural Network Robotic car Created in 1980 s by David Pomerleau 1995 – Drove 1000 miles in traffic at speed of up to 120 MPH – Steered the car coast to coast (throttle and brakes controlled by human) • 30 x 32 image as input, 4 hidden units, and 30 outputs 2020/10/27 Copyright © 2009 Pearson Education, Inc. Slide 11 -72

Figure 11. 21 The structure of ALVINN 2020/10/27 Copyright © 2009 Pearson Education, Inc. 73 Slide 11 -73

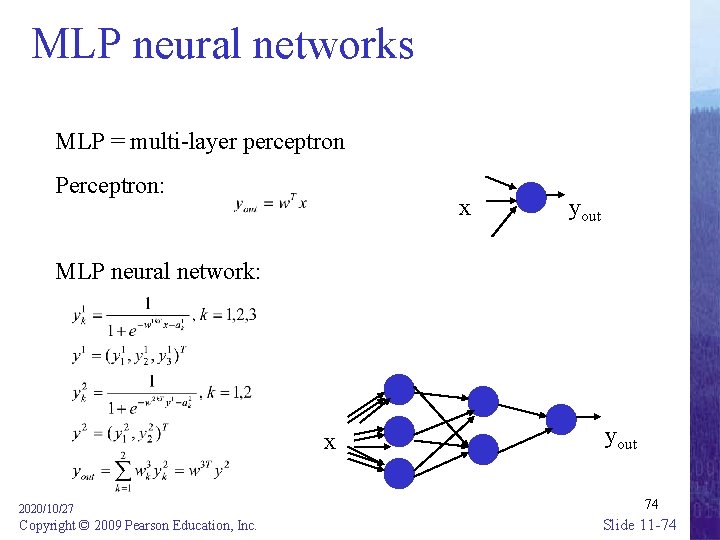

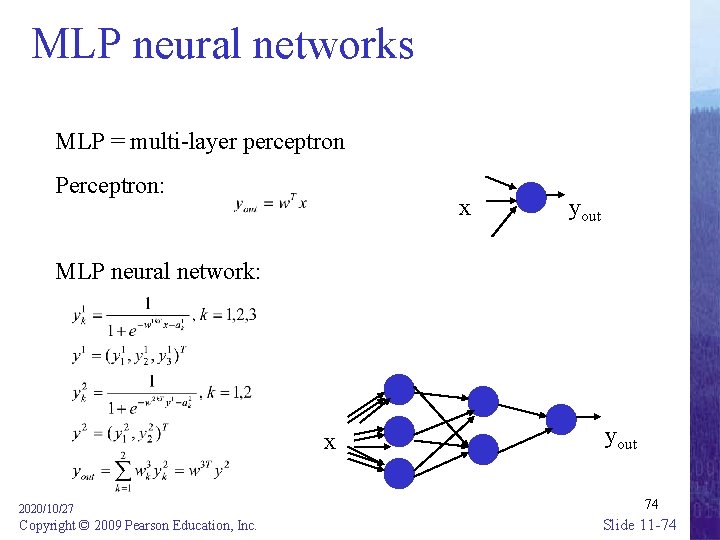

MLP neural networks MLP = multi-layer perceptron Perceptron: x yout MLP neural network: x 2020/10/27 Copyright © 2009 Pearson Education, Inc. yout 74 Slide 11 -74

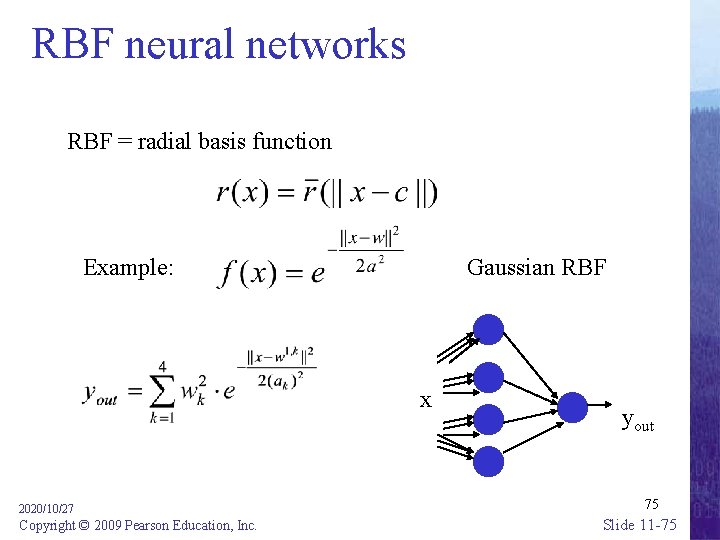

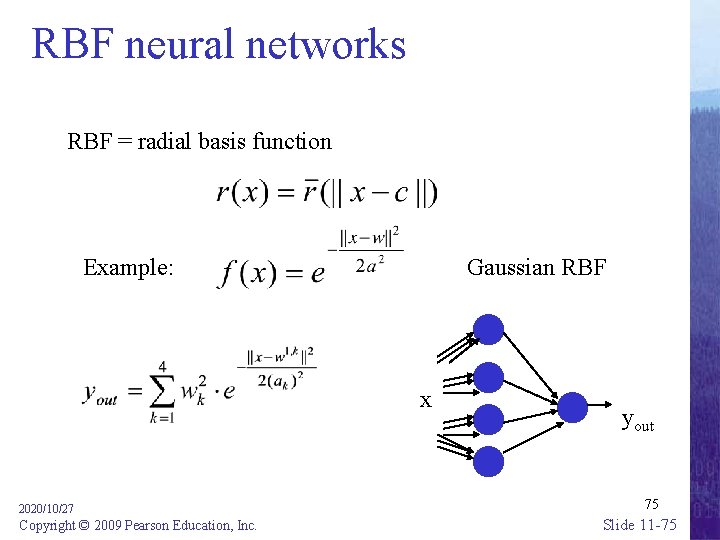

RBF neural networks RBF = radial basis function Example: Gaussian RBF x 2020/10/27 Copyright © 2009 Pearson Education, Inc. yout 75 Slide 11 -75

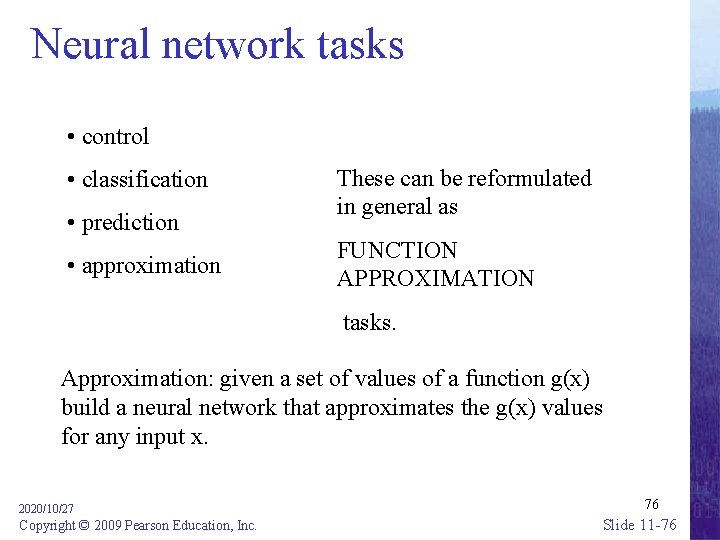

Neural network tasks • control • classification • prediction • approximation These can be reformulated in general as FUNCTION APPROXIMATION tasks. Approximation: given a set of values of a function g(x) build a neural network that approximates the g(x) values for any input x. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 76 Slide 11 -76

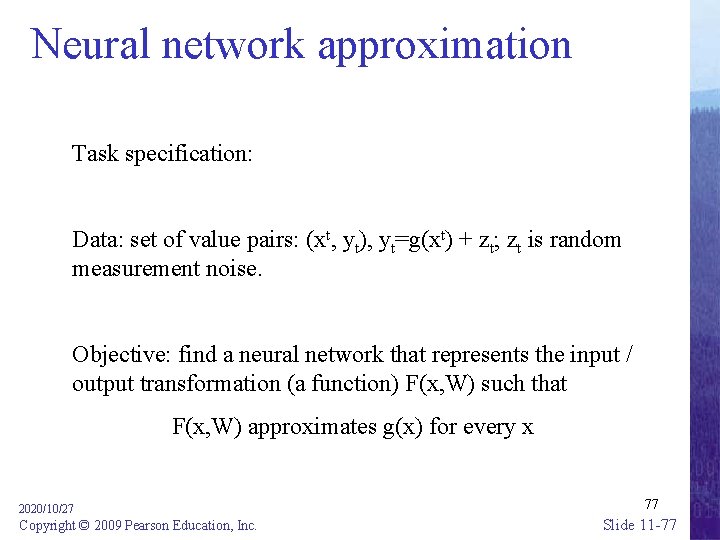

Neural network approximation Task specification: Data: set of value pairs: (xt, yt), yt=g(xt) + zt; zt is random measurement noise. Objective: find a neural network that represents the input / output transformation (a function) F(x, W) such that F(x, W) approximates g(x) for every x 2020/10/27 Copyright © 2009 Pearson Education, Inc. 77 Slide 11 -77

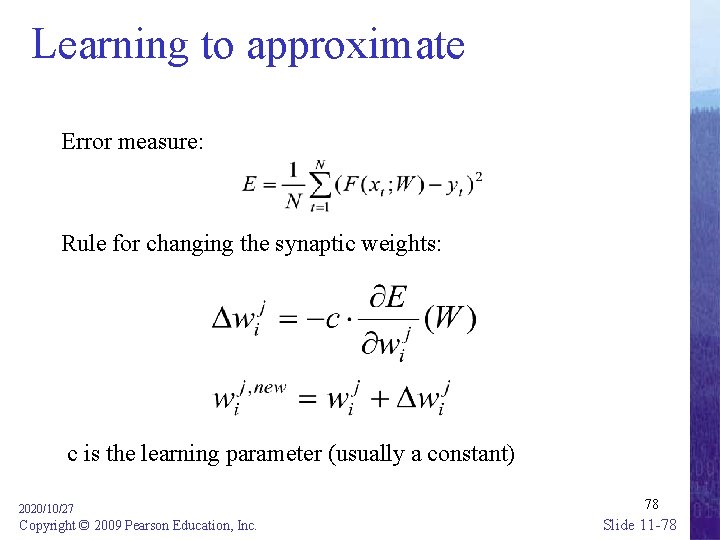

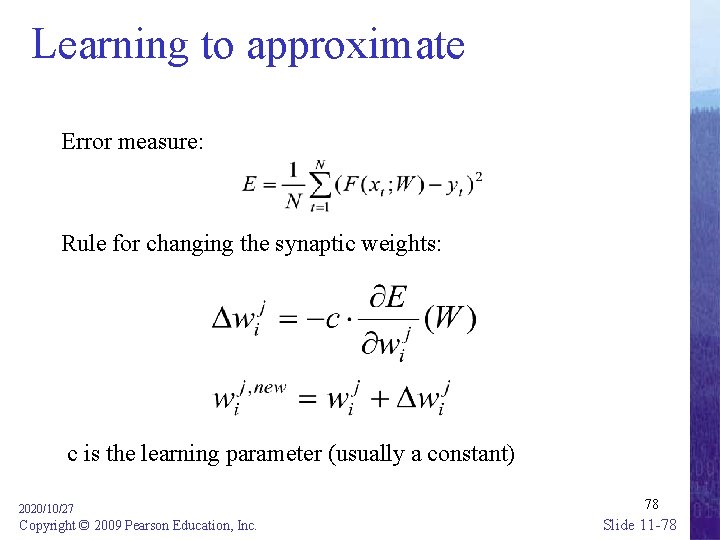

Learning to approximate Error measure: Rule for changing the synaptic weights: c is the learning parameter (usually a constant) 2020/10/27 Copyright © 2009 Pearson Education, Inc. 78 Slide 11 -78

New methods for learning with neural networks Bayesian learning: the distribution of the neural network parameters is learnt Support vector learning: the minimal representative subset of the available data is used to calculate the synaptic weights of the neurons 2020/10/27 Copyright © 2009 Pearson Education, Inc. 79 Slide 11 -79

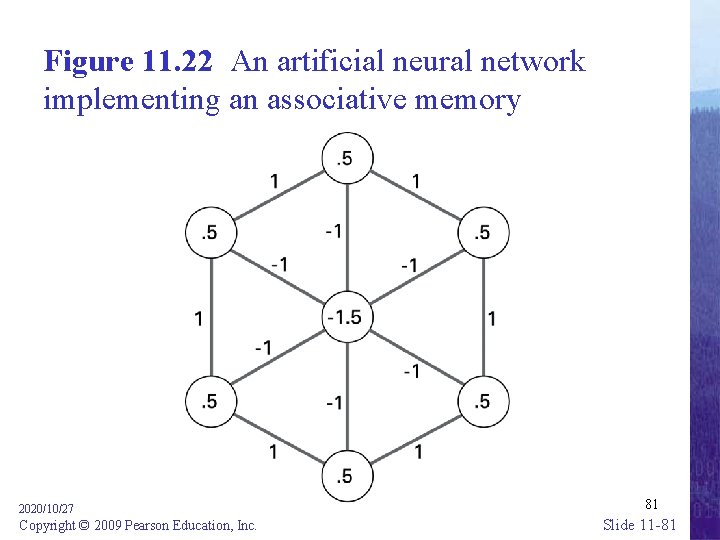

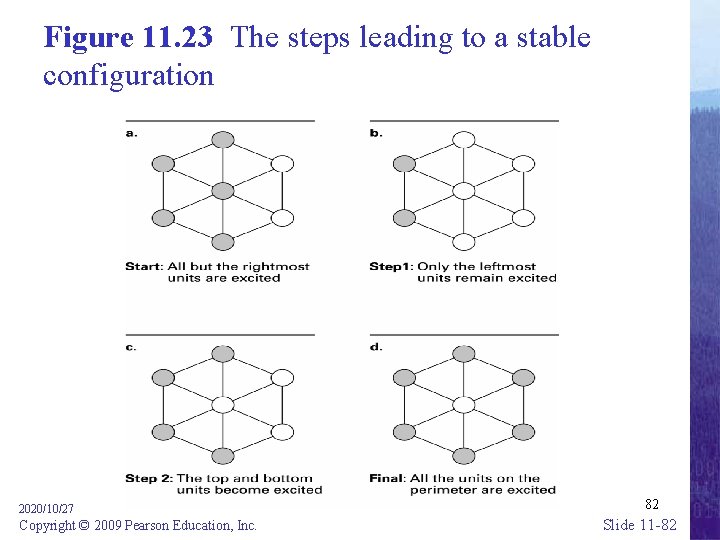

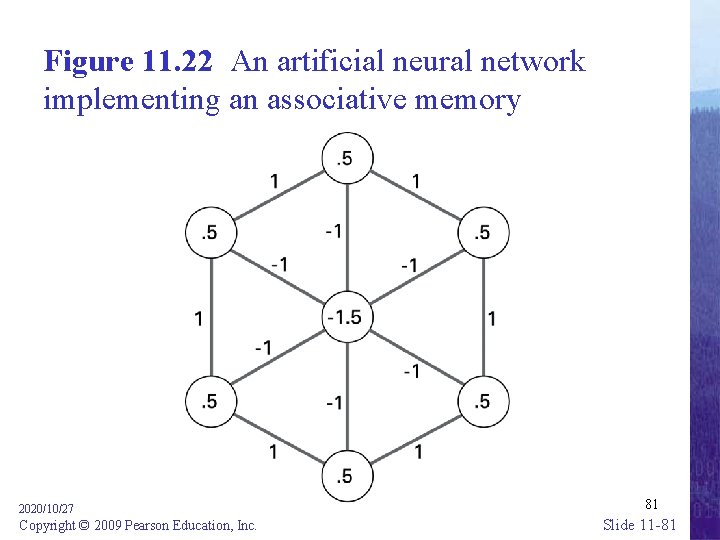

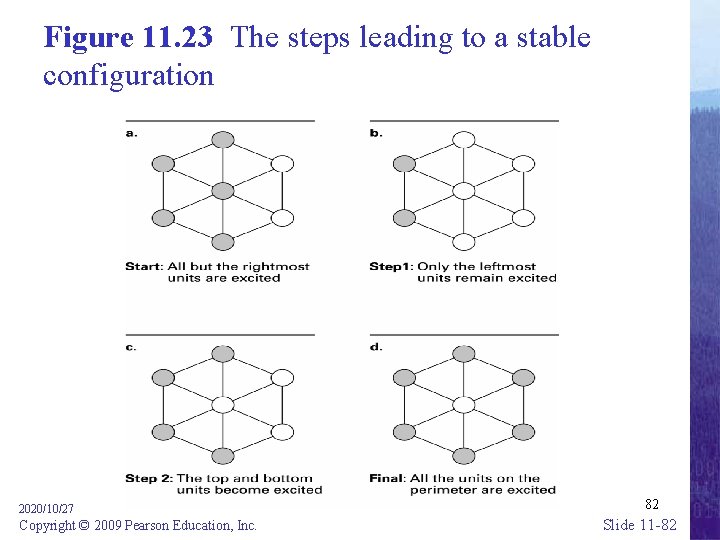

Associative Memory • Associative memory: The retrieval of information relevant to the information at hand • One direction of research seeks to build associative memory using neural networks that when given a partial pattern, transition themselves to a completed pattern. 2020/10/27 Copyright © 2009 Pearson Education, Inc. 80 Slide 11 -80

Figure 11. 22 An artificial neural network implementing an associative memory 2020/10/27 Copyright © 2009 Pearson Education, Inc. 81 Slide 11 -81

Figure 11. 23 The steps leading to a stable configuration 2020/10/27 Copyright © 2009 Pearson Education, Inc. 82 Slide 11 -82

Robotics • Truly autonomous robots require progress in perception and reasoning. • Major advances being made in mobility • Plan development versus reactive responses • Evolutionary robotics 2020/10/27 Copyright © 2009 Pearson Education, Inc. 83 Slide 11 -83

Issues Raised by Artificial Intelligence • When should a computer’s decision be trusted over a human’s? • If a computer can do a job better than a human, when should a human do the job anyway? • What would be the social impact if computer “intelligence” surpasses that of many humans? 2020/10/27 Copyright © 2009 Pearson Education, Inc. 84 Slide 11 -84

Thank You! Chapter 11 Artificial Intelligence 謝謝捧場 tsaiwn@csie. nctu. edu. tw 蔡文能 2020/10/27 Copyright © 2009 Pearson Education, Inc. 85 Slide 11 -85