Machine Learning Foundations of Artificial Intelligence Learning i

![Rewarded Card Example i Deck of cards, with each card designated by [r, s], Rewarded Card Example i Deck of cards, with each card designated by [r, s],](https://slidetodoc.com/presentation_image_h/0f6adf649f7cd318b6fa618e4372680e/image-15.jpg)

- Slides: 43

Machine Learning Foundations of Artificial Intelligence

Learning i What is Learning? 4 Learning in AI is also called machine learning or pattern recognition. 4 The basic objective is to allow an intelligent agent to discover autonomously knowledge from experience. i Let’s examine the definition more closely: 4 “an intelligent agent”: The ability to learn requires a prior level of intelligence and knowledge. Learning has to start from an existing level of capability. 4 “to discover autonomously”: Learning is fundamentally about an agent recognizing new facts for its own use and acquiring new abilities that reinforce its own existing abilities. Literal programming, i. e. rote learning from instruction, is not useful. 4 “knowledge”: Whatever is learned has to be represented in some way that the agent can use. “If you can't represent it, you can't learn it” is a corollary of the slogan “Knowledge is power”. 4 “from experience”: Experience is typically a set of so-called training examples; examples may be categorized or not. They may be random or selected by a teacher. They may include explanations or not. Foundations of Artificial Intelligence 2

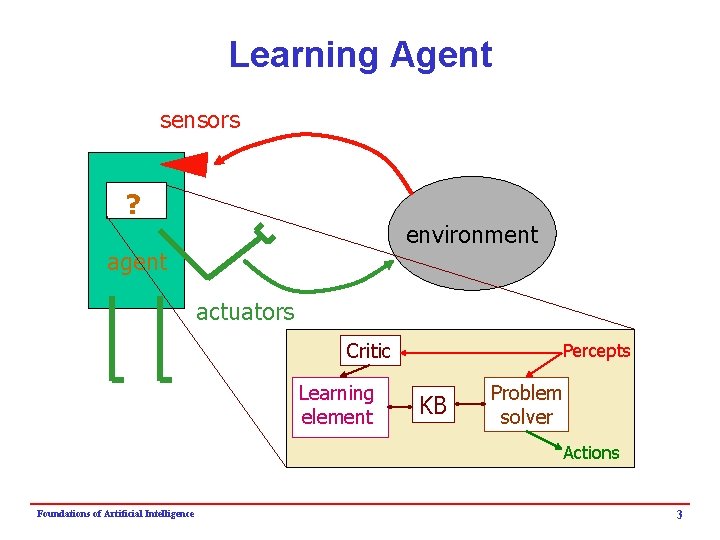

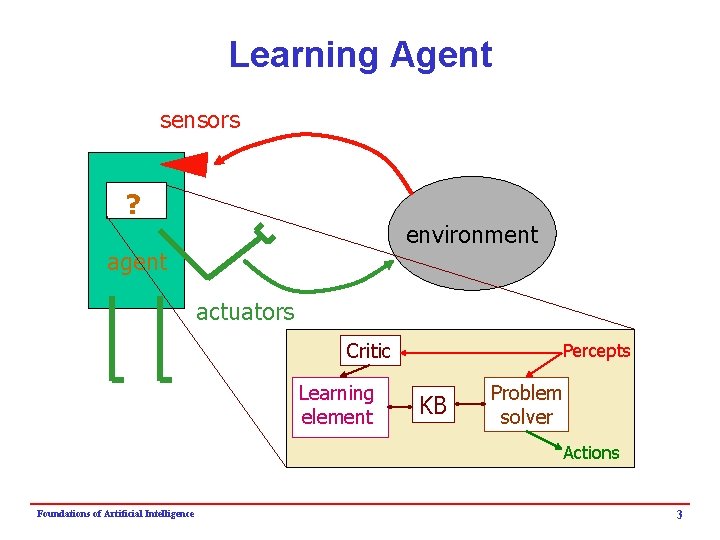

Learning Agent sensors ? environment agent actuators Critic Learning element Percepts KB Problem solver Actions Foundations of Artificial Intelligence 3

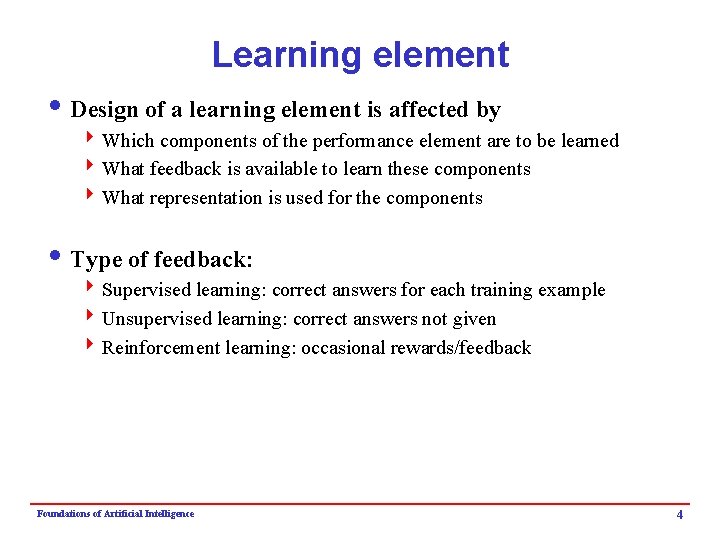

Learning element i Design of a learning element is affected by 4 Which components of the performance element are to be learned 4 What feedback is available to learn these components 4 What representation is used for the components i Type of feedback: 4 Supervised learning: correct answers for each training example 4 Unsupervised learning: correct answers not given 4 Reinforcement learning: occasional rewards/feedback Foundations of Artificial Intelligence 4

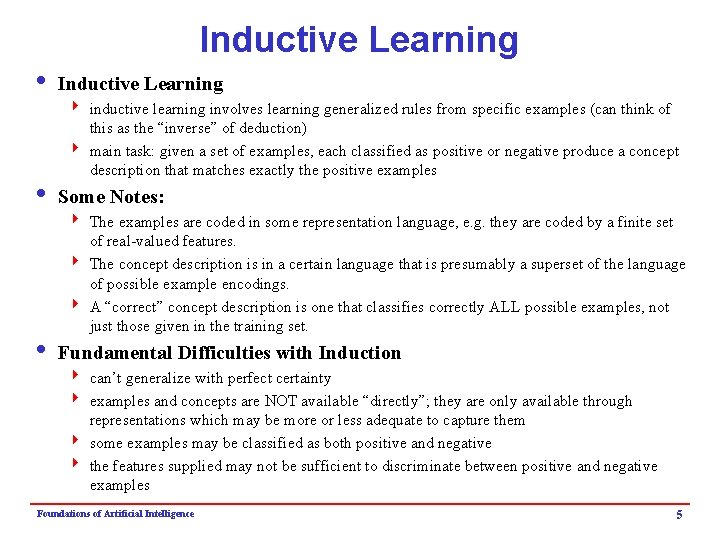

Inductive Learning i Inductive Learning 4 inductive learning involves learning generalized rules from specific examples (can think of this as the “inverse” of deduction) 4 main task: given a set of examples, each classified as positive or negative produce a concept description that matches exactly the positive examples i Some Notes: 4 The examples are coded in some representation language, e. g. they are coded by a finite set of real-valued features. 4 The concept description is in a certain language that is presumably a superset of the language of possible example encodings. 4 A “correct” concept description is one that classifies correctly ALL possible examples, not just those given in the training set. i Fundamental Difficulties with Induction 4 can’t generalize with perfect certainty 4 examples and concepts are NOT available “directly”; they are only available through representations which may be more or less adequate to capture them 4 some examples may be classified as both positive and negative 4 the features supplied may not be sufficient to discriminate between positive and negative examples Foundations of Artificial Intelligence 5

Inductive Learning Frameworks 1. Function-learning formulation 2. Logic-inference formulation Foundations of Artificial Intelligence 6

Inductive learning i. Simplest form: learn a function from examples f is the target function An example is a pair (x, f(x)) Problem: find a hypothesis h such that h ≈ f given a training set of examples i. This is a highly simplified model of real learning: 4 Ignores prior knowledge 4 Assumes examples are given Foundations of Artificial Intelligence 7

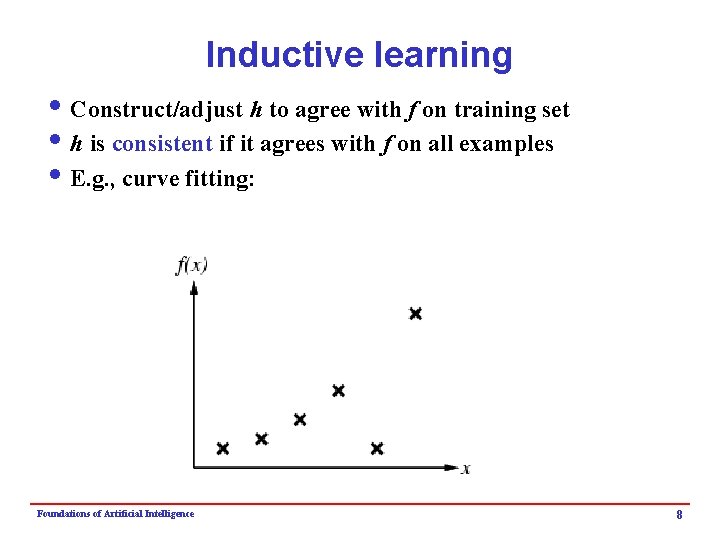

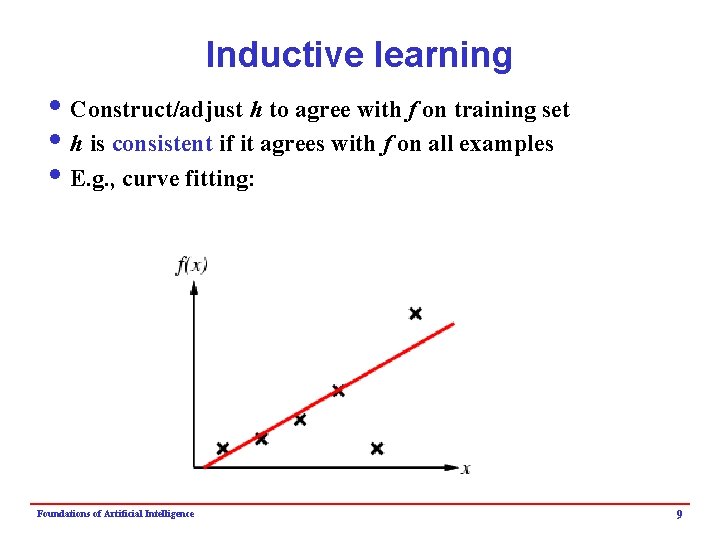

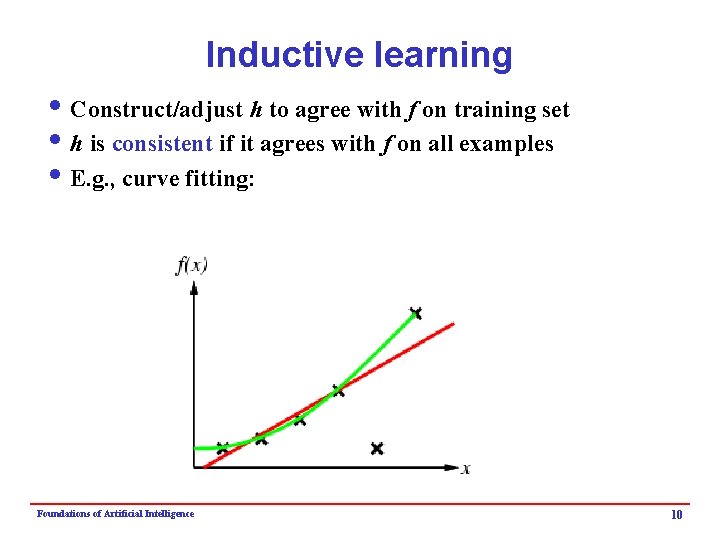

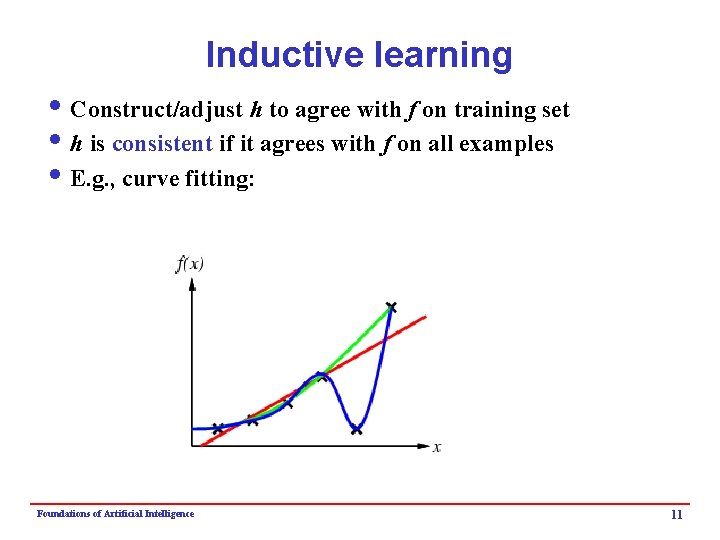

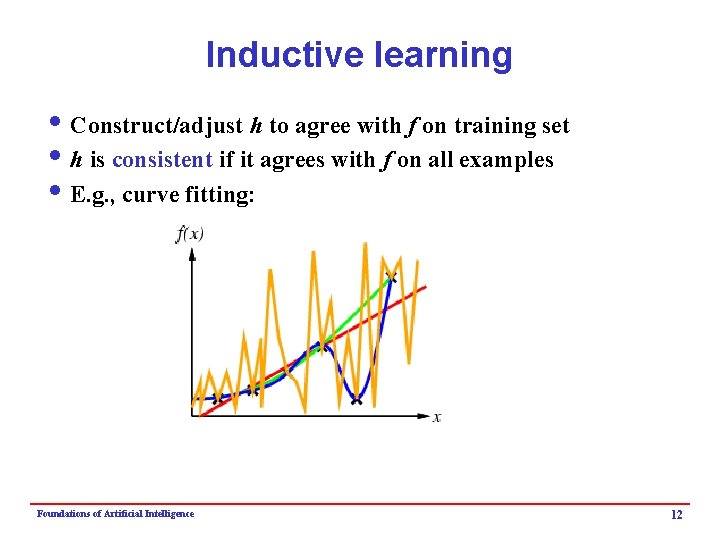

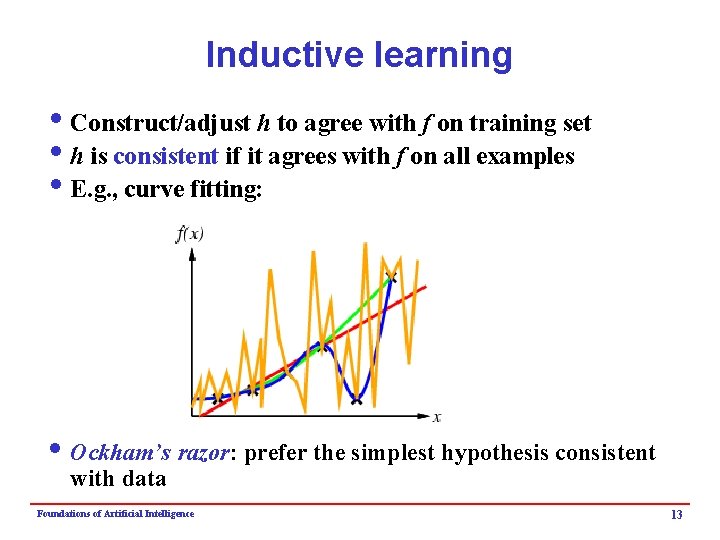

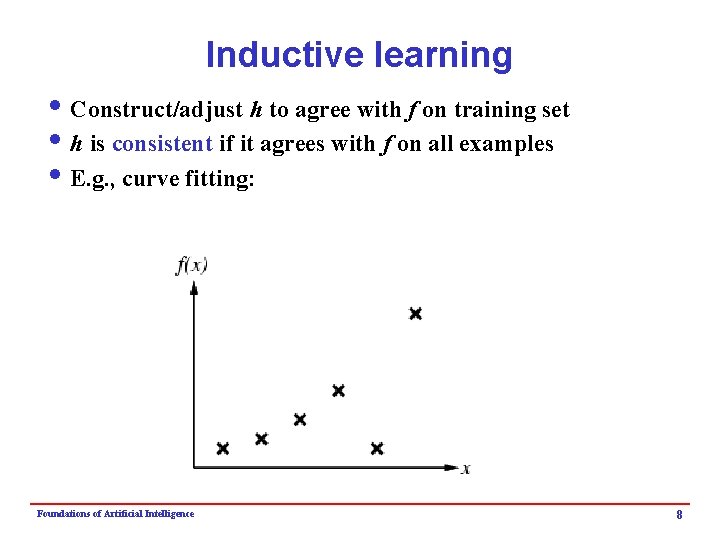

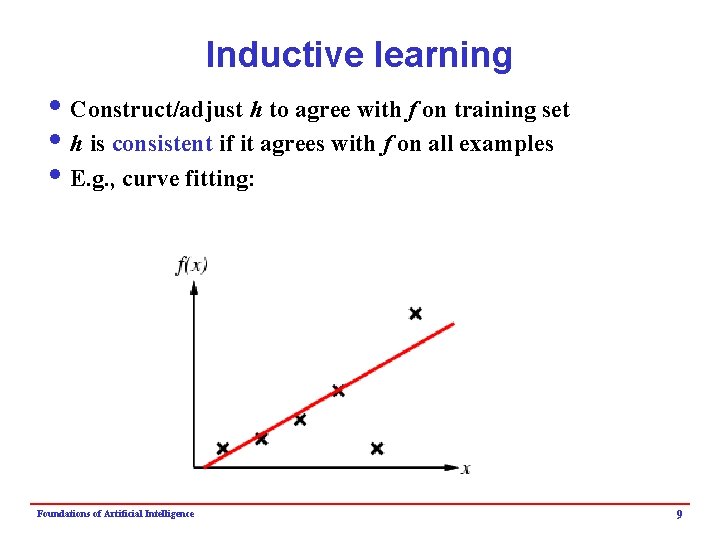

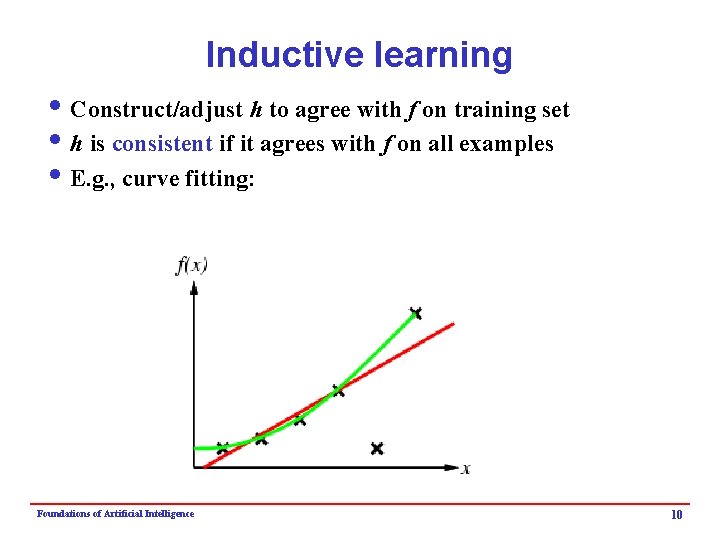

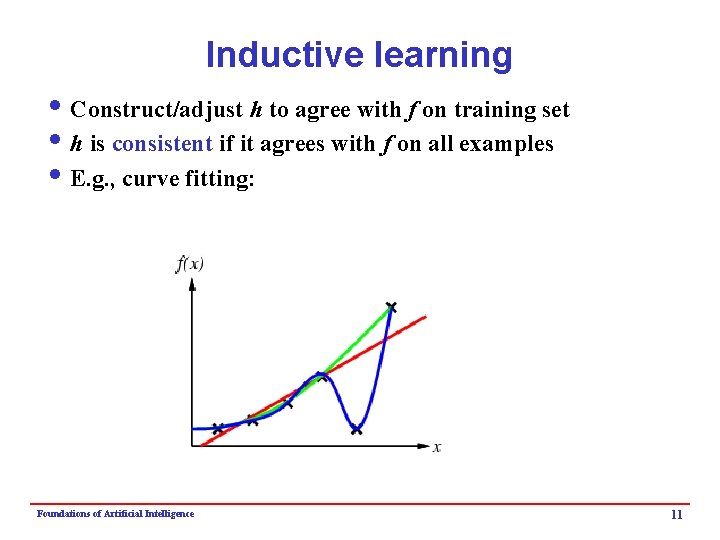

Inductive learning i Construct/adjust h to agree with f on training set i h is consistent if it agrees with f on all examples i E. g. , curve fitting: Foundations of Artificial Intelligence 8

Inductive learning i Construct/adjust h to agree with f on training set i h is consistent if it agrees with f on all examples i E. g. , curve fitting: Foundations of Artificial Intelligence 9

Inductive learning i Construct/adjust h to agree with f on training set i h is consistent if it agrees with f on all examples i E. g. , curve fitting: Foundations of Artificial Intelligence 10

Inductive learning i Construct/adjust h to agree with f on training set i h is consistent if it agrees with f on all examples i E. g. , curve fitting: Foundations of Artificial Intelligence 11

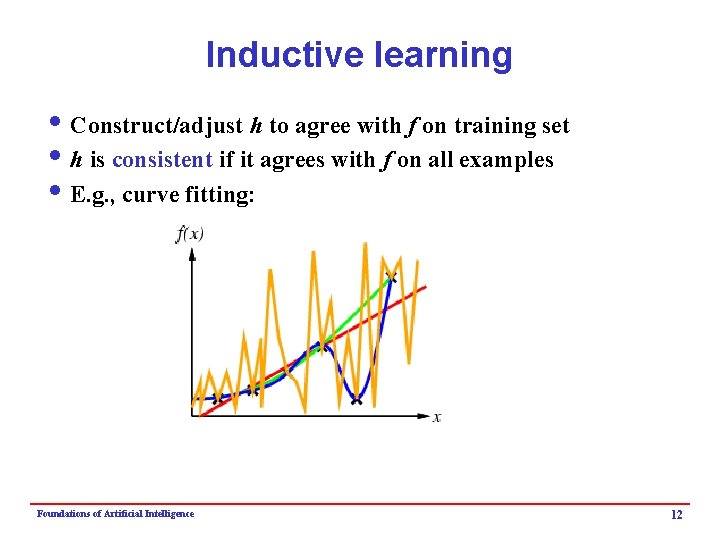

Inductive learning i Construct/adjust h to agree with f on training set i h is consistent if it agrees with f on all examples i E. g. , curve fitting: Foundations of Artificial Intelligence 12

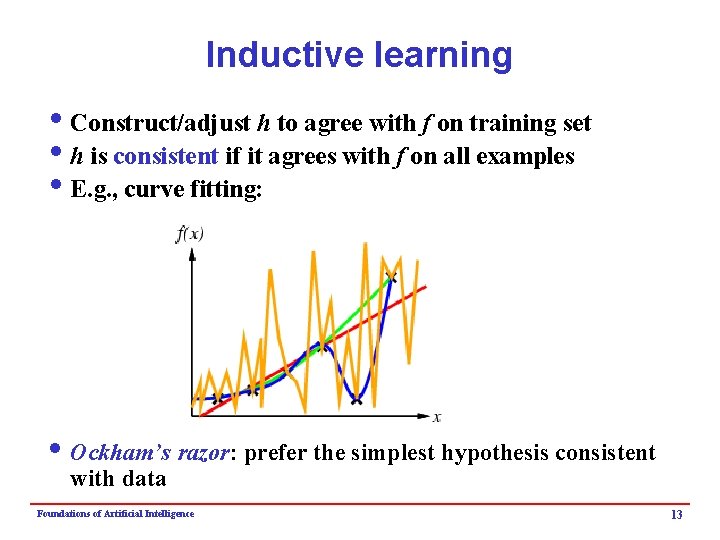

Inductive learning i Construct/adjust h to agree with f on training set i h is consistent if it agrees with f on all examples i E. g. , curve fitting: i Ockham’s razor: prefer the simplest hypothesis consistent with data Foundations of Artificial Intelligence 13

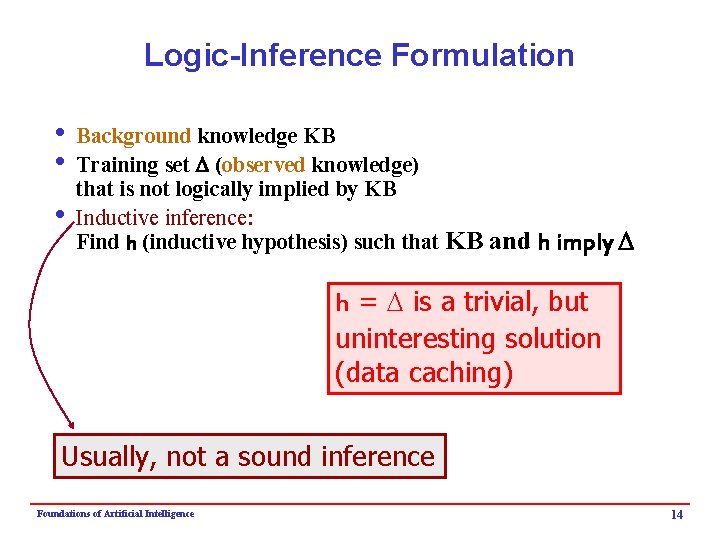

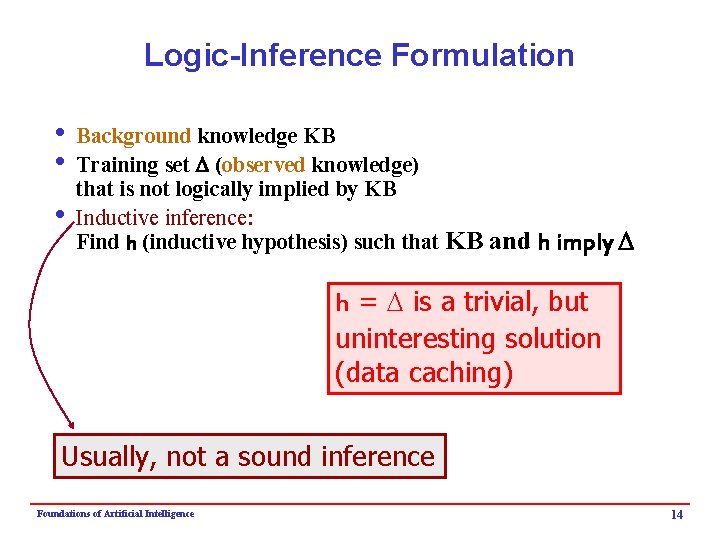

Logic-Inference Formulation i Background knowledge KB i Training set D (observed knowledge) that is not logically implied by KB i Inductive inference: Find h (inductive hypothesis) such that KB and h imply D h = D is a trivial, but uninteresting solution (data caching) Usually, not a sound inference Foundations of Artificial Intelligence 14

![Rewarded Card Example i Deck of cards with each card designated by r s Rewarded Card Example i Deck of cards, with each card designated by [r, s],](https://slidetodoc.com/presentation_image_h/0f6adf649f7cd318b6fa618e4372680e/image-15.jpg)

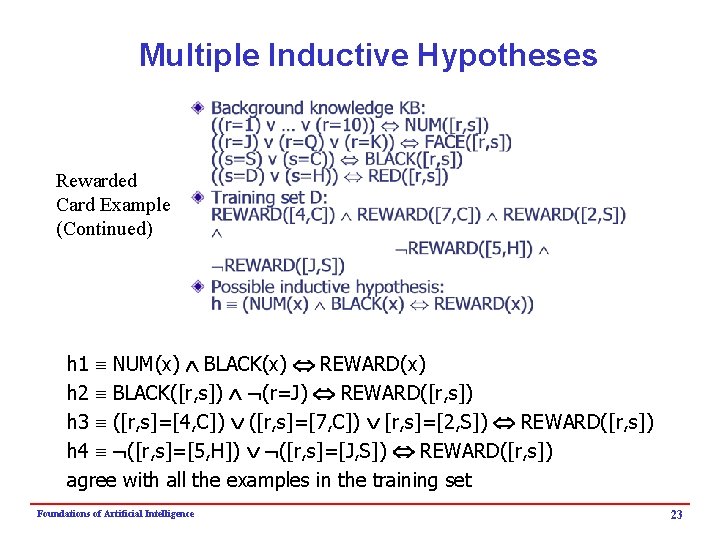

Rewarded Card Example i Deck of cards, with each card designated by [r, s], its rank and suit, and some cards “rewarded” i Background knowledge KB: ((r=1) v … v (r=10)) NUM(r) ((r=J) v (r=Q) v (r=K)) FACE(r) ((s=S) v (s=C)) BLACK(s) ((s=D) v (s=H)) RED(s) i Training set D: REWARD([4, C]) REWARD([7, C]) REWARD([2, S]) REWARD([5, H]) REWARD([J, S]) i Possible inductive hypothesis: h (NUM(r) BLACK(s) REWARD([r, s])) Note: There are several possible inductive hypotheses Foundations of Artificial Intelligence 15

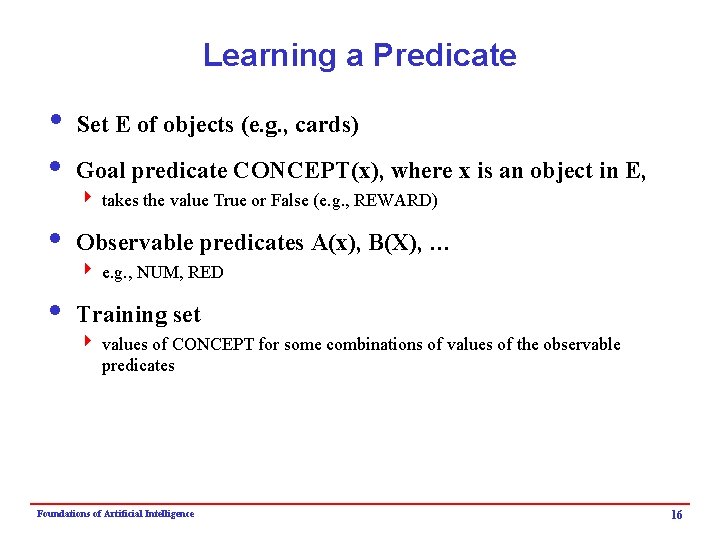

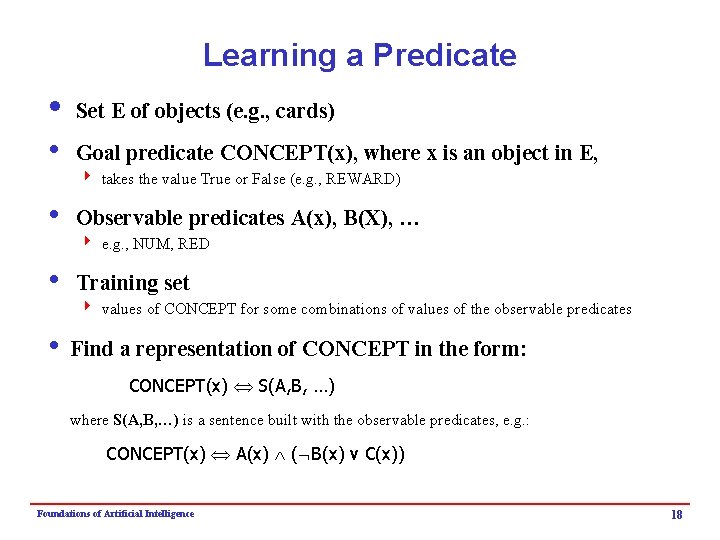

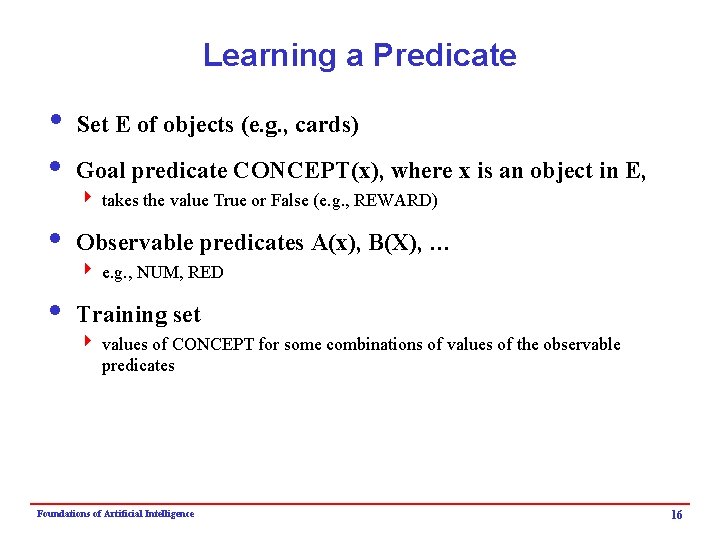

Learning a Predicate i Set E of objects (e. g. , cards) i Goal predicate CONCEPT(x), where x is an object in E, 4 takes the value True or False (e. g. , REWARD) i Observable predicates A(x), B(X), … 4 e. g. , NUM, RED i Training set 4 values of CONCEPT for some combinations of values of the observable predicates Foundations of Artificial Intelligence 16

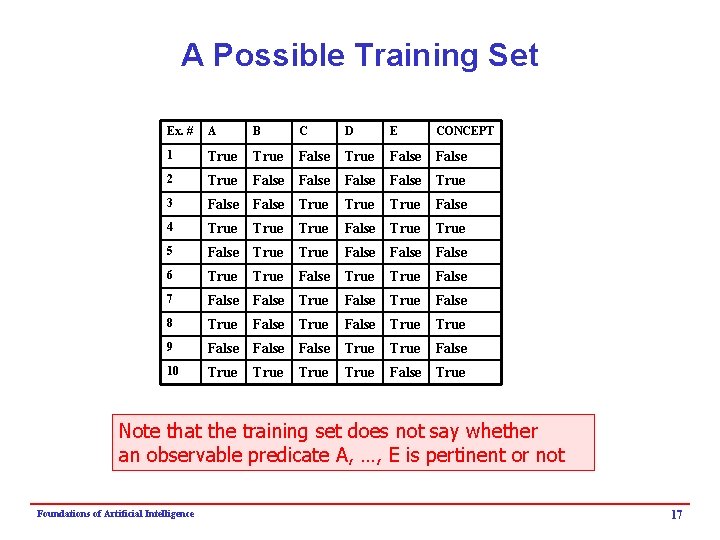

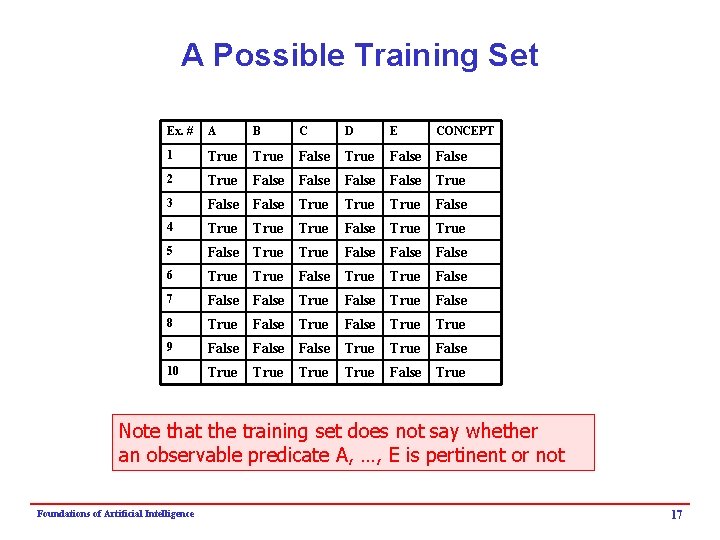

A Possible Training Set Ex. # A B C D E CONCEPT 1 True False 2 True False True 3 False True False 4 True False True 5 False True False 6 True False 7 False True False 8 True False True 9 False True False 10 True False True Note that the training set does not say whether an observable predicate A, …, E is pertinent or not Foundations of Artificial Intelligence 17

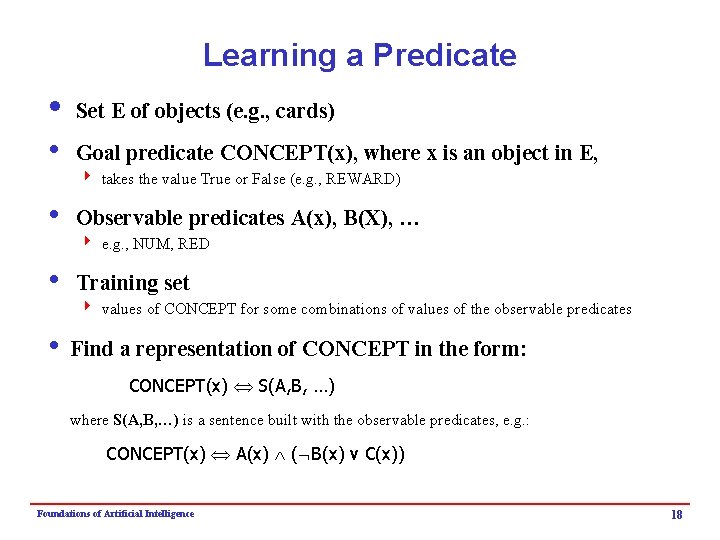

Learning a Predicate i Set E of objects (e. g. , cards) i Goal predicate CONCEPT(x), where x is an object in E, 4 takes the value True or False (e. g. , REWARD) i Observable predicates A(x), B(X), … 4 e. g. , NUM, RED i Training set 4 values of CONCEPT for some combinations of values of the observable predicates i Find a representation of CONCEPT in the form: CONCEPT(x) S(A, B, …) where S(A, B, …) is a sentence built with the observable predicates, e. g. : CONCEPT(x) A(x) ( B(x) v C(x)) Foundations of Artificial Intelligence 18

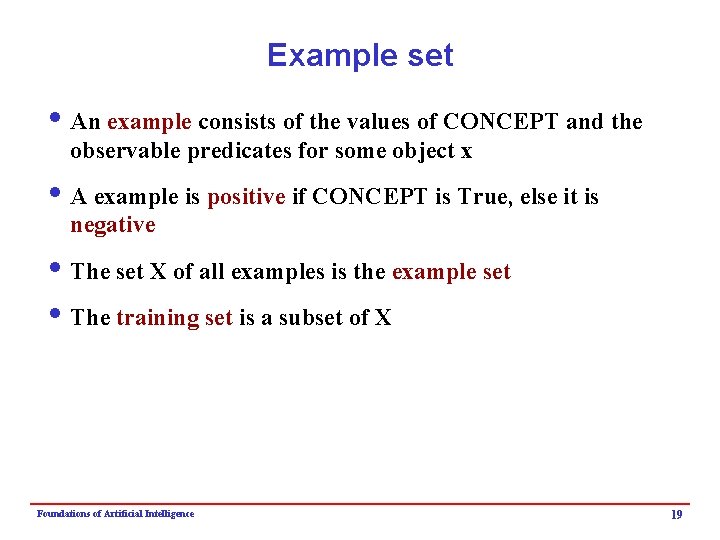

Example set i An example consists of the values of CONCEPT and the observable predicates for some object x i A example is positive if CONCEPT is True, else it is negative i The set X of all examples is the example set i The training set is a subset of X Foundations of Artificial Intelligence 19

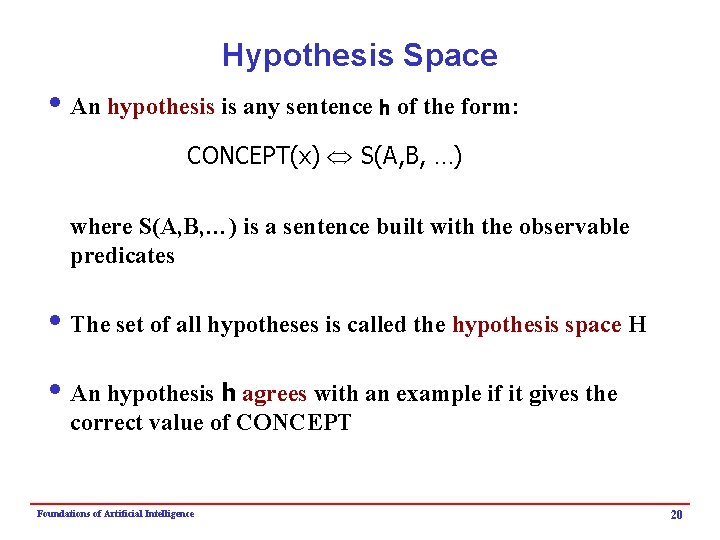

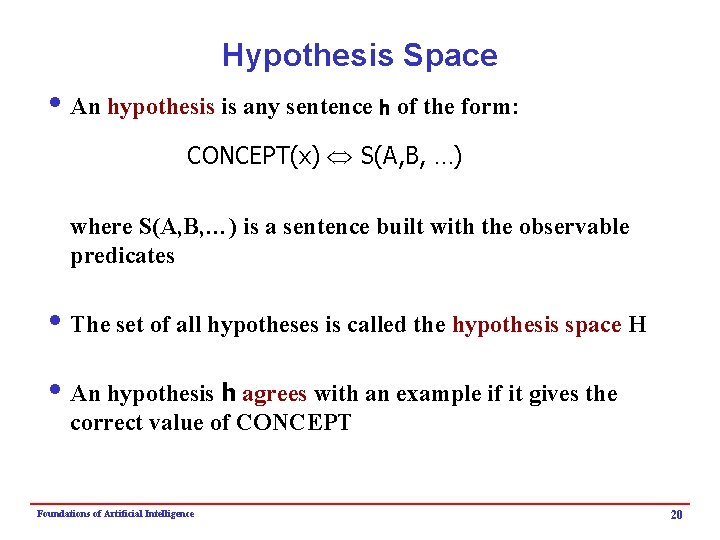

Hypothesis Space i An hypothesis is any sentence h of the form: CONCEPT(x) S(A, B, …) where S(A, B, …) is a sentence built with the observable predicates i The set of all hypotheses is called the hypothesis space H i An hypothesis h agrees with an example if it gives the correct value of CONCEPT Foundations of Artificial Intelligence 20

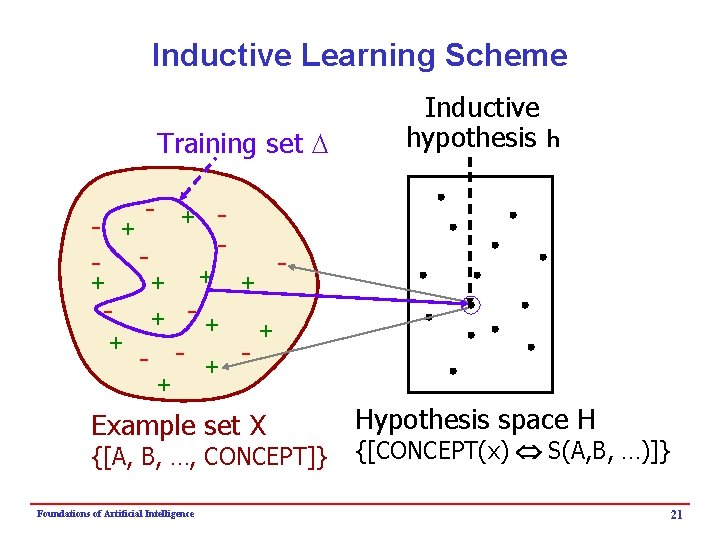

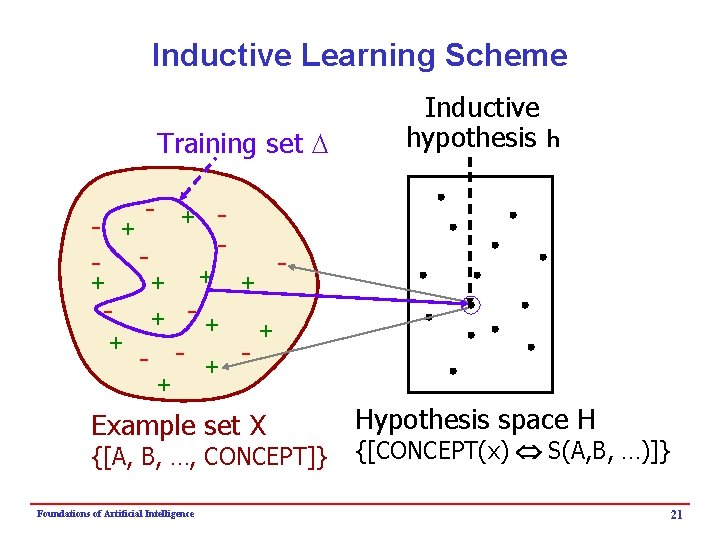

Inductive Learning Scheme Training set D - + + + - - + -+ + + - + Example set X {[A, B, …, CONCEPT]} Foundations of Artificial Intelligence Inductive hypothesis h Hypothesis space H {[CONCEPT(x) S(A, B, …)]} 21

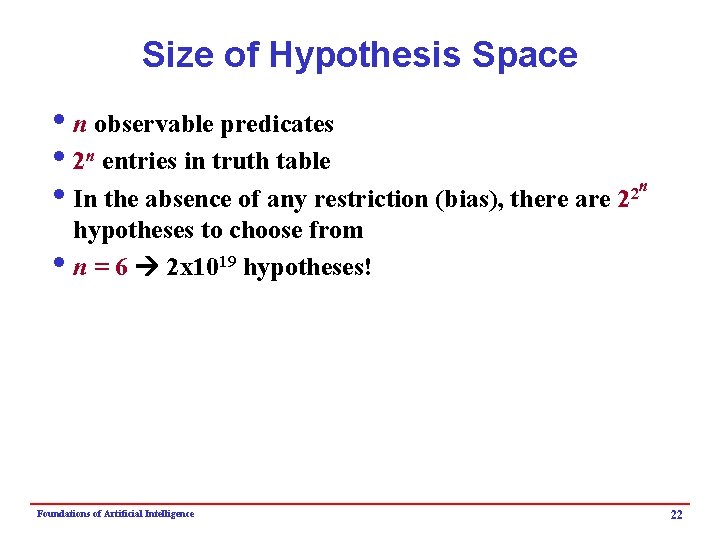

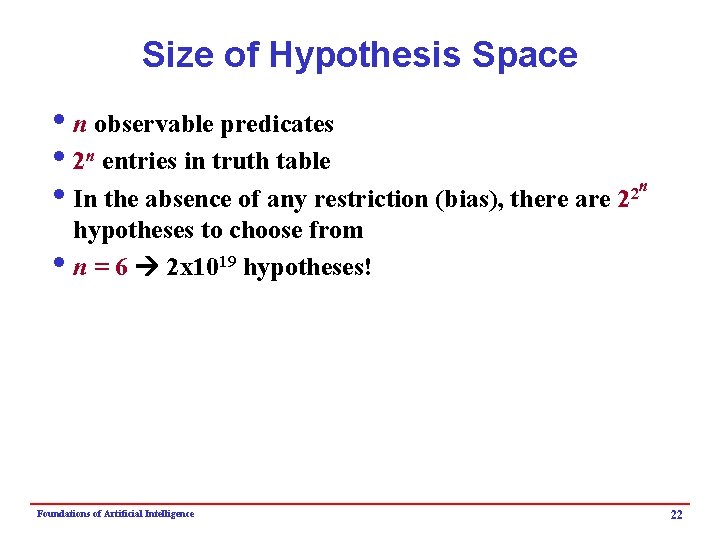

Size of Hypothesis Space in observable predicates i 2 n entries in truth table n 2 i. In the absence of any restriction (bias), there are 2 hypotheses to choose from in = 6 2 x 1019 hypotheses! Foundations of Artificial Intelligence 22

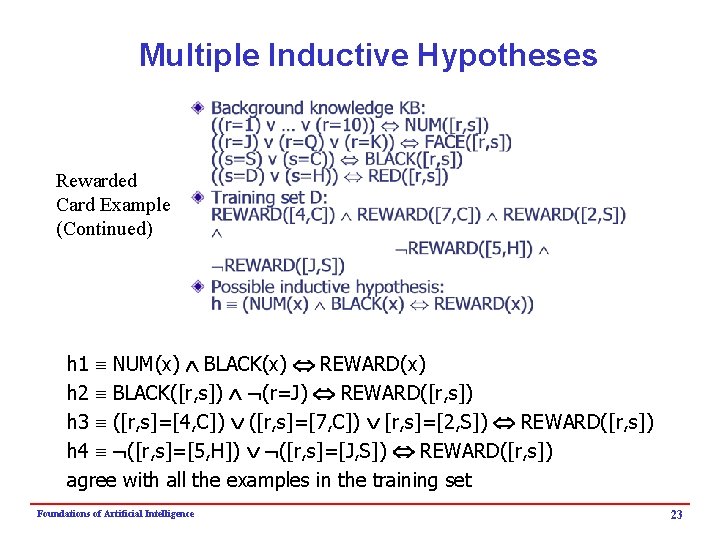

Multiple Inductive Hypotheses Rewarded Card Example (Continued) h 1 NUM(x) BLACK(x) REWARD(x) h 2 BLACK([r, s]) (r=J) REWARD([r, s]) h 3 ([r, s]=[4, C]) ([r, s]=[7, C]) [r, s]=[2, S]) REWARD([r, s]) h 4 ([r, s]=[5, H]) ([r, s]=[J, S]) REWARD([r, s]) agree with all the examples in the training set Foundations of Artificial Intelligence 23

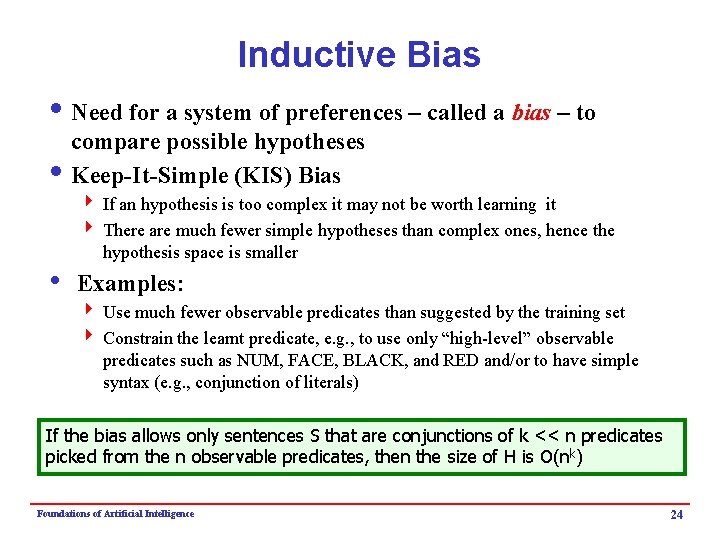

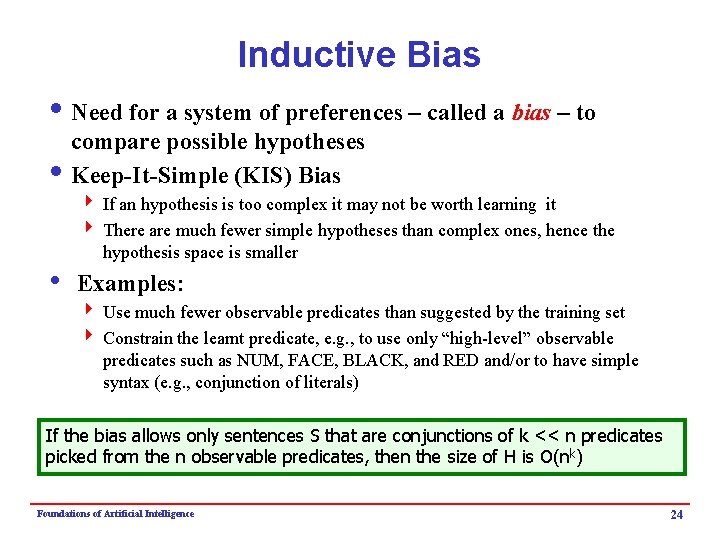

Inductive Bias i Need for a system of preferences – called a bias – to compare possible hypotheses i Keep-It-Simple (KIS) Bias 4 If an hypothesis is too complex it may not be worth learning it 4 There are much fewer simple hypotheses than complex ones, hence the hypothesis space is smaller i Examples: 4 Use much fewer observable predicates than suggested by the training set 4 Constrain the learnt predicate, e. g. , to use only “high-level” observable predicates such as NUM, FACE, BLACK, and RED and/or to have simple syntax (e. g. , conjunction of literals) If the bias allows only sentences S that are conjunctions of k << n predicates picked from the n observable predicates, then the size of H is O(nk) Foundations of Artificial Intelligence 24

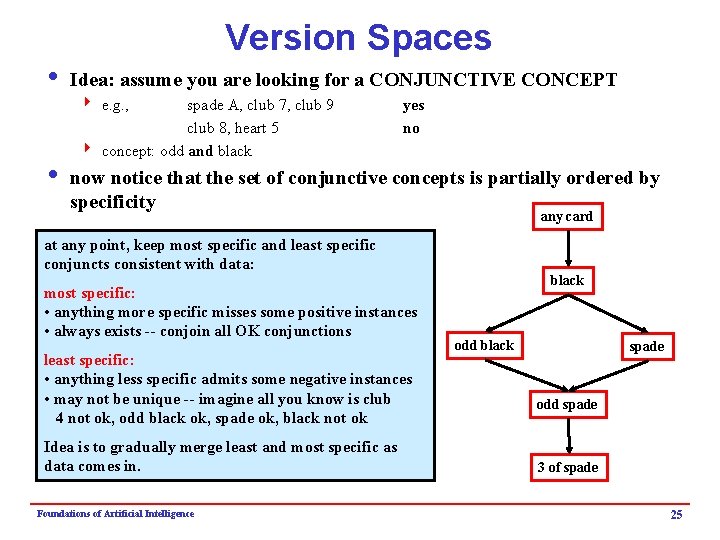

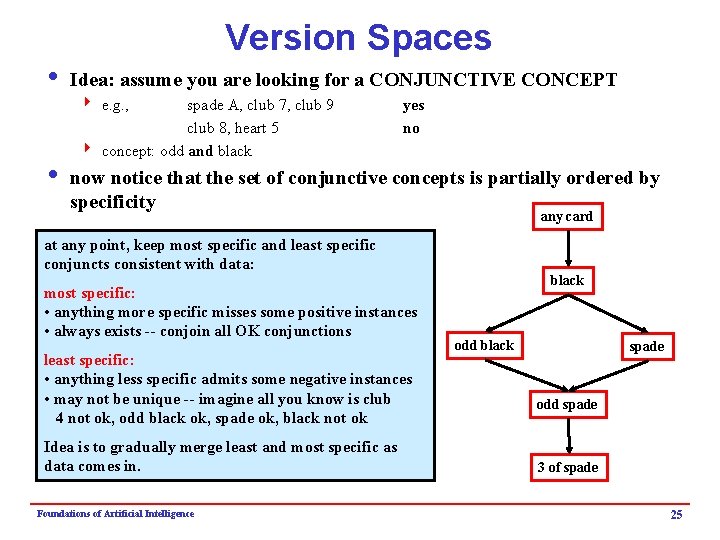

Version Spaces i Idea: assume you are looking for a CONJUNCTIVE CONCEPT 4 e. g. , spade A, club 7, club 9 club 8, heart 5 4 concept: odd and black yes no i now notice that the set of conjunctive concepts is partially ordered by specificity any card at any point, keep most specific and least specific conjuncts consistent with data: most specific: • anything more specific misses some positive instances • always exists -- conjoin all OK conjunctions least specific: • anything less specific admits some negative instances • may not be unique -- imagine all you know is club 4 not ok, odd black ok, spade ok, black not ok Idea is to gradually merge least and most specific as data comes in. Foundations of Artificial Intelligence black odd black spade odd spade 3 of spade 25

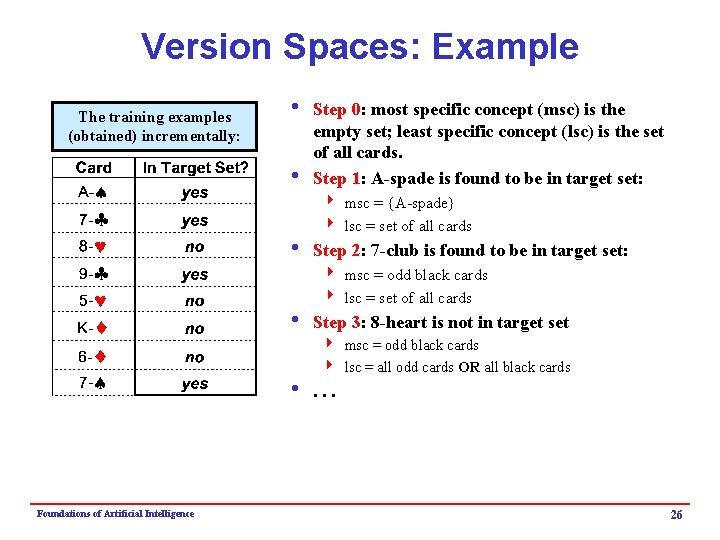

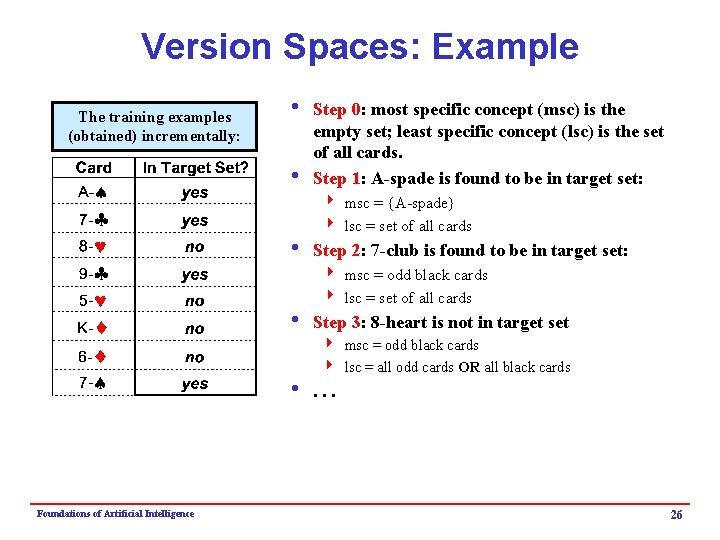

Version Spaces: Example The training examples (obtained) incrementally: i Step 0: most specific concept (msc) is the empty set; least specific concept (lsc) is the set of all cards. i Step 1: A-spade is found to be in target set: 4 msc = {A-spade} 4 lsc = set of all cards i Step 2: 7 -club is found to be in target set: 4 msc = odd black cards 4 lsc = set of all cards i Step 3: 8 -heart is not in target set 4 msc = odd black cards 4 lsc = all odd cards OR all black cards i. . . Foundations of Artificial Intelligence 26

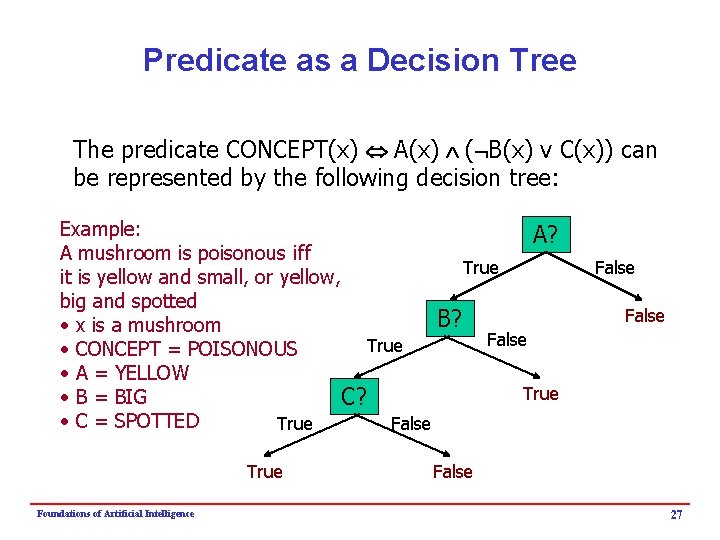

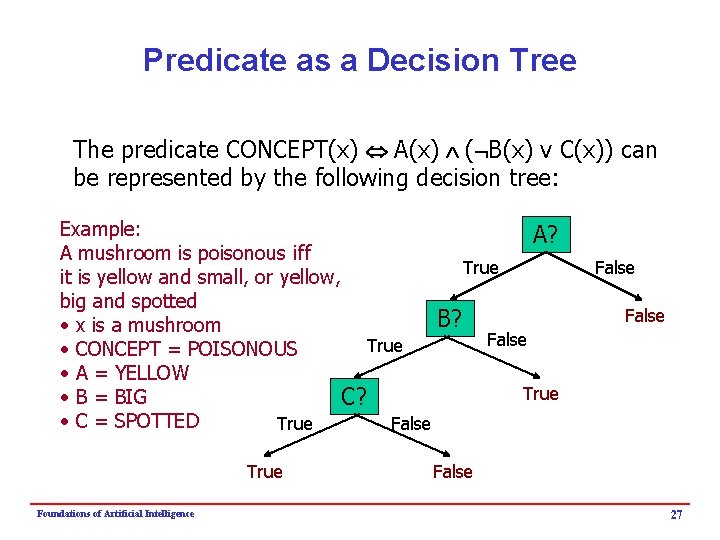

Predicate as a Decision Tree The predicate CONCEPT(x) A(x) ( B(x) v C(x)) can be represented by the following decision tree: Example: A? A mushroom is poisonous iff True it is yellow and small, or yellow, big and spotted B? • x is a mushroom False True • CONCEPT = POISONOUS • A = YELLOW True • B = BIG C? • C = SPOTTED True False True Foundations of Artificial Intelligence False 27

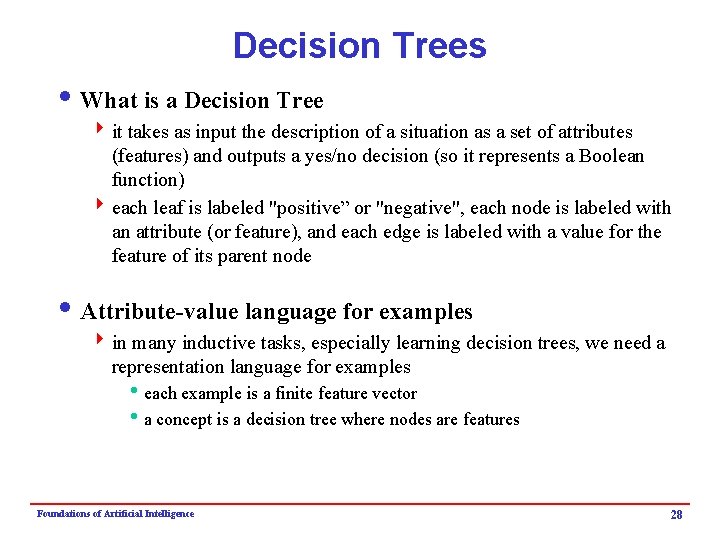

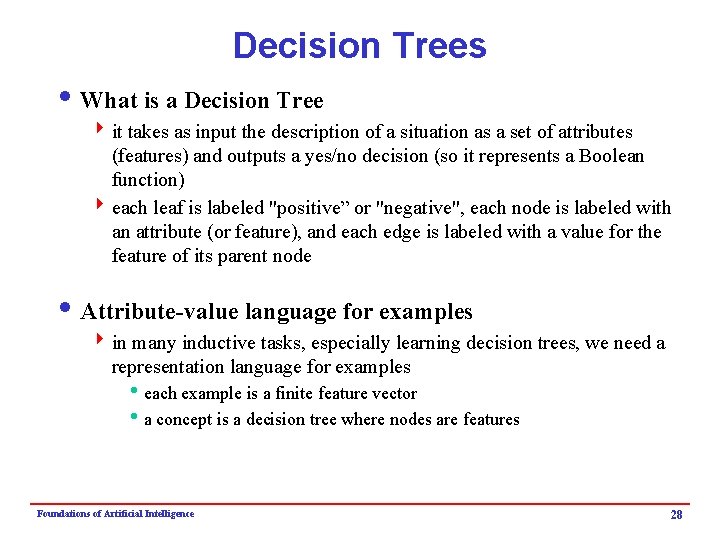

Decision Trees i What is a Decision Tree 4 it takes as input the description of a situation as a set of attributes (features) and outputs a yes/no decision (so it represents a Boolean function) 4 each leaf is labeled "positive” or "negative", each node is labeled with an attribute (or feature), and each edge is labeled with a value for the feature of its parent node i Attribute-value language for examples 4 in many inductive tasks, especially learning decision trees, we need a representation language for examples heach example is a finite feature vector ha concept is a decision tree where nodes are features Foundations of Artificial Intelligence 28

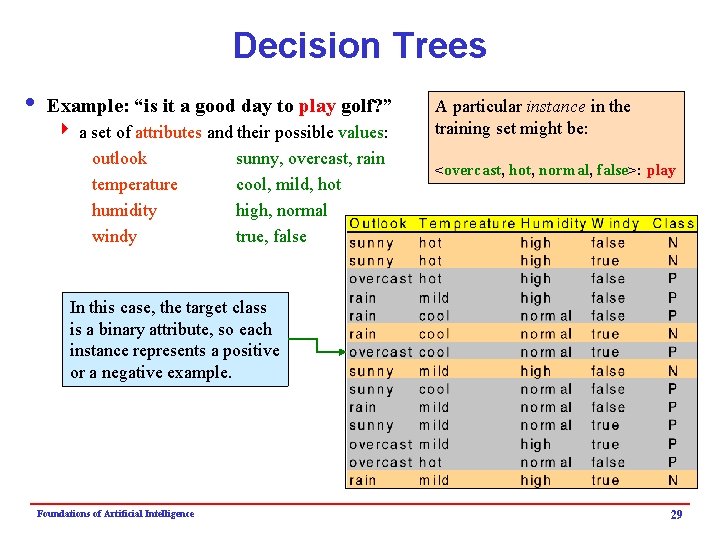

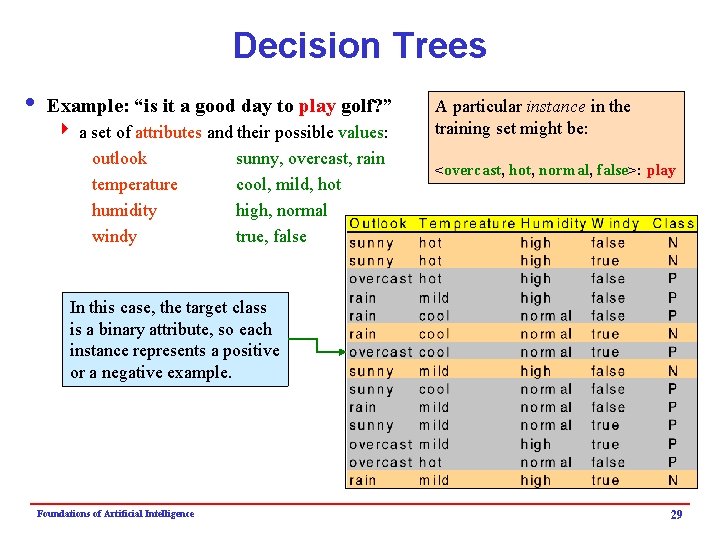

Decision Trees i Example: “is it a good day to play golf? ” 4 a set of attributes and their possible values: outlook sunny, overcast, rain temperature cool, mild, hot humidity high, normal windy true, false A particular instance in the training set might be: <overcast, hot, normal, false>: play In this case, the target class is a binary attribute, so each instance represents a positive or a negative example. Foundations of Artificial Intelligence 29

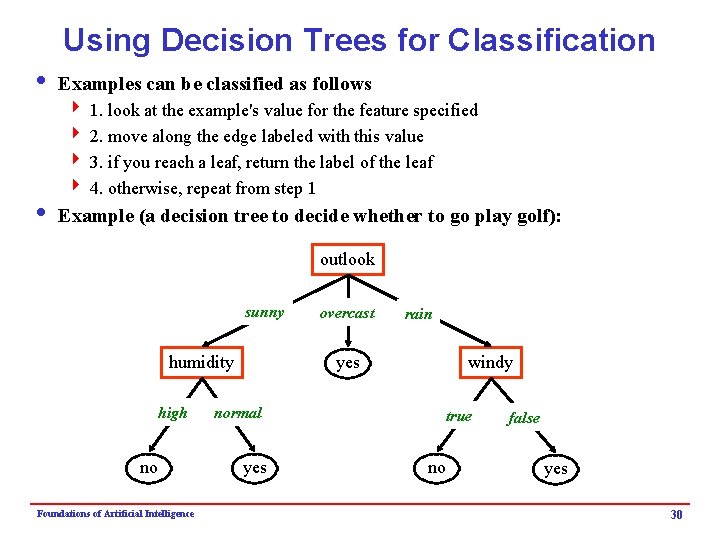

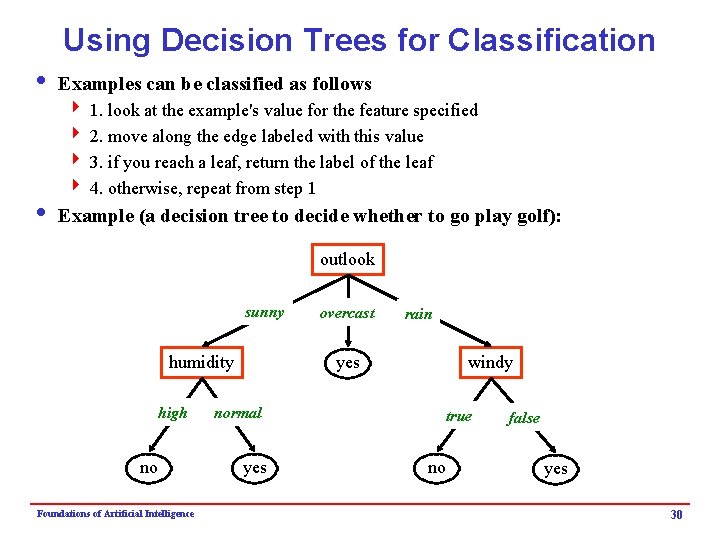

Using Decision Trees for Classification i Examples can be classified as follows 4 1. look at the example's value for the feature specified 4 2. move along the edge labeled with this value 4 3. if you reach a leaf, return the label of the leaf 4 4. otherwise, repeat from step 1 i Example (a decision tree to decide whether to go play golf): outlook sunny no Foundations of Artificial Intelligence rain yes humidity high overcast normal yes windy true no false yes 30

Classification: 3 Step Process i 1. Model construction (Learning): 4 Each record (instance) is assumed to belong to a predefined class, as determined by one of the attributes, called the class label 4 The set of records used for construction of the model is called training set 4 The model is usually represented in the form of classification rules, (IFTHEN statements) or decision trees i 2. Model Evaluation (Accuracy): 4 Estimate accuracy rate of the model based on a test set 4 The known label of test sample is compared to classified result from model 4 Accuracy rate: percentage of test set samples correctly classified by the model 4 Test set is independent of training set otherwise over-fitting will occur i 3. Model Use (Classification): 4 The model is used to classify unseen instances (assigning class labels) 4 Predict the value of an actual attribute Foundations of Artificial Intelligence 31

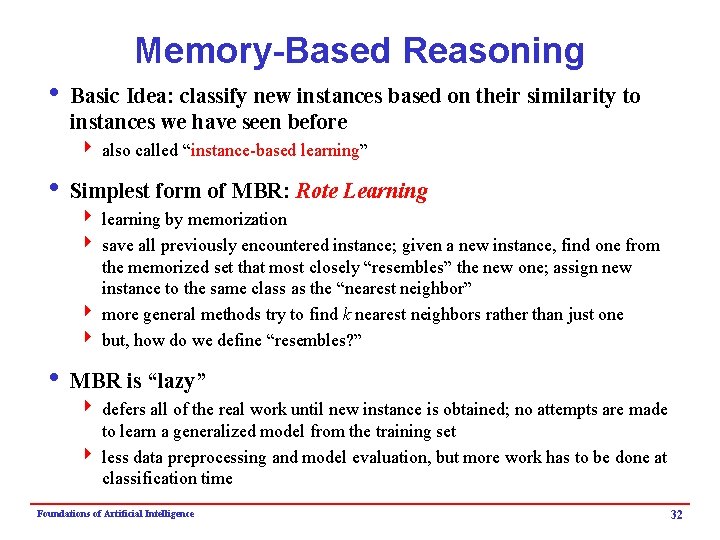

Memory-Based Reasoning i Basic Idea: classify new instances based on their similarity to instances we have seen before 4 also called “instance-based learning” i Simplest form of MBR: Rote Learning 4 learning by memorization 4 save all previously encountered instance; given a new instance, find one from the memorized set that most closely “resembles” the new one; assign new instance to the same class as the “nearest neighbor” 4 more general methods try to find k nearest neighbors rather than just one 4 but, how do we define “resembles? ” i MBR is “lazy” 4 defers all of the real work until new instance is obtained; no attempts are made to learn a generalized model from the training set 4 less data preprocessing and model evaluation, but more work has to be done at classification time Foundations of Artificial Intelligence 32

MBR & Collaborative Filtering i Collaborative Filtering or “Social Learning” 4 idea is to give recommendations to a user based on the “ratings” of objects by other users 4 usually assumes that features in the data are similar objects (e. g. , Web pages, music, movies, etc. ) 4 usually requires “explicit” ratings of objects by users based on a rating scale 4 there have been some attempts to obtain ratings implicitly based on user behavior (mixed results; problem is that implicit ratings are often binary) i Nearest Neighbors Strategy: 4 Find similar users and predicted (weighted) average of user ratings 4 We can use any distance or similarity measure to compute similarity among users (user ratings on items viewed as a vector) 4 In case of ratings, often the Pearson r algorithm is used to compute correlations Foundations of Artificial Intelligence 33

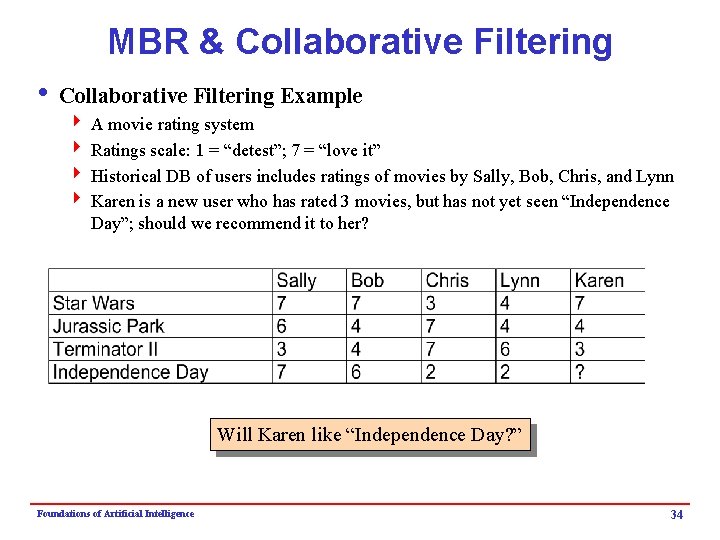

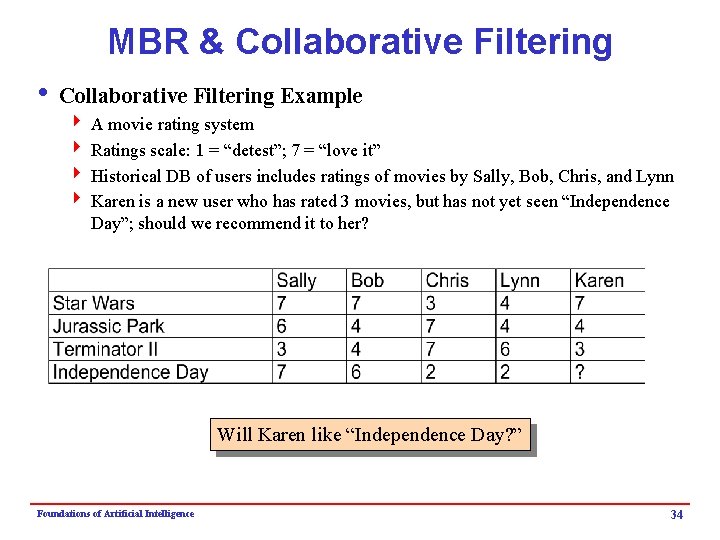

MBR & Collaborative Filtering i Collaborative Filtering Example 4 A movie rating system 4 Ratings scale: 1 = “detest”; 7 = “love it” 4 Historical DB of users includes ratings of movies by Sally, Bob, Chris, and Lynn 4 Karen is a new user who has rated 3 movies, but has not yet seen “Independence Day”; should we recommend it to her? Will Karen like “Independence Day? ” Foundations of Artificial Intelligence 34

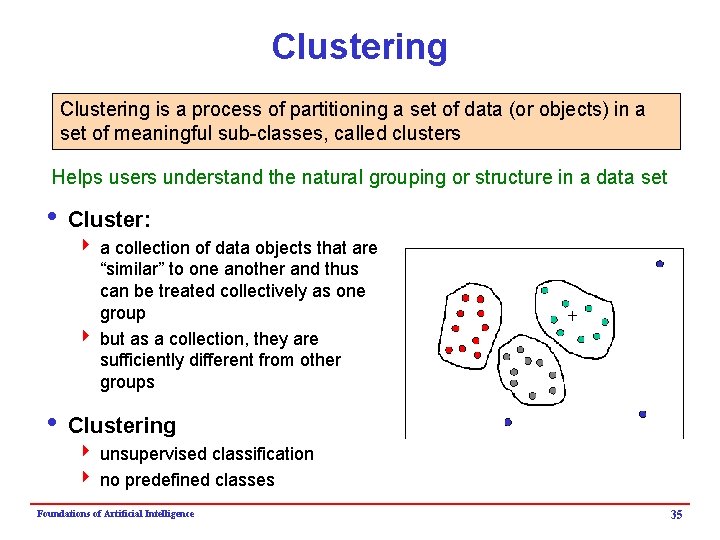

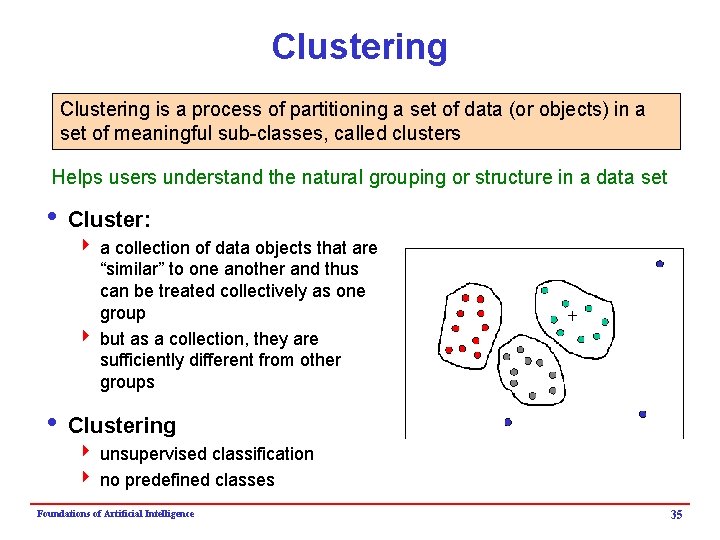

Clustering is a process of partitioning a set of data (or objects) in a set of meaningful sub-classes, called clusters Helps users understand the natural grouping or structure in a data set i Cluster: 4 a collection of data objects that are “similar” to one another and thus can be treated collectively as one group 4 but as a collection, they are sufficiently different from other groups i Clustering 4 unsupervised classification 4 no predefined classes Foundations of Artificial Intelligence 35

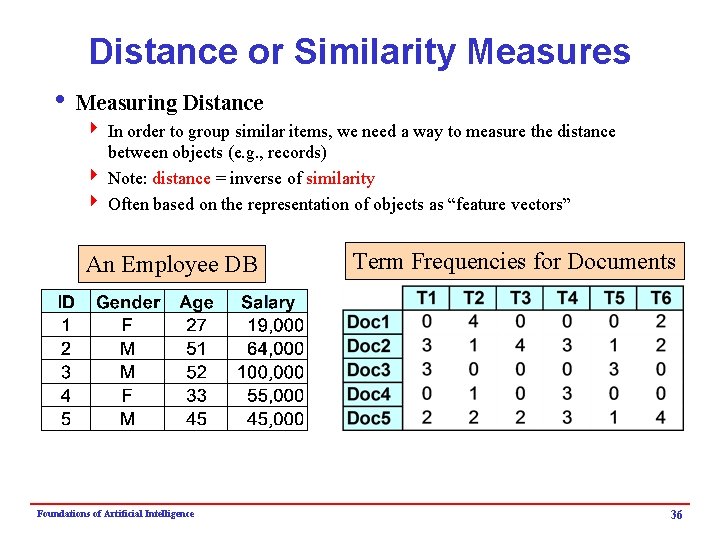

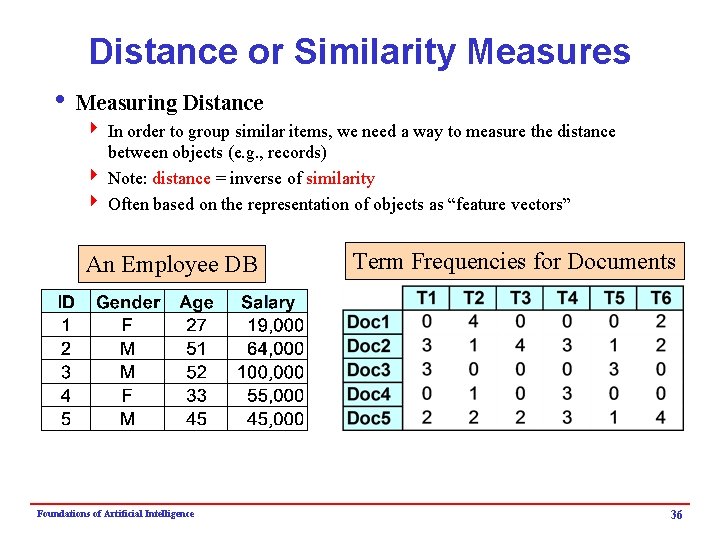

Distance or Similarity Measures i Measuring Distance 4 In order to group similar items, we need a way to measure the distance between objects (e. g. , records) 4 Note: distance = inverse of similarity 4 Often based on the representation of objects as “feature vectors” An Employee DB Foundations of Artificial Intelligence Term Frequencies for Documents 36

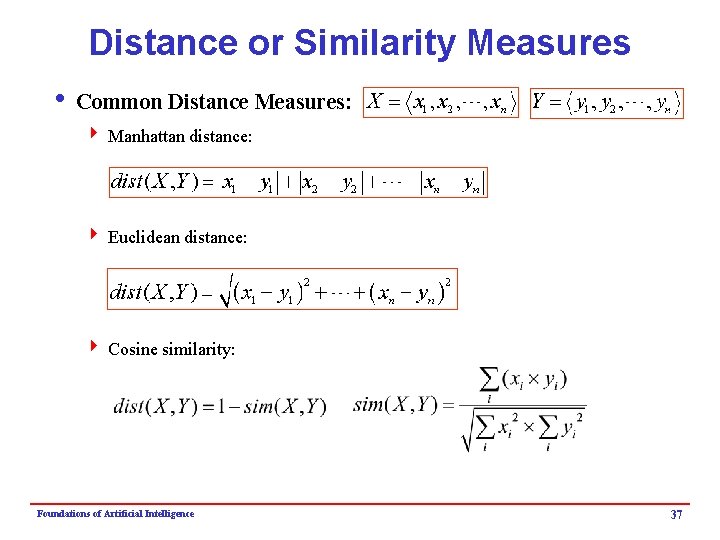

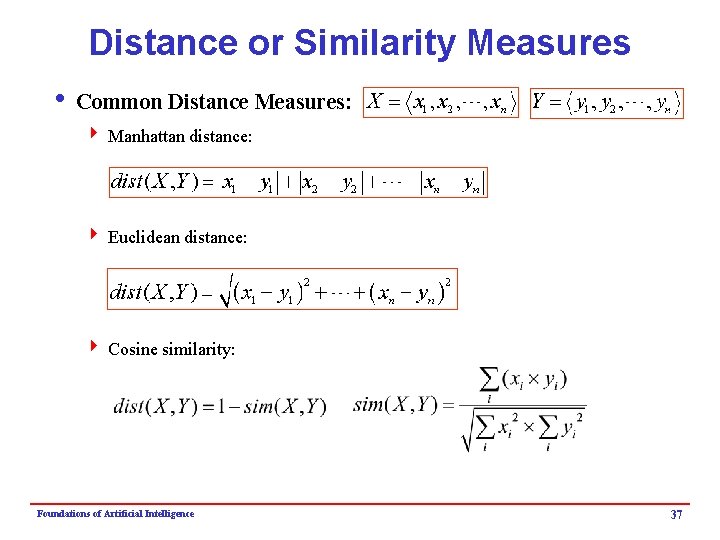

Distance or Similarity Measures i Common Distance Measures: 4 Manhattan distance: 4 Euclidean distance: 4 Cosine similarity: Foundations of Artificial Intelligence 37

What Is Good Clustering? i A good clustering will produce high quality clusters in which: 4 the intra-class (that is, intra-cluster) similarity is high 4 the inter-class similarity is low i The quality of a clustering result also depends on both the similarity measure used by the method and its implementation i The quality of a clustering method is also measured by its ability to discover some or all of the hidden patterns i The quality of a clustering result also depends on the definition and representation of cluster chosen Foundations of Artificial Intelligence 38

Applications of Clustering i Clustering has wide applications in Pattern Recognition i Spatial Data Analysis: 4 create thematic maps in GIS by clustering feature spaces 4 detect spatial clusters and explain them in spatial data mining i Image Processing i Market Research i Information Retrieval 4 Document or term categorization 4 Information visualization and IR interfaces i Web Mining 4 Cluster Web usage data to discover groups of similar access patterns 4 Web Personalization Foundations of Artificial Intelligence 39

Learning by Discovery i One example: AM by Doug Lenat at Stanford 4 a mathematical system 4 inputs: set theory (union, intersection, etc); “how to do mathematics” (based on a book by Polya), e. g. , if f is an interesting function of two arguments, then f(x, x) is an interesting function on one, etc. 4 speculated about what was interesting an made conjectures, etc. i What AM discovered 4 4 integers (as equivalence relation on cardinality of sets) addition (using disjoint union of sets) multiplication primes: 1 was interesting, the function returning the cardinality of set of divisors was interesting, etc. 4 Glodbach’s conjecture: “all even numbers are the sum of two prime numbers”; (note that AM did not prove it, just discovered that it was interesting) i Why was AM so successful? 4 Connection between LISP and mathematics (mutations of small bits of LISP code are likely to be interesting) 4 Doesn’t extend to other domains 4 Lessons from EURISKO (fleet game) Foundations of Artificial Intelligence 40

Explanation-Based Learning i Explanation- based learning (EBL) systems try to explain why each training instance belongs to the target concept. 4 The resulting “proof” is then generalized and saved. 4 If a new instance can be explained in the same manner as a previous instance, then it is also assumed to be a member of the target concept. i Like macro- operators, EBL systems never learn to solve a problem that they couldn’t solve before (in principle). 4 However, they can become much more efficient at problem-solving by reorganizing the search space. i One of the strengths of EBL is that the resulting “explanations” are typically easy to understand. i One of the weaknesses of EBL is that they rely on a domain theory to generate the explanations. Foundations of Artificial Intelligence 41

Case-Based Learning i Case-based reasoning (CBR) systems keep track of previously seen instances and apply them directly to new ones. i In general, a CBR system simply stores each “case” that it experiences in a “case base” which represents its memory of previous episodes. i To reason about a new instance, the system consults its case base and finds the most similar case that it’s seen before. The old case is then adapted and applied to the new situation. i CBR is similar to reasoning by analogy. Many people believe that much of human learning is case- based in nature. Foundations of Artificial Intelligence 42

Connectionist Algorithms i Connectionist models (also called neural networks) are inspired by the interconnectivity of the brain. 4 Connectionist networks typically consist of many nodes that are highly interconnected. When a node is activated, it sends signals to other nodes so that they are activated in turn. i Using layers of nodes allows connectionist models to learn fairly complex functions. i Neural networks are loosely modeled after the biological processes involved in cognition: 4 1. Information processing involves many simple elements called neurons. 4 2. Signals are transmitted between neurons using connecting links. 4 3. Each link has a weight that controls the strength of its signal. 4 4. Each neuron applies an activation function to the input that it receives from other neurons. This function determines its output. Foundations of Artificial Intelligence 43