Artificial Intelligence The different levels of language analysis

![Charts: example [Oracio → GN GV • ] [Oracio → GN • GV] [GN Charts: example [Oracio → GN GV • ] [Oracio → GN • GV] [GN](https://slidetodoc.com/presentation_image/4eef0fcb3a213859f960c8576c26fea1/image-68.jpg)

- Slides: 92

Artificial Intelligence The different levels of language analysis Fall 2008 professor: Luigi Ceccaroni

The different levels of language analysis • A NL system must use considerable knowledge about the structure of the language itself, including: – What the words are – How words combine to form sentences – What the words mean – How word meanings contribute to sentence meaning –… 2

The different levels of language analysis • We cannot completely account for linguistic behavior without also taking into account: – humans’ general world knowledge – their reasoning abilities • For example, to participate in a conversation it is necessary: – Knowledge about the structure of the language, but also – Knowledge about the world in general – Knowledge about the conversational setting in 3 particular

The different levels of language analysis • The following are some of the different forms of knowledge relevant for NL understanding: – Phonetic and phonological knowledge – Morphological knowledge – Syntactic knowledge – Semantic knowledge – Pragmatic knowledge – Discourse knowledge – World knowledge 4

Phonetic and phonological knowledge • It concerns how words are related to the sounds that realize them • Such knowledge is crucial for speechbased systems 5

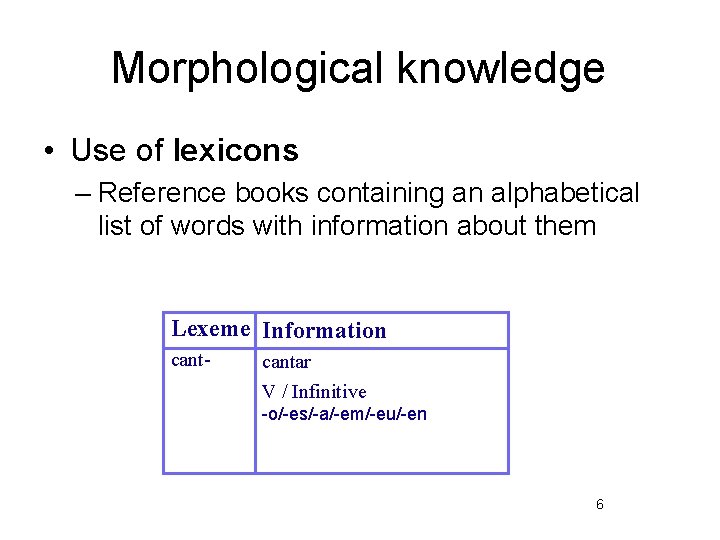

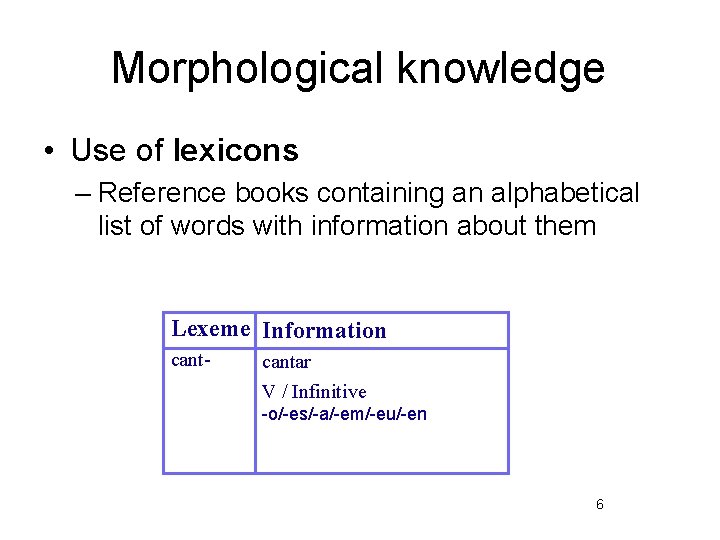

Morphological knowledge • Use of lexicons – Reference books containing an alphabetical list of words with information about them Lexeme Information cant- cantar V / Infinitive -o/-es/-a/-em/-eu/-en 6

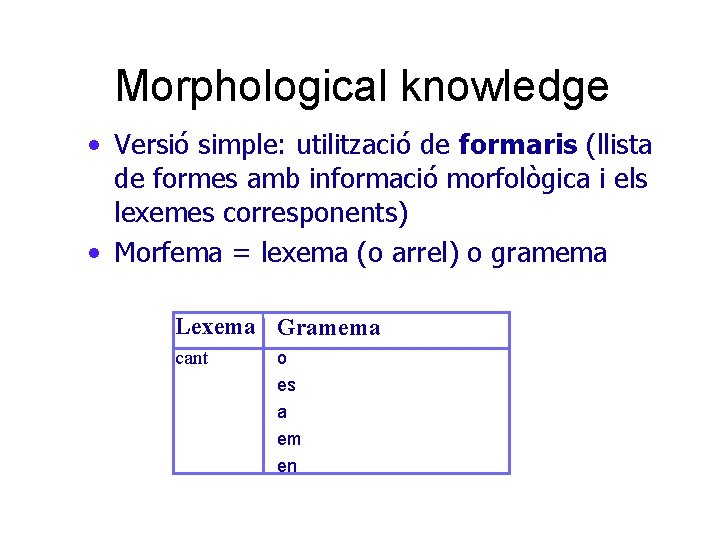

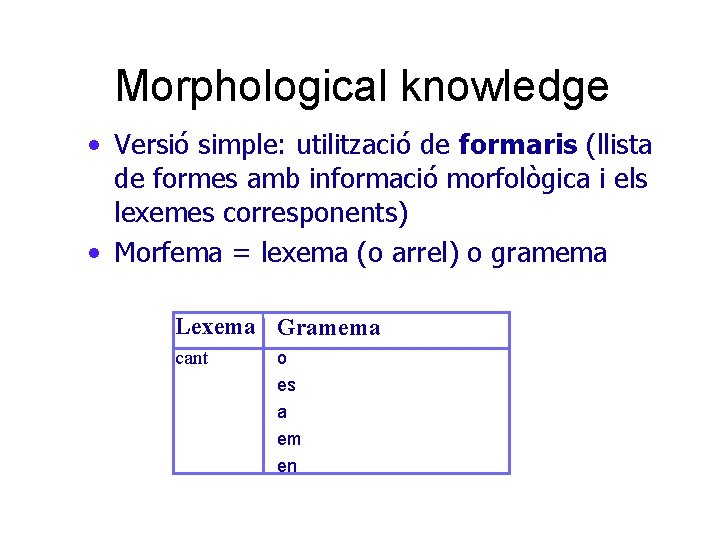

Morphological knowledge • Versió simple: utilització de formaris (llista de formes amb informació morfològica i els lexemes corresponents) • Morfema = lexema (o arrel) o gramema Lexema Gramema cant o es a em en

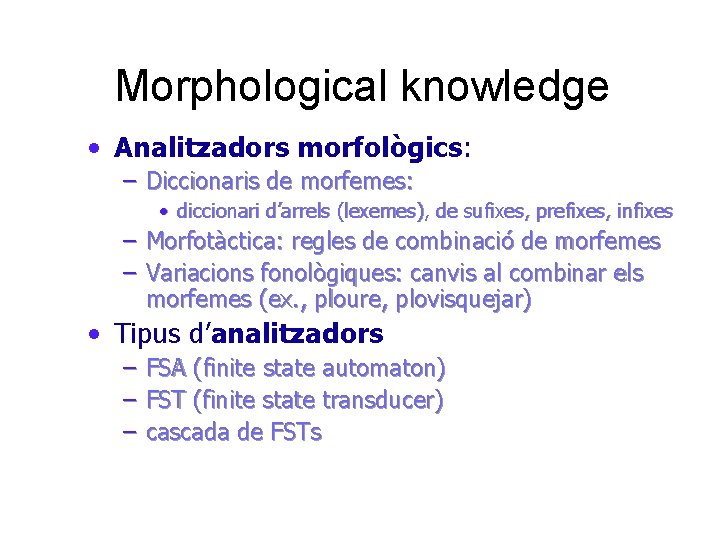

Morphological knowledge • Analitzadors morfològics: – Diccionaris de morfemes: • diccionari d’arrels (lexemes), de sufixes, prefixes, infixes – Morfotàctica: regles de combinació de morfemes – Variacions fonològiques: canvis al combinar els morfemes (ex. , ploure, plovisquejar) • Tipus d’analitzadors – – – FSA (finite state automaton) FST (finite state transducer) cascada de FSTs

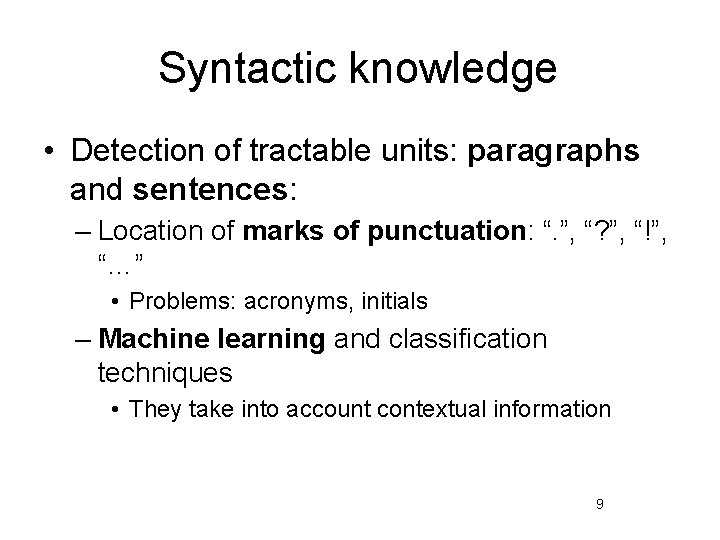

Syntactic knowledge • Detection of tractable units: paragraphs and sentences: – Location of marks of punctuation: “. ”, “? ”, “!”, “…” • Problems: acronyms, initials – Machine learning and classification techniques • They take into account contextual information 9

Syntactic knowledge • Detection of units of meaning – Recognition and adequate grouping of words • “/Parlarà/ /sens dubte/ /de/ /les/ /reestructuracions/ /urbanes/ /a/ /Sant Cugat/” • Assignment of grammatical categories • Addition of semantic information to lexical units (use of ontologies and dictionaries) • Acknowledgment and classification of 10 proper names and entities

Syntactic knowledge • Correspondence between orthographic and grammatical words – “dóna-m’ho”, “dímelo” (1 orthographic word, 3 grammatical words) – “sens dubte”, “sin embargo” (2 orthographic words, 1 p. grammatical word) • Homonymy – Same form, different grammatical categories: • “wheel” (rueda, ruleta): noun • “wheel” (empujar): verb 11

Syntactic knowledge • Polysemy – Same form and category, different meanings: • “bank” (grupo): data bank, electronic resource bank • “bank” (banco): bank account, bank balance • “bank” (ribera): river bank, river + burst its banks • Acronyms – “The cell’s DNA sample was identified by PRC, a process approved by the official UBI. ” 12

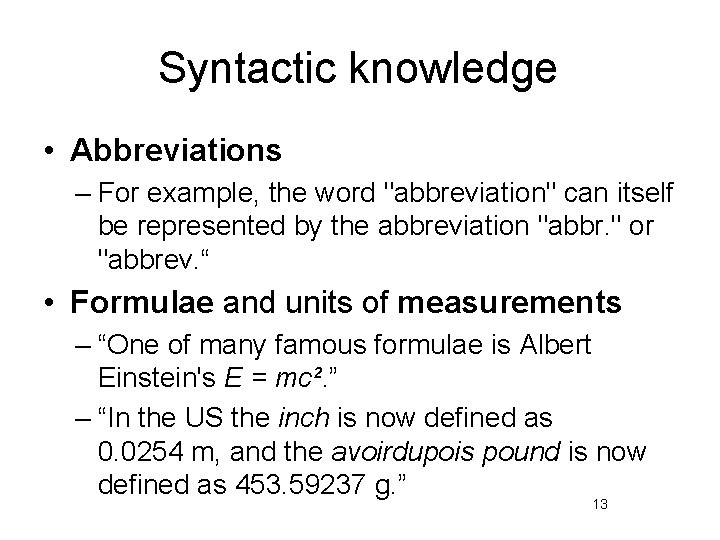

Syntactic knowledge • Abbreviations – For example, the word "abbreviation" can itself be represented by the abbreviation "abbr. " or "abbrev. “ • Formulae and units of measurements – “One of many famous formulae is Albert Einstein's E = mc². ” – “In the US the inch is now defined as 0. 0254 m, and the avoirdupois pound is now defined as 453. 59237 g. ” 13

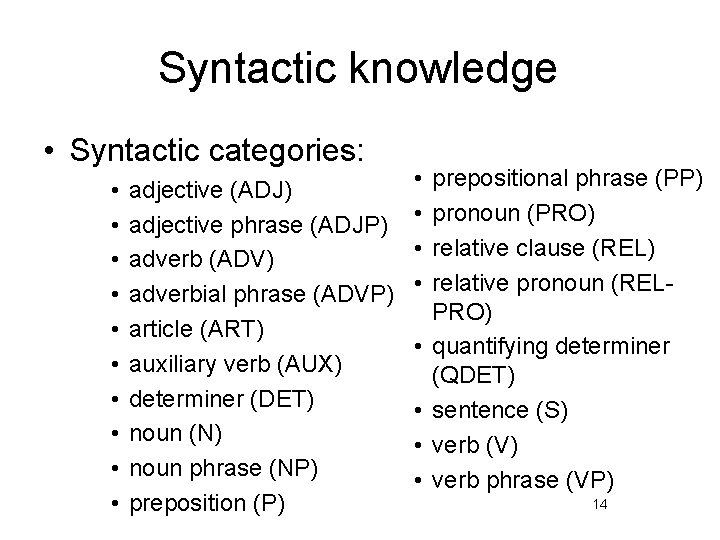

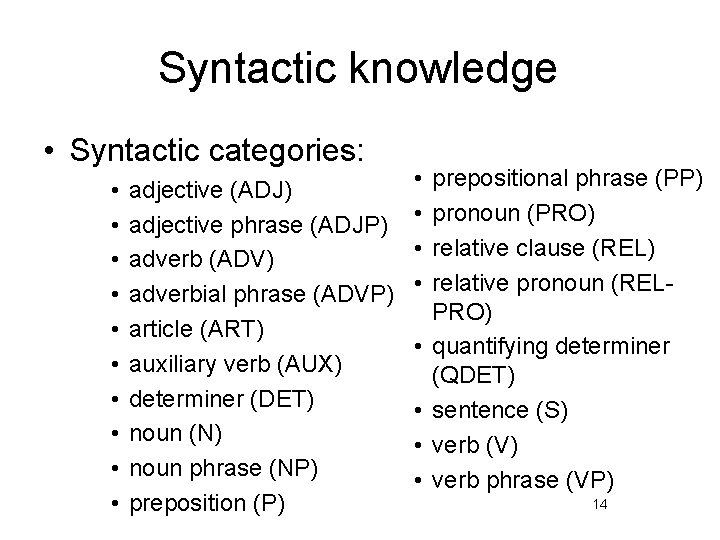

Syntactic knowledge • Syntactic categories: • • • adjective (ADJ) adjective phrase (ADJP) adverb (ADV) adverbial phrase (ADVP) article (ART) auxiliary verb (AUX) determiner (DET) noun (N) noun phrase (NP) preposition (P) • • prepositional phrase (PP) pronoun (PRO) relative clause (REL) relative pronoun (RELPRO) quantifying determiner (QDET) sentence (S) verb (V) verb phrase (VP) 14

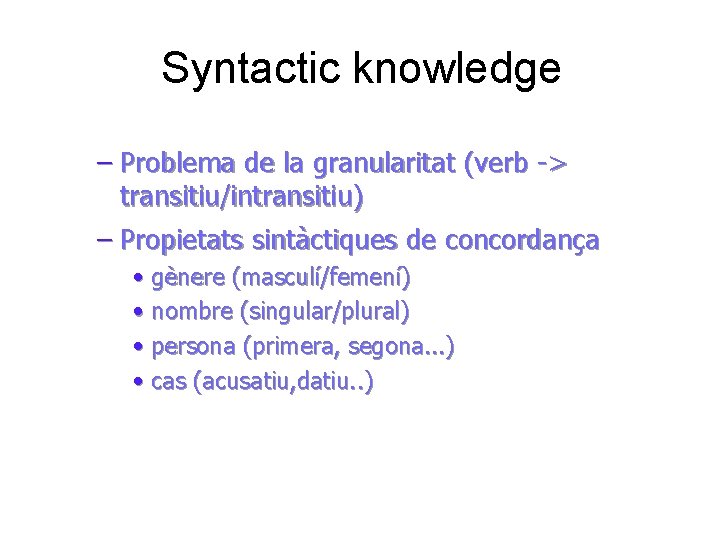

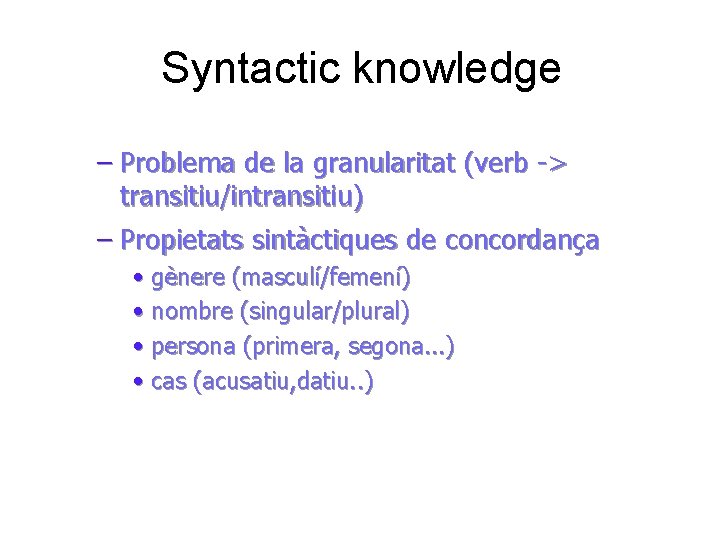

Syntactic knowledge – Problema de la granularitat (verb -> transitiu/intransitiu) – Propietats sintàctiques de concordança • gènere (masculí/femení) • nombre (singular/plural) • persona (primera, segona. . . ) • cas (acusatiu, datiu. . )

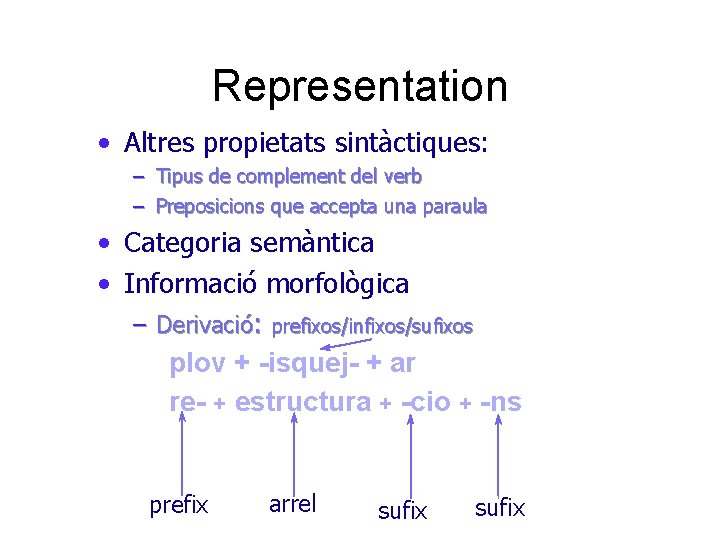

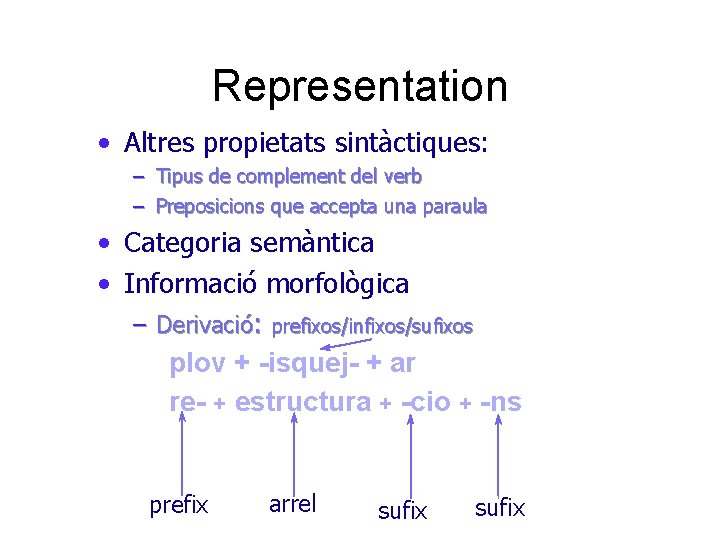

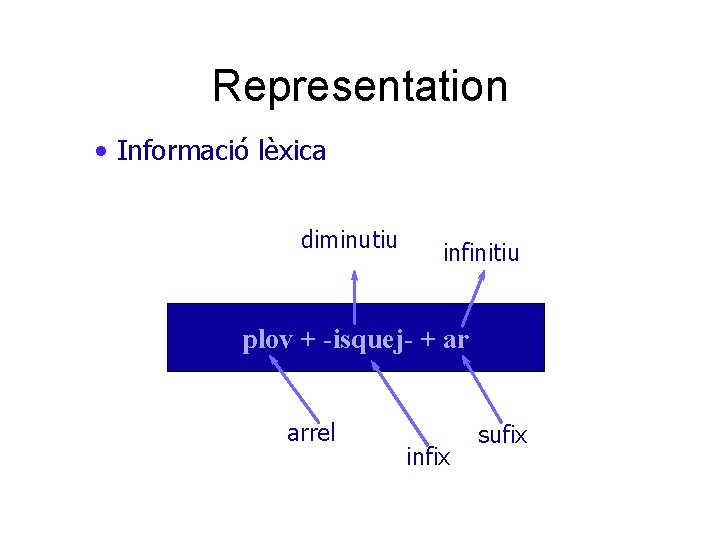

Representation • Altres propietats sintàctiques: – Tipus de complement del verb – Preposicions que accepta una paraula • Categoria semàntica • Informació morfològica – Derivació: prefixos/infixos/sufixos plov + -isquej- + ar re- + estructura + -cio + -ns prefix arrel sufix

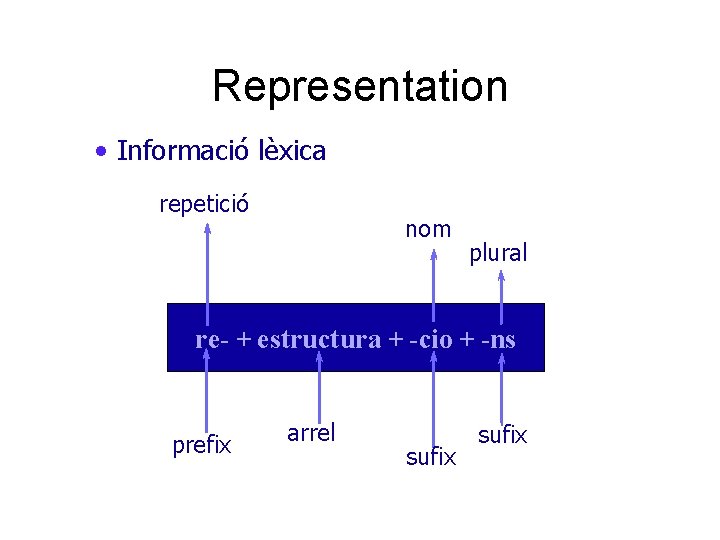

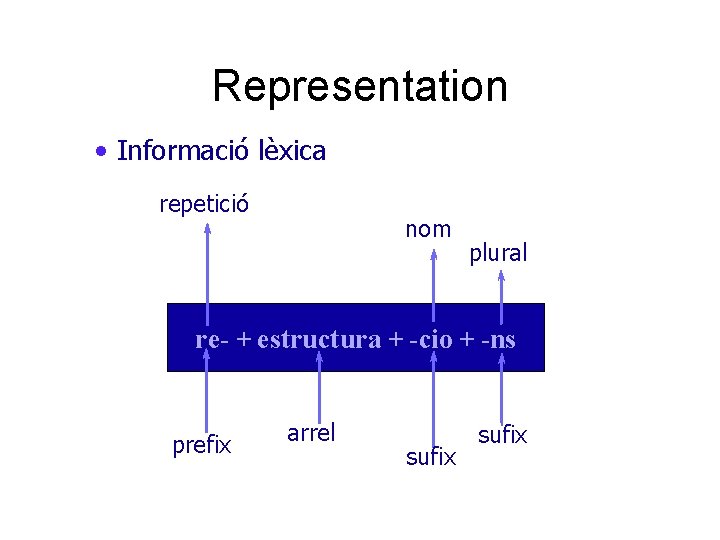

Representation • Informació lèxica repetició nom plural re- + estructura + -cio + -ns prefix arrel sufix

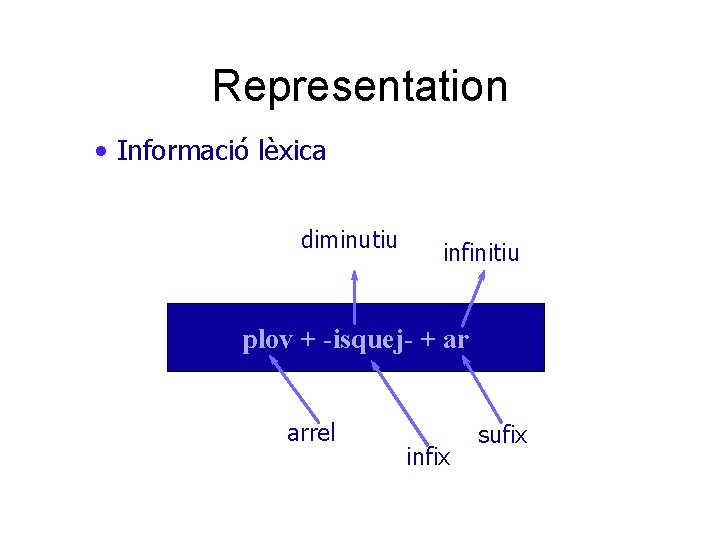

Representation • Informació lèxica diminutiu infinitiu plov + -isquej- + ar arrel infix sufix

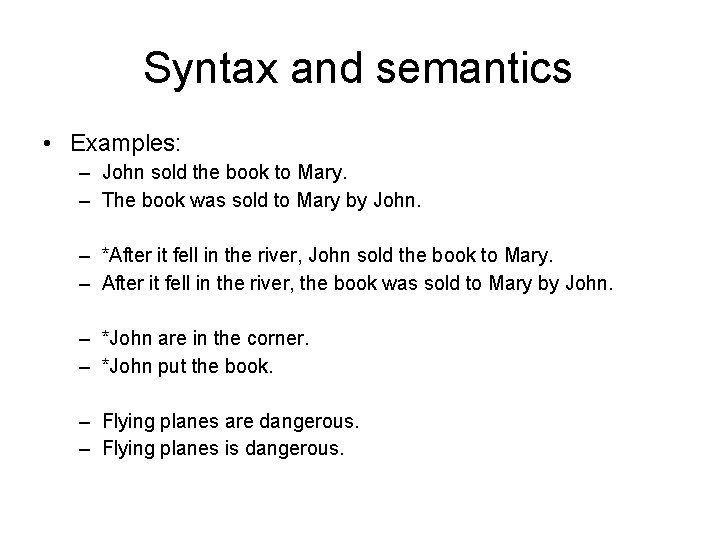

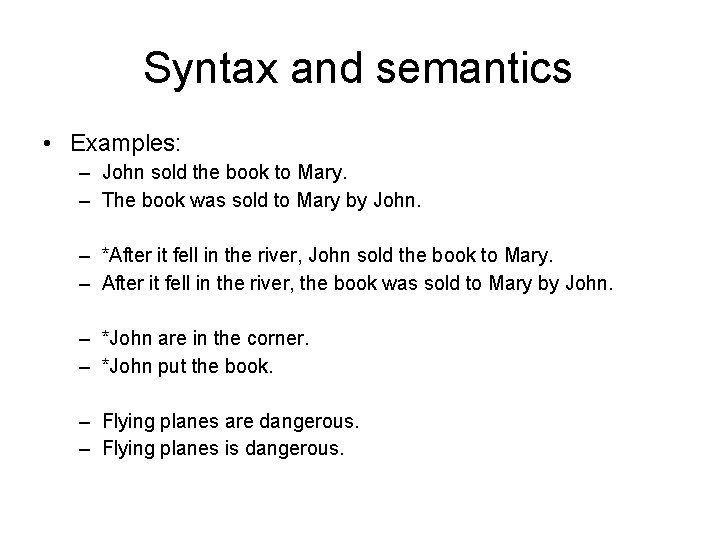

Syntax and semantics • Examples: – John sold the book to Mary. – The book was sold to Mary by John. – *After it fell in the river, John sold the book to Mary. – After it fell in the river, the book was sold to Mary by John. – *John are in the corner. – *John put the book. – Flying planes are dangerous. – Flying planes is dangerous.

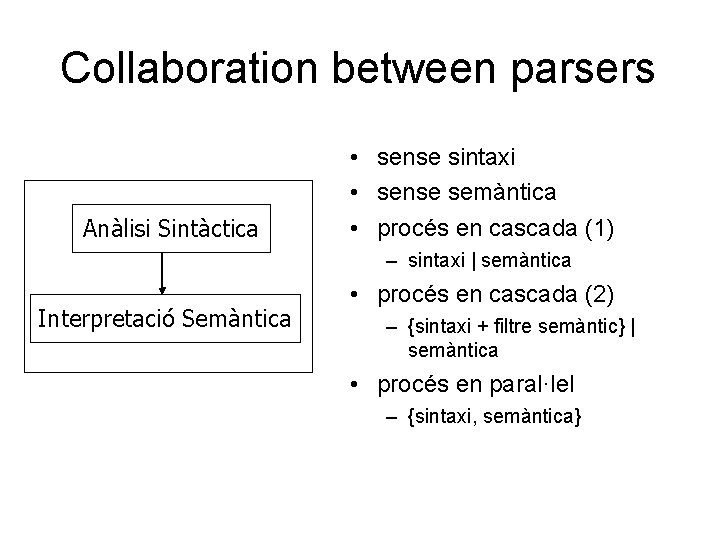

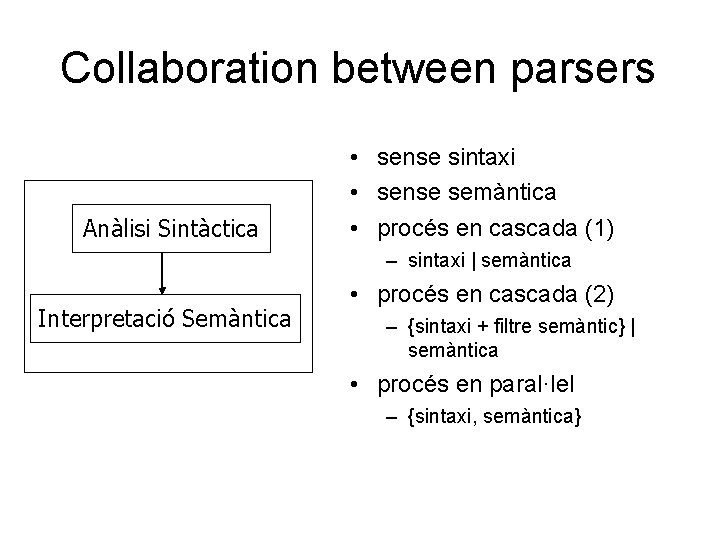

Collaboration between parsers Anàlisi Sintàctica • sense sintaxi • sense semàntica • procés en cascada (1) – sintaxi | semàntica Interpretació Semàntica • procés en cascada (2) – {sintaxi + filtre semàntic} | semàntica • procés en paral·lel – {sintaxi, semàntica}

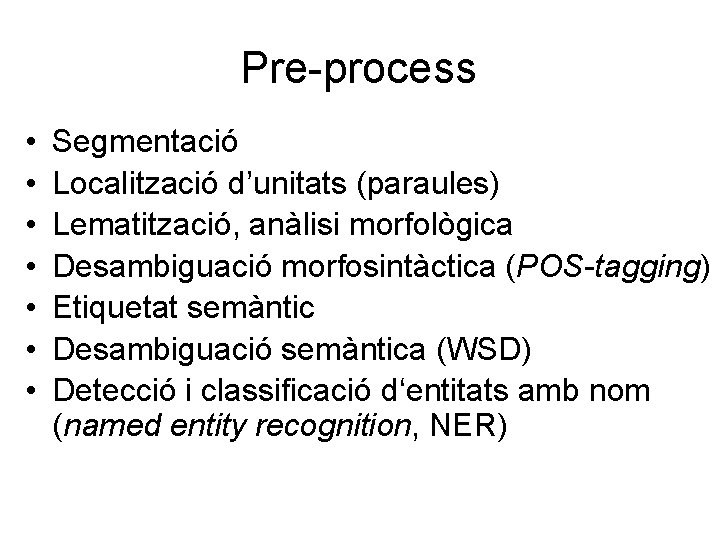

Pre-process • • Segmentació Localització d’unitats (paraules) Lematització, anàlisi morfològica Desambiguació morfosintàctica (POS-tagging) Etiquetat semàntic Desambiguació semàntica (WSD) Detecció i classificació d‘entitats amb nom (named entity recognition, NER)

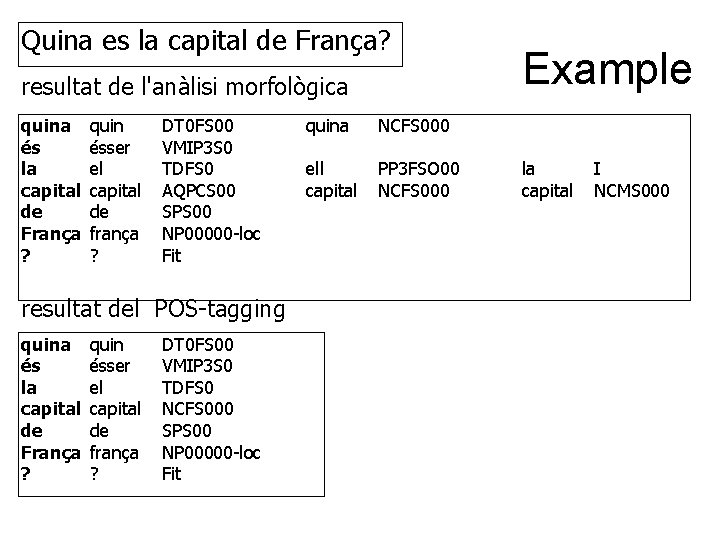

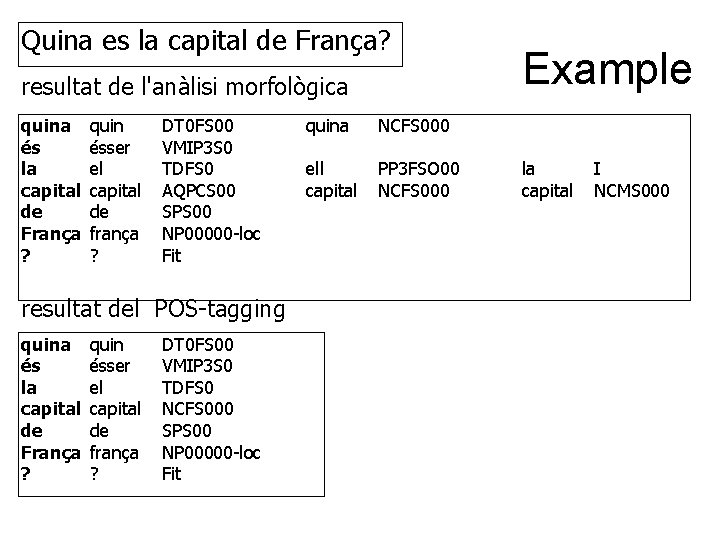

Quina es la capital de França? resultat de l'anàlisi morfològica quina és la capital de França ? quin ésser el capital de frança ? DT 0 FS 00 VMIP 3 S 0 TDFS 0 AQPCS 00 SPS 00 NP 00000 -loc Fit resultat del POS-tagging quina és la capital de França ? quin ésser el capital de frança ? DT 0 FS 00 VMIP 3 S 0 TDFS 0 NCFS 000 SPS 00 NP 00000 -loc Fit quina NCFS 000 ell capital PP 3 FSO 00 NCFS 000 Example la capital I NCMS 000

Post-process • Anàlisi semàntica - pragmàtica • Anàlisi il·locutiva – Reconeixement d’intencions

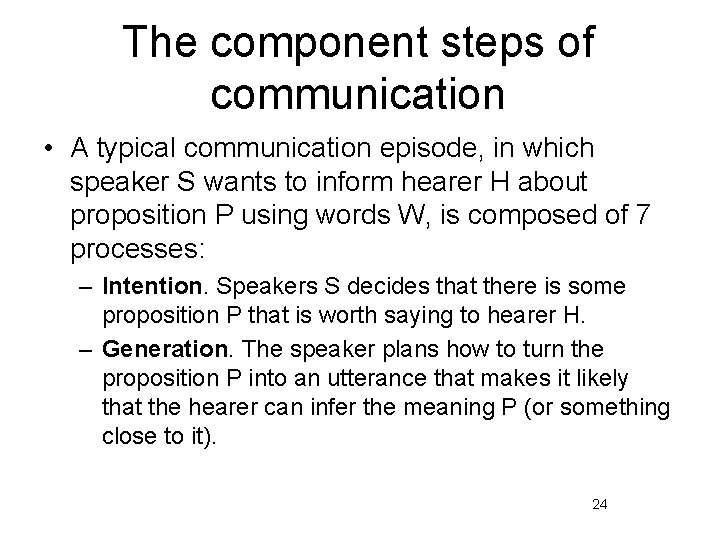

The component steps of communication • A typical communication episode, in which speaker S wants to inform hearer H about proposition P using words W, is composed of 7 processes: – Intention. Speakers S decides that there is some proposition P that is worth saying to hearer H. – Generation. The speaker plans how to turn the proposition P into an utterance that makes it likely that the hearer can infer the meaning P (or something close to it). 24

The component steps of communication – Synthesis. The speaker produces the physical realization W’ of the words W. This can be via ink on paper, vibrations in air, or some other medium. – Perception. H perceives W’ as W 2’ and decodes it as the words W 2. When the medium is speech, the perception is called speech recognition; when it is printing, it is called optical character recognition (OCR). Both are now commonplace. 25

The component steps of communication – Analysis. We divide it into 3 main parts: • Syntactic interpretation (or parsing) is the process of building a parse tree for an input string. The interior nodes of the parse tree represent phrases and the leaf nodes represent words. • Semantic interpretation is the process of extracting the meaning of an utterance. Utterances with several possible interpretations are said to be ambiguous. • Pragmatic interpretation takes into account the fact that the same words can have different meanings in different situations. 26

The component steps of communication – Disambiguation. H infers that S intended to convey Pi (where ideally Pi = P). Communication works because H does the work of figuring out which interpretation is the one S probably meant to convey. Disambiguation is a process that depends heavily on uncertain reasoning. – Incorporation. H decides to believe Pi (or not). A totally naive agent might believe everything it hears. A sophisticated agent treats the speech act as evidence for Pi, not confirmation of it. 27

Syntactic analysis • Objectives: – Determining if a sentence is syntactically correct – Creating a structure with information which can be used during the semantic analysis

Syntactic analysis • Alphabet (vocabulary): Σ • Concatenation operations • Σ* (free monoid): set of all strings that can be formed with symbols of Σ • Language: L ⊆ Σ* • Given a string w 1 n of Σ*: w 1 n = w 1, …, wn wi ∈ Σ – We have to determine if w 1 n ∈ L

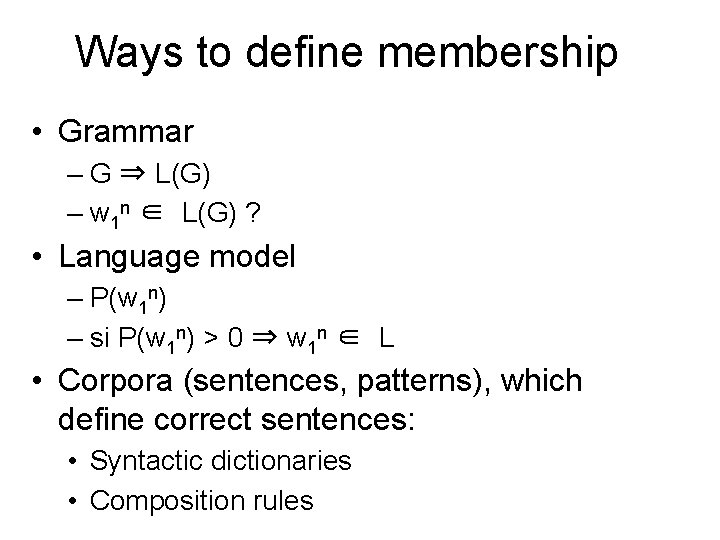

Ways to define membership • Grammar – G ⇒ L(G) – w 1 n ∈ L(G) ? • Language model – P(w 1 n) – si P(w 1 n) > 0 ⇒ w 1 n ∈ L • Corpora (sentences, patterns), which define correct sentences: • Syntactic dictionaries • Composition rules

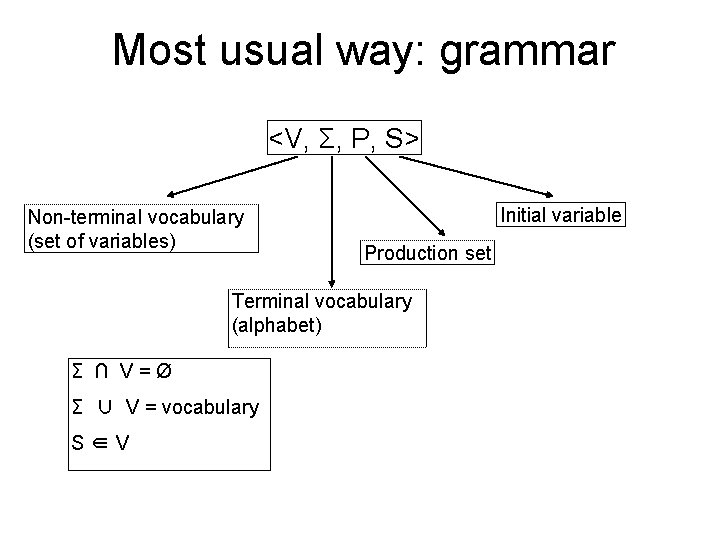

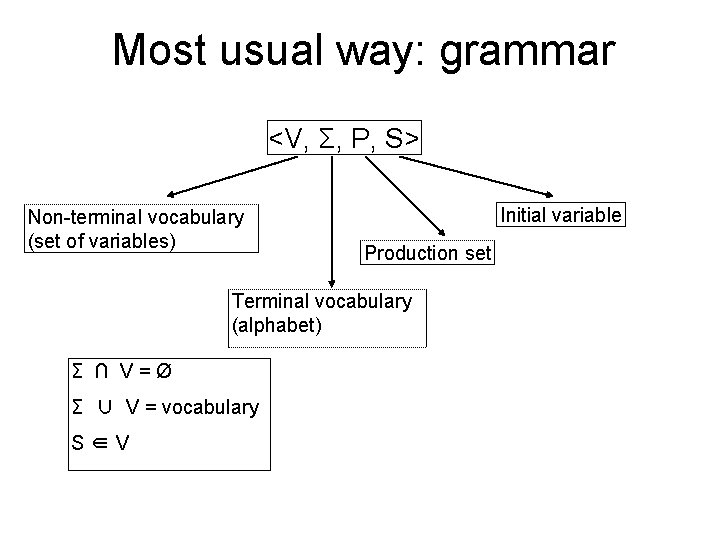

Most usual way: grammar <V, Σ, P, S> Non-terminal vocabulary (set of variables) Initial variable Production set Terminal vocabulary (alphabet) Σ ∩ V = Ø Σ ∪ V = vocabulary S ∈ V

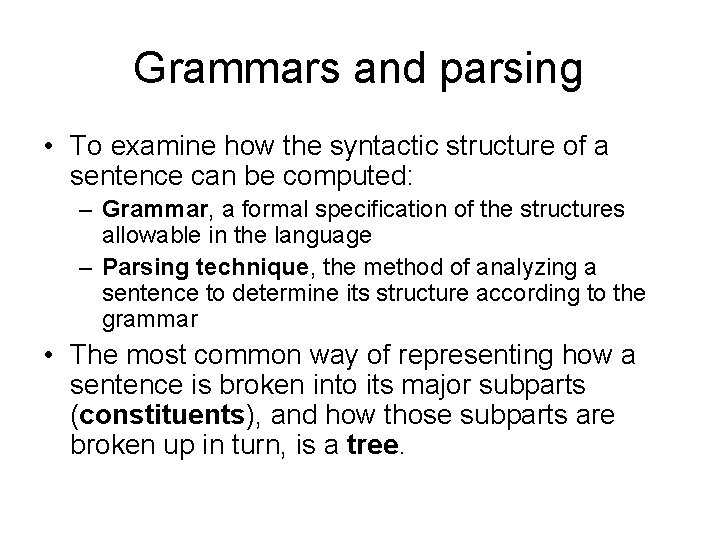

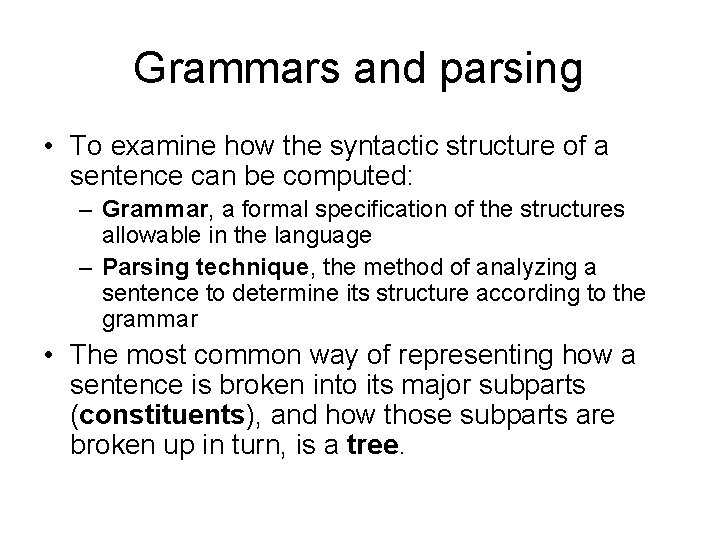

Grammars and parsing • To examine how the syntactic structure of a sentence can be computed: – Grammar, a formal specification of the structures allowable in the language – Parsing technique, the method of analyzing a sentence to determine its structure according to the grammar • The most common way of representing how a sentence is broken into its major subparts (constituents), and how those subparts are broken up in turn, is a tree.

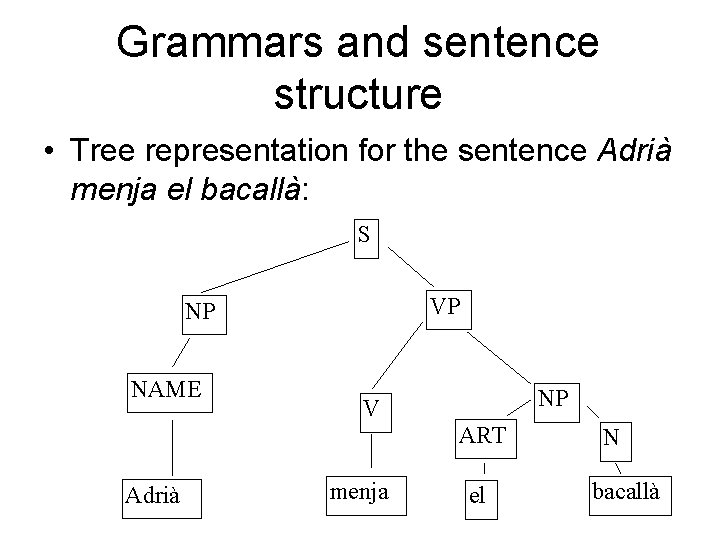

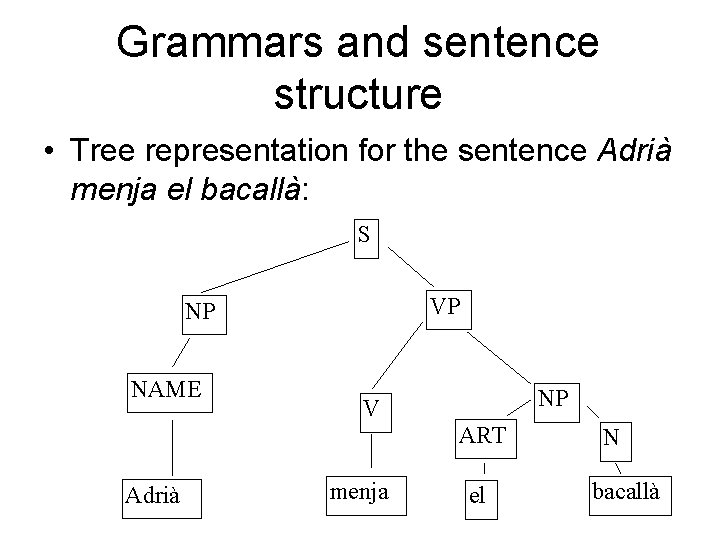

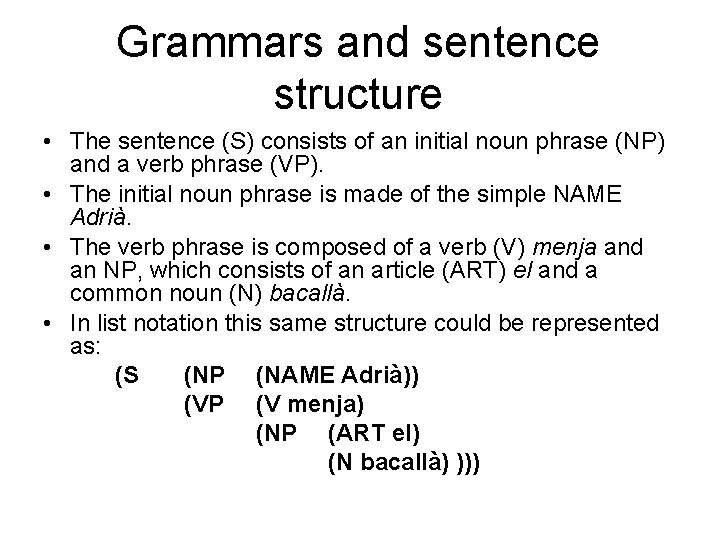

Grammars and sentence structure • Tree representation for the sentence Adrià menja el bacallà: S VP NP NAME NP V ART Adrià menja el N bacallà

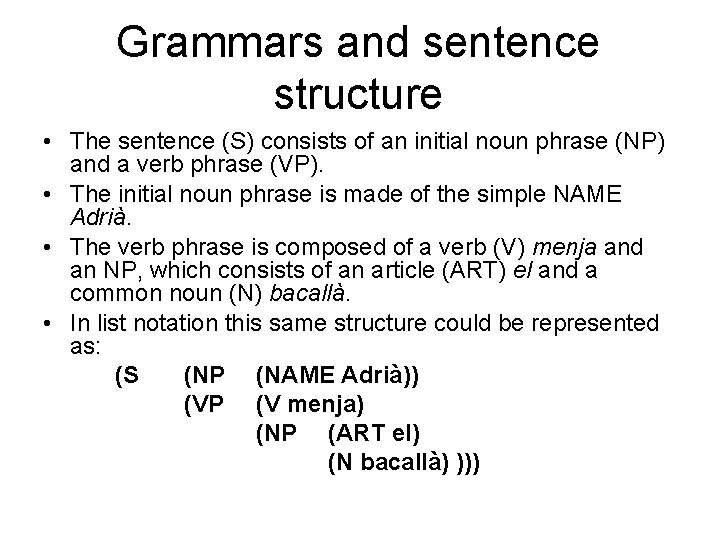

Grammars and sentence structure • The sentence (S) consists of an initial noun phrase (NP) and a verb phrase (VP). • The initial noun phrase is made of the simple NAME Adrià. • The verb phrase is composed of a verb (V) menja and an NP, which consists of an article (ART) el and a common noun (N) bacallà. • In list notation this same structure could be represented as: (S (NP (NAME Adrià)) (VP (V menja) (NP (ART el) (N bacallà) )))

Grammars and sentence structure • • To construct a tree structure for a sentence, you must know what structures are legal. A set of rewrite rules describes what tree structures are allowable. These rules say that a certain symbol may be expanded in the tree by a sequence of other symbols. A set of rules constitutes a grammar: 1. 2. 3. 4. 5. 6. 7. 8. S → NP VP VP → V NP NP → NAME NP → ART N NAME → Adrià V → menja ART → el N → bacallà

Grammars and sentence structure • Rule 1 says that an S may consist of an NP followed by a VP. • Rule 2 says that a VP may consist of a V followed by an NP. • Rules 3 and 4 say that an NP may consist of a NAME or may consist of an ART followed by an N. • Rules 5 - 8 define possible words for the categories. • Grammars consisting entirely of rules with a single symbol on the left-hand side, called the mother, are called context-free grammars (CFGs).

Grammars and sentence structure • Context-free grammars (CFGs) are a very important class of grammars because: – the formalism is powerful enough to describe most of the structure in natural languages, – yet is restricted enough so that efficient parsers can be built to analyze sentences. • Symbols that cannot be further decomposed in a grammar (the words Adrià, menja…) are called terminal symbols. • The other symbols, such as NP and VP, are called nonterminal symbols. • The grammatical symbols such as N and V that describe word categories are called lexical symbols. • Many words will be listed under multiple categories. For example, poder would be listed under V (can) and N (power). • Grammars have a special symbol called the start symbol. Usually, the start symbol is S (also meaning sentence).

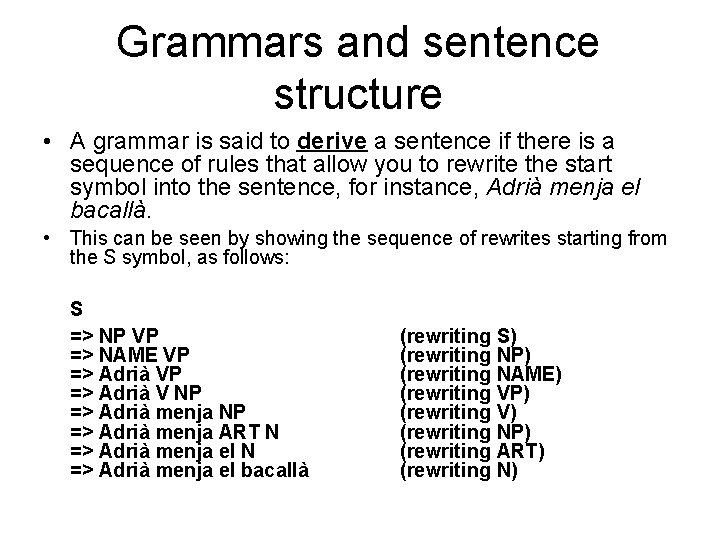

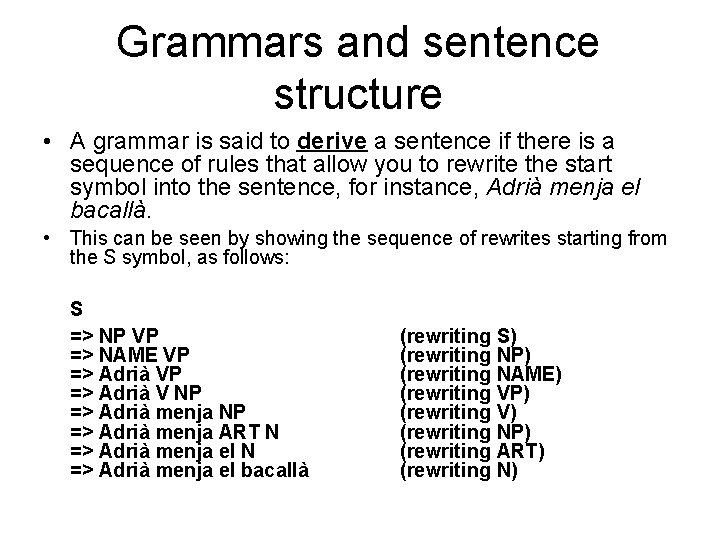

Grammars and sentence structure • A grammar is said to derive a sentence if there is a sequence of rules that allow you to rewrite the start symbol into the sentence, for instance, Adrià menja el bacallà. • This can be seen by showing the sequence of rewrites starting from the S symbol, as follows: S => NP VP => NAME VP => Adrià V NP => Adrià menja ART N => Adrià menja el bacallà (rewriting S) (rewriting NP) (rewriting NAME) (rewriting VP) (rewriting V) (rewriting NP) (rewriting ART) (rewriting N)

Grammars and sentence structure • Two important processes are based on derivations: – The first is sentence generation, which uses derivations to construct legal sentences. A simple generator could be implemented by randomly choosing rewrite rules, starting from the S symbol, until you have a sequence of words. The preceding example shows that the sentence Adrià menja el bacallà can be generated from the grammar. – The second process based on derivations is parsing, which identifies the structure of sentences given a grammar.

Parsing as a search procedure • In derivations, there are two basic methods of searching: – A top-down strategy starts with the S symbol and then searches through different ways to rewrite the symbols until the input sentence is generated, or until all possibilities have been explored. The preceding example demonstrates that Adrià menja el bacallà is a legal sentence by showing the derivation that could be found by this process.

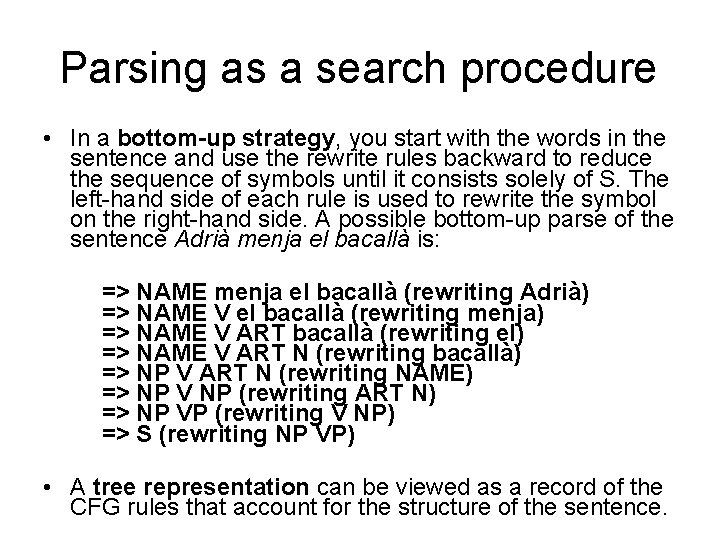

Parsing as a search procedure • In a bottom-up strategy, you start with the words in the sentence and use the rewrite rules backward to reduce the sequence of symbols until it consists solely of S. The left-hand side of each rule is used to rewrite the symbol on the right-hand side. A possible bottom-up parse of the sentence Adrià menja el bacallà is: => NAME menja el bacallà (rewriting Adrià) => NAME V el bacallà (rewriting menja) => NAME V ART bacallà (rewriting el) => NAME V ART N (rewriting bacallà) => NP V ART N (rewriting NAME) => NP V NP (rewriting ART N) => NP VP (rewriting V NP) => S (rewriting NP VP) • A tree representation can be viewed as a record of the CFG rules that account for the structure of the sentence.

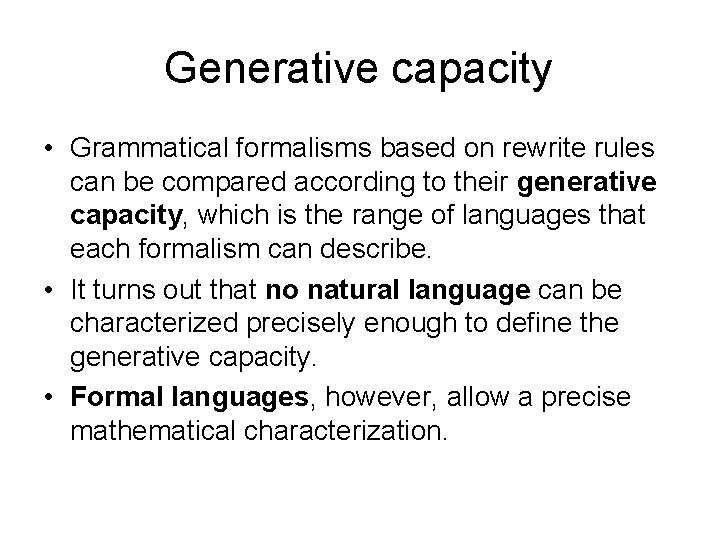

What makes a good grammar • In constructing a grammar for a language, you are interested in: – generality, the range of sentences the grammar analyzes correctly; – selectivity, the range of non-sentences it identifies as problematic; – understandability, the simplicity of the grammar itself.

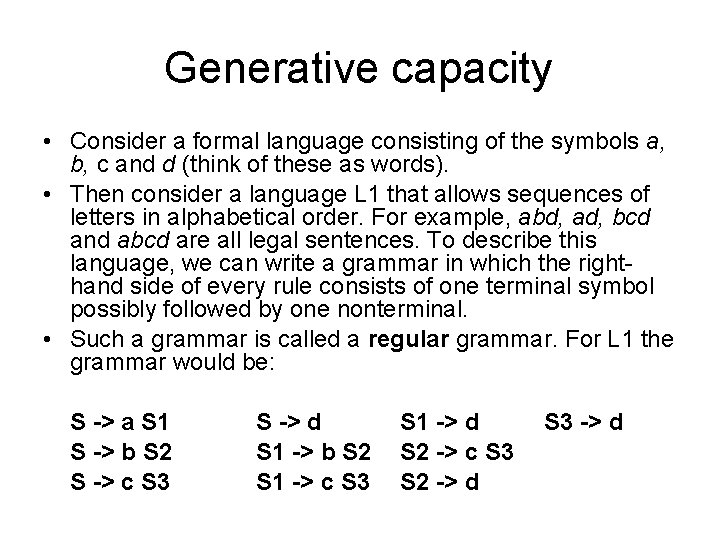

Generative capacity • Grammatical formalisms based on rewrite rules can be compared according to their generative capacity, which is the range of languages that each formalism can describe. • It turns out that no natural language can be characterized precisely enough to define the generative capacity. • Formal languages, however, allow a precise mathematical characterization.

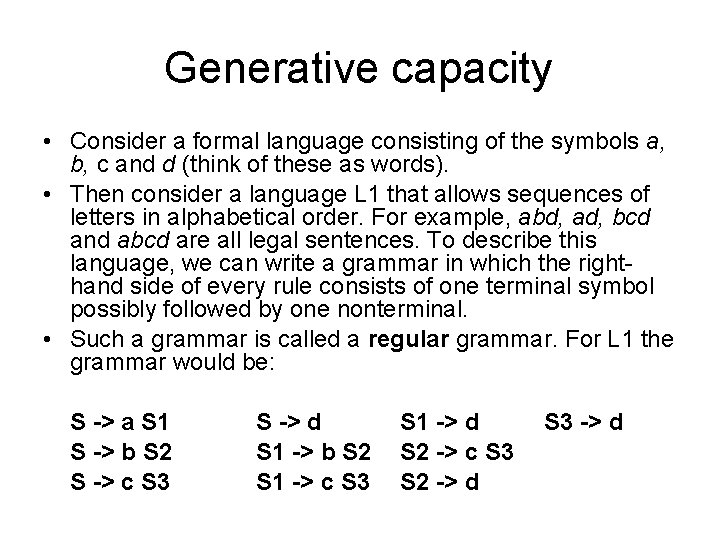

Generative capacity • Consider a formal language consisting of the symbols a, b, c and d (think of these as words). • Then consider a language L 1 that allows sequences of letters in alphabetical order. For example, abd, ad, bcd and abcd are all legal sentences. To describe this language, we can write a grammar in which the righthand side of every rule consists of one terminal symbol possibly followed by one nonterminal. • Such a grammar is called a regular grammar. For L 1 the grammar would be: S -> a S 1 S -> b S 2 S -> c S 3 S -> d S 1 -> b S 2 S 1 -> c S 3 S 1 -> d S 3 -> d S 2 -> c S 3 S 2 -> d

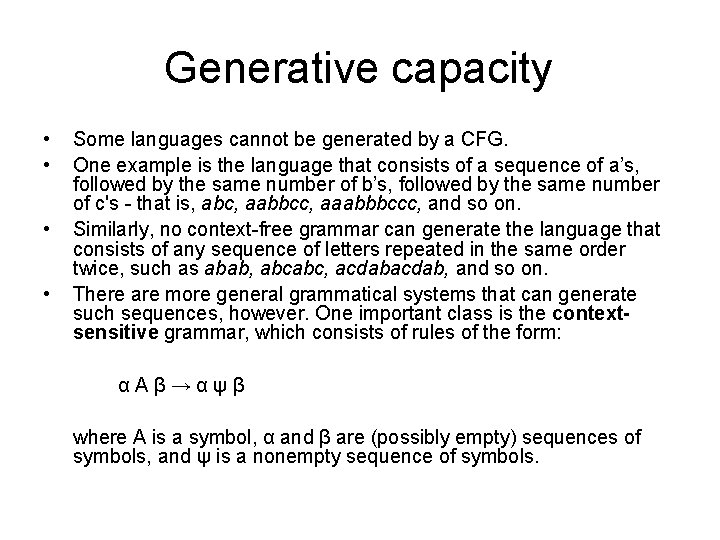

Generative capacity • Consider another language, L 2, that consists only of sentences that have a sequence of a’s followed by an equal number of b’s—that is, ab, aabb, aaabbb, and so on. You cannot write a regular grammar that can generate L 2 exactly. • A context-free grammar to generate L 2, however, is simple: S -> a b S -> a S b

Generative capacity • • Some languages cannot be generated by a CFG. One example is the language that consists of a sequence of a’s, followed by the same number of b’s, followed by the same number of c's - that is, abc, aabbcc, aaabbbccc, and so on. Similarly, no context-free grammar can generate the language that consists of any sequence of letters repeated in the same order twice, such as abab, abcabc, acdab, and so on. There are more general grammatical systems that can generate such sequences, however. One important class is the contextsensitive grammar, which consists of rules of the form: α A β → α ψ β where A is a symbol, α and β are (possibly empty) sequences of symbols, and ψ is a nonempty sequence of symbols.

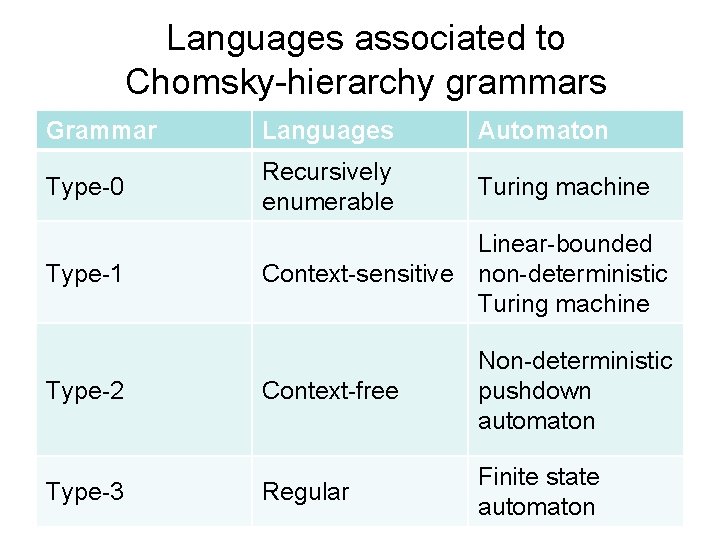

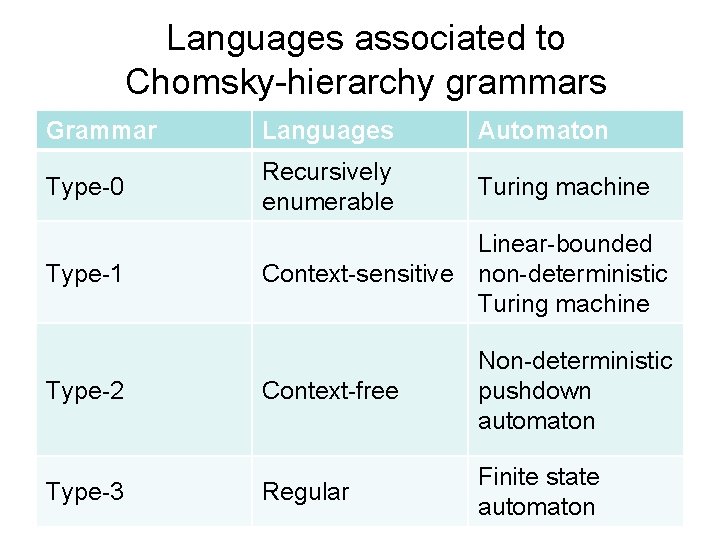

Generative capacity • Even more general are the type 0 grammars, which allow arbitrary rewrite rules. • Work in formal language theory began with Chomsky (1956). Since the languages generated by regular grammars are a subset of those generated by context-free grammars, which in turn are a subset of those generated by context-sensitive grammars, which in turn are a subset of those generated by type 0 languages, they form a hierarchy of languages (called the Chomsky Hierarchy).

Languages associated to Chomsky-hierarchy grammars Grammar Languages Automaton Type-0 Recursively enumerable Turing machine Type-1 Linear-bounded Context-sensitive non-deterministic Turing machine Type-2 Context-free Non-deterministic pushdown automaton Regular Finite state automaton Type-3

Grammaticality condition A sentence w (a string of Σ*) pertains to the language generated by grammar G, if grammar G can derive w starting from S, using production rules.

Obtaining the grammar • Definition of the terminal tagset (Σ) • Definition of the non-terminal tagset (V) • Definition of grammar rules (P): – Manual construction – Automatic construction • Grammatical inference (induction) – Semiautomatic construction

Grammars for language processing • • Minimum: context-free grammars (CFGs) Is NL a context-free language? No. Are CFGs sufficient? No. Solutions: – CFG + {procedural addition of context} – Logical grammars (unification) – Grammars enriched with statistical information (SCFGs) –…

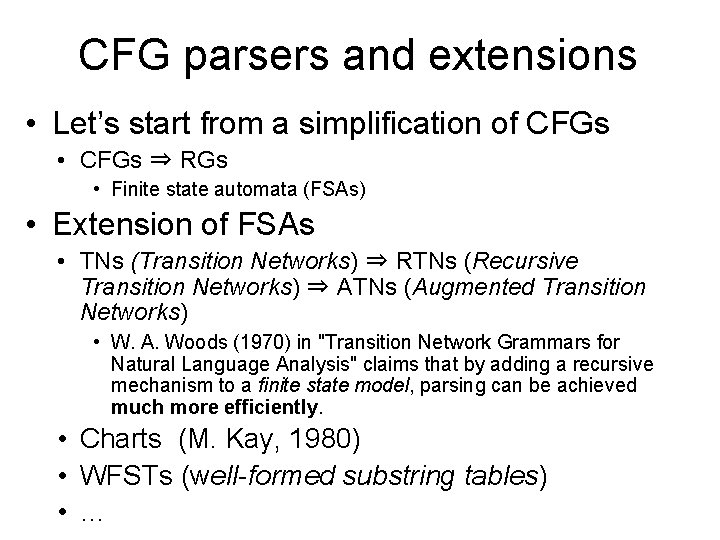

CFG parsers and extensions • Let’s start from a simplification of CFGs • CFGs ⇒ RGs • Finite state automata (FSAs) • Extension of FSAs • TNs (Transition Networks) ⇒ RTNs (Recursive Transition Networks) ⇒ ATNs (Augmented Transition Networks) • W. A. Woods (1970) in "Transition Network Grammars for Natural Language Analysis" claims that by adding a recursive mechanism to a finite state model, parsing can be achieved much more efficiently. • Charts (M. Kay, 1980) • WFSTs (well-formed substring tables) • …

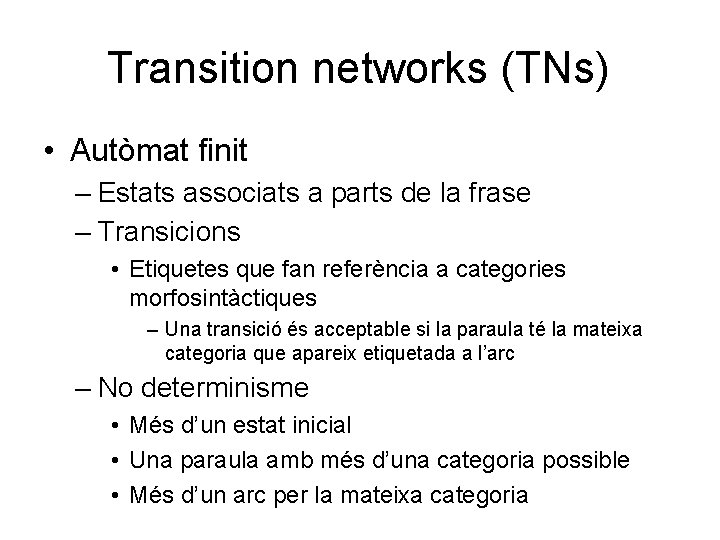

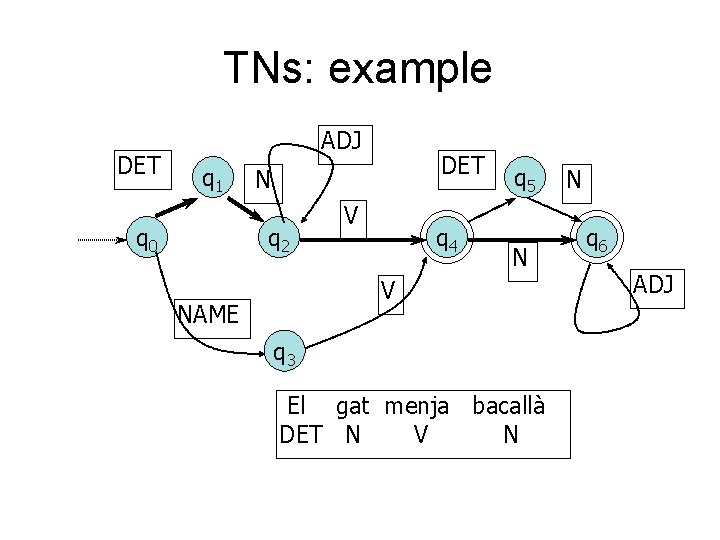

Transition networks (TNs) • Autòmat finit – Estats associats a parts de la frase – Transicions • Etiquetes que fan referència a categories morfosintàctiques – Una transició és acceptable si la paraula té la mateixa categoria que apareix etiquetada a l’arc – No determinisme • Més d’un estat inicial • Una paraula amb més d’una categoria possible • Més d’un arc per la mateixa categoria

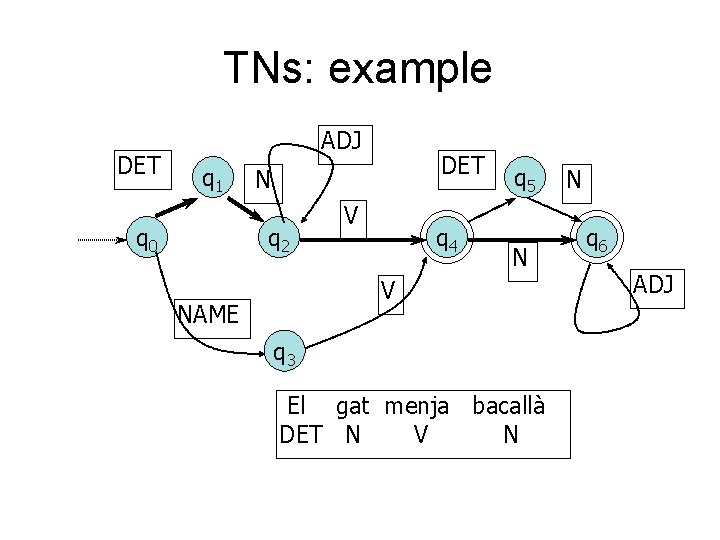

TNs: example DET ADJ q 1 q 0 DET N q 2 V q 4 q 5 N V NAME q 3 El gat menja bacallà DET N V N N q 6 ADJ

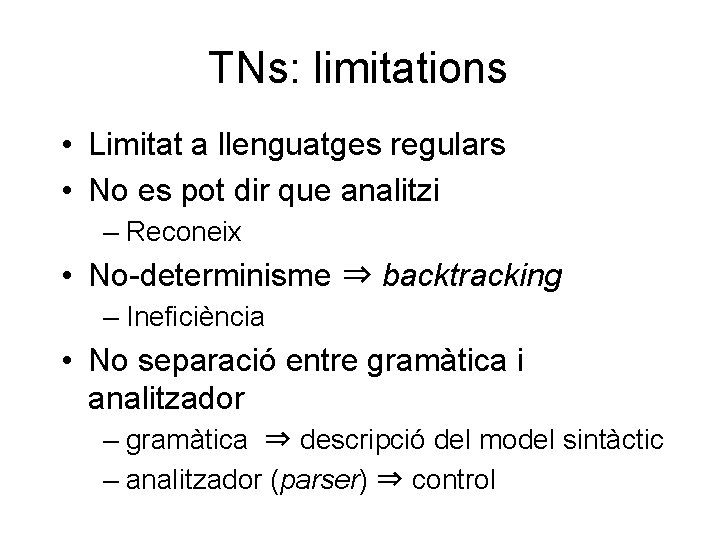

TNs: limitations • Limitat a llenguatges regulars • No es pot dir que analitzi – Reconeix • No-determinisme ⇒ backtracking – Ineficiència • No separació entre gramàtica i analitzador – gramàtica ⇒ descripció del model sintàctic – analitzador (parser) ⇒ control

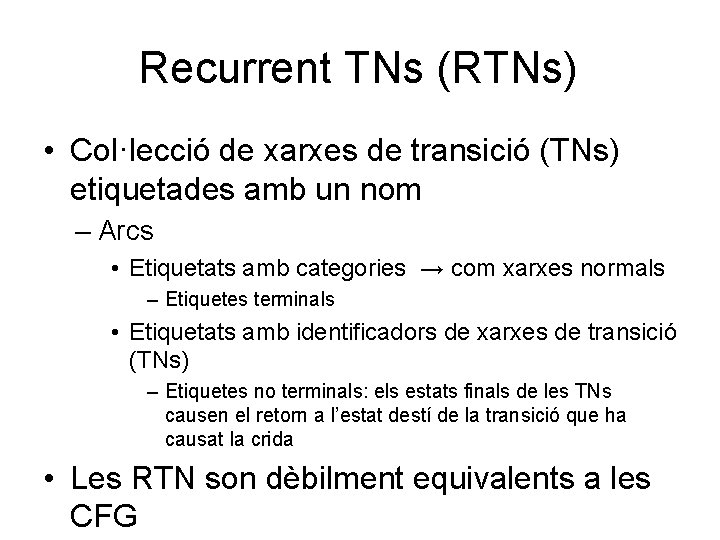

Recurrent TNs (RTNs) • Col·lecció de xarxes de transició (TNs) etiquetades amb un nom – Arcs • Etiquetats amb categories → com xarxes normals – Etiquetes terminals • Etiquetats amb identificadors de xarxes de transició (TNs) – Etiquetes no terminals: els estats finals de les TNs causen el retorn a l’estat destí de la transició que ha causat la crida • Les RTN son dèbilment equivalents a les CFG

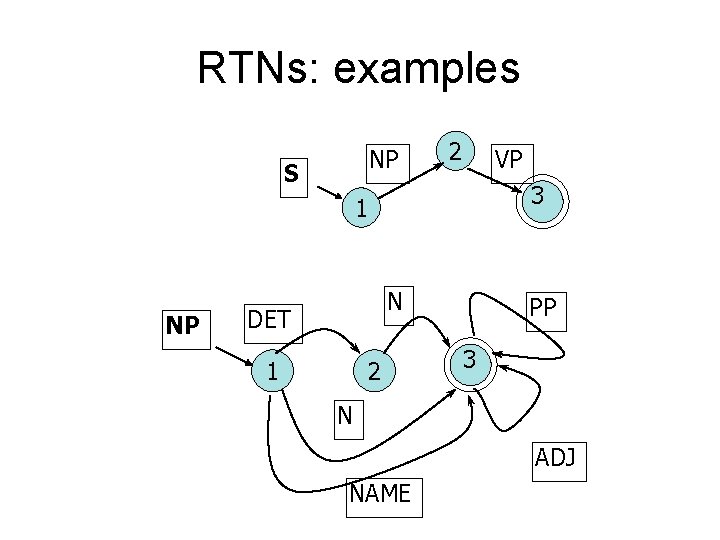

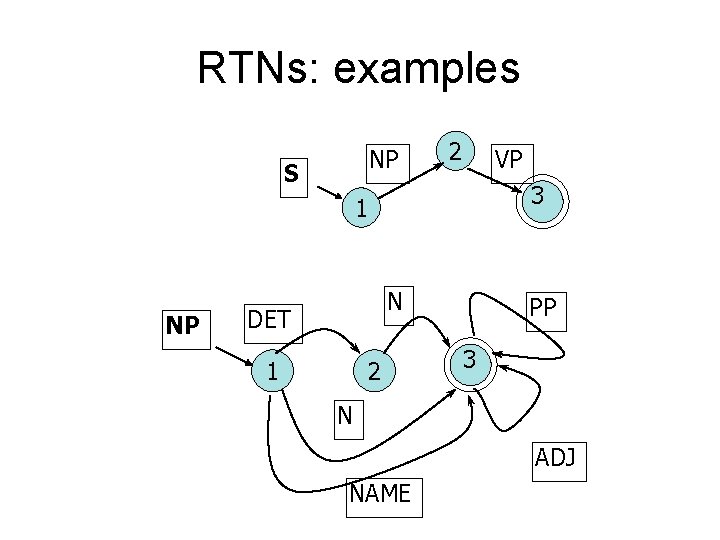

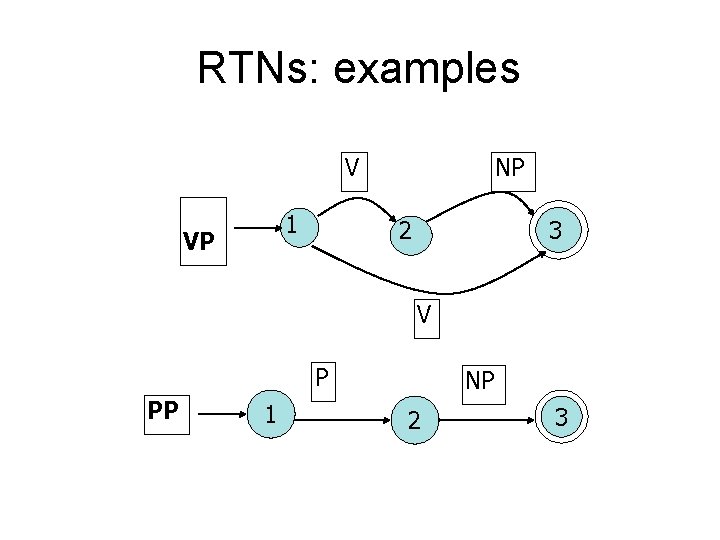

RTNs: examples NP S 2 VP 3 1 NP N DET 1 2 PP 3 N ADJ NAME

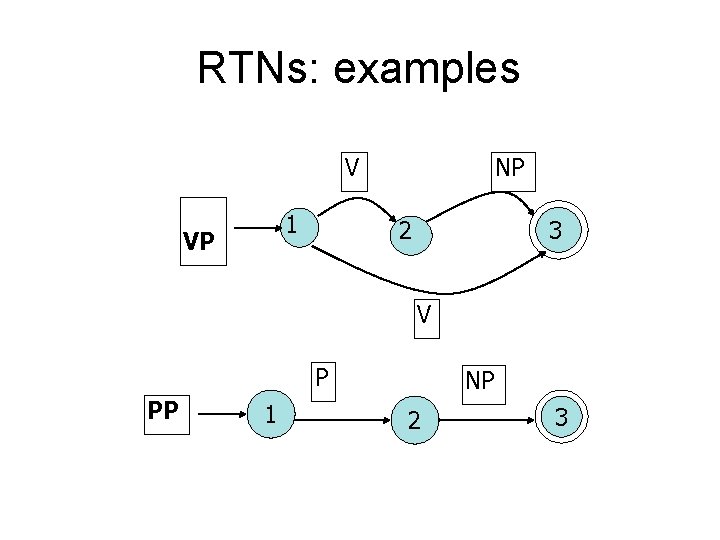

RTNs: examples V 1 VP NP 2 3 V P PP 1 NP 2 3

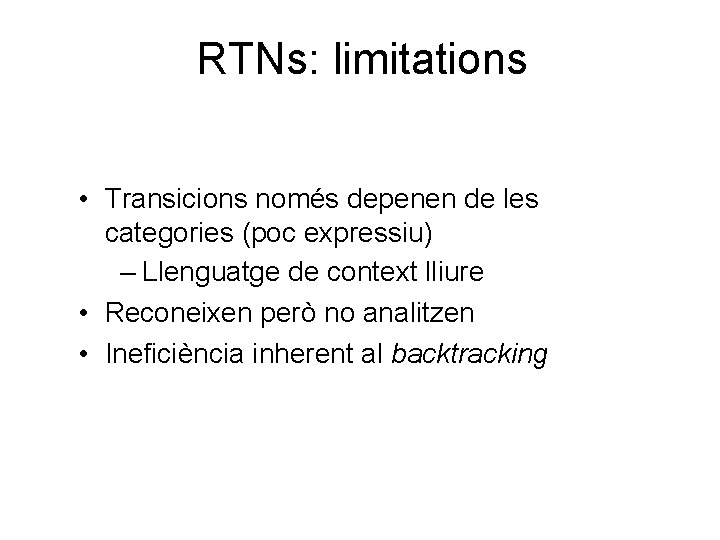

RTNs: limitations • Transicions només depenen de les categories (poc expressiu) – Llenguatge de context lliure • Reconeixen però no analitzen • Ineficiència inherent al backtracking

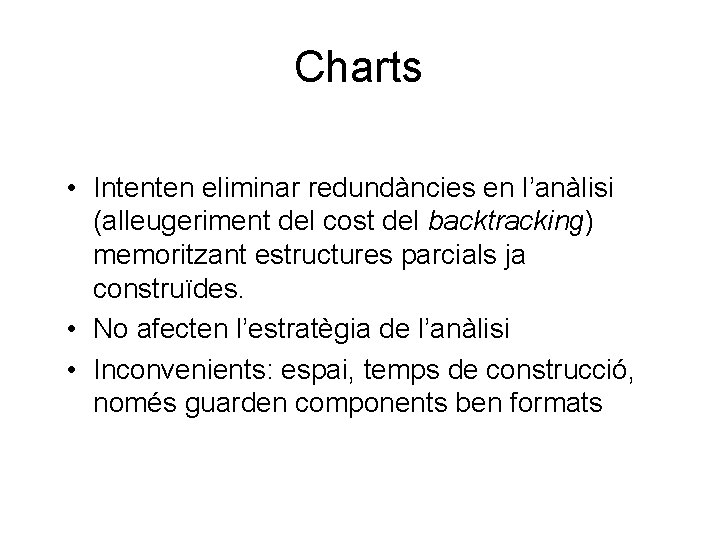

Charts • Intenten eliminar redundàncies en l’anàlisi (alleugeriment del cost del backtracking) memoritzant estructures parcials ja construïdes. • No afecten l’estratègia de l’anàlisi • Inconvenients: espai, temps de construcció, només guarden components ben formats

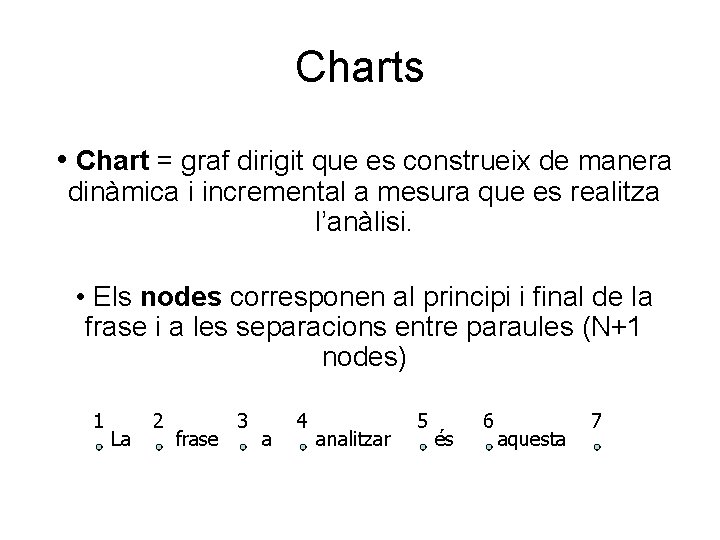

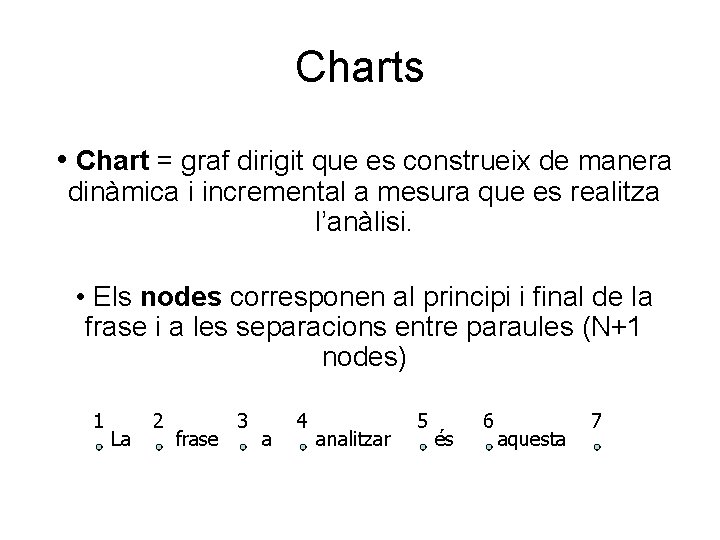

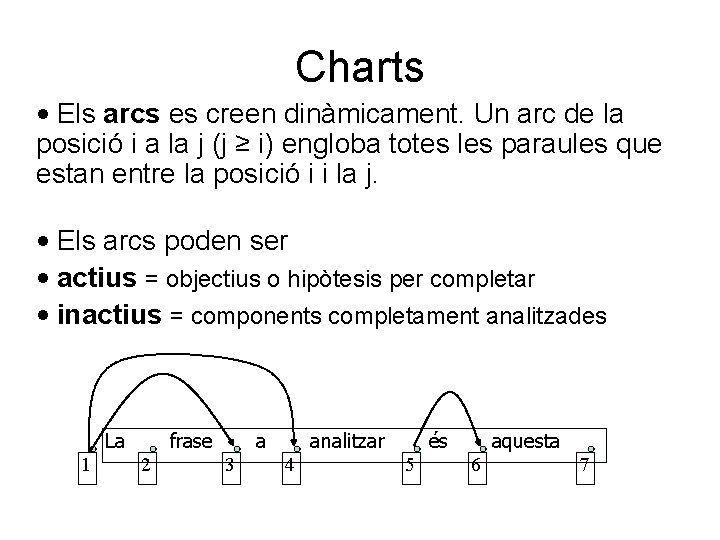

Charts • Chart = graf dirigit que es construeix de manera dinàmica i incremental a mesura que es realitza l’anàlisi. • Els nodes corresponen al principi i final de la frase i a les separacions entre paraules (N+1 nodes) 1 La 2 frase 3 a 4 analitzar 5 és 6 aquesta 7

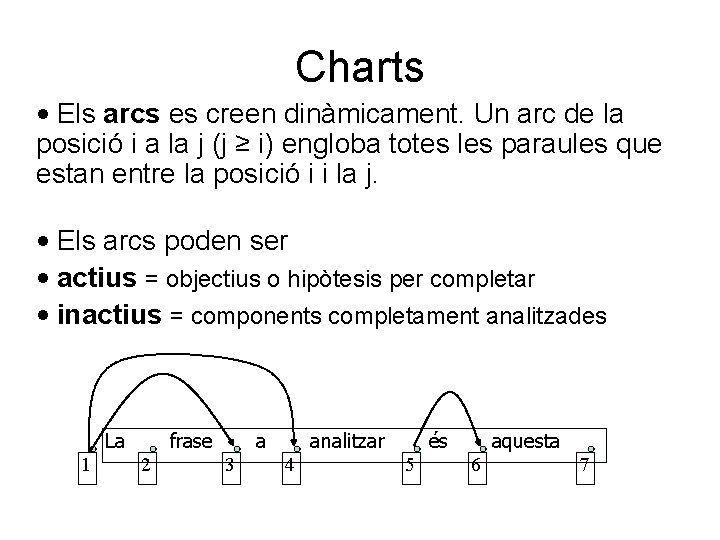

Charts • Els arcs es creen dinàmicament. Un arc de la posició i a la j (j ≥ i) engloba totes les paraules que estan entre la posició i i la j. • Els arcs poden ser • actius = objectius o hipòtesis per completar • inactius = components completament analitzades La 1 frase 2 a 3 analitzar 4 és 5 aquesta 6 7

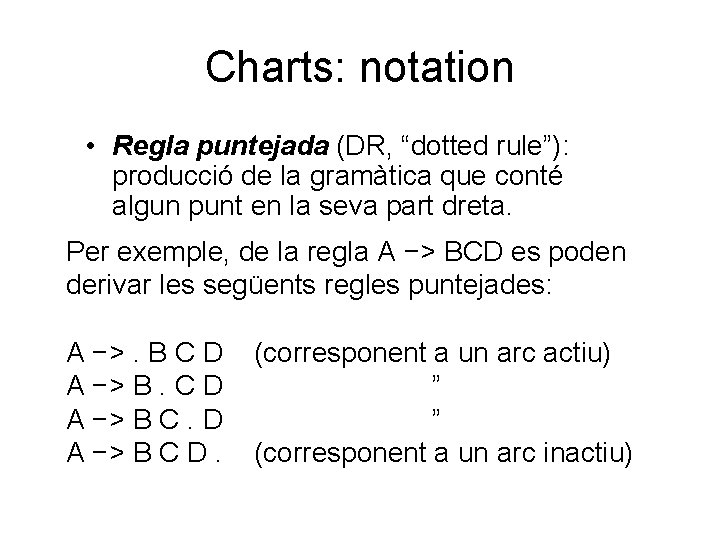

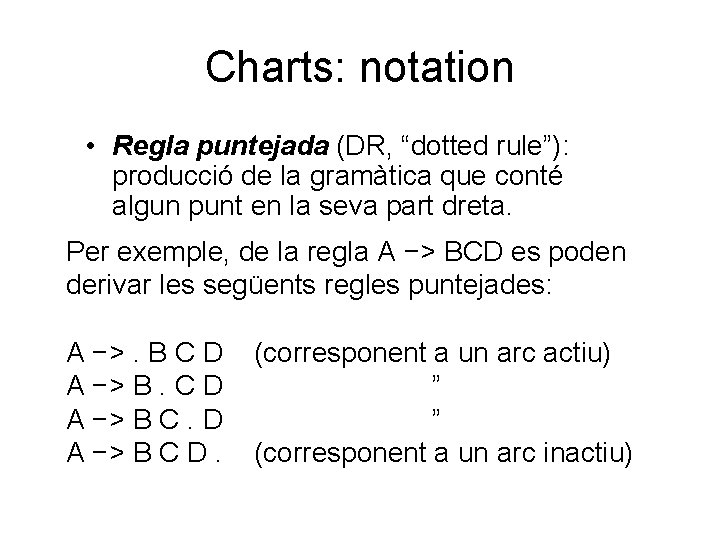

Charts: notation • Regla puntejada (DR, “dotted rule”): producció de la gramàtica que conté algun punt en la seva part dreta. Per exemple, de la regla A −> BCD es poden derivar les següents regles puntejades: A −>. B C D (corresponent a un arc actiu) A −> B. C D ” A −> B C D. (corresponent a un arc inactiu)

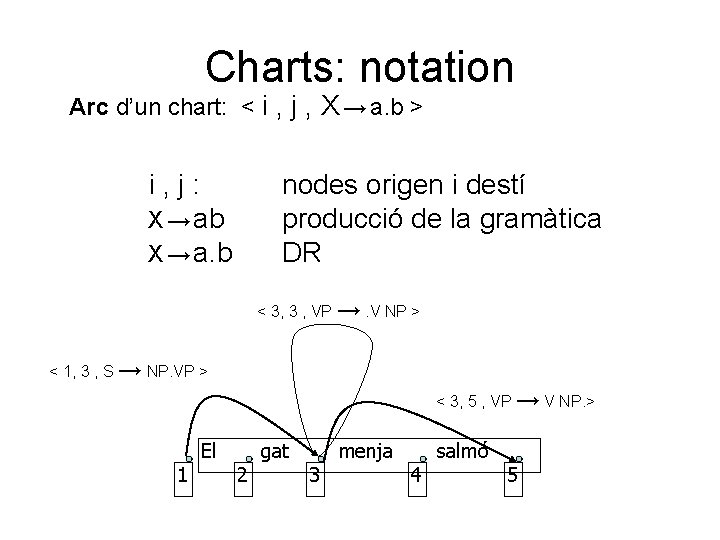

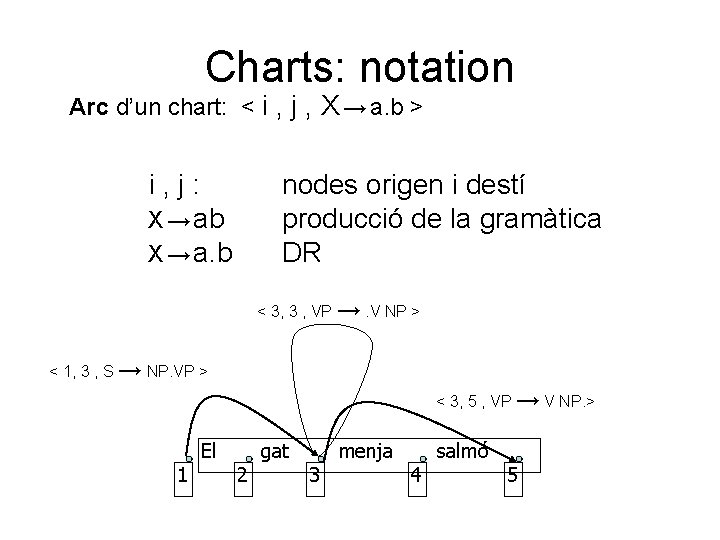

Charts: notation Arc d’un chart: < i , j , X → a. b > i , j : X → ab X → a. b nodes origen i destí producció de la gramàtica DR < 3, 3 , VP →. V NP > < 1, 3 , S → NP. VP > < 3, 5 , VP → V NP. > 1 El 2 gat 3 menja 4 salmó 5

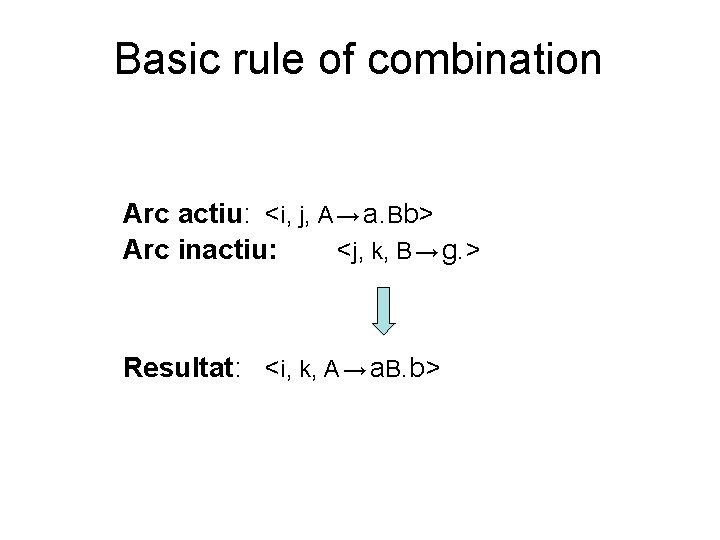

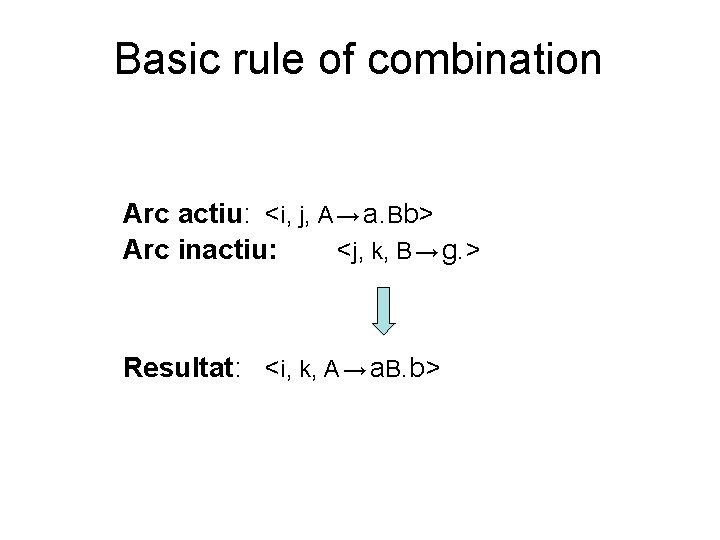

Basic rule of combination Arc actiu: <i, j, A → a. Bb> Arc inactiu: <j, k, B → g. > Resultat: <i, k, A → a. B. b>

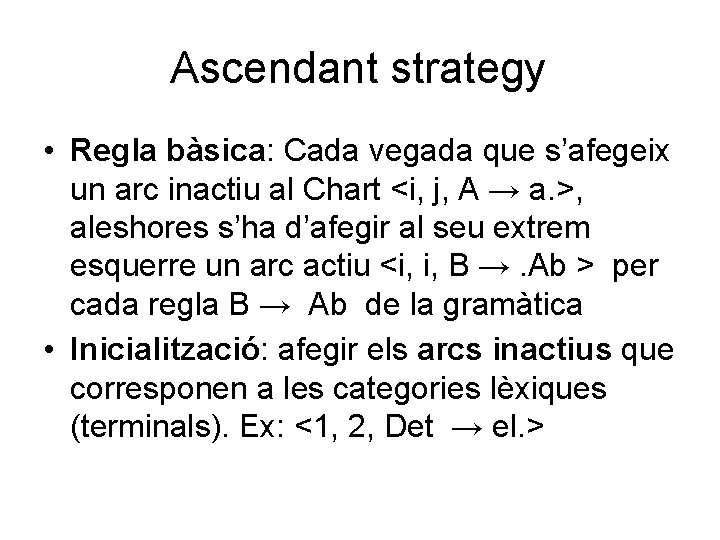

Ascendant strategy • Regla bàsica: Cada vegada que s’afegeix un arc inactiu al Chart <i, j, A → a. >, aleshores s’ha d’afegir al seu extrem esquerre un arc actiu <i, i, B →. Ab > per cada regla B → Ab de la gramàtica • Inicialització: afegir els arcs inactius que corresponen a les categories lèxiques (terminals). Ex: <1, 2, Det → el. >

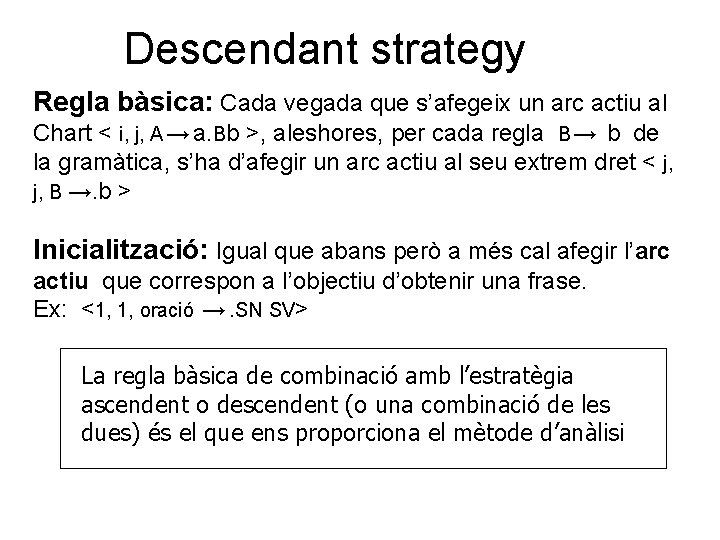

Descendant strategy Regla bàsica: Cada vegada que s’afegeix un arc actiu al Chart < i, j, A → a. Bb >, aleshores, per cada regla B → b de la gramàtica, s’ha d’afegir un arc actiu al seu extrem dret < j, B →. b > Inicialització: Igual que abans però a més cal afegir l’arc actiu que correspon a l’objectiu d’obtenir una frase. Ex: <1, 1, oració →. SN SV> La regla bàsica de combinació amb l’estratègia ascendent o descendent (o una combinació de les dues) és el que ens proporciona el mètode d’anàlisi

![Charts example Oracio GN GV Oracio GN GV GN Charts: example [Oracio → GN GV • ] [Oracio → GN • GV] [GN](https://slidetodoc.com/presentation_image/4eef0fcb3a213859f960c8576c26fea1/image-68.jpg)

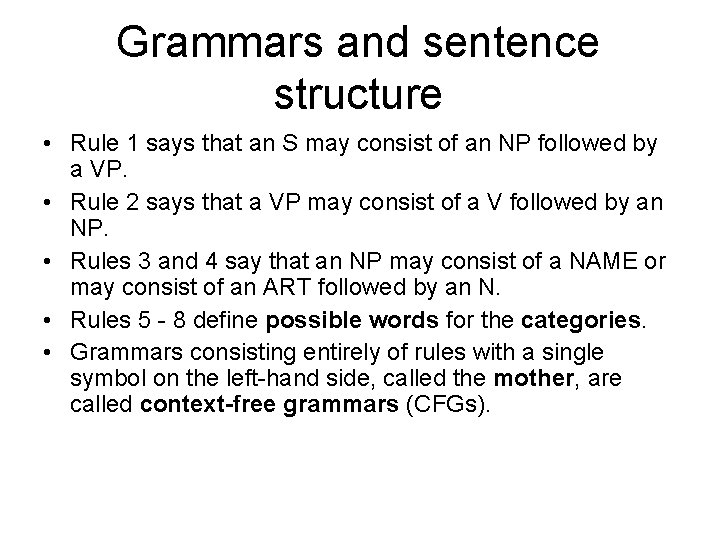

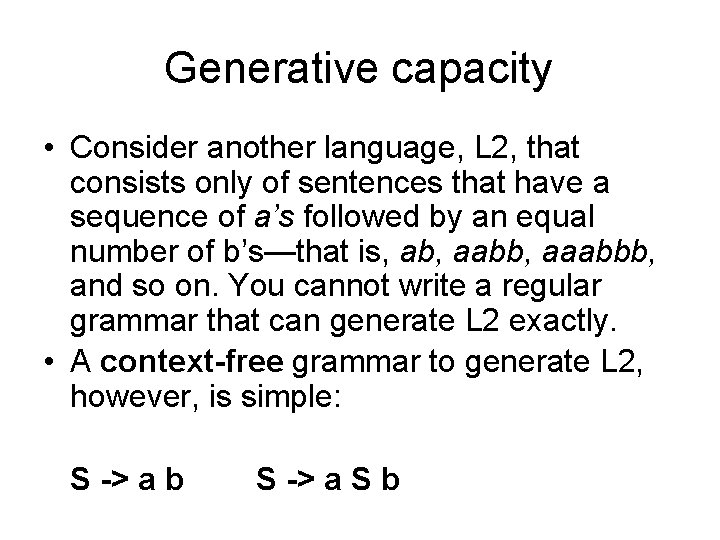

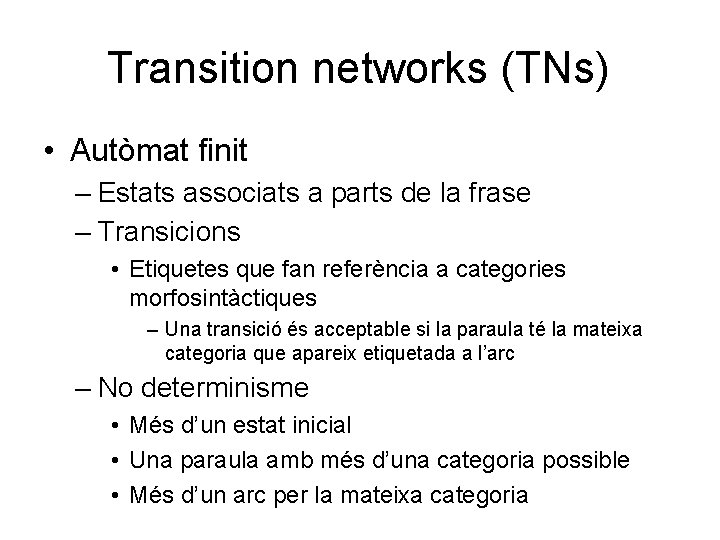

Charts: example [Oracio → GN GV • ] [Oracio → GN • GV] [GN → n • ] [GV → • vi] [Oracio → • GN GV] [GV → • vt GN] [GN → det • n] [GN → • det n] [GN → det n • ] [GV → vt • GN] [GV → vi • ][GN → • n] [det] [n] [vt] [vi] [n] el gat menja peix 0 1 2 3 4

Features (rasgos) • Context-free grammars provide the basis for most of the computational parsing mechanisms developed to date. • As they have been described, they would be inconvenient for capturing natural language. • The basic context-free mechanism can be extended defining constituents by a set of features. • This extension allows aspects of natural language such as agreement and subcategorization to be handled in an intuitive and concise way.

Feature systems and augmented grammars • In natural language there are often agreement restrictions between words and between phrases. • For example, the noun phrase (NP) un hombres is not correct because the article un indicates a single object while the noun hombres indicates a plural object. • The NP does not satisfy the number agreement restriction.

Feature systems and augmented grammars • There are many other forms of agreement, including – subject-verb agreement – gender agreement for pronouns – restrictions between the head of the phrase and the form of its complement. • Features are introduced to handle such phenomena. • A feature NUMBER might be defined that takes a value of either s (for singular) or p (for plural) and we then might write an augmented CFG rule such as NP → ART N only when NUMBER 1 agrees with NUMBER 2 • This rule says that a legal NP consists of an article (ART) followed by a noun (N), but only when the number feature of the first word agrees with the number feature of the second.

Feature systems and augmented grammars NP → ART N only when NUMBER 1 agrees with NUMBER 2 • This one rule is equivalent to two CFG rules that would use different terminal symbols for encoding singular and plural forms of all NPs, such as NP-SING → ART-SING NP-PLURAL → ART-PLURAL N-PLURAL • The two approaches seem similar in ease-of-use in this one example. • Though, in the second case, all rules in the grammar that use an NP on the right-hand side would need to be duplicated to include a rule for NP-SING and a rule for NP-PLURAL, effectively doubling the size of the grammar.

Feature systems and augmented grammars • Handling additional features, such as person agreement, would double the size of the grammar again, and so on. • Using features, the size of the augmented grammar remains the same as the original one, yet accounts for agreement constraints. • A constituent is defined as a feature structure (FS), a mapping from features to values that defines the relevant properties of the constituent.

Feature systems and augmented grammars • Example: a FS for a constituent ART 1 that represents a particular use of the word un: ART 1: (CAT ART ROOT un NUMBER s) • It is a constituent in the category ART that has its root in the word un and is singular. • Usually an abbreviation is used that gives the CAT value more prominence: ART 1: (ART ROOT un NUMBER s)

Feature systems and augmented grammars • FSs can be used to represent larger constituents as well. • FSs themselves can occur as values. • Special features based on the integers (1, 2, 3…) stand for the subconstituents (first, second…). • The representation of the NP constituent for the phrase un pez could be: NP 1: (NP NUMBER s 1 (ART ROOT un NUMBER s) 2 (N ROOT pez NUMBER s))

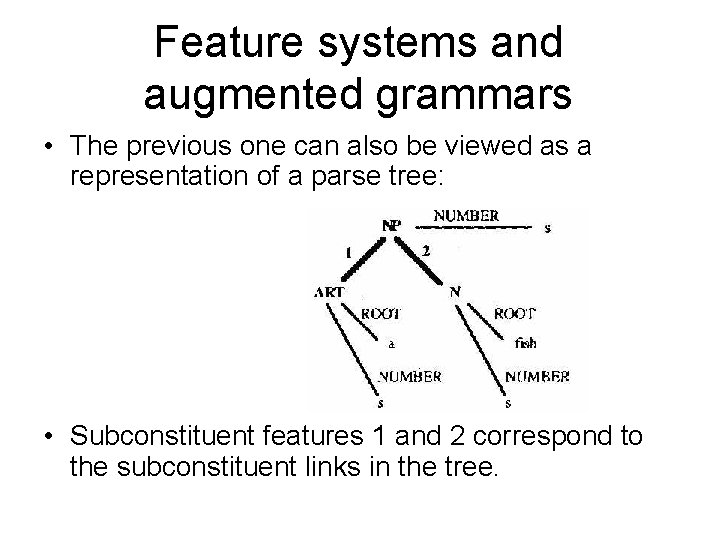

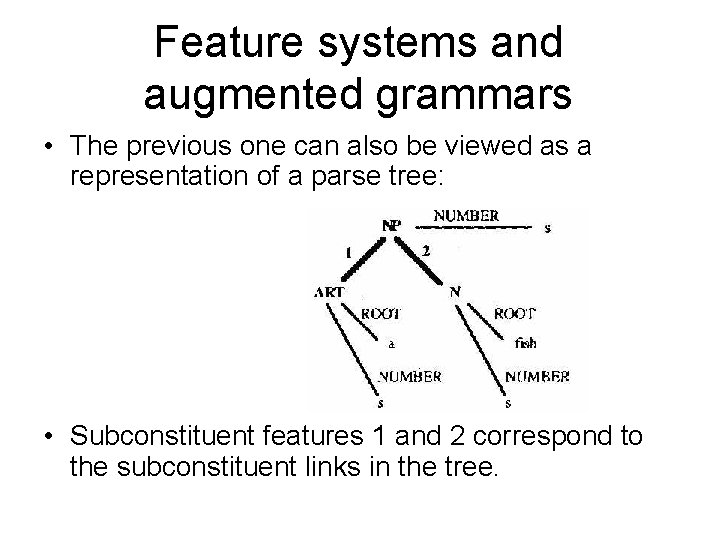

Feature systems and augmented grammars • The previous one can also be viewed as a representation of a parse tree: • Subconstituent features 1 and 2 correspond to the subconstituent links in the tree.

Feature systems and augmented grammars • The rules in an augmented grammar are stated in terms of FSs rather than simple categories. • Variables are allowed as feature values so that a rule can apply to a wide range of situations. • For example, a rule for simple NPs would be as follows: (NP NUMBER ? n): (ART NUMBER ? n) (N NUMBER ? n) • This says that an NP constituent can consist of two subconstituents, the first being an ART and the second being an N, in which the NUMBER feature in all three constituents is identical.

Feature systems and augmented grammars (NP NUMBER ? n): (ART NUMBER ? n) (N NUMBER ? n) • According to this rule, constituent NP 1 given previously is a legal constituent. • The constituent *(NP 1 (ART NUMBER s) 2 (N NUMBER s)) is not allowed by this rule because there is no NUMBER feature in the NP. • The constituent *(NP NUMBER s 1 (ART NUMBER s) 2 (N NUMBER p)) is not allowed because the NUMBER feature of the N constituent is not identical to the other two NUMBER features.

Feature systems and augmented grammars • Variables are also useful in specifying ambiguity in a constituent. • The word crisis is ambiguous between a singular and a plural reading. • The word might have two entries in the lexicon that differ only by the value of the NUMBER feature. • Alternatively, we could define a single entry that uses a variable as the value of the NUMBER feature: (N ROOT crisis NUMBER ? n) • This works because any value of the NUMBER feature is allowed for the word crisis.

Feature systems and augmented grammars • In many cases not just any value would work, but a range of values is possible. • We introduce constrained variables, which are variables that can only take a value out of a specified list. • For example, the variable ? n{s p} would be a variable that can take the value s or the value p. • When we write such variables, we will drop the variable name altogether and just list the possible values. • The word crisis might be represented by the constituent: (N ROOT crisis NUMBER ? n{s p}) or more simply as (N ROOT crisis NUMBER {s p})

Feature systems and augmented grammars • There is an interesting issue of whether an augmented CFG can describe languages that cannot be described by a simple CFG. • The answer depends on the constraints on what can be a feature value. • If the set of feature values is finite, then it would always be possible to create new constituent categories for every combination of features. Thus it is expressively equivalent to a CFG. • If the set of feature values is unconstrained then such grammars have arbitrary computational power. • In practice, even when the set of values is not explicitly restricted, this power is not used, and the standard parsing algorithms can be used on grammars that include features.

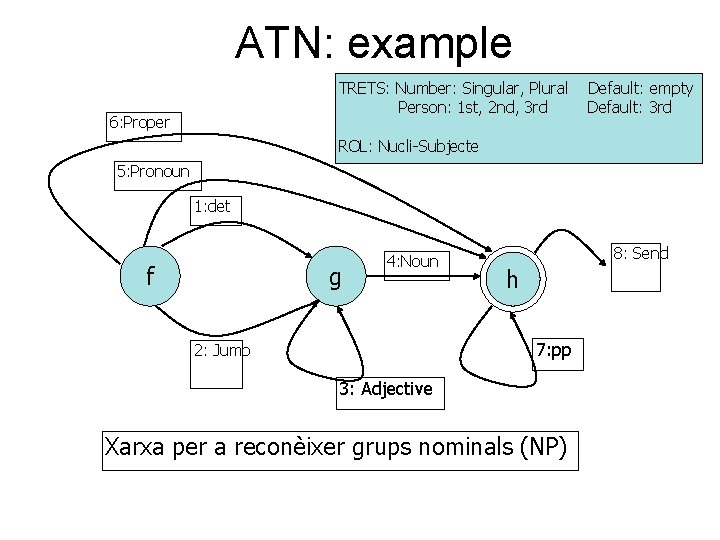

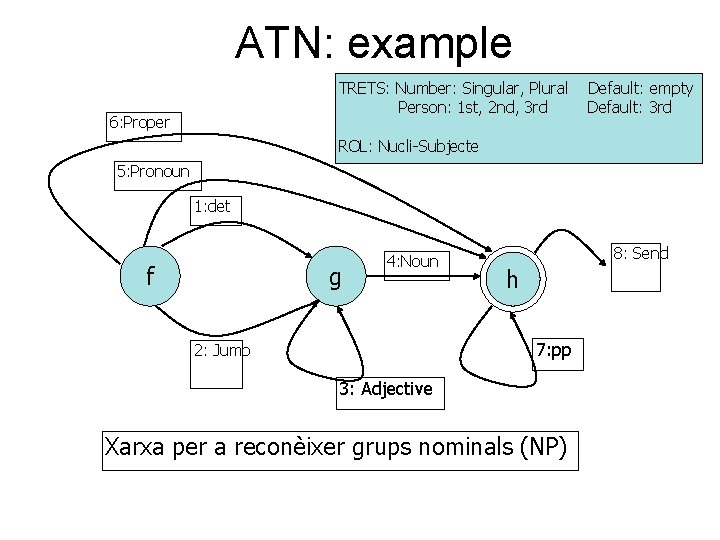

ATN: example TRETS: Number: Singular, Plural Person: 1 st, 2 nd, 3 rd 6: Proper Default: empty Default: 3 rd ROL: Nucli-Subjecte 5: Pronoun 1: det f g 4: Noun 8: Send h 7: pp 2: Jump 3: Adjective Xarxa per a reconèixer grups nominals (NP)

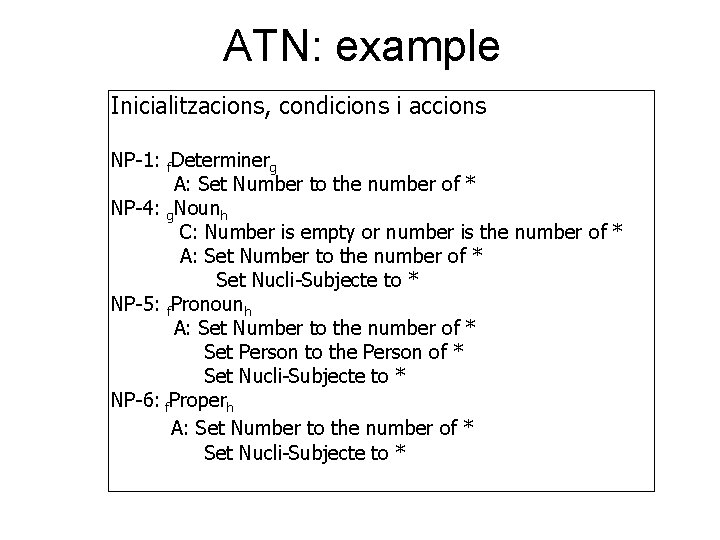

ATN: example Inicialitzacions, condicions i accions NP-1: f. Determinerg A: Set Number to the number of * NP-4: g. Nounh C: Number is empty or number is the number of * A: Set Number to the number of * Set Nucli-Subjecte to * NP-5: f. Pronounh A: Set Number to the number of * Set Person to the Person of * Set Nucli-Subjecte to * NP-6: f. Properh A: Set Number to the number of * Set Nucli-Subjecte to *

ATN: limitations • Són adequades per l’anàlisi descendent, però no resulta fàcil implementar una anàlisi ascendent o híbrida. • Redundància de les operacions de backtracking: – ineficiència • Problemes d’expressivitat notacional: – la gramàtica es barreja amb les accions

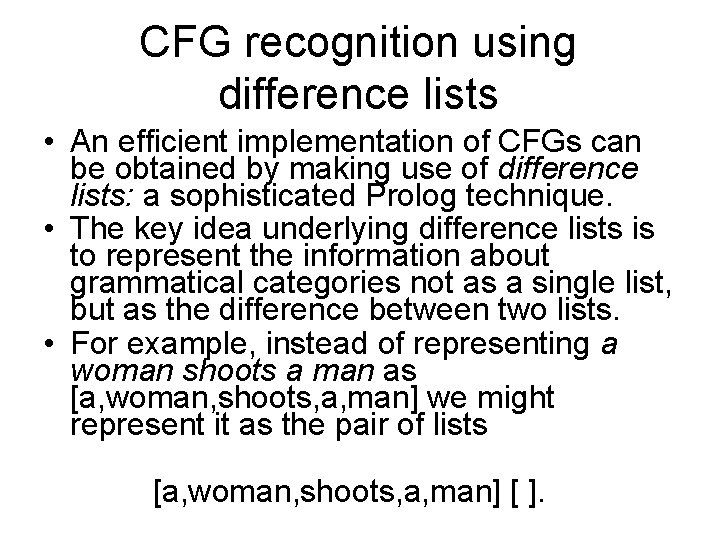

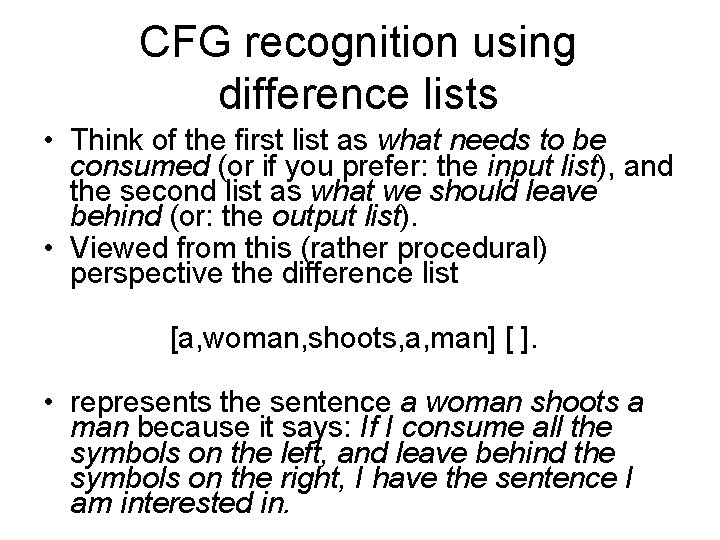

CFG recognition using difference lists • An efficient implementation of CFGs can be obtained by making use of difference lists: a sophisticated Prolog technique. • The key idea underlying difference lists is to represent the information about grammatical categories not as a single list, but as the difference between two lists. • For example, instead of representing a woman shoots a man as [a, woman, shoots, a, man] we might represent it as the pair of lists [a, woman, shoots, a, man] [ ].

CFG recognition using difference lists • Think of the first list as what needs to be consumed (or if you prefer: the input list), and the second list as what we should leave behind (or: the output list). • Viewed from this (rather procedural) perspective the difference list [a, woman, shoots, a, man] [ ]. • represents the sentence a woman shoots a man because it says: If I consume all the symbols on the left, and leave behind the symbols on the right, I have the sentence I am interested in.

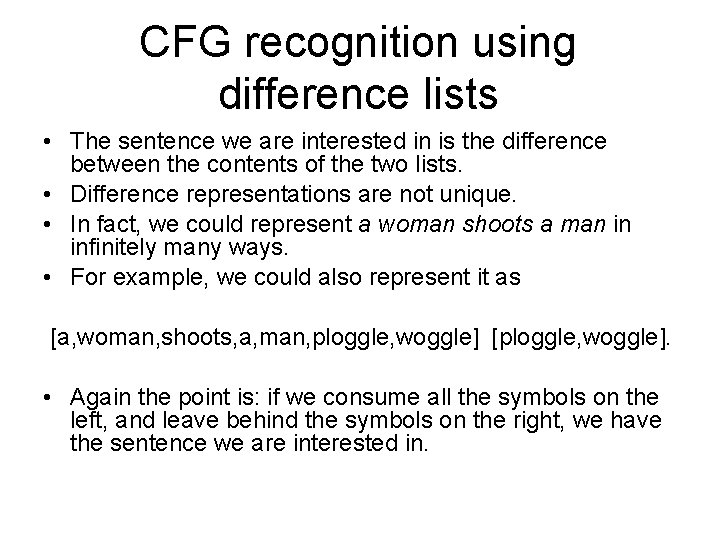

CFG recognition using difference lists • The sentence we are interested in is the difference between the contents of the two lists. • Difference representations are not unique. • In fact, we could represent a woman shoots a man in infinitely many ways. • For example, we could also represent it as [a, woman, shoots, a, man, ploggle, woggle] [ploggle, woggle]. • Again the point is: if we consume all the symbols on the left, and leave behind the symbols on the right, we have the sentence we are interested in.

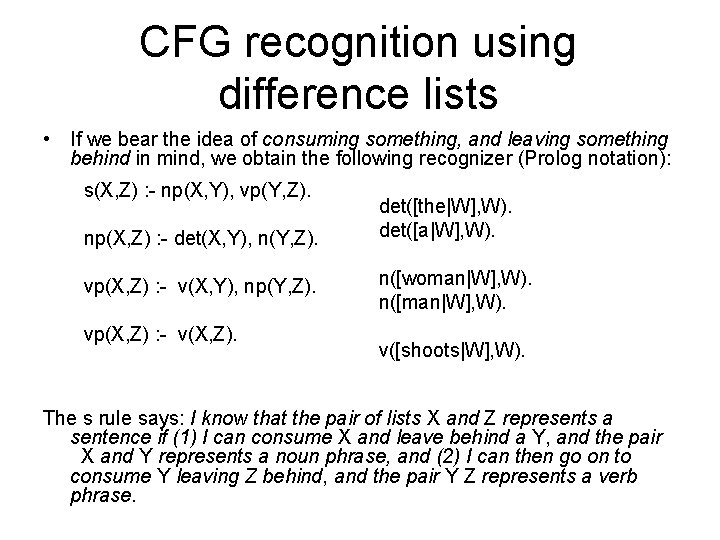

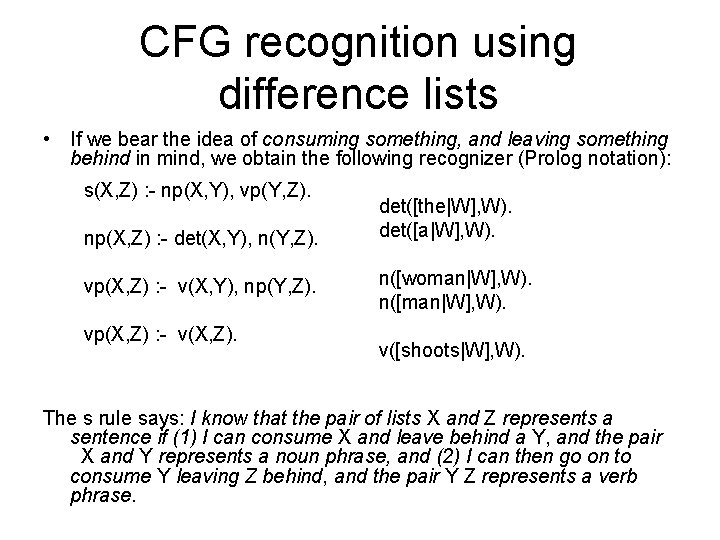

CFG recognition using difference lists • If we bear the idea of consuming something, and leaving something behind in mind, we obtain the following recognizer (Prolog notation): s(X, Z) : - np(X, Y), vp(Y, Z). np(X, Z) : - det(X, Y), n(Y, Z). vp(X, Z) : - v(X, Y), np(Y, Z). vp(X, Z) : - v(X, Z). det([the|W], W). det([a|W], W). n([woman|W], W). n([man|W], W). v([shoots|W], W). The s rule says: I know that the pair of lists X and Z represents a sentence if (1) I can consume X and leave behind a Y, and the pair X and Y represents a noun phrase, and (2) I can then go on to consume Y leaving Z behind, and the pair Y Z represents a verb phrase.

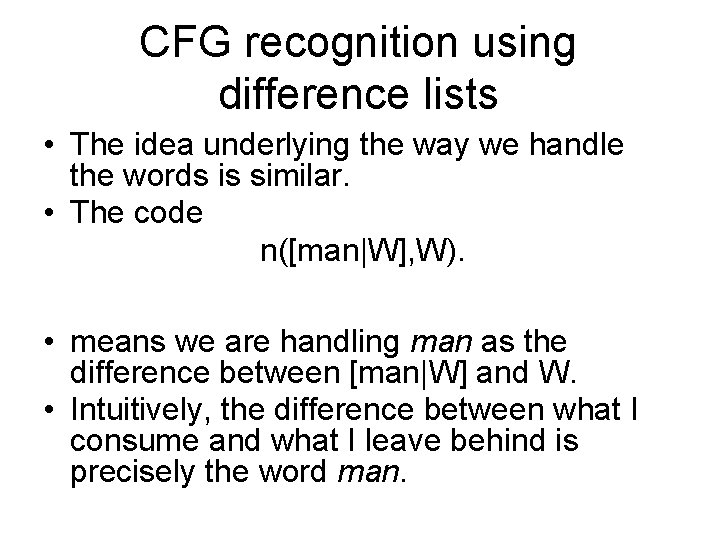

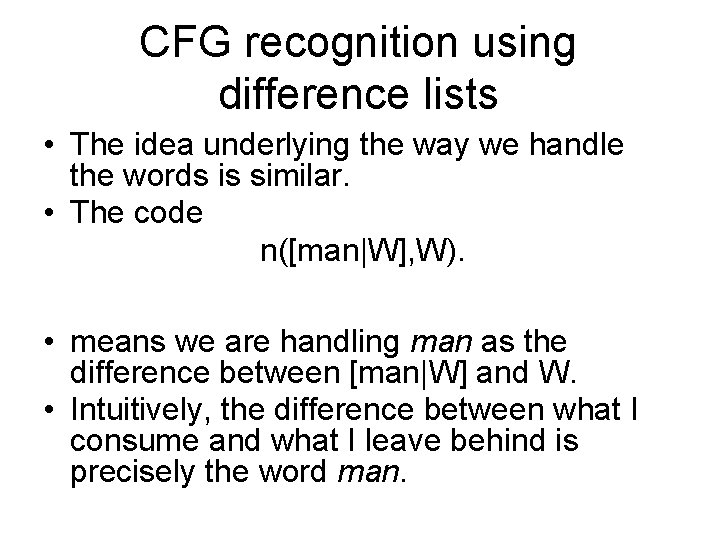

CFG recognition using difference lists • The idea underlying the way we handle the words is similar. • The code n([man|W], W). • means we are handling man as the difference between [man|W] and W. • Intuitively, the difference between what I consume and what I leave behind is precisely the word man.

CFG recognition using difference lists • How do we use such grammars? Here's how to recognize sentences: s([a, woman, shoots, a, man], []). yes • This asks whether we can get an s by consuming the symbols in [a, woman, shoots, a, man], leaving nothing behind.

CFG recognition using difference lists • Similarly, to generate all the sentences in the grammar, we ask s(X, []). • This asks: what values can you give to X, such that we get an s by consuming the symbols in X, leaving nothing behind? • The queries for other grammatical categories also work the same way. • For example, to find out if a woman is a noun phrase we ask: np([a, woman], []).

CFG recognition using difference lists • And we generate all the noun phrases in the grammar as follows: np(X, []). • It has to be admitted that this recognizer is not as easy to understand, at least at first, and it's a pain having to keep track of all those difference list variables. • This is where DCGs come in.