NEURAL NETWORKS REFERENCES ARTIFICIAL INTELLIGENCE FOR GAMES ARTIFICIAL

- Slides: 21

NEURAL NETWORKS REFERENCES: • “ARTIFICIAL INTELLIGENCE FOR GAMES” • "ARTIFICIAL INTELLIGENCE: A NEW SYNTHESIS • HTTPS: //MATTMAZUR. COM/2015/03/17/A-STEP-BY-STEP-BACKPROPAGATION-EXAMPLE/ "

HISTORY • In the 70’s vs today • variants • • (Multi-layer) Feed-forward (perceptron) Hebbian Recurrent …

OVERVIEW • • Givens: Process: Using the Neural Network. Basically: a classifier.

EXAMPLE APPLICATION #1 • Modeling a enemy AI. • Supervised training • Unsupervised training

EXAMPLE APPLICATION #2 (I. E. THE BORING LAB)

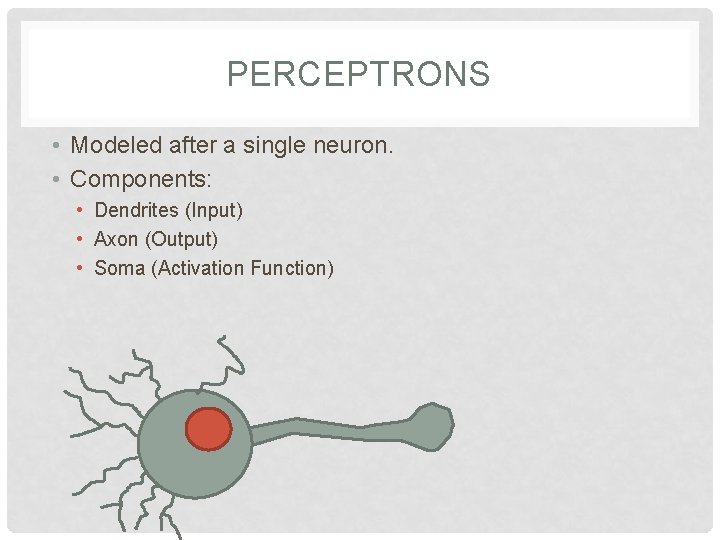

PERCEPTRONS • Modeled after a single neuron. • Components: • Dendrites (Input) • Axon (Output) • Soma (Activation Function)

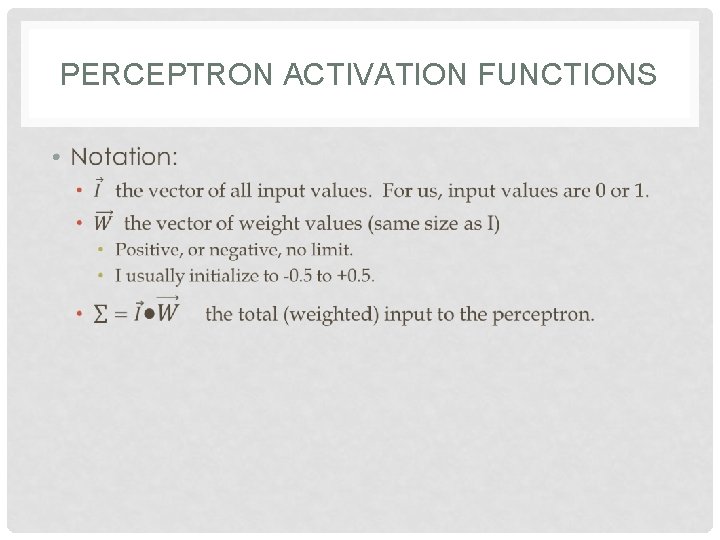

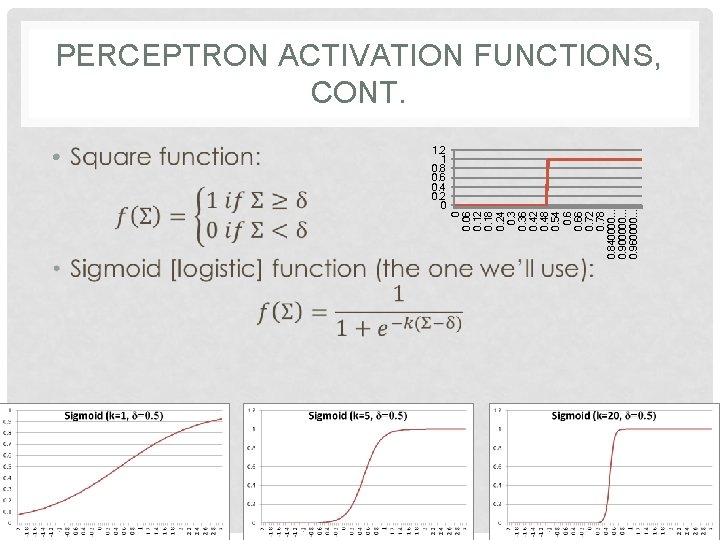

PERCEPTRON ACTIVATION FUNCTIONS •

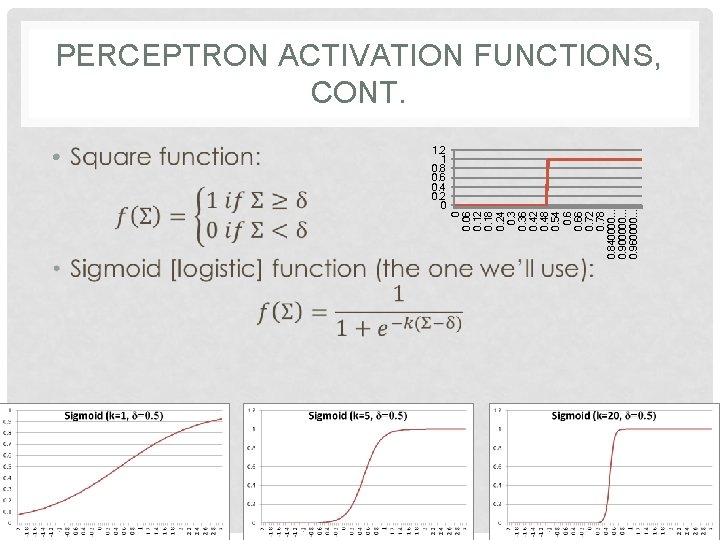

• 1. 2 1 0. 8 0. 6 0. 4 0. 2 0 0 0. 06 0. 12 0. 18 0. 24 0. 36 0. 42 0. 48 0. 54 0. 66 0. 72 0. 78 0. 840000. . . 0. 900000. . . 0. 960000. . . PERCEPTRON ACTIVATION FUNCTIONS, CONT.

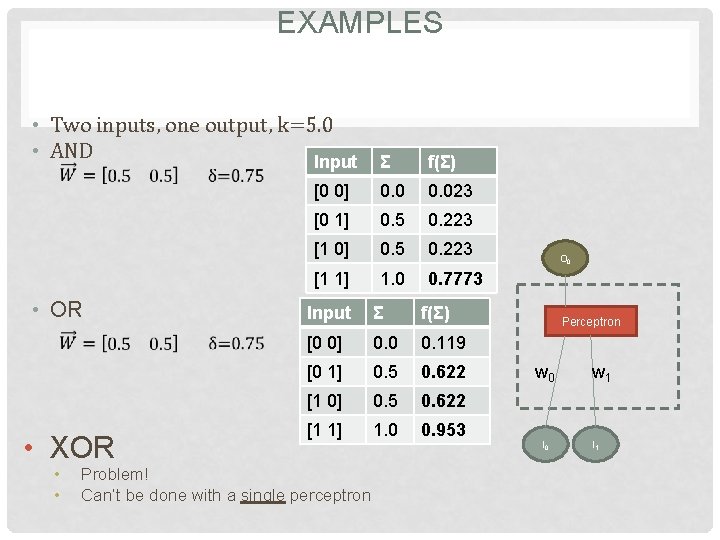

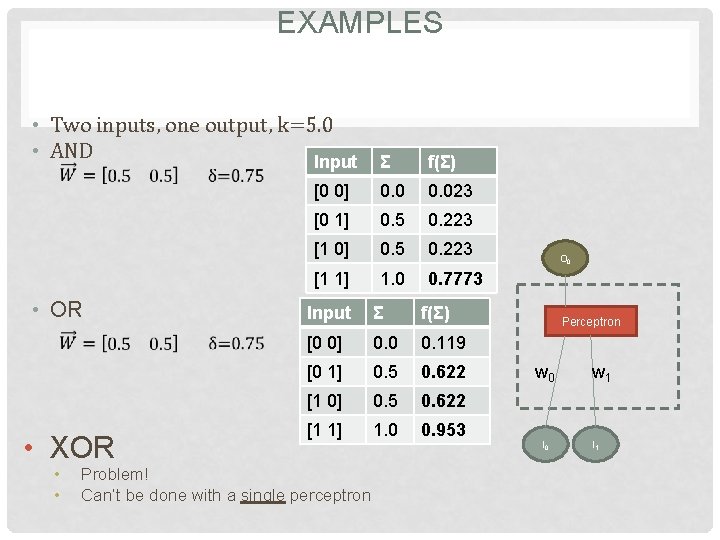

EXAMPLES • Two inputs, one output, k=5. 0 • AND Input • OR • XOR • • Σ f(Σ) [0 0] 0. 023 [0 1] 0. 5 0. 223 [1 0] 0. 5 0. 223 [1 1] 1. 0 0. 7773 Input Σ f(Σ) [0 0] 0. 0 0. 119 [0 1] 0. 5 0. 622 [1 0] 0. 5 0. 622 [1 1] 1. 0 0. 953 Problem! Can’t be done with a single perceptron O 0 Perceptron w 0 I 0 w 1 I 1

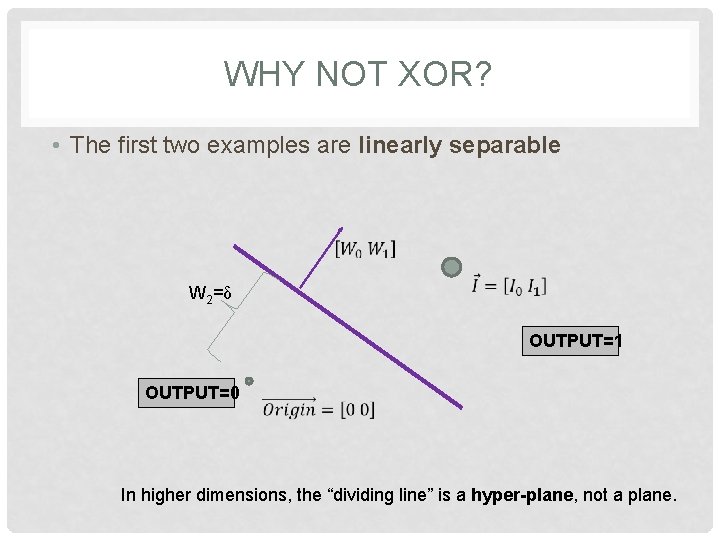

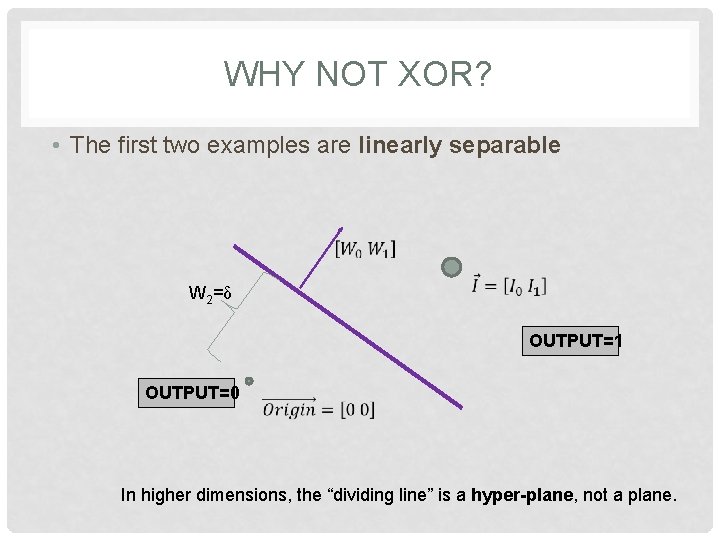

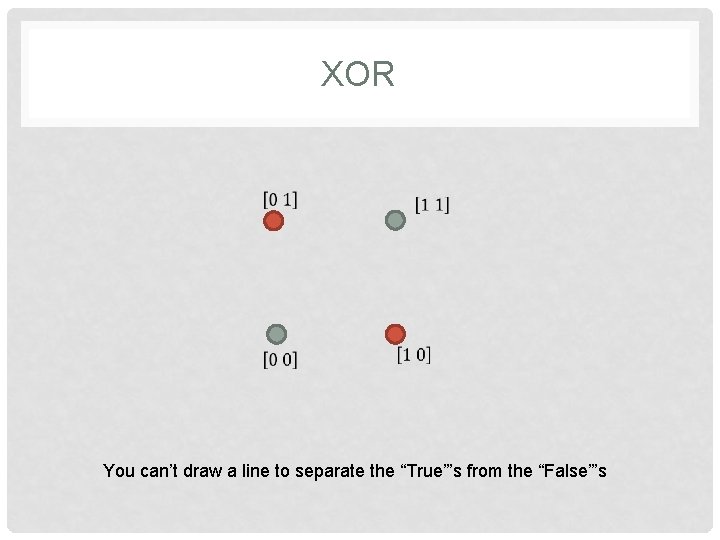

WHY NOT XOR? • The first two examples are linearly separable W 2=δ OUTPUT=1 OUTPUT=0 In higher dimensions, the “dividing line” is a hyper-plane, not a plane.

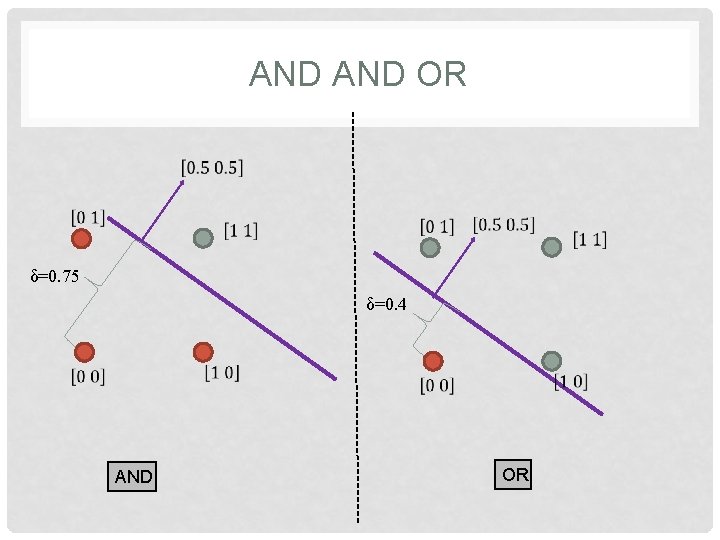

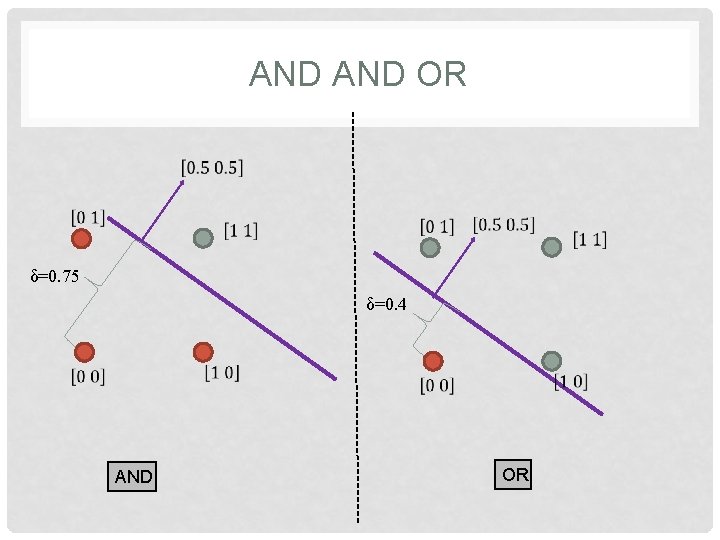

AND OR δ=0. 75 δ=0. 4 AND OR

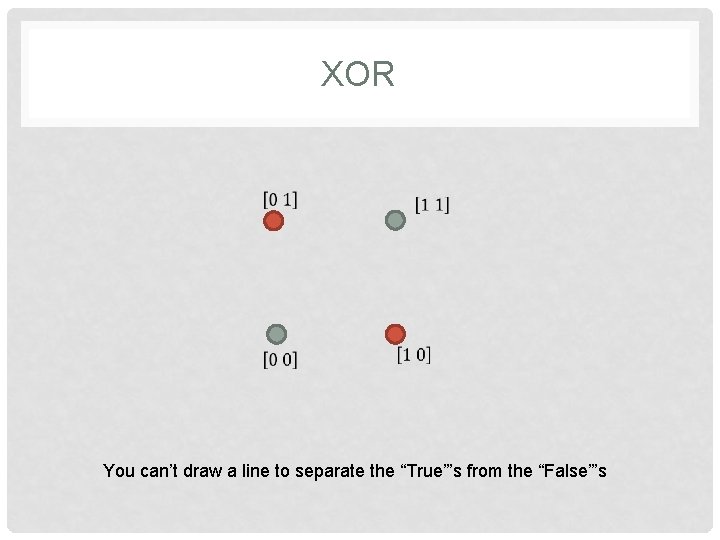

XOR You can’t draw a line to separate the “True”’s from the “False”’s

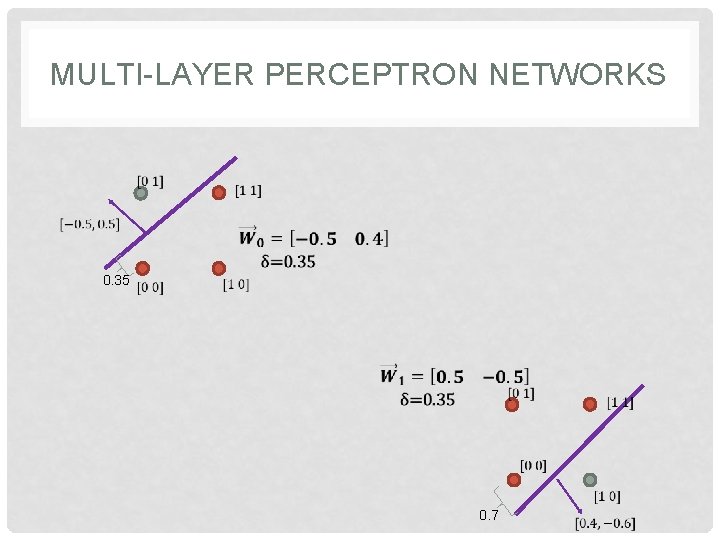

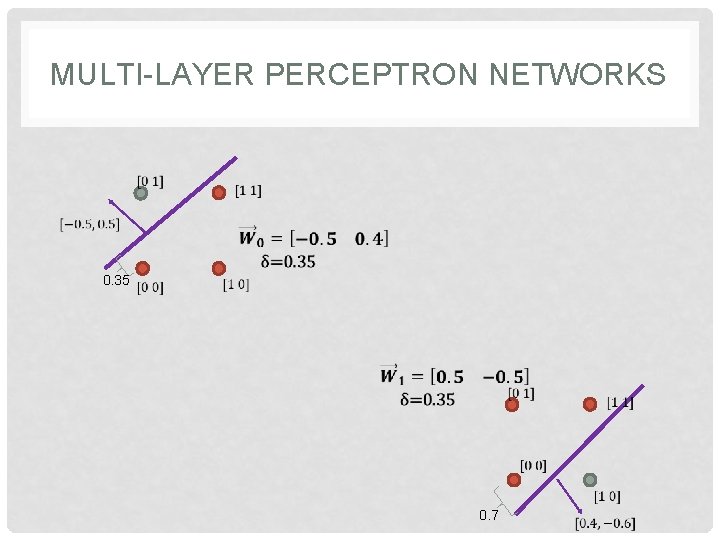

MULTI-LAYER PERCEPTRON NETWORKS 0. 35 0. 7

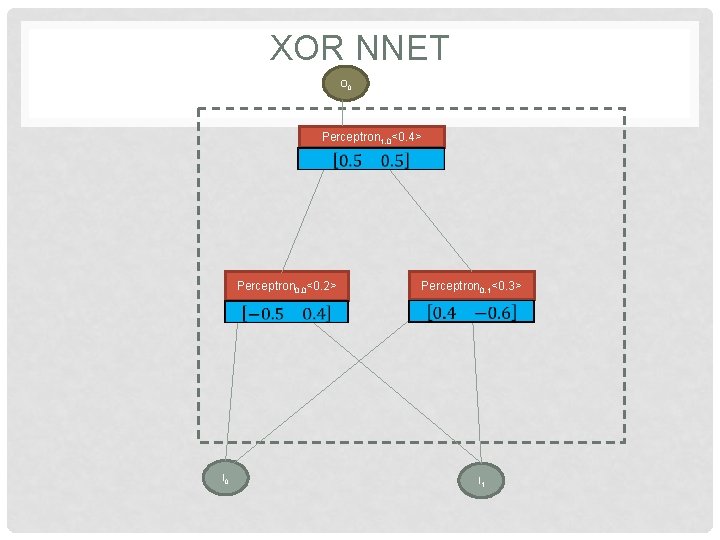

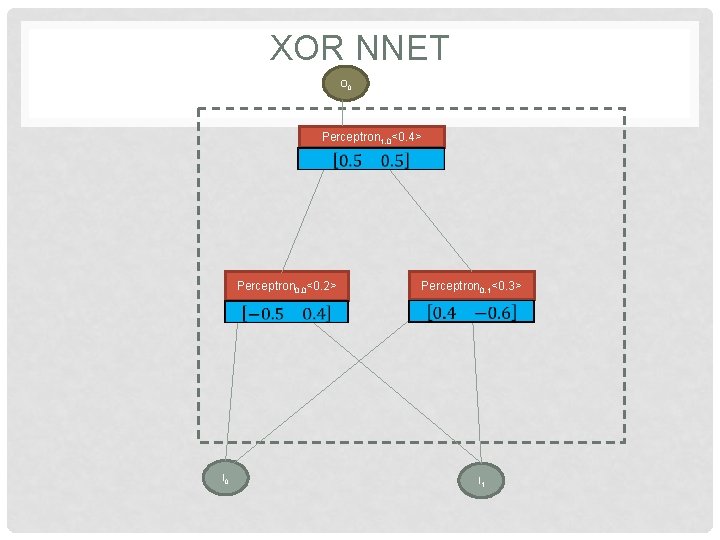

XOR NNET O 0 Perceptron 1, 0<0. 4> Perceptron 0, 1<0. 3> Perceptron 0, 0<0. 2> I 0 I 1

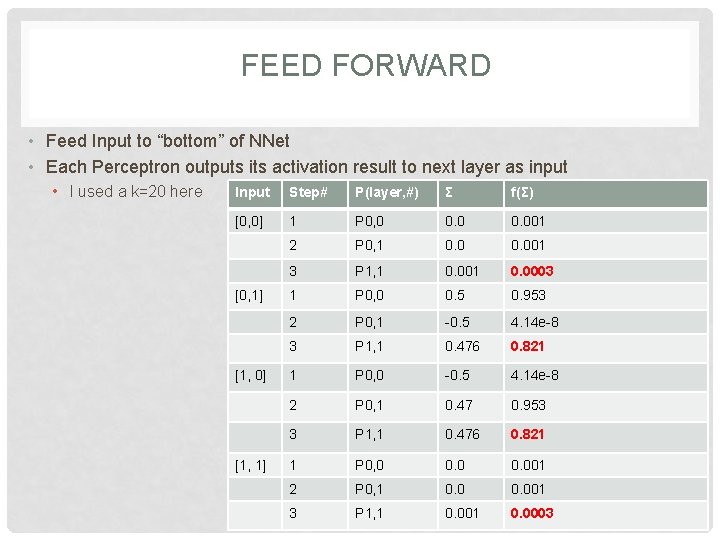

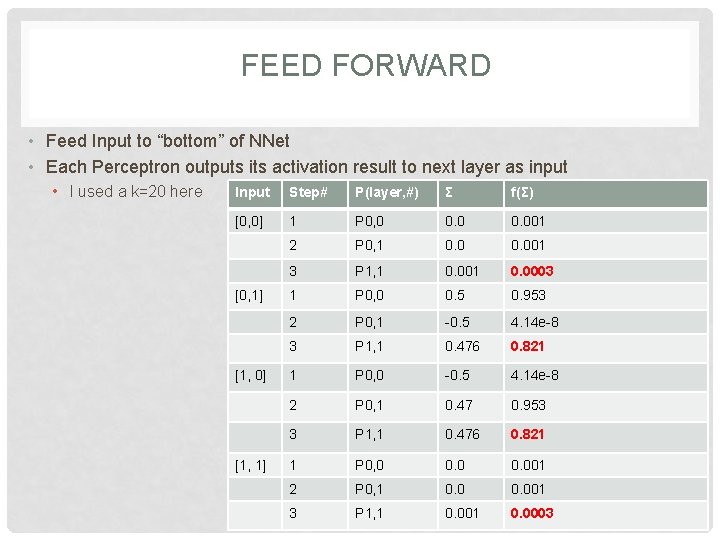

FEED FORWARD • Feed Input to “bottom” of NNet • Each Perceptron outputs its activation result to next layer as input • I used a k=20 here Input Step# P(layer, #) Σ f(Σ) [0, 0] 1 P 0, 0 0. 001 2 P 0, 1 0. 001 3 P 1, 1 0. 0003 1 P 0, 0 0. 5 0. 953 2 P 0, 1 -0. 5 4. 14 e-8 3 P 1, 1 0. 476 0. 821 1 P 0, 0 -0. 5 4. 14 e-8 2 P 0, 1 0. 47 0. 953 3 P 1, 1 0. 476 0. 821 1 P 0, 0 0. 001 2 P 0, 1 0. 001 3 P 1, 1 0. 0003 [0, 1] [1, 0] [1, 1]

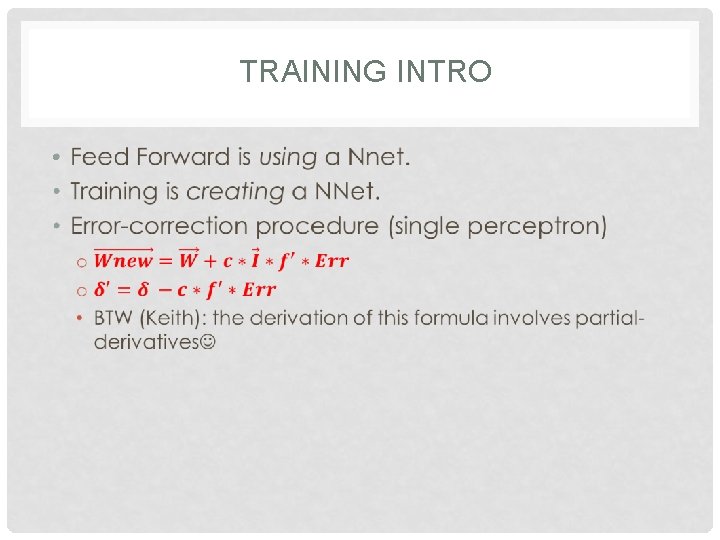

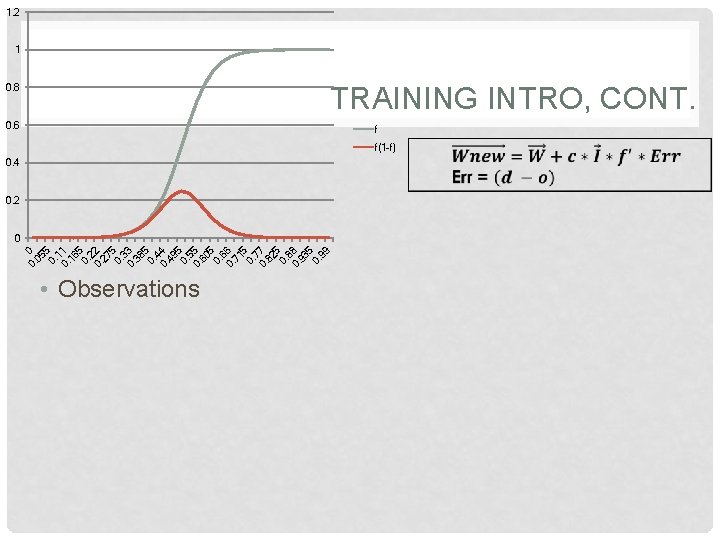

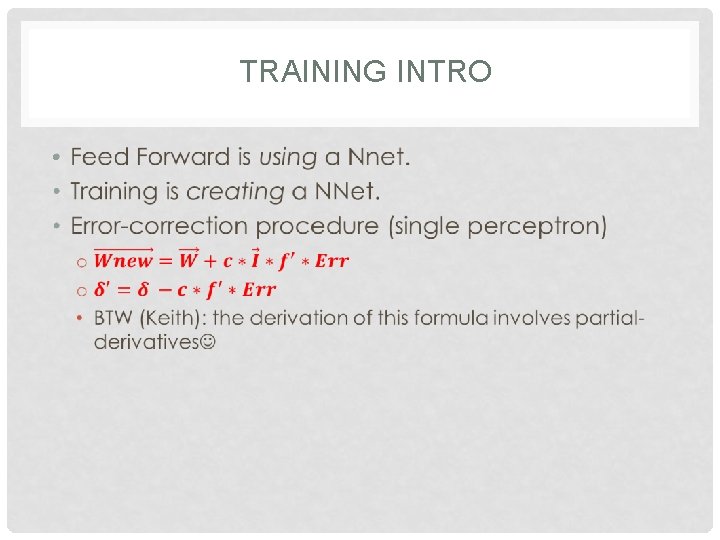

TRAINING INTRO •

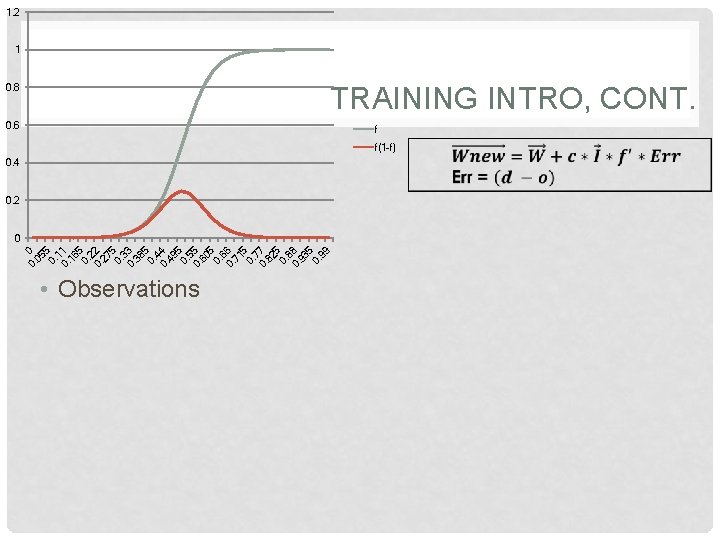

0. 0 05 5 0. 11 0. 16 5 0. 2 27 5 0. 33 0. 38 5 0. 44 0. 49 5 0. 55 0. 60 5 0. 6 71 5 0. 77 0. 82 5 0. 88 0. 93 5 0. 99 1. 2 1 0. 8 TRAINING INTRO, CONT. 0. 6 f f(1 -f) 0. 4 0. 2 0 • Observations

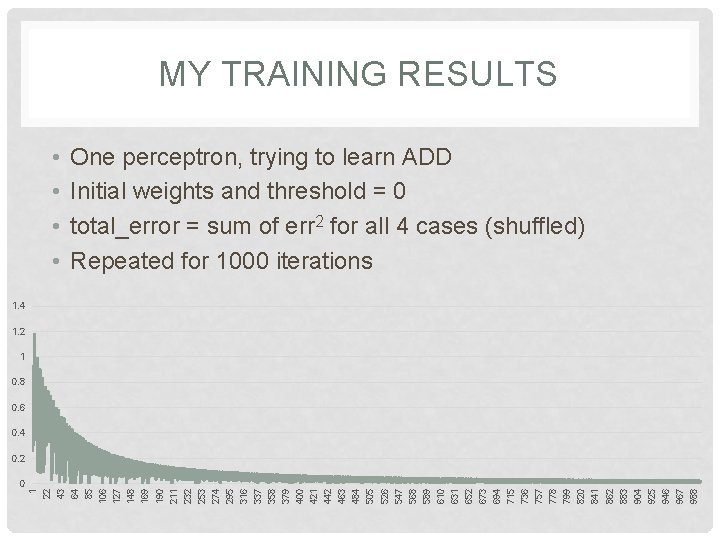

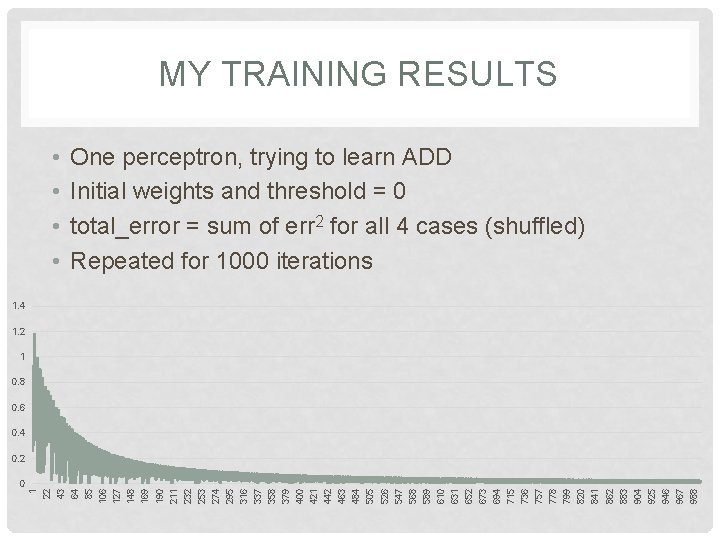

MY TRAINING RESULTS • • One perceptron, trying to learn ADD Initial weights and threshold = 0 total_error = sum of err 2 for all 4 cases (shuffled) Repeated for 1000 iterations 1. 4 1. 2 1 0. 8 0. 6 0. 4 0. 2 988 967 946 925 904 883 862 841 820 799 778 757 736 694 715 673 652 631 610 589 568 547 526 505 484 463 442 421 400 379 358 337 316 295 274 253 232 211 190 169 148 127 85 106 64 43 22 1 0

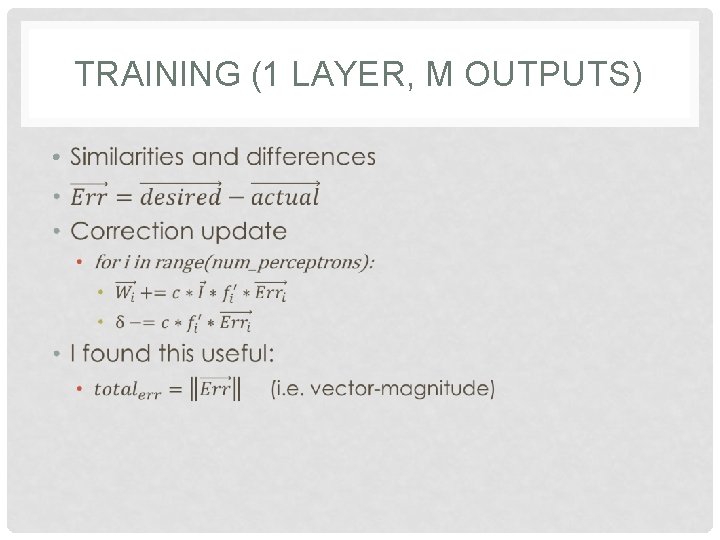

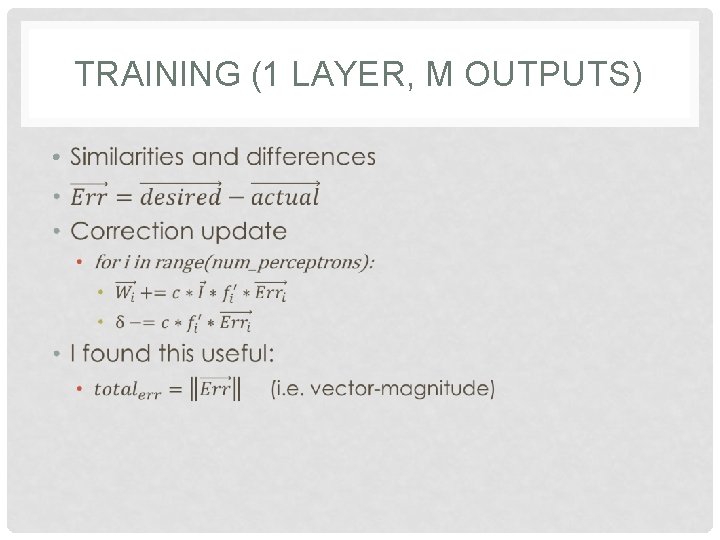

TRAINING (1 LAYER, M OUTPUTS) •

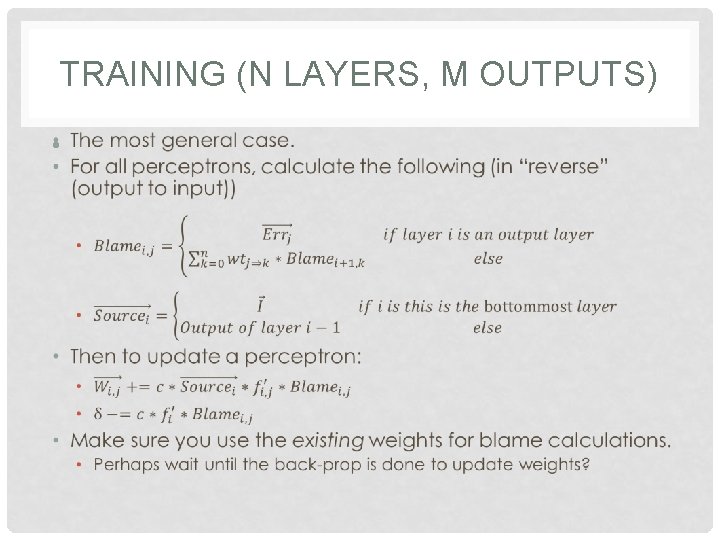

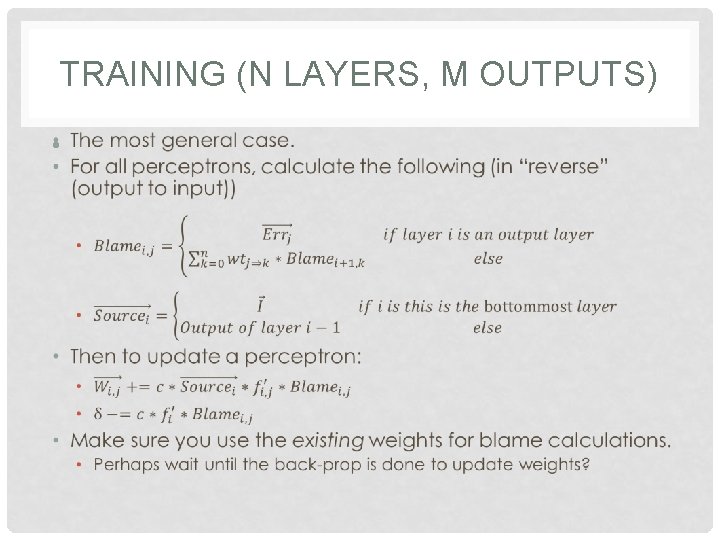

TRAINING (N LAYERS, M OUTPUTS) •

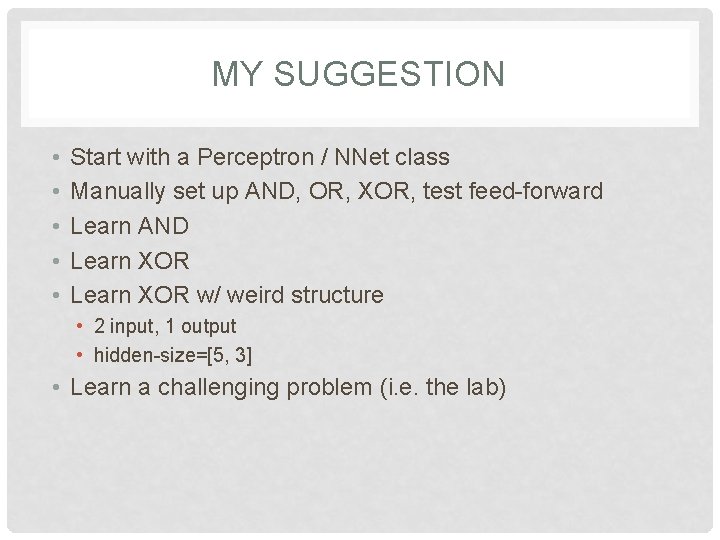

MY SUGGESTION • • • Start with a Perceptron / NNet class Manually set up AND, OR, XOR, test feed-forward Learn AND Learn XOR w/ weird structure • 2 input, 1 output • hidden-size=[5, 3] • Learn a challenging problem (i. e. the lab)