The AI Security Paradox Dr Roman Yampolskiylouisville edu

- Slides: 20

The AI Security Paradox Dr. Roman. Yampolskiy@louisville. edu Computer Engineering and Computer Science University of Louisville - cecs. louisville. edu/ry Director – Cyber. Security Lab @romanyam /roman. yampolskiy

@romanyam AI + roman. yampolskiy@louisville. edu What is AI Safety? Cybersecurity = AI Safety & Security Science and engineering aimed at creating safe and secure machines. 2

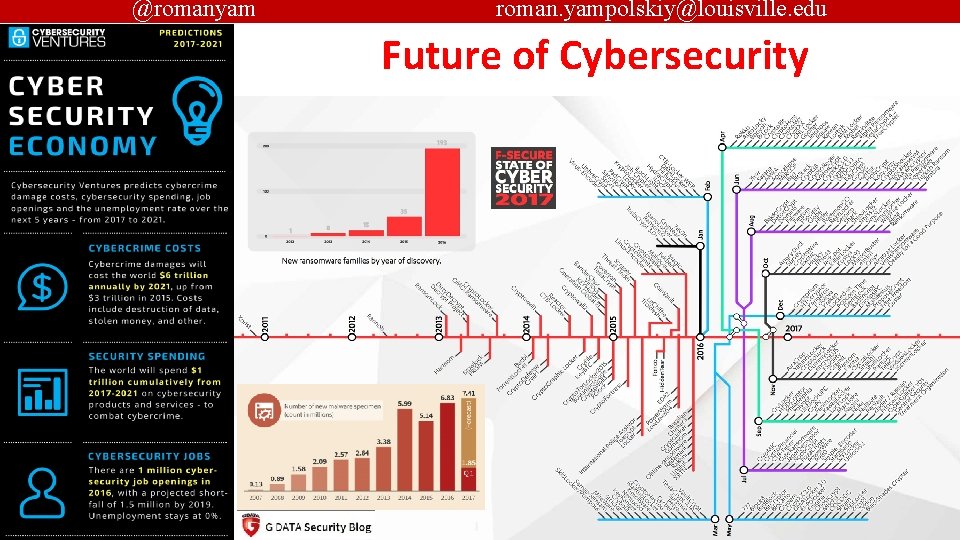

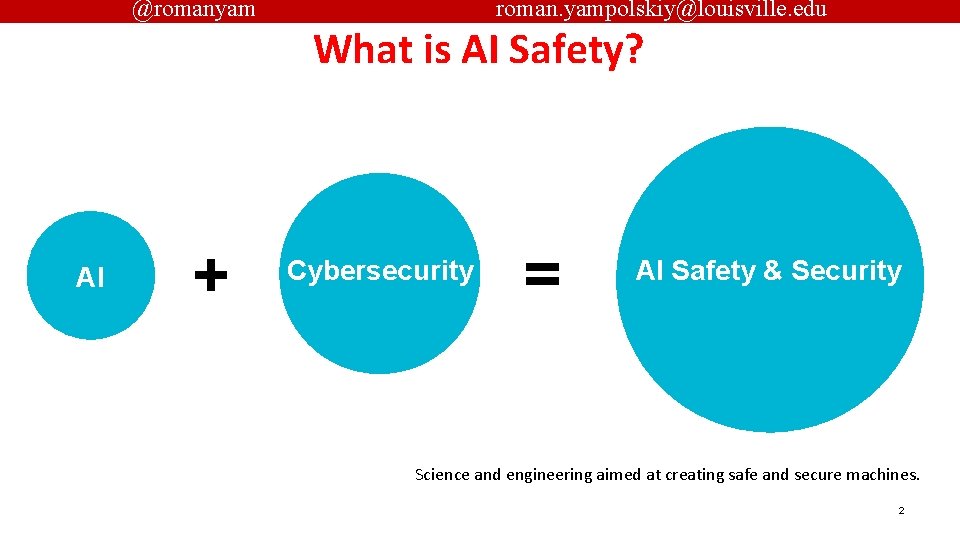

@romanyam roman. yampolskiy@louisville. edu Future of Cybersecurity 3

@romanyam roman. yampolskiy@louisville. edu AI for Cybersecurity (Example-IBM Watson) 4

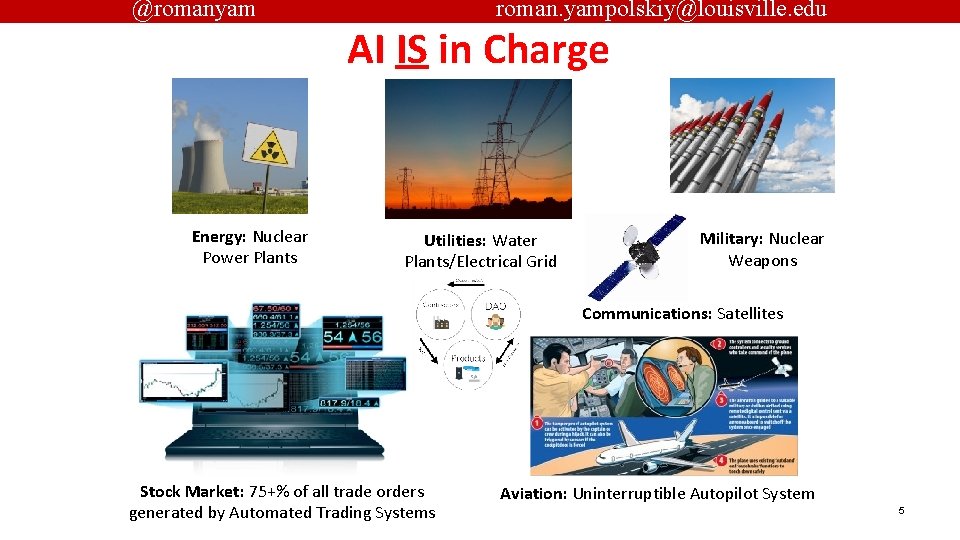

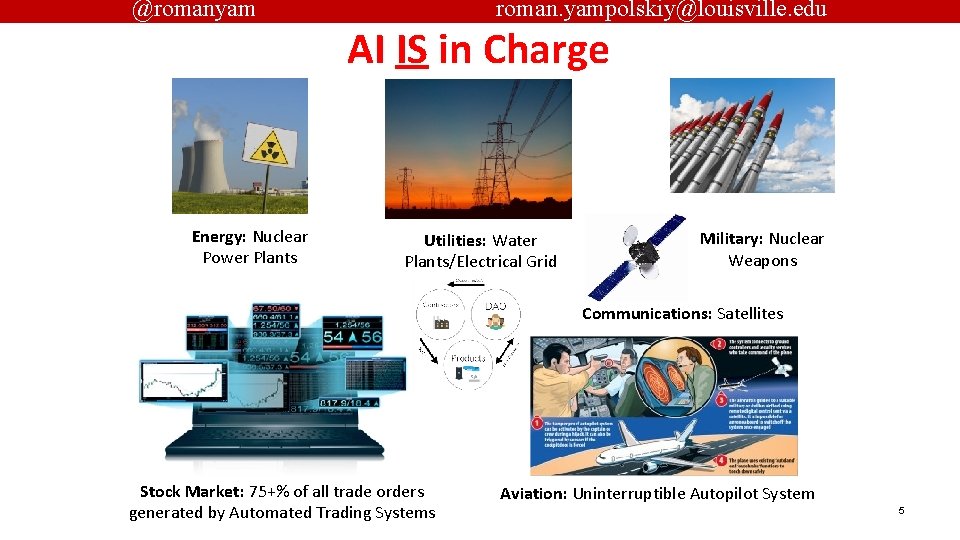

@romanyam Energy: Nuclear Power Plants roman. yampolskiy@louisville. edu AI IS in Charge Utilities: Water Plants/Electrical Grid Military: Nuclear Weapons Communications: Satellites Stock Market: 75+% of all trade orders generated by Automated Trading Systems Aviation: Uninterruptible Autopilot System 5

@romanyam roman. yampolskiy@louisville. edu What is Next? Super. Intelligence is Coming … 6

@romanyam roman. yampolskiy@louisville. edu Super. Smart 7

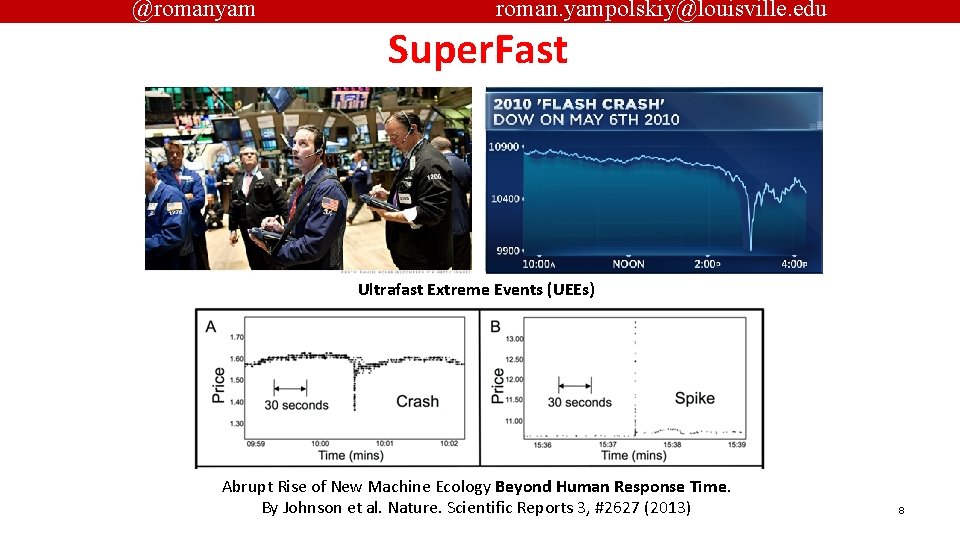

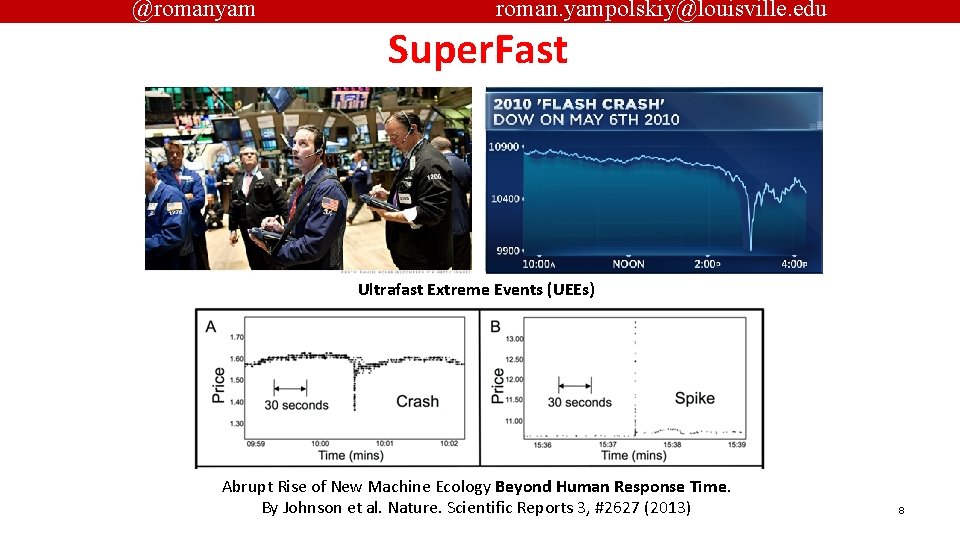

@romanyam roman. yampolskiy@louisville. edu Super. Fast Ultrafast Extreme Events (UEEs) Abrupt Rise of New Machine Ecology Beyond Human Response Time. By Johnson et al. Nature. Scientific Reports 3, #2627 (2013) 8

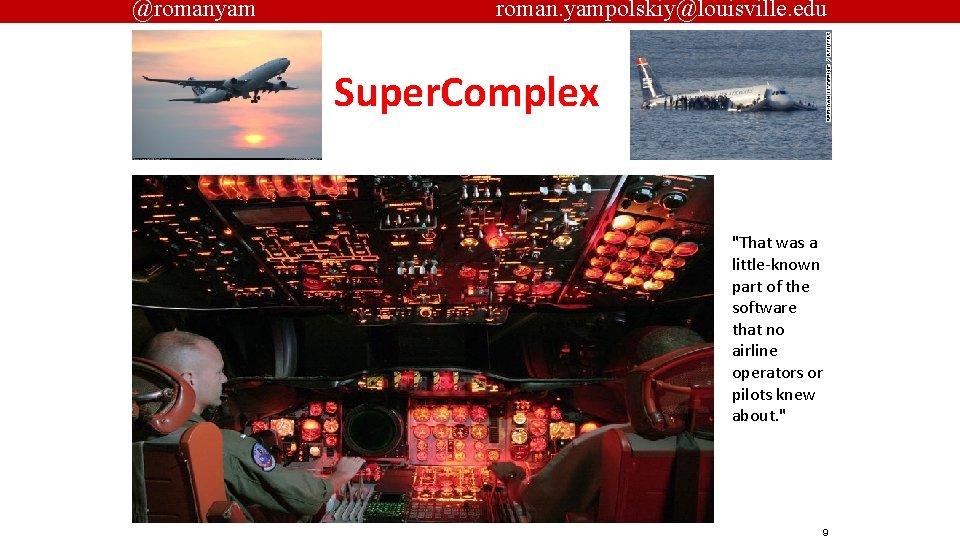

@romanyam roman. yampolskiy@louisville. edu Super. Complex "That was a little-known part of the software that no airline operators or pilots knew about. " 9

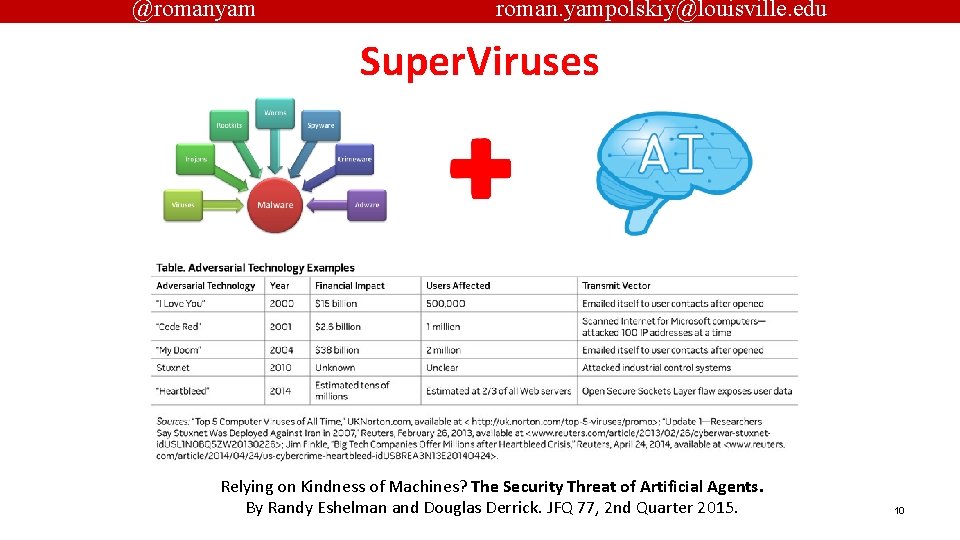

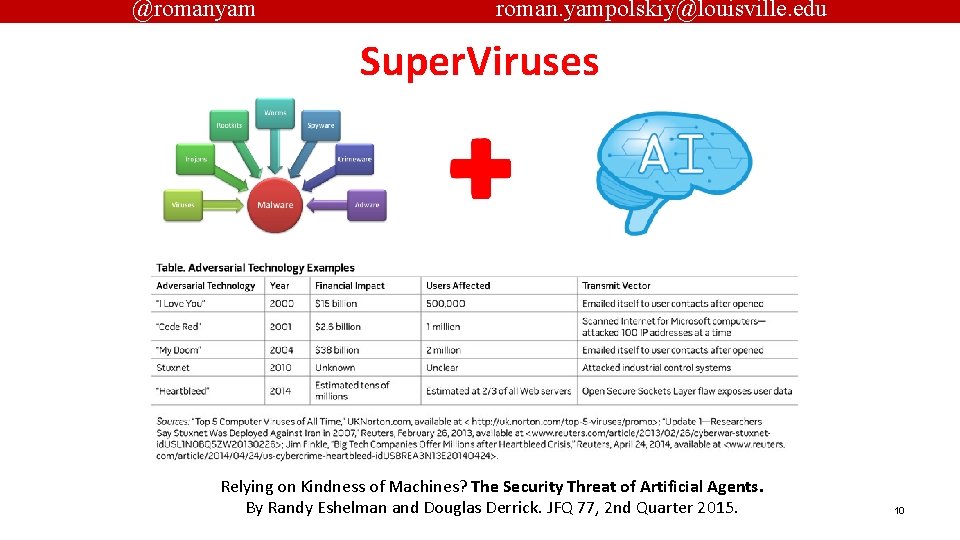

@romanyam roman. yampolskiy@louisville. edu Super. Viruses Relying on Kindness of Machines? The Security Threat of Artificial Agents. By Randy Eshelman and Douglas Derrick. JFQ 77, 2 nd Quarter 2015. 10

@romanyam roman. yampolskiy@louisville. edu Super. Soldiers 11

@romanyam “The development of full artificial intelligence could spell the end of the human race. ” roman. yampolskiy@louisville. edu Super. Concerns “I think we should be very careful about artificial intelligence” “… there’s some prudence in thinking about benchmarks that would indicate some general intelligence developing on the horizon. ” "I am in the camp that is concerned about super intelligence" “…eventually they'll think faster than us and they'll get rid of the slow humans…” 12

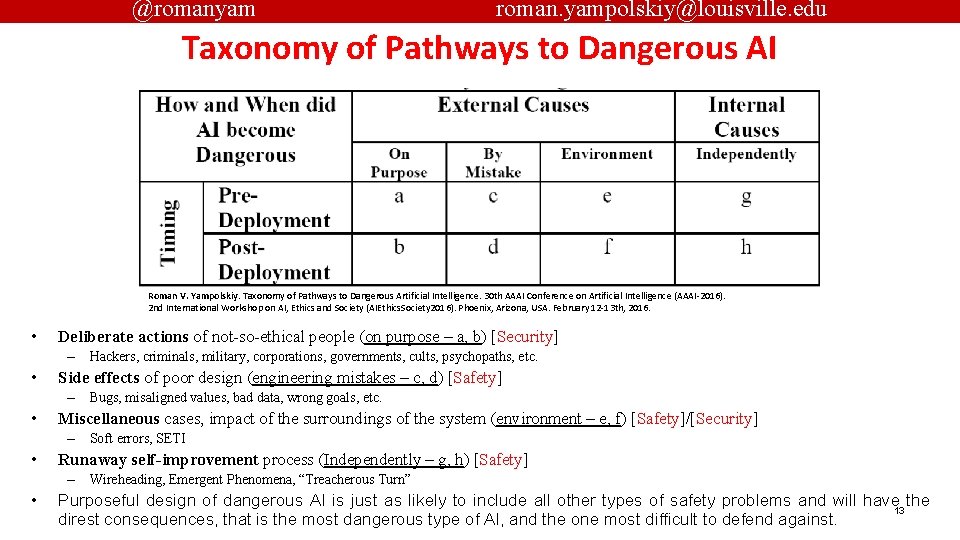

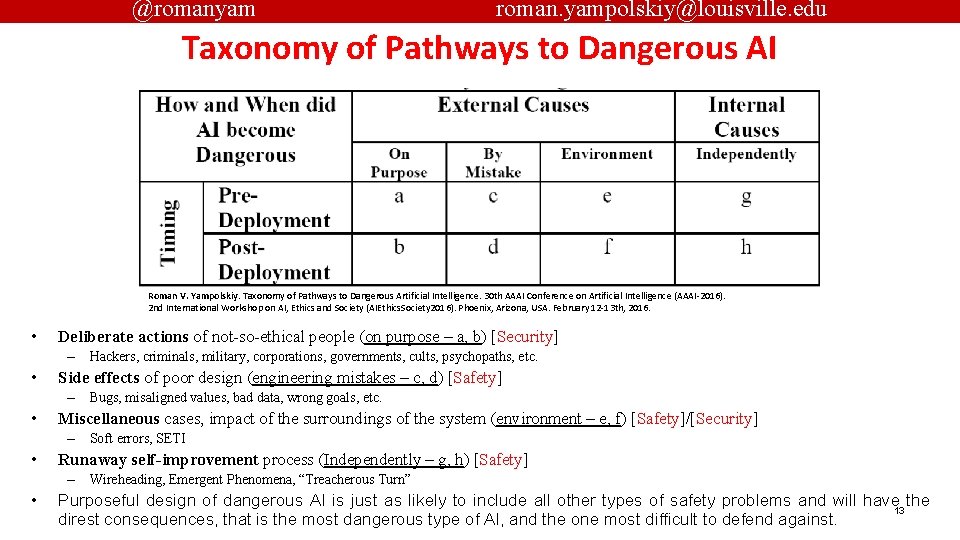

@romanyam roman. yampolskiy@louisville. edu Taxonomy of Pathways to Dangerous AI Roman V. Yampolskiy. Taxonomy of Pathways to Dangerous Artificial Intelligence. 30 th AAAI Conference on Artificial Intelligence (AAAI-2016). 2 nd International Workshop on AI, Ethics and Society (AIEthics. Society 2016). Phoenix, Arizona, USA. February 12 -13 th, 2016. • Deliberate actions of not-so-ethical people (on purpose – a, b) [Security] – Hackers, criminals, military, corporations, governments, cults, psychopaths, etc. • Side effects of poor design (engineering mistakes – c, d) [Safety] – Bugs, misaligned values, bad data, wrong goals, etc. • Miscellaneous cases, impact of the surroundings of the system (environment – e, f) [Safety]/[Security] – Soft errors, SETI • Runaway self-improvement process (Independently – g, h) [Safety] – Wireheading, Emergent Phenomena, “Treacherous Turn” • Purposeful design of dangerous AI is just as likely to include all other types of safety problems and will have 13 the direst consequences, that is the most dangerous type of AI, and the one most difficult to defend against.

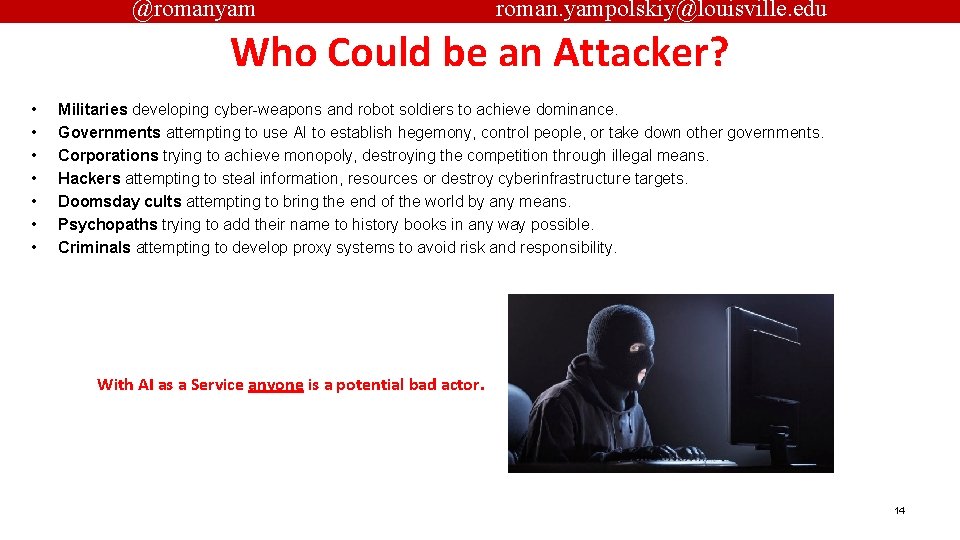

@romanyam roman. yampolskiy@louisville. edu Who Could be an Attacker? • • Militaries developing cyber-weapons and robot soldiers to achieve dominance. Governments attempting to use AI to establish hegemony, control people, or take down other governments. Corporations trying to achieve monopoly, destroying the competition through illegal means. Hackers attempting to steal information, resources or destroy cyberinfrastructure targets. Doomsday cults attempting to bring the end of the world by any means. Psychopaths trying to add their name to history books in any way possible. Criminals attempting to develop proxy systems to avoid risk and responsibility. With AI as a Service anyone is a potential bad actor. 14

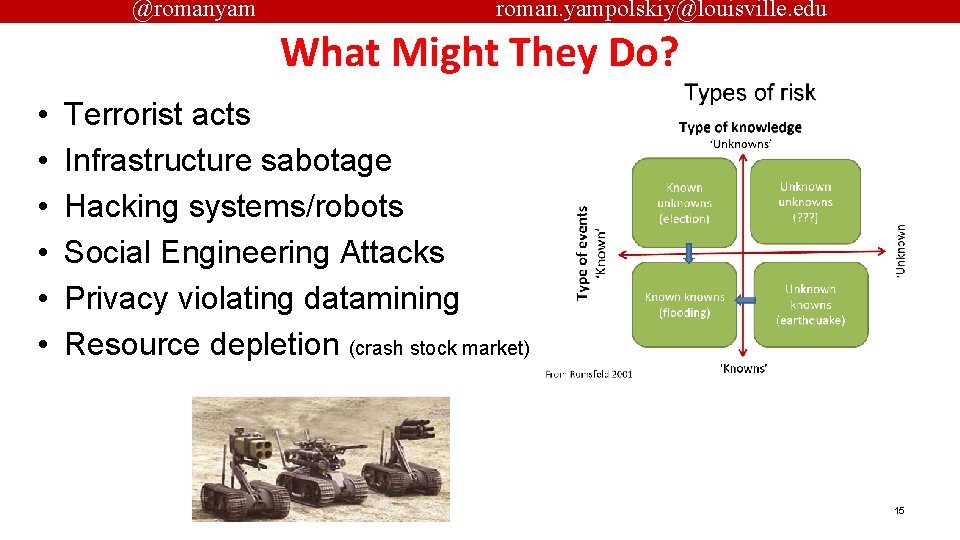

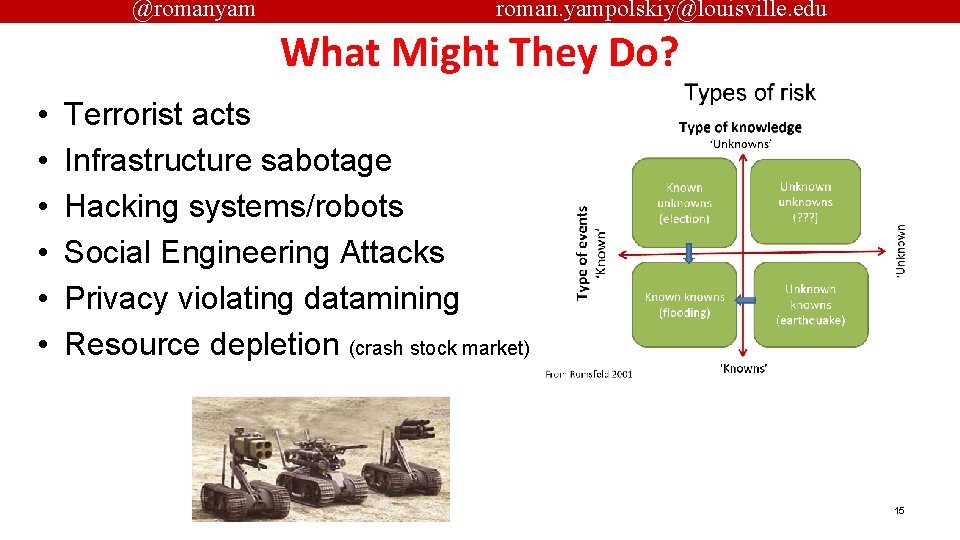

@romanyam roman. yampolskiy@louisville. edu What Might They Do? • • • Terrorist acts Infrastructure sabotage Hacking systems/robots Social Engineering Attacks Privacy violating datamining Resource depletion (crash stock market) 15

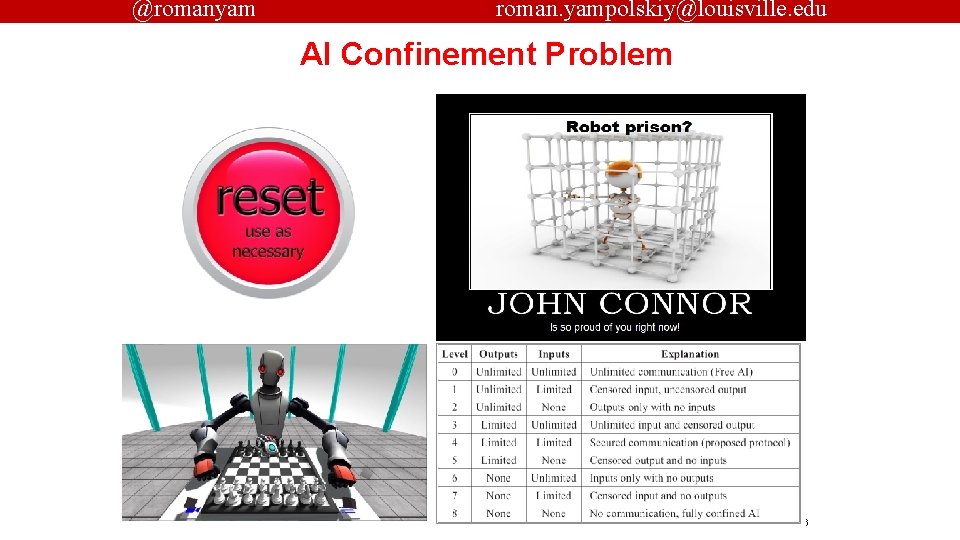

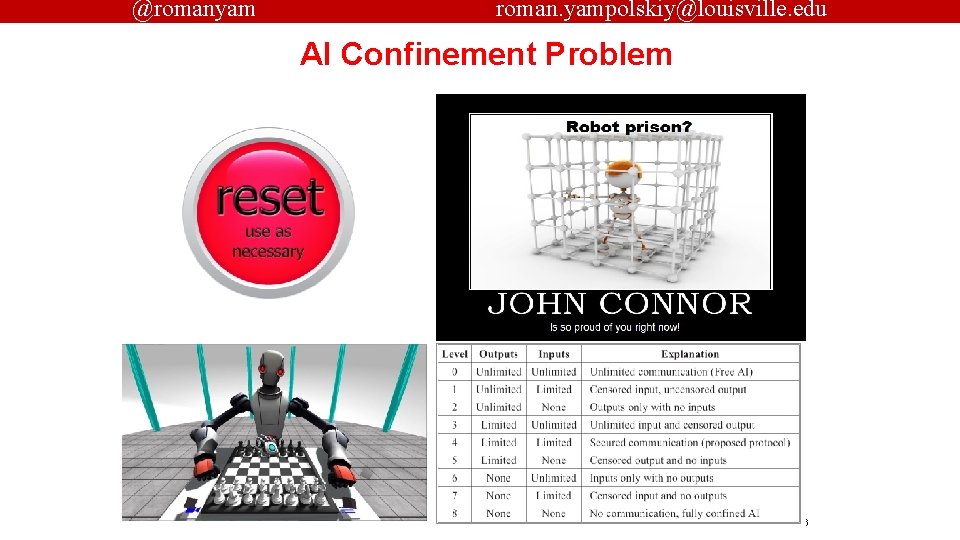

@romanyam roman. yampolskiy@louisville. edu AI Confinement Problem 16

@romanyam roman. yampolskiy@louisville. edu AI Regulation 17

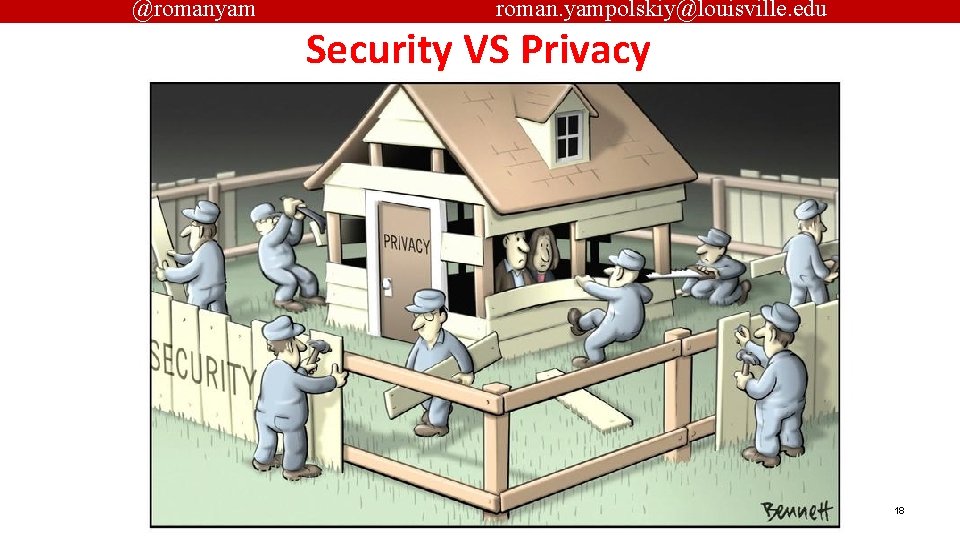

@romanyam roman. yampolskiy@louisville. edu Security VS Privacy 18

@romanyam roman. yampolskiy@louisville. edu Conclusions • AI failures and attacks will grow in frequency and severity proportionate to AI’s capability. • Governments need to work to ensure protection of citizens. 19

The End! Roman. Yampolskiy@louisville. edu Director, Cyber. Security Lab Computer Engineering and Computer Science University of Louisville - cecs. louisville. edu/ry @romanyam /Roman. Yampolskiy All images used in this presentation are copyrighted to their respective owners and are used for educational purposes only. 20

Provate security

Provate security Edu.sharif.edu

Edu.sharif.edu Politheistic

Politheistic Roman republic vs roman empire

Roman republic vs roman empire Hình ảnh bộ gõ cơ thể búng tay

Hình ảnh bộ gõ cơ thể búng tay Slidetodoc

Slidetodoc Bổ thể

Bổ thể Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Voi kéo gỗ như thế nào

Voi kéo gỗ như thế nào Chụp phim tư thế worms-breton

Chụp phim tư thế worms-breton Chúa sống lại

Chúa sống lại Môn thể thao bắt đầu bằng chữ f

Môn thể thao bắt đầu bằng chữ f Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Cong thức tính động năng

Cong thức tính động năng Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Mật thư tọa độ 5x5

Mật thư tọa độ 5x5 Làm thế nào để 102-1=99

Làm thế nào để 102-1=99 Phản ứng thế ankan

Phản ứng thế ankan Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Thơ thất ngôn tứ tuyệt đường luật

Thơ thất ngôn tứ tuyệt đường luật