Chapter 20 Part 3 Computational Lexical Semantics Acknowledgements

- Slides: 69

Chapter 20 Part 3 Computational Lexical Semantics Acknowledgements: these slides include material from Dan Jurafsky, Rada Mihalcea, Ray Mooney, Katrin Erk, and Ani Nenkova 1

Similarity Metrics • Similarity metrics are useful not just for word sense disambiguation, but also for: – Finding topics of documents – Representing word meanings, not with respect to a fixed sense inventory • We will start with dictionary based methods and then look at vector space models 2

Thesaurus-based word similarity • We could use anything in thesaurus – Meronymy – Glosses – Example sentences • In practice – By “thesaurus-based” we just mean • Using the is-a/subsumption/hypernym hierarchy • Can define similarity between words or between senses 3

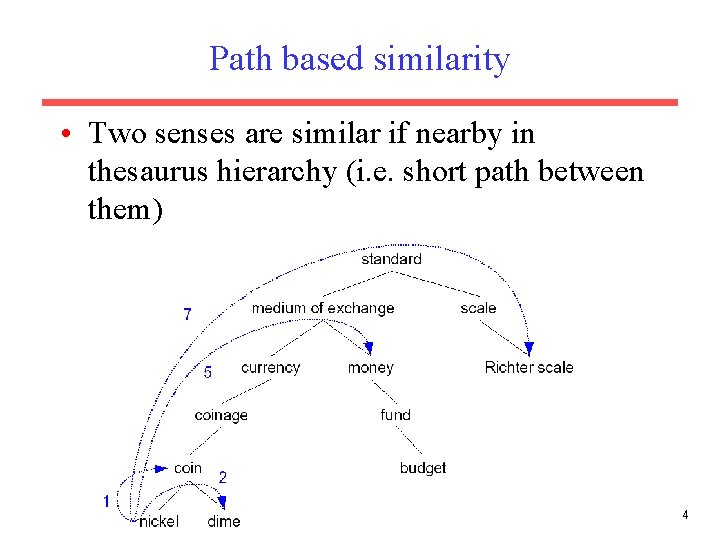

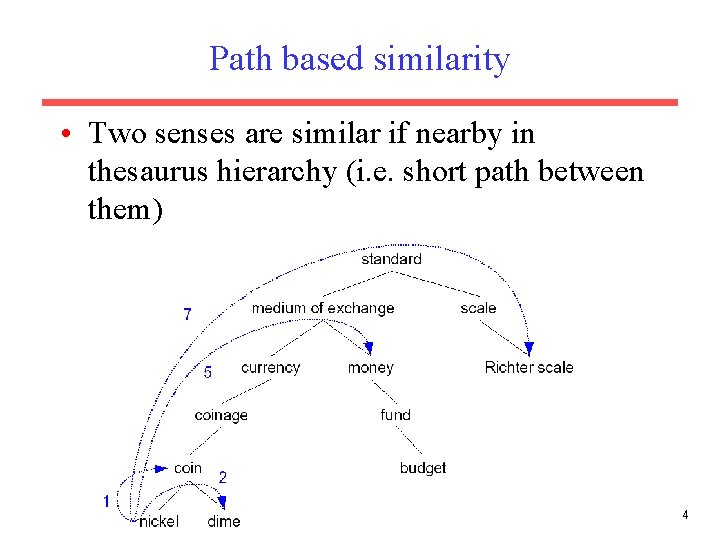

Path based similarity • Two senses are similar if nearby in thesaurus hierarchy (i. e. short path between them) 4

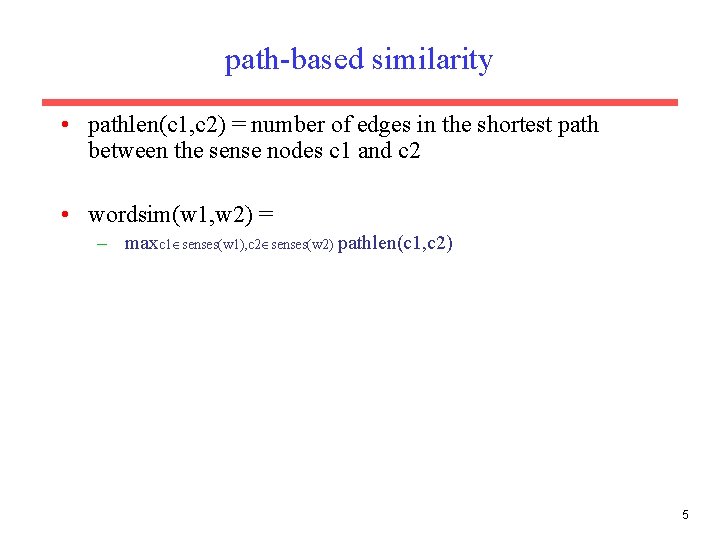

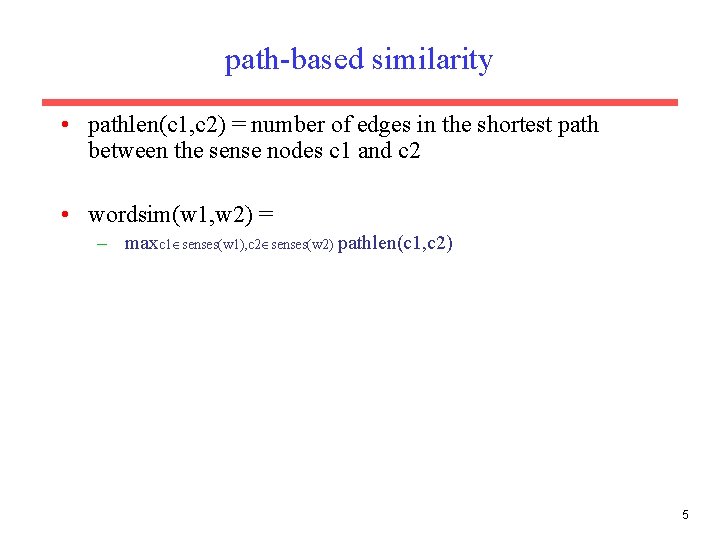

path-based similarity • pathlen(c 1, c 2) = number of edges in the shortest path between the sense nodes c 1 and c 2 • wordsim(w 1, w 2) = – maxc 1 senses(w 1), c 2 senses(w 2) pathlen(c 1, c 2) 5

Problem with basic path-based similarity • Assumes each link represents a uniform distance • But, some areas of Word. Net are more developed than others • Depended on the people who created it • Also, links deep in the hierarchy are intuitively more narrow than links higher up [on slide 4, e. g. , nickel to money vs nickel to standard] 6

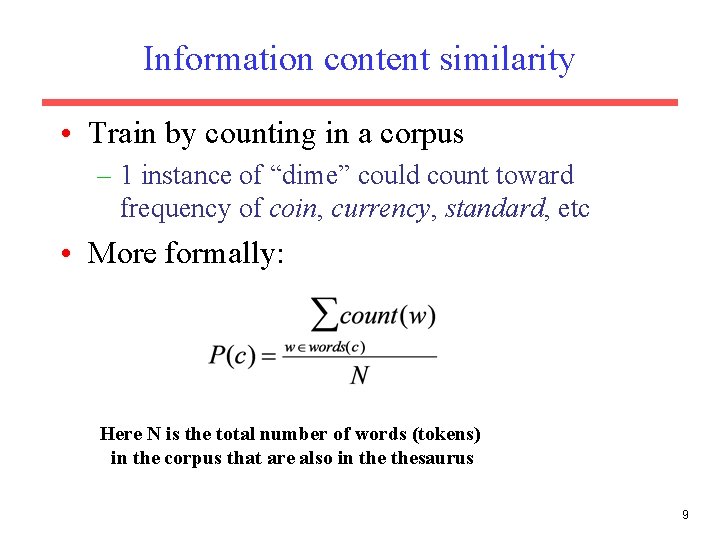

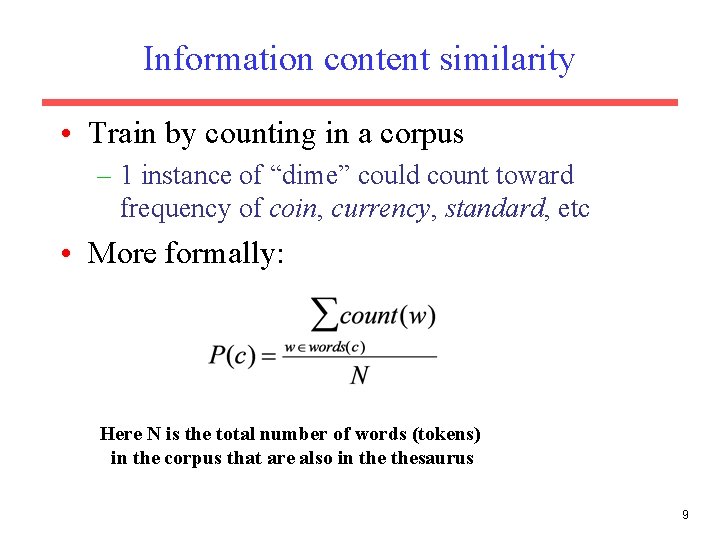

Information content similarity metrics • Let’s define P(C) as: – The probability that a randomly selected word in a corpus is an instance of concept c – A word is an instance of a concept if it appears below the concept in the Word. Net hierarchy – We saw this idea when we covered selectional preferences 7

In particular – If there is a single node that is the ancestor of all nodes, then its probability is 1 – The lower a node in the hierarchy, the lower its probability – An occurrence of the word dime would count towards the frequency of coin, currency, standard, etc. 8

Information content similarity • Train by counting in a corpus – 1 instance of “dime” could count toward frequency of coin, currency, standard, etc • More formally: Here N is the total number of words (tokens) in the corpus that are also in thesaurus 9

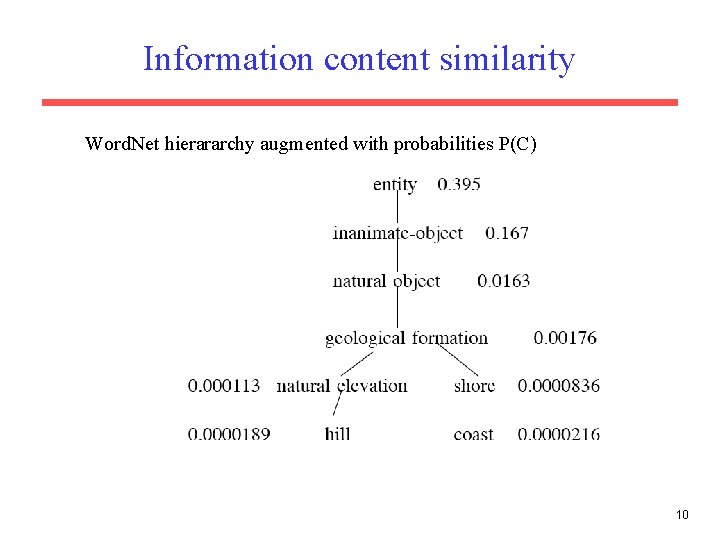

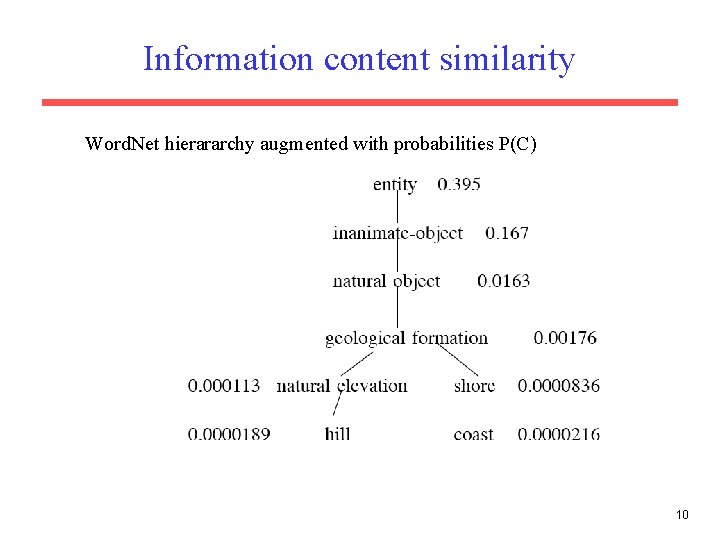

Information content similarity Word. Net hierararchy augmented with probabilities P(C) 10

Information content: definitions • Information content: – IC(c)=-log. P(c) • Lowest common subsumer LCS(c 1, c 2) – I. e. the lowest node in the hierarchy – That subsumes (is a hypernym of) both c 1 and c 2 11

Resnik method • The similarity between two senses is related to their common information • The more two senses have in common, the more similar they are • Resnik: measure the common information as: – The info content of the lowest common subsumer of the two senses – simresnik(c 1, c 2) = -log P(LCS(c 1, c 2)) 12

Example Use: • Yaw Gyamfi, Janyce Wiebe, Rada Mihalcea, and Cem Akkaya (2009). Integrating Knowledge for Subjectivity Sense Labeling. HLT-NAACL 2009. 13

What is Subjectivity? • The linguistic expression of somebody’s opinions, sentiments, emotions, evaluations, beliefs, speculations (private states) This particular use of subjectivity was adapted from literary theory Banfield 1982; Wiebe 1990

Examples of Subjective Expressions • References to private states – She was enthusiastic about the plan • Descriptions – That would lead to disastrous consequences – What a freak show

Subjectivity Analysis • Automatic extraction of subjectivity (opinions) from text or dialog

Subjectivity Analysis: Applications • • • Opinion-oriented question answering: How do the Chinese regard the human rights record of the United States? Product review mining: What features of the Think. Pad T 43 do customers like and which do they dislike? Review classification: Is a review positive or negative toward the movie? Tracking sentiments toward topics over time: Is anger ratcheting up or cooling down? Etc.

Subjectivity Lexicons • Most approaches to subjectivity and sentiment analysis exploit subjectivity lexicons. – Lists of keywords that have been gathered together because they have subjective uses Brilliant Difference Hate Interest Love …

Automatically Identifying Subjective Words • Much work in this area Hatzivassiloglou & Mc. Keown ACL 97 Wiebe AAAI 00 Turney ACL 02 Kamps & Marx 2002 Wiebe, Riloff, & Wilson Co. NLL 03 Yu & Hatzivassiloglou EMNLP 03 Kim & Hovy IJCNLP 05 Esuli & Sebastiani CIKM 05 Andreevskaia & Bergler EACL 06 Etc. Subjectivity Lexicon available at : http: //www. cs. pitt. edu/mpqa Entries from several sources

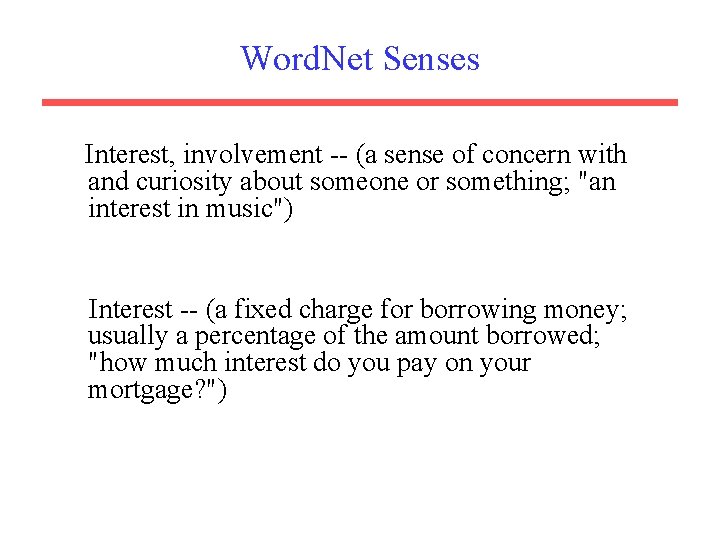

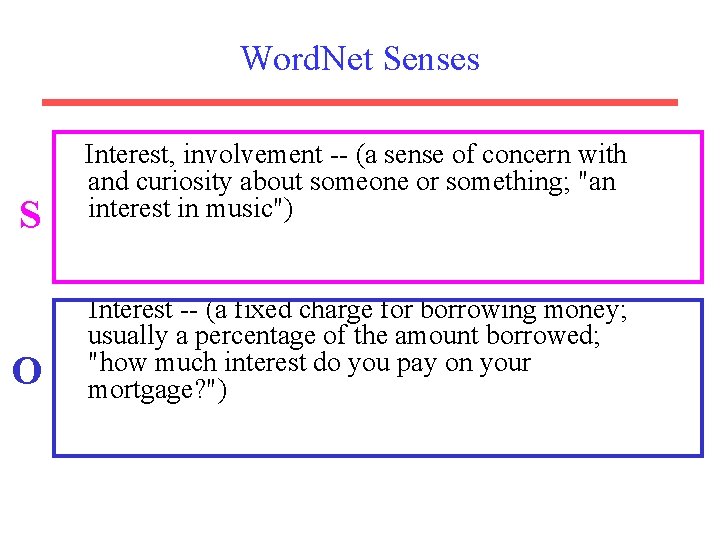

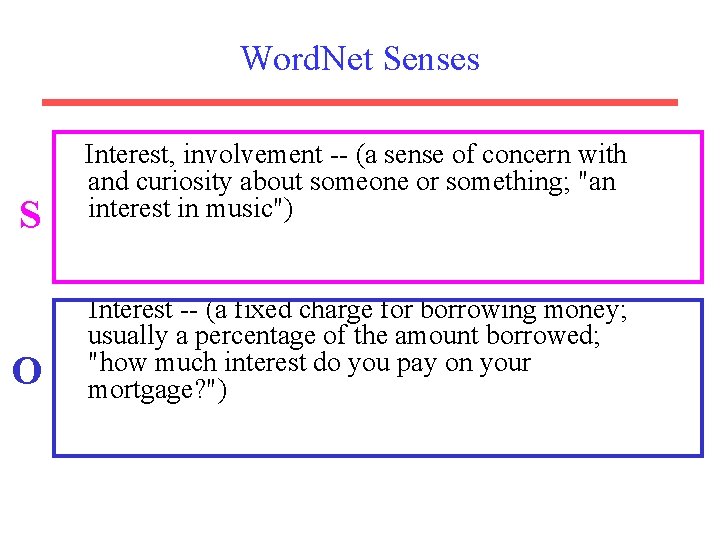

However… • Consider the keyword “interest” • It is in the subjectivity lexicon • But, what about “interest rate, ” for example?

Word. Net Senses Interest, involvement -- (a sense of concern with and curiosity about someone or something; "an interest in music") Interest -- (a fixed charge for borrowing money; usually a percentage of the amount borrowed; "how much interest do you pay on your mortgage? ")

Word. Net Senses S O Interest, involvement -- (a sense of concern with and curiosity about someone or something; "an interest in music") Interest -- (a fixed charge for borrowing money; usually a percentage of the amount borrowed; "how much interest do you pay on your mortgage? ")

Senses • Even in subjectivity lexicons, many senses of the keywords are objective • Thus, many appearances of keywords in texts are false hits

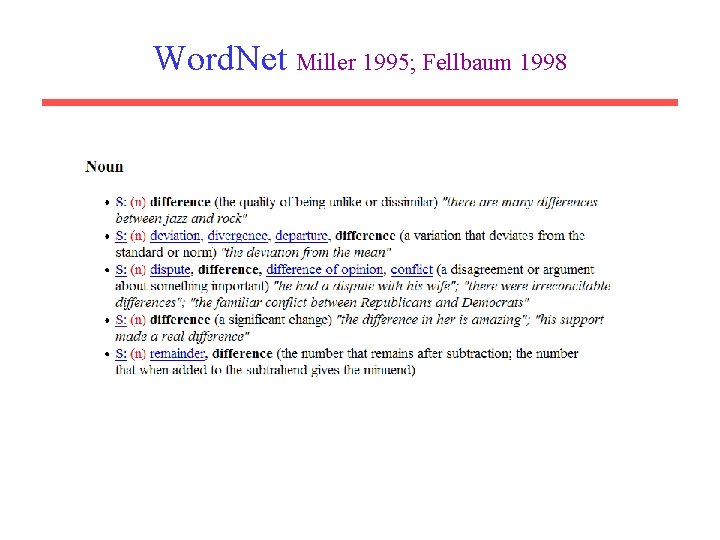

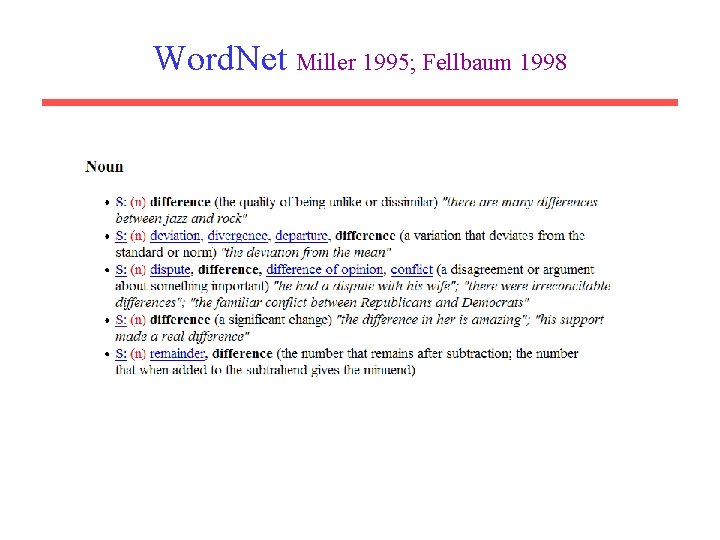

Word. Net Miller 1995; Fellbaum 1998

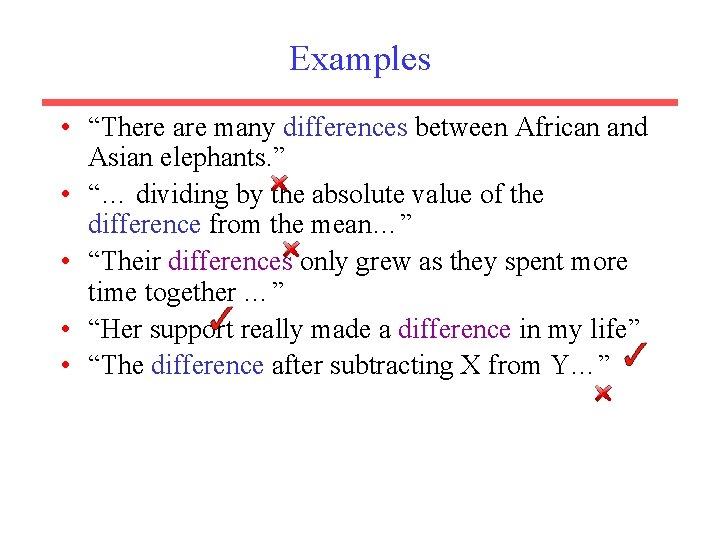

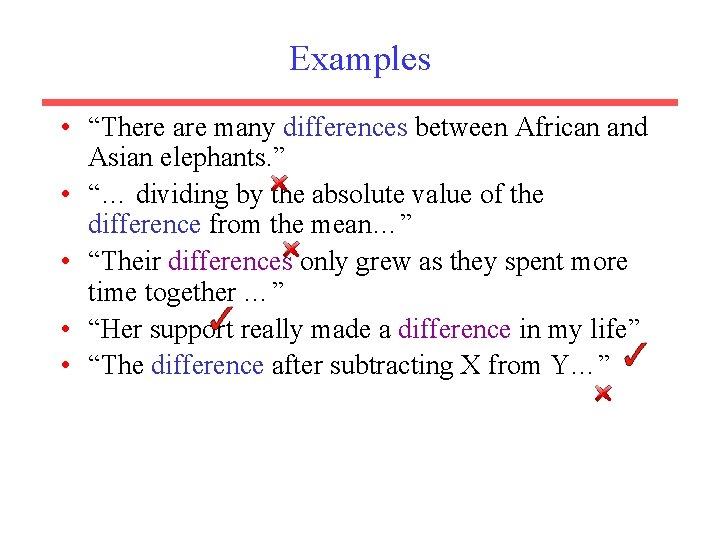

Examples • “There are many differences between African and Asian elephants. ” • “… dividing by the absolute value of the difference from the mean…” • “Their differences only grew as they spent more time together …” • “Her support really made a difference in my life” • “The difference after subtracting X from Y…”

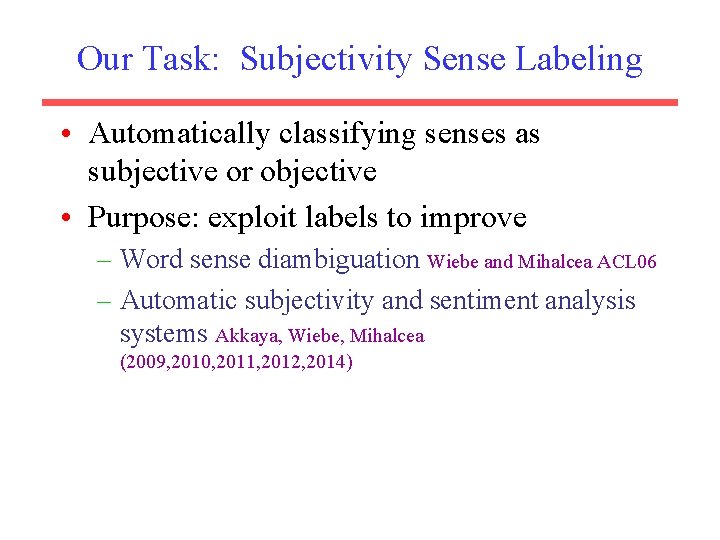

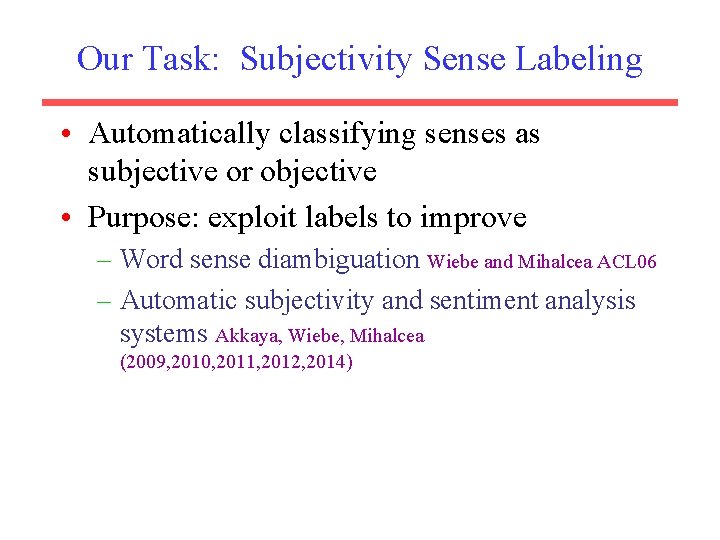

Our Task: Subjectivity Sense Labeling • Automatically classifying senses as subjective or objective • Purpose: exploit labels to improve – Word sense diambiguation Wiebe and Mihalcea ACL 06 – Automatic subjectivity and sentiment analysis systems Akkaya, Wiebe, Mihalcea (2009, 2010, 2011, 2012, 2014)

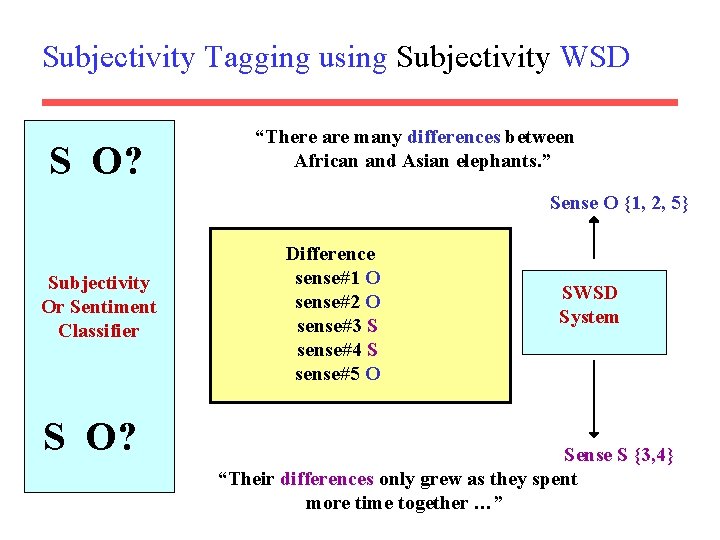

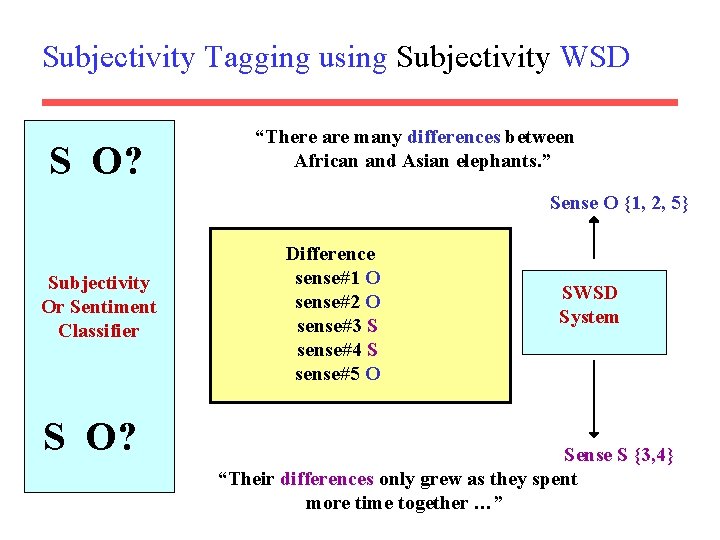

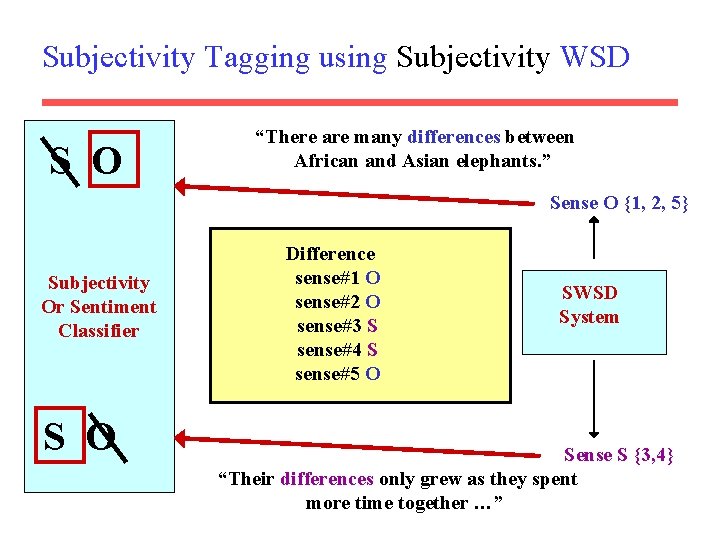

Subjectivity Tagging using Subjectivity WSD S O? “There are many differences between African and Asian elephants. ” Sense O {1, 2, 5} Subjectivity Or Sentiment Classifier S O? Difference sense#1 O sense#2 O sense#3 S sense#4 S sense#5 O SWSD System Sense S {3, 4} “Their differences only grew as they spent more time together …”

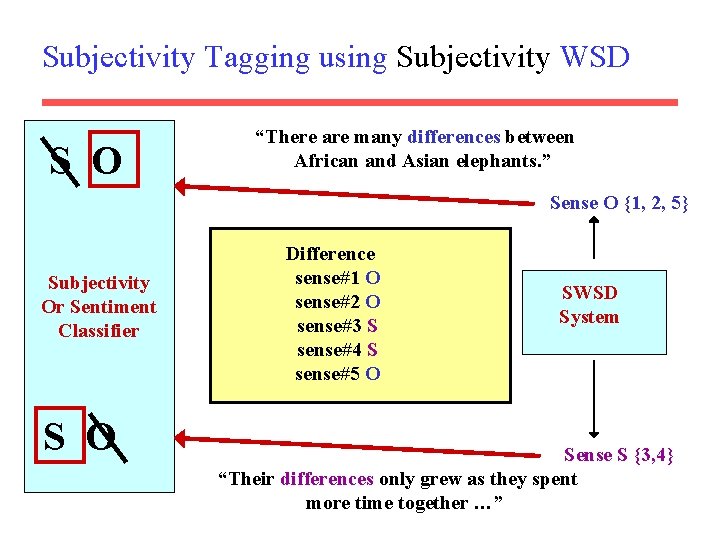

Subjectivity Tagging using Subjectivity WSD S O “There are many differences between African and Asian elephants. ” Sense O {1, 2, 5} Subjectivity Or Sentiment Classifier S O Difference sense#1 O sense#2 O sense#3 S sense#4 S sense#5 O SWSD System Sense S {3, 4} “Their differences only grew as they spent more time together …”

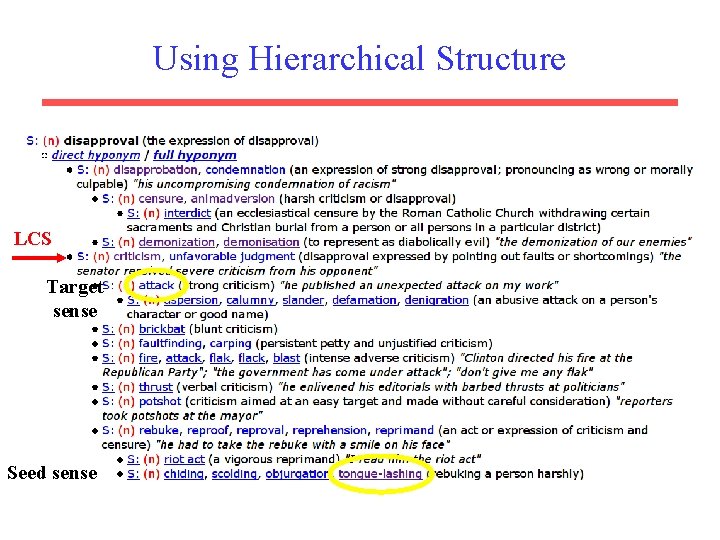

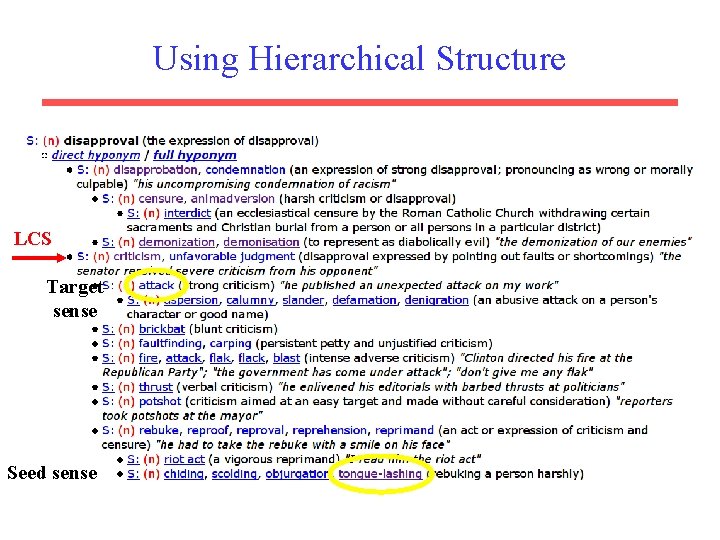

Using Hierarchical Structure LCS Target sense Seed sense

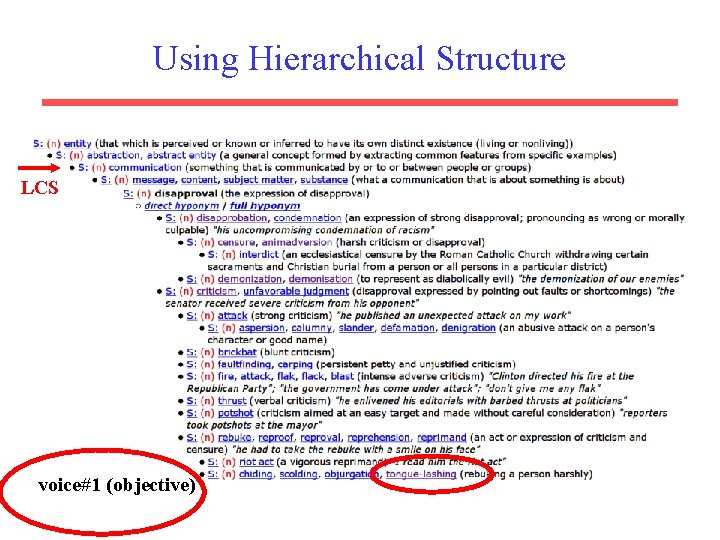

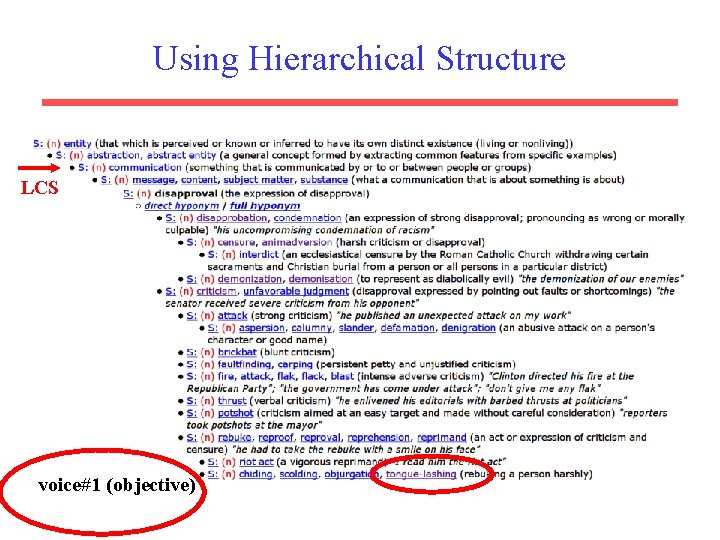

Using Hierarchical Structure LCS voice#1 (objective)

• If you are interested in the entire approach and experiments, please see the paper (it is on my website) 31

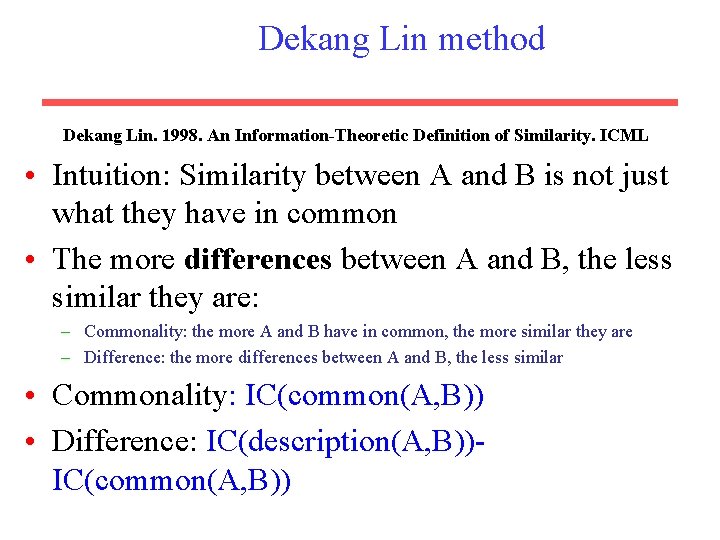

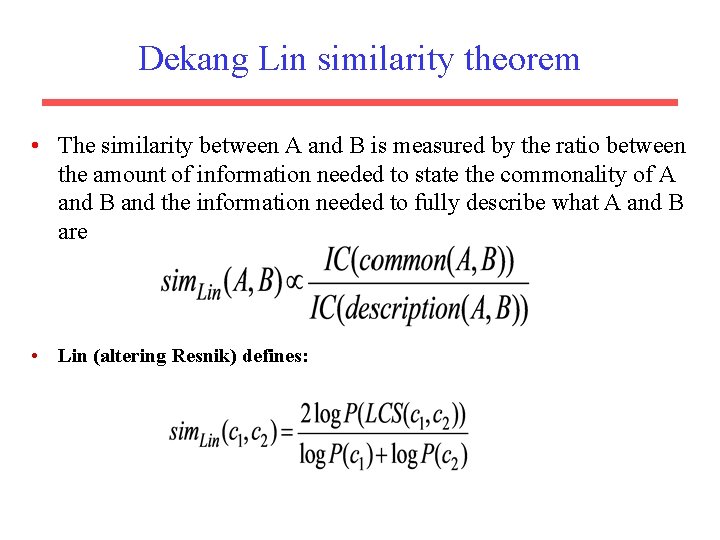

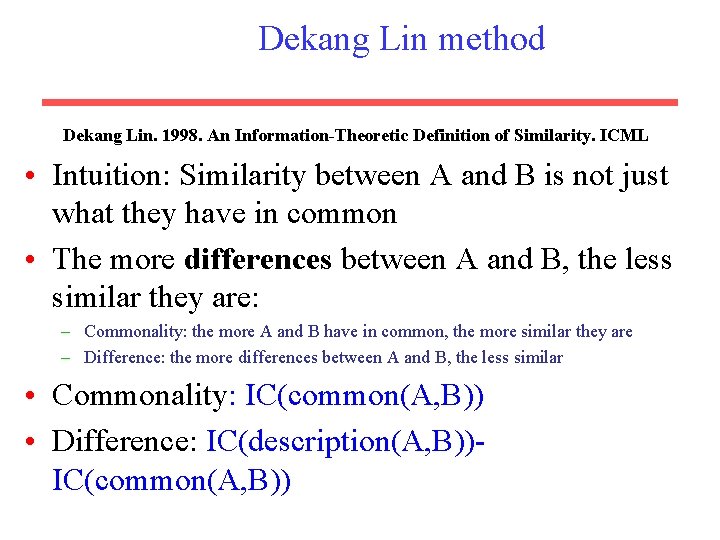

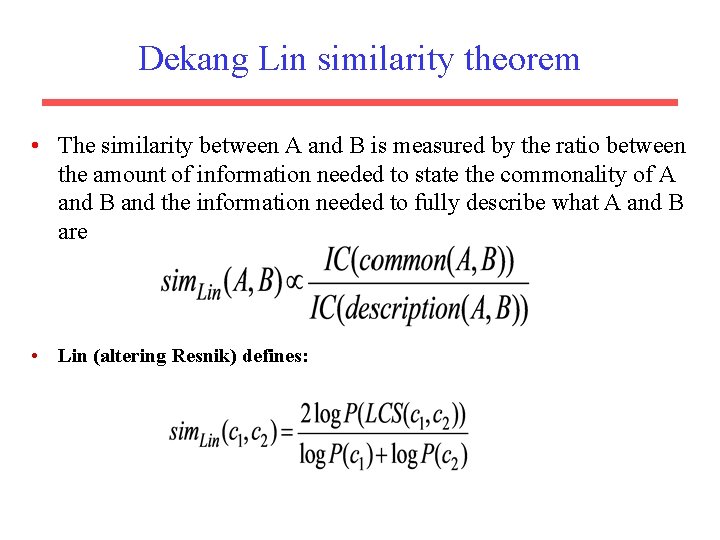

Dekang Lin method Dekang Lin. 1998. An Information-Theoretic Definition of Similarity. ICML • Intuition: Similarity between A and B is not just what they have in common • The more differences between A and B, the less similar they are: – Commonality: the more A and B have in common, the more similar they are – Difference: the more differences between A and B, the less similar • Commonality: IC(common(A, B)) • Difference: IC(description(A, B))IC(common(A, B))

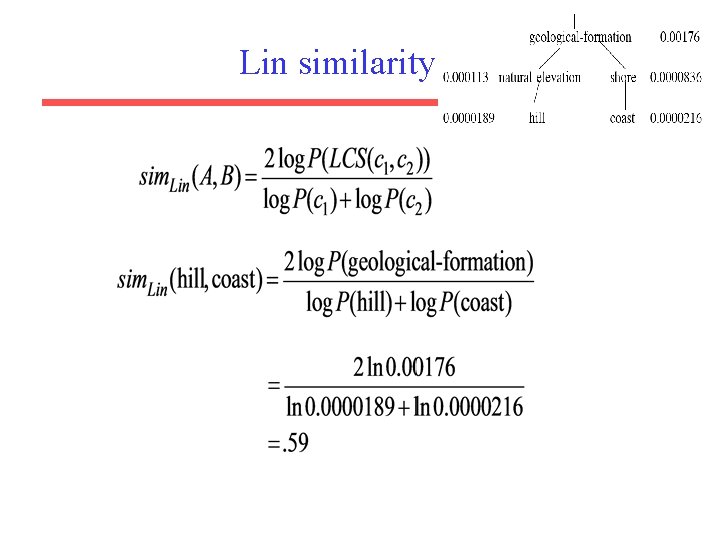

Dekang Lin similarity theorem • The similarity between A and B is measured by the ratio between the amount of information needed to state the commonality of A and B and the information needed to fully describe what A and B are • Lin (altering Resnik) defines:

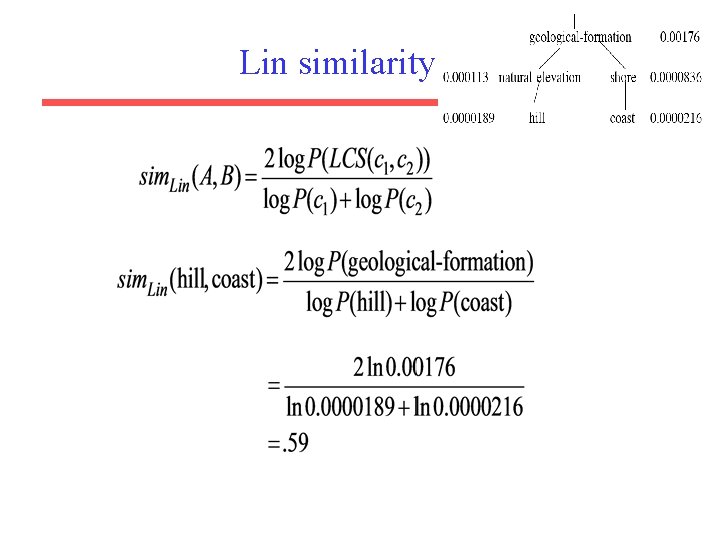

Lin similarity function

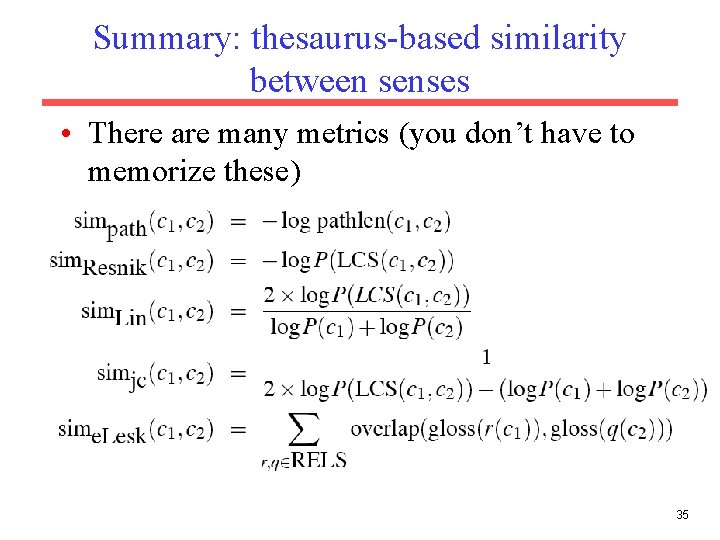

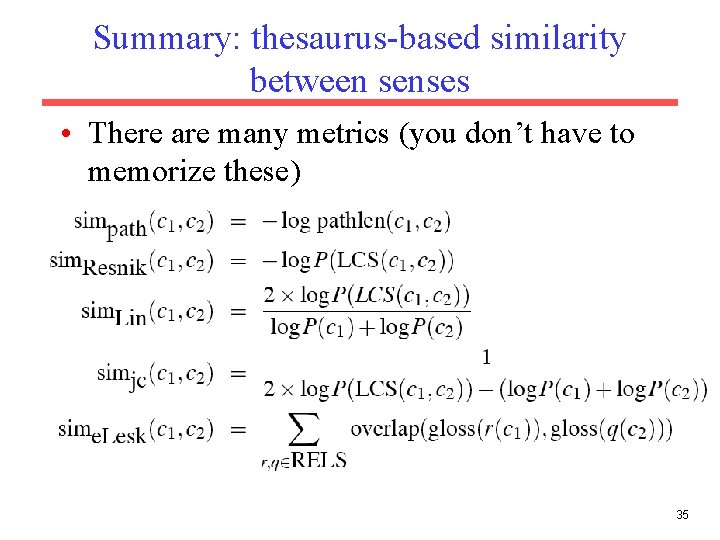

Summary: thesaurus-based similarity between senses • There are many metrics (you don’t have to memorize these) 35

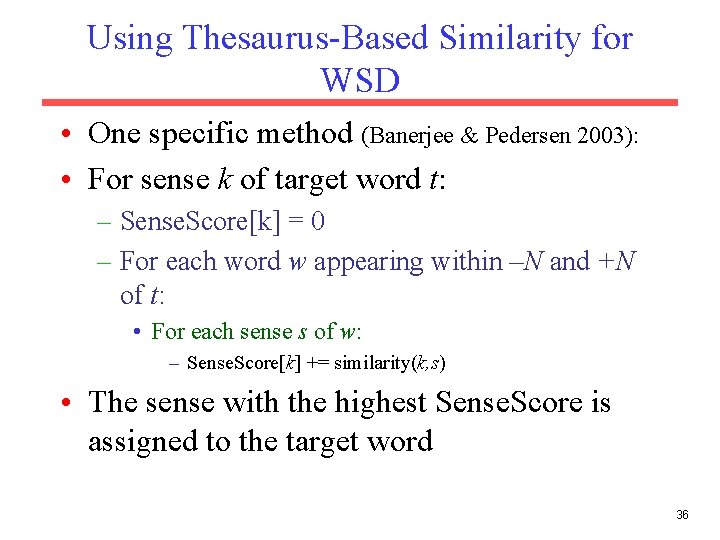

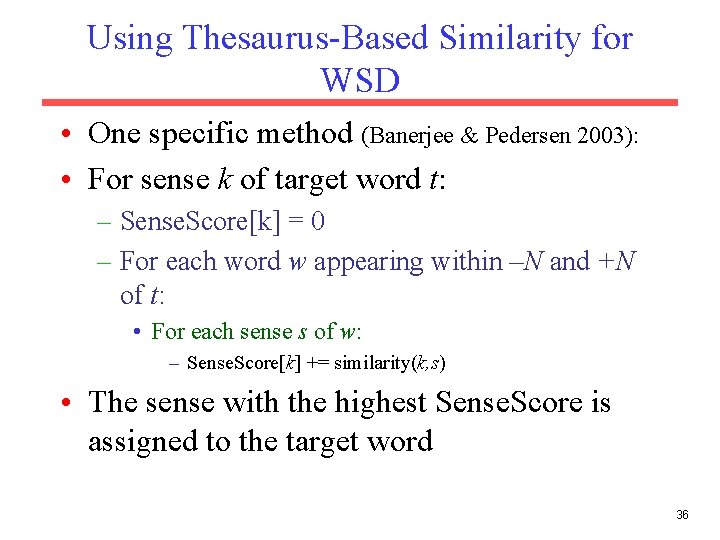

Using Thesaurus-Based Similarity for WSD • One specific method (Banerjee & Pedersen 2003): • For sense k of target word t: – Sense. Score[k] = 0 – For each word w appearing within –N and +N of t: • For each sense s of w: – Sense. Score[k] += similarity(k, s) • The sense with the highest Sense. Score is assigned to the target word 36

Problems with thesaurus-based meaning • We don’t have a thesaurus for every language • Even if we do, they have problems with recall – – Many words are missing Most (if not all) phrases are missing Some connections between senses are missing Thesauri work less well for verbs, adjectives • Adjectives and verbs have less structured hyponymy relations

Distributional models of meaning • Also called vector-space models of meaning • Offer much higher recall than hand-built thesauri – Although they tend to have lower precision • Zellig Harris (1954): “oculist and eye-doctor … occur in almost the same environments…. If A and B have almost identical environments we say that they are synonyms. • Firth (1957): “You shall know a word by the company it keeps!” • 38

Intuition of distributional word similarity • Nida example: A bottle of tesgüino is on the table Everybody likes tesgüino Tesgüino makes you drunk We make tesgüino out of corn. • From context words humans can guess tesgüino means – an alcoholic beverage like beer • Intuition for algorithm: – Two words are similar if they have similar word contexts.

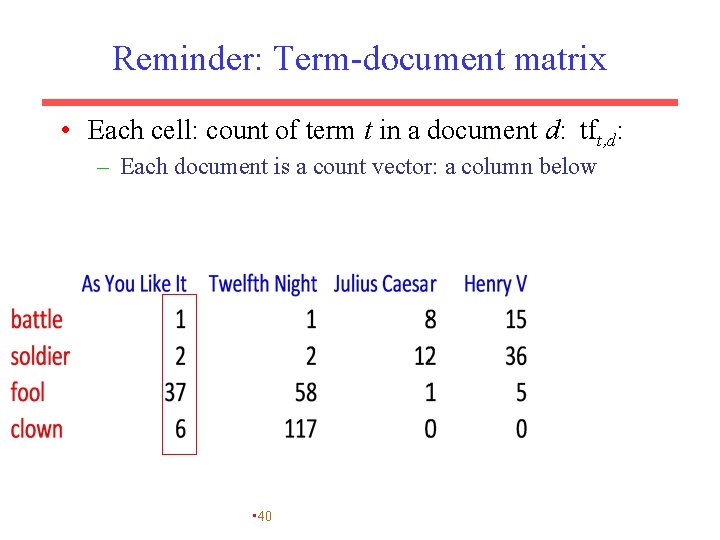

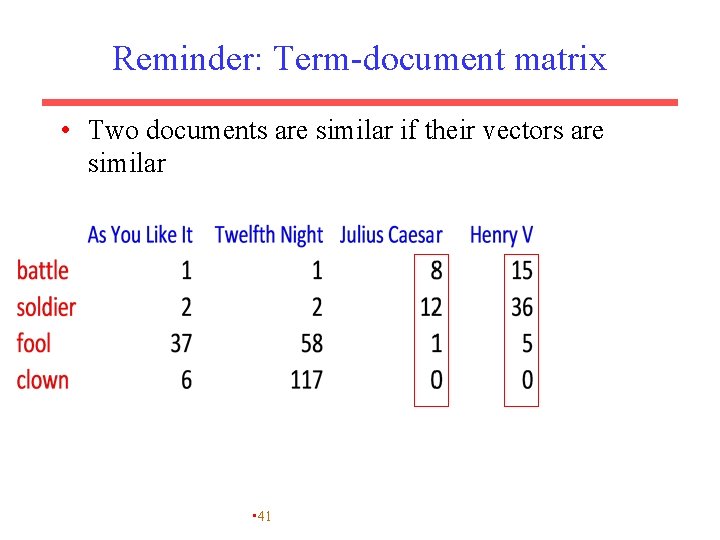

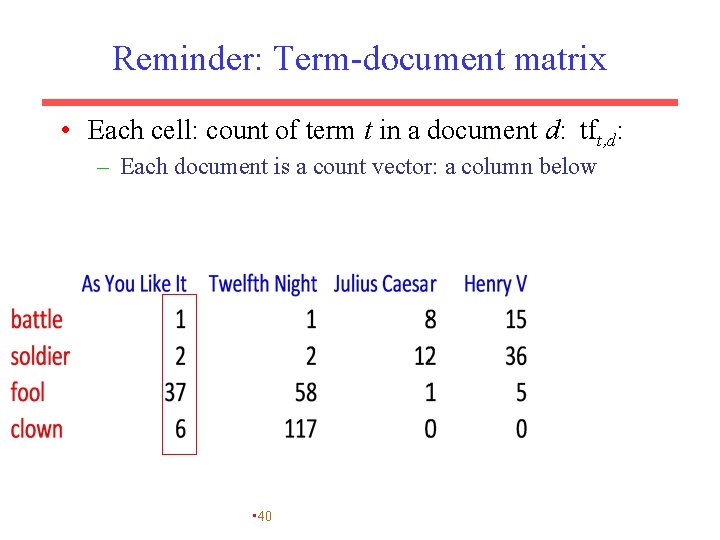

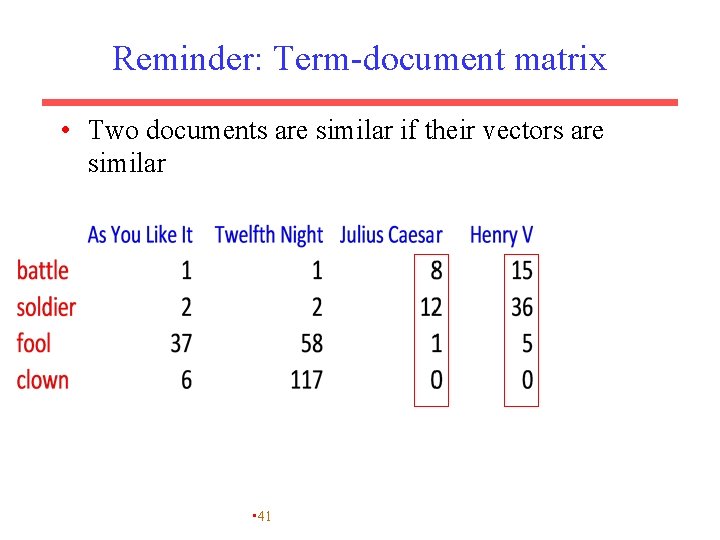

Reminder: Term-document matrix • Each cell: count of term t in a document d: tft, d: – Each document is a count vector: a column below • 40

Reminder: Term-document matrix • Two documents are similar if their vectors are similar • 41

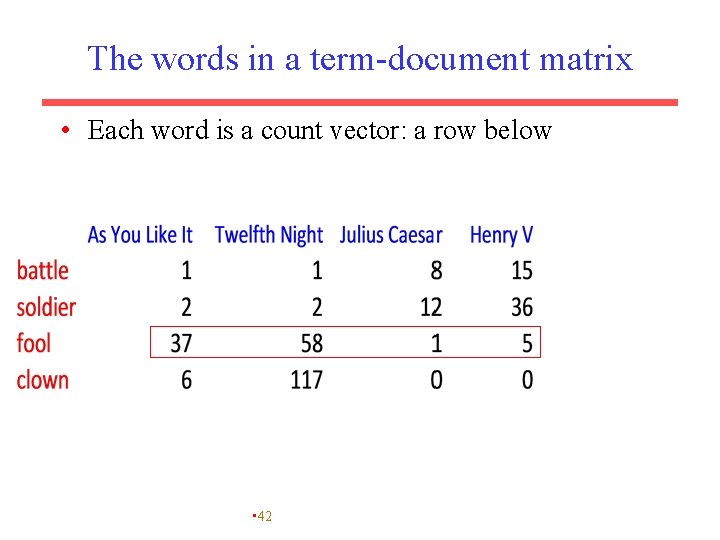

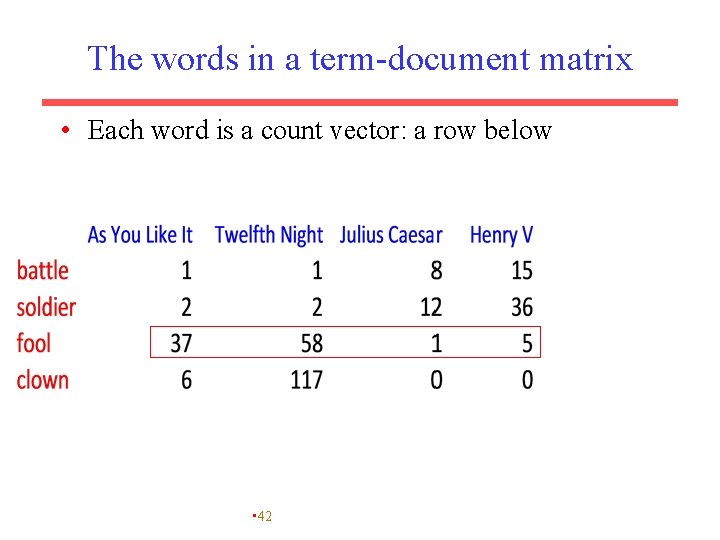

The words in a term-document matrix • Each word is a count vector: a row below • 42

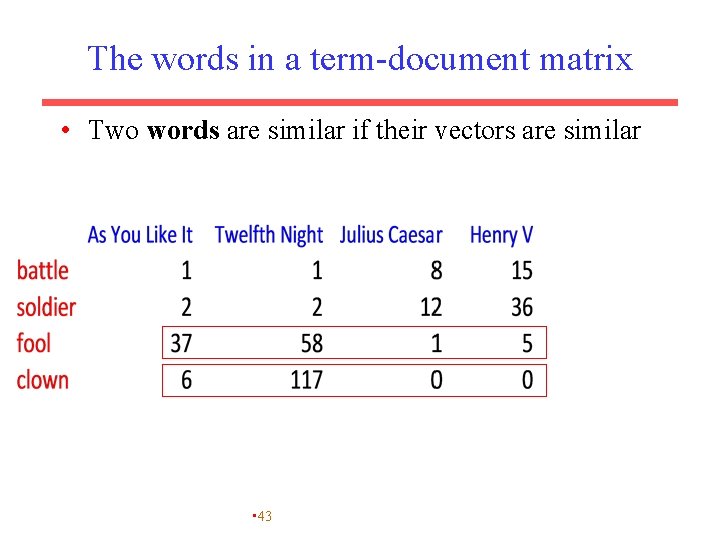

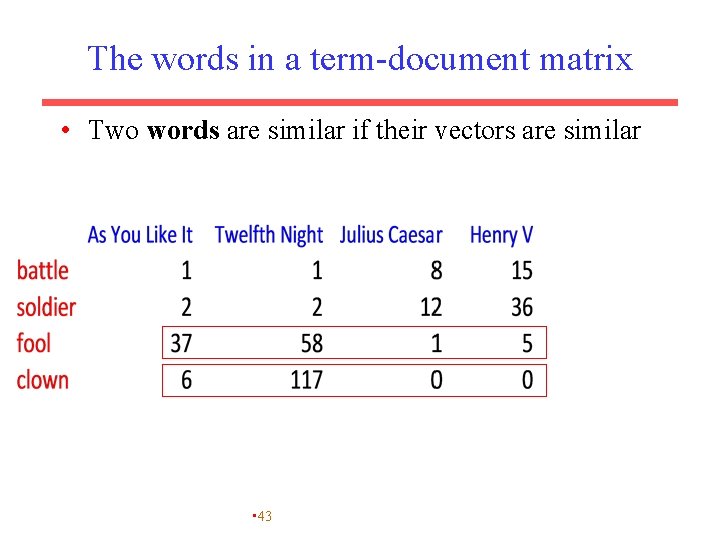

The words in a term-document matrix • Two words are similar if their vectors are similar • 43

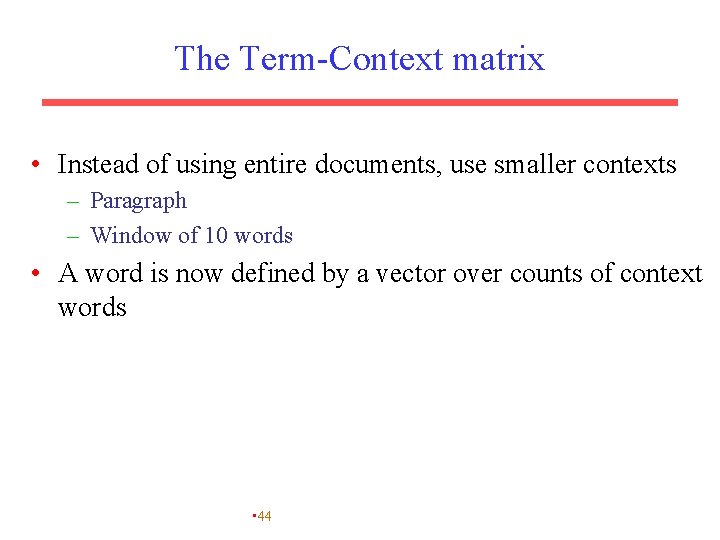

The Term-Context matrix • Instead of using entire documents, use smaller contexts – Paragraph – Window of 10 words • A word is now defined by a vector over counts of context words • 44

Sample contexts: 20 words (Brown corpus) • equal amount of sugar, a sliced lemon, a tablespoonful of apricot preserve or jam, a pinch each of clove and nutmeg, • on board for their enjoyment. Cautiously she sampled her first pineapple and another fruit whose taste she likened to that of • of a recursive type well suited to programming on the digital computer. In finding the optimal Rstage policy from that of • substantially affect commerce, for the purpose of gathering data and information necessary for the study authorized in the first section of this • 45

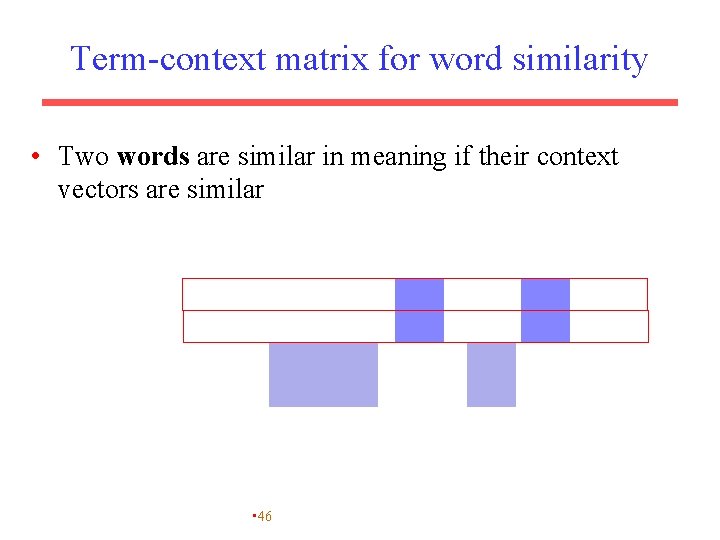

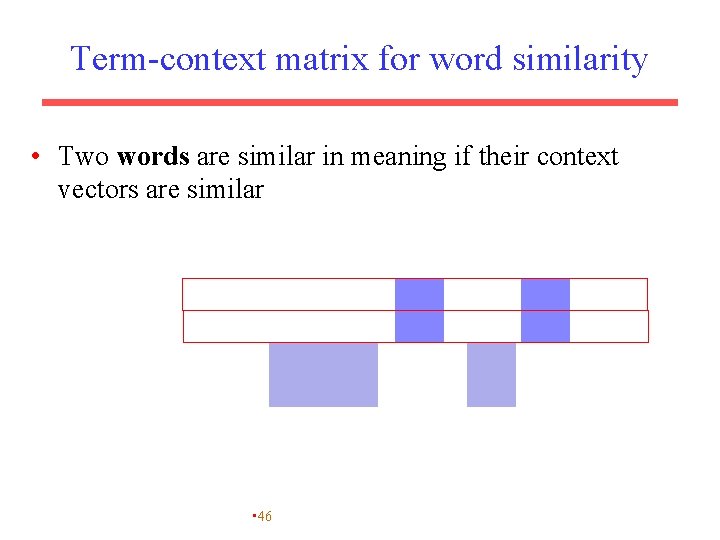

Term-context matrix for word similarity • Two words are similar in meaning if their context vectors are similar • 46

Should we use raw counts? • For the term-document matrix – We used tf-idf instead of raw term counts • For the term-context matrix – Positive Pointwise Mutual Information (PPMI) is common • 47

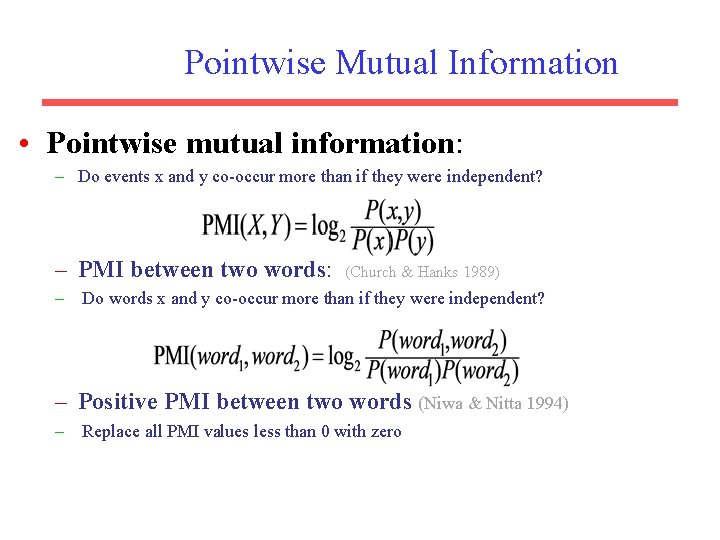

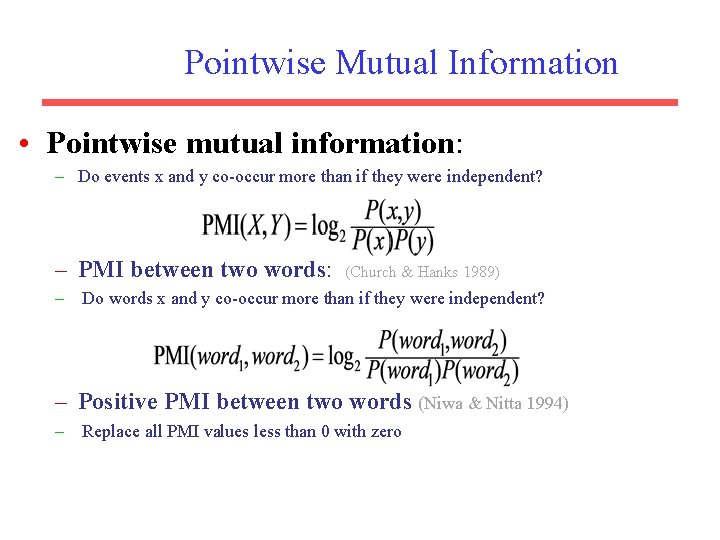

Pointwise Mutual Information • Pointwise mutual information: – Do events x and y co-occur more than if they were independent? – PMI between two words: (Church & Hanks 1989) – Do words x and y co-occur more than if they were independent? – Positive PMI between two words (Niwa & Nitta 1994) – Replace all PMI values less than 0 with zero

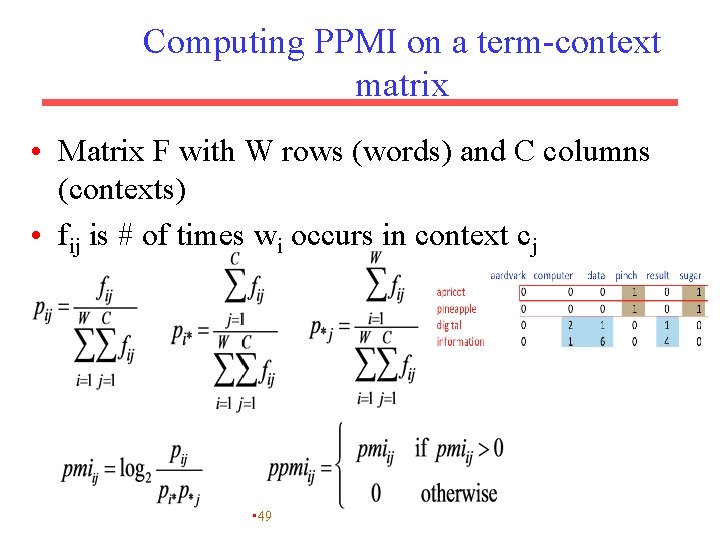

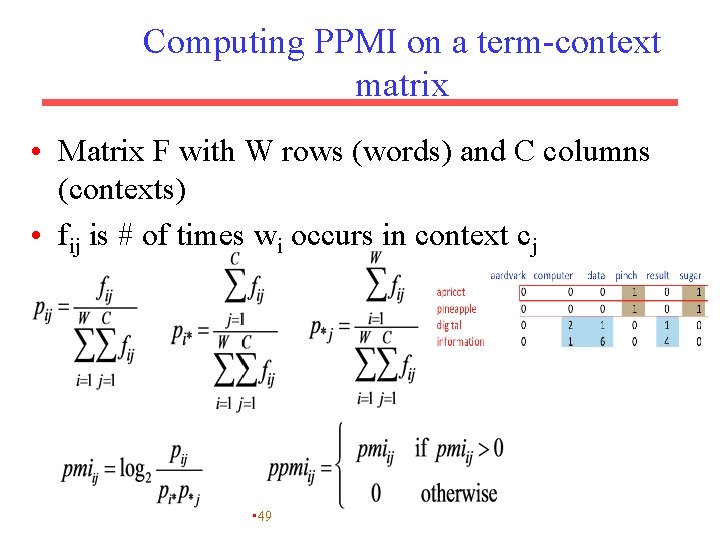

Computing PPMI on a term-context matrix • Matrix F with W rows (words) and C columns (contexts) • fij is # of times wi occurs in context cj • 49

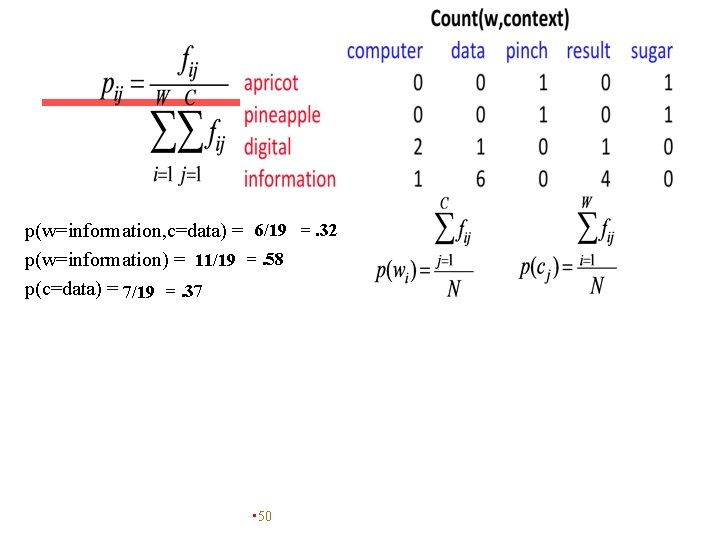

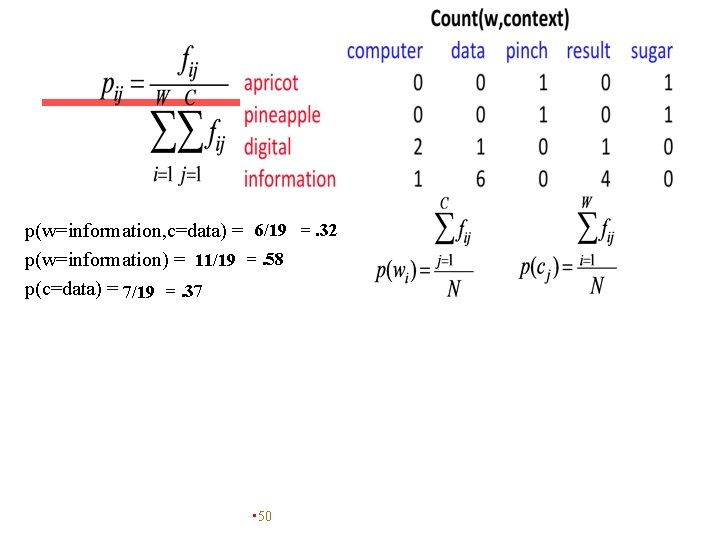

p(w=information, c=data) = 6/19 =. 32 p(w=information) = 11/19 =. 58 p(c=data) = 7/19 =. 37 • 50

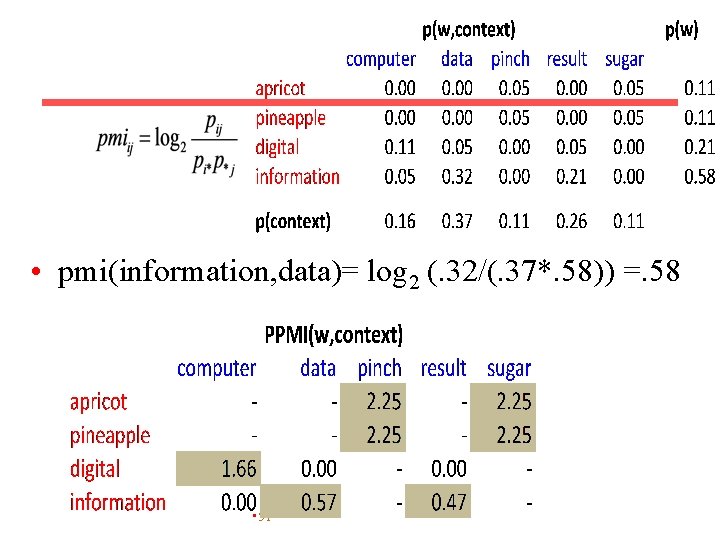

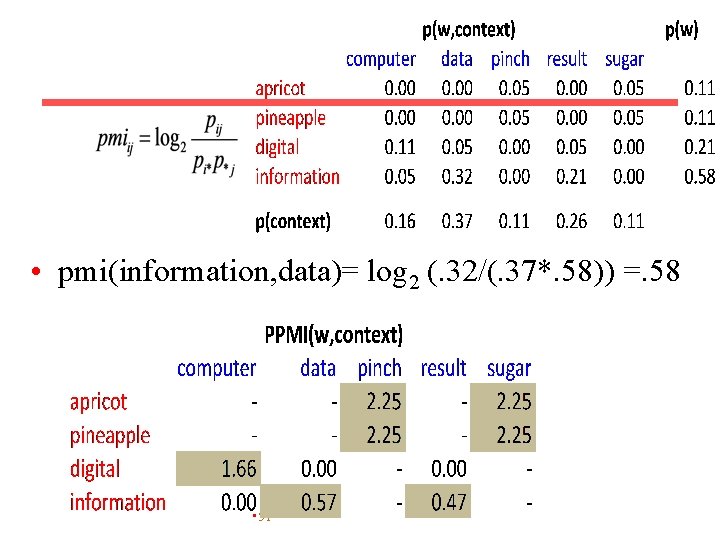

• pmi(information, data)= log 2 (. 32/(. 37*. 58)) =. 58 • 51

Weighing PMI • PMI is biased toward infrequent events • Various weighting schemes help alleviate this – See Turney and Pantel (2010) – Add-one smoothing can also help • 52

Summary: vector space models • Representing meaning through counts – Represent document/sentence/context through content words • Proximity in semantic space ~ similarity between words 53

Summary: vector space models • Uses: – – – Search Inducing ontologies Modeling human judgments of word similarity Improve supervised word sense disambiguation Word-sense discrimination: cluster words based on vectors; the clusters may not correspond to any particular sense inventory 54

Sense. Eval • Standardized international “competition” on WSD. • Organized by the Association for Computational Linguistics (ACL) Special Interest Group on the Lexicon (SIGLEX). – – Senseval 1: 1998 Senseval 2: 2001 Senseval 3: 2004 Senseval 4: 2007 55

Senseval 1: 1998 • Datasets for – English – French – Italian • Lexical sample in English – Noun: accident, behavior, bet, disability, excess, float, giant, knee, onion, promise, rabbit, sack, scrap, shirt, steering – Verb: amaze, bet, bother, bury, calculate, consumer, derive, float, invade, promise, sack, scrap, sieze – Adjective: brilliant, deaf, floating, generous, giant, modest, slight, wooden – Indeterminate: band, bitter, hurdle, sanction, shake • Total number of ambiguous English words tagged: 8, 448 56

Senseval 1 English Sense Inventory • Senses from the HECTOR lexicography project. • Multiple levels of granularity – Coarse grained (avg. 7. 2 senses per word) – Fine grained (avg. 10. 4 senses per word) 57

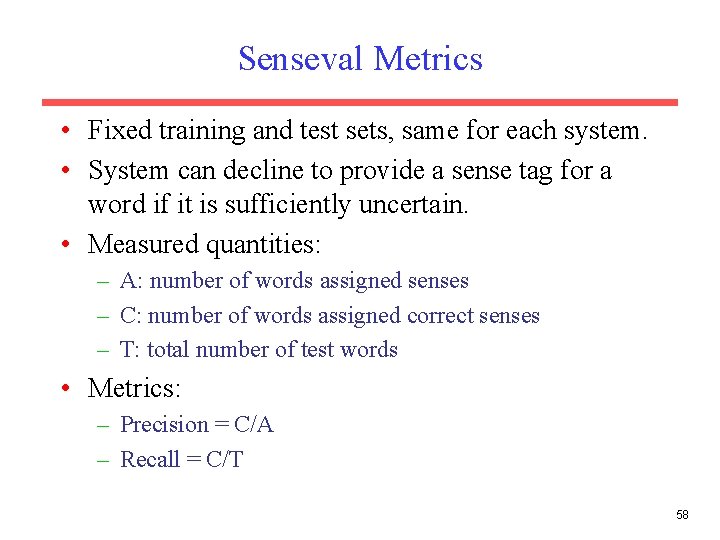

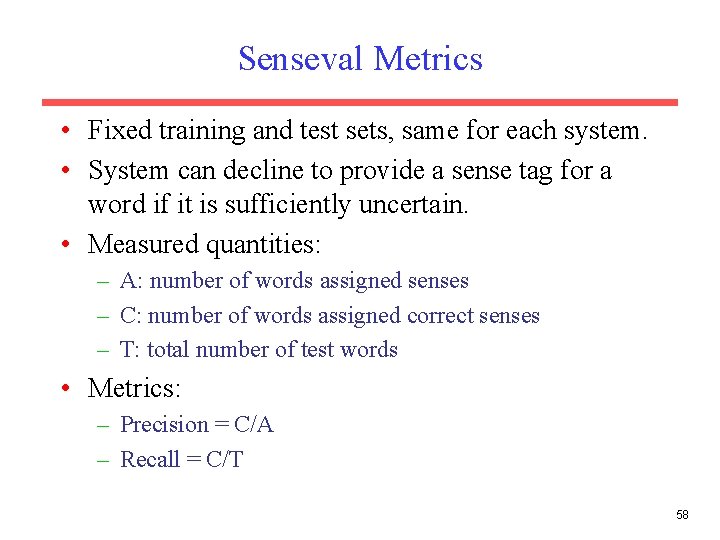

Senseval Metrics • Fixed training and test sets, same for each system. • System can decline to provide a sense tag for a word if it is sufficiently uncertain. • Measured quantities: – A: number of words assigned senses – C: number of words assigned correct senses – T: total number of test words • Metrics: – Precision = C/A – Recall = C/T 58

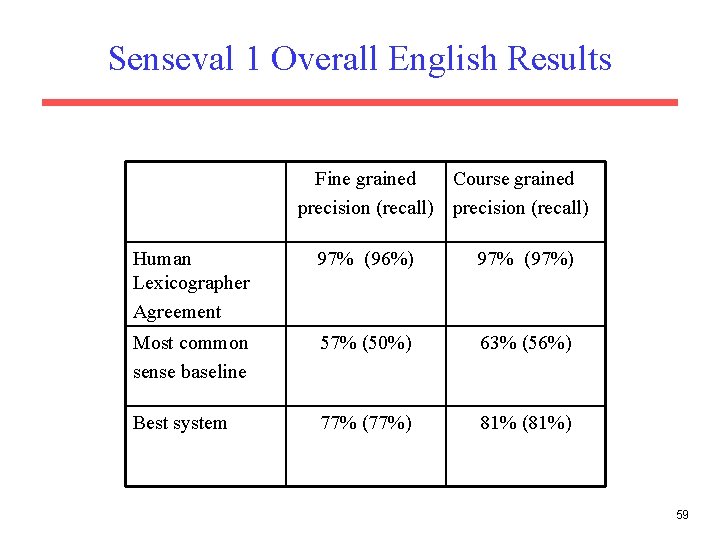

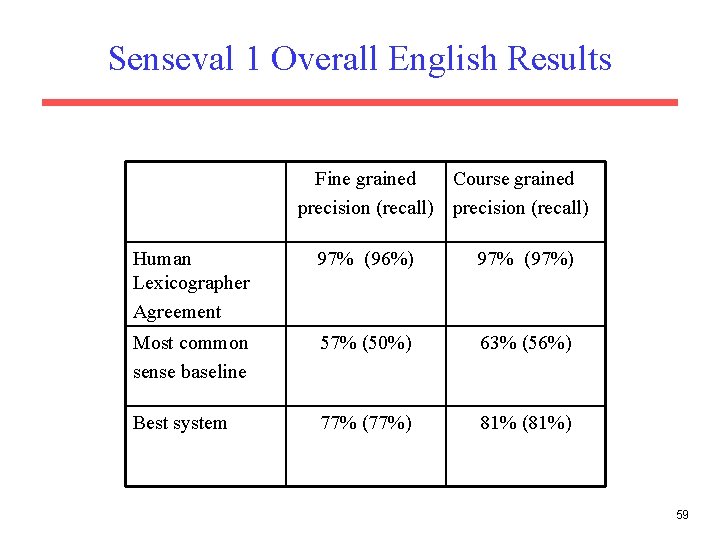

Senseval 1 Overall English Results Fine grained Course grained precision (recall) Human Lexicographer Agreement 97% (96%) 97% (97%) Most common sense baseline 57% (50%) 63% (56%) Best system 77% (77%) 81% (81%) 59

Senseval 2: 2001 • More languages: Chinese, Danish, Dutch, Czech, Basque, Estonian, Italian, Korean, Spanish, Swedish, Japanese, English • Includes an “all-words” task as well as lexical sample. • Includes a “translation” task for Japanese, where senses correspond to distinct translations of a word into another language. • 35 teams competed with over 90 systems entered. 60

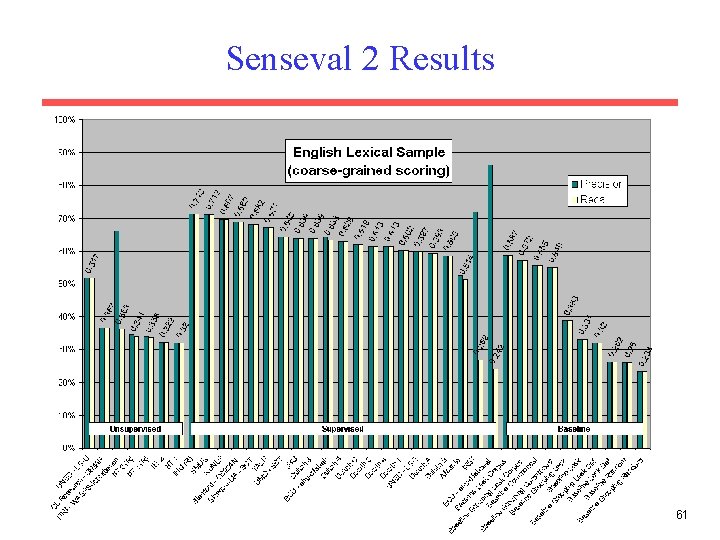

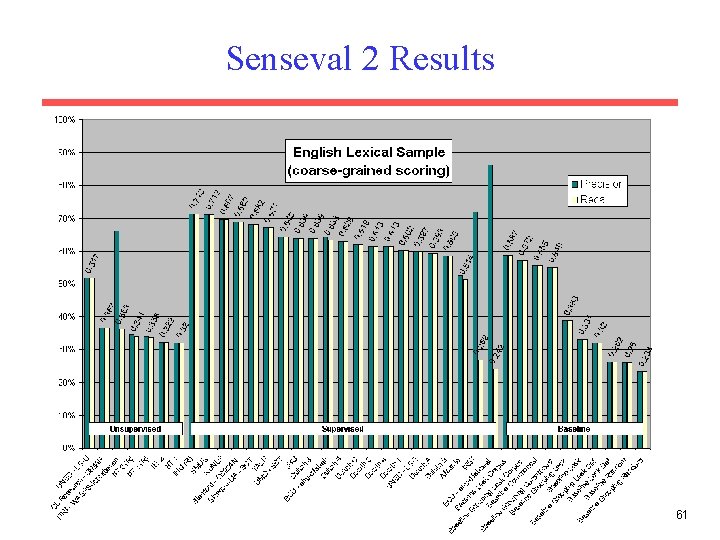

Senseval 2 Results 61

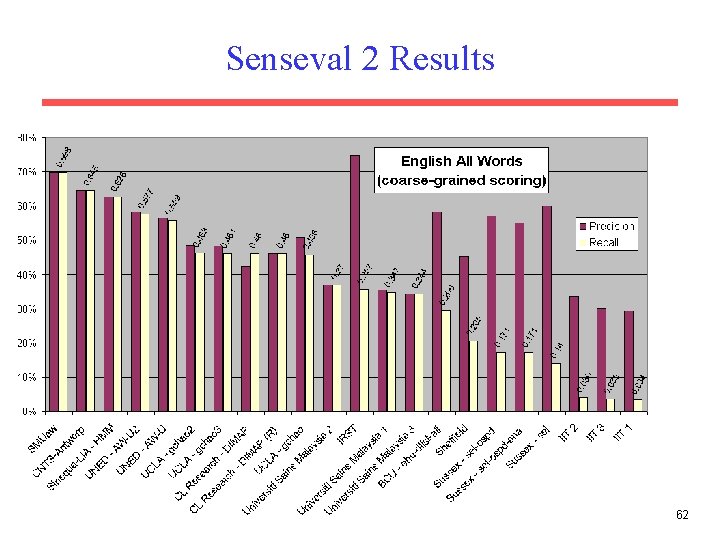

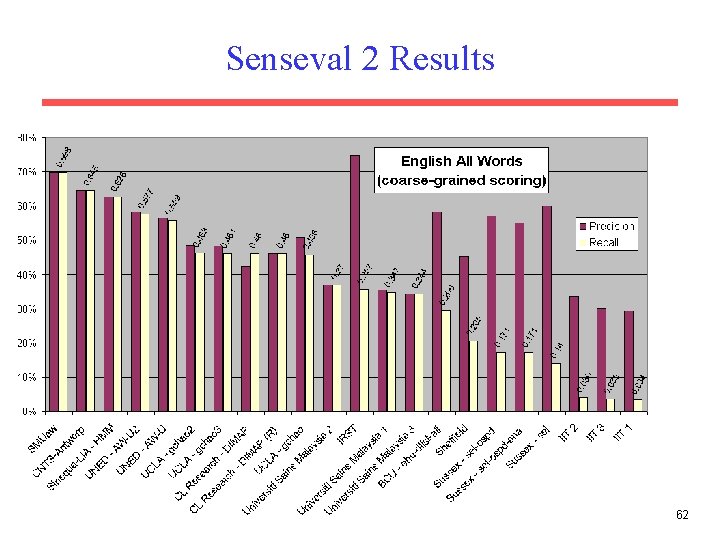

Senseval 2 Results 62

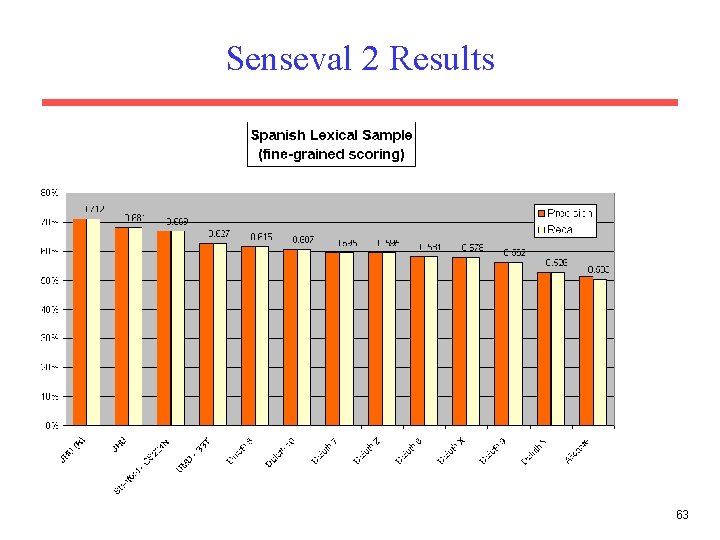

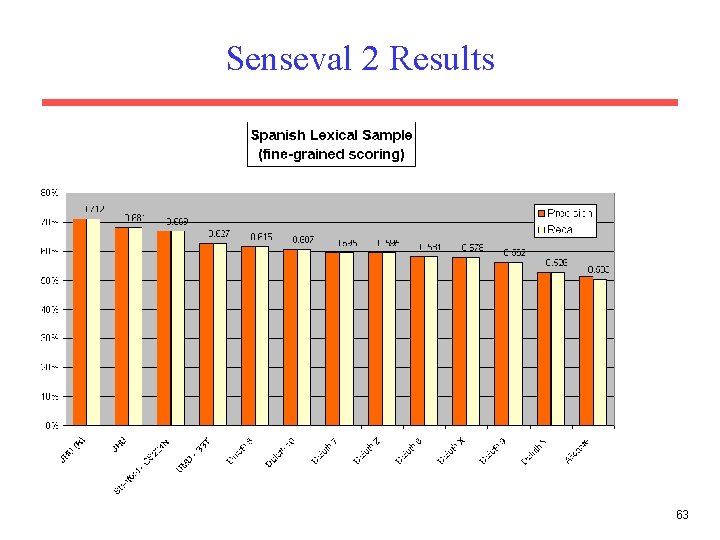

Senseval 2 Results 63

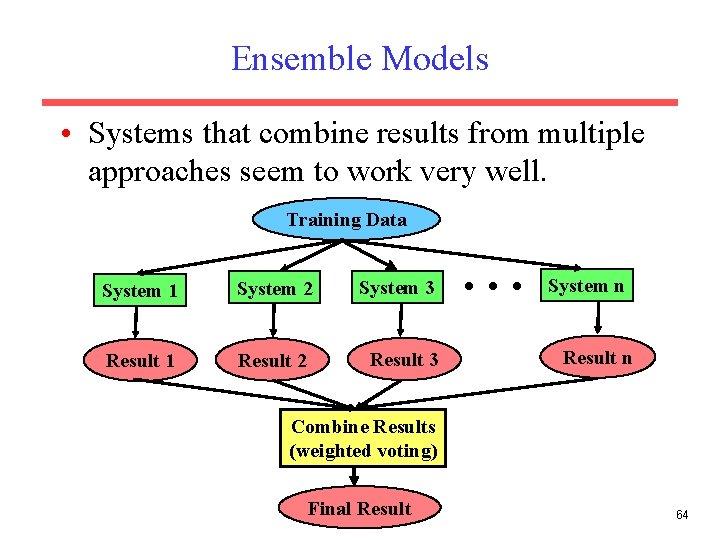

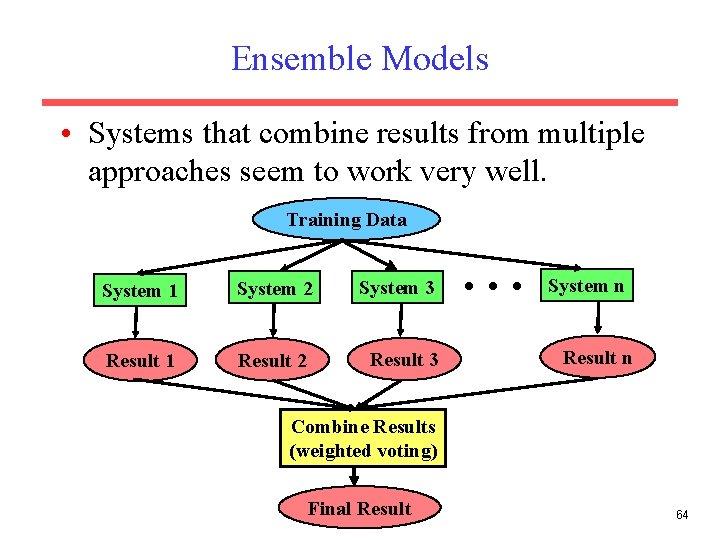

Ensemble Models • Systems that combine results from multiple approaches seem to work very well. Training Data System 1 System 2 System 3 Result 1 Result 2 Result 3 . . . System n Result n Combine Results (weighted voting) Final Result 64

Senseval 3: 2004 • Some new languages: English, Italian, Basque, Catalan, Chinese, Romanian • Some new tasks – Subcategorization acquisition – Semantic role labelling – Logical form 65

Senseval 3 English Lexical Sample • Volunteers over the web used to annotate senses of 60 ambiguous nouns, adjectives, and verbs. • Non expert lexicographers achieved only 62. 8% inter-annotator agreement for fine senses. • Best results again in the low 70% accuracy range. 66

Senseval 3: English All Words Task • 5, 000 words from Wall Street Journal newspaper and Brown corpus (editorial, news, and fiction) • 2, 212 words tagged with Word. Net senses. • Interannotator agreement of 72. 5% for people with advanced linguistics degrees. – Most disagreements on a smaller group of difficult words. Only 38% of word types had any disagreement at all. • Most-common sense baseline: 60. 9% accuracy • Best results from competition: 65% accuracy 67

Other Approaches to WSD • Active learning • Unsupervised sense clustering • Semi-supervised learning (Yarowsky 1995) – Bootstrap from a small number of labeled examples to exploit unlabeled data – Exploit “one sense per collocation” and “one sense per discourse” to create the labeled training data 68

Issues in WSD • What is the right granularity of a sense inventory? • Integrating WSD with other NLP tasks – Syntactic parsing – Semantic role labeling – Semantic parsing • Does WSD actually improve performance on some real end-user task? – – – Information retrieval Information extraction Machine translation Question answering Sentiment Analysis 69