A Level2 trigger algorithm for the identification of

- Slides: 27

A Level-2 trigger algorithm for the identification of muons in the ATLAS Muon Spectrometer Alessandro Di Mattia on behalf of the Atlas TDAQ group Computing in High Energy Physics Interlaken, September 26 -30, 2004

Outline: • The ATLAS trigger • m. Fast algorithm • relevant physics performances • Implementation in the Online framework • Latency of the algorithm • Conclusions

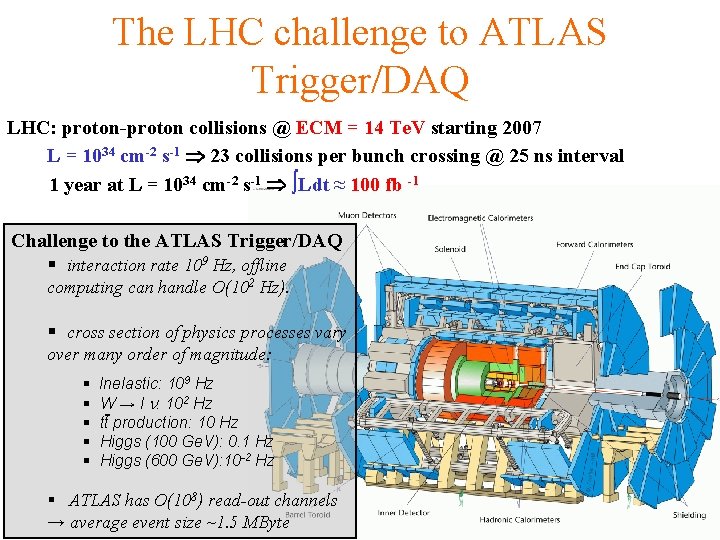

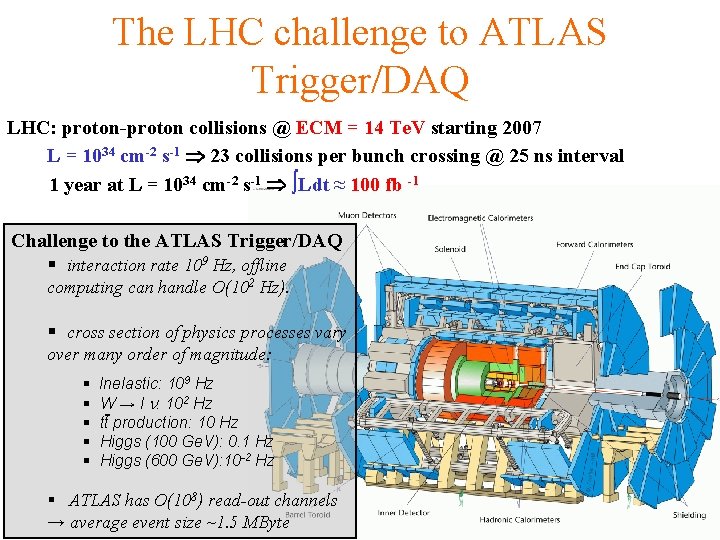

The LHC challenge to ATLAS Trigger/DAQ LHC: proton-proton collisions @ ECM = 14 Te. V starting 2007 L = 1034 cm-2 s-1 23 collisions per bunch crossing @ 25 ns interval 1 year at L = 1034 cm-2 s-1 ∫Ldt ≈ 100 fb -1 Challenge to the ATLAS Trigger/DAQ § interaction rate 109 Hz, offline computing can handle O(102 Hz). § cross section of physics processes vary over many order of magnitude: § § § Inelastic: 109 Hz W → l n: 102 Hz tt production: 10 Hz Higgs (100 Ge. V): 0. 1 Hz Higgs (600 Ge. V): 10 -2 Hz § ATLAS has O(108) read-out channels → average event size ~1. 5 MByte

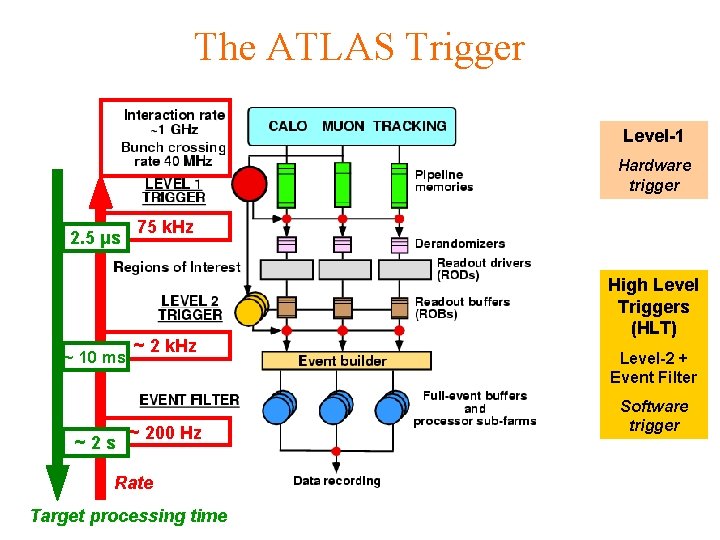

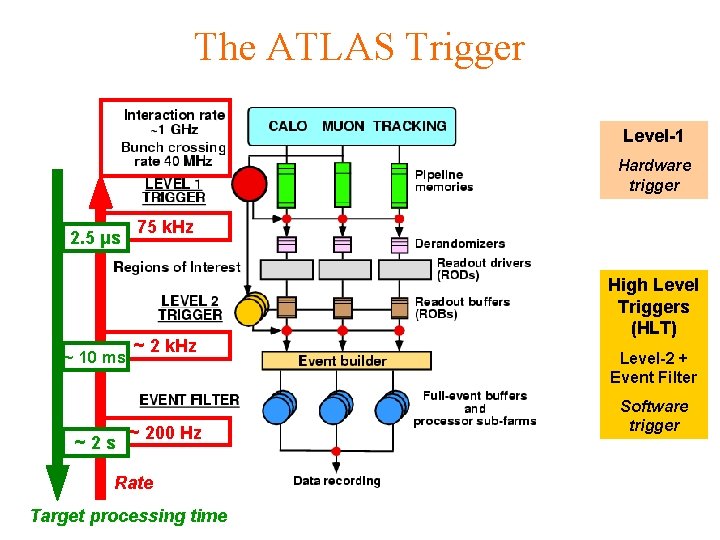

The ATLAS Trigger Level-1 Hardware trigger 2. 5 μs ~ 10 ms ~2 s 75 k. Hz ~ 200 Hz Rate Target processing time High Level Triggers (HLT) Level-2 + Event Filter Software trigger

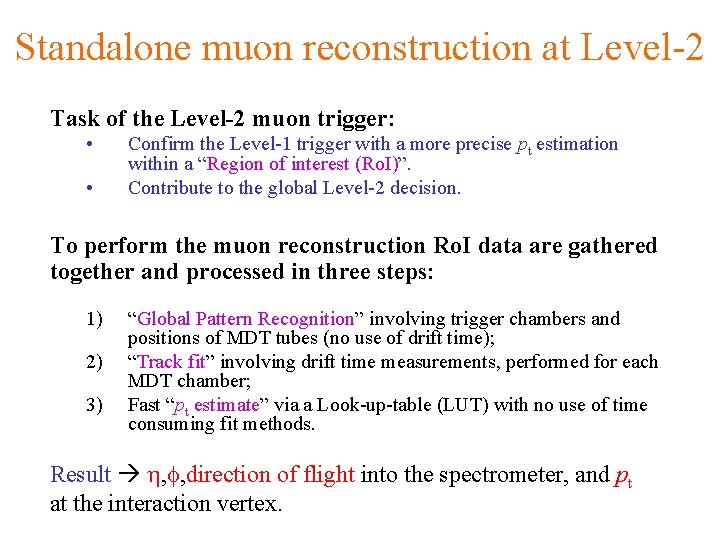

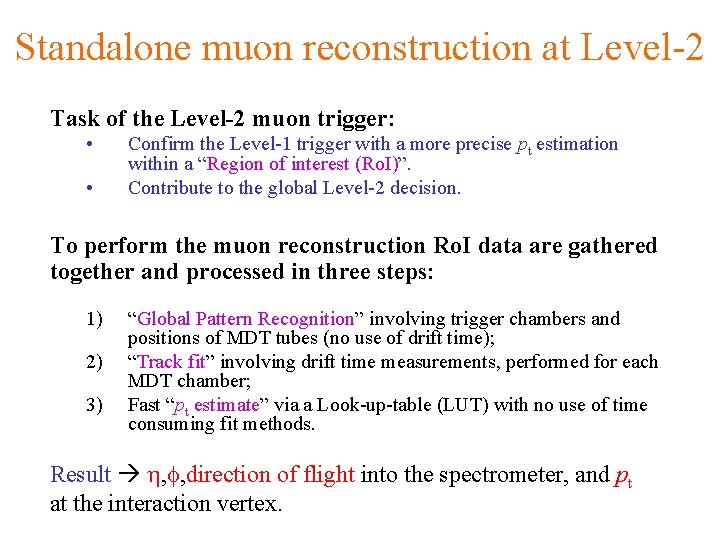

Standalone muon reconstruction at Level-2 Task of the Level-2 muon trigger: • • Confirm the Level-1 trigger with a more precise pt estimation within a “Region of interest (Ro. I)”. Contribute to the global Level-2 decision. To perform the muon reconstruction Ro. I data are gathered together and processed in three steps: 1) 2) 3) “Global Pattern Recognition” involving trigger chambers and positions of MDT tubes (no use of drift time); “Track fit” involving drift time measurements, performed for each MDT chamber; Fast “pt estimate” via a Look-up-table (LUT) with no use of time consuming fit methods. Result h, f, direction of flight into the spectrometer, and pt at the interaction vertex.

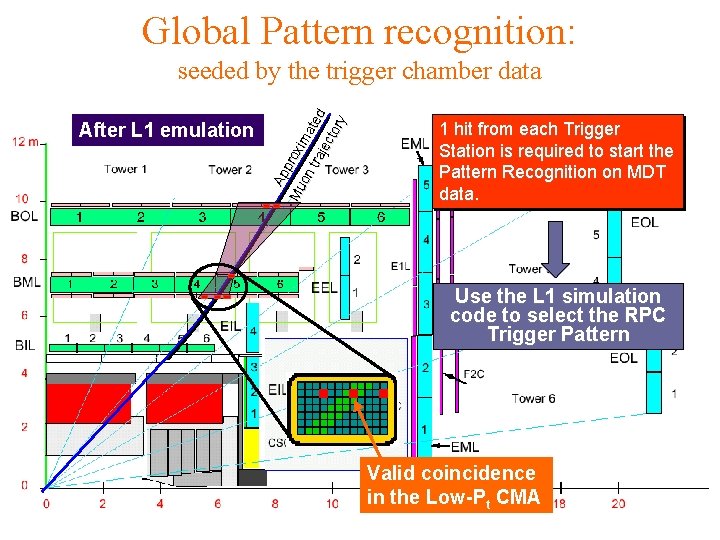

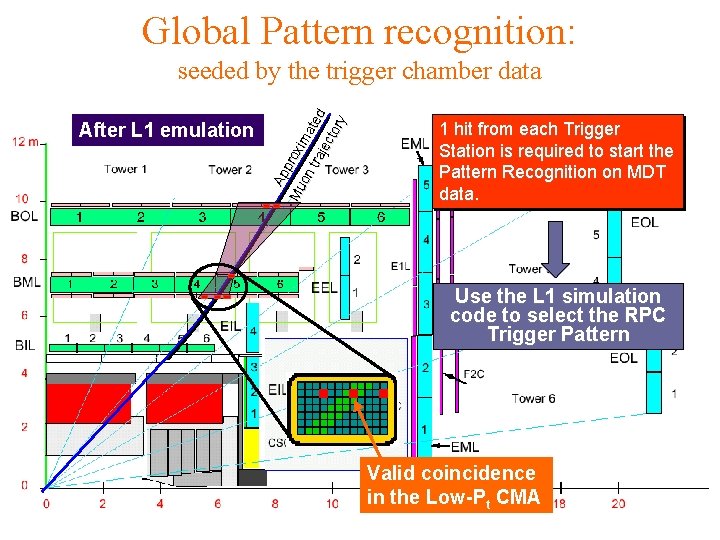

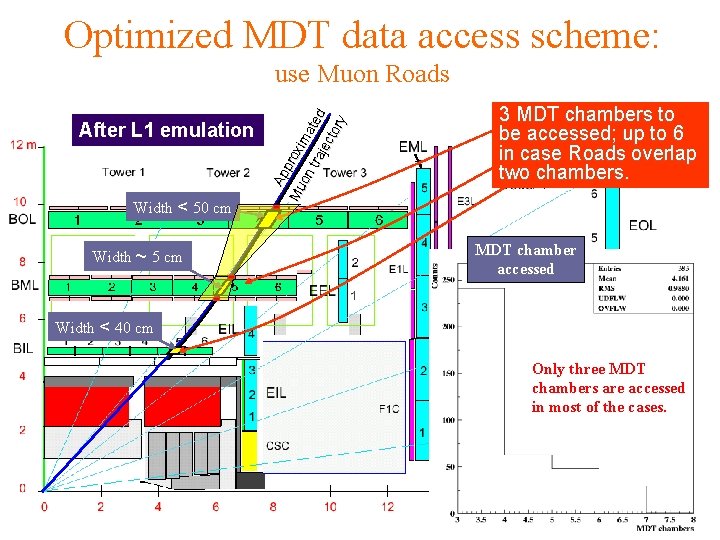

Global Pattern recognition: After L 1 emulation Ap p Mu roxi m on tra ated jm ecu torn y seeded by the trigger chamber data 1 hit from each Trigger Station is required to start the Pattern Recognition on MDT data. Use the L 1 simulation code to select the RPC Trigger Pattern Valid coincidence in the Low-Pt CMA

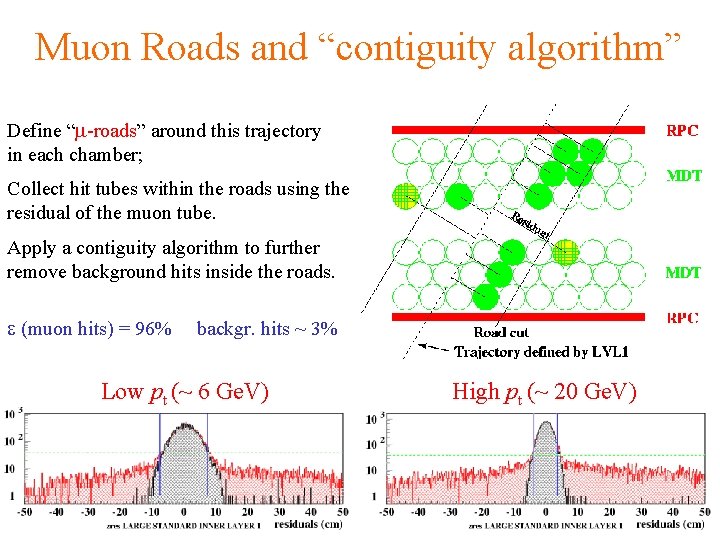

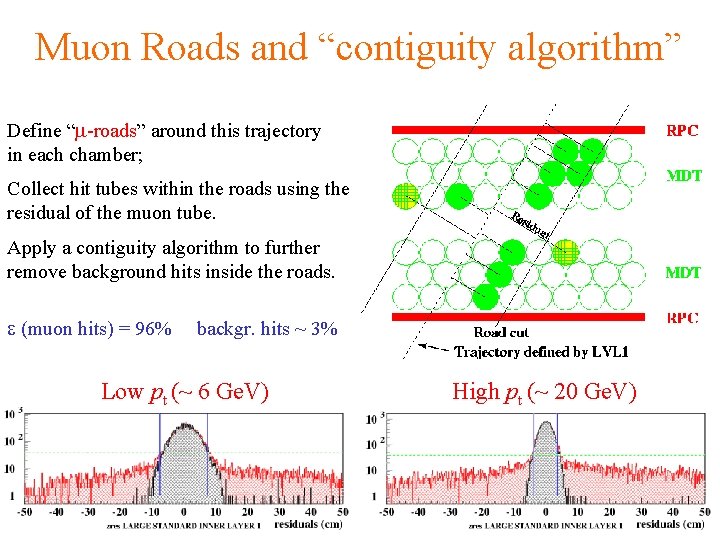

Muon Roads and “contiguity algorithm” Define “m-roads” around this trajectory in each chamber; Collect hit tubes within the roads using the residual of the muon tube. Apply a contiguity algorithm to further remove background hits inside the roads. e (muon hits) = 96% backgr. hits ~ 3% Low pt (~ 6 Ge. V) High pt (~ 20 Ge. V)

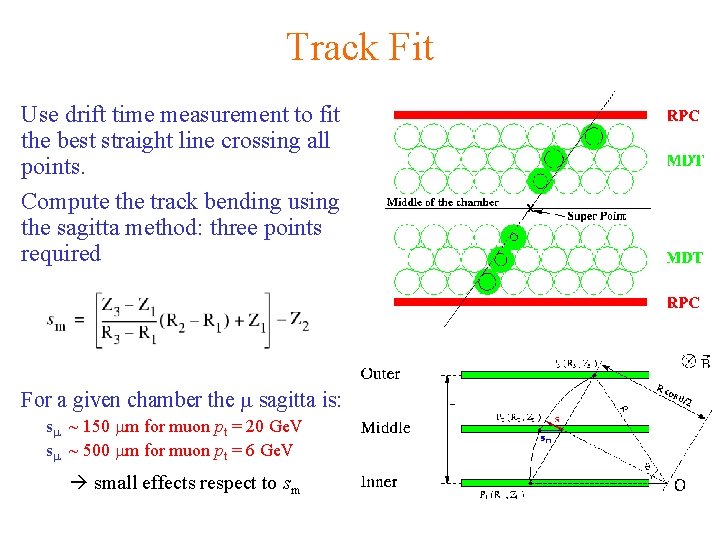

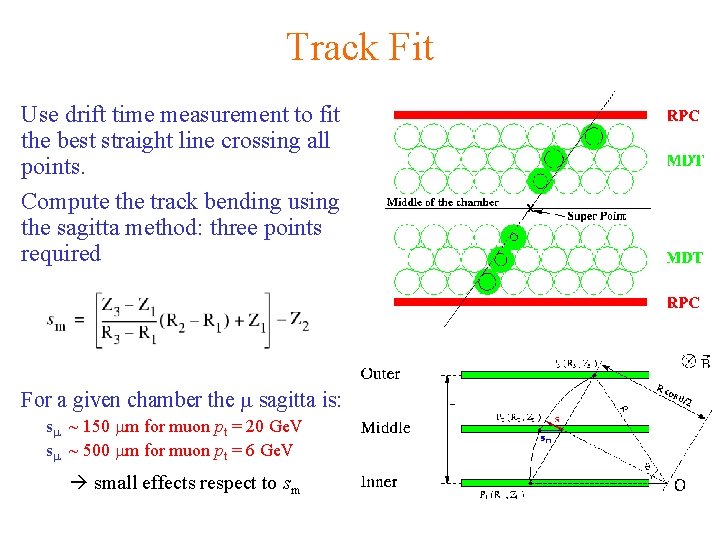

Track Fit Use drift time measurement to fit the best straight line crossing all points. Compute the track bending using the sagitta method: three points required For a given chamber the m sagitta is: sm ~ 150 mm for muon pt = 20 Ge. V sm ~ 500 mm for muon pt = 6 Ge. V small effects respect to sm

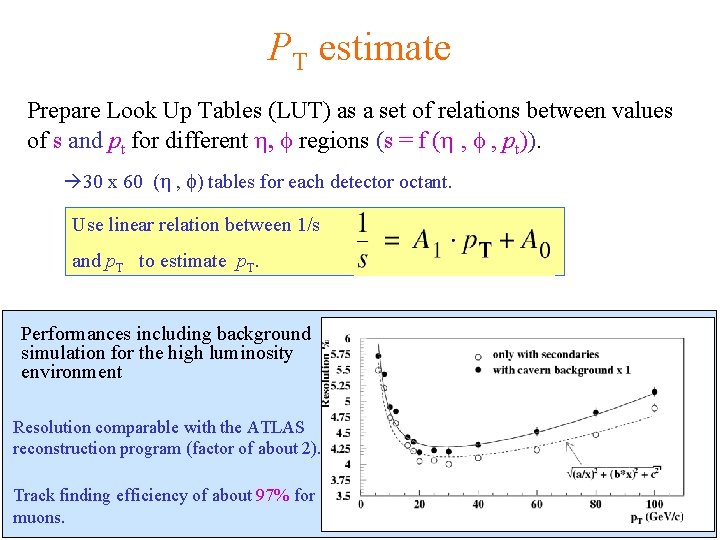

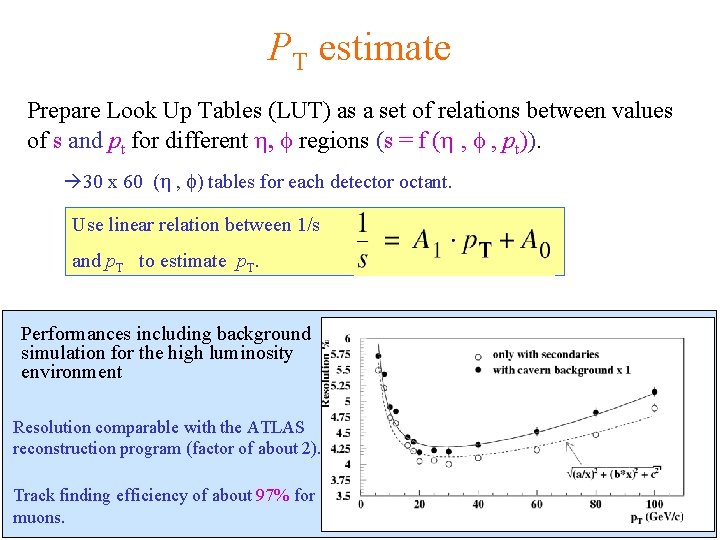

PT estimate Prepare Look Up Tables (LUT) as a set of relations between values of s and pt for different h, f regions (s = f (h , f , pt)). 30 x 60 (h , f) tables for each detector octant. Use linear relation between 1/s and p. T to estimate p. T. Performances including background simulation for the high luminosity environment Resolution comparable with the ATLAS reconstruction program (factor of about 2). Track finding efficiency of about 97% for muons.

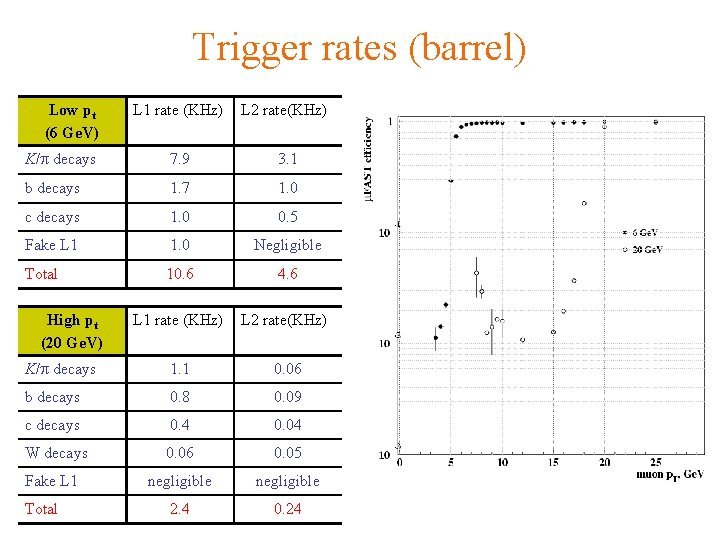

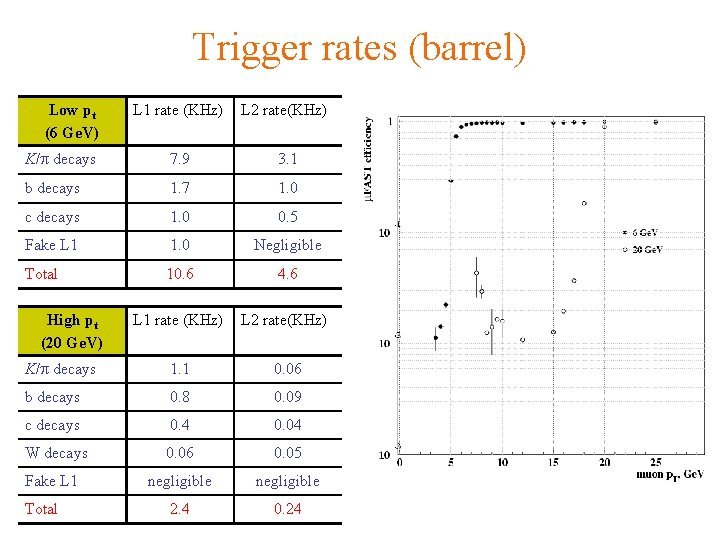

Trigger rates (barrel) Low pt (6 Ge. V) L 1 rate (KHz) L 2 rate(KHz) K/p decays 7. 9 3. 1 b decays 1. 7 1. 0 c decays 1. 0 0. 5 Fake L 1 1. 0 Negligible Total 10. 6 4. 6 L 1 rate (KHz) L 2 rate(KHz) K/p decays 1. 1 0. 06 b decays 0. 8 0. 09 c decays 0. 4 0. 04 W decays 0. 06 0. 05 negligible 2. 4 0. 24 High pt (20 Ge. V) Fake L 1 Total

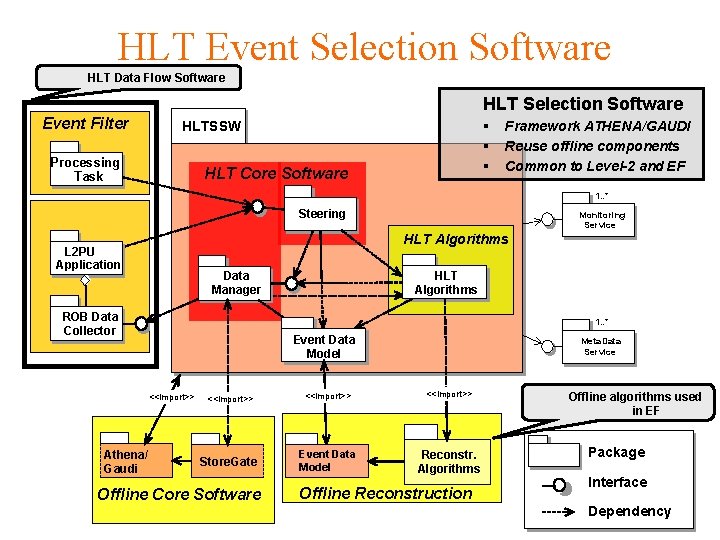

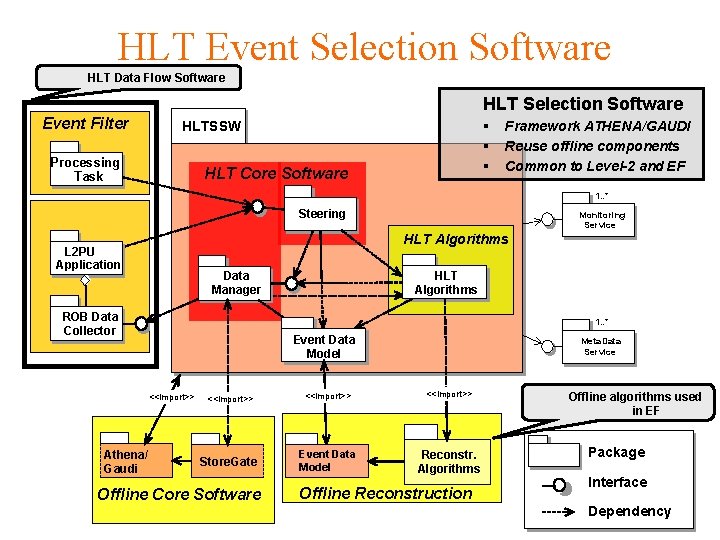

HLT Event Selection Software HLT Data Flow Software HLT Selection Software Event Filter § § § HLTSSW Processing Task HLT Core Software Framework ATHENA/GAUDI Reuse offline components Common to Level-2 and EF 1. . * Steering HLT Algorithms L 2 PU Application Data Manager ROB Data Collector HLT Algorithms 1. . * Event Data Model <<import>> Athena/ Gaudi Monitoring Service Meta. Data Service <<import>> Store. Gate Event Data Model Reconstr. Algorithms Offline Core Software Offline Reconstruction Offline algorithms used in EF Package Interface Dependency

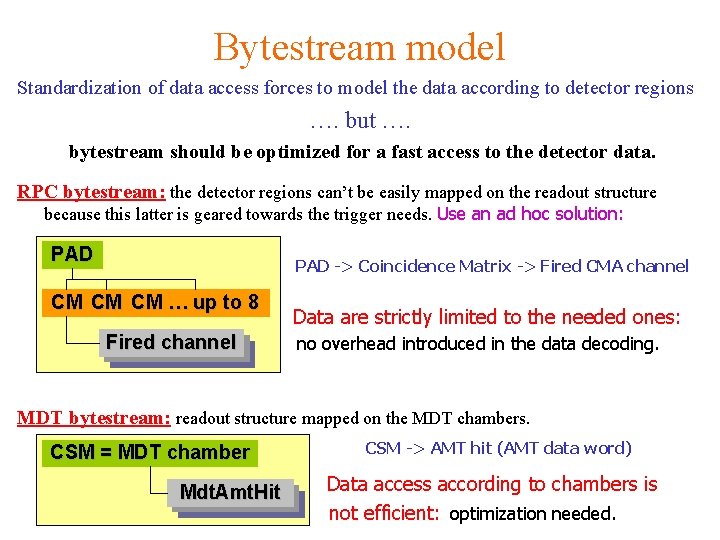

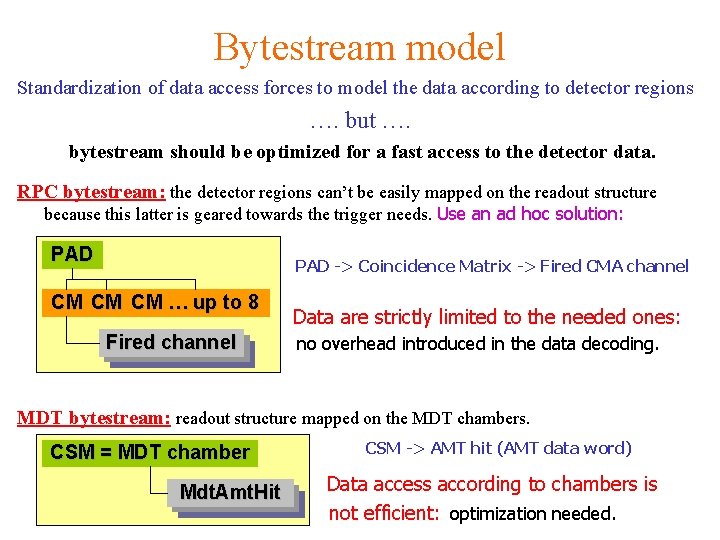

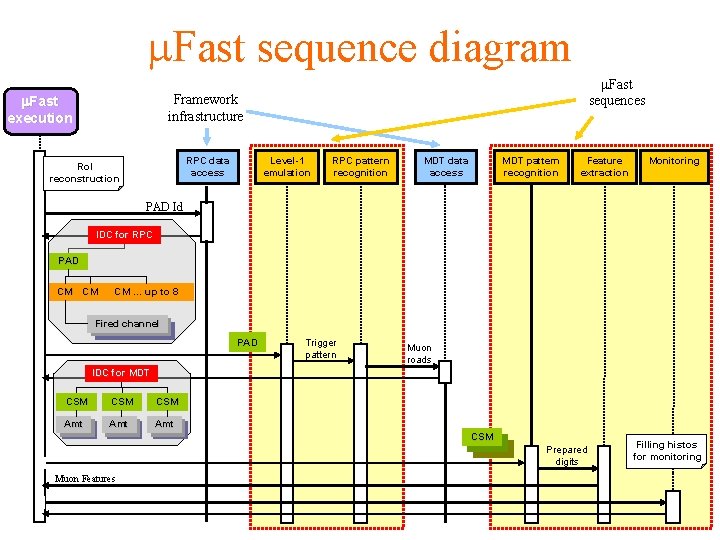

Bytestream model Standardization of data access forces to model the data according to detector regions …. but …. bytestream should be optimized for a fast access to the detector data. RPC bytestream: the detector regions can’t be easily mapped on the readout structure because this latter is geared towards the trigger needs. Use an ad hoc solution: PAD -> Coincidence Matrix -> Fired CMA channel CM CM CM … up to 8 Data are strictly limited to the needed ones: Fired channel Firedchannel no overhead introduced in the data decoding. MDT bytestream: readout structure mapped on the MDT chambers. CSM = MDT chamber Mdt. Amt. Hitt CSM -> AMT hit (AMT data word) Data access according to chambers is not efficient: optimization needed.

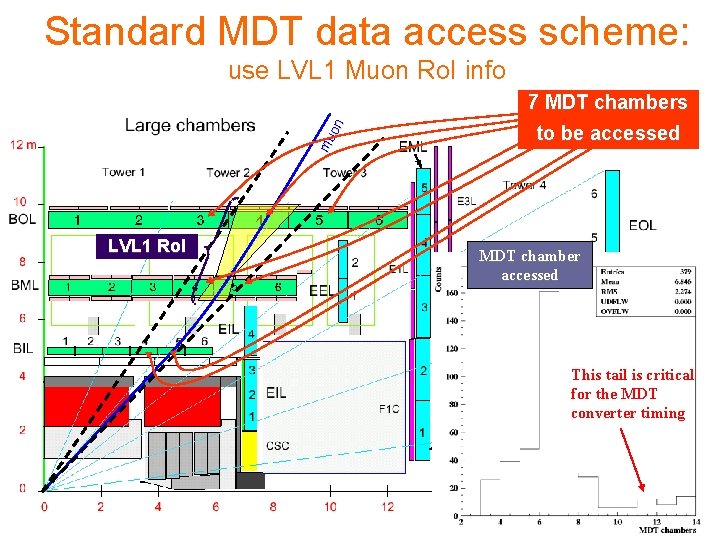

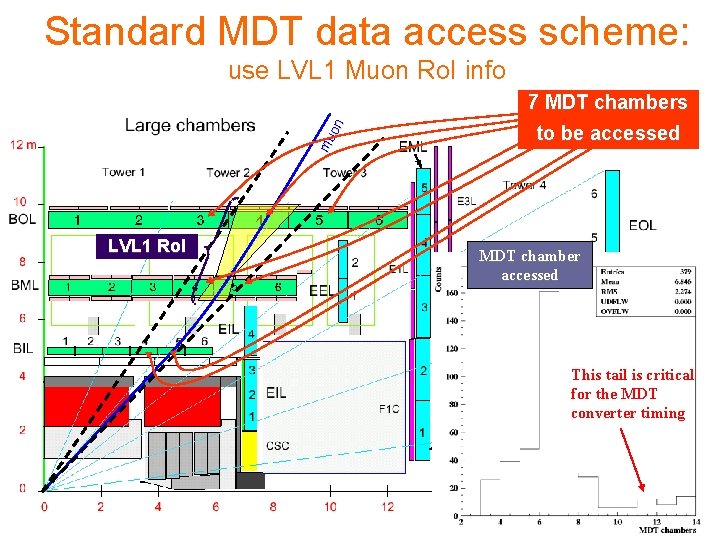

Standard MDT data access scheme: use LVL 1 Muon Ro. I info mu on 7 MDT chambers LVL 1 Ro. I to be accessed MDT chamber accessed This tail is critical for the MDT converter timing

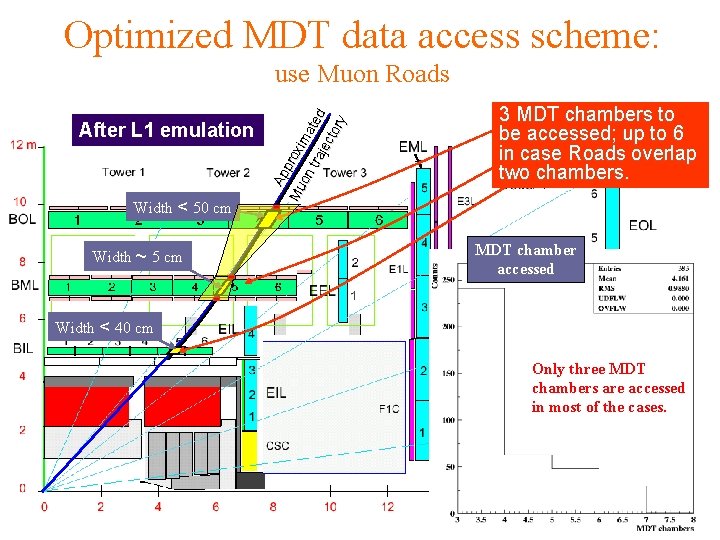

Optimized MDT data access scheme: After L 1 emulation Width < 50 cm Width ~ 5 cm Ap p Mu roxi m on tra ated jm ecu torn y use Muon Roads 3 MDT chambers to be accessed; up to 6 in case Roads overlap two chambers. MDT chamber accessed Width < 40 cm Only three MDT chambers are accessed in most of the cases.

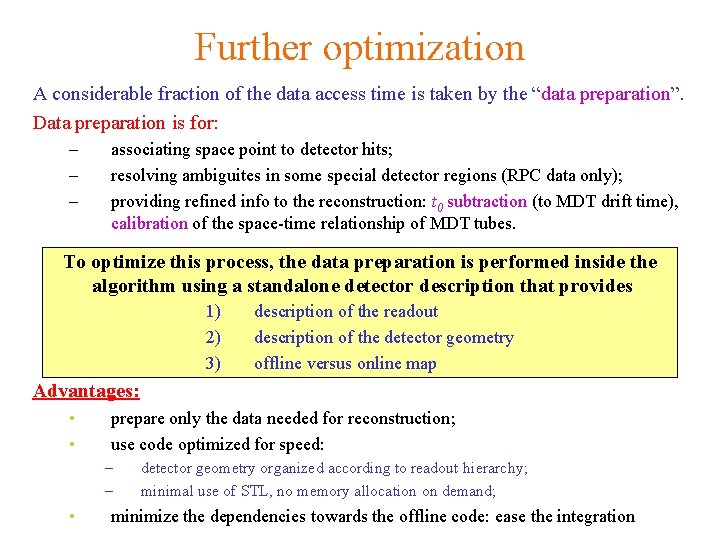

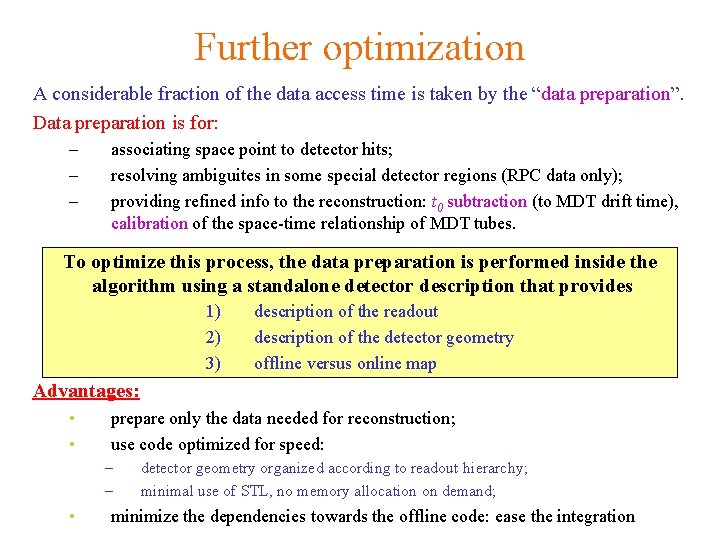

Further optimization A considerable fraction of the data access time is taken by the “data preparation”. Data preparation is for: – – – associating space point to detector hits; resolving ambiguites in some special detector regions (RPC data only); providing refined info to the reconstruction: t 0 subtraction (to MDT drift time), calibration of the space-time relationship of MDT tubes. To optimize this process, the data preparation is performed inside the algorithm using a standalone detector description that provides 1) 2) 3) description of the readout xxxx description of the detector geometry offline versus online map xxxx Advantages: • • prepare only the data needed for reconstruction; use code optimized for speed: – – • detector geometry organized according to readout hierarchy; minimal use of STL, no memory allocation on demand; minimize the dependencies towards the offline code: ease the integration

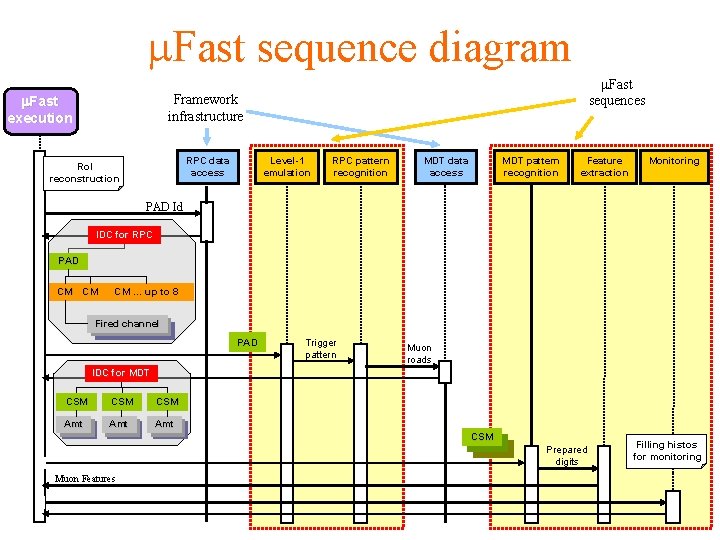

m. Fast sequence diagram m. Fast sequences Framework infrastructure m. Fast execution RPC data access Ro. I reconstruction Level-1 emulation RPC pattern recognition MDT data access MDT pattern recognition Feature extraction Monitoring PAD Id IDC for RPC PAD CM CM CM … up to 8 Fired channel Firedchannel PAD Trigger pattern Muon roads IDC for MDT CSM CSM Amt Amt Amt Muon Features CSM CSM Prepared digits Filling histos for monitoring

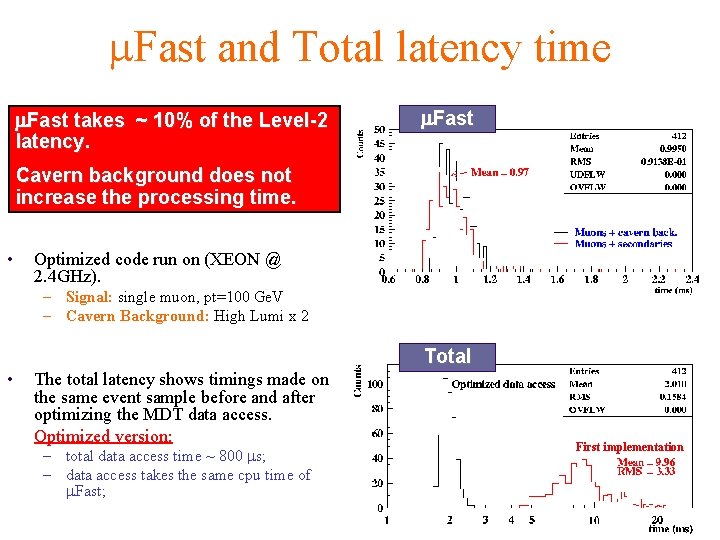

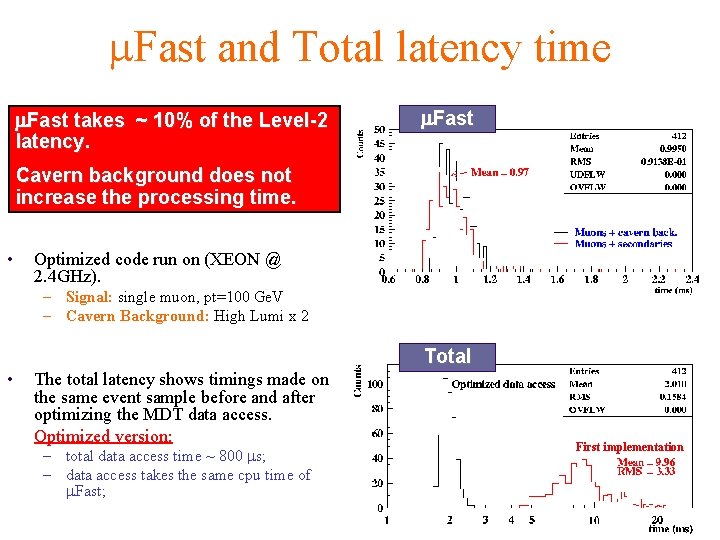

m. Fast and Total latency time m. Fast takes ~ 10% of the Level-2 latency. m. Fast Cavern background does not increase the processing time. • Optimized code run on (XEON @ 2. 4 GHz). – Signal: single muon, pt=100 Ge. V – Cavern Background: High Lumi x 2 Total • The total latency shows timings made on the same event sample before and after optimizing the MDT data access. Optimized version: – total data access time ~ 800 ms; – data access takes the same cpu time of m. Fast; First implementation

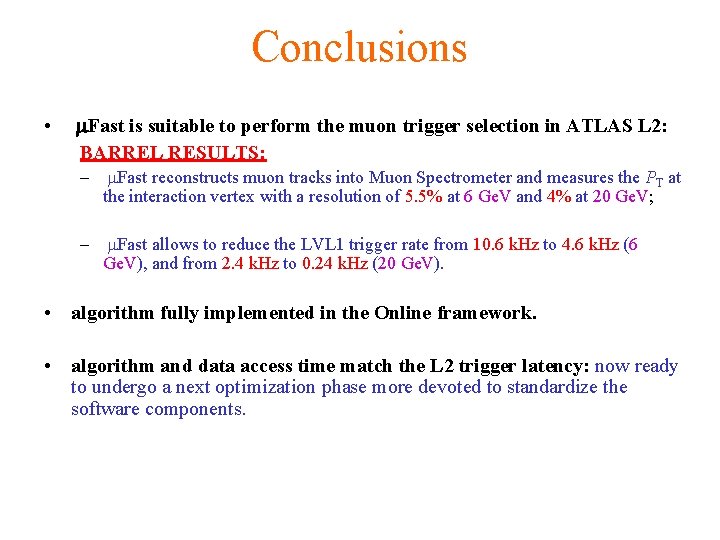

Conclusions • m. Fast is suitable to perform the muon trigger selection in ATLAS L 2: BARREL RESULTS: – m. Fast reconstructs muon tracks into Muon Spectrometer and measures the PT at the interaction vertex with a resolution of 5. 5% at 6 Ge. V and 4% at 20 Ge. V; – m. Fast allows to reduce the LVL 1 trigger rate from 10. 6 k. Hz to 4. 6 k. Hz (6 Ge. V), and from 2. 4 k. Hz to 0. 24 k. Hz (20 Ge. V). • algorithm fully implemented in the Online framework. • algorithm and data access time match the L 2 trigger latency: now ready to undergo a next optimization phase more devoted to standardize the software components.

Backup transparencies

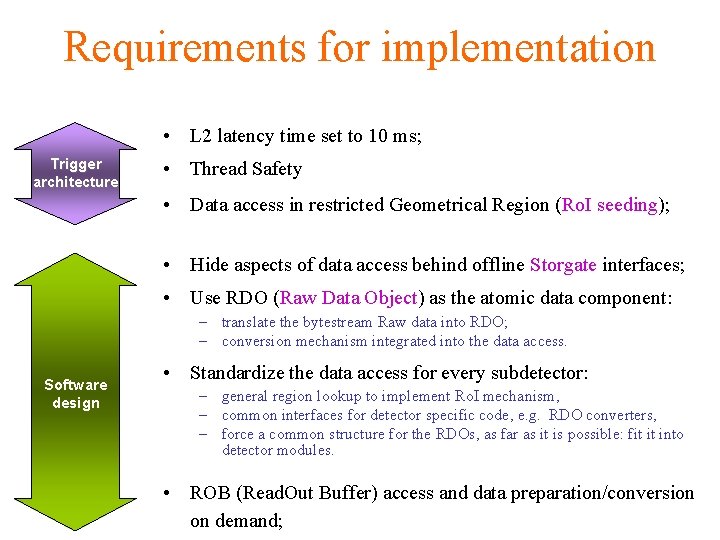

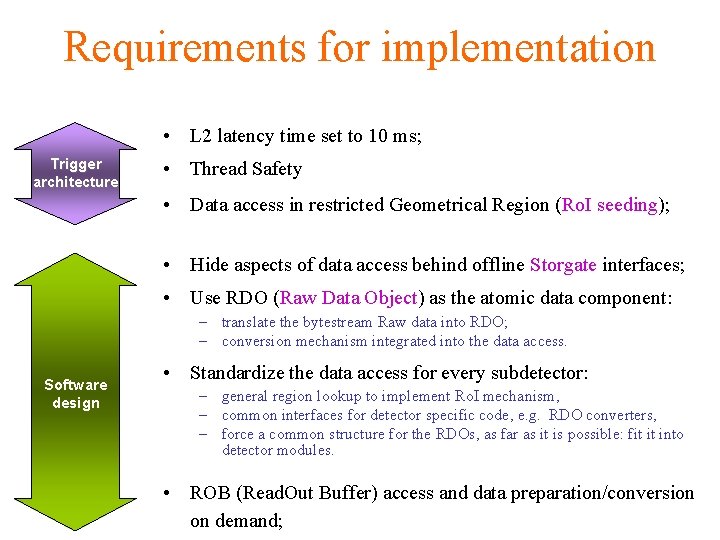

Requirements for implementation • L 2 latency time set to 10 ms; Trigger architecture • Thread Safety • Data access in restricted Geometrical Region (Ro. I seeding); • Hide aspects of data access behind offline Storgate interfaces; • Use RDO (Raw Data Object) as the atomic data component: – translate the bytestream Raw data into RDO; – conversion mechanism integrated into the data access. Software design • Standardize the data access for every subdetector: – general region lookup to implement Ro. I mechanism, – common interfaces for detector specific code, e. g. RDO converters, – force a common structure for the RDOs, as far as it is possible: fit it into detector modules. • ROB (Read. Out Buffer) access and data preparation/conversion on demand;

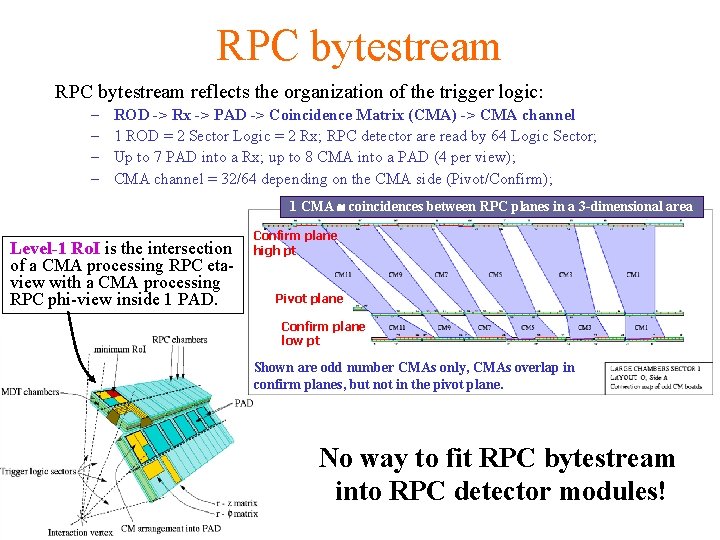

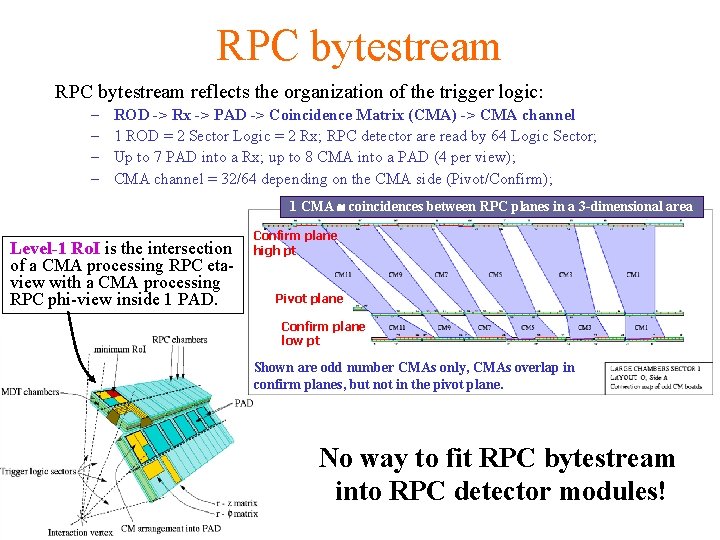

RPC bytestream reflects the organization of the trigger logic: – – ROD -> Rx -> PAD -> Coincidence Matrix (CMA) -> CMA channel 1 ROD = 2 Sector Logic = 2 Rx; RPC detector are read by 64 Logic Sector; Up to 7 PAD into a Rx; up to 8 CMA into a PAD (4 per view); CMA channel = 32/64 depending on the CMA side (Pivot/Confirm); 1 CMA coincidences between RPC planes in a 3 -dimensional area Level-1 Ro. I is the intersection of a CMA processing RPC etaview with a CMA processing RPC phi-view inside 1 PAD. Confirm plane high pt Pivot plane Confirm plane low pt Shown are odd number CMAs only, CMAs overlap in confirm planes, but not in the pivot plane. No way to fit RPC bytestream into RPC detector modules!

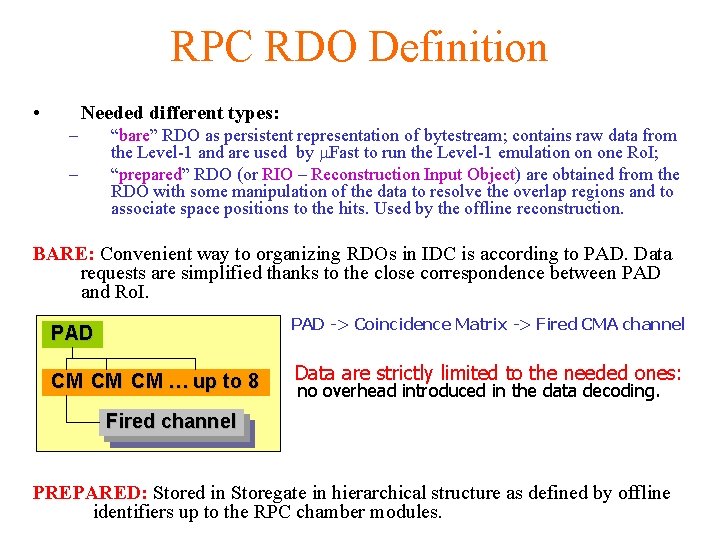

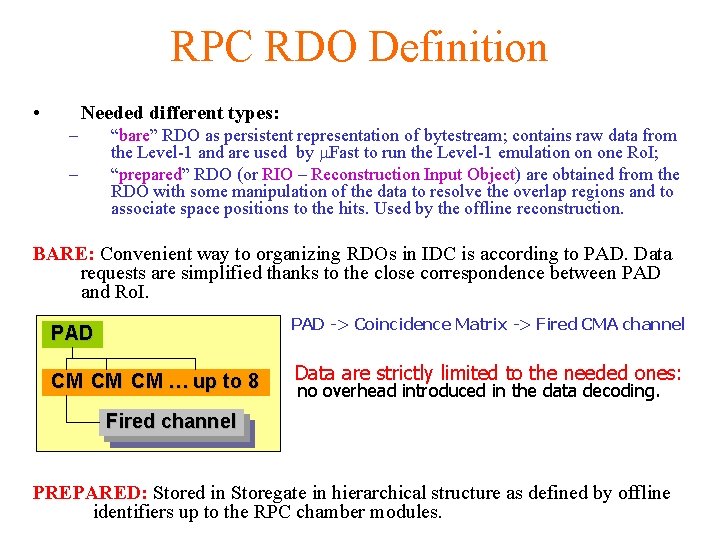

RPC RDO Definition • Needed different types: – – “bare” RDO as persistent representation of bytestream; contains raw data from the Level-1 and are used by m. Fast to run the Level-1 emulation on one Ro. I; “prepared” RDO (or RIO – Reconstruction Input Object) are obtained from the RDO with some manipulation of the data to resolve the overlap regions and to associate space positions to the hits. Used by the offline reconstruction. BARE: Convenient way to organizing RDOs in IDC is according to PAD. Data requests are simplified thanks to the close correspondence between PAD and Ro. I. PAD -> Coincidence Matrix -> Fired CMA channel PAD CM CM CM … up to 8 Data are strictly limited to the needed ones: no overhead introduced in the data decoding. Fired channel Firedchannel PREPARED: Stored in Storegate in hierarchical structure as defined by offline identifiers up to the RPC chamber modules.

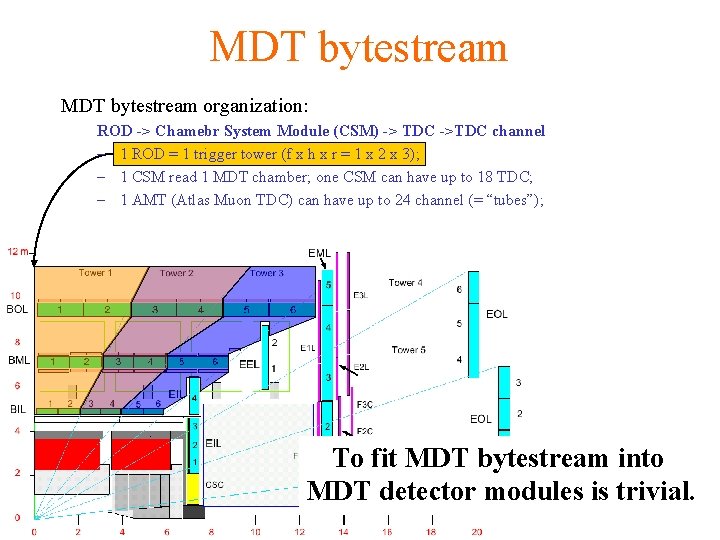

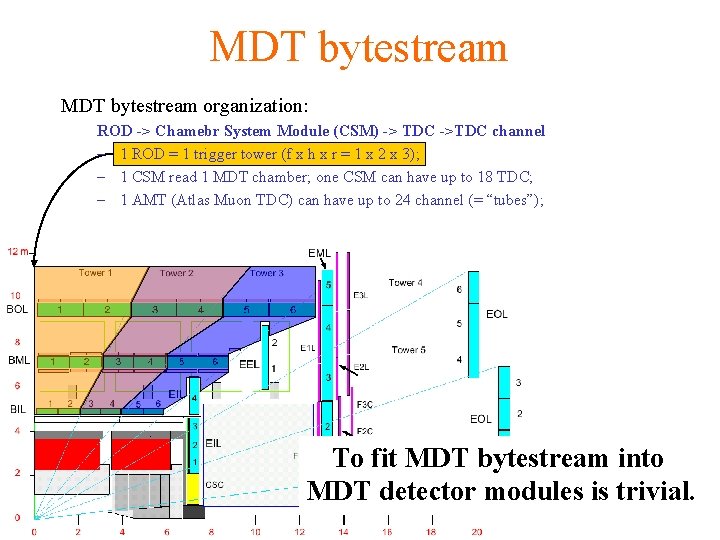

MDT bytestream organization: ROD -> Chamebr System Module (CSM) -> TDC ->TDC channel – 1 ROD = 1 trigger tower (f x h x r = 1 x 2 x 3); – 1 CSM read 1 MDT chamber; one CSM can have up to 18 TDC; – 1 AMT (Atlas Muon TDC) can have up to 24 channel (= “tubes”); To fit MDT bytestream into MDT detector modules is trivial.

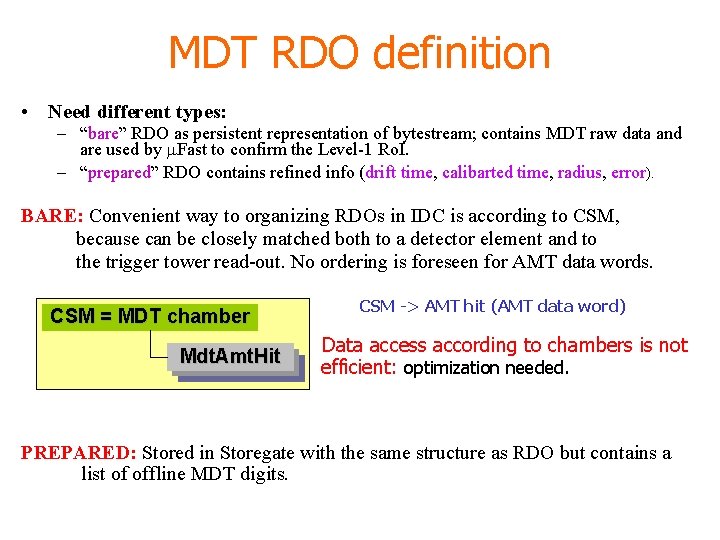

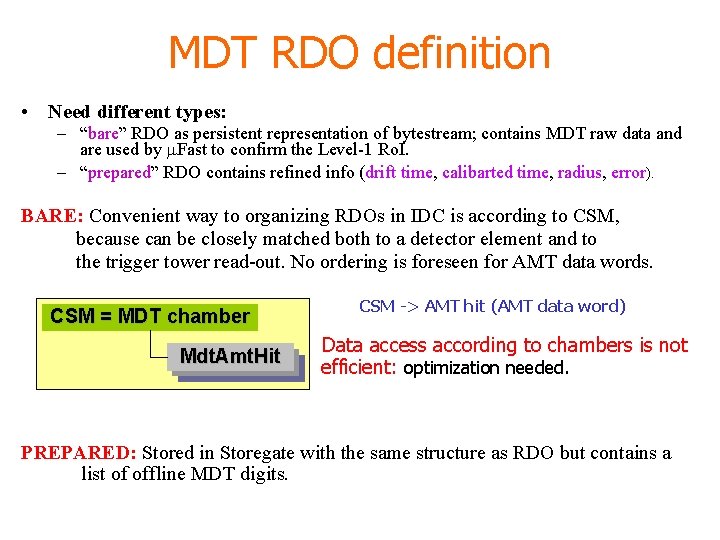

MDT RDO definition • Need different types: – “bare” RDO as persistent representation of bytestream; contains MDT raw data and are used by m. Fast to confirm the Level-1 Ro. I. – “prepared” RDO contains refined info (drift time, calibarted time, radius, error). BARE: Convenient way to organizing RDOs in IDC is according to CSM, because can be closely matched both to a detector element and to the trigger tower read-out. No ordering is foreseen for AMT data words. CSM = MDT chamber Mdt. Amt. Hitt CSM -> AMT hit (AMT data word) Data access according to chambers is not efficient: optimization needed. PREPARED: Stored in Storegate with the same structure as RDO but contains a list of offline MDT digits.

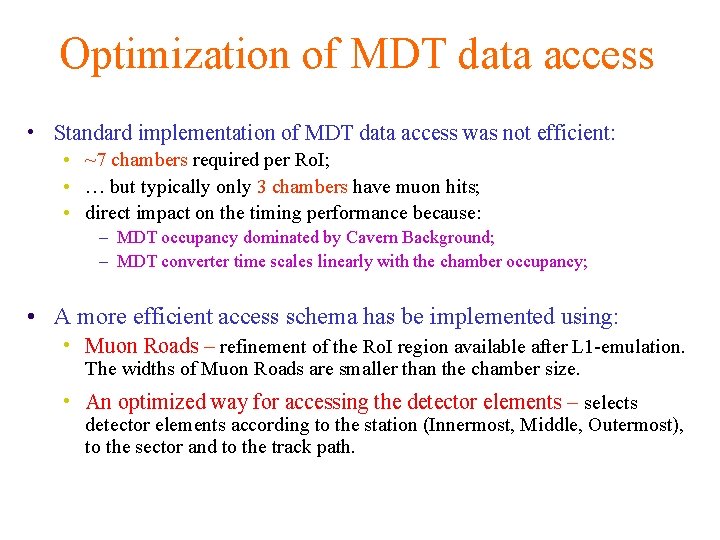

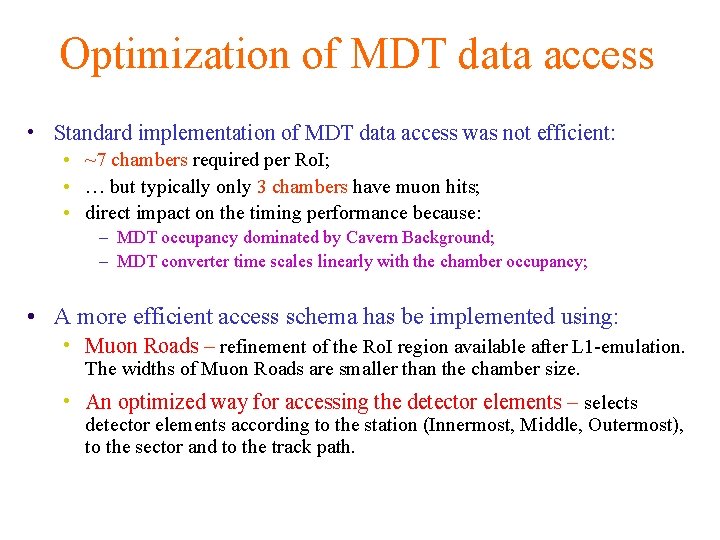

Optimization of MDT data access • Standard implementation of MDT data access was not efficient: • ~7 chambers required per Ro. I; • … but typically only 3 chambers have muon hits; • direct impact on the timing performance because: – MDT occupancy dominated by Cavern Background; – MDT converter time scales linearly with the chamber occupancy; • A more efficient access schema has be implemented using: • Muon Roads – refinement of the Ro. I region available after L 1 -emulation. The widths of Muon Roads are smaller than the chamber size. • An optimized way for accessing the detector elements – selects detector elements according to the station (Innermost, Middle, Outermost), to the sector and to the track path.

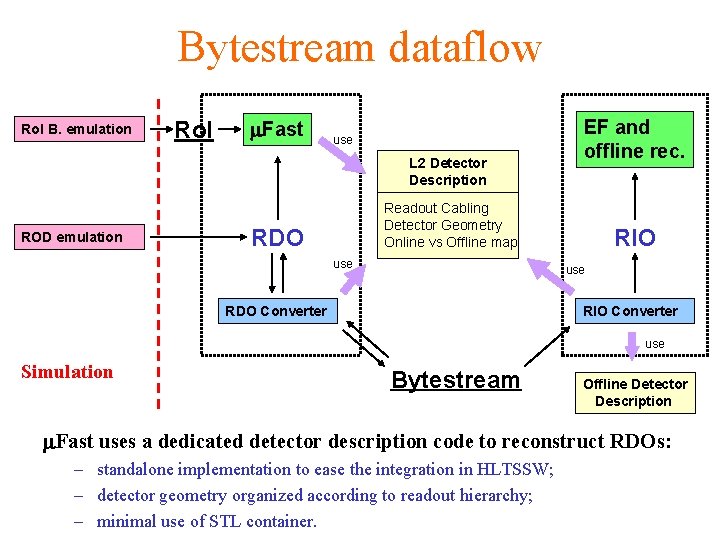

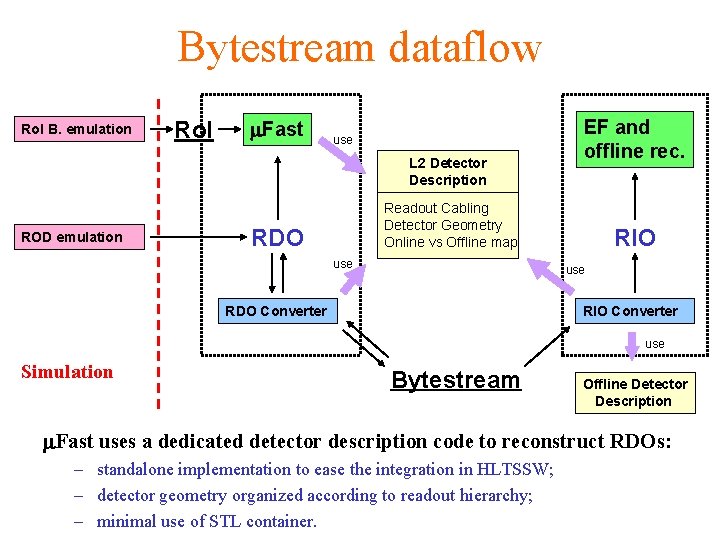

Bytestream dataflow Ro. I B. emulation Ro. I m. Fast use L 2 Detector Description ROD emulation EF and offline rec. Readout Cabling Detector Geometry Online vs Offline map RDO use RIO use RDO Converter RIO Converter use Simulation Bytestream Offline Detector Description m. Fast uses a dedicated detector description code to reconstruct RDOs: – standalone implementation to ease the integration in HLTSSW; – detector geometry organized according to readout hierarchy; – minimal use of STL container.

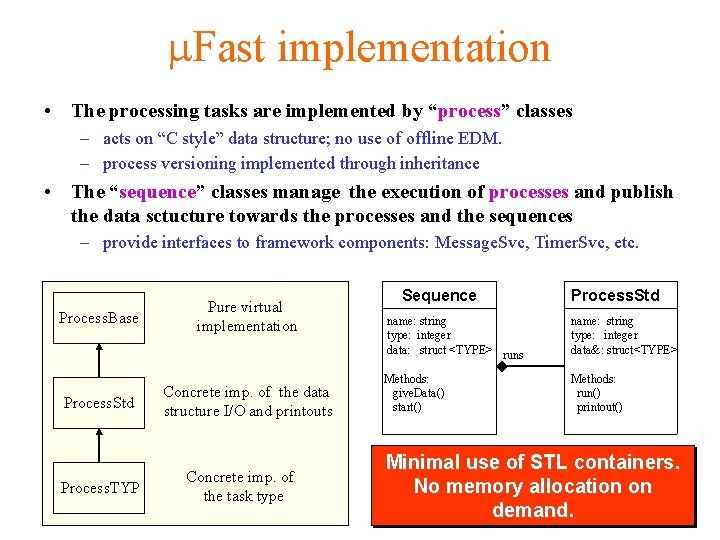

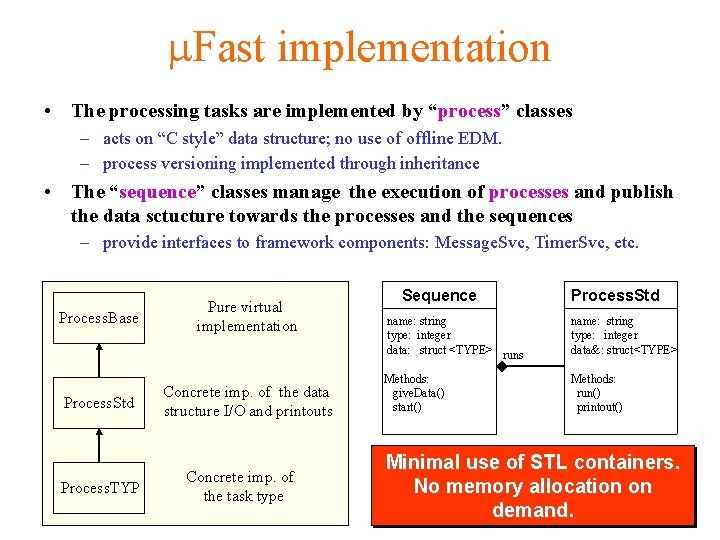

m. Fast implementation • The processing tasks are implemented by “process” classes – acts on “C style” data structure; no use of offline EDM. – process versioning implemented through inheritance • The “sequence” classes manage the execution of processes and publish the data sctucture towards the processes and the sequences – provide interfaces to framework components: Message. Svc, Timer. Svc, etc. Process. Base Process. Std Process. TYP Pure virtual implementation Concrete imp. of the data structure I/O and printouts Concrete imp. of the task type Sequence Process. Std name: string type: integer data: struct <TYPE> runs name: string type: integer data&: struct<TYPE> Methods: give. Data() start() Methods: run() printout() Minimal use of STL containers. No memory allocation on demand.