Uncertainty Russell and Norvig Chapter 14 15 Koller

- Slides: 55

Uncertainty Russell and Norvig: Chapter 14, 15 Koller article on BNs CMCS 424 Spring 2002 April 23

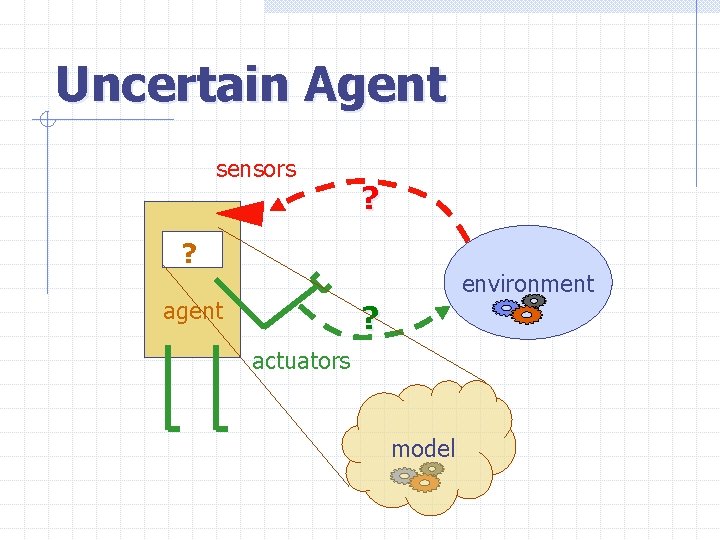

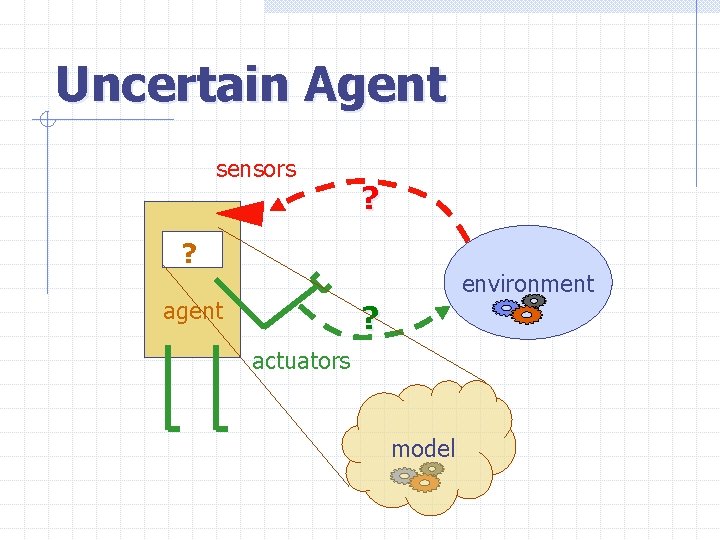

Uncertain Agent sensors ? ? environment agent ? actuators model

An Old Problem …

Types of Uncertainty in prior knowledge E. g. , some causes of a disease are unknown and are not represented in the background knowledge of a medical-assistant agent

Types of Uncertainty For example, to drive my car in the morning: Uncertainty in prior knowledge • It must not have been stolen during the night E. g. , some causes of a disease are unknown and are • It must not have in flat tires not represented the background knowledge of a • There must be gasagent in the tank medical-assistant • The battery must not be dead Uncertainty in actions E. g. , ignition actionsmust are represented with relatively short lists • The work preconditions, while lists are in fact arbitrary • Iofmust not have lost thethese car keys long • No truck should obstruct the driveway • I must not have suddenly become blind or paralytic Etc… Not only would it not be possible to list all of them, but would trying to do so be efficient?

Types of Uncertainty in prior knowledge E. g. , some causes of a disease are unknown and are not represented in the background knowledge of a medical-assistant agent Uncertainty in actions E. g. , actions are represented with relatively short lists of preconditions, while these lists are. Courtesy in fact. R. arbitrary Chatila long Uncertainty in perception E. g. , sensors do not return exact or complete information about the world; a robot never knows exactly its position

Types of Uncertainty in prior knowledge E. g. , some causes of of a disease are unknown and are Sources uncertainty: not represented in the background knowledge of a medical-assistant agent 1. Ignorance Uncertainty in actions E. g. , actions are represented with relatively short lists 2. Laziness (efficiency? ) of preconditions, while these lists are in fact arbitrary long Uncertainty in perception E. g. , sensors do not return exact What we call uncertainty isoracomplete summary information about the world; a robot never knows of all thatitsisposition not explicitly taken into account exactly in the agent’s KB

Questions How to represent uncertainty in knowledge? How to perform inferences with uncertain knowledge? Which action to choose under uncertainty?

How do we deal with uncertainty? Implicit: n n Ignore what you are uncertain of when you can Build procedures that are robust to uncertainty Explicit: n n Build a model of the world that describe uncertainty about its state, dynamics, and observations Reason about the effect of actions given the model

Handling Uncertainty Approaches: 1. Default reasoning 2. Worst-case reasoning 3. Probabilistic reasoning

Default Reasoning Creed: The world is fairly normal. Abnormalities are rare So, an agent assumes normality, until there is evidence of the contrary E. g. , if an agent sees a bird x, it assumes that x can fly, unless it has evidence that x is a penguin, an ostrich, a dead bird, a bird with broken wings, …

Representation in Logic BIRD(x) ABF(x) FLIES(x) Very active research field in the 80’s PENGUINS(x) AB (x) F Non-monotonic logics: defaults, circumscription, BROKEN-WINGS(x) AB (x) closed-world assumptions F BIRD(Tweety) Applications to databases … Default rule: Unless ABF(Tweety) can be proven True, assume it is False But what to do if several defaults are contradictory? Which ones to keep? Which one to reject?

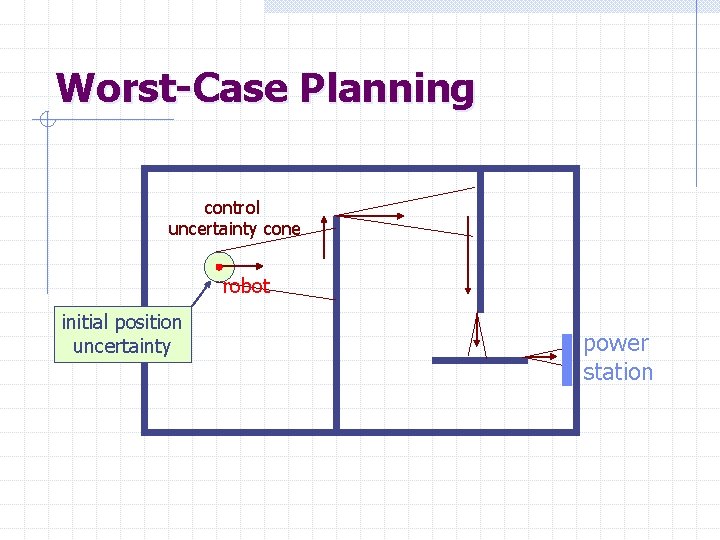

Worst-Case Reasoning Creed: Just the opposite! The world is ruled by Murphy’s Law Uncertainty is defined by sets, e. g. , the set possible outcomes of an action, the set of possible positions of a robot The agent assumes the worst case, and chooses the actions that maximizes a utility function in this case Example: Adversarial search

Probabilistic Reasoning Creed: The world is not divided between “normal” and “abnormal”, nor is it adversarial. Possible situations have various likelihoods (probabilities) The agent has probabilistic beliefs – pieces of knowledge with associated probabilities (strengths) – and chooses its actions to maximize the expected value of some utility function

How do we represent Uncertainty? We need to answer several questions: What do we represent & how we represent it? n What language do we use to represent our uncertainty? What are the semantics of our representation? What can we do with the representations? n What queries can be answered? How do we answer them? How do we construct a representation? n Can we ask an expert? Can we learn from data?

Probability A well-known and well-understood framework for uncertainty Clear semantics Provides principled answers for: n n n Combining evidence Predictive & Diagnostic reasoning Incorporation of new evidence Intuitive (at some level) to human experts Can be learned

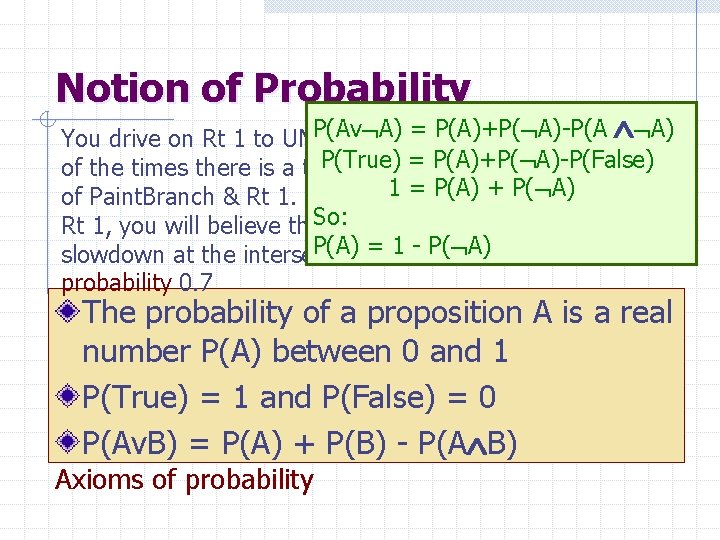

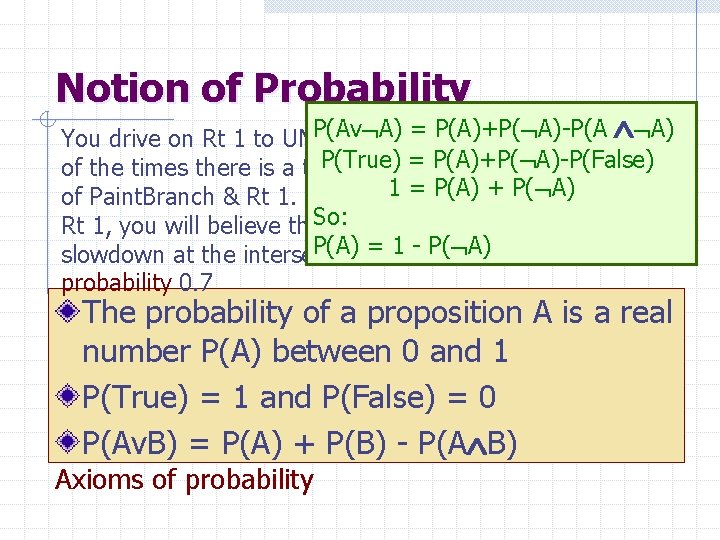

Notion of Probability P(Av A) = P(A)+P( A)-P(A A) You drive on Rt 1 to UMD often, and you notice that 70% P(True) = P(A)+P( A)-P(False) of the times there is a traffic slowdown at the intersection = P(A) P( A) of Paint. Branch & Rt 1. The next 1 time you+plan to drive on So: the proposition “there is a Rt 1, you will believe that P(A) =of 1 PB - P( A) slowdown at the intersection & Rt 1” is True with probability 0. 7 The probability of a proposition A is a real number P(A) between 0 and 1 P(True) = 1 and P(False) = 0 P(Av. B) = P(A) + P(B) - P(A B) Axioms of probability

Frequency Interpretation Draw a ball from a bag containing n balls of the same size, r red and s yellow. The probability that the proposition A = “the ball is red” is true corresponds to the relative frequency with which we expect to draw a red ball P(A) = r/n

Subjective Interpretation There are many situations in which there is no objective frequency interpretation: n n On a windy day, just before paragliding from the top of El Capitan, you say “there is probability 0. 05 that I am going to die” You have worked hard on your AI class and you believe that the probability that you will get an A is 0. 9

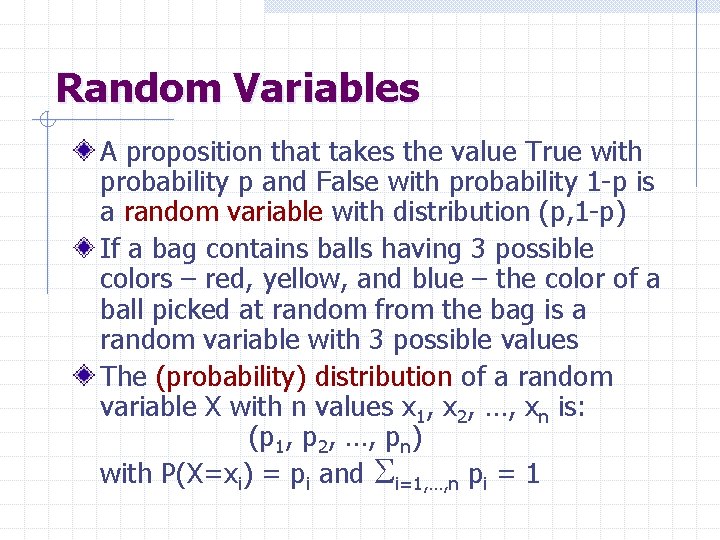

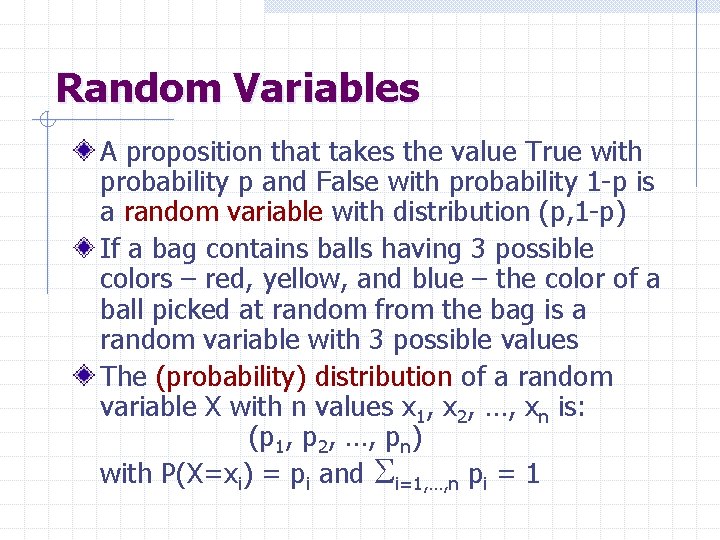

Random Variables A proposition that takes the value True with probability p and False with probability 1 -p is a random variable with distribution (p, 1 -p) If a bag contains balls having 3 possible colors – red, yellow, and blue – the color of a ball picked at random from the bag is a random variable with 3 possible values The (probability) distribution of a random variable X with n values x 1, x 2, …, xn is: (p 1, p 2, …, pn) with P(X=xi) = pi and Si=1, …, n pi = 1

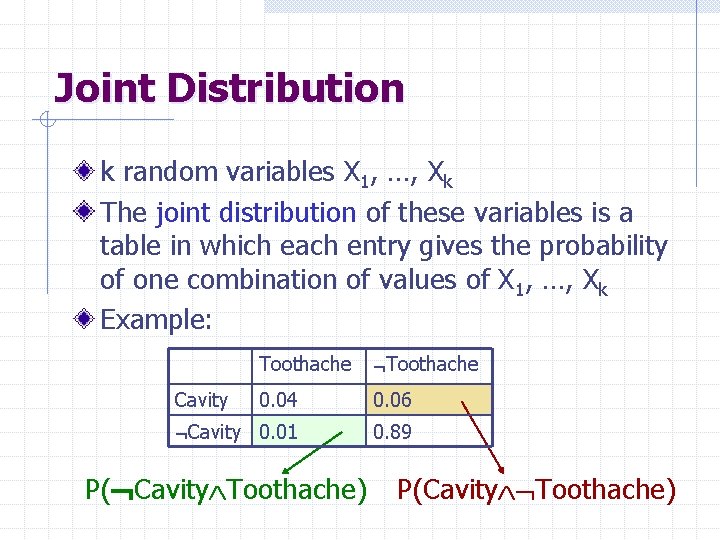

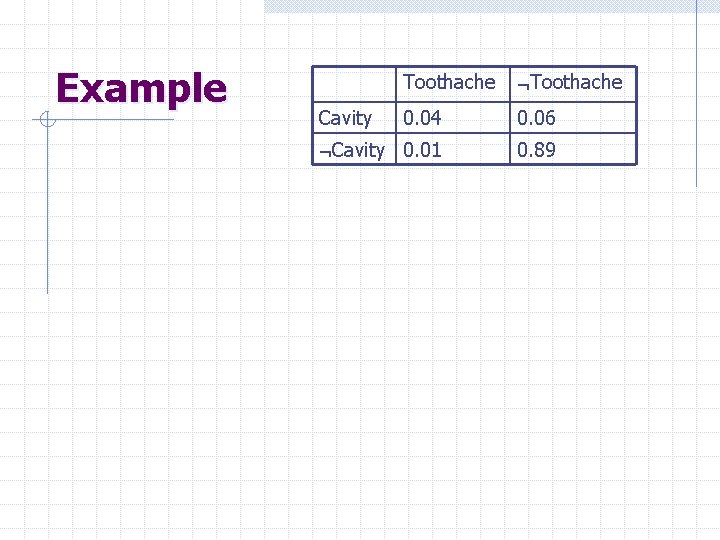

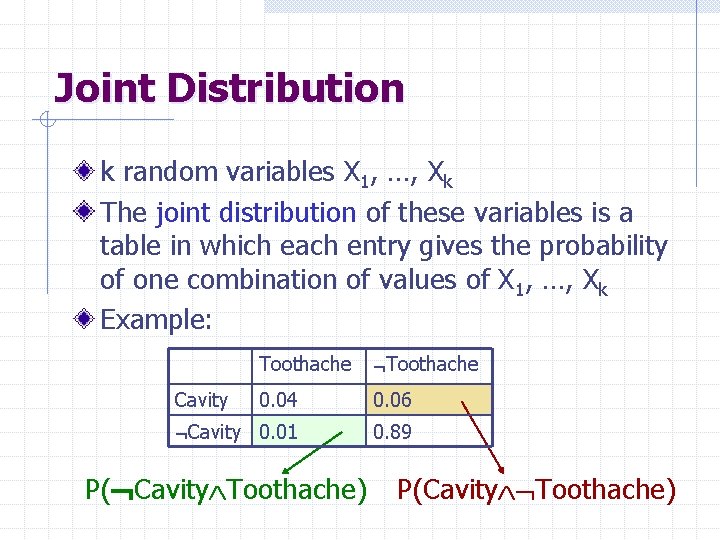

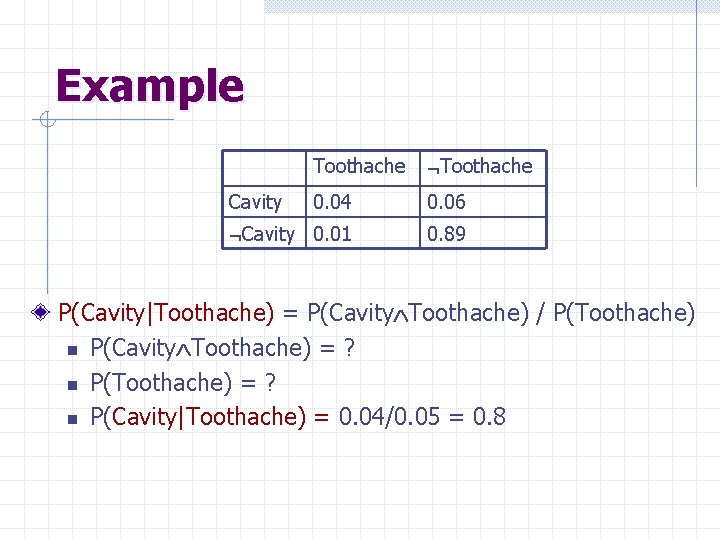

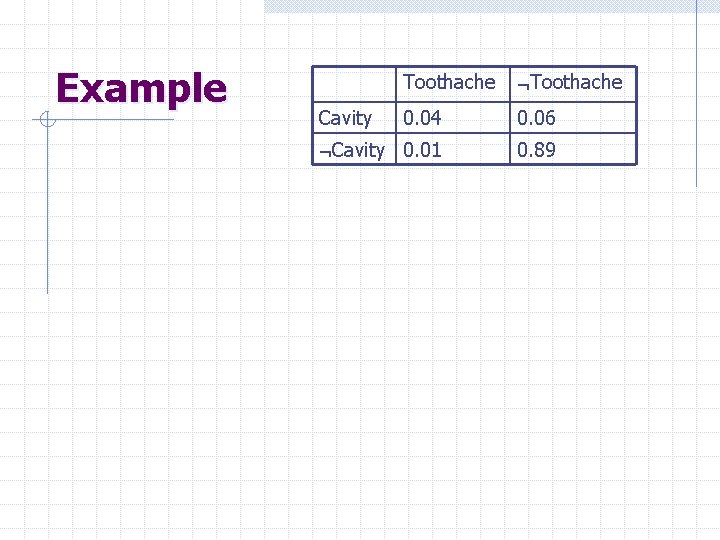

Joint Distribution k random variables X 1, …, Xk The joint distribution of these variables is a table in which each entry gives the probability of one combination of values of X 1, …, Xk Example: Toothache 0. 04 0. 06 Cavity 0. 01 0. 89 Cavity P( Cavity Toothache) P(Cavity Toothache)

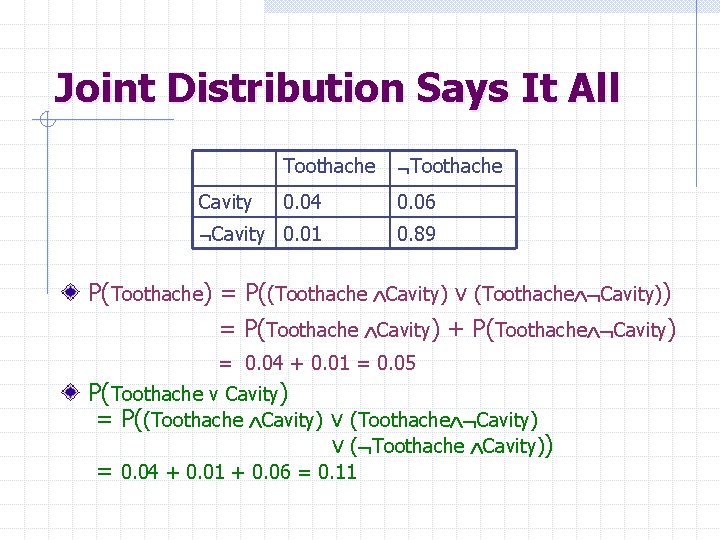

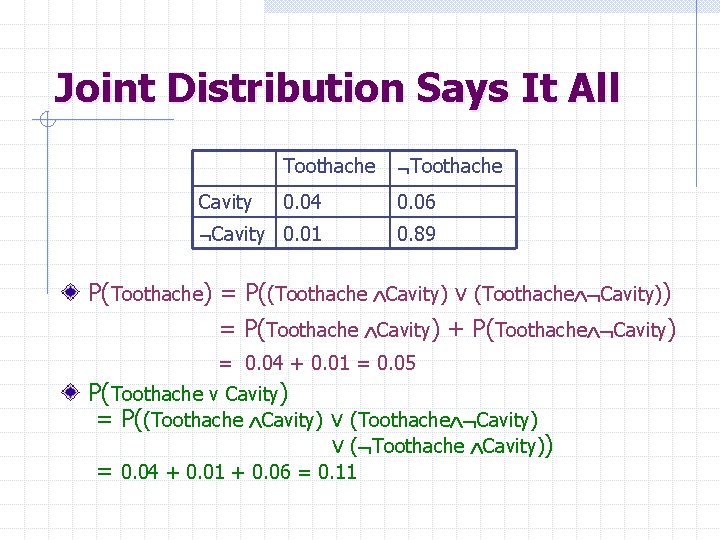

Joint Distribution Says It All Toothache 0. 04 0. 06 Cavity 0. 01 0. 89 Cavity P(Toothache) = P((Toothache Cavity) v (Toothache Cavity)) = P(Toothache Cavity) + P(Toothache Cavity) = 0. 04 + 0. 01 = 0. 05 P(Toothache v Cavity) = P((Toothache Cavity) v ( Toothache Cavity)) = 0. 04 + 0. 01 + 0. 06 = 0. 11

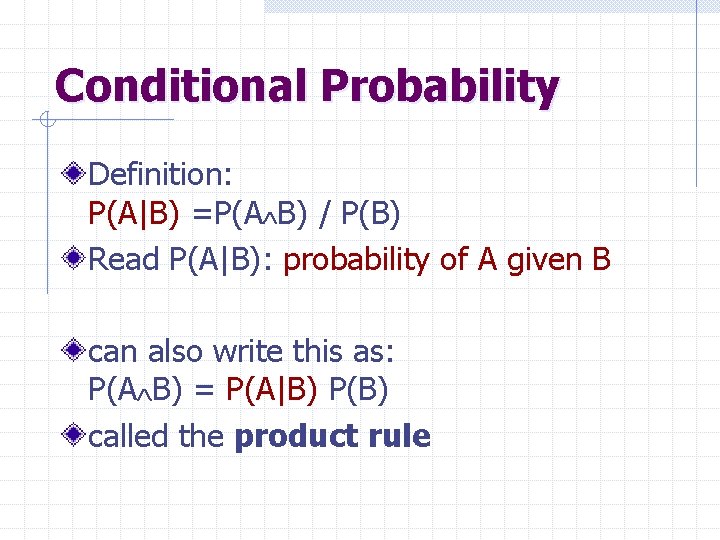

Conditional Probability Definition: P(A|B) =P(A B) / P(B) Read P(A|B): probability of A given B can also write this as: P(A B) = P(A|B) P(B) called the product rule

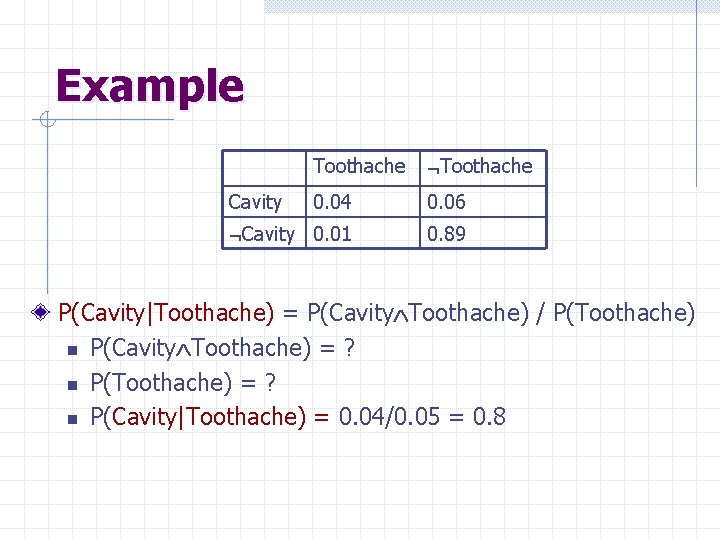

Example Toothache 0. 04 0. 06 Cavity 0. 01 0. 89 Cavity P(Cavity|Toothache) = P(Cavity Toothache) / P(Toothache) n P(Cavity Toothache) = ? n P(Cavity|Toothache) = 0. 04/0. 05 = 0. 8

Generalization P(A B C) = P(A|B, C) P(B|C) P(C)

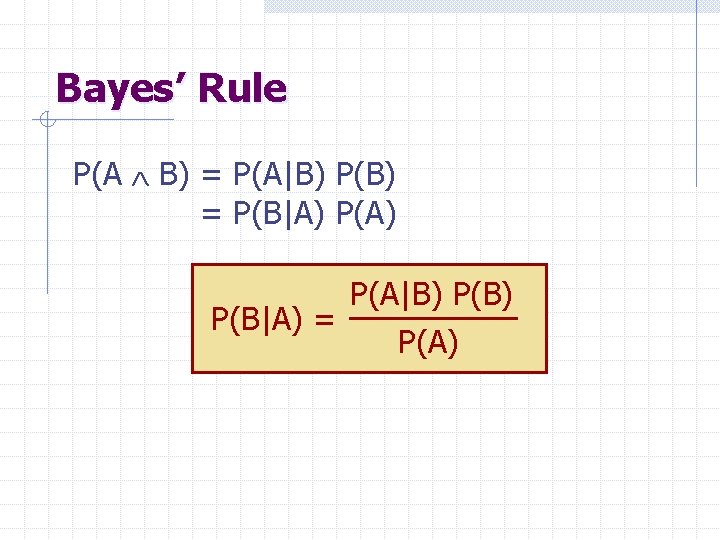

Bayes’ Rule P(A B) = P(A|B) P(B) = P(B|A) P(A|B) P(B|A) = P(A)

Example Toothache 0. 04 0. 06 Cavity 0. 01 0. 89 Cavity

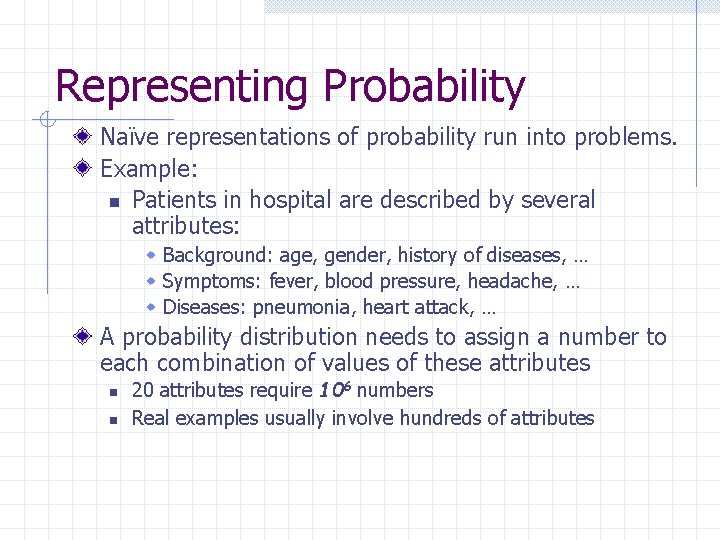

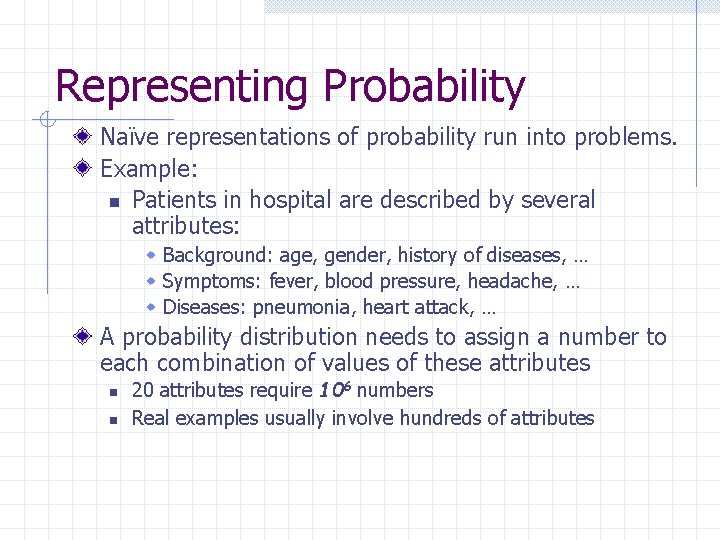

Representing Probability Naïve representations of probability run into problems. Example: n Patients in hospital are described by several attributes: w Background: age, gender, history of diseases, … w Symptoms: fever, blood pressure, headache, … w Diseases: pneumonia, heart attack, … A probability distribution needs to assign a number to each combination of values of these attributes n n 20 attributes require 106 numbers Real examples usually involve hundreds of attributes

Practical Representation Key idea -- exploit regularities Here we focus on exploiting conditional independence properties

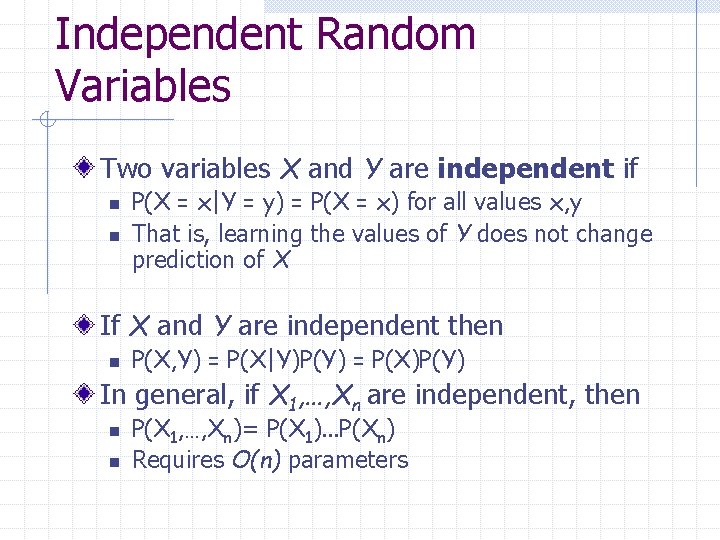

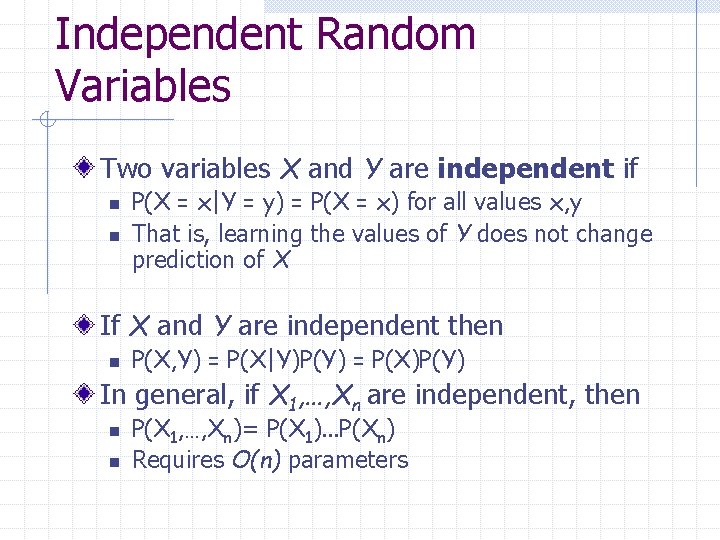

Independent Random Variables Two variables X and Y are independent if n n P(X = x|Y = y) = P(X = x) for all values x, y That is, learning the values of Y does not change prediction of X If X and Y are independent then n P(X, Y) = P(X|Y)P(Y) = P(X)P(Y) In general, if X 1, …, Xn are independent, then n n P(X 1, …, Xn)= P(X 1). . . P(Xn) Requires O(n) parameters

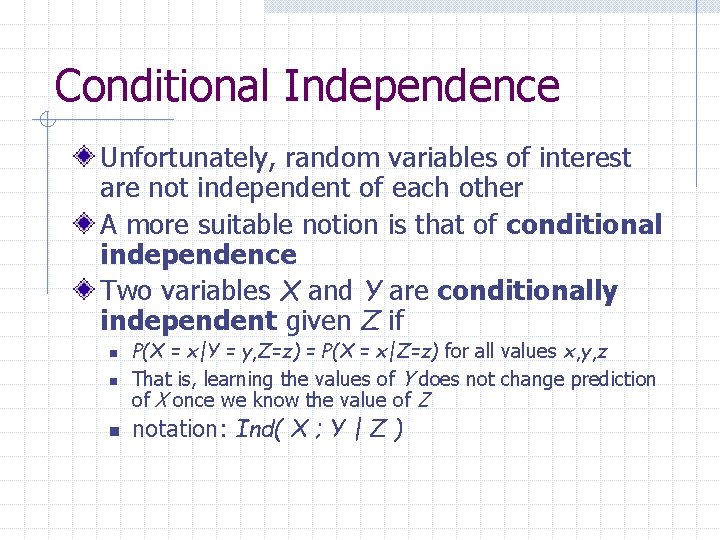

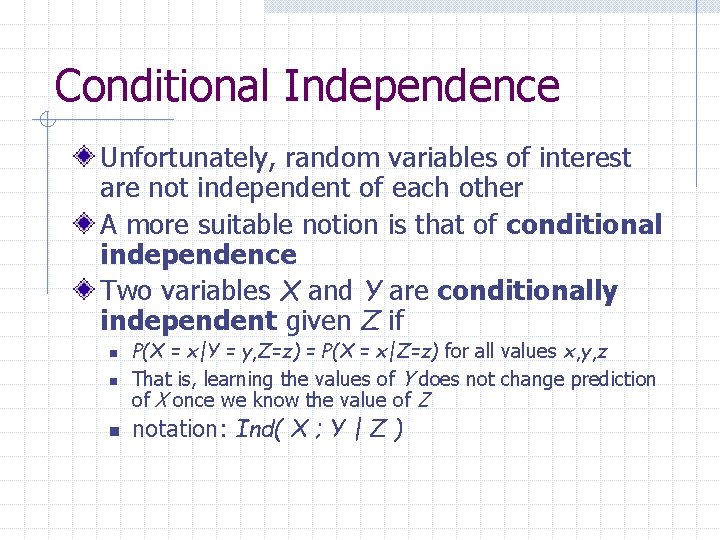

Conditional Independence Unfortunately, random variables of interest are not independent of each other A more suitable notion is that of conditional independence Two variables X and Y are conditionally independent given Z if n n n P(X = x|Y = y, Z=z) = P(X = x|Z=z) for all values x, y, z That is, learning the values of Y does not change prediction of X once we know the value of Z notation: Ind( X ; Y | Z )

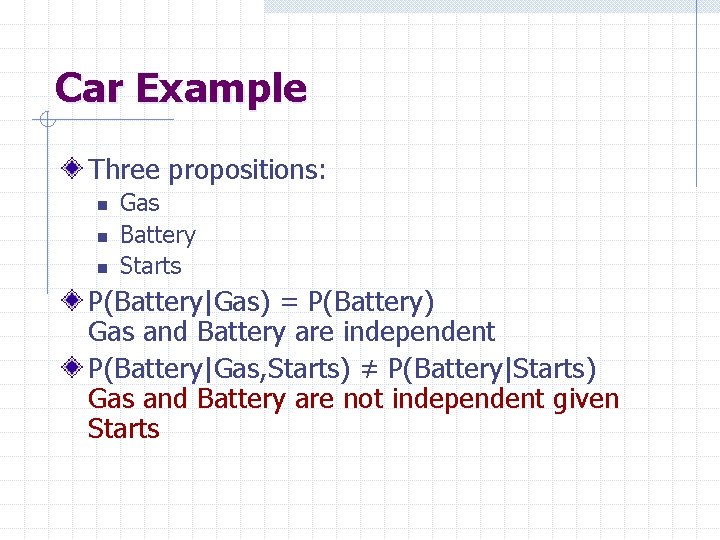

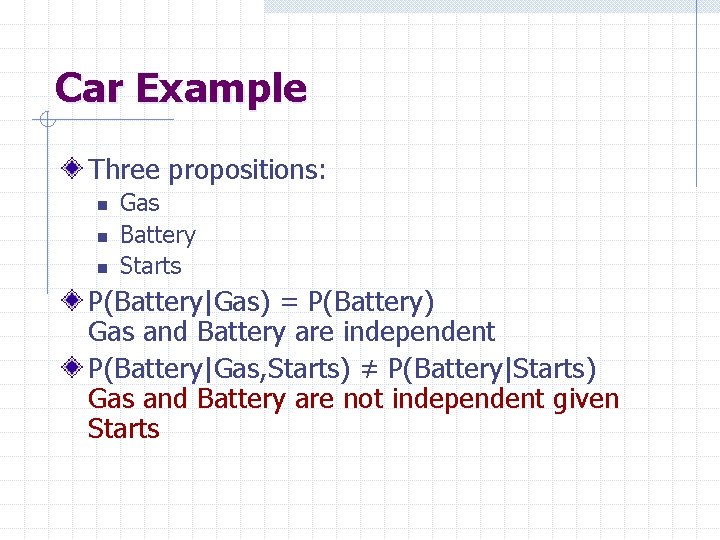

Car Example Three propositions: n n n Gas Battery Starts P(Battery|Gas) = P(Battery) Gas and Battery are independent P(Battery|Gas, Starts) ≠ P(Battery|Starts) Gas and Battery are not independent given Starts

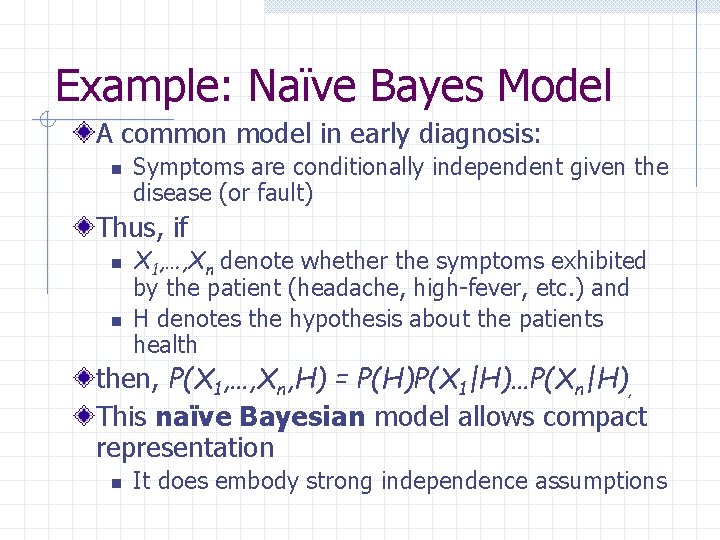

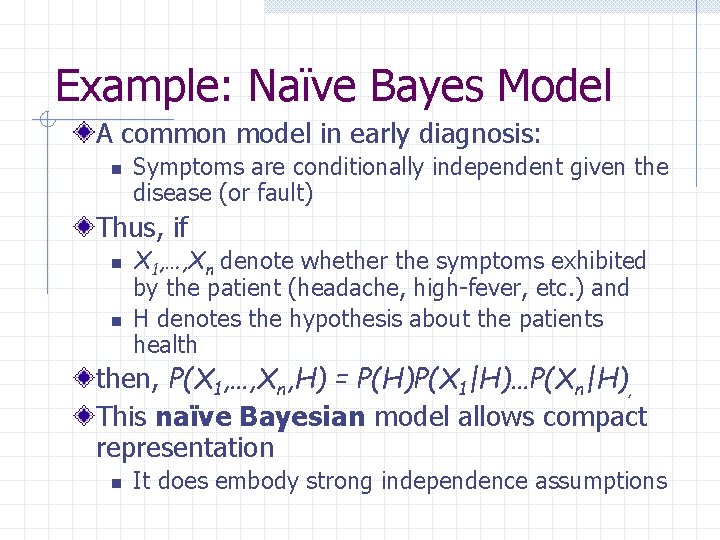

Example: Naïve Bayes Model A common model in early diagnosis: n Symptoms are conditionally independent given the disease (or fault) Thus, if n n X 1, …, Xn denote whether the symptoms exhibited by the patient (headache, high-fever, etc. ) and H denotes the hypothesis about the patients health then, P(X 1, …, Xn, H) = P(H)P(X 1|H)…P(Xn|H), This naïve Bayesian model allows compact representation n It does embody strong independence assumptions

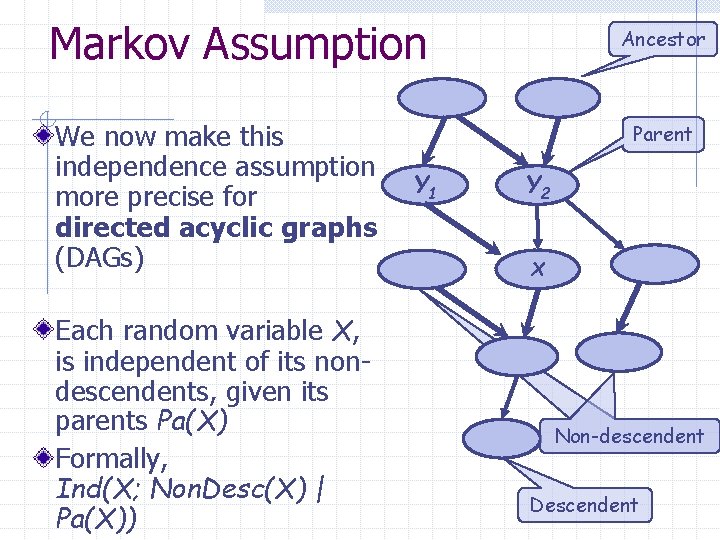

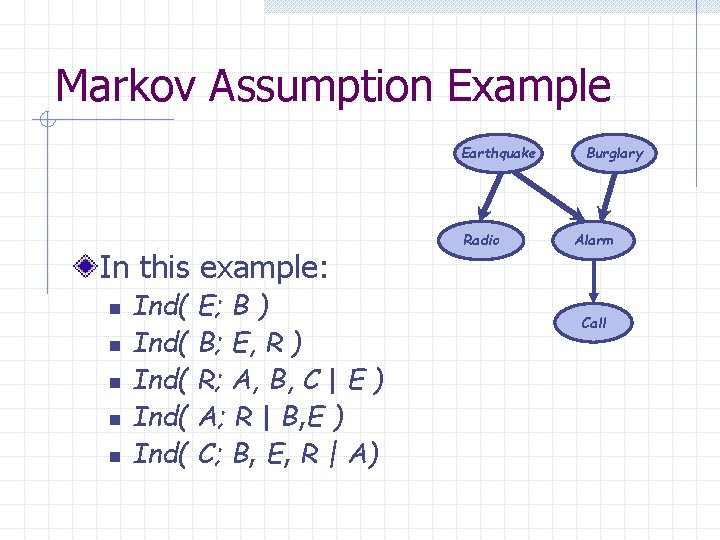

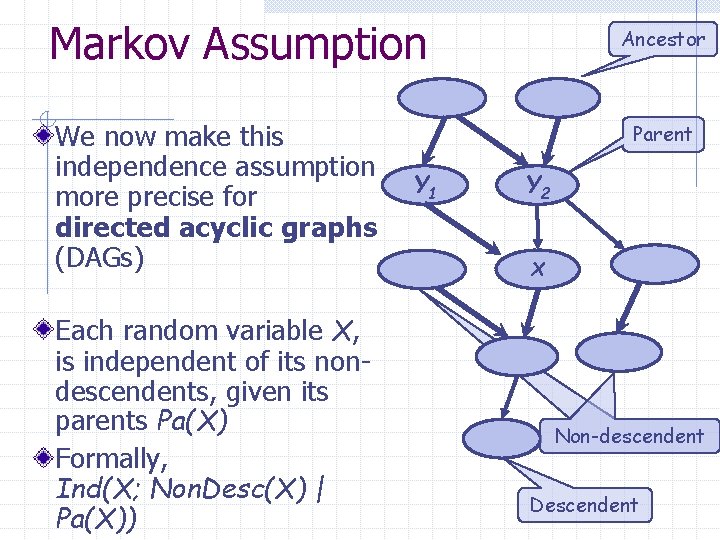

Markov Assumption We now make this independence assumption more precise for directed acyclic graphs (DAGs) Each random variable X, is independent of its nondescendents, given its parents Pa(X) Formally, Ind(X; Non. Desc(X) | Pa(X)) Ancestor Parent Y 1 Y 2 X Non-descendent Descendent

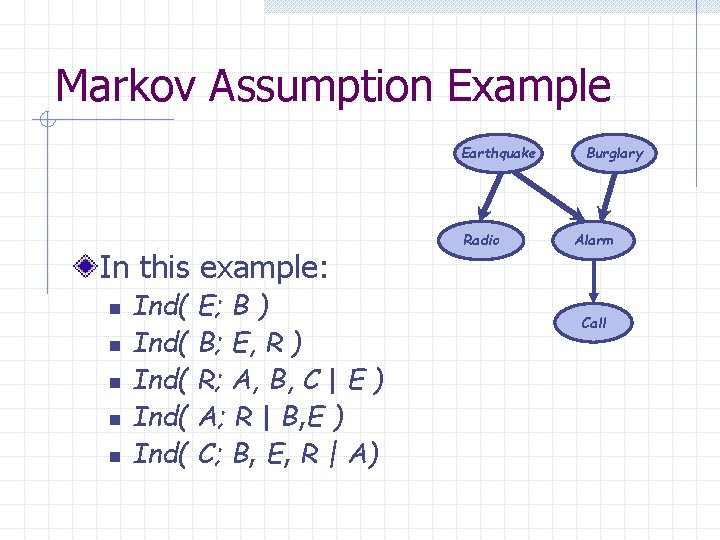

Markov Assumption Example Earthquake In this example: n n n Ind( E; B ) Ind( B; E, R ) Ind( R; A, B, C | E ) Ind( A; R | B, E ) Ind( C; B, E, R | A) Radio Burglary Alarm Call

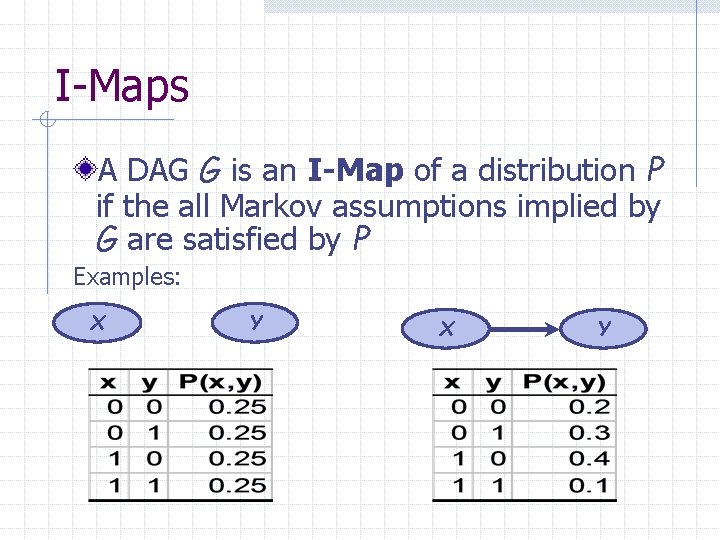

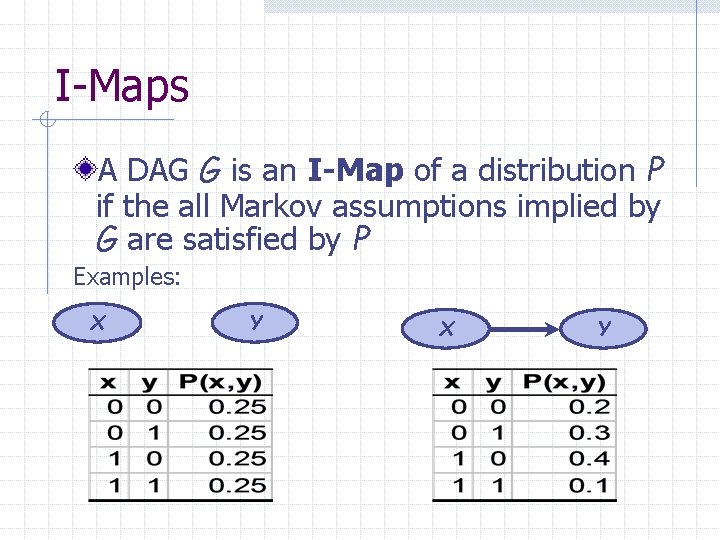

I-Maps A DAG G is an I-Map of a distribution P if the all Markov assumptions implied by G are satisfied by P Examples: X Y

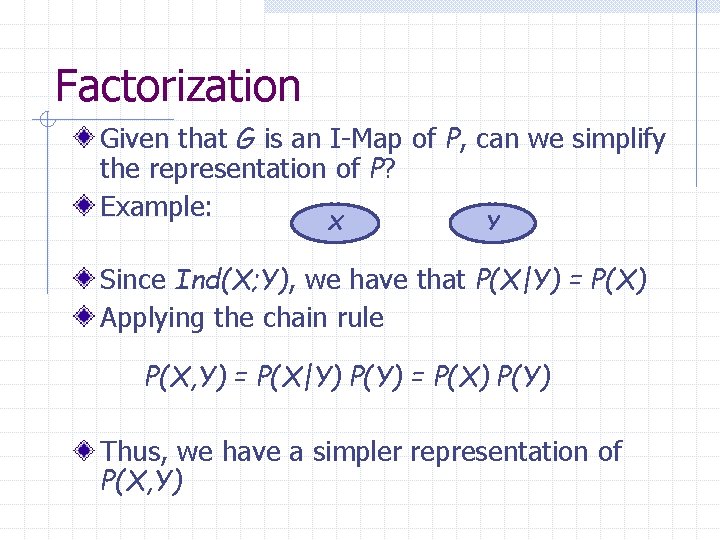

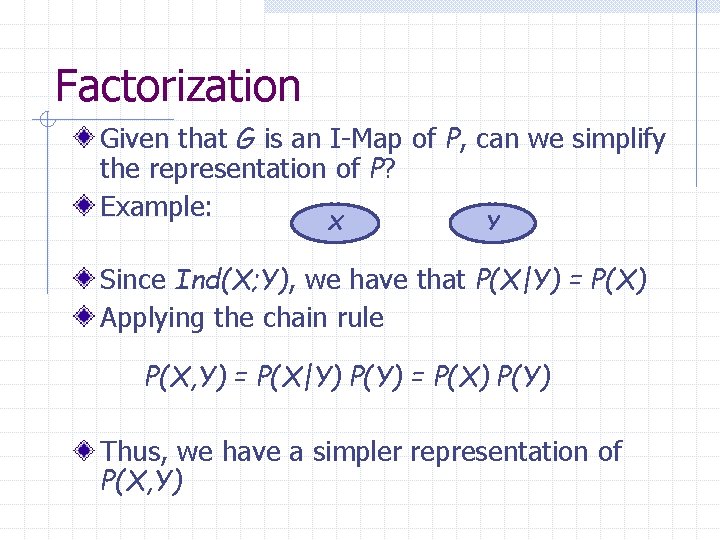

Factorization Given that G is an I-Map of P, can we simplify the representation of P? Example: X Y Since Ind(X; Y), we have that P(X|Y) = P(X) Applying the chain rule P(X, Y) = P(X|Y) P(Y) = P(X) P(Y) Thus, we have a simpler representation of P(X, Y)

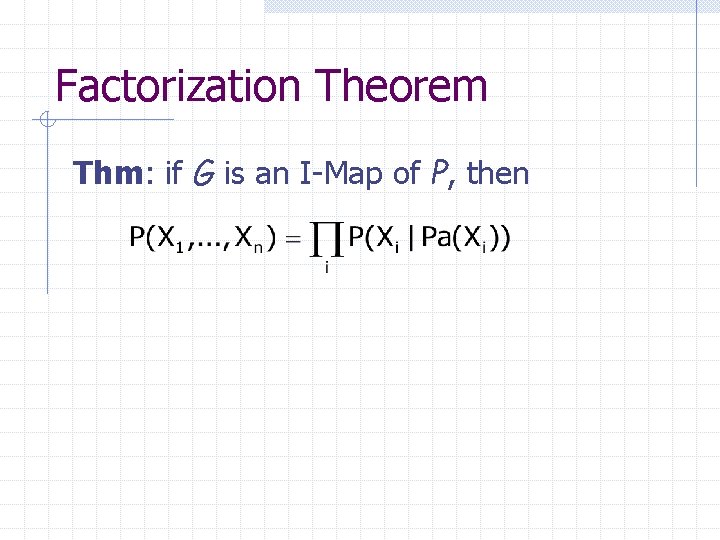

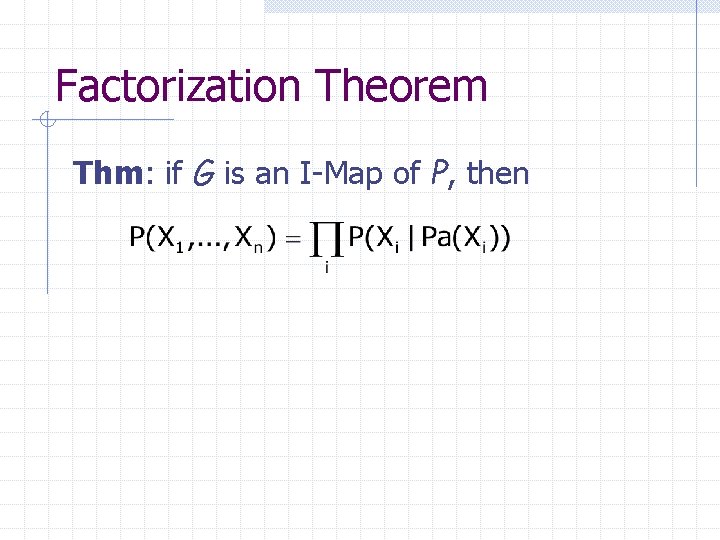

Factorization Theorem Thm: if G is an I-Map of P, then

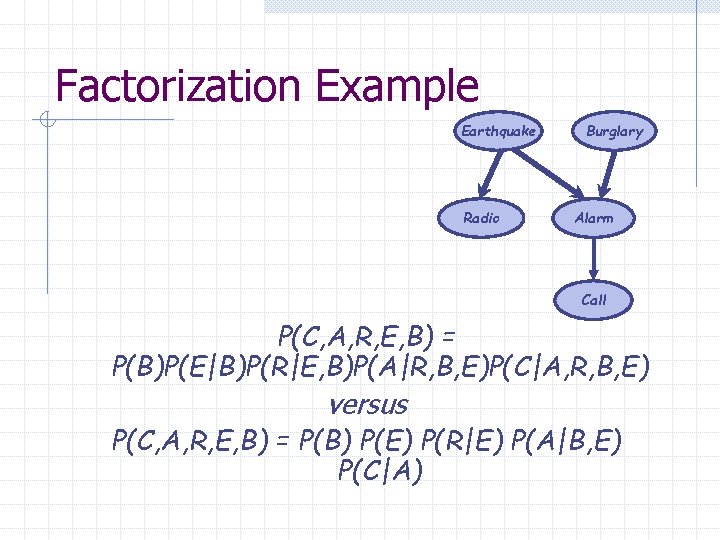

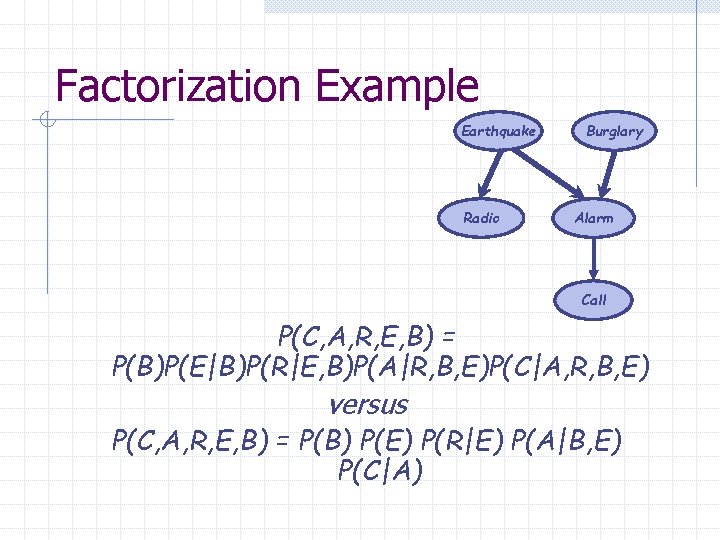

Factorization Example Earthquake Radio Burglary Alarm Call P(C, A, R, E, B) = P(B)P(E|B)P(R|E, B)P(A|R, B, E)P(C|A, R, B, E) versus P(C, A, R, E, B) = P(B) P(E) P(R|E) P(A|B, E) P(C|A)

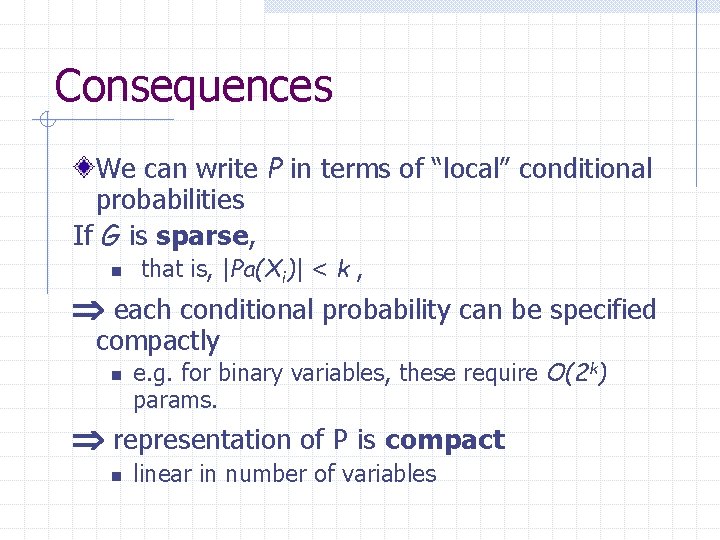

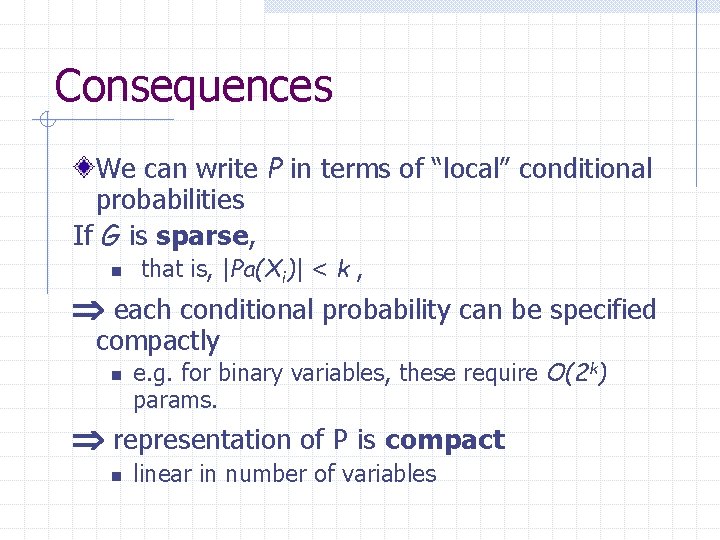

Consequences We can write P in terms of “local” conditional probabilities If G is sparse, n that is, |Pa(Xi)| < k , each conditional probability can be specified compactly n e. g. for binary variables, these require O(2 k) params. representation of P is compact n linear in number of variables

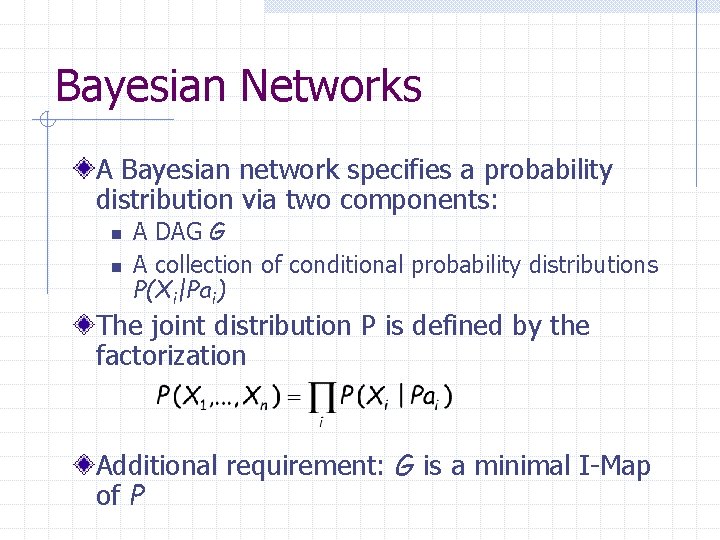

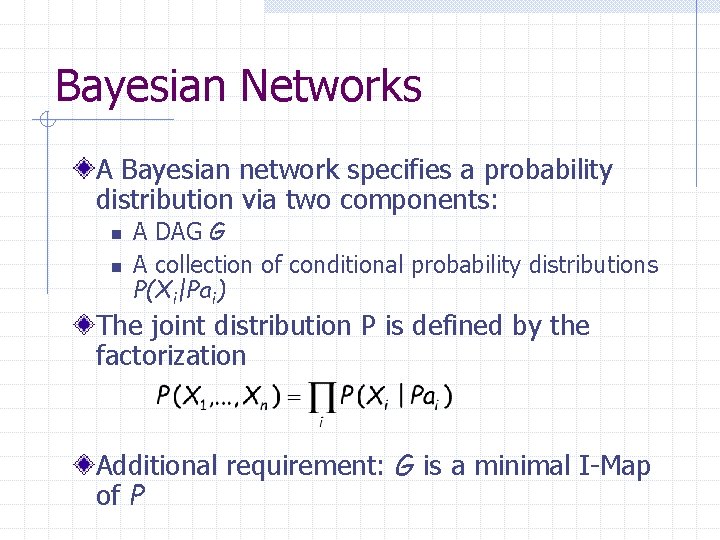

Bayesian Networks A Bayesian network specifies a probability distribution via two components: n n A DAG G A collection of conditional probability distributions P(Xi|Pai) The joint distribution P is defined by the factorization Additional requirement: G is a minimal I-Map of P

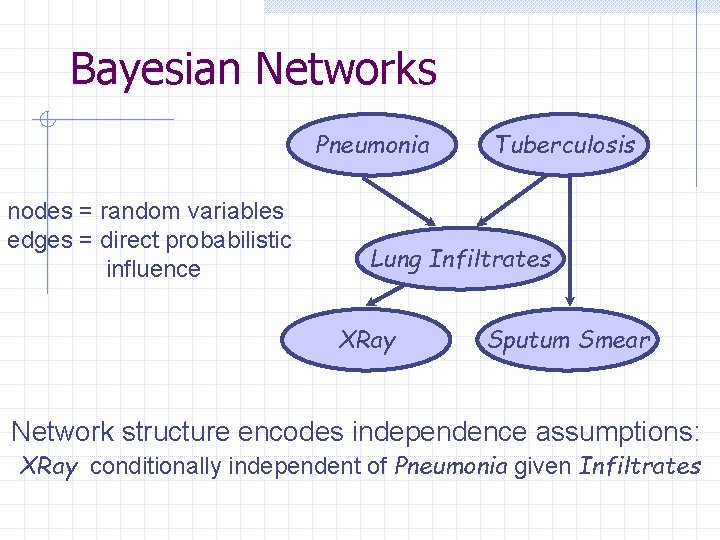

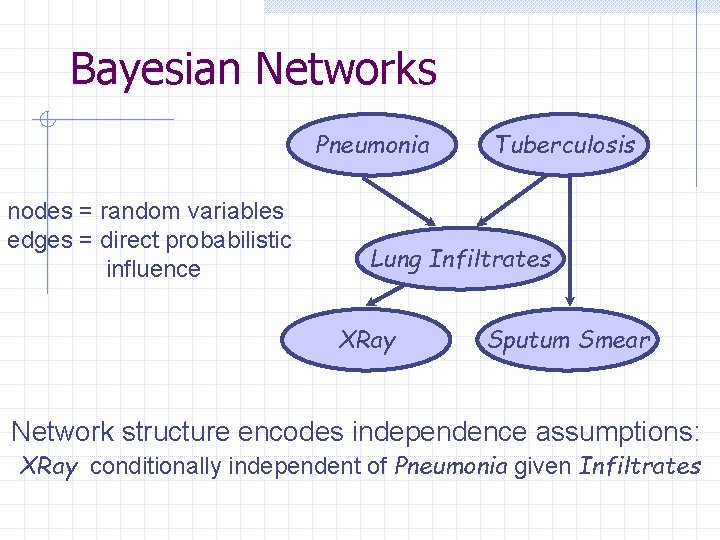

Bayesian Networks Pneumonia nodes = random variables edges = direct probabilistic influence Tuberculosis Lung Infiltrates XRay Sputum Smear Network structure encodes independence assumptions: XRay conditionally independent of Pneumonia given Infiltrates

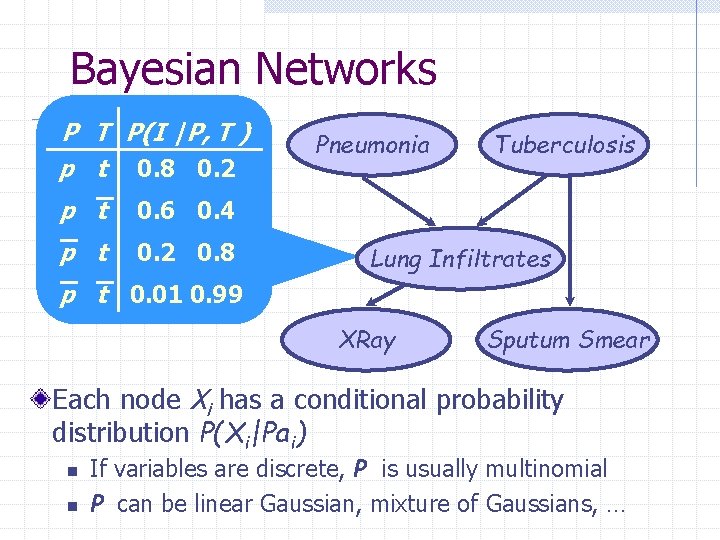

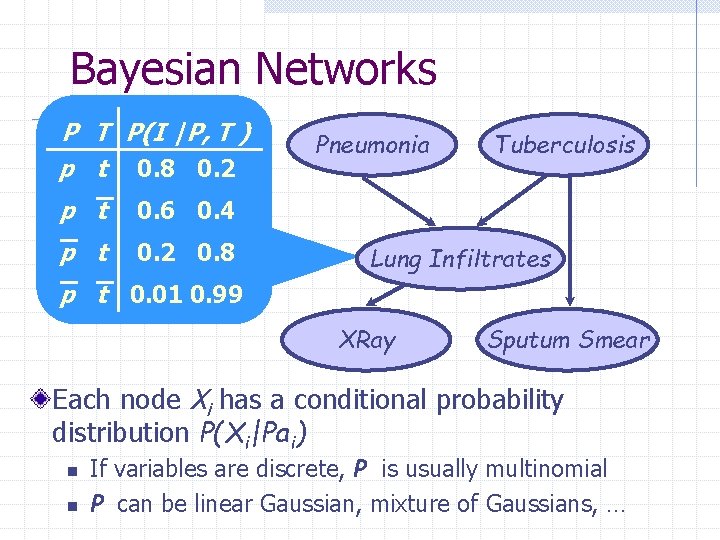

Bayesian Networks P T P(I |P, T ) p t 0. 8 0. 2 p t 0. 6 0. 4 p t 0. 2 0. 8 Pneumonia Tuberculosis Lung Infiltrates p t 0. 01 0. 99 XRay Sputum Smear Each node Xi has a conditional probability distribution P(Xi|Pai) n n If variables are discrete, P is usually multinomial P can be linear Gaussian, mixture of Gaussians, …

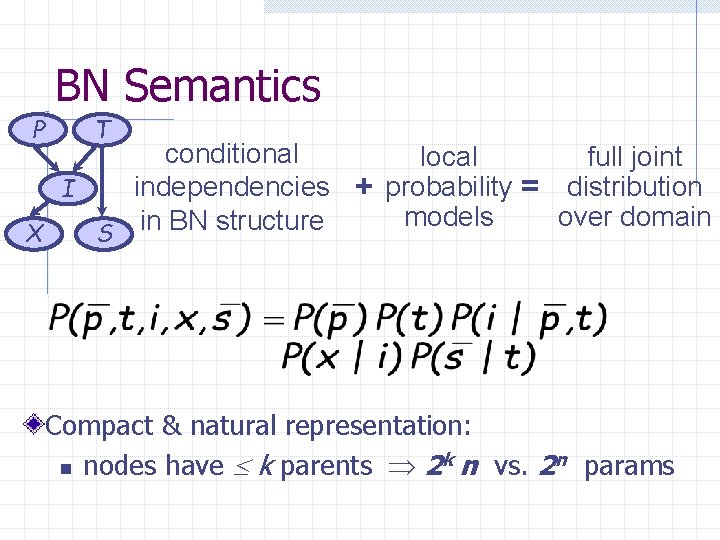

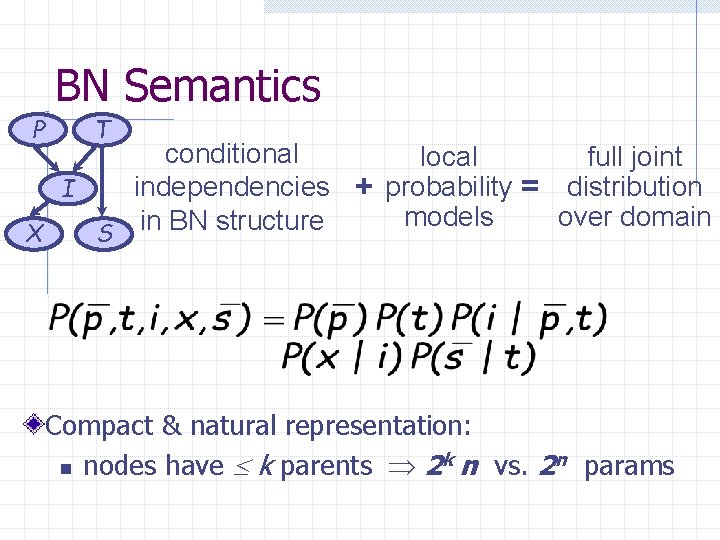

BN Semantics P T conditional local full joint independencies + probability = distribution I models over domain in BN structure X S Compact & natural representation: n nodes have k parents 2 k n vs. 2 n params

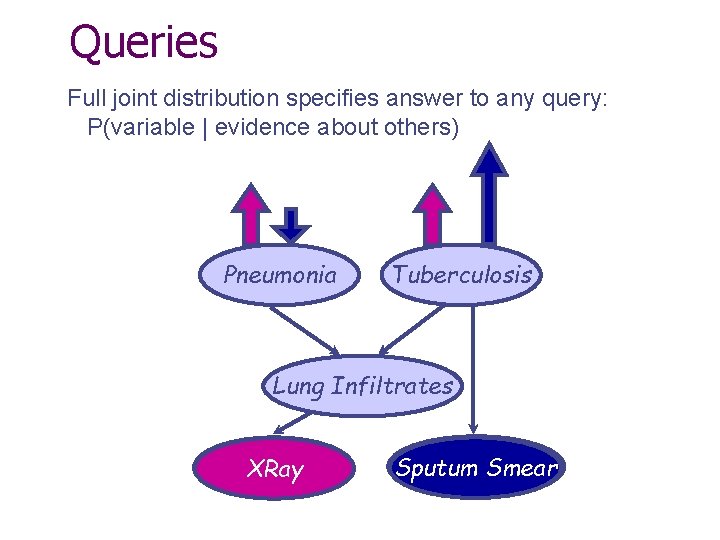

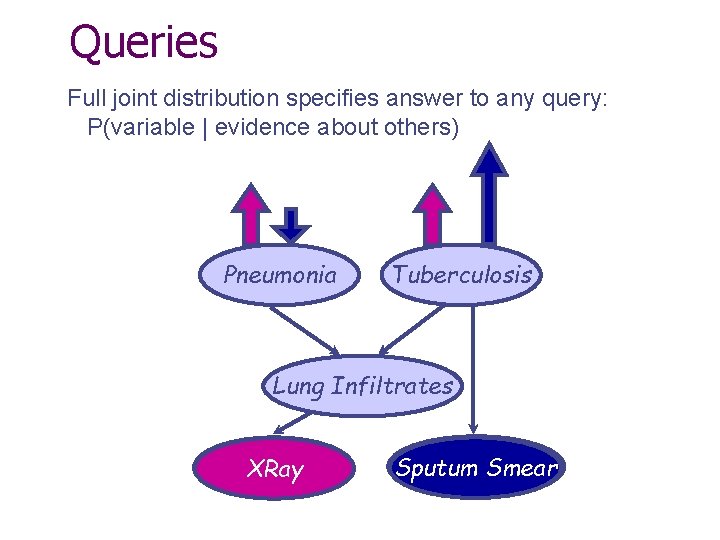

Queries Full joint distribution specifies answer to any query: P(variable | evidence about others) Pneumonia Tuberculosis Lung Infiltrates XRay Sputum Smear

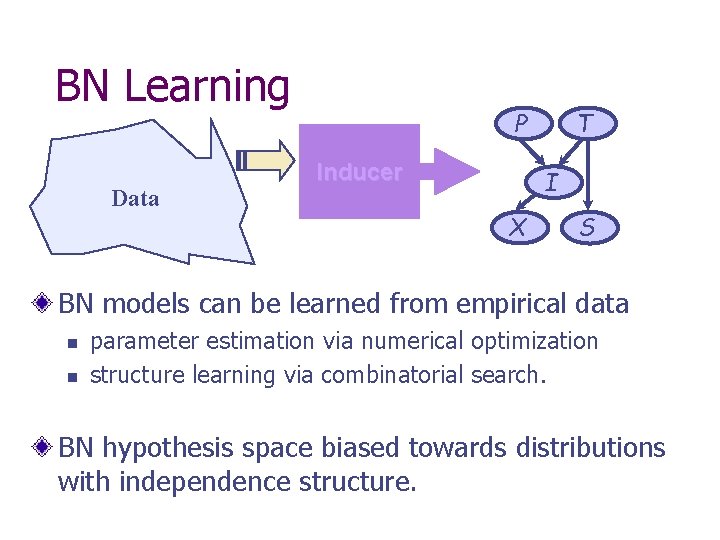

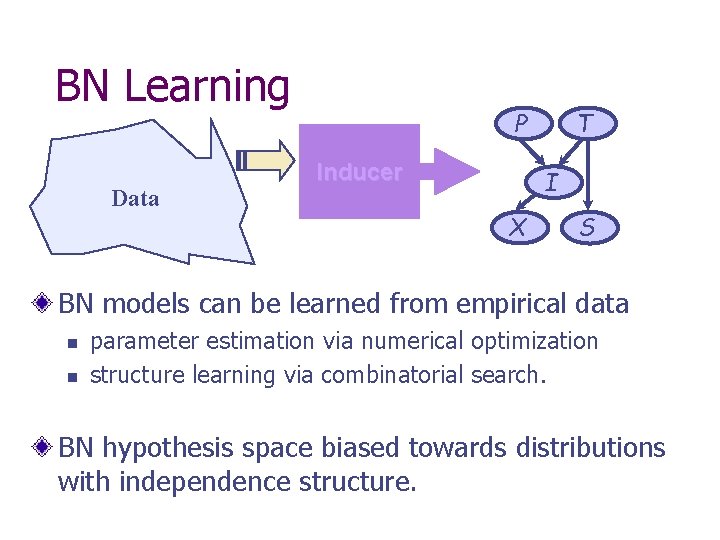

BN Learning Data P Inducer T I X S BN models can be learned from empirical data n n parameter estimation via numerical optimization structure learning via combinatorial search. BN hypothesis space biased towards distributions with independence structure.

Questions How to represent uncertainty in knowledge? If a goal is terribly important, an agent may be better to aperform inferences with action off. How choosing less efficient, but less uncertain than a more efficient one uncertain knowledge? Which action to choose under uncertainty? But if the goal is also extremely urgent, and the less uncertain action is deemed too slow, then the agent may take its chance with the faster, but more uncertain action

Summary Types of uncertainty Default/worst-case/probabilistic reasoning Probability Theory Bayesian Networks Making decisions under uncertainty Exciting Research Area!

References Russell & Norvig, chapters 14, 15 Daphne Koller’s BN notes, available from the class web page Jean-Claude Latombe’s excellent lecture notes, http: //robotics. stanford. edu/~latombe/cs 121/w inter 02/home. htm Nir Friedman’s excellent lecture notes, http: //www. cs. huji. ac. il/~pmai/

Questions How to represent uncertainty in knowledge? How to perform inferences with uncertain knowledge? When a doctor receives lab analysis results for some patient, how do they change his prior knowledge about the health condition of this patient?

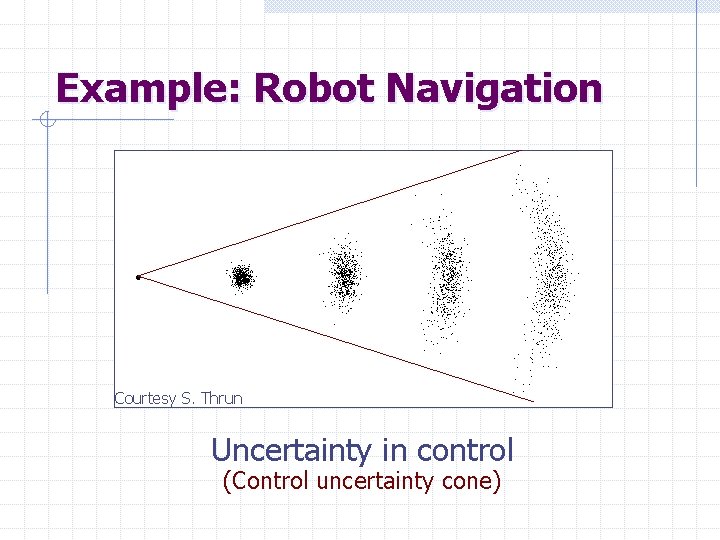

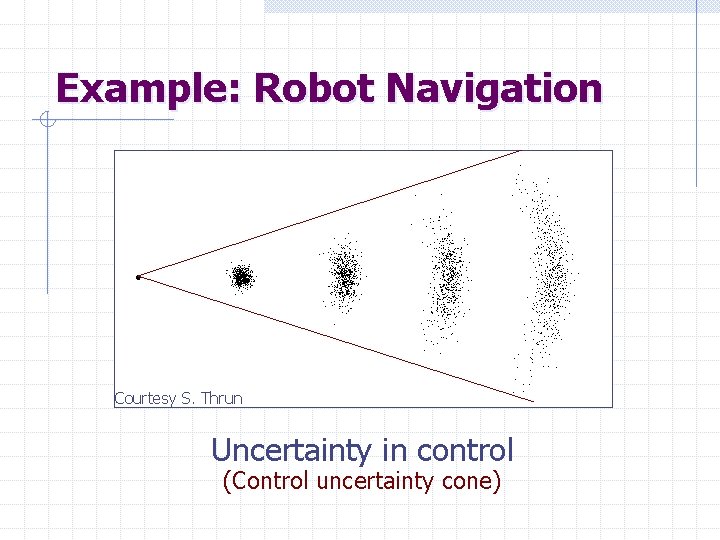

Example: Robot Navigation Courtesy S. Thrun Uncertainty in control (Control uncertainty cone)

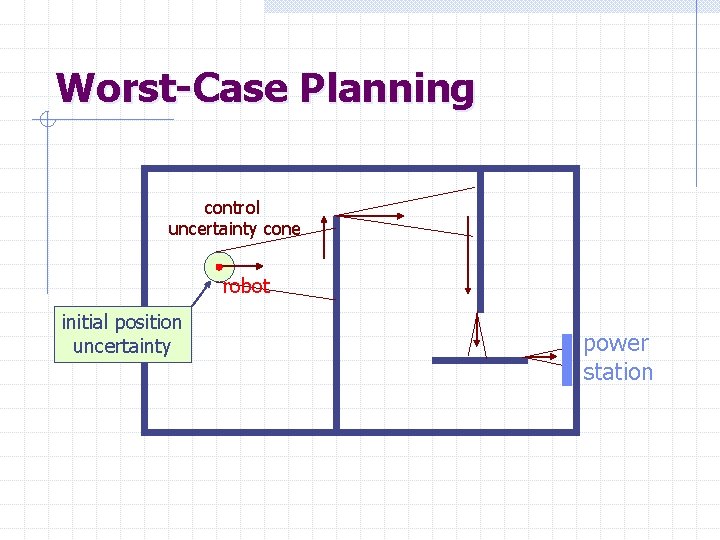

Worst-Case Planning control uncertainty cone robot initial position uncertainty power station

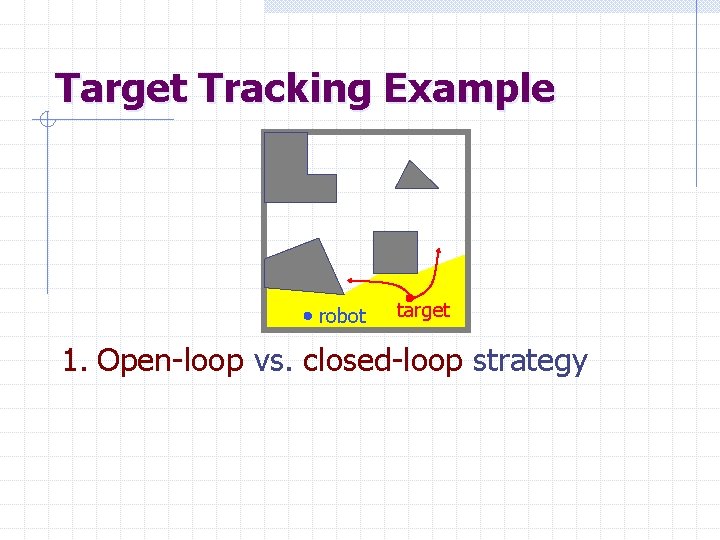

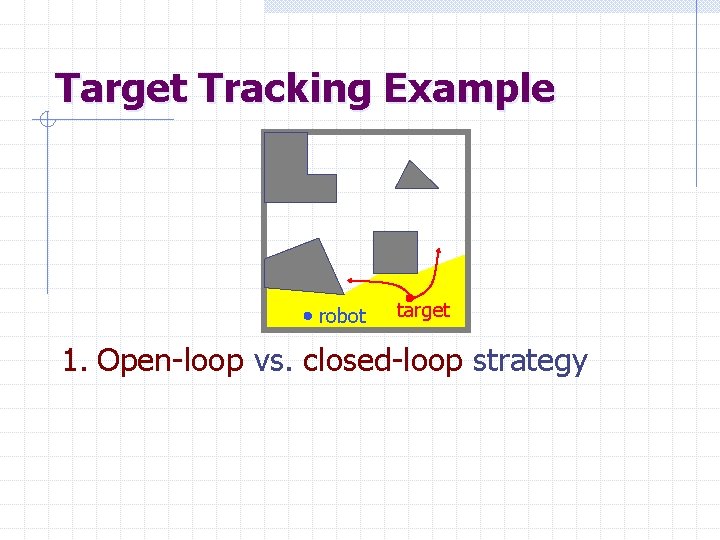

Target Tracking Example robot target 1. Open-loop vs. closed-loop strategy

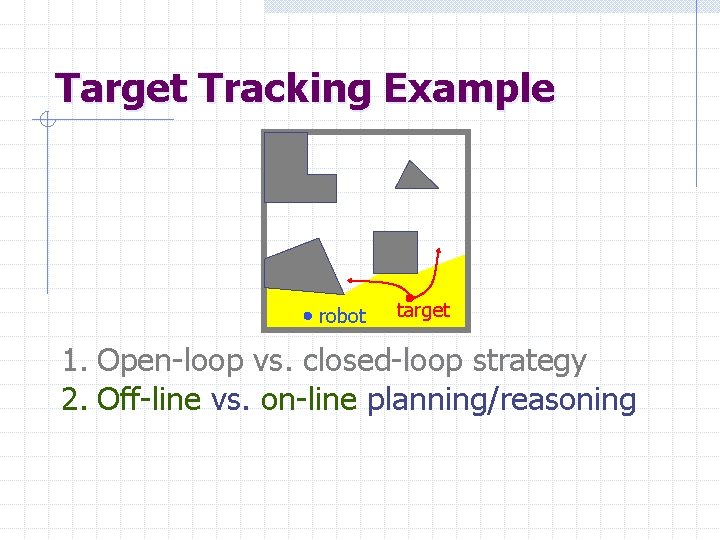

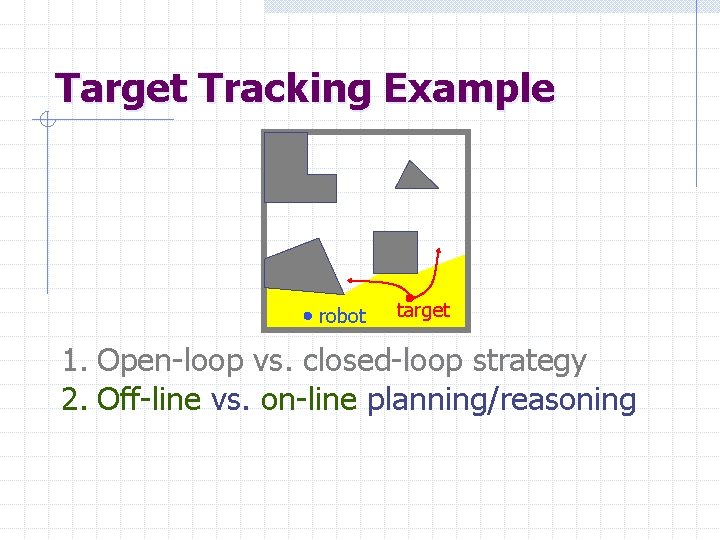

Target Tracking Example robot target 1. Open-loop vs. closed-loop strategy 2. Off-line vs. on-line planning/reasoning

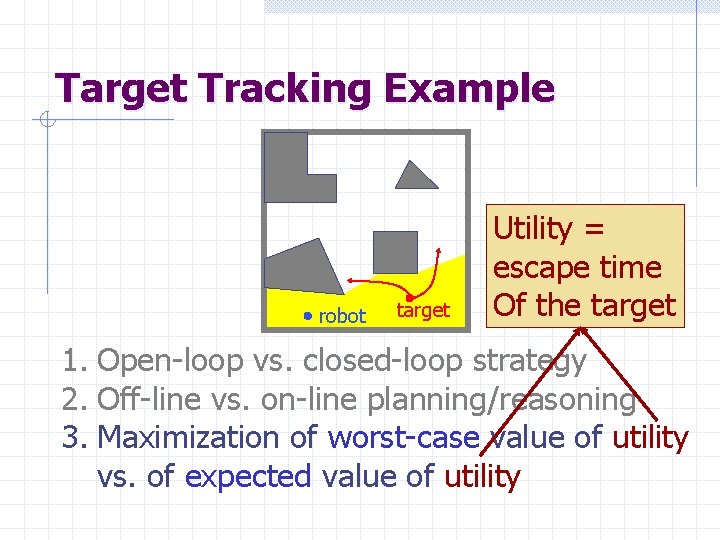

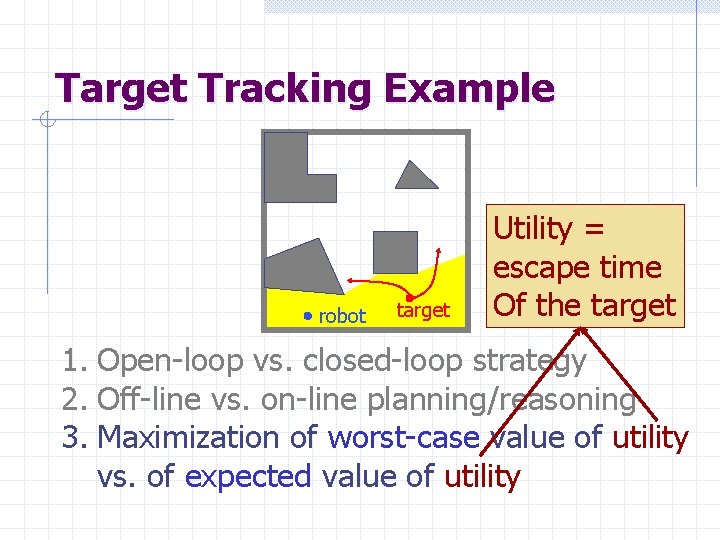

Target Tracking Example robot target Utility = escape time Of the target 1. Open-loop vs. closed-loop strategy 2. Off-line vs. on-line planning/reasoning 3. Maximization of worst-case value of utility vs. of expected value of utility

Russell and norvig

Russell and norvig How dogs communicate

How dogs communicate Russell norvig

Russell norvig Peter norvig design patterns

Peter norvig design patterns Russel norvig

Russel norvig Marianne koller

Marianne koller Concept of equivalence in translation

Concept of equivalence in translation Ghardaa

Ghardaa Tension pneumothorax

Tension pneumothorax Koller equivalence

Koller equivalence Daphne koller

Daphne koller Kristina koller

Kristina koller Korymbiform

Korymbiform Koller equivalence

Koller equivalence Chapter 31 postwar uncertainty

Chapter 31 postwar uncertainty Chapter 15 section 1 postwar uncertainty

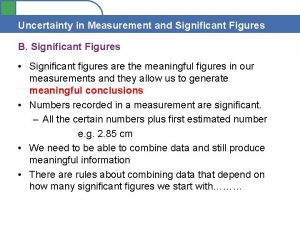

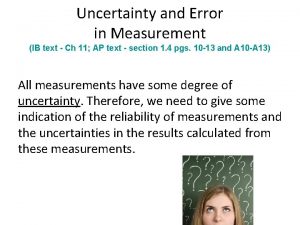

Chapter 15 section 1 postwar uncertainty Uncertainty in measurement and significant digits

Uncertainty in measurement and significant digits Risk and uncertainty continuum

Risk and uncertainty continuum Position paper importance

Position paper importance Power distance map

Power distance map Uncertainty vs risk

Uncertainty vs risk Laray m. barna (1997)

Laray m. barna (1997) Multiplying and dividing uncertainties

Multiplying and dividing uncertainties Uncertainty of a protractor

Uncertainty of a protractor Lesson 4 modern ideas and uncertainty

Lesson 4 modern ideas and uncertainty Measurements and their uncertainty answer key

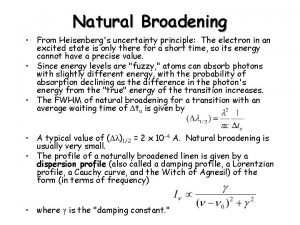

Measurements and their uncertainty answer key Uncertainty relation and natural line width

Uncertainty relation and natural line width Capital budgeting under uncertainty

Capital budgeting under uncertainty Risk and uncertainty in farm management

Risk and uncertainty in farm management Risk and uncertainty in managerial economics

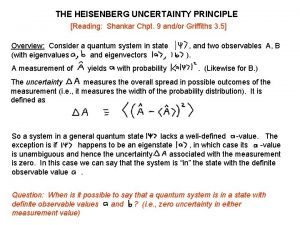

Risk and uncertainty in managerial economics Heisenberg uncertainty principle mathematically

Heisenberg uncertainty principle mathematically The trailside killer

The trailside killer Clay lawson and russell odom

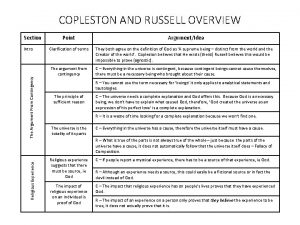

Clay lawson and russell odom Copleston and russell debate summary

Copleston and russell debate summary Russell and taylor operations management

Russell and taylor operations management School based apprenticeships and traineeships

School based apprenticeships and traineeships Russell quarterly economic and market review

Russell quarterly economic and market review Copleston and russell debate summary

Copleston and russell debate summary Russell quarterly economic and market review

Russell quarterly economic and market review Random uncertainty

Random uncertainty What are significant figures

What are significant figures Uncertainty in subtraction

Uncertainty in subtraction Uncertainties higher physics

Uncertainties higher physics How to calculate experimental uncertainty

How to calculate experimental uncertainty Uncertainty of slope

Uncertainty of slope Triple beam balance uncertainty

Triple beam balance uncertainty Reiters

Reiters Implied uncertainty spectrum

Implied uncertainty spectrum Pearson uncertainty map

Pearson uncertainty map Uncertainty for micrometer

Uncertainty for micrometer Heisenbergs uncertainty

Heisenbergs uncertainty Propagating uncertainty physics

Propagating uncertainty physics Pearson's uncertainty map example

Pearson's uncertainty map example Optimism in face of uncertainty

Optimism in face of uncertainty Maxwell equation tattoo

Maxwell equation tattoo Design stage uncertainty

Design stage uncertainty