Heuristic Search Russell and Norvig Chapter 4 Heuristic

![Simulated annealing 알고리즘 for t 1 to ∞ do T schedule[t] if T=0 then Simulated annealing 알고리즘 for t 1 to ∞ do T schedule[t] if T=0 then](https://slidetodoc.com/presentation_image_h2/67a3b11e78d23a17265e05e2dc5e2844/image-65.jpg)

- Slides: 94

Heuristic Search Russell and Norvig: Chapter 4 Heuristic Search

Current Bag of Tricks Search algorithm Heuristic Search 2

Current Bag of Tricks Search algorithm Modified search algorithm Heuristic Search 3

Current Bag of Tricks Search algorithm Modified search algorithm Avoiding repeated states n 어떤 때에는 검사를 위한 시간이 더 걸릴 수 도 있음 – Search Space가 매우 클 때 Heuristic Search 4

Best-First Search Define a function: f : node N real number f(N) called the evaluation function, whose value depends on the contents of the state associated with N Order the nodes in the fringe in increasing values of f(N) n 순서화 하기 위한 방법이 중요, heap의 사용도 한 방법 --- 이유는? f(N) can be any function you want, but will it work? Heuristic Search 5

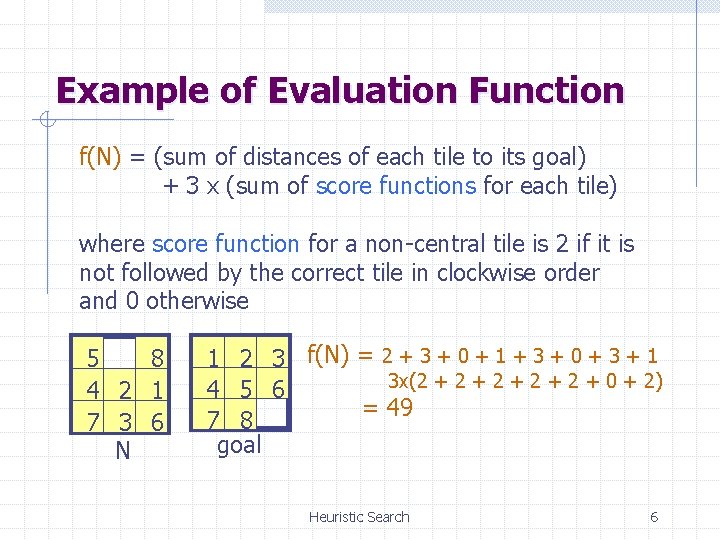

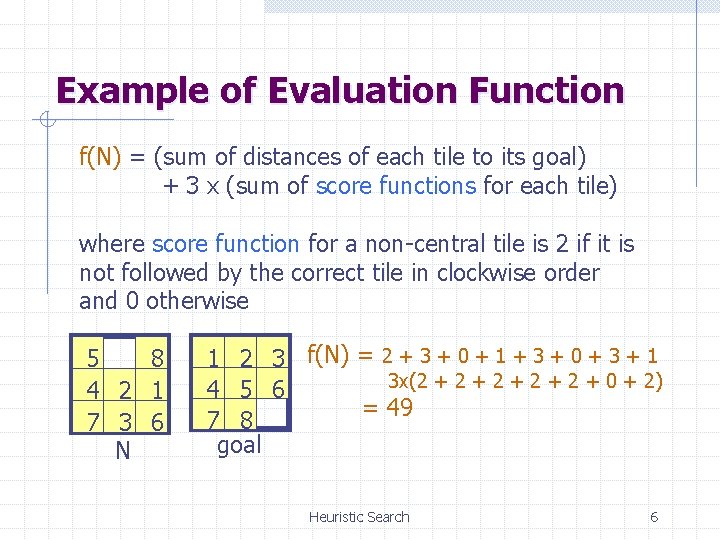

Example of Evaluation Function f(N) = (sum of distances of each tile to its goal) + 3 x (sum of score functions for each tile) where score function for a non-central tile is 2 if it is not followed by the correct tile in clockwise order and 0 otherwise 5 8 4 2 1 7 3 6 N 1 2 3 f(N) = 2 + 3 + 0 + 1 + 3 + 0 + 3 + 1 3 x(2 + 2 + 2 + 0 + 2) 4 5 6 = 49 7 8 goal Heuristic Search 6

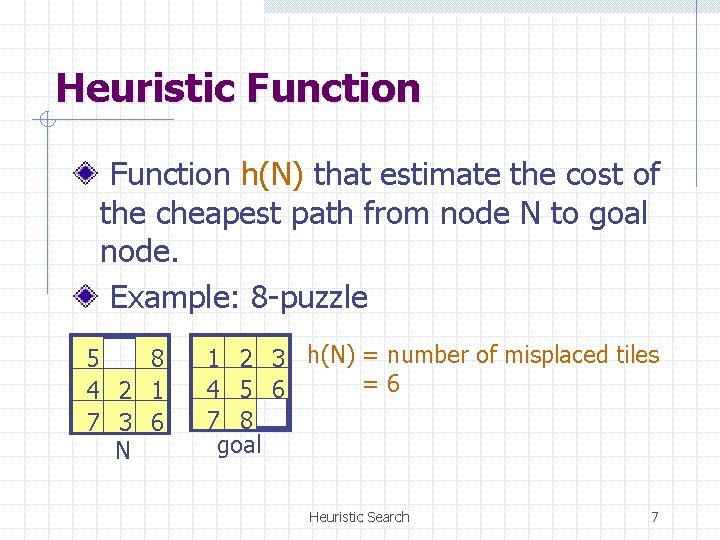

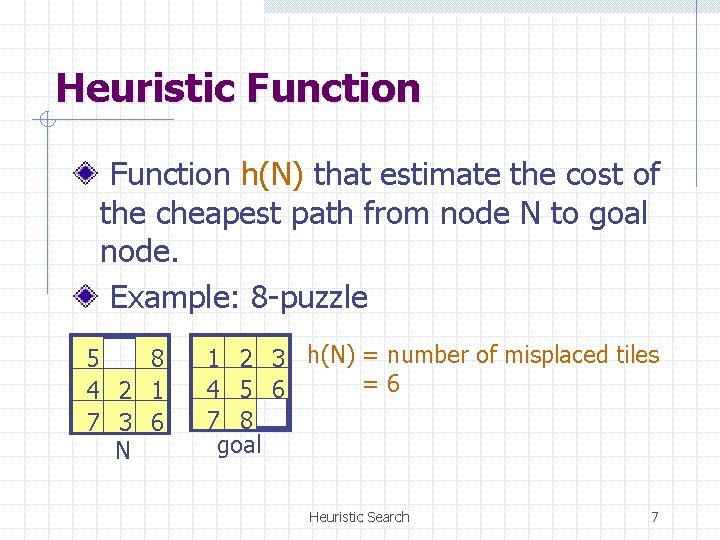

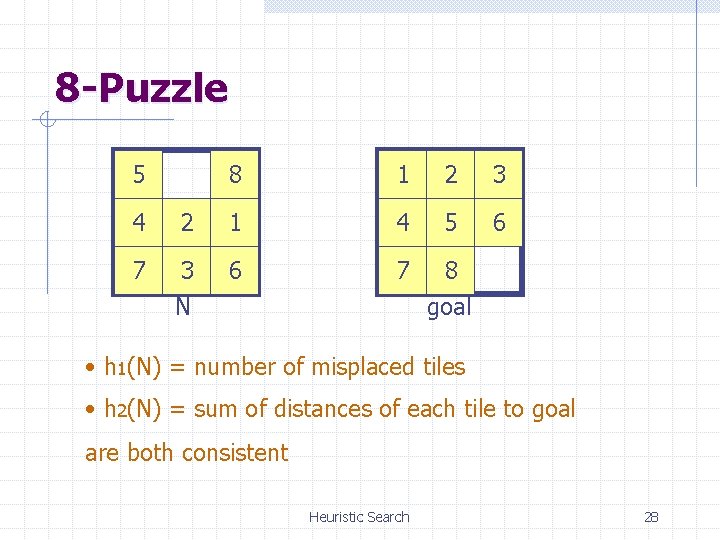

Heuristic Function h(N) that estimate the cost of the cheapest path from node N to goal node. Example: 8 -puzzle 5 8 4 2 1 7 3 6 N 1 2 3 h(N) = number of misplaced tiles =6 4 5 6 7 8 goal Heuristic Search 7

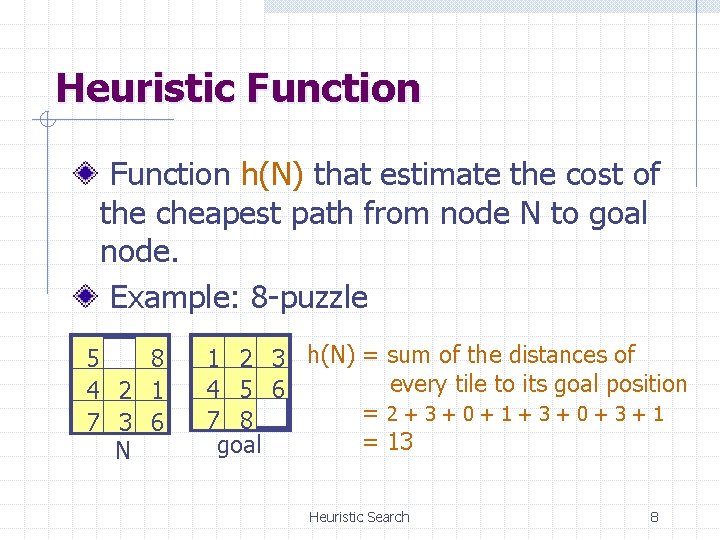

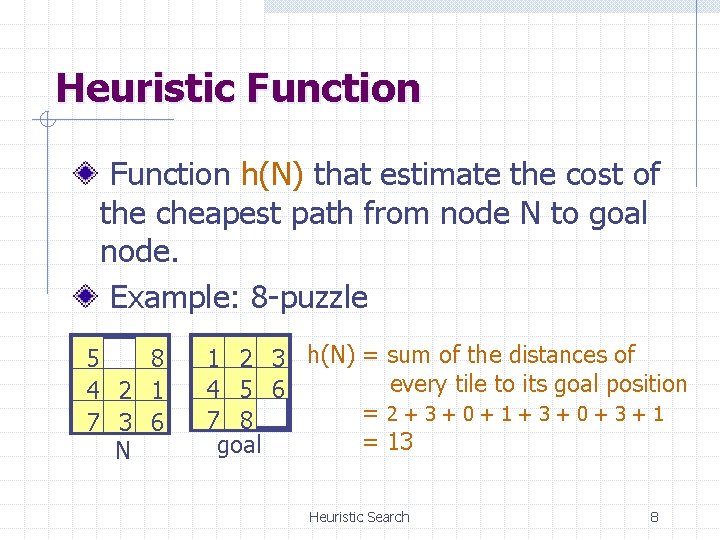

Heuristic Function h(N) that estimate the cost of the cheapest path from node N to goal node. Example: 8 -puzzle 5 8 4 2 1 7 3 6 N 1 2 3 h(N) = sum of the distances of every tile to its goal position 4 5 6 =2+3+0+1+3+0+3+1 7 8 = 13 goal Heuristic Search 8

Robot Navigation N YN XN h(N) = Straight-line distance to the goal = [(Xg – XN)2 + (Yg – YN)2]1/2 Heuristic Search 9

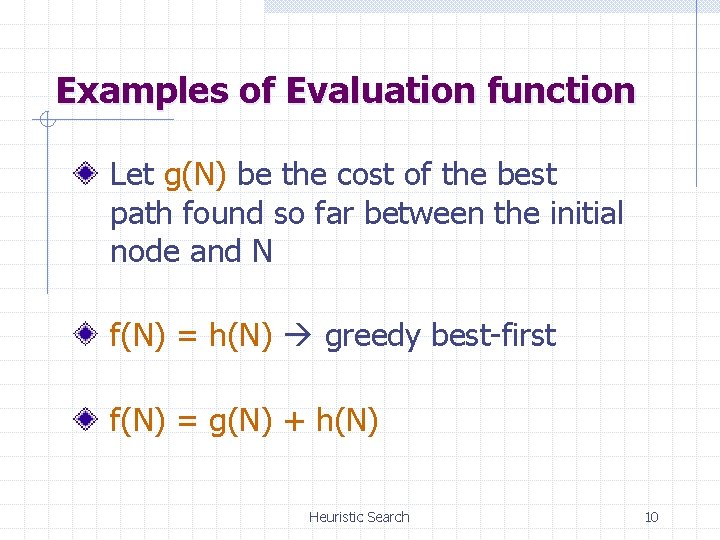

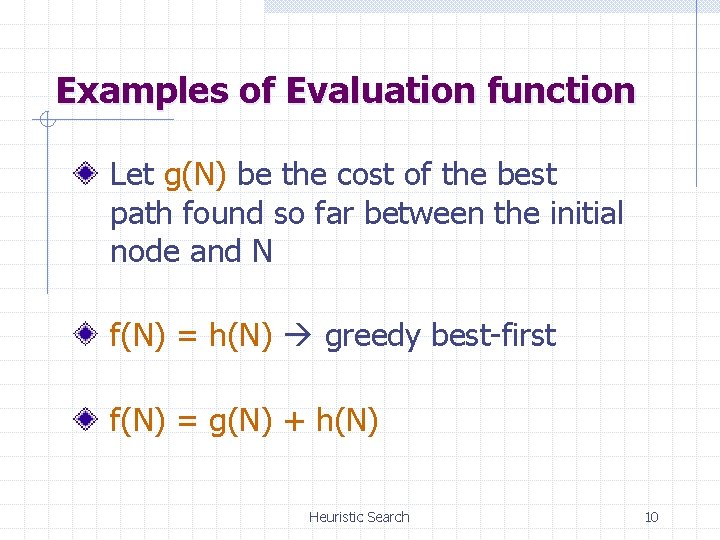

Examples of Evaluation function Let g(N) be the cost of the best path found so far between the initial node and N f(N) = h(N) greedy best-first f(N) = g(N) + h(N) Heuristic Search 10

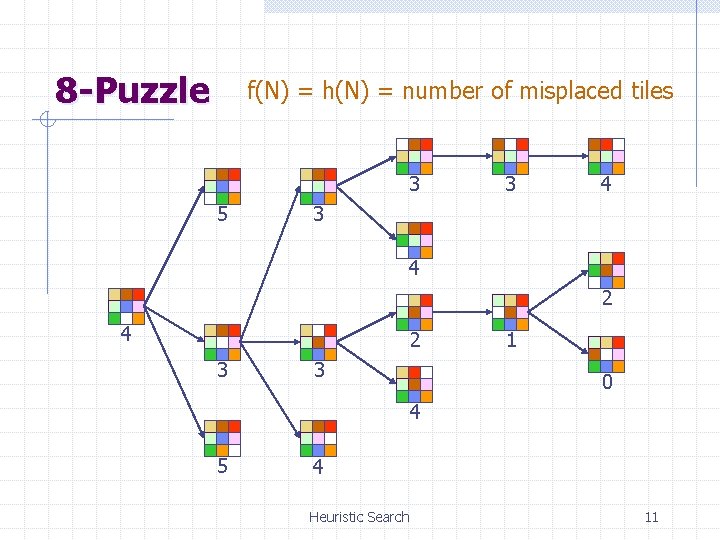

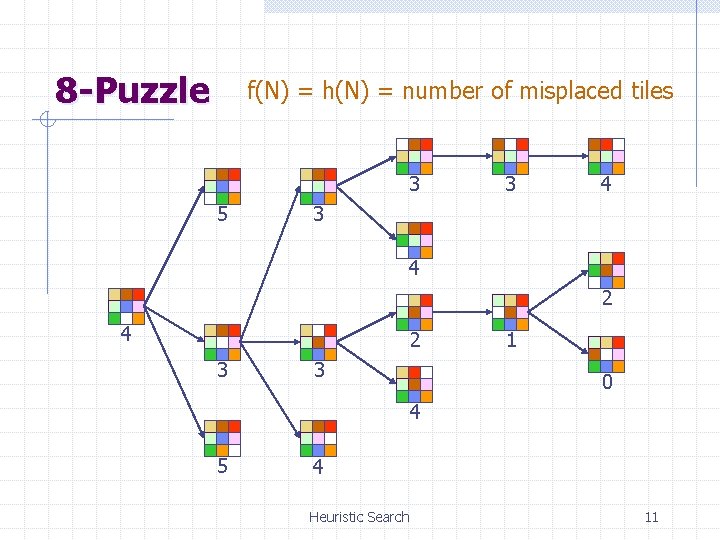

8 -Puzzle f(N) = h(N) = number of misplaced tiles 3 5 3 4 2 4 2 3 3 1 0 4 5 4 Heuristic Search 11

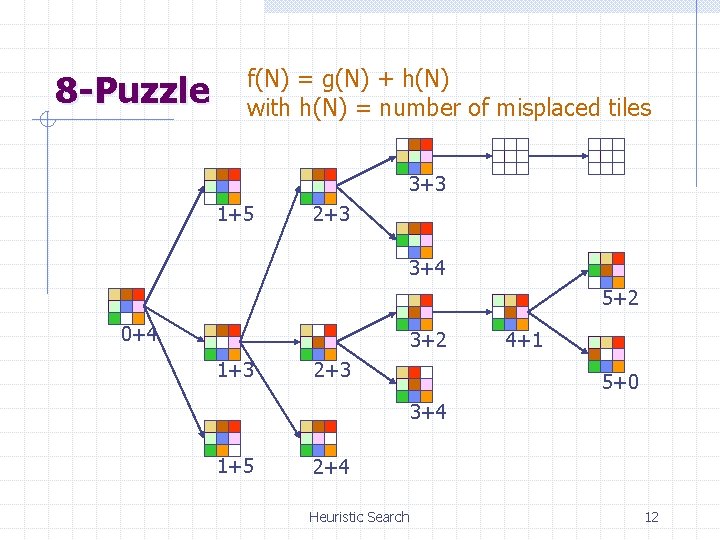

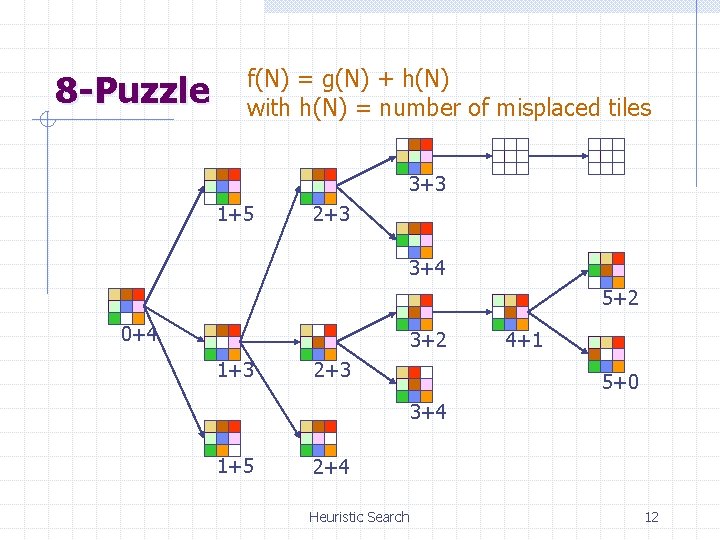

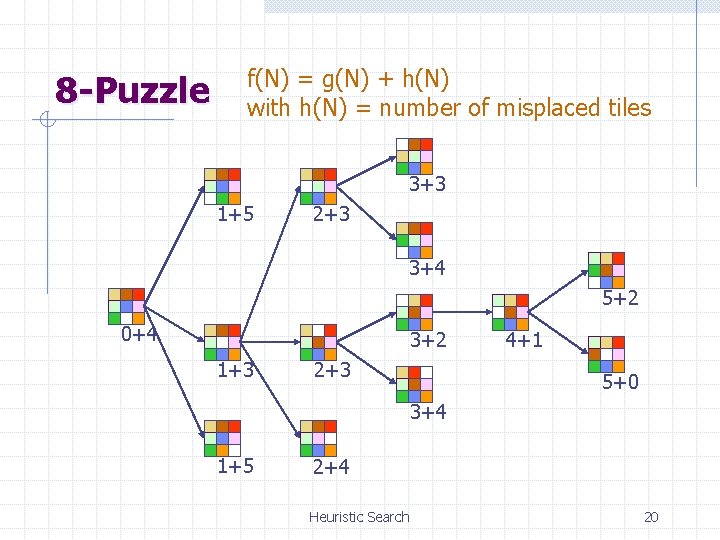

8 -Puzzle f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 3+3 1+5 2+3 3+4 5+2 0+4 3+2 1+3 2+3 4+1 5+0 3+4 1+5 2+4 Heuristic Search 12

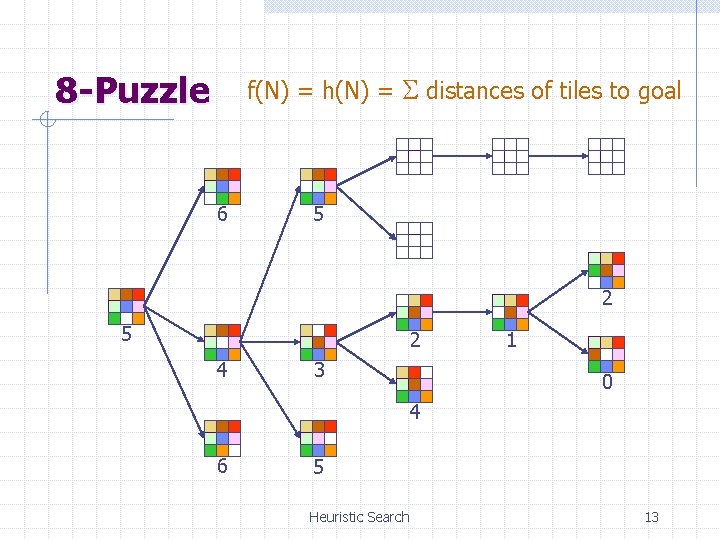

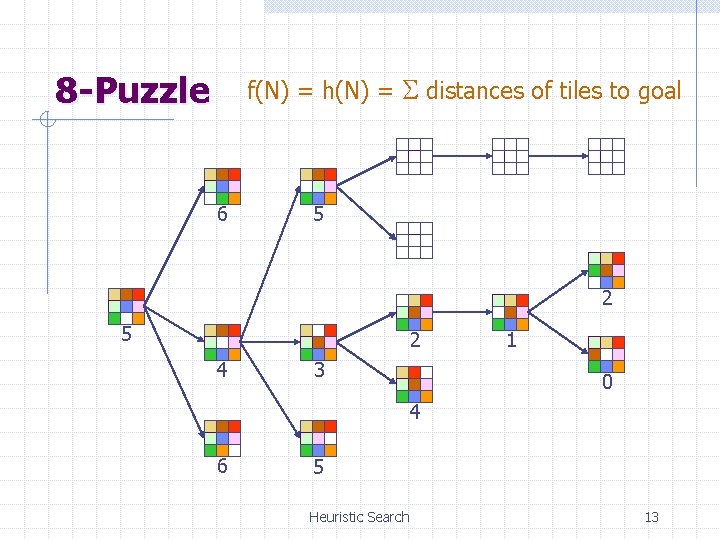

8 -Puzzle f(N) = h(N) = distances of tiles to goal 6 5 2 4 3 1 0 4 6 5 Heuristic Search 13

Can we Prove Anything? If the state space is finite and we avoid repeated states, the search is complete, but in general is not optimal If the state space is finite and we do not avoid repeated states, the search is in general not complete If the state space is infinite, the search is in general not complete Heuristic Search 14

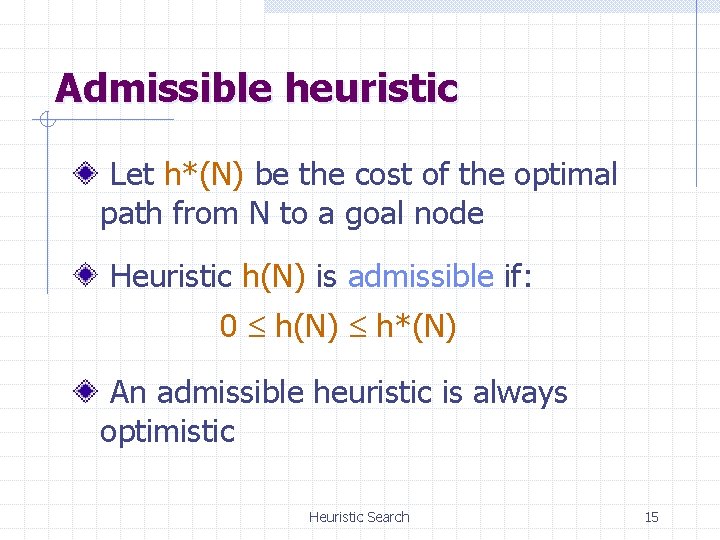

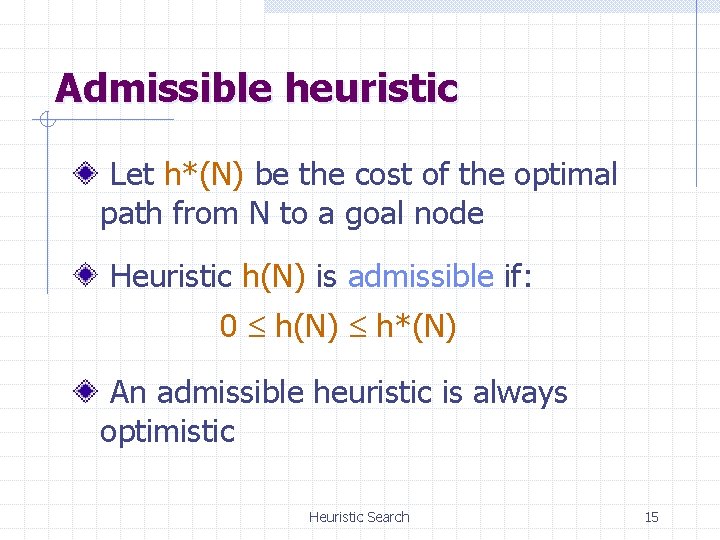

Admissible heuristic Let h*(N) be the cost of the optimal path from N to a goal node Heuristic h(N) is admissible if: 0 h(N) h*(N) An admissible heuristic is always optimistic Heuristic Search 15

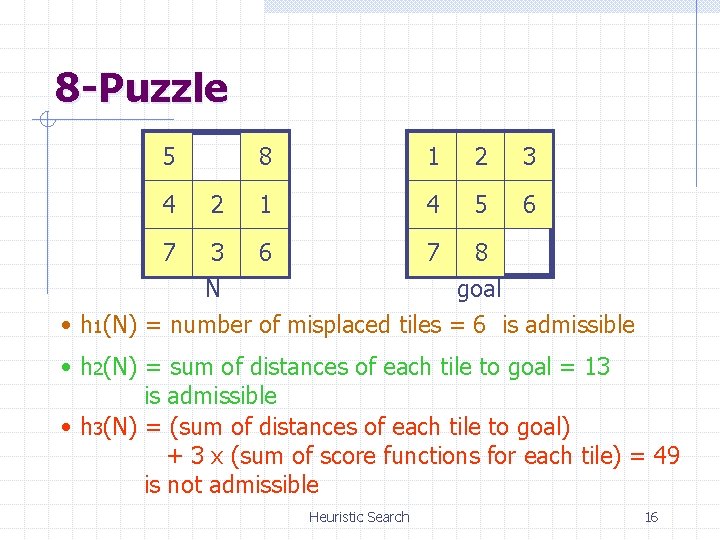

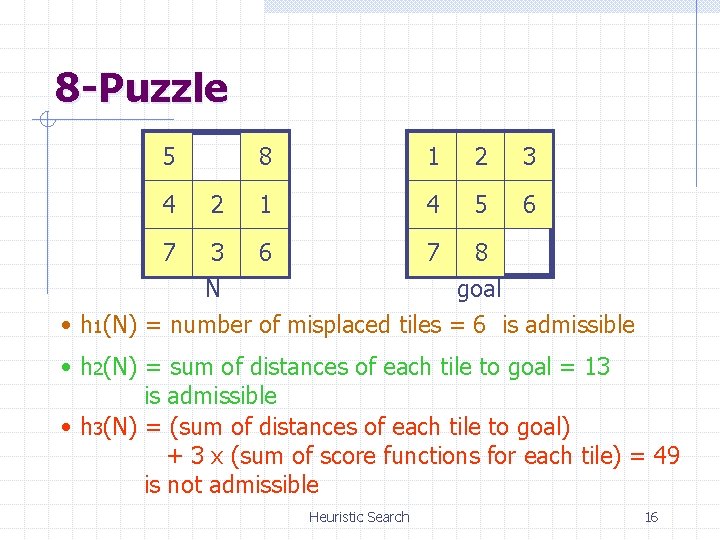

8 -Puzzle 5 4 2 8 1 2 3 1 4 5 6 7 8 3 6 N goal • h 1(N) = number of misplaced tiles = 6 is admissible 7 • h 2(N) = sum of distances of each tile to goal = 13 is admissible • h 3(N) = (sum of distances of each tile to goal) + 3 x (sum of score functions for each tile) = 49 is not admissible Heuristic Search 16

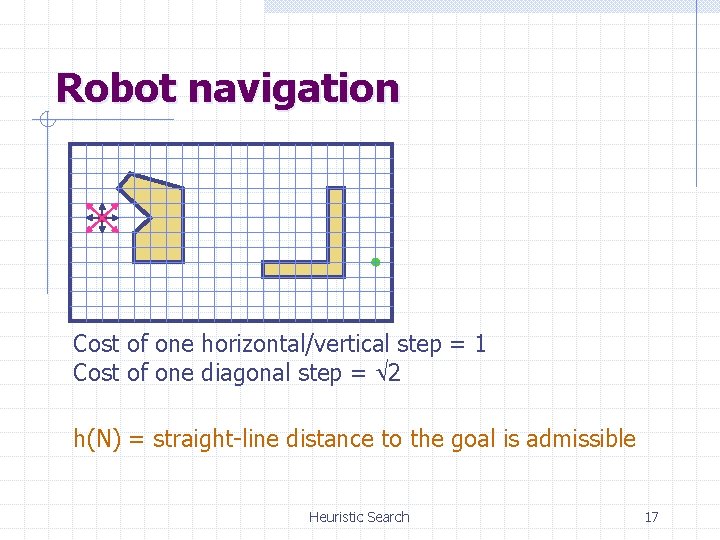

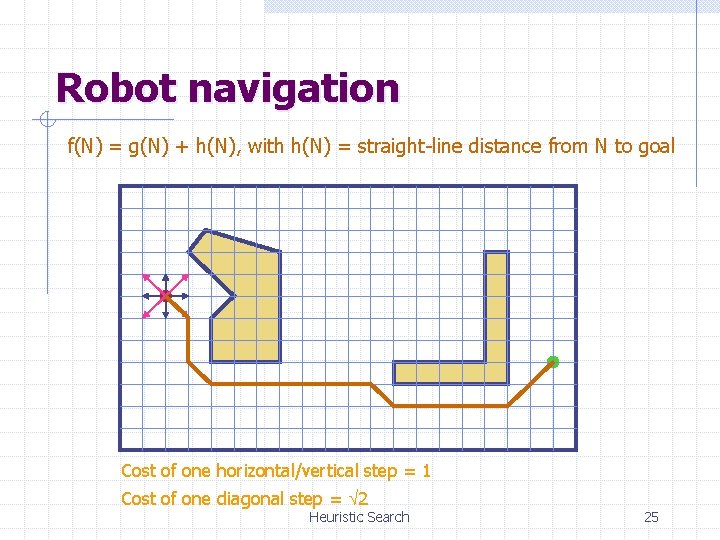

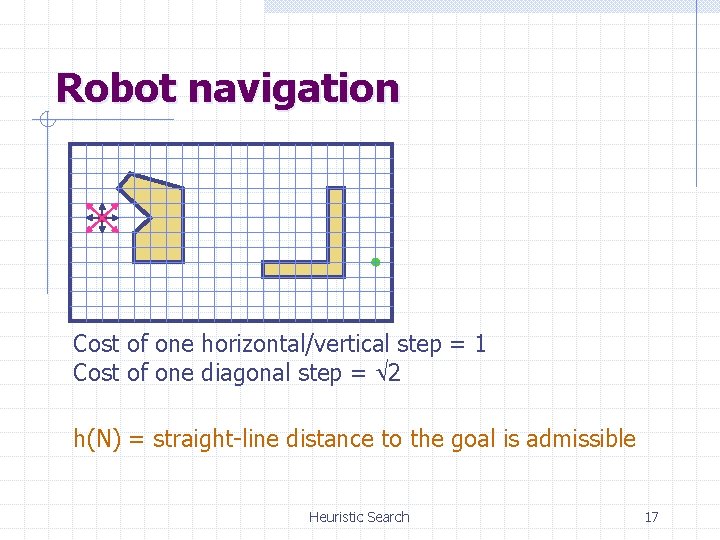

Robot navigation Cost of one horizontal/vertical step = 1 Cost of one diagonal step = 2 h(N) = straight-line distance to the goal is admissible Heuristic Search 17

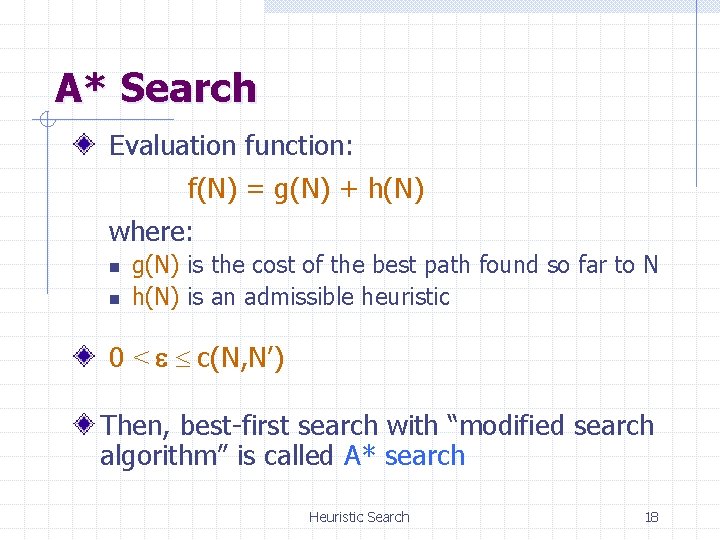

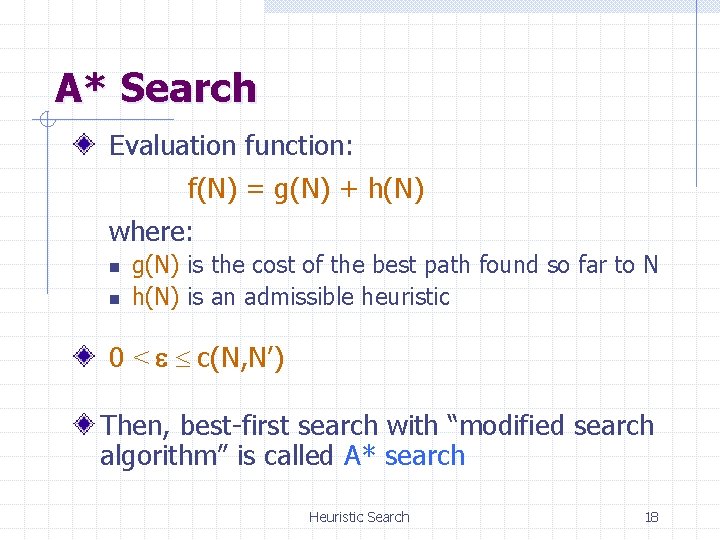

A* Search Evaluation function: f(N) = g(N) + h(N) where: n n g(N) is the cost of the best path found so far to N h(N) is an admissible heuristic 0 < c(N, N’) Then, best-first search with “modified search algorithm” is called A* search Heuristic Search 18

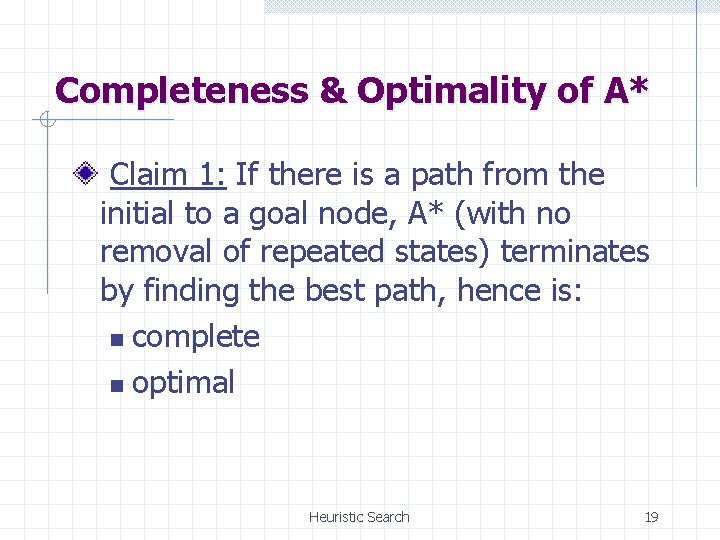

Completeness & Optimality of A* Claim 1: If there is a path from the initial to a goal node, A* (with no removal of repeated states) terminates by finding the best path, hence is: n complete n optimal Heuristic Search 19

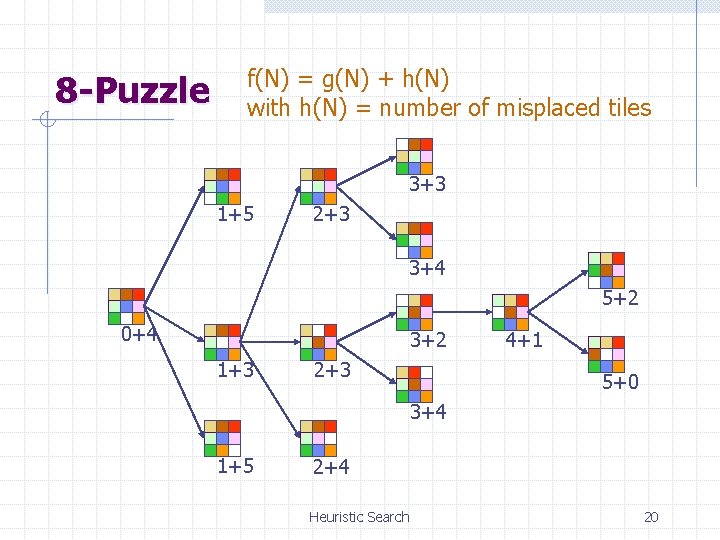

8 -Puzzle f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 3+3 1+5 2+3 3+4 5+2 0+4 3+2 1+3 2+3 4+1 5+0 3+4 1+5 2+4 Heuristic Search 20

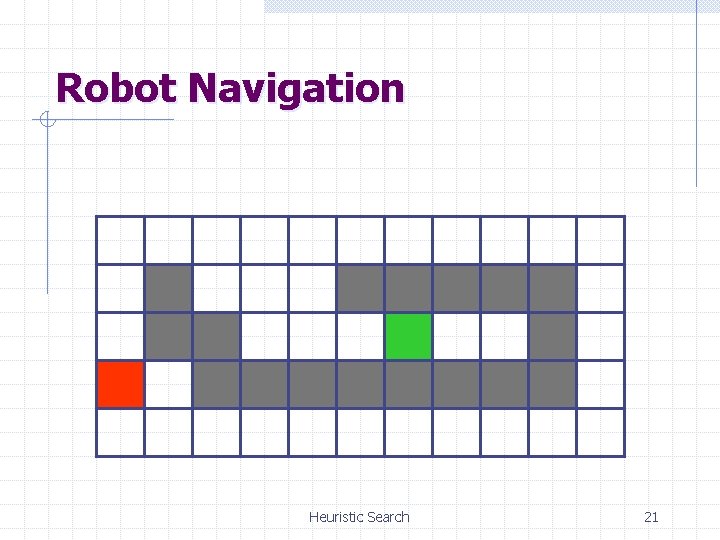

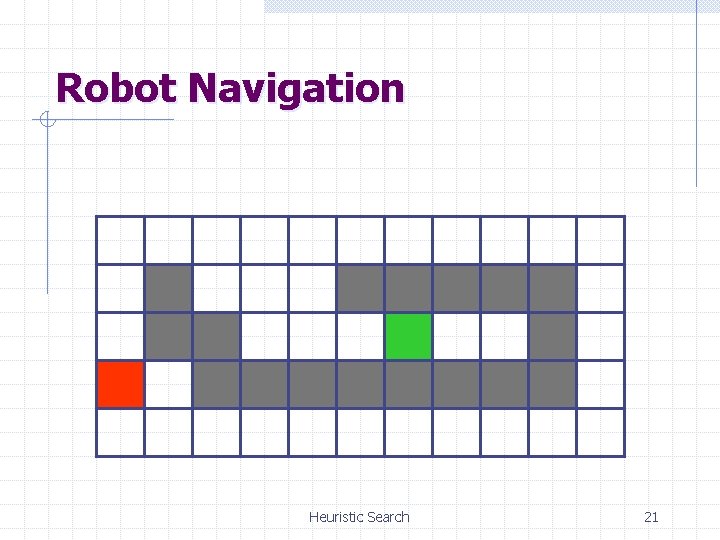

Robot Navigation Heuristic Search 21

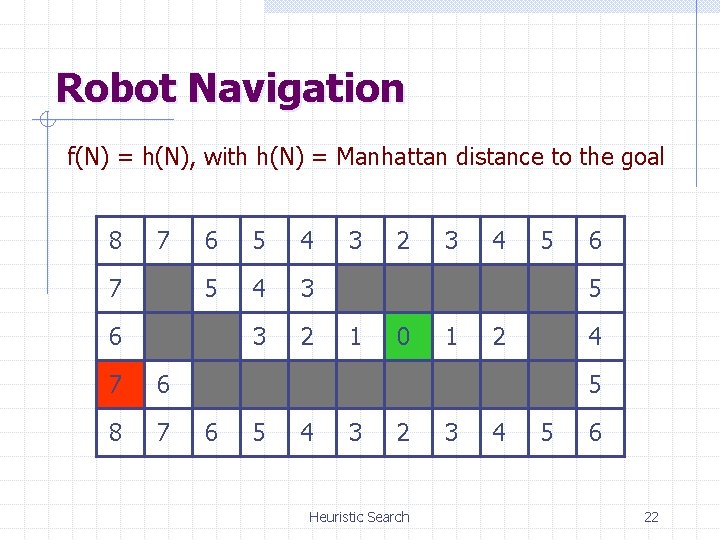

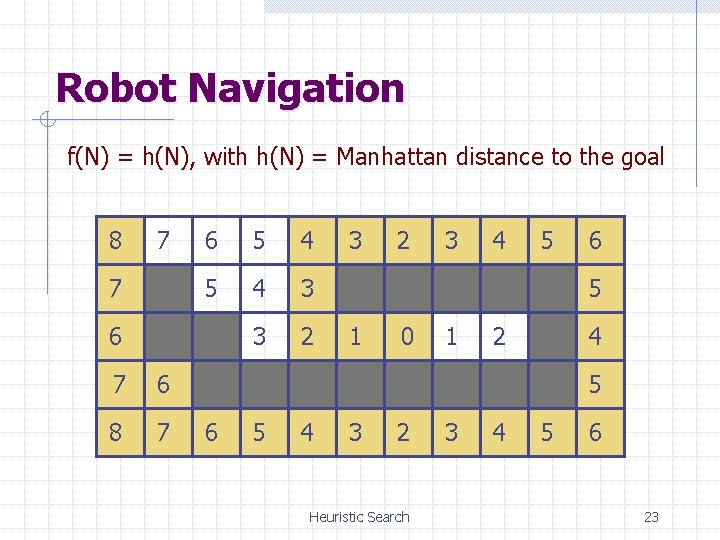

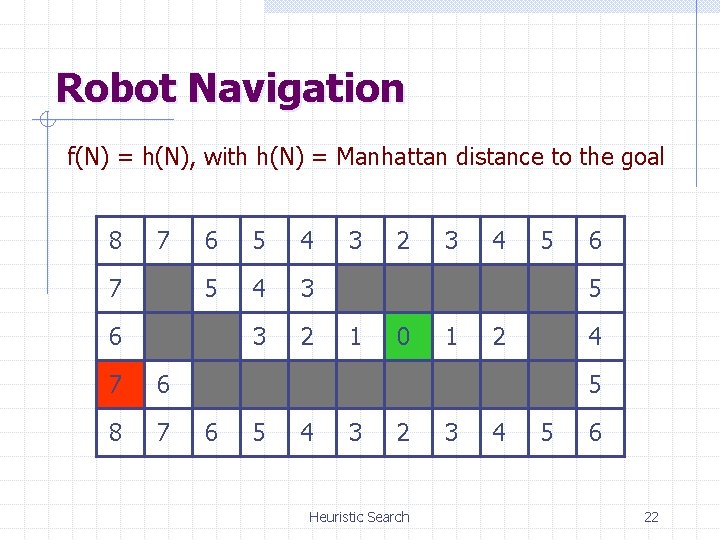

Robot Navigation f(N) = h(N), with h(N) = Manhattan distance to the goal 8 7 7 6 5 4 3 3 2 6 7 6 8 7 3 2 3 4 5 6 5 1 0 1 2 4 5 6 5 4 3 2 Heuristic Search 3 4 5 6 22

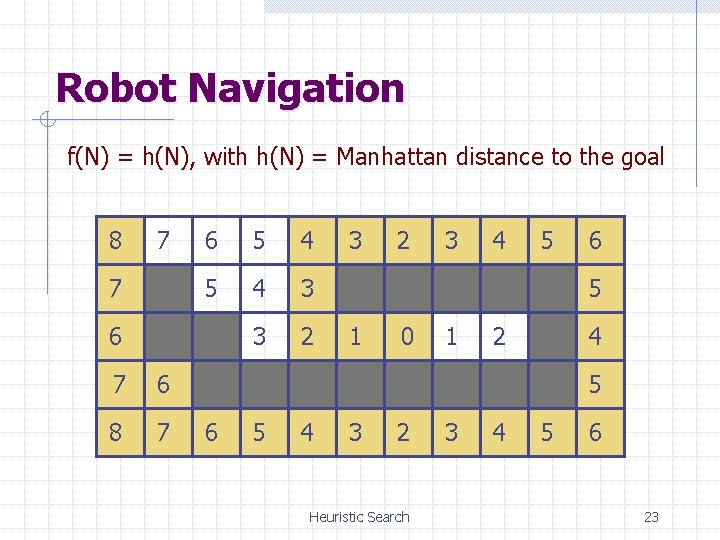

Robot Navigation f(N) = h(N), with h(N) = Manhattan distance to the goal 8 7 7 6 5 4 3 3 2 6 77 6 8 7 3 2 3 4 5 6 5 1 00 1 2 4 5 6 5 4 3 2 Heuristic Search 3 4 5 6 23

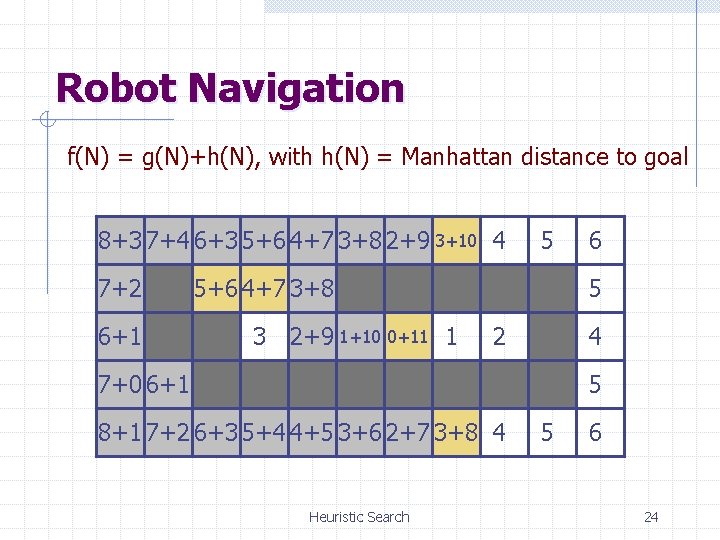

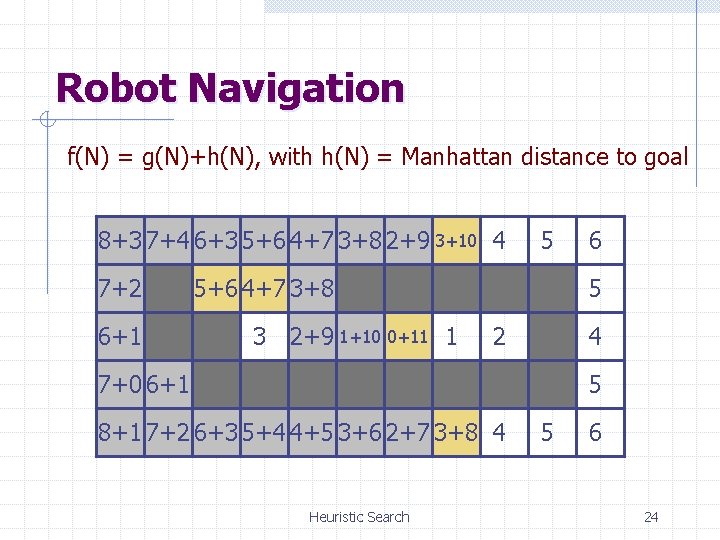

Robot Navigation f(N) = g(N)+h(N), with h(N) = Manhattan distance to goal 8 7+4 7 6+3 6 5+6 5 4+7 4 3+8 3 2+9 2 3+10 3 4 8+3 6+5 7+2 7 6+1 6 5 5+6 5 4+7 4 3+8 3 3 2+9 2 1+10 1 0+11 0 1 6 5 2 4 7+0 7 6+1 6 5 8+1 8 7+2 7 6+3 6 5+4 5 4+5 4 3+6 3 2+7 2 3+8 3 4 Heuristic Search 5 6 24

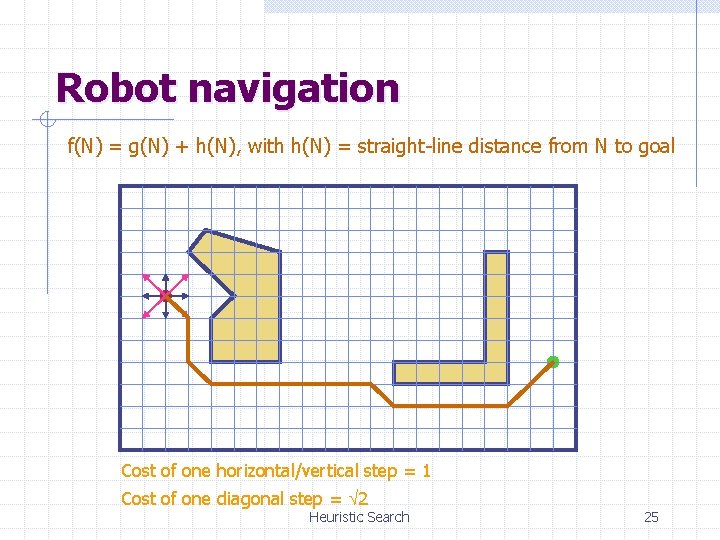

Robot navigation f(N) = g(N) + h(N), with h(N) = straight-line distance from N to goal Cost of one horizontal/vertical step = 1 Cost of one diagonal step = 2 Heuristic Search 25

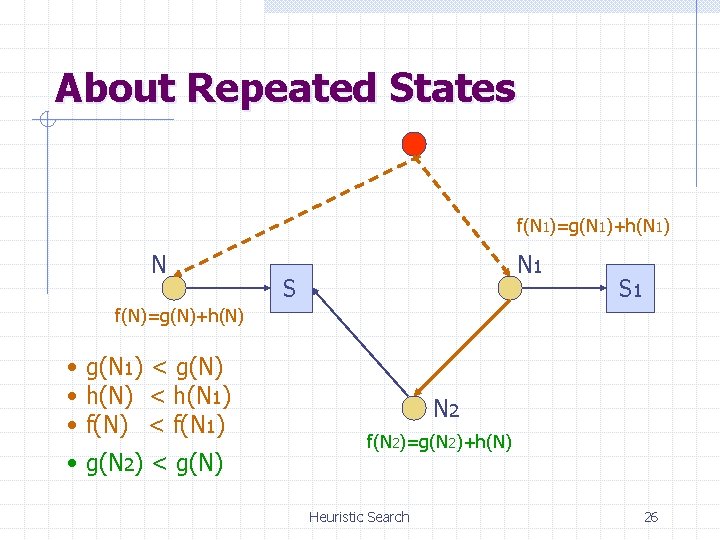

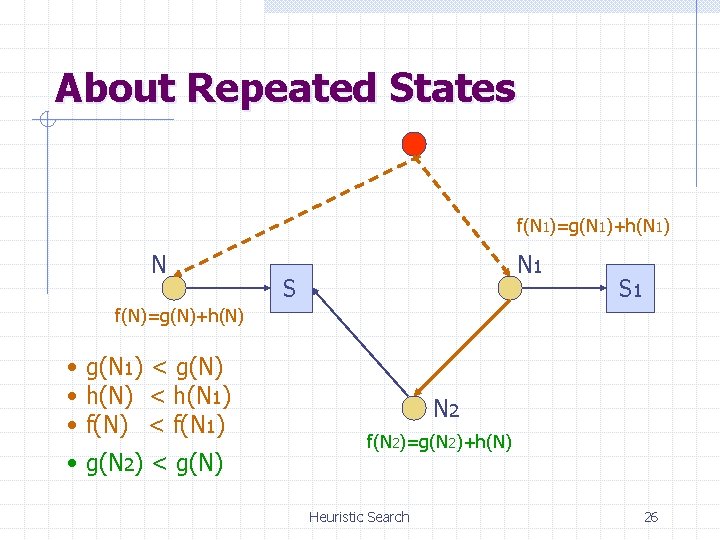

About Repeated States f(N 1)=g(N 1)+h(N 1) N N 1 S S 1 f(N)=g(N)+h(N) • g(N 1) < g(N) • h(N) < h(N 1) • f(N) < f(N 1) • g(N 2) < g(N) N 2 f(N 2)=g(N 2)+h(N) Heuristic Search 26

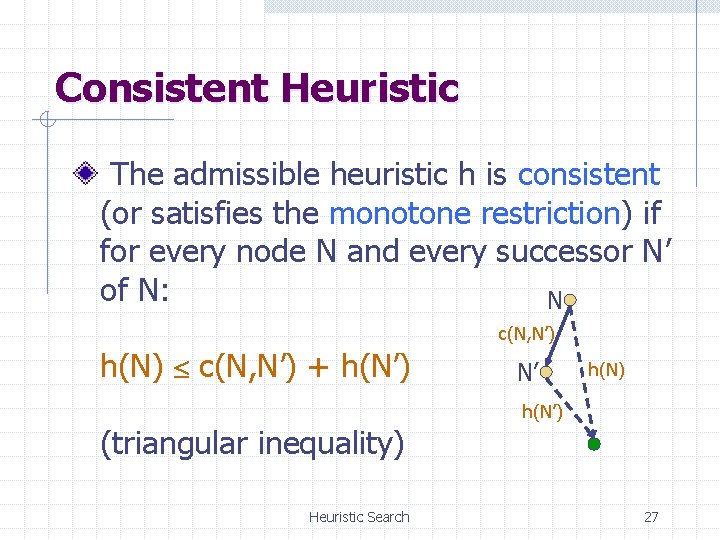

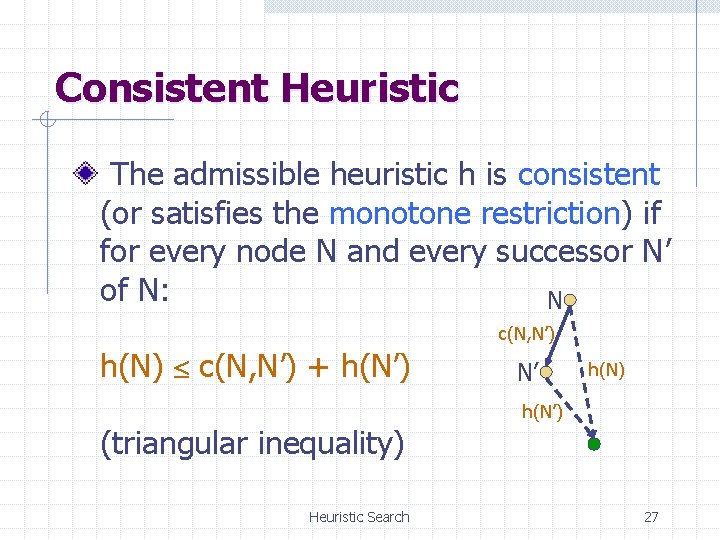

Consistent Heuristic The admissible heuristic h is consistent (or satisfies the monotone restriction) if for every node N and every successor N’ of N: N c(N, N’) h(N) c(N, N’) + h(N’) N’ h(N) h(N’) (triangular inequality) Heuristic Search 27

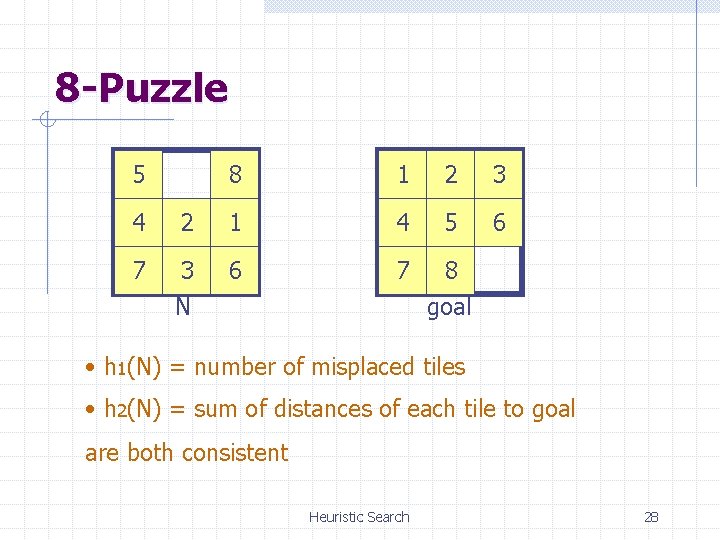

8 -Puzzle 5 8 1 2 3 6 4 2 1 4 5 7 3 N 6 7 8 goal • h 1(N) = number of misplaced tiles • h 2(N) = sum of distances of each tile to goal are both consistent Heuristic Search 28

Robot navigation Cost of one horizontal/vertical step = 1 Cost of one diagonal step = 2 h(N) = straight-line distance to the goal is consistent Heuristic Search 29

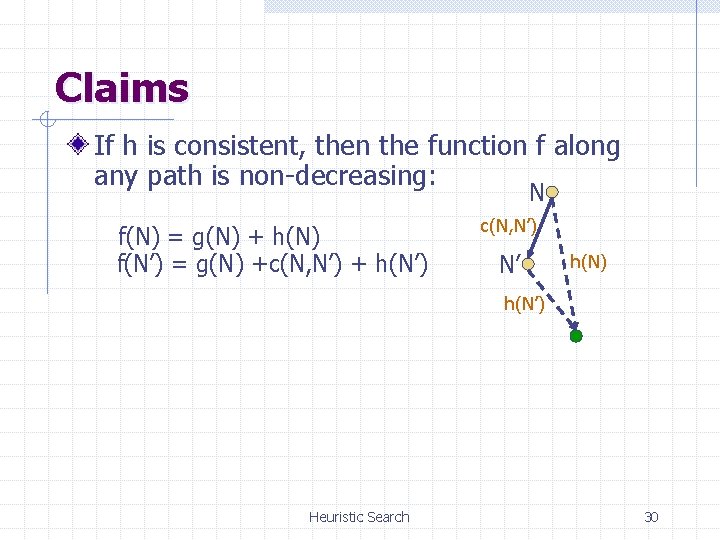

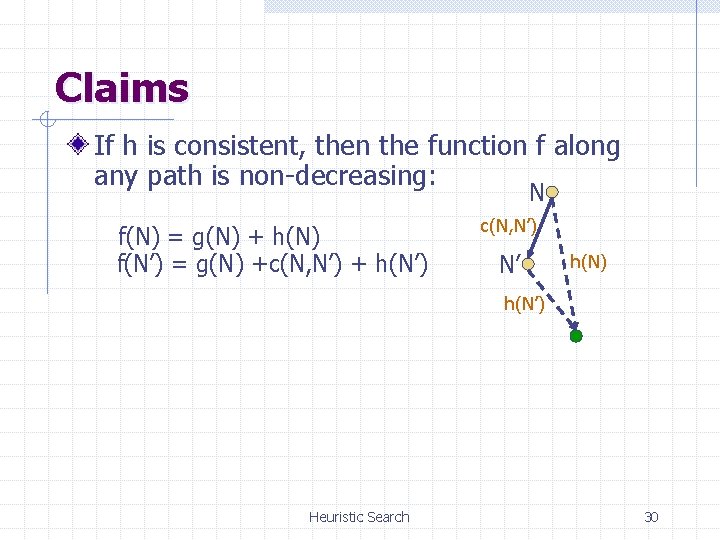

Claims If h is consistent, then the function f along any path is non-decreasing: N f(N) = g(N) + h(N) f(N’) = g(N) +c(N, N’) + h(N’) c(N, N’) N’ h(N) h(N’) Heuristic Search 30

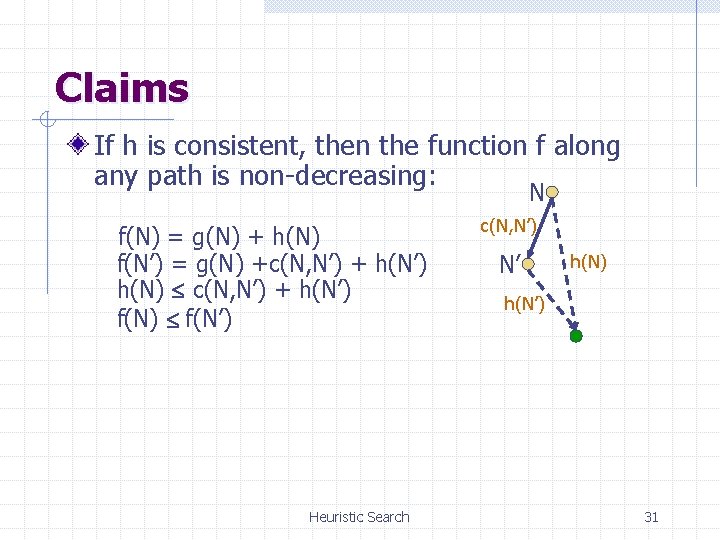

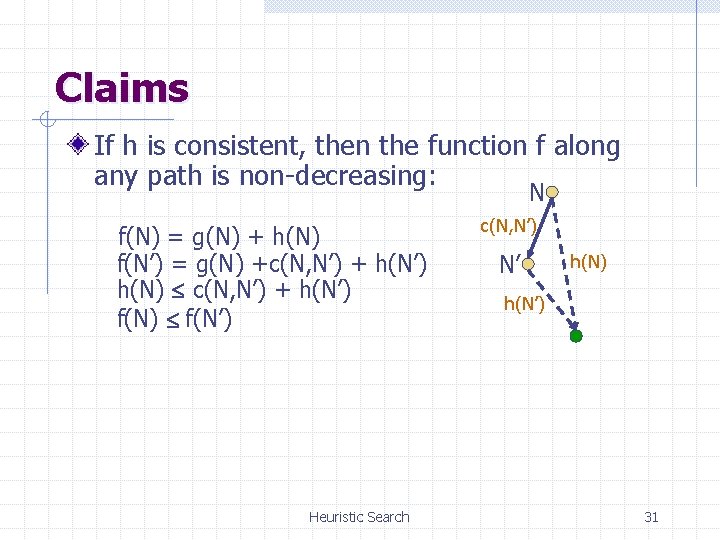

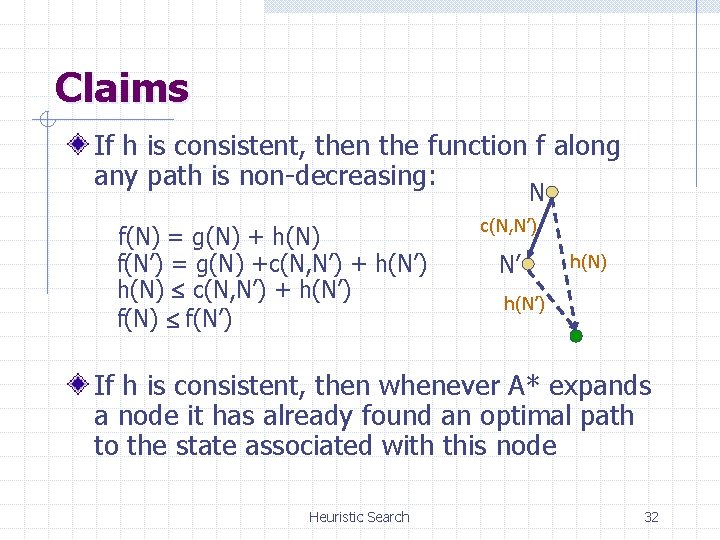

Claims If h is consistent, then the function f along any path is non-decreasing: N f(N) = g(N) + h(N) f(N’) = g(N) +c(N, N’) + h(N’) h(N) c(N, N’) + h(N’) f(N) f(N’) Heuristic Search c(N, N’) N’ h(N) h(N’) 31

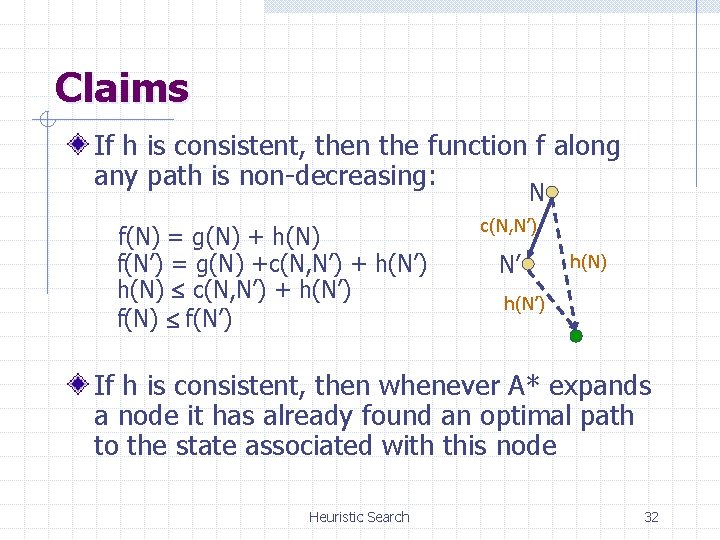

Claims If h is consistent, then the function f along any path is non-decreasing: N f(N) = g(N) + h(N) f(N’) = g(N) +c(N, N’) + h(N’) h(N) c(N, N’) + h(N’) f(N) f(N’) c(N, N’) N’ h(N) h(N’) If h is consistent, then whenever A* expands a node it has already found an optimal path to the state associated with this node Heuristic Search 32

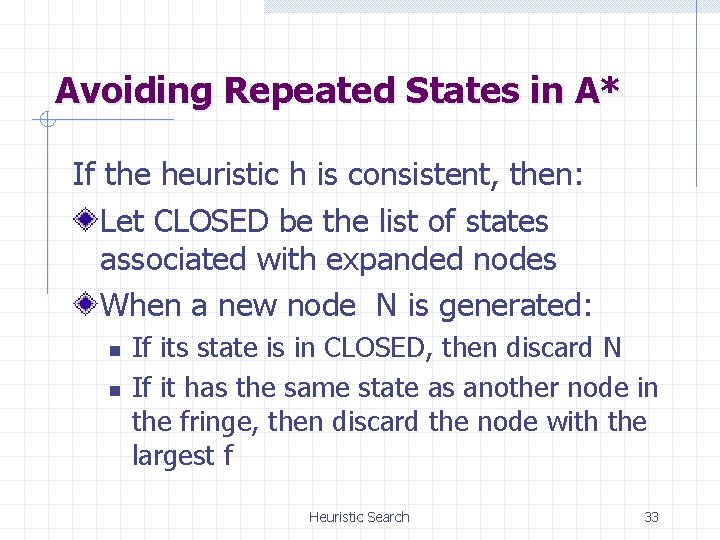

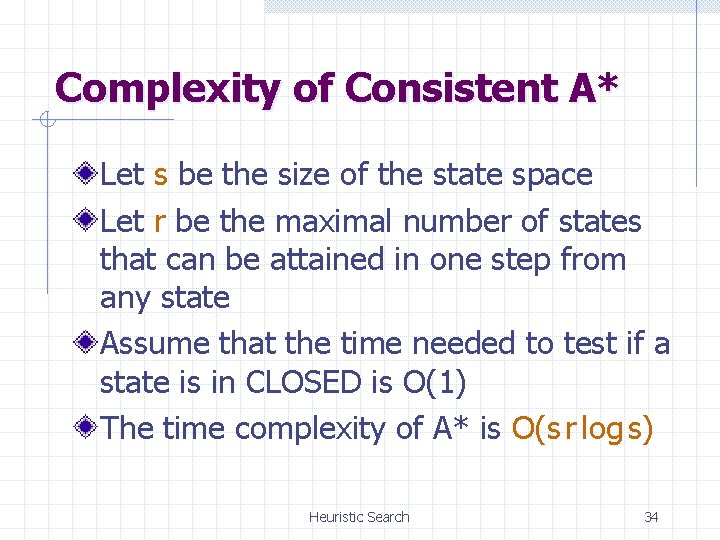

Avoiding Repeated States in A* If the heuristic h is consistent, then: Let CLOSED be the list of states associated with expanded nodes When a new node N is generated: n n If its state is in CLOSED, then discard N If it has the same state as another node in the fringe, then discard the node with the largest f Heuristic Search 33

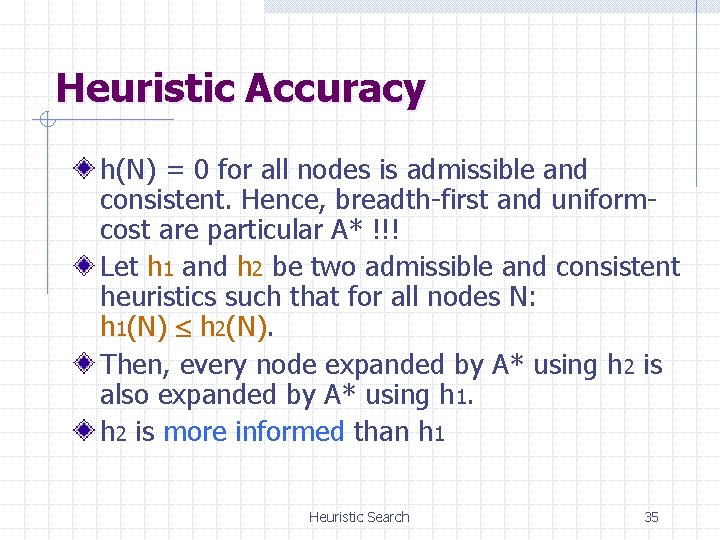

Complexity of Consistent A* Let s be the size of the state space Let r be the maximal number of states that can be attained in one step from any state Assume that the time needed to test if a state is in CLOSED is O(1) The time complexity of A* is O(s r log s) Heuristic Search 34

Heuristic Accuracy h(N) = 0 for all nodes is admissible and consistent. Hence, breadth-first and uniformcost are particular A* !!! Let h 1 and h 2 be two admissible and consistent heuristics such that for all nodes N: h 1(N) h 2(N). Then, every node expanded by A* using h 2 is also expanded by A* using h 1. h 2 is more informed than h 1 Heuristic Search 35

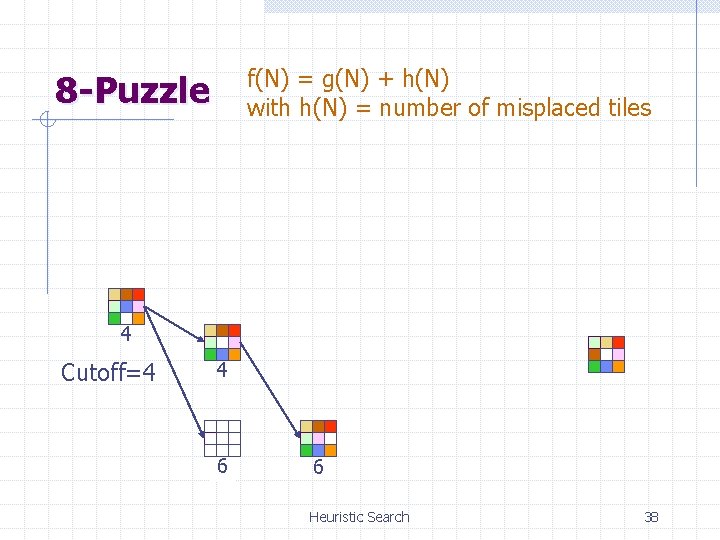

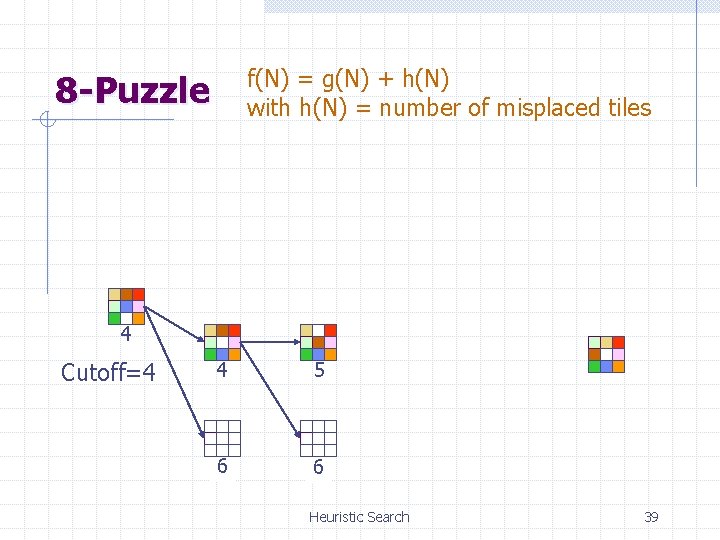

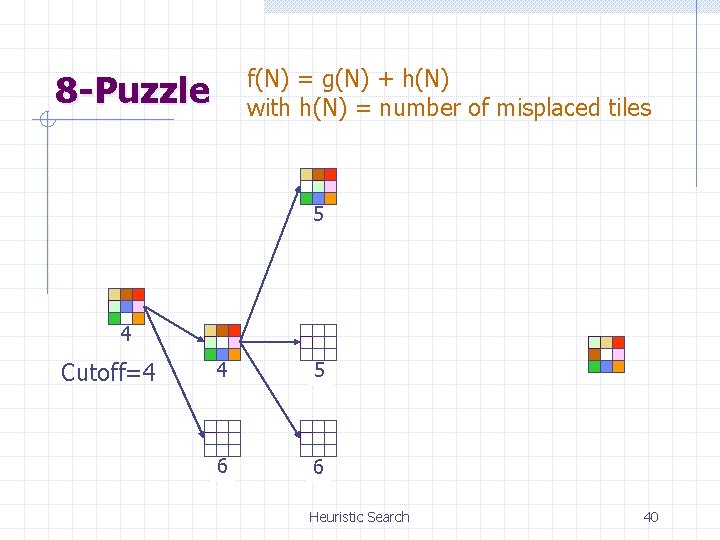

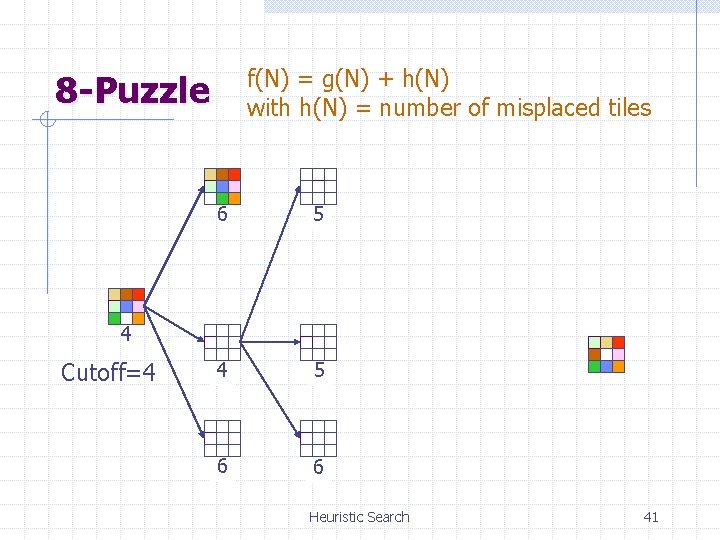

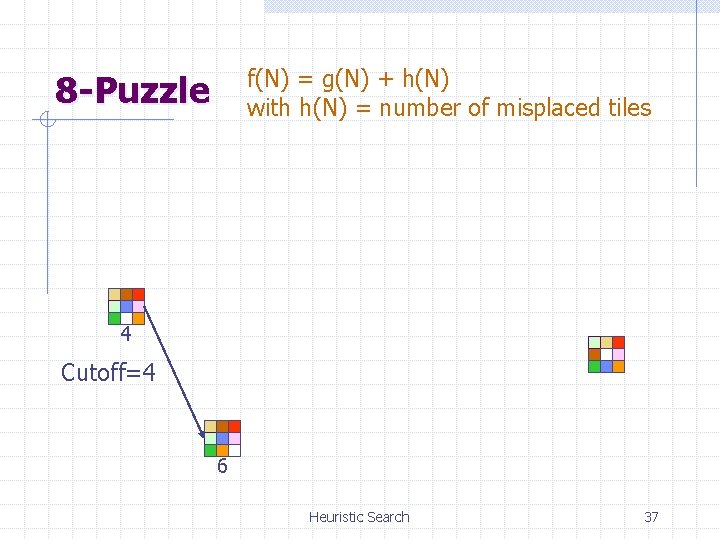

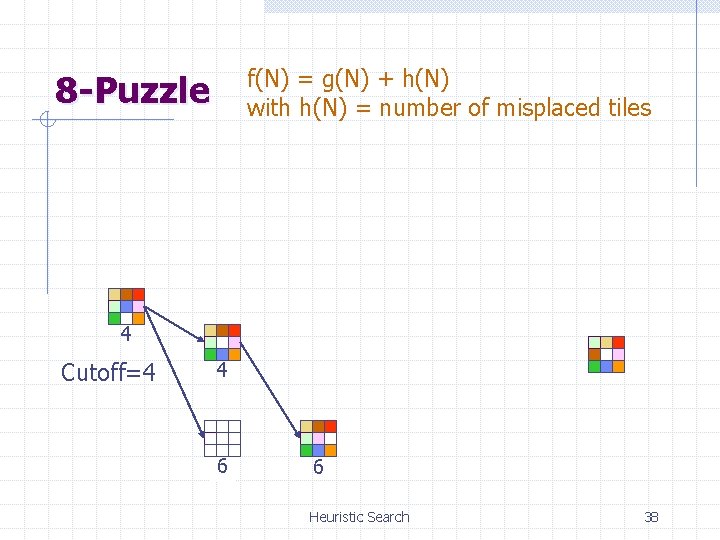

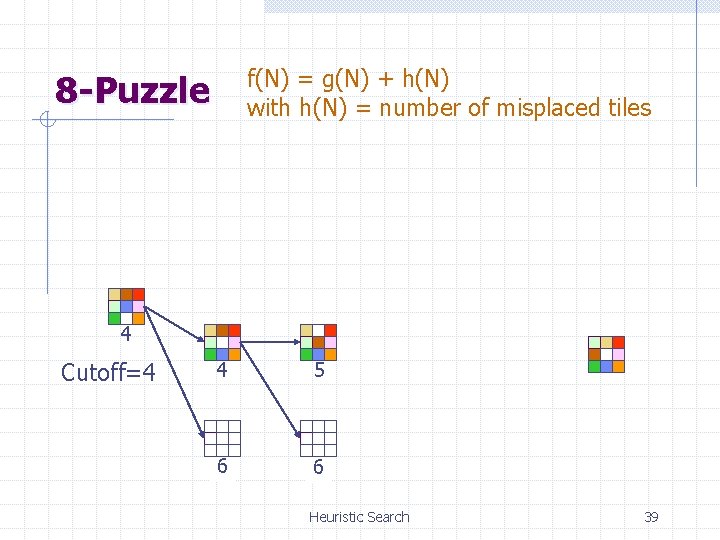

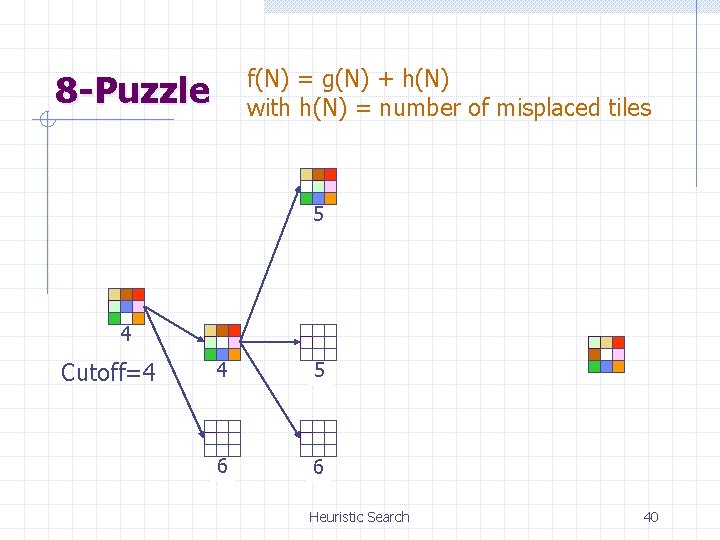

Iterative Deepening A* (IDA*) Use f(N) = g(N) + h(N) with admissible and consistent h Each iteration is depth-first with cutoff on the value of f of expanded nodes Heuristic Search 36

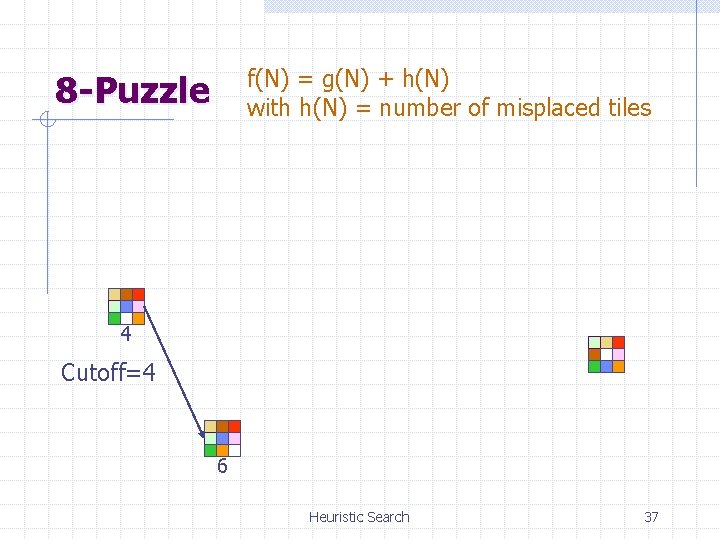

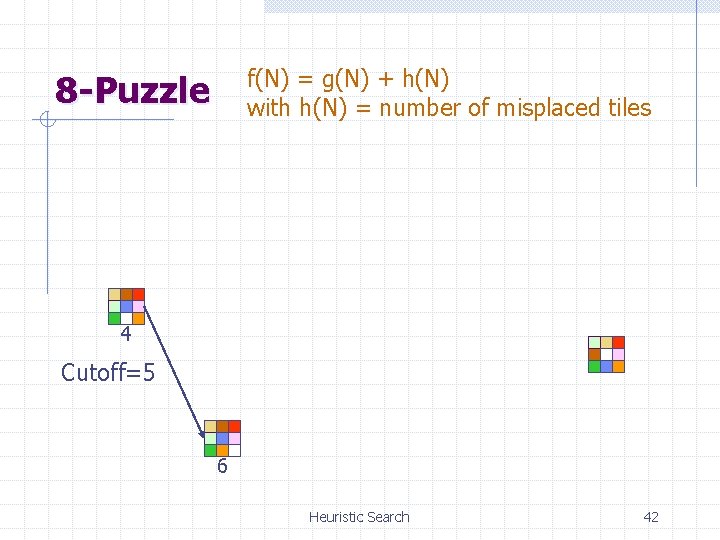

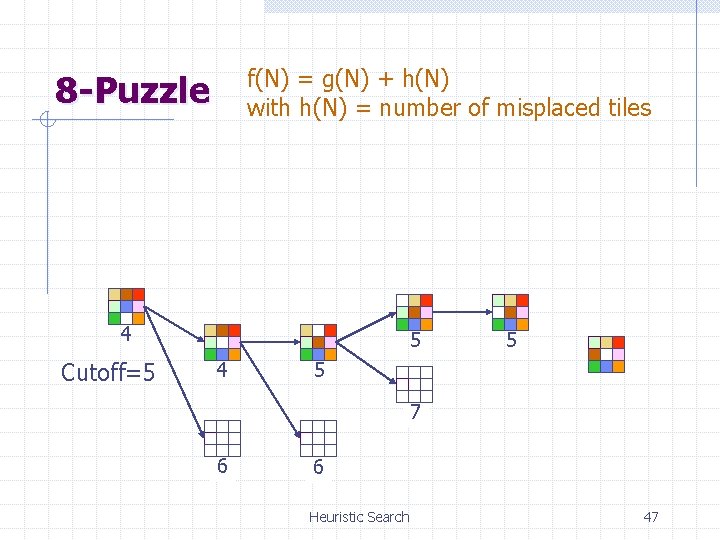

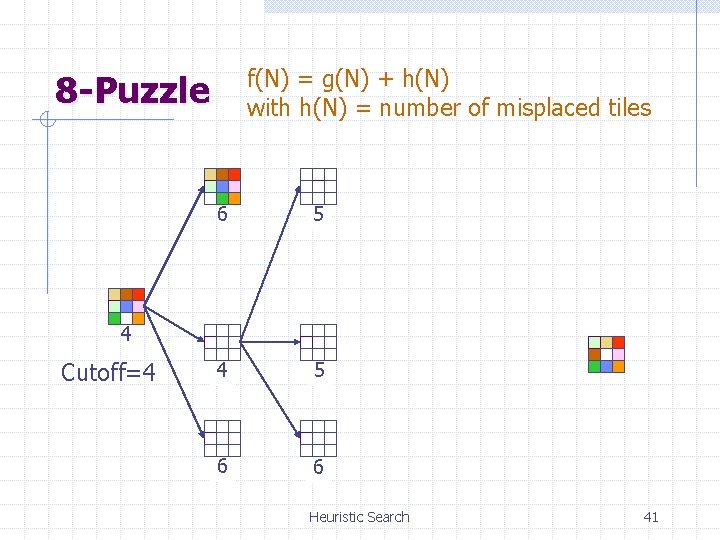

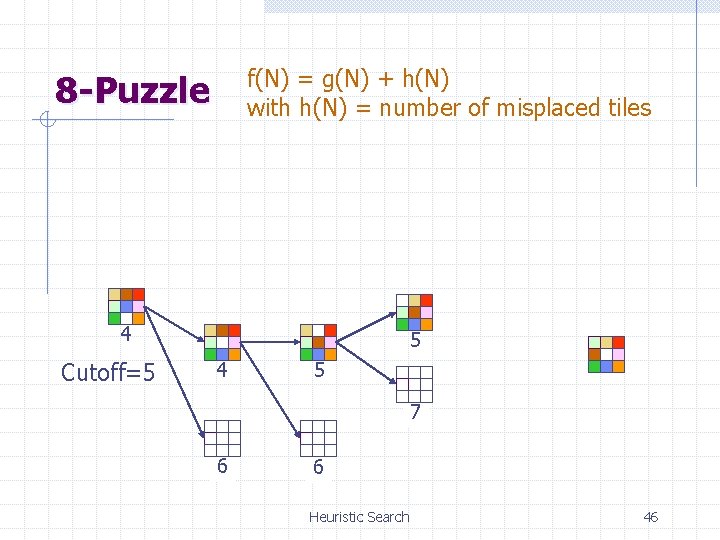

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 4 Cutoff=4 6 Heuristic Search 37

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 4 Cutoff=4 4 6 6 Heuristic Search 38

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 4 Cutoff=4 4 5 6 6 Heuristic Search 39

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 5 4 Cutoff=4 4 5 6 6 Heuristic Search 40

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 6 5 4 5 6 6 4 Cutoff=4 Heuristic Search 41

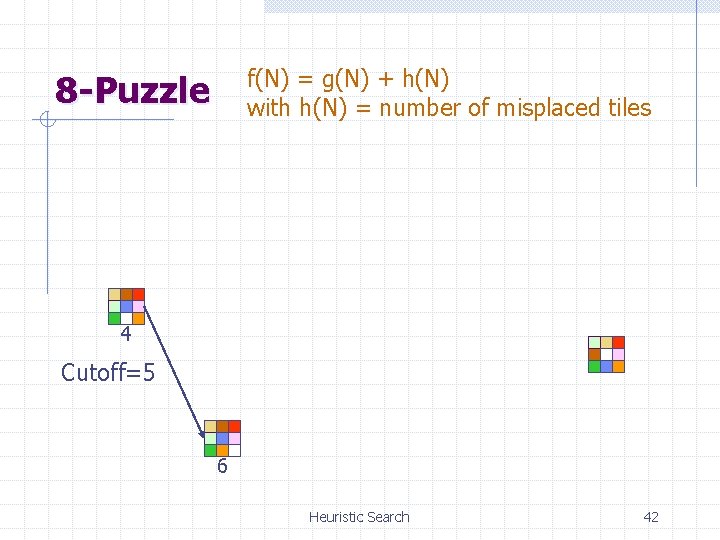

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 4 Cutoff=5 6 Heuristic Search 42

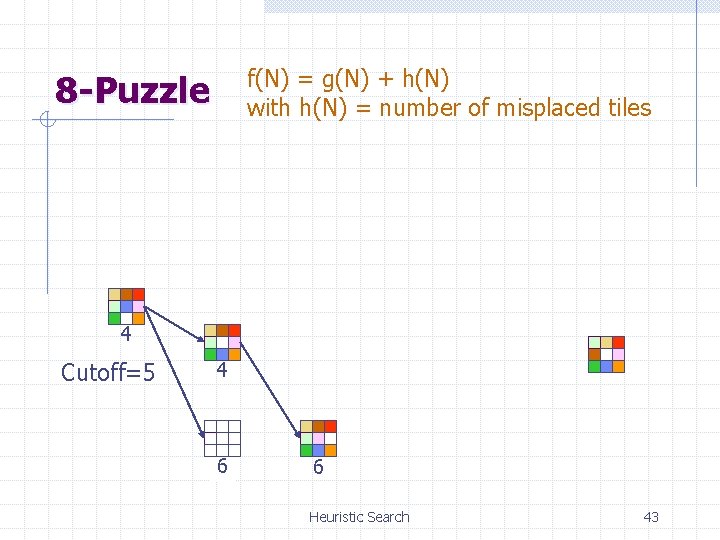

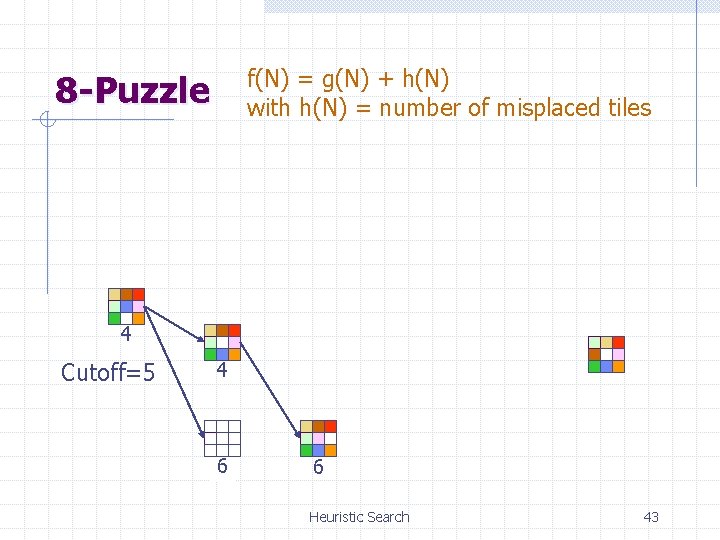

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 4 Cutoff=5 4 6 6 Heuristic Search 43

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 4 Cutoff=5 4 5 6 6 Heuristic Search 44

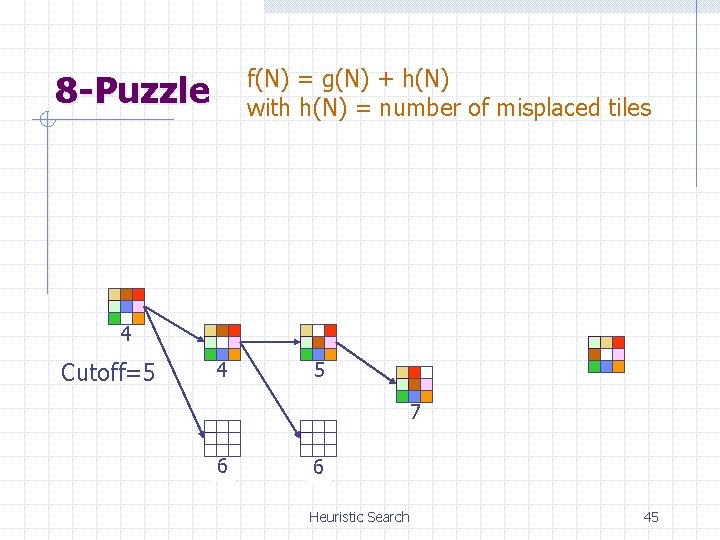

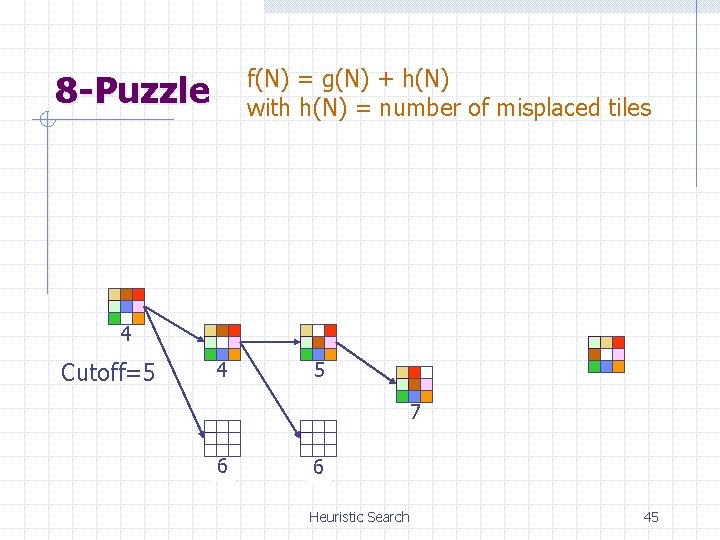

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 4 Cutoff=5 4 5 7 6 6 Heuristic Search 45

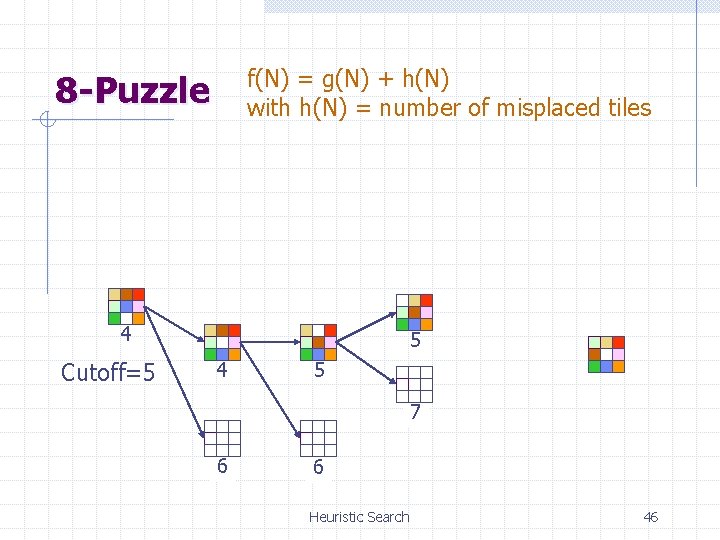

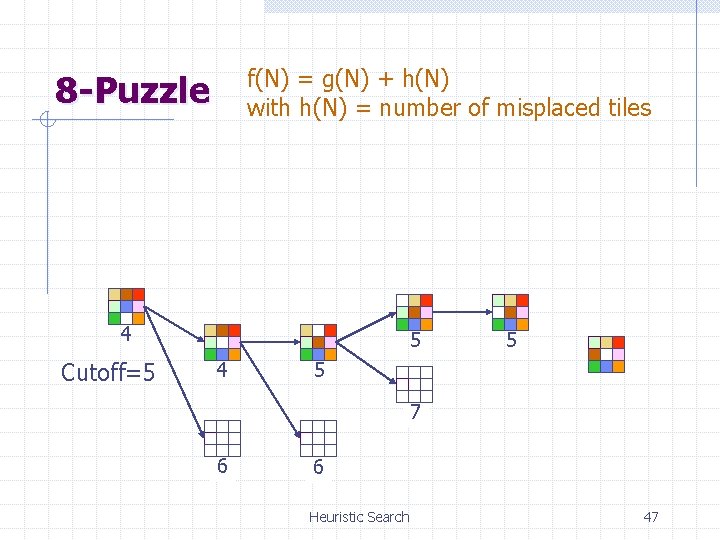

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 4 Cutoff=5 5 4 5 7 6 6 Heuristic Search 46

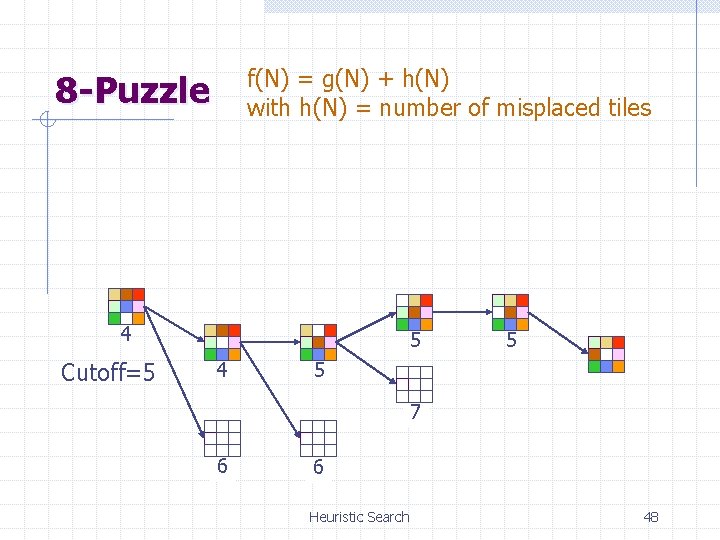

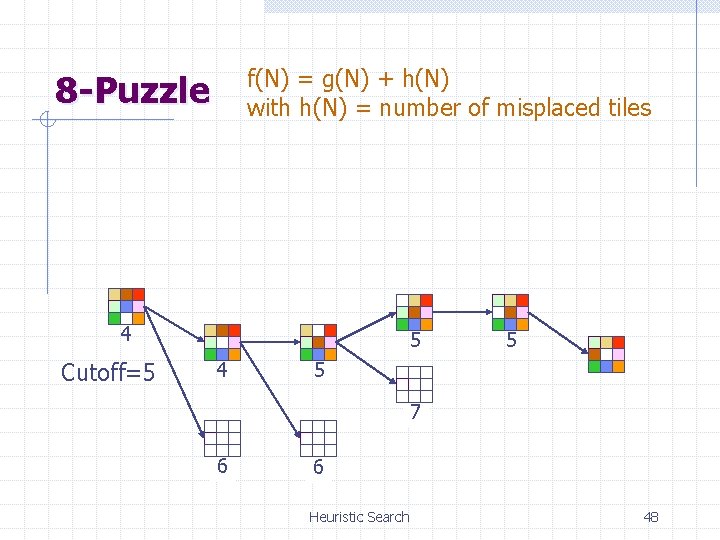

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 4 Cutoff=5 5 4 5 5 7 6 6 Heuristic Search 47

f(N) = g(N) + h(N) with h(N) = number of misplaced tiles 8 -Puzzle 4 Cutoff=5 5 4 5 5 7 6 6 Heuristic Search 48

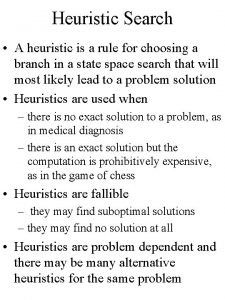

About Heuristics are intended to orient the search along promising paths The time spent computing heuristics must be recovered by a better search After all, a heuristic function could consist of solving the problem; then it would perfectly guide the search Deciding which node to expand is sometimes called meta-reasoning Heuristics may not always look like numbers and may involve large amount of knowledge Heuristic Search 49

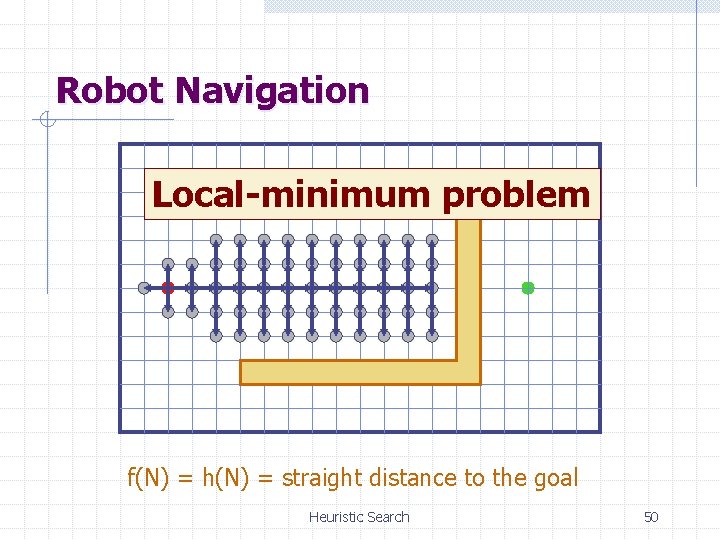

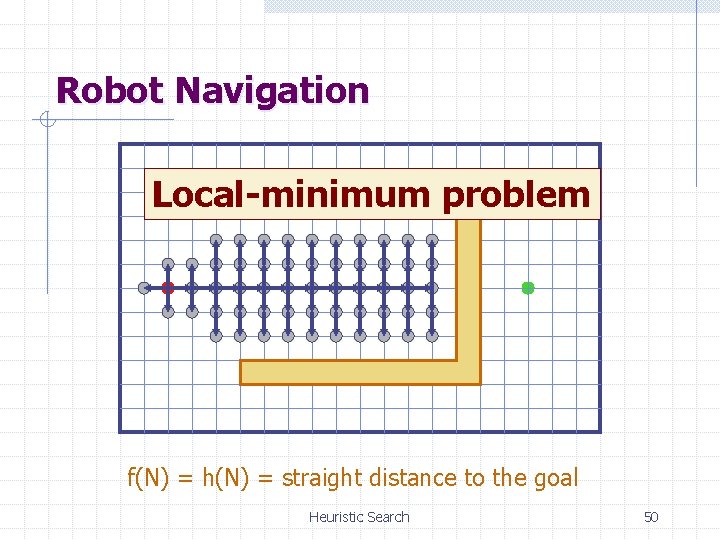

Robot Navigation Local-minimum problem f(N) = h(N) = straight distance to the goal Heuristic Search 50

What’s the Issue? Search is an iterative local procedure Good heuristics should provide some global look-ahead (at low computational cost) Heuristic Search 51

Other Search Techniques Steepest descent (~ greedy best-first with no search) may get stuck into local minimum Heuristic Search 52

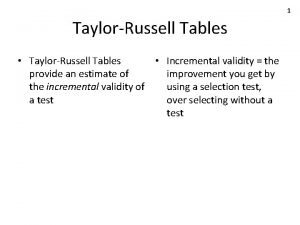

Other Search Techniques Steepest descent (~ greedy best-first with no search) may get stuck into local minimum Simulated annealing Heuristic Search 53

Other Search Techniques Steepest descent (~ greedy best-first with no search) may get stuck into local minimum Simulated annealing Genetic algorithms Heuristic Search 54

Informed Search methods Heuristic Search

Best-first Search Evaluation function : : : a number purporting to described the desirability of expanding the node Best-first search : : : a node with the best evaluation is expanded first 자료구조가 중요!!! Queue를 사용하되 linked list로 구현? ? ? Heuristic Search 56

Greedy search heuristic function h(n) = estimated cost of the cheapest path from the state at node n to a goal state 현재부터 가장 가까운 거리에 있는 것 Hill climbing method Heuristic Search 57

Iterative improvement algorithm Hill climbing method Simulated annealing Neural networks Genetic algorithm Heuristic Search 58

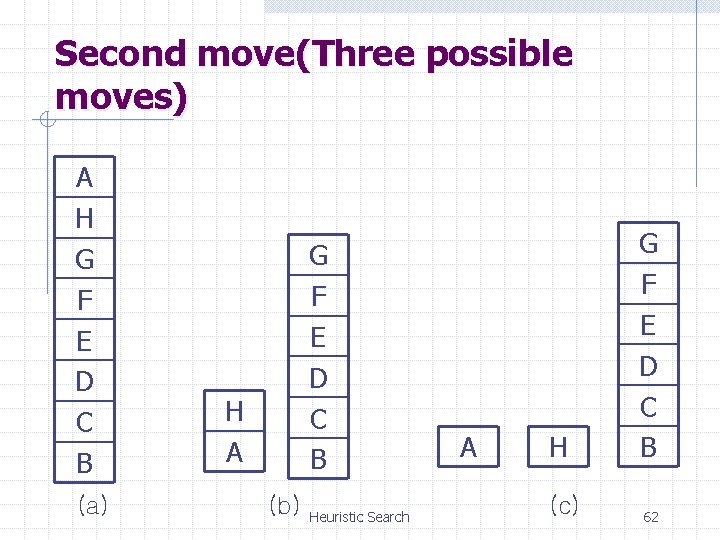

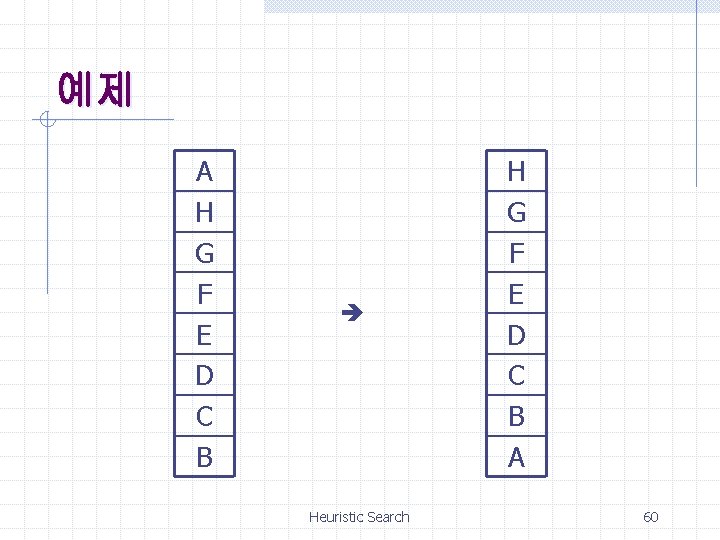

예제 A H G F E D C B Heuristic Search H G F E D C B A 60

First move A H G F E D C B Heuristic Search 61

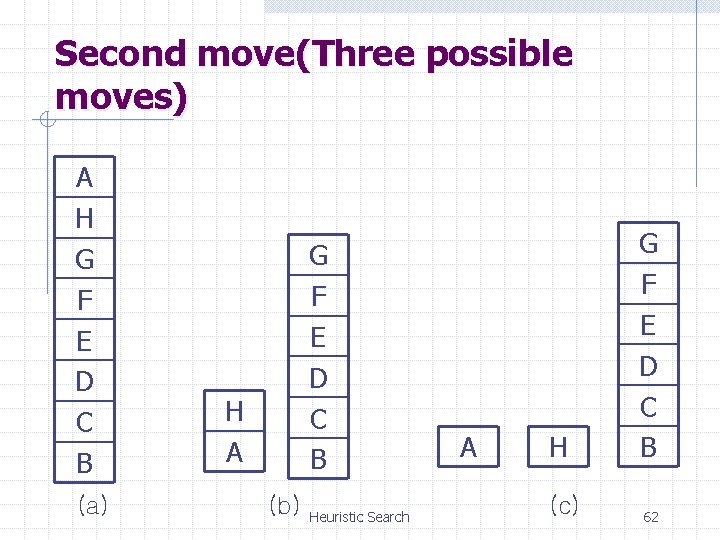

Second move(Three possible moves) A H G F E D C B (a) G F E D C B H A (b) Heuristic Search A H (c) G F E D C B 62

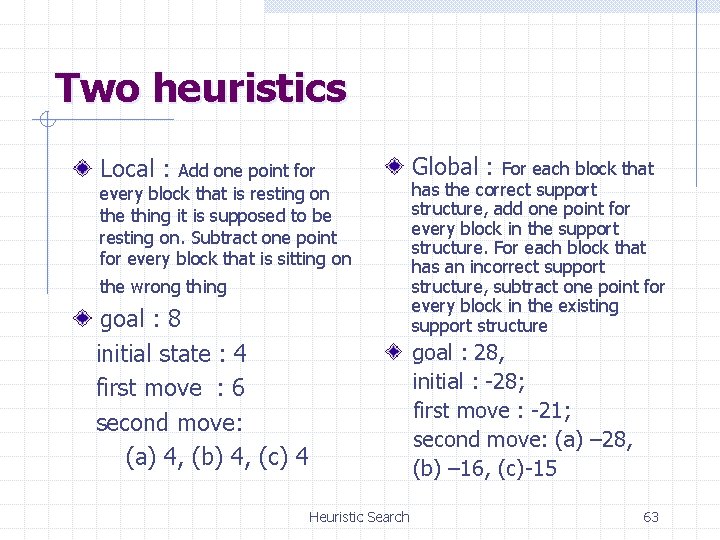

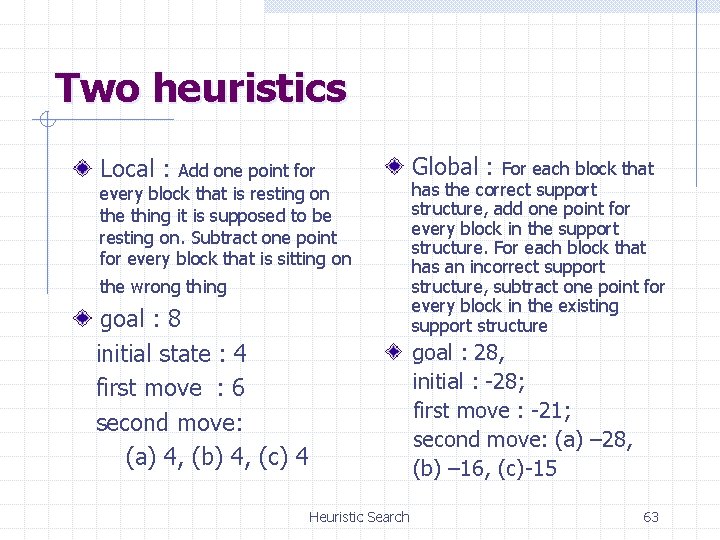

Two heuristics Local : Add one point for every block that is resting on the thing it is supposed to be resting on. Subtract one point for every block that is sitting on the wrong thing goal : 8 initial state : 4 first move : 6 second move: (a) 4, (b) 4, (c) 4 Global : For each block that has the correct support structure, add one point for every block in the support structure. For each block that has an incorrect support structure, subtract one point for every block in the existing support structure goal : 28, initial : -28; first move : -21; second move: (a) – 28, (b) – 16, (c)-15 Heuristic Search 63

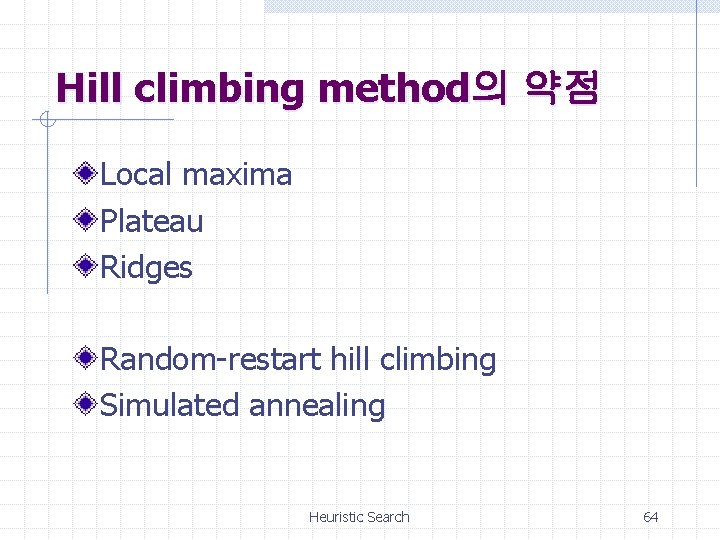

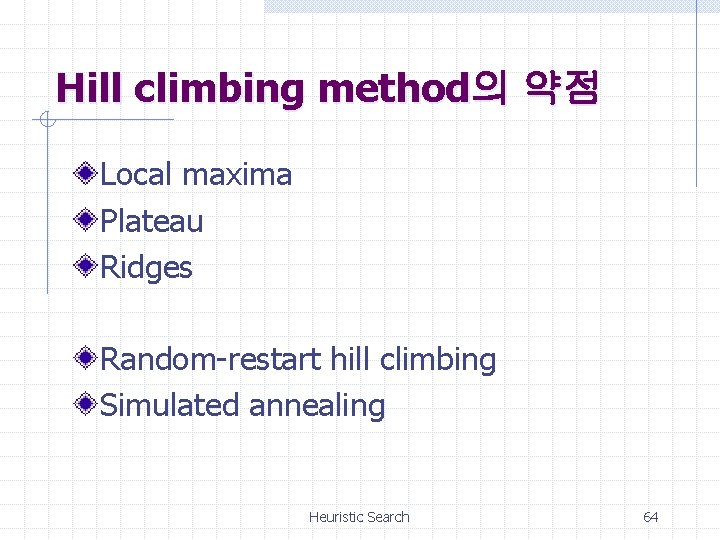

Hill climbing method의 약점 Local maxima Plateau Ridges Random-restart hill climbing Simulated annealing Heuristic Search 64

![Simulated annealing 알고리즘 for t 1 to do T schedulet if T0 then Simulated annealing 알고리즘 for t 1 to ∞ do T schedule[t] if T=0 then](https://slidetodoc.com/presentation_image_h2/67a3b11e78d23a17265e05e2dc5e2844/image-65.jpg)

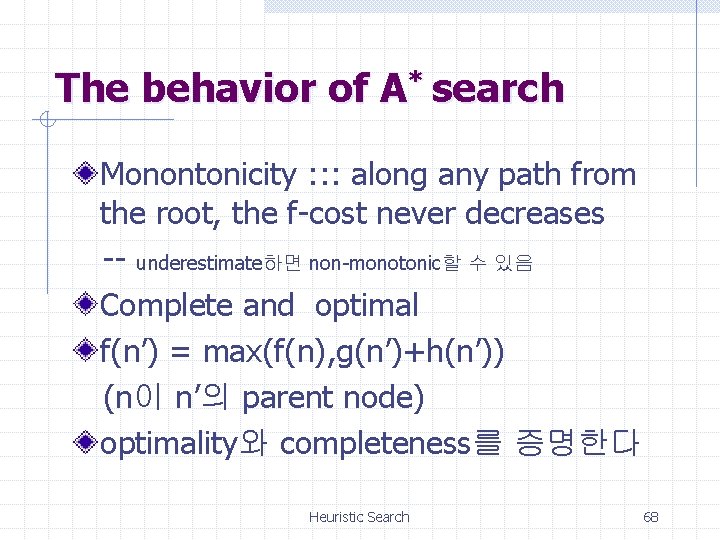

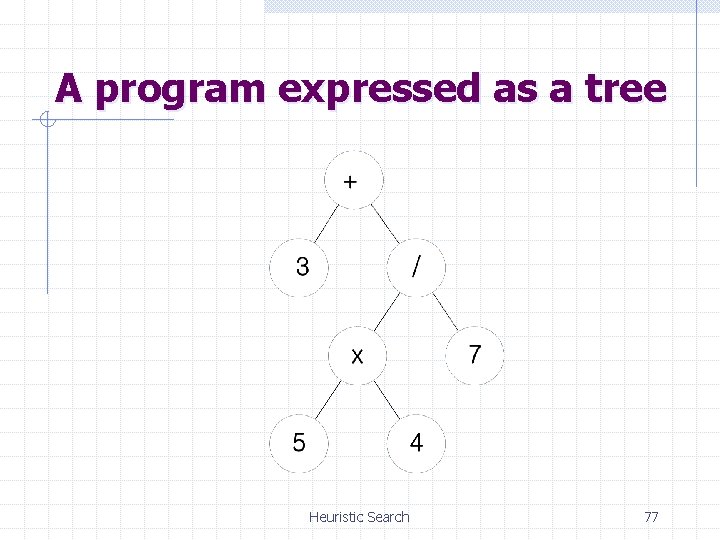

Simulated annealing 알고리즘 for t 1 to ∞ do T schedule[t] if T=0 then return current next a randomly selected successor of current ∆E Value[next]-Value[current] if ∆E>0 then current next else current next only with probability e∆E /T 이동성 P=e∆E /T (P=e-∆E /k. T ) 일반적으로 에너지가 낮은 방향으로 물리현상은 일어나지만 높은 에너지 상황으로 변하는 확률이 존재한다. ∆E : positive change in energy level T : Temperature k : Boltzmann’s constant Heuristic Search 65

Heuristic Search f(n)=g(n)+h(n) f(n) = estimated cost of the cheapest solution through n Best-first search Heuristic Search 66

Minimizing the total path cost (A* algorithm) Admissible heuristics : : : an h function that never overestimates the cost to reach the goal If h is a admissible, f(n) never overestimates the actual cost of the best solution through n Heuristic Search 67

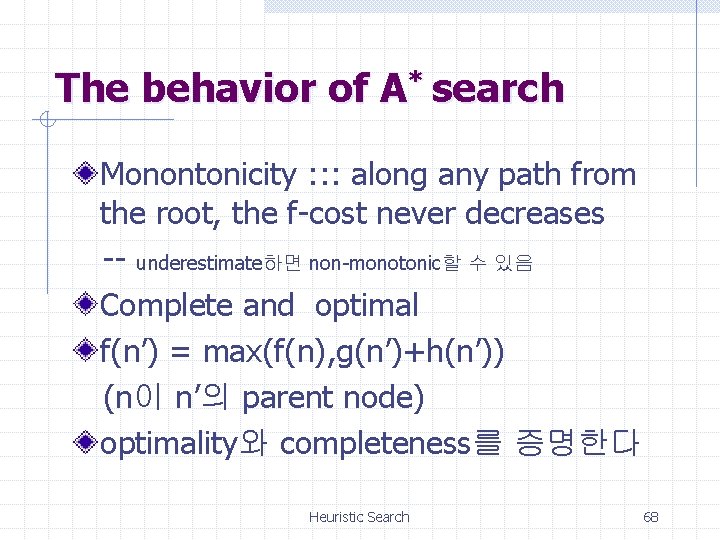

The behavior of A* search Monontonicity : : : along any path from the root, the f-cost never decreases -- underestimate하면 non-monotonic할 수 있음 Complete and optimal f(n’) = max(f(n), g(n’)+h(n’)) (n이 n’의 parent node) optimality와 completeness를 증명한다 Heuristic Search 68

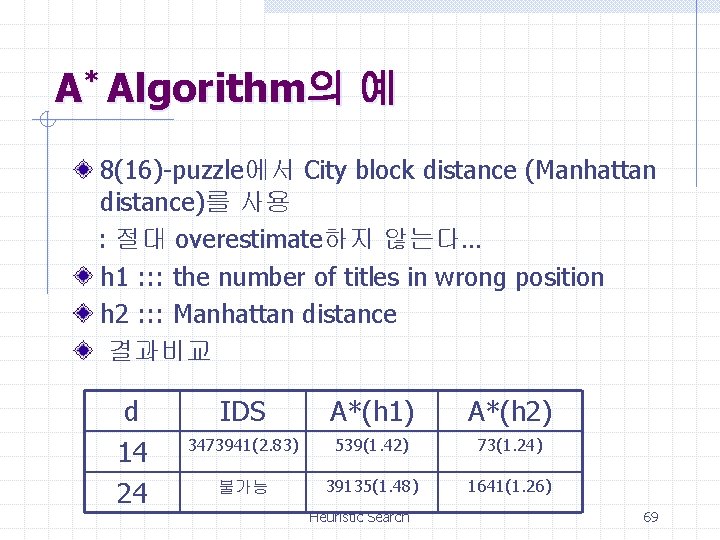

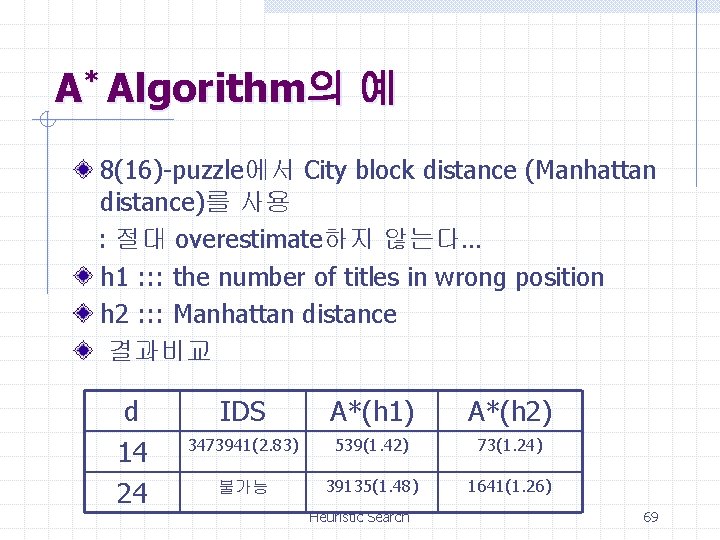

A* Algorithm의 예 8(16)-puzzle에서 City block distance (Manhattan distance)를 사용 : 절대 overestimate하지 않는다… h 1 : : : the number of titles in wrong position h 2 : : : Manhattan distance 결과비교 d 14 24 IDS A*(h 1) A*(h 2) 3473941(2. 83) 539(1. 42) 73(1. 24) 불가능 39135(1. 48) 1641(1. 26) Heuristic Search 69

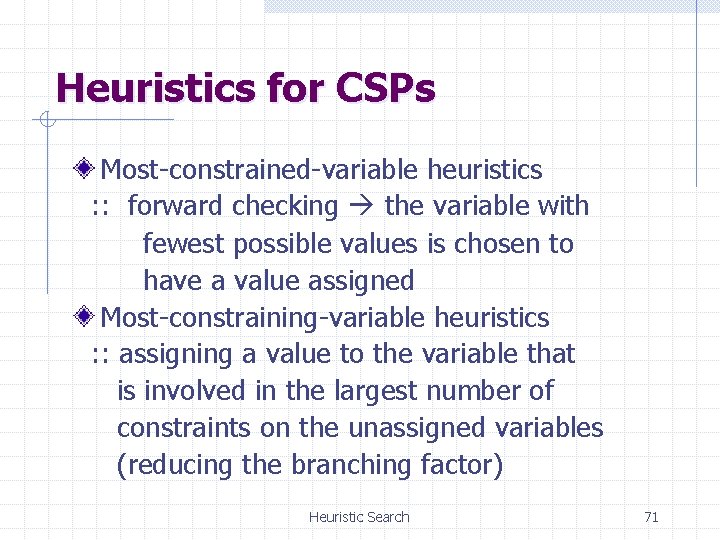

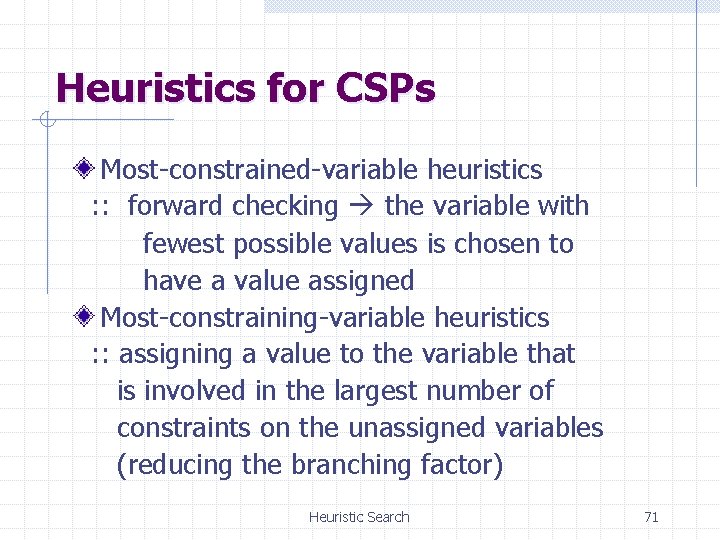

Heuristics for CSPs Most-constrained-variable heuristics : : forward checking the variable with fewest possible values is chosen to have a value assigned Most-constraining-variable heuristics : : assigning a value to the variable that is involved in the largest number of constraints on the unassigned variables (reducing the branching factor) Heuristic Search 71

Example Heuristic Search 72

Memory Bounded Search Simplified Memory-Bound A* Heuristic Search 73

Machine Evolution Heuristic Search

Evolutions Generations of descendants n n Production of descendants changed from their parents Selective survival Search processes Searching for high peaks in the hyperspace Heuristic Search 75

Applications Function optimization n The maximum of a function : : : John Holland Solving specific problems • • Control reactive agents Classifier systems Genetic programming Heuristic Search 76

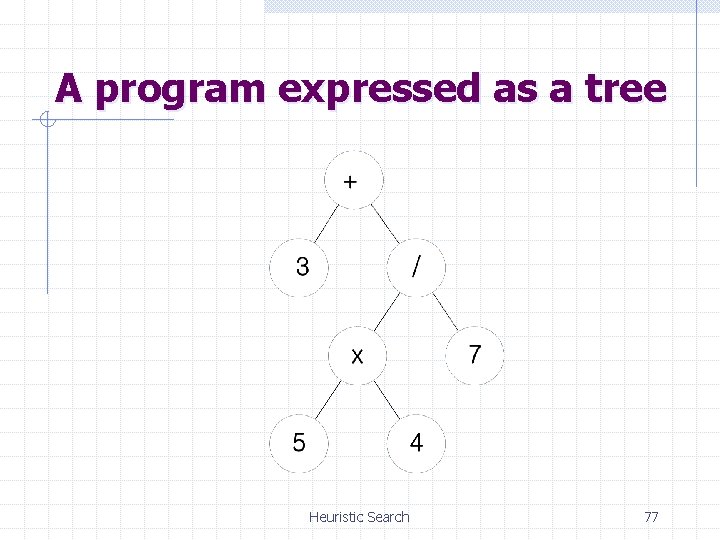

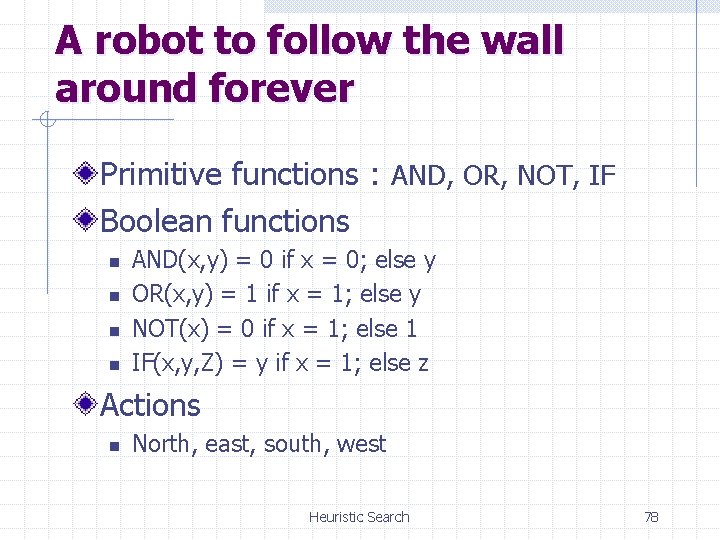

A program expressed as a tree Heuristic Search 77

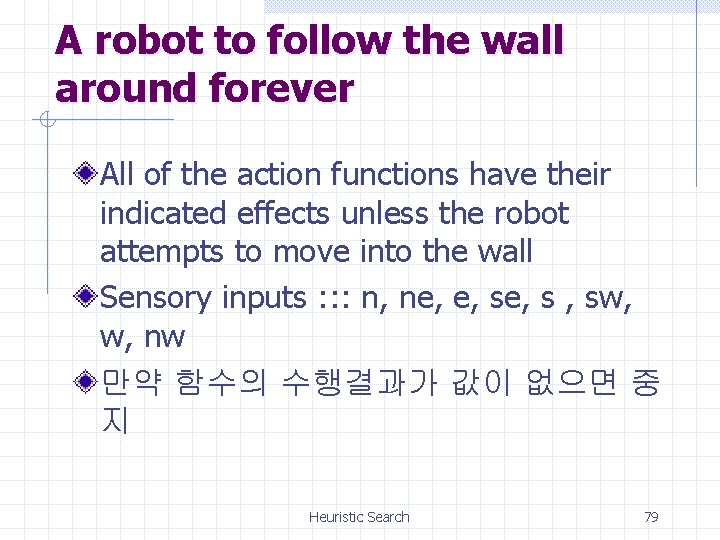

A robot to follow the wall around forever Primitive functions : AND, OR, NOT, IF Boolean functions n n AND(x, y) = 0 if x = 0; else y OR(x, y) = 1 if x = 1; else y NOT(x) = 0 if x = 1; else 1 IF(x, y, Z) = y if x = 1; else z Actions n North, east, south, west Heuristic Search 78

A robot to follow the wall around forever All of the action functions have their indicated effects unless the robot attempts to move into the wall Sensory inputs : : : n, ne, e, s , sw, w, nw 만약 함수의 수행결과가 값이 없으면 중 지 Heuristic Search 79

A robot in a Grid World Heuristic Search 80

A wall following program Heuristic Search 81

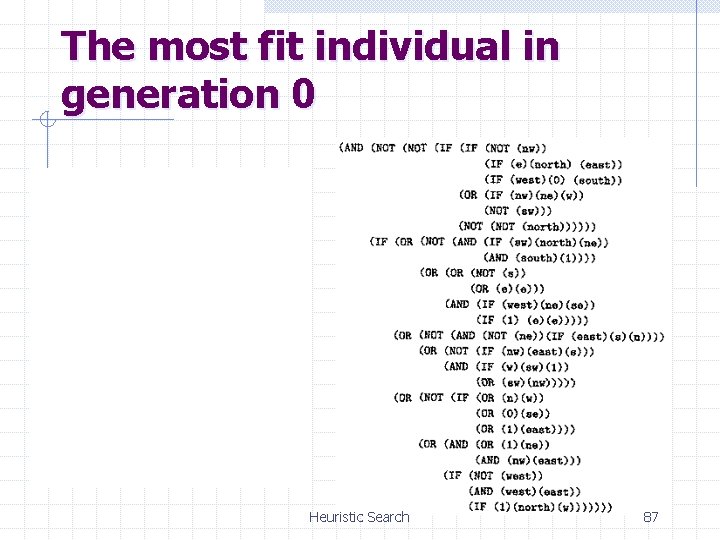

The GP process Generation 0 (0세대): start with a population of random programs with functions, constants, and sensory inputs n 5000 random programs Final : Generation 62 60 steps 동안 벽에 있는 방을 방문한 횟수로 평가 32 cells이면 perfects; 10곳에서 출발하여 fitness 측정 Heuristic Search 82

Generation of populations I (i+1)th generation n n 10%는 i-the generation에서 copy 5000 populations에서 무작위로 7개를 선택하여 가장 우수한 것을 선택 (tournament selection) 90%는 앞의 방법으로 두 프로그램(a mother, a father)을 선택하여, 무작위로 선 정한 father의 subtree를 mother의 subtree 에 넣는다 (crossover) Heuristic Search 83

Crossover Heuristic Search 84

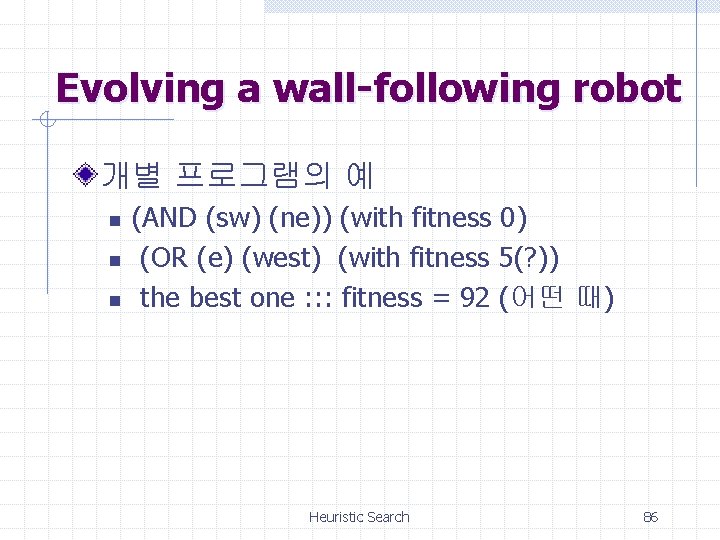

Generation of populations II n Mutation : 1%를 tournament로 선정 무 작위로 선택한 subtree를 제거하고, 1세대 에서 개체를 생성하는 방법으로 만들어서 끼워넣는다 Heuristic Search 85

Evolving a wall-following robot 개별 프로그램의 예 n n n (AND (sw) (ne)) (with fitness 0) (OR (e) (west) (with fitness 5(? )) the best one : : : fitness = 92 (어떤 때) Heuristic Search 86

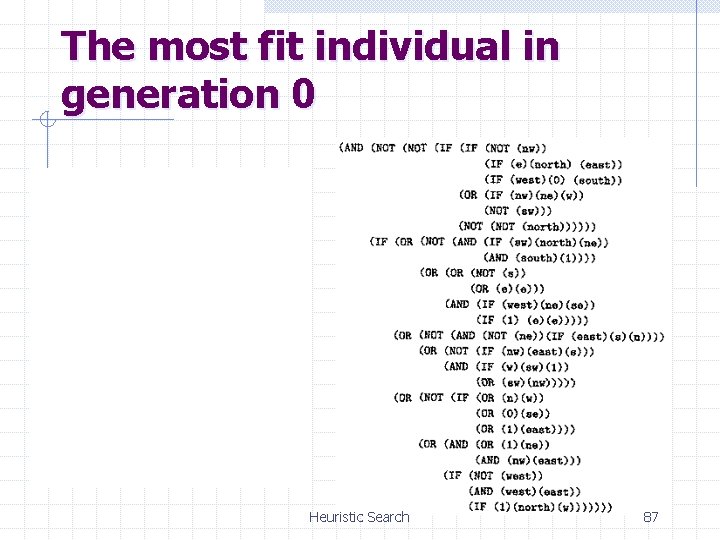

The most fit individual in generation 0 Heuristic Search 87

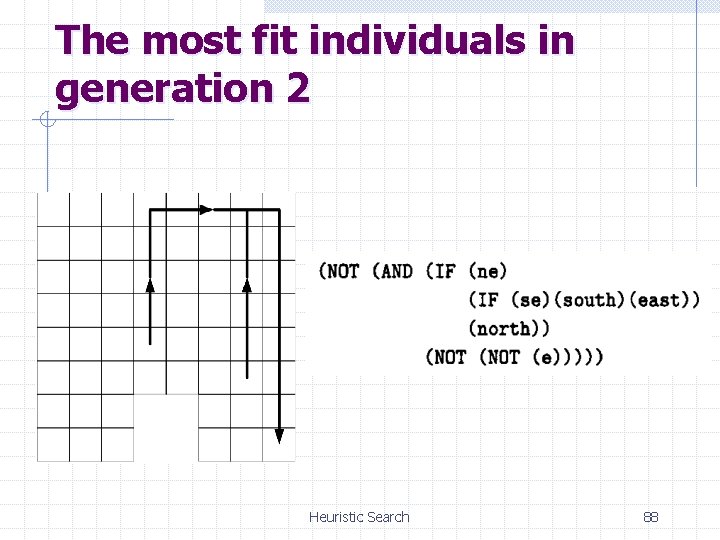

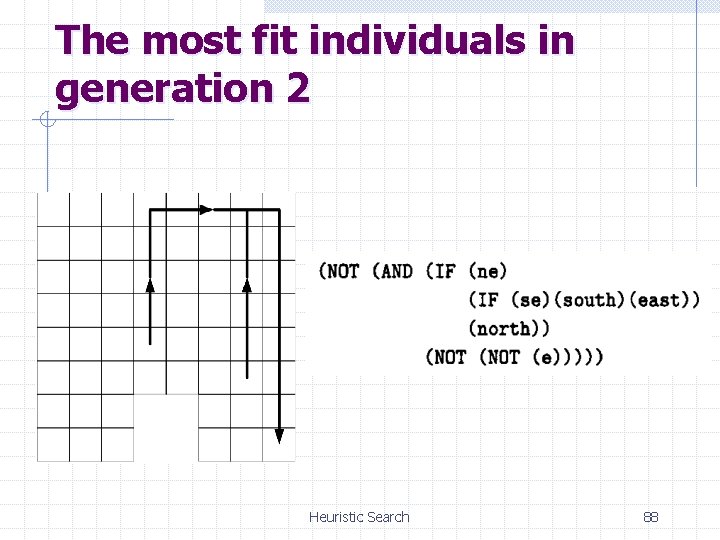

The most fit individuals in generation 2 Heuristic Search 88

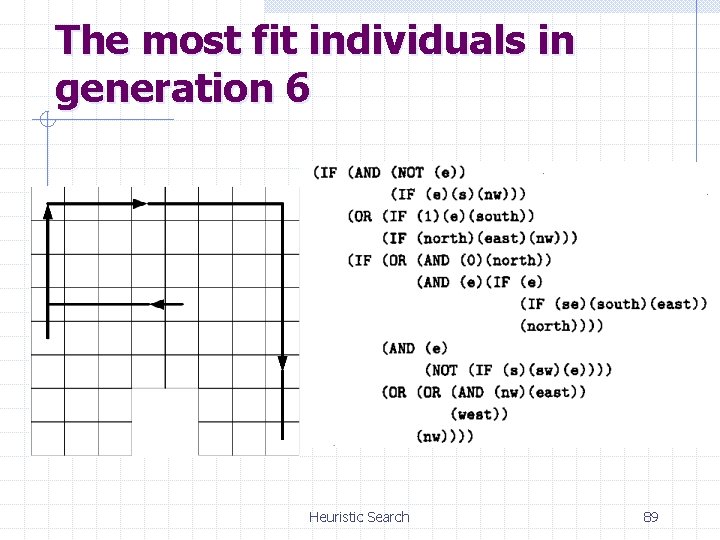

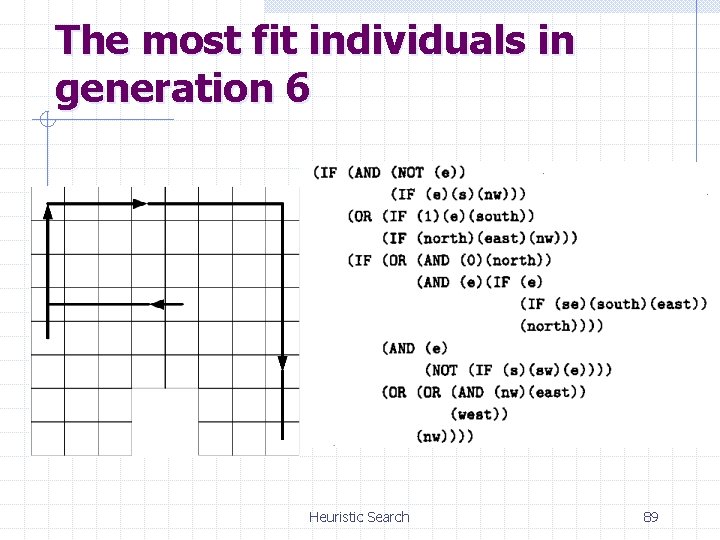

The most fit individuals in generation 6 Heuristic Search 89

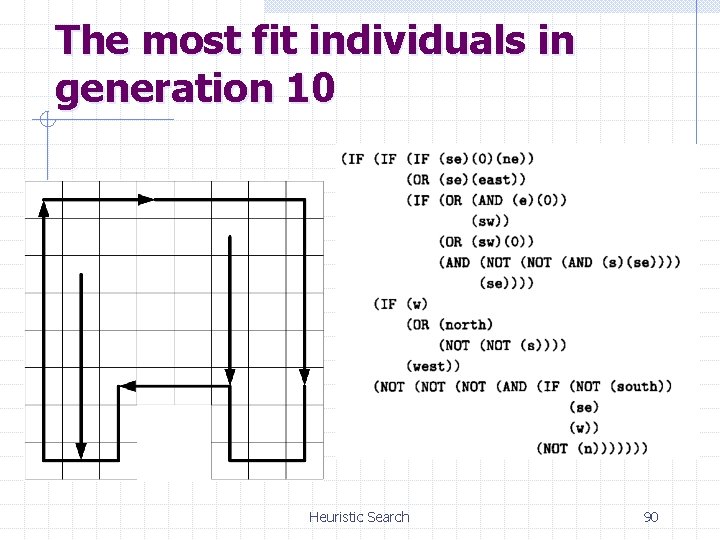

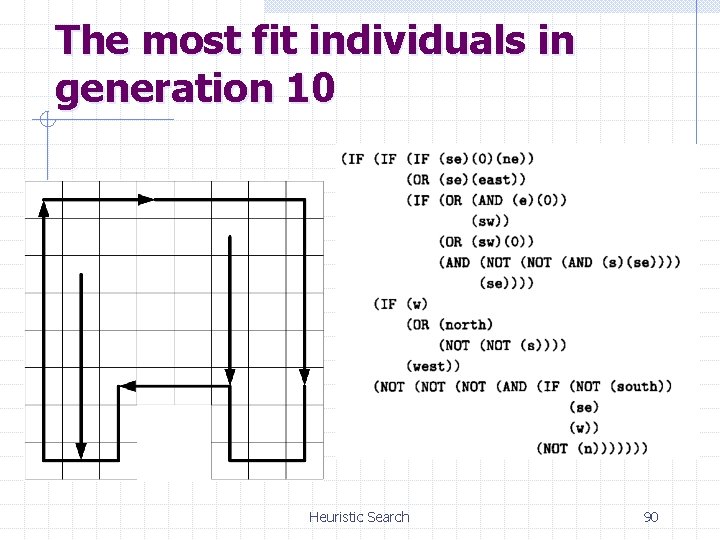

The most fit individuals in generation 10 Heuristic Search 90

Fitness as a function of generation number Heuristic Search 91

숙제 Specify fitness functions for use in evolving agents that n n Control an elevator Control stop lights on a city main street Determine what the words genotype and phenotype in evolutionary theory? Why do you think mutation might or might no be helpful in evolutionary processes that use crossover? Heuristic Search 92

When to Use Search Techniques? The search space is small, and n n There is no other available techniques, or It is not worth the effort to develop a more efficient technique The search space is large, and n n There is no other available techniques, and There exist “good” heuristics Heuristic Search 93

Summary Heuristic function Best-first search Admissible heuristic and A* A* is complete and optimal Consistent heuristic and repeated states Heuristic accuracy IDA* Heuristic Search 94

Russel and norvig

Russel and norvig Russell norvig

Russell norvig Russell norvig

Russell norvig Blind search dan heuristic search

Blind search dan heuristic search Peter norvig design patterns

Peter norvig design patterns Russel norvig

Russel norvig Generate and test heuristic search

Generate and test heuristic search Heuristic search adalah

Heuristic search adalah Heuristic search methods

Heuristic search methods Informed (heuristic) search strategies

Informed (heuristic) search strategies Heuristic search

Heuristic search Heuristic search

Heuristic search Informed search and uninformed search in ai

Informed search and uninformed search in ai Uninformed search methods

Uninformed search methods Federated discovery

Federated discovery Local search vs global search

Local search vs global search Federated search vs distributed search

Federated search vs distributed search Https://images.search.yahoo.com/search/images

Https://images.search.yahoo.com/search/images Best first search in ai

Best first search in ai Video.search.yahoo.com

Video.search.yahoo.com Httptw

Httptw One disadvantage of binary search

One disadvantage of binary search Linear search vs binary search

Linear search vs binary search Http://tw

Http://tw Semantic search vs cognitive search

Semantic search vs cognitive search Which search strategy is called as blind search

Which search strategy is called as blind search Http://search.yahoo.com/search?ei=utf-8

Http://search.yahoo.com/search?ei=utf-8 Criminal profiling serial killers

Criminal profiling serial killers Russell odom and clay lawson

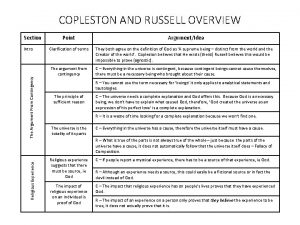

Russell odom and clay lawson Copleston and russell debate summary

Copleston and russell debate summary Russell and taylor operations management

Russell and taylor operations management Russell and atwell guardian

Russell and atwell guardian Russell quarterly economic and market review

Russell quarterly economic and market review Copleston and russell debate summary

Copleston and russell debate summary Russell quarterly economic and market review

Russell quarterly economic and market review Cognitive walkthrough and heuristic evaluation

Cognitive walkthrough and heuristic evaluation Admissible and consistent heuristic example

Admissible and consistent heuristic example Define horror genre

Define horror genre Bryant traction

Bryant traction John russell northrop grumman

John russell northrop grumman Value philosophy

Value philosophy The plot against people

The plot against people Russell chuderewicz penn state

Russell chuderewicz penn state Hr diagram supergiants

Hr diagram supergiants Fph eportfolio

Fph eportfolio Dr. stuart russell

Dr. stuart russell Diagram bintang

Diagram bintang Fundador de los testigos de jehova charles taze russell

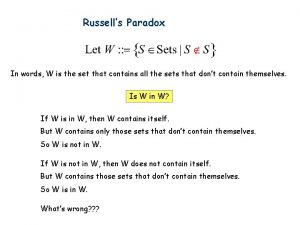

Fundador de los testigos de jehova charles taze russell Russell’s paradox

Russell’s paradox Russell's paradox

Russell's paradox Bertrand russell on denoting

Bertrand russell on denoting Celia russell

Celia russell Paradosso di russell insiemi

Paradosso di russell insiemi How to avoid foolish opinions summary

How to avoid foolish opinions summary Hr diagram worksheet answers

Hr diagram worksheet answers Russell wallace day

Russell wallace day Adeline russell

Adeline russell Plaque index scoring criteria

Plaque index scoring criteria Russell vs copleston debate

Russell vs copleston debate Bulimia deutsch

Bulimia deutsch Define incremental validity

Define incremental validity John russell houston

John russell houston Summary of what is the horror genre by sharon russell

Summary of what is the horror genre by sharon russell Er first snowfall

Er first snowfall Richard russell rick riordan jr

Richard russell rick riordan jr Lynne russell

Lynne russell One line jesus quotes

One line jesus quotes Russell c hibbeler

Russell c hibbeler 20 21

20 21 Stock watering definition apush

Stock watering definition apush M russell ballard age

M russell ballard age Richard russell military contributions

Richard russell military contributions Hertzsprung russell diagramm entfernungsbestimmung

Hertzsprung russell diagramm entfernungsbestimmung Russell's paradox

Russell's paradox Bertrand russell prose style

Bertrand russell prose style Russell loveridge

Russell loveridge Russell sign bulimia image

Russell sign bulimia image Simple matching coefficient

Simple matching coefficient Sunshine.chpc.utah.edu/labs/star life

Sunshine.chpc.utah.edu/labs/star life Russell herman

Russell herman Service environment

Service environment Pixabay

Pixabay Dr steven kahn

Dr steven kahn Richard russell quotes

Richard russell quotes John morgan russell

John morgan russell Deirdre russell

Deirdre russell Diagramma h r

Diagramma h r Russell c hibbeler

Russell c hibbeler Phyllis russell

Phyllis russell Bertrand russell biografia

Bertrand russell biografia Sarah russell aged care

Sarah russell aged care Lala rhoads

Lala rhoads Hertzsprung-russell diagram

Hertzsprung-russell diagram Periapical granuloma

Periapical granuloma Russell betts

Russell betts