Adversarial Search and Game Playing Russell and Norvig

- Slides: 74

Adversarial Search and Game Playing Russell and Norvig: Chapter 5 Russell and Norvig: Chapter 6 CS 121 – Winter 2003 Adversarial Search and Game Playing 1

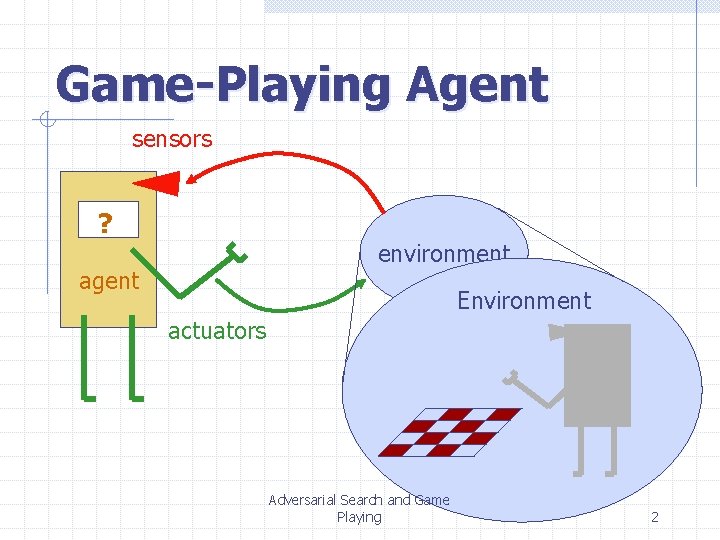

Game-Playing Agent sensors ? environment agent Environment actuators Adversarial Search and Game Playing 2

Perfect Two-Player Game Two players MAX and MIN take turn (with MAX playing first) State space Initial state Successor function Terminal test Score function, that tells whether a terminal state is a win (for MAX), a loss, or a draw Perfect knowledge of states, no uncertainty in successor function Adversarial Search and Game Playing 3

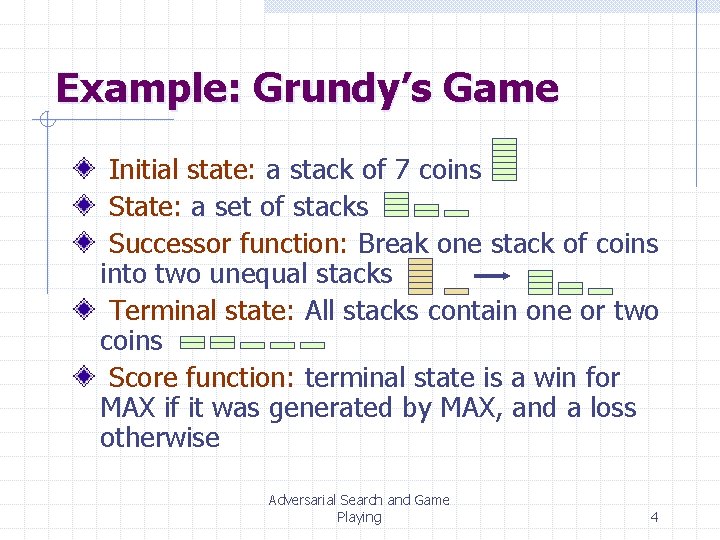

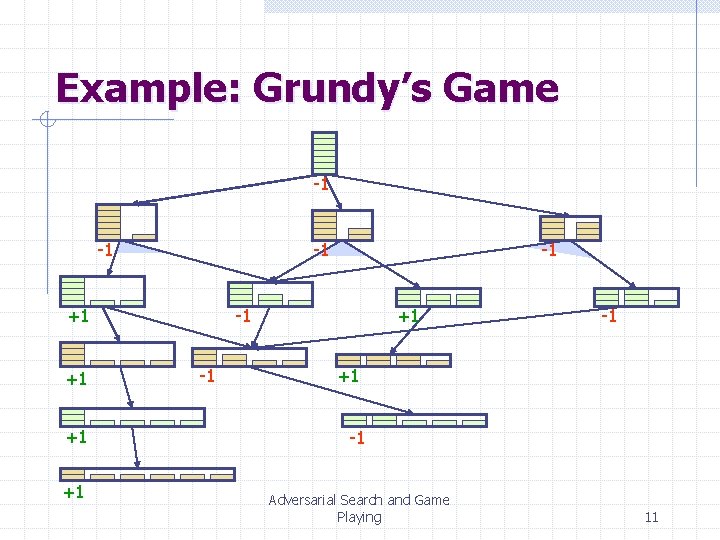

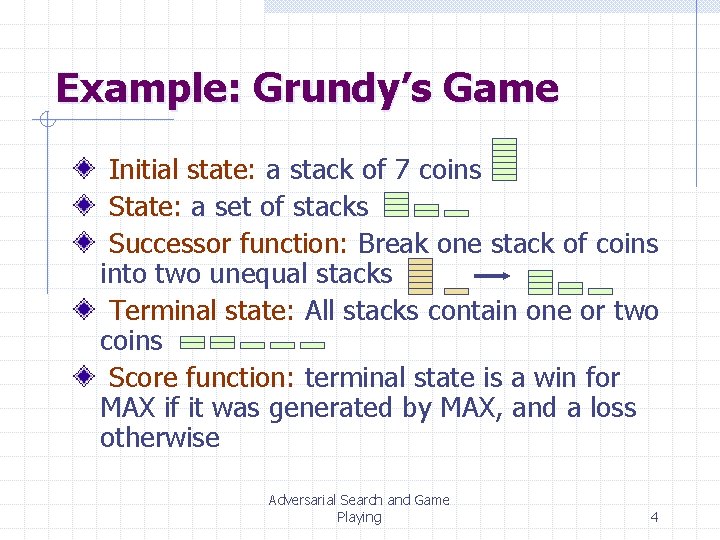

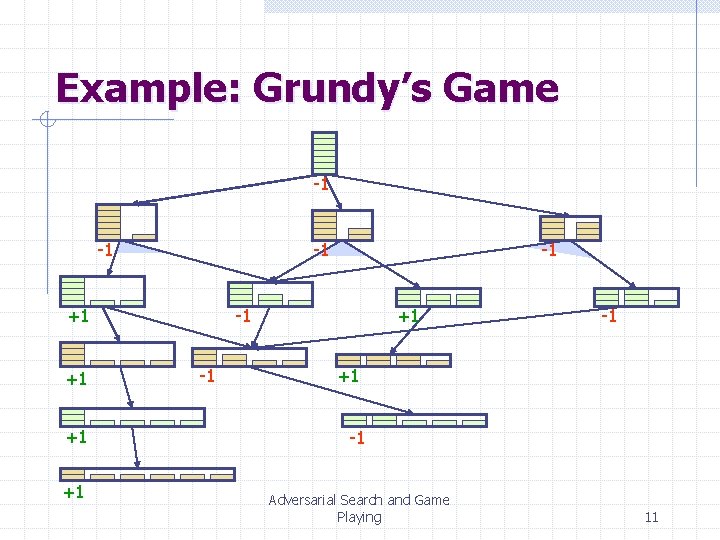

Example: Grundy’s Game Initial state: a stack of 7 coins State: a set of stacks Successor function: Break one stack of coins into two unequal stacks Terminal state: All stacks contain one or two coins Score function: terminal state is a win for MAX if it was generated by MAX, and a loss otherwise Adversarial Search and Game Playing 4

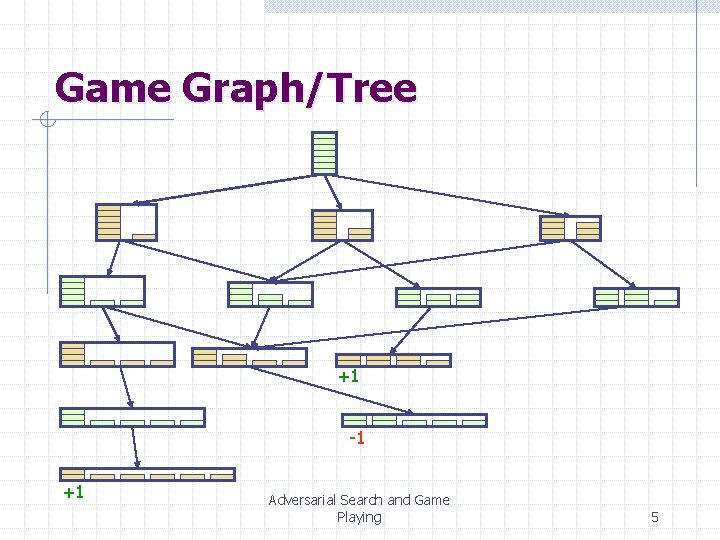

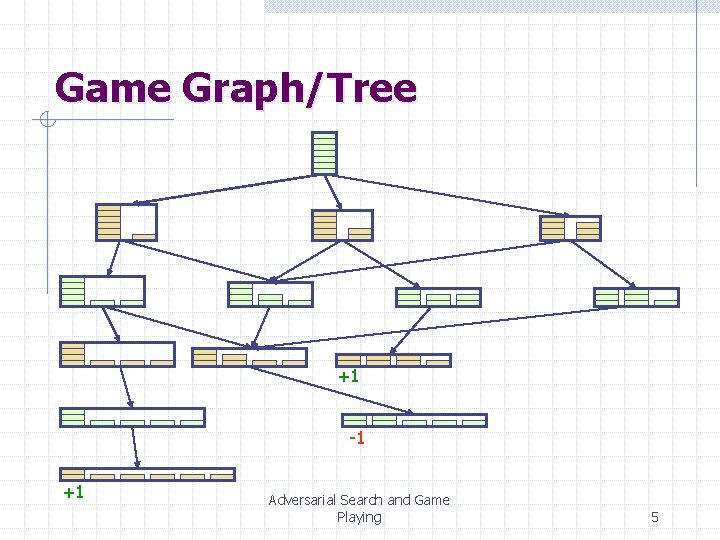

Game Graph/Tree +1 -1 +1 Adversarial Search and Game Playing 5

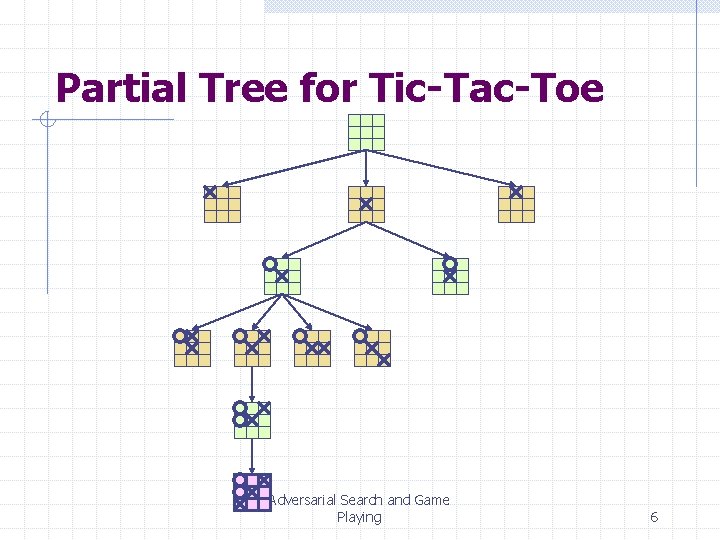

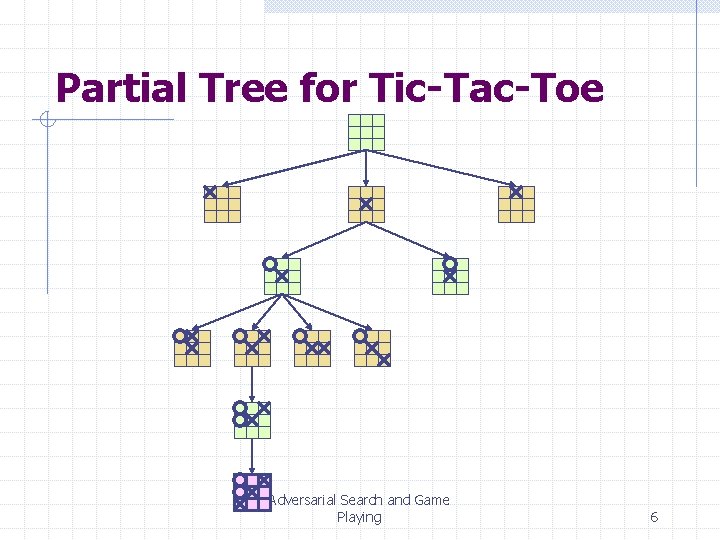

Partial Tree for Tic-Tac-Toe Adversarial Search and Game Playing 6

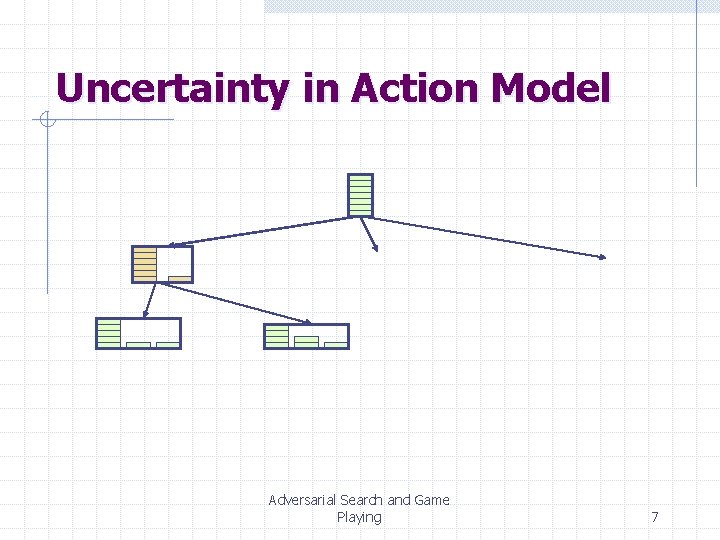

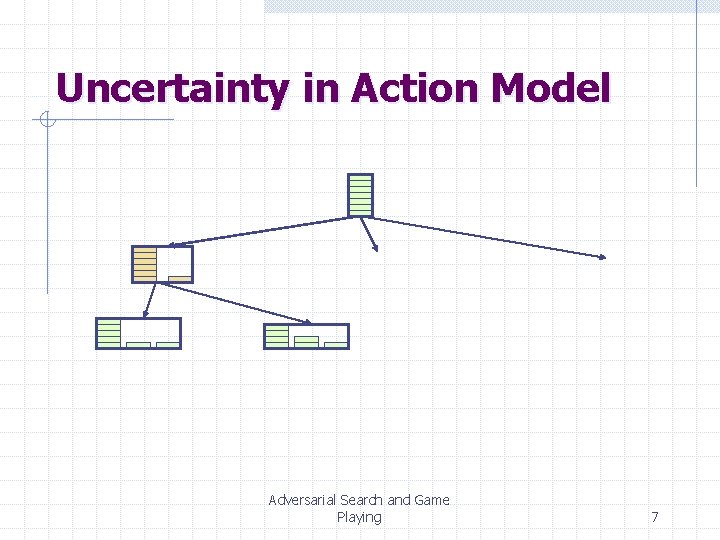

Uncertainty in Action Model Adversarial Search and Game Playing 7

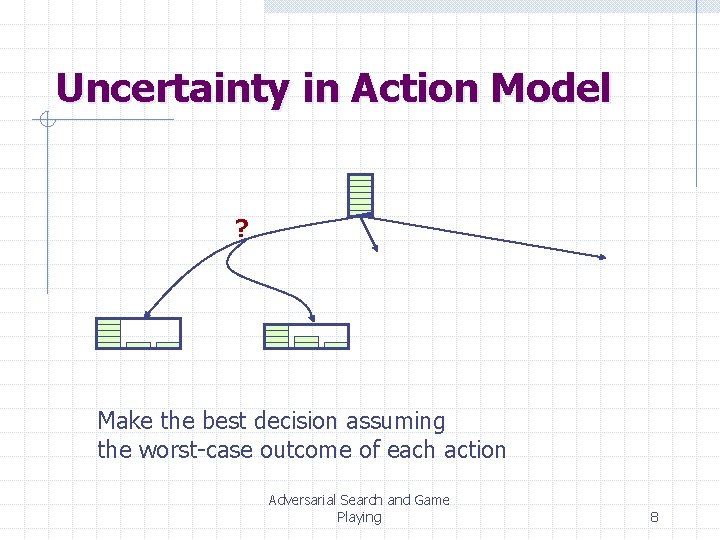

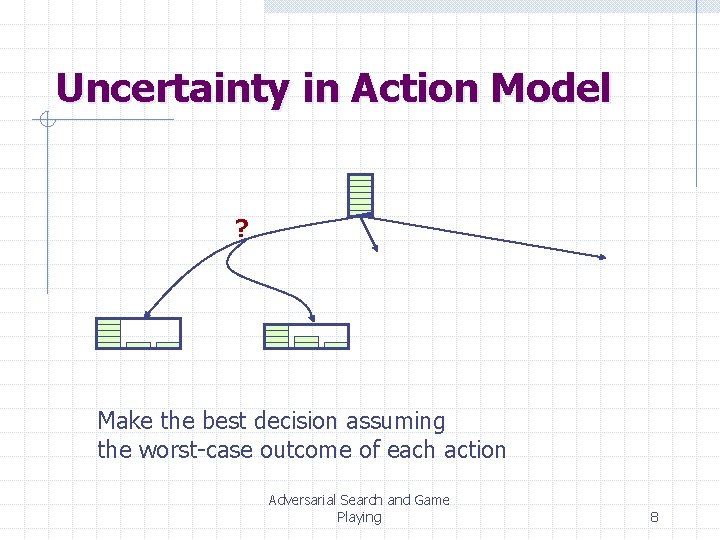

Uncertainty in Action Model ? Make the best decision assuming the worst-case outcome of each action Adversarial Search and Game Playing 8

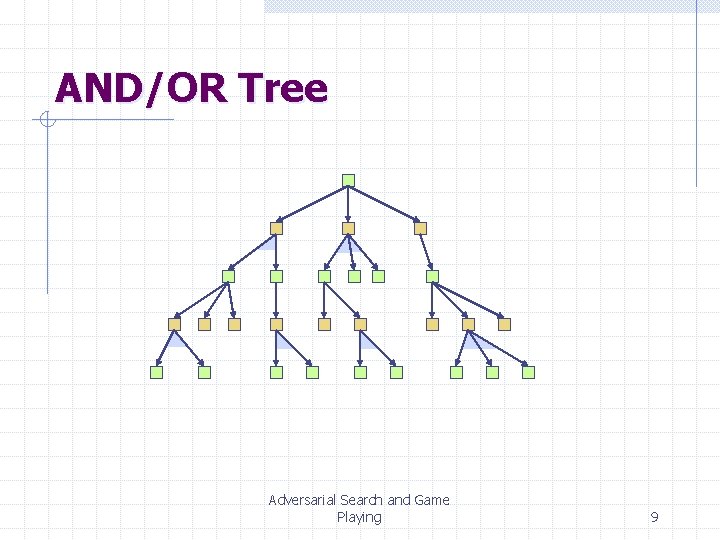

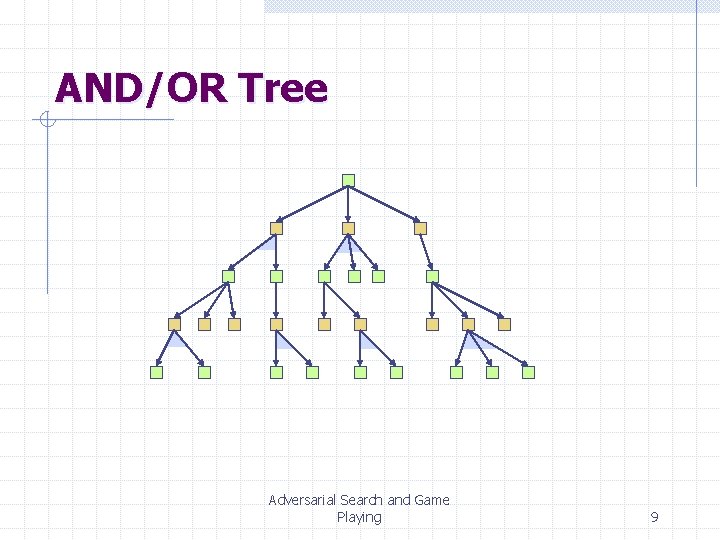

AND/OR Tree Adversarial Search and Game Playing 9

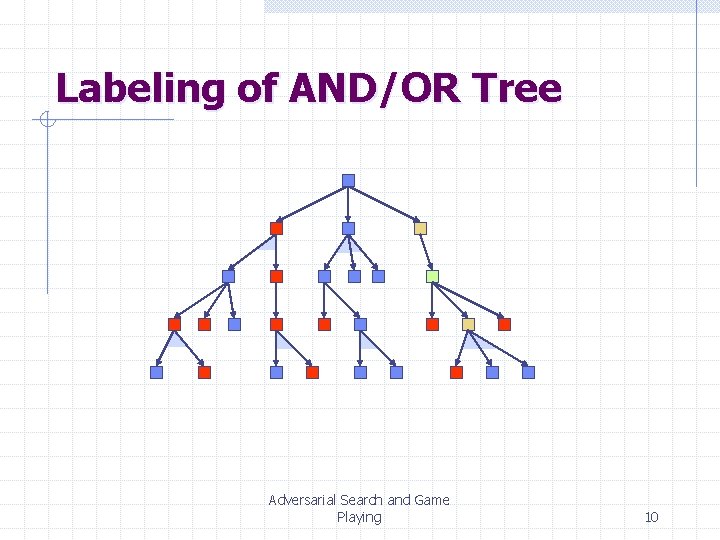

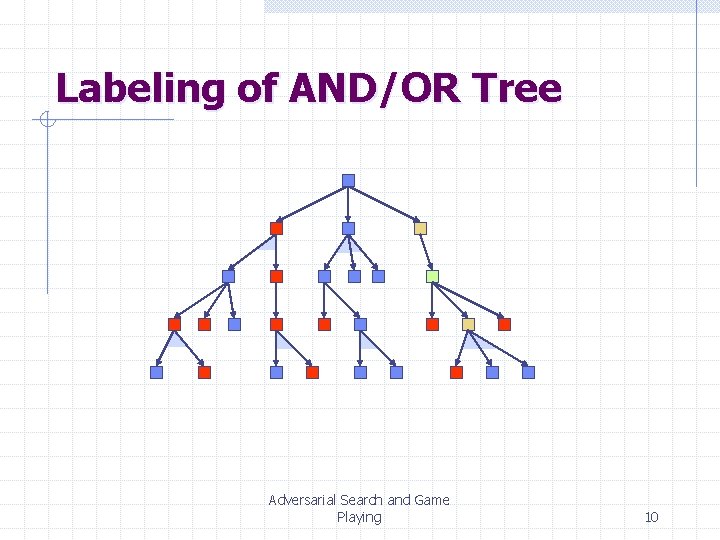

Labeling of AND/OR Tree Adversarial Search and Game Playing 10

Example: Grundy’s Game -1 -1 -1 +1 +1 -1 -1 -1 +1 -1 Adversarial Search and Game Playing 11

But in general the search tree is too big to make it possible to reach the terminal states! Adversarial Search and Game Playing 12

But in general the search tree is too big to make it possible to reach the terminal states! Examples: • Checkers: ~1040 nodes • Chess: ~10120 nodes Adversarial Search and Game Playing 13

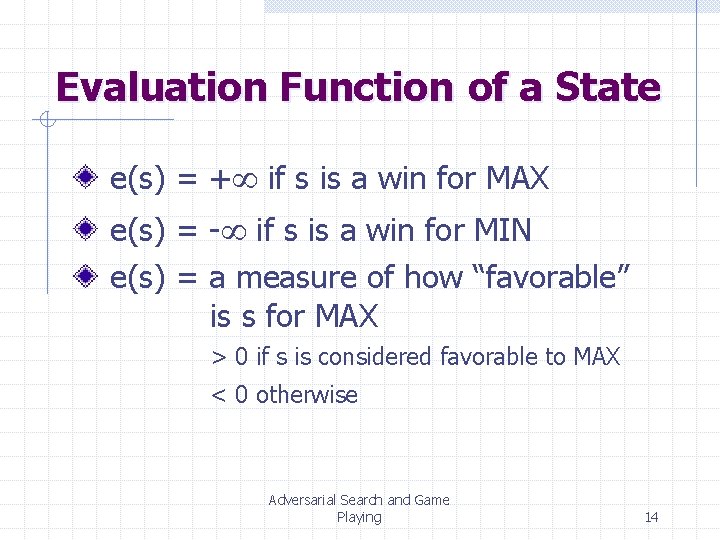

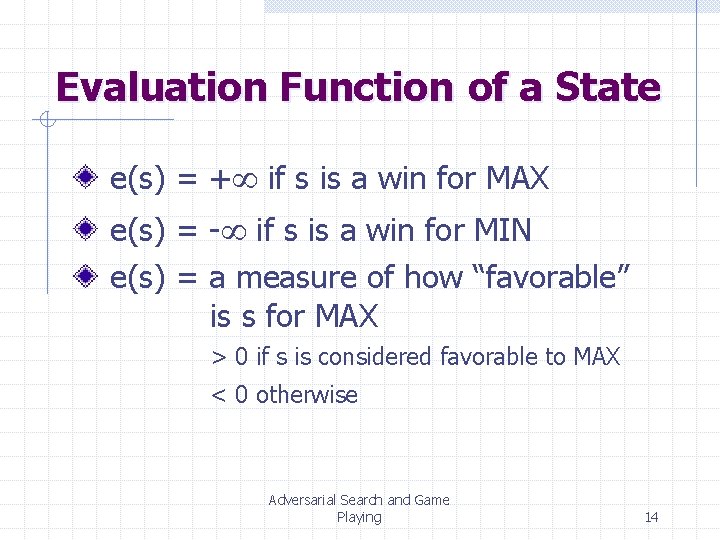

Evaluation Function of a State e(s) = + if s is a win for MAX e(s) = - if s is a win for MIN e(s) = a measure of how “favorable” is s for MAX > 0 if s is considered favorable to MAX < 0 otherwise Adversarial Search and Game Playing 14

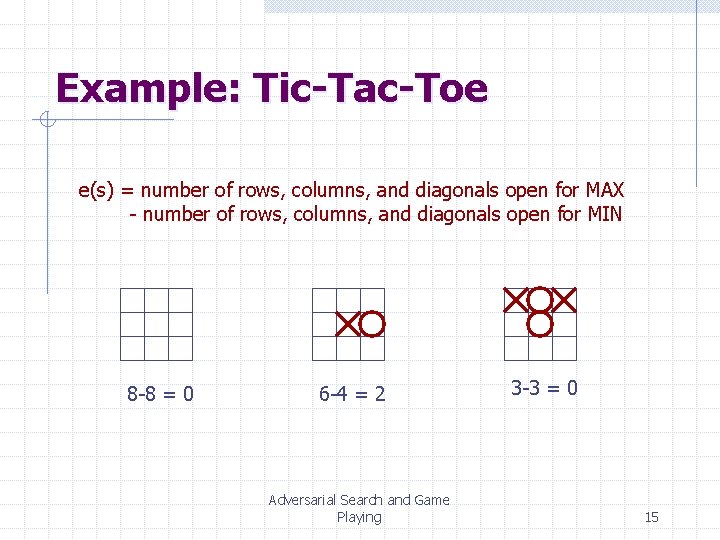

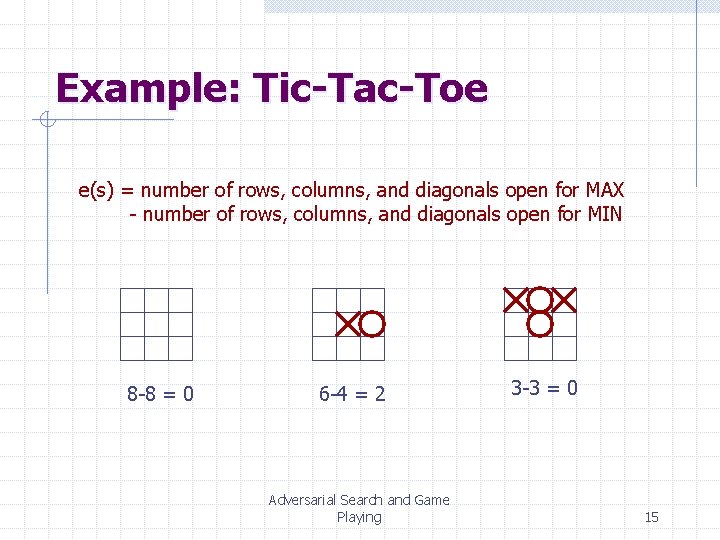

Example: Tic-Tac-Toe e(s) = number of rows, columns, and diagonals open for MAX - number of rows, columns, and diagonals open for MIN 8 -8 = 0 6 -4 = 2 Adversarial Search and Game Playing 3 -3 = 0 15

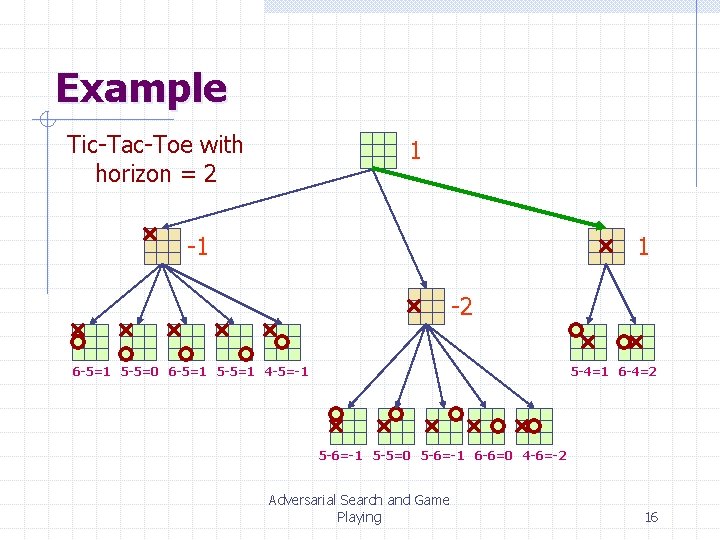

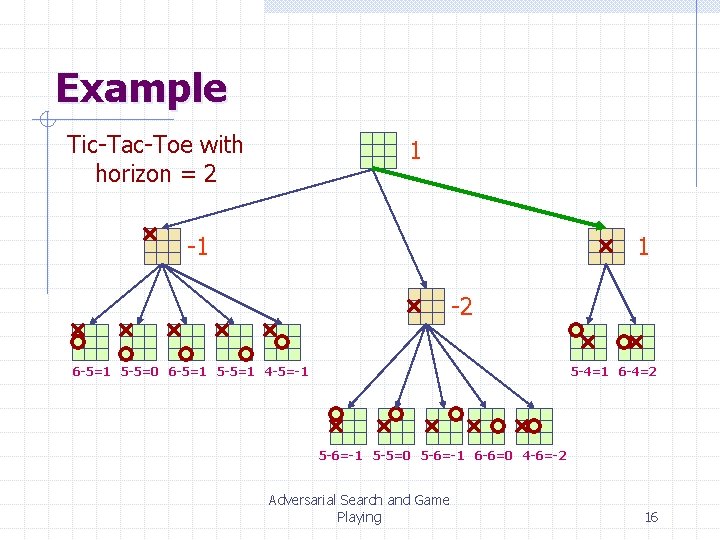

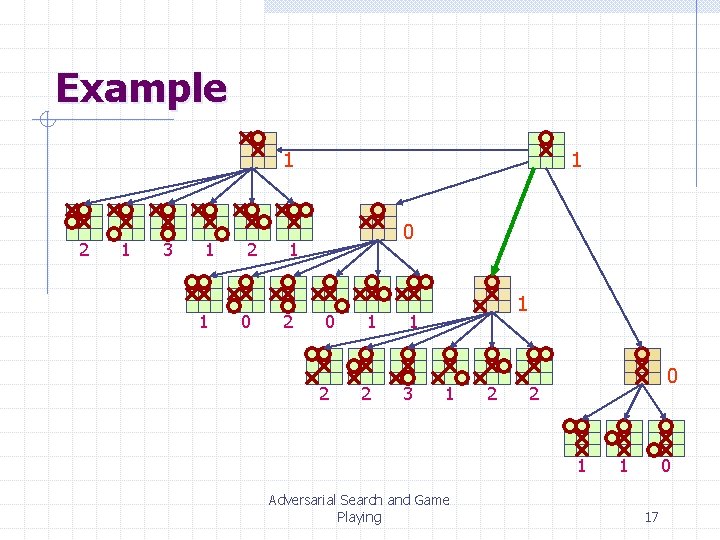

Example Tic-Tac-Toe with horizon = 2 1 -1 1 -2 6 -5=1 5 -5=0 6 -5=1 5 -5=1 4 -5=-1 5 -4=1 6 -4=2 5 -6=-1 5 -5=0 5 -6=-1 6 -6=0 4 -6=-2 Adversarial Search and Game Playing 16

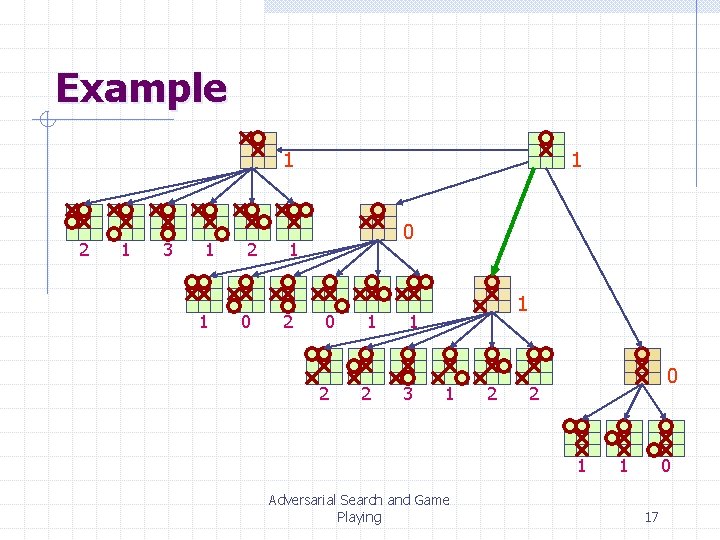

Example 1 2 1 3 1 1 2 0 2 1 1 3 1 2 0 2 1 Adversarial Search and Game Playing 1 0 17

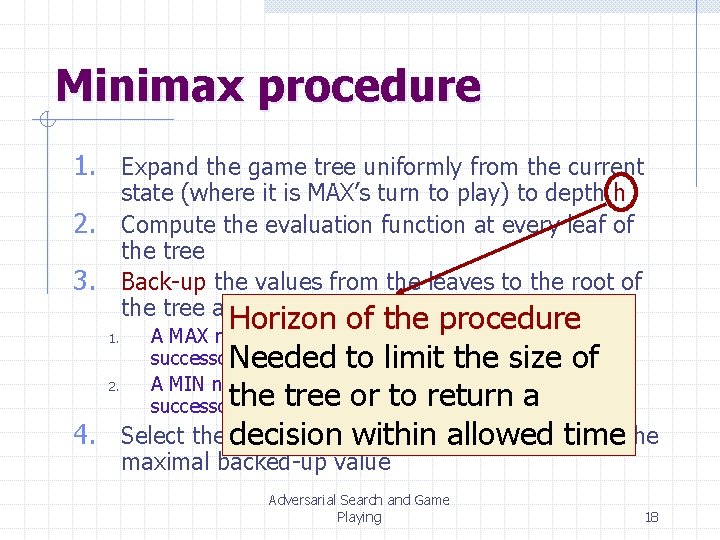

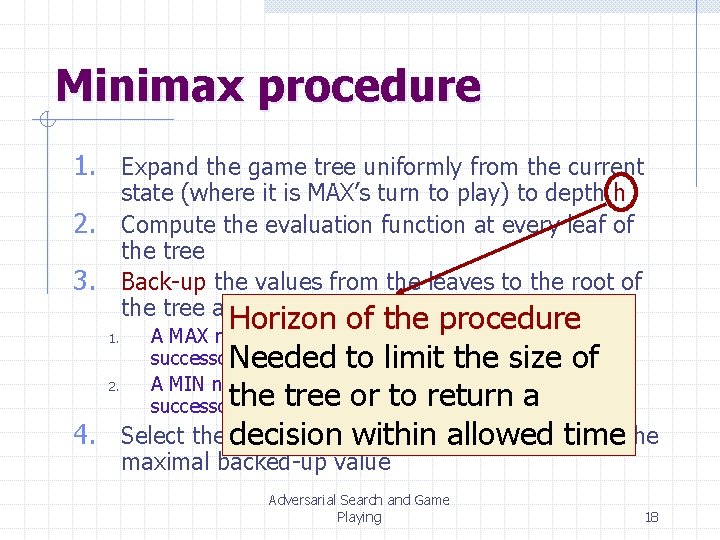

Minimax procedure 1. Expand the game tree uniformly from the current state (where it is MAX’s turn to play) to depth h Compute the evaluation function at every leaf of the tree Back-up the values from the leaves to the root of the tree as follows: 2. 3. Horizon of the procedure Needed to limit the size of the tree or to return a 4. Select the decision move toward the MIN node thattime has the within allowed 1. 2. A MAX node gets the maximum of the evaluation of its successors A MIN node gets the minimum of the evaluation of its successors maximal backed-up value Adversarial Search and Game Playing 18

Game Playing (for MAX) Repeat until win, lose, or draw 1. Select move using Minimax procedure 2. Execute move 3. Observe MIN’s move Adversarial Search and Game Playing 19

Issues Choice of the horizon Size of memory needed Number of nodes examined Adversarial Search and Game Playing 20

Adaptive horizon Wait for quiescence Extend singular nodes /Secondary search Note that the horizon may not then be the same on every path of the tree Adversarial Search and Game Playing 21

Issues Choice of the horizon Size of memory needed Number of nodes examined Adversarial Search and Game Playing 22

Alpha-Beta Procedure Generate the game tree to depth h in depth-first manner Back-up estimates (alpha and beta values) of the evaluation functions whenever possible Prune branches that cannot lead to changing the final decision Adversarial Search and Game Playing 23

Example Adversarial Search and Game Playing 24

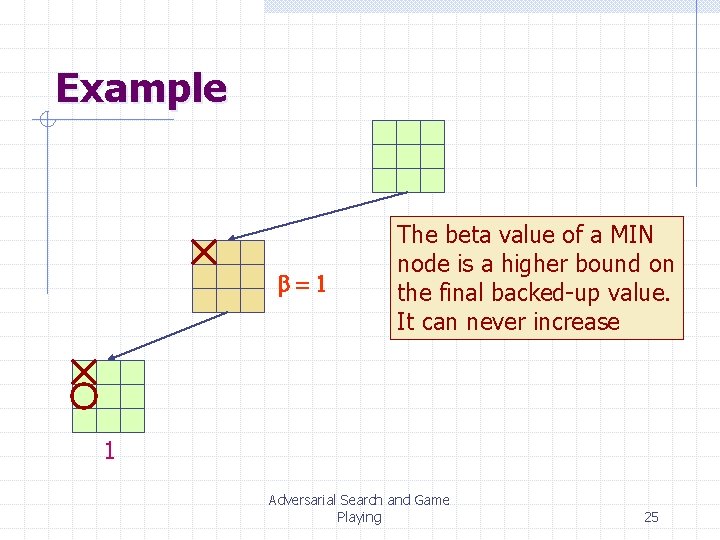

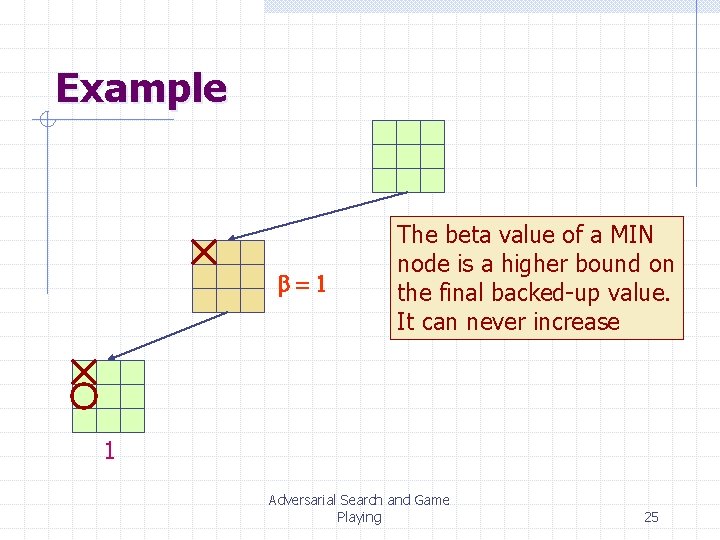

Example b=1 The beta value of a MIN node is a higher bound on the final backed-up value. It can never increase 1 Adversarial Search and Game Playing 25

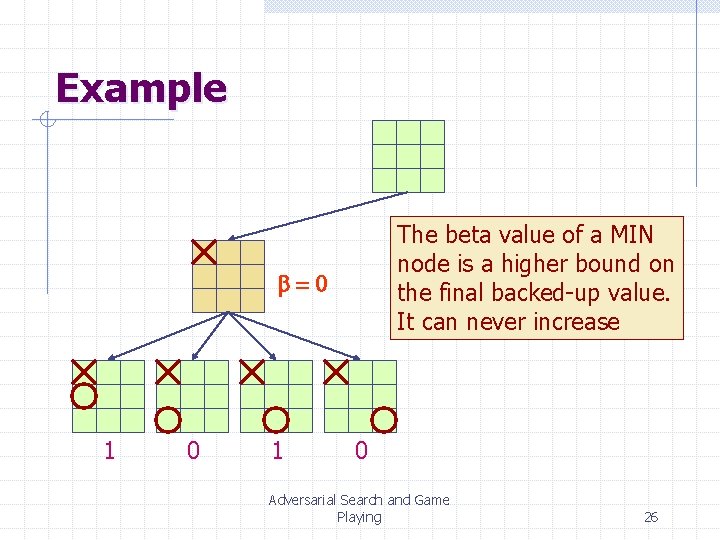

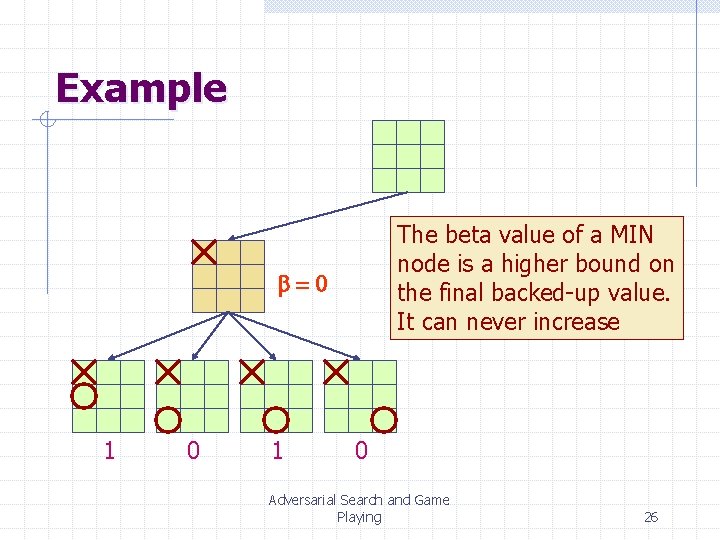

Example The beta value of a MIN node is a higher bound on the final backed-up value. It can never increase b=0 1 0 Adversarial Search and Game Playing 26

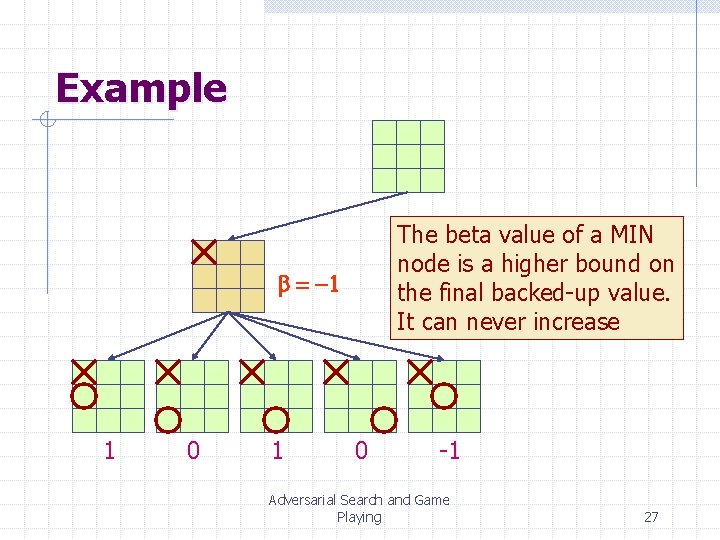

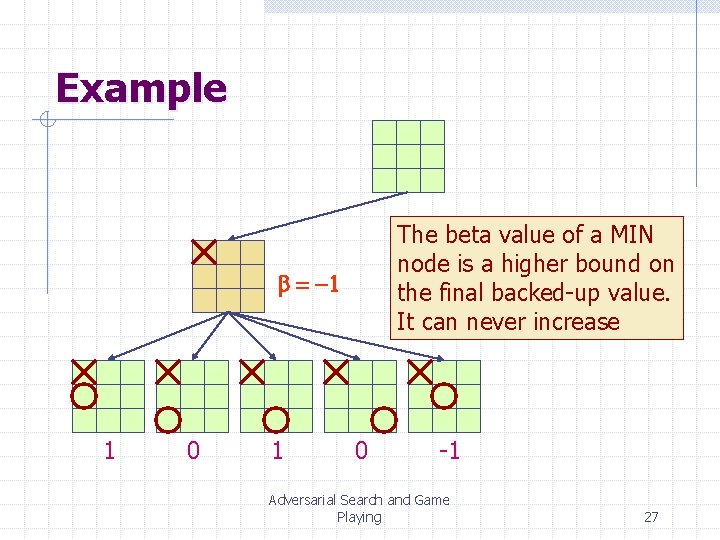

Example The beta value of a MIN node is a higher bound on the final backed-up value. It can never increase b = -1 1 0 -1 Adversarial Search and Game Playing 27

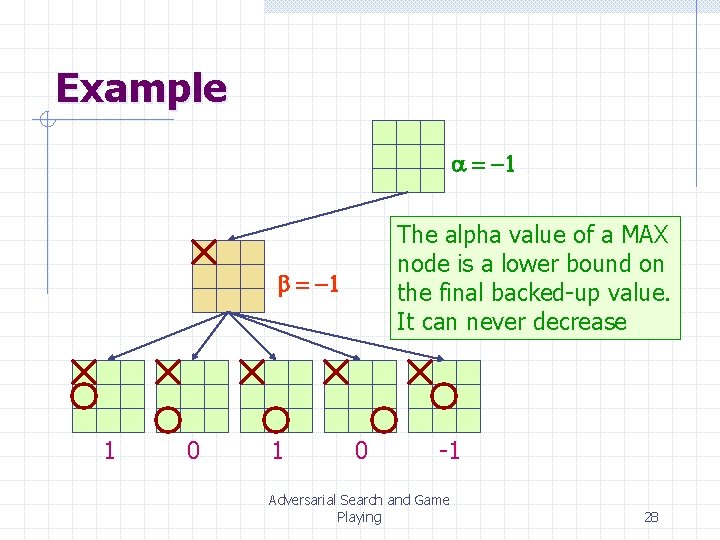

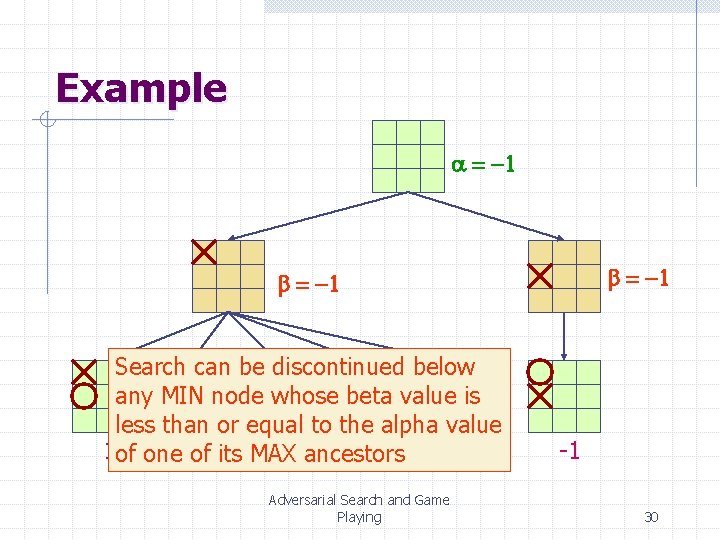

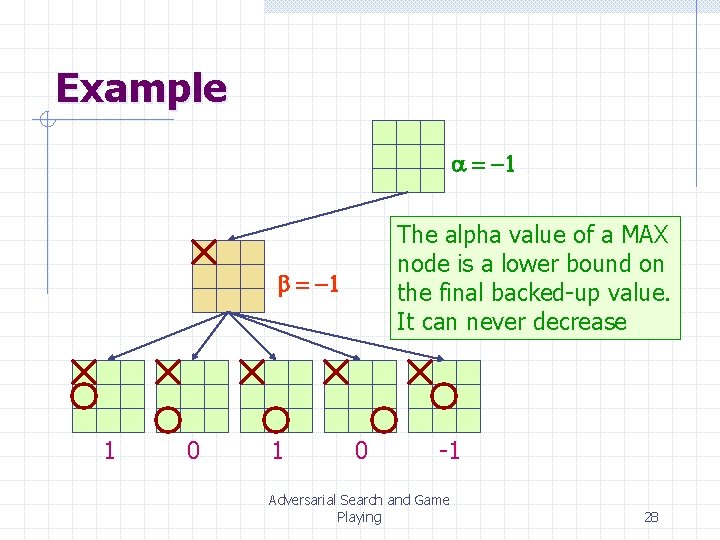

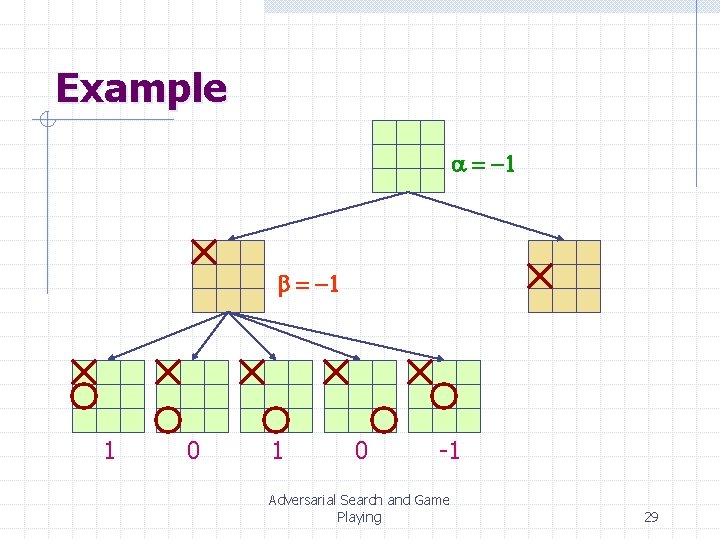

Example a = -1 The alpha value of a MAX node is a lower bound on the final backed-up value. It can never decrease b = -1 1 0 -1 Adversarial Search and Game Playing 28

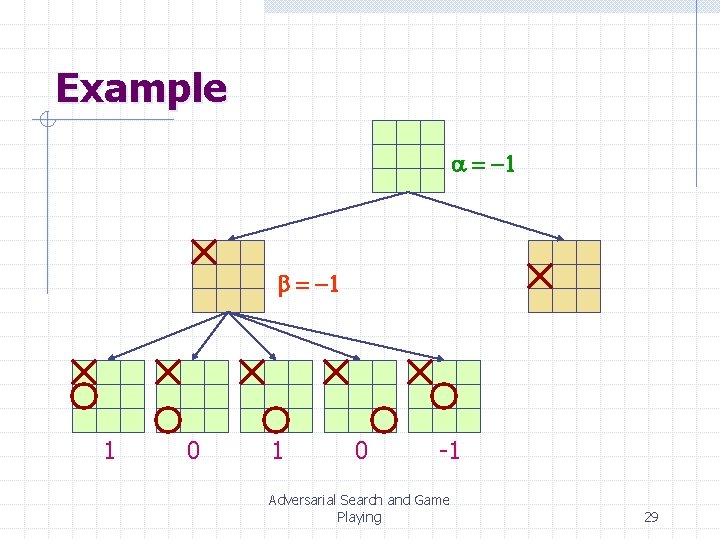

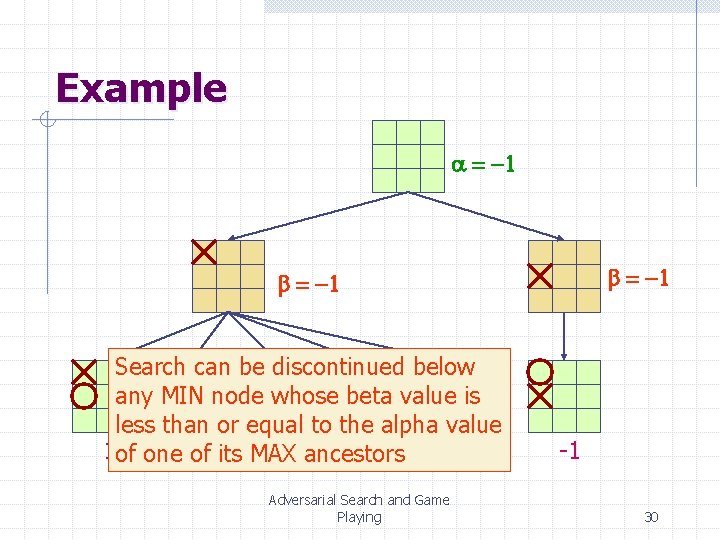

Example a = -1 b = -1 1 0 -1 Adversarial Search and Game Playing 29

Example a = -1 b = -1 Search can be discontinued below any MIN node whose beta value is less than or equal to the alpha value 1 of one 0 of its MAX 1 ancestors 0 -1 Adversarial Search and Game Playing -1 30

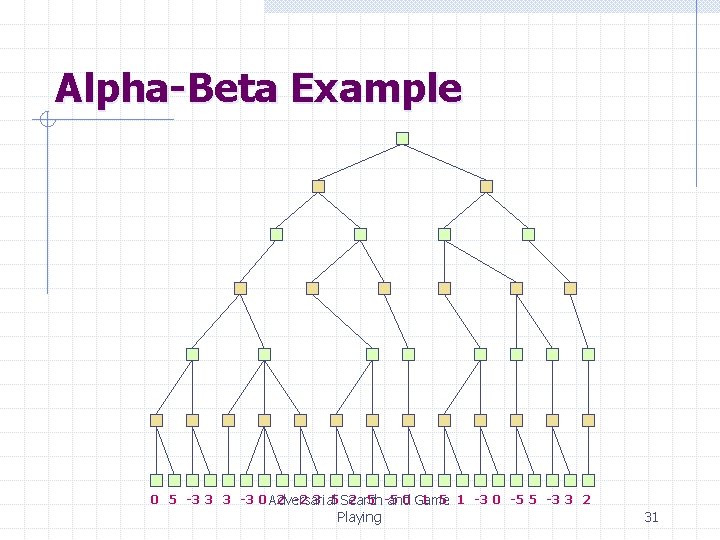

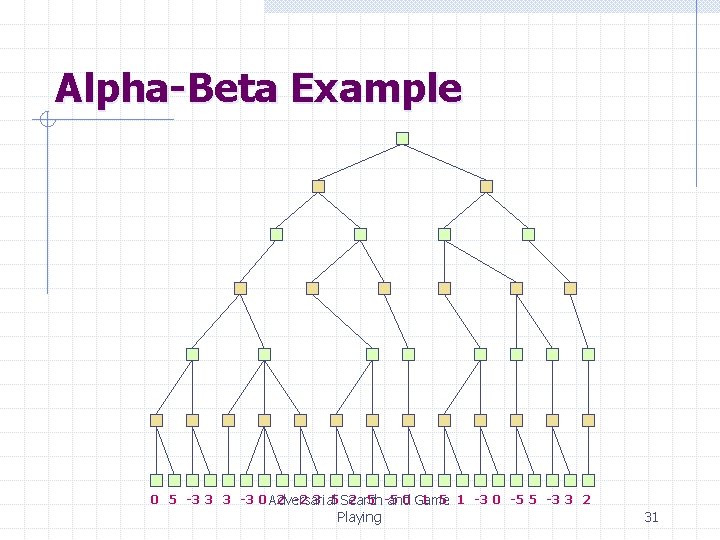

Alpha-Beta Example 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 31

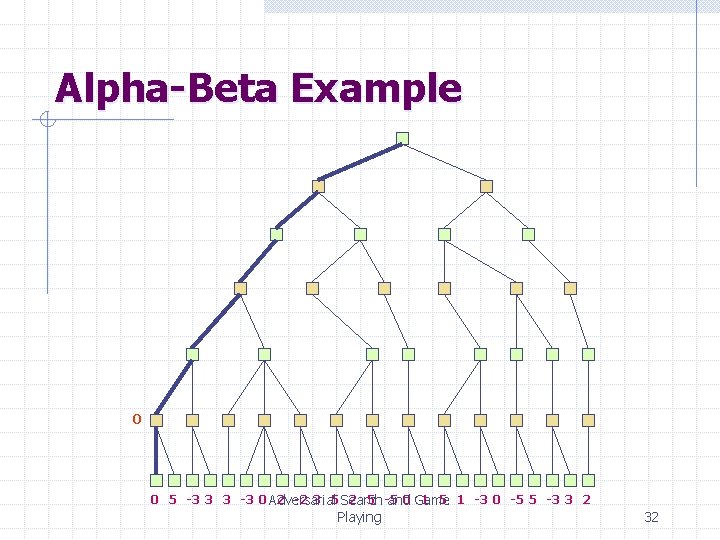

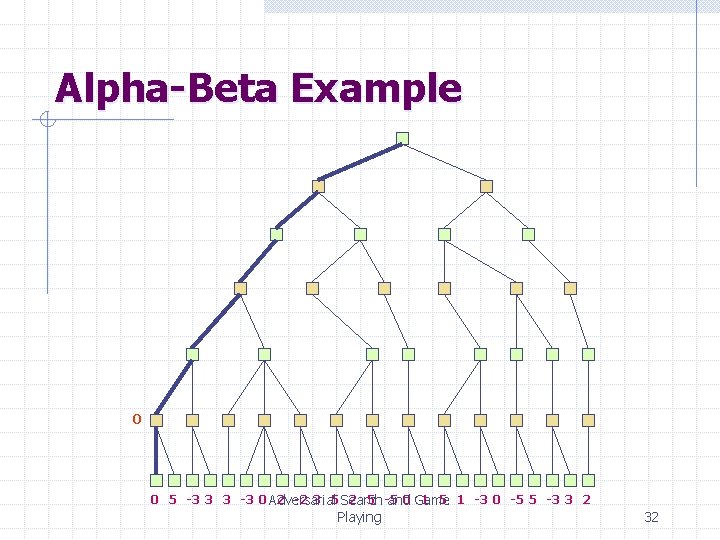

Alpha-Beta Example 0 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 32

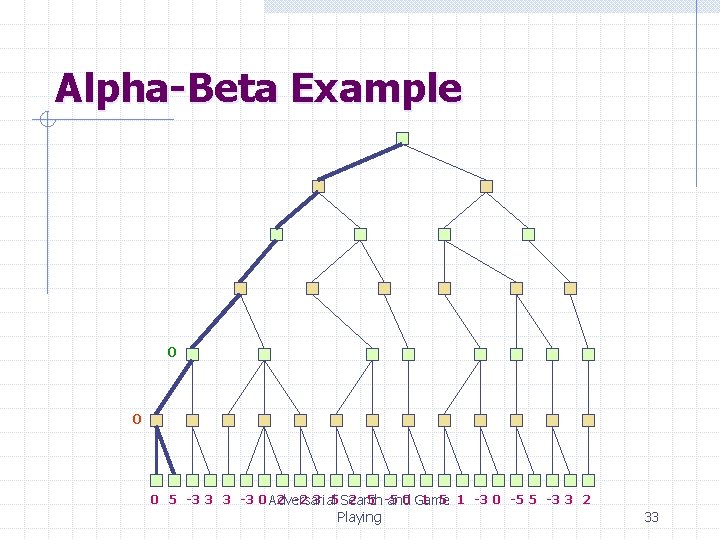

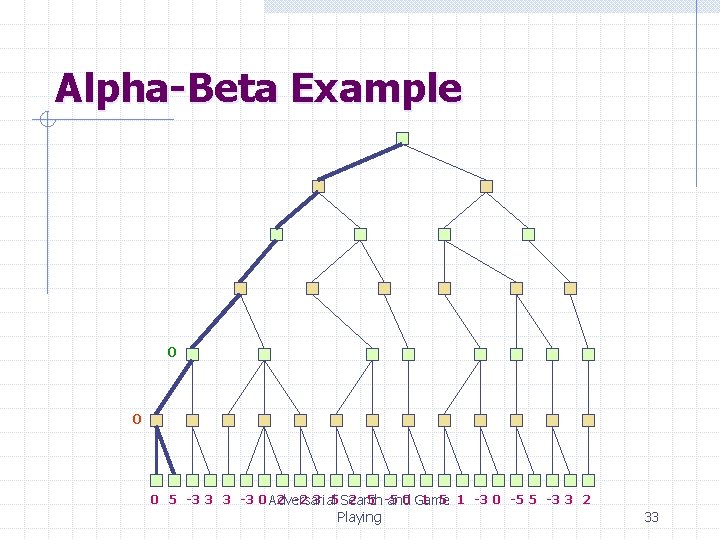

Alpha-Beta Example 0 0 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 33

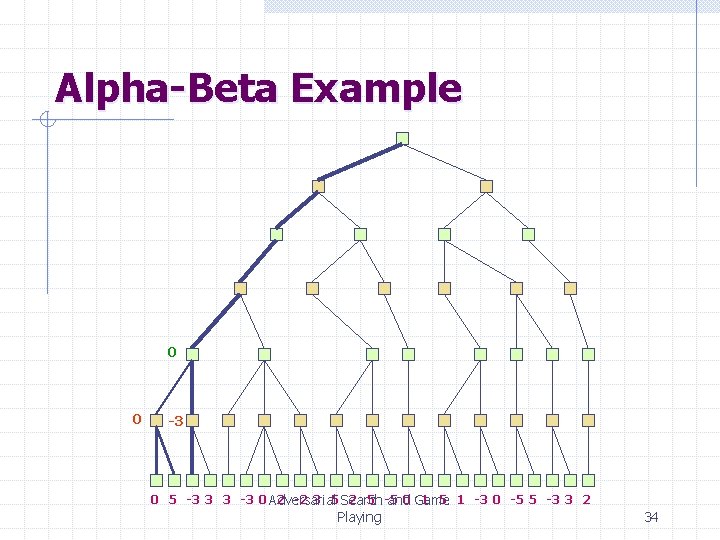

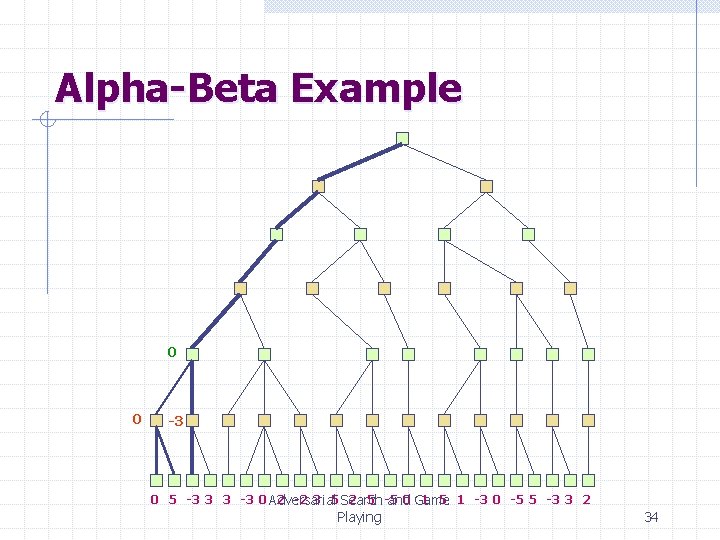

Alpha-Beta Example 0 0 -3 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 34

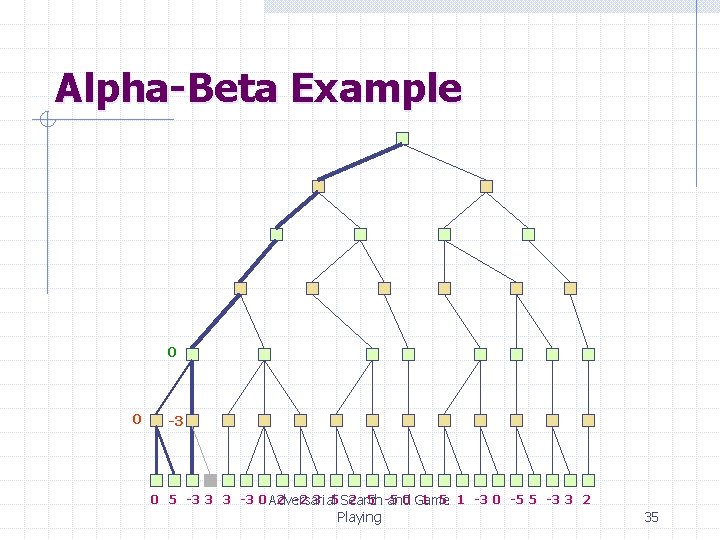

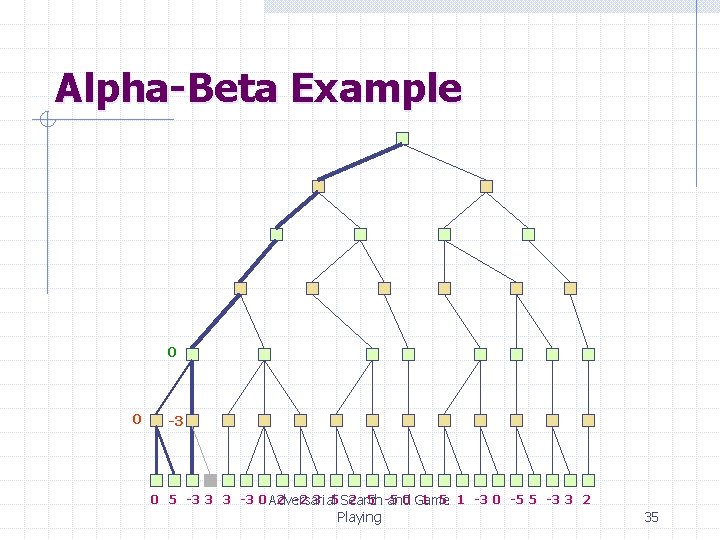

Alpha-Beta Example 0 0 -3 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 35

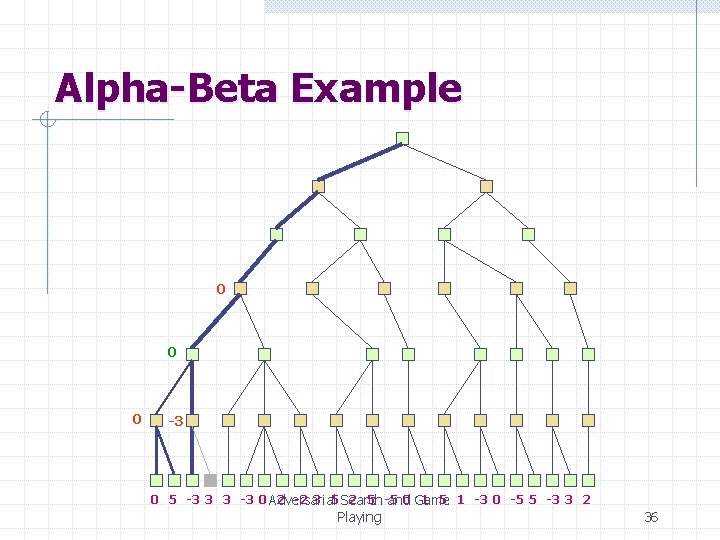

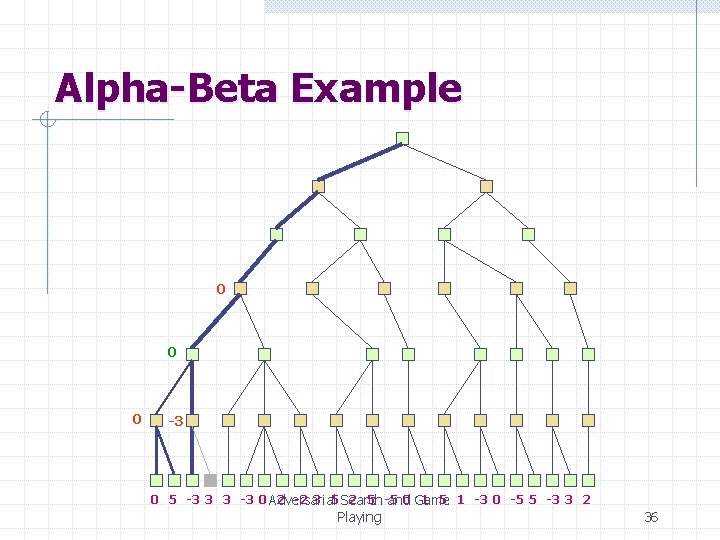

Alpha-Beta Example 0 0 0 -3 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 36

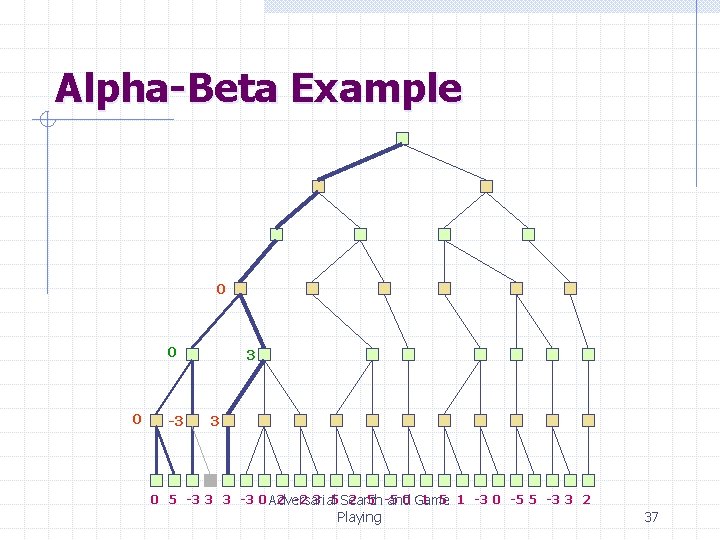

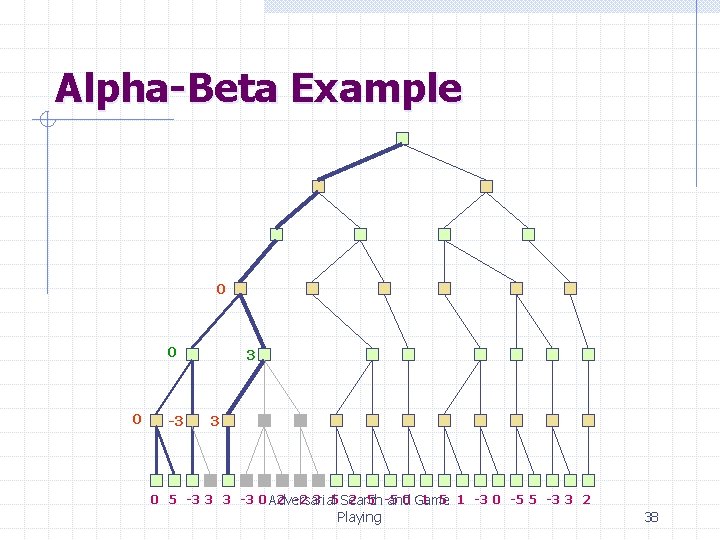

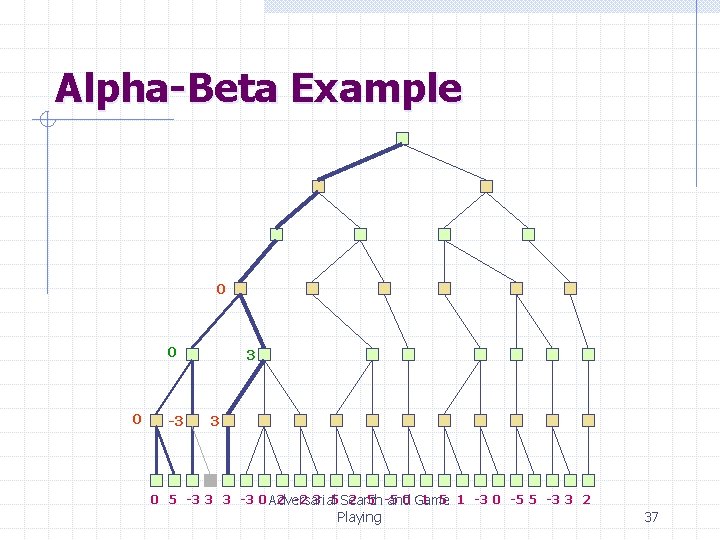

Alpha-Beta Example 0 0 0 -3 3 3 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 37

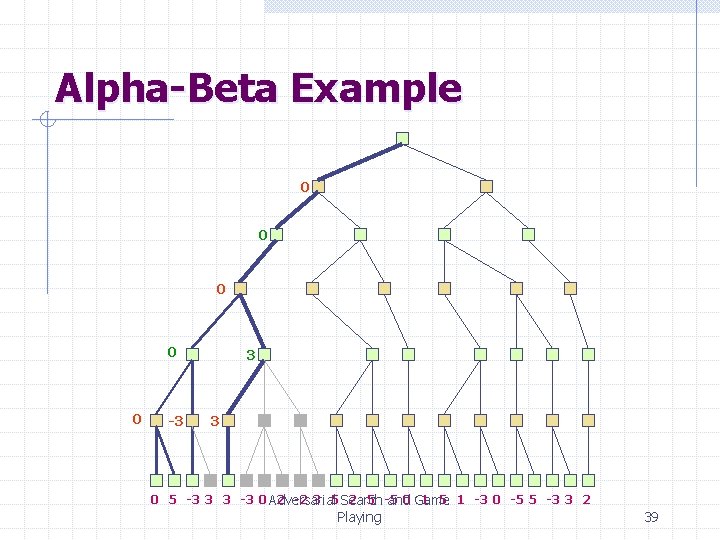

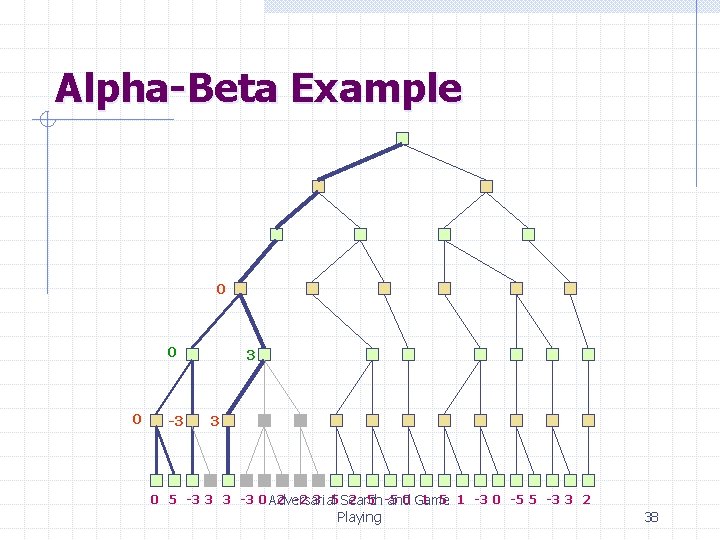

Alpha-Beta Example 0 0 0 -3 3 3 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 38

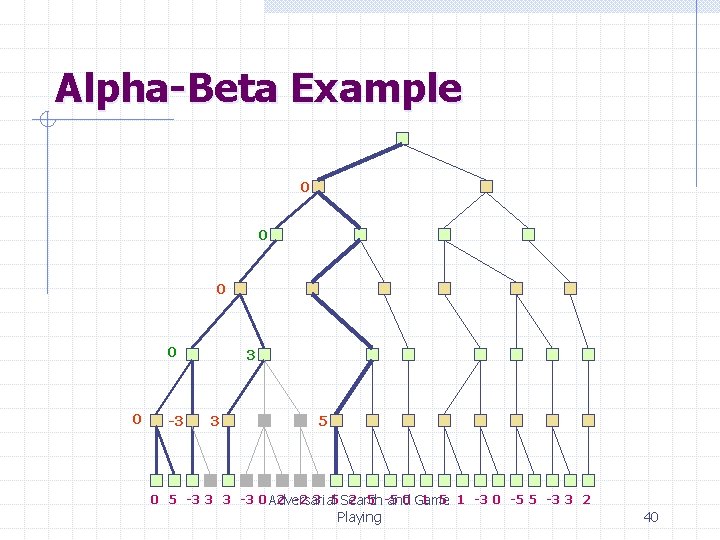

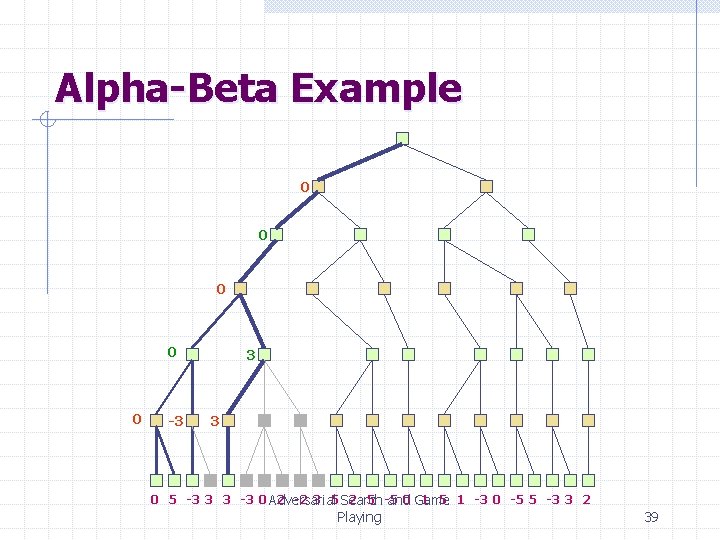

Alpha-Beta Example 0 0 0 -3 3 3 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 39

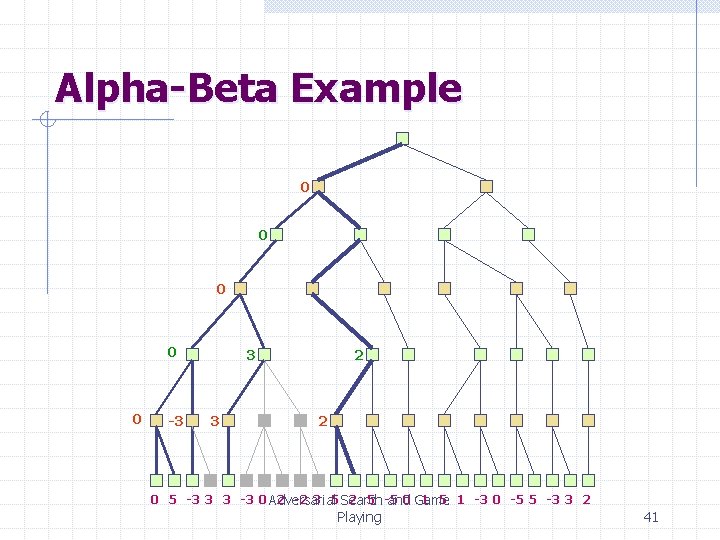

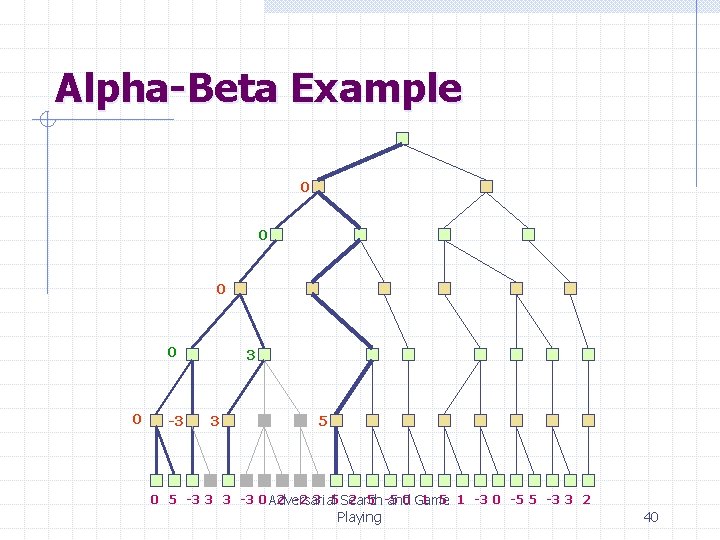

Alpha-Beta Example 0 0 0 -3 3 3 5 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 40

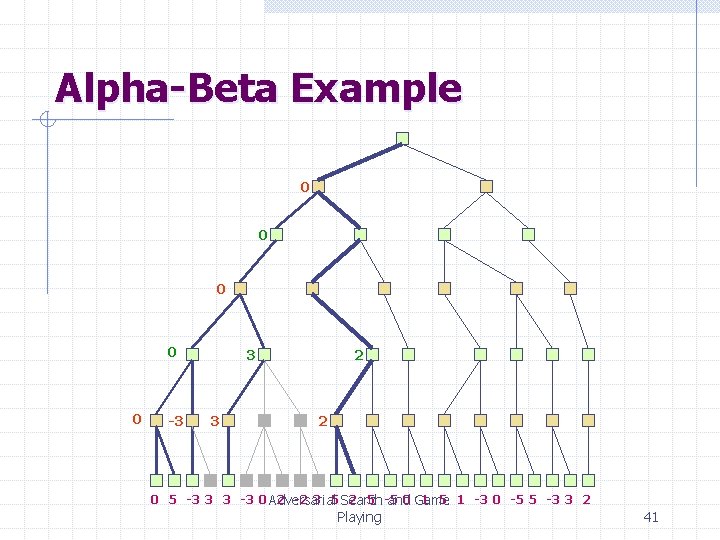

Alpha-Beta Example 0 0 0 -3 3 3 2 2 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 41

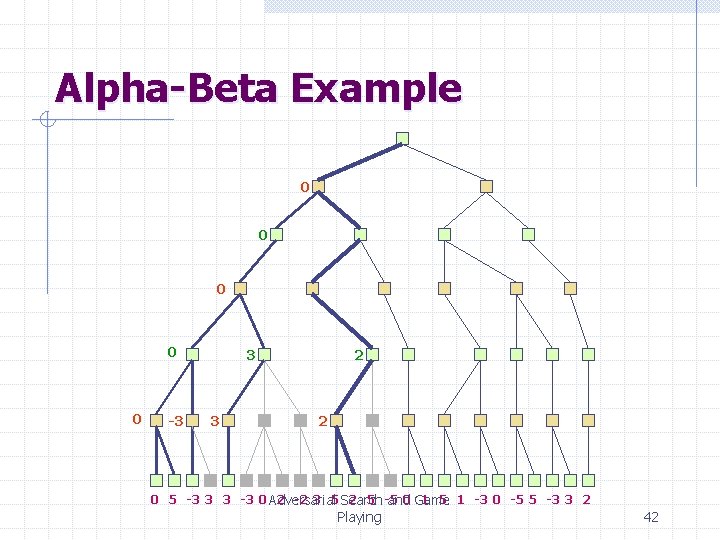

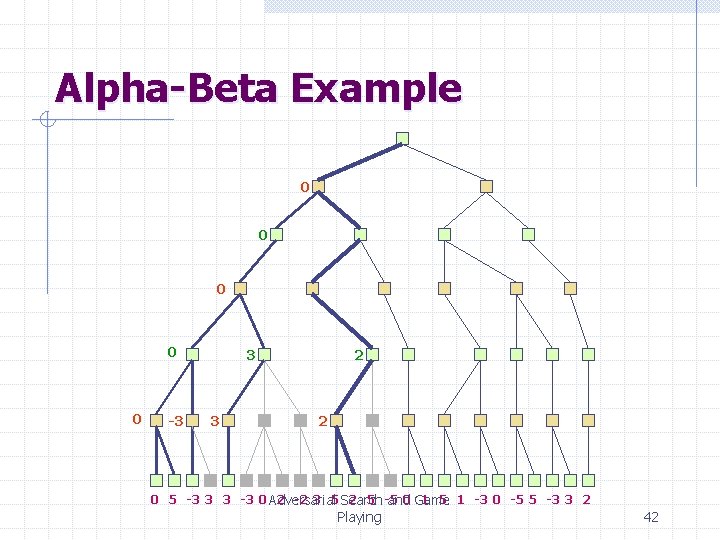

Alpha-Beta Example 0 0 0 -3 3 3 2 2 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 42

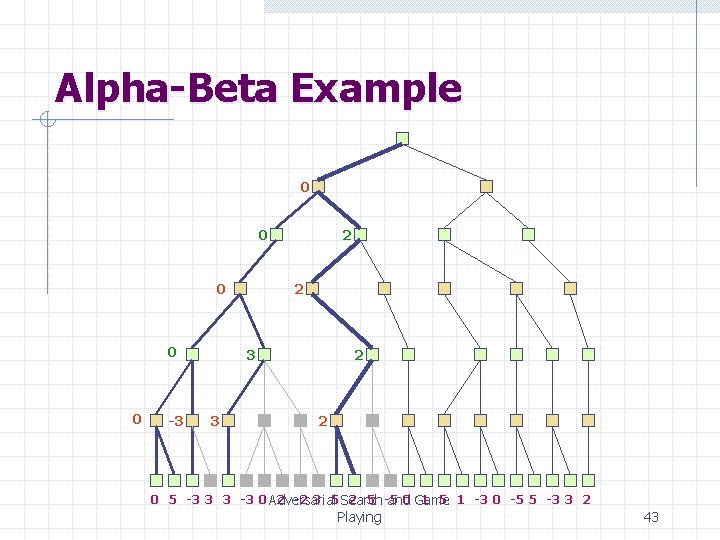

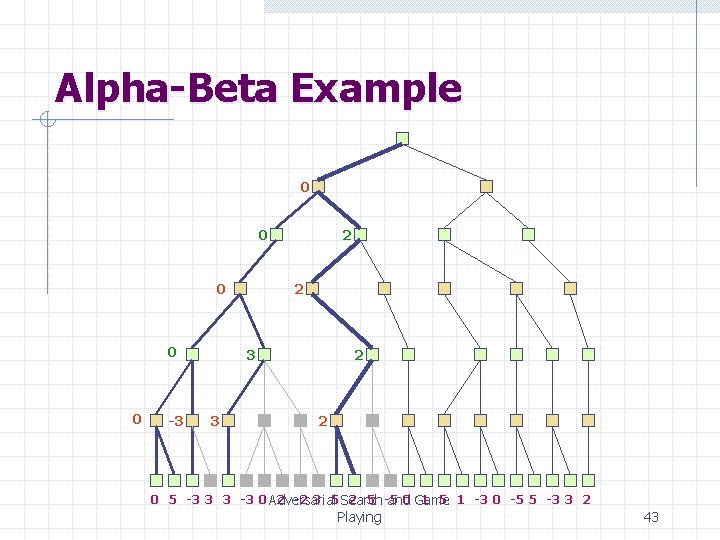

Alpha-Beta Example 0 0 0 -3 2 2 3 3 2 2 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 43

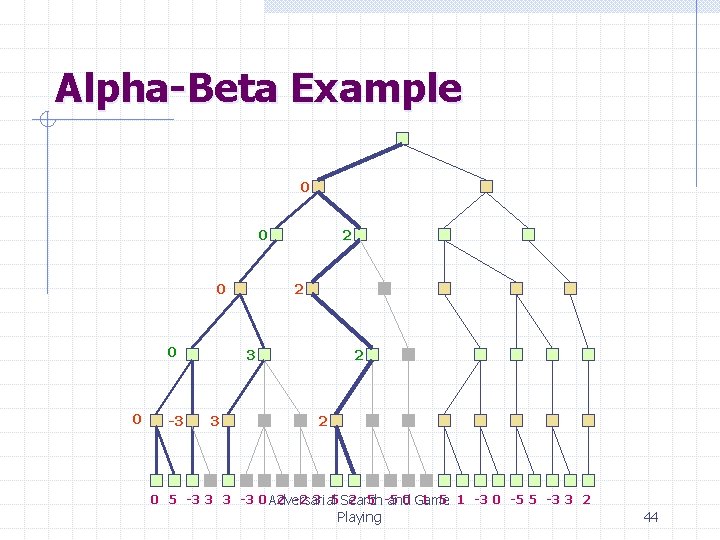

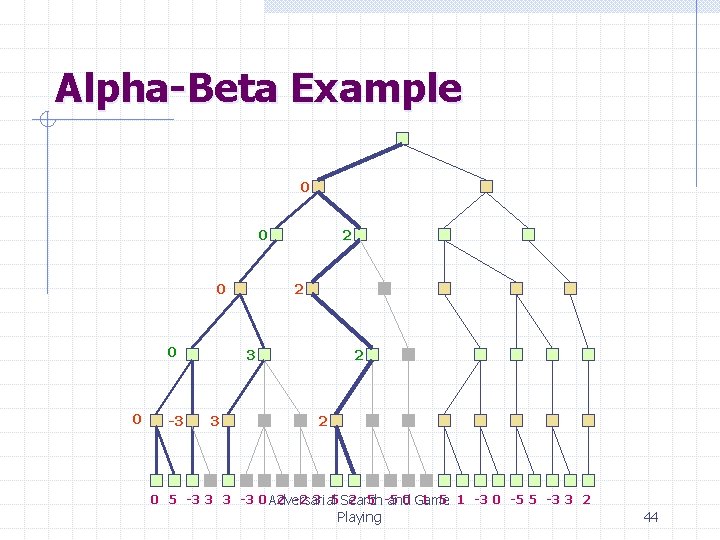

Alpha-Beta Example 0 0 0 -3 2 2 3 3 2 2 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 44

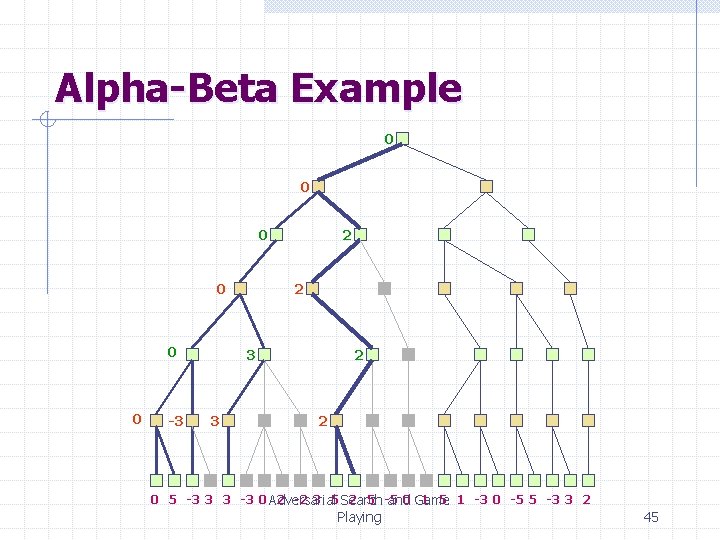

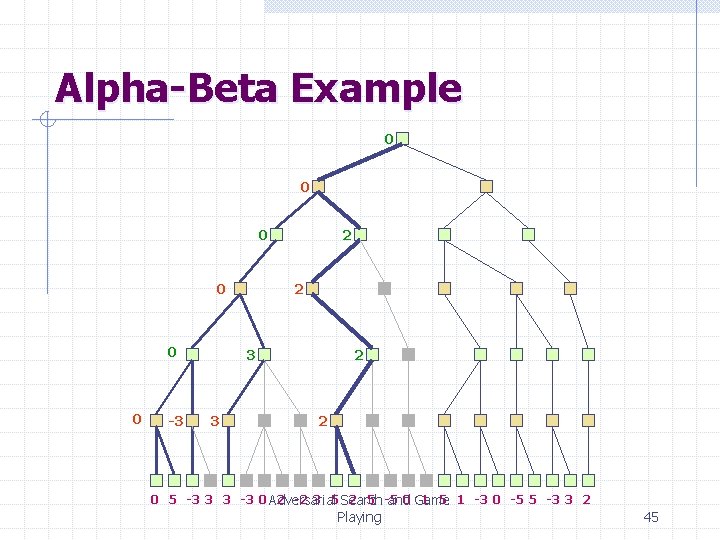

Alpha-Beta Example 0 0 0 -3 2 2 3 3 2 2 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 45

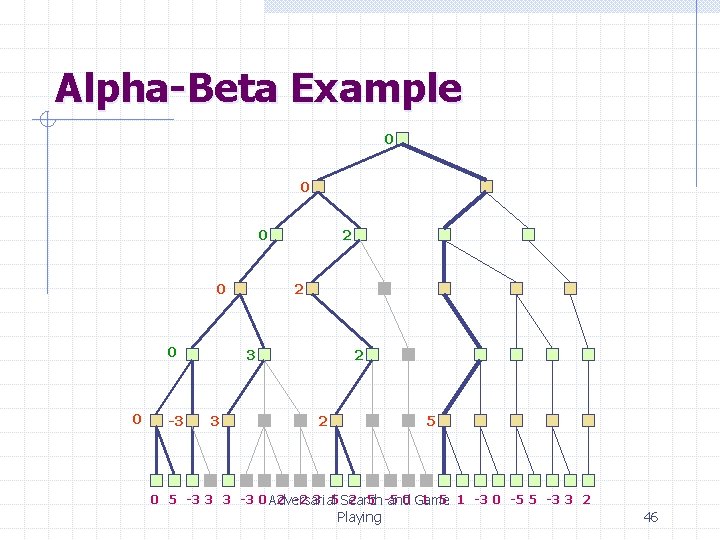

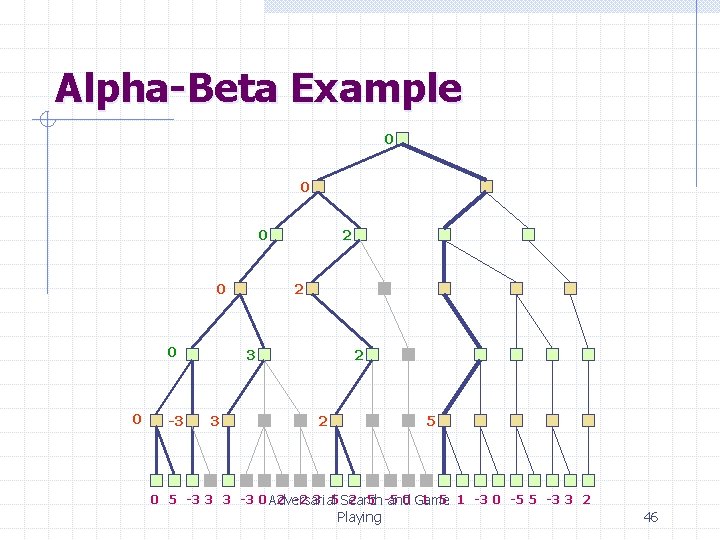

Alpha-Beta Example 0 0 0 -3 2 2 3 3 2 2 5 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 46

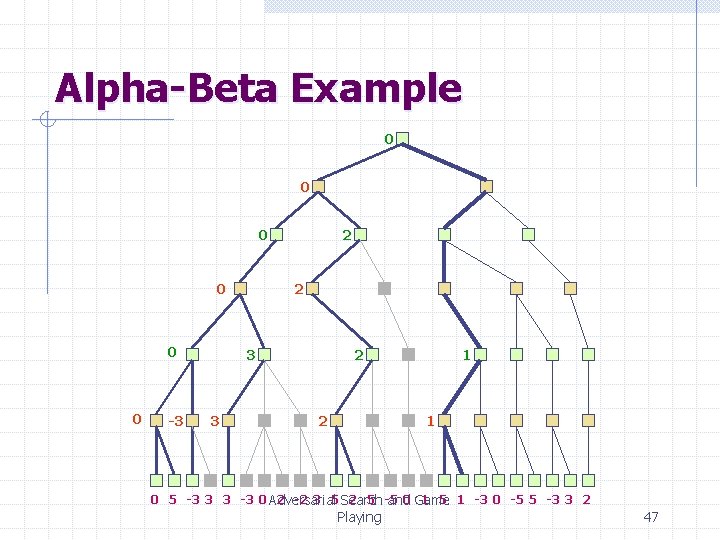

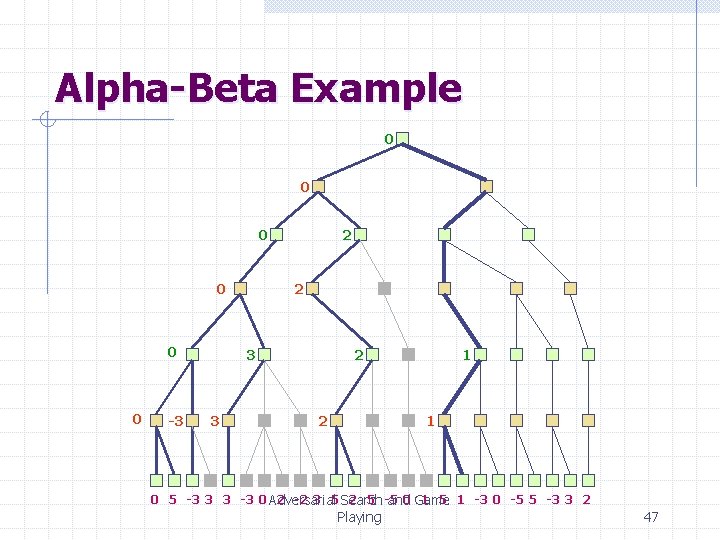

Alpha-Beta Example 0 0 0 -3 2 2 3 3 2 2 1 1 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 47

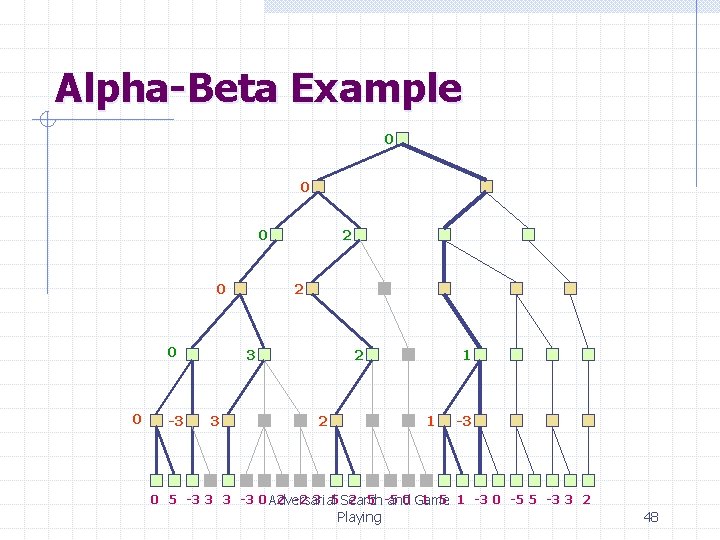

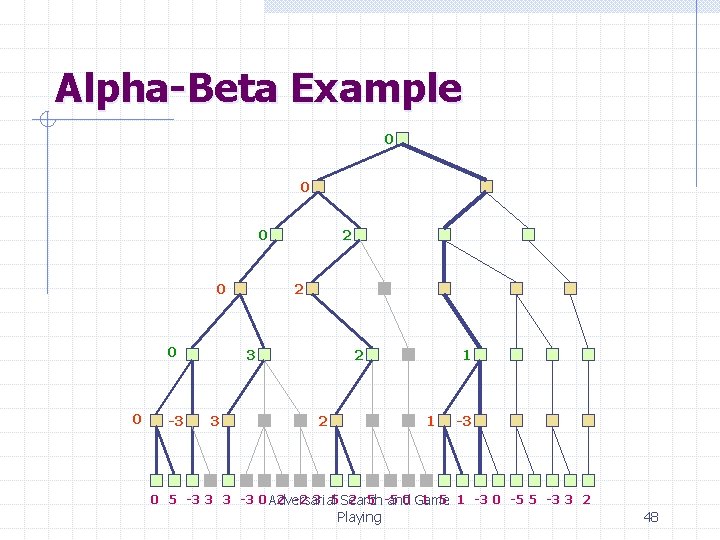

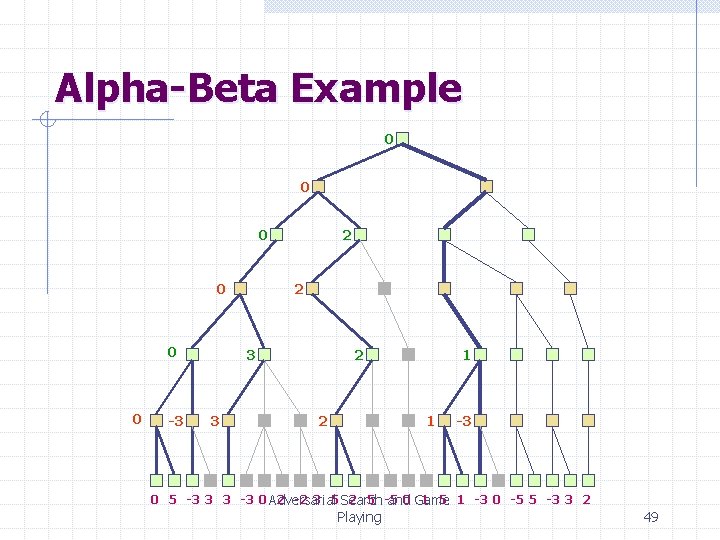

Alpha-Beta Example 0 0 0 -3 2 2 3 3 2 2 1 1 -3 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 48

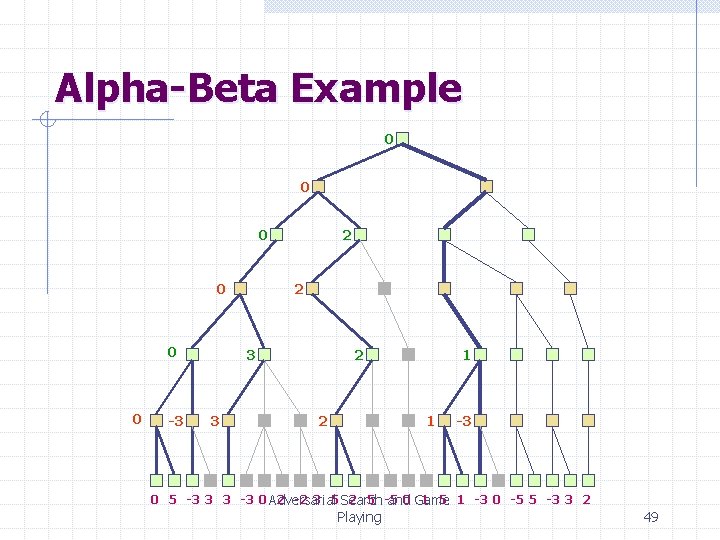

Alpha-Beta Example 0 0 0 -3 2 2 3 3 2 2 1 1 -3 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 49

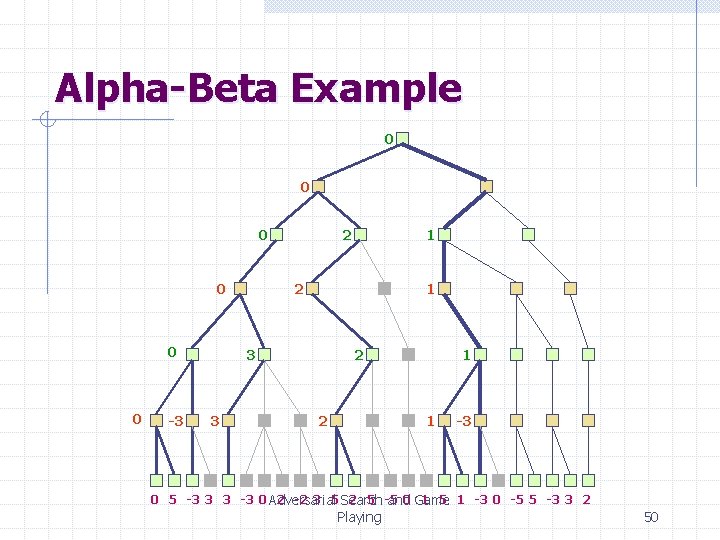

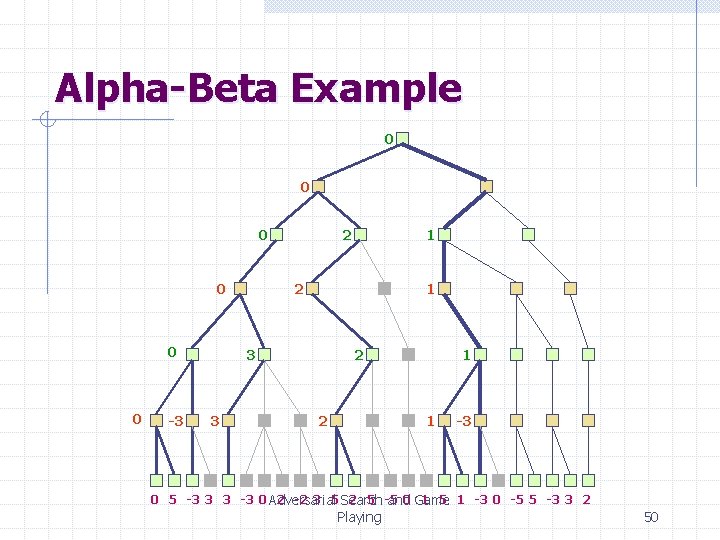

Alpha-Beta Example 0 0 0 -3 2 2 1 3 3 1 2 2 1 1 -3 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 50

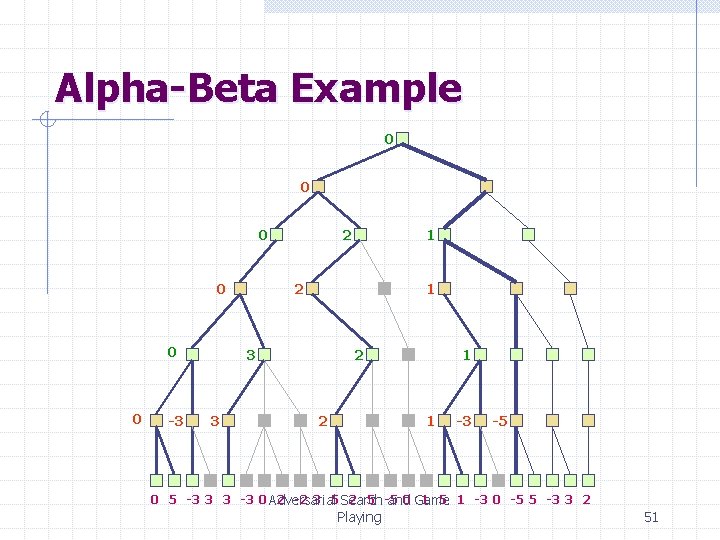

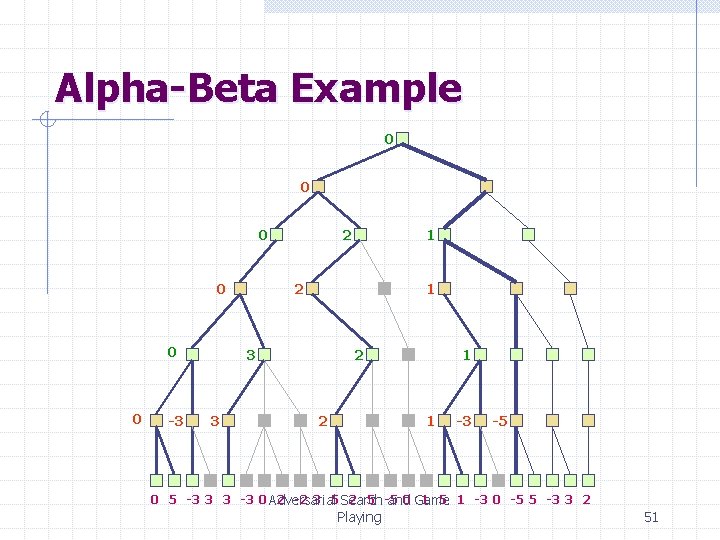

Alpha-Beta Example 0 0 0 -3 2 2 1 3 3 1 2 2 1 1 -3 -5 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 51

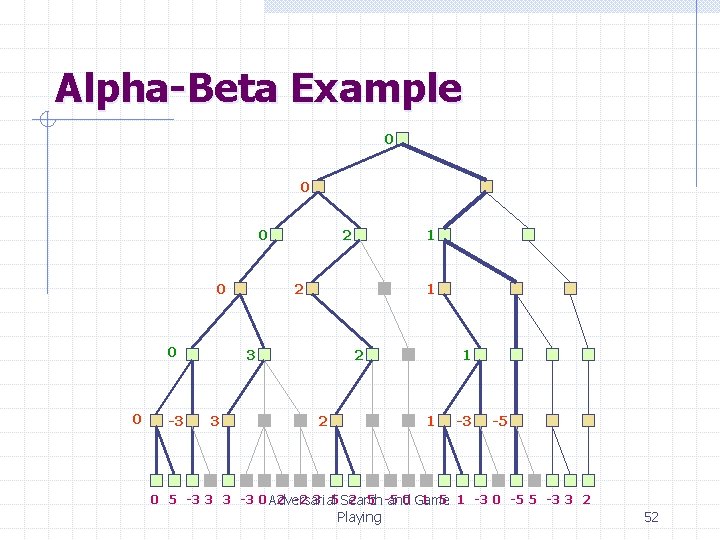

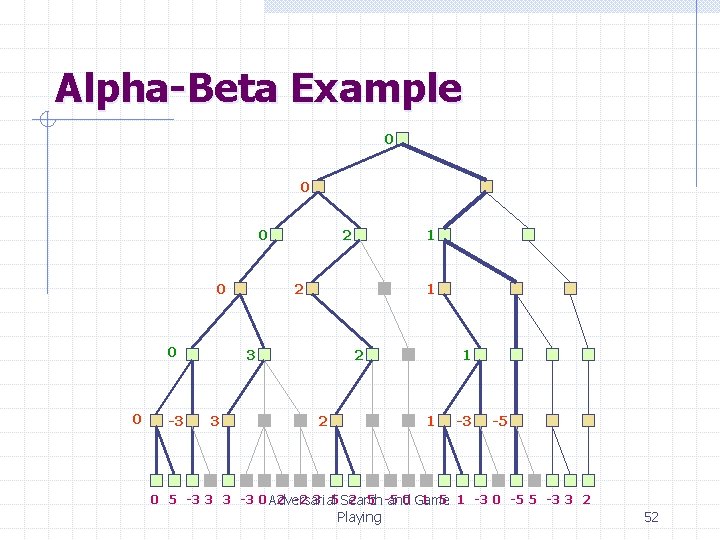

Alpha-Beta Example 0 0 0 -3 2 2 1 3 3 1 2 2 1 1 -3 -5 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 52

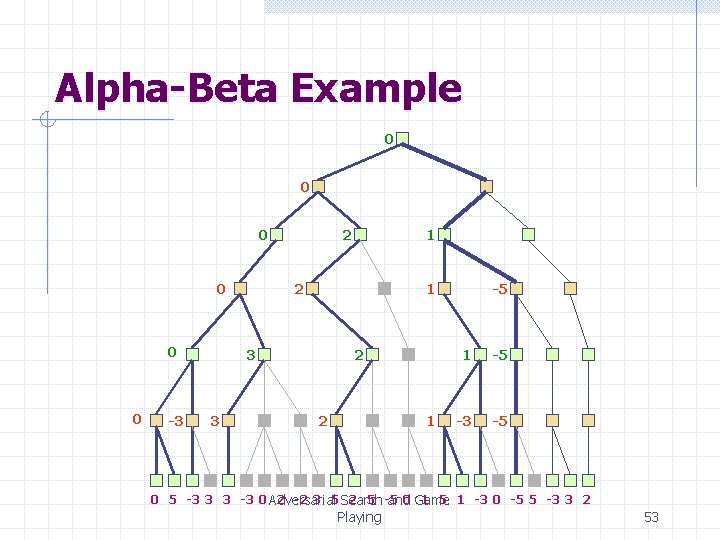

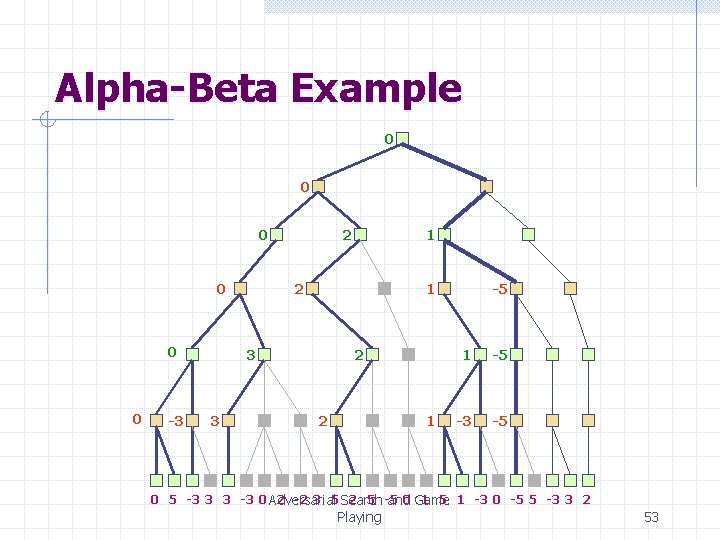

Alpha-Beta Example 0 0 0 -3 2 2 1 3 3 1 2 2 1 -5 -3 -5 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 53

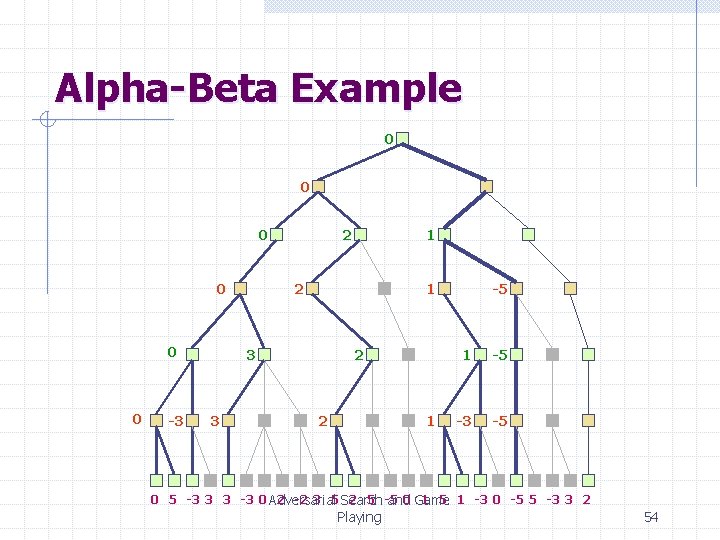

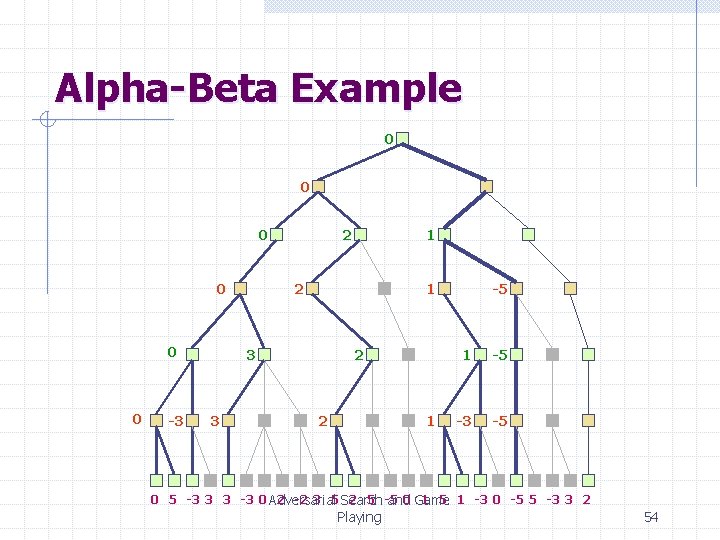

Alpha-Beta Example 0 0 0 -3 2 2 1 3 3 1 2 2 1 -5 -3 -5 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 54

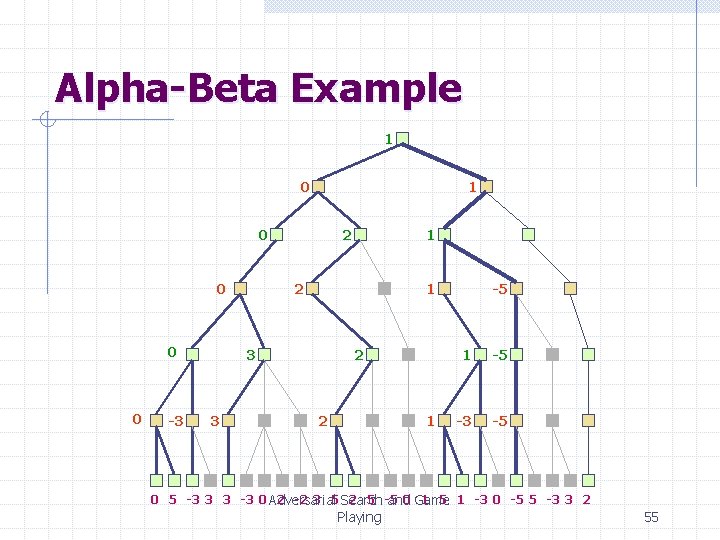

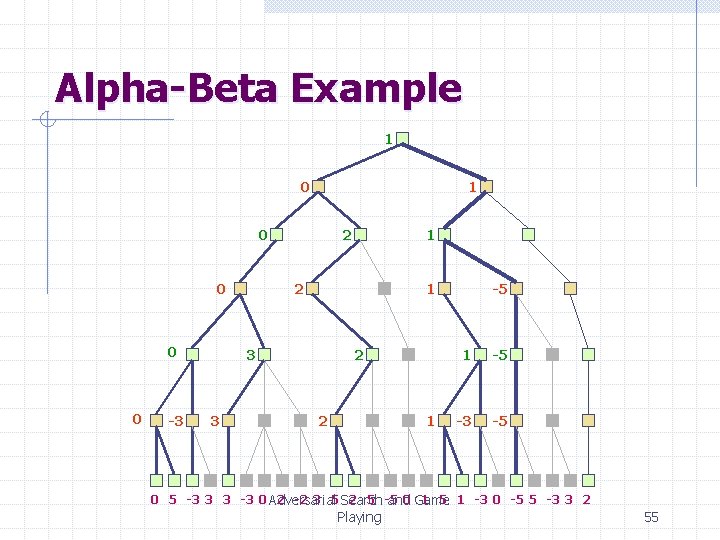

Alpha-Beta Example 1 0 0 0 0 -3 2 2 1 3 3 1 2 2 1 -5 -3 -5 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 55

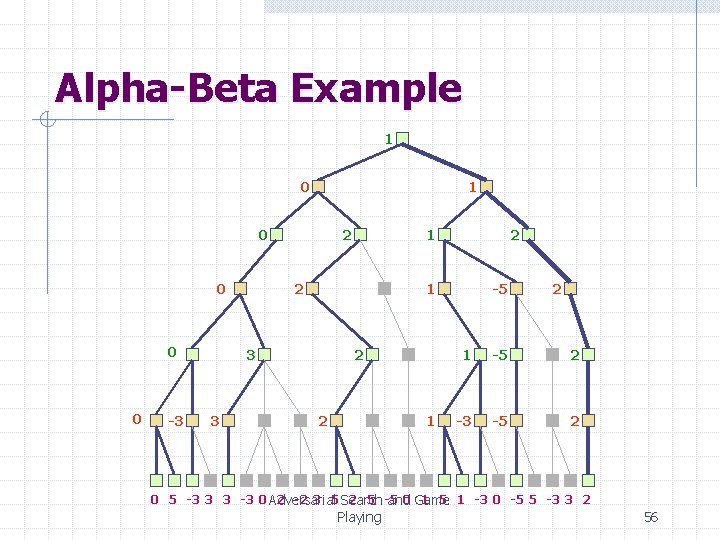

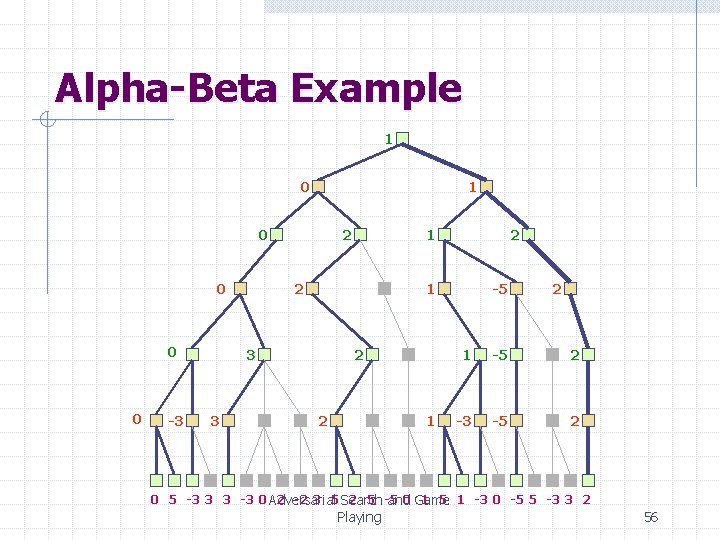

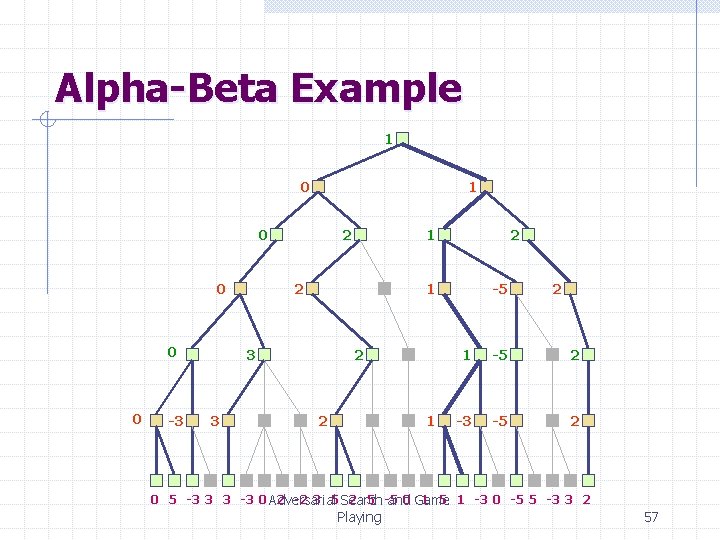

Alpha-Beta Example 1 0 0 0 0 -3 2 2 2 1 3 3 1 2 2 1 -5 2 -3 -5 2 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 56

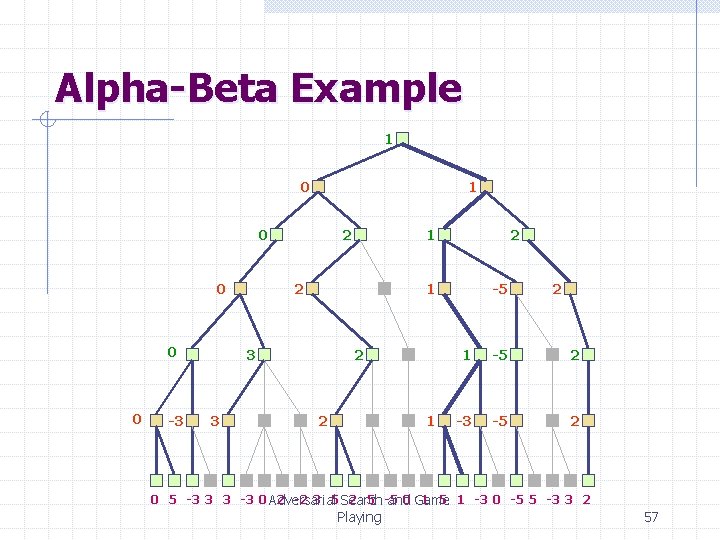

Alpha-Beta Example 1 0 0 0 0 -3 2 2 2 1 3 3 1 2 2 1 -5 2 -3 -5 2 0 5 -3 3 3 -3 0 Adversarial 2 -2 3 5 Search 2 5 -5 0 Game 1 5 1 -3 0 -5 5 -3 3 2 and Playing 57

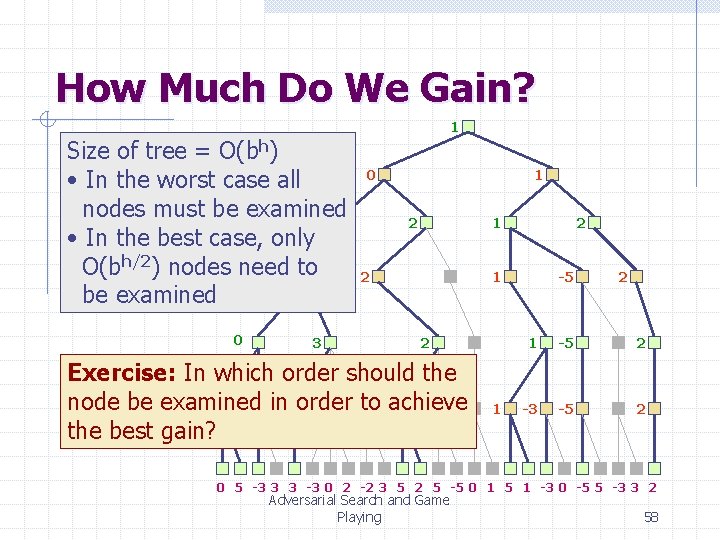

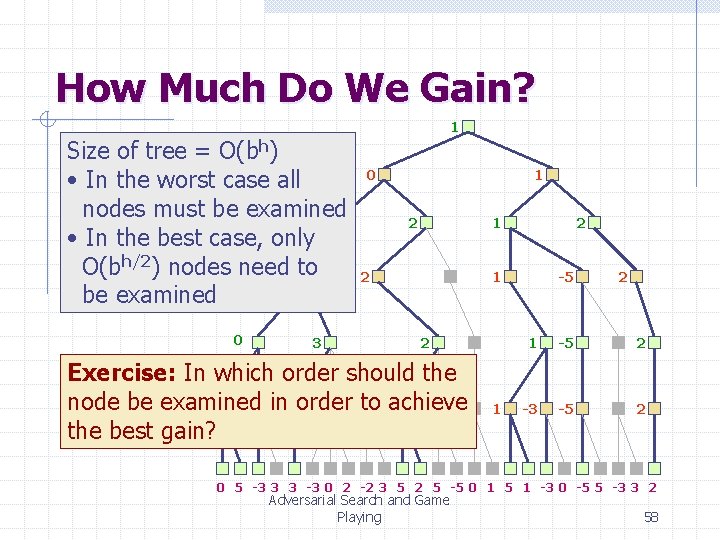

How Much Do We Gain? 1 Size of tree = O(bh) • In the worst case all nodes must be examined 0 • In the best case, only O(bh/2) nodes need 0 to be examined 0 3 0 1 2 2 1 2 Exercise: In which order should the node be examined 0 -3 in 3 order to 2 achieve the best gain? 1 -5 2 -3 -5 2 0 5 -3 3 3 -3 0 2 -2 3 5 2 5 -5 0 1 5 1 -3 0 -5 5 -3 3 2 Adversarial Search and Game Playing 58

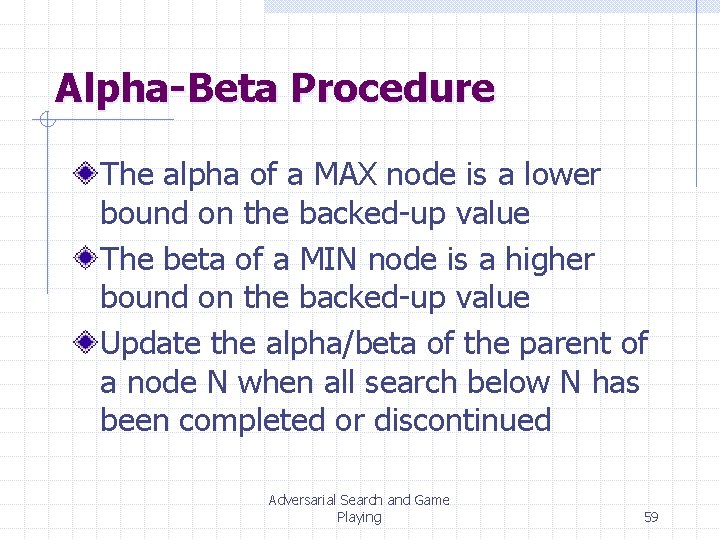

Alpha-Beta Procedure The alpha of a MAX node is a lower bound on the backed-up value The beta of a MIN node is a higher bound on the backed-up value Update the alpha/beta of the parent of a node N when all search below N has been completed or discontinued Adversarial Search and Game Playing 59

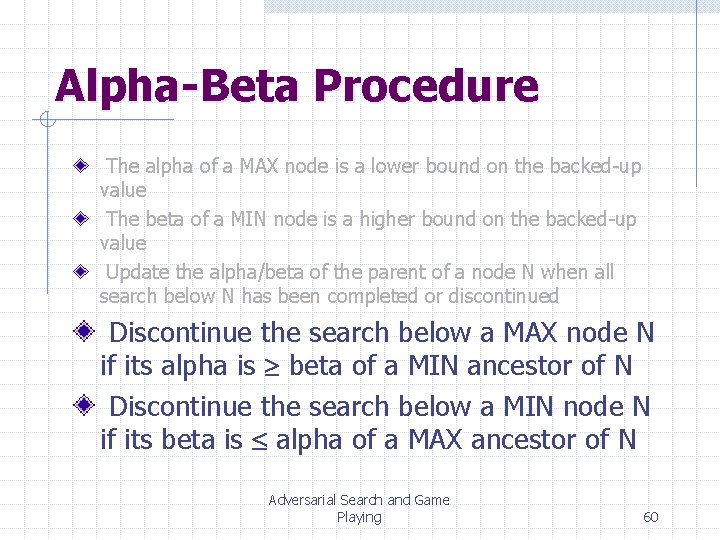

Alpha-Beta Procedure The alpha of a MAX node is a lower bound on the backed-up value The beta of a MIN node is a higher bound on the backed-up value Update the alpha/beta of the parent of a node N when all search below N has been completed or discontinued Discontinue the search below a MAX node N if its alpha is beta of a MIN ancestor of N Discontinue the search below a MIN node N if its beta is alpha of a MAX ancestor of N Adversarial Search and Game Playing 60

Alpha-Beta + … Iterative deepening Singular extensions Adversarial Search and Game Playing 61

Checkers © Jonathan Schaeffer Adversarial Search and Game Playing 62

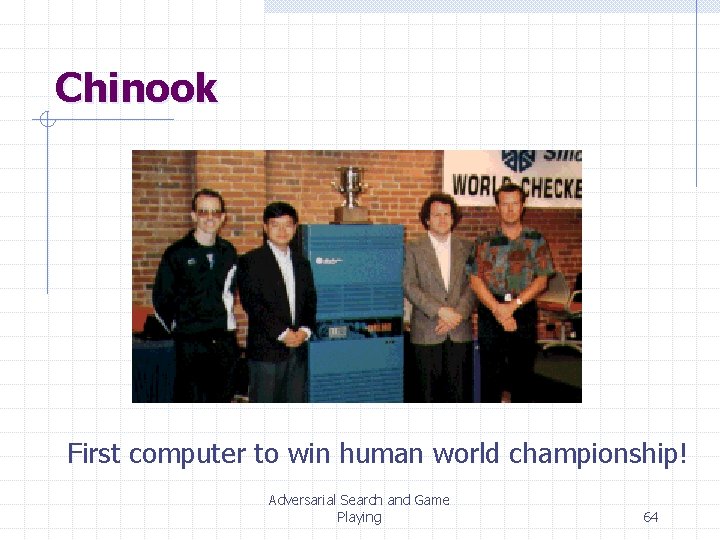

Chinook vs. Tinsley Name: Marion Tinsley Profession: Teach mathematics Hobby: Checkers Record: Over 42 years loses only 3 (!) games of checkers © Jonathan Schaeffer Adversarial Search and Game Playing 63

Chinook First computer to win human world championship! Adversarial Search and Game Playing 64

Chess Adversarial Search and Game Playing 65

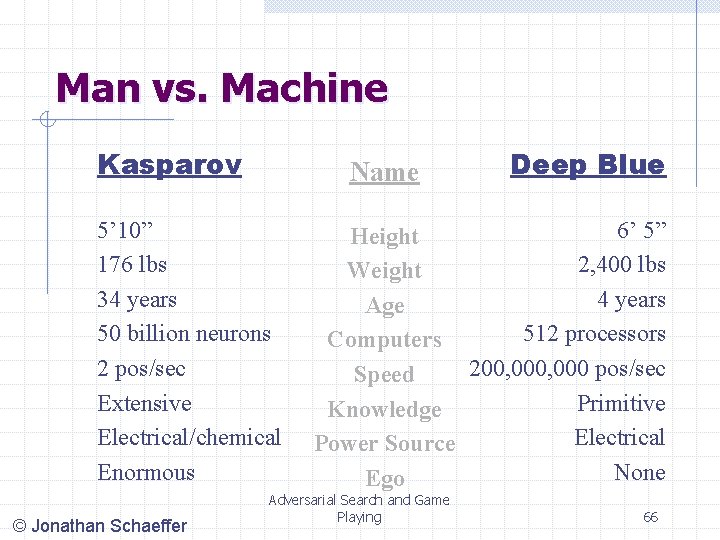

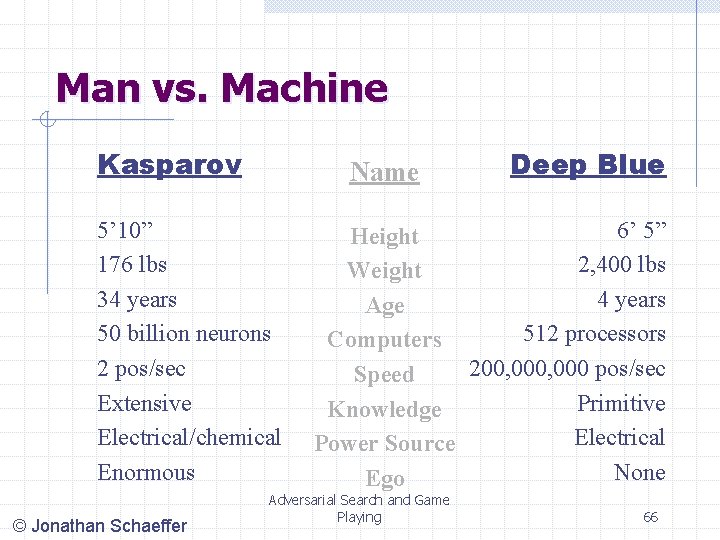

Man vs. Machine Kasparov Name 5’ 10” 176 lbs 34 years 50 billion neurons 2 pos/sec Extensive Electrical/chemical Enormous © Jonathan Schaeffer Deep Blue 6’ 5” Height 2, 400 lbs Weight 4 years Age 512 processors Computers 200, 000 pos/sec Speed Primitive Knowledge Electrical Power Source None Ego Adversarial Search and Game Playing 66

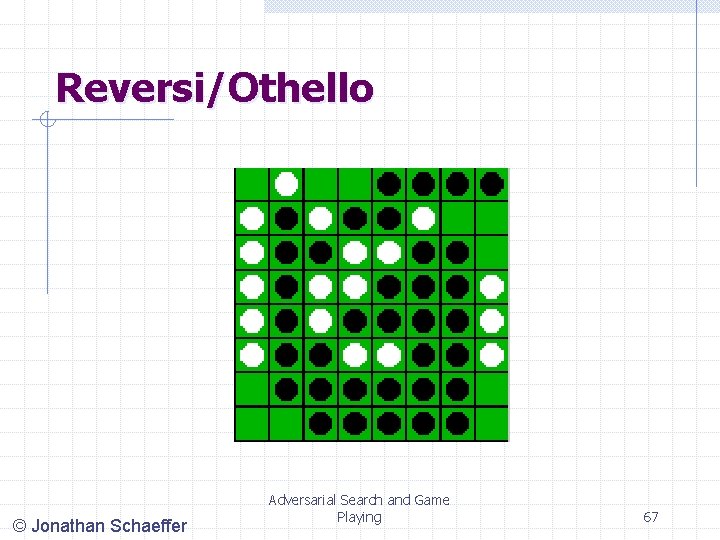

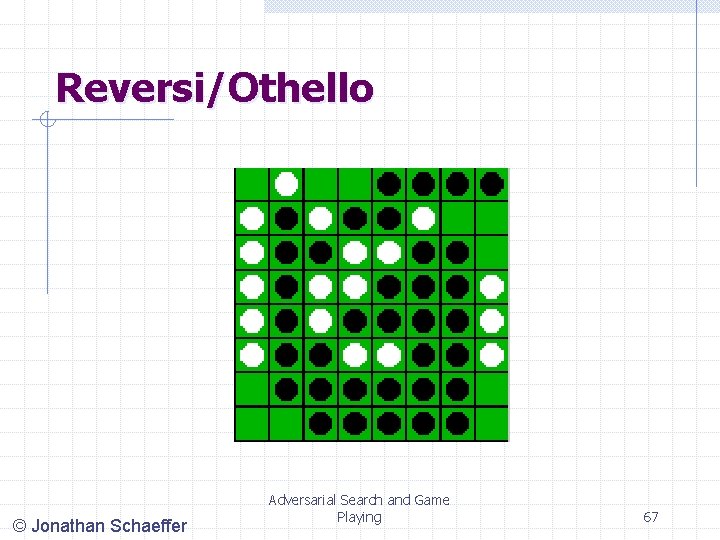

Reversi/Othello © Jonathan Schaeffer Adversarial Search and Game Playing 67

Othello Name: Takeshi Murakami Title: World Othello Champion Crime: Man crushed by machine © Jonathan Schaeffer Adversarial Search and Game Playing 68

Go: On the One Side Name: Chen Zhixing Author: Handtalk (Goemate) Profession: Retired Computer skills: selftaught assembly language programmer Accomplishments: dominated computer go for 4 years. © Jonathan Schaeffer Adversarial Search and Game Playing 69

Go: And on the Other Gave Handtalk a 9 stone handicap and still easily beat the program, thereby winning $15, 000 © Jonathan Schaeffer Adversarial Search and Game Playing 70

Perspective on Games: Pro “Saying Deep Blue doesn’t really think about chess is like saying an airplane doesn't really fly because it doesn't flap its wings” Drew Mc. Dermott © Jonathan Schaeffer Adversarial Search and Game Playing 71

Perspective on Games: Con “Chess is the Drosophila of artificial intelligence. However, computer chess has developed much as genetics might have if the geneticists had concentrated their efforts starting in 1910 on breeding racing Drosophila. We would have some science, but mainly we would have very fast fruit flies. ” John Mc. Carthy © Jonathan Schaeffer Adversarial Search and Game Playing 72

Other Games Multi-player games, with alliances or not Games with randomness in successor function (e. g. , rolling a dice) Incompletely known states (e. g. , card games) Adversarial Search and Game Playing 73

Summary Two-players game as a domain where action models are uncertain Optimal decision in the worst case Game tree Evaluation function / backed-up value Minimax procedure Alpha-beta procedure Adversarial Search and Game Playing 74

Russell and norvig

Russell and norvig How dogs communicate

How dogs communicate Russell norvig

Russell norvig Adversarial search problems uses

Adversarial search problems uses Peter norvig design patterns

Peter norvig design patterns Russel norvig

Russel norvig Jillian and dawn are playing a game

Jillian and dawn are playing a game Greek vase shapes

Greek vase shapes Playing a decent game of table tennis (ping-pong).

Playing a decent game of table tennis (ping-pong). Foreshadowing examples in the most dangerous game

Foreshadowing examples in the most dangerous game Complete a interrogação abaixo usando o past continuous

Complete a interrogação abaixo usando o past continuous Adversarial stakeholders

Adversarial stakeholders The adversarial system

The adversarial system What is adversary system

What is adversary system Adversarial personalized ranking for recommendation

Adversarial personalized ranking for recommendation Pgd

Pgd Quantum generative adversarial learning

Quantum generative adversarial learning On adaptive attacks to adversarial example defenses

On adaptive attacks to adversarial example defenses Adversarial interview

Adversarial interview Adversarial system law definition

Adversarial system law definition Adversarial examples

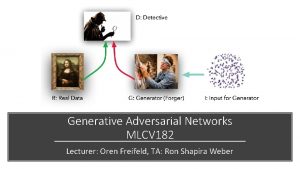

Adversarial examples Generative adversarial network

Generative adversarial network Spectral normalization gan

Spectral normalization gan Conditional generator

Conditional generator Adversarial multi-task learning for text classification

Adversarial multi-task learning for text classification Voice conversion

Voice conversion Adversarial training

Adversarial training Certified defenses against adversarial examples

Certified defenses against adversarial examples The limitations of deep learning in adversarial settings.

The limitations of deep learning in adversarial settings. Adversarial patch

Adversarial patch Melody randford

Melody randford Informed search and uninformed search in ai

Informed search and uninformed search in ai A formal approach to game design and game research

A formal approach to game design and game research The blind search algorithms are.

The blind search algorithms are. Federated discovery

Federated discovery Local search vs global search

Local search vs global search Federated search vs distributed search

Federated search vs distributed search Mail @ malaysia.images.search.yahoo.com

Mail @ malaysia.images.search.yahoo.com Best first search in ai

Best first search in ai Blind search adalah

Blind search adalah Video.search.yahoo.com search video

Video.search.yahoo.com search video Videos yahoo search

Videos yahoo search Binary search tree advantages and disadvantages

Binary search tree advantages and disadvantages Linear search vs binary search

Linear search vs binary search Images search yahoo

Images search yahoo Multilingual semantical markup

Multilingual semantical markup Comparison of uninformed search strategies

Comparison of uninformed search strategies Http://search.yahoo.com/search?ei=utf-8

Http://search.yahoo.com/search?ei=utf-8 Abcde games

Abcde games The farming game rules

The farming game rules Game lab game theory

Game lab game theory Liar game game theory

Liar game game theory Liar game game theory

Liar game game theory Achilles and ajax playing dice

Achilles and ajax playing dice Chess with the devil

Chess with the devil My brother and sister playing tennis at 11am yesterday

My brother and sister playing tennis at 11am yesterday Is playing

Is playing Lesson 1 construct an equilateral triangle

Lesson 1 construct an equilateral triangle David carpenter serial killer

David carpenter serial killer James russell odom

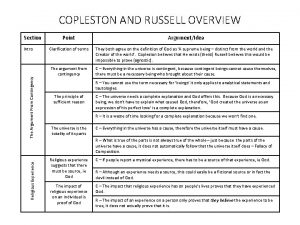

James russell odom Copleston and russell debate summary

Copleston and russell debate summary Russell and taylor operations management

Russell and taylor operations management Russell and atwell guardian

Russell and atwell guardian Russell quarterly economic and market review

Russell quarterly economic and market review Copleston and russell debate summary

Copleston and russell debate summary Russell quarterly economic and market review

Russell quarterly economic and market review Diagram on the playing area in volleyball

Diagram on the playing area in volleyball I like play badminton

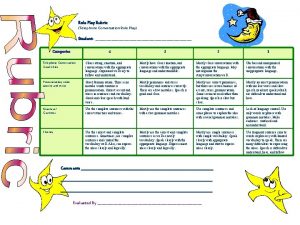

I like play badminton Analytic rubrics sample for role playing

Analytic rubrics sample for role playing I enjoy playing basketball revision

I enjoy playing basketball revision You say we are playing football indirect speech

You say we are playing football indirect speech Role-playing dimensions power bi

Role-playing dimensions power bi Playing nice in the sandbox

Playing nice in the sandbox Design a museum exhibit holistic rubric

Design a museum exhibit holistic rubric Copy the paragraph below

Copy the paragraph below