TDC 561 Network Programming Week 10 Performance Aspects

- Slides: 29

TDC 561 Network Programming Week 10: Performance Aspects of End-to-End (Transport) Protocols and API Programming Distributed Systems Frameworks (DS 520 -95 -103) DISC’ 99 Camelia Zlatea, Ph. D Email: czlatea@cs. depaul. edu

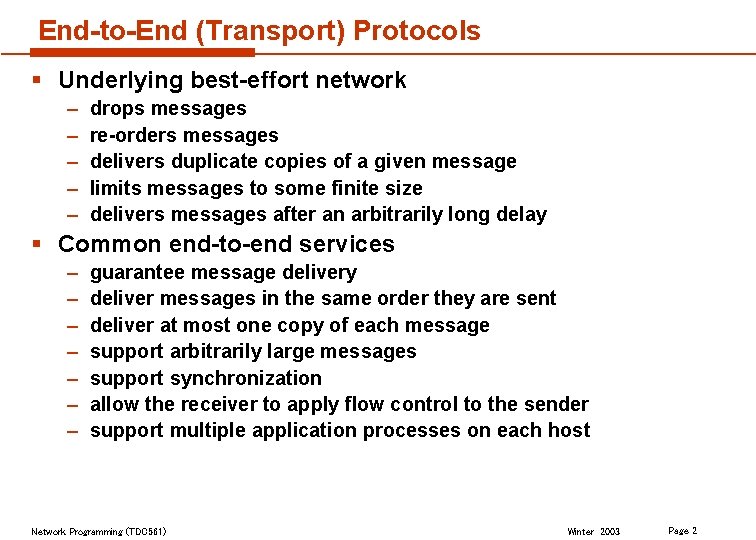

End-to-End (Transport) Protocols § Underlying best-effort network – – – drops messages re-orders messages delivers duplicate copies of a given message limits messages to some finite size delivers messages after an arbitrarily long delay § Common end-to-end services – – – – guarantee message delivery deliver messages in the same order they are sent deliver at most one copy of each message support arbitrarily large messages support synchronization allow the receiver to apply flow control to the sender support multiple application processes on each host Network Programming (TDC 561) Winter 2003 Page 2

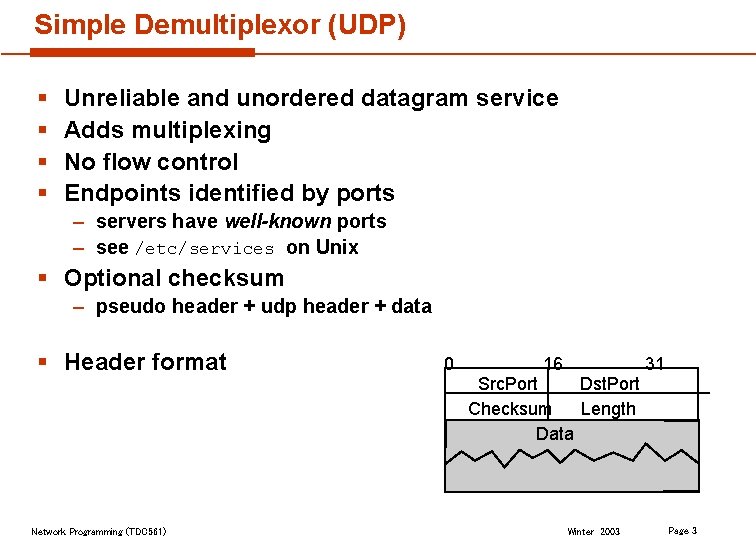

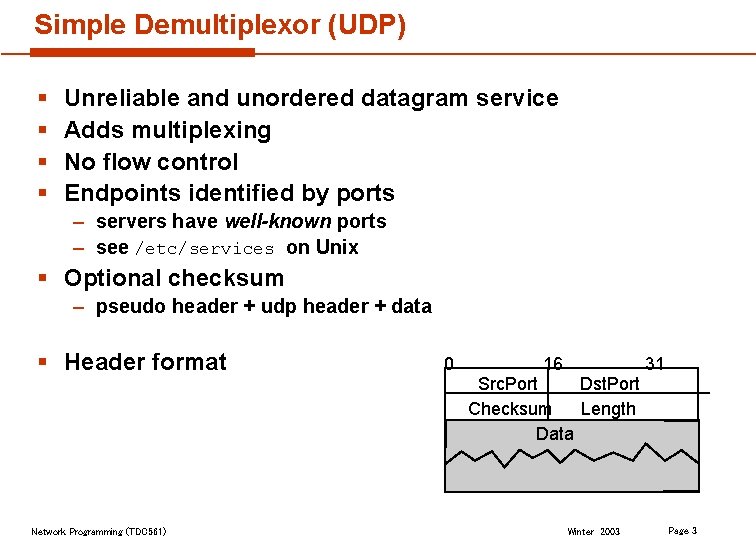

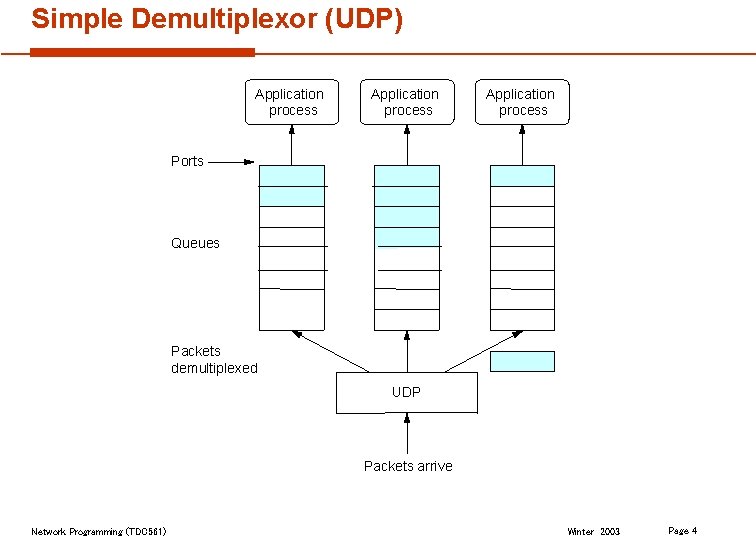

Simple Demultiplexor (UDP) § § Unreliable and unordered datagram service Adds multiplexing No flow control Endpoints identified by ports – servers have well-known ports – see /etc/services on Unix § Optional checksum – pseudo header + udp header + data § Header format Network Programming (TDC 561) 0 16 31 Src. Port Dst. Port Checksum Length Data Winter 2003 Page 3

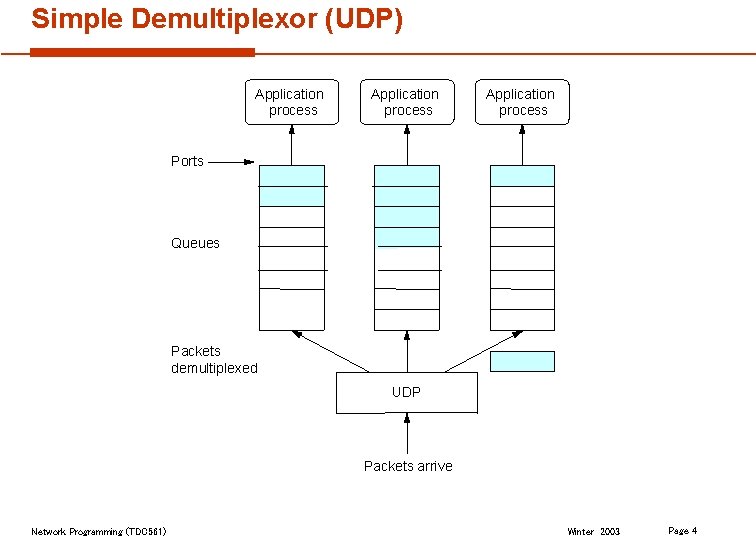

Simple Demultiplexor (UDP) Application process Ports Queues Packets demultiplexed UDP Packets arrive Network Programming (TDC 561) Winter 2003 Page 4

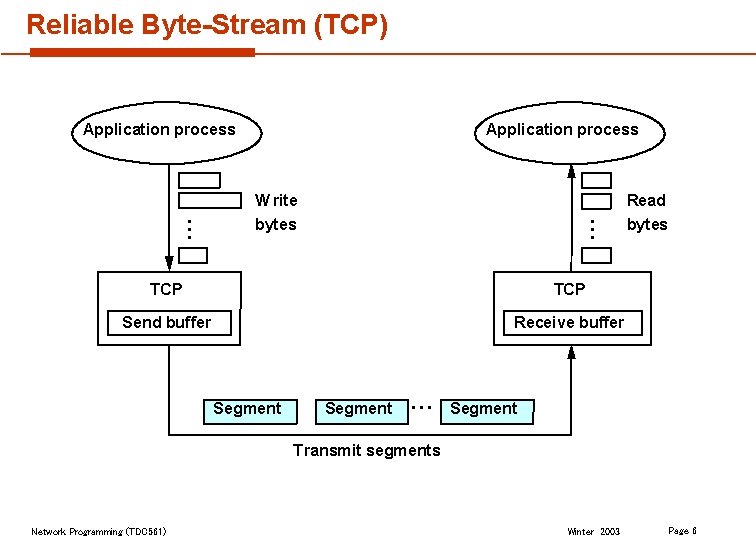

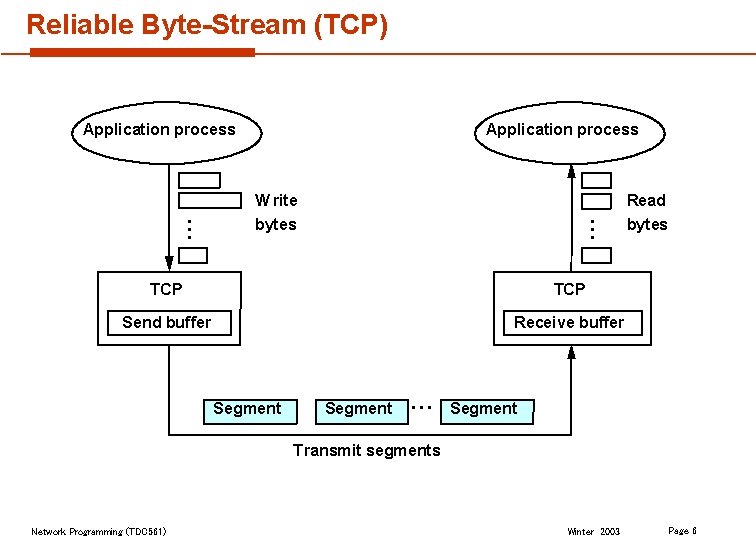

Reliable Byte-Stream (TCP) § Connection-oriented § Byte-stream – sending process writes some number of bytes – TCP breaks into segments and sends via IP – receiving process reads some number of bytes § Full duplex § Flow control: keep sender from overrunning receiver § Congestion control: keep sender from overrunning network Network Programming (TDC 561) Winter 2003 Page 5

Reliable Byte-Stream (TCP) Application process W rite Read bytes … … Application process TCP Send buffer Receive buffer Segment … Segment Transmit segments Network Programming (TDC 561) Winter 2003 Page 6

End-to-End Issues Based on sliding window protocol used at data link level, but the situation is very different. § Potentially connects many different hosts – need explicit connection establishment and termination § Potentially different RTT (Round Trip Time) – need adaptive timeout mechanism § Potentially long delay in network – need to be prepared for arrival of very old packets § Potentially different capacity at destination – need to accommodate different amounts of buffering § Potentially different network capacity – need to be prepared for network congestion Network Programming (TDC 561) Winter 2003 Page 7

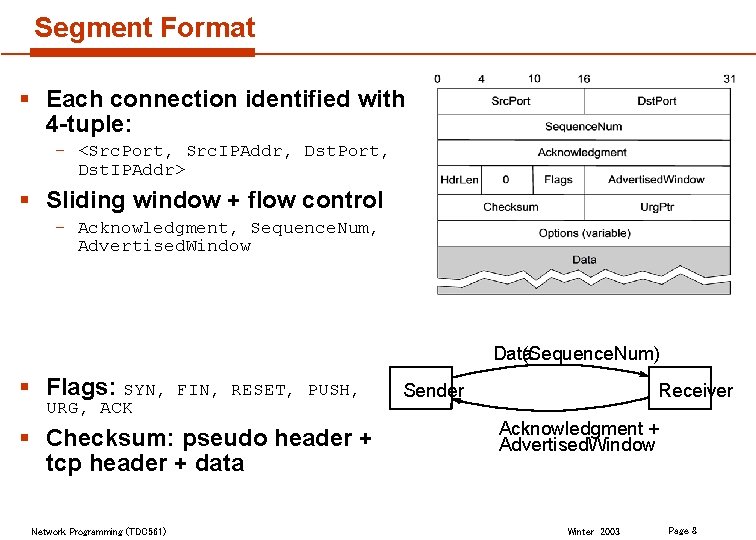

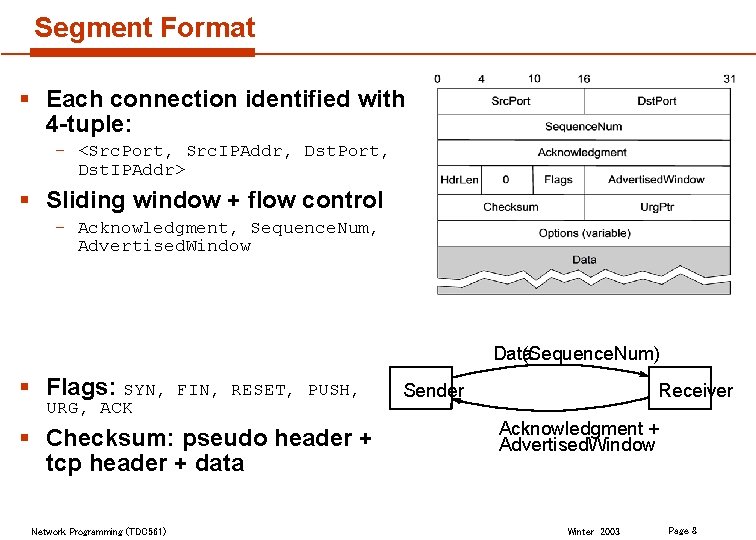

Segment Format § Each connection identified with 4 -tuple: – <Src. Port, Src. IPAddr, Dst. Port, Dst. IPAddr> § Sliding window + flow control – Acknowledgment, Sequence. Num, Advertised. Window Data (Sequence. Num) § Flags: SYN, URG, ACK FIN, RESET, PUSH, § Checksum: pseudo header + tcp header + data Network Programming (TDC 561) Receiver Sender Acknowledgment + Advertised. Window Winter 2003 Page 8

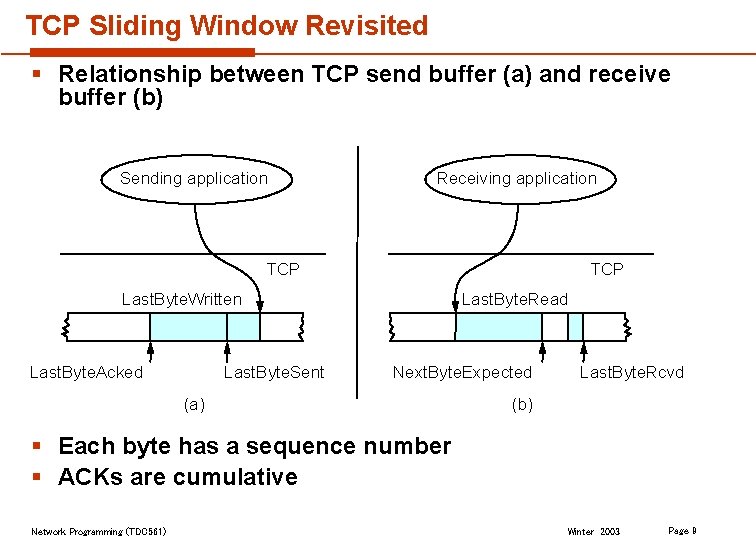

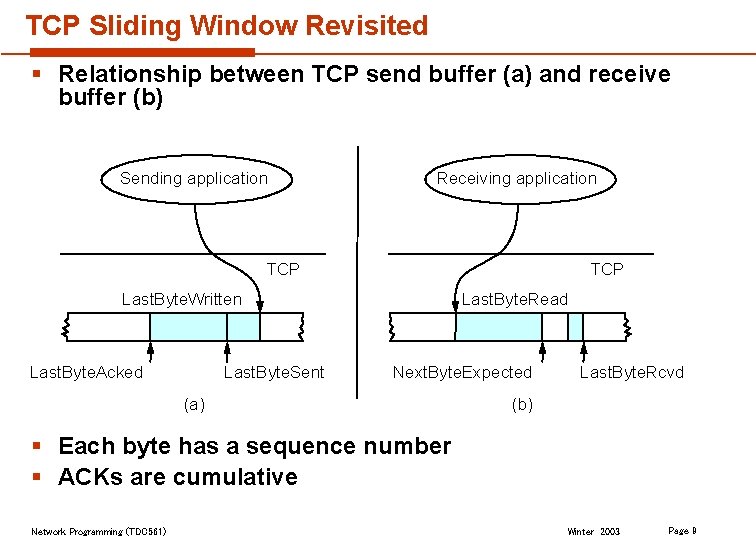

TCP Sliding Window Revisited § Relationship between TCP send buffer (a) and receive buffer (b) Sending application Receiving application TCP Last. Byte. Written Last. Byte. Acked Last. Byte. Sent Last. Byte. Read Next. Byte. Expected (a) Last. Byte. Rcvd (b) § Each byte has a sequence number § ACKs are cumulative Network Programming (TDC 561) Winter 2003 Page 9

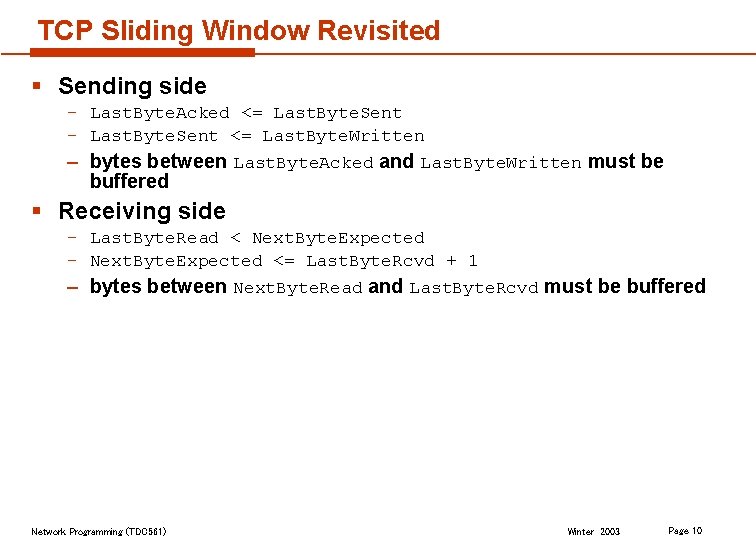

TCP Sliding Window Revisited § Sending side – Last. Byte. Acked <= Last. Byte. Sent – Last. Byte. Sent <= Last. Byte. Written – bytes between Last. Byte. Acked and Last. Byte. Written must be buffered § Receiving side – Last. Byte. Read < Next. Byte. Expected – Next. Byte. Expected <= Last. Byte. Rcvd + 1 – bytes between Next. Byte. Read and Last. Byte. Rcvd must be buffered Network Programming (TDC 561) Winter 2003 Page 10

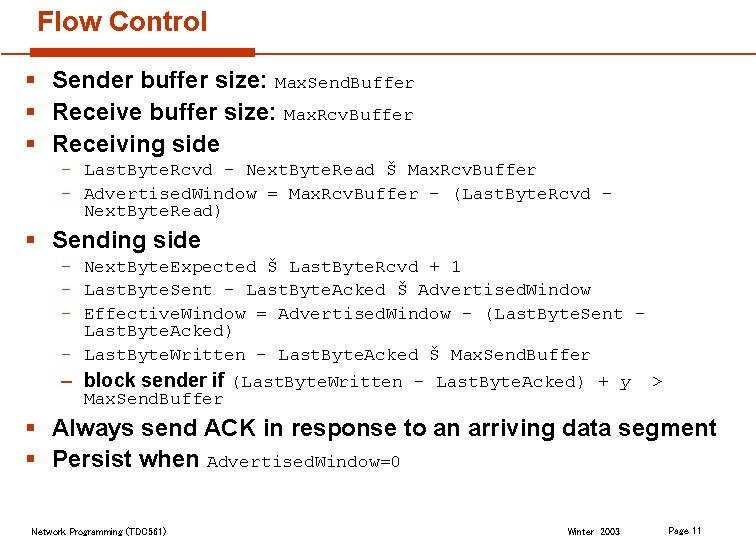

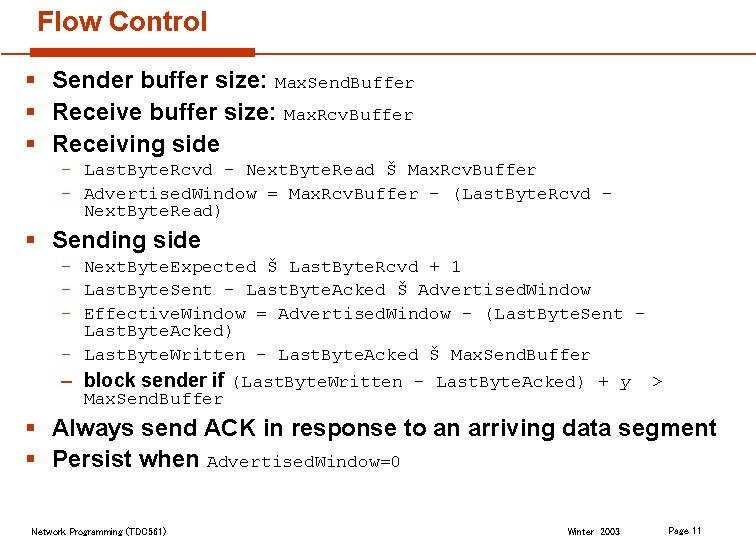

Flow Control § Sender buffer size: Max. Send. Buffer § Receive buffer size: Max. Rcv. Buffer § Receiving side – Last. Byte. Rcvd - Next. Byte. Read Š Max. Rcv. Buffer – Advertised. Window = Max. Rcv. Buffer - (Last. Byte. Rcvd Next. Byte. Read) § Sending side – Next. Byte. Expected Š Last. Byte. Rcvd + 1 – Last. Byte. Sent - Last. Byte. Acked Š Advertised. Window – Effective. Window = Advertised. Window - (Last. Byte. Sent Last. Byte. Acked) – Last. Byte. Written - Last. Byte. Acked Š Max. Send. Buffer – block sender if (Last. Byte. Written - Last. Byte. Acked) + y > Max. Send. Buffer § Always send ACK in response to an arriving data segment § Persist when Advertised. Window=0 Network Programming (TDC 561) Winter 2003 Page 11

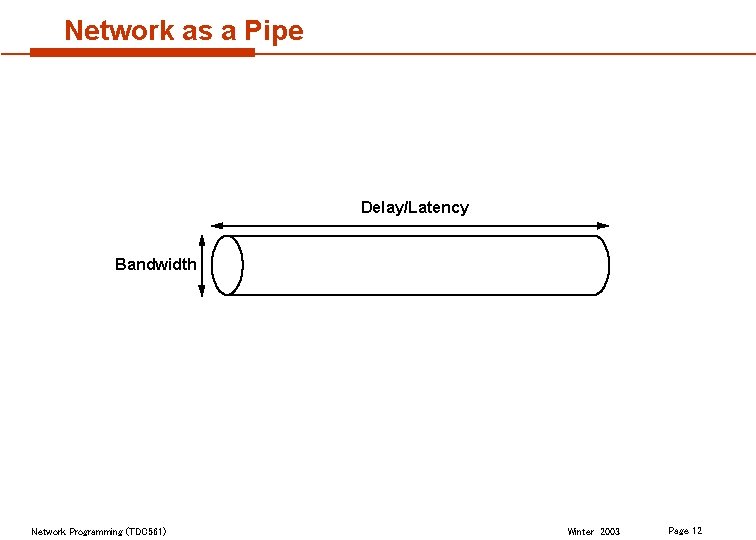

Network as a Pipe Delay/Latency Bandwidth Network Programming (TDC 561) Winter 2003 Page 12

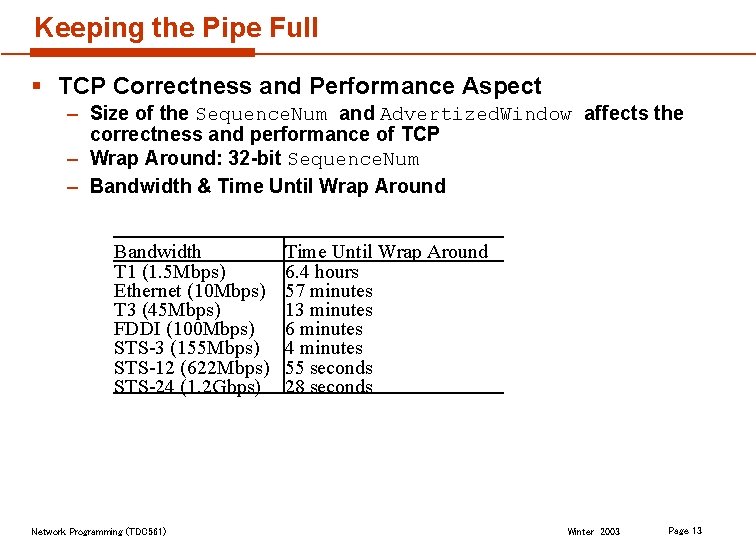

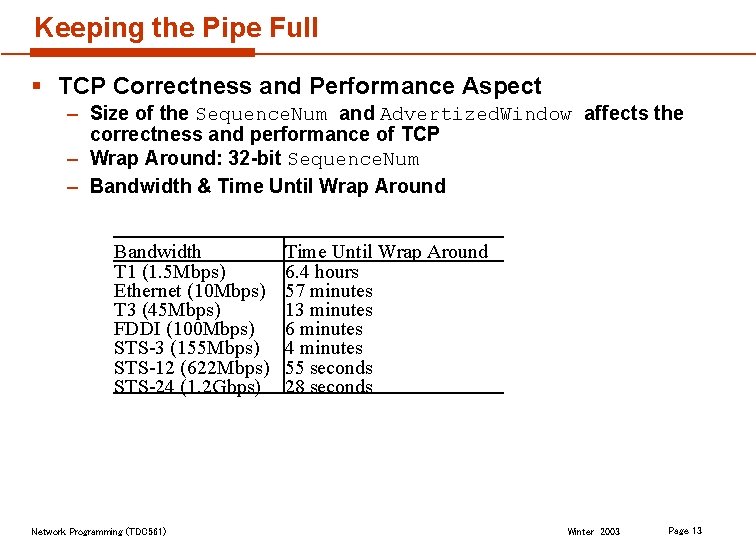

Keeping the Pipe Full § TCP Correctness and Performance Aspect – Size of the Sequence. Num and Advertized. Window affects the correctness and performance of TCP – Wrap Around: 32 -bit Sequence. Num – Bandwidth & Time Until Wrap Around Bandwidth T 1 (1. 5 Mbps) Ethernet (10 Mbps) T 3 (45 Mbps) FDDI (100 Mbps) STS-3 (155 Mbps) STS-12 (622 Mbps) STS-24 (1. 2 Gbps) Network Programming (TDC 561) Time Until Wrap Around 6. 4 hours 57 minutes 13 minutes 6 minutes 4 minutes 55 seconds 28 seconds Winter 2003 Page 13

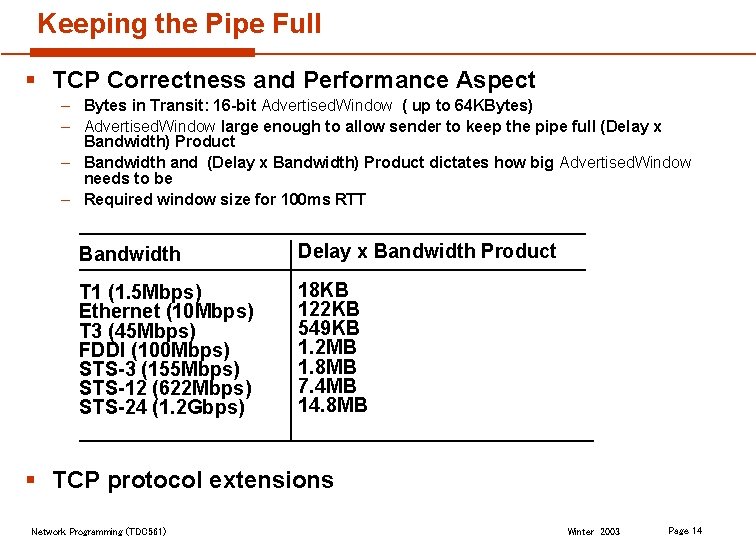

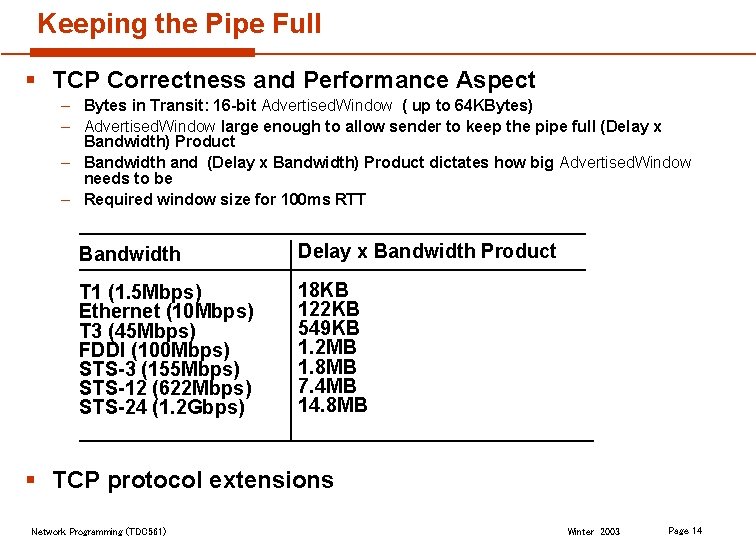

Keeping the Pipe Full § TCP Correctness and Performance Aspect – Bytes in Transit: 16 -bit Advertised. Window ( up to 64 KBytes) – Advertised. Window large enough to allow sender to keep the pipe full (Delay x Bandwidth) Product – Bandwidth and (Delay x Bandwidth) Product dictates how big Advertised. Window needs to be – Required window size for 100 ms RTT Bandwidth Delay x Bandwidth Product T 1 (1. 5 Mbps) Ethernet (10 Mbps) T 3 (45 Mbps) FDDI (100 Mbps) STS-3 (155 Mbps) STS-12 (622 Mbps) STS-24 (1. 2 Gbps) 18 KB 122 KB 549 KB 1. 2 MB 1. 8 MB 7. 4 MB 14. 8 MB § TCP protocol extensions Network Programming (TDC 561) Winter 2003 Page 14

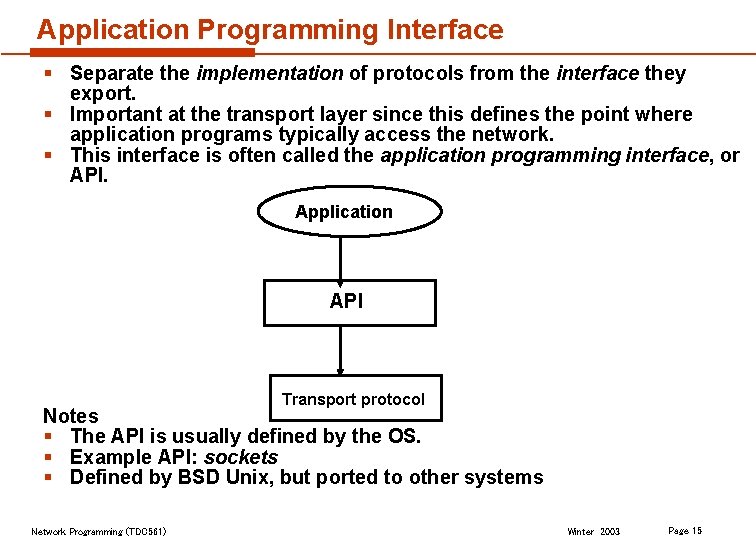

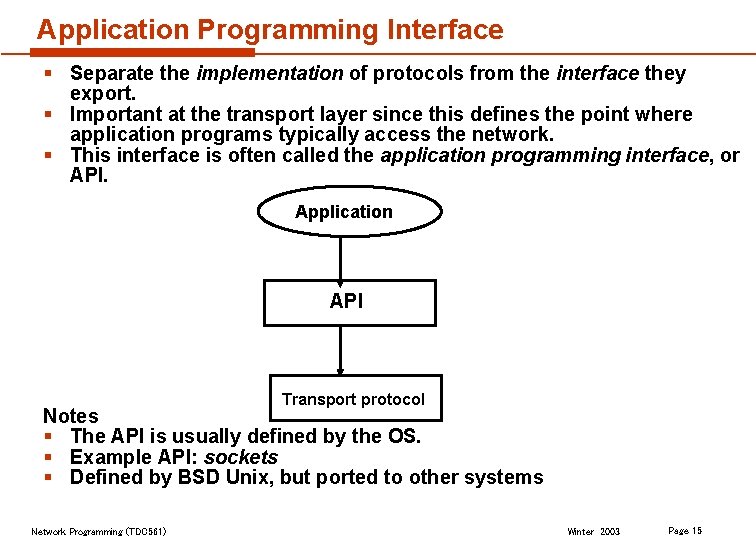

Application Programming Interface § Separate the implementation of protocols from the interface they export. § Important at the transport layer since this defines the point where application programs typically access the network. § This interface is often called the application programming interface, or API. Application API Transport protocol Notes § The API is usually defined by the OS. § Example API: sockets § Defined by BSD Unix, but ported to other systems Network Programming (TDC 561) Winter 2003 Page 15

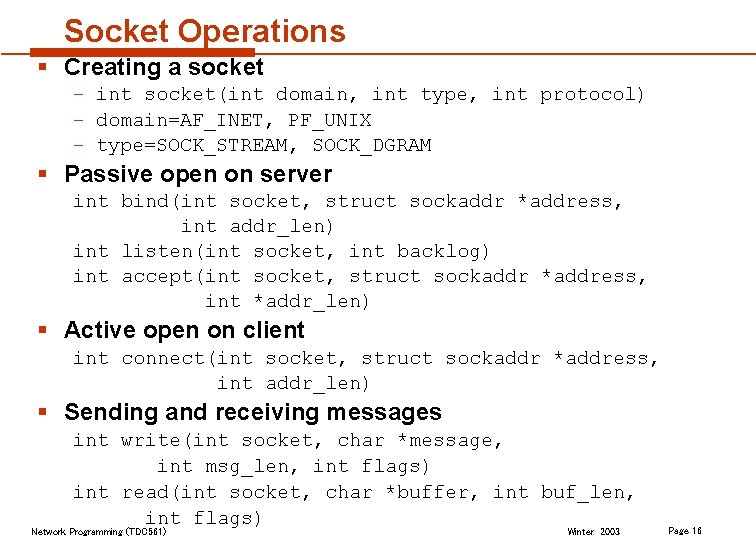

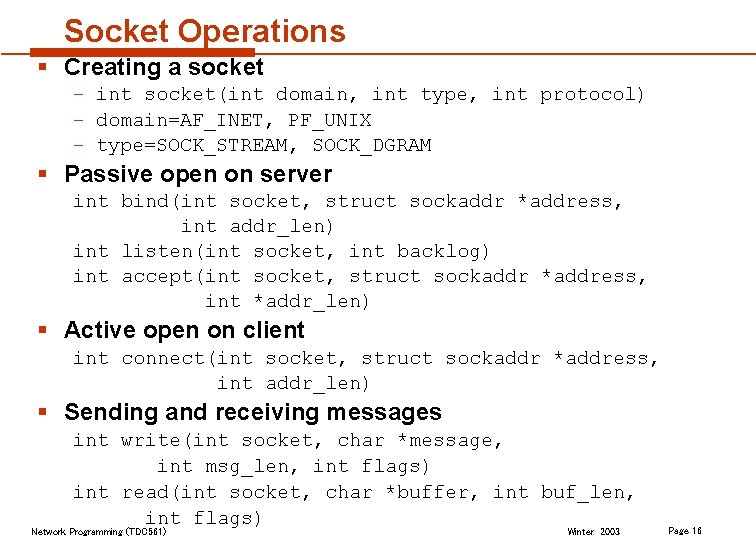

Socket Operations § Creating a socket – int socket(int domain, int type, int protocol) – domain=AF_INET, PF_UNIX – type=SOCK_STREAM, SOCK_DGRAM § Passive open on server int bind(int socket, struct sockaddr *address, int addr_len) int listen(int socket, int backlog) int accept(int socket, struct sockaddr *address, int *addr_len) § Active open on client int connect(int socket, struct sockaddr *address, int addr_len) § Sending and receiving messages int write(int socket, char *message, int msg_len, int flags) int read(int socket, char *buffer, int buf_len, int flags) Network Programming (TDC 561) Winter 2003 Page 16

Performance Network Programming (TDC 561) Winter 2003 Page 17

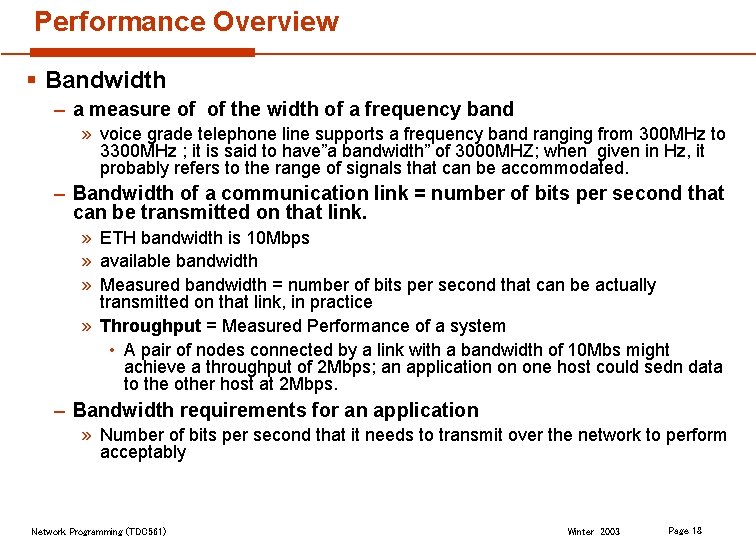

Performance Overview § Bandwidth – a measure of of the width of a frequency band » voice grade telephone line supports a frequency band ranging from 300 MHz to 3300 MHz ; it is said to have”a bandwidth” of 3000 MHZ; when given in Hz, it probably refers to the range of signals that can be accommodated. – Bandwidth of a communication link = number of bits per second that can be transmitted on that link. » ETH bandwidth is 10 Mbps » available bandwidth » Measured bandwidth = number of bits per second that can be actually transmitted on that link, in practice » Throughput = Measured Performance of a system • A pair of nodes connected by a link with a bandwidth of 10 Mbs might achieve a throughput of 2 Mbps; an application on one host could sedn data to the other host at 2 Mbps. – Bandwidth requirements for an application » Number of bits per second that it needs to transmit over the network to perform acceptably Network Programming (TDC 561) Winter 2003 Page 18

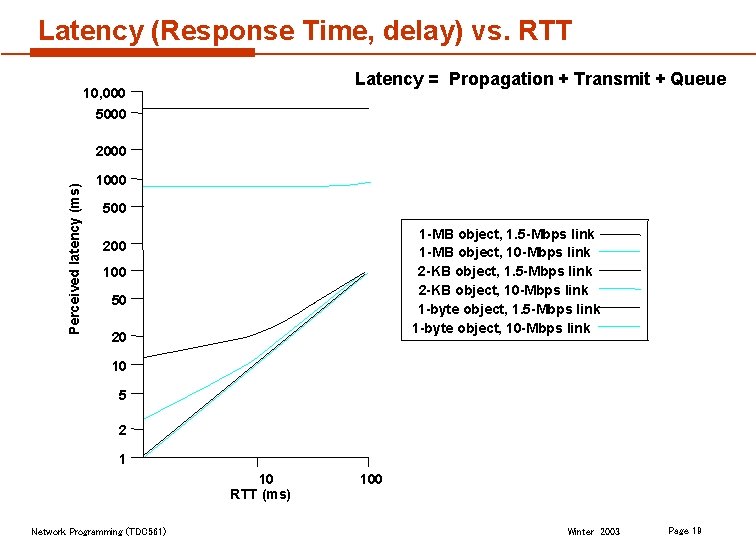

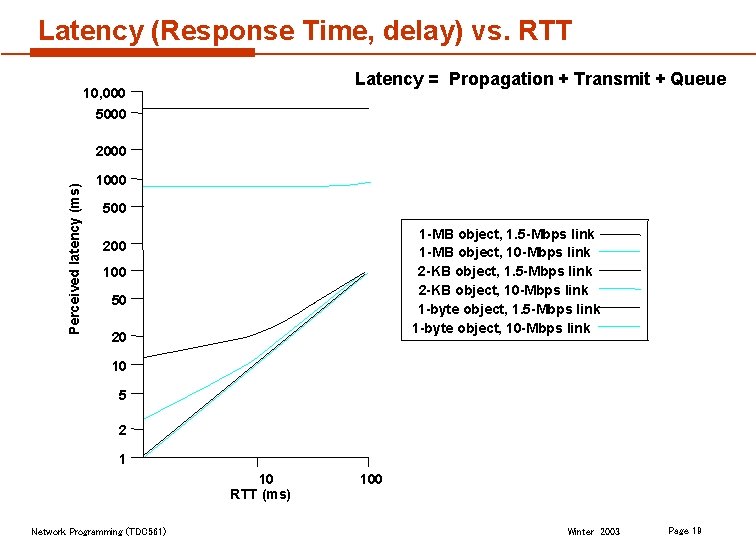

Latency (Response Time, delay) vs. RTT Latency = Propagation + Transmit + Queue 10, 000 5000 Perceived latency (ms) 2000 1000 500 1 -MB object, 1. 5 -Mbps link 1 -MB object, 10 -Mbps link 2 -KB object, 1. 5 -Mbps link 2 -KB object, 10 -Mbps link 1 -byte object, 1. 5 -Mbps link 1 -byte object, 10 -Mbps link 200 100 50 20 10 5 2 1 10 RTT (ms) Network Programming (TDC 561) 100 Winter 2003 Page 19

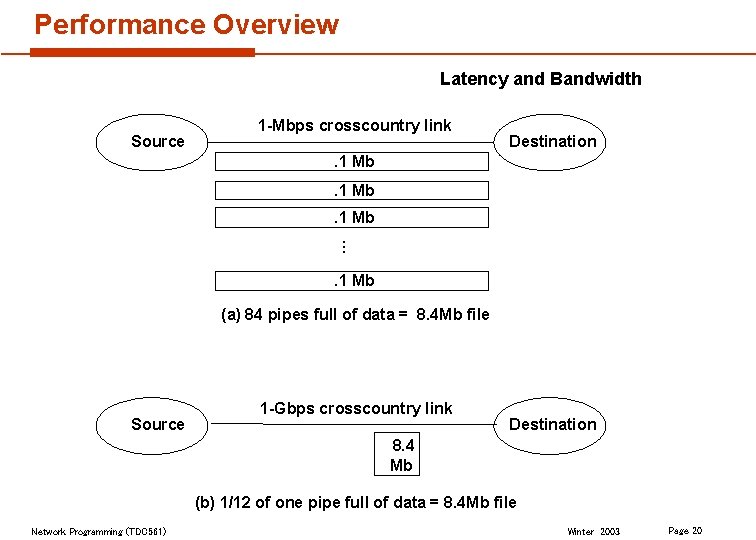

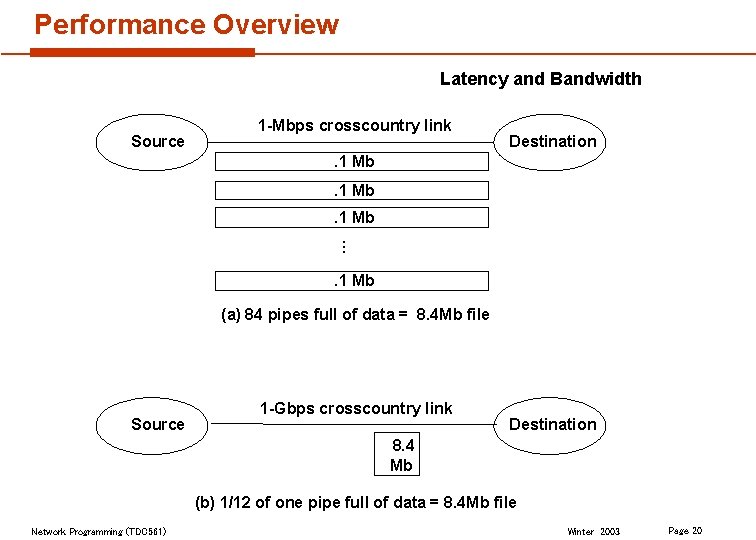

Performance Overview Latency and Bandwidth Source 1 -Mbps crosscountry link Destination . 1 Mb … . 1 Mb (a) 84 pipes full of data = 8. 4 Mb file Source 1 -Gbps crosscountry link Destination 8. 4 Mb (b) 1/12 of one pipe full of data = 8. 4 Mb file Network Programming (TDC 561) Winter 2003 Page 20

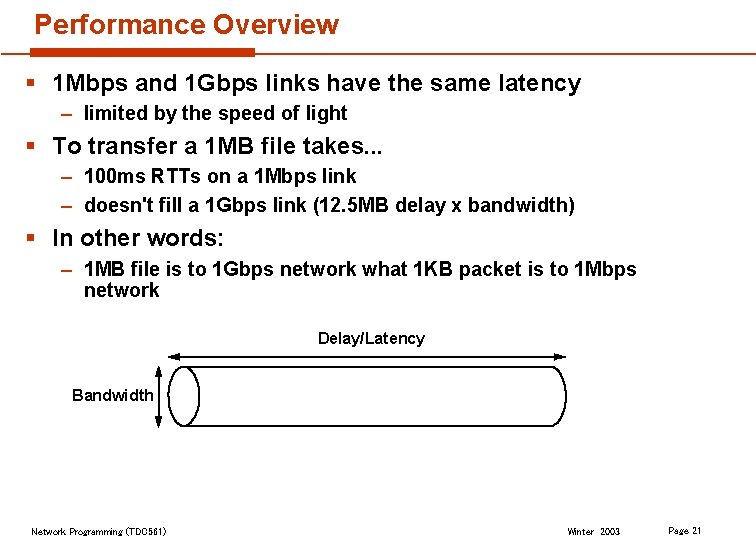

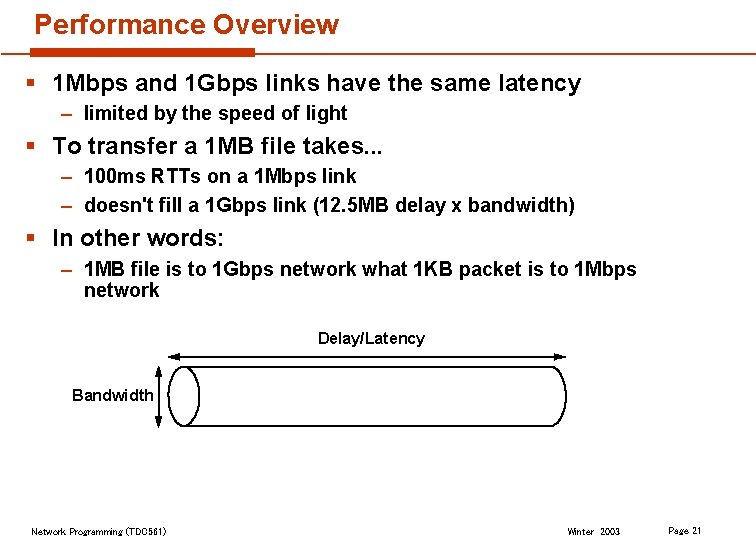

Performance Overview § 1 Mbps and 1 Gbps links have the same latency – limited by the speed of light § To transfer a 1 MB file takes. . . – 100 ms RTTs on a 1 Mbps link – doesn't fill a 1 Gbps link (12. 5 MB delay x bandwidth) § In other words: – 1 MB file is to 1 Gbps network what 1 KB packet is to 1 Mbps network Delay/Latency Bandwidth Network Programming (TDC 561) Winter 2003 Page 21

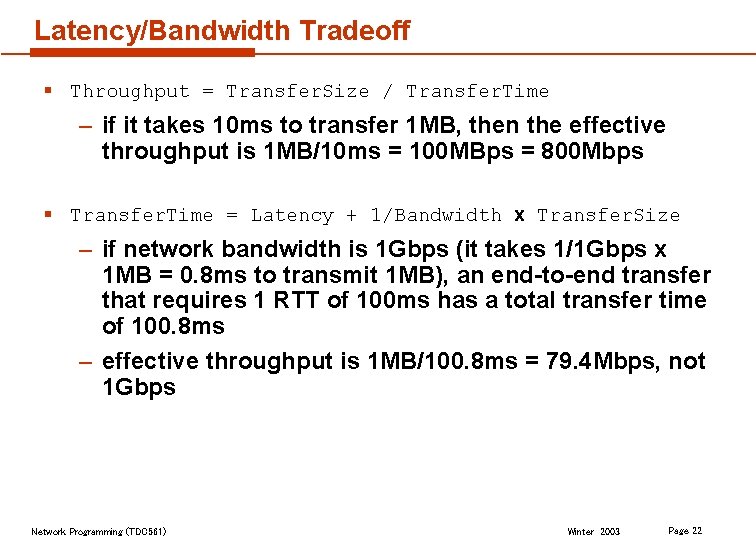

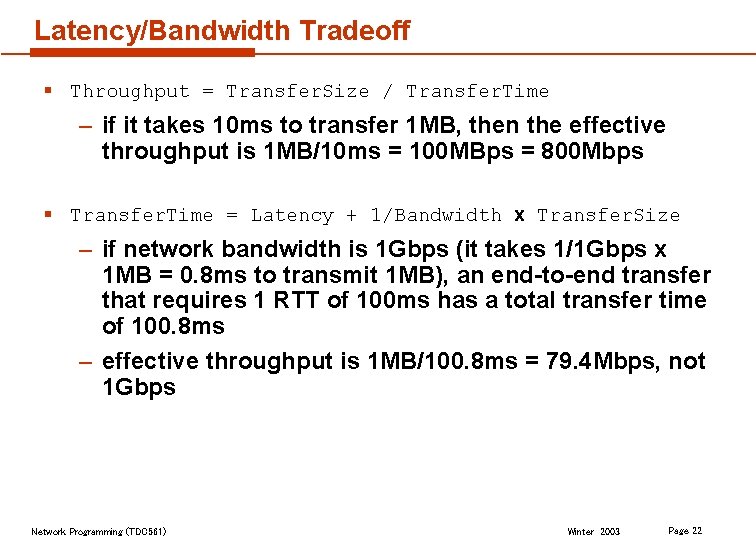

Latency/Bandwidth Tradeoff § Throughput = Transfer. Size / Transfer. Time – if it takes 10 ms to transfer 1 MB, then the effective throughput is 1 MB/10 ms = 100 MBps = 800 Mbps § Transfer. Time = Latency + 1/Bandwidth x Transfer. Size – if network bandwidth is 1 Gbps (it takes 1/1 Gbps x 1 MB = 0. 8 ms to transmit 1 MB), an end-to-end transfer that requires 1 RTT of 100 ms has a total transfer time of 100. 8 ms – effective throughput is 1 MB/100. 8 ms = 79. 4 Mbps, not 1 Gbps Network Programming (TDC 561) Winter 2003 Page 22

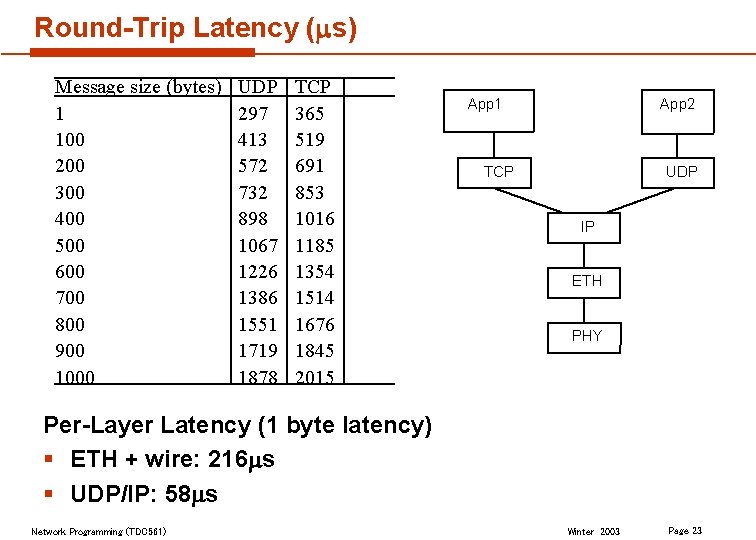

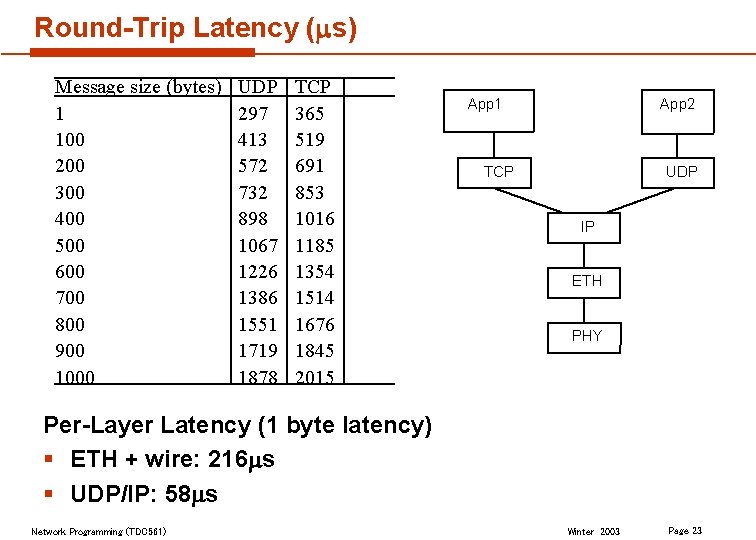

Round-Trip Latency (ms) Message size (bytes) 1 100 200 300 400 500 600 700 800 900 1000 UDP 297 413 572 732 898 1067 1226 1386 1551 1719 1878 TCP 365 519 691 853 1016 1185 1354 1514 1676 1845 2015 App 1 App 2 TCP UDP IP ETH PHY Per-Layer Latency (1 byte latency) § ETH + wire: 216 ms § UDP/IP: 58 ms Network Programming (TDC 561) Winter 2003 Page 23

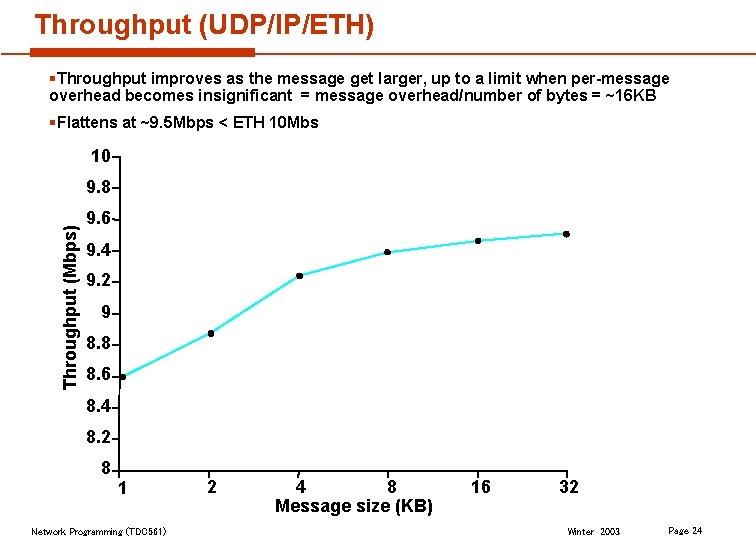

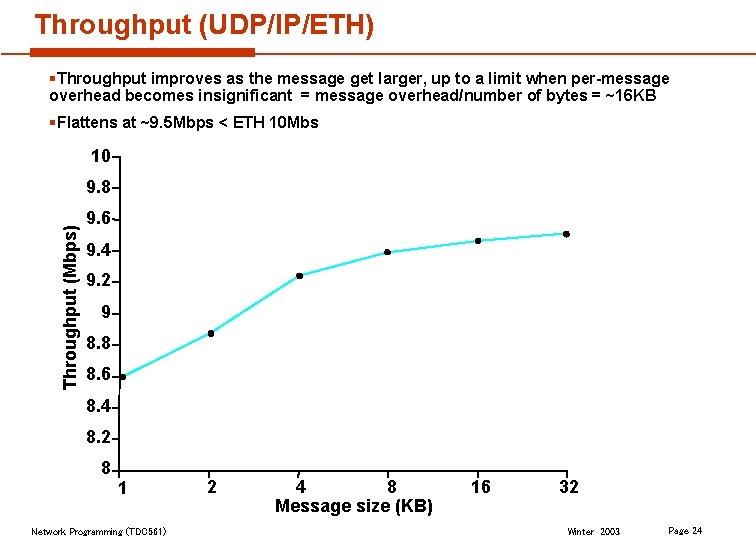

Throughput (UDP/IP/ETH) §Throughput improves as the message get larger, up to a limit when per-message overhead becomes insignificant = message overhead/number of bytes = ~16 KB §Flattens at ~9. 5 Mbps < ETH 10 Mbs 10 Throughput (Mbps) 9. 8 9. 6 9. 4 9. 2 9 8. 8 8. 6 8. 4 8. 2 8 1 Network Programming (TDC 561) 2 4 8 Message size (KB) 16 32 Winter 2003 Page 24

§ Notes – transferring a large amount of data helps improve the effective throughput; in the limit, an infinitely large transfer size causes the effective throughput to approach the network bandwidth – having to endure more than one RTT will hurt the effective throughput for any transfer of finite size, and will be most noticeable for small transfers Network Programming (TDC 561) Winter 2003 Page 25

Implications § Congestion control – – – feedback based mechanisms require an RTT to adjust can send 10 MB in one 100 ms RTT on a 1 Gbps network that 10 MB might congest a router and lead to massive losses can lose half a delay x bandwidth's of data during slow start reservations work for continuous streams (e. g. , video), but require an extra RTT for bulk transfers § Retransmissions – – retransmitting a packet costs 1 RTT dropping even one packet (cell) halves effective bandwidth retransmission also implies buffering at the sender possible solution: forward error correction (FEC) § Trading bandwidth for latency – each RTT is precious – willing to “waste” bandwidth to save latency – example: pre-fetching Network Programming (TDC 561) Winter 2003 Page 26

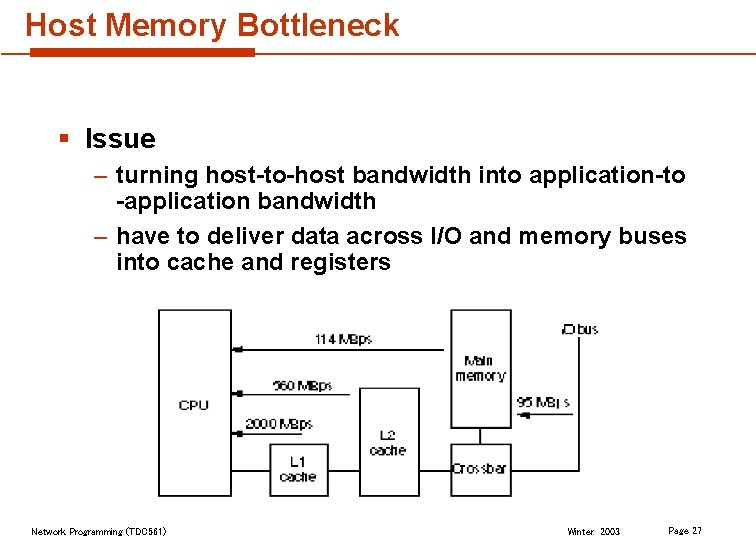

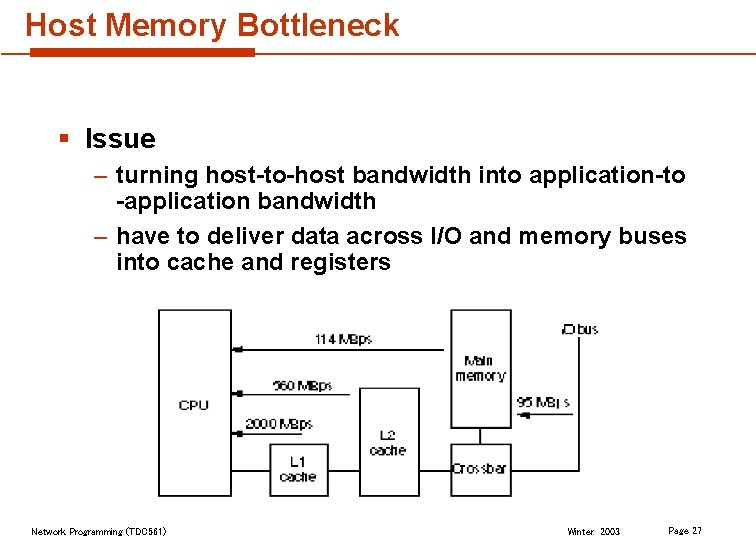

Host Memory Bottleneck § Issue – turning host-to-host bandwidth into application-to -application bandwidth – have to deliver data across I/O and memory buses into cache and registers Network Programming (TDC 561) Winter 2003 Page 27

§ Memory bandwidth – I/O bus must keep up with network speed (currently does for STS-12, assuming peak rate is achievable) – 114 MBps (measured number) is only slightly faster than I/O bus; can't afford to go across memory bus twice – caches are of questionable value (rather small) – lots of reason to access buffers » user/kernel boundary » certain protocols (reassembly, check-summing) » network device and its driver § Same latency/bandwidth problems as highspeed networks Network Programming (TDC 561) Winter 2003 Page 28

Integrated Services § High-speed networks have enabled new applications – they also need “deliver on time” assurances from the network § Applications that are sensitive to the timeliness of data are called real-time applications – voice – video – industrial control § Timeliness guarantees must come from inside the network – end-hosts cannot correct late packets like they can correct for lost packets § Need more than best-effort – IETF is standardizing extensions to best-effort model Network Programming (TDC 561) Winter 2003 Page 29