TDC 561 Network Programming Week 7 ClientServer Design

![Example: Concurrency using threads 2/3 main(int argc, char *argv[]) { /* Variable declaration section Example: Concurrency using threads 2/3 main(int argc, char *argv[]) { /* Variable declaration section](https://slidetodoc.com/presentation_image_h/30c6e2dde5080f61845af1fa8b4d823e/image-7.jpg)

![while(1) { /* worker thread */ /* wait for work to do */ while(worker_state[workernum]== while(1) { /* worker thread */ /* wait for work to do */ while(worker_state[workernum]==](https://slidetodoc.com/presentation_image_h/30c6e2dde5080f61845af1fa8b4d823e/image-30.jpg)

![/* do some initialization */ for (i=0; i<MAXWORKERS; i++) { worker_state[i]=0; new_wk[i] = malloc(sizeof(workerstruct)); /* do some initialization */ for (i=0; i<MAXWORKERS; i++) { worker_state[i]=0; new_wk[i] = malloc(sizeof(workerstruct));](https://slidetodoc.com/presentation_image_h/30c6e2dde5080f61845af1fa8b4d823e/image-33.jpg)

![TFTP Issue send DATA[n] (time out) retransmit DATA[n] receive ACK[n] send DATA[n+1] receive ACK[n] TFTP Issue send DATA[n] (time out) retransmit DATA[n] receive ACK[n] send DATA[n+1] receive ACK[n]](https://slidetodoc.com/presentation_image_h/30c6e2dde5080f61845af1fa8b4d823e/image-53.jpg)

![Discussion: TFPT RFC 1350 2/5 int main(int argc, char *argv[]) { int sockfd, newfd, Discussion: TFPT RFC 1350 2/5 int main(int argc, char *argv[]) { int sockfd, newfd,](https://slidetodoc.com/presentation_image_h/30c6e2dde5080f61845af1fa8b4d823e/image-63.jpg)

- Slides: 66

TDC 561 Network Programming Week 7: Client/Server Design Alternatives Study Cases: TFTP Camelia Zlatea, Ph. D Email: czlatea@cs. depaul. edu Distributed Systems Frameworks (DS 520 -95 -103) DISC’ 99

References § Douglas Comer, David Stevens, Internetworking with TCP/IP : Client-Server Programming, Volume III (BSD Unix and ANSI C), 2 nd edition, 1996 (ISBN 0 -13 -260969 -X) – Chap. 2, 8 § W. Richard Stevens, Network Programming : Networking API: Sockets and XTI, Volume 1, 2 nd edition, 1998 (ISBN 013 -490012 -X) – Chap. 7, 27 Network Programming (TDC 561) Winter 2003 Page 2

Server Design Iterative Connectionless Iterative Connection-Oriented Concurrent Connectionless Concurrent Connection-Oriented Network Programming (TDC 561) Winter 2003 Page 3

Concurrent Server Design Alternatives § Single Process Concurrency § One child per client § Spawn one thread per client § Pre-forking multiple processes § Pre-threaded Server Network Programming (TDC 561) Winter 2003 Page 4

One thread per client § Similar with fork - call pthread_create instead. § Using threads makes it easier (less overhead) to have child threads share information. § Sharing information must be done carefully – Mutual exclusion - pthread_mutex – Synchronization - pthread_cond Network Programming (TDC 561) Winter 2003 Page 5

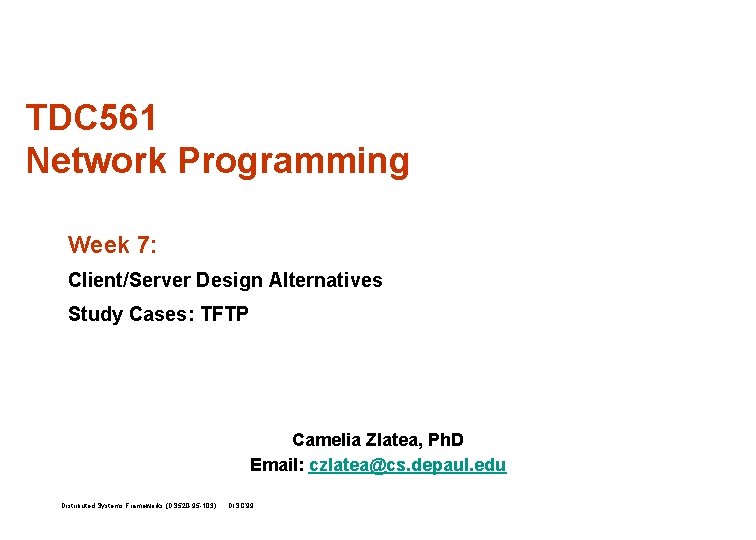

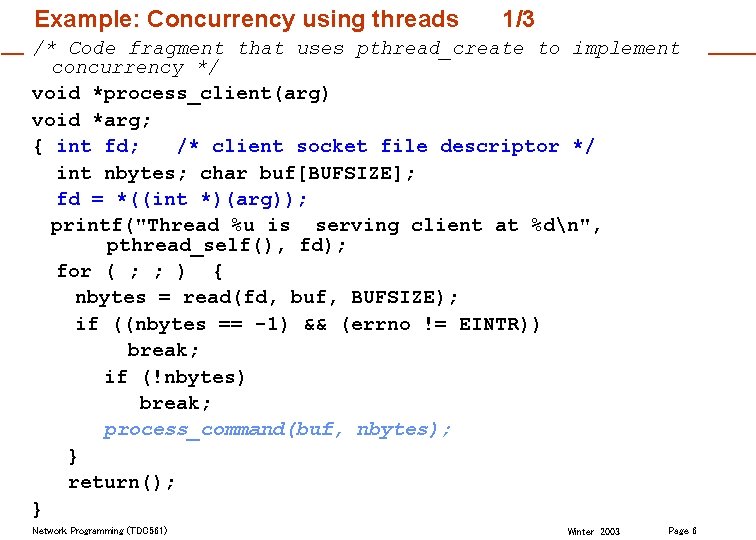

Example: Concurrency using threads 1/3 /* Code fragment that uses pthread_create to implement concurrency */ void *process_client(arg) void *arg; { int fd; /* client socket file descriptor */ int nbytes; char buf[BUFSIZE]; fd = *((int *)(arg)); printf("Thread %u is serving client at %dn", pthread_self(), fd); for ( ; ; ) { nbytes = read(fd, buf, BUFSIZE); if ((nbytes == -1) && (errno != EINTR)) break; if (!nbytes) break; process_command(buf, nbytes); } return(); } Network Programming (TDC 561) Winter 2003 Page 6

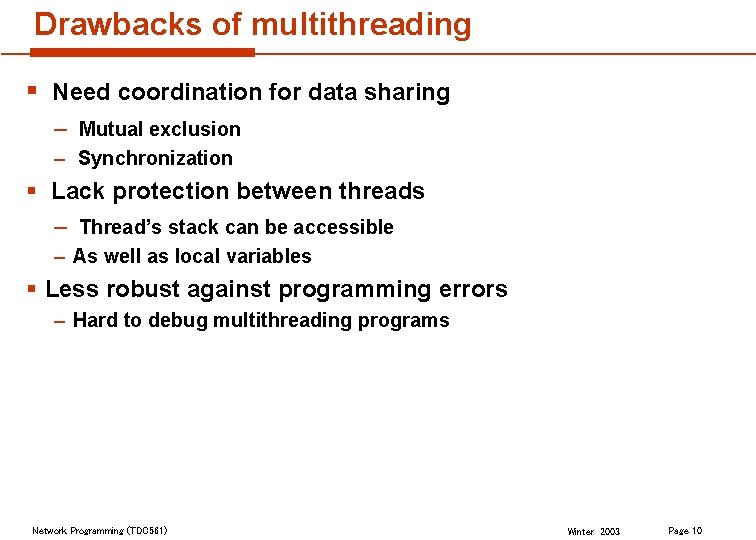

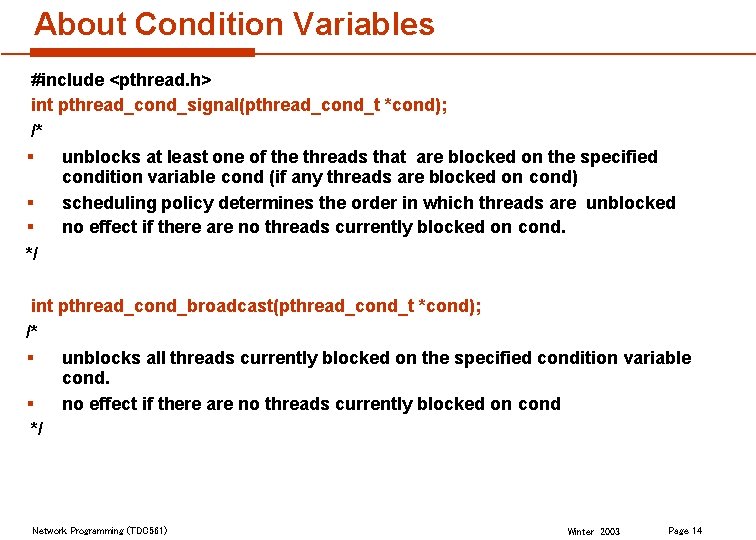

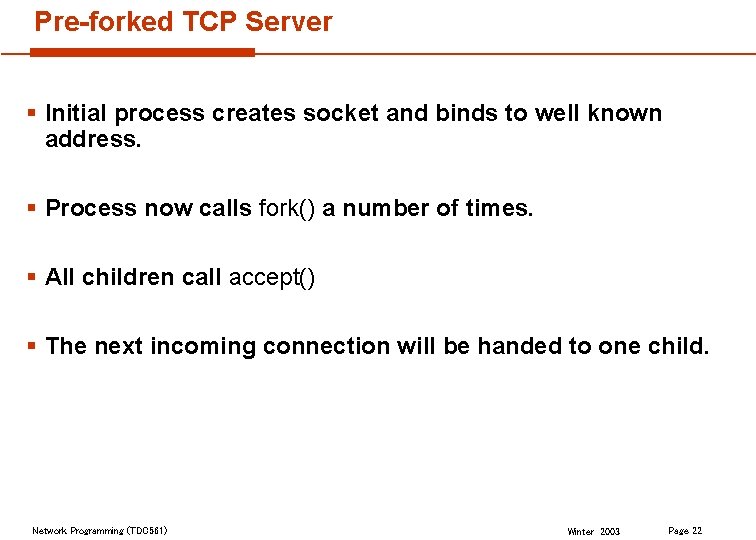

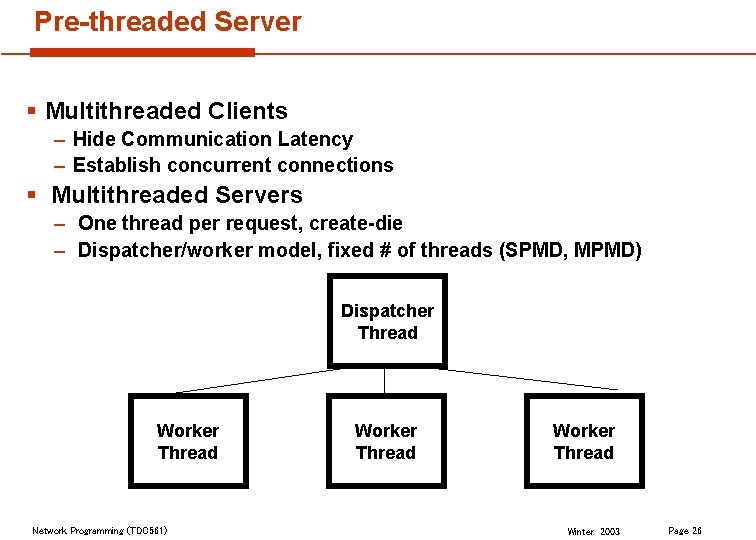

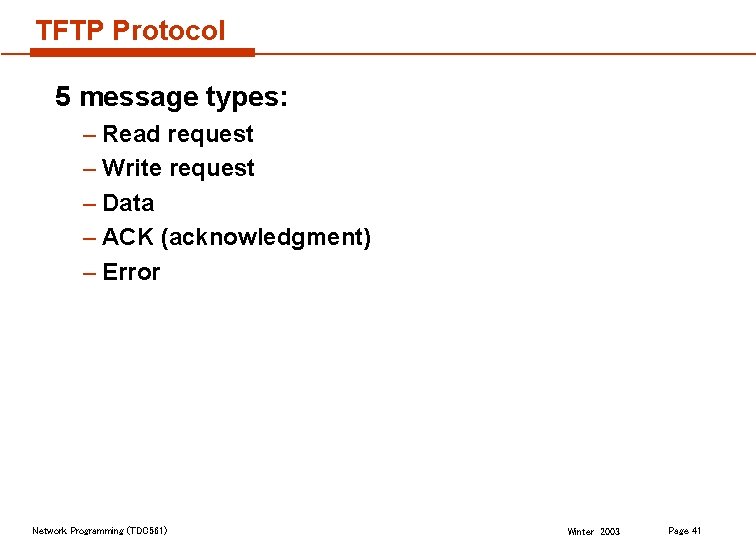

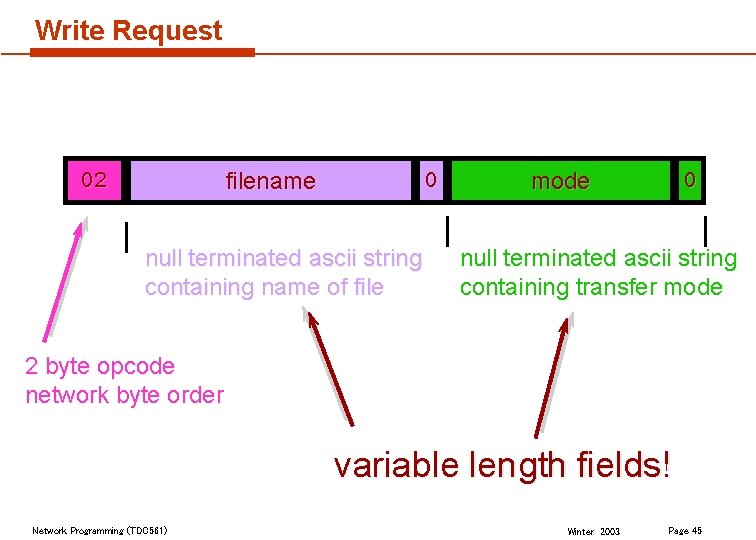

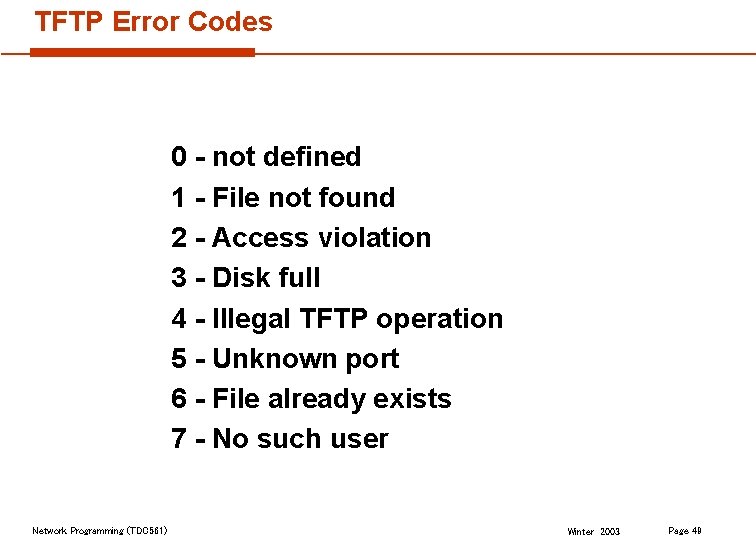

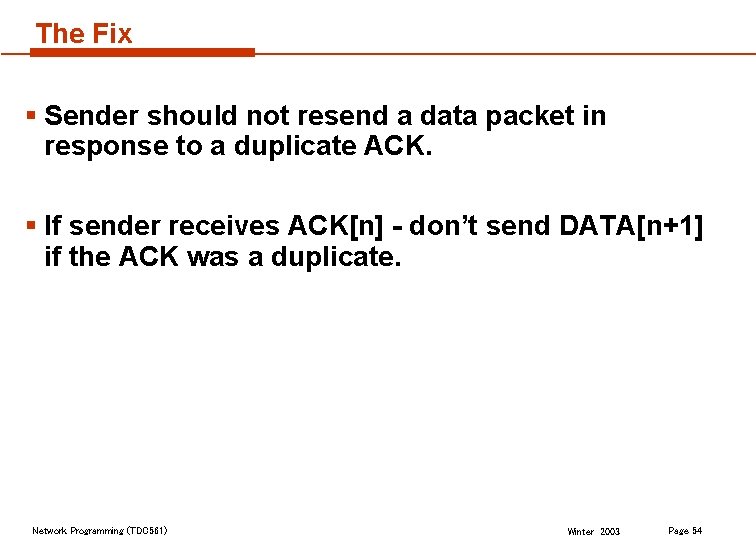

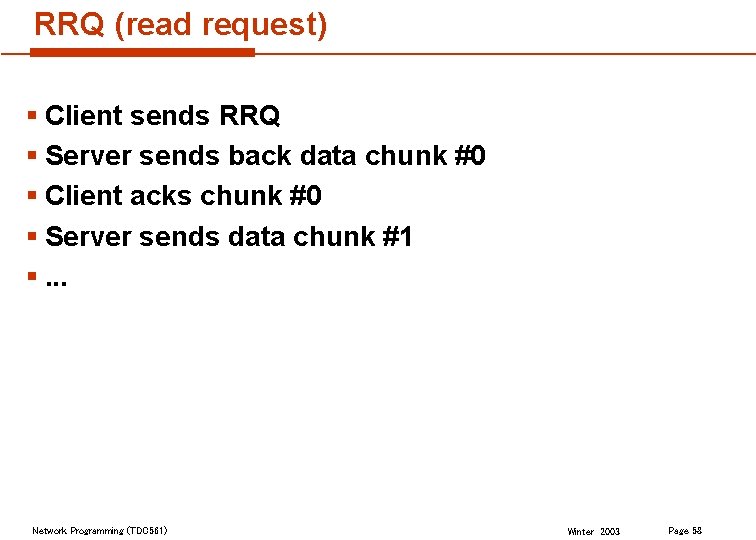

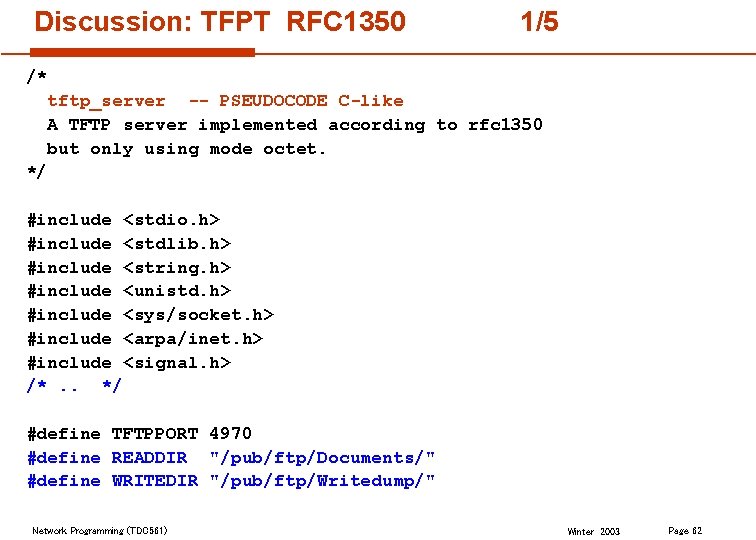

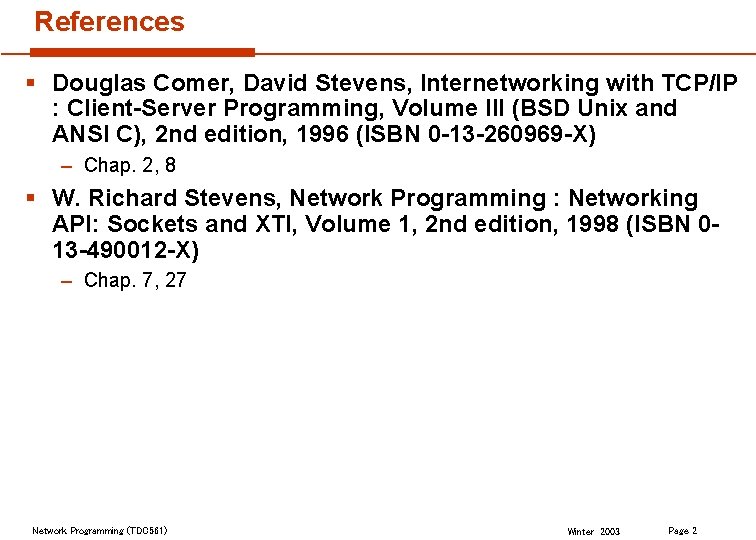

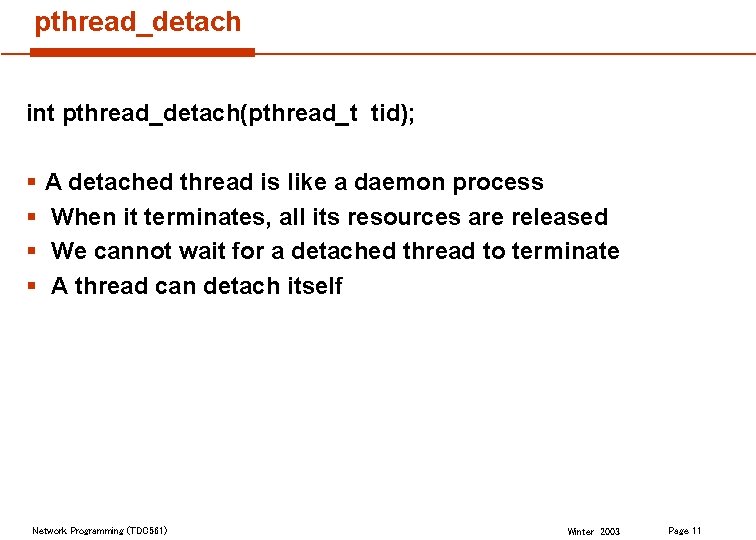

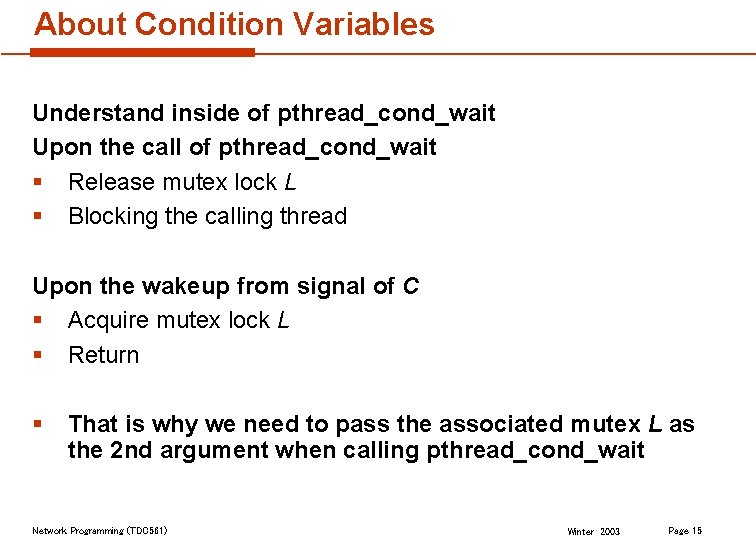

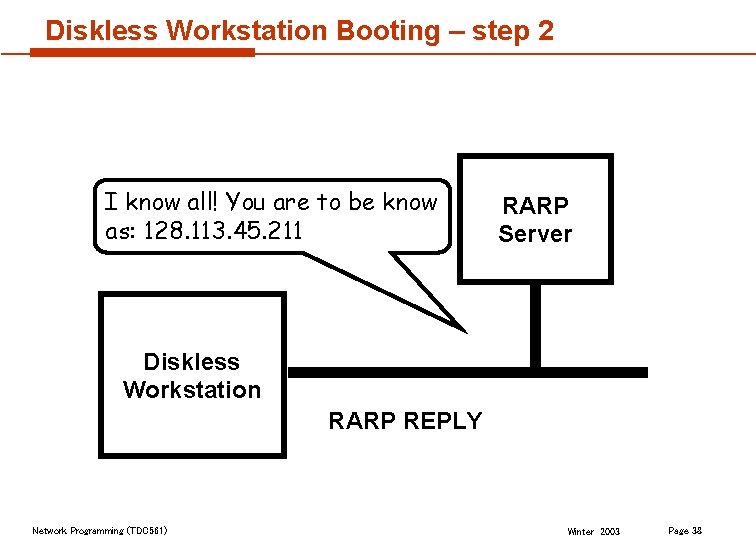

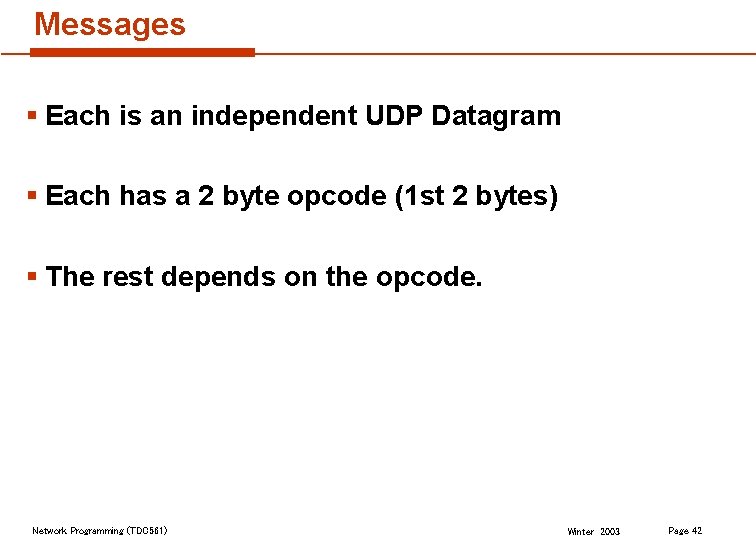

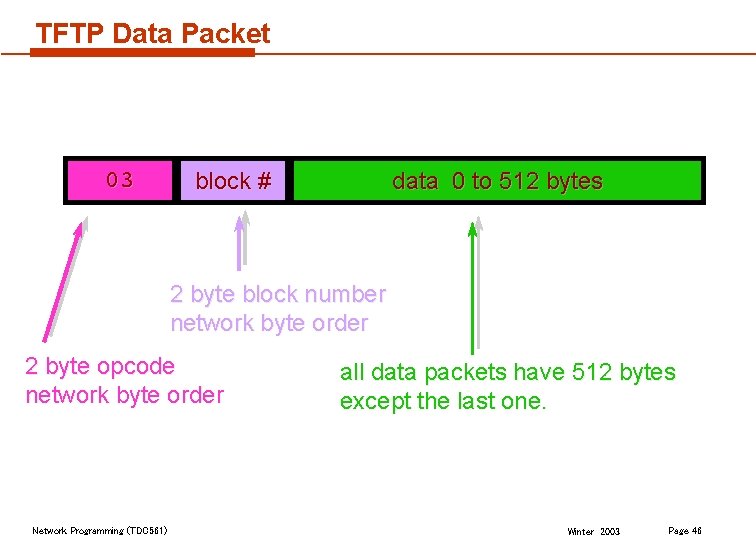

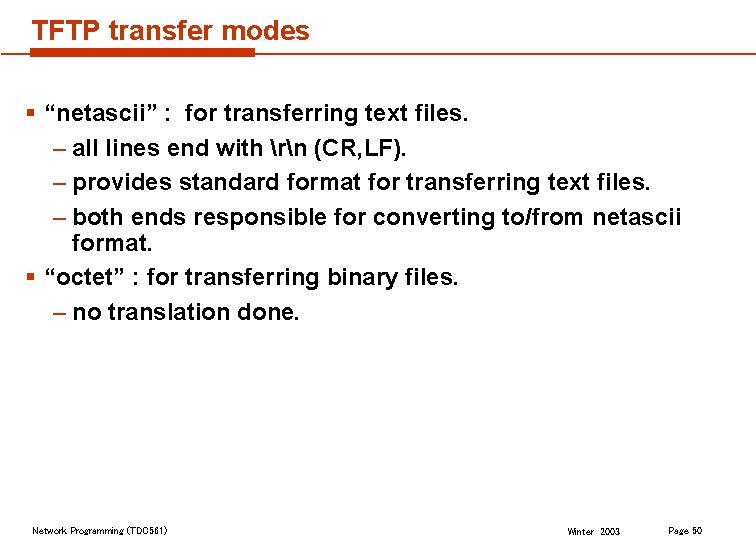

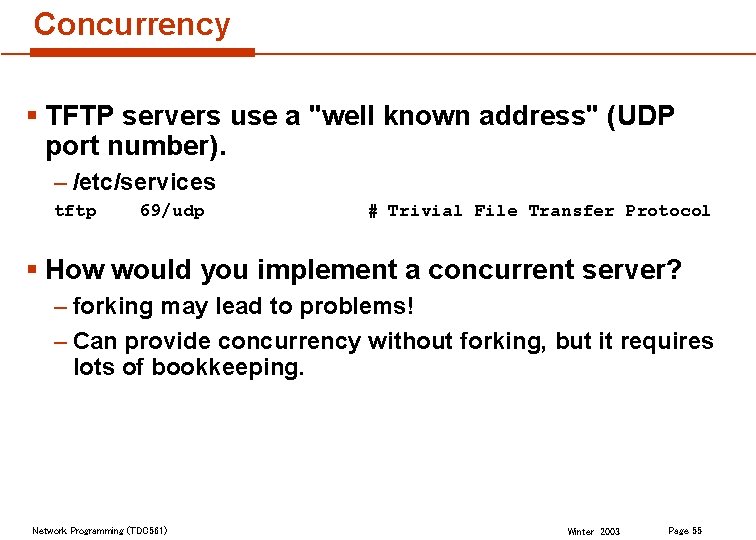

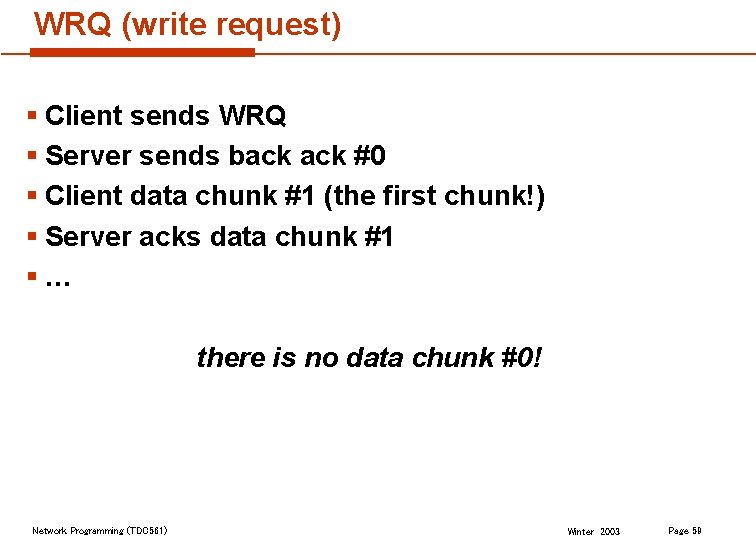

![Example Concurrency using threads 23 mainint argc char argv Variable declaration section Example: Concurrency using threads 2/3 main(int argc, char *argv[]) { /* Variable declaration section](https://slidetodoc.com/presentation_image_h/30c6e2dde5080f61845af1fa8b4d823e/image-7.jpg)

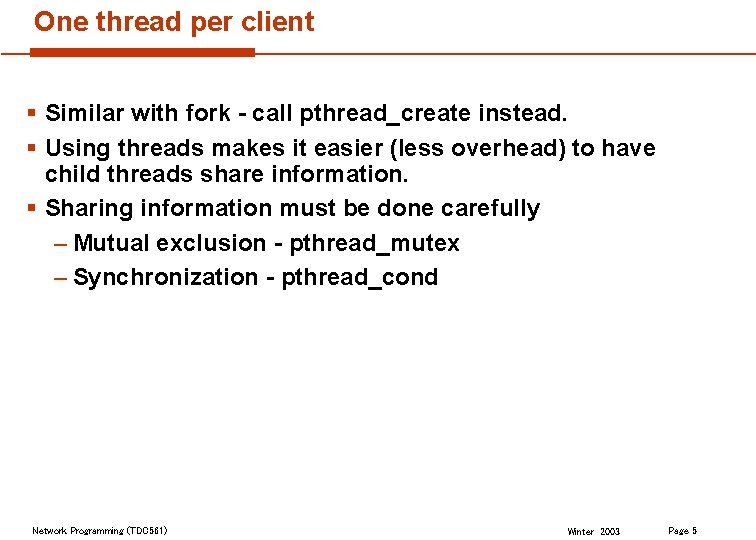

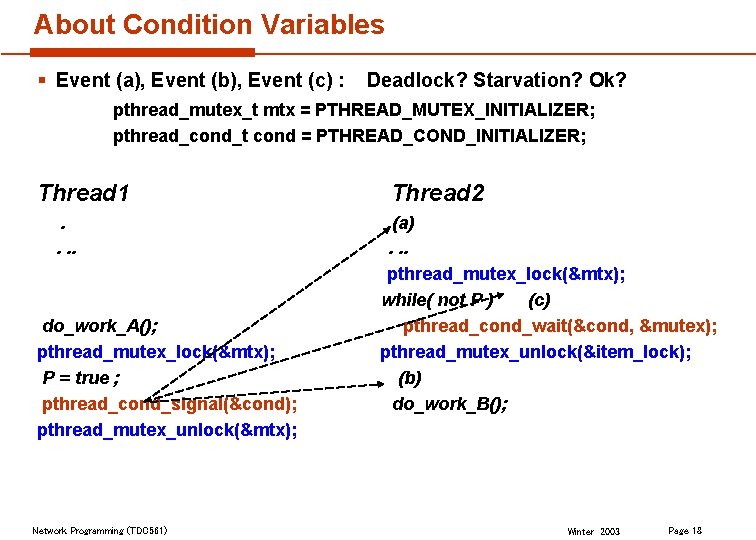

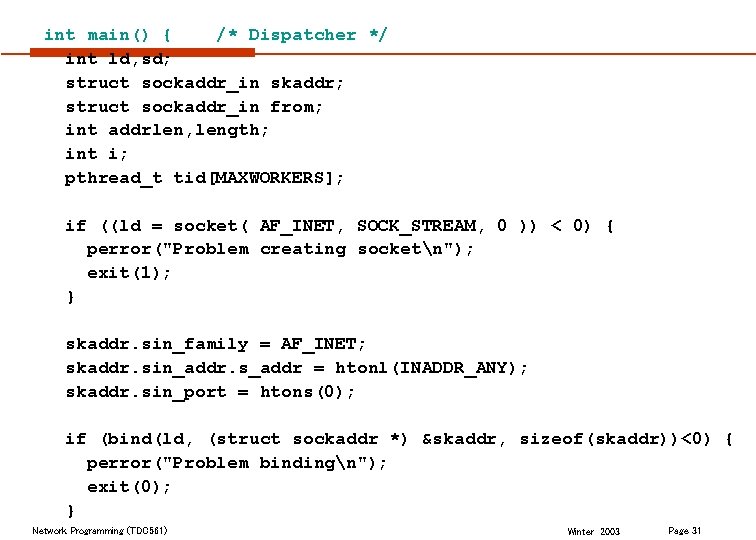

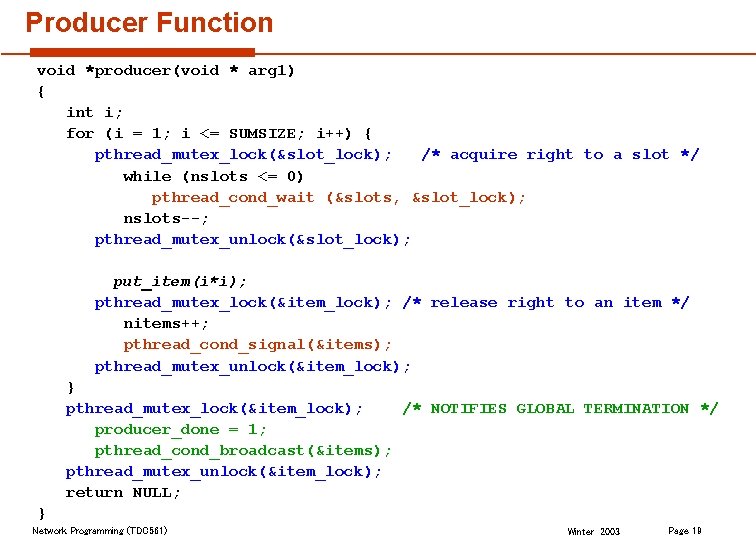

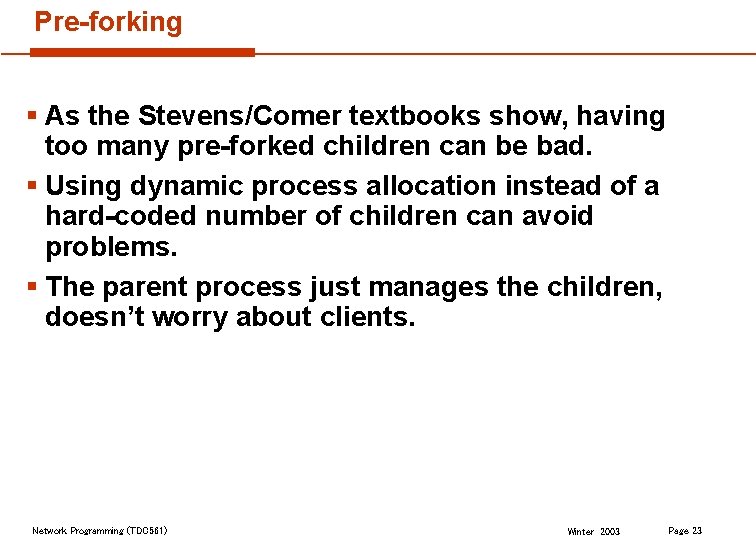

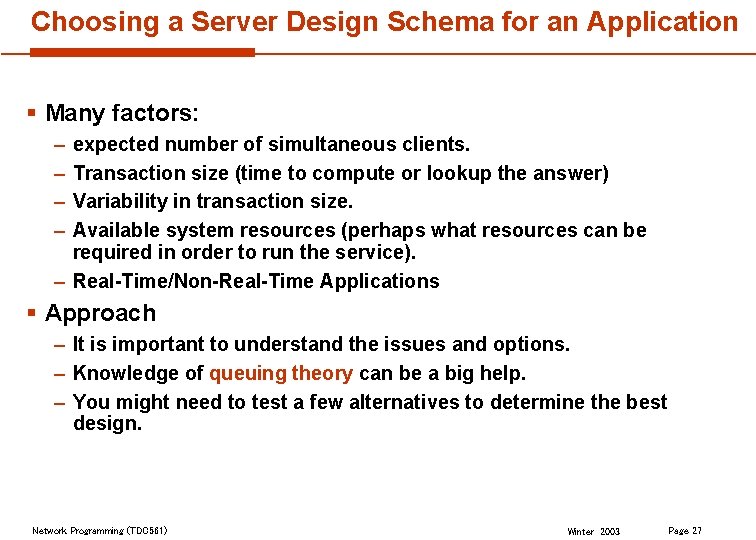

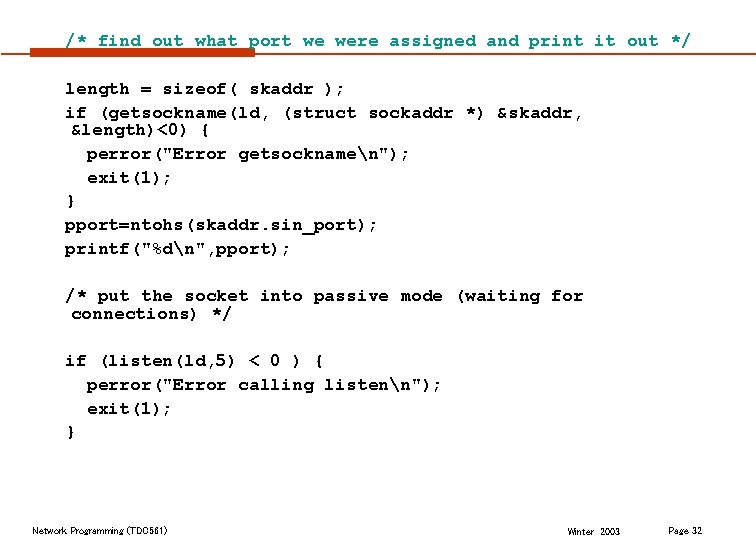

Example: Concurrency using threads 2/3 main(int argc, char *argv[]) { /* Variable declaration section */ /* The calls socket(), bind(), and listen() */ while(1) { /* infinite accept() loop */ newfd = accept(sockfd, (struct sockaddr *)&theiraddr, &sinsize); if (newfd < 0) { /* error in accept() */ perror("accept"); exit(-1); } Network Programming (TDC 561) Winter 2003 Page 7

Example: Concurrency using threads 3/3 if (error=pthread_create(tid, NULL, process_client, (void *)(newfd))) fprintf(stderr, "Could not create thread %d: %sn", tid, strerror(error)); } } Network Programming (TDC 561) Winter 2003 Page 8

Multithreading Benefits § Provide concurrency – – Introduce another level of scheduling Fine control of multithreading with priority Tolerant communication latency Overlap I/O with CPU work § Provide parallelism – Allow either SPMD or MPMD styles – Be able to partition computation domains – Utilize multiple CPUs for speedup § Provide resource/data sharing – – – Dynamically allocated memory can be shared Pointers are valid to all threads Multiple threads can share resources Share the same set of open files § SPMD = Single Program Multiple Data Share working directory § MPMD = Multiple Program Multiple Data Network Programming (TDC 561) Winter 2003 Page 9

Drawbacks of multithreading § Need coordination for data sharing – Mutual exclusion – Synchronization § Lack protection between threads – Thread’s stack can be accessible – As well as local variables § Less robust against programming errors – Hard to debug multithreading programs Network Programming (TDC 561) Winter 2003 Page 10

pthread_detach int pthread_detach(pthread_t tid); § § A detached thread is like a daemon process When it terminates, all its resources are released We cannot wait for a detached thread to terminate A thread can detach itself Network Programming (TDC 561) Winter 2003 Page 11

About Condition Variables § § It refers to blocking until some event occurs, which means waiting for a unbounded/unpredictable duration It has atomic operations for waiting and signaling, that is, testing and blocking are un-divisible It associates with some shared variable, which are protected by a mutex lock Condition variable C: – Condition » shared variables S » predicate P of S – A mutex lock L – Operations » Mutex lock: pthread_mutex_lock » Mutex unlock: pthread_mutex_unlock » Condition variable wait: pthread_cond_wait » Condition variable signal: pthread_cond_signal or pthread_cond_broadcast Network Programming (TDC 561) Winter 2003 Page 12

About Condition Variables § § Whenever a thread changes one variable of S, it signals on the condition variable C This signal wakes up a waiting thread, which then checks to see if P is now satisfied – It does not guarantee that P has become true – It just tells the blocked thread that one of S has changed – The signaled thread should retest P (the Boolean predicate) – When using condition variables there is always a Boolean predicate, an invariant, associated with each condition wait that must be true before thread should proceed. – The return from pthread_cond_wait() does not imply anything about the value of this predicate, the predicate should always be re-evaluated. Network Programming (TDC 561) Winter 2003 Page 13

About Condition Variables #include <pthread. h> int pthread_cond_signal(pthread_cond_t *cond); /* § unblocks at least one of the threads that are blocked on the specified condition variable cond (if any threads are blocked on cond) § scheduling policy determines the order in which threads are unblocked § no effect if there are no threads currently blocked on cond. */ int pthread_cond_broadcast(pthread_cond_t *cond); /* § unblocks all threads currently blocked on the specified condition variable cond. § no effect if there are no threads currently blocked on cond */ Network Programming (TDC 561) Winter 2003 Page 14

About Condition Variables Understand inside of pthread_cond_wait Upon the call of pthread_cond_wait § Release mutex lock L § Blocking the calling thread Upon the wakeup from signal of C § Acquire mutex lock L § Return § That is why we need to pass the associated mutex L as the 2 nd argument when calling pthread_cond_wait Network Programming (TDC 561) Winter 2003 Page 15

About Condition Variables § Event (a) and Event (b): Deadlock? Starvation? Ok? pthread_mutex_t mtx = PTHREAD_MUTEX_INITIALIZER; pthread_cond_t cond = PTHREAD_COND_INITIALIZER; Thread 1. . Thread 2. (a). . . do_work_A(); pthread_cond_wait(&cond, &mutex); pthread_cond_signal(&cond); (b) do_work_B(); Network Programming (TDC 561) Winter 2003 Page 16

About Condition Variables § Event (a), Event (b), Event (c) : Deadlock? Starvation? Ok? pthread_mutex_t mtx = PTHREAD_MUTEX_INITIALIZER; pthread_cond_t cond = PTHREAD_COND_INITIALIZER; Thread 1. . do_work_A(); P = true ; pthread_cond_signal(&cond); Network Programming (TDC 561) Thread 2. (a). . . while ( not P ) (c) pthread_cond_wait(&cond, &mutex); (b) do_work_B(); Winter 2003 Page 17

About Condition Variables § Event (a), Event (b), Event (c) : Deadlock? Starvation? Ok? pthread_mutex_t mtx = PTHREAD_MUTEX_INITIALIZER; pthread_cond_t cond = PTHREAD_COND_INITIALIZER; Thread 1. . do_work_A(); pthread_mutex_lock(&mtx); P = true ; pthread_cond_signal(&cond); pthread_mutex_unlock(&mtx); Network Programming (TDC 561) Thread 2. (a). . . pthread_mutex_lock(&mtx); while( not P ) (c) pthread_cond_wait(&cond, &mutex); pthread_mutex_unlock(&item_lock); (b) do_work_B(); Winter 2003 Page 18

Producer Function void *producer(void * arg 1) { int i; for (i = 1; i <= SUMSIZE; i++) { pthread_mutex_lock(&slot_lock); /* acquire right to a slot */ while (nslots <= 0) pthread_cond_wait (&slots, &slot_lock); nslots--; pthread_mutex_unlock(&slot_lock); put_item(i*i); pthread_mutex_lock(&item_lock); /* release right to an item */ nitems++; pthread_cond_signal(&items); pthread_mutex_unlock(&item_lock); } pthread_mutex_lock(&item_lock); /* NOTIFIES GLOBAL TERMINATION */ producer_done = 1; pthread_cond_broadcast(&items); pthread_mutex_unlock(&item_lock); return NULL; } Network Programming (TDC 561) Winter 2003 Page 19

Consumer Function void *consumer(void *arg 2) { int myitem; for ( ; ; ) { pthread_mutex_lock(&item_lock); /* acquire right to an item */ while ((nitems <=0) && !producer_done) pthread_cond_wait(&items, &item_lock); if ((nitems <= 0) && producer_done) { /* DISTRIBUTED GLOBAL TERMINATION */ pthread_mutex_unlock(&item_lock); break; } nitems--; pthread_mutex_unlock(&item_lock); get_item(&myitem); sum += myitem; pthread_mutex_lock(&slot_lock); /* release right to a slot */ nslots++; pthread_cond_signal(&slots); pthread_mutex_unlock(&slot_lock); } return NULL; } Network Programming (TDC 561) Winter 2003 Page 20

Pre-forked Server § Creating a new process for each client is expensive. § We can create a set of processes, each of which can take care of a client. § Each child process is an iterative server. Network Programming (TDC 561) Winter 2003 Page 21

Pre-forked TCP Server § Initial process creates socket and binds to well known address. § Process now calls fork() a number of times. § All children call accept() § The next incoming connection will be handed to one child. Network Programming (TDC 561) Winter 2003 Page 22

Pre-forking § As the Stevens/Comer textbooks show, having too many pre-forked children can be bad. § Using dynamic process allocation instead of a hard-coded number of children can avoid problems. § The parent process just manages the children, doesn’t worry about clients. Network Programming (TDC 561) Winter 2003 Page 23

Sockets library vs. system call § A pre-forked TCP server won’t usually work the way we want if sockets is not part of the kernel: – calling accept() is a library call, not an atomic operation. § We can get around this by making sure only one child calls accept() at a time using a locking scheme. Network Programming (TDC 561) Winter 2003 Page 24

Pre-threaded Server § Same benefits as pre-forking. § Can also have the main thread do all the calls to accept() and hand off each client to an existing thread. Network Programming (TDC 561) Winter 2003 Page 25

Pre-threaded Server § Multithreaded Clients – Hide Communication Latency – Establish concurrent connections § Multithreaded Servers – One thread per request, create-die – Dispatcher/worker model, fixed # of threads (SPMD, MPMD) Dispatcher Thread Worker Thread Network Programming (TDC 561) Worker Thread Winter 2003 Page 26

Choosing a Server Design Schema for an Application § Many factors: – – expected number of simultaneous clients. Transaction size (time to compute or lookup the answer) Variability in transaction size. Available system resources (perhaps what resources can be required in order to run the service). – Real-Time/Non-Real-Time Applications § Approach – It is important to understand the issues and options. – Knowledge of queuing theory can be a big help. – You might need to test a few alternatives to determine the best design. Network Programming (TDC 561) Winter 2003 Page 27

/* PSEUDO-CODE OUTLINE for pre-threaded server using SPMD */ /* global data and variables */ #define MAXCLIENTS 10 #define MAXWORKERS 10 pthread_mutex_t mtx = PTHREAD_MUTEX_INITIALIZER; pthread_cond_t cond[MAXWORKERS]; pthread_cond_t idle; int client_sd[MAXCLIENTS]; int worker_state[MAXWORKERS]; workerptr new_wk[MAXWORKERS]; /* active socket array */ /* worker state array */ /* reference to worker information slot { int worker_num; int sd; } */ pthread_t tid[MAXWORKERS]; Network Programming (TDC 561) Winter 2003 Page 28

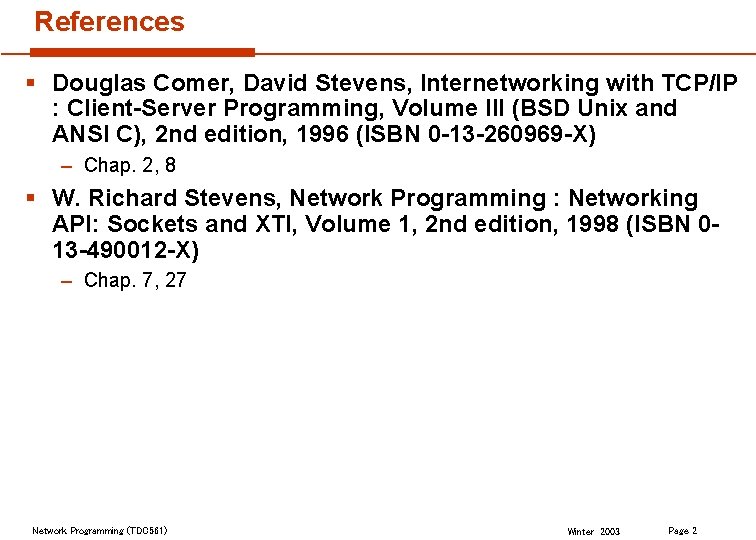

void *handle_client( void *arg) {/* worker thread */ workerptr me = (workerptr) arg; int workernum = me->workernum; int sd = me->sd; char buf[BUFSIZE]; int n; /* By default a new thread is joinable, we don't really want this (unless we do something special we end up with the thread equivalent of zombies). So we explicitly change thread type to detached */ pthread_detach(pthread_self()); printf("Thread %ld started for client number %d (sd %d)n", pthread_self(), workernum, client_sd[workernum]); Network Programming (TDC 561) Winter 2003 Page 29

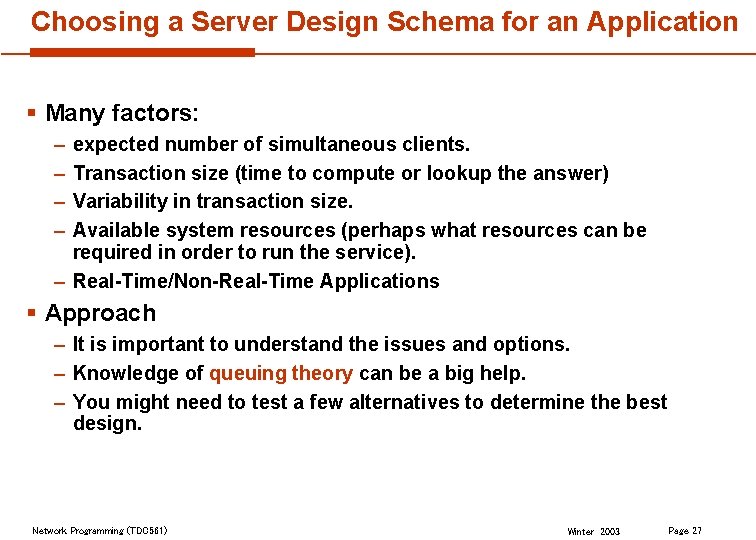

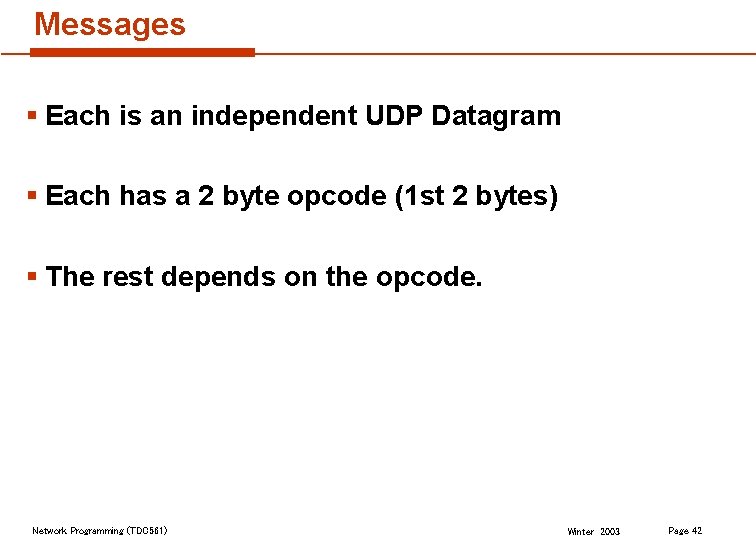

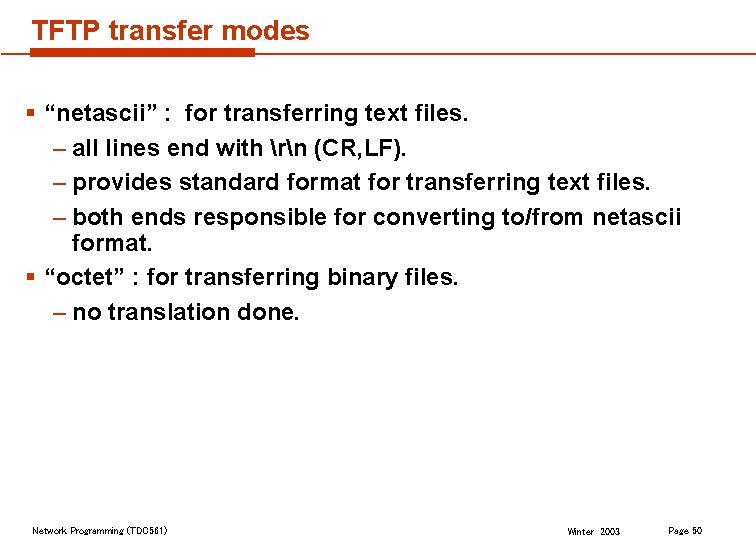

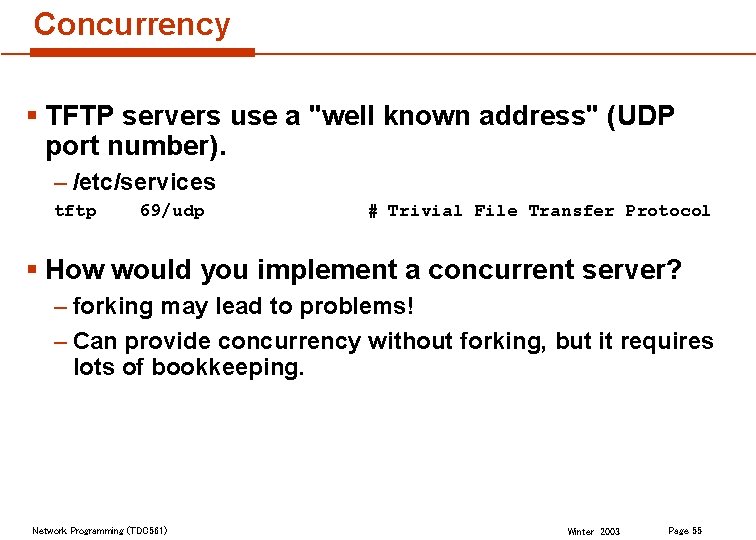

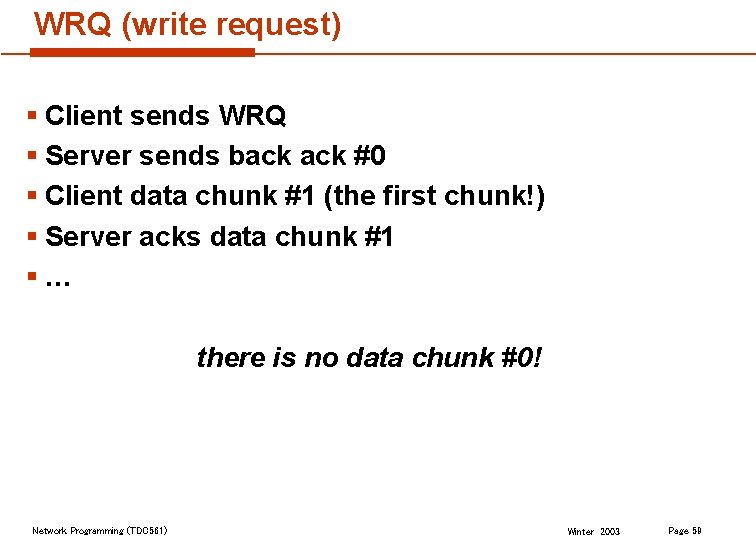

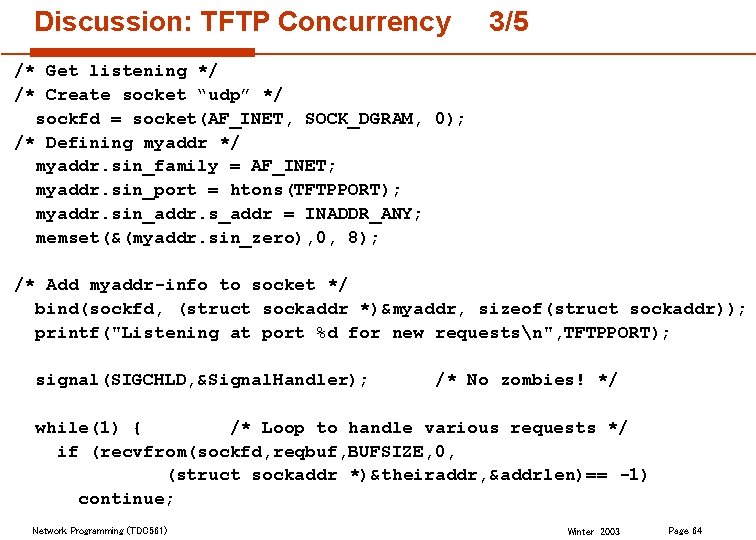

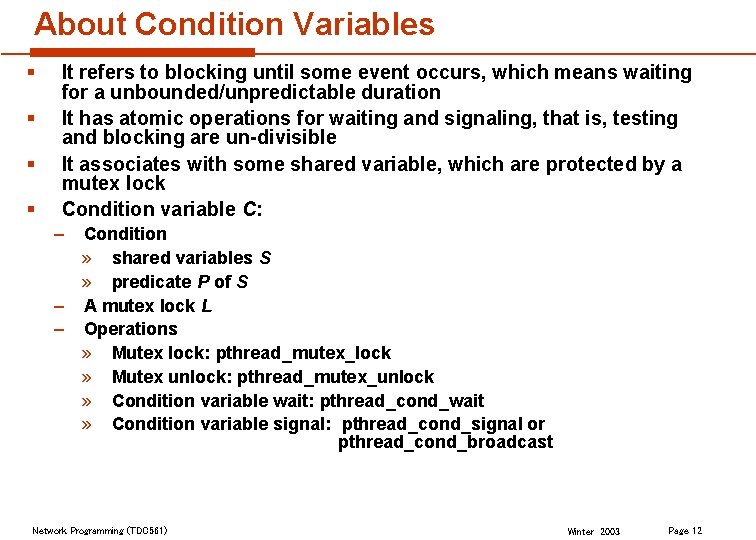

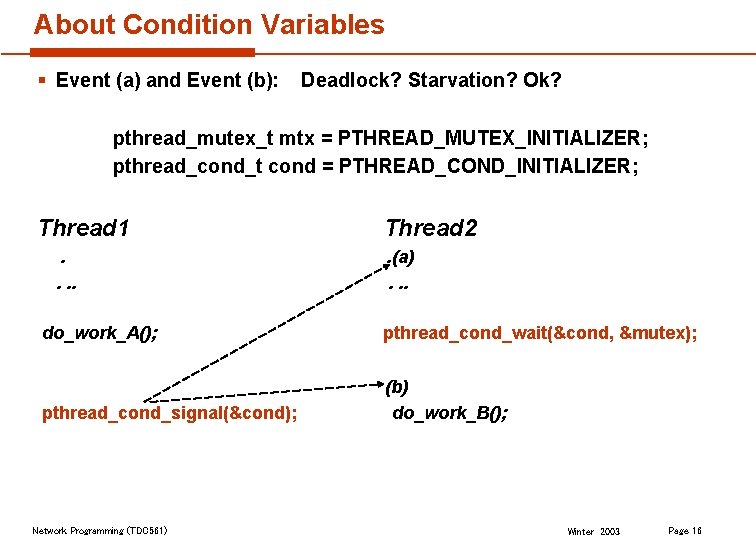

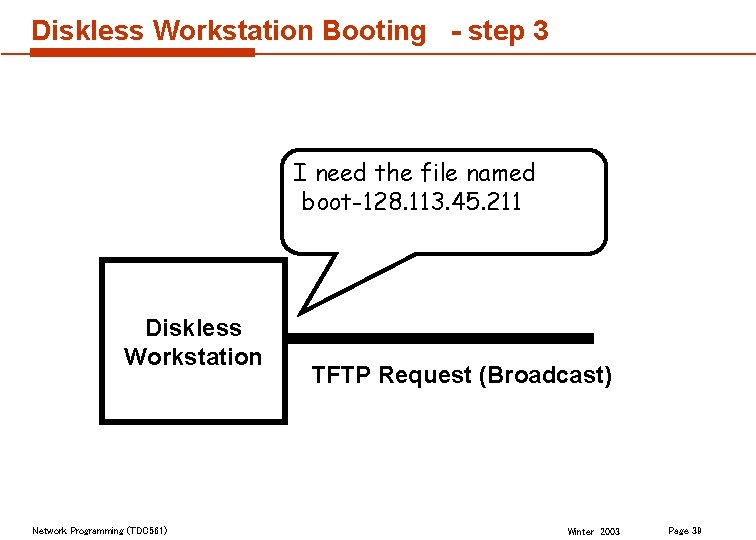

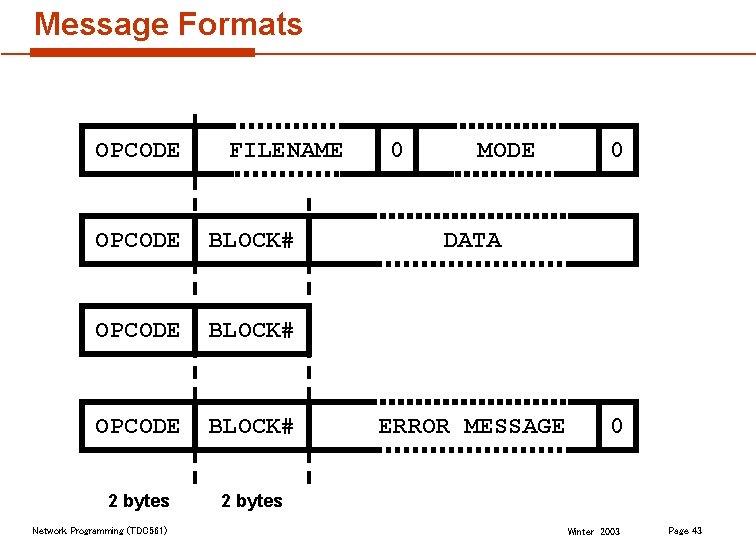

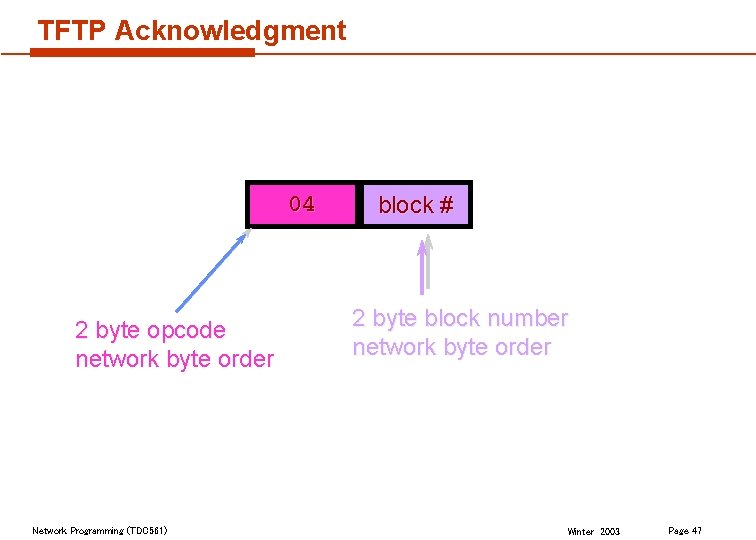

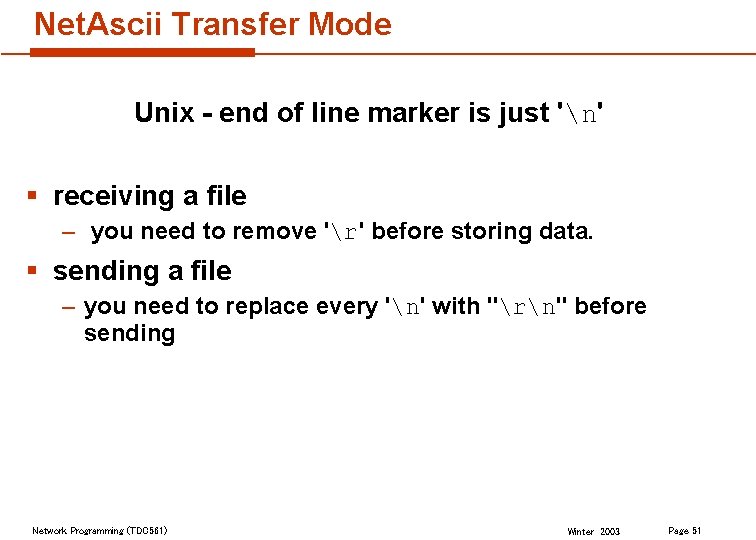

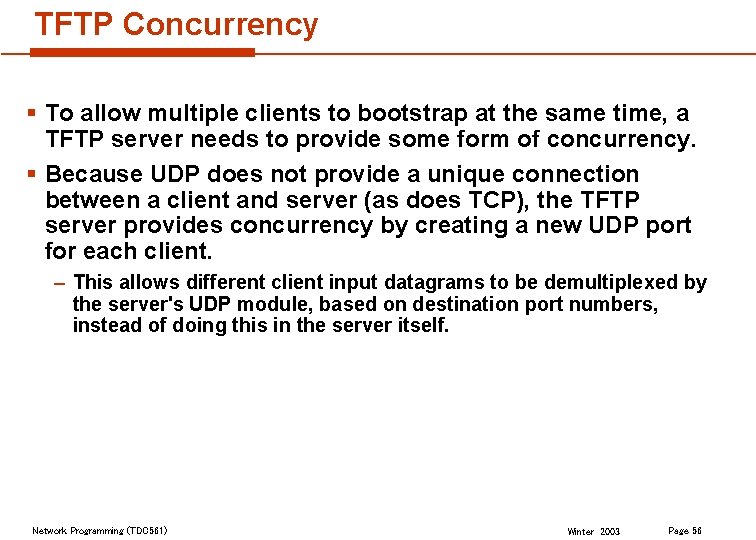

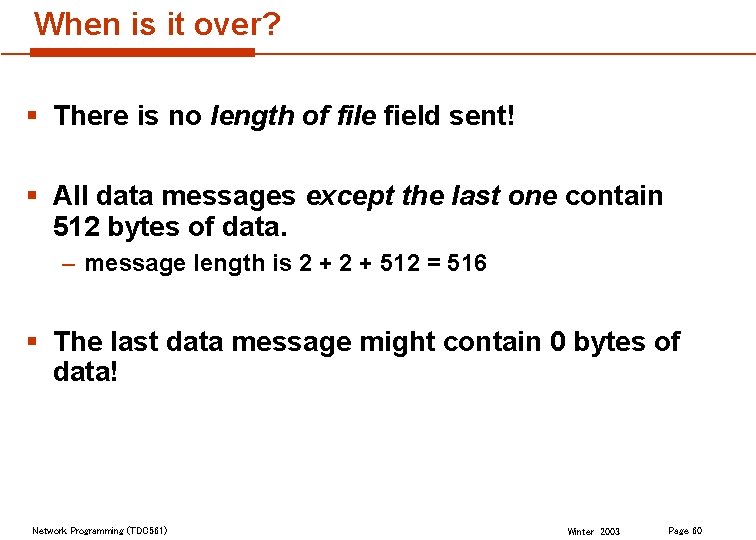

![while1 worker thread wait for work to do whileworkerstateworkernum while(1) { /* worker thread */ /* wait for work to do */ while(worker_state[workernum]==](https://slidetodoc.com/presentation_image_h/30c6e2dde5080f61845af1fa8b4d823e/image-30.jpg)

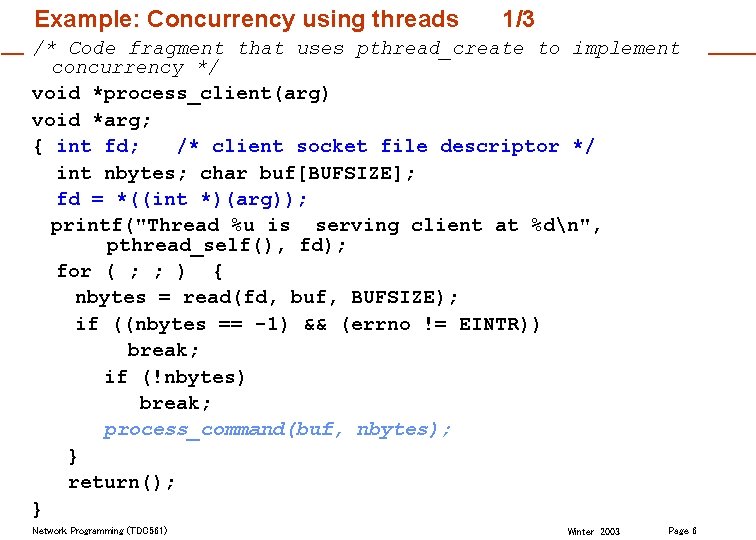

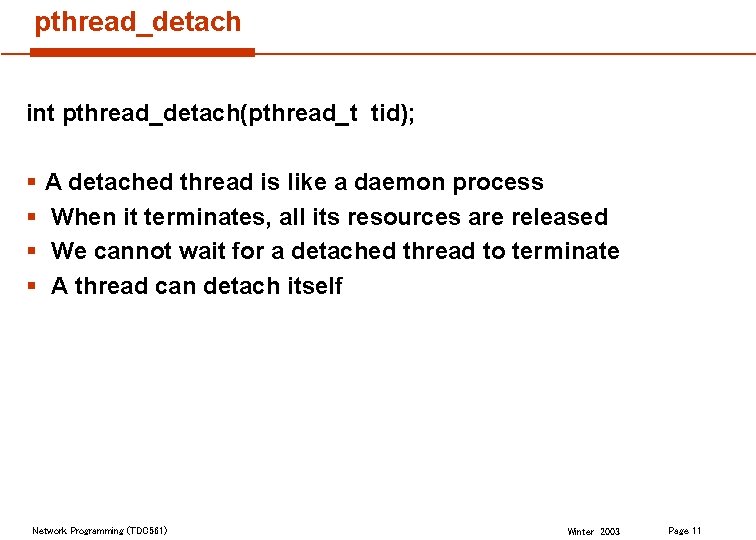

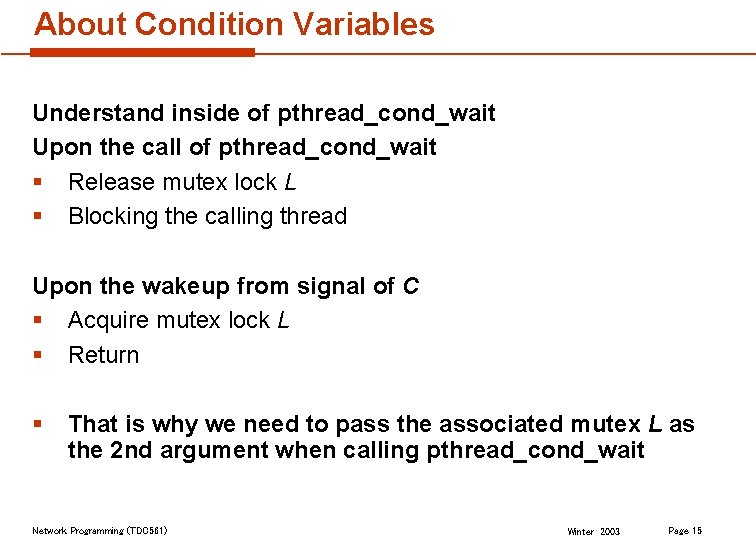

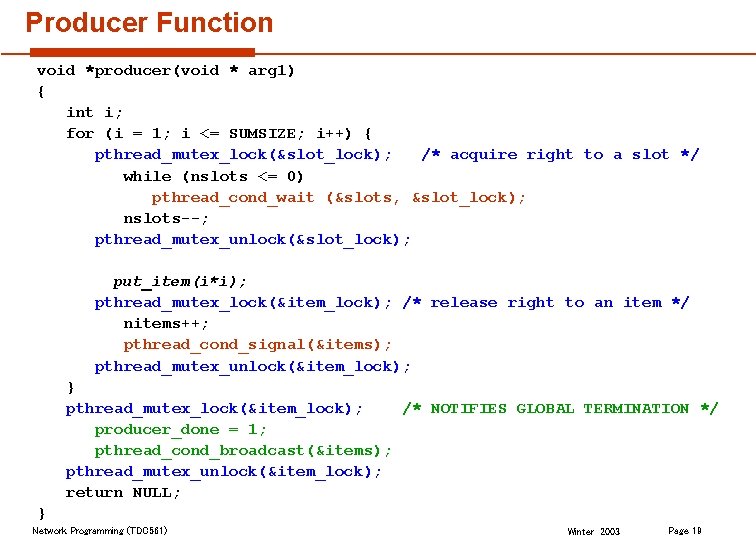

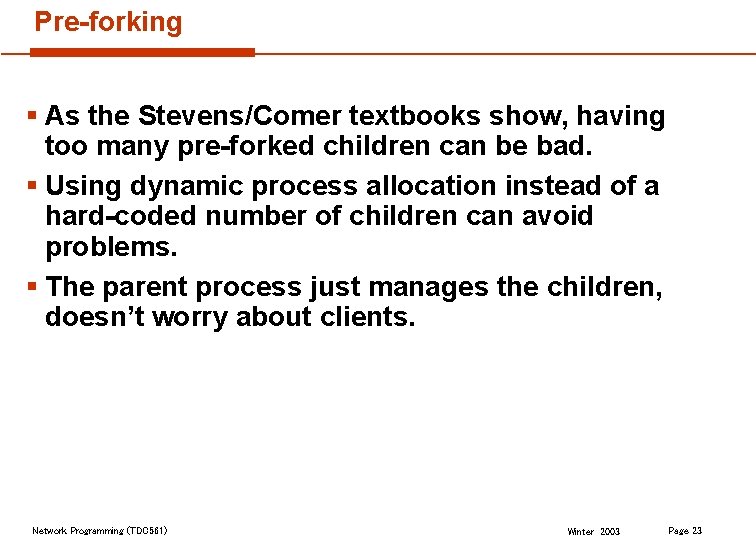

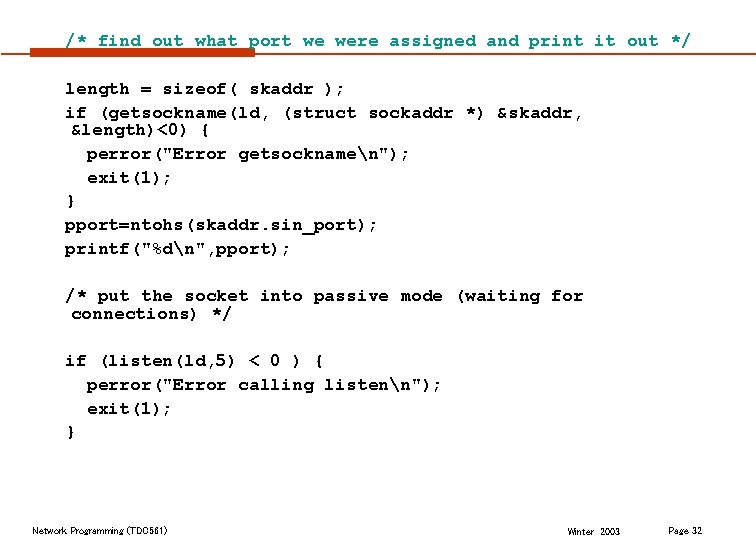

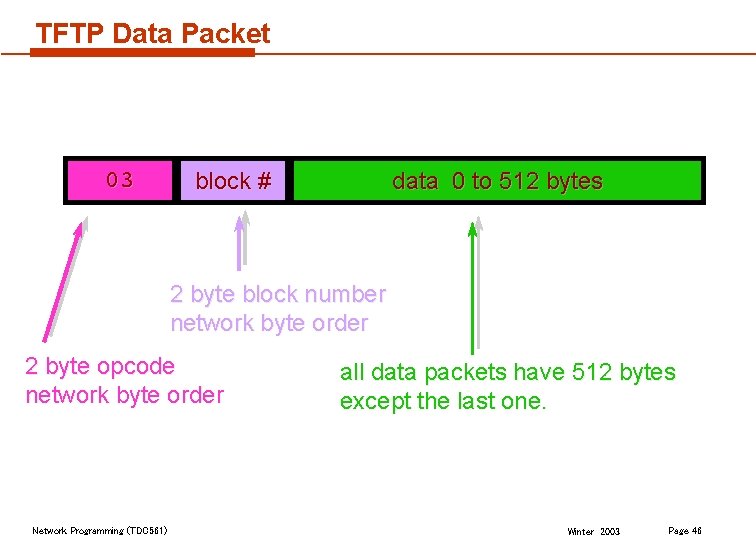

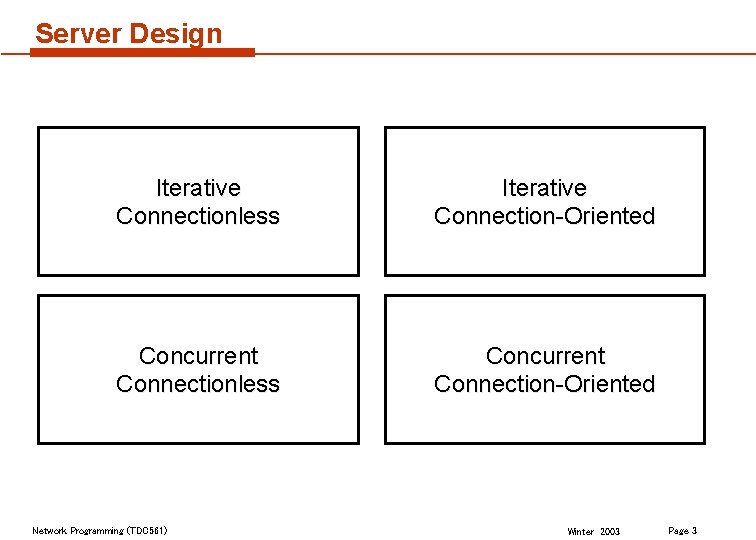

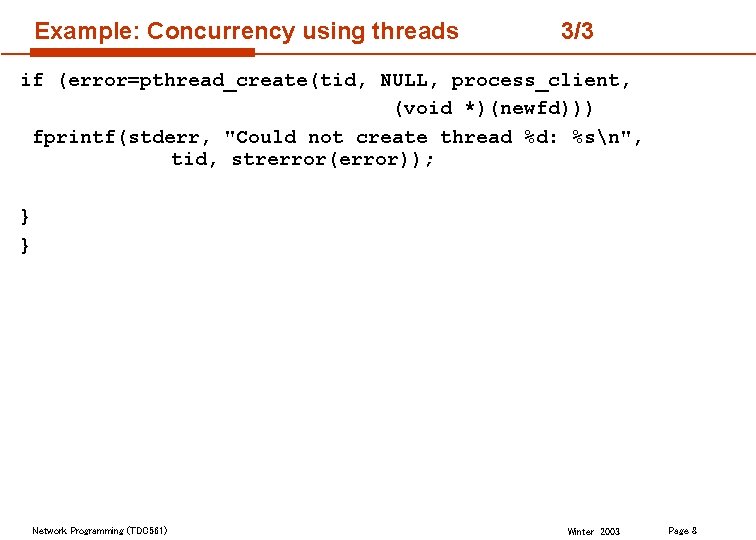

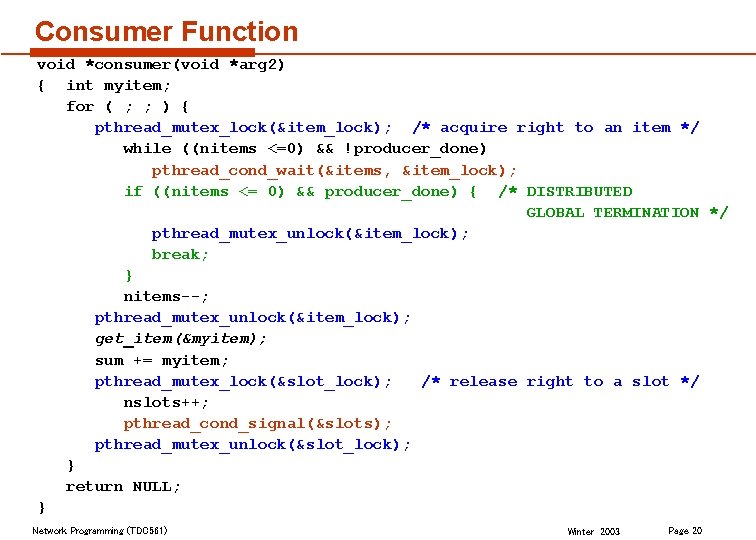

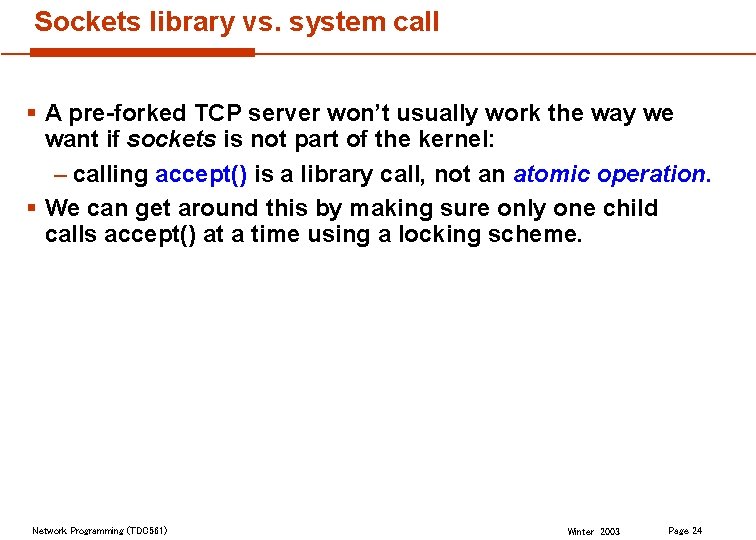

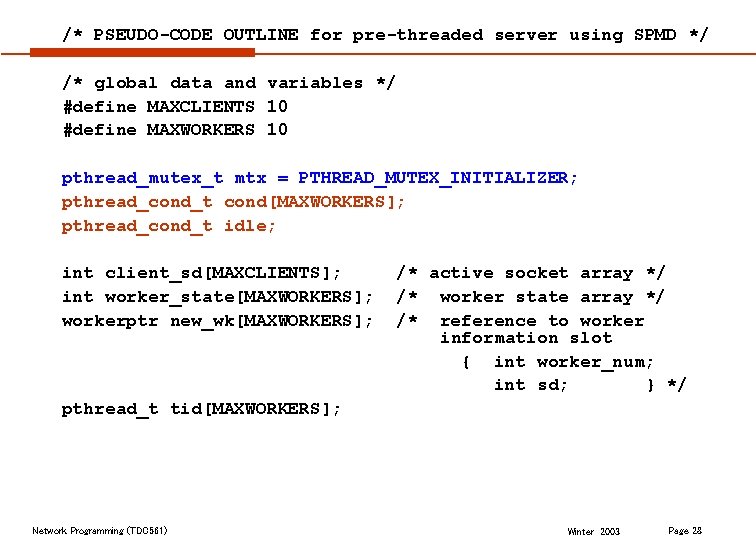

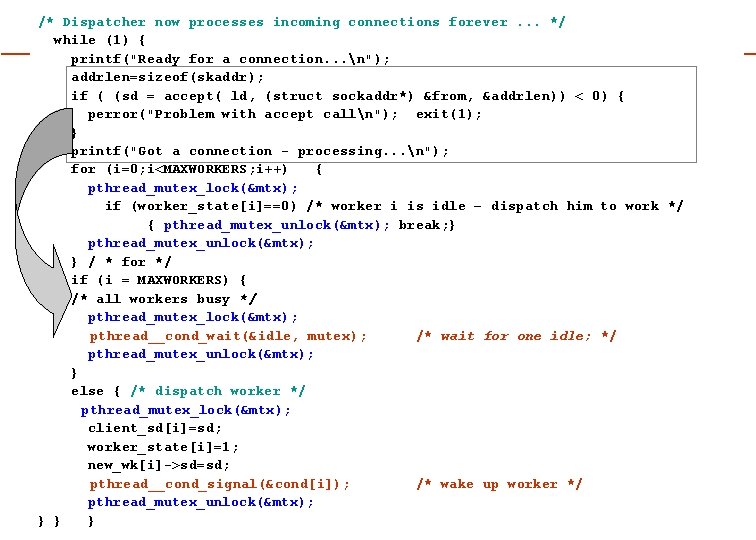

while(1) { /* worker thread */ /* wait for work to do */ while(worker_state[workernum]== 0) pthread_cond_wait(&cond[workernum], &mtx); /* do the work requested */ pthread_mutex_lock(&mtx); sd = me->sd; /* get the updated socket fd */ pthread_mutex_unlock(&mtx); while ( (n=read(sd, buf, BUFSIZE))>0) { do_work(); } /* work done - set itself idle assumes that read returned EOF */ pthread_mutex_lock(&mtx); close(client_sd[workernum]); worker_state[workernum]=0; printf(“Worker %d has completed work n", workernum); pthread__cond_signal(&idle); pthread_mutex_unlock(&mtx); } /* end while */ Network Programming (TDC 561) /* notifies dispatcher*/ Winter 2003 Page 30

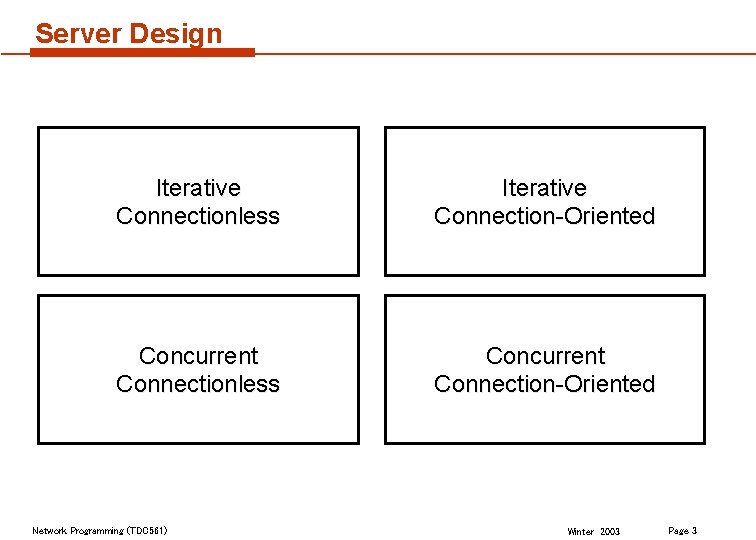

int main() { /* Dispatcher */ int ld, sd; struct sockaddr_in skaddr; struct sockaddr_in from; int addrlen, length; int i; pthread_t tid[MAXWORKERS]; if ((ld = socket( AF_INET, SOCK_STREAM, 0 )) < 0) { perror("Problem creating socketn"); exit(1); } skaddr. sin_family = AF_INET; skaddr. sin_addr. s_addr = htonl(INADDR_ANY); skaddr. sin_port = htons(0); if (bind(ld, (struct sockaddr *) &skaddr, sizeof(skaddr))<0) { perror("Problem bindingn"); exit(0); } Network Programming (TDC 561) Winter 2003 Page 31

/* find out what port we were assigned and print it out */ length = sizeof( skaddr ); if (getsockname(ld, (struct sockaddr *) &skaddr, &length)<0) { perror("Error getsocknamen"); exit(1); } pport=ntohs(skaddr. sin_port); printf("%dn", pport); /* put the socket into passive mode (waiting for connections) */ if (listen(ld, 5) < 0 ) { perror("Error calling listenn"); exit(1); } Network Programming (TDC 561) Winter 2003 Page 32

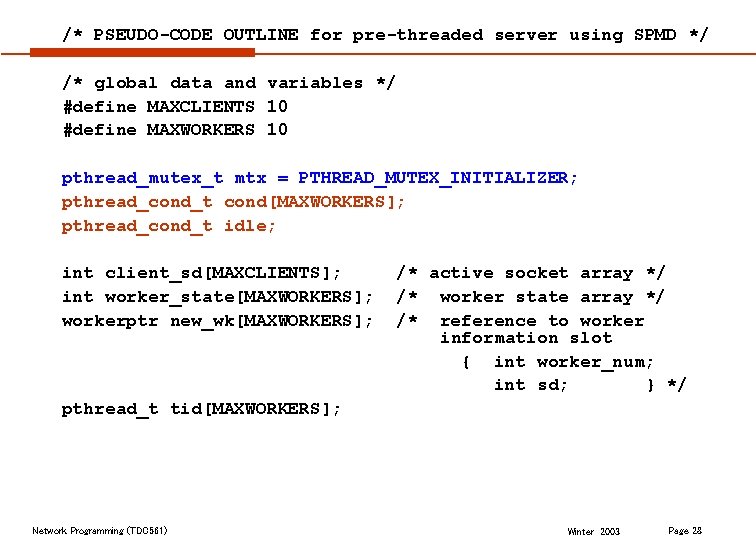

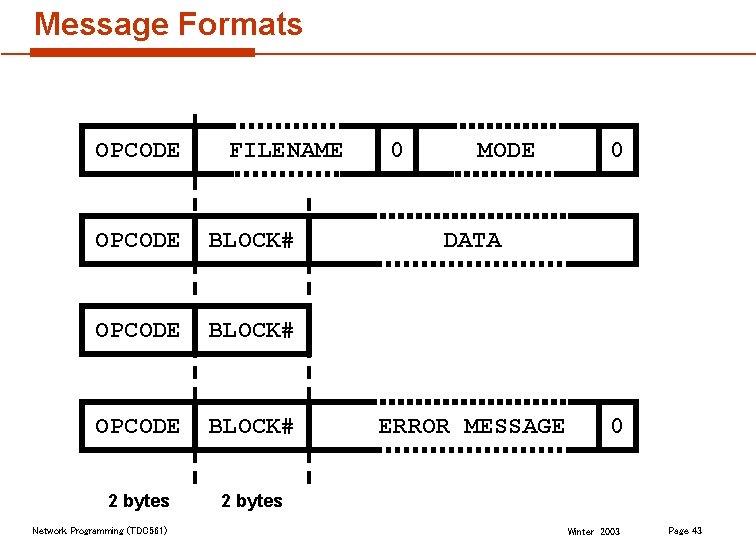

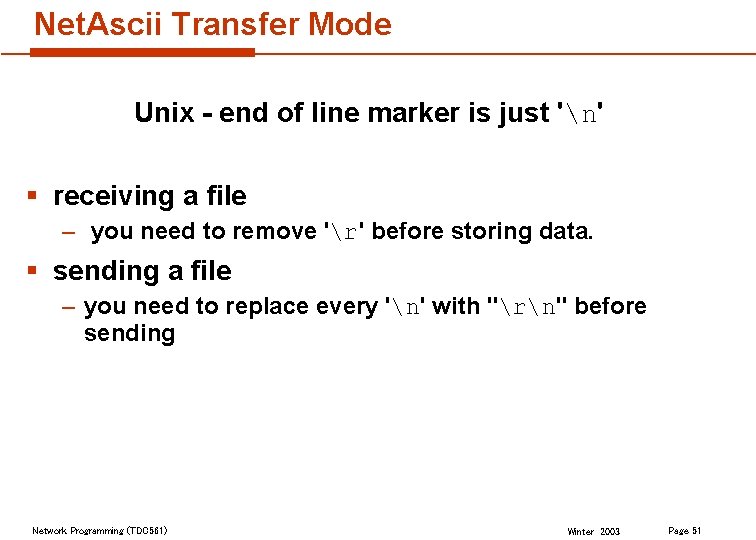

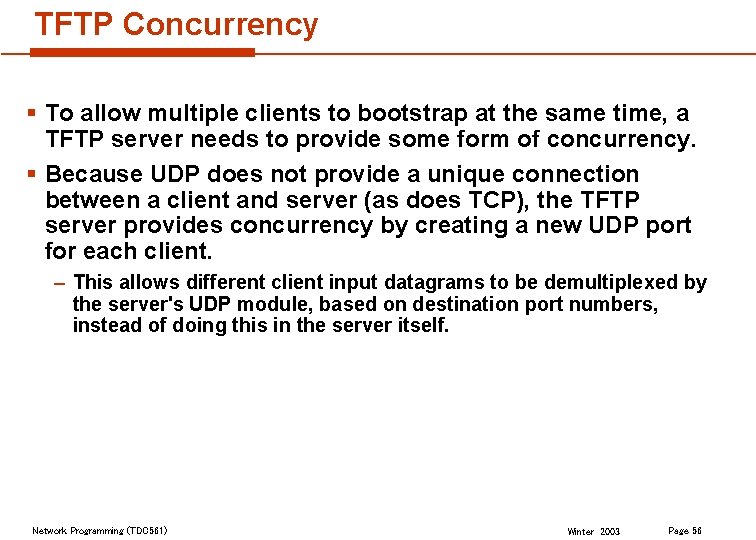

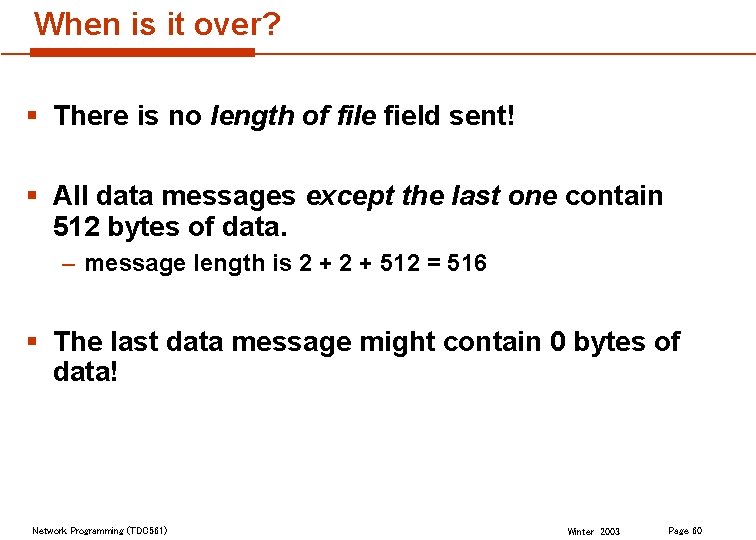

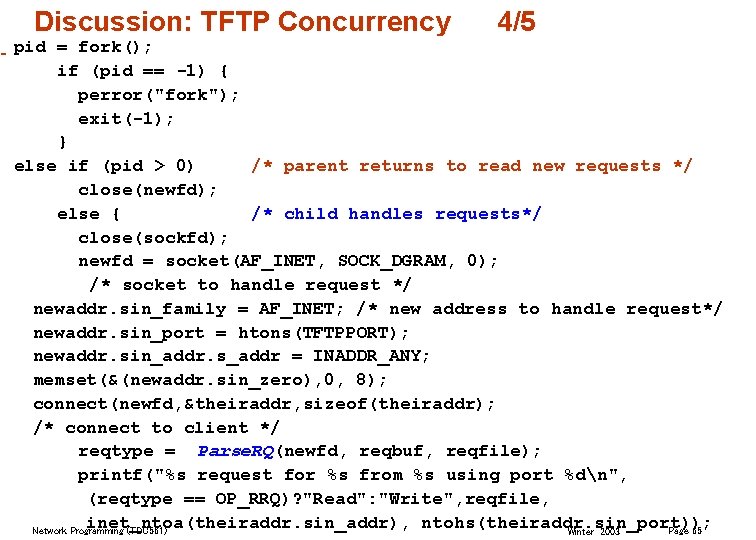

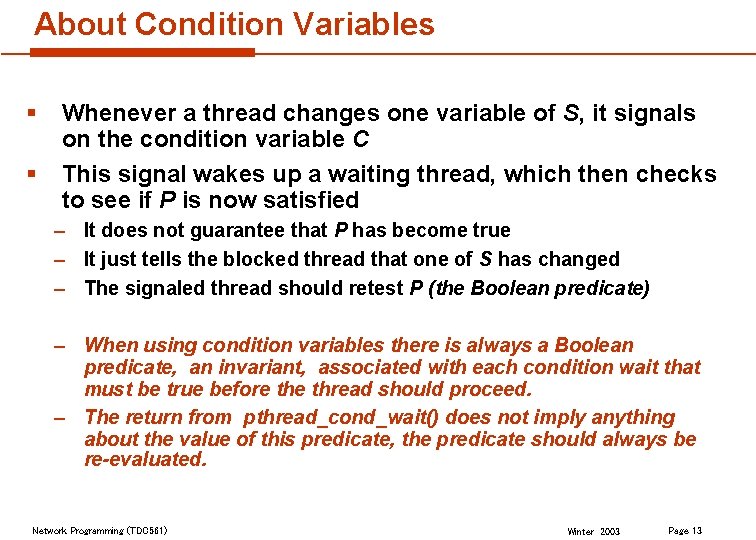

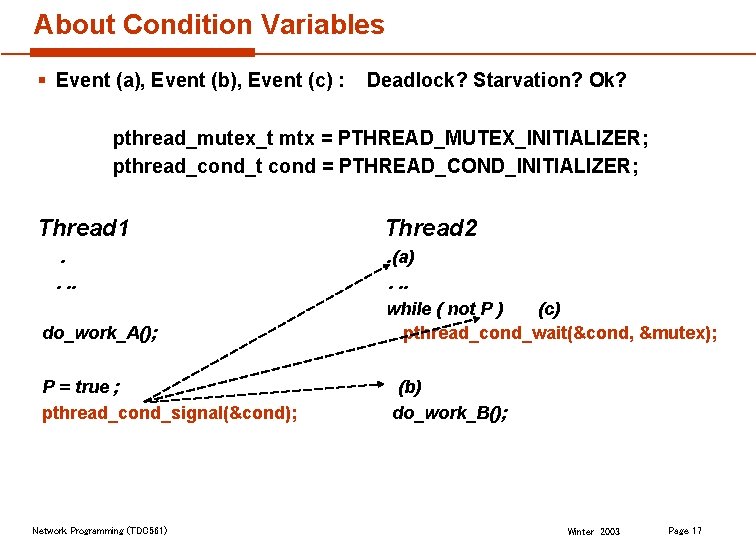

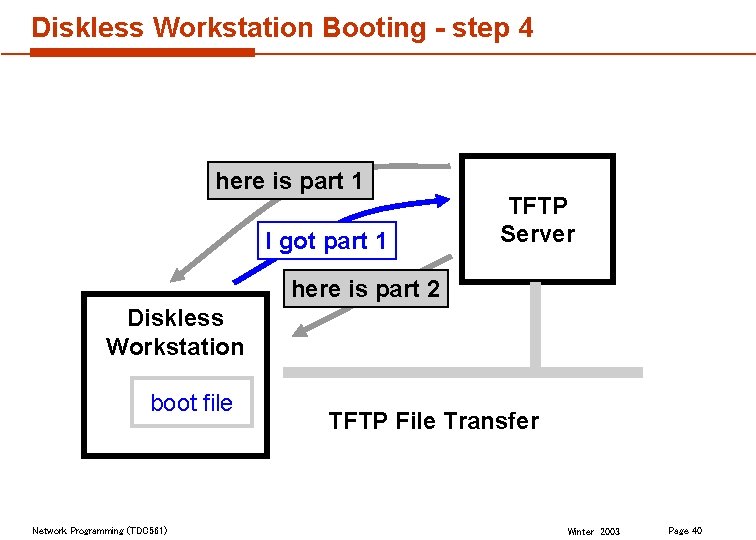

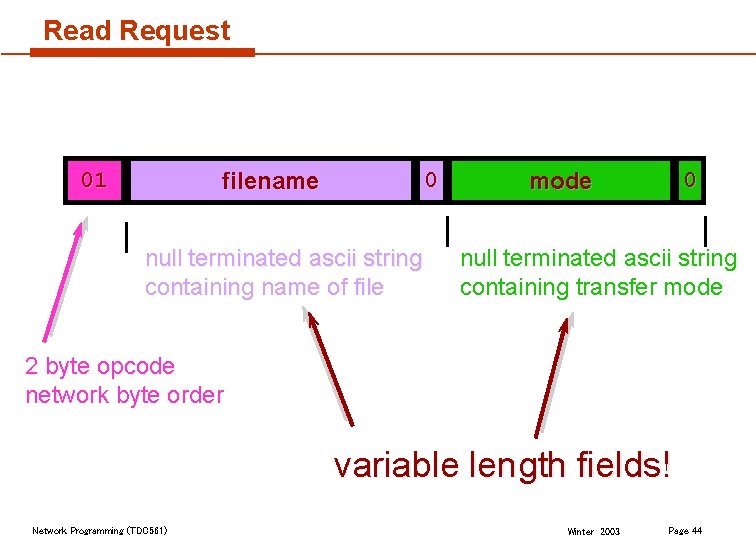

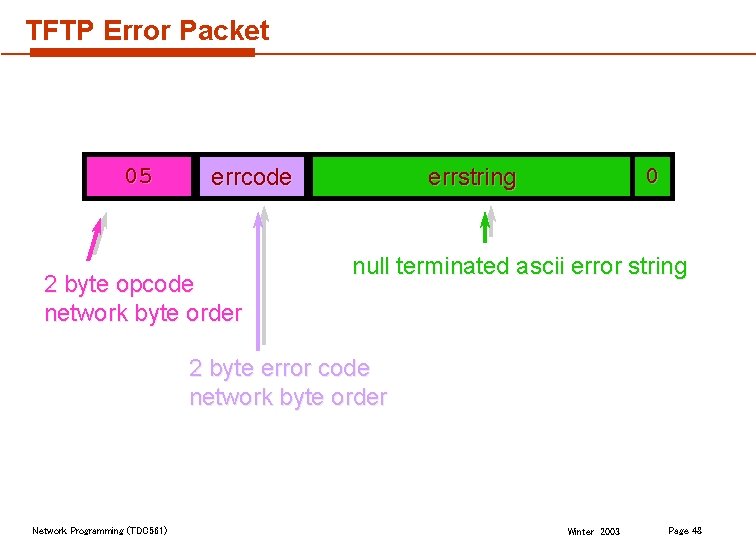

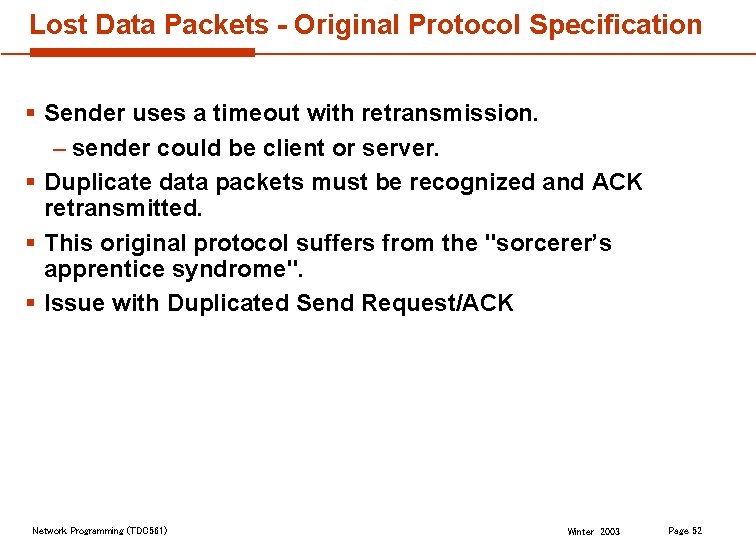

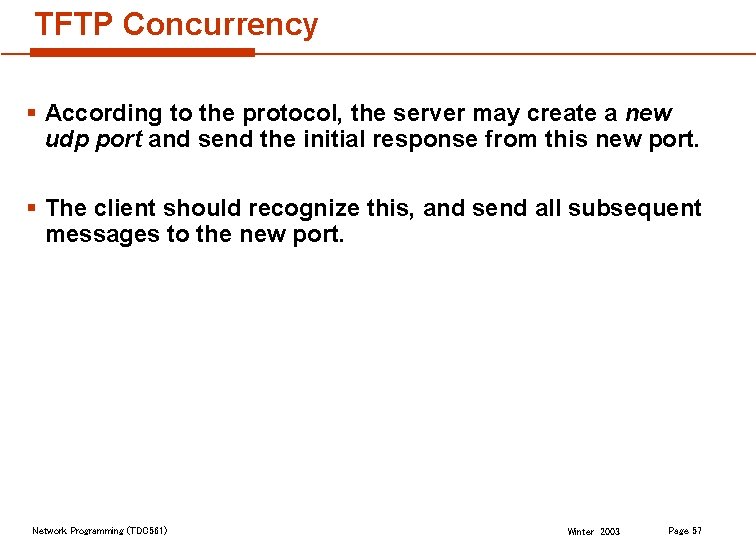

![do some initialization for i0 iMAXWORKERS i workerstatei0 newwki mallocsizeofworkerstruct /* do some initialization */ for (i=0; i<MAXWORKERS; i++) { worker_state[i]=0; new_wk[i] = malloc(sizeof(workerstruct));](https://slidetodoc.com/presentation_image_h/30c6e2dde5080f61845af1fa8b4d823e/image-33.jpg)

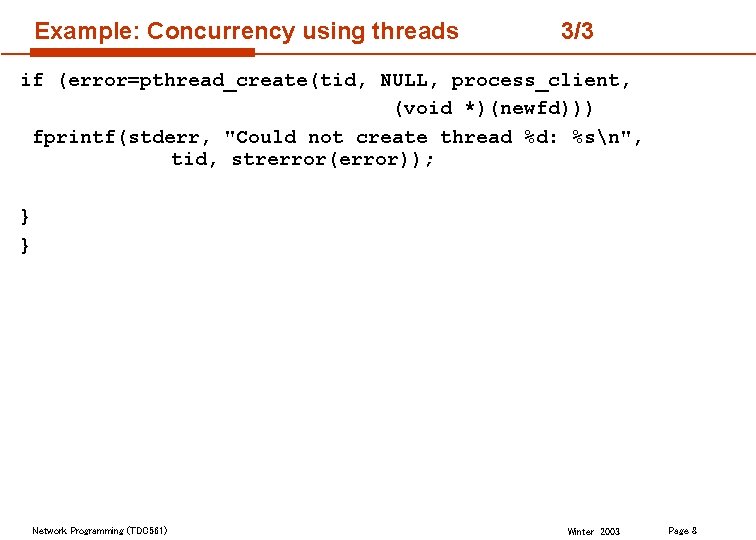

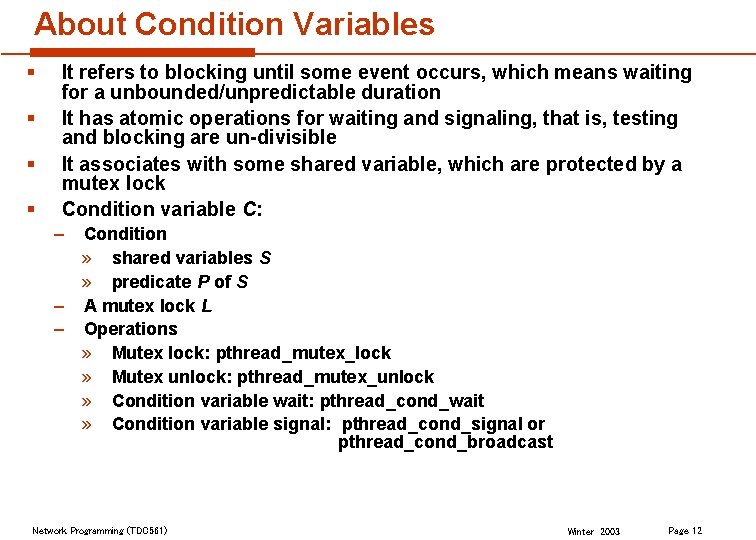

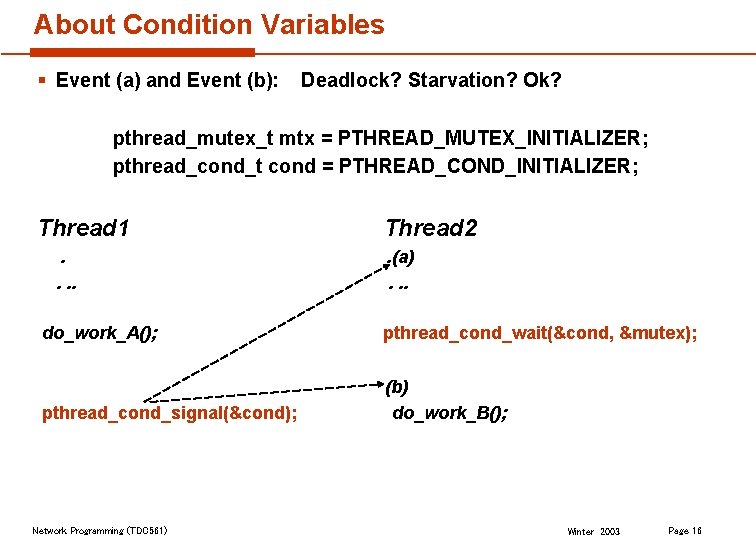

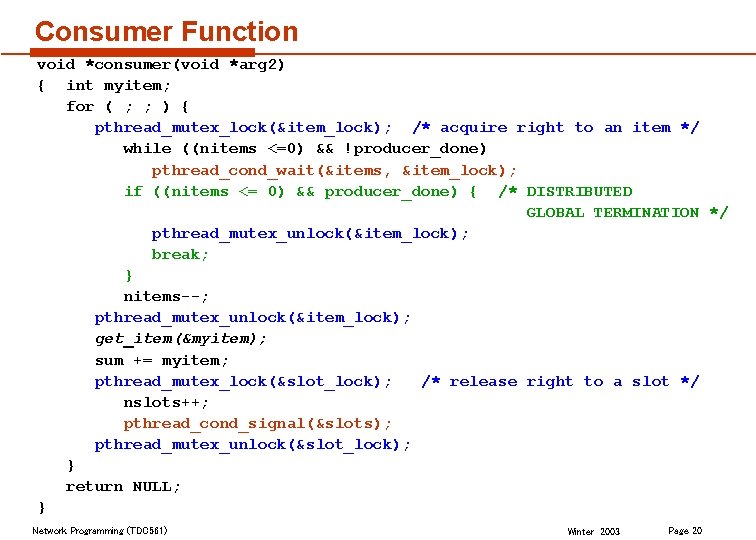

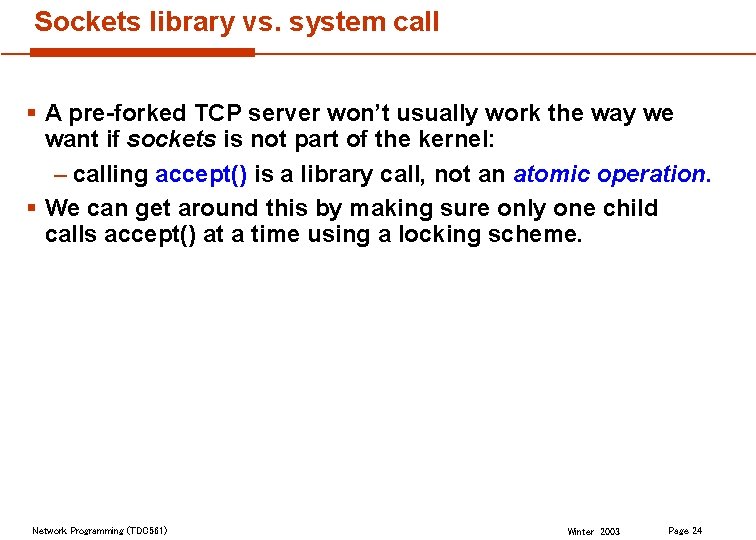

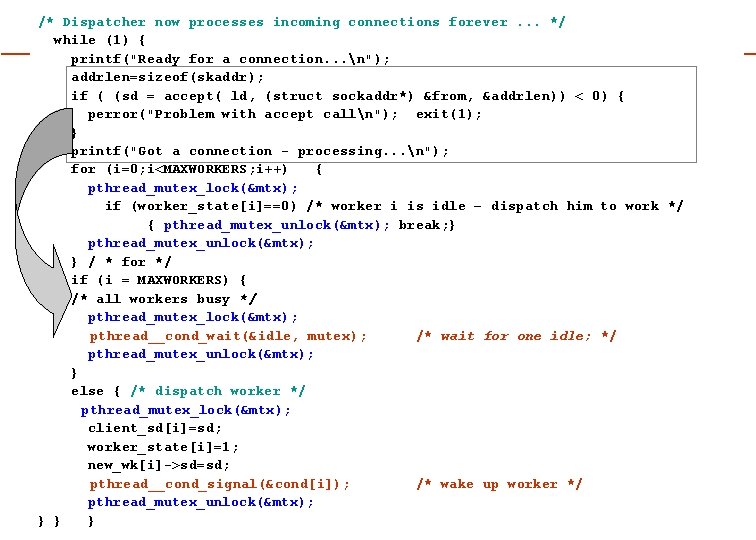

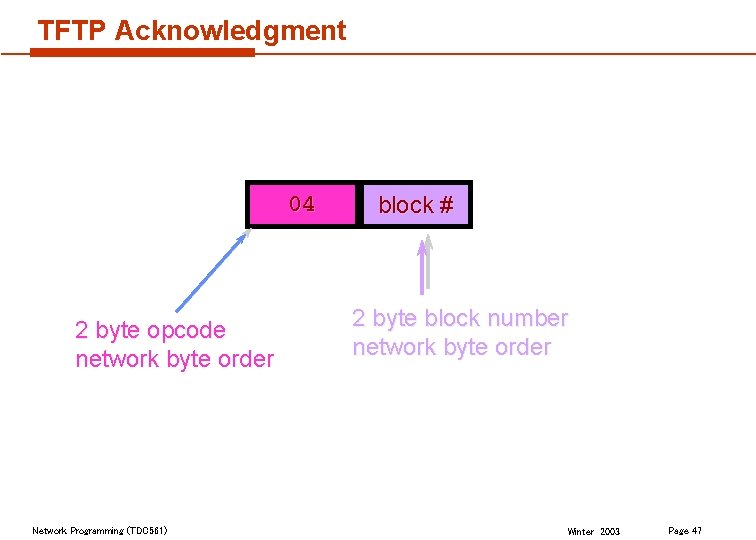

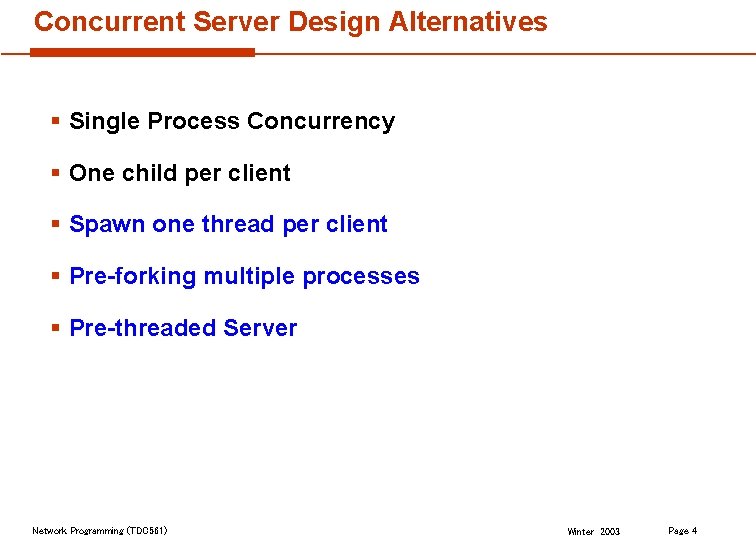

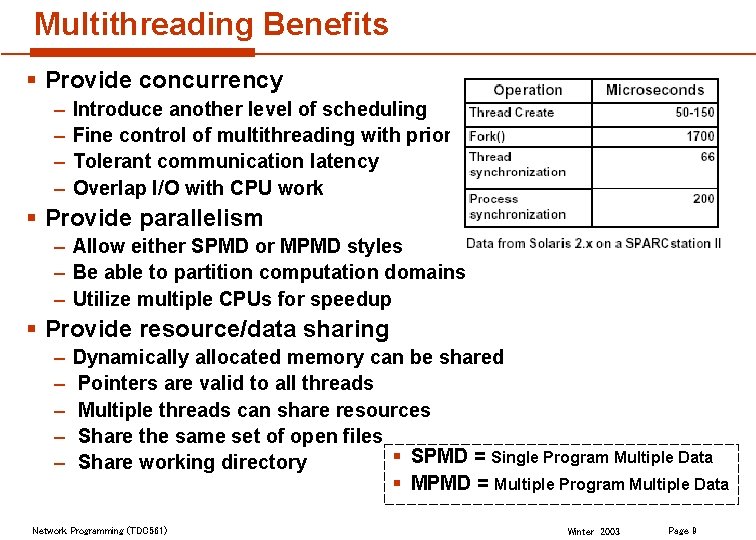

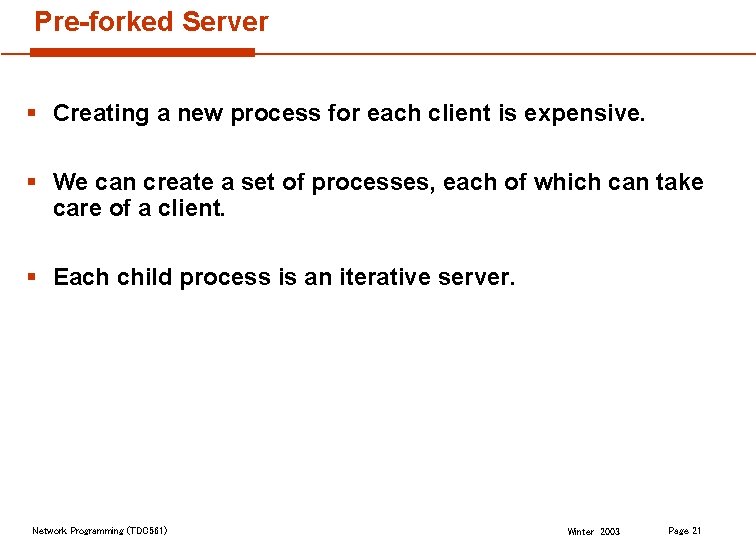

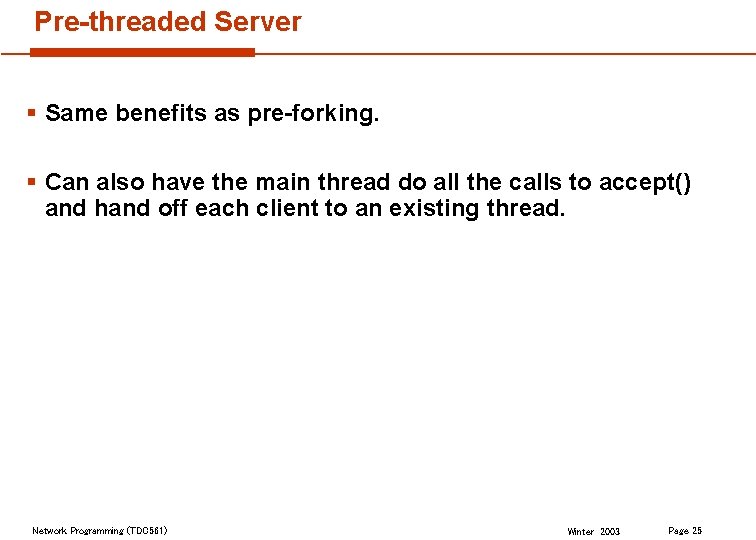

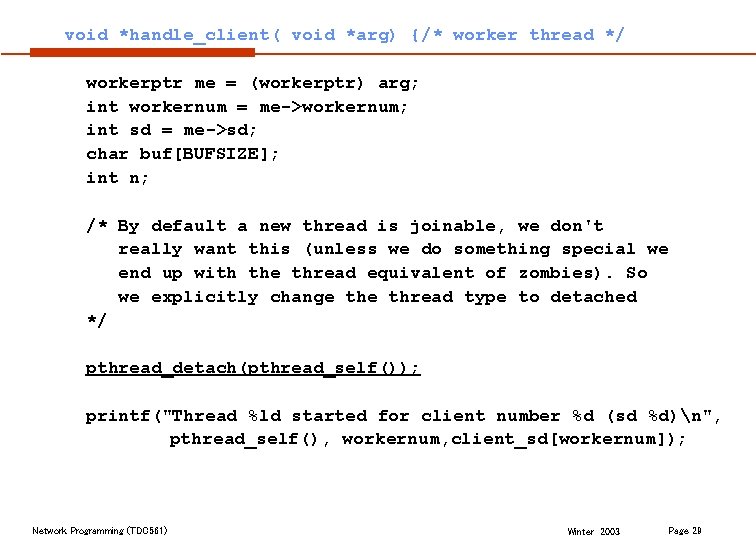

/* do some initialization */ for (i=0; i<MAXWORKERS; i++) { worker_state[i]=0; new_wk[i] = malloc(sizeof(workerstruct)); new_wk[i]->workernum=i; new_wk[i]->sd=0; cond[i] = PTHREAD_COND_INITIALIZER; pthread_create(&tid[i], NULL, handle_client, (void *) new_wk[i]); } Network Programming (TDC 561) Winter 2003 Page 33

/* Dispatcher now processes incoming connections forever. . . */ while (1) { printf("Ready for a connection. . . n"); addrlen=sizeof(skaddr); if ( (sd = accept( ld, (struct sockaddr*) &from, &addrlen)) < 0) { perror("Problem with accept calln"); exit(1); } printf("Got a connection - processing. . . n"); for (i=0; i<MAXWORKERS; i++) { pthread_mutex_lock(&mtx); if (worker_state[i]==0) /* worker i is idle – dispatch him to work */ { pthread_mutex_unlock(&mtx); break; } pthread_mutex_unlock(&mtx); } / * for */ if (i = MAXWORKERS) { /* all workers busy */ pthread_mutex_lock(&mtx); pthread__cond_wait(&idle, mutex); /* wait for one idle; */ pthread_mutex_unlock(&mtx); } else { /* dispatch worker */ pthread_mutex_lock(&mtx); client_sd[i]=sd; worker_state[i]=1; new_wk[i]->sd=sd; pthread__cond_signal(&cond[i]); /* wake up worker */ pthread_mutex_unlock(&mtx); } } } Network Programming (TDC 561) Winter 2003 Page 34

Pre-Threaded Server ( For Assignment #3 ) § Safety Requirement – Absence of deadlock ( Worker Threads and Dispatcher) § Progress Requirement – Absence of Starvation ( either for Worker Thread or Dispatcher ), given there are incoming client requests – Fairness to handle incoming client request (bound the waiting time for an external client, reduce response time to an external client) – Guarantee Distributed Global Termination for the server thread pool. » Dispatcher can decide to terminate thread pool (dispatcher_is_done = true) and then notifies all worker threads about this (using pthread_signal_broadcast) » When a worker thread becomes idle and the dispatcher_done he does not block anymore, but he returns and therefore worker thread is terminate » Eventually, after a finite interval of time all worker threads will terminate Network Programming (TDC 561) Winter 2003 Page 35

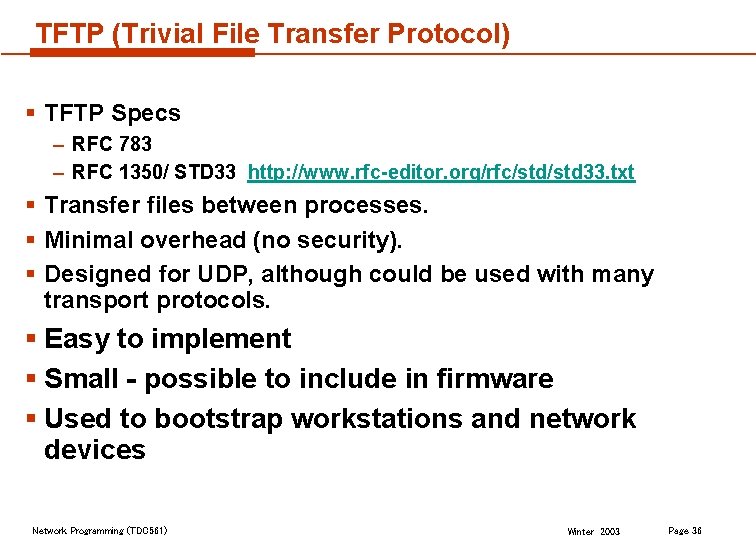

TFTP (Trivial File Transfer Protocol) § TFTP Specs – RFC 783 – RFC 1350/ STD 33 http: //www. rfc-editor. org/rfc/std 33. txt § Transfer files between processes. § Minimal overhead (no security). § Designed for UDP, although could be used with many transport protocols. § Easy to implement § Small - possible to include in firmware § Used to bootstrap workstations and network devices Network Programming (TDC 561) Winter 2003 Page 36

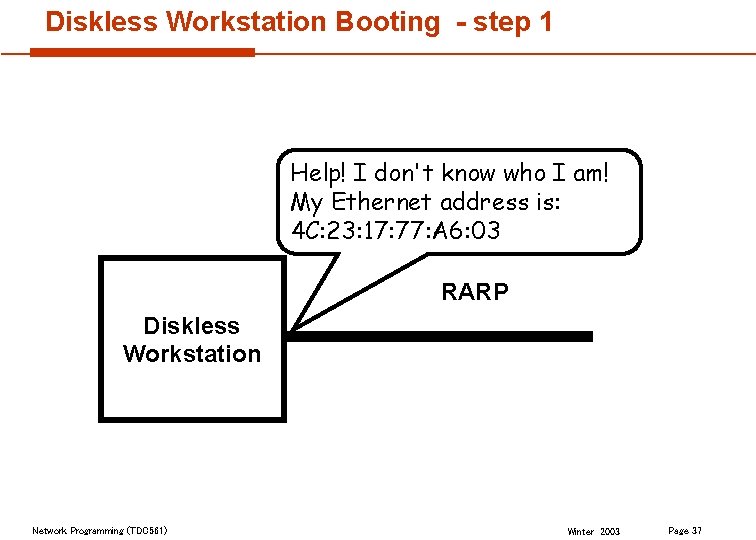

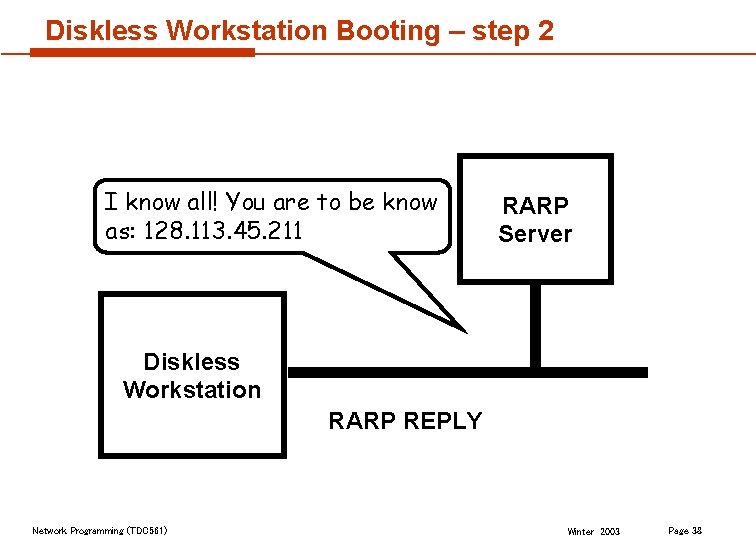

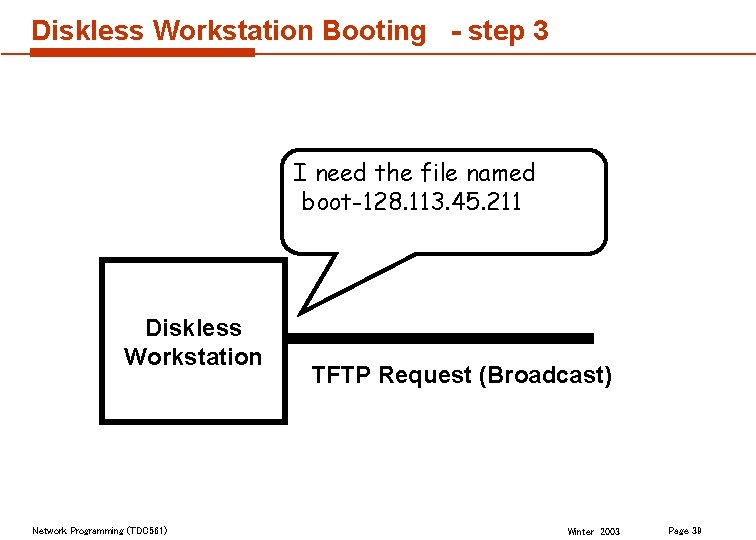

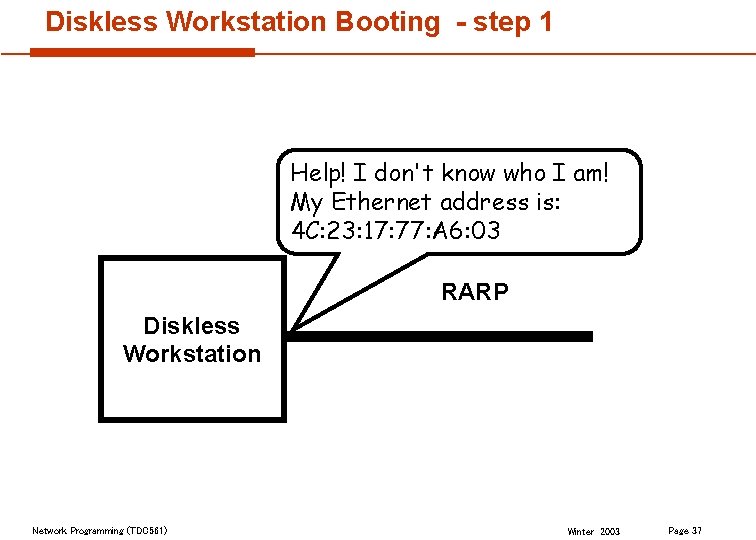

Diskless Workstation Booting - step 1 Help! I don't know who I am! My Ethernet address is: 4 C: 23: 17: 77: A 6: 03 RARP Diskless Workstation Network Programming (TDC 561) Winter 2003 Page 37

Diskless Workstation Booting – step 2 I know all! You are to be know as: 128. 113. 45. 211 RARP Server Diskless Workstation RARP REPLY Network Programming (TDC 561) Winter 2003 Page 38

Diskless Workstation Booting - step 3 I need the file named boot-128. 113. 45. 211 Diskless Workstation Network Programming (TDC 561) TFTP Request (Broadcast) Winter 2003 Page 39

Diskless Workstation Booting - step 4 here is part 1 I got part 1 TFTP Server here is part 2 Diskless Workstation boot file Network Programming (TDC 561) TFTP File Transfer Winter 2003 Page 40

TFTP Protocol 5 message types: – Read request – Write request – Data – ACK (acknowledgment) – Error Network Programming (TDC 561) Winter 2003 Page 41

Messages § Each is an independent UDP Datagram § Each has a 2 byte opcode (1 st 2 bytes) § The rest depends on the opcode. Network Programming (TDC 561) Winter 2003 Page 42

Message Formats OPCODE FILENAME OPCODE BLOCK# 2 bytes Network Programming (TDC 561) 0 MODE 0 DATA ERROR MESSAGE 0 Winter 2003 Page 43

Read Request 01 0 filename null terminated ascii string containing name of file 0 mode null terminated ascii string containing transfer mode 2 byte opcode network byte order variable length fields! Network Programming (TDC 561) Winter 2003 Page 44

Write Request 02 0 filename null terminated ascii string containing name of file 0 mode null terminated ascii string containing transfer mode 2 byte opcode network byte order variable length fields! Network Programming (TDC 561) Winter 2003 Page 45

TFTP Data Packet 03 block # data 0 to 512 bytes 2 byte block number network byte order 2 byte opcode network byte order Network Programming (TDC 561) all data packets have 512 bytes except the last one. Winter 2003 Page 46

TFTP Acknowledgment 04 2 byte opcode network byte order Network Programming (TDC 561) block # 2 byte block number network byte order Winter 2003 Page 47

TFTP Error Packet 05 errcode 2 byte opcode network byte order 0 errstring null terminated ascii error string 2 byte error code network byte order Network Programming (TDC 561) Winter 2003 Page 48

TFTP Error Codes 0 - not defined 1 - File not found 2 - Access violation 3 - Disk full 4 - Illegal TFTP operation 5 - Unknown port 6 - File already exists 7 - No such user Network Programming (TDC 561) Winter 2003 Page 49

TFTP transfer modes § “netascii” : for transferring text files. – all lines end with rn (CR, LF). – provides standard format for transferring text files. – both ends responsible for converting to/from netascii format. § “octet” : for transferring binary files. – no translation done. Network Programming (TDC 561) Winter 2003 Page 50

Net. Ascii Transfer Mode Unix - end of line marker is just 'n' § receiving a file – you need to remove 'r' before storing data. § sending a file – you need to replace every 'n' with "rn" before sending Network Programming (TDC 561) Winter 2003 Page 51

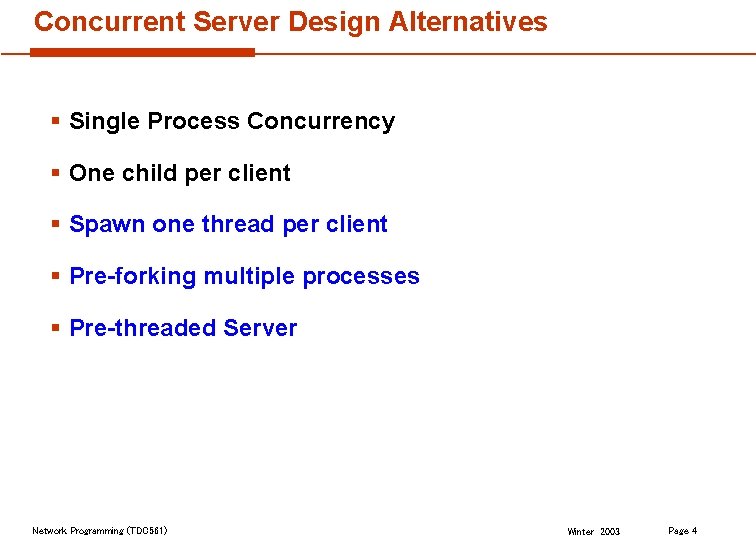

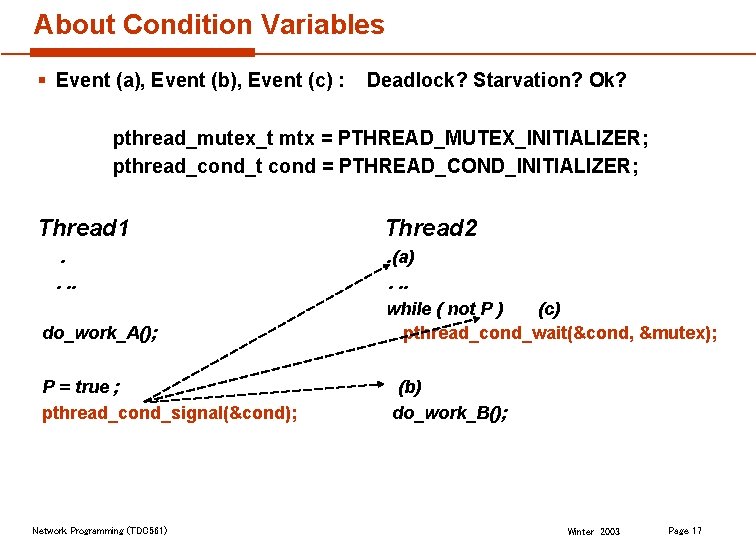

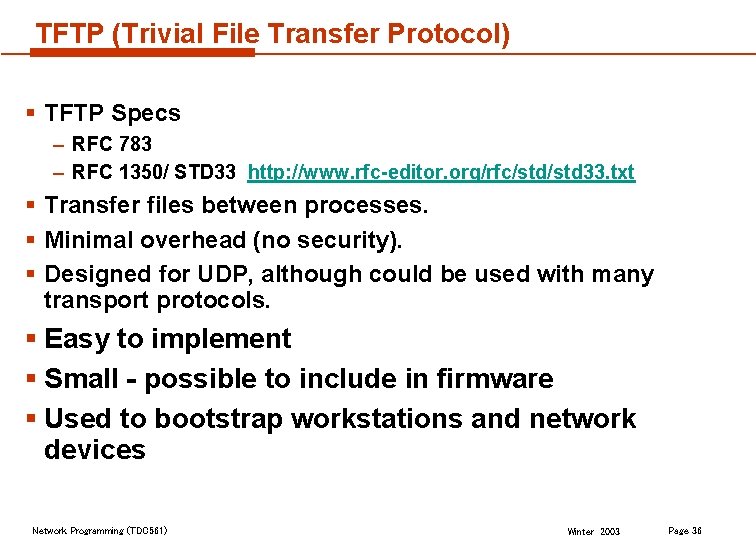

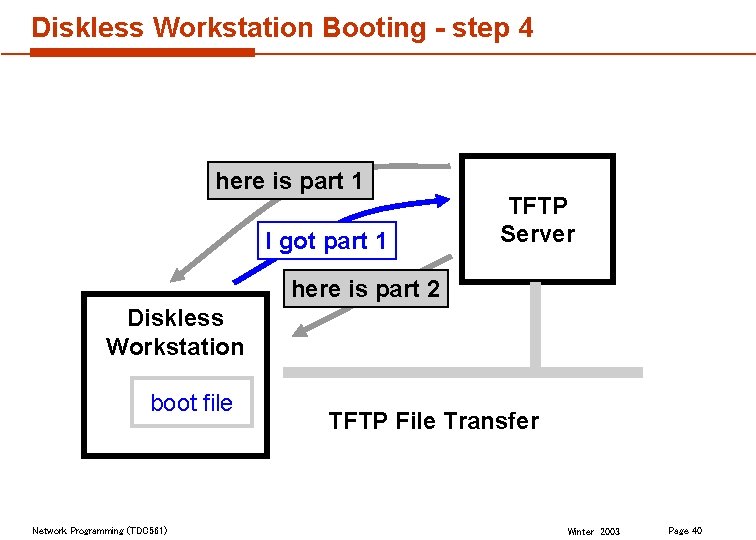

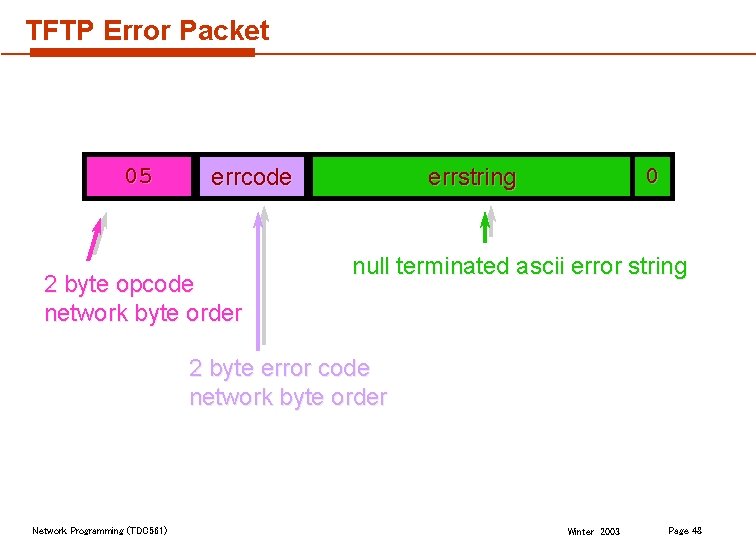

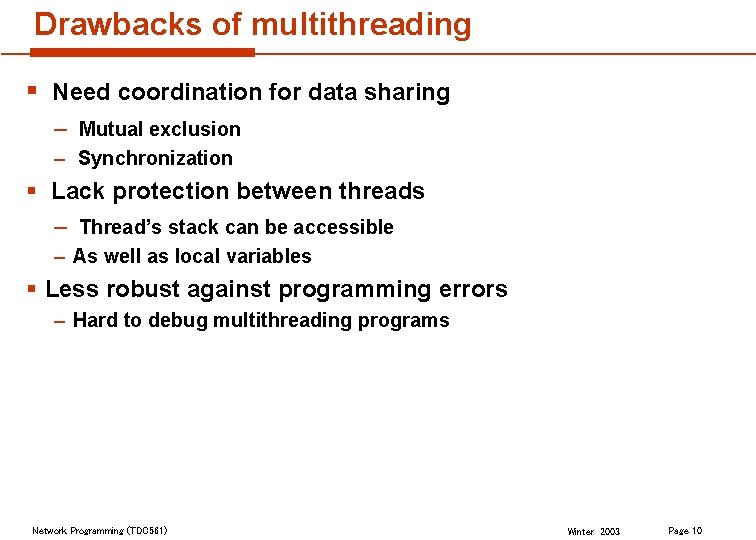

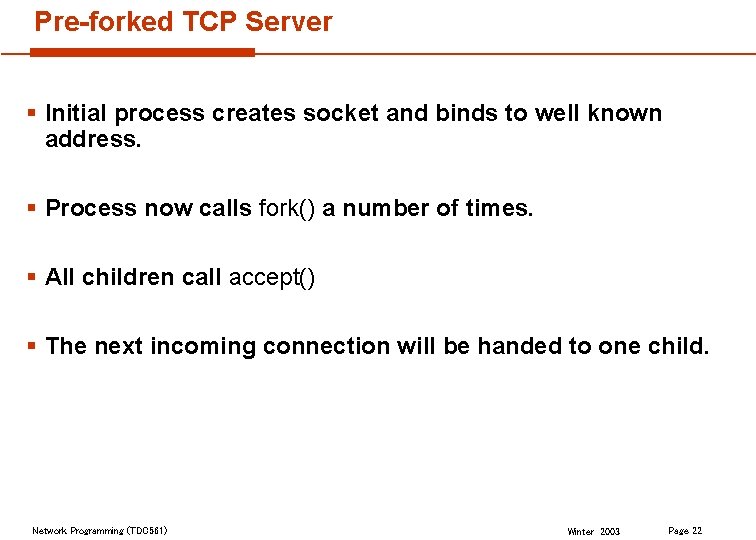

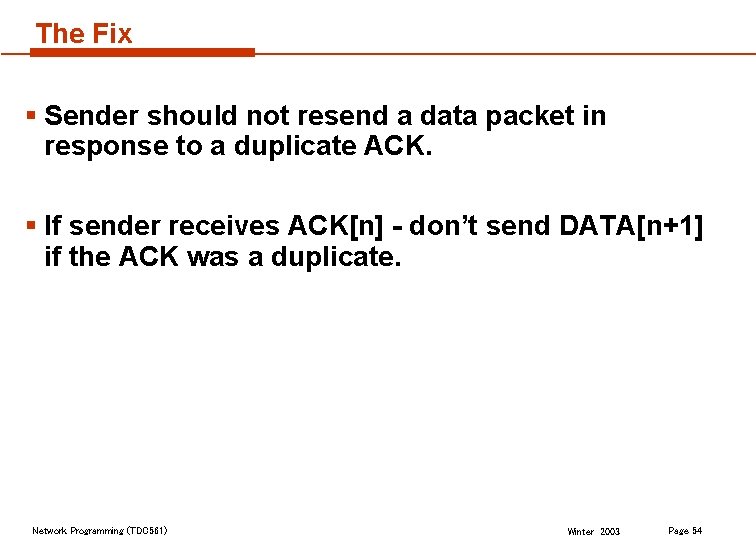

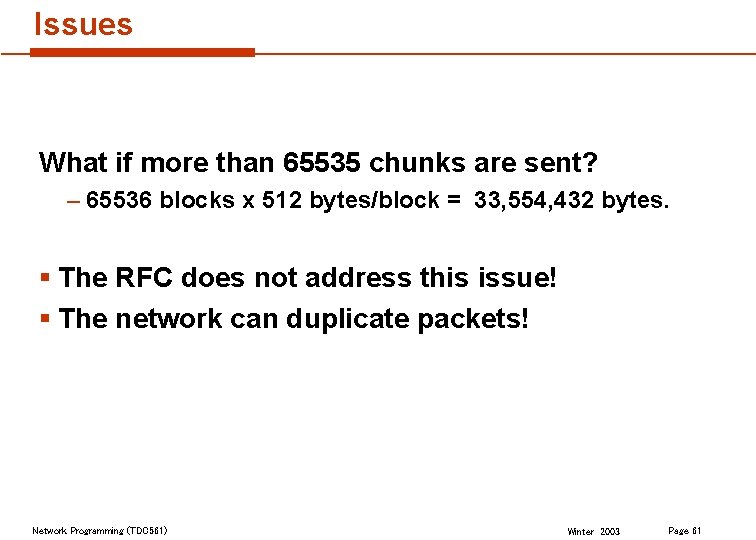

Lost Data Packets - Original Protocol Specification § Sender uses a timeout with retransmission. – sender could be client or server. § Duplicate data packets must be recognized and ACK retransmitted. § This original protocol suffers from the "sorcerer’s apprentice syndrome". § Issue with Duplicated Send Request/ACK Network Programming (TDC 561) Winter 2003 Page 52

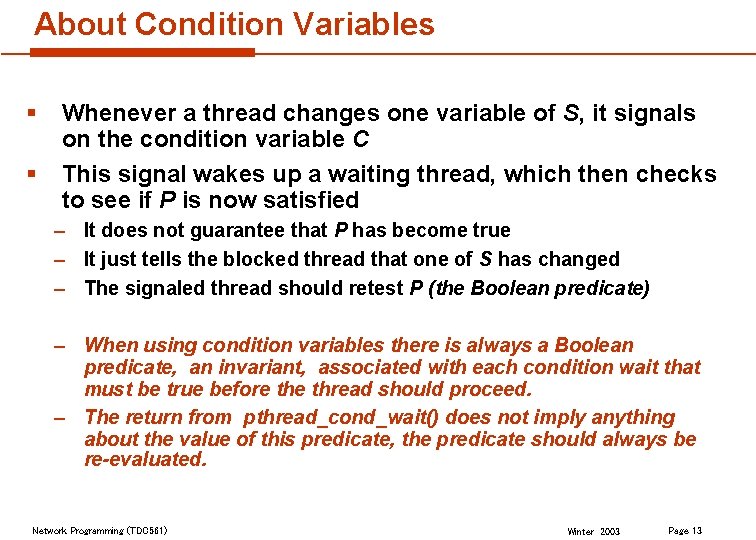

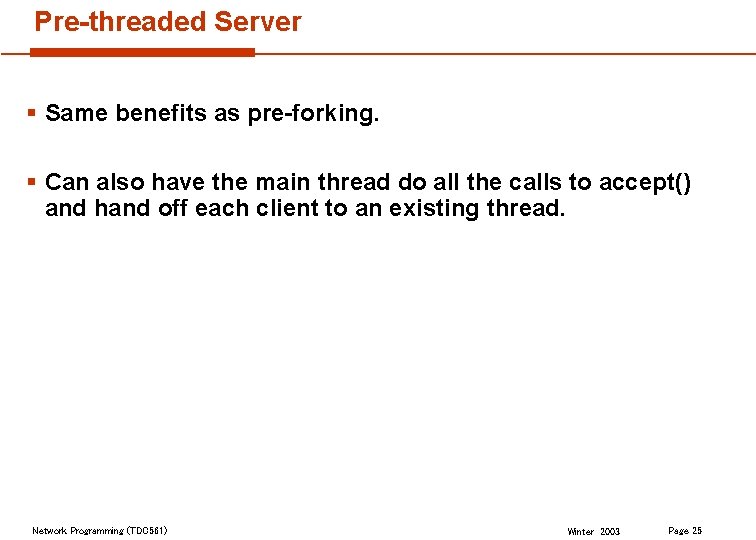

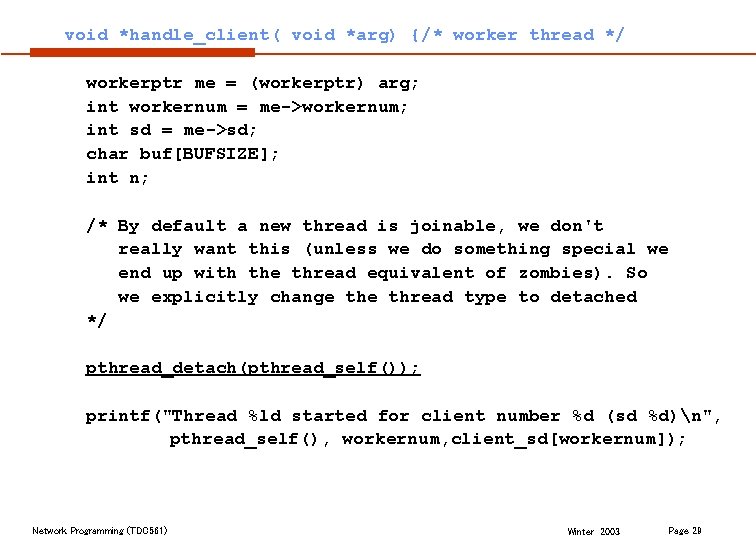

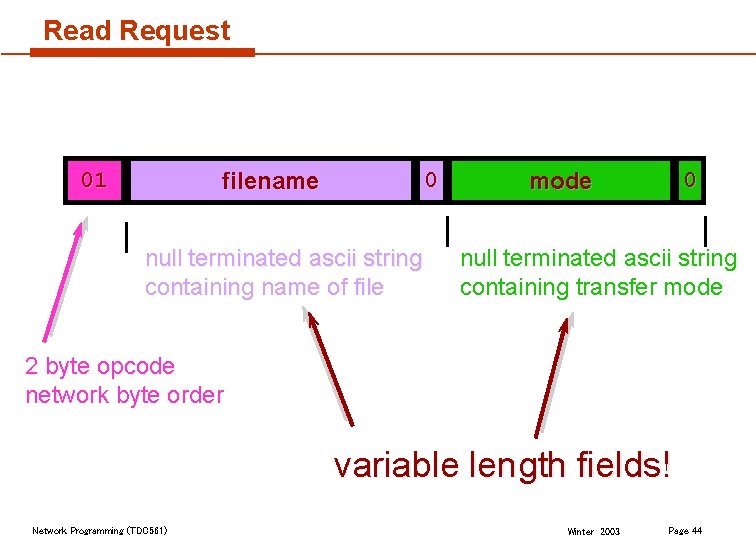

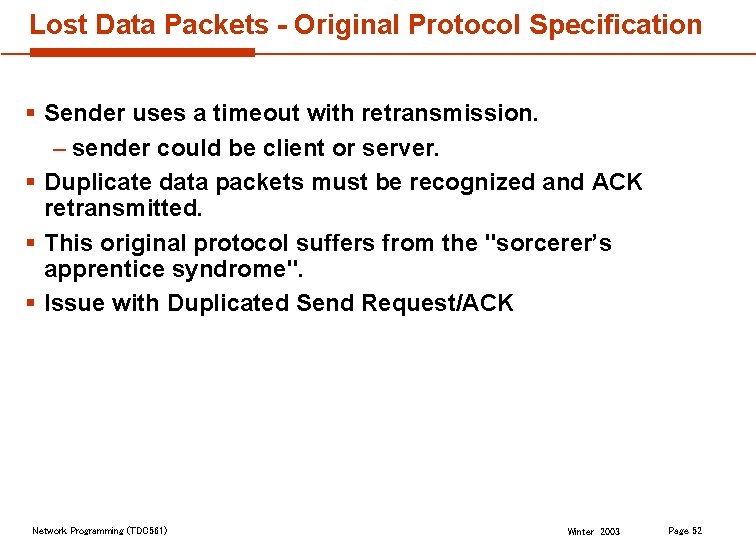

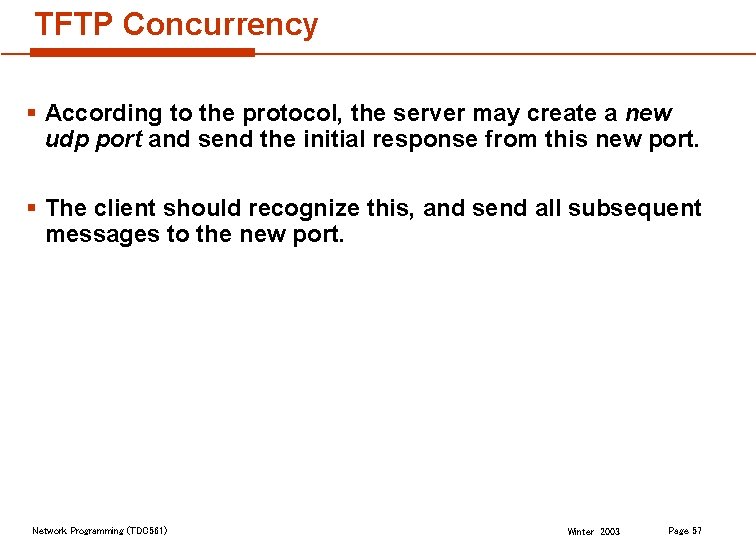

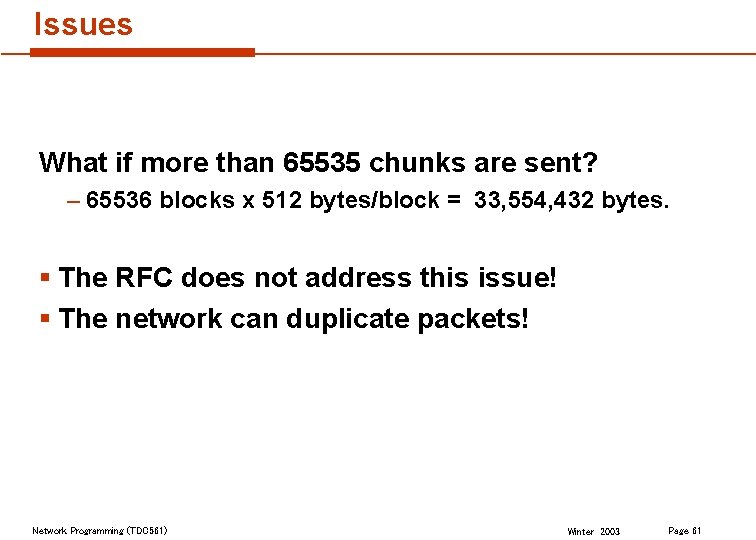

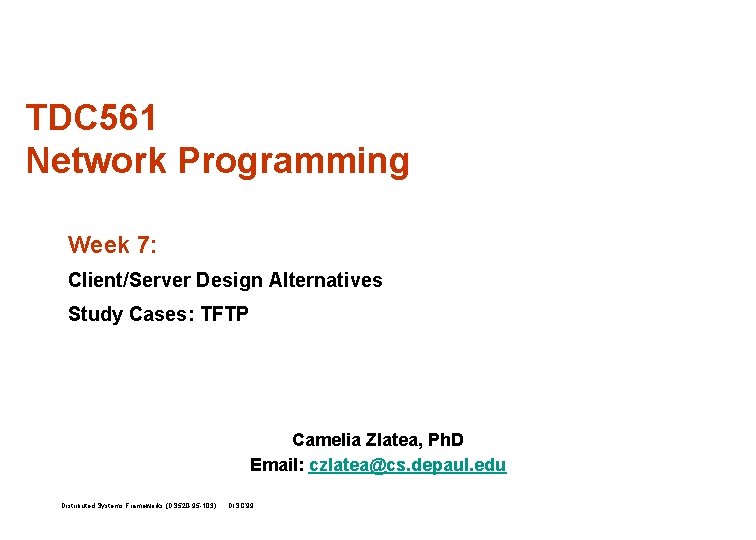

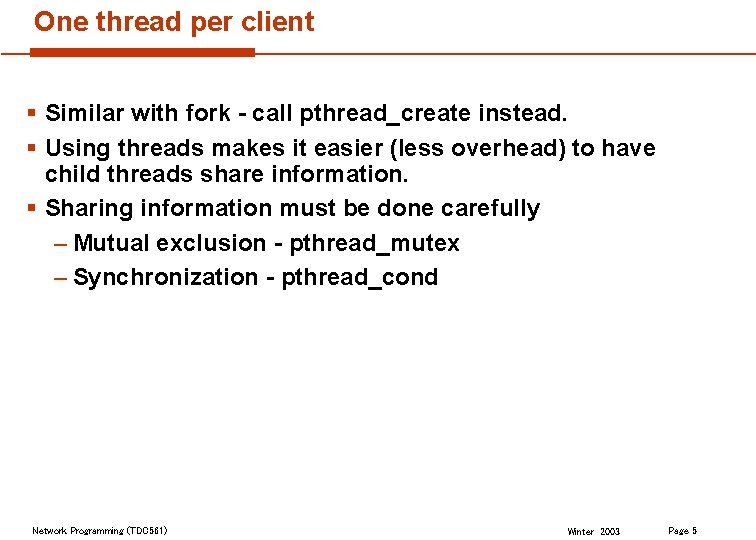

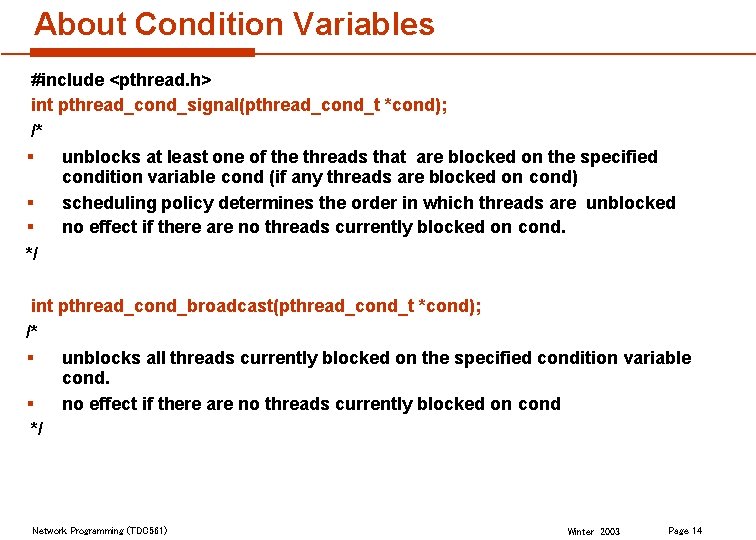

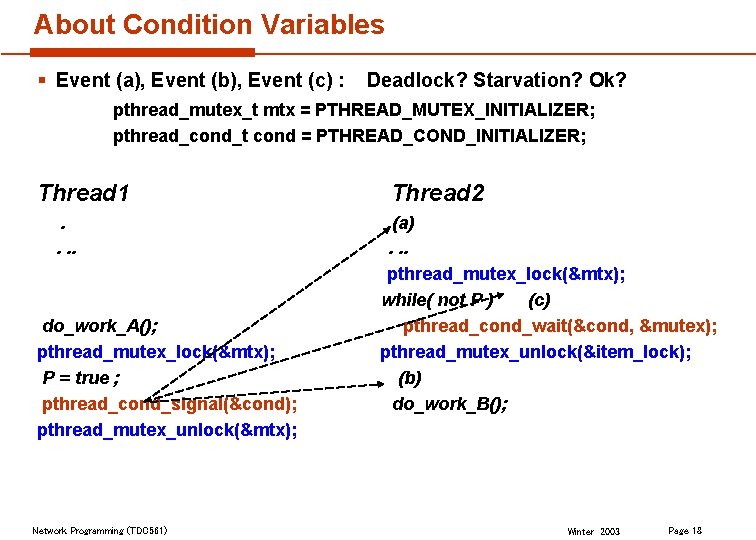

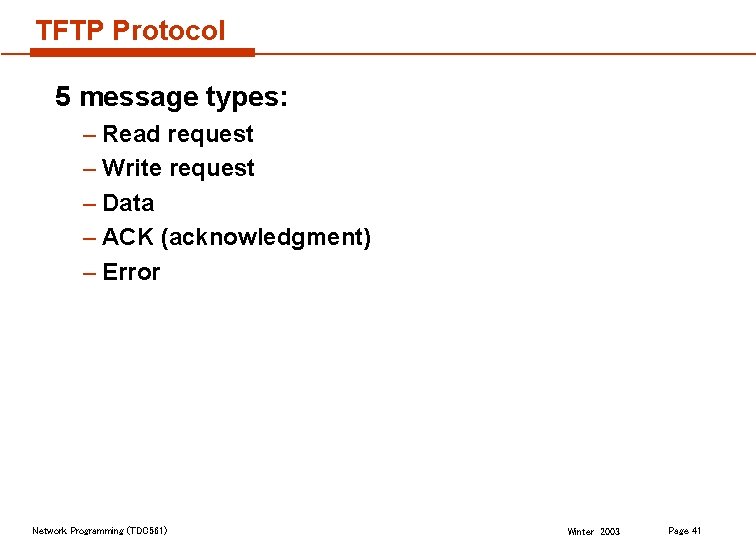

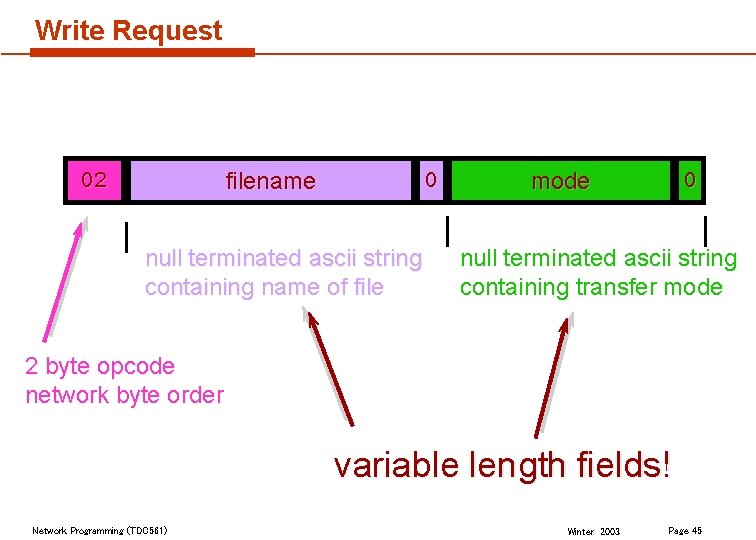

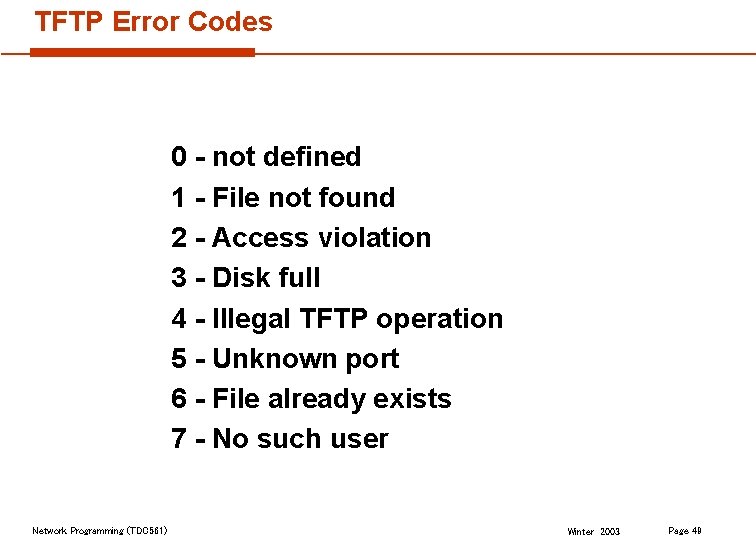

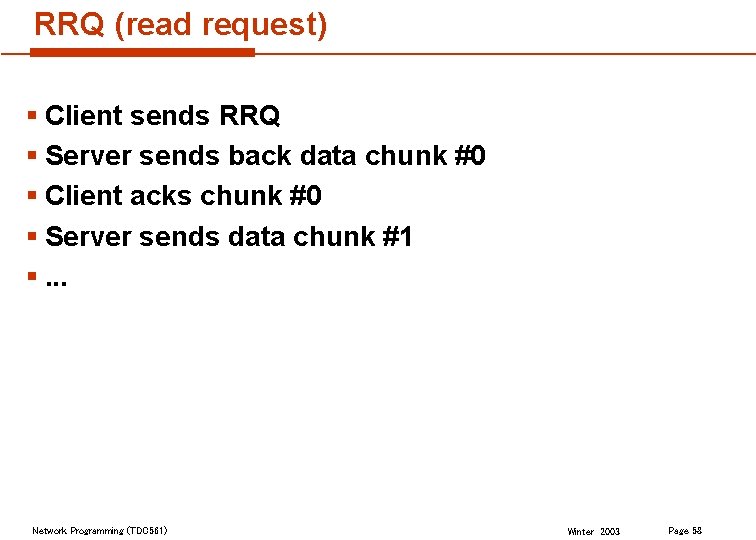

![TFTP Issue send DATAn time out retransmit DATAn receive ACKn send DATAn1 receive ACKn TFTP Issue send DATA[n] (time out) retransmit DATA[n] receive ACK[n] send DATA[n+1] receive ACK[n]](https://slidetodoc.com/presentation_image_h/30c6e2dde5080f61845af1fa8b4d823e/image-53.jpg)

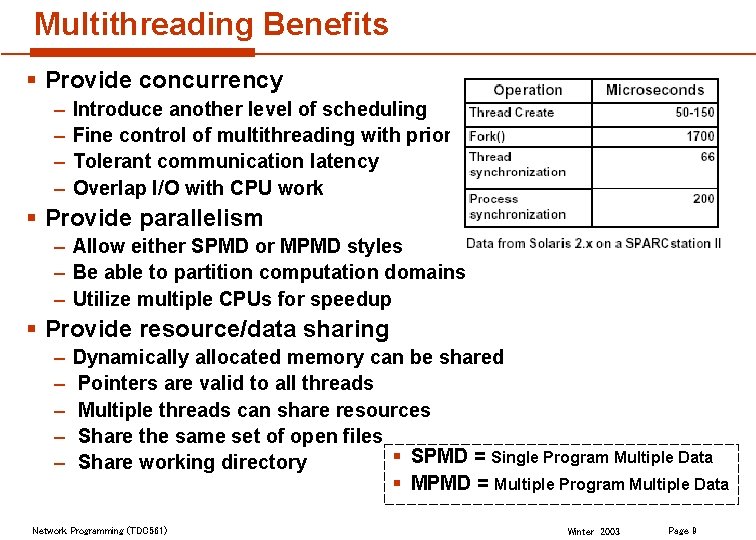

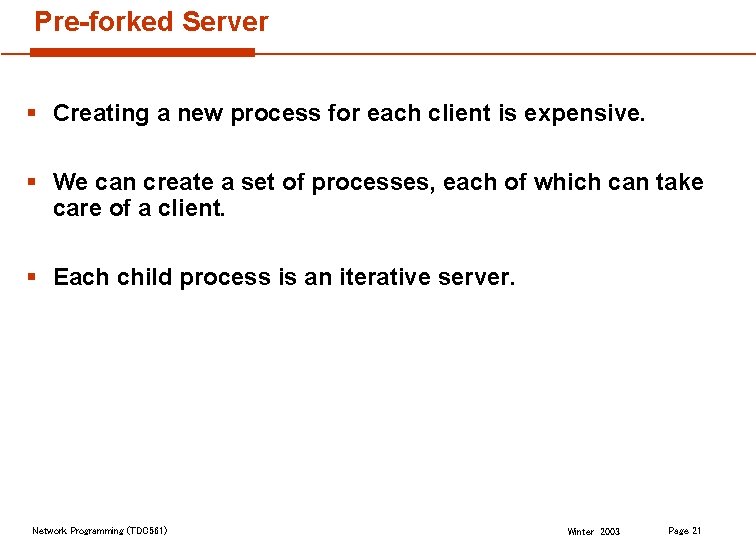

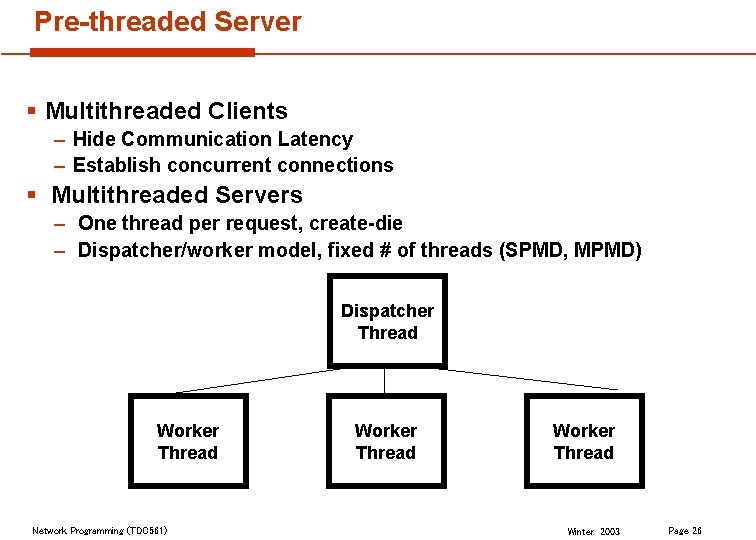

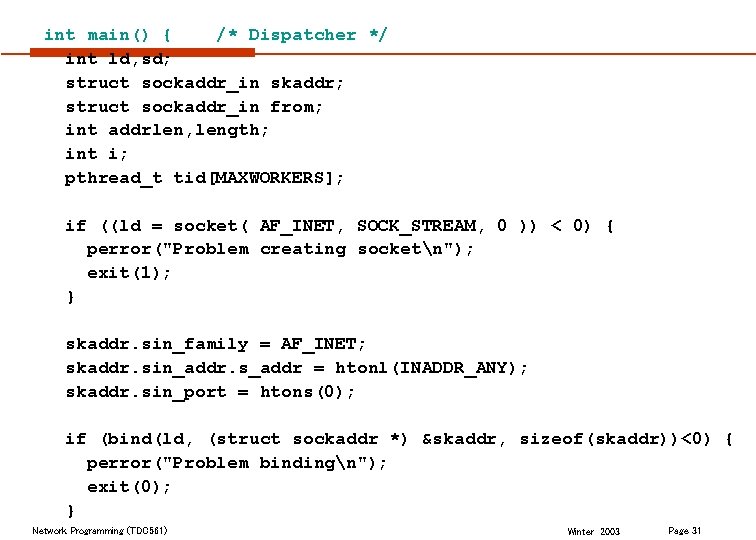

TFTP Issue send DATA[n] (time out) retransmit DATA[n] receive ACK[n] send DATA[n+1] receive ACK[n] (dup) send DATA[n+1] (dup). . . Network Programming (TDC 561) receive DATA[n] send ACK[n] receive DATA[n] (dup) send ACK[n] (dup) receive DATA[n+1] send ACK[n+1] receive DATA[n+1] (dup) send ACK[n+1] (dup) Winter 2003 Page 53

The Fix § Sender should not resend a data packet in response to a duplicate ACK. § If sender receives ACK[n] - don’t send DATA[n+1] if the ACK was a duplicate. Network Programming (TDC 561) Winter 2003 Page 54

Concurrency § TFTP servers use a "well known address" (UDP port number). – /etc/services tftp 69/udp # Trivial File Transfer Protocol § How would you implement a concurrent server? – forking may lead to problems! – Can provide concurrency without forking, but it requires lots of bookkeeping. Network Programming (TDC 561) Winter 2003 Page 55

TFTP Concurrency § To allow multiple clients to bootstrap at the same time, a TFTP server needs to provide some form of concurrency. § Because UDP does not provide a unique connection between a client and server (as does TCP), the TFTP server provides concurrency by creating a new UDP port for each client. – This allows different client input datagrams to be demultiplexed by the server's UDP module, based on destination port numbers, instead of doing this in the server itself. Network Programming (TDC 561) Winter 2003 Page 56

TFTP Concurrency § According to the protocol, the server may create a new udp port and send the initial response from this new port. § The client should recognize this, and send all subsequent messages to the new port. Network Programming (TDC 561) Winter 2003 Page 57

RRQ (read request) § Client sends RRQ § Server sends back data chunk #0 § Client acks chunk #0 § Server sends data chunk #1 §. . . Network Programming (TDC 561) Winter 2003 Page 58

WRQ (write request) § Client sends WRQ § Server sends back #0 § Client data chunk #1 (the first chunk!) § Server acks data chunk #1 §… there is no data chunk #0! Network Programming (TDC 561) Winter 2003 Page 59

When is it over? § There is no length of file field sent! § All data messages except the last one contain 512 bytes of data. – message length is 2 + 512 = 516 § The last data message might contain 0 bytes of data! Network Programming (TDC 561) Winter 2003 Page 60

Issues What if more than 65535 chunks are sent? – 65536 blocks x 512 bytes/block = 33, 554, 432 bytes. § The RFC does not address this issue! § The network can duplicate packets! Network Programming (TDC 561) Winter 2003 Page 61

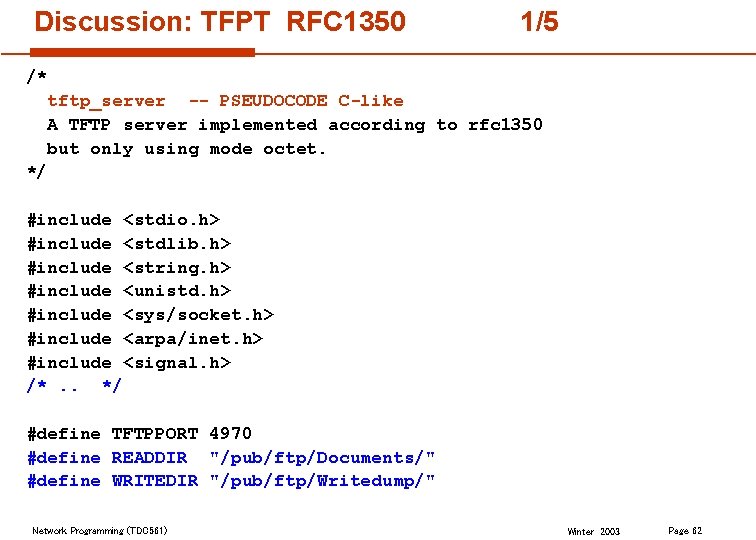

Discussion: TFPT RFC 1350 1/5 /* tftp_server -- PSEUDOCODE C-like A TFTP server implemented according to rfc 1350 but only using mode octet. */ #include <stdio. h> #include <stdlib. h> #include <string. h> #include <unistd. h> #include <sys/socket. h> #include <arpa/inet. h> #include <signal. h> /*. . */ #define TFTPPORT 4970 #define READDIR "/pub/ftp/Documents/" #define WRITEDIR "/pub/ftp/Writedump/" Network Programming (TDC 561) Winter 2003 Page 62

![Discussion TFPT RFC 1350 25 int mainint argc char argv int sockfd newfd Discussion: TFPT RFC 1350 2/5 int main(int argc, char *argv[]) { int sockfd, newfd,](https://slidetodoc.com/presentation_image_h/30c6e2dde5080f61845af1fa8b4d823e/image-63.jpg)

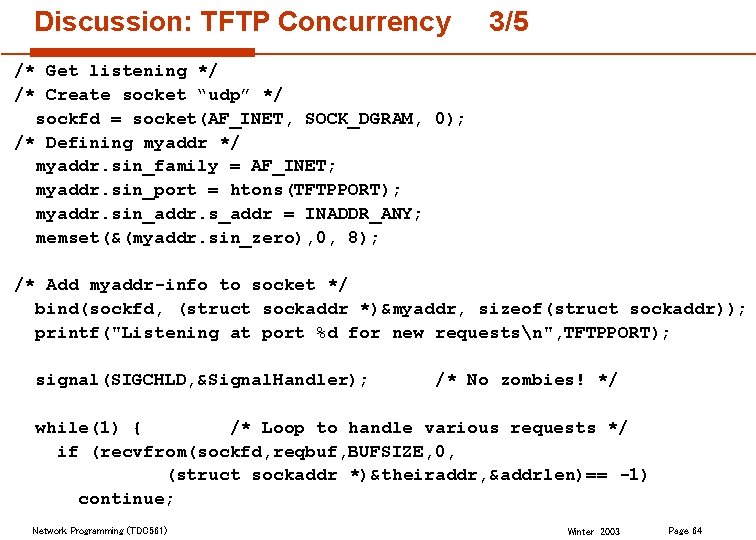

Discussion: TFPT RFC 1350 2/5 int main(int argc, char *argv[]) { int sockfd, newfd, pid; struct sockaddr_in myaddr; /* local endpoint */ struct sockaddr_in theiraddr; /* remote endpoint */ struct sockaddr_in newaddr; /* handles requests */ unsigned short reqtype; char reqbuf[BUFSIZE]; char reqfile[NAMESIZE], filename[NAMESIZE]; if (argc != 1) { fprintf(stderr, "usage: %sn", argv[0]); exit(1); } Network Programming (TDC 561) Winter 2003 Page 63

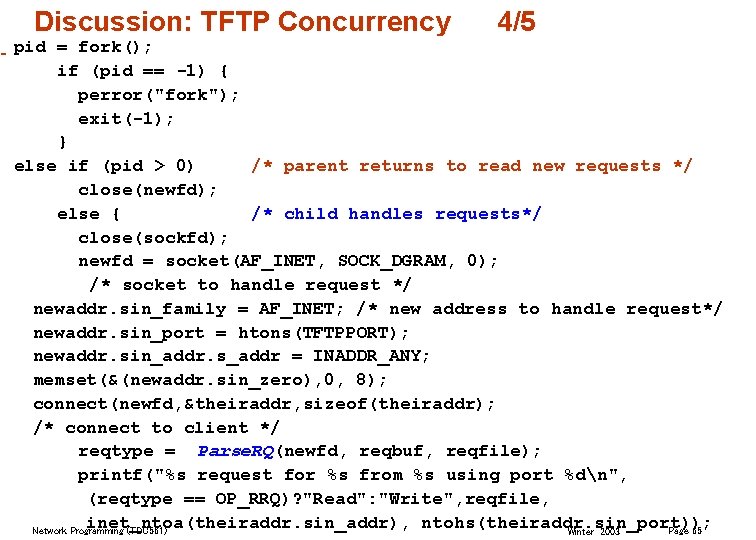

Discussion: TFTP Concurrency 3/5 /* Get listening */ /* Create socket “udp” */ sockfd = socket(AF_INET, SOCK_DGRAM, 0); /* Defining myaddr */ myaddr. sin_family = AF_INET; myaddr. sin_port = htons(TFTPPORT); myaddr. sin_addr. s_addr = INADDR_ANY; memset(&(myaddr. sin_zero), 0, 8); /* Add myaddr-info to socket */ bind(sockfd, (struct sockaddr *)&myaddr, sizeof(struct sockaddr)); printf("Listening at port %d for new requestsn", TFTPPORT); signal(SIGCHLD, &Signal. Handler); /* No zombies! */ while(1) { /* Loop to handle various requests */ if (recvfrom(sockfd, reqbuf, BUFSIZE, 0, (struct sockaddr *)&theiraddr, &addrlen)== -1) continue; Network Programming (TDC 561) Winter 2003 Page 64

Discussion: TFTP Concurrency 4/5 pid = fork(); if (pid == -1) { perror("fork"); exit(-1); } else if (pid > 0) /* parent returns to read new requests */ close(newfd); else { /* child handles requests*/ close(sockfd); newfd = socket(AF_INET, SOCK_DGRAM, 0); /* socket to handle request */ newaddr. sin_family = AF_INET; /* new address to handle request*/ newaddr. sin_port = htons(TFTPPORT); newaddr. sin_addr. s_addr = INADDR_ANY; memset(&(newaddr. sin_zero), 0, 8); connect(newfd, &theiraddr, sizeof(theiraddr); /* connect to client */ reqtype = Parse. RQ(newfd, reqbuf, reqfile); printf("%s request for %s from %s using port %dn", (reqtype == OP_RRQ)? "Read": "Write", reqfile, inet_ntoa(theiraddr. sin_addr), ntohs(theiraddr. sin_port)); Network Programming (TDC 561) Page 65 Winter 2003

Discussion: TFTP Concurrency 5/5 if (reqtype == OP_RRQ) { /* read request */ strncpy(filename, READDIR, sizeof(filename)); strncat(filename, reqfile, sizeof(filename)-strlen(reqfile)); Handle. RQ(newfd, filename, OP_RRQ); } else { /* write request */ strncpy(filename, WRITEDIR, sizeof(filename)); strncat(filename, reqfile, sizeof(filename)-strlen(reqfile)); Handle. RQ(newfd, filename, OP_WRQ); } close(newfd); exit(-1); } } return 1; } Network Programming (TDC 561) Winter 2003 Page 66