Performance Issues Application Programmers View John Cownie HPC

- Slides: 44

Performance Issues Application Programmers View John Cownie HPC Benchmark Engineer

Agenda • Running 64 bit 32 bit codes under AMD 64 (Suse Linux) • FPU and Memory performance issues • OS and memory layout (1 P, 2 P, 4 P) • Spectrum of application needs memory cpu • Benchmark examples STREAM, HPL • Some real applications • Conclusions 2

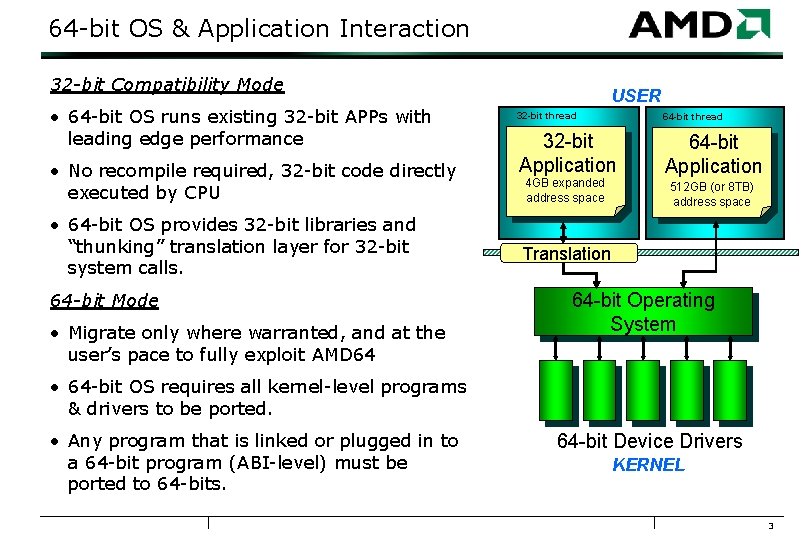

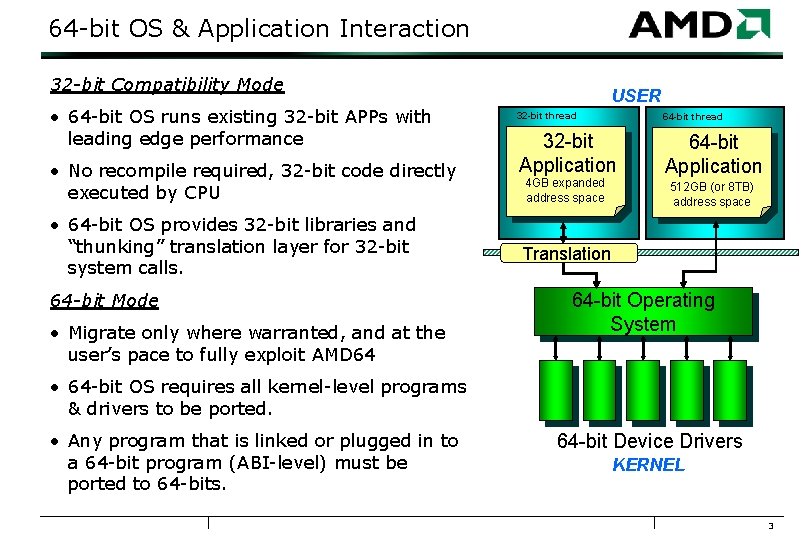

64 -bit OS & Application Interaction 32 -bit Compatibility Mode • 64 -bit OS runs existing 32 -bit APPs with leading edge performance • No recompile required, 32 -bit code directly executed by CPU • 64 -bit OS provides 32 -bit libraries and “thunking” translation layer for 32 -bit system calls. 64 -bit Mode • Migrate only where warranted, and at the user’s pace to fully exploit AMD 64 USER 32 -bit thread 64 -bit thread 32 -bit Application 64 -bit Application 4 GB expanded address space 512 GB (or 8 TB) address space Translation 64 -bit Operating System • 64 -bit OS requires all kernel-level programs & drivers to be ported. • Any program that is linked or plugged in to a 64 -bit program (ABI-level) must be ported to 64 -bits. 64 -bit Device Drivers KERNEL 3

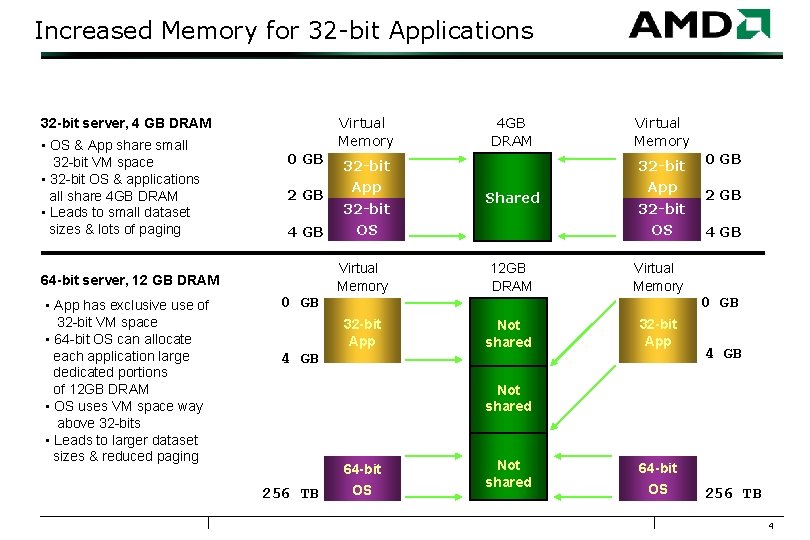

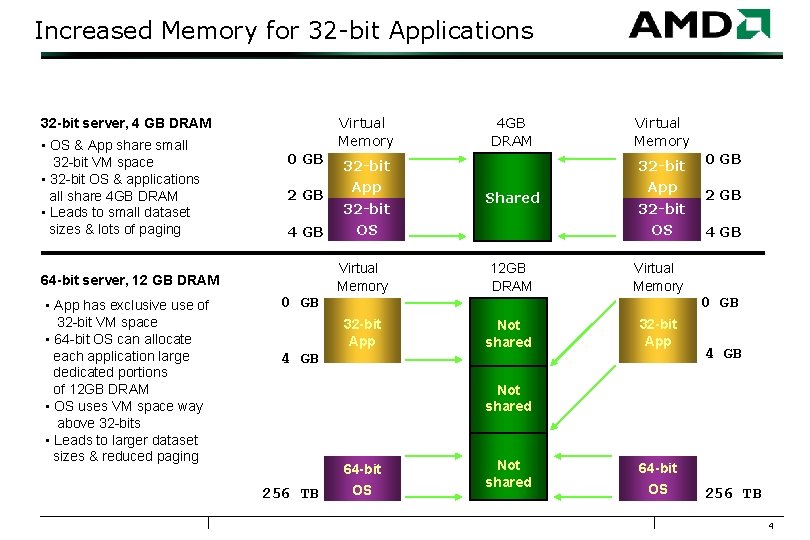

Increased Memory for 32 -bit Applications Virtual Memory 32 -bit server, 4 GB DRAM • OS & App share small 32 -bit VM space • 32 -bit OS & applications all share 4 GB DRAM • Leads to small dataset sizes & lots of paging 0 GB 2 GB 4 GB 64 -bit server, 12 GB DRAM • App has exclusive use of 32 -bit VM space • 64 -bit OS can allocate each application large dedicated portions of 12 GB DRAM • OS uses VM space way above 32 -bits • Leads to larger dataset sizes & reduced paging 0 GB 32 -bit App 32 -bit OS 4 GB DRAM Shared Virtual Memory 32 -bit App 32 -bit OS Virtual Memory 12 GB DRAM Virtual Memory 32 -bit App Not shared 32 -bit App 4 GB 0 GB 2 GB 4 GB 0 GB 4 GB Not shared 256 TB 64 -bit OS Not shared 64 -bit OS 256 TB 4

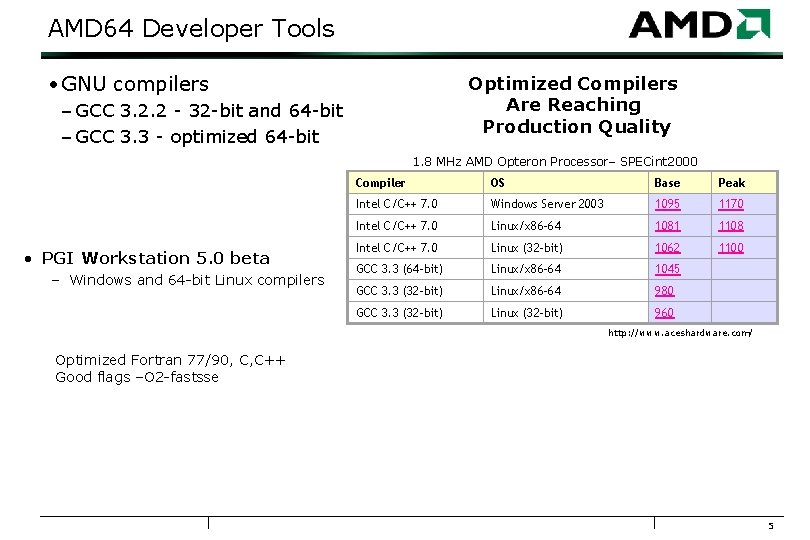

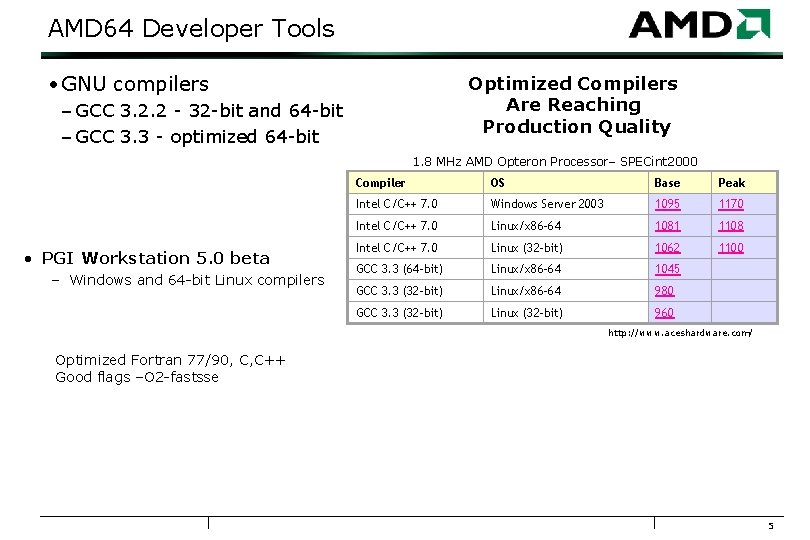

AMD 64 Developer Tools • GNU compilers Optimized Compilers Are Reaching Production Quality – GCC 3. 2. 2 - 32 -bit and 64 -bit – GCC 3. 3 - optimized 64 -bit 1. 8 MHz AMD Opteron Processor– SPECint 2000 • PGI Workstation 5. 0 beta – Windows and 64 -bit Linux compilers Compiler OS Base Peak Intel C/C++ 7. 0 Windows Server 2003 1095 1170 Intel C/C++ 7. 0 Linux/x 86 -64 1081 1108 Intel C/C++ 7. 0 Linux (32 -bit) 1062 1100 GCC 3. 3 (64 -bit) Linux/x 86 -64 1045 GCC 3. 3 (32 -bit) Linux/x 86 -64 980 GCC 3. 3 (32 -bit) Linux (32 -bit) 960 http: //www. aceshardware. com/ Optimized Fortran 77/90, C, C++ Good flags –O 2 -fastsse 5

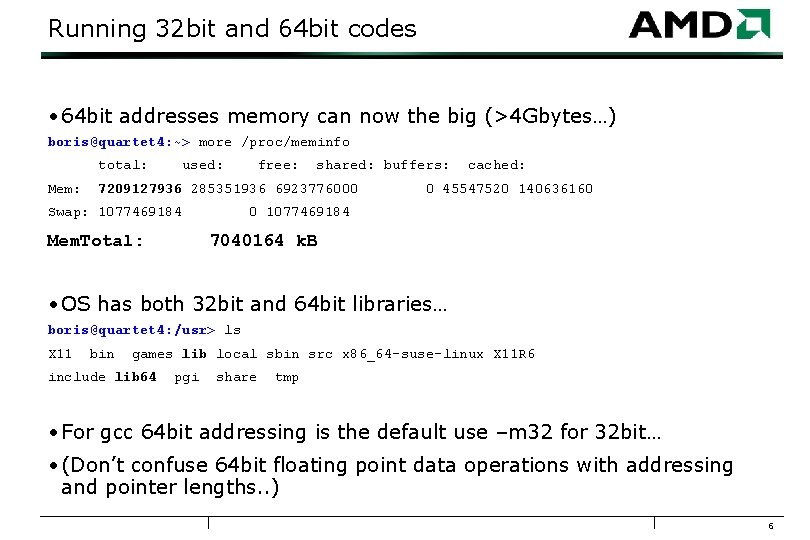

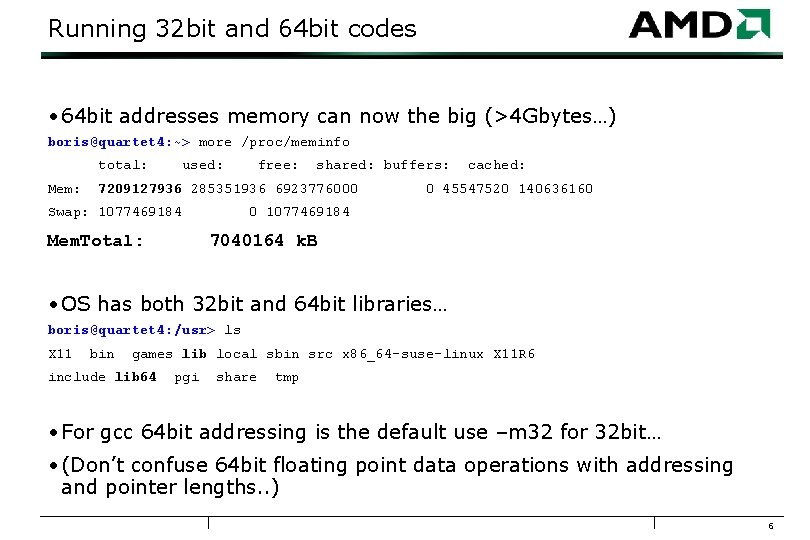

Running 32 bit and 64 bit codes • 64 bit addresses memory can now the big (>4 Gbytes…) boris@quartet 4: ~> more /proc/meminfo total: Mem: used: free: shared: buffers: 7209127936 285351936 6923776000 Swap: 1077469184 Mem. Total: cached: 0 45547520 140636160 0 1077469184 7040164 k. B • OS has both 32 bit and 64 bit libraries… boris@quartet 4: /usr> ls X 11 bin games lib local sbin src x 86_64 -suse-linux X 11 R 6 include lib 64 pgi share tmp • For gcc 64 bit addressing is the default use –m 32 for 32 bit… • (Don’t confuse 64 bit floating point data operations with addressing and pointer lengths. . ) 6

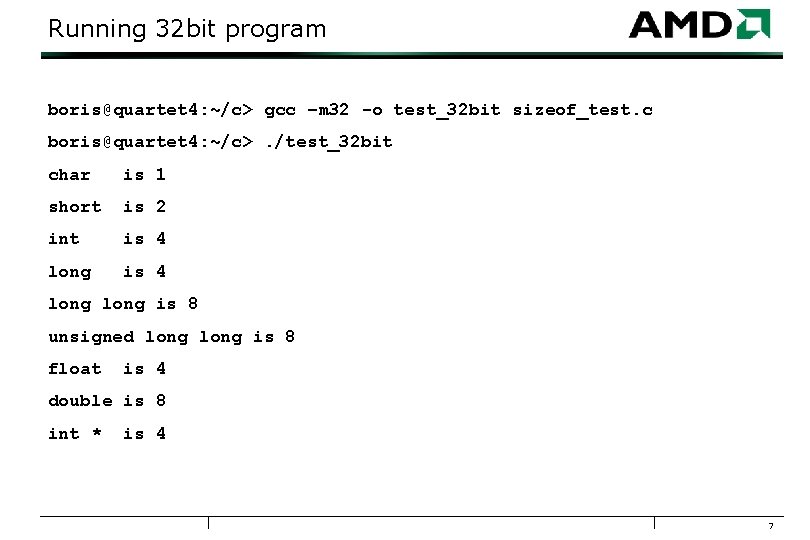

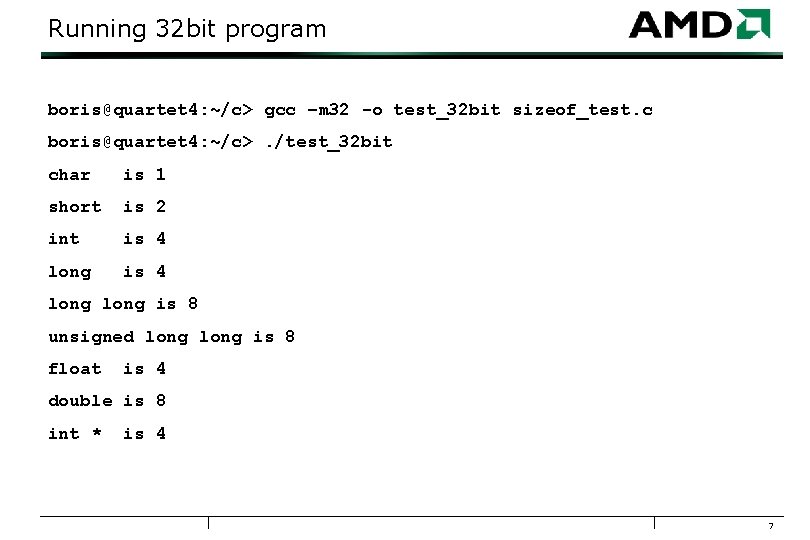

Running 32 bit program boris@quartet 4: ~/c> gcc –m 32 -o test_32 bit sizeof_test. c boris@quartet 4: ~/c>. /test_32 bit char is 1 short is 2 int is 4 long is 8 unsigned long is 8 float is 4 double is 8 int * is 4 7

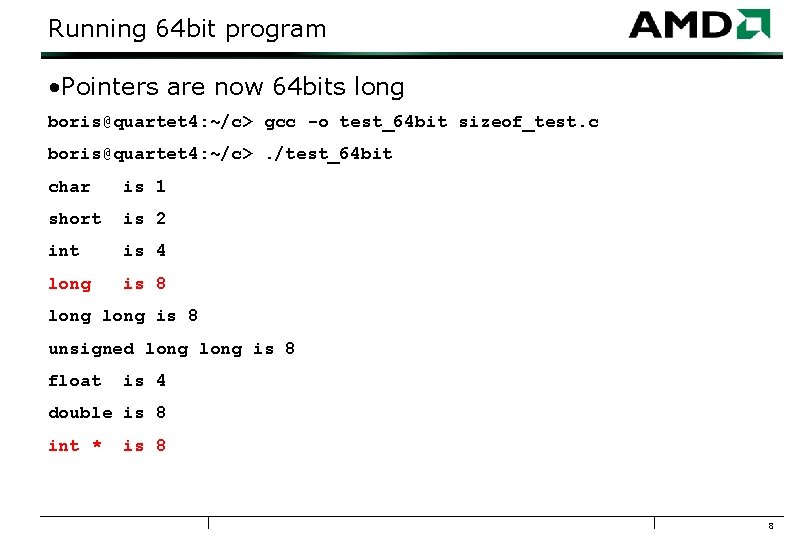

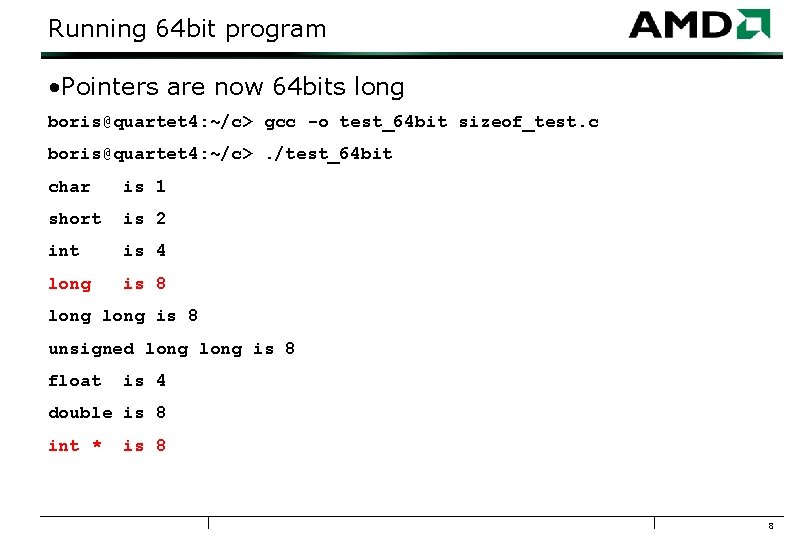

Running 64 bit program • Pointers are now 64 bits long boris@quartet 4: ~/c> gcc -o test_64 bit sizeof_test. c boris@quartet 4: ~/c>. /test_64 bit char is 1 short is 2 int is 4 long is 8 unsigned long is 8 float is 4 double is 8 int * is 8 8

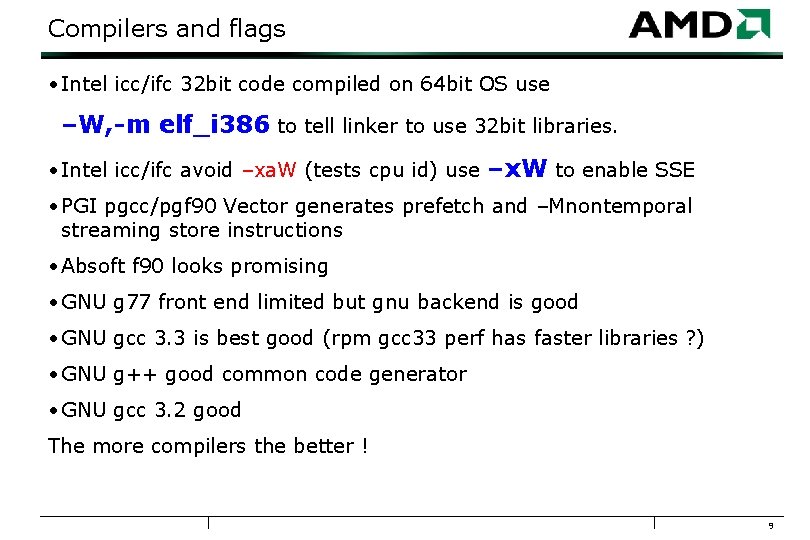

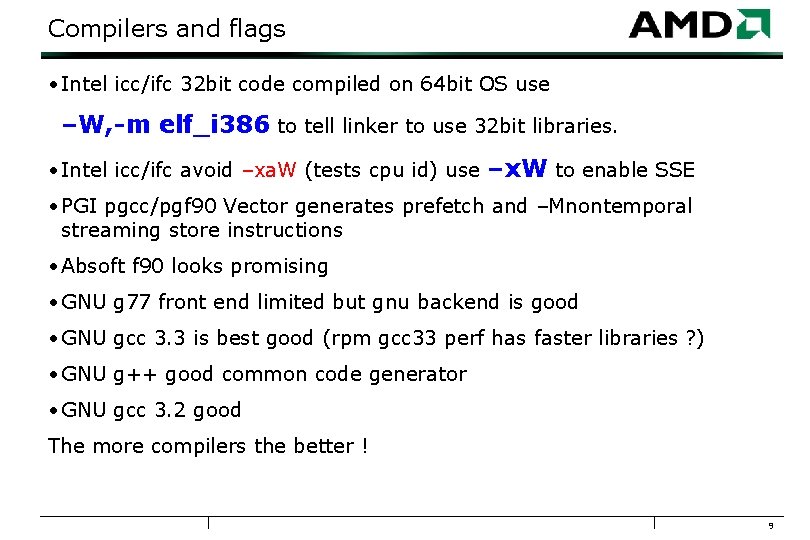

Compilers and flags • Intel icc/ifc 32 bit code compiled on 64 bit OS use –W, -m elf_i 386 to tell linker to use 32 bit libraries. • Intel icc/ifc avoid –xa. W (tests cpu id) use –x. W to enable SSE • PGI pgcc/pgf 90 Vector generates prefetch and –Mnontemporal streaming store instructions • Absoft f 90 looks promising • GNU g 77 front end limited but gnu backend is good • GNU gcc 3. 3 is best good (rpm gcc 33 perf has faster libraries ? ) • GNU g++ good common code generator • GNU gcc 3. 2 good The more compilers the better ! 9

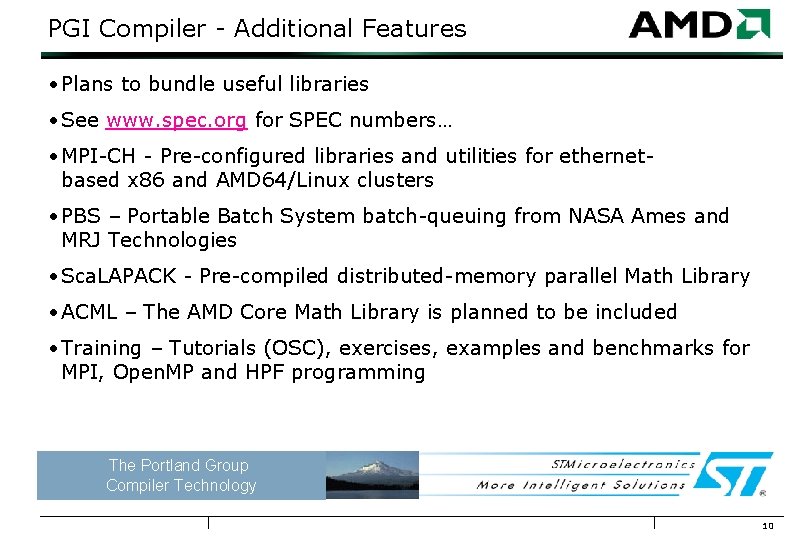

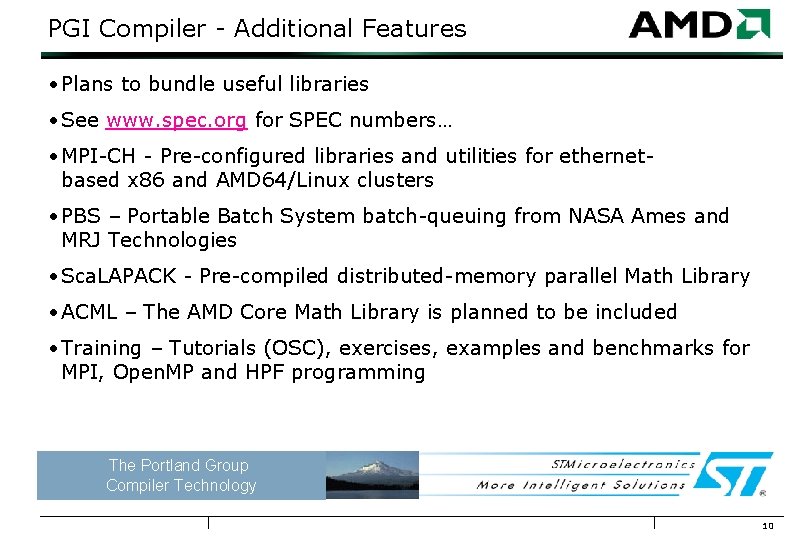

PGI Compiler - Additional Features • Plans to bundle useful libraries • See www. spec. org for SPEC numbers… • MPI-CH - Pre-configured libraries and utilities for ethernet- based x 86 and AMD 64/Linux clusters • PBS – Portable Batch System batch-queuing from NASA Ames and MRJ Technologies • Sca. LAPACK - Pre-compiled distributed-memory parallel Math Library • ACML – The AMD Core Math Library is planned to be included • Training – Tutorials (OSC), exercises, examples and benchmarks for MPI, Open. MP and HPF programming The Portland Group Compiler Technology 10

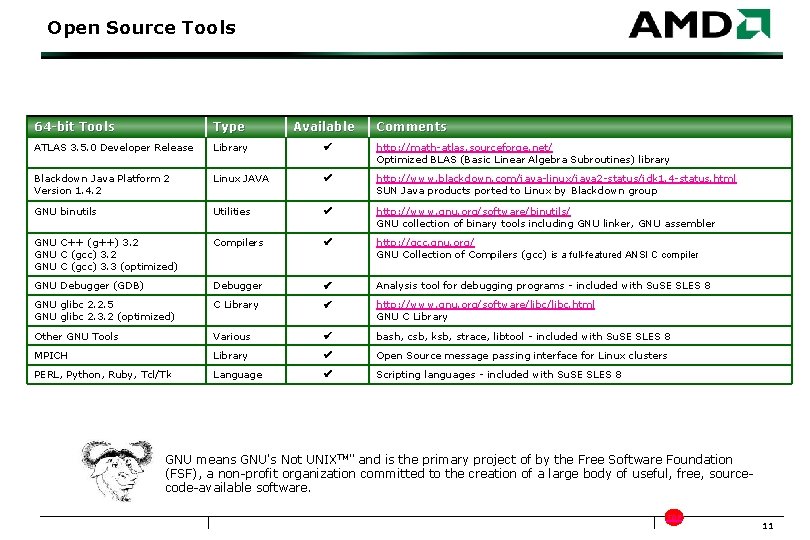

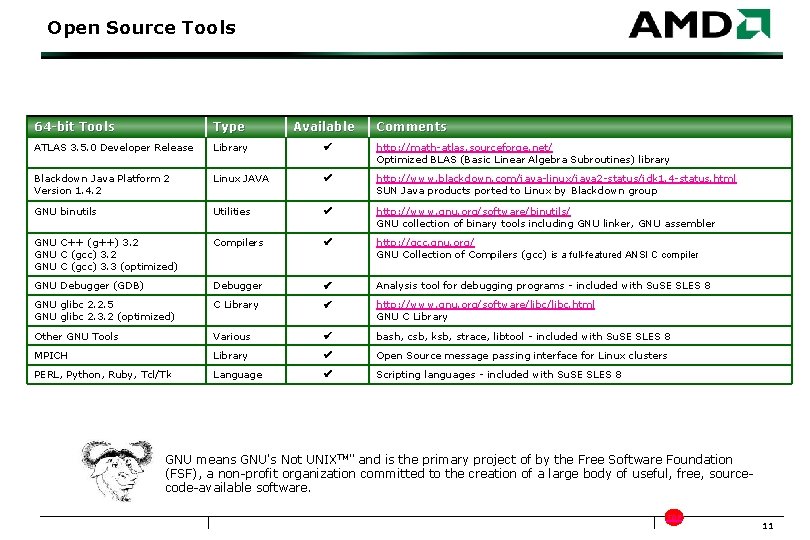

Open Source Tools 64 -bit Tools Type Available Comments ATLAS 3. 5. 0 Developer Release Library http: //math-atlas. sourceforge. net/ Optimized BLAS (Basic Linear Algebra Subroutines) library Blackdown Java Platform 2 Version 1. 4. 2 Linux JAVA http: //www. blackdown. com/java-linux/java 2 -status/jdk 1. 4 -status. html SUN Java products ported to Linux by Blackdown group GNU binutils Utilities http: //www. gnu. org/software/binutils/ GNU collection of binary tools including GNU linker, GNU assembler GNU C++ (g++) 3. 2 GNU C (gcc) 3. 3 (optimized) Compilers http: //gcc. gnu. org/ GNU Collection of Compilers (gcc) is a full-featured ANSI C compiler GNU Debugger (GDB) Debugger Analysis tool for debugging programs - included with Su. SE SLES 8 GNU glibc 2. 2. 5 GNU glibc 2. 3. 2 (optimized) C Library http: //www. gnu. org/software/libc. html GNU C Library Other GNU Tools Various bash, csb, ksb, strace, libtool - included with Su. SE SLES 8 MPICH Library Open Source message passing interface for Linux clusters PERL, Python, Ruby, Tcl/Tk Language Scripting languages - included with Su. SE SLES 8 GNU means GNU's Not UNIXTM" and is the primary project of by the Free Software Foundation (FSF), a non-profit organization committed to the creation of a large body of useful, free, sourcecode-available software. TOP 11

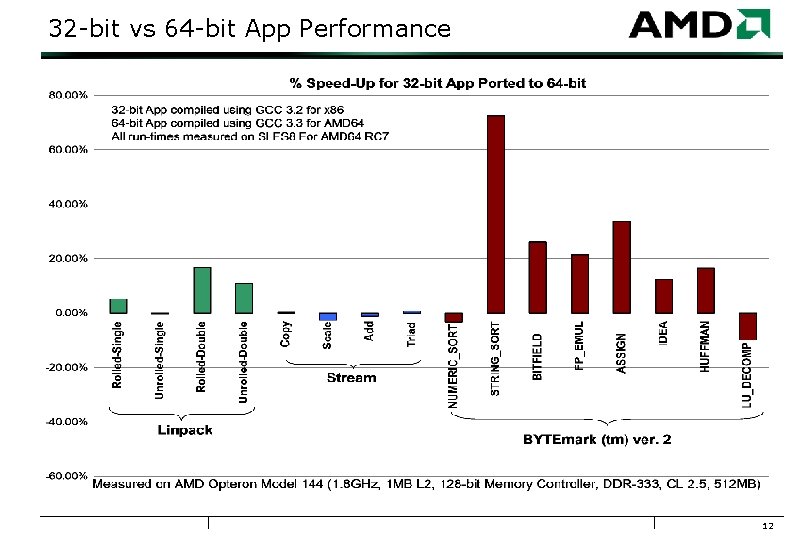

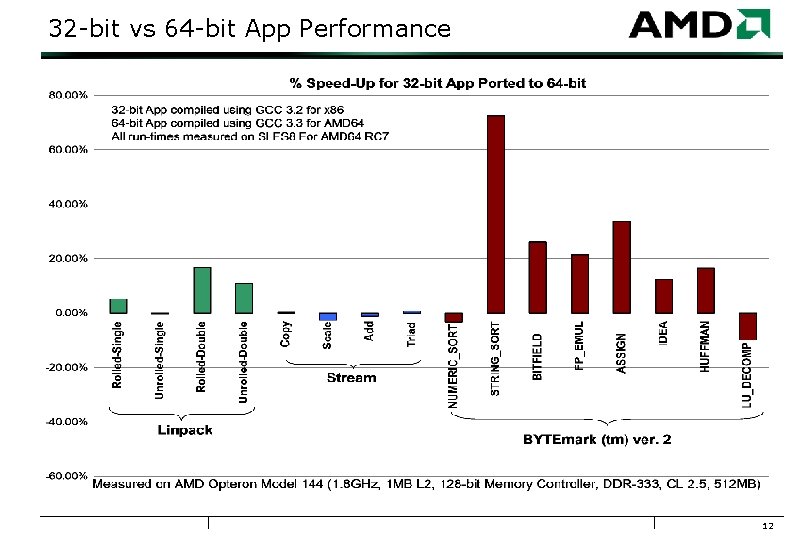

32 -bit vs 64 -bit App Performance 12

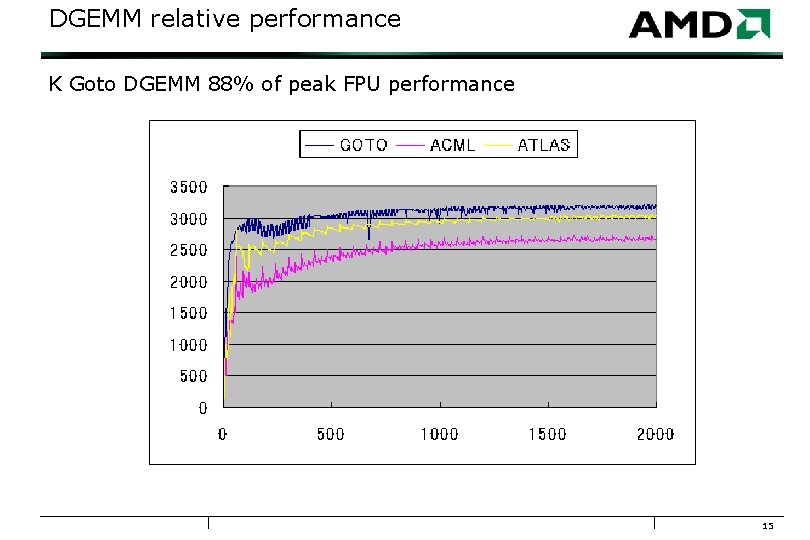

BLAS libraries • 3 different BLAS libraries support 32 bit and 64 bit code 1. ACML (includes FFTs) 2. ATLAS 3. Goto • Currently Goto has fastest DGEMM. ~88% of peak on 1 P HPL • Compare with BLASBENCH and pick best one for your application. • For FFTs also consider FFTW 13

Optimized Numerical Libraries: ACML • AMD and The Numerical Algorithms Group (NAG) joint development the AMD Core Math Library (ACML) • ACML includes • Basic Linear Algebra Subroutines (BLAS) levels 1, 2 and 3 • A wide variety of Fast Fourier Transforms (FFTs) • Linear Algebra Package (LAPACK) • ACML has: • Fortran and C Interfaces • Highly optimized routines for the AMD 64 Instruction Set • Ability to address single-, double-, single-complex and double-complex data types • Will be available for commercially available OSs • ACML is freely downloadable from www. developwithamd. com/acml 14

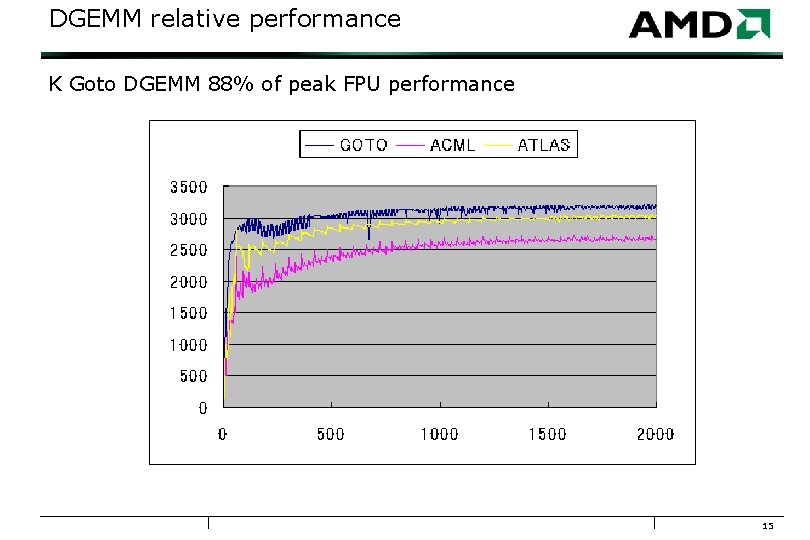

DGEMM relative performance K Goto DGEMM 88% of peak FPU performance 15

Floating Point and Memory Performance

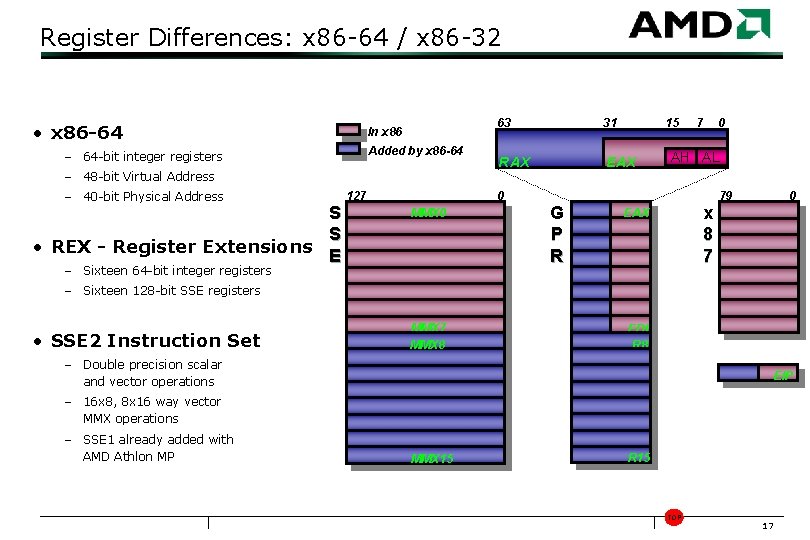

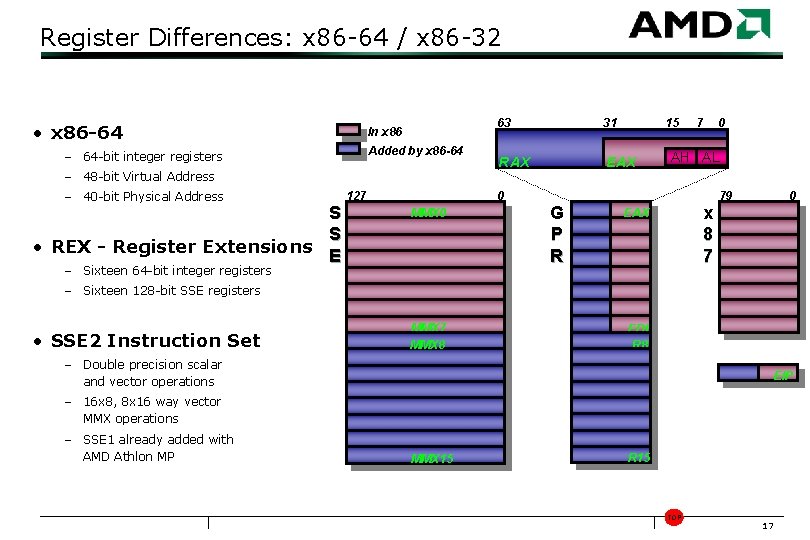

Register Differences: x 86 -64 / x 86 -32 • x 86 -64 In x 86 Added by x 86 -64 – 64 -bit integer registers – 48 -bit Virtual Address – 40 -bit Physical Address S S • REX - Register Extensions E 127 63 31 15 RAX EAX 7 AH AL 0 MMX 0 – Sixteen 64 -bit integer registers 0 79 G P R 0 x 8 7 EAX – Sixteen 128 -bit SSE registers • SSE 2 Instruction Set – Double precision scalar and vector operations MMX 7 MMX 8 EDI R 8 XMM 8 EIP – 16 x 8, 8 x 16 way vector MMX operations – SSE 1 already added with AMD Athlon MP MMX 15 R 15 TOP 17

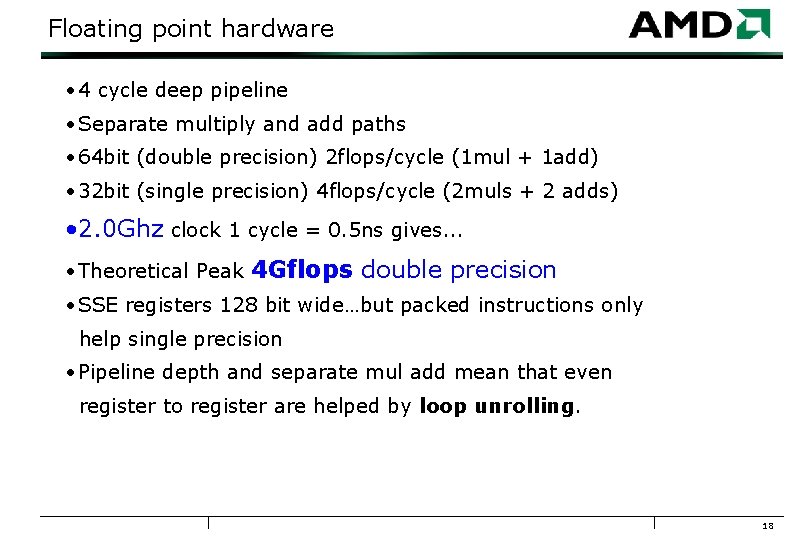

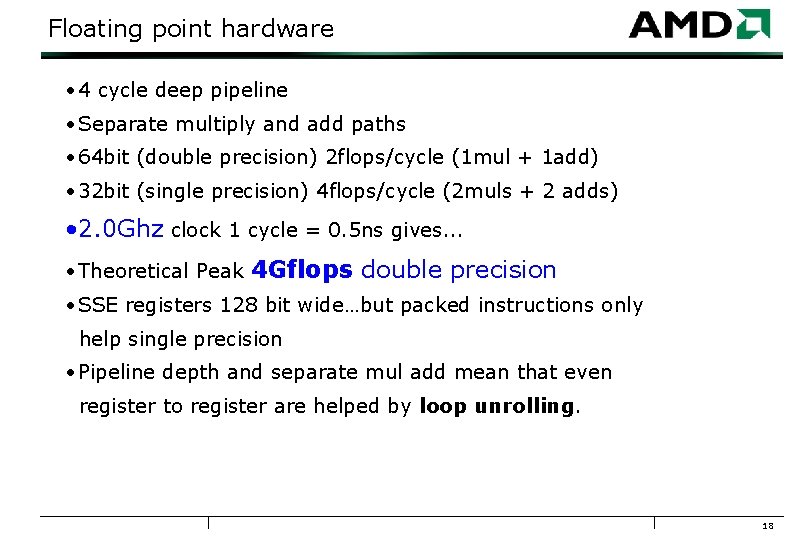

Floating point hardware • 4 cycle deep pipeline • Separate multiply and add paths • 64 bit (double precision) 2 flops/cycle (1 mul + 1 add) • 32 bit (single precision) 4 flops/cycle (2 muls + 2 adds) • 2. 0 Ghz clock 1 cycle = 0. 5 ns gives. . . • Theoretical Peak 4 Gflops double precision • SSE registers 128 bit wide…but packed instructions only help single precision • Pipeline depth and separate mul add mean that even register to register are helped by loop unrolling. 18

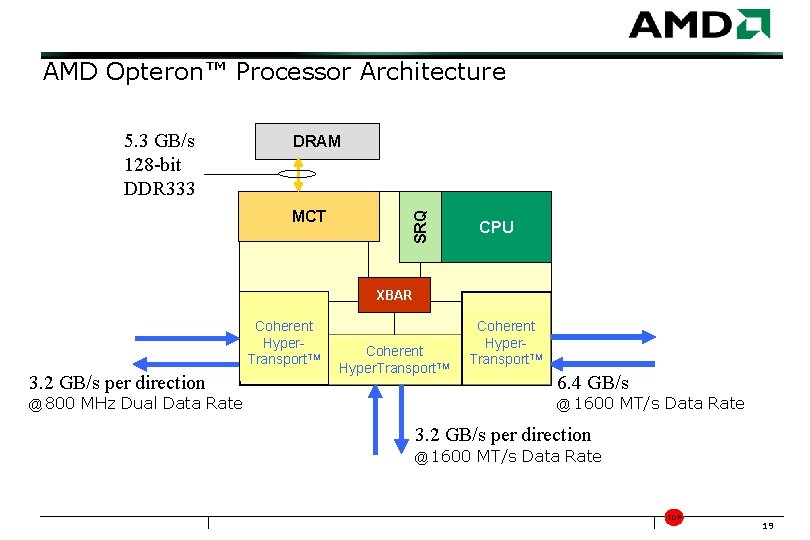

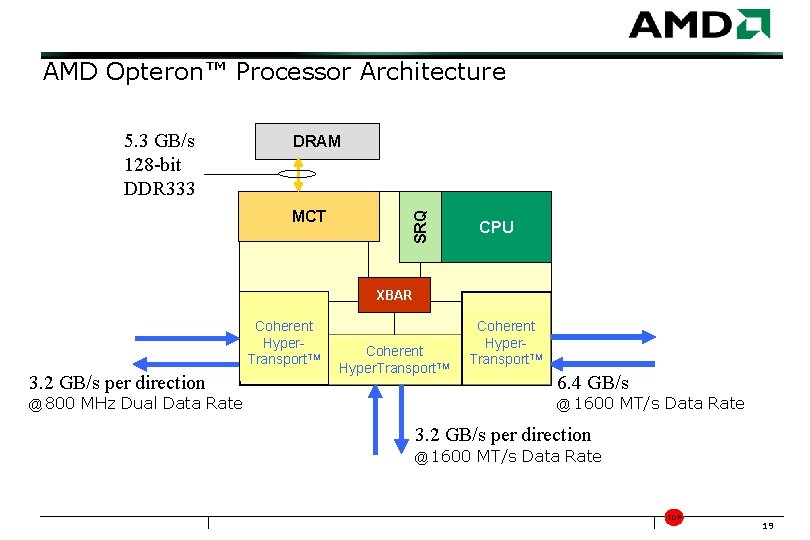

AMD Opteron™ Processor Architecture DRAM MCT SRQ 5. 3 GB/s 128 -bit DDR 333 CPU XBAR Coherent Hyper. Transport. TM 3. 2 GB/s per direction @ 800 MHz Dual Data Rate Coherent Hyper. Transport. TM 6. 4 GB/s @ 1600 MT/s Data Rate 3. 2 GB/s per direction @ 1600 MT/s Data Rate TOP 19

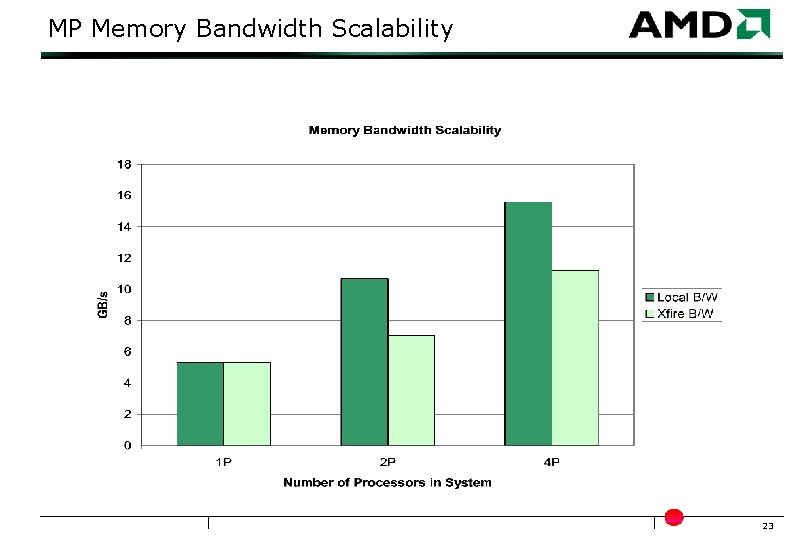

Main Memory hardware • Dual on-chip memory controllers • Remote memory systems accesses via hyper-transport • Bandwidth scales with more processors • Latency is very good 1 P, 2 P, 4 P (cache probes on 2 P, 4 P) • Local memory latency is less than remote memory • 2 P machine 1 hop (worst case) • 4 P machine 2 hops (worst case) • Memory DIMMS 333 Mhz (2700) or 266 Mhz (2100) • Can interleave memory banks (BIOS) • Can interleave processor memory (BIOS) 20

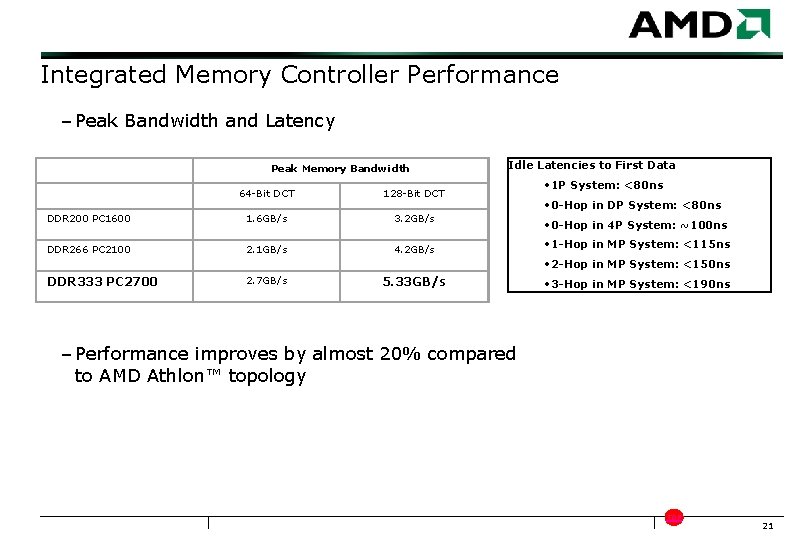

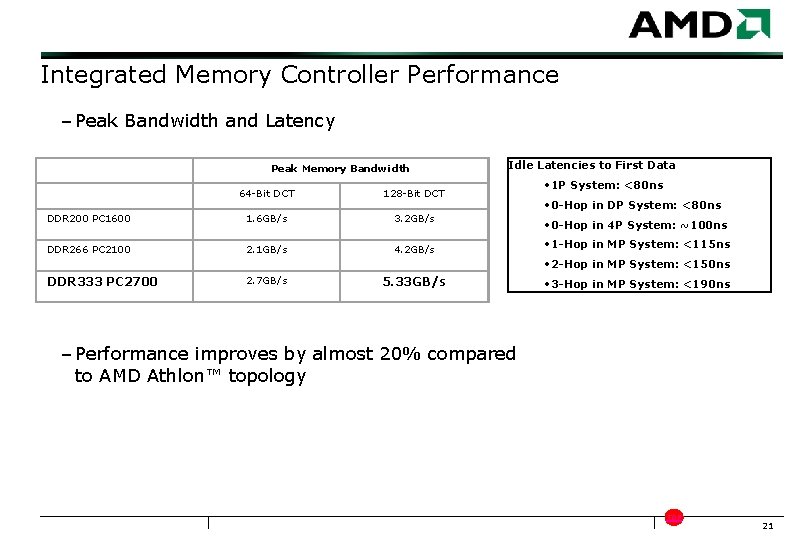

Integrated Memory Controller Performance – Peak Bandwidth and Latency Peak Memory Bandwidth 64 -Bit DCT 128 -Bit DCT DDR 200 PC 1600 1. 6 GB/s 3. 2 GB/s DDR 266 PC 2100 2. 1 GB/s 4. 2 GB/s Idle Latencies to First Data • 1 P System: <80 ns • 0 -Hop in DP System: <80 ns • 0 -Hop in 4 P System: ~100 ns • 1 -Hop in MP System: <115 ns • 2 -Hop in MP System: <150 ns DDR 333 PC 2700 2. 7 GB/s 5. 33 GB/s • 3 -Hop in MP System: <190 ns – Performance improves by almost 20% compared to AMD Athlon™ topology TOP 21

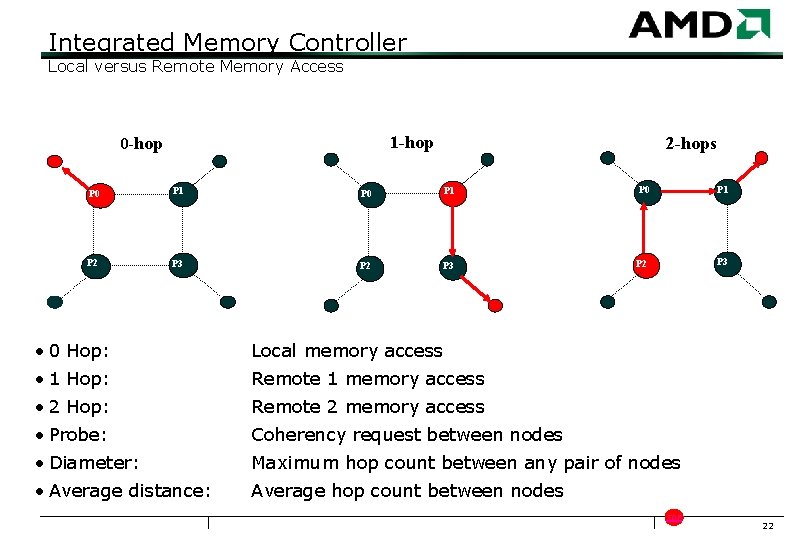

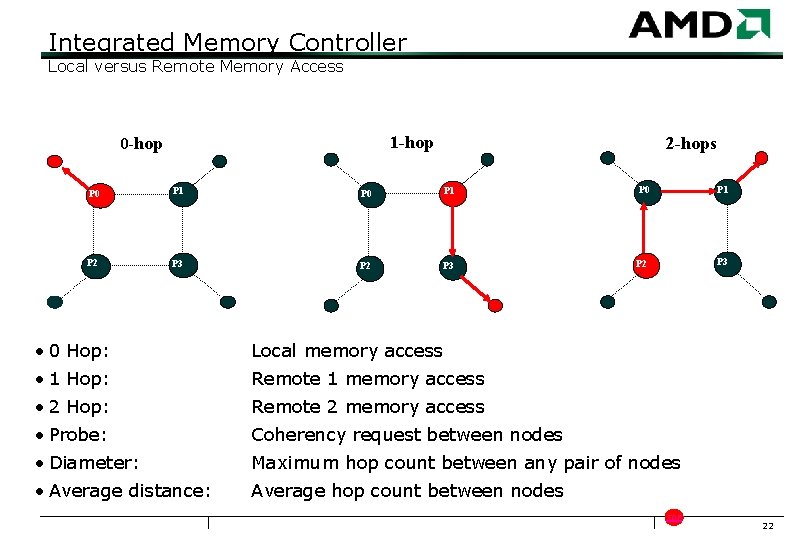

Integrated Memory Controller Local versus Remote Memory Access 1 -hop 0 -hop 2 -hops P 0 P 1 P 2 P 3 • 0 Hop: Local memory access • 1 Hop: Remote 1 memory access • 2 Hop: Remote 2 memory access • Probe: Coherency request between nodes • Diameter: Maximum hop count between any pair of nodes • Average distance: Average hop count between nodes TOP 22

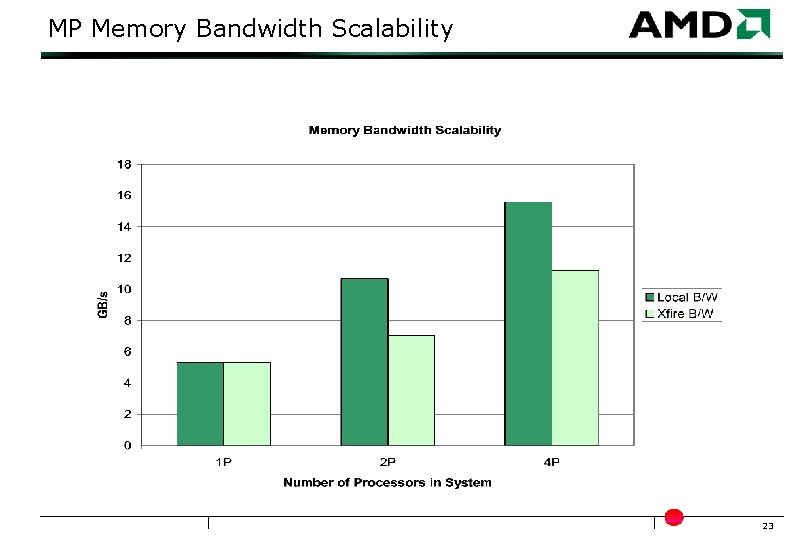

MP Memory Bandwidth Scalability TOP 23

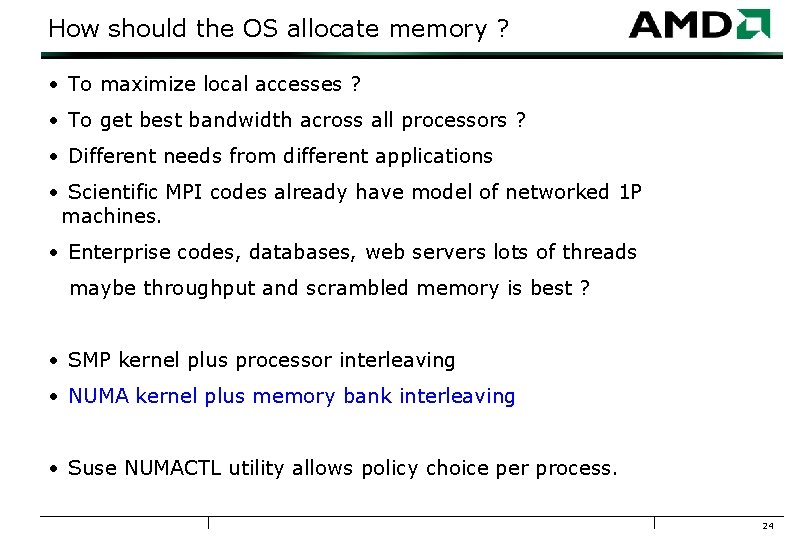

How should the OS allocate memory ? • To maximize local accesses ? • To get best bandwidth across all processors ? • Different needs from different applications • Scientific MPI codes already have model of networked 1 P machines. • Enterprise codes, databases, web servers lots of threads maybe throughput and scrambled memory is best ? • SMP kernel plus processor interleaving • NUMA kernel plus memory bank interleaving • Suse NUMACTL utility allows policy choice per process. 24

MPI libraries (shmem buffers where ? ) • Argonne MPICH compile with gcc –O 2 –funroll-all-loops • Myrinet, Quadrics, Dolphin, also have MPI libraries based on MPICH • On MP machine uses shared memory in the box. • Where do MPI message buffers go ? • Currently just malloc one chunk of space for all processors • OK for 2 P … not so good for 4 P machine (2 hops worst. . contention) • Scope to improve MPI performance on 4 P NUMA machine with better buffer placement… 25

Synthetic Benchmarks

Spectrum of application needs • Some codes are memory limited (BIG data) • Others are CPU bound (kernels and SMALL data) • ALL memory codes like low latency to memory ! • Example in BLAS libraries • BLAS 1 vector-vector … Memory Intensive …STREAM • BLAS 2 matrix-vector • BLAS 3 matrix-matrix … CPU Intensive … DGEMM, HPL • Faster CPU will not help memory bound problems… • PREFETCH can sometimes hide memory latency (on predictable memory access patterns. 27

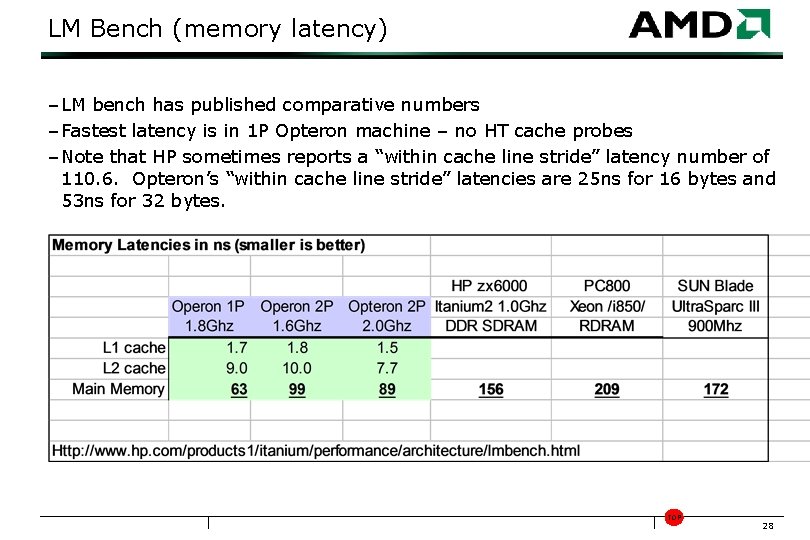

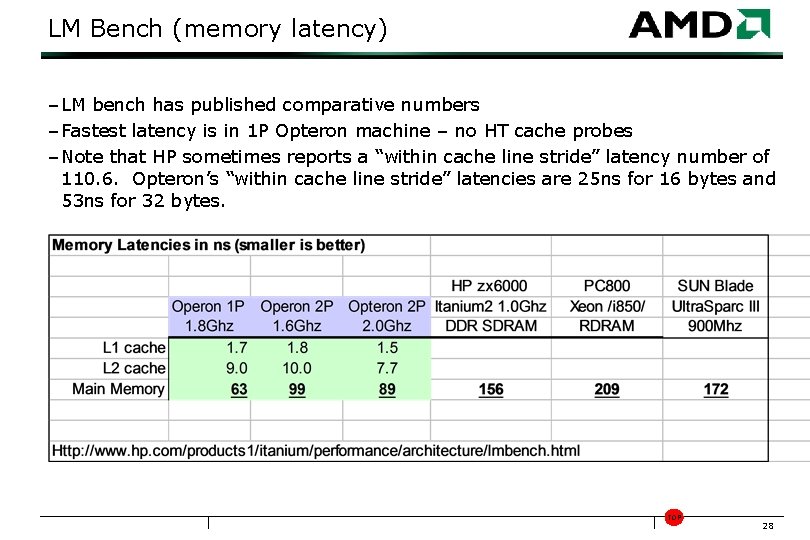

LM Bench (memory latency) – LM bench has published comparative numbers – Fastest latency is in 1 P Opteron machine – no HT cache probes – Note that HP sometimes reports a “within cache line stride” latency number of 110. 6. Opteron’s “within cache line stride” latencies are 25 ns for 16 bytes and 53 ns for 32 bytes. TOP 28

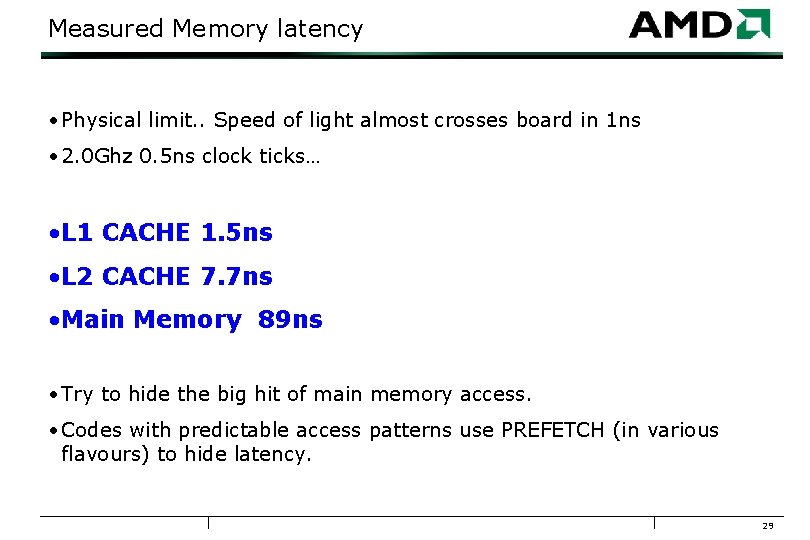

Measured Memory latency • Physical limit. . Speed of light almost crosses board in 1 ns • 2. 0 Ghz 0. 5 ns clock ticks… • L 1 CACHE 1. 5 ns • L 2 CACHE 7. 7 ns • Main Memory 89 ns • Try to hide the big hit of main memory access. • Codes with predictable access patterns use PREFETCH (in various flavours) to hide latency. 29

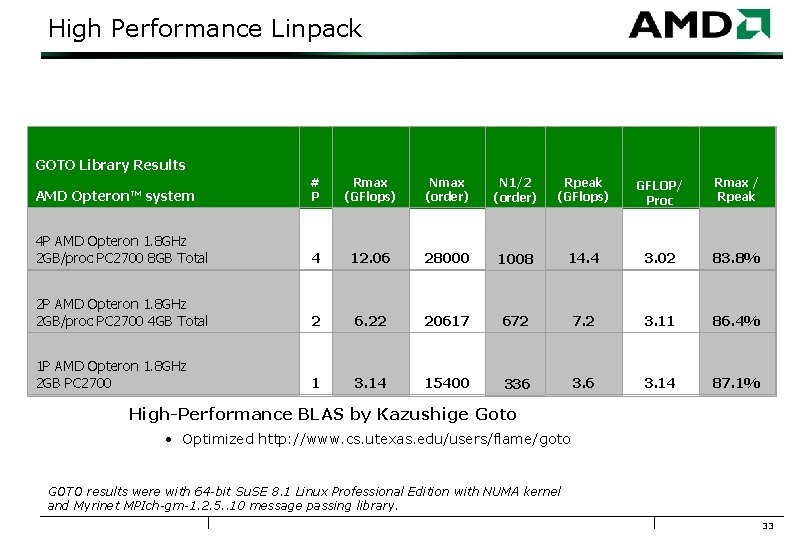

High Performance Linpack • A CPU bound problem (memory system not stressed) • Solves A x = b using LU factorization • Results Peak Gflops rate achieved for matrix size Nx. N • Almost all time is spent in DGEMM • Use MPI message passing • Larger N the better – fill memory per node • Used in www. top 500. org ranking of supercomputers • N (half) size what Gflops is half Nmax a measure of overhead • Current number one machine is Earth Simulator (40 Tflops) Cray Red Storm (10, 000 Opterons) has comparable peak 30

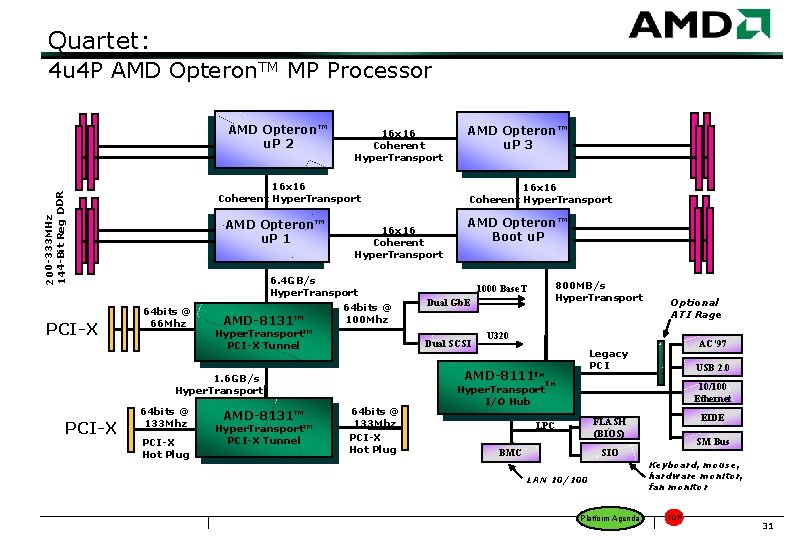

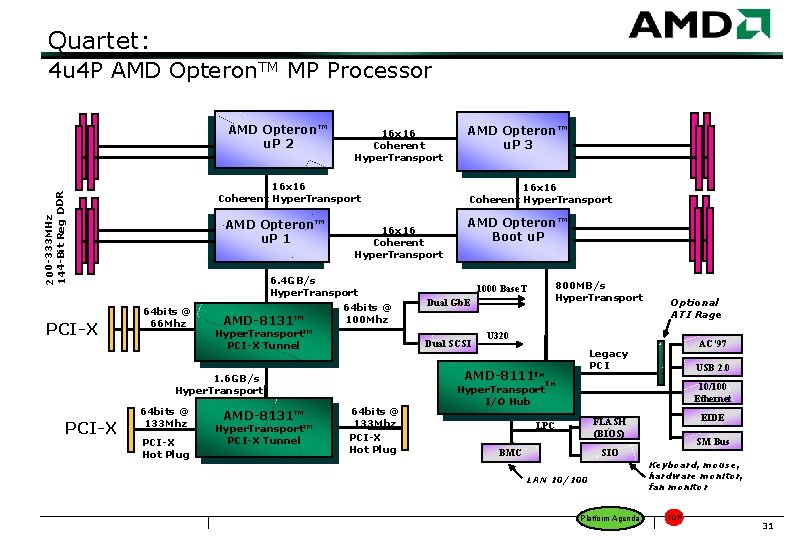

Quartet: 4 u 4 P AMD Opteron. TM MP Processor AMD Opteron™ u. P 2 16 x 16 Coherent Hyper. Transport 200 -333 MHz 144 -Bit Reg DDR PCI-X 16 x 16 Coherent Hyper. Transport AMD Opteron™ u. P 1 64 bits @ 66 Mhz PCI-X Hot Plug AMD-8131™ Hyper. Transport™ PCI-X Tunnel AMD Opteron™ Boot u. P 800 MB/s Hyper. Transport 1000 Base. T Dual Gb. E Dual SCSI 64 bits @ 133 Mhz PCI-X Hot Plug Optional ATI Rage U 320 AC’ 97 Legacy PCI AMD-8111 TMTM 1. 6 GB/s Hyper. Transport 64 bits @ 133 Mhz 16 x 16 Coherent Hyper. Transport 6. 4 GB/s Hyper. Transport 64 bits @ 100 Mhz AMD-8131™ Hyper. Transport™ PCI-X Tunnel AMD Opteron™ u. P 3 USB 2. 0 10/100 Ethernet Hyper. Transport I/O Hub EIDE FLASH (BIOS) LPC SM Bus SIO BMC LAN 10/100 Platform Agenda Keyboard, mouse, hardware monitor, fan monitor TOP 31

MPI vs threaded BLAS ? • BLAS libraries can do thread level parallelism to exploit MP node • MPI can treat MP node processors as separate machines talking via shmem. • Which is best ? • NUMA kernel allocates memory locally for each process • But in the box MPI on 4 P has memory issues. • MPI and single threaded BLAS performs best with NUMA kernel. • Mixing Open. MP and MPI is possible and maybe sensible. • Generally static upfront decomposition feels better ? 32

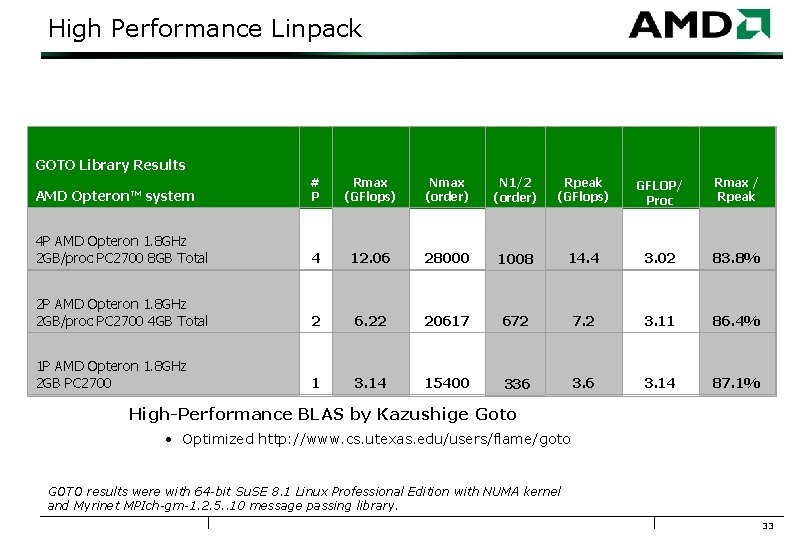

High Performance Linpack GOTO Library Results AMD Opteron™ system # P Rmax (GFlops) Nmax (order) N 1/2 (order) Rpeak (GFlops) GFLOP/ Proc Rmax / Rpeak 4 P AMD Opteron 1. 8 GHz 2 GB/proc PC 2700 8 GB Total 4 12. 06 28000 1008 14. 4 3. 02 83. 8% 2 P AMD Opteron 1. 8 GHz 2 GB/proc PC 2700 4 GB Total 2 6. 22 20617 672 7. 2 3. 11 86. 4% 1 P AMD Opteron 1. 8 GHz 2 GB PC 2700 1 3. 14 15400 336 3. 14 87. 1% High-Performance BLAS by Kazushige Goto • Optimized http: //www. cs. utexas. edu/users/flame/goto GOTO results were with 64 -bit Su. SE 8. 1 Linux Professional Edition with NUMA kernel and Myrinet MPIch-gm-1. 2. 5. . 10 message passing library. 33

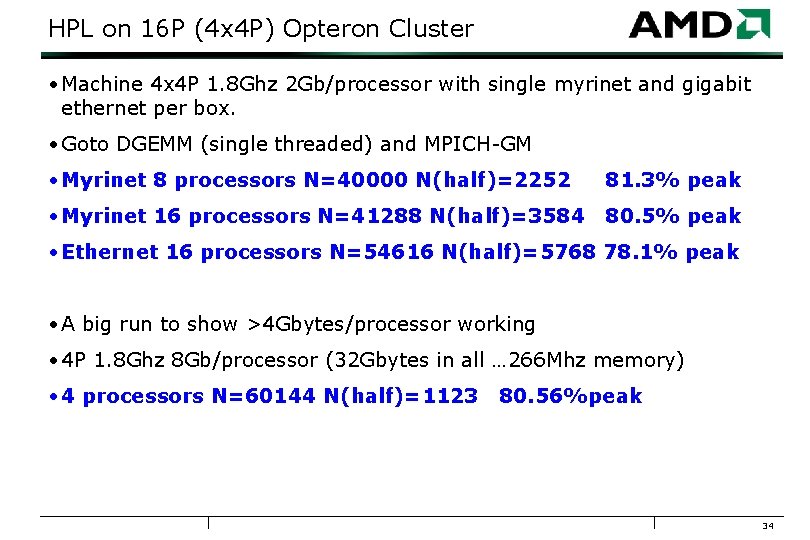

HPL on 16 P (4 x 4 P) Opteron Cluster • Machine 4 x 4 P 1. 8 Ghz 2 Gb/processor with single myrinet and gigabit ethernet per box. • Goto DGEMM (single threaded) and MPICH-GM • Myrinet 8 processors N=40000 N(half)=2252 81. 3% peak • Myrinet 16 processors N=41288 N(half)=3584 80. 5% peak • Ethernet 16 processors N=54616 N(half)=5768 78. 1% peak • A big run to show >4 Gbytes/processor working • 4 P 1. 8 Ghz 8 Gb/processor (32 Gbytes in all … 266 Mhz memory) • 4 processors N=60144 N(half)=1123 80. 56%peak 34

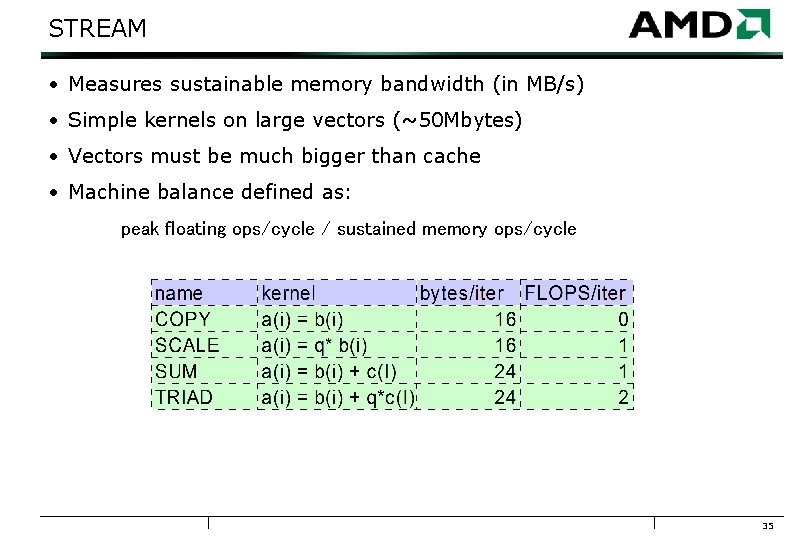

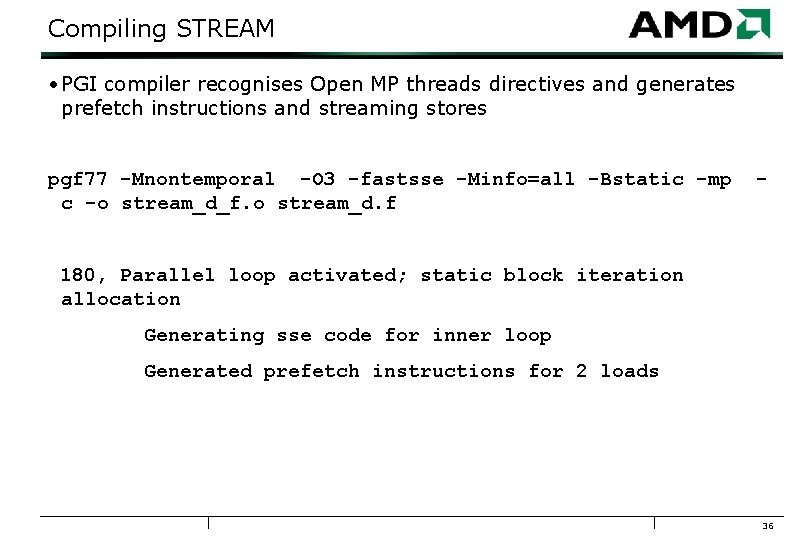

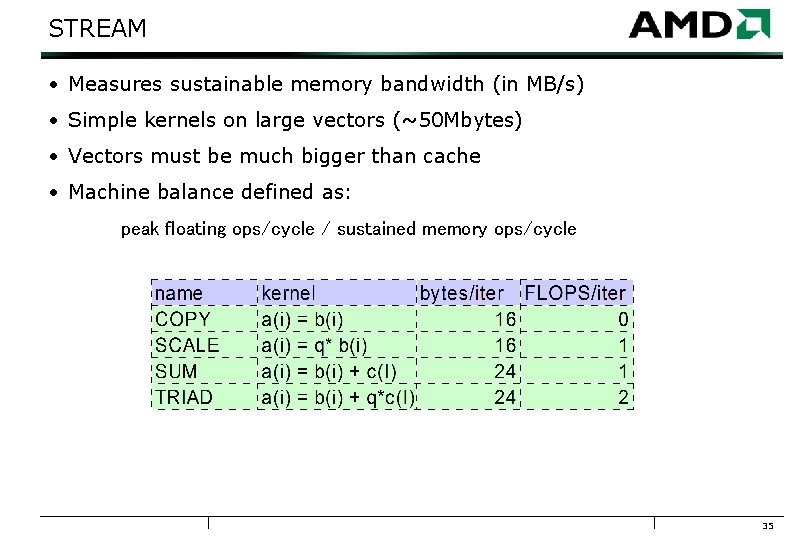

STREAM • Measures sustainable memory bandwidth (in MB/s) • Simple kernels on large vectors (~50 Mbytes) • Vectors must be much bigger than cache • Machine balance defined as: peak floating ops/cycle / sustained memory ops/cycle 35

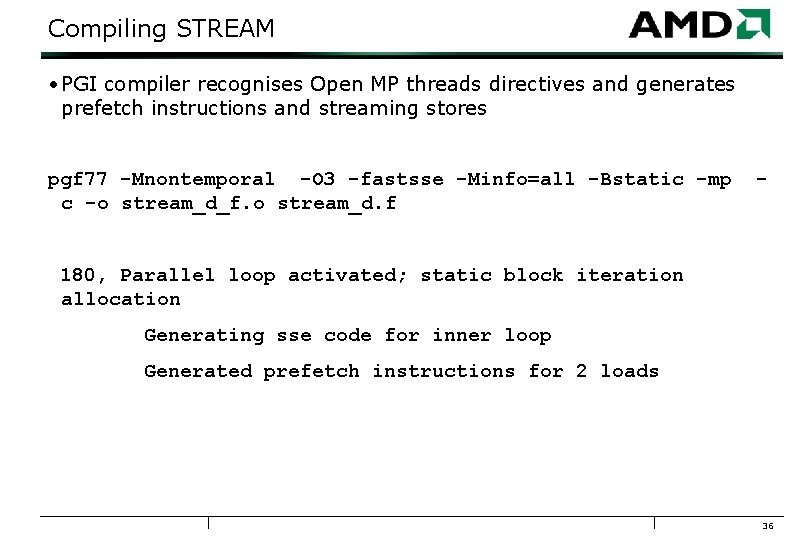

Compiling STREAM • PGI compiler recognises Open MP threads directives and generates prefetch instructions and streaming stores pgf 77 -Mnontemporal -O 3 -fastsse -Minfo=all -Bstatic -mp c -o stream_d_f. o stream_d. f - 180, Parallel loop activated; static block iteration allocation Generating sse code for inner loop Generated prefetch instructions for 2 loads 36

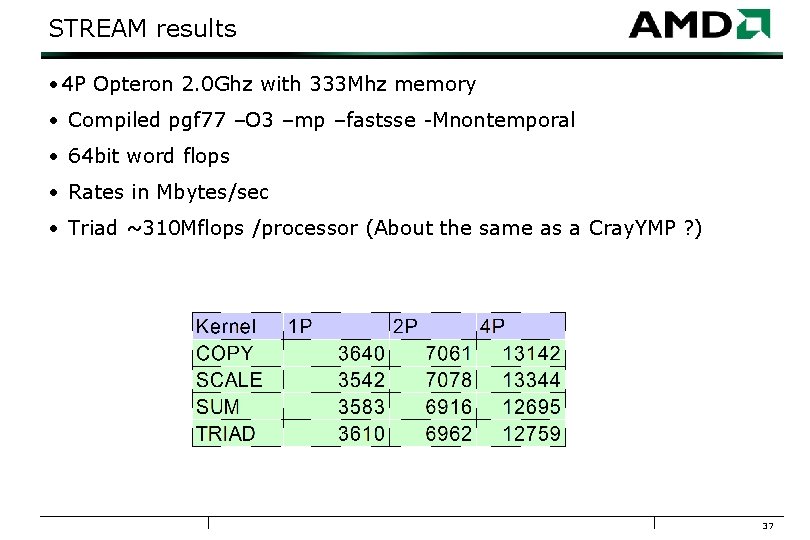

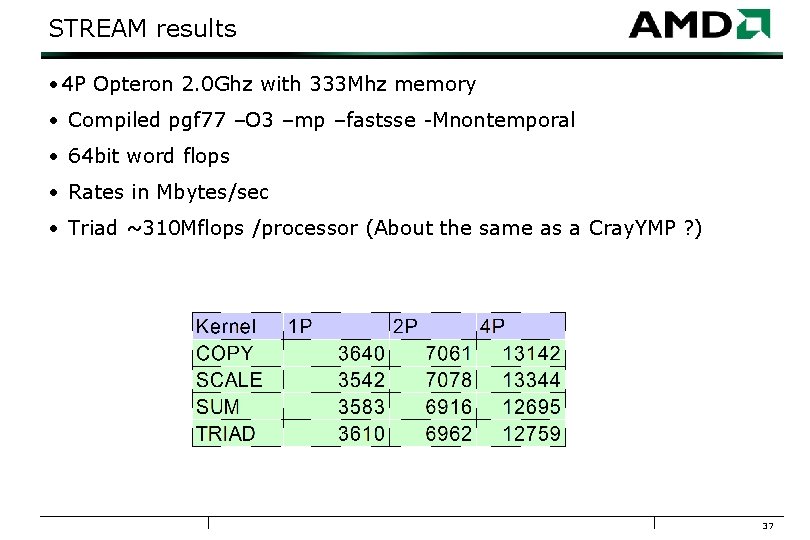

STREAM results • 4 P Opteron 2. 0 Ghz with 333 Mhz memory • Compiled pgf 77 –O 3 –mp –fastsse -Mnontemporal • 64 bit word flops • Rates in Mbytes/sec • Triad ~310 Mflops /processor (About the same as a Cray. YMP ? ) 37

Application Benchmarks

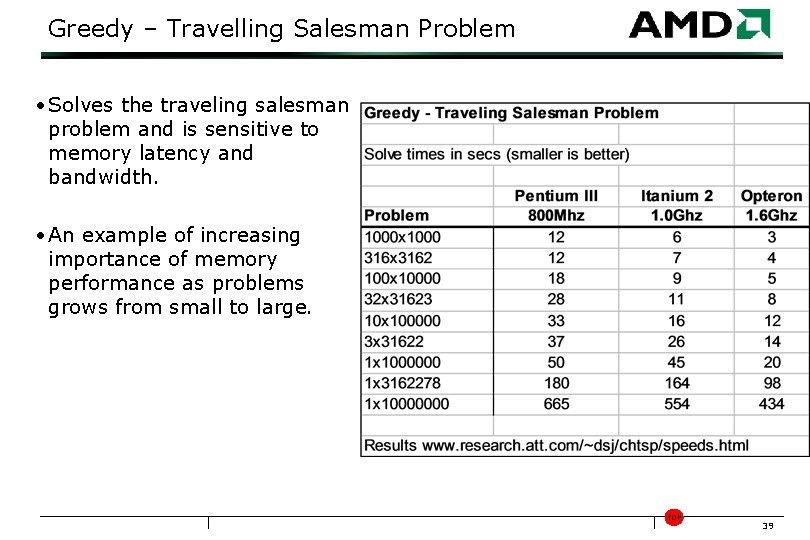

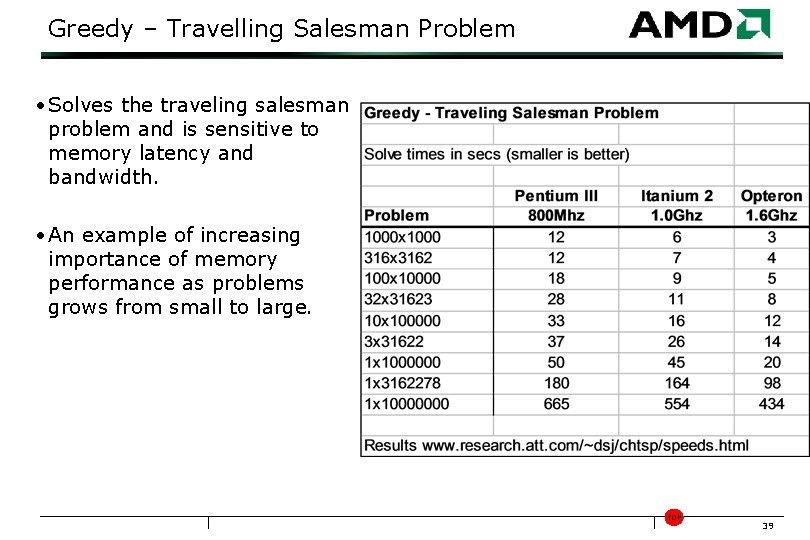

Greedy – Travelling Salesman Problem • Solves the traveling salesman problem and is sensitive to memory latency and bandwidth. • An example of increasing importance of memory performance as problems grows from small to large. TOP 39

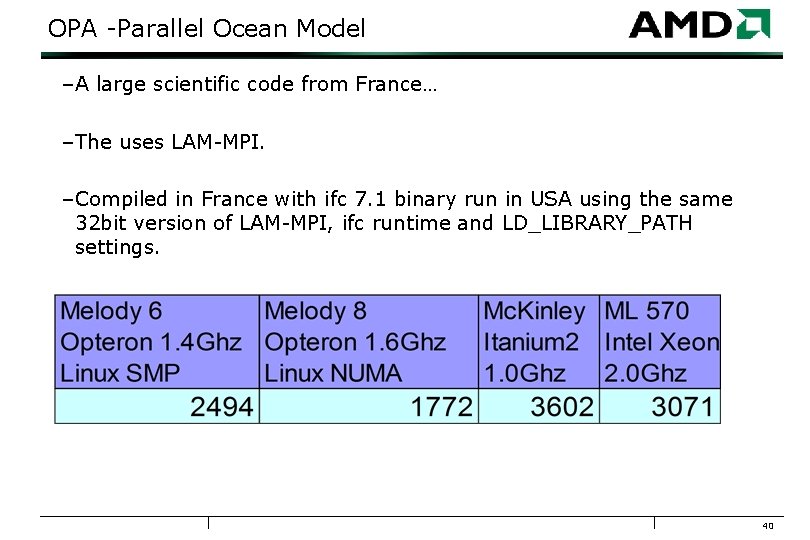

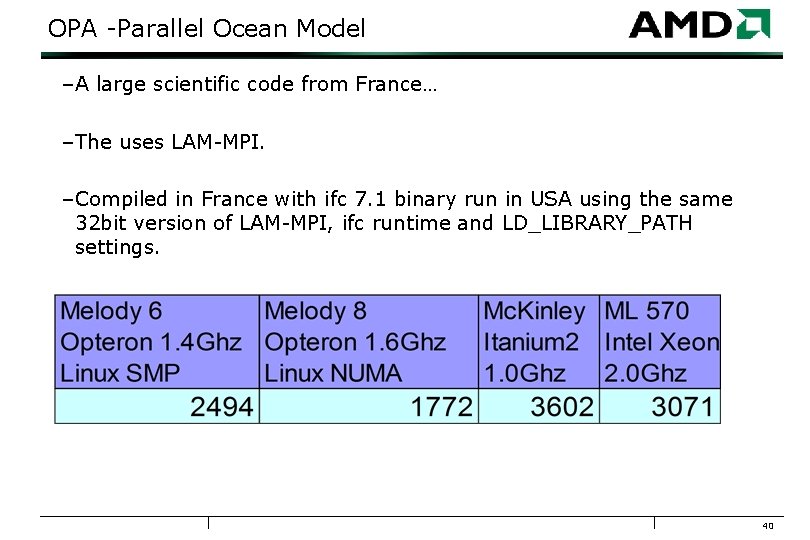

OPA -Parallel Ocean Model – A large scientific code from France… – The uses LAM-MPI. – Compiled in France with ifc 7. 1 binary run in USA using the same 32 bit version of LAM-MPI, ifc runtime and LD_LIBRARY_PATH settings. 40

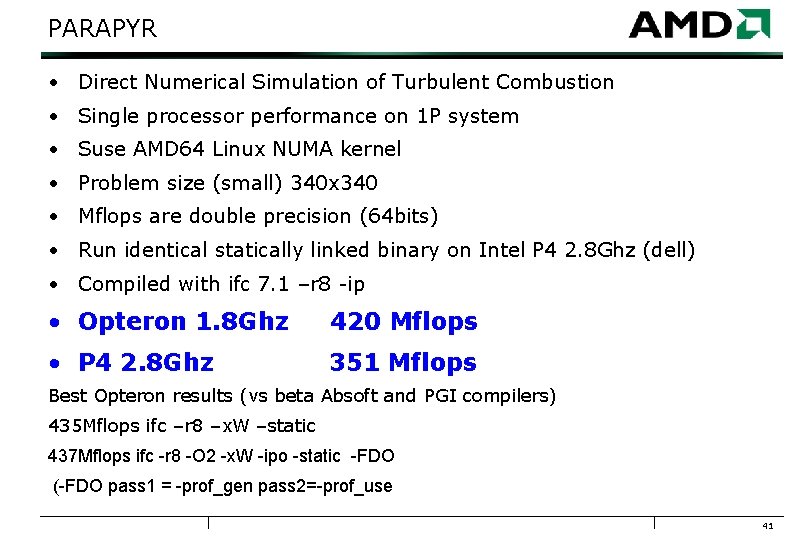

PARAPYR • Direct Numerical Simulation of Turbulent Combustion • Single processor performance on 1 P system • Suse AMD 64 Linux NUMA kernel • Problem size (small) 340 x 340 • Mflops are double precision (64 bits) • Run identical statically linked binary on Intel P 4 2. 8 Ghz (dell) • Compiled with ifc 7. 1 –r 8 -ip • Opteron 1. 8 Ghz 420 Mflops • P 4 2. 8 Ghz 351 Mflops Best Opteron results (vs beta Absoft and PGI compilers) 435 Mflops ifc –r 8 –x. W –static 437 Mflops ifc -r 8 -O 2 -x. W -ipo -static -FDO (-FDO pass 1 = -prof_gen pass 2=-prof_use 41

PARAPYR Best 1. 8 Ghz Opteron ifc results 435 Mflops ifc –r 8 –x. W –static 437 Mflops ifc -r 8 -O 2 -x. W -ipo -static -FDO (-FDO pass 1 = -prof_gen pass 2=-prof_use) 42

Conclusions – Use AMD 64 64 bit OS with NUMA support – 32 bit compiled applications run well Know you applications memory or cpu needs (so you know what to expect) 64 bit compilers need work (as ever) – Competitive processors Itanium 1. 5 Ghz and Xeon 3. 0 Ghz have higher peak FLOPs but relatively poor memory scaling Highly tuned benchmarks which make heavy use of floating point and which fit in cache or make low use of the memory system perform better on Itanium Xeon memory system does not scale well • Opteron has excellent memory system performance and scalability – both bandwidth and latency Codes that depend on memory latency or bandwidth perform better on Opteron Codes with a mix of integer and floating point will perform better on Opteron Code that is not highly tuned will likely perform better on Opteron • More Upside from MPI 4 P memory layout (MPICH 2 ? ) and 64 bit compilers TOP 43

AMD, the AMD Arrow logo, AMD Athlon, AMD Opteron, 3 DNow! and combinations thereof, AMD-8100, AMD-8111 and AMD-8131 AMD-8151 are trademarks of Advanced Micro Devices, Inc. Hyper. Transport is a licensed trademark of the Hyper. Transport Technology Consortium. Microsoft and Windows are registered trademarks of Microsoft Corporation in the U. S. and/or other jurisdictions. Pentium and MMX are registered trademarks of Intel Corporation in the U. S. and/or other jurisdictions. SPEC and SPECfp are registered trademarks of Standard Performance Evaluation Corporation in the U. S. and/or other jurisdictions. Other product and company names used in this presentation are for identification purposes only and may be trademarks of their respective companies. 44