Optimal Defense Against Jamming Attacks in Cognitive Radio

- Slides: 49

Optimal Defense Against Jamming Attacks in Cognitive Radio Networks Using the Markov Decision Process Approach Yongle Wu, Beibei Wang, and K. J. Ray Liu Presenter: Wayne Hsiao Advisor: Frank, Yeong-Sung Lin

Agenda • • Introduction Related Works System Model Optimal Strategy with Perfect Knowledge o Markov Models o Markov Decision Process • Learning the Parameters • Simulation Results

Agenda • • Introduction Related Works System Model Optimal Strategy with Perfect Knowledge o Markov Models o Markov Decision Process • Learning the Parameters • Simulation Results

Introduction • Cognitive radio technology has been receiving a growing attention • In a cognitive radio network o Unlicensed users (secondary users) o Spectrum holders (primary users) • Secondary users usually compete for limited spectrum resources o Game theory has been widely applied as a flexible and proper tool to model and analyze their behavior in the network

Introduction • Cognitive radio networks are vulnerable to malicious attacks • Security countermeasures o Crucial to the successful deployment of cognitive radio networks • We mainly focus on the jamming attack o One of the major threats to cognitive radio networks o Several malicious attackers intend to interrupt the communications of secondary users by injecting interference

Introduction • Secondary user could hop across multiple bands in order to reduce the probability of being jammed o Optimal defense strategy o Markov decision process (MDP) • The optimal strategy strikes a balance between the cost associated with hopping and the damage caused by attackers

Introduction • In order to determine the optimal strategy, the secondary user needs to know some information o the number of attackers • Maximum Likelihood Estimation (MLE) o A learning process in this paper that the secondary user estimates the useful parameters based on past observations

Agenda • • Introduction Related Works System Model Optimal Strategy with Perfect Knowledge o Markov Models o Markov Decision Process • Learning the Parameters • Simulation Results

Related Works • The problem becomes more complicated in a cognitive radio network o Primary users’ access has to be taken into consideration • We consider the scenario o A single-radio secondary user o Defense strategy is to hop across different bands

Agenda • • Introduction Related Works System Model Optimal Strategy with Perfect Knowledge o Markov Models o Markov Decision Process • Learning the Parameters • Simulation Results

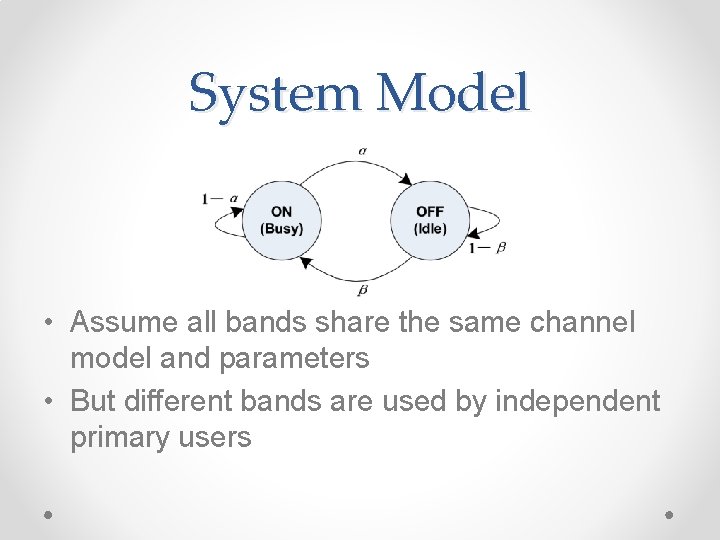

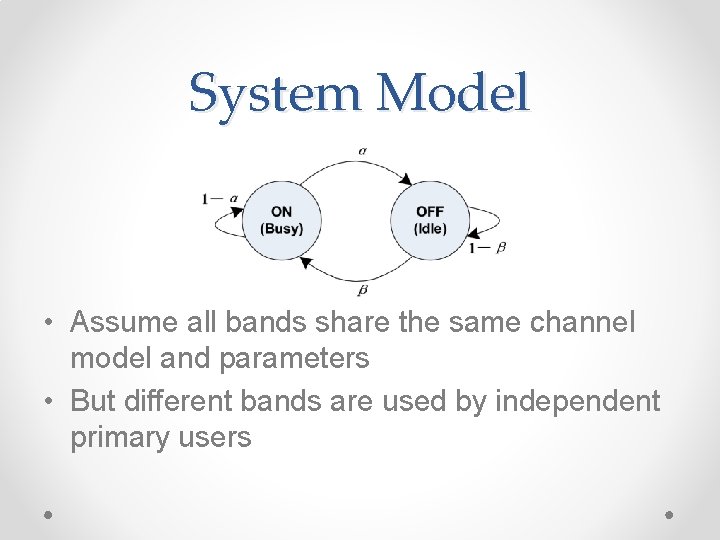

System Model • A secondary user opportunistically accesses one of the predefined M licensed bands • Each licensed band is time-slotted • The access pattern of primary users can be characterized by an ON-OFF model

System Model • Assume all bands share the same channel model and parameters • But different bands are used by independent primary users

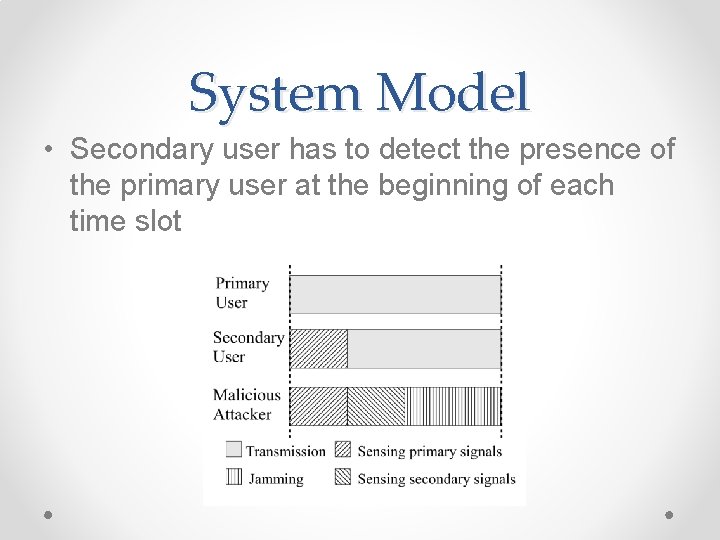

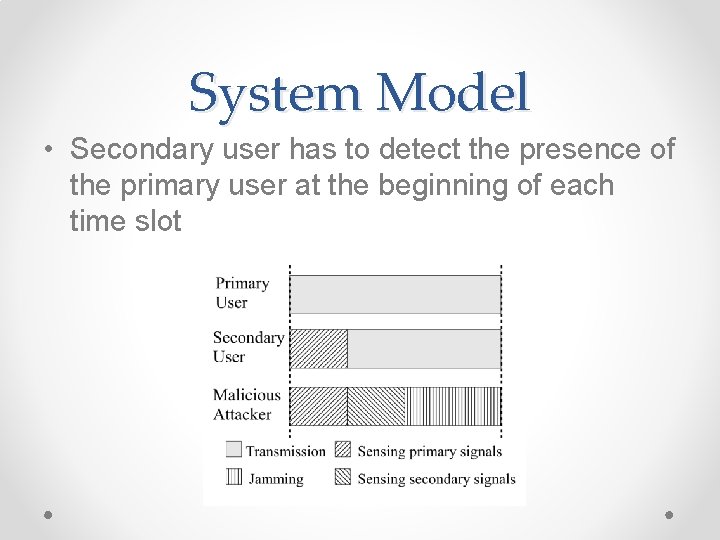

System Model • Secondary user has to detect the presence of the primary user at the beginning of each time slot

System Model • Communication gain R o When the primary user is absent in that band • The cost associated with hopping is C • We assume there are m (m ≥ 1) malicious single-radio attackers • Attackers do not want to interfere with primary users o Because primary users’ usage of spectrum is enforced by their ownership of bands

System Model • On finding the secondary user o Attacker will immediately inject jamming power which makes the secondary user fail to decode data packets • We assume that the secondary user suffers from a significant loss L when jammed • When all the attackers coordinate to maximize the damage o they detect m channels in a time slot

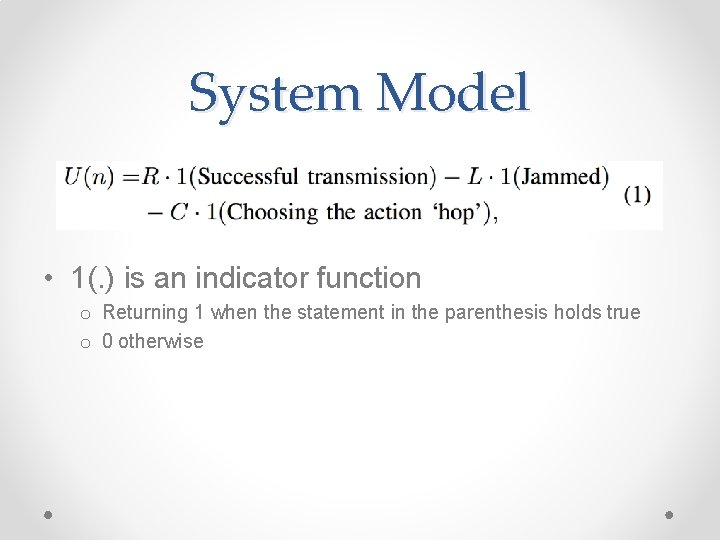

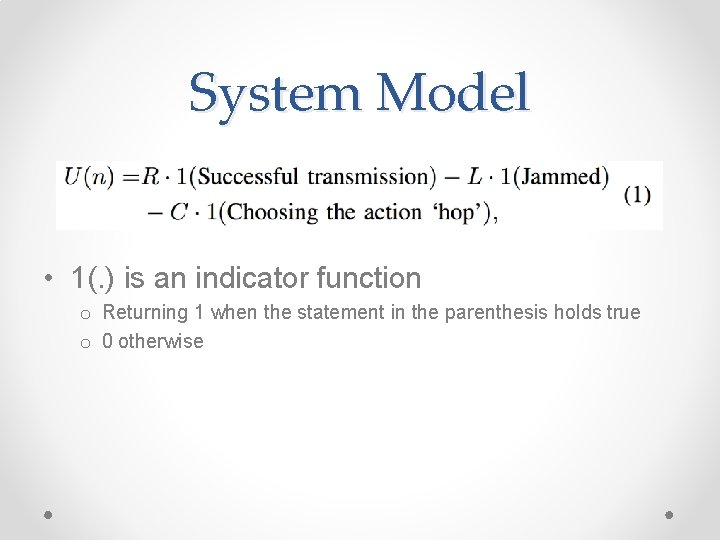

System Model • The longer the secondary user stays in a band, the higher risk to be exposed to attackers • At the end of each time slot the secondary user decides o to stay o to hop • The secondary user receives an immediate payoff U(n) in the nth time slot

System Model • 1(. ) is an indicator function o Returning 1 when the statement in the parenthesis holds true o 0 otherwise

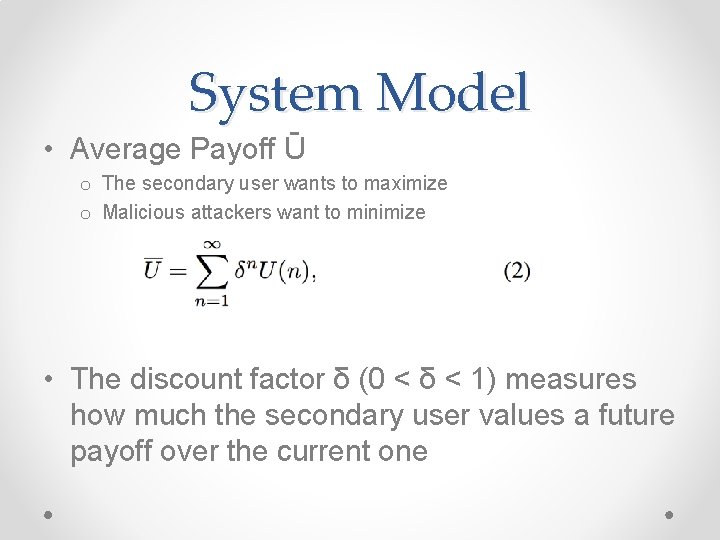

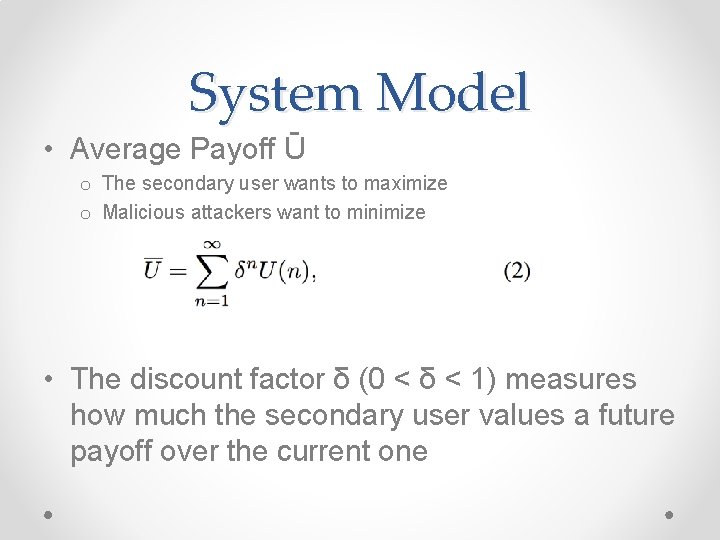

System Model • Average Payoff Ū o The secondary user wants to maximize o Malicious attackers want to minimize • The discount factor δ (0 < δ < 1) measures how much the secondary user values a future payoff over the current one

Agenda • • Introduction Related Works System Model Optimal Strategy with Perfect Knowledge o Markov Models o Markov Decision Process • Learning the Parameters • Simulation Results

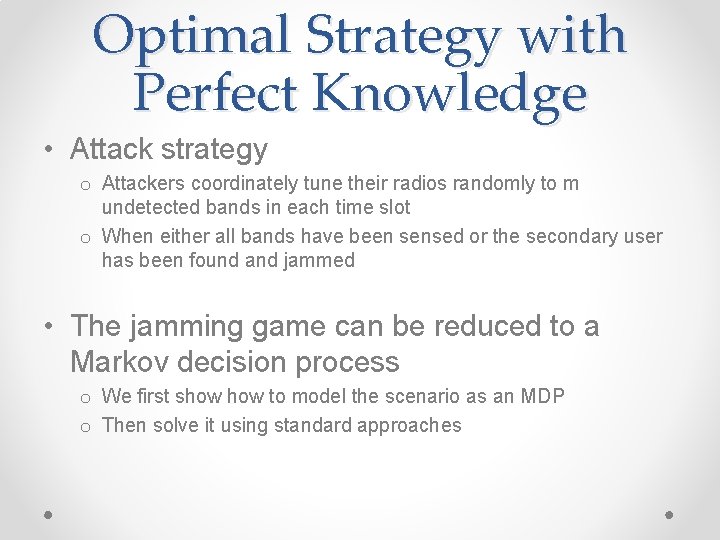

Optimal Strategy with Perfect Knowledge • Attack strategy o Attackers coordinately tune their radios randomly to m undetected bands in each time slot o When either all bands have been sensed or the secondary user has been found and jammed • The jamming game can be reduced to a Markov decision process o We first show to model the scenario as an MDP o Then solve it using standard approaches

Optimal Strategy with Perfect Knowledge • At the end of the nth time slot o The secondary user observes the state of the current time slot S(n) o And chooses an action a(n) • Whether to tune the radio to a new band or not, which takes effect at the beginning of the next time slot • S(n) = P o The primary user occupied the band in the nth time slot • S(n) = J o The secondary user was jammed in the nth time slot

Optimal Strategy with Perfect Knowledge • a(n) = h o The secondary user to hop to a new band • The secondary user has transmitted a packet successfully in the time slot o ‘to hop’ (a(n) = h) o ‘to stay’ (a(n) = s) • S(n) = K o This is the Kth consecutive slot with successful transmission in the same band

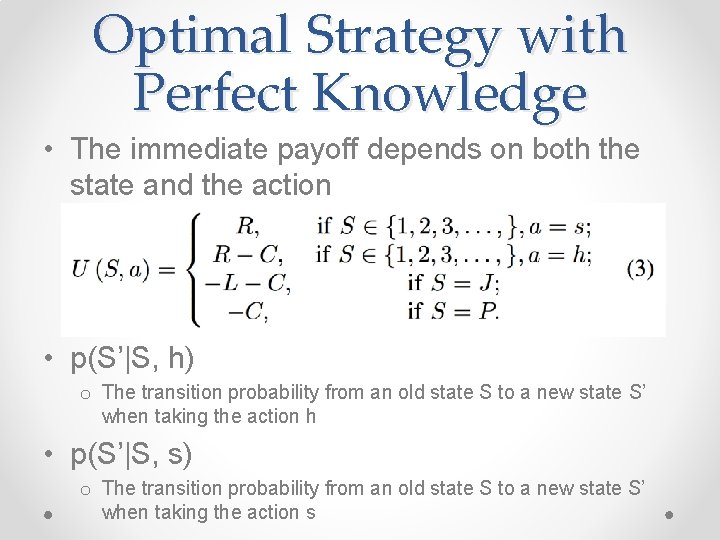

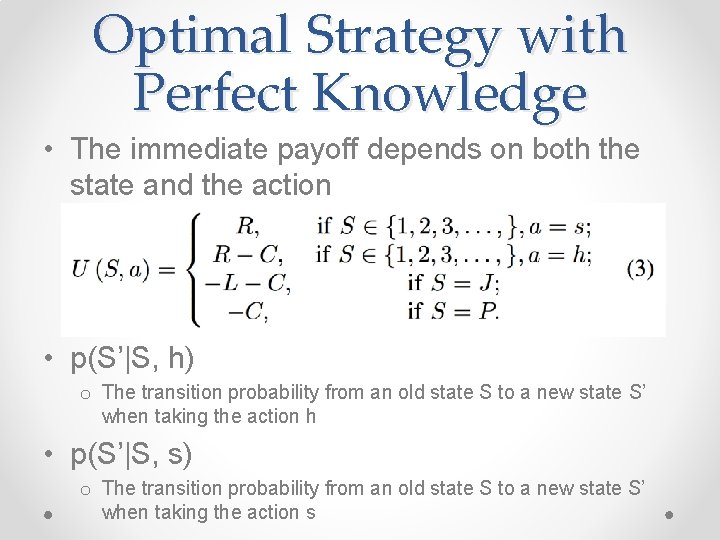

Optimal Strategy with Perfect Knowledge • The immediate payoff depends on both the state and the action • p(S’|S, h) o The transition probability from an old state S to a new state S’ when taking the action h • p(S’|S, s) o The transition probability from an old state S to a new state S’ when taking the action s

Optimal Strategy with Perfect Knowledge • If the secondary user hops to a new band, transition probabilities do not depend on the old state • The only possible new states are o P (the new band is occupied by the primary user) o J (transmission in the new band is detected by an attacker) o 1 (successful transmission begins in the new band)

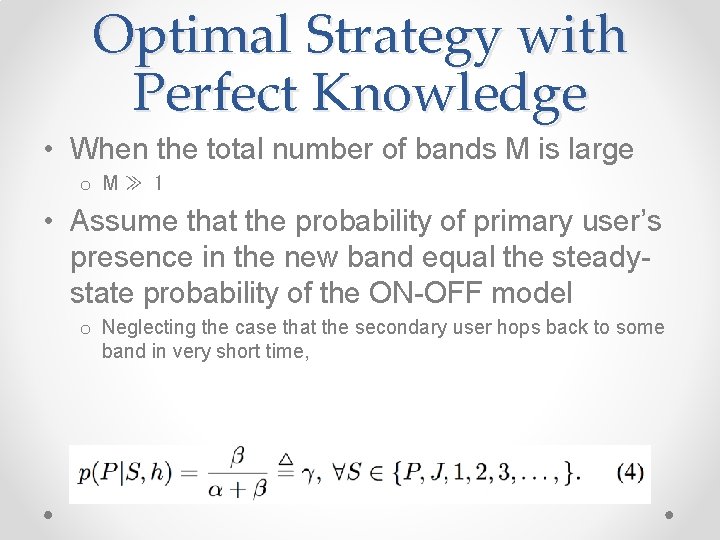

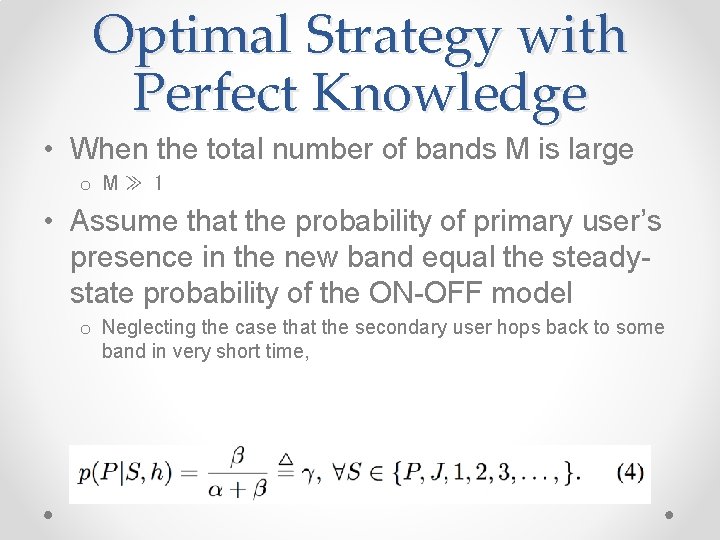

Optimal Strategy with Perfect Knowledge • When the total number of bands M is large o M≫ 1 • Assume that the probability of primary user’s presence in the new band equal the steadystate probability of the ON-OFF model o Neglecting the case that the secondary user hops back to some band in very short time,

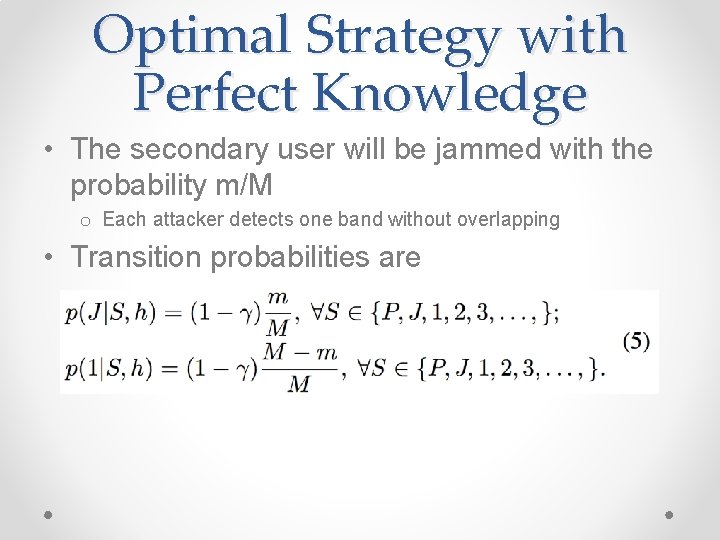

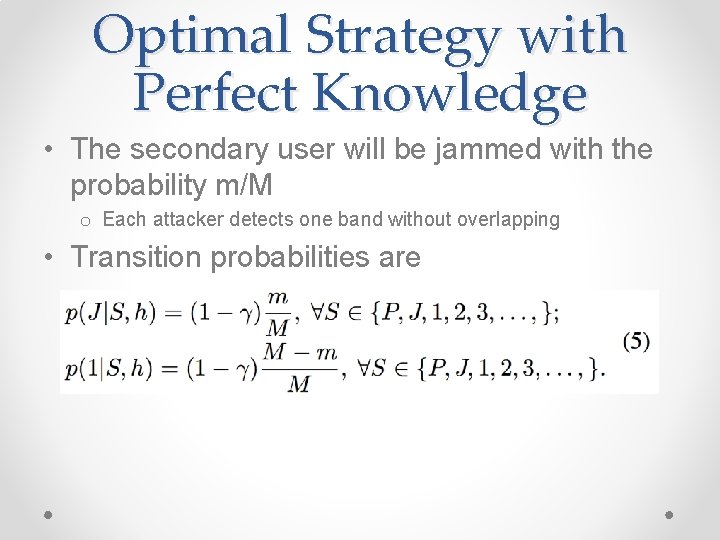

Optimal Strategy with Perfect Knowledge • The secondary user will be jammed with the probability m/M o Each attacker detects one band without overlapping • Transition probabilities are

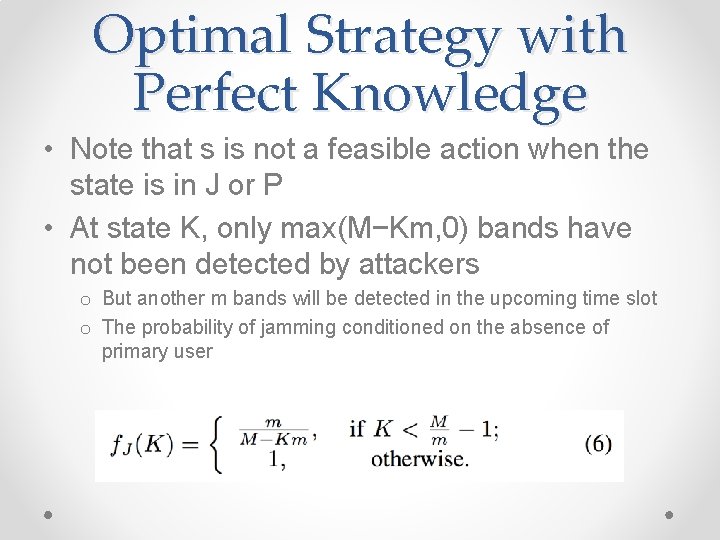

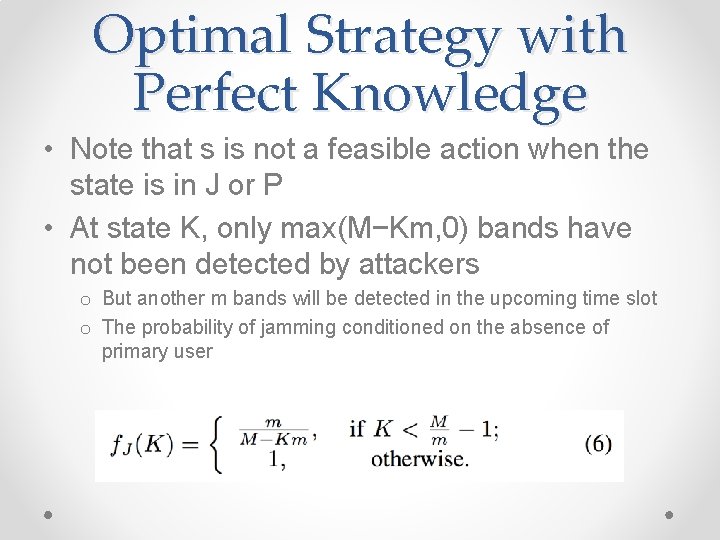

Optimal Strategy with Perfect Knowledge • Note that s is not a feasible action when the state is in J or P • At state K, only max(M−Km, 0) bands have not been detected by attackers o But another m bands will be detected in the upcoming time slot o The probability of jamming conditioned on the absence of primary user

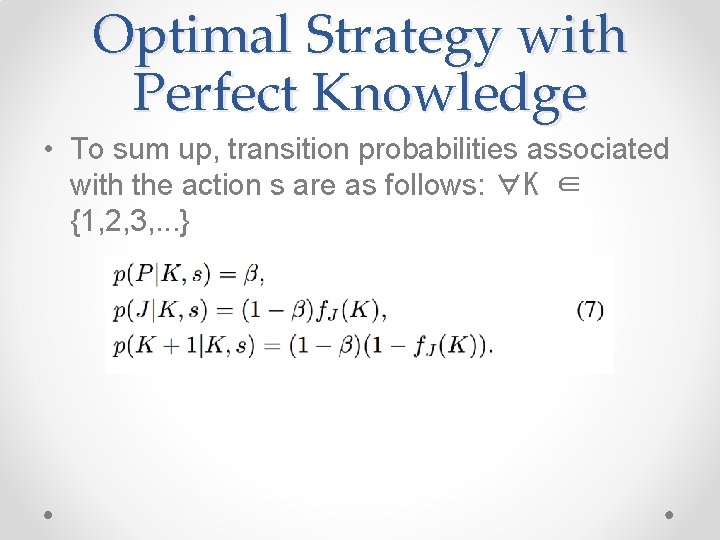

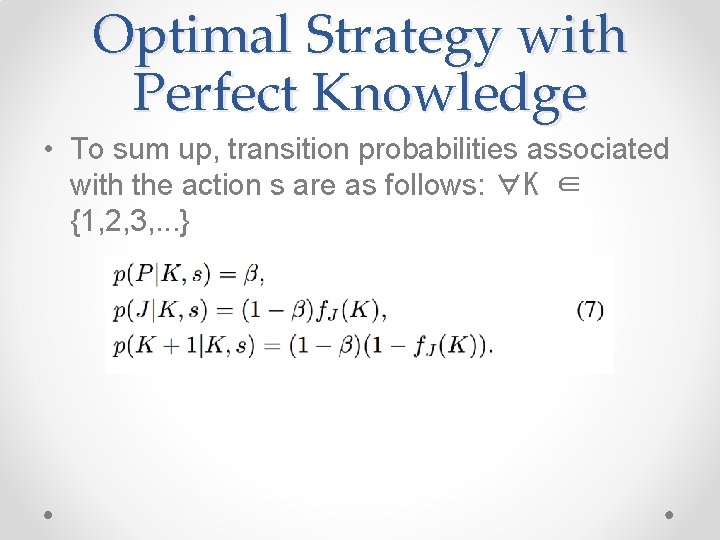

Optimal Strategy with Perfect Knowledge • To sum up, transition probabilities associated with the action s are as follows: ∀K ∈ {1, 2, 3, . . . }

Agenda • • Introduction Related Works System Model Optimal Strategy with Perfect Knowledge o Markov Models o Markov Decision Process • Learning the Parameters • Simulation Results

Markov Decision Process • If the secondary user stays in the same band for too long, he/she will eventually be found by an attacker o p(K + 1|K, s) = 0 if K > M/m − 1 • Therefore, we can limit the state S to a finite set , where

Markov Decision Process • An MDP consists of four important components o o a finite set of states a finite set of actions transition probabilities immediate payoffs • The optimal defense strategy can be obtained by solving the MDP

Markov Decision Process • A policy is defined as a mapping from a state to an action o π : S(n) → a(n) • A policy π specifies an action π(S) to take whenever the user is in state S • Among all possible policies, the optimal policy is the one that maximizes the expected discounted payoff

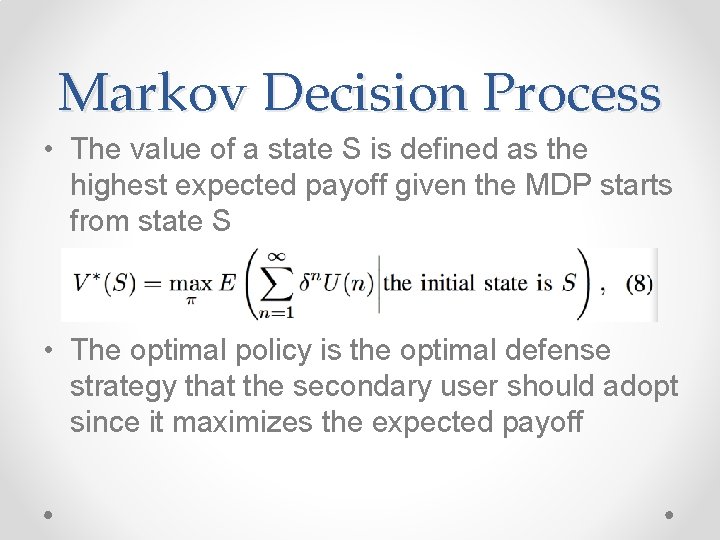

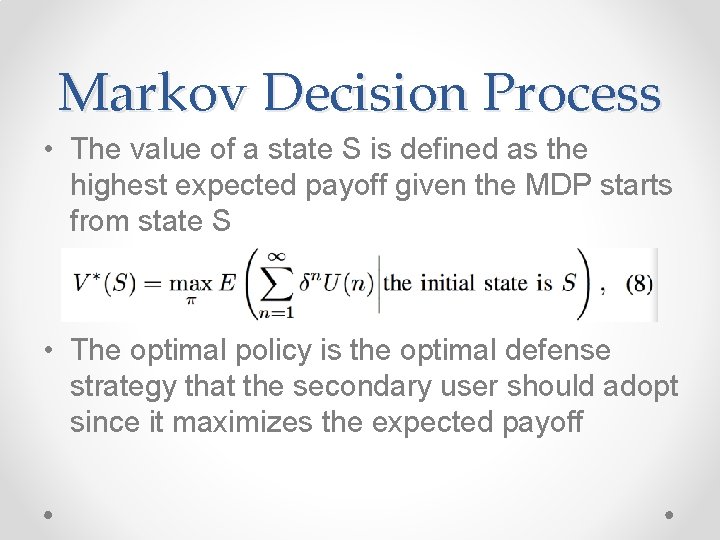

Markov Decision Process • The value of a state S is defined as the highest expected payoff given the MDP starts from state S • The optimal policy is the optimal defense strategy that the secondary user should adopt since it maximizes the expected payoff

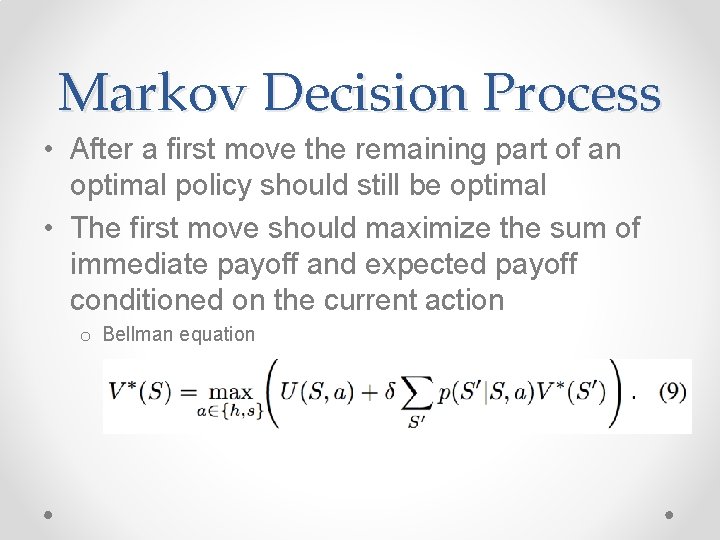

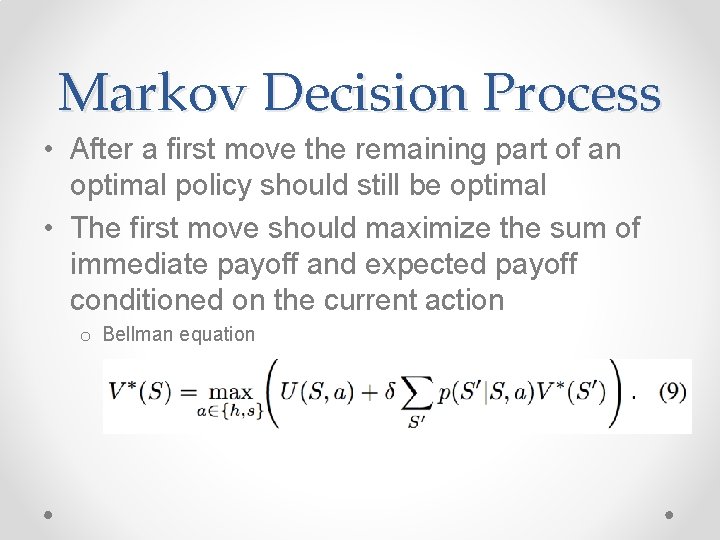

Markov Decision Process • After a first move the remaining part of an optimal policy should still be optimal • The first move should maximize the sum of immediate payoff and expected payoff conditioned on the current action o Bellman equation

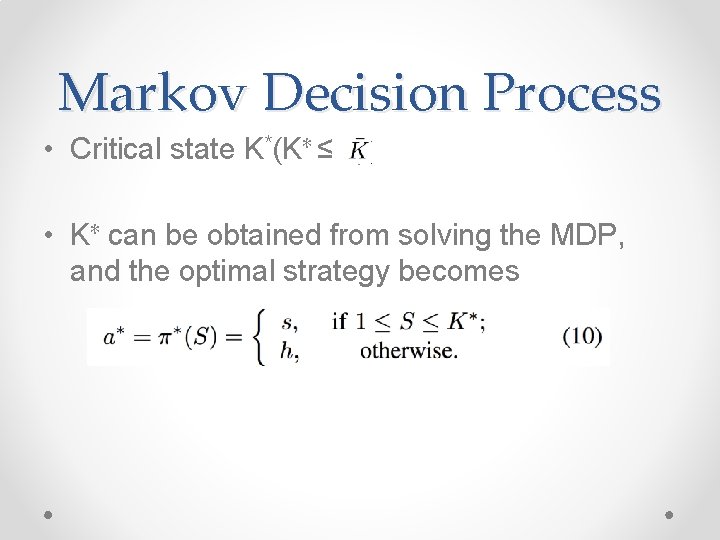

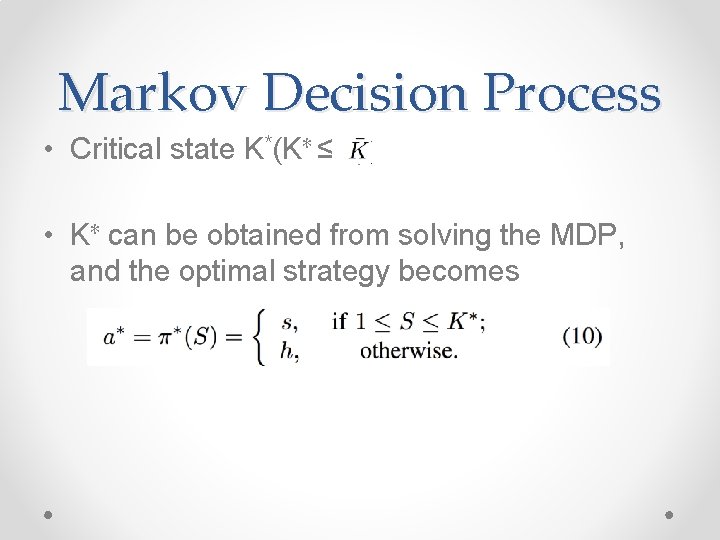

Markov Decision Process • Critical state K*(K∗ ≤ ) • K∗ can be obtained from solving the MDP, and the optimal strategy becomes

Agenda • • Introduction Related Works System Model Optimal Strategy with Perfect Knowledge o Markov Models o Markov Decision Process • Learning the Parameters • Simulation Results

Learning the Parameters • A learning scheme o Maximum Likelihood Estimation (MLE) • The secondary user simply sets a value as an initial guess of the optimal critical state K∗ • And follows the strategy (10) with the estimate during the whole learning period

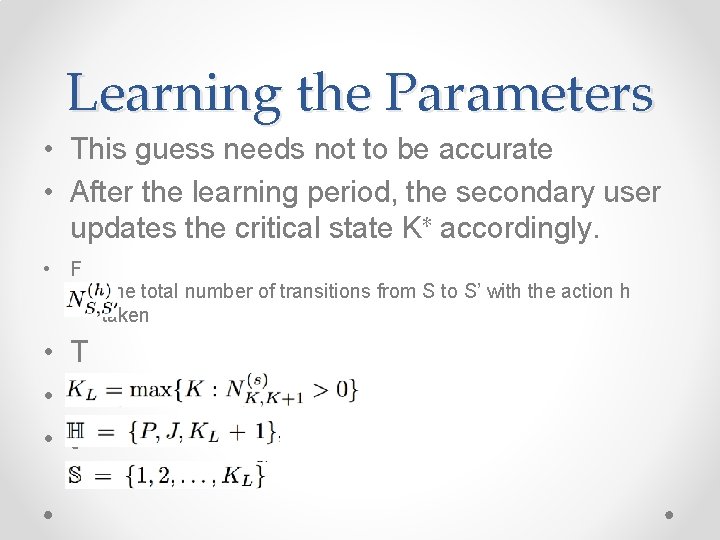

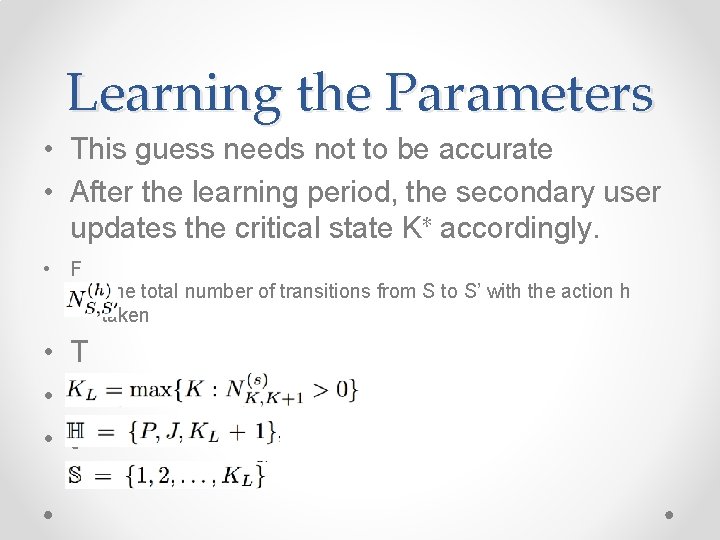

Learning the Parameters • This guess needs not to be accurate • After the learning period, the secondary user updates the critical state K∗ accordingly. • F o The total number of transitions from S to S’ with the action h taken • T • t

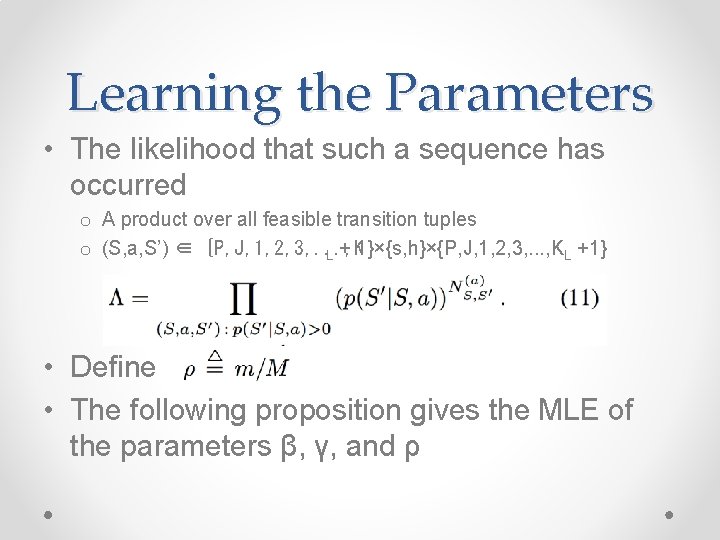

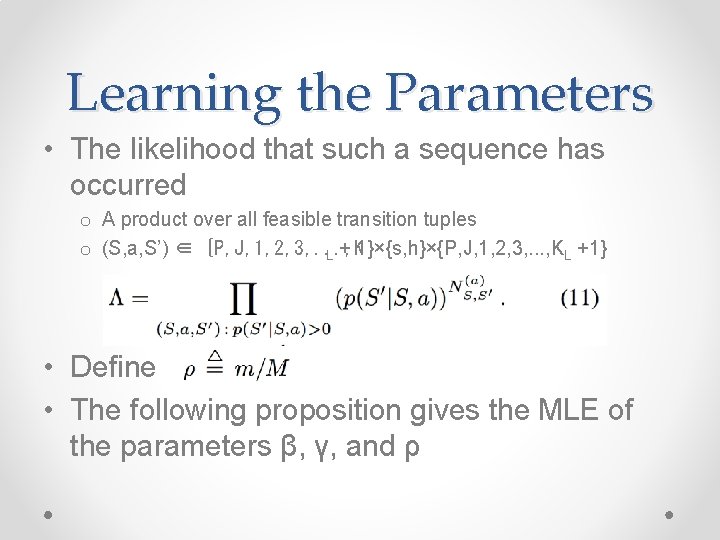

Learning the Parameters • The likelihood that such a sequence has occurred o A product over all feasible transition tuples o (S, a, S’) ∈ {P, J, 1, 2, 3, . . . , K L + 1}×{s, h}×{P, J, 1, 2, 3, . . . , KL +1} • Define • The following proposition gives the MLE of the parameters β, γ, and ρ

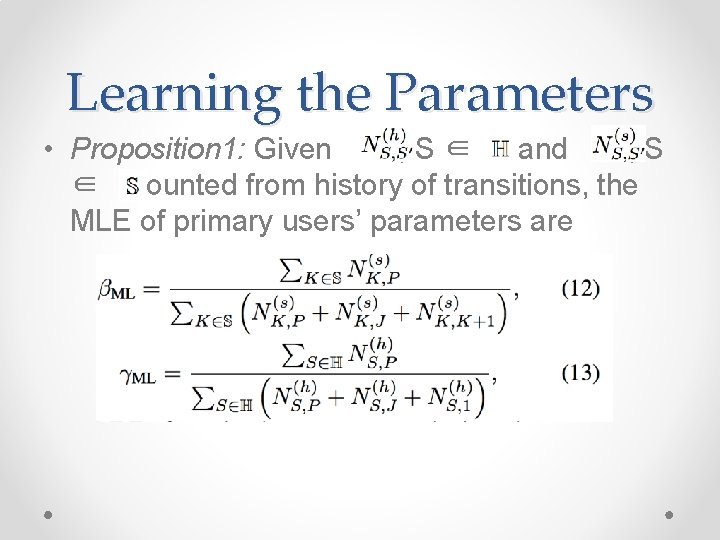

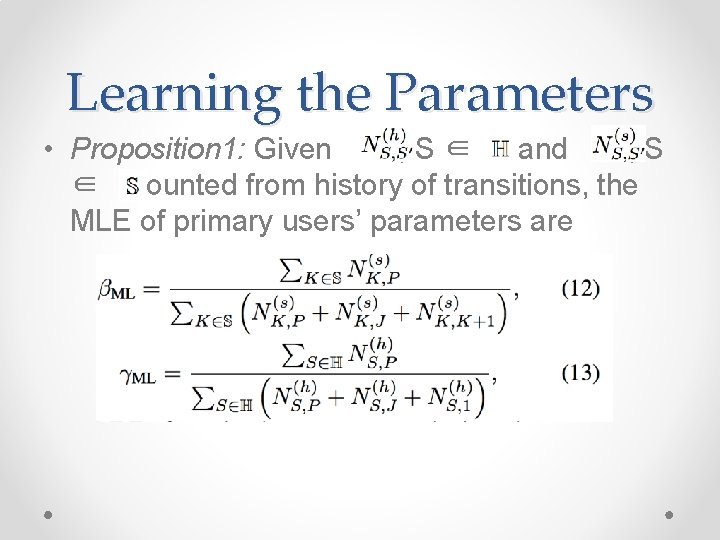

Learning the Parameters • Proposition 1: Given , S∈ and , S ∈ counted from history of transitions, the MLE of primary users’ parameters are

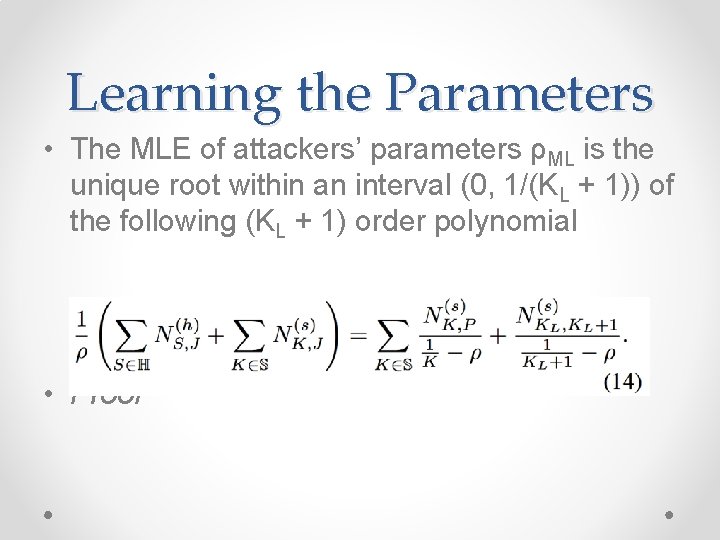

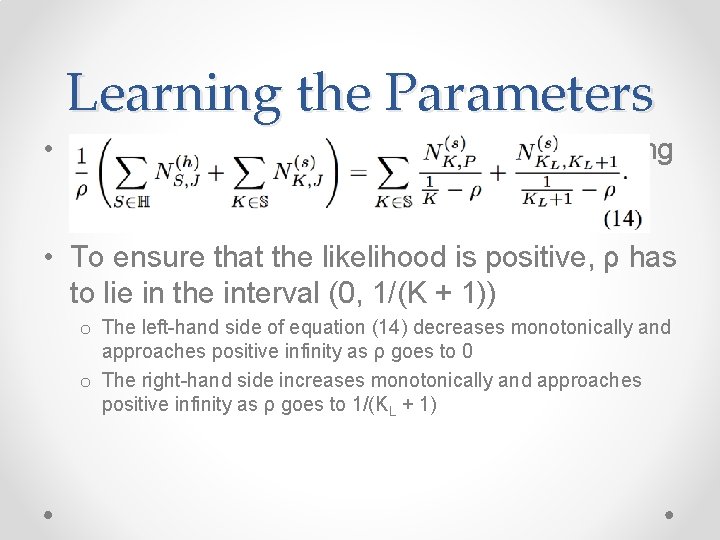

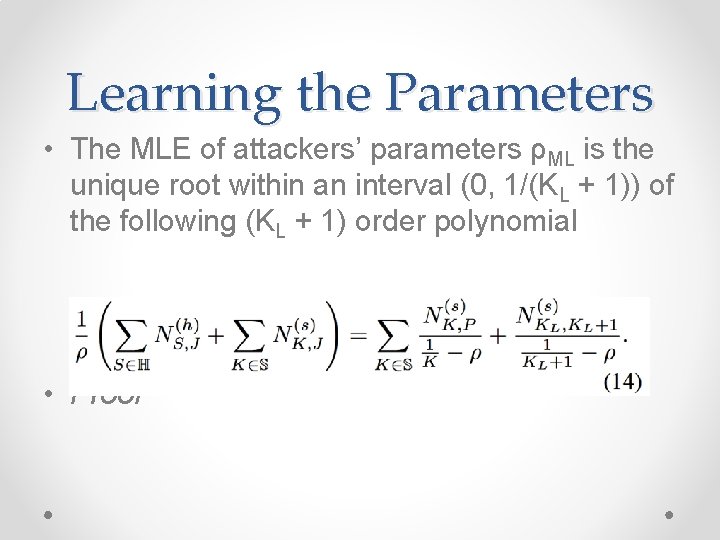

Learning the Parameters • The MLE of attackers’ parameters ρML is the unique root within an interval (0, 1/(KL + 1)) of the following (KL + 1) order polynomial • Proof

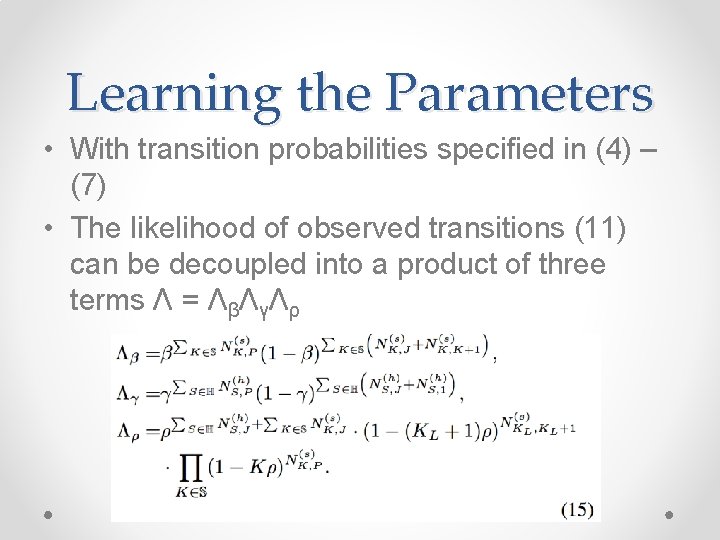

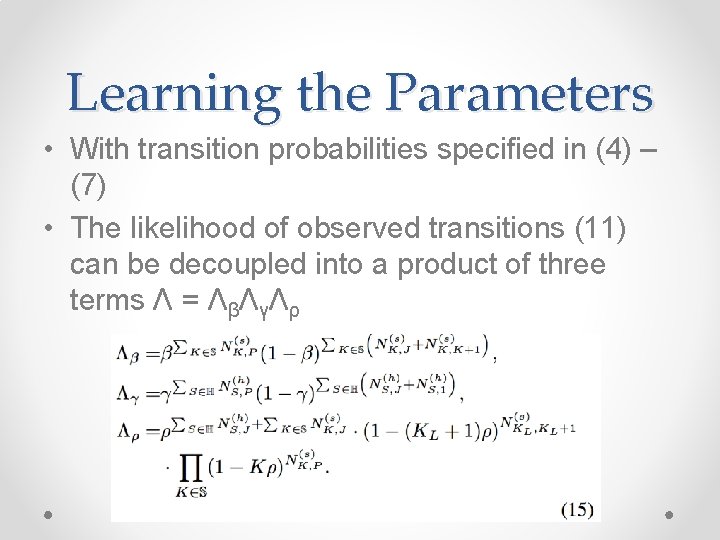

Learning the Parameters • With transition probabilities specified in (4) – (7) • The likelihood of observed transitions (11) can be decoupled into a product of three terms Λ = ΛβΛγΛρ

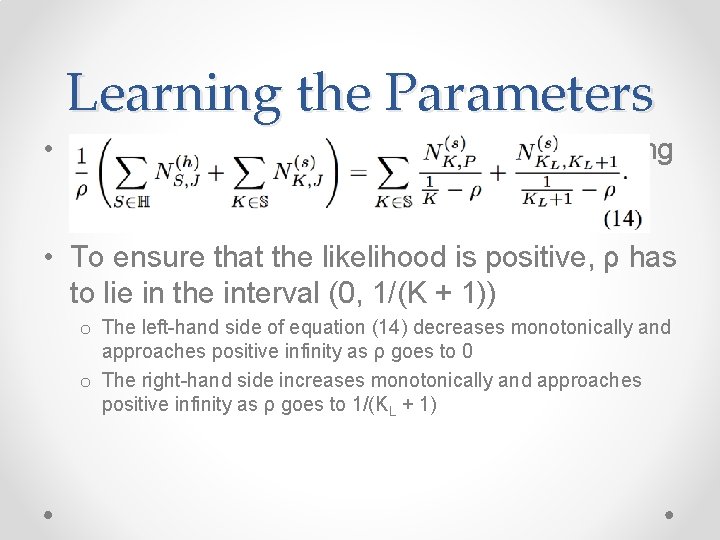

Learning the Parameters • By differentiating ln Λβ, ln Λγ, lnΛρ and equating them to 0 o Obtain the MLE (12) (13) and (14) • To ensure that the likelihood is positive, ρ has to lie in the interval (0, 1/(K + 1)) o The left-hand side of equation (14) decreases monotonically and approaches positive infinity as ρ goes to 0 o The right-hand side increases monotonically and approaches positive infinity as ρ goes to 1/(KL + 1)

Learning the Parameters • After the learning period, the secondary user rounds M ·ρML to the nearest integer as an estimation of m • Calculate the optimal strategy using the MDP approach described in the previous section

Agenda • • Introduction Related Works System Model Optimal Strategy with Perfect Knowledge o Markov Models o Markov Decision Process • Learning the Parameters • Simulation Results

Simulation Result • • • Communication gain R = 5 Hopping cost C = 1 Total number of bands M = 60 Discount factor δ = 0. 95 Primary users’ access pattern o β = 0. 01, γ = 0. 1

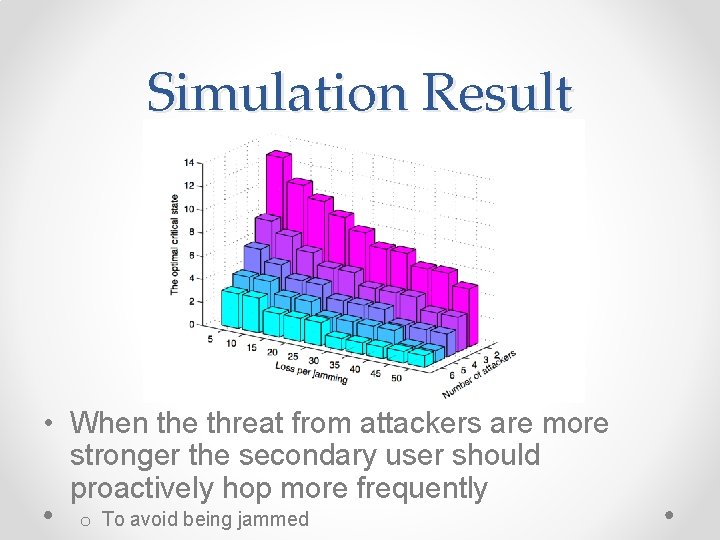

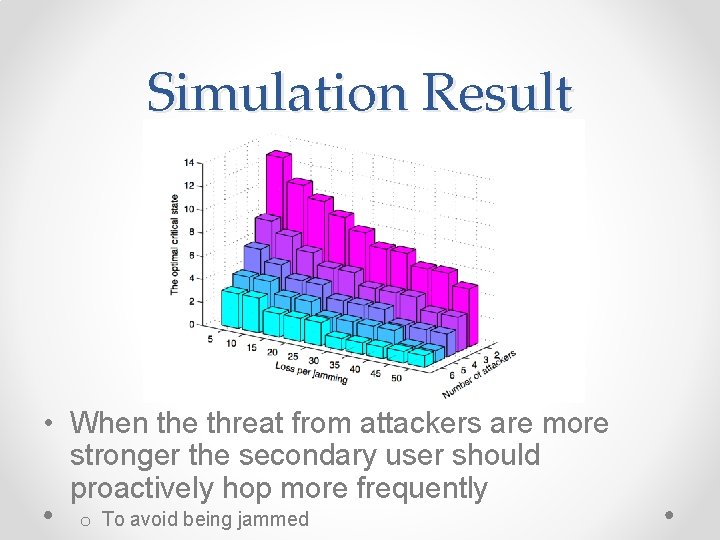

Simulation Result • When the threat from attackers are more stronger the secondary user should proactively hop more frequently o To avoid being jammed

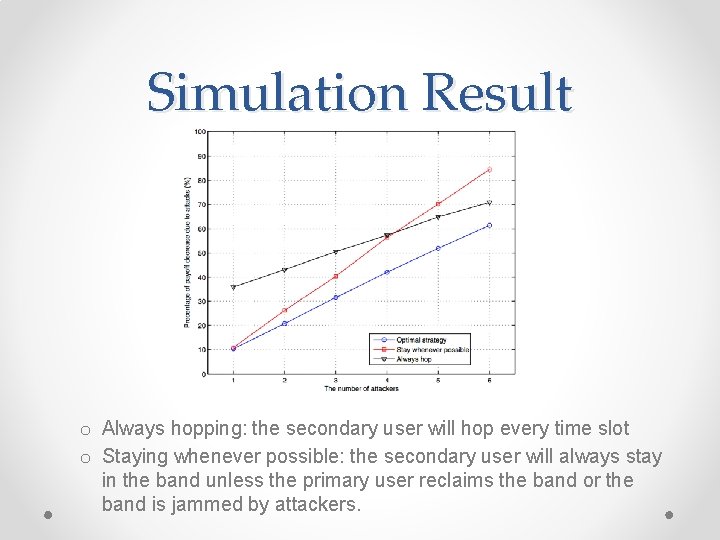

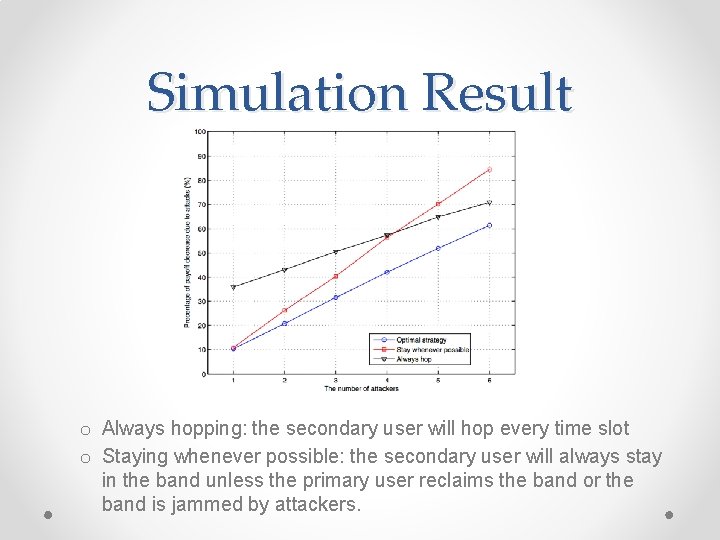

Simulation Result o Always hopping: the secondary user will hop every time slot o Staying whenever possible: the secondary user will always stay in the band unless the primary user reclaims the band or the band is jammed by attackers.