Neurnov siete pre potaov videnie k r 2019

![Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-23.jpg)

![Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-24.jpg)

![Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-25.jpg)

![Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-26.jpg)

![Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-27.jpg)

![Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-28.jpg)

![Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-29.jpg)

- Slides: 108

Neurónové siete pre počítačové videnie šk. r. 2019 -20 10 Generative Models Doc. RNDr. Milan Ftáčnik, CSc. RNDr. Zuzana Černeková, Ph. D.

Overview ● Unsupervised Learning ● Generative Models ○ Pixel. RNN and Pixel. CNN ○ Variational Autoencoders (VAE) ○ Generative Adversarial Networks (GAN) Neurónové siete pre počítačové videnie 2019 -20 2

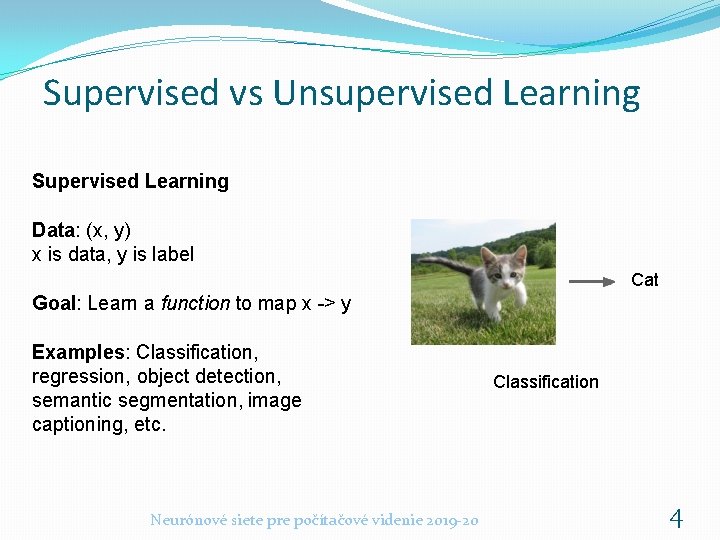

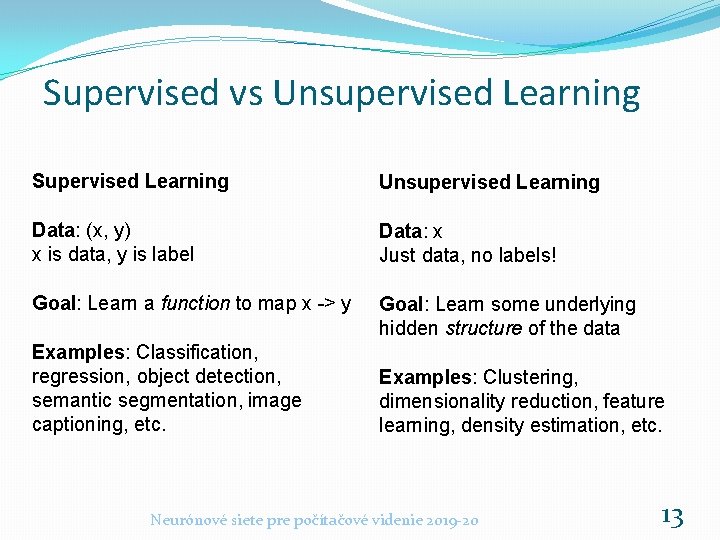

Supervised vs Unsupervised Learning Supervised Learning Data: (x, y) x is data, y is label Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. Neurónové siete pre počítačové videnie 2019 -20 3

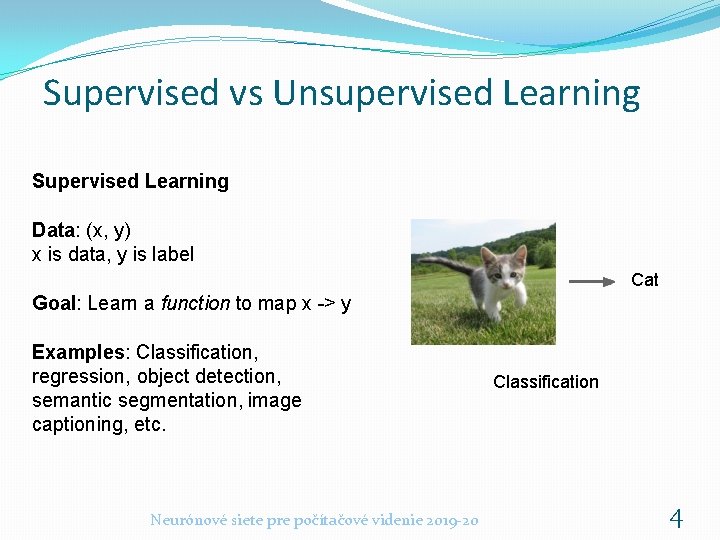

Supervised vs Unsupervised Learning Supervised Learning Data: (x, y) x is data, y is label Cat Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. Neurónové siete pre počítačové videnie 2019 -20 Classification 4

Supervised vs Unsupervised Learning Supervised Learning Data: (x, y) x is data, y is label Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. DOG, CAT Object Detection Neurónové siete pre počítačové videnie 2019 -20 5

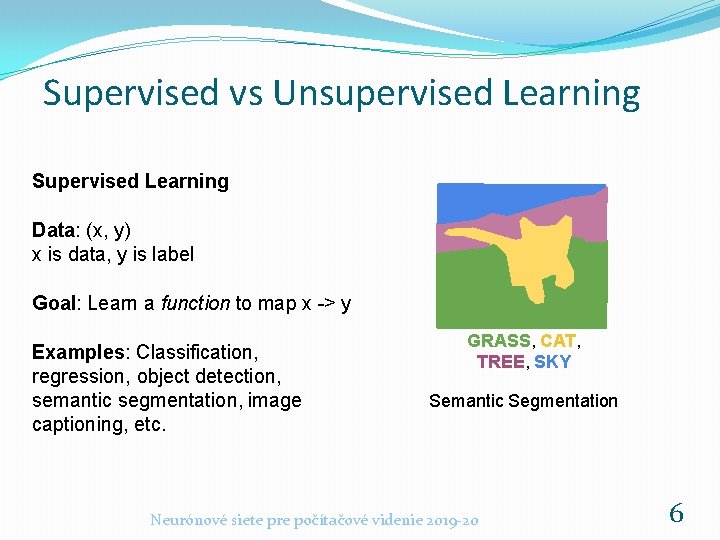

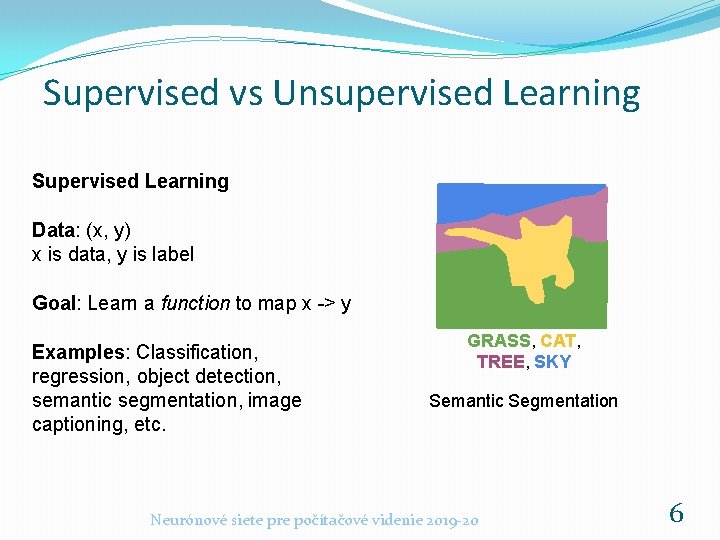

Supervised vs Unsupervised Learning Supervised Learning Data: (x, y) x is data, y is label Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. GRASS, CAT, TREE, SKY Semantic Segmentation Neurónové siete pre počítačové videnie 2019 -20 6

Supervised vs Unsupervised Learning Supervised Learning Data: (x, y) x is data, y is label Goal: Learn a function to map x -> y Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. A cat sitting on a suitcase on the floor Image captioning Neurónové siete pre počítačové videnie 2019 -20 7

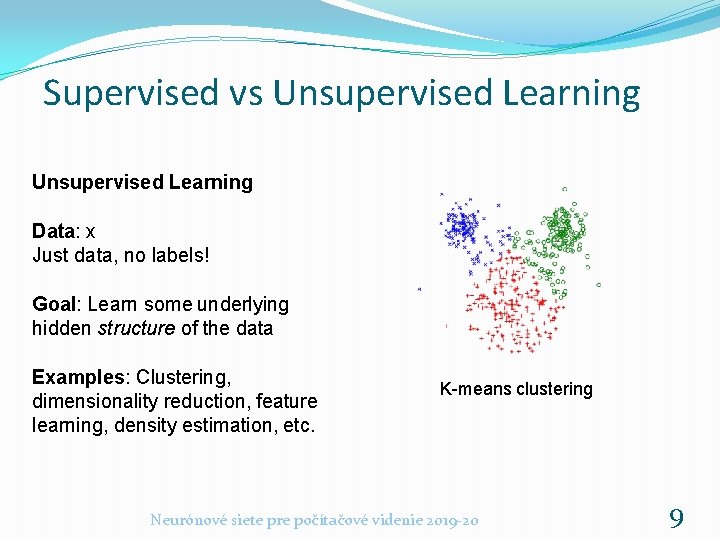

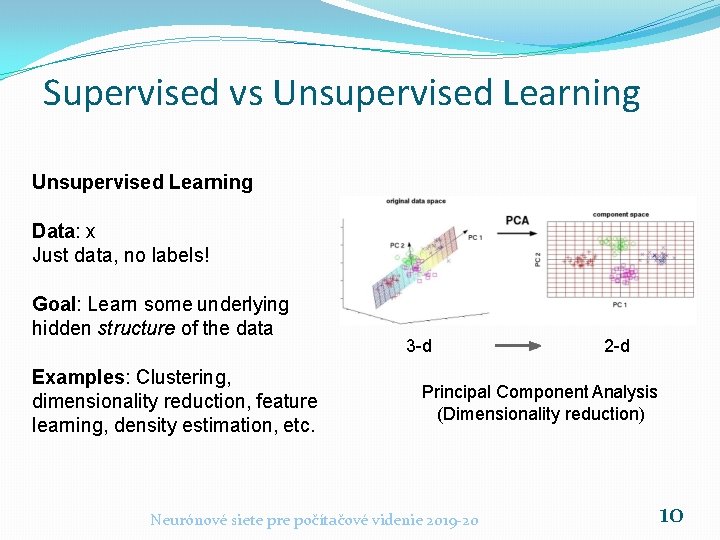

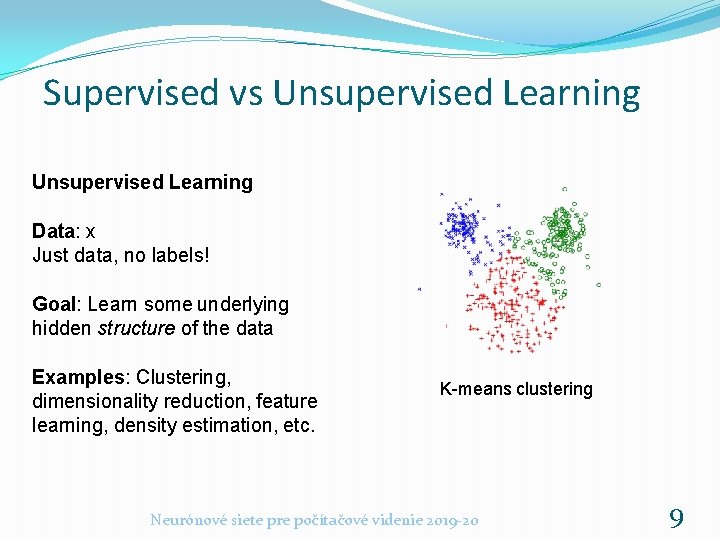

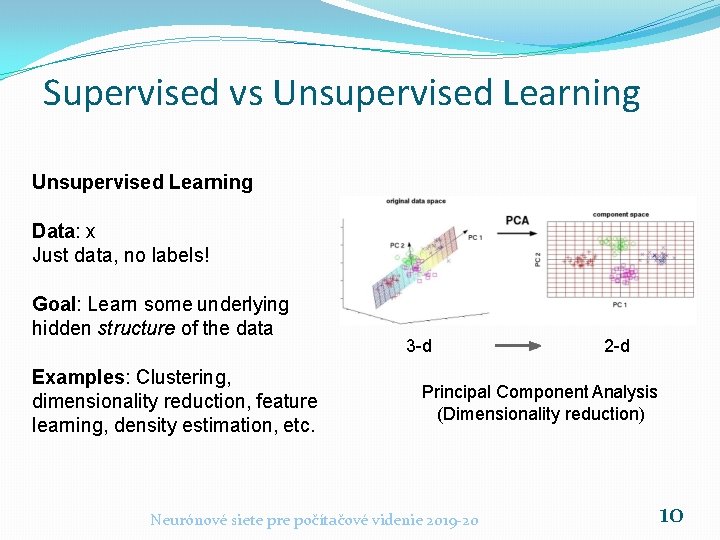

Supervised vs Unsupervised Learning Data: x Just data, no labels! Goal: Learn some underlying hidden structure of the data Examples: Clustering, dimensionality reduction, feature learning, density estimation, etc. Neurónové siete pre počítačové videnie 2019 -20 8

Supervised vs Unsupervised Learning Data: x Just data, no labels! Goal: Learn some underlying hidden structure of the data Examples: Clustering, dimensionality reduction, feature learning, density estimation, etc. K-means clustering Neurónové siete pre počítačové videnie 2019 -20 9

Supervised vs Unsupervised Learning Data: x Just data, no labels! Goal: Learn some underlying hidden structure of the data Examples: Clustering, dimensionality reduction, feature learning, density estimation, etc. 3 -d 2 -d Principal Component Analysis (Dimensionality reduction) Neurónové siete pre počítačové videnie 2019 -20 10

Supervised vs Unsupervised Learning Data: x Just data, no labels! Goal: Learn some underlying hidden structure of the data Examples: Clustering, dimensionality reduction, feature learning, density estimation, etc. Autoencoders (Feature learning) Neurónové siete pre počítačové videnie 2019 -20 11

Supervised vs Unsupervised Learning Data: x Just data, no labels! Figure copyright Ian Goodfellow, 2016. Reproduced with permission. 1 -d density estimation Goal: Learn some underlying hidden structure of the data Examples: Clustering, dimensionality reduction, feature learning, density estimation, etc. 2 -d density estimation Neurónové siete pre počítačové videnie 2019 -20 12

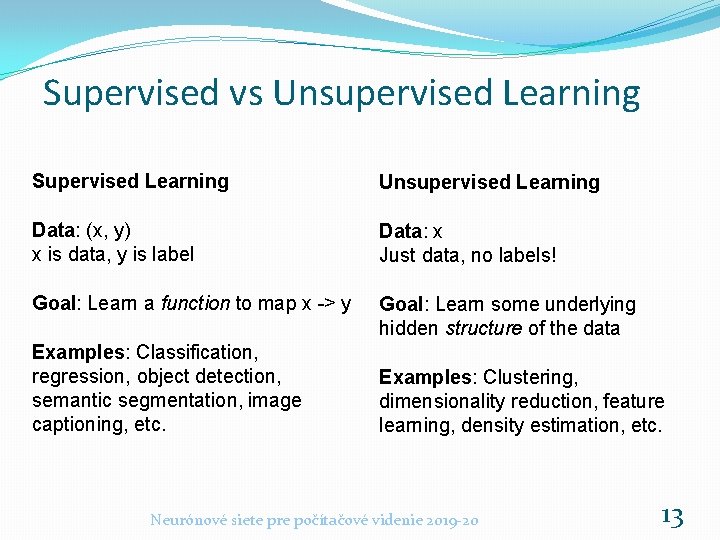

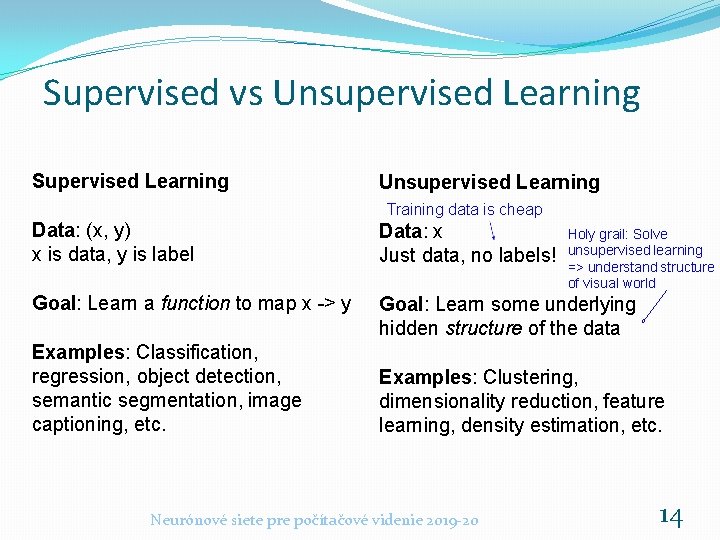

Supervised vs Unsupervised Learning Supervised Learning Unsupervised Learning Data: (x, y) x is data, y is label Data: x Just data, no labels! Goal: Learn a function to map x -> y Goal: Learn some underlying hidden structure of the data Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. Examples: Clustering, dimensionality reduction, feature learning, density estimation, etc. Neurónové siete pre počítačové videnie 2019 -20 13

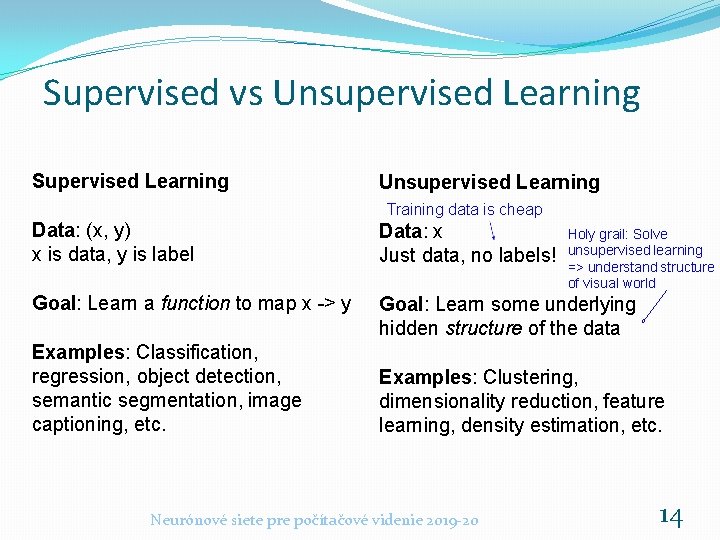

Supervised vs Unsupervised Learning Supervised Learning Unsupervised Learning Training data is cheap Data: (x, y) x is data, y is label Data: x Just data, no labels! Goal: Learn a function to map x -> y Goal: Learn some underlying hidden structure of the data Examples: Classification, regression, object detection, semantic segmentation, image captioning, etc. Holy grail: Solve unsupervised learning => understand structure of visual world Examples: Clustering, dimensionality reduction, feature learning, density estimation, etc. Neurónové siete pre počítačové videnie 2019 -20 14

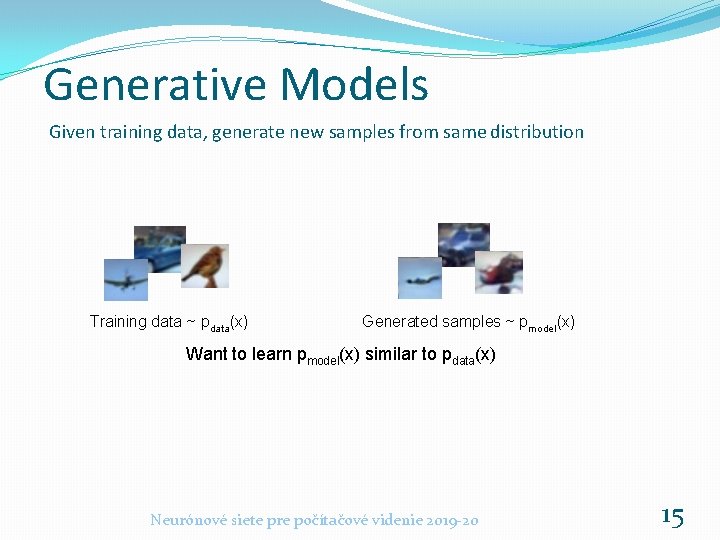

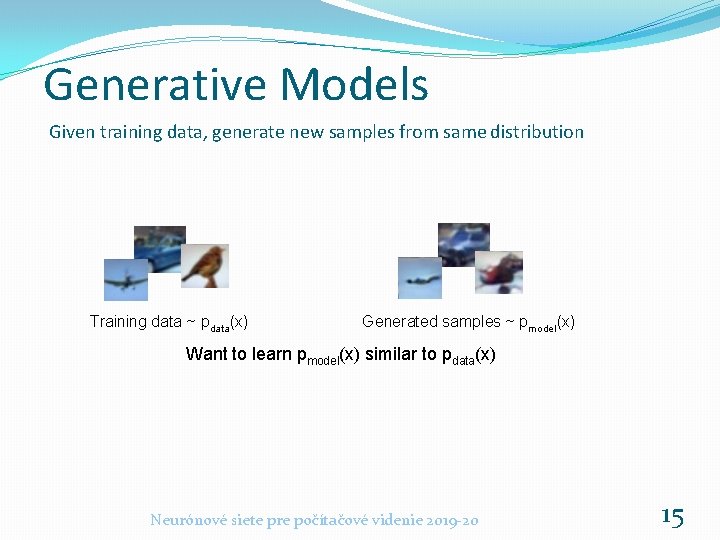

Generative Models Given training data, generate new samples from same distribution Training data ~ pdata(x) Generated samples ~ pmodel(x) Want to learn pmodel(x) similar to pdata(x) Neurónové siete pre počítačové videnie 2019 -20 15

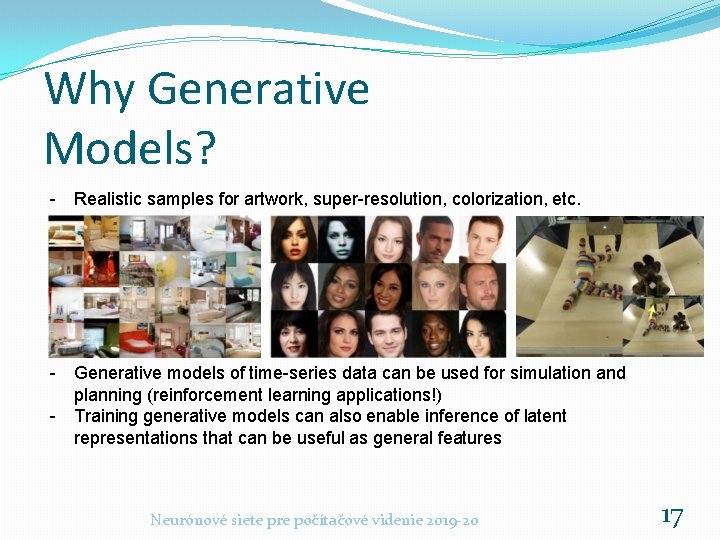

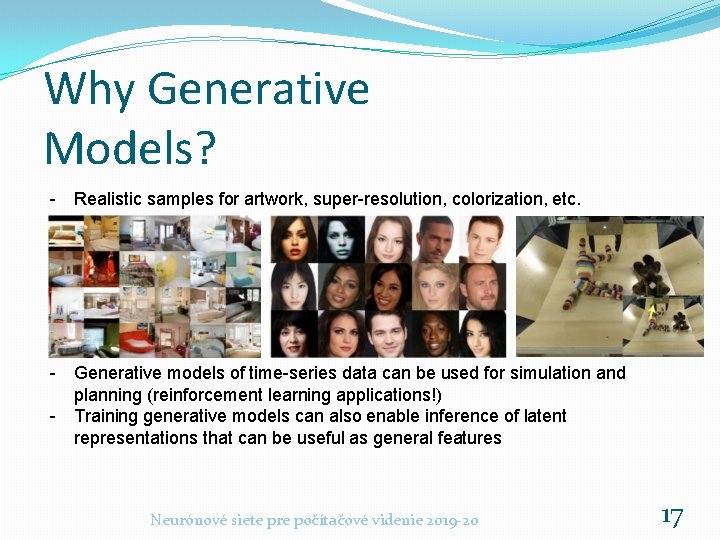

Why Generative Models? - Realistic samples for artwork, super-resolution, colorization, etc. - Generative models of time-series data can be used for simulation and planning (reinforcement learning applications!) - Training generative models can also enable inference of latent representations that can be useful as general features Neurónové siete pre počítačové videnie 2019 -20 17

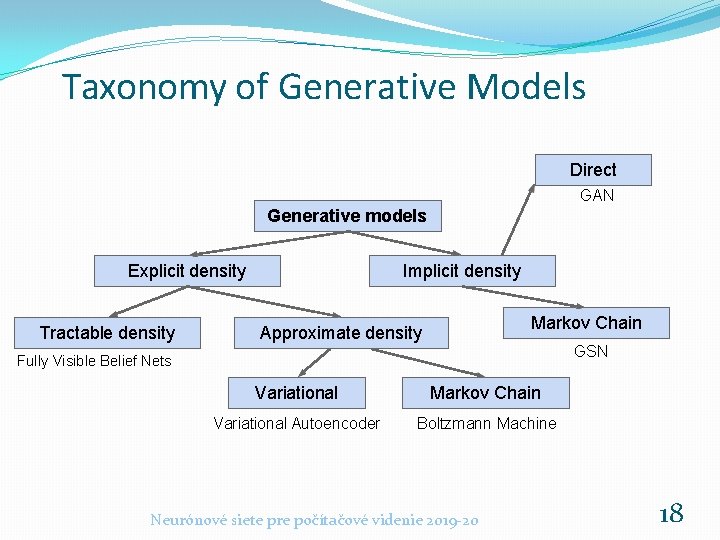

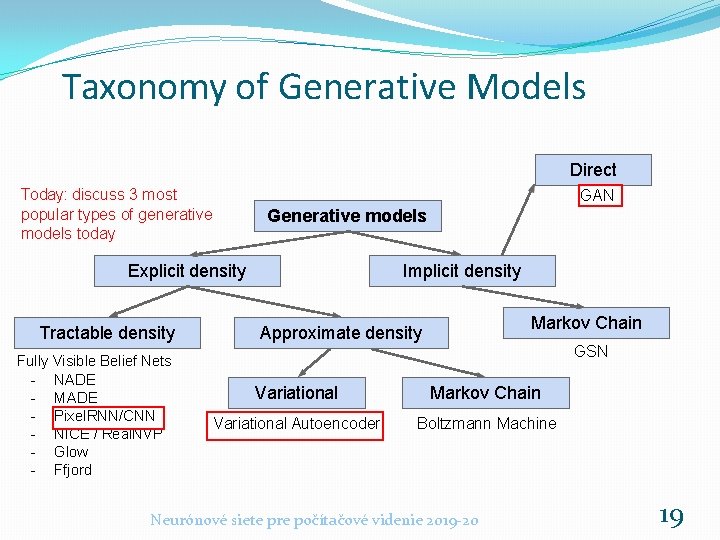

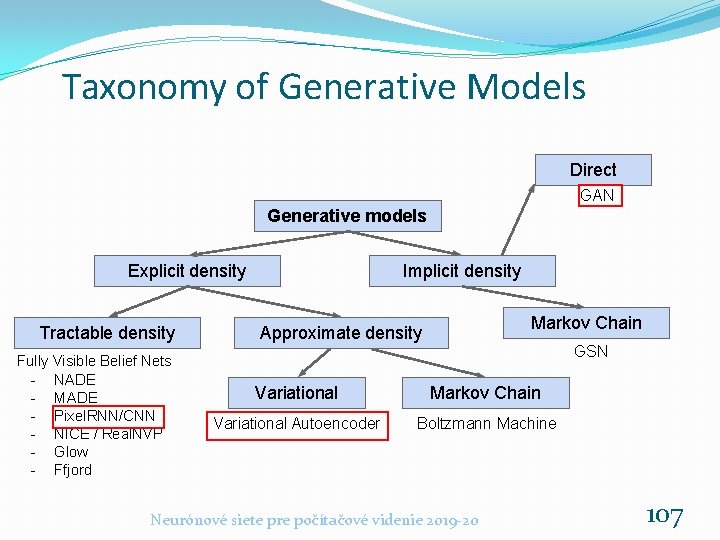

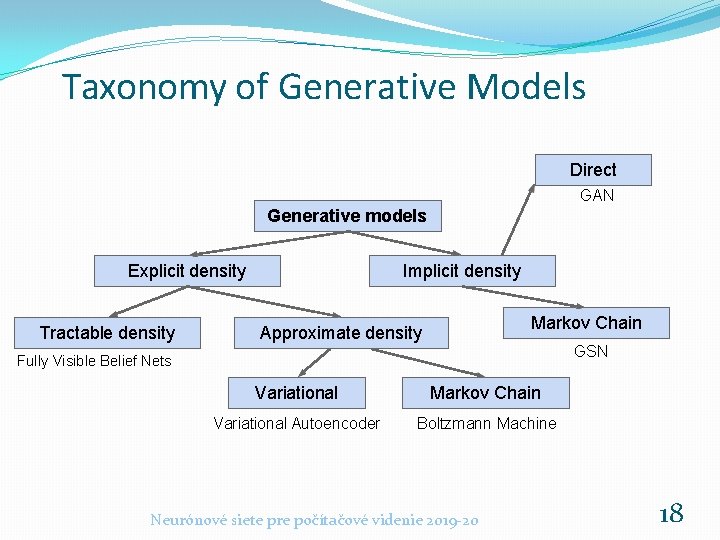

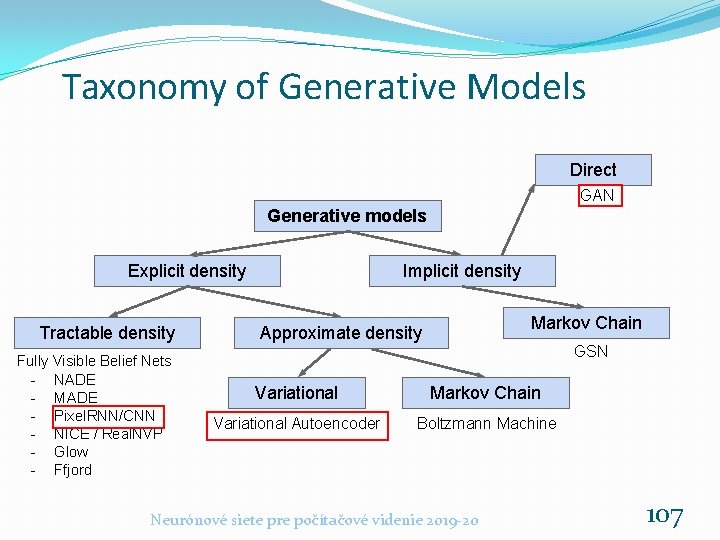

Taxonomy of Generative Models Direct GAN Generative models Explicit density Tractable density Implicit density Markov Chain Approximate density GSN Fully Visible Belief Nets Variational Markov Chain Variational Autoencoder Boltzmann Machine Neurónové siete pre počítačové videnie 2019 -20 18

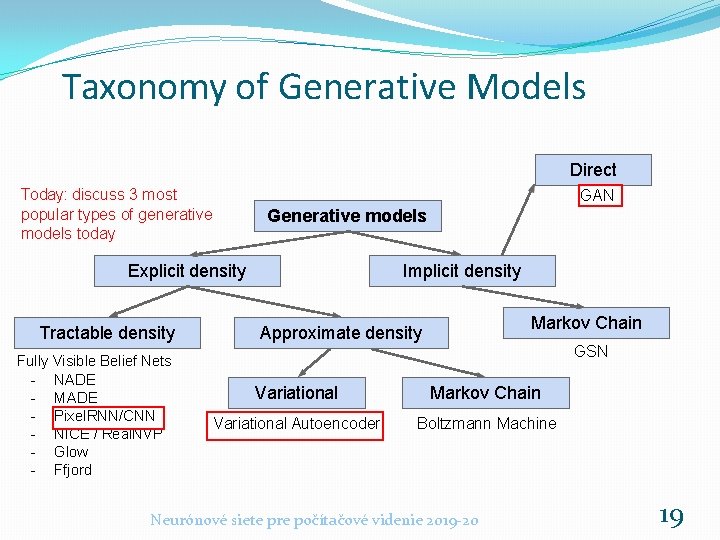

Taxonomy of Generative Models Direct Today: discuss 3 most popular types of generative models today GAN Generative models Explicit density Tractable density Fully Visible Belief Nets - NADE - MADE - Pixel. RNN/CNN - NICE / Real. NVP - Glow - Ffjord Implicit density Markov Chain Approximate density GSN Variational Markov Chain Variational Autoencoder Boltzmann Machine Neurónové siete pre počítačové videnie 2019 -20 19

Pixel. RNN and Pixel. CNN Neurónové siete pre počítačové videnie 2019 -20 20

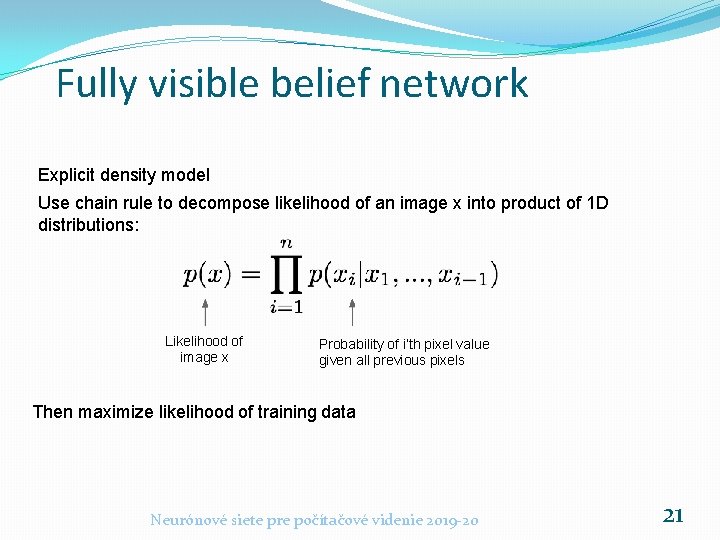

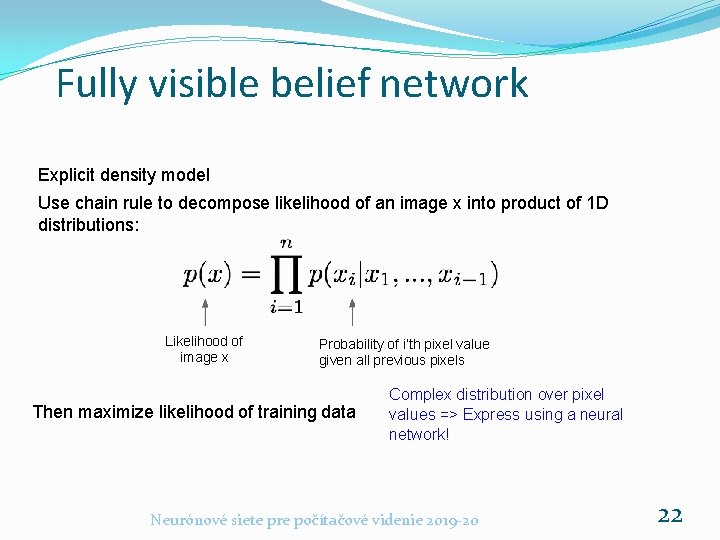

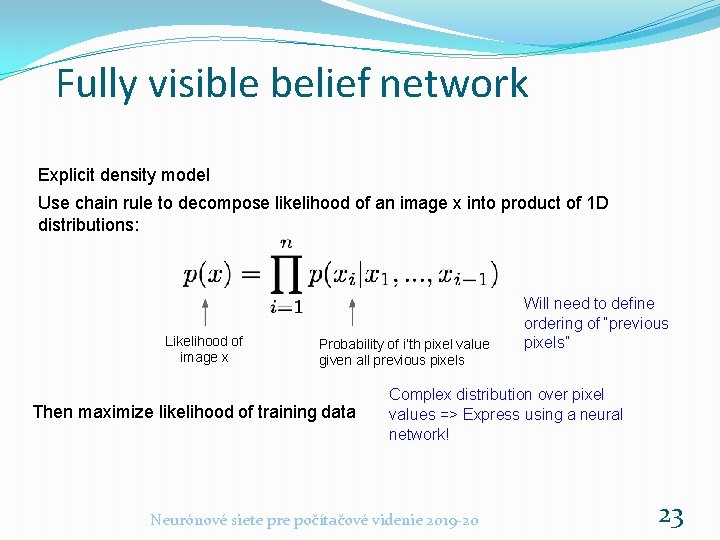

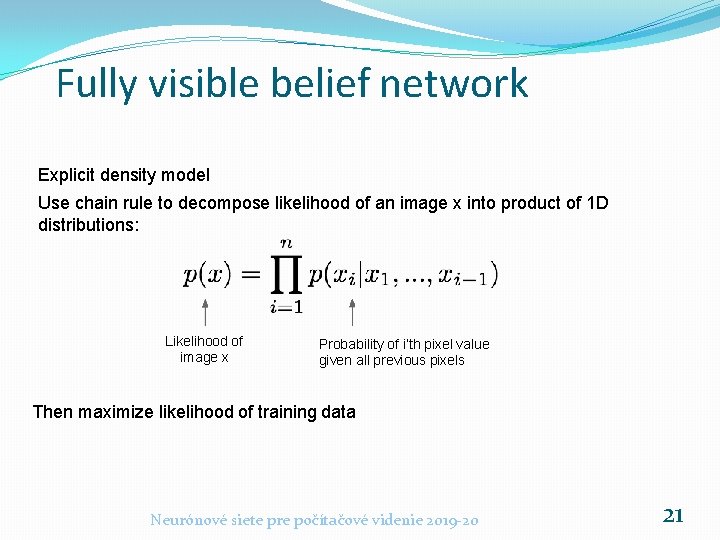

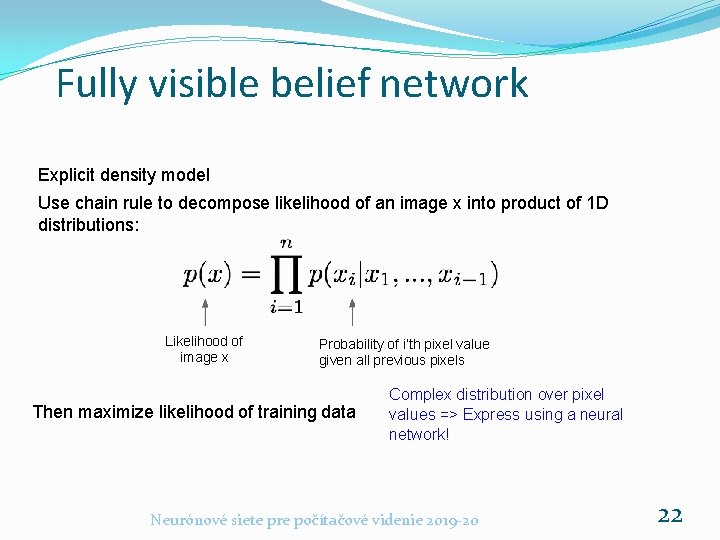

Fully visible belief network Explicit density model Use chain rule to decompose likelihood of an image x into product of 1 D distributions: Likelihood of image x Probability of i’th pixel value given all previous pixels Then maximize likelihood of training data Neurónové siete pre počítačové videnie 2019 -20 21

Fully visible belief network Explicit density model Use chain rule to decompose likelihood of an image x into product of 1 D distributions: Likelihood of image x Probability of i’th pixel value given all previous pixels Then maximize likelihood of training data Complex distribution over pixel values => Express using a neural network! Neurónové siete pre počítačové videnie 2019 -20 22

Fully visible belief network Explicit density model Use chain rule to decompose likelihood of an image x into product of 1 D distributions: Likelihood of image x Probability of i’th pixel value given all previous pixels Then maximize likelihood of training data Will need to define ordering of “previous pixels” Complex distribution over pixel values => Express using a neural network! Neurónové siete pre počítačové videnie 2019 -20 23

![Pixel RNN van der Oord et al 2016 Generate image pixels starting from corner Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-23.jpg)

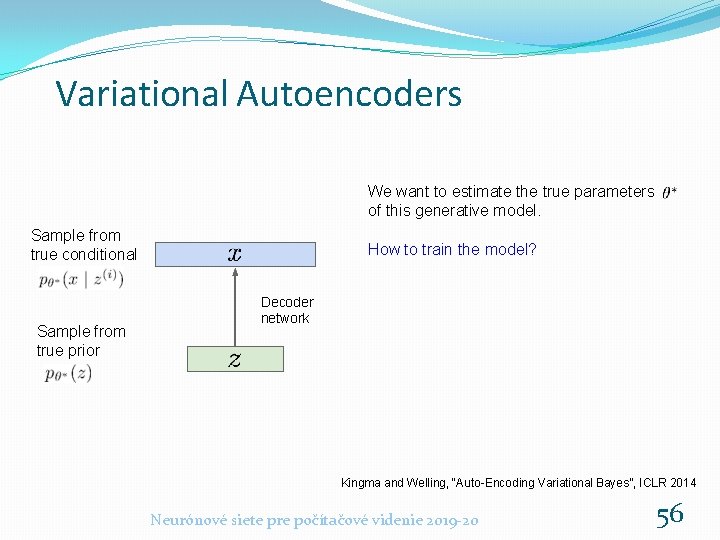

Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner Dependency on previous pixels modeled using an RNN (LSTM) Neurónové siete pre počítačové videnie 2019 -20 24

![Pixel RNN van der Oord et al 2016 Generate image pixels starting from corner Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-24.jpg)

Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner Dependency on previous pixels modeled using an RNN (LSTM) Neurónové siete pre počítačové videnie 2019 -20 25

![Pixel RNN van der Oord et al 2016 Generate image pixels starting from corner Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-25.jpg)

Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner Dependency on previous pixels modeled using an RNN (LSTM) Neurónové siete pre počítačové videnie 2019 -20 26

![Pixel RNN van der Oord et al 2016 Generate image pixels starting from corner Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-26.jpg)

Pixel. RNN [van der Oord et al. 2016] Generate image pixels starting from corner Dependency on previous pixels modeled using an RNN (LSTM) Drawback: sequential generation is slow! Neurónové siete pre počítačové videnie 2019 -20 27

![Pixel CNN van der Oord et al 2016 Softmax loss at each pixel Still Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-27.jpg)

Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still generate image pixels starting from corner Dependency on previous pixels now modeled using a CNN over context region Neurónové siete pre počítačové videnie 2019 -20 28

![Pixel CNN van der Oord et al 2016 Softmax loss at each pixel Still Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-28.jpg)

Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still generate image pixels starting from corner Dependency on previous pixels now modeled using a CNN over context region Training: maximize likelihood of training images Neurónové siete pre počítačové videnie 2019 -20 29

![Pixel CNN van der Oord et al 2016 Softmax loss at each pixel Still Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still](https://slidetodoc.com/presentation_image_h2/6827347fcdcc7cd8b001e8a537924f38/image-29.jpg)

Pixel. CNN [van der Oord et al. 2016] Softmax loss at each pixel Still generate image pixels starting from corner Dependency on previous pixels now modeled using a CNN over context region Training is faster than Pixel. RNN (can parallelize convolutions since context region values known from training images) Generation must still proceed sequentially => still slow Neurónové siete pre počítačové videnie 2019 -20 30

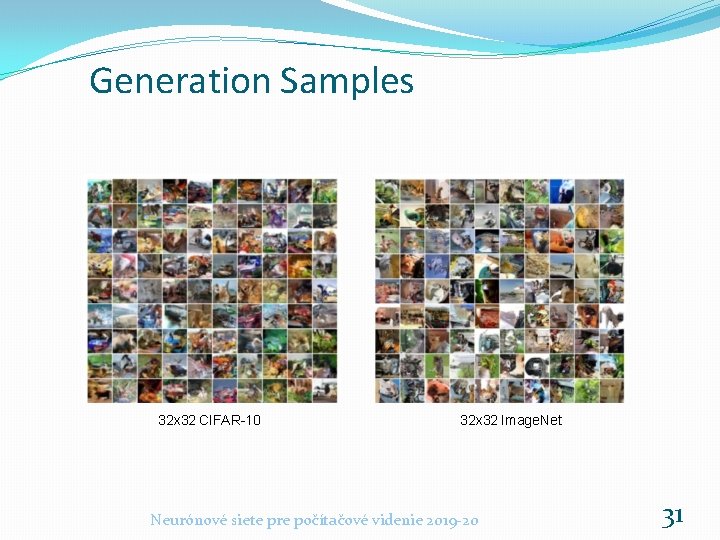

Generation Samples 32 x 32 CIFAR-10 32 x 32 Image. Net Neurónové siete pre počítačové videnie 2019 -20 31

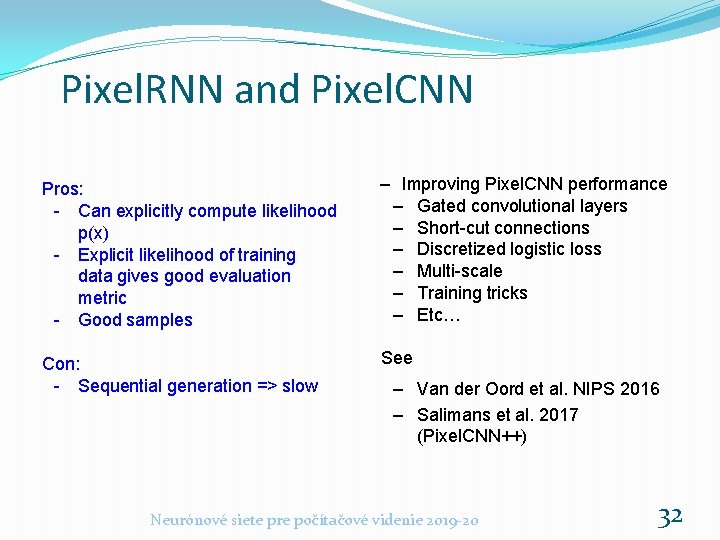

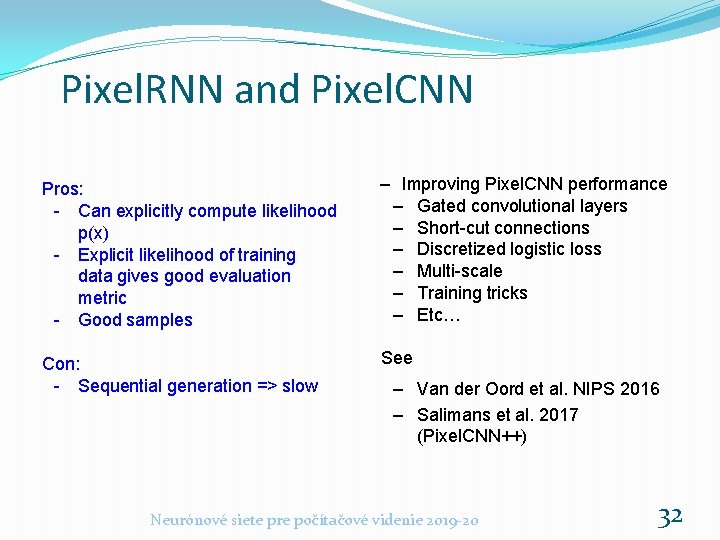

Pixel. RNN and Pixel. CNN Pros: - Can explicitly compute likelihood p(x) - Explicit likelihood of training data gives good evaluation metric - Good samples ‒ Improving Pixel. CNN performance ‒ Gated convolutional layers ‒ Short-cut connections ‒ Discretized logistic loss ‒ Multi-scale ‒ Training tricks ‒ Etc… Con: - Sequential generation => slow See ‒ Van der Oord et al. NIPS 2016 ‒ Salimans et al. 2017 (Pixel. CNN++) Neurónové siete pre počítačové videnie 2019 -20 32

Variational Autoencoders (VAE) Neurónové siete pre počítačové videnie 2019 -20 33

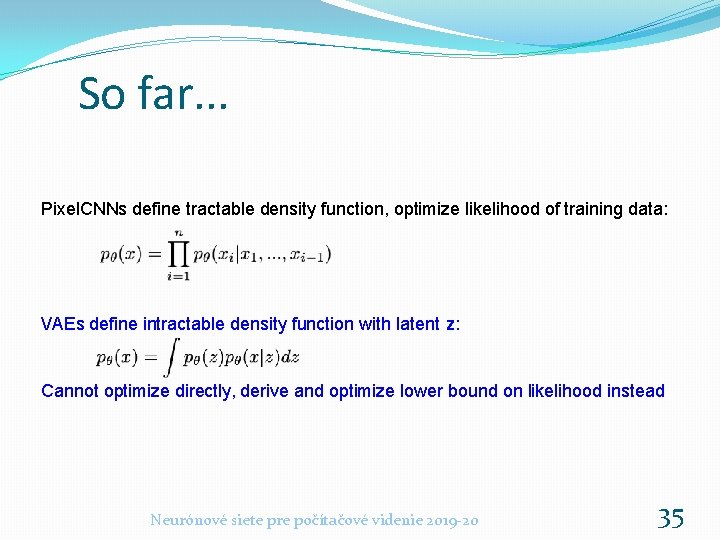

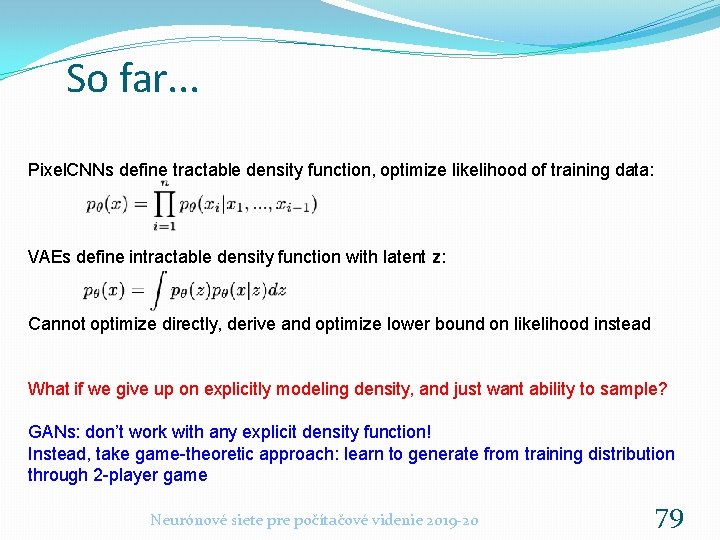

So far. . . Pixel. CNNs define tractable density function, optimize likelihood of training data: Neurónové siete pre počítačové videnie 2019 -20 34

So far. . . Pixel. CNNs define tractable density function, optimize likelihood of training data: VAEs define intractable density function with latent z: Cannot optimize directly, derive and optimize lower bound on likelihood instead Neurónové siete pre počítačové videnie 2019 -20 35

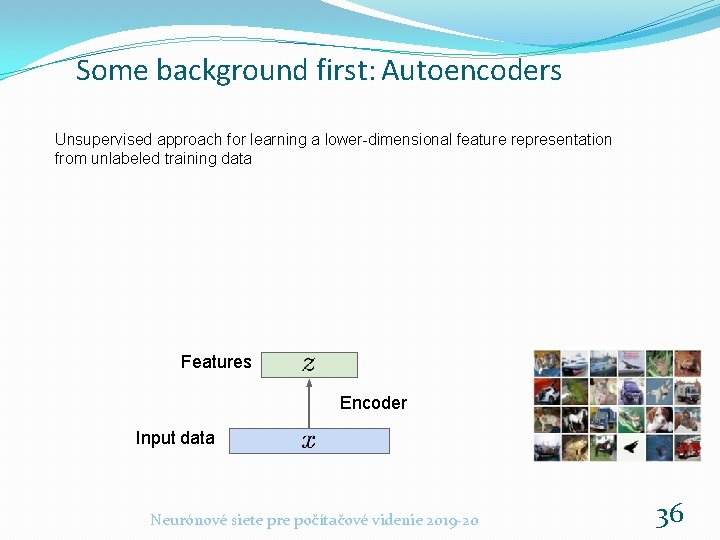

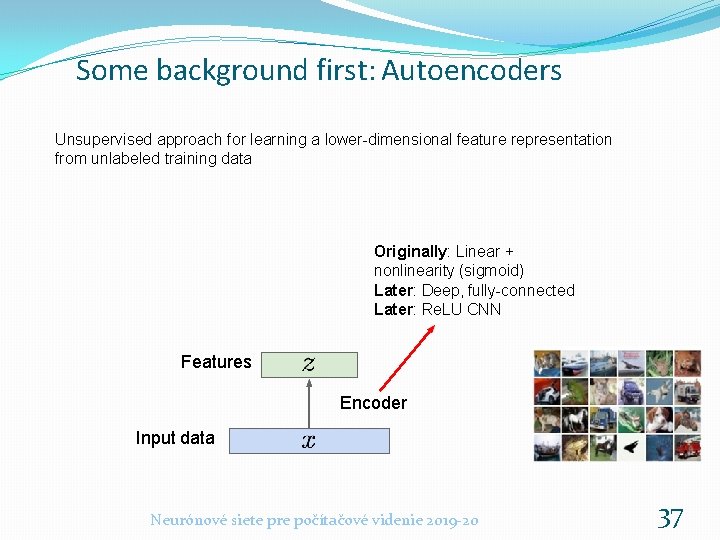

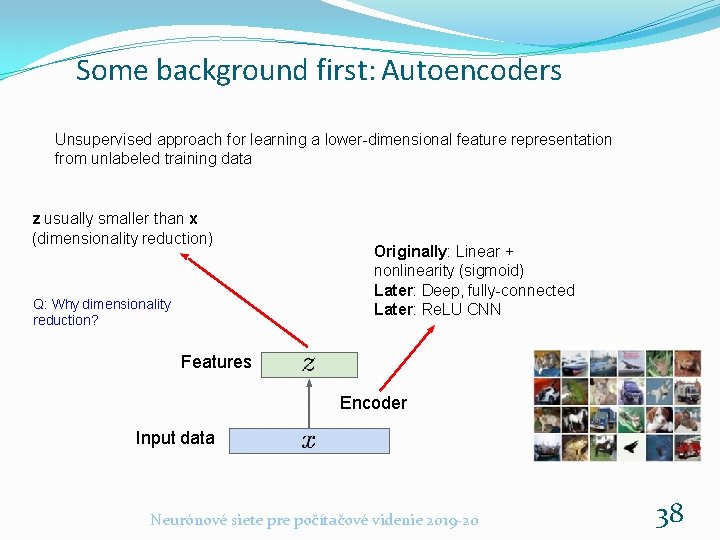

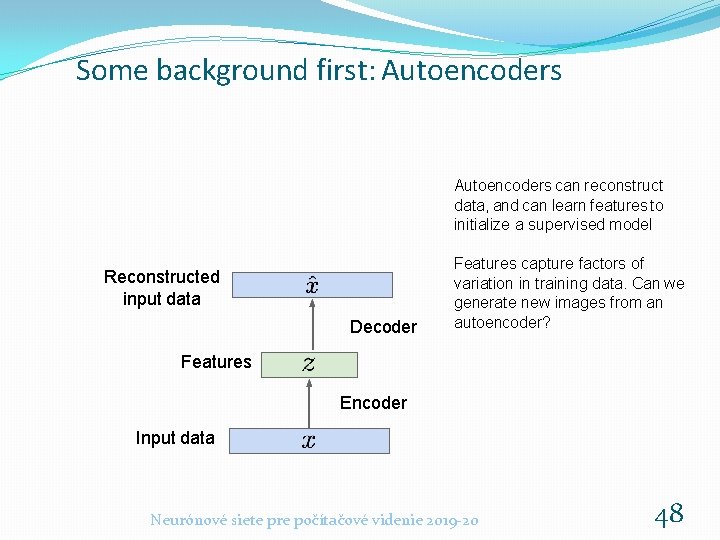

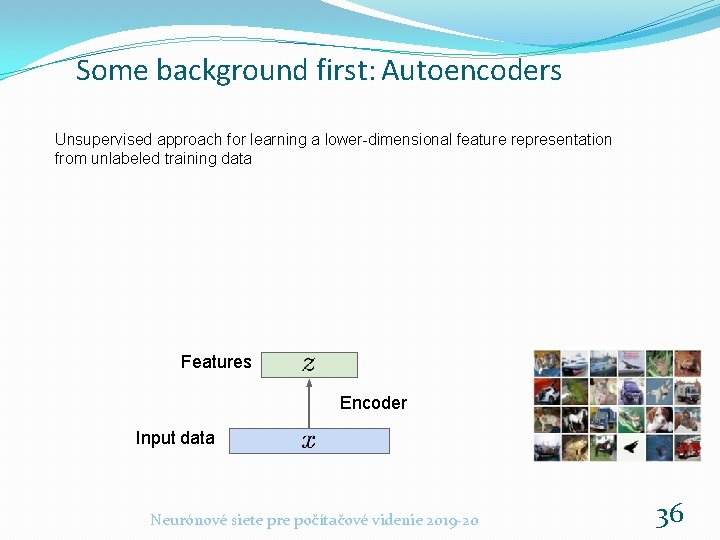

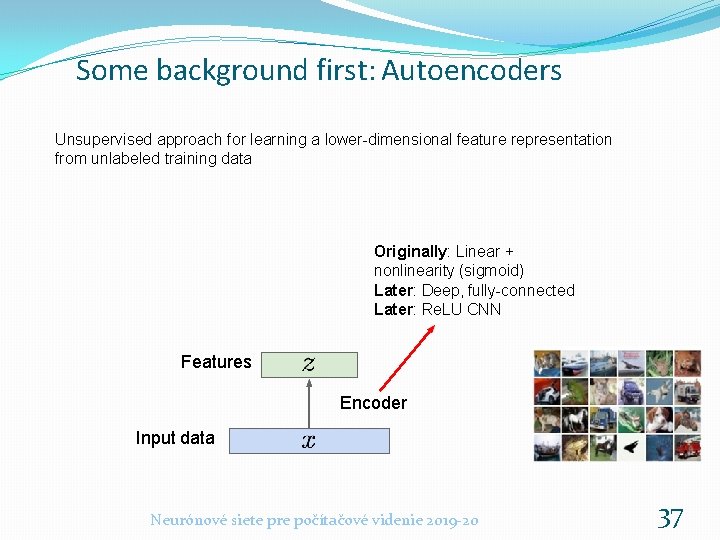

Some background first: Autoencoders Unsupervised approach for learning a lower-dimensional feature representation from unlabeled training data Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 36

Some background first: Autoencoders Unsupervised approach for learning a lower-dimensional feature representation from unlabeled training data Originally: Linear + nonlinearity (sigmoid) Later: Deep, fully-connected Later: Re. LU CNN Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 37

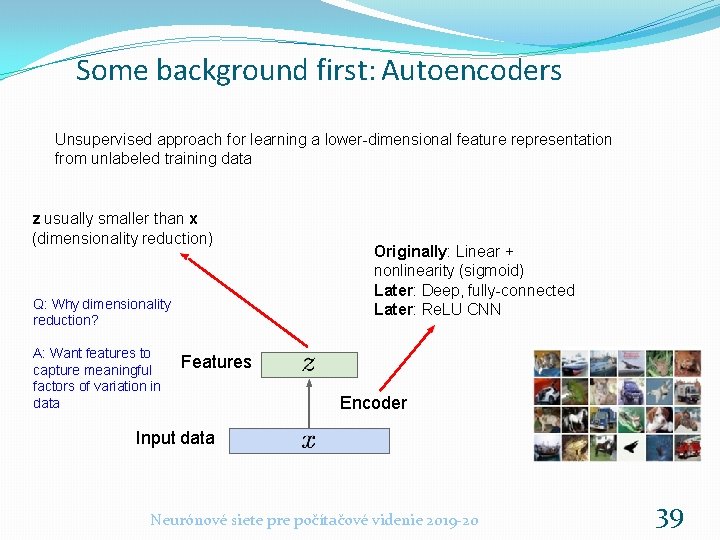

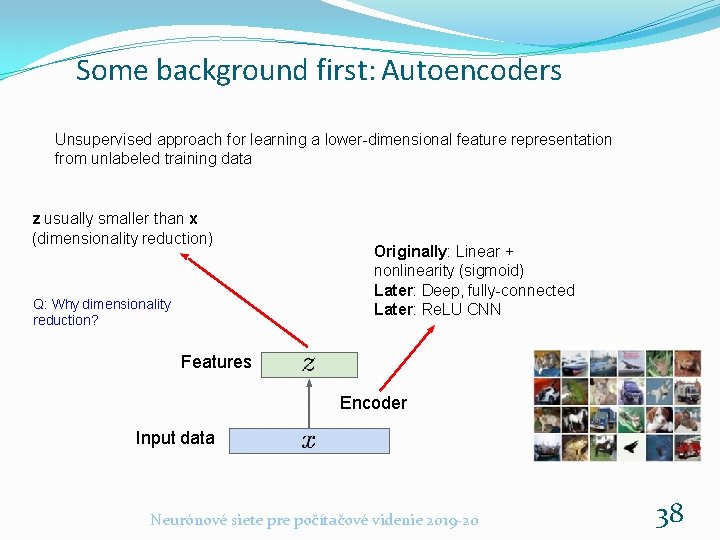

Some background first: Autoencoders Unsupervised approach for learning a lower-dimensional feature representation from unlabeled training data z usually smaller than x (dimensionality reduction) Q: Why dimensionality reduction? Originally: Linear + nonlinearity (sigmoid) Later: Deep, fully-connected Later: Re. LU CNN Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 38

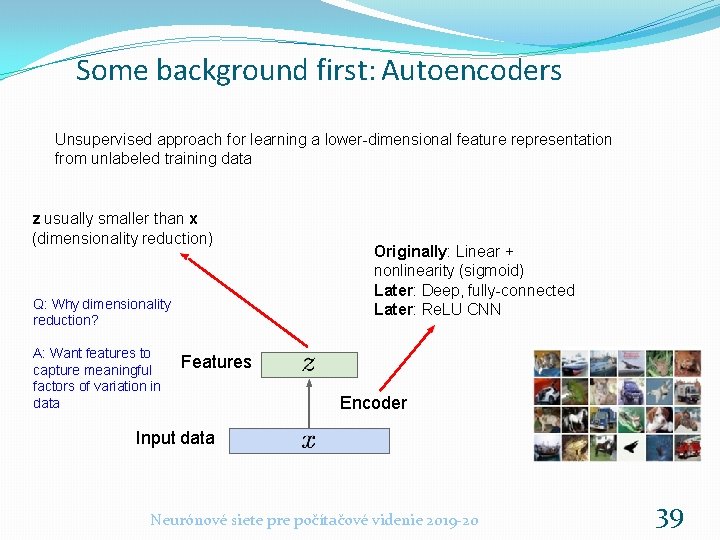

Some background first: Autoencoders Unsupervised approach for learning a lower-dimensional feature representation from unlabeled training data z usually smaller than x (dimensionality reduction) Q: Why dimensionality reduction? A: Want features to capture meaningful factors of variation in data Originally: Linear + nonlinearity (sigmoid) Later: Deep, fully-connected Later: Re. LU CNN Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 39

Some background first: Autoencoders How to learn this feature representation? Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 40

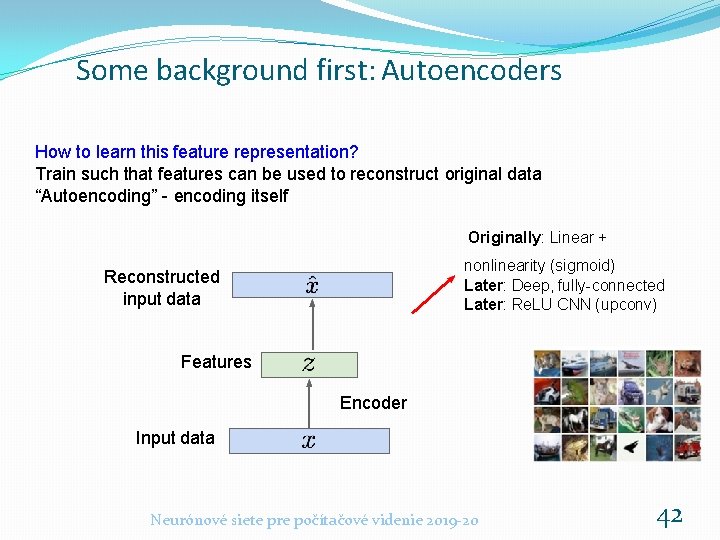

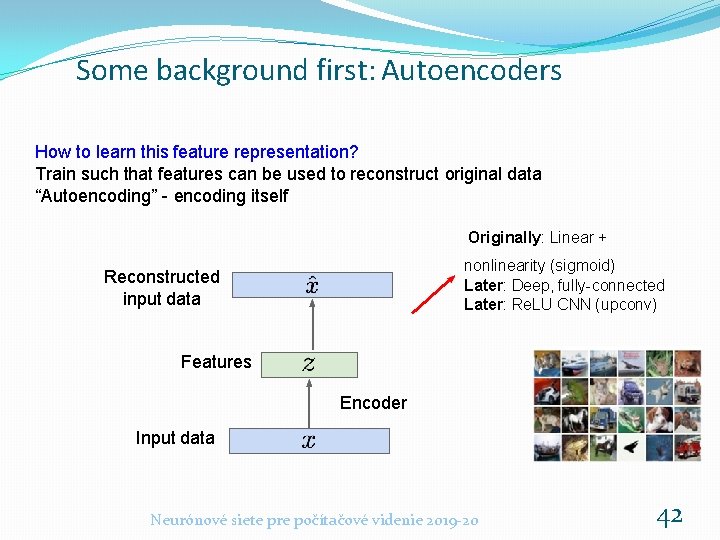

Some background first: Autoencoders How to learn this feature representation? Train such that features can be used to reconstruct original data “Autoencoding” - encoding itself Reconstructed input data Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 41

Some background first: Autoencoders How to learn this feature representation? Train such that features can be used to reconstruct original data “Autoencoding” - encoding itself Originally: Linear + nonlinearity (sigmoid) Later: Deep, fully-connected Later: Re. LU CNN (upconv) Reconstructed input data Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 42

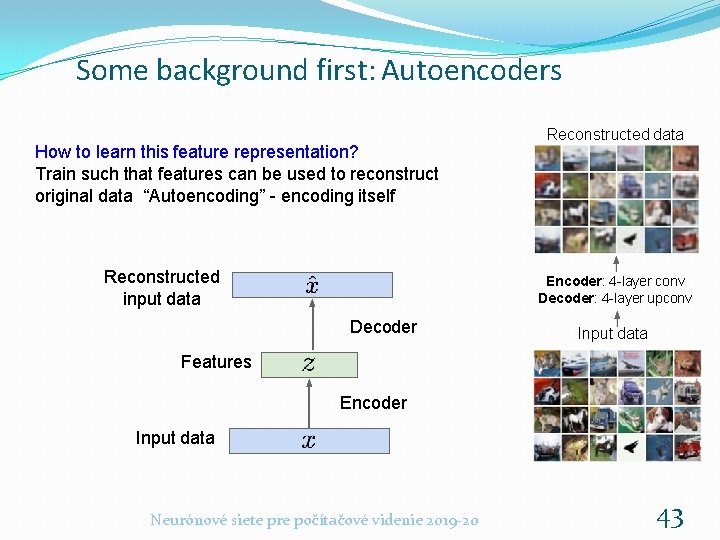

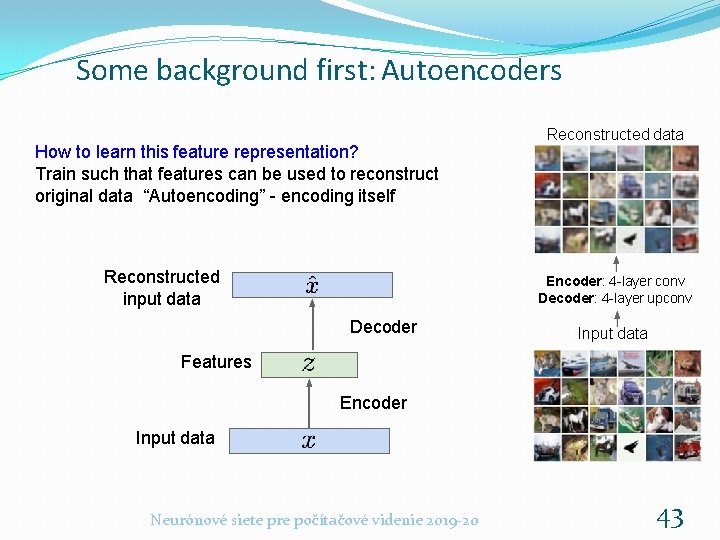

Some background first: Autoencoders How to learn this feature representation? Train such that features can be used to reconstruct original data “Autoencoding” - encoding itself Reconstructed input data Reconstructed data Encoder: 4 -layer conv Decoder: 4 -layer upconv Decoder Input data Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 43

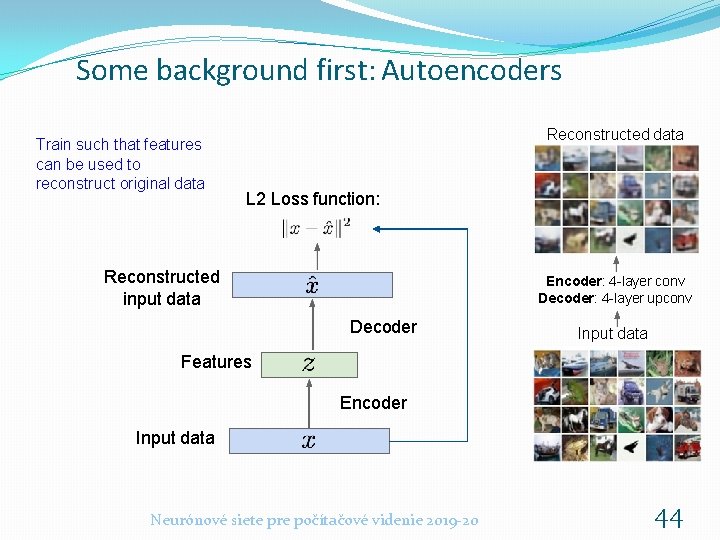

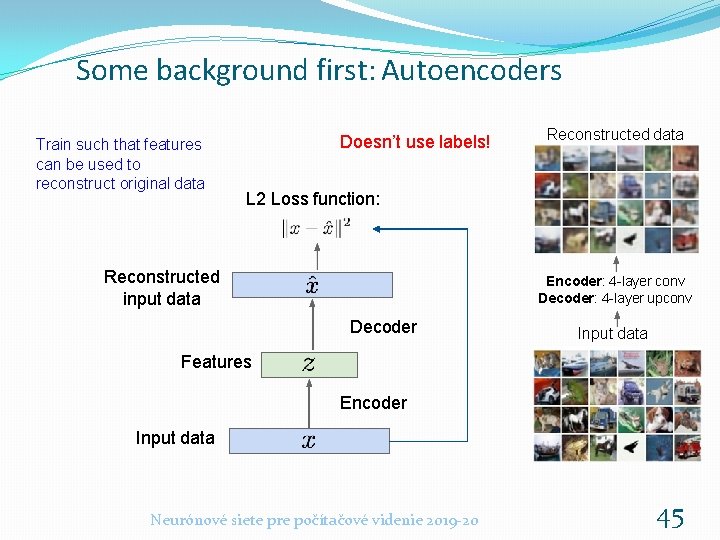

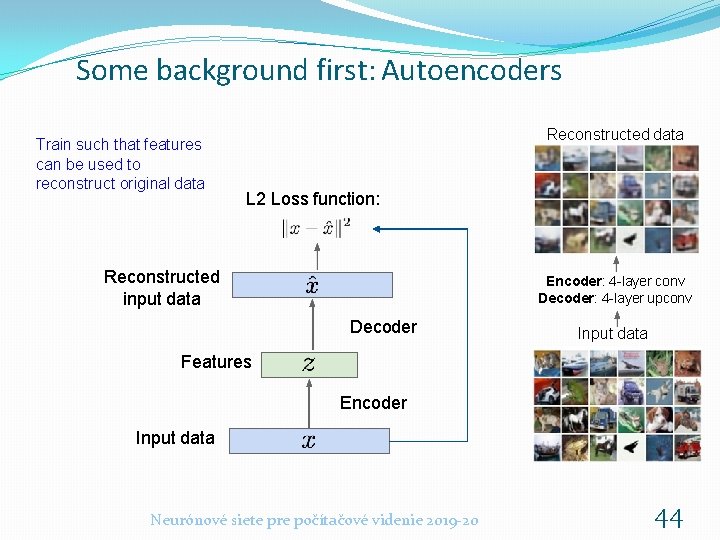

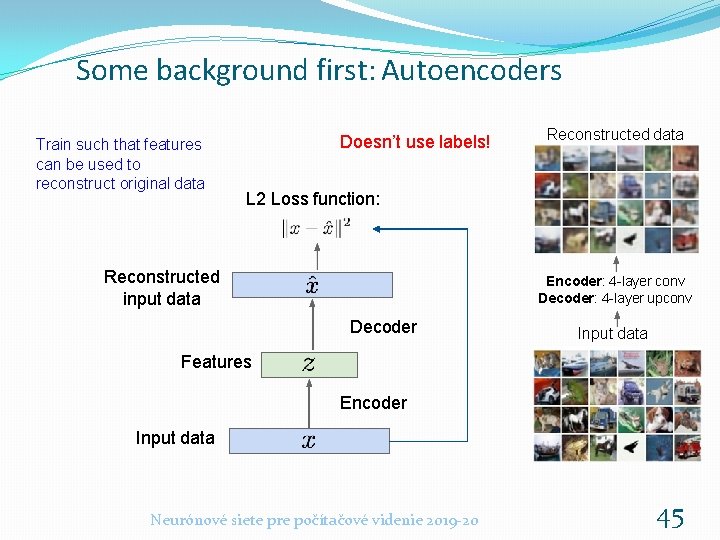

Some background first: Autoencoders Train such that features can be used to reconstruct original data Reconstructed data L 2 Loss function: Reconstructed input data Encoder: 4 -layer conv Decoder: 4 -layer upconv Decoder Input data Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 44

Some background first: Autoencoders Train such that features can be used to reconstruct original data Doesn’t use labels! Reconstructed data L 2 Loss function: Reconstructed input data Encoder: 4 -layer conv Decoder: 4 -layer upconv Decoder Input data Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 45

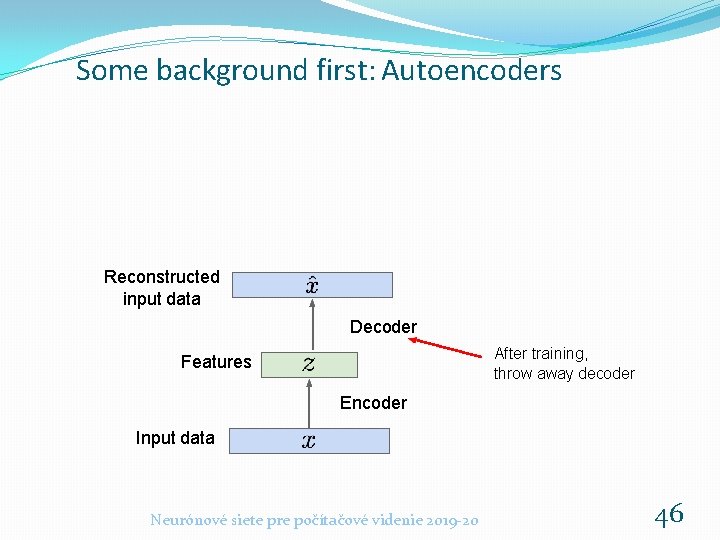

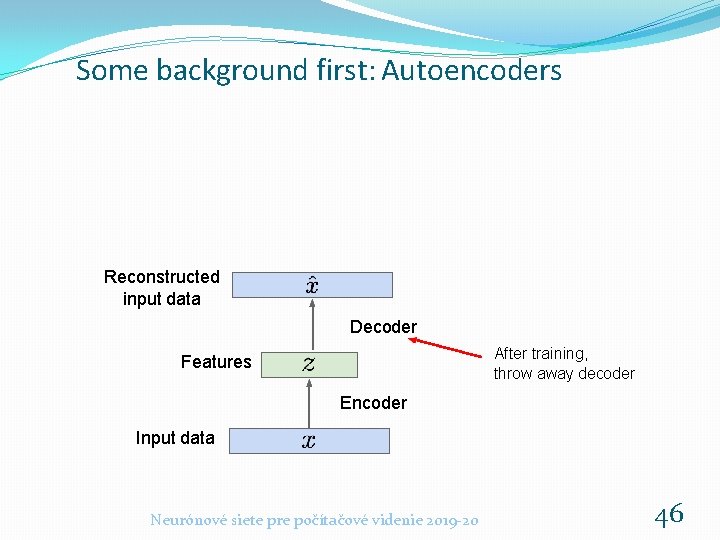

Some background first: Autoencoders Reconstructed input data Decoder After training, throw away decoder Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 46

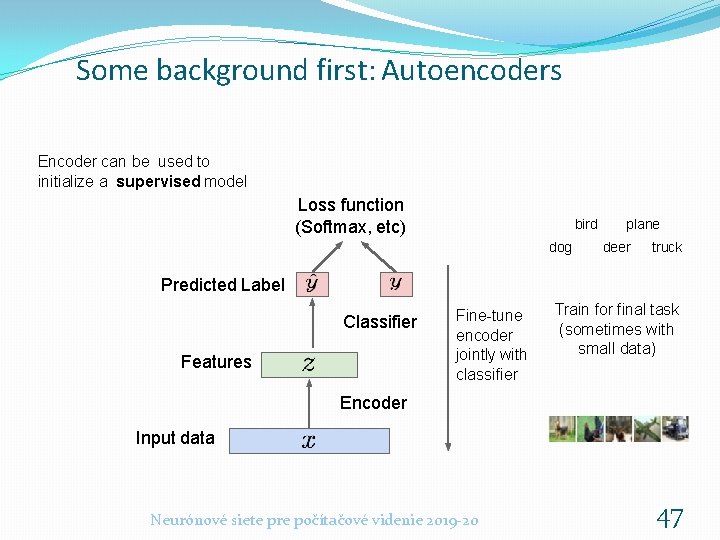

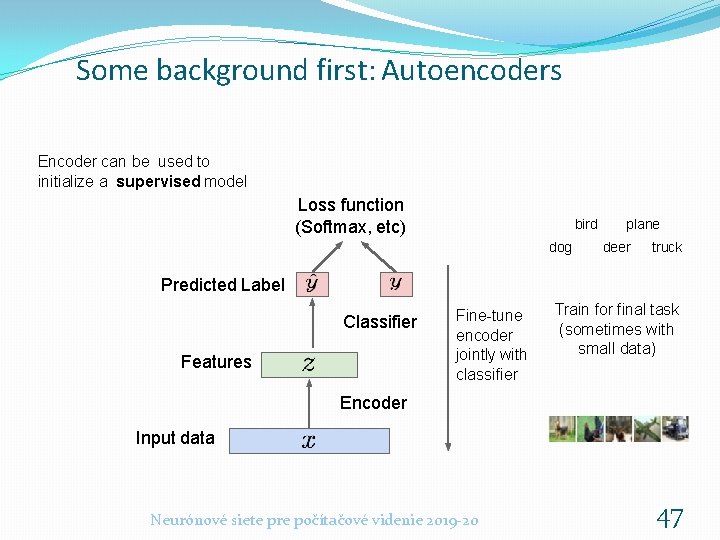

Some background first: Autoencoders Encoder can be used to initialize a supervised model Loss function (Softmax, etc) bird dog plane deer truck Predicted Label Classifier Features Fine-tune encoder jointly with classifier Train for final task (sometimes with small data) Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 47

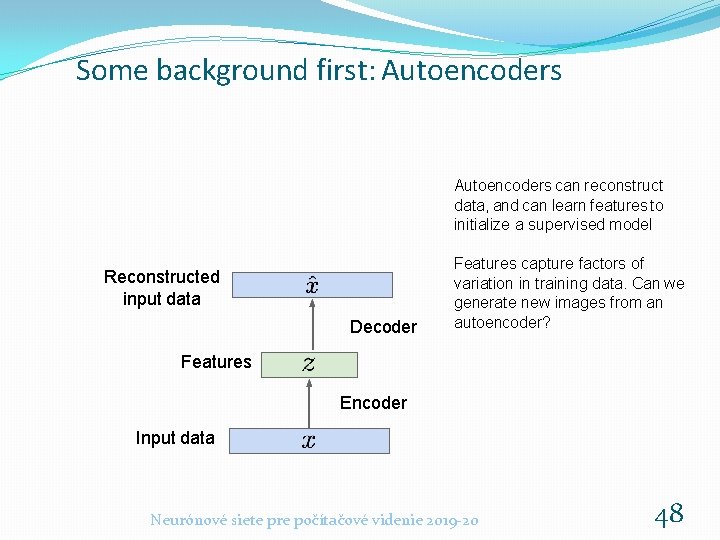

Some background first: Autoencoders can reconstruct data, and can learn features to initialize a supervised model Reconstructed input data Decoder Features capture factors of variation in training data. Can we generate new images from an autoencoder? Features Encoder Input data Neurónové siete pre počítačové videnie 2019 -20 48

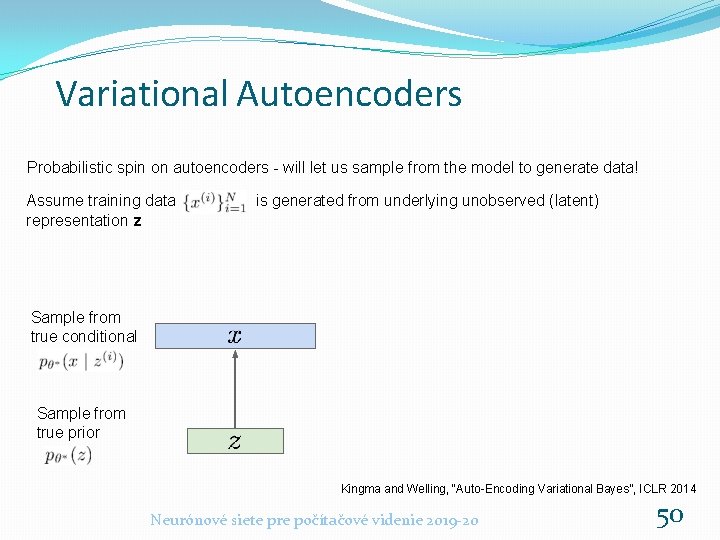

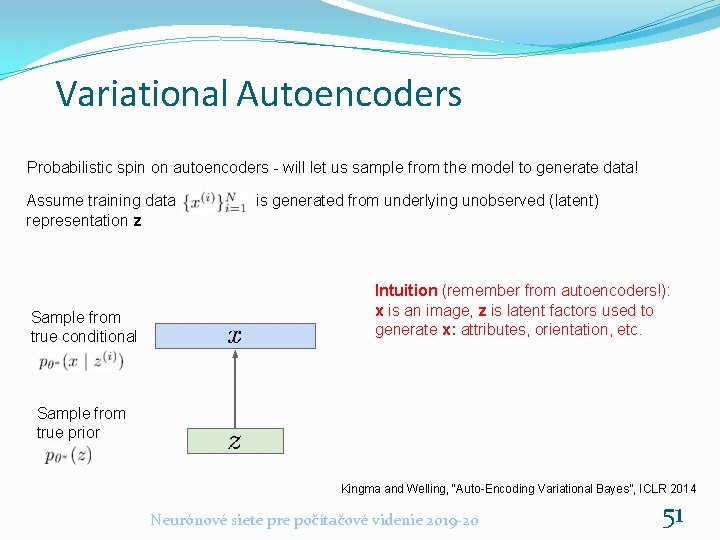

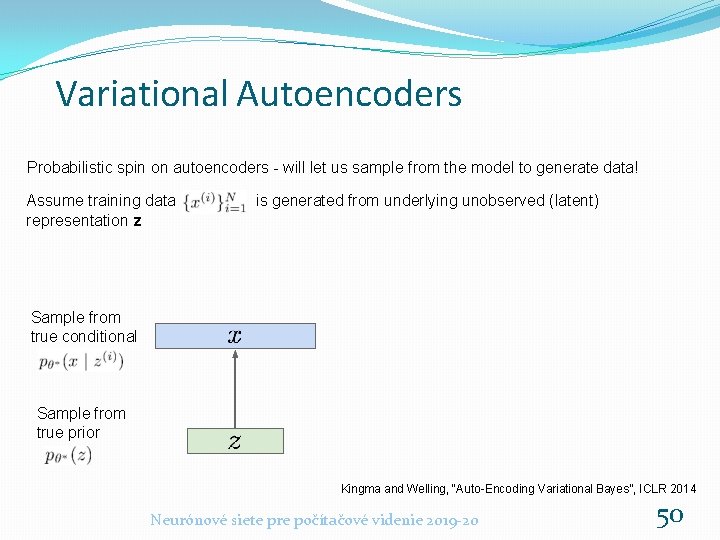

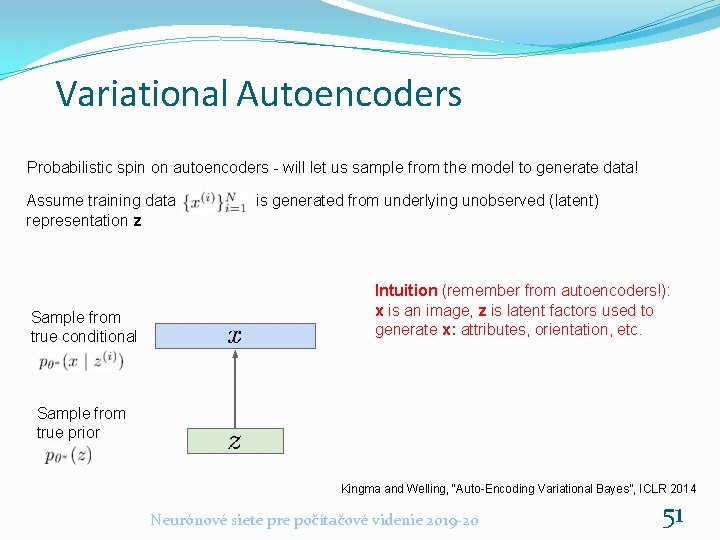

Variational Autoencoders Probabilistic spin on autoencoders - will let us sample from the model to generate data! Neurónové siete pre počítačové videnie 2019 -20 49

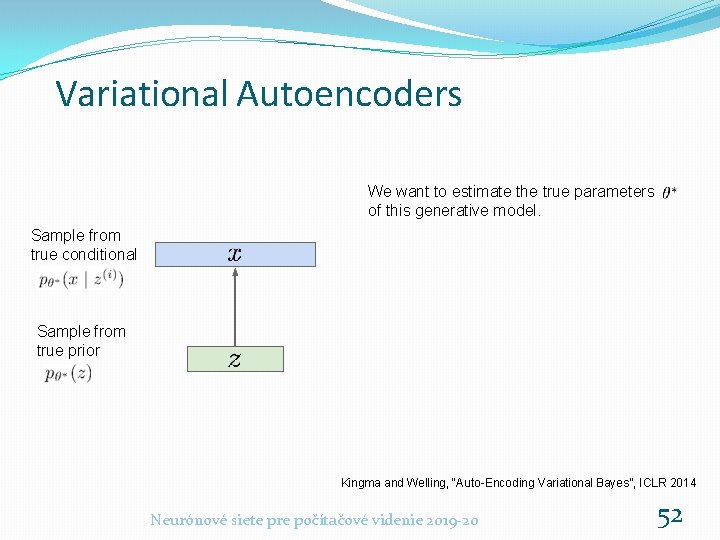

Variational Autoencoders Probabilistic spin on autoencoders - will let us sample from the model to generate data! Assume training data representation z is generated from underlying unobserved (latent) Sample from true conditional Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 50

Variational Autoencoders Probabilistic spin on autoencoders - will let us sample from the model to generate data! Assume training data representation z Sample from true conditional is generated from underlying unobserved (latent) Intuition (remember from autoencoders!): x is an image, z is latent factors used to generate x: attributes, orientation, etc. Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 51

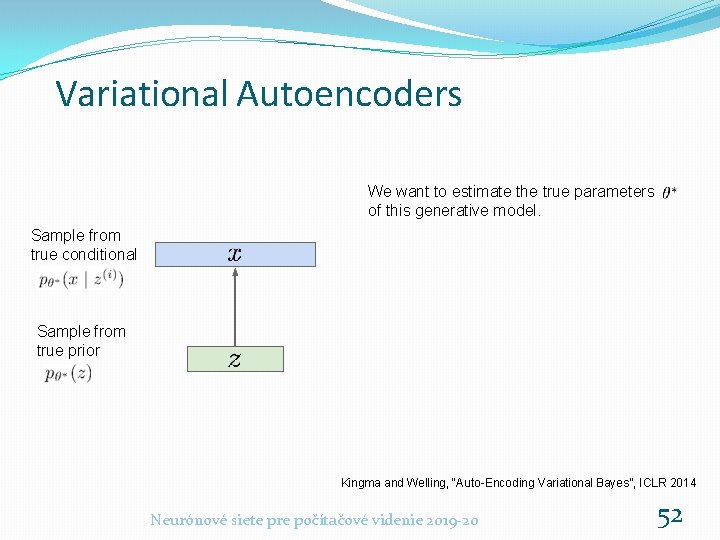

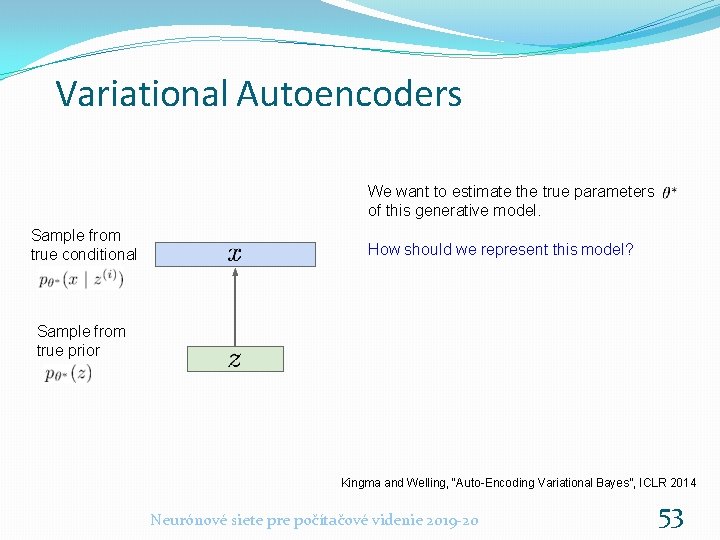

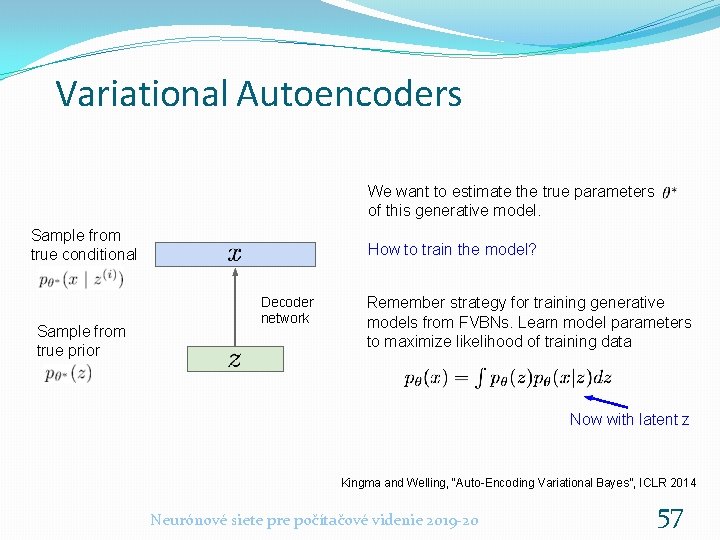

Variational Autoencoders We want to estimate the true parameters of this generative model. Sample from true conditional Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 52

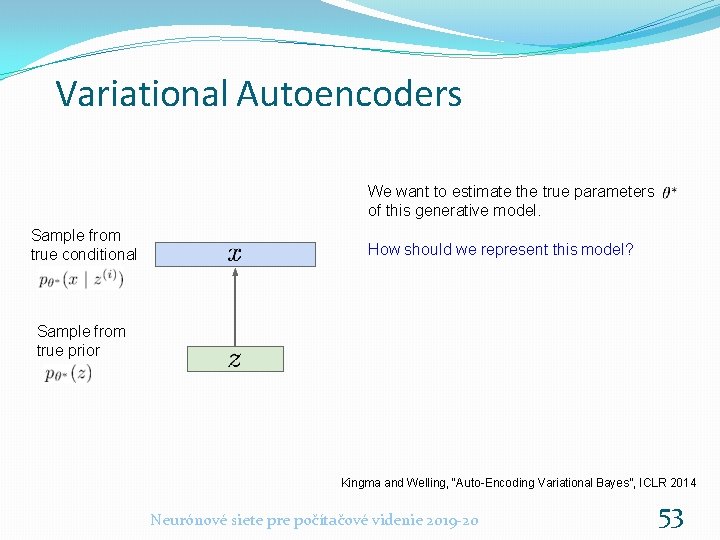

Variational Autoencoders We want to estimate the true parameters of this generative model. Sample from true conditional How should we represent this model? Sample from true prior Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 53

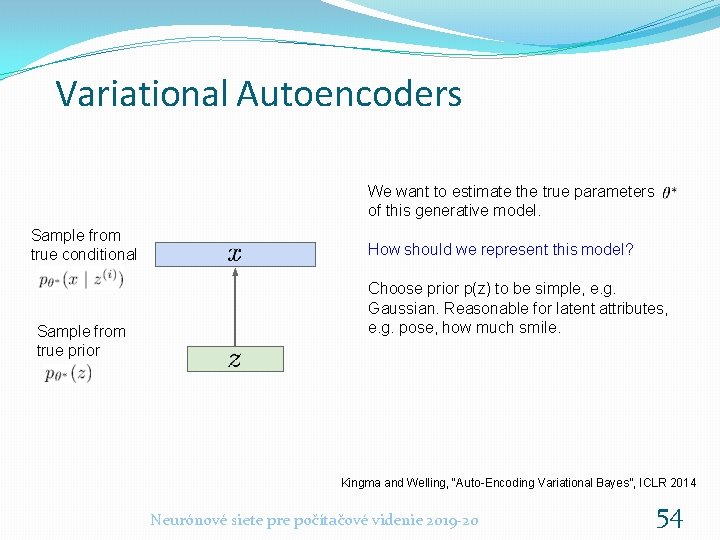

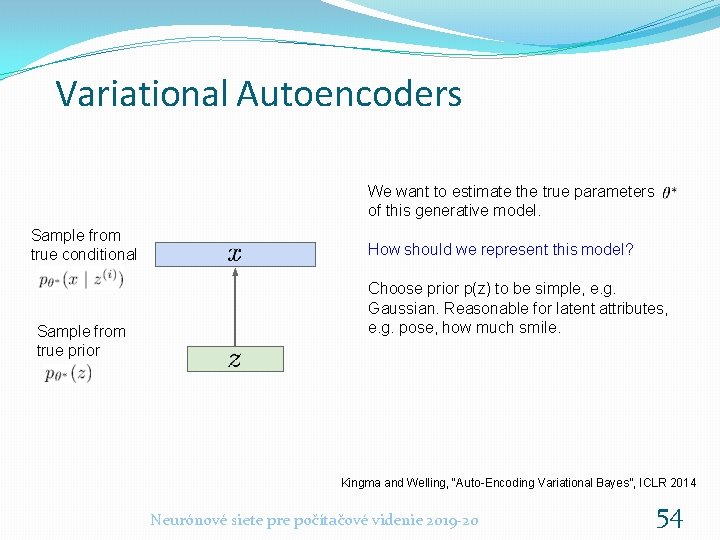

Variational Autoencoders We want to estimate the true parameters of this generative model. Sample from true conditional Sample from true prior How should we represent this model? Choose prior p(z) to be simple, e. g. Gaussian. Reasonable for latent attributes, e. g. pose, how much smile. Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 54

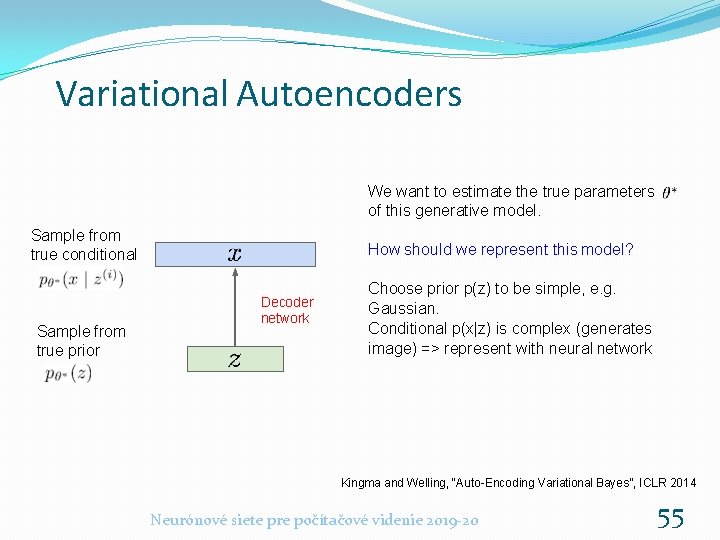

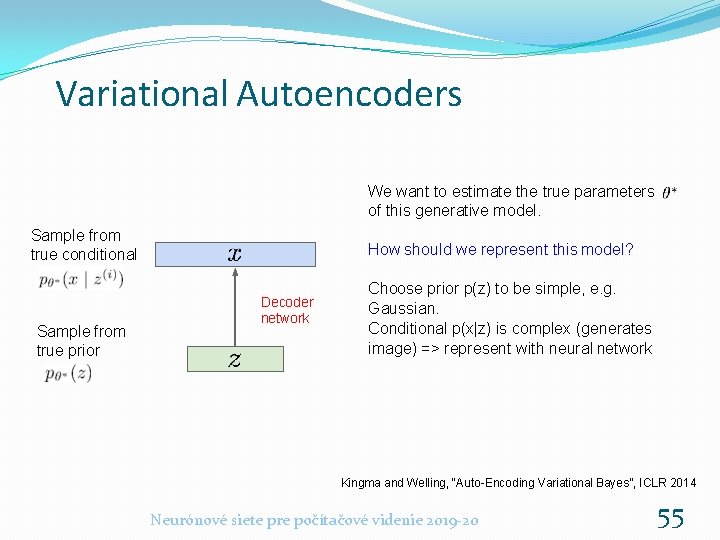

Variational Autoencoders We want to estimate the true parameters of this generative model. Sample from true conditional Sample from true prior How should we represent this model? Decoder network Choose prior p(z) to be simple, e. g. Gaussian. Conditional p(x|z) is complex (generates image) => represent with neural network Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 55

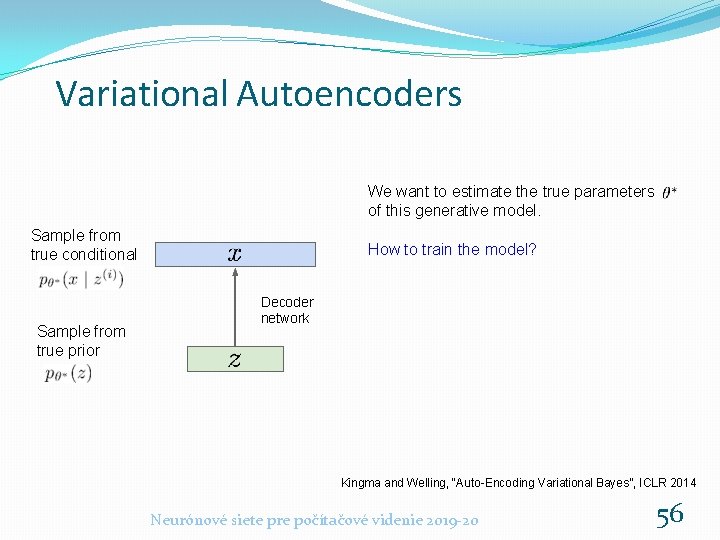

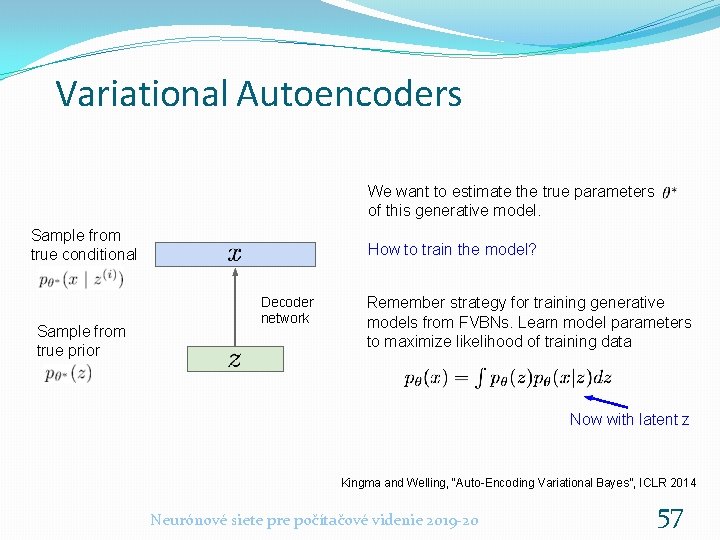

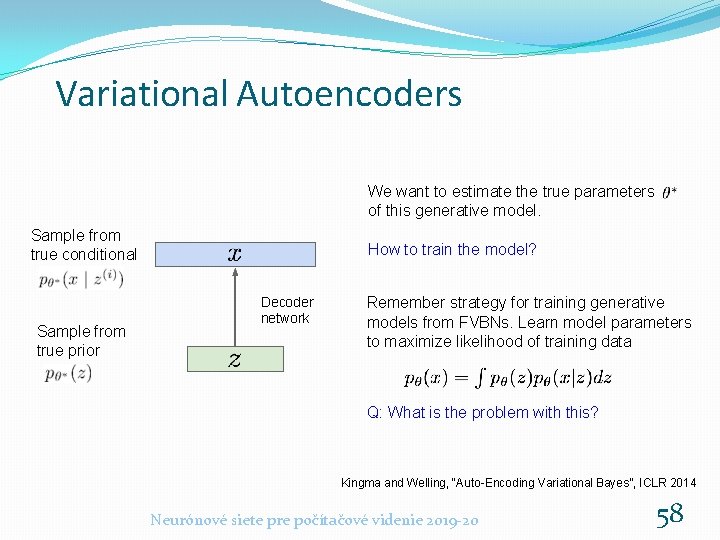

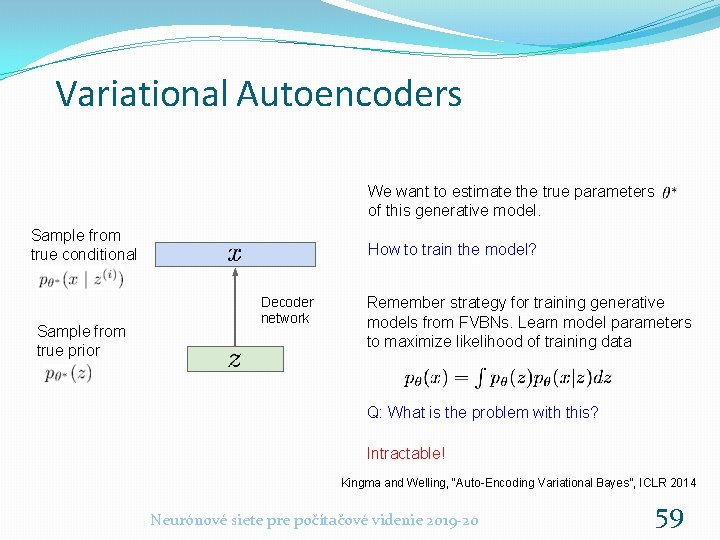

Variational Autoencoders We want to estimate the true parameters of this generative model. Sample from true conditional Sample from true prior How to train the model? Decoder network Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 56

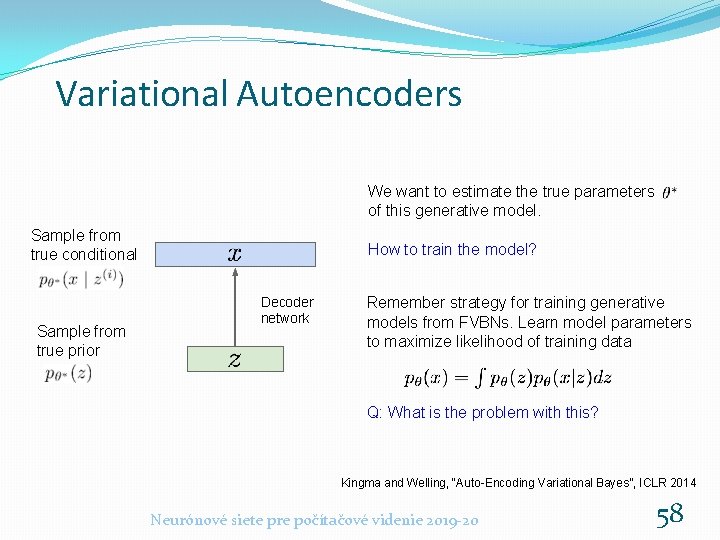

Variational Autoencoders We want to estimate the true parameters of this generative model. Sample from true conditional Sample from true prior How to train the model? Decoder network Remember strategy for training generative models from FVBNs. Learn model parameters to maximize likelihood of training data Now with latent z Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 57

Variational Autoencoders We want to estimate the true parameters of this generative model. Sample from true conditional Sample from true prior How to train the model? Decoder network Remember strategy for training generative models from FVBNs. Learn model parameters to maximize likelihood of training data Q: What is the problem with this? Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 58

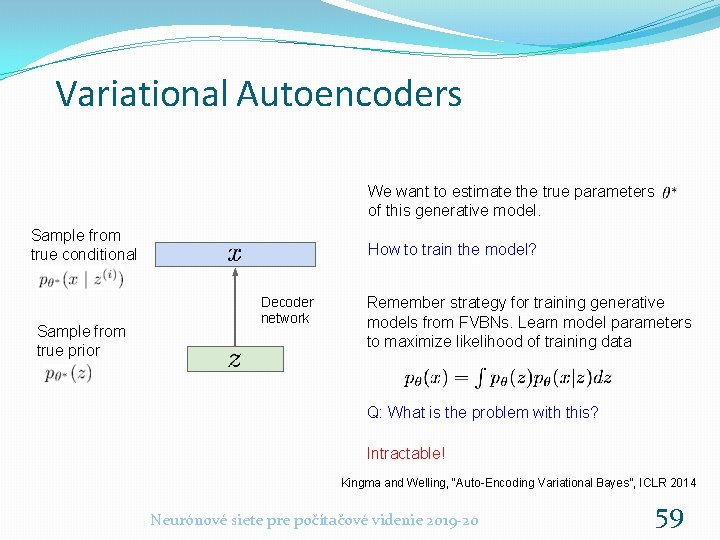

Variational Autoencoders We want to estimate the true parameters of this generative model. Sample from true conditional Sample from true prior How to train the model? Decoder network Remember strategy for training generative models from FVBNs. Learn model parameters to maximize likelihood of training data Q: What is the problem with this? Intractable! Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 59

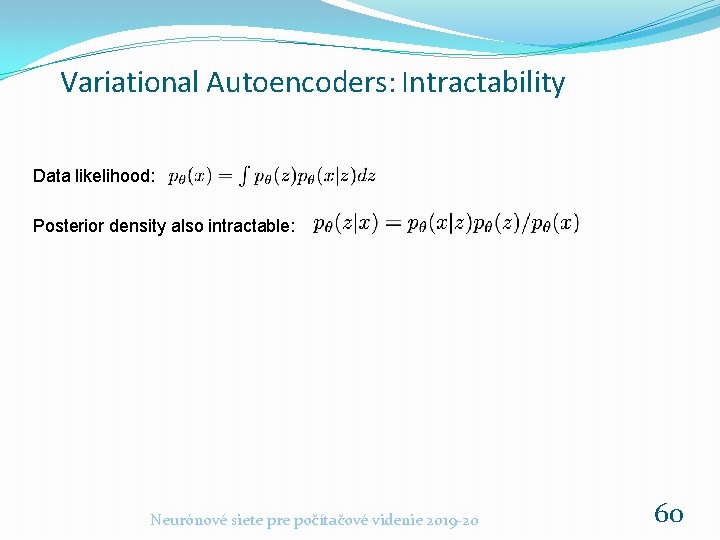

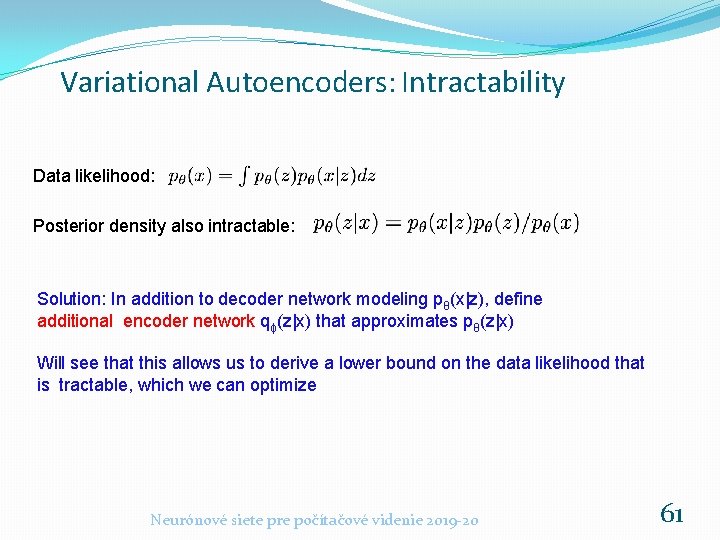

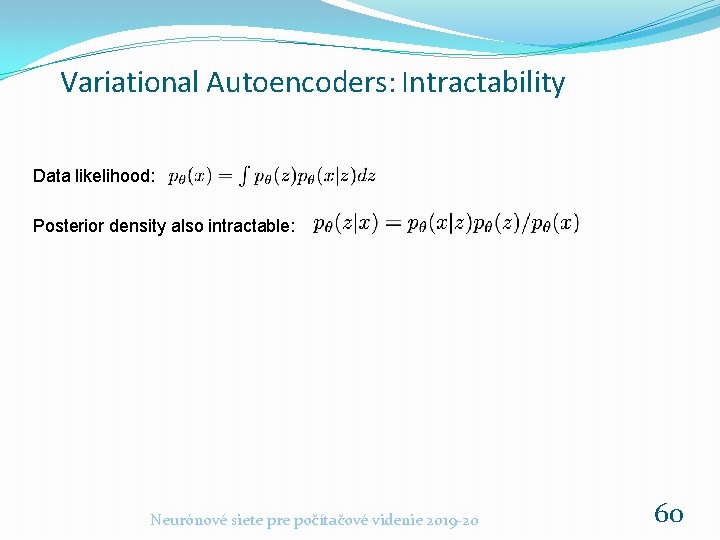

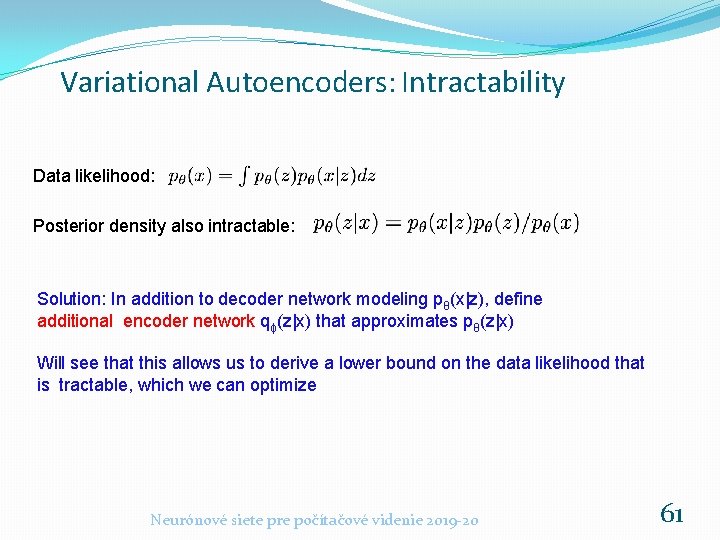

Variational Autoencoders: Intractability Data likelihood: Posterior density also intractable: Neurónové siete pre počítačové videnie 2019 -20 60

Variational Autoencoders: Intractability Data likelihood: Posterior density also intractable: Solution: In addition to decoder network modeling pθ(x|z), define additional encoder network qɸ(z|x) that approximates pθ(z|x) Will see that this allows us to derive a lower bound on the data likelihood that is tractable, which we can optimize Neurónové siete pre počítačové videnie 2019 -20 61

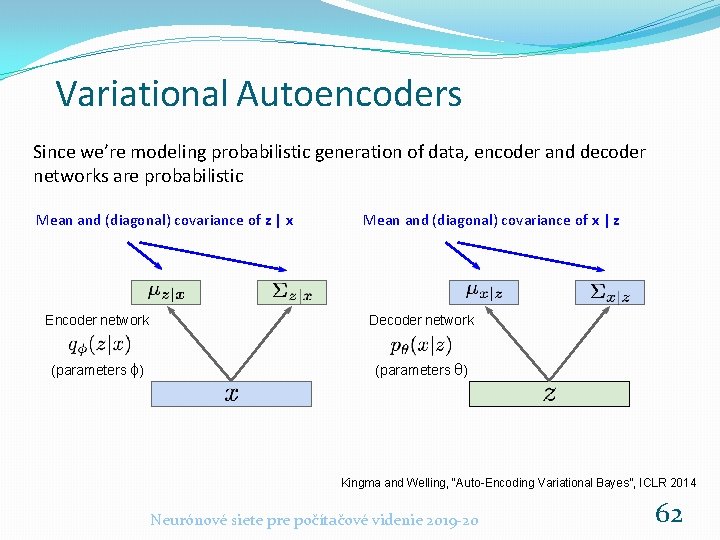

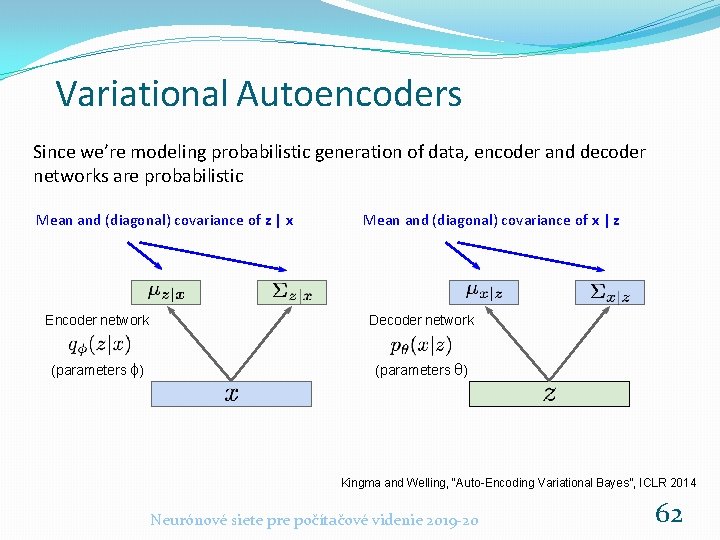

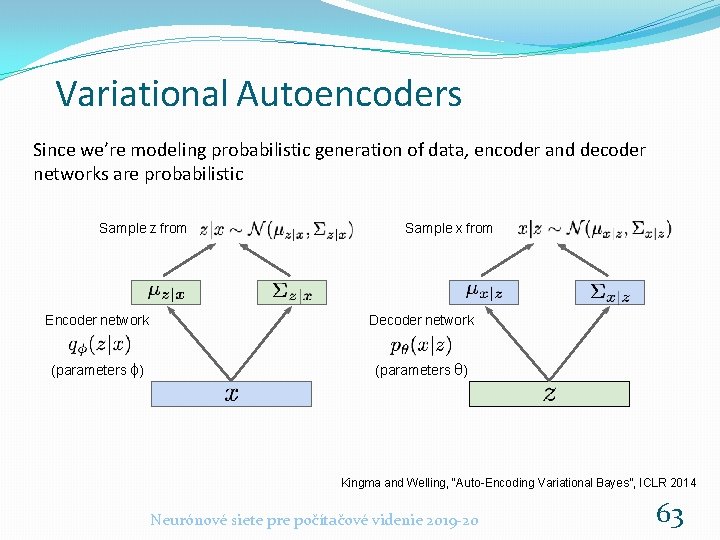

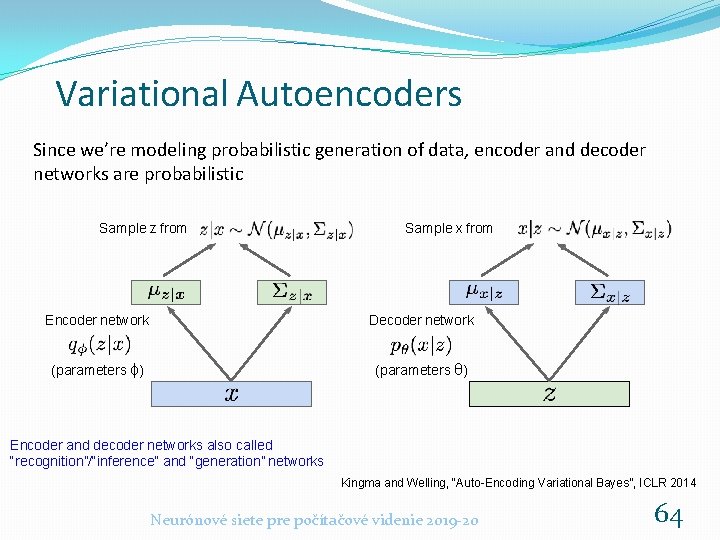

Variational Autoencoders Since we’re modeling probabilistic generation of data, encoder and decoder networks are probabilistic Mean and (diagonal) covariance of z | x Mean and (diagonal) covariance of x | z Encoder network Decoder network (parameters ɸ) (parameters θ) Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 62

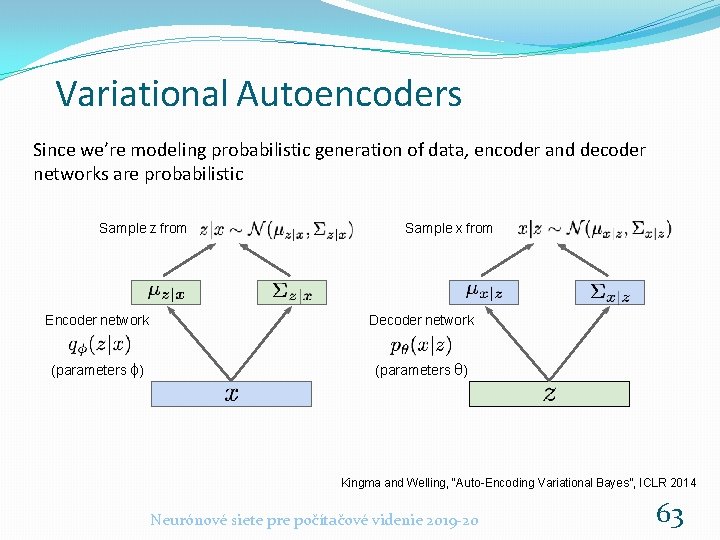

Variational Autoencoders Since we’re modeling probabilistic generation of data, encoder and decoder networks are probabilistic Sample z from Sample x from Encoder network Decoder network (parameters ɸ) (parameters θ) Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 63

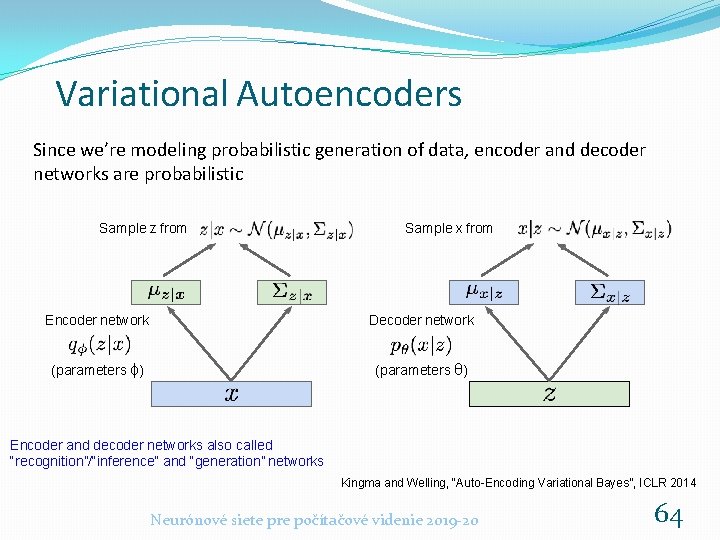

Variational Autoencoders Since we’re modeling probabilistic generation of data, encoder and decoder networks are probabilistic Sample z from Sample x from Encoder network Decoder network (parameters ɸ) (parameters θ) Encoder and decoder networks also called “recognition”/“inference” and “generation” networks Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 64

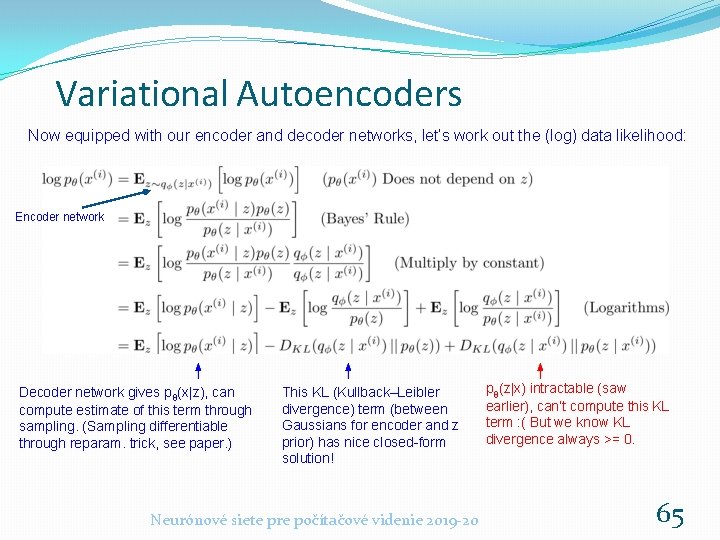

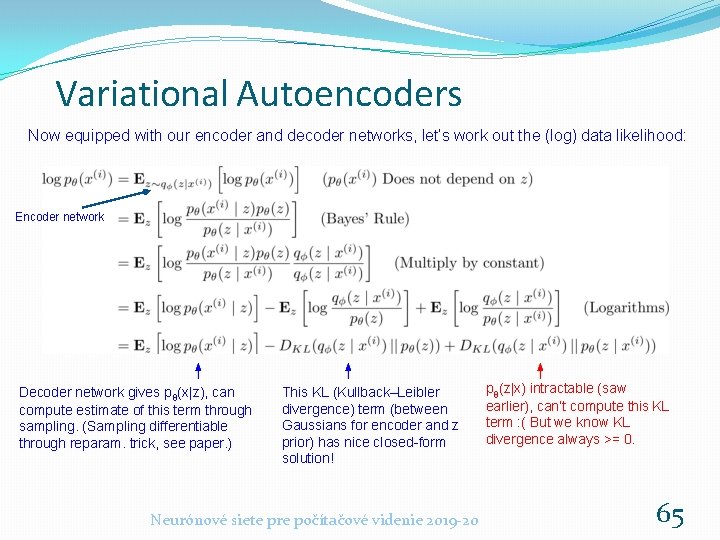

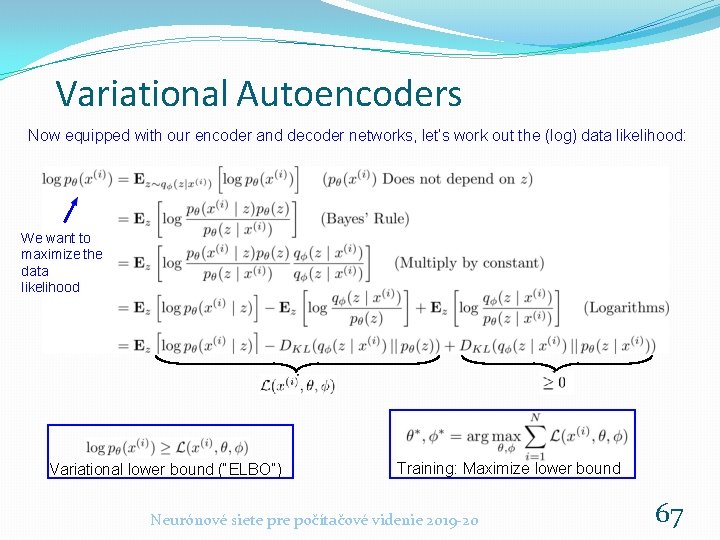

Variational Autoencoders Now equipped with our encoder and decoder networks, let’s work out the (log) data likelihood: Encoder network Decoder network gives pθ(x|z), can compute estimate of this term through sampling. (Sampling differentiable through reparam. trick, see paper. ) This KL (Kullback–Leibler divergence) term (between Gaussians for encoder and z prior) has nice closed-form solution! Neurónové siete pre počítačové videnie 2019 -20 pθ(z|x) intractable (saw earlier), can’t compute this KL term : ( But we know KL divergence always >= 0. 65

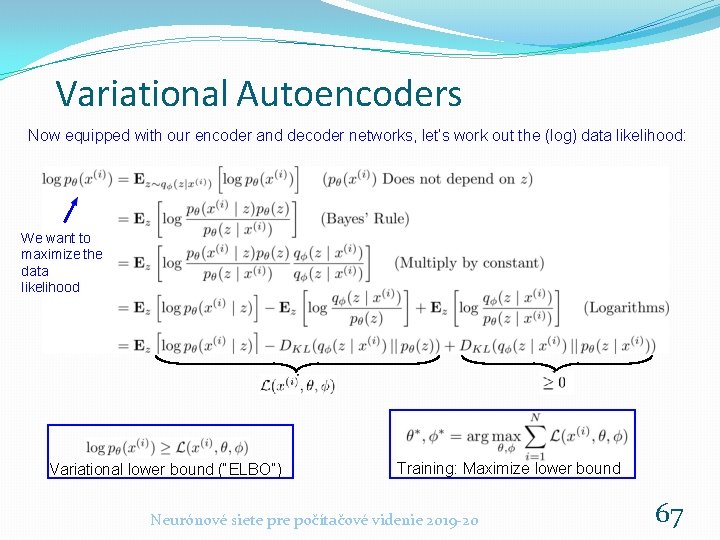

Variational Autoencoders Now equipped with our encoder and decoder networks, let’s work out the (log) data likelihood: We want to maximize the data likelihood Tractable lower bound which we can take gradient of and optimize! (pθ(x|z) differentiable, KL term differentiable) Neurónové siete pre počítačové videnie 2019 -20 66

Variational Autoencoders Now equipped with our encoder and decoder networks, let’s work out the (log) data likelihood: We want to maximize the data likelihood Variational lower bound (“ELBO”) Training: Maximize lower bound Neurónové siete pre počítačové videnie 2019 -20 67

Variational Autoencoders Now equipped with our encoder and decoder networks, let’s work out the (log) data likelihood: Make approximate posterior distribution close to prior Reconstruct the input data Variational lower bound (“ELBO”) Training: Maximize lower bound Neurónové siete pre počítačové videnie 2019 -20 68

Variational Autoencoders Putting it all together: maximizing the likelihood lower bound Maximize likelihood of original input being reconstructed Sample x|z from Decoder network Make approximate posterior distribution close to prior For every minibatch of input data: compute this forward pass, and then backprop! Sample z from Encoder network Input Data Neurónové siete pre počítačové videnie 2019 -20 69

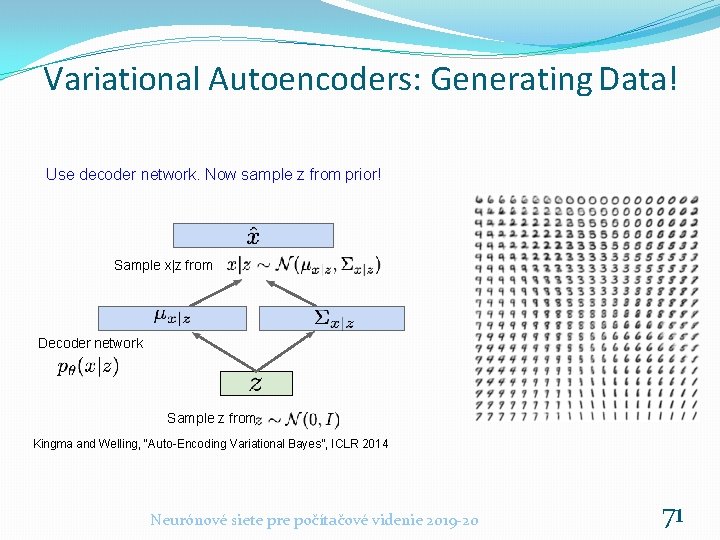

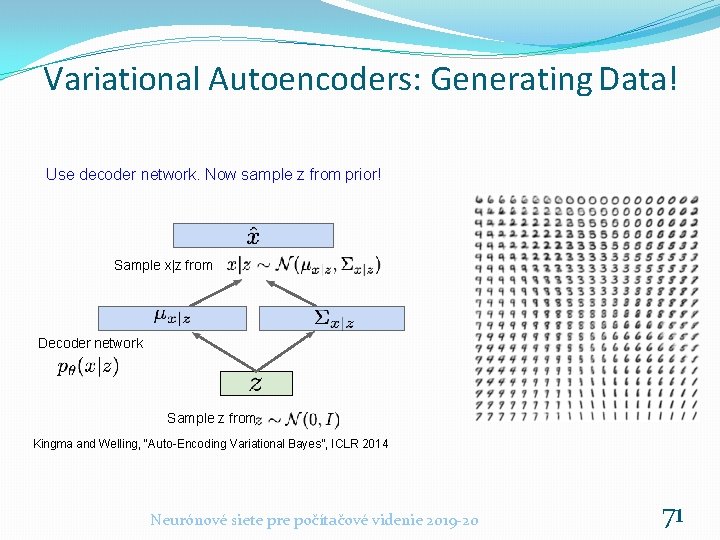

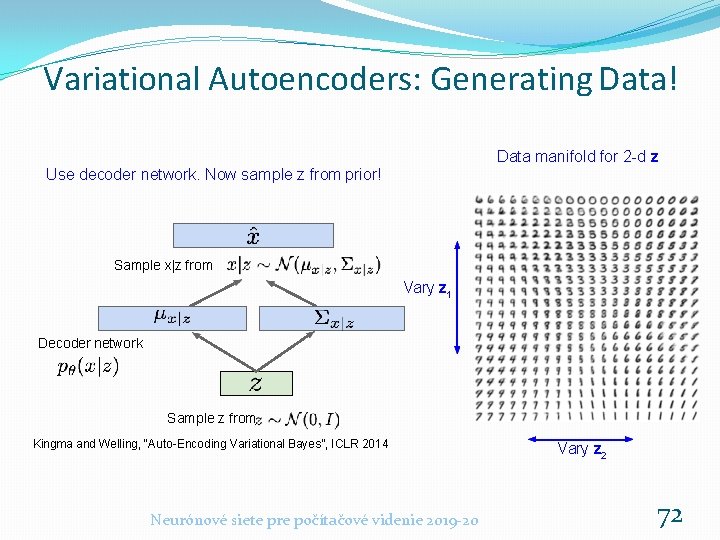

Variational Autoencoders: Generating Data! Use decoder network. Now sample z from prior! Sample x|z from Decoder network Sample z from Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 70

Variational Autoencoders: Generating Data! Use decoder network. Now sample z from prior! Sample x|z from Decoder network Sample z from Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 71

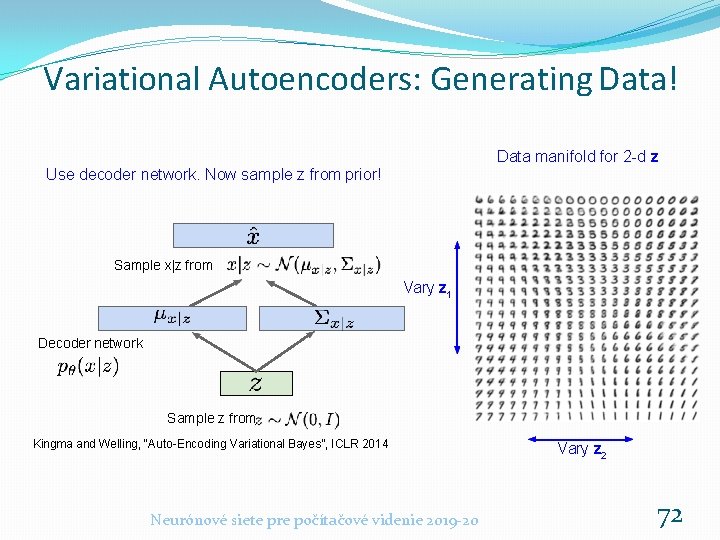

Variational Autoencoders: Generating Data! Data manifold for 2 -d z Use decoder network. Now sample z from prior! Sample x|z from Vary z 1 Decoder network Sample z from Kingma and Welling, “Auto-Encoding Variational Bayes”, ICLR 2014 Neurónové siete pre počítačové videnie 2019 -20 Vary z 2 72

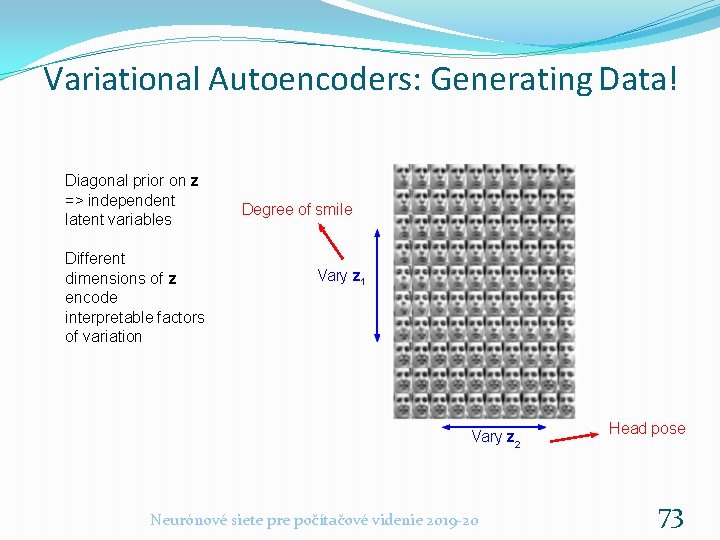

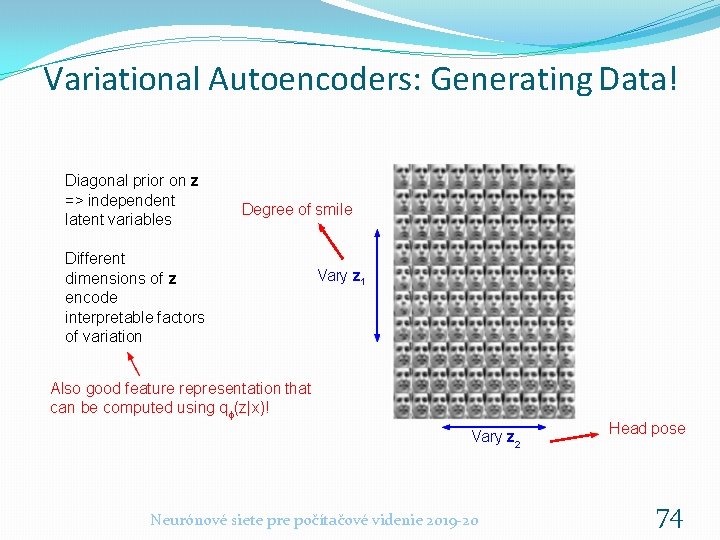

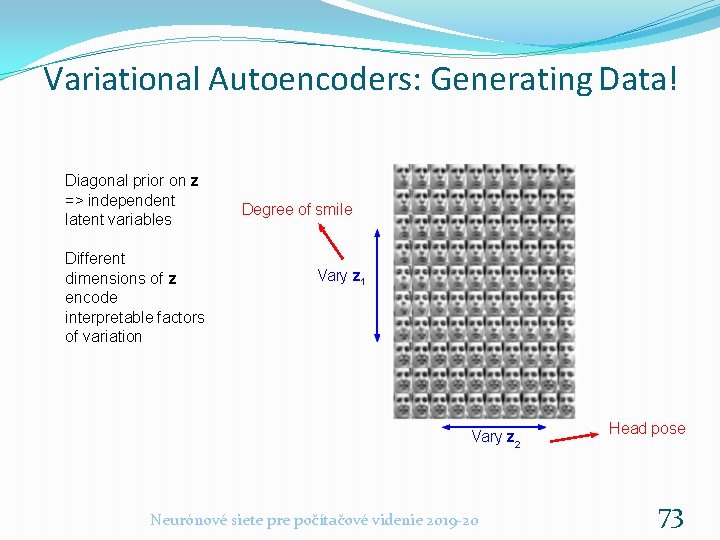

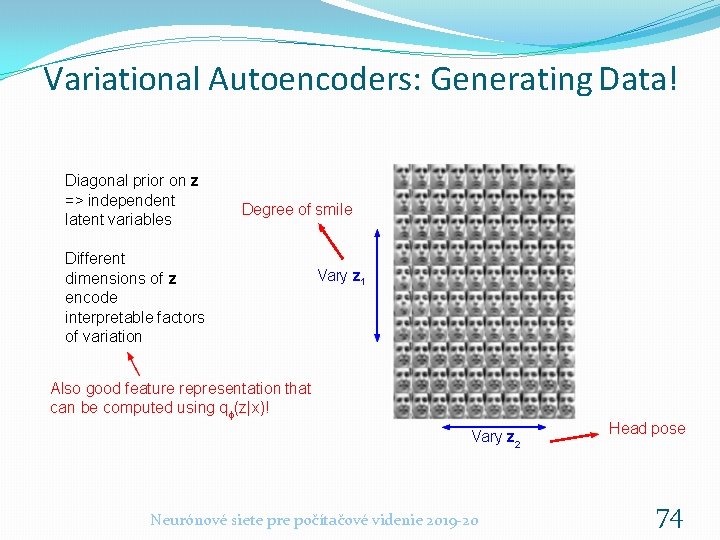

Variational Autoencoders: Generating Data! Diagonal prior on z => independent latent variables Different dimensions of z encode interpretable factors of variation Degree of smile Vary z 1 Vary z 2 Neurónové siete pre počítačové videnie 2019 -20 Head pose 73

Variational Autoencoders: Generating Data! Diagonal prior on z => independent latent variables Degree of smile Different dimensions of z encode interpretable factors of variation Vary z 1 Also good feature representation that can be computed using qɸ(z|x)! Vary z 2 Neurónové siete pre počítačové videnie 2019 -20 Head pose 74

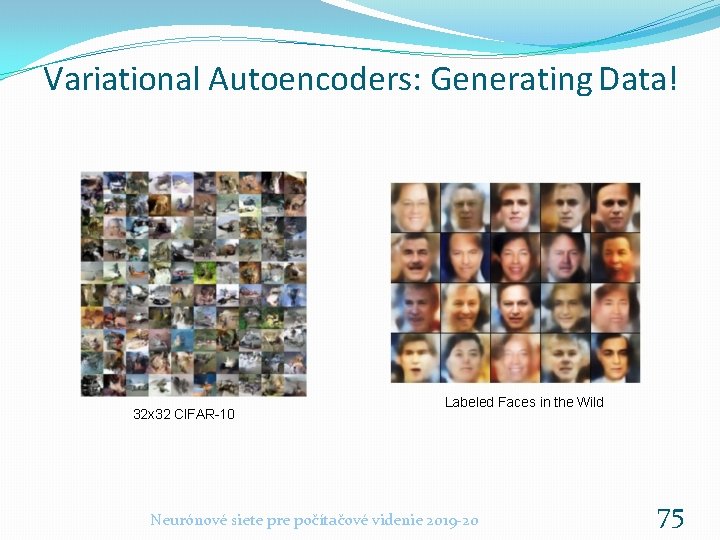

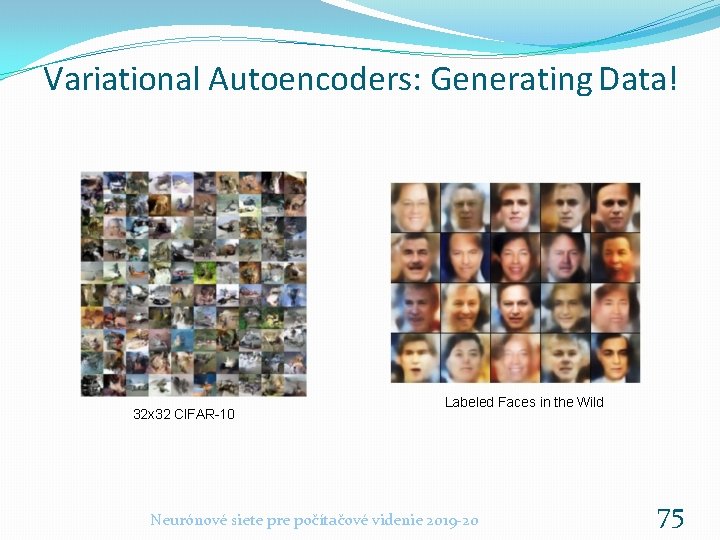

Variational Autoencoders: Generating Data! 32 x 32 CIFAR-10 Labeled Faces in the Wild Neurónové siete pre počítačové videnie 2019 -20 75

Variational Autoencoders Probabilistic spin to traditional autoencoders => allows generating data Defines an intractable density => derive and optimize a (variational) lower bound Pros: - Principled approach to generative models - Allows inference of q(z|x), can be useful feature representation for other tasks Cons: - Maximizes lower bound of likelihood: okay, but not as good evaluation as Pixel. RNN/Pixel. CNN - Samples blurrier and lower quality compared to state-of-the-art (GANs) Active areas of research: - More flexible approximations, e. g. richer approximate posterior instead of diagonal Gaussian, e. g. , Gaussian Mixture Models (GMMs) - Incorporating structure in latent variables, e. g. , Categorical Distributions Neurónové siete pre počítačové videnie 2019 -20 76

Generative Adversarial Networks (GAN) Neurónové siete pre počítačové videnie 2019 -20 77

So far. . . Pixel. CNNs define tractable density function, optimize likelihood of training data: VAEs define intractable density function with latent z: Cannot optimize directly, derive and optimize lower bound on likelihood instead What if we give up on explicitly modeling density, and just want ability to sample? Neurónové siete pre počítačové videnie 2019 -20 78

So far. . . Pixel. CNNs define tractable density function, optimize likelihood of training data: VAEs define intractable density function with latent z: Cannot optimize directly, derive and optimize lower bound on likelihood instead What if we give up on explicitly modeling density, and just want ability to sample? GANs: don’t work with any explicit density function! Instead, take game-theoretic approach: learn to generate from training distribution through 2 -player game Neurónové siete pre počítačové videnie 2019 -20 79

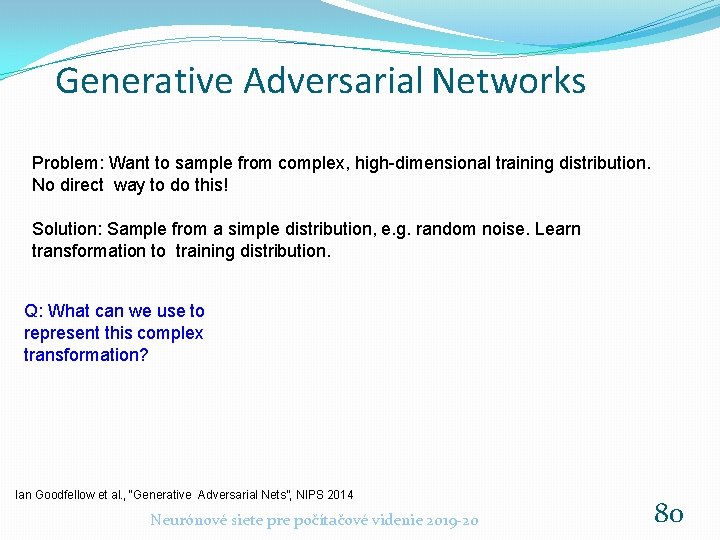

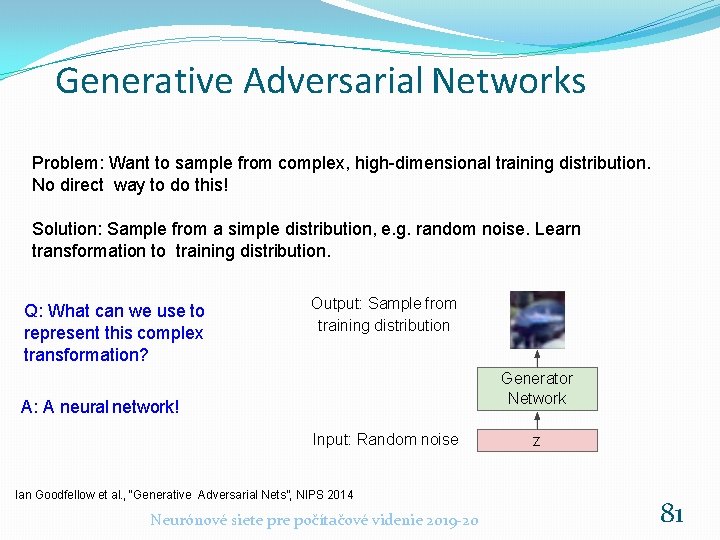

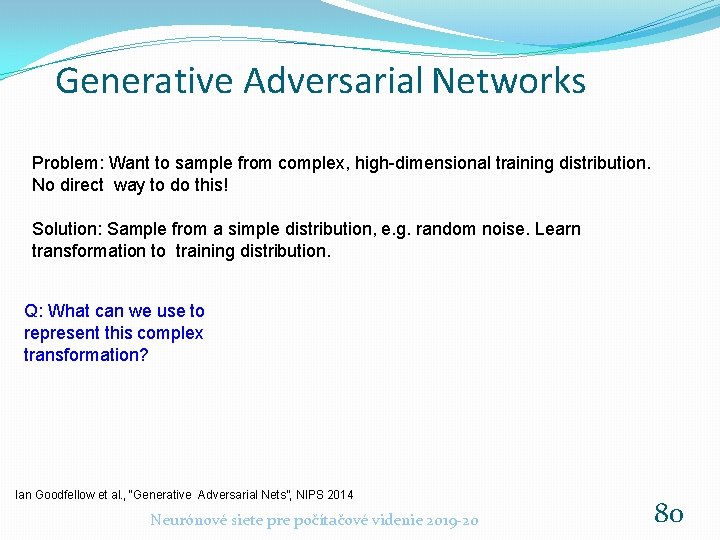

Generative Adversarial Networks Problem: Want to sample from complex, high-dimensional training distribution. No direct way to do this! Solution: Sample from a simple distribution, e. g. random noise. Learn transformation to training distribution. Q: What can we use to represent this complex transformation? Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Neurónové siete pre počítačové videnie 2019 -20 80

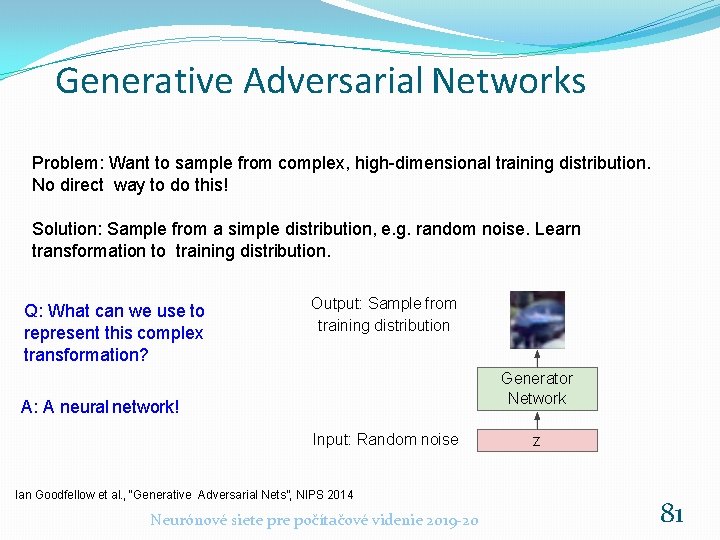

Generative Adversarial Networks Problem: Want to sample from complex, high-dimensional training distribution. No direct way to do this! Solution: Sample from a simple distribution, e. g. random noise. Learn transformation to training distribution. Q: What can we use to represent this complex transformation? Output: Sample from training distribution Generator Network A: A neural network! Input: Random noise Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Neurónové siete pre počítačové videnie 2019 -20 z 81

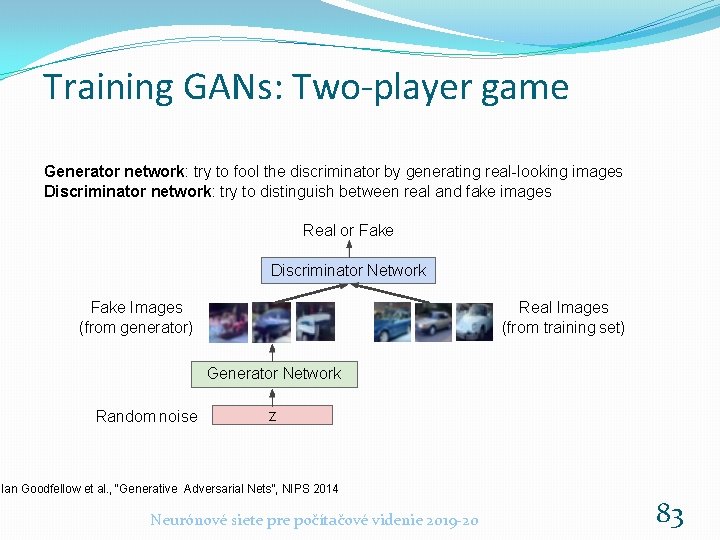

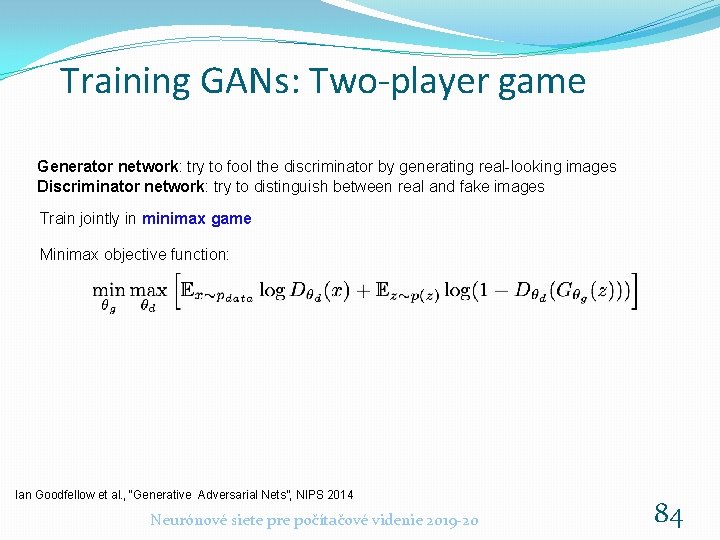

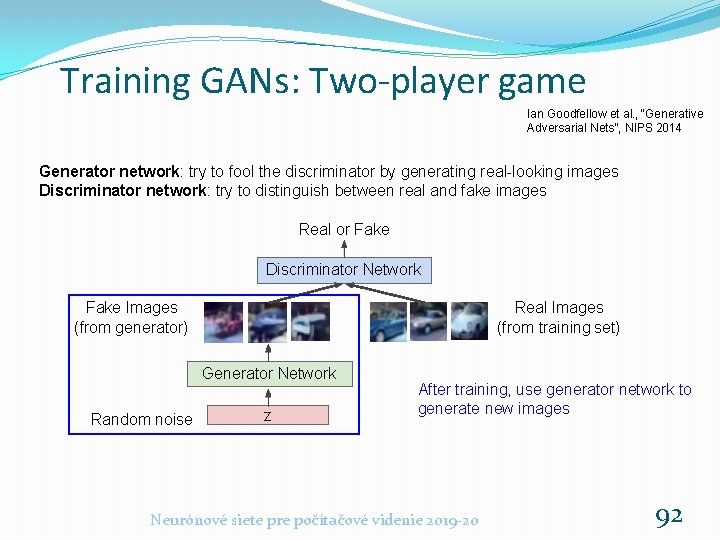

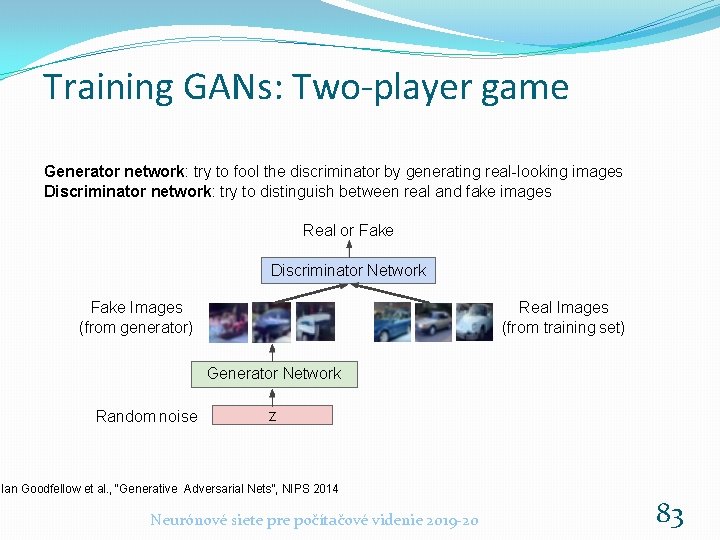

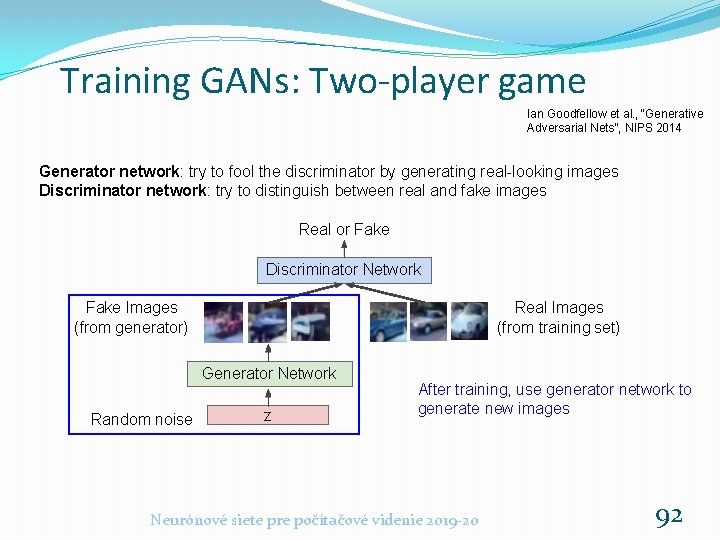

Training GANs: Two-player game Generator network: try to fool the discriminator by generating real-looking images Discriminator network: try to distinguish between real and fake images Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Neurónové siete pre počítačové videnie 2019 -20 82

Training GANs: Two-player game Generator network: try to fool the discriminator by generating real-looking images Discriminator network: try to distinguish between real and fake images Real or Fake Discriminator Network Real Images (from training set) Fake Images (from generator) Generator Network Random noise z Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Neurónové siete pre počítačové videnie 2019 -20 83

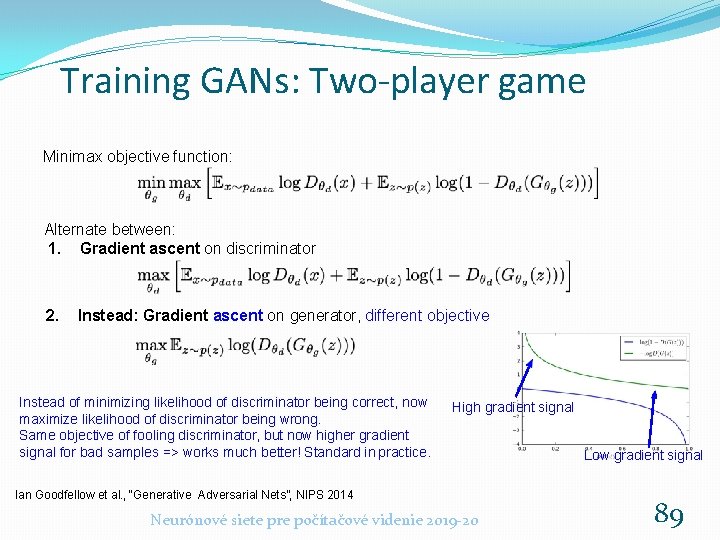

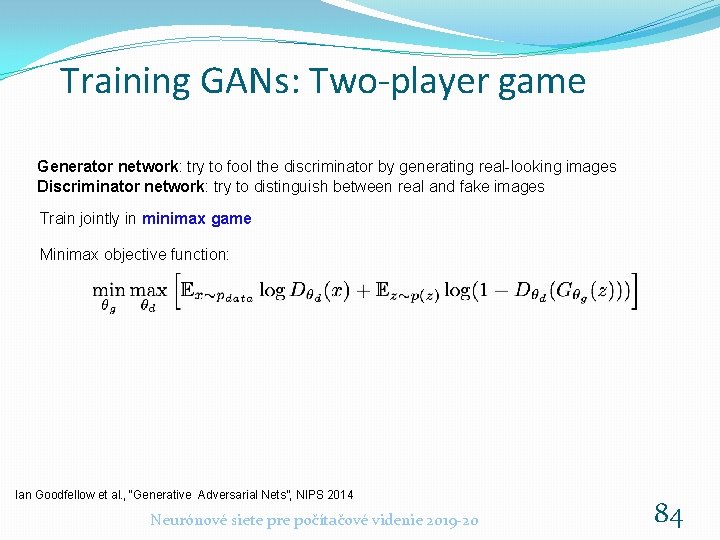

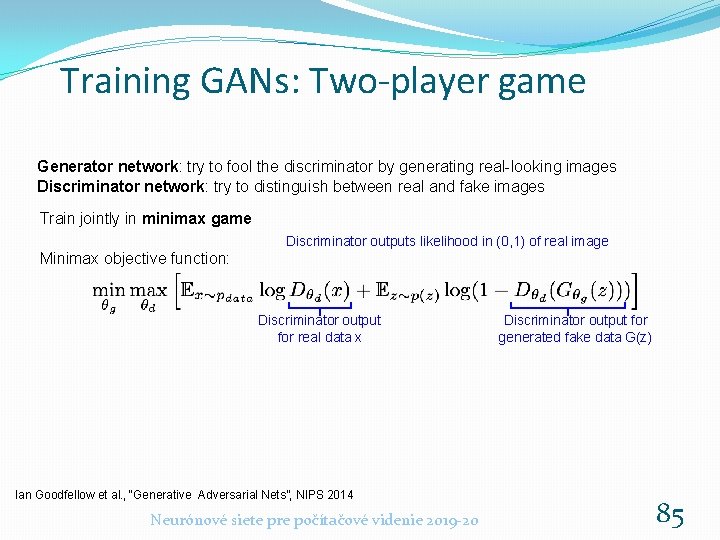

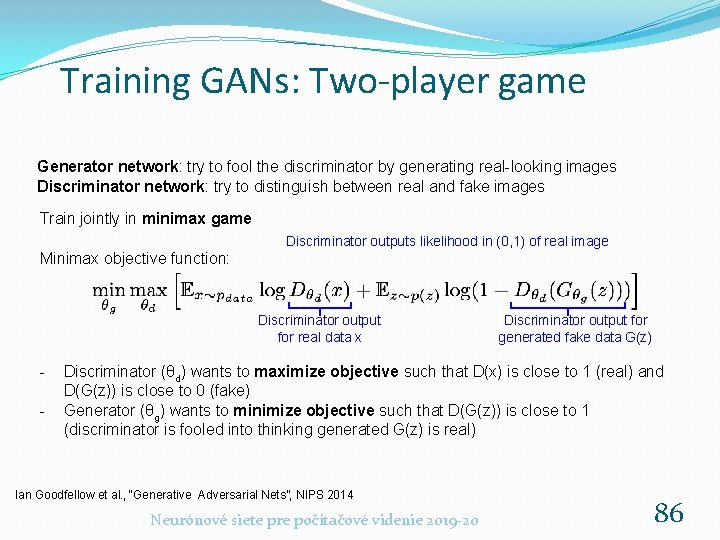

Training GANs: Two-player game Generator network: try to fool the discriminator by generating real-looking images Discriminator network: try to distinguish between real and fake images Train jointly in minimax game Minimax objective function: Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Neurónové siete pre počítačové videnie 2019 -20 84

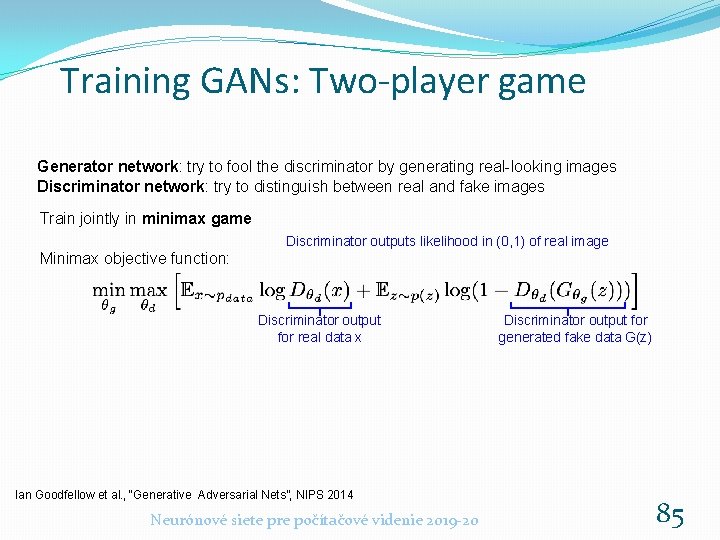

Training GANs: Two-player game Generator network: try to fool the discriminator by generating real-looking images Discriminator network: try to distinguish between real and fake images Train jointly in minimax game Discriminator outputs likelihood in (0, 1) of real image Minimax objective function: Discriminator output for real data x Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Neurónové siete pre počítačové videnie 2019 -20 Discriminator output for generated fake data G(z) 85

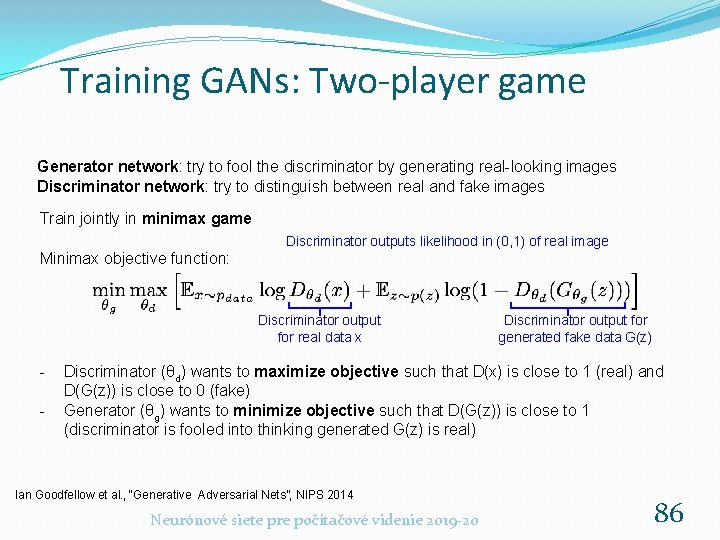

Training GANs: Two-player game Generator network: try to fool the discriminator by generating real-looking images Discriminator network: try to distinguish between real and fake images Train jointly in minimax game Discriminator outputs likelihood in (0, 1) of real image Minimax objective function: Discriminator output for real data x - Discriminator output for generated fake data G(z) Discriminator (θd) wants to maximize objective such that D(x) is close to 1 (real) and D(G(z)) is close to 0 (fake) Generator (θg) wants to minimize objective such that D(G(z)) is close to 1 (discriminator is fooled into thinking generated G(z) is real) Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Neurónové siete pre počítačové videnie 2019 -20 86

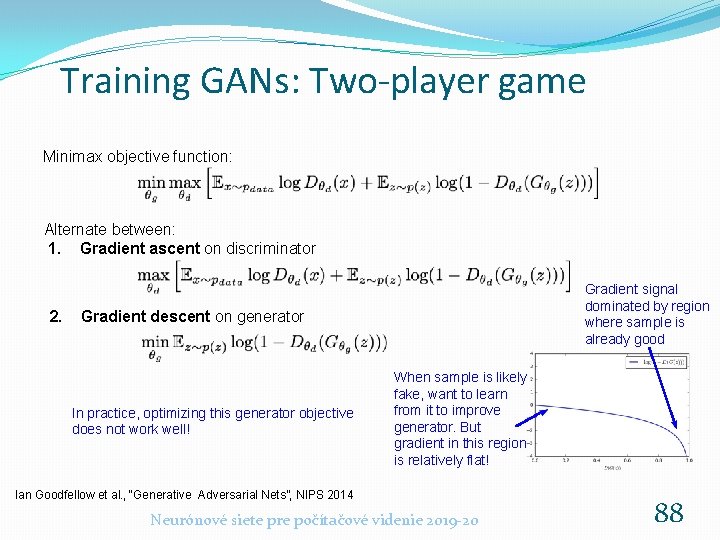

Training GANs: Two-player game Minimax objective function: Alternate between: 1. Gradient ascent on discriminator 2. Gradient descent on generator Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Neurónové siete pre počítačové videnie 2019 -20 87

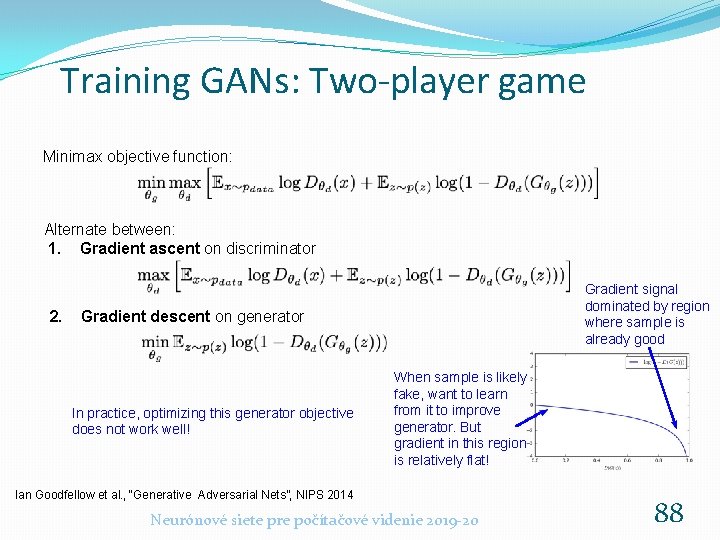

Training GANs: Two-player game Minimax objective function: Alternate between: 1. Gradient ascent on discriminator 2. Gradient signal dominated by region where sample is already good Gradient descent on generator In practice, optimizing this generator objective does not work well! When sample is likely fake, want to learn from it to improve generator. But gradient in this region is relatively flat! Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Neurónové siete pre počítačové videnie 2019 -20 88

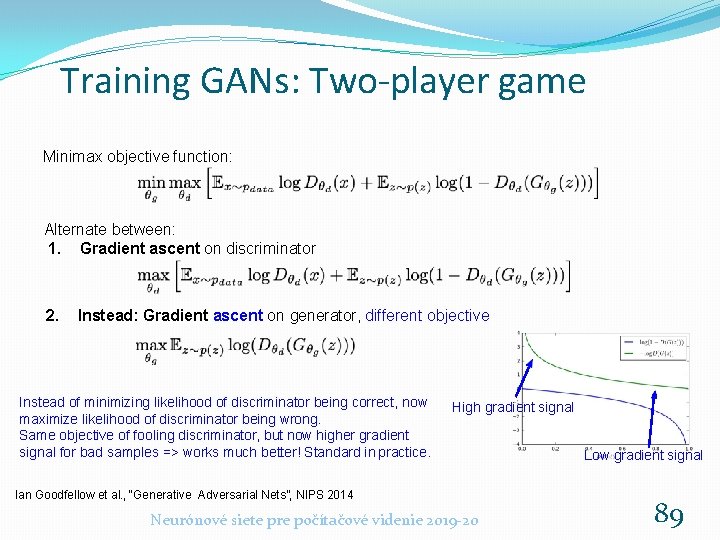

Training GANs: Two-player game Minimax objective function: Alternate between: 1. Gradient ascent on discriminator 2. Instead: Gradient ascent on generator, different objective Instead of minimizing likelihood of discriminator being correct, now maximize likelihood of discriminator being wrong. Same objective of fooling discriminator, but now higher gradient signal for bad samples => works much better! Standard in practice. High gradient signal Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Neurónové siete pre počítačové videnie 2019 -20 Low gradient signal 89

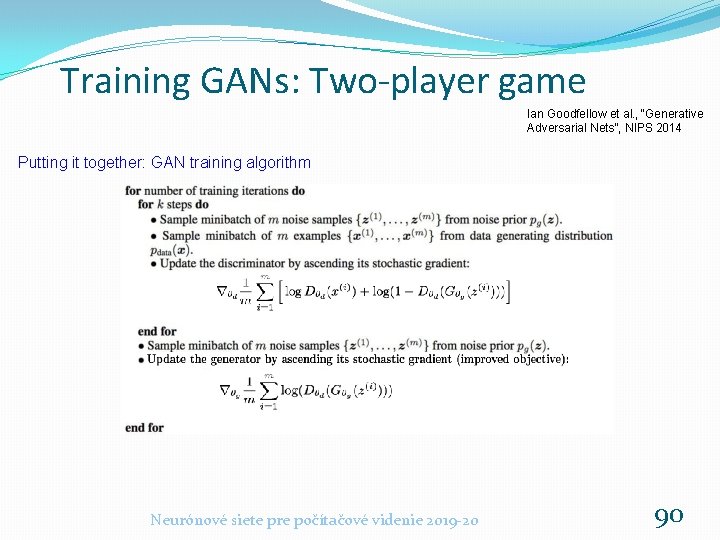

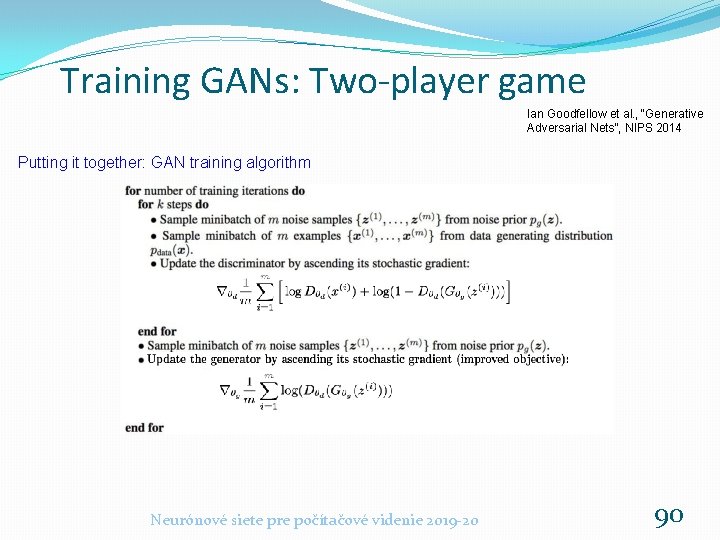

Training GANs: Two-player game Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Putting it together: GAN training algorithm Neurónové siete pre počítačové videnie 2019 -20 90

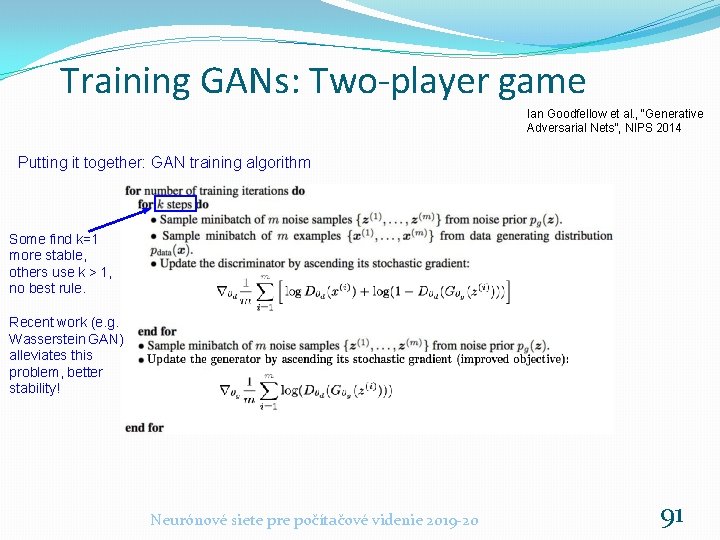

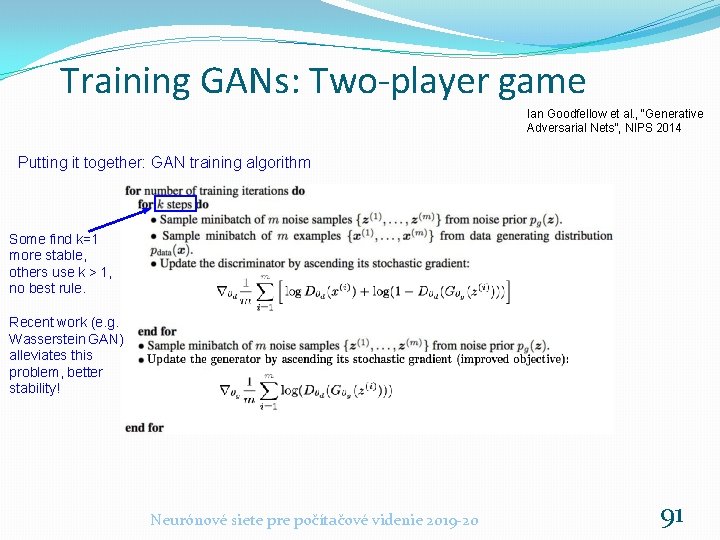

Training GANs: Two-player game Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Putting it together: GAN training algorithm Some find k=1 more stable, others use k > 1, no best rule. Recent work (e. g. Wasserstein GAN) alleviates this problem, better stability! Neurónové siete pre počítačové videnie 2019 -20 91

Training GANs: Two-player game Ian Goodfellow et al. , “Generative Adversarial Nets”, NIPS 2014 Generator network: try to fool the discriminator by generating real-looking images Discriminator network: try to distinguish between real and fake images Real or Fake Discriminator Network Real Images (from training set) Fake Images (from generator) Generator Network Random noise z After training, use generator network to generate new images Neurónové siete pre počítačové videnie 2019 -20 92

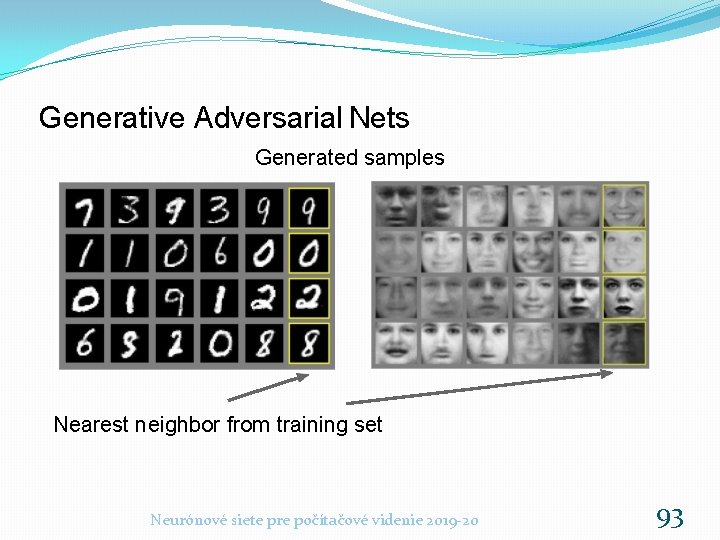

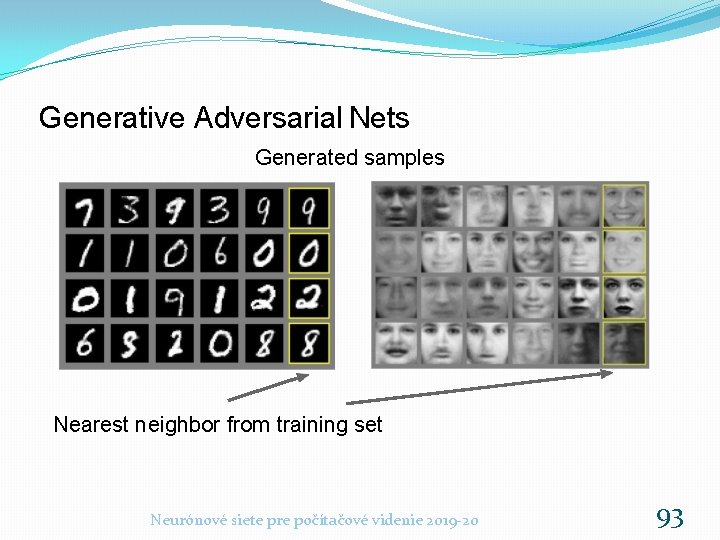

Generative Adversarial Nets Generated samples Nearest neighbor from training set Neurónové siete pre počítačové videnie 2019 -20 93

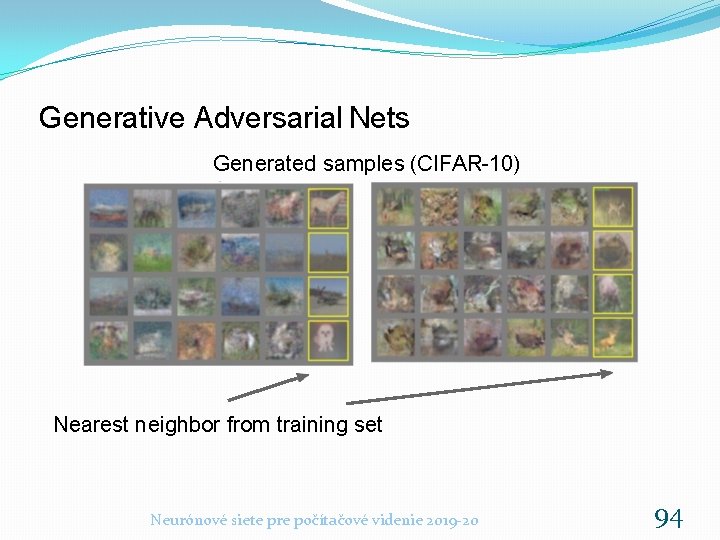

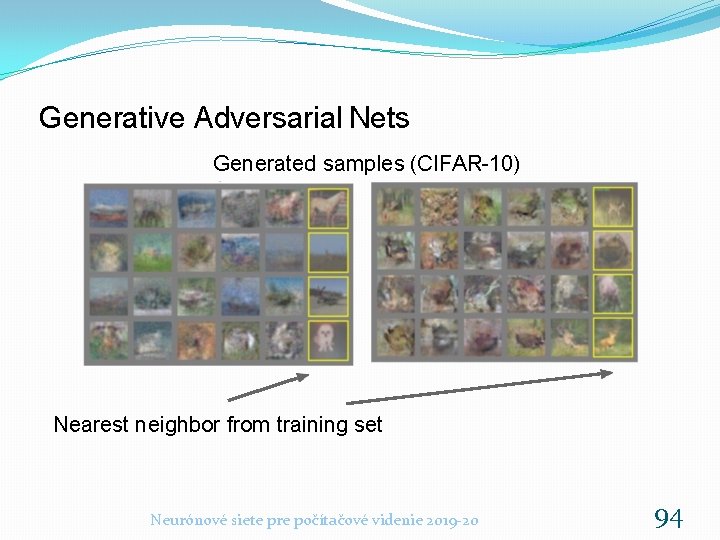

Generative Adversarial Nets Generated samples (CIFAR-10) Nearest neighbor from training set Neurónové siete pre počítačové videnie 2019 -20 94

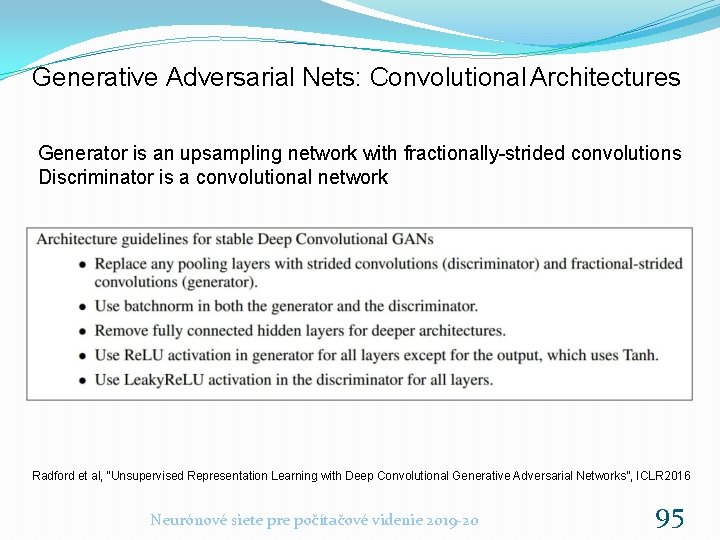

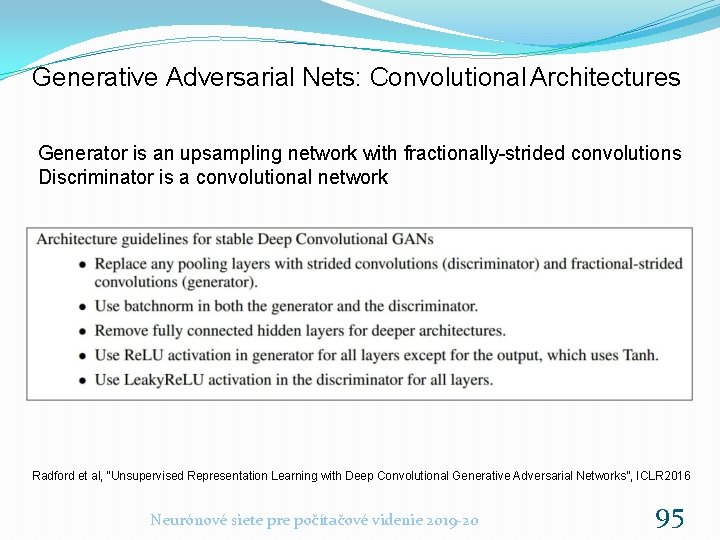

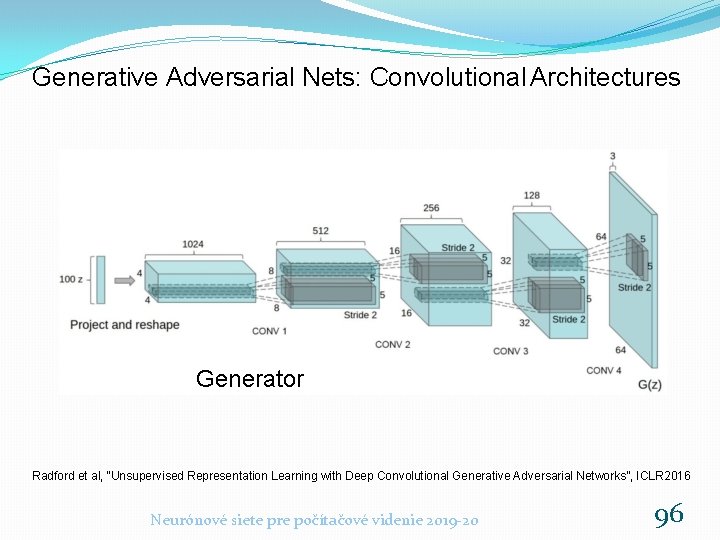

Generative Adversarial Nets: Convolutional Architectures Generator is an upsampling network with fractionally-strided convolutions Discriminator is a convolutional network Radford et al, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks”, ICLR 2016 Neurónové siete pre počítačové videnie 2019 -20 95

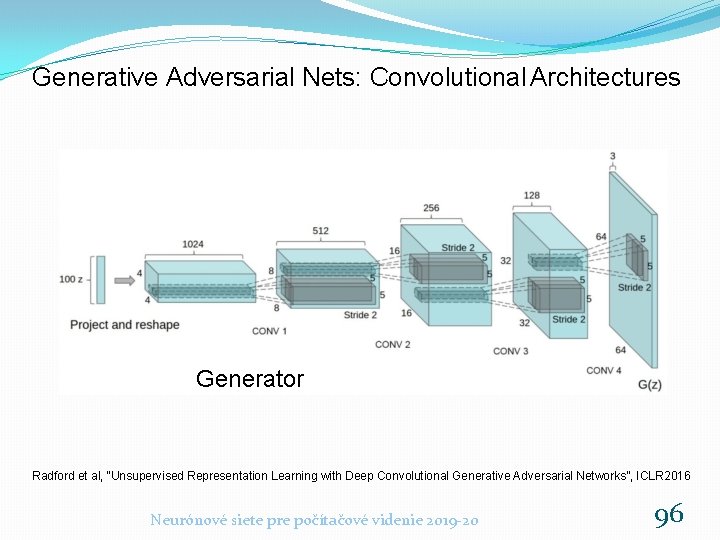

Generative Adversarial Nets: Convolutional Architectures Generator Radford et al, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks”, ICLR 2016 Neurónové siete pre počítačové videnie 2019 -20 96

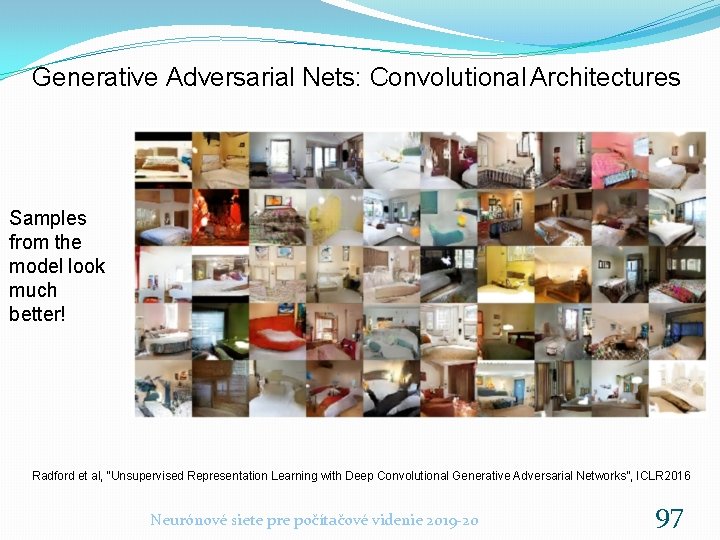

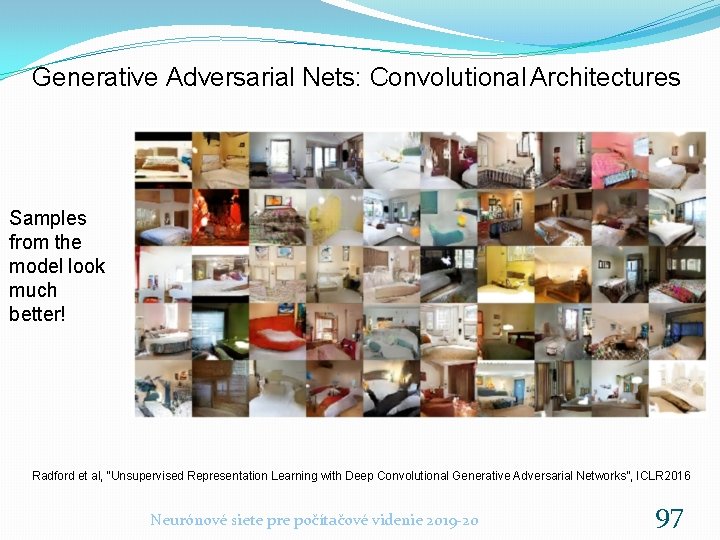

Generative Adversarial Nets: Convolutional Architectures Samples from the model look much better! Radford et al, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks”, ICLR 2016 Neurónové siete pre počítačové videnie 2019 -20 97

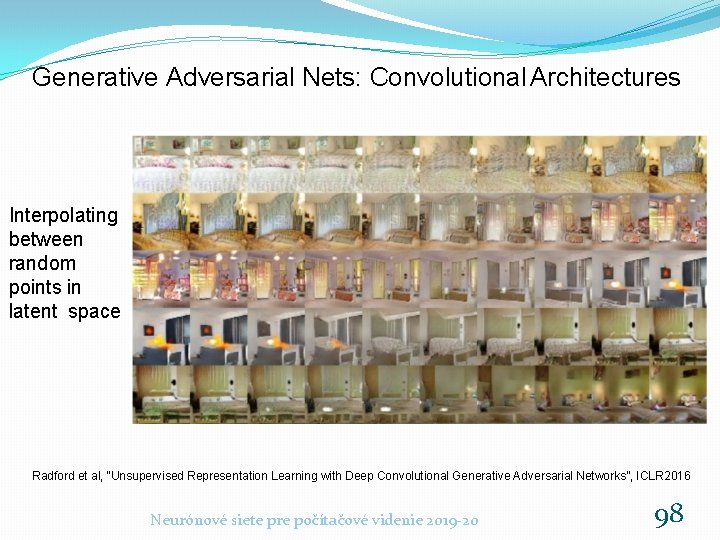

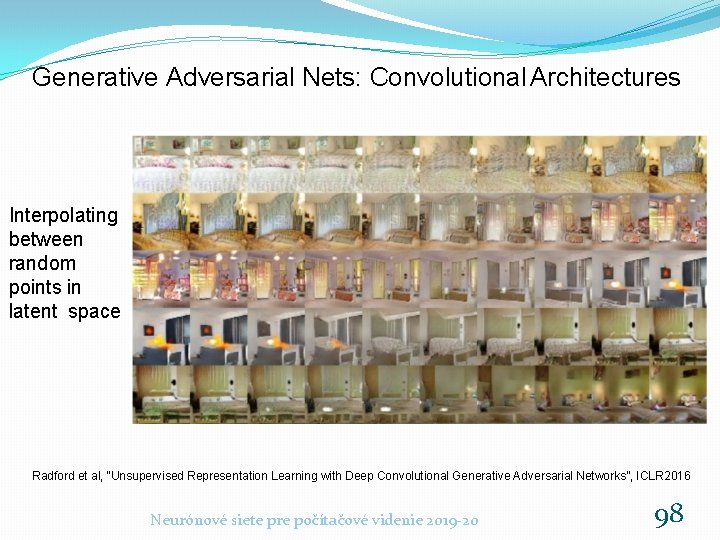

Generative Adversarial Nets: Convolutional Architectures Interpolating between random points in latent space Radford et al, “Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks”, ICLR 2016 Neurónové siete pre počítačové videnie 2019 -20 98

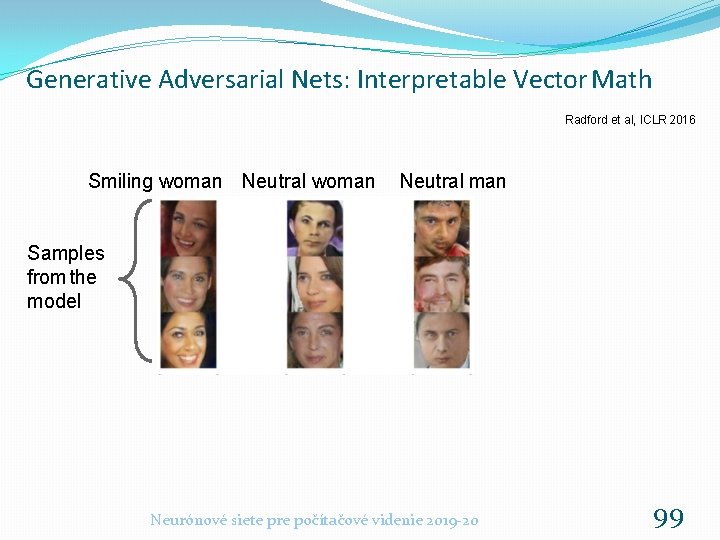

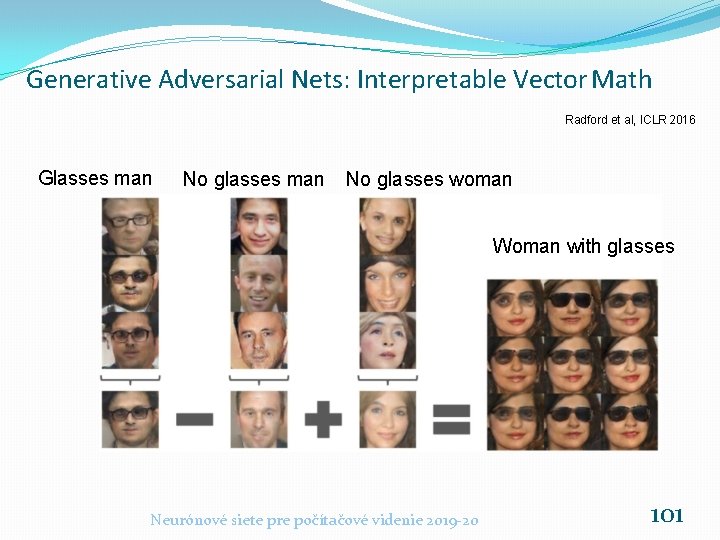

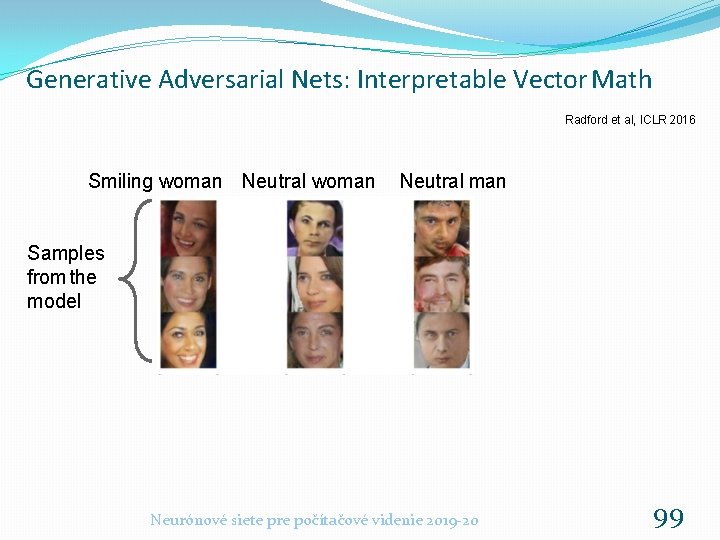

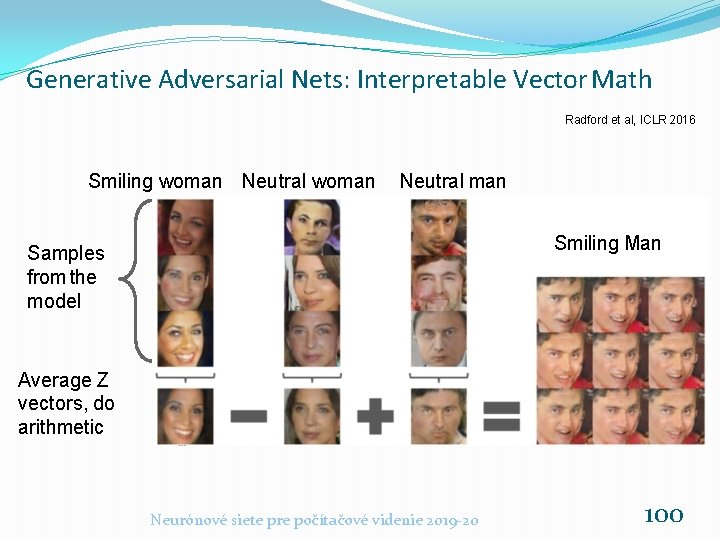

Generative Adversarial Nets: Interpretable Vector Math Radford et al, ICLR 2016 Smiling woman Neutral man Samples from the model Neurónové siete pre počítačové videnie 2019 -20 99

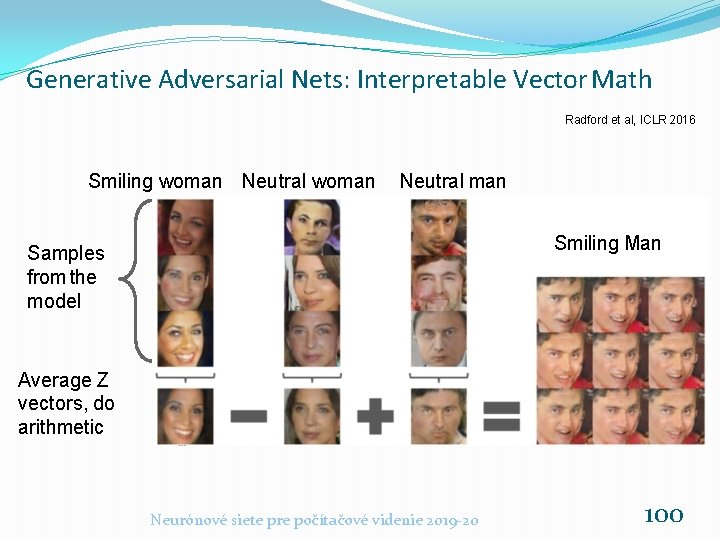

Generative Adversarial Nets: Interpretable Vector Math Radford et al, ICLR 2016 Smiling woman Neutral man Smiling Man Samples from the model Average Z vectors, do arithmetic Neurónové siete pre počítačové videnie 2019 -20 100

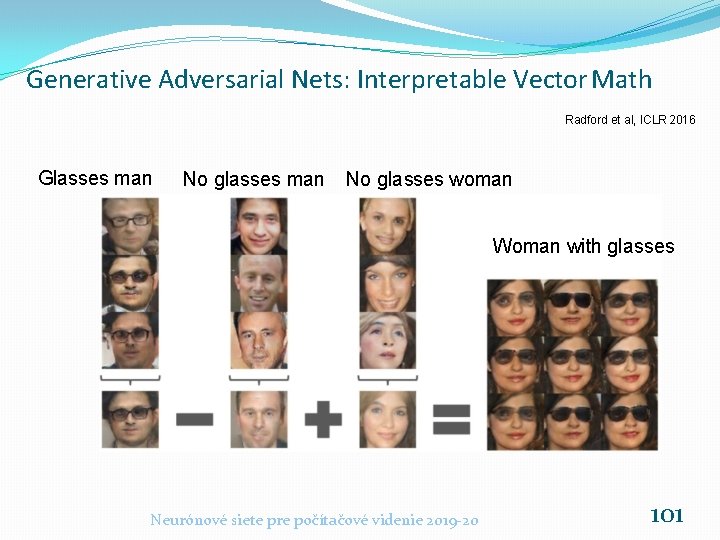

Generative Adversarial Nets: Interpretable Vector Math Radford et al, ICLR 2016 Glasses man No glasses woman Woman with glasses Neurónové siete pre počítačové videnie 2019 -20 101

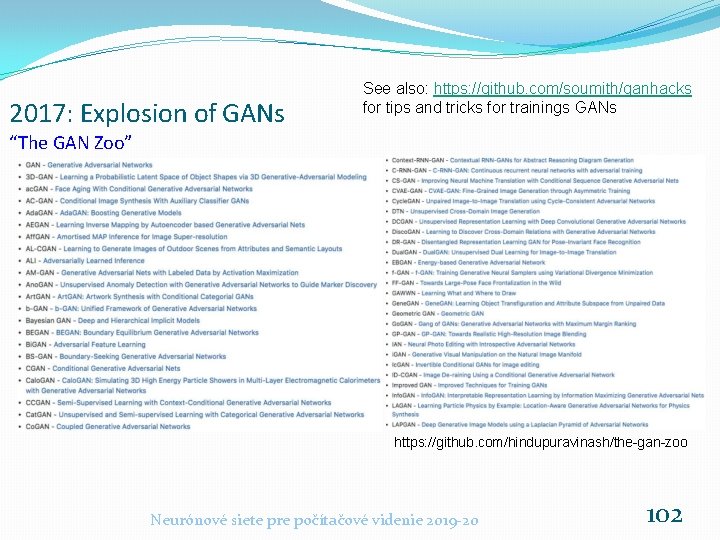

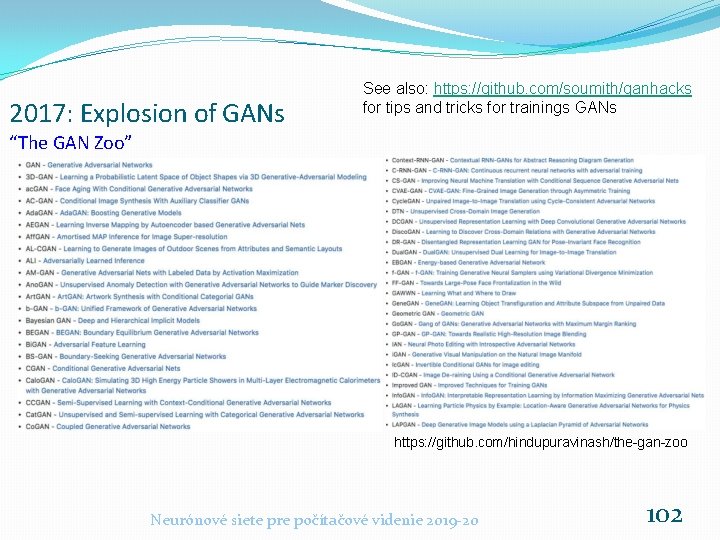

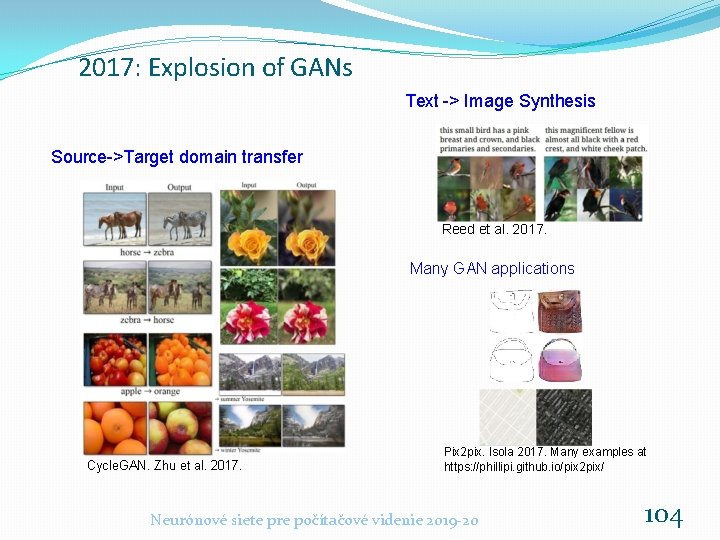

2017: Explosion of GANs See also: https: //github. com/soumith/ganhacks for tips and tricks for trainings GANs “The GAN Zoo” https: //github. com/hindupuravinash/the-gan-zoo Neurónové siete pre počítačové videnie 2019 -20 102

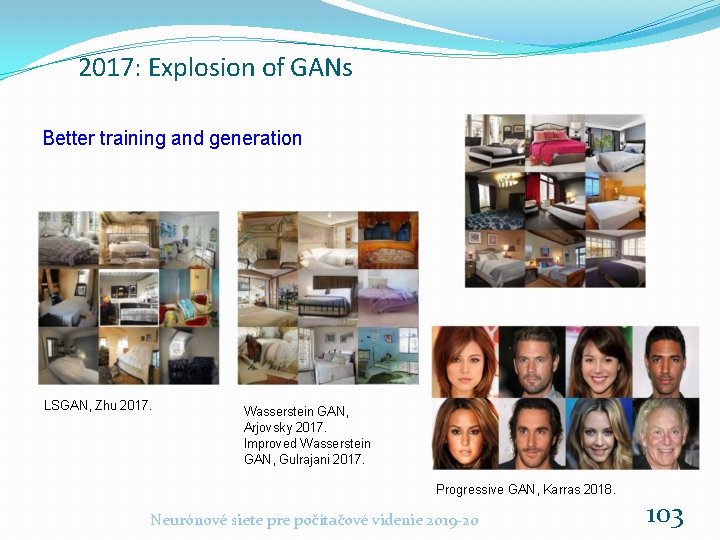

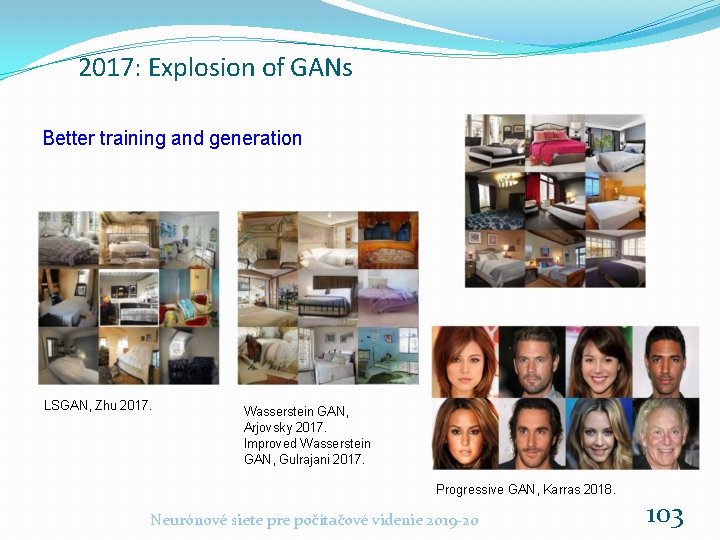

2017: Explosion of GANs Better training and generation LSGAN, Zhu 2017. Wasserstein GAN, Arjovsky 2017. Improved Wasserstein GAN, Gulrajani 2017. Progressive GAN, Karras 2018. Neurónové siete pre počítačové videnie 2019 -20 103

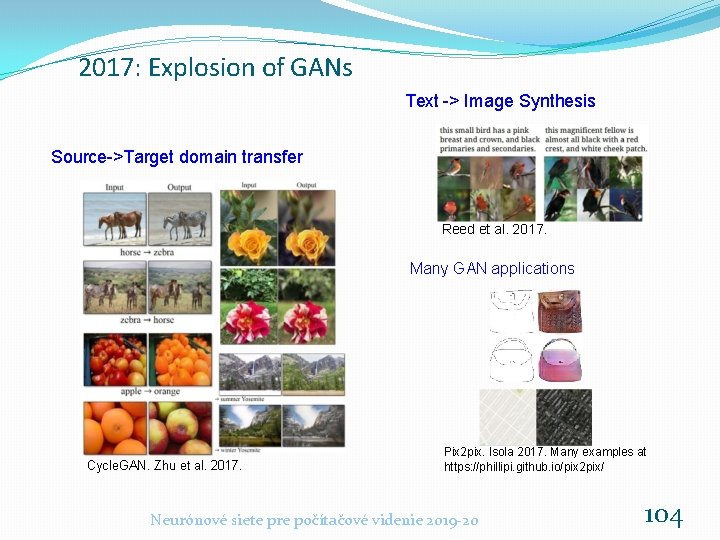

2017: Explosion of GANs Text -> Image Synthesis Source->Target domain transfer Reed et al. 2017. Many GAN applications Cycle. GAN. Zhu et al. 2017. Pix 2 pix. Isola 2017. Many examples at https: //phillipi. github. io/pix 2 pix/ Neurónové siete pre počítačové videnie 2019 -20 104

2019: Big. GAN Brock et al. , 2019 Neurónové siete pre počítačové videnie 2019 -20 105

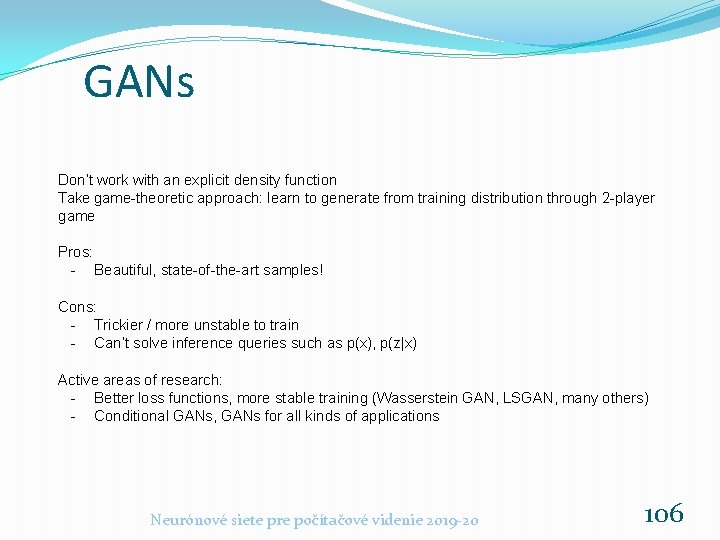

GANs Don’t work with an explicit density function Take game-theoretic approach: learn to generate from training distribution through 2 -player game Pros: - Beautiful, state-of-the-art samples! Cons: - Trickier / more unstable to train - Can’t solve inference queries such as p(x), p(z|x) Active areas of research: - Better loss functions, more stable training (Wasserstein GAN, LSGAN, many others) - Conditional GANs, GANs for all kinds of applications Neurónové siete pre počítačové videnie 2019 -20 106

Taxonomy of Generative Models Direct GAN Generative models Explicit density Tractable density Fully Visible Belief Nets - NADE - MADE - Pixel. RNN/CNN - NICE / Real. NVP - Glow - Ffjord Implicit density Markov Chain Approximate density GSN Variational Markov Chain Variational Autoencoder Boltzmann Machine Neurónové siete pre počítačové videnie 2019 -20 107

Useful Resources on Generative Models CS 236: Deep Generative Models (Stanford) CS 294 -158 Deep Unsupervised Learning (Berkeley) Neurónové siete pre počítačové videnie 2019 -20 108

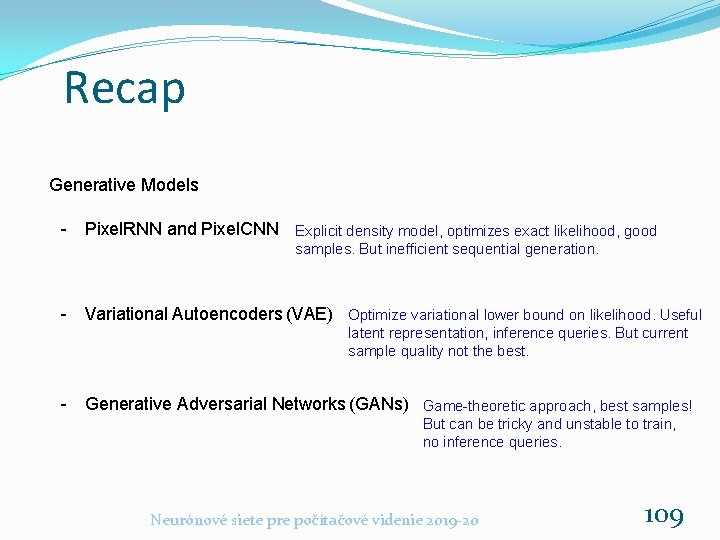

Recap Generative Models - Pixel. RNN and Pixel. CNN Explicit density model, optimizes exact likelihood, good samples. But inefficient sequential generation. - Variational Autoencoders (VAE) Optimize variational lower bound on likelihood. Useful latent representation, inference queries. But current sample quality not the best. - Generative Adversarial Networks (GANs) Game-theoretic approach, best samples! But can be tricky and unstable to train, no inference queries. Neurónové siete pre počítačové videnie 2019 -20 109

Hypermetriopia

Hypermetriopia Diagnostika potaov toshiba

Diagnostika potaov toshiba Diagnostika potaov hp

Diagnostika potaov hp Znacznik pre /pre jest stosowany w celu wyświetlenia

Znacznik pre /pre jest stosowany w celu wyświetlenia Pre act scores 2019

Pre act scores 2019 Tea de las siete virtudes

Tea de las siete virtudes El cuento de blancanieves y los siete enanitos

El cuento de blancanieves y los siete enanitos Falta de temor

Falta de temor Vuelan en la araña gris siete pájaros del prisma

Vuelan en la araña gris siete pájaros del prisma Siete pecados capitales gula

Siete pecados capitales gula Mateo 24:29-30

Mateo 24:29-30 Apocalipsis 1

Apocalipsis 1 Las maravillas del mundo actual

Las maravillas del mundo actual Canto 26 inferno

Canto 26 inferno Straightforward in spanish

Straightforward in spanish Corona de los siete dolores de la virgen maría

Corona de los siete dolores de la virgen maría Peticiones cortas

Peticiones cortas Uno dos tres cuatro cinco seis siete ocho nueve diez

Uno dos tres cuatro cinco seis siete ocho nueve diez Los elementos de la autoestima

Los elementos de la autoestima Da questo tutti sapranno che siete miei discepoli

Da questo tutti sapranno che siete miei discepoli 7 colinas de roma

7 colinas de roma Pecados capitales y sus virtudes contrarias

Pecados capitales y sus virtudes contrarias Pocitacove siete zakladne pojmy

Pocitacove siete zakladne pojmy Siete espejos esenios

Siete espejos esenios Sieť kužeľa

Sieť kužeľa Siete colinas

Siete colinas Significado de las 7 iglesias del apocalipsis

Significado de las 7 iglesias del apocalipsis Yo soy el alfa y el omega

Yo soy el alfa y el omega Las siete iglesias del apocalipsis en la actualidad

Las siete iglesias del apocalipsis en la actualidad Obraz kvadra

Obraz kvadra Las siete dimensiones

Las siete dimensiones Contraste de los colores

Contraste de los colores Las 9 musas

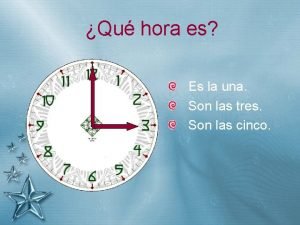

Las 9 musas Siete y cuarto

Siete y cuarto Sustantivos luna

Sustantivos luna Mensaje a las 7 iglesias

Mensaje a las 7 iglesias Las 7 iglesias del apocalipsis

Las 7 iglesias del apocalipsis Las 7 copas de la ira de dios explicación

Las 7 copas de la ira de dios explicación Son las cuatro menos veinticinco

Son las cuatro menos veinticinco Siete colinas

Siete colinas Los 7 sacramentos y sus significados

Los 7 sacramentos y sus significados Siete son

Siete son Las hermanas de sipora

Las hermanas de sipora Cuáles son las siete iglesias

Cuáles son las siete iglesias Inject.7

Inject.7 Los 7 dolores de maria

Los 7 dolores de maria Tt siet

Tt siet Un hotel va a hospedar a siete estudiantes

Un hotel va a hospedar a siete estudiantes Gravimetric analysis of calcium and hard water lab answers

Gravimetric analysis of calcium and hard water lab answers Causes of pre hepatic jaundice

Causes of pre hepatic jaundice Pré australopithecus

Pré australopithecus Searchexposure

Searchexposure Pre image

Pre image Steady state kinetics

Steady state kinetics Past simple excercises

Past simple excercises Importancia de la asistencia escolar

Importancia de la asistencia escolar Pre project activities

Pre project activities Diagram of a school bus

Diagram of a school bus Pre tracheal lymph node

Pre tracheal lymph node Preind

Preind Danielson's rubric

Danielson's rubric Kennisnetgeboortezorg

Kennisnetgeboortezorg Pre calc sequences and series

Pre calc sequences and series Four term contingency

Four term contingency Lesson 3 fitness

Lesson 3 fitness Pre reproductive age

Pre reproductive age Ipa instrument for pre-accession assistance

Ipa instrument for pre-accession assistance Pre cab

Pre cab Pre cana worksheets

Pre cana worksheets Pre saberes

Pre saberes Pre incorporation meaning

Pre incorporation meaning Epäsuora kysymyslause

Epäsuora kysymyslause Calcosferiti

Calcosferiti Pre gps wayfinding aid

Pre gps wayfinding aid Pre-test publicitario

Pre-test publicitario Since lab

Since lab Bus pretrip inspection

Bus pretrip inspection Father of modern criminology

Father of modern criminology Elementos pré textuais abnt

Elementos pré textuais abnt Ucl pre enrolment

Ucl pre enrolment Preliminary visits for expatriates

Preliminary visits for expatriates Pre production visualisation diagram

Pre production visualisation diagram Typy sopiek

Typy sopiek Pre assessment activity

Pre assessment activity Example of narrow topic

Example of narrow topic Obvod trojholnika

Obvod trojholnika Pre op checklist nursing

Pre op checklist nursing Pre las

Pre las Basic algebra formula

Basic algebra formula Pre spanish period physical education

Pre spanish period physical education Venus of brassempouy

Venus of brassempouy Pre-modern society

Pre-modern society Persia before 1935

Persia before 1935 The most dangerous game by richard connell theme

The most dangerous game by richard connell theme What is visitor pre registration in picme

What is visitor pre registration in picme Pre split drilling

Pre split drilling Reader response journal prompts

Reader response journal prompts Pre planning example

Pre planning example 1984 pre reading questions

1984 pre reading questions Among the hidden pre reading activities

Among the hidden pre reading activities Php stands for hypertext pre-processor. *

Php stands for hypertext pre-processor. * Psp mvr

Psp mvr Agogische vakken betekenis

Agogische vakken betekenis Efsweb

Efsweb Grandmother by sameeneh shirazie

Grandmother by sameeneh shirazie Pre-australopiths

Pre-australopiths Prt cern

Prt cern Myschoolfees huntsville city schools

Myschoolfees huntsville city schools Cuatro etapas del catecumenado

Cuatro etapas del catecumenado