Memory is used to store programs and data

- Slides: 46

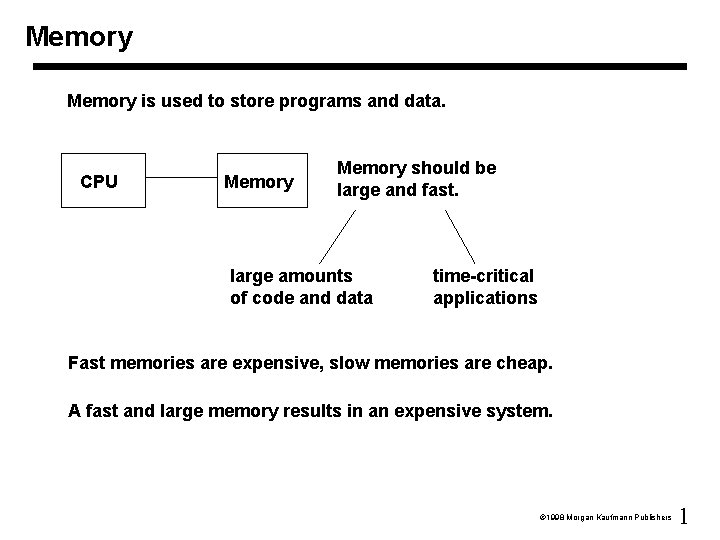

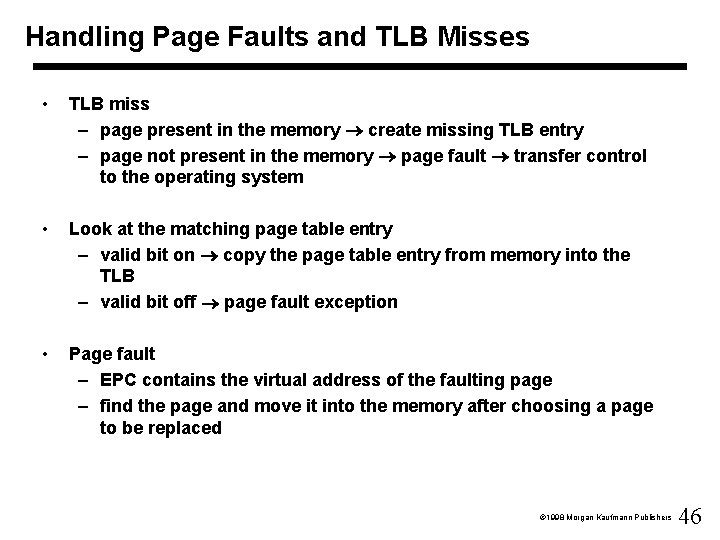

Memory is used to store programs and data. CPU Memory should be large and fast. large amounts of code and data time-critical applications Fast memories are expensive, slow memories are cheap. A fast and large memory results in an expensive system. 1998 Morgan Kaufmann Publishers 1

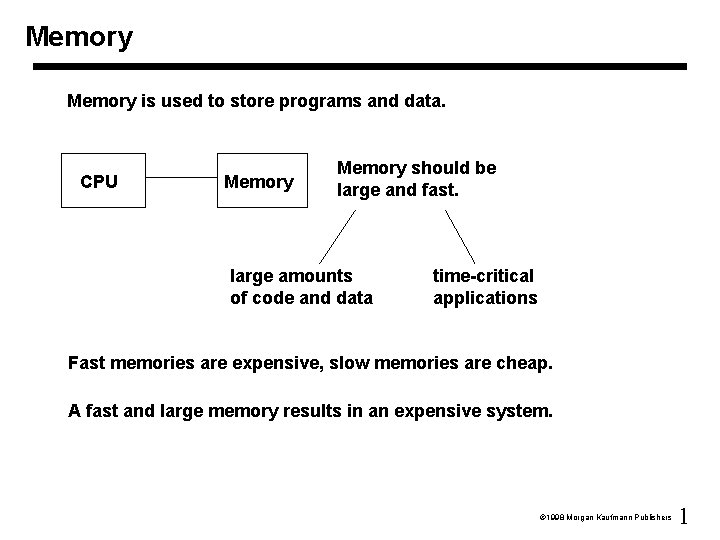

Main Memory Types • CPU uses the main memory on the instruction level. • RAM (Random Access Memory): we can read and write. – Static – Dynamic • ROM (Read-Only Memory): we can only read. – Changing of the contents is possible for most types. • Characteristics – Access time – Price – Volatility 1998 Morgan Kaufmann Publishers 2

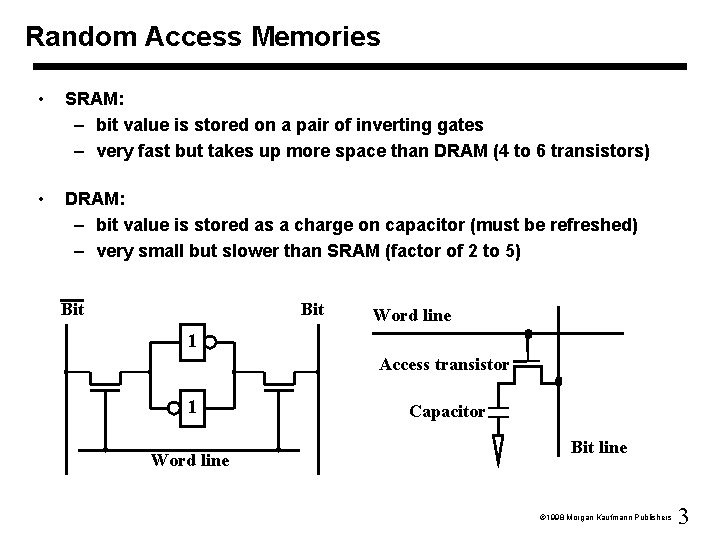

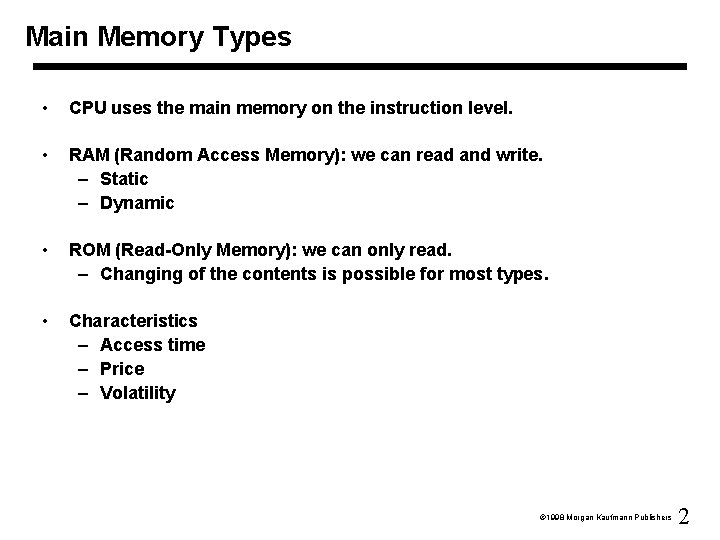

Random Access Memories • SRAM: – bit value is stored on a pair of inverting gates – very fast but takes up more space than DRAM (4 to 6 transistors) • DRAM: – bit value is stored as a charge on capacitor (must be refreshed) – very small but slower than SRAM (factor of 2 to 5) Bit Word line 1 Access transistor 1 Word line Capacitor Bit line 1998 Morgan Kaufmann Publishers 3

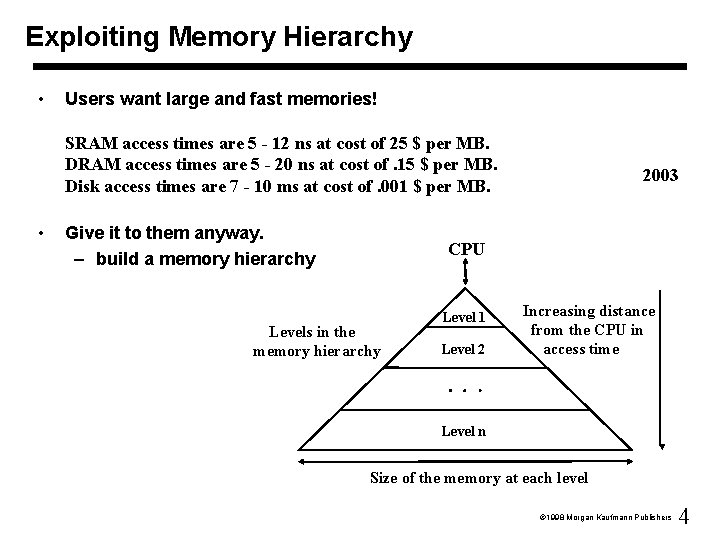

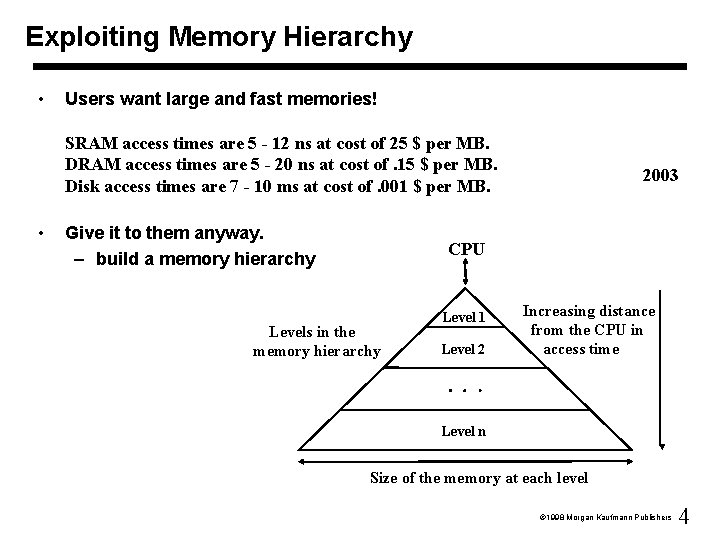

Exploiting Memory Hierarchy • Users want large and fast memories! SRAM access times are 5 - 12 ns at cost of 25 $ per MB. DRAM access times are 5 - 20 ns at cost of. 15 $ per MB. Disk access times are 7 - 10 ms at cost of. 001 $ per MB. • Give it to them anyway. – build a memory hierarchy 2003 CPU Levels in the memory hierarchy Level 1 Level 2 Increasing distance from the CPU in access time Level n Size of the memory at each level 1998 Morgan Kaufmann Publishers 4

Locality • A principle that makes having a memory hierarchy a good idea • If an item is referenced, temporal locality: it will tend to be referenced again soon spatial locality: nearby items will tend to be referenced soon. • Why does code have locality? – loops – instructions accessed sequentially – arrays, records 1998 Morgan Kaufmann Publishers 5

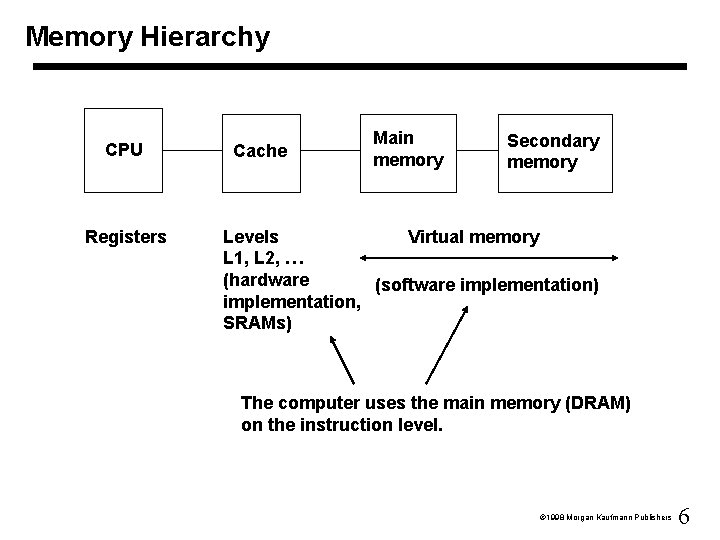

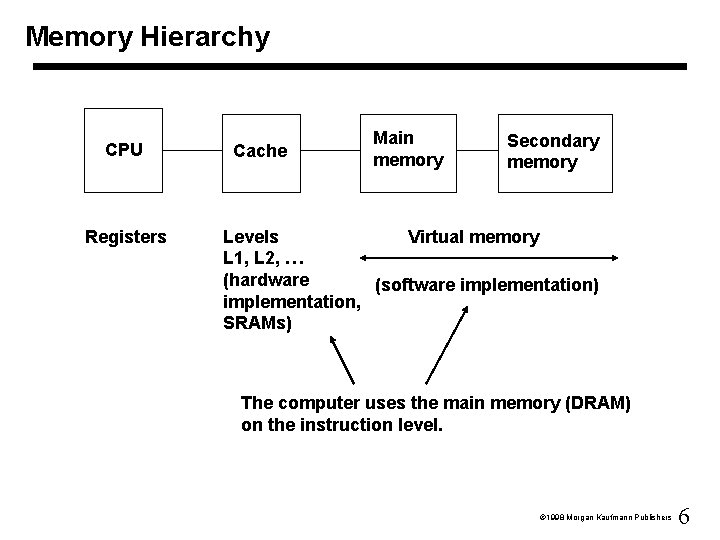

Memory Hierarchy CPU Registers Cache Main memory Secondary memory Levels Virtual memory L 1, L 2, … (hardware (software implementation) implementation, SRAMs) The computer uses the main memory (DRAM) on the instruction level. 1998 Morgan Kaufmann Publishers 6

Memory Hierarchy A pair of levels in the memory hierarchy: – two levels: upper and lower – block: minimum unit of data transferred between upper and lower level – hit: data requested is in the upper level • hit rate – miss: data requested is not in the upper level • miss rate – miss penalty depends mainly on lower level access time 1998 Morgan Kaufmann Publishers 7

Cache Basics • What information goes into the cache in addition to the referenced one? • How do we know if a data item is in the cache? • If it is, how do we find it? 1998 Morgan Kaufmann Publishers 8

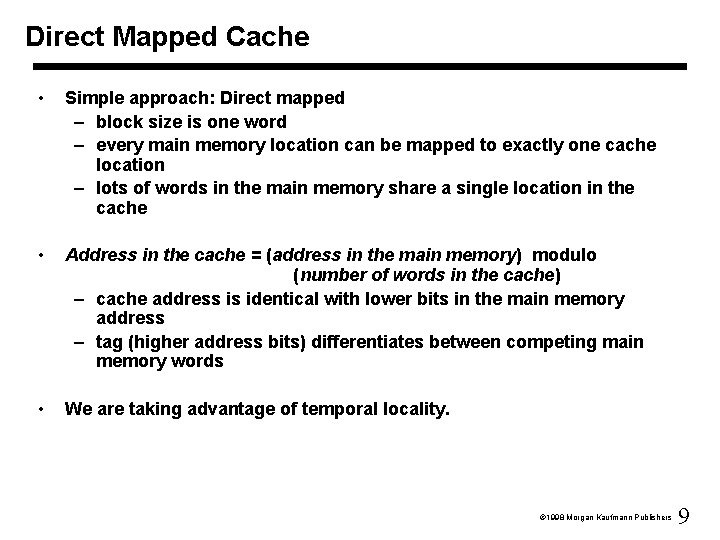

Direct Mapped Cache • Simple approach: Direct mapped – block size is one word – every main memory location can be mapped to exactly one cache location – lots of words in the main memory share a single location in the cache • Address in the cache = (address in the main memory) modulo (number of words in the cache) – cache address is identical with lower bits in the main memory address – tag (higher address bits) differentiates between competing main memory words • We are taking advantage of temporal locality. 1998 Morgan Kaufmann Publishers 9

Direct Mapped Cache: Simple Example 1998 Morgan Kaufmann Publishers 10

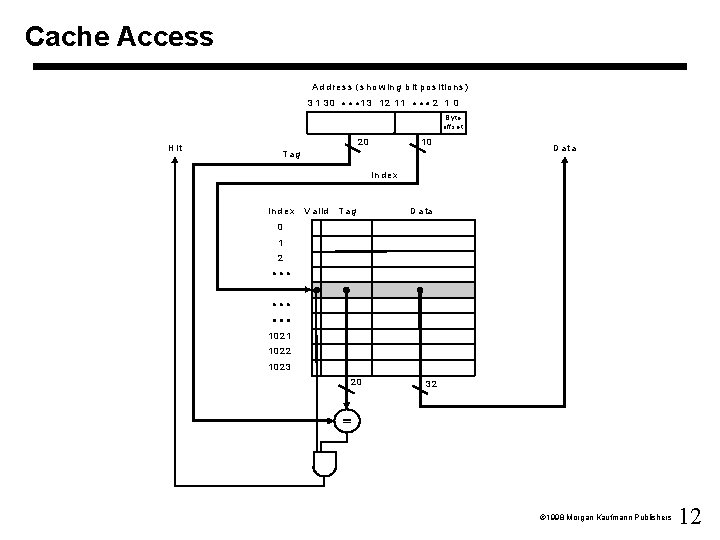

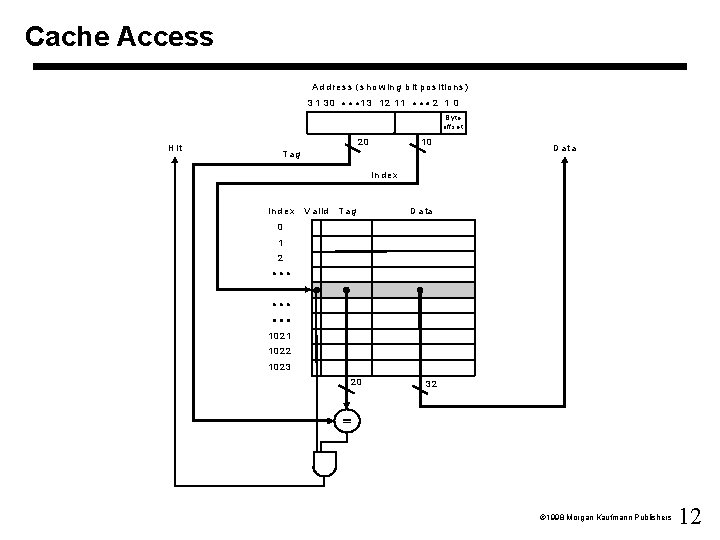

A More Realistic Example • • 32 bit word length 32 bit address 1 k. W cache block size 1 word 10 bit cache index 20 bit tag size 2 bit byte offset (word alignment assumed) valid bit 1998 Morgan Kaufmann Publishers 11

Cache Access A d d r e s s (s h o w in g b it p o s itio n s ) 3 1 30 1 3 12 11 2 1 0 B y te offse t H it 10 20 Tag D ata In d e x V a lid T ag D a ta 0 1 2 10 21 10 22 10 23 20 32 1998 Morgan Kaufmann Publishers 12

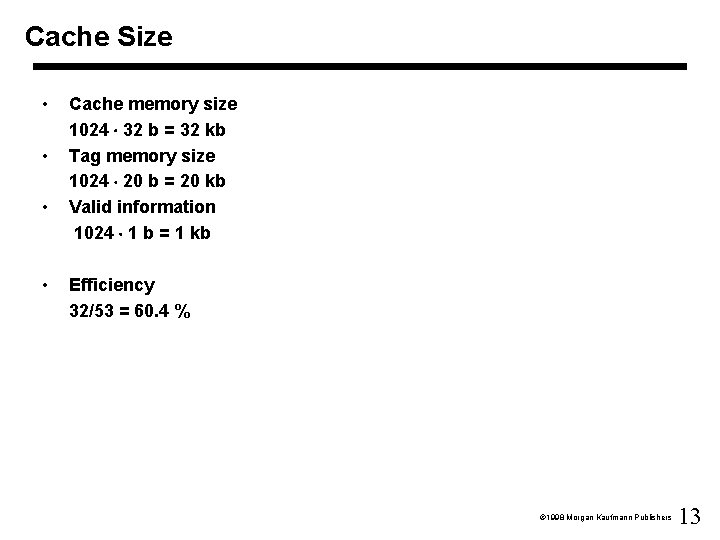

Cache Size • • Cache memory size 1024 32 b = 32 kb Tag memory size 1024 20 b = 20 kb Valid information 1024 1 b = 1 kb Efficiency 32/53 = 60. 4 % 1998 Morgan Kaufmann Publishers 13

Cache Hits and Misses • Cache hit - continue – access the cache • Cache miss – stall the CPU – get information from the main memory – write information in the cache • data, tag, set valid bit – resume execution 1998 Morgan Kaufmann Publishers 14

Hits and Misses in More Detail • Read hits – this is what we want! • Read misses – stall the CPU, fetch block from memory, deliver to cache, restart • Write hits: – can replace the data in the cache and memory (write-through) – write the data only in the cache, write in the main memory later (write-back) • Write misses: – read the entire block into the cache, then write the word 1998 Morgan Kaufmann Publishers 15

Write Through and Write Back • Write through – update cache and main memory at the same time – may result in extra main memory writes – requires a write buffer; it stores data while it is waiting to be written to memory • Write back – update main memory only during replacement – replacement may be slower 1998 Morgan Kaufmann Publishers 16

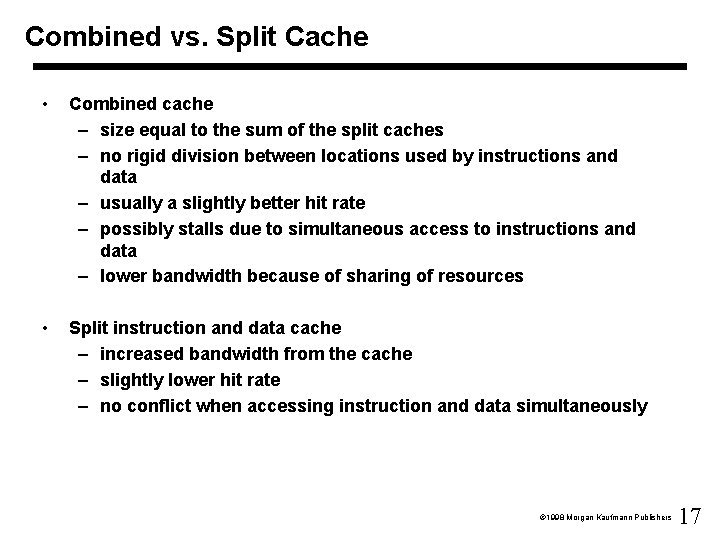

Combined vs. Split Cache • Combined cache – size equal to the sum of the split caches – no rigid division between locations used by instructions and data – usually a slightly better hit rate – possibly stalls due to simultaneous access to instructions and data – lower bandwidth because of sharing of resources • Split instruction and data cache – increased bandwidth from the cache – slightly lower hit rate – no conflict when accessing instruction and data simultaneously 1998 Morgan Kaufmann Publishers 17

Taking Advantage of Spatial Locality • The cache described so far: – simple – block size one word – only temporal locality exploited • Spatial locality – block size longer than one word – when a miss occurs, multiple adjacent words are fetched 1998 Morgan Kaufmann Publishers 18

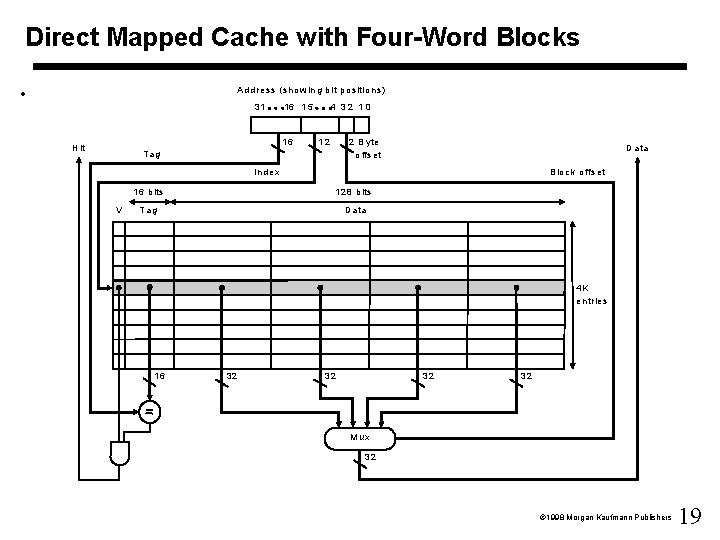

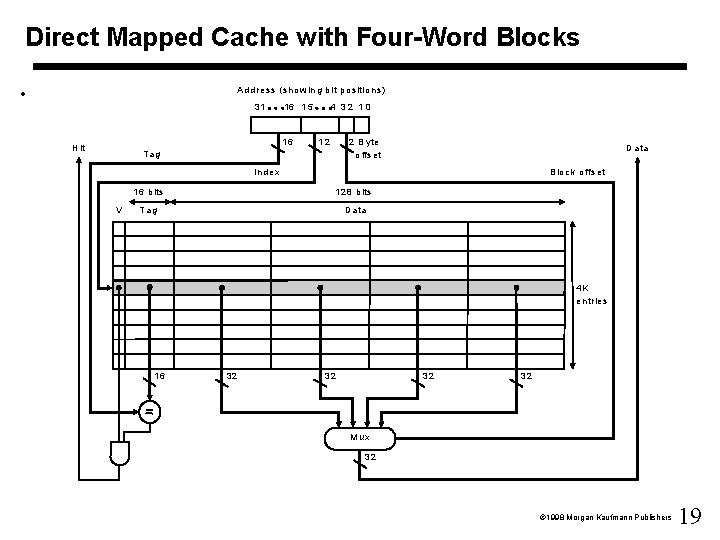

Direct Mapped Cache with Four-Word Blocks • A d d res s (sho w in g b it po sition s) 31 16 1 5 16 H it 4 32 1 0 12 2 B yt e o ffs et T ag D ata Ind ex V B loc k offs et 1 6 bits 12 8 bits T ag D ata 4 K en trie s 16 32 32 Mux 32 1998 Morgan Kaufmann Publishers 19

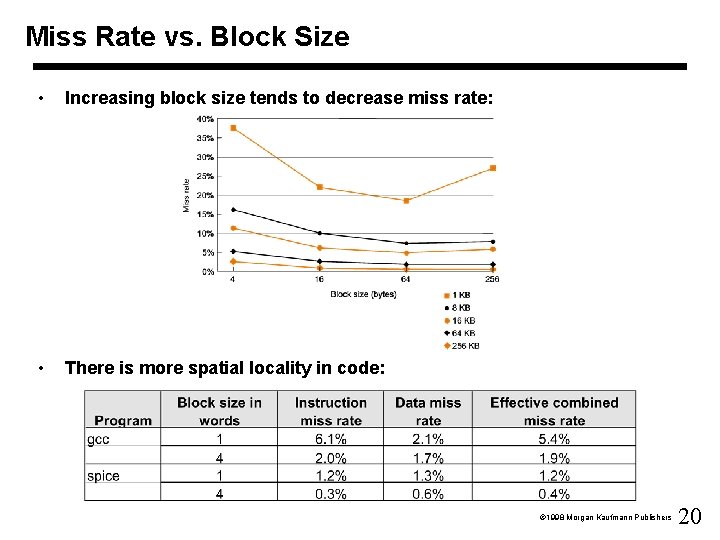

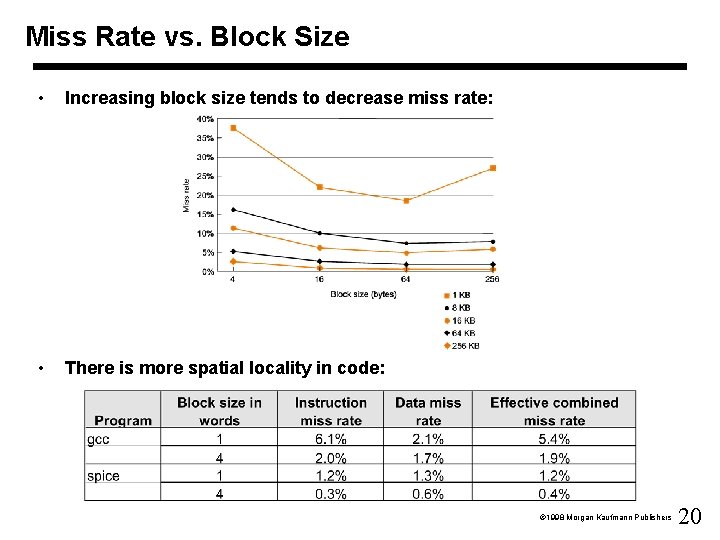

Miss Rate vs. Block Size • Increasing block size tends to decrease miss rate: • There is more spatial locality in code: 1998 Morgan Kaufmann Publishers 20

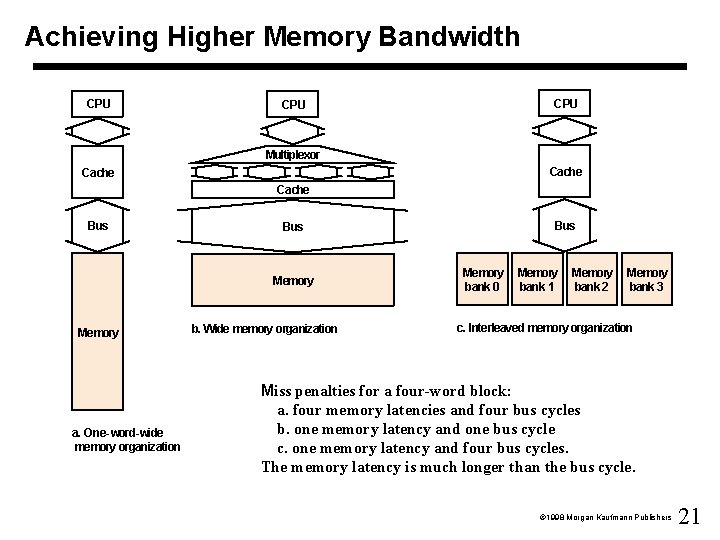

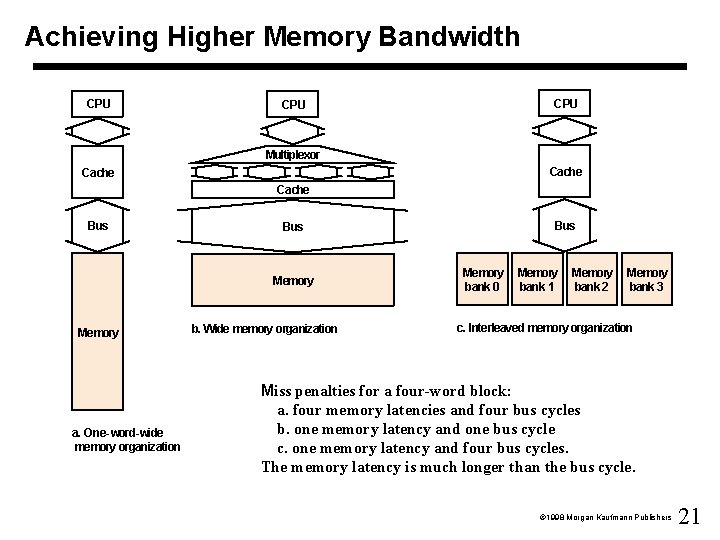

Achieving Higher Memory Bandwidth CPU CPU Multiplexor Cache Bus Memory a. One-word-wide memory organization Bus b. Wide memory organization Memory bank 0 Memory bank 1 Memory bank 2 Memory bank 3 c. Interleaved memory organization Miss penalties for a four-word block: a. four memory latencies and four bus cycles b. one memory latency and one bus cycle c. one memory latency and four bus cycles. The memory latency is much longer than the bus cycle. 1998 Morgan Kaufmann Publishers 21

Performance • Simplified model: execution time = (execution cycles + stall cycles) cycle time stall cycles = # of instructions miss rate miss penalty • The model is more complicated for writes than reads (write-through vs. write-back, write buffers) • Two ways of improving performance: – decreasing the miss rate – decreasing the miss penalty 1998 Morgan Kaufmann Publishers 22

Flexible Placement of Cache blocks • • • Direct mapped cache – a memory block can go exactly in one place in the cache – use the tag to identify the referenced word – easy to implement Fully associative cache – a memory block can be placed in any location in the cache – search all entries in the cache in parallel – expensive to implement (a comparator associated with each cache entry) Set-associative cache – a memory block can be placed in a fixed number of locations – n locations: n-way set-associative cache – a block is mapped to a set; it can be placed in any element in that set – search the elements of the set – implementation simpler than in fully associative cache 1998 Morgan Kaufmann Publishers 23

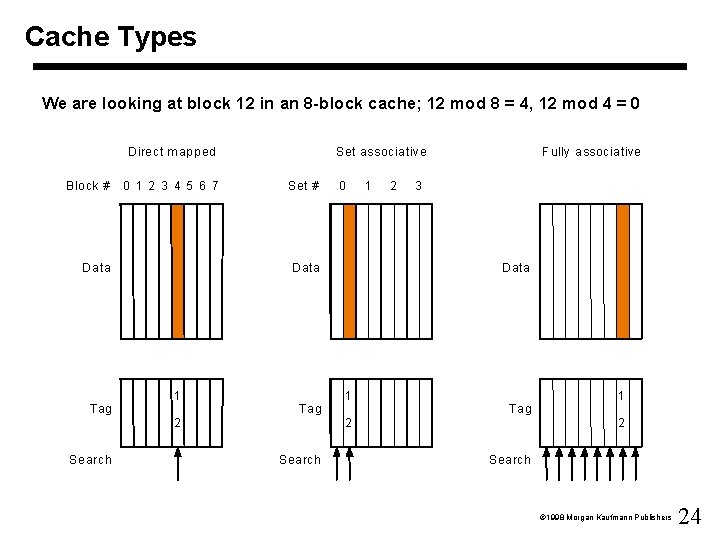

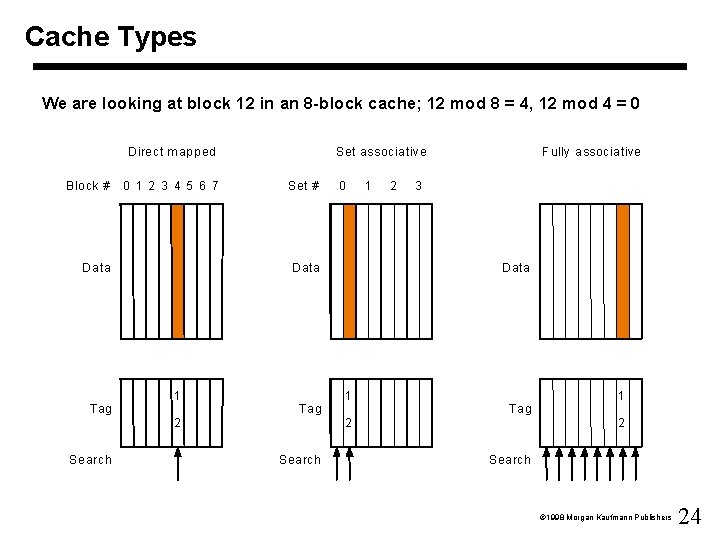

Cache Types We are looking at block 12 in an 8 -block cache; 12 mod 8 = 4, 12 mod 4 = 0 Direct m apped Block # 0 1 2 3 4 5 6 7 Data Set associative Set # D ata 1 Tag 1 2 3 Data 1 Tag 2 Search 0 Fully associative 1 Tag 2 Search 1998 Morgan Kaufmann Publishers 24

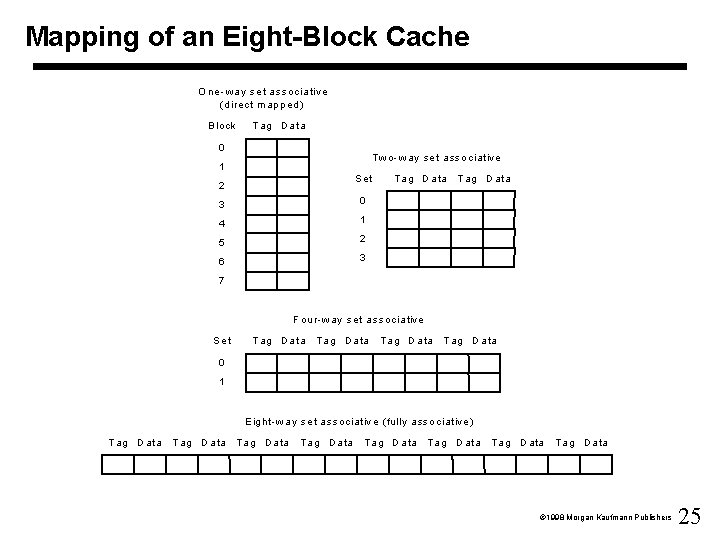

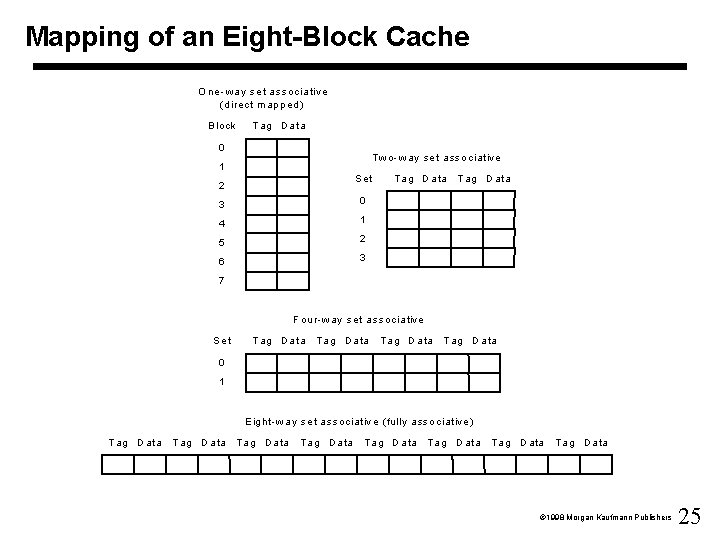

Mapping of an Eight-Block Cache O n e- w a y se t a ss o ciativ e (direc t m a pp ed ) B lock T ag D ata 0 T w o- w a y se t a ss o ciativ e 1 S et 2 3 0 4 1 5 2 6 3 T a g D a ta 7 F ou r-w ay s et ass oc ia tive S et T ag D ata T ag D a ta T a g D a ta 0 1 E ig ht-w a y se t a sso c iativ e (fully a ssoc iative ) T ag D ata T ag D a ta T a g D a ta T a g D ata T ag D ata 1998 Morgan Kaufmann Publishers 25

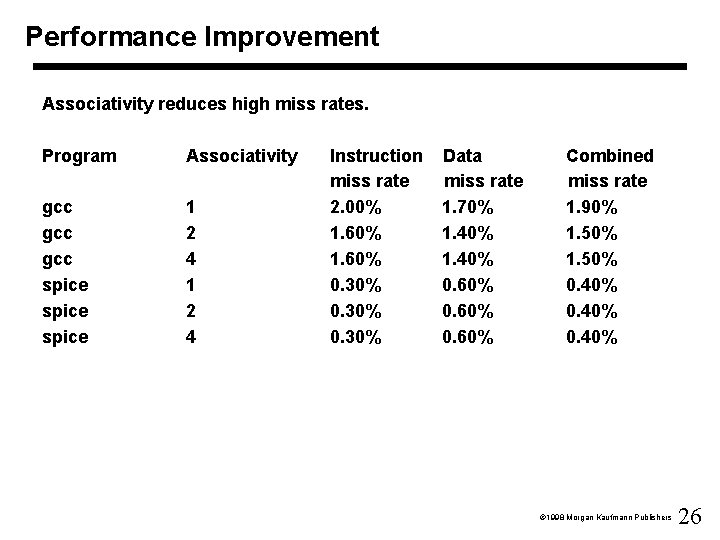

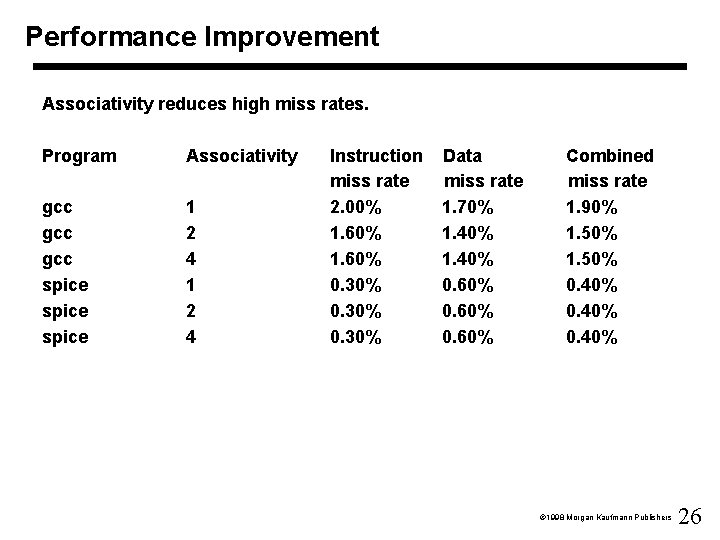

Performance Improvement Associativity reduces high miss rates. Program Associativity gcc gcc spice 1 2 4 Instruction miss rate 2. 00% 1. 60% 0. 30% Data miss rate 1. 70% 1. 40% 0. 60% Combined miss rate 1. 90% 1. 50% 0. 40% 1998 Morgan Kaufmann Publishers 26

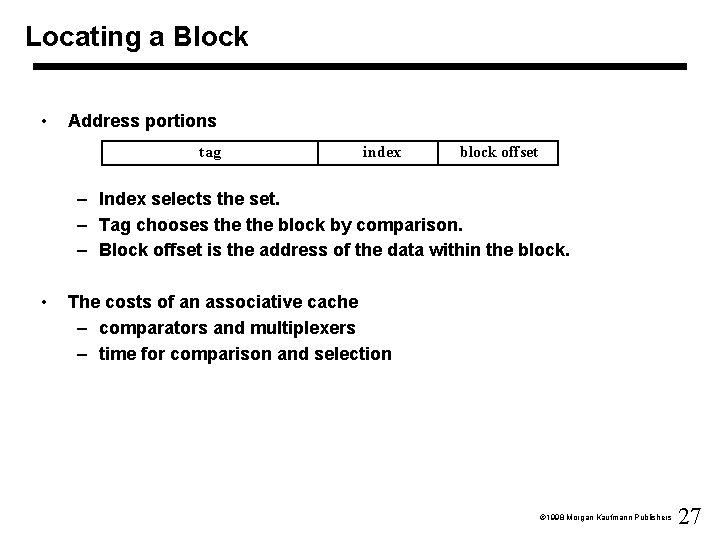

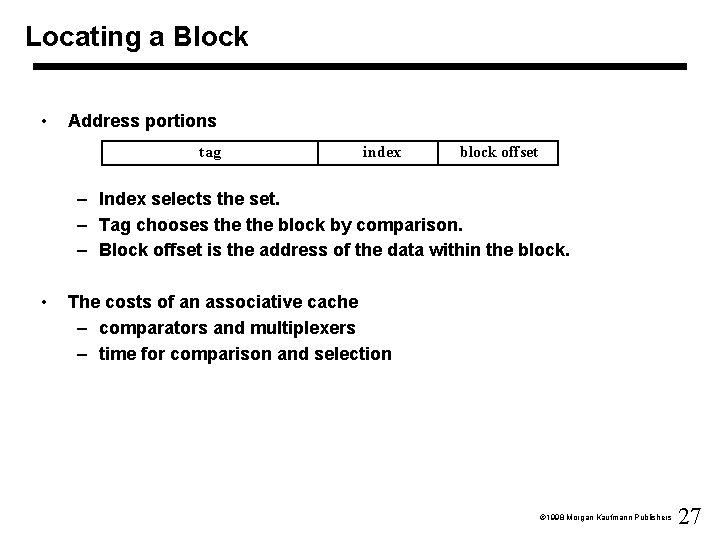

Locating a Block • Address portions tag index block offset – Index selects the set. – Tag chooses the block by comparison. – Block offset is the address of the data within the block. • The costs of an associative cache – comparators and multiplexers – time for comparison and selection 1998 Morgan Kaufmann Publishers 27

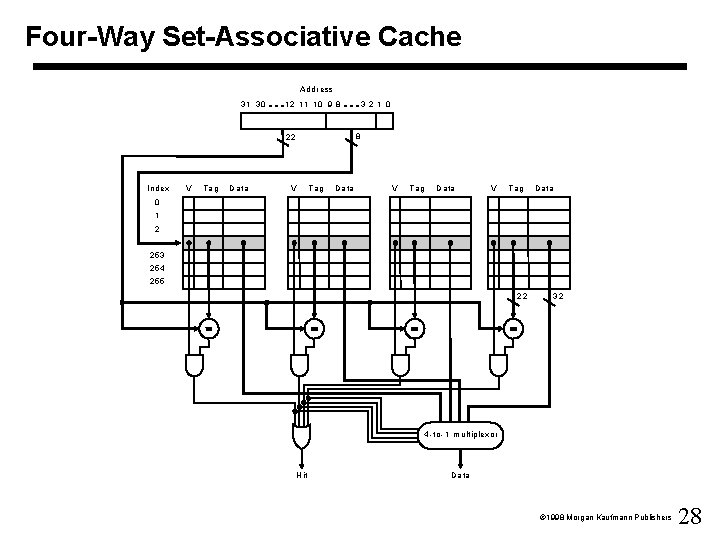

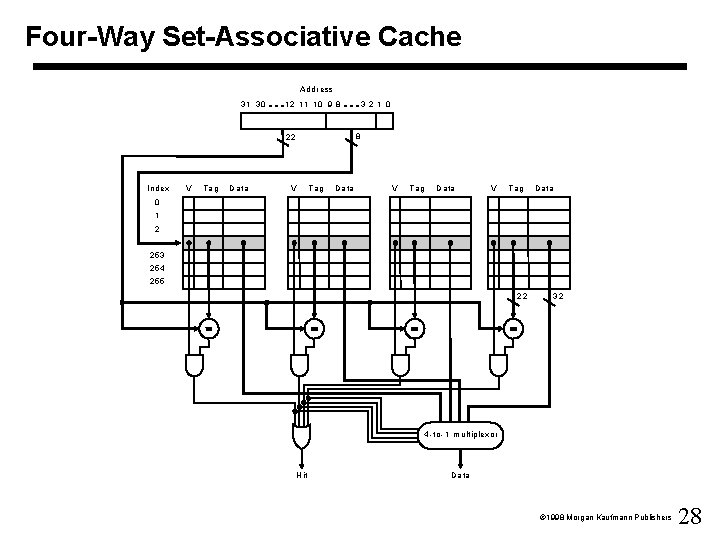

Four-Way Set-Associative Cache A d dress 31 3 0 1 2 11 10 9 8 8 22 Ind ex V Tag D ata 3 2 1 0 V Ta g D a ta V T ag D ata 0 1 2 25 3 25 4 25 5 22 32 4 - to - 1 m ultip le xo r H it D a ta 1998 Morgan Kaufmann Publishers 28

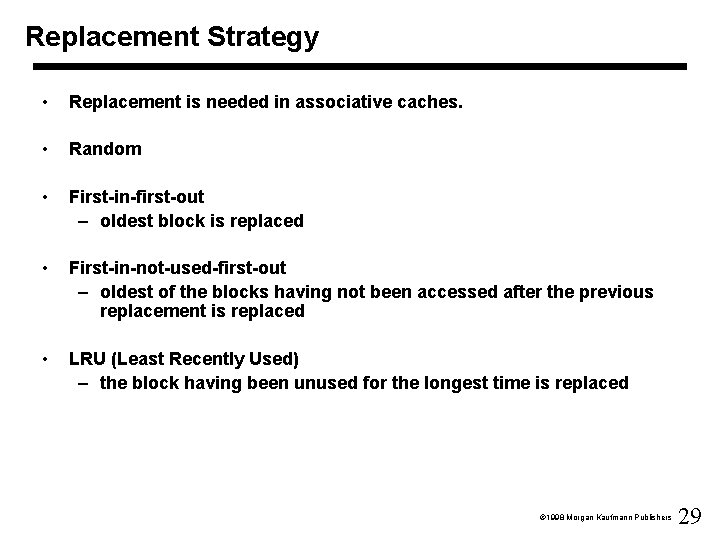

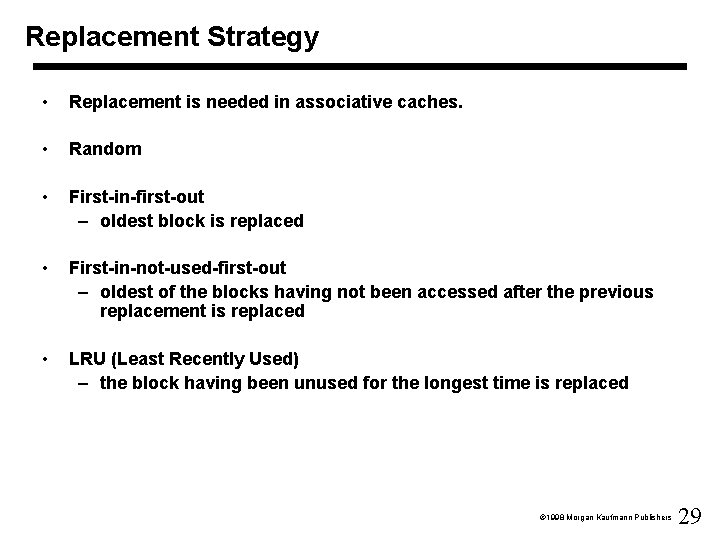

Replacement Strategy • Replacement is needed in associative caches. • Random • First-in-first-out – oldest block is replaced • First-in-not-used-first-out – oldest of the blocks having not been accessed after the previous replacement is replaced • LRU (Least Recently Used) – the block having been unused for the longest time is replaced 1998 Morgan Kaufmann Publishers 29

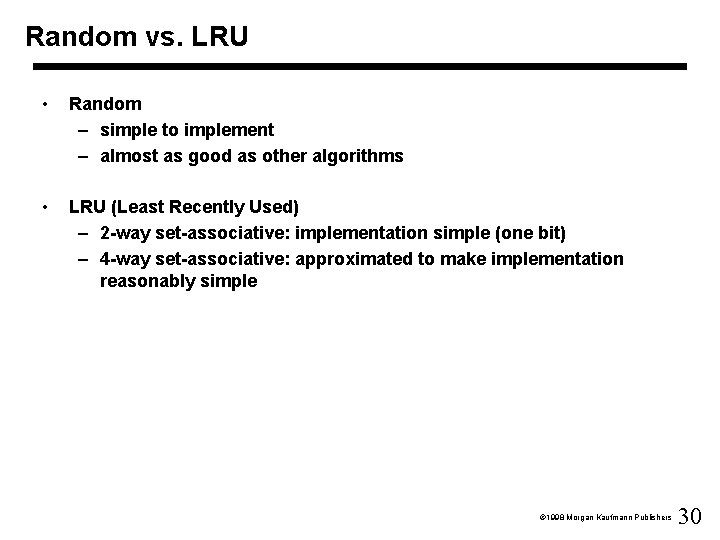

Random vs. LRU • Random – simple to implement – almost as good as other algorithms • LRU (Least Recently Used) – 2 -way set-associative: implementation simple (one bit) – 4 -way set-associative: approximated to make implementation reasonably simple 1998 Morgan Kaufmann Publishers 30

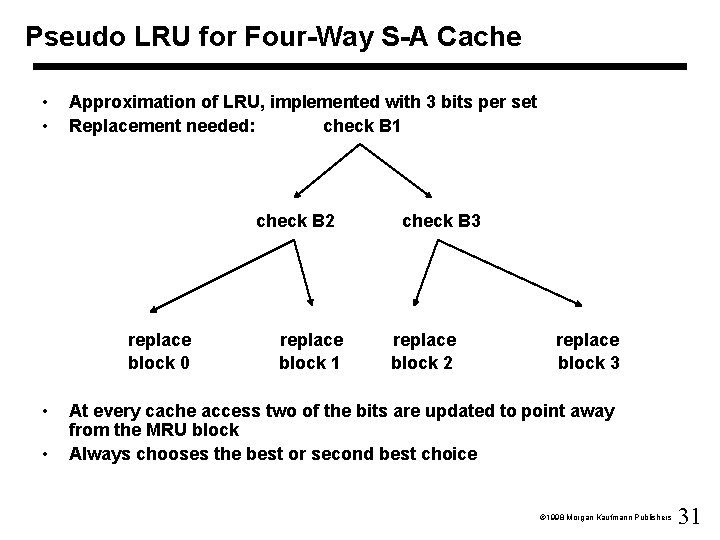

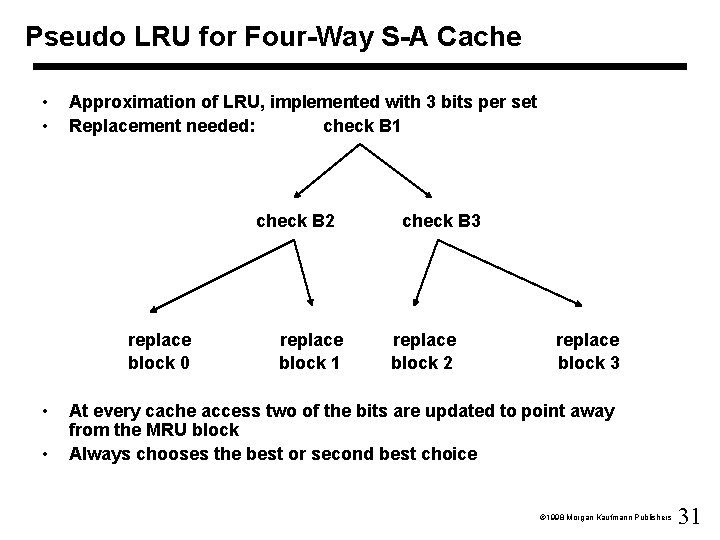

Pseudo LRU for Four-Way S-A Cache • • Approximation of LRU, implemented with 3 bits per set Replacement needed: check B 1 check B 2 replace block 0 • • replace block 1 check B 3 replace block 2 replace block 3 At every cache access two of the bits are updated to point away from the MRU block Always chooses the best or second best choice 1998 Morgan Kaufmann Publishers 31

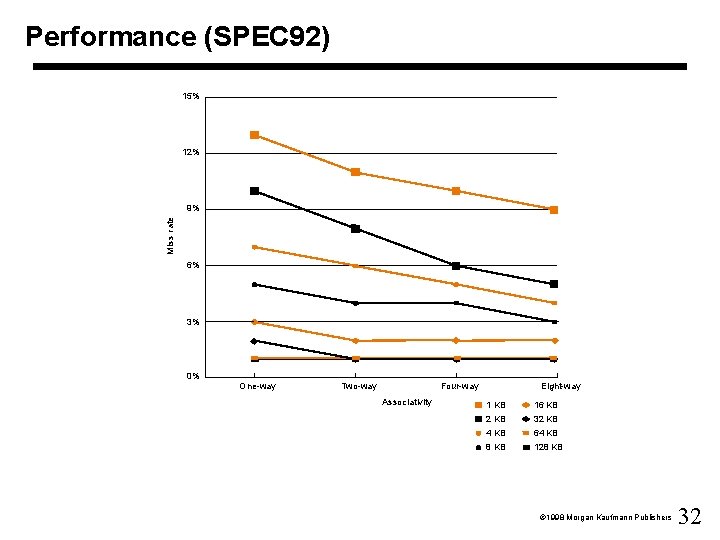

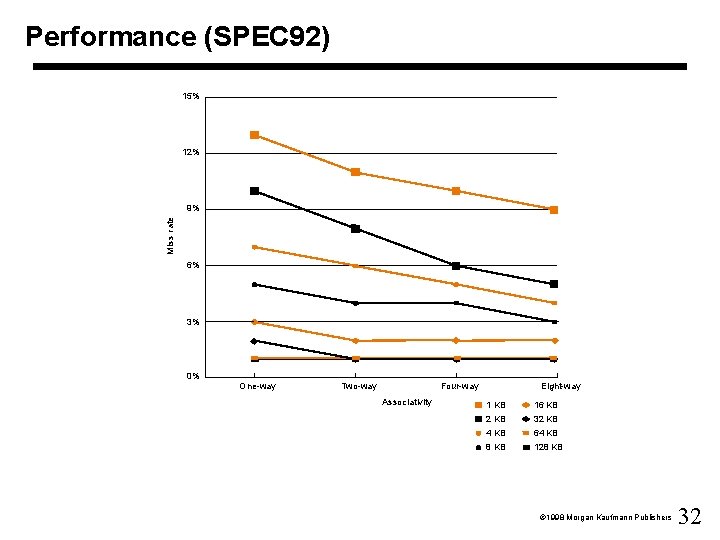

Performance (SPEC 92) 15% 12% Miss rate 9% 6% 3% 0% One-way Two-way Four-way Associativity Eight-way 1 KB 16 KB 2 KB 32 KB 4 KB 64 KB 8 KB 128 KB 1998 Morgan Kaufmann Publishers 32

Multilevel Caches • Usually two levels: – L 1 cache is often on the same chip as the processor – L 2 cache is usually off-chip – miss penalty goes down if data is in L 2 cache • Example: – CPI of 1. 0 on a 500 Mhz machine with a 200 ns main memory access time: miss penalty 100 clock cycles – Add a 2 nd level cache with 20 ns access time: miss penalty 10 clock cycles • Using multilevel caches: – minimise the hit time on L 1 – minimise the miss rate on L 2 1998 Morgan Kaufmann Publishers 33

Virtual Memory • Main memory can act as a “cache” for the secondary storage – large virtual address space used in each program – smaller main memory • Motivations – efficient and safe sharing of memory among multiple programs – remove programming burdens of a small main memory • Advantages: – illusion of having more physical memory – program relocation – protection 1998 Morgan Kaufmann Publishers 34

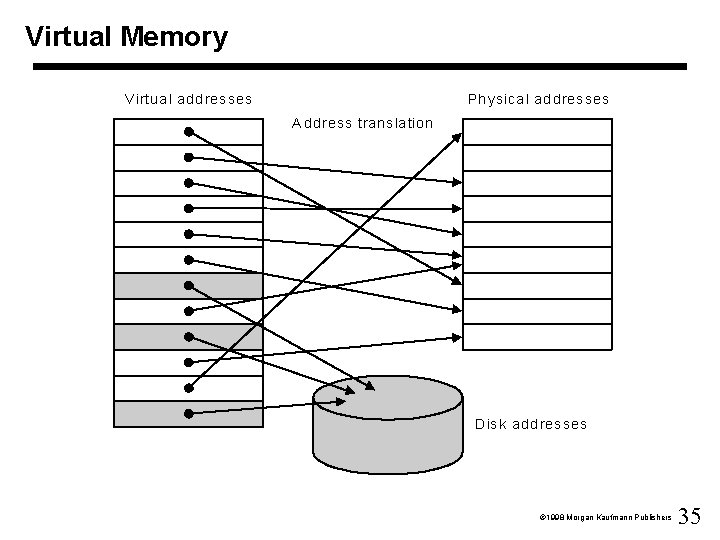

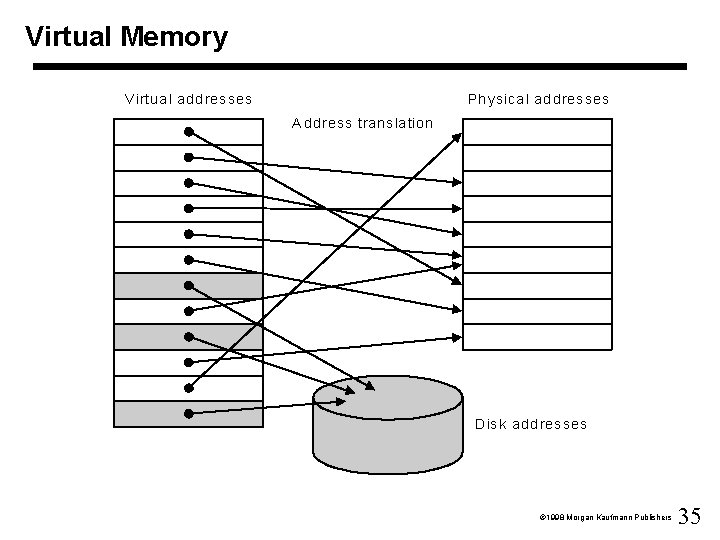

Virtual Memory V irtua l a dd res ses P h ysica l a d dress es A dd res s tra ns la tio n D is k ad d res ses 1998 Morgan Kaufmann Publishers 35

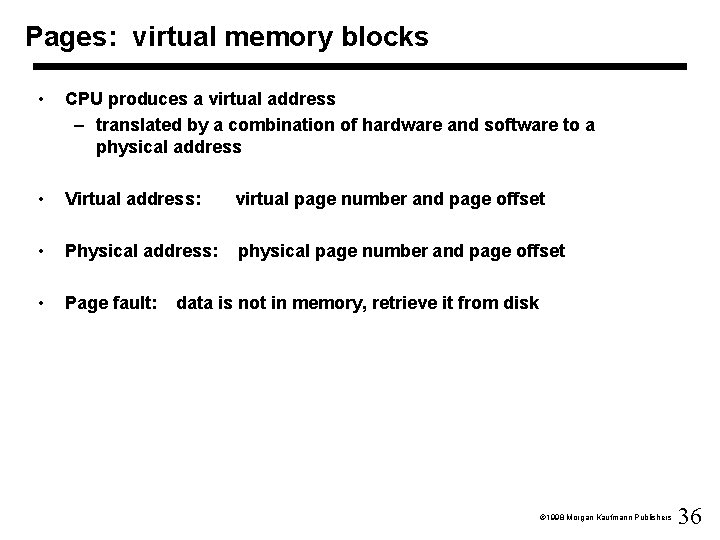

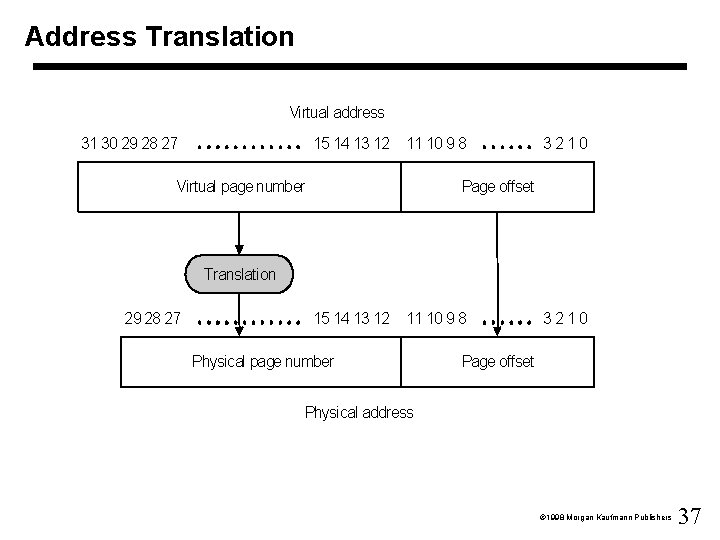

Pages: virtual memory blocks • CPU produces a virtual address – translated by a combination of hardware and software to a physical address • Virtual address: virtual page number and page offset • Physical address: physical page number and page offset • Page fault: data is not in memory, retrieve it from disk 1998 Morgan Kaufmann Publishers 36

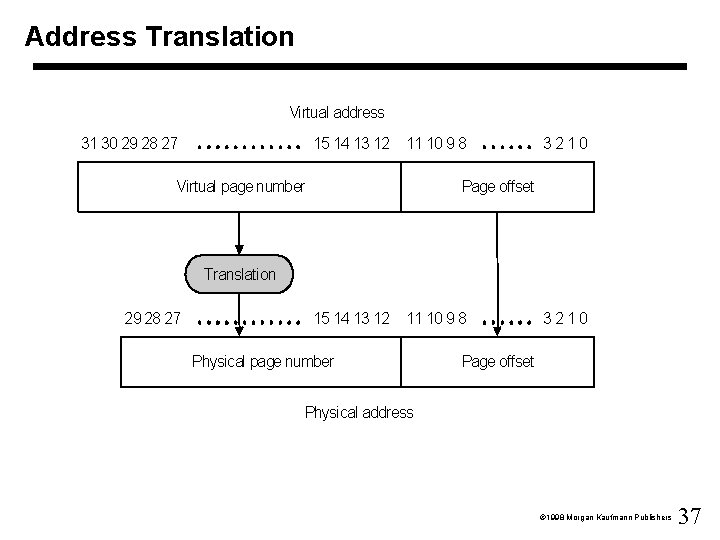

Address Translation Virtual address 31 30 29 28 27 15 14 13 12 11 10 9 8 Virtual page number 3210 Page offset Translation 29 28 27 15 14 13 12 11 10 9 8 Physical page number 3210 Page offset Physical address 1998 Morgan Kaufmann Publishers 37

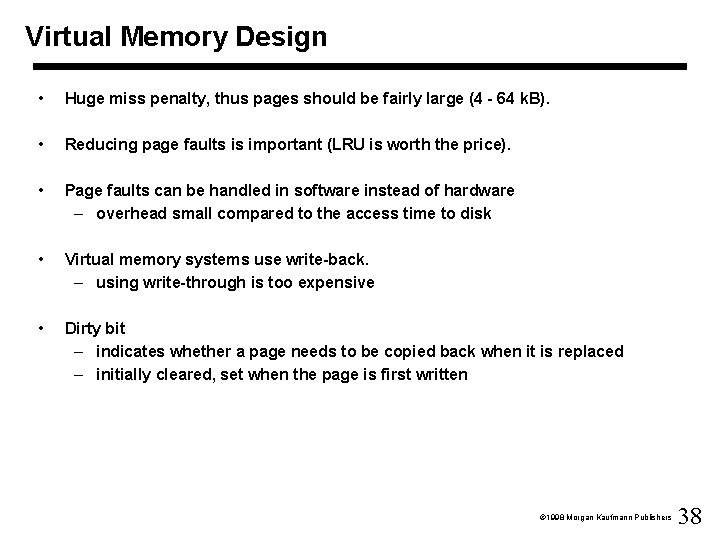

Virtual Memory Design • Huge miss penalty, thus pages should be fairly large (4 - 64 k. B). • Reducing page faults is important (LRU is worth the price). • Page faults can be handled in software instead of hardware – overhead small compared to the access time to disk • Virtual memory systems use write-back. – using write-through is too expensive • Dirty bit – indicates whether a page needs to be copied back when it is replaced – initially cleared, set when the page is first written 1998 Morgan Kaufmann Publishers 38

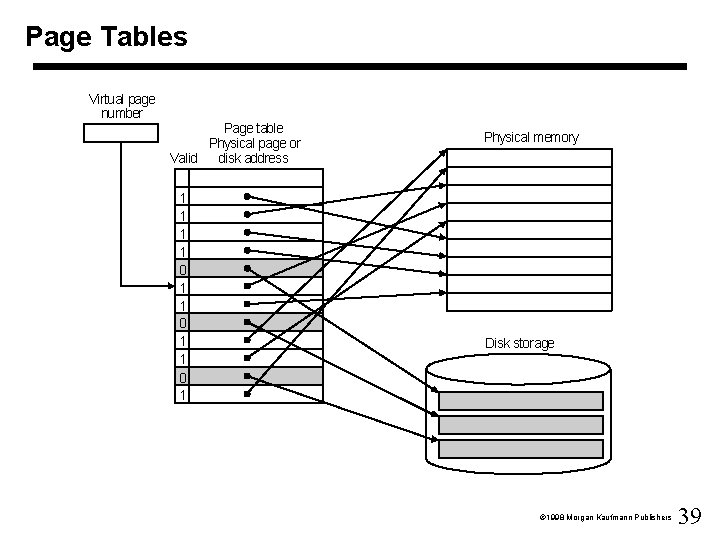

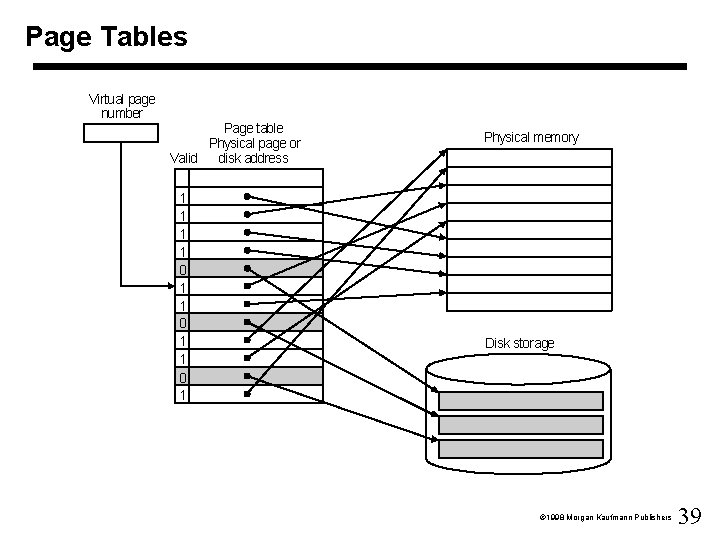

Page Tables Virtual page number Page table Physical page or Valid disk address 1 1 0 1 Physical memory Disk storage 1998 Morgan Kaufmann Publishers 39

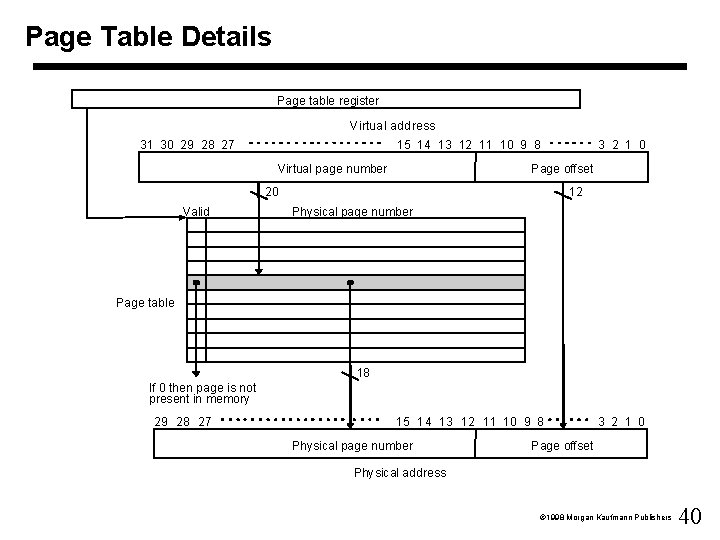

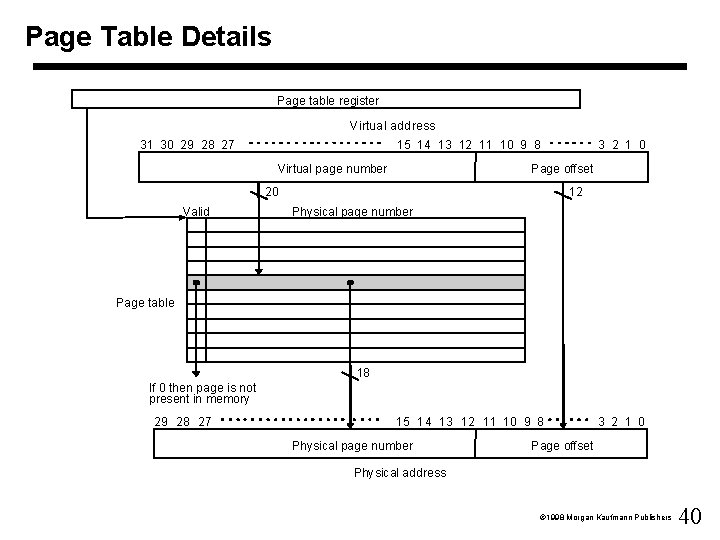

Page Table Details Page table register Virtual address 31 30 29 28 27 15 14 13 12 11 10 9 8 Virtual page number Page offset 20 Valid 3 2 1 0 12 Physical page number Page table 18 If 0 then page is not present in memory 29 28 27 15 14 13 12 11 10 9 8 Physical page number 3 2 1 0 Page offset Physical address 1998 Morgan Kaufmann Publishers 40

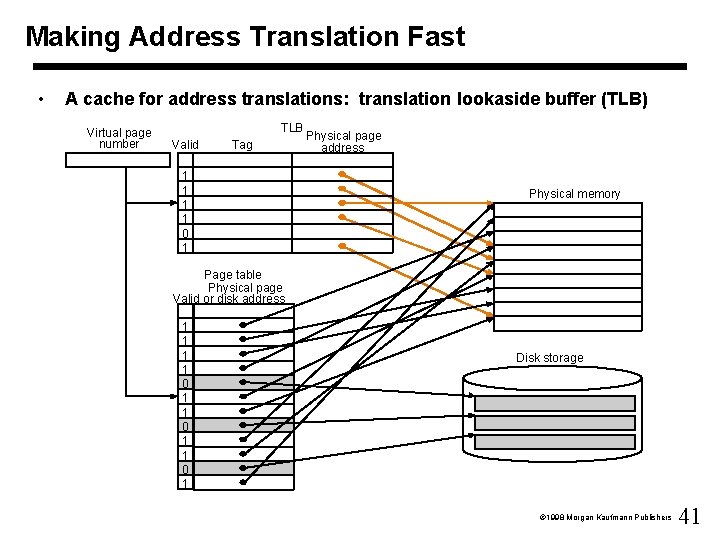

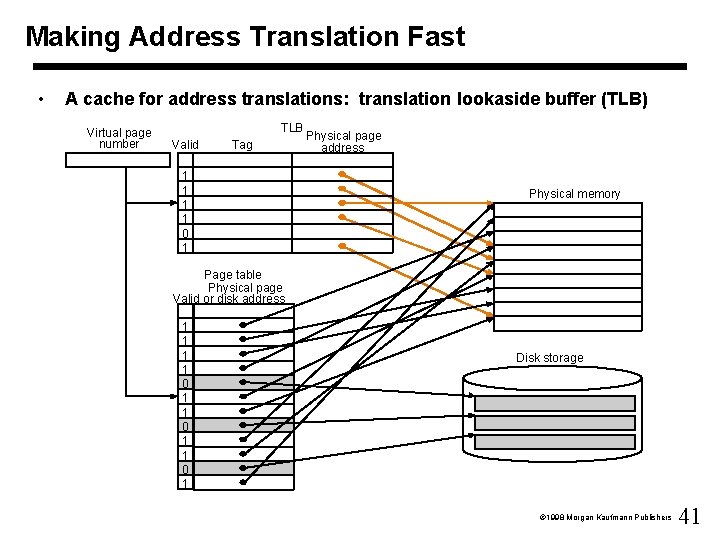

Making Address Translation Fast • A cache for address translations: translation lookaside buffer (TLB) Virtual page number TLB Valid Tag 1 1 0 1 Physical page address Physical memory Page table Physical page Valid or disk address 1 1 0 1 Disk storage 1998 Morgan Kaufmann Publishers 41

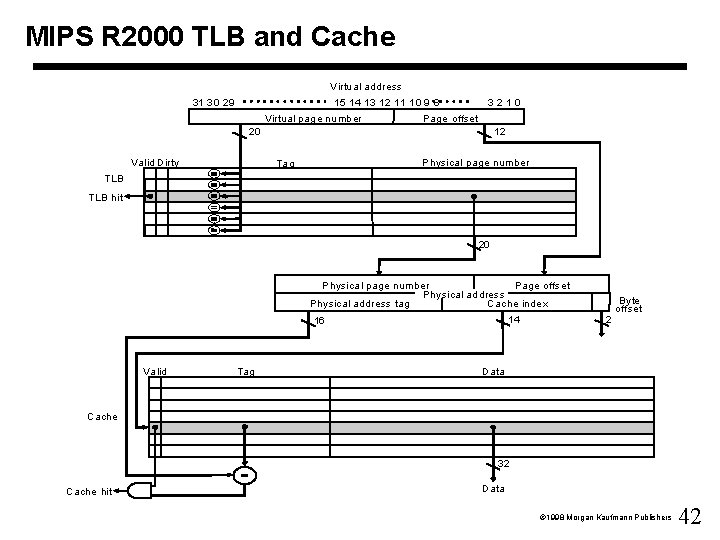

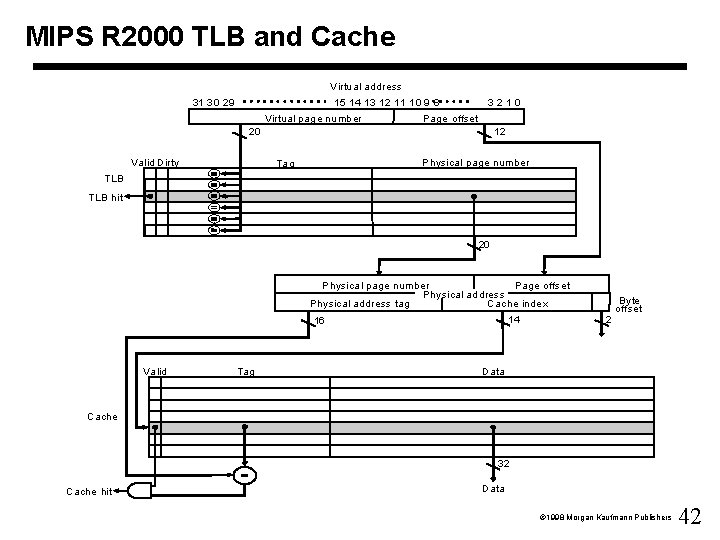

MIPS R 2000 TLB and Cache Virtual address 31 30 29 15 14 13 12 11 10 9 8 Virtual page number 3210 Page offset 20 Valid Dirty 12 Physical page number Tag TLB hit 20 Physical page number Page offset Physical address tag Cache inde x 14 16 Valid Tag Byte offset 2 Data Cache 32 Cache hit Data 1998 Morgan Kaufmann Publishers 42

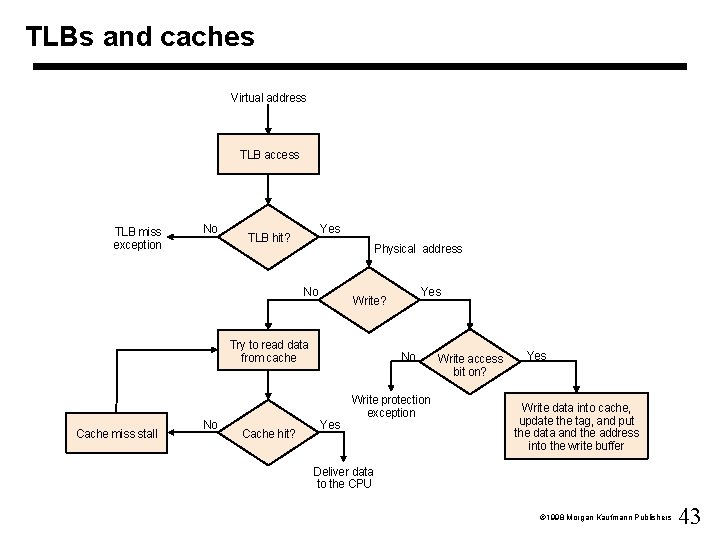

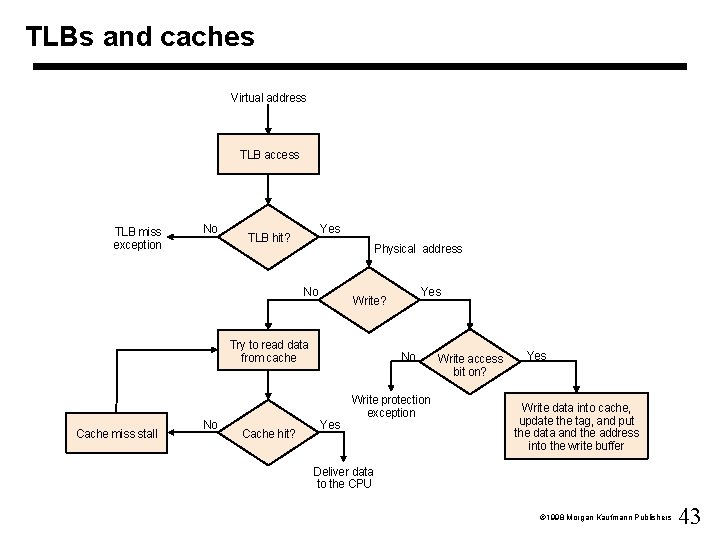

TLBs and caches Virtual address TLB access TLB miss exception No Yes TLB hit? Physical address No Try to read data from cache Cache miss stall No Cache hit? Yes Write? No Yes Write protection exception Write access bit on? Yes Write data into cache, update the tag, and put the data and the address into the write buffer Deliver data to the CPU 1998 Morgan Kaufmann Publishers 43

Protection and Virtual Memory • Multiple processes and the operating system – share a single main memory – memory protection is provided • A user process can not access other processes’ data • The operating system takes care of system administration – page tables, TLBs 1998 Morgan Kaufmann Publishers 44

Hardware Requirements for Protection • At least two operating modes – user process – operating system process (also called kernel, supervisor or executive process) • Portion of the CPU state a user process can read but not write – user/supervisor mode bit – page table pointer – TLB • Mechanisms for going from user mode to supervisor mode, and vice versa – system call exception – return from exception 1998 Morgan Kaufmann Publishers 45

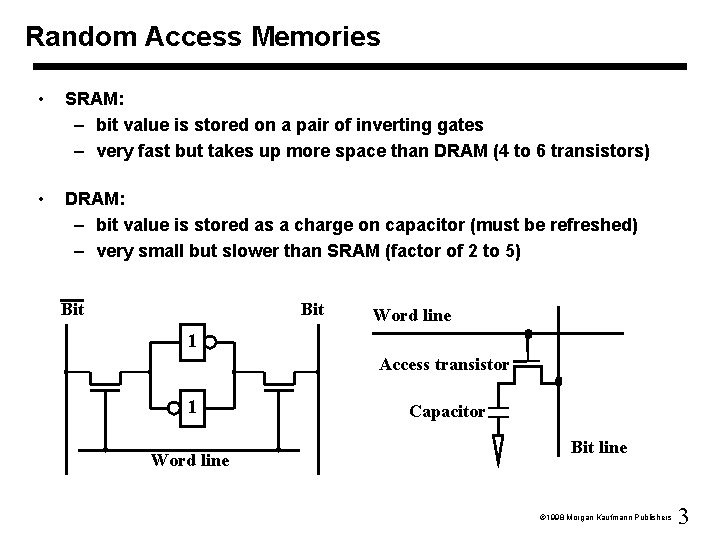

Handling Page Faults and TLB Misses • TLB miss – page present in the memory create missing TLB entry – page not present in the memory page fault transfer control to the operating system • Look at the matching page table entry – valid bit on copy the page table entry from memory into the TLB – valid bit off page fault exception • Page fault – EPC contains the virtual address of the faulting page – find the page and move it into the memory after choosing a page to be replaced 1998 Morgan Kaufmann Publishers 46