Memory hierarchy Memory Operating system and CPU memory

![Locality example Which function has better locality? int sumarrayrows(int a[M][N]) { int i, j, Locality example Which function has better locality? int sumarrayrows(int a[M][N]) { int i, j,](https://slidetodoc.com/presentation_image/575bc856443c5f3d31062725ad0f75da/image-9.jpg)

- Slides: 76

Memory hierarchy

Memory Operating system and CPU memory management unit gives each process the “illusion” of a uniform, dedicated memory space i. e. 0 x 0 – 0 x. FFFFFFFF for x 86 -64 Allows easy multitasking Hides underlying memory organization – 2–

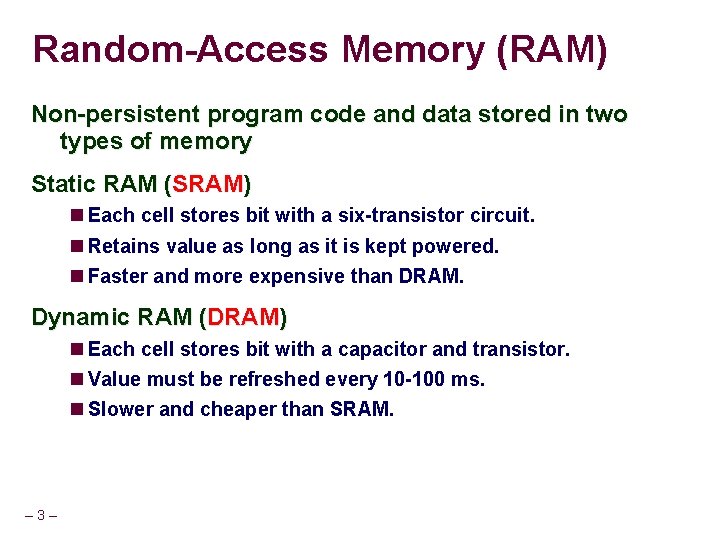

Random-Access Memory (RAM) Non-persistent program code and data stored in two types of memory Static RAM (SRAM) Each cell stores bit with a six-transistor circuit. Retains value as long as it is kept powered. Faster and more expensive than DRAM. Dynamic RAM (DRAM) Each cell stores bit with a capacitor and transistor. Value must be refreshed every 10 -100 ms. Slower and cheaper than SRAM. – 3–

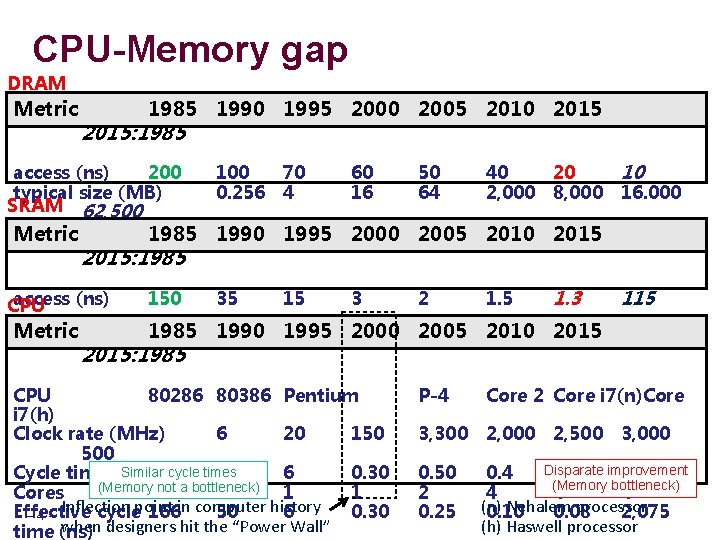

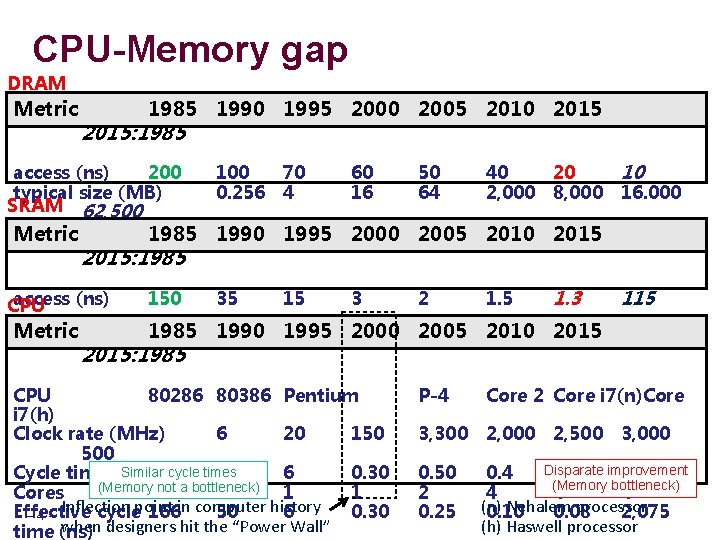

CPU-Memory gap DRAM Metric 1985 1990 1995 2000 2005 2010 2015: 1985 access (ns) 200 typical size (MB) SRAM 62, 500 Metric 70 4 60 16 50 64 40 2, 000 20 8, 000 10 16. 000 1985 1990 1995 2000 2005 2010 2015: 1985 access (ns) CPU Metric 100 0. 256 150 35 15 3 2 1. 5 1. 3 115 1985 1990 1995 2000 2005 2010 2015: 1985 CPU 80286 80386 Pentium i 7(h) Clock rate (MHz) 6 20 150 500 Similar cycle times Cycle time (ns)166 50 6 0. 30 (Memory 1 not a bottleneck) Cores 1 1 1 Inflection history Effective cyclepoint 166 in computer 50 6 0. 30 – 4– when designers hit the “Power Wall” time (ns) P-4 Core 2 Core i 7(n)Core 3, 300 2, 000 0. 50 2 0. 25 2, 500 3, 000 Disparate 0. 4 0. 33 improvement 500 (Memory bottleneck) 4 4 4 (n) Nehalem processor 0. 10 0. 08 2, 075 (h) Haswell processor

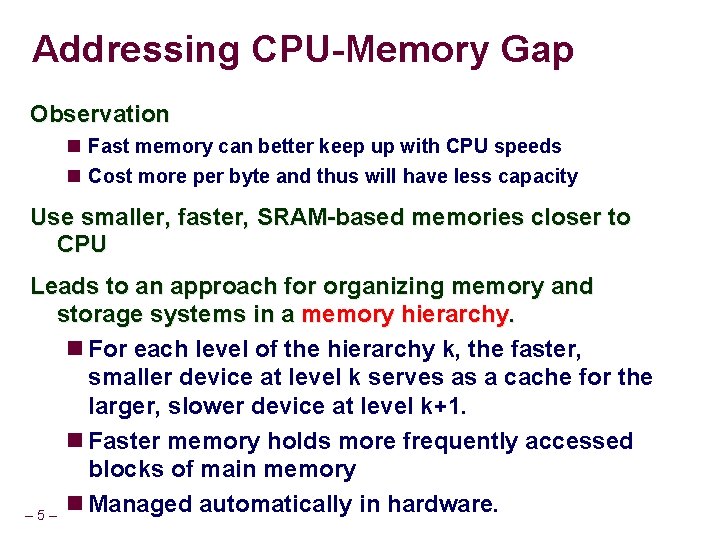

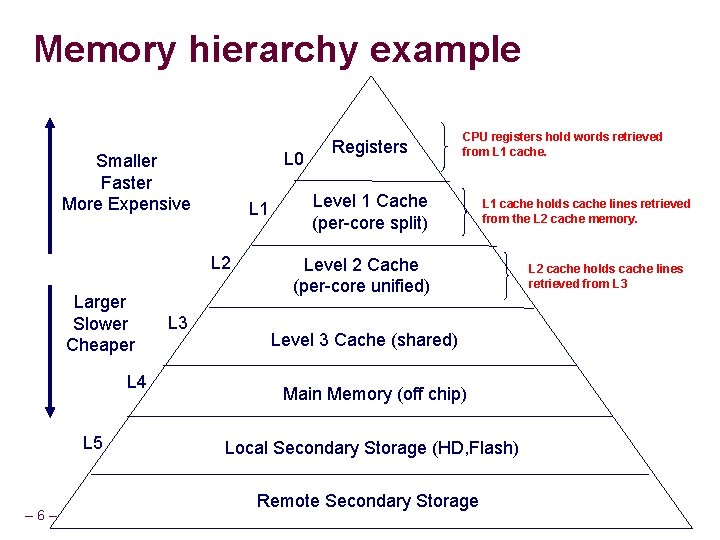

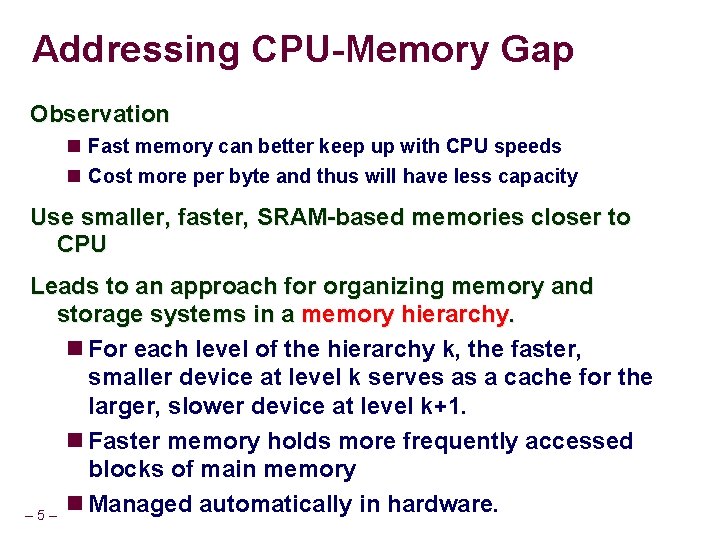

Addressing CPU-Memory Gap Observation Fast memory can better keep up with CPU speeds Cost more per byte and thus will have less capacity Use smaller, faster, SRAM-based memories closer to CPU Leads to an approach for organizing memory and storage systems in a memory hierarchy. For each level of the hierarchy k, the faster, smaller device at level k serves as a cache for the larger, slower device at level k+1. Faster memory holds more frequently accessed blocks of main memory Managed automatically in hardware. – 5–

Memory hierarchy example L 0 Smaller Faster More Expensive L 1 L 2 Larger Slower Cheaper L 4 L 5 – 6– L 3 Registers CPU registers hold words retrieved from L 1 cache. Level 1 Cache (per-core split) L 1 cache holds cache lines retrieved from the L 2 cache memory. Level 2 Cache (per-core unified) Level 3 Cache (shared) Main Memory (off chip) Local Secondary Storage (HD, Flash) Remote Secondary Storage L 2 cache holds cache lines retrieved from L 3

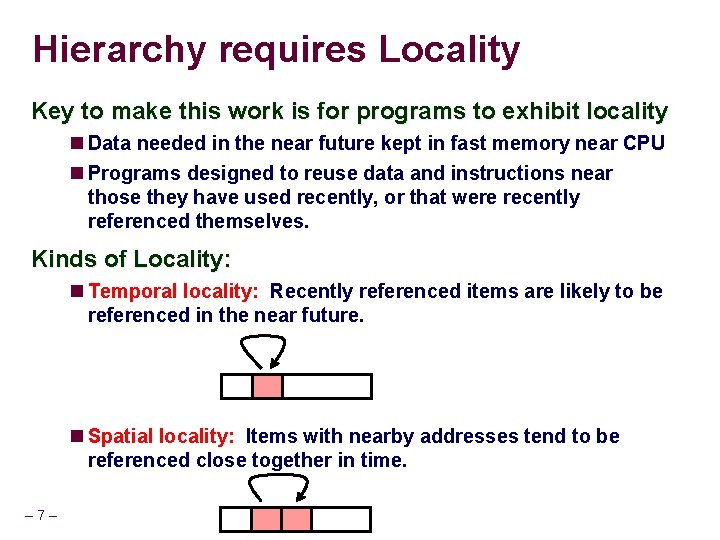

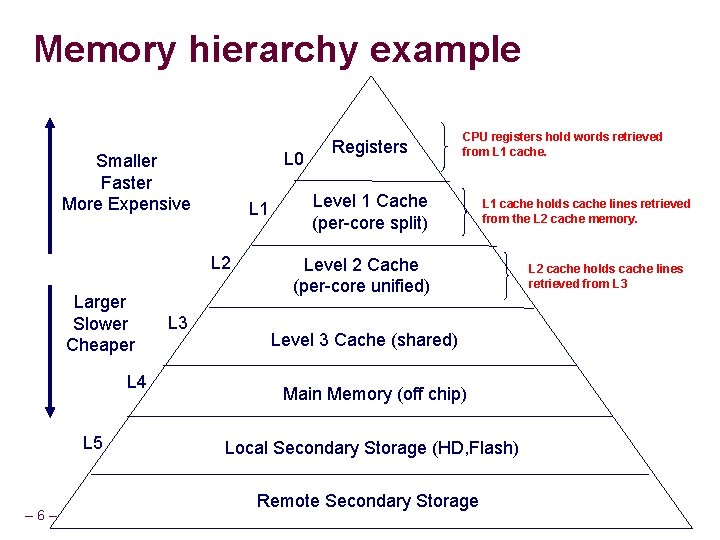

Hierarchy requires Locality Key to make this work is for programs to exhibit locality Data needed in the near future kept in fast memory near CPU Programs designed to reuse data and instructions near those they have used recently, or that were recently referenced themselves. Kinds of Locality: Temporal locality: Recently referenced items are likely to be referenced in the near future. Spatial locality: Items with nearby addresses tend to be referenced close together in time. – 7–

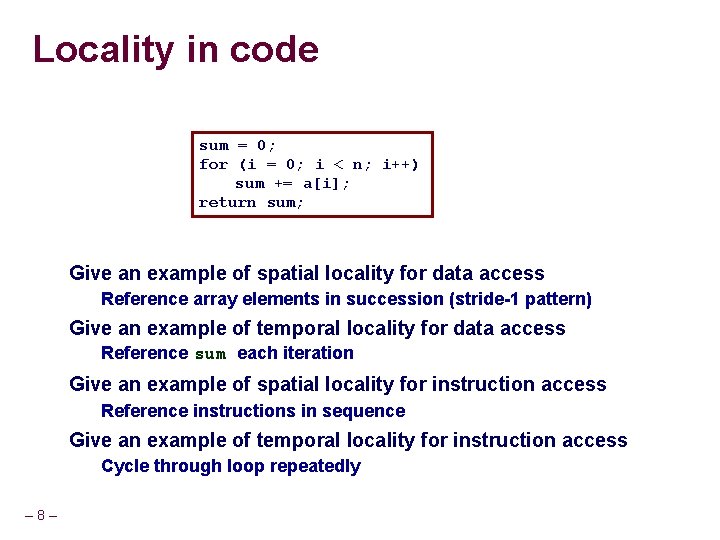

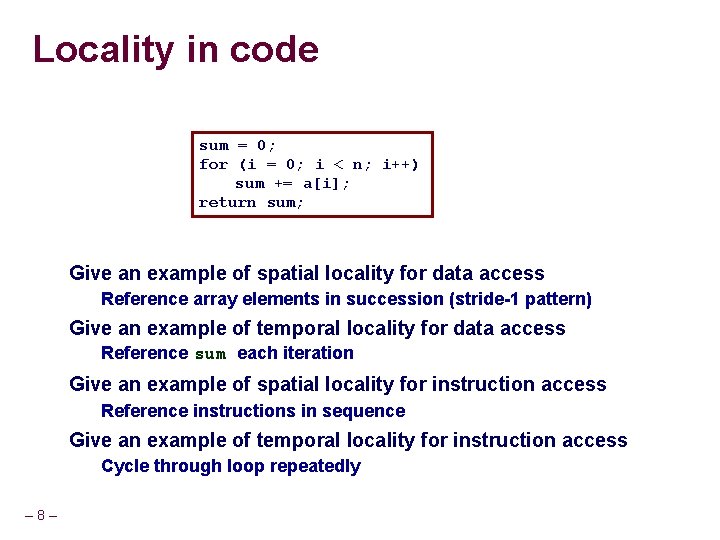

Locality in code sum = 0; for (i = 0; i < n; i++) sum += a[i]; return sum; Give an example of spatial locality for data access Reference array elements in succession (stride-1 pattern) Give an example of temporal locality for data access Reference sum each iteration Give an example of spatial locality for instruction access Reference instructions in sequence Give an example of temporal locality for instruction access Cycle through loop repeatedly – 8–

![Locality example Which function has better locality int sumarrayrowsint aMN int i j Locality example Which function has better locality? int sumarrayrows(int a[M][N]) { int i, j,](https://slidetodoc.com/presentation_image/575bc856443c5f3d31062725ad0f75da/image-9.jpg)

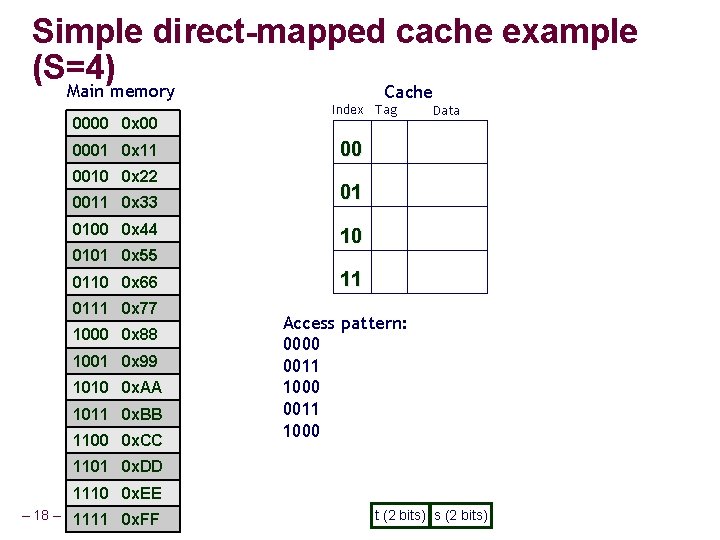

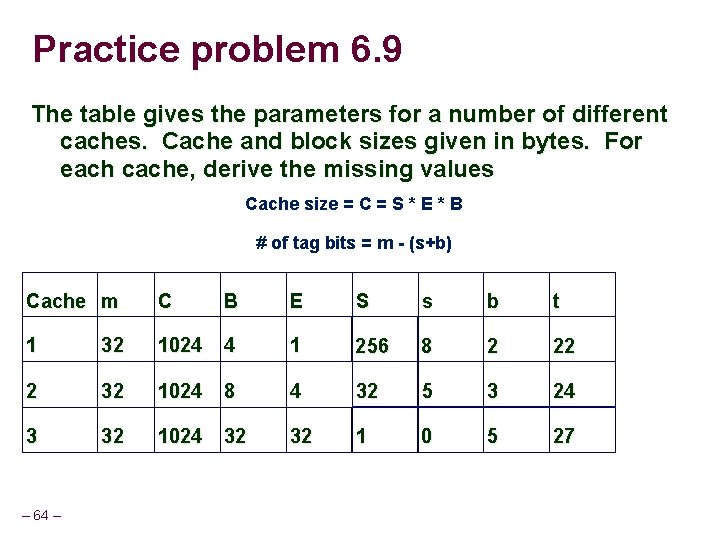

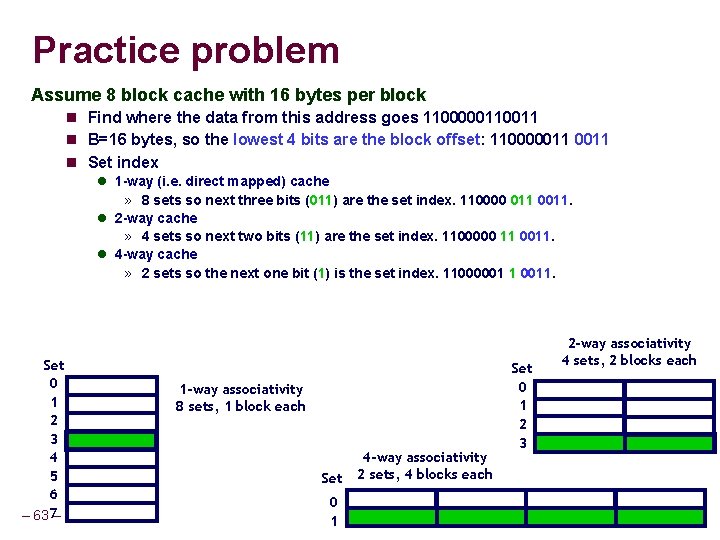

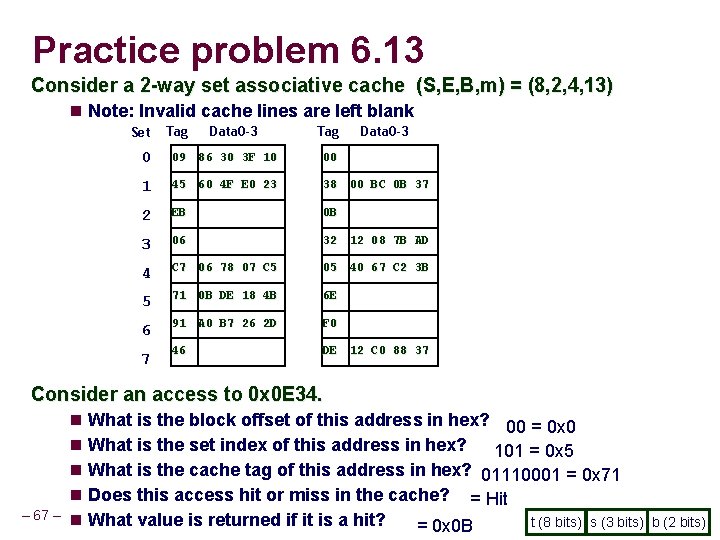

Locality example Which function has better locality? int sumarrayrows(int a[M][N]) { int i, j, sum = 0; for (i = 0; i < M; i++) for (j = 0; j < N; j++) sum += a[i][j]; return sum } int sumarraycols(int a[M][N]) { int i, j, sum = 0; – 9– for (j = 0; j < N; j++) for (i = 0; i < M; i++) sum += a[i][j]; return sum }

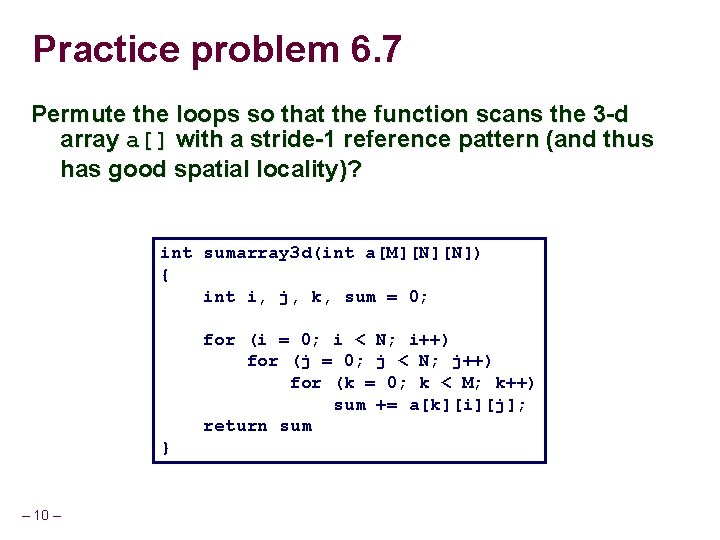

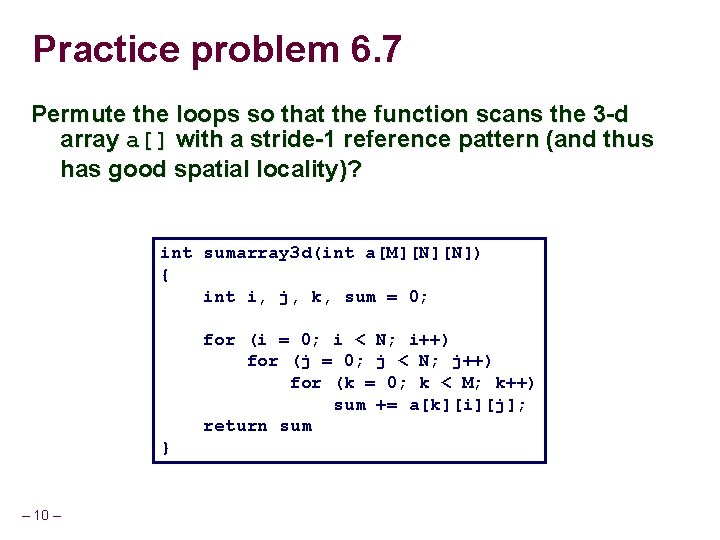

Practice problem 6. 7 Permute the loops so that the function scans the 3 -d array a[] with a stride-1 reference pattern (and thus has good spatial locality)? int sumarray 3 d(int a[M][N][N]) { int i, j, k, sum = 0; for (i = 0; i < N; i++) for (j = 0; j < N; j++) for (k = 0; k < M; k++) sum += a[k][i][j]; return sum } – 10 –

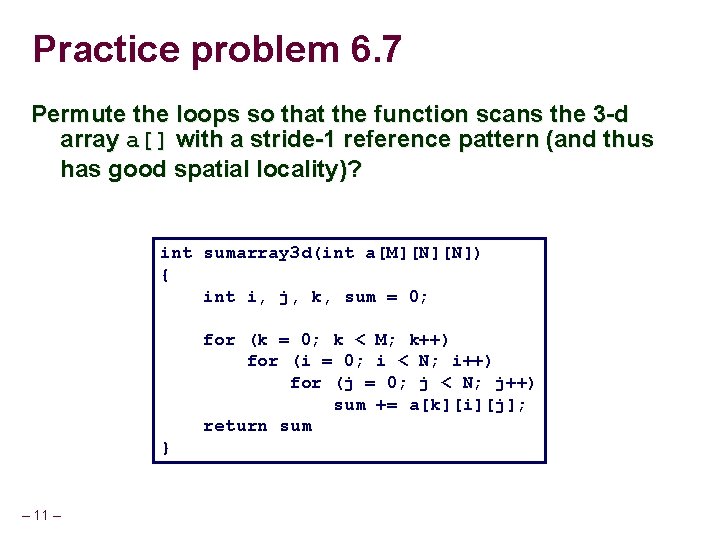

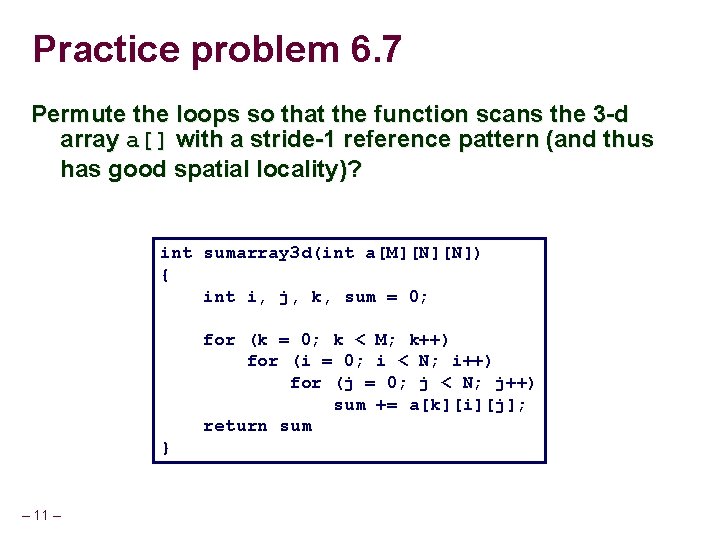

Practice problem 6. 7 Permute the loops so that the function scans the 3 -d array a[] with a stride-1 reference pattern (and thus has good spatial locality)? int sumarray 3 d(int a[M][N][N]) { int i, j, k, sum = 0; for (k = 0; k < M; k++) for (i = 0; i < N; i++) for (j = 0; j < N; j++) sum += a[k][i][j]; return sum } – 11 –

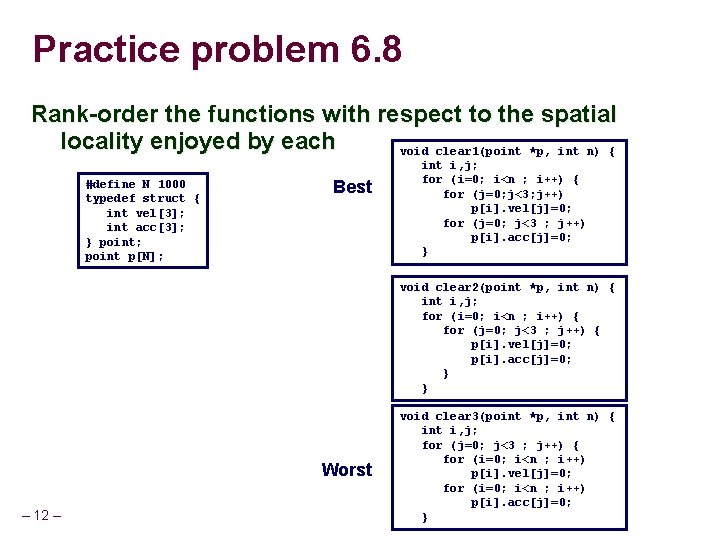

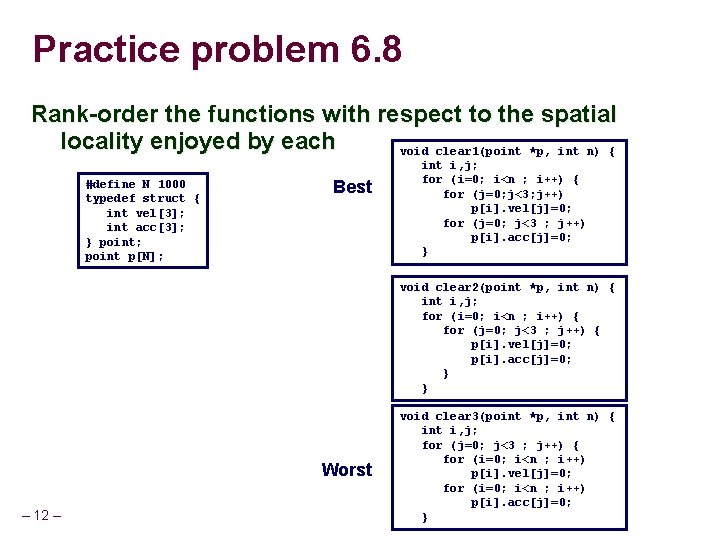

Practice problem 6. 8 Rank-order the functions with respect to the spatial locality enjoyed by each void clear 1(point *p, int n) { #define N 1000 typedef struct { int vel[3]; int acc[3]; } point; point p[N]; Best int i, j; for (i=0; i<n ; i++) { for (j=0; j<3; j++) p[i]. vel[j]=0; for (j=0; j<3 ; j++) p[i]. acc[j]=0; } void clear 2(point *p, int n) { int i, j; for (i=0; i<n ; i++) { for (j=0; j<3 ; j++) { p[i]. vel[j]=0; p[i]. acc[j]=0; } } Worst – 12 – void clear 3(point *p, int n) { int i, j; for (j=0; j<3 ; j++) { for (i=0; i<n ; i++) p[i]. vel[j]=0; for (i=0; i<n ; i++) p[i]. acc[j]=0; }

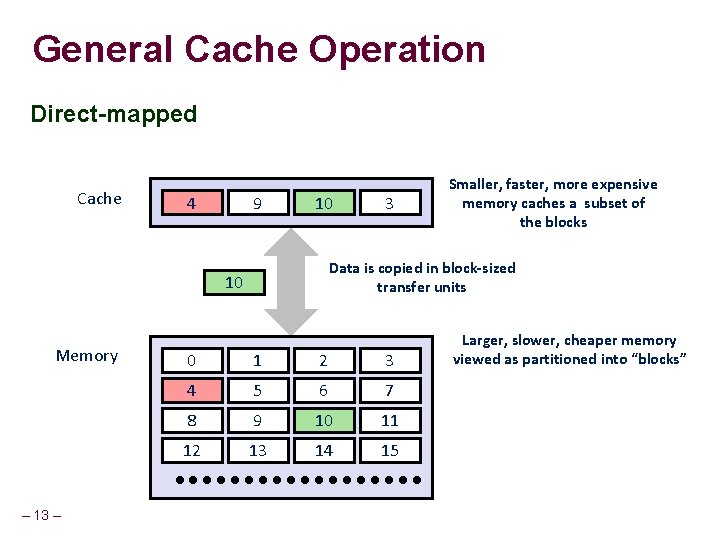

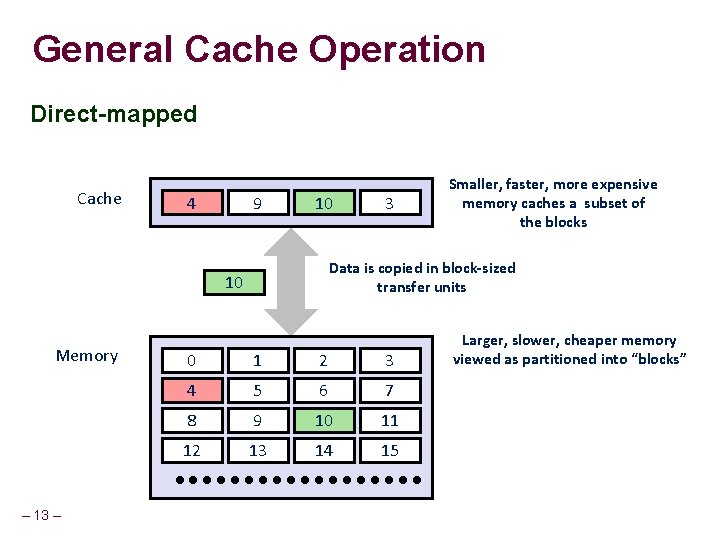

General Cache Operation Direct-mapped Cache 8 4 9 14 10 Data is copied in block-sized transfer units 10 4 Memory – 13 – 3 Smaller, faster, more expensive memory caches a subset of the blocks 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 Larger, slower, cheaper memory viewed as partitioned into “blocks”

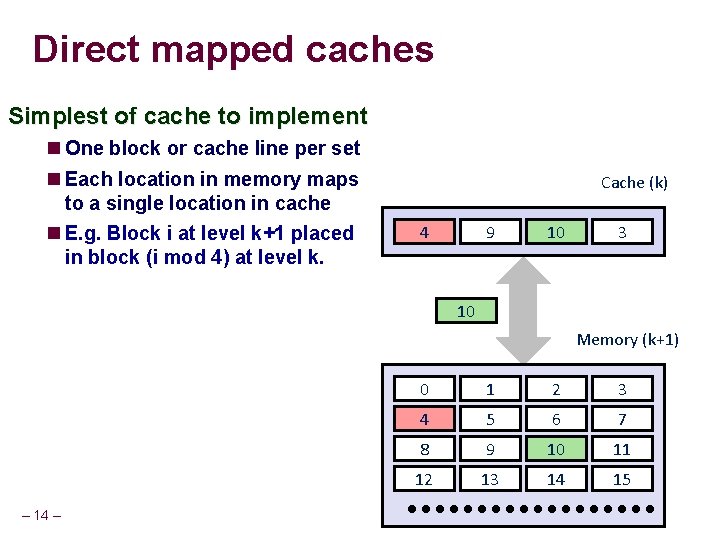

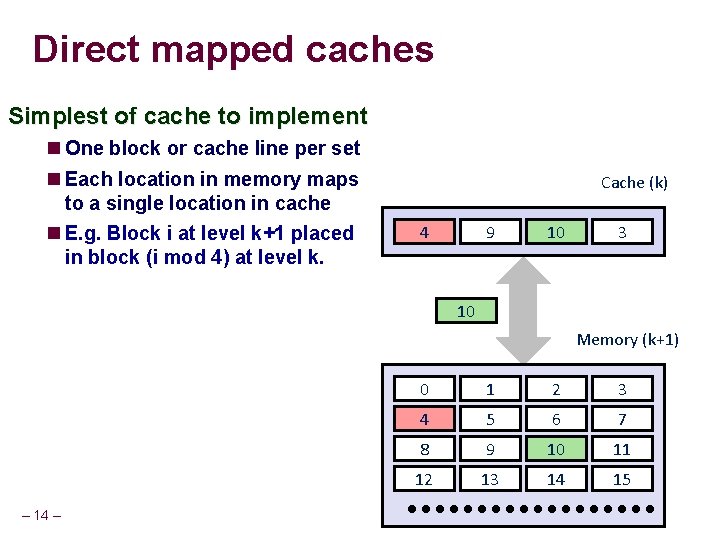

Direct mapped caches Simplest of cache to implement One block or cache line per set Each location in memory maps to a single location in cache E. g. Block i at level k+1 placed in block (i mod 4) at level k. Cache (k) 8 4 9 14 10 3 10 4 Memory (k+1) – 14 – 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15

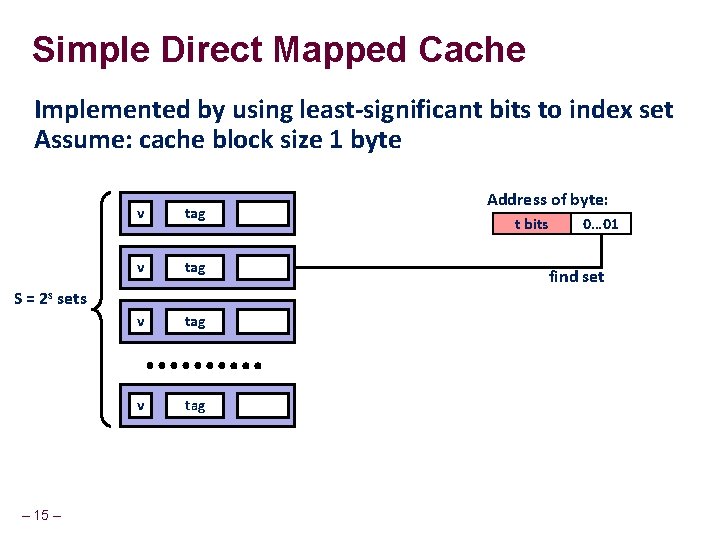

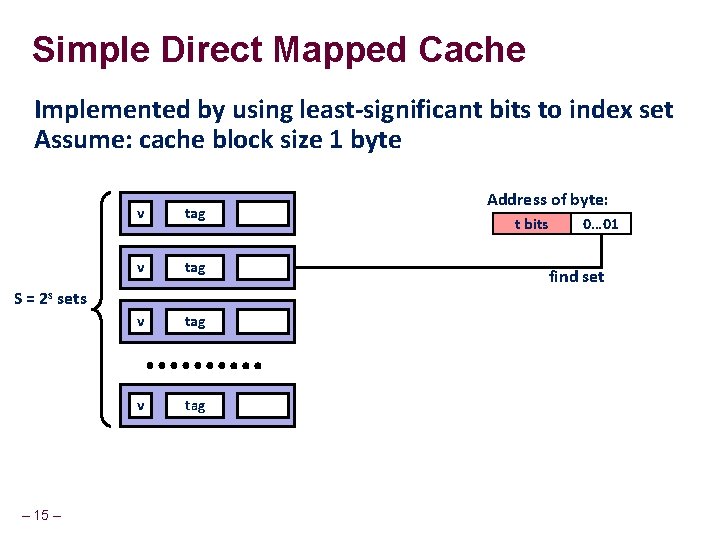

Simple Direct Mapped Cache Implemented by using least-significant bits to index set Assume: cache block size 1 byte v tag S = 2 s sets – 15 – Address of byte: t bits 0… 01 find set

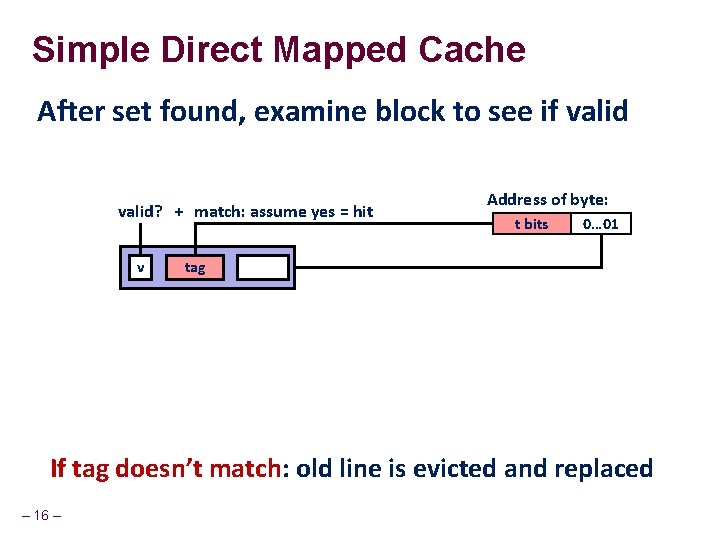

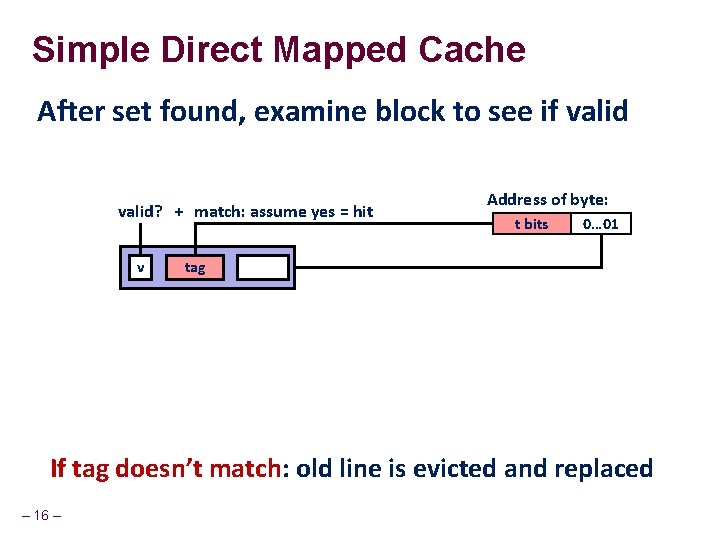

Simple Direct Mapped Cache After set found, examine block to see if valid? + match: assume yes = hit v Address of byte: t bits 0… 01 tag If tag doesn’t match: old line is evicted and replaced – 16 –

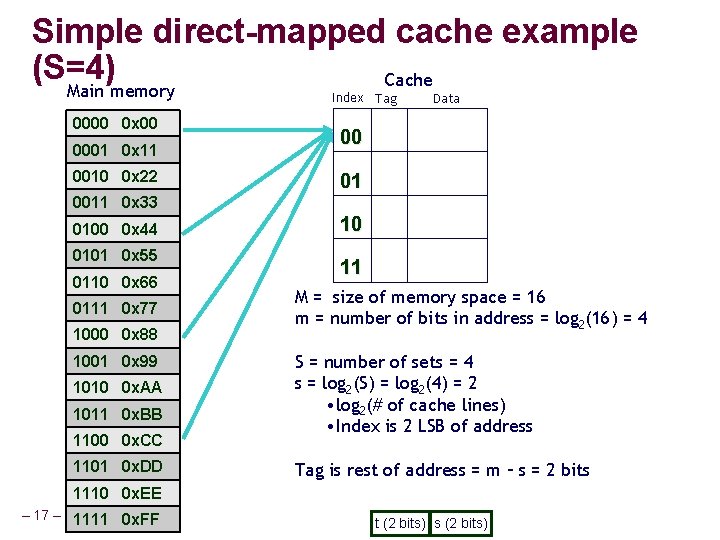

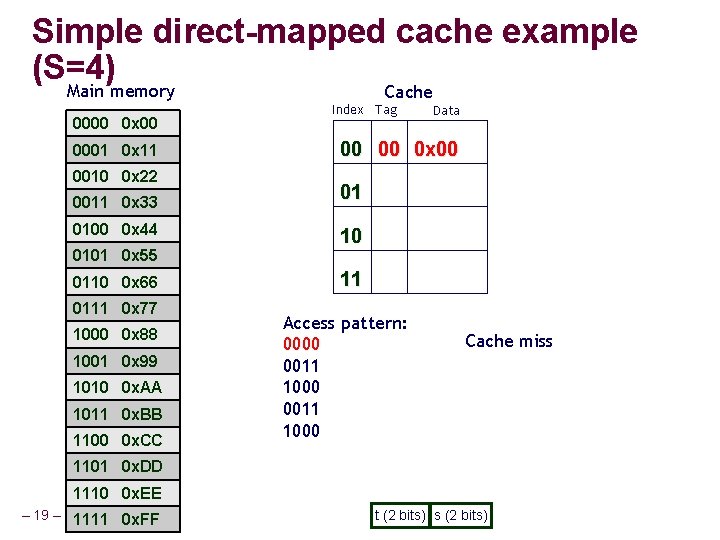

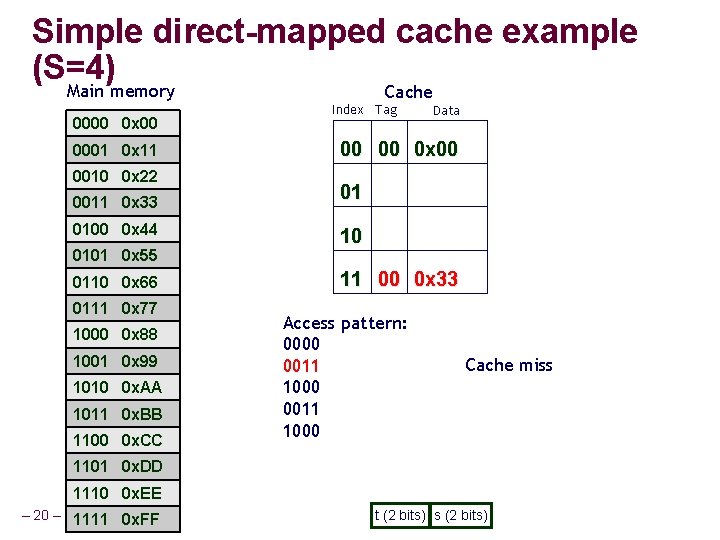

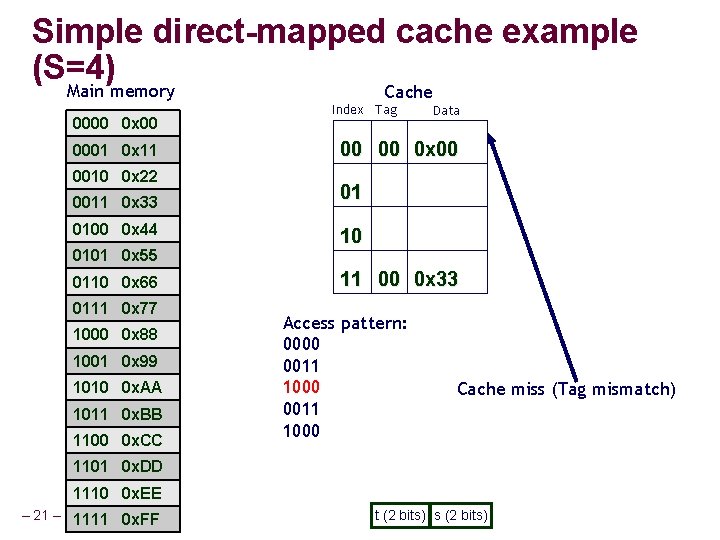

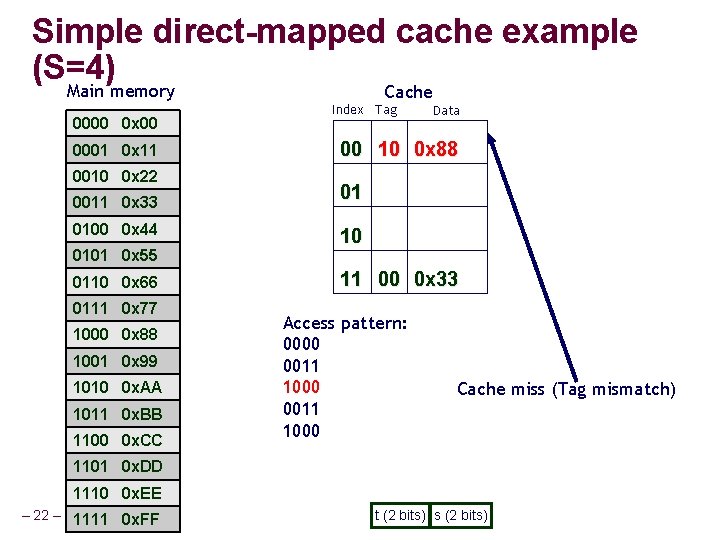

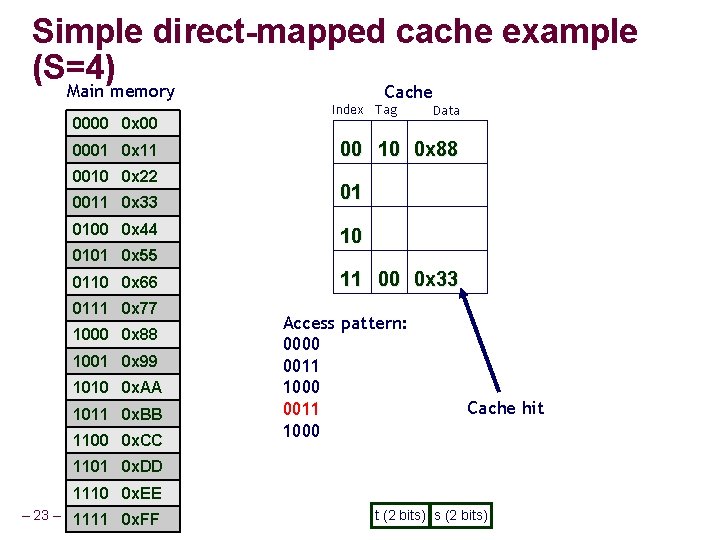

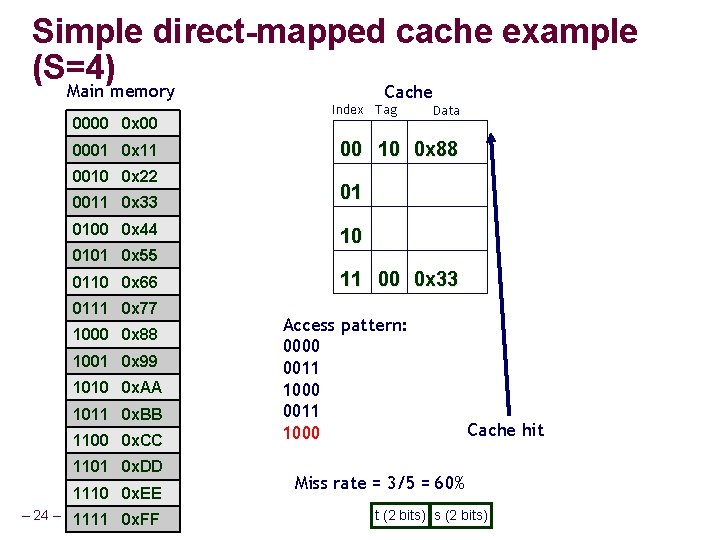

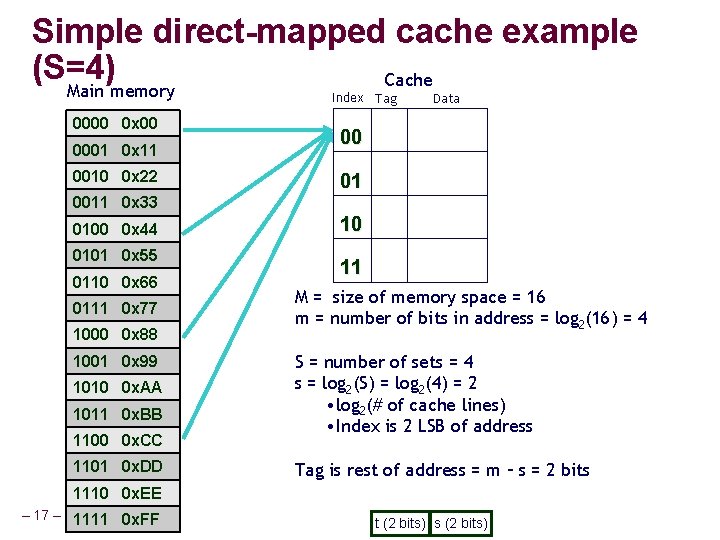

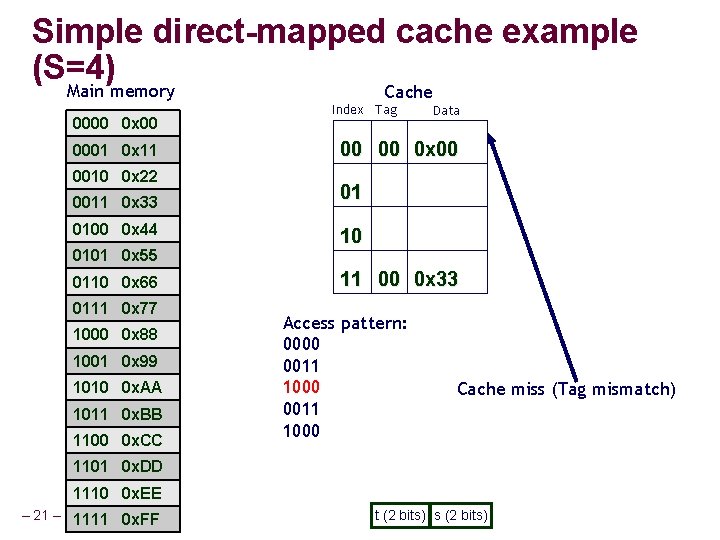

Simple direct-mapped cache example (S=4) Cache Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 Index Tag Data 00 01 0011 0 x 33 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC 1101 0 x. DD 10 11 M = size of memory space = 16 m = number of bits in address = log 2(16) = 4 S = number of sets = 4 s = log 2(S) = log 2(4) = 2 • log 2(# of cache lines) • Index is 2 LSB of address Tag is rest of address = m – s = 2 bits 1110 0 x. EE – 17 – 1111 0 x. FF t (2 bits) s (2 bits)

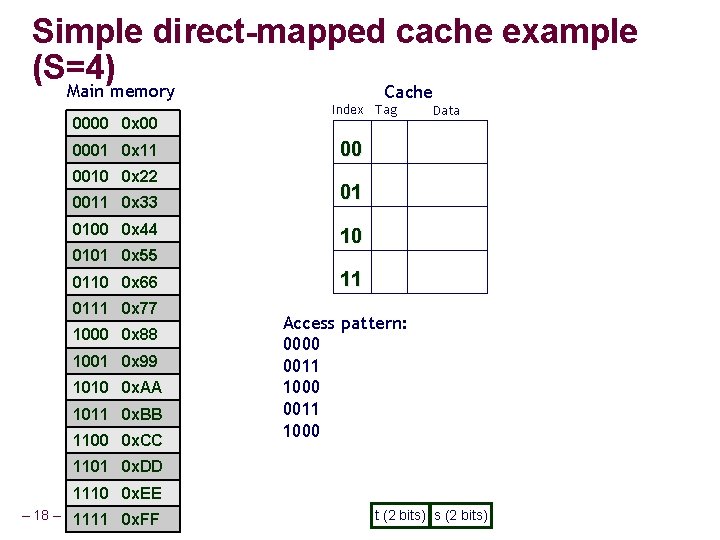

Simple direct-mapped cache example (S=4) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Cache Index Tag Data 00 01 10 11 Access pattern: 0000 0011 1000 1101 0 x. DD 1110 0 x. EE – 18 – 1111 0 x. FF t (2 bits) s (2 bits)

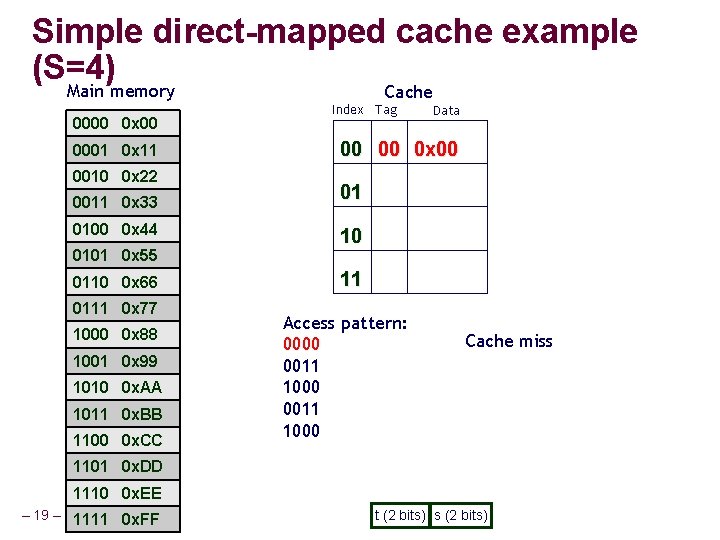

Simple direct-mapped cache example (S=4) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Cache Index Tag Data 00 00 0 x 00 01 10 11 Access pattern: 0000 0011 1000 Cache miss 1101 0 x. DD 1110 0 x. EE – 19 – 1111 0 x. FF t (2 bits) s (2 bits)

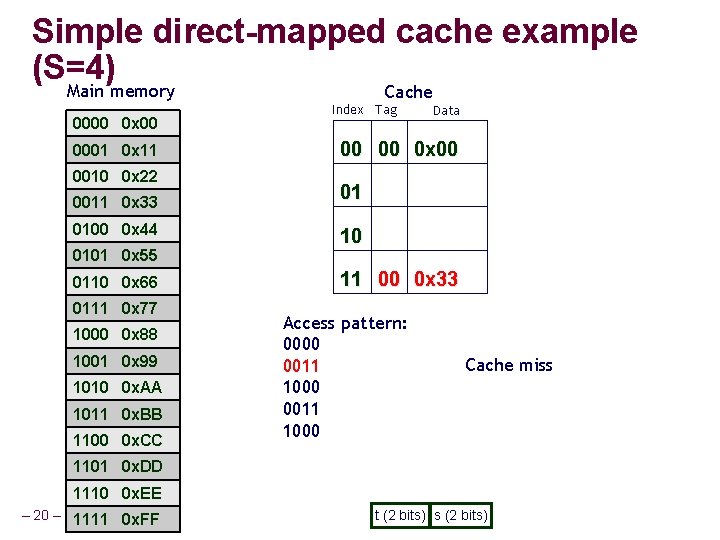

Simple direct-mapped cache example (S=4) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Cache Index Tag Data 00 00 0 x 00 01 10 11 00 0 x 33 Access pattern: 0000 0011 1000 Cache miss 1101 0 x. DD 1110 0 x. EE – 20 – 1111 0 x. FF t (2 bits) s (2 bits)

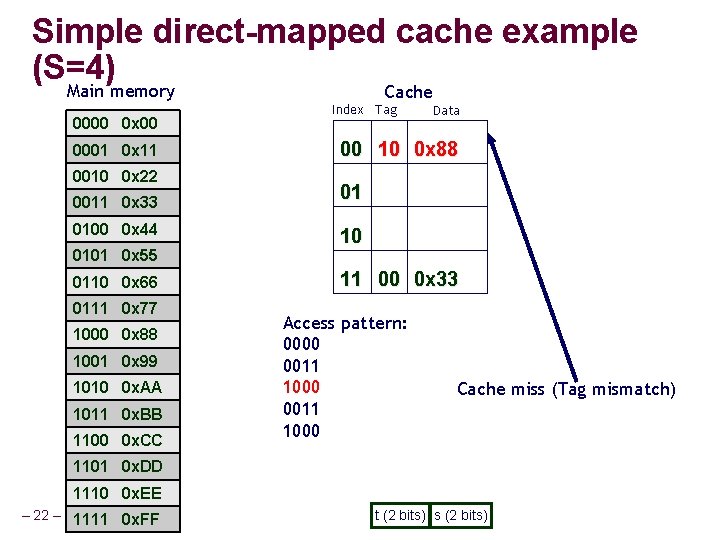

Simple direct-mapped cache example (S=4) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Cache Index Tag Data 00 00 0 x 00 01 10 11 00 0 x 33 Access pattern: 0000 0011 1000 Cache miss (Tag mismatch) 1101 0 x. DD 1110 0 x. EE – 21 – 1111 0 x. FF t (2 bits) s (2 bits)

Simple direct-mapped cache example (S=4) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Cache Index Tag Data 00 10 0 x 88 01 10 11 00 0 x 33 Access pattern: 0000 0011 1000 Cache miss (Tag mismatch) 1101 0 x. DD 1110 0 x. EE – 22 – 1111 0 x. FF t (2 bits) s (2 bits)

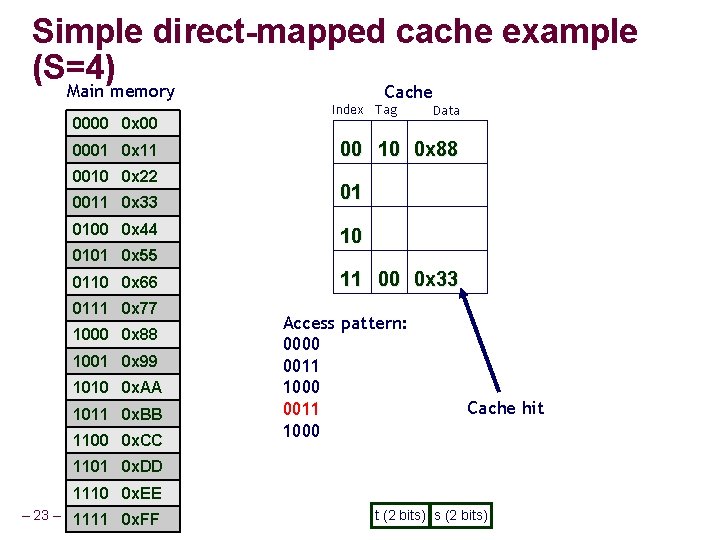

Simple direct-mapped cache example (S=4) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Cache Index Tag Data 00 10 0 x 88 01 10 11 00 0 x 33 Access pattern: 0000 0011 1000 Cache hit 1101 0 x. DD 1110 0 x. EE – 23 – 1111 0 x. FF t (2 bits) s (2 bits)

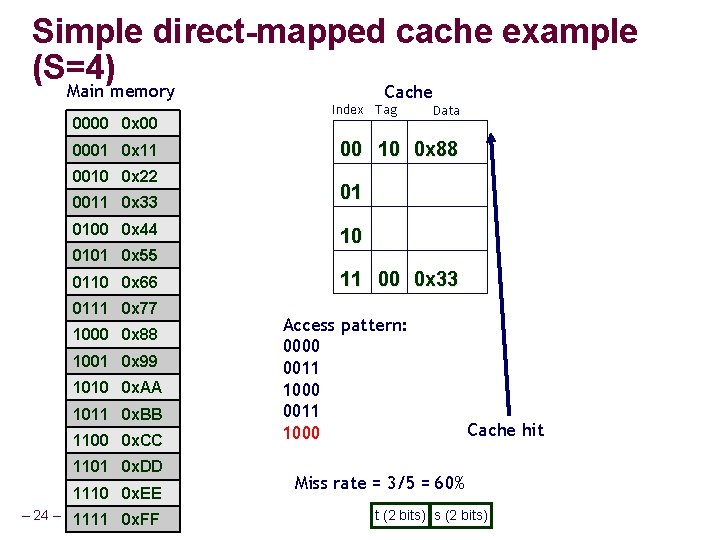

Simple direct-mapped cache example (S=4) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC 1101 0 x. DD 1110 0 x. EE – 24 – 1111 0 x. FF Cache Index Tag Data 00 10 0 x 88 01 10 11 00 0 x 33 Access pattern: 0000 0011 1000 Cache hit Miss rate = 3/5 = 60% t (2 bits) s (2 bits)

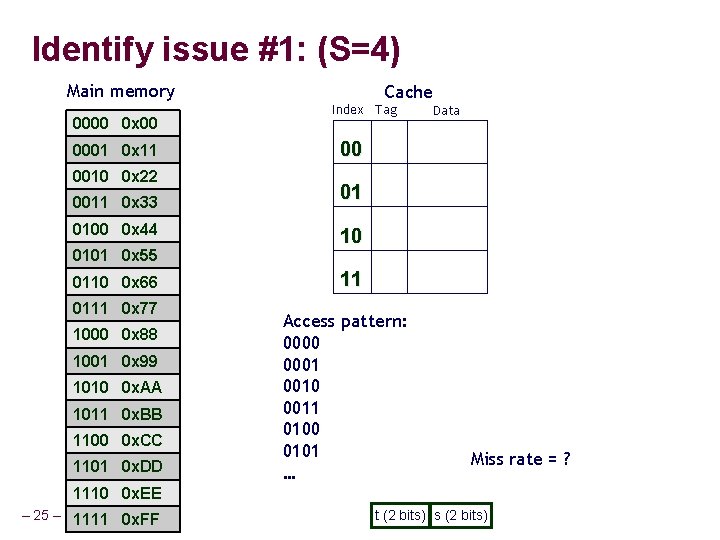

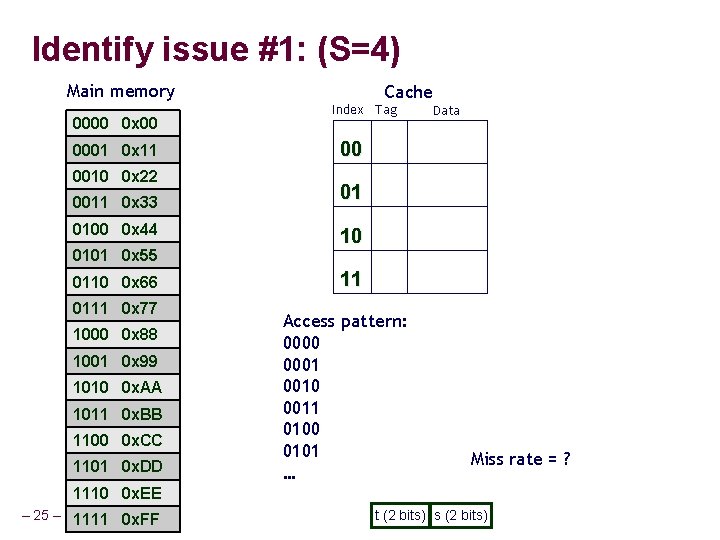

Identify issue #1: (S=4) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC 1101 0 x. DD Cache Index Tag Data 00 01 10 11 Access pattern: 0000 0001 0010 0011 0100 0101 … Miss rate = ? 1110 0 x. EE – 25 – 1111 0 x. FF t (2 bits) s (2 bits)

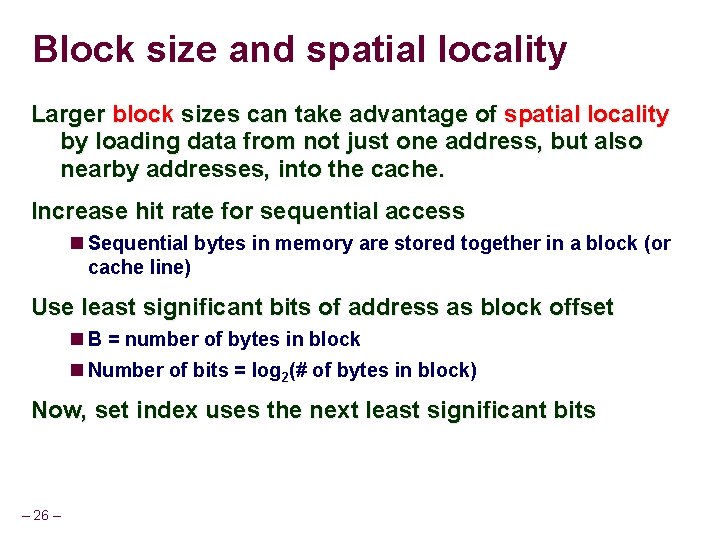

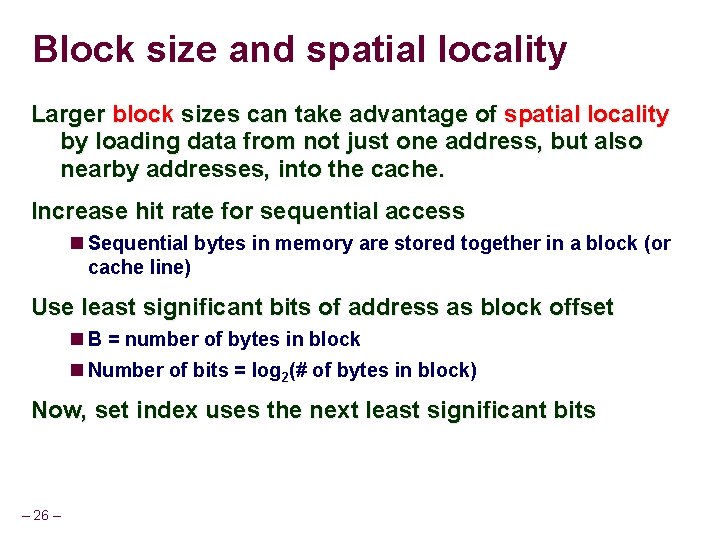

Block size and spatial locality Larger block sizes can take advantage of spatial locality by loading data from not just one address, but also nearby addresses, into the cache. Increase hit rate for sequential access Sequential bytes in memory are stored together in a block (or cache line) Use least significant bits of address as block offset B = number of bytes in block Number of bits = log 2(# of bytes in block) Now, set index uses the next least significant bits – 26 –

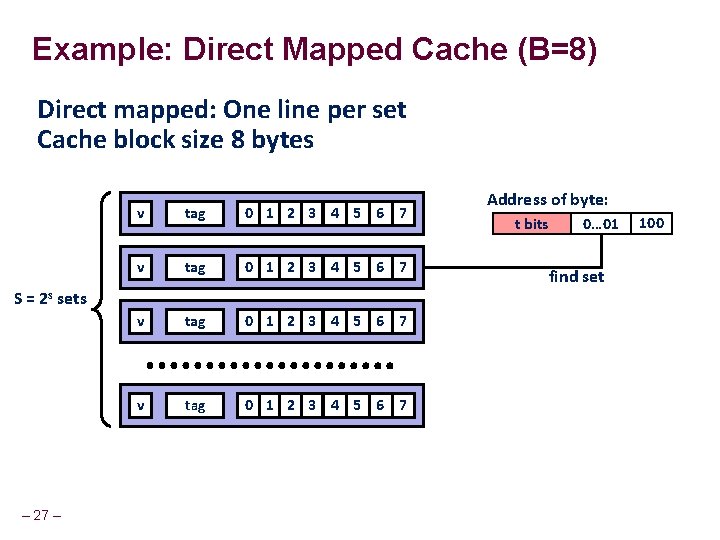

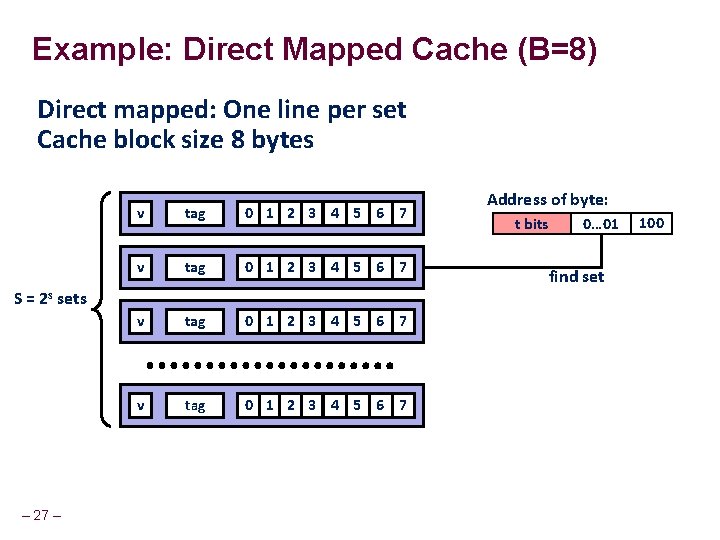

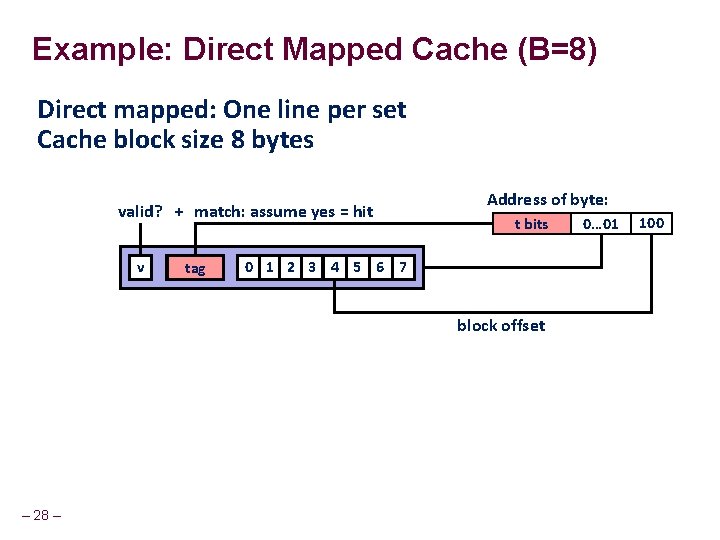

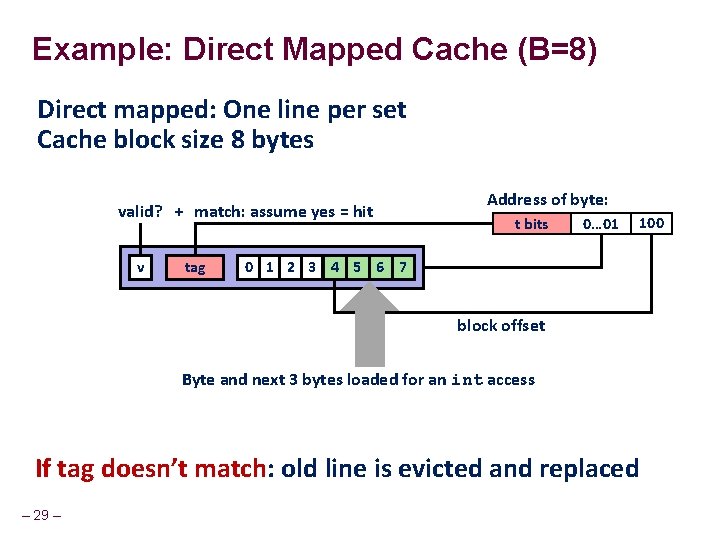

Example: Direct Mapped Cache (B=8) Direct mapped: One line per set Cache block size 8 bytes v tag 0 1 2 3 4 5 6 7 S = 2 s sets – 27 – Address of byte: t bits 0… 01 find set 100

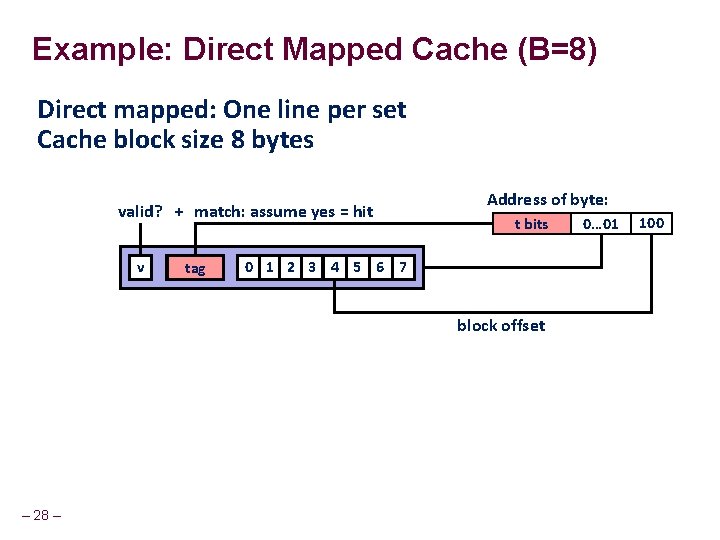

Example: Direct Mapped Cache (B=8) Direct mapped: One line per set Cache block size 8 bytes valid? + match: assume yes = hit v tag Address of byte: t bits 0 1 2 3 4 5 6 7 block offset – 28 – 0… 01 100

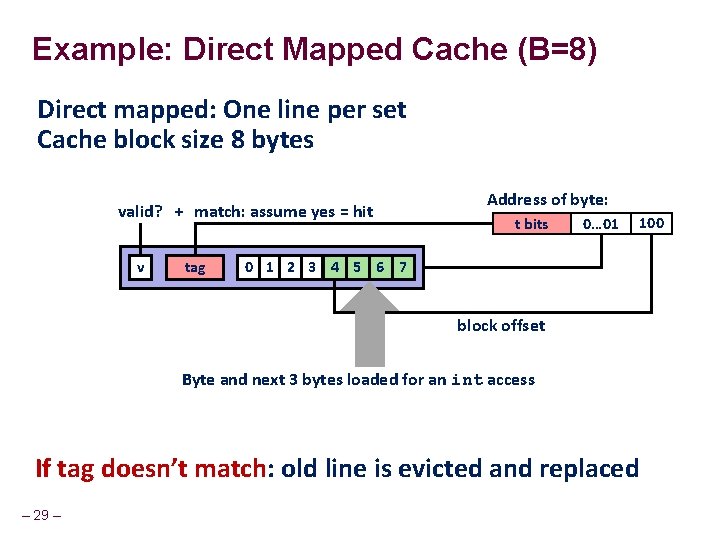

Example: Direct Mapped Cache (B=8) Direct mapped: One line per set Cache block size 8 bytes valid? + match: assume yes = hit v tag Address of byte: t bits 0… 01 100 0 1 2 3 4 5 6 7 block offset Byte and next 3 bytes loaded for an int access If tag doesn’t match: old line is evicted and replaced – 29 –

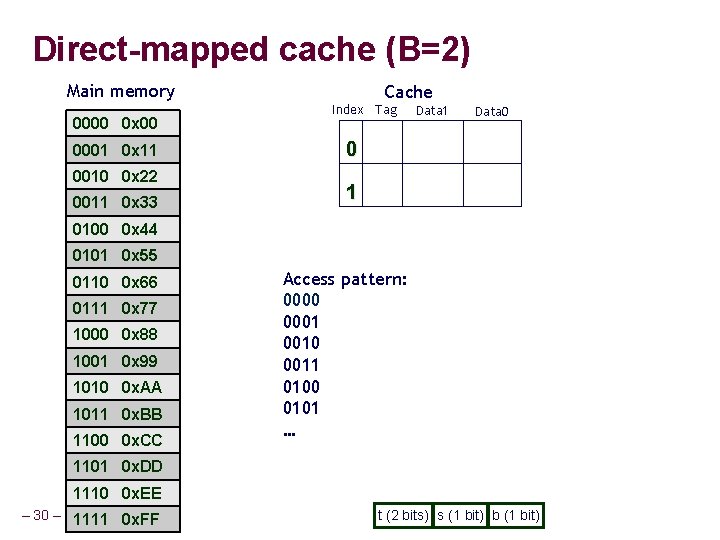

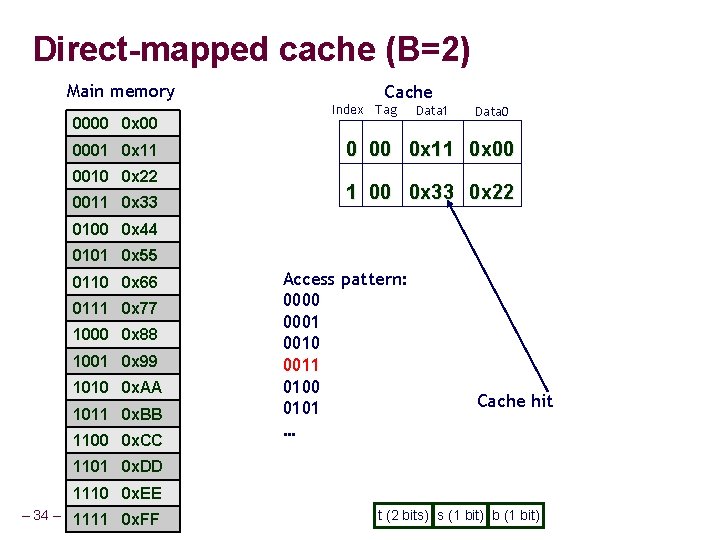

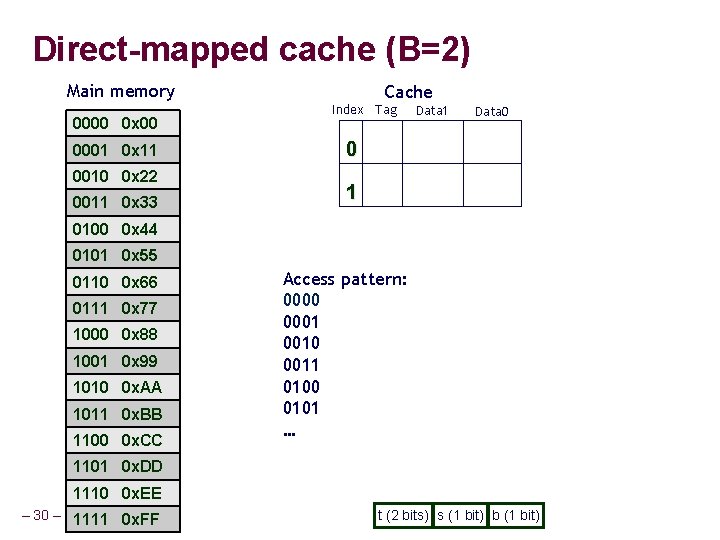

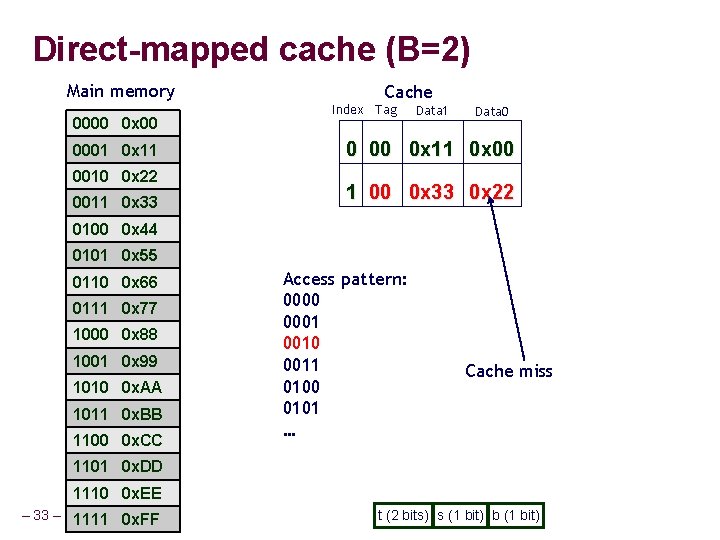

Direct-mapped cache (B=2) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 Cache Index Tag Data 1 Data 0 0 1 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Access pattern: 0000 0001 0010 0011 0100 0101 … 1101 0 x. DD 1110 0 x. EE – 30 – 1111 0 x. FF t (2 bits) s (1 bit) b (1 bit)

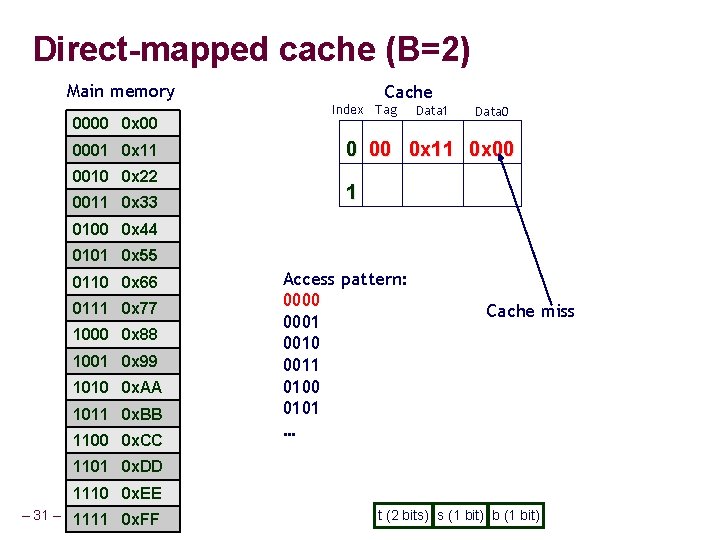

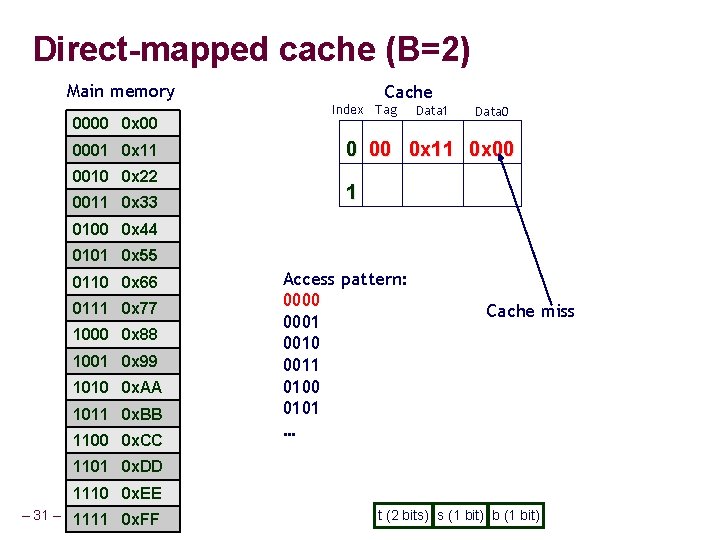

Direct-mapped cache (B=2) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 Cache Index Tag Data 1 Data 0 0 00 0 x 11 0 x 00 1 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Access pattern: 0000 0001 0010 0011 0100 0101 … Cache miss 1101 0 x. DD 1110 0 x. EE – 31 – 1111 0 x. FF t (2 bits) s (1 bit) b (1 bit)

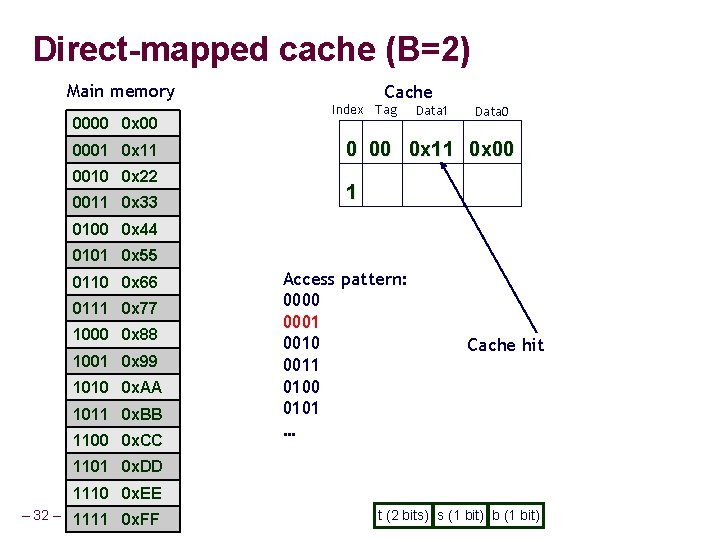

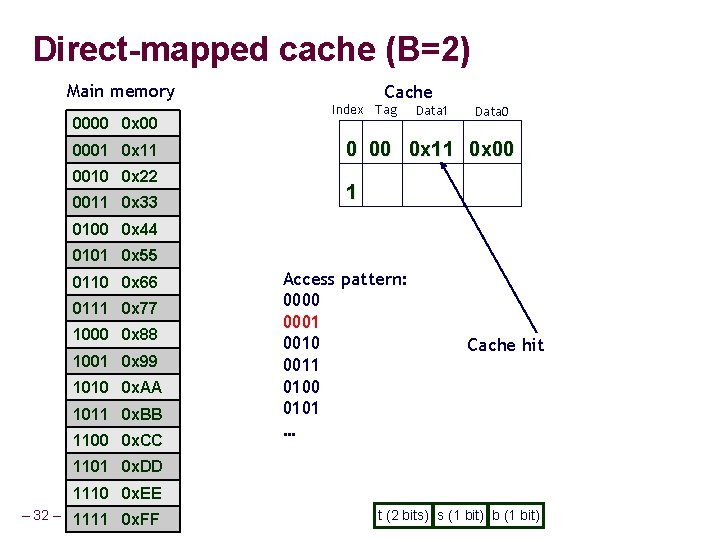

Direct-mapped cache (B=2) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 Cache Index Tag Data 1 Data 0 0 00 0 x 11 0 x 00 1 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Access pattern: 0000 0001 0010 0011 0100 0101 … Cache hit 1101 0 x. DD 1110 0 x. EE – 32 – 1111 0 x. FF t (2 bits) s (1 bit) b (1 bit)

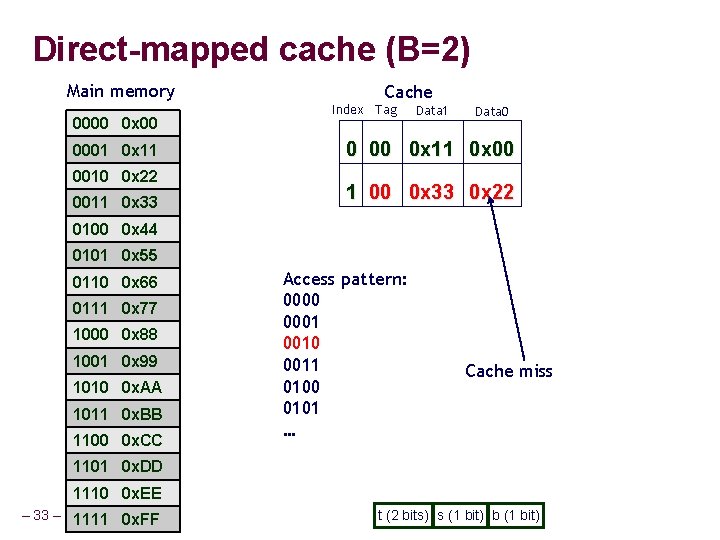

Direct-mapped cache (B=2) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 Cache Index Tag Data 1 Data 0 0 00 0 x 11 0 x 00 1 00 0 x 33 0 x 22 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Access pattern: 0000 0001 0010 0011 0100 0101 … Cache miss 1101 0 x. DD 1110 0 x. EE – 33 – 1111 0 x. FF t (2 bits) s (1 bit) b (1 bit)

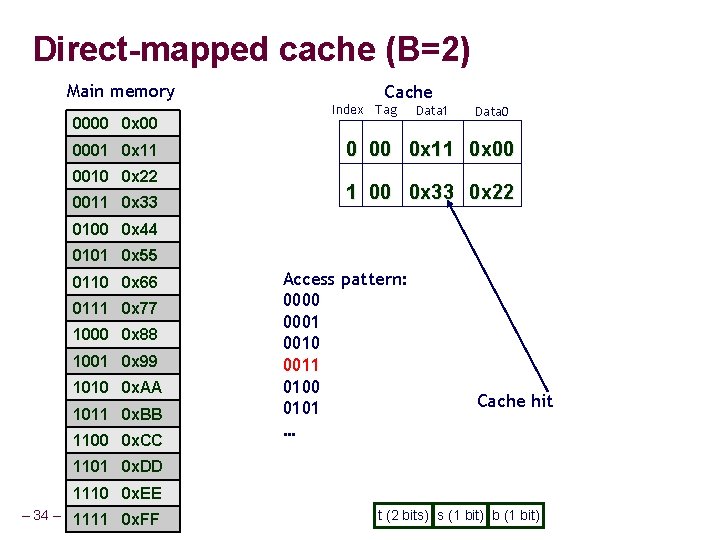

Direct-mapped cache (B=2) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 Cache Index Tag Data 1 Data 0 0 00 0 x 11 0 x 00 1 00 0 x 33 0 x 22 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Access pattern: 0000 0001 0010 0011 0100 0101 … Cache hit 1101 0 x. DD 1110 0 x. EE – 34 – 1111 0 x. FF t (2 bits) s (1 bit) b (1 bit)

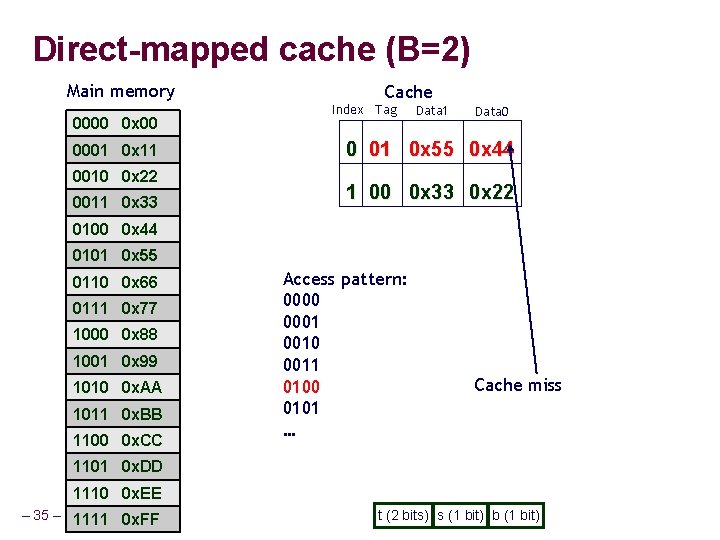

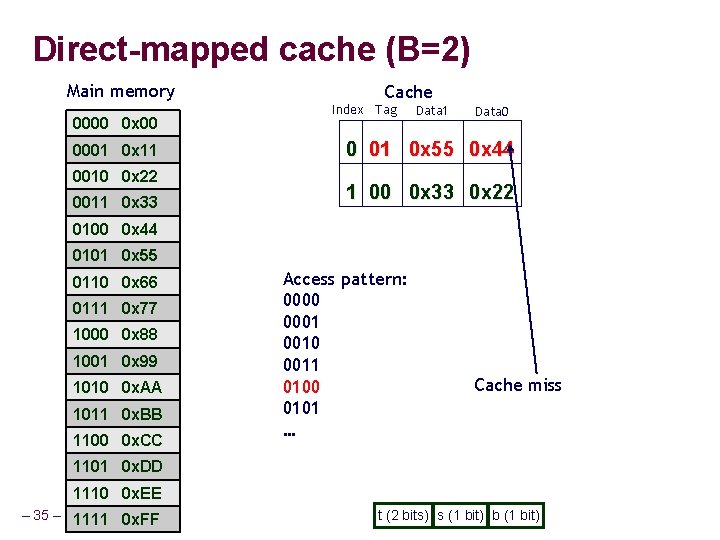

Direct-mapped cache (B=2) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 Cache Index Tag Data 1 Data 0 0 01 0 x 55 0 x 44 1 00 0 x 33 0 x 22 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Access pattern: 0000 0001 0010 0011 0100 0101 … Cache miss 1101 0 x. DD 1110 0 x. EE – 35 – 1111 0 x. FF t (2 bits) s (1 bit) b (1 bit)

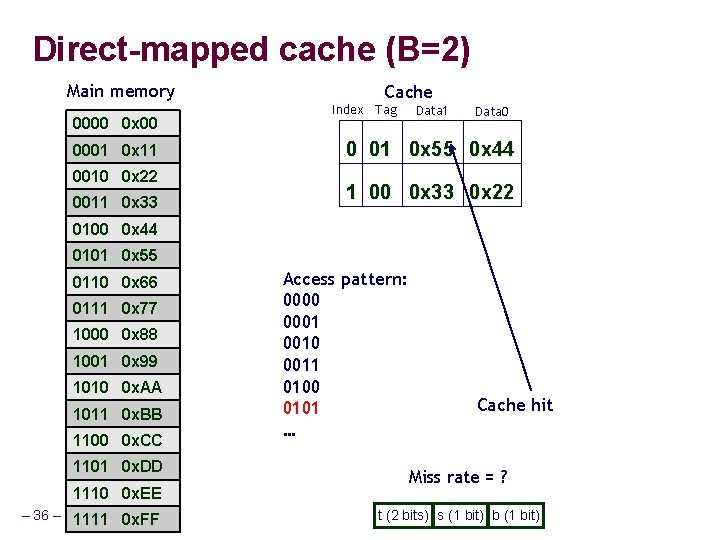

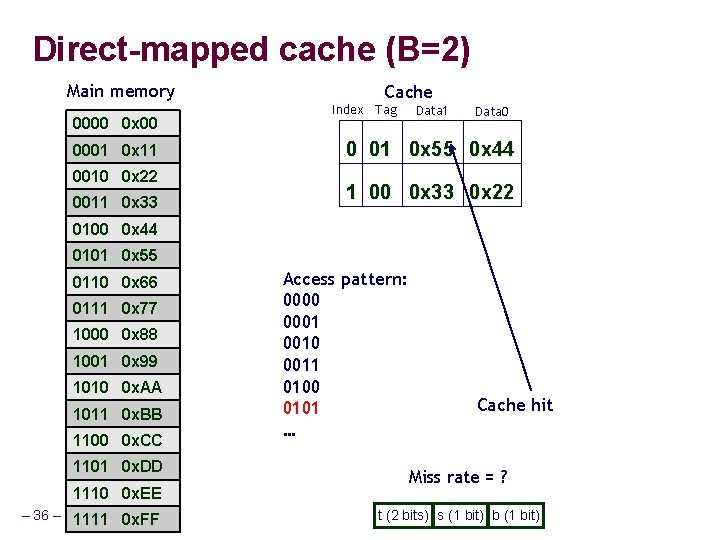

Direct-mapped cache (B=2) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 Cache Index Tag Data 1 Data 0 0 01 0 x 55 0 x 44 1 00 0 x 33 0 x 22 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC 1101 0 x. DD 1110 0 x. EE – 36 – 1111 0 x. FF Access pattern: 0000 0001 0010 0011 0100 0101 … Cache hit Miss rate = ? t (2 bits) s (1 bit) b (1 bit)

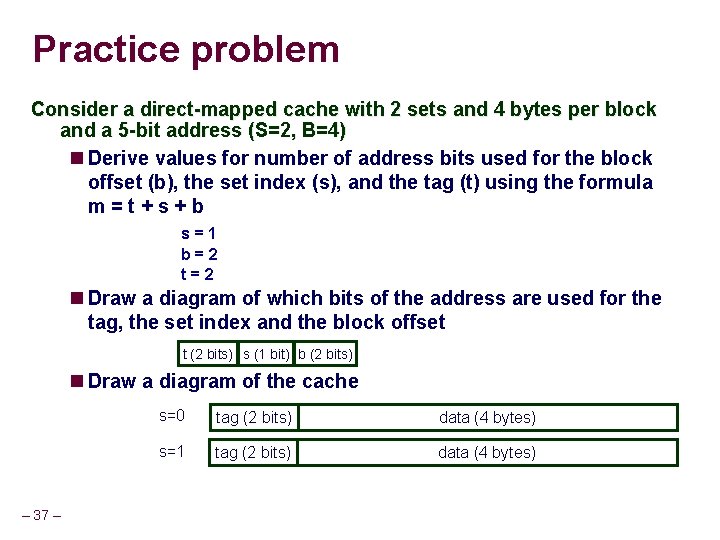

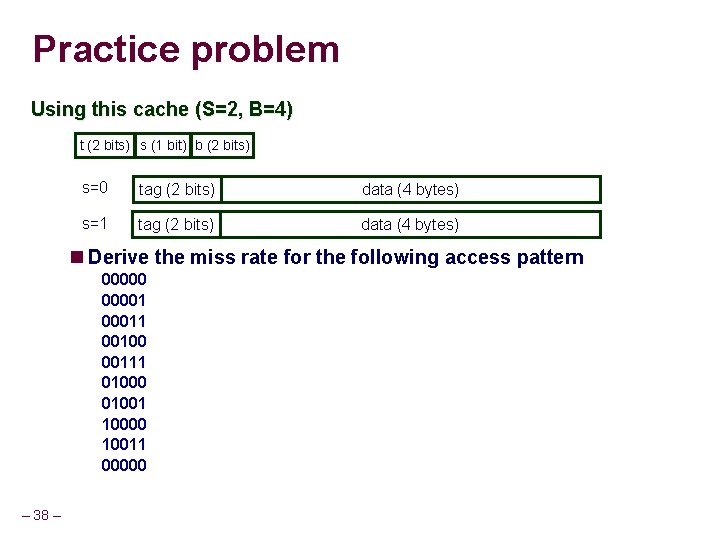

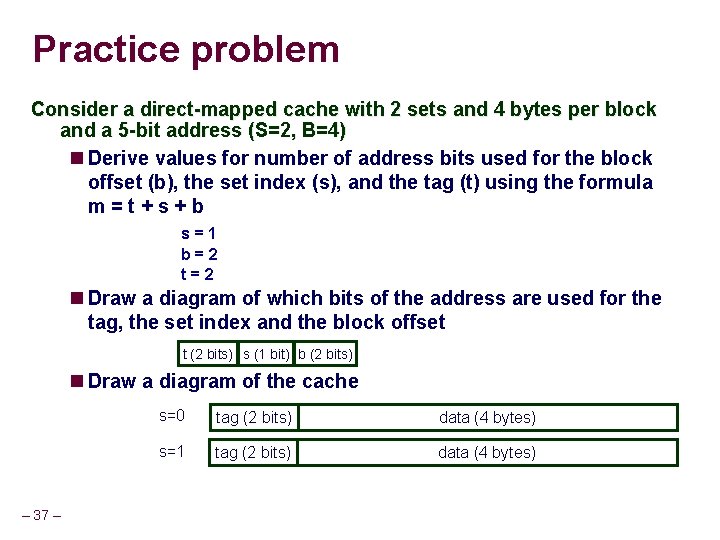

Practice problem Consider a direct-mapped cache with 2 sets and 4 bytes per block and a 5 -bit address (S=2, B=4) Derive values for number of address bits used for the block offset (b), the set index (s), and the tag (t) using the formula m=t+s+b s=1 b=2 t=2 Draw a diagram of which bits of the address are used for the tag, the set index and the block offset t (2 bits) s (1 bit) b (2 bits) Draw a diagram of the cache – 37 – s=0 tag (2 bits) data (4 bytes) s=1 tag (2 bits) data (4 bytes)

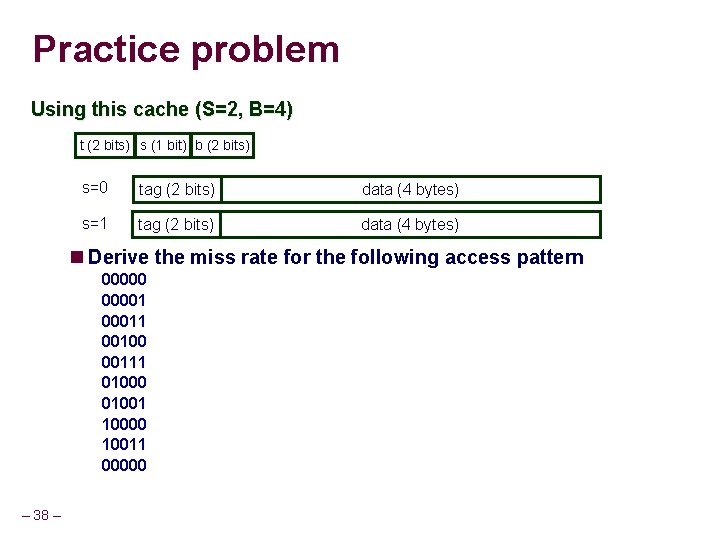

Practice problem Using this cache (S=2, B=4) t (2 bits) s (1 bit) b (2 bits) s=0 tag (2 bits) data (4 bytes) s=1 tag (2 bits) data (4 bytes) Derive the miss rate for the following access pattern 000001 00011 00100 00111 01000 01001 10000 10011 00000 – 38 –

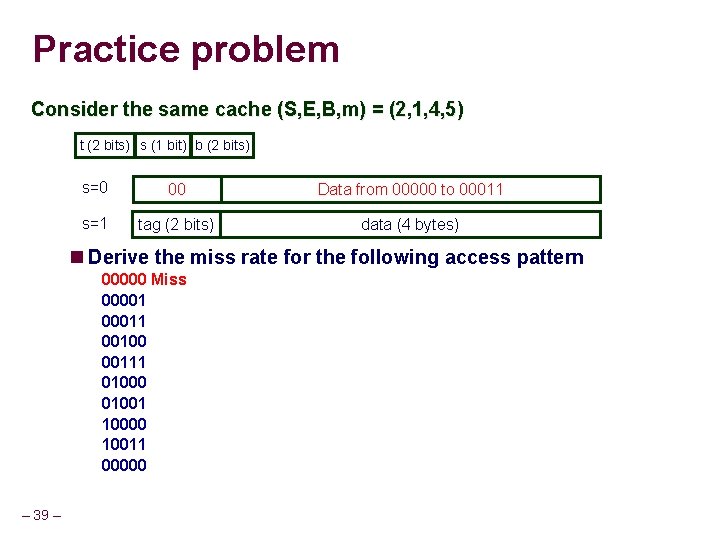

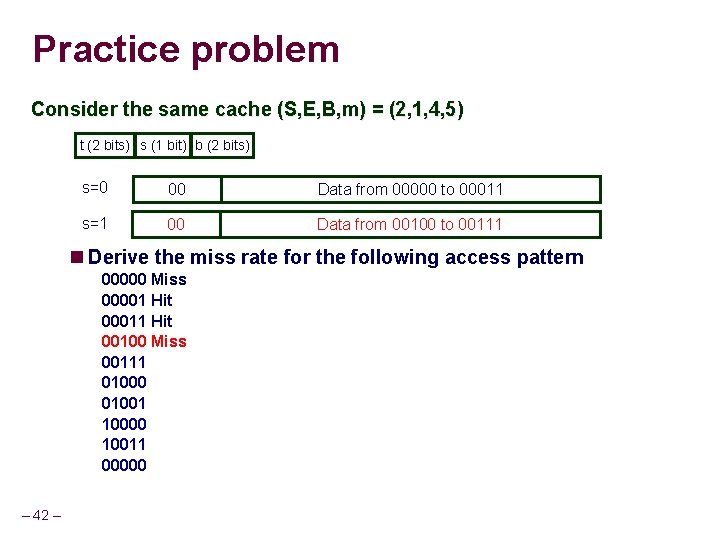

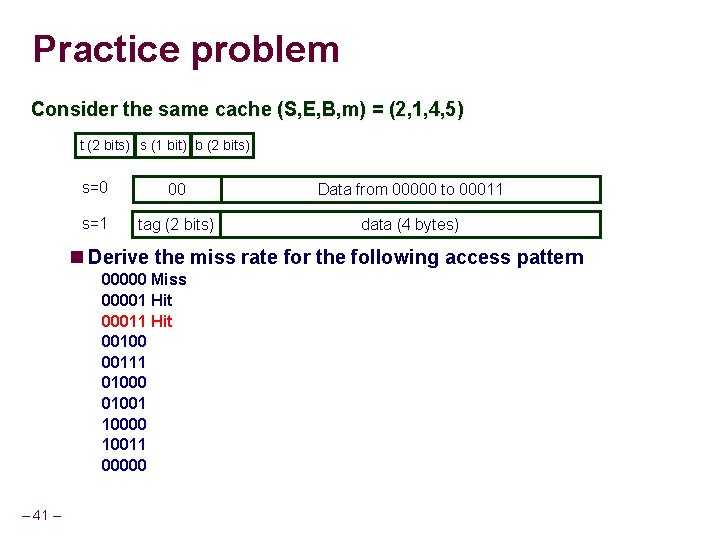

Practice problem Consider the same cache (S, E, B, m) = (2, 1, 4, 5) t (2 bits) s (1 bit) b (2 bits) s=0 00 Data from 00000 to 00011 s=1 tag (2 bits) data (4 bytes) Derive the miss rate for the following access pattern 00000 Miss 000011 00100 00111 01000 01001 10000 10011 00000 – 39 –

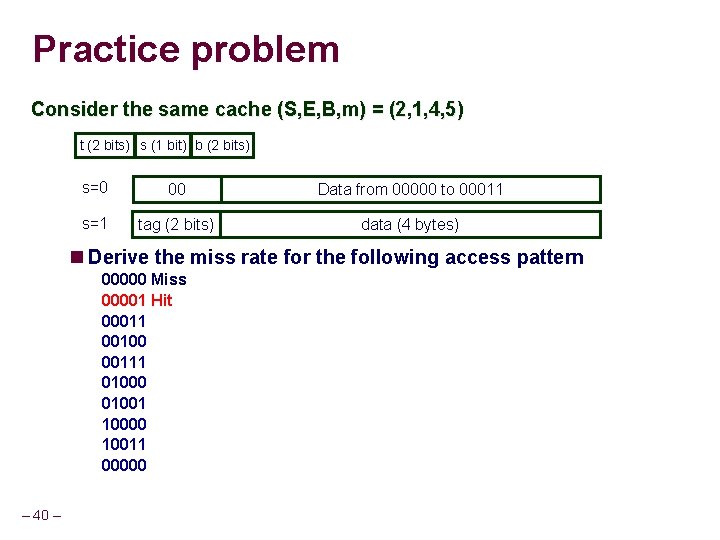

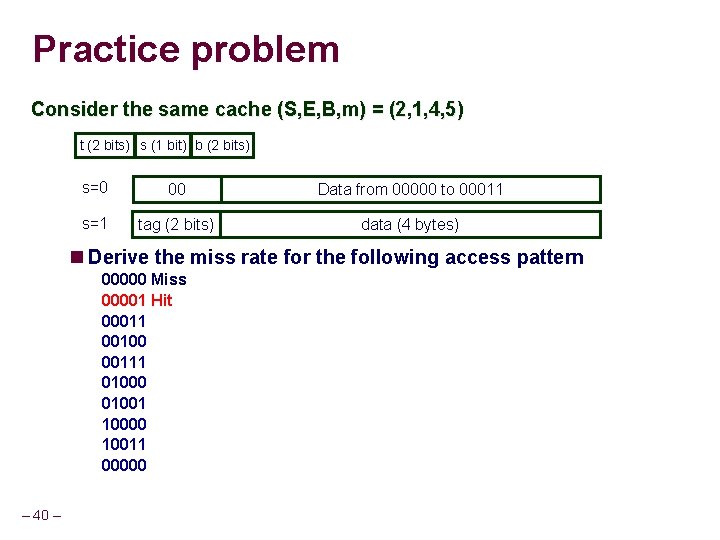

Practice problem Consider the same cache (S, E, B, m) = (2, 1, 4, 5) t (2 bits) s (1 bit) b (2 bits) s=0 00 Data from 00000 to 00011 s=1 tag (2 bits) data (4 bytes) Derive the miss rate for the following access pattern 00000 Miss 00001 Hit 00011 00100 00111 01000 01001 10000 10011 00000 – 40 –

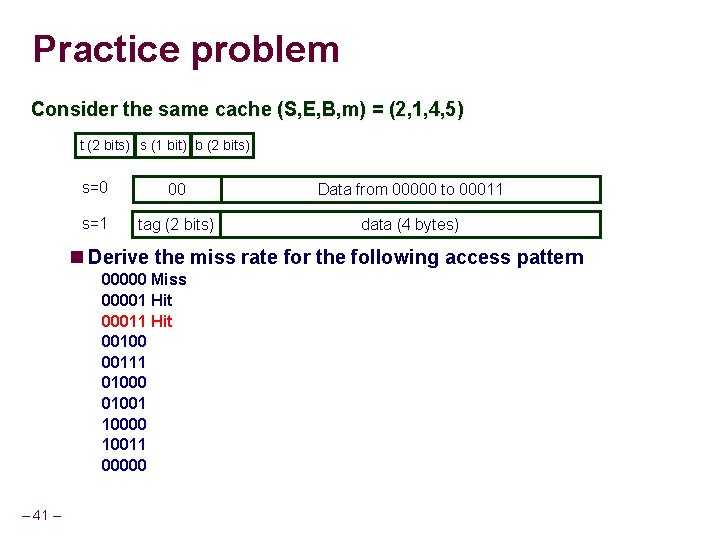

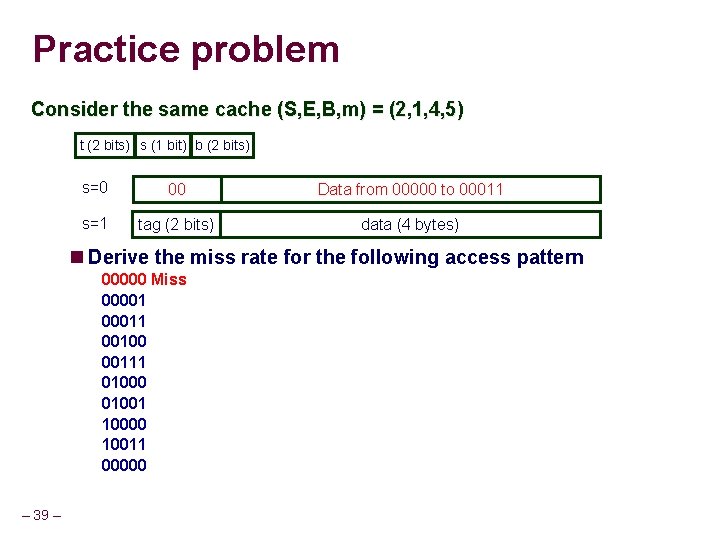

Practice problem Consider the same cache (S, E, B, m) = (2, 1, 4, 5) t (2 bits) s (1 bit) b (2 bits) s=0 00 Data from 00000 to 00011 s=1 tag (2 bits) data (4 bytes) Derive the miss rate for the following access pattern 00000 Miss 00001 Hit 00011 Hit 00100 00111 01000 01001 10000 10011 00000 – 41 –

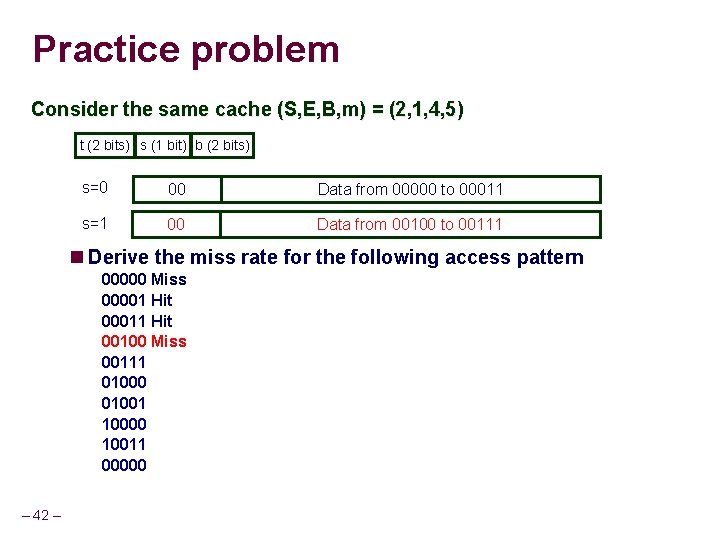

Practice problem Consider the same cache (S, E, B, m) = (2, 1, 4, 5) t (2 bits) s (1 bit) b (2 bits) s=0 00 Data from 00000 to 00011 s=1 00 Data from 00100 to 00111 Derive the miss rate for the following access pattern 00000 Miss 00001 Hit 00011 Hit 00100 Miss 00111 01000 01001 10000 10011 00000 – 42 –

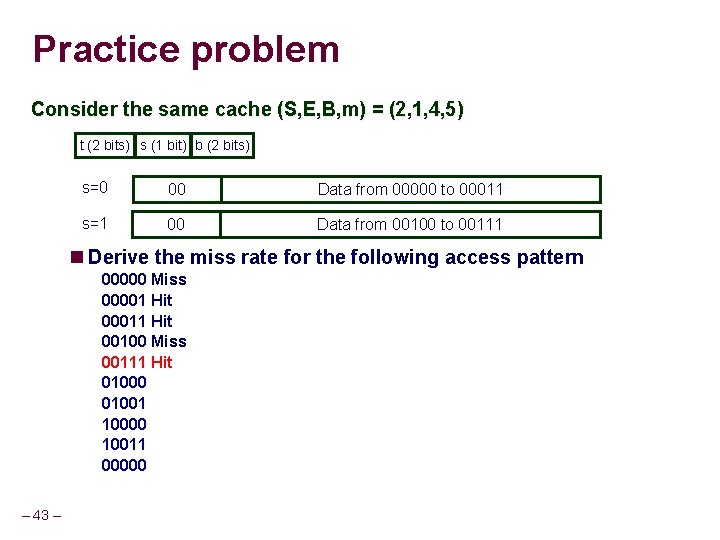

Practice problem Consider the same cache (S, E, B, m) = (2, 1, 4, 5) t (2 bits) s (1 bit) b (2 bits) s=0 00 Data from 00000 to 00011 s=1 00 Data from 00100 to 00111 Derive the miss rate for the following access pattern 00000 Miss 00001 Hit 00011 Hit 00100 Miss 00111 Hit 01000 01001 10000 10011 00000 – 43 –

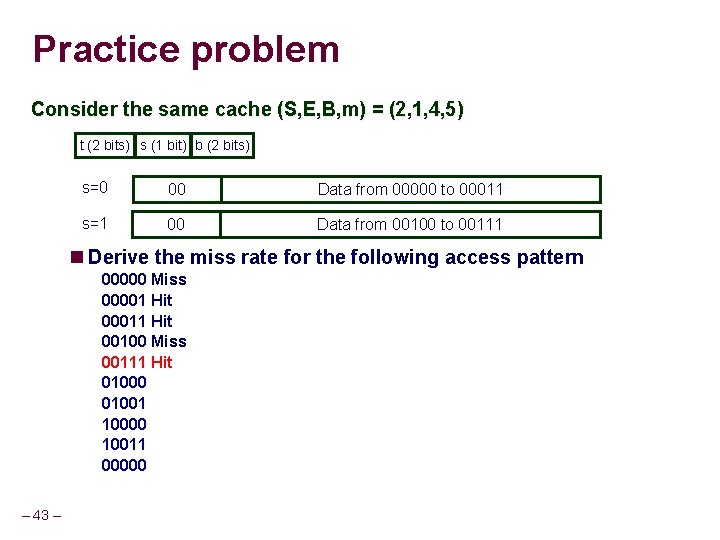

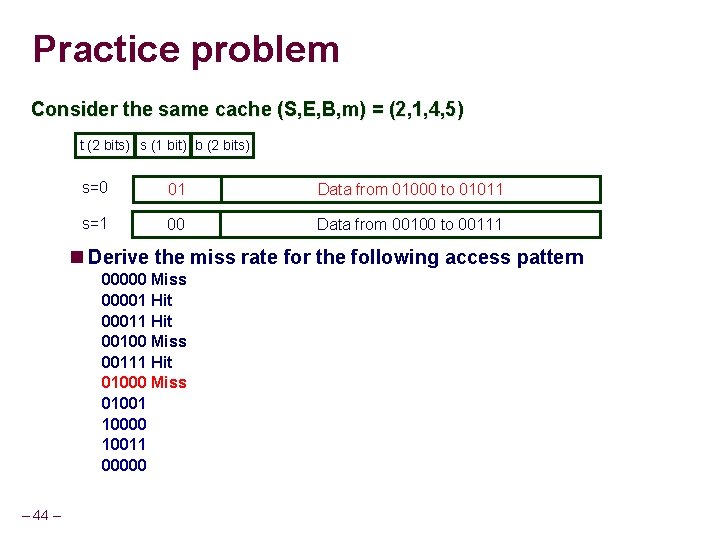

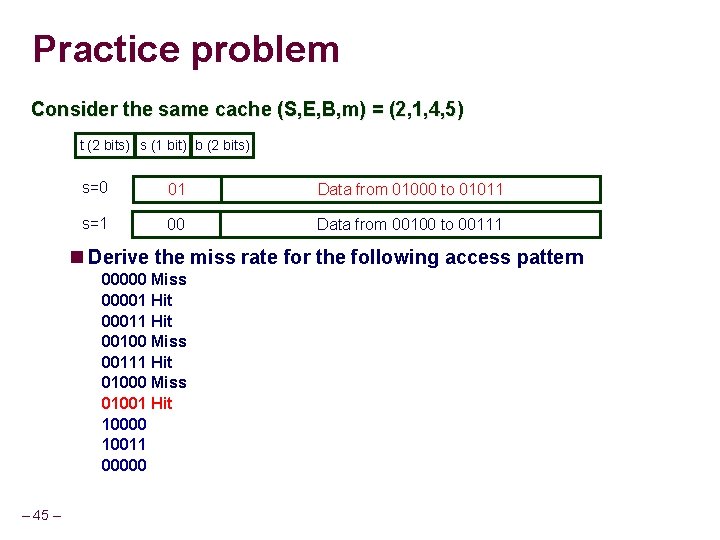

Practice problem Consider the same cache (S, E, B, m) = (2, 1, 4, 5) t (2 bits) s (1 bit) b (2 bits) s=0 01 Data from 01000 to 01011 s=1 00 Data from 00100 to 00111 Derive the miss rate for the following access pattern 00000 Miss 00001 Hit 00011 Hit 00100 Miss 00111 Hit 01000 Miss 01001 10000 10011 00000 – 44 –

Practice problem Consider the same cache (S, E, B, m) = (2, 1, 4, 5) t (2 bits) s (1 bit) b (2 bits) s=0 01 Data from 01000 to 01011 s=1 00 Data from 00100 to 00111 Derive the miss rate for the following access pattern 00000 Miss 00001 Hit 00011 Hit 00100 Miss 00111 Hit 01000 Miss 01001 Hit 10000 10011 00000 – 45 –

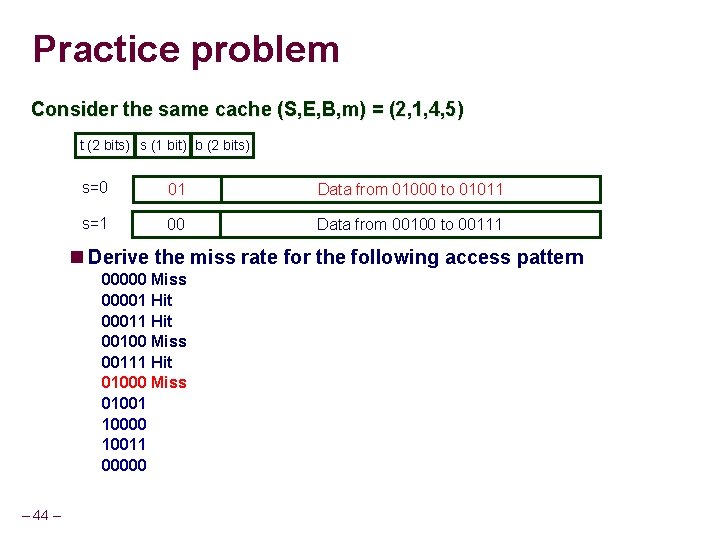

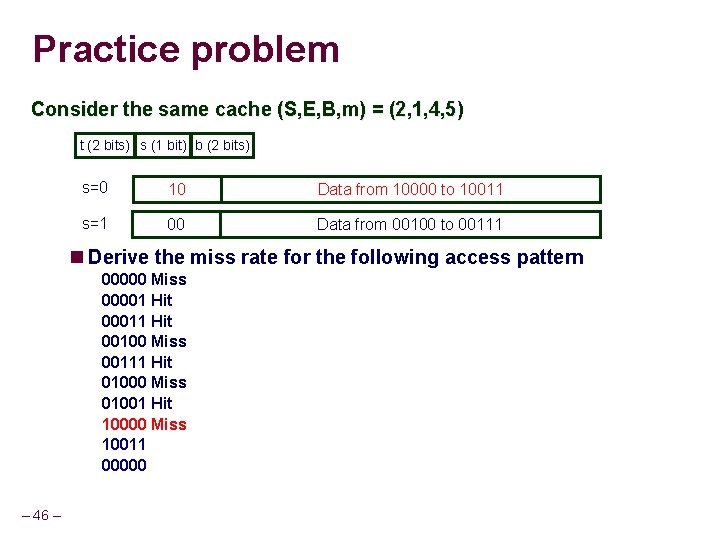

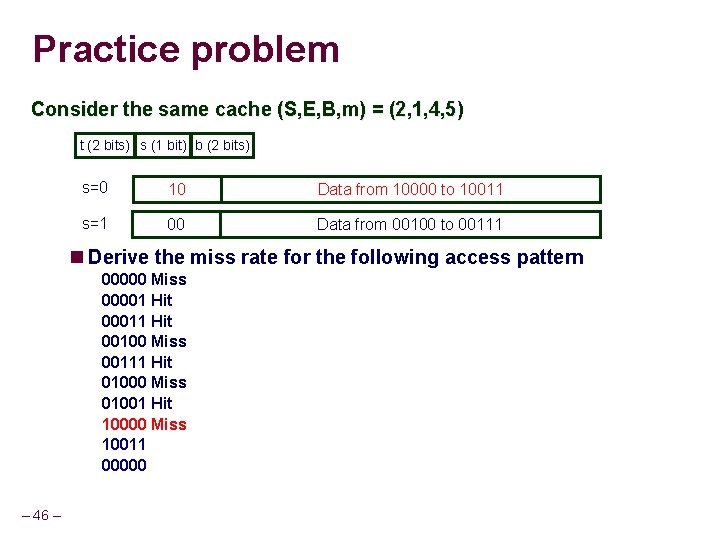

Practice problem Consider the same cache (S, E, B, m) = (2, 1, 4, 5) t (2 bits) s (1 bit) b (2 bits) s=0 10 Data from 10000 to 10011 s=1 00 Data from 00100 to 00111 Derive the miss rate for the following access pattern 00000 Miss 00001 Hit 00011 Hit 00100 Miss 00111 Hit 01000 Miss 01001 Hit 10000 Miss 10011 00000 – 46 –

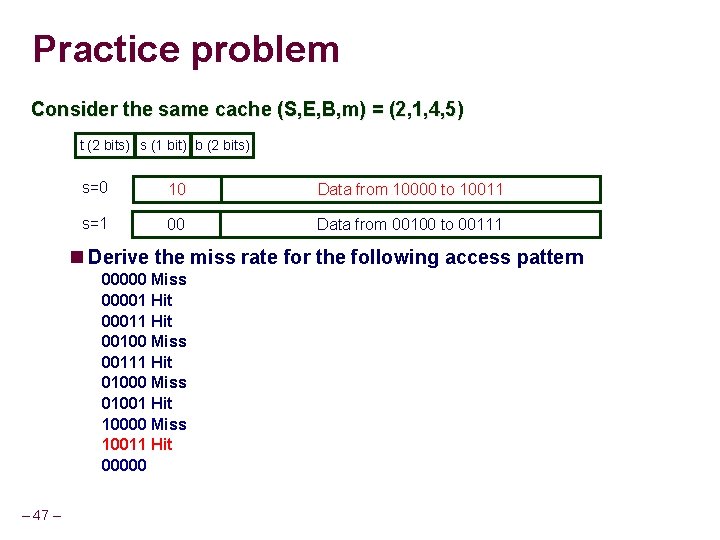

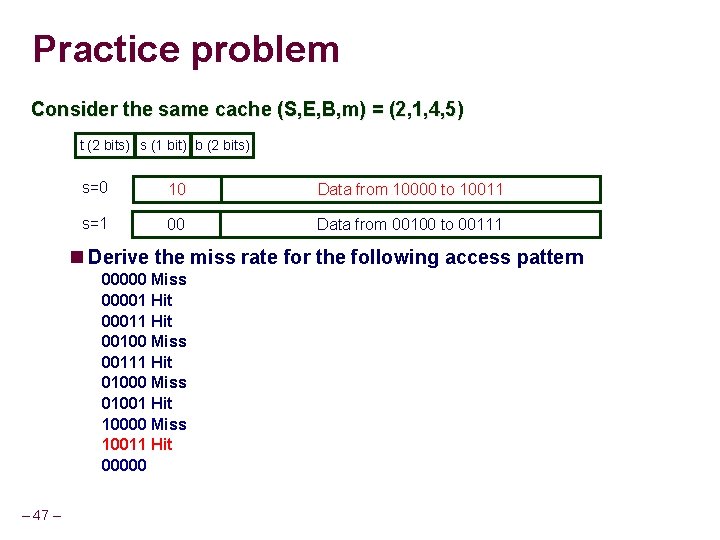

Practice problem Consider the same cache (S, E, B, m) = (2, 1, 4, 5) t (2 bits) s (1 bit) b (2 bits) s=0 10 Data from 10000 to 10011 s=1 00 Data from 00100 to 00111 Derive the miss rate for the following access pattern 00000 Miss 00001 Hit 00011 Hit 00100 Miss 00111 Hit 01000 Miss 01001 Hit 10000 Miss 10011 Hit 00000 – 47 –

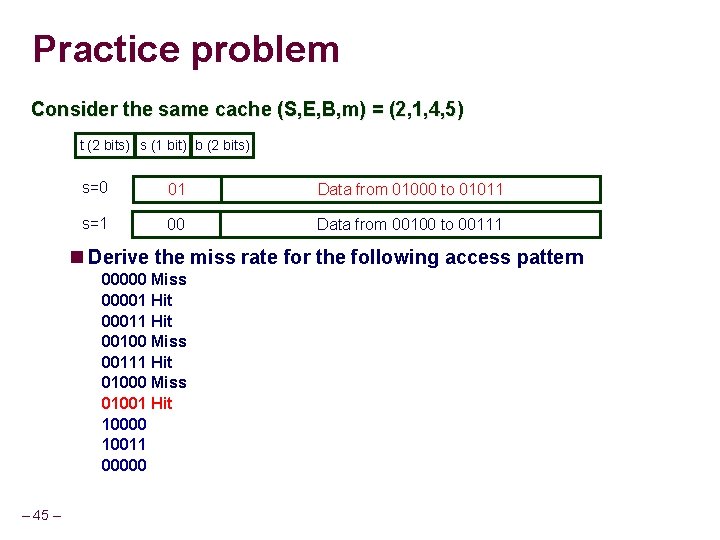

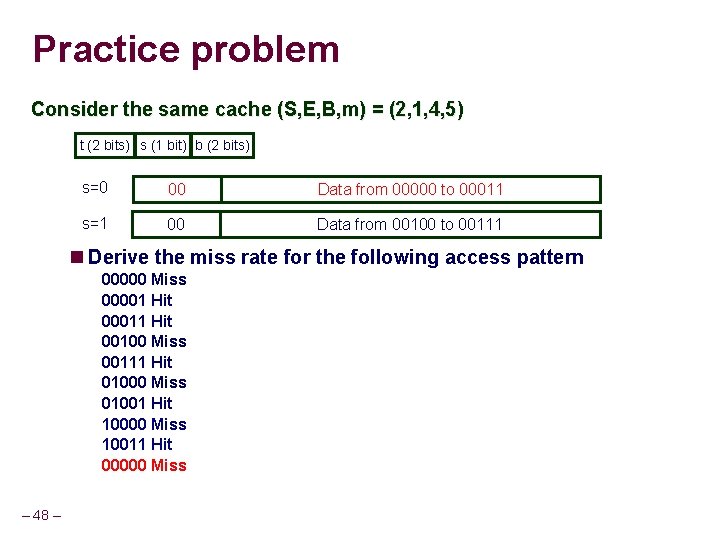

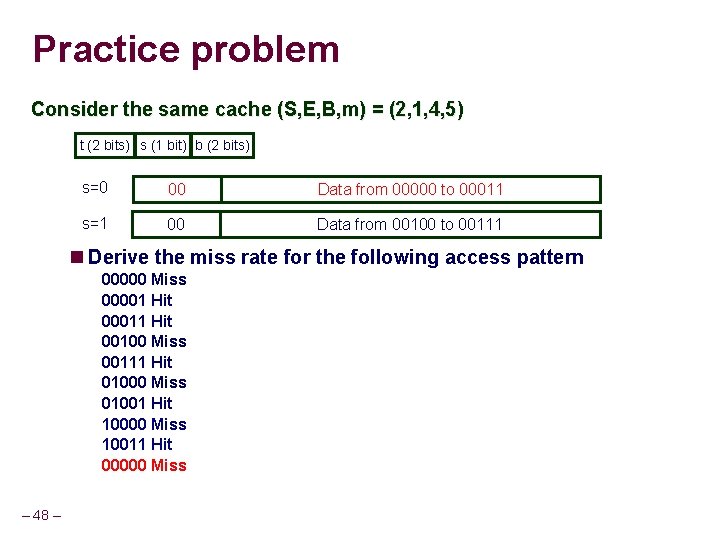

Practice problem Consider the same cache (S, E, B, m) = (2, 1, 4, 5) t (2 bits) s (1 bit) b (2 bits) s=0 00 Data from 00000 to 00011 s=1 00 Data from 00100 to 00111 Derive the miss rate for the following access pattern 00000 Miss 00001 Hit 00011 Hit 00100 Miss 00111 Hit 01000 Miss 01001 Hit 10000 Miss 10011 Hit 00000 Miss – 48 –

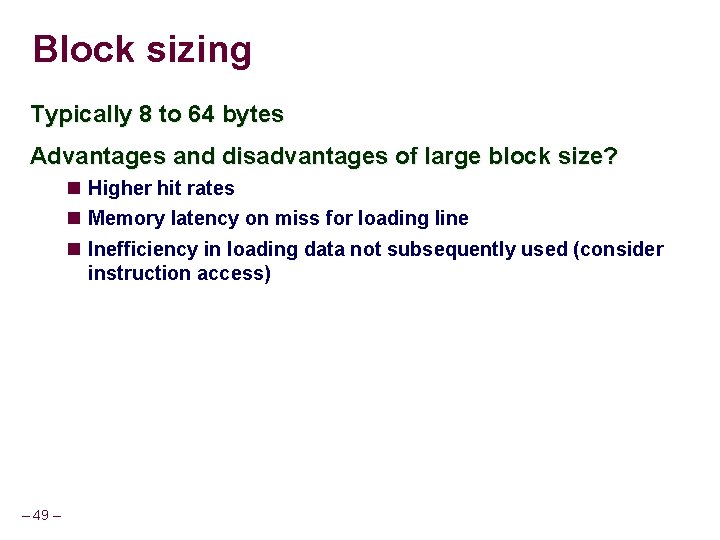

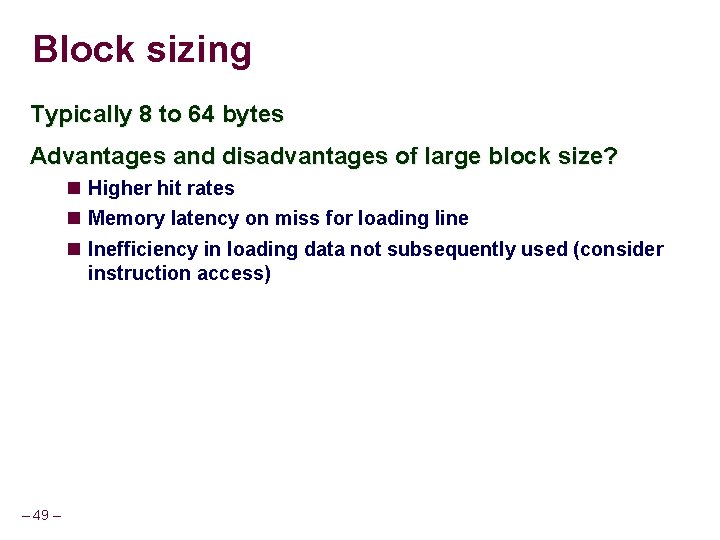

Block sizing Typically 8 to 64 bytes Advantages and disadvantages of large block size? Higher hit rates Memory latency on miss for loading line Inefficiency in loading data not subsequently used (consider instruction access) – 49 –

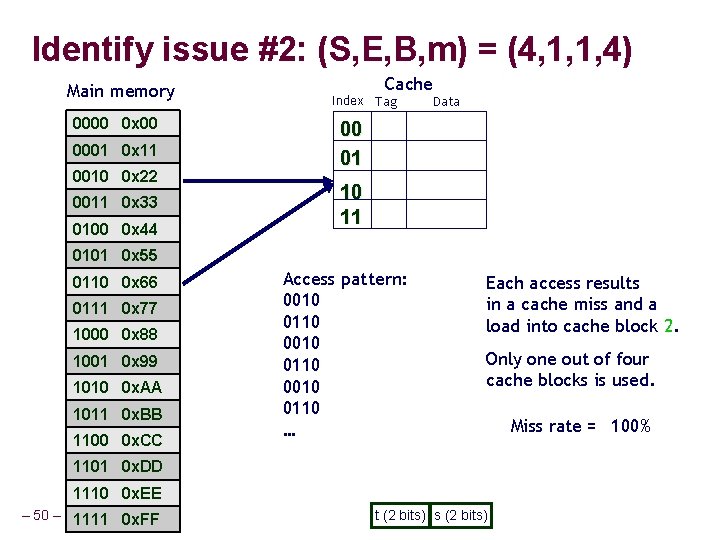

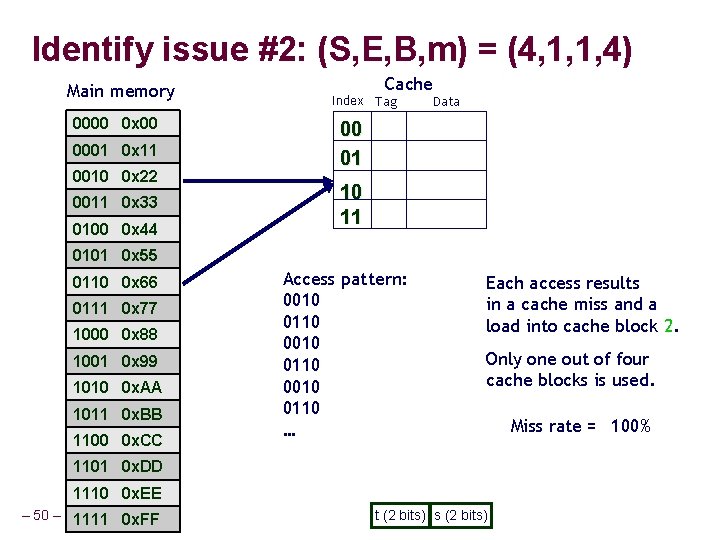

Identify issue #2: (S, E, B, m) = (4, 1, 1, 4) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 0100 0 x 44 Cache Index Tag Data 00 01 10 11 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC Access pattern: 0010 0110 … Each access results in a cache miss and a load into cache block 2. Only one out of four cache blocks is used. 1101 0 x. DD 1110 0 x. EE – 50 – 1111 0 x. FF t (2 bits) s (2 bits) Miss rate = 100%

Direct mapping and conflict misses The direct-mapped cache simple Each memory address belongs in exactly one block in cache Indices and offsets can be computed with simple bit operators But, has problems when multiple blocks map to same entry Cause thrashing in cache – 51 –

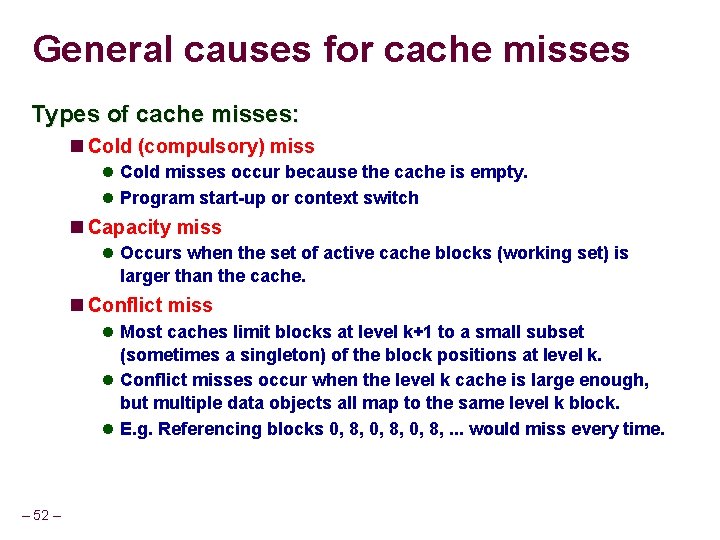

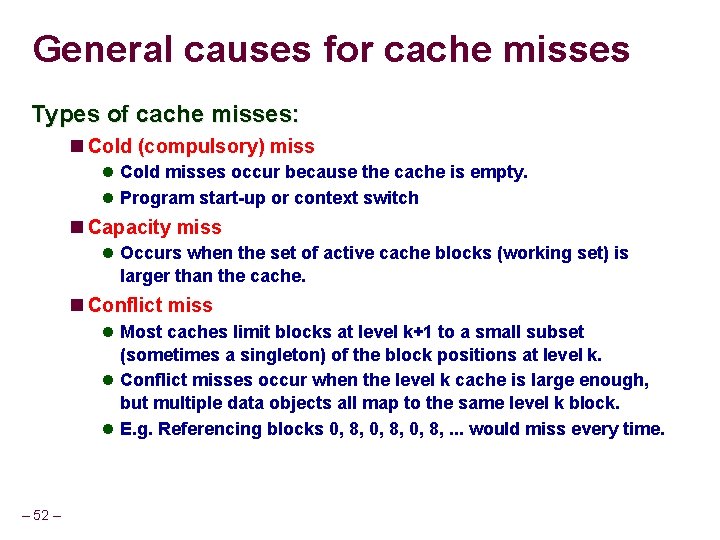

General causes for cache misses Types of cache misses: Cold (compulsory) miss Cold misses occur because the cache is empty. Program start-up or context switch Capacity miss Occurs when the set of active cache blocks (working set) is larger than the cache. Conflict miss Most caches limit blocks at level k+1 to a small subset (sometimes a singleton) of the block positions at level k. Conflict misses occur when the level k cache is large enough, but multiple data objects all map to the same level k block. E. g. Referencing blocks 0, 8, . . . would miss every time. – 52 –

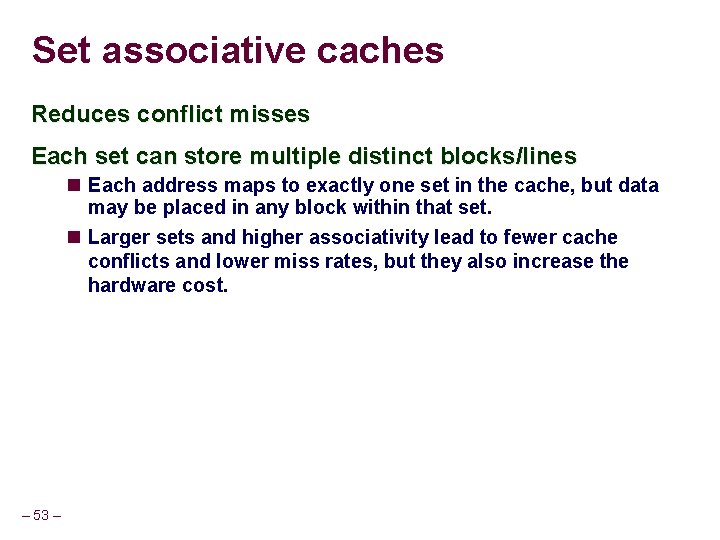

Set associative caches Reduces conflict misses Each set can store multiple distinct blocks/lines Each address maps to exactly one set in the cache, but data may be placed in any block within that set. Larger sets and higher associativity lead to fewer cache conflicts and lower miss rates, but they also increase the hardware cost. – 53 –

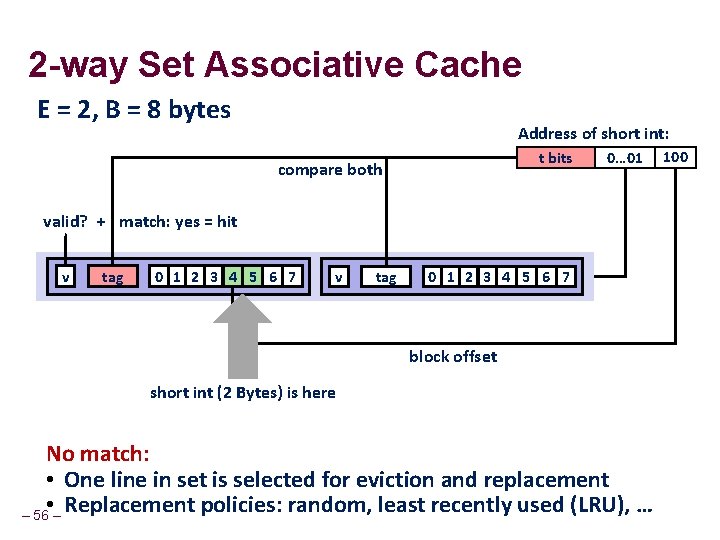

E-way Set Associative Cache (E = 2) E = 2: Two lines per set Assume: cache block size 8 bytes – 54 – Address of short int: t bits v tag 0 1 2 3 4 5 6 7 v tag 0 1 2 3 4 5 6 7 0… 01 100 find set

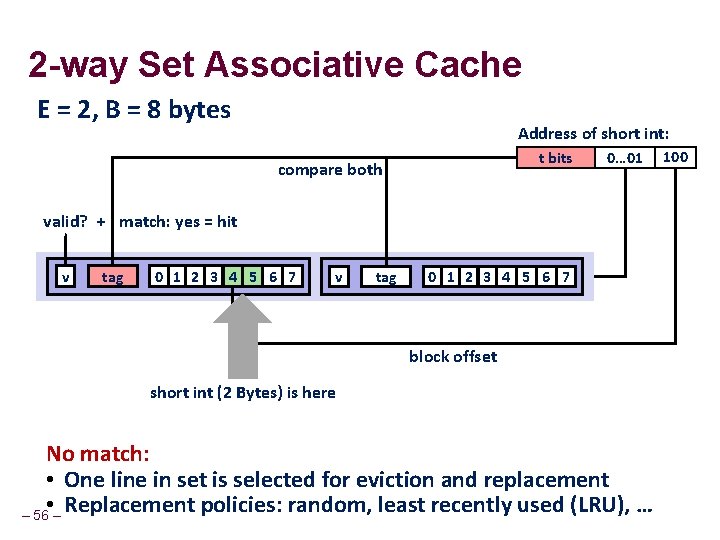

2 -way Set Associative Cache E = 2, B = 8 bytes Address of short int: t bits compare both valid? + match: yes = hit v tag 0 1 2 3 4 5 6 7 block offset – 55 – 0… 01 100

2 -way Set Associative Cache E = 2, B = 8 bytes Address of short int: t bits compare both 0… 01 valid? + match: yes = hit v tag 0 1 2 3 4 5 6 7 block offset short int (2 Bytes) is here No match: • One line in set is selected for eviction and replacement • Replacement policies: random, least recently used (LRU), … – 56 – 100

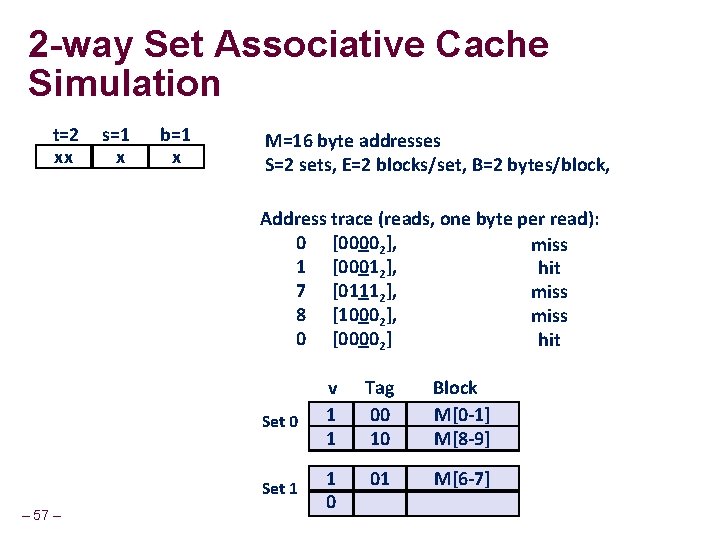

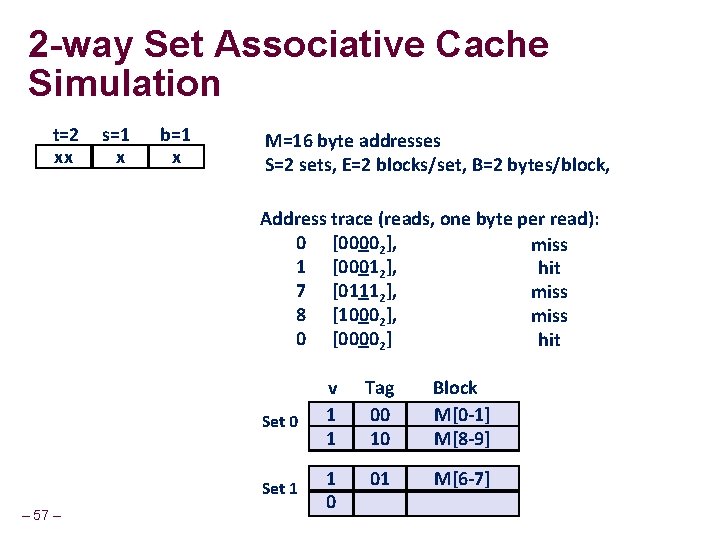

2 -way Set Associative Cache Simulation t=2 xx s=1 x b=1 x M=16 byte addresses S=2 sets, E=2 blocks/set, B=2 bytes/block, Address trace (reads, one byte per read): 0 [00002], miss 1 [00012], hit 7 [01112], miss 8 [10002], miss 0 [00002] hit – 57 – Set 0 v 0 1 Tag ? 00 10 Block ? M[0 -1] M[8 -9] Set 1 0 01 M[6 -7]

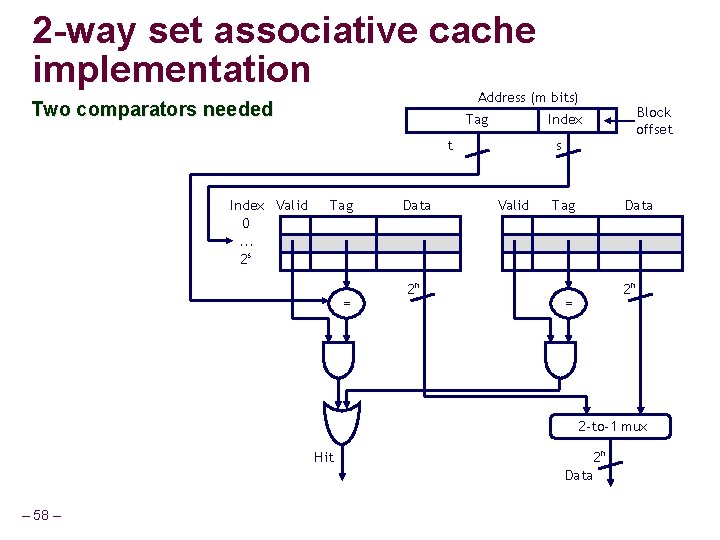

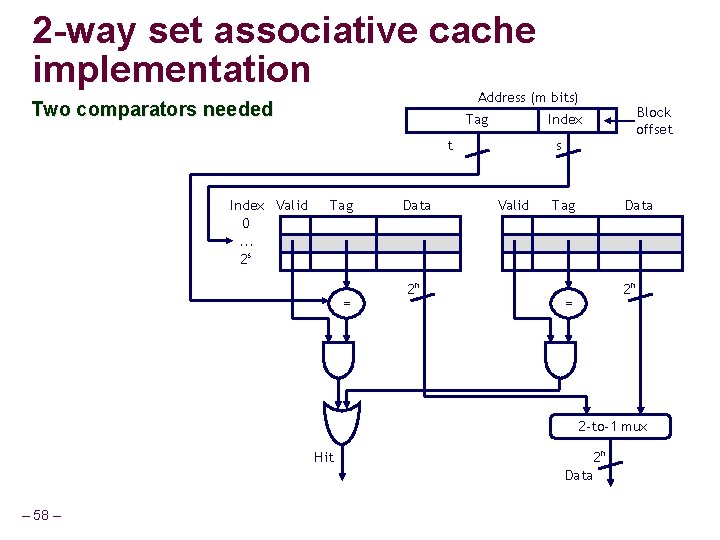

2 -way set associative cache implementation Address (m bits) Two comparators needed Tag t Index Valid 0. . . 2 s Tag = Data 2 n Block offset Index s Valid Tag Data 2 n = 2 -to-1 mux 2 n Hit Data – 58 –

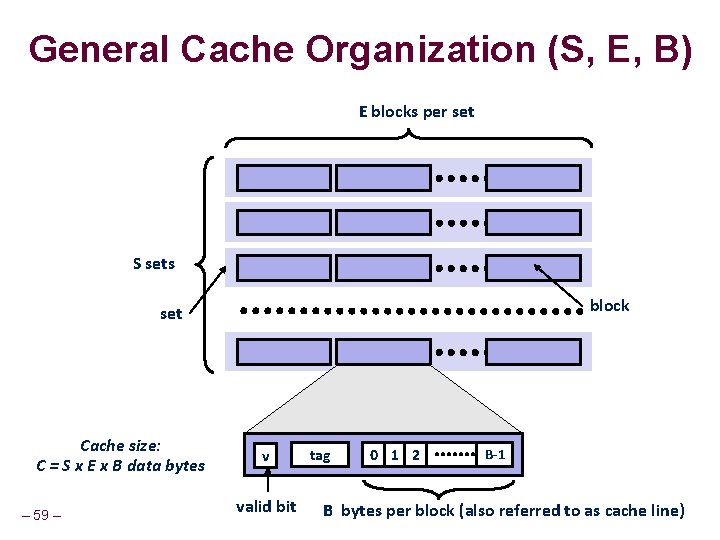

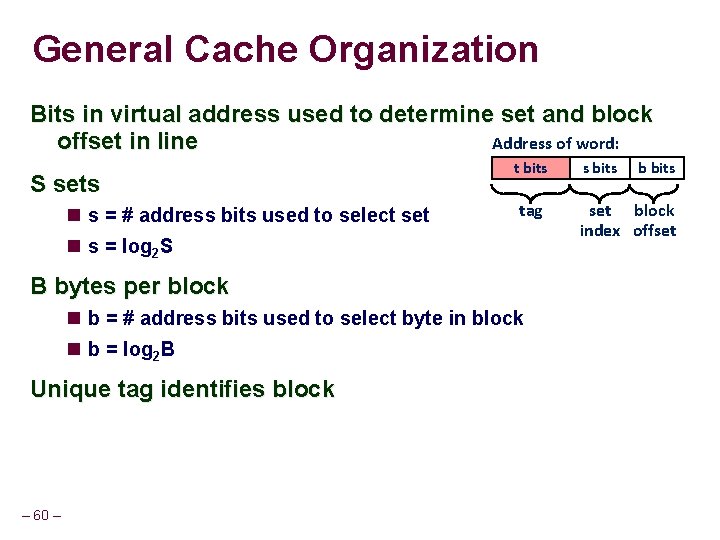

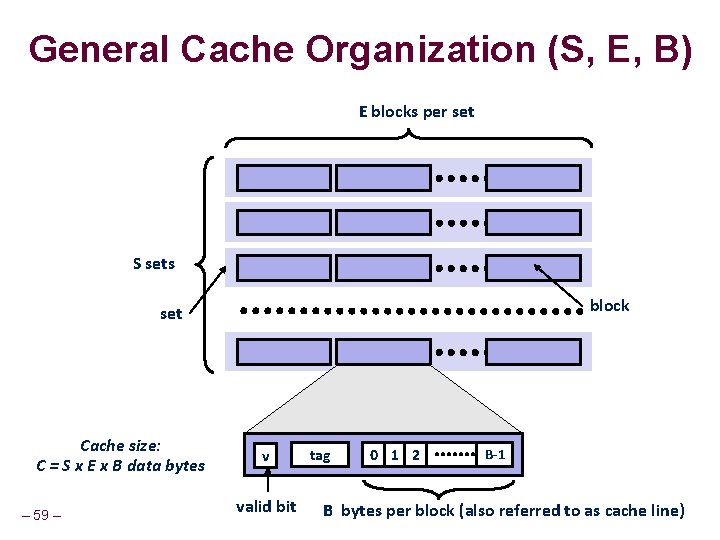

General Cache Organization (S, E, B) E blocks per set S sets block set Cache size: C = S x E x B data bytes – 59 – v valid bit tag 0 1 2 B-1 B bytes per block (also referred to as cache line)

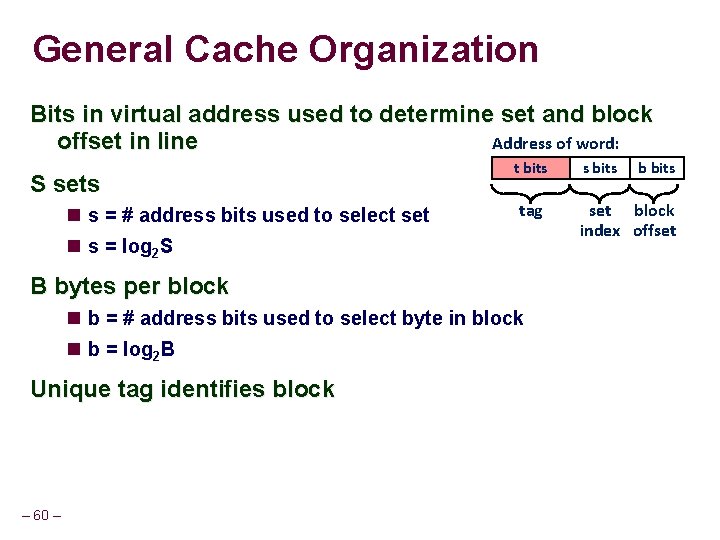

General Cache Organization Bits in virtual address used to determine set and block offset in line Address of word: S sets s = # address bits used to select set s = log 2 S t bits tag B bytes per block b = # address bits used to select byte in block b = log 2 B Unique tag identifies block – 60 – s bits b bits set block index offset

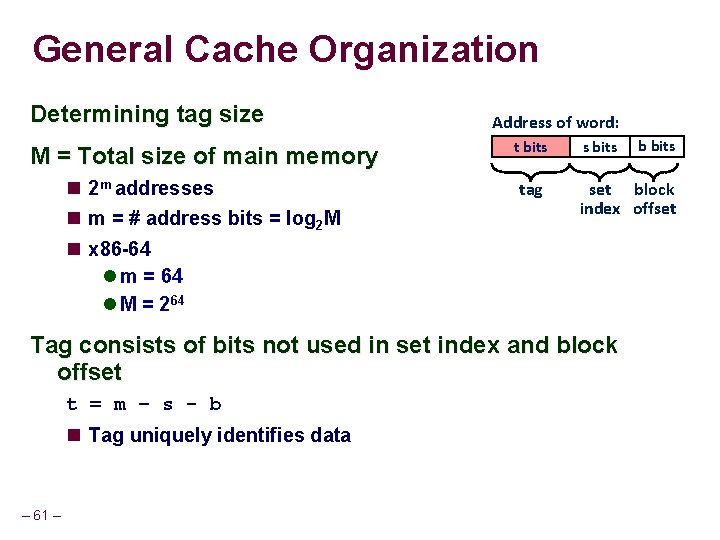

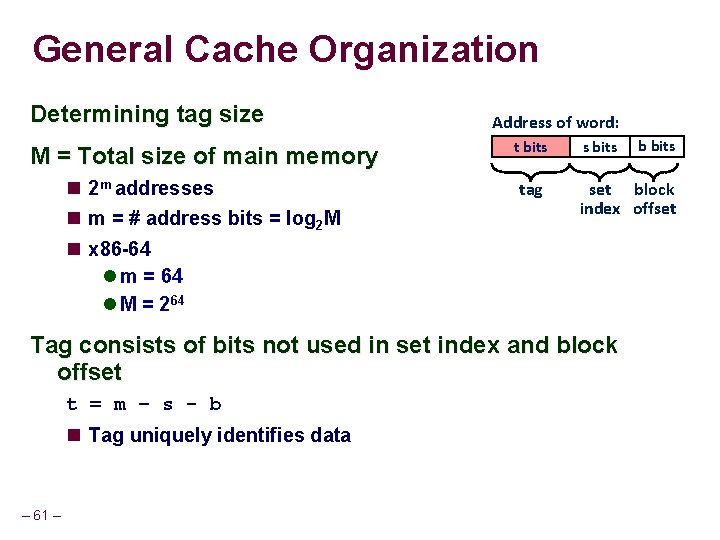

General Cache Organization Determining tag size Address of word: M = Total size of main memory t bits 2 m addresses m = # address bits = log 2 M x 86 -64 m = 64 M = 264 tag s bits set block index offset Tag consists of bits not used in set index and block offset t = m – s - b Tag uniquely identifies data – 61 – b bits

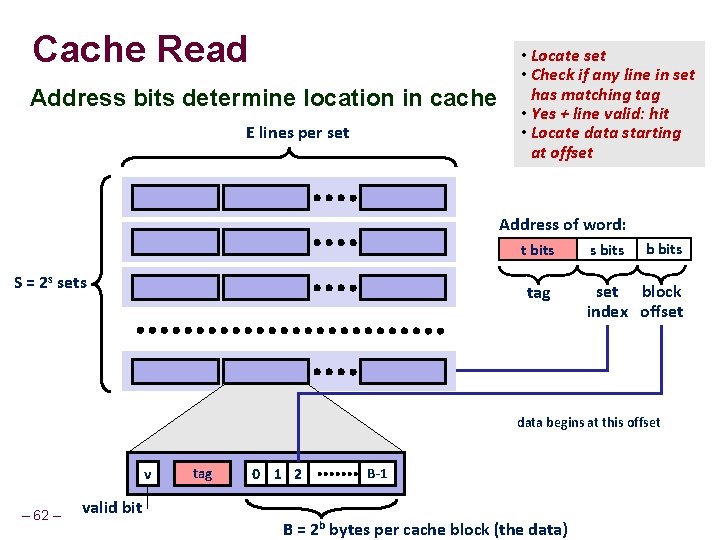

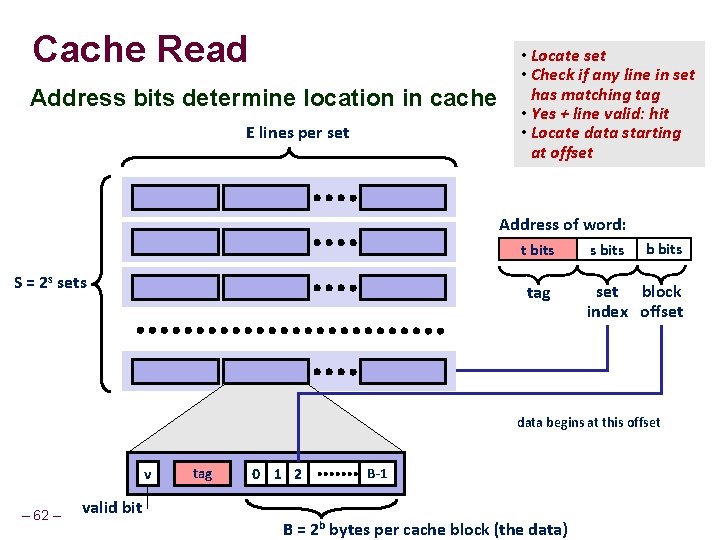

Cache Read Address bits determine location in cache E lines per set • Locate set • Check if any line in set has matching tag • Yes + line valid: hit • Locate data starting at offset Address of word: t bits S = 2 s sets tag s bits b bits set block index offset data begins at this offset v – 62 – valid bit tag 0 1 2 B-1 B = 2 b bytes per cache block (the data)

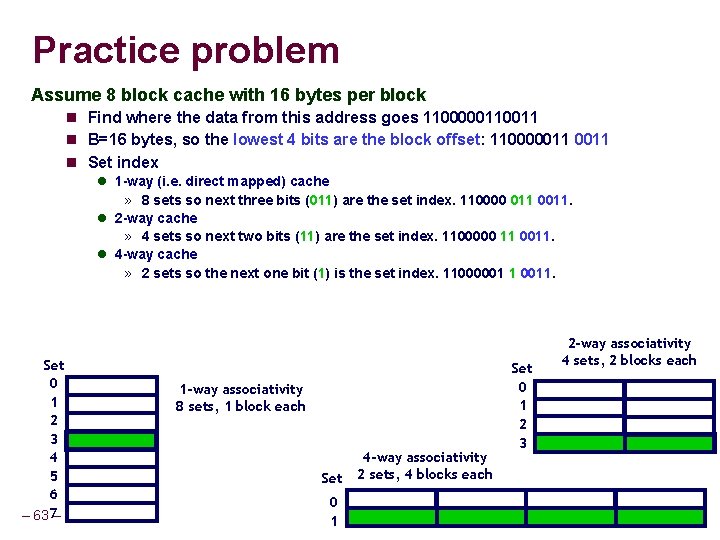

Practice problem Assume 8 block cache with 16 bytes per block Find where the data from this address goes 110000011 B=16 bytes, so the lowest 4 bits are the block offset: 110000011 Set index 1 -way (i. e. direct mapped) cache » 8 sets so next three bits (011) are the set index. 110000 011 0011. 2 -way cache » 4 sets so next two bits (11) are the set index. 1100000 11 0011. 4 -way cache » 2 sets so the next one bit (1) is the set index. 11000001 1 0011. Set 0 1 2 3 4 5 6 – 63 7– 1 -way associativity 8 sets, 1 block each Set 0 1 4 -way associativity 2 sets, 4 blocks each Set 0 1 2 3 2 -way associativity 4 sets, 2 blocks each

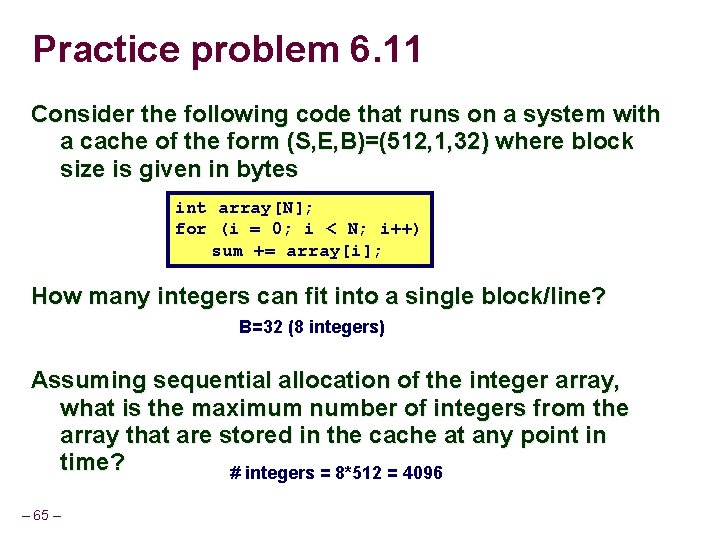

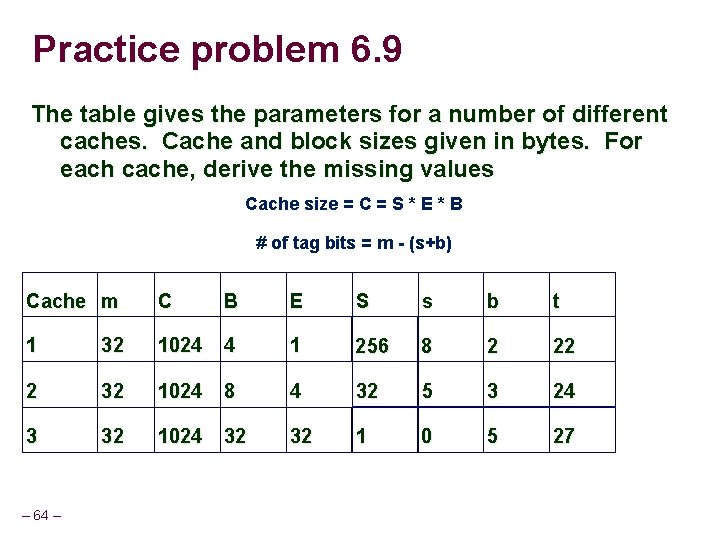

Practice problem 6. 9 The table gives the parameters for a number of different caches. Cache and block sizes given in bytes. For each cache, derive the missing values Cache size = C = S * E * B # of tag bits = m - (s+b) Cache m C B E S s b t 1 32 1024 4 1 256 8 2 22 2 32 1024 8 4 32 5 3 24 3 32 1024 32 32 1 0 5 27 – 64 –

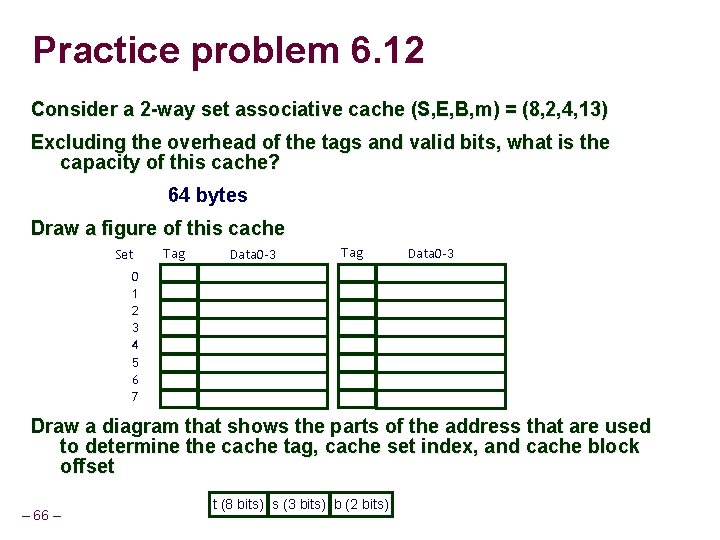

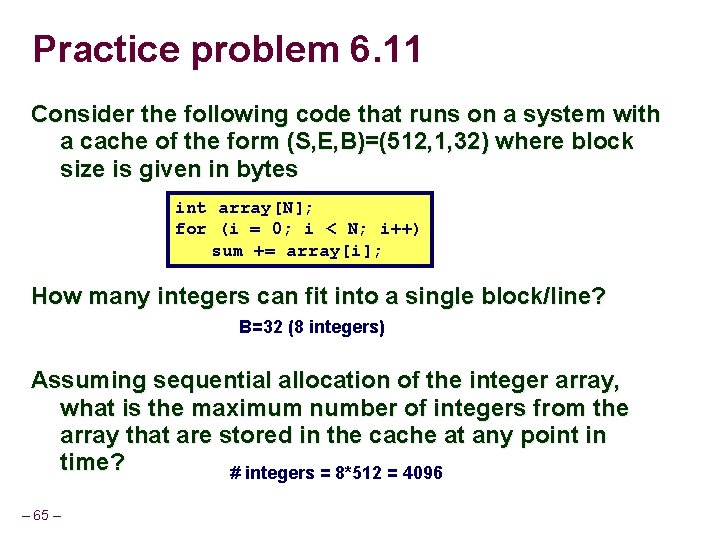

Practice problem 6. 11 Consider the following code that runs on a system with a cache of the form (S, E, B)=(512, 1, 32) where block size is given in bytes int array[N]; for (i = 0; i < N; i++) sum += array[i]; How many integers can fit into a single block/line? B=32 (8 integers) Assuming sequential allocation of the integer array, what is the maximum number of integers from the array that are stored in the cache at any point in time? # integers = 8*512 = 4096 – 65 –

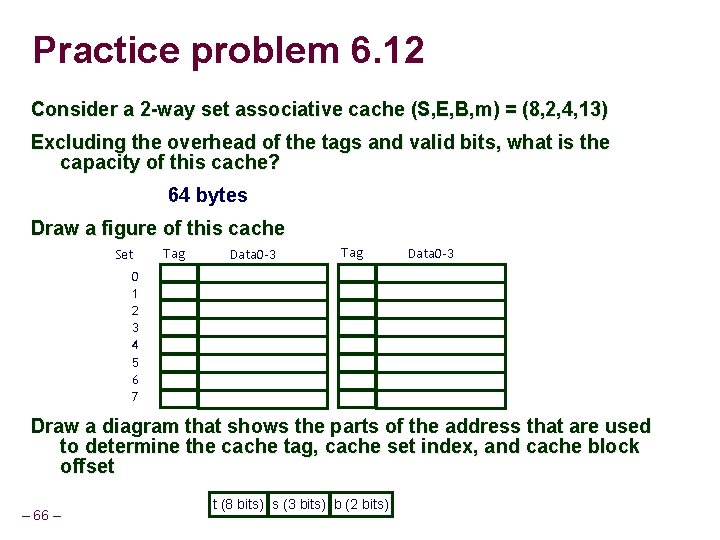

Practice problem 6. 12 Consider a 2 -way set associative cache (S, E, B, m) = (8, 2, 4, 13) Excluding the overhead of the tags and valid bits, what is the capacity of this cache? 64 bytes Draw a figure of this cache Set Tag Data 0 -3 0 1 2 3 4 5 6 7 Draw a diagram that shows the parts of the address that are used to determine the cache tag, cache set index, and cache block offset – 66 – t (8 bits) s (3 bits) b (2 bits)

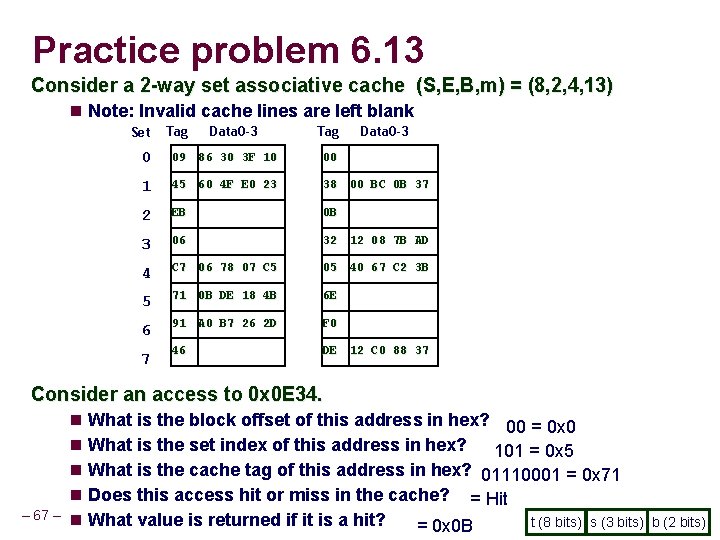

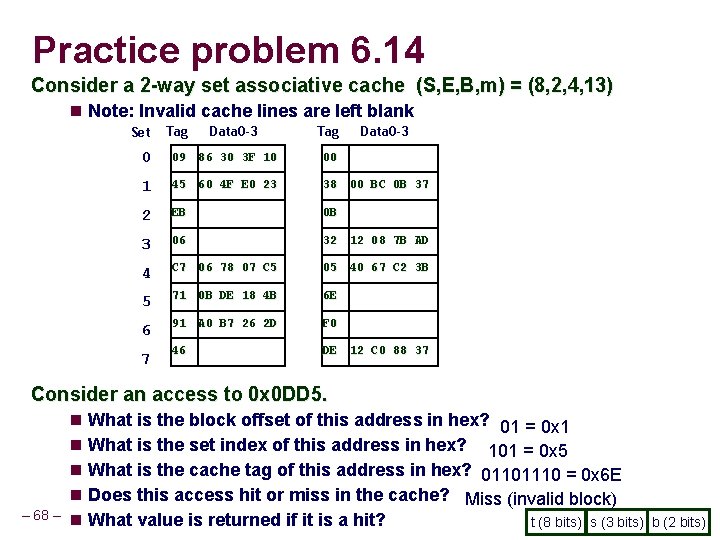

Practice problem 6. 13 Consider a 2 -way set associative cache (S, E, B, m) = (8, 2, 4, 13) Note: Invalid cache lines are left blank Set Tag Data 0 -3 0 09 86 30 3 F 10 00 1 45 60 4 F E 0 23 38 00 BC 0 B 37 2 EB 0 B 3 06 32 12 08 7 B AD 4 C 7 06 78 07 C 5 05 40 67 C 2 3 B 5 71 0 B DE 18 4 B 6 E 6 91 A 0 B 7 26 2 D F 0 46 DE 12 C 0 88 37 7 Consider an access to 0 x 0 E 34. – 67 – What is the block offset of this address in hex? 00 = 0 x 0 What is the set index of this address in hex? 101 = 0 x 5 What is the cache tag of this address in hex? 01110001 = 0 x 71 Does this access hit or miss in the cache? = Hit What value is returned if it is a hit? t (8 bits) s (3 bits) = 0 x 0 B b (2 bits)

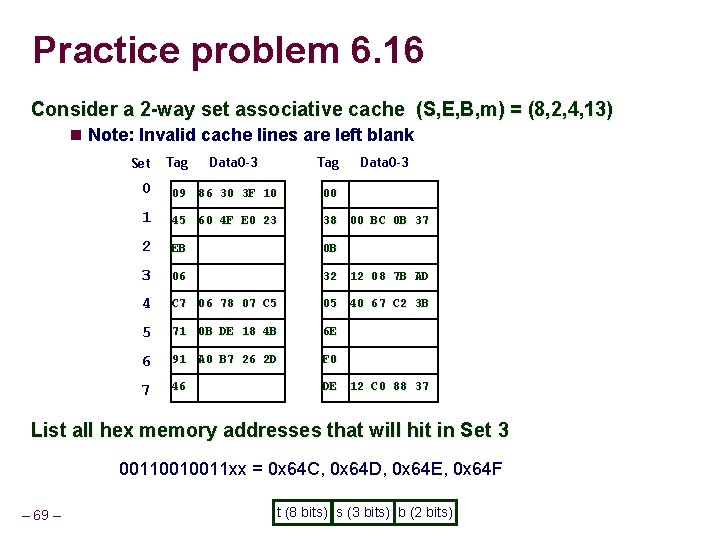

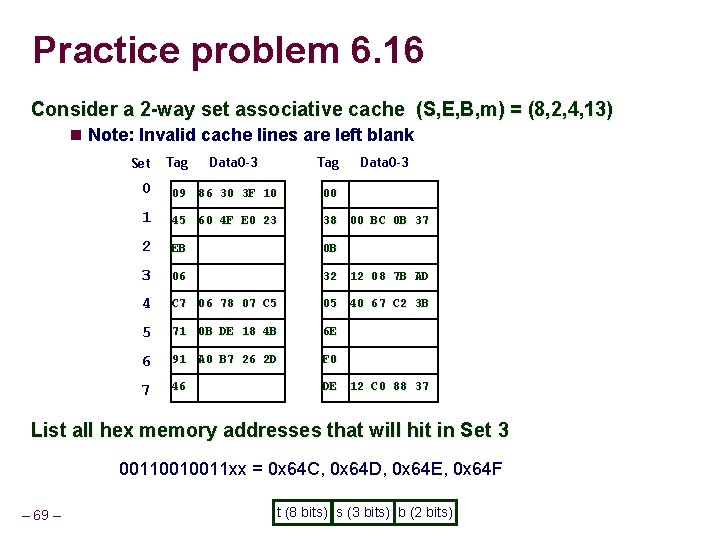

Practice problem 6. 14 Consider a 2 -way set associative cache (S, E, B, m) = (8, 2, 4, 13) Note: Invalid cache lines are left blank Set Tag Data 0 -3 0 09 86 30 3 F 10 00 1 45 60 4 F E 0 23 38 00 BC 0 B 37 2 EB 0 B 3 06 32 12 08 7 B AD 4 C 7 06 78 07 C 5 05 40 67 C 2 3 B 5 71 0 B DE 18 4 B 6 E 6 91 A 0 B 7 26 2 D F 0 46 DE 12 C 0 88 37 7 Consider an access to 0 x 0 DD 5. – 68 – What is the block offset of this address in hex? 01 = 0 x 1 What is the set index of this address in hex? 101 = 0 x 5 What is the cache tag of this address in hex? 01101110 = 0 x 6 E Does this access hit or miss in the cache? Miss (invalid block) What value is returned if it is a hit? t (8 bits) s (3 bits) b (2 bits)

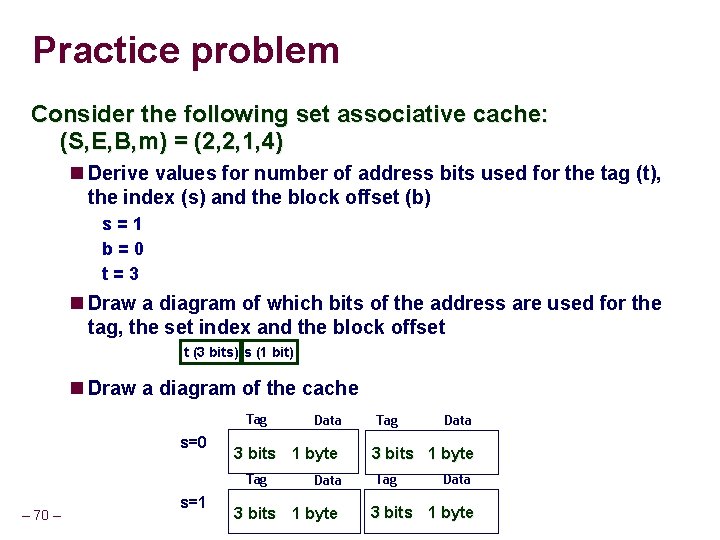

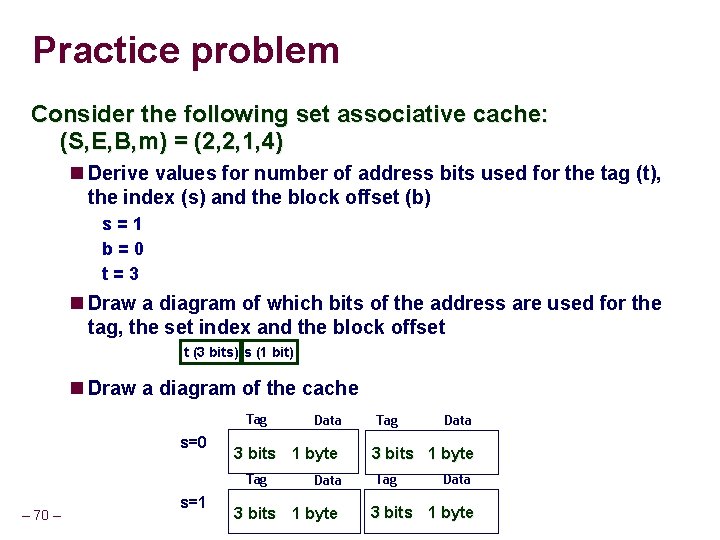

Practice problem 6. 16 Consider a 2 -way set associative cache (S, E, B, m) = (8, 2, 4, 13) Note: Invalid cache lines are left blank Set Tag Data 0 -3 0 09 86 30 3 F 10 00 1 45 60 4 F E 0 23 38 00 BC 0 B 37 2 EB 0 B 3 06 32 12 08 7 B AD 4 C 7 06 78 07 C 5 05 40 67 C 2 3 B 5 71 0 B DE 18 4 B 6 E 6 91 A 0 B 7 26 2 D F 0 7 46 DE 12 C 0 88 37 List all hex memory addresses that will hit in Set 3 00110010011 xx = 0 x 64 C, 0 x 64 D, 0 x 64 E, 0 x 64 F – 69 – t (8 bits) s (3 bits) b (2 bits)

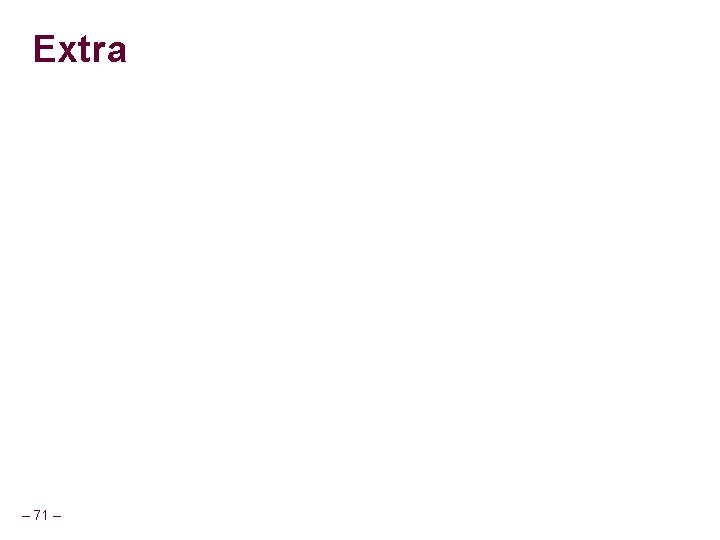

Practice problem Consider the following set associative cache: (S, E, B, m) = (2, 2, 1, 4) Derive values for number of address bits used for the tag (t), the index (s) and the block offset (b) s=1 b=0 t=3 Draw a diagram of which bits of the address are used for the tag, the set index and the block offset t (3 bits) s (1 bit) Draw a diagram of the cache Tag s=0 3 bits 1 byte Tag – 70 – s=1 Data 3 bits 1 byte Tag Data 3 bits 1 byte

Extra – 71 –

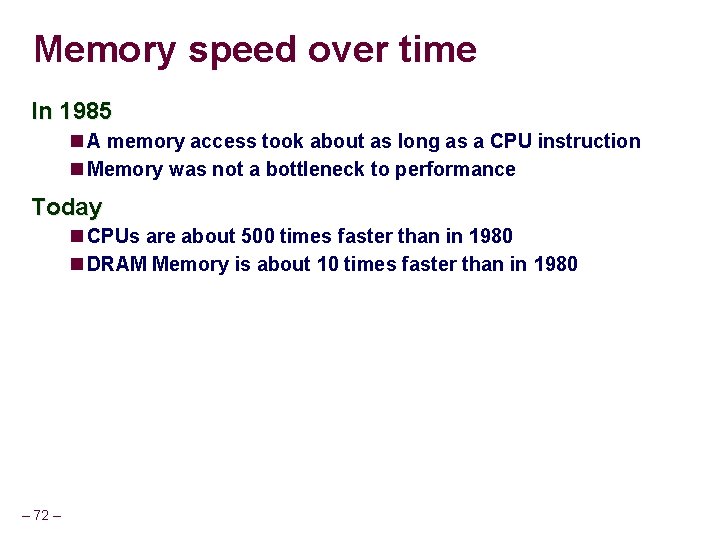

Memory speed over time In 1985 A memory access took about as long as a CPU instruction Memory was not a bottleneck to performance Today CPUs are about 500 times faster than in 1980 DRAM Memory is about 10 times faster than in 1980 – 72 –

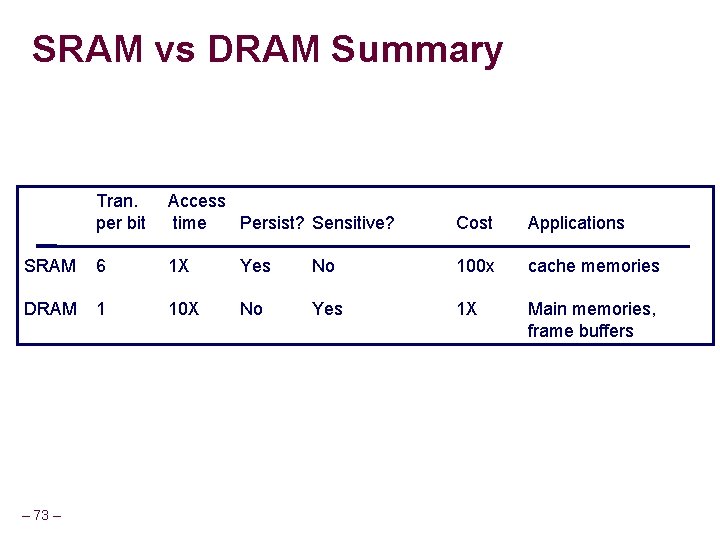

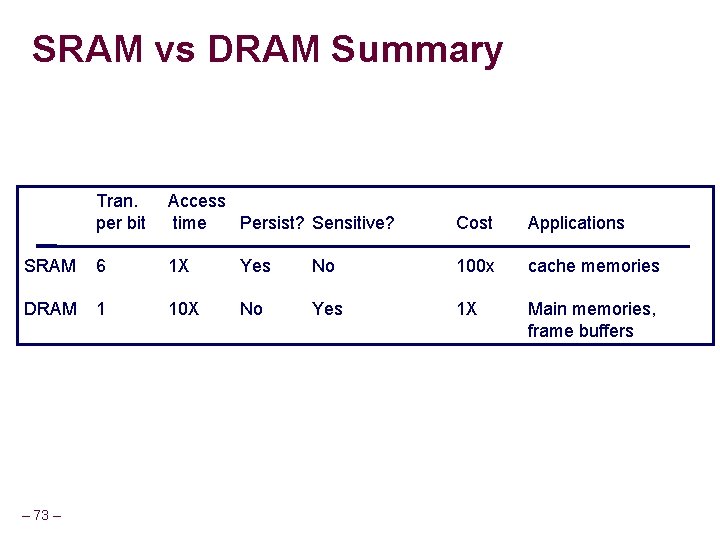

SRAM vs DRAM Summary Tran. per bit Access time Persist? Sensitive? Cost Applications SRAM 6 1 X Yes No 100 x cache memories DRAM 1 10 X No Yes 1 X Main memories, frame buffers – 73 –

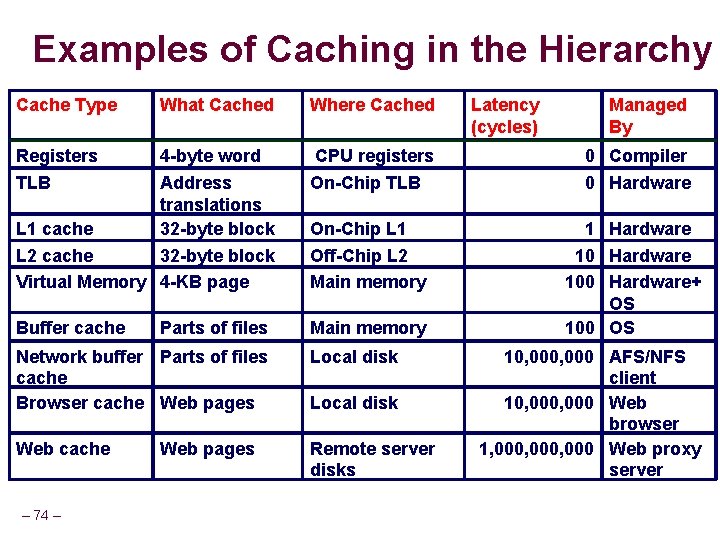

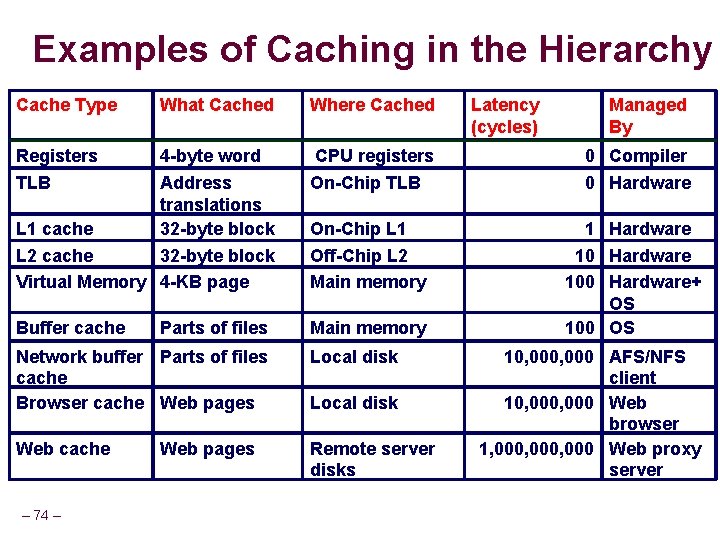

Examples of Caching in the Hierarchy Cache Type What Cached Where Cached Registers 4 -byte word CPU registers 0 Compiler Address translations L 1 cache 32 -byte block L 2 cache 32 -byte block Virtual Memory 4 -KB page On-Chip TLB 0 Hardware On-Chip L 1 Off-Chip L 2 Main memory Buffer cache Main memory 1 Hardware 100 Hardware+ OS 100 OS TLB Parts of files Network buffer Parts of files cache Browser cache Web pages Local disk Web cache Remote server disks – 74 – Web pages Local disk Latency (cycles) Managed By 10, 000 AFS/NFS client 10, 000 Web browser 1, 000, 000 Web proxy server

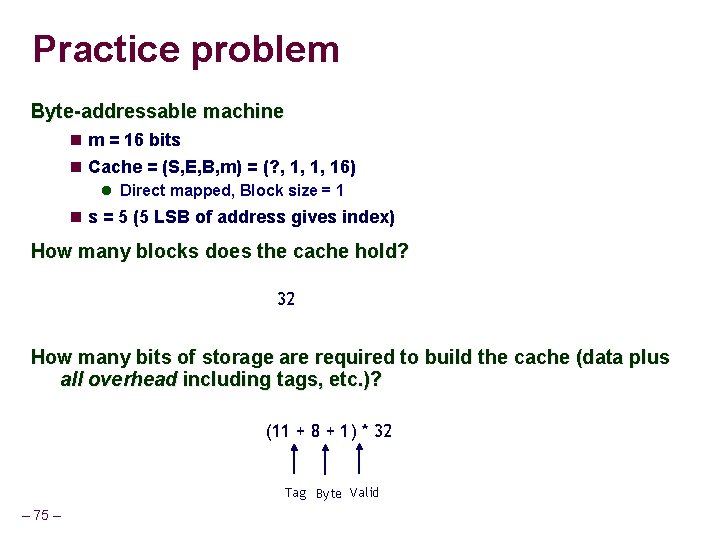

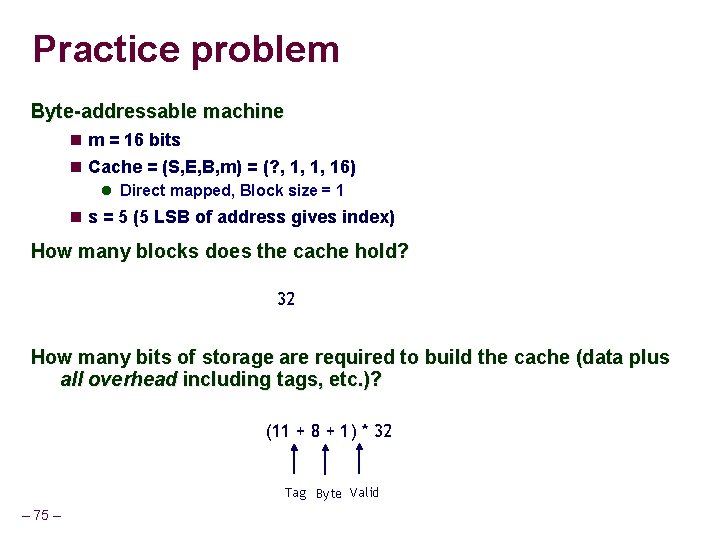

Practice problem Byte-addressable machine m = 16 bits Cache = (S, E, B, m) = (? , 1, 1, 16) Direct mapped, Block size = 1 s = 5 (5 LSB of address gives index) How many blocks does the cache hold? 32 How many bits of storage are required to build the cache (data plus all overhead including tags, etc. )? (11 + 8 + 1) * 32 Tag Byte Valid – 75 –

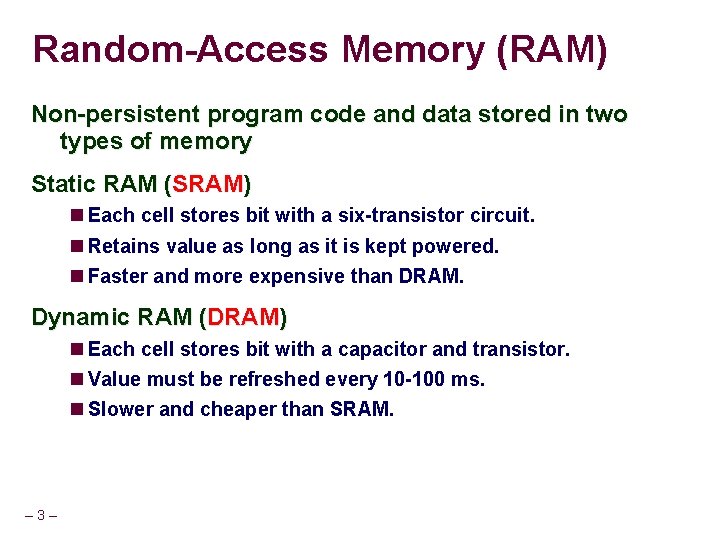

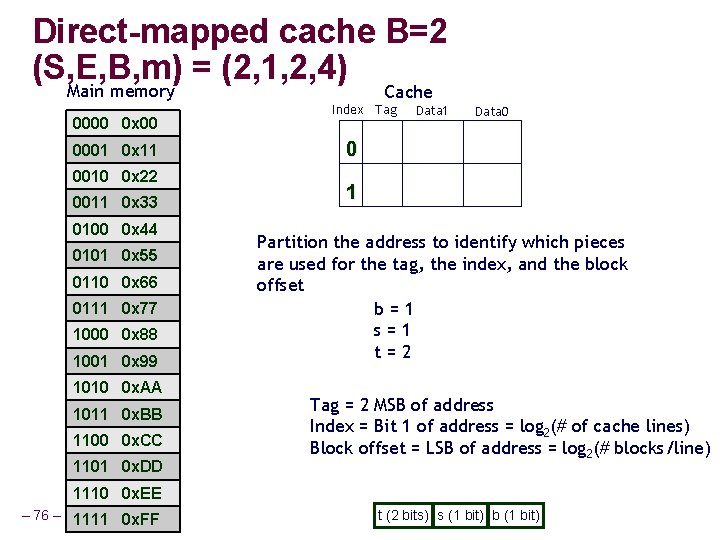

Direct-mapped cache B=2 (S, E, B, m) = (2, 1, 2, 4) Main memory 0000 0 x 00 0001 0 x 11 0010 0 x 22 0011 0 x 33 0100 0 x 44 0101 0 x 55 0110 0 x 66 0111 0 x 77 1000 0 x 88 1001 0 x 99 1010 0 x. AA 1011 0 x. BB 1100 0 x. CC 1101 0 x. DD Cache Index Tag Data 1 Data 0 0 1 Partition the address to identify which pieces are used for the tag, the index, and the block offset b=1 s=1 t=2 Tag = 2 MSB of address Index = Bit 1 of address = log 2(# of cache lines) Block offset = LSB of address = log 2(# blocks/line) 1110 0 x. EE – 76 – 1111 0 x. FF t (2 bits) s (1 bit) b (1 bit)