Internet Transport Glenford Mapp Digital Technology Group DTG

- Slides: 61

Internet Transport Glenford Mapp Digital Technology Group (DTG) http: //www. cl. cam. ac. uk/Research/DTG/~gem 11

Myths about Internet Transport • TCP/IP was always around • All packet networks work using TCP/IP • TCP/IP inherently superior to other protocols

TCP/IP was not dominant in late 70’s and early 80’s • Most computer vendors were developing their own protocol suites • Mainframe and mini-computers vendors – IBM - SNA Architecture – DEC - DECnet - See Ethernet Frame Types – Xerox - XNS

PC manufacturers • Apple - Appletalk • Novell - Netware Suite • Microsoft Networking - SMB, Net. BIOS and Net. BEUI

Big Telecoms • X. 25 - Packet-based data communication – specified by the CCITT - part of the ITU – Virtual Circuit Technology(Telephone people understood it) – Connection oriented so there were definite phases of connection • CONNECTION ESTABLISHED • DATA TRANSFER • CONNECTION TERMINATION

X. 25 used as a data-connect technology • Two main ways – Connect sites using X. 25 – Link in terminals using X. 25 • Interface`between the X. 25 concentrator/MUX and the terminal is`called X. 3 – Mainframe in a building and you have hundreds/thousands of terminals using links to X. 25 concentrators • Credit card/ financial industry - big users

X. 25 • Connection represented by a Virtual Circuit Number, part of your packet. If you passed through an X. 25 Switch, map incoming VCI with outgoing VCI. – X. 25 gave rise to Frame Relay – Frame Relay influenced ATM • X. 25 Links still exist today – The idea that TCP/IP has obliterated everything before it is not true

But why did TCP/IP win? • Fragmented Opposition – Networks were being used to sell hardware and software applications. They were not being used to connect different systems together – TCP/IP was designed to connect different systems together

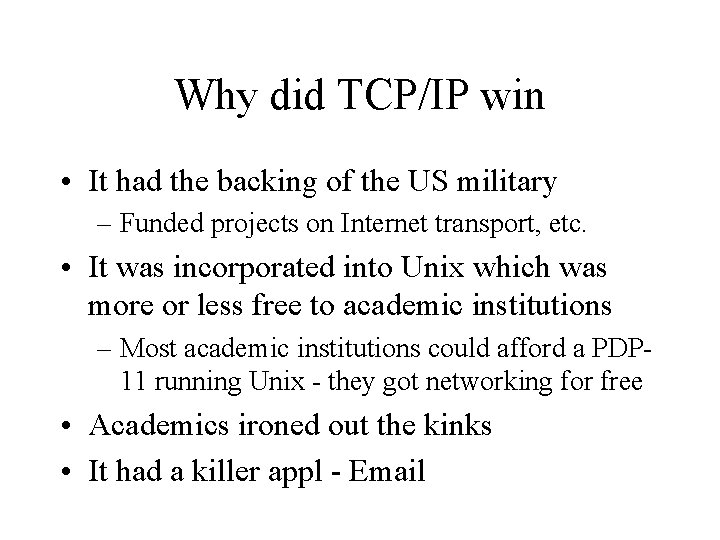

Why did TCP/IP win • It had the backing of the US military – Funded projects on Internet transport, etc. • It was incorporated into Unix which was more or less free to academic institutions – Most academic institutions could afford a PDP 11 running Unix - they got networking for free • Academics ironed out the kinks • It had a killer appl - Email

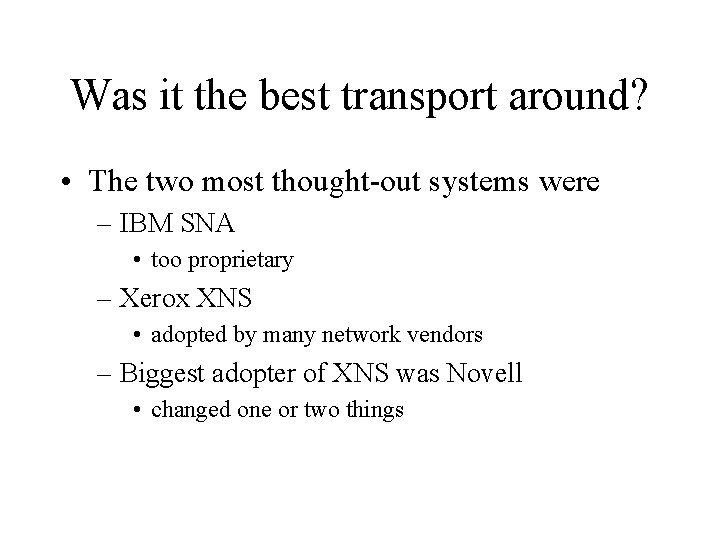

Was it the best transport around? • The two most thought-out systems were – IBM SNA • too proprietary – Xerox XNS • adopted by many network vendors – Biggest adopter of XNS was Novell • changed one or two things

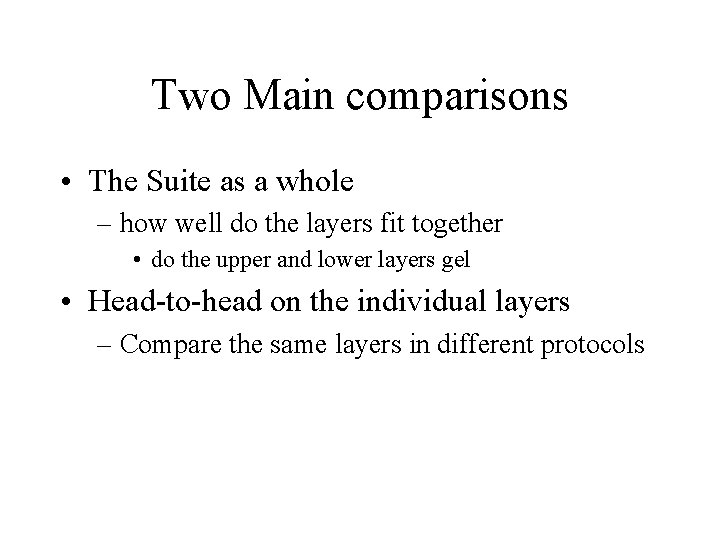

Two Main comparisons • The Suite as a whole – how well do the layers fit together • do the upper and lower layers gel • Head-to-head on the individual layers – Compare the same layers in different protocols

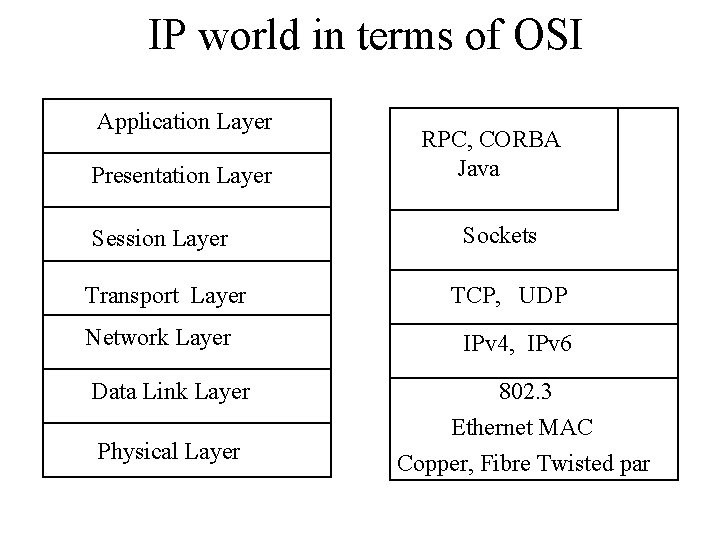

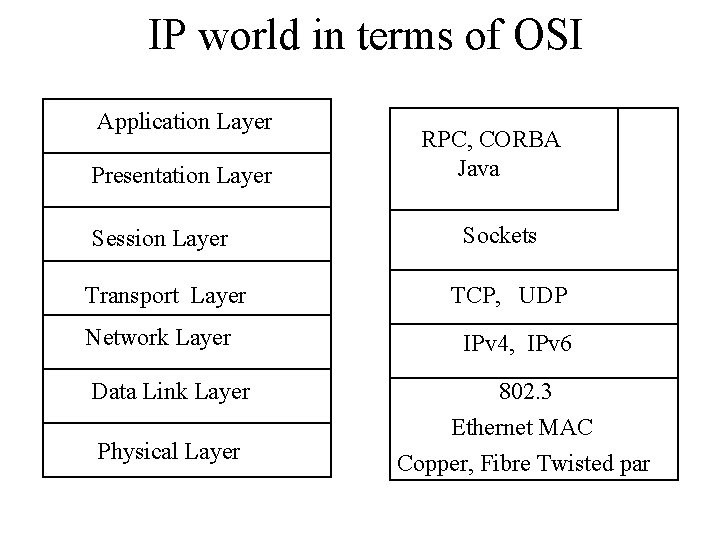

IP world in terms of OSI Application Layer Presentation Layer Session Layer Transport Layer Network Layer Data Link Layer Physical Layer RPC, CORBA Java Sockets TCP, UDP IPv 4, IPv 6 802. 3 Ethernet MAC Copper, Fibre Twisted par

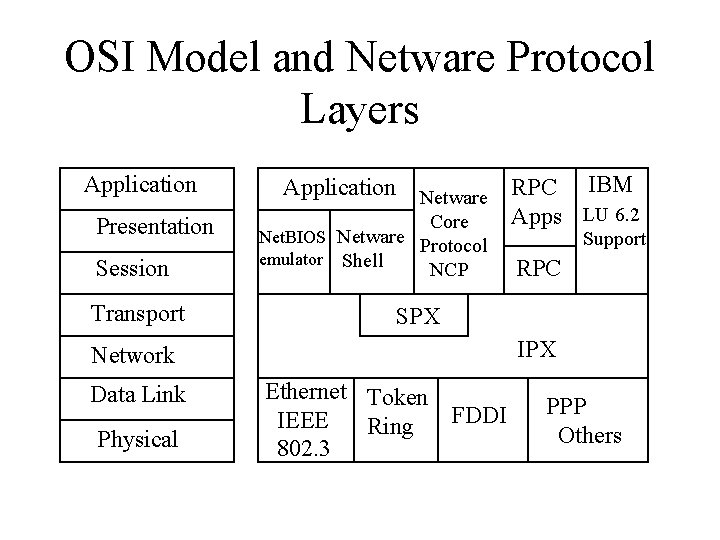

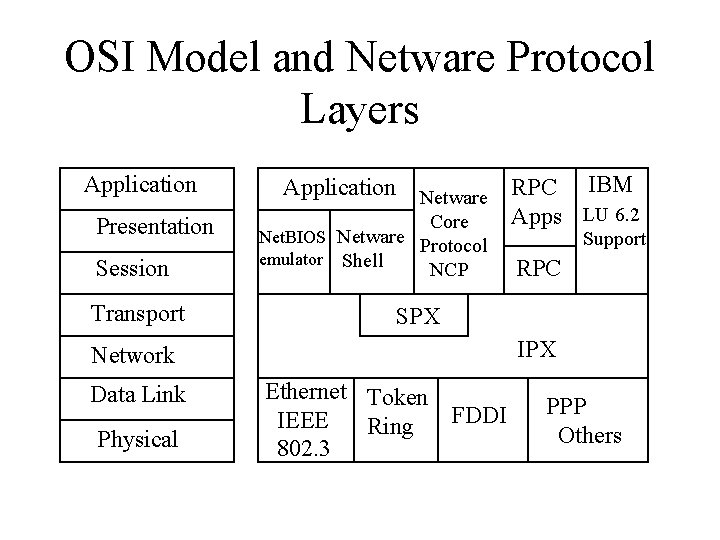

OSI Model and Netware Protocol Layers Application Presentation Session Transport Application Netware RPC IBM Apps LU 6. 2 Core Net. BIOS Netware Protocol emulator Shell NCP Physical RPC SPX IPX Network Data Link Support Ethernet Token FDDI IEEE Ring 802. 3 PPP Others

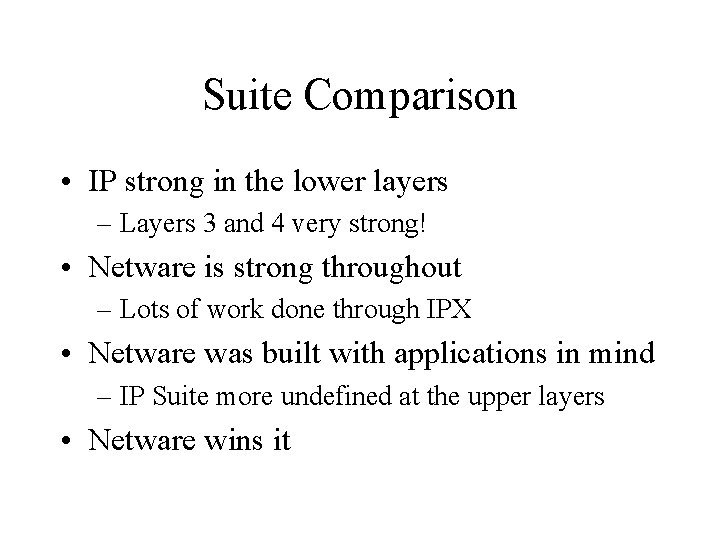

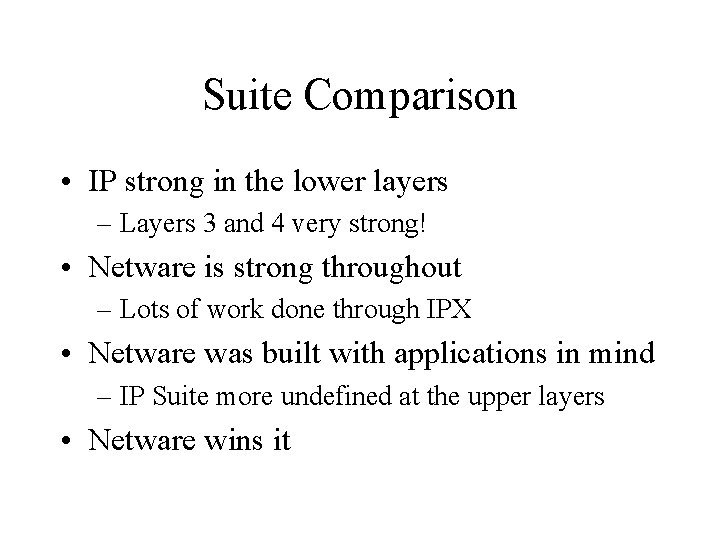

Suite Comparison • IP strong in the lower layers – Layers 3 and 4 very strong! • Netware is strong throughout – Lots of work done through IPX • Netware was built with applications in mind – IP Suite more undefined at the upper layers • Netware wins it

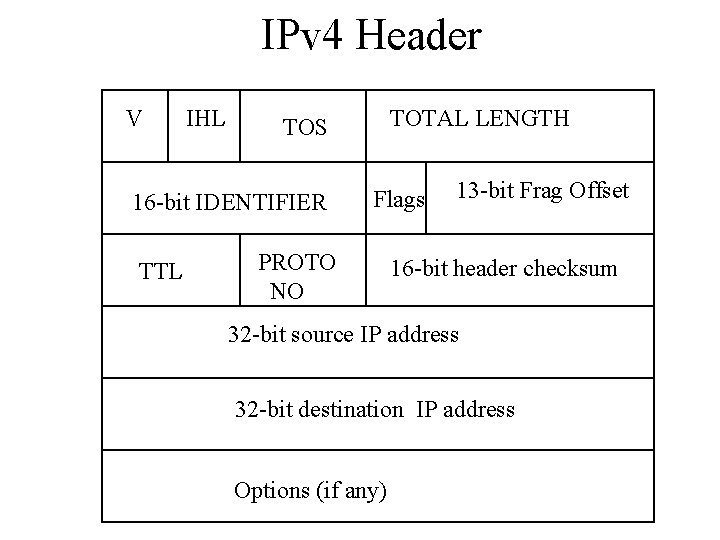

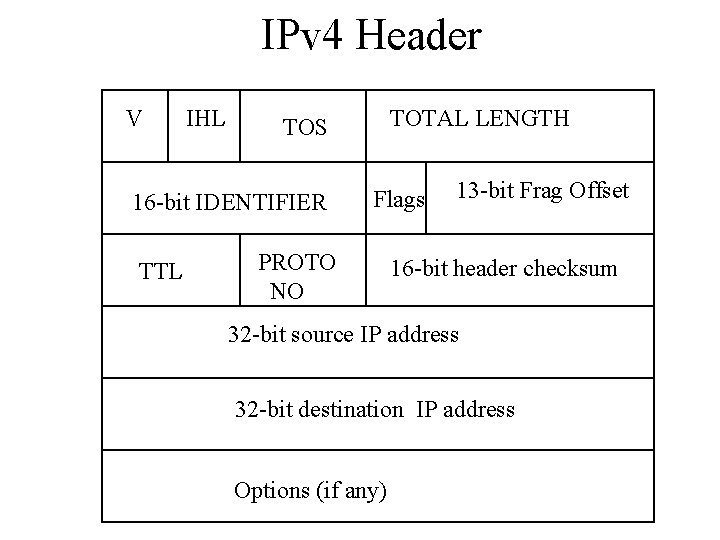

IPv 4 Header V IHL 16 -bit IDENTIFIER TTL TOTAL LENGTH TOS Flags PROTO NO 13 -bit Frag Offset 16 -bit header checksum 32 -bit source IP address 32 -bit destination IP address Options (if any)

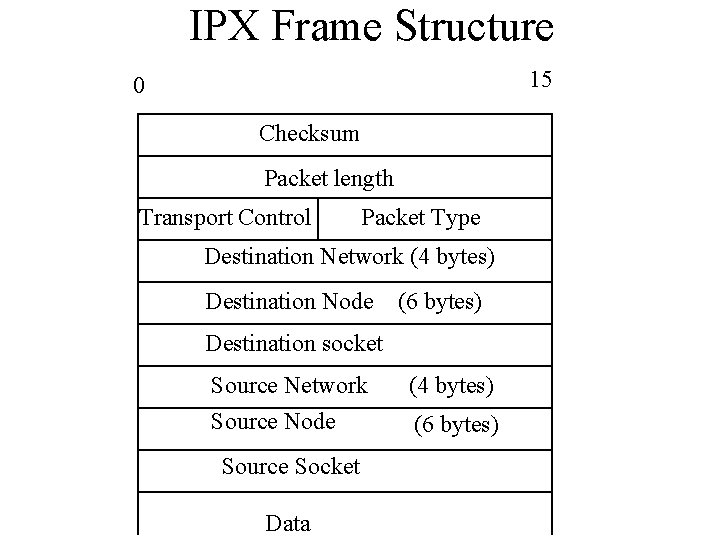

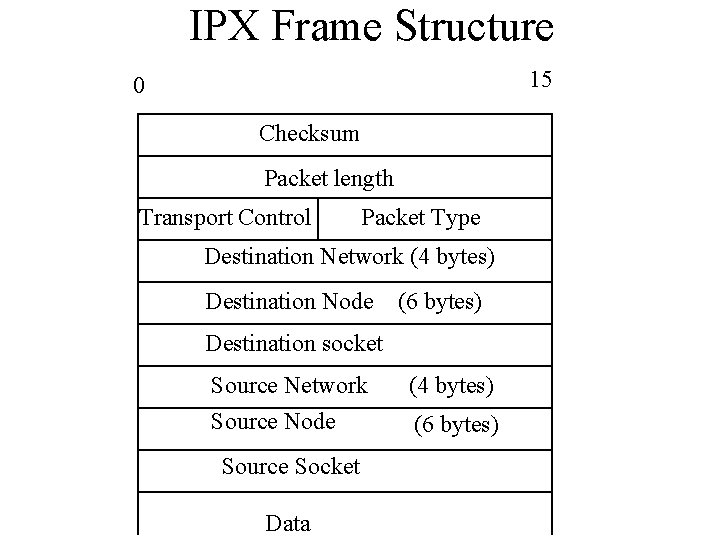

IPX Frame Structure 15 0 Checksum Packet length Transport Control Packet Type Destination Network (4 bytes) Destination Node (6 bytes) Destination socket Source Network Source Node Source Socket Data (4 bytes) (6 bytes)

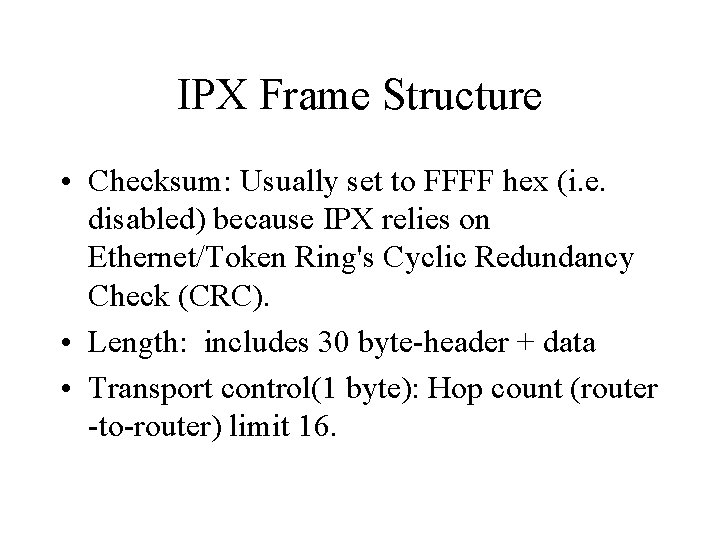

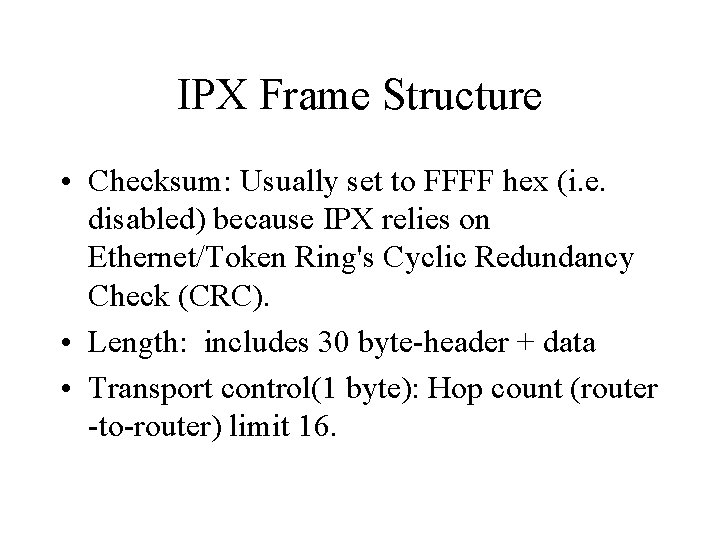

IPX Frame Structure • Checksum: Usually set to FFFF hex (i. e. disabled) because IPX relies on Ethernet/Token Ring's Cyclic Redundancy Check (CRC). • Length: includes 30 byte-header + data • Transport control(1 byte): Hop count (router -to-router) limit 16.

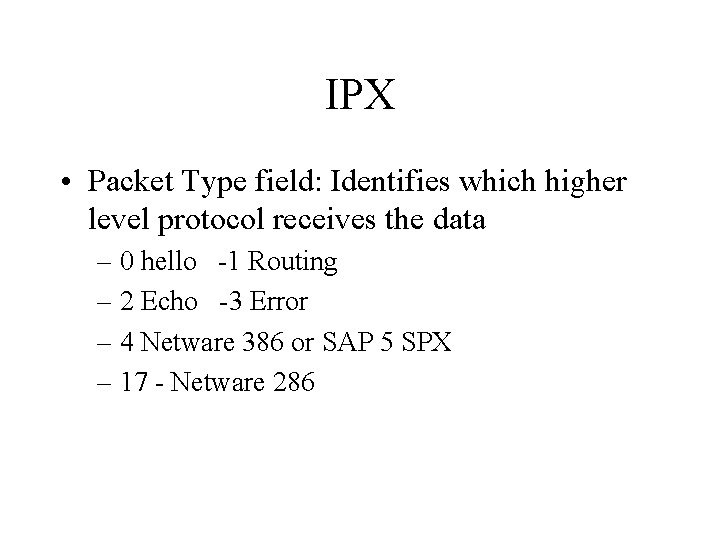

IPX • Packet Type field: Identifies which higher level protocol receives the data – 0 hello -1 Routing – 2 Echo -3 Error – 4 Netware 386 or SAP 5 SPX – 17 - Netware 286

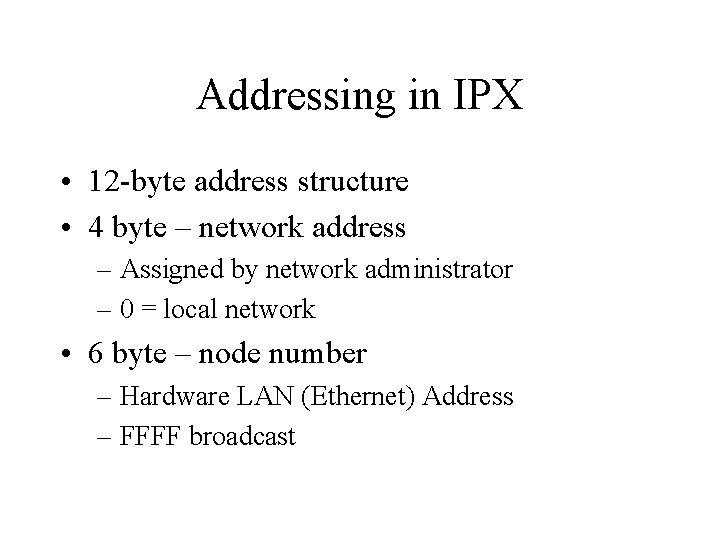

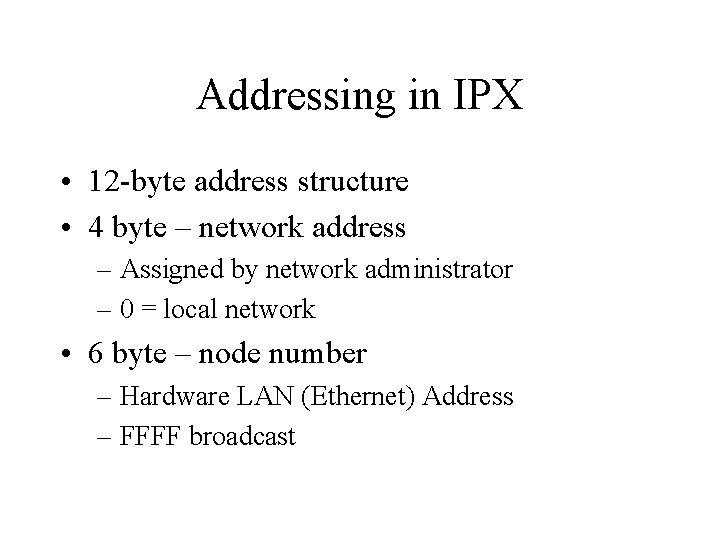

Addressing in IPX • 12 -byte address structure • 4 byte – network address – Assigned by network administrator – 0 = local network • 6 byte – node number – Hardware LAN (Ethernet) Address – FFFF broadcast

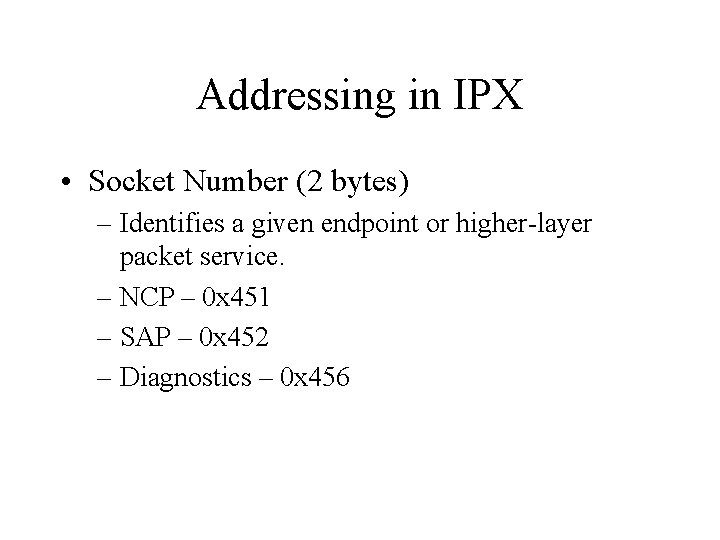

Addressing in IPX • Socket Number (2 bytes) – Identifies a given endpoint or higher-layer packet service. – NCP – 0 x 451 – SAP – 0 x 452 – Diagnostics – 0 x 456

IP vs IPX • IP is small compared to IPX • IPX does more than just networking – uniquely identifies endpoints as well as interfaces • Really does IP/UDP - Unreliable datagram service

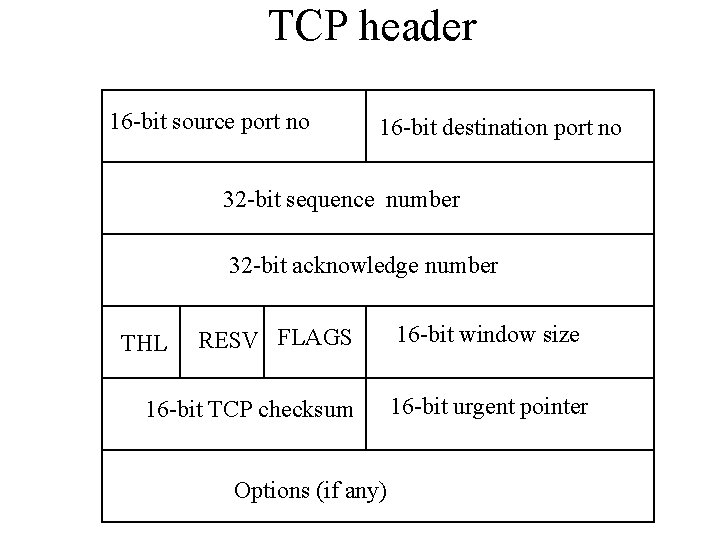

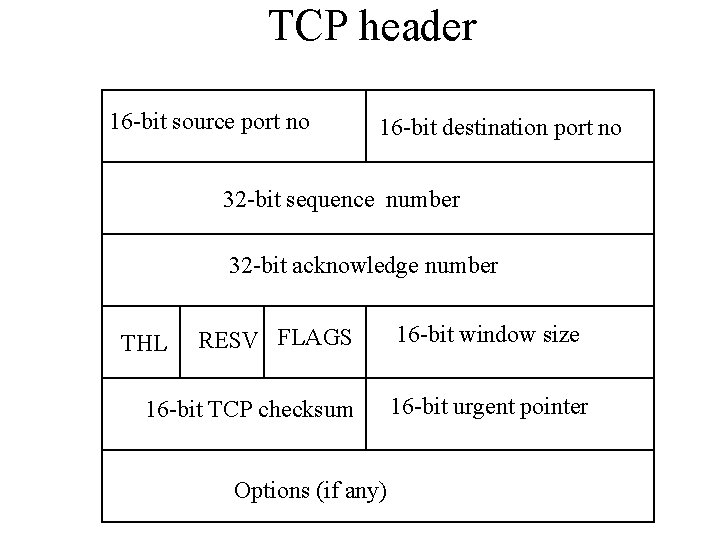

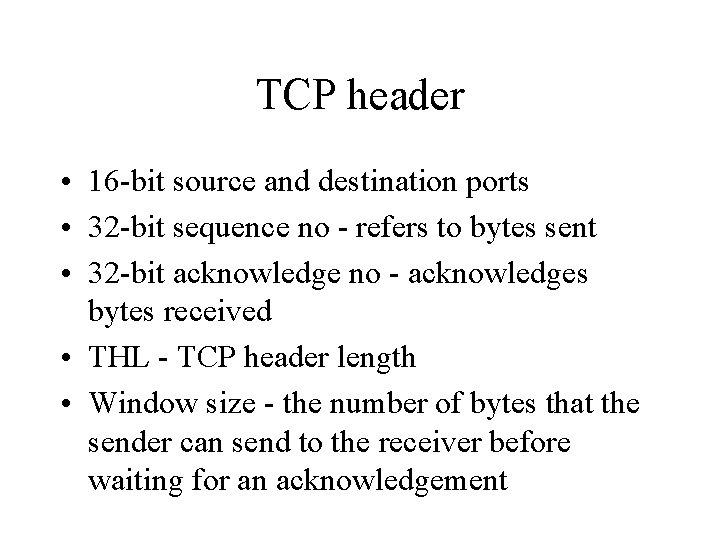

TCP header 16 -bit source port no 16 -bit destination port no 32 -bit sequence number 32 -bit acknowledge number THL RESV FLAGS 16 -bit TCP checksum Options (if any) 16 -bit window size 16 -bit urgent pointer

TCP header • 16 -bit source and destination ports • 32 -bit sequence no - refers to bytes sent • 32 -bit acknowledge no - acknowledges bytes received • THL - TCP header length • Window size - the number of bytes that the sender can send to the receiver before waiting for an acknowledgement

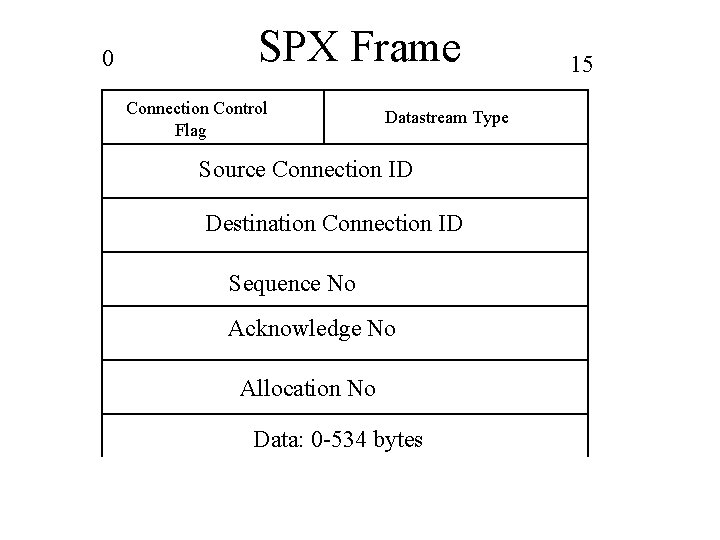

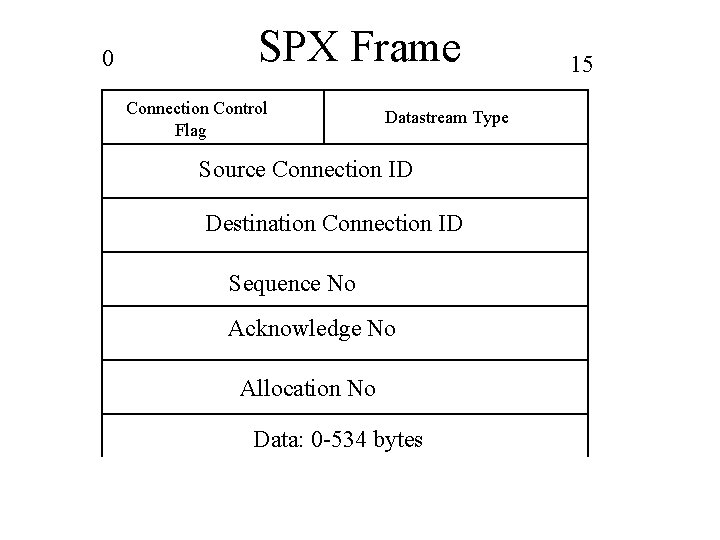

0 SPX Frame Connection Control Flag Datastream Type Source Connection ID Destination Connection ID Sequence No Acknowledge No Allocation No Data: 0 -534 bytes 15

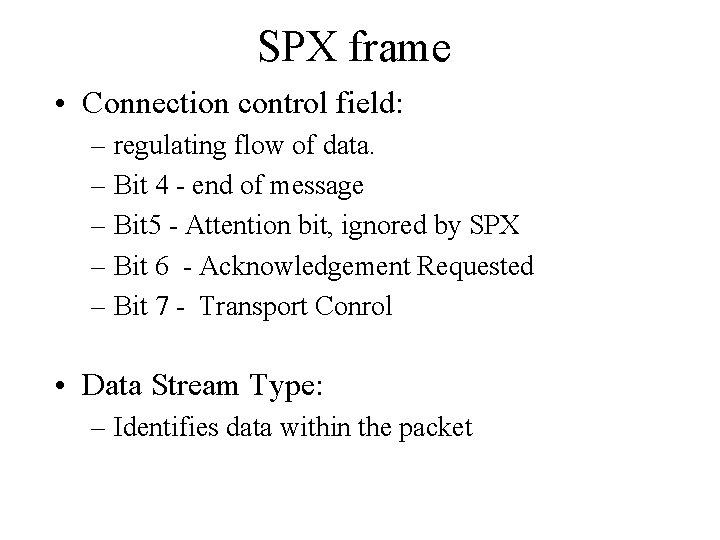

SPX frame • Connection control field: – regulating flow of data. – Bit 4 - end of message – Bit 5 - Attention bit, ignored by SPX – Bit 6 - Acknowledgement Requested – Bit 7 - Transport Conrol • Data Stream Type: – Identifies data within the packet

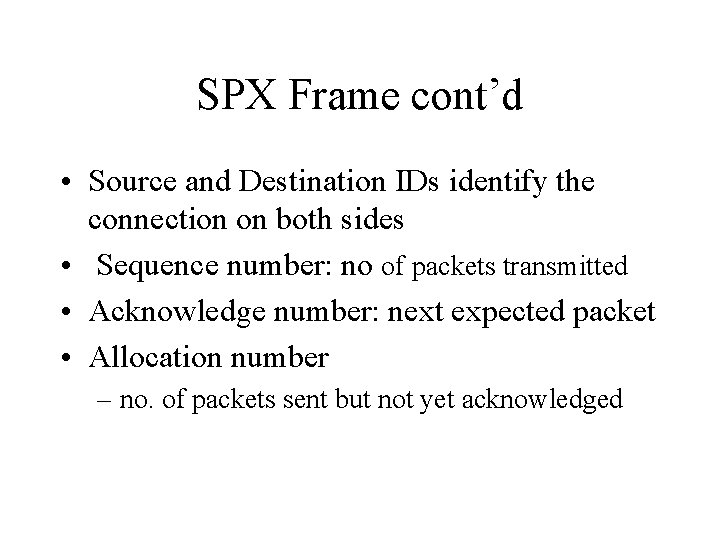

SPX Frame cont’d • Source and Destination IDs identify the connection on both sides • Sequence number: no of packets transmitted • Acknowledge number: next expected packet • Allocation number – no. of packets sent but not yet acknowledged

Sequenced Packet Exchange II (SPX II) • Introduced to provide improvements over SPX protocol in – window flow control (sending several packets before ack), – larger packet sizes (>576 bytes), • improved negotiation of network options: • safer method of closing connections • Packet: added a 2 -byte Negotiation size.

Comparisons • TCP is much bigger than SPX • TCP has to do de-multiplexing of packets to find the connection endpoints • Endpoints are specified in IPX and the actual connection is specified in SPX packet • SPX basically gives reliability but is built on the datagram service provided by IPX

The winner is • It’s a draw! – IPX/SPX = IP/UDP/TCP • Probably the correct way to do it but it adds lots to the network layer – IP is a pure network layer, IPX is not – TCP is build directly on IP so more complicated than SPX

Challenges TCP/IP faced • Congestion – Late 80’s TCP/IP getting going • huge blackouts begin to occur – TCP is not reacting to congestion • Van Jacobson comes up with a algorithm called slow start

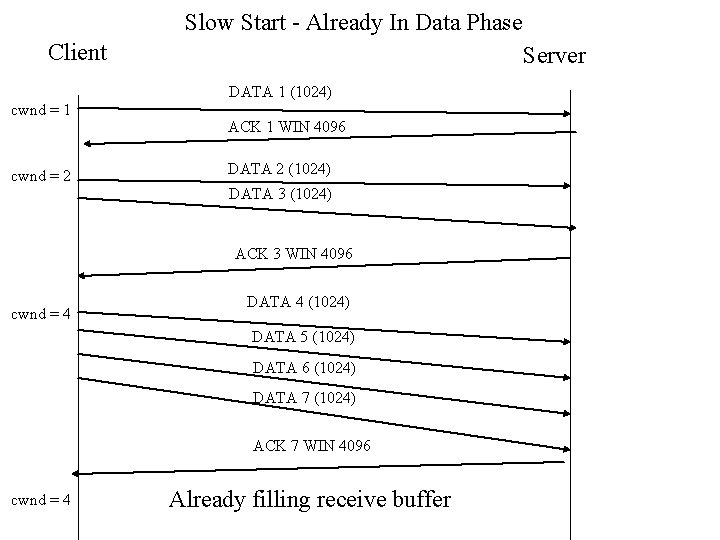

Handling Congestion Slow Start Algorithm • TCP attempts to avoid causing congestion • Slow Start implemented at the start of the connection • The connection now has a congestion window; cwnd.

Slow Start cont’d • At the start, cwnd is set to 1 and only one packet is sent • If the segment is successfully acknowledged then cwnd is increased to 2 and so now two packets are sent, • If these are successfully acknowledged, then 4 packets are sent, etc

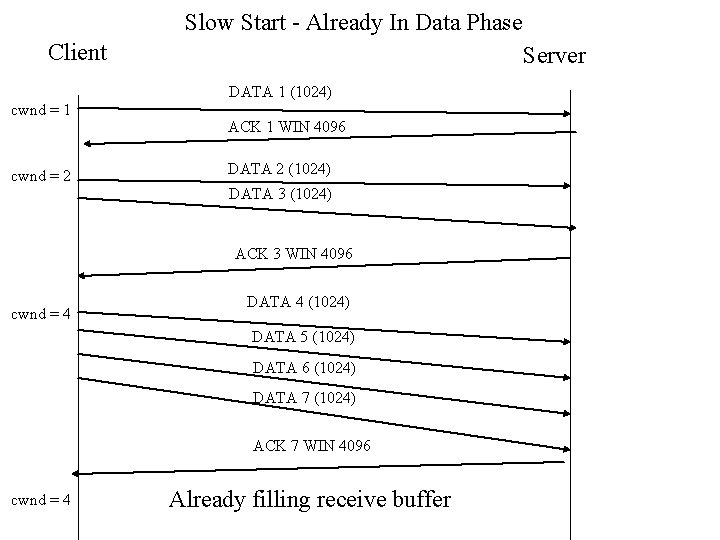

Slow Start - Already In Data Phase Client Server DATA 1 (1024) cwnd = 1 cwnd = 2 ACK 1 WIN 4096 DATA 2 (1024) DATA 3 (1024) ACK 3 WIN 4096 cwnd = 4 DATA 4 (1024) DATA 5 (1024) DATA 6 (1024) DATA 7 (1024) ACK 7 WIN 4096 cwnd = 4 Already filling receive buffer

Slow Start Cont’d • We continue to double the number of packets sent until: – we reach the size of the receive buffer as in the last slide – we see packet loss • very likely for large packet transfers going very long distances • even though it starts slowly; slow start is in fact growing exponentially so it’s very aggressive for large window sizes

Slow Start - Packet Loss • When we see packet loss we do the following: – Set the maximum size to aim for as half the current value of cwnd: • ssthresh = cwnd/2 – Set cwnd back to 1 and repeat slow start – If we get above or equal to ssthresh; we increase cwnd by 1 for every successful transmission i. e linear instead of exponential

Reaction to Retransmission • TCP uses a Go-back-n retransmission policy • All packets starting from the first missing packet must be retransmitted – even if packets later in the sequence arrived OK on the first transmission, they must still be retransmitted

Problems with Standard TCP • Didn’t work well on satellite links or on distances with large RTT • Main reason: Retransmission using the Goback-n approach is too costly. The pipe contains a lot of packets and to have to retransmit all of them if say, the first packet gets corrupted, is too complicated

Selective Retransmission • Introduced in RFC 2018. This defined two new TCP options • SACK-permitted – indicates that selective acknowledgements are allowed • SACK – Sender only retransmits packets not received

Present Issues: Problems with Slow Start • Key assumption of TCP is that packet loss is due to congestion. – Clumsy indicator at best. – Dead wrong at worst. • Better to let the network indicate congestion explicitly

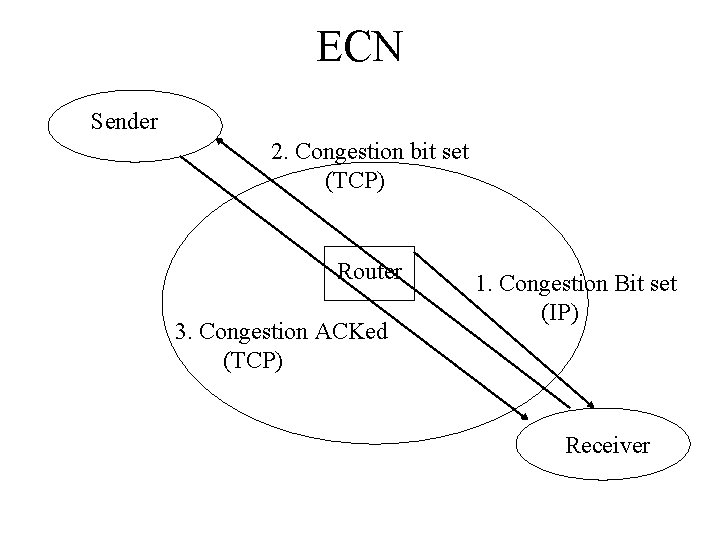

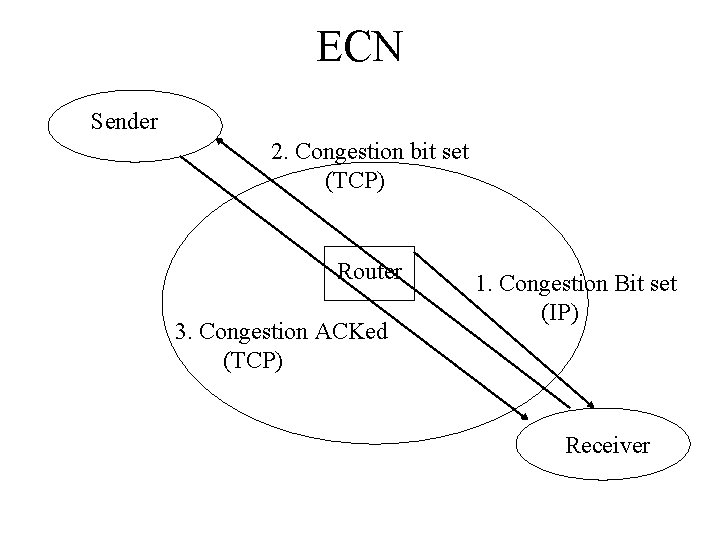

Explicit Congestion Notification (ECN) • With ECN, we use 2 bits in the IP header and 2 bits in TCP header to explicitly indicate to the sender and receiver that packets on this connection have experienced congestion • So when there is congestion in the network IP routers set a bit in the IP header saying that this packet has been through a congested area

ECN cont’d • When the packet reaches the receiver, the IP processing engine notes the congestion and sets the appropriate bit in the TCP header • TCP receive engine sends a TCP ACK packet to the sender saying that congestion has been experienced on this connection • Sender reduces sending rate and signals to the receiver that appropriate action has been taken

ECN Sender 2. Congestion bit set (TCP) Router 3. Congestion ACKed (TCP) 1. Congestion Bit set (IP) Receiver

Present Issues: Slow Start and Wireless Networks • Key assumption of TCP is that packet loss is due to congestion – hence the slow start algorithm – true in wired networks with good link quality • In wireless networks where there is handoff and channel fading; packet loss is very temporary – slow start represents drastic action which cuts the bandwidth of the connection

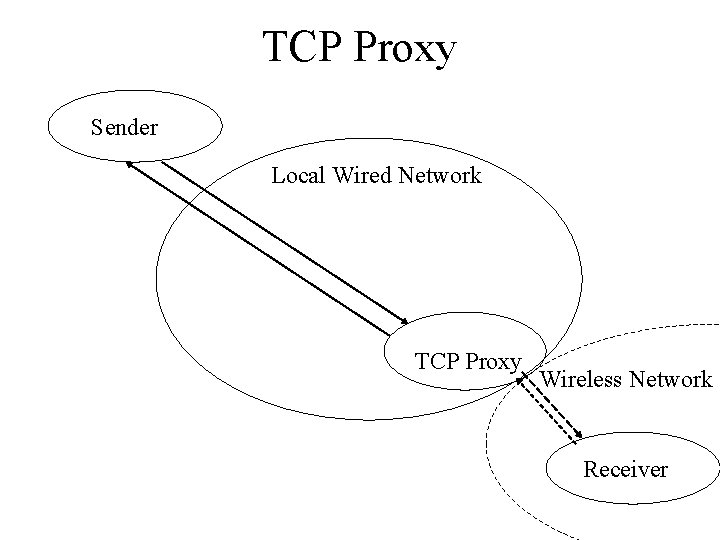

Slow Start and Wireless networks • Must avoid TCP going into slow start on wireless networks • Solution: – have normal TCP for the wired core network – different kind of TCP for wireless last-mile part – Proxy TCP server in the middle

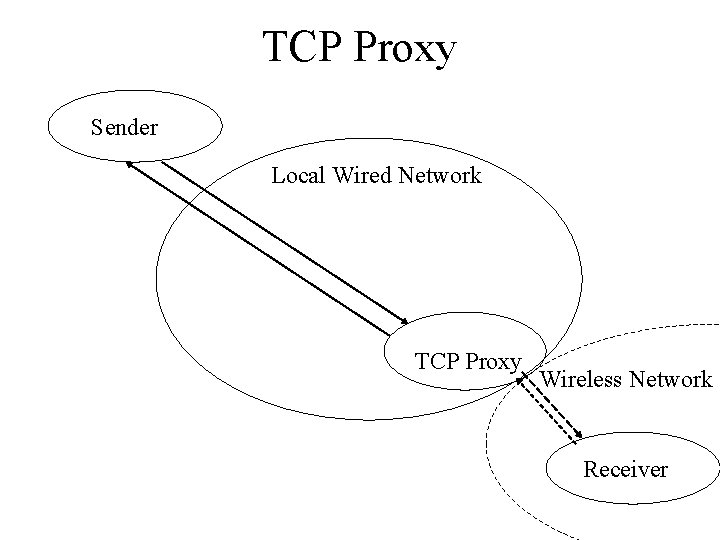

TCP Proxy Sender Local Wired Network TCP Proxy Wireless Network Receiver

TCP Proxy • TCP Proxy – can buffer packets and retransmit packet locally when the mobile node has lost packets due to channel fading and handoff – Splits the connection into 2 connections – Big issue: do you try to maintain end-to-end semantics • Yes, then sender sees what happening - slower response • No, then the sender can presume things about the connection, e. g Round-Trip-Time and Window control that are not true

M-TCP • Splits the connection in two but maintains the end-to-end semantics – Proxy does not perform caching/retransmission – Geared to handling long period of disconnection – Closes the window hence stops the sender when the receiver loses contact – prevents slow start when connection is reestablished

I-TCP • Also splits the connections • Breaks end-to-end semantics • Packets from the sender are acknowledged by the TCP Proxy and forwarded on a different connection mobile node. • TCP proxy handles buffering and retransmission

Key Issues for the future • New applications require a low-latency environment – Voice over IP – Networked games – Multimedia • TCP is too heavyweight – most of these applications do not need the bytestream paradigm

Network implications • To engineer low latency, a lot of people are pushing for the development of a super-fast core: ATM, MPLS. • Traditional routers replaced by very fast switches. All intelligence on routing and connections will be pushed to the edge

Transport Support for Low latency • New Approach is to go back the Netware style, so we use UDP/IP as a data-carrying substrate and build our protocol on that – flexible – protocols can run in user-space – new low latency NIC card support memorymapping in user-space

User-Space Transport Protocols • Easier to test and implement • Also avoids multiplexing and cross talk in the kernel • Since the process and not the kernel implementing the protocol some issues: – Timers – Packet Handling

Timers • Since the process is called to run periodically it cannot be too dependent on timers since they will be imprecise without hardware support – TCP is very timer-dependent – User-level TCP hasn’t performed well • needs lot of hardware timer support

Packet Handling • Since the protocol is running in user-space when the process is finally called there may be lots of packets waiting to be processed; data from the remote side, ACKs or NACKs for data that you sent, etc. • Don’t have to treat them in FIFO order

Packet Handling • Treat NACKs first, retransmit the packet – allows the other end to get on with it • Treat ACKs next, frees up local buffers that you might need • For data stream, treat retransmitted packets first • Have a priority bit to indicate which packets you want treated first

A 1 - Transport • Developed at AT&T Laboratories. Cambridge Ltd • User-space protocol developed to support multimedia applications – very flexible – supported Qo. S vectors • Performance over 155 Mbits/s ATM link – 111 Mbits/s (reliable), 130 Mbits/s Raw

Newer Transport Protocols (NTP) • NTPs running directly on top of IP – Compete with both TCP and UDP • Applications – streaming, low-latency – Qo. S, congestion issues • Support for mobility and/or multi-homing • Security – easier mechanisms to setup security • Some are gathering a following

NTP Cont’d • Datagram Congestion Control Protocol (DCCP) – Berkeley Institute 2003 – Driven by Media-Streaming Applications – Combines unreliable delivery with Congestion Control – Supports ECN and congestion negotiation on setup

NTP Cont’d • The Stream Control Transport Protocol (SCTP) – Originally used as the transport protocol on the SS 7 Signalling Network – Multi-streaming – One logical connection is used to support a number of streams not just one – Multi-homing support

SCTP cont’d • Support for mobility – Uses a different mechanism than Mobile IP – Associate a set of local addresses with a set of remote addresses and you can add new IP addresses and delete old one as you move around • Support for security – Verfication tag / cookie – Specifics IPSec for strong security if required

NTP cont’d • Explicit Congestion Control Protocol (XCP) – Precise congestion signalling – XCP congestion header • Sender uses header to request higher Qo. S – Routers know about XCP header and can modify fields based on the congestion they are seeing – Run different congestion algorithms – XCP-i – for inter-networking