Information Technology Infrastructure Committee ITIC Report to the

- Slides: 36

Information Technology Infrastructure Committee (ITIC) Report to the NAC March 8, 2012 Larry Smarr Chair ITIC

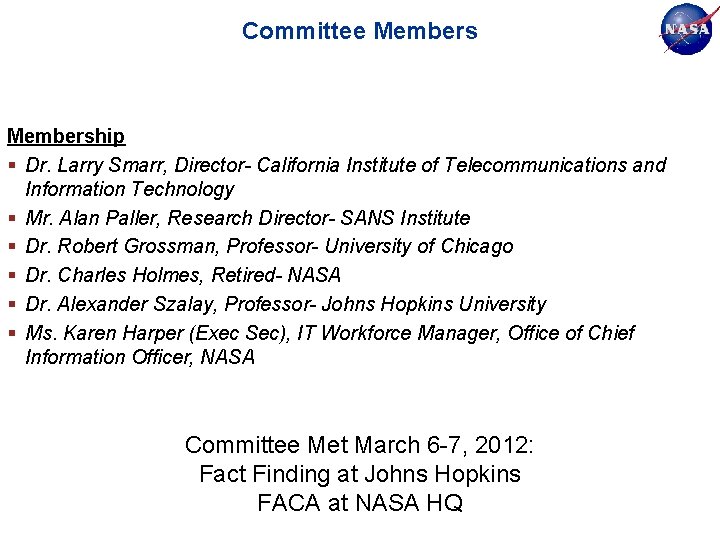

Committee Membership § Dr. Larry Smarr, Director- California Institute of Telecommunications and Information Technology § Mr. Alan Paller, Research Director- SANS Institute § Dr. Robert Grossman, Professor- University of Chicago § Dr. Charles Holmes, Retired- NASA § Dr. Alexander Szalay, Professor- Johns Hopkins University § Ms. Karen Harper (Exec Sec), IT Workforce Manager, Office of Chief Information Officer, NASA Committee Met March 6 -7, 2012: Fact Finding at Johns Hopkins FACA at NASA HQ

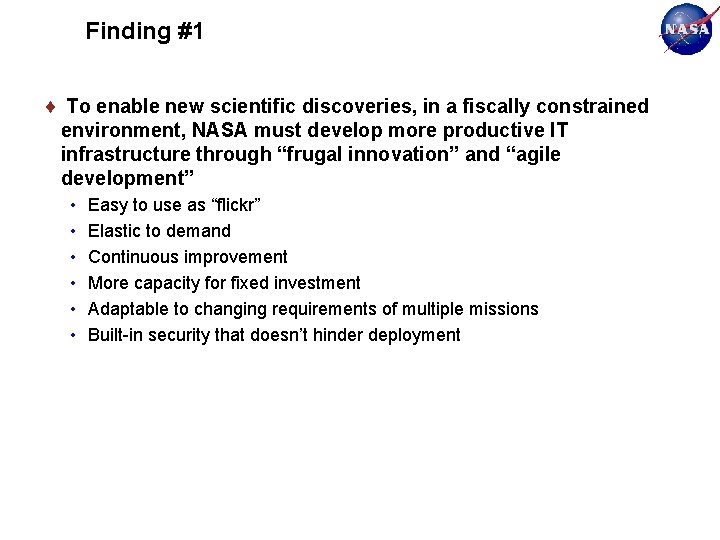

Finding #1 To enable new scientific discoveries, in a fiscally constrained environment, NASA must develop more productive IT infrastructure through “frugal innovation” and “agile development” • • • Easy to use as “flickr” Elastic to demand Continuous improvement More capacity for fixed investment Adaptable to changing requirements of multiple missions Built-in security that doesn’t hinder deployment

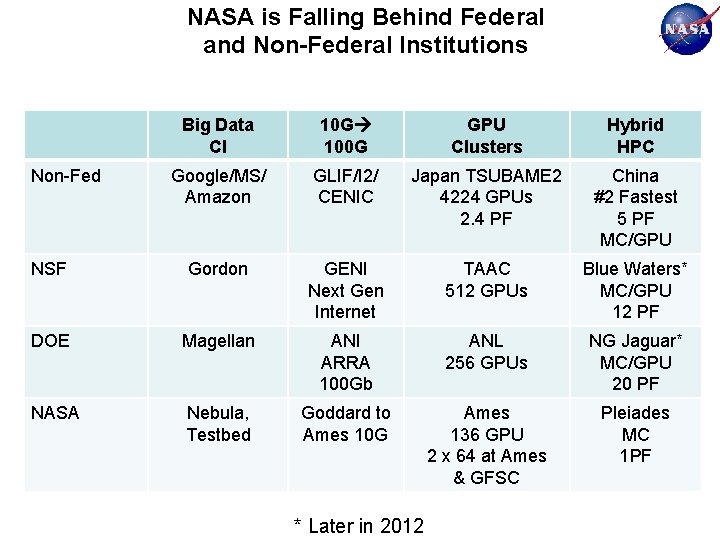

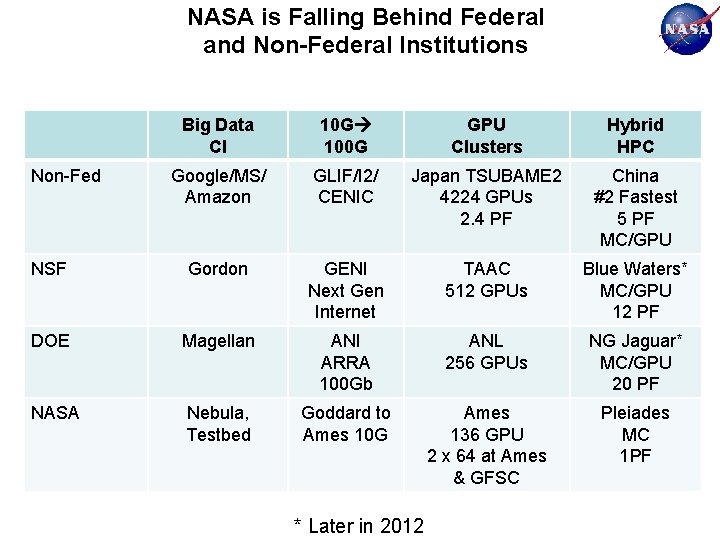

NASA is Falling Behind Federal and Non-Federal Institutions Big Data CI 10 G 100 G GPU Clusters Hybrid HPC Google/MS/ Amazon GLIF/I 2/ CENIC Japan TSUBAME 2 4224 GPUs 2. 4 PF China #2 Fastest 5 PF MC/GPU NSF Gordon GENI Next Gen Internet TAAC 512 GPUs Blue Waters* MC/GPU 12 PF DOE Magellan ANI ARRA 100 Gb ANL 256 GPUs NG Jaguar* MC/GPU 20 PF NASA Nebula, Testbed Goddard to Ames 10 G Ames 136 GPU 2 x 64 at Ames & GFSC Pleiades MC 1 PF Non-Fed * Later in 2012

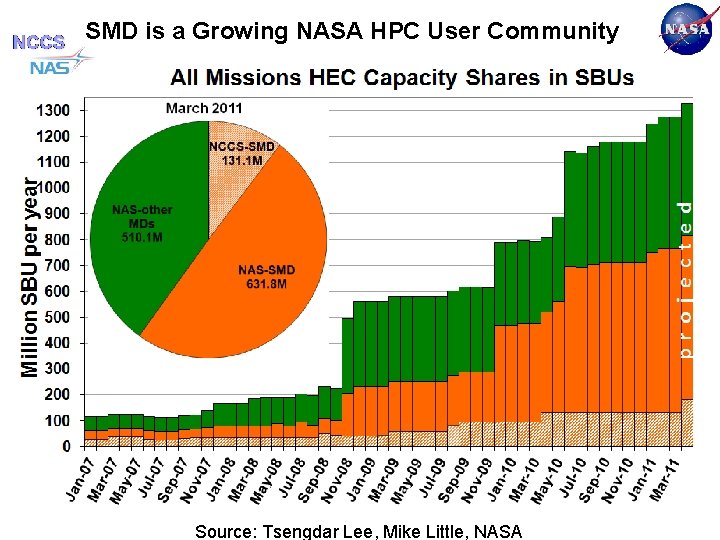

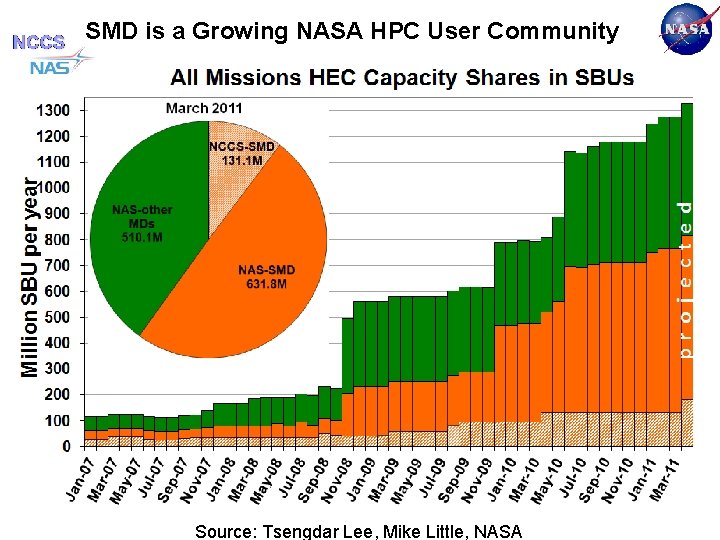

projected SMD is a Growing NASA HPC User Community Source: Tsengdar Lee, Mike Little, NASA

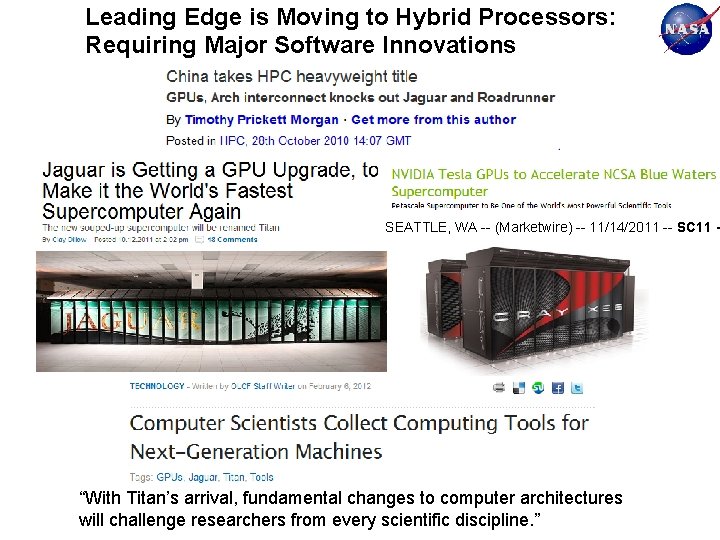

Leading Edge is Moving to Hybrid Processors: Requiring Major Software Innovations SEATTLE, WA -- (Marketwire) -- 11/14/2011 -- SC 11 - “With Titan’s arrival, fundamental changes to computer architectures will challenge researchers from every scientific discipline. ”

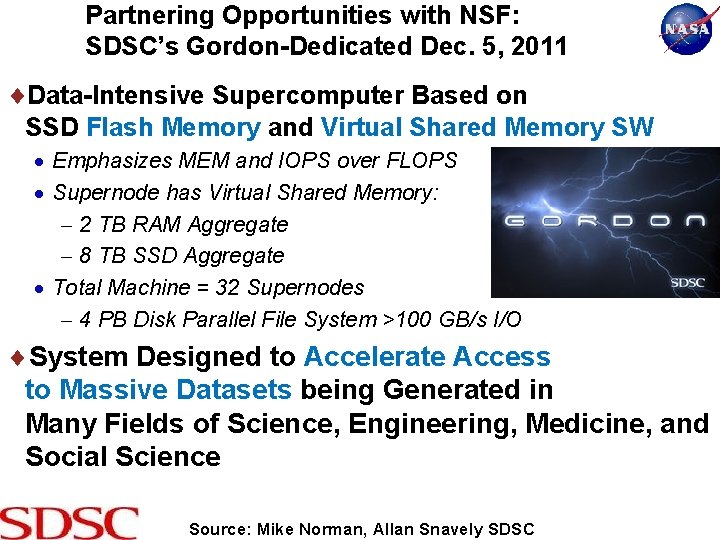

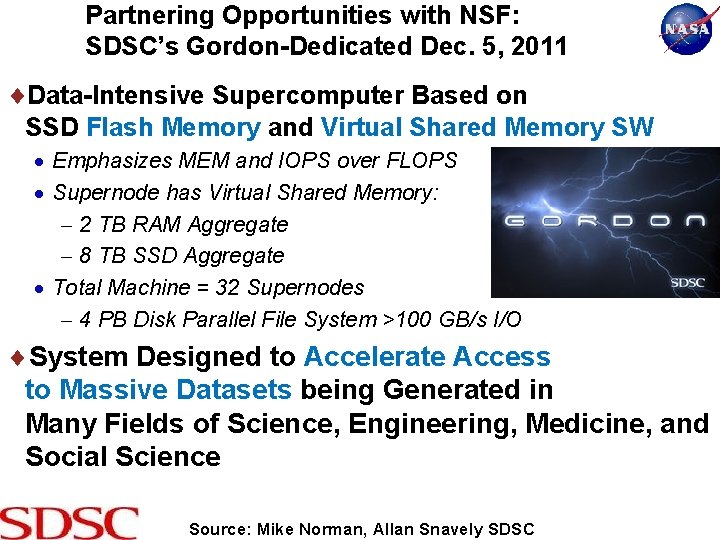

Partnering Opportunities with NSF: SDSC’s Gordon-Dedicated Dec. 5, 2011 Data-Intensive Supercomputer Based on SSD Flash Memory and Virtual Shared Memory SW Emphasizes MEM and IOPS over FLOPS Supernode has Virtual Shared Memory: 2 TB RAM Aggregate 8 TB SSD Aggregate Total Machine = 32 Supernodes 4 PB Disk Parallel File System >100 GB/s I/O System Designed to Accelerate Access to Massive Datasets being Generated in Many Fields of Science, Engineering, Medicine, and Social Science Source: Mike Norman, Allan Snavely SDSC

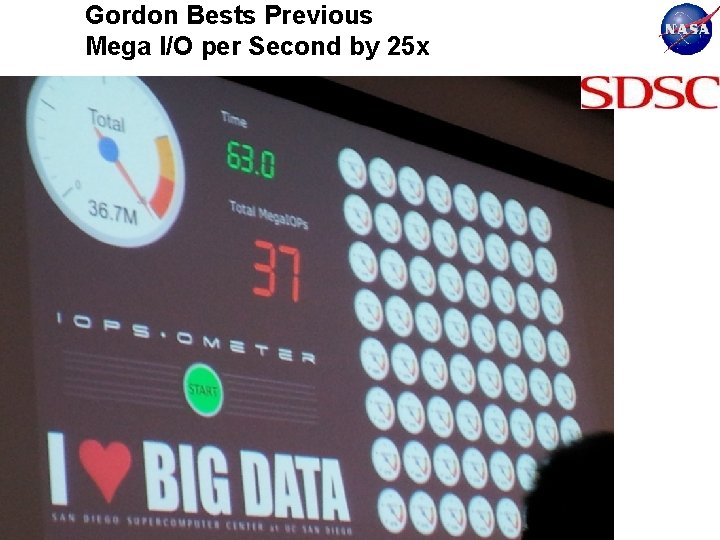

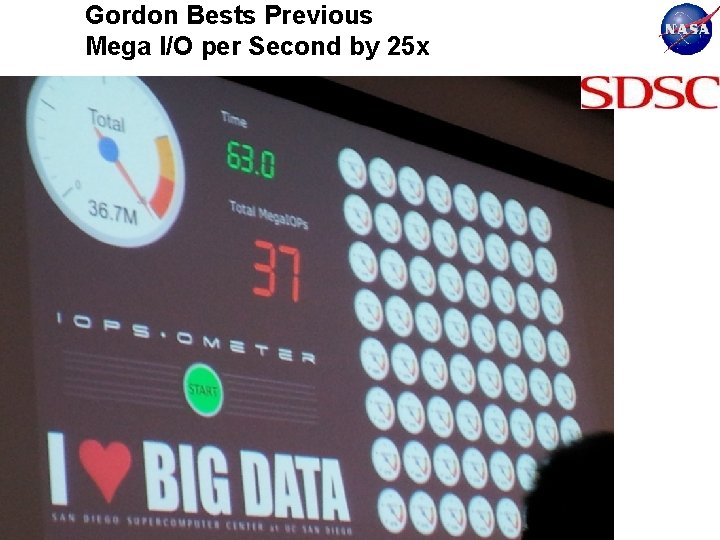

Gordon Bests Previous Mega I/O per Second by 25 x

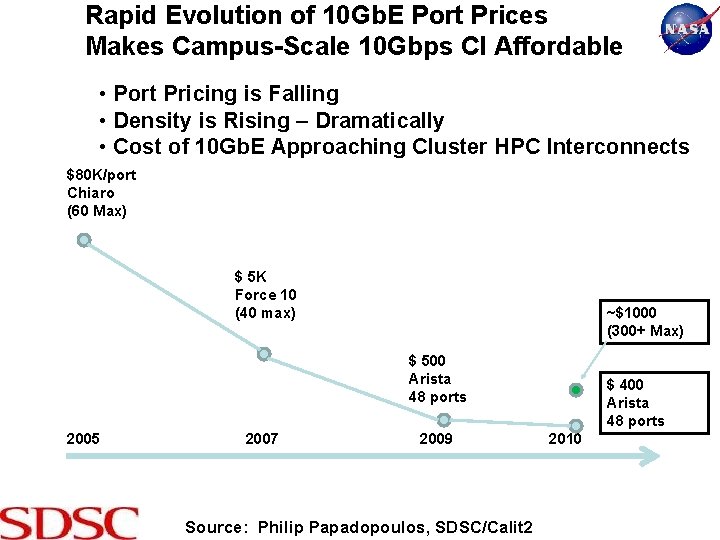

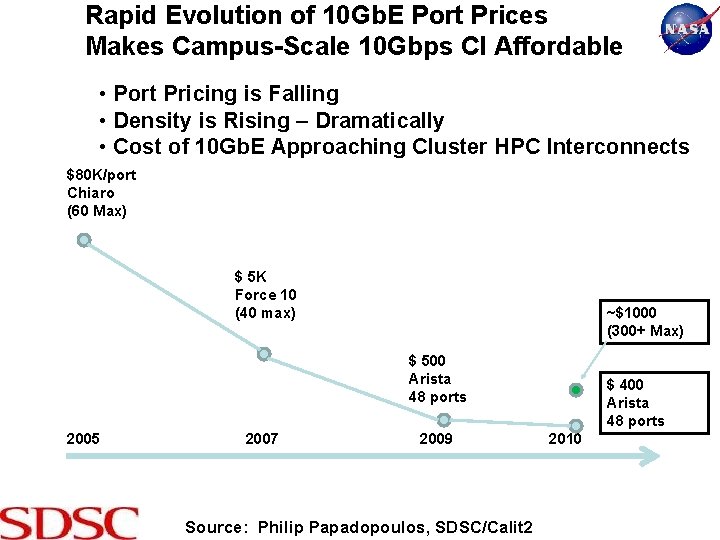

Rapid Evolution of 10 Gb. E Port Prices Makes Campus-Scale 10 Gbps CI Affordable • Port Pricing is Falling • Density is Rising – Dramatically • Cost of 10 Gb. E Approaching Cluster HPC Interconnects $80 K/port Chiaro (60 Max) $ 5 K Force 10 (40 max) ~$1000 (300+ Max) $ 500 Arista 48 ports 2005 2007 2009 Source: Philip Papadopoulos, SDSC/Calit 2 $ 400 Arista 48 ports 2010

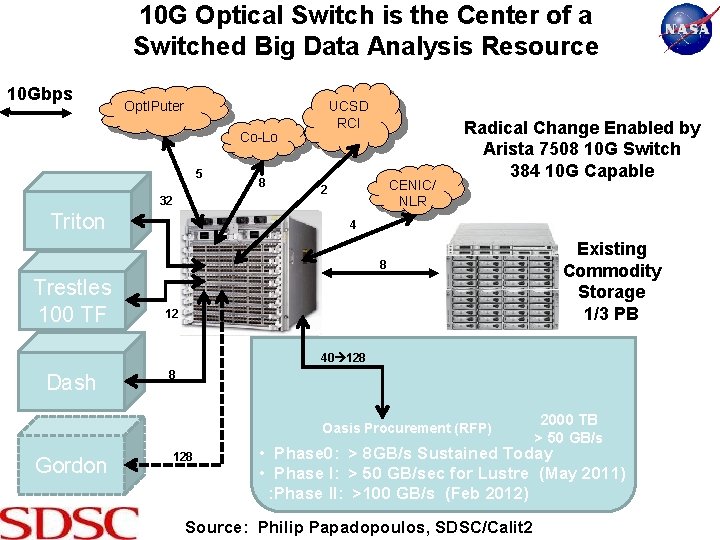

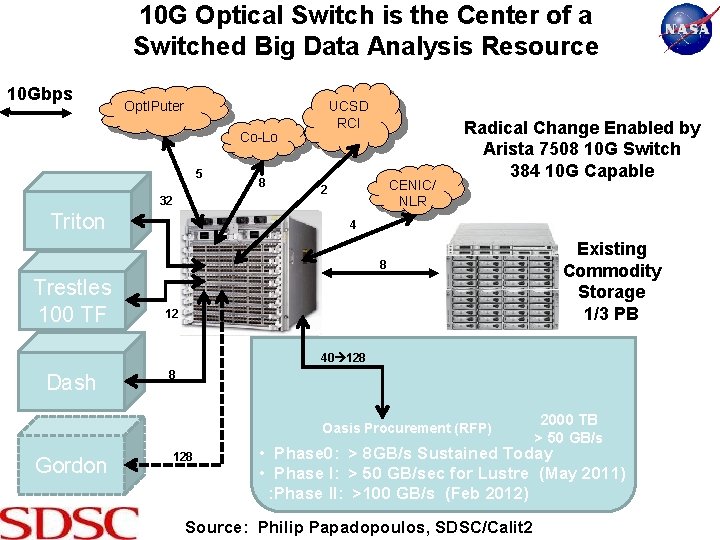

10 G Optical Switch is the Center of a Switched Big Data Analysis Resource 10 Gbps Opt. IPuter UCSD RCI Co-Lo 5 8 CENIC/ NLR 2 32 Triton Radical Change Enabled by Arista 7508 10 G Switch 384 10 G Capable 4 8 Trestles 32 100 TF 2 12 Existing Commodity Storage 1/3 PB 40 128 Dash 8 Oasis Procurement (RFP) Gordon 128 2000 TB > 50 GB/s • Phase 0: > 8 GB/s Sustained Today • Phase I: > 50 GB/sec for Lustre (May 2011) : Phase II: >100 GB/s (Feb 2012) Source: Philip Papadopoulos, SDSC/Calit 2

The Next Step for Data-Intensive Science: Pioneering the HPC Cloud

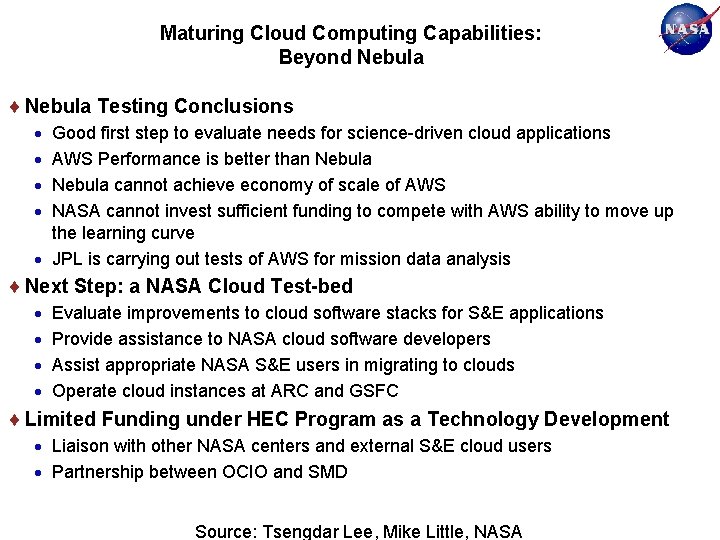

Maturing Cloud Computing Capabilities: Beyond Nebula Testing Conclusions Good first step to evaluate needs for science-driven cloud applications AWS Performance is better than Nebula cannot achieve economy of scale of AWS NASA cannot invest sufficient funding to compete with AWS ability to move up the learning curve JPL is carrying out tests of AWS for mission data analysis Next Step: a NASA Cloud Test-bed Evaluate improvements to cloud software stacks for S&E applications Provide assistance to NASA cloud software developers Assist appropriate NASA S&E users in migrating to clouds Operate cloud instances at ARC and GSFC Limited Funding under HEC Program as a Technology Development Liaison with other NASA centers and external S&E cloud users Partnership between OCIO and SMD Source: Tsengdar Lee, Mike Little, NASA

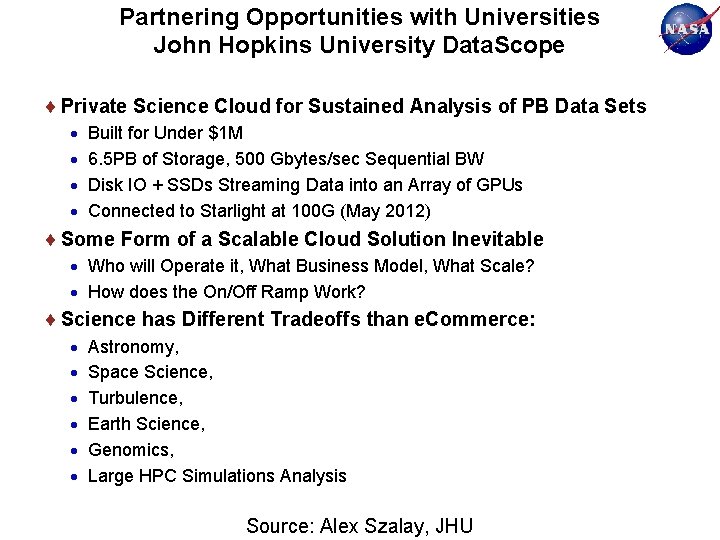

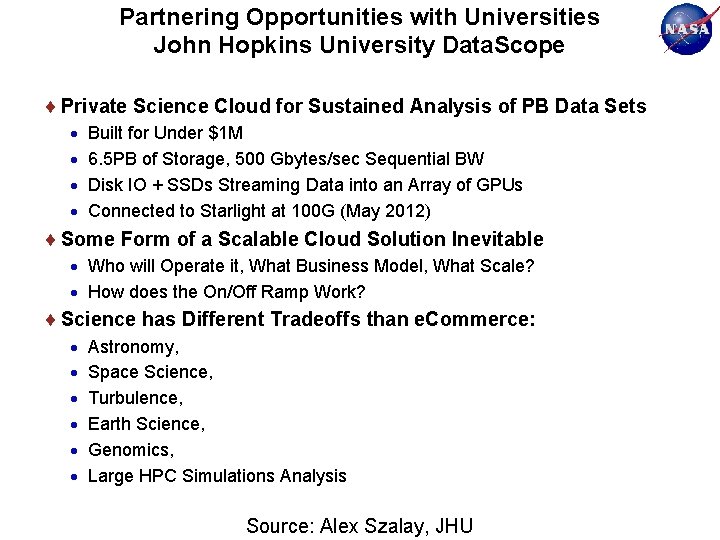

Partnering Opportunities with Universities John Hopkins University Data. Scope Private Science Cloud for Sustained Analysis of PB Data Sets Built for Under $1 M 6. 5 PB of Storage, 500 Gbytes/sec Sequential BW Disk IO + SSDs Streaming Data into an Array of GPUs Connected to Starlight at 100 G (May 2012) Some Form of a Scalable Cloud Solution Inevitable Who will Operate it, What Business Model, What Scale? How does the On/Off Ramp Work? Science has Different Tradeoffs than e. Commerce: Astronomy, Space Science, Turbulence, Earth Science, Genomics, Large HPC Simulations Analysis Source: Alex Szalay, JHU

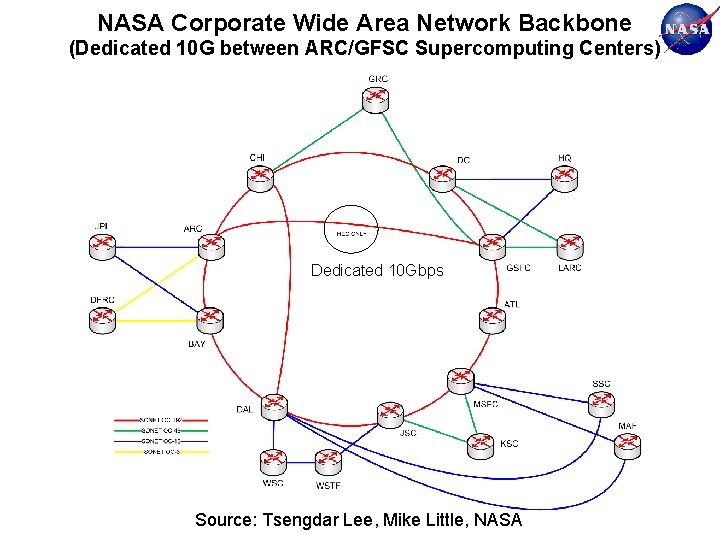

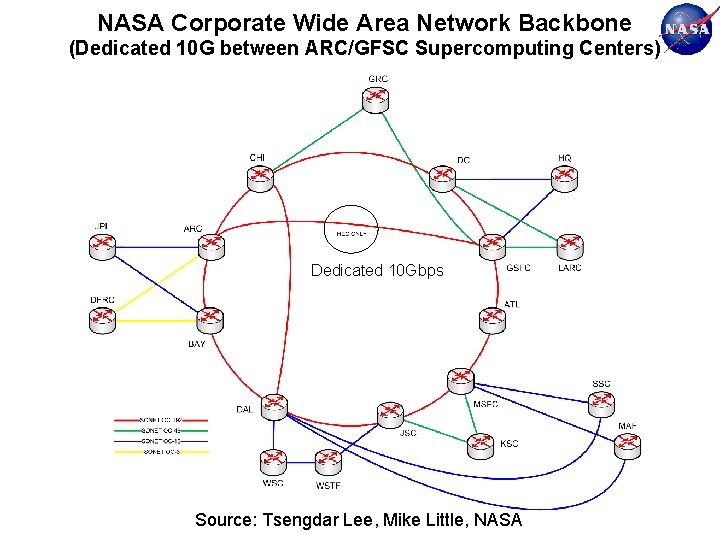

NASA Corporate Wide Area Network Backbone (Dedicated 10 G between ARC/GFSC Supercomputing Centers) Dedicated 10 Gbps Source: Tsengdar Lee, Mike Little, NASA

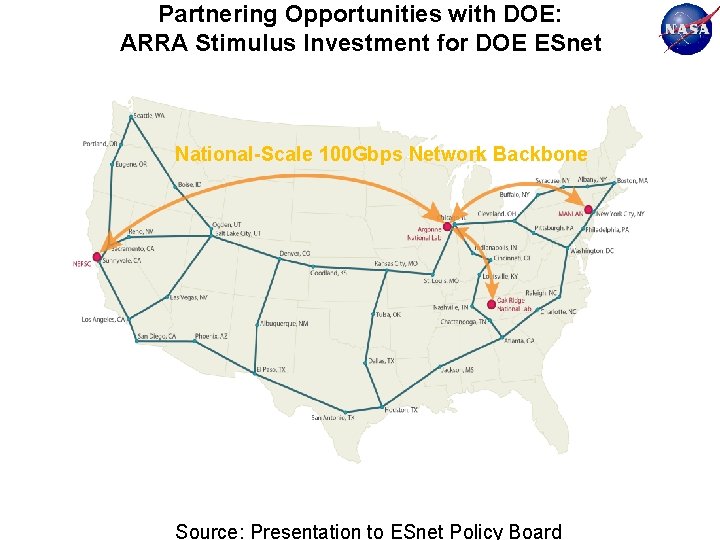

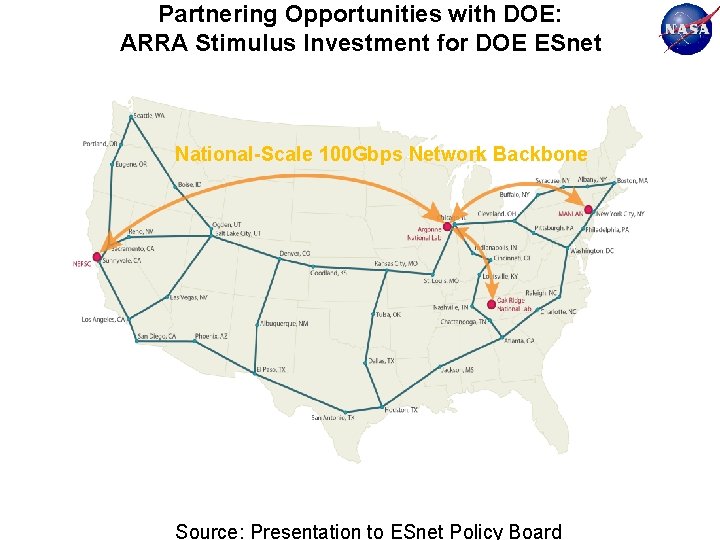

Partnering Opportunities with DOE: ARRA Stimulus Investment for DOE ESnet National-Scale 100 Gbps Network Backbone Source: Presentation to ESnet Policy Board

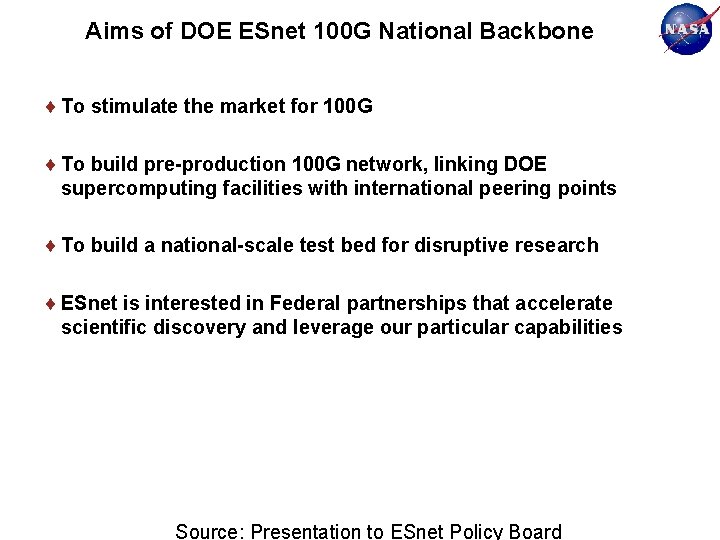

Aims of DOE ESnet 100 G National Backbone To stimulate the market for 100 G To build pre-production 100 G network, linking DOE supercomputing facilities with international peering points To build a national-scale test bed for disruptive research ESnet is interested in Federal partnerships that accelerate scientific discovery and leverage our particular capabilities Source: Presentation to ESnet Policy Board

Global Partnering Opportunities: The Global Lambda Integrated Facility Research Innovation Labs Linked by 10 Gps Dedicated Networks www. glif. is/publications/maps/GLIF_5 -11_World_2 k. jpg

NAC Committee on IT Infrastructure Recommendation #1 Recommendation: To enable NASA to gain experience on emerging leading-edge IT technologies such as: Data-Intensive Cyberinfrastructure, 100 Gbps Networking, GPU Clusters, and Hybrid HPC Architectures, we recommendation that NASA aggressively pursue partnerships with other Federal agencies, specifically NSF and DOE, as well as public/private opportunities. We believe joint agency program calls for end users to develop innovative applications will help keep NASA at the leading edge of capabilities and enable training of NASA staff to support NASA researchers as these technologies become mainstream.

NAC Committee on IT Infrastructure Recommendation #1 (Continued) Major Reasons for the Recommendation: NASA has fallen behind the leading edge, compared to other Federal agencies and international centers, in key emerging information and networking technologies. In a budget constrained fiscal environment, it is unlikely that NASA will be able to catch up by internal efforts. Partnering, as was historically done in HPCC, seems an attractive option. Consequences of No Action on the Recommendation: Within a few more years, the gap between NASA internally driven efforts and the U. S. and global best-of-breed will become a gap to large to bridge. This will severely undercut NASA’s ability to make progress on a number of critical application arenas.

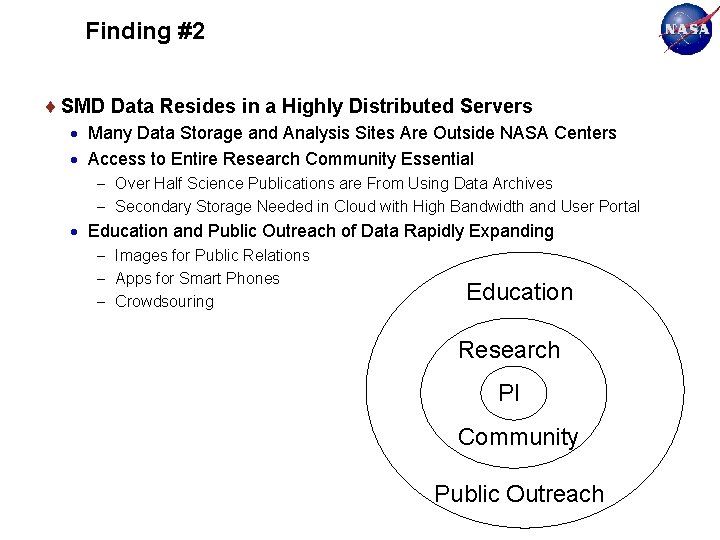

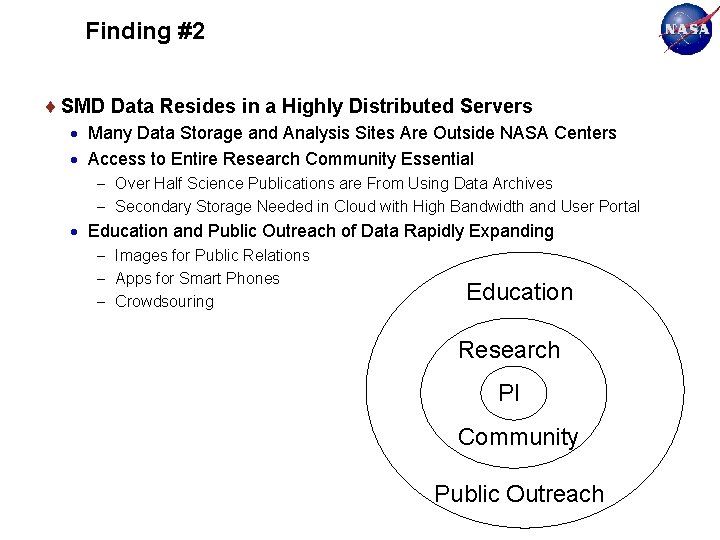

Finding #2 SMD Data Resides in a Highly Distributed Servers Many Data Storage and Analysis Sites Are Outside NASA Centers Access to Entire Research Community Essential Over Half Science Publications are From Using Data Archives Secondary Storage Needed in Cloud with High Bandwidth and User Portal Education and Public Outreach of Data Rapidly Expanding Images for Public Relations Apps for Smart Phones Crowdsouring Education Research PI Community Public Outreach

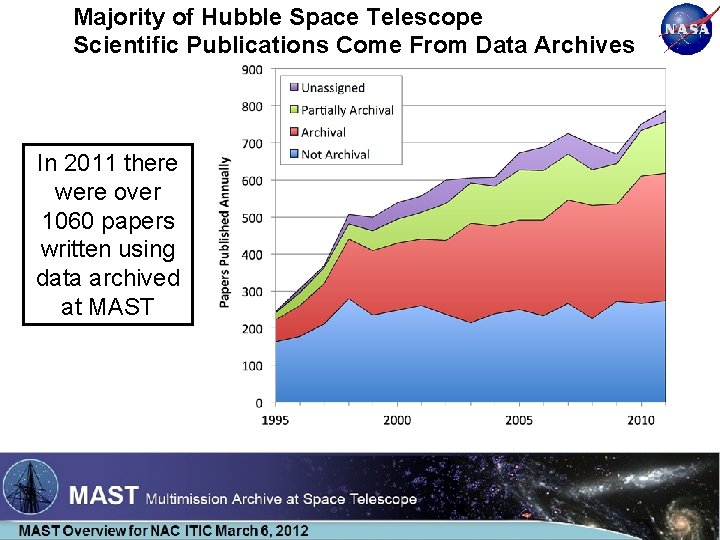

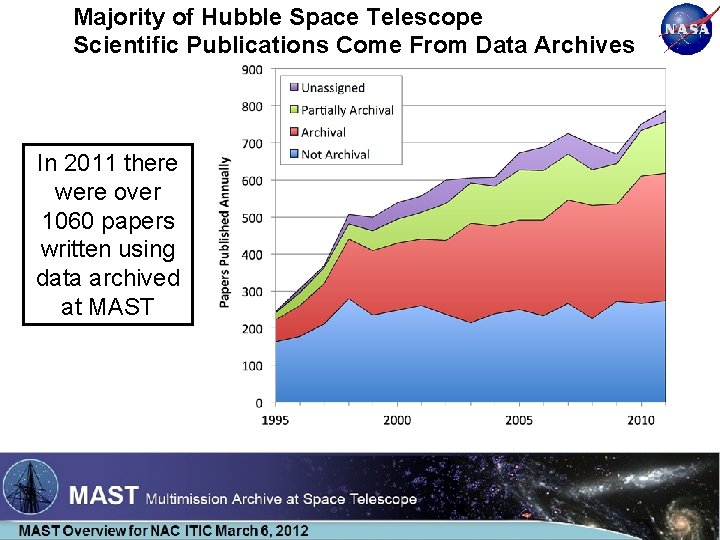

Majority of Hubble Space Telescope Scientific Publications Come From Data Archives In 2011 there were over 1060 papers written using data archived at MAST

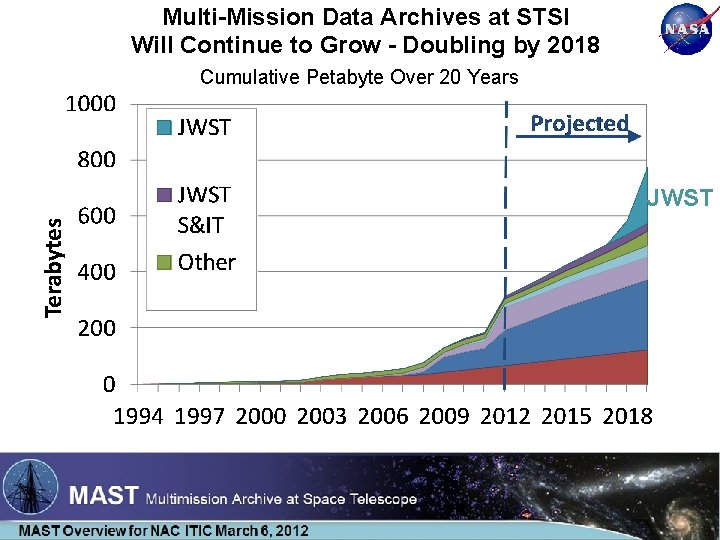

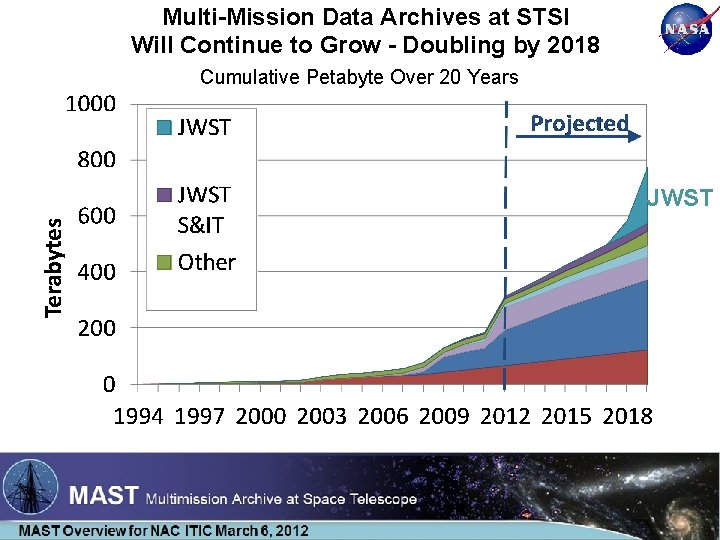

Multi-Mission Data Archives at STSI Will Continue to Grow - Doubling by 2018 Cumulative Petabyte Over 20 Years JWST

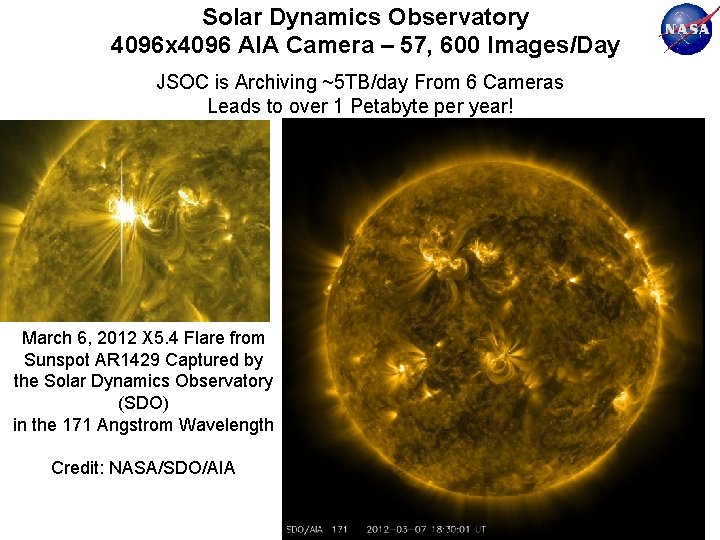

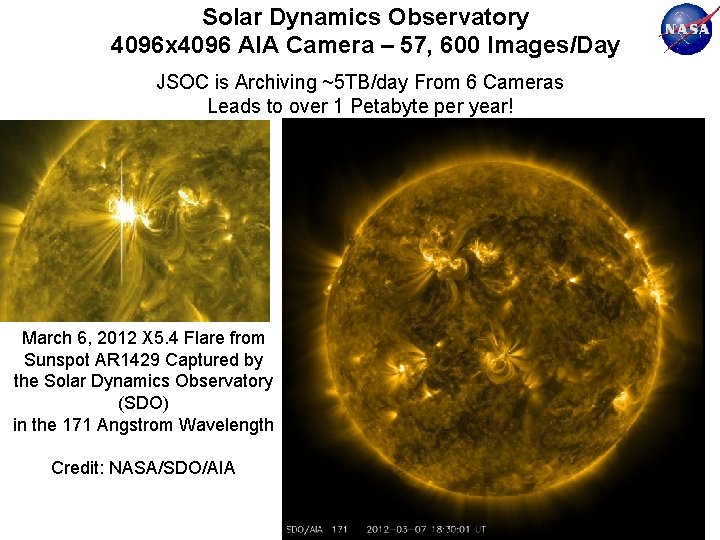

Solar Dynamics Observatory 4096 x 4096 AIA Camera – 57, 600 Images/Day JSOC is Archiving ~5 TB/day From 6 Cameras Leads to over 1 Petabyte per year! March 6, 2012 X 5. 4 Flare from Sunspot AR 1429 Captured by the Solar Dynamics Observatory (SDO) in the 171 Angstrom Wavelength Credit: NASA/SDO/AIA

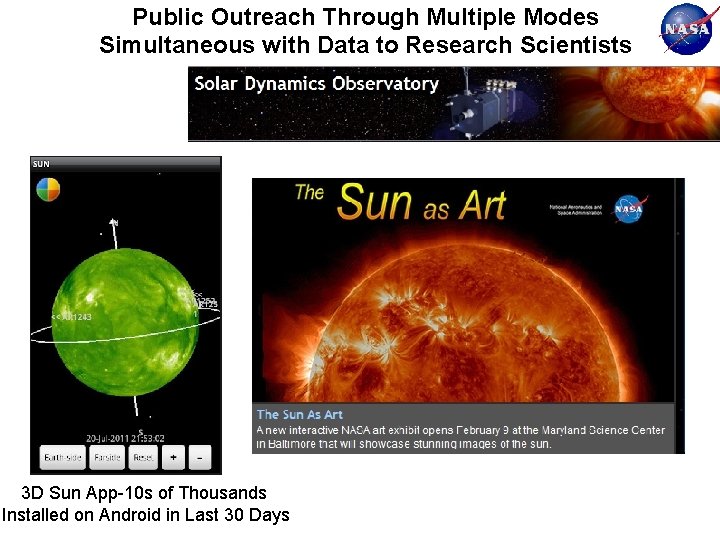

Public Outreach Through Multiple Modes Simultaneous with Data to Research Scientists 3 D Sun App-10 s of Thousands Installed on Android in Last 30 Days

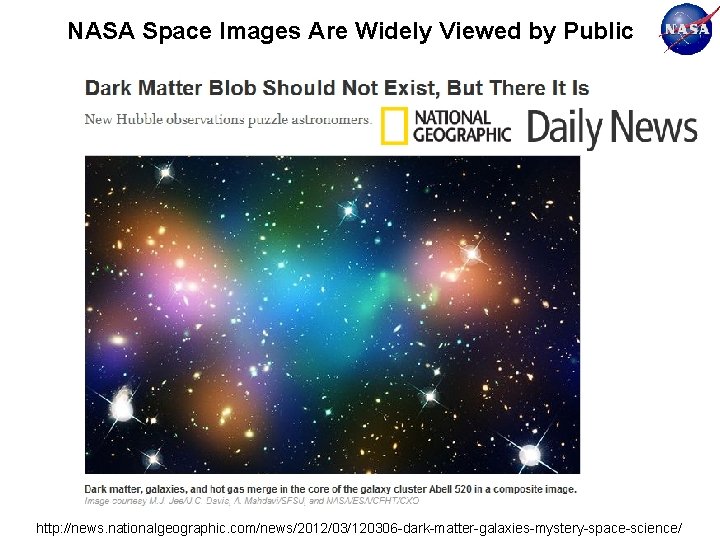

NASA Space Images Are Widely Viewed by Public http: //news. nationalgeographic. com/news/2012/03/120306 -dark-matter-galaxies-mystery-space-science/

32 of the 200+ Apps in the Apple i. Store that Return from a Search on “NASA”

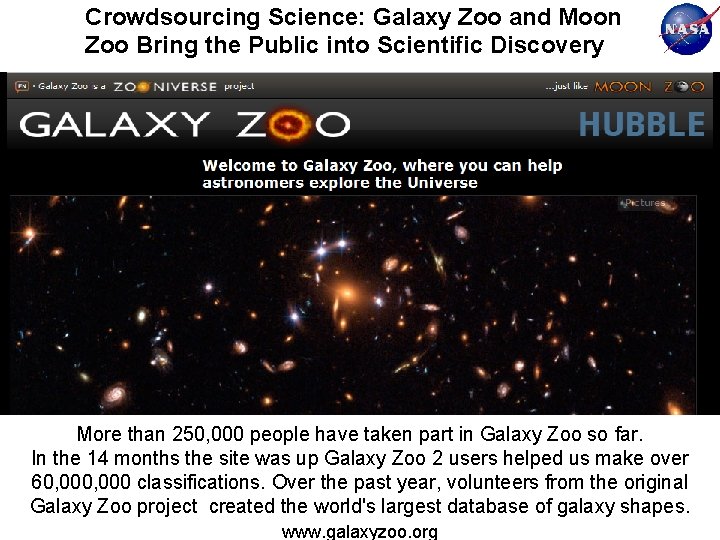

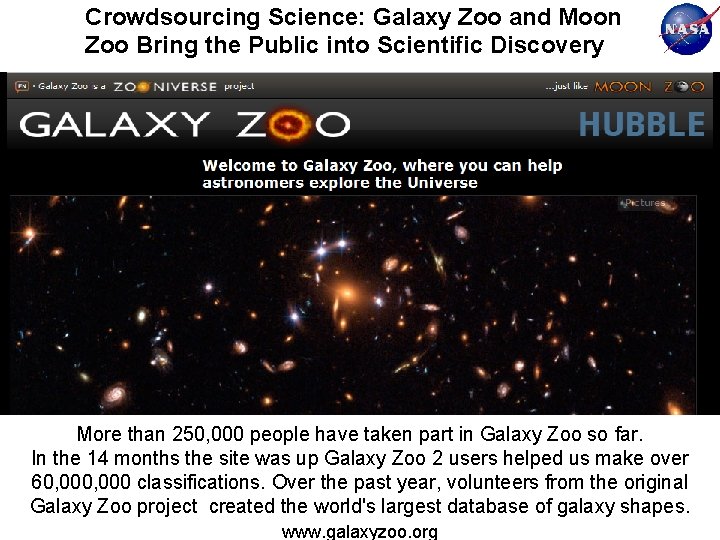

Crowdsourcing Science: Galaxy Zoo and Moon Zoo Bring the Public into Scientific Discovery More than 250, 000 people have taken part in Galaxy Zoo so far. In the 14 months the site was up Galaxy Zoo 2 users helped us make over 60, 000 classifications. Over the past year, volunteers from the original Galaxy Zoo project created the world's largest database of galaxy shapes. www. galaxyzoo. org

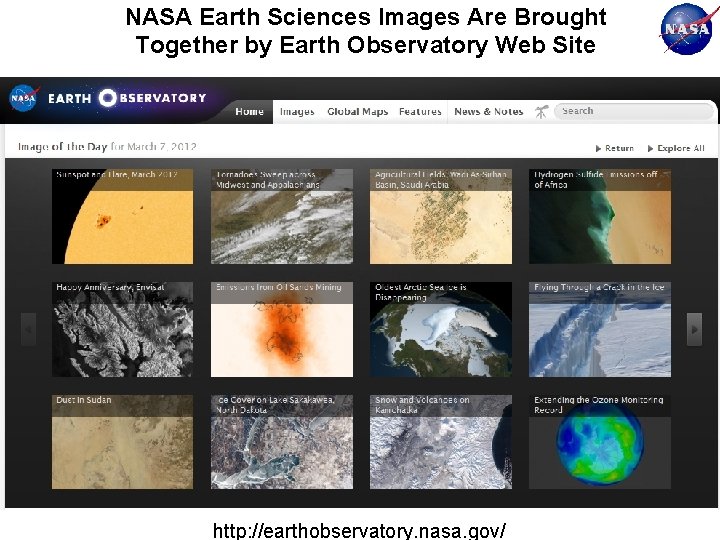

NASA Earth Sciences Images Are Brought Together by Earth Observatory Web Site http: //earthobservatory. nasa. gov/

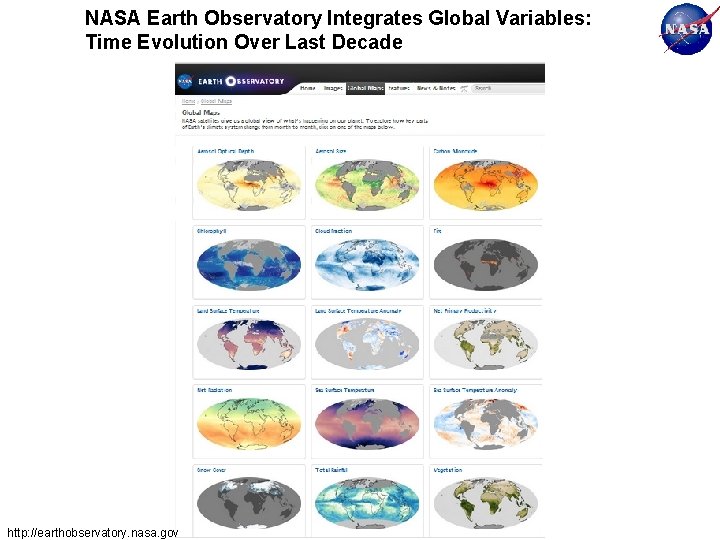

NASA Earth Observatory Integrates Global Variables: Time Evolution Over Last Decade http: //earthobservatory. nasa. gov

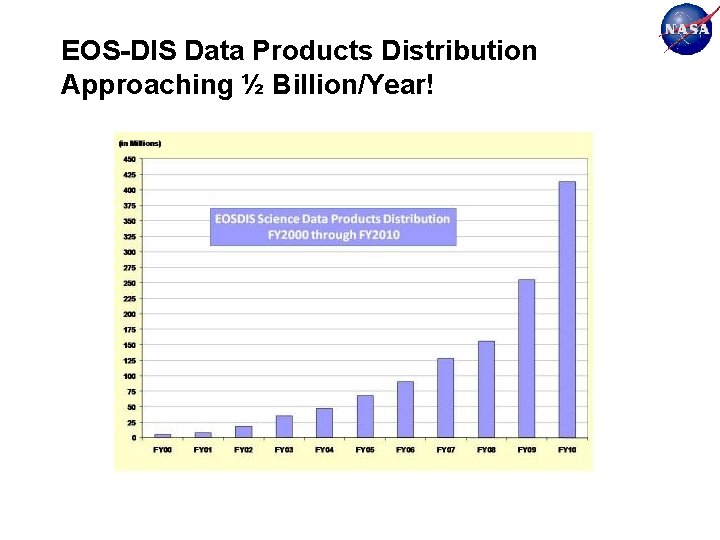

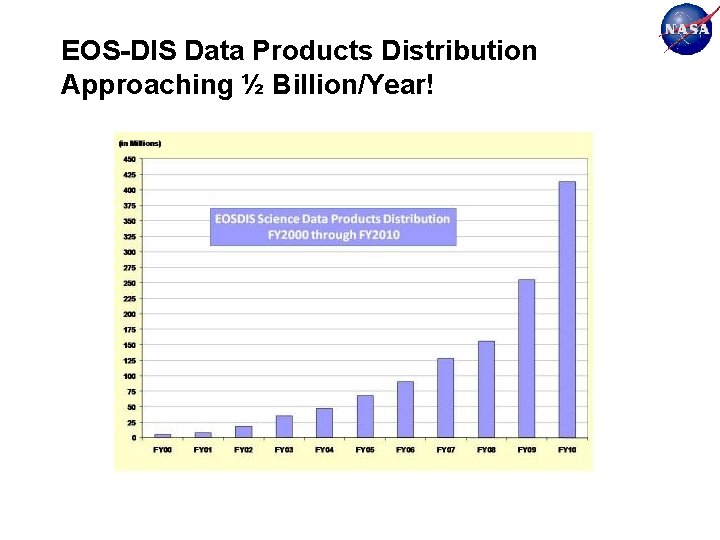

EOS-DIS Data Products Distribution Approaching ½ Billion/Year!

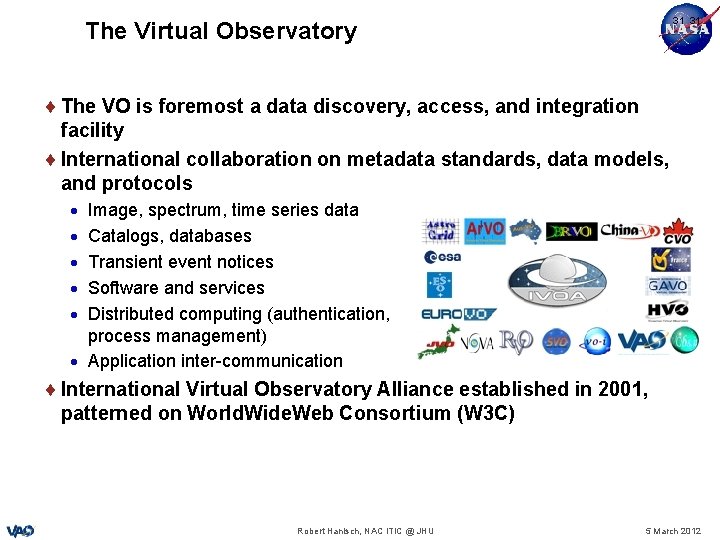

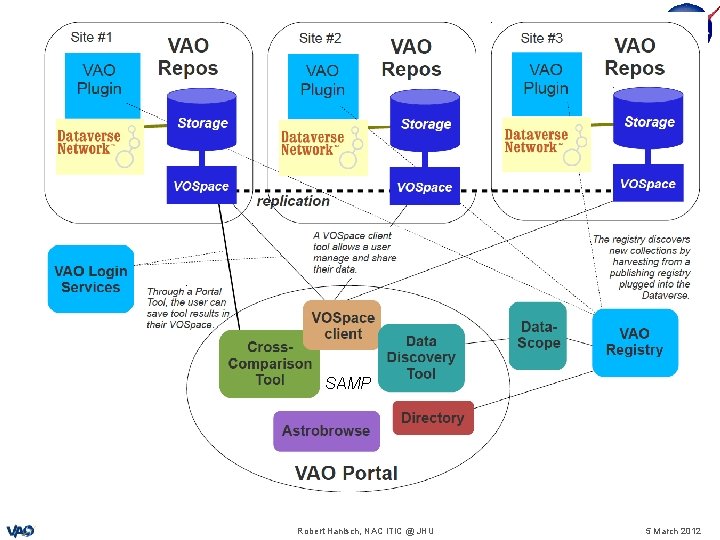

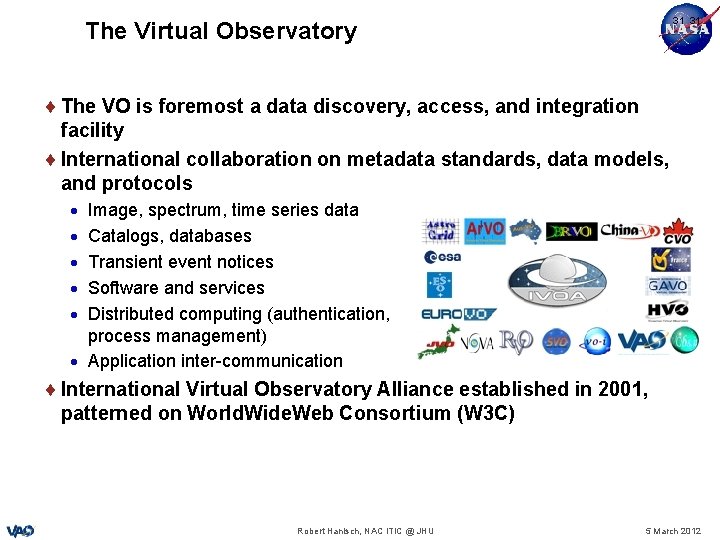

31 31 The Virtual Observatory The VO is foremost a data discovery, access, and integration facility International collaboration on metadata standards, data models, and protocols Image, spectrum, time series data Catalogs, databases Transient event notices Software and services Distributed computing (authentication, process management) Application inter-communication authorization, International Virtual Observatory Alliance established in 2001, patterned on World. Wide. Web Consortium (W 3 C) Robert Hanisch, NAC ITIC @ JHU 5 March 2012

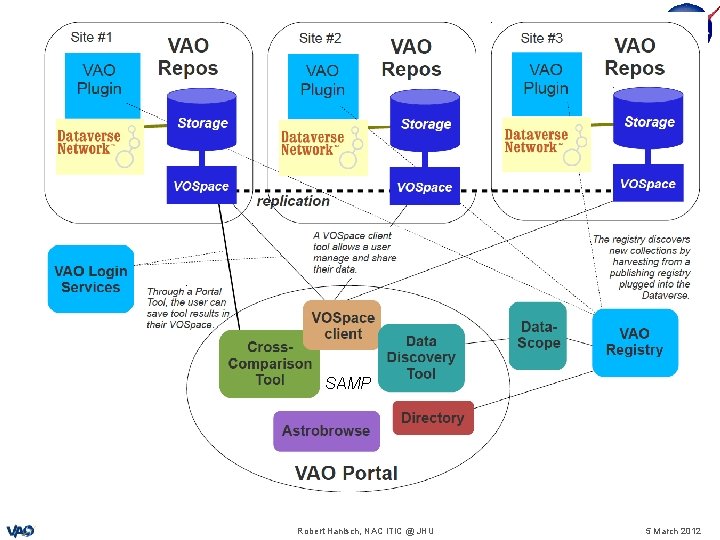

32 32 SAMP Robert Hanisch, NAC ITIC @ JHU 5 March 2012

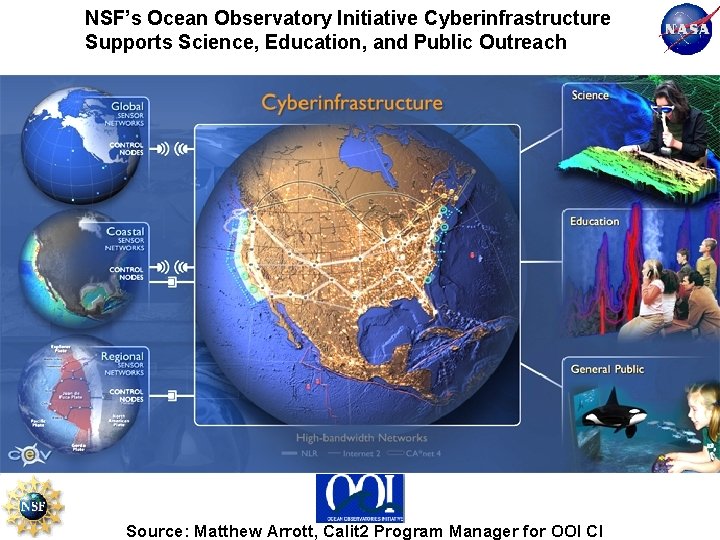

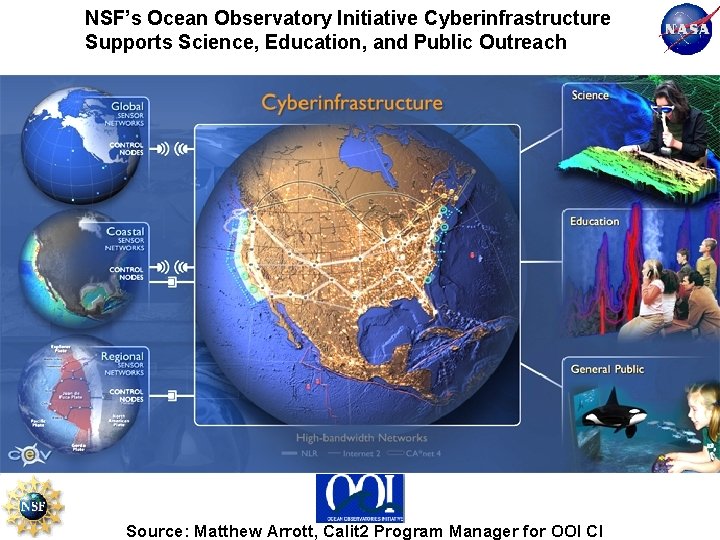

NSF’s Ocean Observatory Initiative Cyberinfrastructure Supports Science, Education, and Public Outreach Source: Matthew Arrott, Calit 2 Program Manager for OOI CI

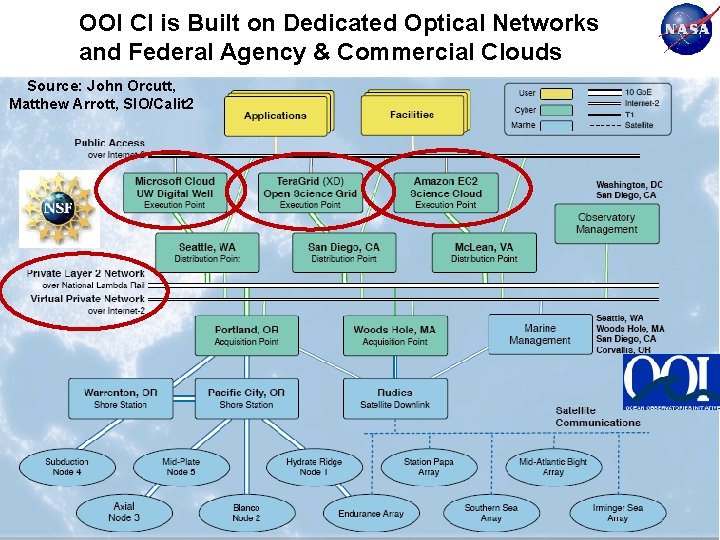

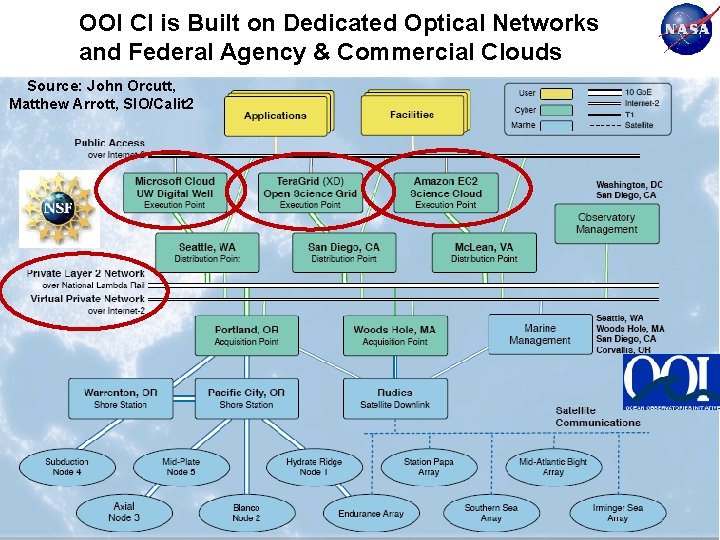

OOI CI is Built on Dedicated Optical Networks and Federal Agency & Commercial Clouds Source: John Orcutt, Matthew Arrott, SIO/Calit 2

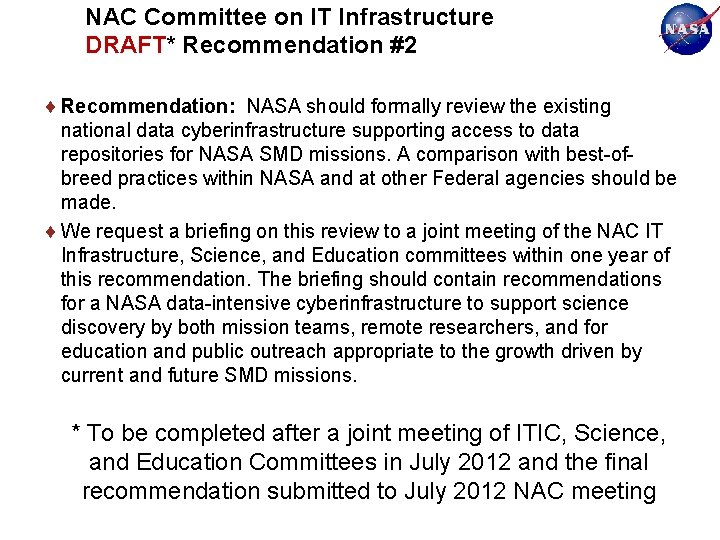

NAC Committee on IT Infrastructure DRAFT* Recommendation #2 Recommendation: NASA should formally review the existing national data cyberinfrastructure supporting access to data repositories for NASA SMD missions. A comparison with best-ofbreed practices within NASA and at other Federal agencies should be made. We request a briefing on this review to a joint meeting of the NAC IT Infrastructure, Science, and Education committees within one year of this recommendation. The briefing should contain recommendations for a NASA data-intensive cyberinfrastructure to support science discovery by both mission teams, remote researchers, and for education and public outreach appropriate to the growth driven by current and future SMD missions. * To be completed after a joint meeting of ITIC, Science, and Education Committees in July 2012 and the final recommendation submitted to July 2012 NAC meeting

NAC Committee on IT Infrastructure Recommendation #2 (continued) Major Reasons for the Recommendation: NASA data repository and analysis facilities for SMD missions are distributed across NASA centers and throughout U. S. universities and research facilities. There is considerable variation in the sophistication of the integrated cyberinfrastructure supporting scientific discovery, educational reuse, and public outreach across SMD subdivisions. The rapid rise in the last decade of “mining data archives” by groups other than those funded by specific missions implies a need for a national-scale cyberinfrastructure architecture that can allow for free-flow of data to where it is needed. Other agencies, specifically NSF’s Ocean Observatories Initiative Cyberinfrastructure program, should be used as a benchmark for NASA’s data-intensive architecture. Consequences of No Action on the Recommendation: The science , education, and public outreach potential of NASA’s investment in SMD space missions will not be realized.

Itic curve

Itic curve Itil information technology infrastructure library

Itil information technology infrastructure library Learning without burden 1993 ppt

Learning without burden 1993 ppt Vaidyanathan committee report related to revival of

Vaidyanathan committee report related to revival of Irani committee report

Irani committee report Cadbury committee report ppt

Cadbury committee report ppt Technology executive committee

Technology executive committee Components of information system infrastructure

Components of information system infrastructure National health information infrastructure

National health information infrastructure Critical energy infrastructure information

Critical energy infrastructure information National health information infrastructure

National health information infrastructure Joint information systems committee

Joint information systems committee Webomr

Webomr Hình ảnh bộ gõ cơ thể búng tay

Hình ảnh bộ gõ cơ thể búng tay Ng-html

Ng-html Bổ thể

Bổ thể Tỉ lệ cơ thể trẻ em

Tỉ lệ cơ thể trẻ em Chó sói

Chó sói Tư thế worm breton

Tư thế worm breton Hát lên người ơi alleluia

Hát lên người ơi alleluia Các môn thể thao bắt đầu bằng tiếng chạy

Các môn thể thao bắt đầu bằng tiếng chạy Thế nào là hệ số cao nhất

Thế nào là hệ số cao nhất Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Công thức tính thế năng

Công thức tính thế năng Trời xanh đây là của chúng ta thể thơ

Trời xanh đây là của chúng ta thể thơ Mật thư anh em như thể tay chân

Mật thư anh em như thể tay chân 101012 bằng

101012 bằng độ dài liên kết

độ dài liên kết Các châu lục và đại dương trên thế giới

Các châu lục và đại dương trên thế giới Thơ thất ngôn tứ tuyệt đường luật

Thơ thất ngôn tứ tuyệt đường luật Quá trình desamine hóa có thể tạo ra

Quá trình desamine hóa có thể tạo ra Một số thể thơ truyền thống

Một số thể thơ truyền thống Cái miệng bé xinh thế chỉ nói điều hay thôi

Cái miệng bé xinh thế chỉ nói điều hay thôi Vẽ hình chiếu vuông góc của vật thể sau

Vẽ hình chiếu vuông góc của vật thể sau Thế nào là sự mỏi cơ

Thế nào là sự mỏi cơ đặc điểm cơ thể của người tối cổ

đặc điểm cơ thể của người tối cổ Giọng cùng tên là

Giọng cùng tên là