GPFS short overview of architecture installation configuration and

![Deleting a disk [root@gpfs-01 -01 ~]# mmdeldisk gpfs "disk_hdb_gpfs_01_03" Deleting disks. . . Scanning Deleting a disk [root@gpfs-01 -01 ~]# mmdeldisk gpfs "disk_hdb_gpfs_01_03" Deleting disks. . . Scanning](https://slidetodoc.com/presentation_image/019df3afe2dff00e46b1e0b36471ddd7/image-31.jpg)

![Adding a disk (and a storage pool) [root@gpfs-01 -03 ~]# mmadddisk gpfs "disk_hdb_gpfs_01_03: : Adding a disk (and a storage pool) [root@gpfs-01 -03 ~]# mmadddisk gpfs "disk_hdb_gpfs_01_03: :](https://slidetodoc.com/presentation_image/019df3afe2dff00e46b1e0b36471ddd7/image-33.jpg)

- Slides: 44

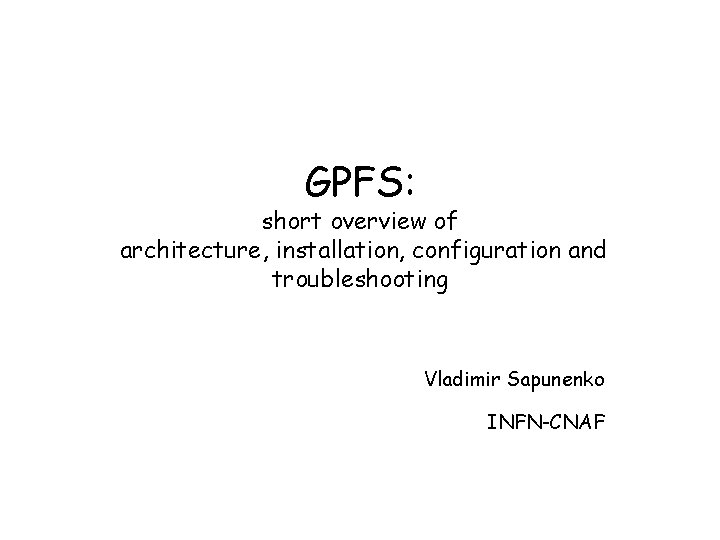

GPFS: short overview of architecture, installation, configuration and troubleshooting Vladimir Sapunenko INFN-CNAF

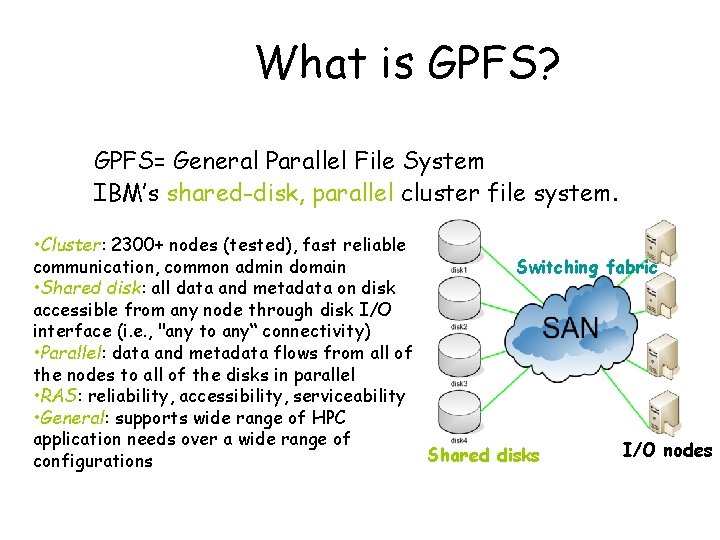

What is GPFS? GPFS= General Parallel File System IBM’s shared-disk, parallel cluster file system. • Cluster: 2300+ nodes (tested), fast reliable communication, common admin domain Switching fabric • Shared disk: all data and metadata on disk accessible from any node through disk I/O interface (i. e. , "any to any“ connectivity) • Parallel: data and metadata flows from all of the nodes to all of the disks in parallel • RAS: reliability, accessibility, serviceability • General: supports wide range of HPC application needs over a wide range of I/O nodes Shared disks configurations

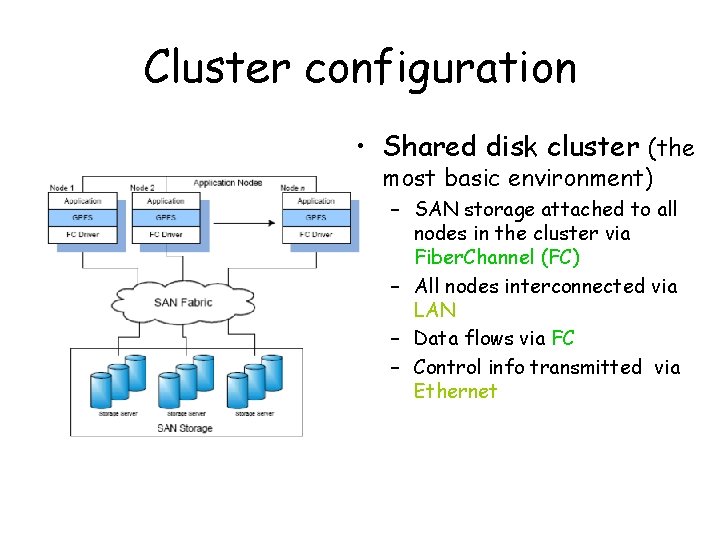

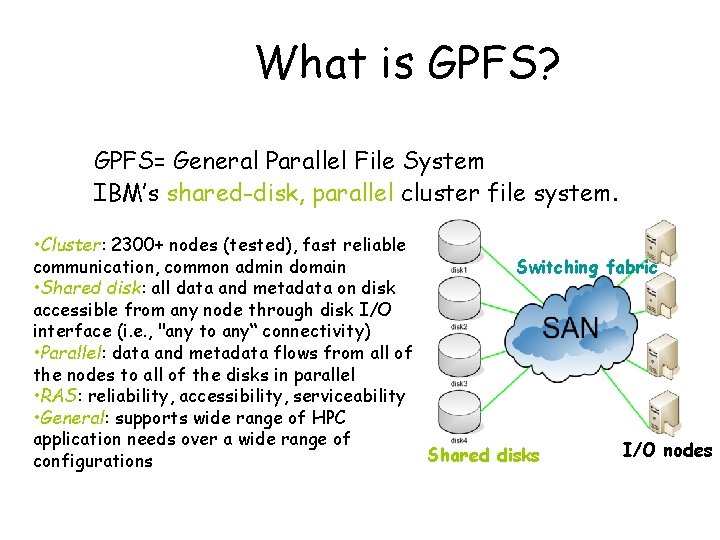

Cluster configuration • Shared disk cluster (the most basic environment) – SAN storage attached to all nodes in the cluster via Fiber. Channel (FC) – All nodes interconnected via LAN – Data flows via FC – Control info transmitted via Ethernet

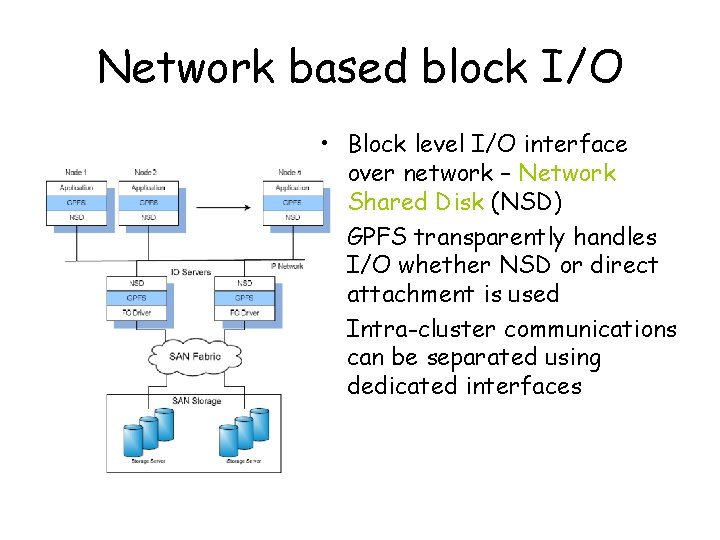

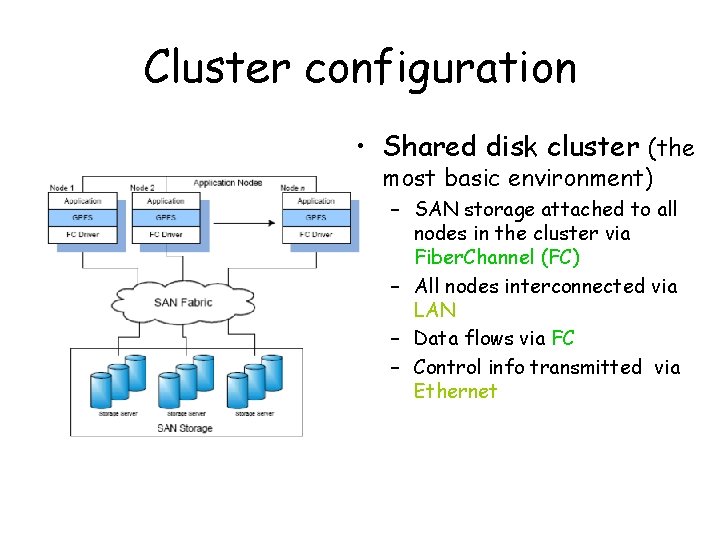

Network based block I/O • Block level I/O interface over network – Network Shared Disk (NSD) • GPFS transparently handles I/O whether NSD or direct attachment is used • Intra-cluster communications can be separated using dedicated interfaces

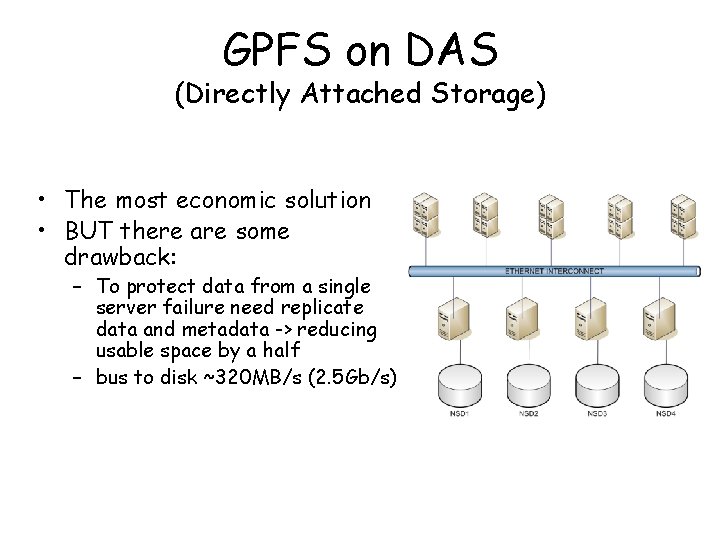

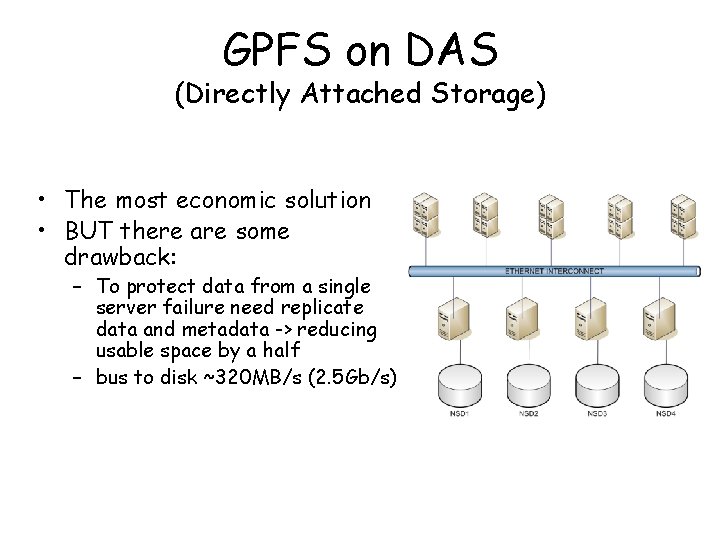

GPFS on DAS (Directly Attached Storage) • The most economic solution • BUT there are some drawback: – To protect data from a single server failure need replicate data and metadata -> reducing usable space by a half – bus to disk ~320 MB/s (2. 5 Gb/s)

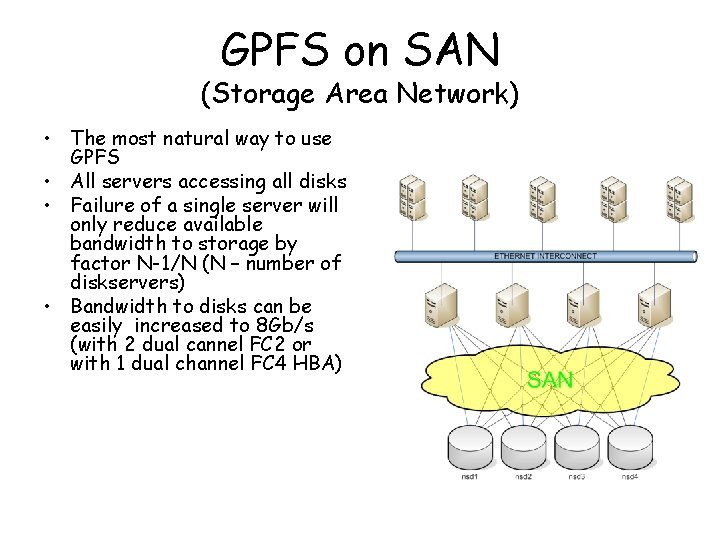

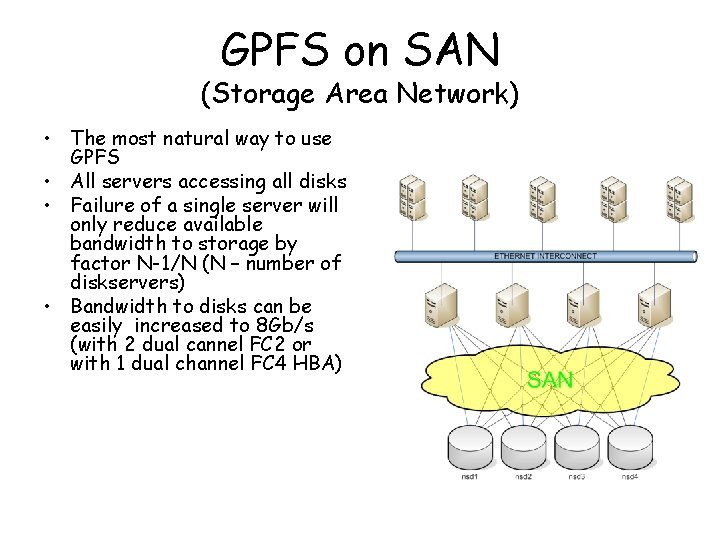

GPFS on SAN (Storage Area Network) • The most natural way to use GPFS • All servers accessing all disks • Failure of a single server will only reduce available bandwidth to storage by factor N-1/N (N – number of diskservers) • Bandwidth to disks can be easily increased to 8 Gb/s (with 2 dual cannel FC 2 or with 1 dual channel FC 4 HBA)

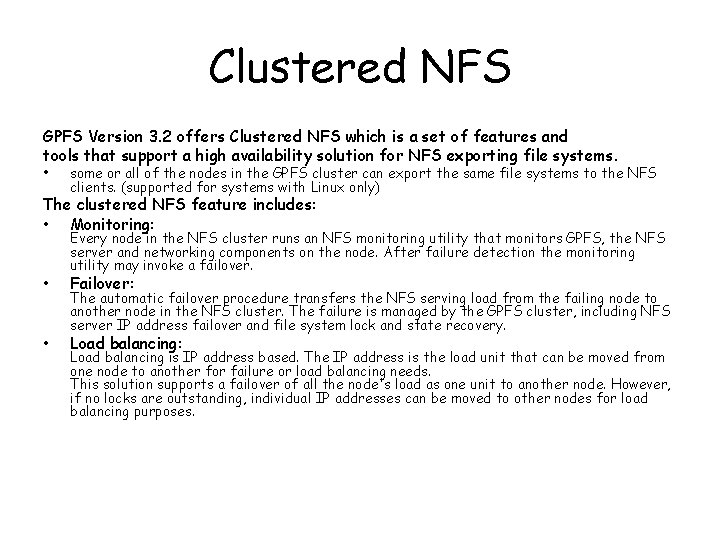

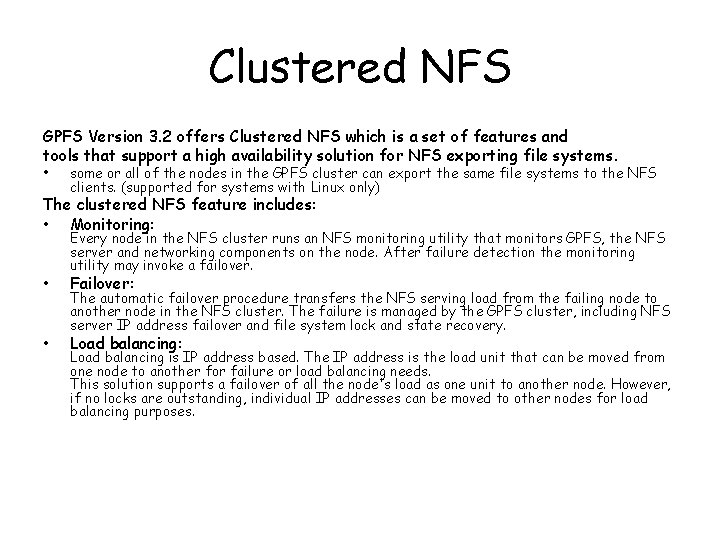

Clustered NFS GPFS Version 3. 2 offers Clustered NFS which is a set of features and tools that support a high availability solution for NFS exporting file systems. • some or all of the nodes in the GPFS cluster can export the same file systems to the NFS clients. (supported for systems with Linux only) The clustered NFS feature includes: • Monitoring: • • Every node in the NFS cluster runs an NFS monitoring utility that monitors GPFS, the NFS server and networking components on the node. After failure detection the monitoring utility may invoke a failover. Failover: The automatic failover procedure transfers the NFS serving load from the failing node to another node in the NFS cluster. The failure is managed by the GPFS cluster, including NFS server IP address failover and file system lock and state recovery. Load balancing: Load balancing is IP address based. The IP address is the load unit that can be moved from one node to another for failure or load balancing needs. This solution supports a failover of all the node's load as one unit to another node. However, if no locks are outstanding, individual IP addresses can be moved to other nodes for load balancing purposes.

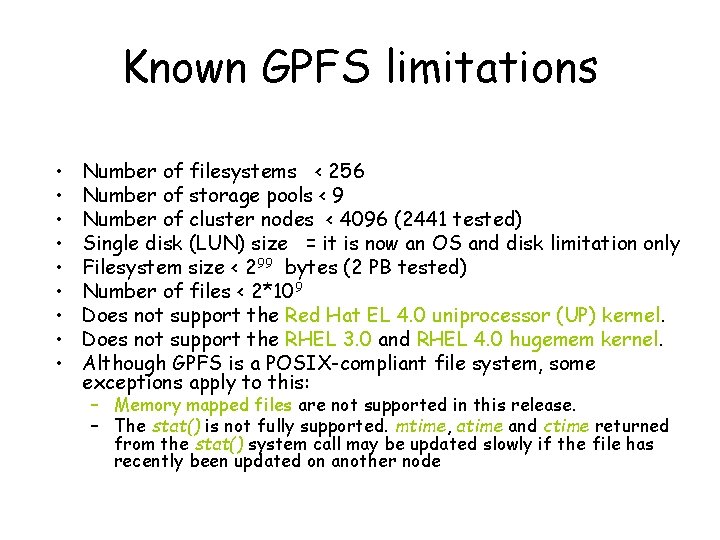

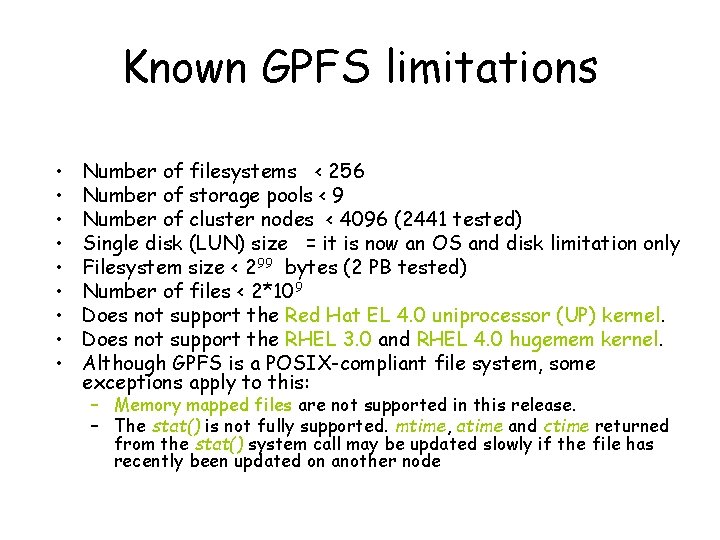

Known GPFS limitations • • • Number of filesystems < 256 Number of storage pools < 9 Number of cluster nodes < 4096 (2441 tested) Single disk (LUN) size = it is now an OS and disk limitation only Filesystem size < 299 bytes (2 PB tested) Number of files < 2*109 Does not support the Red Hat EL 4. 0 uniprocessor (UP) kernel. Does not support the RHEL 3. 0 and RHEL 4. 0 hugemem kernel. Although GPFS is a POSIX-compliant file system, some exceptions apply to this: – Memory mapped files are not supported in this release. – The stat() is not fully supported. mtime, atime and ctime returned from the stat() system call may be updated slowly if the file has recently been updated on another node

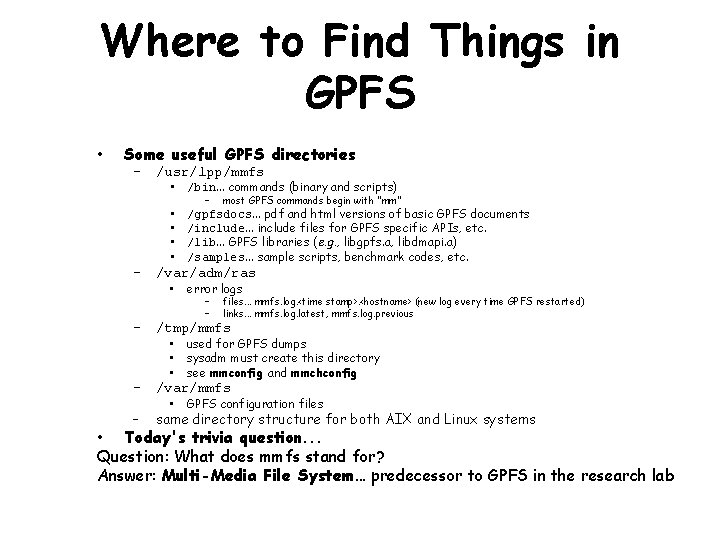

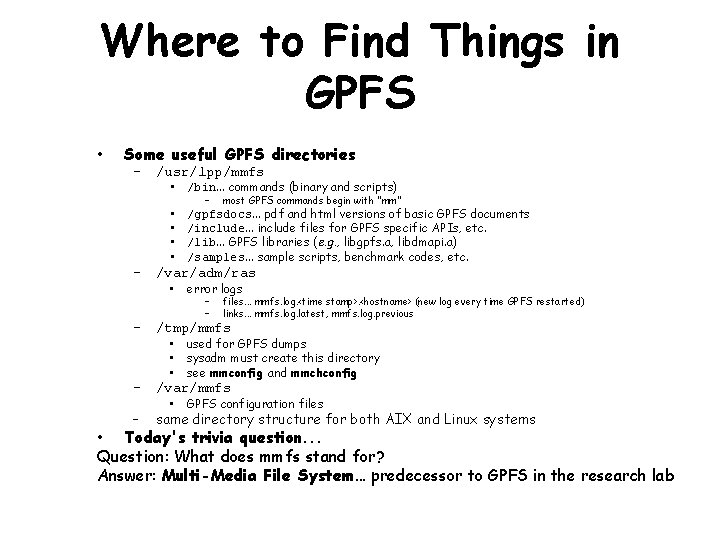

Where to Find Things in GPFS • Some useful GPFS directories – /usr/lpp/mmfs • /bin. . . commands (binary and scripts) • • – most GPFS commands begin with "mm" /gpfsdocs. . . pdf and html versions of basic GPFS documents /include. . . include files for GPFS specific APIs, etc. /lib. . . GPFS libraries (e. g. , libgpfs. a, libdmapi. a) /samples. . . sample scripts, benchmark codes, etc. – /var/adm/ras • error logs – – files. . . mmfs. log. <time stamp>. <hostname> (new log every time GPFS restarted) links. . . mmfs. log. latest, mmfs. log. previous – /tmp/mmfs • • • used for GPFS dumps sysadm must create this directory see mmconfig and mmchconfig • GPFS configuration files – /var/mmfs – same directory structure for both AIX and Linux systems • Today's trivia question. . . Question: What does mmfs stand for? Answer: Multi-Media File System. . . predecessor to GPFS in the research lab

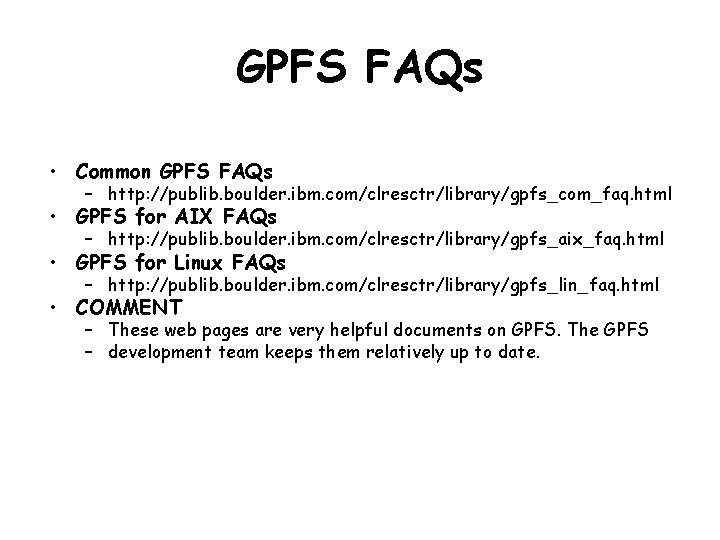

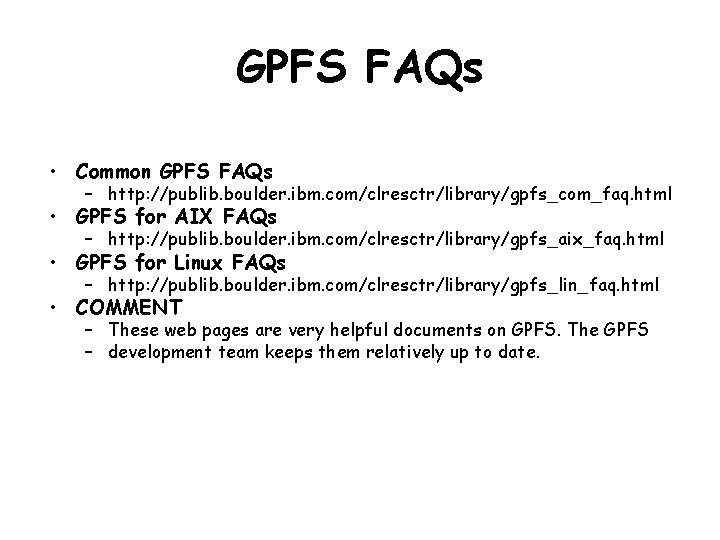

GPFS FAQs • Common GPFS FAQs – http: //publib. boulder. ibm. com/clresctr/library/gpfs_com_faq. html • GPFS for AIX FAQs – http: //publib. boulder. ibm. com/clresctr/library/gpfs_aix_faq. html • GPFS for Linux FAQs – http: //publib. boulder. ibm. com/clresctr/library/gpfs_lin_faq. html • COMMENT – These web pages are very helpful documents on GPFS. The GPFS – development team keeps them relatively up to date.

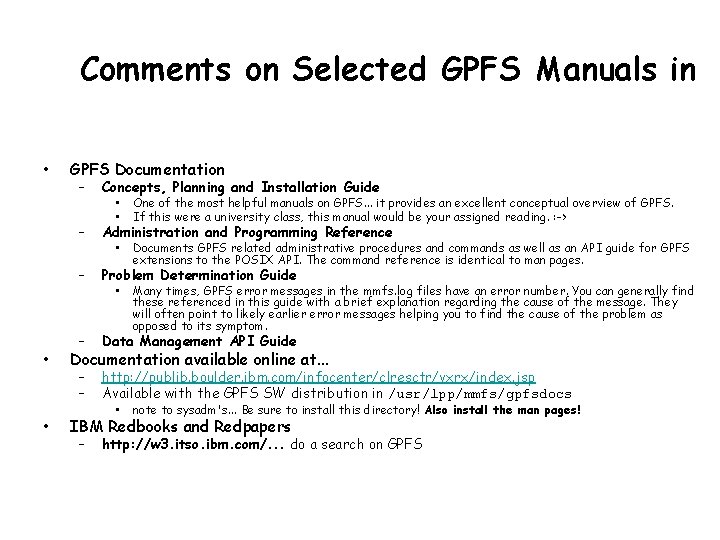

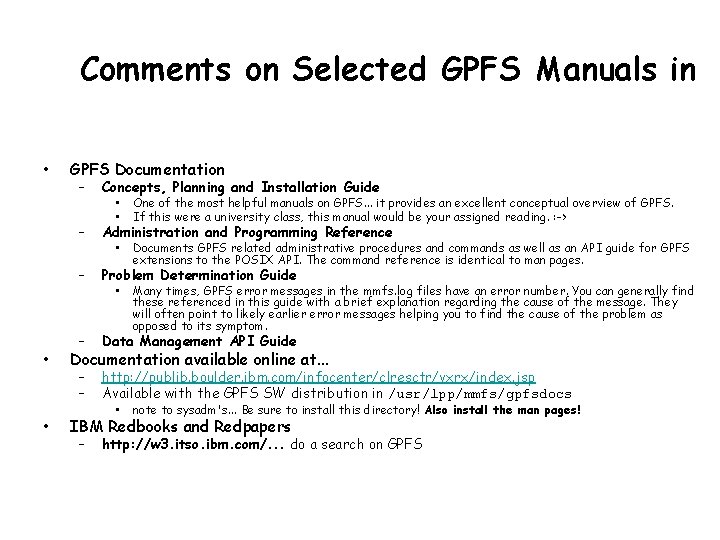

Comments on Selected GPFS Manuals in • • • GPFS Documentation – Concepts, Planning and Installation Guide – Administration and Programming Reference – Problem Determination Guide – Data Management API Guide – – http: //publib. boulder. ibm. com/infocenter/clresctr/vxrx/index. jsp Available with the GPFS SW distribution in /usr/lpp/mmfs/gpfsdocs • • One of the most helpful manuals on GPFS. . . it provides an excellent conceptual overview of GPFS. If this were a university class, this manual would be your assigned reading. : -> • Documents GPFS related administrative procedures and commands as well as an API guide for GPFS extensions to the POSIX API. The command reference is identical to man pages. • Many times, GPFS error messages in the mmfs. log files have an error number. You can generally find these referenced in this guide with a brief explanation regarding the cause of the message. They will often point to likely earlier error messages helping you to find the cause of the problem as opposed to its symptom. Documentation available online at. . . • note to sysadm's. . . Be sure to install this directory! Also install the man pages! IBM Redbooks and Redpapers – http: //w 3. itso. ibm. com/. . . do a search on GPFS

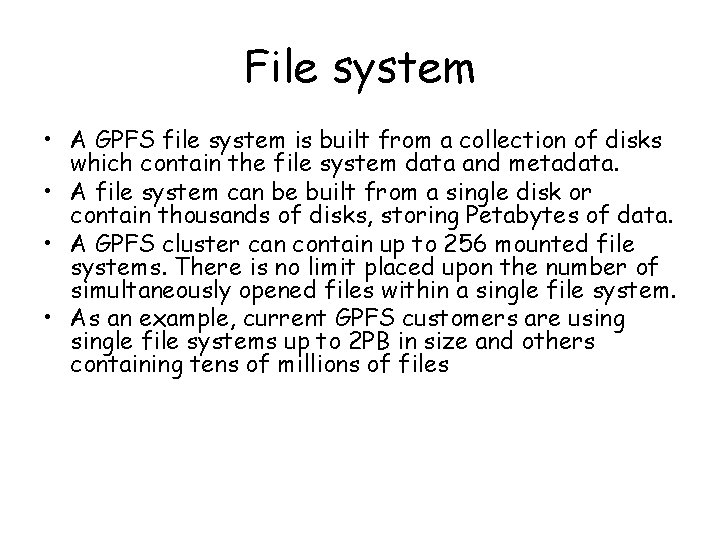

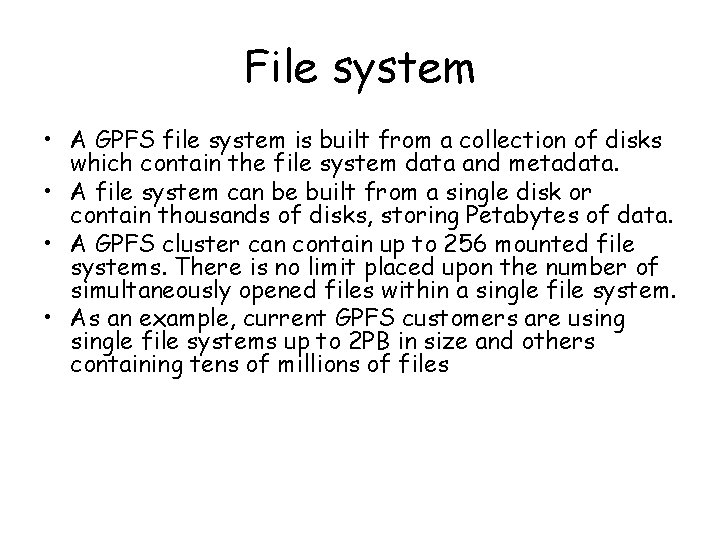

File system • A GPFS file system is built from a collection of disks which contain the file system data and metadata. • A file system can be built from a single disk or contain thousands of disks, storing Petabytes of data. • A GPFS cluster can contain up to 256 mounted file systems. There is no limit placed upon the number of simultaneously opened files within a single file system. • As an example, current GPFS customers are usingle file systems up to 2 PB in size and others containing tens of millions of files

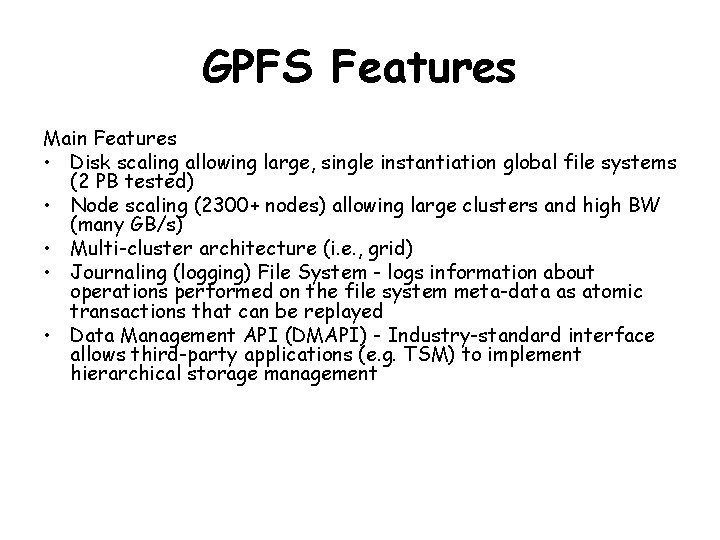

GPFS Features Main Features • Disk scaling allowing large, single instantiation global file systems (2 PB tested) • Node scaling (2300+ nodes) allowing large clusters and high BW (many GB/s) • Multi-cluster architecture (i. e. , grid) • Journaling (logging) File System - logs information about operations performed on the file system meta-data as atomic transactions that can be replayed • Data Management API (DMAPI) - Industry-standard interface allows third-party applications (e. g. TSM) to implement hierarchical storage management

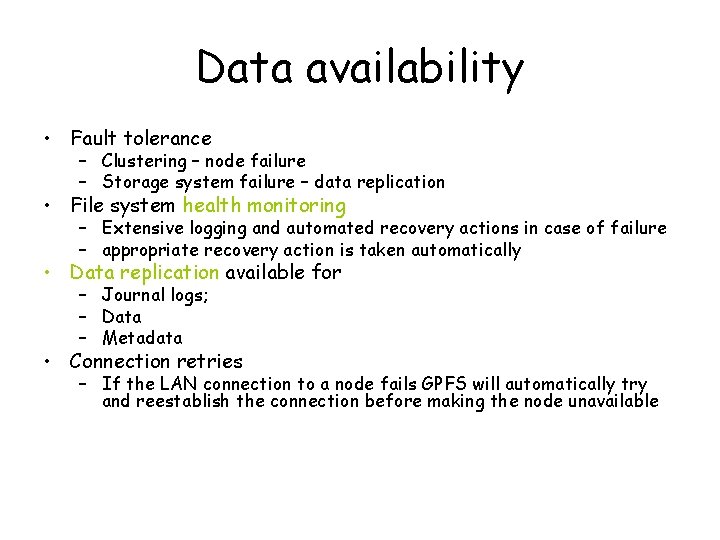

Data availability • Fault tolerance – Clustering – node failure – Storage system failure – data replication • File system health monitoring – Extensive logging and automated recovery actions in case of failure – appropriate recovery action is taken automatically • Data replication available for – Journal logs; – Data – Metadata • Connection retries – If the LAN connection to a node fails GPFS will automatically try and reestablish the connection before making the node unavailable

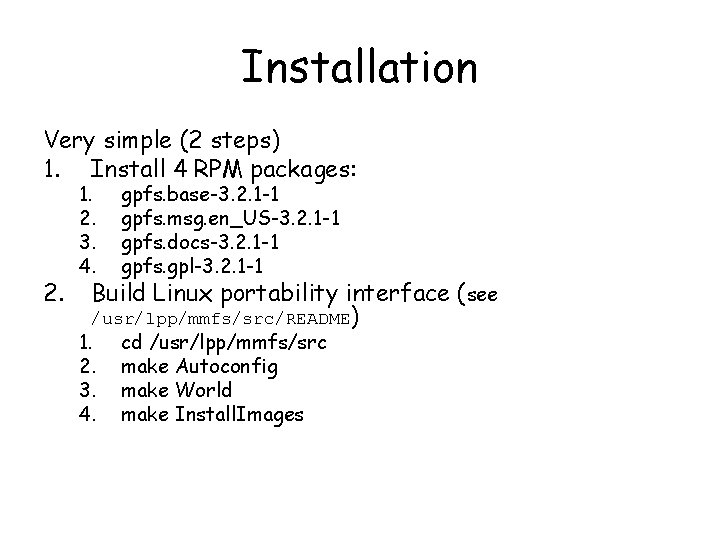

Installation Very simple (2 steps) 1. Install 4 RPM packages: 2. 1. 2. 3. 4. gpfs. base-3. 2. 1 -1 gpfs. msg. en_US-3. 2. 1 -1 gpfs. docs-3. 2. 1 -1 gpfs. gpl-3. 2. 1 -1 1. 2. 3. 4. cd /usr/lpp/mmfs/src make Autoconfig make World make Install. Images Build Linux portability interface (see /usr/lpp/mmfs/src/README)

Installation (comments) • Updates are freely available from official GPFS site • Passwordless access needed from any to any node within cluster – Rsh or Ssh must be configured accordingly • Dependencies – compat-libstdc++ – xorg-x 11 -devel (imake required for Autoconfig) • No need to repeat portability layer build on all hosts. Once compiled, copy the binaries (5 kernel modules) to all other nodes (with the same kernel and arch/hardware)

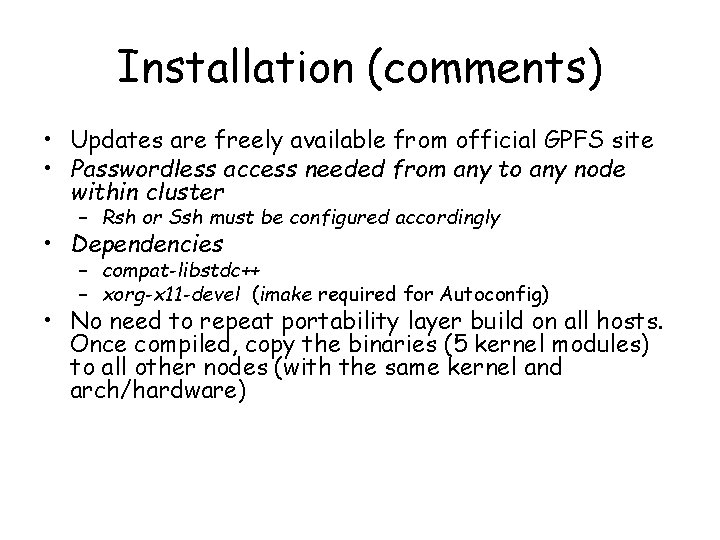

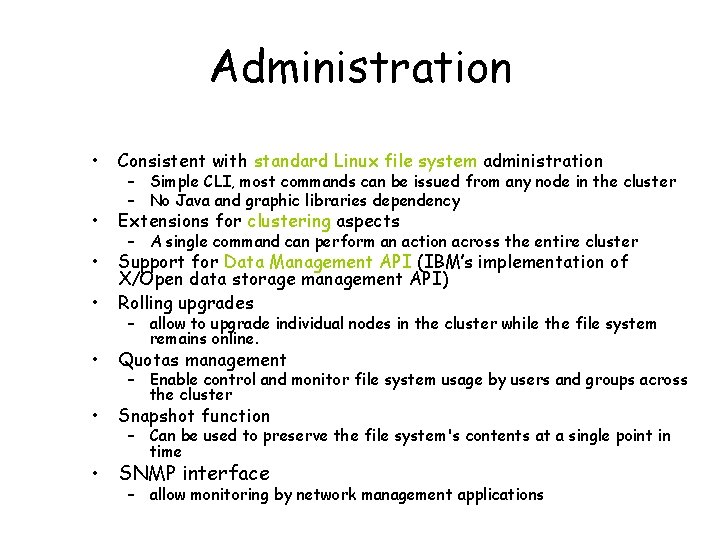

Administration • Consistent with standard Linux file system administration • Extensions for clustering aspects • • Support for Data Management API (IBM’s implementation of X/Open data storage management API) Rolling upgrades • Quotas management • Snapshot function – Simple CLI, most commands can be issued from any node in the cluster – No Java and graphic libraries dependency – A single command can perform an action across the entire cluster – allow to upgrade individual nodes in the cluster while the file system remains online. – Enable control and monitor file system usage by users and groups across the cluster – Can be used to preserve the file system's contents at a single point in time • SNMP interface – allow monitoring by network management applications

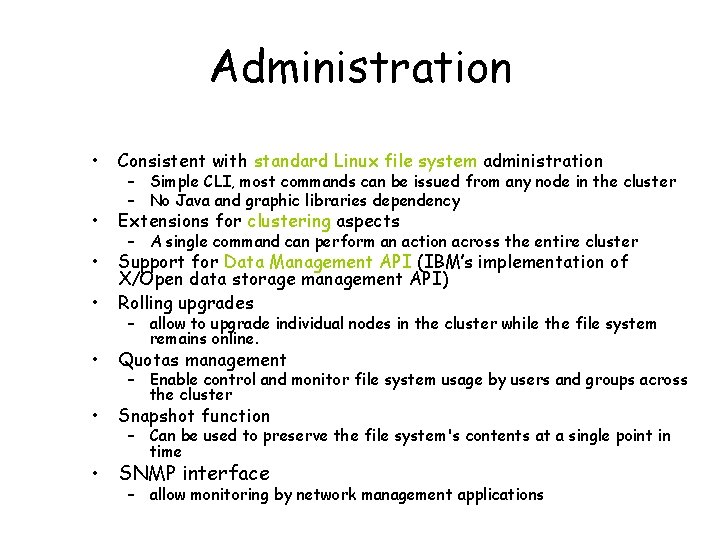

"mm list" commands GPFS provides a number of commands to list parameter settings, configuration components and other things. COMMENT: By default, nearly all of the mm commands require root authority to execute. However, many sysadmins reset the permissions on mmls commands to allow programmers and others to execute them as they are very useful for the purposes of problem determination and debugging.

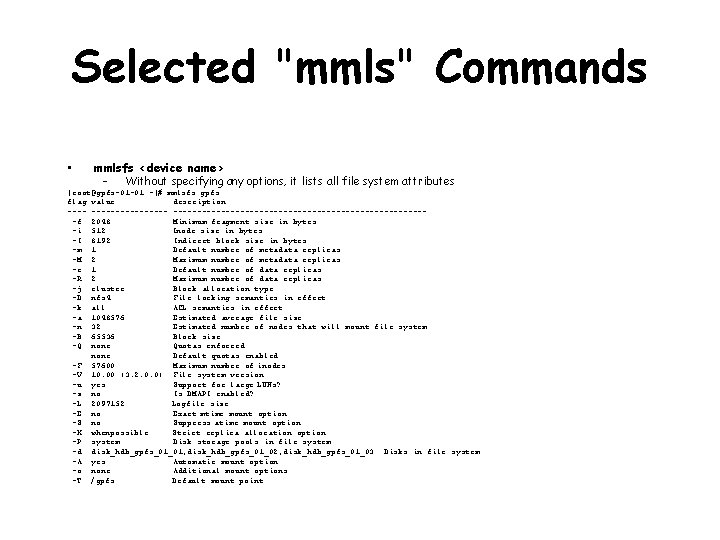

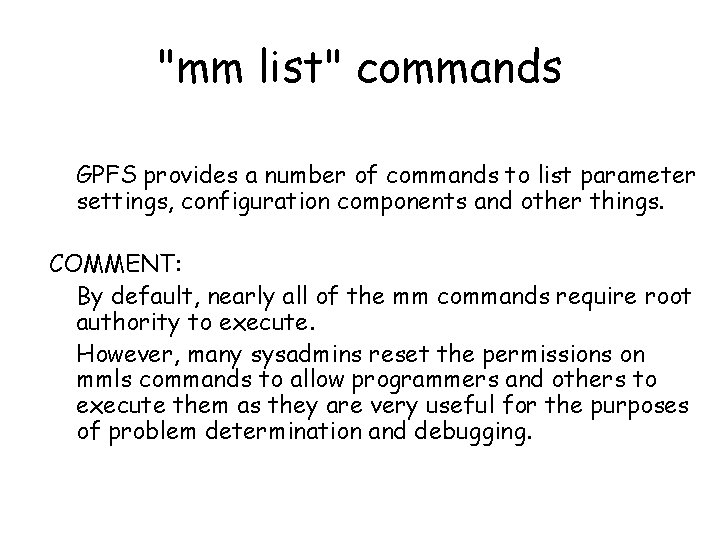

Selected "mmls" Commands • mmlsfs <device name> – Without specifying any options, it lists all file system attributes [root@gpfs-01 -01 ~]# mmlsfs gpfs flag value description ---------------------------f 2048 Minimum fragment size in bytes -i 512 Inode size in bytes -I 8192 Indirect block size in bytes -m 1 Default number of metadata replicas -M 2 Maximum number of metadata replicas -r 1 Default number of data replicas -R 2 Maximum number of data replicas -j cluster Block allocation type -D nfs 4 File locking semantics in effect -k all ACL semantics in effect -a 1048576 Estimated average file size -n 32 Estimated number of nodes that will mount file system -B 65536 Block size -Q none Quotas enforced none Default quotas enabled -F 57600 Maximum number of inodes -V 10. 00 (3. 2. 0. 0) File system version -u yes Support for large LUNs? -z no Is DMAPI enabled? -L 2097152 Logfile size -E no Exact mtime mount option -S no Suppress atime mount option -K whenpossible Strict replica allocation option -P system Disk storage pools in file system -d disk_hdb_gpfs_01_01; disk_hdb_gpfs_01_02; disk_hdb_gpfs_01_03 Disks in file system -A yes Automatic mount option -o none Additional mount options -T /gpfs Default mount point

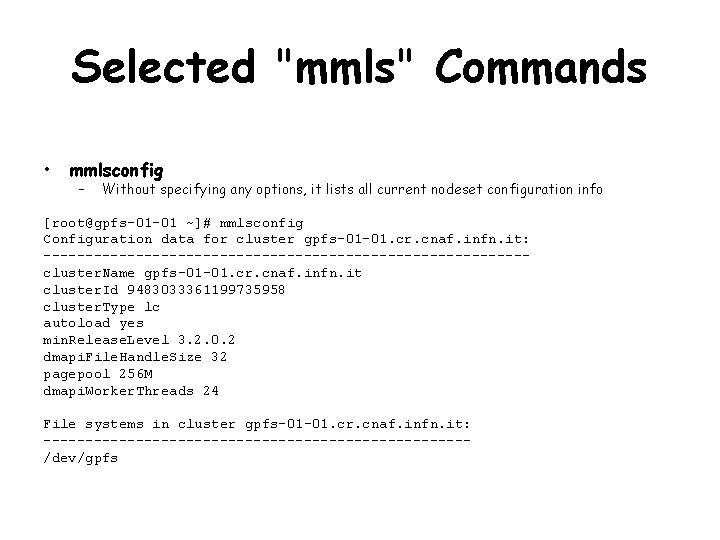

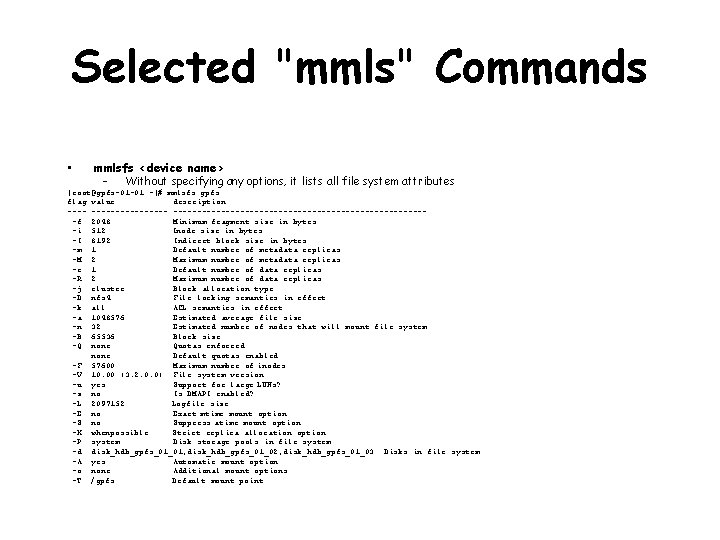

Selected "mmls" Commands • mmlsconfig – Without specifying any options, it lists all current nodeset configuration info [root@gpfs-01 -01 ~]# mmlsconfig Configuration data for cluster gpfs-01 -01. cr. cnaf. infn. it: -----------------------------cluster. Name gpfs-01 -01. cr. cnaf. infn. it cluster. Id 9483033361199735958 cluster. Type lc autoload yes min. Release. Level 3. 2. 0. 2 dmapi. File. Handle. Size 32 pagepool 256 M dmapi. Worker. Threads 24 File systems in cluster gpfs-01 -01. cr. cnaf. infn. it: -------------------------/dev/gpfs

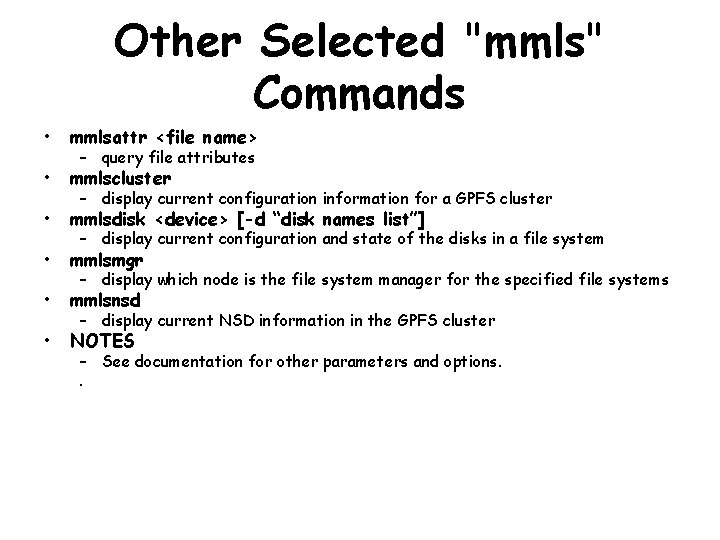

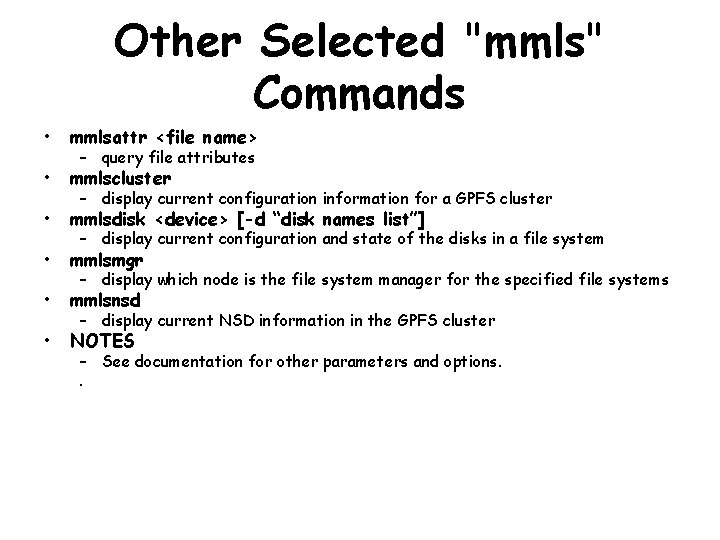

Other Selected "mmls" Commands • mmlsattr <file name> • mmlscluster • mmlsdisk <device> [-d “disk names list”] • mmlsmgr • mmlsnsd • NOTES – query file attributes – display current configuration information for a GPFS cluster – display current configuration and state of the disks in a file system – display which node is the file system manager for the specified file systems – display current NSD information in the GPFS cluster – See documentation for other parameters and options. .

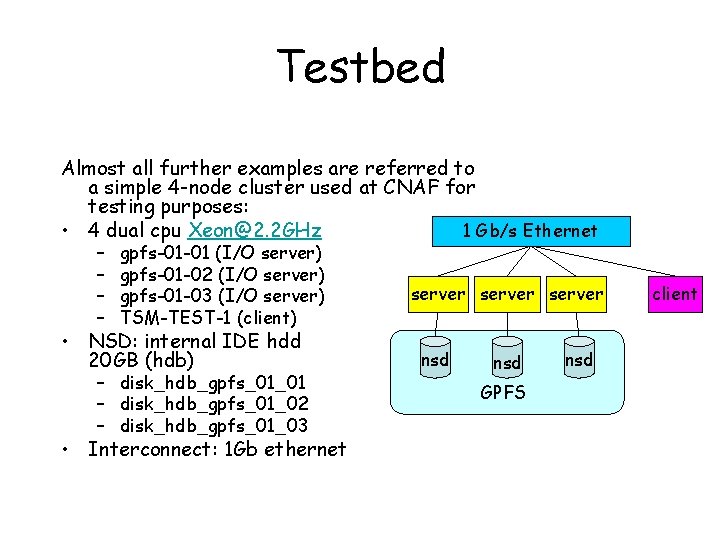

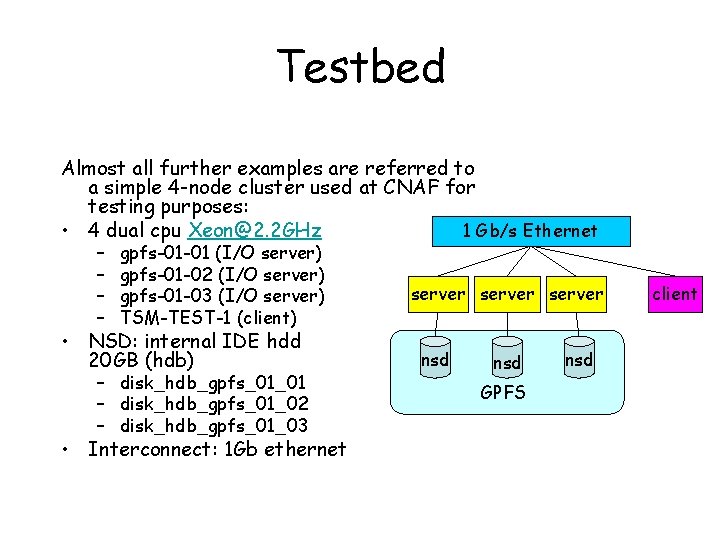

Testbed Almost all further examples are referred to a simple 4 -node cluster used at CNAF for testing purposes: • 4 dual cpu Xeon@2. 2 GHz 1 Gb/s Ethernet – – gpfs-01 -01 (I/O server) gpfs-01 -02 (I/O server) gpfs-01 -03 (I/O server) TSM-TEST-1 (client) • NSD: internal IDE hdd 20 GB (hdb) – disk_hdb_gpfs_01_01 – disk_hdb_gpfs_01_02 – disk_hdb_gpfs_01_03 • Interconnect: 1 Gb ethernet server nsd GPFS nsd client

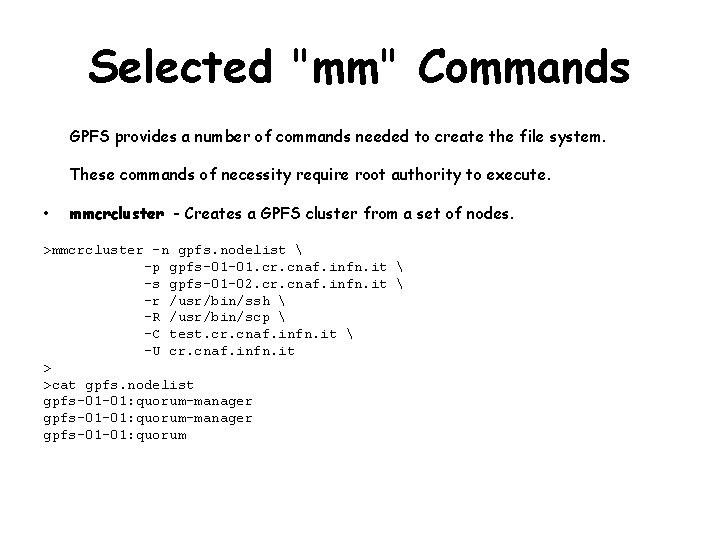

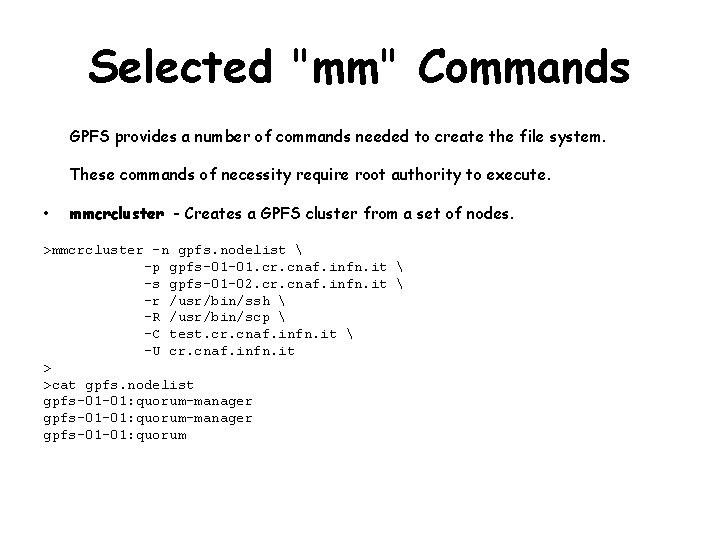

Selected "mm" Commands GPFS provides a number of commands needed to create the file system. These commands of necessity require root authority to execute. • mmcrcluster - Creates a GPFS cluster from a set of nodes. >mmcrcluster -n gpfs. nodelist -p gpfs-01 -01. cr. cnaf. infn. it -s gpfs-01 -02. cr. cnaf. infn. it -r /usr/bin/ssh -R /usr/bin/scp -C test. cr. cnaf. infn. it -U cr. cnaf. infn. it > >cat gpfs. nodelist gpfs-01 -01: quorum-manager gpfs-01 -01: quorum

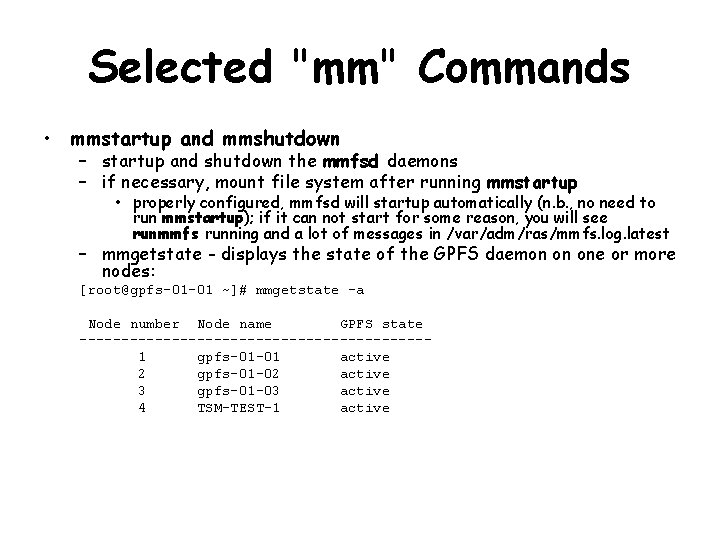

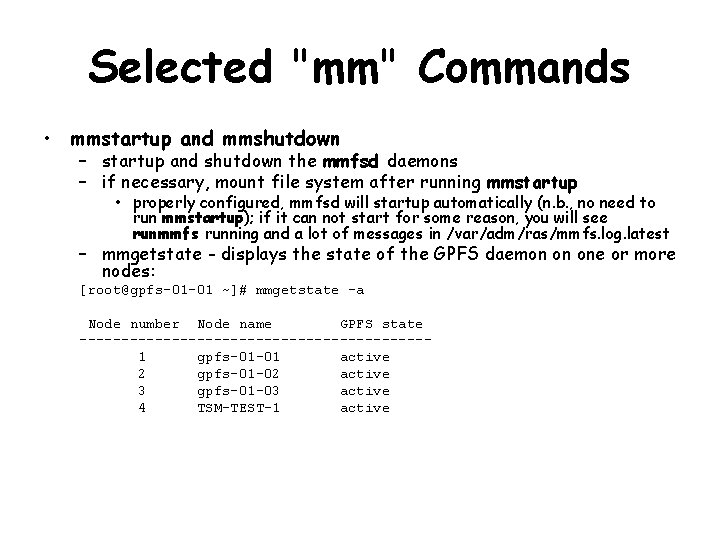

Selected "mm" Commands • mmstartup and mmshutdown – startup and shutdown the mmfsd daemons – if necessary, mount file system after running mmstartup • properly configured, mmfsd will startup automatically (n. b. , no need to run mmstartup); if it can not start for some reason, you will see runmmfs running and a lot of messages in /var/adm/ras/mmfs. log. latest – mmgetstate - displays the state of the GPFS daemon on one or more nodes: [root@gpfs-01 -01 ~]# mmgetstate -a Node number Node name GPFS state ---------------------1 gpfs-01 -01 active 2 gpfs-01 -02 active 3 gpfs-01 -03 active 4 TSM-TEST-1 active

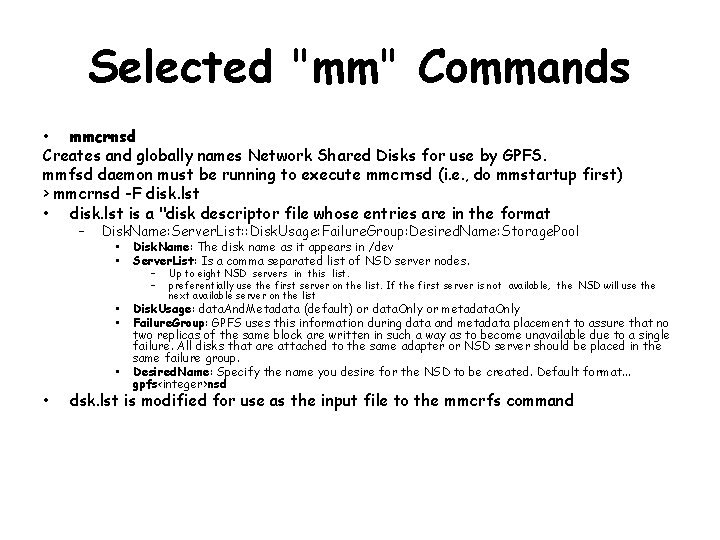

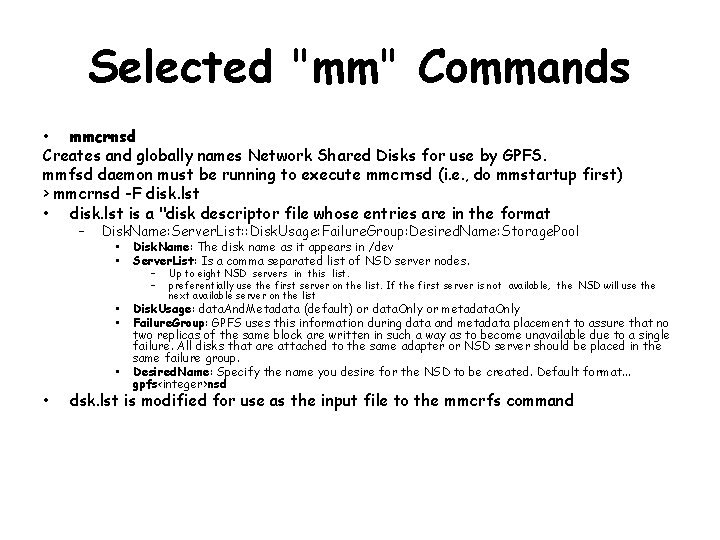

Selected "mm" Commands • mmcrnsd Creates and globally names Network Shared Disks for use by GPFS. mmfsd daemon must be running to execute mmcrnsd (i. e. , do mmstartup first) > mmcrnsd -F disk. lst • disk. lst is a "disk descriptor file whose entries are in the format – Disk. Name: Server. List: : Disk. Usage: Failure. Group: Desired. Name: Storage. Pool • • Disk. Name: The disk name as it appears in /dev Server. List: Is a comma separated list of NSD server nodes. • • Disk. Usage: data. And. Metadata (default) or data. Only or metadata. Only Failure. Group: GPFS uses this information during data and metadata placement to assure that no two replicas of the same block are written in such a way as to become unavailable due to a single failure. All disks that are attached to the same adapter or NSD server should be placed in the same failure group. Desired. Name: Specify the name you desire for the NSD to be created. Default format. . . gpfs<integer>nsd • • – – Up to eight NSD servers in this list. preferentially use the first server on the list. If the first server is not available, the NSD will use the next available server on the list dsk. lst is modified for use as the input file to the mmcrfs command

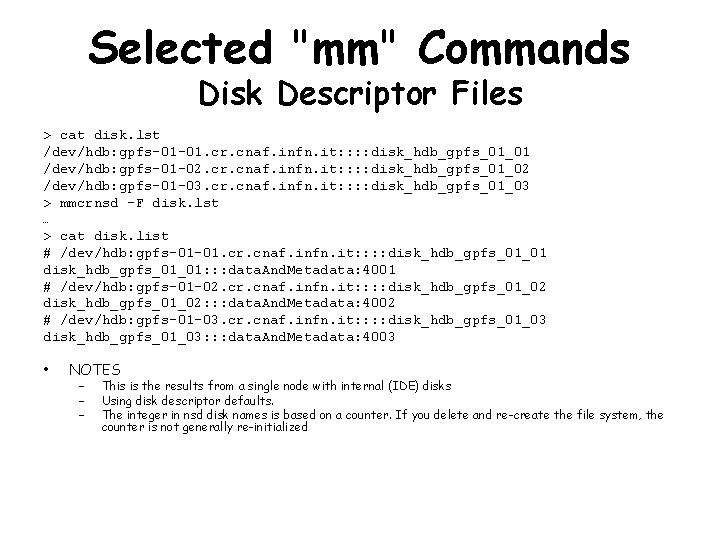

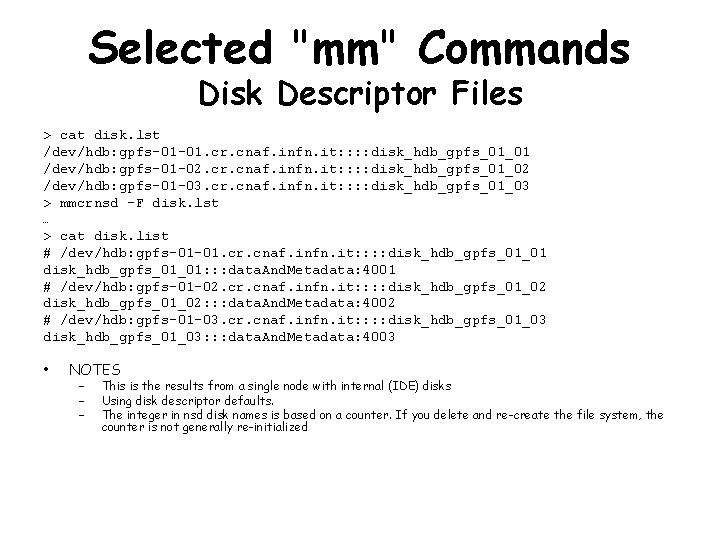

Selected "mm" Commands Disk Descriptor Files > cat disk. lst /dev/hdb: gpfs-01 -01. cr. cnaf. infn. it: : disk_hdb_gpfs_01_01 /dev/hdb: gpfs-01 -02. cr. cnaf. infn. it: : disk_hdb_gpfs_01_02 /dev/hdb: gpfs-01 -03. cr. cnaf. infn. it: : disk_hdb_gpfs_01_03 > mmcrnsd –F disk. lst … > cat disk. list # /dev/hdb: gpfs-01 -01. cr. cnaf. infn. it: : disk_hdb_gpfs_01_01: : : data. And. Metadata: 4001 # /dev/hdb: gpfs-01 -02. cr. cnaf. infn. it: : disk_hdb_gpfs_01_02: : : data. And. Metadata: 4002 # /dev/hdb: gpfs-01 -03. cr. cnaf. infn. it: : disk_hdb_gpfs_01_03: : : data. And. Metadata: 4003 • NOTES – – – This is the results from a single node with internal (IDE) disks Using disk descriptor defaults. The integer in nsd disk names is based on a counter. If you delete and re-create the file system, the counter is not generally re-initialized

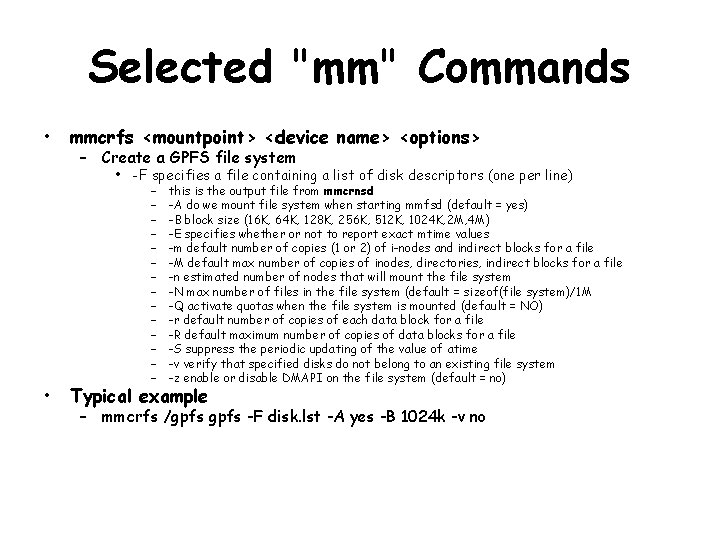

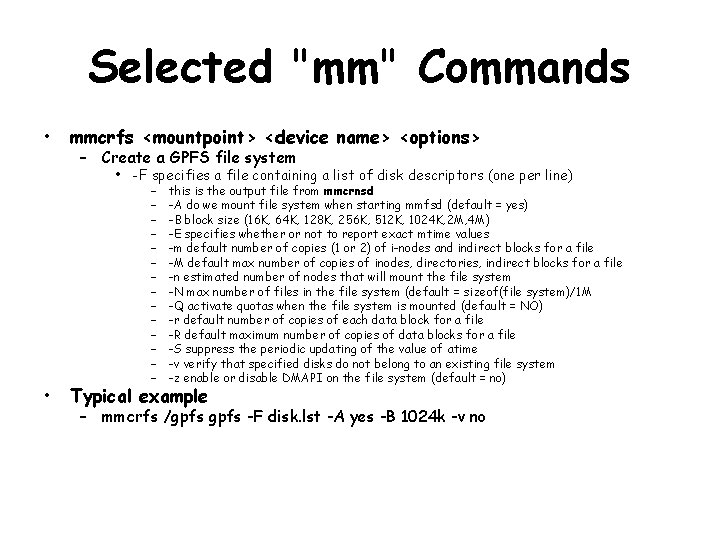

Selected "mm" Commands • mmcrfs <mountpoint> <device name> <options> – Create a GPFS file system • -F specifies a file containing a list of disk descriptors (one per line) • – – – – this is the output file from mmcrnsd -A do we mount file system when starting mmfsd (default = yes) -B block size (16 K, 64 K, 128 K, 256 K, 512 K, 1024 K, 2 M, 4 M) -E specifies whether or not to report exact mtime values -m default number of copies (1 or 2) of i-nodes and indirect blocks for a file -M default max number of copies of inodes, directories, indirect blocks for a file -n estimated number of nodes that will mount the file system -N max number of files in the file system (default = sizeof(file system)/1 M -Q activate quotas when the file system is mounted (default = NO) -r default number of copies of each data block for a file -R default maximum number of copies of data blocks for a file -S suppress the periodic updating of the value of atime -v verify that specified disks do not belong to an existing file system -z enable or disable DMAPI on the file system (default = no) Typical example – mmcrfs /gpfs -F disk. lst -A yes -B 1024 k -v no

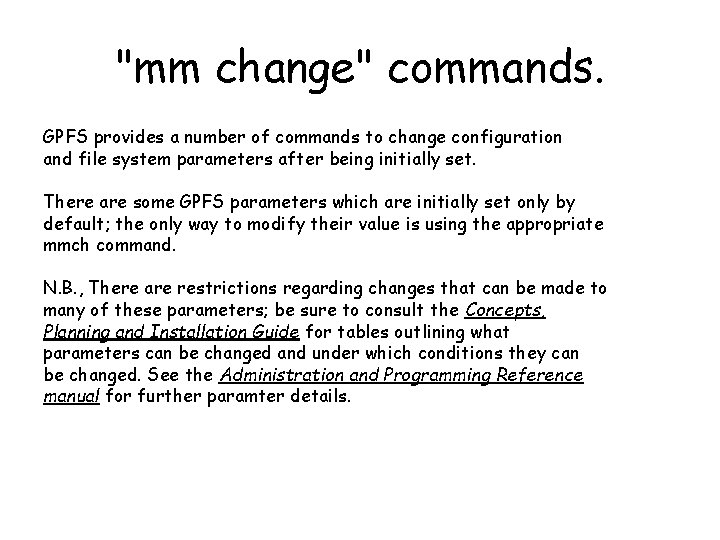

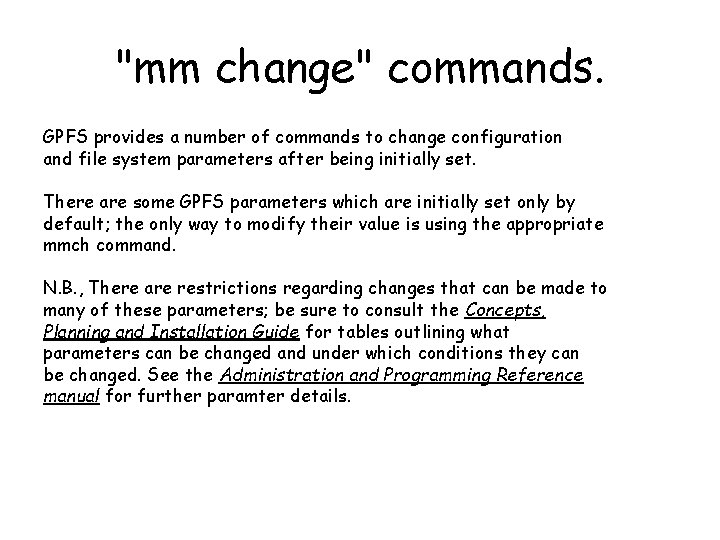

"mm change" commands. GPFS provides a number of commands to change configuration and file system parameters after being initially set. There are some GPFS parameters which are initially set only by default; the only way to modify their value is using the appropriate mmch command. N. B. , There are restrictions regarding changes that can be made to many of these parameters; be sure to consult the Concepts, Planning and Installation Guide for tables outlining what parameters can be changed and under which conditions they can be changed. See the Administration and Programming Reference manual for further paramter details.

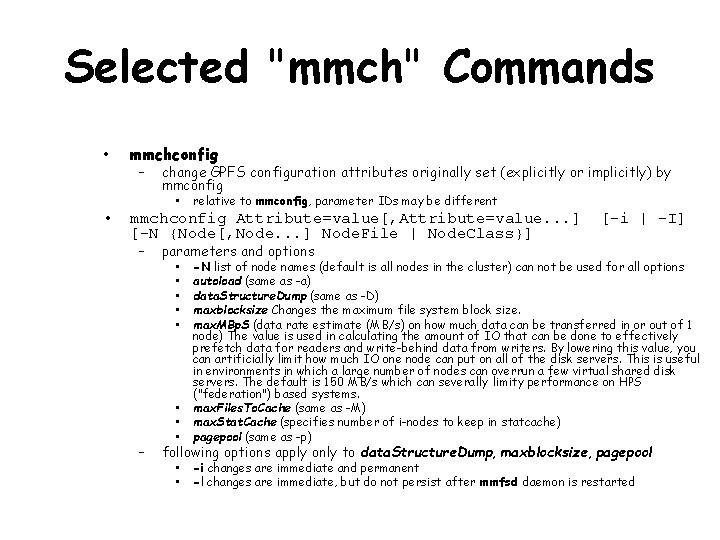

Selected "mmch" Commands • mmchconfig – change GPFS configuration attributes originally set (explicitly or implicitly) by mmconfig • • relative to mmconfig, parameter IDs may be different mmchconfig Attribute=value[, Attribute=value. . . ] [-N {Node[, Node. . . ] Node. File | Node. Class}] – – [-i | -I] parameters and options • • -N list of node names (default is all nodes in the cluster) can not be used for all options autoload (same as -a) data. Structure. Dump (same as -D) maxblocksize Changes the maximum file system block size. max. MBp. S (data rate estimate (MB/s) on how much data can be transferred in or out of 1 node) The value is used in calculating the amount of IO that can be done to effectively prefetch data for readers and write-behind data from writers. By lowering this value, you can artificially limit how much IO one node can put on all of the disk servers. This is useful in environments in which a large number of nodes can overrun a few virtual shared disk servers. The default is 150 MB/s which can severally limity performance on HPS ("federation") based systems. max. Files. To. Cache (same as -M) max. Stat. Cache (specifies number of i-nodes to keep in statcache) pagepool (same as -p) • • -i changes are immediate and permanent -l changes are immediate, but do not persist after mmfsd daemon is restarted following options apply only to data. Structure. Dump, maxblocksize, pagepool

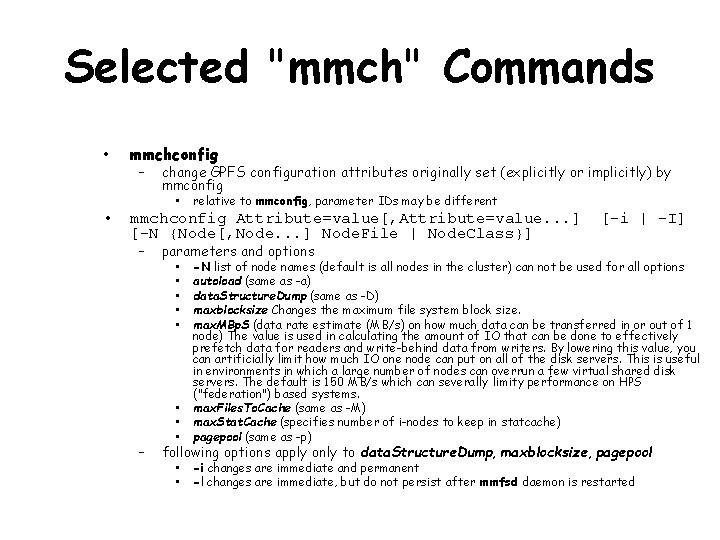

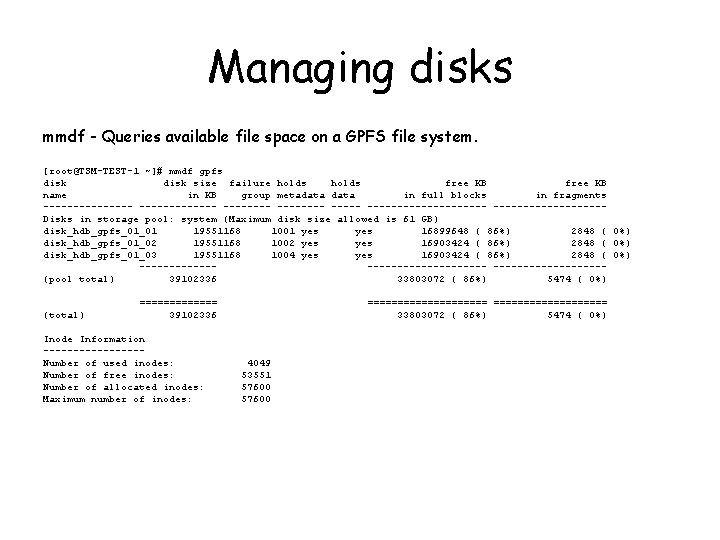

Managing disks mmdf - Queries available file space on a GPFS file system. [root@TSM-TEST-1 ~]# mmdf gpfs disk size failure holds free KB name in KB group metadata in full blocks in fragments -------- ----------------Disks in storage pool: system (Maximum disk size allowed is 61 GB) disk_hdb_gpfs_01_01 19551168 1001 yes 16899648 ( 86%) 2848 ( 0%) disk_hdb_gpfs_01_02 19551168 1002 yes 16903424 ( 86%) 2848 ( 0%) disk_hdb_gpfs_01_03 19551168 1004 yes 16903424 ( 86%) 2848 ( 0%) ----------------(pool total) 39102336 33803072 ( 86%) 5474 ( 0%) (total) ======= 39102336 Inode Information --------Number of used inodes: Number of free inodes: Number of allocated inodes: Maximum number of inodes: ========== 33803072 ( 86%) 5474 ( 0%) 4049 53551 57600

![Deleting a disk rootgpfs01 01 mmdeldisk gpfs diskhdbgpfs0103 Deleting disks Scanning Deleting a disk [root@gpfs-01 -01 ~]# mmdeldisk gpfs "disk_hdb_gpfs_01_03" Deleting disks. . . Scanning](https://slidetodoc.com/presentation_image/019df3afe2dff00e46b1e0b36471ddd7/image-31.jpg)

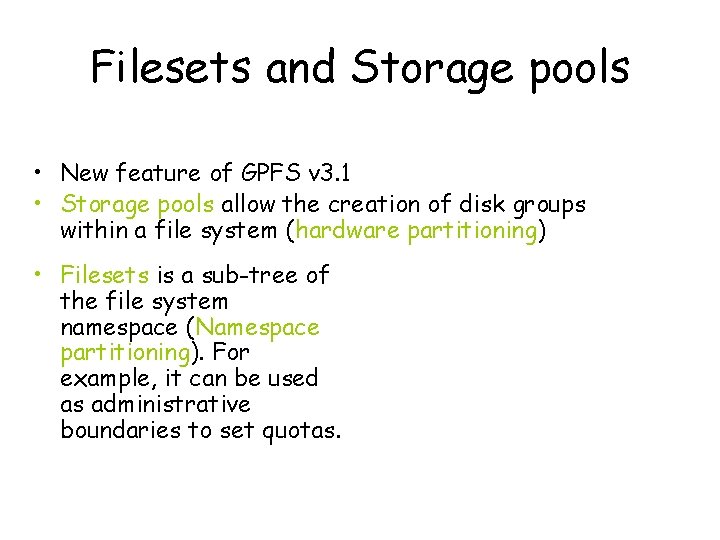

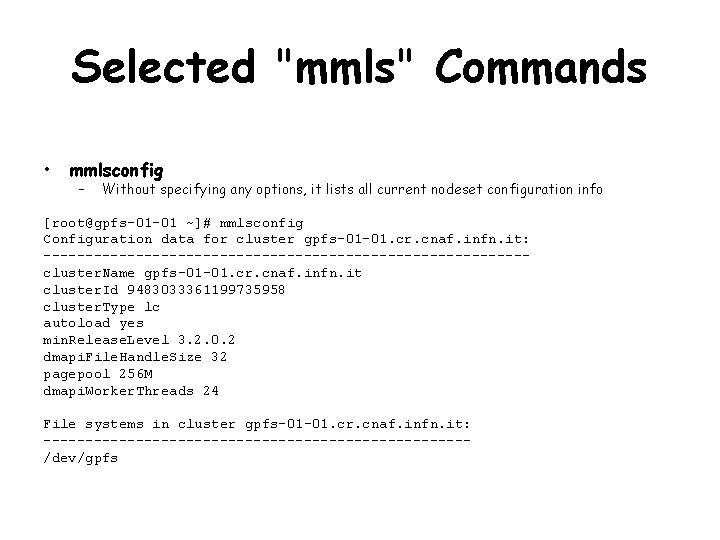

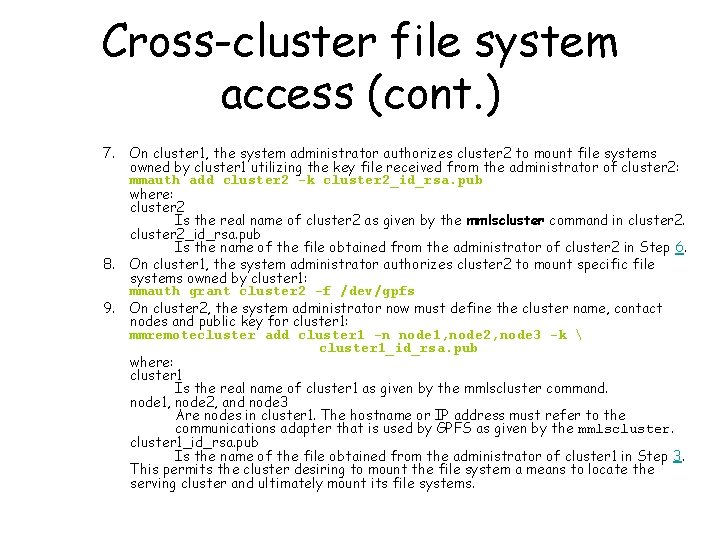

Deleting a disk [root@gpfs-01 -01 ~]# mmdeldisk gpfs "disk_hdb_gpfs_01_03" Deleting disks. . . Scanning system storage pool Scanning file system metadata, phase 1. . . Scan completed successfully. Scanning file system metadata, phase 2. . . Scan completed successfully. Scanning file system metadata, phase 3. . . Scan completed successfully. Scanning file system metadata, phase 4. . . Scan completed successfully. Scanning user file metadata. . . 100 % complete on Wed Jun 4 17: 22: 35 2008 Scan completed successfully. Checking Allocation Map for storage pool 'system' tsdeldisk completed. mmdeldisk: Propagating the cluster configuration data to all affected nodes. This is an asynchronous process. [root@gpfs-01 -01 ~]# mmdf gpfs disk size failure holds free KB name in KB group metadata in full blocks in fragments -------- ----------------Disks in storage pool: system (Maximum disk size allowed is 61 GB) disk_hdb_gpfs_01_01 19551168 1001 yes 15919616 ( 81%) 3104 ( 0%) disk_hdb_gpfs_01_02 19551168 1002 yes 15919936 ( 81%) 2994 ( 0%) ----------------(pool total) 39102336 31839552 ( 81%) 6098 ( 0%) (total) ======= 39102336 Inode Information --------Number of used inodes: Number of free inodes: Number of allocated inodes: Maximum number of inodes: ========== 31839552 ( 81%) 6098 ( 0%) 4039 53561 57600

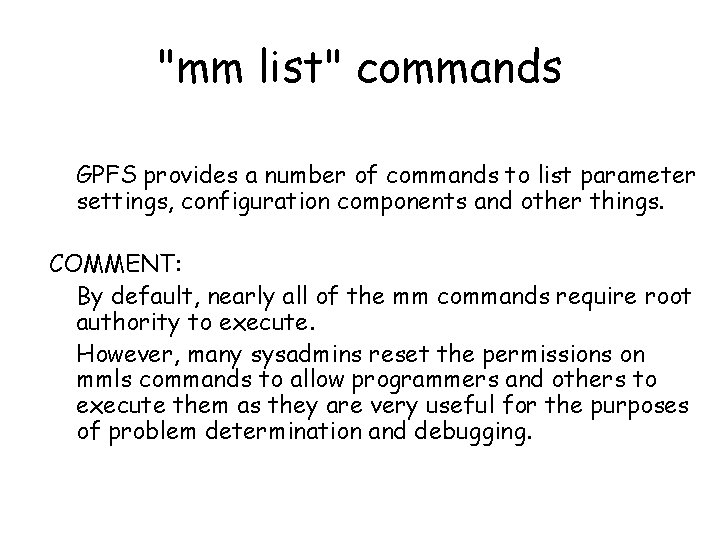

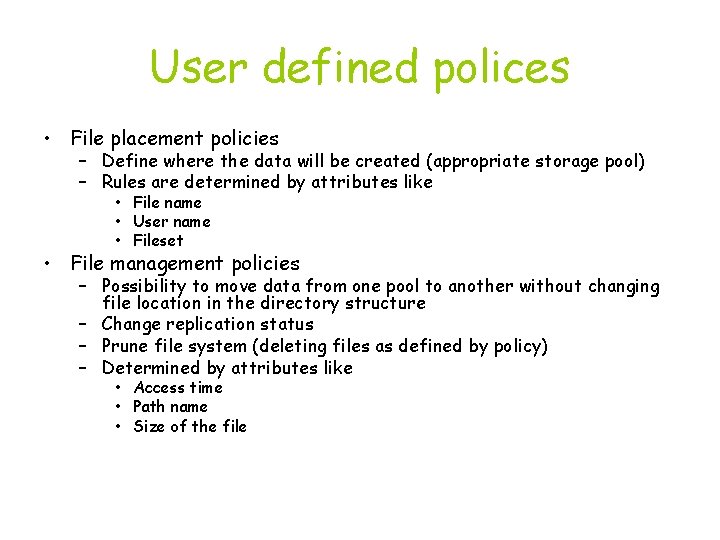

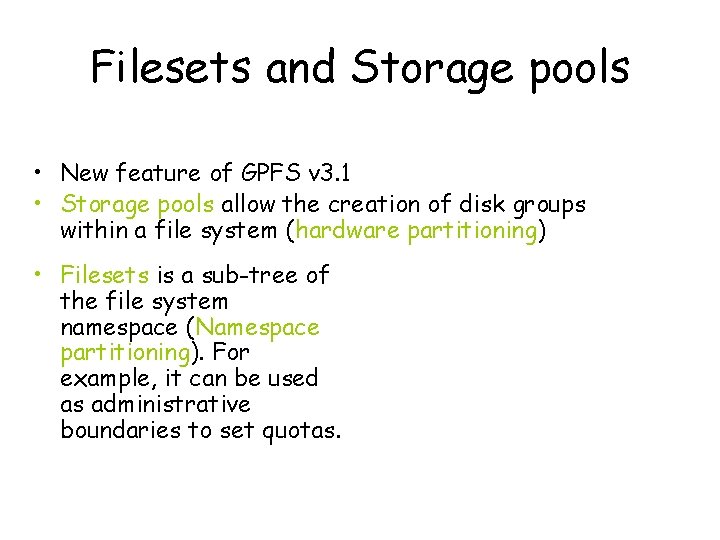

Filesets and Storage pools • New feature of GPFS v 3. 1 • Storage pools allow the creation of disk groups within a file system (hardware partitioning) • Filesets is a sub-tree of the file system namespace (Namespace partitioning). For example, it can be used as administrative boundaries to set quotas.

![Adding a disk and a storage pool rootgpfs01 03 mmadddisk gpfs diskhdbgpfs0103 Adding a disk (and a storage pool) [root@gpfs-01 -03 ~]# mmadddisk gpfs "disk_hdb_gpfs_01_03: :](https://slidetodoc.com/presentation_image/019df3afe2dff00e46b1e0b36471ddd7/image-33.jpg)

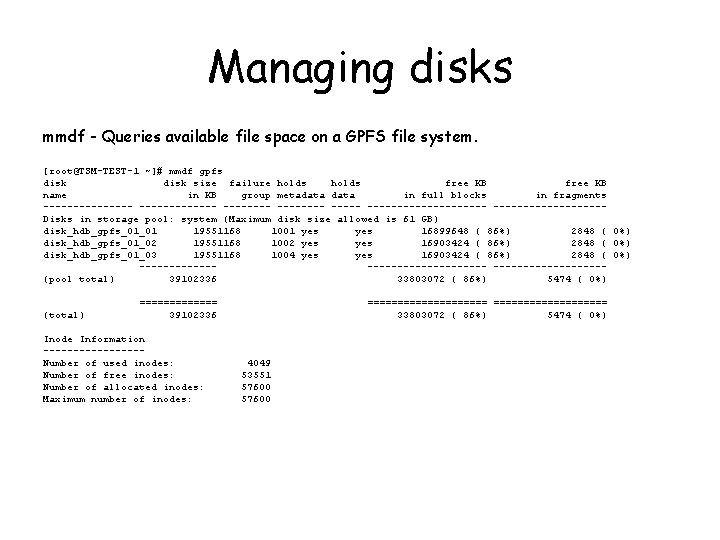

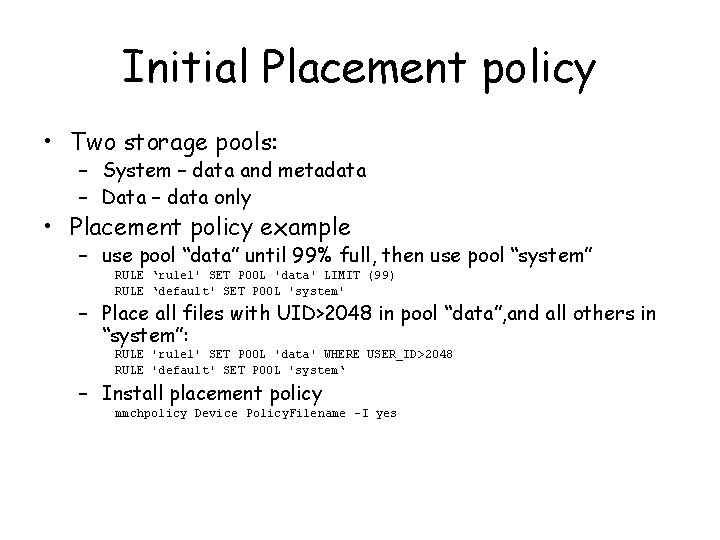

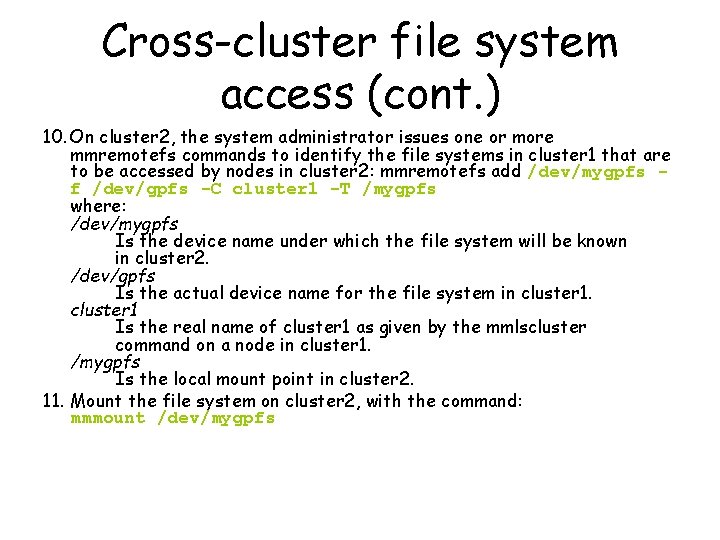

Adding a disk (and a storage pool) [root@gpfs-01 -03 ~]# mmadddisk gpfs "disk_hdb_gpfs_01_03: : : data. Only: : : data" The following disks of gpfs will be formatted on node gpfs-01 -01. cr. cnaf. infn. it: disk_hdb_gpfs_01_03: size 19551168 KB Extending Allocation Map Creating Allocation Map for storage pool 'data' Flushing Allocation Map for storage pool 'data' Disks up to size 52 GB can be added to storage pool 'data'. Checking Allocation Map for storage pool 'data' Completed adding disks to file system gpfs. mmadddisk: Propagating the cluster configuration data to all affected nodes. This is an asynchronous process. [root@gpfs-01 -03 ~]# mmdf gpfs disk size failure holds free KB name in KB group metadata in full blocks in fragments -------- ----------------Disks in storage pool: system (Maximum disk size allowed is 61 GB) disk_hdb_gpfs_01_01 19551168 1001 yes 12713728 ( 65%) 4728 ( 0%) disk_hdb_gpfs_01_02 19551168 1002 yes 12713920 ( 65%) 4562 ( 0%) ----------------(pool total) 39102336 25427648 ( 65%) 9290 ( 0%) Disks in storage pool: data (Maximum disk size allowed is 52 GB) disk_hdb_gpfs_01_03 19551168 4003 no yes 19549056 (100%) 62 ( 0%) ----------------(pool total) 19551168 19549056 (100%) 62 ( 0%) (data) (metadata) (total) ======= 58653504 39102336 ======= 58653504 Inode Information --------Number of used inodes: Number of free inodes: Number of allocated inodes: Maximum number of inodes: ========== 44976704 ( 77%) 9352 ( 0%) 25427648 ( 65%) 9290 ( 0%) ========== 44976704 ( 77%) 9352 ( 0%) 4040 53560 57600

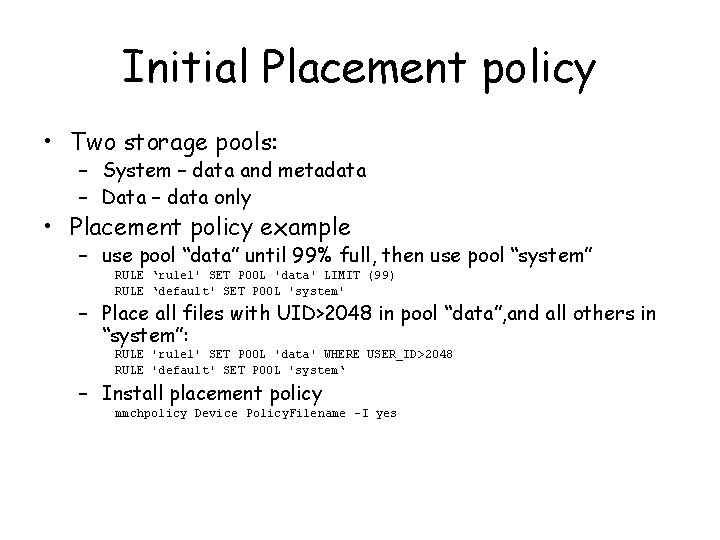

Initial Placement policy • Two storage pools: – System – data and metadata – Data – data only • Placement policy example – use pool “data” until 99% full, then use pool “system” RULE ‘rule 1' SET POOL 'data' LIMIT (99) RULE ‘default' SET POOL 'system' – Place all files with UID>2048 in pool “data”, and all others in “system”: RULE 'rule 1' SET POOL 'data' WHERE USER_ID>2048 RULE 'default' SET POOL 'system‘ – Install placement policy mmchpolicy Device Policy. Filename –I yes

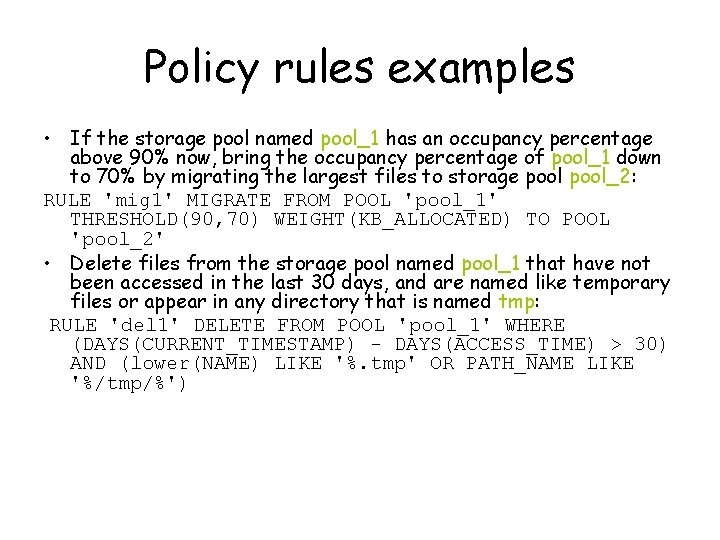

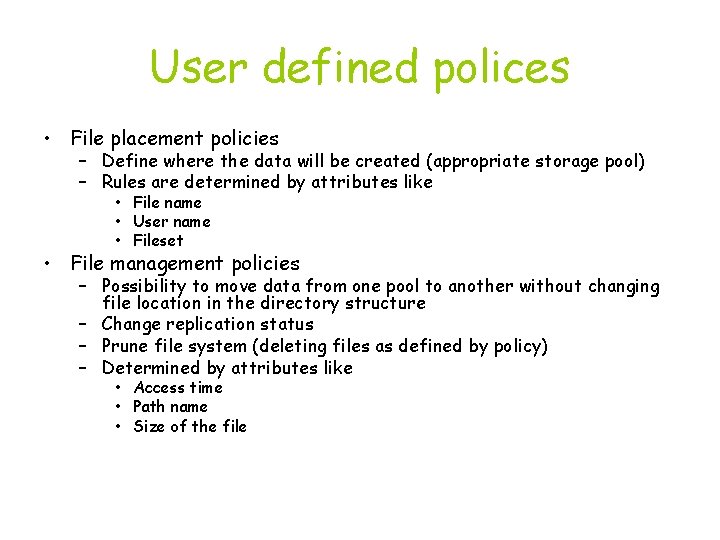

User defined polices • File placement policies – Define where the data will be created (appropriate storage pool) – Rules are determined by attributes like • File name • User name • Fileset • File management policies – Possibility to move data from one pool to another without changing file location in the directory structure – Change replication status – Prune file system (deleting files as defined by policy) – Determined by attributes like • Access time • Path name • Size of the file

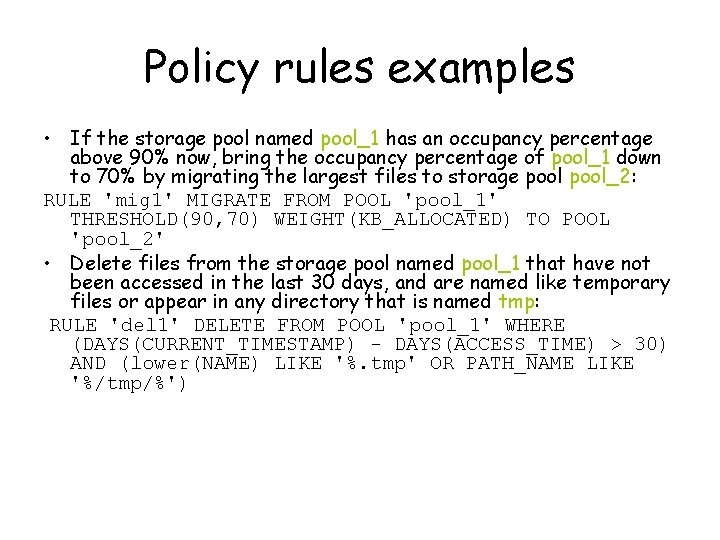

Policy rules examples • If the storage pool named pool_1 has an occupancy percentage above 90% now, bring the occupancy percentage of pool_1 down to 70% by migrating the largest files to storage pool_2: RULE 'mig 1' MIGRATE FROM POOL 'pool_1' THRESHOLD(90, 70) WEIGHT(KB_ALLOCATED) TO POOL 'pool_2' • Delete files from the storage pool named pool_1 that have not been accessed in the last 30 days, and are named like temporary files or appear in any directory that is named tmp: RULE 'del 1' DELETE FROM POOL 'pool_1' WHERE (DAYS(CURRENT_TIMESTAMP) - DAYS(ACCESS_TIME) > 30) AND (lower(NAME) LIKE '%. tmp' OR PATH_NAME LIKE '%/tmp/%')

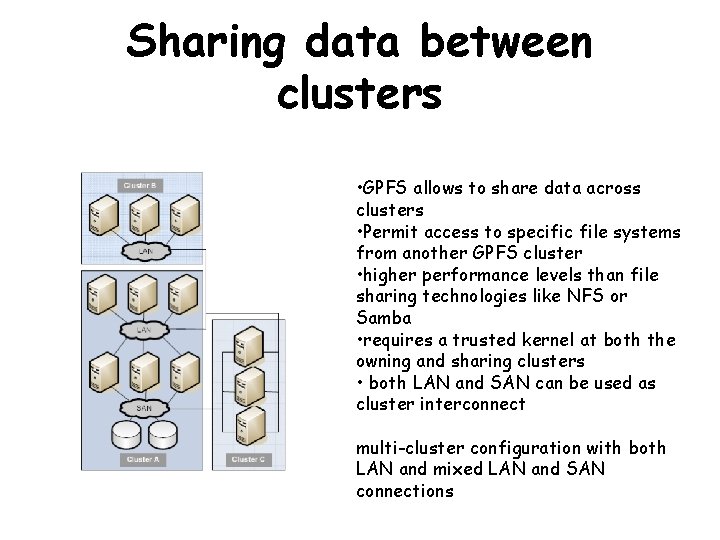

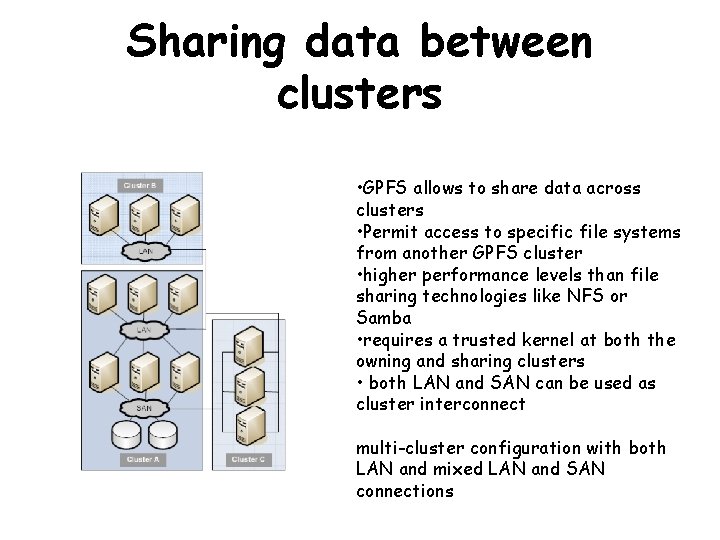

Sharing data between clusters • GPFS allows to share data across clusters • Permit access to specific file systems from another GPFS cluster • higher performance levels than file sharing technologies like NFS or Samba • requires a trusted kernel at both the owning and sharing clusters • both LAN and SAN can be used as cluster interconnect multi-cluster configuration with both LAN and mixed LAN and SAN connections

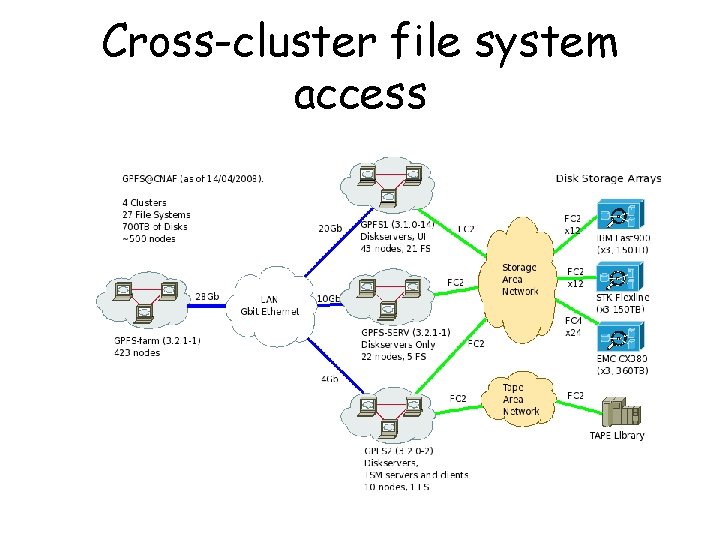

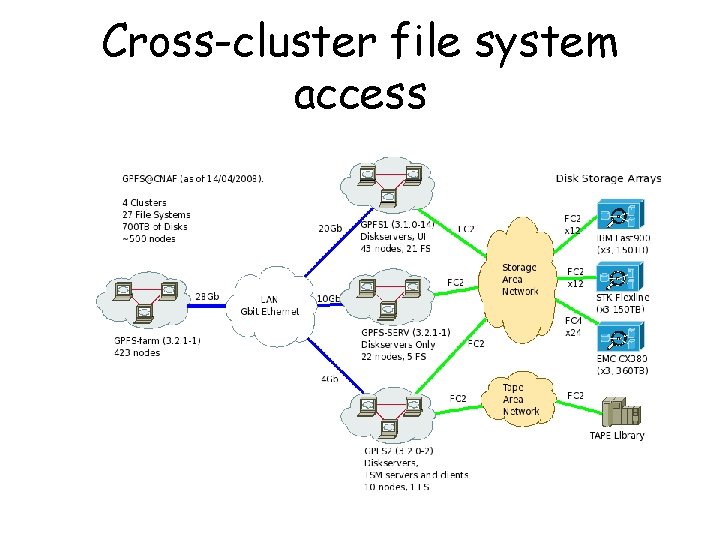

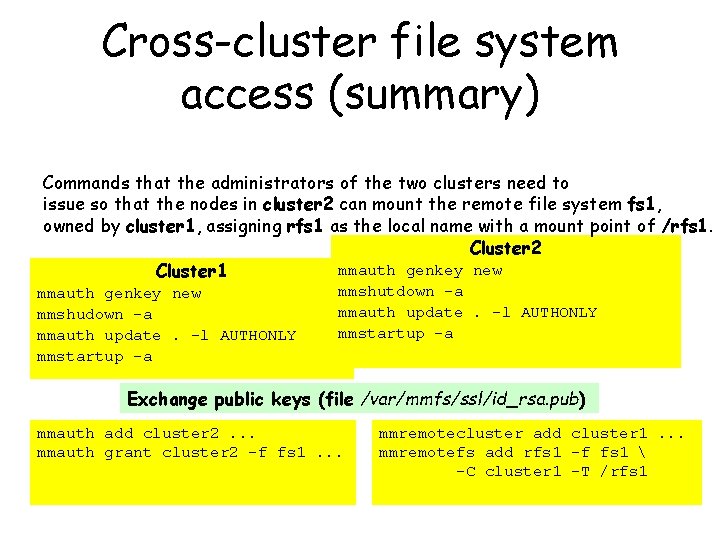

Cross-cluster file system access

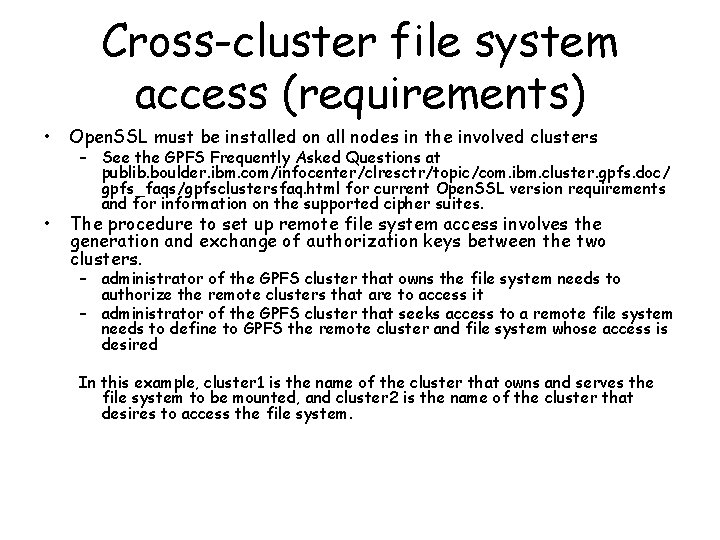

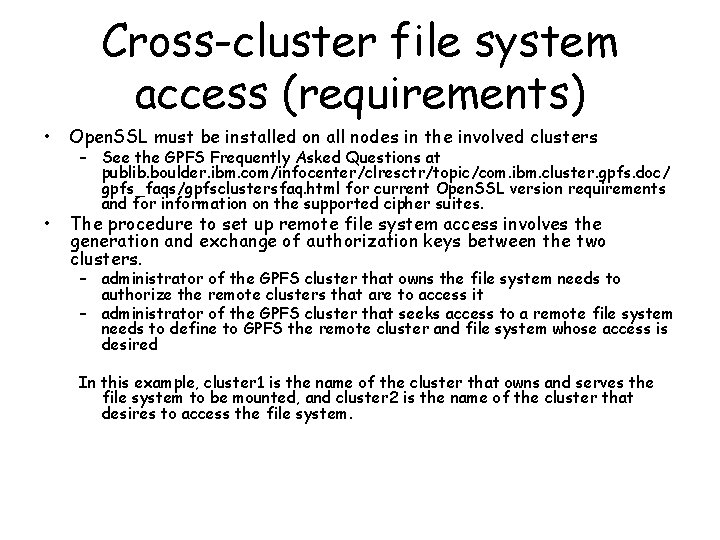

Cross-cluster file system access (requirements) • Open. SSL must be installed on all nodes in the involved clusters • The procedure to set up remote file system access involves the generation and exchange of authorization keys between the two clusters. – See the GPFS Frequently Asked Questions at publib. boulder. ibm. com/infocenter/clresctr/topic/com. ibm. cluster. gpfs. doc/ gpfs_faqs/gpfsclustersfaq. html for current Open. SSL version requirements and for information on the supported cipher suites. – administrator of the GPFS cluster that owns the file system needs to authorize the remote clusters that are to access it – administrator of the GPFS cluster that seeks access to a remote file system needs to define to GPFS the remote cluster and file system whose access is desired In this example, cluster 1 is the name of the cluster that owns and serves the file system to be mounted, and cluster 2 is the name of the cluster that desires to access the file system.

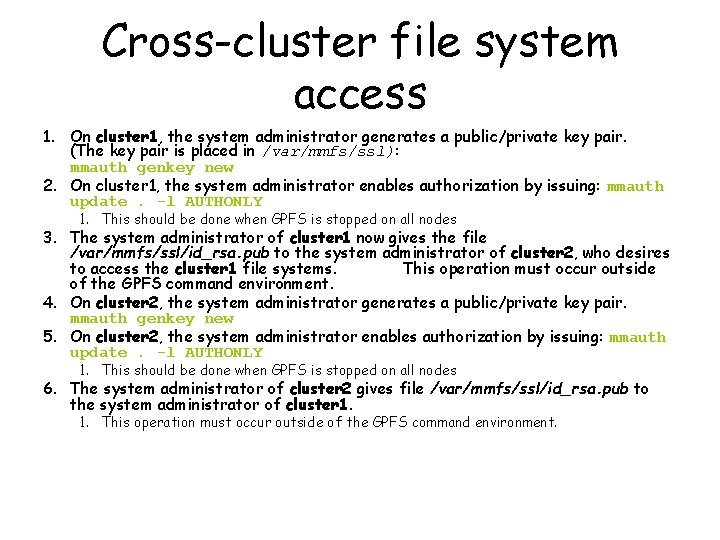

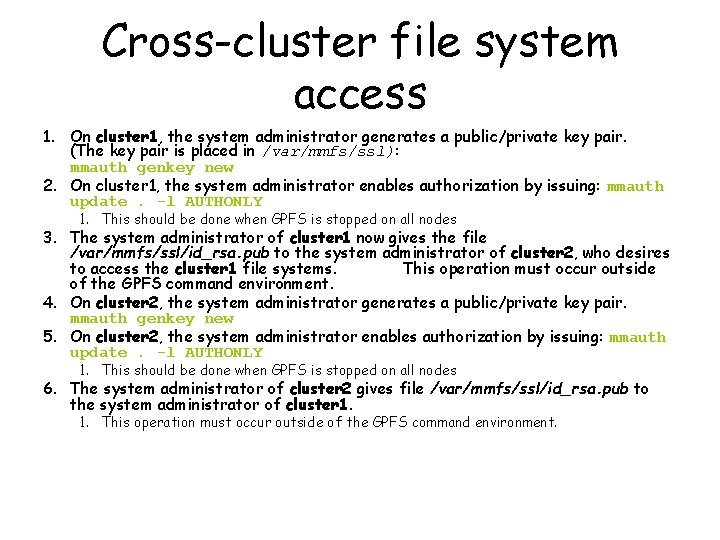

Cross-cluster file system access 1. On cluster 1, the system administrator generates a public/private key pair. (The key pair is placed in /var/mmfs/ssl): mmauth genkey new 2. On cluster 1, the system administrator enables authorization by issuing: mmauth update. -l AUTHONLY 1. This should be done when GPFS is stopped on all nodes 3. The system administrator of cluster 1 now gives the file /var/mmfs/ssl/id_rsa. pub to the system administrator of cluster 2, who desires to access the cluster 1 file systems. This operation must occur outside of the GPFS command environment. 4. On cluster 2, the system administrator generates a public/private key pair. mmauth genkey new 5. On cluster 2, the system administrator enables authorization by issuing: mmauth update. -l AUTHONLY 1. This should be done when GPFS is stopped on all nodes 6. The system administrator of cluster 2 gives file /var/mmfs/ssl/id_rsa. pub to the system administrator of cluster 1. 1. This operation must occur outside of the GPFS command environment.

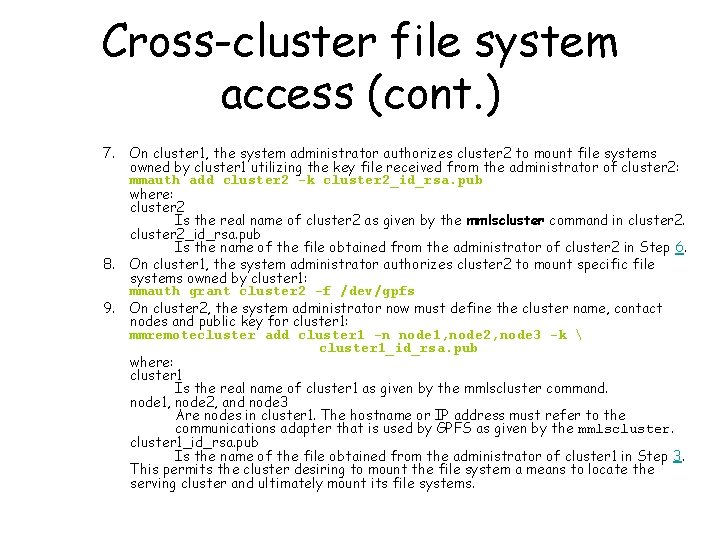

Cross-cluster file system access (cont. ) 7. 8. 9. On cluster 1, the system administrator authorizes cluster 2 to mount file systems owned by cluster 1 utilizing the key file received from the administrator of cluster 2: mmauth add cluster 2 -k cluster 2_id_rsa. pub where: cluster 2 Is the real name of cluster 2 as given by the mmlscluster command in cluster 2_id_rsa. pub Is the name of the file obtained from the administrator of cluster 2 in Step 6. On cluster 1, the system administrator authorizes cluster 2 to mount specific file systems owned by cluster 1: mmauth grant cluster 2 -f /dev/gpfs On cluster 2, the system administrator now must define the cluster name, contact nodes and public key for cluster 1: mmremotecluster add cluster 1 -n node 1, node 2, node 3 -k cluster 1_id_rsa. pub where: cluster 1 Is the real name of cluster 1 as given by the mmlscluster command. node 1, node 2, and node 3 Are nodes in cluster 1. The hostname or IP address must refer to the communications adapter that is used by GPFS as given by the mmlscluster 1_id_rsa. pub Is the name of the file obtained from the administrator of cluster 1 in Step 3. This permits the cluster desiring to mount the file system a means to locate the serving cluster and ultimately mount its file systems.

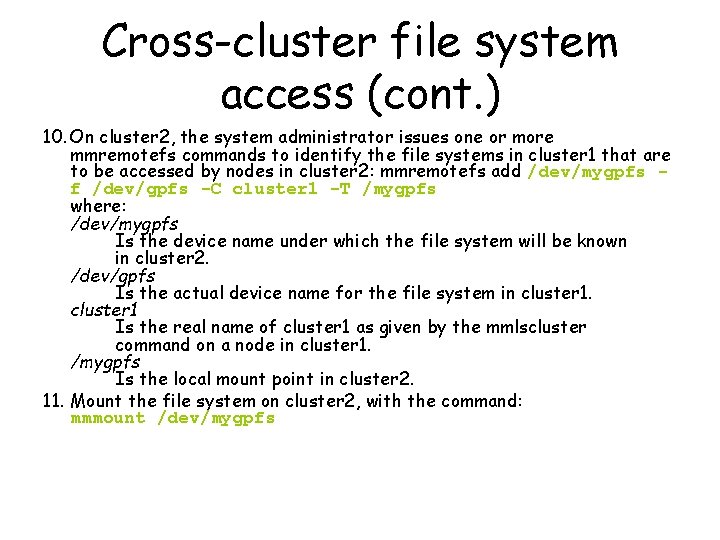

Cross-cluster file system access (cont. ) 10. On cluster 2, the system administrator issues one or more mmremotefs commands to identify the file systems in cluster 1 that are to be accessed by nodes in cluster 2: mmremotefs add /dev/mygpfs f /dev/gpfs -C cluster 1 -T /mygpfs where: /dev/mygpfs Is the device name under which the file system will be known in cluster 2. /dev/gpfs Is the actual device name for the file system in cluster 1 Is the real name of cluster 1 as given by the mmlscluster command on a node in cluster 1. /mygpfs Is the local mount point in cluster 2. 11. Mount the file system on cluster 2, with the command: mmmount /dev/mygpfs

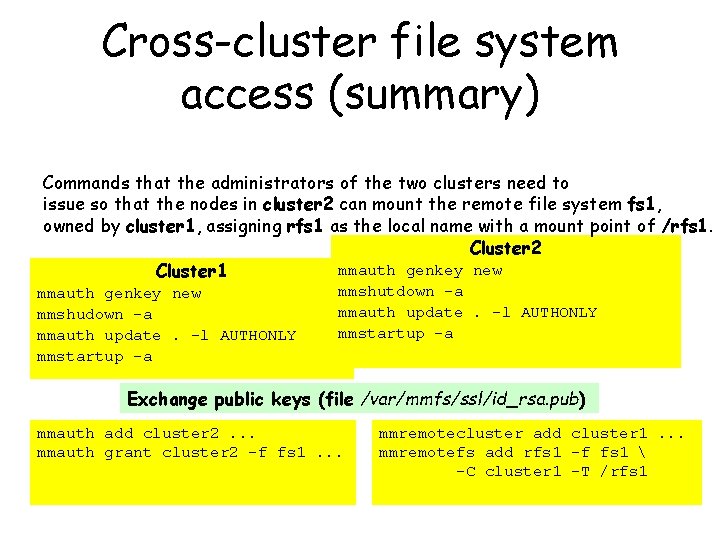

Cross-cluster file system access (summary) Commands that the administrators of the two clusters need to issue so that the nodes in cluster 2 can mount the remote file system fs 1, owned by cluster 1, assigning rfs 1 as the local name with a mount point of /rfs 1. Cluster 2 mmauth genkey new Cluster 1 mmauth genkey new mmshudown -a mmauth update. -l AUTHONLY mmstartup -a mmshutdown -a mmauth update. -l AUTHONLY mmstartup -a Exchange public keys (file /var/mmfs/ssl/id_rsa. pub) mmauth add cluster 2. . . mmauth grant cluster 2 -f fs 1. . . mmremotecluster add cluster 1. . . mmremotefs add rfs 1 -f fs 1 -C cluster 1 -T /rfs 1

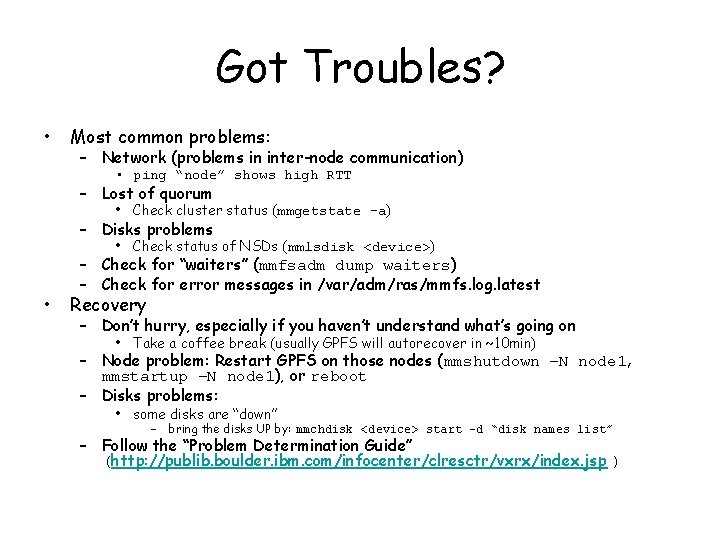

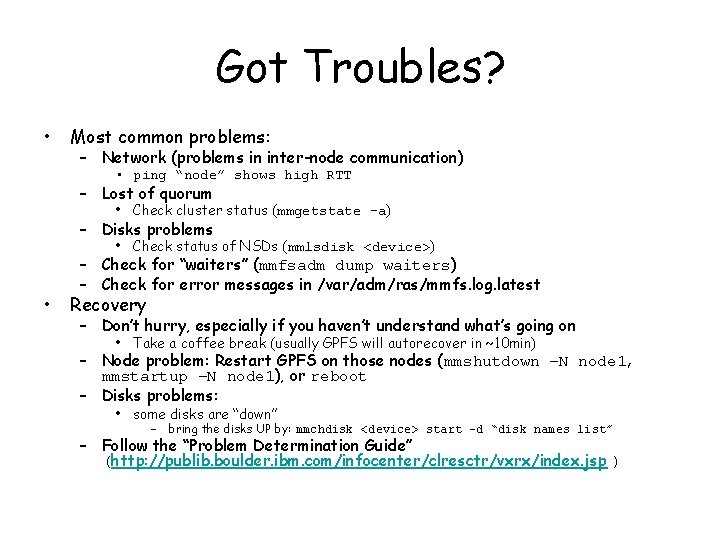

Got Troubles? • Most common problems: – Network (problems in inter-node communication) • ping “node” shows high RTT – Lost of quorum • Check cluster status (mmgetstate -a) – Disks problems • Check status of NSDs (mmlsdisk <device>) • – Check for “waiters” (mmfsadm dump waiters) – Check for error messages in /var/adm/ras/mmfs. log. latest Recovery – Don’t hurry, especially if you haven’t understand what’s going on • Take a coffee break (usually GPFS will autorecover in ~10 min) – Node problem: Restart GPFS on those nodes (mmshutdown –N node 1, mmstartup –N node 1), or reboot – Disks problems: • some disks are “down” – bring the disks UP by: mmchdisk <device> start -d “disk names list” – Follow the “Problem Determination Guide” (http: //publib. boulder. ibm. com/infocenter/clresctr/vxrx/index. jsp )

Long and short

Long and short Gpfs architecture

Gpfs architecture Gpfs vs lustre

Gpfs vs lustre Mmdelnode force

Mmdelnode force Absolute vs relative configuration

Absolute vs relative configuration Chiral or achiral

Chiral or achiral Electron configuration vs noble gas configuration

Electron configuration vs noble gas configuration Relative configuration

Relative configuration Architecture review template

Architecture review template Stylistic overview of architecture

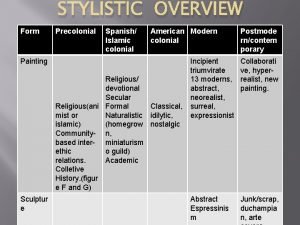

Stylistic overview of architecture Gsm architecture in mobile communication

Gsm architecture in mobile communication Overview of grid architecture

Overview of grid architecture Overview of oracle architecture

Overview of oracle architecture Logical network perimeter

Logical network perimeter Windchill bulk migrator

Windchill bulk migrator Plc installation troubleshooting and maintenance

Plc installation troubleshooting and maintenance Service and installation rules

Service and installation rules Estimation of power wiring systems

Estimation of power wiring systems Plc troubleshooting and maintenance

Plc troubleshooting and maintenance Return architecture

Return architecture Introduction of the story

Introduction of the story Heterozygous tabby x stripeless

Heterozygous tabby x stripeless Tru count air clutch

Tru count air clutch Mc cable uses not permitted

Mc cable uses not permitted Tema configuration

Tema configuration Technisonic avionics installation

Technisonic avionics installation Solar company summit county

Solar company summit county Nexusiq

Nexusiq Unifi installation charges

Unifi installation charges Smoke detector mounting tape

Smoke detector mounting tape Floor framing unit 42

Floor framing unit 42 Permastore tanks and silos

Permastore tanks and silos Interlock installation nm

Interlock installation nm National electrical installation standards

National electrical installation standards We energies meter removal

We energies meter removal Refnet joint installation

Refnet joint installation Spectrum self install

Spectrum self install Solceller timrå

Solceller timrå Tv aerial installation wellington

Tv aerial installation wellington Knx installation

Knx installation Gree versati 3

Gree versati 3 Gps lockbox

Gps lockbox Fusioncompute installation steps

Fusioncompute installation steps Free space optics

Free space optics Winfeed installation key

Winfeed installation key