STORM GPFS on Tier2 Milan Massimo Pistolesemi infn

- Slides: 11

STORM & GPFS on Tier-2 Milan Massimo. Pistolese@mi. infn. it Francesco. Prelz@mi. infn. it Workshop CCR – May 14 2009

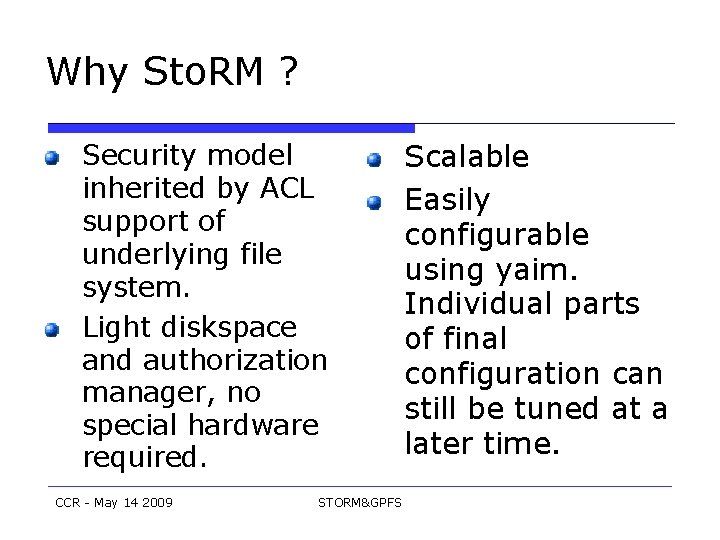

Why Sto. RM ? Security model inherited by ACL support of underlying file system. Light diskspace and authorization manager, no special hardware required. CCR - May 14 2009 STORM&GPFS Scalable Easily configurable using yaim. Individual parts of final configuration can still be tuned at a later time.

Why Sto. RM ? SOAP web service, roles of all its parts can be assigned separately: frontend, back-end, gridftp, mysql request rate handled up to 40 Hz CCR - May 14 2009 STORM&GPFS Gridftp throughput 120 MB/s on 1 Gb/s lan. . Well matched with gpfs architecture.

Why GPFS ? visibility as a local fs, no remote protocols. Cluster structured Slave clustering Redundancy Scalable Abstraction layer CCR - May 14 2009 STORM&GPFS Robustness High performance: concurrent read from 16 clients 380 MB/s (over a physical network limit of 412 MB/s), concurrent write 320 MB/s

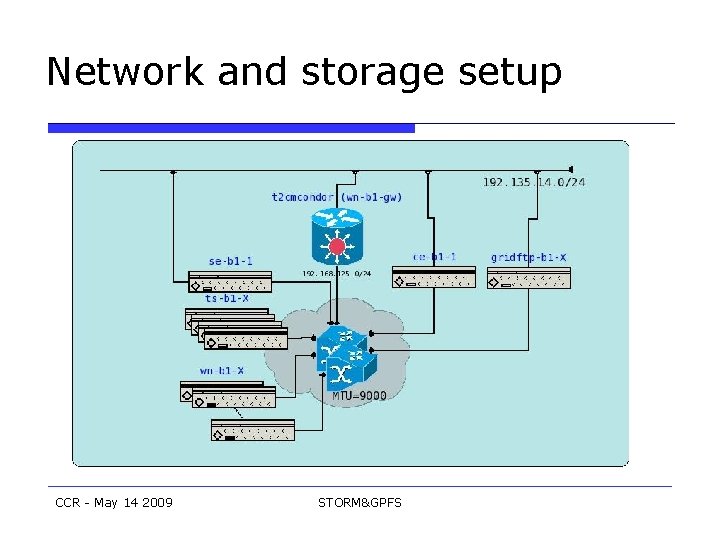

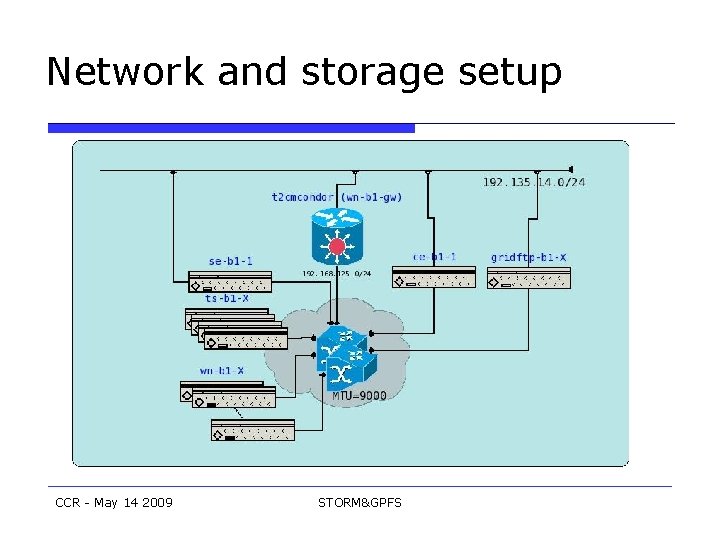

Network and storage setup CCR - May 14 2009 STORM&GPFS

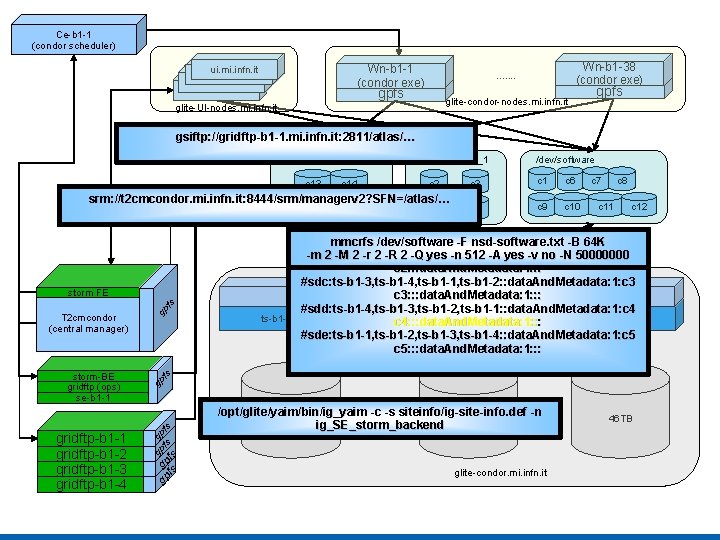

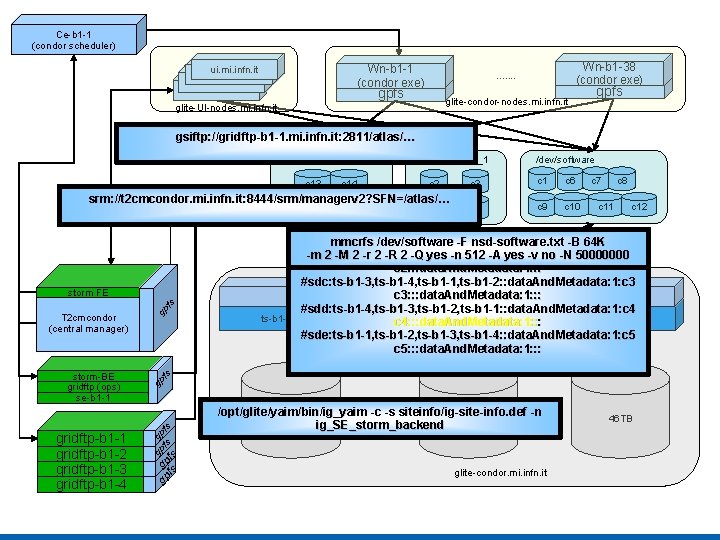

Ce-b 1 -1 (condor scheduler) Wn-b 1 -1 (condor exe) ui. mi. infn. it Wn-b 1 -38 (condor exe) . . . . gpfs glite-condor-nodes. mi. infn. it glite-UI-nodes. mi. infn. it gsiftp: //gridftp-b 1 -1. mi. infn. it: 2811/atlas/… /dev/storage_2 c 13 c 14 /dev/storage_1 c 2 srm: //t 2 cmcondor. mi. infn. it: 8444/srm/managerv 2? SFN=/atlas/… c 15 gridftp-b 1 -1 gridftp-b 1 -2 gridftp-b 1 -3 gridftp-b 1 -4 c 17 c 4 c 3 c 1 c 6 c 5 c 9 c 10 c 7 c 8 c 11 c 12 fs definitions for fs -B 64 K mmcrfs##/dev/software -F /dev/storage_1 nsd-software. txt #sdb: ts-b 1 -2, ts-b 1 -1, ts-b 1 -4, ts-b 1 -3: : data. And. Metadata: 1: c 2 -m 2 -M 2 -r 2 -R 2 -Q yes -n 512 -A yes -v no -N 50000000 c 2: : : data. And. Metadata: 1: : : #sdc: ts-b 1 -3, ts-b 1 -4, ts-b 1 -1, ts-b 1 -2: : data. And. Metadata: 1: c 3: : : data. And. Metadata: 1: : : gpfs #sdd: ts-b 1 -4, ts-b 1 -3, ts-b 1 -2, ts-b 1 -1: : data. And. Metadata: 1: c 4 ts-b 1 -1 , . . . , ts-b 1 -4 ts-b 1 -5, ts-b 1 -6 ts-b 1 -7, ts-b 1 -8 c 4: : : data. And. Metadata: 1: : #sde: ts-b 1 -1, ts-b 1 -2, ts-b 1 -3, ts-b 1 -4: : data. And. Metadata: 1: c 5 Fiber channel c 5: : : data. And. Metadata: 1: : : FC multipath gp storm-BE gridftp (ops) se-b 1 -1 gp gp gpfs fs fs T 2 cmcondor (central manager) gp fs storm FE c 16 /dev/software /opt/glite/yaim/bin/ig_yaim -c -s siteinfo/ig-site-info. def -n 40 TB 46 TB ig_SE_storm_backend glite-condor. mi. infn. it 46 TB

Issues with first production run Project Outline: Analyse MSSM A/H tau l h at 14 Te. V, to obtain discovery potential for this channel in ATLAS (Publish result as a PUB Note) To do this must produce ALL our own datasets Production: Target Numbers of Events: Total: 35 M events Milano Share: 3. 2 M events CCR - May 14 2009 STORM&GPFS

Issues with first production run Job specification: Atlfast II Simulation using job transform (csc_simul_reco_trf. py) Input: evgen -> lcg-cp from SE: INFN-MILANO_LOCALGROUPDISK Output: AOD -> lcg-cp to SE: INFN-MILANO_LOCALGROUPDISK Event Per Job: 250 Total Jobs: 12800 Requirements: 2 GB RAM / 2 GB swap Running Time: Intel(R) Xeon(R) CPU L 5420 @ 2. 50 GHz cache size: 6144 KB 6 hours (TYPE 1) Intel(R) Xeon(TM) CPU 3. 06 GHz cache size: 512 KB 18 hours (TYPE 2) Cluster Performance: 48 CPU (TYPE 1) : 192 Jobs/Day 124 CPU (TYPE 2): 165 Jobs/Day CCR - May 14 2009 STORM&GPFS

Issues with first production run Failure Rate: 50% Environment setup and variables tuning Machine requirements: huge memory needed Works at typical ATLAS Production failure rate ~3% when functioning correctly Mainly issues with setup, GPFS and storage Gpfs perfectly integrated with Sto. RM. No special settings required New clients can be easily added CCR - May 14 2009 STORM&GPFS

Issues with first production run Gpfs caching related to OS limits (singleprocess 2 GB virtual memory on 32 -bit nodes). 64 -bit clients can accommodate larger cache. . C-NFS is good to provide fault-tolerant NFS read-only mounts for better, faster caching, but special care must be taken. Better to separate disk servers from nfs and set gpfs redundancy for related filesystems Local hosts should resolve gridftp servers, to prevent Sto. RM from generating too much internal traffic. (VLANs could also be used). CCR - May 14 2009 STORM&GPFS

Conclusions Most issues found in GPFS and worker node setup. The GPFS cluster architecture allows better organization for pools of similar machines, with central steering. GPFS performance can be easily enforced in a SAN. Headnodes can be added as needed Sto. RM scalability: Can manage as many frontend and gridftp servers as needed CCR - May 14 2009 STORM&GPFS