FiniteState Methods 600 465 Intro to NLP J

![Example: Morphology VP [head=vouloir, . . . ] V[head=vouloir, . . . tense=Present, num=SG, Example: Morphology VP [head=vouloir, . . . ] V[head=vouloir, . . . tense=Present, num=SG,](https://slidetodoc.com/presentation_image/35a88029a1d703585ed33e34d454df35/image-10.jpg)

![FASTUS : Basic phrases Output looks like this (no nested brackets!): … [NG it] FASTUS : Basic phrases Output looks like this (no nested brackets!): … [NG it]](https://slidetodoc.com/presentation_image/35a88029a1d703585ed33e34d454df35/image-37.jpg)

- Slides: 41

Finite-State Methods 600. 465 - Intro to NLP - J. Eisner 1

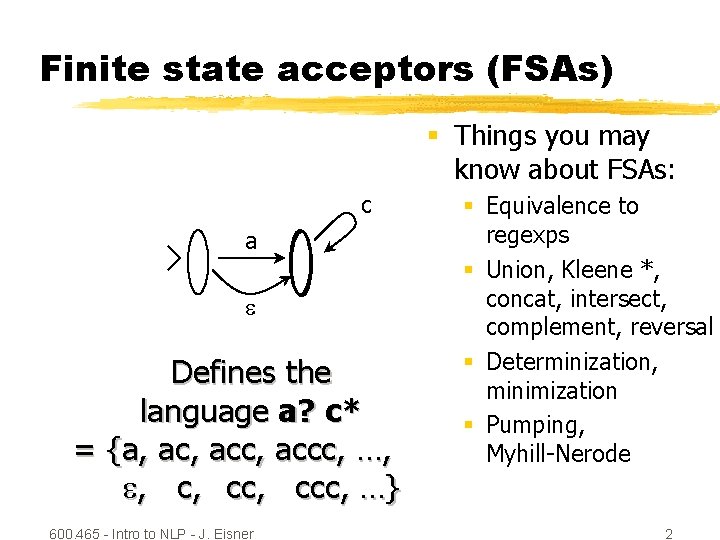

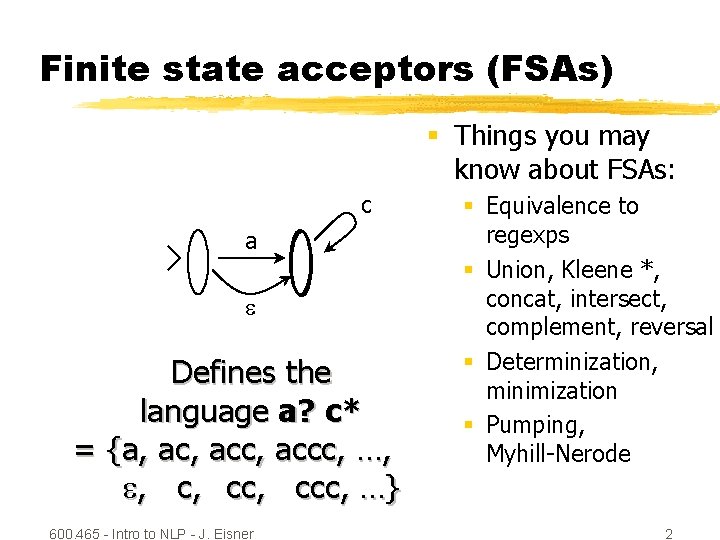

Finite state acceptors (FSAs) § Things you may know about FSAs: c a Defines the language a? c* = {a, acc, accc, …, , c, ccc, …} 600. 465 - Intro to NLP - J. Eisner § Equivalence to regexps § Union, Kleene *, concat, intersect, complement, reversal § Determinization, minimization § Pumping, Myhill-Nerode 2

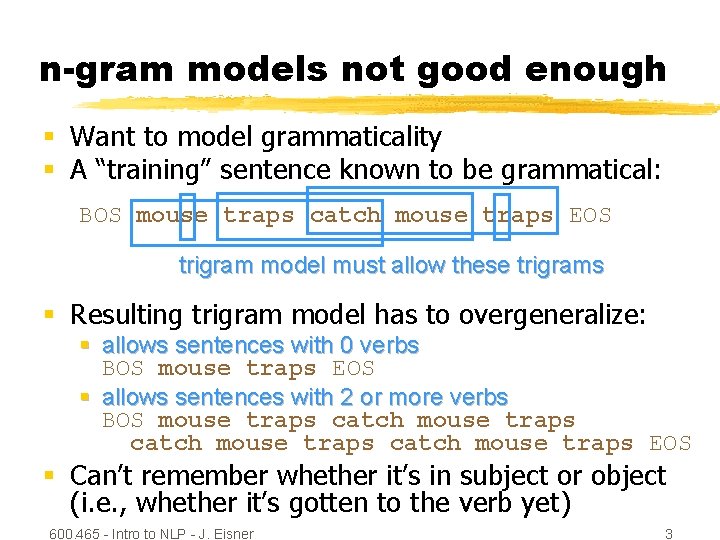

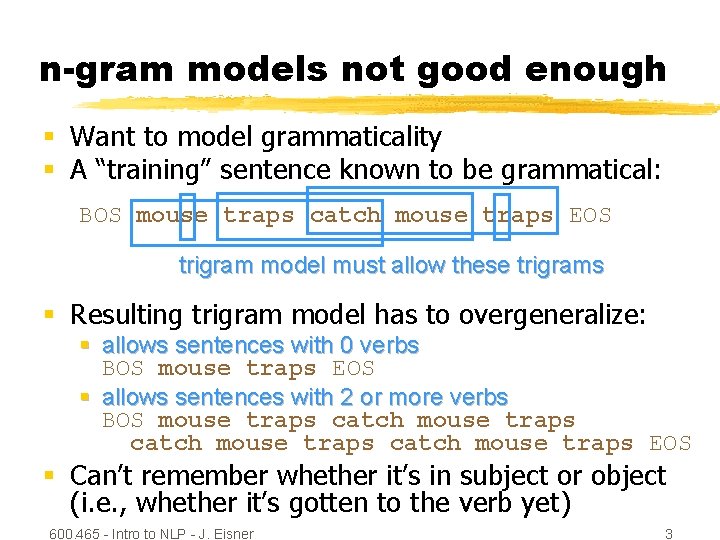

n-gram models not good enough § Want to model grammaticality § A “training” sentence known to be grammatical: BOS mouse traps catch mouse traps EOS trigram model must allow these trigrams § Resulting trigram model has to overgeneralize: § allows sentences with 0 verbs BOS mouse traps EOS § allows sentences with 2 or more verbs BOS mouse traps catch mouse traps EOS § Can’t remember whether it’s in subject or object (i. e. , whether it’s gotten to the verb yet) 600. 465 - Intro to NLP - J. Eisner 3

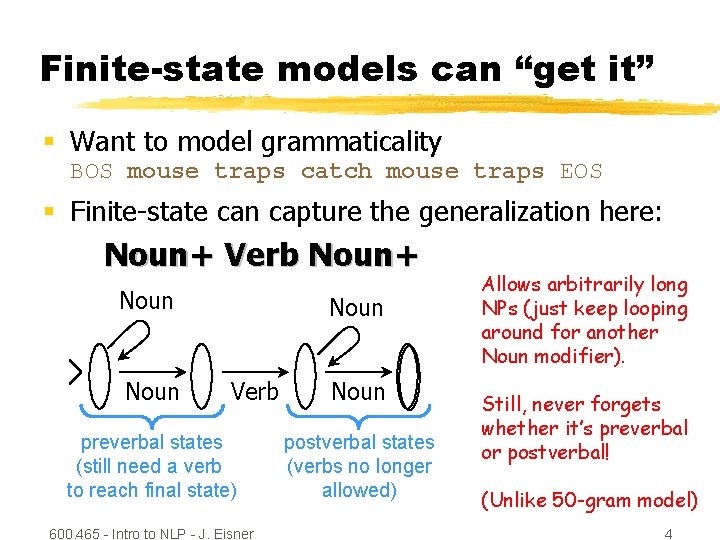

Finite-state models can “get it” § Want to model grammaticality BOS mouse traps catch mouse traps EOS § Finite-state can capture the generalization here: Noun+ Verb Noun+ Noun Verb preverbal states (still need a verb to reach final state) 600. 465 - Intro to NLP - J. Eisner Noun postverbal states (verbs no longer allowed) Allows arbitrarily long NPs (just keep looping around for another Noun modifier). Still, never forgets whether it’s preverbal or postverbal! (Unlike 50 -gram model) 4

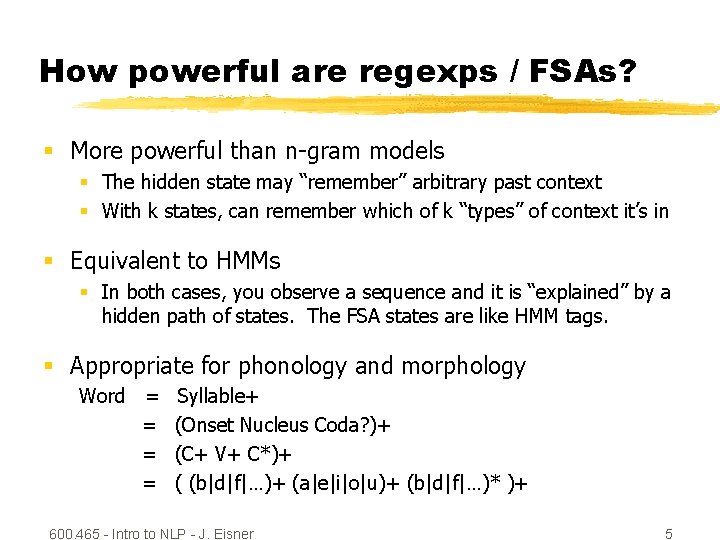

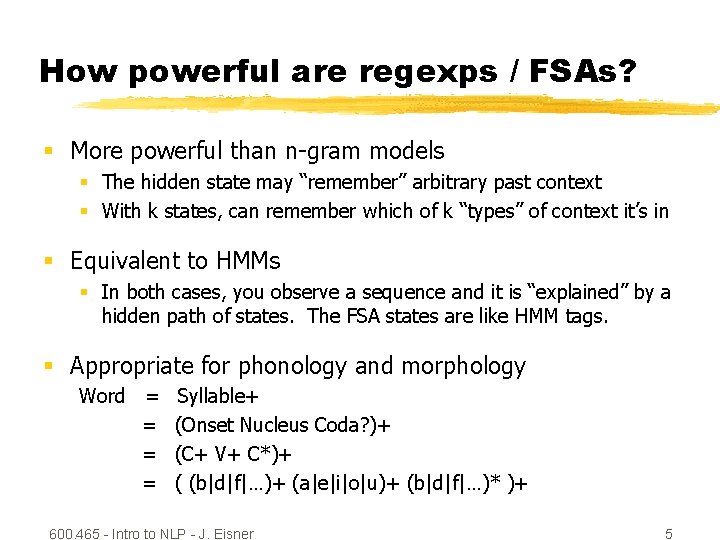

How powerful are regexps / FSAs? § More powerful than n-gram models § The hidden state may “remember” arbitrary past context § With k states, can remember which of k “types” of context it’s in § Equivalent to HMMs § In both cases, you observe a sequence and it is “explained” by a hidden path of states. The FSA states are like HMM tags. § Appropriate for phonology and morphology Word = = Syllable+ (Onset Nucleus Coda? )+ (C+ V+ C*)+ ( (b|d|f|…)+ (a|e|i|o|u)+ (b|d|f|…)* )+ 600. 465 - Intro to NLP - J. Eisner 5

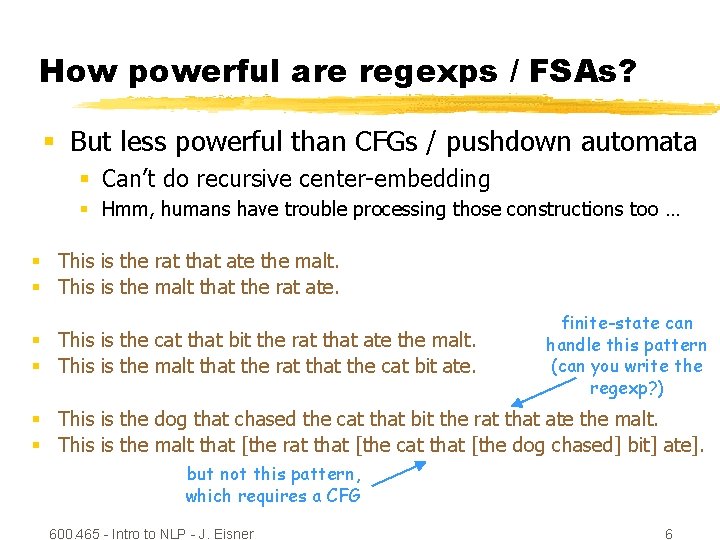

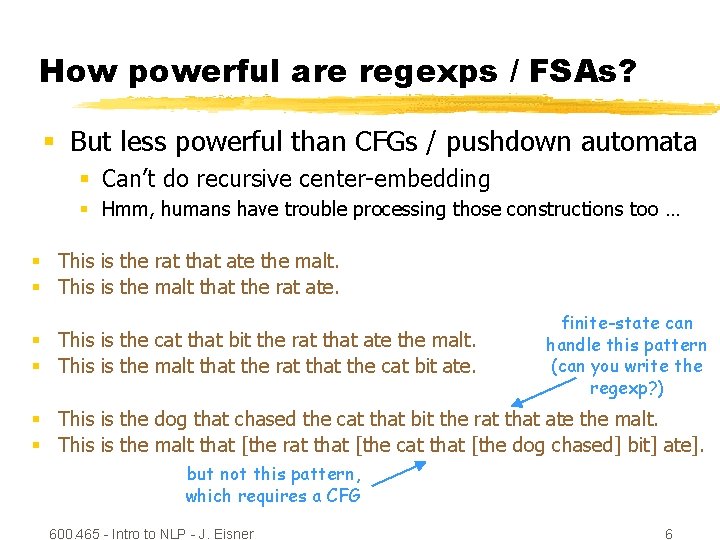

How powerful are regexps / FSAs? § But less powerful than CFGs / pushdown automata § Can’t do recursive center-embedding § Hmm, humans have trouble processing those constructions too … § This is the rat that ate the malt. § This is the malt that the rat ate. § This is the cat that bit the rat that ate the malt. § This is the malt that the rat the cat bit ate. finite-state can handle this pattern (can you write the regexp? ) § This is the dog that chased the cat that bit the rat that ate the malt. § This is the malt that [the rat that [the cat that [the dog chased] bit] ate]. but not this pattern, which requires a CFG 600. 465 - Intro to NLP - J. Eisner 6

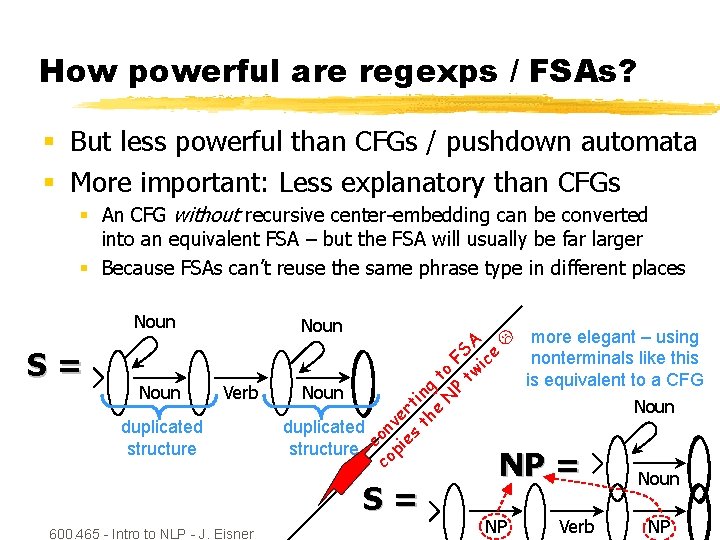

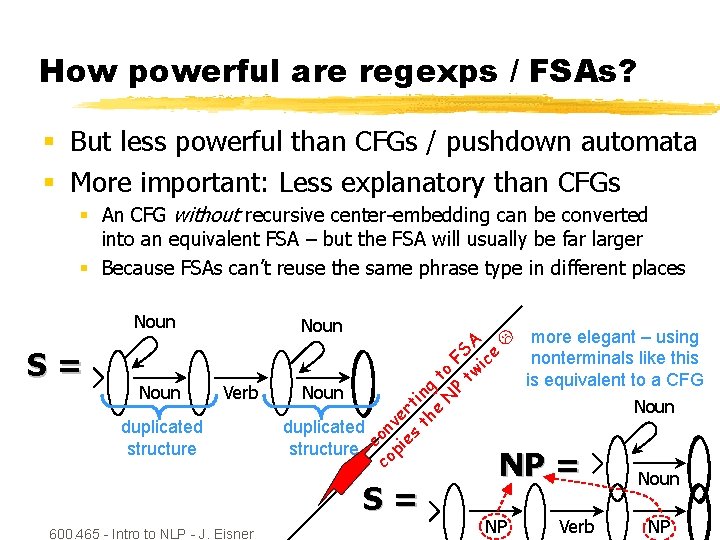

How powerful are regexps / FSAs? § But less powerful than CFGs / pushdown automata § More important: Less explanatory than CFGs § An CFG without recursive center-embedding can be converted into an equivalent FSA – but the FSA will usually be far larger § Because FSAs can’t reuse the same phrase type in different places Noun Verb duplicated structure Noun c co on pi ve es rt th ing e to N P FS tw A ic e S= Noun duplicated structure S= 600. 465 - Intro to NLP - J. Eisner more elegant – using nonterminals like this is equivalent to a CFG Noun NP = NP Verb Noun NP 7

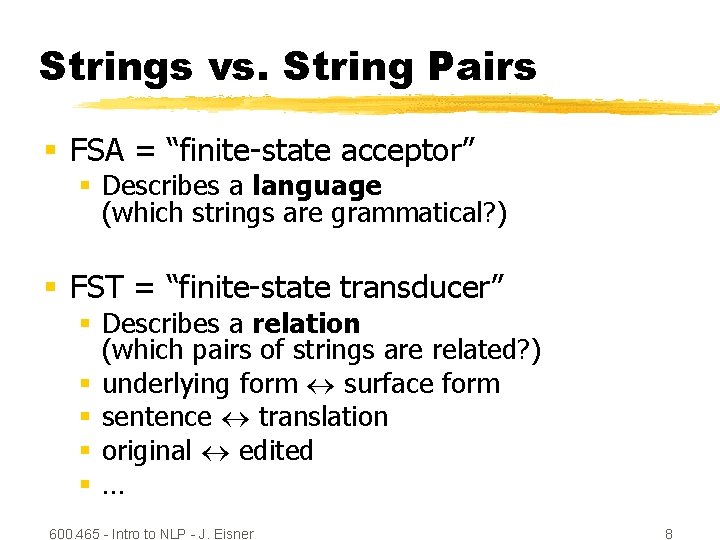

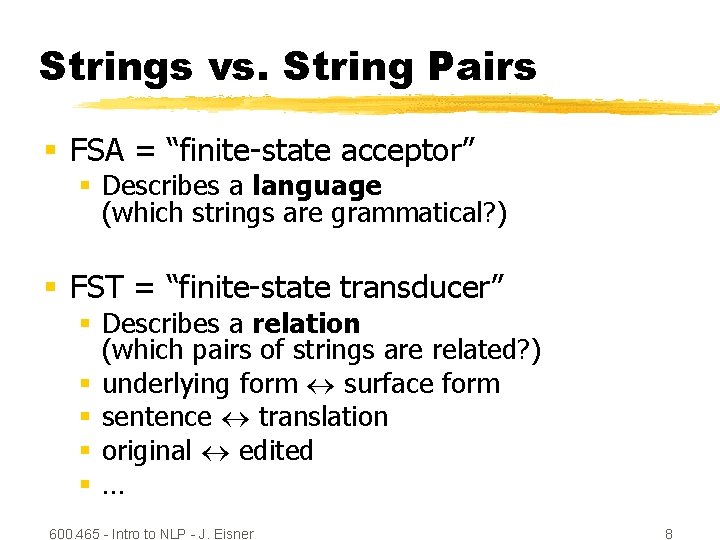

Strings vs. String Pairs § FSA = “finite-state acceptor” § Describes a language (which strings are grammatical? ) § FST = “finite-state transducer” § Describes a relation (which pairs of strings are related? ) § underlying form surface form § sentence translation § original edited §… 600. 465 - Intro to NLP - J. Eisner 8

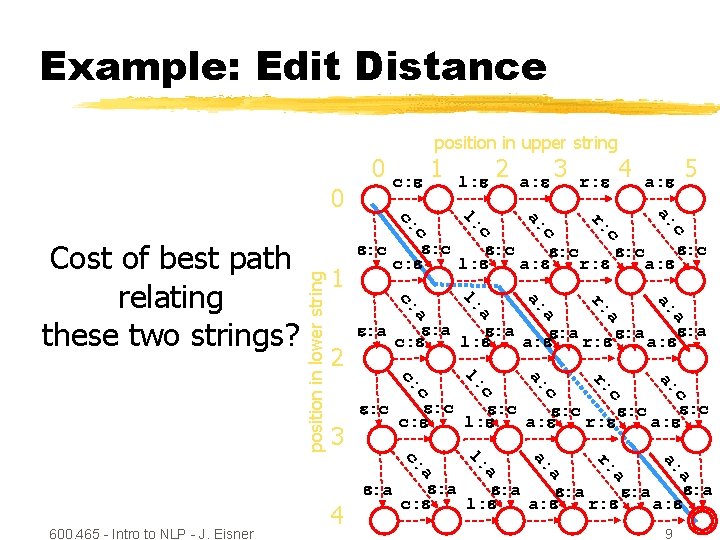

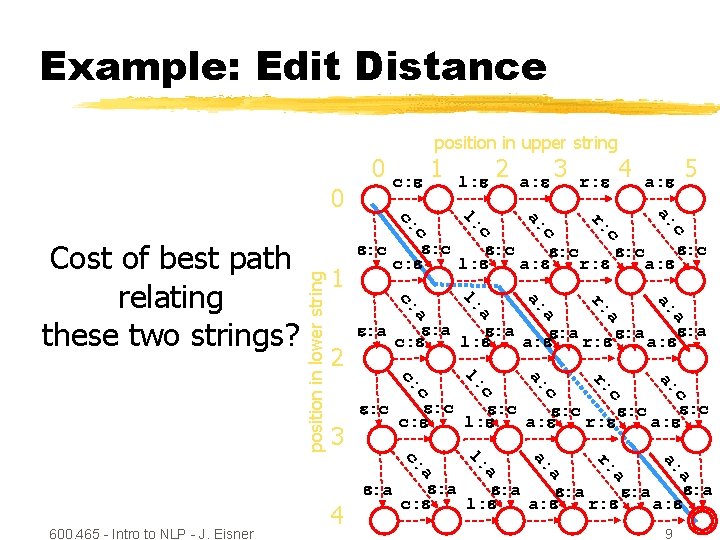

Example: Edit Distance position in upper string c a: r: a a: c r: a: c a: r: a: : c : c l: a: r: a : a : a l: a: : a r: : a a: c position in lower string c r: a: l: : c : c : c l: a: r: a: c: a a 4 5 a c a: a 600. 465 - Intro to NLP - J. Eisner : c a : a a: l: 3 4 c c l: : c r: c: 2 3 c c: : a a: c: 1 2 a : c c: Cost of best path relating these two strings? l: c c: 0 0 c: 1 : a : a : a l: a: r: a: c: 9

![Example Morphology VP headvouloir Vheadvouloir tensePresent numSG Example: Morphology VP [head=vouloir, . . . ] V[head=vouloir, . . . tense=Present, num=SG,](https://slidetodoc.com/presentation_image/35a88029a1d703585ed33e34d454df35/image-10.jpg)

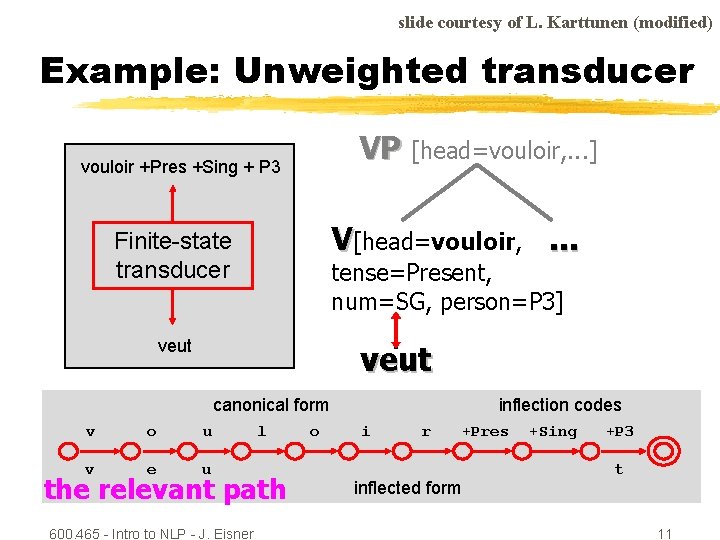

Example: Morphology VP [head=vouloir, . . . ] V[head=vouloir, . . . tense=Present, num=SG, person=P 3] veut 600. 465 - Intro to NLP - J. Eisner 10

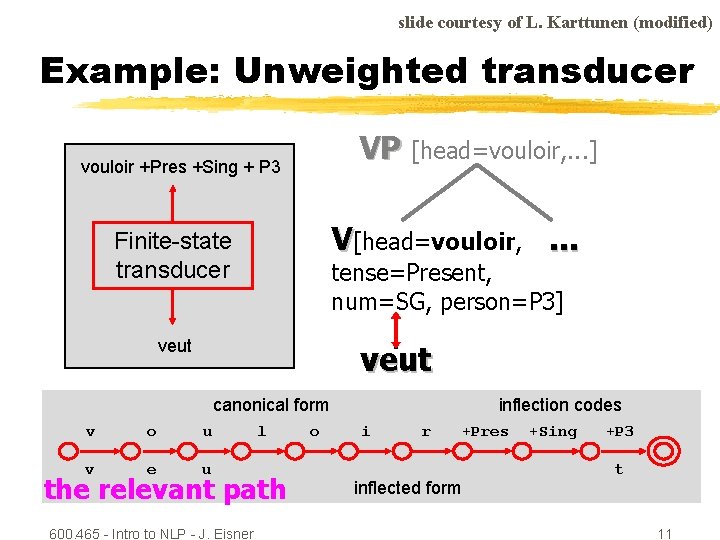

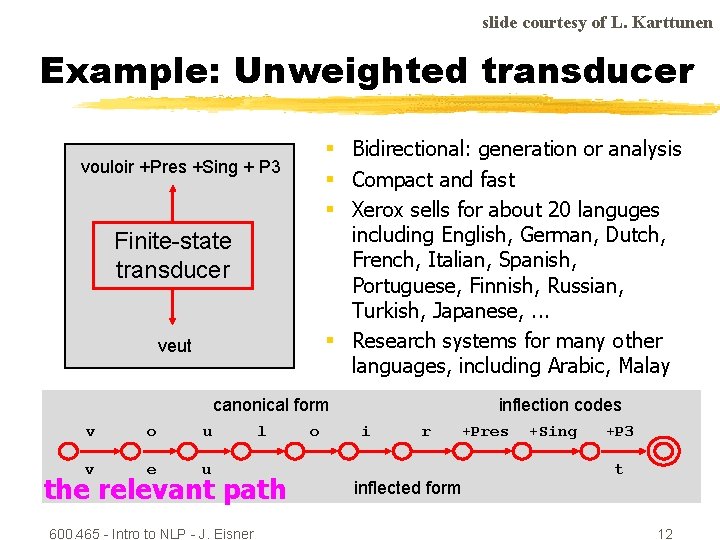

slide courtesy of L. Karttunen (modified) Example: Unweighted transducer VP [head=vouloir, . . . ] vouloir +Pres +Sing + P 3 V[head=vouloir, . . . Finite-state transducer tense=Present, num=SG, person=P 3] veut canonical form v o u v e u l the relevant path 600. 465 - Intro to NLP - J. Eisner o inflection codes i r +Pres inflected form +Sing +P 3 t 11

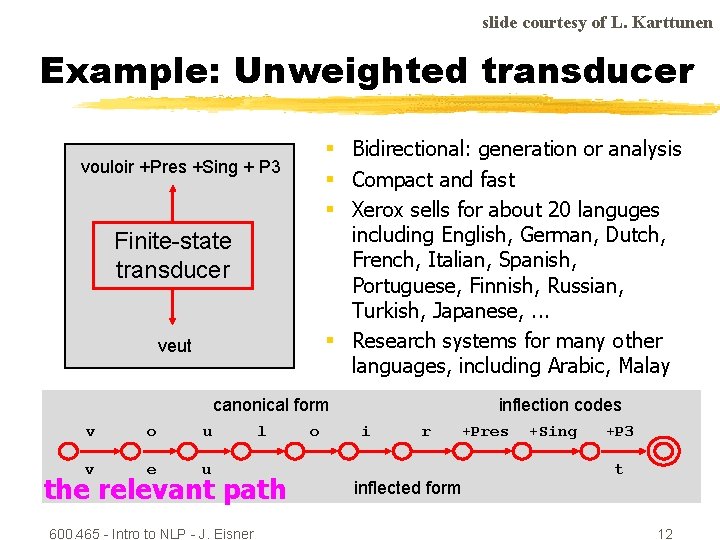

slide courtesy of L. Karttunen Example: Unweighted transducer § Bidirectional: generation or analysis § Compact and fast § Xerox sells for about 20 languges including English, German, Dutch, French, Italian, Spanish, Portuguese, Finnish, Russian, Turkish, Japanese, . . . § Research systems for many other languages, including Arabic, Malay vouloir +Pres +Sing + P 3 Finite-state transducer veut canonical form v o u v e u l the relevant path 600. 465 - Intro to NLP - J. Eisner o inflection codes i r +Pres inflected form +Sing +P 3 t 12

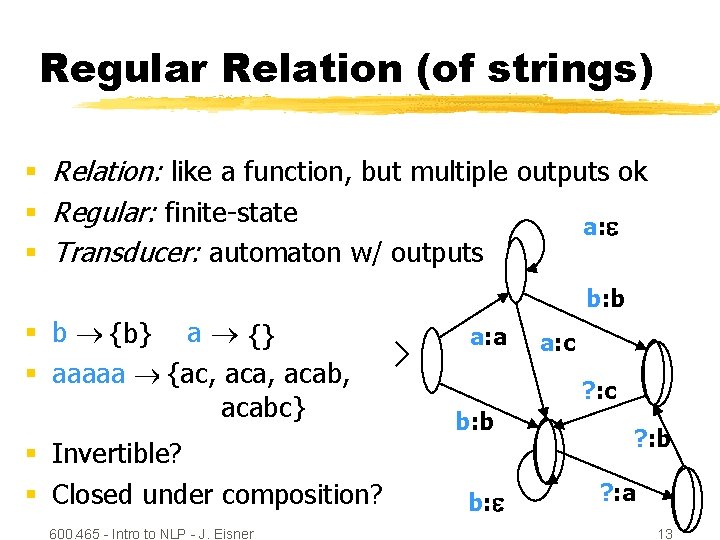

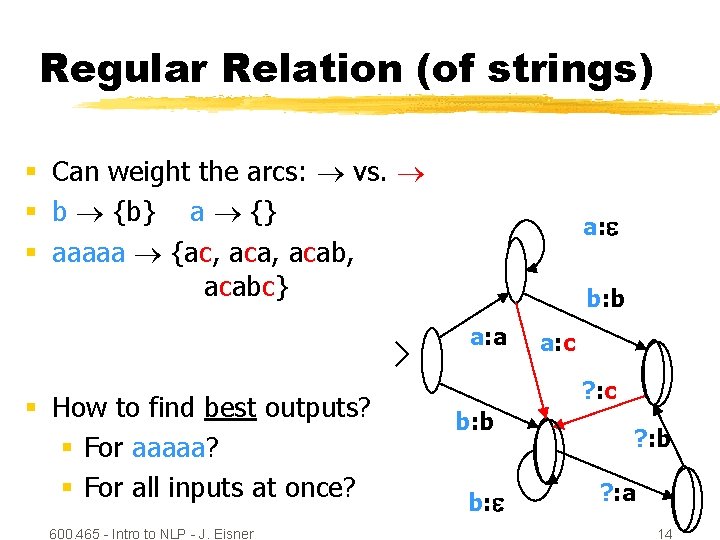

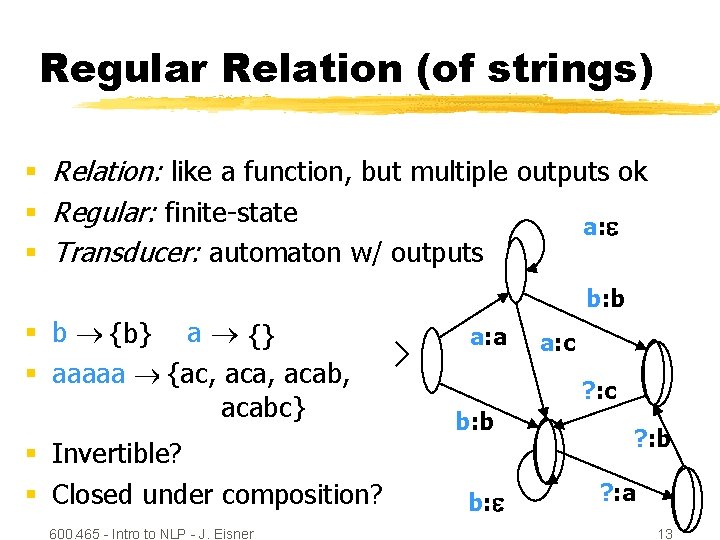

Regular Relation (of strings) § Relation: like a function, but multiple outputs ok § Regular: finite-state a: § Transducer: automaton w/ outputs b: b § b {b} ? a ? {} § aaaaa {ac, ? aca, acabc} § Invertible? § Closed under composition? 600. 465 - Intro to NLP - J. Eisner a: a a: c ? : c b: b b: ? : b ? : a 13

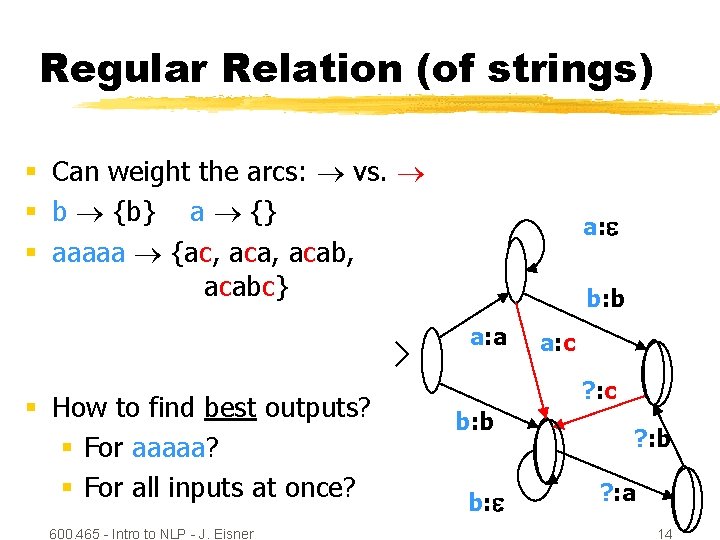

Regular Relation (of strings) § Can weight the arcs: vs. § b {b} a {} § aaaaa {ac, acab, acabc} a: b: b a: a § How to find best outputs? § For aaaaa? § For all inputs at once? 600. 465 - Intro to NLP - J. Eisner a: c ? : c b: b b: ? : b ? : a 14

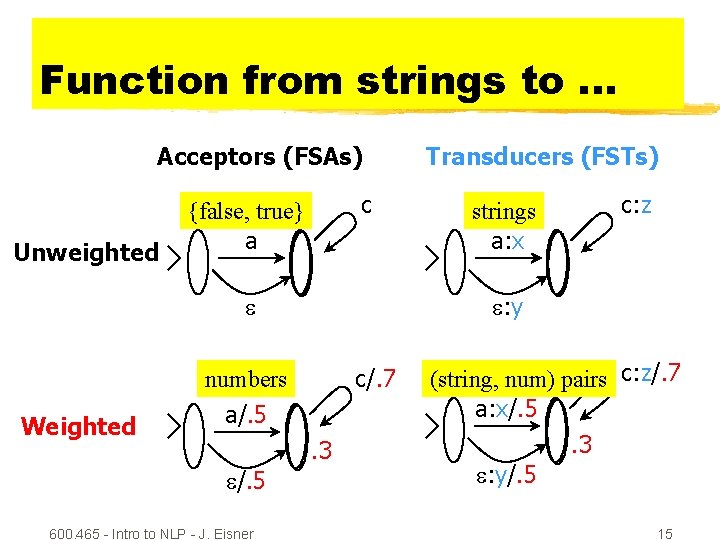

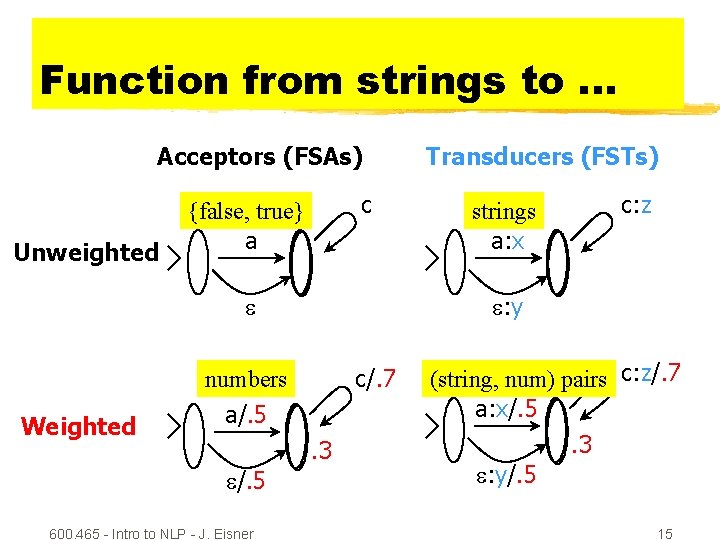

Function from strings to. . . Acceptors (FSAs) Unweighted c {false, true} a c/. 7 a/. 5. 3 /. 5 600. 465 - Intro to NLP - J. Eisner c: z strings a: x : y numbers Weighted Transducers (FSTs) (string, num) pairs c: z/. 7 a: x/. 5. 3 : y/. 5 15

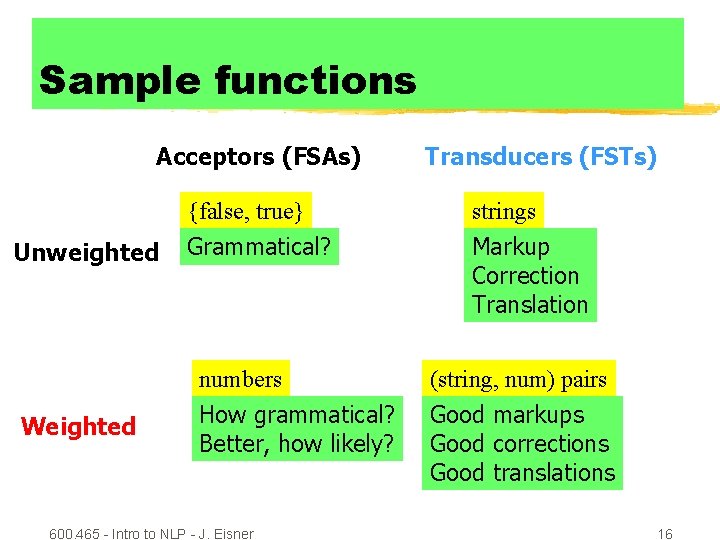

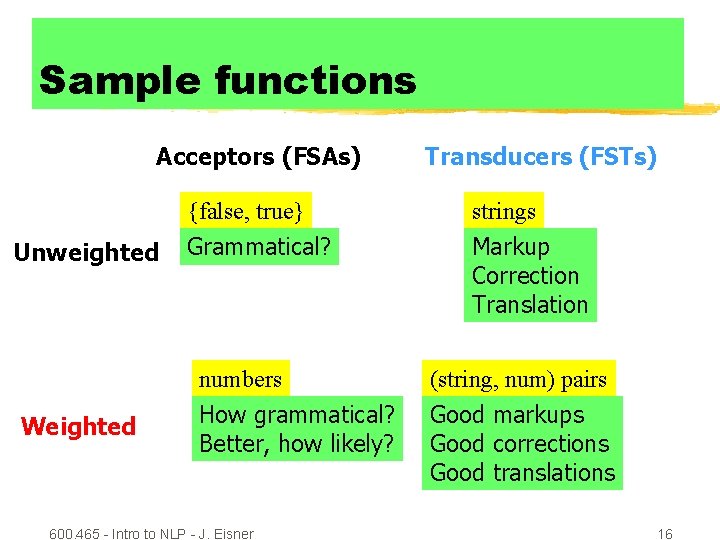

Sample functions Acceptors (FSAs) Unweighted Weighted Transducers (FSTs) {false, true} strings Grammatical? Markup Correction Translation numbers (string, num) pairs How grammatical? Better, how likely? Good markups Good corrections Good translations 600. 465 - Intro to NLP - J. Eisner 16

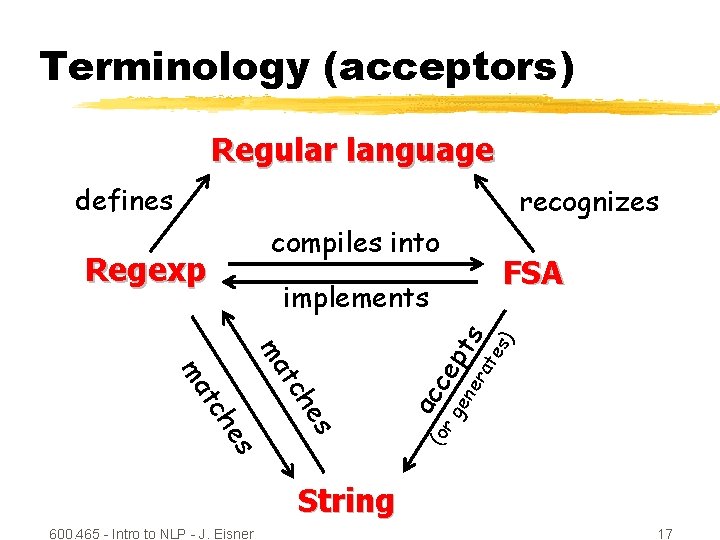

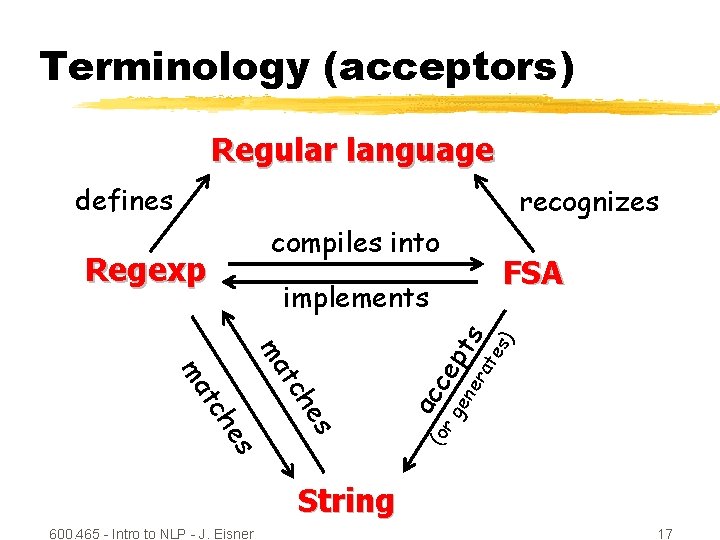

Terminology (acceptors) Regular language defines recognizes compiles into Regexp FSA s pt ne ce ge ac e ch s e ch (or t ma ra s te s) implements String 600. 465 - Intro to NLP - J. Eisner 17

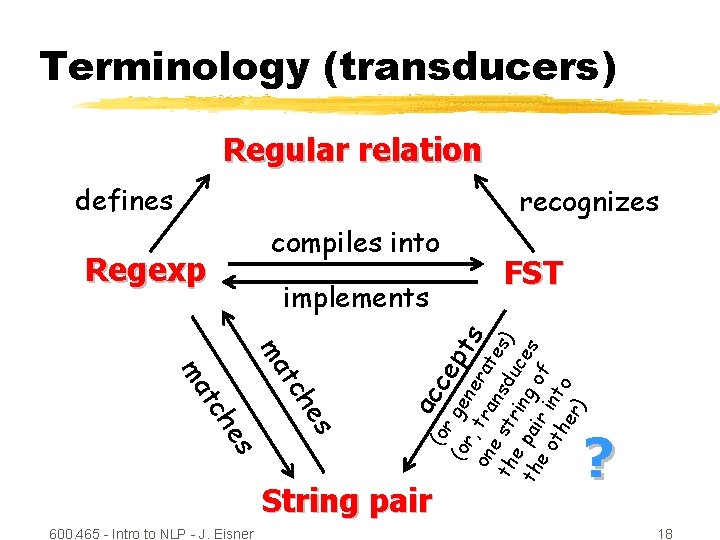

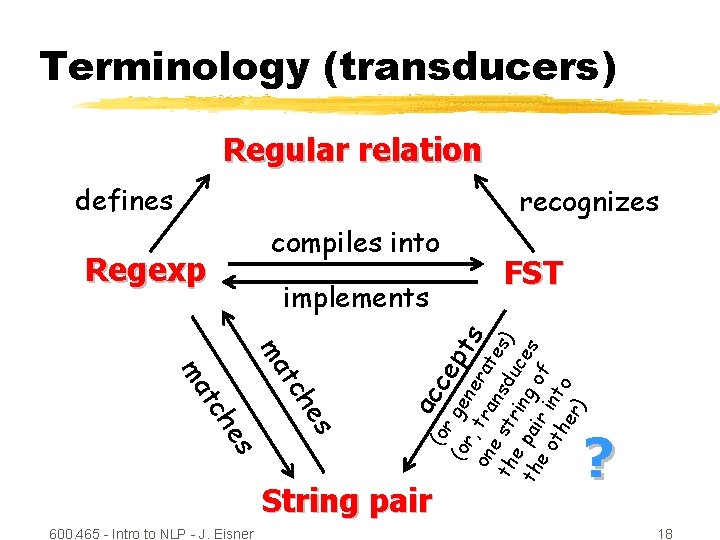

Terminology (transducers) Regular relation defines recognizes compiles into Regexp c ac e ch s (or t ma (or gen ept on , tra erat s e th str nsdu es) e p in c th ai g o es eo ri f th nto er ) implements String pair 600. 465 - Intro to NLP - J. Eisner FST ? 18

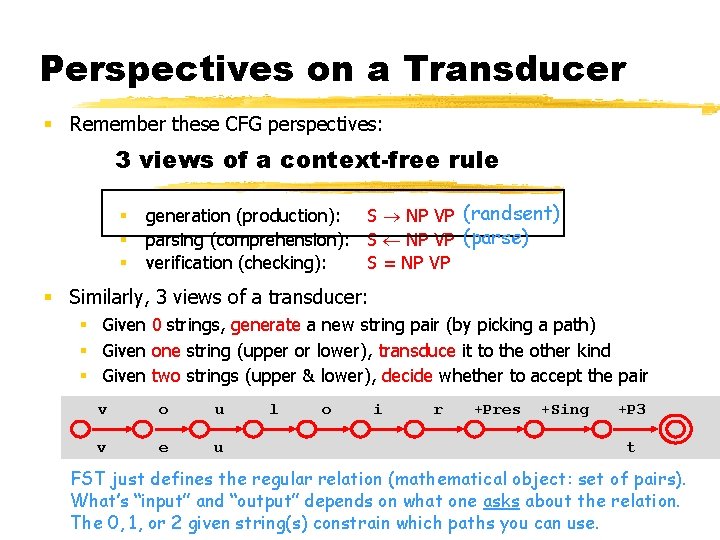

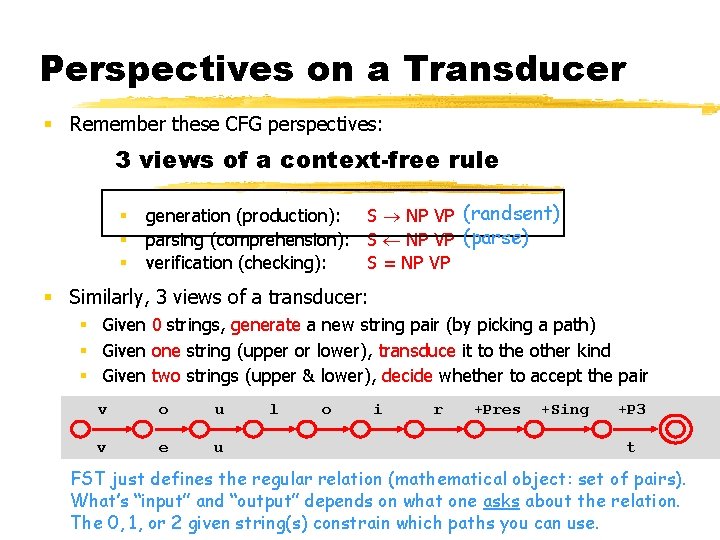

Perspectives on a Transducer § Remember these CFG perspectives: 3 views of a context-free rule § § § generation (production): S NP VP (randsent) parsing (comprehension): S NP VP (parse) verification (checking): S = NP VP § Similarly, 3 views of a transducer: § Given 0 strings, generate a new string pair (by picking a path) § Given one string (upper or lower), transduce it to the other kind § Given two strings (upper & lower), decide whether to accept the pair v o u v e u l o i r +Pres +Sing +P 3 t FST just defines the regular relation (mathematical object: set of pairs). What’s “input” and “output” depends on what one asks about the relation. The 0, 1, or 2 given string(s) constrain which paths you can use. 600. 465 - Intro to NLP - J. Eisner 19

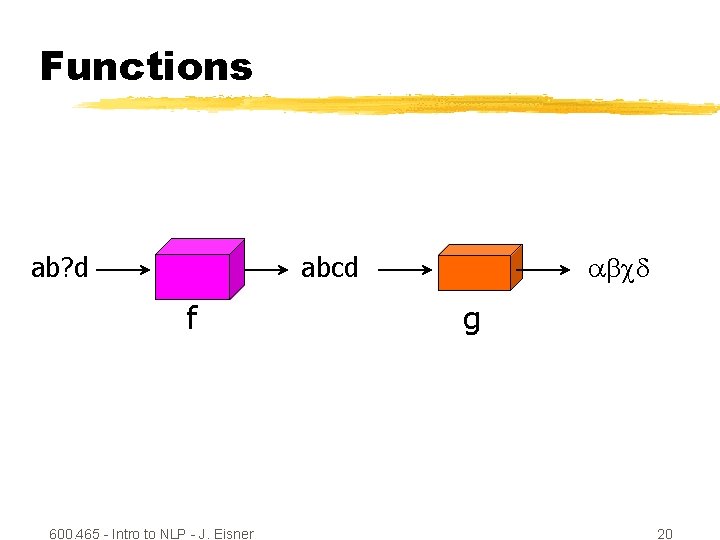

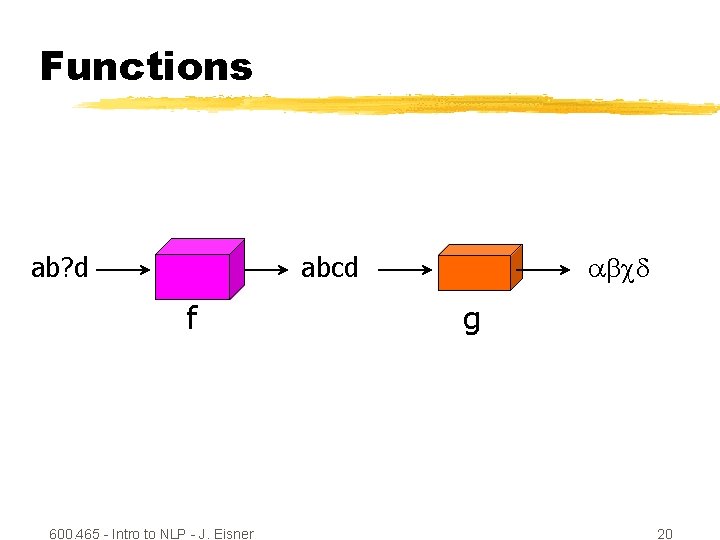

Functions ab? d abcd f 600. 465 - Intro to NLP - J. Eisner g 20

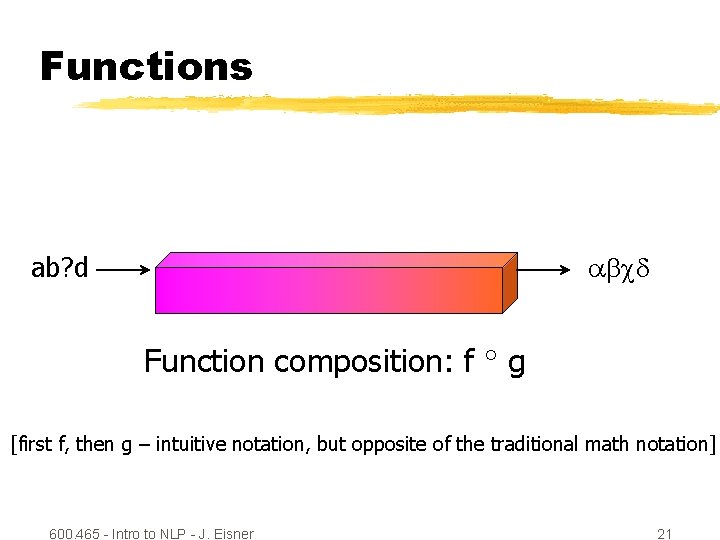

Functions abcd ab? d Function composition: f g [first f, then g – intuitive notation, but opposite of the traditional math notation] 600. 465 - Intro to NLP - J. Eisner 21

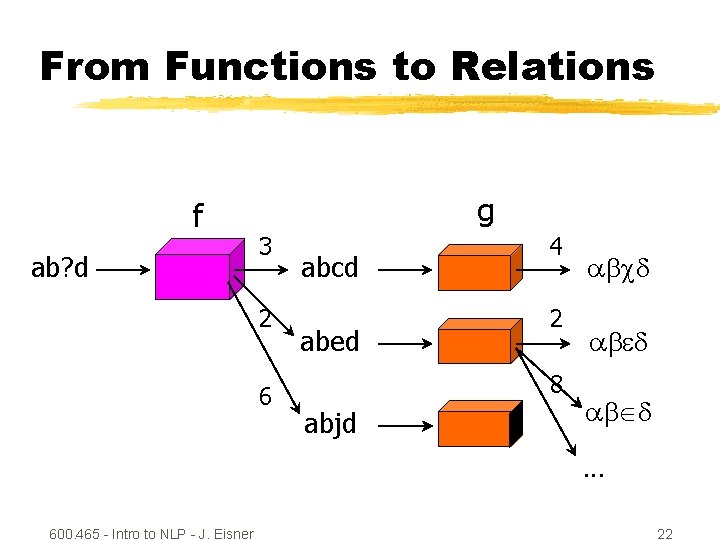

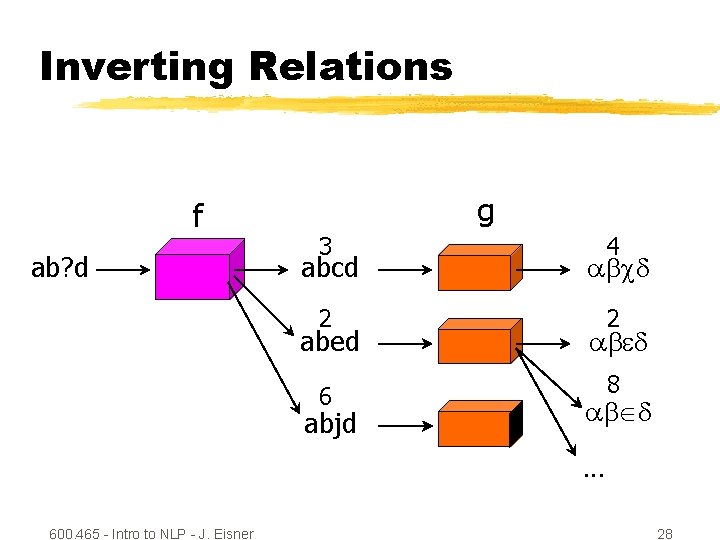

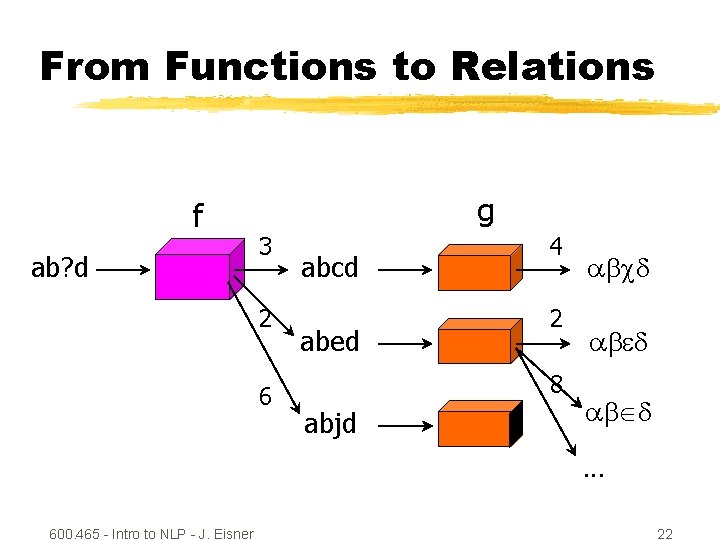

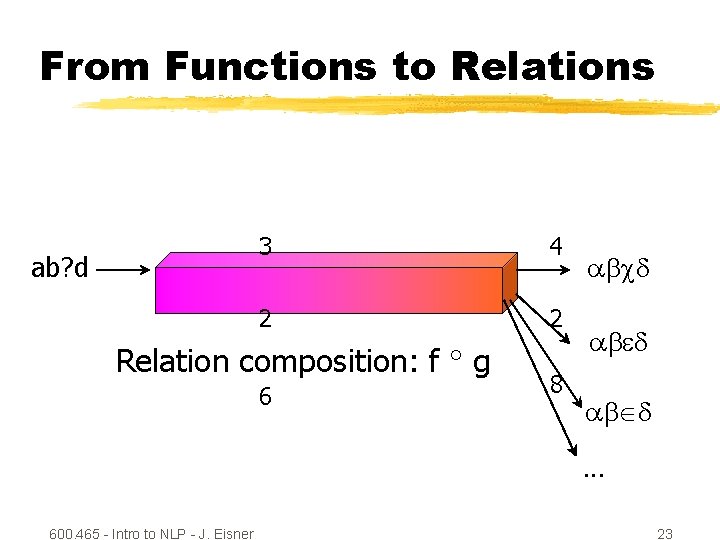

From Functions to Relations f ab? d g 3 2 6 abcd abed 4 2 8 abjd abcd ab d. . . 600. 465 - Intro to NLP - J. Eisner 22

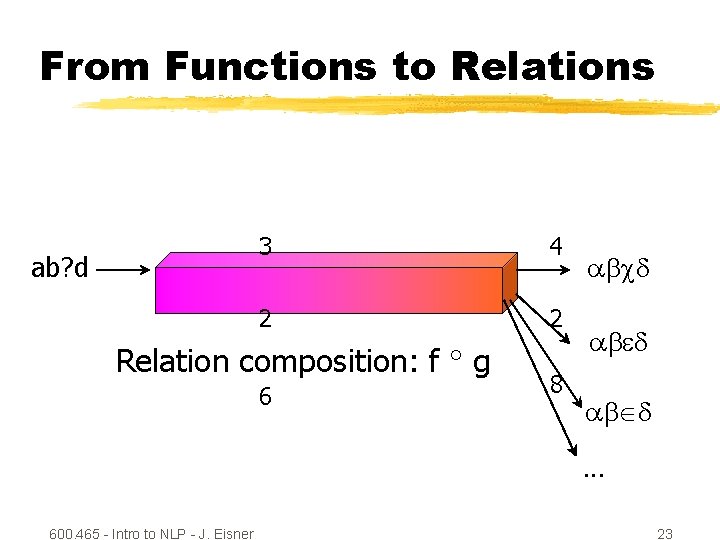

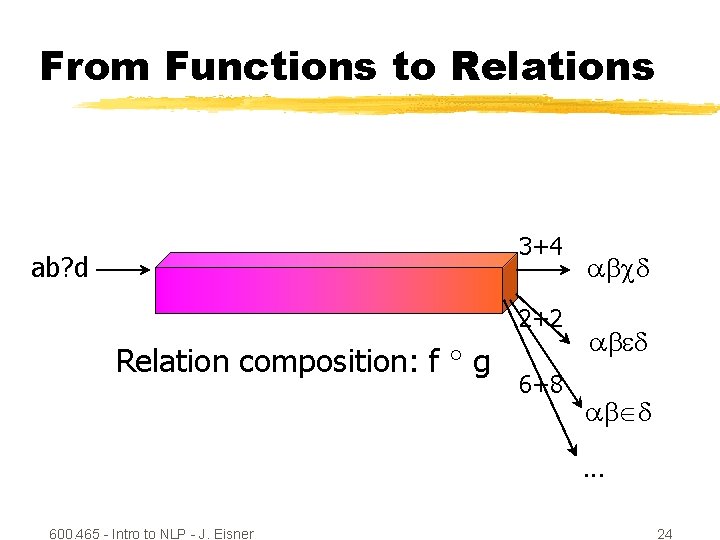

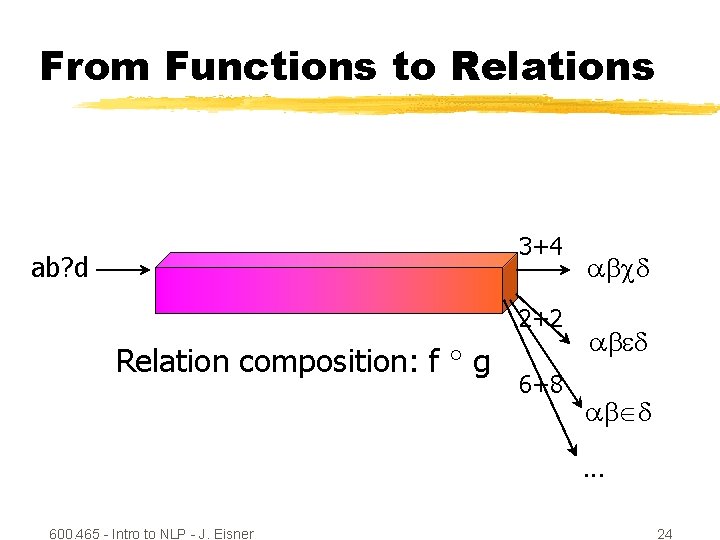

From Functions to Relations ab? d 3 4 2 2 Relation composition: f g 6 8 abcd ab d. . . 600. 465 - Intro to NLP - J. Eisner 23

From Functions to Relations 3+4 ab? d 2+2 Relation composition: f g 6+8 abcd ab d. . . 600. 465 - Intro to NLP - J. Eisner 24

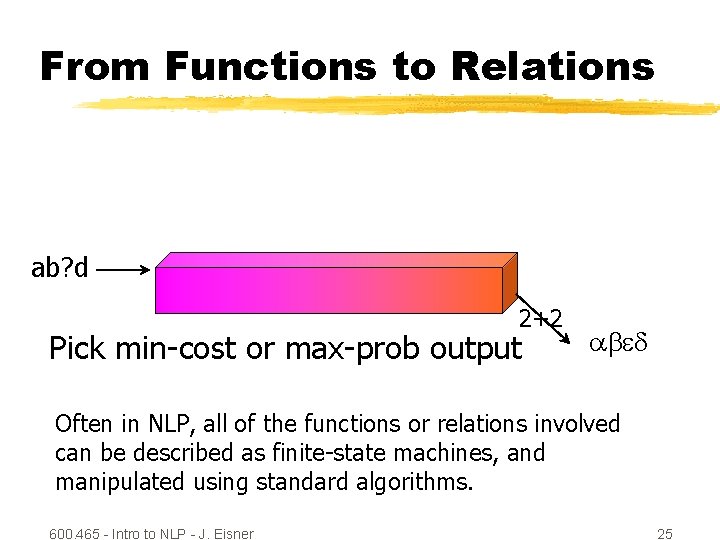

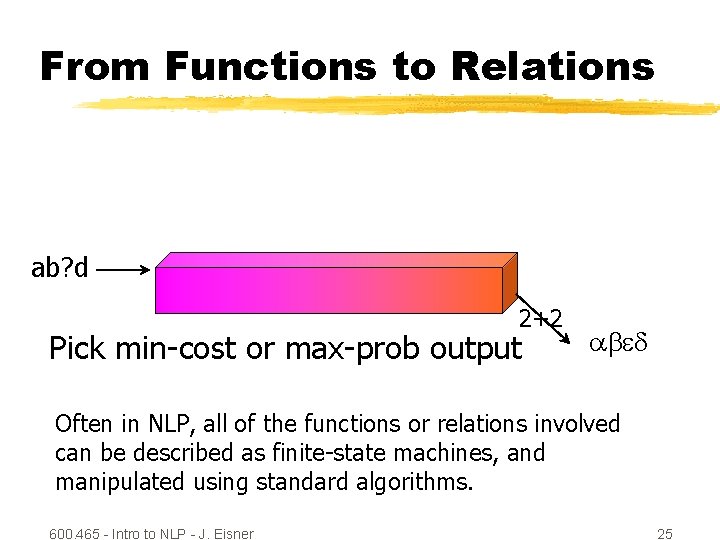

From Functions to Relations ab? d 2+2 Pick min-cost or max-prob output ab d Often in NLP, all of the functions or relations involved can be described as finite-state machines, and manipulated using standard algorithms. 600. 465 - Intro to NLP - J. Eisner 25

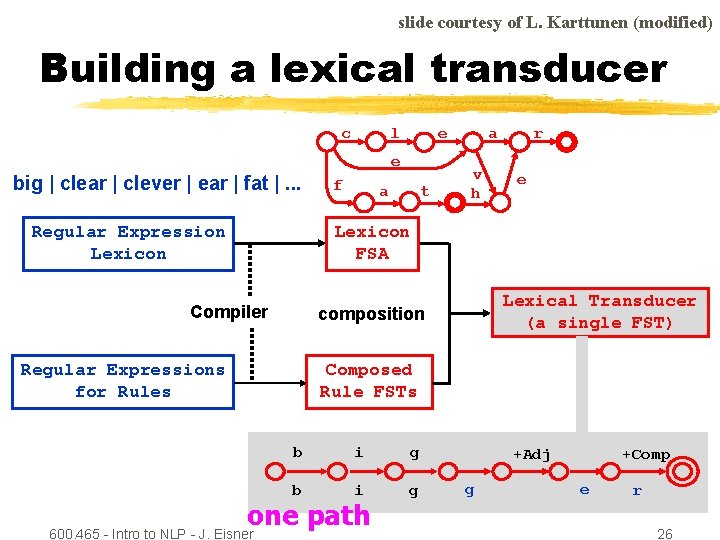

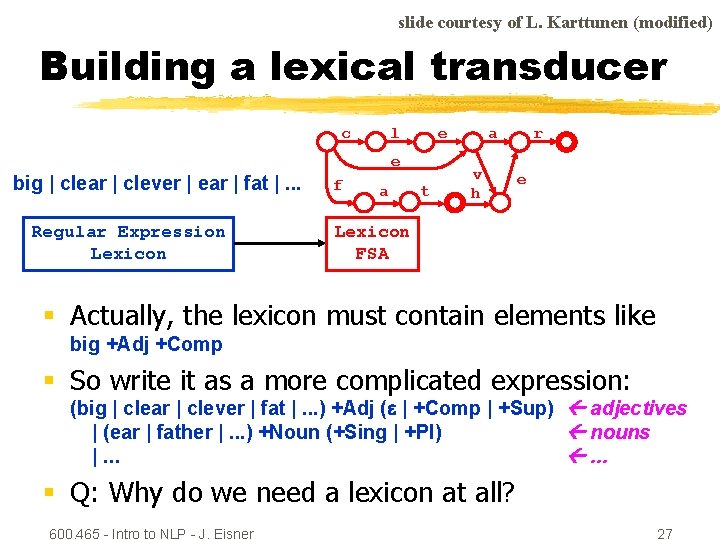

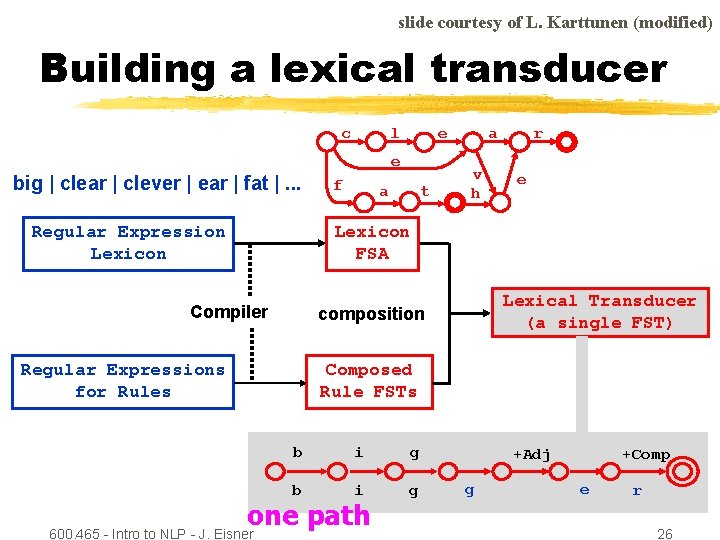

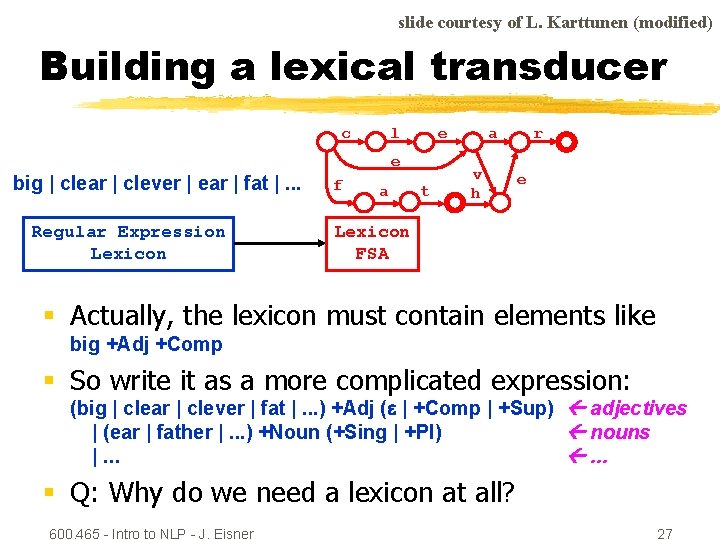

slide courtesy of L. Karttunen (modified) Building a lexical transducer c l e e big | clear | clever | ear | fat |. . . Regular Expression Lexicon f a t a v h r e Lexicon FSA Compiler Lexical Transducer (a single FST) composition Regular Expressions for Rules Composed Rule FSTs b i g one path 600. 465 - Intro to NLP - J. Eisner +Adj g +Comp e r 26

slide courtesy of L. Karttunen (modified) Building a lexical transducer c l e e big | clear | clever | ear | fat |. . . Regular Expression Lexicon f a t a v h r e Lexicon FSA § Actually, the lexicon must contain elements like big +Adj +Comp § So write it as a more complicated expression: (big | clear | clever | fat |. . . ) +Adj ( | +Comp | +Sup) adjectives | (ear | father |. . . ) +Noun (+Sing | +Pl) nouns |. . . § Q: Why do we need a lexicon at all? 600. 465 - Intro to NLP - J. Eisner 27

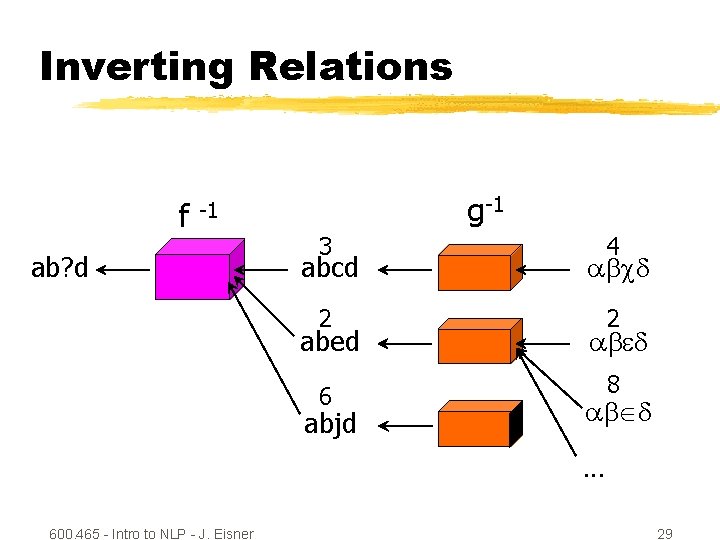

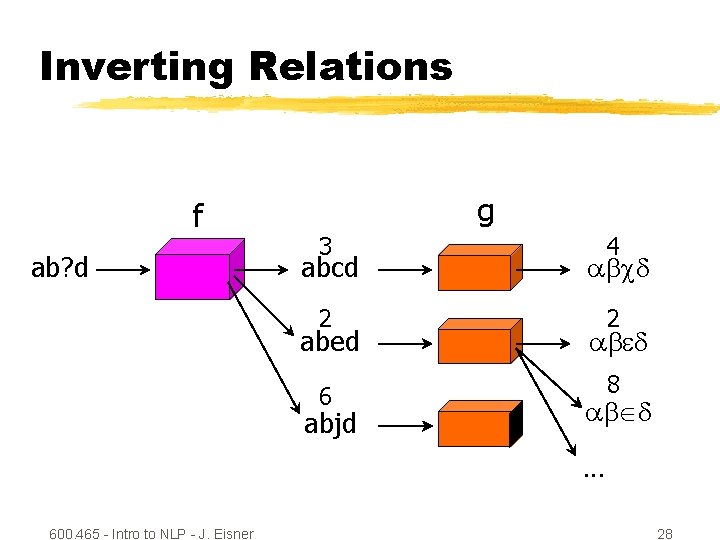

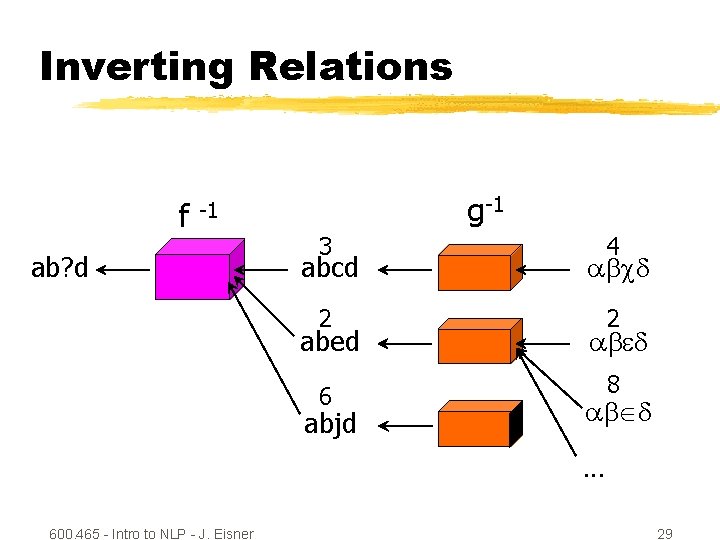

Inverting Relations f ab? d g 3 4 abcd 2 2 abed ab d 6 8 abjd ab d. . . 600. 465 - Intro to NLP - J. Eisner 28

Inverting Relations f g-1 -1 ab? d 3 4 abcd 2 2 abed ab d 6 8 abjd ab d. . . 600. 465 - Intro to NLP - J. Eisner 29

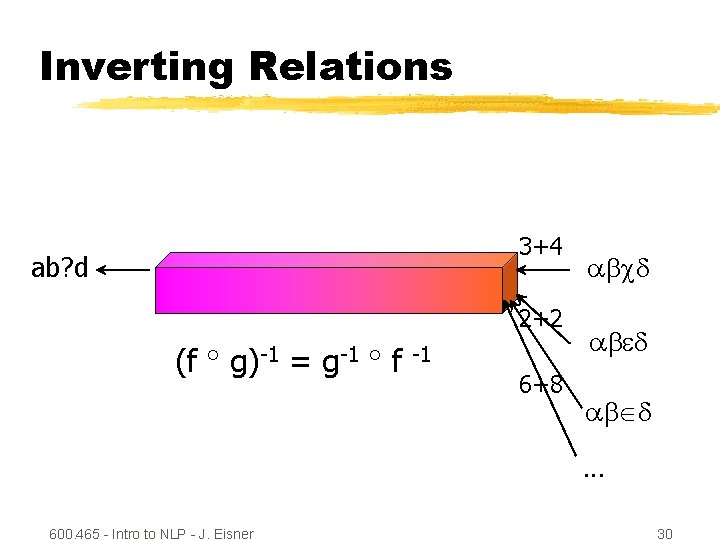

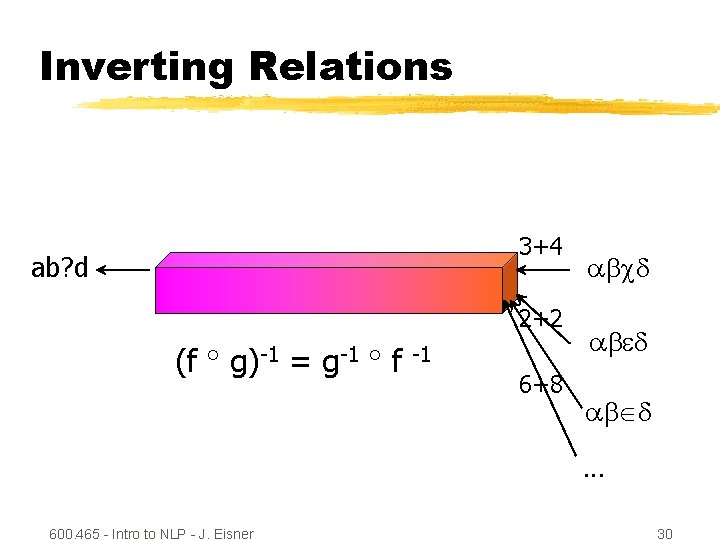

Inverting Relations 3+4 ab? d 2+2 (f g)-1 = g-1 f -1 6+8 abcd ab d. . . 600. 465 - Intro to NLP - J. Eisner 30

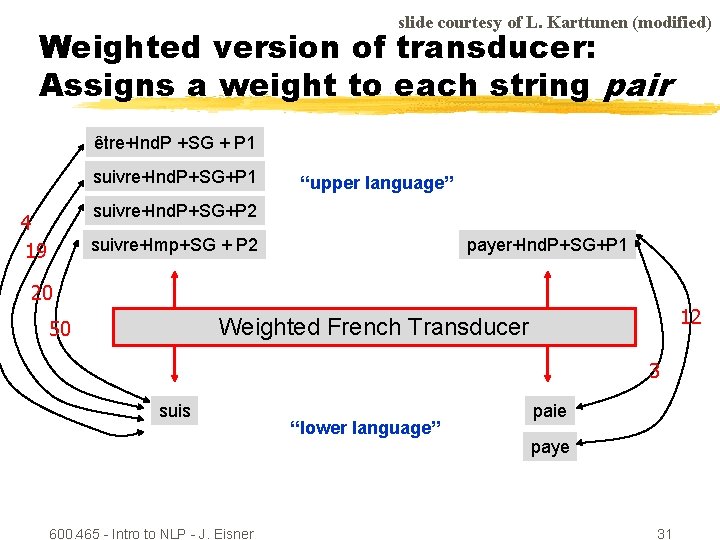

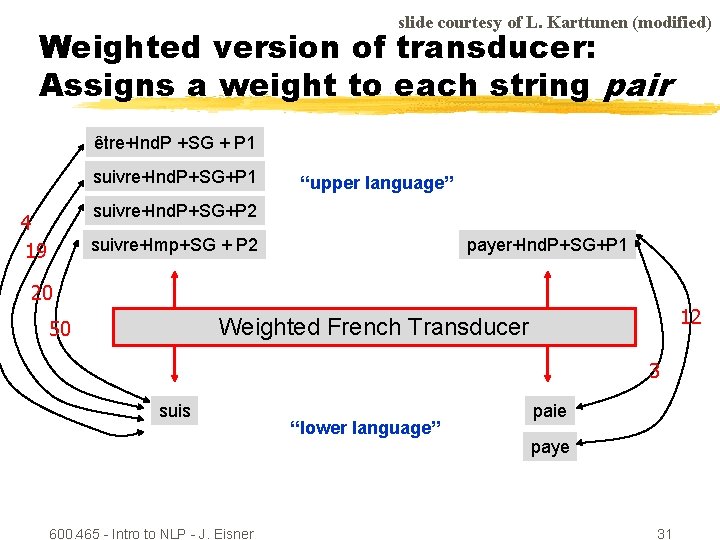

slide courtesy of L. Karttunen (modified) Weighted version of transducer: Assigns a weight to each string pair être+Ind. P +SG + P 1 suivre+Ind. P+SG+P 1 “upper language” suivre+Ind. P+SG+P 2 4 19 payer+Ind. P+SG+P 1 suivre+Imp+SG + P 2 20 12 Weighted French Transducer 50 3 suis 600. 465 - Intro to NLP - J. Eisner “lower language” paie paye 31

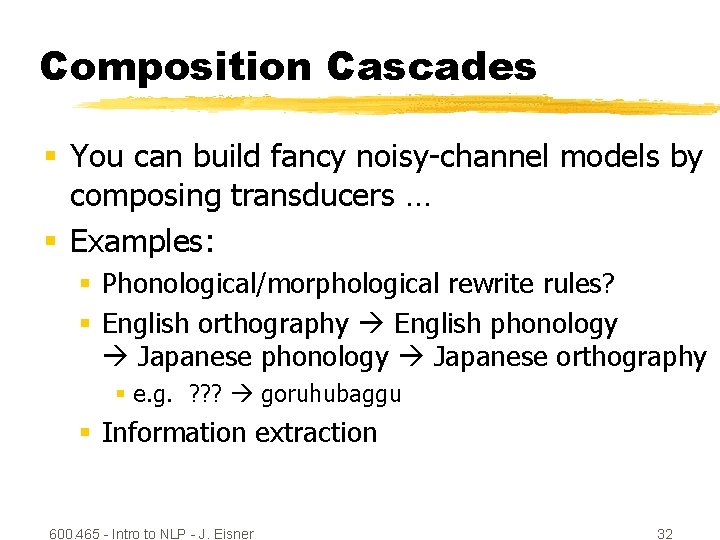

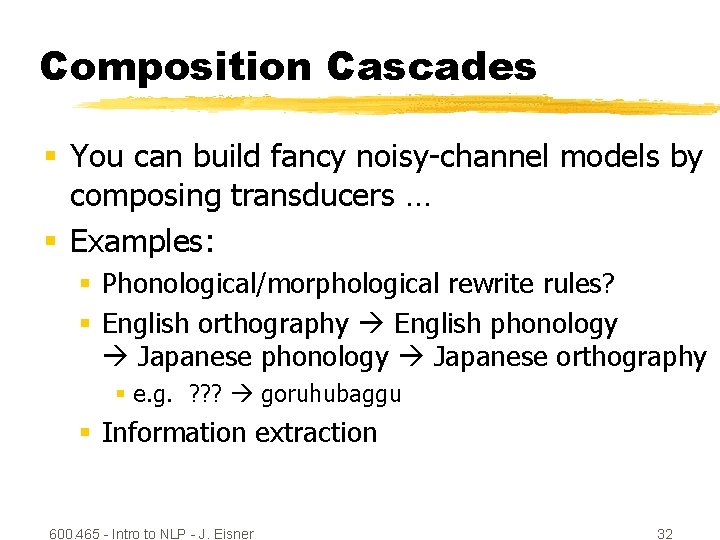

Composition Cascades § You can build fancy noisy-channel models by composing transducers … § Examples: § Phonological/morphological rewrite rules? § English orthography English phonology Japanese orthography § e. g. ? ? ? goruhubaggu § Information extraction 600. 465 - Intro to NLP - J. Eisner 32

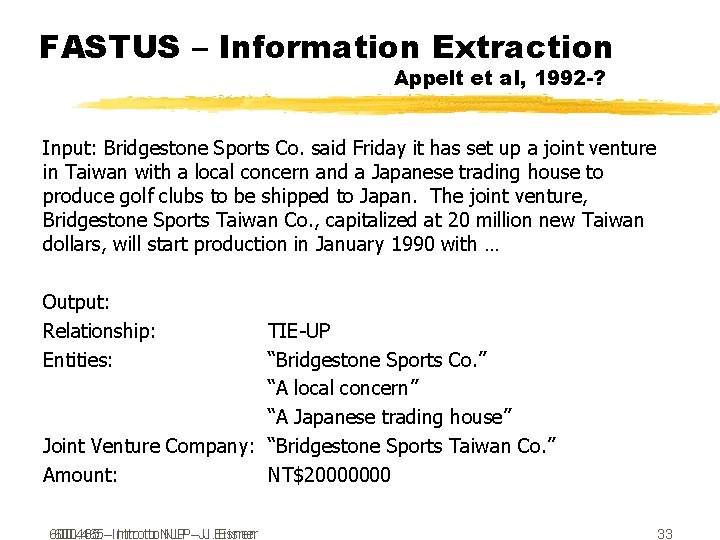

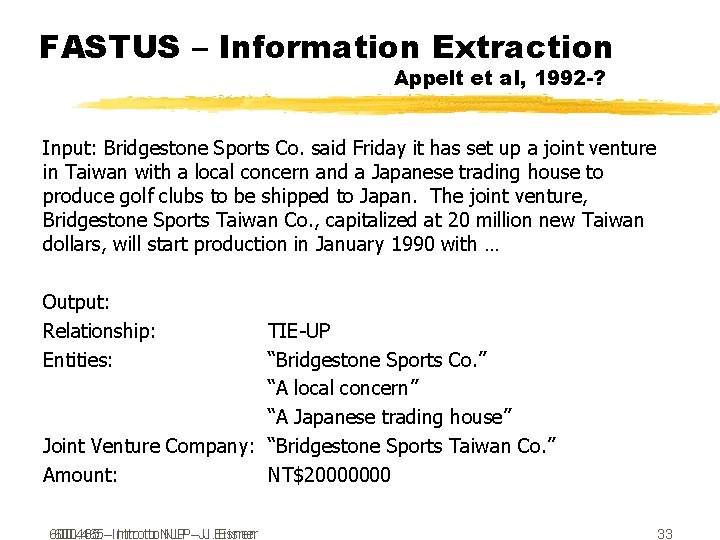

FASTUS – Information Extraction Appelt et al, 1992 -? Input: Bridgestone Sports Co. said Friday it has set up a joint venture in Taiwan with a local concern and a Japanese trading house to produce golf clubs to be shipped to Japan. The joint venture, Bridgestone Sports Taiwan Co. , capitalized at 20 million new Taiwan dollars, will start production in January 1990 with … Output: Relationship: Entities: TIE-UP “Bridgestone Sports Co. ” “A local concern” “A Japanese trading house” Joint Venture Company: “Bridgestone Sports Taiwan Co. ” Amount: NT$20000000 600. 465 --Introto to. NLP--J. J. Eisner 33

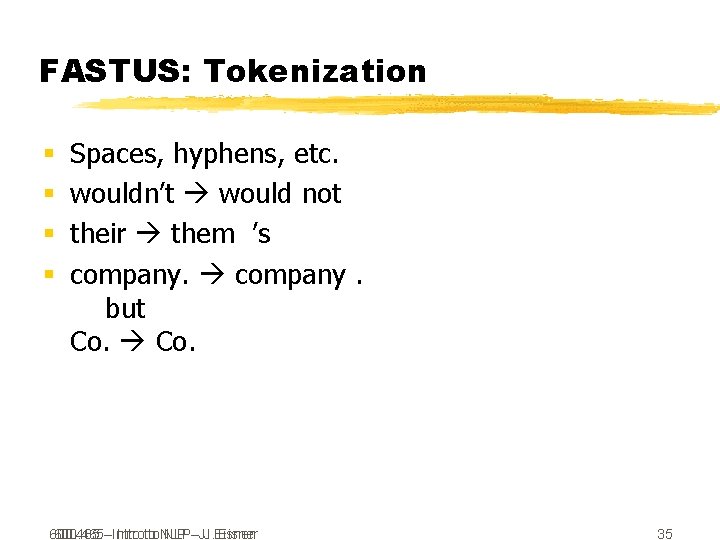

FASTUS: Successive Markups (details on subsequent slides) Tokenization. o. Multiwords. o. Basic phrases (noun groups, verb groups …). o. Complex phrases. o. Semantic Patterns. o. Merging different references 600. 465 --Introto to. NLP--J. J. Eisner 34

FASTUS: Tokenization § § Spaces, hyphens, etc. wouldn’t would not their them ’s company. but Co. 600. 465 --Introto to. NLP--J. J. Eisner 35

FASTUS: Multiwords § “set up” § “joint venture” § “San Francisco Symphony Orchestra, ” “Canadian Opera Company” § … use a specialized regexp to match musical groups. §. . . what kind of regexp would match company names? 600. 465 --Introto to. NLP--J. J. Eisner 36

![FASTUS Basic phrases Output looks like this no nested brackets NG it FASTUS : Basic phrases Output looks like this (no nested brackets!): … [NG it]](https://slidetodoc.com/presentation_image/35a88029a1d703585ed33e34d454df35/image-37.jpg)

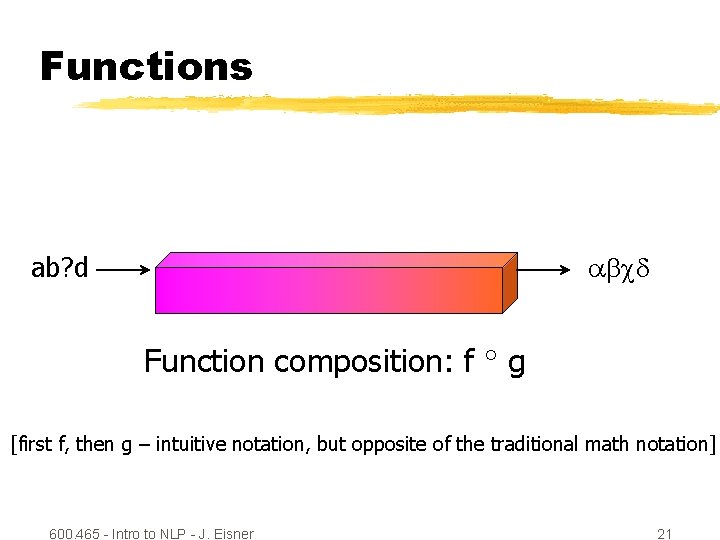

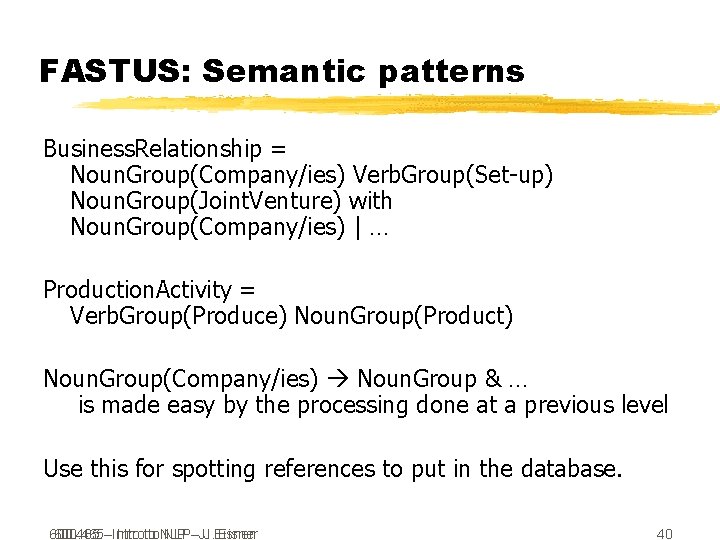

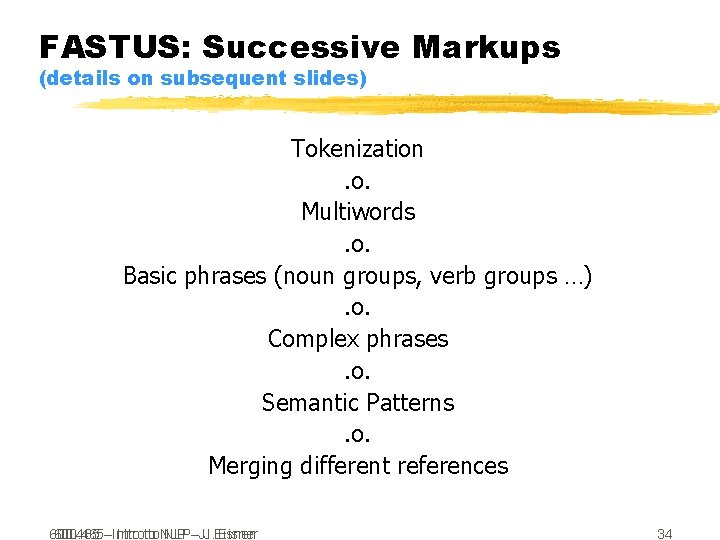

FASTUS : Basic phrases Output looks like this (no nested brackets!): … [NG it] [VG had set_up] [NP a joint_venture] [Prep in] … Company Name: Verb Group: Noun Group: Preposition: Location: Preposition: Noun Group: Bridgestone Sports Co. said Friday it had set up a joint venture in Taiwan with a local concern 600. 465 --Introto to. NLP--J. J. Eisner 37

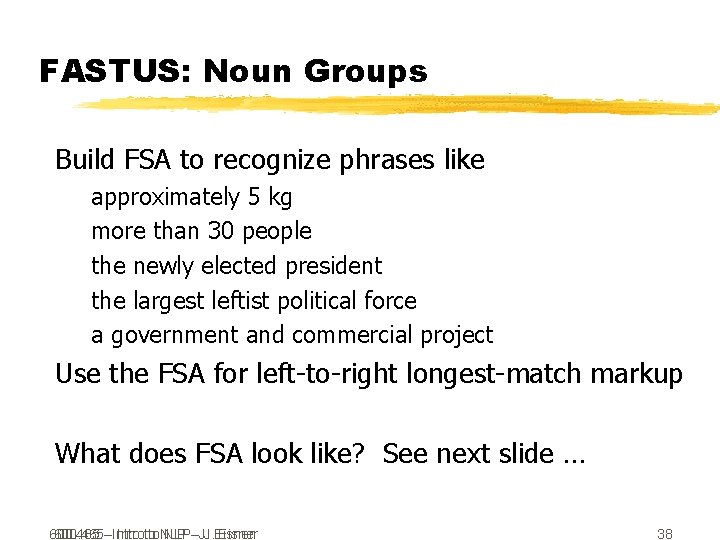

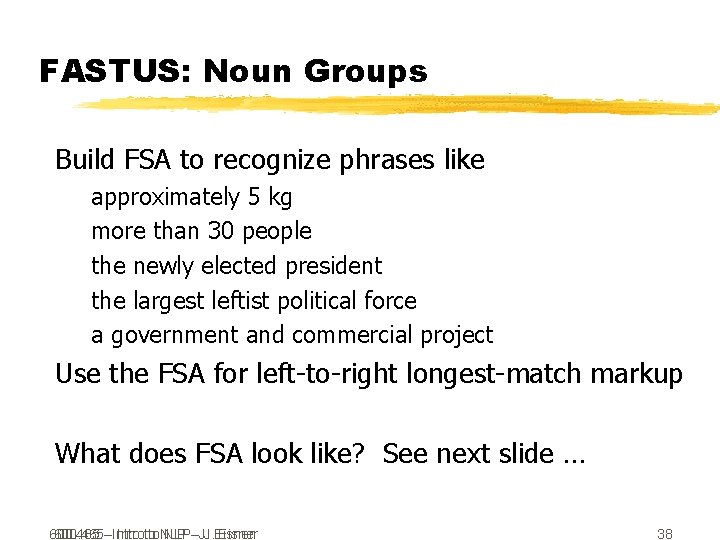

FASTUS: Noun Groups Build FSA to recognize phrases like approximately 5 kg more than 30 people the newly elected president the largest leftist political force a government and commercial project Use the FSA for left-to-right longest-match markup What does FSA look like? See next slide … 600. 465 --Introto to. NLP--J. J. Eisner 38

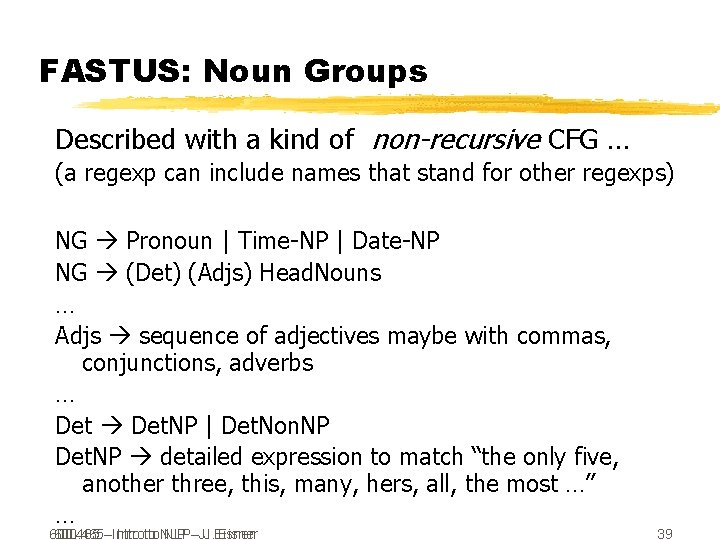

FASTUS: Noun Groups Described with a kind of non-recursive CFG … (a regexp can include names that stand for other regexps) NG Pronoun | Time-NP | Date-NP NG (Det) (Adjs) Head. Nouns … Adjs sequence of adjectives maybe with commas, conjunctions, adverbs … Det. NP | Det. Non. NP Det. NP detailed expression to match “the only five, another three, this, many, hers, all, the most …” … 600. 465 --Introto to. NLP--J. J. Eisner 39

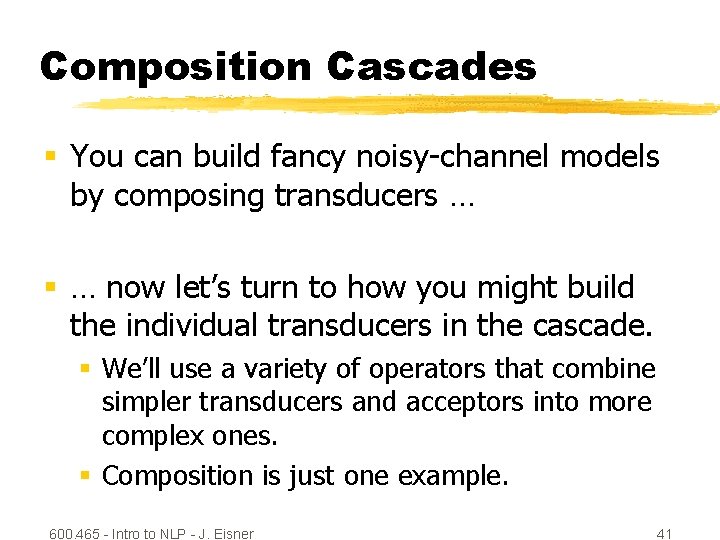

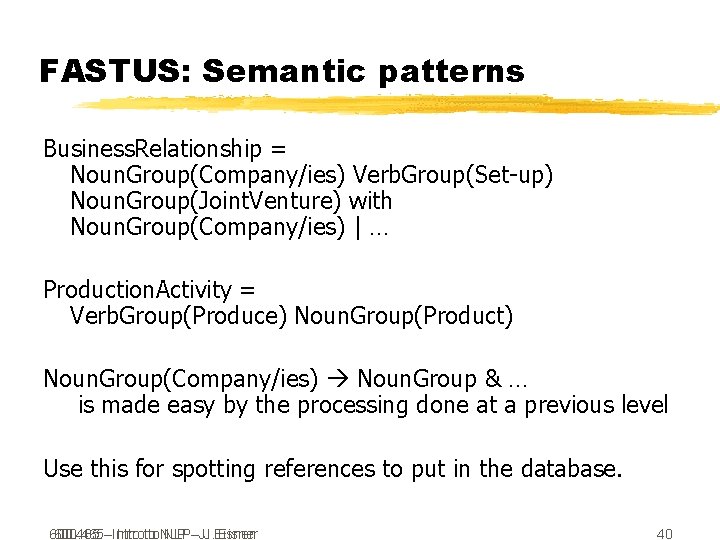

FASTUS: Semantic patterns Business. Relationship = Noun. Group(Company/ies) Verb. Group(Set-up) Noun. Group(Joint. Venture) with Noun. Group(Company/ies) | … Production. Activity = Verb. Group(Produce) Noun. Group(Product) Noun. Group(Company/ies) Noun. Group & … is made easy by the processing done at a previous level Use this for spotting references to put in the database. 600. 465 --Introto to. NLP--J. J. Eisner 40

Composition Cascades § You can build fancy noisy-channel models by composing transducers … § … now let’s turn to how you might build the individual transducers in the cascade. § We’ll use a variety of operators that combine simpler transducers and acceptors into more complex ones. § Composition is just one example. 600. 465 - Intro to NLP - J. Eisner 41