Natural Language Processing NLP techniques for structuring large

- Slides: 22

Natural Language Processing (NLP) techniques for structuring large volumes of human text data Alessandra Sozzi, Kimberley Brett Office for National Statistics

Overview • Introduction to NLP and context of use within ONS • Property data: an example of NLP and machine learning • Sentiment analysis of text: • Automating internal feedback • Understanding daily public satisfaction

What is Natural Language Processing (NLP) • Using computer algorithms and code to understand, and sometimes classify, large volumes of unstructured human text. • Can help to automate analysis previously done by hand • Useful in government as there are many free text fields with rich information

Property websites: Zoopla

Project: Intelligence from housing data • Supplement address register information to provide insight for census field staff • Pilot (Karen Gask): Used Zoopla API to identify caravan properties • Caravans: inconsistently recorded in other data sources • Natural Language Processing and Machine learning approaches in Python

Training • Binary features created from the property description and property type • Data split into 80% training, 20% testing • Tested on Machine learning algorithms: Logistic regression, Decision trees, Random forests, Support Vector Machines • Evaluation: F 1 scores and cross validation

Testing • Support Vector machines performed best in training • Tested on SVM, attaining F 1 score ~0. 917 • Of these: 34/51 in exact location on address register 11 in nearby location 6 not on address register – valuable additions

Pilot extended • Acquired larger Zoopla data and using similar methods, focus on SVM approach • Census test areas: Blackpool, Barnsley & Sheffield, Southwark, West Dorset & South Somerset, Northern Powys • Further investigation: • Whether caravan is residential/ holiday home • Gated communities and retirement properties.

Issues • Data not available for whole of UK as not all advertised via Zoopla • Not all have description • Census test areas: Other LAs may be more/ less likely to have those property types • Time to acquire the data, data cleaning etc • Estate agents embellish descriptions • Spelling: data may have been input in a rush

Sentiment analysis: Projects • Project with Euro. Stat: sentiment analysis of public forums • Blogs, comments on news sites, social media • Undertaken by ONS colleagues; Alessandra Sozzi and Charles Morris • Internal project: • Sentiment analysis of feedback responses from an internal talk

Sentiment analysis • Type of Natural Language Processing • Positive or negative sentiment • Analyse different emotions • Plutchik’s eight emotions Anger Disgust Trust Fear Joy Sadness Surprise Anticipation

Approaches • Lexicon-based • Corpus of words rated by sentiment expressed • Text run through this corpus and given ratings • Machine learning • Builds on the lexicon based approach to learn based on ratings in a test set. • Clerically reviewed gold standards • Essential to evaluate performance

Different lexicons • Many different lexicons, but the following have been used in our analysis: • NRC • Very popular. Contains about 14, 000 rated words. Scale between -1 and 1. • Bing • Contains around 6, 000 words. Scale between -1 and 1. • AFINN • Contains about 4, 000 words. Scale between -5 to 5. • Syuzhet

VADER • Problem with other lexicons: Negations and boosters • VADER: Python based lexicon and sentiment analysis package. Contains only ~6, 000 rated words but does address negations and boosters

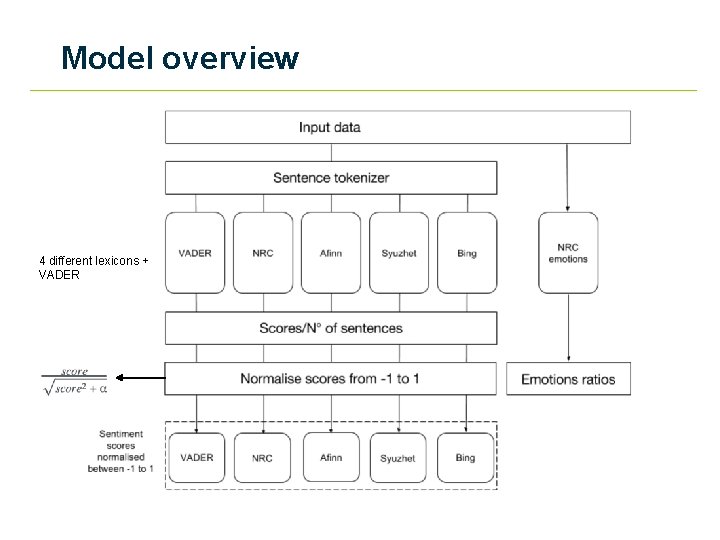

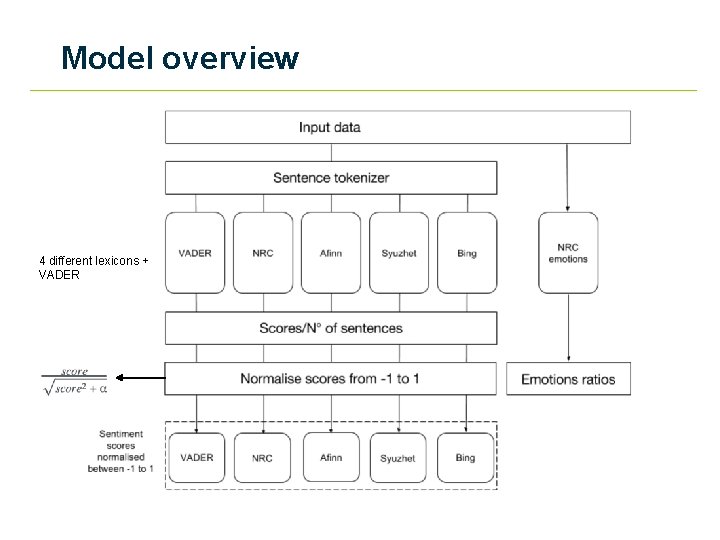

Model overview 4 different lexicons + VADER

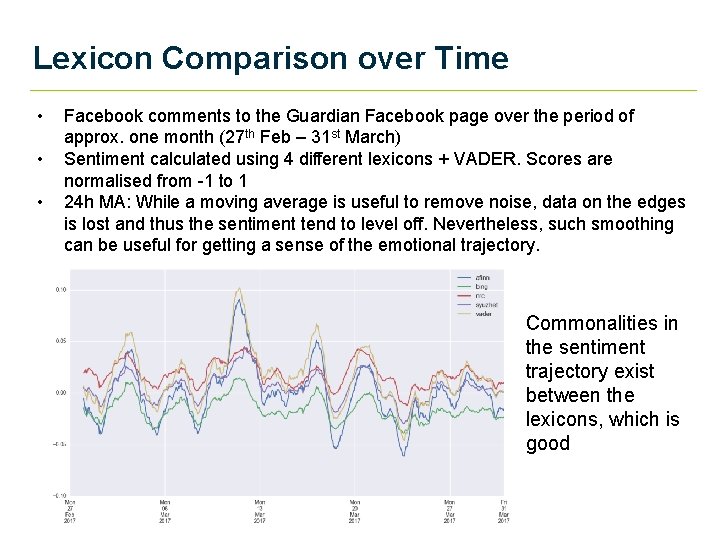

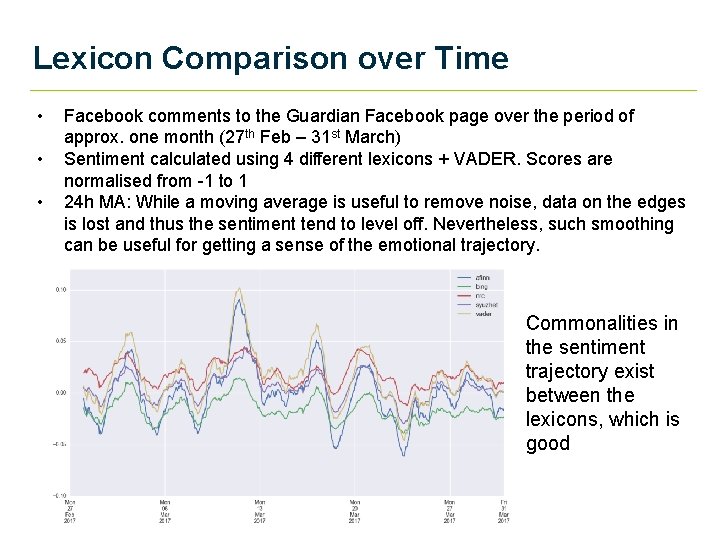

Lexicon Comparison over Time • • • Facebook comments to the Guardian Facebook page over the period of approx. one month (27 th Feb – 31 st March) Sentiment calculated using 4 different lexicons + VADER. Scores are normalised from -1 to 1 24 h MA: While a moving average is useful to remove noise, data on the edges is lost and thus the sentiment tend to level off. Nevertheless, such smoothing can be useful for getting a sense of the emotional trajectory. Commonalities in the sentiment trajectory exist between the lexicons, which is good

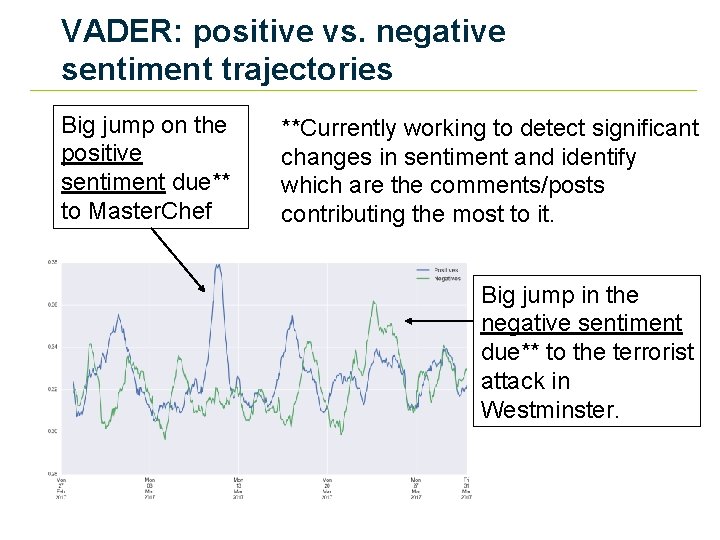

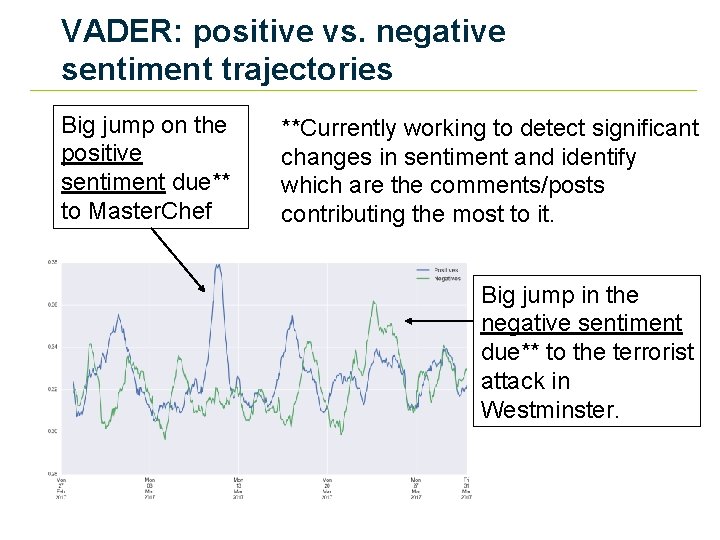

VADER: positive vs. negative sentiment trajectories Big jump on the positive sentiment due** to Master. Chef **Currently working to detect significant changes in sentiment and identify which are the comments/posts contributing the most to it. Big jump in the negative sentiment due** to the terrorist attack in Westminster.

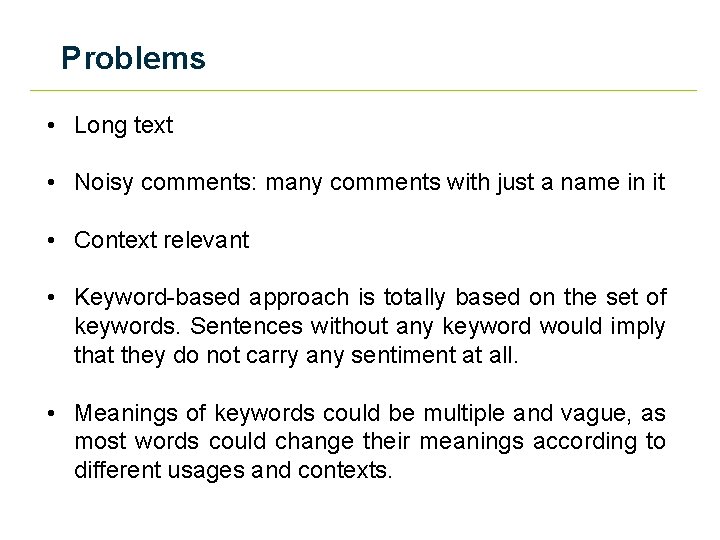

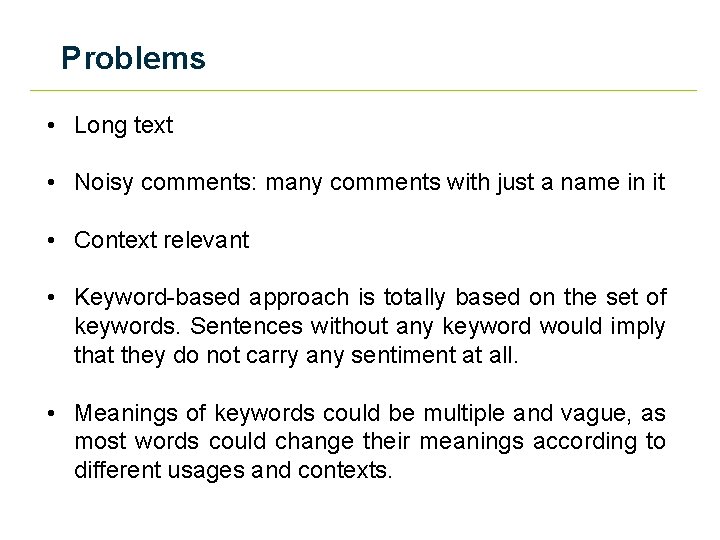

Problems • Long text • Noisy comments: many comments with just a name in it • Context relevant • Keyword-based approach is totally based on the set of keywords. Sentences without any keyword would imply that they do not carry any sentiment at all. • Meanings of keywords could be multiple and vague, as most words could change their meanings according to different usages and contexts.

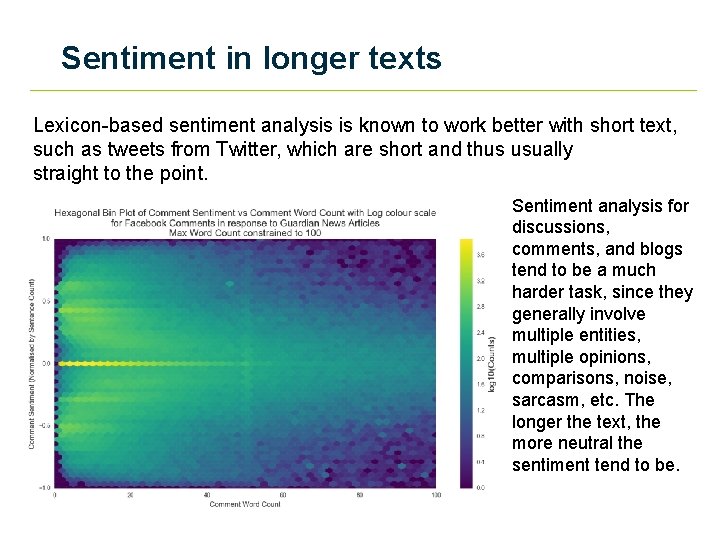

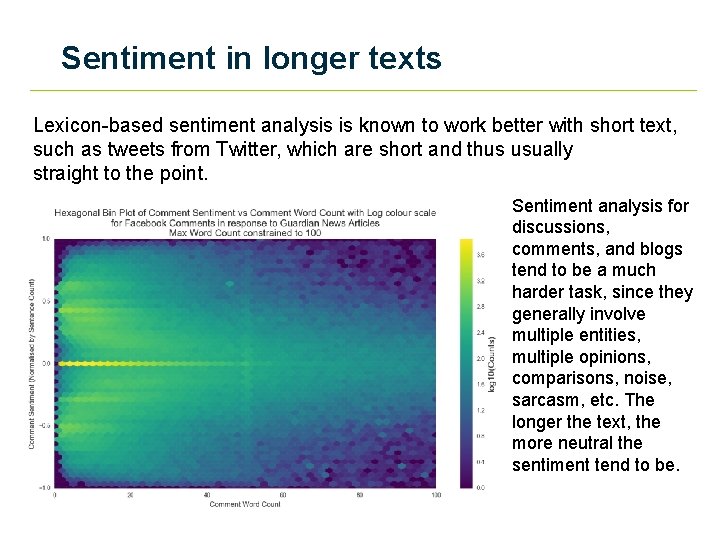

Sentiment in longer texts Lexicon-based sentiment analysis is known to work better with short text, such as tweets from Twitter, which are short and thus usually straight to the point. Sentiment analysis for discussions, comments, and blogs tend to be a much harder task, since they generally involve multiple entities, multiple opinions, comparisons, noise, sarcasm, etc. The longer the text, the more neutral the sentiment tend to be.

Internal feedback responses • Lexicon approach only moderate success as domain specific text not always expressing sentiment keywords • Machine learning: 1. 2. 3. 4. Pre-processing Feature extraction Classification Evaluation NLTK • 15 -20% improvement on Lexicon approach

Where to now? • Further exploration using Scikit learn • Distributional Semantics (word 2 vec , Glove) Using python packages gensim / spacy • Deep learning https: //blog. openai. com/unsupervised-sentiment-neuron/

Further Information • Big Data Team www. ons. gov. uk/datasciencecampus • Big data team Git. Hub: • https: //github. com/ONSBig. Data • Emails: • ons. big. data. project@ons. gov. uk • Alessandra. sozzi@ons. gsi. gov. uk • kimberley. brett@ons. gov. gsi. uk • With thanks to Theodore Manassis, Charles Morris and Karen Gask