600 465 Connecting the dots I NLP in

- Slides: 35

600. 465 Connecting the dots - I (NLP in Practice) Delipto Click Rao edit Master subtitle style delip@jhu. edu

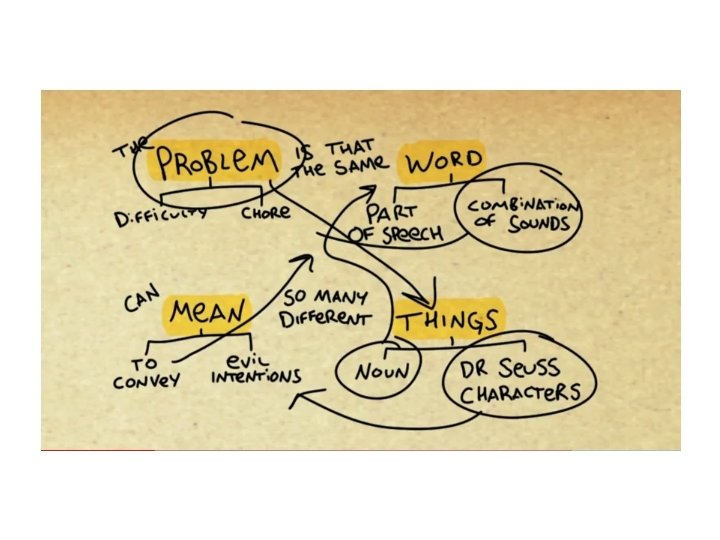

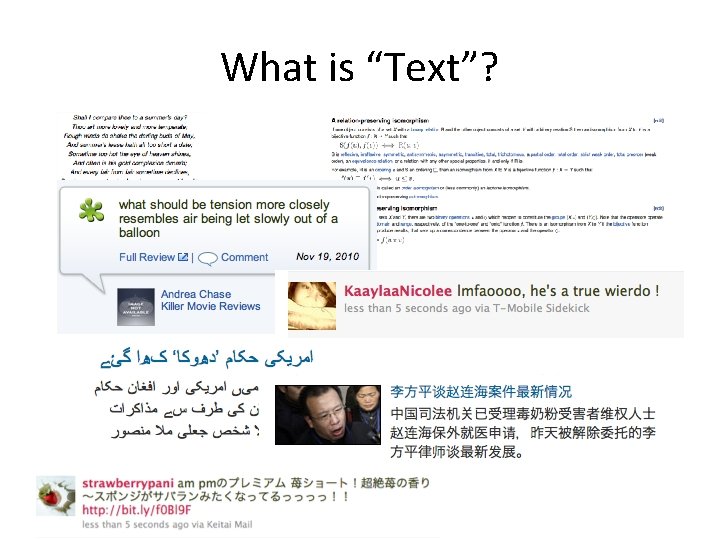

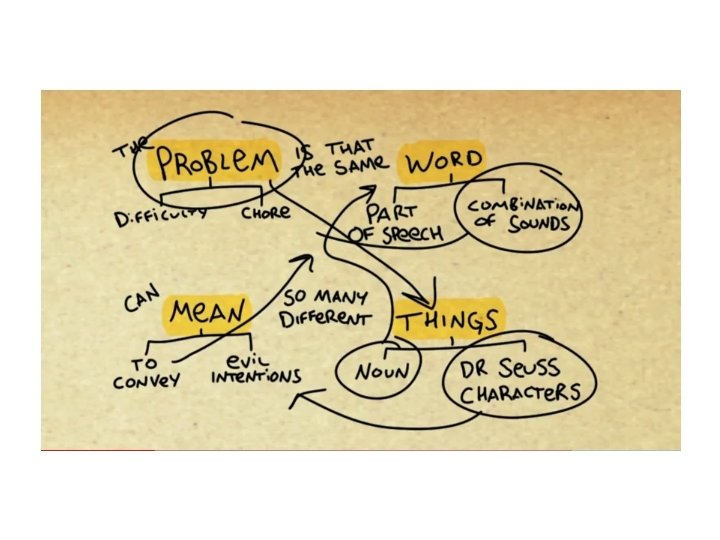

What is “Text”?

What is “Text”?

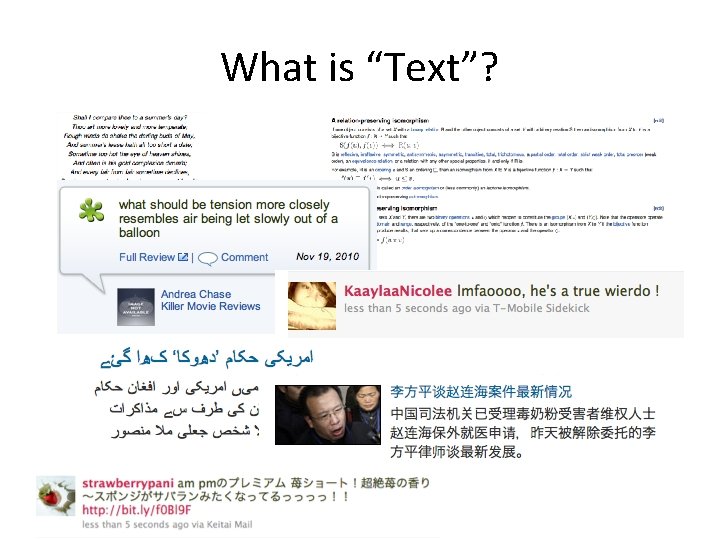

What is “Text”?

“Real” World • • Tons of data on the web A lot of it is text In many languages In many genres Language by itself is complex. The Web further complicates language.

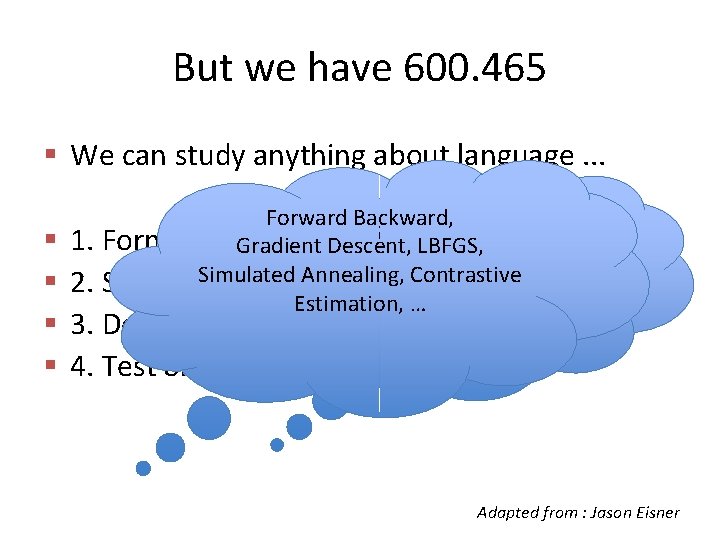

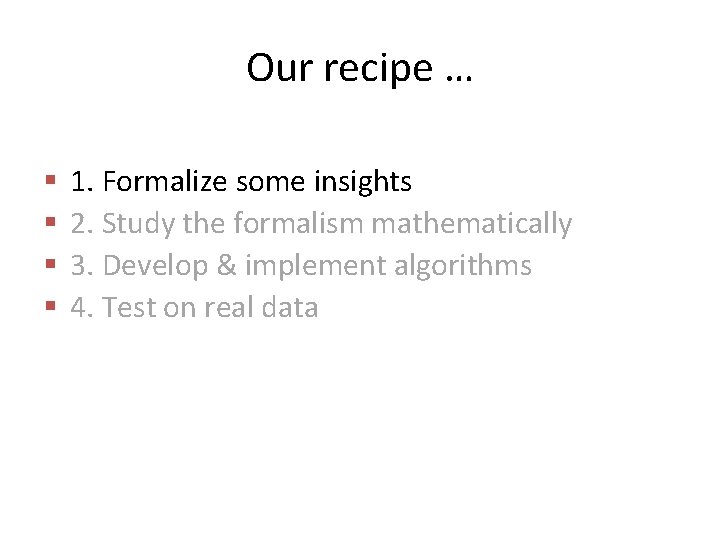

But we have 600. 465 § We can study anything about language. . . § § Forward Backward, feature functions! Formalize some insights Gradient Descent, LBFGS, f(wi = off, wi+1 = the) Simulated Annealing, Contrastive Study the formalism mathematically f(wi = obama, yi = NP) Estimation, … 1. 2. 3. Develop & implement algorithms 4. Test on real data Adapted from : Jason Eisner

NLP for fun and profit • Making NLP more accessible Provide APIs for common NLP tasks var text = document. get(…); – var entities = agent. mark. NE(text); • • Big $$$$ Backend to intelligent processing of text

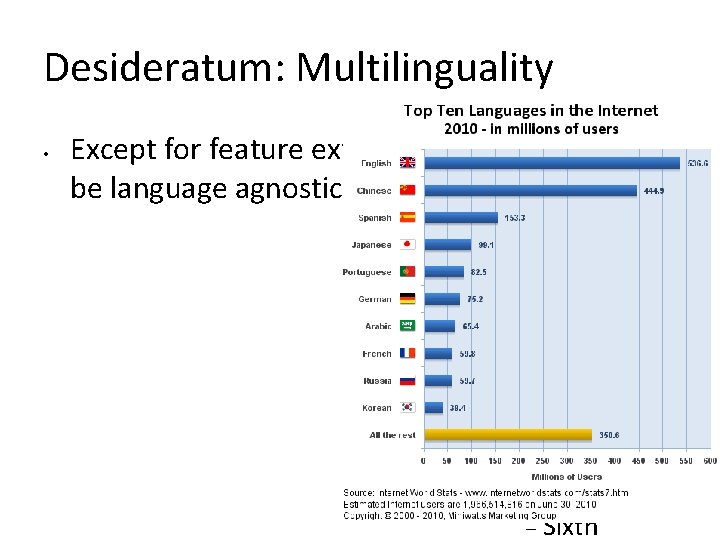

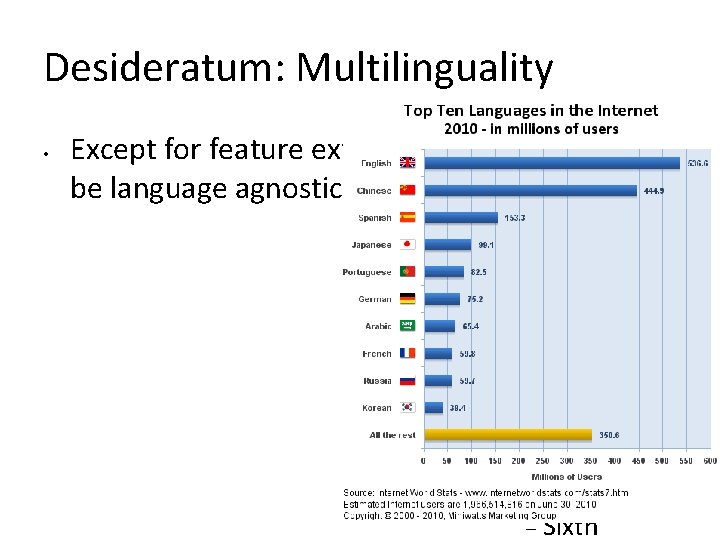

Desideratum: Multilinguality • Clicksystems to edit the outline Except for feature extraction, should text format be language agnostic Second Outline Level Third Outline Level Fourth Outline Level Fifth Outline Level Sixth

In this lecture • • Understand how to solve and ace in NLP tasks Learn general methodology or approaches End-to-End development using an example task Overview of (un)common NLP tasks

Case study: Named Entity Recognition

Case study: Named Entity Recognition • Demo: http: //viewer. opencalais. com • How do we build something like this? • How do we find out well we are doing? • How can we improve?

Case study: Named Entity Recognition • Define the problem – Say, PERSON, LOCATION, ORGANIZATION The UN secretary general met president Obama at Hague.

Case study: Named Entity Recognition • Collect data to learn from – • Sentences with words marked as PER, ORG, LOC, NONE How do we get this data?

Pay the experts

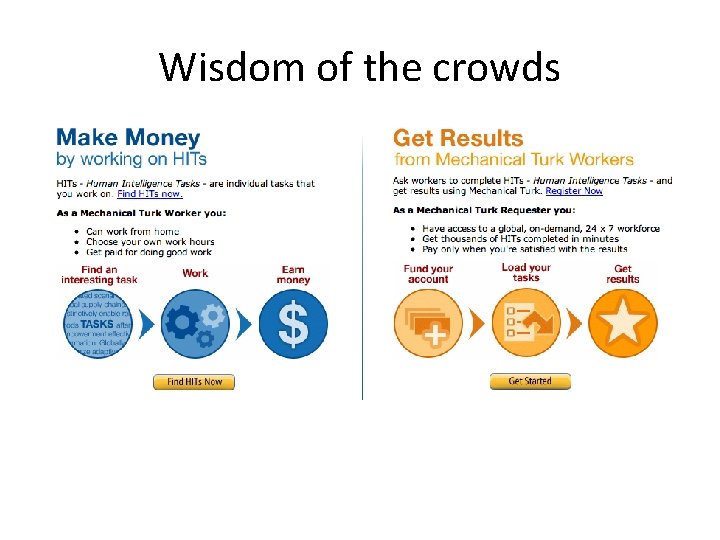

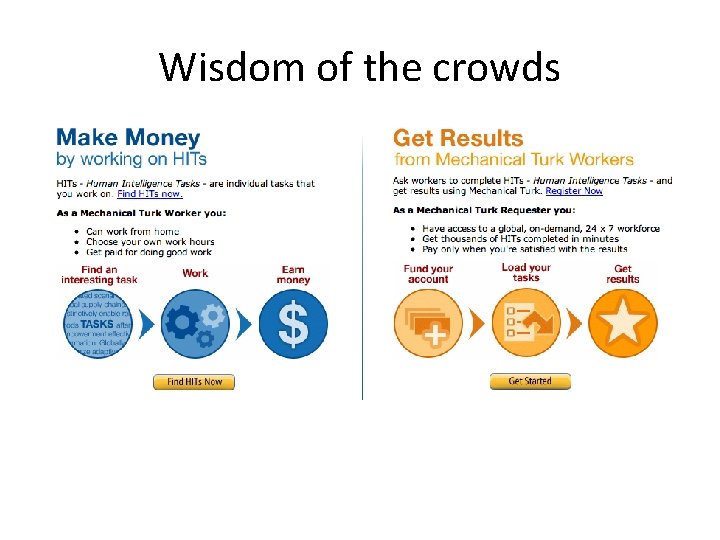

Wisdom of the crowds

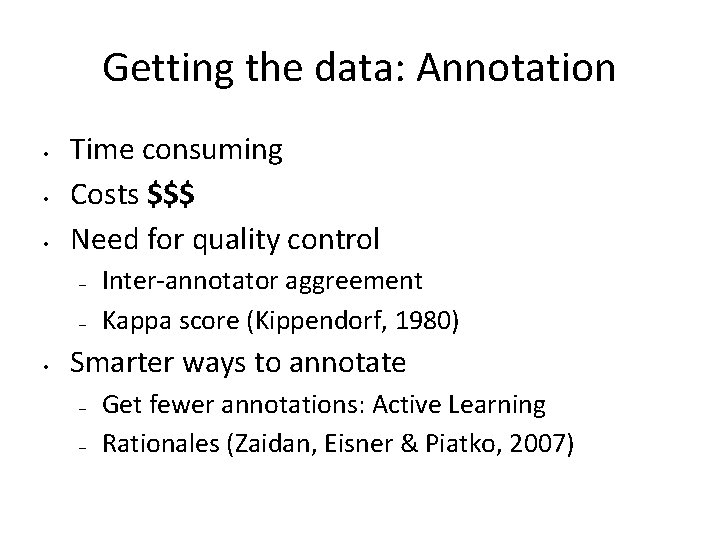

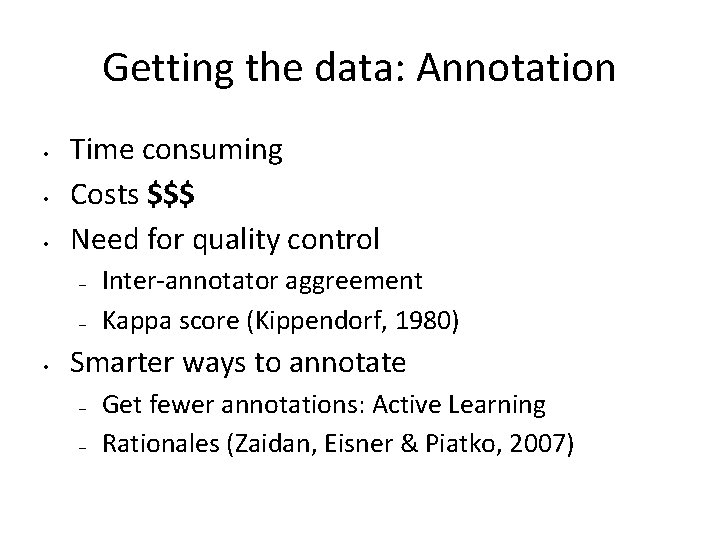

Getting the data: Annotation • • • Time consuming Costs $$$ Need for quality control – – • Inter-annotator aggreement Kappa score (Kippendorf, 1980) Smarter ways to annotate – – Get fewer annotations: Active Learning Rationales (Zaidan, Eisner & Piatko, 2007)

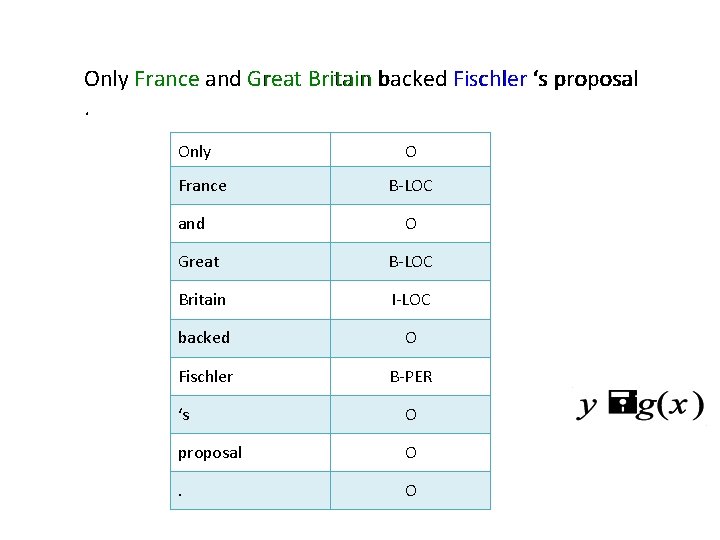

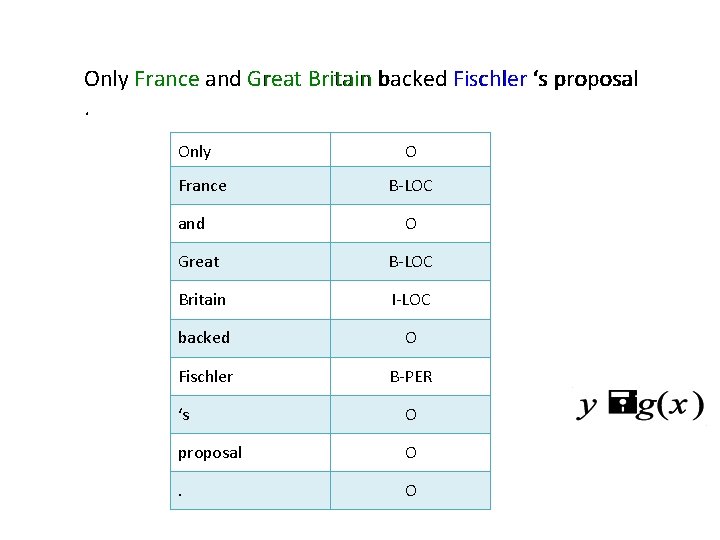

Only France and Great Britain backed Fischler ‘s proposal. Only France and O B-LOC O Great B-LOC Britain I-LOC backed O Fischler B-PER ‘s O proposal O . O

Our recipe … § § 1. Formalize some insights 2. Study the formalism mathematically 3. Develop & implement algorithms 4. Test on real data

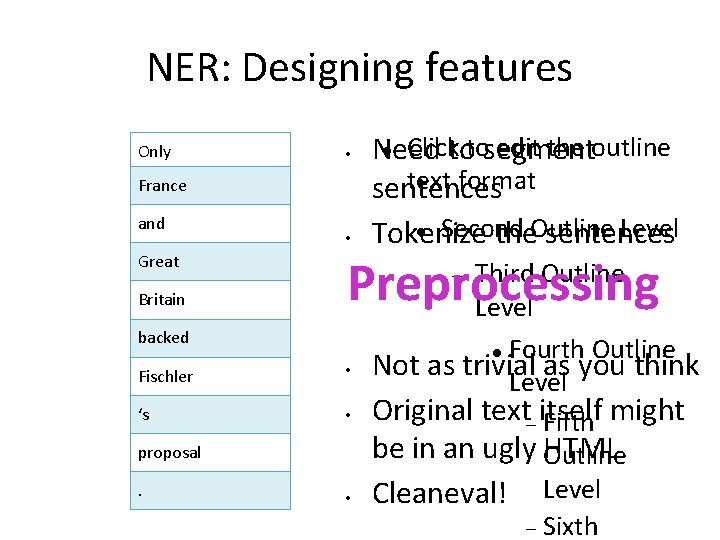

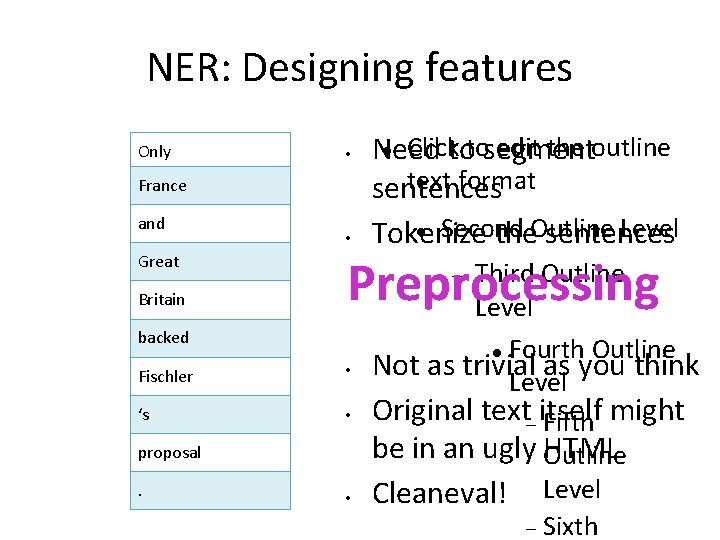

NER: Designing features Only • France and Great Britain • Preprocessing backed Third Outline Level Fourth Outline Not as trivial as you think Level Original text itself Fifth might be in an ugly Outline HTML Cleaneval! Level Sixth Fischler • ‘s • proposal. Click edit the outline Need totosegment text format sentences Second Level Tokenize the. Outline sentences •

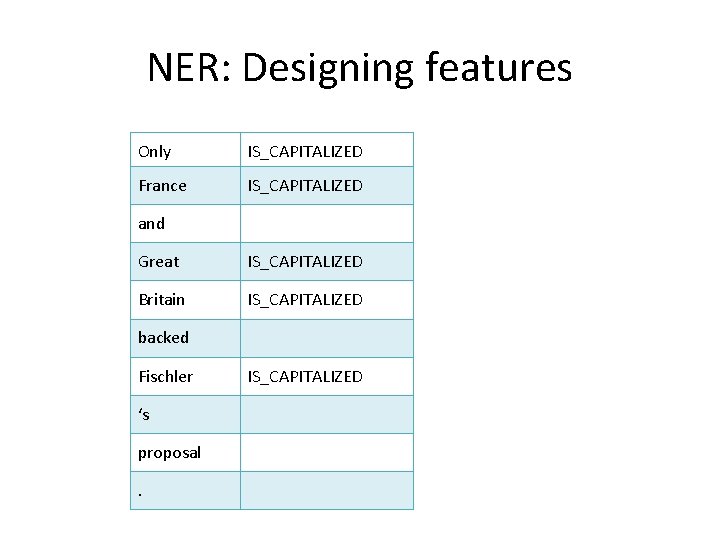

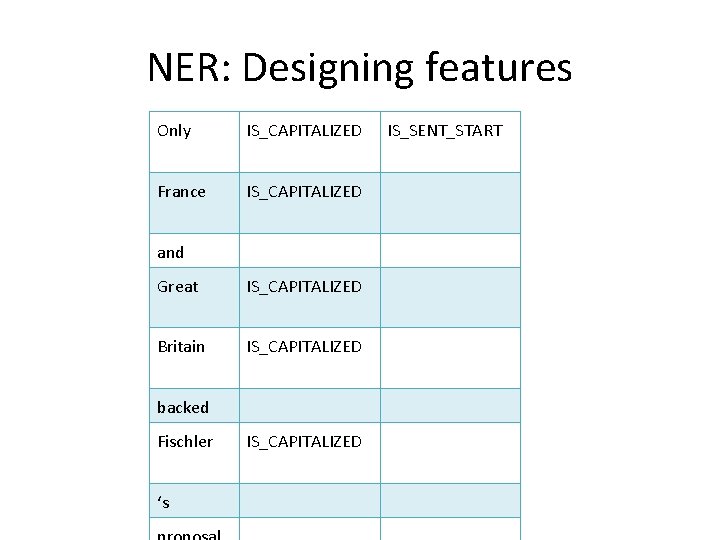

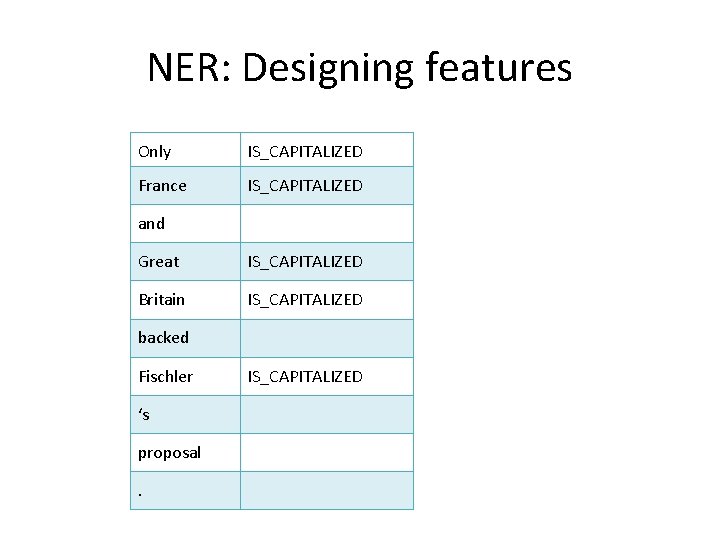

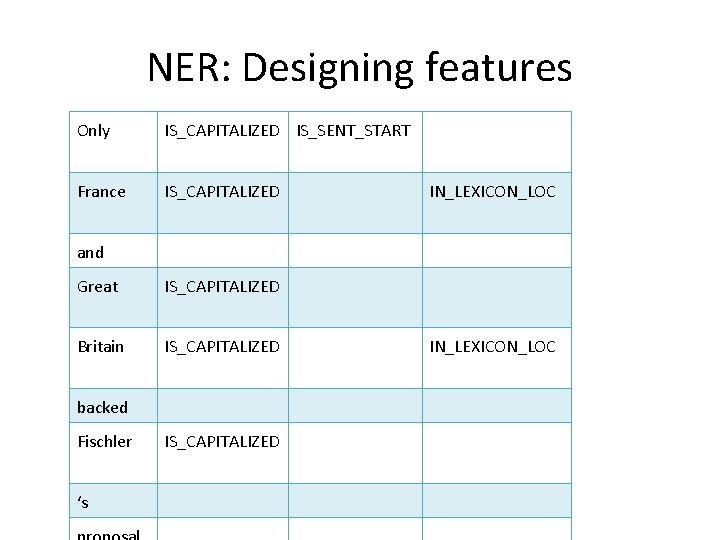

NER: Designing features Only IS_CAPITALIZED France IS_CAPITALIZED and Great IS_CAPITALIZED Britain IS_CAPITALIZED backed Fischler ‘s proposal. IS_CAPITALIZED

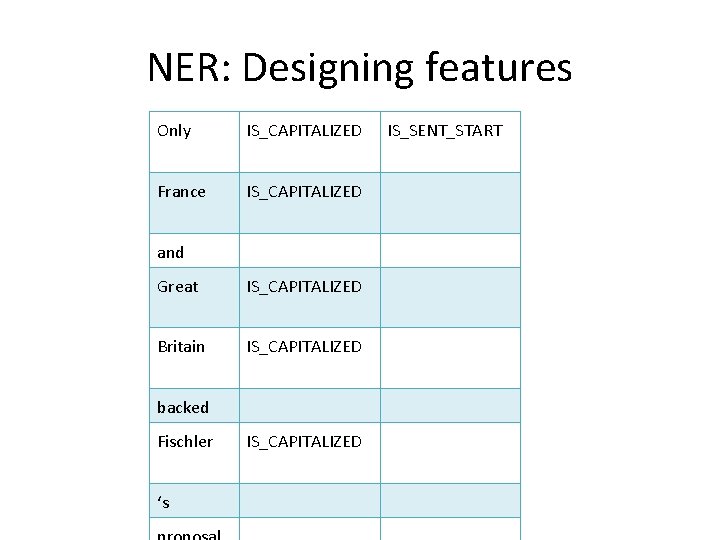

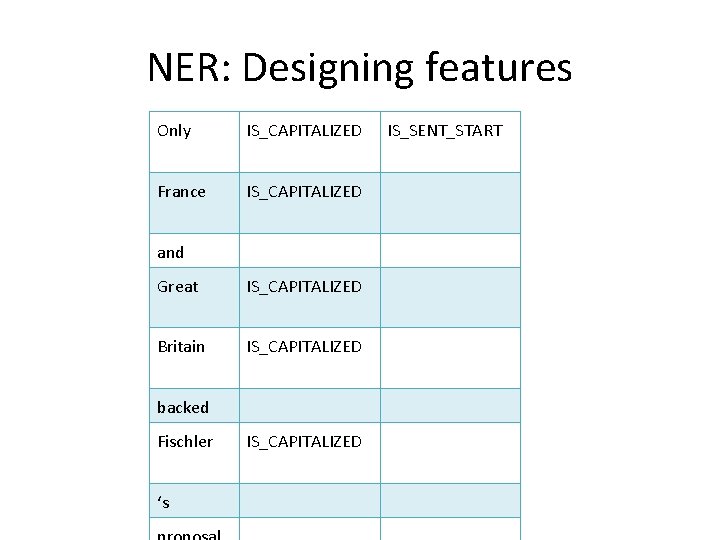

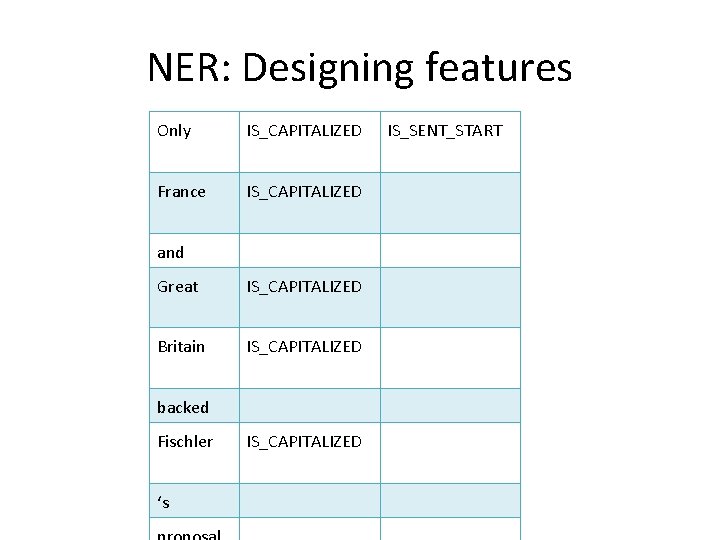

NER: Designing features Only IS_CAPITALIZED France IS_CAPITALIZED and Great IS_CAPITALIZED Britain IS_CAPITALIZED backed Fischler ‘s IS_CAPITALIZED IS_SENT_START

NER: Designing features Only IS_CAPITALIZED France IS_CAPITALIZED and Great IS_CAPITALIZED Britain IS_CAPITALIZED backed Fischler ‘s IS_CAPITALIZED IS_SENT_START

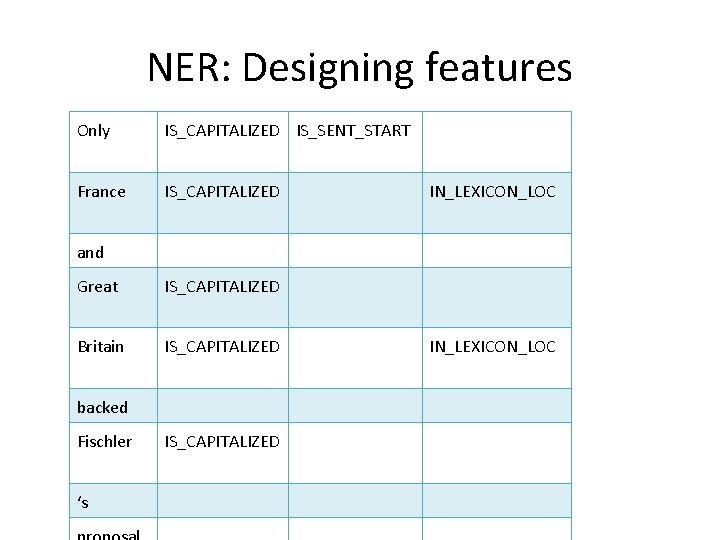

NER: Designing features Only IS_CAPITALIZED IS_SENT_START France IS_CAPITALIZED IN_LEXICON_LOC and Great IS_CAPITALIZED Britain IS_CAPITALIZED backed Fischler ‘s IS_CAPITALIZED IN_LEXICON_LOC

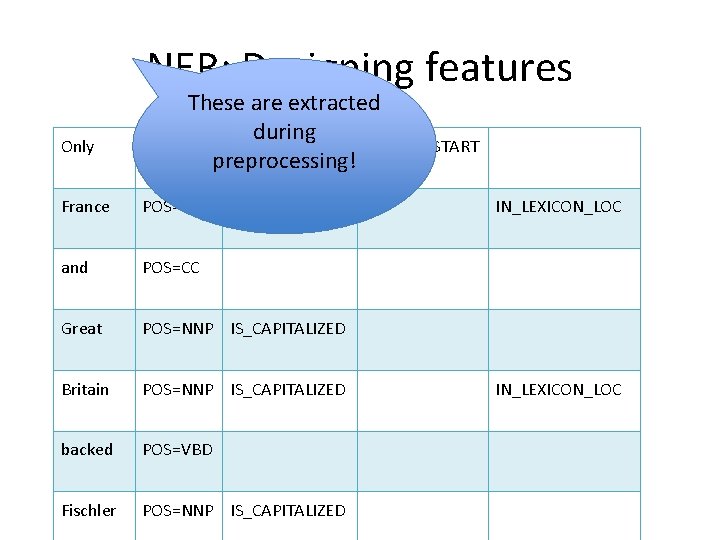

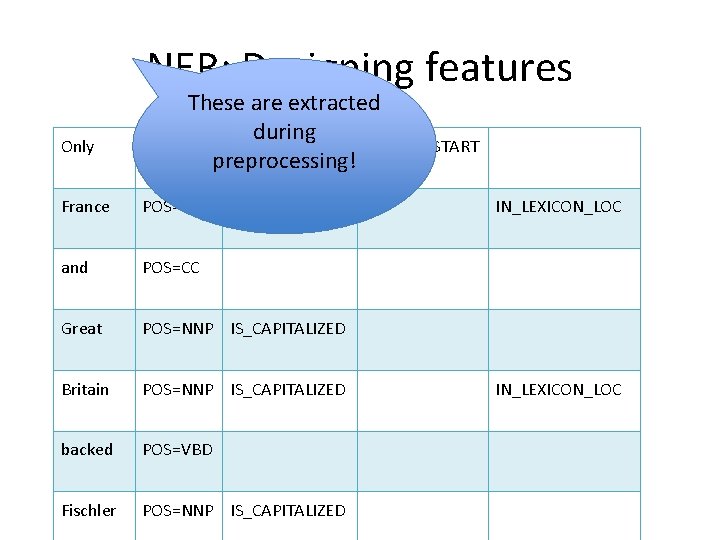

NER: Designing features Only These are extracted during POS=RB IS_CAPITALIZED IS_SENT_START preprocessing! France POS=NNP IS_CAPITALIZED and POS=CC Great POS=NNP IS_CAPITALIZED Britain POS=NNP IS_CAPITALIZED backed POS=VBD Fischler POS=NNP IS_CAPITALIZED IN_LEXICON_LOC

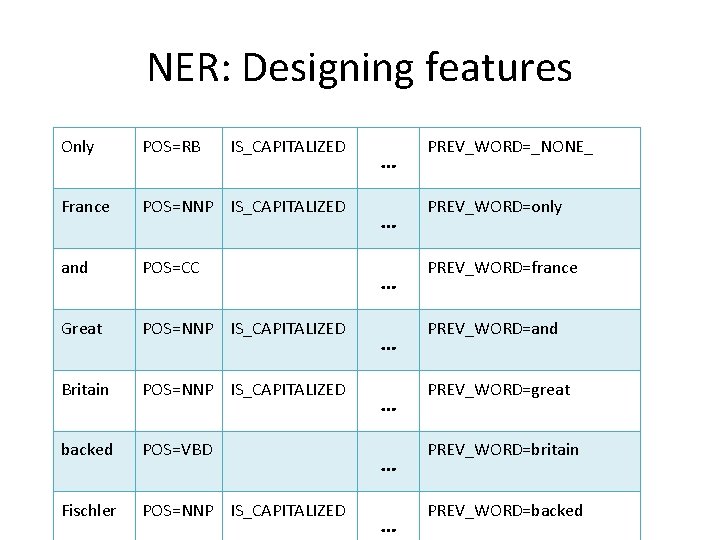

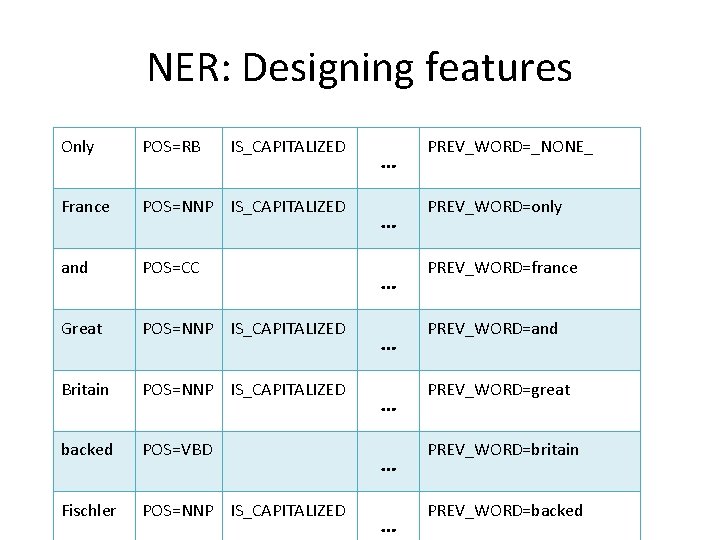

NER: Designing features Only POS=RB IS_CAPITALIZED France POS=NNP IS_CAPITALIZED and POS=CC Great POS=NNP IS_CAPITALIZED Britain POS=NNP IS_CAPITALIZED backed POS=VBD Fischler POS=NNP IS_CAPITALIZED … … … … PREV_WORD=_NONE_ PREV_WORD=only PREV_WORD=france PREV_WORD=and PREV_WORD=great PREV_WORD=britain PREV_WORD=backed

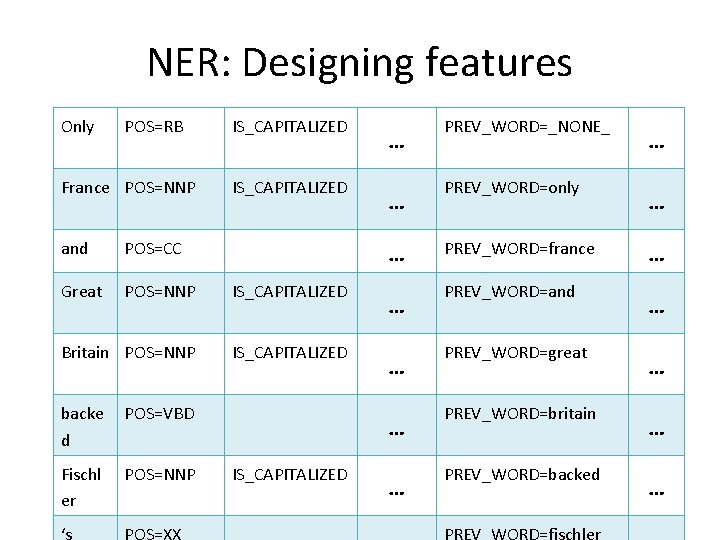

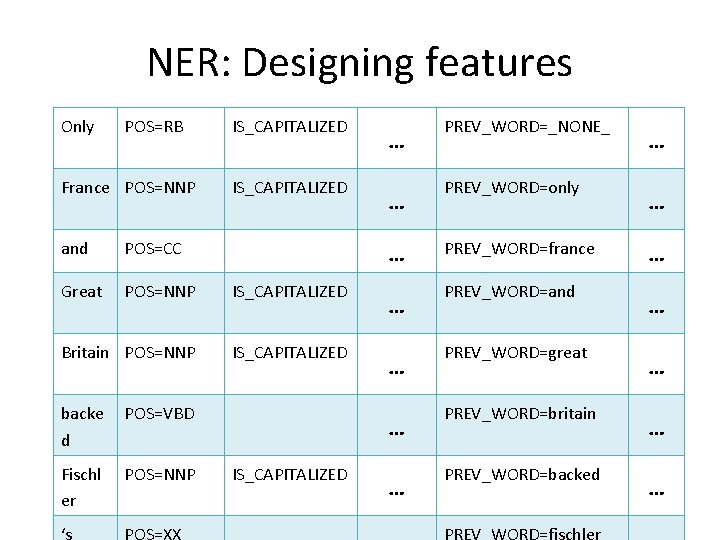

NER: Designing features Only POS=RB France POS=NNP IS_CAPITALIZED and POS=CC Great POS=NNP IS_CAPITALIZED Britain POS=NNP IS_CAPITALIZED backe d POS=VBD Fischl er POS=NNP … … … IS_CAPITALIZED … PREV_WORD=_NONE_ PREV_WORD=only PREV_WORD=france PREV_WORD=and PREV_WORD=great PREV_WORD=britain PREV_WORD=backed … … … …

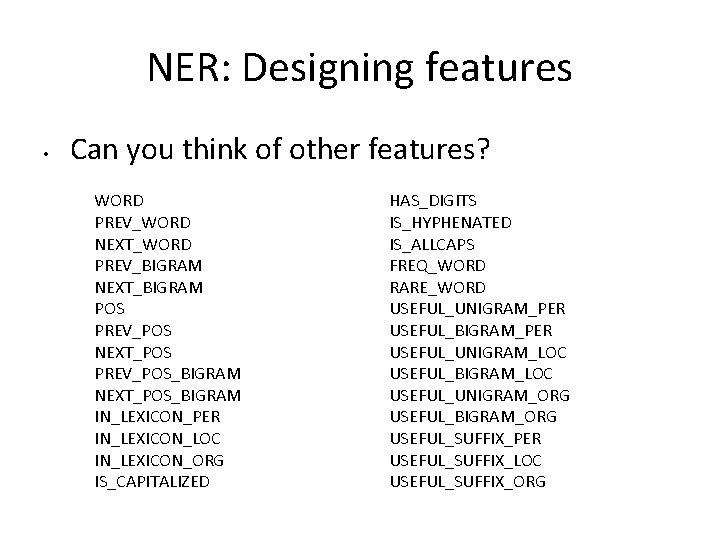

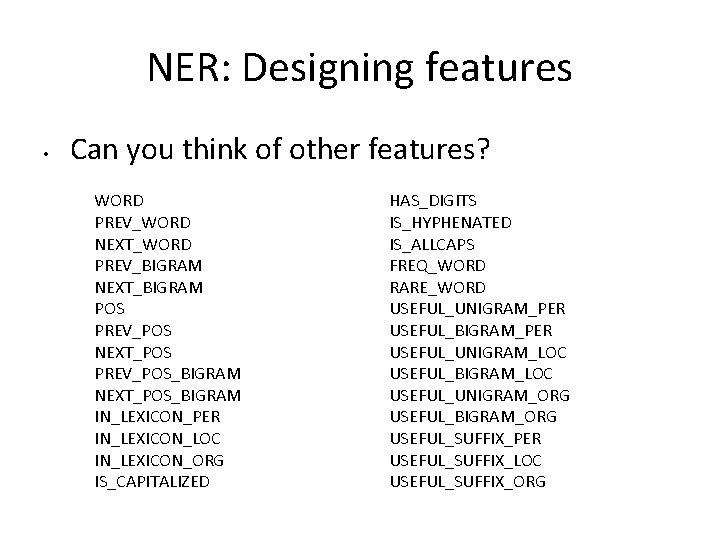

NER: Designing features • Can you think of other features? WORD PREV_WORD NEXT_WORD PREV_BIGRAM NEXT_BIGRAM POS PREV_POS NEXT_POS PREV_POS_BIGRAM NEXT_POS_BIGRAM IN_LEXICON_PER IN_LEXICON_LOC IN_LEXICON_ORG IS_CAPITALIZED HAS_DIGITS IS_HYPHENATED IS_ALLCAPS FREQ_WORD RARE_WORD USEFUL_UNIGRAM_PER USEFUL_BIGRAM_PER USEFUL_UNIGRAM_LOC USEFUL_BIGRAM_LOC USEFUL_UNIGRAM_ORG USEFUL_BIGRAM_ORG USEFUL_SUFFIX_PER USEFUL_SUFFIX_LOC USEFUL_SUFFIX_ORG

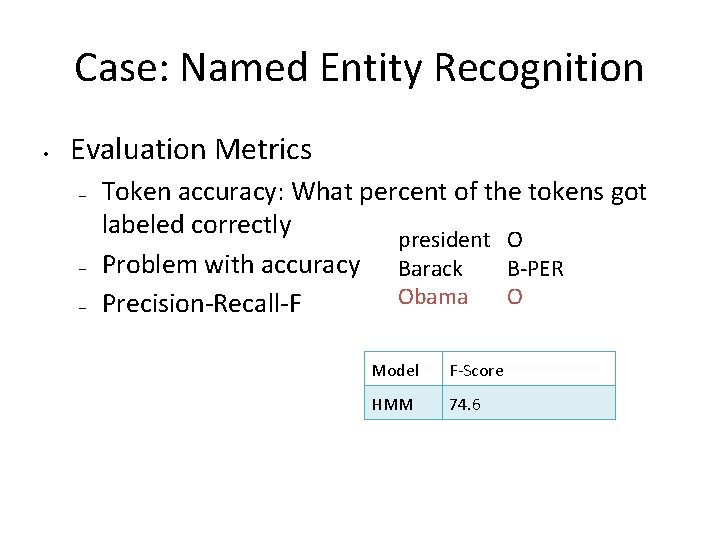

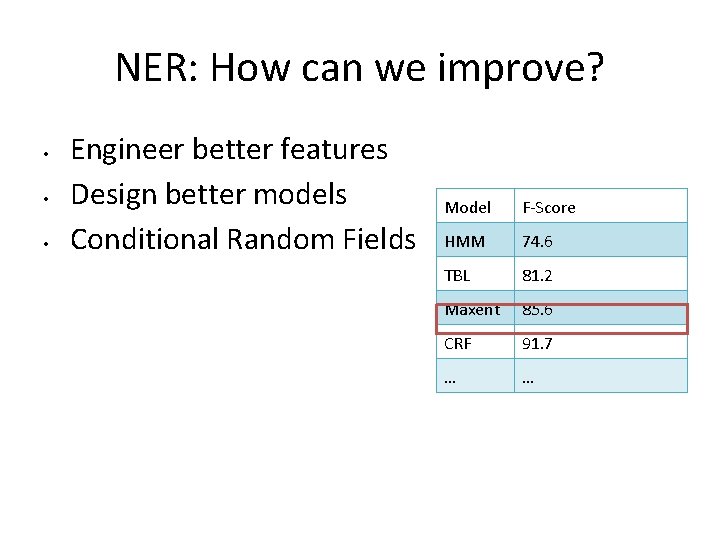

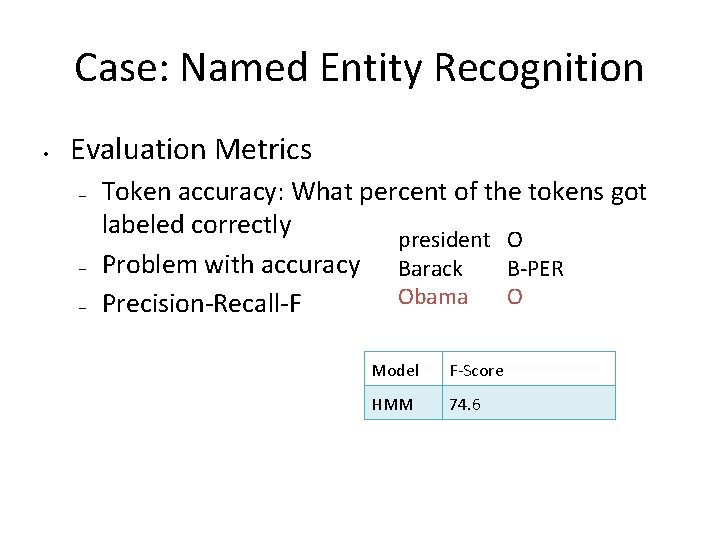

Case: Named Entity Recognition • Evaluation Metrics – – – Token accuracy: What percent of the tokens got labeled correctly president O Problem with accuracy Barack B-PER Obama O Precision-Recall-F Model F-Score HMM 74. 6

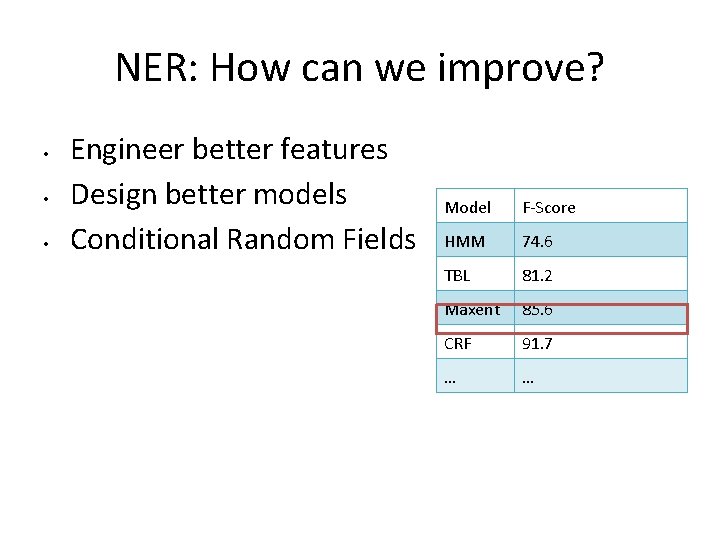

NER: How can we improve? • • • Engineer better features Design better models Conditional Random Fields Model F-Score HMM 74. 6 TBL 81. 2 Maxent 85. 6 CRF 91. 7 … …

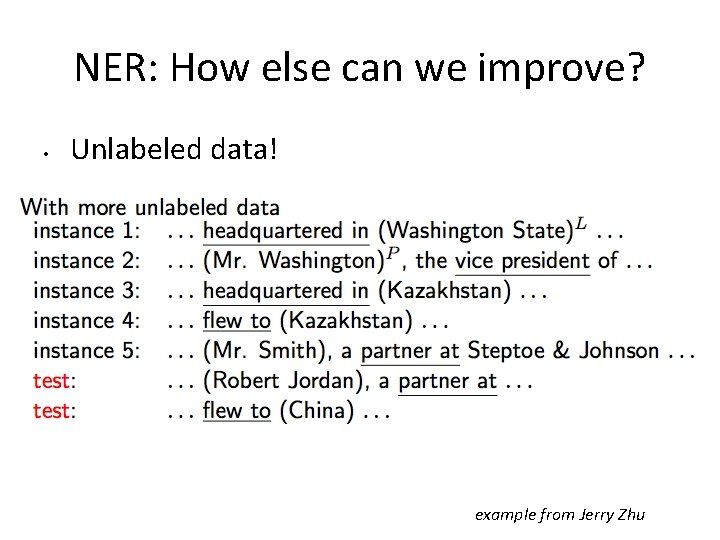

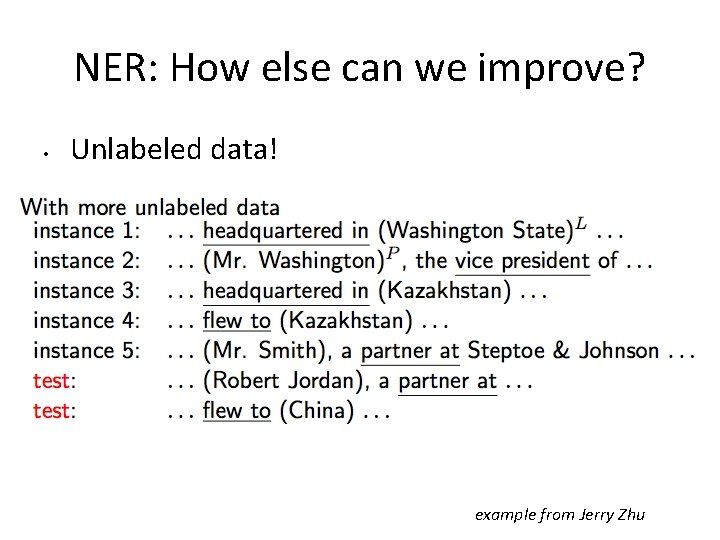

NER: How else can we improve? • Unlabeled data! example from Jerry Zhu

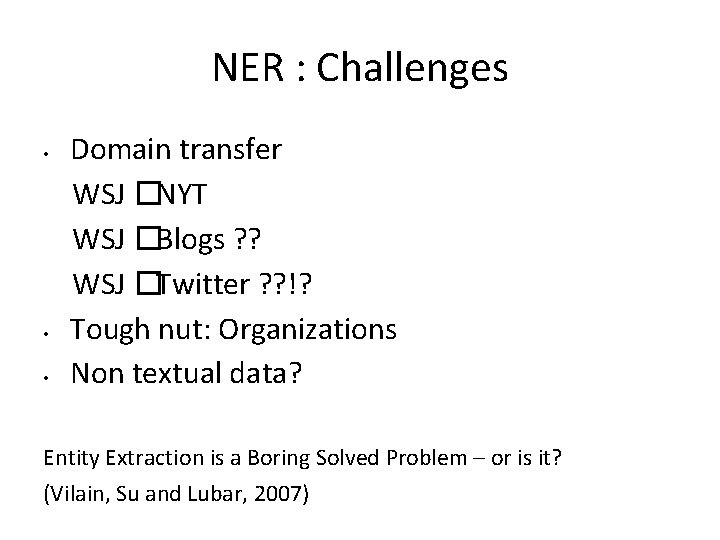

NER : Challenges • • • Domain transfer WSJ �NYT WSJ �Blogs ? ? WSJ �Twitter ? ? !? Tough nut: Organizations Non textual data? Entity Extraction is a Boring Solved Problem – or is it? (Vilain, Su and Lubar, 2007)

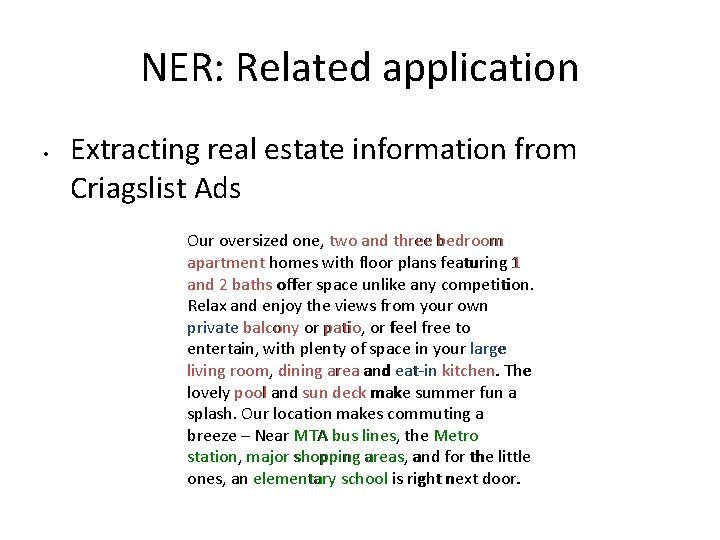

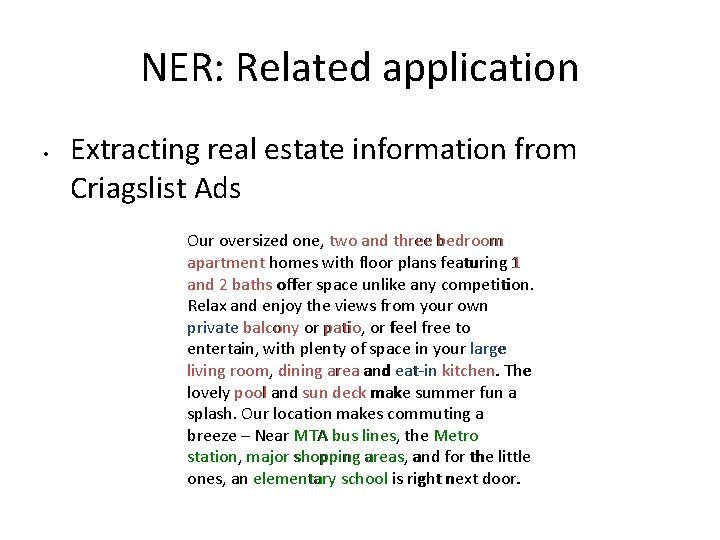

NER: Related application • Extracting real estate information from Criagslist Ads Our oversized one, two and three bedroom apartment homes with floor plans featuring 1 and 2 baths offer space unlike any competition. Relax and enjoy the views from your own private balcony or patio, or feel free to entertain, with plenty of space in your large living room, dining area and eat-in kitchen. The lovely pool and sun deck make summer fun a splash. Our location makes commuting a breeze – Near MTA bus lines, the Metro station, major shopping areas, and for the little ones, an elementary school is right next door.

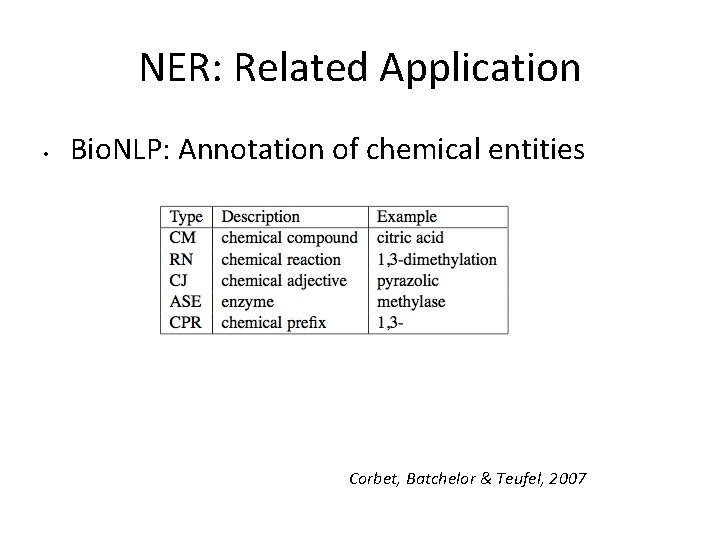

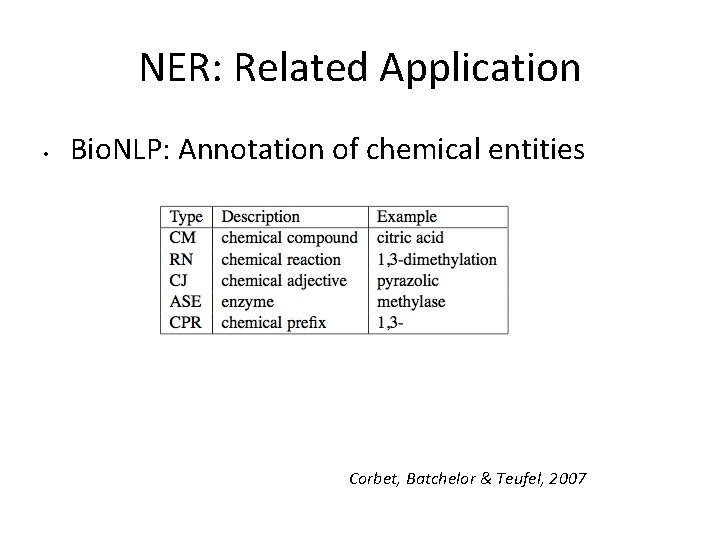

NER: Related Application • Bio. NLP: Annotation of chemical entities Corbet, Batchelor & Teufel, 2007

Shared Tasks: NLP in practice • Shared Task – – – • Everybody works on a (mostly) common dataset Evaluation measures are defined Participants get ranked on the evaluation measures Advance the state of the art Set benchmarks Tasks involve common hard problems or new interesting problems

600-465

600-465 600-465

600-465 600-465

600-465 600-465

600-465 Sudut istimewa

Sudut istimewa 465 punkte abi

465 punkte abi Ece 465

Ece 465 Dd path testing

Dd path testing Eecs 465

Eecs 465 Eecs 465

Eecs 465 Ece 465

Ece 465 Call graph based integration testing

Call graph based integration testing Eecs 465

Eecs 465 Cs 447

Cs 447 Cs 465 gmu

Cs 465 gmu Se 465

Se 465 Eecs 465

Eecs 465 Se 465

Se 465 465 x 4

465 x 4 Dots tb

Dots tb Valence bond picture

Valence bond picture History of quantum dots

History of quantum dots Think dots differentiated instruction

Think dots differentiated instruction Which artist utilized benday dots in his artwork

Which artist utilized benday dots in his artwork Lichtenstein pop art portraits

Lichtenstein pop art portraits Jenis balutan bandage

Jenis balutan bandage Think dots

Think dots Connect 1 2 3 without crossing lines

Connect 1 2 3 without crossing lines Trantas dots

Trantas dots Four dots meaning morse code

Four dots meaning morse code Dots tbc

Dots tbc Evident technologies

Evident technologies Dippin' dots precio

Dippin' dots precio Dot wall

Dot wall 99 dots

99 dots What do the dots represent

What do the dots represent