Fake news no GANs Do Deep Generative Models

- Slides: 48

*Fake news, no GANs Do Deep Generative Models* Know What They Don't Know? Eric Nalisnick, Akihiro Matsukawa, Yee Whye Teh, Dilan Gorur, Balaji Lakshminarayanan (Deep. Mind) ICLR 2019 Presented by: Julius Hietala

TL; DR

*in some interesting cases TL; DR Normalizing flows, VAEs, Pixel. CNNs aren’t reliable enough to detect out of distribution data*

Outline • Paper introduction • Some notes • How normalizing flows work? • Paper experiments • Paper findings • Conclusions • Discussion

Paper introduction • Density estimation/determination is used in many applications (anomaly detection, transfer learning etc. )

Paper introduction • Density estimation/determination is used in many applications (anomaly detection, transfer learning etc. ) • These applications have spawned interest towards deep generative models

Paper introduction • Density estimation/determination is used in many applications (anomaly detection, transfer learning etc. ) • These applications have spawned interest towards deep generative models • Currently popular choices are VAEs, GANs, auto regressive models, and invertible latent variable models

Paper introduction • Density estimation/determination is used in many applications (anomaly detection, transfer learning etc. ) • These applications have spawned interest towards deep generative models • Currently popular choices are VAEs, GANs, auto regressive models, and invertible latent variable models • The latter two are interesting due to the fact that they allow for exact likelihood calculation

Paper introduction • Density estimation/determination is used in many applications (anomaly detection, transfer learning etc. ) • These applications have spawned interest towards deep generative models • Currently popular choices are VAEs, GANs, auto regressive models, and invertible latent variable models • The latter two are interesting due to the fact that they allow for exact likelihood calculation • Main question of the paper: can these models be used for anomaly detection?

Some notes • The authors report results for VAEs, Pixel. CNNs, and normalizing flows.

Some notes • The authors report results for VAEs, Pixel. CNNs, and normalizing flows. • Only normalizing flows are discussed and studied in depth

Some notes • The authors report results for VAEs, Pixel. CNNs, and normalizing flows. • Only normalizing flows are discussed and studied in depth • Is their analysis applicable to all the different types of models?

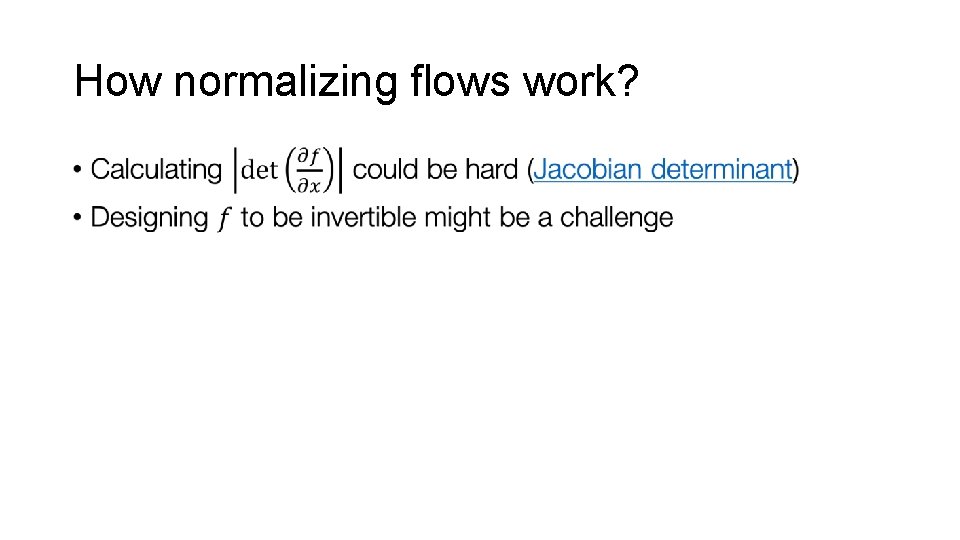

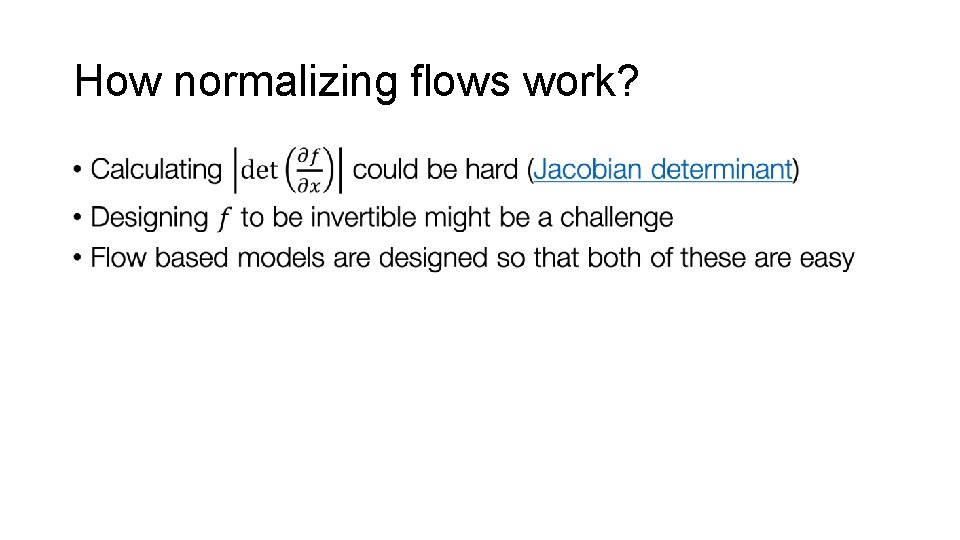

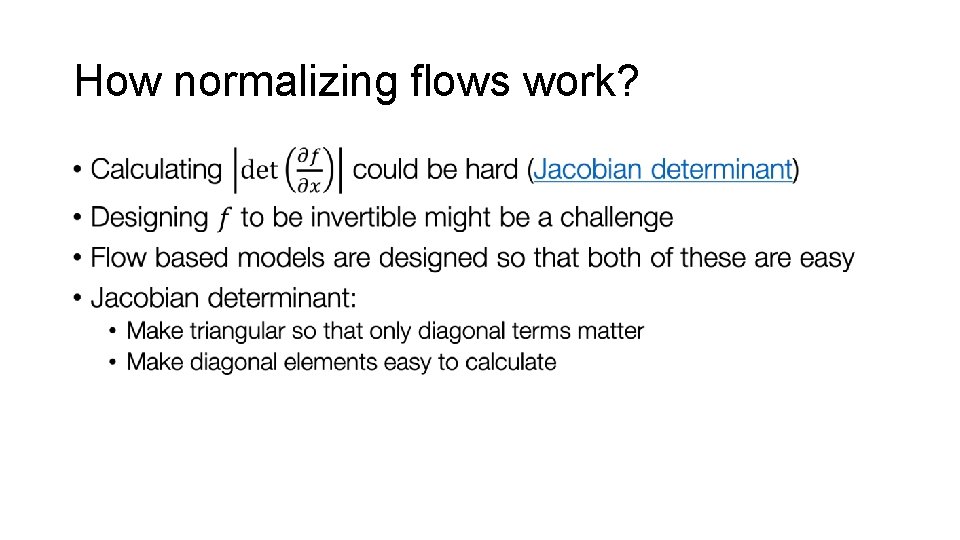

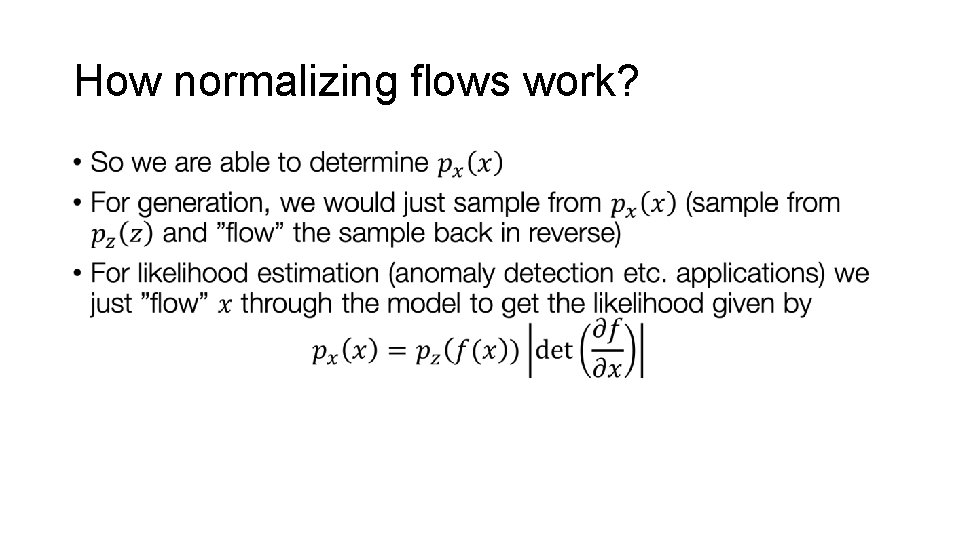

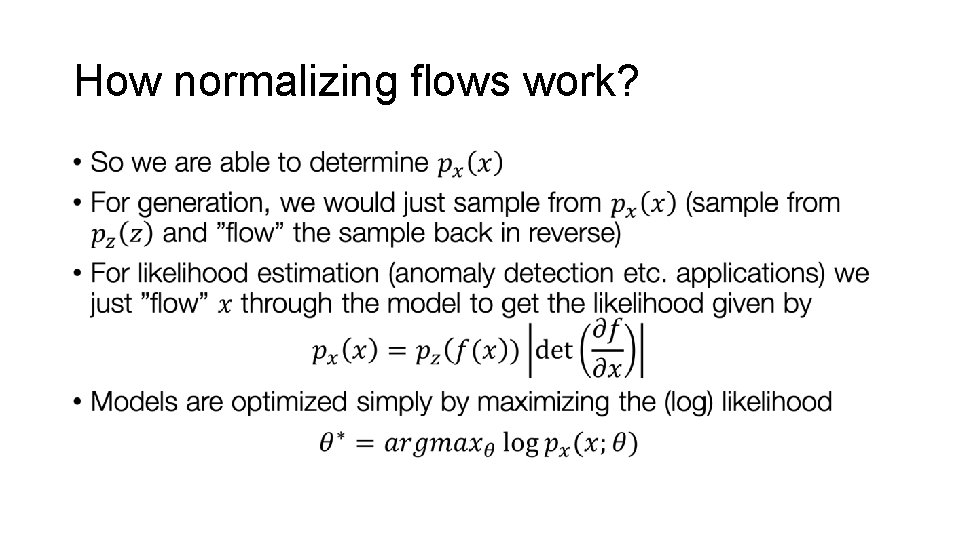

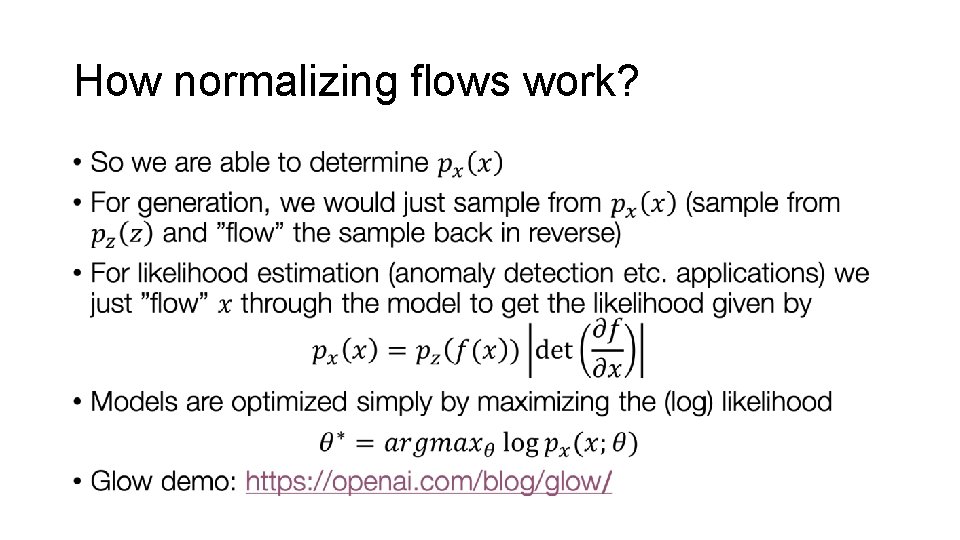

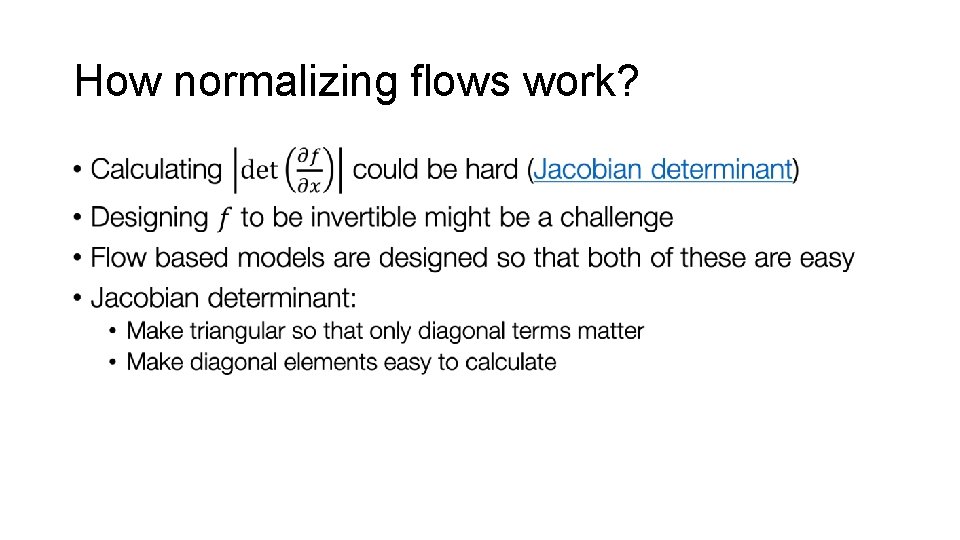

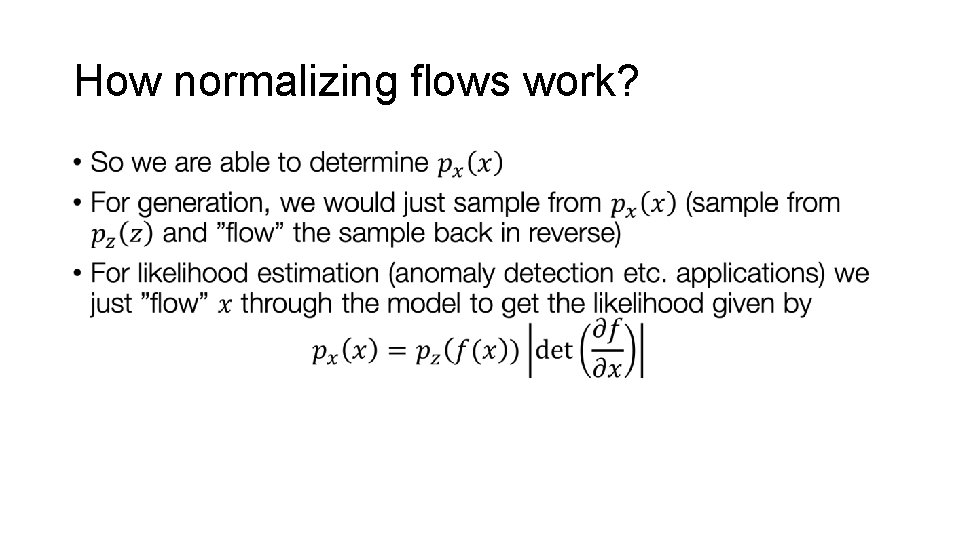

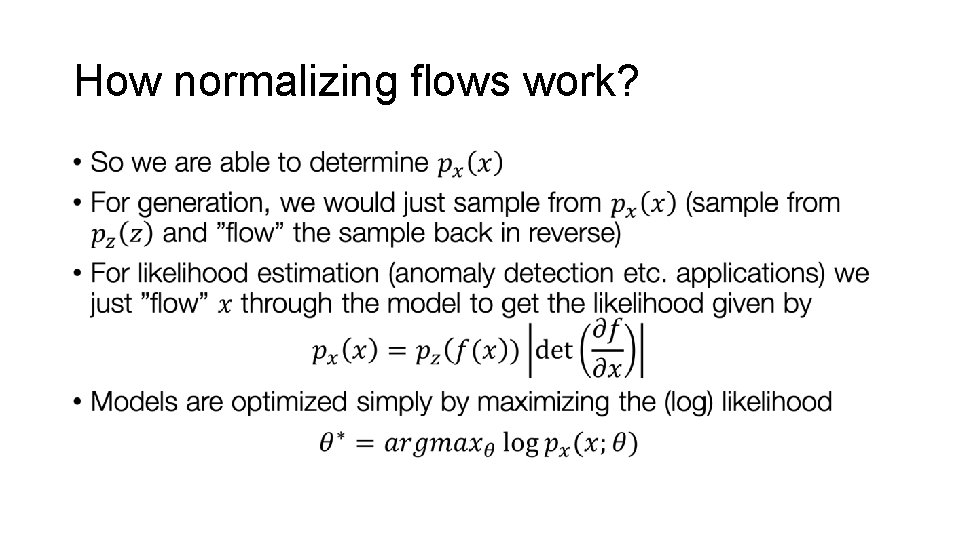

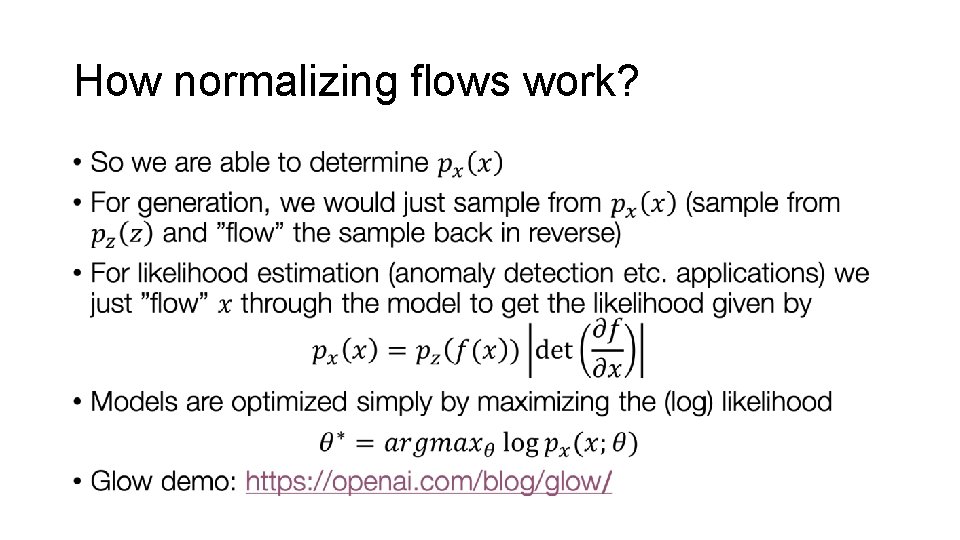

How normalizing flows work?

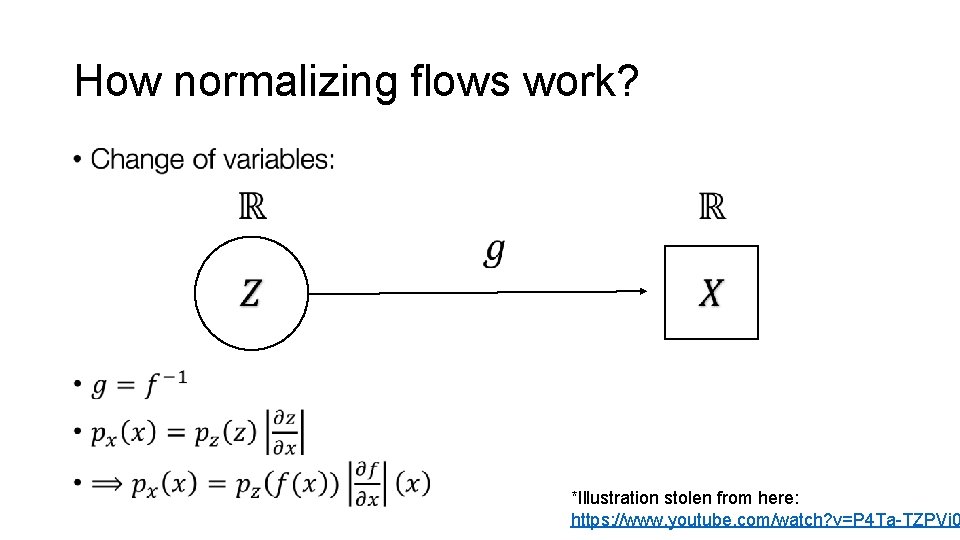

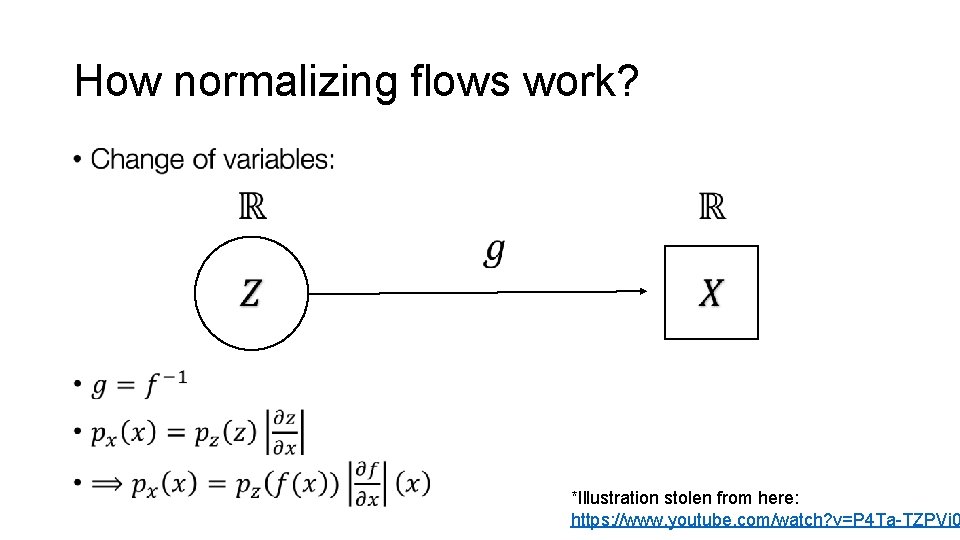

How normalizing flows work? • *Illustration stolen from here: https: //www. youtube. com/watch? v=P 4 Ta-TZPVi 0

How normalizing flows work? •

How normalizing flows work? •

How normalizing flows work? •

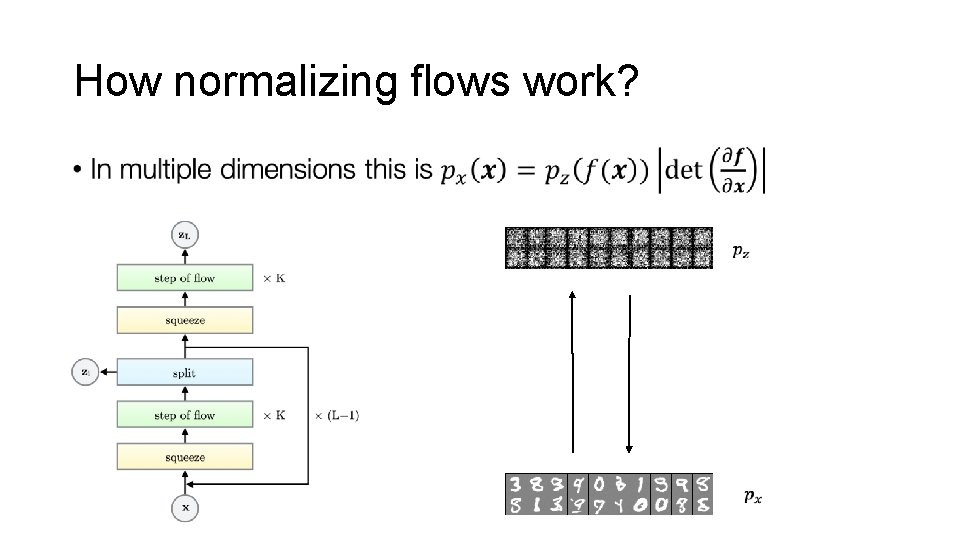

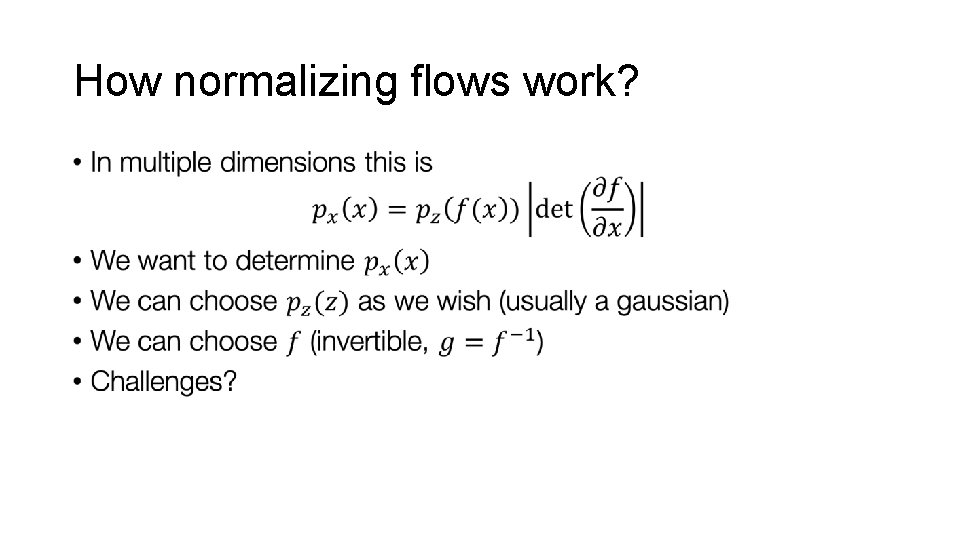

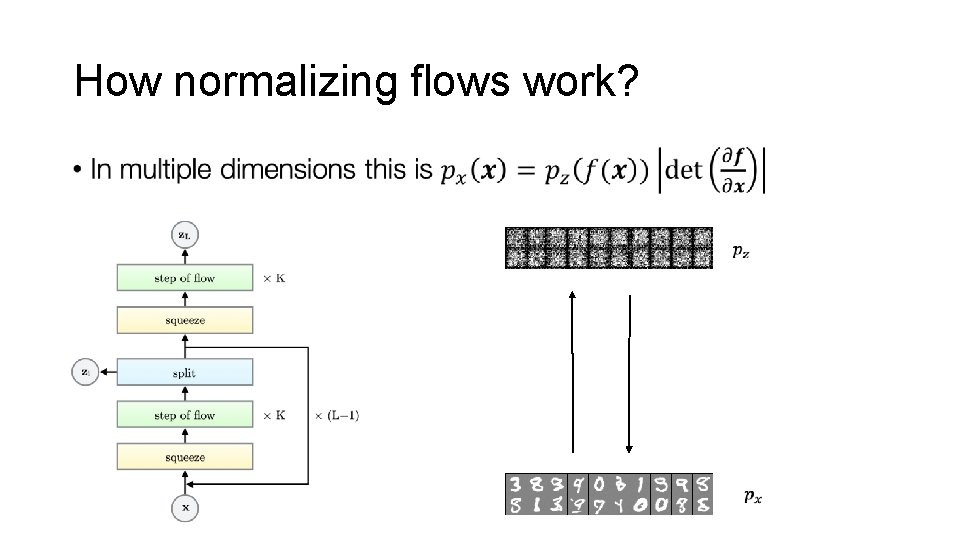

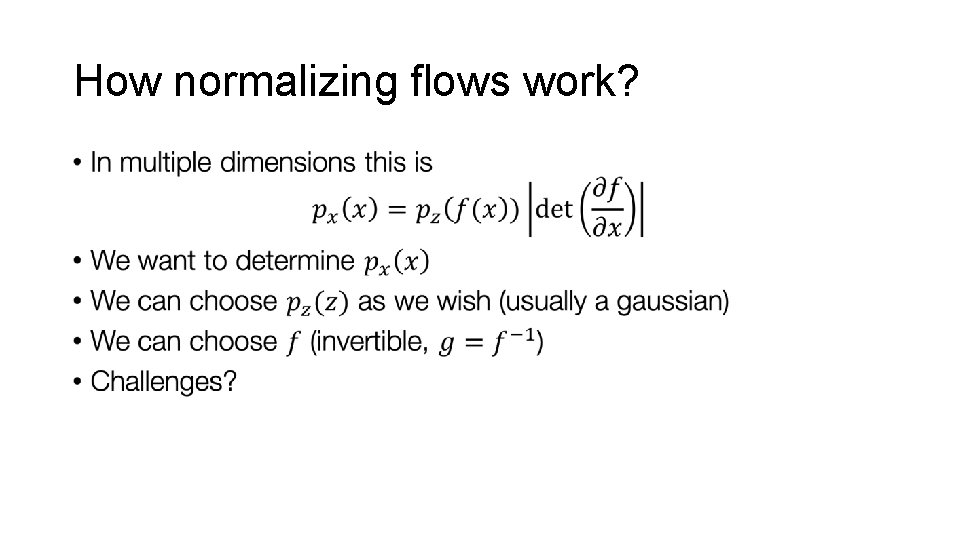

How normalizing flows work? •

How normalizing flows work? •

How normalizing flows work? •

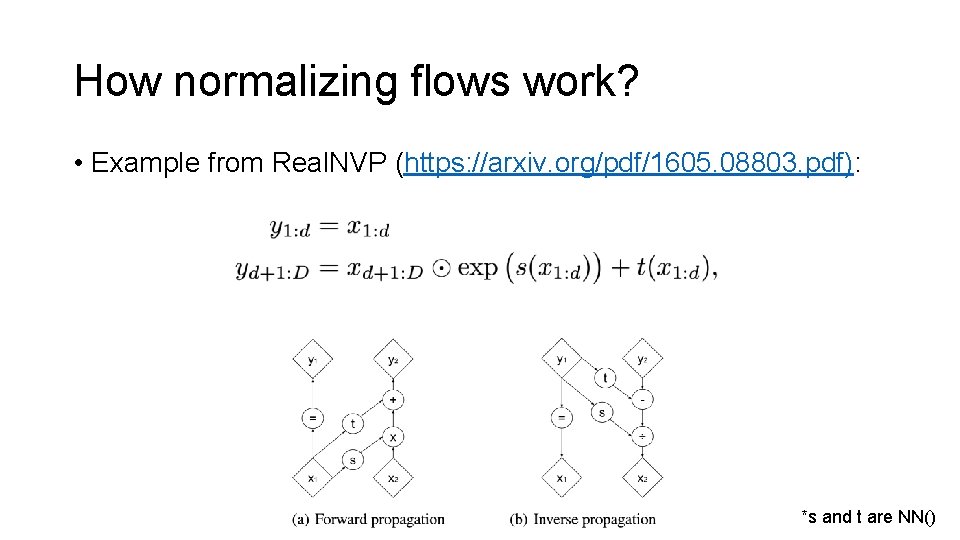

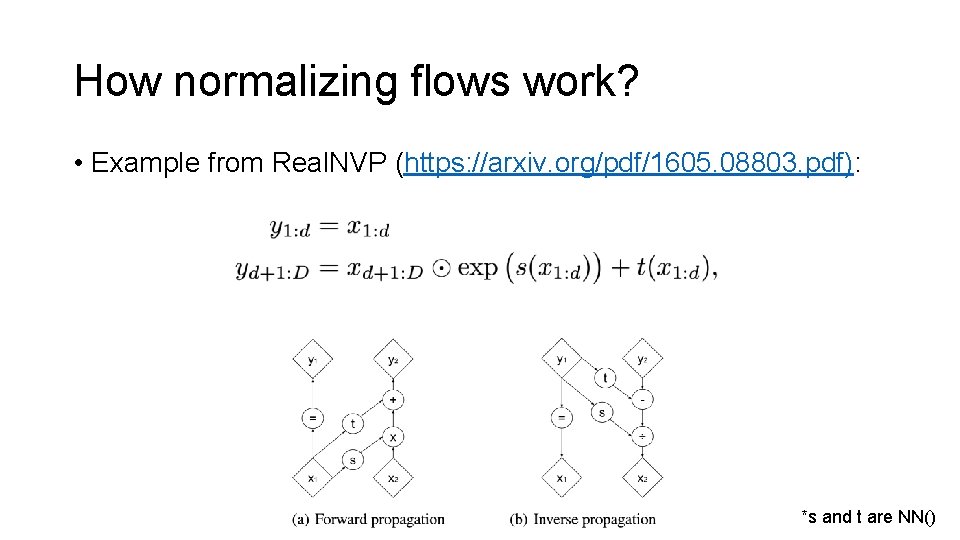

How normalizing flows work? • Example from Real. NVP (https: //arxiv. org/pdf/1605. 08803. pdf): *s and t are NN()

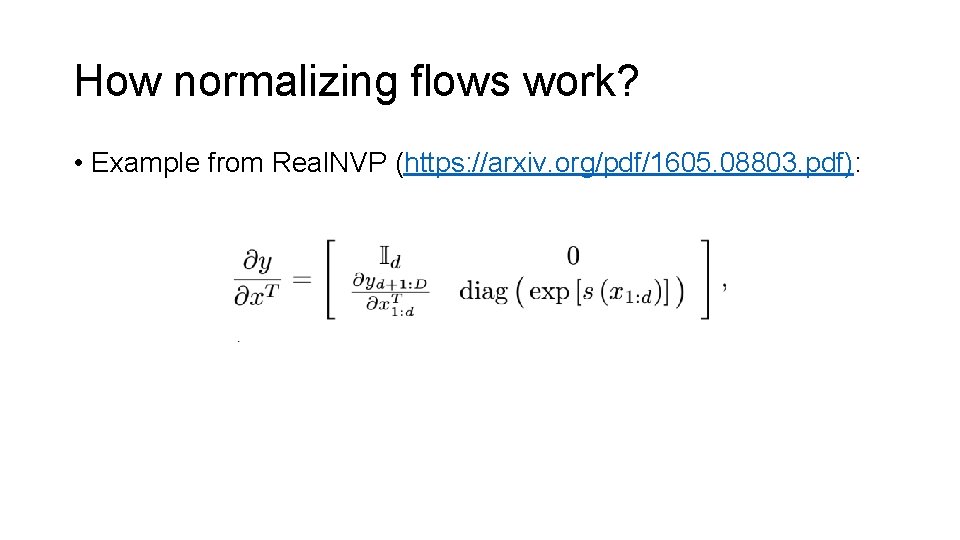

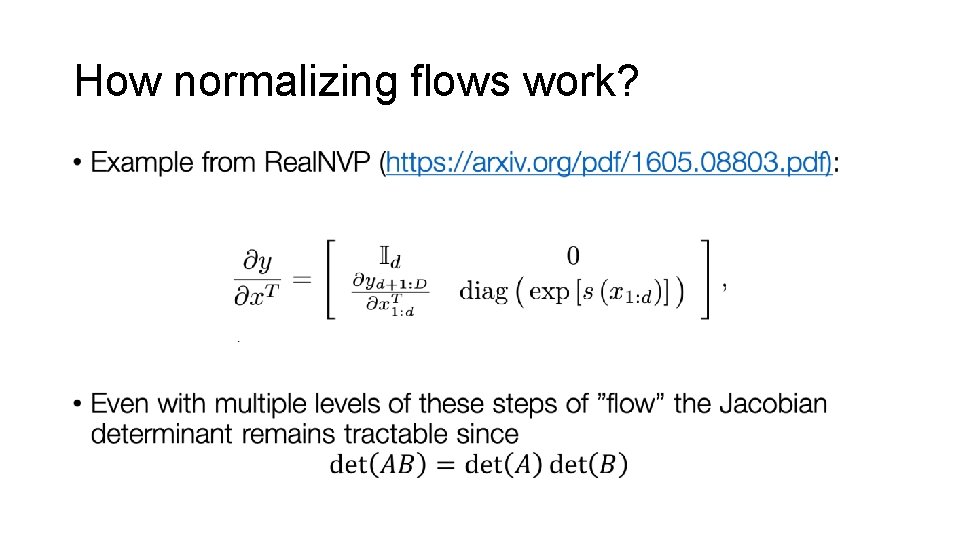

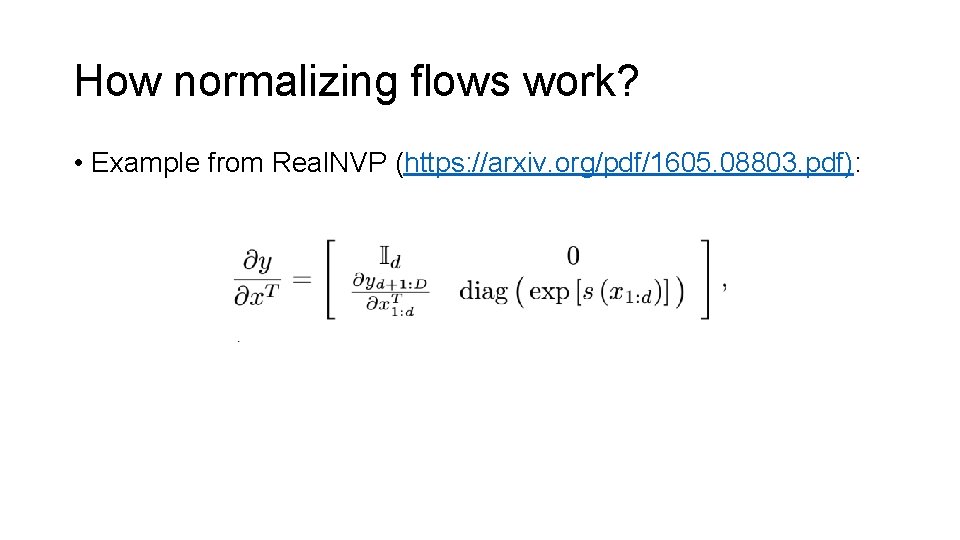

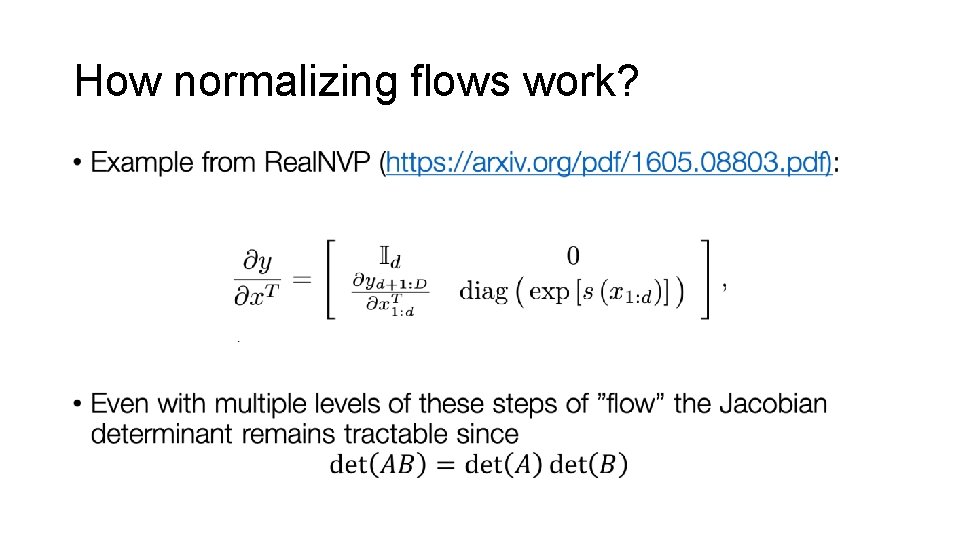

How normalizing flows work? • Example from Real. NVP (https: //arxiv. org/pdf/1605. 08803. pdf):

How normalizing flows work? •

How normalizing flows work? •

How normalizing flows work? •

How normalizing flows work? •

How normalizing flows work? •

How normalizing flows work? •

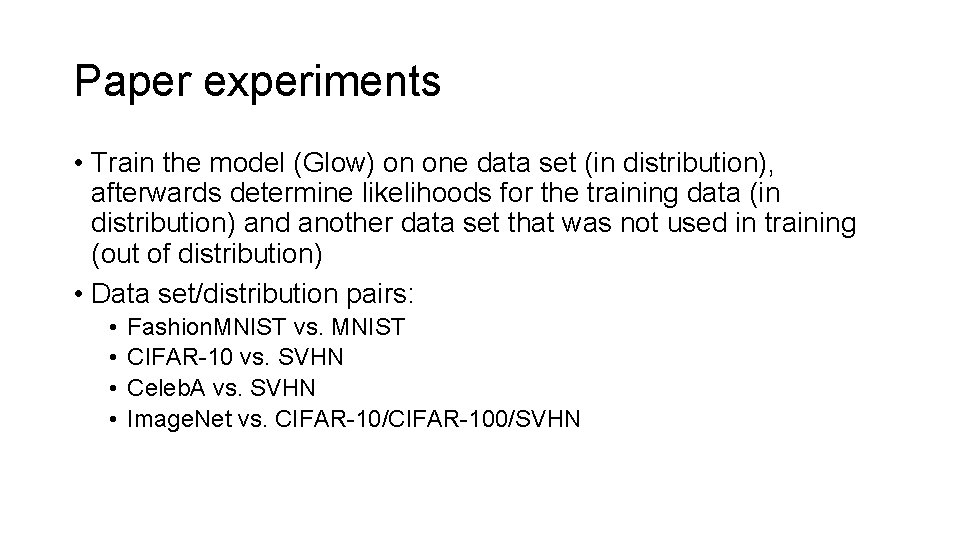

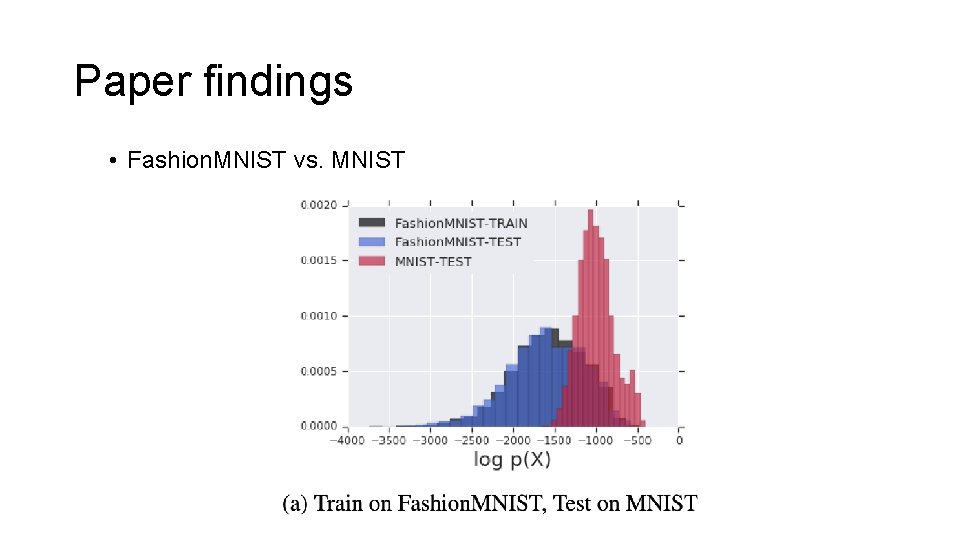

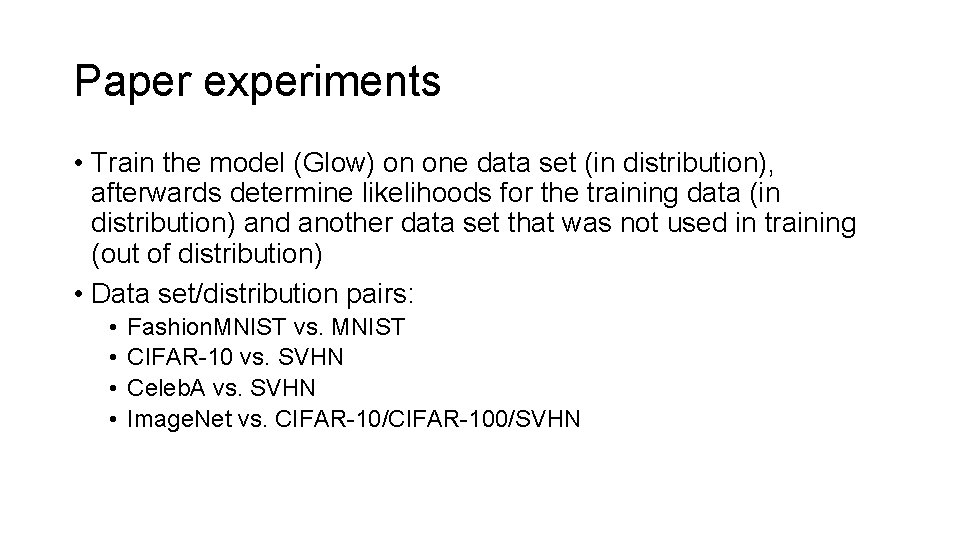

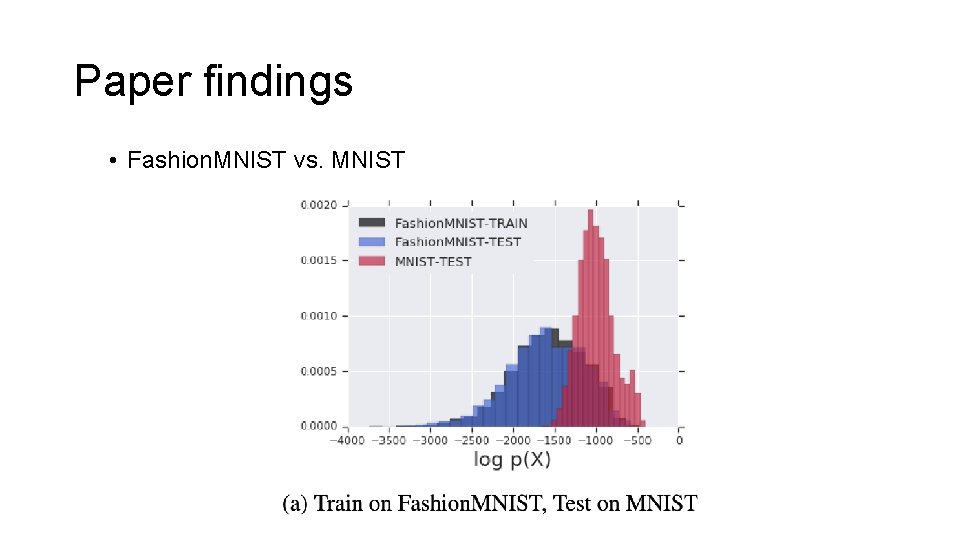

Paper experiments • Train the model (Glow) on one data set (in distribution), afterwards determine likelihoods for the training data (in distribution) and another data set that was not used in training (out of distribution)

Paper experiments • Train the model (Glow) on one data set (in distribution), afterwards determine likelihoods for the training data (in distribution) and another data set that was not used in training (out of distribution) • Data set/distribution pairs: • • Fashion. MNIST vs. MNIST CIFAR-10 vs. SVHN Celeb. A vs. SVHN Image. Net vs. CIFAR-10/CIFAR-100/SVHN

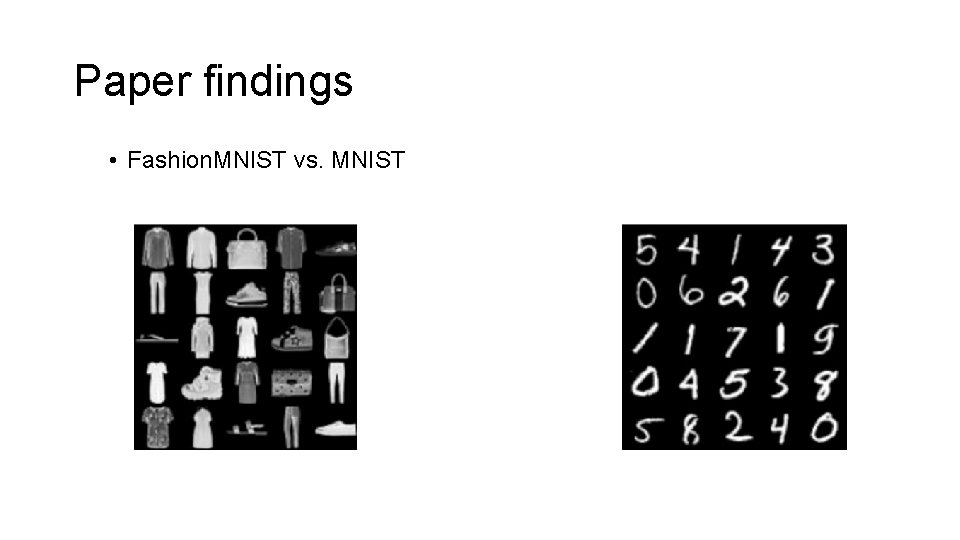

Paper findings • Fashion. MNIST vs. MNIST

Paper findings • Fashion. MNIST vs. MNIST

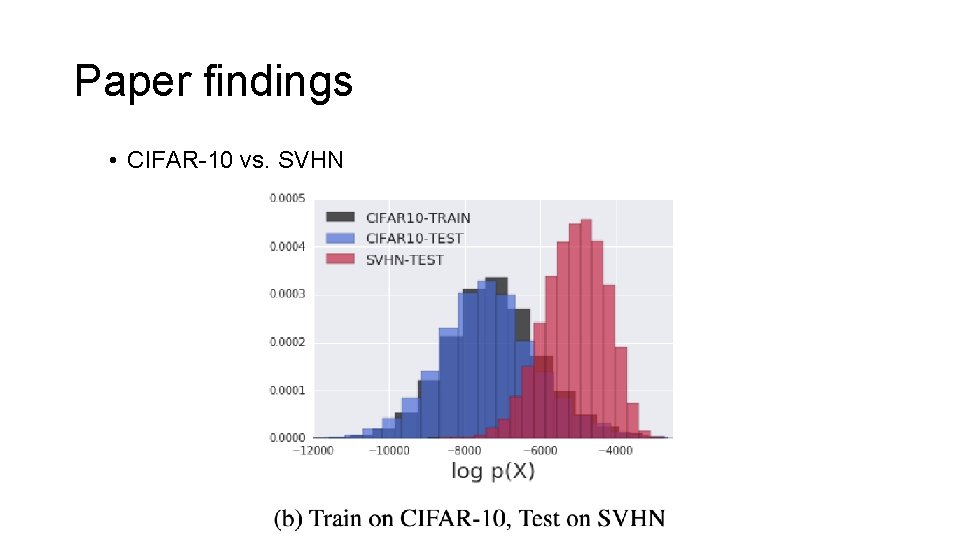

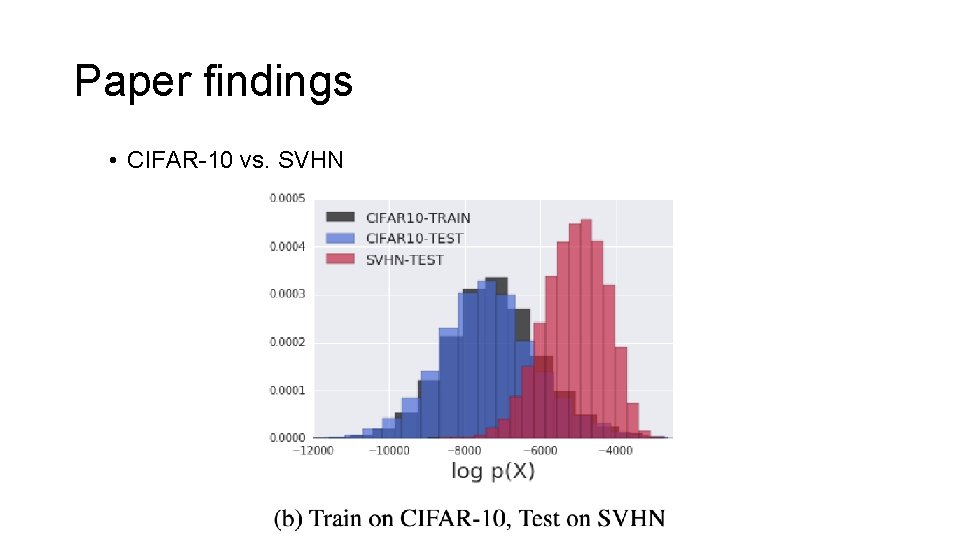

Paper findings • CIFAR-10 vs. SVHN

Paper findings • CIFAR-10 vs. SVHN

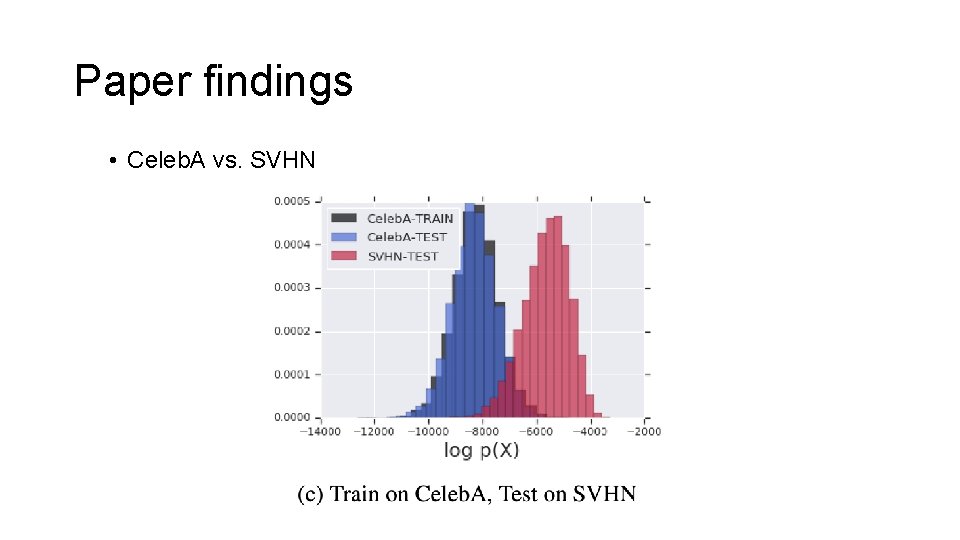

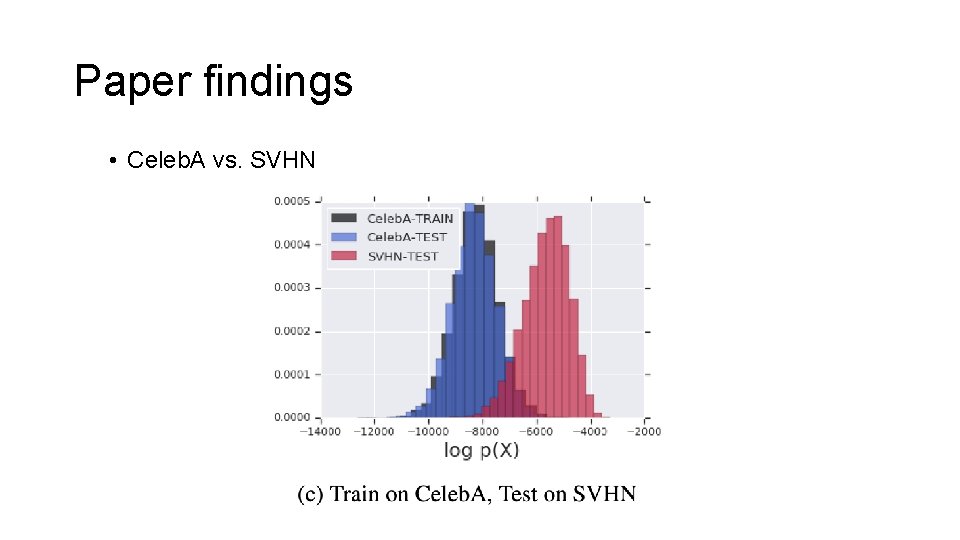

Paper findings • Celeb. A vs. SVHN

Paper findings • Celeb. A vs. SVHN

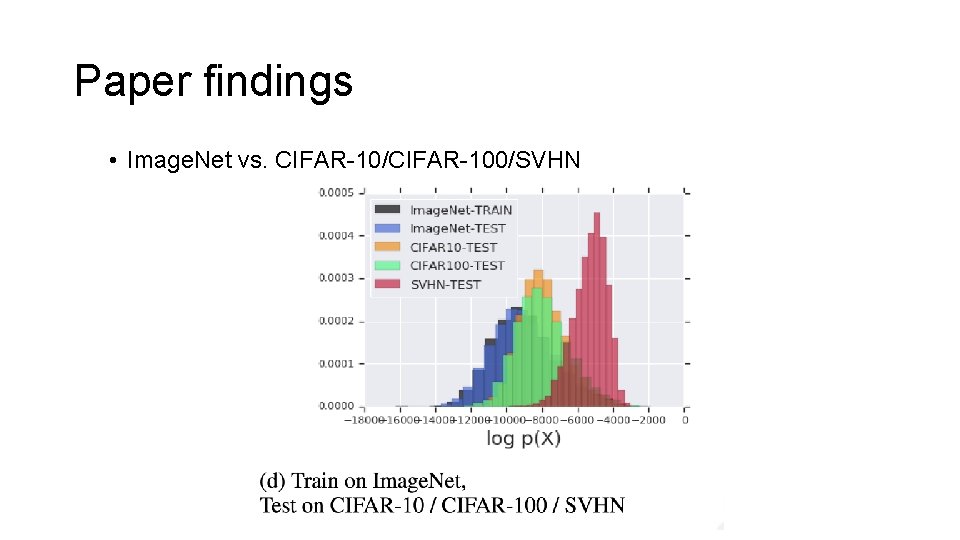

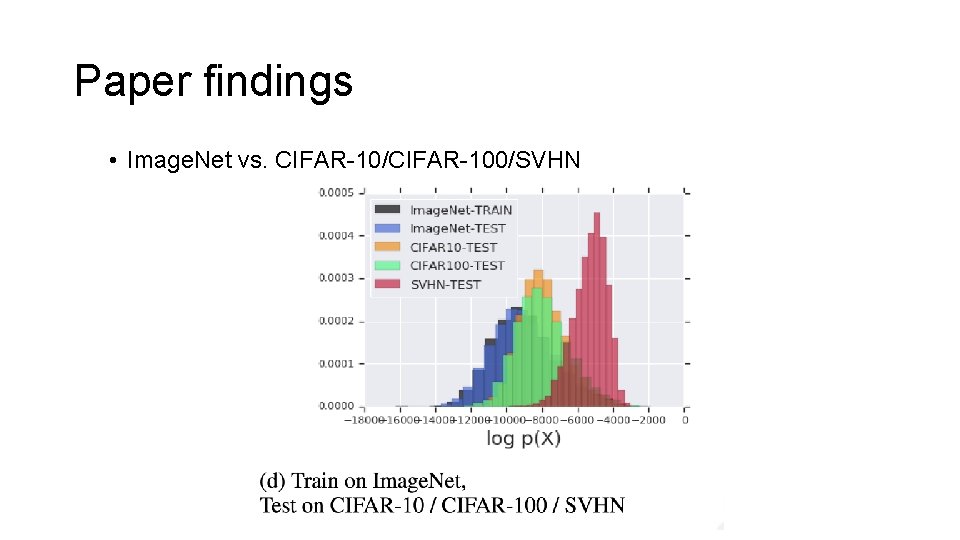

Paper findings • Image. Net vs. CIFAR-10/CIFAR-100/SVHN

Paper findings • Image. Net vs. CIFAR-10/CIFAR-100/SVHN

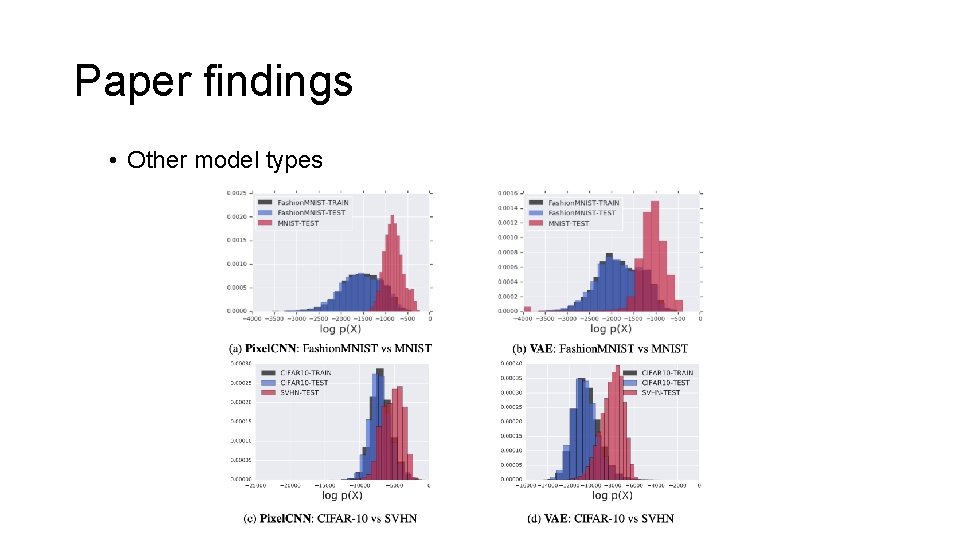

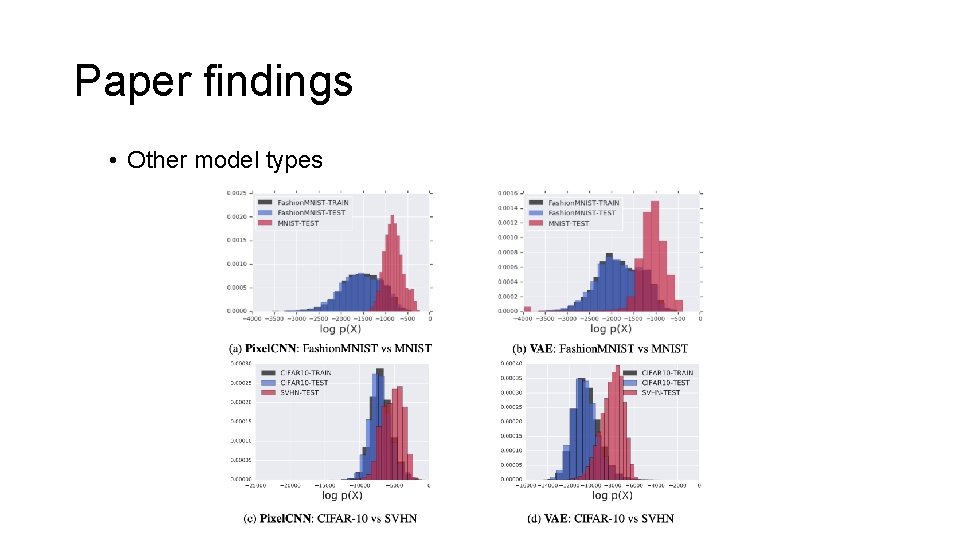

Paper findings • Other model types

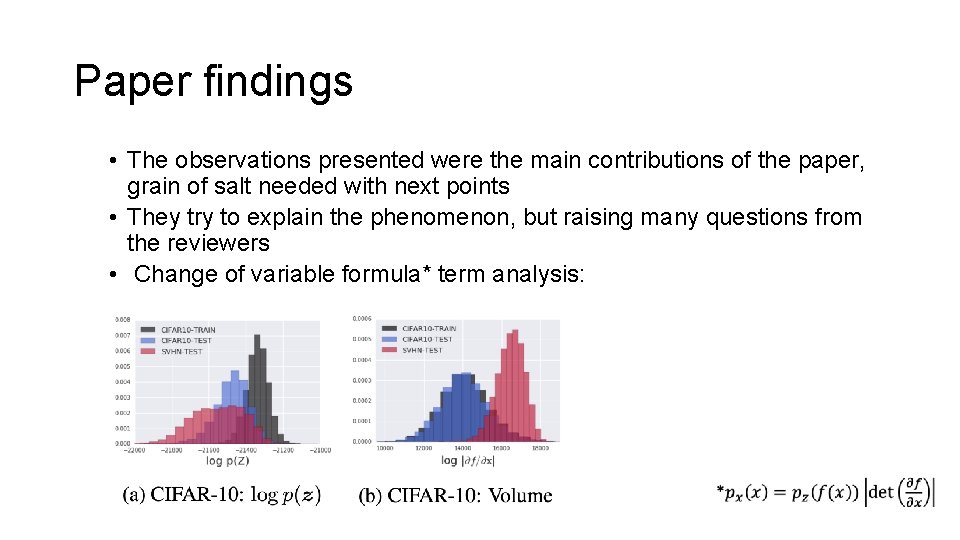

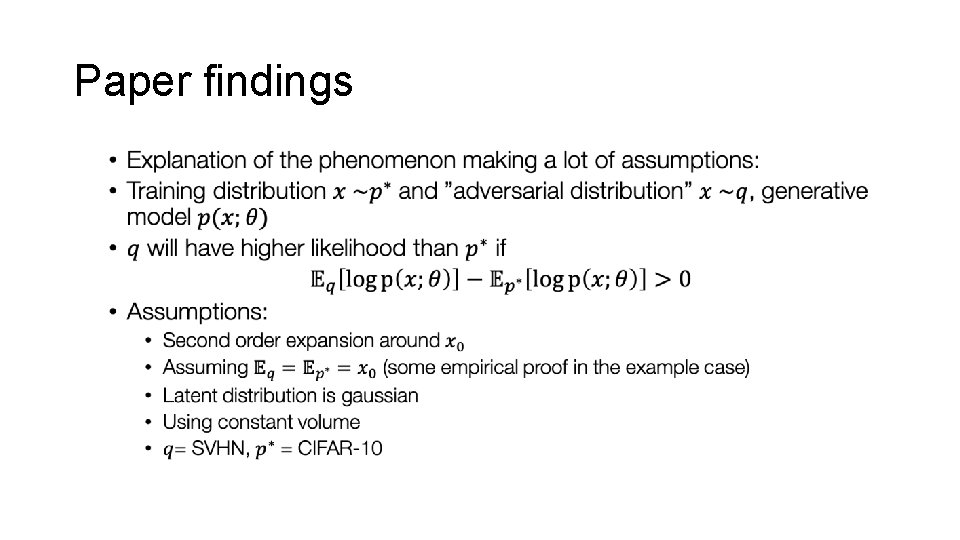

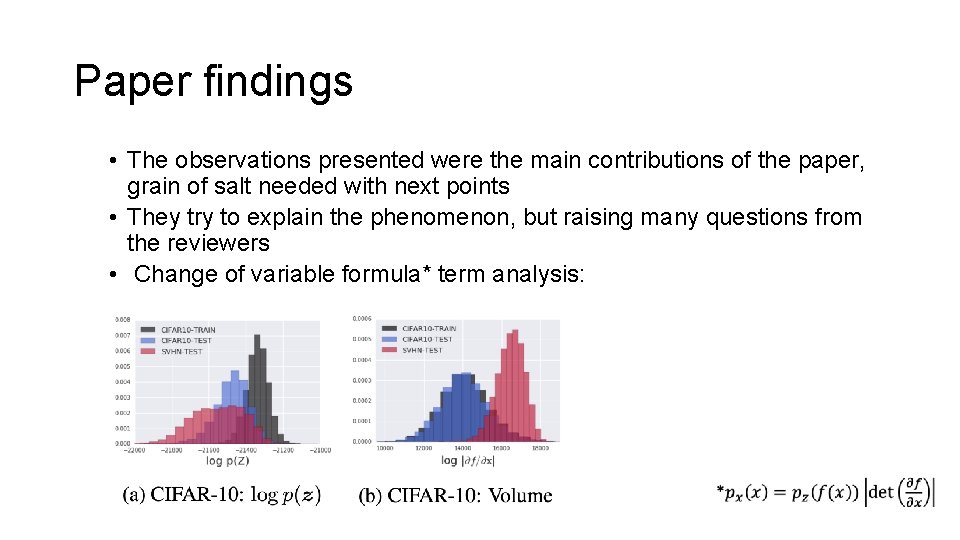

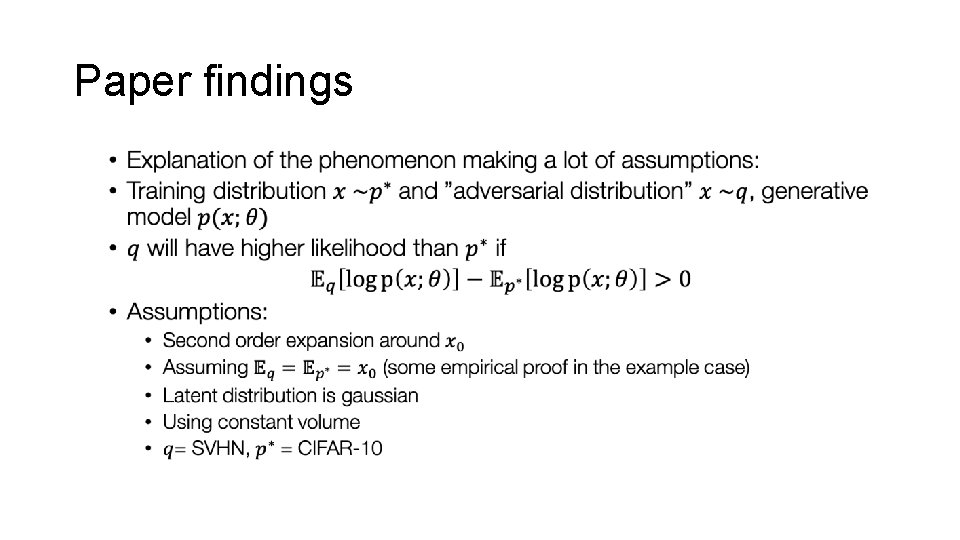

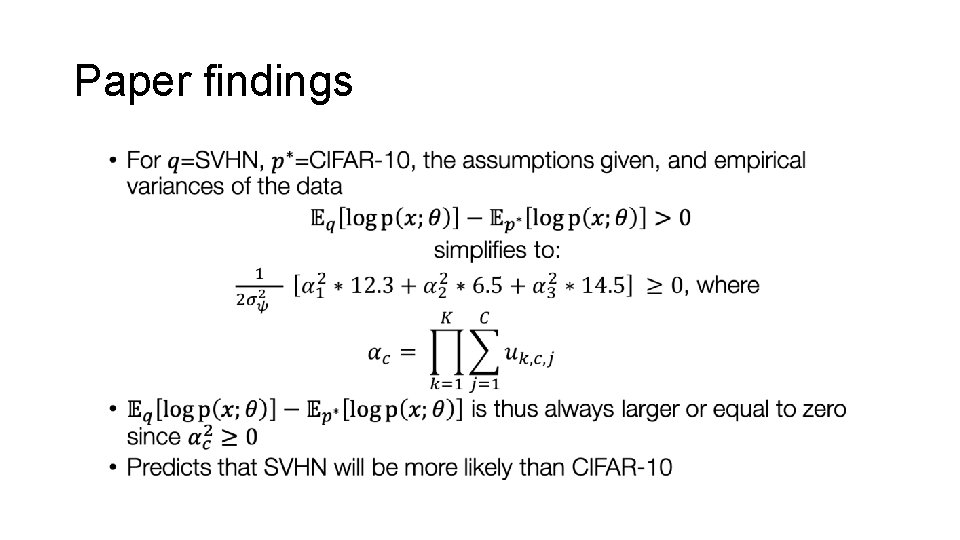

Paper findings • The observations presented were the main contributions of the paper, grain of salt needed with next points

Paper findings • The observations presented were the main contributions of the paper, grain of salt needed with next points • They try to explain the phenomenon, but raising many questions from the reviewers

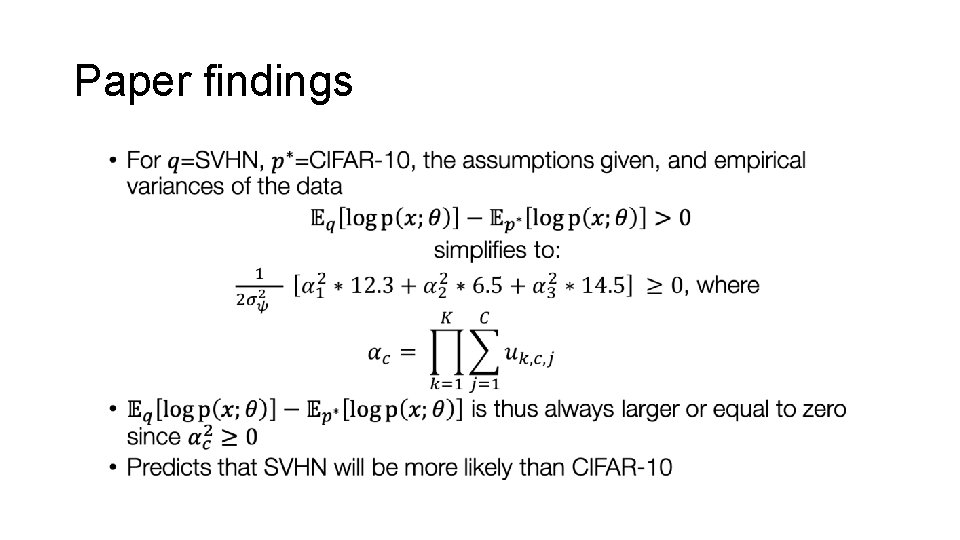

Paper findings • The observations presented were the main contributions of the paper, grain of salt needed with next points • They try to explain the phenomenon, but raising many questions from the reviewers • Change of variable formula* term analysis:

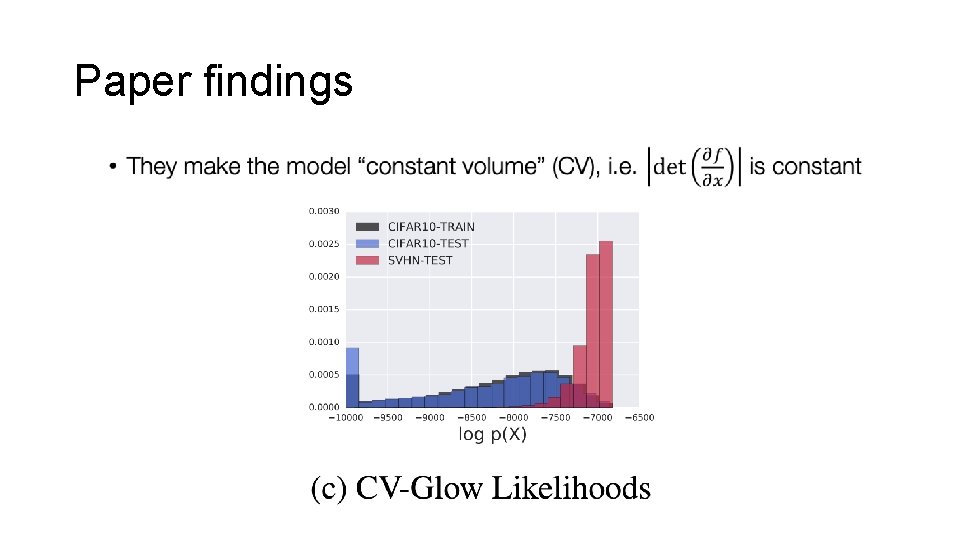

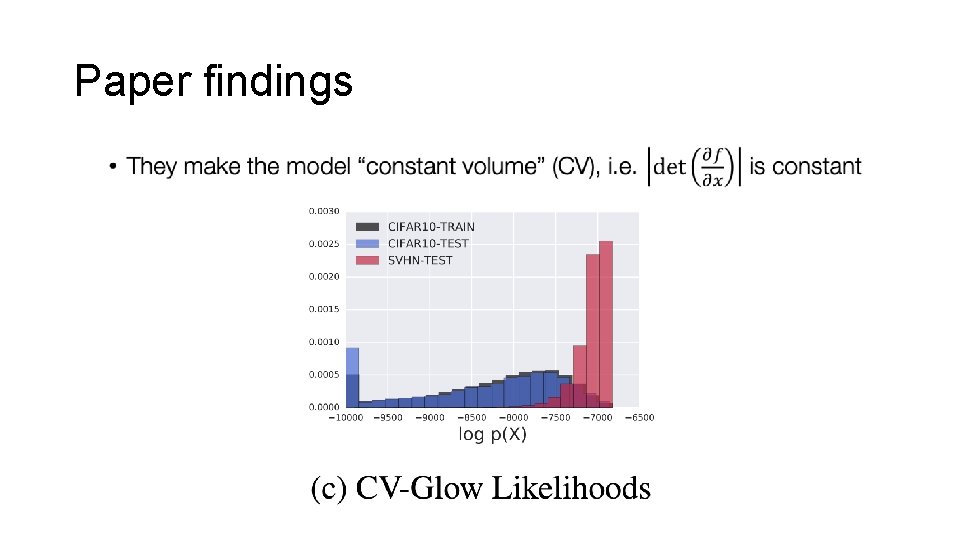

Paper findings •

Paper findings •

Paper findings •

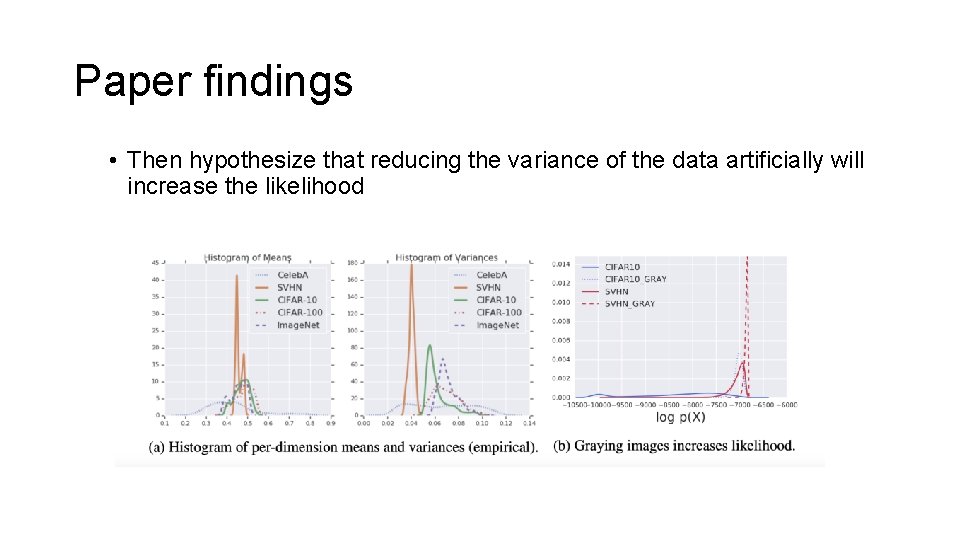

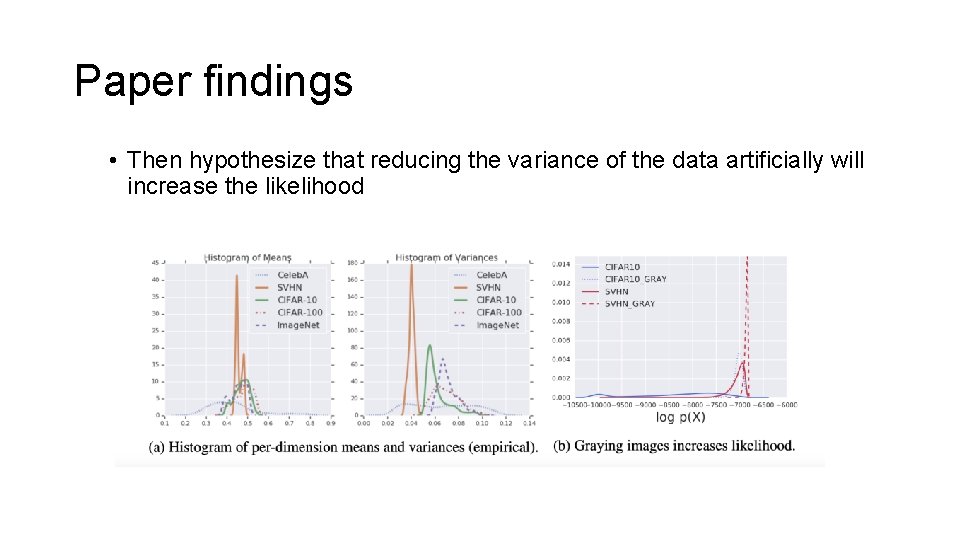

Paper findings • Then hypothesize that reducing the variance of the data artificially will increase the likelihood

Conclusions • Cause to pause when using generative models in anomaly detection • Second order analysis provided (only applicable to a certain type of flow + many assumptions) • The author’s urge further study on the subject

Discussion • How valid/applicable is their analysis? • How come samples do not look like the OOD images if they have higher likelihood?