On Unifying Deep Generative Models Zhiting Hu Carnegie

- Slides: 31

On Unifying Deep Generative Models Zhiting Hu Carnegie Mellon University

On Unifying Deep Generative Models : A text generation library Zhiting Hu Carnegie Mellon University

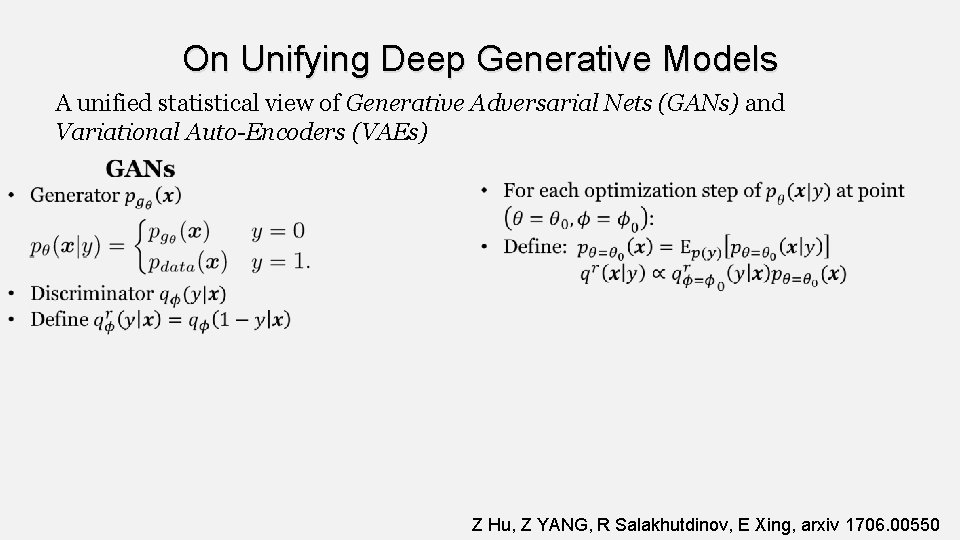

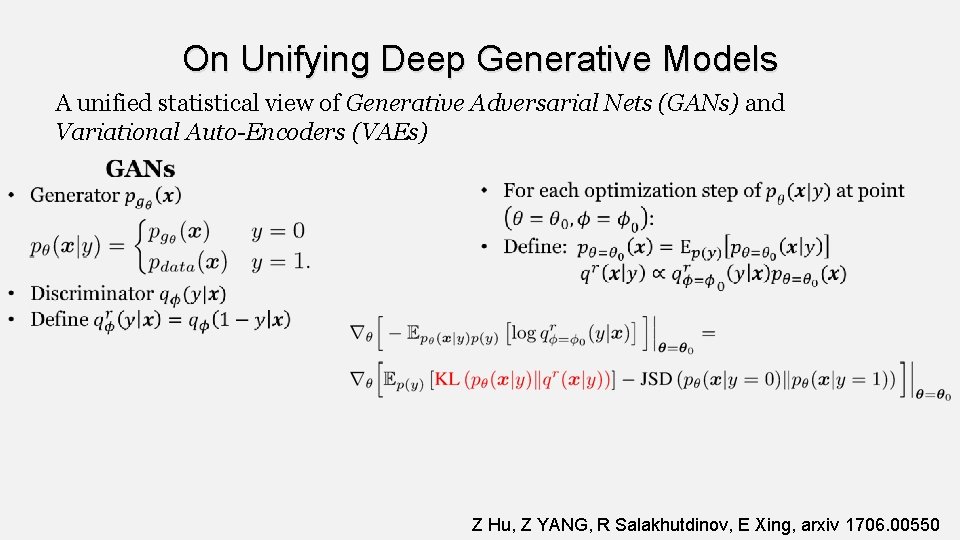

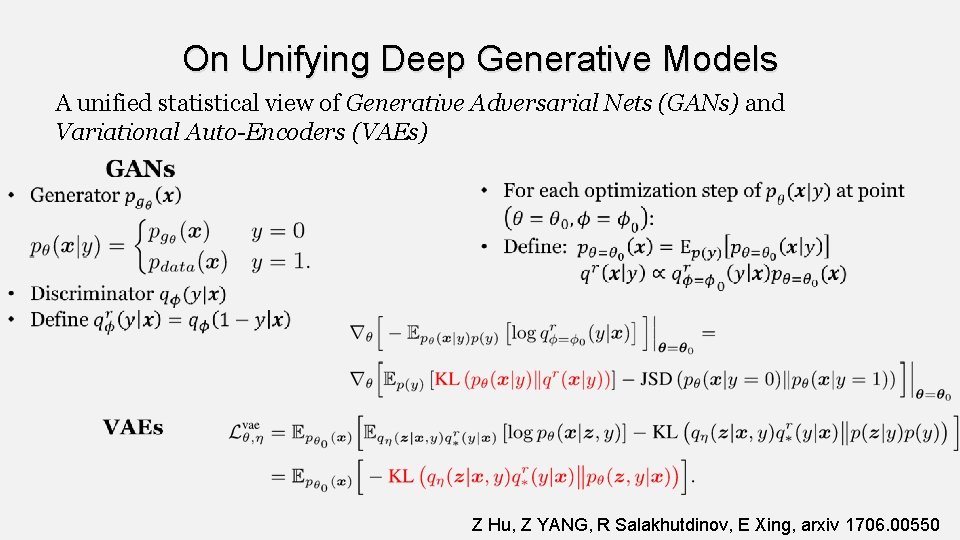

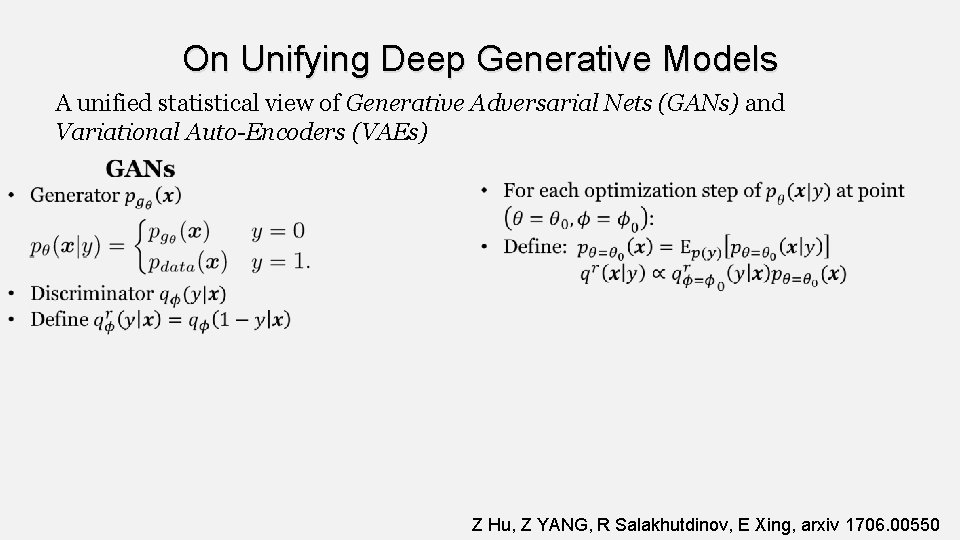

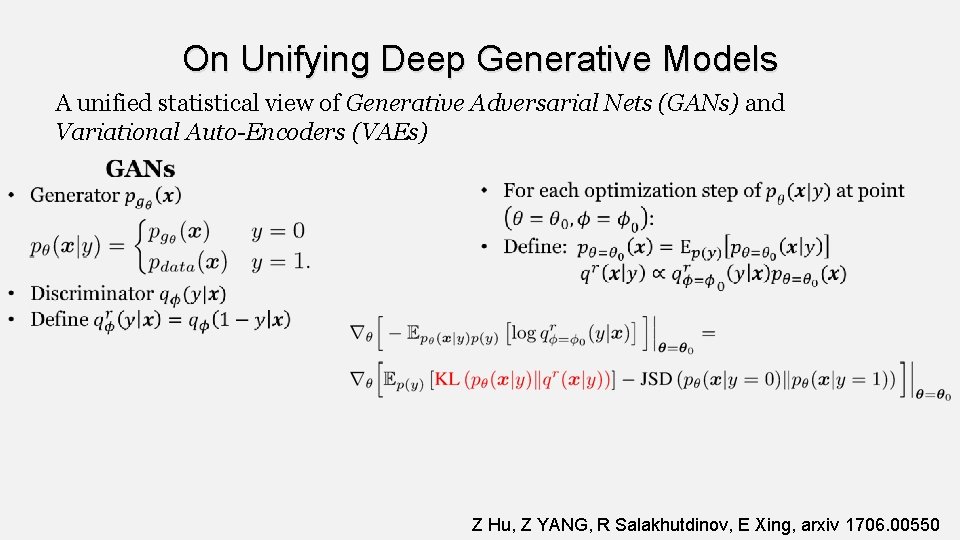

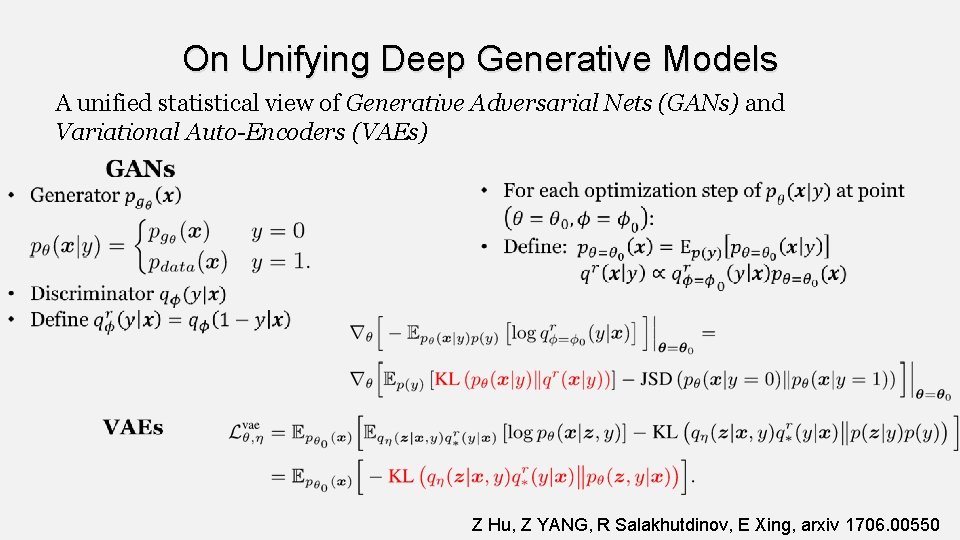

On Unifying Deep Generative Models A unified statistical view of Generative Adversarial Nets (GANs) and Variational Auto-Encoders (VAEs) Z Hu, Z YANG, R Salakhutdinov, E Xing, arxiv 1706. 00550

On Unifying Deep Generative Models A unified statistical view of Generative Adversarial Nets (GANs) and Variational Auto-Encoders (VAEs) Z Hu, Z YANG, R Salakhutdinov, E Xing, arxiv 1706. 00550

On Unifying Deep Generative Models A unified statistical view of Generative Adversarial Nets (GANs) and Variational Auto-Encoders (VAEs) Z Hu, Z YANG, R Salakhutdinov, E Xing, arxiv 1706. 00550

On Unifying Deep Generative Models A unified statistical view of Generative Adversarial Nets (GANs) and Variational Auto-Encoders (VAEs) Z Hu, Z YANG, R Salakhutdinov, E Xing, arxiv 1706. 00550

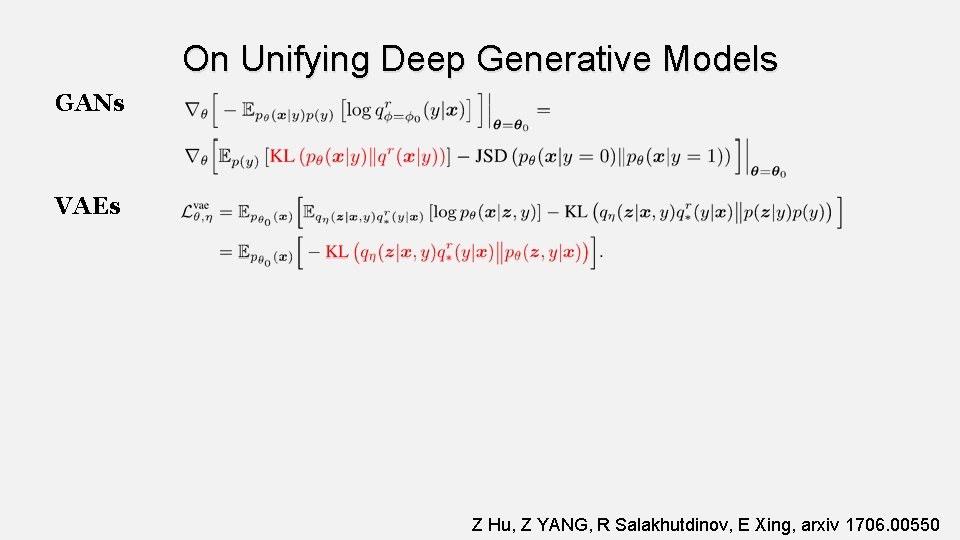

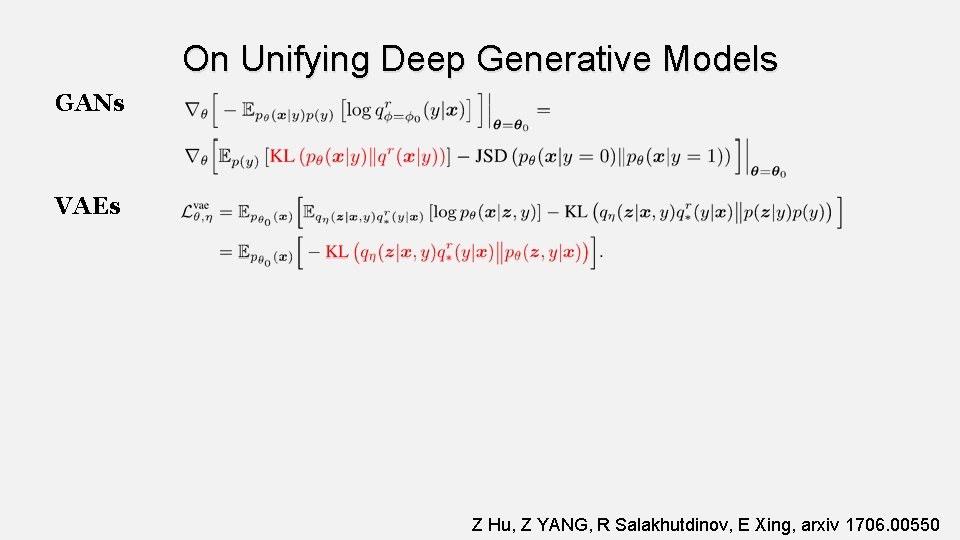

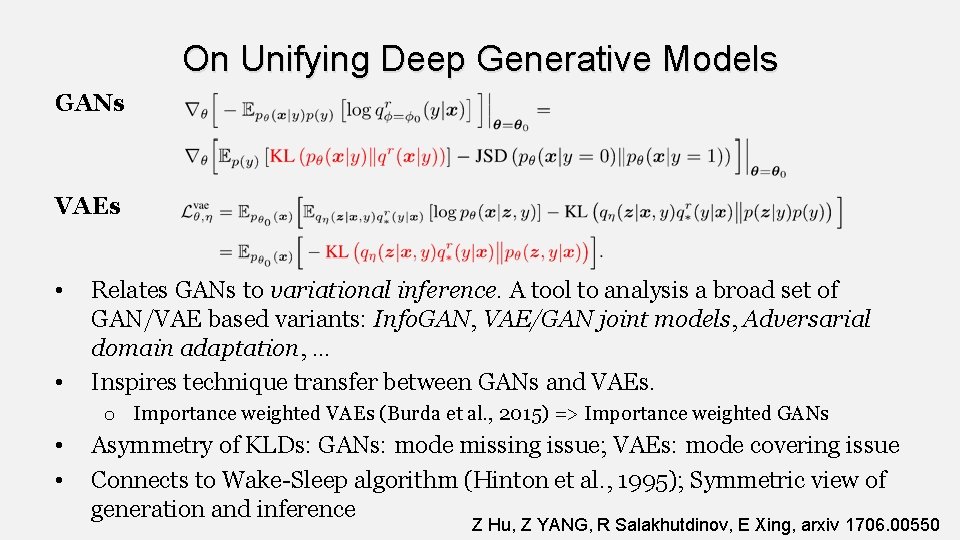

On Unifying Deep Generative Models GANs VAEs Z Hu, Z YANG, R Salakhutdinov, E Xing, arxiv 1706. 00550

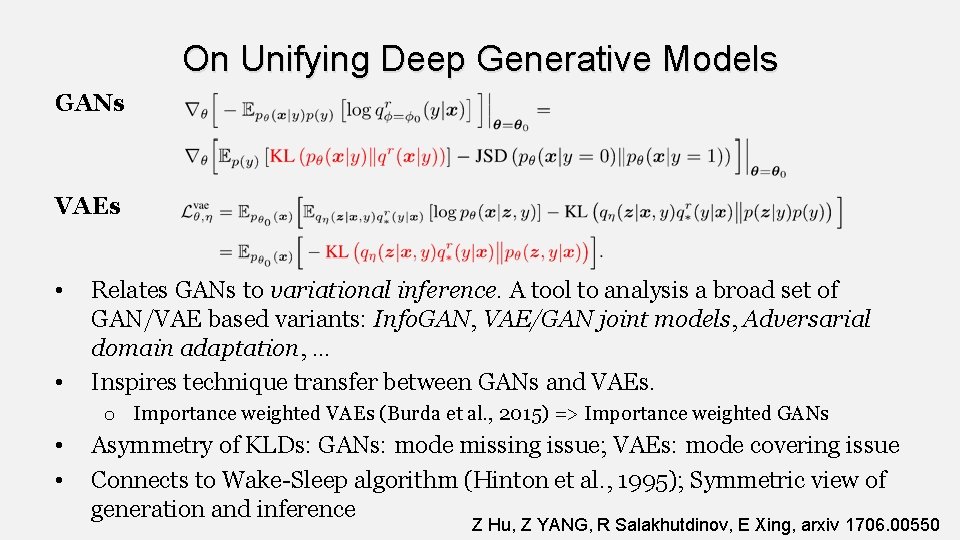

On Unifying Deep Generative Models GANs VAEs • • Relates GANs to variational inference. A tool to analysis a broad set of GAN/VAE based variants: Info. GAN, VAE/GAN joint models, Adversarial domain adaptation, … Inspires technique transfer between GANs and VAEs. o Importance weighted VAEs (Burda et al. , 2015) => Importance weighted GANs • • Asymmetry of KLDs: GANs: mode missing issue; VAEs: mode covering issue Connects to Wake-Sleep algorithm (Hinton et al. , 1995); Symmetric view of generation and inference Z Hu, Z YANG, R Salakhutdinov, E Xing, arxiv 1706. 00550

A modularized, versatile, extensible library for text generation

Overview A soon-to-be open-source library to support text generation research and industry use o Currently based on Tensorflow o Tentative release date: Jan 2018 Zhiting Hu, Zichao Yang, Tiancheng Zhao, Junxian He, Di Wang, Max Ma, Hector Liu, Mansi Gupta, Qizhe Xie, Xiaodan Liang, Haoran Shi, Lianhui Qin, Bowen Tan, Jiacheng , Chenyu Wang, …

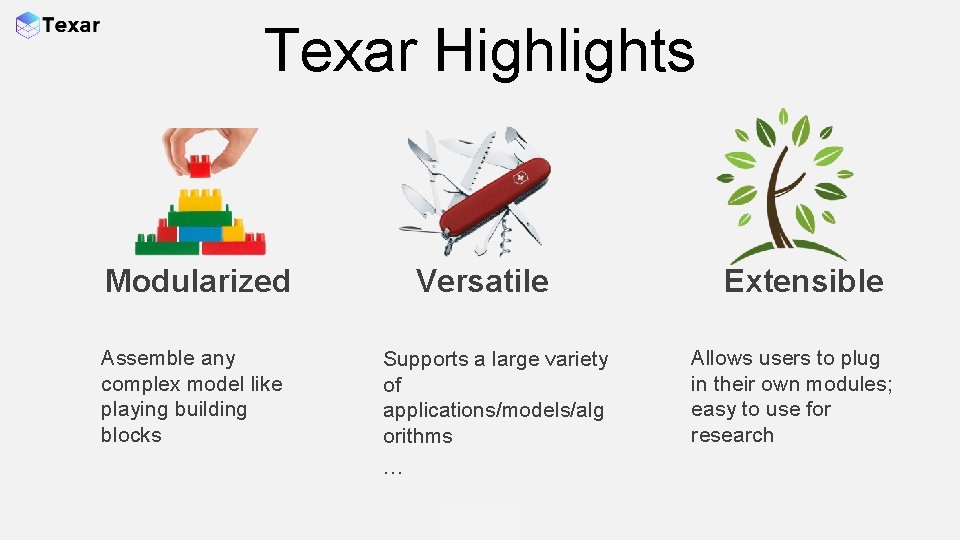

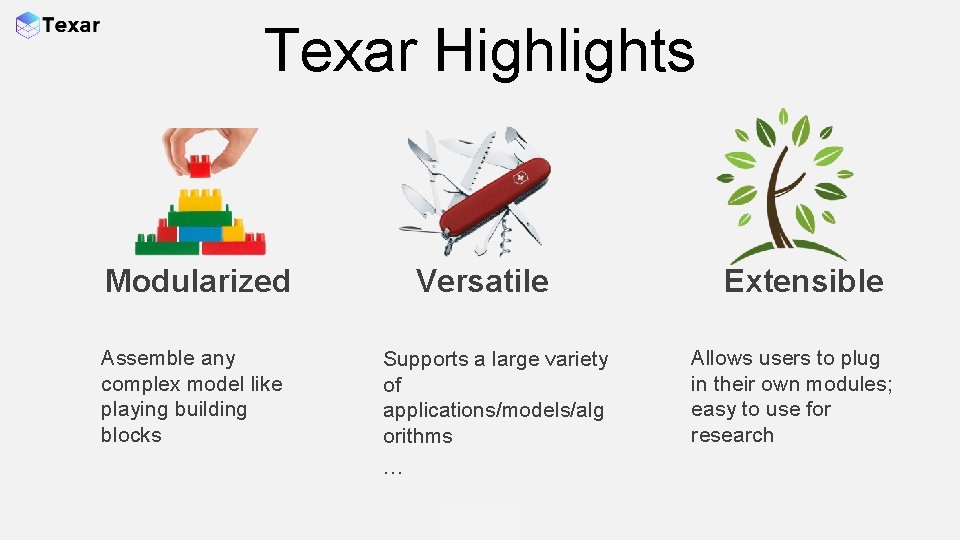

Texar Highlights Modularized Assemble any complex model like playing building blocks Versatile Supports a large variety of applications/models/alg orithms … Extensible Allows users to plug in their own modules; easy to use for research

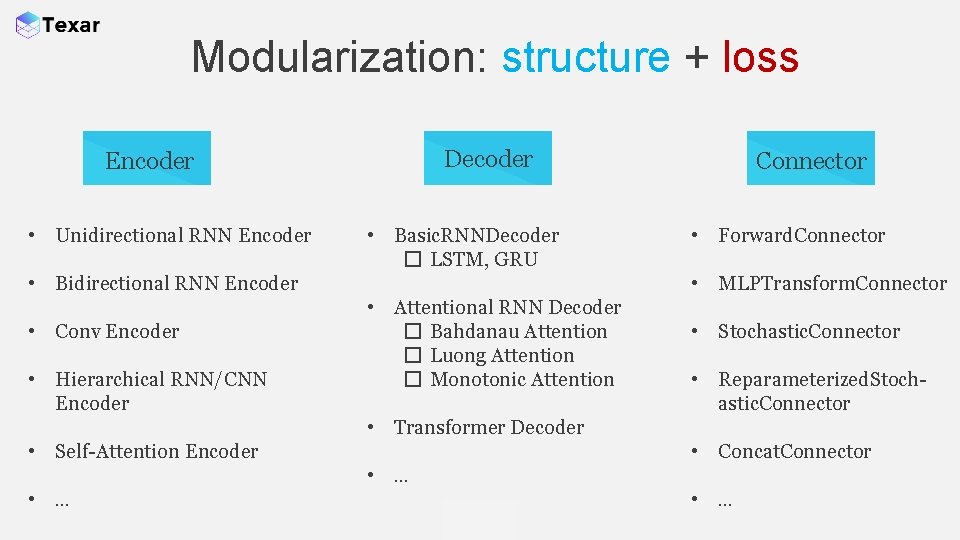

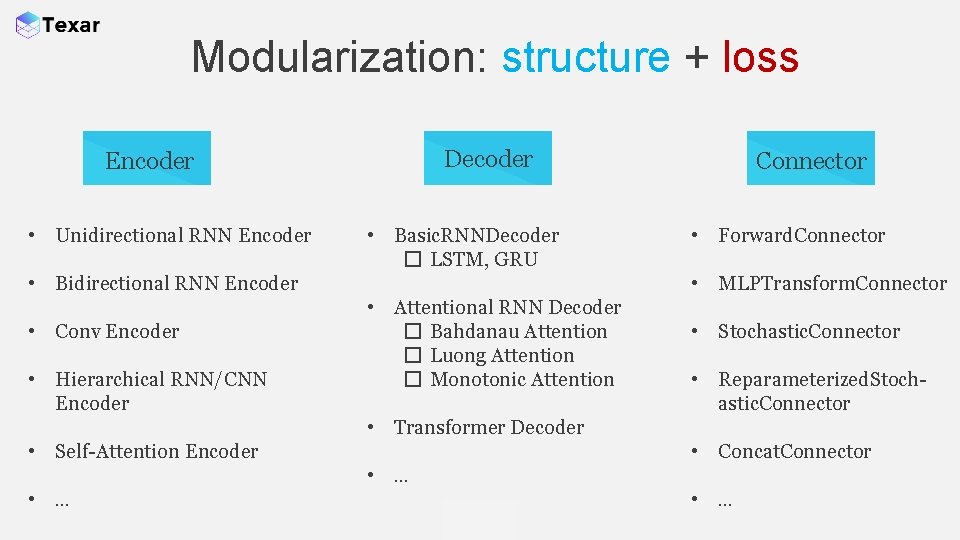

Modularization: structure + loss Decoder Encoder • Unidirectional RNN Encoder • Basic. RNNDecoder � LSTM, GRU • Bidirectional RNN Encoder • Conv Encoder • Hierarchical RNN/CNN Encoder Connector • Forward. Connector • MLPTransform. Connector • Attentional RNN Decoder � Bahdanau Attention � Luong Attention � Monotonic Attention • Stochastic. Connector • Reparameterized. Stochastic. Connector • Transformer Decoder • Self-Attention Encoder • Concat. Connector • …

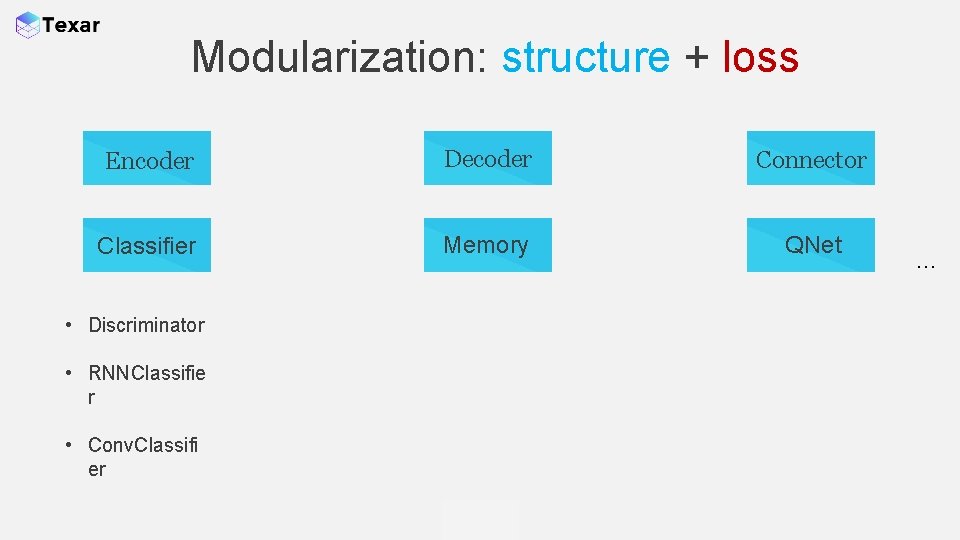

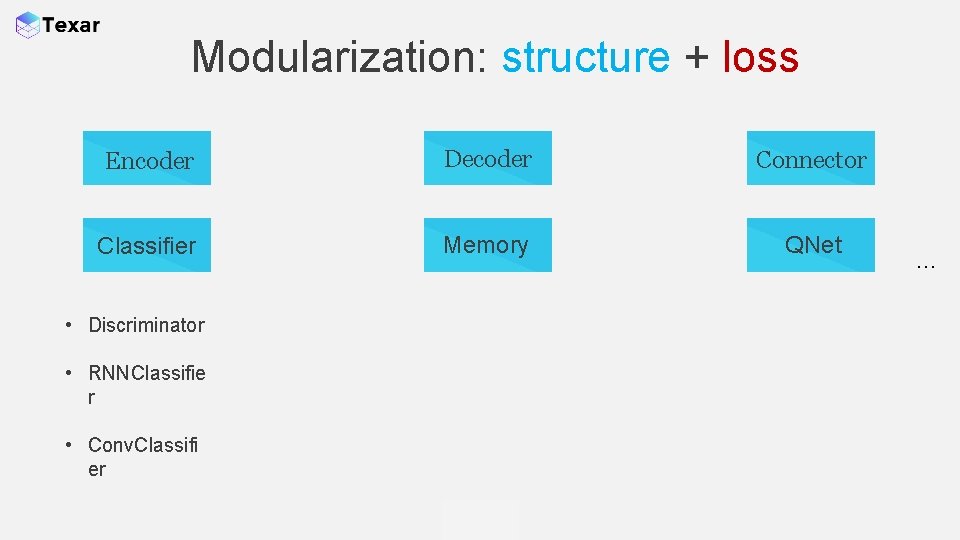

Modularization: structure + loss Encoder Decoder Connector Classifier Memory QNet • Discriminator • RNNClassifie r • Conv. Classifi er …

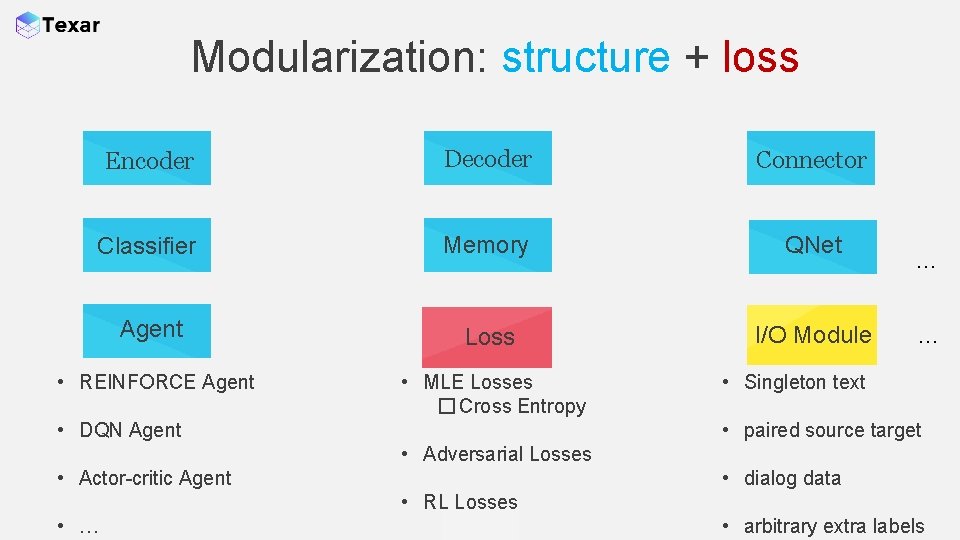

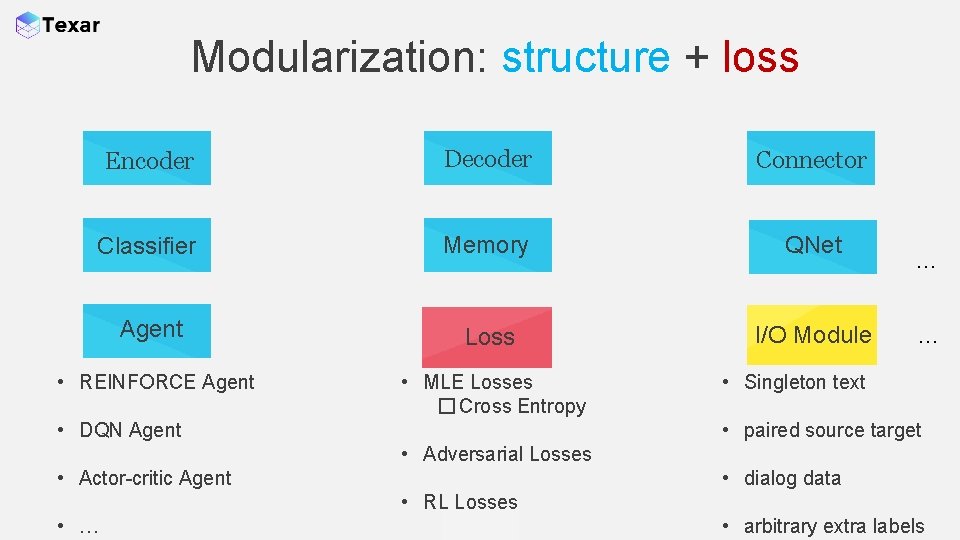

Modularization: structure + loss Encoder Decoder Connector Classifier Memory QNet Agent Loss I/O Module • REINFORCE Agent • MLE Losses � Cross Entropy … … • Singleton text • paired source target • DQN Agent • Adversarial Losses • dialog data • Actor-critic Agent • RL Losses • … • arbitrary extra labels

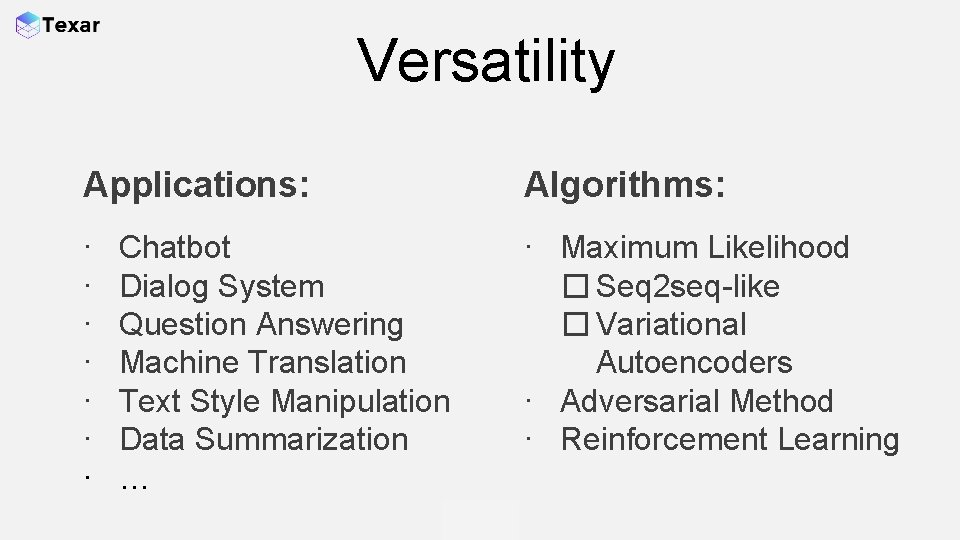

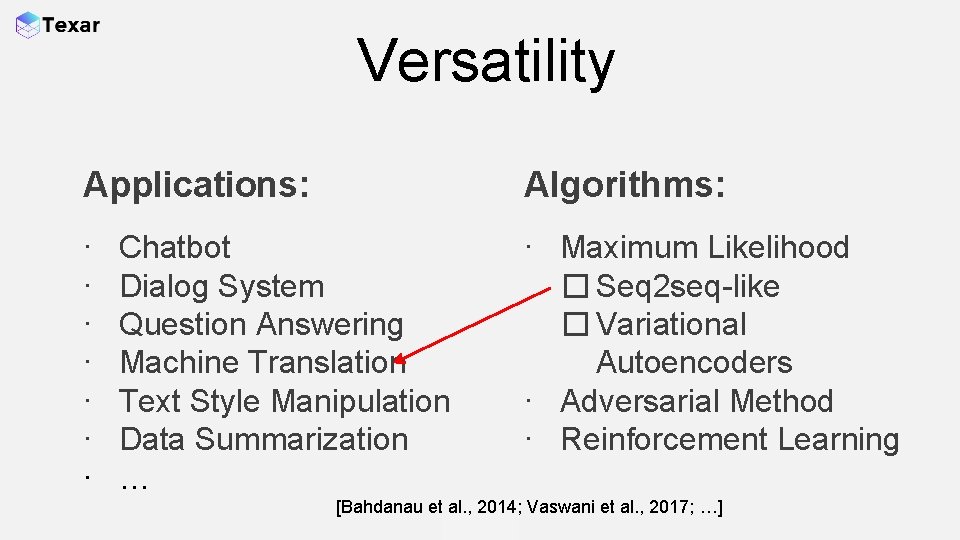

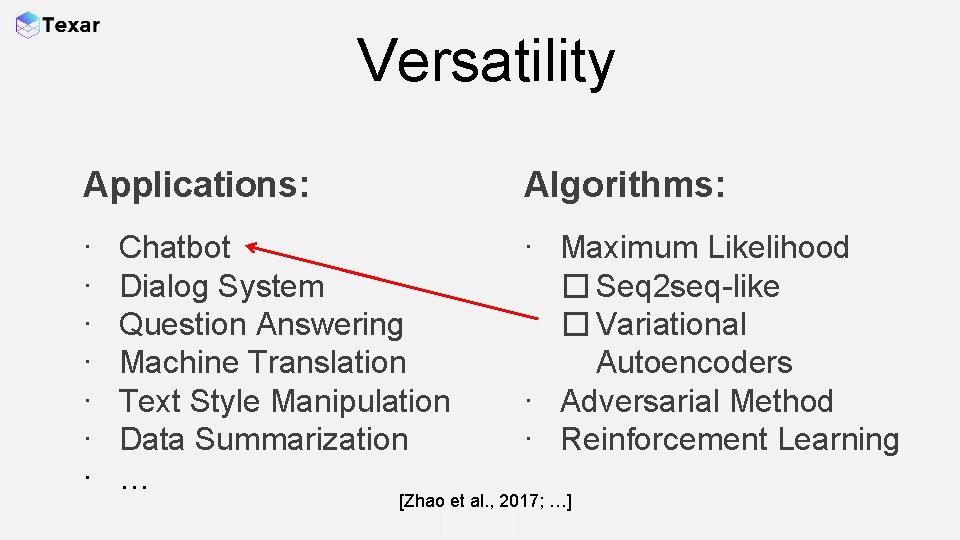

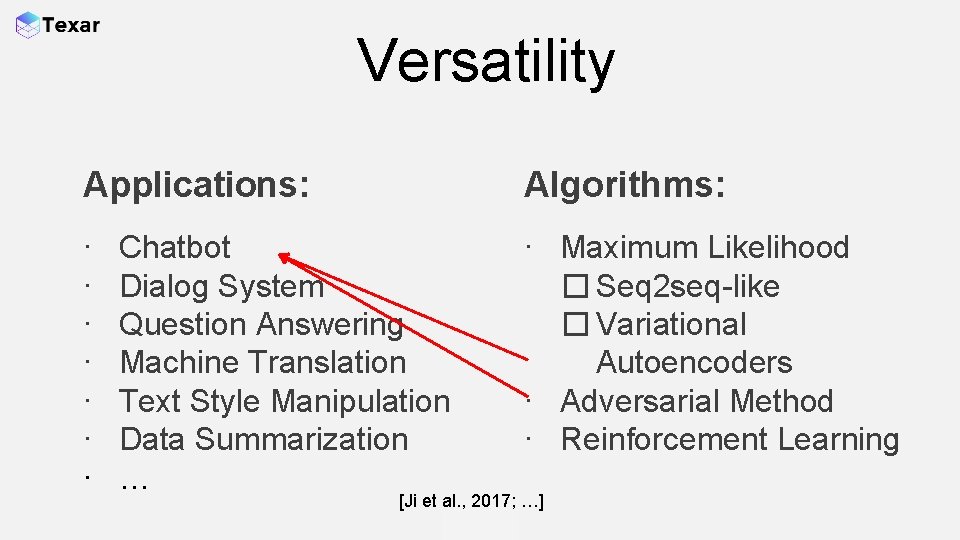

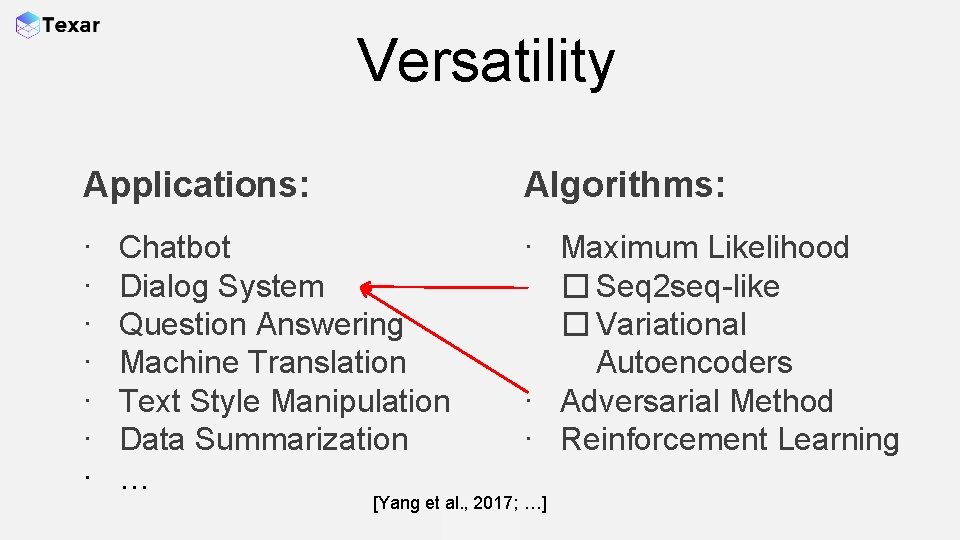

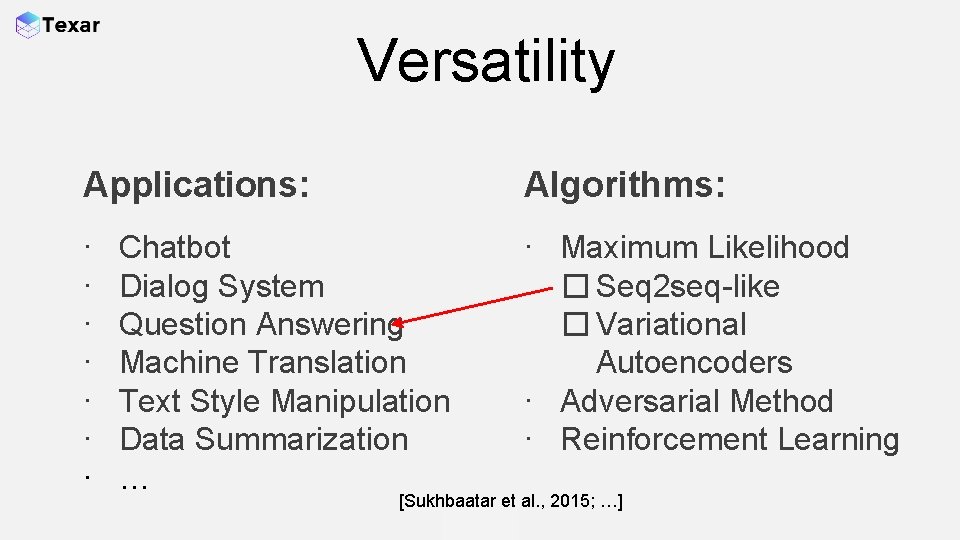

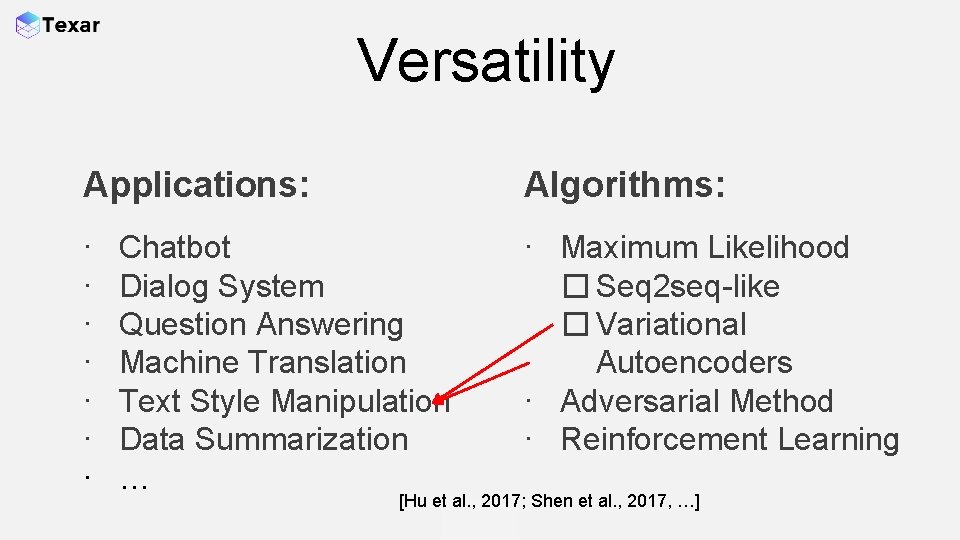

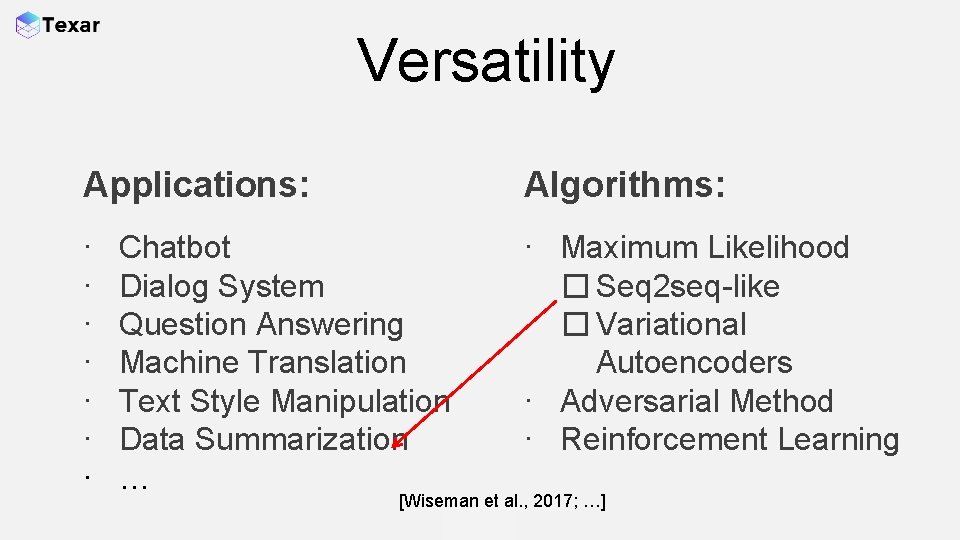

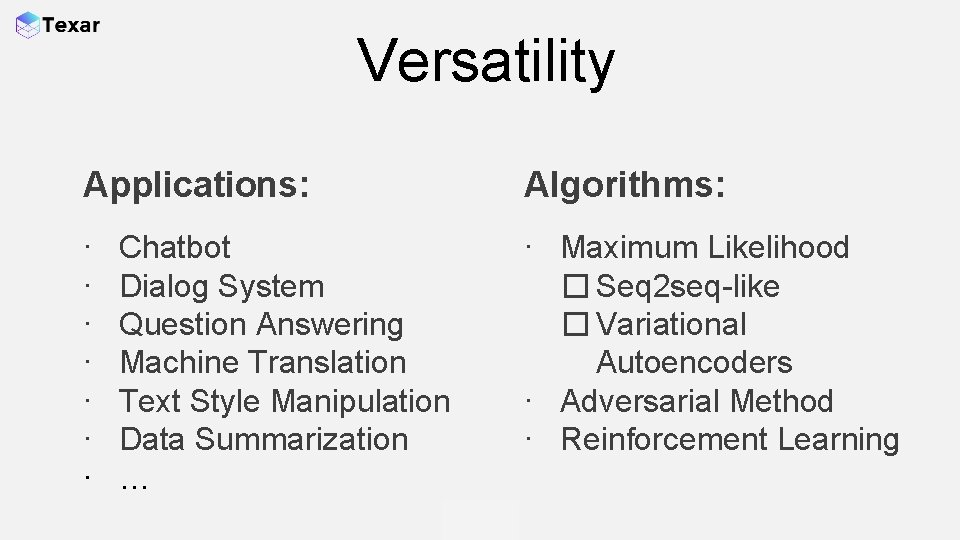

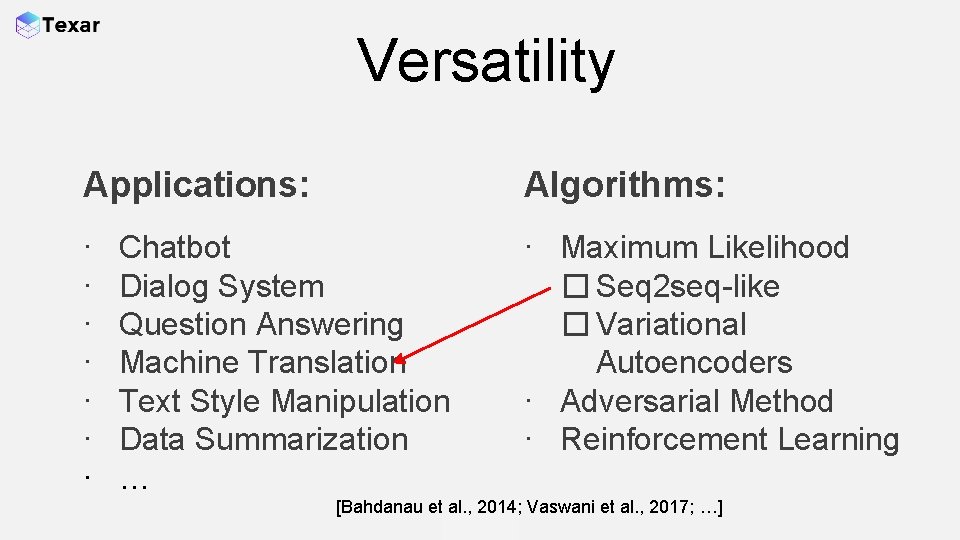

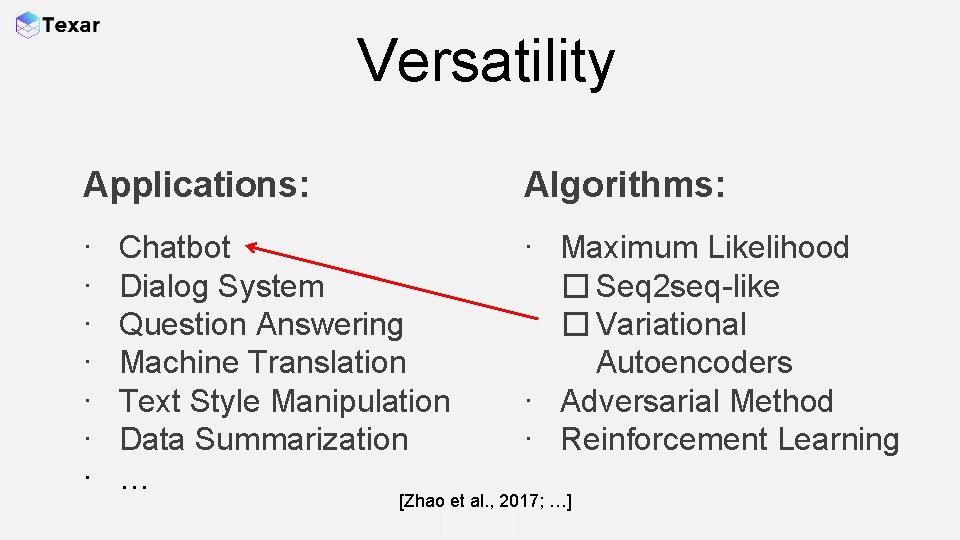

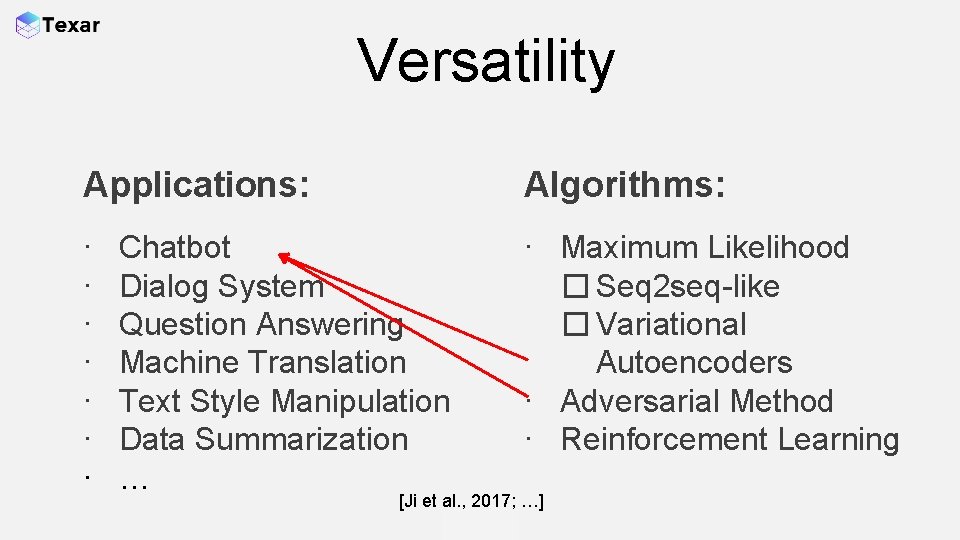

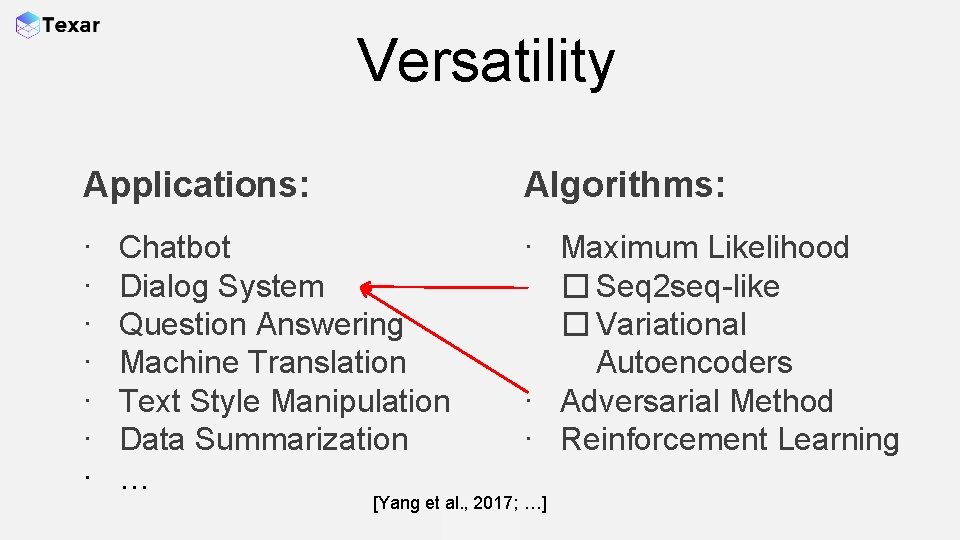

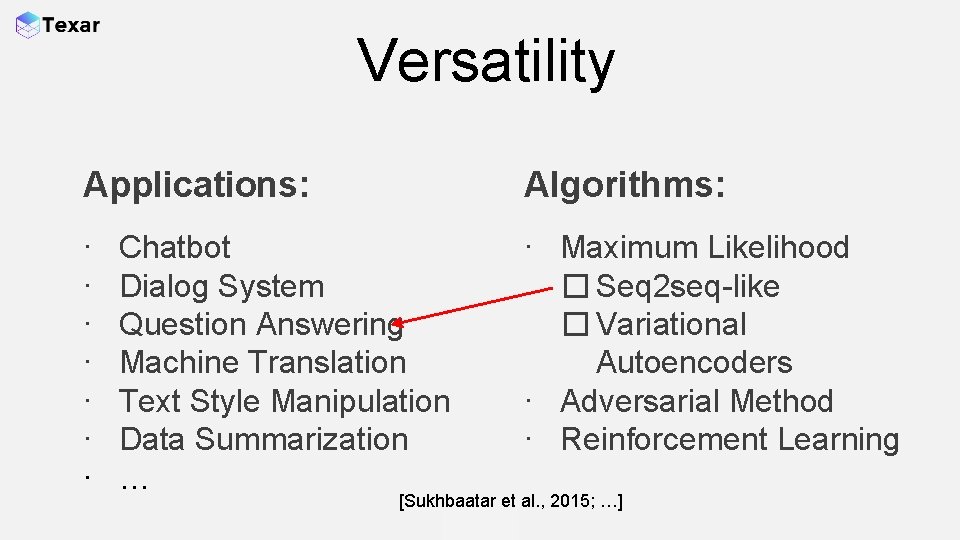

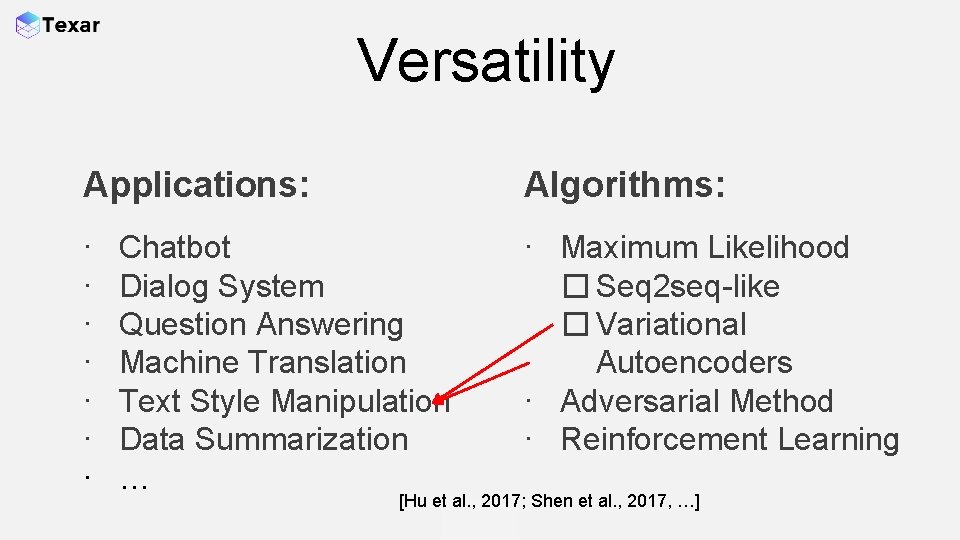

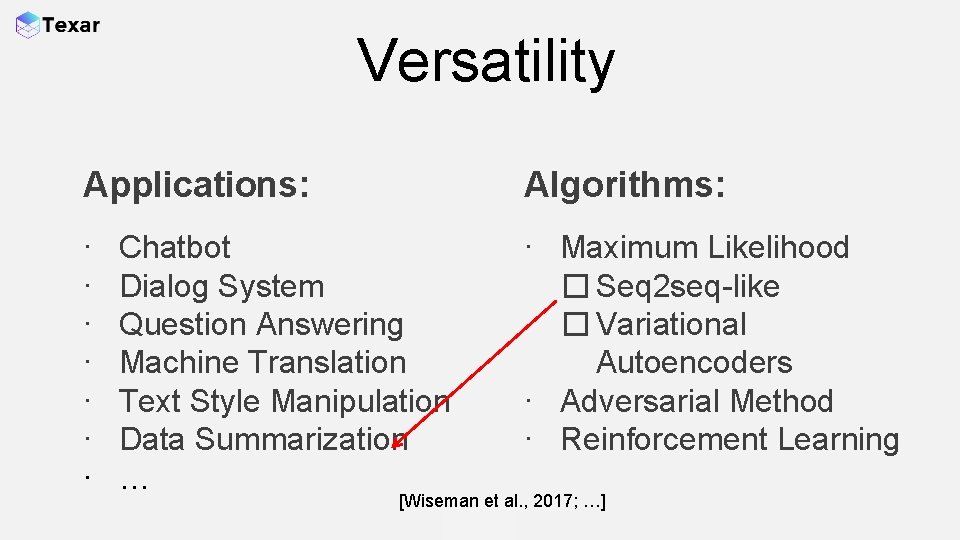

Versatility Applications: Algorithms: ∙ ∙ ∙ ∙ Maximum Likelihood � Seq 2 seq-like � Variational Autoencoders ∙ Adversarial Method ∙ Reinforcement Learning Chatbot Dialog System Question Answering Machine Translation Text Style Manipulation Data Summarization …

Versatility Applications: Algorithms: ∙ ∙ ∙ ∙ Maximum Likelihood � Seq 2 seq-like � Variational Autoencoders ∙ Adversarial Method ∙ Reinforcement Learning Chatbot Dialog System Question Answering Machine Translation Text Style Manipulation Data Summarization … [Bahdanau et al. , 2014; Vaswani et al. , 2017; …]

Versatility Applications: Algorithms: ∙ ∙ ∙ ∙ Maximum Likelihood � Seq 2 seq-like � Variational Autoencoders ∙ Adversarial Method ∙ Reinforcement Learning Chatbot Dialog System Question Answering Machine Translation Text Style Manipulation Data Summarization … [Zhao et al. , 2017; …]

Versatility Applications: Algorithms: ∙ ∙ ∙ ∙ Maximum Likelihood � Seq 2 seq-like � Variational Autoencoders ∙ Adversarial Method ∙ Reinforcement Learning Chatbot Dialog System Question Answering Machine Translation Text Style Manipulation Data Summarization … [Ji et al. , 2017; …]

Versatility Applications: Algorithms: ∙ ∙ ∙ ∙ Maximum Likelihood � Seq 2 seq-like � Variational Autoencoders ∙ Adversarial Method ∙ Reinforcement Learning Chatbot Dialog System Question Answering Machine Translation Text Style Manipulation Data Summarization … [Yang et al. , 2017; …]

Versatility Applications: Algorithms: ∙ ∙ ∙ ∙ Maximum Likelihood � Seq 2 seq-like � Variational Autoencoders ∙ Adversarial Method ∙ Reinforcement Learning Chatbot Dialog System Question Answering Machine Translation Text Style Manipulation Data Summarization … [Sukhbaatar et al. , 2015; …]

Versatility Applications: Algorithms: ∙ ∙ ∙ ∙ Maximum Likelihood � Seq 2 seq-like � Variational Autoencoders ∙ Adversarial Method ∙ Reinforcement Learning Chatbot Dialog System Question Answering Machine Translation Text Style Manipulation Data Summarization … [Hu et al. , 2017; Shen et al. , 2017, …]

Versatility Applications: Algorithms: ∙ ∙ ∙ ∙ Maximum Likelihood � Seq 2 seq-like � Variational Autoencoders ∙ Adversarial Method ∙ Reinforcement Learning Chatbot Dialog System Question Answering Machine Translation Text Style Manipulation Data Summarization … [Wiseman et al. , 2017; …]

Machine Translation (MT) The task: ``This is the best movie I’ve ever seen’’ => ``这是我看过最好看的电影’’

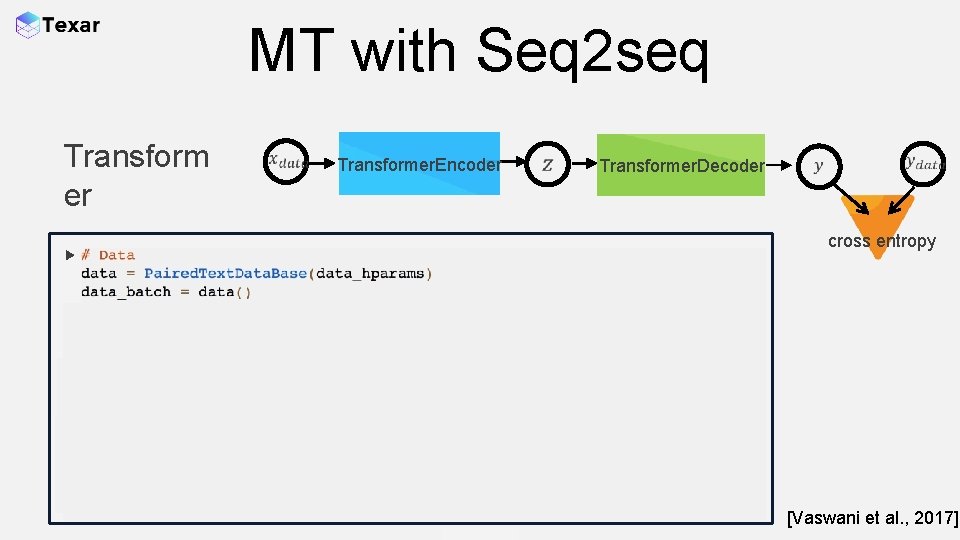

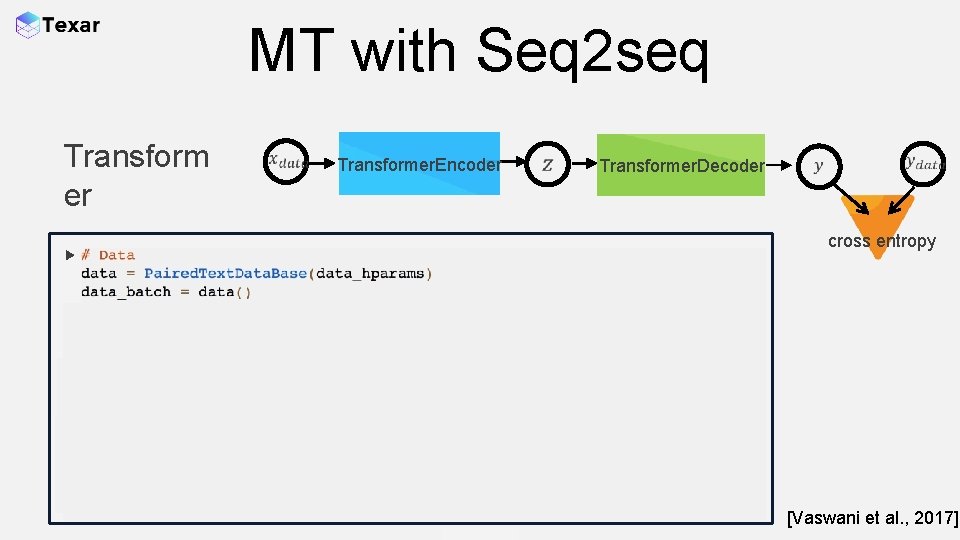

MT with Seq 2 seq Transform er Transformer. Encoder Transformer. Decoder cross entropy ` [Vaswani et al. , 2017]

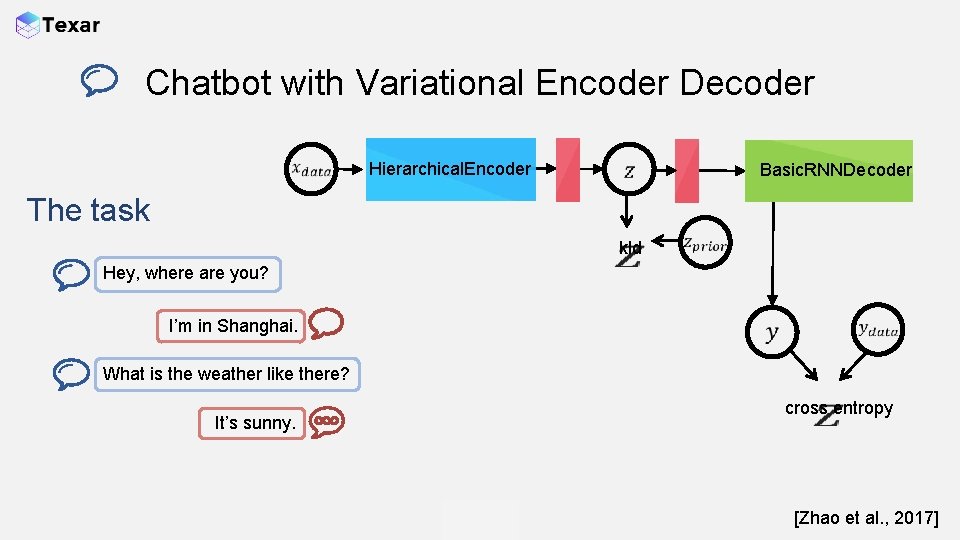

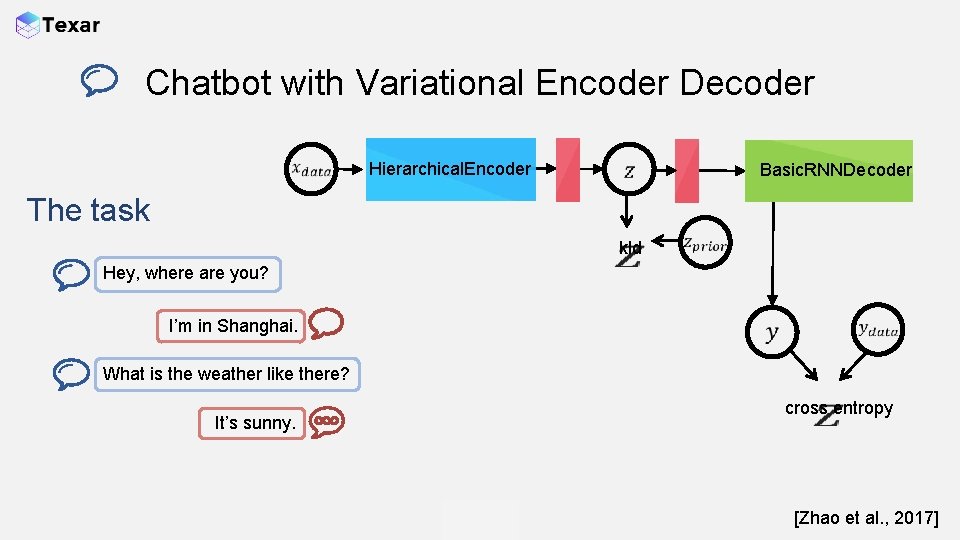

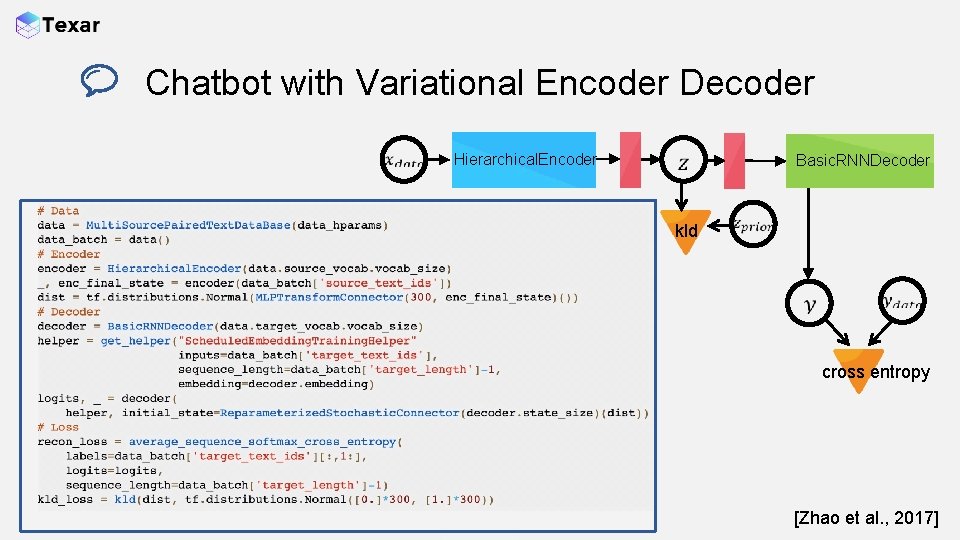

Chatbot with Variational Encoder Decoder Hierarchical. Encoder Basic. RNNDecoder The task kld Hey, where are you? I’m in Shanghai. What is the weather like there? It’s sunny. cross entropy [Zhao et al. , 2017]

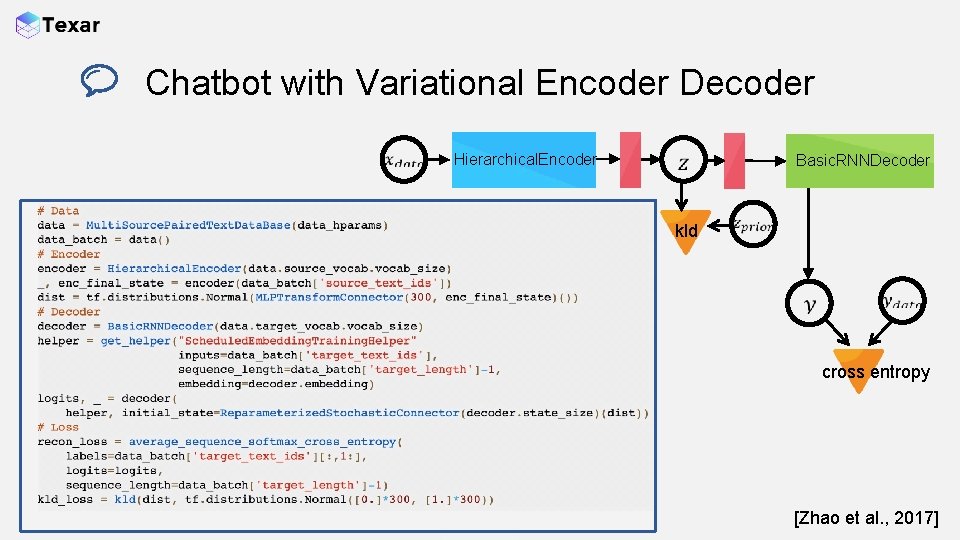

Chatbot with Variational Encoder Decoder Hierarchical. Encoder Basic. RNNDecoder kld cross entropy [Zhao et al. , 2017]

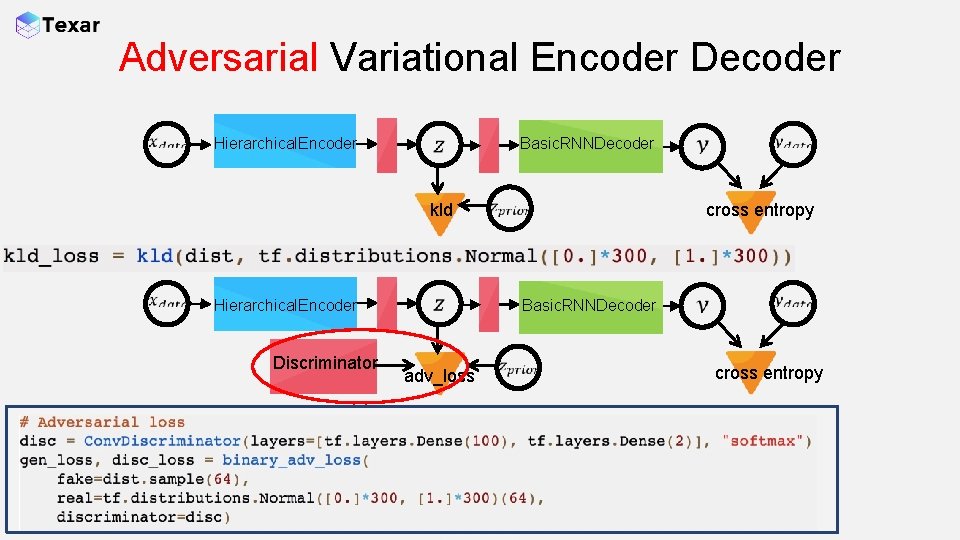

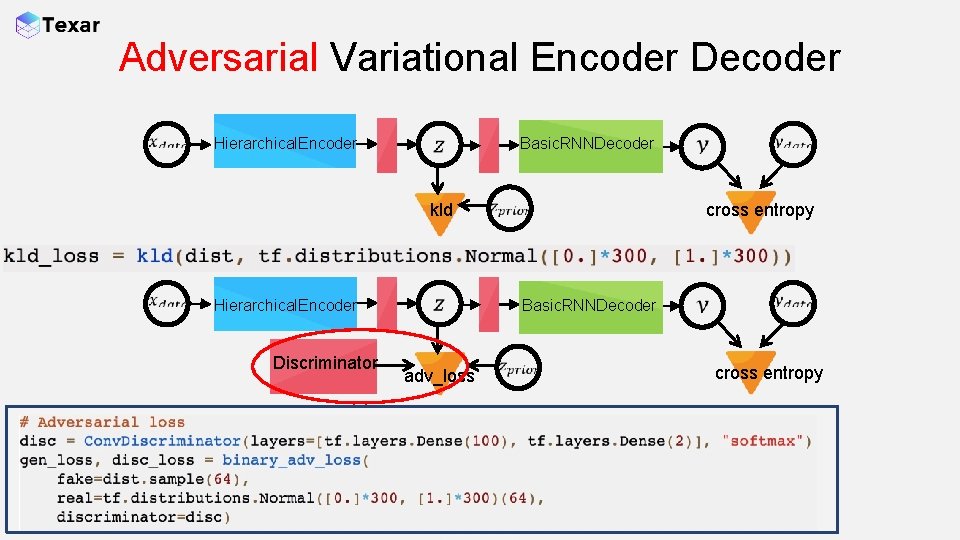

Adversarial Variational Encoder Decoder Hierarchical. Encoder kld Hierarchical. Encoder Discriminator Basic. RNNDecoder cross entropy adv_loss Basic. RNNDecoder cross entropy

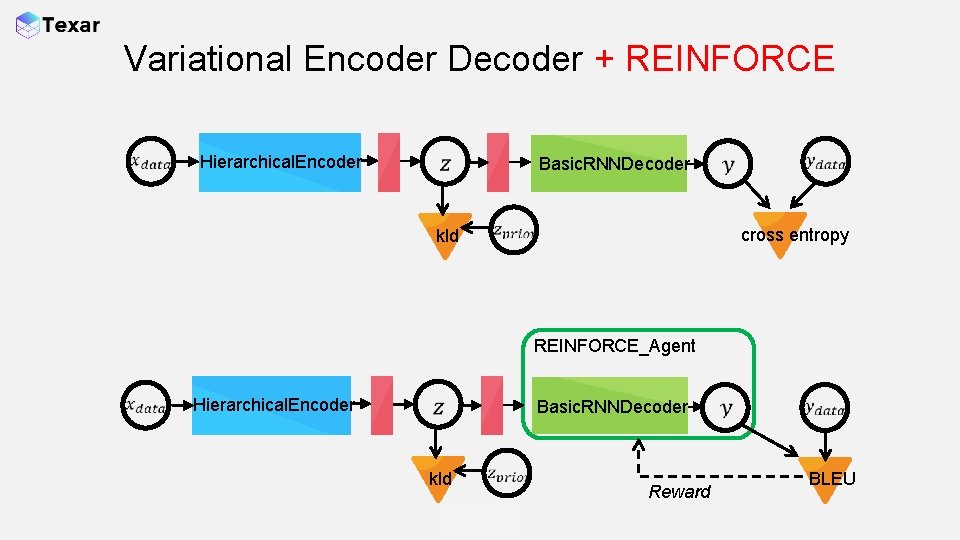

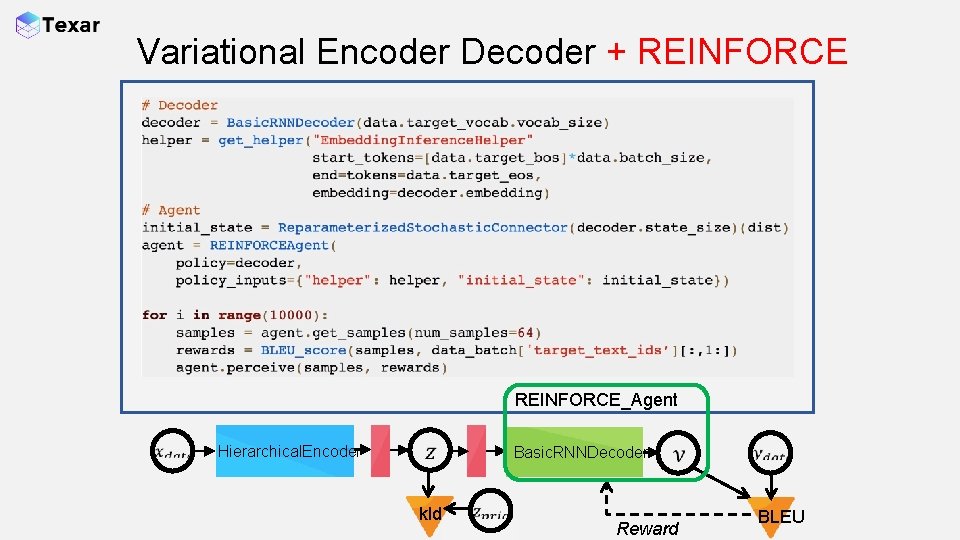

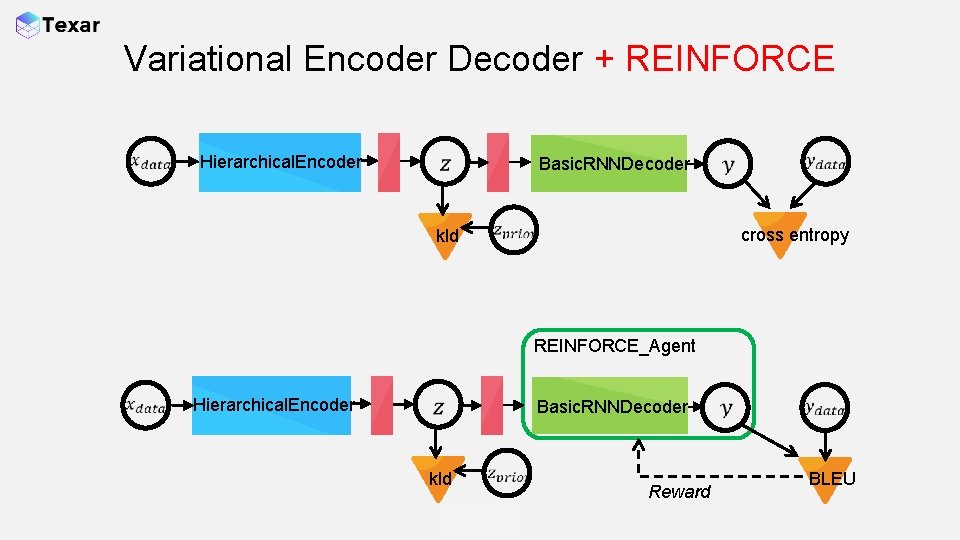

Variational Encoder Decoder + REINFORCE Hierarchical. Encoder Basic. RNNDecoder kld cross entropy REINFORCE_Agent Hierarchical. Encoder kld Basic. RNNDecoder Reward BLEU

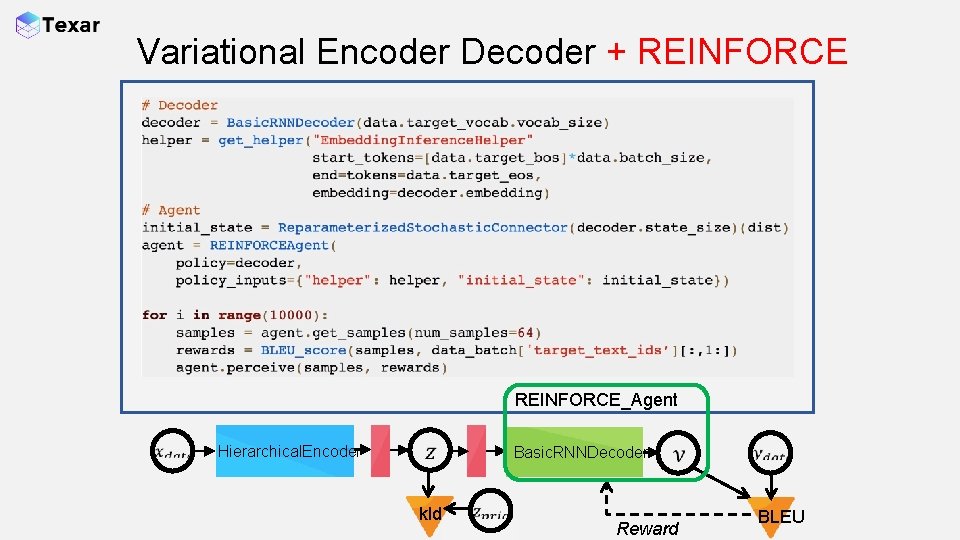

Variational Encoder Decoder + REINFORCE_Agent Hierarchical. Encoder kld Basic. RNNDecoder Reward BLEU

Extensibility Write configuration files to specify templated models Code to assemble modules freely Plug in your own modules easily Implement key functions of a module template, e. g. , Decoder: : step() Implement new modules with compatible interfaces

Thank you!