Image to Image Translation using GANs Shashank Verma

- Slides: 9

Image to Image Translation using GANs Shashank Verma

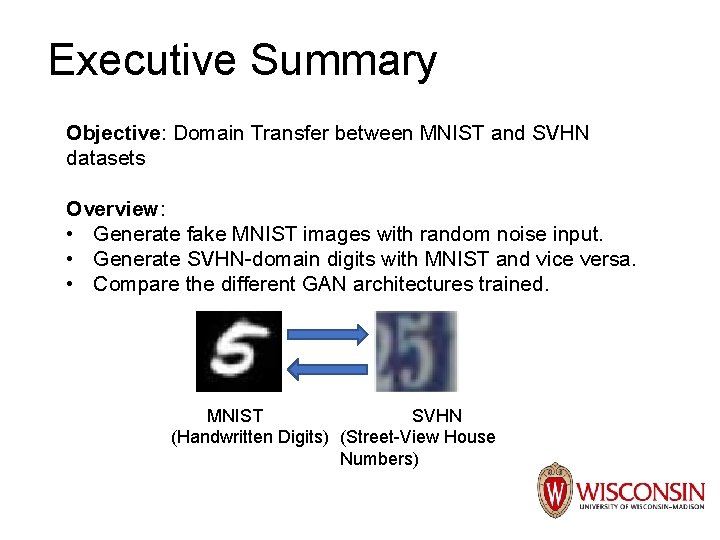

Executive Summary Objective: Domain Transfer between MNIST and SVHN datasets Overview: • Generate fake MNIST images with random noise input. • Generate SVHN-domain digits with MNIST and vice versa. • Compare the different GAN architectures trained. MNIST SVHN (Handwritten Digits) (Street-View House Numbers)

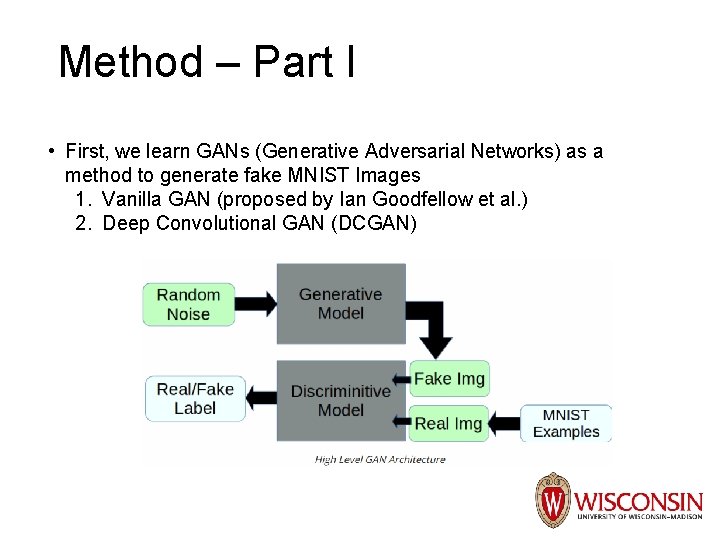

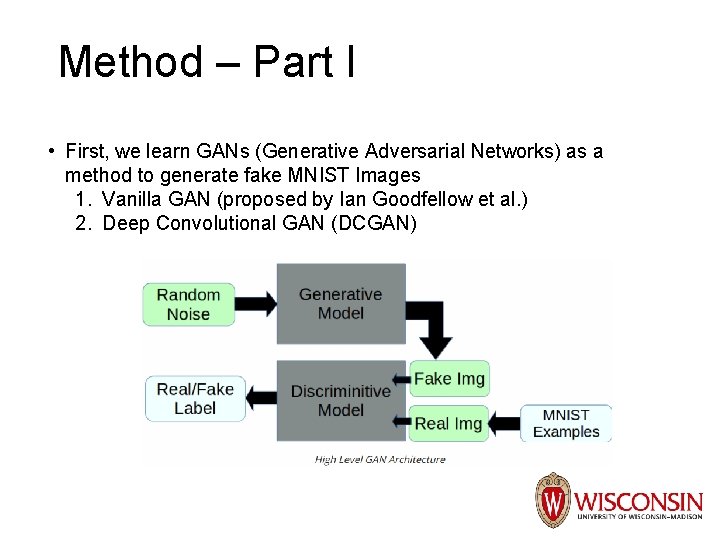

Method – Part I • First, we learn GANs (Generative Adversarial Networks) as a method to generate fake MNIST Images 1. Vanilla GAN (proposed by Ian Goodfellow et al. ) 2. Deep Convolutional GAN (DCGAN)

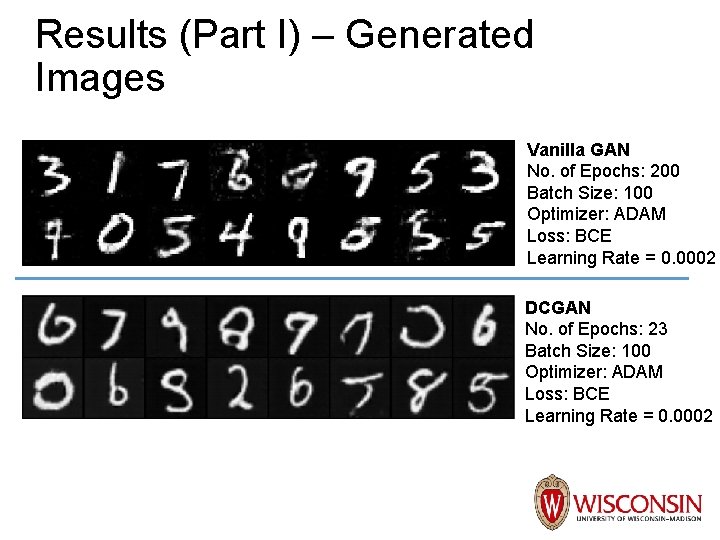

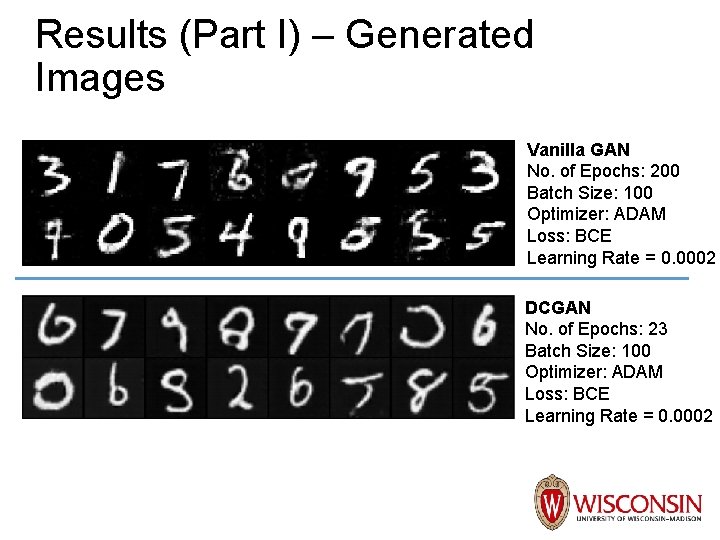

Results (Part I) – Generated Images Vanilla GAN No. of Epochs: 200 Batch Size: 100 Optimizer: ADAM Loss: BCE Learning Rate = 0. 0002 DCGAN No. of Epochs: 23 Batch Size: 100 Optimizer: ADAM Loss: BCE Learning Rate = 0. 0002

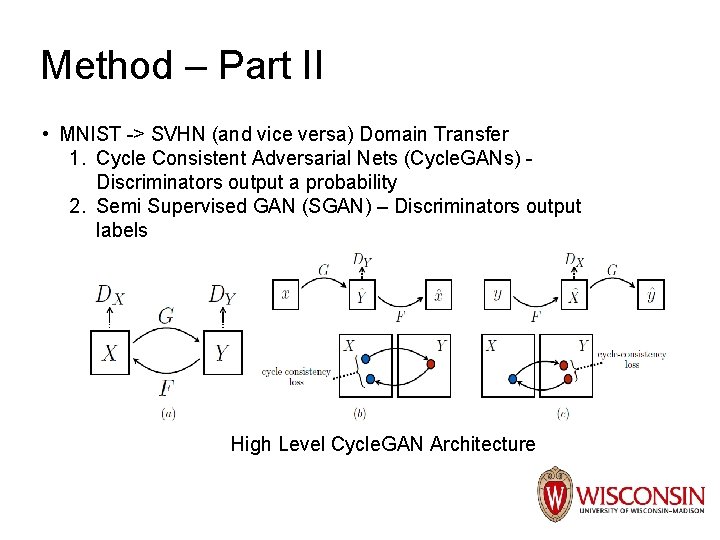

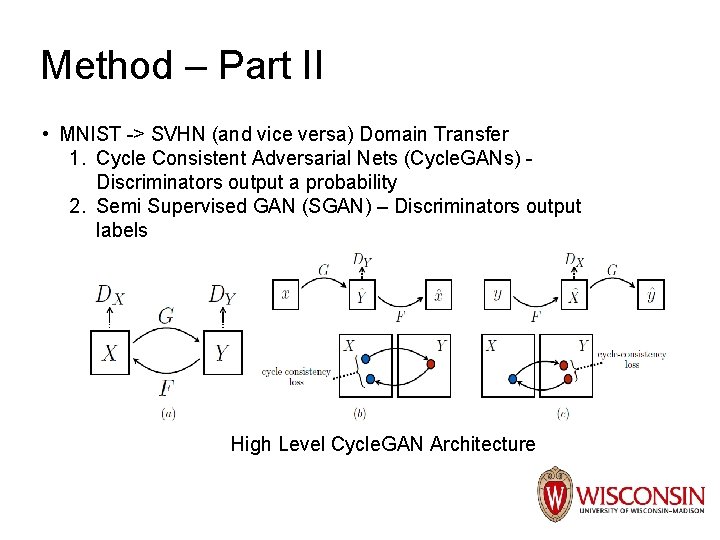

Method – Part II • MNIST -> SVHN (and vice versa) Domain Transfer 1. Cycle Consistent Adversarial Nets (Cycle. GANs) Discriminators output a probability 2. Semi Supervised GAN (SGAN) – Discriminators output labels High Level Cycle. GAN Architecture

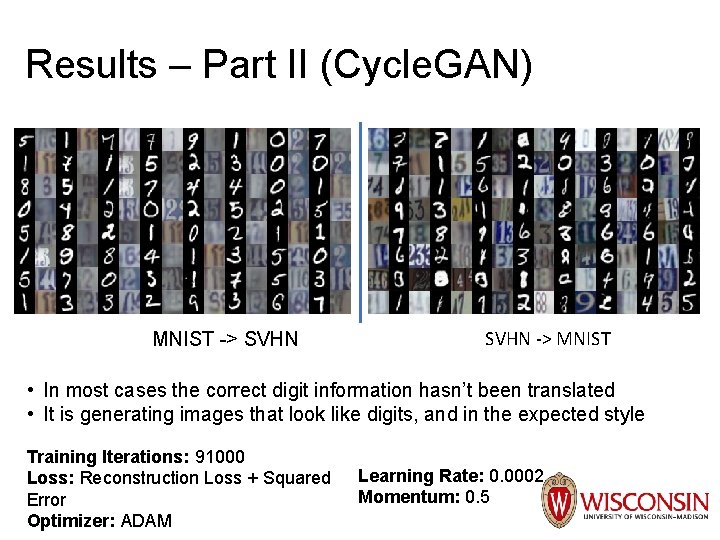

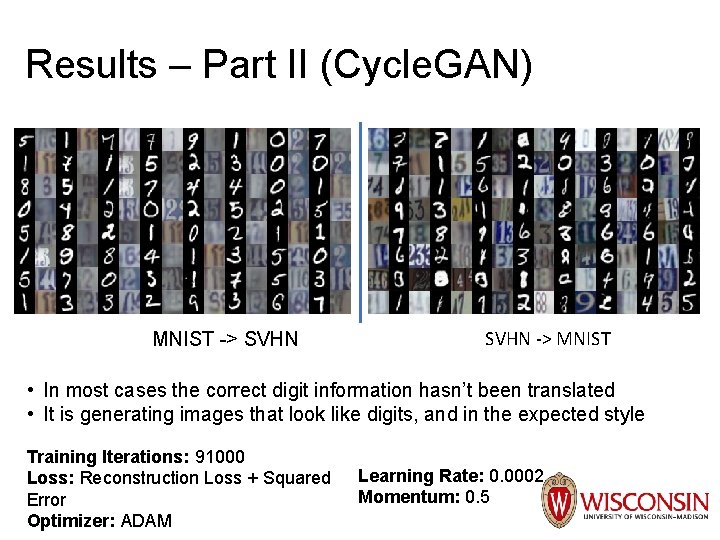

Results – Part II (Cycle. GAN) MNIST -> SVHN -> MNIST • In most cases the correct digit information hasn’t been translated • It is generating images that look like digits, and in the expected style Training Iterations: 91000 Loss: Reconstruction Loss + Squared Error Optimizer: ADAM Learning Rate: 0. 0002 Momentum: 0. 5

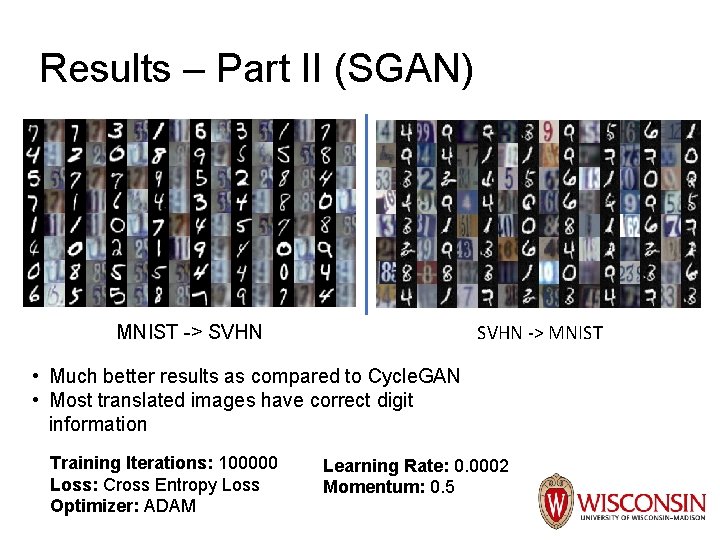

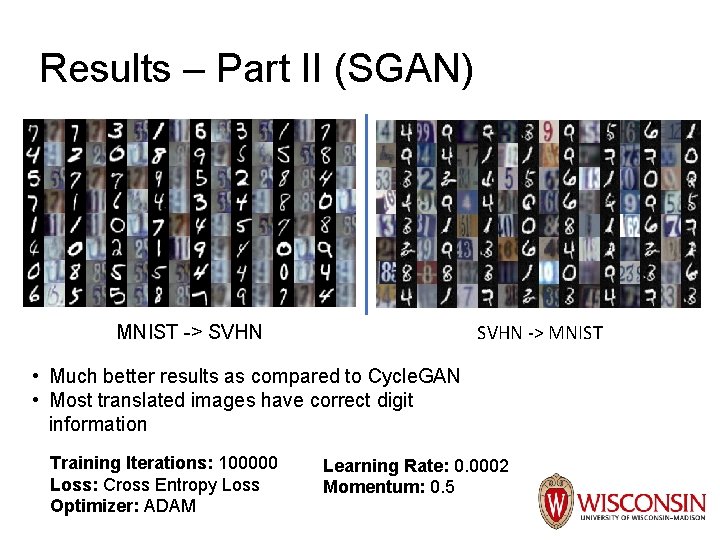

Results – Part II (SGAN) SVHN -> MNIST -> SVHN • Much better results as compared to Cycle. GAN • Most translated images have correct digit information Training Iterations: 100000 Loss: Cross Entropy Loss Optimizer: ADAM Learning Rate: 0. 0002 Momentum: 0. 5

Discussion • DCGAN performed better than Vanilla GAN in generating fake MNIST images. • DCGANs have more stable training dynamics as compared to Vanilla GANs. (Use Convolution layers where you can!) • SGAN performed better than Cycle. GAN in the task of MNIST-SVHN domain transfer. In SGAN, the correct digit information was retained on more occasions as compared to Cycle. GAN. • SGANs are a semi-supervised method requiring labeled data! • GANs are hard to train! (especially Vanilla GAN) They suffer with “mode collapse”, “vanishing gradient” etc. • A method to quantitatively compare domain transfer output is using off-theshelf classifiers on the generated images.

Appendix • Framework: Py. Torch 0. 4. 1 • Hardware: Euler Cluster (Intel® Haswell CPU (4 Cores in use) + Nvidia Ge. Force GTX-1080 (x 2)) • Code Location: https: //github. com/shashank 3959/GAN