Fake News Digital Literacy 1 Fake news spread

![• [One] option might be to empower users to improve their online communities • [One] option might be to empower users to improve their online communities](https://slidetodoc.com/presentation_image_h2/67deb757681845765d3cb92fdae3681c/image-19.jpg)

- Slides: 25

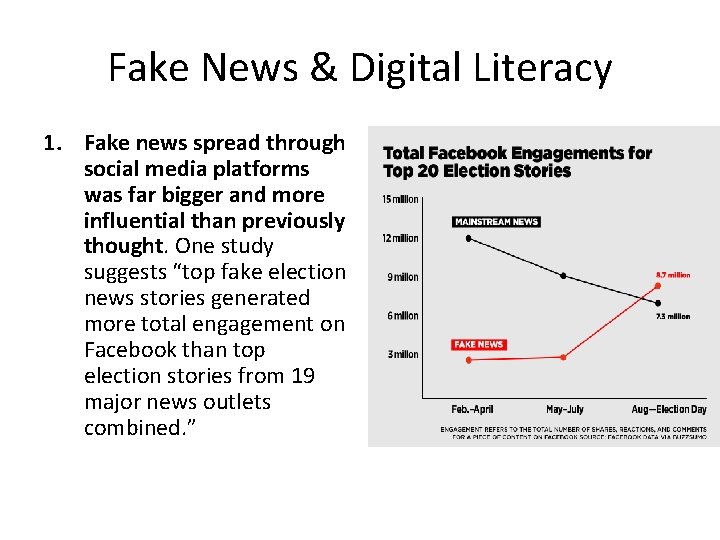

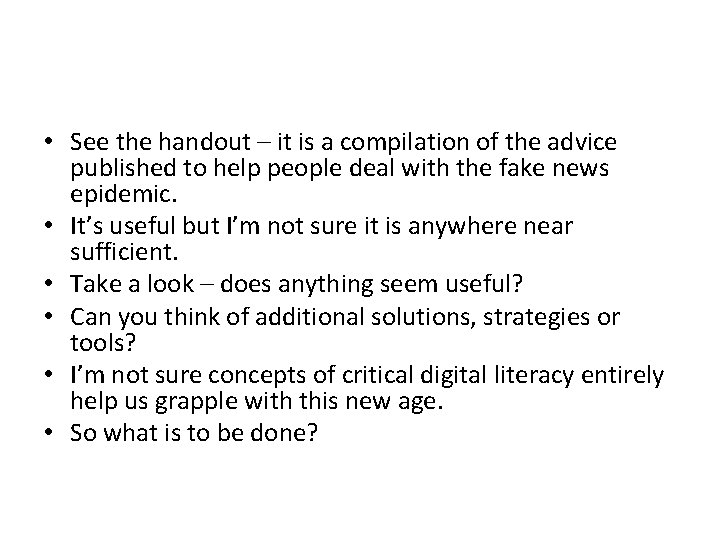

Fake News & Digital Literacy 1. Fake news spread through social media platforms was far bigger and more influential than previously thought. One study suggests “top fake election news stories generated more total engagement on Facebook than top election stories from 19 major news outlets combined. ”

• Automated AI Twitter “swarms” or “commentbots” whenever topics like climate change, gun control, or other hot-button issues come up? Some evidence for this emerging. • In a way these echo fabricated letters to the editor, astroturf groups, and other strategies from the past. However, at present, they are harder to identify and track.

Fake News & Digital Literacy 2. It is becoming an international phenomenon.

• The degree of the problem is suggested by the fact that some major political figures could not tell fake news from real news. Two prominent examples were Trump and General Flynn, the new national security advisor.

• A day after a black activist was kicked and punched by voters at a Donald Trump rally in Alabama, Trump tweeted an image packed with racially loaded and incorrect murder statistics.

• People we have been reading this semester have been in the news as reporters try to make sense of the issue. For example. This article quotes Clay Shirky:

• “Why is this all happening now? Clay Shirky, a professor at New York University who has studied the effects of social networks, suggested a few reasons. • One is the ubiquity of Facebook, which has reached a truly epic scale. Last month the company reported that about 1. 8 billion people now log on to the service every month. Because social networks feed off the various permutations of interactions among people, they become strikingly more powerful as they grow. With about a quarter of the world’s population now on Facebook, the possibilities are staggering. “When the technology gets boring, that’s when the crazy social effects get interesting, ” Mr. Shirky said. • One of those social effects is what Mr. Shirky calls the “shifting of the Overton Window, ” a term coined by the researcher Joseph P. Overton to describe the range of subjects that the mainstream media deems publicly acceptable to discuss.

• From about the early 1980 s until the very recent past, it was usually considered unwise for politicians to court views deemed by most of society to be out of the mainstream, things like overt calls to racial bias. • “White ethnonationalism was kept at bay because of pluralistic ignorance, ” Mr. Shirky said. “Every person who was sitting in their basement yelling at the TV about immigrants or was willing to say white Christians were more American than other kinds of Americans — they didn’t know how many others shared their views. ” • Thanks to the internet, now each person with once-maligned views can see that he’s not alone. And when these people find one another, they can do things — create memes, publications and entire online worlds that bolster their worldview, and then break into the mainstream. The groups also become ready targets for political figures like Mr. Trump, who recognize their energy and enthusiasm and tap into it for real-world victories.

• Mr. Shirky notes that the Overton Window isn’t just shifting on the right. We see it happening on the left, too. Mr. Sanders campaigned on an anti. Wall Street platform that would have been unthinkable for a Democrat just a decade ago. • The upshot is further unforeseen events. “We’re absolutely going to get more of these insurgent candidates, and more crazy social effects, ” Mr. Shirky said. • Mr. Trump is just the tip of the iceberg. Prepare for interesting times.

From an era of “Truthiness” to a “Posttruth” age? • Oxford Dictionaries selected “post-truth” as 2016's international word of the year. The dictionary defines “post-truth” as “relating to or denoting circumstances in which objective facts are less influential in shaping public opinion than appeals to emotion and personal belief. ”

• In a 2008 book, I argued that the internet would usher in a “post-fact” age. Eight years later, in the death throes of an election that features a candidate who once led the campaign to lie about President Obama’s birth, there is more reason to despair about truth in the online age. Why? Because if you study the dynamics of how information moves online today, pretty much everything conspires against truth. Farhad Manjoo, “How the Internet Is Loosening Our Grip on the Truth”

• “The internet is distorting our collective grasp on the truth. Polls show that many of us have burrowed into our own echo chambers of information. In a recent Pew Research Center survey, 81 percent of respondents said that partisans not only differed about policies, but also about “basic facts. ” Manjoo.

Fake News: Institutionalized and “Weaponized” • “Where hoaxes before were shared by your great-aunt who didn’t understand the internet, the misinformation that circulates online is now being reinforced by political campaigns, by political candidates or by amorphous groups of tweeters working around the campaigns, ” said Caitlin Dewey, a reporter at The Washington Post who once wrote a column called “What Was Fake on the Internet This Week. ” Ms. Dewey’s column began in 2014, but by the end of last year, she decided to hang up her fact-checking hat because she had doubts that she was convincing anyone.

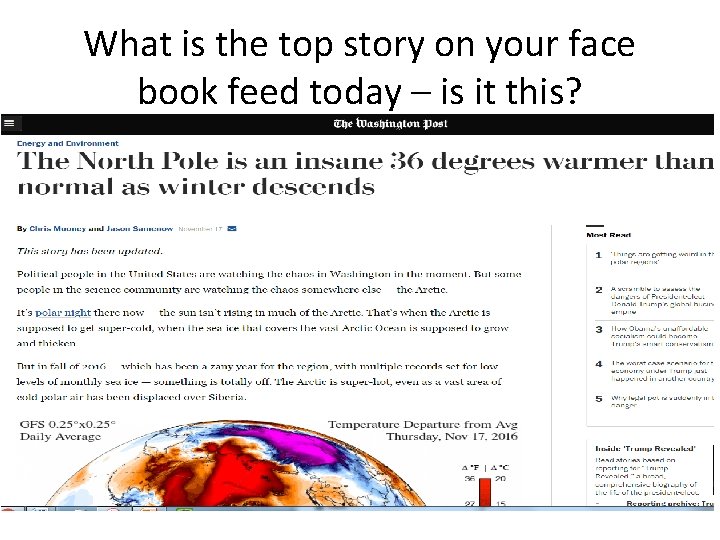

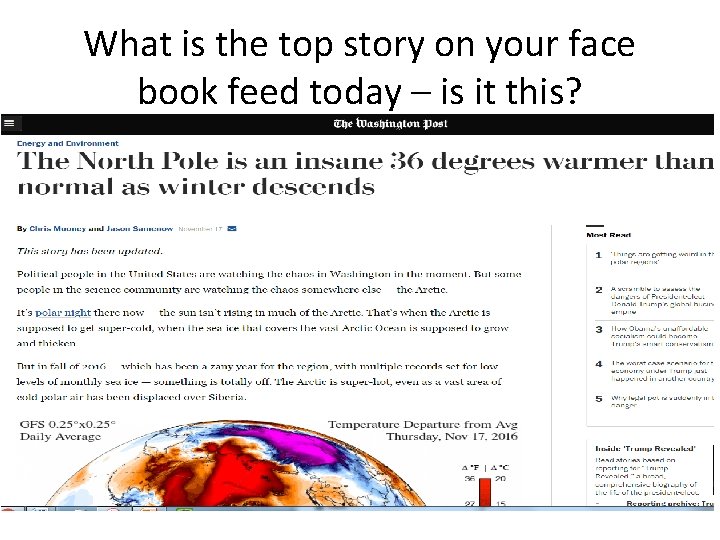

What is the top story on your face book feed today – is it this?

• What is to be done? • Teaching critical digital literacy may be needed more urgently (and more comprehensively) than we imagined. But what else?

• Critical digital literacy is thus far more important than we had assumed. • So what is to be done?

![One option might be to empower users to improve their online communities • [One] option might be to empower users to improve their online communities](https://slidetodoc.com/presentation_image_h2/67deb757681845765d3cb92fdae3681c/image-19.jpg)

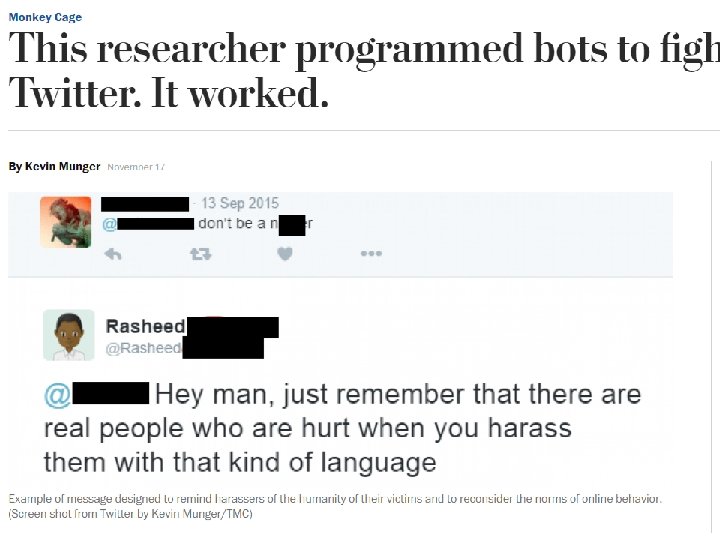

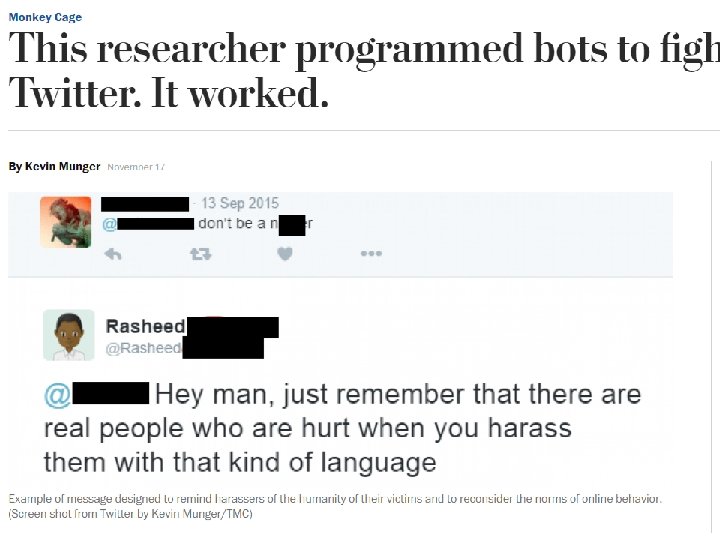

• [One] option might be to empower users to improve their online communities through peerto-peer sanctioning. To test this hypothesis, I used Twitter accounts I controlled (“bots”) to send messages designed to remind harassers of the humanity of their victims and to reconsider the norms of online behavior. • I sent every harasser the same message: – @[subject] Hey man, just remember that there are real people who are hurt when you harass them with that kind of language

• I used a racial slur as the search term because I thought of it as the strongest evidence that a tweet might contain racist harassment. I restricted the sample to users who had a history of using offensive language, and I only included subjects who appeared to be a white man or who were anonymous.

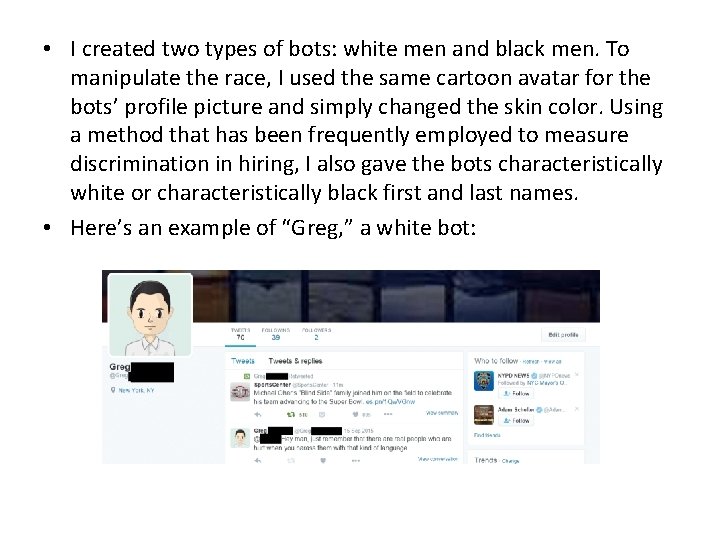

• I created two types of bots: white men and black men. To manipulate the race, I used the same cartoon avatar for the bots’ profile picture and simply changed the skin color. Using a method that has been frequently employed to measure discrimination in hiring, I also gave the bots characteristically white or characteristically black first and last names. • Here’s an example of “Greg, ” a white bot:

• “Overall, I had four types of bots: High Follower/White; Low Follower/White; High Follower/Black; and Low Follower/Black. • Results? Only one of the four types of bots caused a significant reduction in the subjects’ rate of tweeting slurs: the white bots with 500 followers. • Overall, I found that it is possible to cause people to use less harassing language. This change seems to be most likely when both individuals share a social identity. Unsurprisingly, high status people are also more likely to cause a change. ”

• Many people are already engaged in sanctioning bad behavior online, but they sometimes do so in a way that can backfire. If people call out bad behavior in a way that emphasizes the social distance between themselves and the person they’re calling out, my research suggests that the sanctioning is less likely to be effective. • Physical distance, anonymity and partisan bubbles online can lead to extremely nasty behavior, but if we remember that there’s a real person behind every online encounter and emphasize what we have in common rather than what divides us, we might be able to make the Internet a better place.

• But action can be risky – a professor and her students created a list of fake sites and criteria to evaluate the credibility of sites was targeted, threatened, and doxxed, and took the material down.

• See the handout – it is a compilation of the advice published to help people deal with the fake news epidemic. • It’s useful but I’m not sure it is anywhere near sufficient. • Take a look – does anything seem useful? • Can you think of additional solutions, strategies or tools? • I’m not sure concepts of critical digital literacy entirely help us grapple with this new age. • So what is to be done?

Cyber literacy for the digital age

Cyber literacy for the digital age Fake news ppt

Fake news ppt Reflekterende artikel fake news

Reflekterende artikel fake news What does sanctioned countries mean

What does sanctioned countries mean Fake news about nutrition

Fake news about nutrition Fake news

Fake news Fake news

Fake news Fake news

Fake news Washington post fake news

Washington post fake news Start spreading the news

Start spreading the news What are the people in media

What are the people in media Information and media literacy similarities

Information and media literacy similarities Mil subj

Mil subj Peter adams news literacy project

Peter adams news literacy project Newsfeed defenders

Newsfeed defenders Question media a b c d

Question media a b c d Digital health literacy

Digital health literacy Red delicious% apple

Red delicious% apple Digital literacy

Digital literacy Northstar digital literacy

Northstar digital literacy Digital literacy standard curriculum version 4

Digital literacy standard curriculum version 4 Sussed blackboard

Sussed blackboard Nemisa dbe digital literacy application

Nemisa dbe digital literacy application Northstar digital literacy

Northstar digital literacy Computer literacy in sri lanka

Computer literacy in sri lanka Digitalliteracyassessment

Digitalliteracyassessment