Dynamic Cache Reconfiguration for Soft RealTime Embedded Systems

Dynamic Cache Reconfiguration for Soft Real-Time Embedded Systems Weixun Wang and Prabhat Mishra Embedded Systems Lab Computer and Information Science and Engineering University of Florida Ann Gordon-Ross Electrical and Computer Engineering PRESENTED BY: KANIKA CHAWLA SOWMITH BOYANPALLI PARTH SHAH University of Florida

Motivation l Decrease Energy consumption and improve performance using dynamic cache reconfiguration 2

Introduction l Real Time Systems ? ? l Types: 1. Hard Real Time Systems 2. Firm Real Time Systems 3. Soft Real Time Systems 3

Previous Work l Embedded Systems run on Battery l Important to make these applications energy efficient l Techniques used : 1. Dynamic Power Management : Sleep mode when processor is Idle 2. Dynamic Voltage Scaling : Reducing Clock frequency so that tasks run slower and use less power. Tradeoff between performance and energy consumed. 4

Reconfigurable Cache l Modern Techniques use reconfigurable parameters which we tune dynamically to improve system performance l One such parameter is cache as due to modern computing requirements we need large memory caches which utilize more than half of the power l Hence using different configuration of caches for different part of the program can decrease energy consumption 5

Cache In Real Time Systems l. Misses Due to preemption l. Prevention: 1. Cache Locking 2. Cache Partitioning 6

Reconfigurable cache for RTS l Not appropriate for real time systems as conditions change on run time and hence not sure when which configuration is good. l And with Hard real time systems if cache reconfiguration results in missing a deadline then the system fails l Hence we use it only for soft real time systems where the time constraint is not that strict l This paper discusses reconfiguration only for L 1 caches 7

Reconfigurable Cache l Using cache configurations suited for the application can save 62 % energy. l The cache reconfiguration doesn’t result in a performance overhead as it is handled by a lightweight co processor which has its own register set 8

Profiling l Choosing best cache configuration during run time l Intrusinve method: run all cache config and choose which is best. This results in overhead l Auxillary method: Run all config at the same time on an auxillary system. No performance overhead. But aux system is very power hungry. 9

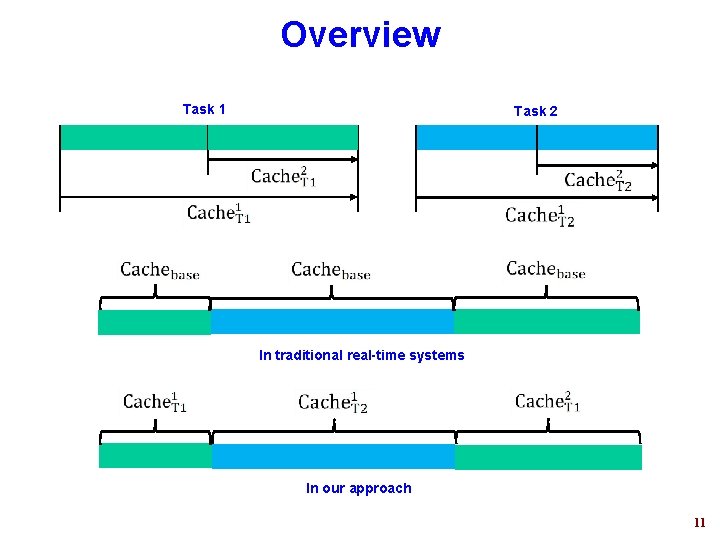

Overview l Base Cache : A cache which is best suited for entire task with respect to energy and performance l Phase : The period of time between a predefined potential preemption point and task completion l What happens on an interrupt? 10

Overview Task 1 Task 2 In traditional real-time systems In our approach 11

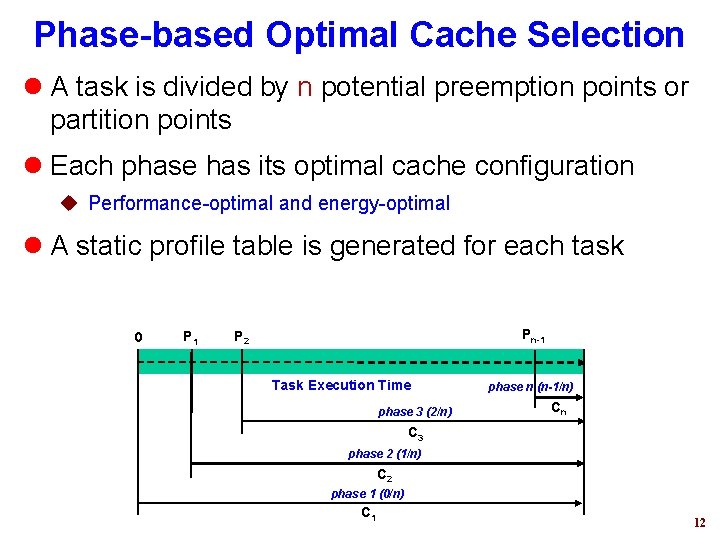

Phase-based Optimal Cache Selection l A task is divided by n potential preemption points or partition points l Each phase has its optimal cache configuration u Performance-optimal and energy-optimal l A static profile table is generated for each task 0 P 1 Pn-1 P 2 Task Execution Time phase 3 (2/n) phase n (n-1/n) Cn C 3 phase 2 (1/n) C 2 phase 1 (0/n) C 1 12

13

14

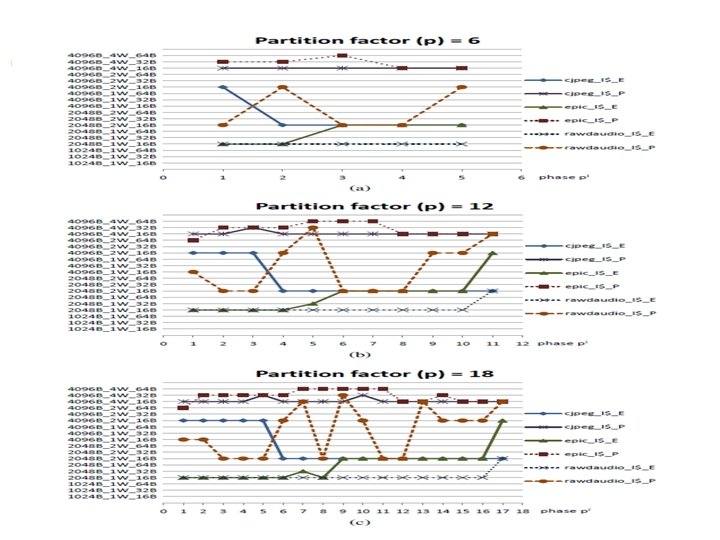

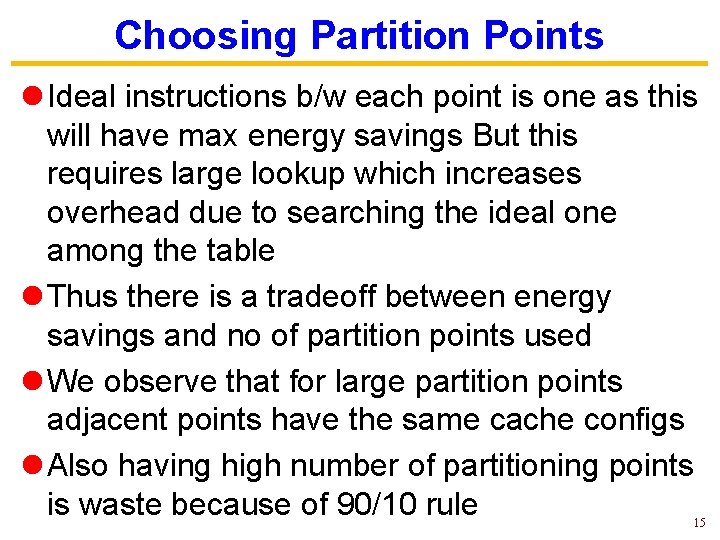

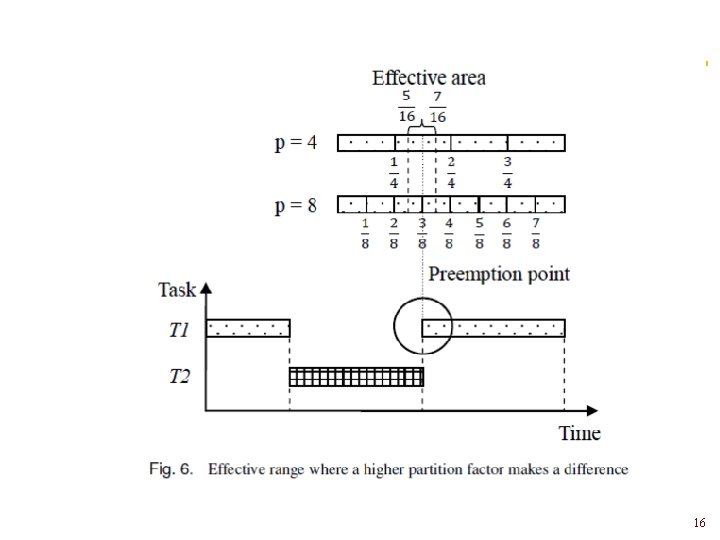

Choosing Partition Points l Ideal instructions b/w each point is one as this will have max energy savings But this requires large lookup which increases overhead due to searching the ideal one among the table l Thus there is a tradeoff between energy savings and no of partition points used l We observe that for large partition points adjacent points have the same cache configs l Also having high number of partitioning points is waste because of 90/10 rule 15

16

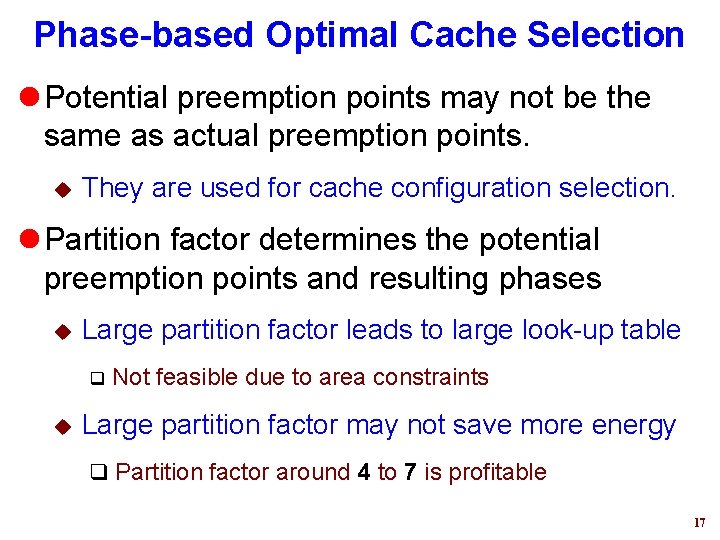

Phase-based Optimal Cache Selection l Potential preemption points may not be the same as actual preemption points. u They are used for cache configuration selection. l Partition factor determines the potential preemption points and resulting phases u Large partition factor leads to large look-up table q u Not feasible due to area constraints Large partition factor may not save more energy q Partition factor around 4 to 7 is profitable 17

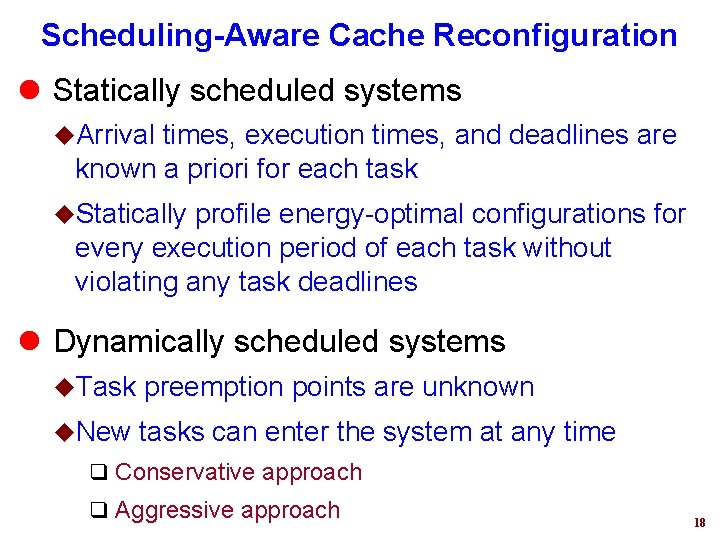

Scheduling-Aware Cache Reconfiguration l Statically scheduled systems u. Arrival times, execution times, and deadlines are known a priori for each task u. Statically profile energy-optimal configurations for every execution period of each task without violating any task deadlines l Dynamically scheduled systems u. Task preemption points are unknown u. New tasks can enter the system at any time q Conservative approach q Aggressive approach 18

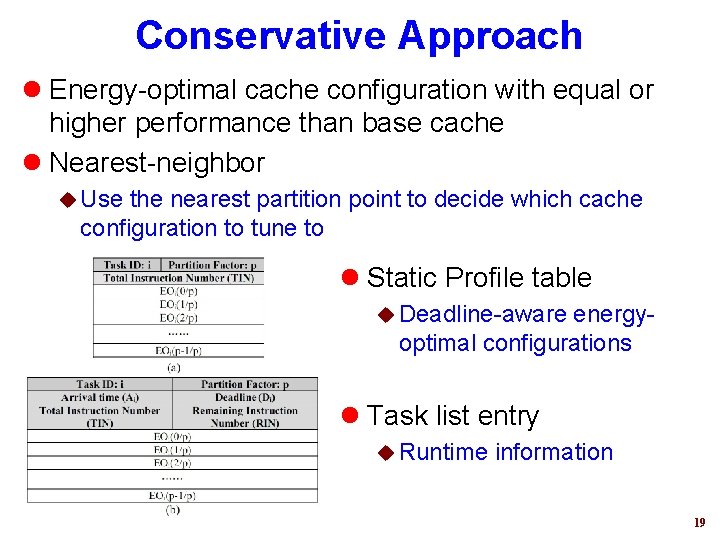

Conservative Approach l Energy-optimal cache configuration with equal or higher performance than base cache l Nearest-neighbor u Use the nearest partition point to decide which cache configuration to tune to l Static Profile table u Deadline-aware energyoptimal configurations l Task list entry u Runtime information 19

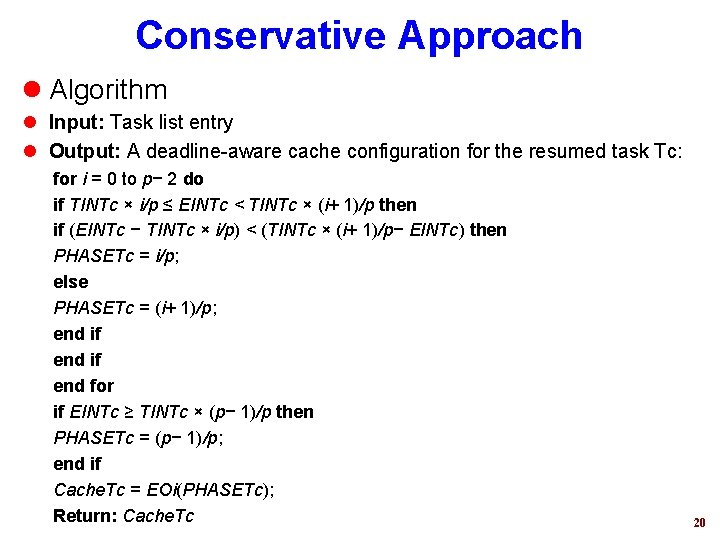

Conservative Approach l Algorithm l Input: Task list entry l Output: A deadline-aware cache configuration for the resumed task Tc: for i = 0 to p− 2 do if TINTc × i/p ≤ EINTc < TINTc × (i+ 1)/p then if (EINTc − TINTc × i/p) < (TINTc × (i+ 1)/p− EINTc) then PHASETc = i/p; else PHASETc = (i+ 1)/p; end if end for if EINTc ≥ TINTc × (p− 1)/p then PHASETc = (p− 1)/p; end if Cache. Tc = EOi(PHASETc); Return: Cache. Tc 20

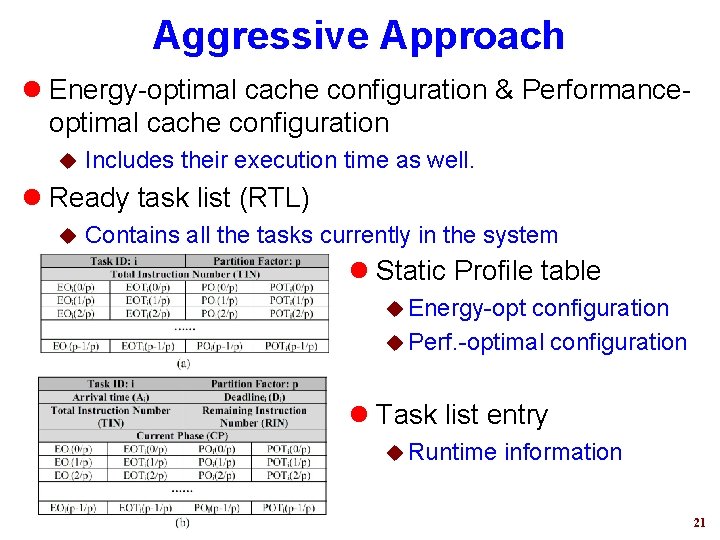

Aggressive Approach l Energy-optimal cache configuration & Performanceoptimal cache configuration u Includes their execution time as well. l Ready task list (RTL) u Contains all the tasks currently in the system l Static Profile table u Energy-opt configuration u Perf. -optimal configuration l Task list entry u Runtime information 21

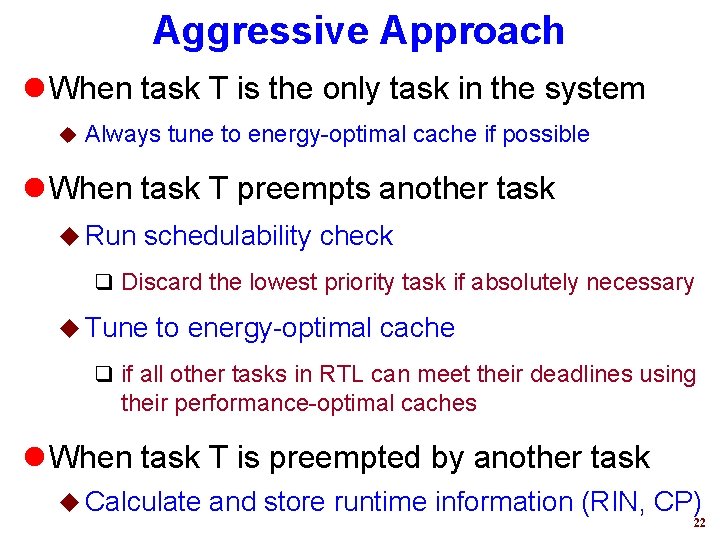

Aggressive Approach l When task T is the only task in the system u Always tune to energy-optimal cache if possible l When task T preempts another task u Run schedulability check q Discard the lowest priority task if absolutely necessary u Tune to energy-optimal cache q if all other tasks in RTL can meet their deadlines using their performance-optimal caches l When task T is preempted by another task u Calculate and store runtime information (RIN, CP) 22

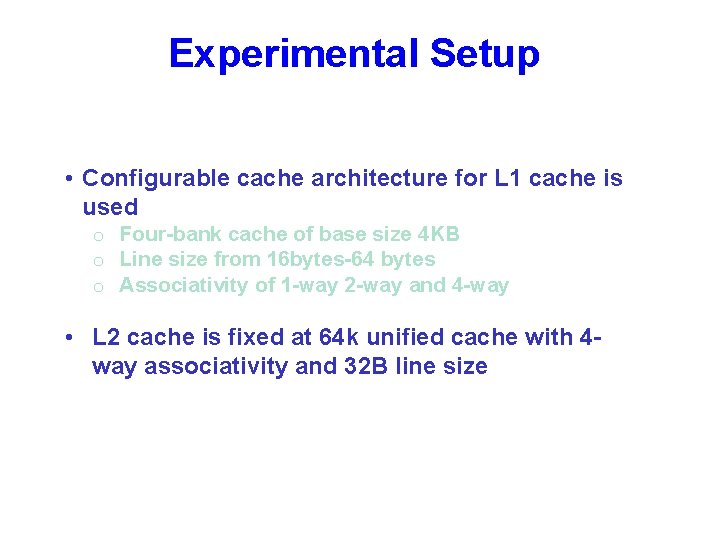

Experimental Setup • Configurable cache architecture for L 1 cache is used o Four-bank cache of base size 4 KB o Line size from 16 bytes-64 bytes o Associativity of 1 -way 2 -way and 4 -way • L 2 cache is fixed at 64 k unified cache with 4 way associativity and 32 B line size

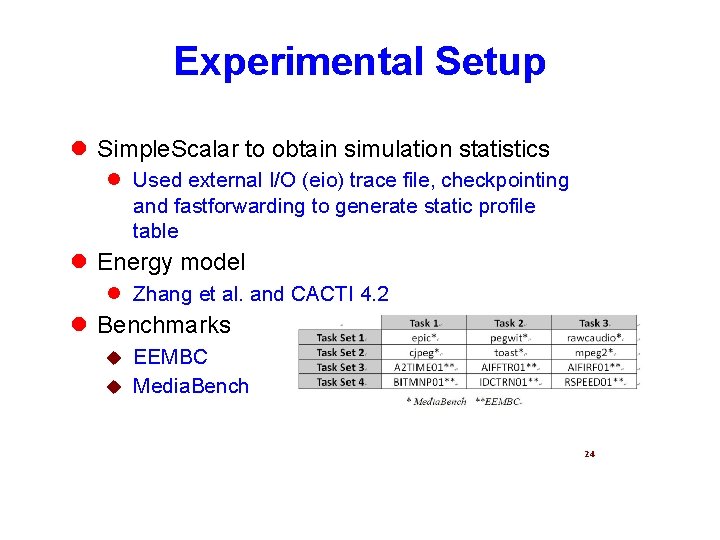

Experimental Setup l Simple. Scalar to obtain simulation statistics l Used external I/O (eio) trace file, checkpointing and fastforwarding to generate static profile table l Energy model l Zhang et al. and CACTI 4. 2 l Benchmarks EEMBC u Media. Bench u 24

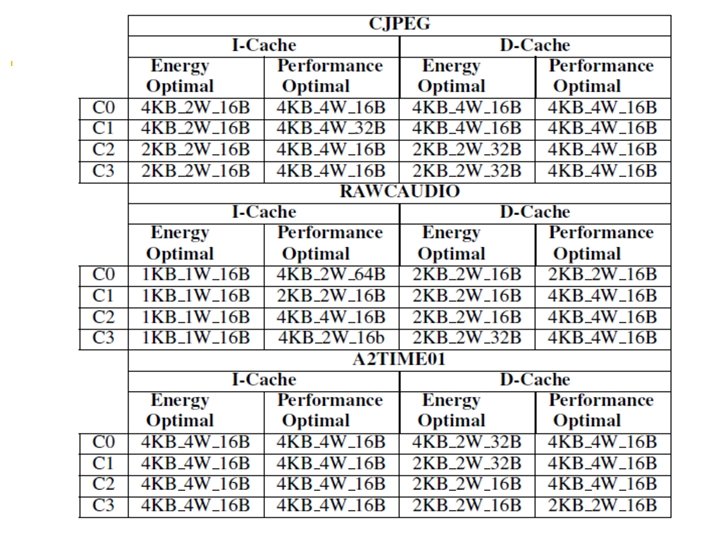

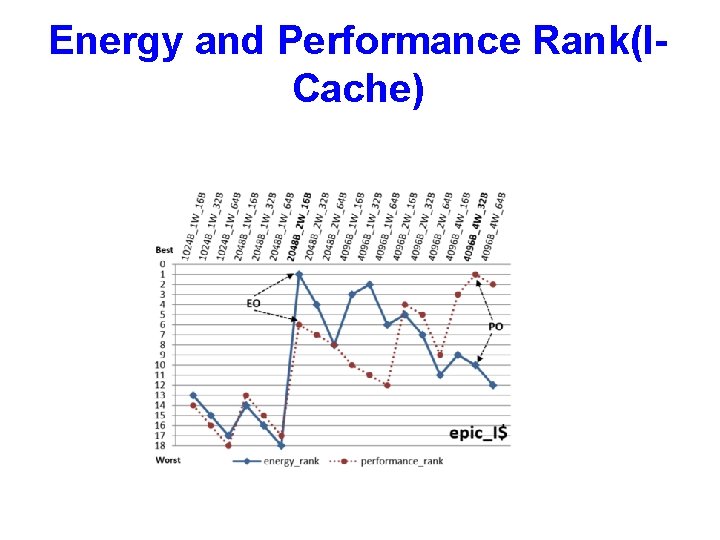

Energy and Performance Rank(ICache)

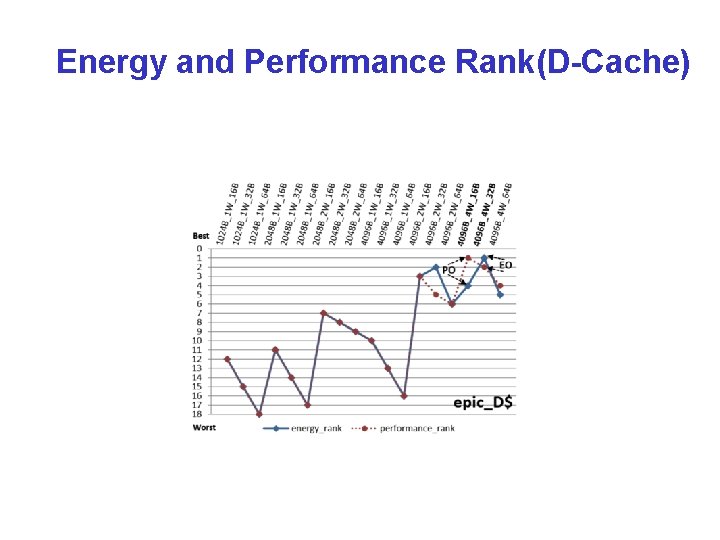

Energy and Performance Rank(D-Cache)

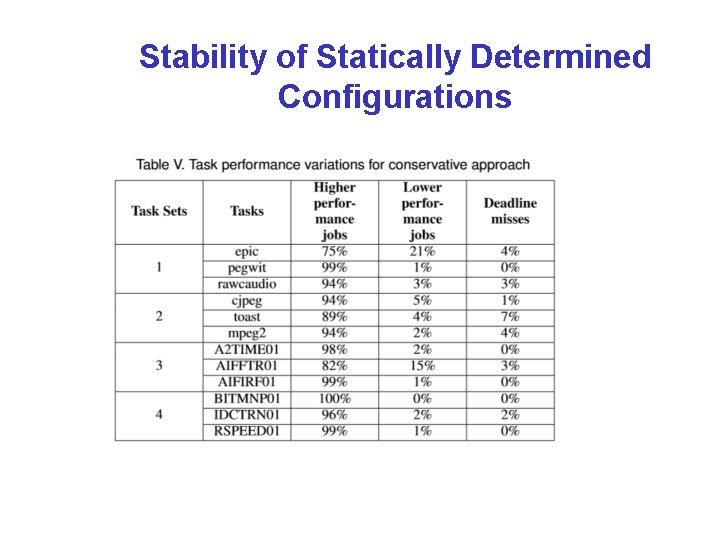

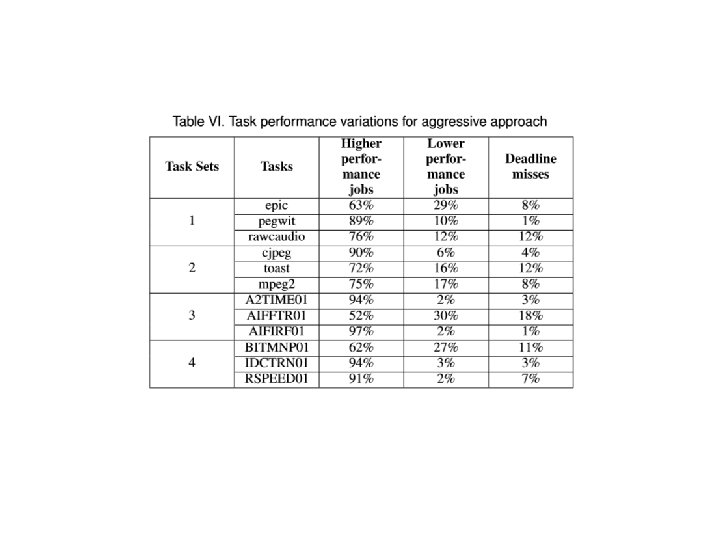

Stability of Statically Determined Configurations

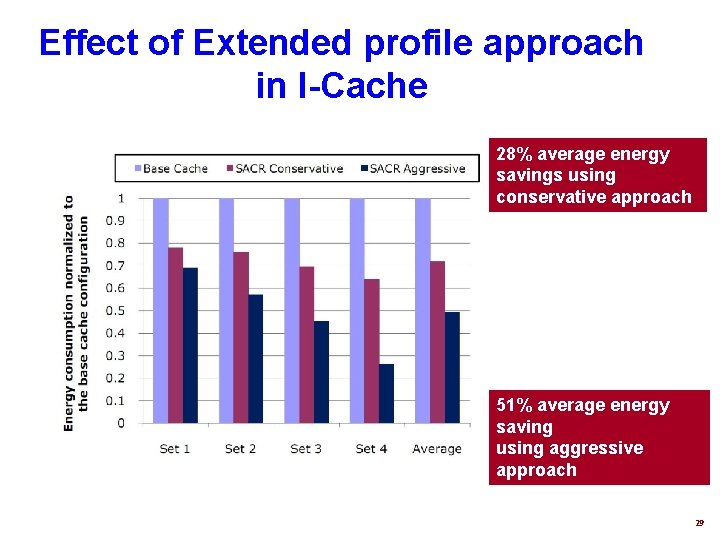

Effect of Extended profile approach in I-Cache 28% average energy savings using conservative approach 51% average energy saving using aggressive approach 29

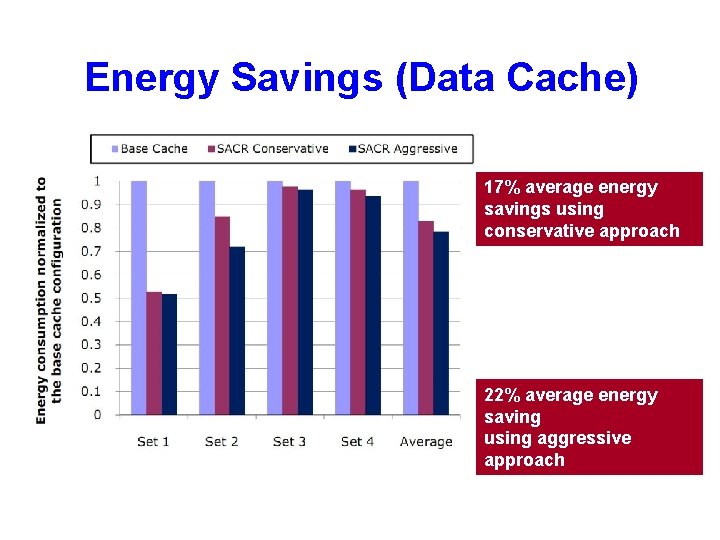

Energy Savings (Data Cache) 17% average energy savings using conservative approach 22% average energy saving using aggressive 30 approach

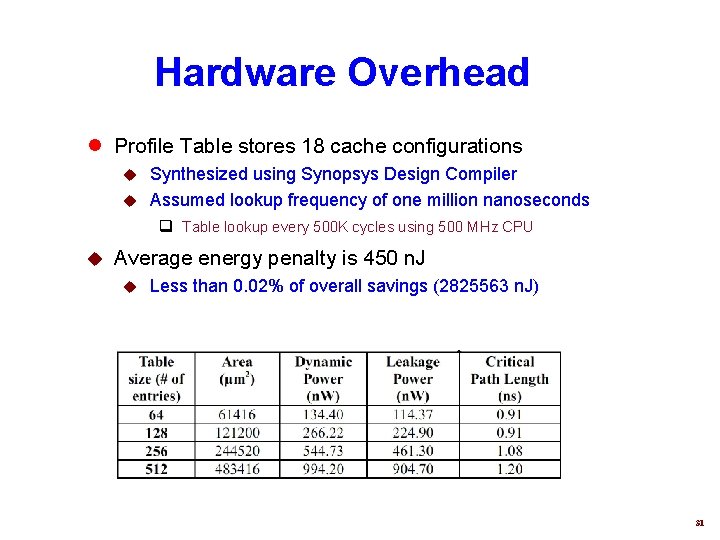

Hardware Overhead l Profile Table stores 18 cache configurations Synthesized using Synopsys Design Compiler u Assumed lookup frequency of one million nanoseconds u q Table lookup every 500 K cycles using 500 MHz CPU u Average energy penalty is 450 n. J u Less than 0. 02% of overall savings (2825563 n. J) 31

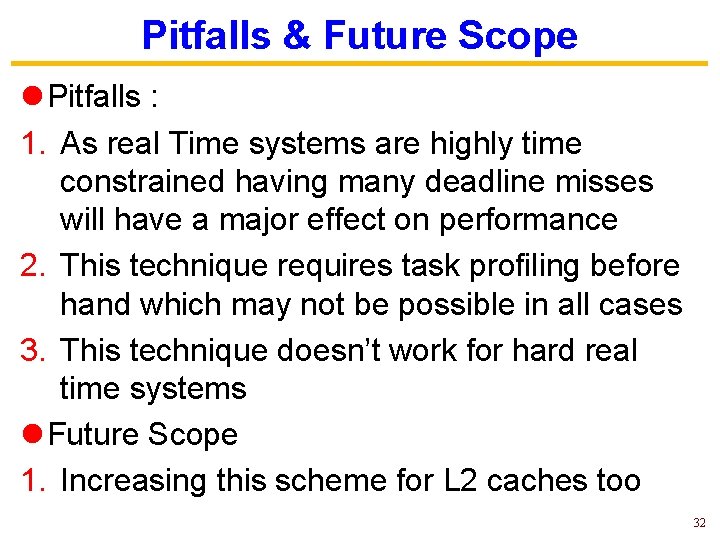

Pitfalls & Future Scope l Pitfalls : 1. As real Time systems are highly time constrained having many deadline misses will have a major effect on performance 2. This technique requires task profiling before hand which may not be possible in all cases 3. This technique doesn’t work for hard real time systems l Future Scope 1. Increasing this scheme for L 2 caches too 32

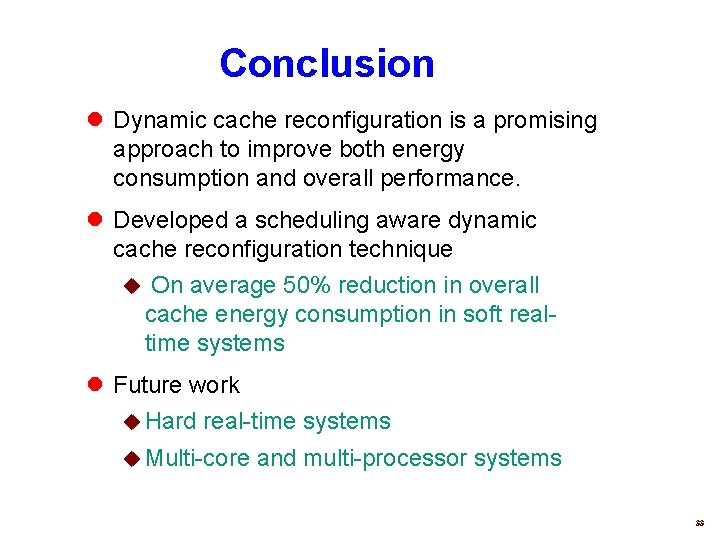

Conclusion l Dynamic cache reconfiguration is a promising approach to improve both energy consumption and overall performance. l Developed a scheduling aware dynamic cache reconfiguration technique u On average 50% reduction in overall cache energy consumption in soft realtime systems l Future work u Hard real-time systems u Multi-core and multi-processor systems 33

THANK YOU

- Slides: 35