Cache Organization of Pentium Cache Organization Cache special

![How does this activity increases speed? • Data writes: MOV [SI], AX • Cache How does this activity increases speed? • Data writes: MOV [SI], AX • Cache](https://slidetodoc.com/presentation_image_h2/cd9336502785ec749d226e8eb22d1c3c/image-11.jpg)

- Slides: 13

Cache Organization of Pentium

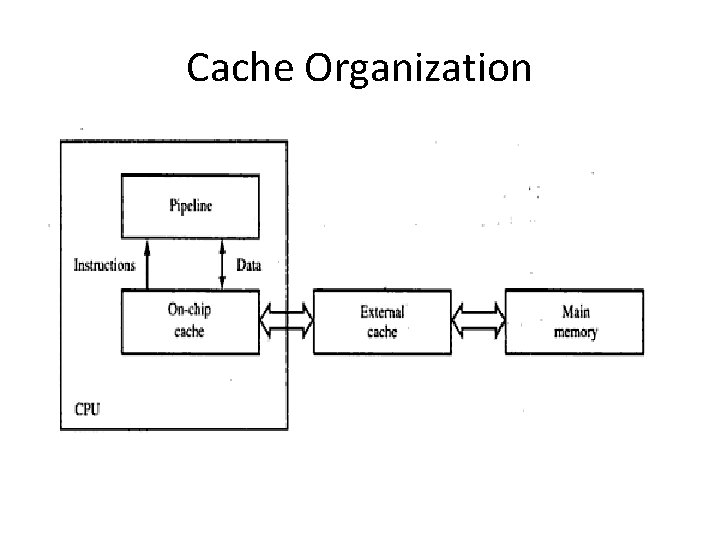

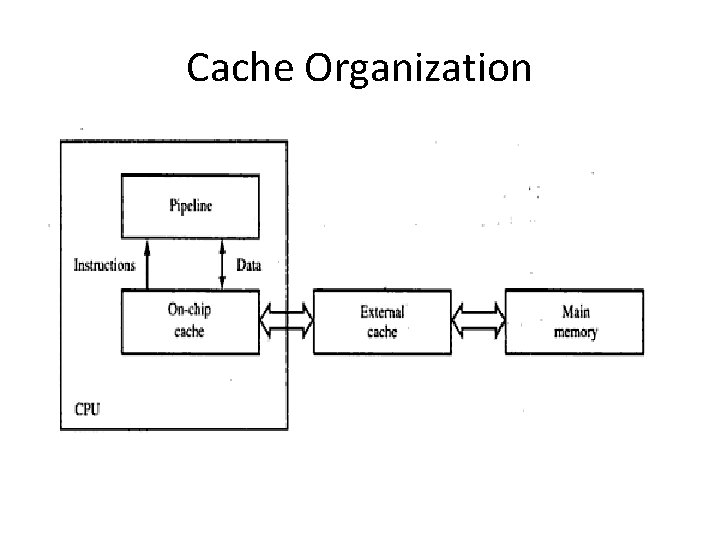

Cache Organization • Cache: special type of high speed RAM to speedup accesses to memory and reduces traffic on processor’s buses. • Two types: Internal & External • Internal (On-chip): used to feed instructions and data to the CPU’s pipeline • When an instruction/data is required, the on -chip cache will be searched first and external cache is examined next

Cache Organization

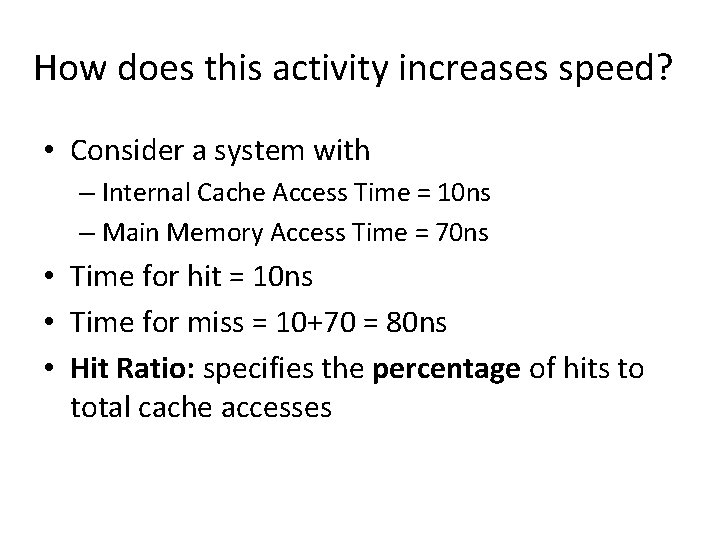

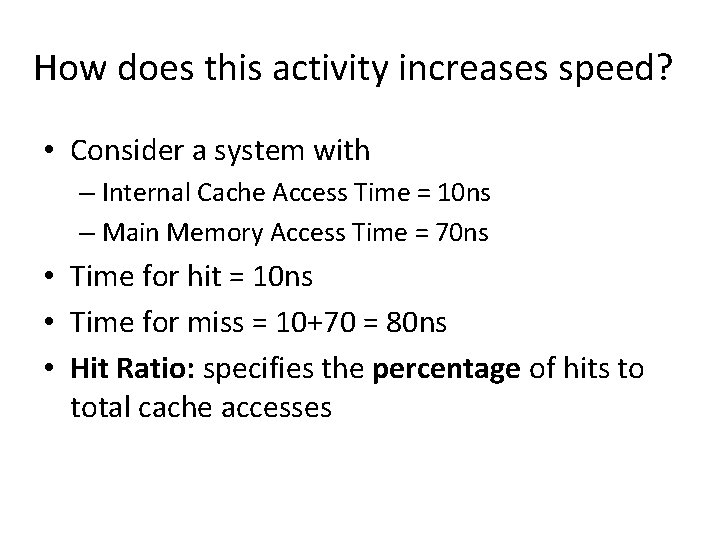

How does this activity increases speed? • Consider a system with – Internal Cache Access Time = 10 ns – Main Memory Access Time = 70 ns • Time for hit = 10 ns • Time for miss = 10+70 = 80 ns • Hit Ratio: specifies the percentage of hits to total cache accesses

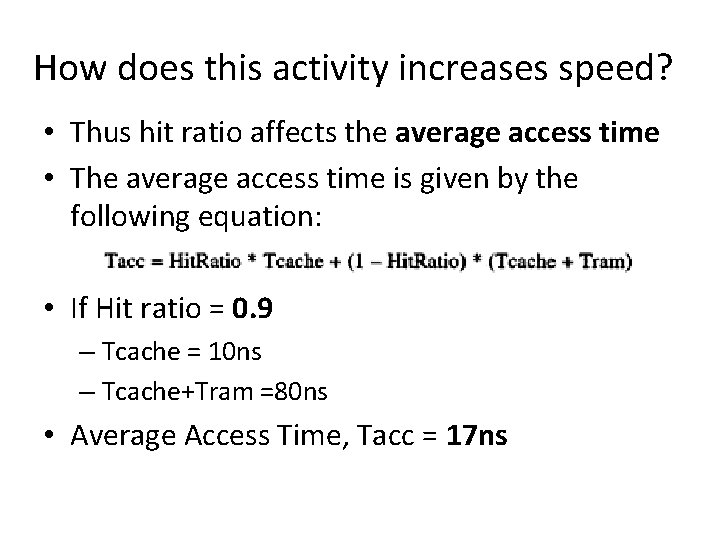

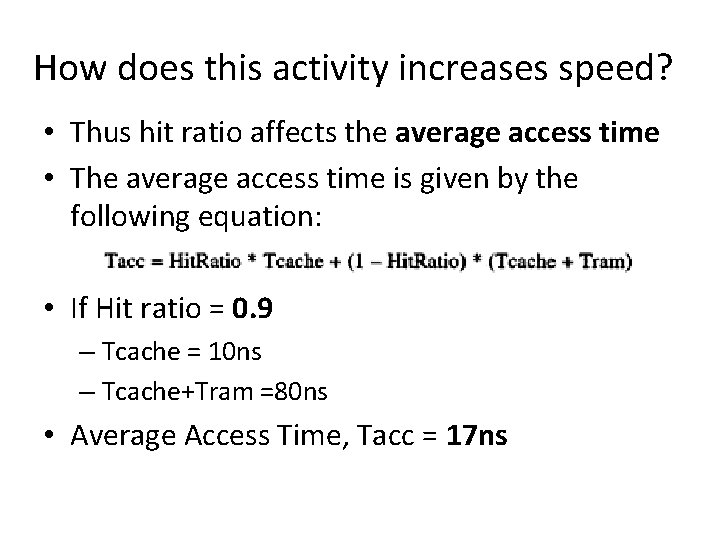

How does this activity increases speed? • Thus hit ratio affects the average access time • The average access time is given by the following equation: • If Hit ratio = 0. 9 – Tcache = 10 ns – Tcache+Tram =80 ns • Average Access Time, Tacc = 17 ns

How does this activity increases speed? • Hit Ratio is governed by many factors like – Size of the program – Type and amount of data used by the program – Addressing activity during execution

How does this activity increases speed? • Two characteristics of running program improves performance when cache is being used – When we access a m/m location, there is a good chance we will access it again. – When we access one location, there is a good possibility that we access the next location also. • In general, it is called locality of reference

How does this activity increases speed? • Instruction Cache Access: • Consider the following loop of instructions: MOV CX, 1000 SUB AX, AX NEXT: ADD AX, [SI] MOV [SI], AX INC SI LOOP NEXT • If cache is empty, the first pass will fill the cache (MISS) and the next 999 passes will generate hits for each instruction fetch

How does this activity increases speed? • When a MISS occurs, the cache reads a group of location from main memory and this group is called line of data. • So after fetching the first instruction, the rest of the loop is already in the cache(prefetch buffer too) before we finish the first pass.

How does this activity increases speed? • Data Cache Access: • The loop example also contains accesses to data operands ADD AX, [SI] & MOV [SI], AX • In this case, a line of data is to be read from main memory and guarantees faster access.

![How does this activity increases speed Data writes MOV SI AX Cache How does this activity increases speed? • Data writes: MOV [SI], AX • Cache](https://slidetodoc.com/presentation_image_h2/cd9336502785ec749d226e8eb22d1c3c/image-11.jpg)

How does this activity increases speed? • Data writes: MOV [SI], AX • Cache Access Time = 10 ns • Main Memory Access Time = 70 ns • Which to choose : Cache/ Main Memory is based on the cache policy used by a particular system • There are 2 policies : – Writeback – Writethrough

How does this activity increases speed? • Writeback: writing results only to cache – Adv : faster writes – Disadv: out-of-date main memory data • Writethrough: writing to cache and main memory – Adv : maintain valid data in main memory – Disadv: requires long write times

How does this activity increases speed? • Replacement Policy: • When cache is full and a line must be replaced, victim line can be chosen in any number of ways & one algorithm is LRU