Cache Design Cache parameters organization and placement Cache

![A B C D E F G H [a] Nearest Future Access Furthest Future A B C D E F G H [a] Nearest Future Access Furthest Future](https://slidetodoc.com/presentation_image_h2/f6192ce861c7470c4660617acbad4efa/image-21.jpg)

- Slides: 34

Cache Design • Cache parameters (organization and placement) • Cache replacement policy • Cache performance evaluation method 2021/10/29 coursecpeg 324 -05 FTopic 7 c 1

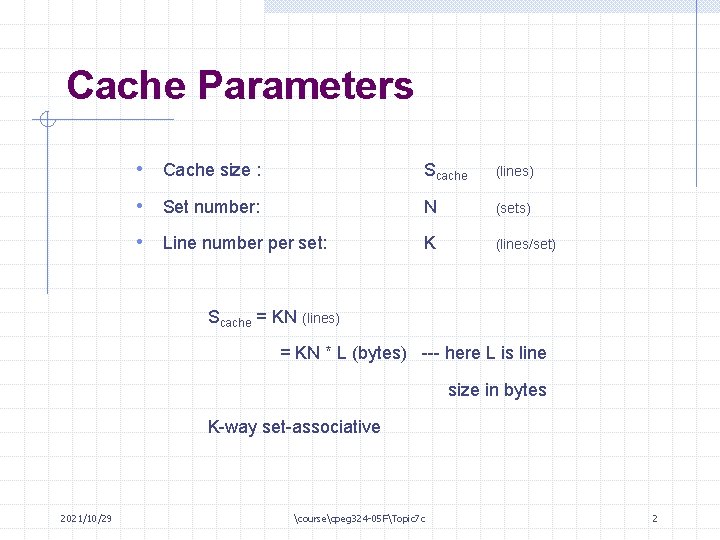

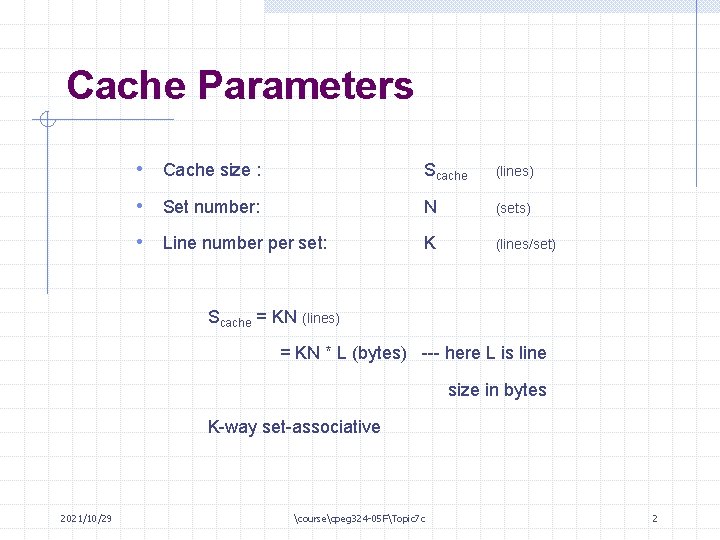

Cache Parameters • Cache size : Scache (lines) • Set number: N (sets) • Line number per set: K (lines/set) Scache = KN (lines) = KN * L (bytes) --- here L is line size in bytes K-way set-associative 2021/10/29 coursecpeg 324 -05 FTopic 7 c 2

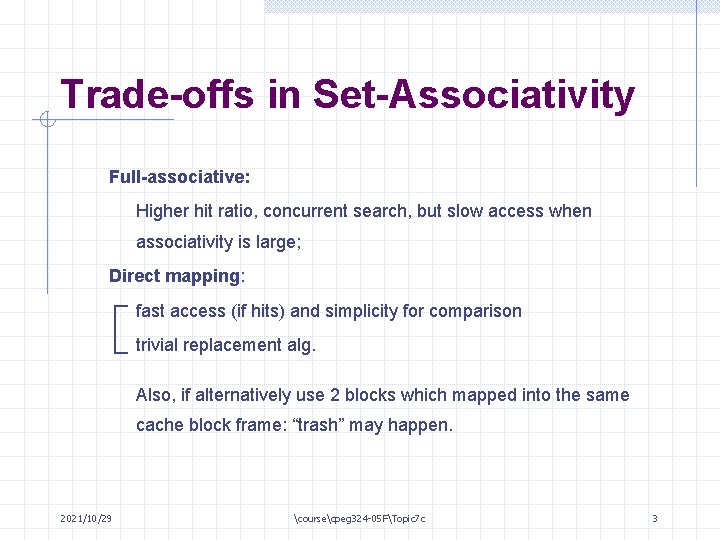

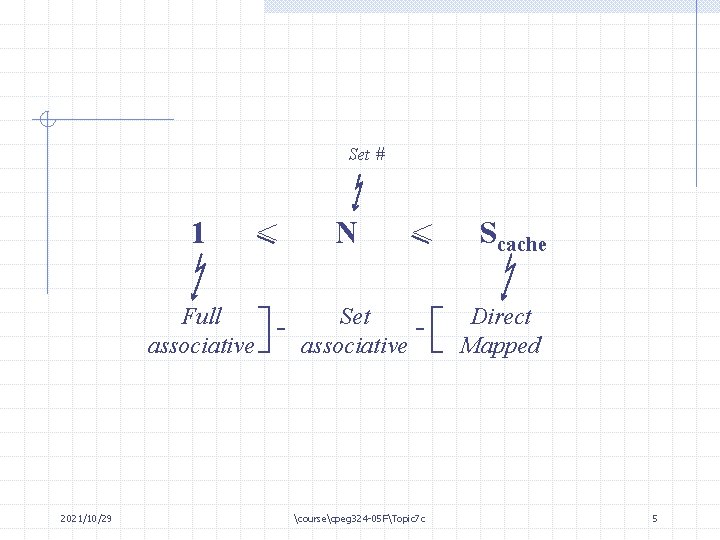

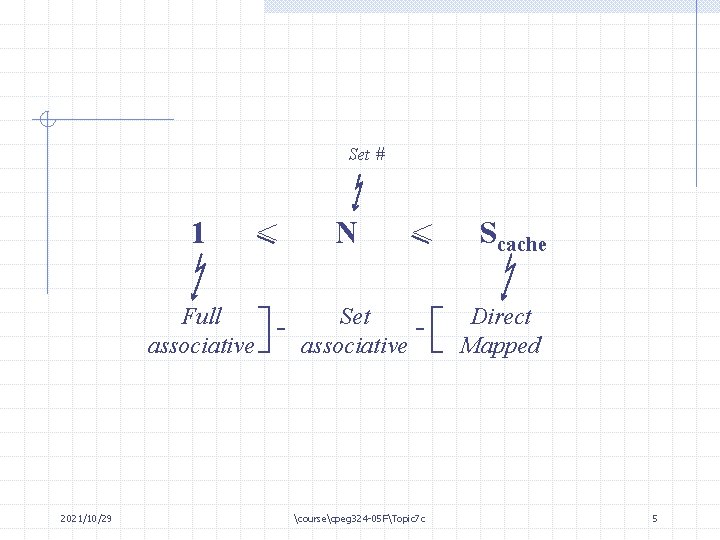

Trade-offs in Set-Associativity Full-associative: Higher hit ratio, concurrent search, but slow access when associativity is large; Direct mapping: fast access (if hits) and simplicity for comparison trivial replacement alg. Also, if alternatively use 2 blocks which mapped into the same cache block frame: “trash” may happen. 2021/10/29 coursecpeg 324 -05 FTopic 7 c 3

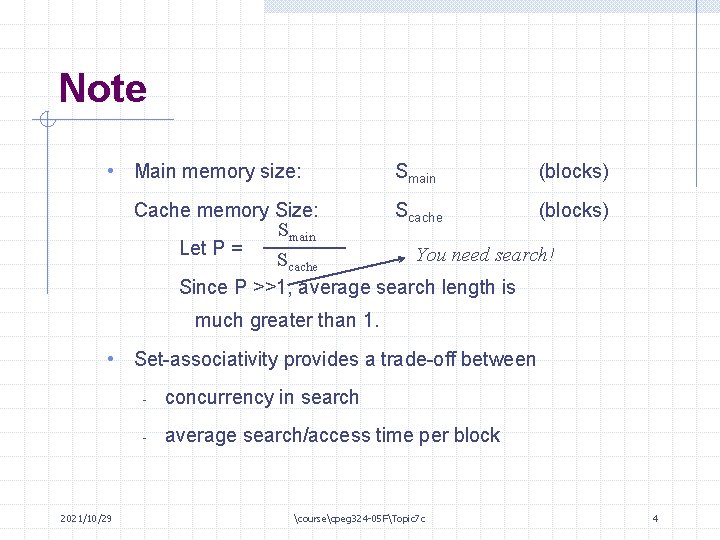

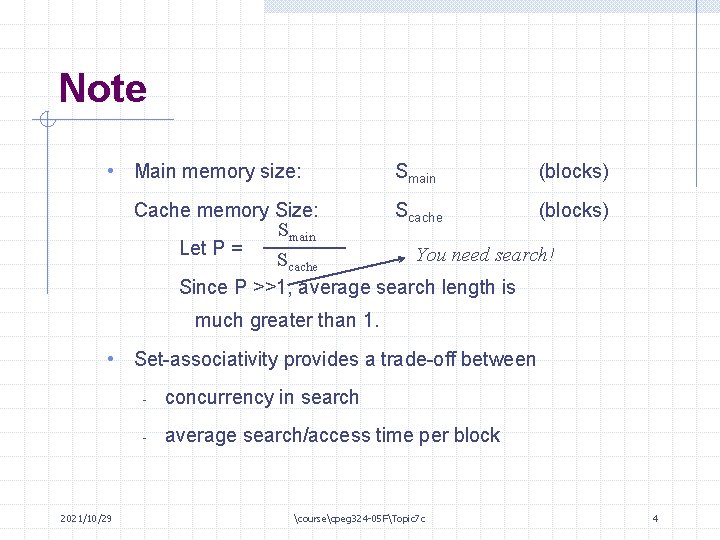

Note • Main memory size: Smain (blocks) Cache memory Size: Scache (blocks) Smain Let P = You need search! Scache Since P >>1, average search length is much greater than 1. • Set-associativity provides a trade-off between 2021/10/29 - concurrency in search - average search/access time per block coursecpeg 324 -05 FTopic 7 c 4

Set # 1 Full associative 2021/10/29 < N < Set associative coursecpeg 324 -05 FTopic 7 c Scache Direct Mapped 5

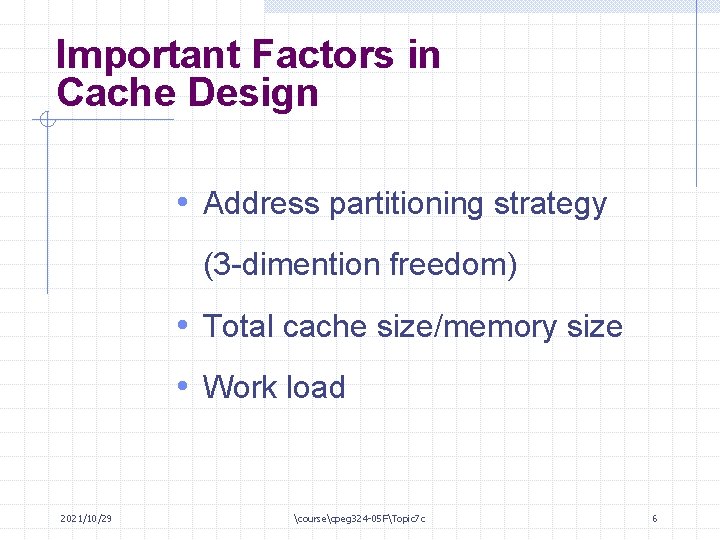

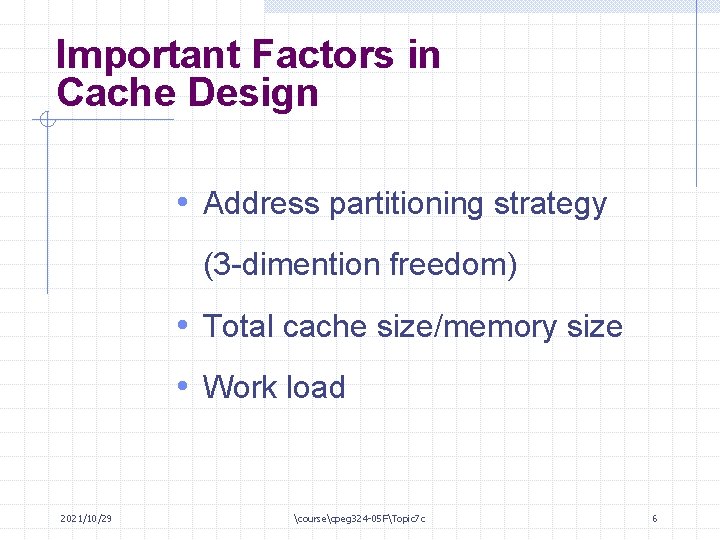

Important Factors in Cache Design • Address partitioning strategy (3 -dimention freedom) • Total cache size/memory size • Work load 2021/10/29 coursecpeg 324 -05 FTopic 7 c 6

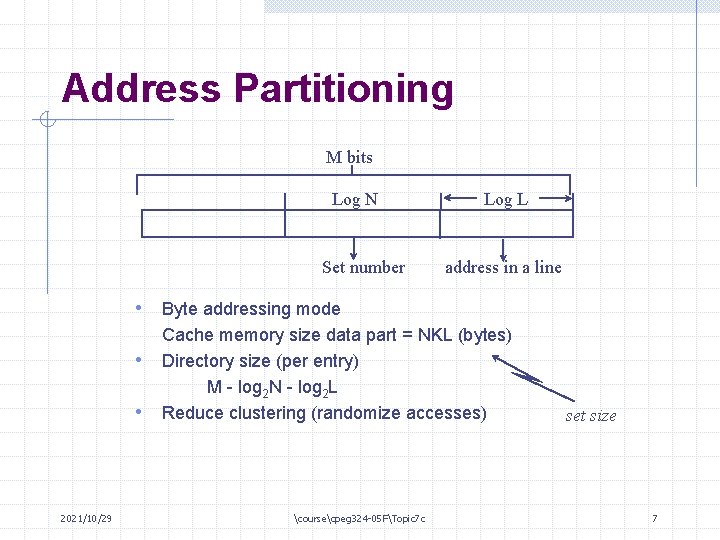

Address Partitioning M bits Log N Set number Log L address in a line • Byte addressing mode • • 2021/10/29 Cache memory size data part = NKL (bytes) Directory size (per entry) M - log 2 N - log 2 L Reduce clustering (randomize accesses) coursecpeg 324 -05 FTopic 7 c set size 7

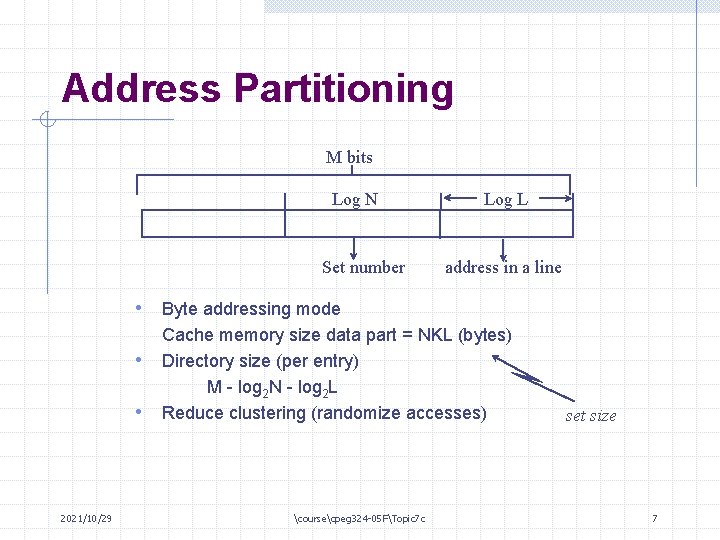

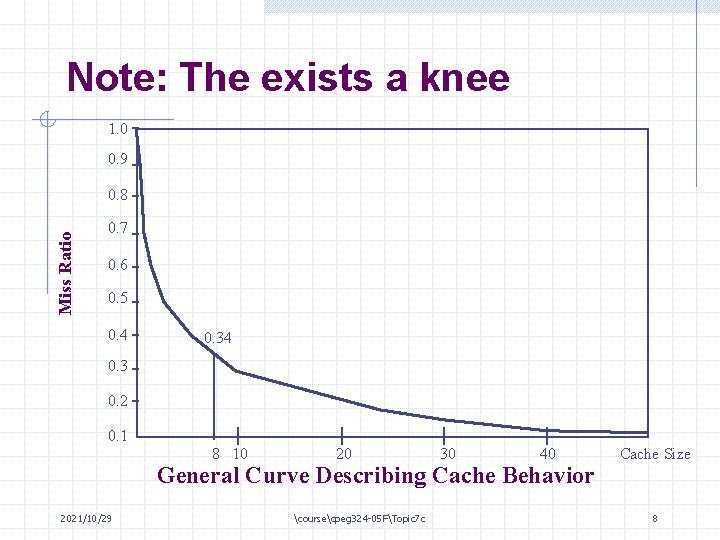

Note: The exists a knee 1. 0 0. 9 Miss Ratio 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 8 10 20 30 40 General Curve Describing Cache Behavior 2021/10/29 coursecpeg 324 -05 FTopic 7 c Cache Size 8

…the data are sketchy and highly dependent on the method of gathering. . . … designer must make critical choices using a combination of “hunches, skills, and experience” as supplement… “a strong intuitive feeling concerning a future event or result. ” 2021/10/29 coursecpeg 324 -05 FTopic 7 c 9

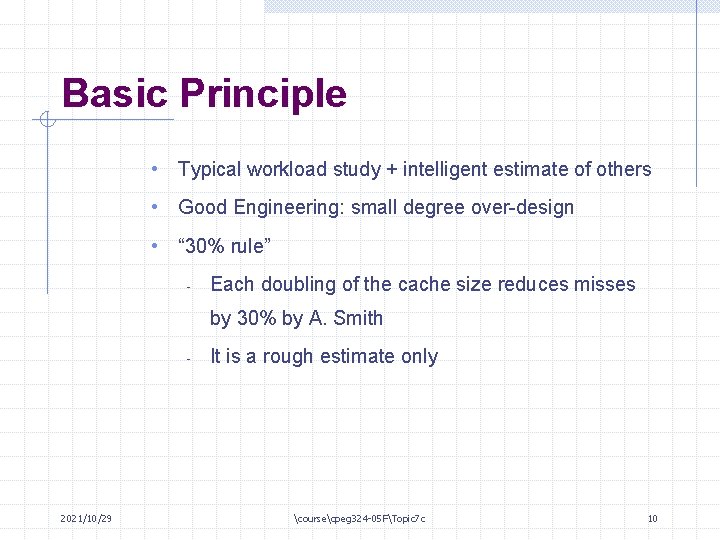

Basic Principle • Typical workload study + intelligent estimate of others • Good Engineering: small degree over-design • “ 30% rule” - Each doubling of the cache size reduces misses by 30% by A. Smith - 2021/10/29 It is a rough estimate only coursecpeg 324 -05 FTopic 7 c 10

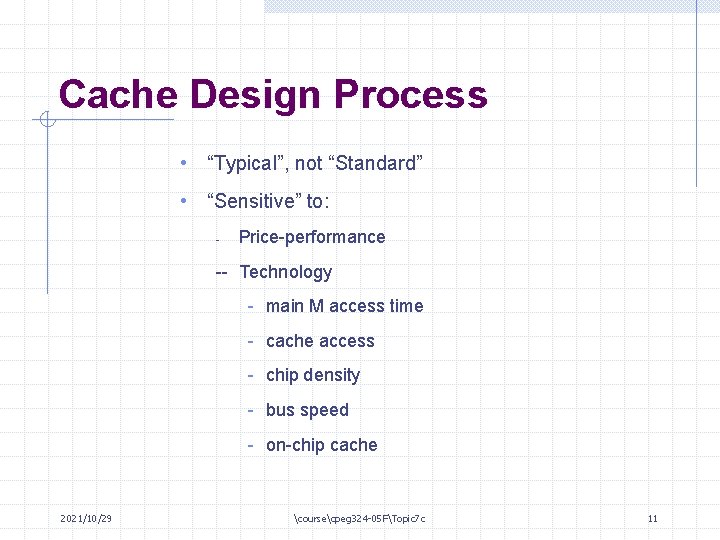

Cache Design Process • “Typical”, not “Standard” • “Sensitive” to: - Price-performance -- Technology - main M access time - cache access - chip density - bus speed - on-chip cache 2021/10/29 coursecpeg 324 -05 FTopic 7 c 11

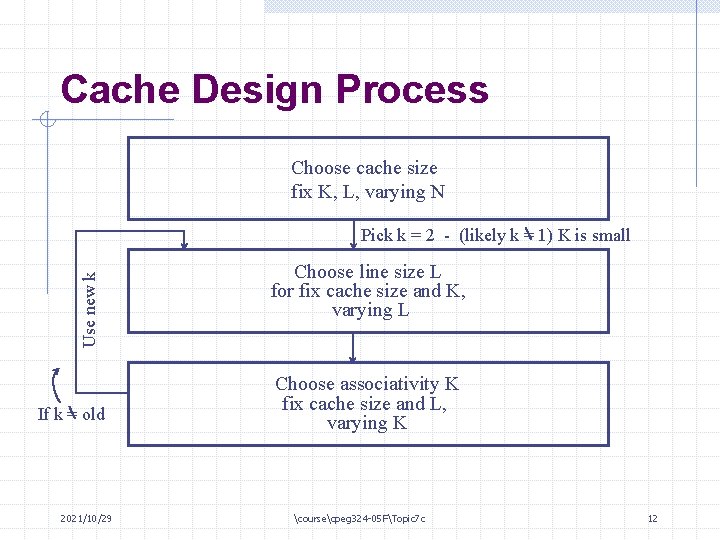

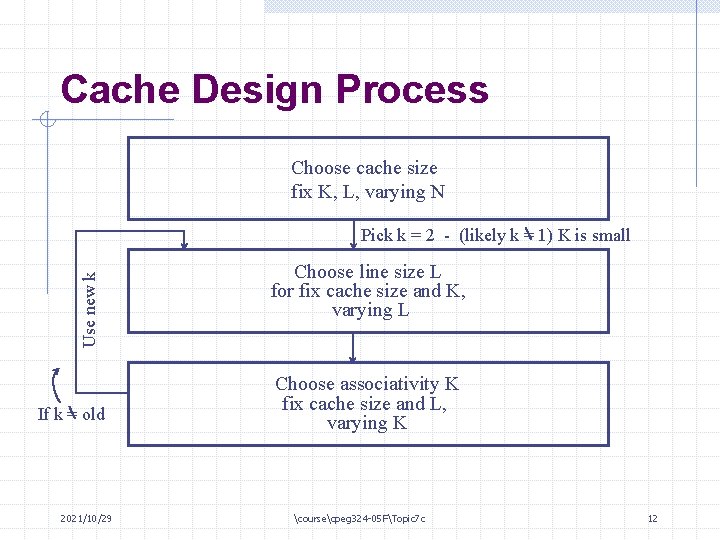

Cache Design Process Choose cache size fix K, L, varying N Use new k Pick k = 2 - (likely k = 1) K is small If k = old 2021/10/29 Choose line size L for fix cache size and K, varying L Choose associativity K fix cache size and L, varying K coursecpeg 324 -05 FTopic 7 c 12

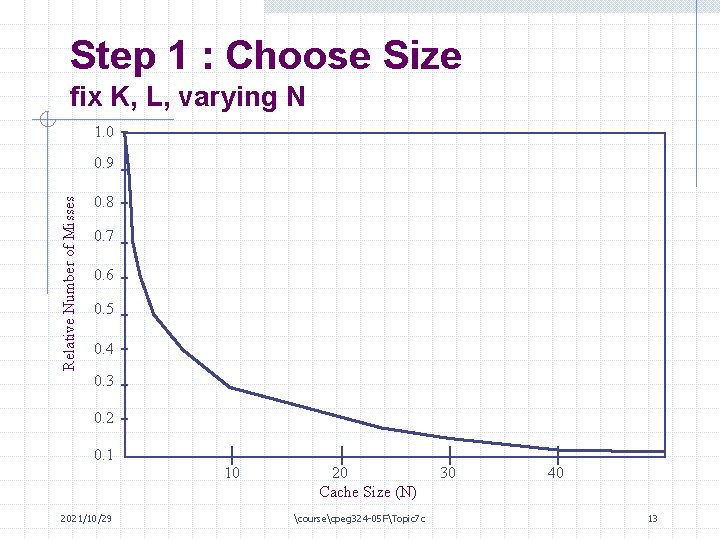

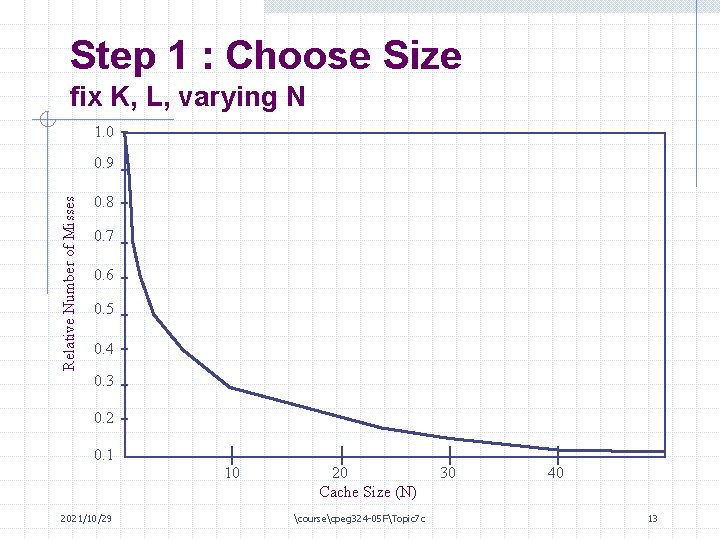

Step 1 : Choose Size fix K, L, varying N 1. 0 Relative Number of Misses 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 2021/10/29 10 20 Cache Size (N) coursecpeg 324 -05 FTopic 7 c 30 40 13

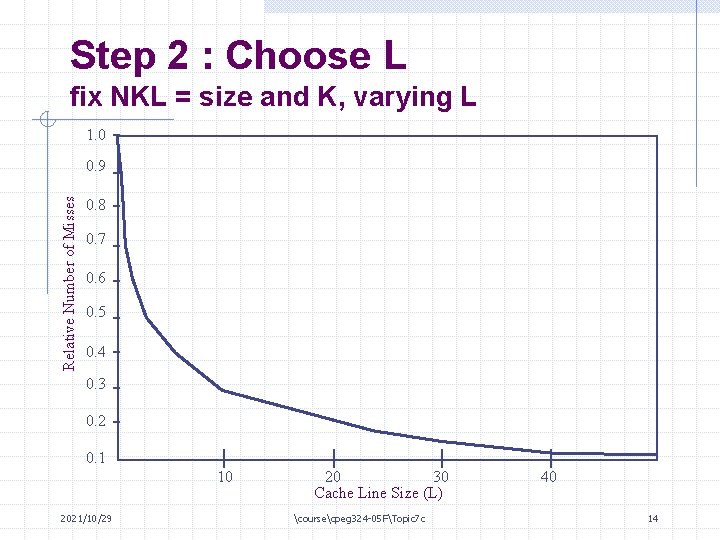

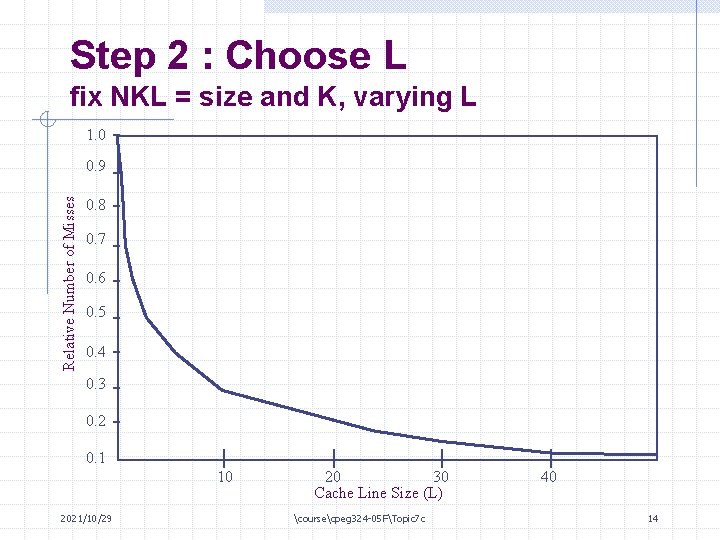

Step 2 : Choose L fix NKL = size and K, varying L 1. 0 Relative Number of Misses 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 10 2021/10/29 20 30 Cache Line Size (L) coursecpeg 324 -05 FTopic 7 c 40 14

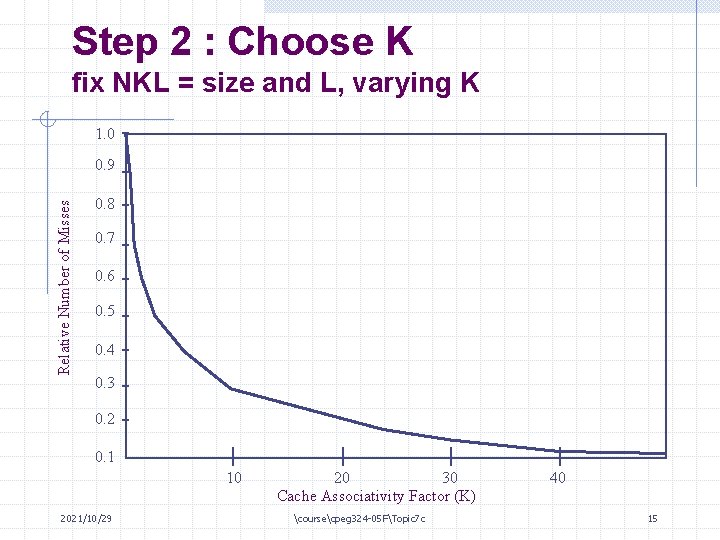

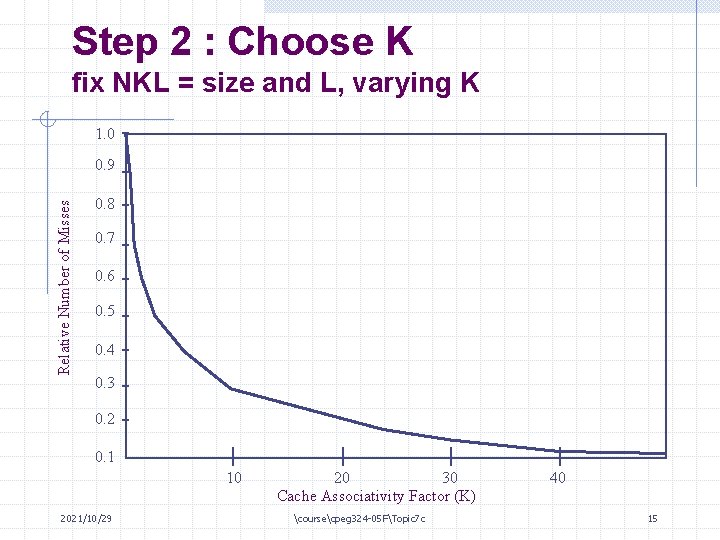

Step 2 : Choose K fix NKL = size and L, varying K 1. 0 Relative Number of Misses 0. 9 0. 8 0. 7 0. 6 0. 5 0. 4 0. 3 0. 2 0. 1 10 2021/10/29 20 30 Cache Associativity Factor (K) coursecpeg 324 -05 FTopic 7 c 40 15

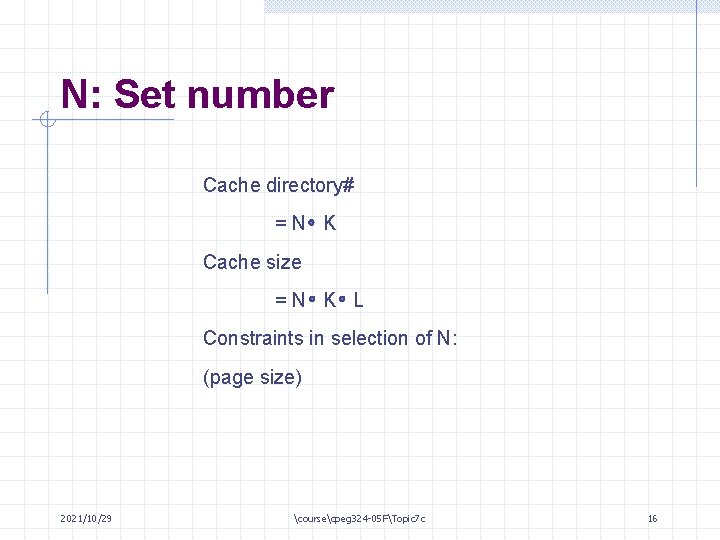

N: Set number Cache directory# =N K Cache size =N K L Constraints in selection of N: (page size) 2021/10/29 coursecpeg 324 -05 FTopic 7 c 16

K: Associativity • Bigger miss ratio • Smaller is better in: - faster - Cheaper simpler • 4 ~ 8 get best miss ratio 2021/10/29 coursecpeg 324 -05 FTopic 7 c 17

L : Line Size • Atomic unit of transmission • Miss ratio • Smaller - Larger average delay - Less traffic - Larger average hardware cost for associative search - Larger possibility of “Line crossers” • Workload dependent • 16 ~ 128 byte 2021/10/29 coursecpeg 324 -05 FTopic 7 c 18

Cache Replacement Policy • FIFO (first-in-first-out) • LRU (least-recently used) • OPT (furthest-future used) do not retain lines that have next occurrence in the most distant future Note: LRU performance is close to OPT for frequently encountered program structures. 2021/10/29 coursecpeg 324 -05 FTopic 7 c 19

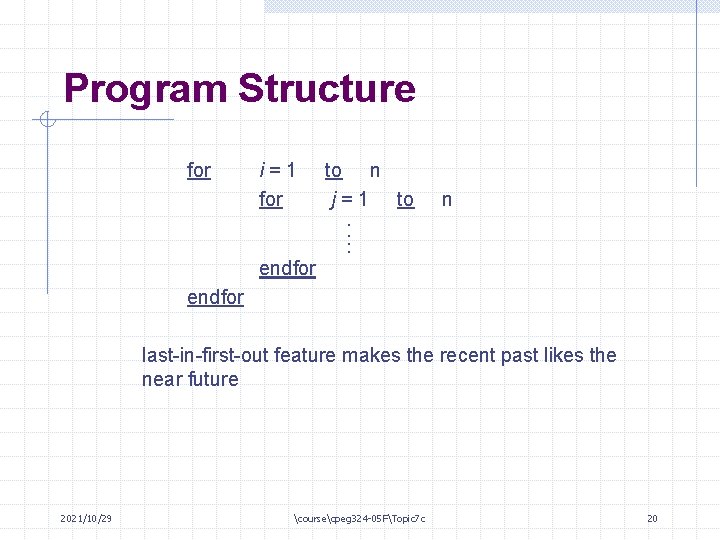

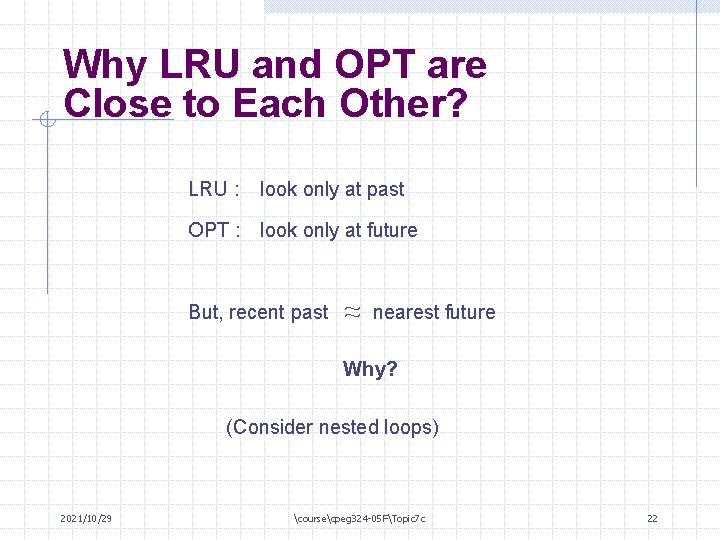

Program Structure i=1 for to n j = 1 to n …. for endfor last-in-first-out feature makes the recent past likes the near future 2021/10/29 coursecpeg 324 -05 FTopic 7 c 20

![A B C D E F G H a Nearest Future Access Furthest Future A B C D E F G H [a] Nearest Future Access Furthest Future](https://slidetodoc.com/presentation_image_h2/f6192ce861c7470c4660617acbad4efa/image-21.jpg)

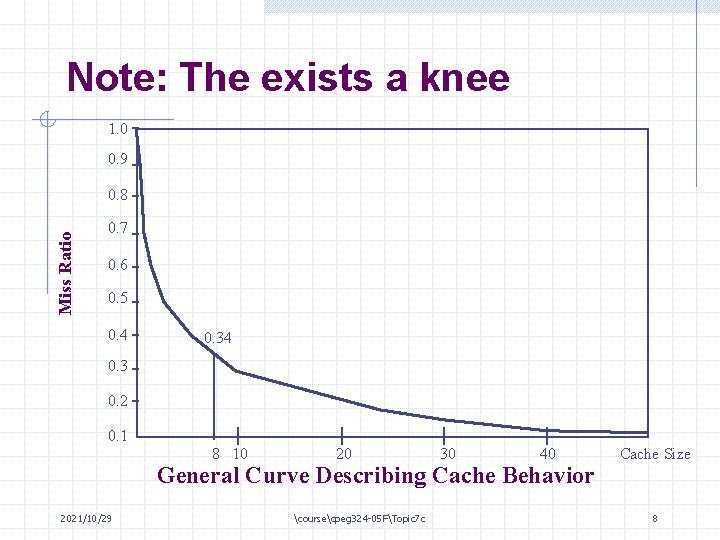

A B C D E F G H [a] Nearest Future Access Furthest Future Access B C A D E F G H Nearest Future Access Furthest Future Access [b] B Z C A D E F G Nearest Future Access Furthest Future Access [c] An eight-way cache directory maintained with the OPT policy: [a] Initial state for future reference-string AZBZCADEFGH; [b] After the cache hit the Line A; and [c] After the cache miss to Line Z. 2021/10/29 coursecpeg 324 -05 FTopic 7 c 21

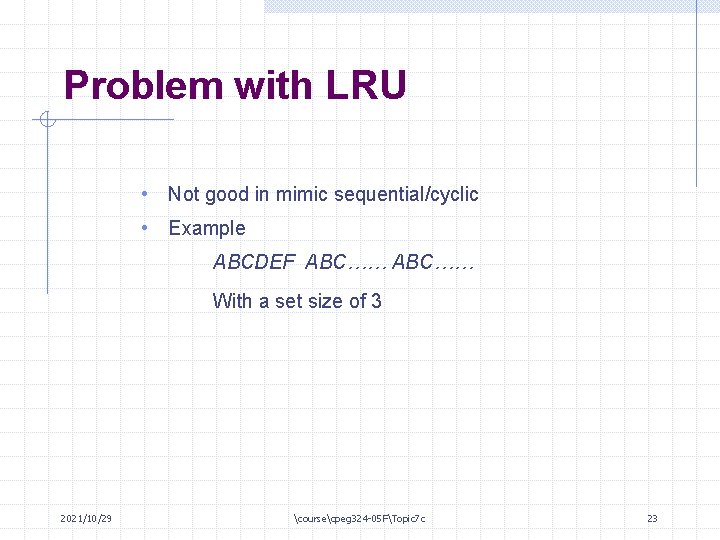

Why LRU and OPT are Close to Each Other? LRU : look only at past OPT : look only at future But, recent past ~ ~ nearest future Why? (Consider nested loops) 2021/10/29 coursecpeg 324 -05 FTopic 7 c 22

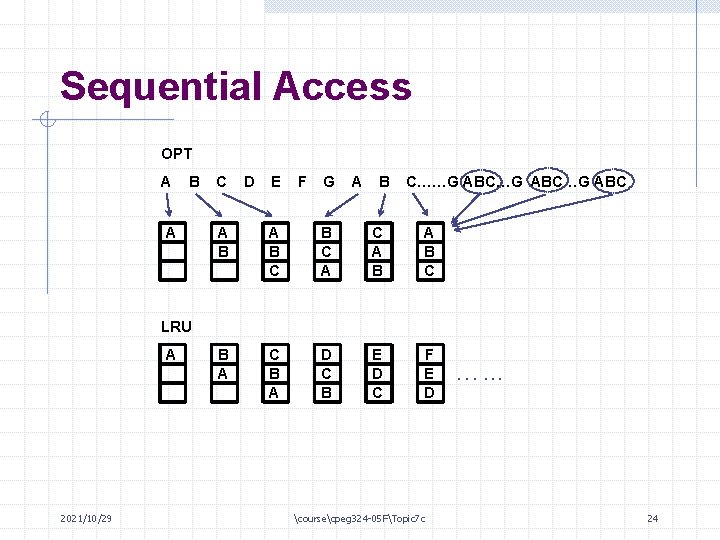

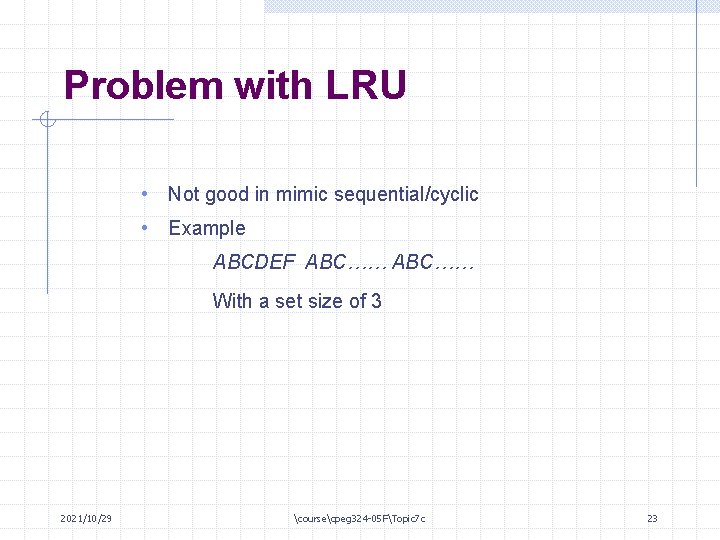

Problem with LRU • Not good in mimic sequential/cyclic • Example ABCDEF ABC…… With a set size of 3 2021/10/29 coursecpeg 324 -05 FTopic 7 c 23

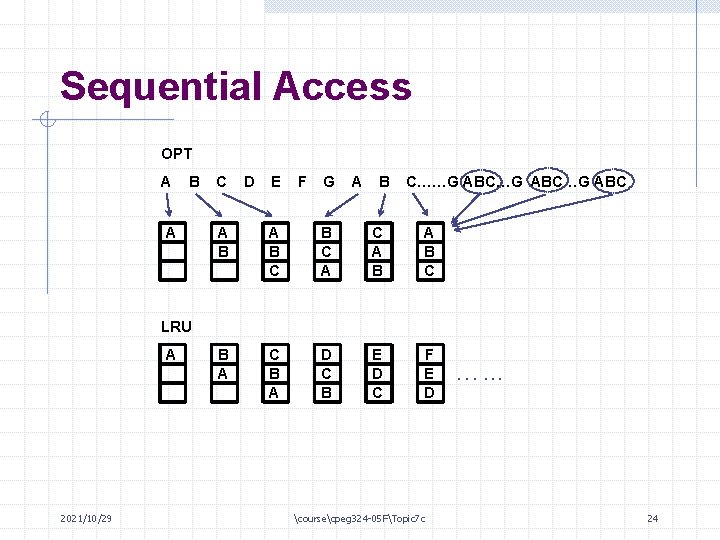

Sequential Access OPT A B A C D E F G A B C……G ABC…G ABC A B C B C A B A B C B A D C B E D C F E D LRU A 2021/10/29 coursecpeg 324 -05 FTopic 7 c …. . . 24

Empirical Data OPT can gain about 10% ~ 30% improvement over LRU (in terms of miss reduction) 2021/10/29 coursecpeg 324 -05 FTopic 7 c 25

A Comparison • OPT has two candidates for replacement the most recently referenced the furthest to be referenced in the future • LRU only has one - the least-recently used -- it never replaces the most recently referenced deadline in LRU 2021/10/29 coursecpeg 324 -05 FTopic 7 c 26

Performance Evaluation Methods for Workload • Analytical modeling • Simulation • Measuring 2021/10/29 coursecpeg 324 -05 FTopic 7 c 27

Cache Analysis Methods • Hardware monitoring 2021/10/29 - fast and accurate - not fast enough (for high-performance machines) - cost - flexibility/repeatability coursecpeg 324 -05 FTopic 7 c 28

cont’d Cache Analysis Methods • Address traces and machine simulator 2021/10/29 - slow - accuracy/fidelity - cost advantage - flexibility/repeatability - OS/other impacts - how to put them in? coursecpeg 324 -05 FTopic 7 c 29

Trace Driven Simulation for Cache • Workload dependence - difficulty in characterizing the load - no general accepted model • Effectiveness 2021/10/29 - possible simulation for many parameters - repeatability coursecpeg 324 -05 FTopic 7 c 30

Problem in Address Traces • Representative of the actual workload (hard) - only cover milliseconds of real workload - diversity of user programs • Initialization transient - use long enough traces to absorb the impact • Inability to properly model multiprocessor effects 2021/10/29 coursecpeg 324 -05 FTopic 7 c 31

An Example • Assume a two-way associative cache with 256 sets Scache = 2 x 256 lines • Assume that the difficulties of count or not count the initialization causes 512 more misses than actually required • Assume a trace of length 100, 000 with hit rate 0. 99 than 1000 misses is generated the 512 makes big difference!! • If want 512 miss count less than 5% then total misses = 512/5% = 10, 240 miss thus with hit = 0. 99 required trace length > 1, 024, 000! 2021/10/29 coursecpeg 324 -05 FTopic 7 c 32

One may not know the cache parameters before hand What to do? ? Make it longer than minimum acceptable length! 2021/10/29 coursecpeg 324 -05 FTopic 7 c 33

• 100, 000? too small • (10 ~ 100) x 106 OK? • 1000 x 106 or more being used now. . 2021/10/29 coursecpeg 324 -05 FTopic 7 c 34