Data Structures Algorithms Lecture 4 Lineartime Sorting Solving

![Counting. Sort Input: array A[0. . n-1] of numbers Assumption: the input elements are Counting. Sort Input: array A[0. . n-1] of numbers Assumption: the input elements are](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-20.jpg)

![Counting. Sort position(i) = number of elements less than A[i] in A[1. . n-1] Counting. Sort position(i) = number of elements less than A[i] in A[1. . n-1]](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-21.jpg)

![Counting. Sort position(i) = number of elements less than A[i] in A[1. . n-1] Counting. Sort position(i) = number of elements less than A[i] in A[1. . n-1]](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-22.jpg)

![Counting. Sort C[i] will contain the number of elements ≤ i Counting. Sort(A, k) Counting. Sort C[i] will contain the number of elements ≤ i Counting. Sort(A, k)](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-23.jpg)

![Counting. Sort(A, k) ► Input: array A[0. . n-1] of integers in the range Counting. Sort(A, k) ► Input: array A[0. . n-1] of integers in the range](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-24.jpg)

![Counting. Sort: running time Counting. Sort(A, k) ► Input: array A[0. . n-1] of Counting. Sort: running time Counting. Sort(A, k) ► Input: array A[0. . n-1] of](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-25.jpg)

![Radix. Sort Input: array A[0. . n-1] of numbers Assumption: the input elements are Radix. Sort Input: array A[0. . n-1] of numbers Assumption: the input elements are](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-27.jpg)

- Slides: 31

Data Structures & Algorithms Lecture 4: Linear-time Sorting

Solving recurrences one more time …

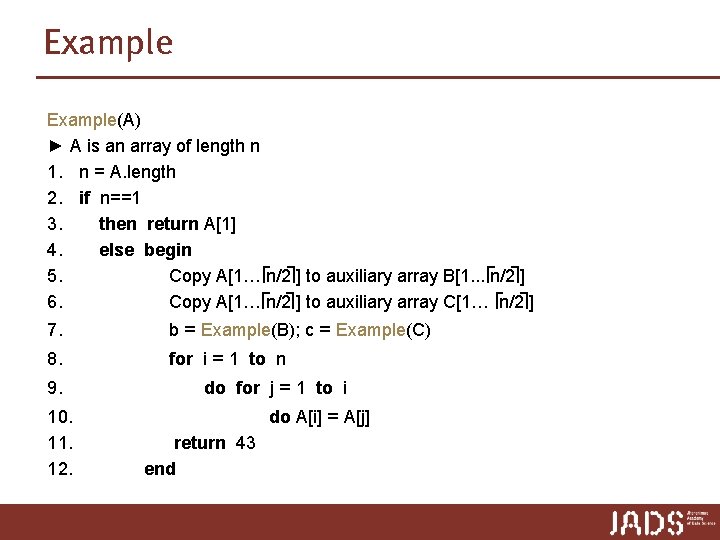

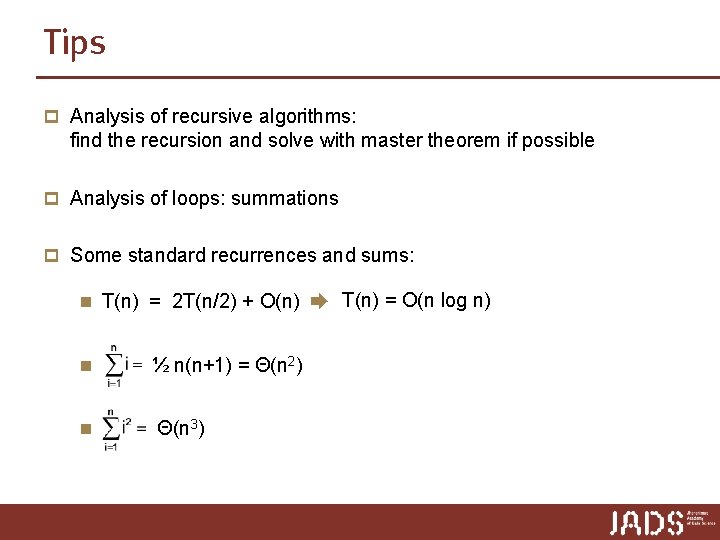

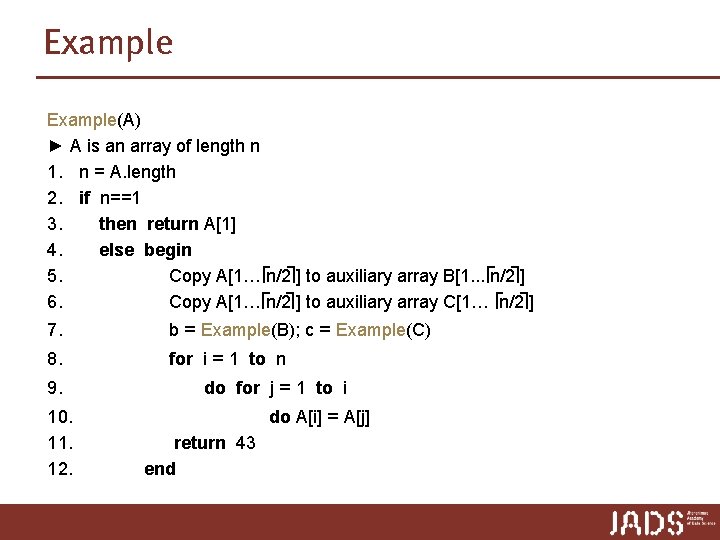

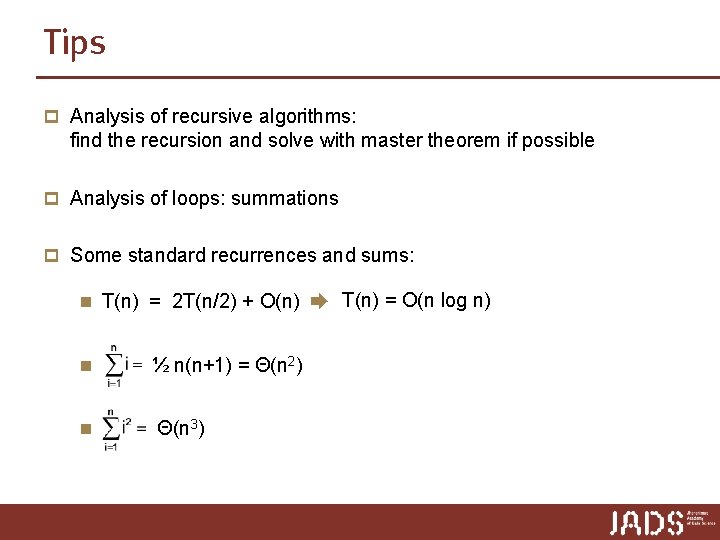

Solving recurrences Use one of the following methods 1. Substitution method: guess the solution and use induction to prove that your guess it is correct. 2. carefully evaluate recursion tree 3. use Master theorem caveat: not always applicable p How to guess: 1. expand the recursion 2. draw a recursion tree

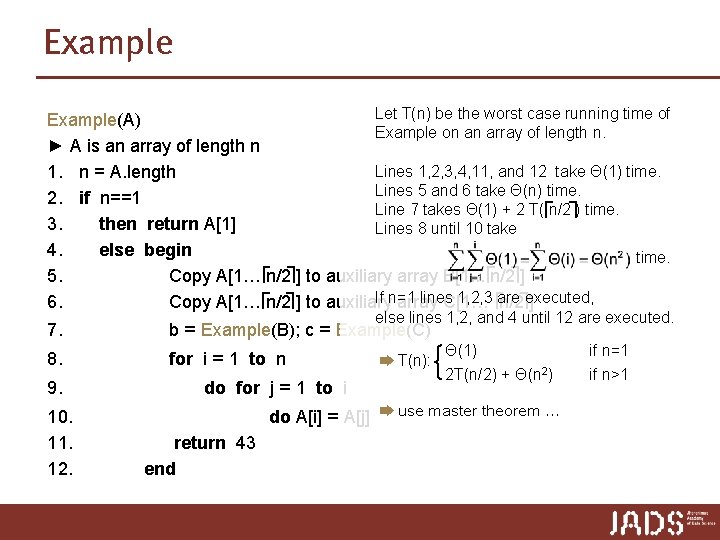

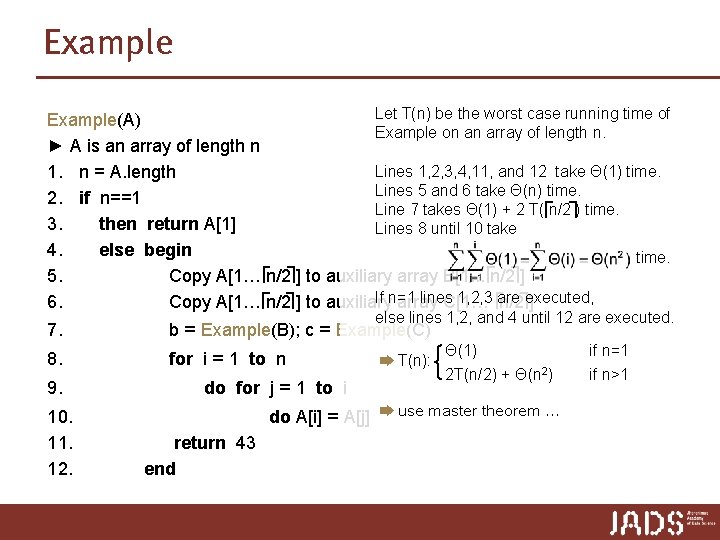

Example(A) ► A is an array of length n 1. n = A. length 2. if n==1 3. then return A[1] 4. else begin 5. Copy A[1… n/2 ] to auxiliary array B[1. . . n/2 ] 6. Copy A[1… n/2 ] to auxiliary array C[1… n/2 ] 7. b = Example(B); c = Example(C) 8. for i = 1 to n 9. 10. 11. 12. do for j = 1 to i do A[i] = A[j] return 43 end

Example Let T(n) be the worst case running time of Example(A) Example on an array of length n. ► A is an array of length n Lines 1, 2, 3, 4, 11, and 12 take Θ(1) time. 1. n = A. length Lines 5 and 6 take Θ(n) time. 2. if n==1 Line 7 takes Θ(1) + 2 T( n/2 ) time. 3. then return A[1] Lines 8 until 10 take 4. else begin time. 5. Copy A[1… n/2 ] to auxiliary array B[1. . . n/2 ] If n=1 lines 1, 2, 3 are 6. Copy A[1… n/2 ] to auxiliary array C[1… n/2 executed, ] else lines 1, 2, and 4 until 12 are executed. 7. b = Example(B); c = Example(C) 8. for i = 1 to n 9. 10. 11. 12. do for j = 1 to i ➨ T(n): Θ(1) 2 T(n/2) + Θ(n 2) do A[i] = A[j] ➨ use master theorem … return 43 end if n=1 if n>1

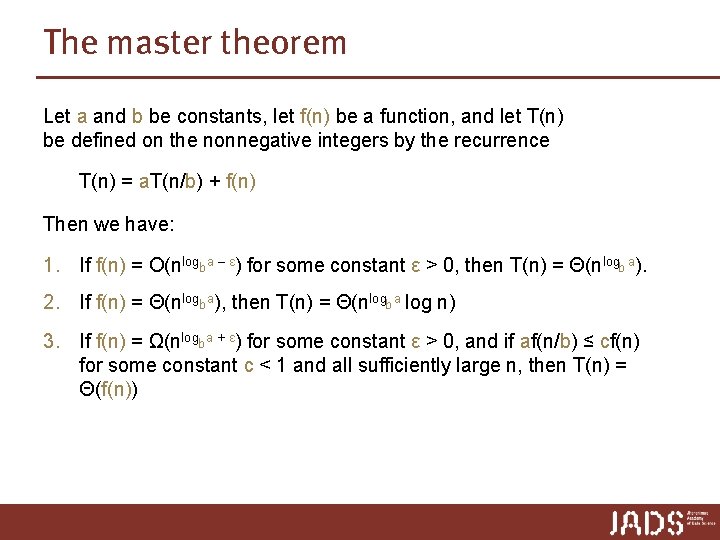

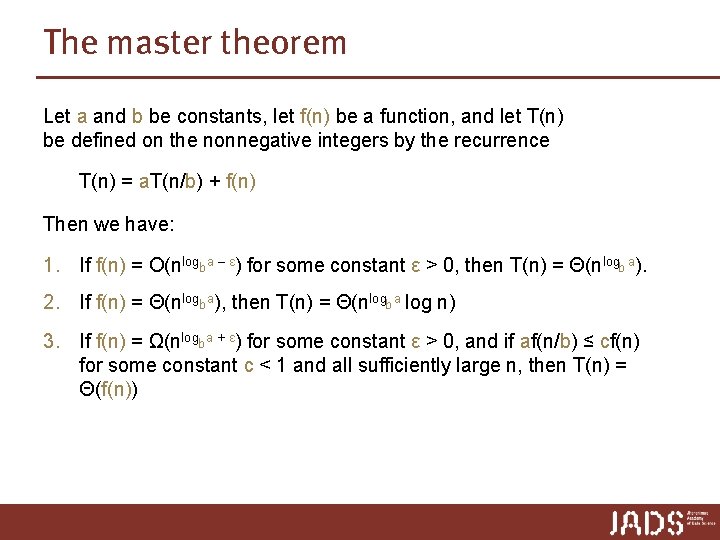

The master theorem Let a and b be constants, let f(n) be a function, and let T(n) be defined on the nonnegative integers by the recurrence T(n) = a. T(n/b) + f(n) Then we have: 1. If f(n) = O(nlogb a – ε) for some constant ε > 0, then T(n) = Θ(nlogb a). 2. If f(n) = Θ(nlogba), then T(n) = Θ(nlogb a log n) 3. If f(n) = Ω(nlogba + ε) for some constant ε > 0, and if af(n/b) ≤ cf(n) for some constant c < 1 and all sufficiently large n, then T(n) = Θ(f(n))

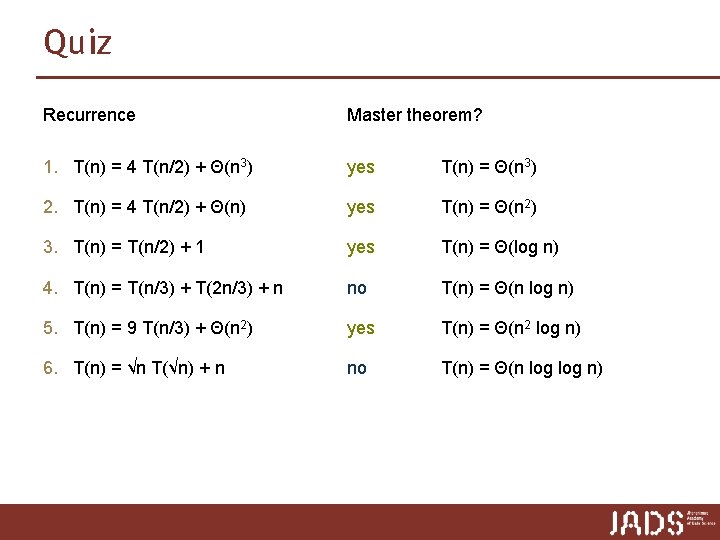

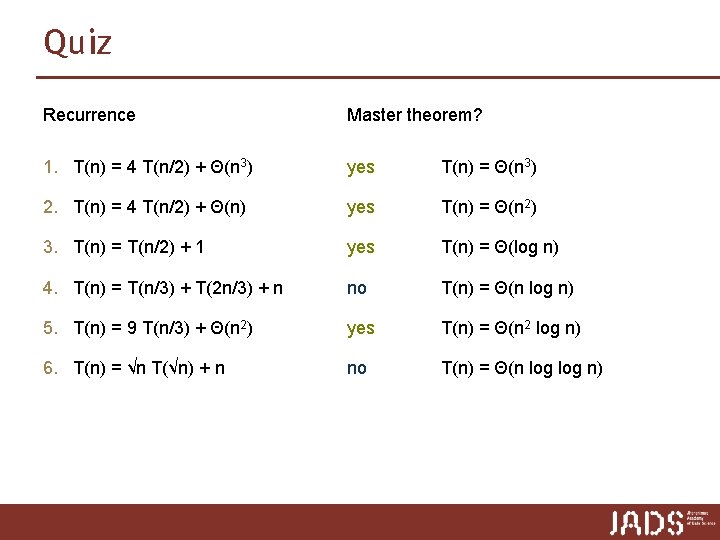

Quiz Recurrence Master theorem? 1. T(n) = 4 T(n/2) + Θ(n 3) yes T(n) = Θ(n 3) 2. T(n) = 4 T(n/2) + Θ(n) yes T(n) = Θ(n 2) 3. T(n) = T(n/2) + 1 yes T(n) = Θ(log n) 4. T(n) = T(n/3) + T(2 n/3) + n no T(n) = Θ(n log n) 5. T(n) = 9 T(n/3) + Θ(n 2) yes T(n) = Θ(n 2 log n) 6. T(n) = √n T(√n) + n no T(n) = Θ(n log n)

Tips p Analysis of recursive algorithms: find the recursion and solve with master theorem if possible p Analysis of loops: summations p Some standard recurrences and sums: n T(n) = 2 T(n/2) + O(n) ➨ T(n) = O(n log n) n ½ n(n+1) = Θ(n 2) n Θ(n 3)

Sorting in linear time

The sorting problem Input: a sequence of n numbers ‹a 0, a 1, …, an-1› Output: a permutation of the input such that ‹ai 0 ≤ … ≤ ain-1› Why do we care so much about sorting? p sorting is used by many applications p (first) step of many algorithms p many techniques can be illustrated by studying sorting

Can we sort faster than Θ(n log n) ? ? Worst case running time of sorting algorithms: Selection. Sort: O(n 2) Insertion. Sort: O(n 2) Merge. Sort: O(n log n) Can we do this faster? Θ(n loglog n) ? Θ(n) ?

Upper and lower bounds Upper bound How do you show that a problem (for example sorting) can be solved in Θ(f(n)) time? ➨ give an algorithm that solves the problem in Θ(f(n)) time. Lower bound How do you show that a problem (for example sorting) cannot be solved faster than in Θ(f(n)) time? ➨ prove that every possible algorithm that solves the problem needs Ω(f(n)) time.

Lower bounds Lower bound How do you show that a problem (for example sorting) can not be solved faster than in Θ(f(n)) time? ➨ prove that every possible algorithm that solves the problem needs Ω(f(n)) time. Model of computation: which operations is the algorithm allowed to use? Bit-manipulations? Random-access (array indexing) vs. pointer-machines?

Comparison-based sorting Selection. Sort(A, n) 1. for i = 0 to n-2: 2. set smallest to i 3. for j = i + 1 to n-1: 4. if A[j] < A[smallest]: set smallest to j 5. swap A[i] with A[smallest] Which steps precisely the algorithm executes — and hence, which element ends up where — only depends on the result of comparisons between the input elements.

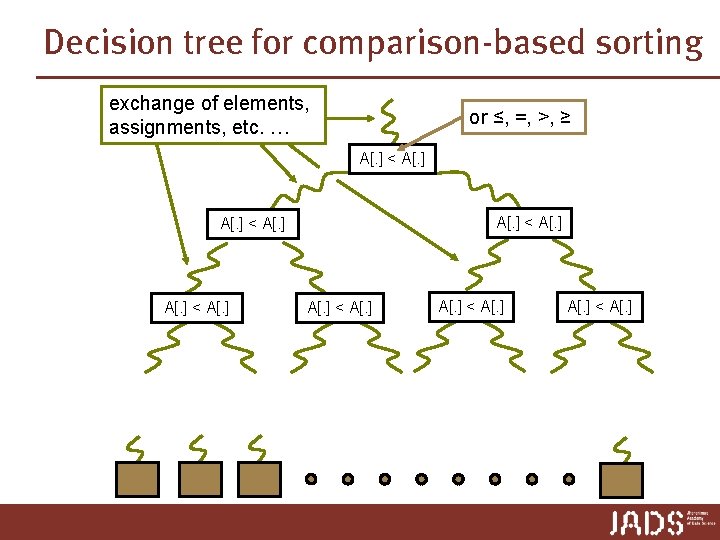

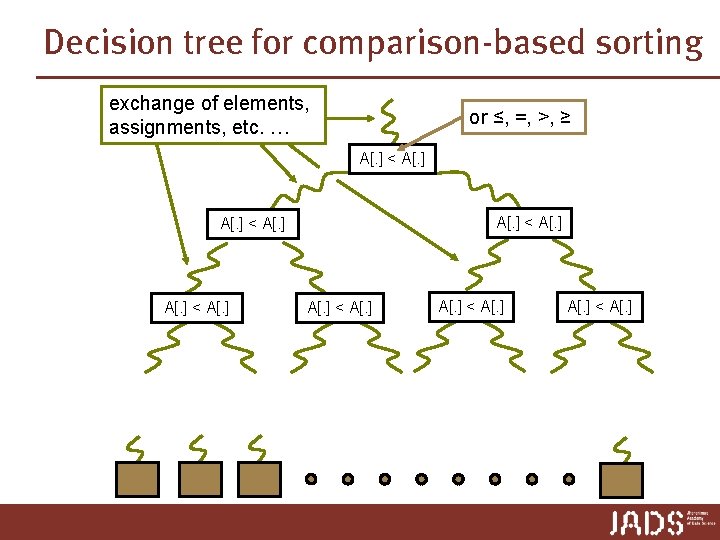

Decision tree for comparison-based sorting exchange of elements, assignments, etc. … or ≤, =, >, ≥ A[. ] < A[. ] A[. ] < A[. ]

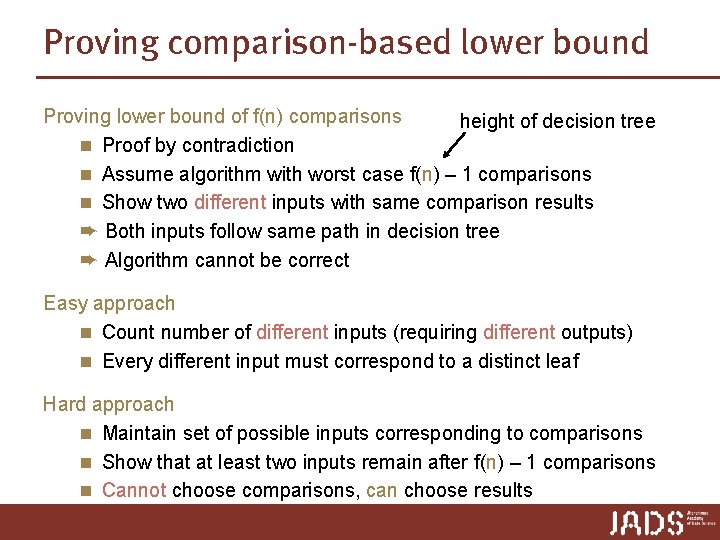

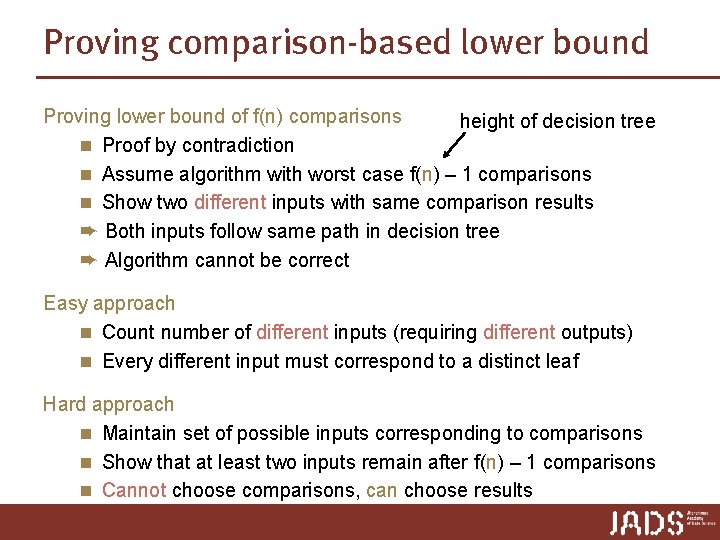

Proving comparison-based lower bound Proving lower bound of f(n) comparisons height of decision tree n Proof by contradiction n Assume algorithm with worst case f(n) – 1 comparisons n Show two different inputs with same comparison results ➨ Both inputs follow same path in decision tree ➨ Algorithm cannot be correct Easy approach n Count number of different inputs (requiring different outputs) n Every different input must correspond to a distinct leaf Hard approach n Maintain set of possible inputs corresponding to comparisons n Show that at least two inputs remain after f(n) – 1 comparisons n Cannot choose comparisons, can choose results

Comparison-based sorting p every permutation of the input follows a different path in the decision tree ➨ the decision tree has at least n! leaves p the height of a binary tree with n! leaves is at least log(n!) p worst case running time ≥ longest path from root to leaf = the height of the tree ≥ log(n!) = Ω(n log n)

Lower bound for comparison-based sorting Theorem Any comparison-based sorting algorithm requires Ω(n log n) comparisons in the worst case. ➨ The worst case running time of Merge. Sort is optimal.

Sorting in linear time … Two algorithms which are faster: 1. Counting. Sort 2. Radix. Sort (not comparison-based, make assumptions on the input)

![Counting Sort Input array A0 n1 of numbers Assumption the input elements are Counting. Sort Input: array A[0. . n-1] of numbers Assumption: the input elements are](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-20.jpg)

Counting. Sort Input: array A[0. . n-1] of numbers Assumption: the input elements are integers in the range 0 to k, for some k Main idea: count for every A[i] the number of elements less than A[i] ➨ position of A[i] in the output array Beware of elements that have the same value! position(i) = number of elements less than A[i] in A[0. . n-1] + number of elements equal to A[i] in A[0. . i-1]

![Counting Sort positioni number of elements less than Ai in A1 n1 Counting. Sort position(i) = number of elements less than A[i] in A[1. . n-1]](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-21.jpg)

Counting. Sort position(i) = number of elements less than A[i] in A[1. . n-1] + number of elements equal to A[i] in A[1. . i-1] 5 3 10 5 4 5 7 7 9 3 10 8 5 3 3 8 3 3 4 5 5 7 7 8 8 9 10 10 numbers < 5 third 5 from left position: (# less than 5) + 2

![Counting Sort positioni number of elements less than Ai in A1 n1 Counting. Sort position(i) = number of elements less than A[i] in A[1. . n-1]](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-22.jpg)

Counting. Sort position(i) = number of elements less than A[i] in A[1. . n-1] + number of elements equal to A[i] in A[1. . i-1] Lemma If every element A[i] is placed on position(i), then the array is sorted and the sorted order is stable. Numbers with the same value appear in the same order in the output array as they do in the input array.

![Counting Sort Ci will contain the number of elements i Counting SortA k Counting. Sort C[i] will contain the number of elements ≤ i Counting. Sort(A, k)](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-23.jpg)

Counting. Sort C[i] will contain the number of elements ≤ i Counting. Sort(A, k) ► Input: array A[0. . n-1] of integers in the range 0. . k ► Output: array B[0. . n-1] which contains the elements of A, sorted 1. for i = 0 to k do C[i] = 0 2. for j = 0 to A. length-1 do C[A[j]] = C[A[j]] + 1 3. ► C[i] now contains the number of elements equal to i 4. for i = 1 to k do C[i] = C[i] + C[i-1] 5. ► C[i] now contains the number of elements less than or equal to i 6. for j = A. length-1 downto 0 7. do B[C[A[ j ] ] -1] = A[j]; C[A[ j ]] = C[A[ j ]] – 1

![Counting SortA k Input array A0 n1 of integers in the range Counting. Sort(A, k) ► Input: array A[0. . n-1] of integers in the range](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-24.jpg)

Counting. Sort(A, k) ► Input: array A[0. . n-1] of integers in the range 0. . k ► Output: array B[0. . n-1] which contains the elements of A, sorted 1. for i = 0 to k do C[i] = 0 2. for j = 0 to A. length-1 do C[A[j]] = C[A[j]] + 1 3. ► C[i] now contains the number of elements equal to i 4. for i = 1 to k do C[i] = C[i ] + C[i-1] 5. ► C[i] now contains the number of elements less than or equal to i 6. for j = A. length downto 1 7. do B[C[A[ j ] ] -1] = A[j]; C[A[ j ]] = C[A[ j ]] – 1 Correctness lines 6/7: Invariant Inv(j): for j + 1 ≤ i < n: B[position(i)] contains A[i] for 0 ≤ i ≤ k: C[i] = ( # numbers smaller than i ) + ( # numbers equal to i in A[1. . j]) Inv(j) holds before loop is executed, Inv(j – 1) holds afterwards

![Counting Sort running time Counting SortA k Input array A0 n1 of Counting. Sort: running time Counting. Sort(A, k) ► Input: array A[0. . n-1] of](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-25.jpg)

Counting. Sort: running time Counting. Sort(A, k) ► Input: array A[0. . n-1] of integers in the range 0. . k ► Output: array B[0. . n-1] which contains the elements of A, sorted 1. for i = 0 to k do C[i] = 0 2. for j = 0 to A. length-1 do C[A[j]] = C[A[j]] + 1 3. ► C[i] now contains the number of elements equal to i 4. for i = 1 to k do C[i] = C[i ] + C[i-1] 5. ► C[i] now contains the number of elements less than or equal to i 6. for j = A. length downto 1 7. do B[C[A[ j ] ] -1] = A[j]; C[A[ j ]] = C[A[ j ]] – 1 line 1: ∑ 0≤i≤k Θ(1) = Θ(k) line 2: ∑ 1≤i≤n Θ(1) = Θ(n) line 4: ∑ 0≤i≤k Θ(1) = Θ(k) lines 6/7: ∑ 1≤i≤n Θ(1) = Θ(n) Total: Θ(n+k) ➨ Θ(n) if k = O(n)

Counting. Sort Theorem Counting. Sort is a stable sorting algorithm that sorts an array of n integers in the range 0. . k in Θ(n+k) time.

![Radix Sort Input array A0 n1 of numbers Assumption the input elements are Radix. Sort Input: array A[0. . n-1] of numbers Assumption: the input elements are](https://slidetodoc.com/presentation_image_h2/7787e6b4f489c22dfda9f899b2249c69/image-27.jpg)

Radix. Sort Input: array A[0. . n-1] of numbers Assumption: the input elements are integers with d digits example (d = 4): 3288, 1193, 9999, 0654, 7243, 4321 dth digit 1 st digit Radix. Sort(A, d) 1. for i = 1 to d 2. do use a stable sort to sort array A on digit i

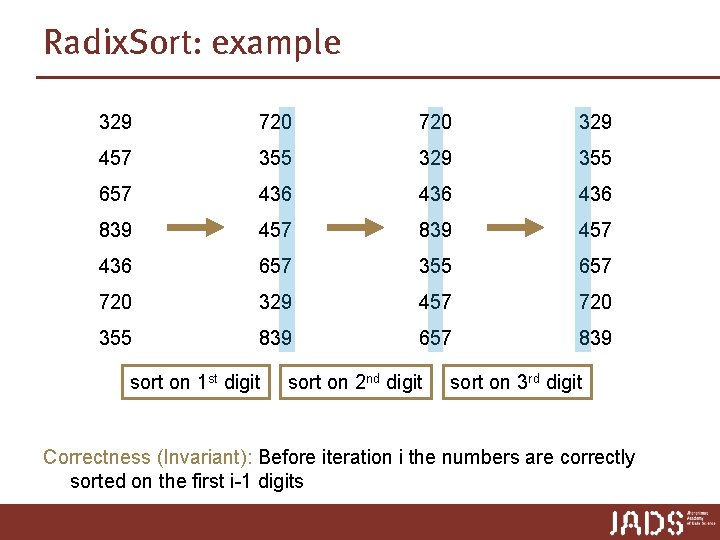

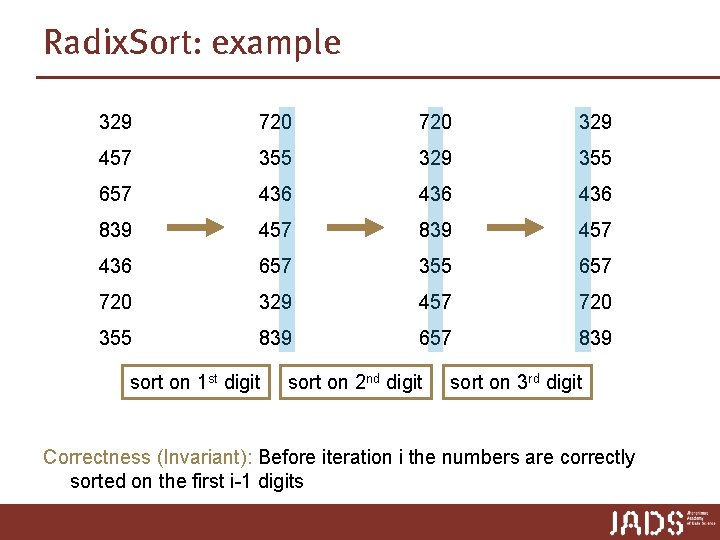

Radix. Sort: example 329 720 329 457 355 329 355 657 436 436 839 457 436 657 355 657 720 329 457 720 355 839 657 839 sort on 1 st digit sort on 2 nd digit sort on 3 rd digit Correctness (Invariant): Before iteration i the numbers are correctly sorted on the first i-1 digits

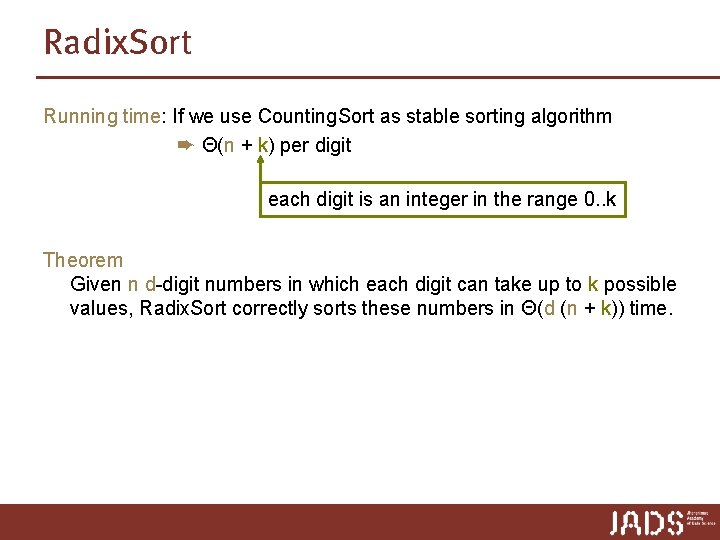

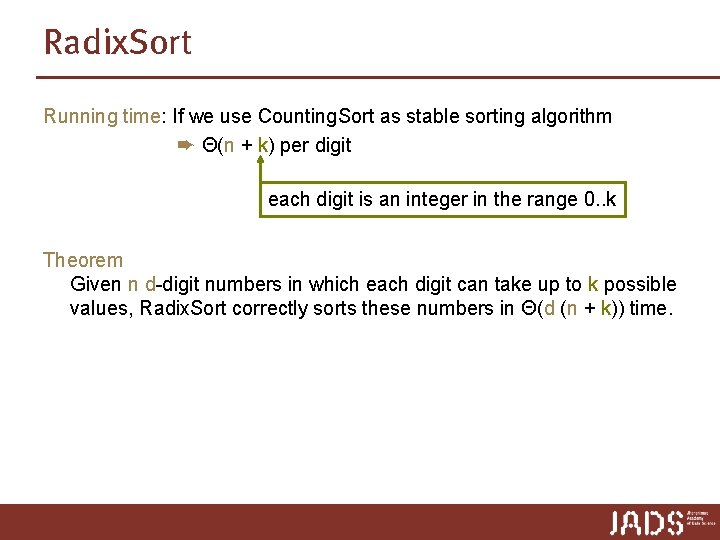

Radix. Sort Running time: If we use Counting. Sort as stable sorting algorithm ➨ Θ(n + k) per digit each digit is an integer in the range 0. . k Theorem Given n d-digit numbers in which each digit can take up to k possible values, Radix. Sort correctly sorts these numbers in Θ(d (n + k)) time.

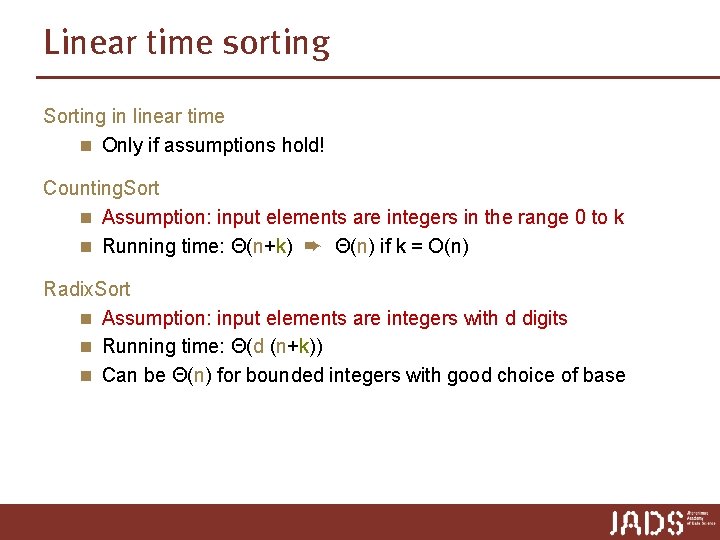

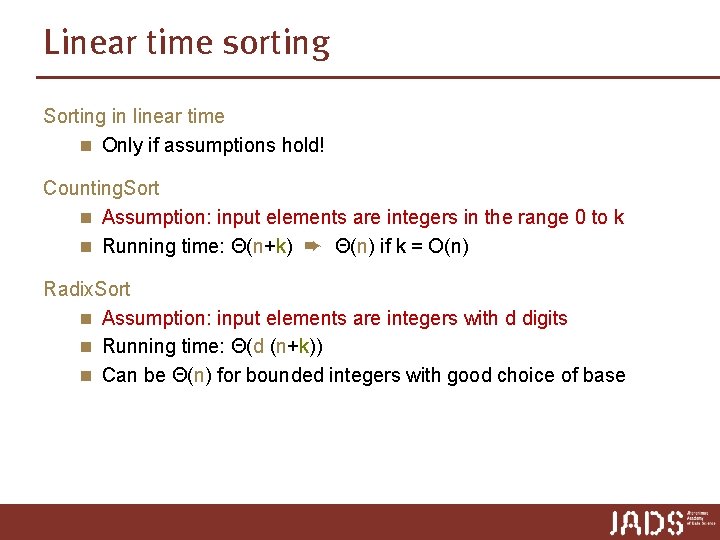

Linear time sorting Sorting in linear time n Only if assumptions hold! Counting. Sort n Assumption: input elements are integers in the range 0 to k n Running time: Θ(n+k) ➨ Θ(n) if k = O(n) Radix. Sort n Assumption: input elements are integers with d digits n Running time: Θ(d (n+k)) n Can be Θ(n) for bounded integers with good choice of base

Recap and preview Today p Models of computation and lower bounds p Sorting is in Ω(n log n) p Linear-time sorting under assumptions n Counting sort n Radix sort Next lecture(s) p Data Structures