Cosc 54730 Android media Part 2 Pictures and

- Slides: 62

Cosc 5/4730 Android media Part 2: Pictures and Video

Emulators and Samsung • The emulators all have problems, much of the following code has been tested on the actual phones • Video playback code works, but the video may not always display in the emulators • android – Video/picture capture does not work as documented. • Samsung hardware! – Some Samsung devices have many problems. Something about their hardware… search for the video and samsung for some possible answers.

Android android. media PLAY MEDIA

Supported formats • In general Android’s support is consistent with other mobile phones. • It supports the 3 GP (. 3 gp) and MPEG-4 (. mp 4) file formats. – 3 GP is a video standard derived from MPEG-4 specifically for use by mobile devices. • As far as codecs go, Android supports H. 263, a codec designed for low-latency and low-bitrate videoconferencing applications. H. 263 video is supported in either MPEG-4 (. mp 4) or 3 GP (. 3 gp) files. Android also supports MPEG-4 Simple Profile in 3 GP files (. 3 gp) as well as H. 264.

Android • First method – For greater flexibility you will need to use the media. Player and a surface. View (or Texture. View API 14+) • Media. Player like the audio and use a surface. View to display the video. • There are examples in the API demo, plus several of the books. • The second method uses a Video. View to display. – The Video. View uses a Media. Controller to start and stop and provide functions to control the video play back. – With a Media. Player like the audio. prepare(), then start() – This is the one I’ll cover in this lecture.

Video. View • Video. View is a View that has video playback capabilities and can be used directly in a layout. • We can then add controls (play, pause, forward, back, etc) with the Media. Controller.

View example • Get the View out of the layout vv = (Video. View) this. find. View. By. Id( R. id. Video. View); • Setup where the file to play is Uri video. Uri = Uri. parse(Environment. get. External. Storage. Directory(). get. Path() + "/example. mp 4"); vv. set. Video. URI(video. Uri); • play the video vv. start();

Adding media controllers. • Very simple vv = (Video. View) this. find. View. By. Id(R. id. Video. View); vv. set. Media. Controller(new Media. Controller(this)); • Now media controls will show up on the screen.

Using native media player • Call for an Intent and send it. Uri data = Uri. parse(Video. File); intent. set. Data. And. Type(data, "video/mp 4"); start. Activity(intent); • Remember, your app is now in the background.

Example code • The Video. Play example code – This will play a video from the internet. – If you can uncomment the code to have it play the video from the sdcard, but you will need to copy the file to the sdcard.

DISPLAYING A PICTURE

Displaying Pictures – See code already covered to how display pictures. • Image. View for example…

Android TAKING A PICTURE

Camera vs Camera 2 API • Starting in API 21 (Lollipop) there is a new set of APIs. – There is no backward compatibly either. • So first we look at Camera v 1 APIs • And then Camera 2 APIs – These are more flexible and should allow for “filters” to be added on the fly to image. • Finally, you can just use an intent have the native camera app take the picture.

Taking A Picture CAMERA V 1 APIS

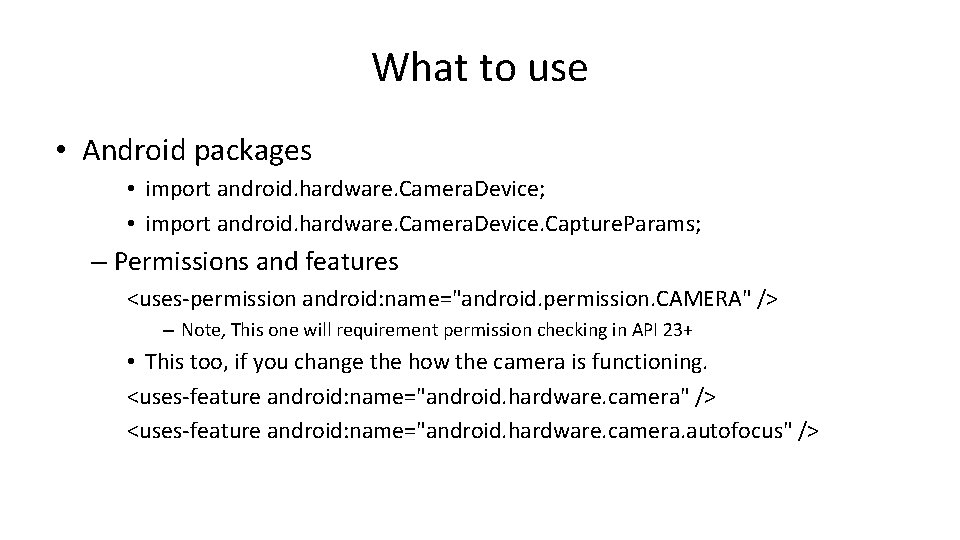

What to use • Android packages • import android. hardware. Camera. Device; • import android. hardware. Camera. Device. Capture. Params; – Permissions and features <uses-permission android: name="android. permission. CAMERA" /> – Note, This one will requirement permission checking in API 23+ • This too, if you change the how the camera is functioning. <uses-feature android: name="android. hardware. camera" /> <uses-feature android: name="android. hardware. camera. autofocus" />

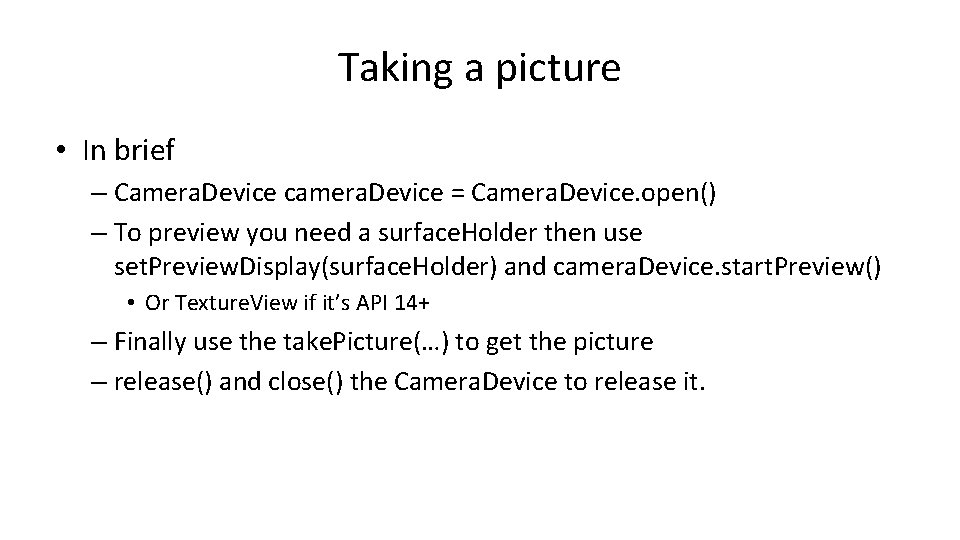

Taking a picture • In brief – Camera. Device camera. Device = Camera. Device. open() – To preview you need a surface. Holder then use set. Preview. Display(surface. Holder) and camera. Device. start. Preview() • Or Texture. View if it’s API 14+ – Finally use the take. Picture(…) to get the picture – release() and close() the Camera. Device to release it.

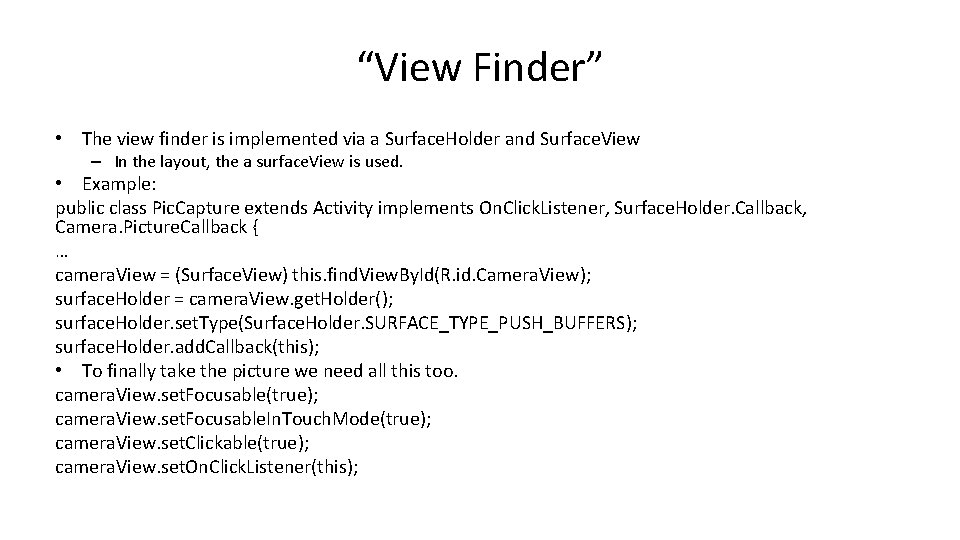

“View Finder” • The view finder is implemented via a Surface. Holder and Surface. View – In the layout, the a surface. View is used. • Example: public class Pic. Capture extends Activity implements On. Click. Listener, Surface. Holder. Callback, Camera. Picture. Callback { … camera. View = (Surface. View) this. find. View. By. Id(R. id. Camera. View); surface. Holder = camera. View. get. Holder(); surface. Holder. set. Type(Surface. Holder. SURFACE_TYPE_PUSH_BUFFERS); surface. Holder. add. Callback(this); • To finally take the picture we need all this too. camera. View. set. Focusable(true); camera. View. set. Focusable. In. Touch. Mode(true); camera. View. set. Clickable(true); camera. View. set. On. Click. Listener(this);

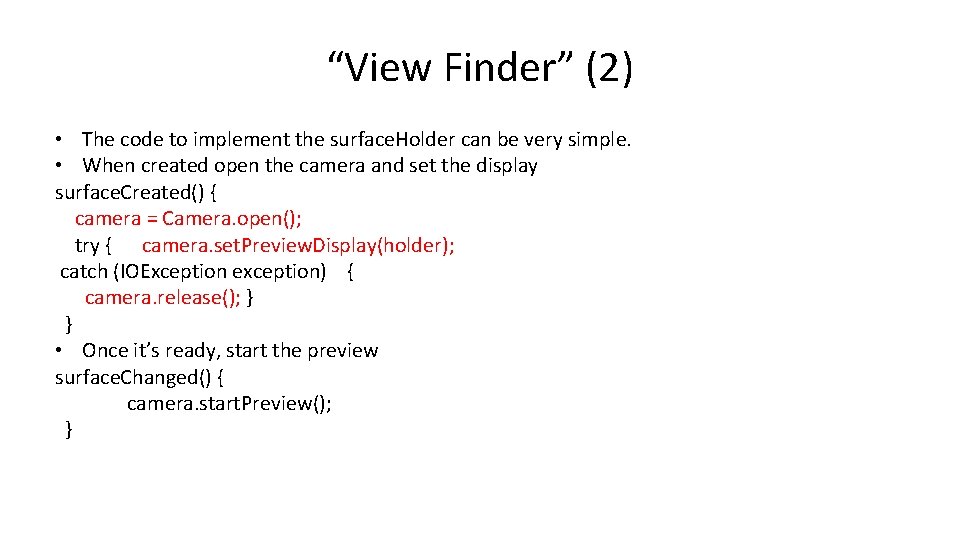

“View Finder” (2) • The code to implement the surface. Holder can be very simple. • When created open the camera and set the display surface. Created() { camera = Camera. open(); try { camera. set. Preview. Display(holder); catch (IOException exception) { camera. release(); } } • Once it’s ready, start the preview surface. Changed() { camera. start. Preview(); }

“View Finder” (3) • When we are done surface. Destroyed() { camera. stop. Preview(); camera. release(); }

Get the Picture • Using the Camera. Picture. Call. Back we implement, we can get the data for the picture and decide what to do it with it. • In its simplest form, which doesn’t nothing with the picture public void on. Picture. Taken(byte[] data, Camera camera) { • byte[] data is the picture that was taken • this just restarts the preview. camera. start. Preview(); }

Get the Picture (2) • To take the picture we use the • camera. take. Picture method in the on. Click method for the Surface. View public void on. Click(View v) { camera. take. Picture(null, this); } • take. Picture (Camera. Shutter. Callback shutter, Camera. Picture. Callback raw, Camera. Picture. Callback postview , Camera. Picture. Callback jpeg) • We only need the last to get the picture and it show on the previous slide. – shutter • the callback for image capture moment, or null – raw • the callback for raw (uncompressed) image data, or null – postview • the callback with postview image data, may be null – jpeg • the callback for JPEG image data, or null

Taking A Picture CAMERA V 2 APIS

Camera 2 APIs • Like v 1, this breaks up into 2 major pieces – Viewfinder • Connect it to a Surface. View or Texture. View. – Taking the picture

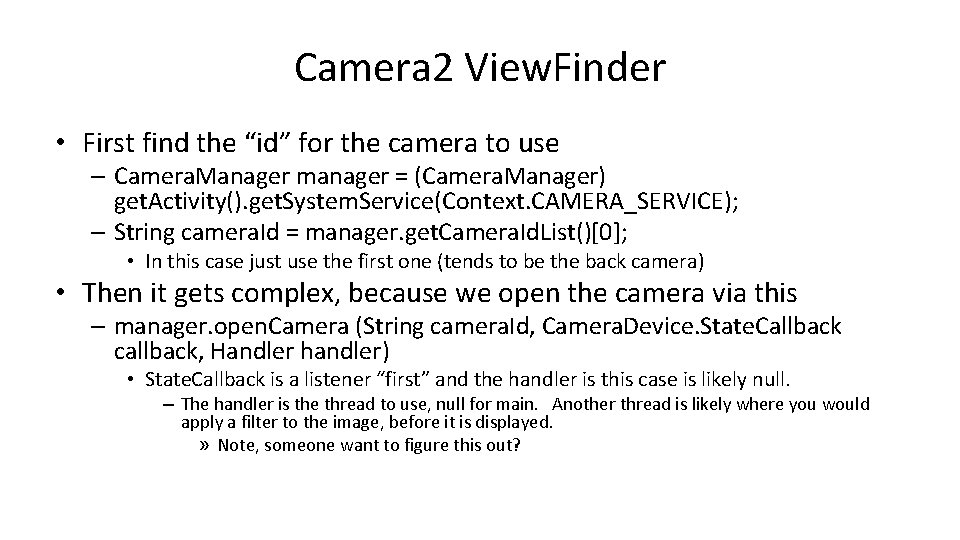

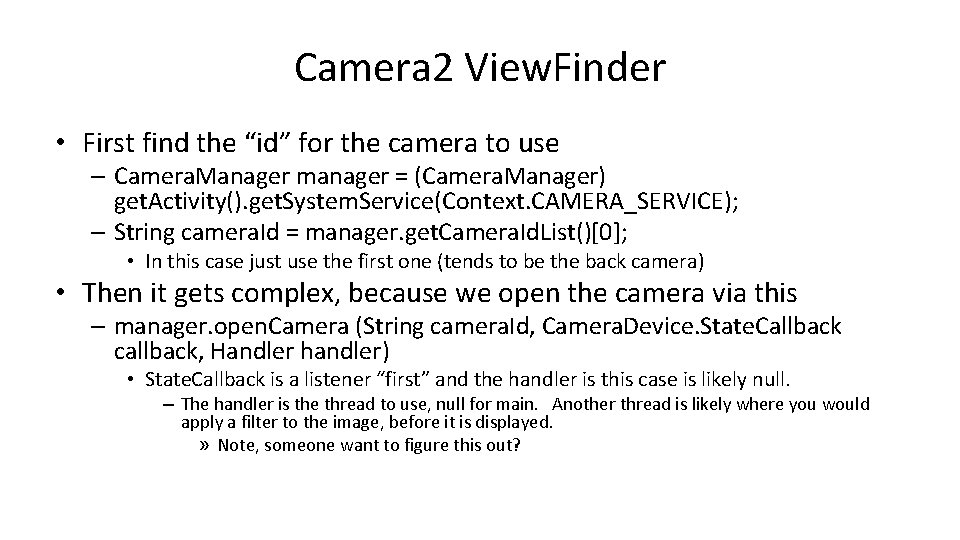

Camera 2 View. Finder • First find the “id” for the camera to use – Camera. Manager manager = (Camera. Manager) get. Activity(). get. System. Service(Context. CAMERA_SERVICE); – String camera. Id = manager. get. Camera. Id. List()[0]; • In this case just use the first one (tends to be the back camera) • Then it gets complex, because we open the camera via this – manager. open. Camera (String camera. Id, Camera. Device. State. Callback callback, Handler handler) • State. Callback is a listener “first” and the handler is this case is likely null. – The handler is the thread to use, null for main. Another thread is likely where you would apply a filter to the image, before it is displayed. » Note, someone want to figure this out?

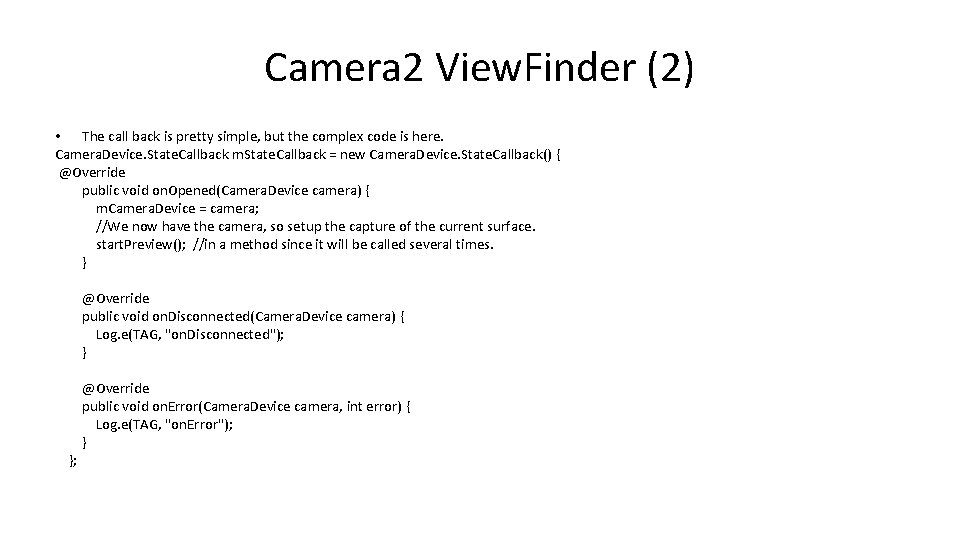

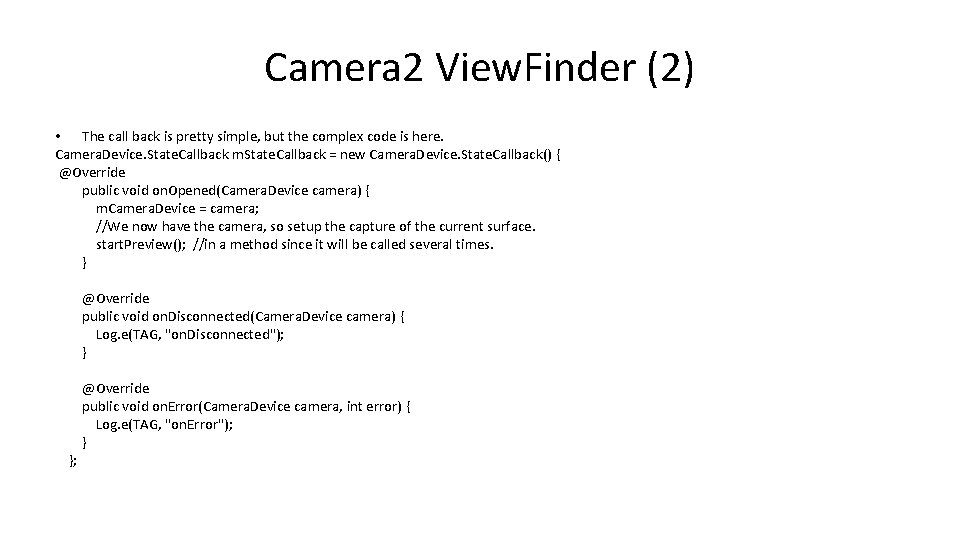

Camera 2 View. Finder (2) • The call back is pretty simple, but the complex code is here. Camera. Device. State. Callback m. State. Callback = new Camera. Device. State. Callback() { @Override public void on. Opened(Camera. Device camera) { m. Camera. Device = camera; //We now have the camera, so setup the capture of the current surface. start. Preview(); //in a method since it will be called several times. } @Override public void on. Disconnected(Camera. Device camera) { Log. e(TAG, "on. Disconnected"); } }; @Override public void on. Error(Camera. Device camera, int error) { Log. e(TAG, "on. Error"); }

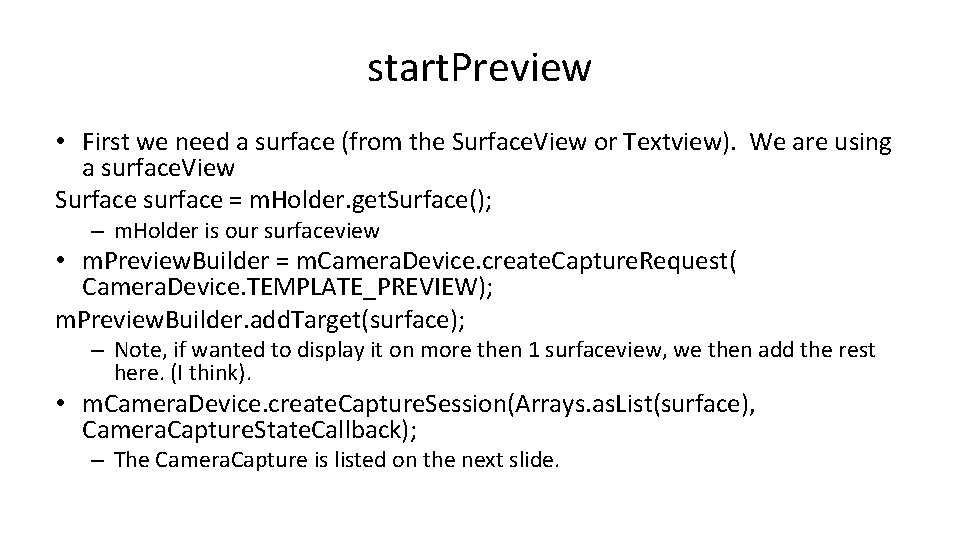

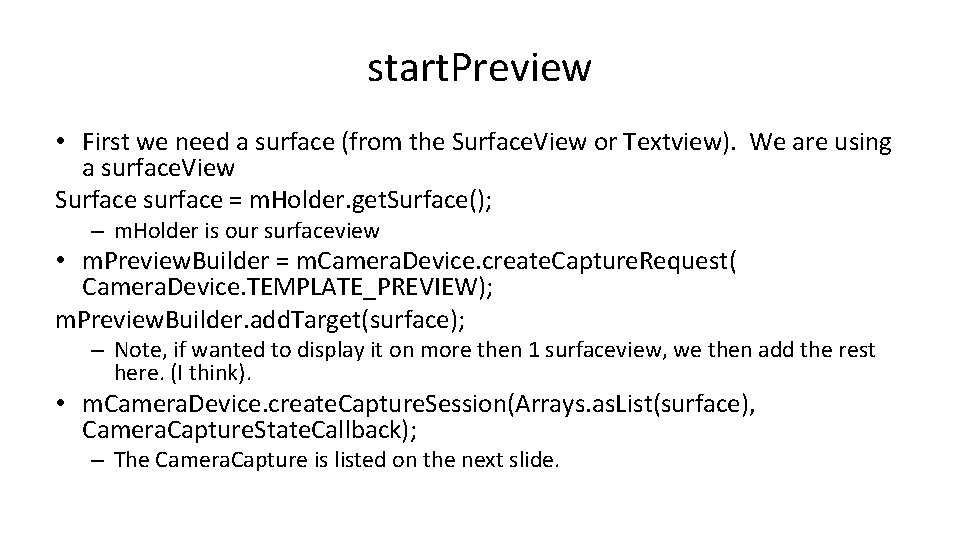

start. Preview • First we need a surface (from the Surface. View or Textview). We are using a surface. View Surface surface = m. Holder. get. Surface(); – m. Holder is our surfaceview • m. Preview. Builder = m. Camera. Device. create. Capture. Request( Camera. Device. TEMPLATE_PREVIEW); m. Preview. Builder. add. Target(surface); – Note, if wanted to display it on more then 1 surfaceview, we then add the rest here. (I think). • m. Camera. Device. create. Capture. Session(Arrays. as. List(surface), Camera. Capture. State. Callback); – The Camera. Capture is listed on the next slide.

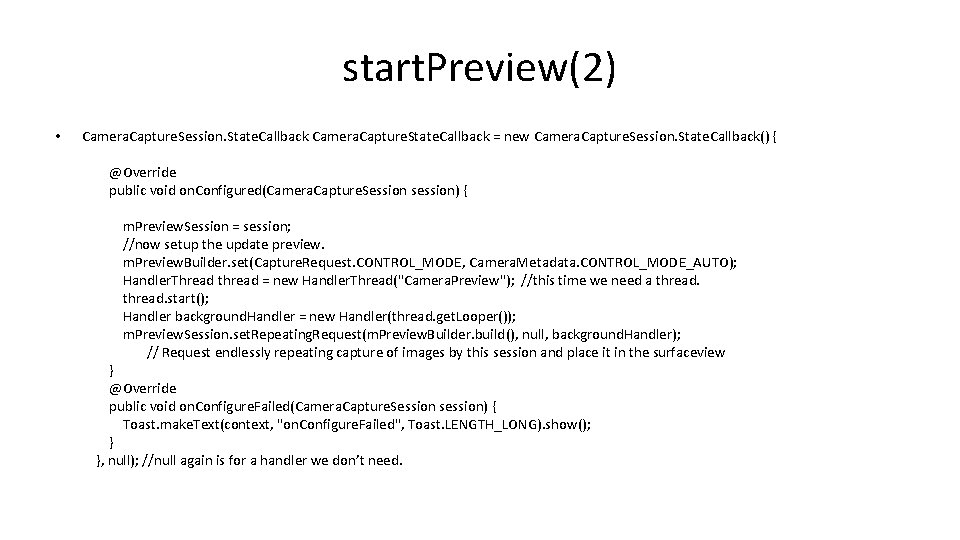

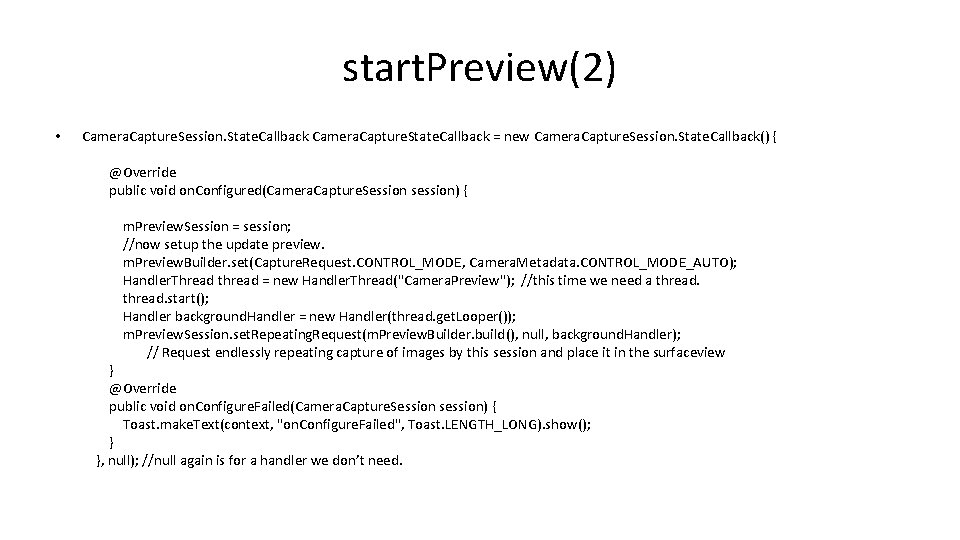

start. Preview(2) • Camera. Capture. Session. State. Callback Camera. Capture. State. Callback = new Camera. Capture. Session. State. Callback() { @Override public void on. Configured(Camera. Capture. Session session) { m. Preview. Session = session; //now setup the update preview. m. Preview. Builder. set(Capture. Request. CONTROL_MODE, Camera. Metadata. CONTROL_MODE_AUTO); Handler. Thread thread = new Handler. Thread("Camera. Preview"); //this time we need a thread. start(); Handler background. Handler = new Handler(thread. get. Looper()); m. Preview. Session. set. Repeating. Request(m. Preview. Builder. build(), null, background. Handler); // Request endlessly repeating capture of images by this session and place it in the surfaceview } @Override public void on. Configure. Failed(Camera. Capture. Session session) { Toast. make. Text(context, "on. Configure. Failed", Toast. LENGTH_LONG). show(); } }, null); //null again is for a handler we don’t need.

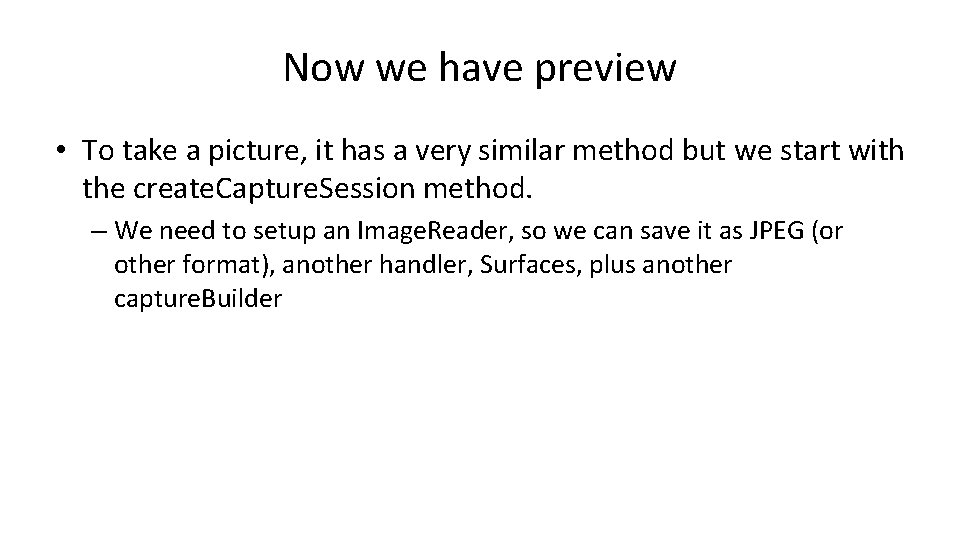

Now we have preview • To take a picture, it has a very similar method but we start with the create. Capture. Session method. – We need to setup an Image. Reader, so we can save it as JPEG (or other format), another handler, Surfaces, plus another capture. Builder

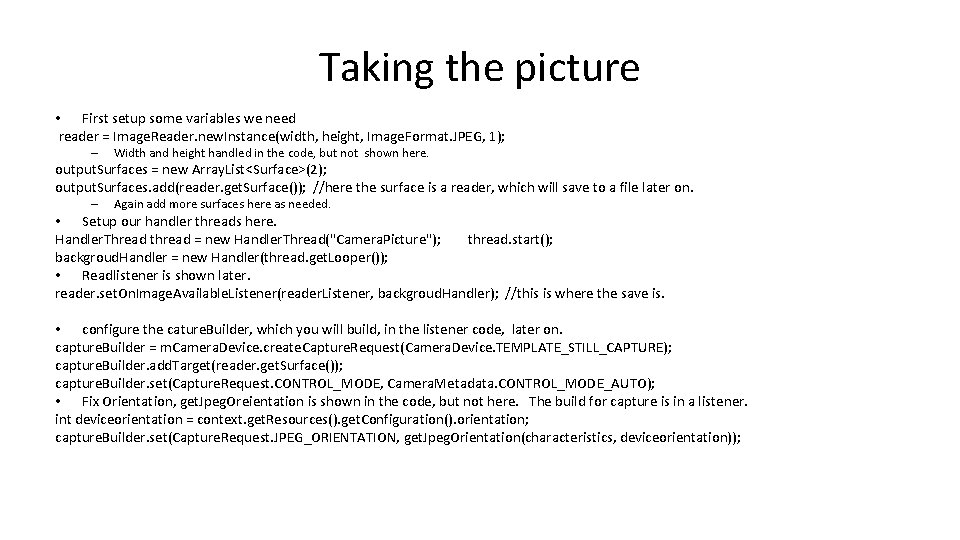

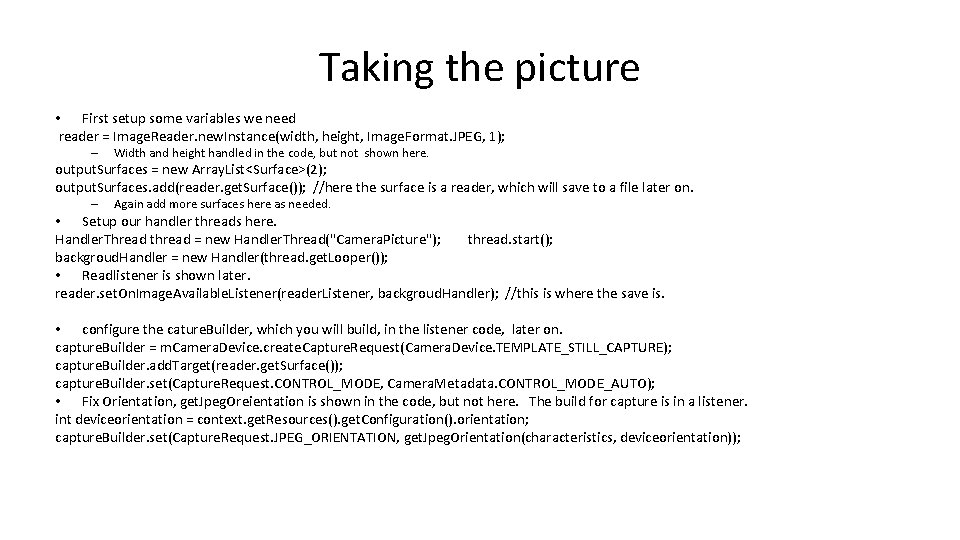

Taking the picture • First setup some variables we need reader = Image. Reader. new. Instance(width, height, Image. Format. JPEG, 1); – Width and height handled in the code, but not shown here. output. Surfaces = new Array. List<Surface>(2); output. Surfaces. add(reader. get. Surface()); //here the surface is a reader, which will save to a file later on. – Again add more surfaces here as needed. • Setup our handler threads here. Handler. Thread thread = new Handler. Thread("Camera. Picture"); thread. start(); backgroud. Handler = new Handler(thread. get. Looper()); • Readlistener is shown later. reader. set. On. Image. Available. Listener(reader. Listener, backgroud. Handler); //this is where the save is. • configure the cature. Builder, which you will build, in the listener code, later on. capture. Builder = m. Camera. Device. create. Capture. Request(Camera. Device. TEMPLATE_STILL_CAPTURE); capture. Builder. add. Target(reader. get. Surface()); capture. Builder. set(Capture. Request. CONTROL_MODE, Camera. Metadata. CONTROL_MODE_AUTO); • Fix Orientation, get. Jpeg. Oreientation is shown in the code, but not here. The build for capture is in a listener. int deviceorientation = context. get. Resources(). get. Configuration(). orientation; capture. Builder. set(Capture. Request. JPEG_ORIENTATION, get. Jpeg. Orientation(characteristics, deviceorientation));

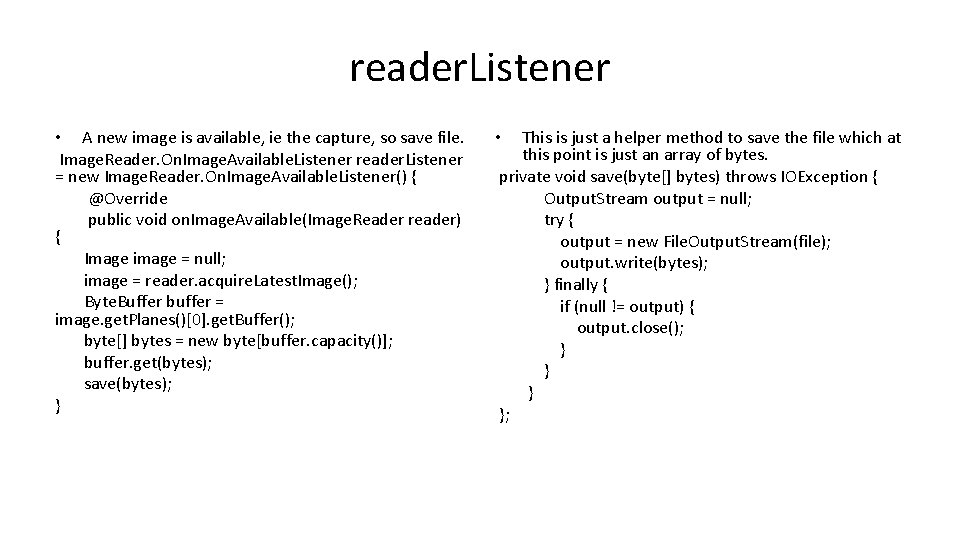

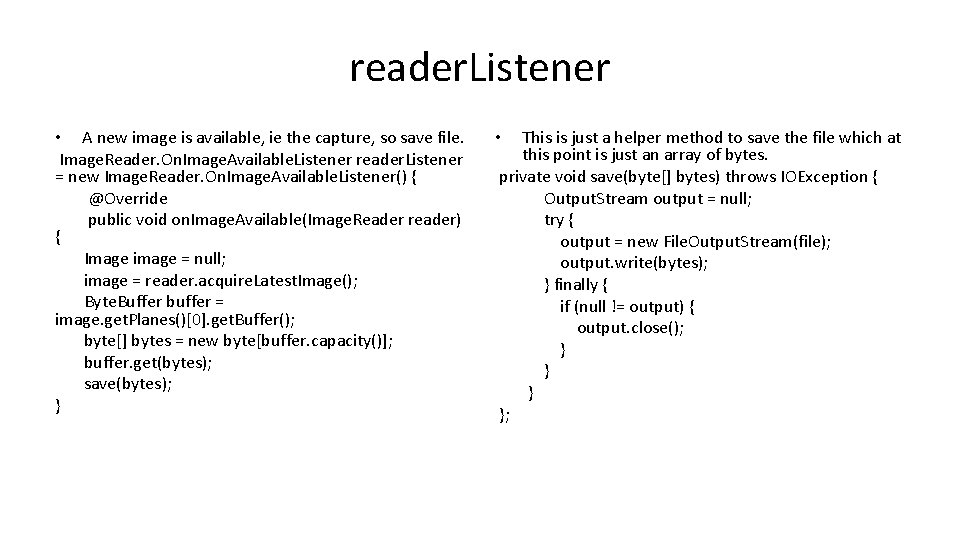

reader. Listener • A new image is available, ie the capture, so save file. Image. Reader. On. Image. Available. Listener reader. Listener = new Image. Reader. On. Image. Available. Listener() { @Override public void on. Image. Available(Image. Reader reader) { Image image = null; image = reader. acquire. Latest. Image(); Byte. Buffer buffer = image. get. Planes()[0]. get. Buffer(); byte[] bytes = new byte[buffer. capacity()]; buffer. get(bytes); save(bytes); } This is just a helper method to save the file which at this point is just an array of bytes. private void save(byte[] bytes) throws IOException { Output. Stream output = null; try { output = new File. Output. Stream(file); output. write(bytes); } finally { if (null != output) { output. close(); } }; •

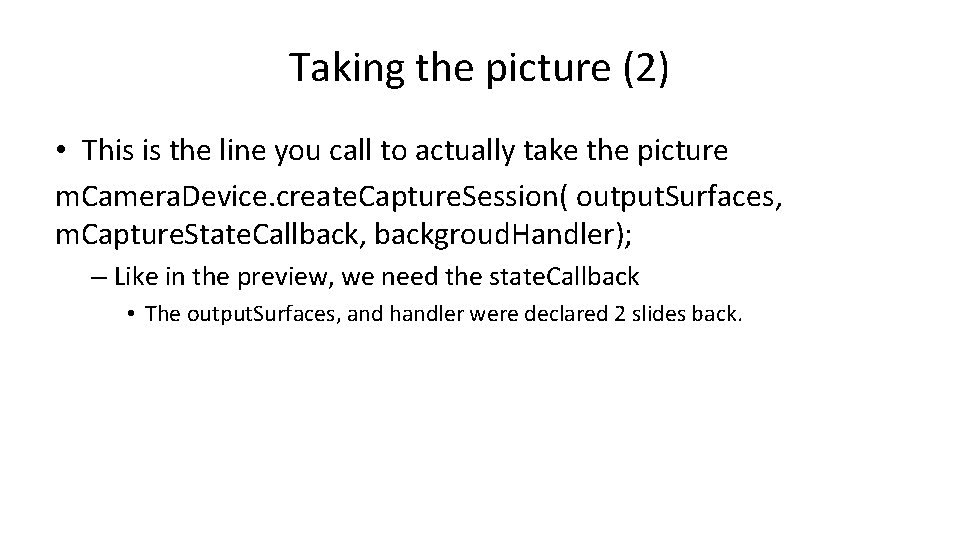

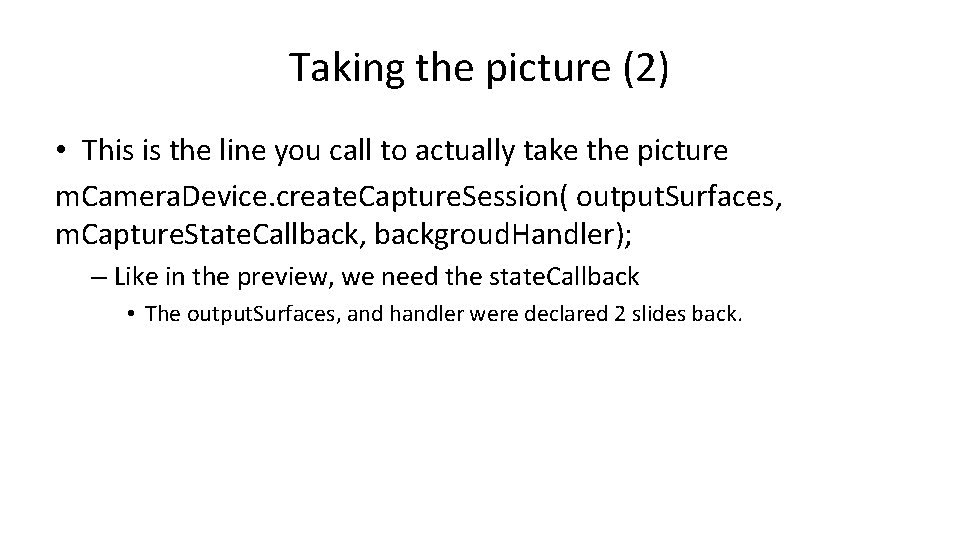

Taking the picture (2) • This is the line you call to actually take the picture m. Camera. Device. create. Capture. Session( output. Surfaces, m. Capture. State. Callback, backgroud. Handler); – Like in the preview, we need the state. Callback • The output. Surfaces, and handler were declared 2 slides back.

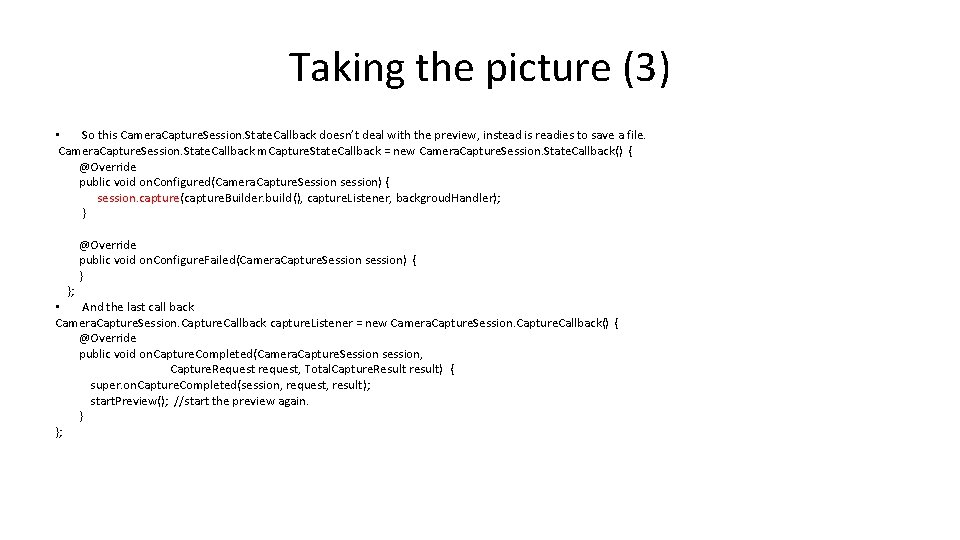

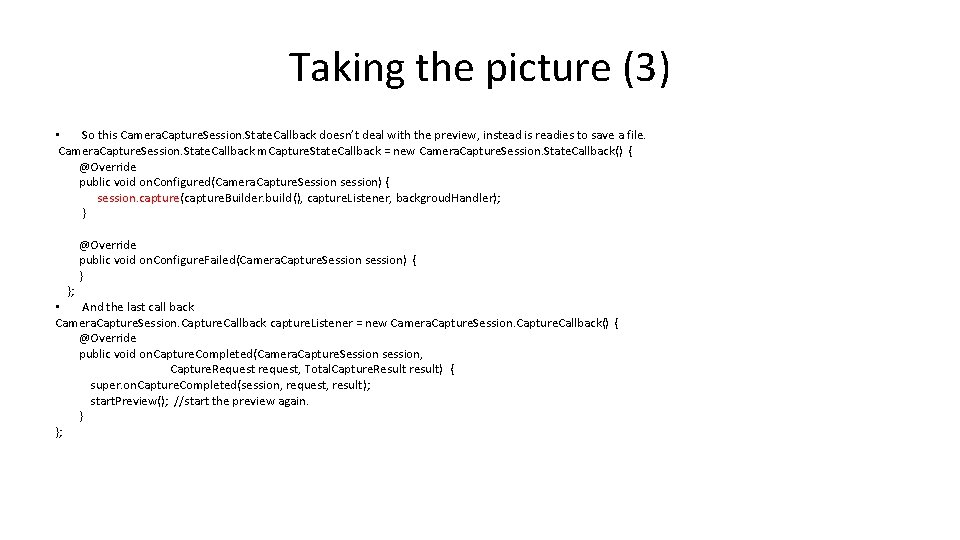

Taking the picture (3) • So this Camera. Capture. Session. State. Callback doesn’t deal with the preview, instead is readies to save a file. Camera. Capture. Session. State. Callback m. Capture. State. Callback = new Camera. Capture. Session. State. Callback() { @Override public void on. Configured(Camera. Capture. Session session) { session. capture(capture. Builder. build(), capture. Listener, backgroud. Handler); } }; @Override public void on. Configure. Failed(Camera. Capture. Session session) { } • And the last call back Camera. Capture. Session. Capture. Callback capture. Listener = new Camera. Capture. Session. Capture. Callback() { @Override public void on. Capture. Completed(Camera. Capture. Session session, Capture. Request request, Total. Capture. Result result) { super. on. Capture. Completed(session, request, result); start. Preview(); //start the preview again. } };

Taking A Picture WITH AN INTENT

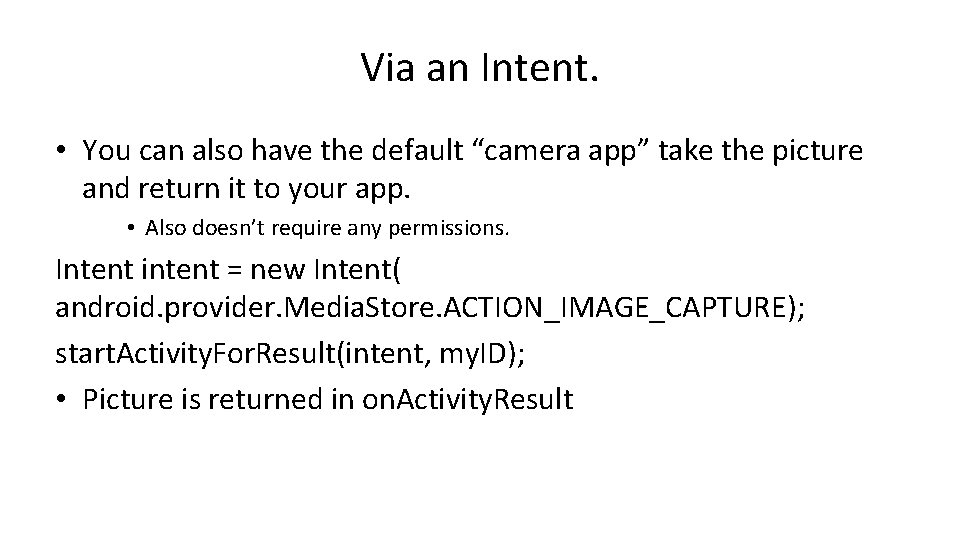

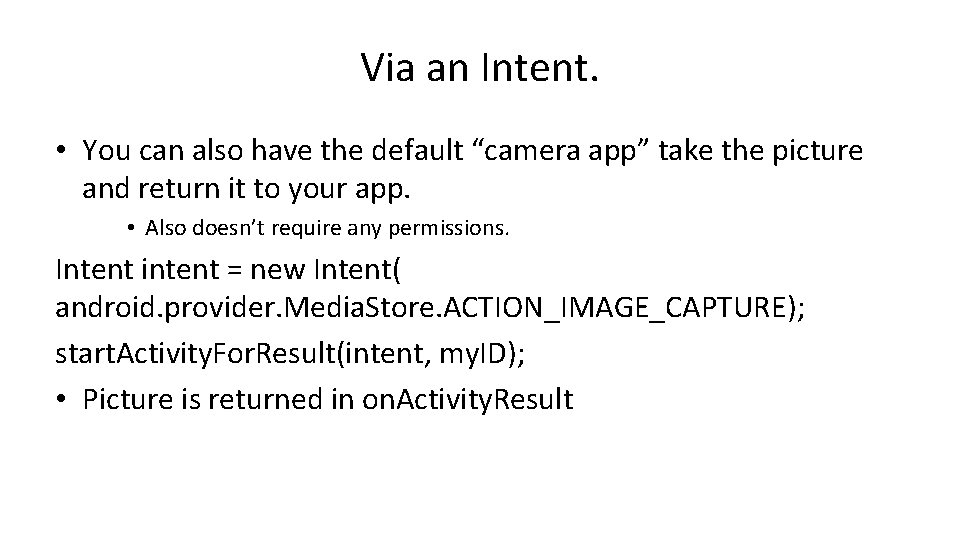

Via an Intent. • You can also have the default “camera app” take the picture and return it to your app. • Also doesn’t require any permissions. Intent intent = new Intent( android. provider. Media. Store. ACTION_IMAGE_CAPTURE); start. Activity. For. Result(intent, my. ID); • Picture is returned in on. Activity. Result

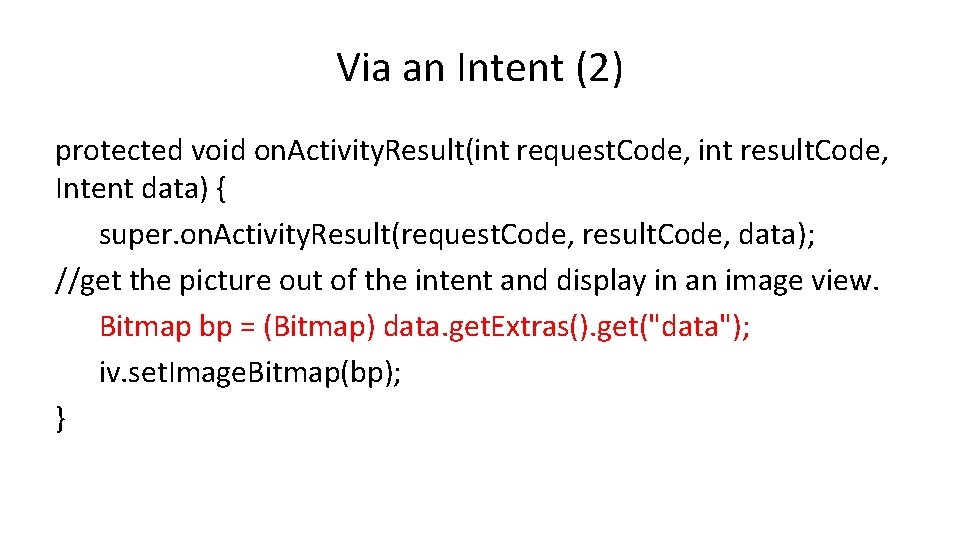

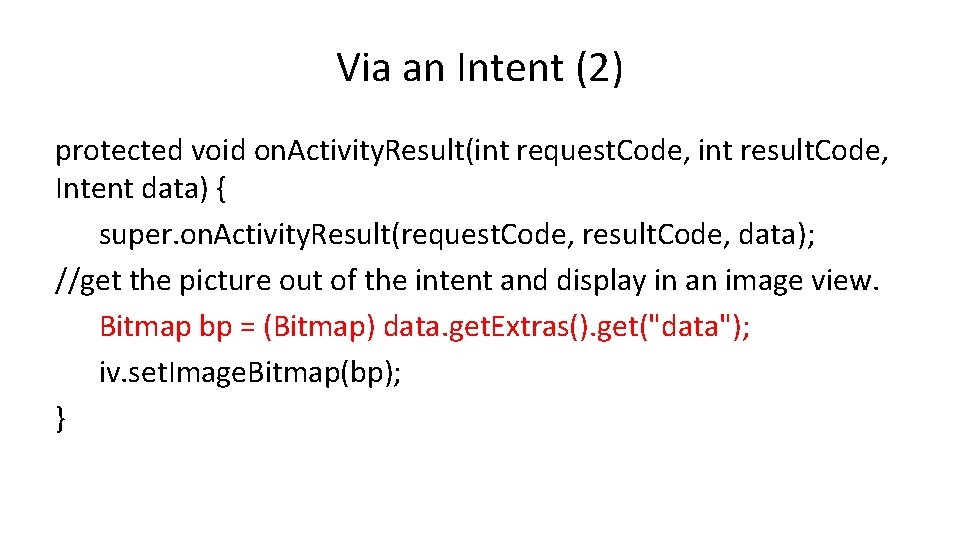

Via an Intent (2) protected void on. Activity. Result(int request. Code, int result. Code, Intent data) { super. on. Activity. Result(request. Code, result. Code, data); //get the picture out of the intent and display in an image view. Bitmap bp = (Bitmap) data. get. Extras(). get("data"); iv. set. Image. Bitmap(bp); }

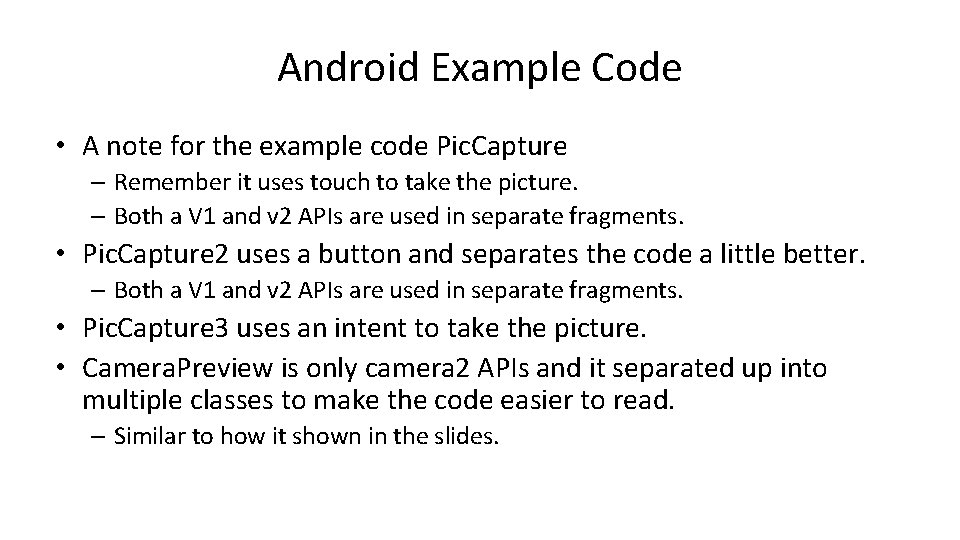

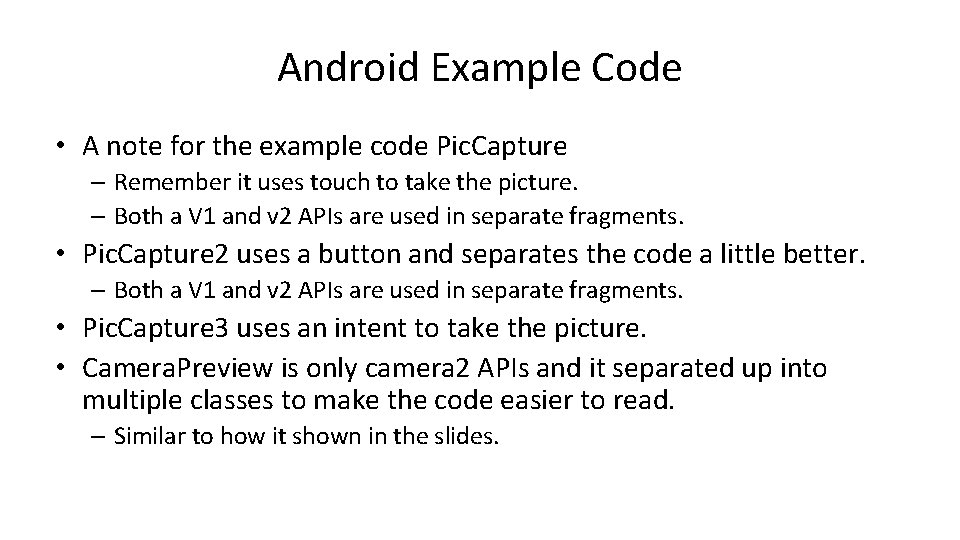

Android Example Code • A note for the example code Pic. Capture – Remember it uses touch to take the picture. – Both a V 1 and v 2 APIs are used in separate fragments. • Pic. Capture 2 uses a button and separates the code a little better. – Both a V 1 and v 2 APIs are used in separate fragments. • Pic. Capture 3 uses an intent to take the picture. • Camera. Preview is only camera 2 APIs and it separated up into multiple classes to make the code easier to read. – Similar to how it shown in the slides.

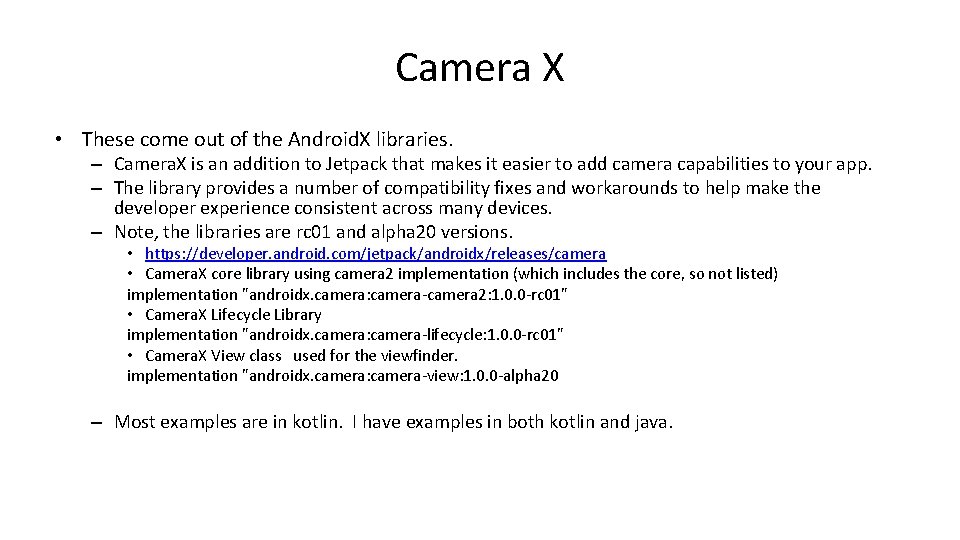

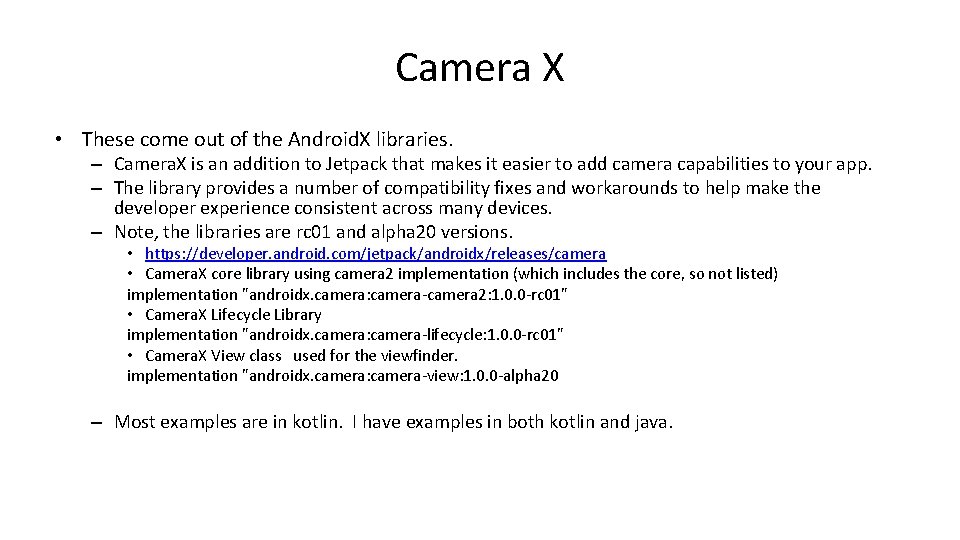

Camera X • These come out of the Android. X libraries. – Camera. X is an addition to Jetpack that makes it easier to add camera capabilities to your app. – The library provides a number of compatibility fixes and workarounds to help make the developer experience consistent across many devices. – Note, the libraries are rc 01 and alpha 20 versions. • https: //developer. android. com/jetpack/androidx/releases/camera • Camera. X core library using camera 2 implementation (which includes the core, so not listed) implementation "androidx. camera: camera-camera 2: 1. 0. 0 -rc 01" • Camera. X Lifecycle Library implementation "androidx. camera: camera-lifecycle: 1. 0. 0 -rc 01" • Camera. X View class used for the viewfinder. implementation "androidx. camera: camera-view: 1. 0. 0 -alpha 20 – Most examples are in kotlin. I have examples in both kotlin and java.

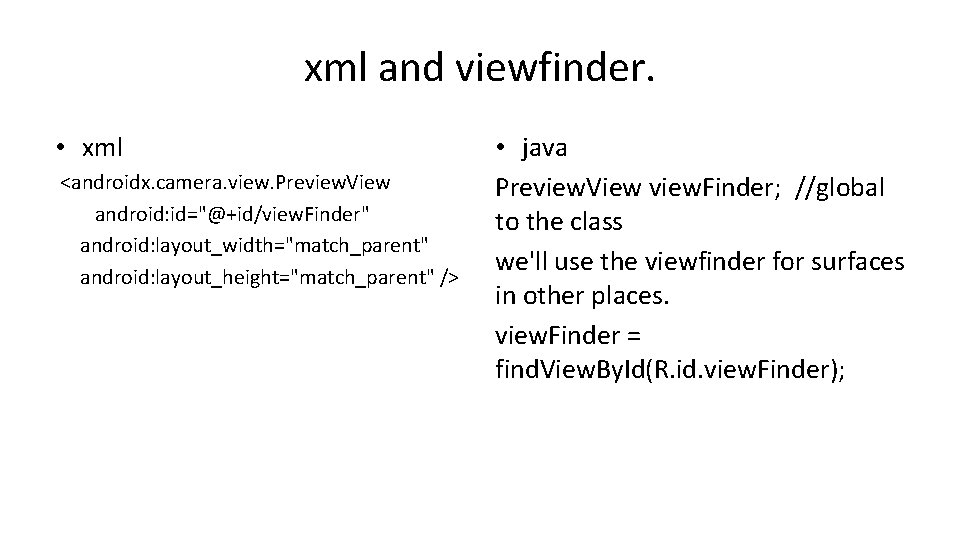

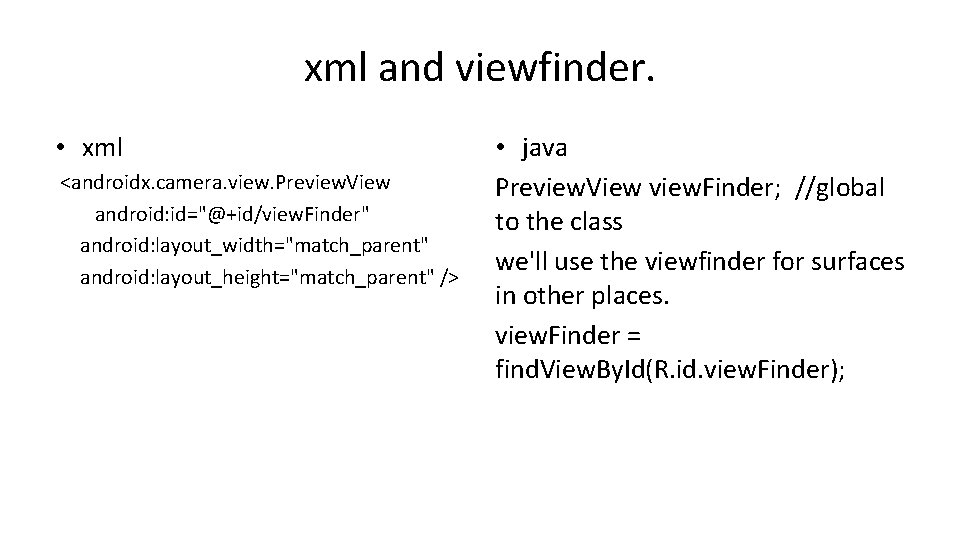

xml and viewfinder. • xml <androidx. camera. view. Preview. View android: id="@+id/view. Finder" android: layout_width="match_parent" android: layout_height="match_parent" /> • java Preview. View view. Finder; //global to the class we'll use the viewfinder for surfaces in other places. view. Finder = find. View. By. Id(R. id. view. Finder);

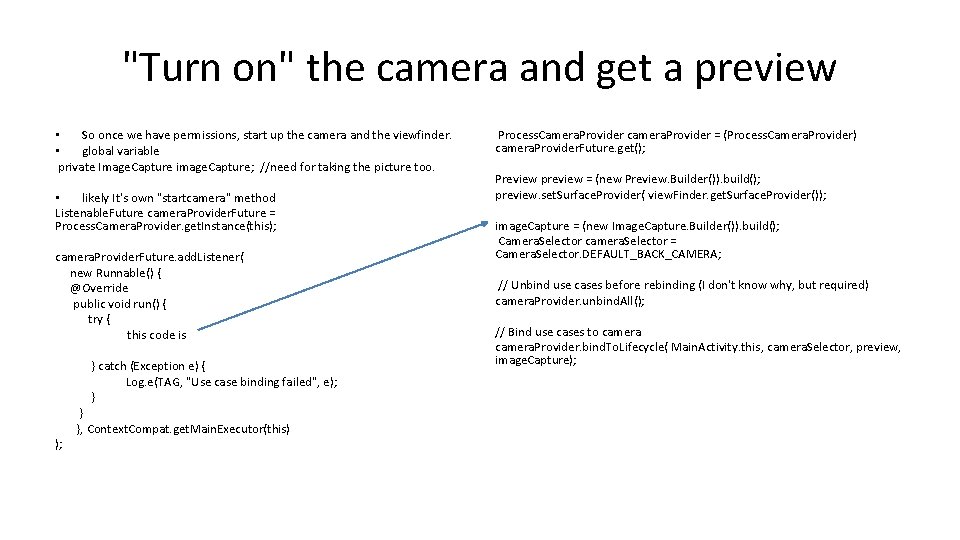

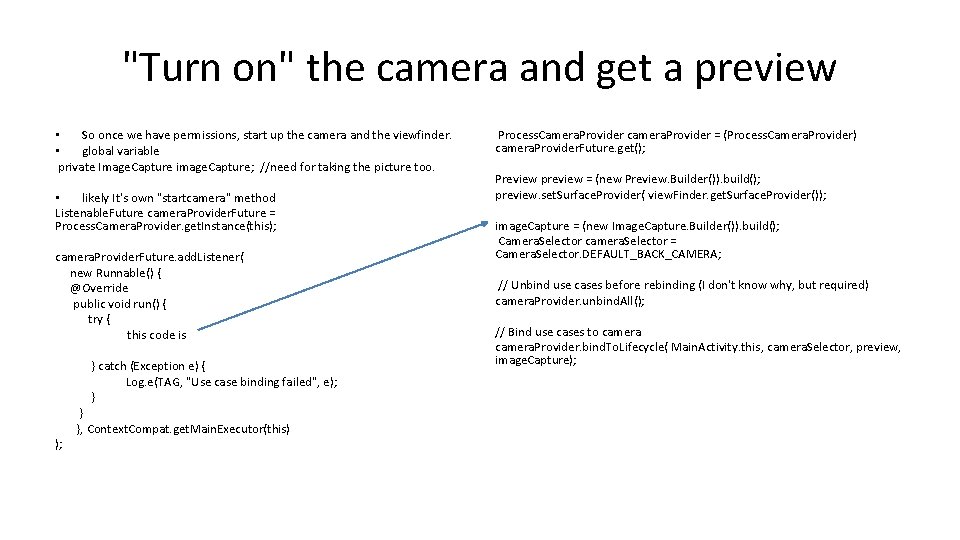

"Turn on" the camera and get a preview • So once we have permissions, start up the camera and the viewfinder. • global variable private Image. Capture image. Capture; //need for taking the picture too. • likely It's own "startcamera" method Listenable. Future camera. Provider. Future = Process. Camera. Provider. get. Instance(this); camera. Provider. Future. add. Listener( new Runnable() { @Override public void run() { try { this code is } catch (Exception e) { Log. e(TAG, "Use case binding failed", e); } ); } }, Context. Compat. get. Main. Executor(this) Process. Camera. Provider camera. Provider = (Process. Camera. Provider) camera. Provider. Future. get(); Preview preview = (new Preview. Builder()). build(); preview. set. Surface. Provider( view. Finder. get. Surface. Provider()); image. Capture = (new Image. Capture. Builder()). build(); Camera. Selector camera. Selector = Camera. Selector. DEFAULT_BACK_CAMERA; // Unbind use cases before rebinding (I don't know why, but required) camera. Provider. unbind. All(); // Bind use cases to camera. Provider. bind. To. Lifecycle( Main. Activity. this, camera. Selector, preview, image. Capture);

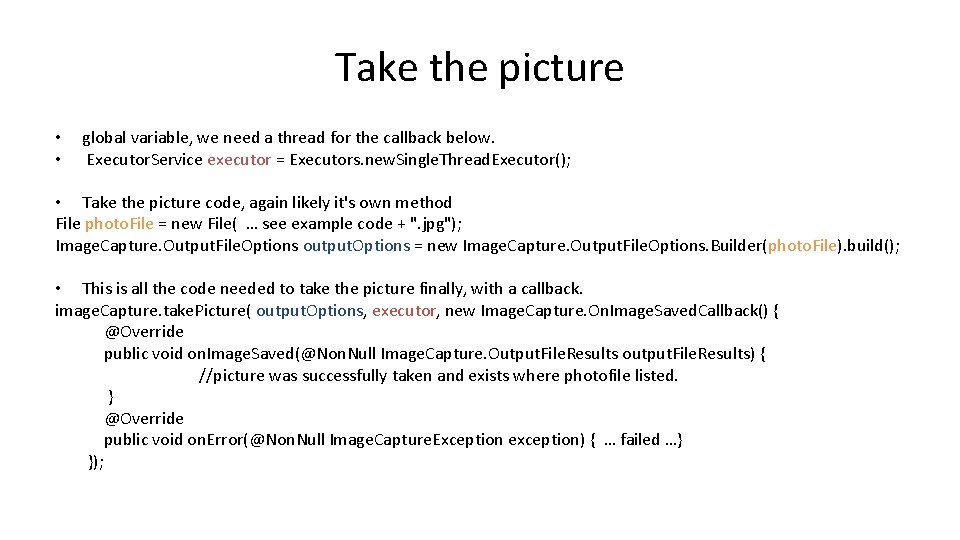

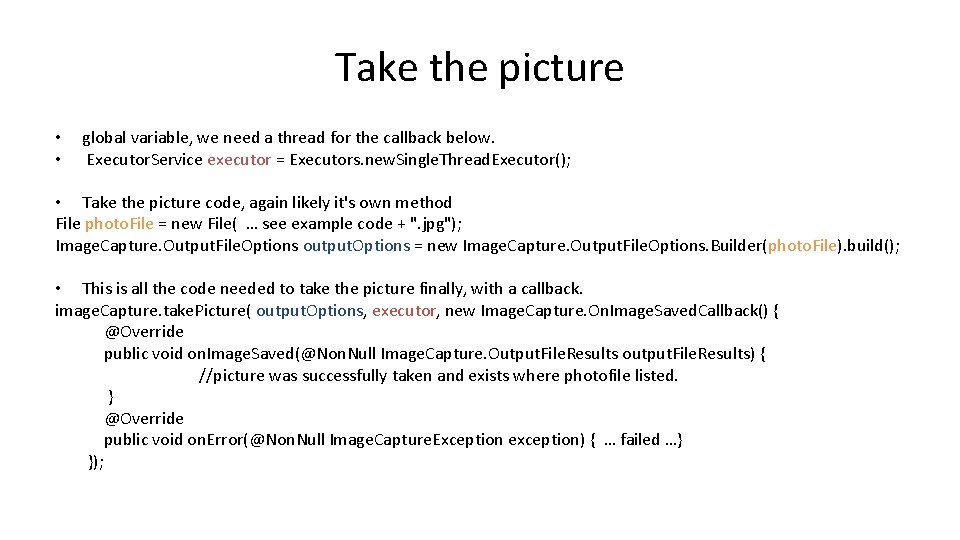

Take the picture • • global variable, we need a thread for the callback below. Executor. Service executor = Executors. new. Single. Thread. Executor(); • Take the picture code, again likely it's own method File photo. File = new File( … see example code + ". jpg"); Image. Capture. Output. File. Options output. Options = new Image. Capture. Output. File. Options. Builder(photo. File). build(); • This is all the code needed to take the picture finally, with a callback. image. Capture. take. Picture( output. Options, executor, new Image. Capture. On. Image. Saved. Callback() { @Override public void on. Image. Saved(@Non. Null Image. Capture. Output. File. Results output. File. Results) { //picture was successfully taken and exists where photofile listed. } @Override public void on. Error(@Non. Null Image. Capture. Exception exception) { … failed …} });

Android Example Code • Camera. Xdemo is a java version • Camera. Xdemo_kt is a kotlin version • These are both the "same" example. it breaks up a preview with a button to take the picture. – It goes into the public app directory for the example.

Android android. media RECORDING VIDEO

Recording VIDEO CAMERA V 1 APIS

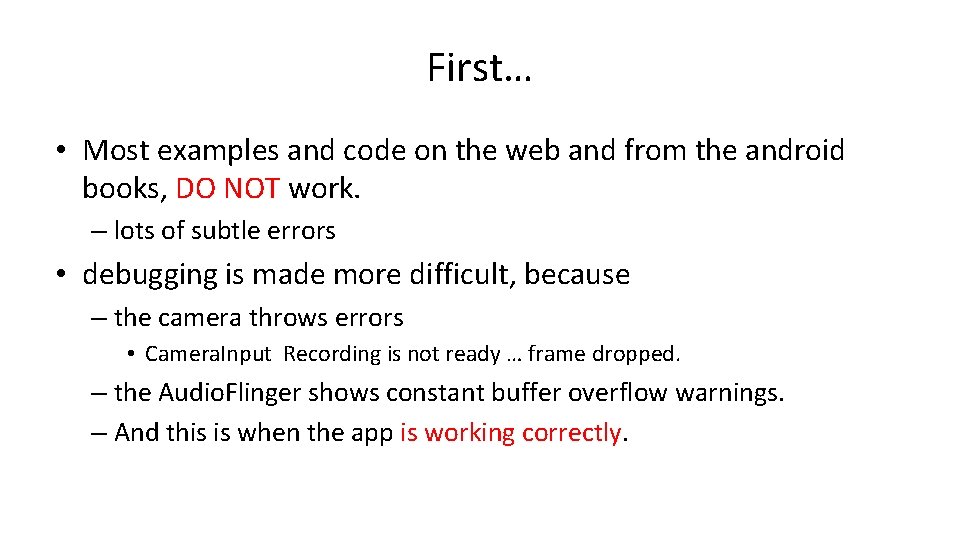

First… • Most examples and code on the web and from the android books, DO NOT work. – lots of subtle errors • debugging is made more difficult, because – the camera throws errors • Camera. Input Recording is not ready … frame dropped. – the Audio. Flinger shows constant buffer overflow warnings. – And this is when the app is working correctly.

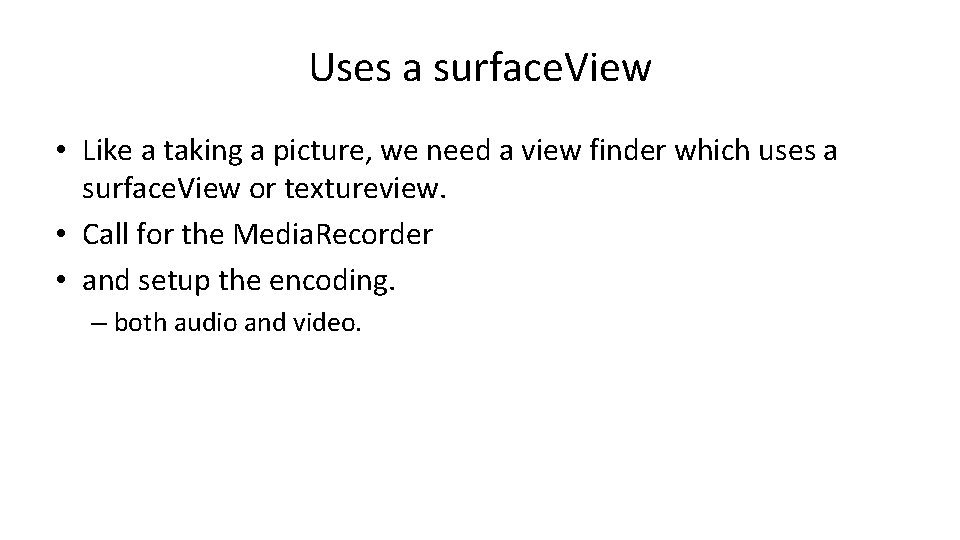

Uses a surface. View • Like a taking a picture, we need a view finder which uses a surface. View or textureview. • Call for the Media. Recorder • and setup the encoding. – both audio and video.

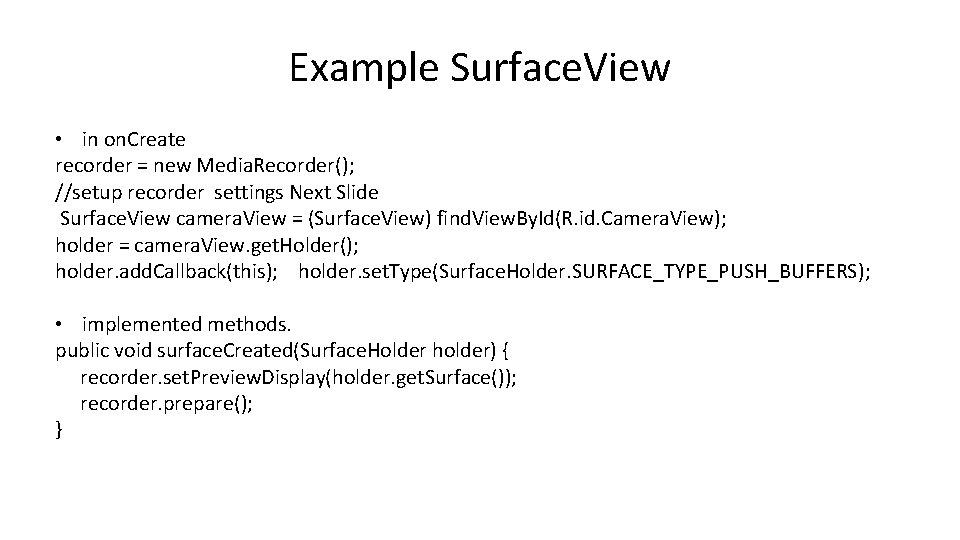

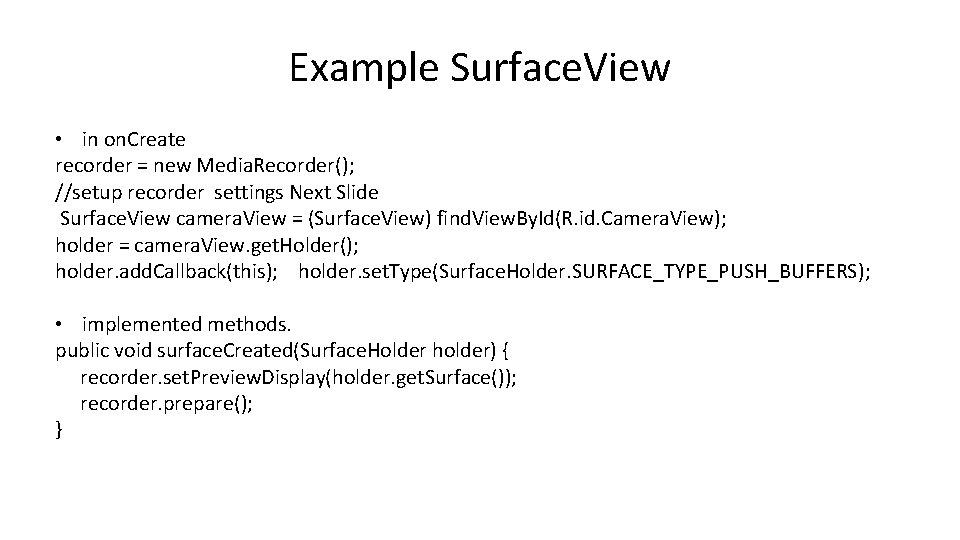

Example Surface. View • in on. Create recorder = new Media. Recorder(); //setup recorder settings Next Slide Surface. View camera. View = (Surface. View) find. View. By. Id(R. id. Camera. View); holder = camera. View. get. Holder(); holder. add. Callback(this); holder. set. Type(Surface. Holder. SURFACE_TYPE_PUSH_BUFFERS); • implemented methods. public void surface. Created(Surface. Holder holder) { recorder. set. Preview. Display(holder. get. Surface()); recorder. prepare(); }

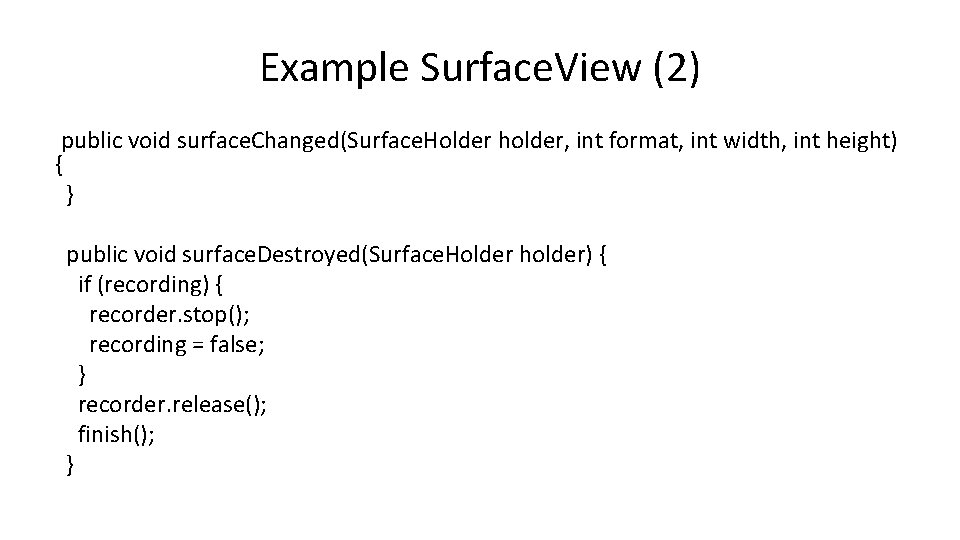

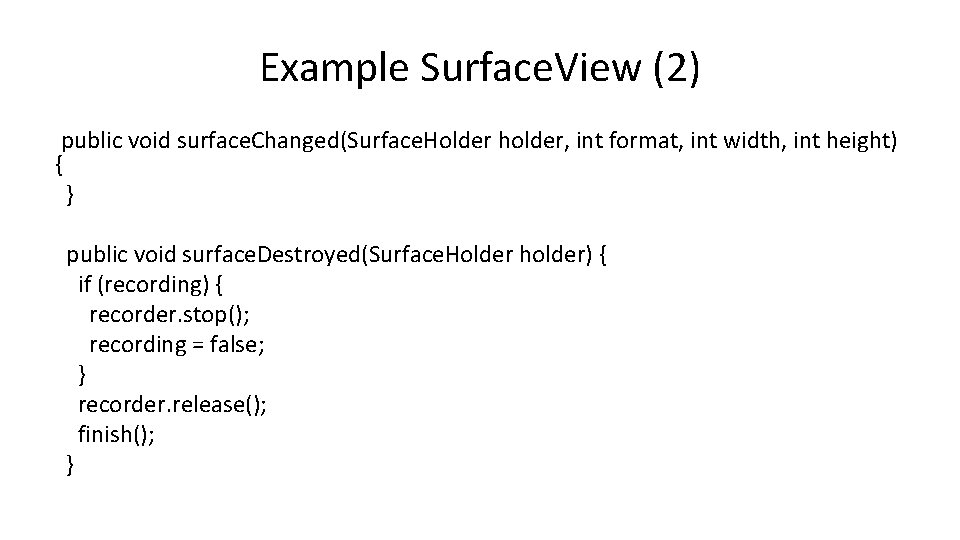

Example Surface. View (2) public void surface. Changed(Surface. Holder holder, int format, int width, int height) { } public void surface. Destroyed(Surface. Holder holder) { if (recording) { recorder. stop(); recording = false; } recorder. release(); finish(); }

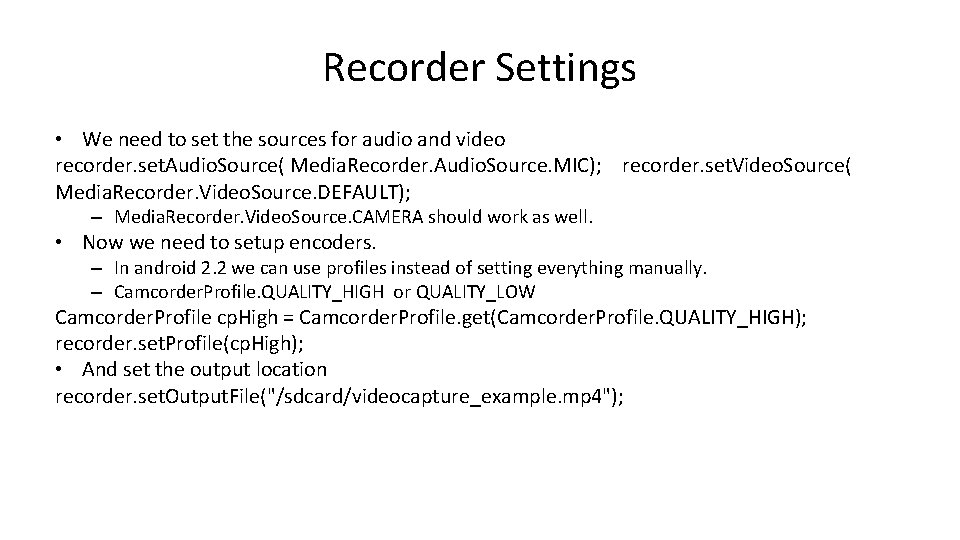

Recorder Settings • We need to set the sources for audio and video recorder. set. Audio. Source( Media. Recorder. Audio. Source. MIC); recorder. set. Video. Source( Media. Recorder. Video. Source. DEFAULT); – Media. Recorder. Video. Source. CAMERA should work as well. • Now we need to setup encoders. – In android 2. 2 we can use profiles instead of setting everything manually. – Camcorder. Profile. QUALITY_HIGH or QUALITY_LOW Camcorder. Profile cp. High = Camcorder. Profile. get(Camcorder. Profile. QUALITY_HIGH); recorder. set. Profile(cp. High); • And set the output location recorder. set. Output. File("/sdcard/videocapture_example. mp 4");

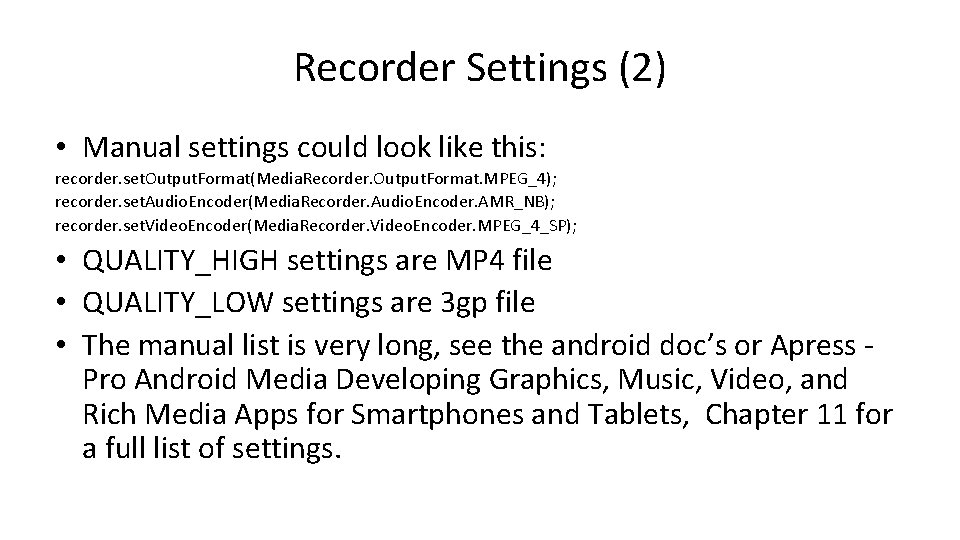

Recorder Settings (2) • Manual settings could look like this: recorder. set. Output. Format(Media. Recorder. Output. Format. MPEG_4); recorder. set. Audio. Encoder(Media. Recorder. Audio. Encoder. AMR_NB); recorder. set. Video. Encoder(Media. Recorder. Video. Encoder. MPEG_4_SP); • QUALITY_HIGH settings are MP 4 file • QUALITY_LOW settings are 3 gp file • The manual list is very long, see the android doc’s or Apress Pro Android Media Developing Graphics, Music, Video, and Rich Media Apps for Smartphones and Tablets, Chapter 11 for a full list of settings.

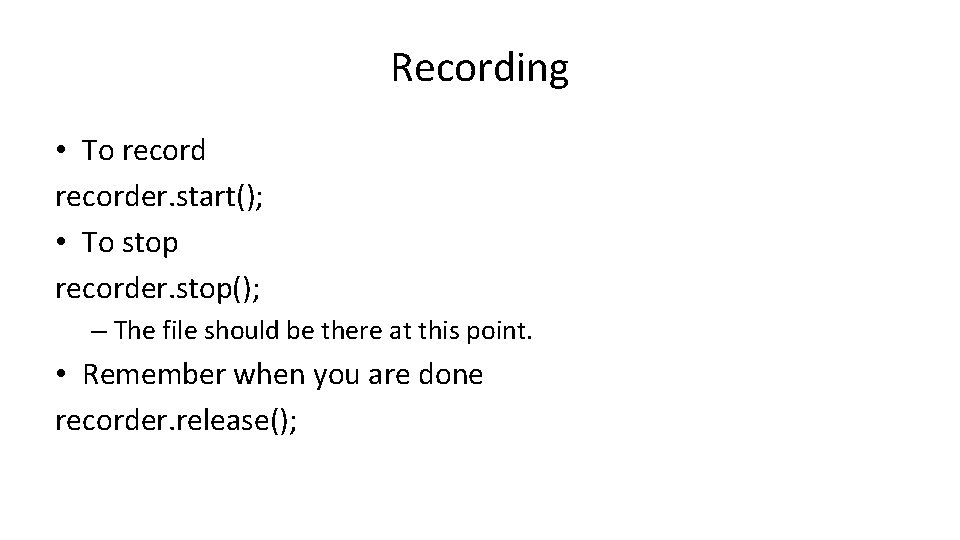

Recording • To recorder. start(); • To stop recorder. stop(); – The file should be there at this point. • Remember when you are done recorder. release();

Example code • The example code – need an Sdcard to test code and the file will be located /sdcard/videocapture_example. mp 4 • The code uses an extended surface. View call capture. Surface, instead of just a surface. View – The code is all there, but rearranged from the slides. • Honesty, the code just didn’t work without an extended Surface. View • The code starts up with the viewfinder, touch the screen to start recording, again to stop recording. It will then launch the native media player to replay the video. • As Note, using this on API 23+ (marshmallow), the video is likely upside down.

Recording VIDEO CAMERA V 2 APIS

Camera 2 APIs. • Recording video with Camera 2 API is very similar to taking a picture. • Except… – Change Capture to a Repeating Request. • Why? Because we need more then one frame. – This changes how some of the code works. – As a note, on at least API 22 (lollipop 5. 1) the example code works once then fails. – But works great on API 23 without any problems.

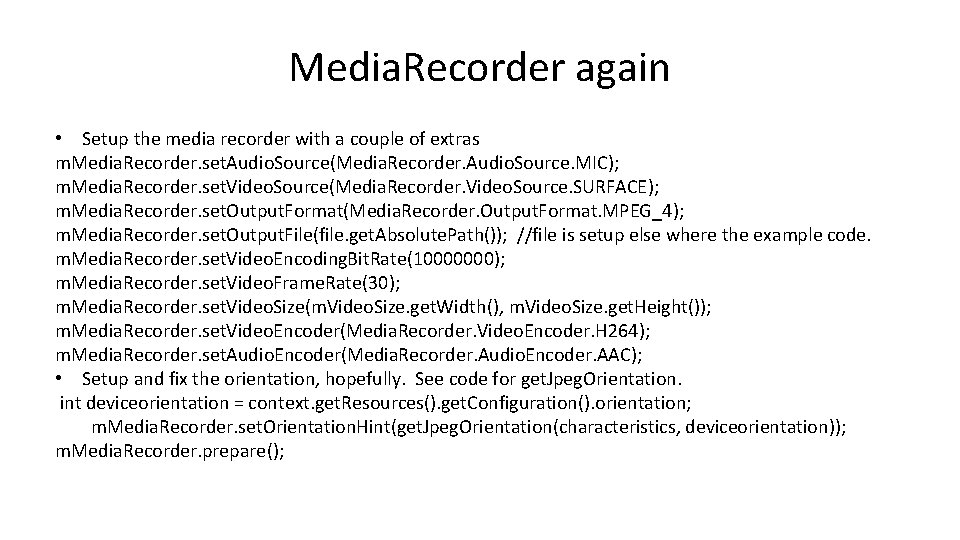

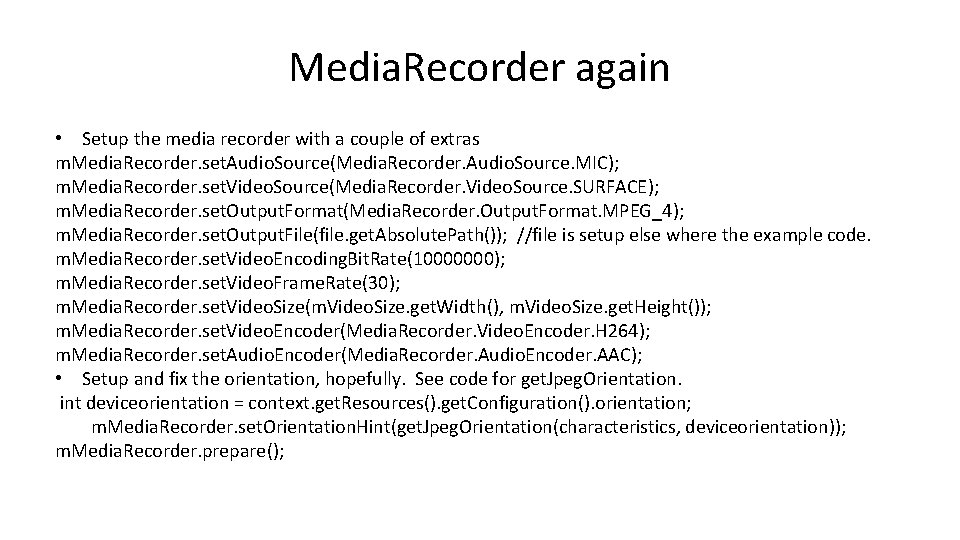

Media. Recorder again • Setup the media recorder with a couple of extras m. Media. Recorder. set. Audio. Source(Media. Recorder. Audio. Source. MIC); m. Media. Recorder. set. Video. Source(Media. Recorder. Video. Source. SURFACE); m. Media. Recorder. set. Output. Format(Media. Recorder. Output. Format. MPEG_4); m. Media. Recorder. set. Output. File(file. get. Absolute. Path()); //file is setup else where the example code. m. Media. Recorder. set. Video. Encoding. Bit. Rate(10000000); m. Media. Recorder. set. Video. Frame. Rate(30); m. Media. Recorder. set. Video. Size(m. Video. Size. get. Width(), m. Video. Size. get. Height()); m. Media. Recorder. set. Video. Encoder(Media. Recorder. Video. Encoder. H 264); m. Media. Recorder. set. Audio. Encoder(Media. Recorder. Audio. Encoder. AAC); • Setup and fix the orientation, hopefully. See code for get. Jpeg. Orientation. int deviceorientation = context. get. Resources(). get. Configuration(). orientation; m. Media. Recorder. set. Orientation. Hint(get. Jpeg. Orientation(characteristics, deviceorientation)); m. Media. Recorder. prepare();

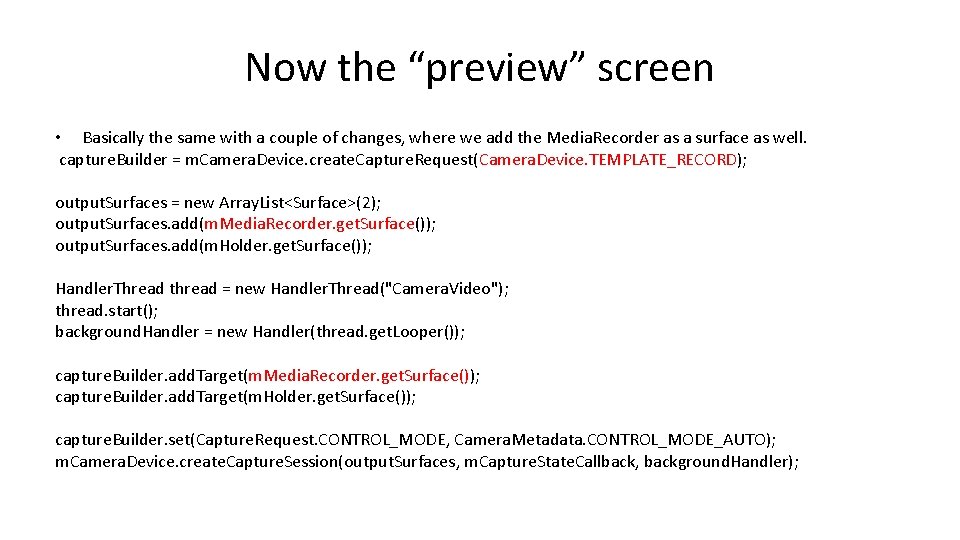

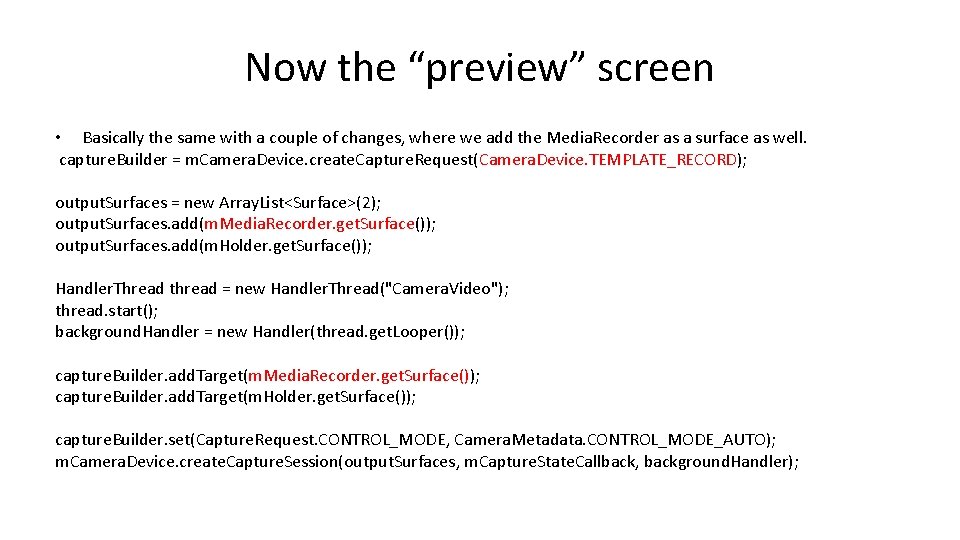

Now the “preview” screen • Basically the same with a couple of changes, where we add the Media. Recorder as a surface as well. capture. Builder = m. Camera. Device. create. Capture. Request(Camera. Device. TEMPLATE_RECORD); output. Surfaces = new Array. List<Surface>(2); output. Surfaces. add(m. Media. Recorder. get. Surface()); output. Surfaces. add(m. Holder. get. Surface()); Handler. Thread thread = new Handler. Thread("Camera. Video"); thread. start(); background. Handler = new Handler(thread. get. Looper()); capture. Builder. add. Target(m. Media. Recorder. get. Surface()); capture. Builder. add. Target(m. Holder. get. Surface()); capture. Builder. set(Capture. Request. CONTROL_MODE, Camera. Metadata. CONTROL_MODE_AUTO); m. Camera. Device. create. Capture. Session(output. Surfaces, m. Capture. State. Callback, background. Handler);

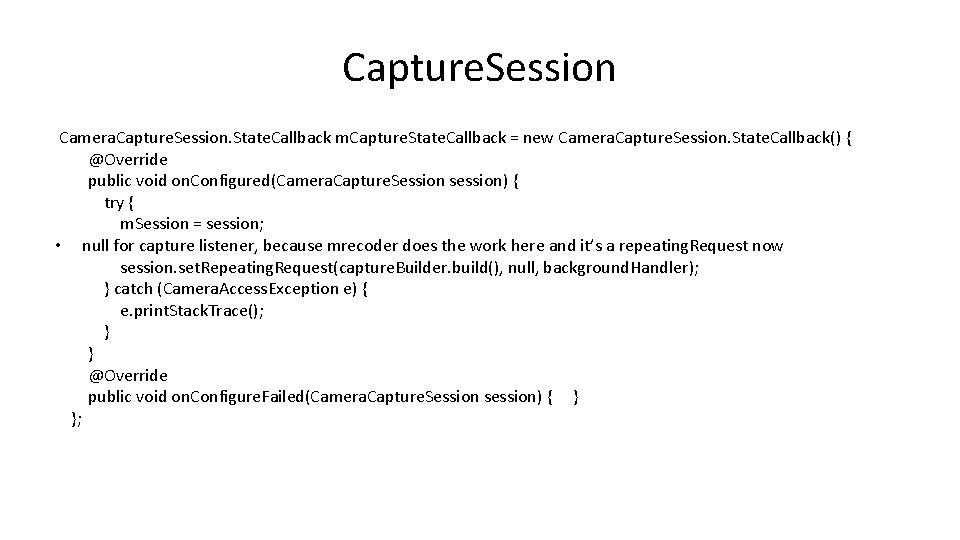

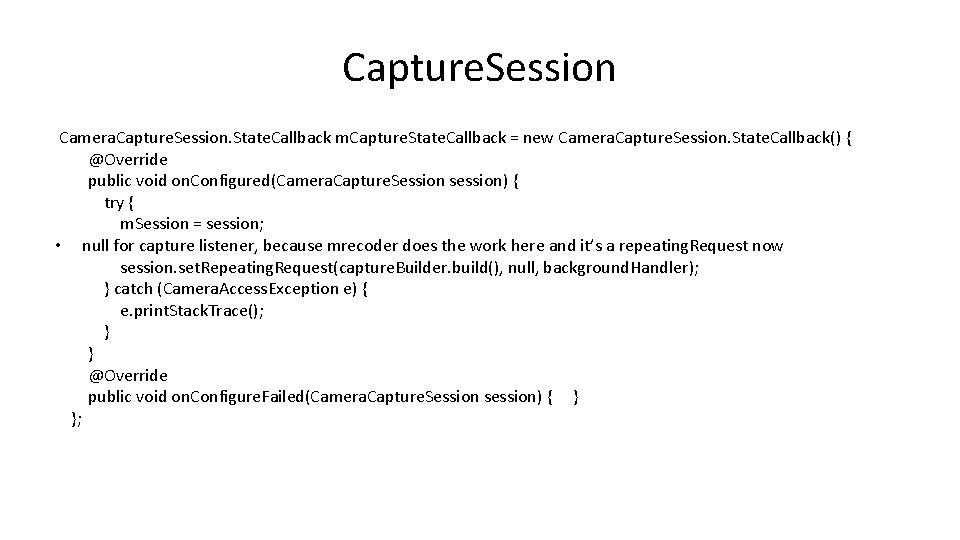

Capture. Session Camera. Capture. Session. State. Callback m. Capture. State. Callback = new Camera. Capture. Session. State. Callback() { @Override public void on. Configured(Camera. Capture. Session session) { try { m. Session = session; • null for capture listener, because mrecoder does the work here and it’s a repeating. Request now session. set. Repeating. Request(capture. Builder. build(), null, background. Handler); } catch (Camera. Access. Exception e) { e. print. Stack. Trace(); } } @Override public void on. Configure. Failed(Camera. Capture. Session session) { } };

Recording a video • Finally to start recording: m. Media. Recorder. start(); • And to stop m. Media. Recorder. stop(); m. Media. Recorder. reset(); – The file is now stored.

Example code • Video. Capture 3 uses the camera 2 and this material covered here. – It will check if you are running lollipop or marshmallow. • If lollipop, it will exit, since the app dies anyway. • Camera. Preview is an attempt to put all this perview, picture taking and video taking together. – Again, works great in marshmallow and fails in lollipop.

References • http: //developer. android. com/intl/zh-CN/guide/topics/media/index. html • http: //www. brighthub. com/mobile/google-android/articles/43414. aspx (a difficult example to follow and it’s for 1. 6) • Apress - Pro Android Media Developing Graphics, Music, Video, and Rich Media Apps for Smartphones and Tablets – Chapter 2 for taking pictures, chapter 9 for video playback. • API examples, com. example. android. apis. media • Camera 2 – https: //github. com/googlesamples/android-Camera 2 Video – http: //developer. android. com/reference/android/hardware/camera 2/packagesummary. html

Camera. X and video. • At 1. 0. 0. rc 01 version, the video functions are not public, but are usable. – primary differences • Use a Video. Capture Object (instead of a Image. Capture) • Video. Caputre. start. Recording( first 2 are same parameters, Video. Callback) • Video. Capture. stop. Recording() //when done. • See the Camera. XVideo. Demo

Q&A

Universal pictures columbia pictures

Universal pictures columbia pictures Columbia paramount

Columbia paramount Cosc 4p61

Cosc 4p61 Cosc 4p42

Cosc 4p42 Cosc 3p91

Cosc 3p91 Cosc 1306

Cosc 1306 Cosc 1306

Cosc 1306 Cosc 4368

Cosc 4368 Cosc 4p41

Cosc 4p41 Cosc 4p41

Cosc 4p41 Cosc

Cosc Cosc 3340

Cosc 3340 Cosc 320

Cosc 320 Cosc parameters

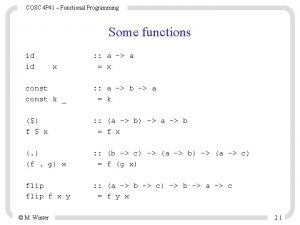

Cosc parameters Cosc 4p41

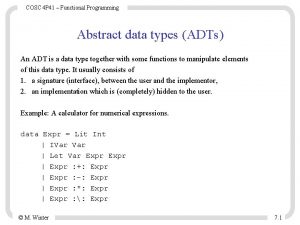

Cosc 4p41 Adt functional programming

Adt functional programming Cosc 3340

Cosc 3340 68030 pinout

68030 pinout Cosc 121

Cosc 121 Cosc 1p02

Cosc 1p02 Cosc 2p12

Cosc 2p12 Cosc 3p92

Cosc 3p92 Cosc 3p92

Cosc 3p92 Cosc 121

Cosc 121 Cosc 121

Cosc 121 Lc3 trap vector table

Lc3 trap vector table Cosc 121

Cosc 121 Cosc -cos d

Cosc -cos d Cosc 1306

Cosc 1306 Cosc 3p94

Cosc 3p94 Cosc101

Cosc101 Is etm recognizable

Is etm recognizable Cosc 3340

Cosc 3340 Fce speaking part 2 pictures

Fce speaking part 2 pictures Example of people as media?

Example of people as media? What is the picture all about

What is the picture all about Part whole model subtraction

Part whole model subtraction Part to part ratio definition

Part to part ratio definition Part part whole

Part part whole Technical description meaning

Technical description meaning Parts

Parts The phase of the moon you see depends on ______.

The phase of the moon you see depends on ______. Part to part variation

Part to part variation Hot cold media

Hot cold media Hot media and cold media

Hot media and cold media Benefits of transferring data over a wired network

Benefits of transferring data over a wired network Luhan hot

Luhan hot What is an important part of mass media

What is an important part of mass media Difference selective and differential media

Difference selective and differential media Jadi

Jadi Media decisions

Media decisions Invasiones en la alta edad media

Invasiones en la alta edad media Caracteristicas de la alta edad media

Caracteristicas de la alta edad media Difference between differential and selective media

Difference between differential and selective media Eduqas a level media

Eduqas a level media Seni visual stpm penggal 1

Seni visual stpm penggal 1 Moyens hors médias

Moyens hors médias Media jadi dan media rancangan

Media jadi dan media rancangan New media vs old media

New media vs old media Look at picture and answer the question

Look at picture and answer the question Android ril architecture

Android ril architecture Android motion sensor

Android motion sensor Main components android

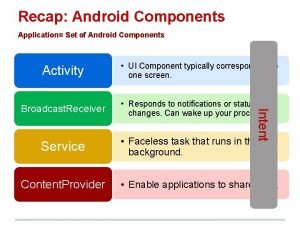

Main components android