Connectionism ASSOCIATIONISM Associationism David Hume 1711 1776 was

- Slides: 62

Connectionism

ASSOCIATIONISM

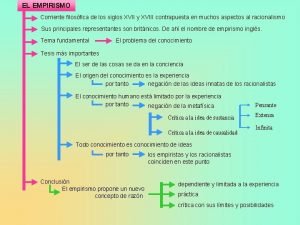

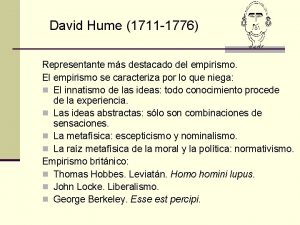

Associationism David Hume (1711 -1776) was one of the first philosophers to develop a detailed theory of mental processes.

Associationism “There is a secret tie or union among particular ideas, which causes the mind to conjoin them more frequently together, and makes the one, upon its appearance, introduce the other. ”

Three Principles 1. Resemblance 2. Contiguity in space and time 3. Cause and effect

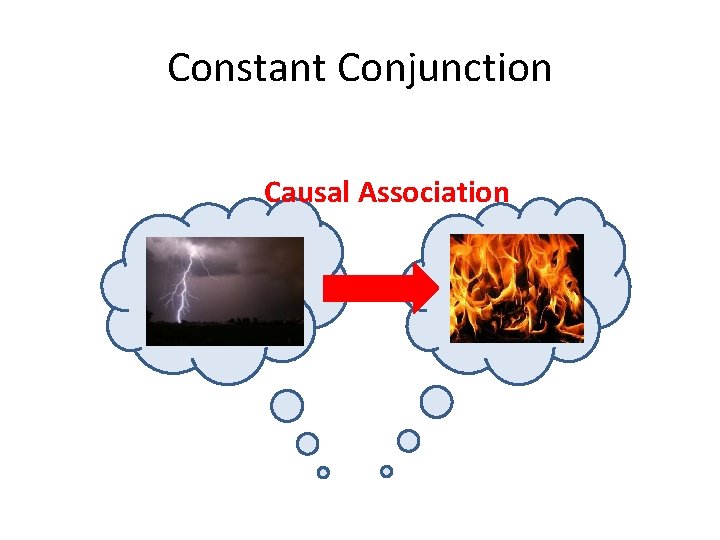

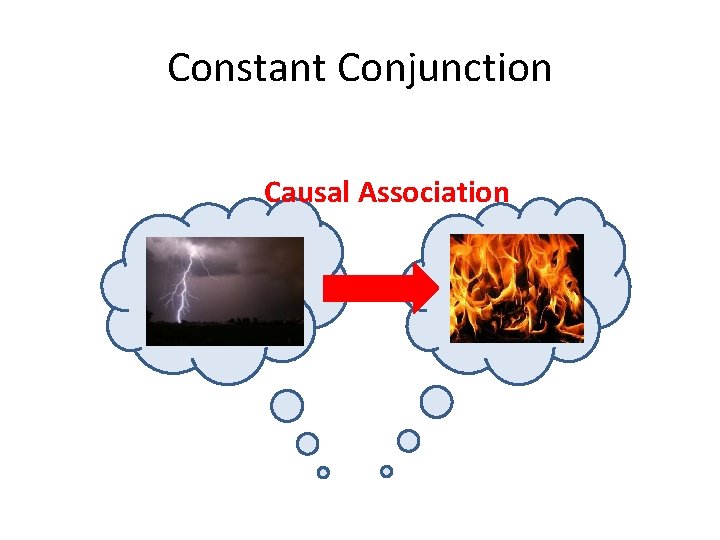

Constant Conjunction

Constant Conjunction

Constant Conjunction Causal Association

Vivacity Hume thought different ideas you had different levels of “vivacity” – how clear or lively they are. (Compare seeing an elephant to remembering an elephant. )

Belief To believe an idea was for that idea to be very vivacious. Importantly, causal association is vivacity preserving. If you believe the cause, then you believe its effect.

Constant Conjunction

Constant Conjunction

Hume’s Non-Rational Mind Hume thus had a model of mental processes that was non-rational. Associative principles aren’t truth-preserving; they are vivacity preserving. (Hume thought this was a positive feature, because he thought that you could not rationally justify causal reasoning. )

Classical Conditioning And as we saw before, the associationist paradigm continued into psychology after it became a science.

Connectionism is the “new” associationism.

CONNECTIONISM

Names • Connectionist Network • Artificial Neural Network • Parallel Distributed Processors

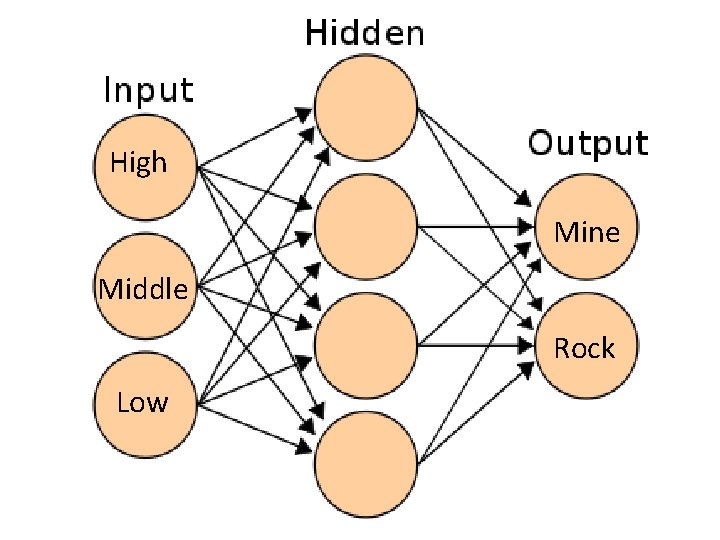

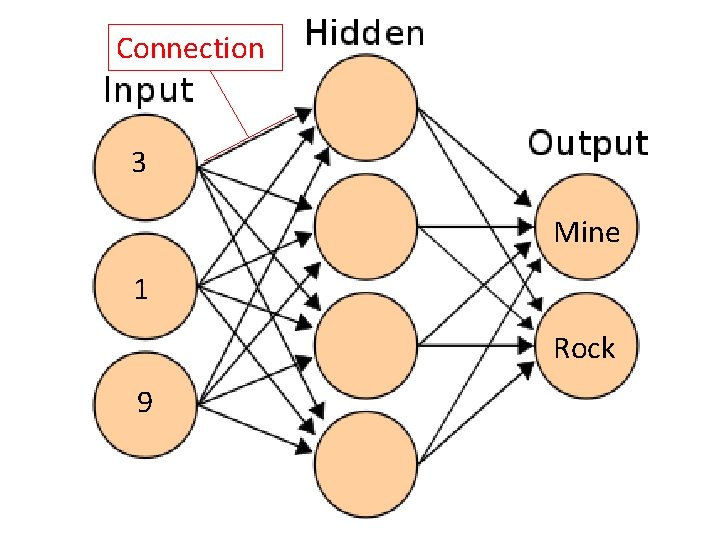

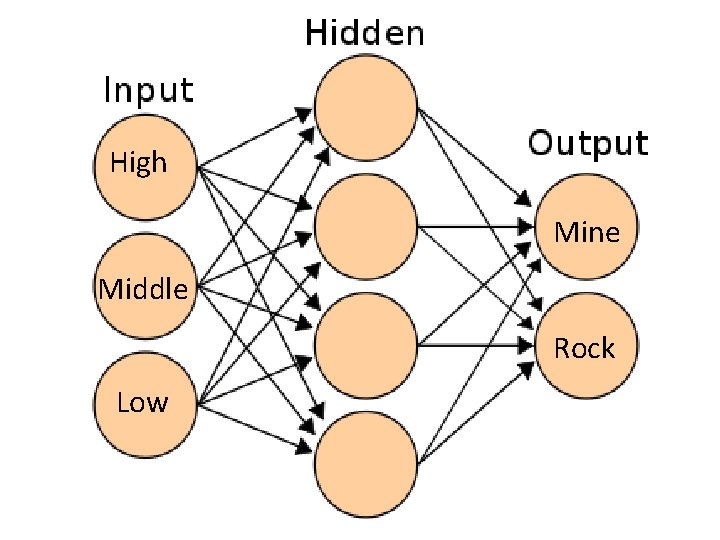

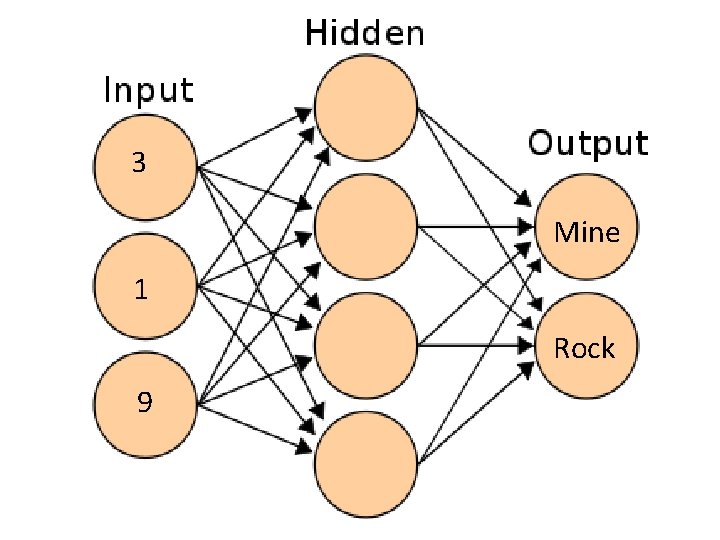

High Mine Middle Rock Low

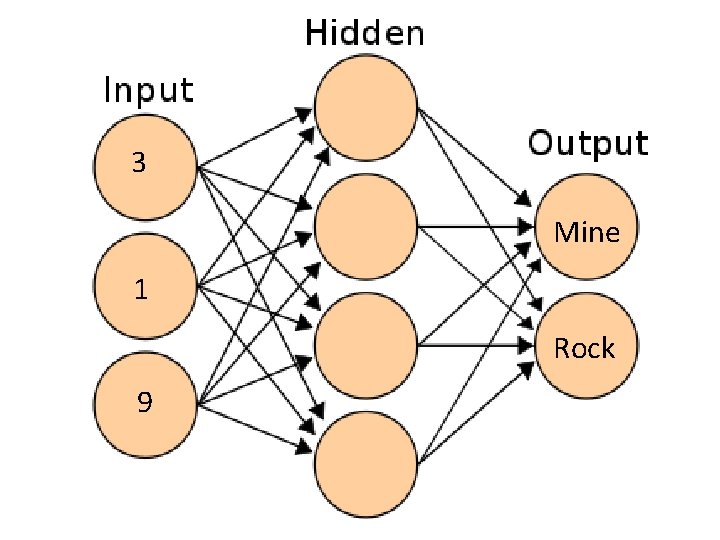

3 Mine 1 Rock 9

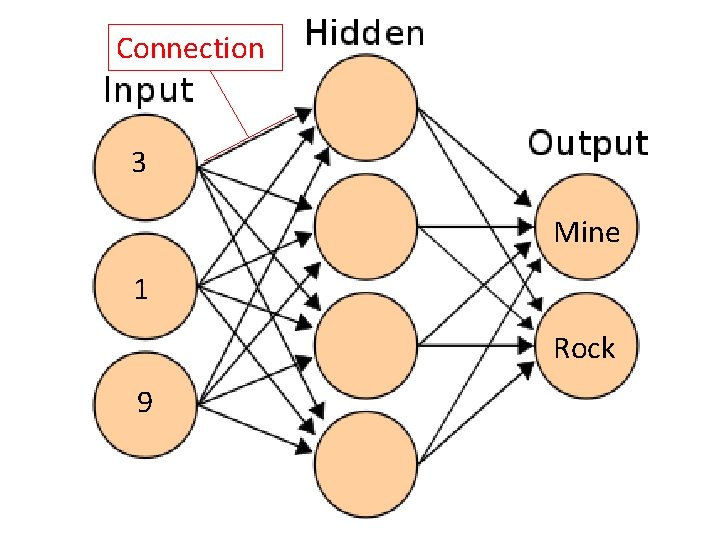

Connection 3 Mine 1 Rock 9

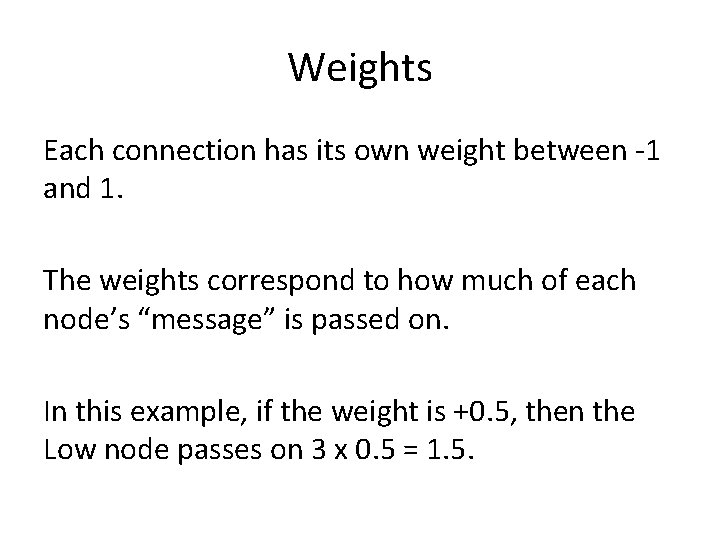

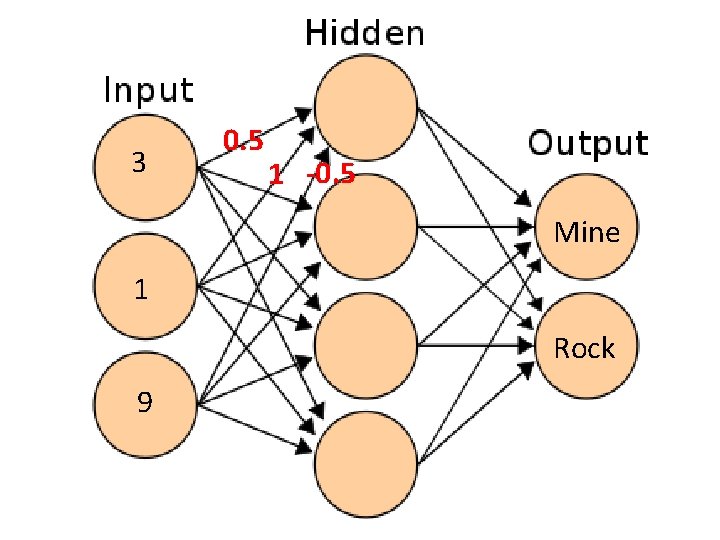

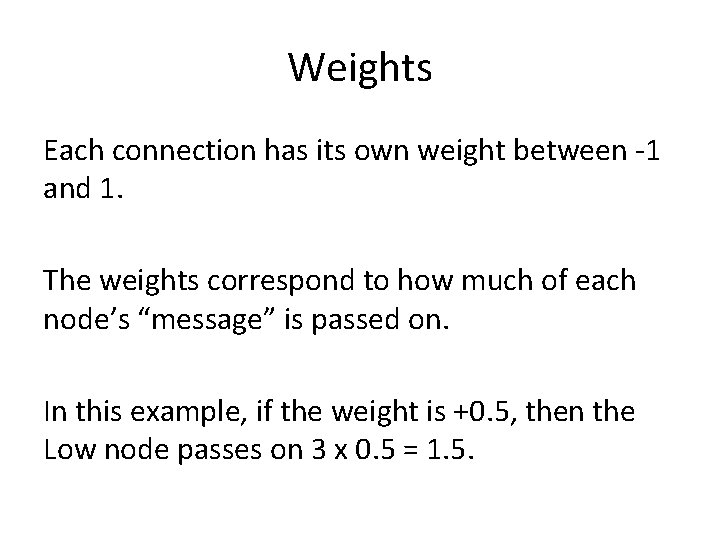

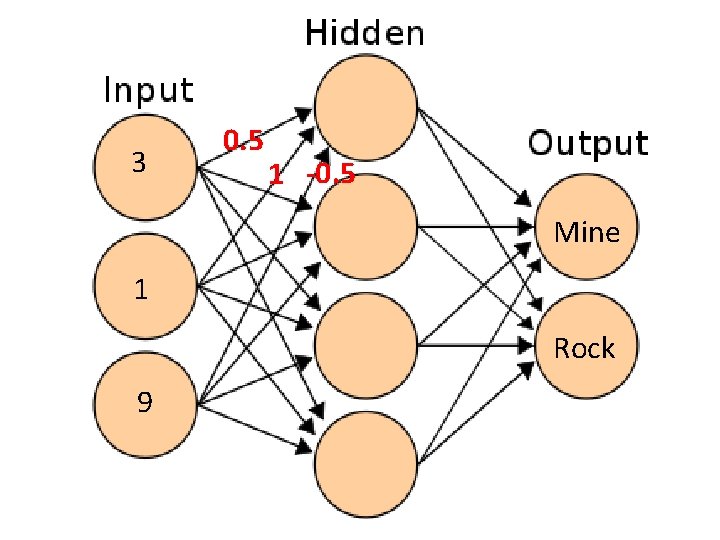

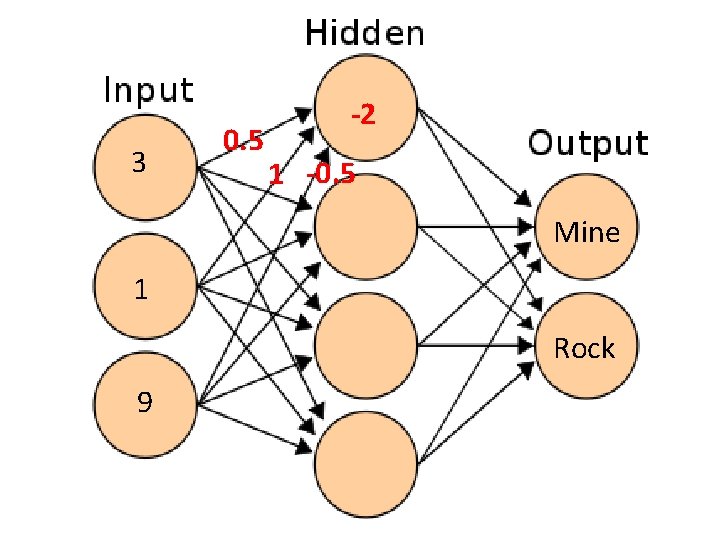

Weights Each connection has its own weight between -1 and 1. The weights correspond to how much of each node’s “message” is passed on. In this example, if the weight is +0. 5, then the Low node passes on 3 x 0. 5 = 1. 5.

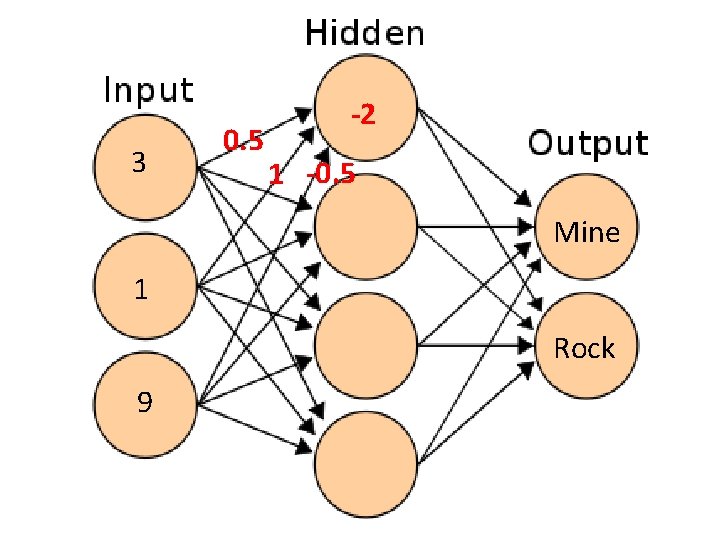

3 0. 5 1 -0. 5 Mine 1 Rock 9

3 0. 5 -2 1 -0. 5 Mine 1 Rock 9

f(-2) 3 Mine 1 Rock 9

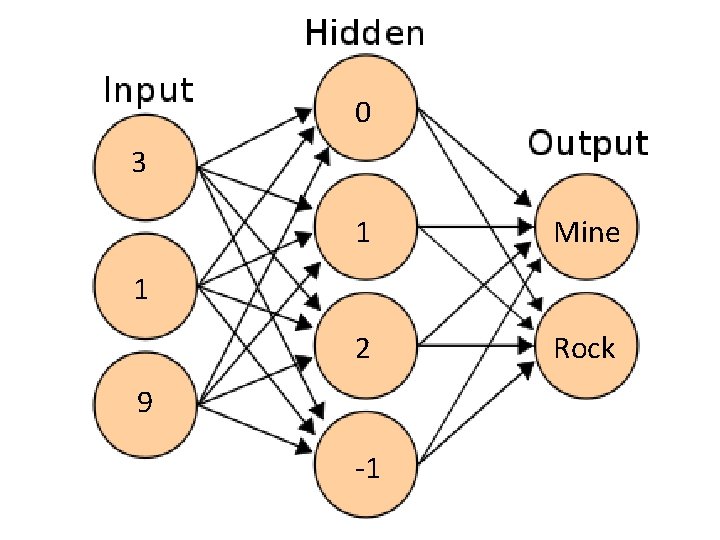

Activation Function Each non-input node has an activation function. This tells it how active to be, given the sum of its inputs. Often the activation functions are just on/ off: f(x) = 1, if x > 0; otherwise f(x) = 0

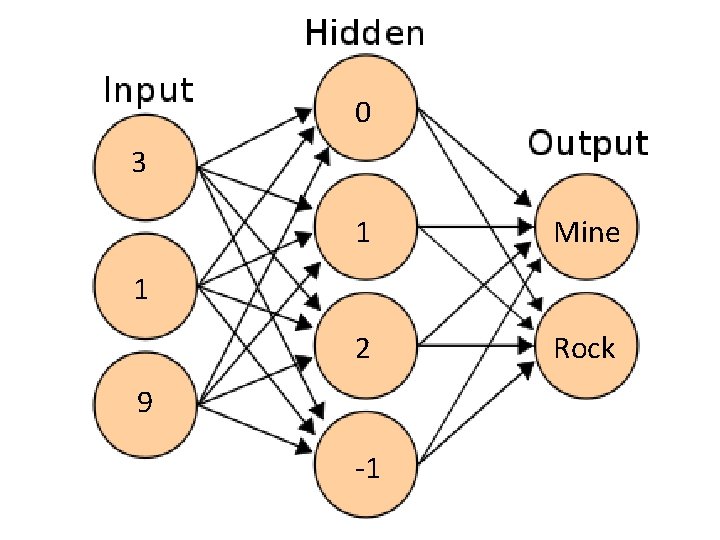

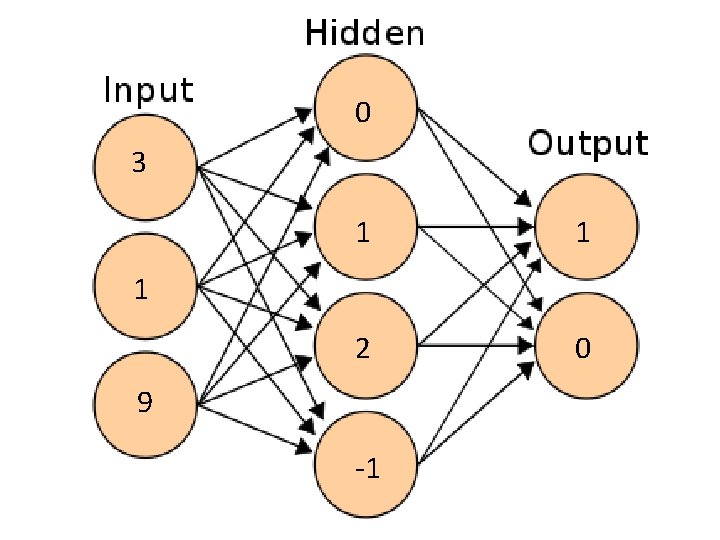

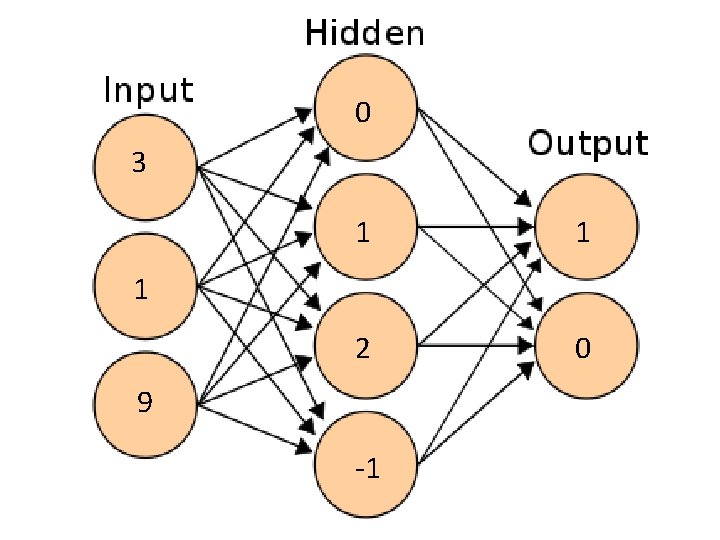

0 3 1 Mine 2 Rock 1 9 -1

0 3 1 1 2 0 1 9 -1

Training a Connectionist Network STEP 1: Assign weights to the connections at random.

Training a Connectionist Network STEP 2: Gather a very large number of categorization tasks to which you know the answer. For example, a large number of echoes where you know whether they are from rocks or from mines. This is the “training set. ”

Training a Connectionist Network STEP 3: Randomly select one echo from the training set. Give it to the network.

Back Propagation STEP 4: If the network gets the answer right, do nothing. If it gets the answer wrong, find all the connections that supported the wrong answer and adjust them down slightly. Find all the ones that supported the right answer and adjust them up slightly.

Repeat! STEP 5: Repeat the testing-and-adjusting thousands of times. Now you have a trained network.

Important Properties of Connectionist Networks 1. Connectionist networks can learn. (If they have access to thousands of right answers, and someone is around to adjust the weights of their connections. As soon as they stop being “trained” they never learn a new thing again. )

Learning If we suppose that networks train themselves (and no one knows how this could happen), learning is still a problem: The system, though it can learn, can’t remember. In altering its connections, it alters the traces of its former experiences.

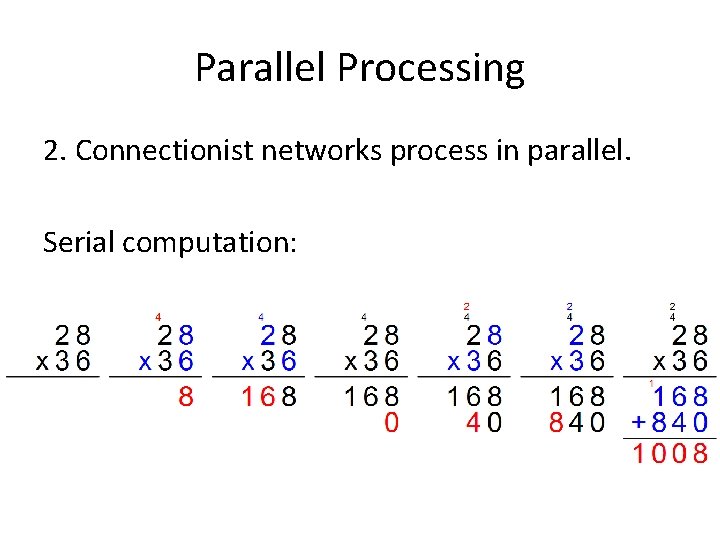

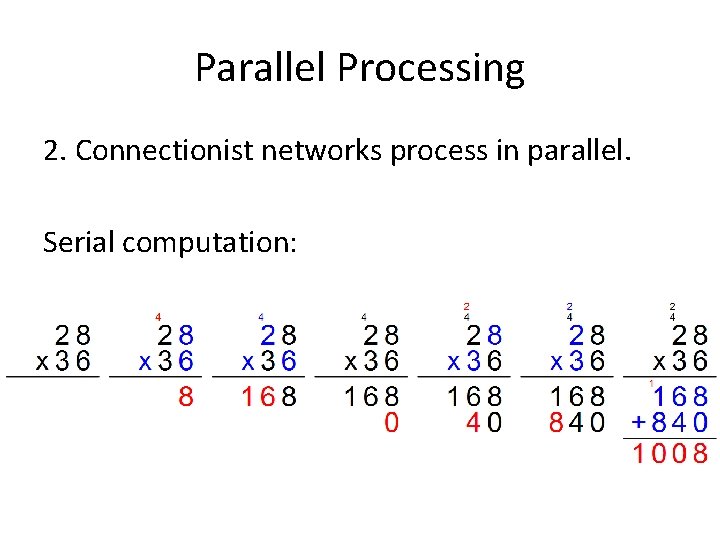

Parallel Processing 2. Connectionist networks process in parallel. Serial computation:

Parallel Processing A parallel computation might work like this: I want to solve a really complicated math problem, so I assign small parts of it to each student in class. They work “in parallel” and together we solve the problem faster than one processor working serially.

Distributed Representations 3. Representations in connectionist networks are distributed. Information about the ‘shape’ of the object (in sonar echoes) is encoded not in any one node or connection, but across all the nodes and connections.

Local Processing 4. Processing in a connectionist network is local. There is no central processor controlling what happens in a connectionist network. The only thing that determines whether a node activates is its activation function and its inputs. There’s no program telling it what to do.

Graceful Degradation 5 -6. Connectionist networks tolerate low-quality inputs, and can still work even as some of their parts begin to fail. Since computing and representation are distributed throughout the network, even if part of it is destroyed or isn’t receiving input, the whole will still work pretty well.

CONNECTIONISM AND THE BRAIN

Brain = Neural Network? One of the main points of interest of connectionism is the idea that the human brain might be a connectionist network.

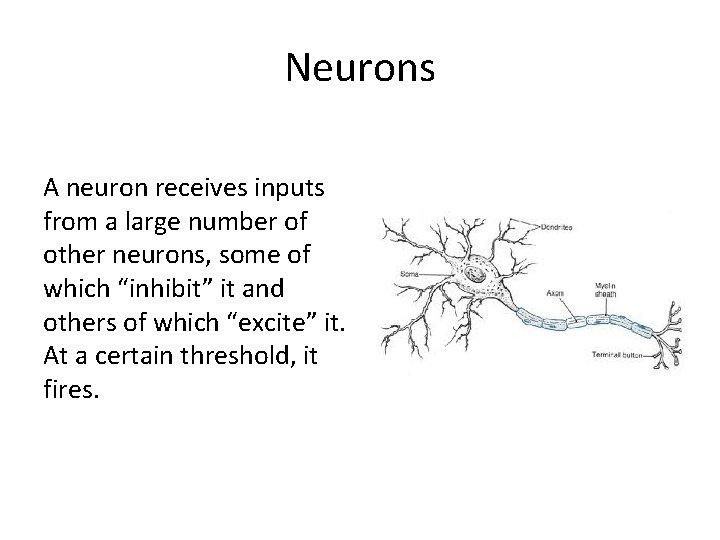

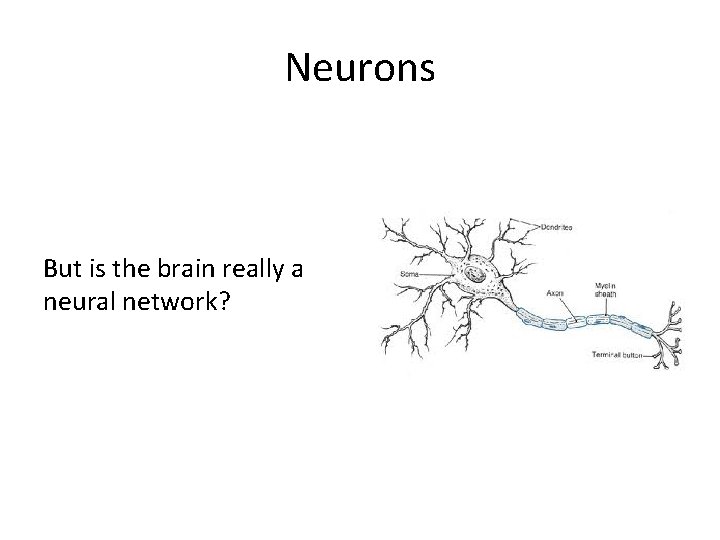

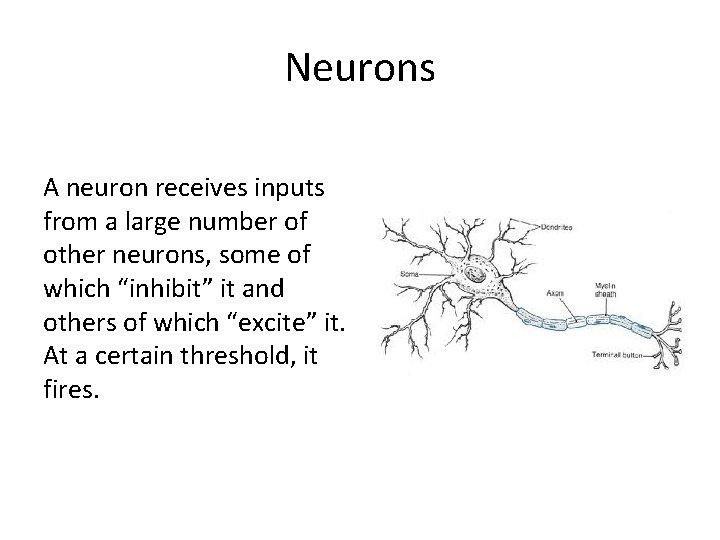

Neurons A neuron receives inputs from a large number of other neurons, some of which “inhibit” it and others of which “excite” it. At a certain threshold, it fires.

Neurons are hooked up ‘in parallel’: different chains of activation and inhibition can operate independently of one another.

Neurons But is the brain really a neural network?

Spike Trains Neurons fire in ‘spikes’ and many brain researchers think they communicate in the frequency of spikes over time. That’s not a part of connectionism.

Spike Trains (Another hypothesis is that they communicate information by firing in the same patterns as other neurons. )

Back Propagation There’s also no evidence of connectionist-style training. The brain has no (known) means of changing the connections between neurons that “contribute to the wrong answer. ”

Close Enough An alternate view might be that while brains aren’t neural networks, they are like neural networks. Furthermore, they are more like neural networks than they are like universal computers because (so the argument goes) neural networks are good at what we’re good at and bad at what we’re bad at.

PROBLEM CASES

Logic, Math Universal computers can solve logic problems or math problems with very high accuracy, and with very few steps. Neural networks need extensive training and a large number of nodes to achieve even moderate accuracy on such tasks.

Bechtel & Abrahamesen Ravenscroft describes a case where Bechtel & Abrahamsen built a connectionist network that was supposed to tell whether an argument was valid or invalid (out of 12 possible argument forms). For instance, it might be given: (P → Q), Q├ P

The Logic Network • After ½ million training sessions, it was 76% accurate. • After 2. 5 million training sessions, it was 84% accurate. I’ve known students who were 99% accurate, for a larger range of problems, after a couple dozen examples.

Language For the same reason, language poses a problem. Human spoken languages have a similar structure to computer programming languages (that’s intentional). So it’s very hard to get a connectionist network that can speak grammatically.

IMPLEMENTATION

Simulation Every universal computer can simulate every connectionist network. In fact, almost no connectionist networks exist. When you read about researchers “designing” networks, they are virtually designing them in a universal computer. And when they “train” the networks, the computer trains them.

Simulation So the mind could be a universal computer that simulates a neural network. But… that would be strange and wasteful. Why throw out all your computational power to simulate something weaker?

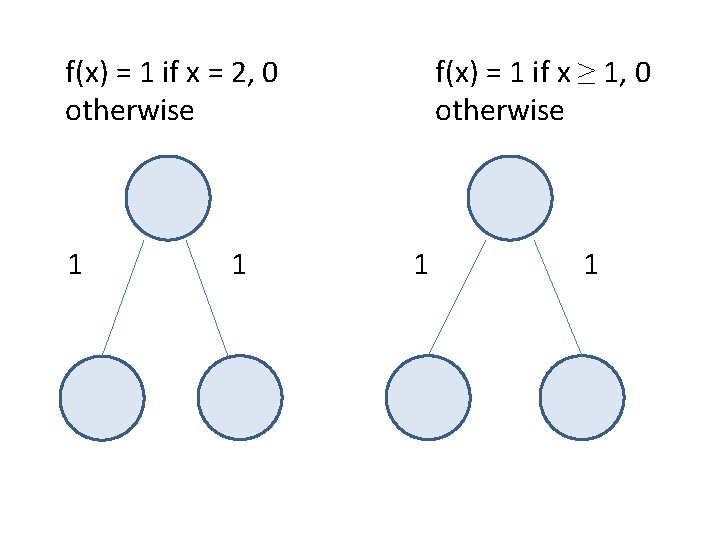

A more interesting idea is that maybe the mind is a universal computer implemented by a connectionist network of neurons. Most connectionist networks are not universal computers. But some are.

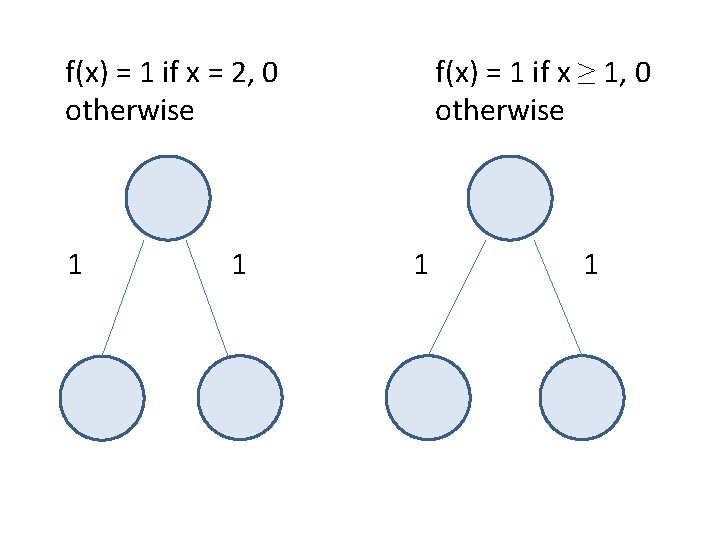

f(x) = 1 if x = 2, 0 otherwise 1 1 f(x) = 1 if x ≥ 1, 0 otherwise 1 1

David hume 1711 a 1776

David hume 1711 a 1776 Levítico 1711

Levítico 1711 Thorndike connectionism

Thorndike connectionism Connectionism theory of learning

Connectionism theory of learning Socrates response to cebes

Socrates response to cebes Associationism

Associationism Associationism

Associationism David hume escepticismo

David hume escepticismo Inconsistent triad

Inconsistent triad Is constant and consistent the same

Is constant and consistent the same Ekip çalışması için gerekli yönetsel koşullar

Ekip çalışması için gerekli yönetsel koşullar David hume problem of evil

David hume problem of evil Fisolofica

Fisolofica David hume nationality

David hume nationality David hume naturalism

David hume naturalism The bundle

The bundle Stie cirebon

Stie cirebon Correntes filosóficas

Correntes filosóficas Hume aesthetics

Hume aesthetics David hume iq

David hume iq Conocimiento segun hume

Conocimiento segun hume May 1775

May 1775 Symptoms of misinformed conscience

Symptoms of misinformed conscience January 10th 1776

January 10th 1776 1787-1776

1787-1776 What experiences from 1763 to 1776

What experiences from 1763 to 1776 1776

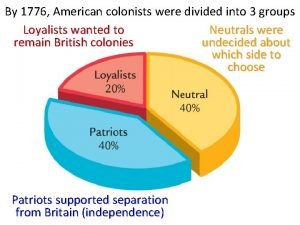

1776 1776 divided by 3

1776 divided by 3 The united states in the caribbean 1776 to 1985

The united states in the caribbean 1776 to 1985 1776-1607

1776-1607 July 12 1776

July 12 1776 July 16 1776

July 16 1776 Middle colonies timeline

Middle colonies timeline Hume foreclosures

Hume foreclosures Locke berkeley and hume

Locke berkeley and hume Odontoclasia definition

Odontoclasia definition Ubytki wg blacka

Ubytki wg blacka Hume rothery ratio

Hume rothery ratio Pxi-2599

Pxi-2599 Hume city library

Hume city library Art as a disinterested judgement examples

Art as a disinterested judgement examples Hume contexto historico

Hume contexto historico Hume guillotine

Hume guillotine Rory hume

Rory hume Millers liquefaction foci

Millers liquefaction foci Hume rothery rules

Hume rothery rules Hume's fork examples

Hume's fork examples Plagiarism is deceitful because it is dishonest

Plagiarism is deceitful because it is dishonest Contents of dentinal tubules

Contents of dentinal tubules Odontoclasia definition

Odontoclasia definition Edingburgo

Edingburgo Montesquieu pronunciation in french

Montesquieu pronunciation in french Hume center vt

Hume center vt Metodo inductivo hume

Metodo inductivo hume Hume main ideas

Hume main ideas Mount and hume classification

Mount and hume classification Falacia de afirmación del consecuente

Falacia de afirmación del consecuente Kant sensibilidad

Kant sensibilidad Madi hume

Madi hume Regla de hume rothery

Regla de hume rothery Empiricist

Empiricist Solid solution

Solid solution Hume winzar

Hume winzar