ATLAS Software Development Environment for Hardware Transactional Memory

![Alternative: Transactional Memory (TM) n Memory transactions [Knight’ 86][Herlihy & Moss’ 93] n n Alternative: Transactional Memory (TM) n Memory transactions [Knight’ 86][Herlihy & Moss’ 93] n n](https://slidetodoc.com/presentation_image_h/16c7698464e957a6b00accae544ee6c3/image-3.jpg)

![Implementation of TM n Software TM n n [Harris’ 03][Saha’ 06][Dice’ 06] Versioning & Implementation of TM n Software TM n n [Harris’ 03][Saha’ 06][Dice’ 06] Versioning &](https://slidetodoc.com/presentation_image_h/16c7698464e957a6b00accae544ee6c3/image-5.jpg)

![Software Environment for HTM n Programming language [Carlstrom’ 07] n n Parallel programming interface Software Environment for HTM n Programming language [Carlstrom’ 07] n n Parallel programming interface](https://slidetodoc.com/presentation_image_h/16c7698464e957a6b00accae544ee6c3/image-6.jpg)

![Atomicity Violation Detection n AVIO [Lu’ 06] n n Atomic region = No unserializable Atomicity Violation Detection n AVIO [Lu’ 06] n n Atomic region = No unserializable](https://slidetodoc.com/presentation_image_h/16c7698464e957a6b00accae544ee6c3/image-34.jpg)

![TCC vs. SLE n Speculative Lock Elision (SLE) [Rajwar & Goodman’ 01] n Speculate TCC vs. SLE n Speculative Lock Elision (SLE) [Rajwar & Goodman’ 01] n Speculate](https://slidetodoc.com/presentation_image_h/16c7698464e957a6b00accae544ee6c3/image-60.jpg)

- Slides: 63

ATLAS Software Development Environment for Hardware Transactional Memory Sewook Wee Computer Systems Lab Stanford University 1

The Parallel Programming Crisis n Multi-cores for scalable performance n n Parallel programming is a must, but still hard n n n No faster single core any more Multiple threads access shared memory Correct synchronization is required Conventional: lock-based synchronization n n Coarse-grain locks: serialize system Fine-grain locks: hard to be correct 2

![Alternative Transactional Memory TM n Memory transactions Knight 86Herlihy Moss 93 n n Alternative: Transactional Memory (TM) n Memory transactions [Knight’ 86][Herlihy & Moss’ 93] n n](https://slidetodoc.com/presentation_image_h/16c7698464e957a6b00accae544ee6c3/image-3.jpg)

Alternative: Transactional Memory (TM) n Memory transactions [Knight’ 86][Herlihy & Moss’ 93] n n n Atomicity (all or nothing) n n n At commit, all memory updates take effect at once On abort, none of the memory updates appear to take effect Isolation n n An atomic & isolated sequence of memory accesses Inspired by database transactions No other code can observe memory updates before commit Serializability n Transactions seem to commit in a single serial order 3

Advantages of TM n As easy to use as coarse-grain locks n n n As good performance as fine-grain locks n n n Programmer declares the atomic region No explicit declaration or management of locks System implements synchronization Optimistic concurrency [Kung’ 81] Slow down only on true conflicts (R-W or W-W) Fine-grain dependency detection No trade-off between performance & correctness 4

![Implementation of TM n Software TM n n Harris 03Saha 06Dice 06 Versioning Implementation of TM n Software TM n n [Harris’ 03][Saha’ 06][Dice’ 06] Versioning &](https://slidetodoc.com/presentation_image_h/16c7698464e957a6b00accae544ee6c3/image-5.jpg)

Implementation of TM n Software TM n n [Harris’ 03][Saha’ 06][Dice’ 06] Versioning & conflict detection in software No hardware change, flexible Poor performance (up to 8 x) Hardware TM [Herlihy & Moss’ 93] [Hammond’ 04][Moore’ 06] n n n Modifying data cache hardware High performance Correctness: strong isolation 5

![Software Environment for HTM n Programming language Carlstrom 07 n n Parallel programming interface Software Environment for HTM n Programming language [Carlstrom’ 07] n n Parallel programming interface](https://slidetodoc.com/presentation_image_h/16c7698464e957a6b00accae544ee6c3/image-6.jpg)

Software Environment for HTM n Programming language [Carlstrom’ 07] n n Parallel programming interface Operating system n n Provides virtualization, resource management, … Challenges for TM n n Interaction of active transaction and OS Productivity tools n n Correctness and performance debugging tools Build up on TM features 6

Contributions n An operating system for hardware TM n Productivity tools for parallel programming n Full-system prototyping & evaluation 7

Agenda n Motivation n Background n Operating System for HTM n Productivity Tools for Parallel Programming n Conclusions 8

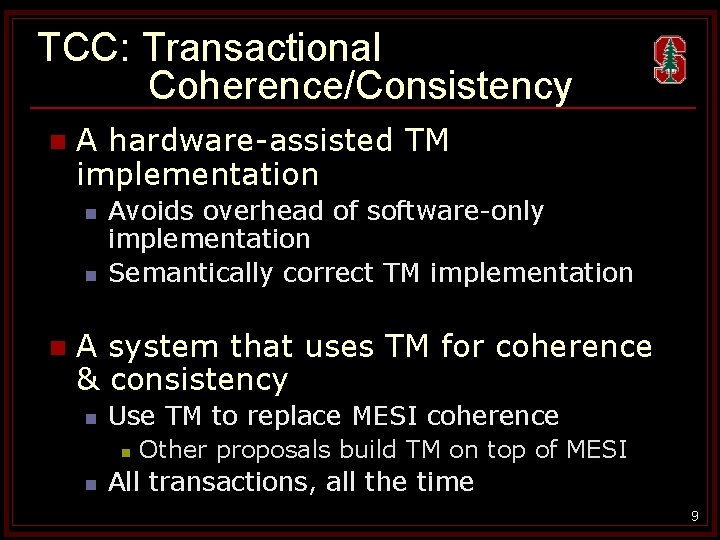

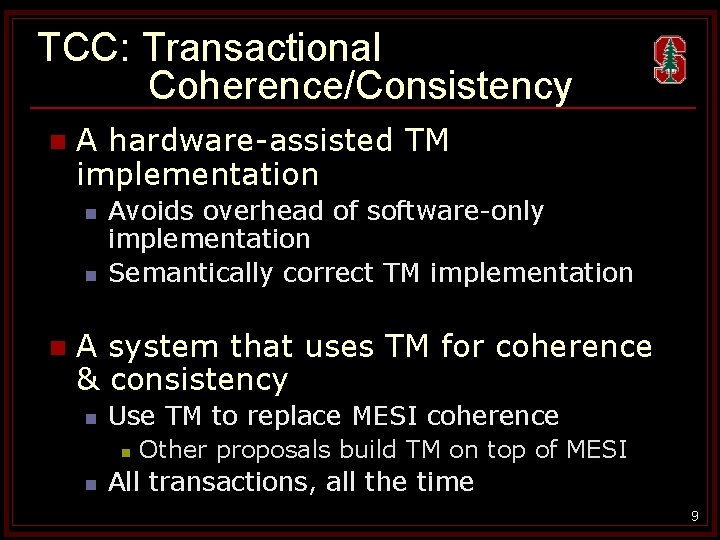

TCC: Transactional Coherence/Consistency n A hardware-assisted TM implementation n Avoids overhead of software-only implementation Semantically correct TM implementation A system that uses TM for coherence & consistency n Use TM to replace MESI coherence n n Other proposals build TM on top of MESI All transactions, all the time 9

TCC Execution Model CPU 0 CPU 1 CPU 2 . . . ld 0 xabdc ld 0 xe 4 e 4. . . Execute Code st 0 xcccc . . . ld 0 x 5678. . . time Execute Code Arbitrate Commit . . . ld 0 x 1234 ld 0 xcccc . . . 0 xcccc Arbitrate Undo Commit Re- ld 0 xcccc Execute Code See [ISCA’ 04] for details 10

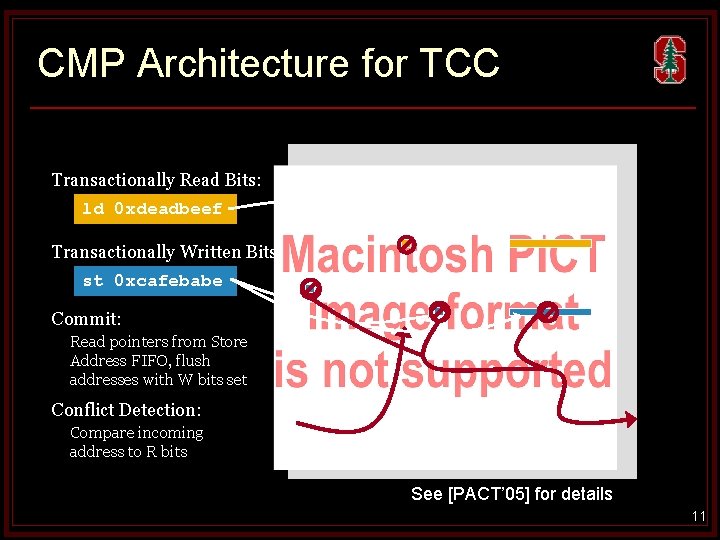

CMP Architecture for TCC Transactionally Read Bits: ld 0 xdeadbeef Transactionally Written Bits: st 0 xcafebabe Commit: Read pointers from Store Address FIFO, flush addresses with W bits set Conflict Detection: Compare incoming address to R bits See [PACT’ 05] for details 11

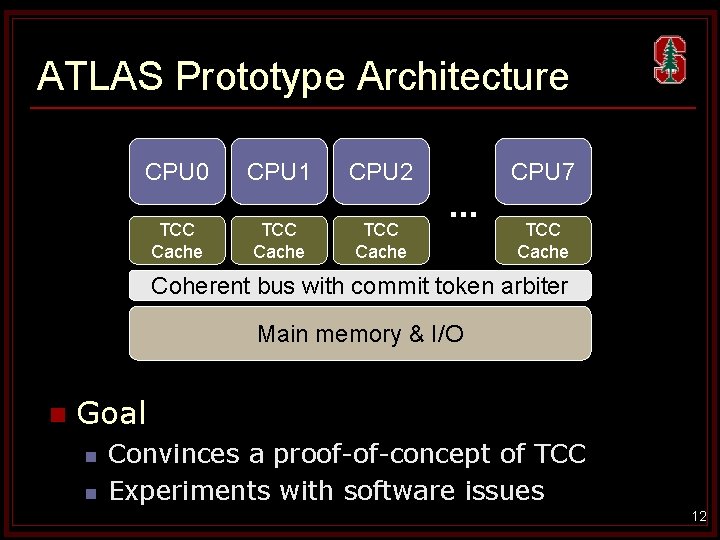

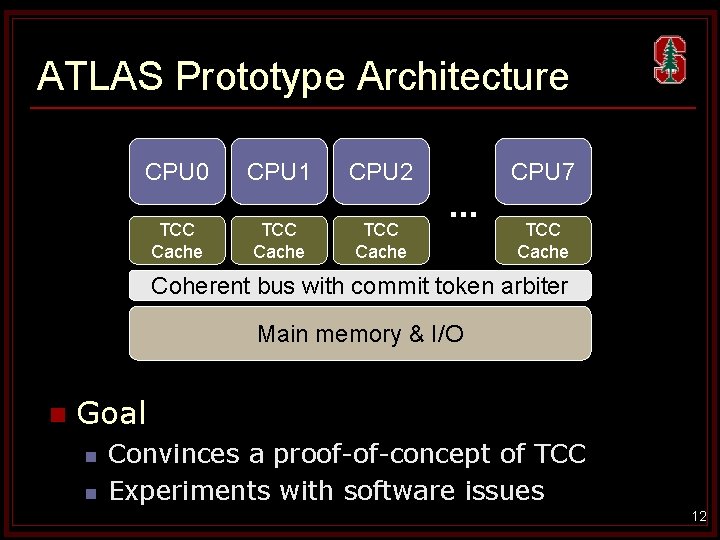

ATLAS Prototype Architecture CPU 0 TCC Cache CPU 1 TCC Cache CPU 2 TCC Cache CPU 7 … TCC Cache Coherent bus with commit token arbiter Main memory & I/O n Goal n n Convinces a proof-of-concept of TCC Experiments with software issues 12

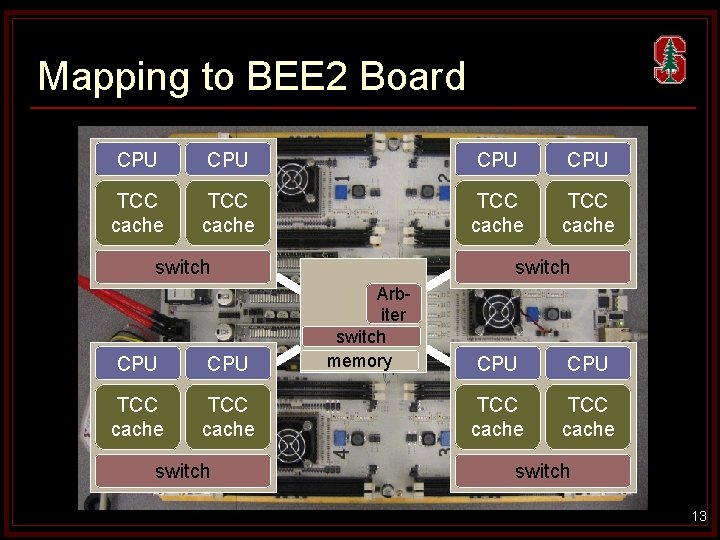

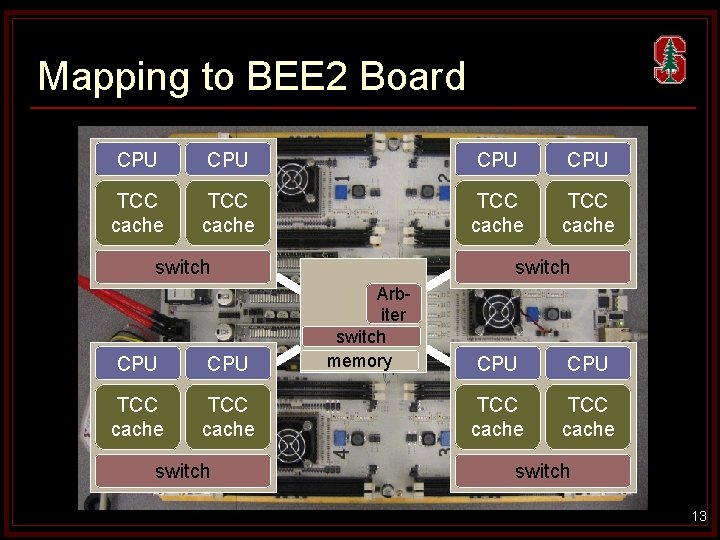

Mapping to BEE 2 Board CPU CPU TCC cache switch CPU TCC cache switch Arbiter switch memory CPU TCC cache switch 13

Agenda n Motivation n Background n Operating System for HTM n Productivity Tools for Parallel Programming n Conclusions 14

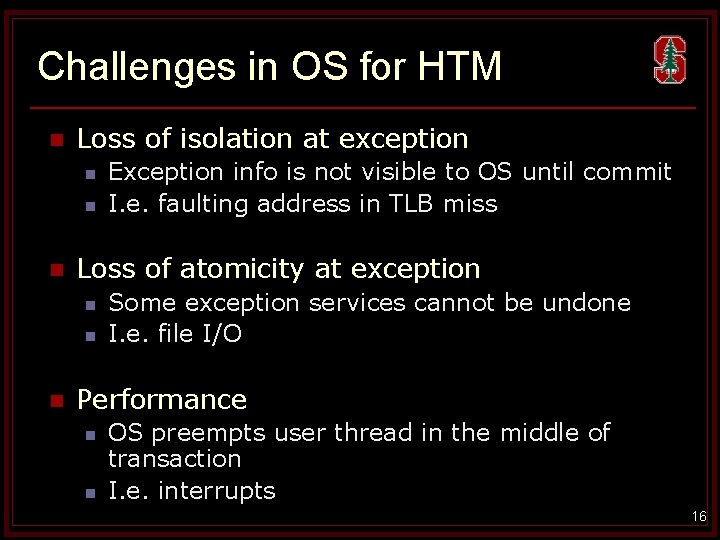

Challenges in OS for HTM What should we do if OS needs to run in the middle of transaction? 15

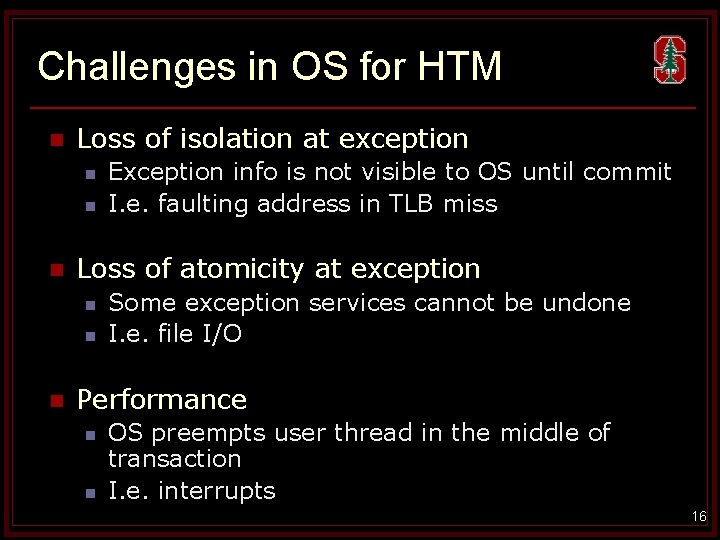

Challenges in OS for HTM n Loss of isolation at exception n Loss of atomicity at exception n Exception info is not visible to OS until commit I. e. faulting address in TLB miss Some exception services cannot be undone I. e. file I/O Performance n n OS preempts user thread in the middle of transaction I. e. interrupts 16

Practical Solutions n Performance n n n Loss of isolation at exception n n A dedicated CPU for operating system No need to preempt user thread in the middle of transaction Mailbox: separate communication layer between application and OS Loss of atomicity at exception n Serialize system for irrevocable exceptions 17

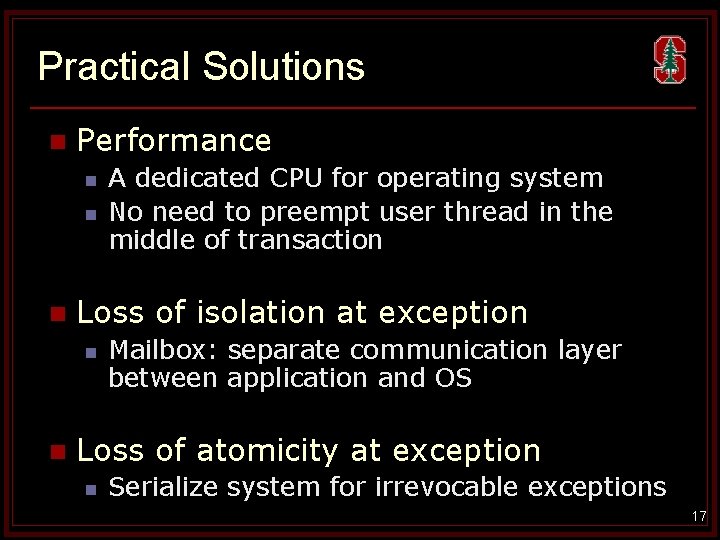

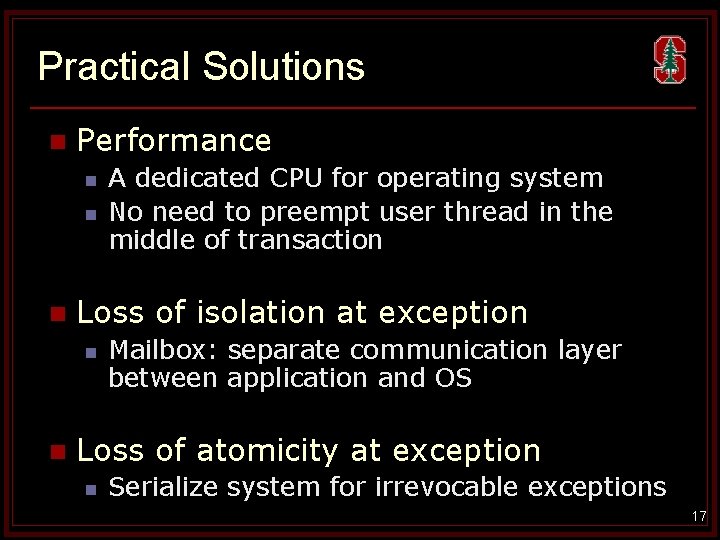

Architecture Update CPU CPU $ TCC M cache $ M TCC cache switch $ proxy kernel CPU TCC $cache M switch CPU Linux CPU TCC $cache M switch Arb iter switch memory M CPU CPU TCC cache $ M switch 18

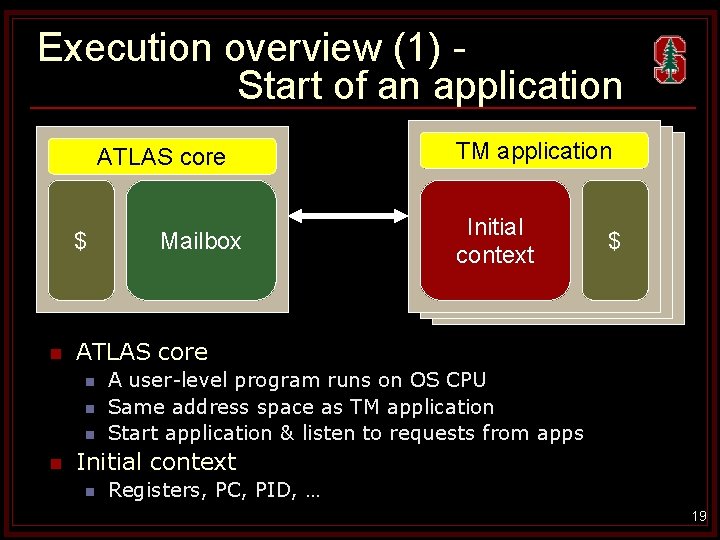

Execution overview (1) Start of an application n OS CPU Operating ATLAS system core TM Bootloader application Application PP CPU $ Initial Mailbox MM context $$$ ATLAS core n n Mailbox A user-level program runs on OS CPU Same address space as TM application Start application & listen to requests from apps Initial context n Registers, PC, PID, … 19

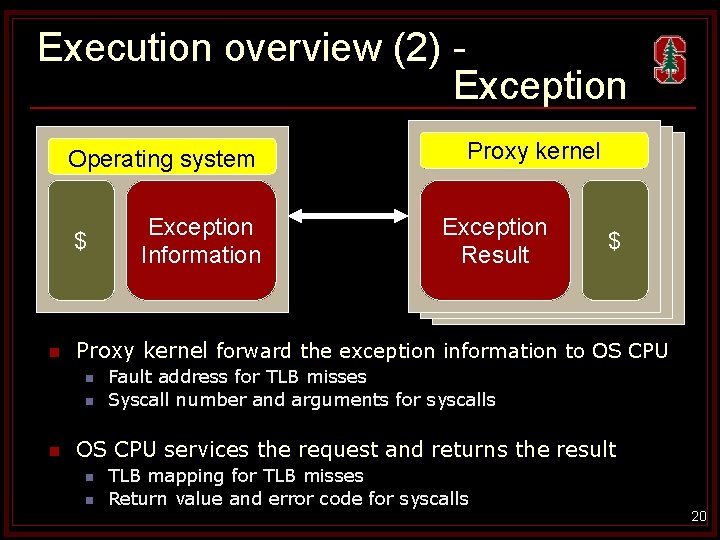

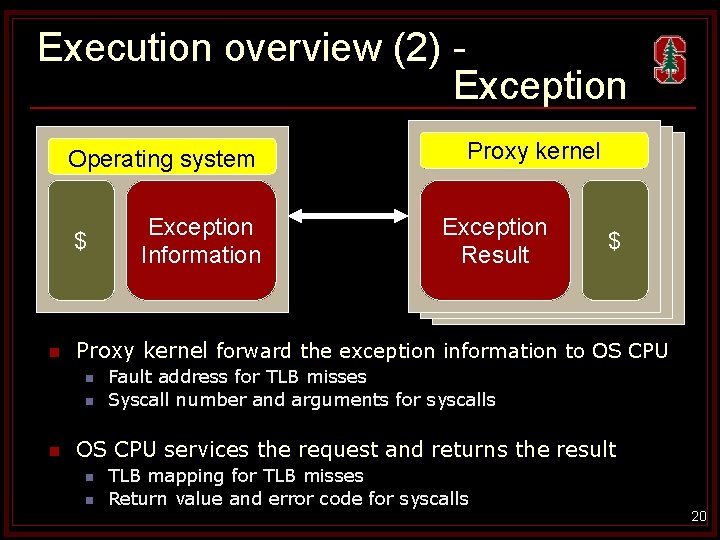

Execution overview (2) Exception OS CPU Operating ATLAS system core $ n Exception Mailbox MM Result $$$ Proxy kernel forward the exception information to OS CPU n n n Exception Mailbox Information TM Proxy application kernel Application PP CPU Fault address for TLB misses Syscall number and arguments for syscalls OS CPU services the request and returns the result n n TLB mapping for TLB misses Return value and error code for syscalls 20

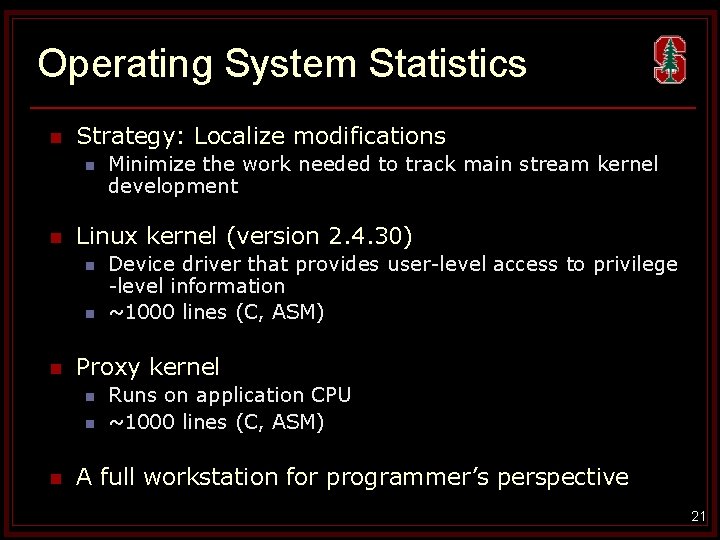

Operating System Statistics n Strategy: Localize modifications n n Linux kernel (version 2. 4. 30) n n n Device driver that provides user-level access to privilege -level information ~1000 lines (C, ASM) Proxy kernel n n n Minimize the work needed to track main stream kernel development Runs on application CPU ~1000 lines (C, ASM) A full workstation for programmer’s perspective 21

System Performance n n Total execution time scales OS time scales, too 22

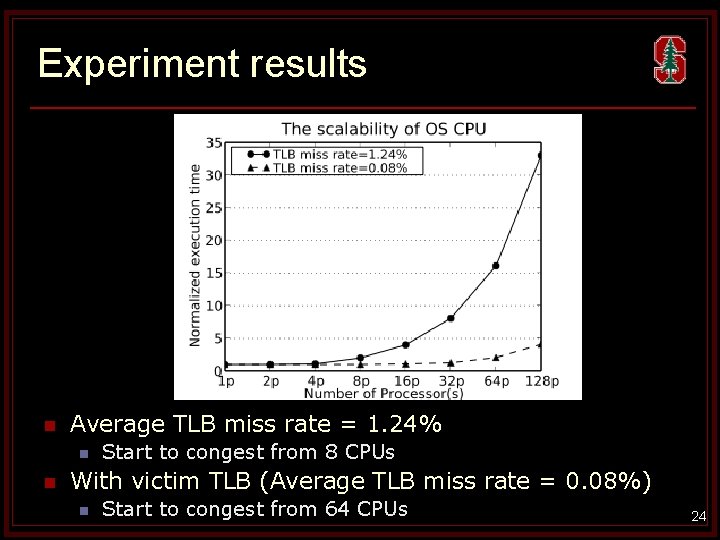

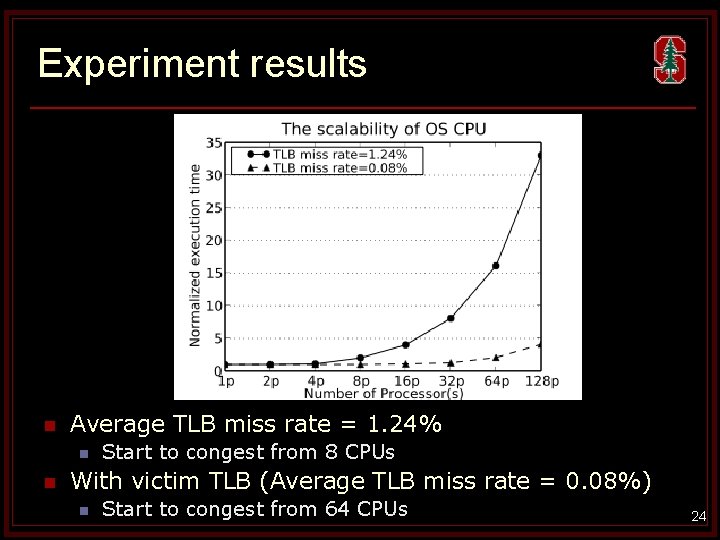

Scalability of OS CPU n Single CPU for operating system n n n Eventually, it will become a bottleneck as system scales Multiple CPUs for OS will need to run SMP OS Micro-benchmark experiment n n n Simultaneous TLB miss requests Controlled injection ratio Looking for the number of application CPUs that saturates OS CPU 23

Experiment results n Average TLB miss rate = 1. 24% n n Start to congest from 8 CPUs With victim TLB (Average TLB miss rate = 0. 08%) n Start to congest from 64 CPUs 24

Agenda n Motivation n Background n Operating System for HTM n Productivity Tools for Parallel Programming n Conclusions 25

Challenges in Productivity Tools for Parallel Programming n Correctness n Nondeterministic behavior n n Need to track an entire interleaving n n Related to a thread interleaving Very expensive in time/space Performance n n Detailed information of the performance bottleneck events Light-weight monitoring n Do not disturb the interleaving 26

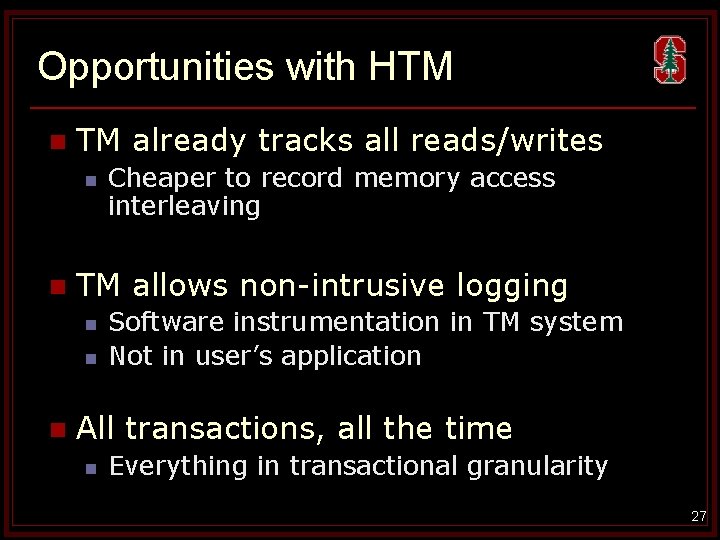

Opportunities with HTM n TM already tracks all reads/writes n n TM allows non-intrusive logging n n n Cheaper to record memory access interleaving Software instrumentation in TM system Not in user’s application All transactions, all the time n Everything in transactional granularity 27

Tool 1: Replay. T Deterministic Replay Thesis Defense Talk

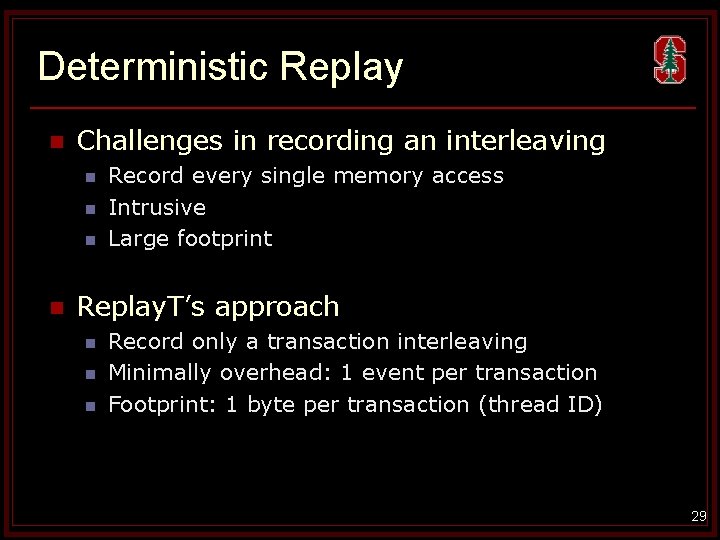

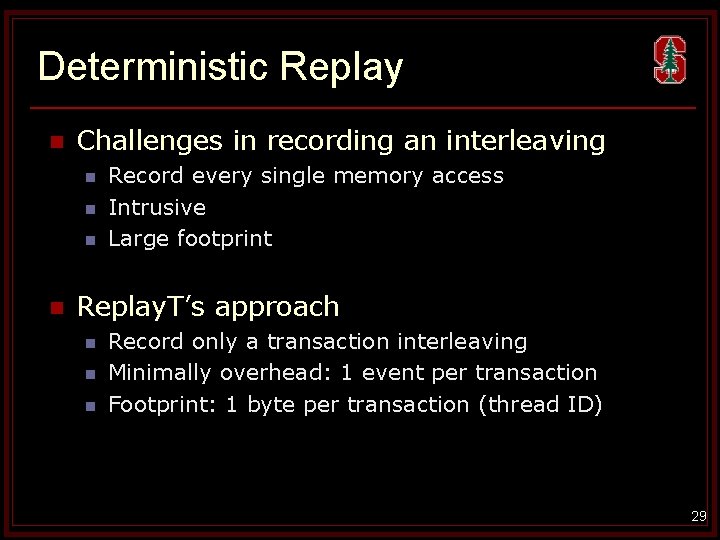

Deterministic Replay n Challenges in recording an interleaving n n Record every single memory access Intrusive Large footprint Replay. T’s approach n n n Record only a transaction interleaving Minimally overhead: 1 event per transaction Footprint: 1 byte per transaction (thread ID) 29

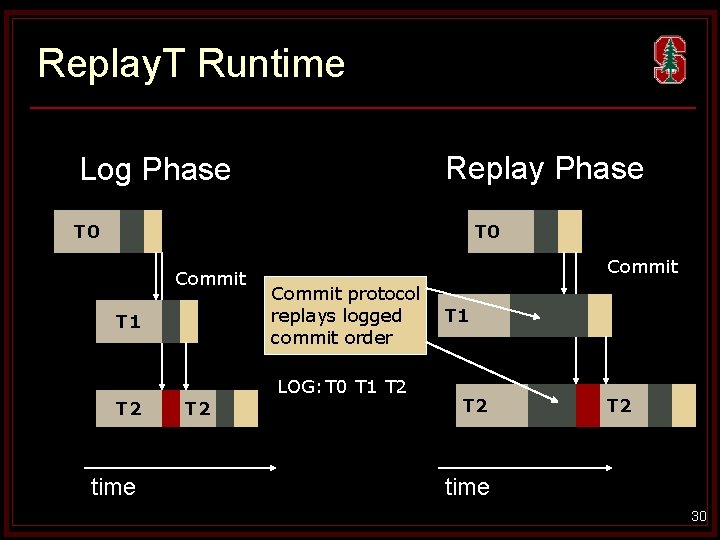

Replay. T Runtime Replay Phase Log Phase T 0 Commit T 1 T 2 time T 2 Commit protocol replays logged commit order LOG: T 0 T 1 T 2 T 2 time 30

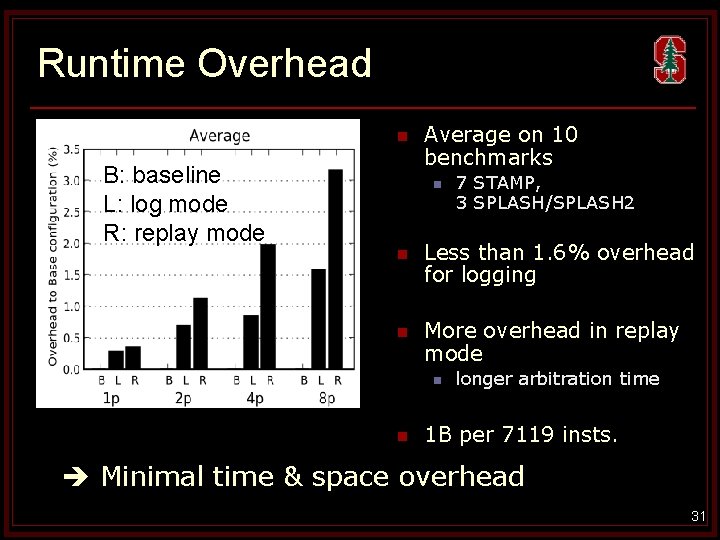

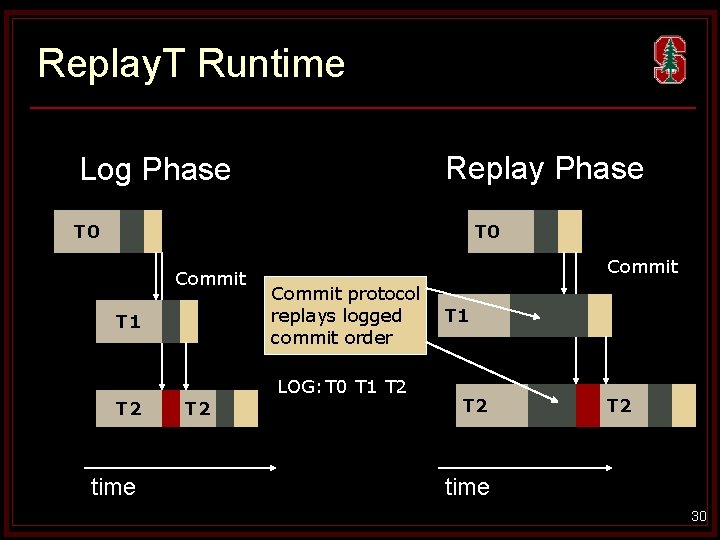

Runtime Overhead n B: baseline L: log mode R: replay mode Average on 10 benchmarks n 7 STAMP, 3 SPLASH/SPLASH 2 n Less than 1. 6% overhead for logging n More overhead in replay mode n n longer arbitration time 1 B per 7119 insts. Minimal time & space overhead 31

Tool 2. AVIO-TM Atomicity Violation Detection Thesis Defense Talk

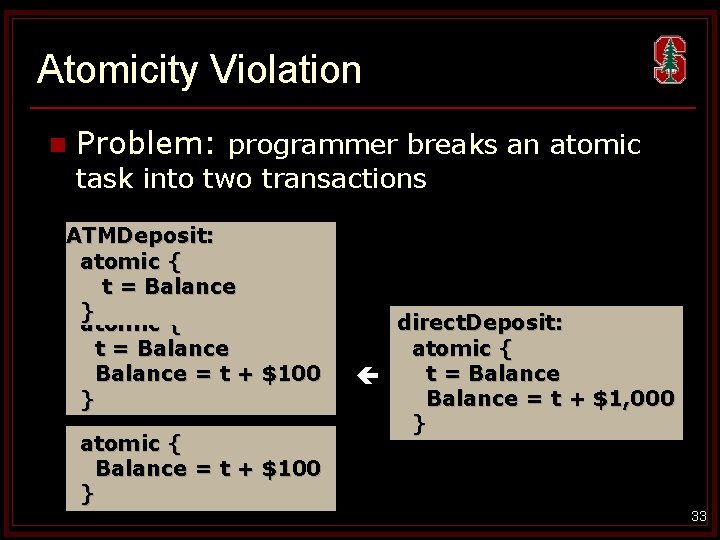

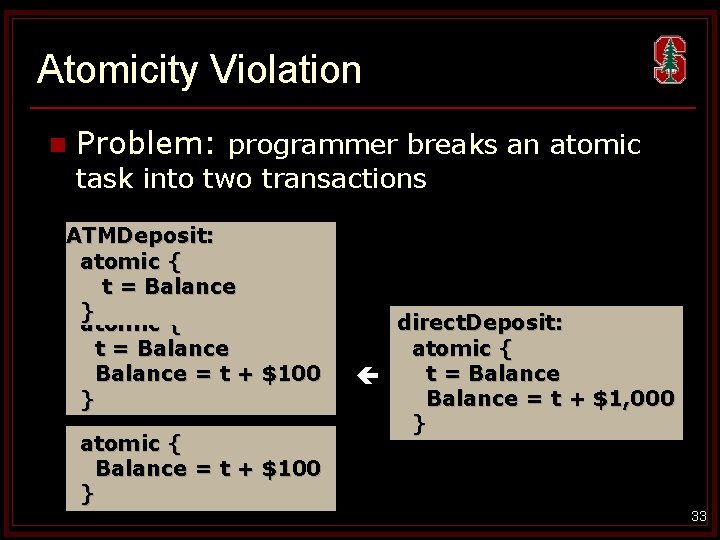

Atomicity Violation n Problem: programmer breaks an atomic task into two transactions ATMDeposit: atomic { t = Balance ATMDeposit: } atomic { t = Balance = t + $100 } atomic { Balance = t + $100 } direct. Deposit: atomic { t = Balance = t + $1, 000 } 33

![Atomicity Violation Detection n AVIO Lu 06 n n Atomic region No unserializable Atomicity Violation Detection n AVIO [Lu’ 06] n n Atomic region = No unserializable](https://slidetodoc.com/presentation_image_h/16c7698464e957a6b00accae544ee6c3/image-34.jpg)

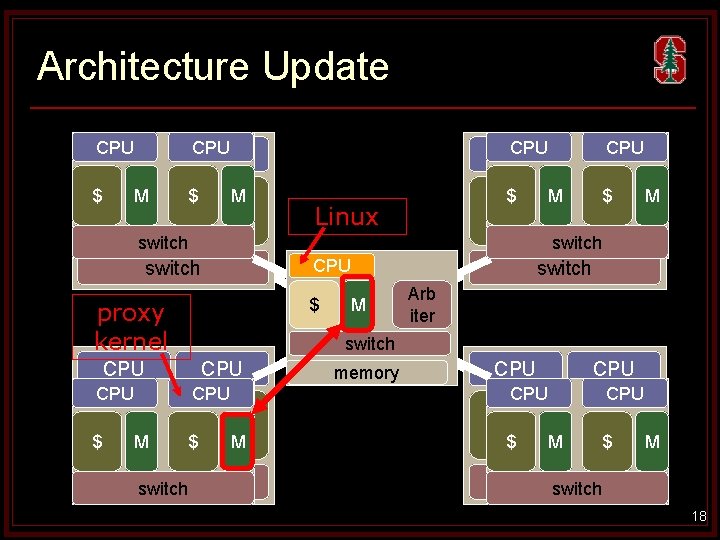

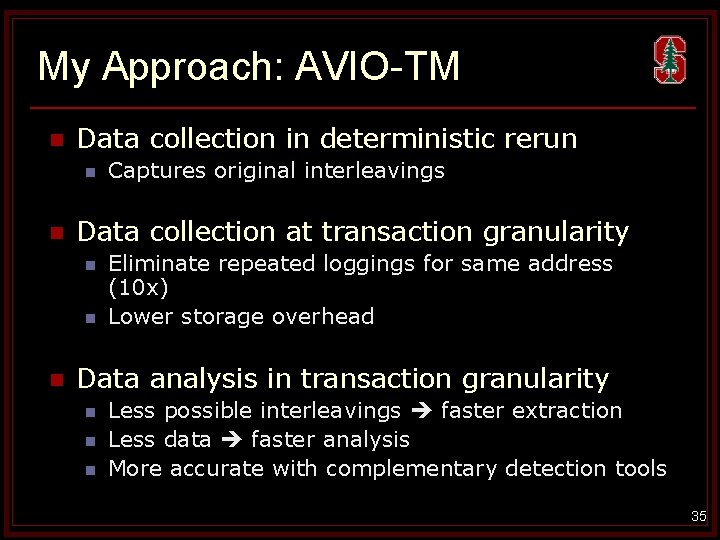

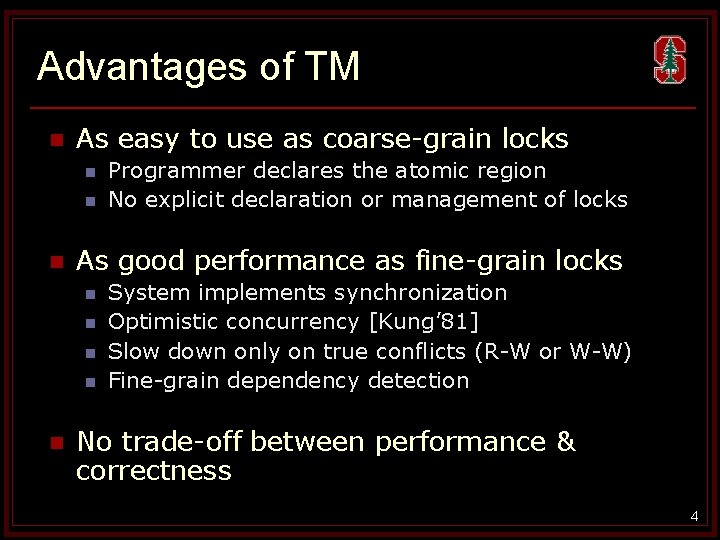

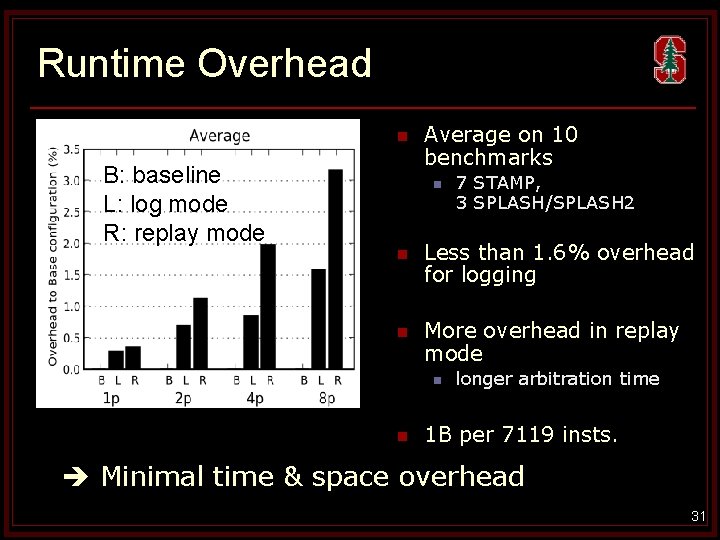

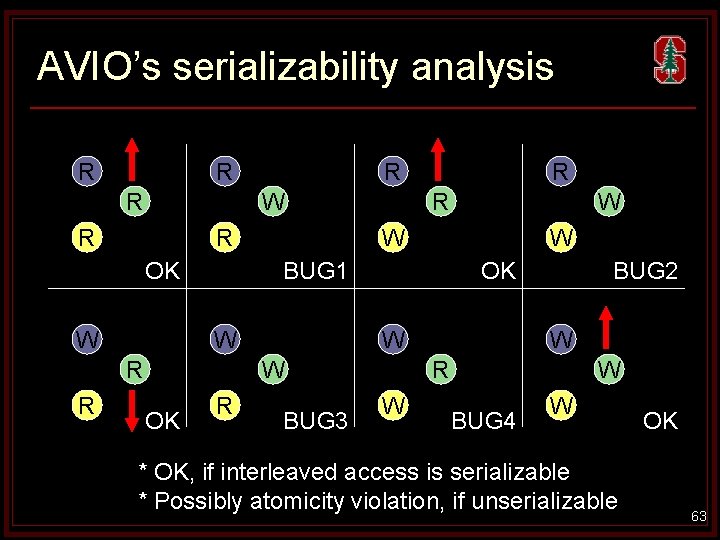

Atomicity Violation Detection n AVIO [Lu’ 06] n n Atomic region = No unserializable interleavings Extracts a set of atomic region from correct runs Detects unserializable interleavings in buggy runs Challenges of AVIO n Need to record all loads/stores in global order n n Slow (28 x) Intrusive - software instrumentation Storage overhead Slow analysis n Due to the large volume of data 34

My Approach: AVIO-TM n Data collection in deterministic rerun n n Data collection at transaction granularity n n n Captures original interleavings Eliminate repeated loggings for same address (10 x) Lower storage overhead Data analysis in transaction granularity n n n Less possible interleavings faster extraction Less data faster analysis More accurate with complementary detection tools 35

Tool 3. TAPE Performance Bottleneck Monitor Thesis Defense Talk

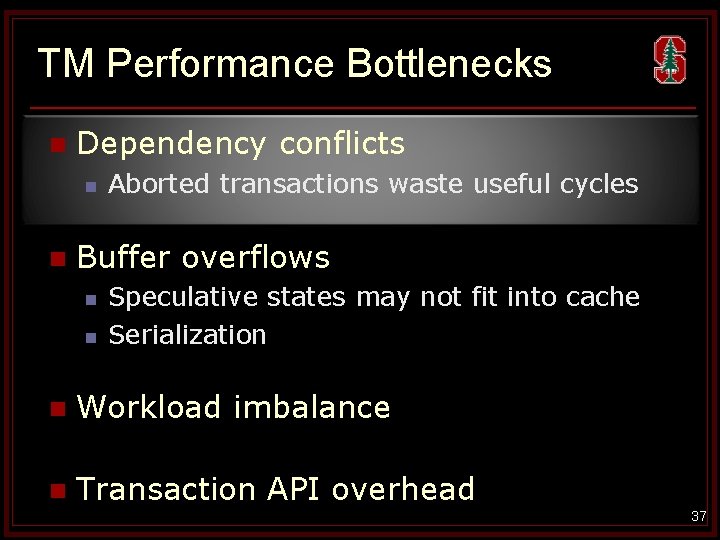

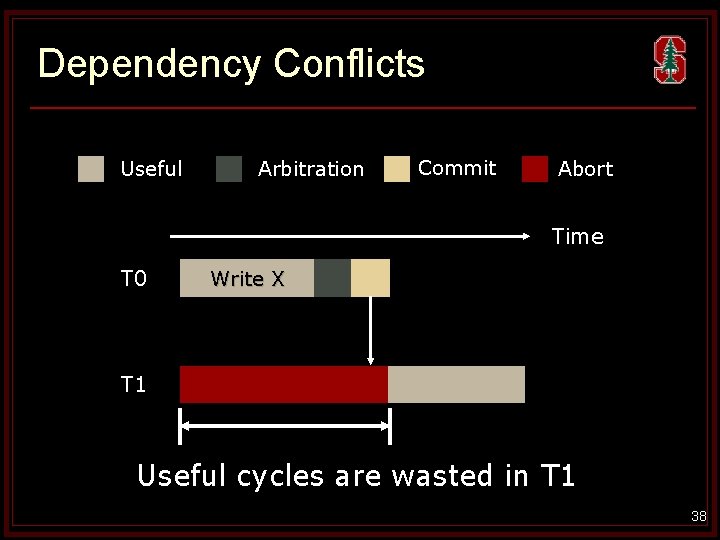

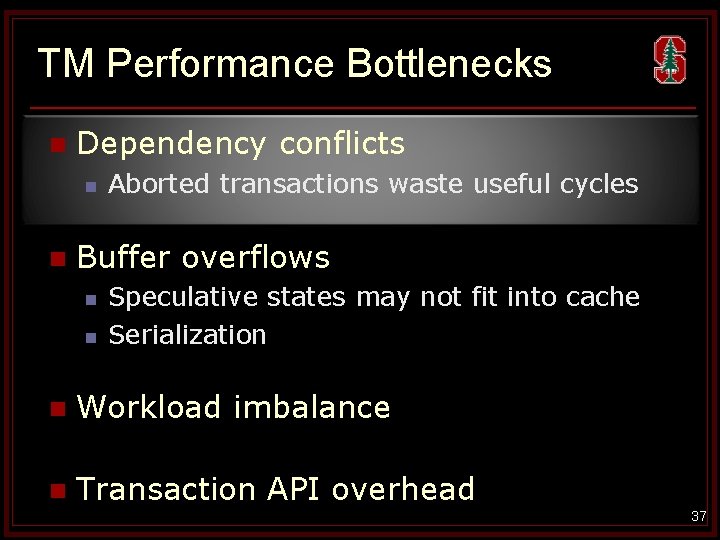

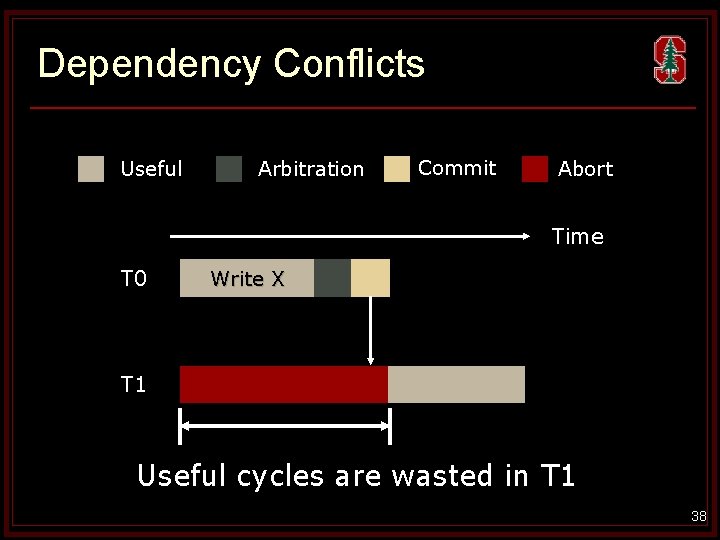

TM Performance Bottlenecks n Dependency conflicts n n Aborted transactions waste useful cycles Buffer overflows n n Speculative states may not fit into cache Serialization n Workload imbalance n Transaction API overhead 37

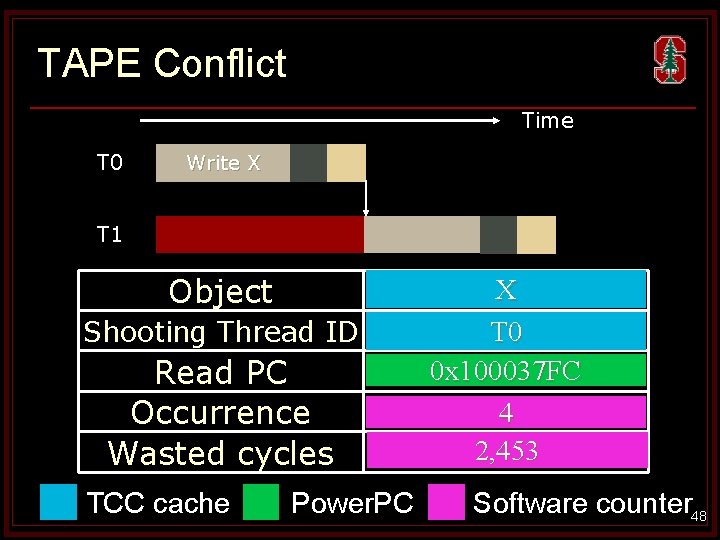

Dependency Conflicts Useful Arbitration Commit Abort Time T 0 Write X T 1 Read X Useful cycles are wasted in T 1 38

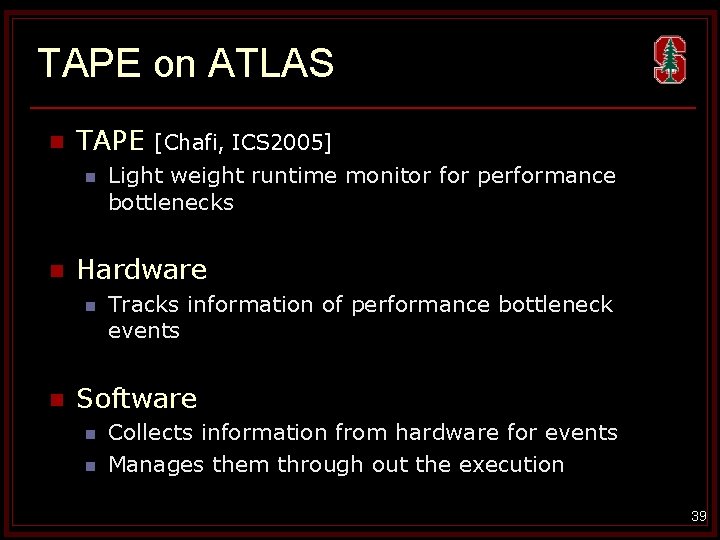

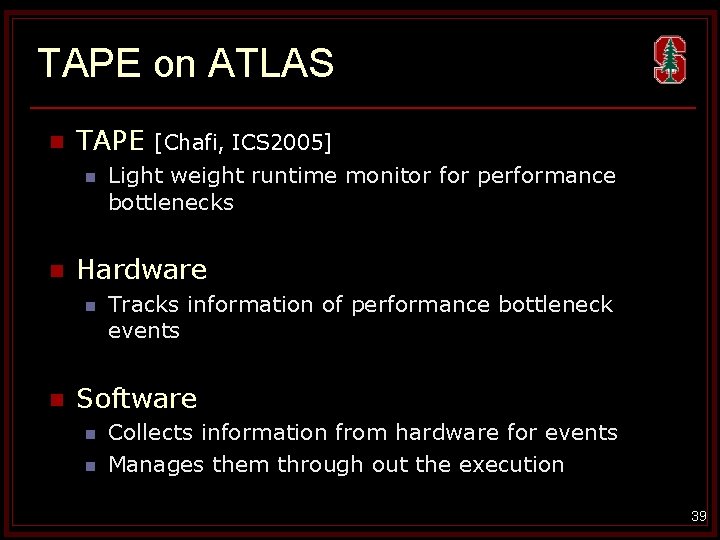

TAPE on ATLAS n TAPE n n Light weight runtime monitor for performance bottlenecks Hardware n n [Chafi, ICS 2005] Tracks information of performance bottleneck events Software n n Collects information from hardware for events Manages them through out the execution 39

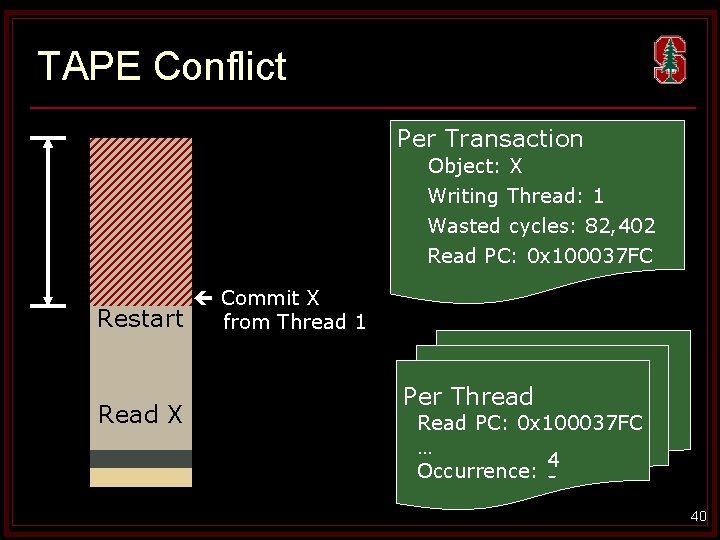

TAPE Conflict T 0 Read X Per Transaction Object: X Writing Thread: 1 Wasted cycles: 82, 402 Read PC: 0 x 100037 FC Commit X Restart from Thread 1 Read X Per Thread Read PC: 0 x 100037 FC … 4 Occurrence: 3 40

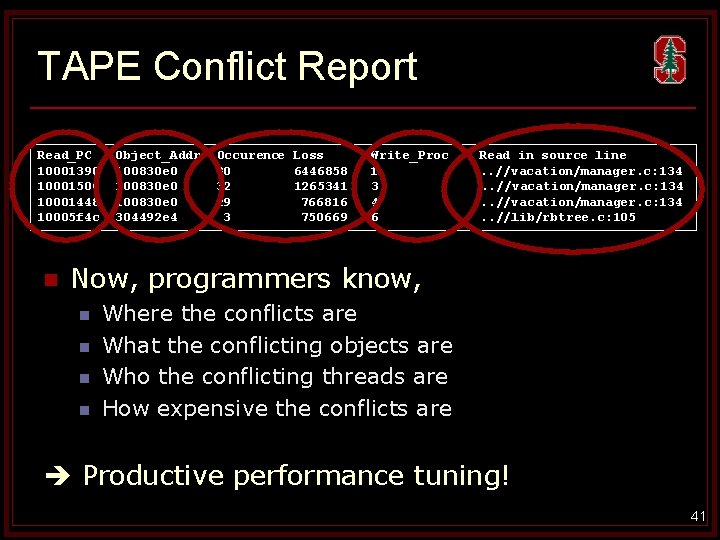

TAPE Conflict Report Read_PC 10001390 10001500 10001448 10005 f 4 c n Object_Addr 100830 e 0 304492 e 4 Occurence Loss 30 6446858 32 1265341 29 766816 3 750669 Write_Proc 1 3 4 6 Read in source line. . //vacation/manager. c: 134. . //lib/rbtree. c: 105 Now, programmers know, n n Where the conflicts are What the conflicting objects are Who the conflicting threads are How expensive the conflicts are Productive performance tuning! 41

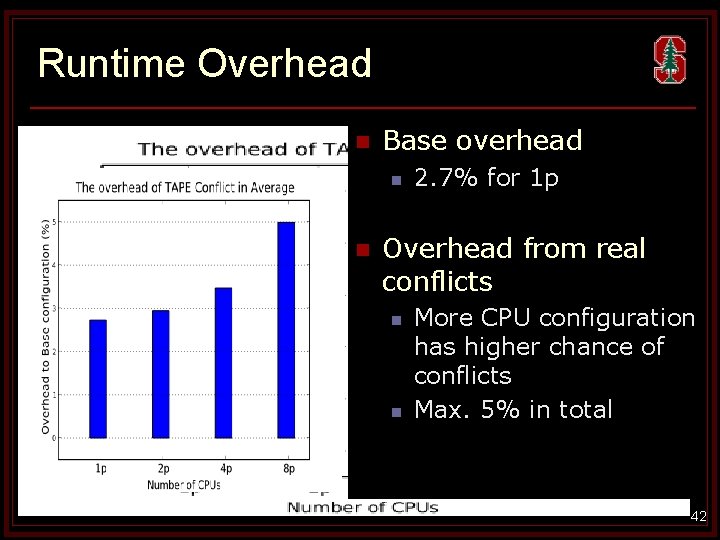

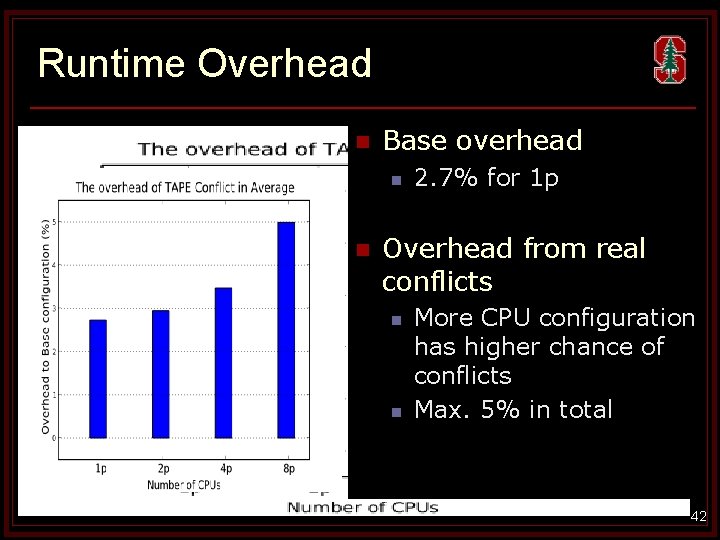

Runtime Overhead n Base overhead n n 2. 7% for 1 p Overhead from real conflicts n n More CPU configuration has higher chance of conflicts Max. 5% in total 42

Conclusion n An operating system for hardware TM n n Productivity tools for parallel programming n n A dedicated CPU for the operating system Proxy kernel on application CPU Separate communication channel between them Replay. T: Deterministic replay AVIO-TM: Atomicity violation detection TAPE: Runtime performance bottleneck monitor Full-system prototyping & evaluation n Convincing proof-of-concept 43

RAMP Tutorial n ISCA 2006 and ASPLOS 2008 n Audience of >60 people (academia & industry) n n Including faculties from Berkeley, MIT, and UIUC Parallelized, tuned, and debugged apps with ATLAS n n From speedup of 1 to ideal speedup in a few minutes Hands-on experience with real system “most successful hands-on tutorial in last several decades” - Chuck Thacker (Microsoft Research) 44

Acknowledgements n n n n n My wife So Jung and our baby (coming soon) My parents who have supported me for last 30 years My advisors: Christos Kozyrakis and Kunle Olukotun My committee: Boris Murmann and Fouad A. Tobagi Njuguna Njoroge, Jared Casper, Jiwon Seo, Chi Cao Minh, and all other TCC group members RAMP community and BEE 2 developers Shan Lu from UIUC Samsung Scholarship All of my friends at Stanford & my Church 45

Backup Slides Thesis Defense Talk

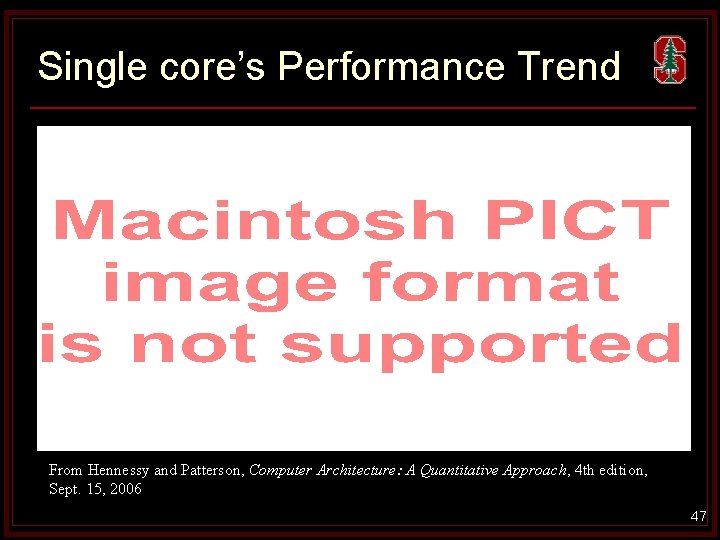

Single core’s Performance Trend From Hennessy and Patterson, Computer Architecture: A Quantitative Approach, 4 th edition, Sept. 15, 2006 47

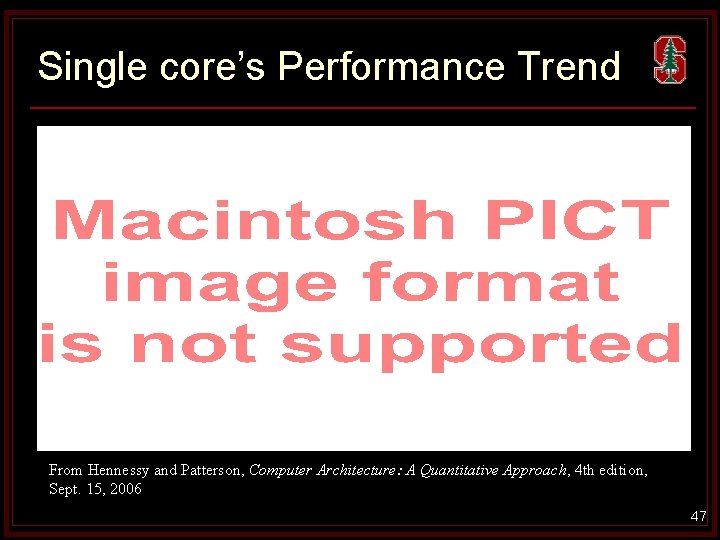

TAPE Conflict Time T 0 Write X T 1 Read X Object Shooting Thread ID Read PC Occurrence Wasted cycles TCC cache Power. PC X T 0 0 x 100037 FC 4 2, 453 Software counter 48

Memory transaction vs. Database transaction 49

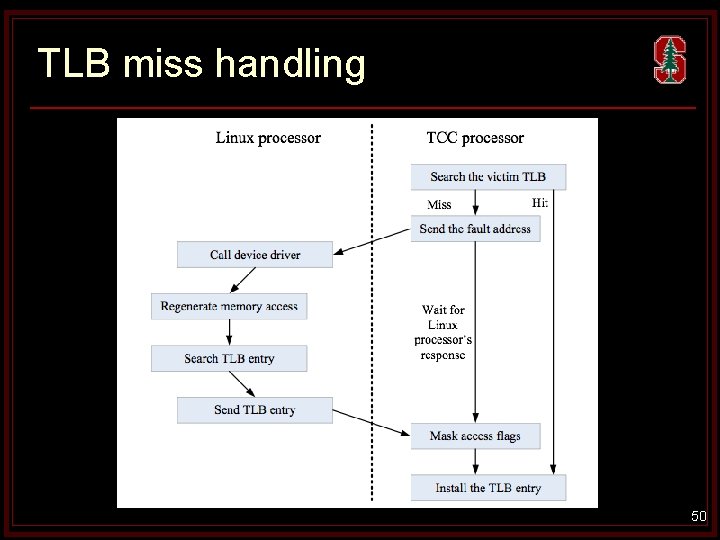

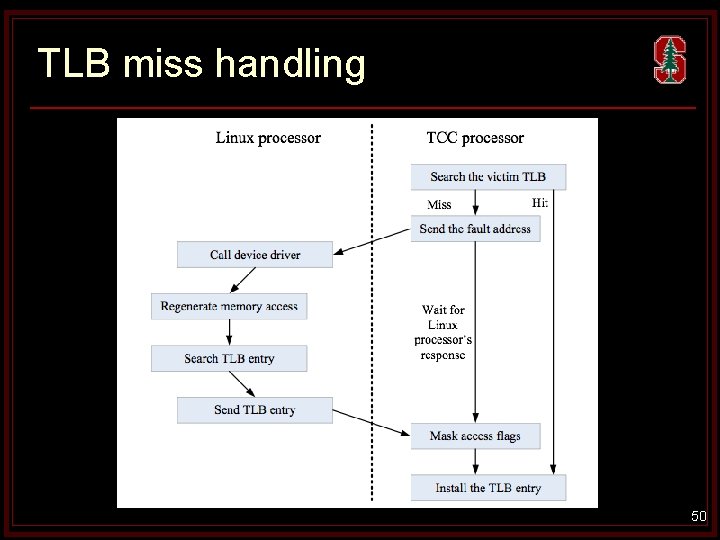

TLB miss handling 50

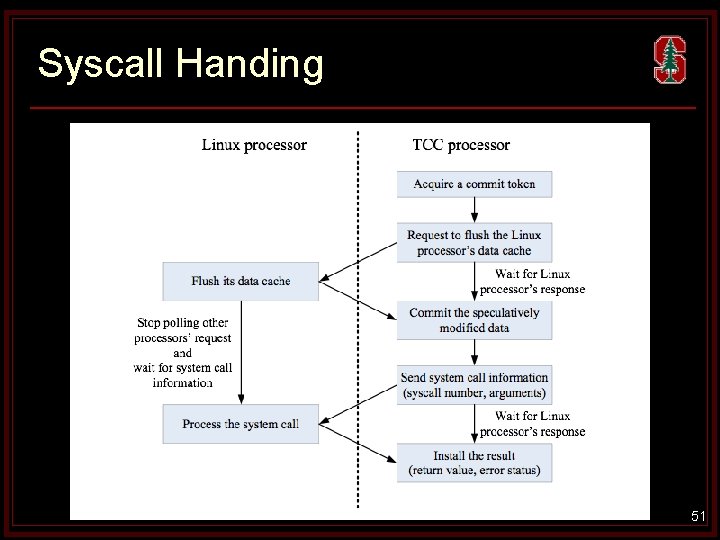

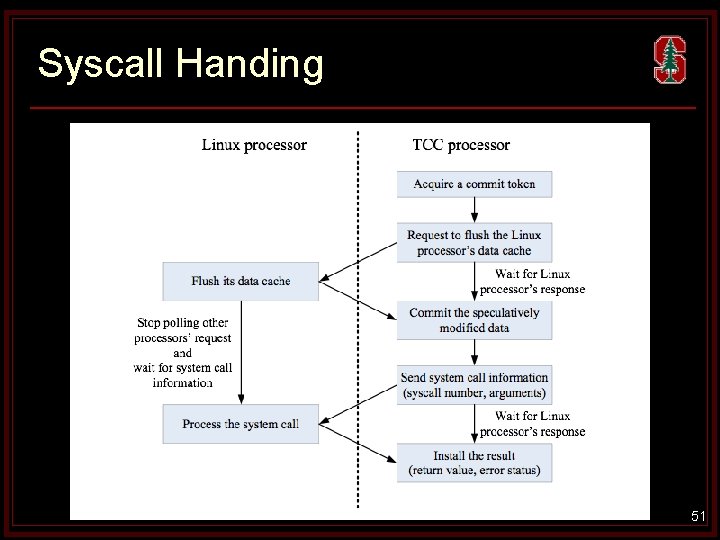

Syscall Handing 51

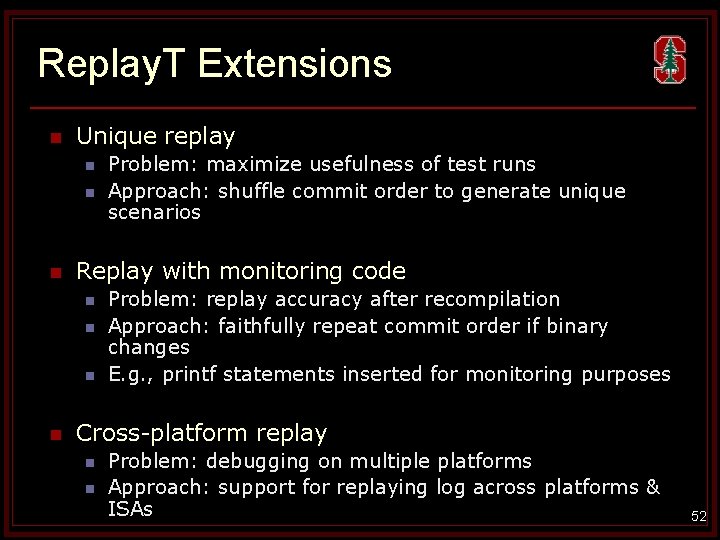

Replay. T Extensions n Unique replay n n n Replay with monitoring code n n Problem: maximize usefulness of test runs Approach: shuffle commit order to generate unique scenarios Problem: replay accuracy after recompilation Approach: faithfully repeat commit order if binary changes E. g. , printf statements inserted for monitoring purposes Cross-platform replay n n Problem: debugging on multiple platforms Approach: support for replaying log across platforms & ISAs 52

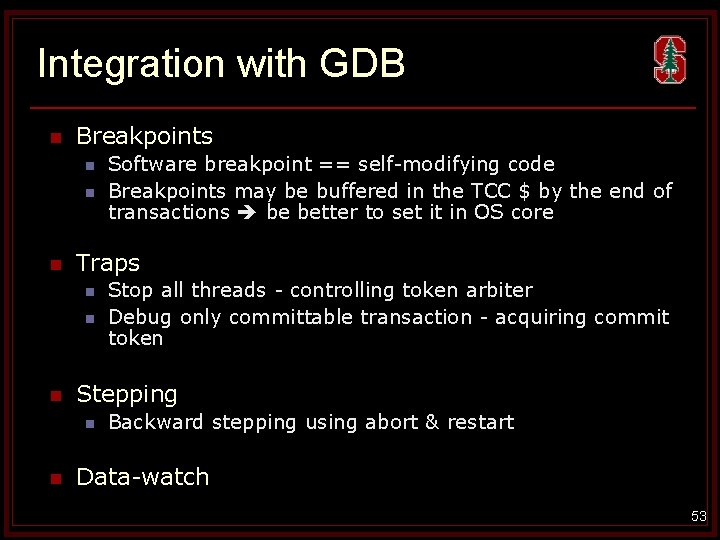

Integration with GDB n Breakpoints n n n Traps n n n Stop all threads - controlling token arbiter Debug only committable transaction - acquiring commit token Stepping n n Software breakpoint == self-modifying code Breakpoints may be buffered in the TCC $ by the end of transactions be better to set it in OS core Backward stepping using abort & restart Data-watch 53

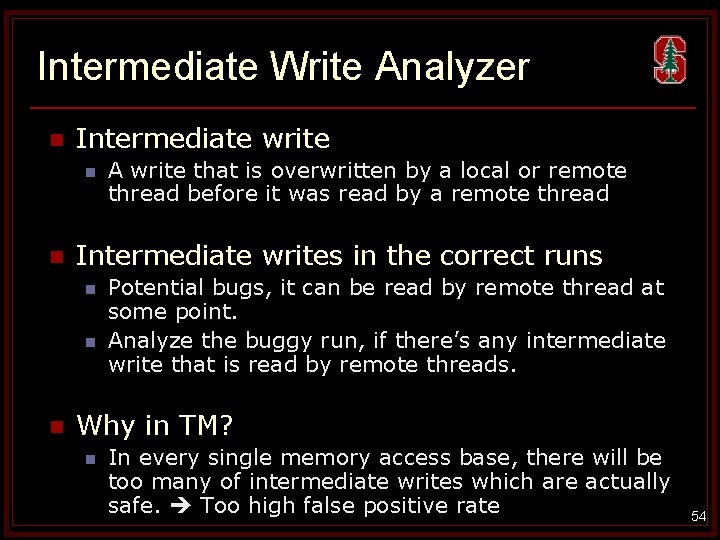

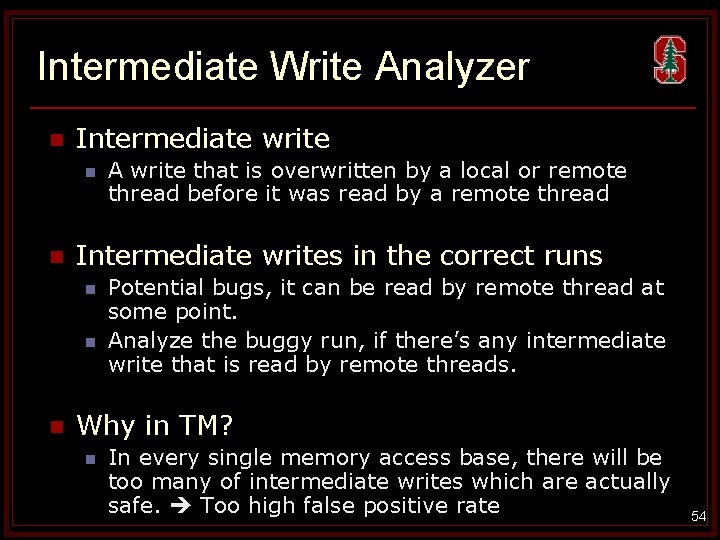

Intermediate Write Analyzer n Intermediate write n n Intermediate writes in the correct runs n n n A write that is overwritten by a local or remote thread before it was read by a remote thread Potential bugs, it can be read by remote thread at some point. Analyze the buggy run, if there’s any intermediate write that is read by remote threads. Why in TM? n In every single memory access base, there will be too many of intermediate writes which are actually safe. Too high false positive rate 54

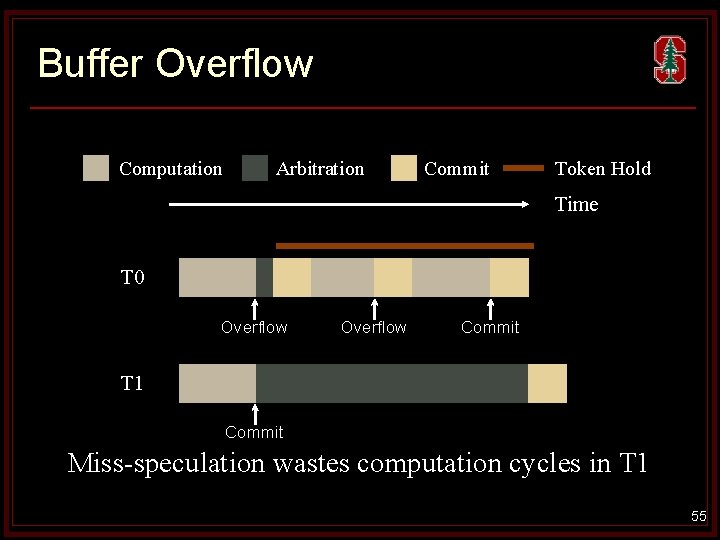

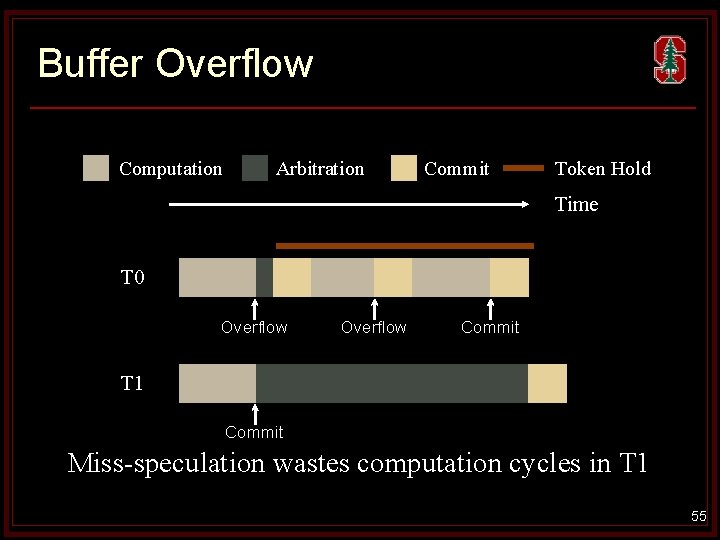

Buffer Overflow Computation Arbitration Commit Token Hold Time T 0 Overflow Commit T 1 Commit Miss-speculation wastes computation cycles in T 1 55

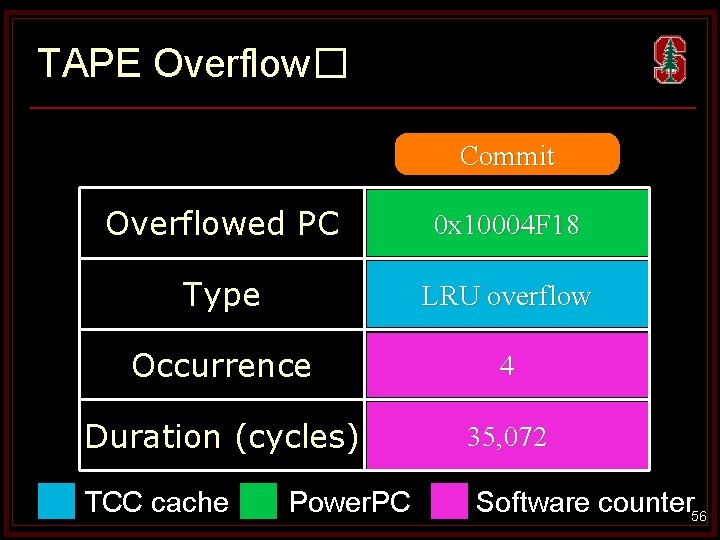

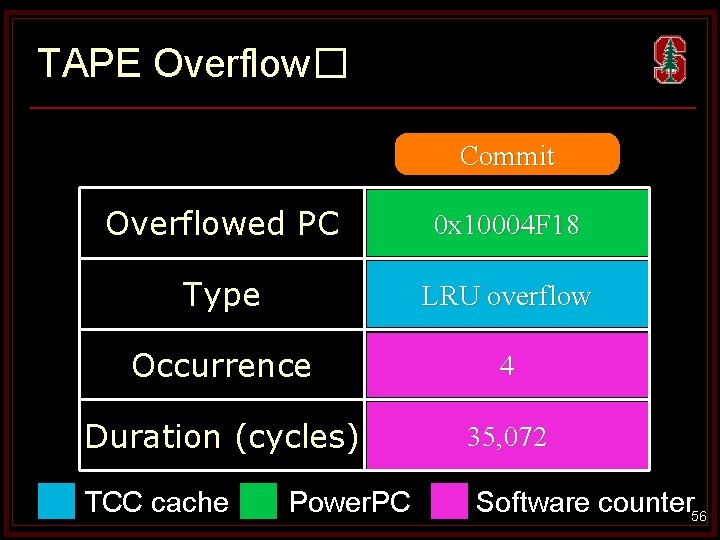

TAPE Overflow� Overflow Commit Overflowed PC 0 x 10004 F 18 Type LRU overflow Occurrence 4 Duration (cycles) 35, 072 TCC cache Power. PC Software counter 56

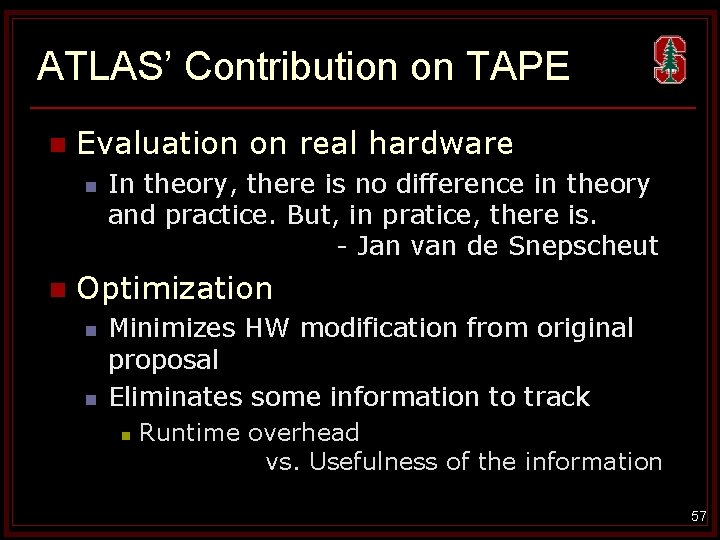

ATLAS’ Contribution on TAPE n Evaluation on real hardware n n In theory, there is no difference in theory and practice. But, in pratice, there is. - Jan van de Snepscheut Optimization n n Minimizes HW modification from original proposal Eliminates some information to track n Runtime overhead vs. Usefulness of the information 57

Why not SMP kernel? 58

What is strong isolation? 59

![TCC vs SLE n Speculative Lock Elision SLE Rajwar Goodman 01 n Speculate TCC vs. SLE n Speculative Lock Elision (SLE) [Rajwar & Goodman’ 01] n Speculate](https://slidetodoc.com/presentation_image_h/16c7698464e957a6b00accae544ee6c3/image-60.jpg)

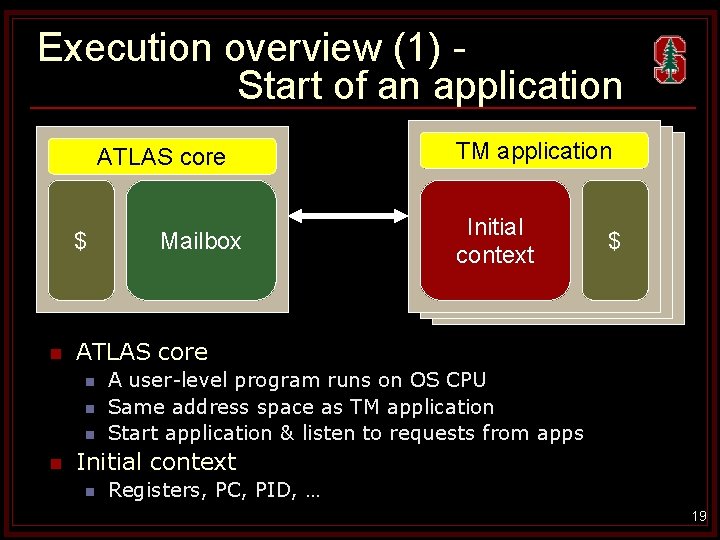

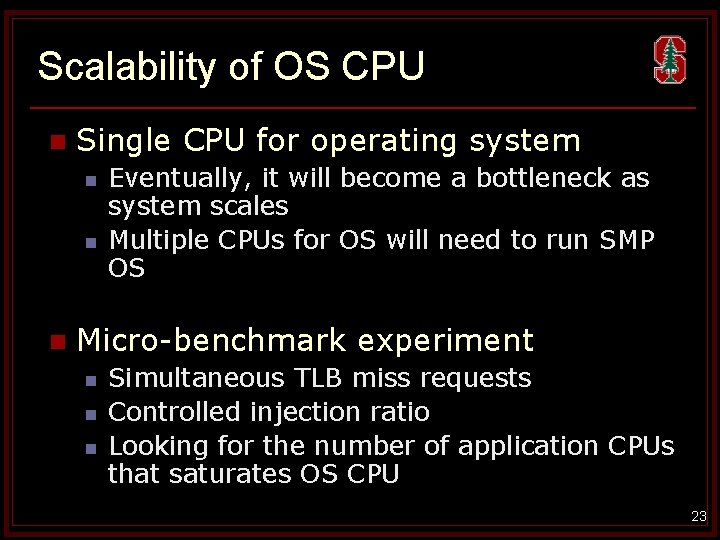

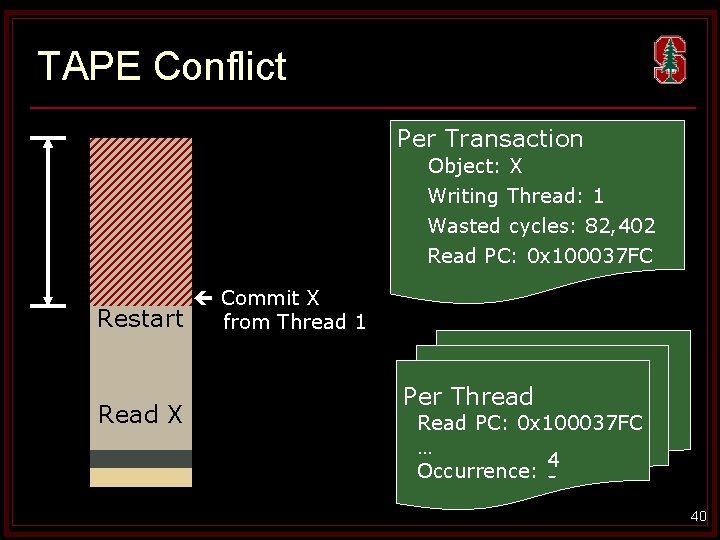

TCC vs. SLE n Speculative Lock Elision (SLE) [Rajwar & Goodman’ 01] n Speculate through locks n n n If a conflict is detected, it aborts ALL involved threads No guarantee to forward progress TLR: Transactional Lock Removal [above’ 02] n n Extended from SLE Guarantee to forward progress by giving a priority to the oldest thread 60

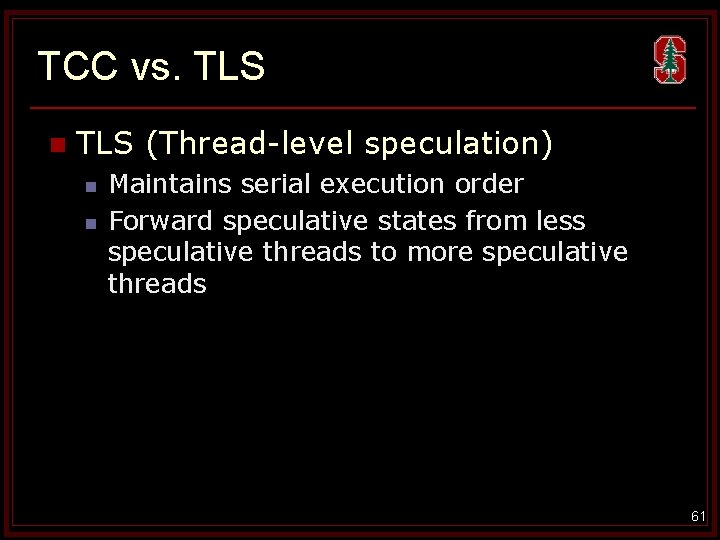

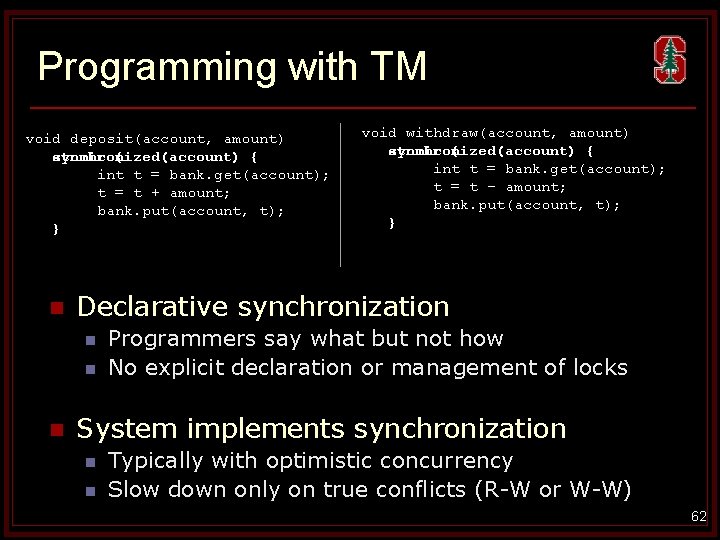

TCC vs. TLS n TLS (Thread-level speculation) n n Maintains serial execution order Forward speculative states from less speculative threads to more speculative threads 61

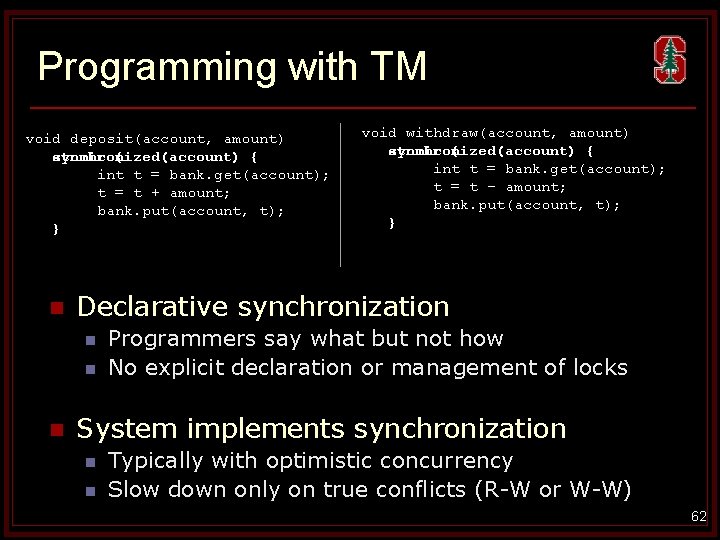

Programming with TM void deposit(account, amount) synchronized(account) atomic { { int t = bank. get(account); t = t + amount; bank. put(account, t); } n Declarative synchronization n void withdraw(account, amount) synchronized(account) atomic { { int t = bank. get(account); t = t – amount; bank. put(account, t); } Programmers say what but not how No explicit declaration or management of locks System implements synchronization n n Typically with optimistic concurrency Slow down only on true conflicts (R-W or W-W) 62

AVIO’s serializability analysis R R R W R R OK W R W OK W W R W W W OK R BUG 1 R R R BUG 3 BUG 2 W R W W BUG 4 W * OK, if interleaved access is serializable * Possibly atomicity violation, if unserializable OK 63