Hardware Architecture Server Hardware Network hardware Client hardware

- Slides: 95

Hardware Architecture Server Hardware Network hardware Client hardware 1

Server Hardware l l l Server- crucial part of he DW Large hardware configuration used to: l to support large volume of database l adhoc nature of query access. Server architecture options include: l Symmetric multiprocessing l Cluster technology l Massively parallel processing l Other technologies 2

Symmetric Multi Processing Machine CPU System Bus Disk controllers l l SMP machine – a set of CPUs that share memory & disk. Shared everything environment OS allows different CPUs to access central structures- memory address tables , job schedule queues in a controlled manner. Process can run on any CPU in the machine. 3

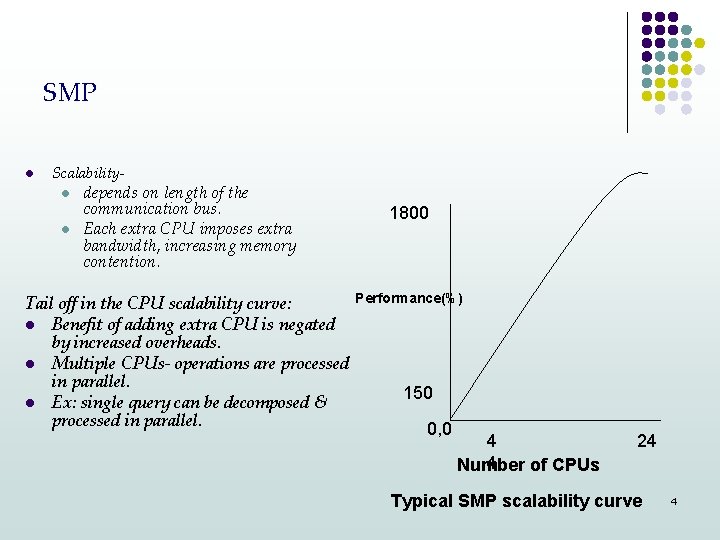

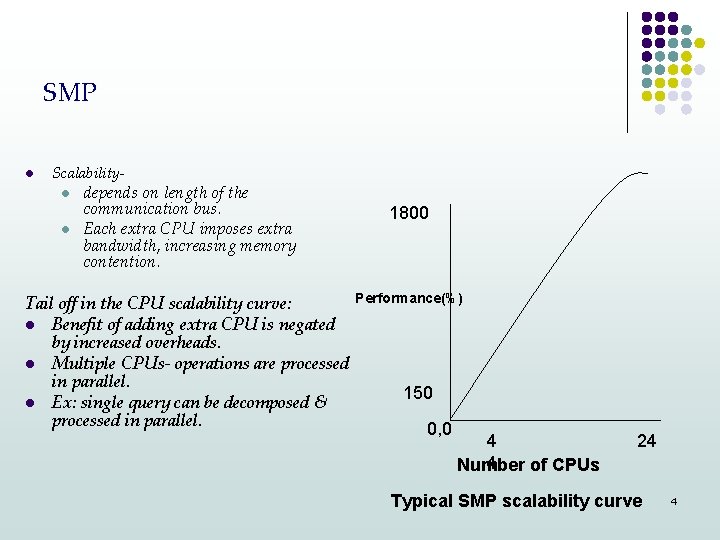

SMP l Scalabilityl l depends on length of the communication bus. Each extra CPU imposes extra bandwidth, increasing memory contention. Tail off in the CPU scalability curve: l Benefit of adding extra CPU is negated by increased overheads. l Multiple CPUs- operations are processed in parallel. l Ex: single query can be decomposed & processed in parallel. 1800 Performance(%) 150 0, 0 4 4 Number of CPUs 24 Typical SMP scalability curve 4

SMP vs MPP l l l SMP: Tightly coupled CPUs sharing memories & disks. MPP: Loosely coupled CPUs each having its own memory & disk. Emerging technologies: l high speed memory connections l non uniform memory access NUMA. 5

Cluster of SMP machines SMP nodes High speed interconnect I/O subsystem s l l Loosely coupled SMP machines connected to a high speed interconnect. Use shared disk systems-own CPU –node & memory but share disk. Shared resources managed in a distributed fashion. - by software called the distributed lock manager. Run on each machine & communicates over the high speed interconnect. Scalability l Ex: digital on VAX VMS machines, SUN cluster 1 -50 TB 6

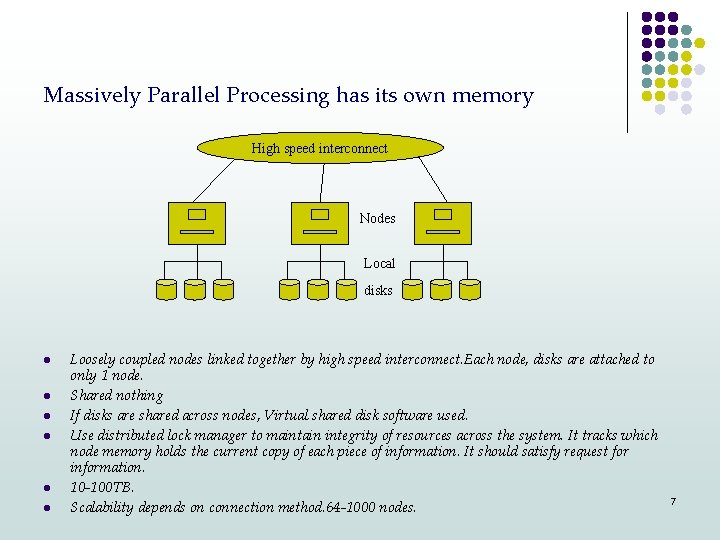

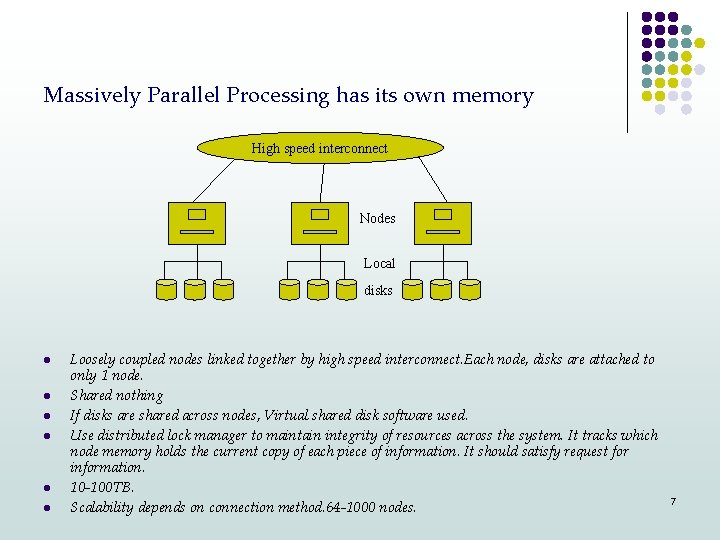

Massively Parallel Processing has its own memory High speed interconnect Nodes Local disks l l l Loosely coupled nodes linked together by high speed interconnect. Each node, disks are attached to only 1 node. Shared nothing If disks are shared across nodes, Virtual shared disk software used. Use distributed lock manager to maintain integrity of resources across the system. It tracks which node memory holds the current copy of each piece of information. It should satisfy request for information. 10 -100 TB. Scalability depends on connection method. 64 -1000 nodes. 7

Emerging technologies l l l NUMA- tightly coupled cluster of SMP nodes & extremely high speed interconnect. Nearly SMP internal speeds. Thus OS runs across the whole machine as a single machine instance. High Speed interconnect- memory channel by Digital in clustered Unix systems. Allows the cluster to act as if it has one memory address space. No need to a shared disk to be connected to each node. Both: overcome bottlenecks in cluster systems. provide scalability of disk, memory & CPU. 8

Server Management l l l DW systems are dynamic, complex & large in size. Due to changing structure, require extensive management. Need tools to allow automatic monitoring of processes & statistics. l Running out of space of key disks. l Dying process l Process using excessive resource. l Process returning error l Disks exhibiting I. /O bottleneck. l Hardware failure. l Excessive memory swapping l Low buffer cache hit ratios. 9

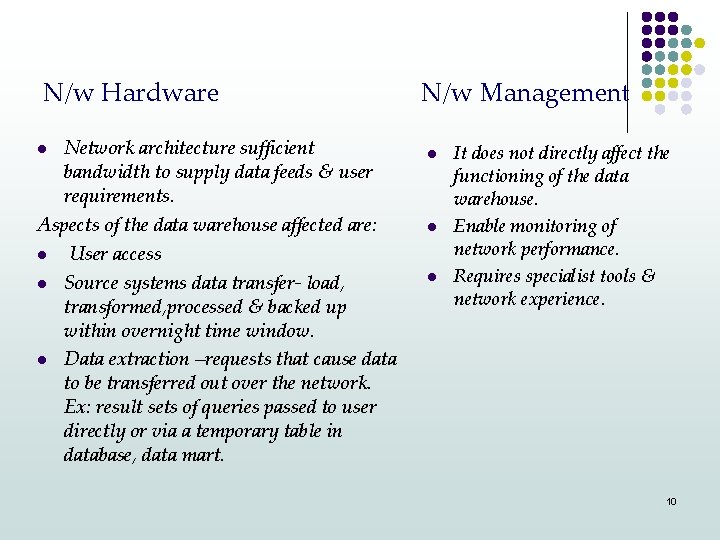

N/w Hardware Network architecture sufficient bandwidth to supply data feeds & user requirements. Aspects of the data warehouse affected are: l User access l Source systems data transfer- load, transformed, processed & backed up within overnight time window. l Data extraction –requests that cause data to be transferred out over the network. Ex: result sets of queries passed to user directly or via a temporary table in database, data mart. l N/w Management l l l It does not directly affect the functioning of the data warehouse. Enable monitoring of network performance. Requires specialist tools & network experience. 10

Client management Client tools Client Hardware l l Clients- external to the data warehouse. Client management l l l Need to know the requirements for that machine to access the data warehouse. Relay the information about multiple access paths to the server system to those responsible for the client systems. Client tools During design consider what user side tools will be used. If tools have special requirements ex: data summarized in certain ways, requirements need to be catered to. l DW should be designed to be accessible to any tool. Multiple tools can be used, should be thoroughly tested , trialed to ensure suitability to the users. l 11

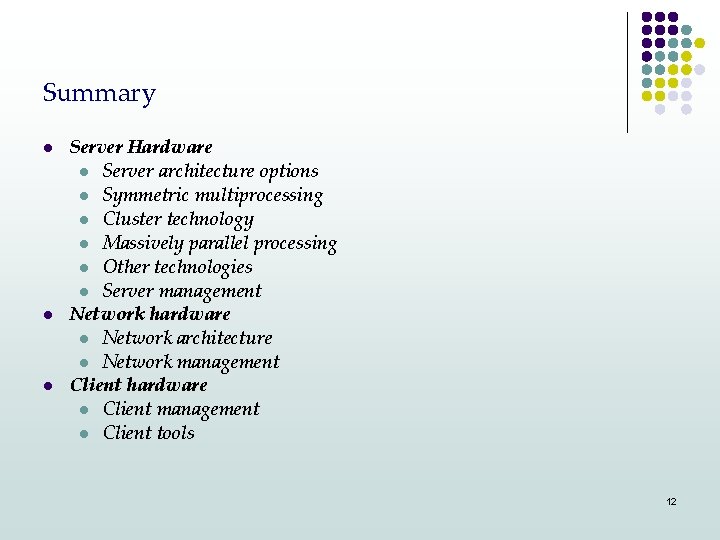

Summary l Server Hardware l l l l Network hardware l l l Server architecture options Symmetric multiprocessing Cluster technology Massively parallel processing Other technologies Server management Network architecture Network management Client hardware l l Client management Client tools 12

Security Introduction Requirements Performance impact of security Security impact on DW design 13

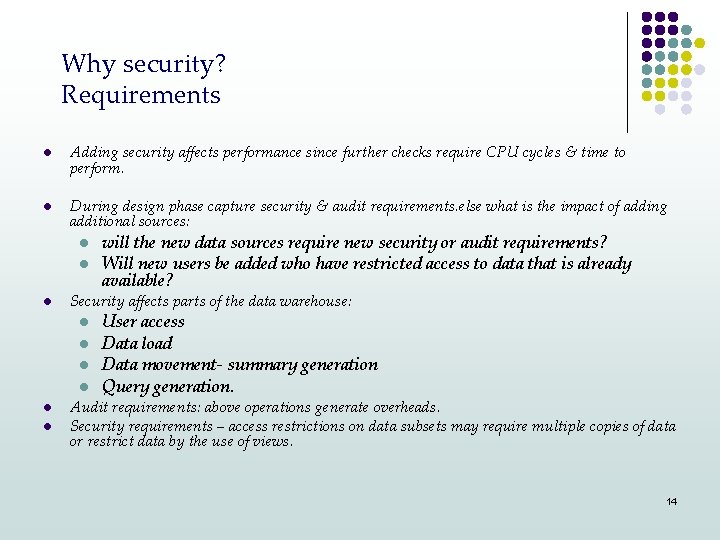

Why security? Requirements l Adding security affects performance since further checks require CPU cycles & time to perform. l During design phase capture security & audit requirements. else what is the impact of adding additional sources: l l l Security affects parts of the data warehouse: l l l will the new data sources require new security or audit requirements? Will new users be added who have restricted access to data that is already available? User access Data load Data movement- summary generation Query generation. Audit requirements: above operations generate overheads. Security requirements – access restrictions on data subsets may require multiple copies of data or restrict data by the use of views. 14

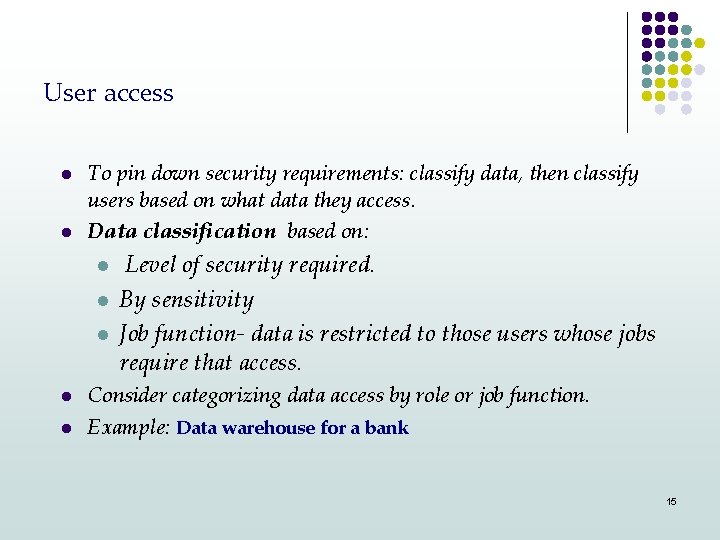

User access l l To pin down security requirements: classify data, then classify users based on what data they access. Data classification based on: l l l Level of security required. By sensitivity Job function- data is restricted to those users whose jobs require that access. Consider categorizing data access by role or job function. Example: Data warehouse for a bank 15

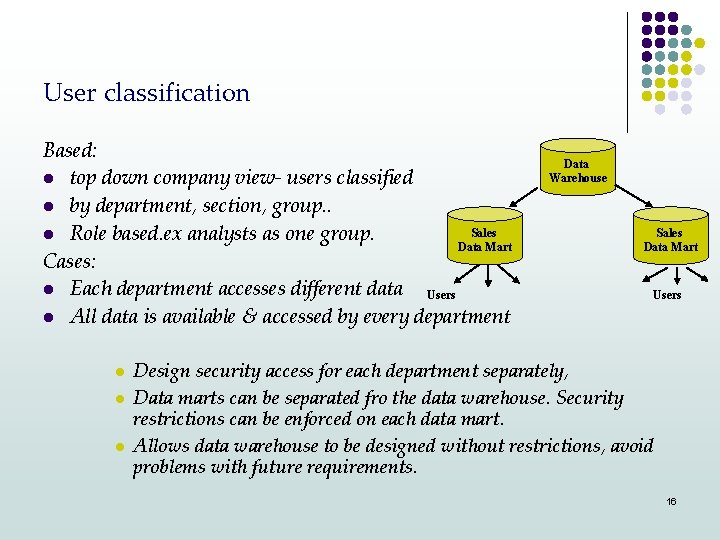

User classification Based: l top down company view- users classified l by department, section, group. . Sales l Role based. ex analysts as one group. Data Mart Cases: l Each department accesses different data Users l All data is available & accessed by every department l l l Data Warehouse Sales Data Mart Users Design security access for each department separately, Data marts can be separated fro the data warehouse. Security restrictions can be enforced on each data mart. Allows data warehouse to be designed without restrictions, avoid problems with future requirements. 16

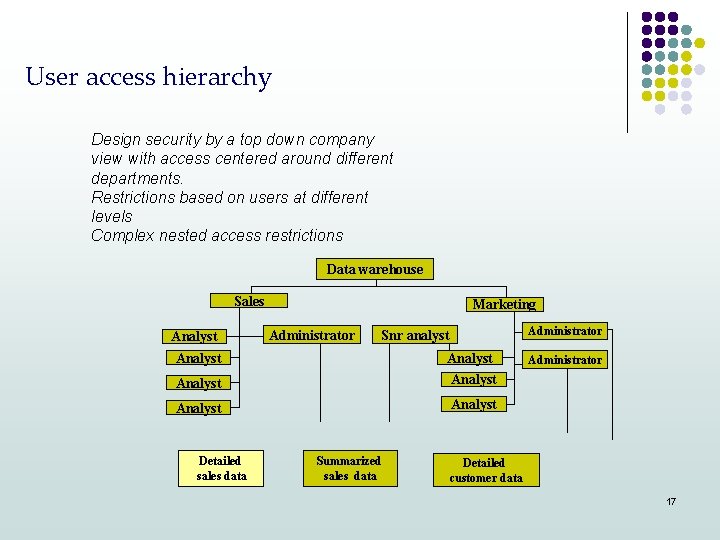

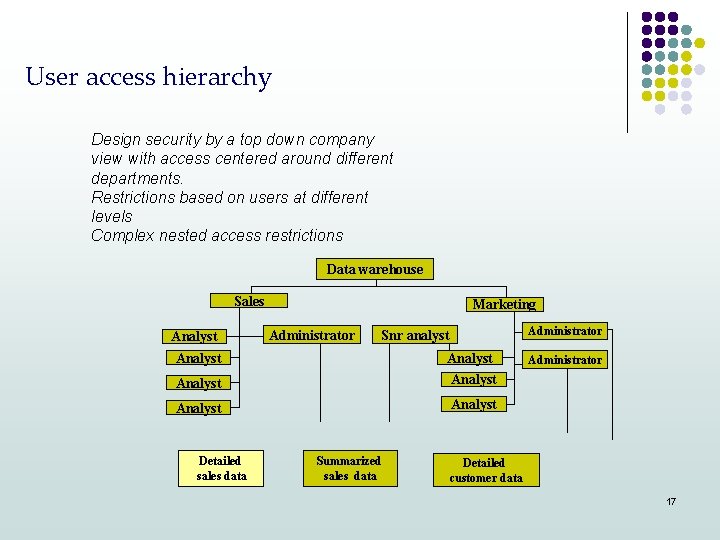

User access hierarchy Design security by a top down company view with access centered around different departments. Restrictions based on users at different levels Complex nested access restrictions Data warehouse Sales Analyst Marketing Administrator Snr analyst Analyst Analyst Detailed sales data Summarized sales data Administrator Detailed customer data 17

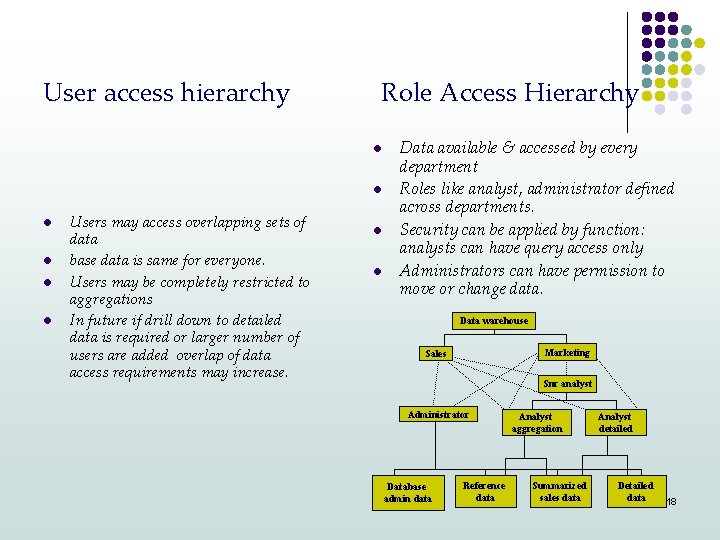

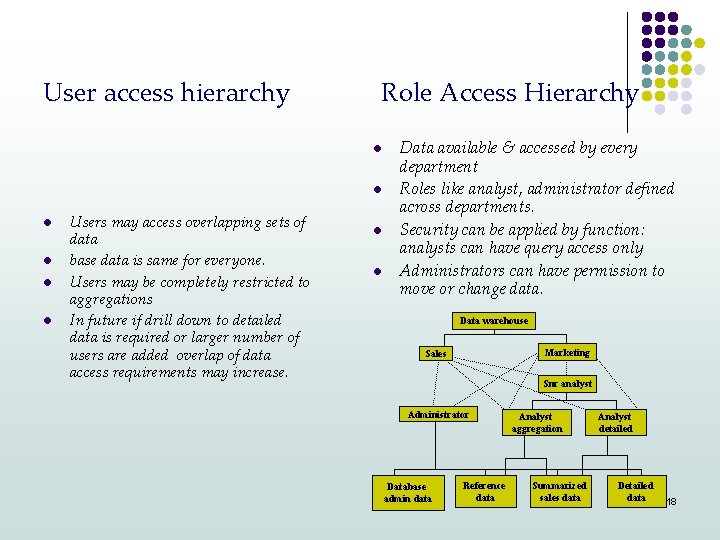

User access hierarchy Role Access Hierarchy l l l Users may access overlapping sets of data base data is same for everyone. Users may be completely restricted to aggregations In future if drill down to detailed data is required or larger number of users are added overlap of data access requirements may increase. l l Data available & accessed by every department Roles like analyst, administrator defined across departments. Security can be applied by function: analysts can have query access only Administrators can have permission to move or change data. Data warehouse Marketing Sales Snr analyst Administrator Database admin data Reference data Analyst aggregation Summarized sales data Analyst detailed Detailed data 18

Guideline l The tighter the security, more person oriented the design. The more complex the interdependencies, the more role oriented the design. l Table versus views l Data access restrictions can be implemented through views. Approach 1: l For ex: no person can access transaction data of their own account, but give access to rest of the data by creating a view. l A separate view will be required for each person who has access to that table. Also many people can have multiple accounts. l Performance overheads l How can so many views be maintained as users open & close accounts? l 19

Approach 2 l Place data in separate tables depending on its classification. l Data can be accessed directly since no view is required for user or role to filter unauthorized data. l Massive duplication, large overlaps in data the roles can access. l Overhead of disk space & processing power. Approach 3 l Load employees & non employees data in separate tables. l But a non employee could be employed as an analyst at the bank, access to data? l What about historical data in the non employees table. 20

Legal requirements l l It may be required by law to enforce restrictions. Can data be of minimal nature? Individual customer data can be subject to restrictions. Ex: Data sufficient to identify account but not owner, anonymity can be preserved, can ti be utilized for trending & profiling? Can it be used to mailshot a new product to identified account holders. can it be used to cross match customer id to customer details. Ex: Flight reservations where many airlines share a common booking system run by one of the airline services. 21

Audit requirements Subset of security. l Can cause excessive overheads on the system. More hardware is required to allow overnight processing operation to finish in time. l Audit requirements: l connections l Disconnections l Data access l Data change l Audit “success & or failure”. The latter can indicate attempted unauthorized or fraudulent access. 22

To audit data access l l Audit each access separately- A user accessed a specific table during a given session. Affects audit information held, saving disk space & I/O overheads. For data changes to be audited: note that change has occurred or capture the change. Audit requirements may be company standards but may not be required. Implement audit for genuinely required legal , company & security reasons. Network requirements l l l Security issues like: Is encryption/ decryption necessary before loading. - overhead of processing power & time. Any restrictions on network routes. -can cause bottlenecks on critical sections. 23

l l l l Security implications. Data landing in the warehouse: where is it stored Who has access to that disk space. Is it to be backed up. Query result set is also a data movement. Stored in a temporary tablewhere is it? How can it be made visible, not to non authorized users. What about summarized versions of restricted data? Processes have capacity & bandwidth implications. 24

Documentation l l l l For all security & audit requirements to enable cost justification. Contains information like: Data classification User classification Network requirement Data movement & storage requirement All auditable actions. Data Movement l l Use trusted versions of RDBMS, runs on trusted OS>, conforms to trusted levels of security. Contains functionality like row labels & security clearance Row labels: each row of data can be classified Security clearance applies to users, which data they are allowed to see. 25

Data Movement l l l l Expensive- resource & time. Bulk movements occur : data loads Aggregation creation Results temporary tables Data extracts Performance overheads; if security check & audit has to be applied. Security requirement may force generation of extra aggregation. , which needs to be maintained , consumes disk space, places pressure on the overnight window, increased CPU requirements. Needs more hardware purchases. 26

Views Restrictions: l Restricted data manipulation language operations DML l Lost query optimization paths l Restrictions on parallel processing of view projections. l Complex views: treated as read only, inserts, updates & deletes will sometimes be restricted. l Security implies maintenance overhead. l Views are enforced to restrict access data tables & aggregations , if they change then the views may change. l Number of users keep on changing. l Impose parse overhead when accessed by SQL statement. Along with statement even the view has to be merged & parsed. l Parallel operations can give required performance. 27

Auditing Each audited action requires some code to run. CPU impact. Requires disk space to store audit logs –, stored for a long time, can grow quickly. Deal with audit logs, operational task. No space for storage? l l Security impact on Design l Application development l Extra security code required for : load , warehouse & query manager. Load manager: l Require checking code to filter records, place them in specific locations based on contents. Need extra transformation rules to modify sensitive fields & hide certain data. 28

Warehouse manager: l Need code to create & maintain alll extra views & aggregations required to enforce security. Query manager: l Require changes to handle access restrictions. l Aware of all extra views & aggregations, abler to point users to correct versions of objects. Guideline: Increase complexity estimate for any piece of software that requires security code to be added. 29

Testing Complex & lengthy process. Security increases test time in two ways: l Programs added increase time required for integration & system testing. Increase number of errors found in testing & amount of recoding needs to be performed. l To test added functionality, test suite size increases, take longer to plan & develop, increases amount of time taken for testing. Database design If added security increases number of database objects- tables, views, affect database layout. Adds to complexity of database management & backup recovery plan. l 30

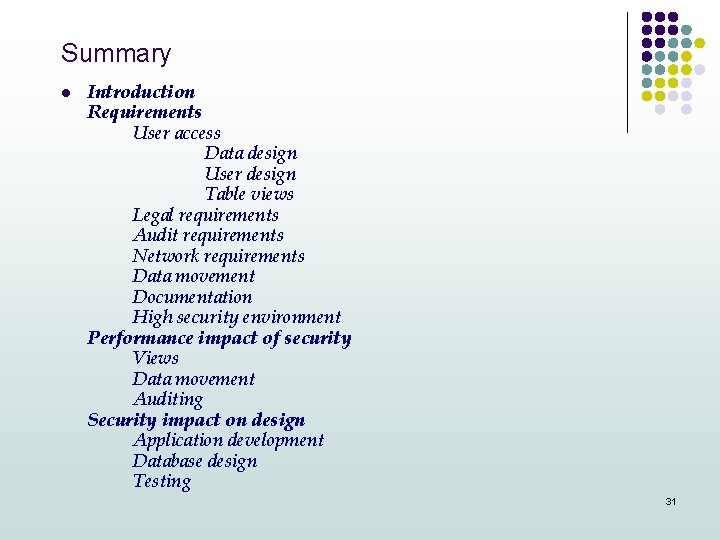

Summary l Introduction Requirements User access Data design User design Table views Legal requirements Audit requirements Network requirements Data movement Documentation High security environment Performance impact of security Views Data movement Auditing Security impact on design Application development Database design Testing 31

Backup & Recovery 32

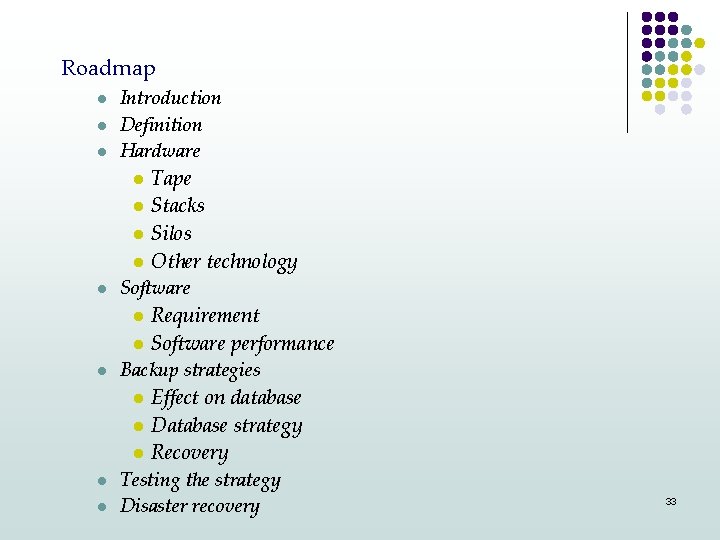

Roadmap l l l Introduction Definition Hardware Tape l Stacks l Silos l Other technology Software l Requirement l Software performance Backup strategies l Effect on database l Database strategy l Recovery Testing the strategy Disaster recovery l l l 33

Introduction Regular automated operation, documented. l Important: large volume of data, system complexity. Objective: l Discuss issues considered in designing backup strategy , pointing requirements for hardware & software. What to backup? l Os application code, user directories, database. l During design phase: Check CPU requirements to perform backup &bandwidth available. l Design together: database & application components & backup strategy. l 34

Definitions l l l Complete backup- entire database backed up at the same time. database data files, control & journal files. Partial backup ( for large databases, round robin fashion) Cold backup- when database is completely shut down. Hot backup-database engine is up & running, special requirements to be observed. Online backup: synonym for hot backup. 35

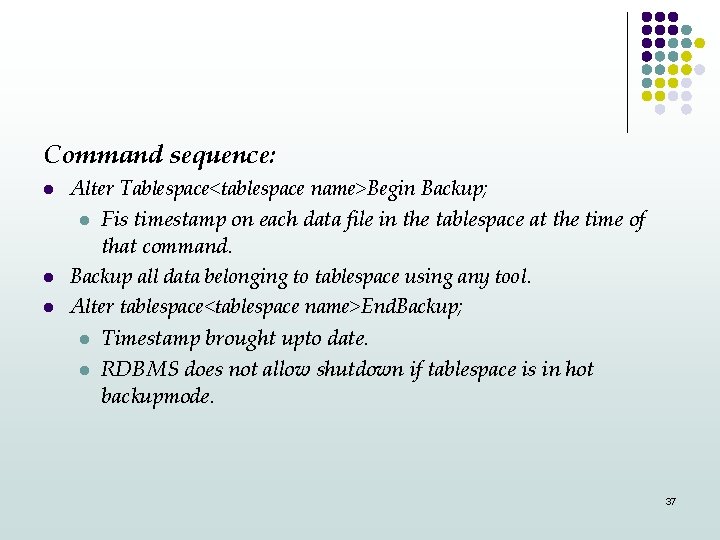

Oracle l l Journal files: redo files, reused in a round robin fashion Database is used in archivelog mode, redo files are backed up before being reused. Operation -automatic, transparent. Hot backups at table space level. For backup to be consistent all data files in tablespace must be backedup. 36

Command sequence: l l l Alter Tablespace<tablespace name>Begin Backup; l Fis timestamp on each data file in the tablespace at the time of that command. Backup all data belonging to tablespace using any tool. Alter tablespace<tablespace name>End. Backup; l Timestamp brought upto date. l RDBMS does not allow shutdown if tablespace is in hot backupmode. 37

l Hardware l Tape l l l Stacks Silos Other technology 38

Hardware l Which hardware to use for backup strategy. l Choice places upper bound on speed. Objective: l Outline hardware choices available & their comparison. 39

Tape technology l l Tape media Standalone tape drives Tape stackers Tape silos. 40

Tape media Factors to be considered are: l Reliability of medium & drive l Shelf life l Cost per unit, per drive l Scalability l Cost of upgrades to tape system 41

Standalone tape drives l l Issue of connectivity Methods of connecting tape drive : l Directly to server-How spread multiple drives across nodes for optimal performance with least disturbance to the server & least I/O latency. Effect of backup on high speed interconnect. l l As a network available device- high bandwidth available when either shared or dedicated. Remotely to another machine-resource to be controlled, server to server high bandwidth. Can have a dedicated backup server. 42

Stackers l l l Automatically dismount the current one & load the next tape into a single tape drive. Ability to perform unattended backup. Avoid error or delay. Can be controlled programmatically. Ex: restore from a recent backup that is still in the stacker. Can be used to control which tape files are copied to. Auto labeling of tapes possible with bar-coding, to ensure that correct tape is loaded. 43

Silos l l l l Large tape storage facilities up to thousands of tapes. Sealed environments with robotic arms for tape manipulation. Use multiple integrated tape drives. Use software & hardware to label & manage all tapes they store. Tape library software- accessed externally, controlled programmatically. Expensive solution. Can be shared among applications- become bottleneck. , should not lose backups. Large footprint, cannot place close to the data warehouse server. May be connected remotely or througha dedicated link. 44

Other technology l l Disk backup Disk to disk backup Mirror breaking To minimize downtime & maximize availability 45

Disk to disk backup Backup performed to disk, then backed up later to tape. Why? l Speed of initial backup & speed of restore. l Automated. Minimal disruption of access to data. l Backup overheads , depend on the nature of the usage profile of the warehouse. l 24 x 7 access is required to support long running queries. , in batch, do not contend to same disk with backup. l Online copy of latest backup available. Restore operations are speeded up. l 46

Mirror breaking l l Common OLTP technique. For resilience during the working day. One of the mirror set is broken out when it is time for backup. One set can be backed up to tape, other is still is available for use. Mirrors can be resynced when the backup is complete. While the mirror is broken, data is unprotected against media failure. Use triple mirroring- costly & difficult to manage. 47

Optical Jukeboxes Devices that allow data to be stored near line. l Uses WORM. l This technology allows large number of optical disks to be managed like a stacker or silo. l Only 1 or 2 disks can be accessed at a time. Slow write speed. l But long life , reliability. 48

l Software l l Requirement Software performance 49

Software Requirements criteria: l Degree of parallelism possible l Product scalability as more tape drives are added. l CPU hit on the server during backup. l Does the package have a client server option, does it run on the database server itself. Heavier backup load can be offloaded to a separate machine. l Work in cluster or MPP environment l Platforms supported by the package l Tape drives & tape media supported by the package l Is package database aware 50

Software l l l Need to know what data is on which tape. It is important if data is parallel streamed onto tapes. Essential to match software tape labels against physical tapes if the tapes have to be retrieved manually. 51

Performance Affected by: l I/O bottlenecks- read to disk & write to tape. l CPU bottleneck-batch processes running. l Minimize elapsed time required for backup. Rates- 10 GB – 500 Gb per hr. l CPU bandwidth if near 100%, CPU may spend more time context switching processes in & out of he CPU>- thrashing. 52

Minimize backup elapsed time: l l l Use high degree of parallelism. Create multiple backup streams/ some may multiplex backup streams onto a single tape. Parallel backup streams, but each stream writes to different tape, needs multiple tape drives. Server loaded. Maximize throughput & minimize CPU usage. Depends on : software, speed of disk I/O & tape subsystems, maintaining information on tape contents. 53

l Backup strategies l l l Effect on database Database strategy Recovery 54

Backup strategies l Effect on database design l Design of the backup strategy & database performed hand in hand. l Backup requirements affected by partitioning strategy chosen for fact data. 55

Design strategies l l l Aim is to reduce the amount of data to be backed up on a regular basis. l Bring down the effect of backup on overnight processing. Strategy to be designed around daily load. Mark certain areas as read only table spaces- cannot be changed even accidentally without bringing it to read write state. l Need to backup only once. l Can be backed up twice to protect against tape media failure, on a second tape, different tape device & different controller. 56

Design strategies l Unchanging data to be isolated in separate table spaces , so can be marked Read only. l Partitioning scheme such that bulk of the partitions can be marked read only. l Decide size of the partition. l Reduce amount of journaling of redo generated. Possible with RDBMS, turn off logging with certain operations like: data load, index creation. 57

Automation of backups l l l Accomplish large backup without operator intervention. Automate scripts that run regular backups. Should report problems or deal with them directly & restart job once the problem is fixed. Managed by warehouse manager. Should be integrated into system management routines & handled by system management & scheduling software being used. 58

Recovery strategies l l Built around backup strategy. Recovery situation implies that some failure has occurred Recovery action depends on what the failure was. Strategy consists of set of failure scenarios & their resolution. 59

Document recovery steps for a failure scenario l l l l l Instance failure Media failure Loss of damage of table space or data file. table. Redo log file Archive log file Control file Failure during data movement Recovery plan should take into account: use of operations that are not logged. 60

Document recovery steps for a failure scenario Data movement scenarios to be covered: l Data logging into staging tables l Movement from staging to fact table l Partition roll up into larger partitions. l Creation of aggregations. l Document plan to include process involved, details of tables used, views that need to be created. 61

Testing the strategy l l Check that the backup scripts are backing up intended files. For all failure scenarios suggested recovery path to be implemented. Testing to be carried out before the data warehouse goes live, also on a regular basis. Avoid testing during busy times. 62

Disaster recovery l l l It is a major site loss- damaged beyond repair. Could mean log term outage, could make it impossible to catch up with the load process. Plan minimal functionality disaster recovery. 63

Requirements for disaster recovery l l l l Replacement / standby machine Sufficient tape & disk capacity Communication link to users Communication link to data sources Copies of relevant pieces of software Backup of database Application aware systems administration & operations staff. 64

Summary l l l Introduction Definition Hardware Tape l Stacks l Silos l Other technology Software l Requirement l Software performance Backup strategies l Effect on database l Database strategy l Recovery Testing the strategy Disaster recovery l l l 65

Capacity Planning 66

Roadmap l l l Introduction Capacity Planning Process Estimate load l l l Initial Configuration CPU bandwidthl Daily processing l Overnight Processing How much memory How much disk Database Sizing Non database sizing 67

Objective l l Cover issues related to growth & performance of the DW To be able to support extended sizes without: l l Performance loss Growth of load window 68

What capacity is required by the hardware? l Knowledge of the usage profile of the users: l l l l No. of users Whether they use adhoc queries frequently/ occasionally at known/ unknown intervals at regular predictable times Average size of the query they tend to run Elapsed log in time per day Peak time of daily usage No. of queries per peak hour/ per day. Usage profiles: Change over time Useful for growth predictions & capacity planning 69

Estimating the load l Choosing hardware : l Hardware architecture l Resilience l What is going to be the ultimate size of the DW? l No re estimation if budget is a problem. l Sizing capacity should not be as per budget. l Small growth in requirements- - addition of enough hard l Adding a single byte to a fact data means adding NGB of storage for each year’s worth. 70

Initial Configuration l No history information/ statistics available. l Sizing done on predicted load. Estimate configuration based on known requirements. If there is a change, sizing can be adjusted accordingly. Allow for contingency l l l 71

How Much CPU Bandwidth? l Loads placed on system: l Daily processing l l Overnight processing l l User query processing Data transformation & load Aggregation & index creation Backup These use the most system capacity. 72

Daily Processing l l To estimate CPU requirements- estimate the time taken by each query. What @ adhoc queries? l l Estimate size of the largest likely query Query may be across every piece of data. Common query: recent month’s / week’s data. Query period can be discerned form the knowledge of the business. 73

Daily Processing l l Likely period of the query: - volume of data involved. Assume: l l Query will perform full table scan of fact data for that period. Measure of volume of data- ‘F’ MB – accessed. Know I/O characteristics of devices that the data will reside on. ‘S’ Scan rate at which the data can be readdepends on the disk speeds, throughput ratings of I/O controllers, size of F itself. 74

Daily Processing l ‘F’ is large, in GB l l l Spread across multiple disks & controllers. Assume a reasonable spread of data: ‘T’ (time in seconds to perform a full table scan)T=F/S Depending on the degree of parallelism & striping calculate: T 1 to TN. That ‘F’ is spread across. Higher degree of parallelism implies l higher throughput, but also higher I/O bottleneck. 75

Daily Processing l l l SLA specifies query response time requirement say ‘Tp’ gives the required scan rate ‘Sp’, no. of disk sets / disks that are needed to spread data across. Gives ‘P’ the required degree of parallelism to meet query response time for a single query. 76

Daily Processing l ‘Pc’- parallel scanning threads that a single CPU can support. l Varies with processor. - current- 2 -4 scanners. l CPU requirement to support single query. Cs=roundup (2 P/Ps) ‘ 2 P’- allow for query overheads/ queries that involve sorts. Minimum no. of CPUs required overall: Ct=n. Cs+1 ‘n’- number of concurrent queries that will be allowed to run. l l l 77

Overnight Processing l l Most operations are serialized tend to run one at a time, one after another. CPU bandwidth required for data transformation l l l Backup large volume of data in a short period of timel l Depends on the data processing involved. Same as aggregation & index creation operation. Large CPU bandwidth. Parallel streams speed up backupl but CPU loaded, bandwidth increases. 78

Overnight Processing l l l Data load uses massive parallelism to speed up operation. Post processing may be carried out on loaded data. l Can outweigh aggregation processing, l Use baseline as post processing & not aggregation for CPU Establish baseline How much CPU capacity the operation requires to complete in allowed time. Avoid delays in data arrival Overnight processing to be completed without running into the business day. 79

Overnight Processing l l Testing to be carried out on sufficient data. Certain operations like sorts may not scale linearly. Carry out tests on a no. of sets of varying sizes Generate a scaling factor to assist in sizing the full size system. 80

How Much Memory? l l Estimate minimum memory requirement. Factors affecting amount of memory required: l l l Database requirementsl to cache data blocks as the are used. l to cache parsed SQL statements Memory for sort space, parameterized & can be specified. In case of parallel technology, allow sort area for each sort process l important in case when multiple concurrent queries are running. 81

How Much Memory? l l l Each user connected to the system uses memory space Depends on how the machines are connected & which software they run. OS also requires memory, variable based on the features & tools used. 82

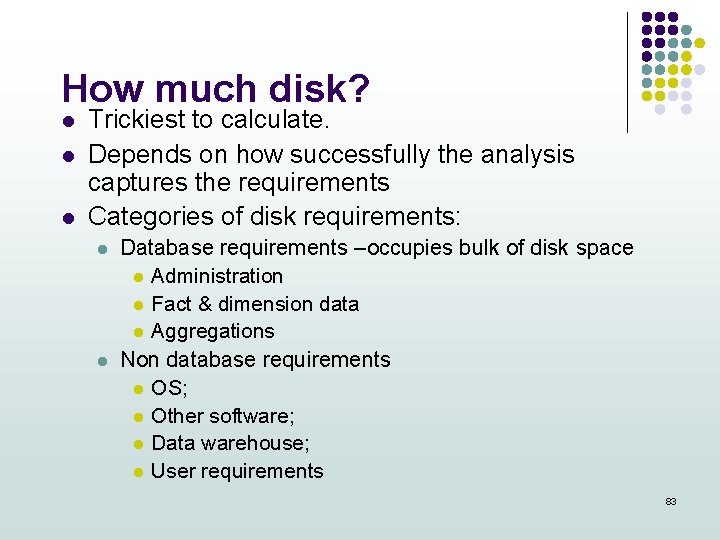

How much disk? l l l Trickiest to calculate. Depends on how successfully the analysis captures the requirements Categories of disk requirements: l l Database requirements –occupies bulk of disk space l Administration l Fact & dimension data l Aggregations Non database requirements l OS; l Other software; l Data warehouse; l User requirements 83

How much disk? l l l Make allowance for disk resilience. Use disk mirroring or RAID 5 Overheads need to be calculated & factored into your capacity plans. 84

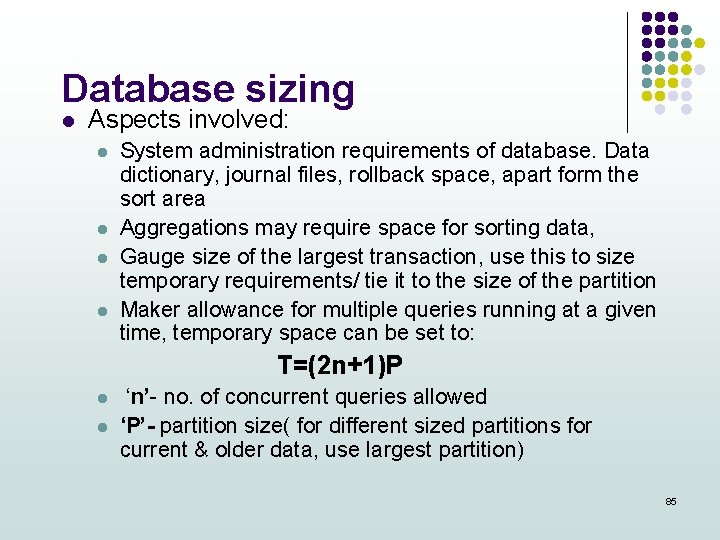

Database sizing l Aspects involved: l l System administration requirements of database. Data dictionary, journal files, rollback space, apart form the sort area Aggregations may require space for sorting data, Gauge size of the largest transaction, use this to size temporary requirements/ tie it to the size of the partition Maker allowance for multiple queries running at a given time, temporary space can be set to: T=(2 n+1)P l l ‘n’- no. of concurrent queries allowed ‘P’- partition size( for different sized partitions for current & older data, use largest partition) 85

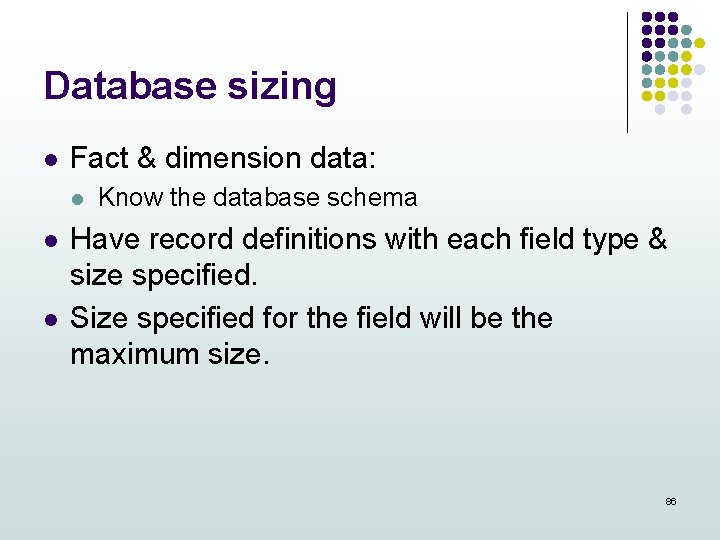

Database sizing l Fact & dimension data: l l l Know the database schema Have record definitions with each field type & size specified. Size specified for the field will be the maximum size. 86

Database sizing l Requirements to calculate the actual size: l l l Average size of data in each field-different from the maximum, can make GB difference. Use real data for estimate. Percentage occupancy of each field- empty fields take up space in a database record. Position of each data field in the table RDBMS storage format for each data type Any table, row, column overheads. 87

Database sizing l Database block or page size affects sizing calculations l l Header information at top of the block, cannot be used by data, @ 100+bytes, 2 KB / 16 KB block size Saving of 700 B of extra data space in every 16 KB. Significant space saving- Hundreds of GB. 88

Database sizing l Estimate size of index space required for fact & dimension data. l l l Lightly indexed: index occupies 15 -30% space of fact data, high maintenance cost. Ideally use a primary key index, may also use a concatenated foreign key index onto dimension tables to allow star queries to work. Majority of the users requirements are met by aggregations, no need to access fact data. 89

Database sizing l Size of the fact data: l l l Amount of data intended to keep online. Then decide on the partitioning strategy. Each partition to be large enough to accommodate peak period, may also generate space wastage. Size aggregations: make allowance Thumb rule: allow same amount of space for aggregations as the fact data kept online. 90

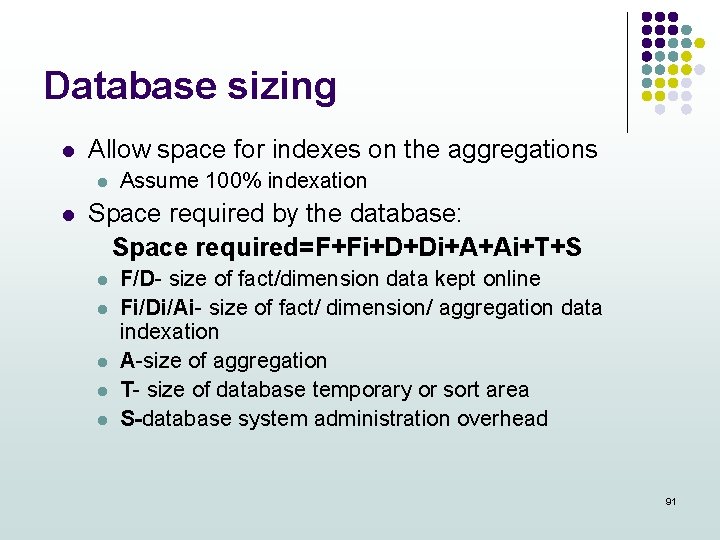

Database sizing l Allow space for indexes on the aggregations l l Assume 100% indexation Space required by the database: Space required=F+Fi+D+Di+A+Ai+T+S l l l F/D- size of fact/dimension data kept online Fi/Di/Ai- size of fact/ dimension/ aggregation data indexation A-size of aggregation T- size of database temporary or sort area S-database system administration overhead 91

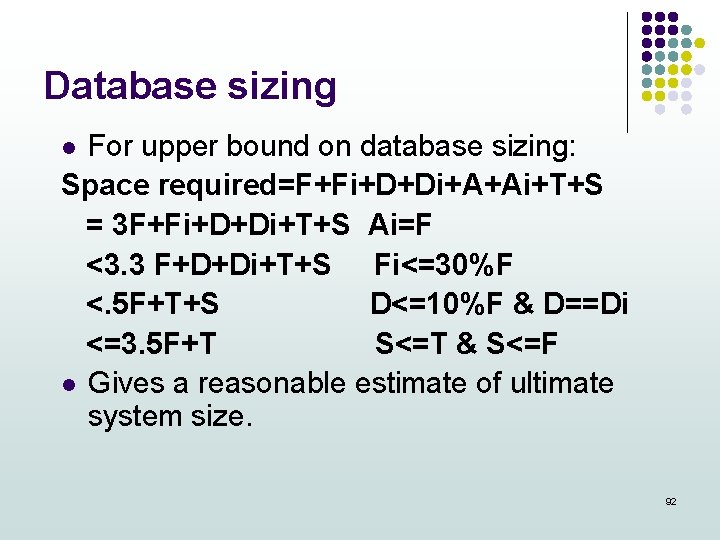

Database sizing For upper bound on database sizing: Space required=F+Fi+D+Di+A+Ai+T+S = 3 F+Fi+D+Di+T+S Ai=F <3. 3 F+D+Di+T+S Fi<=30%F <. 5 F+T+S D<=10%F & D==Di <=3. 5 F+T S<=T & S<=F l Gives a reasonable estimate of ultimate system size. l 92

Database sizing l l l Dimension data could be larger than fact data. F is the size of the ultimate size of the fact data which can be kept online Cab be correspondingly scaled back depending on how many years worth of data to be kept online. 93

Non database sizing l l Take server environment into account In a cluster/ MPP environment- allow space on each node for: l l l OS, Save space through NFS Allowance for swap space, system crash dumps Install RDBMS Third party tools, log & trace files DW application require space for code & tools, log & trace space for each of the mangers- load, warehouse & query. Users require disk space for data extracts / reports, directories to save SQL queries. 94

Summary l l l Introduction Capacity Planning Process Estimate load l l l Initial Configuration CPU bandwidthl Daily processing l Overnight Processing How much memory How much disk Database Sizing Non database sizing 95