AI Ethics II Resolving Value Conflicts Recall that

- Slides: 27

AI Ethics (II): Resolving Value Conflicts

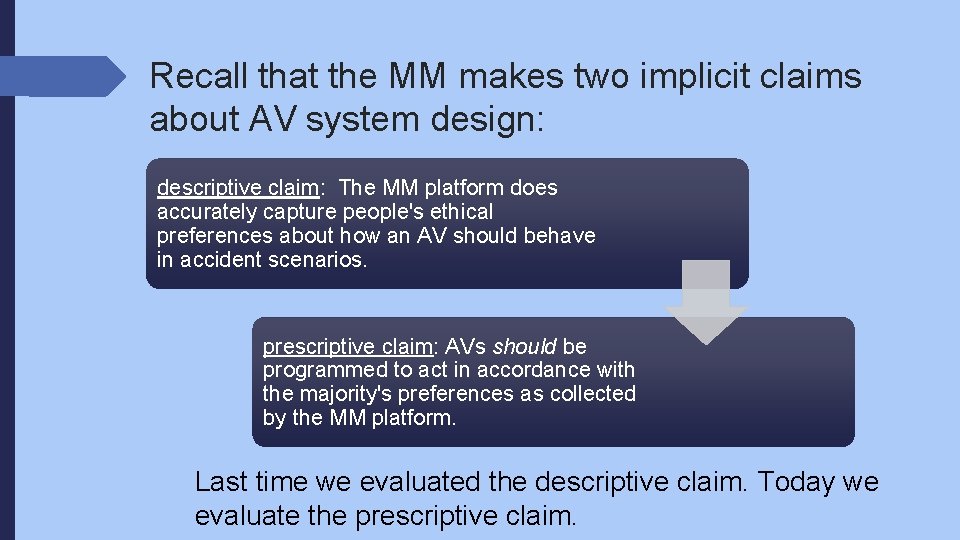

Recall that the MM makes two implicit claims about AV system design: descriptive claim: The MM platform does accurately capture people's ethical preferences about how an AV should behave in accident scenarios. prescriptive claim: AVs should be programmed to act in accordance with the majority's preferences as collected by the MM platform. Last time we evaluated the descriptive claim. Today we evaluate the prescriptive claim.

Question: Should you program your autonomous vehicle to behave in accident scenarios in accordance with these preferences? Why or why not? Hypothetical Case: You are the project lead for a major company’s autonomous vehicle program. A team member comes to you with a dataset consisting of millions of people's preferences about how an AV should behave in various accident scenarios, gathered via an experiment similar to the MM. A qualified team tells you that the data collection and experimental design practices were pristine, with excellent controls and external auditing for hidden biases. Suppose the preferences collected turned out to be precisely the same as those collected in the MM.

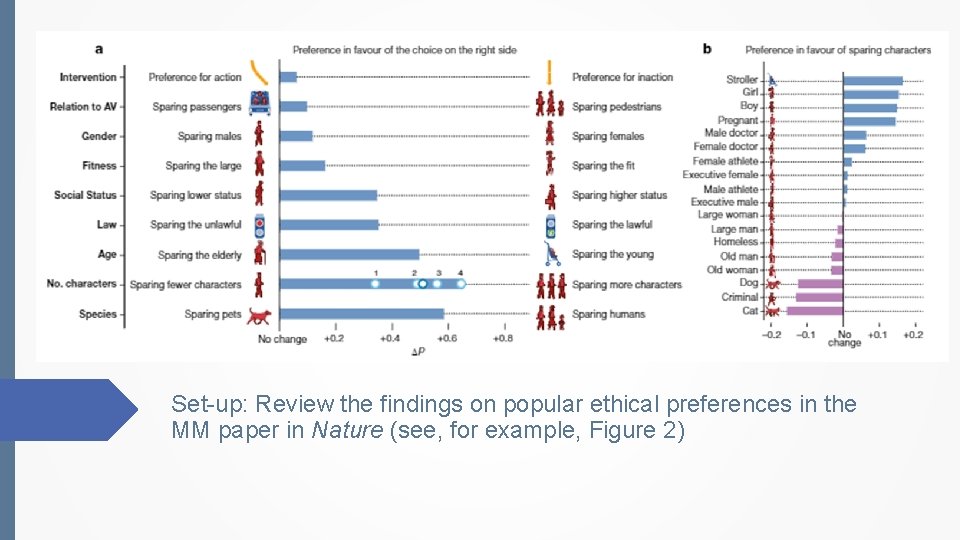

Group Discussion: Distinguishing ethically significant preferences Have students evaluate the ethical significance of the preferences the MM experiment yields (see figure 2) by sorting them into ethically unproblematic, somewhat ethically problematic, and ethically problematic categories. Suggestions 10 minutes: Have students break into small groups to discuss it 5 minutes: Have students write down their group's answers and their reasons 15 minutes: Have a general group discussion about the group's answers to these questions

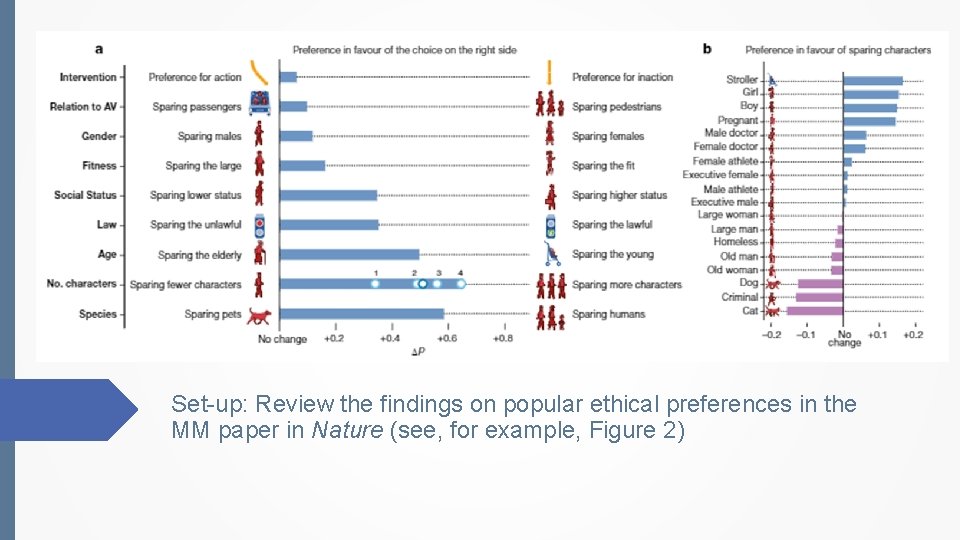

Set-up: Review the findings on popular ethical preferences in the MM paper in Nature (see, for example, Figure 2)

Aims of discussion Some preferences in MM intuitively seem ethically problematic; others do not. Which are which? Problematic: favoring those of higher over lower status, females over males, fit over the overweight, the young (e. g. , children) over elderly Perhaps somewhat less problematic: favoring the law-abiding over the lawbreaking, inaction over action, pedestrians over passengers Unproblematic: favoring saving more over fewer lives, humans over animals Note that the strongest preferences in MM are the unproblematic ones Question: Should we take weak preferences in thought experiments like the MM seriously? If not, then the MM does not tell us much that we do not already know (viz. , we should save more rather than fewer people, and humans over animals)

Short Lecture: Principled relations between values

Preferences may have ethical significance, but only so long as they do not violate certain prior ethical conditions or principles (“red lines”) For example, my preference that you or people in your group suffer, or to use your body or property, or to limit your freedom to speak, move, etc. , or that you be treated as subordinate to me, do not count ethically. Even my preferences regarding myself may not count ethically; for example, suppose I want to sell myself into slavery. I cannot ethically fulfill this preference. These red lines are usually defined by our rights

If these prior conditions are met, then the majority's preferences may have ethical significance. It is still a normative (prescriptive) question whether they should, however. Some normative reasons they might: Exercising free choice is part of flourishing as a human (virtue ethics) Individuals may be in the best position to know what will make them happy, so fulfilling their preferences in the aggregate may maximize happiness for everyone (consequentialism) We have duties to respect people's free choices, which may roughly mean respecting their preferences (deontology).

There may be a number of other important ordering and priority relations between ethical values and interests in particular cases For example, my interest in hearing all points of view in a debate may impose natural limits on your right to speak and interrupt others. My right to life is qualitatively more important than others' interest in being entertained; hence it would be unethical to force me to fight to the death as a gladiator in order to entertain people.

Lecture: Challenges for the Prescriptive Claim

Prescriptive claim: Autonomous vehicles should be programmed to make decisions in accident scenarios in accordance with the majority's preferences as collected by the MM platform. We simply assume today the descriptive claim that the MM platform is a good tool for capturing those preferences.

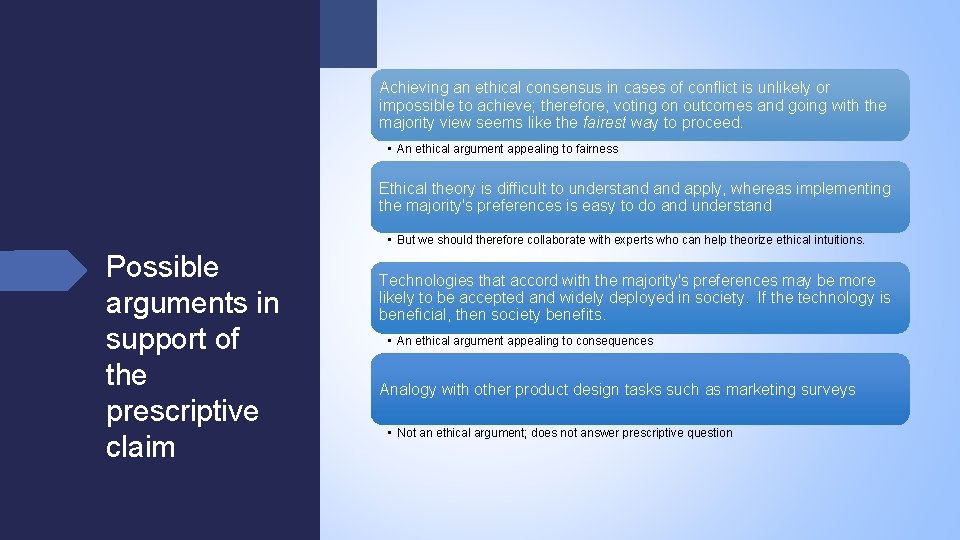

Achieving an ethical consensus in cases of conflict is unlikely or impossible to achieve; therefore, voting on outcomes and going with the majority view seems like the fairest way to proceed. • An ethical argument appealing to fairness Ethical theory is difficult to understand apply, whereas implementing the majority's preferences is easy to do and understand • But we should therefore collaborate with experts who can help theorize ethical intuitions. Possible arguments in support of the prescriptive claim Technologies that accord with the majority's preferences may be more likely to be accepted and widely deployed in society. If the technology is beneficial, then society benefits. • An ethical argument appealing to consequences Analogy with other product design tasks such as marketing surveys • Not an ethical argument; does not answer prescriptive question

Challenge 1: Fairness in ethics

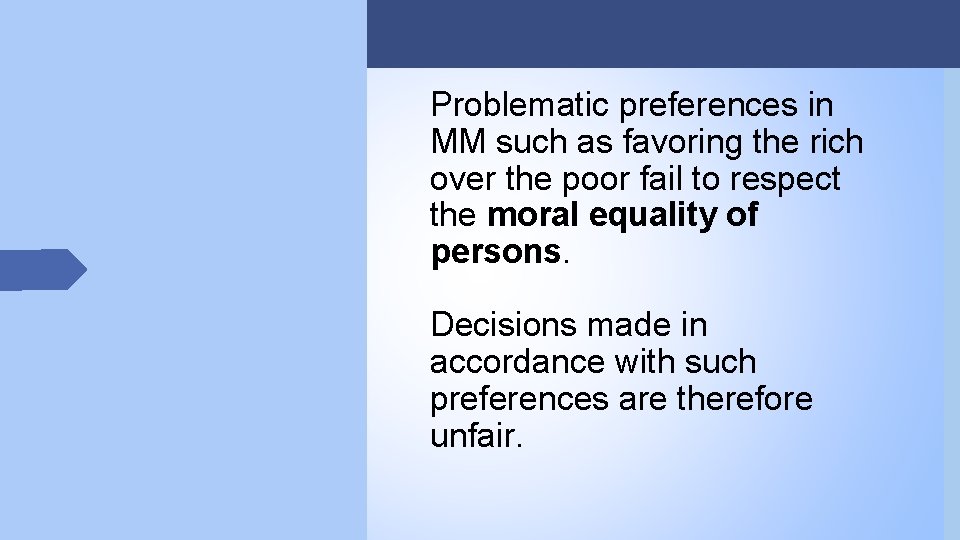

Problematic preferences in MM such as favoring the rich over the poor fail to respect the moral equality of persons. Decisions made in accordance with such preferences are therefore unfair.

Fairness = treating like cases alike. That is, any decision to treat persons differently must be rationally related to achieving the legitimate purpose for which the classification is made For example, suppose I decide to give A's to everyone with red hair and fail everyone else. This is unfair because your hair color has nothing to do with whether you should get an A or not. Similarly, if I decide to program the AV to spare the fit over the overweight, or the rich over the poor, or females over males, then that is unfair because your fitness or wealth or gender has nothing to do with whether you should be subject to the injury that an AV might cause you in an accident. Moral equality bars consideration of such features.

The unproblematic and less problematic preferences in MM are not so obviously unfair. There is some rational reason to distinguish jaywalkers because jaywalking contributes to cause motor vehicle accidents There is certainly rational reason to distinguish animals from humans, and more humans from less, since in each case fewer persons are killed by the AV, which is the purpose of the classification

Challenge 2: The Lesson from ethical theories

Ethical Theories about what makes actions right or wrong. They help identify and explain the features of actions that we should be sensitive to when evaluating actions. Consequentialist vs Non-Consequentialist Theories Consequentialism Tells us to be attuned to the consequences of actions/their outcomes Sample Theory: Utilitarianism Non-Consequentialism Tells us to be attuned to things other than consequences (but consequences as well). This might include character traits (that someone acts kindly) or intrinsic features of acts (this is an act of lying) or violates of rules and connections to responsibility (this person violated a rule and should be held responsible) Sample Theory: Simple Deontology

Ethical theories and aggregating preferences Recall the results page of moral machines It seems to be attempting to measure our sensitivity to various kinds of considerations in ways that map on to things important in different ethical theories Some of us tended to be attuned to consequences, others to duties or intrinsic features of acts, and the results page was attempting to help us identify patterns in our thinking None of the ethical theories or the principles that MM might help us identify as part of our reasoning None of us clicked: “Let the crowd decide!” Imagine you are presented with three options for programming a car for accident scenarios Do what a Utilitarian says we should do in this circumstance Do what a deontologist says we should do in this circumstance Do what the crowd says! You might think the best answer is (1), but think that we should do (2) before letting the crowd decide!

The Lesson Taking stakeholders seriously does not mean turning decisions over to a vote Just because we disagree on the principles we should use or all use different ones, doesn’t mean we should let the crowd decide. That doesn’t follow from the disagreement or from the different principles. If we think that the point of ethical theorizing is to help decide on good ethical principles, then that means we should think in terms of those principles…and those principles might tell us not to turn it over to a vote That is, turning it over to a vote is an ethical principle

Challenge 3: Legal concerns

Distinction and relationship between law and ethics: Distinction: not everything that is legal is therefore ethical For example, you have a legal right under current U. S. law to protest at military funerals, but ethically you should not Main difference: Legal duties are morally enforceable; ethical duties are not. Relationship: in general you have an ethical duty to obey the law of a legitimate state Without law adjudicating our conflicts, we would inevitably do wrong to each other; hence disobeying duly enacted law is unethical (natural legal theory) Moreover, we need law to coordinate our activity: you ethically should drive on the right side of the road in the U. S. , on the left in the U. K. (positivist legal theory)

In practice, state tort law may already substantially constrain AV behavior in many of these accident scenarios. Consider the case pitting five jaywalkers against one innocent pedestrian. In a pure "contributory negligence" jurisdiction, makers of AVs who are negligent would not be held liable for killing the jaywalkers because the jaywalkers' actions negligently "contributed" to proximately cause the accident (e. g. , AL, DC, MD, VA; MA is a "comparative negligence" jurisdiction). The law in such a jurisdiction may thus determine that AVs should be programmed to kill the jaywalkers. In a "strict liability" jurisdiction, by contrast, makers of AVs would be held liable for any deaths the AV causes, regardless of whom (if anyone) is at fault. The law in such a jursidiction may therefore determine that the AV should kill the innocent pedestrian.

So from the point of view of the law, what is the point of doing an experiment like the MM? It might help establish an industry standard of care that companies must meet when designing autonomous systems so that we as a society can hold negligent and reckless or intentionally bad actors liable or culpable for the harms their systems cause Example case: Uber AV's fatal crash in Arizona. Sensors failed to identify and calculate the trajectory of an object in the road as a pedestrian because the pedestrian was outside a crosswalk (i. e. , a jaywalker). The car struck and killed the pedestrian. (See the NTSB report. ) Uber escaped criminal prosecution under Arizona's "reckless driving" statute, but settled the civil case within 10 days (likely for an amount well in excess of standard insurance limits of $1 million).

Review Questions What is the implicit prescriptive claim made in the MM? Which preferences in the MM experiment seem morally problematic? Which are unproblematic? Why are some of the preferences from the MM experiment ethically problematic? What does it mean to treat people fairly? Is taking a majority vote for how to resolve conflicts of values between stakeholders a way to avoid having to do ethics in such cases? What is the difference between law and ethics? What is the point of worrying about ethical AI system design from the point of view of the law?

Homework Assignment: Resolving Stakeholder Value Conflicts See course website. Due December 3.