1 How to design a useful student assessment

- Slides: 87

1

How to design a useful student assessment: The principles of assessment Mahmoud Kohan Department of Operating Room Alborz University of Medical Sciences Ph. D Student in Medical Education Isfahan University of Medical Sciences

3 Medical education in general is characterized by paradox of student's need to learn by trial and error without the luxury and opportunity of making mistake. Woolliscroft & Schwenk 1989

Outline Why do we need to focus on Assessment? The importance of assessment Purpose of Assessment Direction in student assessment Road Map to Student Assessment Characteristics of Assessment Instruments

At the end of this workshop, participants be able to: Explain why we need to focus on Assessment Explain the importance of Assessment Identify the purposes of Assessment Explain the new directions in student assessment Design a Road Map to student Assessment Identify the characteristics of Assessment Instruments

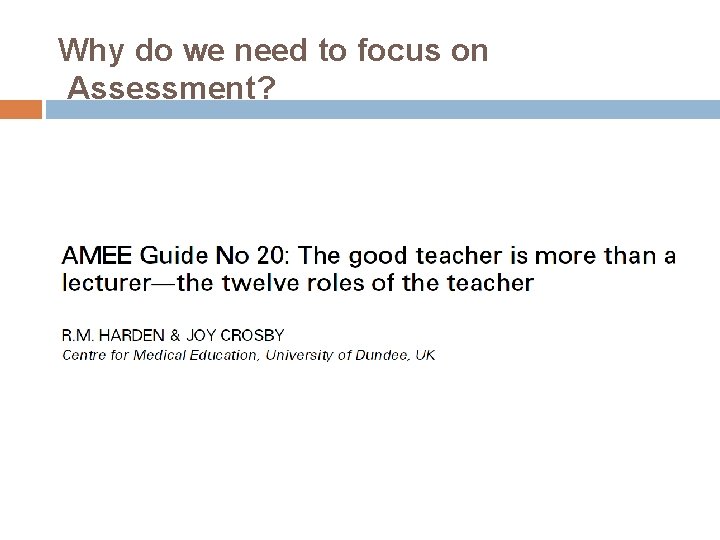

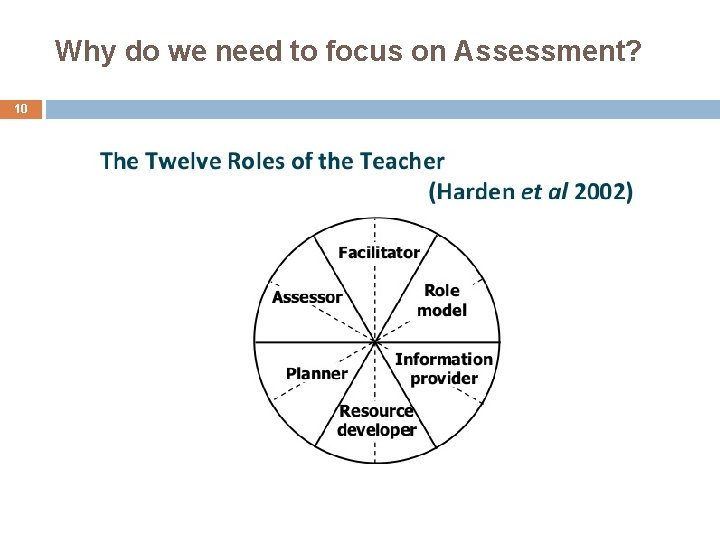

Why do we need to focus on Assessment?

Why do we need to focus on Assessment?

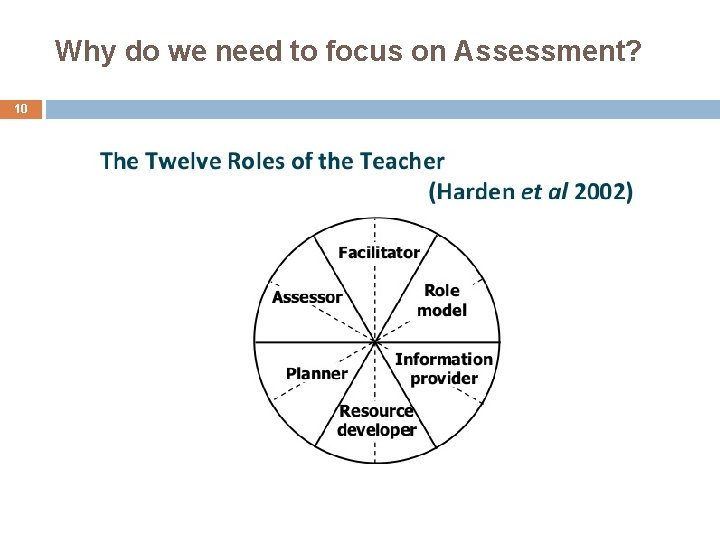

Why do we need to focus on Assessment? 10

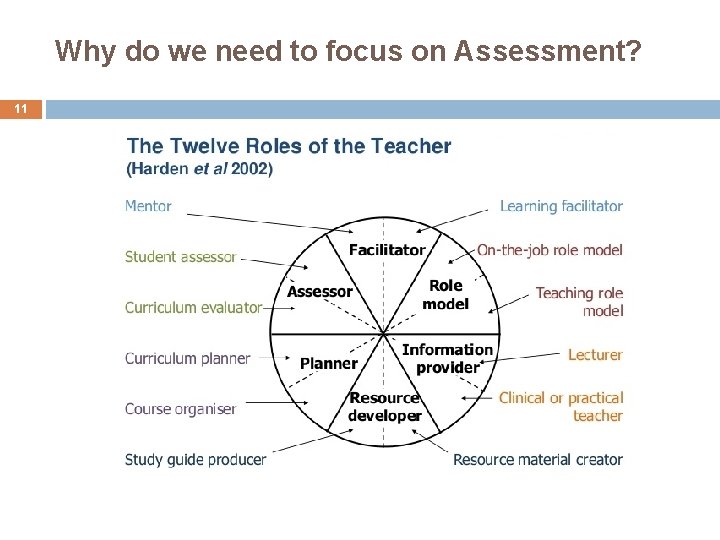

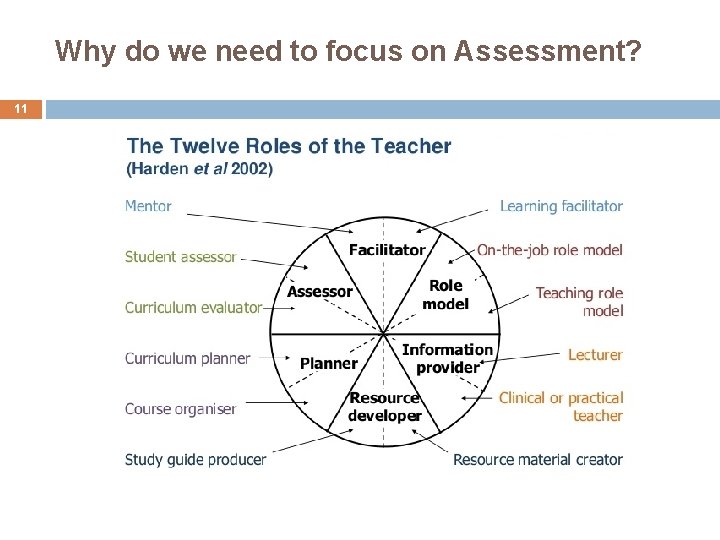

Why do we need to focus on Assessment? 11

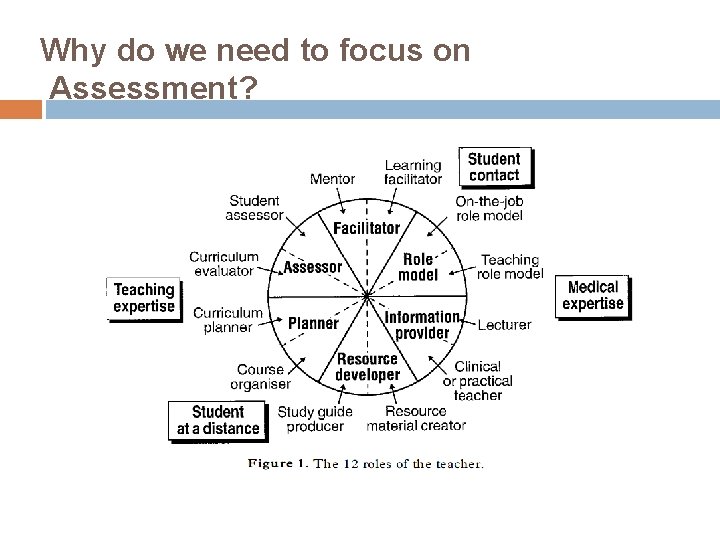

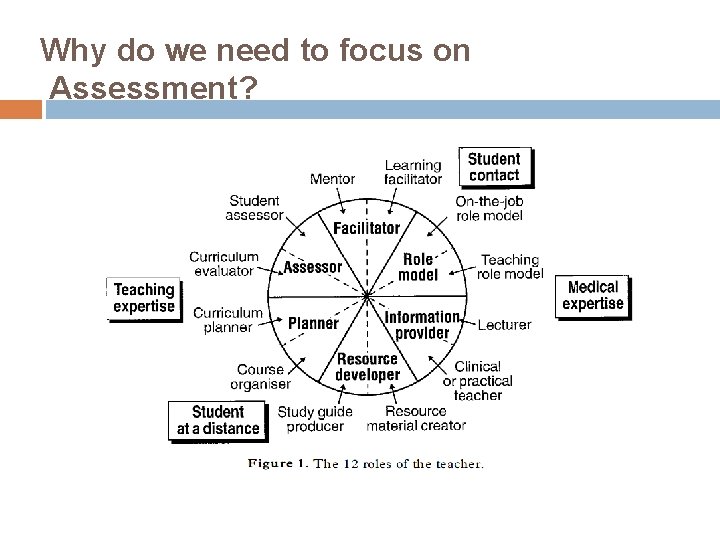

Why do we need to focus on Assessment?

Why do we need to focus on Assessment? 13

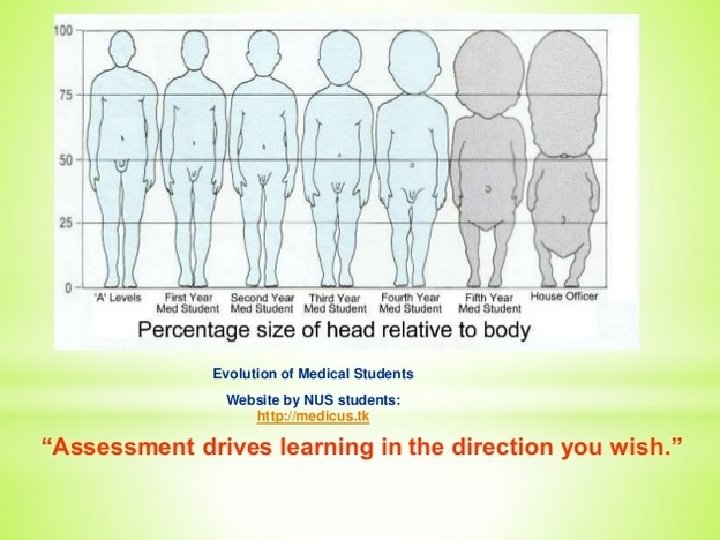

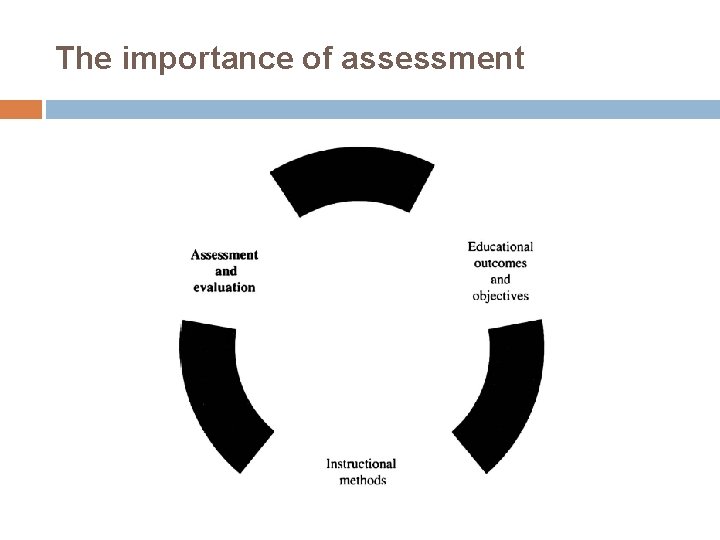

The importance of assessment

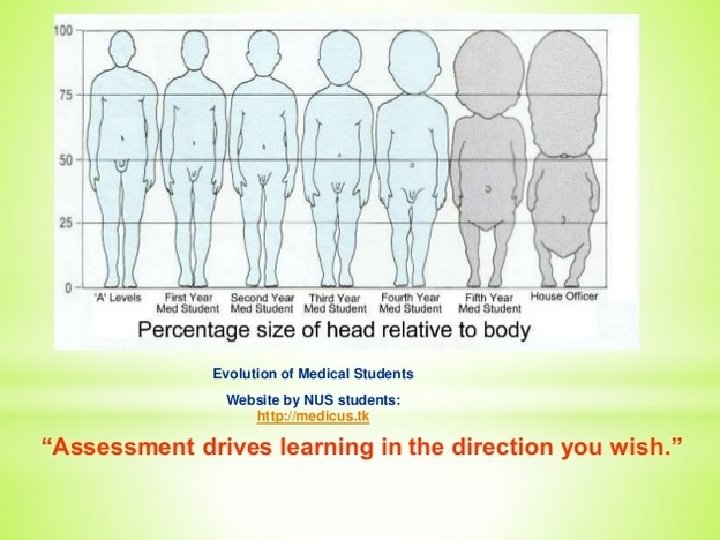

The importance of assessment "Assessment Drives Learning" George E. Miller (1919 -1998)

The importance of assessment

The importance of assessment

The importance of assessment Testing is 50% of Teaching

The importance of assessment “I believe that teaching without testing is like Cooking without tasting” an Lang

The importance of assessment Assessment is viewed as a "necessary evil" in the Curriculum

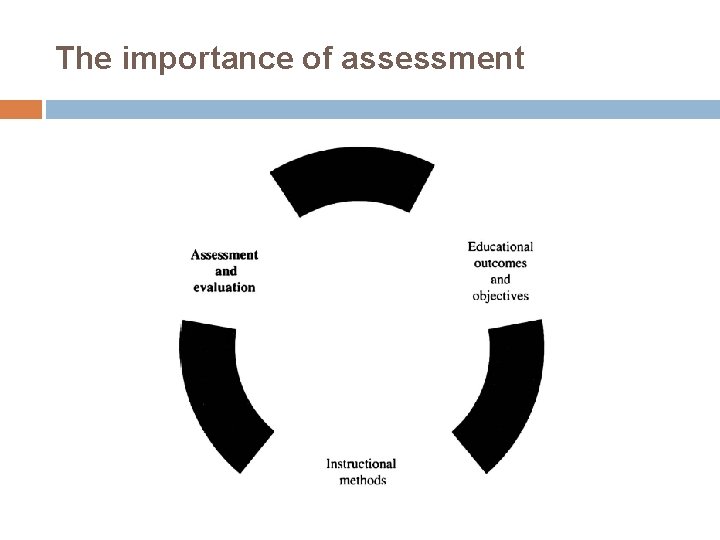

The importance of assessment

The importance of assessment Assessment is of fundamental importance because it is central to public accountability.

Purpose of Assessment

Purpose of Assessment Determination of whether the learning outcomes have been met Support of student learning Certification and judgment of competency Development and evaluation of teaching programs Public accountability Understanding of the learning process Predicting future performance

Purpose of Assessment Principle 1: The Primary Purpose of Assessment is to Improve Student Learning Principle 2: Assessment for Other Purposes Also Supports Student Learning

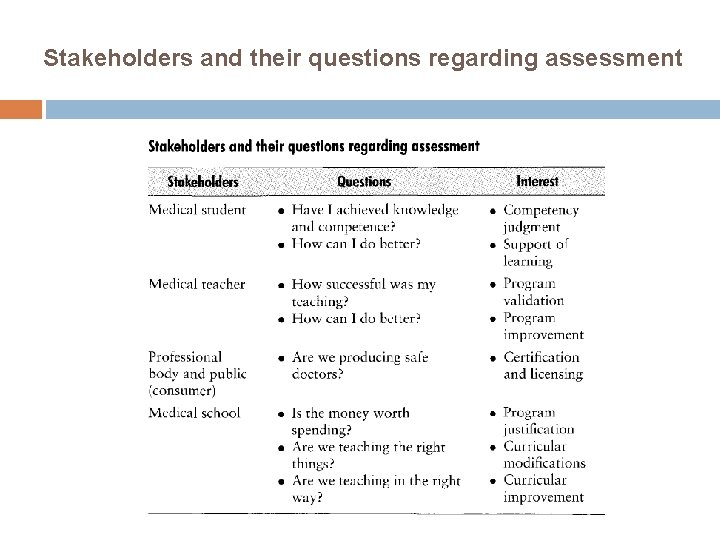

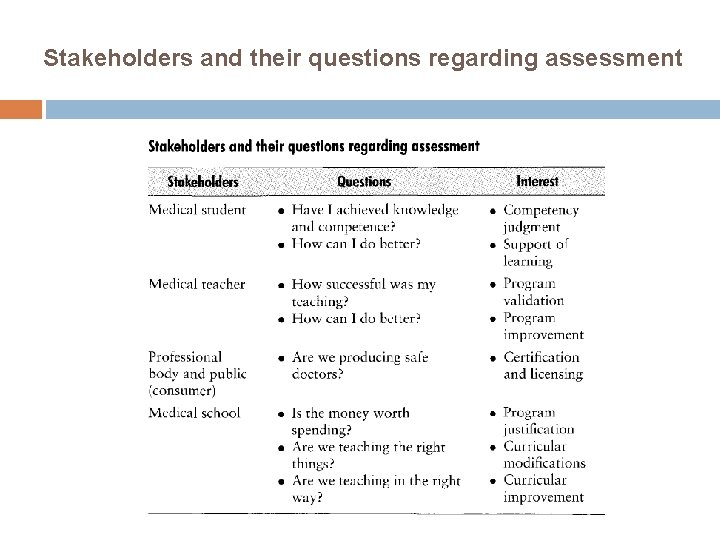

Stakeholders and their questions regarding assessment

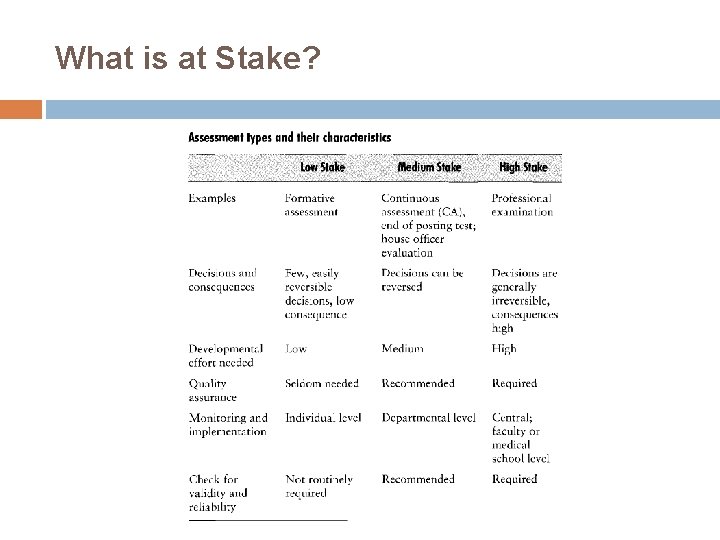

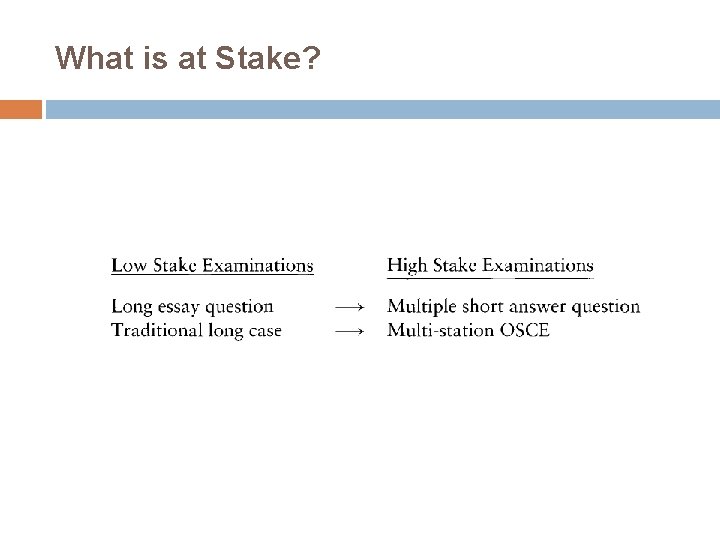

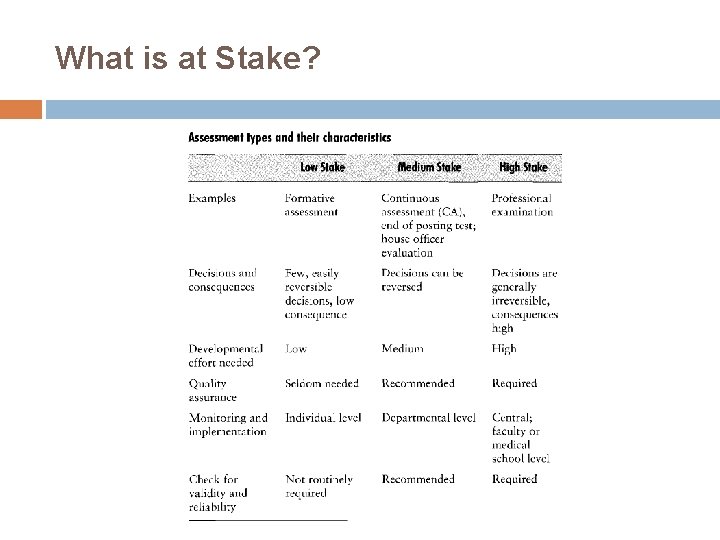

What is at Stake?

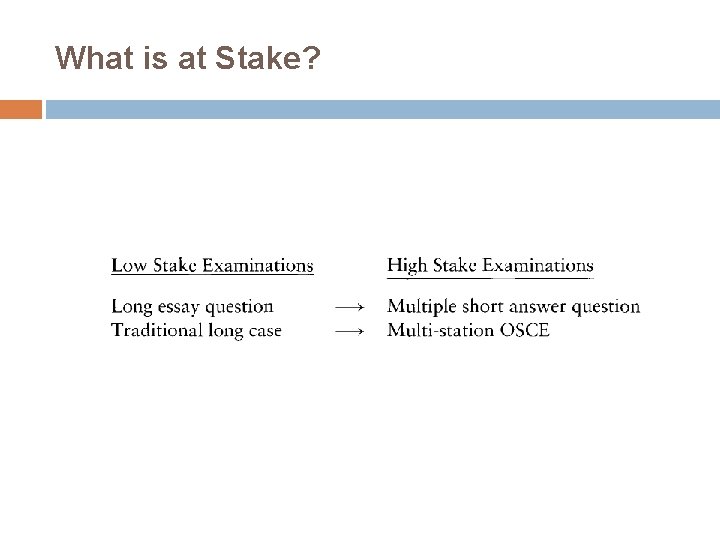

What is at Stake?

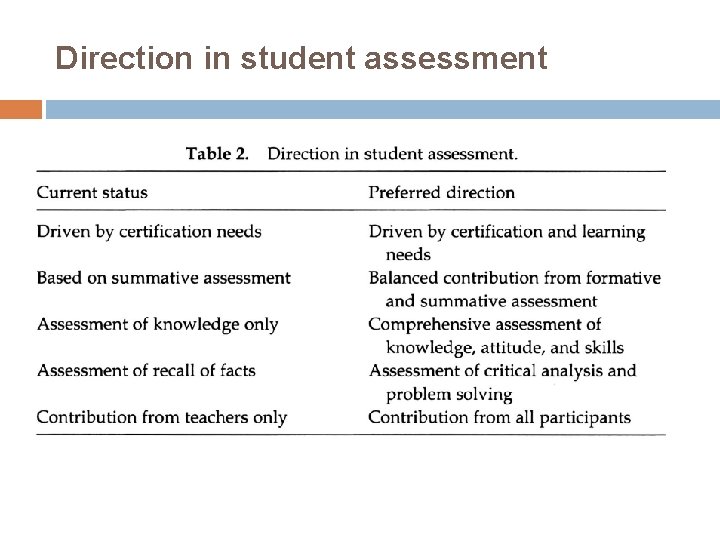

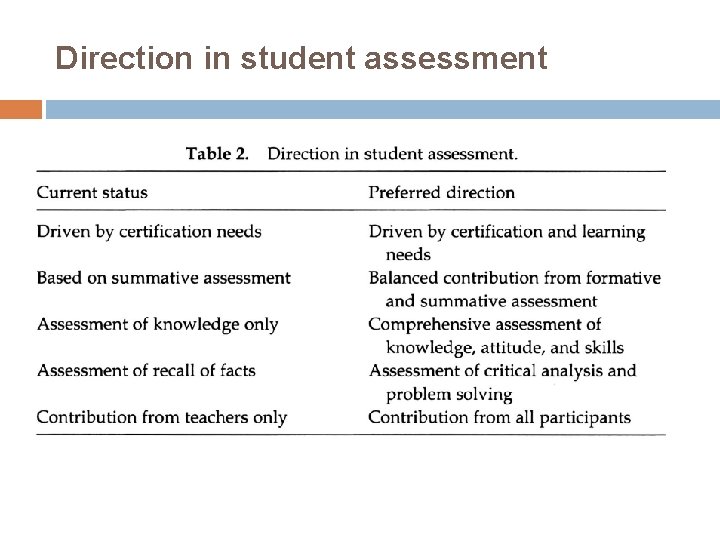

Direction in student assessment

Direction in student assessment

Direction in student assessment

Direction in student assessment

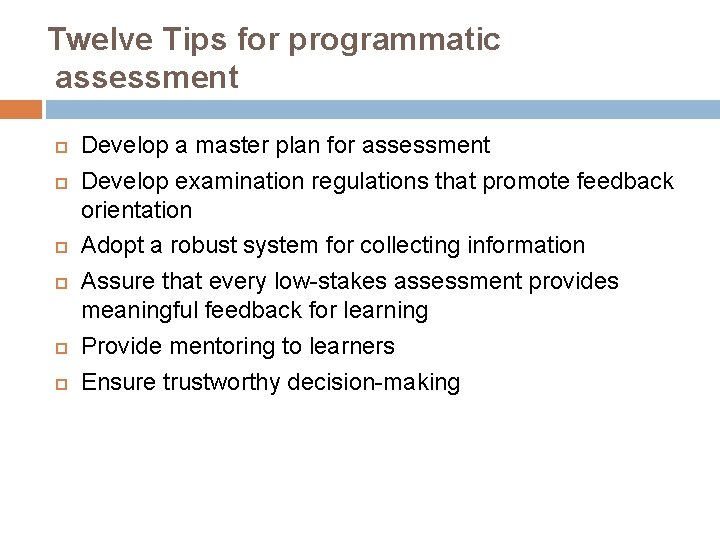

Twelve Tips for programmatic assessment Develop a master plan for assessment Develop examination regulations that promote feedback orientation Adopt a robust system for collecting information Assure that every low-stakes assessment provides meaningful feedback for learning Provide mentoring to learners Ensure trustworthy decision-making

Twelve Tips for programmatic assessment Organise intermediate decision-making assessments Encourage and facilitate personalised remediation Monitor and evaluate the learning effect of the programme and adapt Use the assessment process information for curriculum evaluation Promote continuous interaction between the stakeholders Develop a strategy for implementation

38

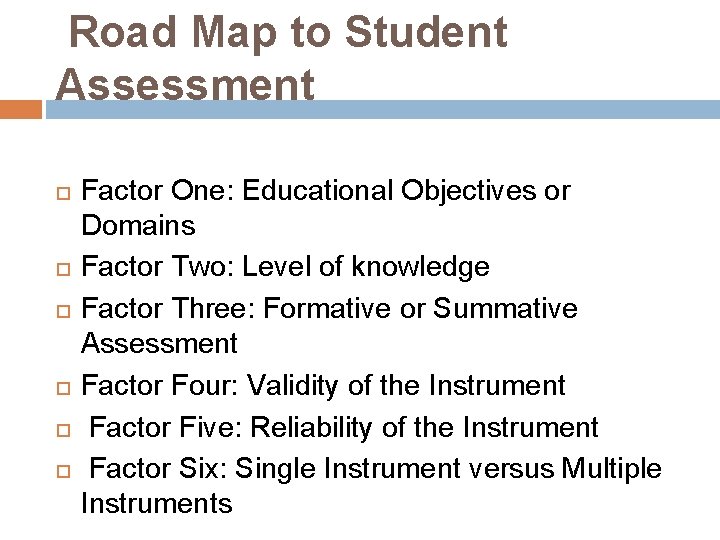

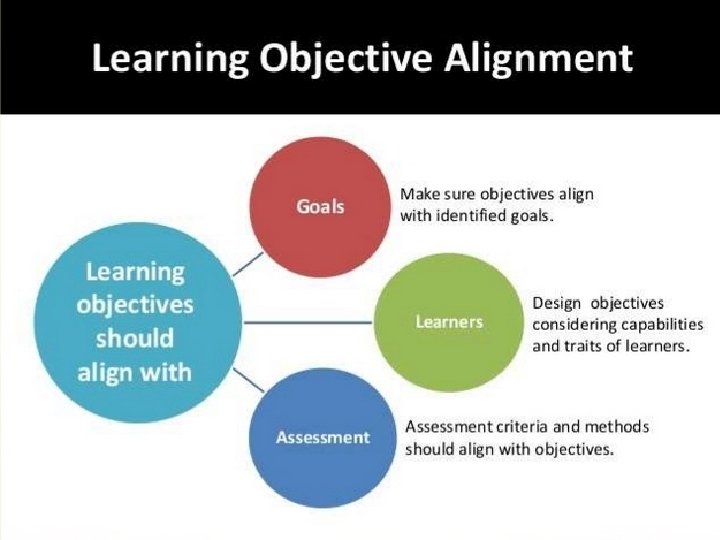

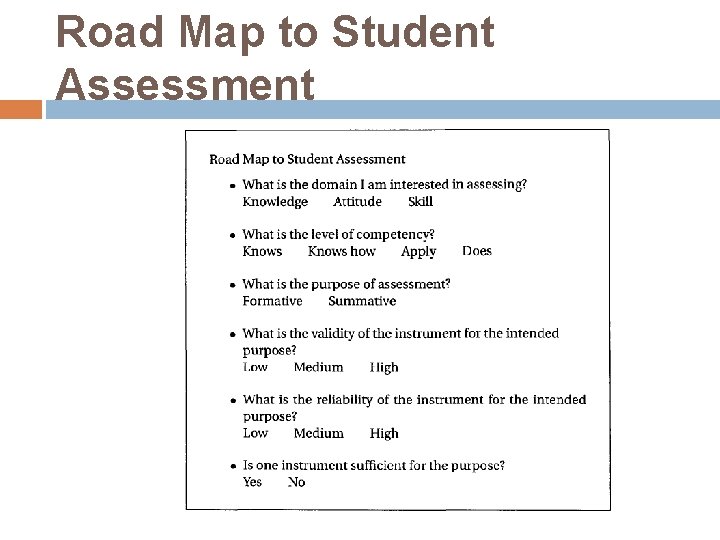

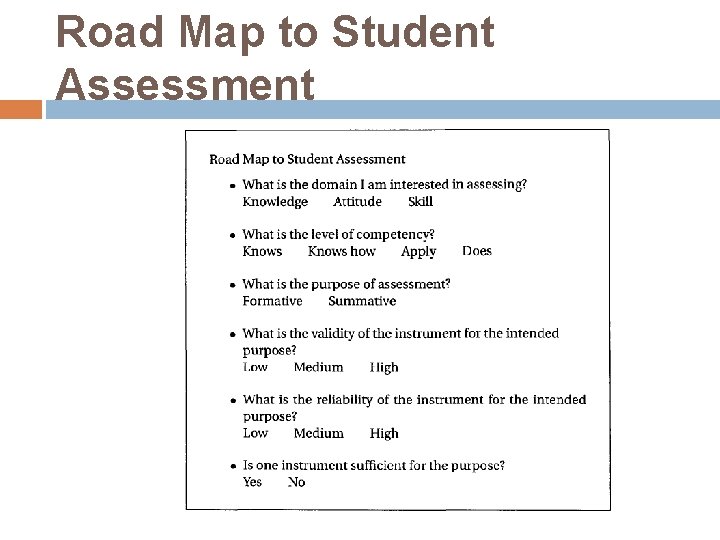

Road Map to Student Assessment Factor One: Educational Objectives or Domains Factor Two: Level of knowledge Factor Three: Formative or Summative Assessment Factor Four: Validity of the Instrument Factor Five: Reliability of the Instrument Factor Six: Single Instrument versus Multiple Instruments

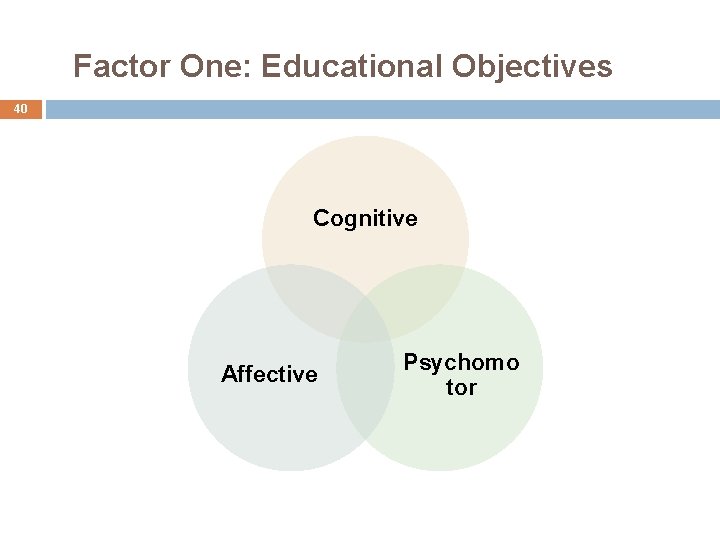

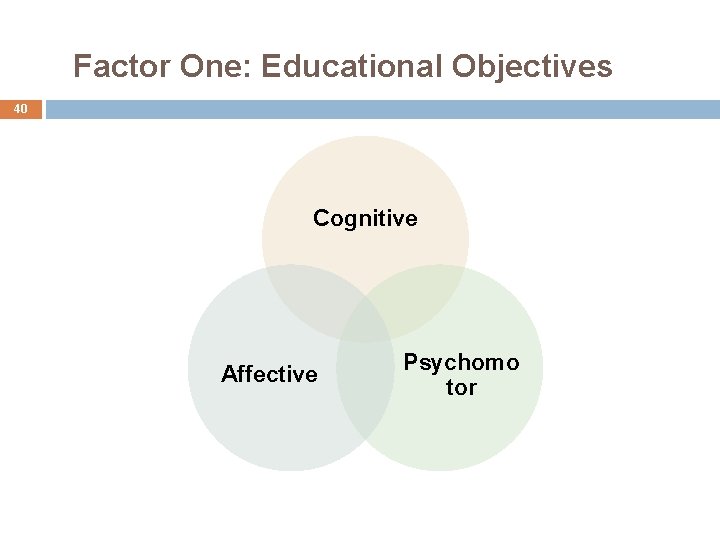

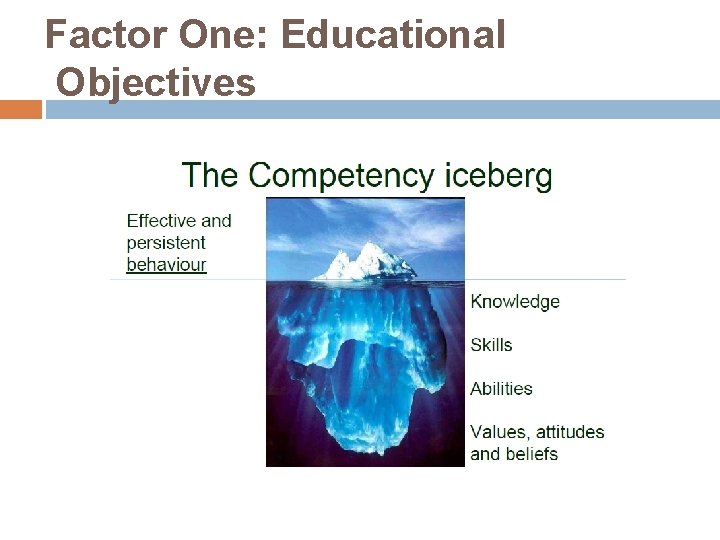

Factor One: Educational Objectives 40 Cognitive Affective Psychomo tor

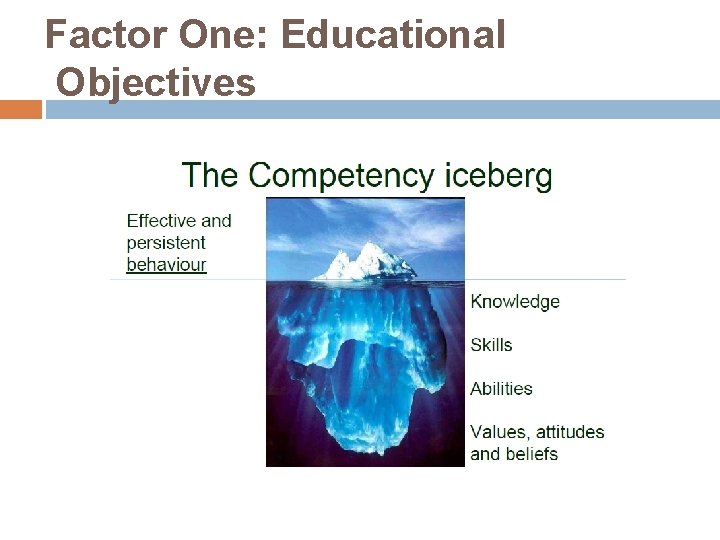

Factor One: Educational Objectives

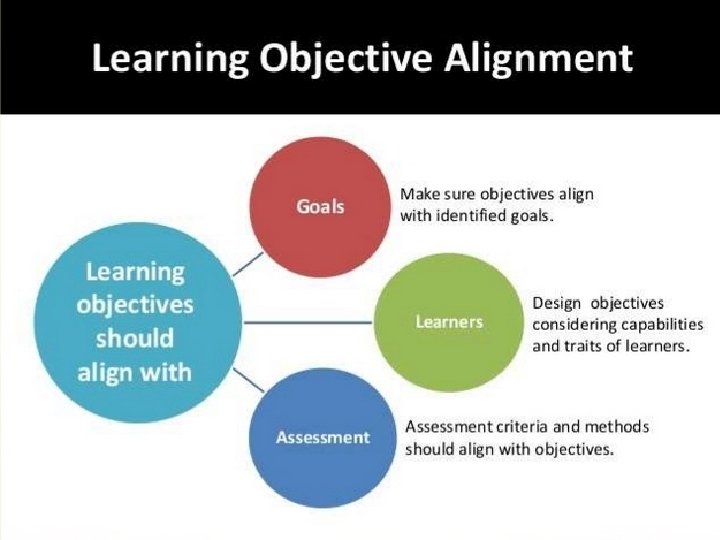

Factor One: Educational Objectives

Factor One: Educational Objectives

ASSESSMENT OF KNOWLEDGE Multiple Choice Question Extended Matching Item Essay Questions and Variations Oral Examination Key Features Examination

ASSESSMENT OF CLINICAL SKILLS Objective Structured Clinical Examination Short Case and Long Case Portfolio Mini Clinical Evaluation Exercise (mini-CEX) Direct Observation of Procedural Skills (DOPS) Checklist 360 -Degree Evaluation Logbook

ASSESSMENT OF ATTITUDES Observation Portfolio Peer/self assessment OSCE Written examination

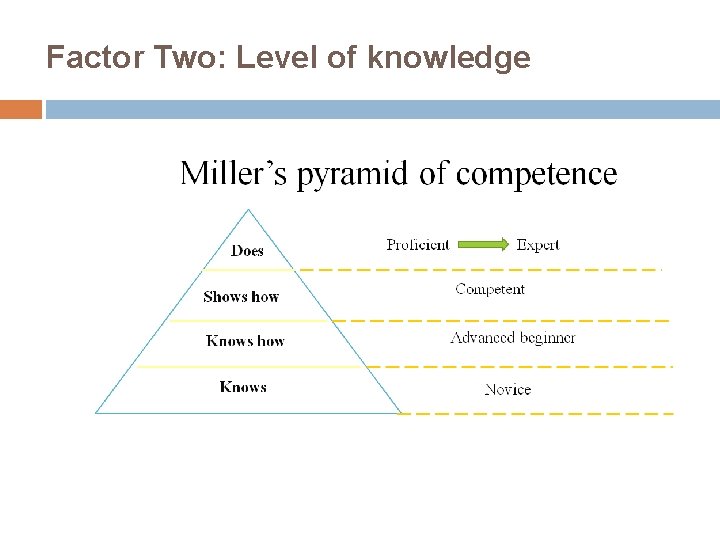

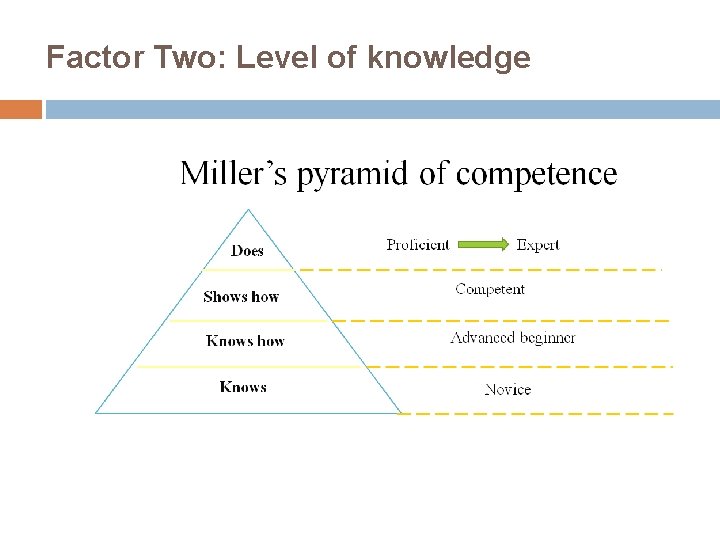

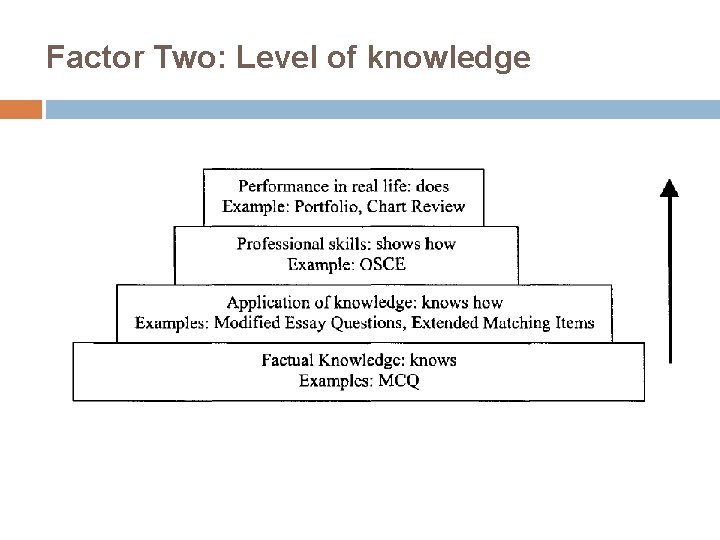

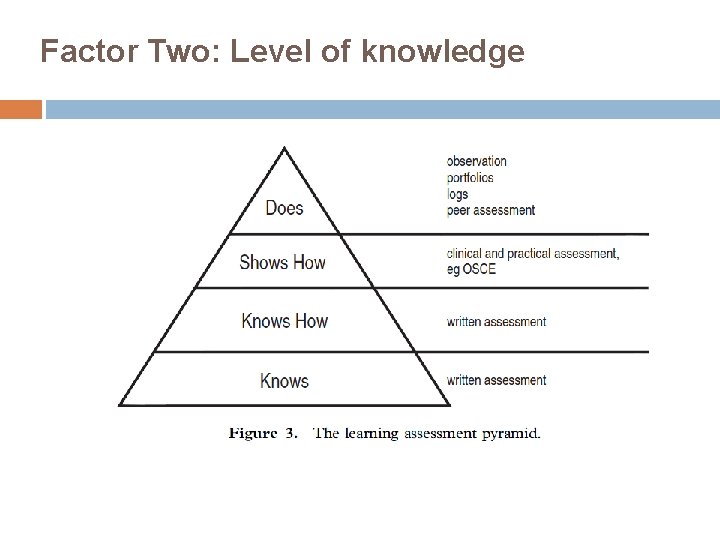

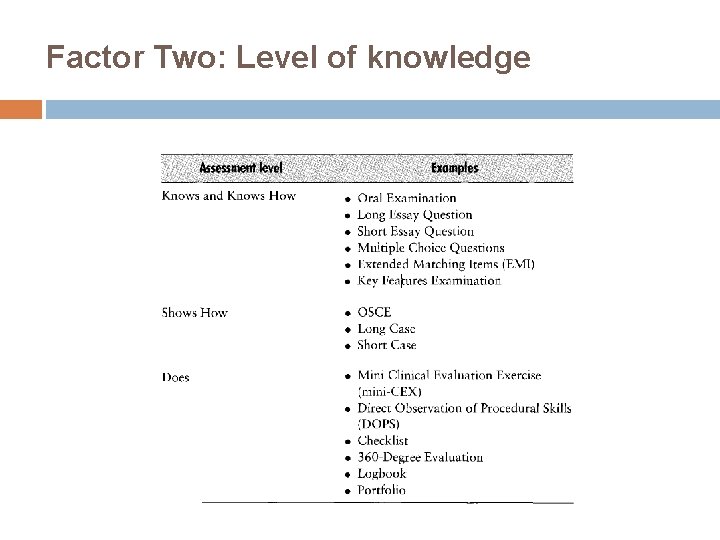

Factor Two: Level of knowledge

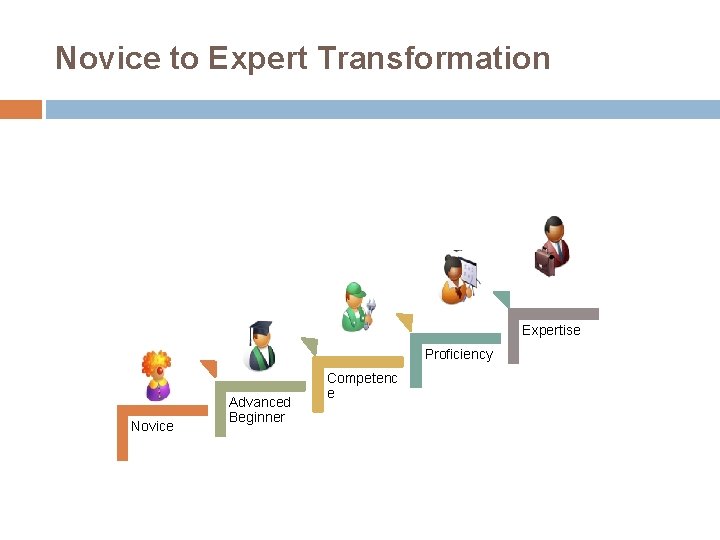

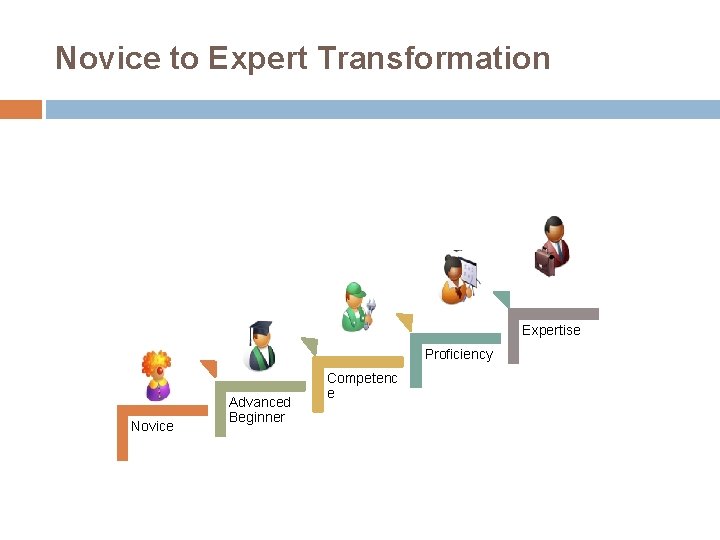

Novice to Expert Transformation Expertise Proficiency Novice Advanced Beginner Competenc e

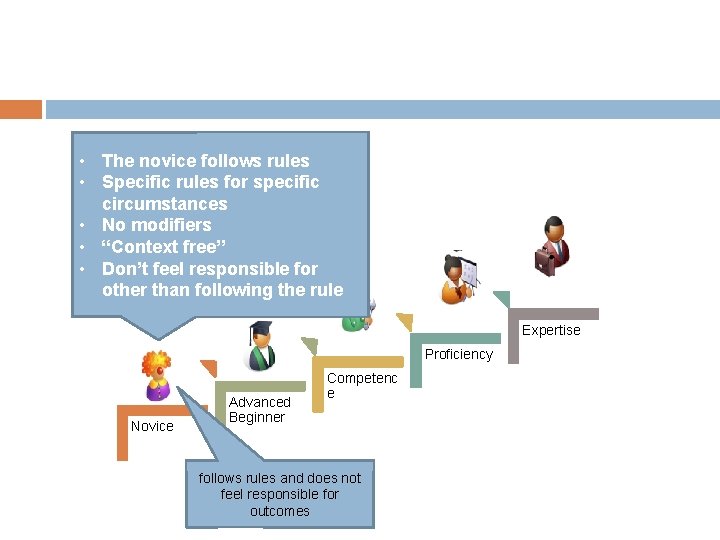

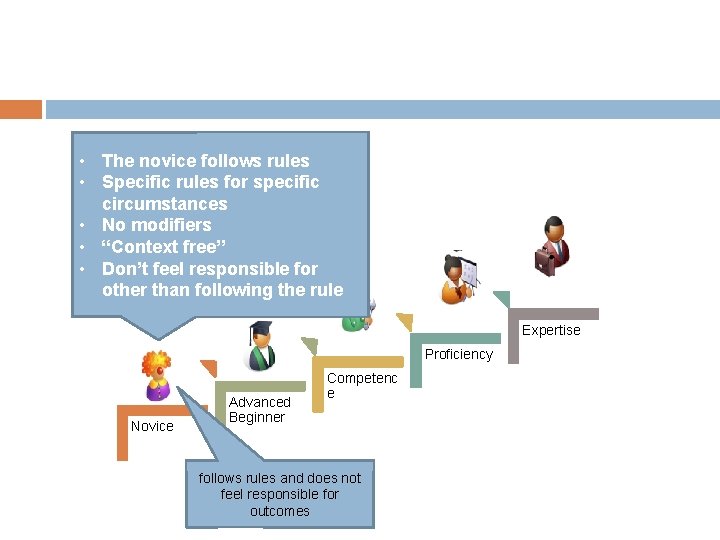

• The novice follows rules • Specific rules for specific circumstances • No modifiers • “Context free” • Don’t feel responsible for other than following the rule Expertise Proficiency Novice Advanced Beginner Competenc e follows rules and does not feel responsible for outcomes

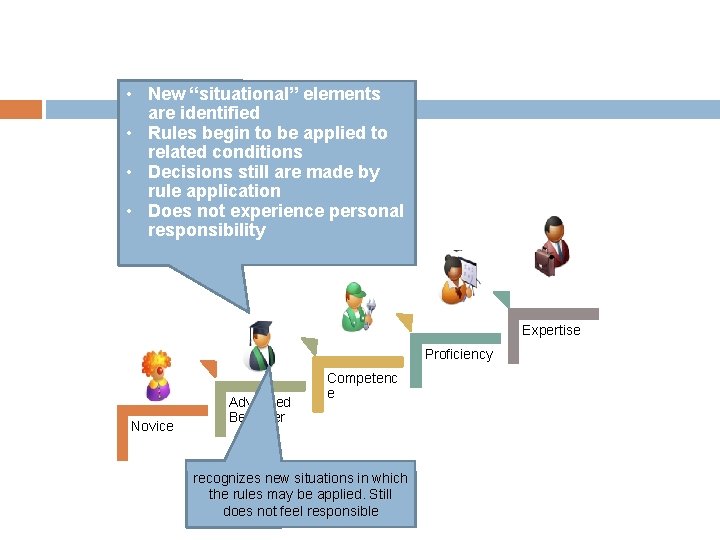

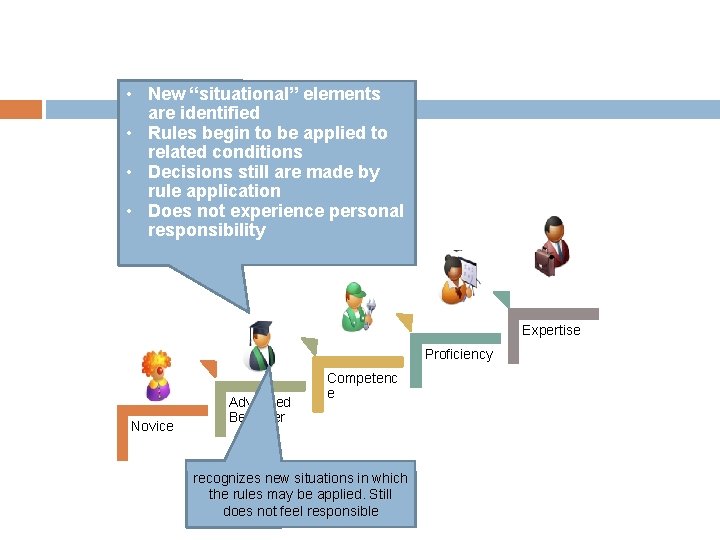

• New “situational” elements are identified • Rules begin to be applied to related conditions • Decisions still are made by rule application • Does not experience personal responsibility Expertise Proficiency Novice Advanced Beginner Competenc e recognizes new situations in which the rules may be applied. Still does not feel responsible

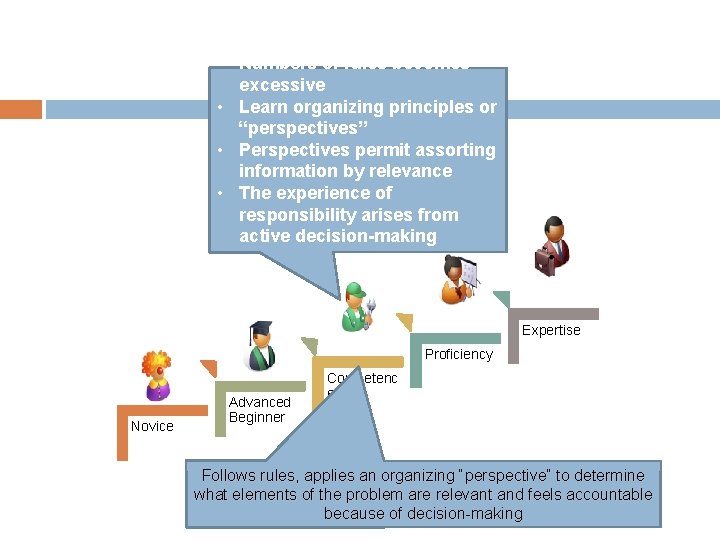

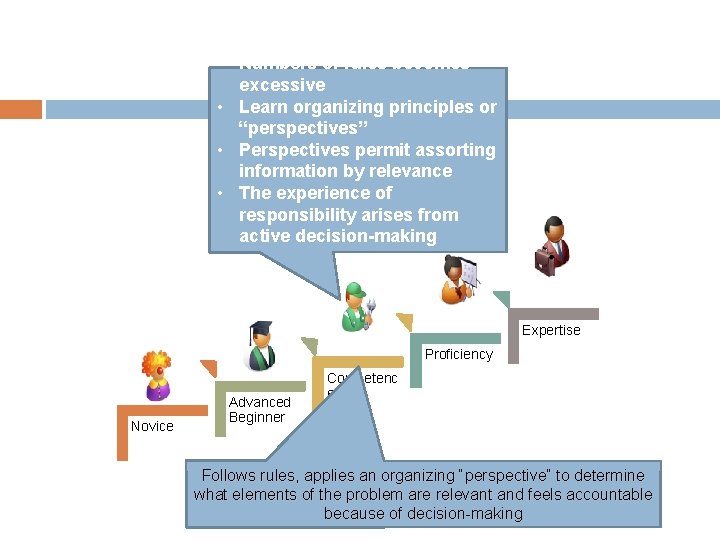

• Numbers of rules becomes excessive • Learn organizing principles or “perspectives” • Perspectives permit assorting information by relevance • The experience of responsibility arises from active decision-making Expertise Proficiency Novice Advanced Beginner Competenc e Follows rules, applies an organizing “perspective” to determine what elements of the problem are relevant and feels accountable because of decision-making

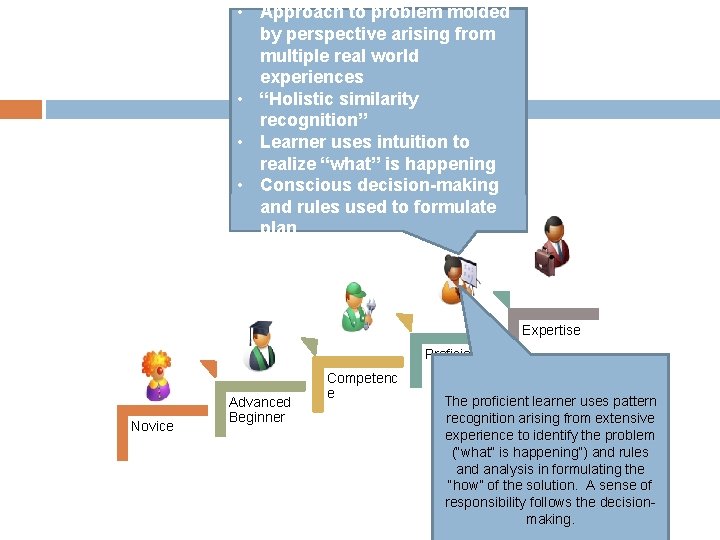

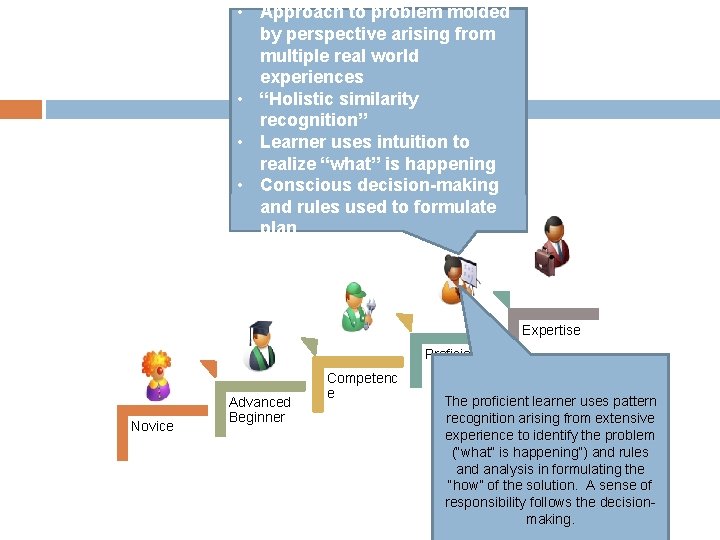

• Approach to problem molded by perspective arising from multiple real world experiences • “Holistic similarity recognition” • Learner uses intuition to realize “what” is happening • Conscious decision-making and rules used to formulate plan Expertise Proficiency Novice Advanced Beginner Competenc e The proficient learner uses pattern recognition arising from extensive experience to identify the problem (“what” is happening”) and rules and analysis in formulating the “how” of the solution. A sense of responsibility follows the decisionmaking.

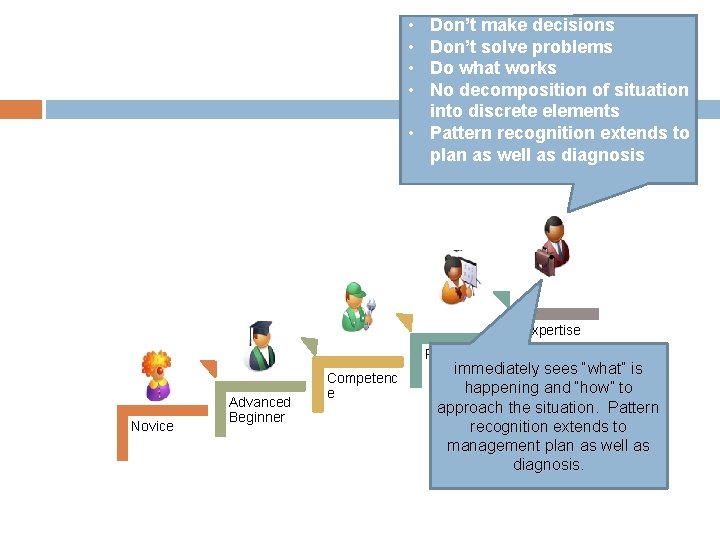

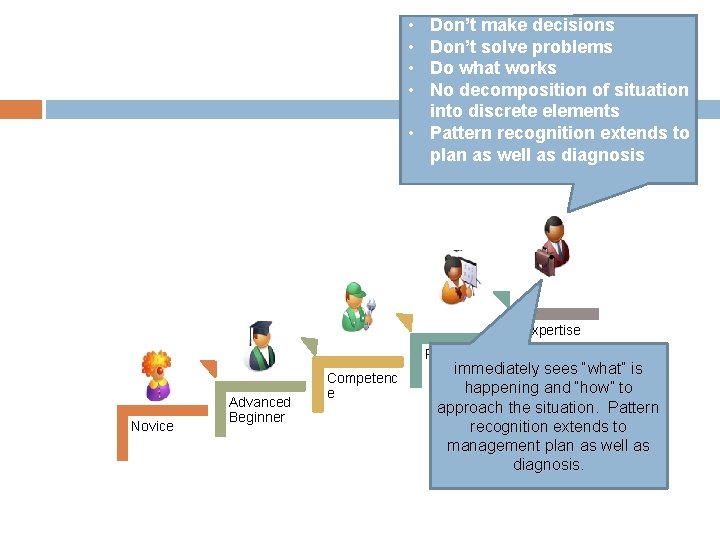

• • Don’t make decisions Don’t solve problems Do what works No decomposition of situation into discrete elements • Pattern recognition extends to plan as well as diagnosis Expertise Proficiency Novice Advanced Beginner Competenc e immediately sees “what” is happening and “how” to approach the situation. Pattern recognition extends to management plan as well as diagnosis.

58

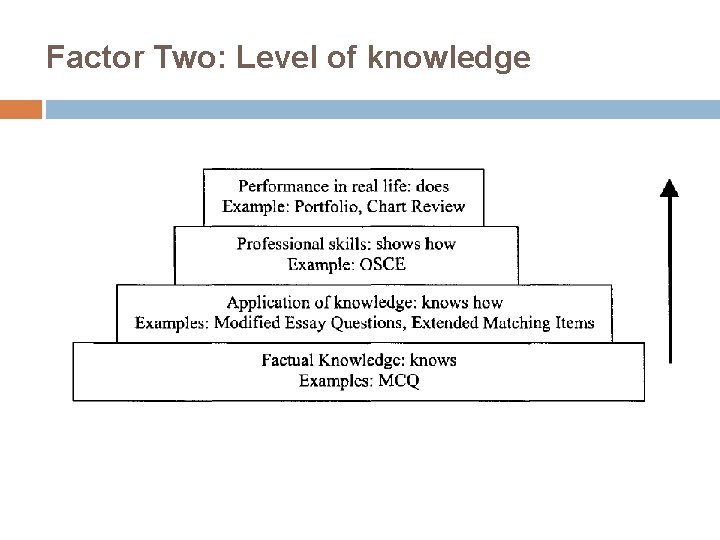

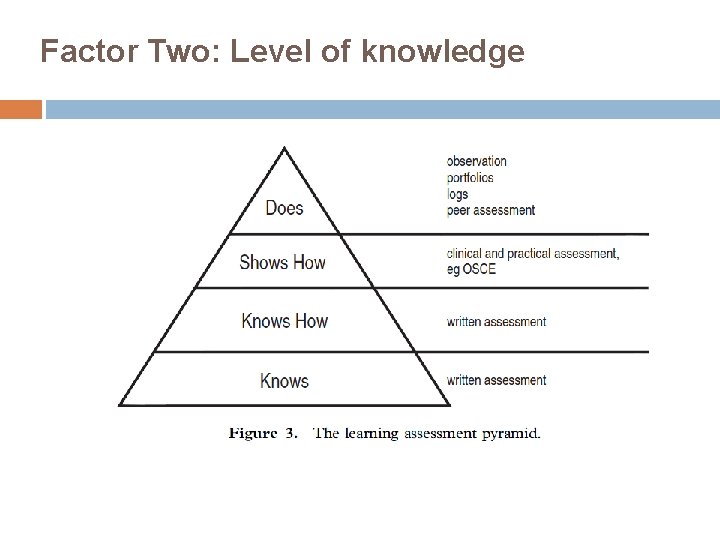

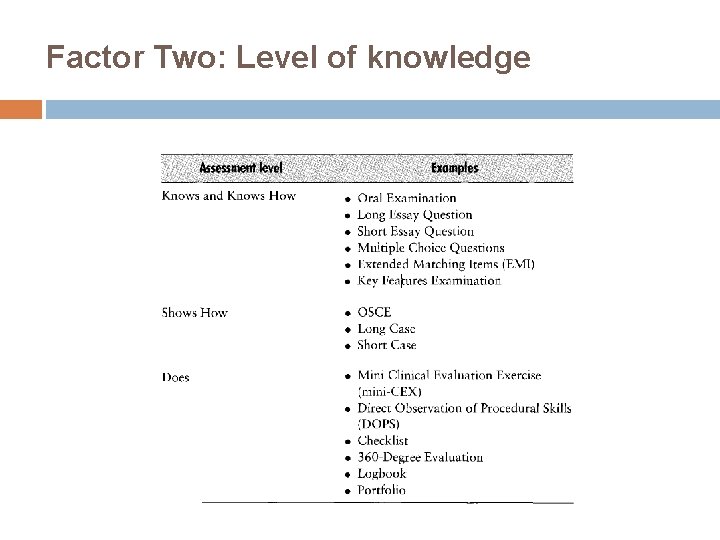

Factor Two: Level of knowledge

Factor Two: Level of knowledge

Factor Two: Level of knowledge

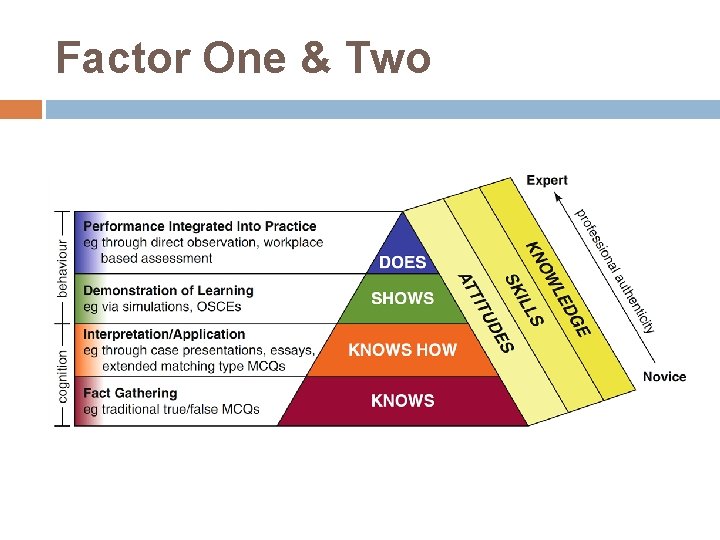

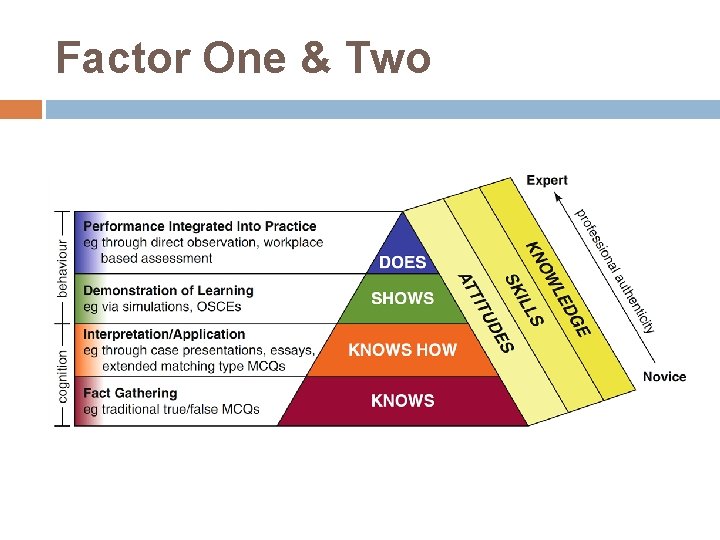

Factor One & Two

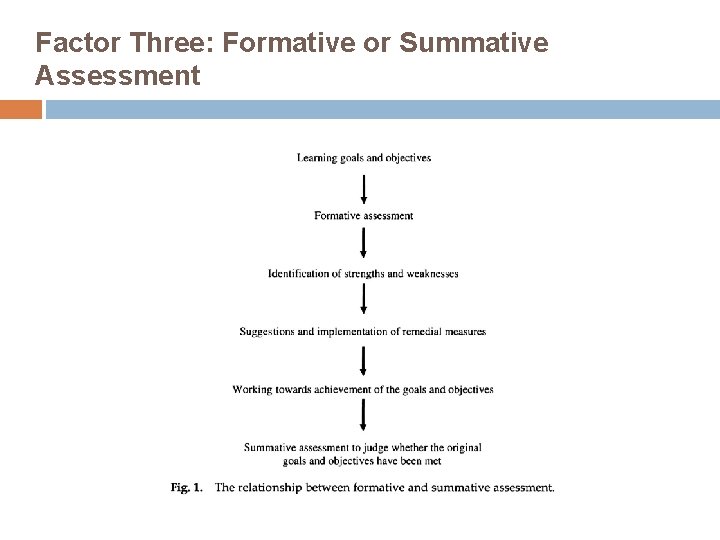

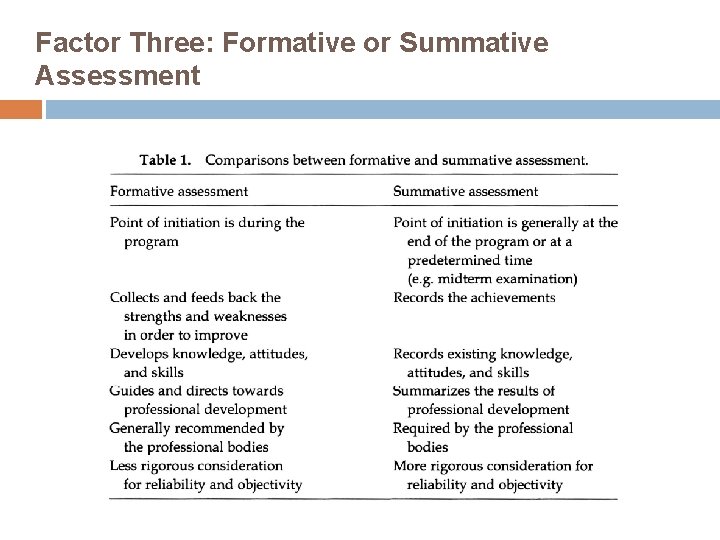

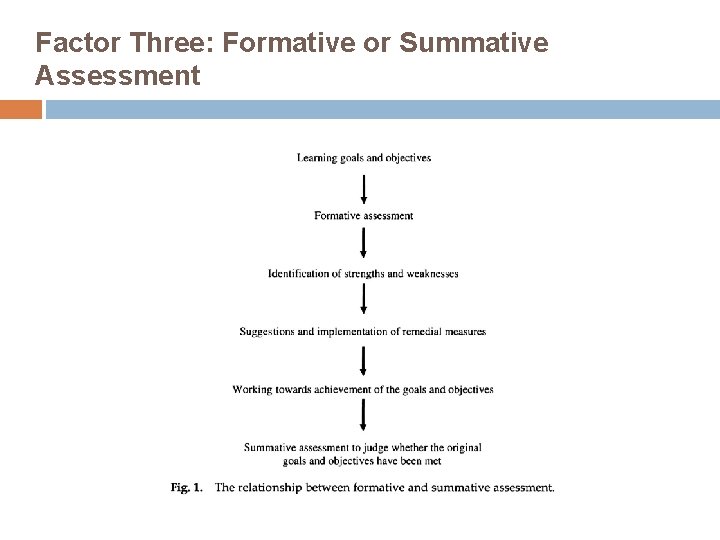

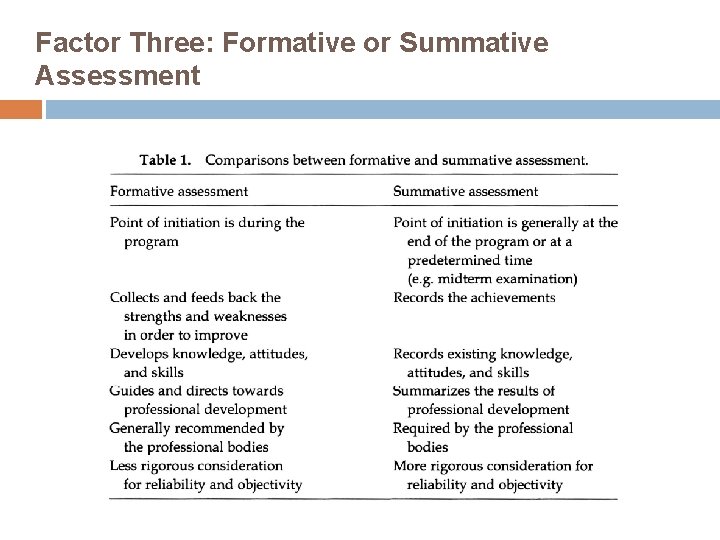

Factor Three: Formative or Summative Assessment

Factor Three: Formative or Summative Assessment

Factor Three: Formative or Summative Assessment

Factor Three: Formative or Summative Assessment

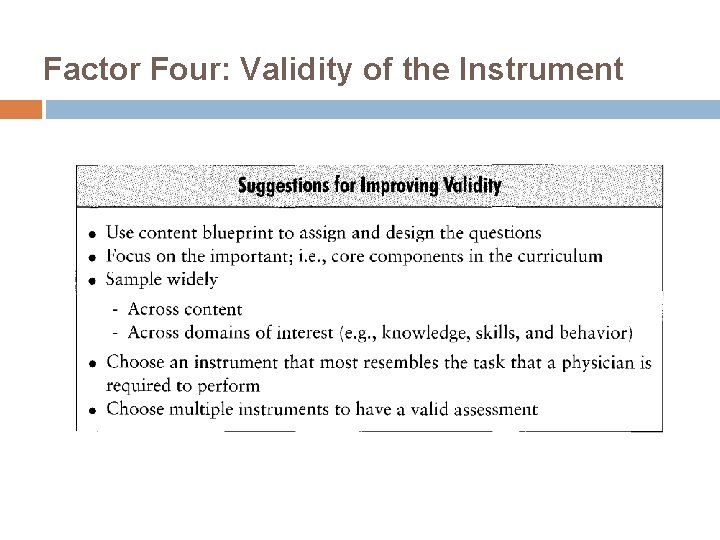

Factor Four: Validity of the Instrument Content validity Construct validity Predictive validity Face validity

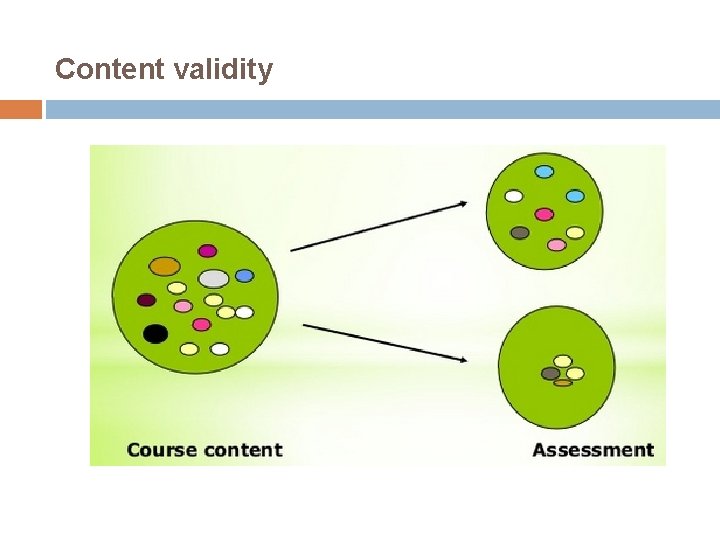

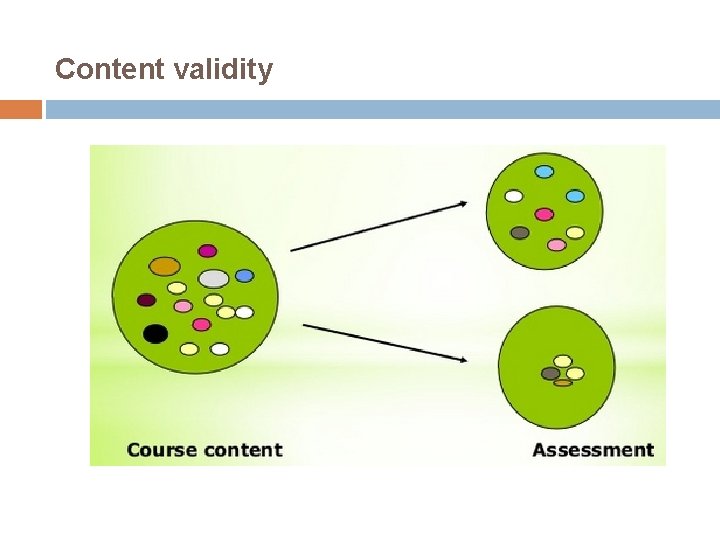

Content validity

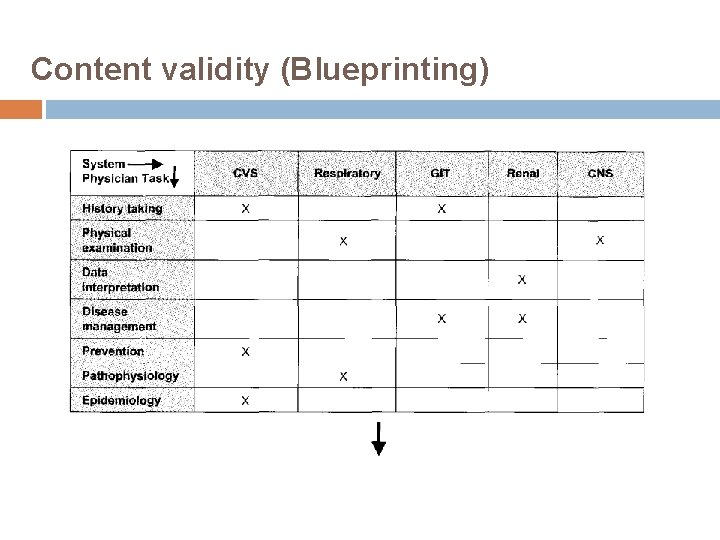

Content validity Blueprinting

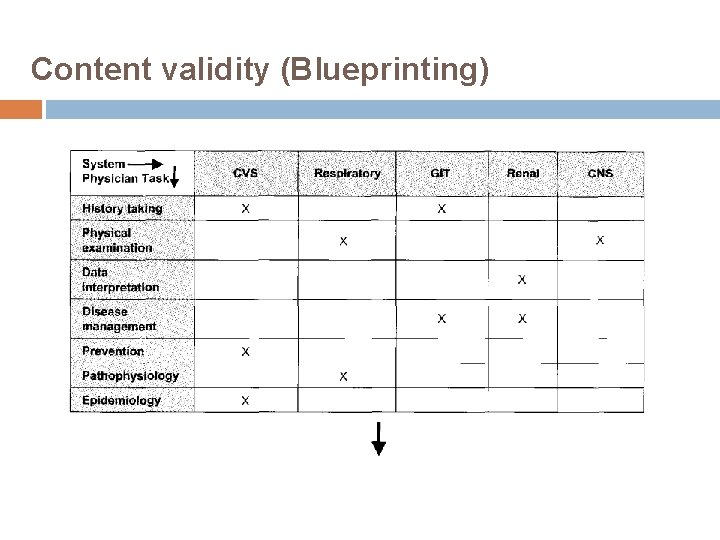

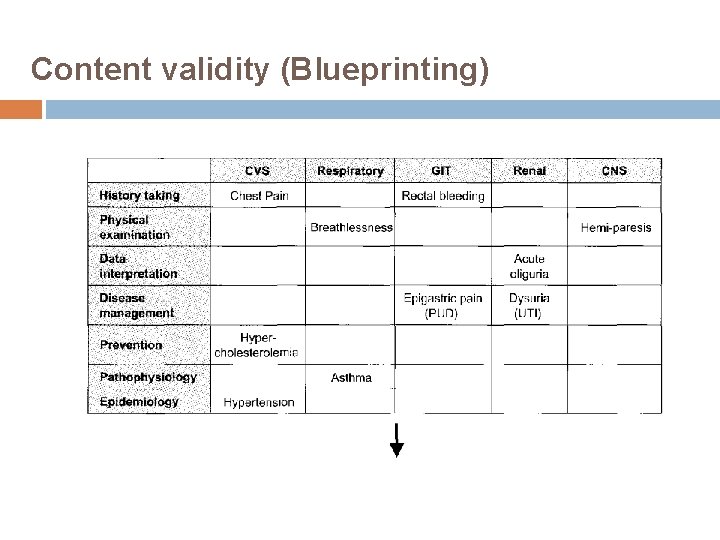

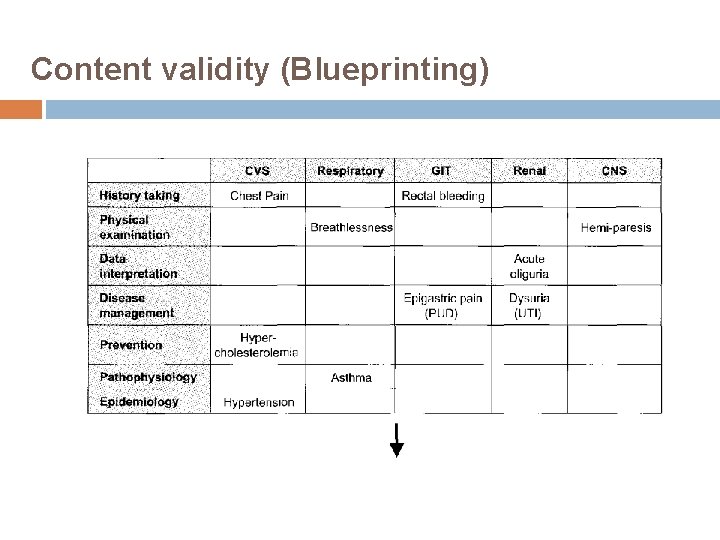

Content validity (Blueprinting)

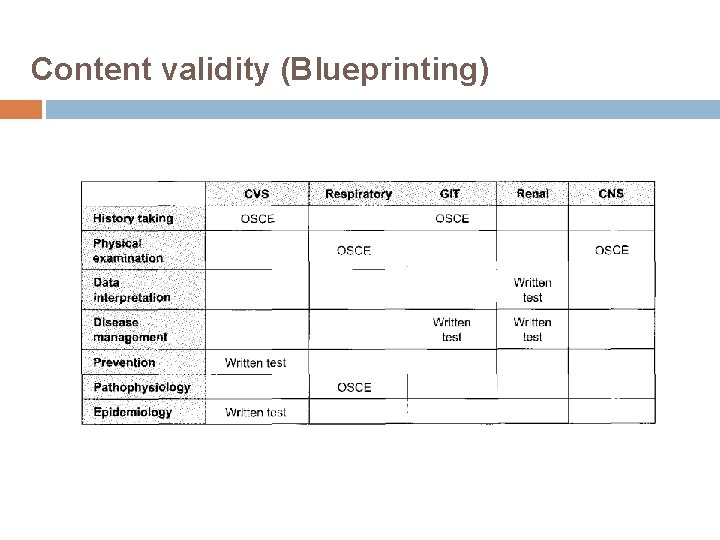

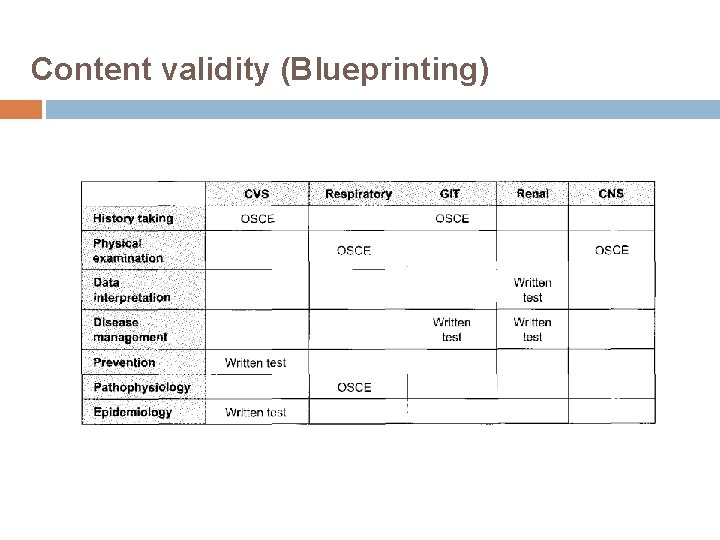

Content validity (Blueprinting)

Content validity (Blueprinting)

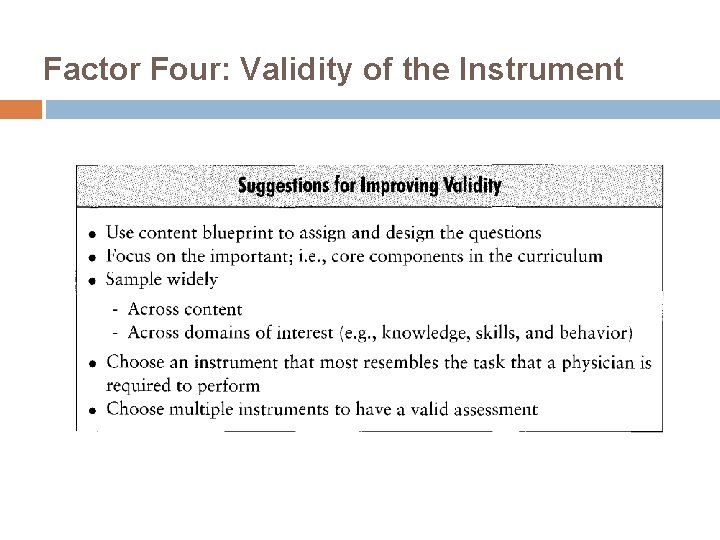

Factor Four: Validity of the Instrument

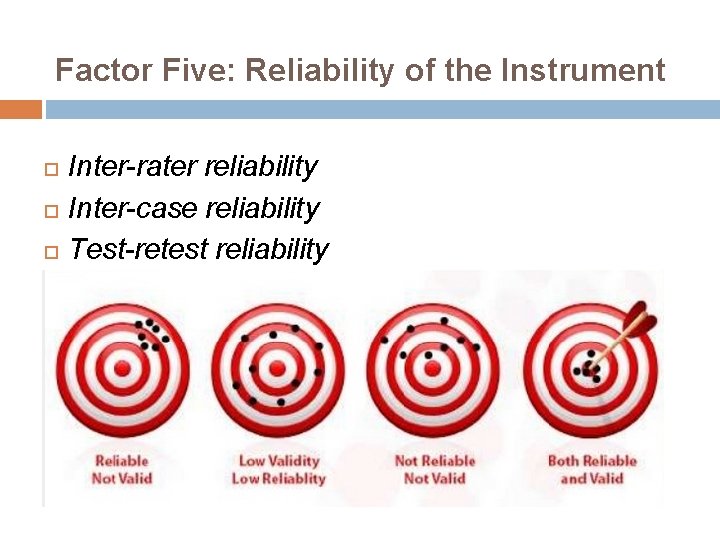

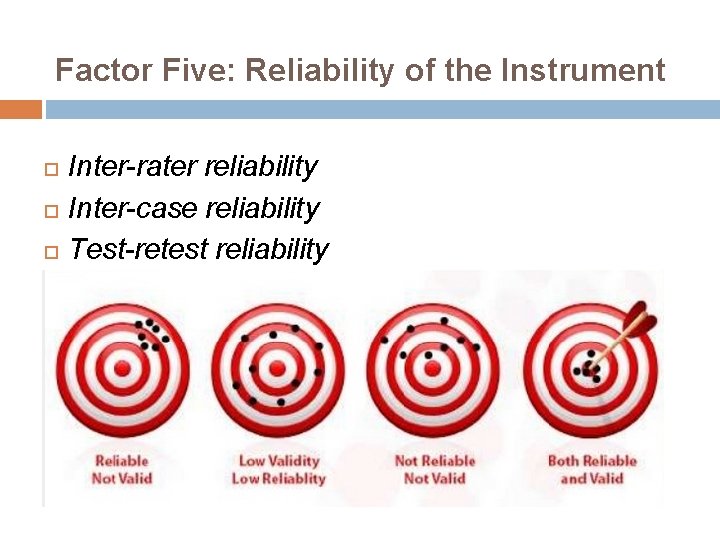

Factor Five: Reliability of the Instrument Inter-rater reliability Inter-case reliability Test-retest reliability

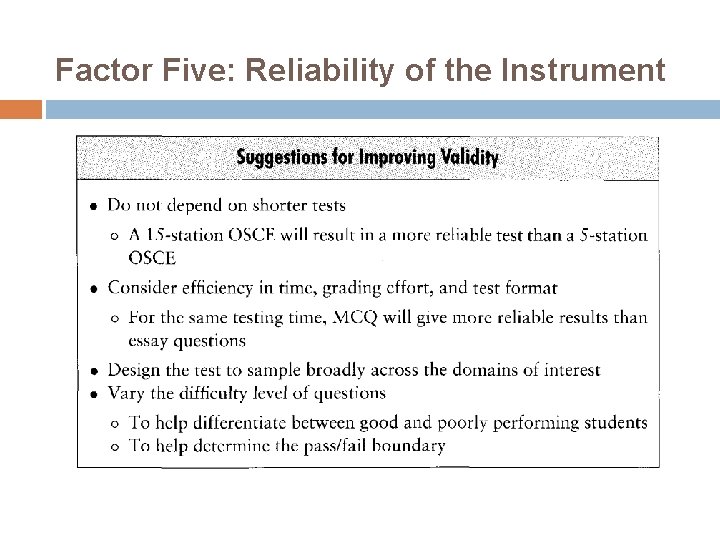

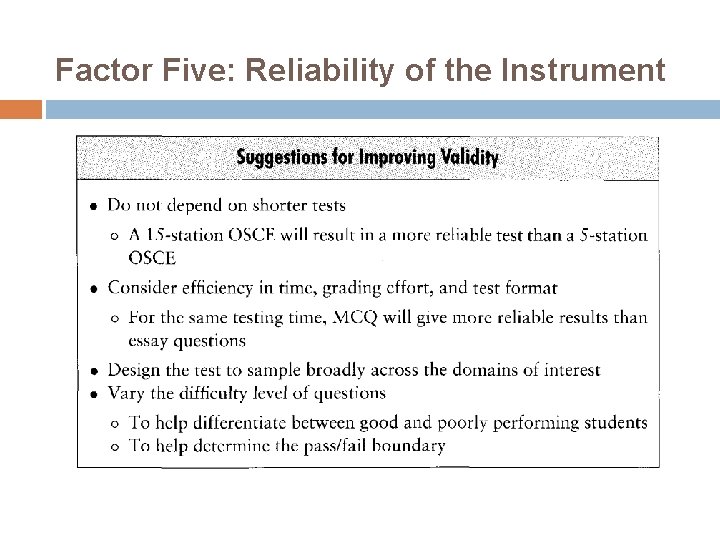

Factor Five: Reliability of the Instrument

Factor Six: Single Instrument versus Multiple Instruments It is virtually impossible to meet these different needs and purposes with a single instrument and to do so in an efficient and effective manner.

Road Map to Student Assessment

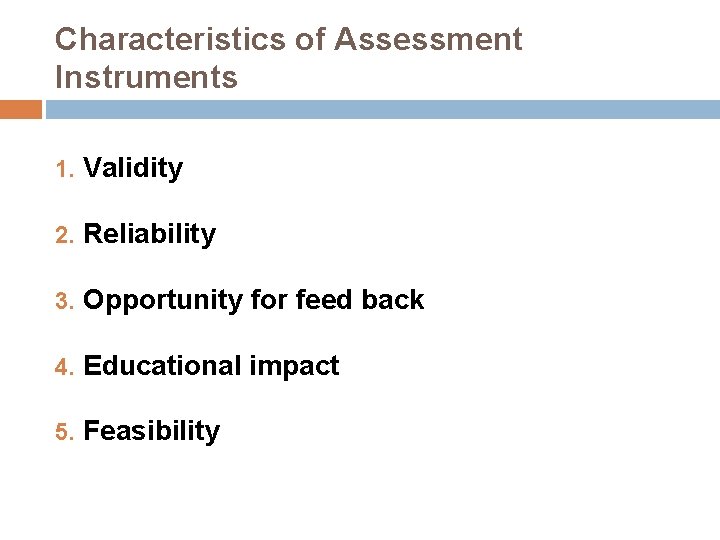

Characteristics of Assessment Instruments 1. Validity 2. Reliability 3. Opportunity for feed back 4. Educational impact 5. Feasibility

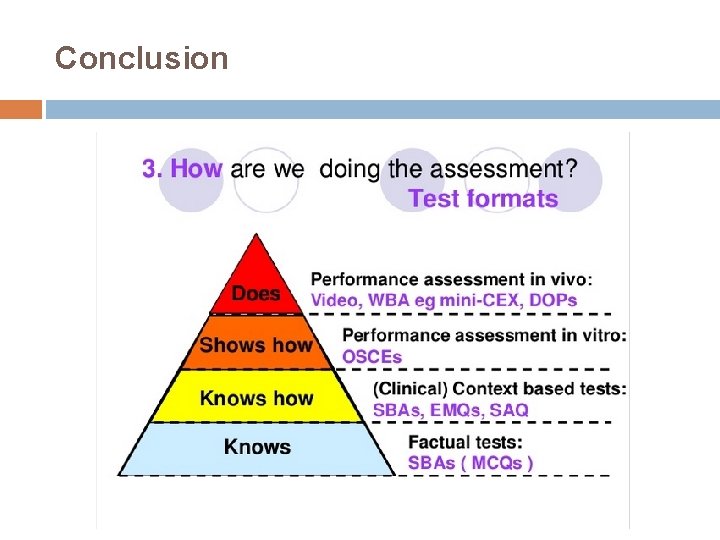

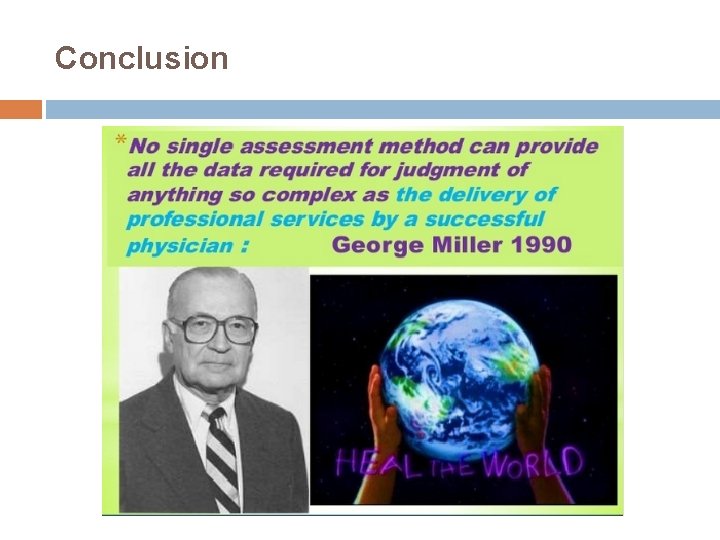

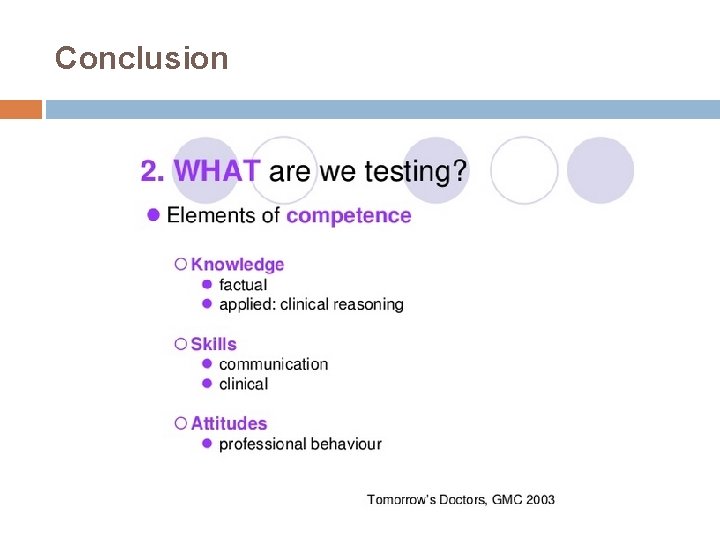

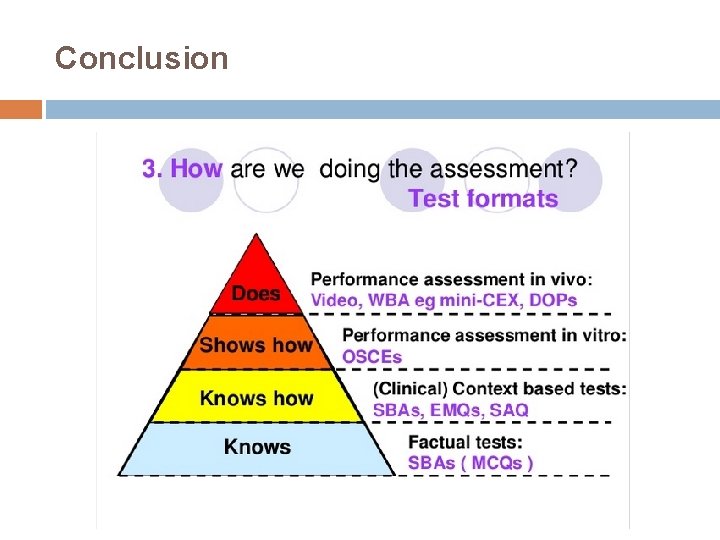

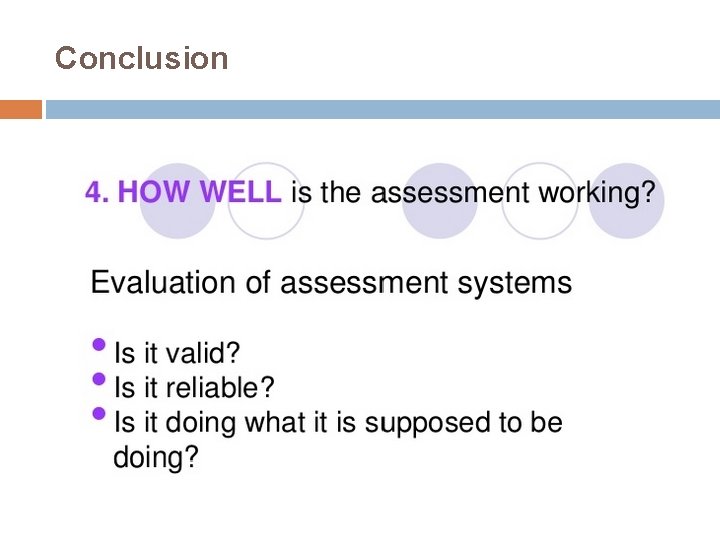

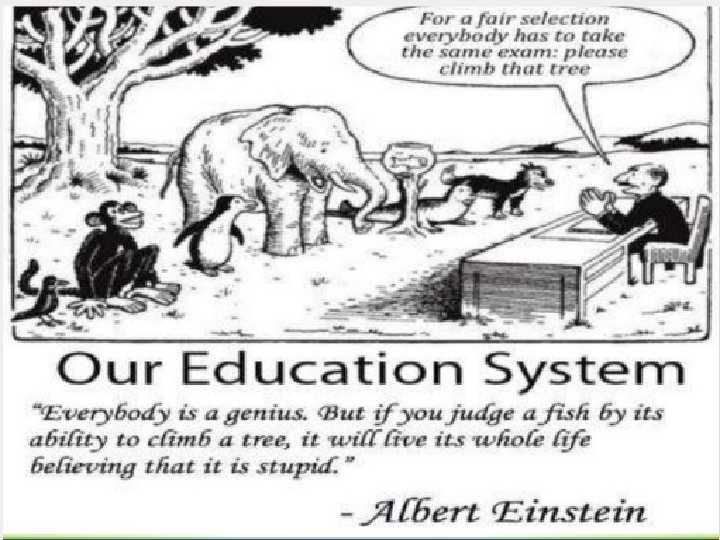

Conclusion

Conclusion

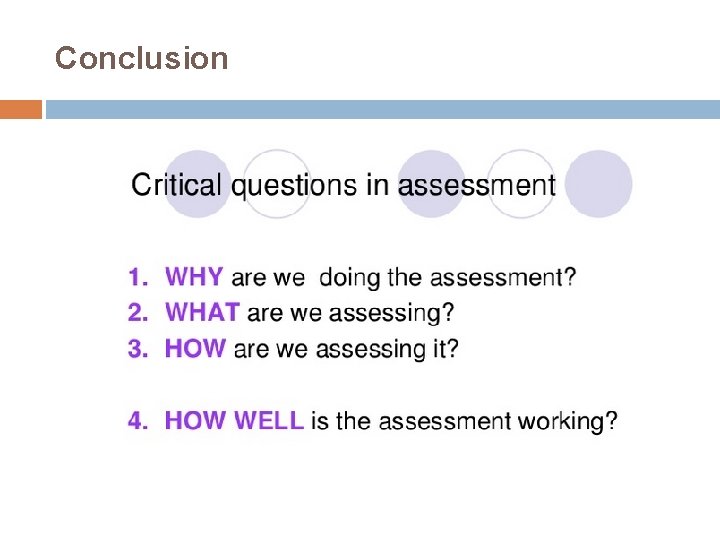

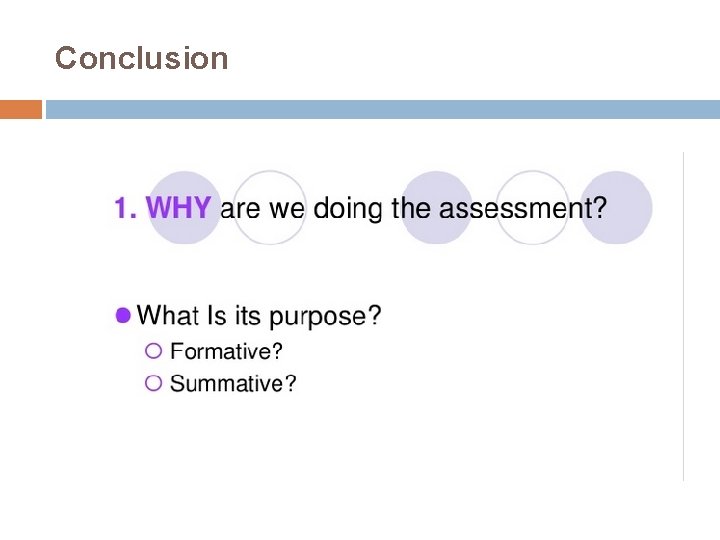

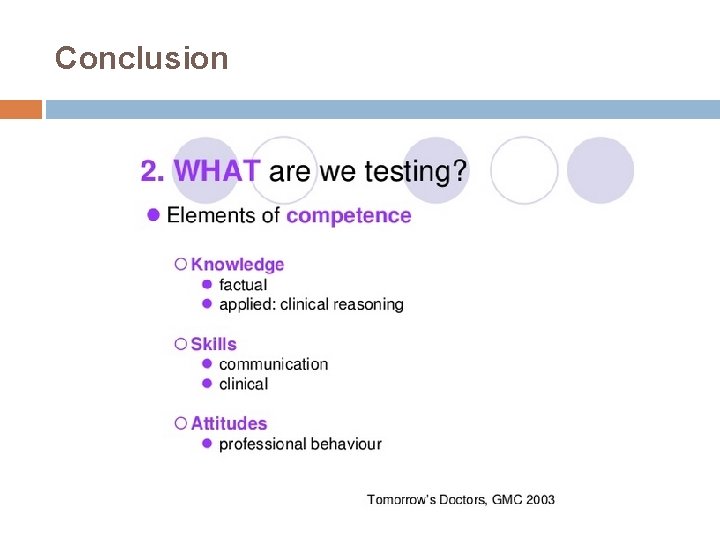

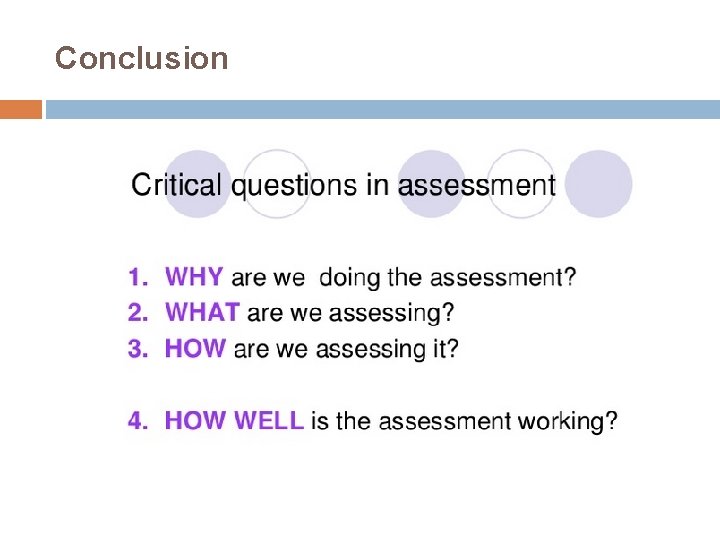

Conclusion

Conclusion

Conclusion

Conclusion

Conclusion

Thank you for your Time 87