Why Randomize Course Overview 1 What is evaluation

- Slides: 55

Why Randomize?

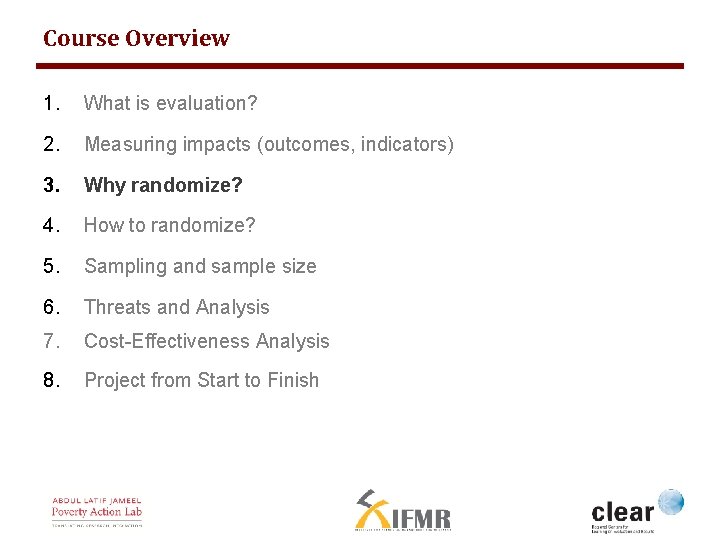

Course Overview 1. What is evaluation? 2. Measuring impacts (outcomes, indicators) 3. Why randomize? 4. How to randomize? 5. Sampling and sample size 6. Threats and Analysis 7. Cost-Effectiveness Analysis 8. Project from Start to Finish

What is the most convincing argument you have heard against RCTs? A. Too expensive B. Takes too long C. Unethical D. Too difficult to design/implement E. Not externally valid (Not generalizable) F. Can tell us whethere is impact, and the magnitude of that impact, but not why or how (it is a black box)

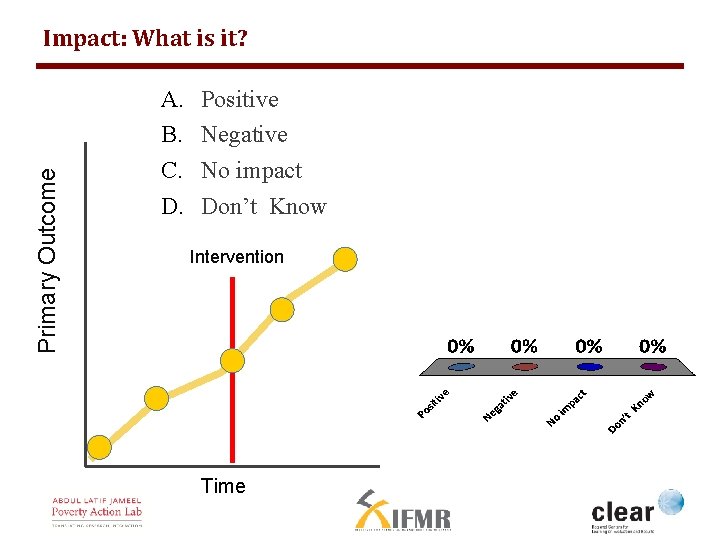

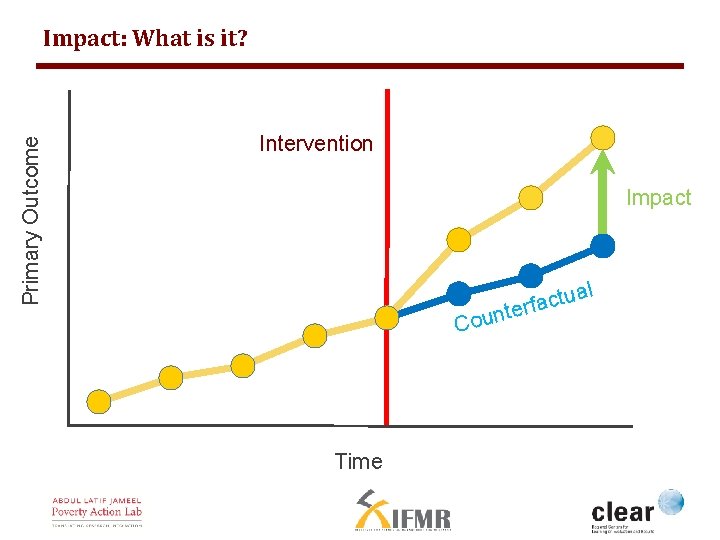

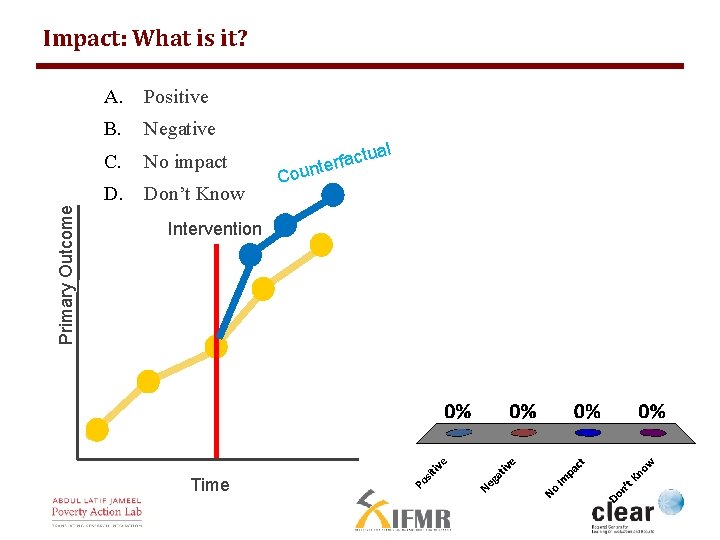

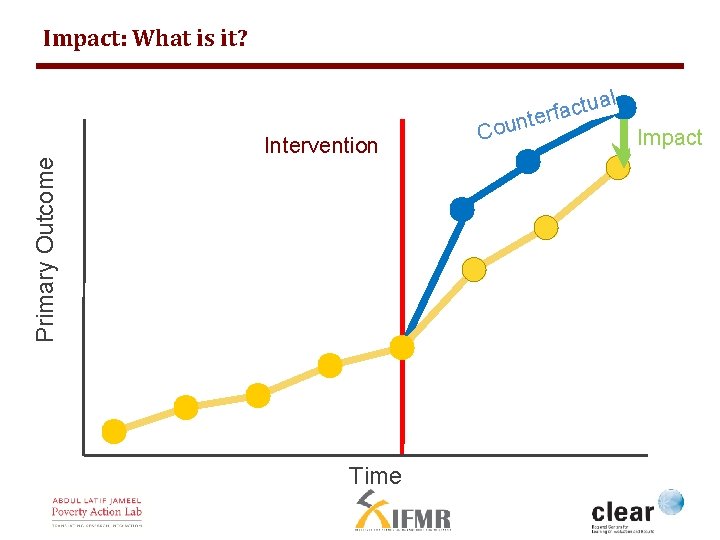

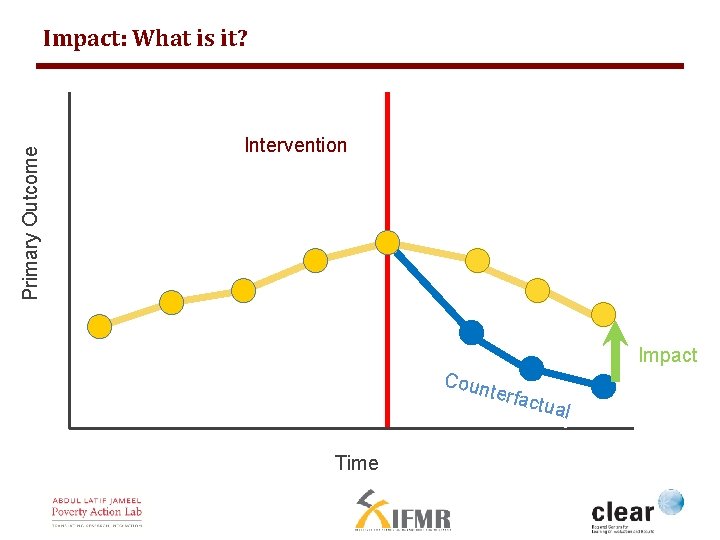

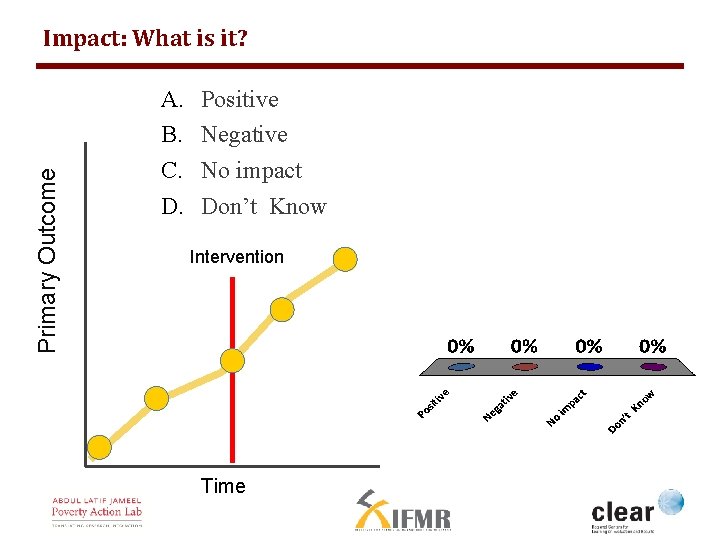

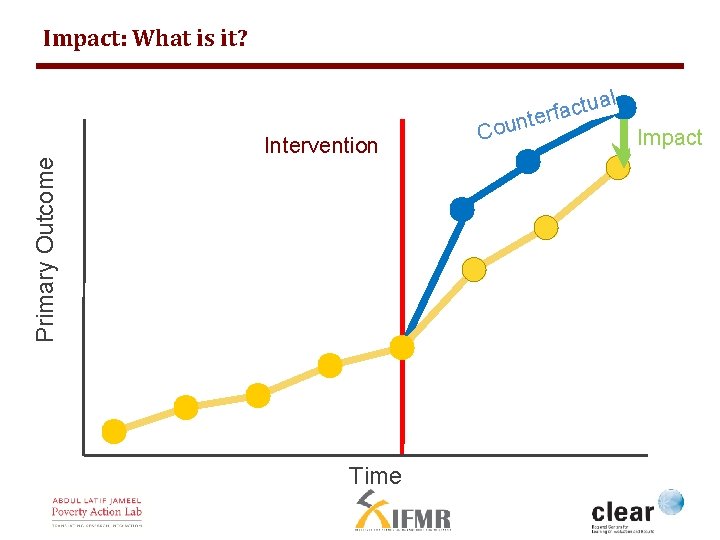

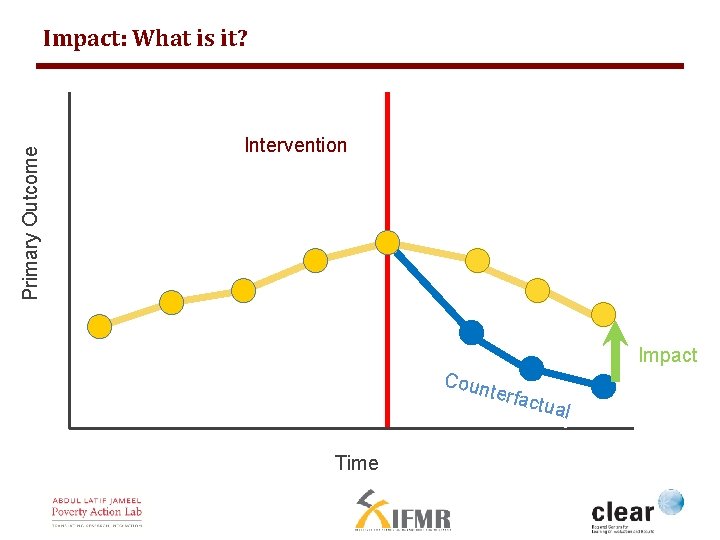

Primary Outcome Impact: What is it? A. B. C. D. Positive Negative No impact Don’t Know Intervention Time

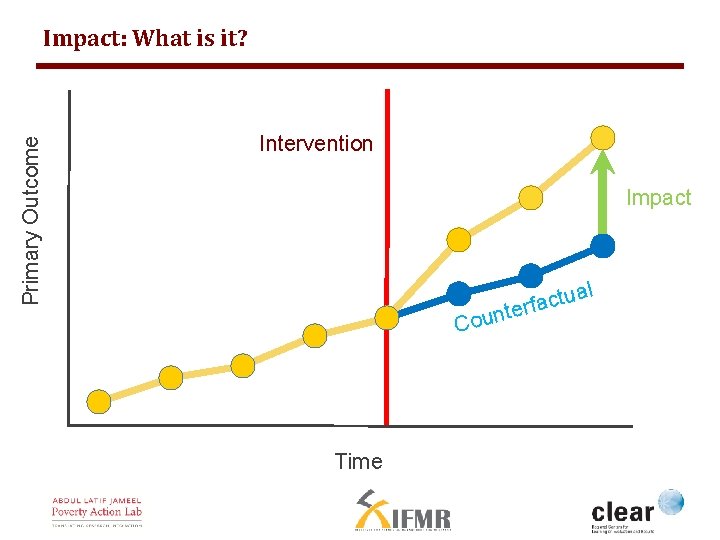

Primary Outcome Impact: What is it? Intervention Impact l tua c a f r te Coun Time

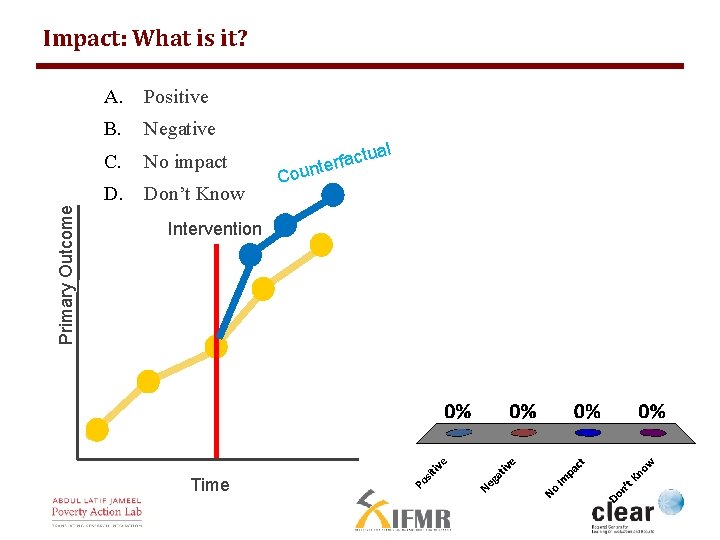

Primary Outcome Impact: What is it? A. Positive B. Negative C. No impact D. Don’t Know Intervention Time al Cou actu nterf

Primary Outcome Impact: What is it? Intervention Time Coun a u t c a terf l Impact

Primary Outcome Impact: What is it? Intervention Impact Coun terfa Time ctual

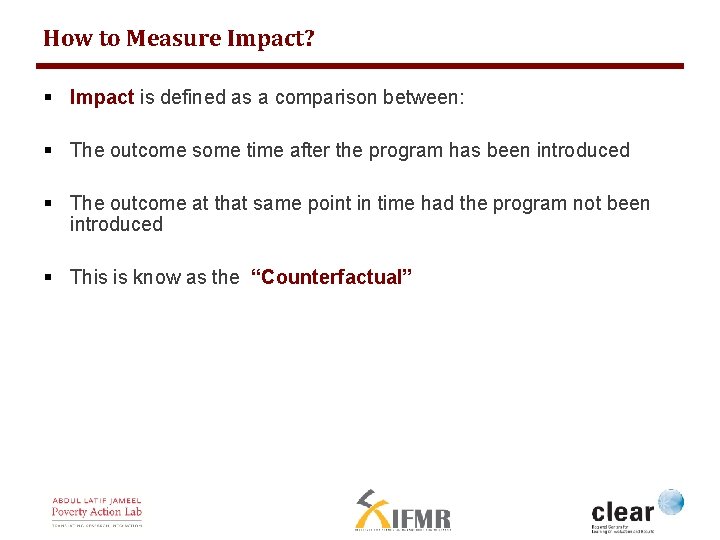

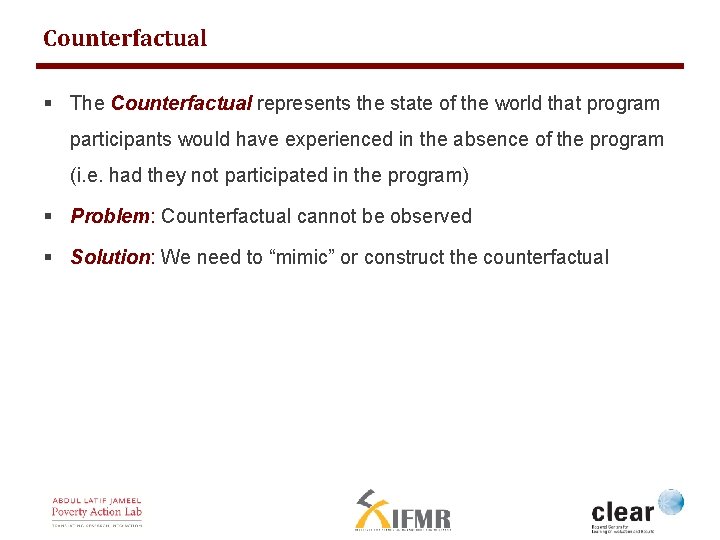

How to Measure Impact? § Impact is defined as a comparison between: § The outcome some time after the program has been introduced § The outcome at that same point in time had the program not been introduced § This is know as the “Counterfactual”

Counterfactual § The Counterfactual represents the state of the world that program participants would have experienced in the absence of the program (i. e. had they not participated in the program) § Problem: Counterfactual cannot be observed § Solution: We need to “mimic” or construct the counterfactual

IMPACT EVALUATION METHODS

Impact Evaluation Methods 1. Randomized Experiments Also known as: § Random Assignment Studies § Randomized Field Trials § Social Experiments § Randomized Controlled Trials (RCTs) § Randomized Controlled Experiments

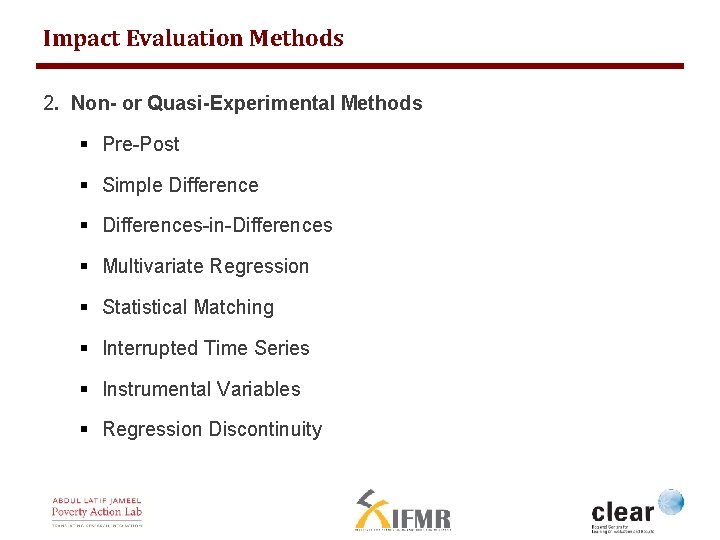

Impact Evaluation Methods 2. Non- or Quasi-Experimental Methods § Pre-Post § Simple Difference § Differences-in-Differences § Multivariate Regression § Statistical Matching § Interrupted Time Series § Instrumental Variables § Regression Discontinuity

WHAT IS A RANDOMIZED EXPERIMENT?

The Basics § Start with simple case: § Take a sample of program applicants • Randomly assign them to either: • Treatment Group – is offered treatment • Control Group - not allowed to receive treatment (during the evaluation period)

Key Advantage § Because members of the groups (treatment and control) do not differ systematically at the outset of the experiment, § Any difference that subsequently arises between them can be attributed to the program rather than to other factors.

WHY RANDOMIZE?

Example: Pratham’s Balsakhi Program

What was the Problem? § Many children in 3 rd and 4 th standard were not even at the 1 st standard level of competency § Class sizes were large § Social distance between teacher and many of the students was large

Context and Partner § 124 Municipal Schools in Vadodara (Western India) § 2002 & 2003: Two academic years § ~ 17, 000 children § “Every child in school and learning well” § Works with most states in India reaching millions of children

Proposed Solution § Hire local women (Balsakhis) § From the community § Train them to teach remedial competencies • Basic literacy, numeracy § Identify lowest performing 3 rd and 4 th standard students • Take these students out of class (2 hours/day) • Balsakhi teaches them basic competencies

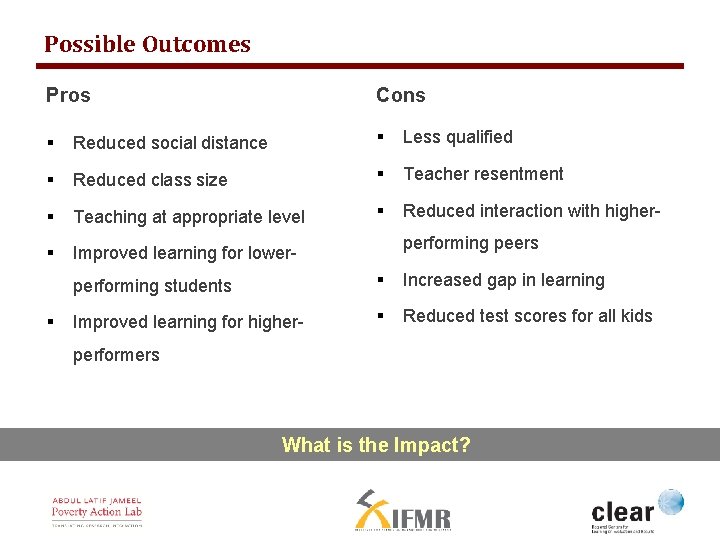

Possible Outcomes Pros Cons § Reduced social distance § Less qualified § Reduced class size § Teacher resentment § Teaching at appropriate level § Reduced interaction with higher- § Improved learning for lower- § performing peers performing students § Increased gap in learning Improved learning for higher- § Reduced test scores for all kids performers What is the Impact?

J-PAL Conducts a Test at the End § Balsakhi students score an average of 51% What can we conclude?

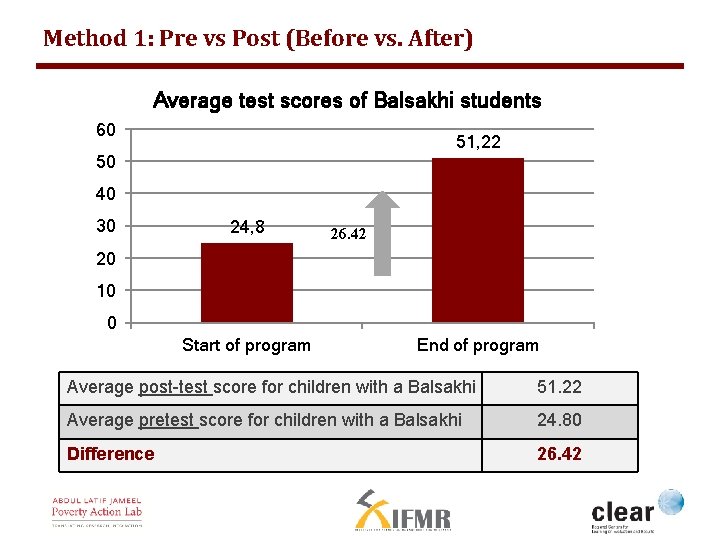

1. Pre-post (Before vs. After) Average change in the outcome of interest before and after the programme § Look at average change in test scores over the school year for the balsakhi children

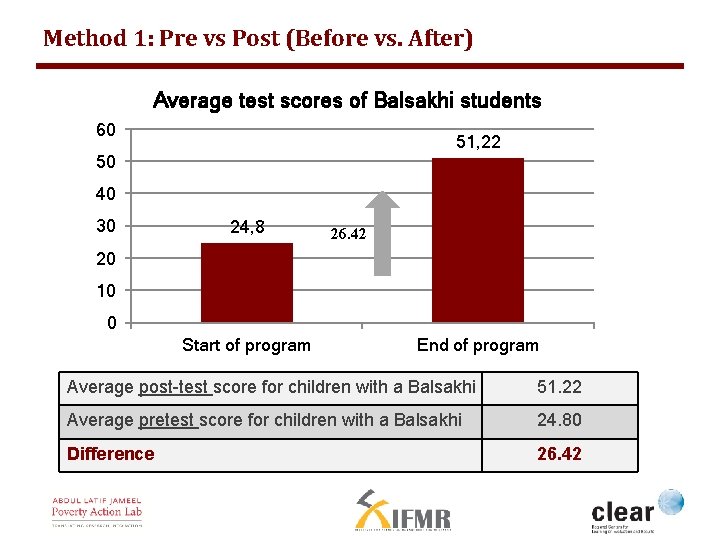

Method 1: Pre vs Post (Before vs. After) Average test scores of Balsakhi students 60 51, 22 50 40 30 24, 8 26. 42 20 10 0 Start of program End of program Average post-test score for children with a Balsakhi 51. 22 Average pretest score for children with a Balsakhi 24. 80 Difference 26. 42

Pre-Post § Limitations of the method • No comparison group, doesn’t take time trend into account What else can we do to estimate impact?

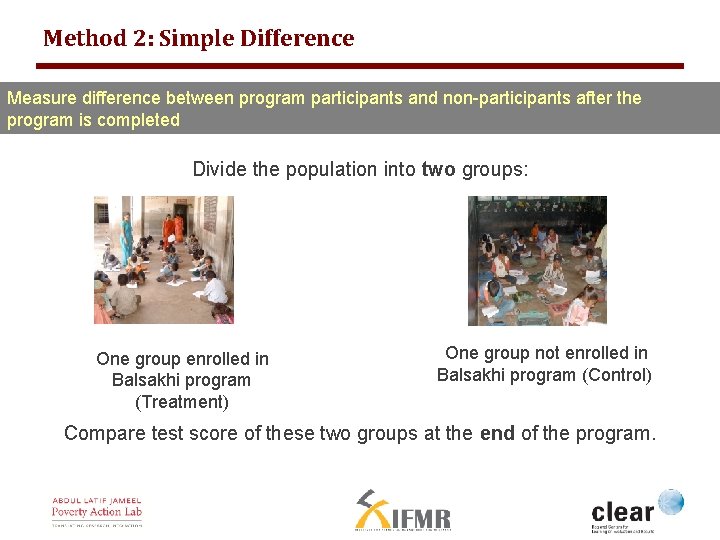

Method 2: Simple Difference Measure difference between program participants and non-participants after the program is completed Divide the population into two groups: One group enrolled in Balsakhi program (Treatment) One group not enrolled in Balsakhi program (Control) Compare test score of these two groups at the end of the program.

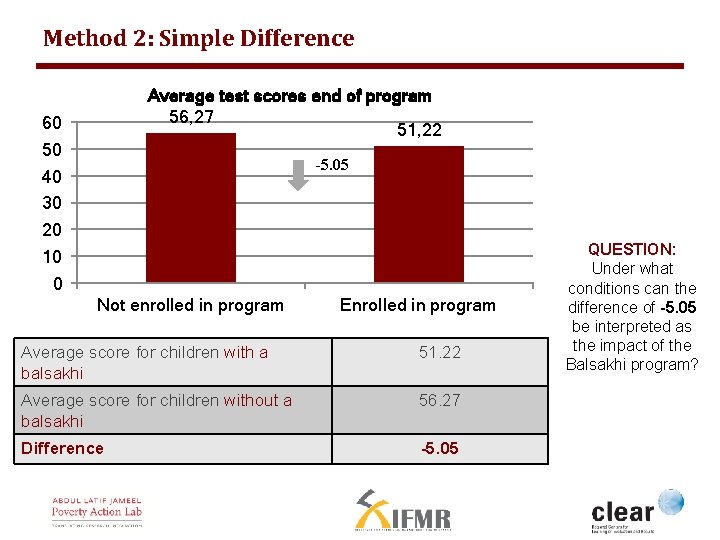

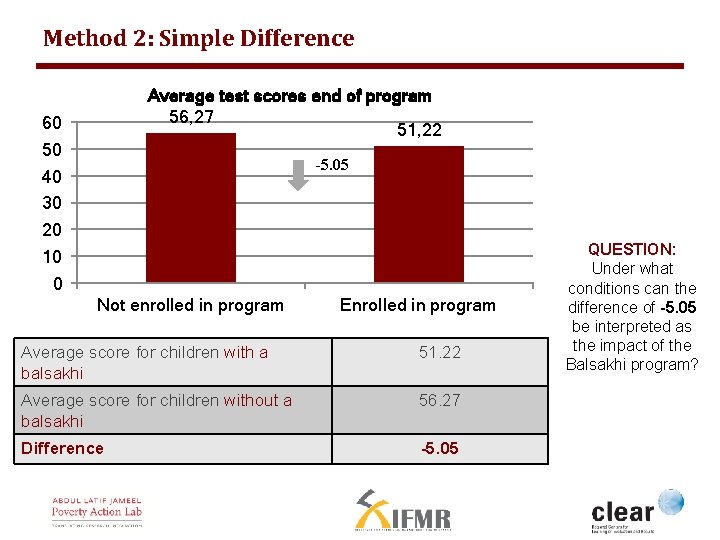

Method 2: Simple Difference Average test scores end of program 56, 27 51, 22 60 50 40 30 20 10 0 -5. 05 Not enrolled in program Enrolled in program Average score for children with a balsakhi 51. 22 Average score for children without a balsakhi 56. 27 Difference -5. 05 QUESTION: Under what conditions can the difference of -5. 05 be interpreted as the impact of the Balsakhi program?

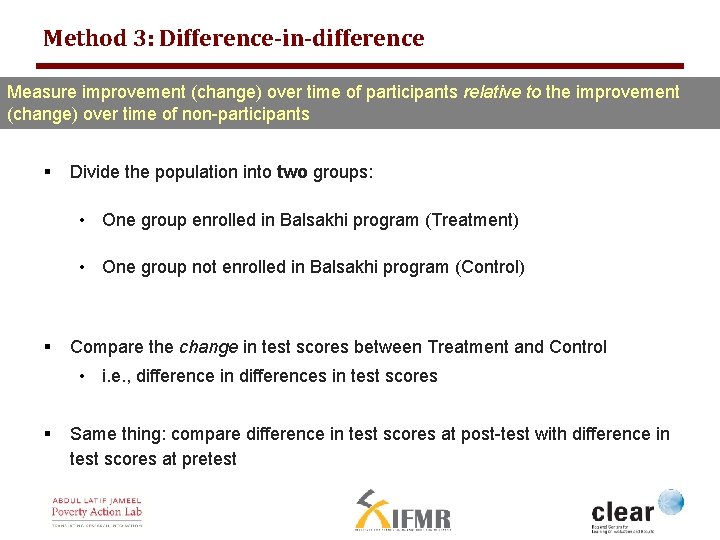

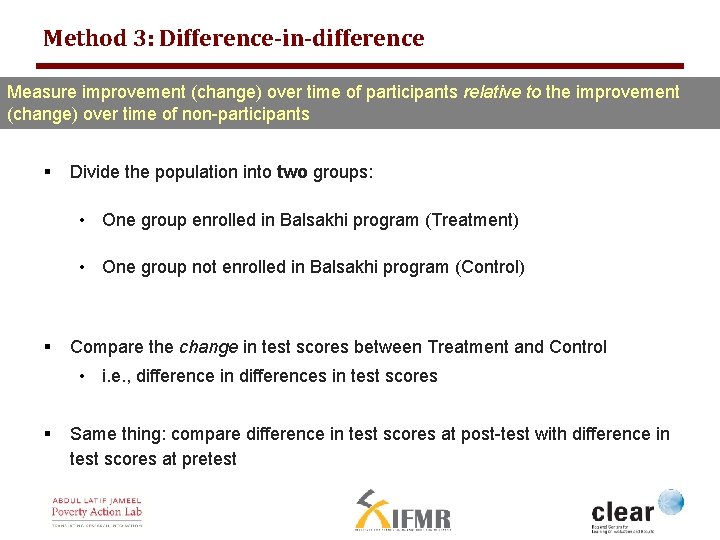

Method 3: Difference-in-difference Measure improvement (change) over time of participants relative to the improvement (change) over time of non-participants § Divide the population into two groups: • One group enrolled in Balsakhi program (Treatment) • One group not enrolled in Balsakhi program (Control) § Compare the change in test scores between Treatment and Control • i. e. , difference in differences in test scores § Same thing: compare difference in test scores at post-test with difference in test scores at pretest

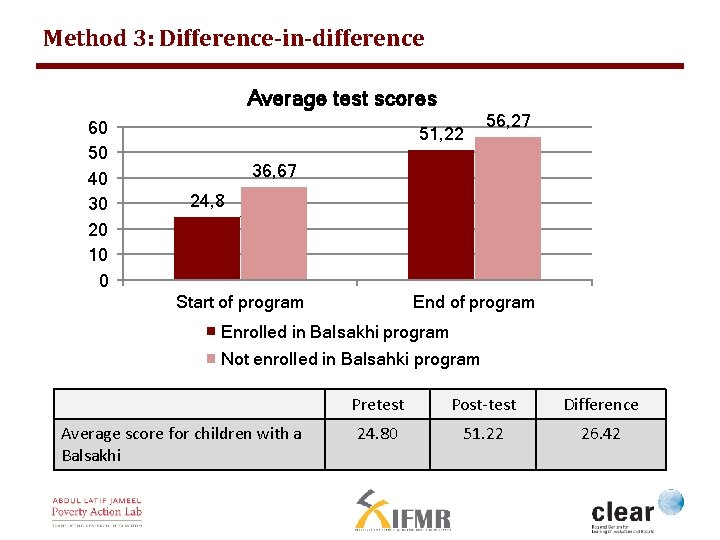

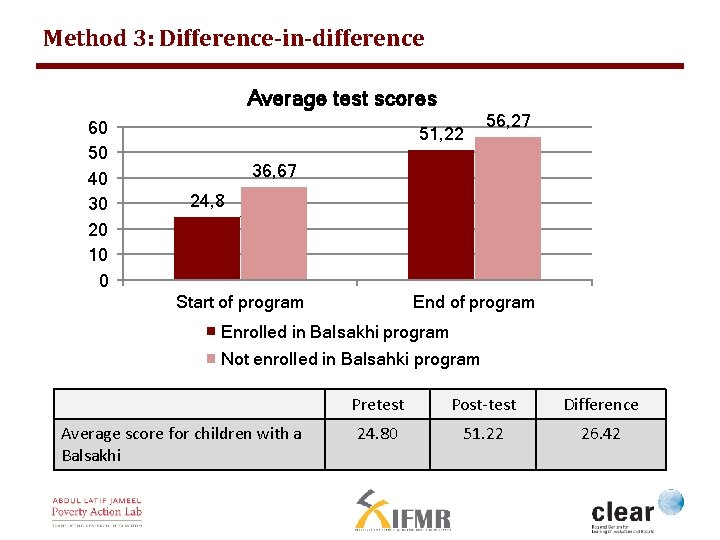

Method 3: Difference-in-difference Average test scores 60 50 40 30 20 10 0 51, 22 56, 27 36, 67 24, 8 Start of program End of program Enrolled in Balsakhi program Not enrolled in Balsahki program Average score for children with a Balsakhi Pretest Post-test Difference 24. 80 51. 22 26. 42

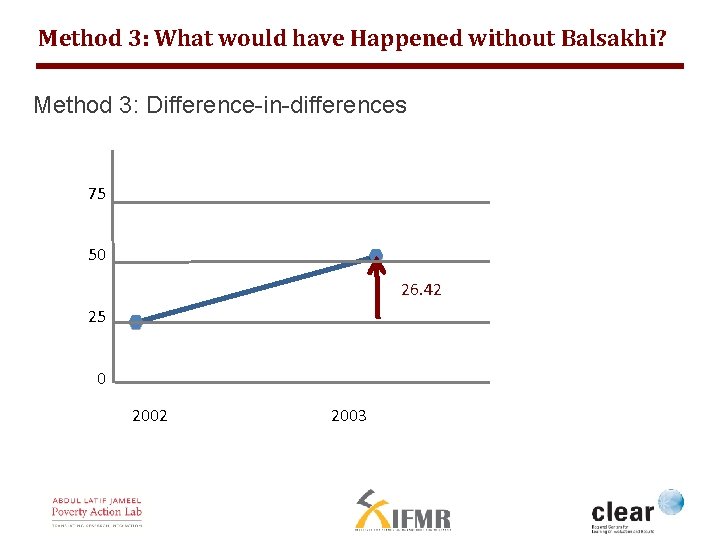

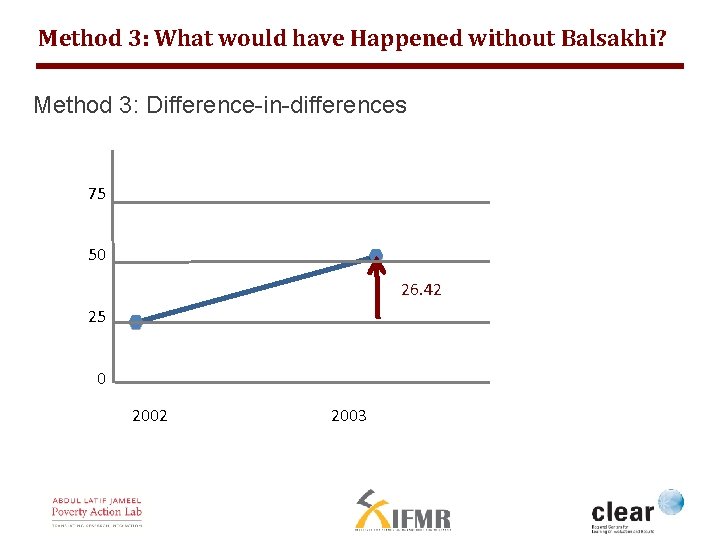

Method 3: What would have Happened without Balsakhi? Method 3: Difference-in-differences 75 50 26. 42 25 0 2002 2003

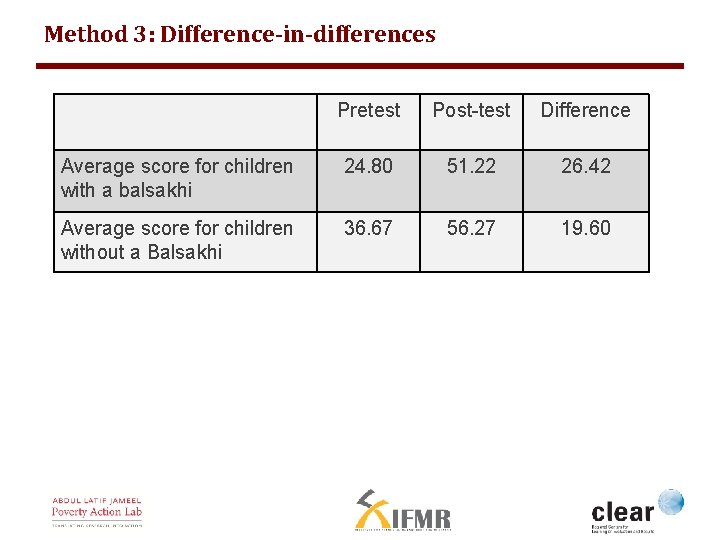

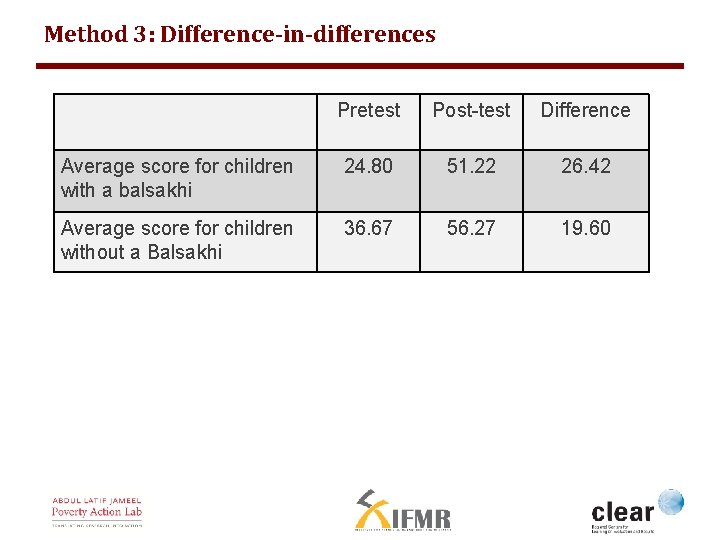

Method 3: Difference-in-differences Pretest Post-test Difference Average score for children with a balsakhi 24. 80 51. 22 26. 42 Average score for children without a Balsakhi 36. 67 56. 27 19. 60

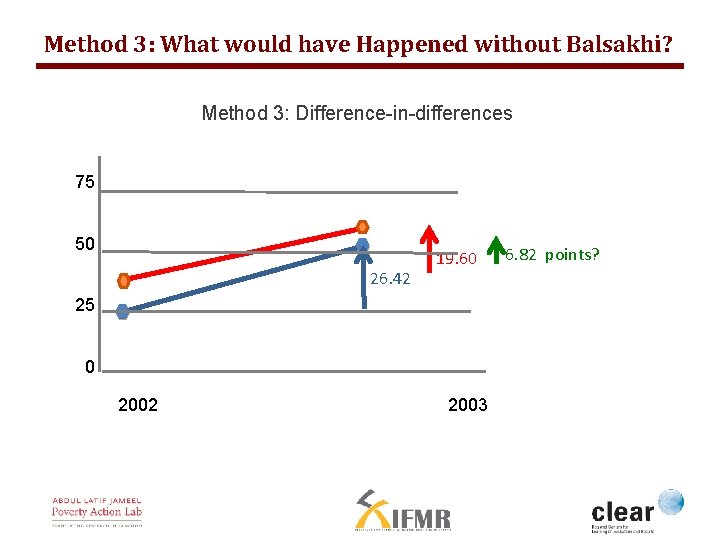

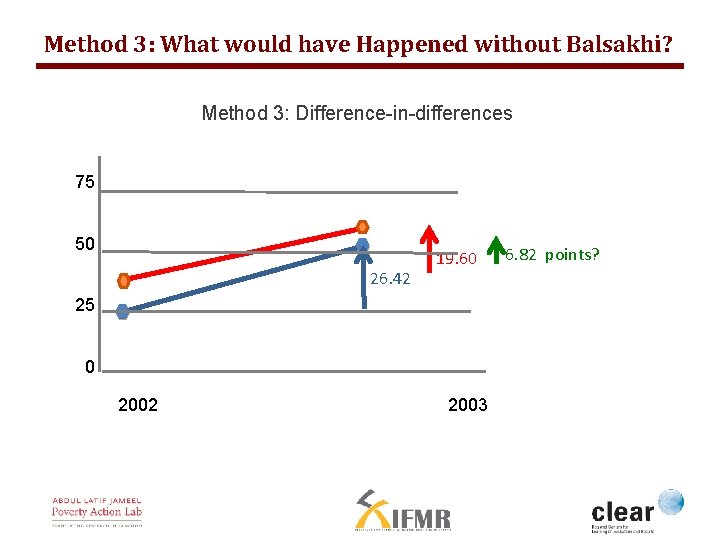

Method 3: What would have Happened without Balsakhi? Method 3: Difference-in-differences 75 50 26. 42 19. 60 25 00 2002 2003 6. 82 points?

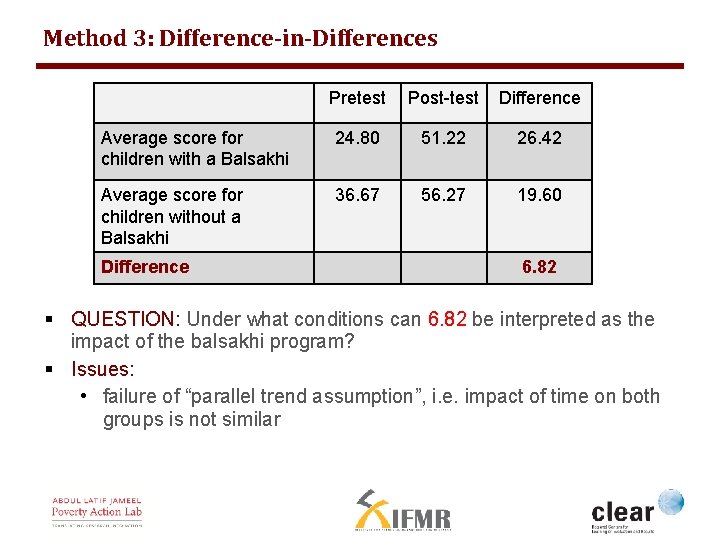

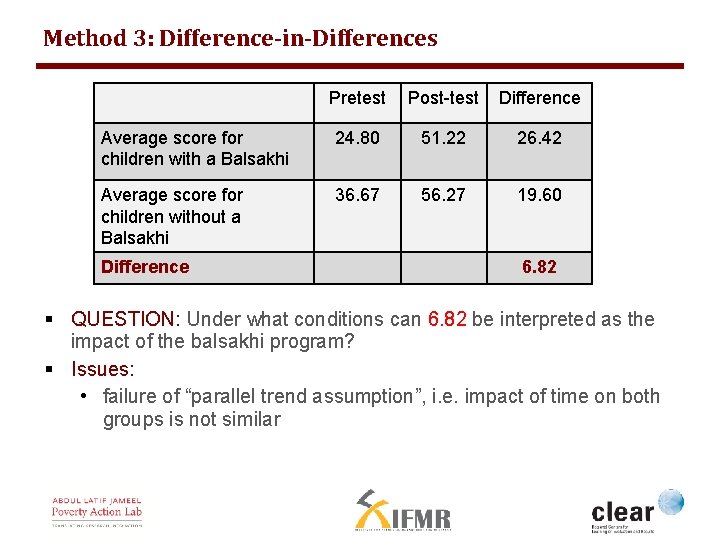

Method 3: Difference-in-Differences Pretest Post-test Difference Average score for children with a Balsakhi 24. 80 51. 22 26. 42 Average score for children without a Balsakhi 36. 67 56. 27 19. 60 Difference 6. 82 § QUESTION: Under what conditions can 6. 82 be interpreted as the impact of the balsakhi program? § Issues: • failure of “parallel trend assumption”, i. e. impact of time on both groups is not similar

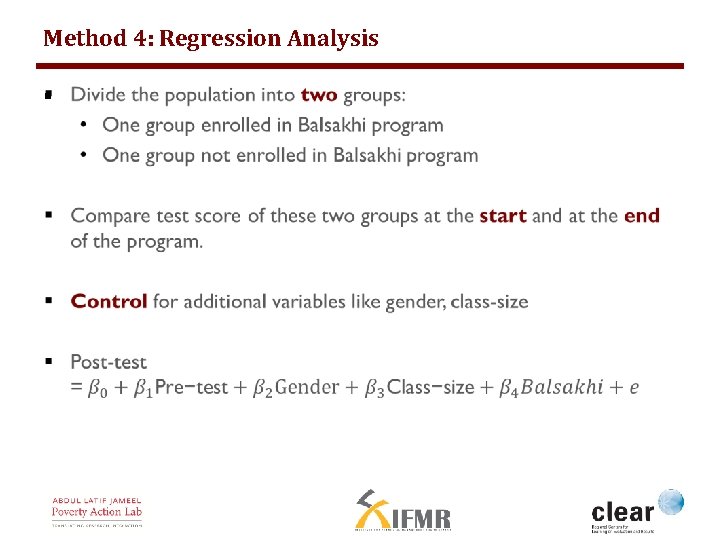

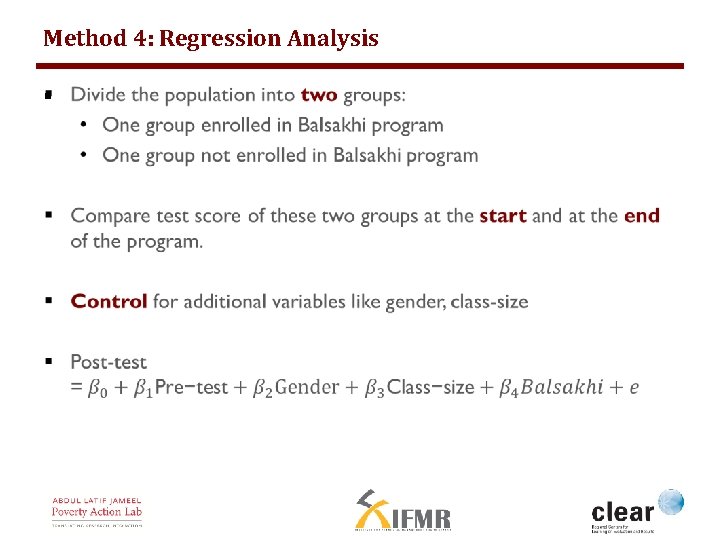

Method 4: Regression Analysis §

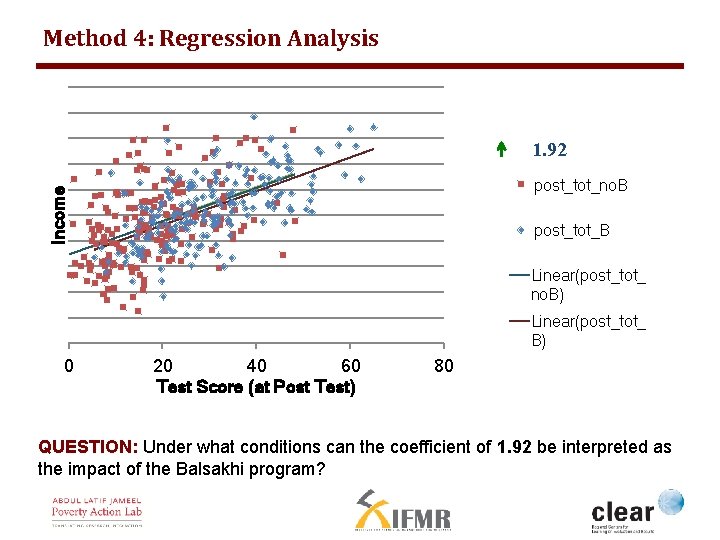

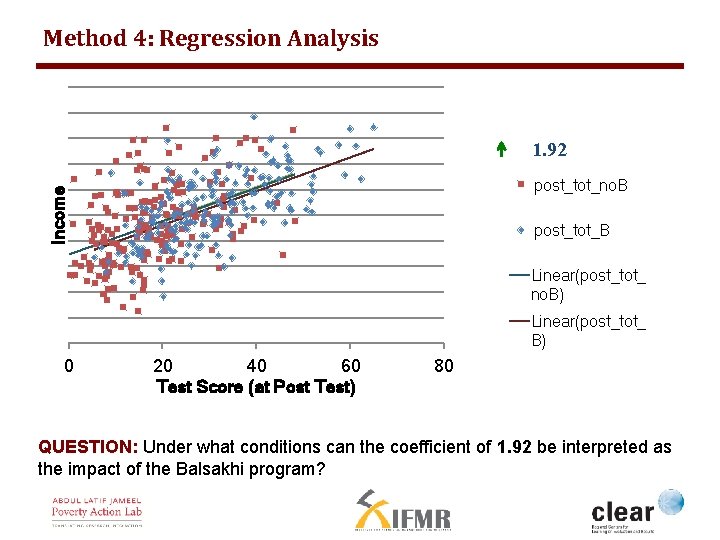

Method 4: Regression Analysis 1. 92 Income post_tot_no. B post_tot_B Linear(post_tot_ no. B) Linear(post_tot_ B) 0 20 40 60 Test Score (at Post Test) 80 QUESTION: Under what conditions can the coefficient of 1. 92 be interpreted as the impact of the Balsakhi program?

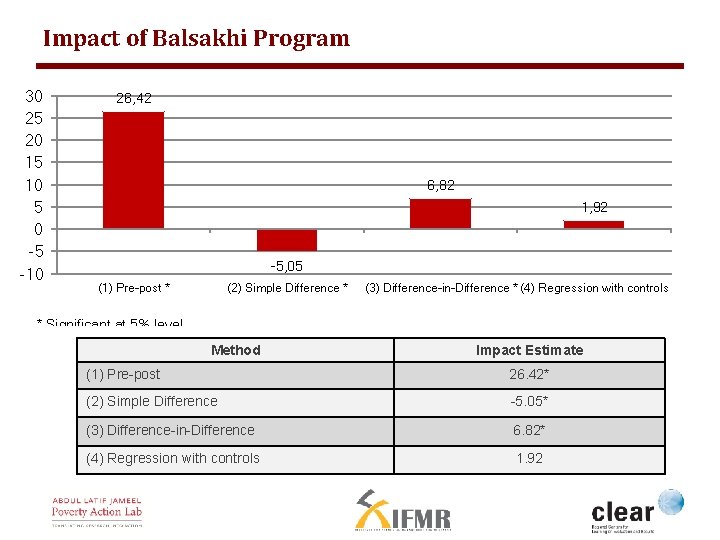

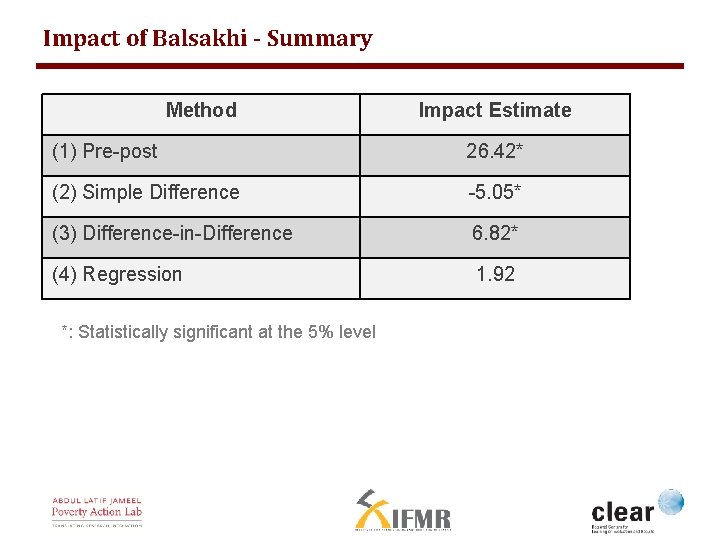

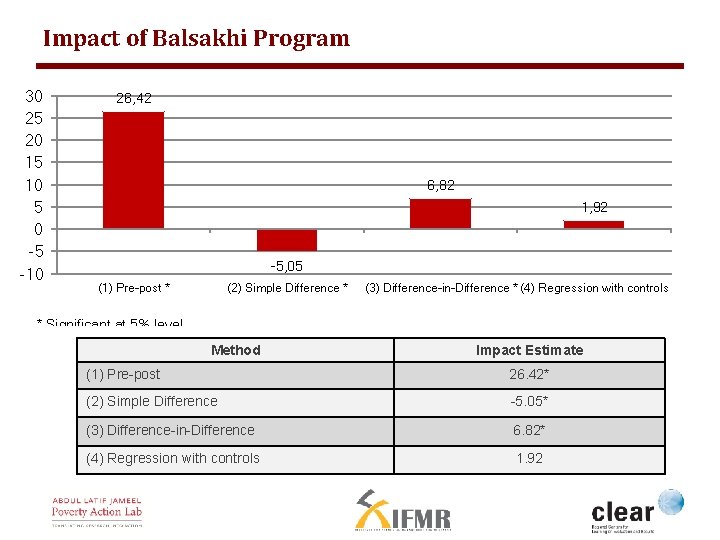

Impact of Balsakhi Program 30 25 20 15 10 5 0 -5 -10 26, 42 6, 82 1, 92 -5, 05 (1) Pre-post * (2) Simple Difference * (3) Difference-in-Difference * (4) Regression with controls * Significant at 5% level Method Impact Estimate (1) Pre-post 26. 42* (2) Simple Difference -5. 05* (3) Difference-in-Difference 6. 82* (4) Regression with controls 1. 92

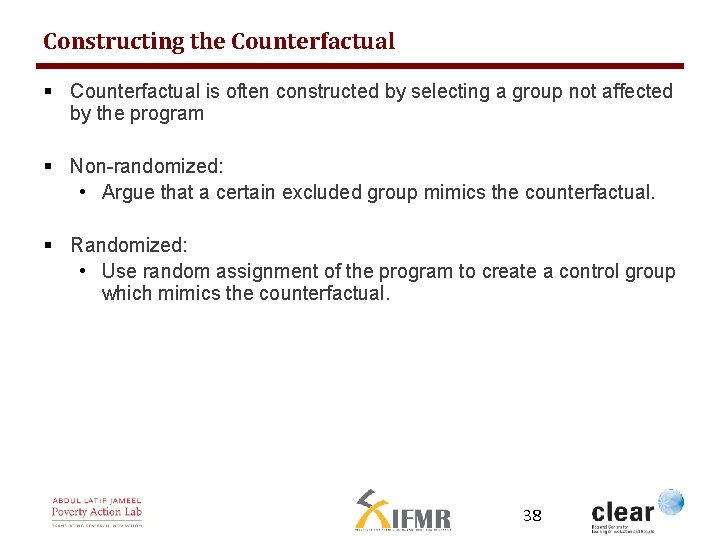

Constructing the Counterfactual § Counterfactual is often constructed by selecting a group not affected by the program § Non-randomized: • Argue that a certain excluded group mimics the counterfactual. § Randomized: • Use random assignment of the program to create a control group which mimics the counterfactual. 38

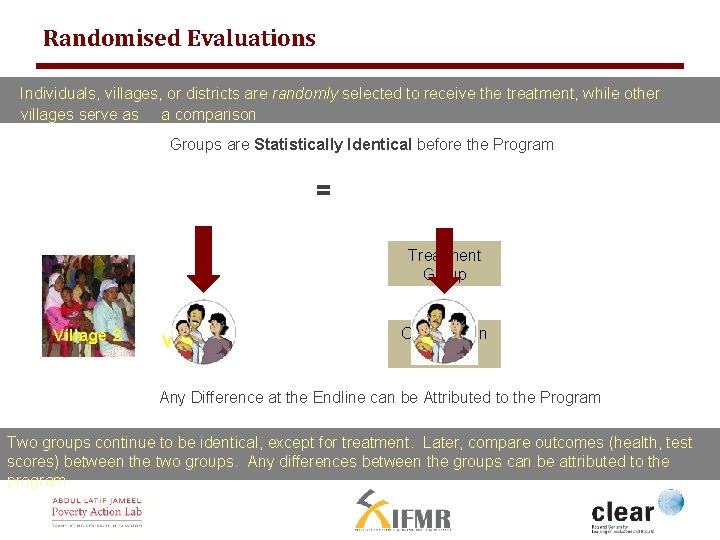

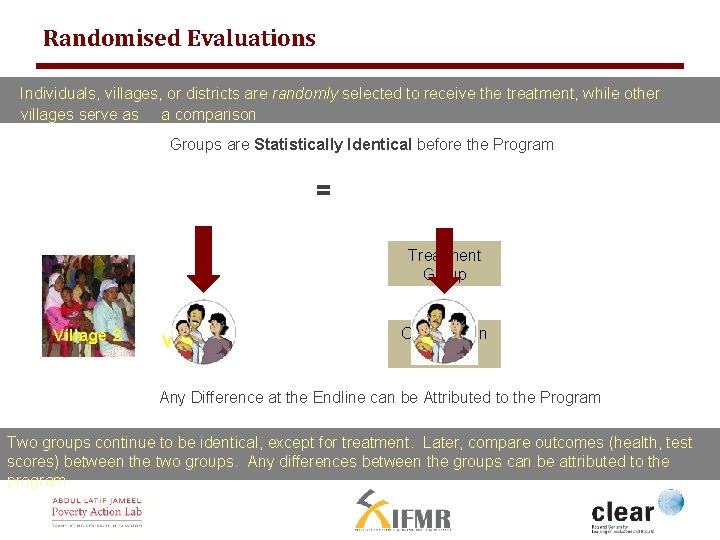

Randomised Evaluations Individuals, villages, or districts are randomly selected to receive the treatment, while other villages serve as a comparison Groups are Statistically Identical before the Program = Treatment Group Village 2 Village 1 Comparison Group Any Difference at the Endline can be Attributed to the Program Two groups continue to be identical, except for treatment. Later, compare outcomes (health, test scores) between the two groups. Any differences between the groups can be attributed to the program.

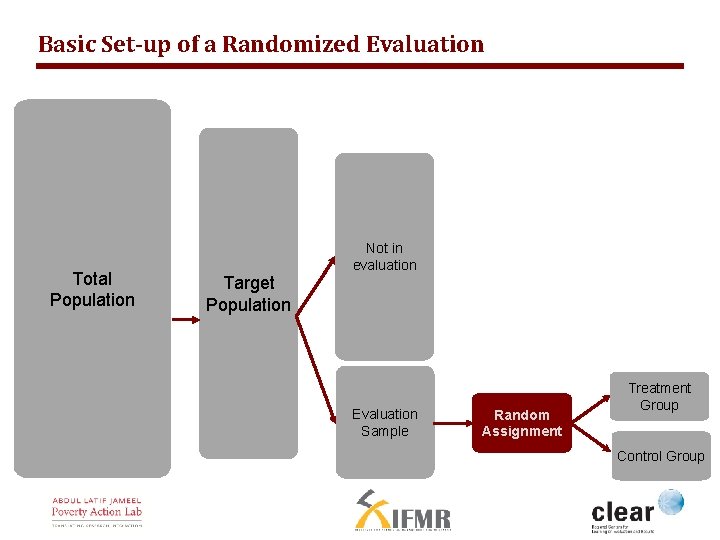

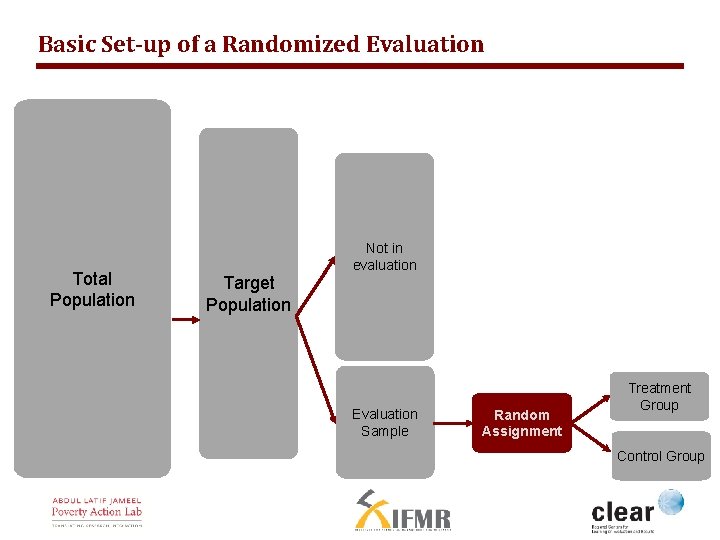

Basic Set-up of a Randomized Evaluation Total Population Target Population Not in evaluation Evaluation Sample Random Assignment Treatment Group Control Group

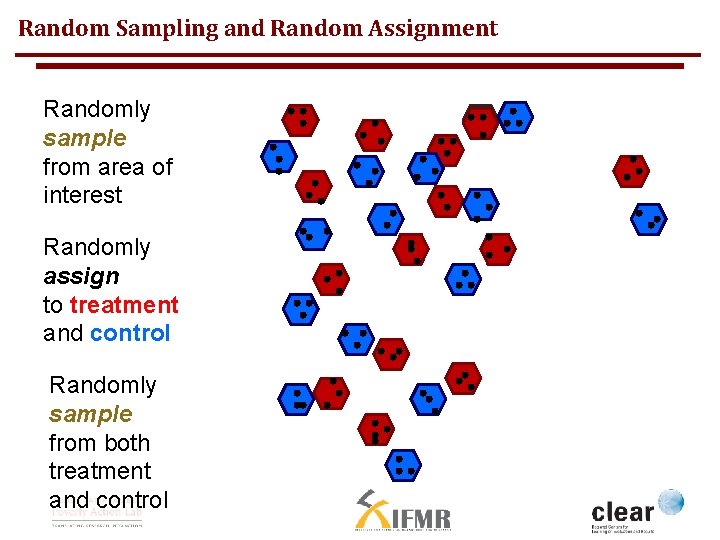

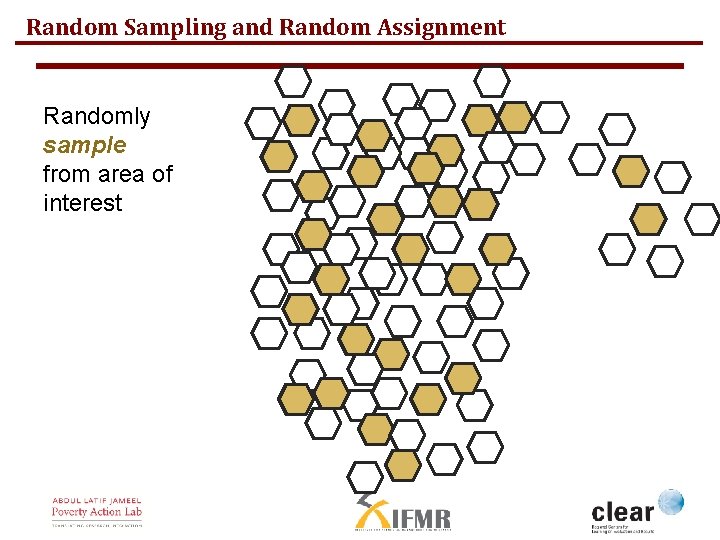

Random Sampling and Random Assignment Randomly sample from area of interest

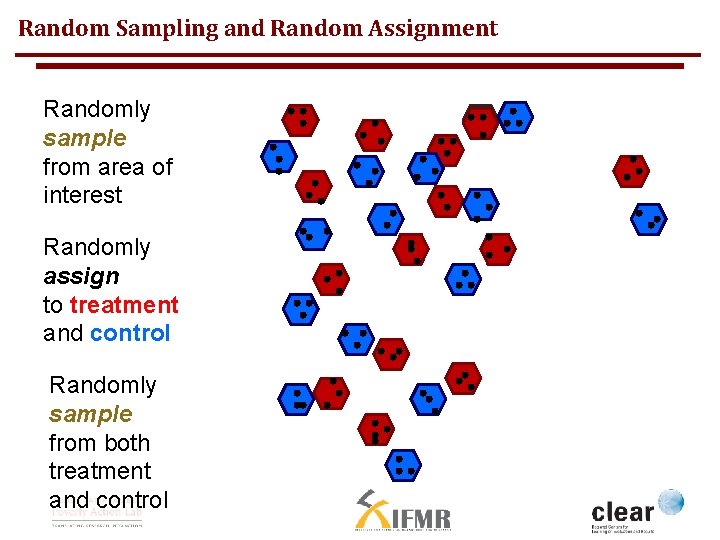

Random Sampling and Random Assignment Randomly sample from area of interest Randomly assign to treatment and control Randomly sample from both treatment and control

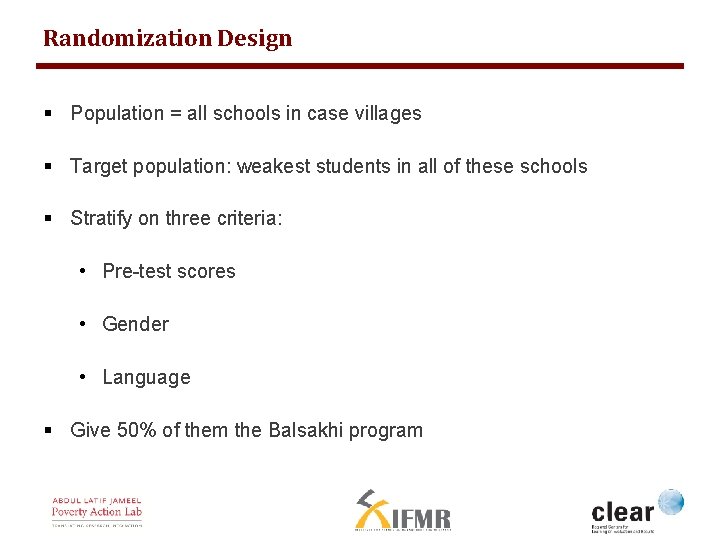

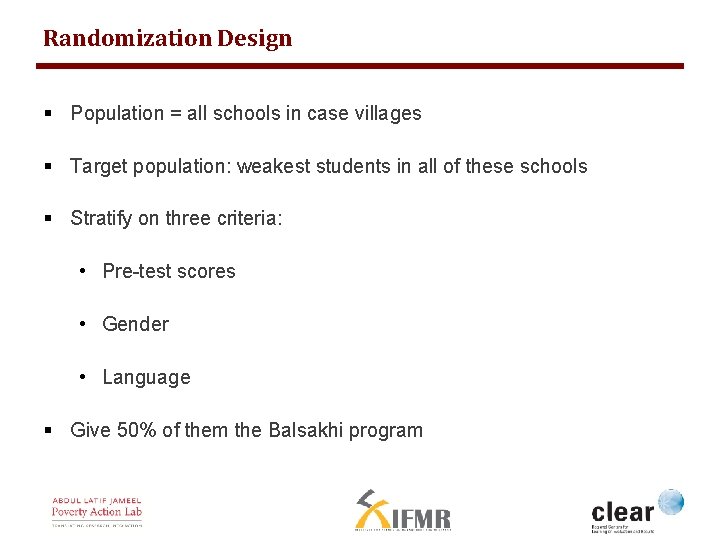

Randomization Design § Population = all schools in case villages § Target population: weakest students in all of these schools § Stratify on three criteria: • Pre-test scores • Gender • Language § Give 50% of them the Balsakhi program

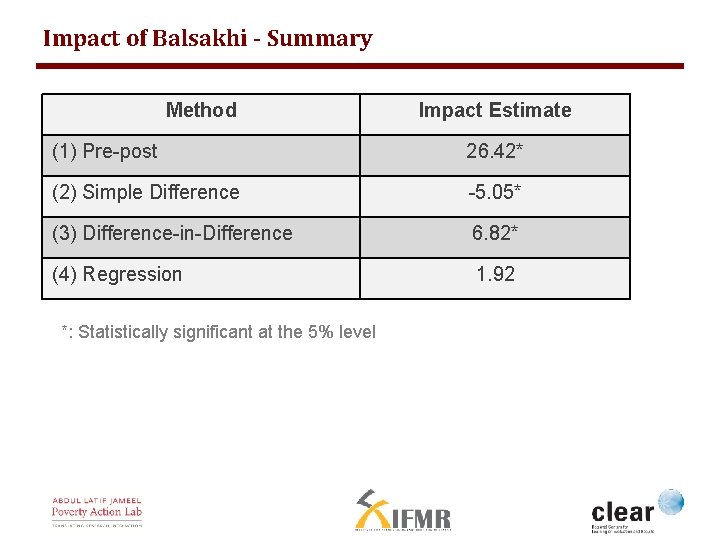

Impact of Balsakhi - Summary Method Impact Estimate (1) Pre-post 26. 42* (2) Simple Difference -5. 05* (3) Difference-in-Difference 6. 82* (4) Regression 1. 92 *: Statistically significant at the 5% level

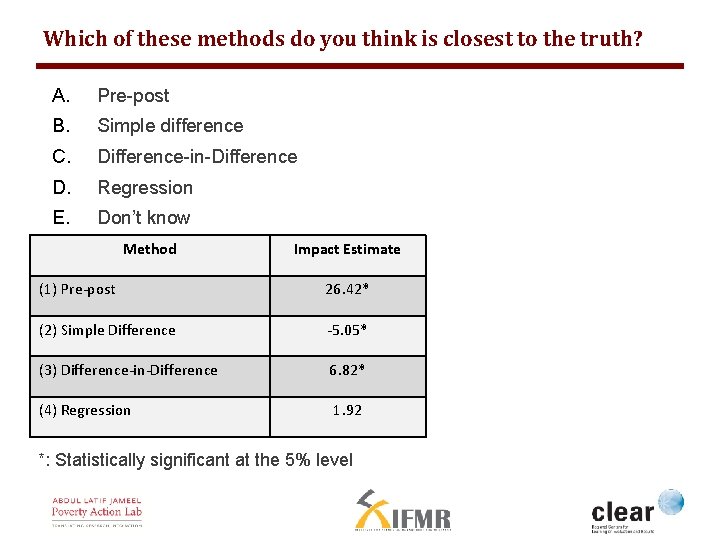

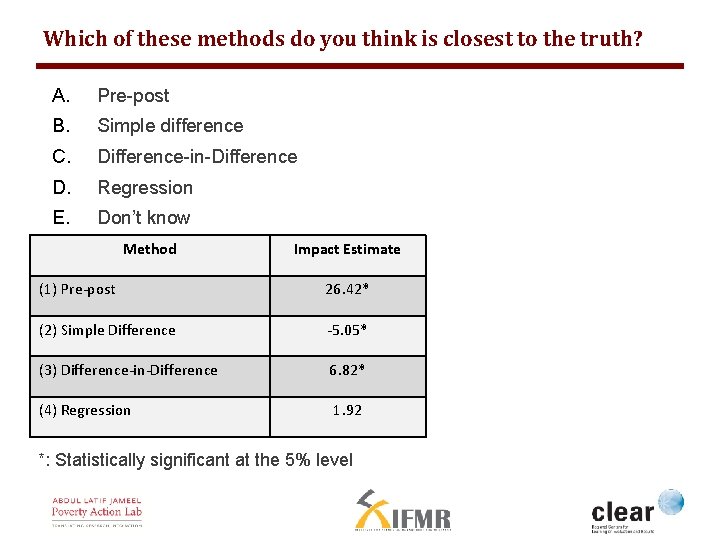

Which of these methods do you think is closest to the truth? A. Pre-post B. Simple difference C. Difference-in-Difference D. Regression E. Don’t know Method Impact Estimate (1) Pre-post 26. 42* (2) Simple Difference -5. 05* (3) Difference-in-Difference 6. 82* (4) Regression 1. 92 *: Statistically significant at the 5% level

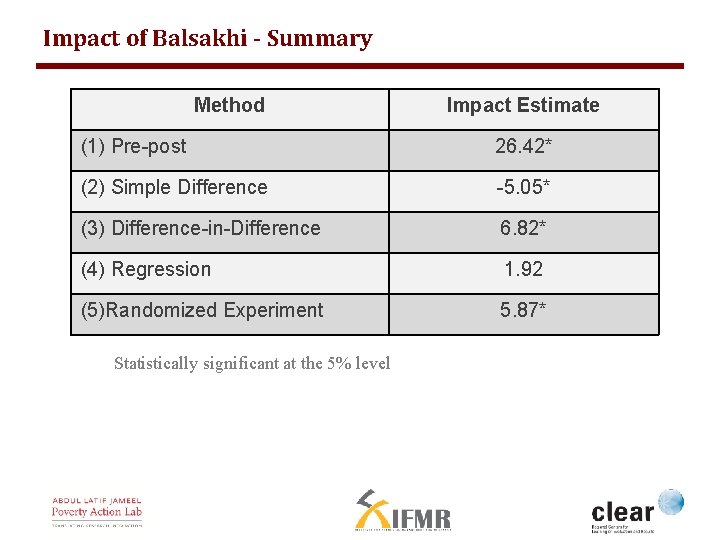

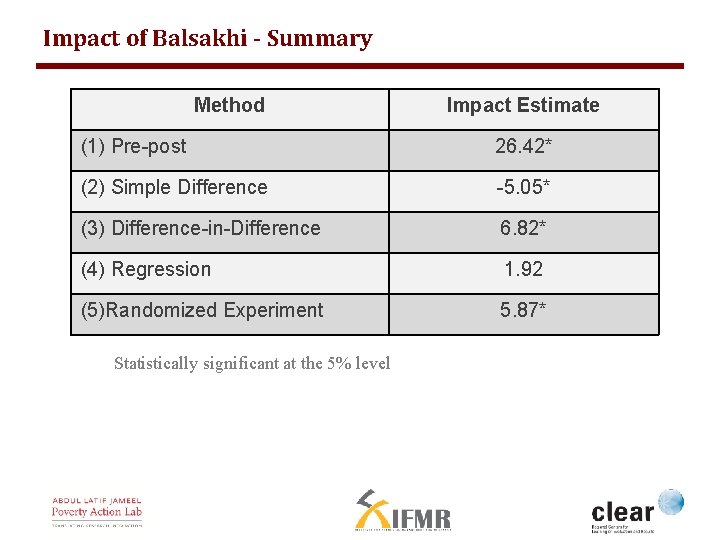

Impact of Balsakhi - Summary Method Impact Estimate (1) Pre-post 26. 42* (2) Simple Difference -5. 05* (3) Difference-in-Difference 6. 82* (4) Regression 1. 92 (5)Randomized Experiment 5. 87* *: Statistically significant at the 5% level

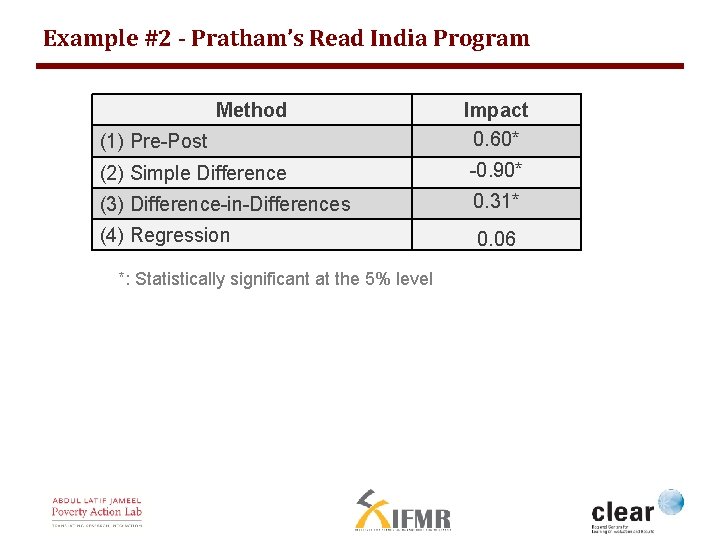

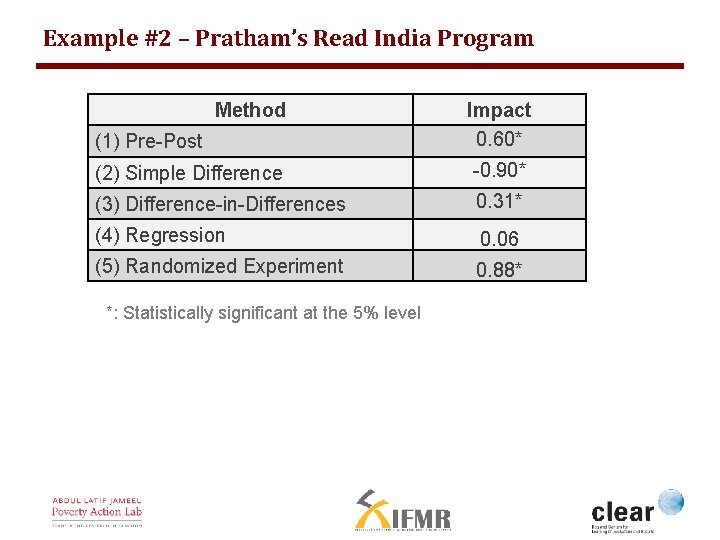

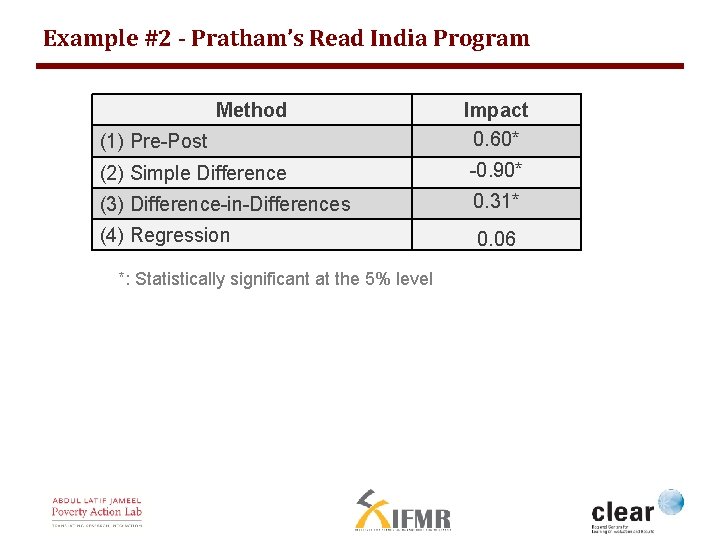

Example #2 - Pratham’s Read India Program Method (1) Pre-Post Impact 0. 60* (2) Simple Difference -0. 90* (3) Difference-in-Differences 0. 31* (4) Regression 0. 06 *: Statistically significant at the 5% level

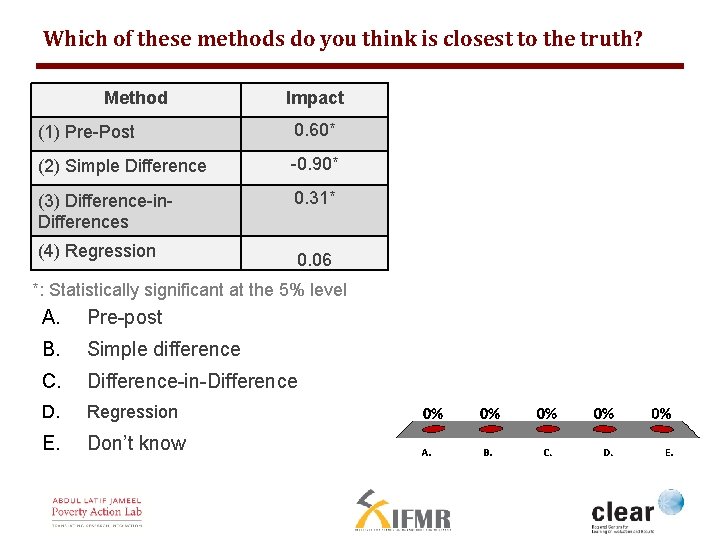

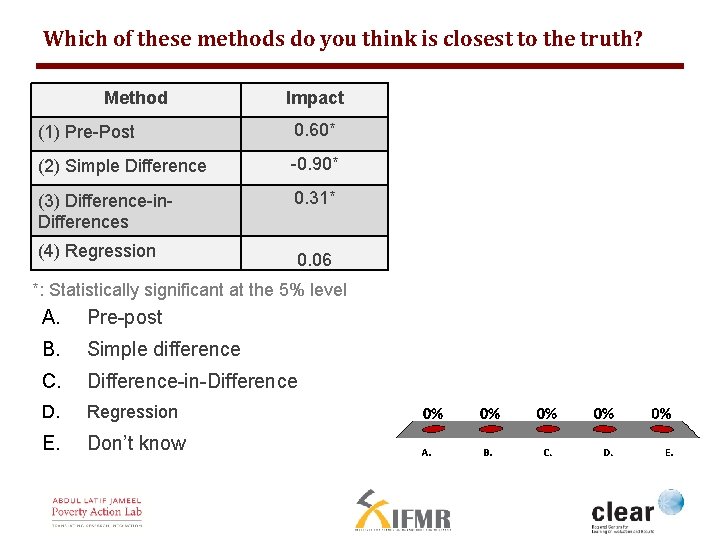

Which of these methods do you think is closest to the truth? Method Impact (1) Pre-Post 0. 60* (2) Simple Difference -0. 90* (3) Difference-in. Differences 0. 31* (4) Regression 0. 06 *: Statistically significant at the 5% level A. Pre-post B. Simple difference C. Difference-in-Difference D. Regression E. Don’t know

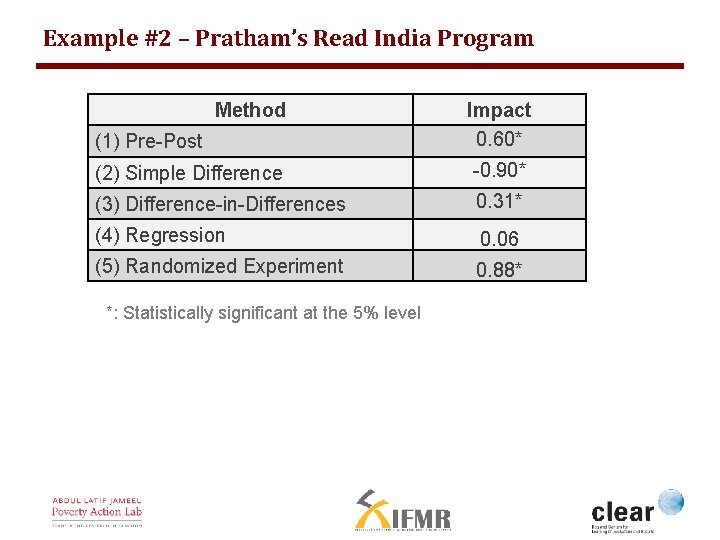

Example #2 – Pratham’s Read India Program Method (1) Pre-Post Impact 0. 60* (2) Simple Difference -0. 90* (3) Difference-in-Differences 0. 31* (4) Regression 0. 06 (5) Randomized Experiment 0. 88* *: Statistically significant at the 5% level

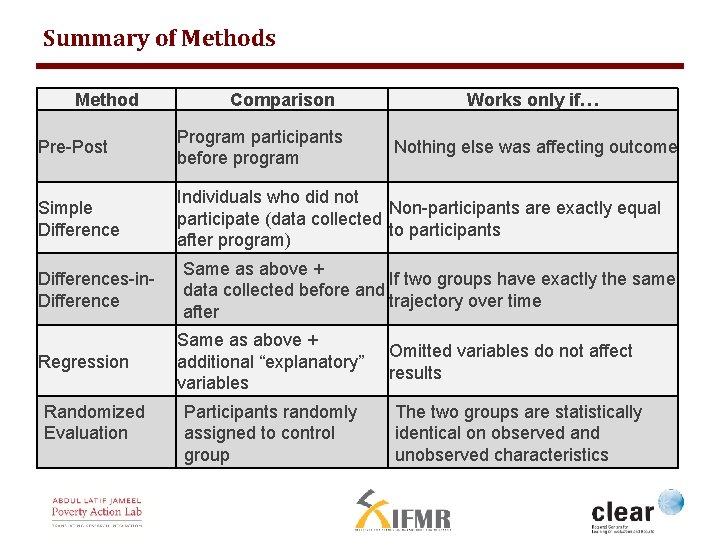

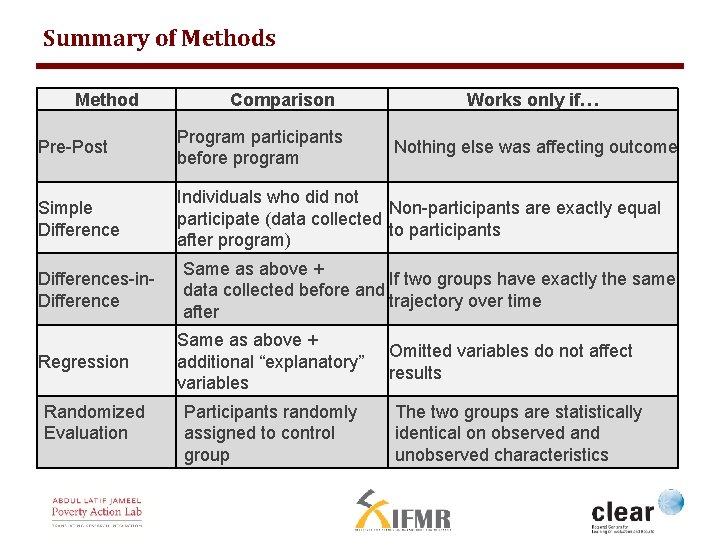

Summary of Methods Method Comparison Works only if… Pre-Post Program participants before program Simple Difference Individuals who did not Non-participants are exactly equal participate (data collected to participants after program) Differences-in. Difference Regression Randomized Evaluation Nothing else was affecting outcome Same as above + If two groups have exactly the same data collected before and trajectory over time after Same as above + additional “explanatory” variables Participants randomly assigned to control group Omitted variables do not affect results The two groups are statistically identical on observed and unobserved characteristics

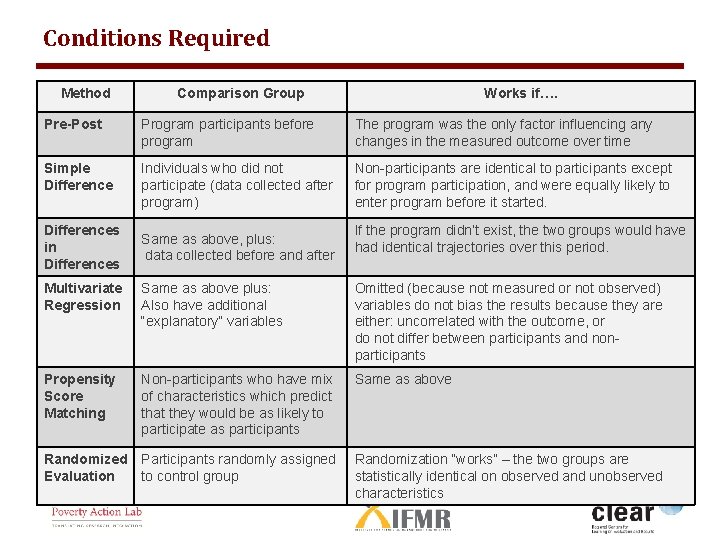

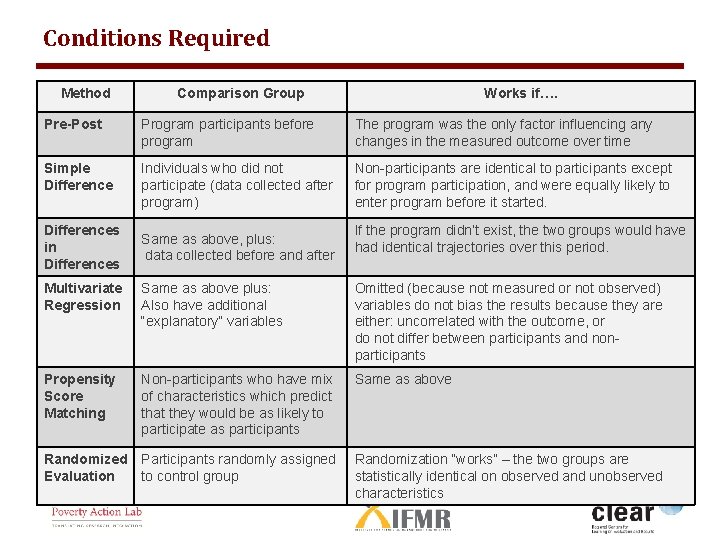

Conditions Required Method Comparison Group Works if…. Pre-Post Program participants before program The program was the only factor influencing any changes in the measured outcome over time Simple Difference Individuals who did not participate (data collected after program) Non-participants are identical to participants except for program participation, and were equally likely to enter program before it started. Differences in Differences Same as above, plus: data collected before and after If the program didn’t exist, the two groups would have had identical trajectories over this period. Multivariate Regression Same as above plus: Also have additional “explanatory” variables Omitted (because not measured or not observed) variables do not bias the results because they are either: uncorrelated with the outcome, or do not differ between participants and nonparticipants Propensity Score Matching Non-participants who have mix of characteristics which predict that they would be as likely to participate as participants Same as above Randomized Evaluation Participants randomly assigned to control group Randomization “works” – the two groups are statistically identical on observed and unobserved characteristics

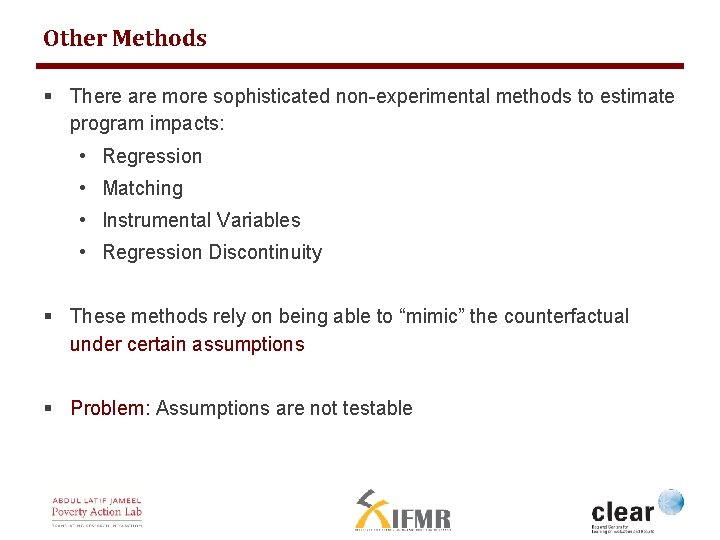

Other Methods § There are more sophisticated non-experimental methods to estimate program impacts: • Regression • Matching • Instrumental Variables • Regression Discontinuity § These methods rely on being able to “mimic” the counterfactual under certain assumptions § Problem: Assumptions are not testable

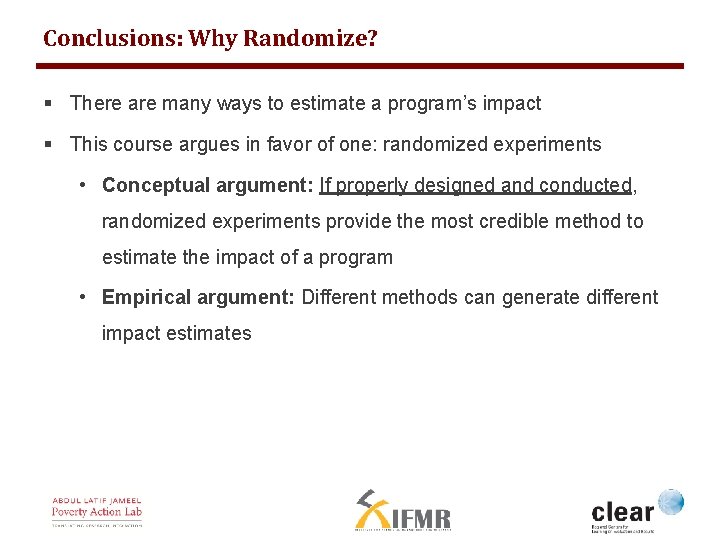

Conclusions: Why Randomize? § There are many ways to estimate a program’s impact § This course argues in favor of one: randomized experiments • Conceptual argument: If properly designed and conducted, randomized experiments provide the most credible method to estimate the impact of a program • Empirical argument: Different methods can generate different impact estimates

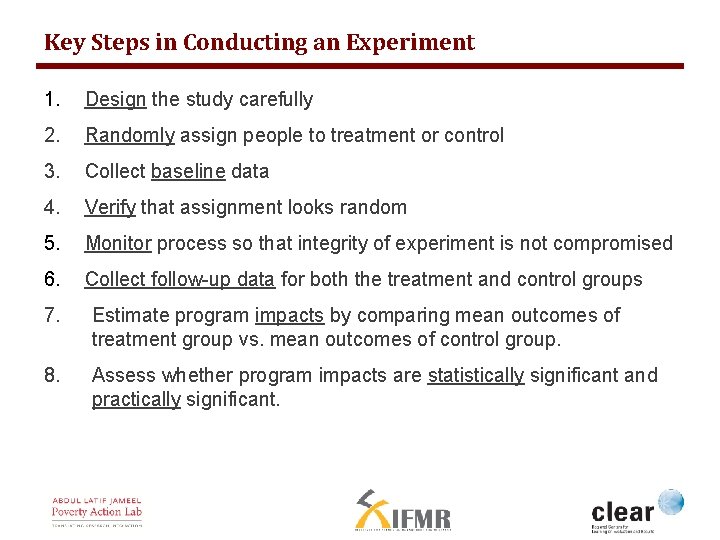

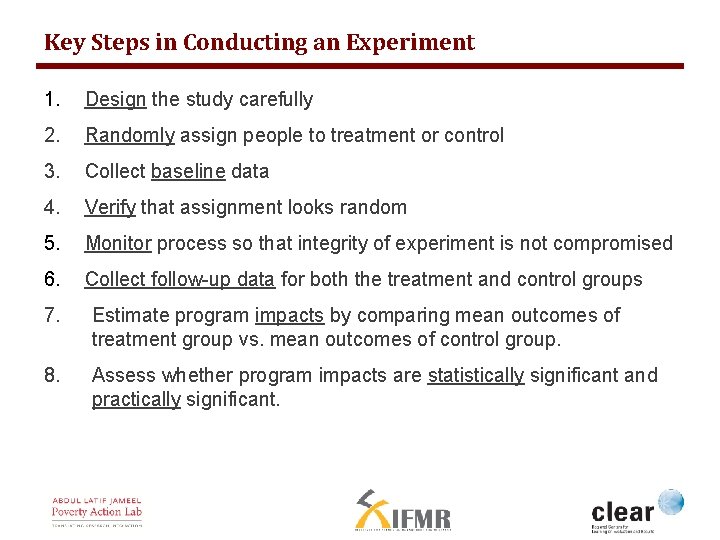

Key Steps in Conducting an Experiment 1. Design the study carefully 2. Randomly assign people to treatment or control 3. Collect baseline data 4. Verify that assignment looks random 5. Monitor process so that integrity of experiment is not compromised 6. Collect follow-up data for both the treatment and control groups 7. Estimate program impacts by comparing mean outcomes of treatment group vs. mean outcomes of control group. 8. Assess whether program impacts are statistically significant and practically significant.

THANK YOU