Types of Parallel Computers Two principal types Shared

- Slides: 24

Types of Parallel Computers Two principal types: • Shared memory multiprocessor • Distributed memory multicomputer ITCS 4/5145 Cluster Computing, UNC-Charlotte, B. Wilkinson, 2006. 1

Shared Memory Multiprocessor 2

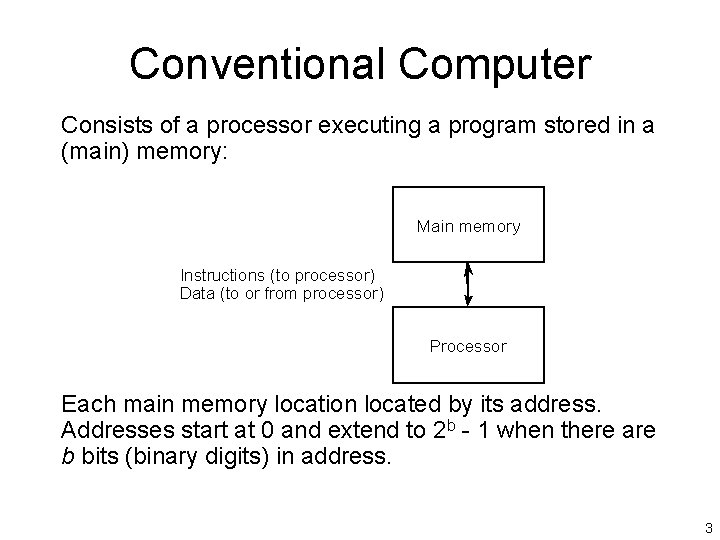

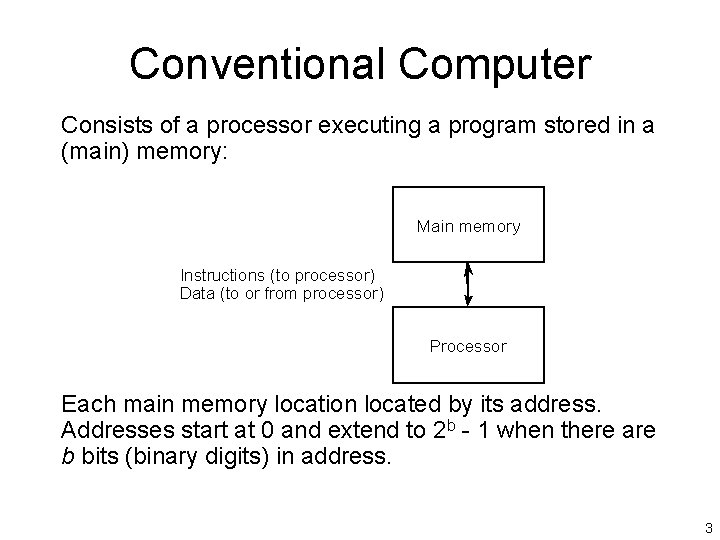

Conventional Computer Consists of a processor executing a program stored in a (main) memory: Main memory Instructions (to processor) Data (to or from processor) Processor Each main memory location located by its address. Addresses start at 0 and extend to 2 b - 1 when there are b bits (binary digits) in address. 3

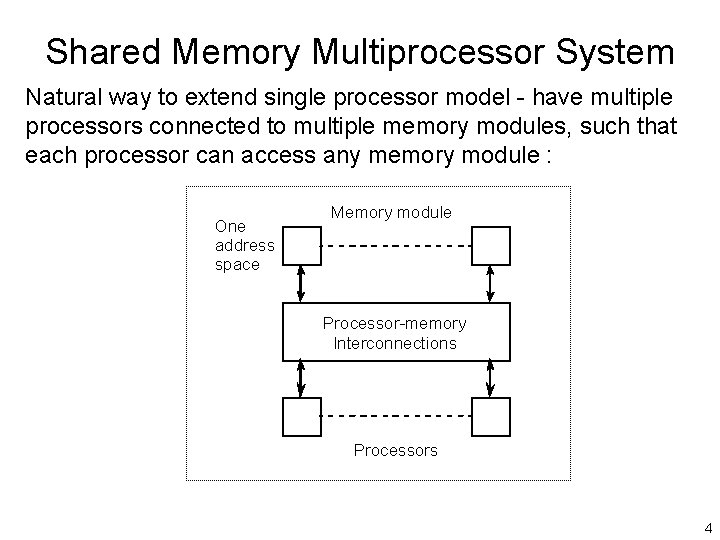

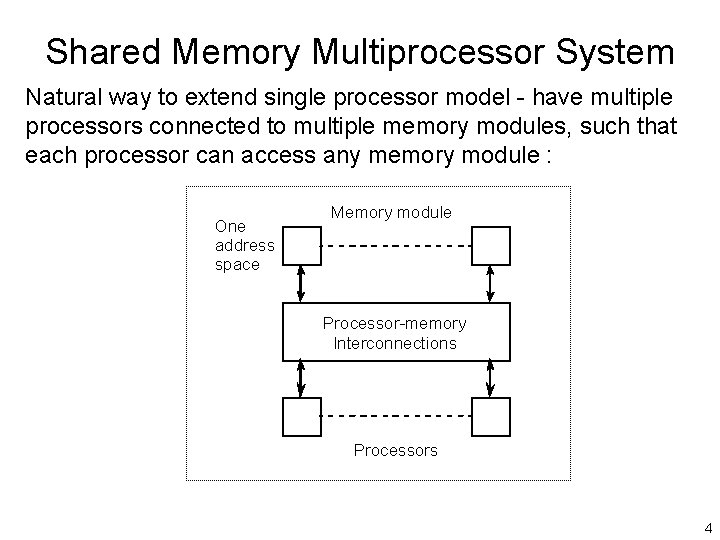

Shared Memory Multiprocessor System Natural way to extend single processor model - have multiple processors connected to multiple memory modules, such that each processor can access any memory module : One address space Memory module Processor-memory Interconnections Processors 4

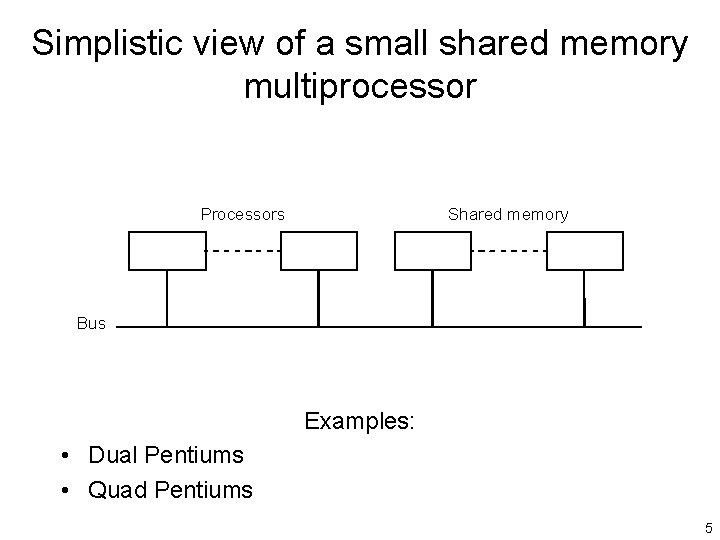

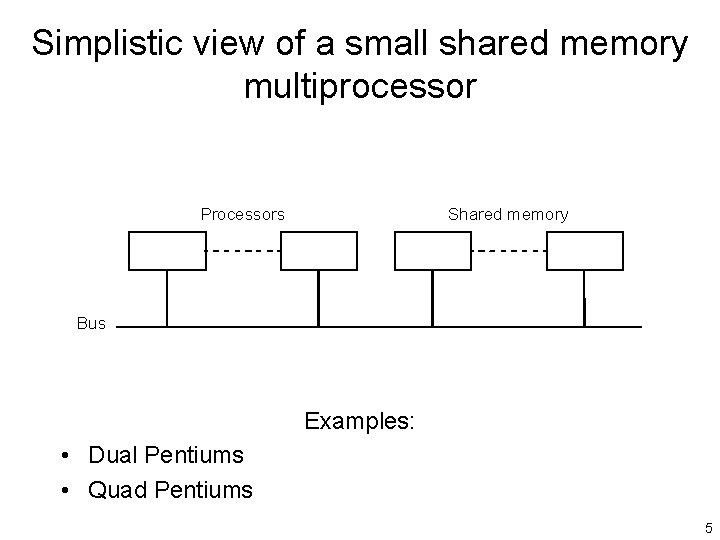

Simplistic view of a small shared memory multiprocessor Processors Shared memory Bus Examples: • Dual Pentiums • Quad Pentiums 5

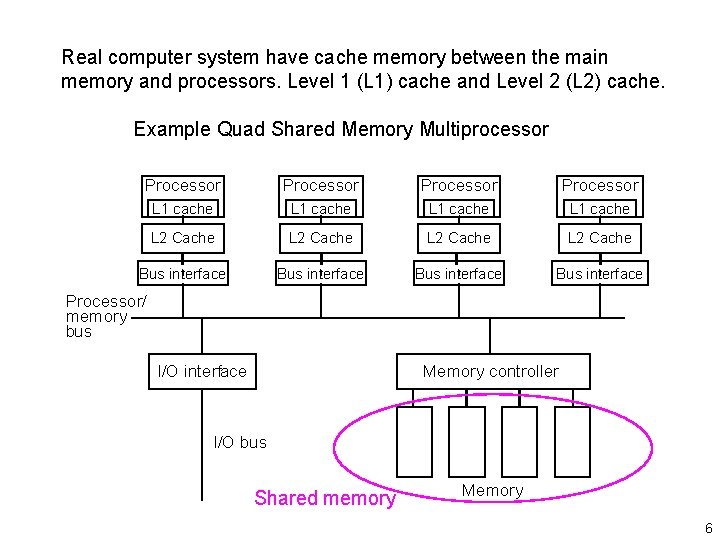

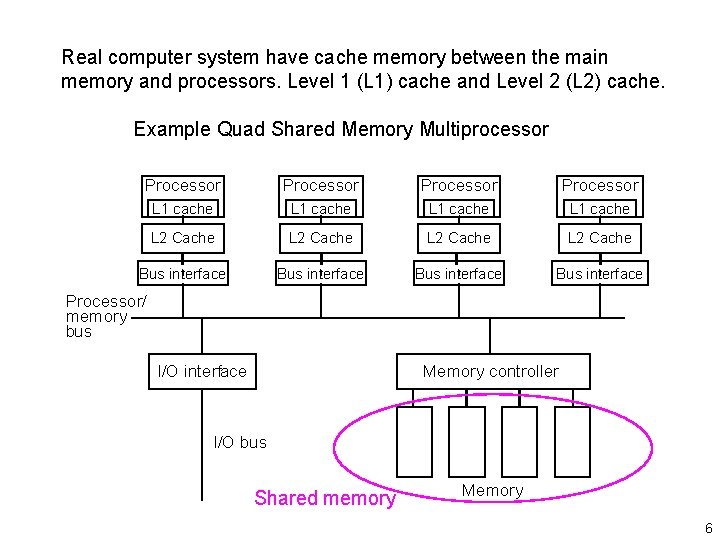

Real computer system have cache memory between the main memory and processors. Level 1 (L 1) cache and Level 2 (L 2) cache. Example Quad Shared Memory Multiprocessor Processor L 1 cache L 2 Cache Bus interface Processor/ memory bus I/O interface Memory controller I/O bus Shared memory Memory 6

“Recent” innovation • Dual-core and multi-core processors • Two or more independent processors in one package • Actually an old idea but not put into wide practice until recently. • Since L 1 cache is usually inside package and L 2 cache outside package, dual-/multi-core processors usually share L 2 cache. 7

Example • Dual core Pentiums (Intel Core. TM 2 Dual processors) -- Two processors in one package sharing a common L 2 Cache. Introduced April 2005. (Also hyper-threaded) • Xbox 360 game console -- triple core Power. PC microprocessor. • Play. Station 3 Cell processor -- 9 core design. References and more information: http: //www. intel. com/products/processor/core 2 duo/index. htm http: //en. wikipedia. org/wiki/Dual_core 8

Programming Shared Memory Multiprocessors Several possible ways 1. Use Threads - programmer decomposes program into individual parallel sequences, (threads), each being able to access shared variables declared outside threads. Example Pthreads 2. Use library functions and preprocessor compiler directives with a sequential programming language to declare shared variables and specify parallelism. Example Open. MP - industry standard. Consists of library functions, compiler directives, and environment variables - needs Open. MP compiler 9

3. Use a modified sequential programming language -- added syntax to declare shared variables and specify parallelism. Example UPC (Unified Parallel C) - needs a UPC compiler. 4. Use a specially designed parallel programming language -with syntax to express parallelism. Compiler automatically creates executable code for each processor (not now common). 5. Use a regular sequential programming language such as C and ask parallelizing compiler to convert it into parallel executable code. Also not now common. 10

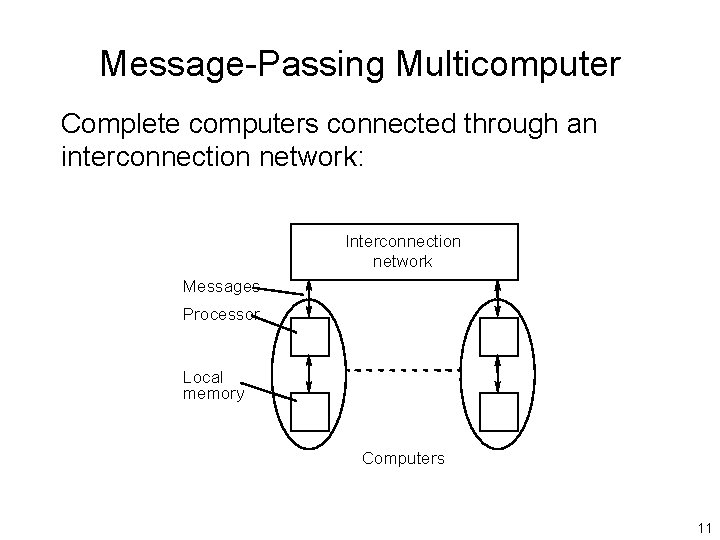

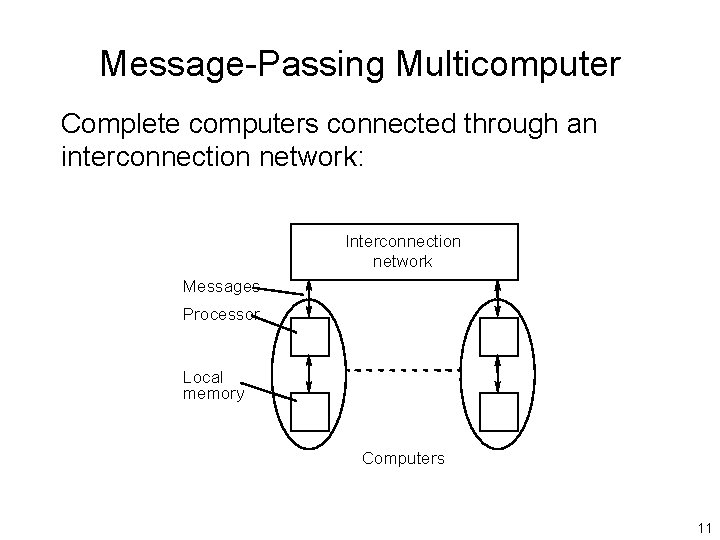

Message-Passing Multicomputer Complete computers connected through an interconnection network: Interconnection network Messages Processor Local memory Computers 11

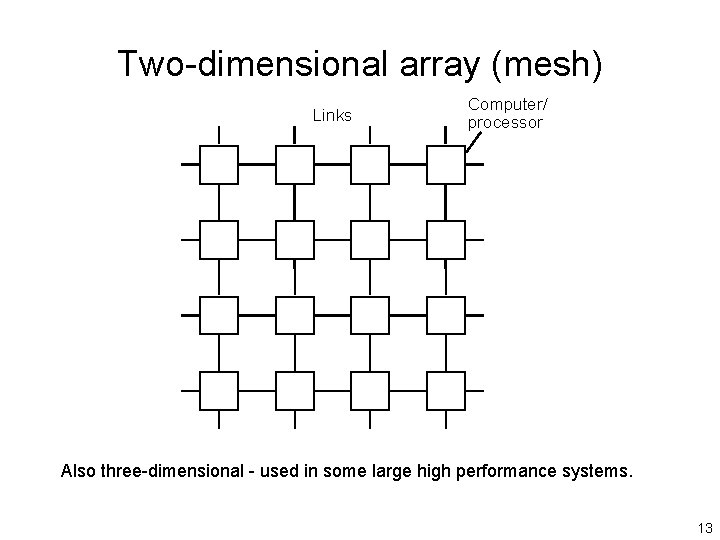

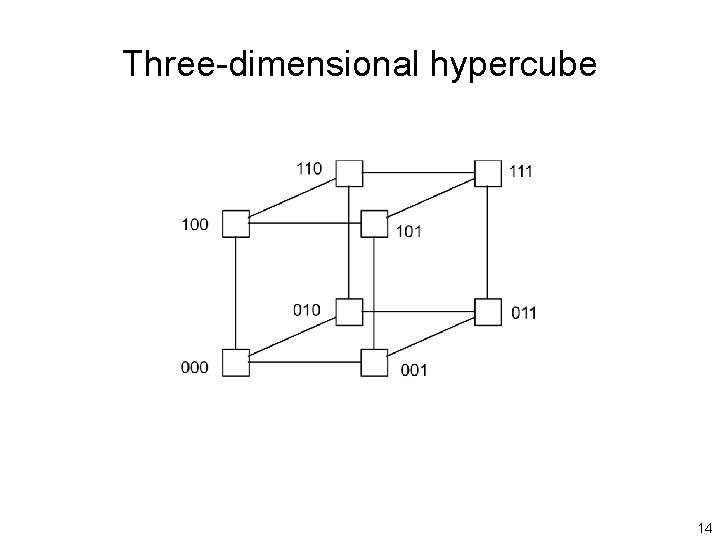

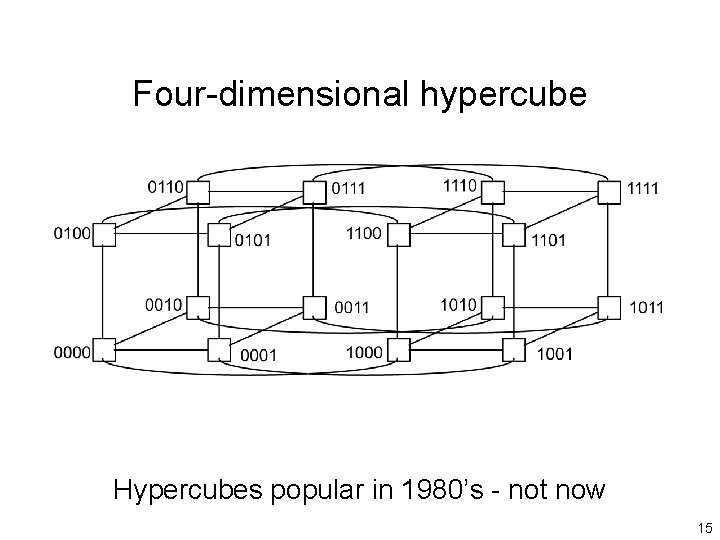

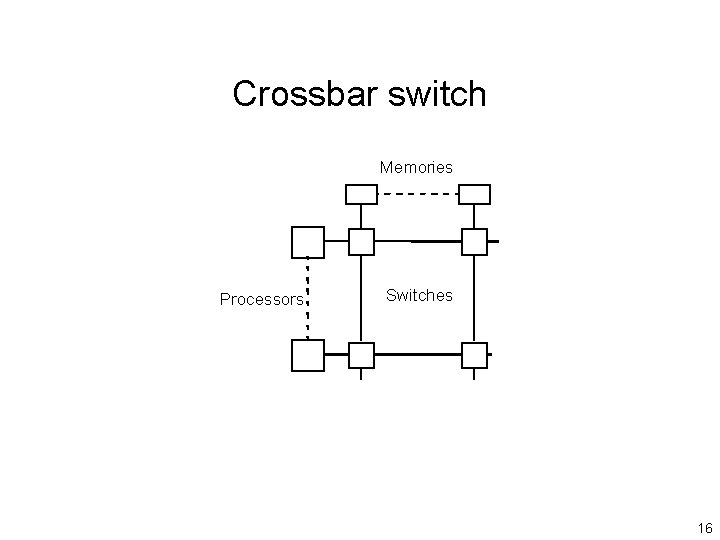

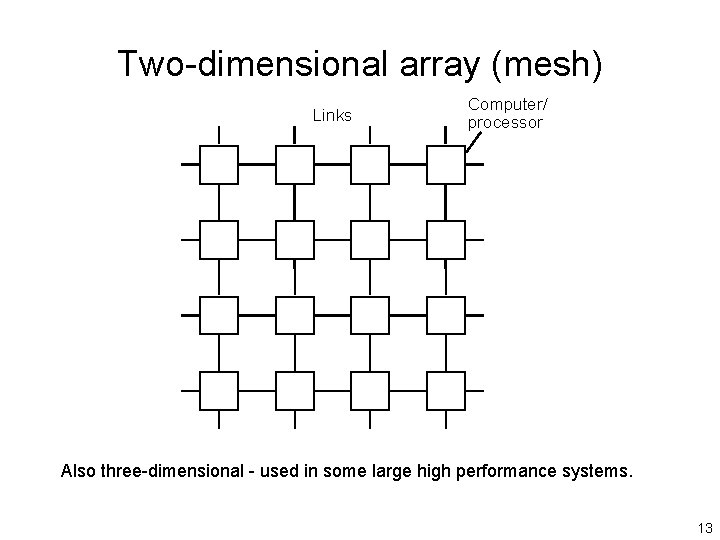

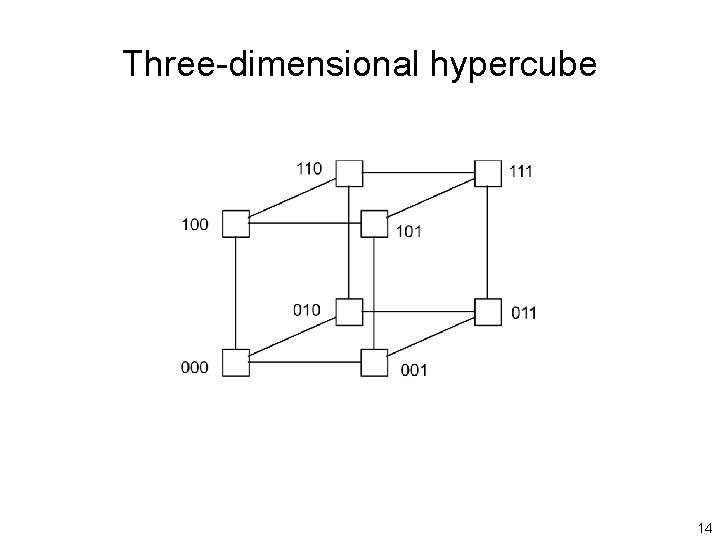

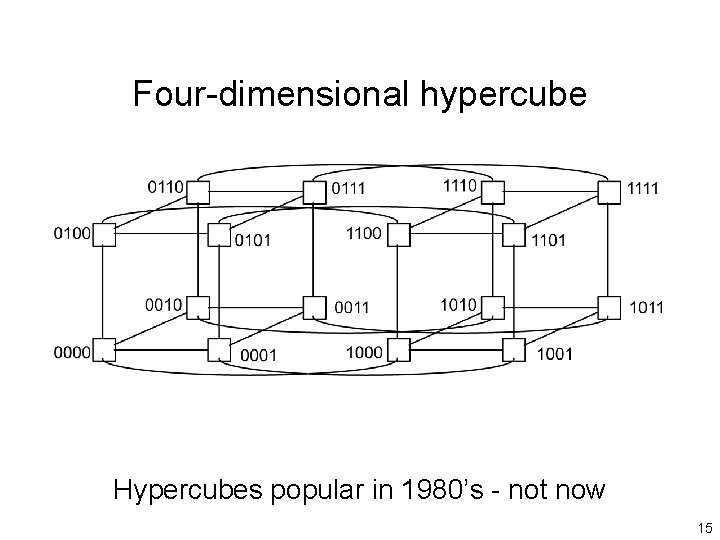

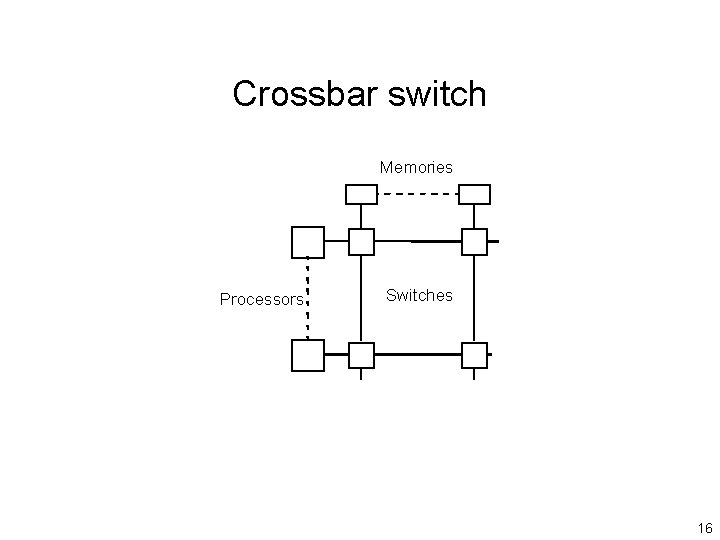

Interconnection Networks • • Limited and exhaustive interconnections 2 - and 3 -dimensional meshes Hypercube (not now common) Using Switches: – Crossbar – Trees – Multistage interconnection networks 12

Two-dimensional array (mesh) Links Computer/ processor Also three-dimensional - used in some large high performance systems. 13

Three-dimensional hypercube 14

Four-dimensional hypercube Hypercubes popular in 1980’s - not now 15

Crossbar switch Memories Processors Switches 16

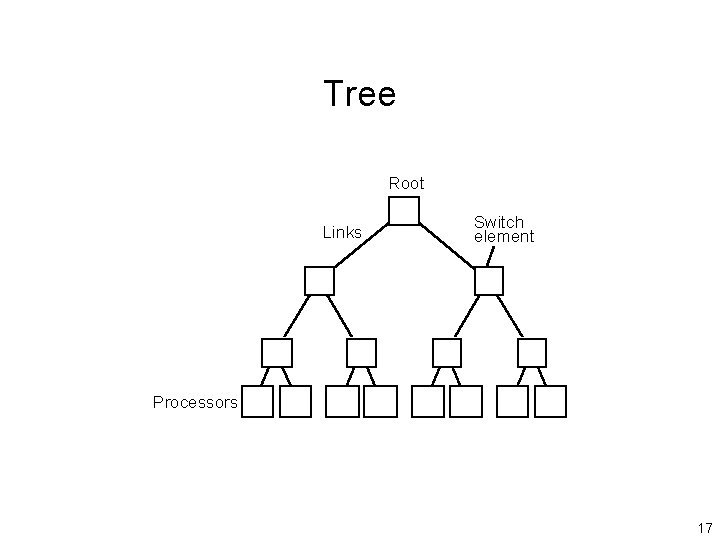

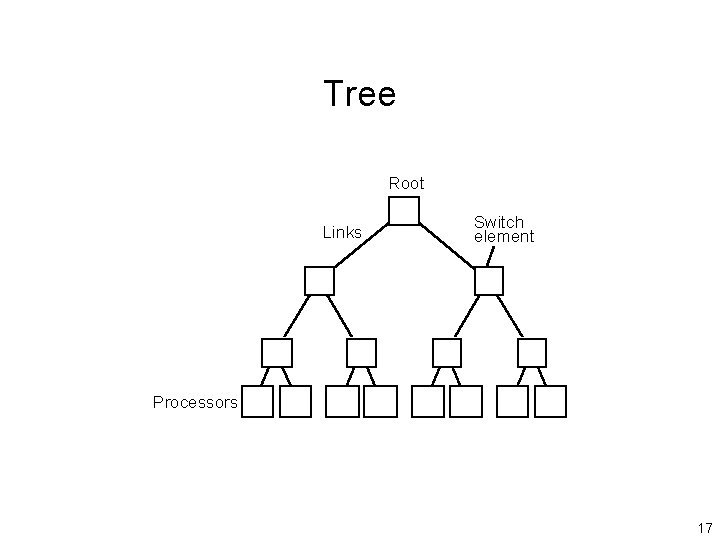

Tree Root Links Switch element Processors 17

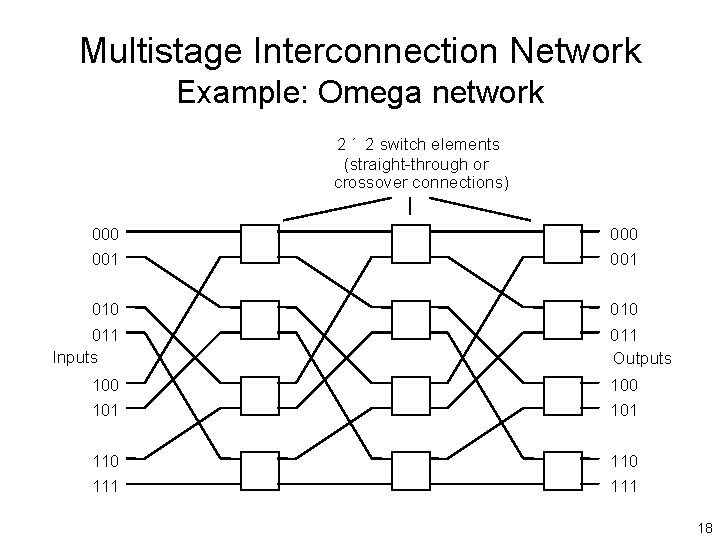

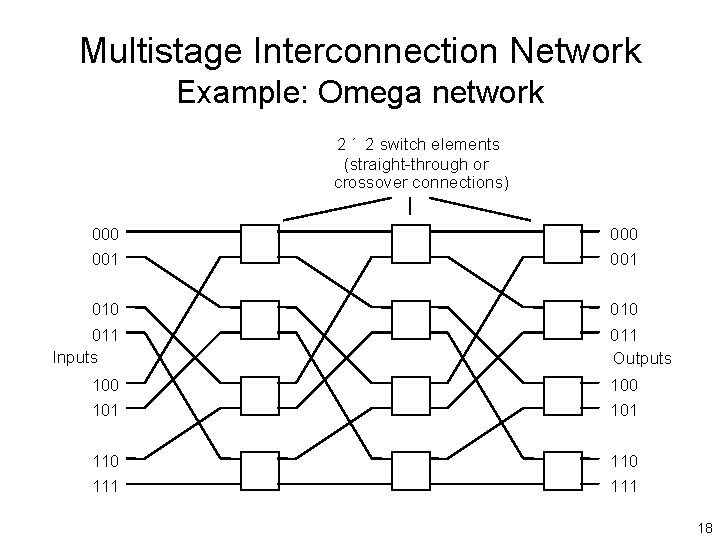

Multistage Interconnection Network Example: Omega network 2 ´ 2 switch elements (straight-through or crossover connections) 000 001 010 011 Inputs 000 001 010 011 Outputs 100 101 110 111 18

Networked Computers as a Computing Platform • A network of computers became a very attractive alternative to expensive supercomputers and parallel computer systems for high-performance computing in early 1990’s. • Several early projects. Notable: – Berkeley NOW (network of workstations) project. – NASA Beowulf project. 19

Key advantages: • Very high performance workstations and PCs readily available at low cost. • The latest processors can easily be incorporated into the system as they become available. • Existing software can be used or modified. 20

Beowulf Clusters* • A group of interconnected “commodity” computers achieving high performance with low cost. • Typically using commodity interconnects high speed Ethernet, and Linux OS. * Beowulf comes from name given by NASA Goddard Space Flight Center cluster project. 21

Cluster Interconnects • Originally fast Ethernet on low cost clusters • Gigabit Ethernet - easy upgrade path More Specialized/Higher Performance • • • Myrinet - 2. 4 Gbits/sec - disadvantage: single vendor c. Lan SCI (Scalable Coherent Interface) QNet Infiniband - may be important as infininband interfaces may be integrated on next generation PCs 22

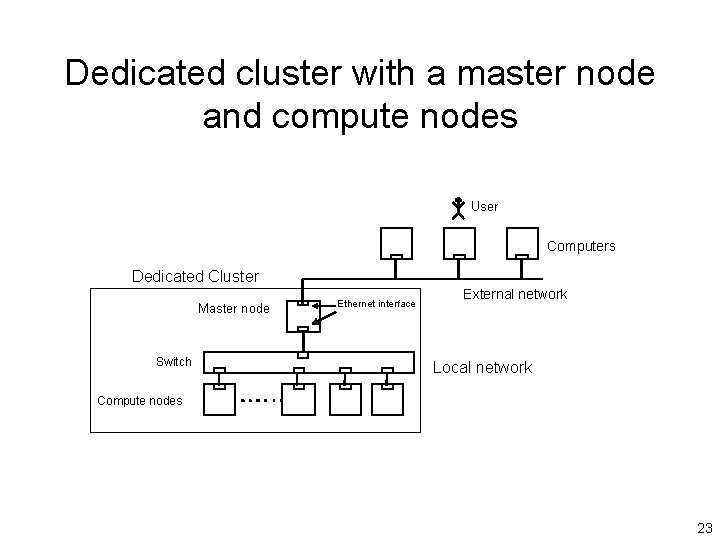

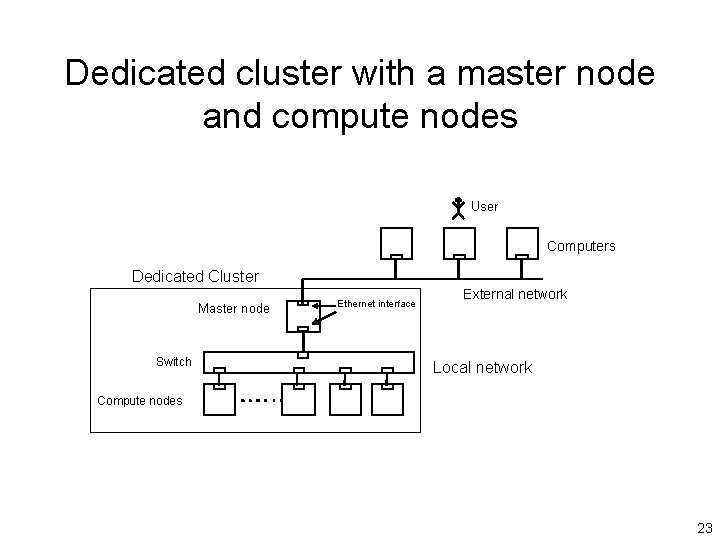

Dedicated cluster with a master node and compute nodes User Computers Dedicated Cluster Master node Switch Ethernet interface External network Local network Compute nodes 23

Software Tools for Clusters • Based upon Message Passing Parallel Programming: • Parallel Virtual Machine (PVM) - developed in late 1980’s. Became very popular. • Message-Passing Interface (MPI) - standard defined in 1990 s. • Both provide a set of user-level libraries for message passing. Use with regular programming languages (C, C++, . . . ). 24