Types of Parallel Computers Two principal approaches Shared

- Slides: 22

Types of Parallel Computers Two principal approaches: • Shared memory multiprocessor • Distributed memory multicomputer ITCS 4/5145 Parallel Programming, UNC-Charlotte, B. Wilkinson, 2012. Jan 12, 2012 1 b. 1

Shared Memory Multiprocessor 1 b. 2

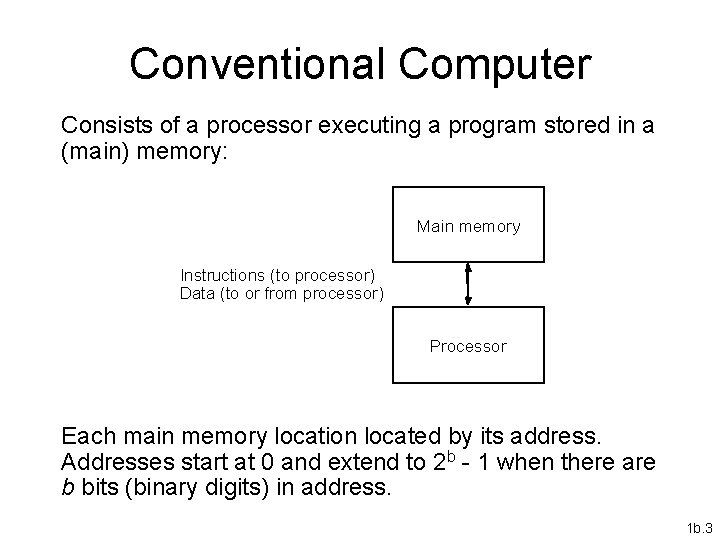

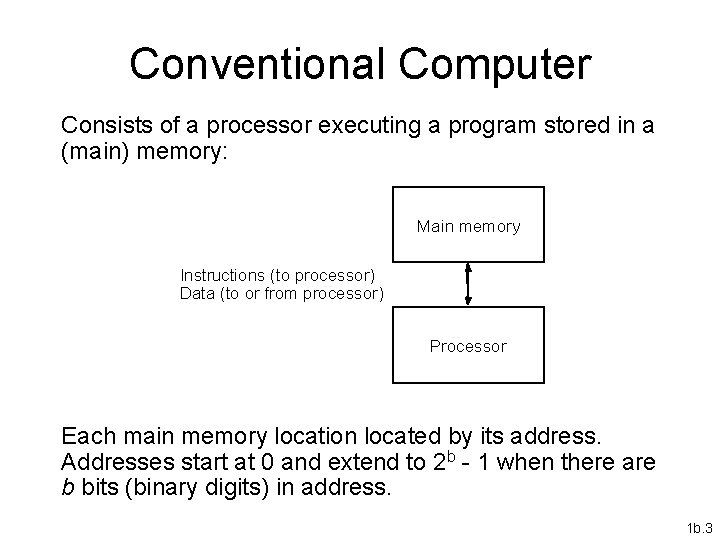

Conventional Computer Consists of a processor executing a program stored in a (main) memory: Main memory Instructions (to processor) Data (to or from processor) Processor Each main memory location located by its address. Addresses start at 0 and extend to 2 b - 1 when there are b bits (binary digits) in address. 1 b. 3

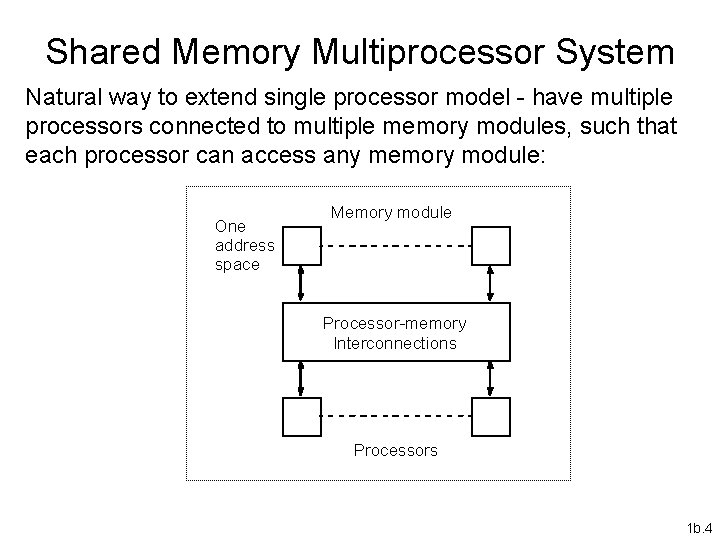

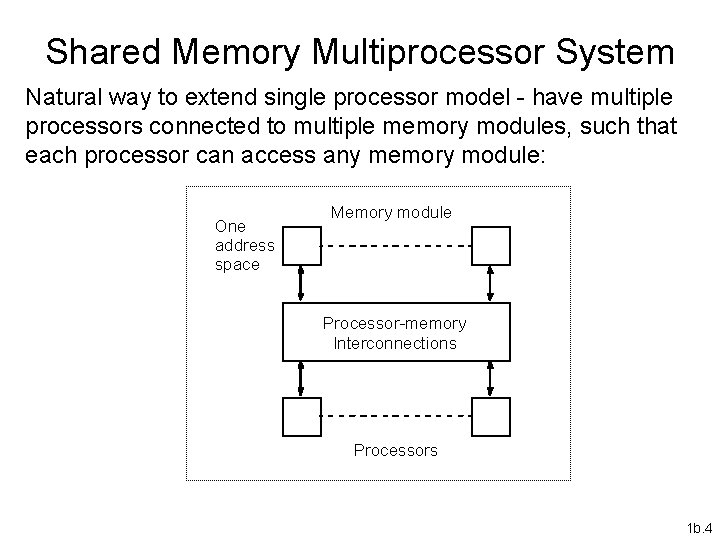

Shared Memory Multiprocessor System Natural way to extend single processor model - have multiple processors connected to multiple memory modules, such that each processor can access any memory module: One address space Memory module Processor-memory Interconnections Processors 1 b. 4

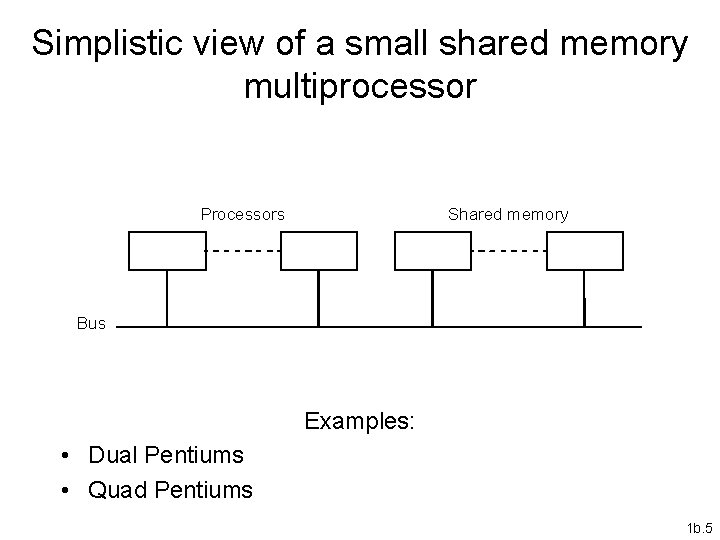

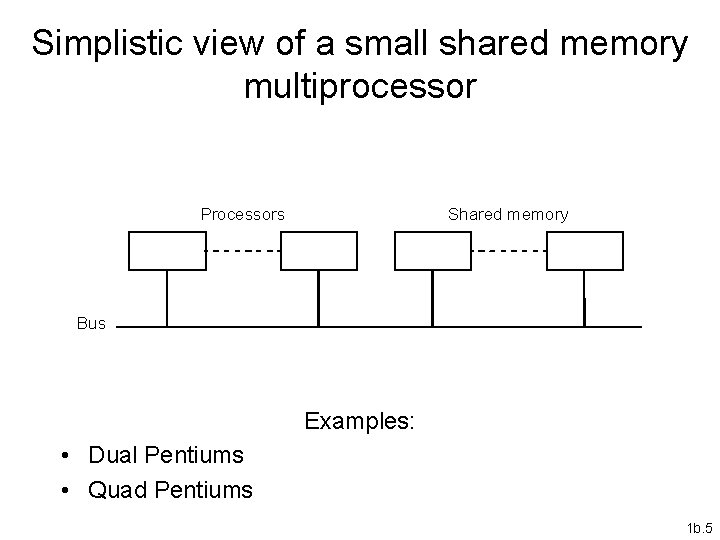

Simplistic view of a small shared memory multiprocessor Processors Shared memory Bus Examples: • Dual Pentiums • Quad Pentiums 1 b. 5

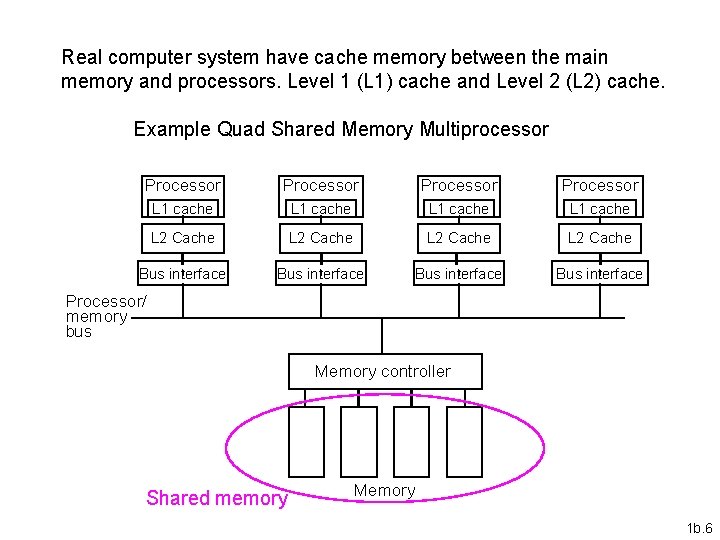

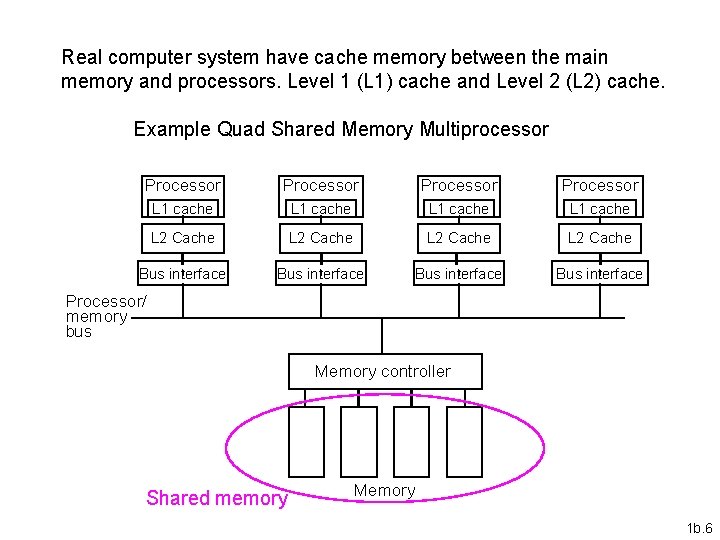

Real computer system have cache memory between the main memory and processors. Level 1 (L 1) cache and Level 2 (L 2) cache. Example Quad Shared Memory Multiprocessor Processor L 1 cache L 2 Cache Bus interface Processor/ memory bus Memory controller Shared memory Memory 1 b. 6

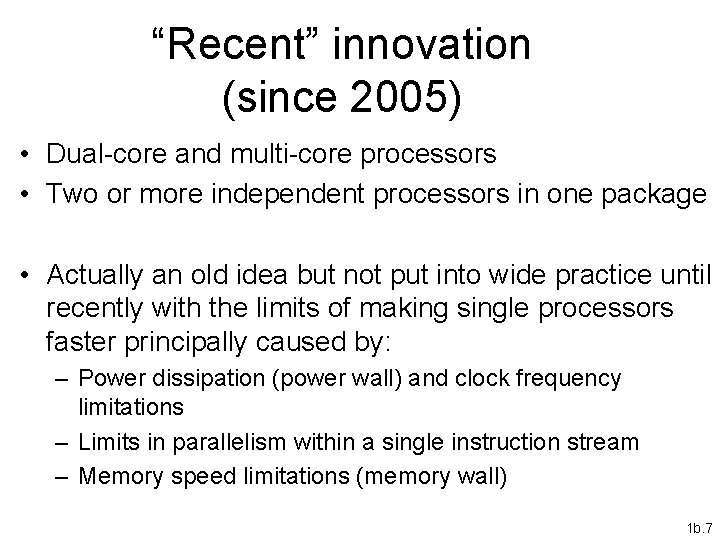

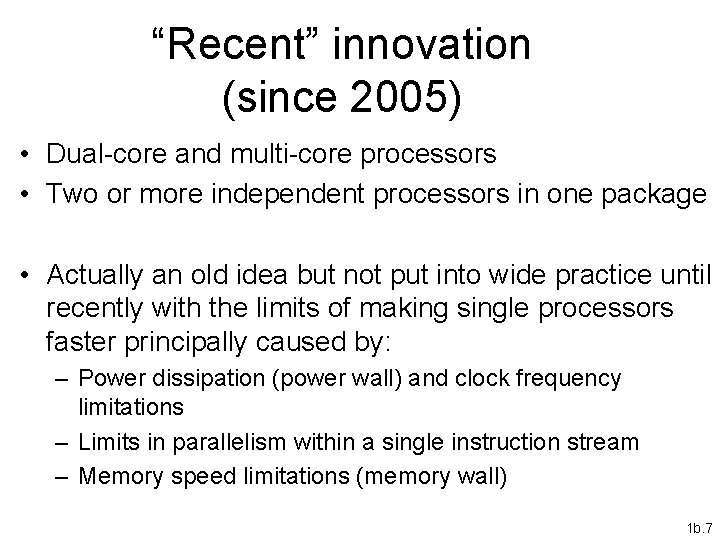

“Recent” innovation (since 2005) • Dual-core and multi-core processors • Two or more independent processors in one package • Actually an old idea but not put into wide practice until recently with the limits of making single processors faster principally caused by: – Power dissipation (power wall) and clock frequency limitations – Limits in parallelism within a single instruction stream – Memory speed limitations (memory wall) 1 b. 7

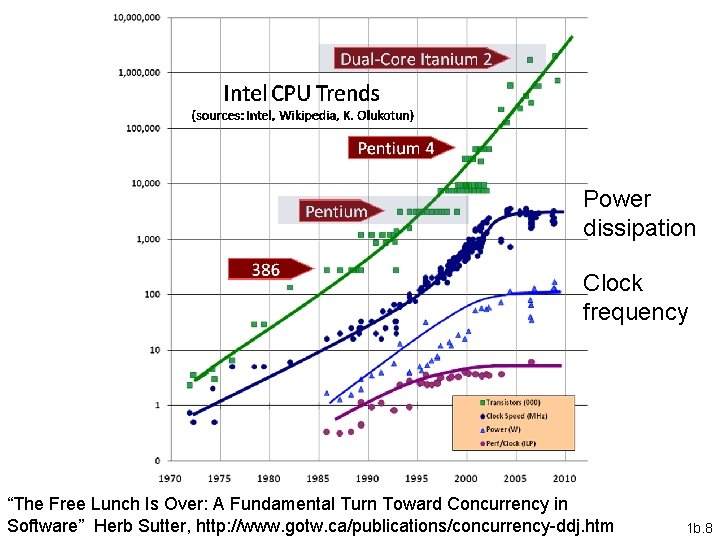

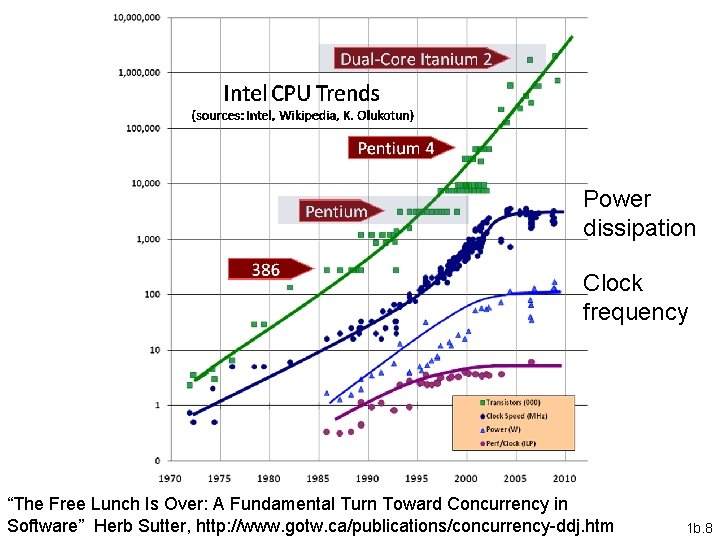

Power dissipation Clock frequency “The Free Lunch Is Over: A Fundamental Turn Toward Concurrency in Software” Herb Sutter, http: //www. gotw. ca/publications/concurrency-ddj. htm 1 b. 8

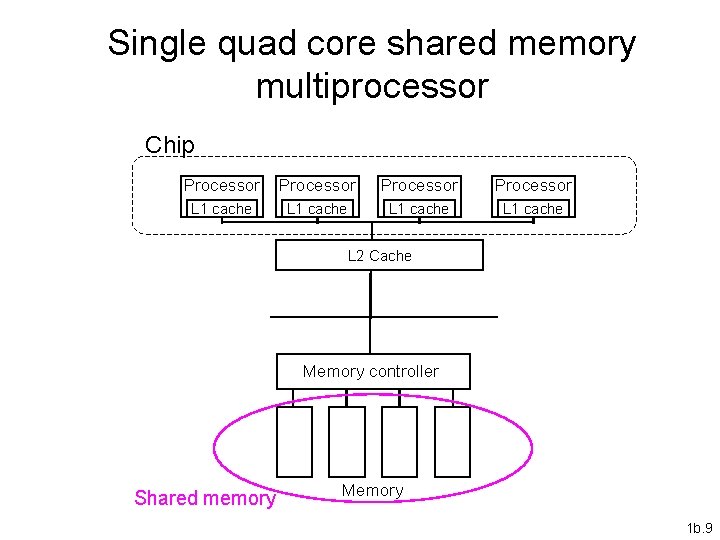

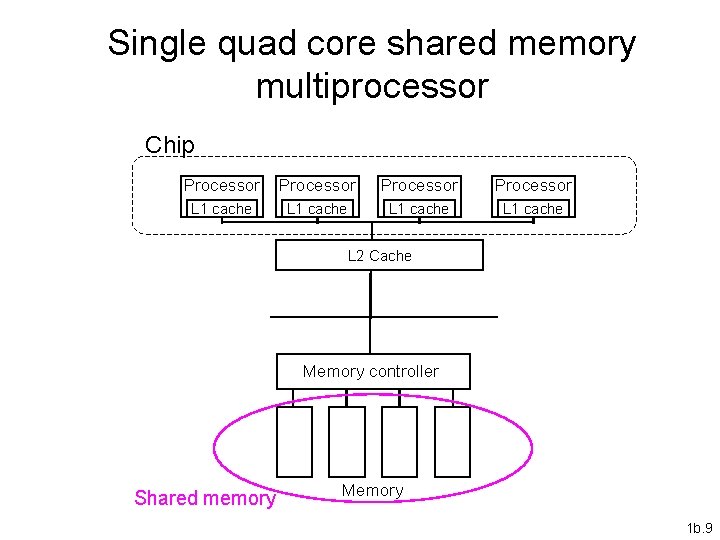

Single quad core shared memory multiprocessor Chip Processor L 1 cache L 2 Cache Memory controller Shared memory Memory 1 b. 9

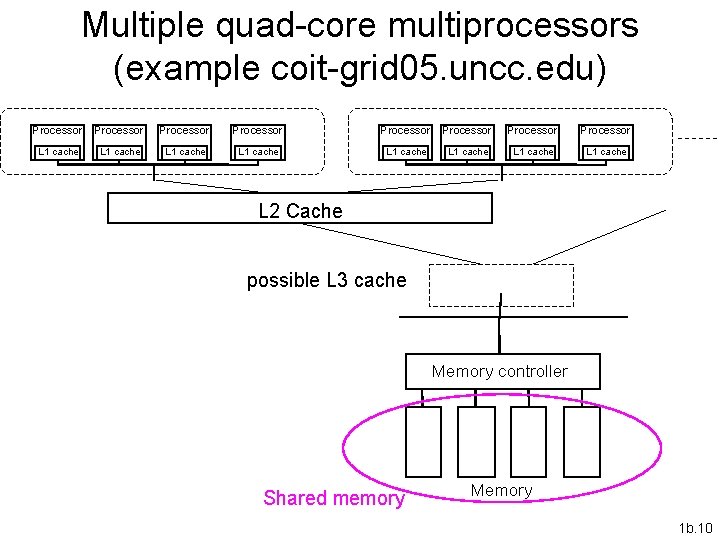

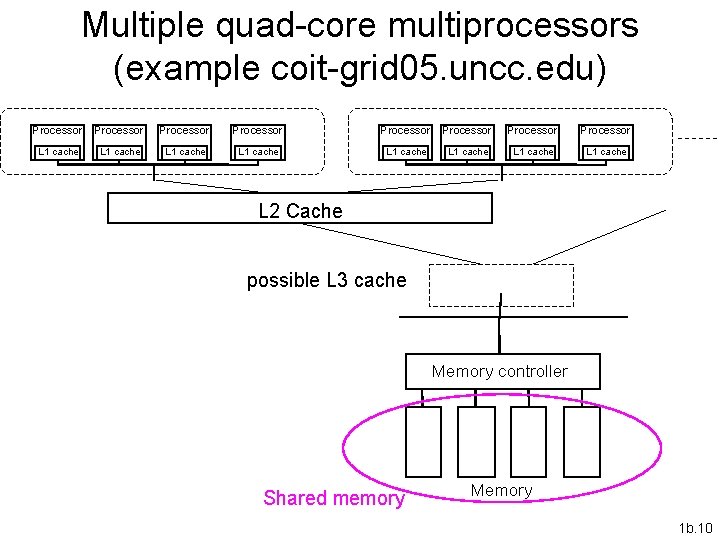

Multiple quad-core multiprocessors (example coit-grid 05. uncc. edu) Processor Processor L 1 cache L 1 cache L 2 Cache possible L 3 cache Memory controller Shared memory Memory 1 b. 10

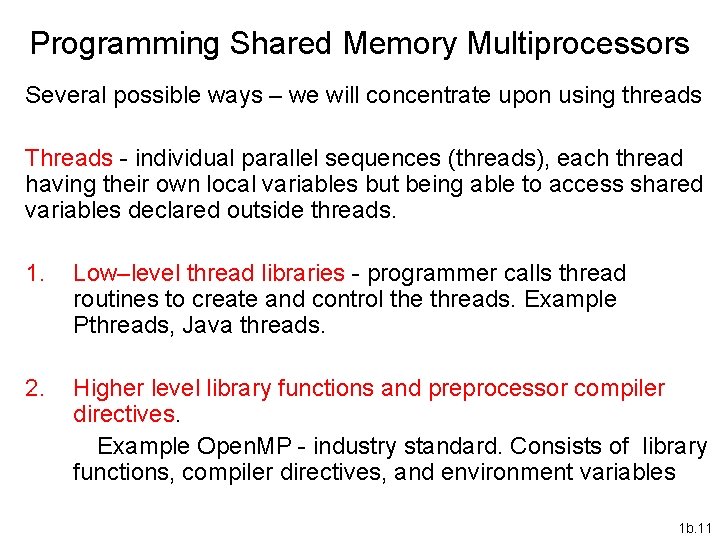

Programming Shared Memory Multiprocessors Several possible ways – we will concentrate upon using threads Threads - individual parallel sequences (threads), each thread having their own local variables but being able to access shared variables declared outside threads. 1. Low–level thread libraries - programmer calls thread routines to create and control the threads. Example Pthreads, Java threads. 2. Higher level library functions and preprocessor compiler directives. Example Open. MP - industry standard. Consists of library functions, compiler directives, and environment variables 1 b. 11

3. Tasks – rather than program with threads, which are closely linked to the physical hardware, can program with parallel “tasks”. Promoted by Intel with their TBB (Thread Building Blocks) tools. Other alternatives include parallelizing compilers compiling regular sequential programs and making them parallel programs, and special parallel languages (both not now common). 1 b. 12

Message-Passing Multicomputer 1 b. 13

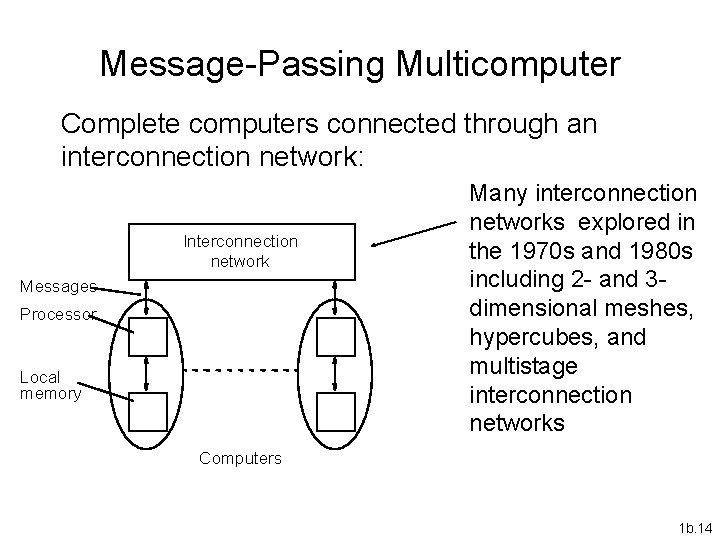

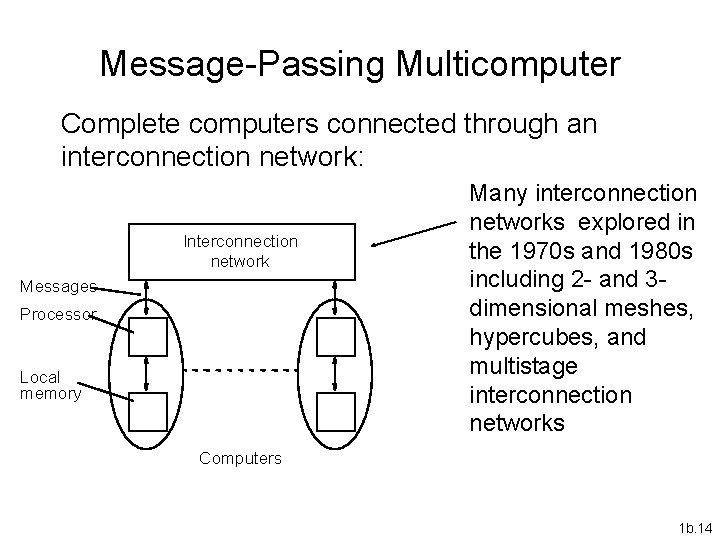

Message-Passing Multicomputer Complete computers connected through an interconnection network: Interconnection network Messages Processor Local memory Many interconnection networks explored in the 1970 s and 1980 s including 2 - and 3 dimensional meshes, hypercubes, and multistage interconnection networks Computers 1 b. 14

Networked Computers as a Computing Platform • A network of computers became a very attractive alternative to expensive supercomputers and parallel computer systems for high-performance computing in early 1990 s. • Several early projects. Notable: – Berkeley NOW (network of workstations) project. – NASA Beowulf project. 1 b. 15

Key advantages: • Very high performance workstations and PCs readily available at low cost. • The latest processors can easily be incorporated into the system as they become available. • Existing software can be used or modified. 1 b. 16

Beowulf Clusters* • A group of interconnected “commodity” computers achieving high performance with low cost. • Typically using commodity interconnects high speed Ethernet, and Linux OS. * Beowulf comes from name given by NASA Goddard Space Flight Center cluster project. 1 b. 17

Cluster Interconnects • Originally fast Ethernet on low cost clusters • Gigabit Ethernet - easy upgrade path More specialized/higher performance interconnects available including Myrinet and Infiniband. 1 b. 18

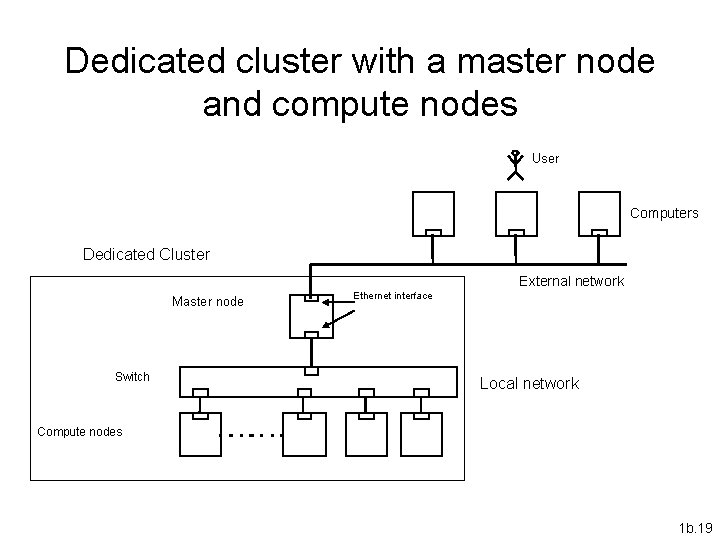

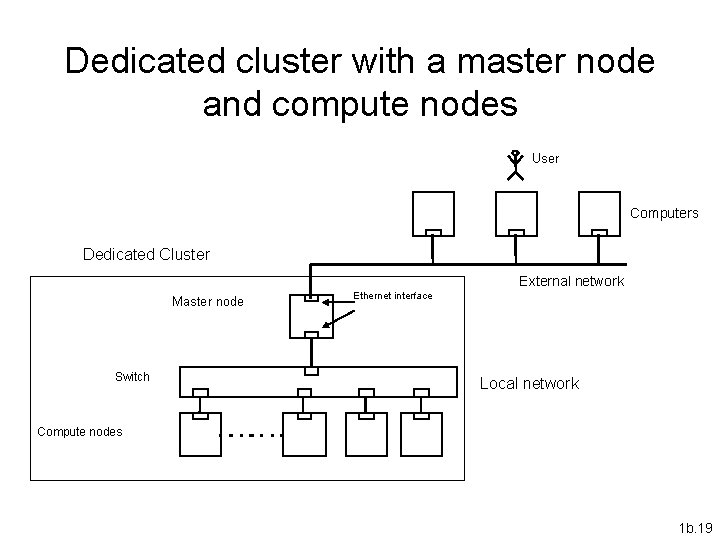

Dedicated cluster with a master node and compute nodes User Computers Dedicated Cluster External network Master node Switch Ethernet interface Local network Compute nodes 1 b. 19

Software Tools for Clusters • Based upon message passing programming model • User-level libraries provided for explicitly specifying messages to be sent between executing processes on each computer. • Use with regular programming languages (C, C++, . . . ). • Can be quite difficult to program correctly as we shall see. 1 b. 20

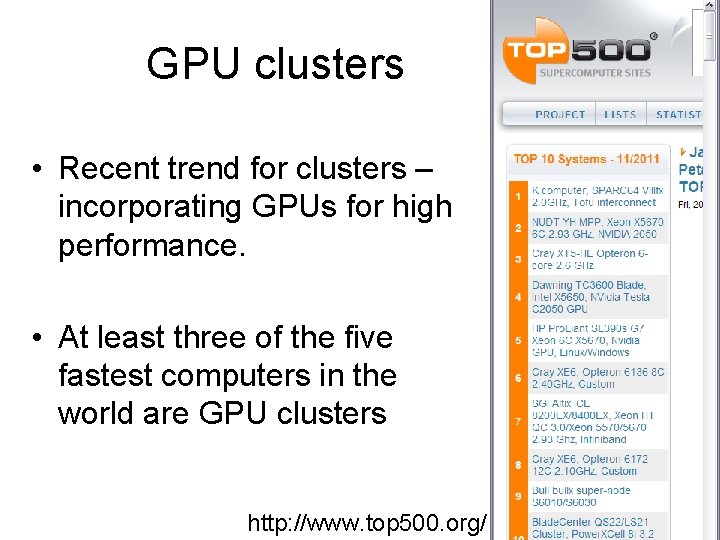

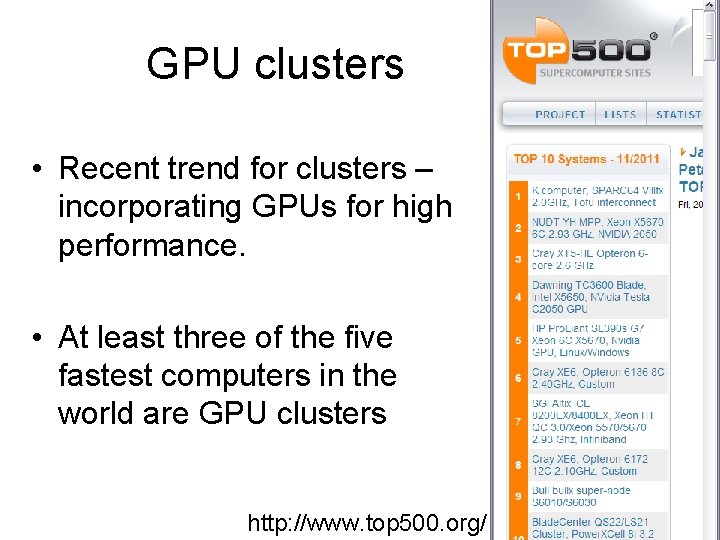

GPU clusters • Recent trend for clusters – incorporating GPUs for high performance. • At least three of the five fastest computers in the world are GPU clusters http: //www. top 500. org/ 1 b. 21

Next step • Learn the message passing programming model, some MPI routines, write a message-passing program and test on the cluster. 1 b. 22