Speech and Language Processing Chapter 8 of SLP

![‘Surprise-redundancy’ tune [How many times do I have to tell you. . . ] ‘Surprise-redundancy’ tune [How many times do I have to tell you. . . ]](https://slidetodoc.com/presentation_image_h/a85a969c25406d5ba3aab32be2589ba5/image-51.jpg)

- Slides: 78

Speech and Language Processing Chapter 8 of SLP Speech Synthesis

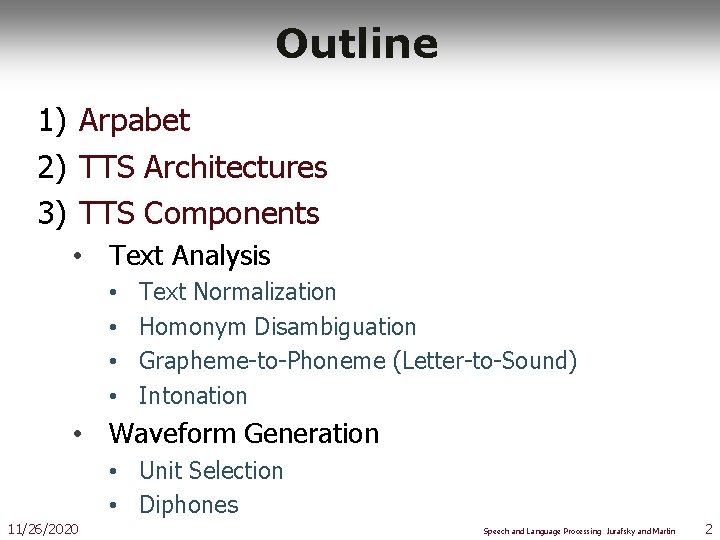

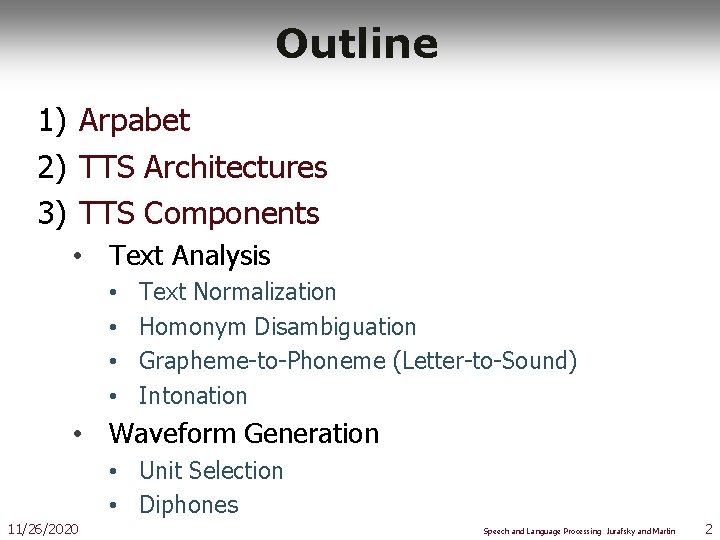

Outline 1) Arpabet 2) TTS Architectures 3) TTS Components • Text Analysis • • Text Normalization Homonym Disambiguation Grapheme-to-Phoneme (Letter-to-Sound) Intonation • Waveform Generation • Unit Selection • Diphones 11/26/2020 Speech and Language Processing Jurafsky and Martin 2

Dave Barry on TTS “And computers are getting smarter all the time; scientists tell us that soon they will be able to talk with us. (By "they", I mean computers; I doubt scientists will ever be able to talk to us. ) 11/26/2020 Speech and Language Processing Jurafsky and Martin 3

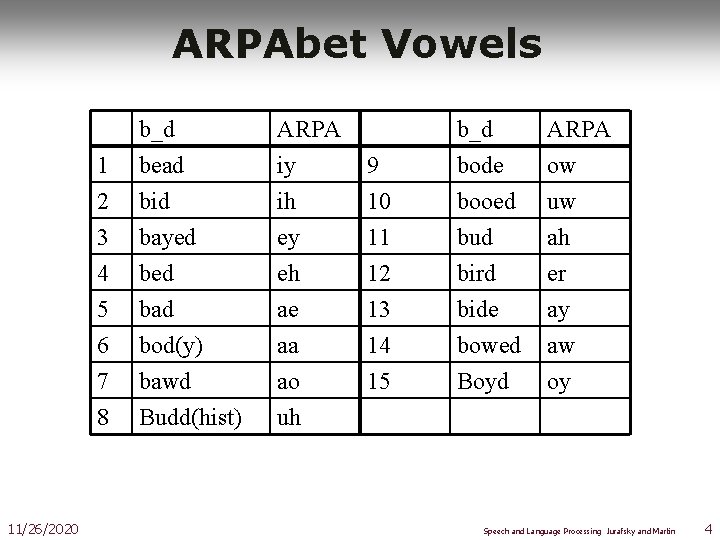

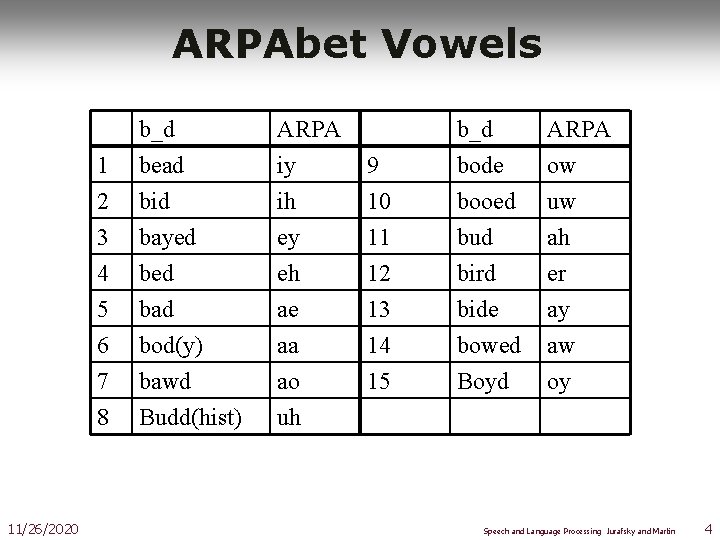

ARPAbet Vowels 11/26/2020 1 2 3 b_d bead bid bayed ARPA iy ih ey 4 5 6 7 8 bed bad bod(y) bawd Budd(hist) eh ae aa ao uh 9 10 11 b_d bode booed bud ARPA ow uw ah 12 13 14 15 bird bide bowed Boyd er ay aw oy Speech and Language Processing Jurafsky and Martin 4

Brief Historical Interlude • Pictures and some text from Hartmut Traunmüller’s web site: • http: //www. ling. su. se/staff/hartmut/kemplne. htm • Von Kempeln 1780 b. Bratislava 1734 d. Vienna 1804 • Leather resonator manipulated by the operator to copy vocal tract configuration during sonorants (vowels, glides, nasals) • Bellows provided air stream, counterweight provided inhalation • Vibrating reed produced periodic pressure wave 11/26/2020 Speech and Language Processing Jurafsky and Martin 5

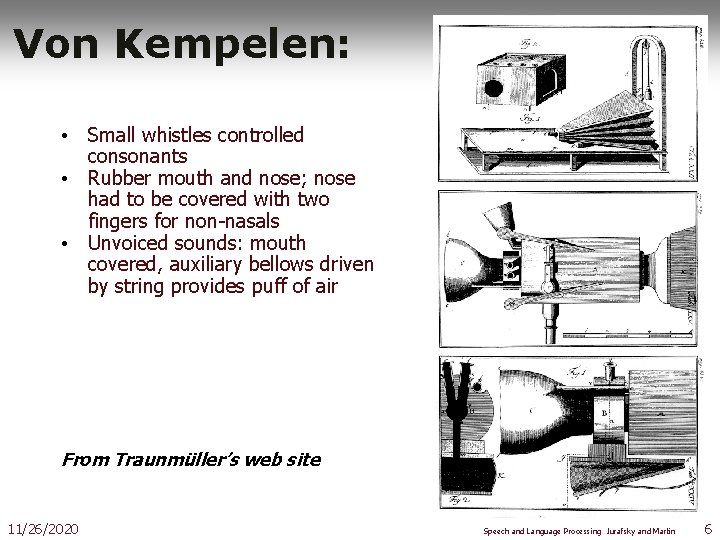

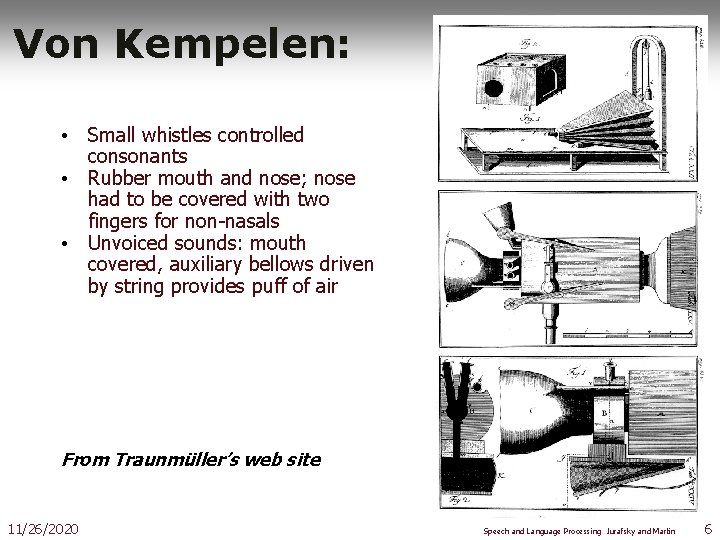

Von Kempelen: • Small whistles controlled consonants • Rubber mouth and nose; nose had to be covered with two fingers for non-nasals • Unvoiced sounds: mouth covered, auxiliary bellows driven by string provides puff of air From Traunmüller’s web site 11/26/2020 Speech and Language Processing Jurafsky and Martin 6

Modern TTS systems § 1960’s first full TTS: Umeda et al (1968) § 1970’s § Joe Olive 1977 concatenation of linear-prediction diphones § Speak and Spell § 1980’s § 1979 MITalk (Allen, Hunnicut, Klatt) § 1990’s-present § Diphone synthesis § Unit selection synthesis 11/26/2020 Speech and Language Processing Jurafsky and Martin 7

2. Overview of TTS: Architectures of Modern Synthesis § Articulatory Synthesis: § Model movements of articulators and acoustics of vocal tract § Formant Synthesis: § Start with acoustics, create rules/filters to create each formant § Concatenative Synthesis: § Use databases of stored speech to assemble new utterances. 11/26/2020 Text from Richard Sproat slides Speech and Language Processing Jurafsky and Martin 8

Formant Synthesis § Were the most common commercial systems while computers were relatively underpowered. § 1979 MITalk (Allen, Hunnicut, Klatt) § 1983 DECtalk system § The voice of Stephen Hawking 11/26/2020 Speech and Language Processing Jurafsky and Martin 9

Concatenative Synthesis § All current commercial systems. § Diphone Synthesis § Units are diphones; middle of one phone to middle of next. § Why? Middle of phone is steady state. § Record 1 speaker saying each diphone § Unit Selection Synthesis § Larger units § Record 10 hours or more, so have multiple copies of each unit § Use search to find best sequence of units 11/26/2020 Speech and Language Processing Jurafsky and Martin 10

TTS Demos (all are Unit-Selection) § Festival § http: //www-2. cs. cmu. edu/~awb/festival_demos/index. html § Cepstral § http: //www. cepstral. com/cgibin/demos/general § IBM § http: //www-306. ibm. com/software/pervasive/tech/demos/tts. shtml 11/26/2020 Speech and Language Processing Jurafsky and Martin 11

Architecture § The three types of TTS § Concatenative § Formant § Articulatory § Only cover the segments+f 0+duration to waveform part. § A full system needs to go all the way from random text to sound. 11/26/2020 Speech and Language Processing Jurafsky and Martin 12

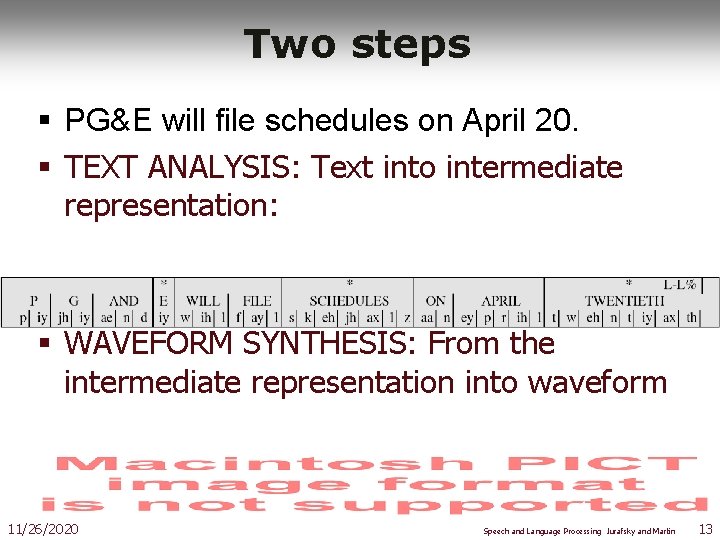

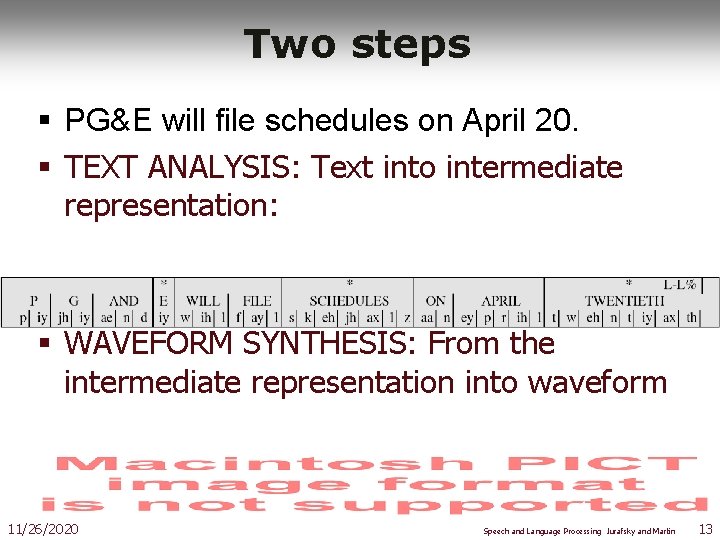

Two steps § PG&E will file schedules on April 20. § TEXT ANALYSIS: Text into intermediate representation: § WAVEFORM SYNTHESIS: From the intermediate representation into waveform 11/26/2020 Speech and Language Processing Jurafsky and Martin 13

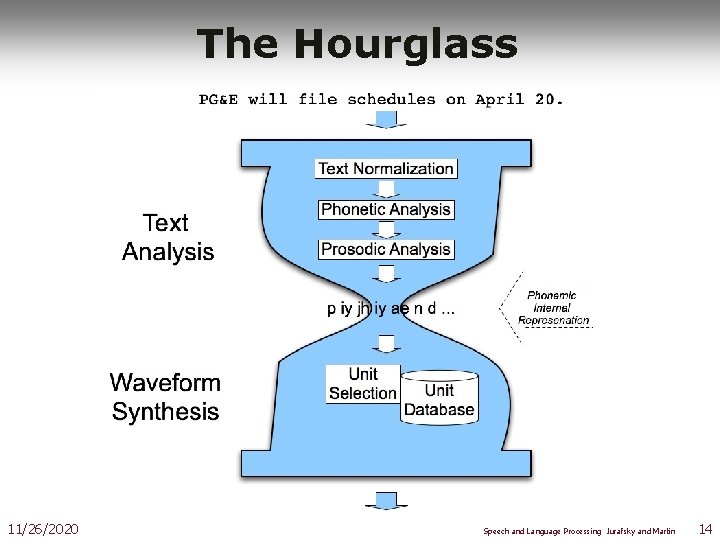

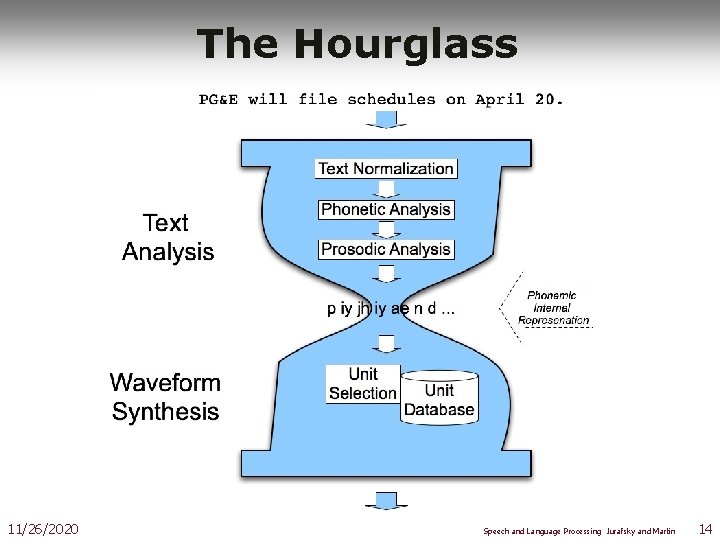

The Hourglass 11/26/2020 Speech and Language Processing Jurafsky and Martin 14

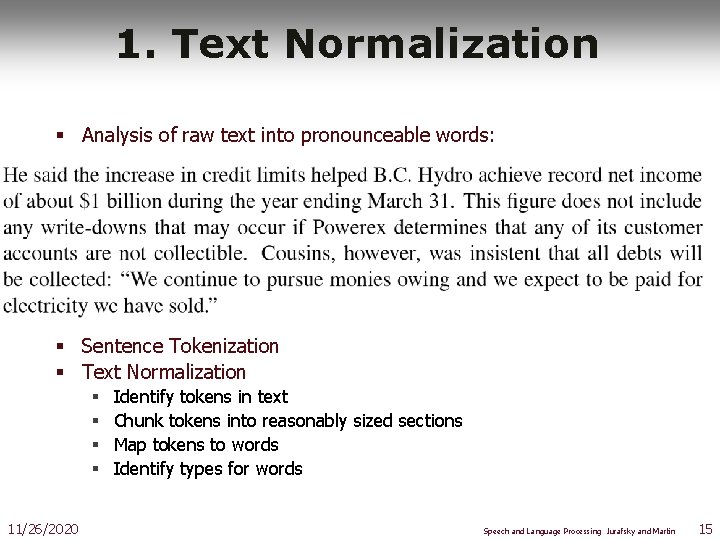

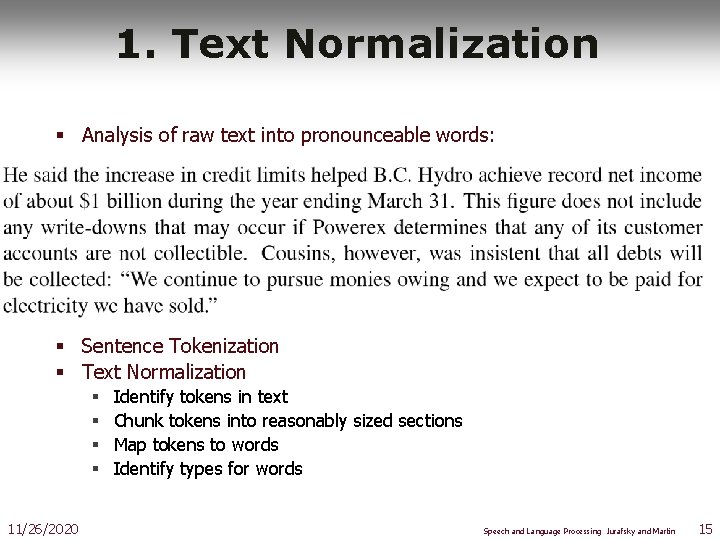

1. Text Normalization § Analysis of raw text into pronounceable words: § Sentence Tokenization § Text Normalization § § 11/26/2020 Identify tokens in text Chunk tokens into reasonably sized sections Map tokens to words Identify types for words Speech and Language Processing Jurafsky and Martin 15

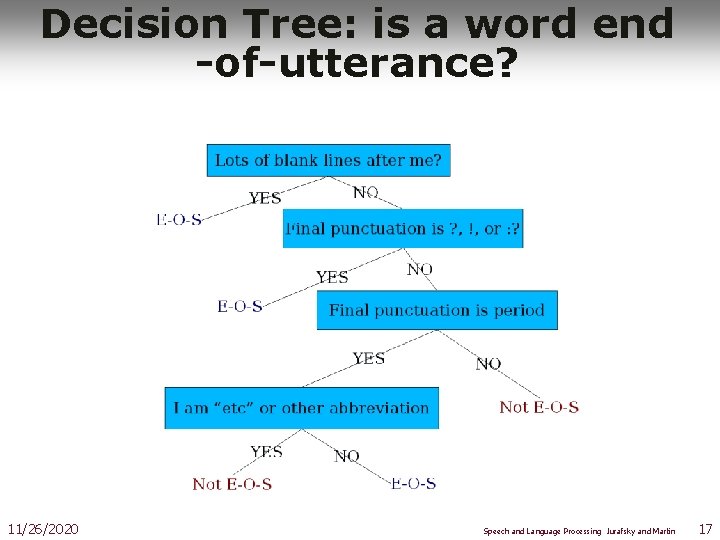

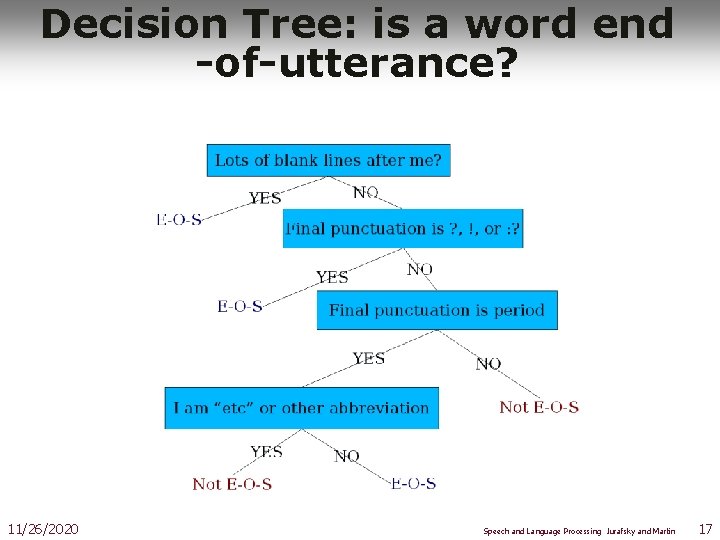

Rules for end-of-utterance detection § A dot with one or two letters is an abbrev § A dot with 3 cap letters is an abbrev. § An abbrev followed by 2 spaces and a capital letter is an end-of-utterance § Non-abbrevs followed by capitalized word are breaks § This fails for § Cog. Sci. Newsletter § Lots of cases at end of line. § Badly spaced/capitalized sentences 11/26/2020 From Alan Black lecture notes Speech and Language Processing Jurafsky and Martin 16

Decision Tree: is a word end -of-utterance? 11/26/2020 Speech and Language Processing Jurafsky and Martin 17

Learning Decision Trees § DTs are rarely built by hand § Hand-building only possible for very simple features, domains § Lots of algorithms for DT induction 11/26/2020 Speech and Language Processing Jurafsky and Martin 18

Next Step: Identify Types of Tokens, and Convert Tokens to Words § Pronunciation of numbers often depends on type: § 1776 date: § seventeen seventy six. § 1776 phone number: § one seven six § 1776 quantifier: § one thousand seven hundred (and) seventy six § 25 day: § twenty-fifth 11/26/2020 Speech and Language Processing Jurafsky and Martin 19

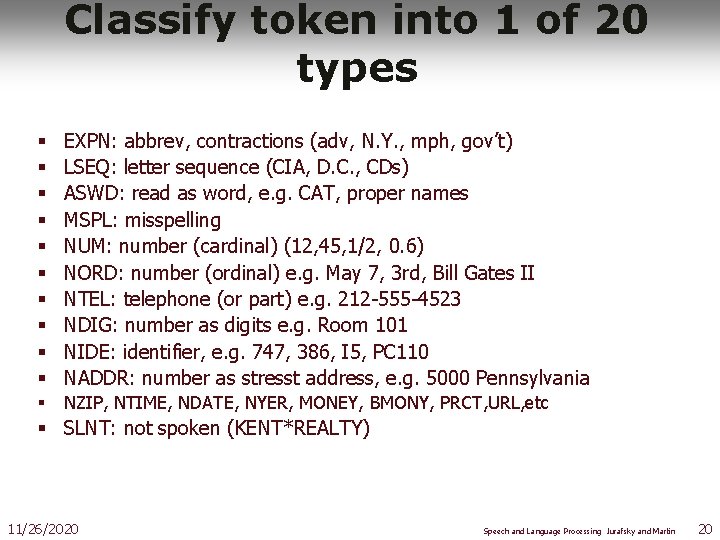

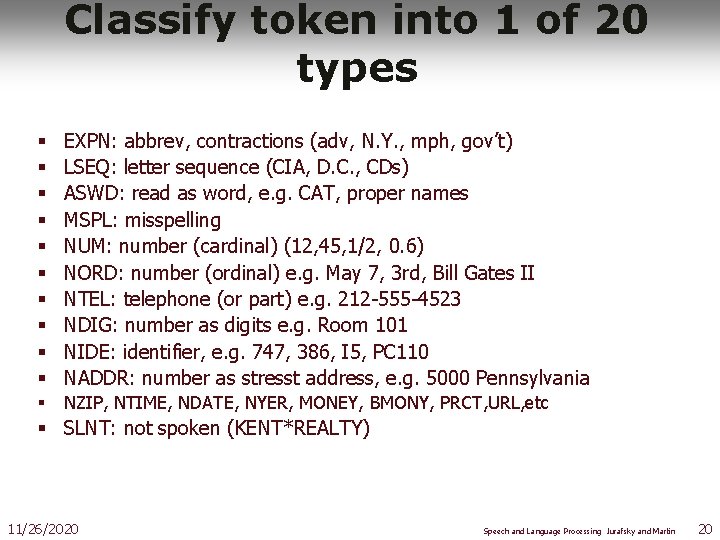

Classify token into 1 of 20 types § § § § § EXPN: abbrev, contractions (adv, N. Y. , mph, gov’t) LSEQ: letter sequence (CIA, D. C. , CDs) ASWD: read as word, e. g. CAT, proper names MSPL: misspelling NUM: number (cardinal) (12, 45, 1/2, 0. 6) NORD: number (ordinal) e. g. May 7, 3 rd, Bill Gates II NTEL: telephone (or part) e. g. 212 -555 -4523 NDIG: number as digits e. g. Room 101 NIDE: identifier, e. g. 747, 386, I 5, PC 110 NADDR: number as stresst address, e. g. 5000 Pennsylvania § NZIP, NTIME, NDATE, NYER, MONEY, BMONY, PRCT, URL, etc § SLNT: not spoken (KENT*REALTY) 11/26/2020 Speech and Language Processing Jurafsky and Martin 20

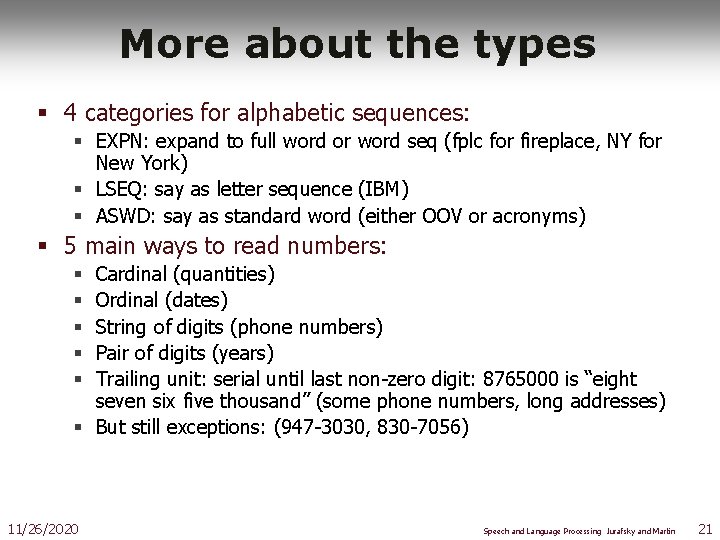

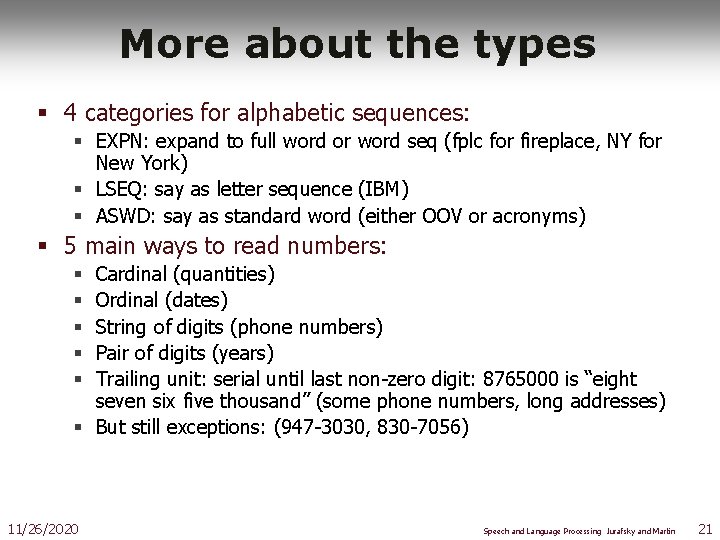

More about the types § 4 categories for alphabetic sequences: § EXPN: expand to full word or word seq (fplc for fireplace, NY for New York) § LSEQ: say as letter sequence (IBM) § ASWD: say as standard word (either OOV or acronyms) § 5 main ways to read numbers: Cardinal (quantities) Ordinal (dates) String of digits (phone numbers) Pair of digits (years) Trailing unit: serial until last non-zero digit: 8765000 is “eight seven six five thousand” (some phone numbers, long addresses) § But still exceptions: (947 -3030, 830 -7056) § § § 11/26/2020 Speech and Language Processing Jurafsky and Martin 21

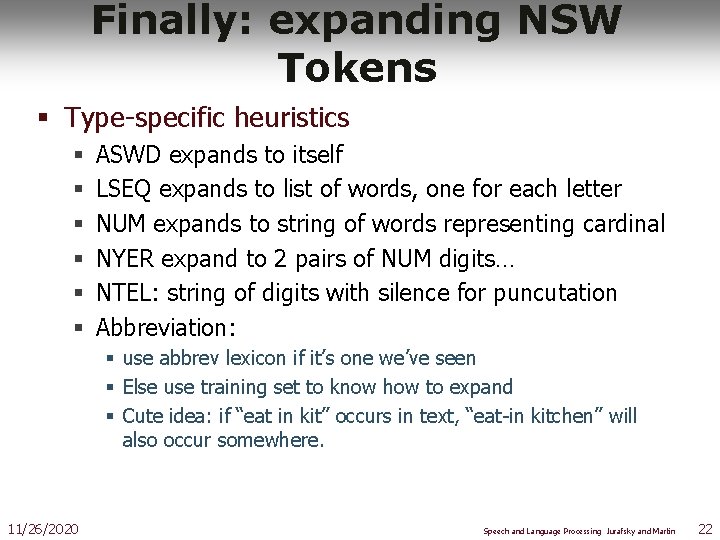

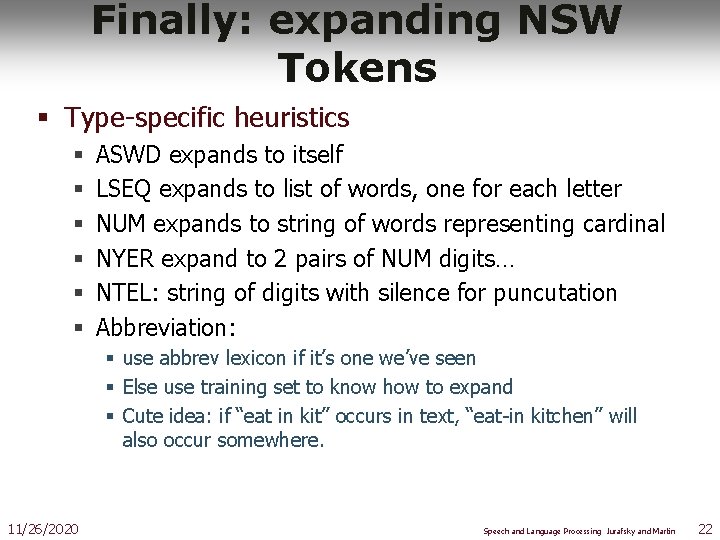

Finally: expanding NSW Tokens § Type-specific heuristics § § § ASWD expands to itself LSEQ expands to list of words, one for each letter NUM expands to string of words representing cardinal NYER expand to 2 pairs of NUM digits… NTEL: string of digits with silence for puncutation Abbreviation: § use abbrev lexicon if it’s one we’ve seen § Else use training set to know how to expand § Cute idea: if “eat in kit” occurs in text, “eat-in kitchen” will also occur somewhere. 11/26/2020 Speech and Language Processing Jurafsky and Martin 22

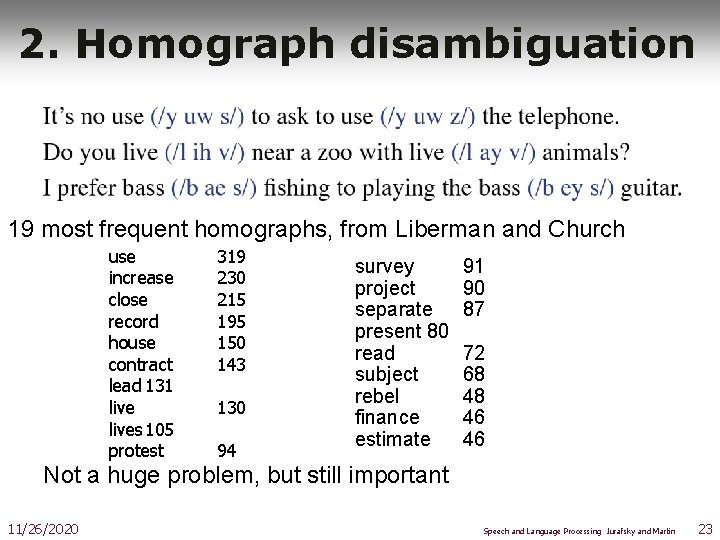

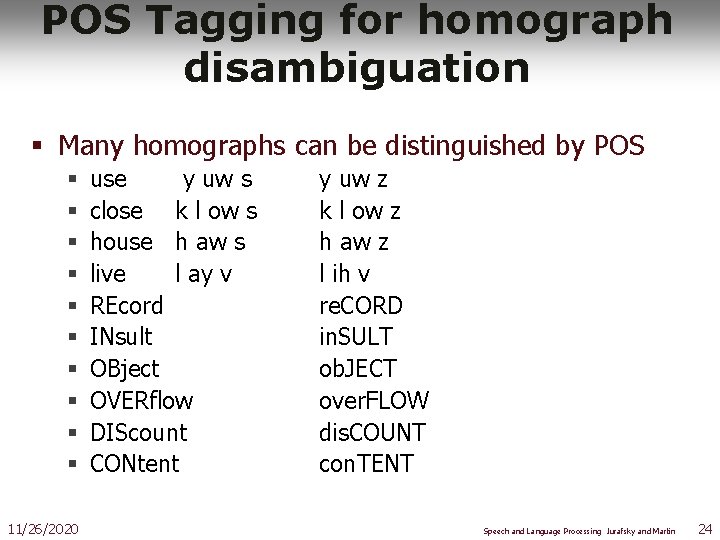

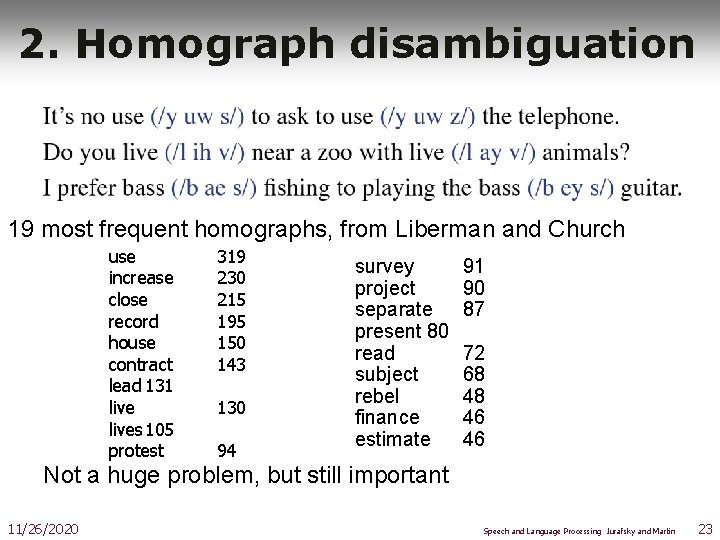

2. Homograph disambiguation 19 most frequent homographs, from Liberman and Church use increase close record house contract lead 131 lives 105 protest 319 230 215 195 150 143 130 94 survey project separate present 80 read subject rebel finance estimate 91 90 87 72 68 48 46 46 Not a huge problem, but still important 11/26/2020 Speech and Language Processing Jurafsky and Martin 23

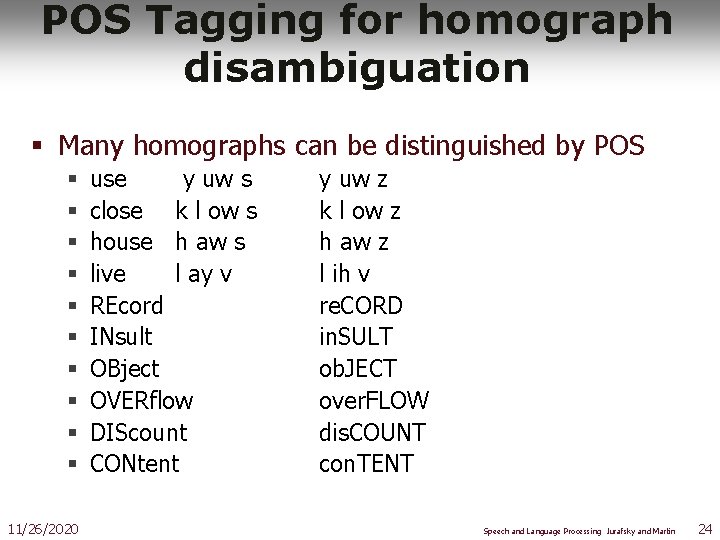

POS Tagging for homograph disambiguation § Many homographs can be distinguished by POS § § § § § 11/26/2020 use y uw s close k l ow s house h aw s live l ay v REcord INsult OBject OVERflow DIScount CONtent y uw z k l ow z h aw z l ih v re. CORD in. SULT ob. JECT over. FLOW dis. COUNT con. TENT Speech and Language Processing Jurafsky and Martin 24

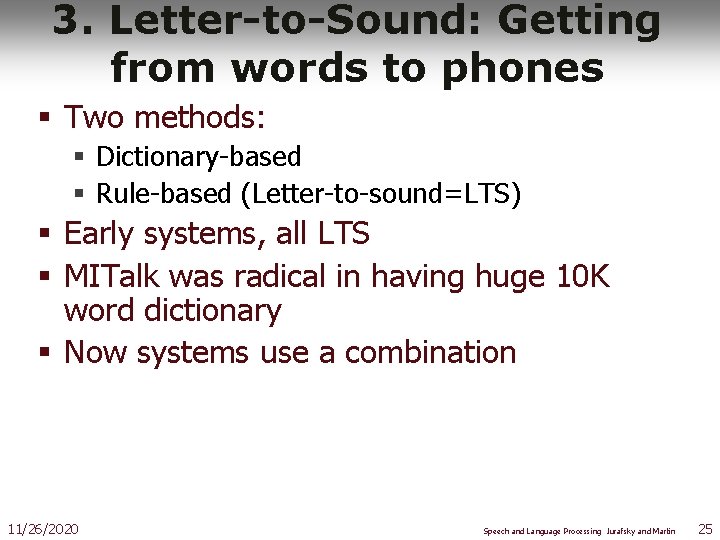

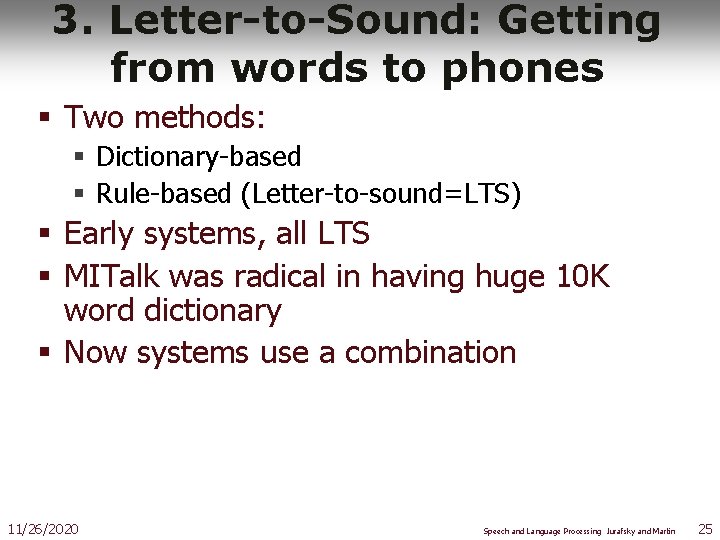

3. Letter-to-Sound: Getting from words to phones § Two methods: § Dictionary-based § Rule-based (Letter-to-sound=LTS) § Early systems, all LTS § MITalk was radical in having huge 10 K word dictionary § Now systems use a combination 11/26/2020 Speech and Language Processing Jurafsky and Martin 25

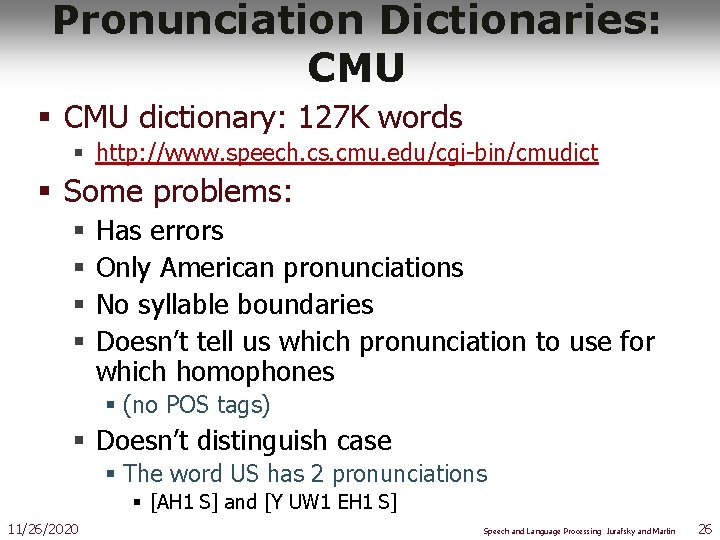

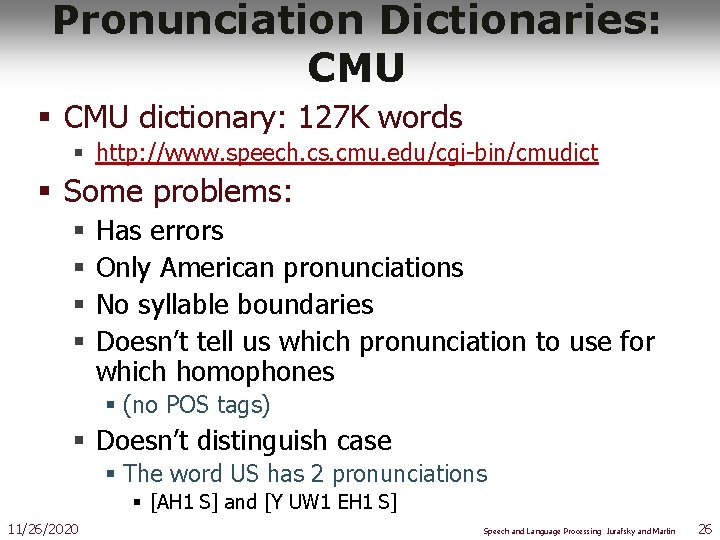

Pronunciation Dictionaries: CMU § CMU dictionary: 127 K words § http: //www. speech. cs. cmu. edu/cgi-bin/cmudict § Some problems: § § Has errors Only American pronunciations No syllable boundaries Doesn’t tell us which pronunciation to use for which homophones § (no POS tags) § Doesn’t distinguish case § The word US has 2 pronunciations § [AH 1 S] and [Y UW 1 EH 1 S] 11/26/2020 Speech and Language Processing Jurafsky and Martin 26

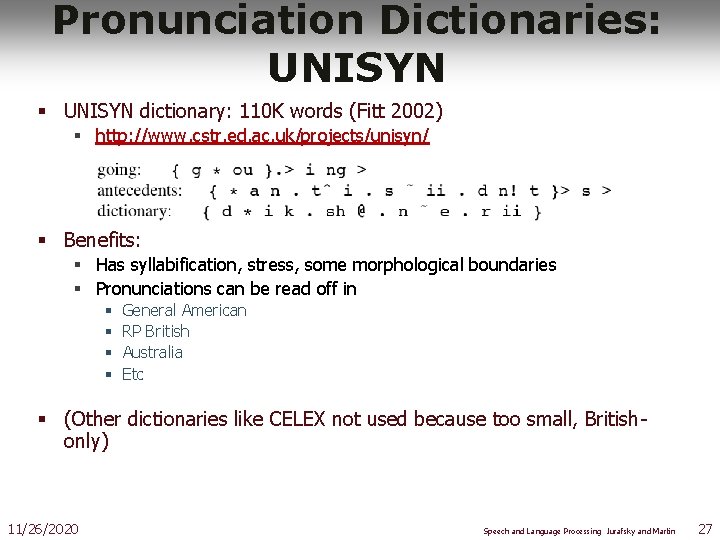

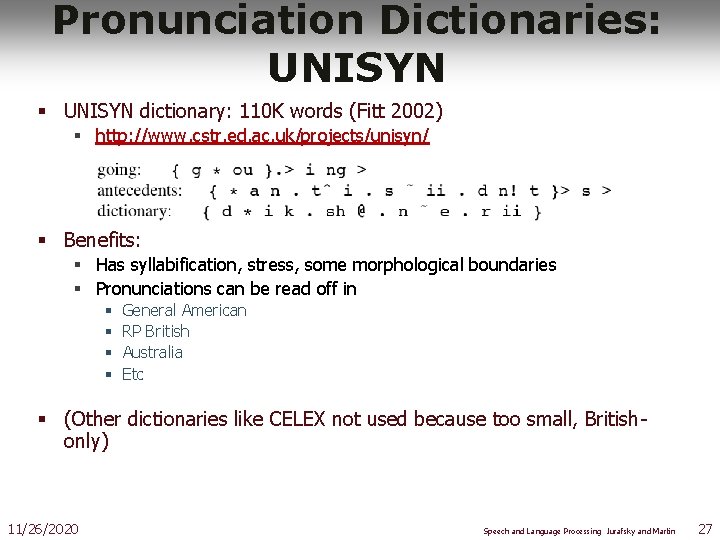

Pronunciation Dictionaries: UNISYN § UNISYN dictionary: 110 K words (Fitt 2002) § http: //www. cstr. ed. ac. uk/projects/unisyn/ § Benefits: § Has syllabification, stress, some morphological boundaries § Pronunciations can be read off in § § General American RP British Australia Etc § (Other dictionaries like CELEX not used because too small, Britishonly) 11/26/2020 Speech and Language Processing Jurafsky and Martin 27

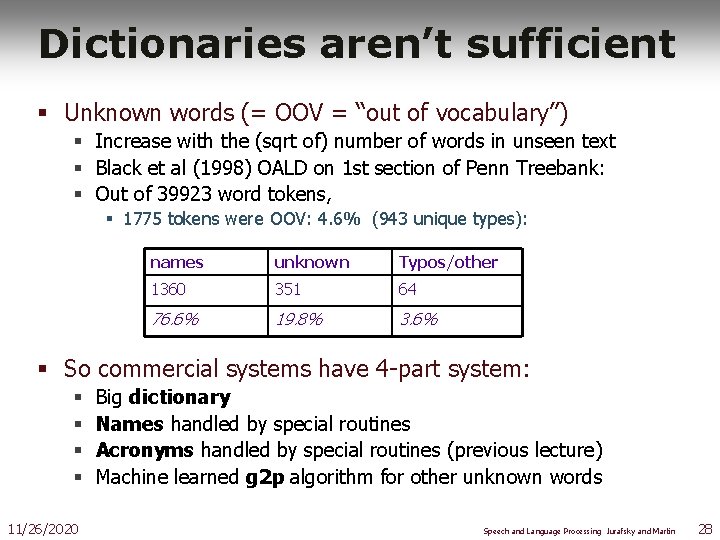

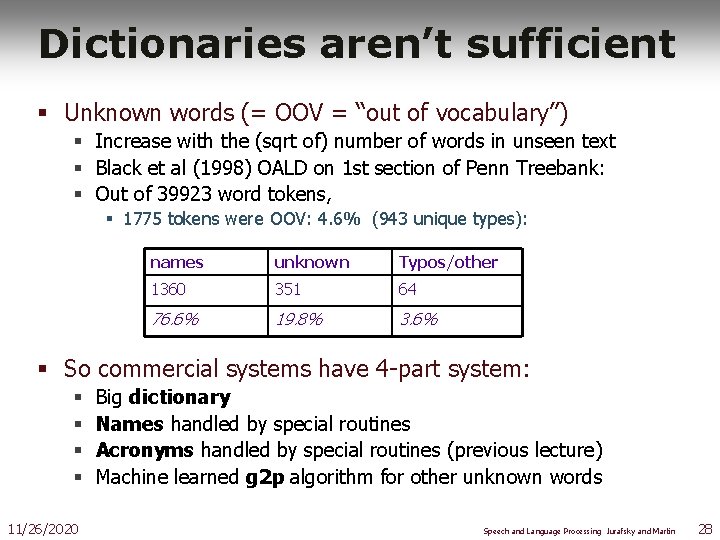

Dictionaries aren’t sufficient § Unknown words (= OOV = “out of vocabulary”) § Increase with the (sqrt of) number of words in unseen text § Black et al (1998) OALD on 1 st section of Penn Treebank: § Out of 39923 word tokens, § 1775 tokens were OOV: 4. 6% (943 unique types): names unknown Typos/other 1360 351 64 76. 6% 19. 8% 3. 6% § So commercial systems have 4 -part system: § § 11/26/2020 Big dictionary Names handled by special routines Acronyms handled by special routines (previous lecture) Machine learned g 2 p algorithm for other unknown words Speech and Language Processing Jurafsky and Martin 28

Names § Big problem area is names § Names are common § 20% of tokens in typical newswire text will be names § 1987 Donnelly list (72 million households) contains about 1. 5 million names § Personal names: Mc. Arthur, D’Angelo, Jiminez, Rajan, Raghavan, Sondhi, Xu, Hsu, Zhang, Chang, Nguyen § Company/Brand names: Infinit, Kmart, Cytyc, Medamicus, Inforte, Aaon, Idexx Labs, Bebe 11/26/2020 Speech and Language Processing Jurafsky and Martin 29

Names § Methods: § Can do morphology (Walters -> Walter, Lucasville) § Can write stress-shifting rules (Jordan -> Jordanian) § Rhyme analogy: Plotsky by analogy with Trostsky (replace tr with pl) § Liberman and Church: for 250 K most common names, got 212 K (85%) from these modified-dictionary methods, used LTS for rest. § Can do automatic country detection (from letter trigrams) and then do country-specific rules § Can train g 2 p system specifically on names § Or specifically on types of names (brand names, Russian names, etc) 11/26/2020 Speech and Language Processing Jurafsky and Martin 30

Acronyms § We saw above § Use machine learning to detect acronyms § EXPN § ASWORD § LETTERS § Use acronym dictionary, hand-written rules to augment 11/26/2020 Speech and Language Processing Jurafsky and Martin 31

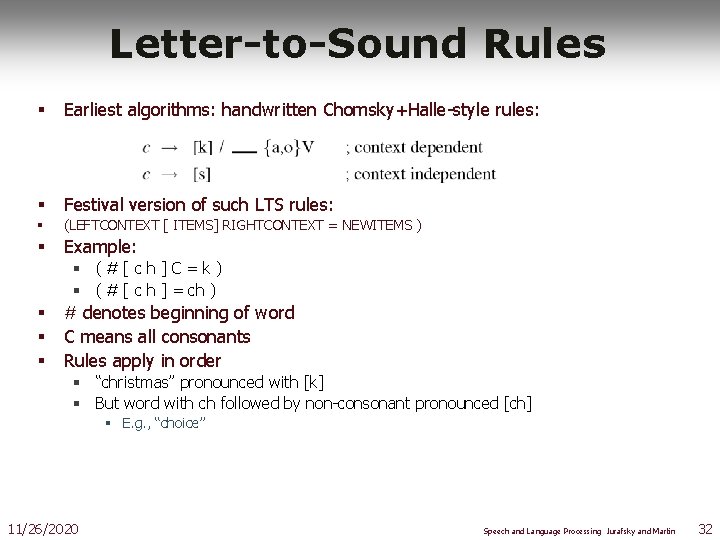

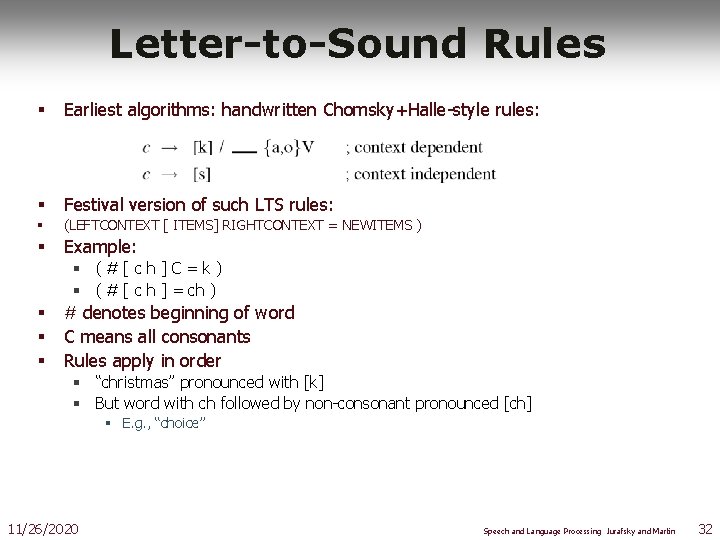

Letter-to-Sound Rules § Earliest algorithms: handwritten Chomsky+Halle-style rules: § Festival version of such LTS rules: § (LEFTCONTEXT [ ITEMS] RIGHTCONTEXT = NEWITEMS ) § Example: § (#[ch]C=k) § ( # [ c h ] = ch ) § § § # denotes beginning of word C means all consonants Rules apply in order § “christmas” pronounced with [k] § But word with ch followed by non-consonant pronounced [ch] § E. g. , “choice” 11/26/2020 Speech and Language Processing Jurafsky and Martin 32

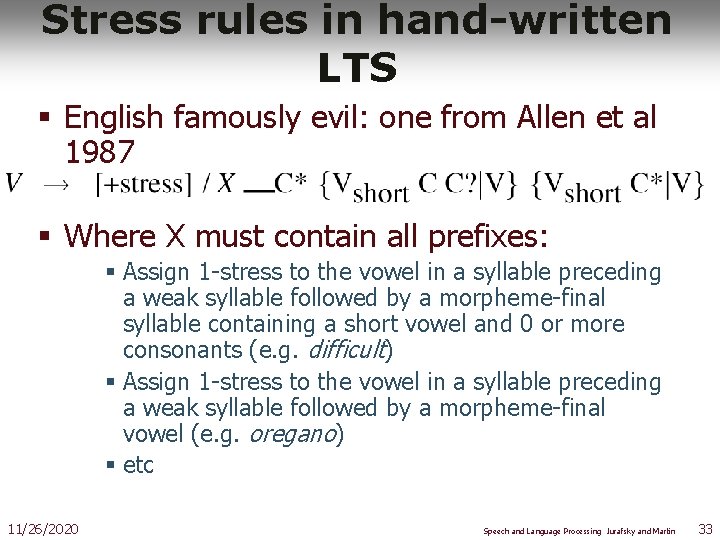

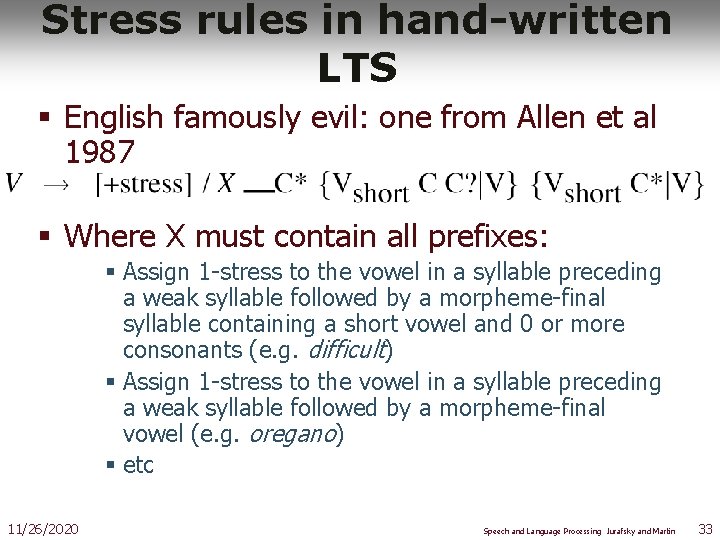

Stress rules in hand-written LTS § English famously evil: one from Allen et al 1987 § Where X must contain all prefixes: § Assign 1 -stress to the vowel in a syllable preceding a weak syllable followed by a morpheme-final syllable containing a short vowel and 0 or more consonants (e. g. difficult) § Assign 1 -stress to the vowel in a syllable preceding a weak syllable followed by a morpheme-final vowel (e. g. oregano) § etc 11/26/2020 Speech and Language Processing Jurafsky and Martin 33

Modern method: Learning LTS rules automatically § Induce LTS from a dictionary of the language § Black et al. 1998 § Applied to English, German, French § Two steps: § alignment § (CART-based) rule-induction 11/26/2020 Speech and Language Processing Jurafsky and Martin 34

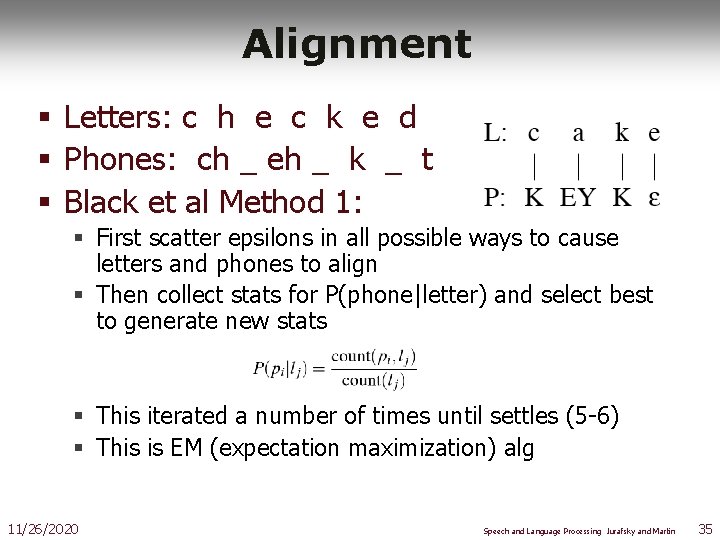

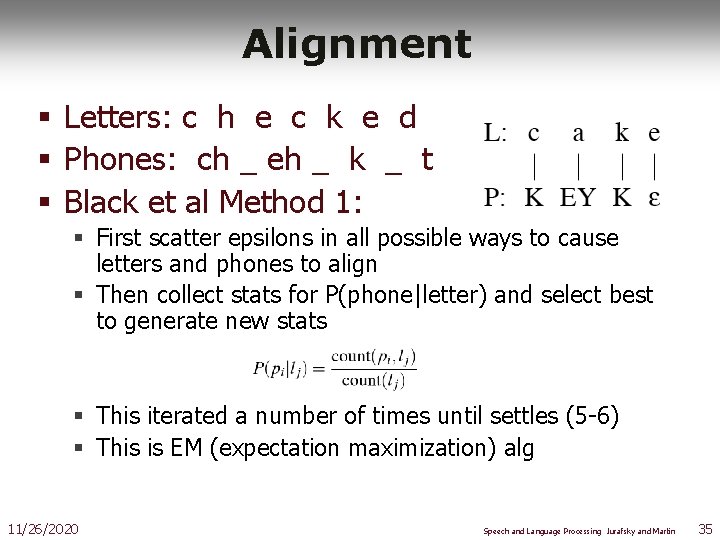

Alignment § Letters: c h e c k e d § Phones: ch _ eh _ k _ t § Black et al Method 1: § First scatter epsilons in all possible ways to cause letters and phones to align § Then collect stats for P(phone|letter) and select best to generate new stats § This iterated a number of times until settles (5 -6) § This is EM (expectation maximization) alg 11/26/2020 Speech and Language Processing Jurafsky and Martin 35

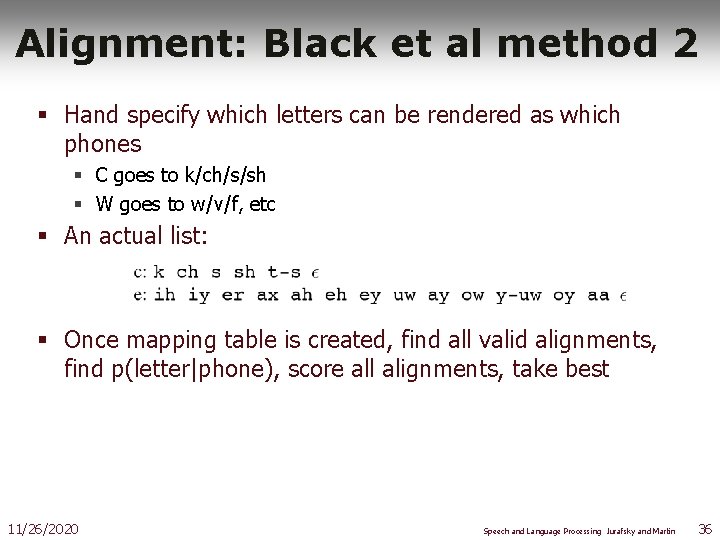

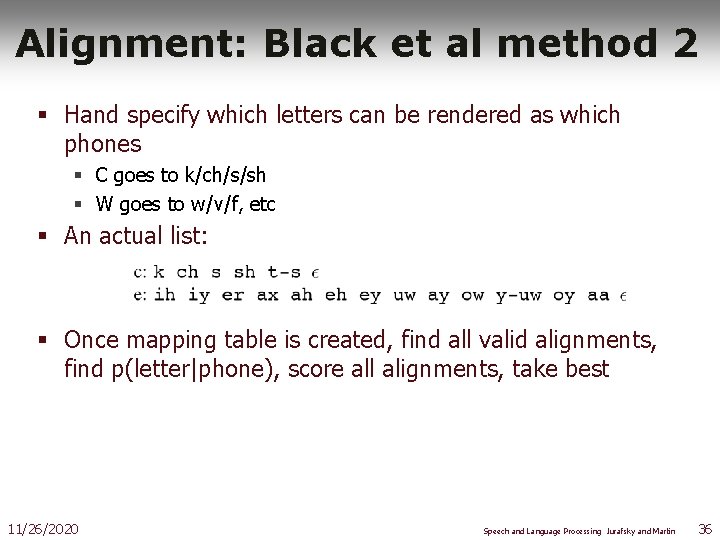

Alignment: Black et al method 2 § Hand specify which letters can be rendered as which phones § C goes to k/ch/s/sh § W goes to w/v/f, etc § An actual list: § Once mapping table is created, find all valid alignments, find p(letter|phone), score all alignments, take best 11/26/2020 Speech and Language Processing Jurafsky and Martin 36

Alignment § Some alignments will turn out to be really bad. § These are just the cases where pronunciation doesn’t match letters: § Dept d ih p aa r t m ah n t § CMU s iy eh m y uw § Lieutenant l eh f t eh n ax n t (British) § Also foreign words § These can just be removed from alignment training 11/26/2020 Speech and Language Processing Jurafsky and Martin 37

Building CART trees § Build a CART tree for each letter in alphabet (26 plus accented) using context of +-3 letters § # # # c h e c -> ch § c h e c k e d -> _ 11/26/2020 Speech and Language Processing Jurafsky and Martin 38

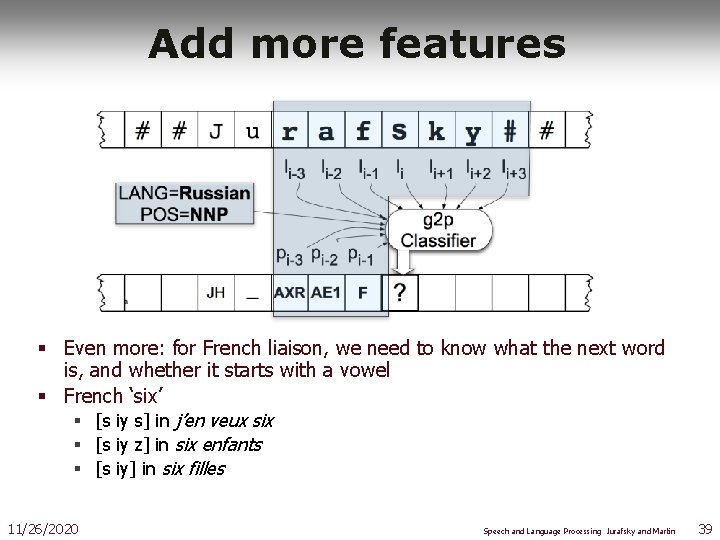

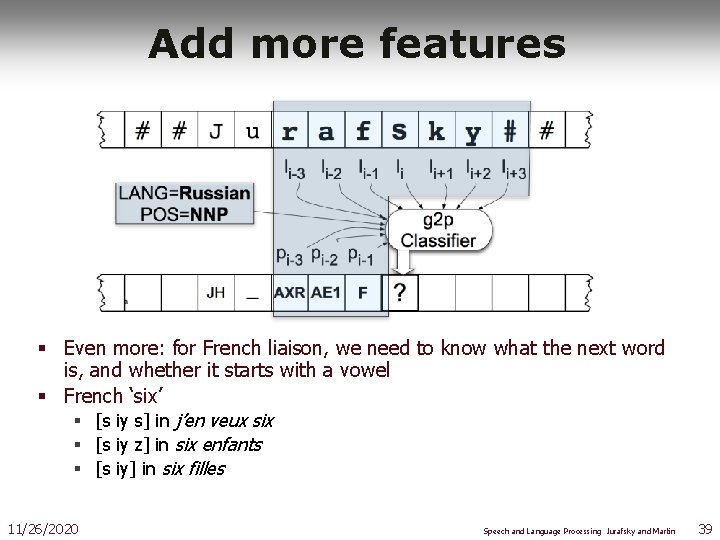

Add more features § Even more: for French liaison, we need to know what the next word is, and whether it starts with a vowel § French ‘six’ § [s iy s] in j’en veux six § [s iy z] in six enfants § [s iy] in six filles 11/26/2020 Speech and Language Processing Jurafsky and Martin 39

Prosody: from words+phones to boundaries, accent, F 0, duration § Prosodic phrasing § Need to break utterances into phrases § Punctuation is useful, not sufficient § Accents: § Predictions of accents: which syllables should be accented § Realization of F 0 contour: given accents/tones, generate F 0 contour § Duration: § Predicting duration of each phone 11/26/2020 Speech and Language Processing Jurafsky and Martin 40

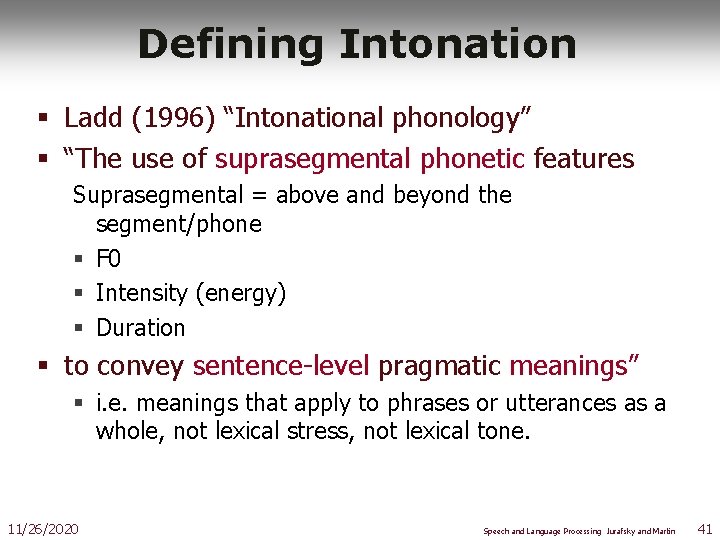

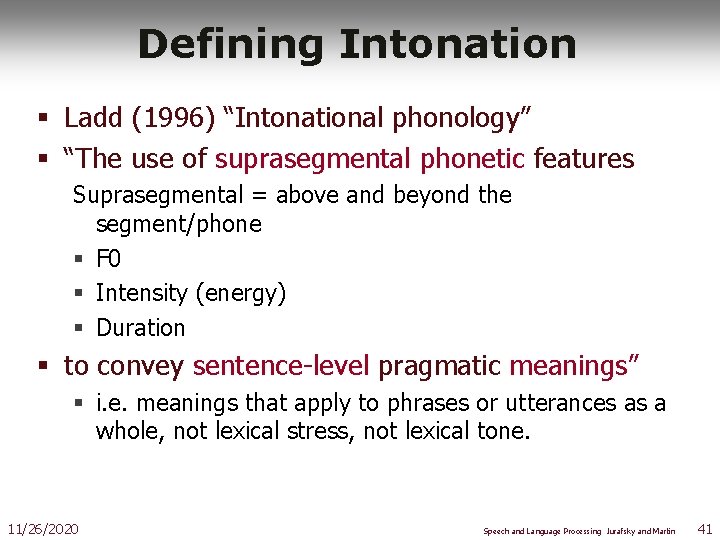

Defining Intonation § Ladd (1996) “Intonational phonology” § “The use of suprasegmental phonetic features Suprasegmental = above and beyond the segment/phone § F 0 § Intensity (energy) § Duration § to convey sentence-level pragmatic meanings” § i. e. meanings that apply to phrases or utterances as a whole, not lexical stress, not lexical tone. 11/26/2020 Speech and Language Processing Jurafsky and Martin 41

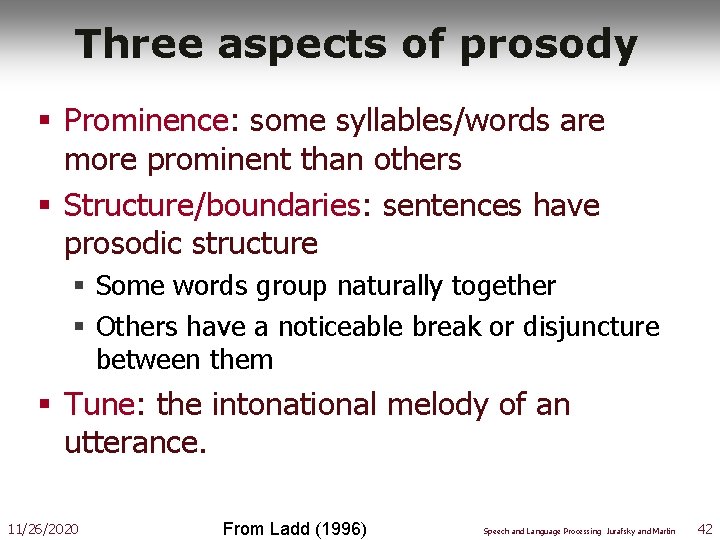

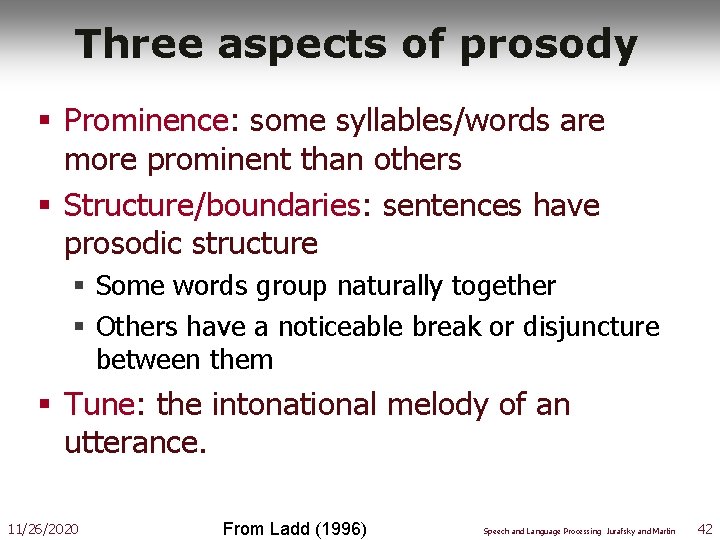

Three aspects of prosody § Prominence: some syllables/words are more prominent than others § Structure/boundaries: sentences have prosodic structure § Some words group naturally together § Others have a noticeable break or disjuncture between them § Tune: the intonational melody of an utterance. 11/26/2020 From Ladd (1996) Speech and Language Processing Jurafsky and Martin 42

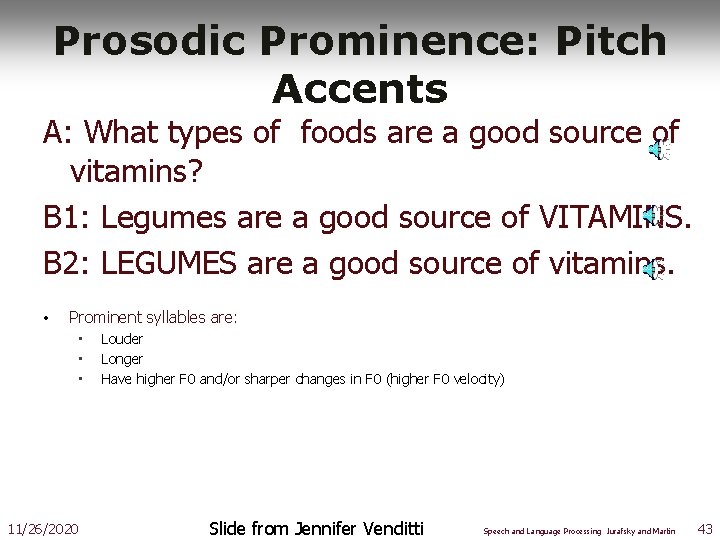

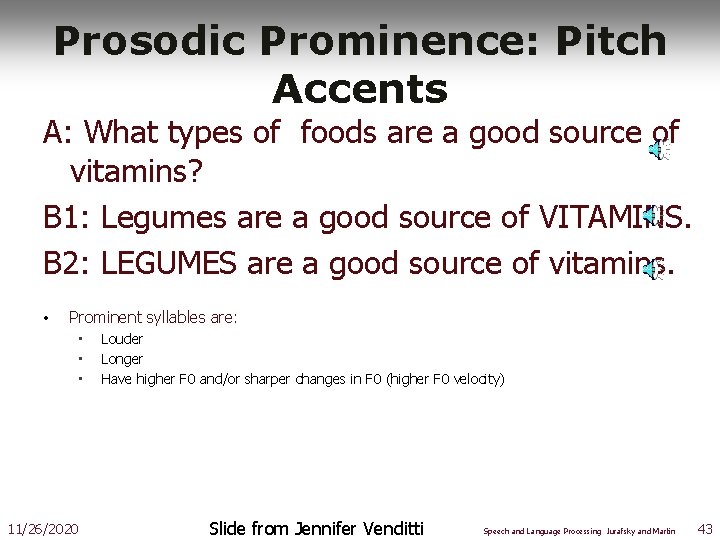

Prosodic Prominence: Pitch Accents A: What types of foods are a good source of vitamins? B 1: Legumes are a good source of VITAMINS. B 2: LEGUMES are a good source of vitamins. • Prominent syllables are: • • • 11/26/2020 Louder Longer Have higher F 0 and/or sharper changes in F 0 (higher F 0 velocity) Slide from Jennifer Venditti Speech and Language Processing Jurafsky and Martin 43

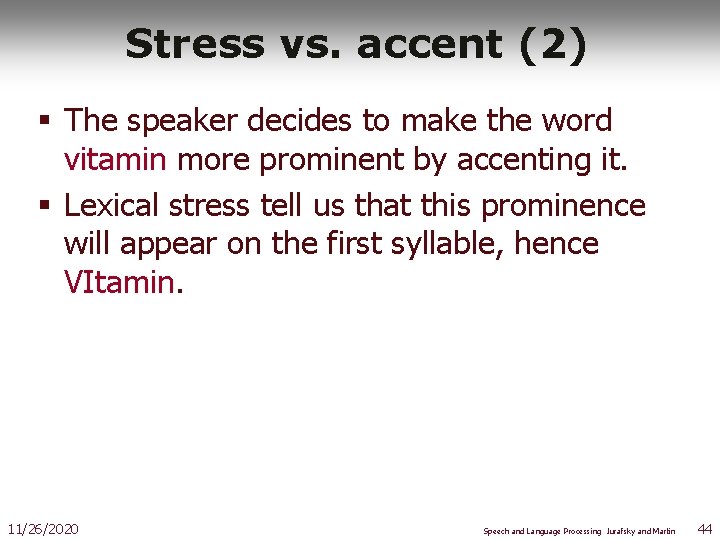

Stress vs. accent (2) § The speaker decides to make the word vitamin more prominent by accenting it. § Lexical stress tell us that this prominence will appear on the first syllable, hence VItamin. 11/26/2020 Speech and Language Processing Jurafsky and Martin 44

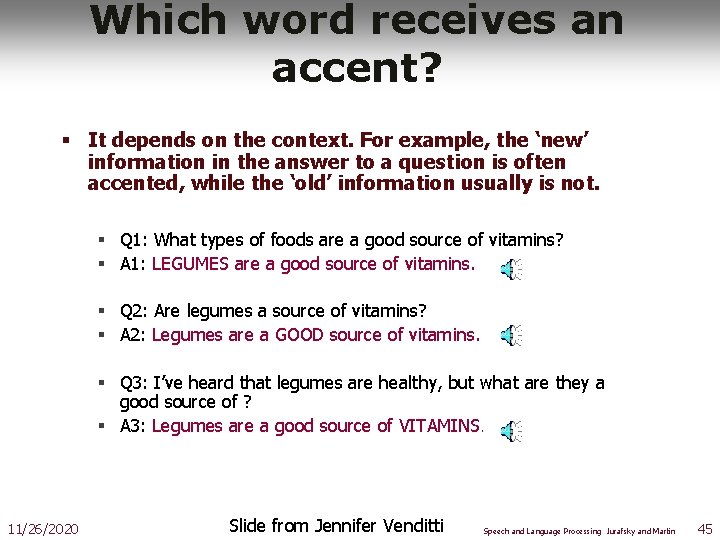

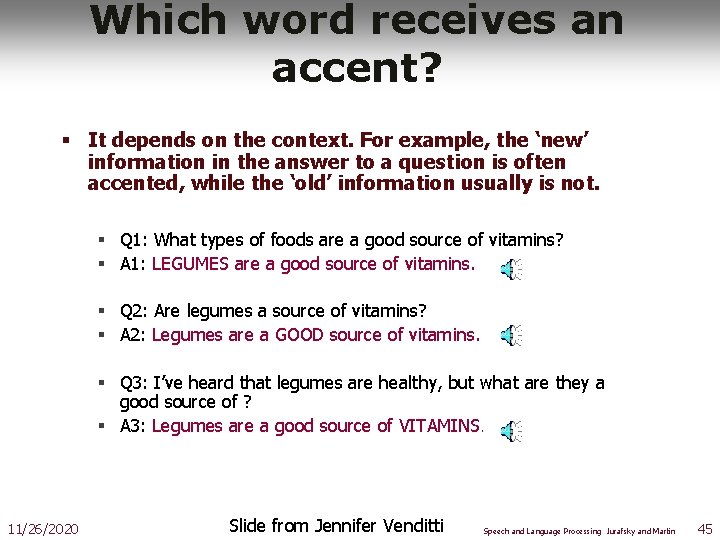

Which word receives an accent? § It depends on the context. For example, the ‘new’ information in the answer to a question is often accented, while the ‘old’ information usually is not. § Q 1: What types of foods are a good source of vitamins? § A 1: LEGUMES are a good source of vitamins. § Q 2: Are legumes a source of vitamins? § A 2: Legumes are a GOOD source of vitamins. § Q 3: I’ve heard that legumes are healthy, but what are they a good source of ? § A 3: Legumes are a good source of VITAMINS. 11/26/2020 Slide from Jennifer Venditti Speech and Language Processing Jurafsky and Martin 45

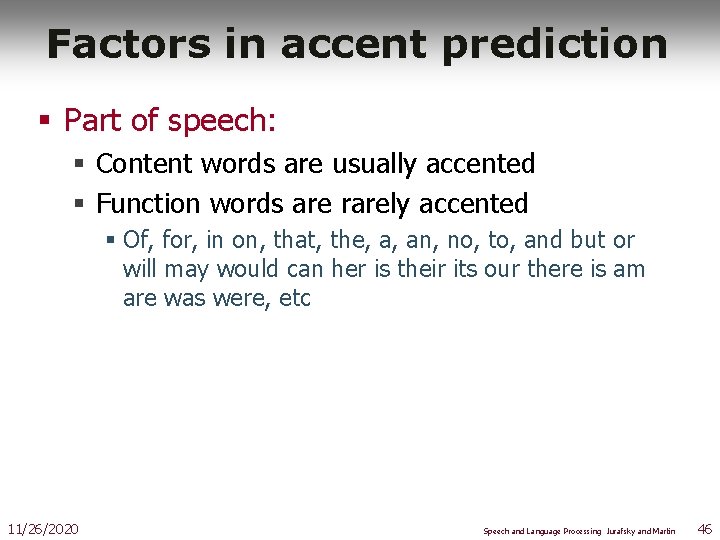

Factors in accent prediction § Part of speech: § Content words are usually accented § Function words are rarely accented § Of, for, in on, that, the, a, an, no, to, and but or will may would can her is their its our there is am are was were, etc 11/26/2020 Speech and Language Processing Jurafsky and Martin 46

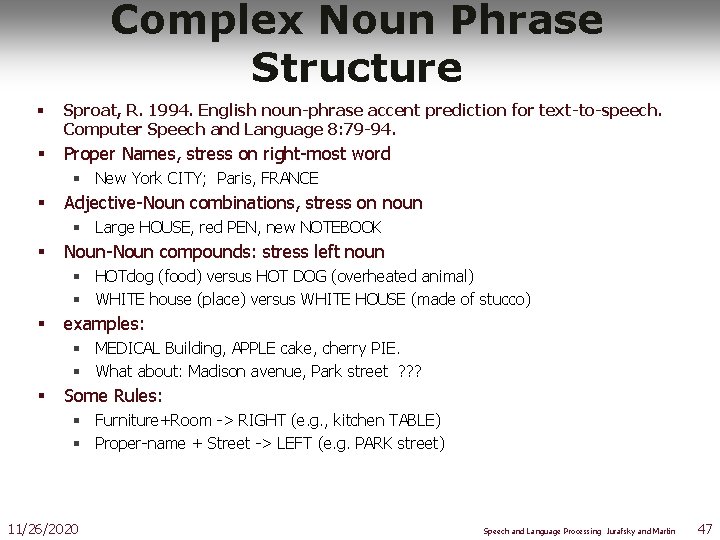

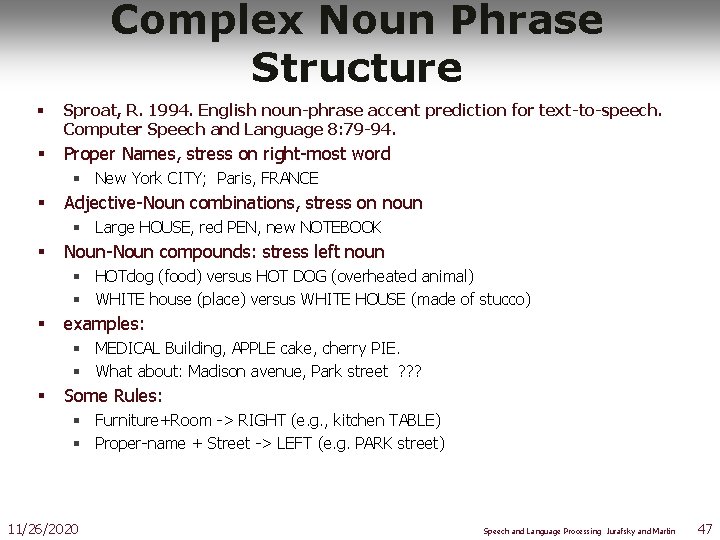

Complex Noun Phrase Structure § Sproat, R. 1994. English noun-phrase accent prediction for text-to-speech. Computer Speech and Language 8: 79 -94. § Proper Names, stress on right-most word § New York CITY; Paris, FRANCE § Adjective-Noun combinations, stress on noun § Large HOUSE, red PEN, new NOTEBOOK § Noun-Noun compounds: stress left noun § HOTdog (food) versus HOT DOG (overheated animal) § WHITE house (place) versus WHITE HOUSE (made of stucco) § examples: § MEDICAL Building, APPLE cake, cherry PIE. § What about: Madison avenue, Park street ? ? ? § Some Rules: § Furniture+Room -> RIGHT (e. g. , kitchen TABLE) § Proper-name + Street -> LEFT (e. g. PARK street) 11/26/2020 Speech and Language Processing Jurafsky and Martin 47

State of the art § Hand-label large training sets § Use CART, SVM, CRF, etc to predict accent § Lots of rich features from context (parts of speech, syntactic structure, information structure, contrast, etc. ) § Classic lit: § Hirschberg, Julia. 1993. Pitch Accent in context: predicting intonational prominence from text. Artificial Intelligence 63, 305 -340 11/26/2020 Speech and Language Processing Jurafsky and Martin 48

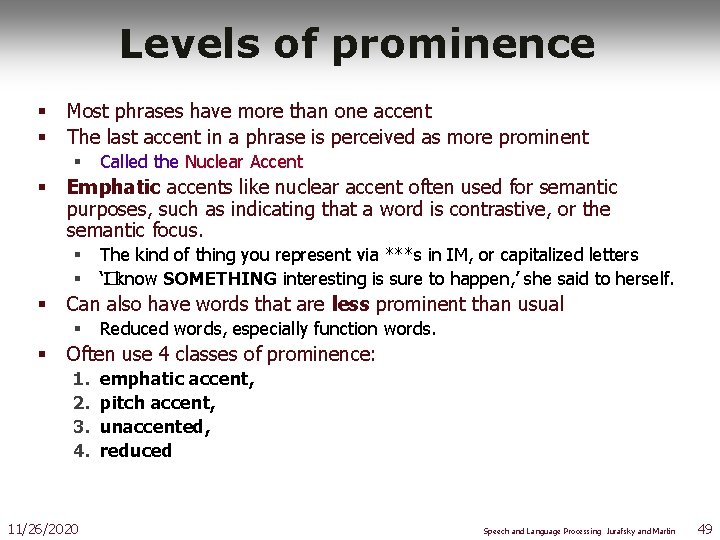

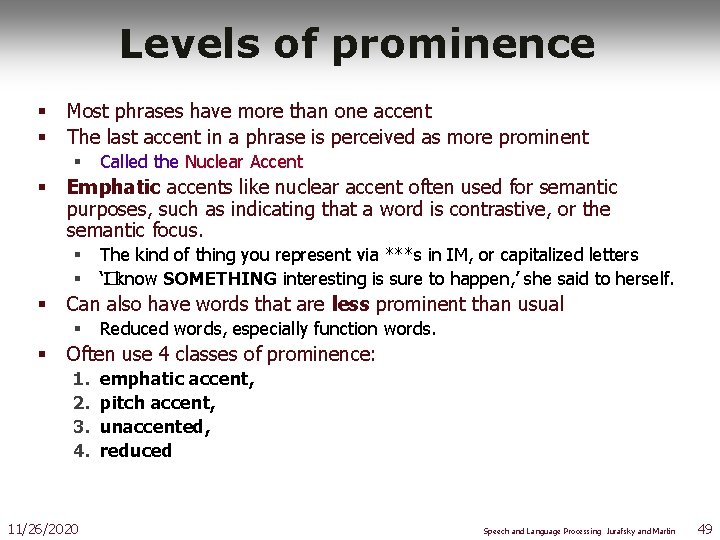

Levels of prominence § § Most phrases have more than one accent The last accent in a phrase is perceived as more prominent § § Emphatic accents like nuclear accent often used for semantic purposes, such as indicating that a word is contrastive, or the semantic focus. § § § The kind of thing you represent via ***s in IM, or capitalized letters ‘� I know SOMETHING interesting is sure to happen, ’ she said to herself. Can also have words that are less prominent than usual § § Called the Nuclear Accent Reduced words, especially function words. Often use 4 classes of prominence: 1. 2. 3. 4. 11/26/2020 emphatic accent, pitch accent, unaccented, reduced Speech and Language Processing Jurafsky and Martin 49

Yes-No question are legumes a good source of VITAMINS Rise from the main accent to the end of the sentence. 11/26/2020 Slide from Jennifer Venditti Speech and Language Processing Jurafsky and Martin 50

![Surpriseredundancy tune How many times do I have to tell you ‘Surprise-redundancy’ tune [How many times do I have to tell you. . . ]](https://slidetodoc.com/presentation_image_h/a85a969c25406d5ba3aab32be2589ba5/image-51.jpg)

‘Surprise-redundancy’ tune [How many times do I have to tell you. . . ] legumes are a good source of vitamins Low beginning followed by a gradual rise to a high at the end. 11/26/2020 Slide from Jennifer Venditti Speech and Language Processing Jurafsky and Martin 51

‘Contradiction’ tune “I’ve heard that linguini is a good source of vitamins. ” linguini isn’t a good source of vitamins [. . . how could you think that? ] Sharp fall at the beginning, flat and low, then rising at the end. 11/26/2020 Slide from Jennifer Venditti Speech and Language Processing Jurafsky and Martin 52

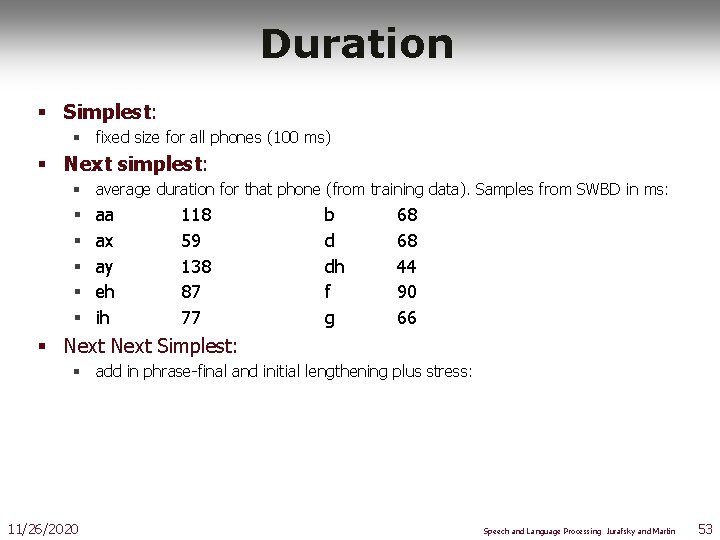

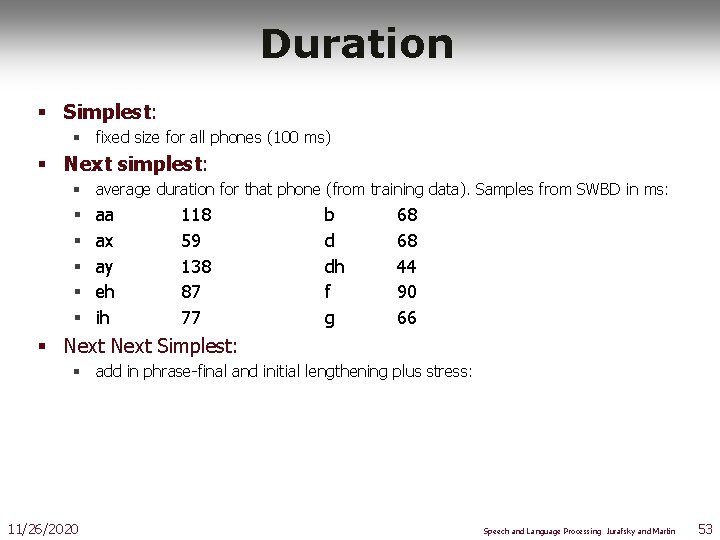

Duration § Simplest: § fixed size for all phones (100 ms) § Next simplest: § average duration for that phone (from training data). Samples from SWBD in ms: § § § aa ax ay eh ih 118 59 138 87 77 b d dh f g 68 68 44 90 66 § Next Simplest: § add in phrase-final and initial lengthening plus stress: 11/26/2020 Speech and Language Processing Jurafsky and Martin 53

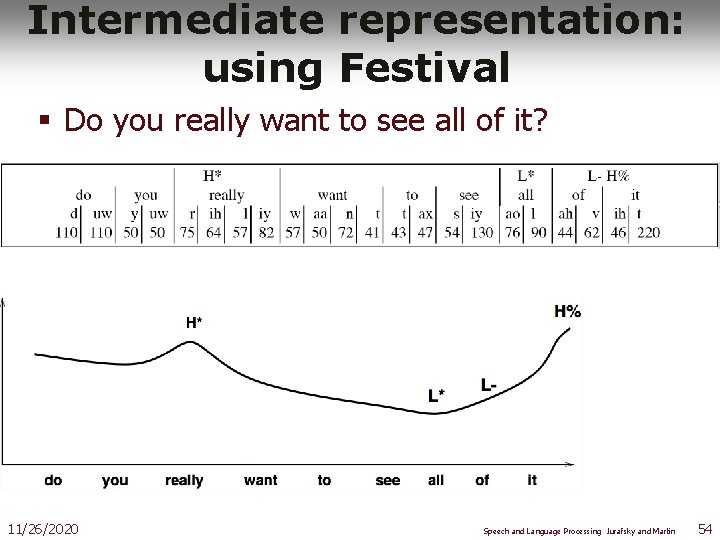

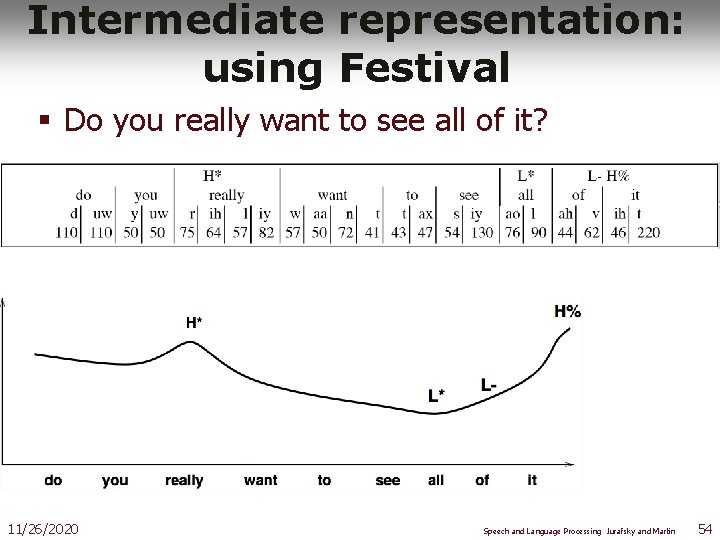

Intermediate representation: using Festival § Do you really want to see all of it? 11/26/2020 Speech and Language Processing Jurafsky and Martin 54

Waveform Synthesis § Given: § String of phones § Prosody § Desired F 0 for entire utterance § Duration for each phone § Stress value for each phone, possibly accent value § Generate: § Waveforms 11/26/2020 Speech and Language Processing Jurafsky and Martin 55

Diphone TTS architecture § Training: § Choose units (kinds of diphones) § Record 1 speaker saying 1 example of each diphone § Mark the boundaries of each diphones, § cut each diphone out and create a diphone database § Synthesizing an utterance, § grab relevant sequence of diphones from database § Concatenate the diphones, doing slight signal processing at boundaries § use signal processing to change the prosody (F 0, energy, duration) of selected sequence of diphones 11/26/2020 Speech and Language Processing Jurafsky and Martin 56

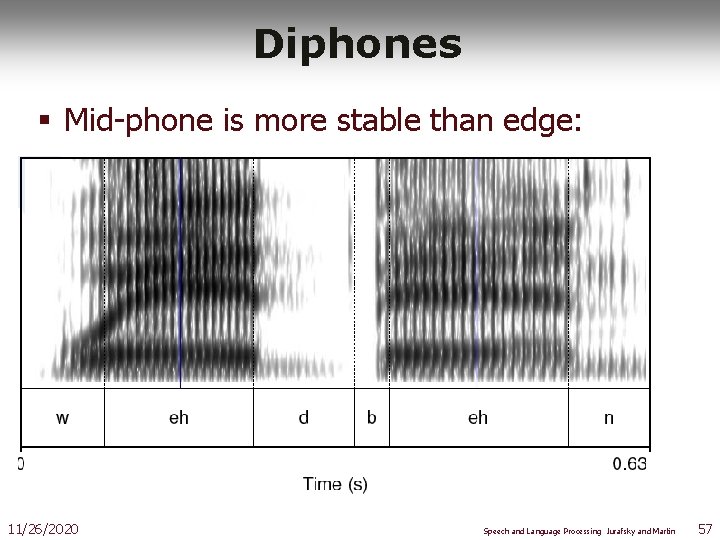

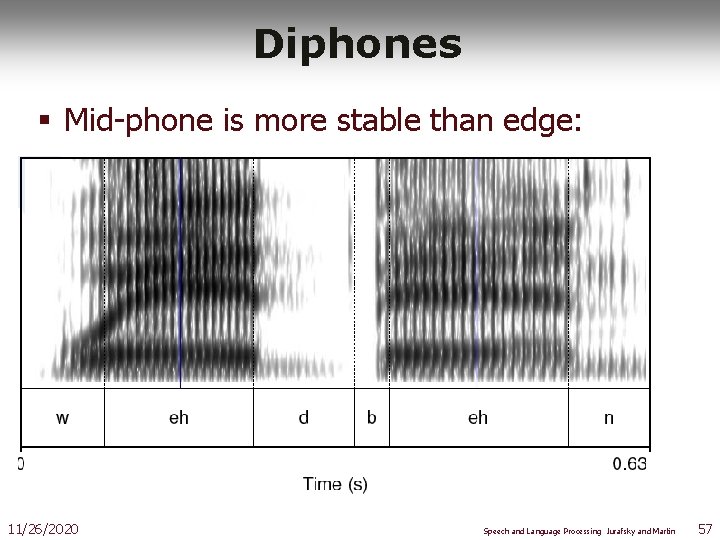

Diphones § Mid-phone is more stable than edge: 11/26/2020 Speech and Language Processing Jurafsky and Martin 57

Diphones § mid-phone is more stable than edge § Need O(phone 2) number of units § Some combinations don’t exist (hopefully) § ATT (Olive et al. 1998) system had 43 phones § 1849 possible diphones § Phonotactics ([h] only occurs before vowels), don’t need to keep diphones across silence § Only 1172 actual diphones § May include stress, consonant clusters § So could have more § Lots of phonetic knowledge in design § Database relatively small (by today’s standards) § Around 8 megabytes for English (16 KHz 16 bit) 11/26/2020 Slide from Richard Sproat Speech and Language Processing Jurafsky and Martin 58

Voice § Speaker § Called a voice talent § Diphone database § Called a voice 11/26/2020 Speech and Language Processing Jurafsky and Martin 59

Prosodic Modification § Modifying pitch and duration independently § Changing sample rate modifies both: § Chipmunk speech § Duration: duplicate/remove parts of the signal § Pitch: resample to change pitch 11/26/2020 Text from Alan Black 60 Speech and Language Processing Jurafsky and Martin

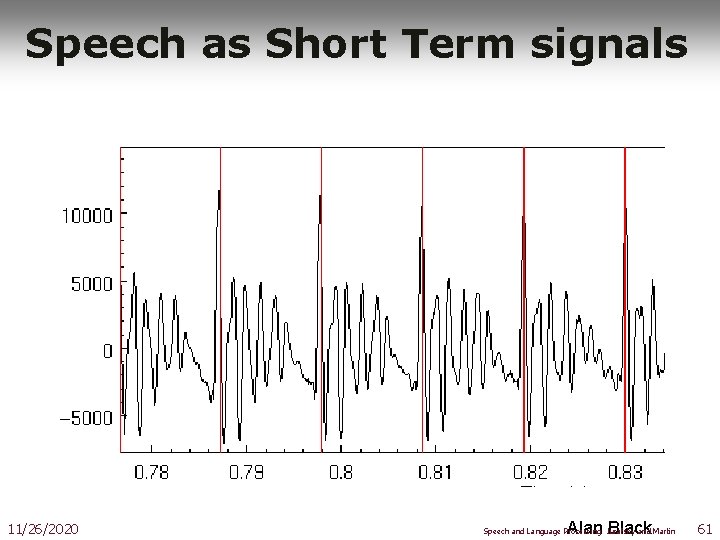

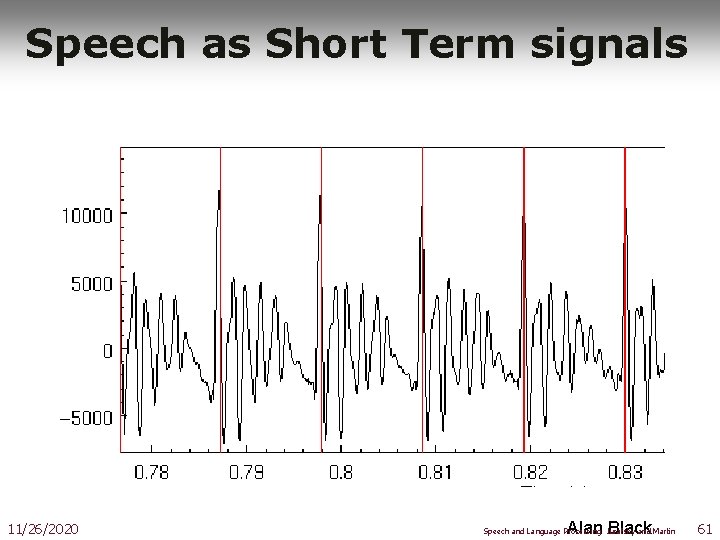

Speech as Short Term signals 11/26/2020 Alan Black Speech and Language Processing Jurafsky and Martin 61

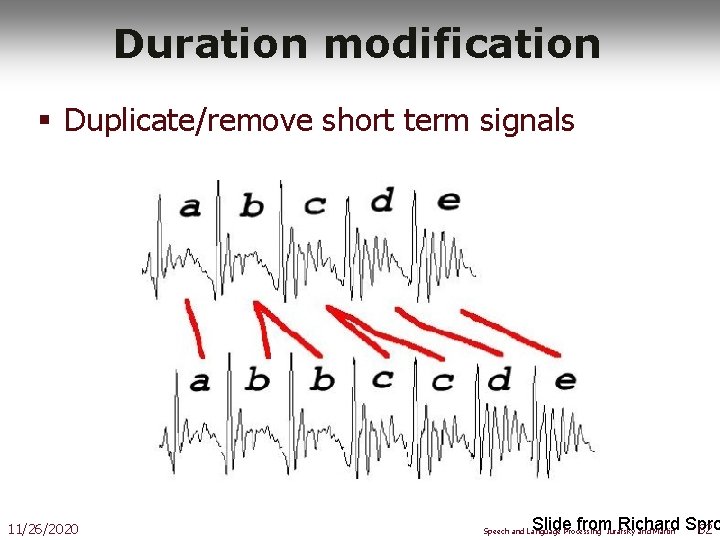

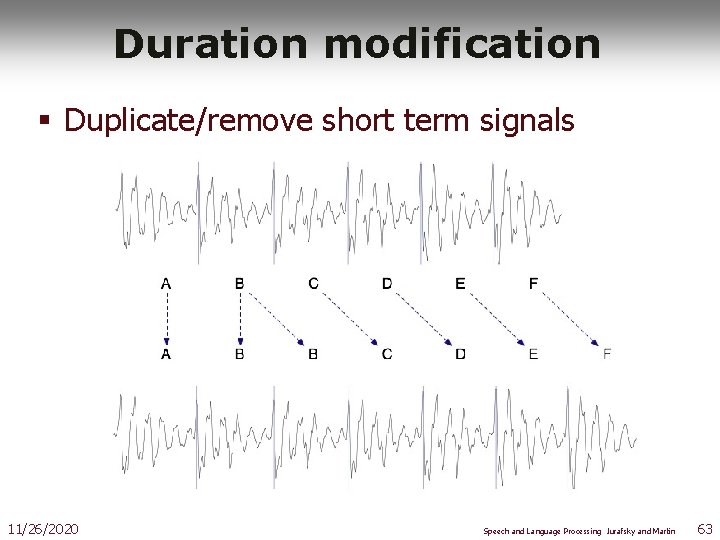

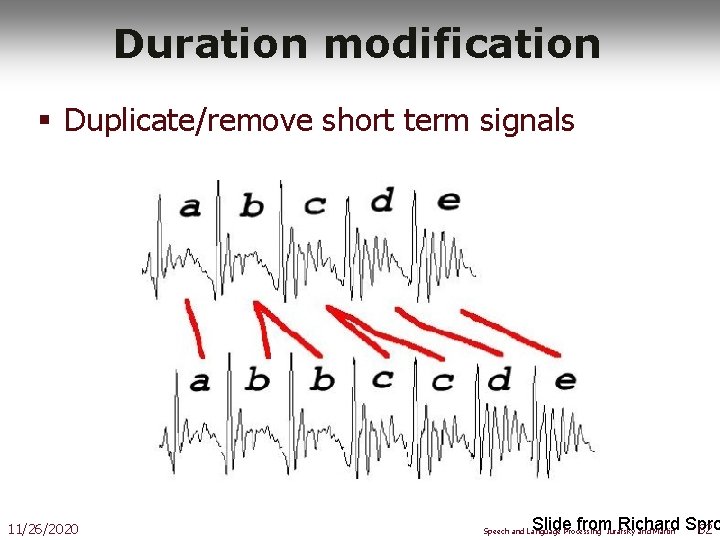

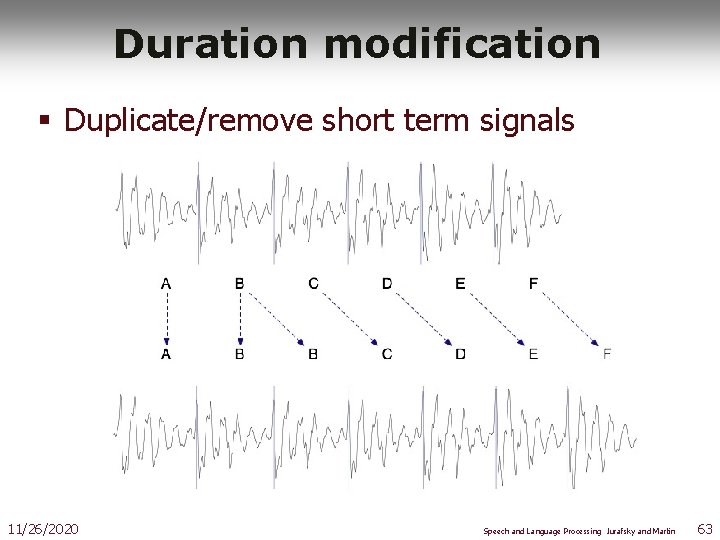

Duration modification § Duplicate/remove short term signals 11/26/2020 Slide from Richard Spro 62 Speech and Language Processing Jurafsky and Martin

Duration modification § Duplicate/remove short term signals 11/26/2020 Speech and Language Processing Jurafsky and Martin 63

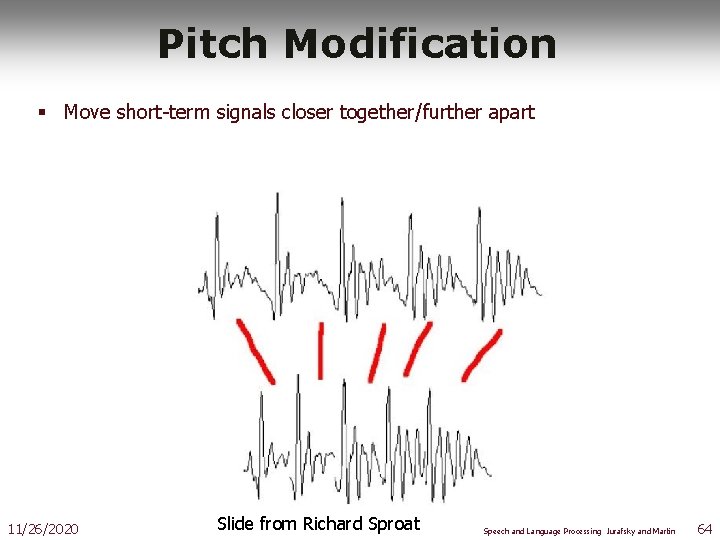

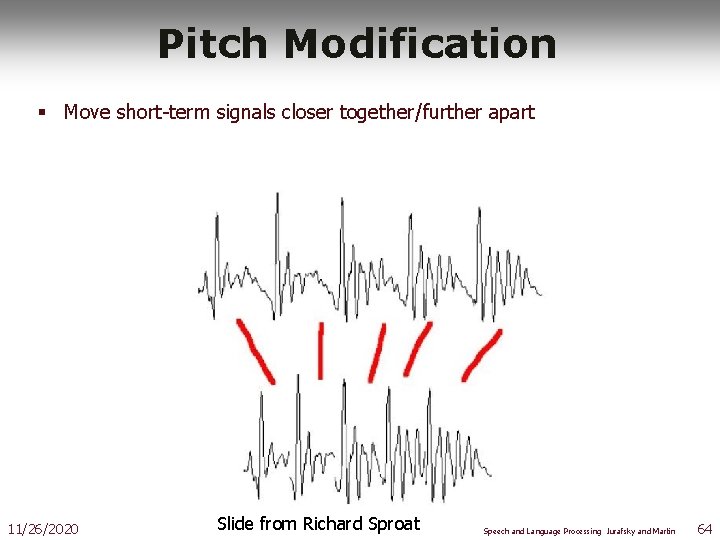

Pitch Modification § Move short-term signals closer together/further apart 11/26/2020 Slide from Richard Sproat Speech and Language Processing Jurafsky and Martin 64

TD-PSOLA ™ § Time-Domain Pitch Synchronous Overlap and Add § Patented by France Telecom (CNET) § Very efficient § No FFT (or inverse FFT) required § Can modify Hz up to two times or by half 11/26/2020 Slide from Richard Sproat Speech and Language Processing Jurafsky and Martin 65

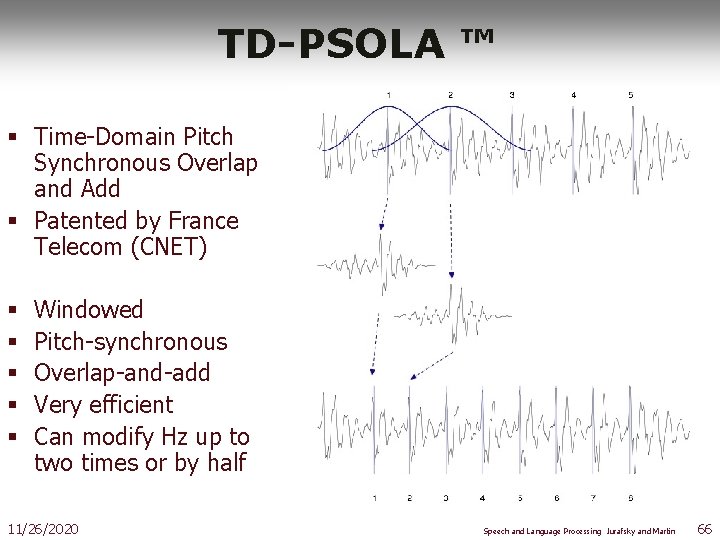

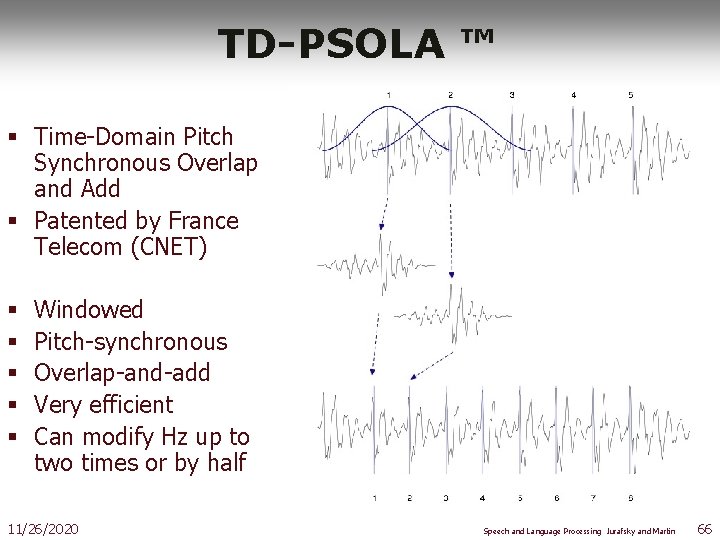

TD-PSOLA ™ § Time-Domain Pitch Synchronous Overlap and Add § Patented by France Telecom (CNET) § § § Windowed Pitch-synchronous Overlap-and-add Very efficient Can modify Hz up to two times or by half 11/26/2020 Speech and Language Processing Jurafsky and Martin 66

Unit Selection Synthesis § Generalization of the diphone intuition § Larger units § From diphones to sentences § Many many copies of each unit § 10 hours of speech instead of 1500 diphones (a few minutes of speech) 11/26/2020 Speech and Language Processing Jurafsky and Martin 67

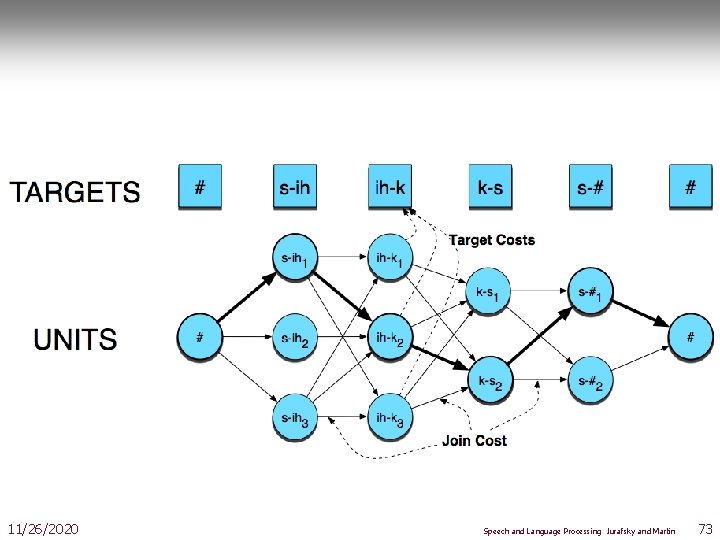

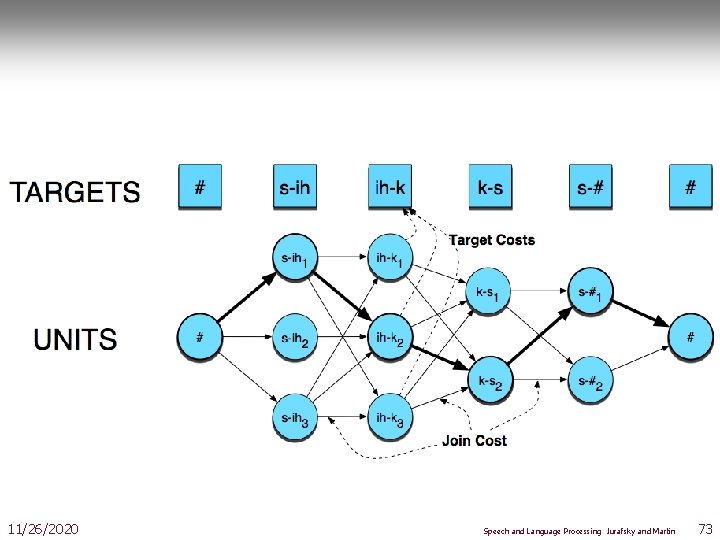

Unit Selection Intuition § Given a big database § Find the unit in the database that is the best to synthesize some target segment § What does “best” mean? § “Target cost”: Closest match to the target description, in terms of § Phonetic context § F 0, stress, phrase position § “Join cost”: Best join with neighboring units § Matching formants + other spectral characteristics § Matching energy § Matching F 0 11/26/2020 Speech and Language Processing Jurafsky and Martin 68

Targets and Target Costs § Target cost T(ut, st): How well the target specification st matches the potential unit in the database ut § Features, costs, and weights § Examples: § /ih-t/ +stress, phrase internal, high F 0, content word § /n-t/ -stress, phrase final, high F 0, function word § /dh-ax/ -stress, phrase initial, low F 0, word “the” 11/26/2020 Speech and Language Processing Jurafsky and Martin 69

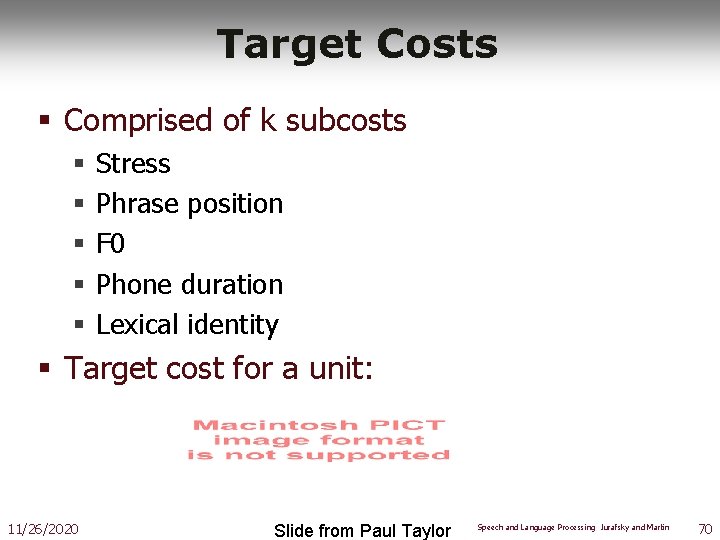

Target Costs § Comprised of k subcosts § § § Stress Phrase position F 0 Phone duration Lexical identity § Target cost for a unit: 11/26/2020 Slide from Paul Taylor Speech and Language Processing Jurafsky and Martin 70

Join (Concatenation) Cost § Measure of smoothness of join § Measured between two database units (target is irrelevant) § Features, costs, and weights § Comprised of k subcosts: § Spectral features § F 0 § Energy § Join cost: 11/26/2020 Slide from Paul Taylor Speech and Language Processing Jurafsky and Martin 71

Total Costs § Hunt and Black 1996 § We now have weights (per phone type) for features set between target and database units § Find best path of units through database that minimize: § Standard problem solvable with Viterbi search with beam width constraint for pruning 11/26/2020 Slide from Paul Taylor Speech and Language Processing Jurafsky and Martin 72

11/26/2020 Speech and Language Processing Jurafsky and Martin 73

Unit Selection Summary § Advantages § Quality is far superior to diphones § Natural prosody selection sounds better § Disadvantages: § Quality can be very bad in places § HCI problem: mix of very good and very bad is quite annoying § Synthesis is computationally expensive § Can’t synthesize everything you want: § Diphone technique can move emphasis § Unit selection gives good (but possibly incorrect) result 11/26/2020 Slide from Richard Sproat Speech and Language Processing Jurafsky and Martin 74

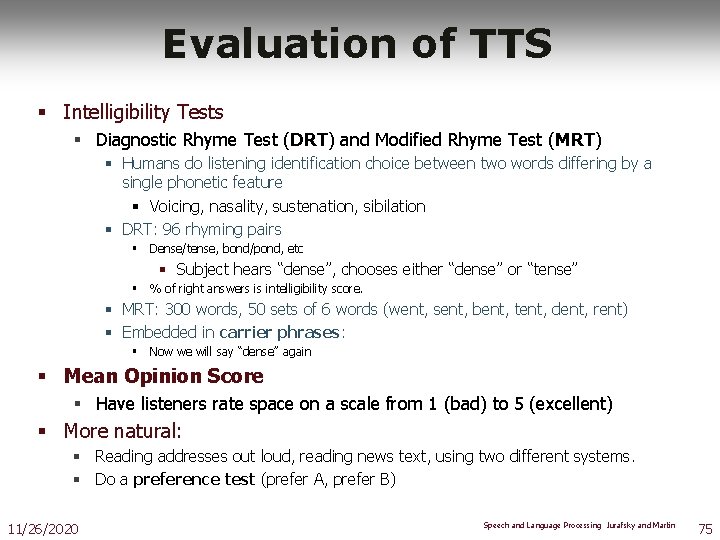

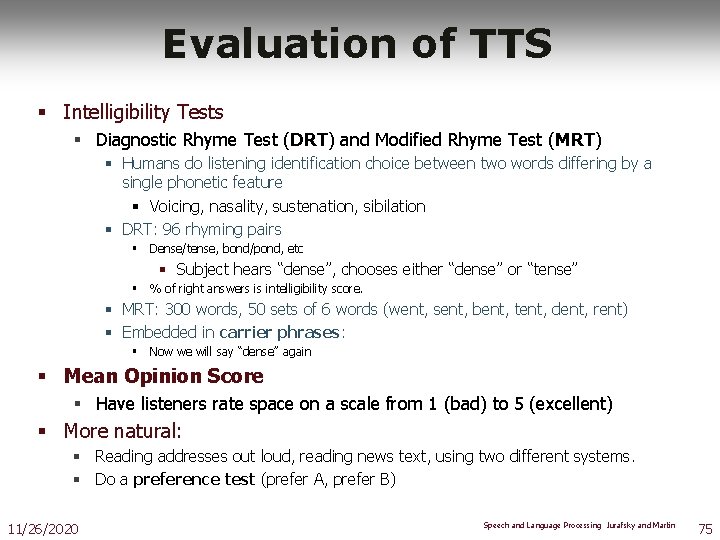

Evaluation of TTS § Intelligibility Tests § Diagnostic Rhyme Test (DRT) and Modified Rhyme Test (MRT) § Humans do listening identification choice between two words differing by a single phonetic feature § Voicing, nasality, sustenation, sibilation § DRT: 96 rhyming pairs § Dense/tense, bond/pond, etc § Subject hears “dense”, chooses either “dense” or “tense” § % of right answers is intelligibility score. § MRT: 300 words, 50 sets of 6 words (went, sent, bent, tent, dent, rent) § Embedded in carrier phrases: § Now we will say “dense” again § Mean Opinion Score § Have listeners rate space on a scale from 1 (bad) to 5 (excellent) § More natural: § Reading addresses out loud, reading news text, using two different systems. § Do a preference test (prefer A, prefer B) 11/26/2020 Speech and Language Processing Jurafsky and Martin 75

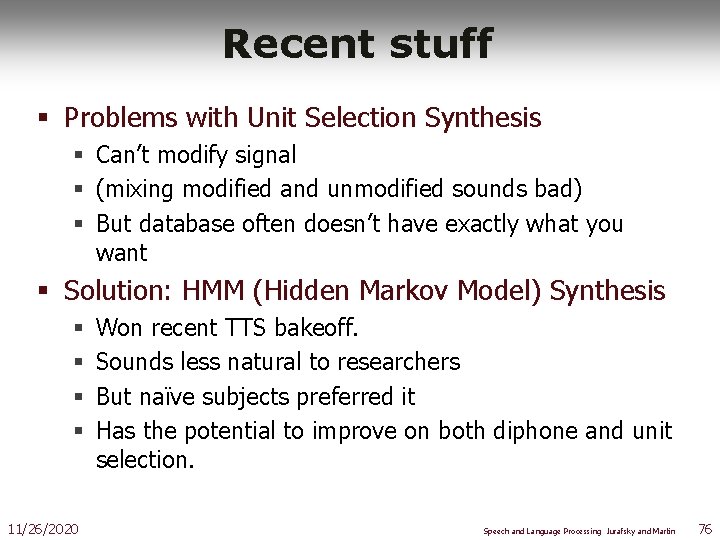

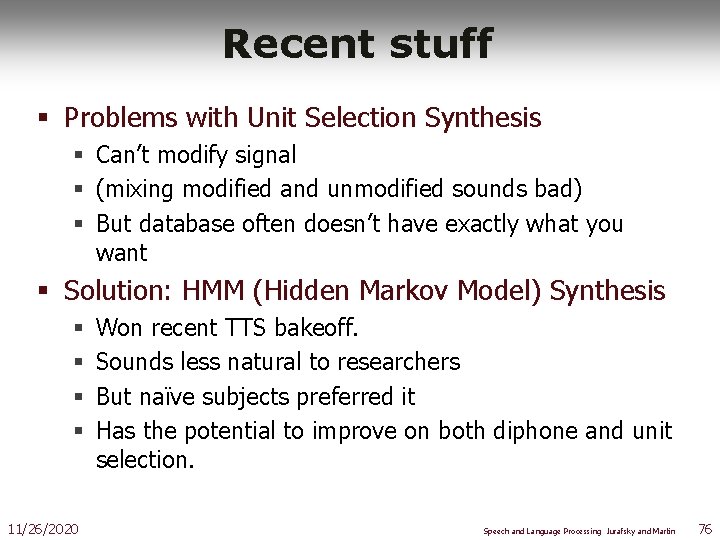

Recent stuff § Problems with Unit Selection Synthesis § Can’t modify signal § (mixing modified and unmodified sounds bad) § But database often doesn’t have exactly what you want § Solution: HMM (Hidden Markov Model) Synthesis § § 11/26/2020 Won recent TTS bakeoff. Sounds less natural to researchers But naïve subjects preferred it Has the potential to improve on both diphone and unit selection. Speech and Language Processing Jurafsky and Martin 76

HMM Synthesis § Unit selection (Roger) § HMM (Roger) § Unit selection (Nina) § HMM (Nina) 11/26/2020 Speech and Language Processing Jurafsky and Martin 77

Summary 1) ARPAbet 2) TTS Architectures 3) TTS Components • Text Analysis • • • Waveform Generation • • • 11/26/2020 Text Normalization Homonym Disambiguation Grapheme-to-Phoneme (Letter-to-Sound) Intonation Diphones Unit Selection HMM Speech and Language Processing Jurafsky and Martin 78