SPARK Outline Motivating Example History Spark Stack Map

![Broadcast Variables. base. Rdd = sc. text. File(“text. txt”) def lookup(value, table): return table[value] Broadcast Variables. base. Rdd = sc. text. File(“text. txt”) def lookup(value, table): return table[value]](https://slidetodoc.com/presentation_image_h/8c39335f4197b6e07004a3140774a71a/image-45.jpg)

- Slides: 48

SPARK

Outline ■ Motivating Example ■ History ■ Spark Stack ■ Map. Reduce Revisited o Linear Regression Using Map. Reduce ■ Iterative and Interactive Procedures in Hadoop ■ In-Memory Storage ■ Resilient Distributed Datasets

Outline ■ Operations on RDDs o Transformations o Actions o Lazy Evaluation ■ Lineage Graph ■ Distributed Execution ■ Caching ■ Broadcast Variables ■ Logistic Regression: Implementation and Performance

Motivating Example ■ Daytona Gray Sort benchmarking challenge. ■ Processed 100 terabytes of data on solid-state drive ■ Time Taken: 23 Minutes ■ Previous winner used Hadoop: 72 minutes ■ This was static dataset. Even higher performance for interactive jobs

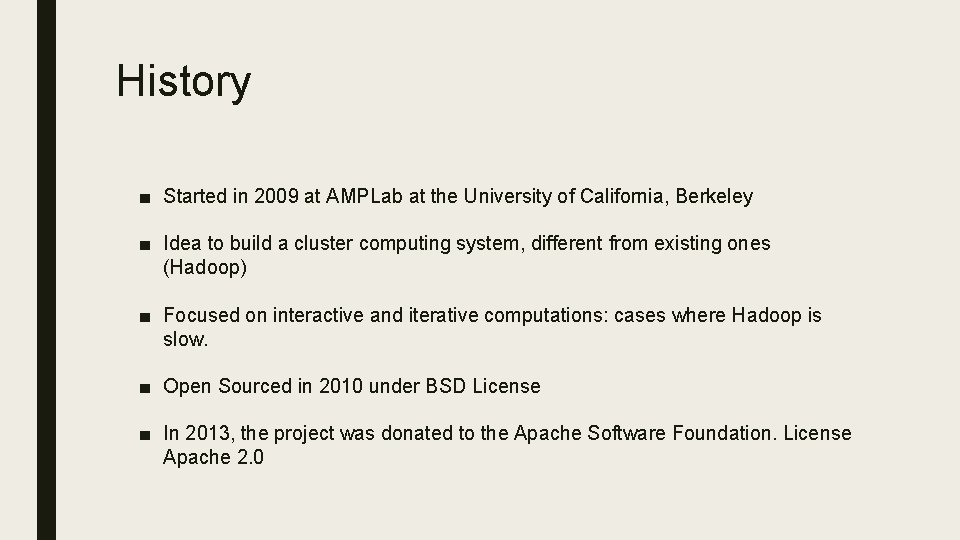

History ■ Started in 2009 at AMPLab at the University of California, Berkeley ■ Idea to build a cluster computing system, different from existing ones (Hadoop) ■ Focused on interactive and iterative computations: cases where Hadoop is slow. ■ Open Sourced in 2010 under BSD License ■ In 2013, the project was donated to the Apache Software Foundation. License Apache 2. 0

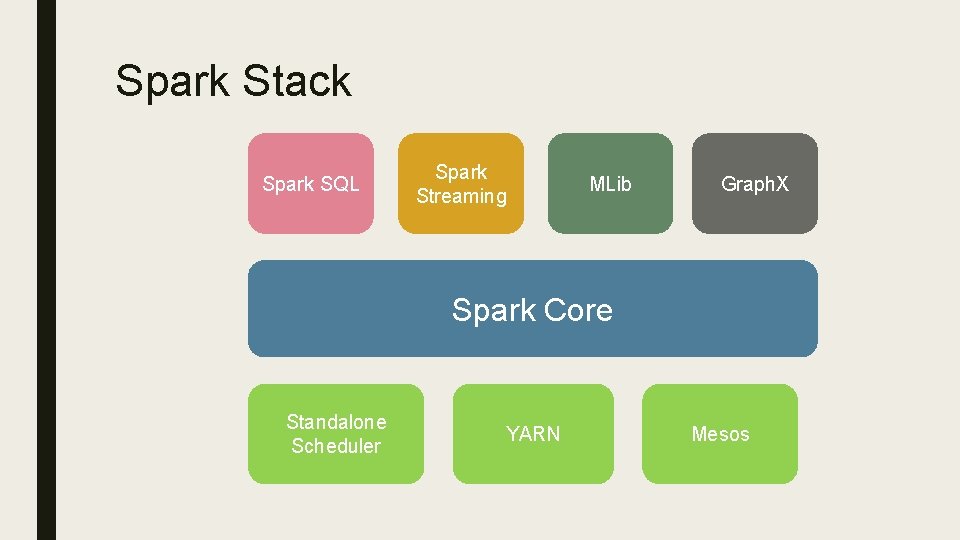

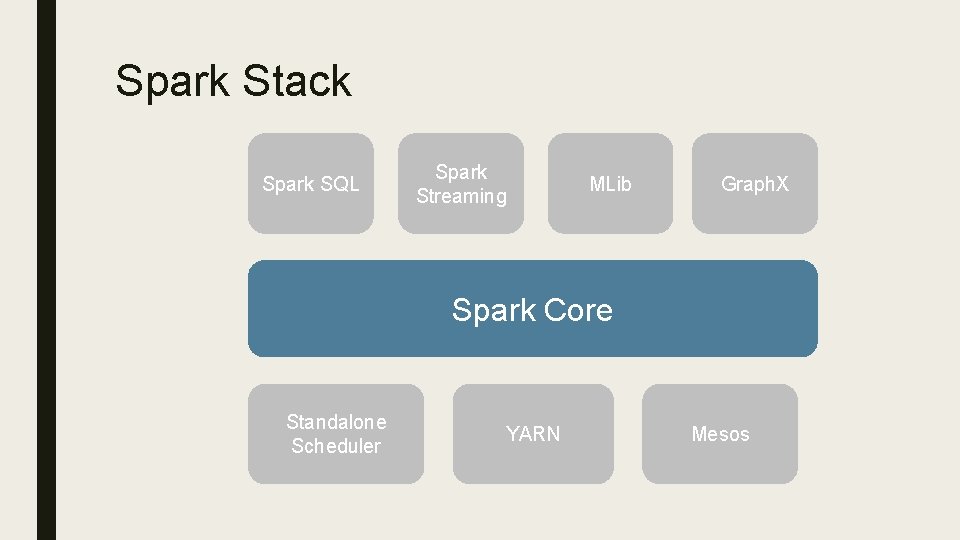

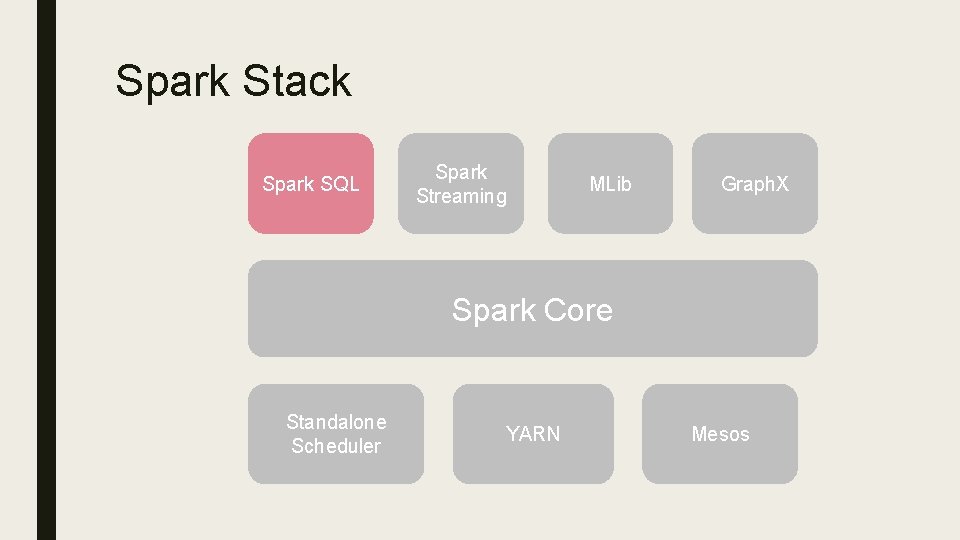

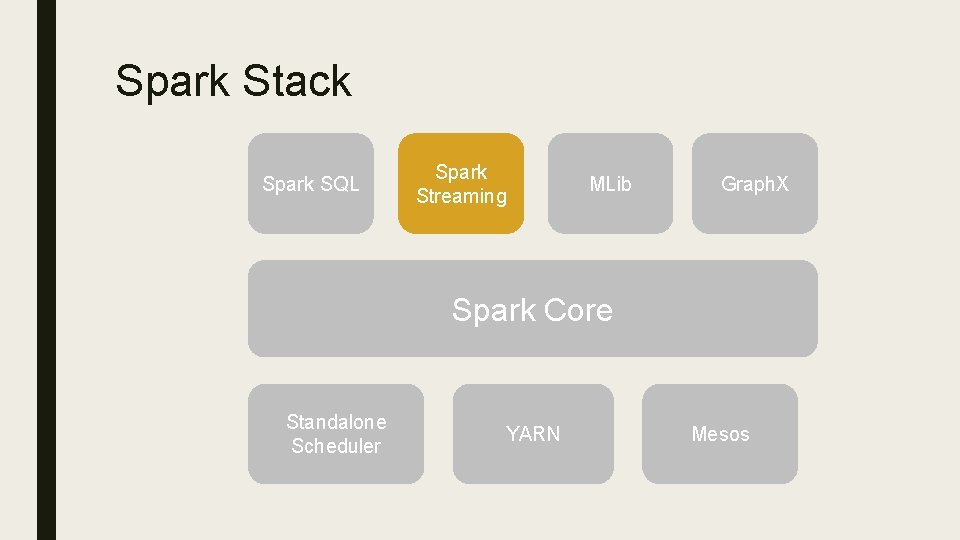

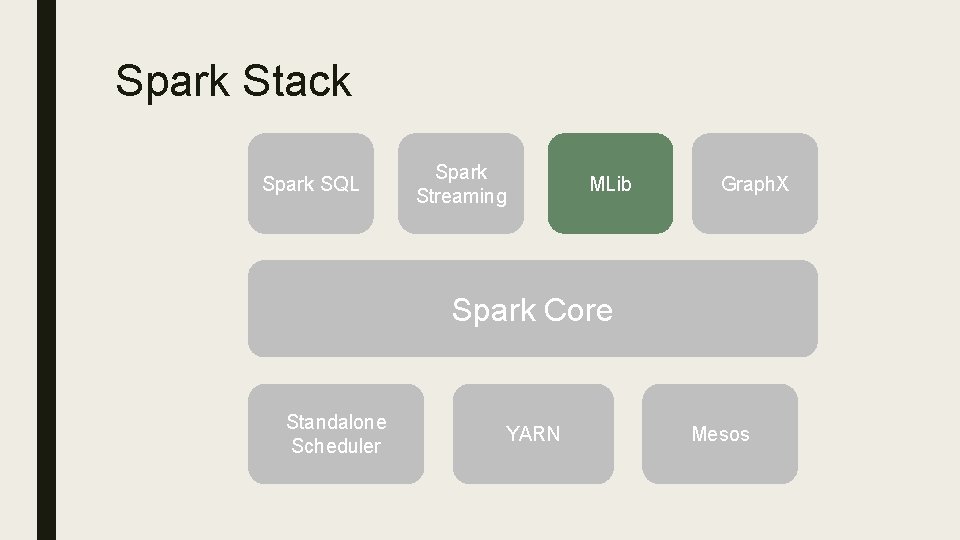

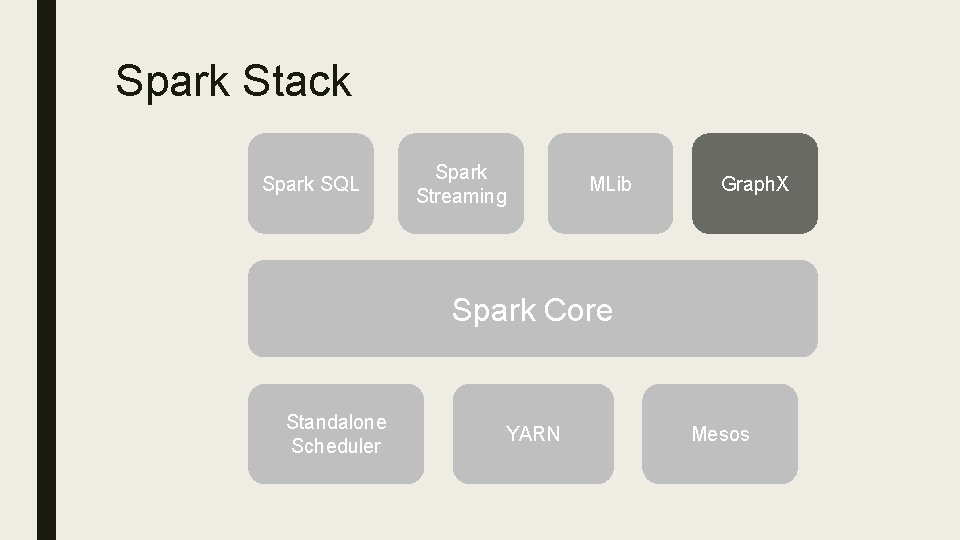

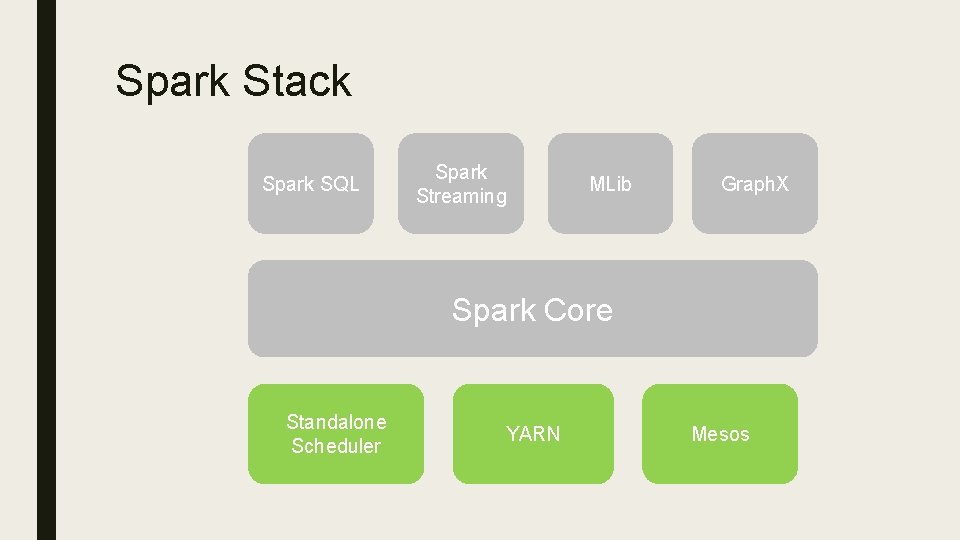

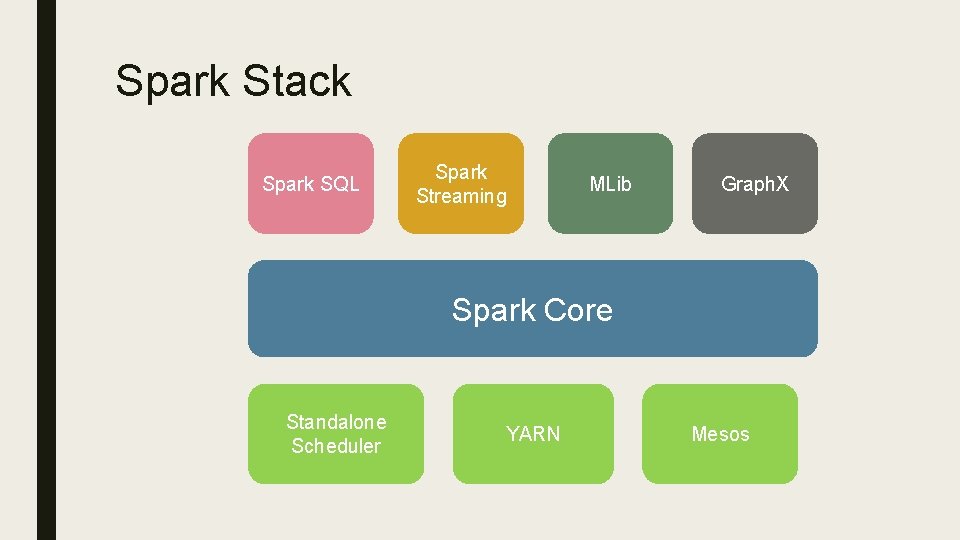

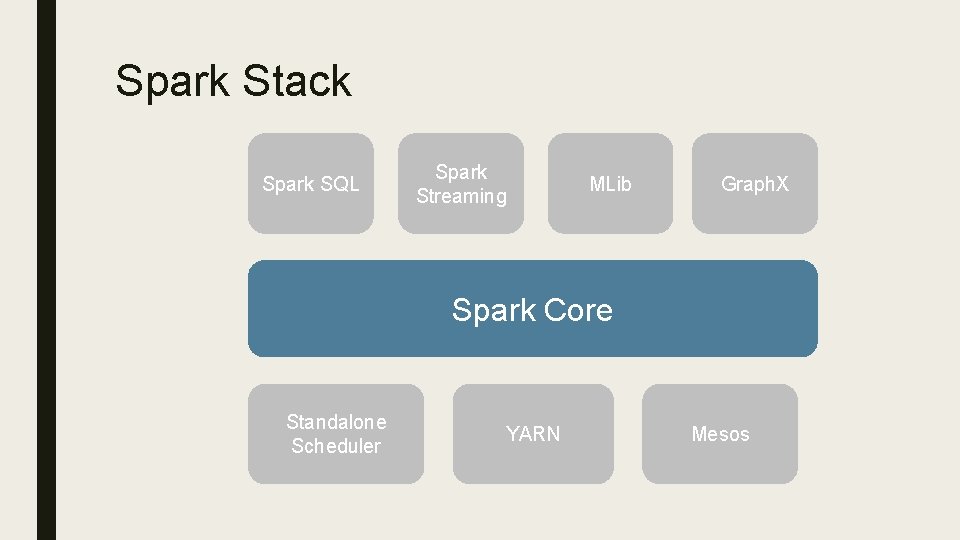

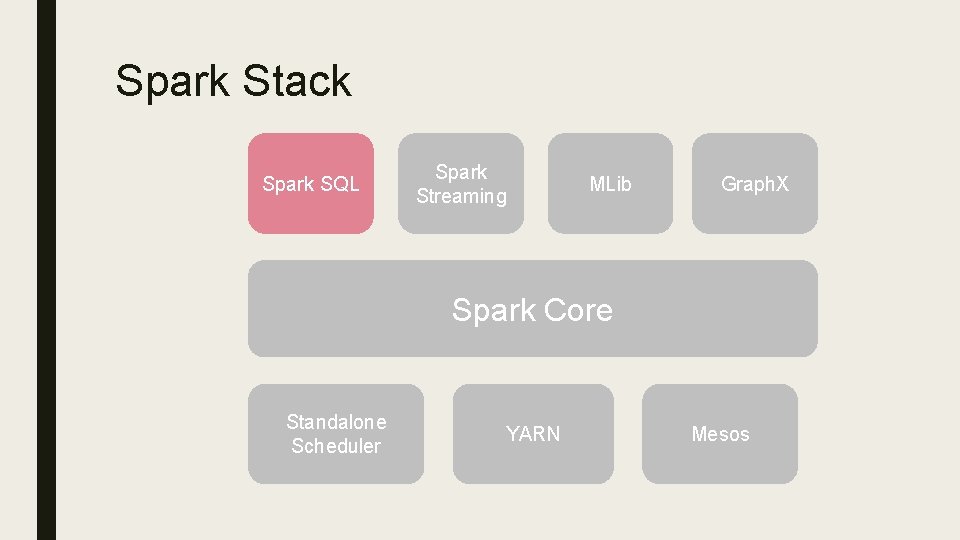

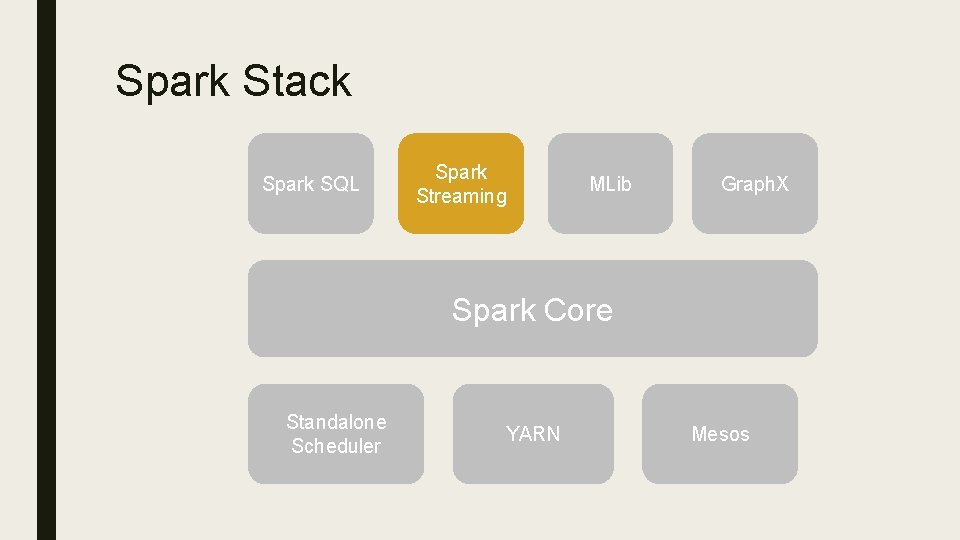

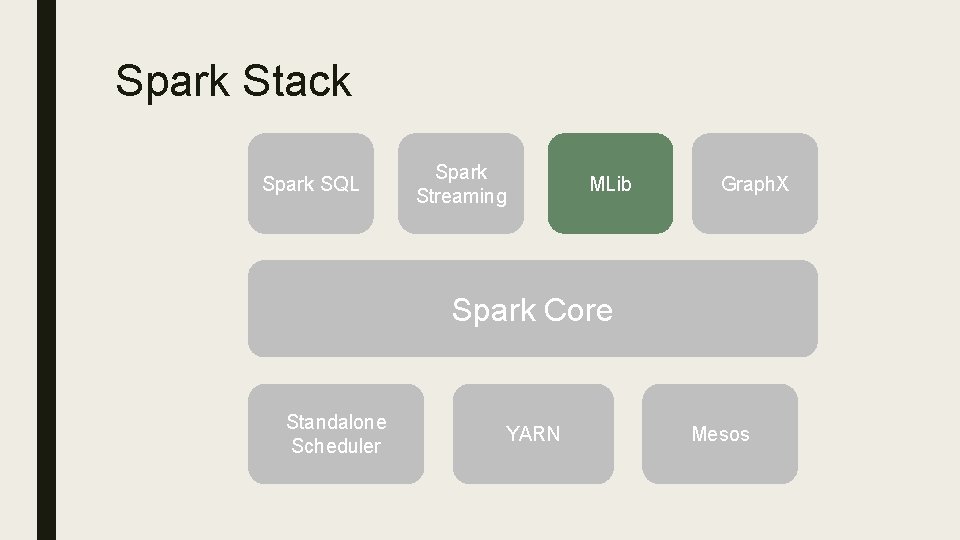

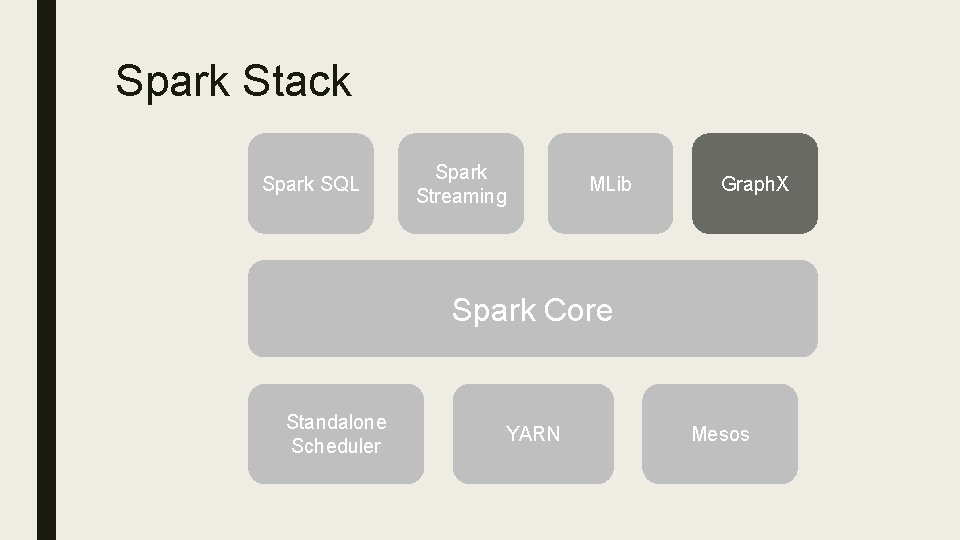

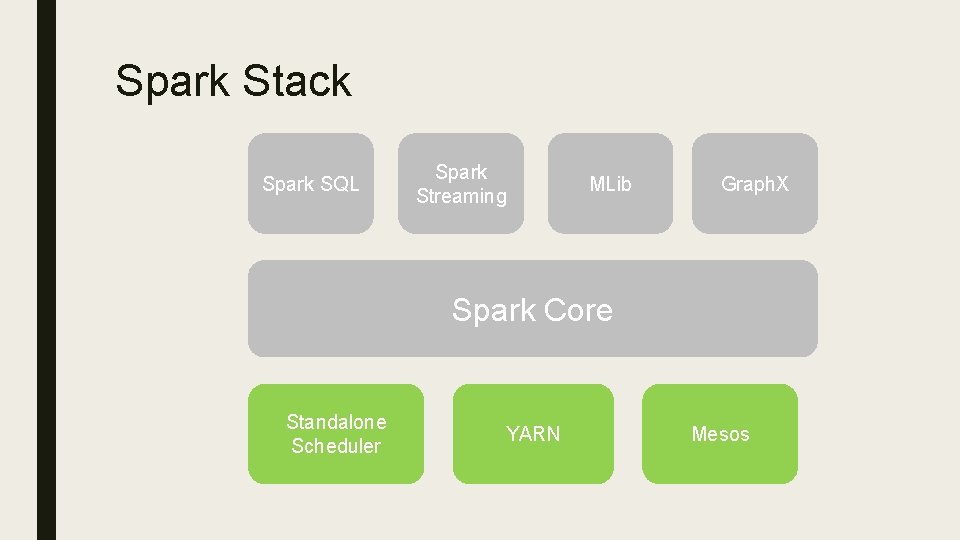

Spark Stack Spark SQL Spark Streaming MLib Graph. X Spark Core Standalone Scheduler YARN Mesos

Spark Stack Spark SQL Spark Streaming MLib Graph. X Spark Core Standalone Scheduler YARN Mesos

Spark Stack Spark SQL Spark Streaming MLib Graph. X Spark Core Standalone Scheduler YARN Mesos

Spark Stack Spark SQL Spark Streaming MLib Graph. X Spark Core Standalone Scheduler YARN Mesos

Spark Stack Spark SQL Spark Streaming MLib Graph. X Spark Core Standalone Scheduler YARN Mesos

Spark Stack Spark SQL Spark Streaming MLib Graph. X Spark Core Standalone Scheduler YARN Mesos

Spark Stack Spark SQL Spark Streaming MLib Graph. X Spark Core Standalone Scheduler YARN Mesos

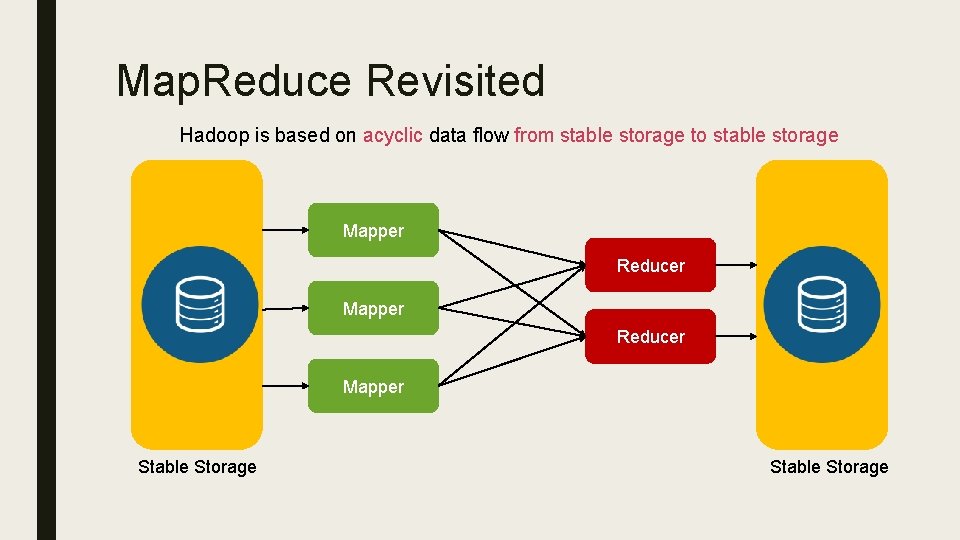

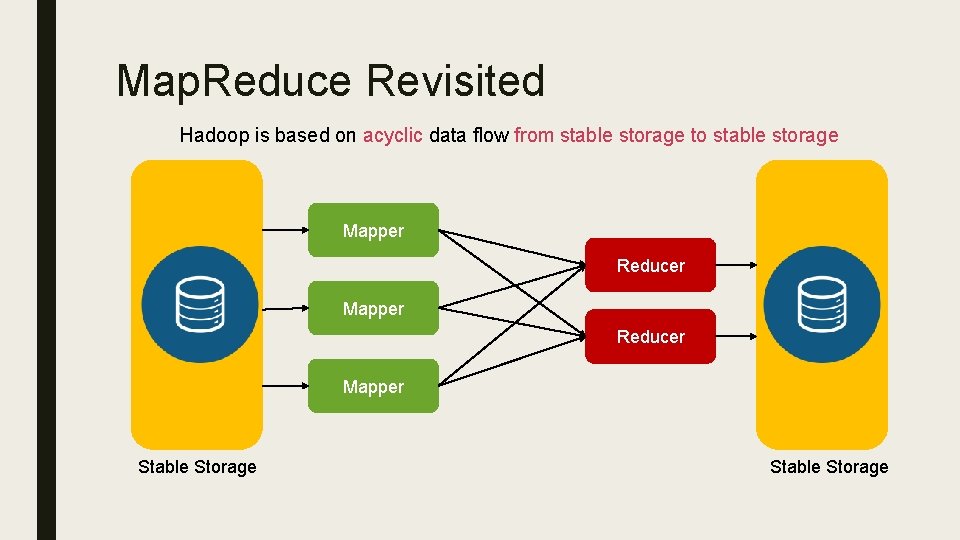

Map. Reduce Revisited Hadoop is based on acyclic data flow from stable storage to stable storage Mapper Reducer Mapper Stable Storage

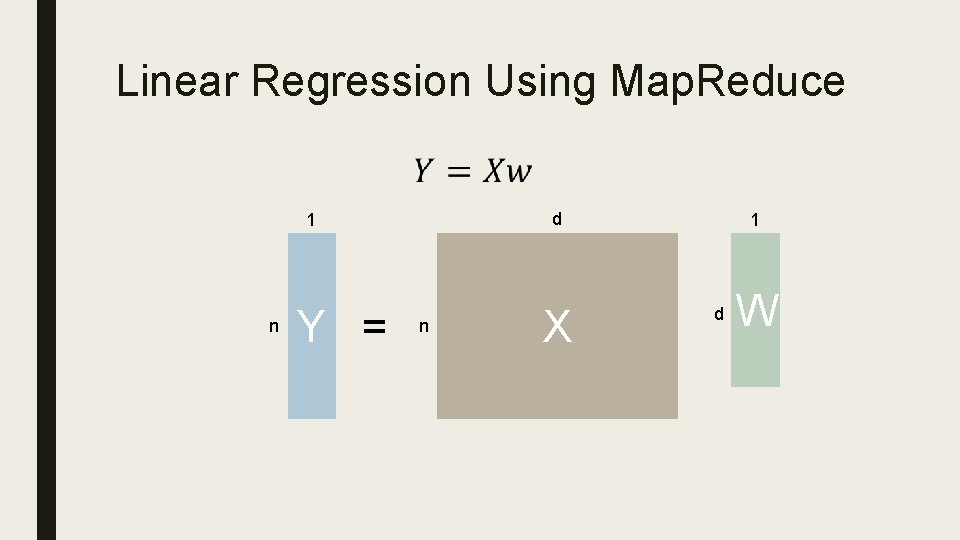

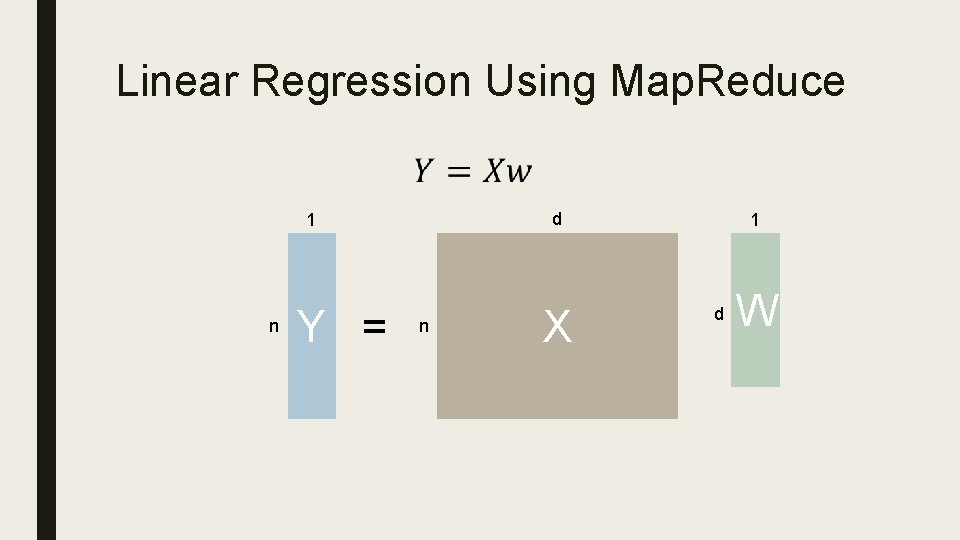

Linear Regression Using Map. Reduce d 1 n Y = n X 1 d W

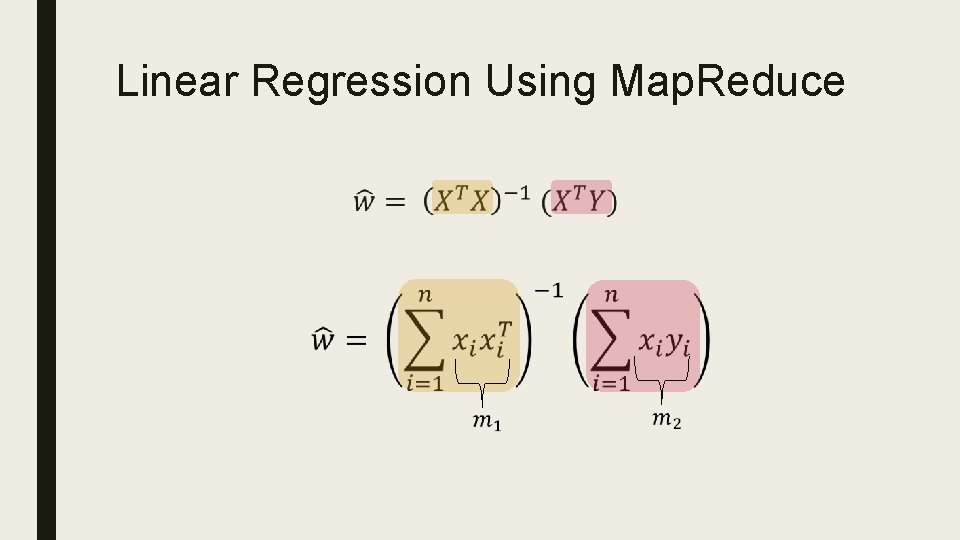

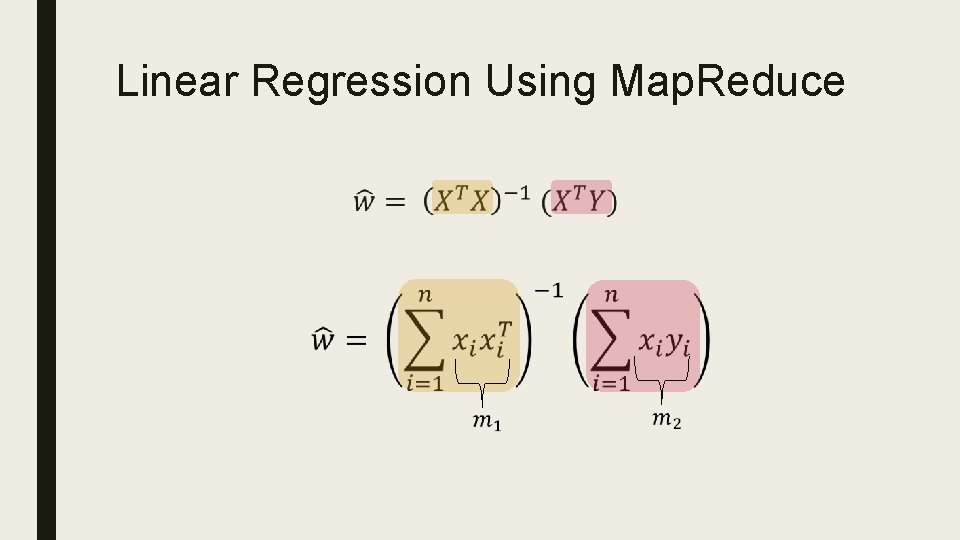

Linear Regression Using Map. Reduce ■

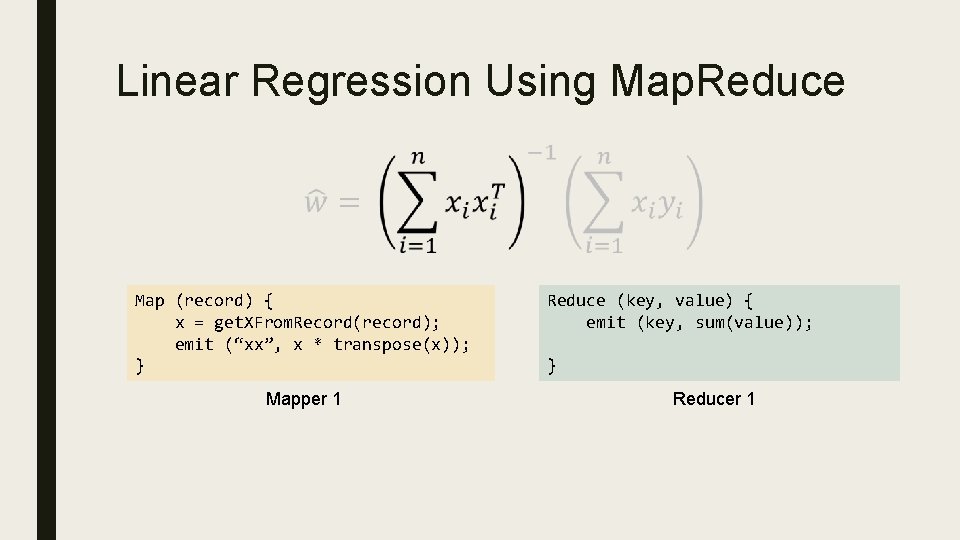

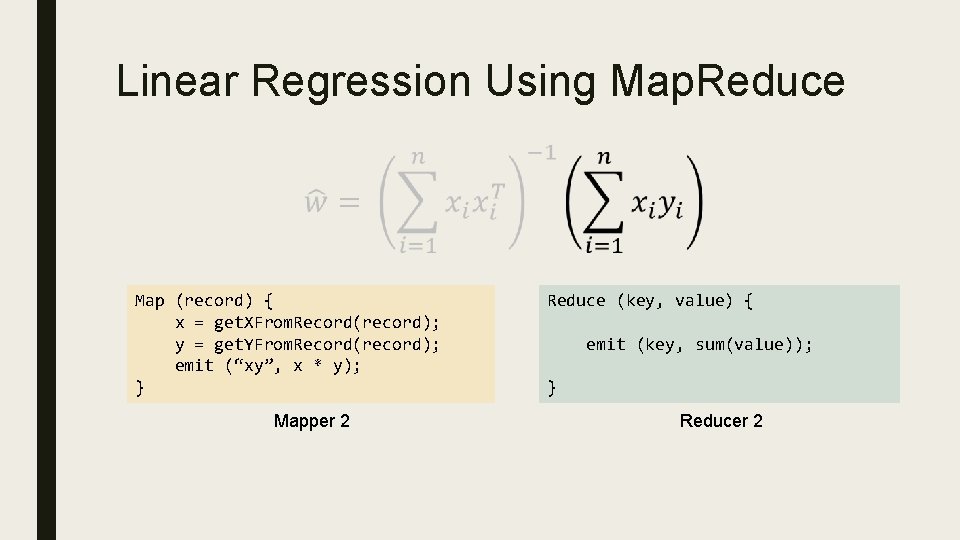

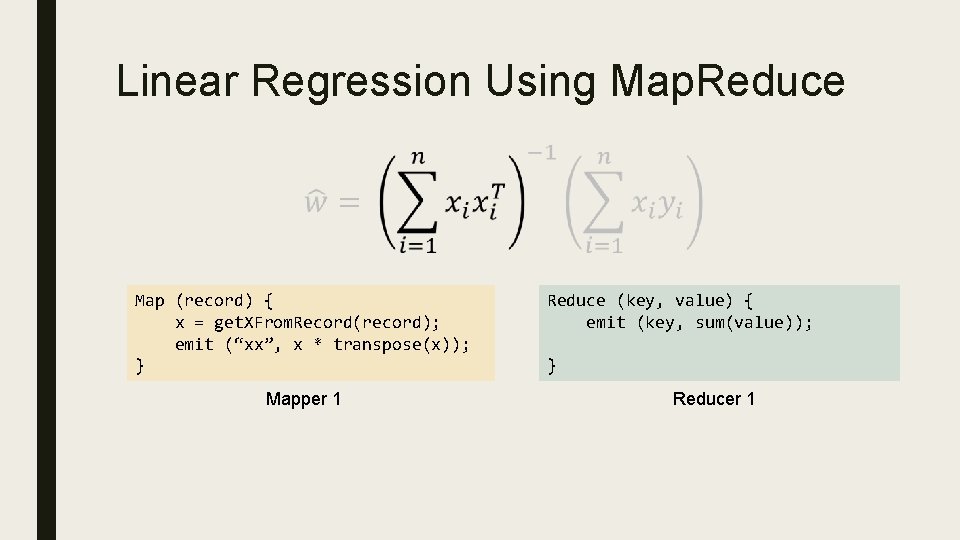

Linear Regression Using Map. Reduce Map (record) { x = get. XFrom. Record(record); emit (“xx”, x * transpose(x)); } Mapper 1 Reduce (key, value) { emit (key, sum(value)); } Reducer 1

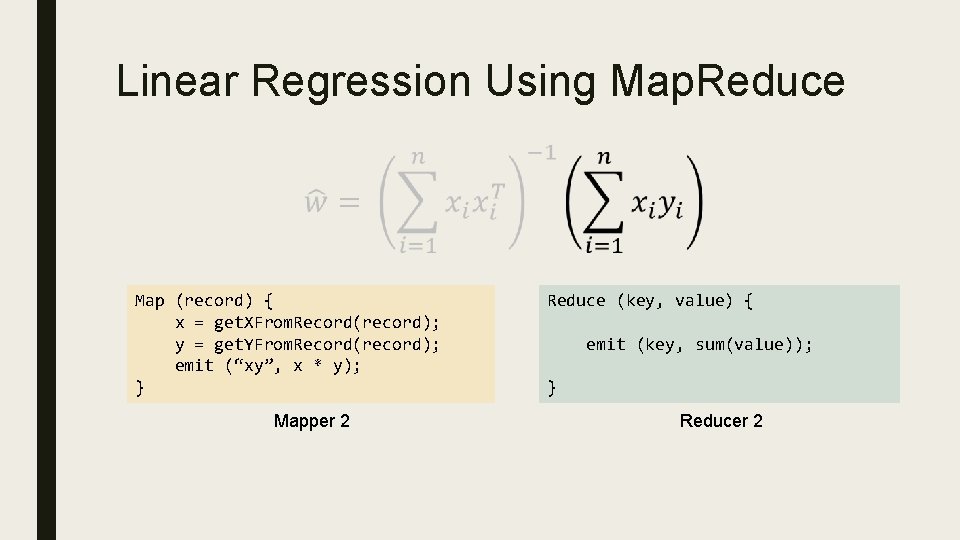

Linear Regression Using Map. Reduce Map (record) { x = get. XFrom. Record(record); y = get. YFrom. Record(record); emit (“xy”, x * y); } Mapper 2 Reduce (key, value) { emit (key, sum(value)); } Reducer 2

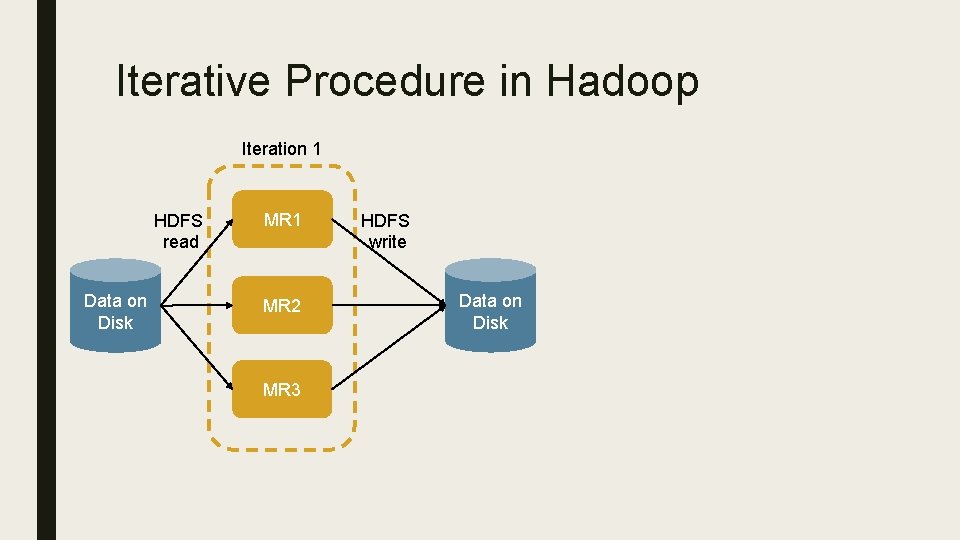

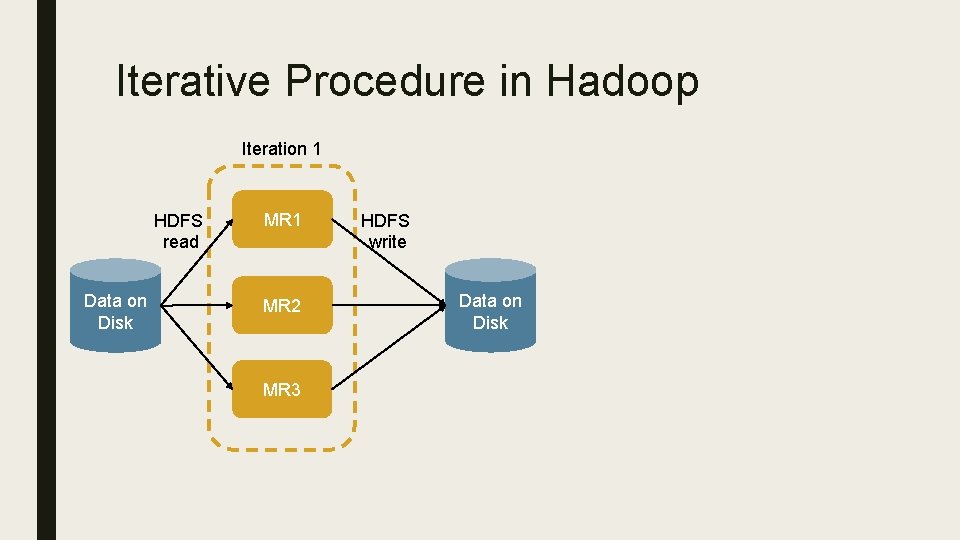

Iterative Procedure in Hadoop Iteration 1 HDFS read Data on Disk MR 1 MR 2 MR 3 HDFS write Data on Disk

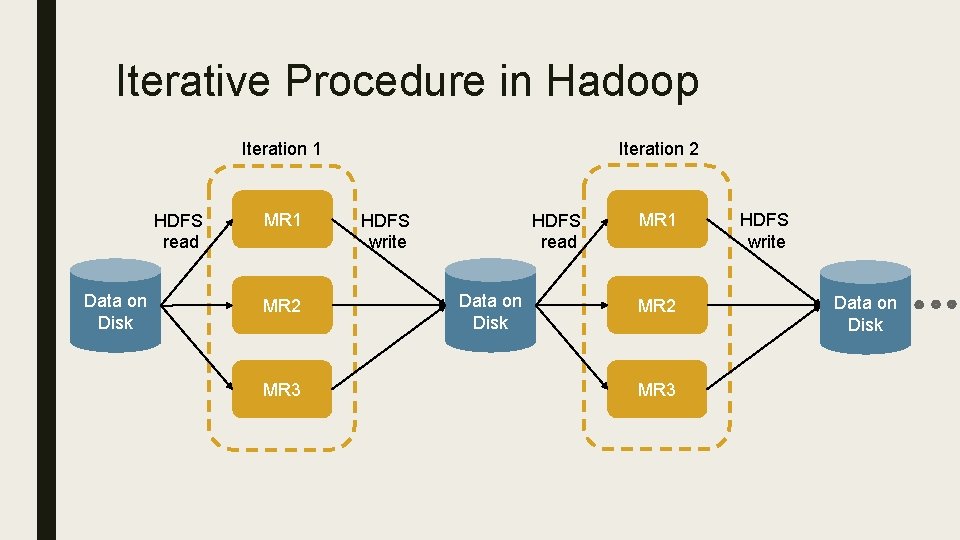

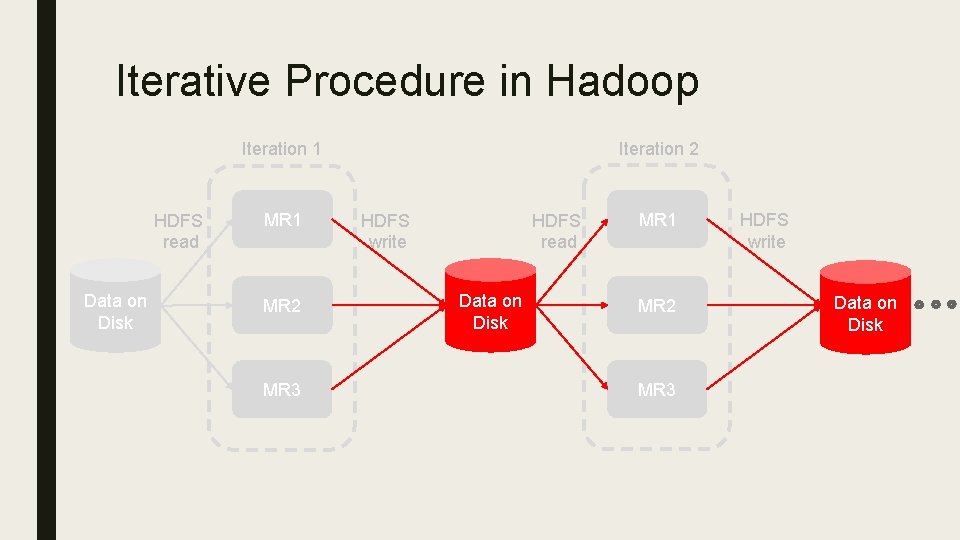

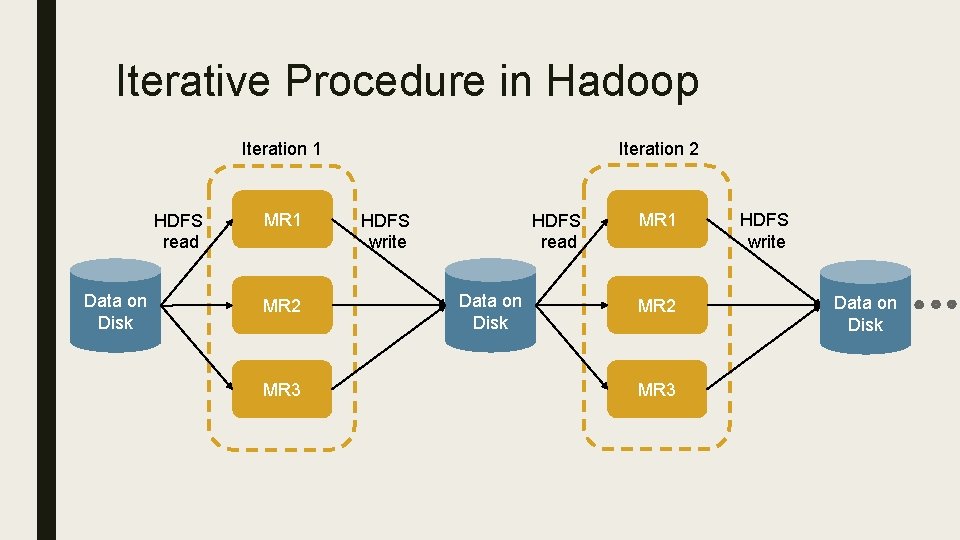

Iterative Procedure in Hadoop Iteration 2 Iteration 1 HDFS read Data on Disk MR 1 MR 2 MR 3 HDFS write Data on Disk

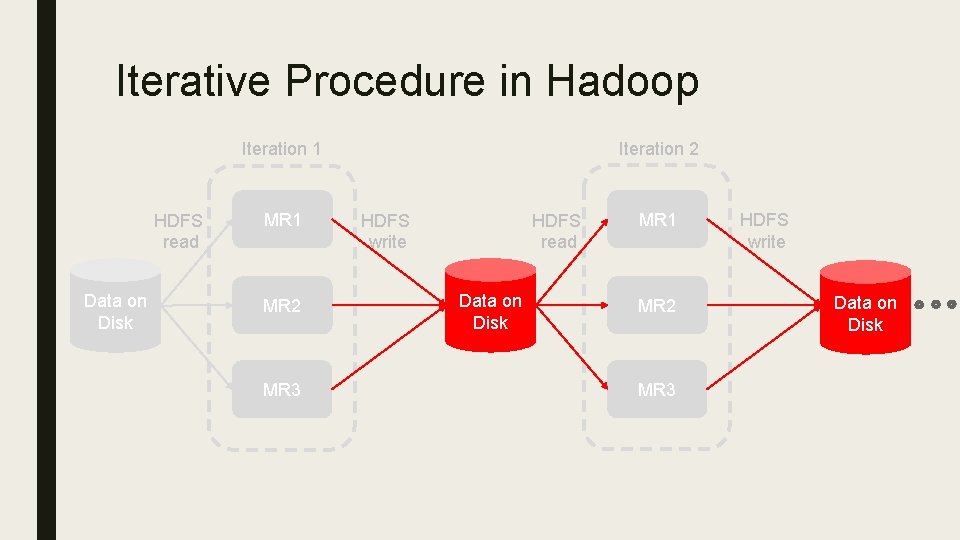

Iterative Procedure in Hadoop Iteration 2 Iteration 1 HDFS read Data on Disk MR 1 MR 2 MR 3 HDFS write Data on Disk

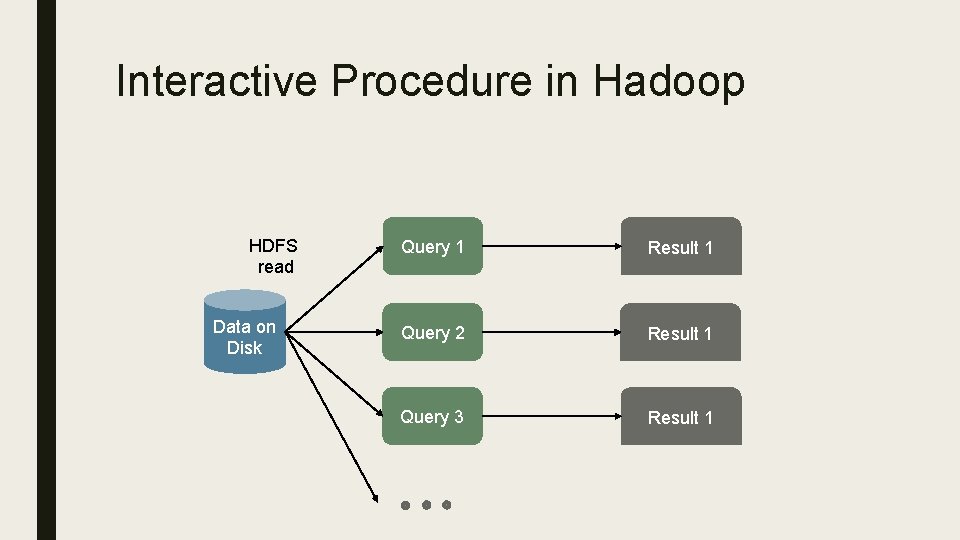

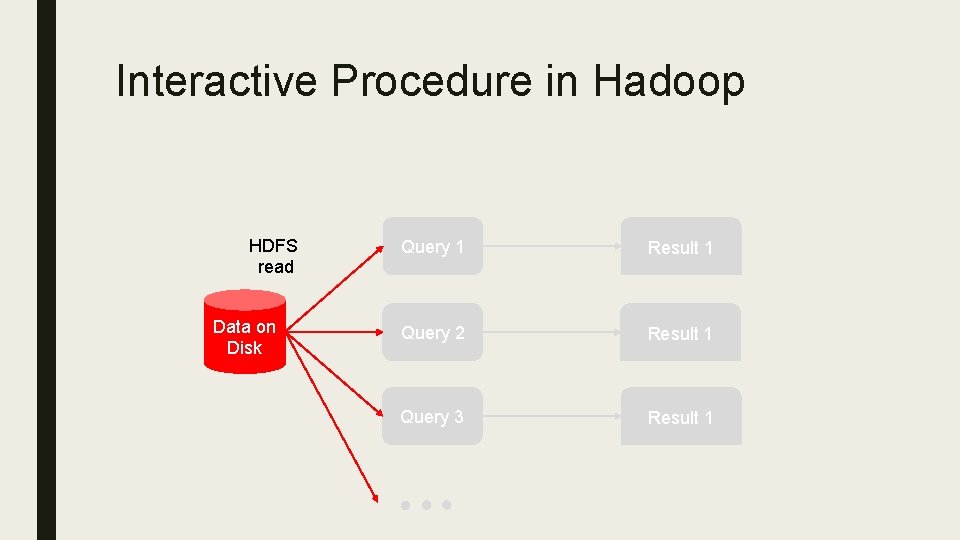

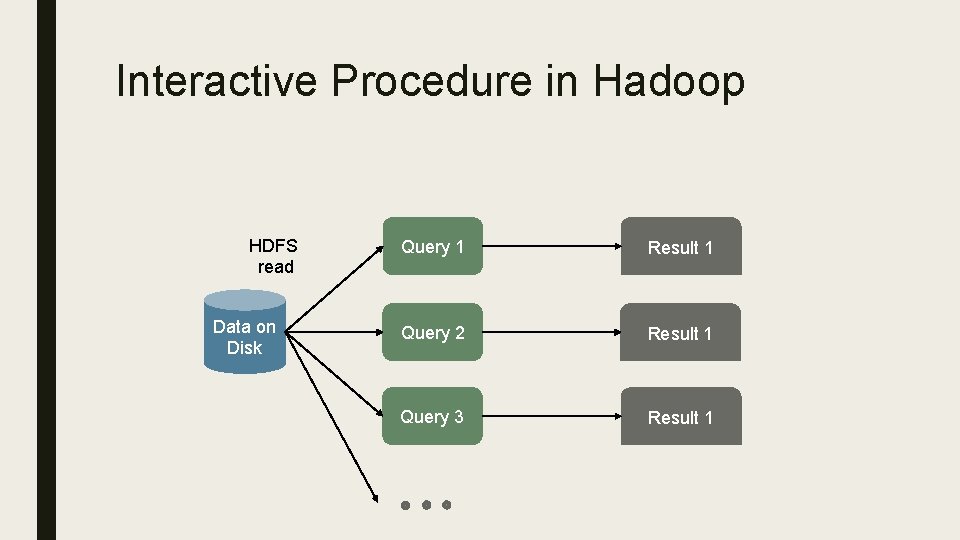

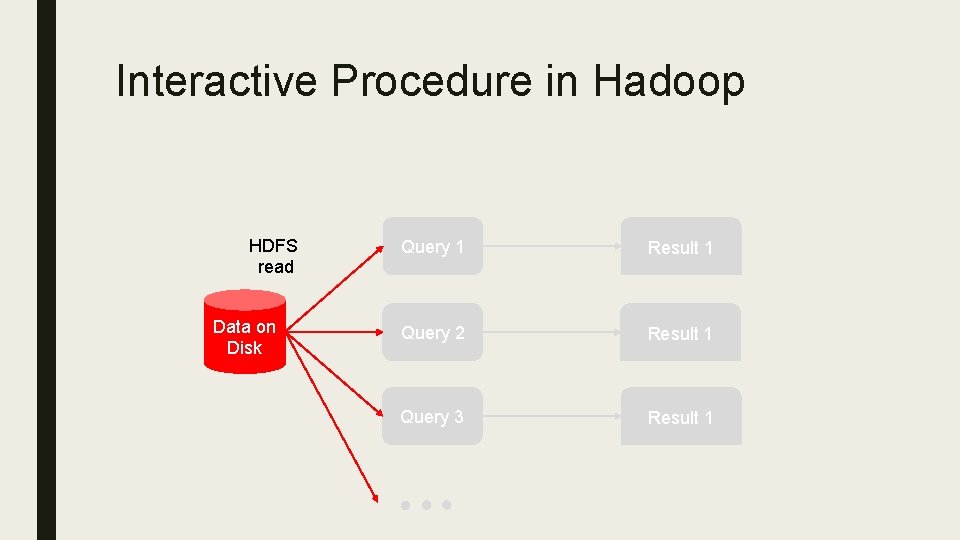

Interactive Procedure in Hadoop HDFS read Data on Disk Query 1 Result 1 Query 2 Result 1 Query 3 Result 1

Interactive Procedure in Hadoop HDFS read Data on Disk Query 1 Result 1 Query 2 Result 1 Query 3 Result 1

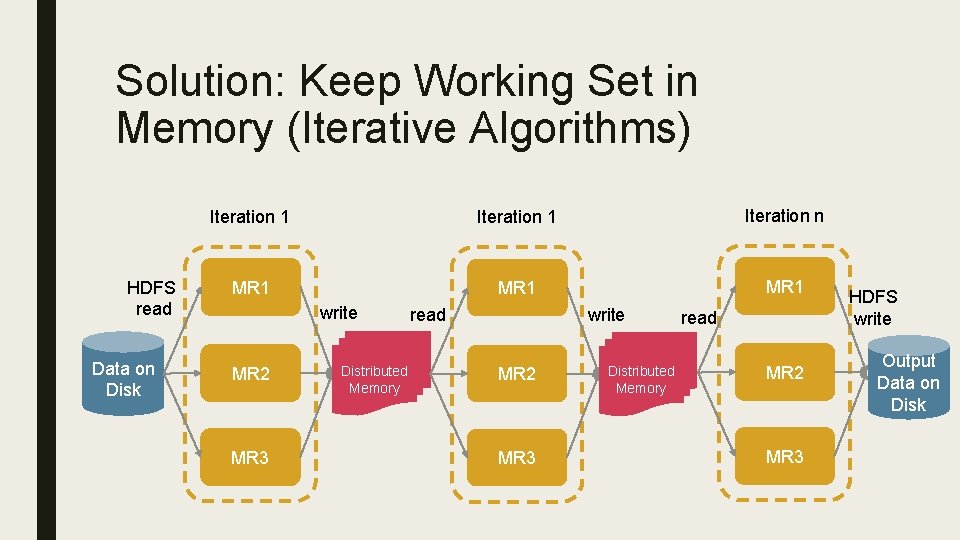

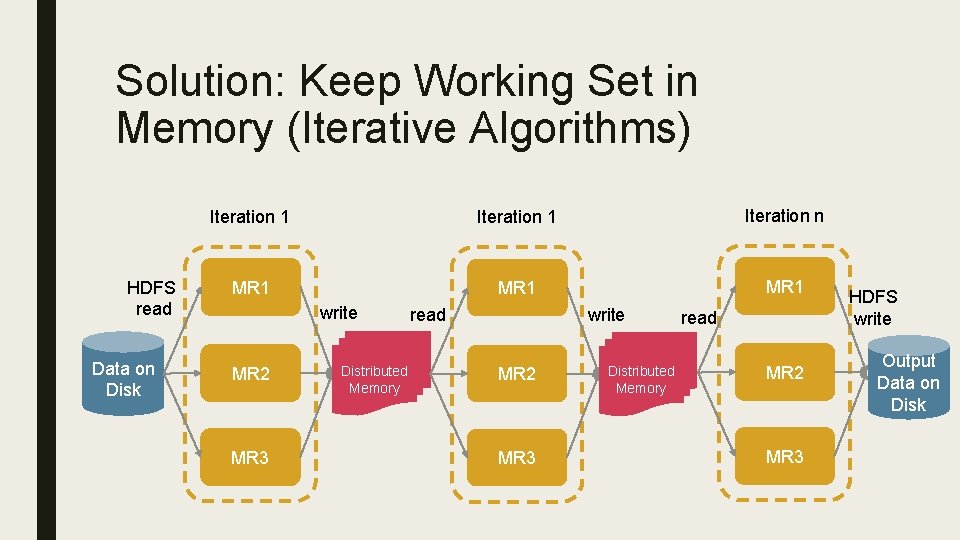

Solution: Keep Working Set in Memory (Iterative Algorithms) HDFS read Data on Disk Iteration 1 Iteration n MR 1 write MR 2 MR 3 read Distributed Memory write MR 2 MR 3 Distributed Memory read MR 2 MR 3 HDFS write Output Data on Disk

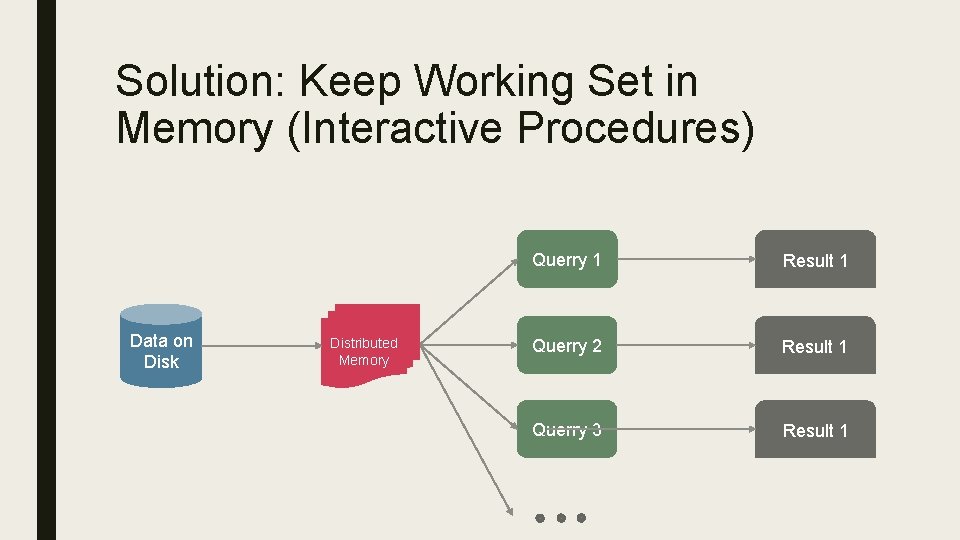

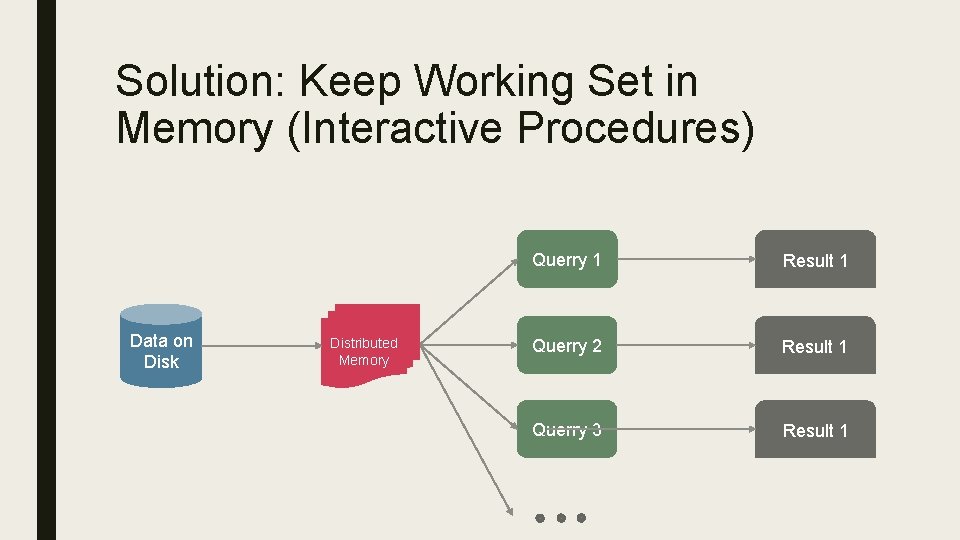

Solution: Keep Working Set in Memory (Interactive Procedures) Data on Disk Distributed Memory Querry 1 Result 1 Querry 2 Result 1 Querry 3 Result 1

Challenge How to design a distributed memory abstraction that is both efficient and fault tolerant?

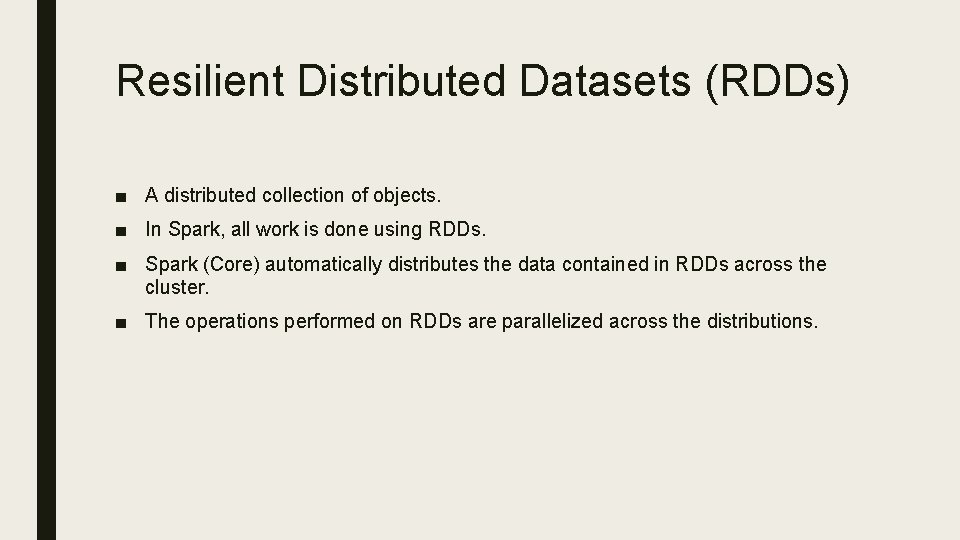

Resilient Distributed Datasets (RDDs) ■ A distributed collection of objects. ■ In Spark, all work is done using RDDs. ■ Spark (Core) automatically distributes the data contained in RDDs across the cluster. ■ The operations performed on RDDs are parallelized across the distributions.

Properties of RDDs ■ In-Memory ■ Immutability ■ Lazy Evaluated ■ Parallelized ■ Partitioned ■ Support any type of Scala, Java or Python objects, including user defined ones.

Creating RDDs ■ From a file: rdd = sc. text. File(“file. txt”) ■ Using paralellize() rdd = sc. parallelize([1, 2, 3])

Types of Operations ■ Transformations: Lazy operations that return another RDD. ■ Actions: operations that trigger computation and return values.

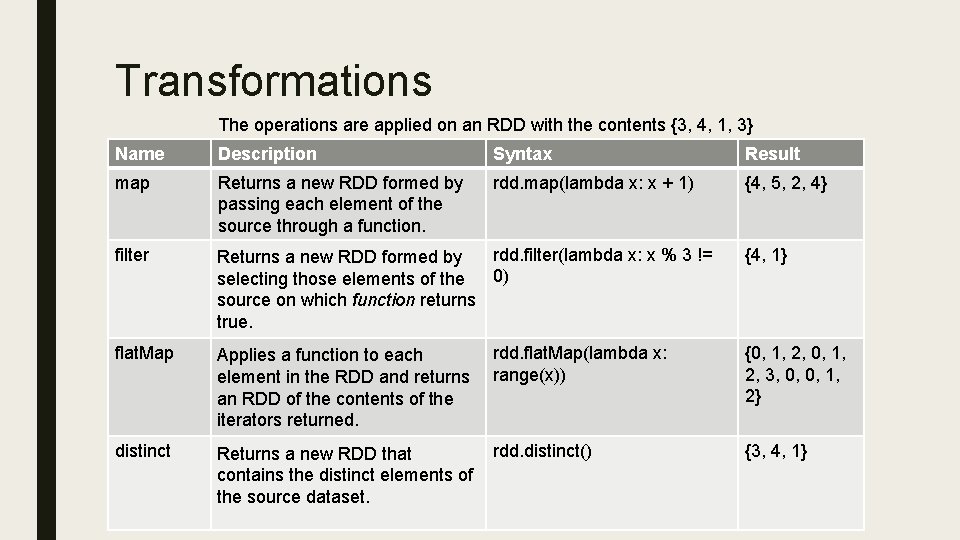

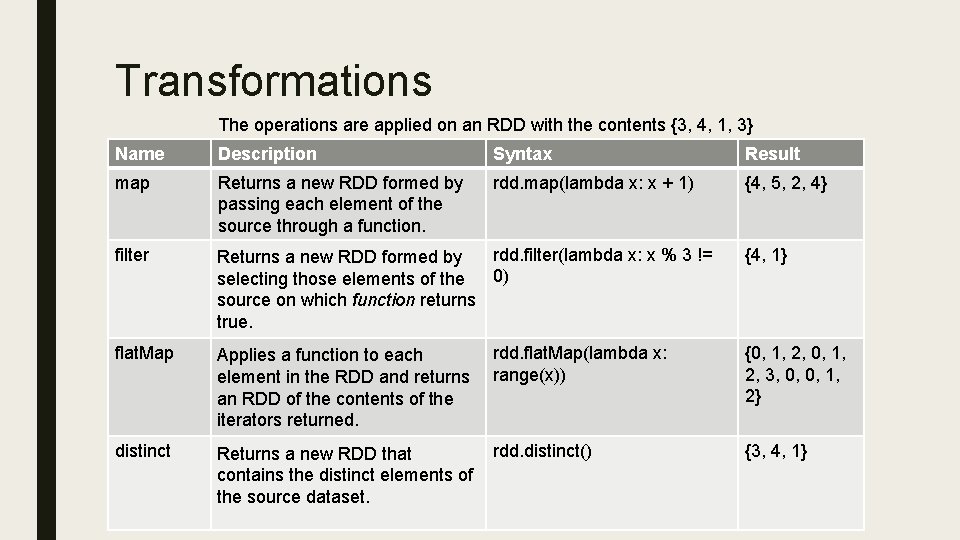

Transformations The operations are applied on an RDD with the contents {3, 4, 1, 3} Name Description Syntax Result map Returns a new RDD formed by passing each element of the source through a function. rdd. map(lambda x: x + 1) {4, 5, 2, 4} filter Returns a new RDD formed by rdd. filter(lambda x: x % 3 != selecting those elements of the 0) source on which function returns true. flat. Map Applies a function to each element in the RDD and returns an RDD of the contents of the iterators returned. distinct rdd. distinct() Returns a new RDD that contains the distinct elements of the source dataset. rdd. flat. Map(lambda x: range(x)) {4, 1} {0, 1, 2, 0, 1, 2, 3, 0, 0, 1, 2} {3, 4, 1}

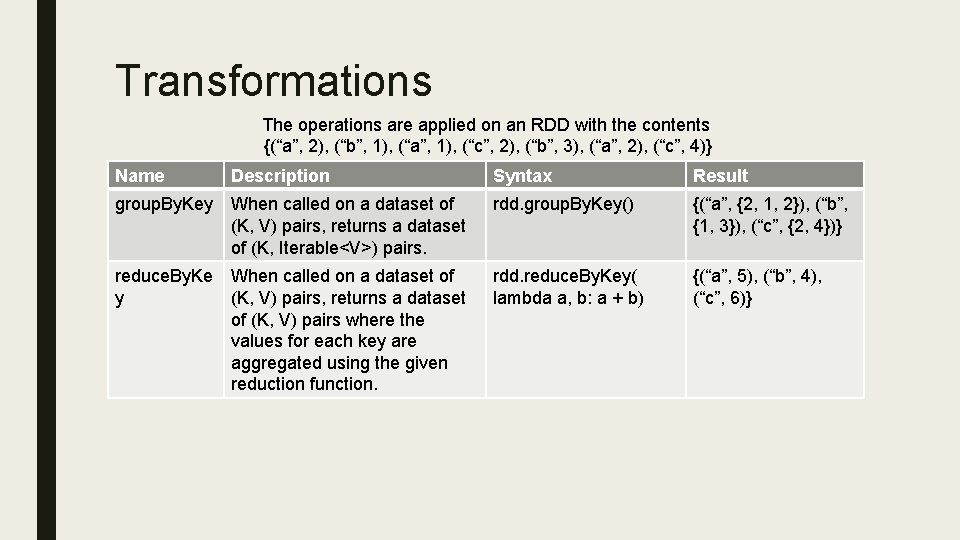

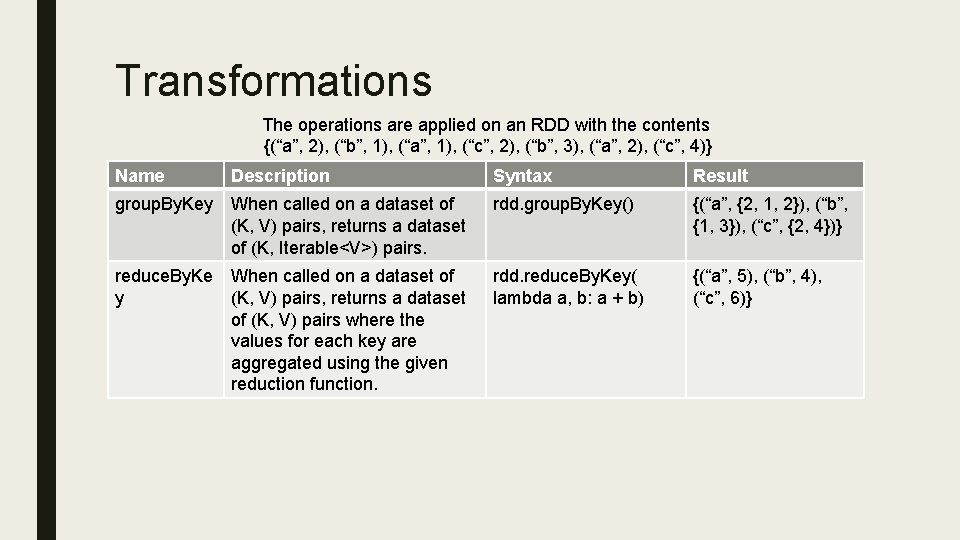

Transformations The operations are applied on an RDD with the contents {(“a”, 2), (“b”, 1), (“a”, 1), (“c”, 2), (“b”, 3), (“a”, 2), (“c”, 4)} Name Description Syntax Result group. By. Key When called on a dataset of (K, V) pairs, returns a dataset of (K, Iterable<V>) pairs. rdd. group. By. Key() {(“a”, {2, 1, 2}), (“b”, {1, 3}), (“c”, {2, 4})} reduce. By. Ke When called on a dataset of y (K, V) pairs, returns a dataset of (K, V) pairs where the values for each key are aggregated using the given reduction function. rdd. reduce. By. Key( lambda a, b: a + b) {(“a”, 5), (“b”, 4), (“c”, 6)}

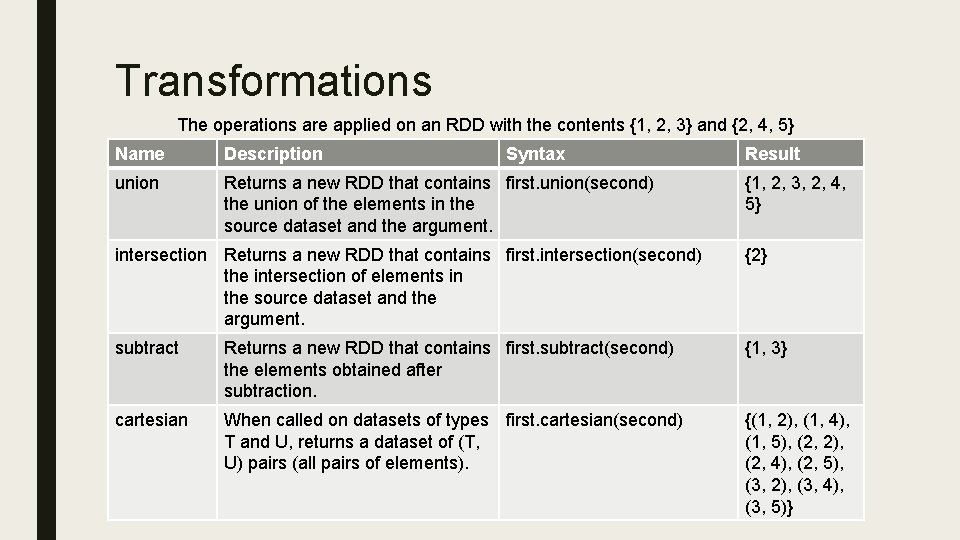

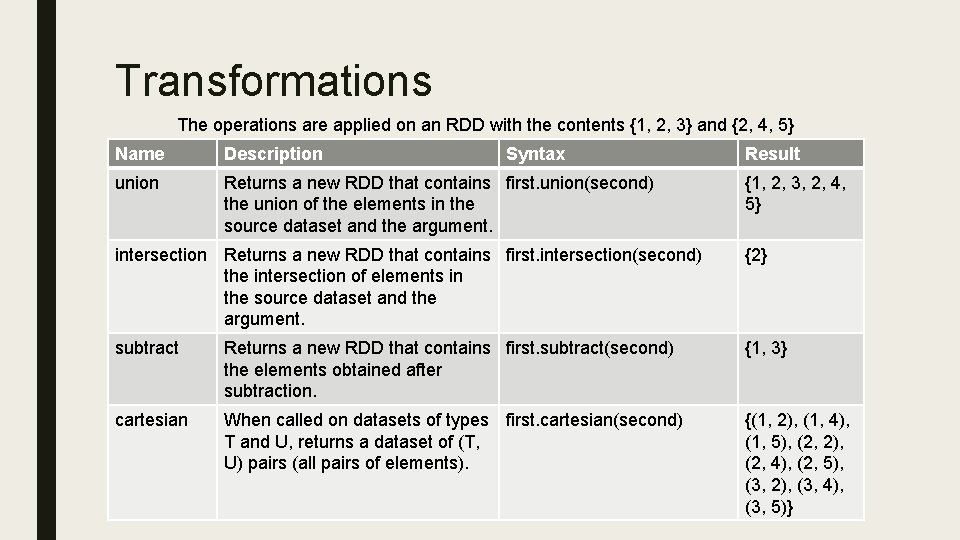

Transformations The operations are applied on an RDD with the contents {1, 2, 3} and {2, 4, 5} Name Description Syntax union Returns a new RDD that contains first. union(second) the union of the elements in the source dataset and the argument. Result {1, 2, 3, 2, 4, 5} intersection Returns a new RDD that contains first. intersection(second) the intersection of elements in the source dataset and the argument. {2} subtract Returns a new RDD that contains first. subtract(second) the elements obtained after subtraction. {1, 3} cartesian When called on datasets of types first. cartesian(second) T and U, returns a dataset of (T, U) pairs (all pairs of elements). {(1, 2), (1, 4), (1, 5), (2, 2), (2, 4), (2, 5), (3, 2), (3, 4), (3, 5)}

Actions The operations are applied on an RDD with the contents {3, 4, 1, 3} Name Description Syntax Result reduce Aggregates the elements of the RDD using a function rdd. reduce(lambda a, b: a * b) 36 collect Returns all the elements of the RDD as an array at the driver program. rdd. collect() count Returns the number of elements rdd. count() in the RDD. 4 first Returns the first element of the RDD. rdd. first() 3 take Returns an array with the first n elements of the dataset. rdd. take(2) [3, 4] [3, 4, 1, 3]

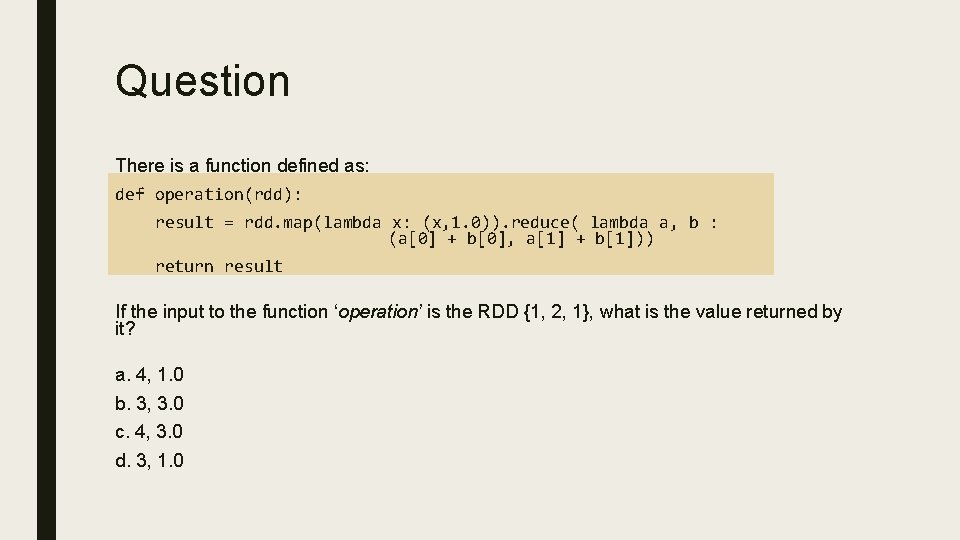

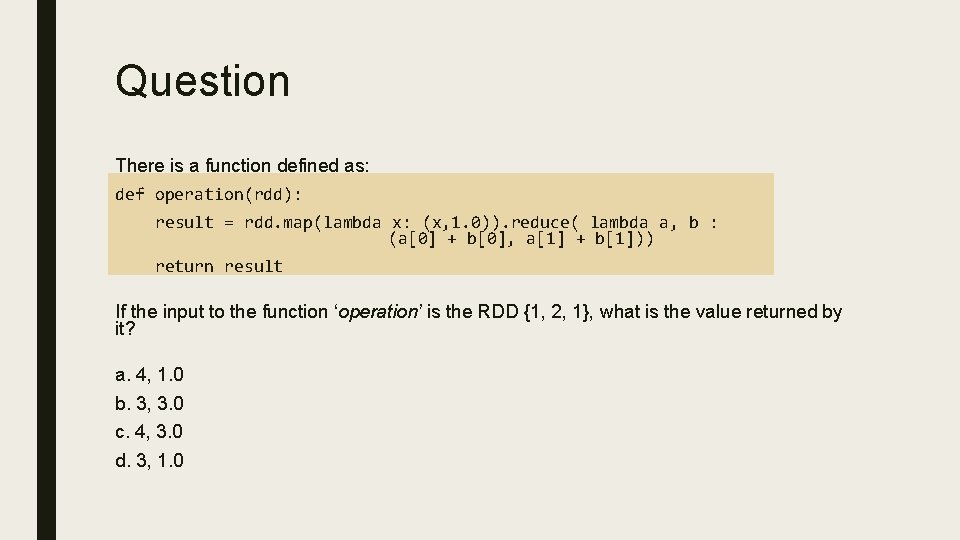

Question There is a function defined as: def operation(rdd): result = rdd. map(lambda x: (x, 1. 0)). reduce( lambda a, b : (a[0] + b[0], a[1] + b[1])) return result If the input to the function ‘operation’ is the RDD {1, 2, 1}, what is the value returned by it? a. 4, 1. 0 b. 3, 3. 0 c. 4, 3. 0 d. 3, 1. 0

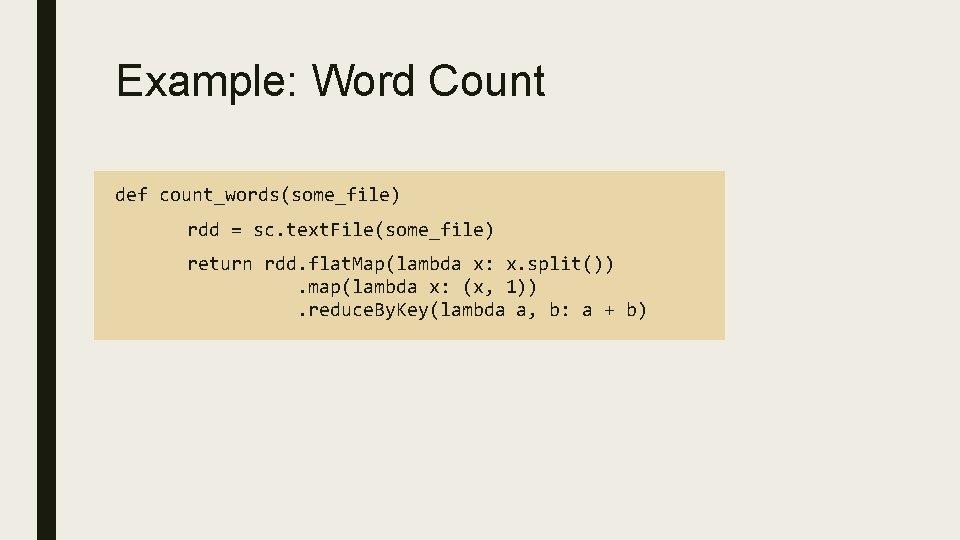

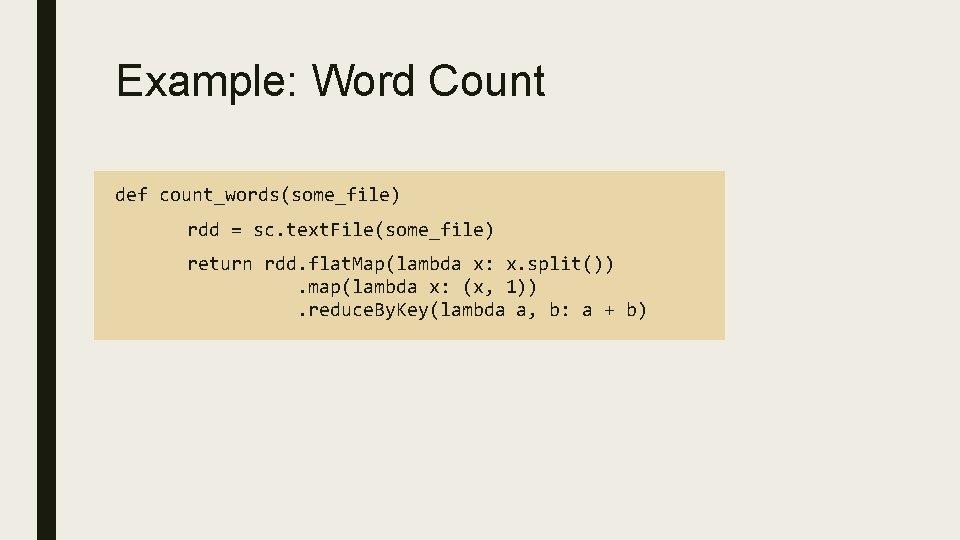

Example: Word Count def count_words(some_file) rdd = sc. text. File(some_file) return rdd. flat. Map(lambda x: x. split()) . map(lambda x: (x, 1)) . reduce. By. Key(lambda a, b: a + b)

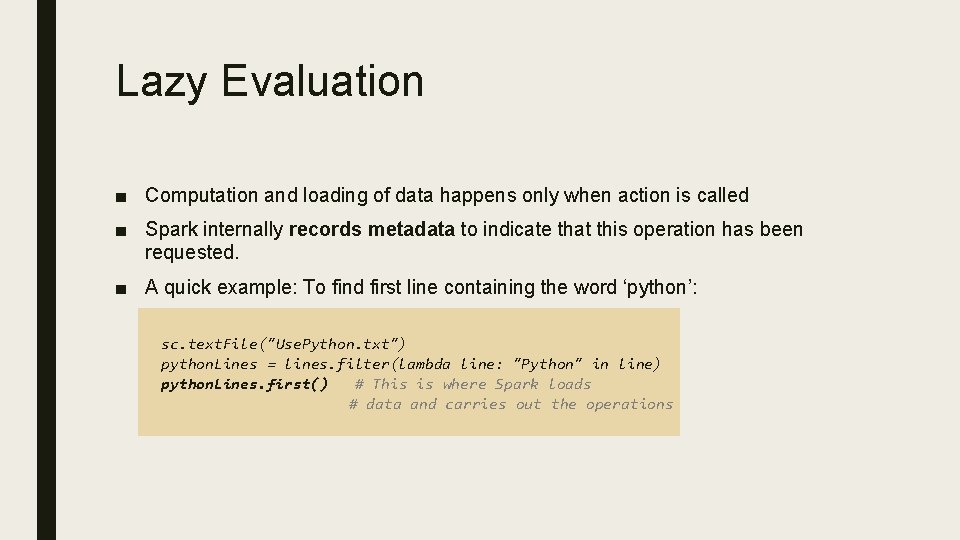

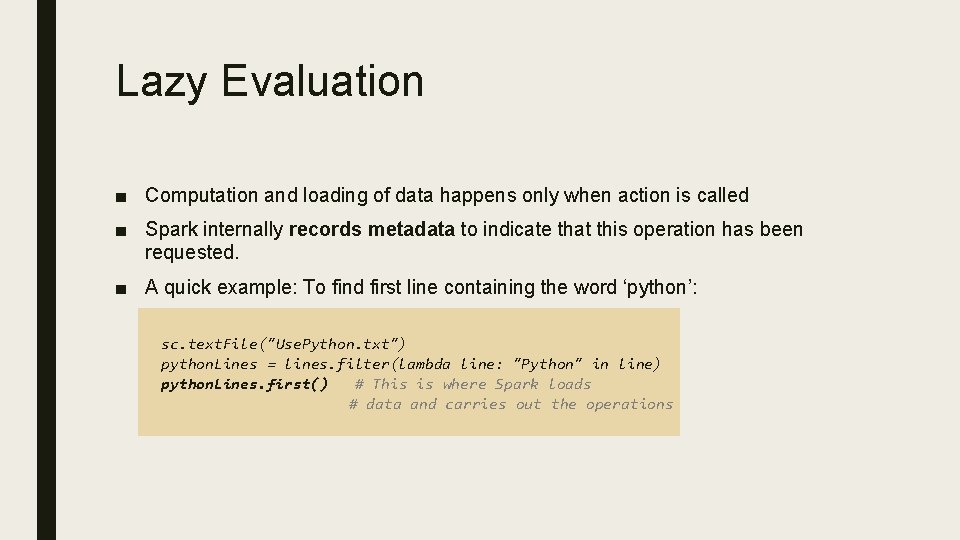

Lazy Evaluation ■ Computation and loading of data happens only when action is called ■ Spark internally records metadata to indicate that this operation has been requested. ■ A quick example: To find first line containing the word ‘python’: sc. text. File("Use. Python. txt") python. Lines = lines. filter(lambda line: "Python" in line) python. Lines. first() # This is where Spark loads # data and carries out the operations

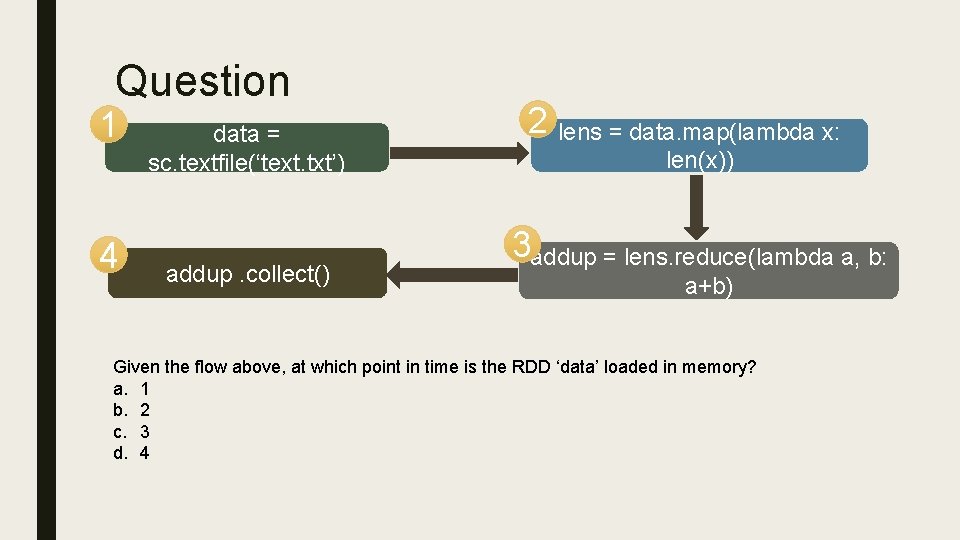

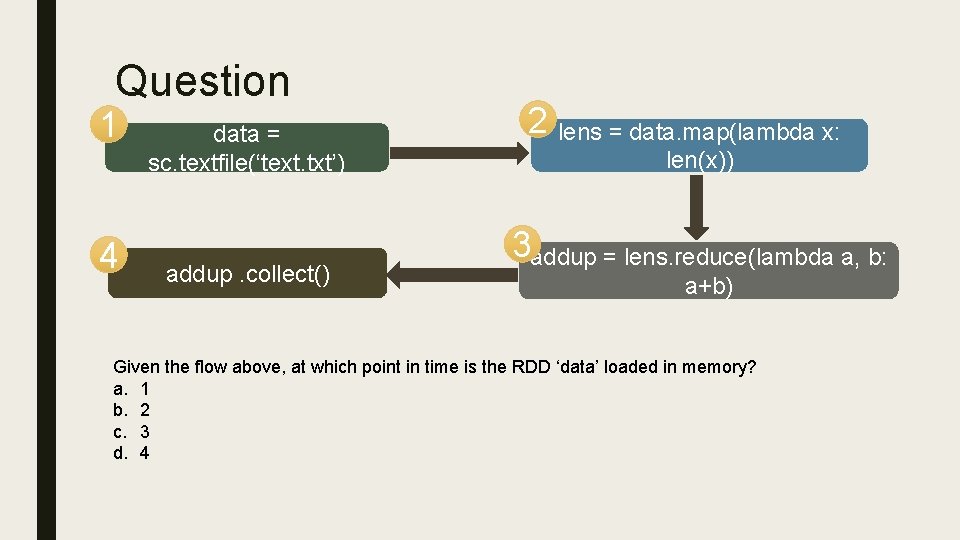

Question 1 4 data = sc. textfile(‘text. txt’) addup. collect() 2 lens = data. map(lambda x: len(x)) 3 addup = lens. reduce(lambda a, b: a+b) Given the flow above, at which point in time is the RDD ‘data’ loaded in memory? a. 1 b. 2 c. 3 d. 4

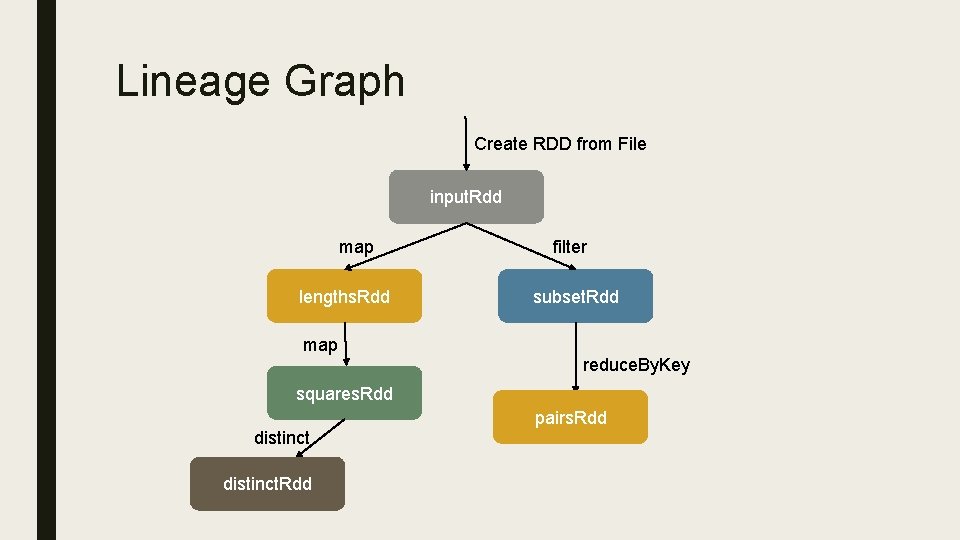

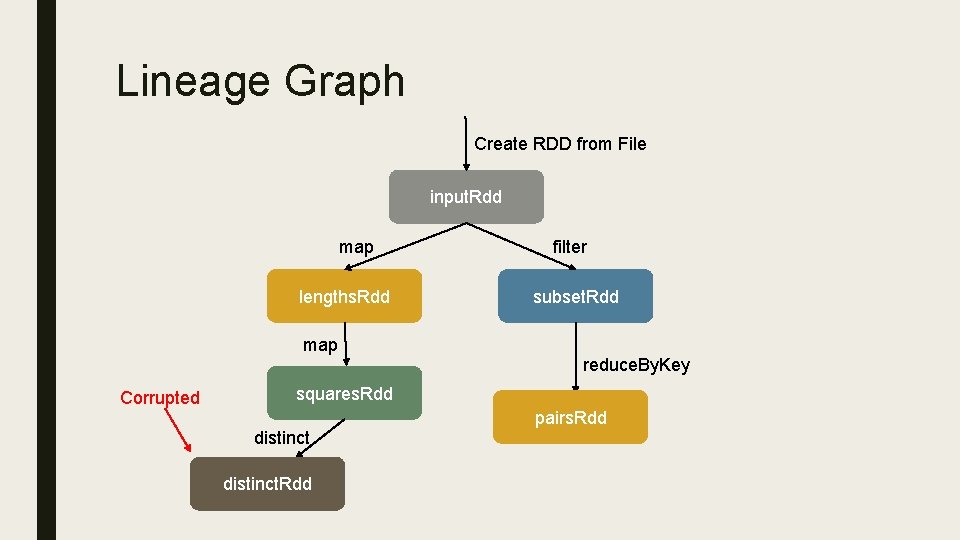

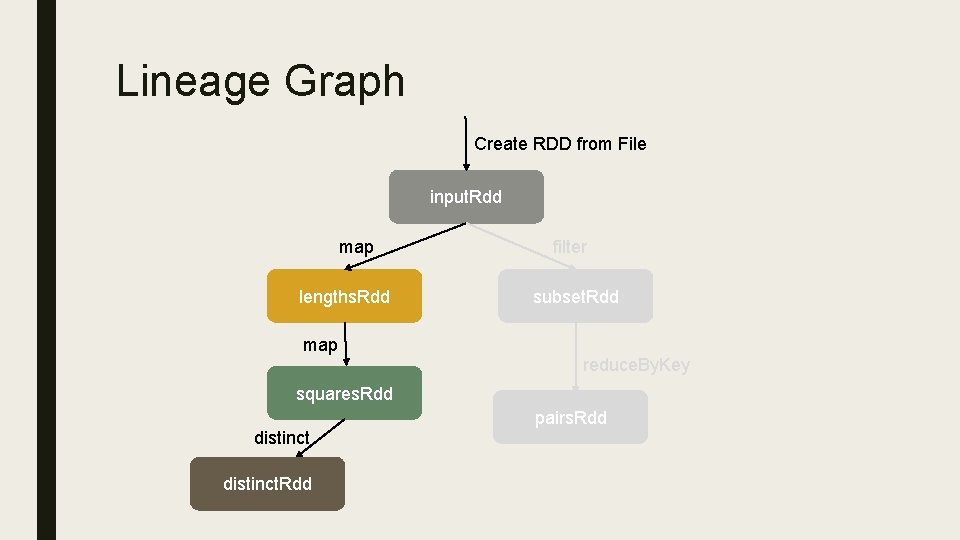

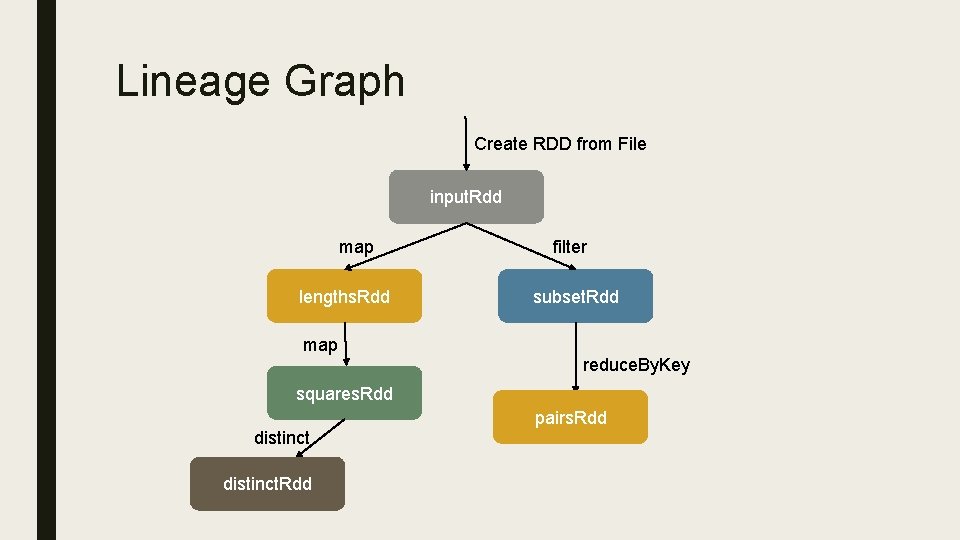

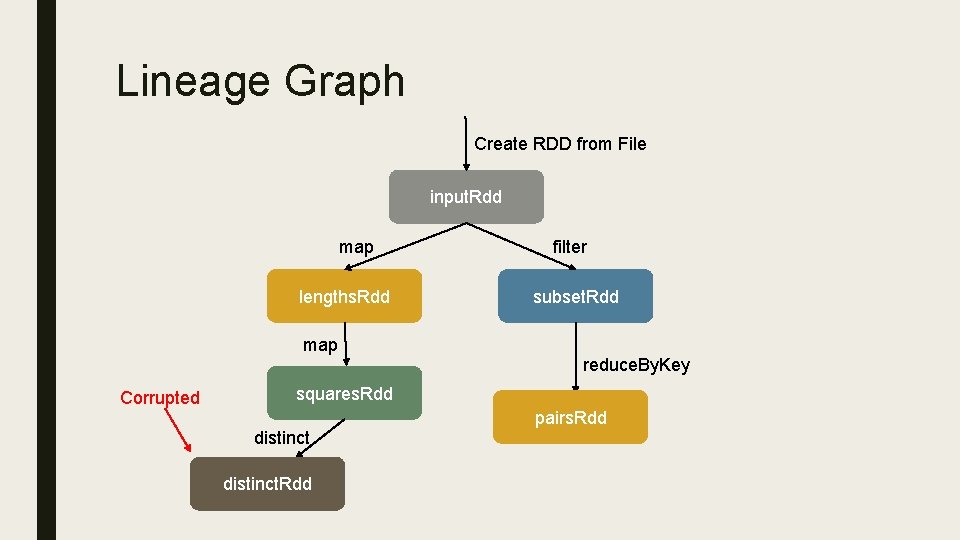

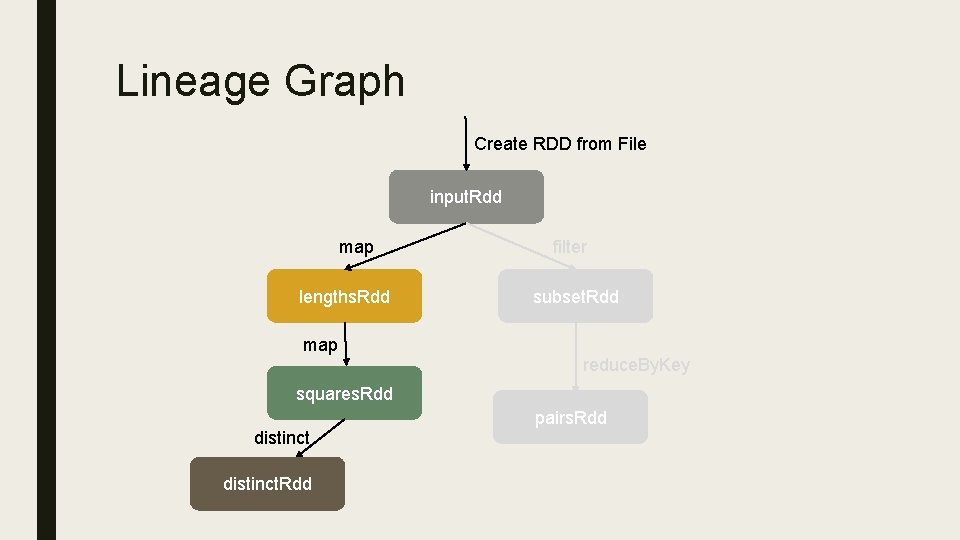

Lineage Graph Create RDD from File input. Rdd map lengths. Rdd map filter subset. Rdd reduce. By. Key squares. Rdd distinct. Rdd pairs. Rdd

Lineage Graph Create RDD from File input. Rdd map lengths. Rdd map Corrupted filter subset. Rdd reduce. By. Key squares. Rdd distinct. Rdd pairs. Rdd

Lineage Graph Create RDD from File input. Rdd map lengths. Rdd map filter subset. Rdd reduce. By. Key squares. Rdd distinct. Rdd pairs. Rdd

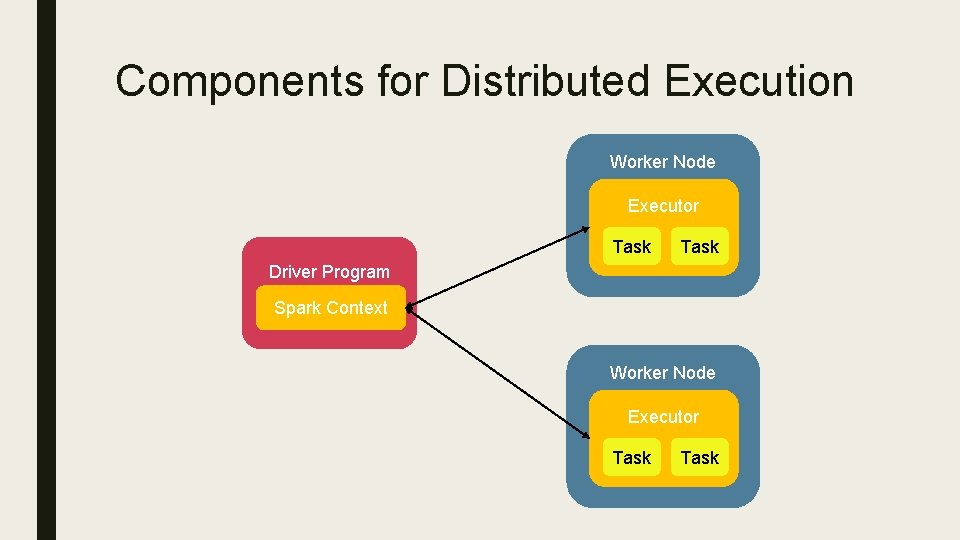

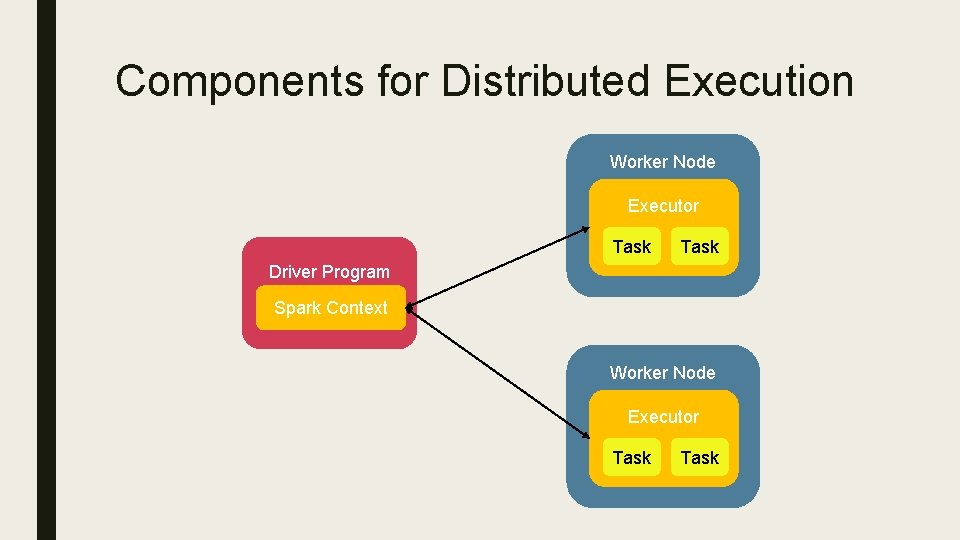

Components for Distributed Execution Worker Node Executor Task Driver Program Spark Context Worker Node Executor Task

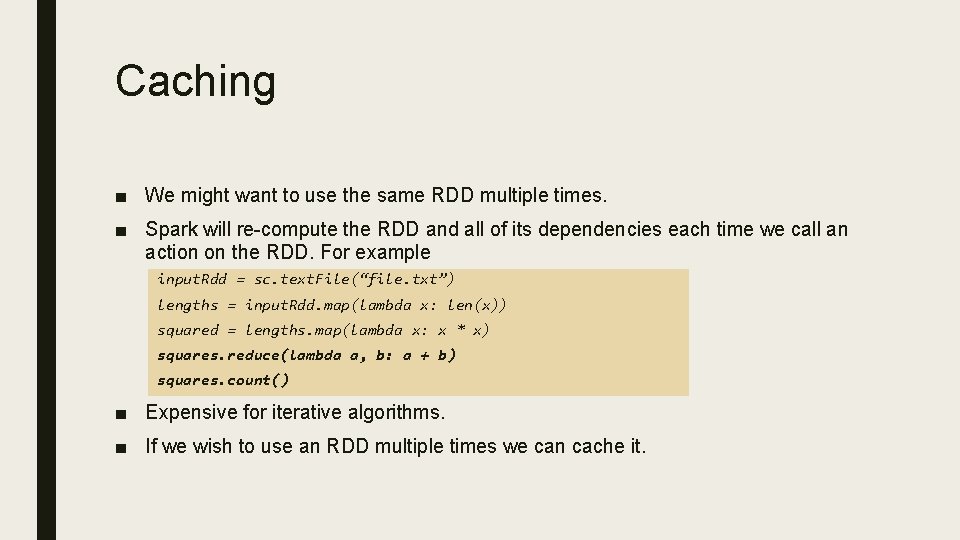

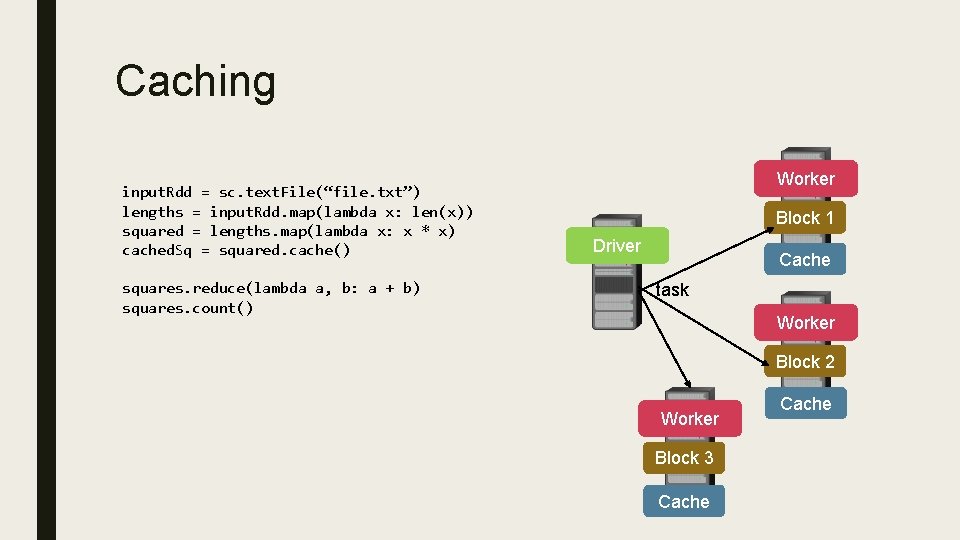

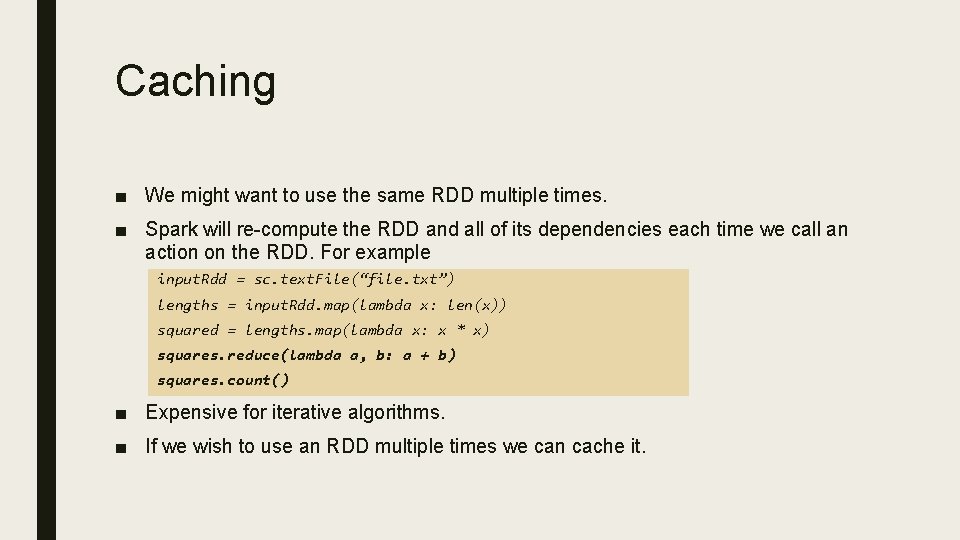

Caching ■ We might want to use the same RDD multiple times. ■ Spark will re-compute the RDD and all of its dependencies each time we call an action on the RDD. For example input. Rdd = sc. text. File(“file. txt”) lengths = input. Rdd. map(lambda x: len(x)) squared = lengths. map(lambda x: x * x) squares. reduce(lambda a, b: a + b) squares. count() ■ Expensive for iterative algorithms. ■ If we wish to use an RDD multiple times we can cache it.

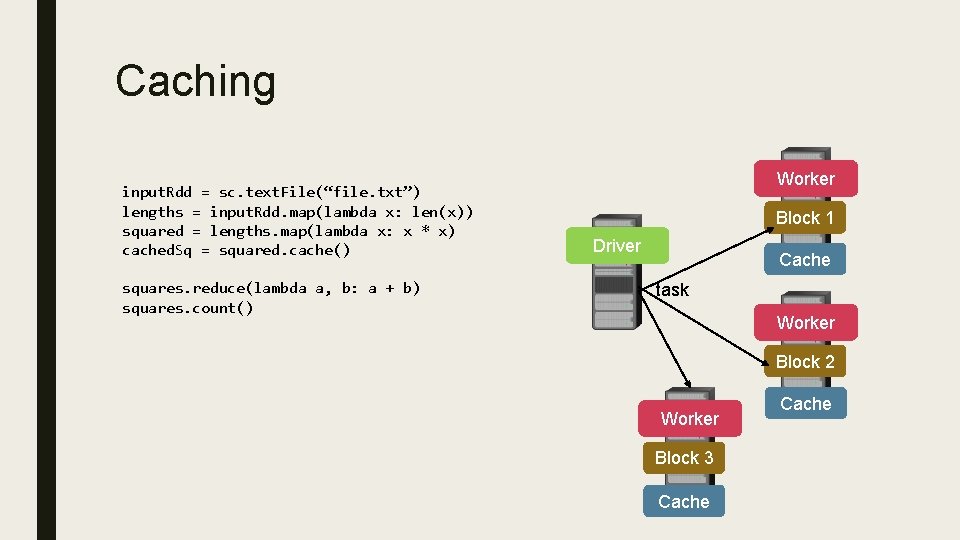

Caching input. Rdd = sc. text. File(“file. txt”) lengths = input. Rdd. map(lambda x: len(x)) squared = lengths. map(lambda x: x * x) cached. Sq = squared. cache() squares. reduce(lambda a, b: a + b) squares. count() Worker Block 1 Driver Cache task Worker Block 2 Worker Block 3 Cache

Broadcast Variables ■ Broadcast Variables allow the program to efficiently send a large, read-only value to all the worker nodes. base. Rdd = sc. text. File(“text. txt”) def lookup(value, table): return table[value] lookup. Table = load. Lookup. Table() base. Rdd. map(lambda x: lookup(x, lookup. Table)). reduce(lambda a, b: a + b)

![Broadcast Variables base Rdd sc text Filetext txt def lookupvalue table return tablevalue Broadcast Variables. base. Rdd = sc. text. File(“text. txt”) def lookup(value, table): return table[value]](https://slidetodoc.com/presentation_image_h/8c39335f4197b6e07004a3140774a71a/image-45.jpg)

Broadcast Variables. base. Rdd = sc. text. File(“text. txt”) def lookup(value, table): return table[value] lookup. Table = spark. broadcast(load. Lookup. Table()) base. Rdd. map(lambda x: lookup(x, lookup. Table)). reduce(lambda a, b: a + b)

Example: Logistic Regression val data = spark. text. File(. . . ). map(read. Point). cache() var w = Vector. random(D) for(i <- 1 to ITERATIONS) { val gradient = data. map(p => ((1 / (1 + exp((w dot p. x)))) - p. y) * p. x ). reduce((a, b) => a + b) w -= ALPHA * gradient } println("w: " + w)

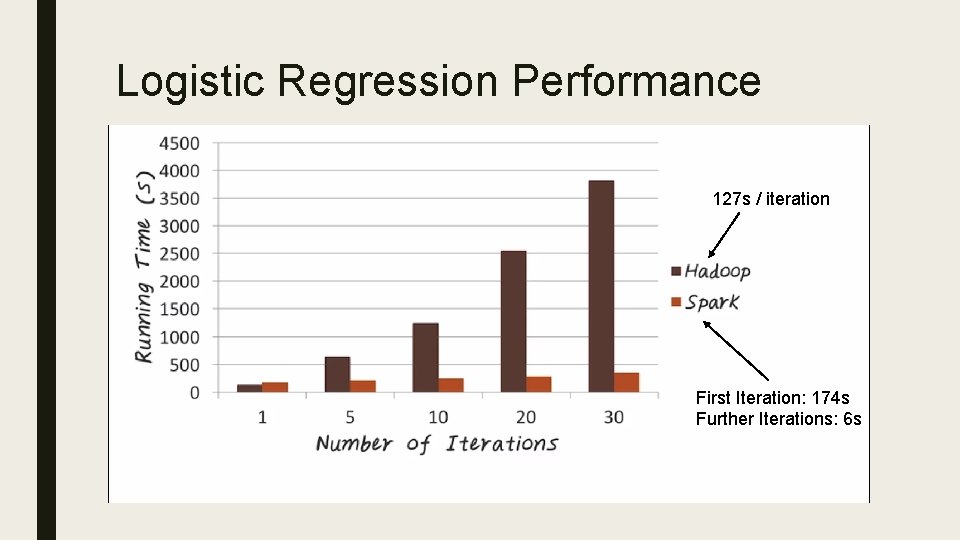

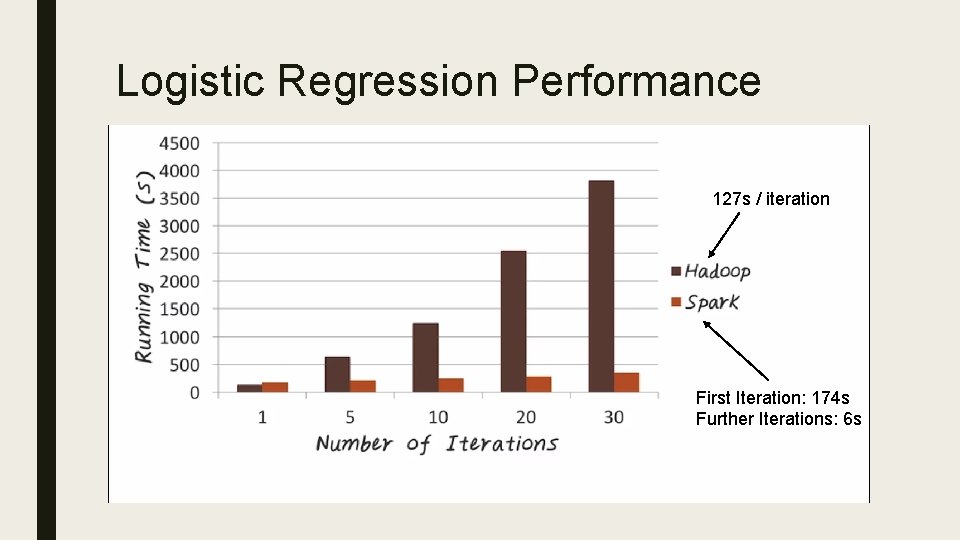

Logistic Regression Performance 127 s / iteration First Iteration: 174 s Further Iterations: 6 s

THANK YOU