Artificial Intelligence II S Russell and P Norvig

![STRIPS Example Goal Stack = [achieve(on(C, B), on(A, C))] State = {clear(B), clear(C), on(C, STRIPS Example Goal Stack = [achieve(on(C, B), on(A, C))] State = {clear(B), clear(C), on(C,](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-21.jpg)

![STRIPS Example Goal Stack = [achieve(on(C, B)), achieve(on(A, C)), achieve(on(C, B), on(A, C))] State STRIPS Example Goal Stack = [achieve(on(C, B)), achieve(on(A, C)), achieve(on(C, B), on(A, C))] State](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-22.jpg)

![STRIPS Example Goal Stack = [achieve(on(A, C)), achieve(on(C, B), on(A, C))] State = {ontable(A), STRIPS Example Goal Stack = [achieve(on(A, C)), achieve(on(C, B), on(A, C))] State = {ontable(A),](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-27.jpg)

![STRIPS Example Goal Stack = [achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))] State STRIPS Example Goal Stack = [achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))] State](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-28.jpg)

![STRIPS Example Goal Stack = [achieve(holding(A)), achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))] STRIPS Example Goal Stack = [achieve(holding(A)), achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))]](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-29.jpg)

![STRIPS Example Goal Stack = [achieve(clear(C)), achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))] STRIPS Example Goal Stack = [achieve(clear(C)), achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))]](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-31.jpg)

![STRIPS Example Goal Stack = [achieve(on(C, B), on(A, C))] State = {ontable(B), on(C, B), STRIPS Example Goal Stack = [achieve(on(C, B), on(A, C))] State = {ontable(B), on(C, B),](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-32.jpg)

![STRIPS Example Goal Stack = [] State = {ontable(B), on(C, B), on(A, C), clear(A), STRIPS Example Goal Stack = [] State = {ontable(B), on(C, B), on(A, C), clear(A),](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-33.jpg)

- Slides: 33

Artificial Intelligence II S. Russell and P. Norvig Artificial Intelligence: A Modern Approach Chapter 11: Planning

Planning ¬Planning versus problem solving ¬Situation calculus ¬Plan-space planning

Planning as Problem Solving ¬Planning – Start state (S) – Goal state (G) – Set of actions ¬Find sequence of actions that get you from start to goal

What is Planning (more generally)? ¬ Generate sequences of actions to perform tasks and achieve objectives. ¬ Search for solution over abstract space of plans. ¬ Assists humans in practical applications – – design and manufacturing military operations games space exploration

Planning versus Problem Solving ¬ Problem solving is basically state-space search, i. e. , primarily an algorithm—not a representation. – initial state, goal-test, successor function ¬ Planning is a combination of an algorithm (search) and a set of representations, usually situational calculus. – provides a way to “open up” the representation of initial state, goal-test, and successor function – planner free to add actions to plan wherever needed – most parts of world independent from other parts, i. e. nearly decomposable

Assumptions ¬ Atomic time ¬ No concurrent actions ¬ Deterministic actions ¬ Agent is sole cause of change ¬ Agent is omniscient ¬ Closed World Assumption

Simple Planning Agent Algorithm ¬Generate a goal to achieve ¬Construct a plan to achieve goal from current state ¬Execute plan until finished ¬Begin again with new goal

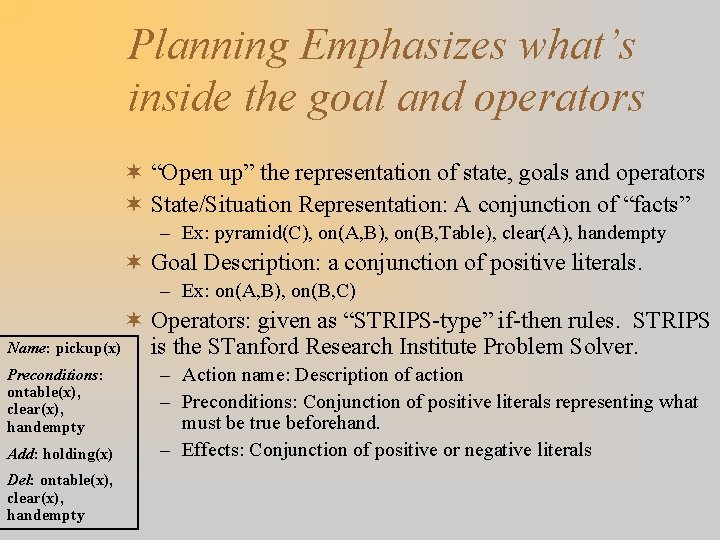

Planning Emphasizes what’s inside the goal and operators ¬ “Open up” the representation of state, goals and operators ¬ State/Situation Representation: A conjunction of “facts” – Ex: pyramid(C), on(A, B), on(B, Table), clear(A), handempty ¬ Goal Description: a conjunction of positive literals. – Ex: on(A, B), on(B, C) ¬ Operators: given as “STRIPS-type” if-then rules. STRIPS Name: pickup(x) is the STanford Research Institute Problem Solver. Preconditions: ontable(x), clear(x), handempty Add: holding(x) Del: ontable(x), clear(x), handempty – Action name: Description of action – Preconditions: Conjunction of positive literals representing what must be true beforehand. – Effects: Conjunction of positive or negative literals

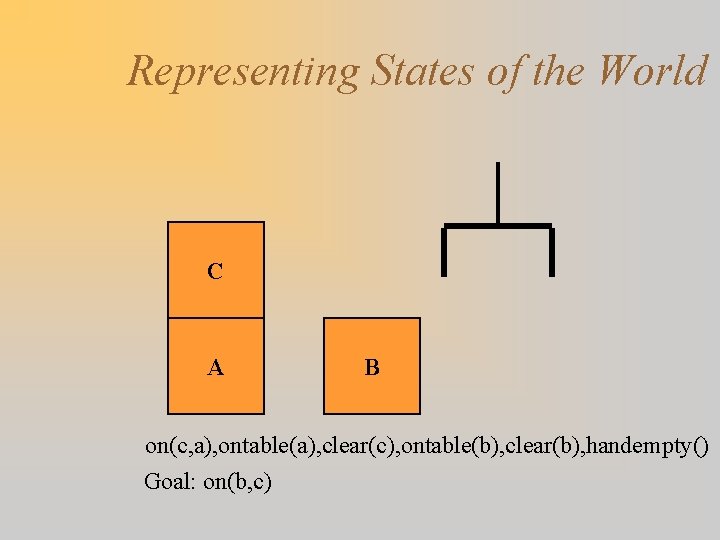

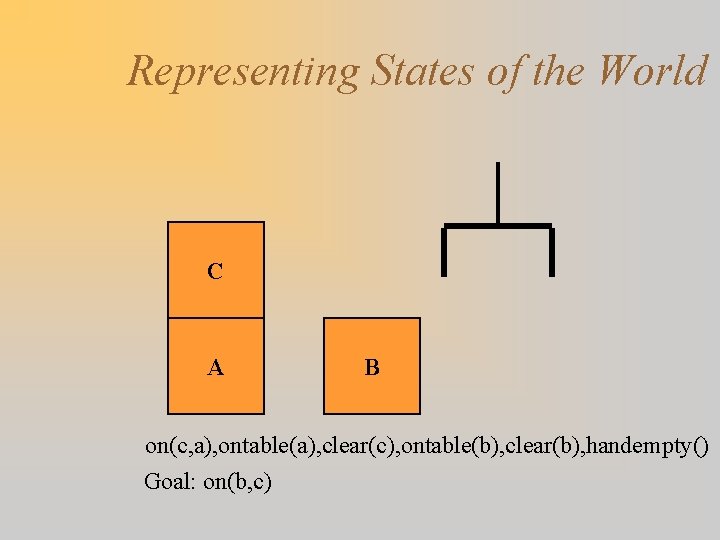

Representing States of the World C A B on(c, a), ontable(a), clear(c), ontable(b), clear(b), handempty() Goal: on(b, c)

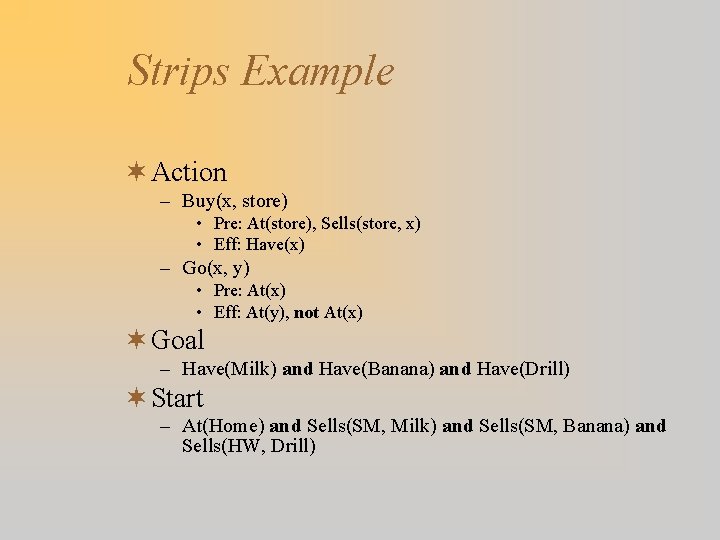

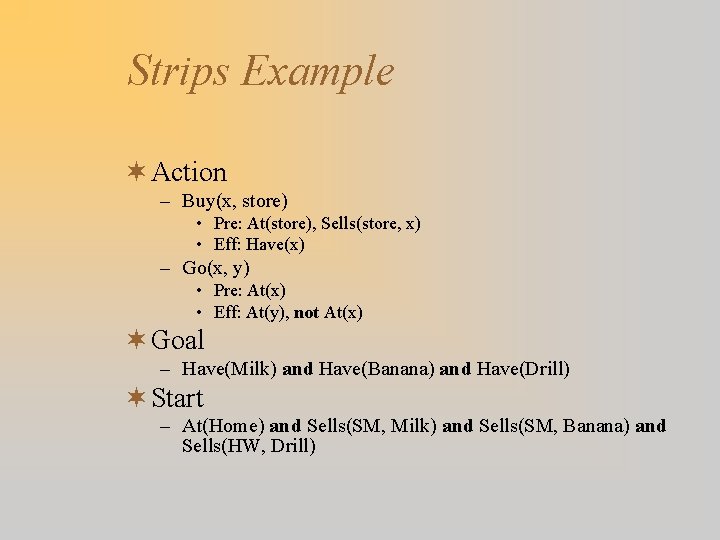

Strips Example ¬ Action – Buy(x, store) • Pre: At(store), Sells(store, x) • Eff: Have(x) – Go(x, y) • Pre: At(x) • Eff: At(y), not At(x) ¬ Goal – Have(Milk) and Have(Banana) and Have(Drill) ¬ Start – At(Home) and Sells(SM, Milk) and Sells(SM, Banana) and Sells(HW, Drill)

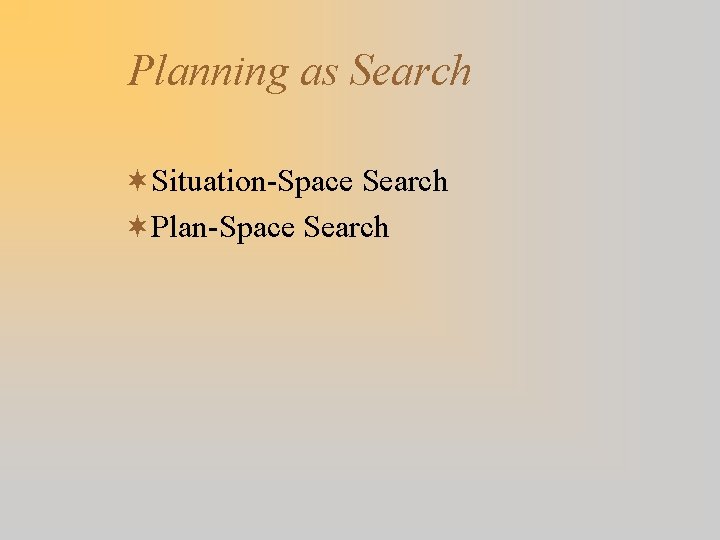

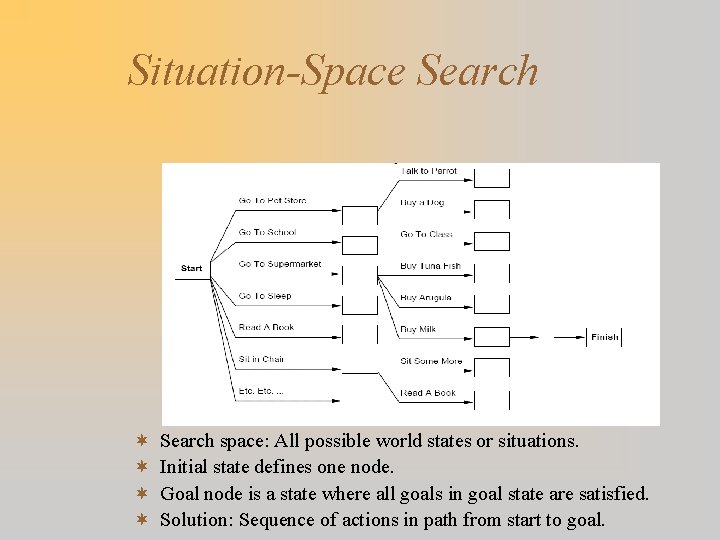

Planning as Search ¬Situation-Space Search ¬Plan-Space Search

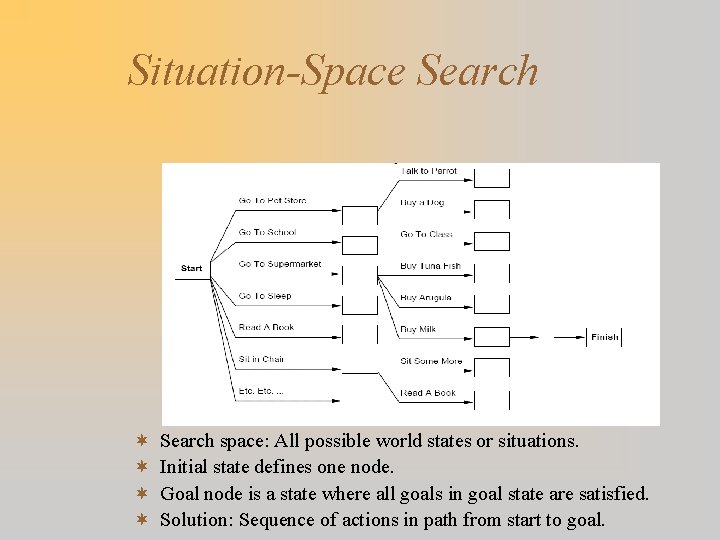

Situation-Space Search ¬ ¬ Search space: All possible world states or situations. Initial state defines one node. Goal node is a state where all goals in goal state are satisfied. Solution: Sequence of actions in path from start to goal.

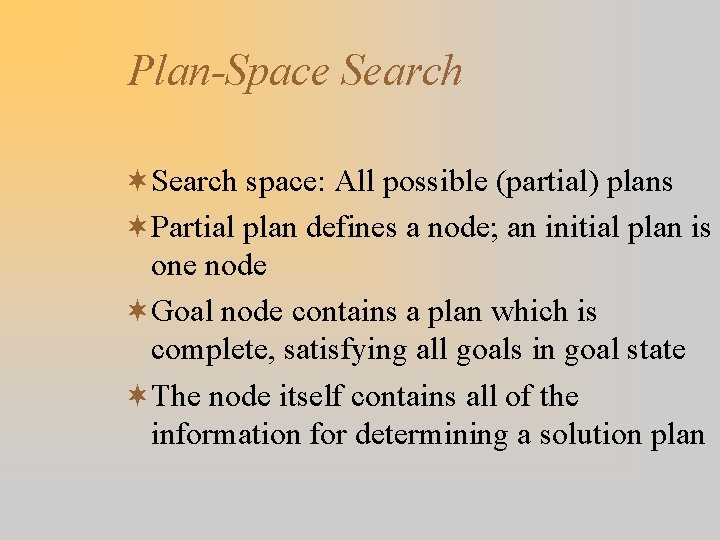

Plan-Space Search ¬Search space: All possible (partial) plans ¬Partial plan defines a node; an initial plan is one node ¬Goal node contains a plan which is complete, satisfying all goals in goal state ¬The node itself contains all of the information for determining a solution plan

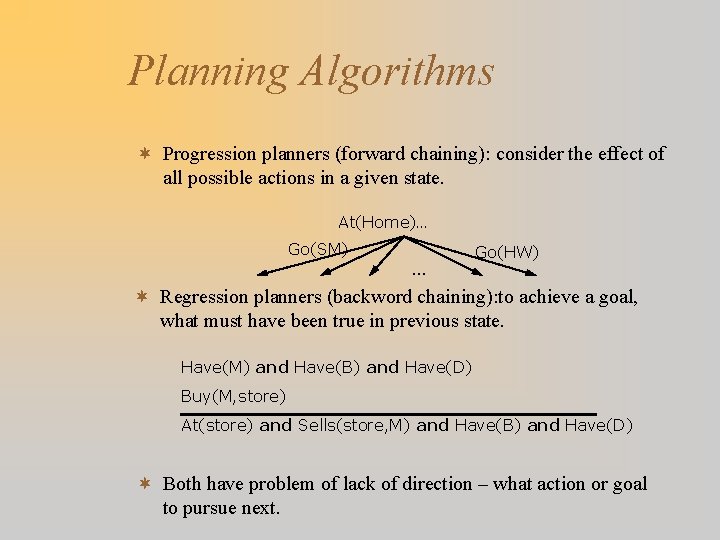

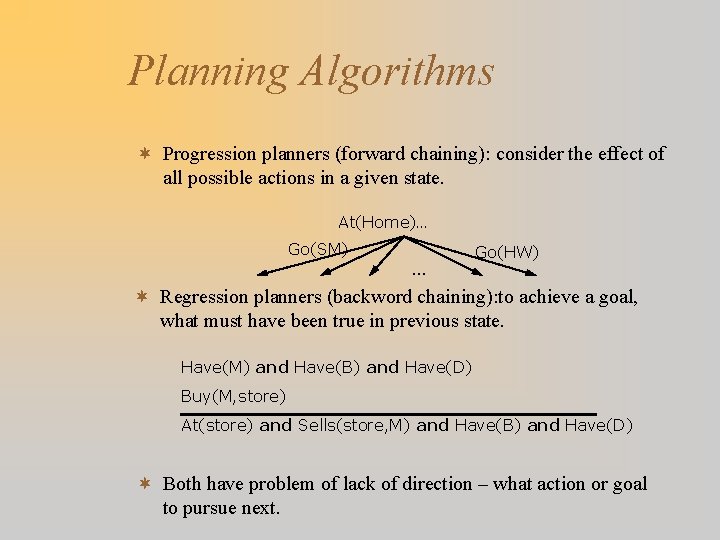

Planning Algorithms ¬ Progression planners (forward chaining): consider the effect of all possible actions in a given state. At(Home)… Go(SM) … Go(HW) ¬ Regression planners (backword chaining): to achieve a goal, what must have been true in previous state. Have(M) and Have(B) and Have(D) Buy(M, store) At(store) and Sells(store, M) and Have(B) and Have(D) ¬ Both have problem of lack of direction – what action or goal to pursue next.

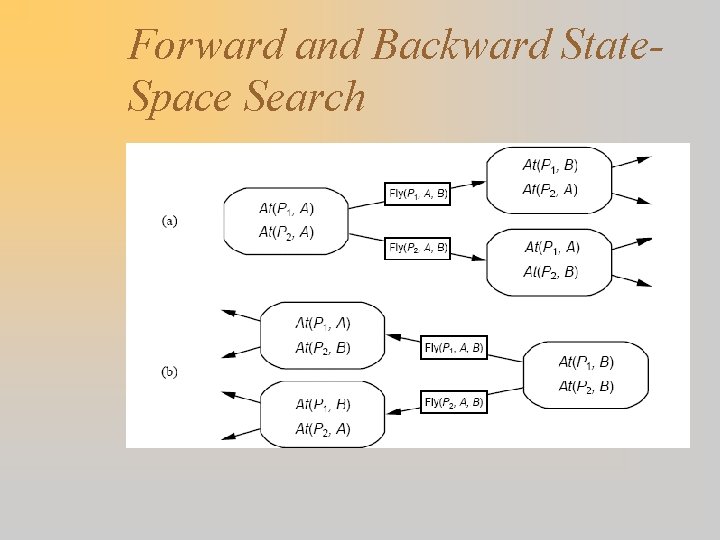

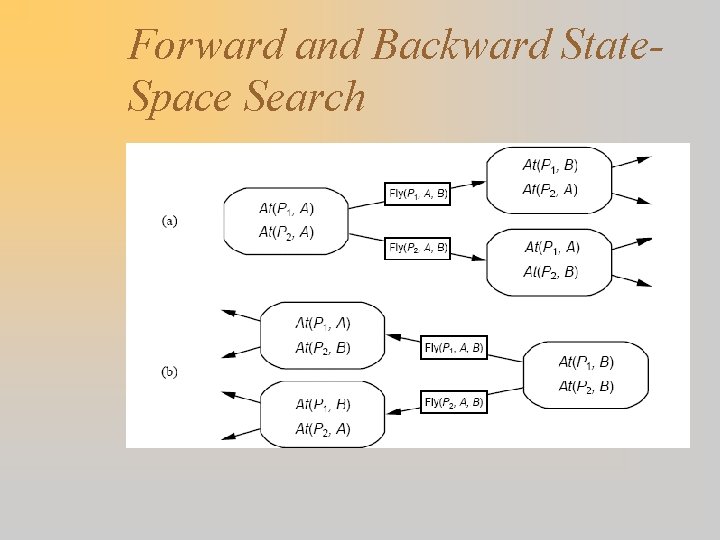

Forward and Backward State. Space Search

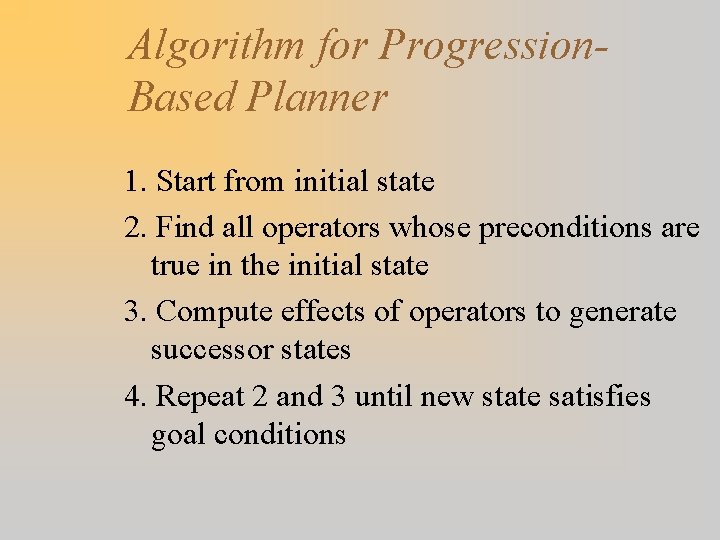

Algorithm for Progression. Based Planner 1. Start from initial state 2. Find all operators whose preconditions are true in the initial state 3. Compute effects of operators to generate successor states 4. Repeat 2 and 3 until new state satisfies goal conditions

Progression Planning Example ¬Initial State S = {clear(A), clear(B), clear(C), ontable(A), ontable(B), A B C ontable(C), handempty} ¬Operator = pickup(x) with = {x/A} Name: pickup(x) ¬Successor state T = {clear(B), clear(C), Preconditions: ontable(B), ontable(C), holding(A)} ontable(x), clear(x), ¬Operator instance associated with the handempty Add: holding(x) action from S to T is pickup(A). Del: ontable(x), clear(x), handempty

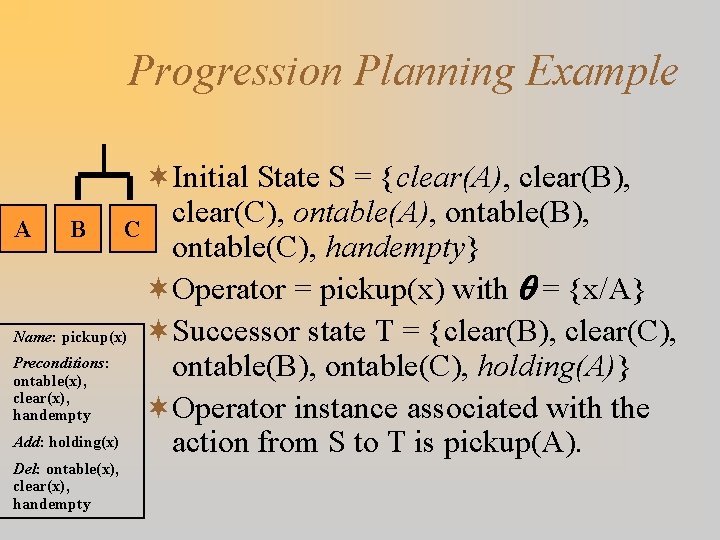

Algorithm for Regression-Based Planner 1. Start with goal node corresponding to goal to be achieved 2. Choose an operator that will add one of the goals 3. Replace the goal with the operator’s preconditions 4. Repeat 2 and 3 until you have reached the initial state

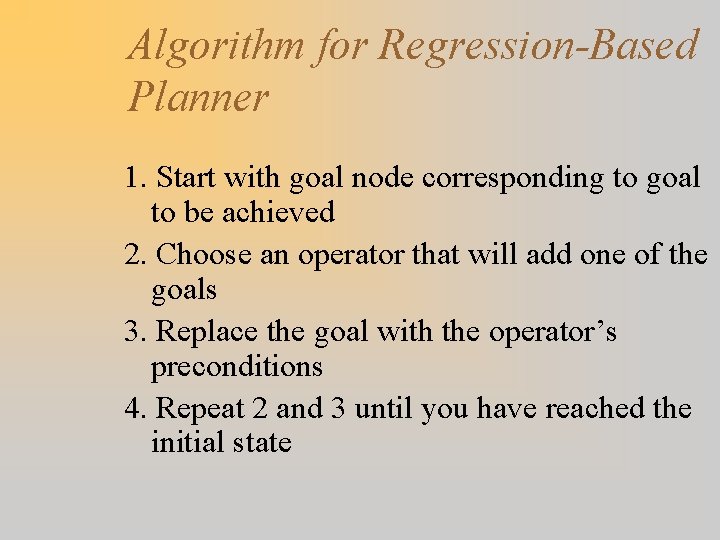

STRIPS Algorithm ¬ A Goal-Stack Based Regression Planner: uses goal stack to maintain set of conjunctive goals yet to be achieved ¬ Maintains a description of the current state, which is initially the given initial state ¬ Approach: – Pick an order for achieving each of the goals – When each goal is popped from stack, if it is not already true in current state, push onto the stack an operator that adds that goal. – Push onto the stack each precondition of that operator in some order, and solve each of these sub-goals in the same fashion. – When all preconditions are solved (popped from stack), re-verify that all of the preconditions are still true and apply the operator to create a new successor state description.

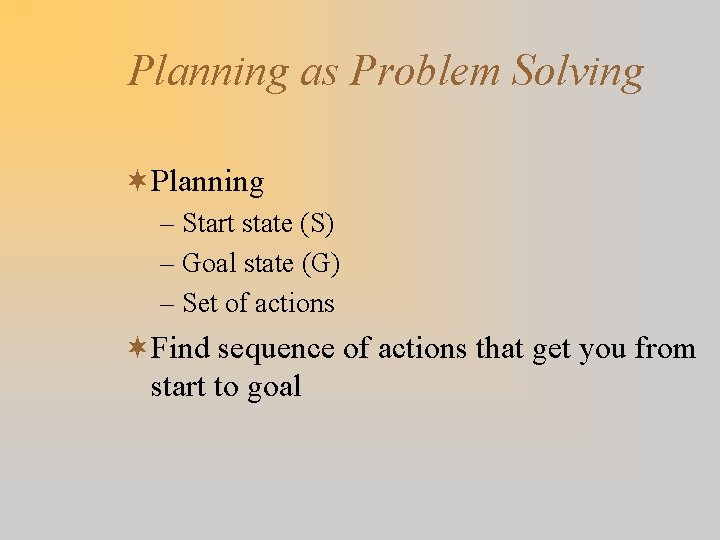

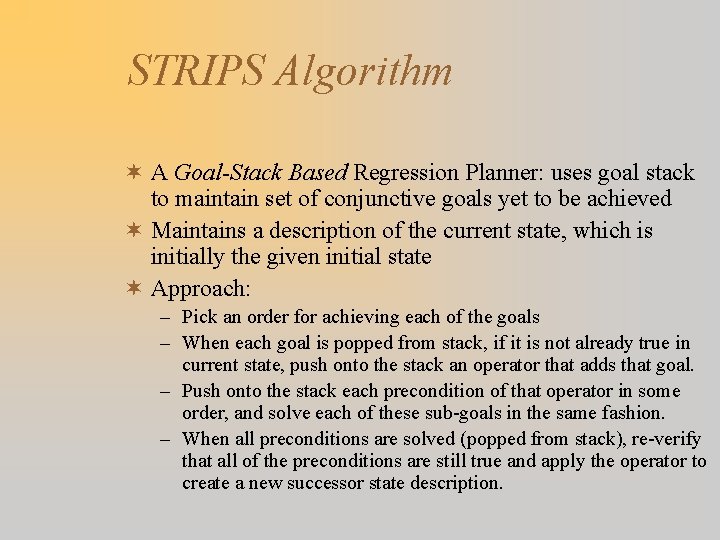

STRIPS Example Pickup(x) P: ontable(x), clear(x), handempty E: holding(x), not ontable(x), not clear(x), not handempty A Putdown(x) P: holding(x) E: ontable(x), clear(x), handempty, not holding(x) Stack(x, y) P: holding(x), clear(y) E: on(x, y), clear(x), handempty, not holding(x), not clear(y) Unstack(x, y) P: clear(x), on(x, y), handempty E: holding(x), clear(y), not clear(x), not on(x, y), not handempty C C A B B Initial State: clear(b), Goal: on(a, c), on(c, b) clear(c), on(c, a), ontable(b), handempty

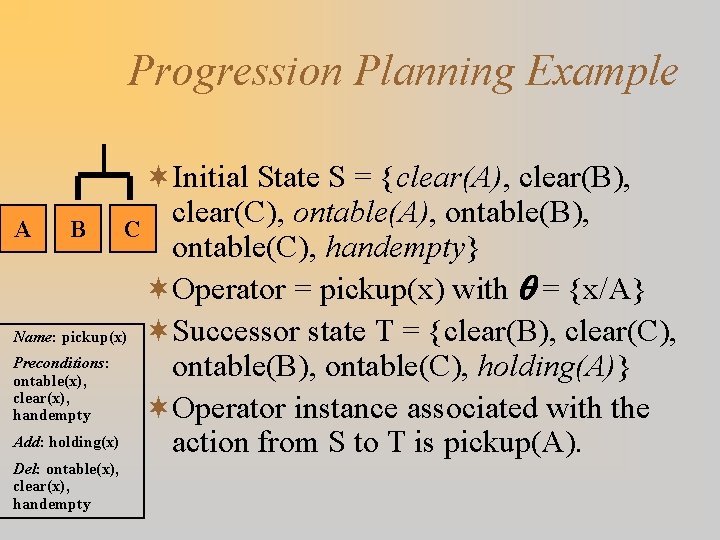

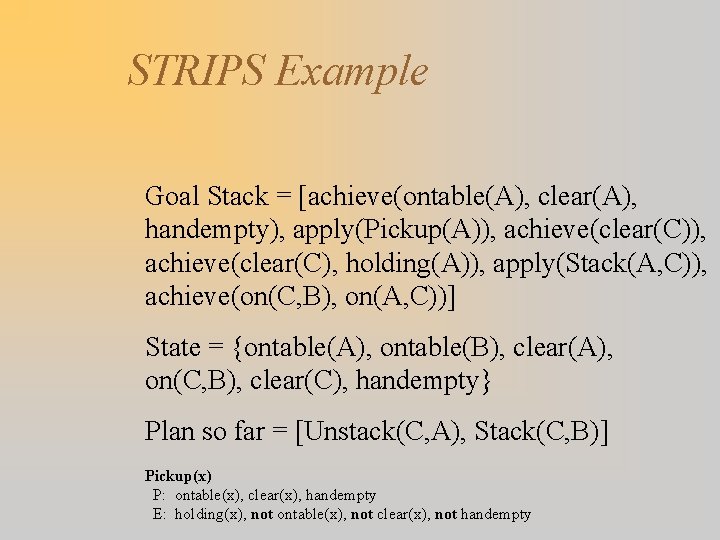

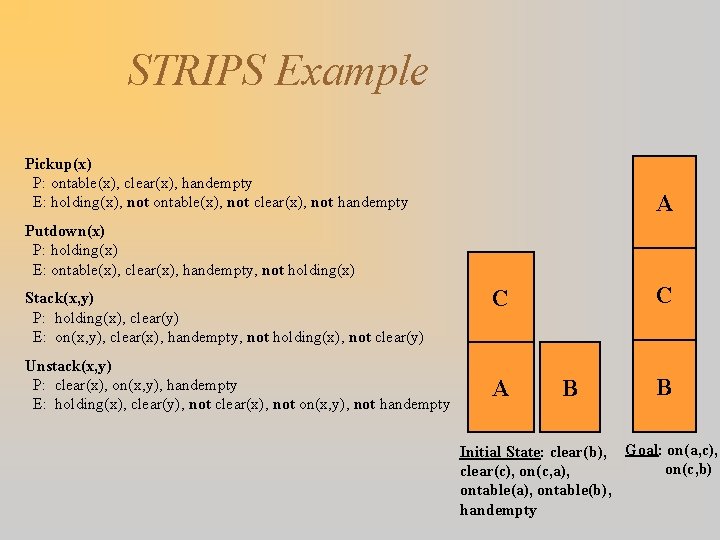

![STRIPS Example Goal Stack achieveonC B onA C State clearB clearC onC STRIPS Example Goal Stack = [achieve(on(C, B), on(A, C))] State = {clear(B), clear(C), on(C,](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-21.jpg)

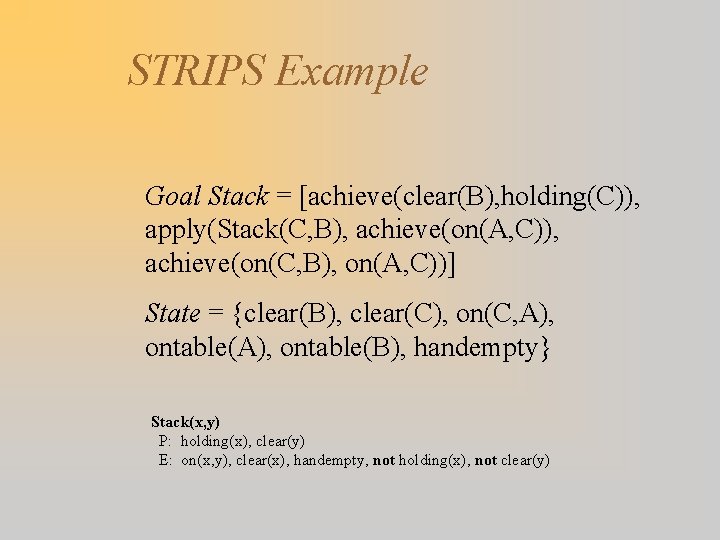

STRIPS Example Goal Stack = [achieve(on(C, B), on(A, C))] State = {clear(B), clear(C), on(C, A), ontable(B), handempty}

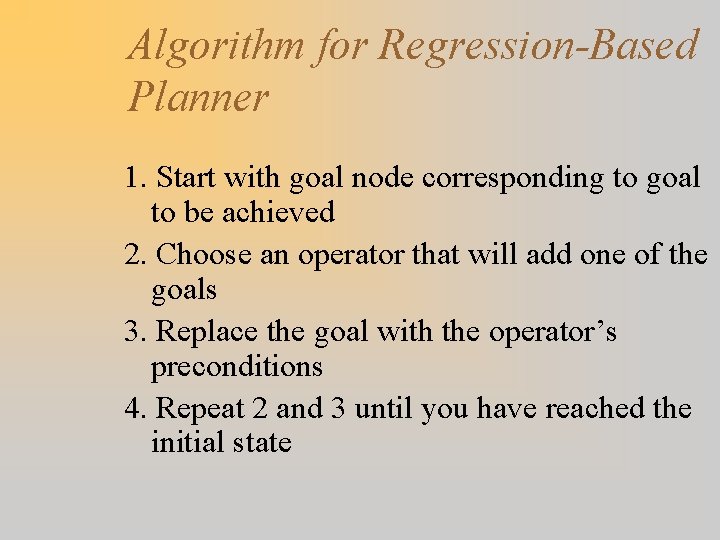

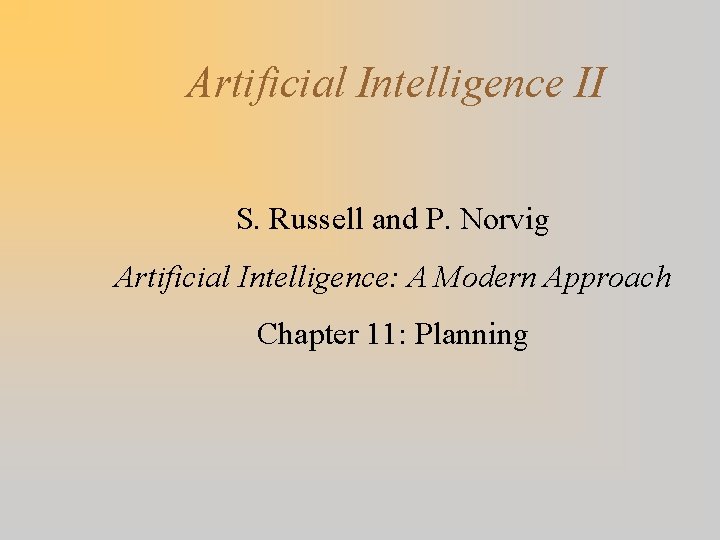

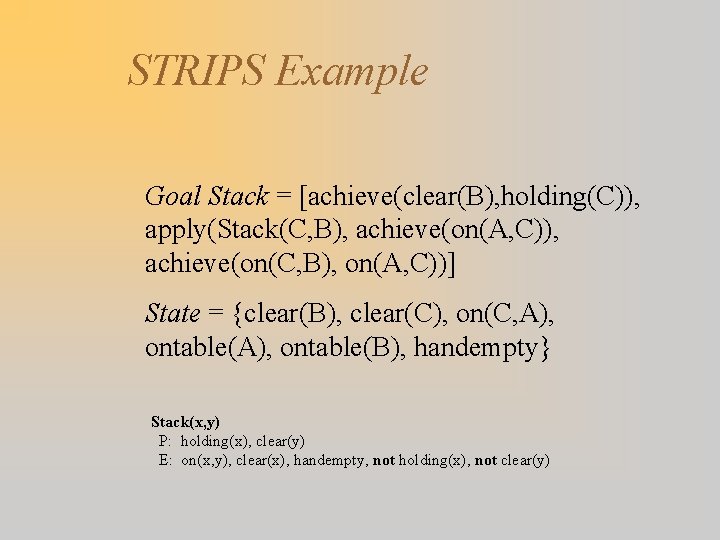

![STRIPS Example Goal Stack achieveonC B achieveonA C achieveonC B onA C State STRIPS Example Goal Stack = [achieve(on(C, B)), achieve(on(A, C)), achieve(on(C, B), on(A, C))] State](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-22.jpg)

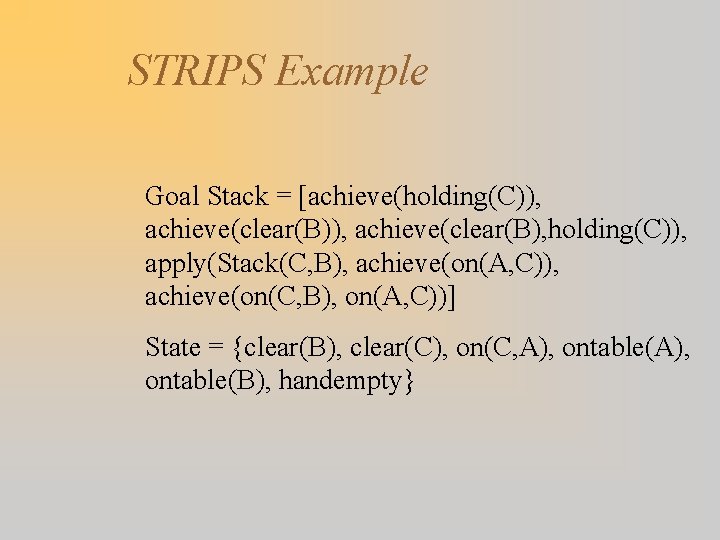

STRIPS Example Goal Stack = [achieve(on(C, B)), achieve(on(A, C)), achieve(on(C, B), on(A, C))] State = {clear(B), clear(C), on(C, A), ontable(B), handempty}

STRIPS Example Goal Stack = [achieve(clear(B), holding(C)), apply(Stack(C, B), achieve(on(A, C)), achieve(on(C, B), on(A, C))] State = {clear(B), clear(C), on(C, A), ontable(B), handempty} Stack(x, y) P: holding(x), clear(y) E: on(x, y), clear(x), handempty, not holding(x), not clear(y)

STRIPS Example Goal Stack = [achieve(holding(C)), achieve(clear(B), holding(C)), apply(Stack(C, B), achieve(on(A, C)), achieve(on(C, B), on(A, C))] State = {clear(B), clear(C), on(C, A), ontable(B), handempty}

STRIPS Example Goal Stack = [achieve(handempty, clear(C), on(C, y)), apply(Unstack(C, y)), achieve(clear(B), holding(C)), apply(Stack(C, B), achieve(on(A, C)), achieve(on(C, B), on(A, C))] State = {clear(B), clear(C), on(C, A), ontable(B), handempty} Unstack(x, y) P: clear(x), on(x, y), handempty E: holding(x), clear(y), not clear(x), not on(x, y), not handempty

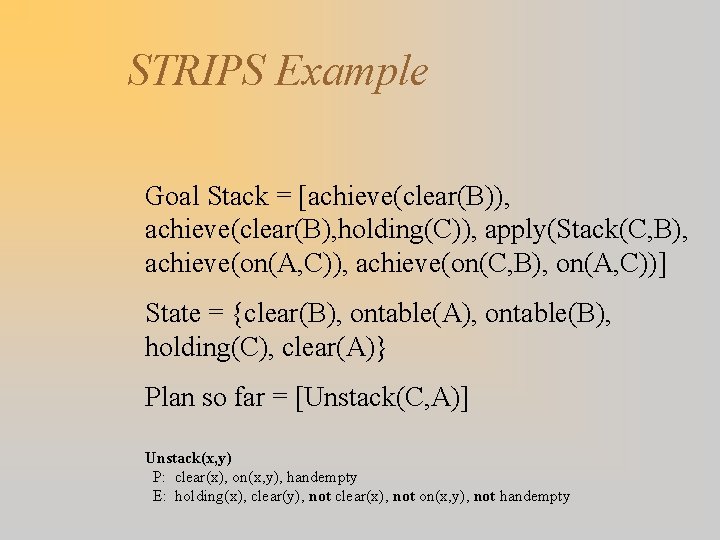

STRIPS Example Goal Stack = [achieve(clear(B)), achieve(clear(B), holding(C)), apply(Stack(C, B), achieve(on(A, C)), achieve(on(C, B), on(A, C))] State = {clear(B), ontable(A), ontable(B), holding(C), clear(A)} Plan so far = [Unstack(C, A)] Unstack(x, y) P: clear(x), on(x, y), handempty E: holding(x), clear(y), not clear(x), not on(x, y), not handempty

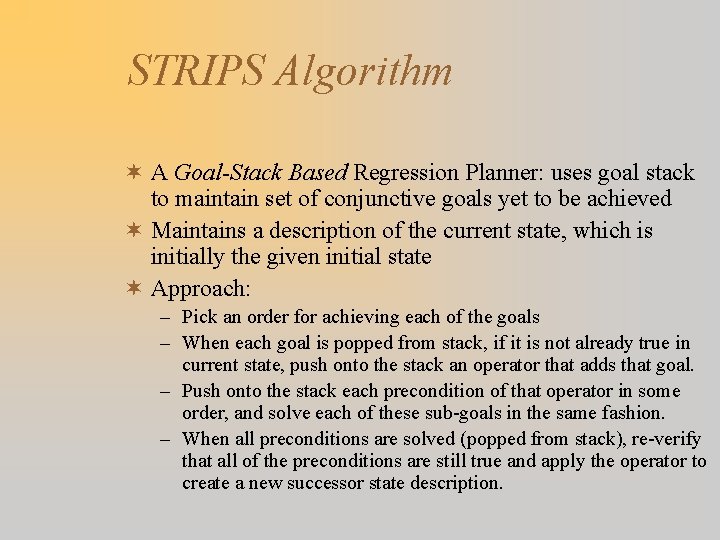

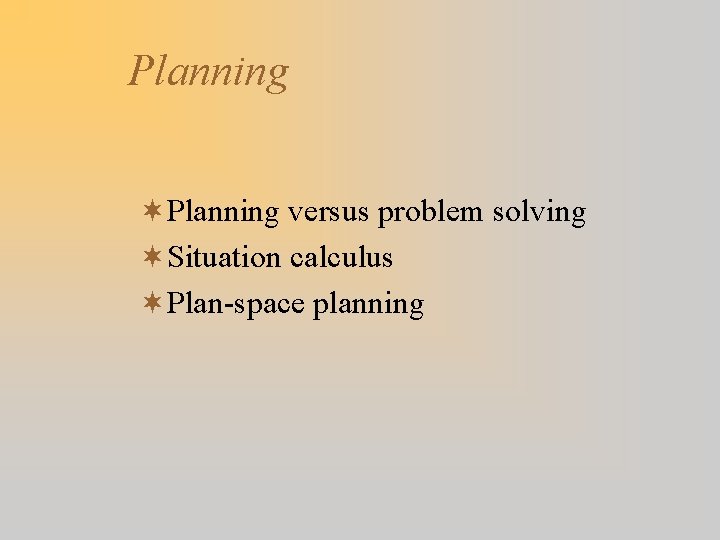

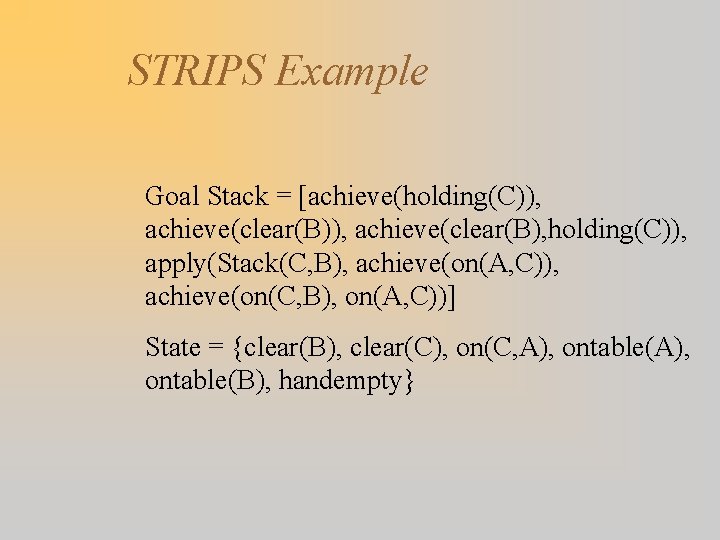

![STRIPS Example Goal Stack achieveonA C achieveonC B onA C State ontableA STRIPS Example Goal Stack = [achieve(on(A, C)), achieve(on(C, B), on(A, C))] State = {ontable(A),](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-27.jpg)

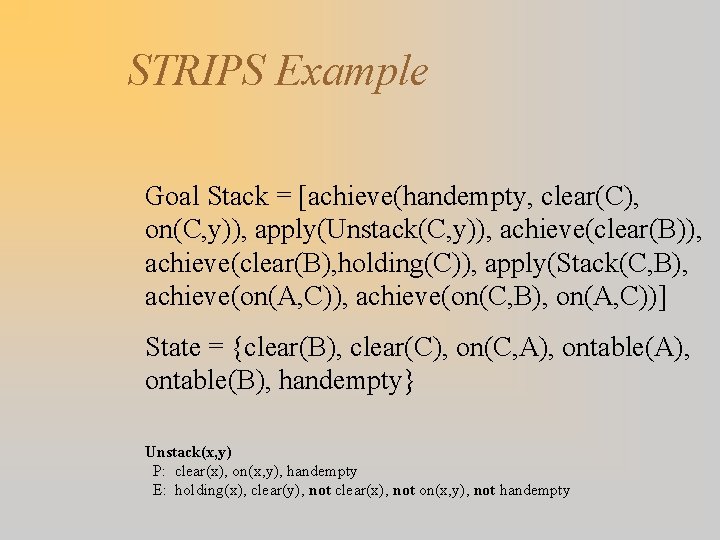

STRIPS Example Goal Stack = [achieve(on(A, C)), achieve(on(C, B), on(A, C))] State = {ontable(A), ontable(B), clear(A), on(C, B), clear(C), handempty} Plan so far = [Unstack(C, A), Stack(C, B)] Stack(x, y) P: holding(x), clear(y) E: on(x, y), clear(x), handempty, not holding(x), not clear(y)

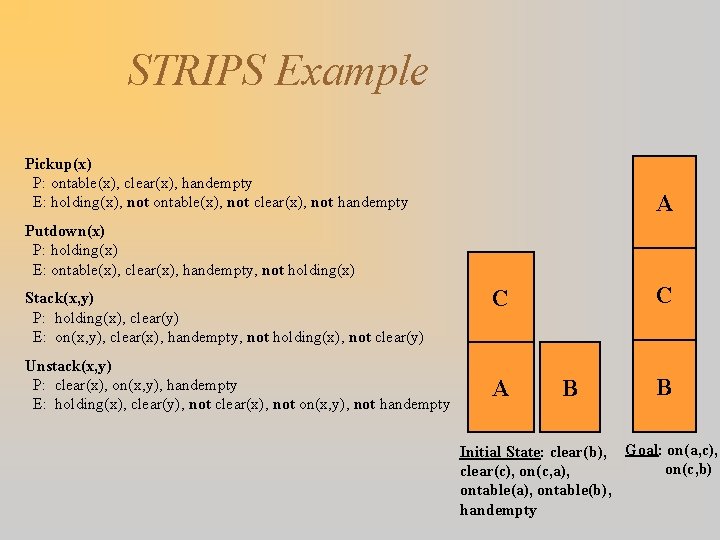

![STRIPS Example Goal Stack achieveclearC holdingA applyStackA C achieveonC B onA C State STRIPS Example Goal Stack = [achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))] State](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-28.jpg)

STRIPS Example Goal Stack = [achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))] State = {ontable(A), ontable(B), clear(A), on(C, B), clear(C), handempty} Plan so far = [Unstack(C, A), Stack(C, B)] Stack(x, y) P: holding(x), clear(y) E: on(x, y), clear(x), handempty, not holding(x), not clear(y)

![STRIPS Example Goal Stack achieveholdingA achieveclearC holdingA applyStackA C achieveonC B onA C STRIPS Example Goal Stack = [achieve(holding(A)), achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))]](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-29.jpg)

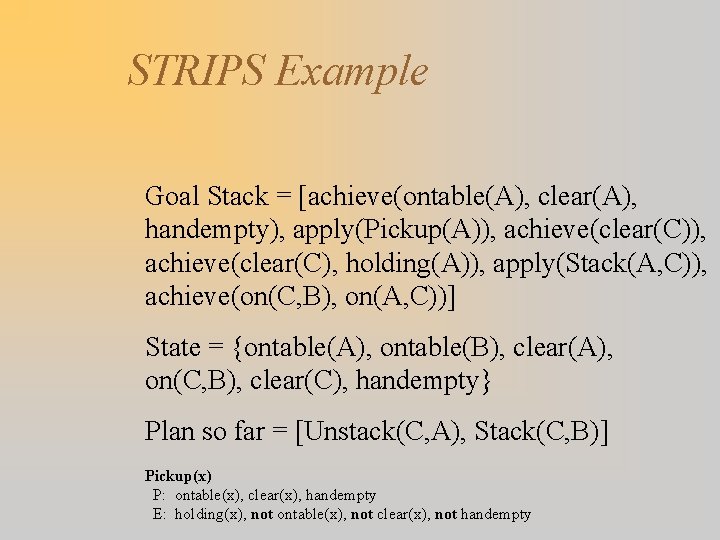

STRIPS Example Goal Stack = [achieve(holding(A)), achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))] State = {ontable(A), ontable(B), clear(A), on(C, B), clear(C), handempty} Plan so far = [Unstack(C, A), Stack(C, B)]

STRIPS Example Goal Stack = [achieve(ontable(A), clear(A), handempty), apply(Pickup(A)), achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))] State = {ontable(A), ontable(B), clear(A), on(C, B), clear(C), handempty} Plan so far = [Unstack(C, A), Stack(C, B)] Pickup(x) P: ontable(x), clear(x), handempty E: holding(x), not ontable(x), not clear(x), not handempty

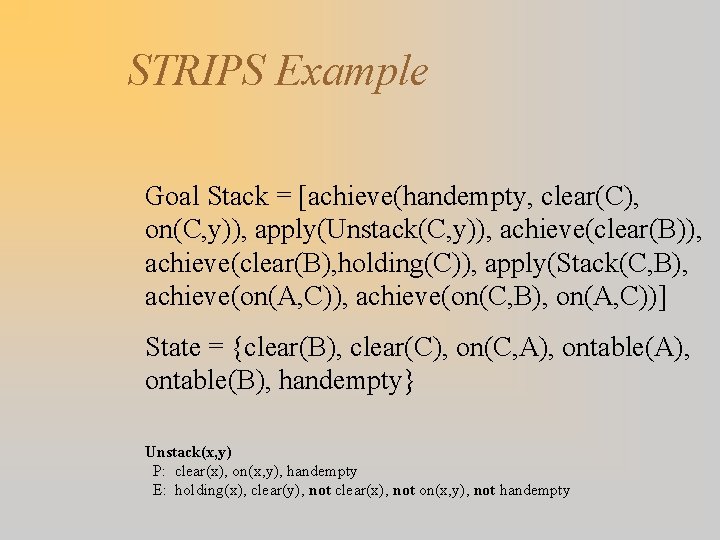

![STRIPS Example Goal Stack achieveclearC achieveclearC holdingA applyStackA C achieveonC B onA C STRIPS Example Goal Stack = [achieve(clear(C)), achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))]](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-31.jpg)

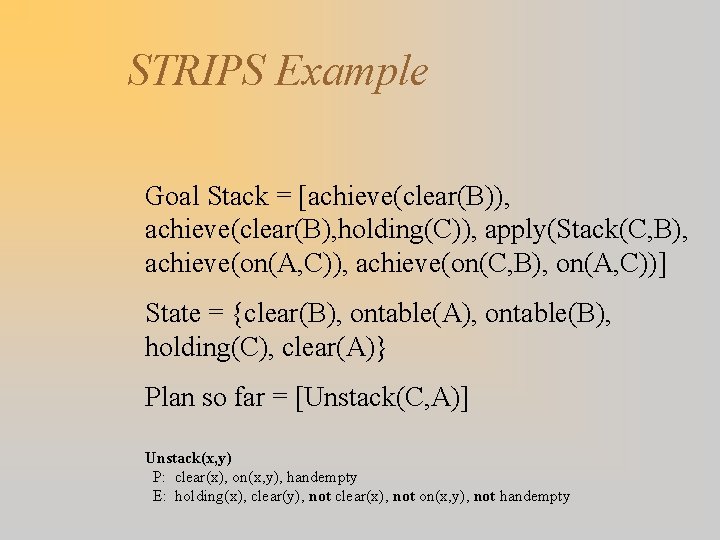

STRIPS Example Goal Stack = [achieve(clear(C)), achieve(clear(C), holding(A)), apply(Stack(A, C)), achieve(on(C, B), on(A, C))] State = {ontable(B), on(C, B), clear(C), holding(A)} Plan so far = [Unstack(C, A), Stack(C, B), Pickup(A)] Pickup(x) P: ontable(x), clear(x), handempty E: holding(x), not ontable(x), not clear(x), not handempty

![STRIPS Example Goal Stack achieveonC B onA C State ontableB onC B STRIPS Example Goal Stack = [achieve(on(C, B), on(A, C))] State = {ontable(B), on(C, B),](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-32.jpg)

STRIPS Example Goal Stack = [achieve(on(C, B), on(A, C))] State = {ontable(B), on(C, B), on(A, C), clear(A), handempty} Plan so far = [Unstack(C, A), Stack(C, B), Pickup(A), Stack(A, C)] Stack(x, y) P: holding(x), clear(y) E: on(x, y), clear(x), handempty, not holding(x), not clear(y)

![STRIPS Example Goal Stack State ontableB onC B onA C clearA STRIPS Example Goal Stack = [] State = {ontable(B), on(C, B), on(A, C), clear(A),](https://slidetodoc.com/presentation_image/3727d444914d521ed3f967628fa59589/image-33.jpg)

STRIPS Example Goal Stack = [] State = {ontable(B), on(C, B), on(A, C), clear(A), handempty} Plan so far = [Unstack(C, A), Stack(C, B), Pickup(A), Stack(A, C)]